Cisco Systems GEM318P, ST373307LC User Manual

Cisco SFS InfiniBand Host Drivers User Guide for Linux

Release 3.2.0

June 2007

Americas Headquarters

Cisco Systems, Inc. 170 West Tasman Drive

San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000

800 553-NETS (6387) Fax: 408 527-0883

Text Part Number: OL-12309-01

THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS.

THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE.

IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

CCVP, the Cisco logo, and the Cisco Square Bridge logo are trademarks of Cisco Systems, Inc.; Changing the Way We Work, Live, Play, and Learn is a service mark of Cisco Systems, Inc.; and Access Registrar, Aironet, BPX, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Enterprise/Solver, EtherChannel, EtherFast, EtherSwitch, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, IP/TV, iQ Expertise, the iQ logo, iQ Net Readiness Scorecard, iQuick Study, LightStream, Linksys, MeetingPlace, MGX, Networking Academy, Network Registrar, Packet, PIX, ProConnect, ScriptShare, SMARTnet, StackWise, The Fastest Way to Increase Your Internet Quotient, and TransPath are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or Website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0705R)

Any Internet Protocol (IP) addresses used in this document are not intended to be actual addresses. Any examples, command display output, and figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses in illustrative content is unintentional and coincidental.

Cisco SFS InfiniBand Host Drivers User Guide for Linux

© 2007 Cisco Systems, Inc. All rights reserved.

|

|

|

|

|

|

|

|

C O N T E N T S |

|||

|

|

|

Preface vii |

|

|

|

|

|

|

|

|

|

|

|

Audience |

vii |

|

|

|

|

|

|

|

|

|

|

Organization |

vii |

|

|

|

|

|

|

|

|

|

|

Conventions |

viii |

|

|

|

|

|

|

|

|

|

|

Root and Non-root Conventions in Examples |

ix |

|||||||

|

|

|

Related Documentation ix |

|

|

|

|

||||

|

|

|

Obtaining Documentation, Obtaining Support, and Security Guidelines ix |

||||||||

|

|

About Host Drivers |

|

|

|

|

|

|

|||

C H A P T E R |

1 |

1-1 |

|

|

|

|

|

||||

|

|

|

Introduction |

1-1 |

|

|

|

|

|

|

|

|

|

|

Architecture |

1-2 |

|

|

|

|

|

|

|

|

|

|

Supported Protocols |

1-3 |

|

|

|

|

|||

|

|

|

IPoIB |

1-3 |

|

|

|

|

|

|

|

|

|

|

SRP |

1-3 |

|

|

|

|

|

|

|

|

|

|

SDP |

1-3 |

|

|

|

|

|

|

|

|

|

|

Supported APIs 1-4 |

|

|

|

|

|

|||

|

|

|

MVAPICH MPI |

1-4 |

|

|

|

|

|||

|

|

|

uDAPL |

|

1-4 |

|

|

|

|

|

|

|

|

|

Intel MPI |

1-4 |

|

|

|

|

|

|

|

|

|

|

HP MPI |

1-4 |

|

|

|

|

|

|

|

|

|

|

HCA Utilities and Diagnostics 1-4 |

|

|

|

|

||||

|

|

Installing Host Drivers |

|

|

|

|

|

||||

C H A P T E R |

2 |

2-1 |

|

|

|

|

|||||

|

|

|

Introduction |

2-1 |

|

|

|

|

|

|

|

|

|

|

Contents of ISO Image |

2-2 |

|

|

|

|

|||

|

|

|

Installing Host Drivers from an ISO Image |

2-2 |

|

|

|

||||

|

|

|

Uninstalling Host Drivers from an ISO Image |

2-3 |

|

|

|

||||

|

|

IP over IB Protocol |

|

|

|

|

|

|

|||

C H A P T E R |

3 |

3-1 |

|

|

|

|

|

||||

|

|

|

Introduction |

3-1 |

|

|

|

|

|

|

|

|

|

|

Manually Configuring IPoIB for Default IB Partition 3-2 |

||||||||

|

|

|

|

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux |

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

OL-12309-01 |

|

|

|

|

|

|

|

iii |

|

|

|

|

|

|

|

|

|

|

|

|||

Contents

|

|

|

|

|

Subinterfaces |

3-2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Creating a Subinterface Associated with a Specific IB Partition |

3-3 |

|

|||||||

|

|

|

|

|

Removing a Subinterface Associated with a Specific IB Partition |

3-4 |

|

|||||||

|

|

|

|

|

Verifying IPoIB Functionality |

3-5 |

|

|

|

|

|

|||

|

|

|

|

|

IPoIB Performance |

3-6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Sample Startup Configuration File |

3-8 |

|

|

|

|

||||

|

|

|

|

|

IPoIB High Availability |

3-8 |

|

|

|

|

|

|

||

|

|

|

|

|

Merging Physical Ports |

3-8 |

|

|

|

|

|

|||

|

|

|

|

|

Unmerging Physical Ports 3-9 |

|

|

|

|

|||||

|

|

|

SCSI RDMA Protocol |

|

|

|

|

|

|

|

|

|||

C H A P T E R |

4 |

|

4-1 |

|

|

|

|

|

|

|

||||

|

|

|

|

|

Introduction |

4-1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Configuring SRP |

4-1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Configuring ITLs when Using Fibre Channel Gateway 4-2 |

|

|

|||||||

|

|

|

|

|

Configuring ITLs with Element Manager while No Global Policy Restrictions Apply 4-2 |

|||||||||

|

|

|

|

|

Configuring ITLs with Element Manager while Global Policy Restrictions Apply 4-4 |

|||||||||

|

|

|

|

|

Configuring SRP Host |

4-6 |

|

|

|

|

|

|||

|

|

|

|

|

Verifying SRP |

4-7 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Verifying SRP Functionality |

4-7 |

|

|

|

|

||||

|

|

|

|

|

Verifying with Element Manager |

4-8 |

|

|

|

|||||

|

|

|

Sockets Direct Protocol |

|

|

|

|

|

|

|

||||

C H A P T E R |

5 |

|

5-1 |

|

|

|

|

|

|

|||||

|

|

|

|

|

Introduction |

5-1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Configuring IPoIB Interfaces |

5-1 |

|

|

|

|

|

|||

|

|

|

|

|

Converting Sockets-Based Application |

5-2 |

|

|

|

|||||

|

|

|

|

|

Explicit/Source Code Conversion Type |

5-2 |

|

|

||||||

|

|

|

|

|

Automatic Conversion Type |

5-2 |

|

|

|

|

||||

|

|

|

|

|

Log Statement |

5-3 |

|

|

|

|

|

|||

|

|

|

|

|

Match Statement |

5-3 |

|

|

|

|

|

|||

|

|

|

|

|

SDP Performance |

5-4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Netperf Server with IPoIB and SDP 5-6 |

|

|

|

||||||

|

|

|

uDAPL 6-1 |

|

|

|

|

|

|

|

|

|

||

C H A P T E R |

6 |

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

Introduction |

6-1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

uDAPL Test Performance |

6-1 |

|

|

|

|

|

|||

|

|

|

|

|

uDAPL Throughput Test Performance |

6-2 |

|

|

||||||

|

|

|

|

|

uDAPL Latency Test Performance |

6-3 |

|

|

|

|||||

|

|

|

|

|

Compiling uDAPL Programs |

6-4 |

|

|

|

|

|

|||

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux |

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

iv |

|

|

|

|

|

|

|

|

|

|

|

OL-12309-01 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

Contents

C H A P T E R |

7 |

MVAPICH MPI |

7-1 |

|

|

|

|

|

|

Introduction |

7-1 |

|

|

|

|

|

|

Initial Setup |

7-2 |

|

|

|

|

|

|

Configuring SSH 7-2 |

|

|

|

|

|

|

|

Editing Environment Variables |

7-5 |

|

|

||

|

|

Setting Environment Variables in System-Wide Startup Files |

7-6 |

||||

|

|

Editing Environment Variables in the Users Shell Startup Files |

7-6 |

||||

|

|

Editing Environment Variables Manually |

7-7 |

|

|||

|

|

MPI Bandwidth Test Performance 7-7 |

|

|

|||

|

|

MPI Latency Test Performance |

7-8 |

|

|

||

|

|

Intel MPI Benchmarks (IMB) Test Performance |

7-9 |

|

|||

|

|

Compiling MPI Programs |

7-12 |

|

|

||

|

|

HCA Utilities and Diagnostics |

|

|

|

||

C H A P T E R |

8 |

8-1 |

|

|

|||

|

|

Introduction |

8-1 |

|

|

|

|

|

|

hca_self_test Utility 8-1 |

|

|

|

|

|

|

|

tvflash Utility |

8-3 |

|

|

|

|

|

|

Viewing Card Type and Firmware Version |

8-3 |

|

|||

|

|

Upgrading Firmware |

8-4 |

|

|

|

|

|

|

Diagnostics |

8-5 |

|

|

|

|

|

|

Acronyms and Abbreviations |

|

|

|

||

A P P E N D I X |

A |

A-1 |

|

|

|||

|

|

|

|

|

|

|

|

I N D E X |

|

|

|

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

v |

|

Contents

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

vi |

OL-12309-01 |

|

|

|

Preface

This preface describes who should read the Cisco SFS InfiniBand Host Drivers User Guide for Linux, how it is organized, and its document conventions. It includes the following sections:

•Audience, page vii

•Organization, page vii

•Conventions, page viii

•Root and Non-root Conventions in Examples, page ix

•Related Documentation, page ix

•Obtaining Documentation, Obtaining Support, and Security Guidelines, page ix

Audience

The intended audience is the administrator responsible for installing, configuring, and managing host drivers and host card adapters. This administrator should have experience administering similar networking or storage equipment.

Organization

This publication is organized as follows:

|

|

Chapter |

Title |

|

Description |

|

|||

|

|

|

|

|

|

|

|||

|

|

Chapter 1 |

About Host Drivers |

|

Describes the Cisco commercial host driver. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 2 |

Installing Host Drivers |

|

Describes the installation of host drivers. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 3 |

IP over IB Protocol |

|

Describes how to configure IPoIB to run IP |

||||

|

|

|

|

|

traffic over an IB network. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 4 |

SCSI RDMA Protocol |

|

Describes how to configure SRP. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 5 |

Sockets Direct Protocol |

|

Describes how to configure and run SDP. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 6 |

uDAPL |

|

Describes how to build and configure |

||||

|

|

|

|

|

uDAPL. |

||||

|

|

|

|

|

|

|

|||

|

|

Chapter 7 |

MVAPICH MPI |

|

Describes the setup and configuration |

||||

|

|

|

|

|

information for MVAPICH MPI. |

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux |

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

OL-12309-01 |

|

|

|

|

|

vii |

|

|

|

|

|

|

|

|

||||

Preface

Conventions

Chapter |

Title |

Description |

|

|

|

Chapter 8 |

HCA Utilities and Diagnostics |

Describes the fundamental HCA utilities |

|

|

and diagnostics. |

|

|

|

Appendix A |

Acronyms and Abbreviations |

Defines the acronyms and abbreviations |

|

|

that are used in this publication. |

|

|

|

Conventions

This document uses the following conventions:

Convention |

Description |

|

|

|

|

boldface font |

Commands, command options, and keywords are in |

|

|

|

boldface. Bold text indicates Chassis Manager elements or |

|

|

text that you must enter as-is. |

|

|

|

italic font |

Arguments in commands for which you supply values are in |

|

|

|

italics. Italics not used in commands indicate emphasis. |

|

|

|

Menu1 > Menu2 > |

Series indicate a pop-up menu sequence to open a form or |

|

Item… |

execute a desired function. |

|

|

|

|

[ |

] |

Elements in square brackets are optional. |

|

|

|

{ x | y | z } |

Alternative keywords are grouped in braces and separated by |

|

|

|

vertical bars. Braces can also be used to group keywords |

|

|

and/or arguments; for example, {interface interface type}. |

|

|

|

[ x | y | z ] |

Optional alternative keywords are grouped in brackets and |

|

|

|

separated by vertical bars. |

|

|

|

string |

A nonquoted set of characters. Do not use quotation marks |

|

|

|

around the string or the string will include the quotation |

|

|

marks. |

|

|

|

screen font |

Terminal sessions and information the system displays are in |

|

|

|

screen font. |

|

|

|

boldface screen |

Information you must enter is in boldface screen font. |

|

font |

|

|

|

|

|

italic screen font |

Arguments for which you supply values are in italic |

|

|

|

screen font. |

|

|

|

^ |

|

The symbol ^ represents the key labeled Control—for |

|

|

example, the key combination ^D in a screen display means |

|

|

hold down the Control key while you press the D key. |

|

|

|

< |

> |

Nonprinting characters, such as passwords are in angle |

|

|

brackets. |

|

|

|

!, # |

An exclamation point (!) or a pound sign (#) at the beginning |

|

|

|

of a line of code indicates a comment line. |

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

viii |

OL-12309-01 |

|

|

|

Preface

Root and Non-root Conventions in Examples

Notes use the following convention:

Note Means reader take note. Notes contain helpful suggestions or references to material not covered in the manual.

Cautions use the following convention:

Caution Means reader be careful. In this situation, you might do something that could result in equipment damage or loss of data.

Root and Non-root Conventions in Examples

This document uses the following conventions to signify root and non-root accounts:

Convention |

Description |

|

|

host1# |

When this prompt appears in an example, it indicates that you |

host2# |

are in a root account. |

|

|

host1$ |

When this prompt appears in an example, it indicates that you |

host2$ |

are in a non-root account. |

|

|

Related Documentation

For additional information related to the Cisco SFS IB host drivers, see the following documents:

•Cisco InfiniBand Host Channel Adapter Hardware Installation Guide

•Release Notes for Linux Host Drivers Release 3.2.0

•Release Notes for Cisco OFED, Release 1.1

•Cisco OpenFabrics Enterprise Distribution InfiniBand Host Drivers User Guide for Linux

•Cisco SFS Product Family Element Manager User Guide

•Cisco SFS InfiniBand Fibre Channel Gateway User Guide

Obtaining Documentation, Obtaining Support, and Security

Guidelines

For information on obtaining documentation, obtaining support, providing documentation feedback, security guidelines, and also recommended aliases and general Cisco documents, see the monthly What’s New in Cisco Product Documentation, which also lists all new and revised Cisco technical documentation, at:

http://www.cisco.com/en/US/docs/general/whatsnew/whatsnew.html

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

ix |

|

Preface

Obtaining Documentation, Obtaining Support, and Security Guidelines

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

x |

OL-12309-01 |

|

|

|

C H A P T E R 1

About Host Drivers

This chapter describes host drivers and includes the following sections:

•Introduction, page 1-1

•Architecture, page 1-2

•Supported Protocols, page 1-3

•Supported APIs, page 1-4

•HCA Utilities and Diagnostics, page 1-4

Note For expansions of acronyms and abbreviations used in this publication, see Appendix A, “Acronyms and Abbreviations.”

Introduction

The Cisco IB HCA offers high-performance 10-Gbps and 20-Gbps IB connectivity to PCI-X and PCI-Express-based servers. As an integral part of the Cisco SFS solution, the Cisco IB HCA enables you to create a unified fabric for consolidating clustering, networking, and storage communications.

After you physically install the HCA in the server, install the drivers to run IB-capable protocols. HCAs support the following protocols in the Linux environment:

•IPoIB

•SRP

•SDP

HCAs support the following APIs in the Linux environment:

•MVAPICH MPI

•uDAPL API

•Intel MPI

•HP MPI

Host drivers also provide utilities to help you configure and verify your HCA. These utilities provide upgrade and diagnostic features.

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

1-1 |

|

|

|

Chapter 1 About Host Drivers

Architecture

Note See the “Root and Non-root Conventions in Examples” section on page ix for details about the significance of prompts used in the examples in this chapter.

Architecture

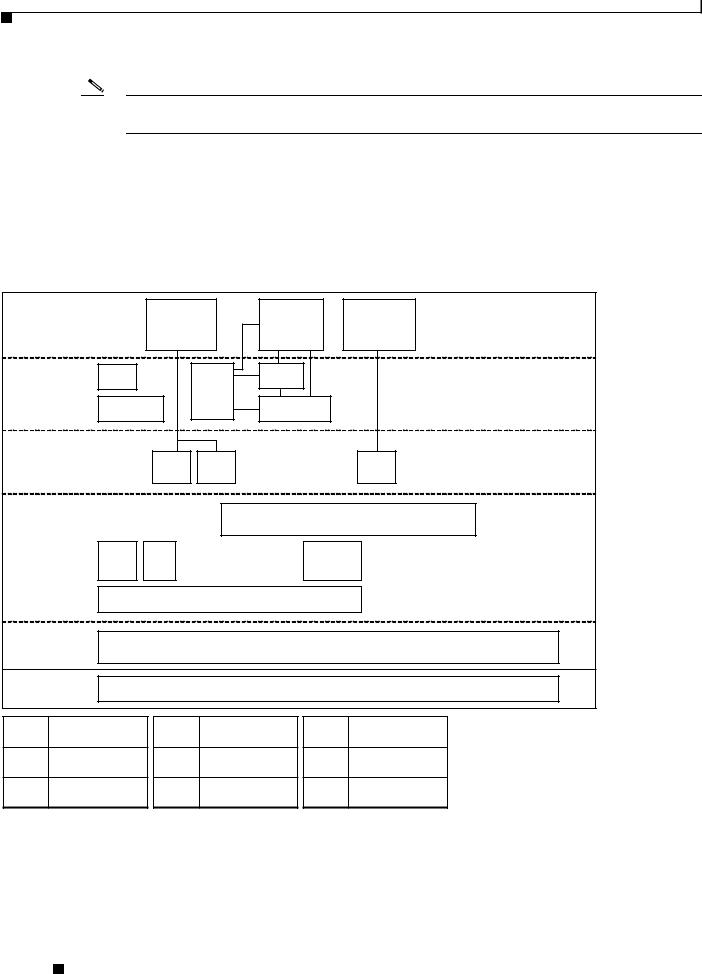

Figure 1-1 displays the software architecture of the protocols and APIs that HCAs support. The figure displays ULPs and APIs in relation to other IB software elements.

Figure 1-1 HCA Supported Protocols and API Architecture

Application |

|

|

IP Based |

|

MPI Based |

Block |

||

Level |

|

|

|

App |

|

App Access |

Storage |

|

|

|

|

Access |

|

Access |

|||

|

|

|

|

|

|

|

||

|

|

Diag |

|

|

|

uDAPL |

|

|

|

|

Tools |

|

Various |

|

|||

User |

|

|

|

|

|

|||

|

|

|

|

|

|

|||

|

User Level |

MPI's |

User Level |

|

||||

APIs |

|

|

|

|

||||

|

MAD API |

|

|

Verbs / API |

|

|||

|

|

|

|

|

||||

Upper |

|

|

|

|

|

|

|

|

Layer |

|

|

|

IPoIB |

SDP |

|

|

SRP |

Protocol |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Connection Manager |

|

|

|

|

|

|

|

|

Abstraction (CMA) |

|

Mid-Layer |

SA |

|

|

|

|

Connection |

|

|

|

|

SMA |

|

|

|

|||

|

|

Client |

|

|

Manager |

|

||

|

|

|

|

|

|

|

||

|

|

|

|

InfiniBand Verbs / API |

|

|

||

Provider |

|

|

|

|

|

Hardware |

|

|

|

|

|

|

|

|

|

Specific Driver |

|

Hardware |

|

|

|

|

InfiniBand HCA |

|

||

IPoIB |

IP over InfiniBand |

|

MPI |

Message Passing |

MAD |

Management |

||

|

Interface |

|

|

Datagram |

||||

|

|

|

|

|

|

|

||

SDP |

Sockets Direct |

|

UDAPL |

User Direct Access |

SMA |

Subnet Manager |

||

Protocol |

|

|

Programming Lib |

|

Agent |

|||

|

|

|

|

|

||||

SRP |

SCSI RDMA |

|

SA |

Subnet |

|

HCA |

Host Channel |

|

|

Protocol (Initiator) |

|

|

Administrator |

|

Adapter |

||

User Space

Kernel Space

180411

Cisco SFS InfiniBand Host Drivers User Guide for Linux

1-2 |

OL-12309-01 |

|

|

Chapter 1 About Host Drivers

Supported Protocols

Supported Protocols

This section describes the supported protocols and includes the following topics:

•IPoIB

•SRP

•SDP

Protocol here refers to software in the networking layer in kernel space.

IPoIB

The IPoIB protocol passes IP traffic over the IB network. Configuring IPoIB requires similar steps to configuring IP on an Ethernet network. SDP relies on IPoIB to resolve IP addresses. (See the “SDP” section on page 1-3.)

To configure IPoIB, you assign an IP address and subnet mask to each IB port. IPoIB automatically adds IB interface names to the IP network configuration. To configure IPoIB, see Chapter 3, “IP over IB Protocol.”

SRP

SRP runs SCSI commands across RDMA-capable networks so that IB hosts can communicate with Fibre Channel storage devices and IB-attached storage devices. SRP requires an SFS with a Fibre Channel gateway to connect the host to Fibre Channel storage. In conjunction with an SFS, SRP disguises IB-attached hosts as Fibre Channel-attached hosts. The topology transparency feature lets Fibre Channel storage communicate seamlessly with IB-attached hosts (known as SRP hosts). For configuration instructions, see Chapter 4, “SCSI RDMA Protocol.”

SDP

SDP is an IB-specific upperlayer protocol. It defines a standard wire protocol to support stream sockets networking over IB. SDP enables sockets-based applications to take advantage of the enhanced performance features provided by IB and achieves lower latency and higher bandwidth than IPoIB running sockets-based applications. It provides a high-performance, data transfer protocol for stream-socket networking over an IB fabric. You can configure the driver to automatically translate TCP to SDP based on a source IP, a destination, or an application name. For configuration instructions, see Chapter 5, “Sockets Direct Protocol.”

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

1-3 |

|

|

|

Chapter 1 About Host Drivers

Supported APIs

Supported APIs

This section describes the supported APIs and includes the following topics:

•MVAPICH MPI

•uDAPL

•Intel MPI

•HP MPI

API refers to software in the networking layer in user space.

MVAPICH MPI

MPI is a standard library functionality in C, C++, and Fortran that can be used to implement a message-passing program. MPI allows the coordination of a program running as multiple processes in a distributed memory environment. This document includes setup and configuration information for MVAPICH MPI. For more information, see Chapter 7, “MVAPICH MPI.”

uDAPL

uDAPL defines a single set of user-level APIs for all RDMA-capable transports. The uDAPL mission is to define a transport-independent and platform-standard set of APIs that exploits RDMA capabilities such as those present in IB. For more information, see Chapter 6, “uDAPL.”

Intel MPI

Cisco tests and supports the SFS IB host drivers with Intel MPI. The Intel MPI implementation is available for separate purchase from Intel. For more information, visit the following URL:

http://www.intel.com/go/mpi

HP MPI

Cisco tests and supports the SFS IB host drivers with HP MPI for Linux. The HP MPI implementation is available for separate purchase from Hewlett Packard. For more information, visit the following URL:

http://www.hp.com/go/mpi

HCA Utilities and Diagnostics

The HCA utilities provide basic tools to view HCA attributes and run preliminary troubleshooting tasks. For more information, see Chapter 8, “HCA Utilities and Diagnostics.”

Cisco SFS InfiniBand Host Drivers User Guide for Linux

1-4 |

OL-12309-01 |

|

|

C H A P T E R 2

Installing Host Drivers

The chapter includes the following sections:

•Introduction, page 2-1

•Contents of ISO Image, page 2-2

•Installing Host Drivers from an ISO Image, page 2-2

•Uninstalling Host Drivers from an ISO Image, page 2-3

Note See the “Root and Non-root Conventions in Examples” section on page ix for details about the significance of prompts used in the examples in this chapter.

Introduction

The Cisco Linux IB driver is delivered as an ISO image. The ISO image contains the binary RPMs for selected Linux distributions. The Cisco Linux IB drivers distribution contains an installation script called tsinstall. The install script performs the necessary steps to accomplish the following:

•Discover the currently installed kernel

•Uninstall any IB stacks that are part of the standard operating system distribution

•Install the Cisco binary RPMs if they are available for the current kernel

•Identify the currently installed IB HCA and perform the required firmware updates

Note For specific details about which binary RPMs are included and which standard Linux distributions and kernels are currently supported, see the Release Notes for Linux Host Drivers Release 3.2.0.

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

2-1 |

|

|

|

Chapter 2 Installing Host Drivers

Contents of ISO Image

Contents of ISO Image

The ISO image contains the following directories and files:

•docs/

This directory contains the related documents.

•tsinstall

This is the installation script.

•redhat/

This directory contains the binary RPMs for Red Hat Enterprise Linux.

•suse/

This directory contains the binary RPMs for SUSE Linux Enterprise Server.

Installing Host Drivers from an ISO Image

See the Cisco InfiniBand Host Channel Adapter Hardware Installation Guide to correctly install HCAs. To install host drivers from an ISO image, perform the following steps:

Note If you upgrade your Linux kernel after installing these host drivers, you need to reinstall the host drivers.

Step 1 Verify that the system has a viable HCA installed by ensuring that you can see the InfiniHost entries in the display.

The following example shows that the installed HCA is viable:

host1# lspci -v | grep Mellanox

06:01.0 PCI bridge: Mellanox Technologies MT23108 PCI Bridge (rev a0) (prog-if 00 [Normal decode])

07:00.0 InfiniBand: Mellanox Technologies MT23108 InfiniHost (rev a0) Subsystem: Mellanox Technologies MT23108 InfiniHost

Step 2 Download an ISO image, and copy it to your network.

You can download an ISO image from http://www.cisco.com/cgi-bin/tablebuild.pl/sfs-linux

Step 3 Use the md5sum utility to confirm the file integrity of your ISO image.

Step 4 Install drivers from an ISO image on your network.

The following example shows how to install host drivers from an ISO image:

host1# mount -o ro,loop topspin-host-3.2.0-136.iso /mnt host1# /mnt/tsinstall

The following kernels are installed, but do not have drivers available: 2.6.9-34.EL.x86_64

The following installed packages are out of date and will be upgraded: topspin-ib-rhel4-3.2.0-118.x86_64 topspin-ib-mpi-rhel4-3.2.0-118.x86_64 topspin-ib-mod-rhel4-2.6.9-34.ELsmp-3.2.0-118.x86_64

The following packages will be installed: topspin-ib-rhel4-3.2.0-136.x86_64 (libraries, binaries, etc)

Cisco SFS InfiniBand Host Drivers User Guide for Linux

2-2 |

OL-12309-01 |

|

|

Chapter 2 Installing Host Drivers

Uninstalling Host Drivers from an ISO Image

topspin-ib-mpi-rhel4-3.2.0-136.x86_64 (MPI libraries, source code, docs, etc) topspin-ib-mod-rhel4-2.6.9-34.ELsmp-3.2.0-136.x86_64 (kernel modules)

installing 100% ###############################################################

Upgrading HCA 0 HCA.LionMini.A0 to firmware build 3.2.0.136

New Node GUID = 0005ad0000200848

New Port1 GUID = 0005ad0000200849

New Port2 GUID = 0005ad000020084a

Programming HCA firmware... Flash Image Size = 355076

Flashing - EFFFFFFFEPPPPPPPEWWWWWWWEWWWWWWWEWWWWWVVVVVVVVVVVVVVVVVVVVVVVVVVVVVV

Flash verify passed!

Step 5 Run a test to verify whether or not the IB link is established between the respective host and the IB switch.

The following example shows a test run that verifies an established IB link:

host1# /usr/local/topspin/sbin/hca_self_test

---- Performing InfiniBand HCA Self Test |

---- |

Number of HCAs Detected ................ |

1 |

PCI Device Check ....................... |

PASS |

Kernel Arch ............................ |

x86_64 |

Host Driver Version .................... |

rhel4-2.6.9-34.ELsmp-3.2.0-136 |

Host Driver RPM Check .................. |

PASS |

HCA Type of HCA #0 ..................... |

LionMini |

HCA Firmware on HCA #0 ................. |

v5.2.000 build 3.2.0.136 HCA.LionMini.A0 |

HCA Firmware Check on HCA #0 ........... |

PASS |

Host Driver Initialization ............. |

PASS |

Number of HCA Ports Active ............. |

2 |

Port State of Port #0 on HCA #0 ........ |

UP 4X |

Port State of Port #1 on HCA #0 ........ |

UP 4X |

Error Counter Check on HCA #0 .......... |

PASS |

Kernel Syslog Check .................... |

PASS |

Node GUID .............................. |

00:05:ad:00:00:20:08:48 |

------------------ DONE --------------------- |

|

The HCA test script, as shown in the example above, checks for the HCA firmware version, verifies that proper kernel modules are loaded on the IP drivers, shows the state of the HCA ports, shows the counters that are associated with each IB port, and indicates whether or not there are any error messages in the host operating system log files.

Note To troubleshoot the results of this test, see Chapter 8, “HCA Utilities and Diagnostics.”

Uninstalling Host Drivers from an ISO Image

The following example shows how to uninstall a host driver from a device:

host1# rpm -e `rpm -qa | grep topspin`

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

2-3 |

|

|

|

Chapter 2 Installing Host Drivers

Uninstalling Host Drivers from an ISO Image

Cisco SFS InfiniBand Host Drivers User Guide for Linux

2-4 |

OL-12309-01 |

|

|

C H A P T E R 3

IP over IB Protocol

This chapter describes IP over IB protocol and includes the following sections:

•Introduction, page 3-1

•Manually Configuring IPoIB for Default IB Partition, page 3-2

•Subinterfaces, page 3-2

•Verifying IPoIB Functionality, page 3-5

•IPoIB Performance, page 3-6

•Sample Startup Configuration File, page 3-8

•IPoIB High Availability, page 3-8

Note See the “Root and Non-root Conventions in Examples” section on page ix for details about the significance of prompts used in the examples in this chapter.

Introduction

Configuring IPoIB requires that you follow similar steps to the steps used for configuring IP on an Ethernet network. When you configure IPoIB, you assign an IP address and a subnet mask to each HCA port. The first HCA port on the first HCA in the host is the ib0 interface, the second port is ib1, and so on.

Note To enable these IPoIB settings across reboots, you must explicitly add these settings to the networking interface startup configuration file. For a sample configuration file, see the “Sample Startup Configuration File” section on page 3-8.

See your Linux distribution documentation for additional information about configuring IP addresses.

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

3-1 |

|

|

|

Chapter 3 IP over IB Protocol

Manually Configuring IPoIB for Default IB Partition

Manually Configuring IPoIB for Default IB Partition

To manually configure IPoIB for the default IB partition, perform the following steps:

Step 1 Log in to your Linux host.

Step 2 To configure the interface, enter the ifconfig command with the following items:

•The appropriate IB interface (ib0 or ib1 on a host with one HCA)

•The IP address that you want to assign to the interface

•The netmask keyword

•The subnet mask that you want to assign to the interface

The following example shows how to configure an IB interface:

host1# ifconfig ib0 192.168.0.1 netmask 255.255.252.0

Step 3 (Optional) Verify the configuration by entering the ifconfig command with the appropriate port identifier ib# argument.

The following example shows how to verify the configuration:

host1# ifconfig ib0 |

|

|

|

|

ib0 |

Link encap:Ethernet HWaddr F8:79:D1:23:9A:2B |

|||

|

inet addr:192.168.0.1 Bcast:192.168.0.255 |

Mask:255.255.255.0 |

||

|

inet6 addr: fe80::9879:d1ff:fe20:f4e7/64 Scope:Link |

|||

|

UP BROADCAST |

RUNNING MULTICAST |

MTU:2044 |

Metric:1 |

|

RX packets:0 |

errors:0 dropped:0 |

overruns:0 |

frame:0 |

|

TX packets:0 |

errors:0 dropped:9 |

overruns:0 |

carrier:0 |

|

collisions:0 |

txqueuelen:1024 |

|

|

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

Step 4 Repeat Step 2 and Step 3 on the remaining interface(s).

Subinterfaces

This section describes subinterfaces. Subinterfaces divide primary (parent) interfaces to provide traffic isolation. Partition assignments distinguish subinterfaces from parent interfaces. The default Partition Key (p_key), ff:ff, applies to the primary (parent) interface.

This section includes the following topics:

•Creating a Subinterface Associated with a Specific IB Partition, page 3-3

•Removing a Subinterface Associated with a Specific IB Partition, page 3-4

Cisco SFS InfiniBand Host Drivers User Guide for Linux

3-2 |

OL-12309-01 |

|

|

Chapter 3 IP over IB Protocol

Subinterfaces

Creating a Subinterface Associated with a Specific IB Partition

To create a subinterface associated with a specific IB partition, perform the following steps:

Step 1 Create a partition on an IB SFS. Alternatively, you can choose to create the partition of the IB interface on the host first, and then create the partition for the ports on the IB SFS. See the Cisco SFS Product Family Element Manager User Guide for information regarding valid partitions on the IB SFS.

Step 2 Log in to your host.

Step 3 Add the value of the partition key to the file as root user.

The following example shows how to add partition 80:02 to the primary interface ib0:

host1# /usr/local/topspin/sbin/ipoibcfg add ib0 80:02

Step 4 Verify that the interface is set up by ensuring that ib0.8002 is displayed.

The following example shows how to verify the interface:

host1# ls /sys/class/net

eth0 ib0 ib0.8002 ib1 lo sit0

|

Step 5 |

Verify that the interface was created by entering the ifconfig -a command. |

||||||||

|

|

The following example shows how to enter the ifconfig -a command: |

||||||||

|

|

host1# ifconfig -a |

|

|

|

|

|

|

|

|

|

|

eth0 |

Link encap:Ethernet |

HWaddr 00:30:48:20:D5:D1 |

||||||

|

|

|

inet addr:172.29.237.206 |

Bcast:172.29.239.255 Mask:255.255.252.0 |

||||||

|

|

|

inet6 addr: fe80::230:48ff:fe20:d5d1/64 Scope:Link |

|||||||

|

|

|

UP BROADCAST RUNNING MULTICAST |

MTU:1500 |

Metric:1 |

|||||

|

|

|

RX packets:9091465 errors:0 dropped:0 overruns:0 frame:0 |

|||||||

|

|

|

TX packets:505050 errors:0 dropped:0 overruns:0 carrier:0 |

|||||||

|

|

|

collisions:0 txqueuelen:1000 |

|

|

|

|

|

||

|

|

|

RX bytes:1517373743 (1.4 GiB) |

TX bytes:39074067 (37.2 MiB) |

||||||

|

|

|

Base address:0x3040 Memory:dd420000-dd440000 |

|||||||

|

|

ib0 |

Link encap:Ethernet |

HWaddr F8:79:D1:23:9A:2B |

||||||

|

|

|

inet addr:192.168.0.1 Bcast:192.168.0.255 |

Mask:255.255.255.0 |

||||||

|

|

|

inet6 addr: fe80::9879:d1ff:fe20:f4e7/64 Scope:Link |

|||||||

|

|

|

UP BROADCAST RUNNING MULTICAST |

MTU:2044 |

Metric:1 |

|||||

|

|

|

RX packets:0 errors:0 dropped:0 overruns:0 frame:0 |

|||||||

|

|

|

TX packets:0 errors:0 dropped:9 overruns:0 carrier:0 |

|||||||

|

|

|

collisions:0 txqueuelen:1024 |

|

|

|

|

|

||

|

|

|

RX bytes:0 (0.0 b) |

TX bytes:0 (0.0 b) |

|

|

|

|

||

|

|

ib0.8002 |

Link encap:Ethernet |

HWaddr 00:00:00:00:00:00 |

||||||

|

|

|

BROADCAST MULTICAST |

MTU:2044 |

Metric:1 |

|

|

|

|

|

|

|

|

RX packets:0 errors:0 dropped:0 overruns:0 frame:0 |

|||||||

|

|

|

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 |

|||||||

|

|

|

collisions:0 txqueuelen:1024 |

|

|

|

|

|

||

|

|

|

RX bytes:0 (0.0 b) |

TX bytes:0 (0.0 b) |

|

|

|

|

||

|

|

lo |

Link encap:Local Loopback |

|

|

|

|

|

|

|

|

|

|

inet addr:127.0.0.1 |

Mask:255.0.0.0 |

|

|

|

|

||

|

|

|

inet6 addr: ::1/128 Scope:Host |

|

|

|

|

|

||

|

|

|

UP LOOPBACK RUNNING |

MTU:16436 |

Metric:1 |

|

|

|

|

|

|

|

|

RX packets:378 errors:0 dropped:0 overruns:0 frame:0 |

|||||||

|

|

|

TX packets:378 errors:0 dropped:0 overruns:0 carrier:0 |

|||||||

|

|

|

collisions:0 txqueuelen:0 |

|

|

|

|

|

|

|

|

|

|

RX bytes:45730 (44.6 KiB) |

TX bytes:45730 (44.6 KiB) |

||||||

|

|

sit0 |

Link encap:IPv6-in-IPv4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux |

|

|

||

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

OL-12309-01 |

|

|

|

|

|

|

|

3-3 |

|

|

|

|

|

|

|

|

|

|

||

Chapter 3 IP over IB Protocol

Subinterfaces

NOARP MTU:1480 |

Metric:1 |

|||

RX packets:0 |

errors:0 |

dropped:0 overruns:0 frame:0 |

||

TX |

packets:0 |

errors:0 |

dropped:0 overruns:0 carrier:0 |

|

collisions:0 |

txqueuelen:0 |

|||

RX |

bytes:0 (0.0 |

b) TX bytes:0 (0.0 b) |

||

Verify that you see the ib0.8002 output.

Step 6 Configure the new interface just as you would the parent interface. (See the “Manually Configuring IPoIB for Default IB Partition” section on page 3-2.)

The following example shows how to configure the new interface:

host1# ifconfig ib0.8002 192.168.12.1 netmask 255.255.255.0

Removing a Subinterface Associated with a Specific IB Partition

To remove a subinterface, perform the following steps:

Step 1 Take the subinterface offline. You cannot remove a subinterface until you bring it down. The following example shows how to take the subinterface offline:

host1# ifconfig ib0.8002 down

Step 2 Remove the value of the partition key to the file as root user.

The following example shows how to remove the partition 80:02 from the primary interface ib0: host1# /usr/local/topspin/sbin/ipoibcfg del ib0 80:02

Step 3 (Optional) Verify that the subinterface no longer appears in the interface list by entering the ifconfig -a command.

The following example shows how to verify that the subinterface no longer appears in the interface list:

|

|

|

host1# ifconfig -a |

|

|

|

|

|

|

|

|

eth0 |

Link encap:Ethernet |

HWaddr 00:30:48:20:D5:D1 |

|||

|

|

|

|

inet addr:172.29.237.206 Bcast:172.29.239.255 Mask:255.255.252.0 |

||||

|

|

|

|

inet6 addr: fe80::230:48ff:fe20:d5d1/64 Scope:Link |

||||

|

|

|

|

UP BROADCAST RUNNING MULTICAST |

MTU:1500 |

Metric:1 |

||

|

|

|

|

RX packets:9091465 errors:0 dropped:0 overruns:0 frame:0 |

||||

|

|

|

|

TX packets:505050 errors:0 dropped:0 overruns:0 carrier:0 |

||||

|

|

|

|

collisions:0 txqueuelen:1000 |

|

|

|

|

|

|

|

|

RX bytes:1517373743 (1.4 GiB) |

TX bytes:39074067 (37.2 MiB) |

|||

|

|

|

|

Base address:0x3040 Memory:dd420000-dd440000 |

||||

|

|

|

ib0 |

Link encap:Ethernet |

HWaddr F8:79:D1:23:9A:2B |

|||

|

|

|

|

inet addr:192.168.0.1 Bcast:192.168.0.255 |

Mask:255.255.255.0 |

|||

|

|

|

|

inet6 addr: fe80::9879:d1ff:fe20:f4e7/64 Scope:Link |

||||

|

|

|

|

UP BROADCAST RUNNING MULTICAST |

MTU:2044 |

Metric:1 |

||

|

|

|

|

RX packets:0 errors:0 dropped:0 overruns:0 frame:0 |

||||

|

|

|

|

TX packets:0 errors:0 dropped:9 overruns:0 carrier:0 |

||||

|

|

|

|

collisions:0 txqueuelen:1024 |

|

|

|

|

|

|

|

|

RX bytes:0 (0.0 b) |

TX bytes:0 (0.0 b) |

|

|

|

|

|

|

ib0.8002 |

Link encap:Ethernet |

HWaddr 00:00:00:00:00:00 |

|||

|

|

|

|

BROADCAST MULTICAST |

MTU:2044 |

Metric:1 |

|

|

|

|

|

|

RX packets:0 errors:0 dropped:0 overruns:0 frame:0 |

||||

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux |

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

3-4 |

|

|

|

|

|

OL-12309-01 |

|

|

|

|

|

|

|

|

||

Chapter 3 IP over IB Protocol

Verifying IPoIB Functionality

|

TX packets:0 |

errors:0 dropped:0 overruns:0 carrier:0 |

|

|

collisions:0 |

txqueuelen:1024 |

|

|

RX bytes:0 (0.0 b) |

TX bytes:0 (0.0 b) |

|

lo |

Link encap:Local Loopback |

||

|

inet addr:127.0.0.1 |

Mask:255.0.0.0 |

|

|

inet6 addr: ::1/128 Scope:Host |

||

|

UP LOOPBACK RUNNING |

MTU:16436 Metric:1 |

|

|

RX packets:378 errors:0 dropped:0 overruns:0 frame:0 |

||

|

TX packets:378 errors:0 dropped:0 overruns:0 carrier:0 |

||

|

collisions:0 |

txqueuelen:0 |

|

|

RX bytes:45730 (44.6 KiB) TX bytes:45730 (44.6 KiB) |

||

sit0 |

Link encap:IPv6-in-IPv4 |

||

|

NOARP MTU:1480 Metric:1 |

||

|

RX packets:0 |

errors:0 dropped:0 overruns:0 frame:0 |

|

|

TX packets:0 |

errors:0 dropped:0 overruns:0 carrier:0 |

|

|

collisions:0 |

txqueuelen:0 |

|

|

RX bytes:0 (0.0 b) |

TX bytes:0 (0.0 b) |

|

|

|

|

|

Verifying IPoIB Functionality

To verify your configuration and your IPoIB functionality, perform the following steps:

Step 1 Log in to your hosts.

Step 2 Verify the IPoIB functionality by using the ifconfig command.

The following example shows how two IB nodes are used to verify IPoIB functionality. In the following example, IB node 1 is at 192.168.0.1, and IB node 2 is at 192.168.0.2:

host1# ifconfig ib0 192.168.0.1 netmask 255.255.252.0 host2# ifconfig ib0 192.168.0.2 netmask 255.255.252.0

Step 3 Enter the ping command from 192.168.0.1 to 192.168.0.2.

The following example shows how to enter the ping command:

host1# ping -c 5 192.168.0.2 |

|

|

|

PING 192.168.0.2 (192.168.0.2) 56(84) |

bytes of data. |

|

|

64 |

bytes from 192.168.0.2: icmp_seq=0 |

ttl=64 time=0.079 |

ms |

64 |

bytes from 192.168.0.2: icmp_seq=1 |

ttl=64 time=0.044 |

ms |

64 |

bytes from 192.168.0.2: icmp_seq=2 |

ttl=64 time=0.055 |

ms |

64 |

bytes from 192.168.0.2: icmp_seq=3 |

ttl=64 time=0.049 |

ms |

64 |

bytes from 192.168.0.2: icmp_seq=4 |

ttl=64 time=0.065 |

ms |

--- 192.168.0.2 ping statistics --- |

|

|

|

5 packets transmitted, 5 received, 0% |

packet loss, time |

3999ms rtt min/avg/max/mdev = |

|

0.044/0.058/0.079/0.014 ms, pipe 2 |

|

|

|

|

|

|

|

Cisco SFS InfiniBand Host Drivers User Guide for Linux

|

OL-12309-01 |

3-5 |

|

|

|

Loading...

Loading...