VMware ESXI - 6.5.1 Resource Management

vSphere Resource Management

Update 1

VMware vSphere 6.5

VMware ESXi 6.5

vCenter Server 6.5

This document supports the version of each product listed and supports all subsequent versions until the document is replaced by a new edition. To check for more recent editions of this document, see http://www.vmware.com/support/pubs.

EN-002644-00

vSphere Resource Management

You can find the most up-to-date technical documentation on the VMware Web site at: http www vmware com support

The VMware Web site also provides the latest product updates.

If you have comments about this documentation, submit your feedback to: docfeedback@vmware.com

Copyright © 2006–2017 VMware, Inc. All rights reserved. Copyright and trademark information.

VMware, Inc.

3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com

2 |

VMware, Inc. |

Contents

About vSphere Resource Management 7 |

|

1 Getting Started with Resource Management 9 |

|

Resource Types 9 |

|

Resource Providers |

9 |

Resource Consumers |

10 |

Goals of Resource Management 10 |

|

2 Configuring Resource Allocation |

|

|

Settings 11 |

|

|

Resource Allocation Shares |

11 |

|

Resource Allocation Reservation 12 |

|

|

Resource Allocation Limit |

12 |

|

Resource Allocation Settings Suggestions |

13 |

|

Edit Resource Settings 13 |

|

|

Changing Resource Allocation Settings |

Example 14 |

|

Admission Control 15 |

|

|

3 CPU Virtualization Basics 17 |

|

Software-Based CPU Virtualization 17 |

|

Hardware-Assisted CPU Virtualization 18 |

|

Virtualization and Processor Specific Behavior |

18 |

Performance Implications of CPU Virtualization |

18 |

4 Administering CPU Resources 19

View Processor Information 19

Specifying CPU Configuration 19

Multicore Processors 20

Hyperthreading 20

|

Using CPU Affinity 22 |

|

|

|

Host Power Management Policies |

23 |

|

5 |

Memory Virtualization Basics |

27 |

|

|

Virtual Machine Memory |

27 |

|

|

Memory Overcommitment |

28 |

|

|

Memory Sharing 28 |

|

|

|

Types of Memory Virtualization 29 |

||

6 |

Administering Memory Resources 33 |

||

|

Understanding Memory Overhead |

33 |

|

|

How ESXi Hosts Allocate Memory |

34 |

|

VMware, Inc. |

3 |

vSphere Resource Management

|

Memory Reclamation |

35 |

|

|

|

|

|

|

|

|

||

|

Using Swap Files |

36 |

|

|

|

|

|

|

|

|

|

|

|

Sharing Memory Across Virtual Machines |

40 |

|

|

|

|||||||

|

Memory Compression |

41 |

|

|

|

|

|

|

|

|||

|

Measuring and Differentiating Types of Memory Usage |

42 |

||||||||||

|

Memory Reliability |

43 |

|

|

|

|

|

|

|

|

|

|

|

About System Swap |

43 |

|

|

|

|

|

|

|

|

|

|

7 |

Configuring Virtual Graphics |

45 |

|

|

|

|

|

|||||

|

View GPU Statistics |

45 |

|

|

|

|

|

|

|

|

|

|

|

Add an NVIDIA GRID vGPU to a Virtual Machine |

45 |

|

|||||||||

|

Configuring Host Graphics |

46 |

|

|

|

|

|

|

||||

|

Configuring Graphics Devices 47 |

|

|

|

|

|

|

|||||

8 Managing Storage I/O Resources |

49 |

|

|

|

|

|||||||

|

About Virtual Machine Storage Policies 50 |

|

|

|

||||||||

|

About I/O Filters |

50 |

|

|

|

|

|

|

|

|

|

|

|

Storage I/O Control Requirements |

50 |

|

|

|

|

||||||

|

Storage I/O Control Resource Shares and Limits |

51 |

|

|

||||||||

|

Set Storage I/O Control Resource Shares and Limits |

52 |

|

|||||||||

|

Enable Storage I/O Control |

52 |

|

|

|

|

|

|

||||

|

Set Storage I/O Control Threshold Value |

53 |

|

|

|

|||||||

|

Storage DRS Integration with Storage Profiles 54 |

|

|

|||||||||

9 |

Managing Resource Pools |

55 |

|

|

|

|

|

|

||||

|

Why Use Resource Pools? 56 |

|

|

|

|

|

|

|||||

|

Create a Resource Pool |

57 |

|

|

|

|

|

|

|

|||

|

Edit a Resource Pool |

58 |

|

|

|

|

|

|

|

|

||

|

Add a Virtual Machine to a Resource Pool |

58 |

|

|

|

|||||||

|

Remove a Virtual Machine from a Resource Pool |

59 |

|

|||||||||

|

Remove a Resource Pool |

|

60 |

|

|

|

|

|

|

|

||

|

Resource Pool Admission Control |

60 |

|

|

|

|

||||||

10 Creating a DRS Cluster |

63 |

|

|

|

|

|

|

|||||

|

Admission Control and Initial Placement |

63 |

|

|

|

|||||||

|

Virtual Machine Migration |

65 |

|

|

|

|

|

|

||||

|

DRS Cluster Requirements |

67 |

|

|

|

|

|

|

||||

|

Configuring DRS with Virtual Flash |

68 |

|

|

|

|

||||||

|

Create a Cluster |

68 |

|

|

|

|

|

|

|

|

|

|

|

Edit Cluster Settings |

69 |

|

|

|

|

|

|

|

|

||

|

Set a Custom Automation Level for a Virtual Machine |

71 |

||||||||||

|

Disable DRS 72 |

|

|

|

|

|

|

|

|

|

|

|

|

Restore a Resource Pool Tree |

72 |

|

|

|

|

|

|

||||

11 Using DRS Clusters to Manage Resources |

73 |

|

|

|||||||||

|

Adding Hosts to a Cluster |

73 |

|

|

|

|

|

|

||||

|

Adding Virtual Machines to a Cluster |

75 |

|

|

|

|

||||||

|

Removing Virtual Machines from a Cluster 75 |

|

|

|

||||||||

4 |

VMware, Inc. |

Contents

|

Removing a Host from a Cluster |

76 |

|

|

|

|

|

|

||||

|

DRS Cluster Validity |

77 |

|

|

|

|

|

|

|

|

|

|

|

Managing Power Resources |

82 |

|

|

|

|

|

|

|

|||

|

Using DRS Affinity Rules |

86 |

|

|

|

|

|

|

|

|||

12 |

Creating a Datastore Cluster |

91 |

|

|

|

|

|

|

||||

|

Initial Placement and Ongoing Balancing |

92 |

|

|

|

|

||||||

|

Storage Migration Recommendations |

92 |

|

|

|

|

|

|||||

|

Create a Datastore Cluster |

|

92 |

|

|

|

|

|

|

|

||

|

Enable and Disable Storage DRS |

93 |

|

|

|

|

|

|

||||

|

Set the Automation Level for Datastore Clusters |

93 |

|

|

||||||||

|

Setting the Aggressiveness Level for Storage DRS |

94 |

|

|

||||||||

|

Datastore Cluster Requirements |

95 |

|

|

|

|

|

|

||||

|

Adding and Removing Datastores from a Datastore Cluster |

96 |

||||||||||

13 |

Using Datastore Clusters to Manage Storage Resources 97 |

|||||||||||

|

Using Storage DRS Maintenance Mode |

97 |

|

|

|

|

||||||

|

Applying Storage DRS Recommendations |

99 |

|

|

|

|

||||||

|

Change Storage DRS Automation Level for a Virtual Machine |

100 |

||||||||||

|

Set Up Off Hours Scheduling for Storage DRS |

100 |

|

|

||||||||

|

Storage DRS Anti Affinity Rules |

101 |

|

|

|

|

|

|

||||

|

Clear Storage DRS Statistics |

104 |

|

|

|

|

|

|

|

|||

|

Storage vMotion Compatibility with Datastore Clusters |

105 |

|

|||||||||

14 |

Using NUMA Systems with ESXi |

107 |

|

|

|

|

||||||

|

What is NUMA? |

107 |

|

|

|

|

|

|

|

|

|

|

|

How ESXi NUMA Scheduling Works |

108 |

|

|

|

|

||||||

|

VMware NUMA Optimization Algorithms and Settings |

109 |

|

|||||||||

|

Resource Management in NUMA Architectures |

110 |

|

|

||||||||

|

Using Virtual NUMA |

110 |

|

|

|

|

|

|

|

|

||

|

Specifying NUMA Controls |

111 |

|

|

|

|

|

|

|

|||

15 |

Advanced |

|

|

|

|

|

|

|

|

|

|

|

|

Attributes 115 |

|

|

|

|

|

|

|

|

|

|

|

|

Set Advanced Host Attributes 115 |

|

|

|

|

|

|

|||||

|

Set Advanced Virtual Machine Attributes |

118 |

|

|

|

|

||||||

|

Latency Sensitivity |

120 |

|

|

|

|

|

|

|

|

|

|

|

About Reliable Memory |

120 |

|

|

|

|

|

|

|

|||

16 |

Fault Definitions |

123 |

|

|

|

|

|

|

|

|

|

|

|

Virtual Machine is Pinned |

|

124 |

|

|

|

|

|

|

|

||

|

Virtual Machine not Compatible with any Host |

124 |

|

|

||||||||

|

VM/VM DRS Rule Violated when Moving to another Host 124 |

|||||||||||

|

Host Incompatible with Virtual Machine |

124 |

|

|

|

|

||||||

|

Host Has Virtual Machine That Violates VM/VM DRS Rules |

124 |

||||||||||

|

Host has Insufficient Capacity for Virtual Machine |

124 |

|

|

||||||||

|

Host in Incorrect State |

124 |

|

|

|

|

|

|

|

|

||

Host Has Insufficient Number of Physical CPUs for Virtual Machine 125

VMware, Inc. |

5 |

vSphere Resource Management

Host has Insufficient Capacity for Each Virtual Machine CPU 125 The Virtual Machine Is in vMotion 125

No Active Host in Cluster 125 Insufficient Resources 125

Insufficient Resources to Satisfy Configured Failover Level for HA 125

No Compatible Hard Affinity Host |

125 |

No Compatible Soft Affinity Host |

125 |

Soft Rule Violation Correction Disallowed 125

Soft Rule Violation Correction Impact 126

17 DRS Troubleshooting Information 127

Cluster Problems 127

Host Problems 130

Virtual Machine Problems 133

Index 137

6 |

VMware, Inc. |

About vSphere Resource Management

vSphere Resource Management describes resource management for VMware® ESXi and vCenter® Server environments.

This documentation focuses on the following topics.

nResource allocation and resource management concepts

nVirtual machine attributes and admission control

nResource pools and how to manage them

nClusters, vSphere® Distributed Resource Scheduler (DRS), vSphere Distributed Power Management (DPM), and how to work with them

nDatastore clusters, Storage DRS, Storage I/O Control, and how to work with them

nAdvanced resource management options

nPerformance considerations

Intended Audience

This information is for system administrators who want to understand how the system manages resources and how they can customize the default behavior. It’s also essential for anyone who wants to understand and use resource pools, clusters, DRS, datastore clusters, Storage DRS, Storage I/O Control, or vSphere DPM.

This documentation assumes you have a working knowledge of VMware ESXi and of vCenter Server.

Task instructions in this guide are based on the vSphere Web Client. You can also perform most of the tasks in this guide by using the new vSphere Client. The new vSphere Client user interface terminology, topology, and workflow are closely aligned with the same aspects and elements of the vSphere Web Client user interface. You can apply the vSphere Web Client instructions to the new vSphere Client unless otherwise instructed.

Not Not all functionality in the vSphere Web Client has been implemented for the vSphere Client in the vSphere 6.5 release. For an up-to-date list of unsupported functionality, see Functionality Updates for the vSphere Client Guide at http www vmware com info id 1413.

VMware, Inc. |

7 |

vSphere Resource Management

8 |

VMware, Inc. |

Getting Started with Resource |

1 |

Management |

To understand resource management, you must be aware of its components, its goals, and how best to implement it in a cluster setting

Resource allocation settings for a virtual machine (shares, reservation, and limit) are discussed, including how to set them and how to view them. Also, admission control, the process whereby resource allocation settings are validated against existing resources is explained.

Resource management is the allocation of resources from resource providers to resource consumers.

The need for resource management arises from the overcommitment of resources—that is, more demand than capacity and from the fact that demand and capacity vary over time. Resource management allows you to dynamically reallocate resources, so that you can more efficiently use available capacity.

This chapter includes the following topics:

n“Resource Types,” on page 9

n“Resource Providers,” on page 9

n“Resource Consumers,” on page 10

n“Goals of Resource Management,” on page 10

Resource Types

Resources include CPU, memory, power, storage, and network resources.

Not ESXi manages network bandwidth and disk resources on a per-host basis, using network traffic shaping and a proportional share mechanism, respectively.

Resource Providers

Hosts and clusters, including datastore clusters, are providers of physical resources.

For hosts, available resources are the host’s hardware specification minus the resources used by the virtualization software.

A cluster is a group of hosts. You can create a cluster using vSphere Web Client, and add multiple hosts to the cluster. vCenter Server manages these hosts’ resources jointly: the cluster owns all of the CPU and memory of all hosts. You can enable the cluster for joint load balancing or failover. See Chapter 10, “Creating a DRS Cluster,” on page 63 for more information.

A datastore cluster is a group of datastores. Like DRS clusters, you can create a datastore cluster using the vSphere Web Client, and add multiple datstores to the cluster. vCenter Server manages the datastore resources jointly. You can enable Storage DRS to balance I/O load and space utilization. See Chapter 12, “Creating a Datastore Cluster,” on page 91.

VMware, Inc. |

9 |

vSphere Resource Management

Resource Consumers

Virtual machines are resource consumers.

The default resource settings assigned during creation work well for most machines. You can later edit the virtual machine settings to allocate a share-based percentage of the total CPU, memory, and storage I/O of the resource provider or a guaranteed reservation of CPU and memory. When you power on that virtual machine, the server checks whether enough unreserved resources are available and allows power on only if there are enough resources. This process is called admission control.

A resource pool is a logical abstraction for flexible management of resources. Resource pools can be grouped into hierarchies and used to hierarchically partition available CPU and memory resources. Accordingly, resource pools can be considered both resource providers and consumers. They provide resources to child resource pools and virtual machines, but are also resource consumers because they consume their parents’ resources. See Chapter 9, “Managing Resource Pools,” on page 55.

ESXi hosts allocate each virtual machine a portion of the underlying hardware resources based on a number of factors:

nResource limits defined by the user.

nTotal available resources for the ESXi host (or the cluster).

nNumber of virtual machines powered on and resource usage by those virtual machines.

nOverhead required to manage the virtualization.

Goals of Resource Management

When managing your resources, you must be aware of what your goals are.

In addition to resolving resource overcommitment, resource management can help you accomplish the following:

nPerformance Isolation: Prevent virtual machines from monopolizing resources and guarantee predictable service rates.

nEfficient Usage: Exploit undercommitted resources and overcommit with graceful degradation.

nEasy Administration: Control the relative importance of virtual machines, provide flexible dynamic partitioning, and meet absolute service-level agreements.

10 |

VMware, Inc. |

Configuring Resource Allocation |

2 |

Settings |

When available resource capacity does not meet the demands of the resource consumers (and virtualization overhead), administrators might need to customize the amount of resources that are allocated to virtual machines or to the resource pools in which they reside.

Use the resource allocation settings (shares, reservation, and limit) to determine the amount of CPU, memory, and storage resources provided for a virtual machine. In particular, administrators have several options for allocating resources.

nReserve the physical resources of the host or cluster.

nSet an upper bound on the resources that can be allocated to a virtual machine.

nGuarantee that a particular virtual machine is always allocated a higher percentage of the physical resources than other virtual machines.

This chapter includes the following topics:

n“Resource Allocation Shares,” on page 11

n“Resource Allocation Reservation,” on page 12

n“Resource Allocation Limit,” on page 12

n“Resource Allocation Settings Suggestions,” on page 13

n“Edit Resource Settings ” on page 13

n “Changing Resource Allocation Settings Example ” on page 14

n“Admission Control,” on page 15

Resource Allocation Shares

Shares specify the relative importance of a virtual machine (or resource pool). If a virtual machine has twice as many shares of a resource as another virtual machine, it is entitled to consume twice as much of that resource when these two virtual machines are competing for resources.

Shares are typically specified as High, Normal, or Low and these values specify share values with a 4:2:1 ratio, respectively. You can also select Custom to assign a specific number of shares (which expresses a proportional weight) to each virtual machine.

Specifying shares makes sense only with regard to sibling virtual machines or resource pools, that is, virtual machines or resource pools with the same parent in the resource pool hierarchy. Siblings share resources according to their relative share values, bounded by the reservation and limit. When you assign shares to a virtual machine, you always specify the priority for that virtual machine relative to other powered-on virtual machines.

VMware, Inc. |

11 |

vSphere Resource Management

The following table shows the default CPU and memory share values for a virtual machine. For resource pools, the default CPU and memory share values are the same, but must be multiplied as if the resource pool were a virtual machine with four virtual CPUs and 16 GB of memory.

Table 2 1. Share Values

Setting |

CPU share values |

Memory share values |

|

|

|

High |

2000 shares per virtual CPU |

20 shares per megabyte of configured virtual |

|

|

machine memory. |

|

|

|

Normal |

1000 shares per virtual CPU |

10 shares per megabyte of configured virtual |

|

|

machine memory. |

|

|

|

Low |

500 shares per virtual CPU |

5 shares per megabyte of configured virtual machine |

|

|

memory. |

|

|

|

For example, an SMP virtual machine with two virtual CPUs and 1GB RAM with CPU and memory shares set to Normal has 2x1000=2000 shares of CPU and 10x1024=10240 shares of memory.

Not Virtual machines with more than one virtual CPU are called SMP (symmetric multiprocessing) virtual machines. ESXi supports up to 128 virtual CPUs per virtual machine.

The relative priority represented by each share changes when a new virtual machine is powered on. This affects all virtual machines in the same resource pool. All of the virtual machines have the same number of virtual CPUs. Consider the following examples.

nTwo CPU-bound virtual machines run on a host with 8GHz of aggregate CPU capacity. Their CPU shares are set to Normal and get 4GHz each.

nA third CPU-bound virtual machine is powered on. Its CPU shares value is set to High, which means it should have twice as many shares as the machines set to Normal. The new virtual machine receives 4GHz and the two other machines get only 2GHz each. The same result occurs if the user specifies a custom share value of 2000 for the third virtual machine.

Resource Allocation Reservation

A reservation specifies the guaranteed minimum allocation for a virtual machine.

vCenter Server or ESXi allows you to power on a virtual machine only if there are enough unreserved resources to satisfy the reservation of the virtual machine. The server guarantees that amount even when the physical server is heavily loaded. The reservation is expressed in concrete units (megahertz or megabytes).

For example, assume you have 2GHz available and specify a reservation of 1GHz for VM1 and 1GHz for VM2. Now each virtual machine is guaranteed to get 1GHz if it needs it. However, if VM1 is using only 500MHz, VM2 can use 1.5GHz.

Reservation defaults to 0. You can specify a reservation if you need to guarantee that the minimum required amounts of CPU or memory are always available for the virtual machine.

Resource Allocation Limit

Limit specifies an upper bound for CPU, memory, or storage I/O resources that can be allocated to a virtual machine.

A server can allocate more than the reservation to a virtual machine, but never allocates more than the limit, even if there are unused resources on the system. The limit is expressed in concrete units (megahertz megabytes, or I/O operations per second).

CPU, memory, and storage I/O resource limits default to unlimited. When the memory limit is unlimited, the amount of memory configured for the virtual machine when it was created becomes its effective limit.

12 |

VMware, Inc. |

Chapter 2 Configuring Resource Allocation Settings

In most cases, it is not necessary to specify a limit. There are benefits and drawbacks:

nBenefits — Assigning a limit is useful if you start with a small number of virtual machines and want to manage user expectations. Performance deteriorates as you add more virtual machines. You can simulate having fewer resources available by specifying a limit.

nDrawbacks — You might waste idle resources if you specify a limit. The system does not allow virtual machines to use more resources than the limit, even when the system is underutilized and idle resources are available. Specify the limit only if you have good reasons for doing so.

Resource Allocation Settings Suggestions

Select resource allocation settings (reservation, limit and shares) that are appropriate for your ESXi environment.

The following guidelines can help you achieve better performance for your virtual machines.

nUse Reservation to specify the minimum acceptable amount of CPU or memory, not the amount you want to have available. The amount of concrete resources represented by a reservation does not change when you change the environment, such as by adding or removing virtual machines. The host assigns additional resources as available based on the limit for your virtual machine, the number of shares and estimated demand.

nWhen specifying the reservations for virtual machines, do not commit all resources (plan to leave at least 10% unreserved). As you move closer to fully reserving all capacity in the system, it becomes increasingly difficult to make changes to reservations and to the resource pool hierarchy without violating admission control. In a DRS-enabled cluster, reservations that fully commit the capacity of the cluster or of individual hosts in the cluster can prevent DRS from migrating virtual machines between hosts.

nIf you expect frequent changes to the total available resources, use Shares to allocate resources fairly across virtual machines. If you use Shares, and you upgrade the host, for example, each virtual machine stays at the same priority (keeps the same number of shares) even though each share represents a larger amount of memory, CPU, or storage I/O resources.

Edit Resource Settings

Use the Edit Resource Settings dialog box to change allocations for memory and CPU resources.

Procedure

1Browse to the virtual machine in the vSphere Web Client navigator.

2 Right-click and select Edit Resource e n s.

3Edit the CPU Resources.

Option |

Description |

Shares |

CPU shares for this resource pool with respect to the parent’s total. Sibling |

|

resource pools share resources according to their relative share values |

|

bounded by the reservation and limit. Select Low, Normal, or High, which |

|

specify share values respectively in a 1:2:4 ratio. Select Custom to give each |

|

virtual machine a specific number of shares, which expresses a |

|

proportional weight. |

|

|

Reservation |

Guaranteed CPU allocation for this resource pool. |

|

|

Limit |

Upper limit for this resource pool’s CPU allocation. Select Unlimited to |

|

specify no upper limit. |

|

|

VMware, Inc. |

13 |

vSphere Resource Management

4Edit the Memory Resources.

Option |

Description |

Shares |

Memory shares for this resource pool with respect to the parent’s total. |

|

Sibling resource pools share resources according to their relative share |

|

values bounded by the reservation and limit. Select Low, Normal, or High, |

|

which specify share values respectively in a 1:2:4 ratio. Select Custom to |

|

give each virtual machine a specific number of shares, which expresses a |

|

proportional weight. |

|

|

Reservation |

Guaranteed memory allocation for this resource pool. |

|

|

Limit |

Upper limit for this resource pool’s memory allocation. Select Unlimited to |

|

specify no upper limit. |

|

|

5Click OK.

Changing Resource Allocation Settings—Example

The following example illustrates how you can change resource allocation settings to improve virtual machine performance.

Assume that on an ESXi host, you have created two new virtual machines—one each for your QA (VM-QA) and Marketing (VM-Marketing) departments.

Figure 2 1. Single Host with Two Virtual Machines

host

VM-QA |

VM-Marketing |

In the following example, assume that VM-QA is memory intensive and accordingly you want to change the resource allocation settings for the two virtual machines to:

nSpecify that, when system memory is overcommitted VM-QA can use twice as much CPU and memory resources as the Marketing virtual machine. Set the CPU shares and memory shares for VM-QA to High and for VM-Marketing set them to Normal.

nEnsure that the Marketing virtual machine has a certain amount of guaranteed CPU resources. You can do so using a reservation setting

Procedure

1Browse to the virtual machines in the vSphere Web Client navigator.

2 Right-click VM-QA, the virtual machine for which you want to change shares, and select Edit e n s.

3Under Virtual Hardware, expand CPU and select High from the Shares drop-down menu.

4 Under Virtual Hardware, expand Memory and select High from the Shares drop-down menu.

5Click OK.

6 |

Right-click the marketing virtual machine (VM-Marketing) and select Edit e n s. |

7 |

Under Virtual Hardware, expand CPU and change the Reservation value to the desired number. |

8Click OK.

14 |

VMware, Inc. |

Chapter 2 Configuring Resource Allocation Settings

If you select the cluster’s Resource Reservation tab and click CPU, you should see that shares for VM-QA are twice that of the other virtual machine. Also, because the virtual machines have not been powered on, the Reservation Used fields have not changed.

Admission Control

When you power on a virtual machine, the system checks the amount of CPU and memory resources that have not yet been reserved. Based on the available unreserved resources, the system determines whether it can guarantee the reservation for which the virtual machine is configured (if any). This process is called admission control.

If enough unreserved CPU and memory are available, or if there is no reservation, the virtual machine is powered on. Otherwise, an Insufficient Resources warning appears.

Not In addition to the user specified memory reservation, for each virtual machine there is also an amount of overhead memory. This extra memory commitment is included in the admission control calculation.

When the vSphere DPM feature is enabled, hosts might be placed in standby mode (that is, powered off) to reduce power consumption. The unreserved resources provided by these hosts are considered available for admission control. If a virtual machine cannot be powered on without these resources, a recommendation to power on sufficient standby hosts is made.

VMware, Inc. |

15 |

vSphere Resource Management

16 |

VMware, Inc. |

CPU Virtualization Basics |

3 |

CPU virtualization emphasizes performance and runs directly on the processor whenever possible. The underlying physical resources are used whenever possible and the virtualization layer runs instructions only as needed to make virtual machines operate as if they were running directly on a physical machine.

CPU virtualization is not the same thing as emulation. ESXi does not use emulation to run virtual CPUs. With emulation, all operations are run in software by an emulator. A software emulator allows programs to run on a computer system other than the one for which they were originally written The emulator does this by emulating, or reproducing, the original computer’s behavior by accepting the same data or inputs and achieving the same results. Emulation provides portability and runs software designed for one platform across several platforms.

When CPU resources are overcommitted the ESXi host time-slices the physical processors across all virtual machines so each virtual machine runs as if it has its specified number of virtual processors. When an ESXi host runs multiple virtual machines, it allocates to each virtual machine a share of the physical resources.

With the default resource allocation settings all virtual machines associated with the same host receive an equal share of CPU per virtual CPU. This means that a single-processor virtual machines is assigned only half of the resources of a dual-processor virtual machine.

This chapter includes the following topics:

n“Software-Based CPU Virtualization,” on page 17

n“Hardware-Assisted CPU Virtualization,” on page 18

n“Virtualization and Processor Specific Behavior,” on page 18

n“Performance Implications of CPU Virtualization,” on page 18

Software-Based CPU Virtualization

With software-based CPU virtualization, the guest application code runs directly on the processor, while the guest privileged code is translated and the translated code runs on the processor.

The translated code is slightly larger and usually runs more slowly than the native version. As a result, guest applications, which have a small privileged code component, run with speeds very close to native. Applications with a significant privileged code component, such as system calls, traps, or page table updates can run slower in the virtualized environment.

VMware, Inc. |

17 |

vSphere Resource Management

Hardware-Assisted CPU Virtualization

Certain processors provide hardware assistance for CPU virtualization.

When using this assistance, the guest can use a separate mode of execution called guest mode. The guest code, whether application code or privileged code, runs in the guest mode. On certain events, the processor exits out of guest mode and enters root mode. The hypervisor executes in the root mode, determines the reason for the exit, takes any required actions, and restarts the guest in guest mode.

When you use hardware assistance for virtualization, there is no need to translate the code. As a result, system calls or trap-intensive workloads run very close to native speed. Some workloads, such as those involving updates to page tables, lead to a large number of exits from guest mode to root mode. Depending on the number of such exits and total time spent in exits, hardware-assisted CPU virtualization can speed up execution significantly

Virtualization and Processor-Specific Behavior

Although VMware software virtualizes the CPU, the virtual machine detects the specific model of the processor on which it is running.

Processor models might differ in the CPU features they offer and applications running in the virtual machine can make use of these features. Therefore, it is not possible to use vMotion® to migrate virtual machines between systems running on processors with different feature sets. You can avoid this restriction, in some cases, by using Enhanced vMotion Compatibility (EVC) with processors that support this feature. See the vCenter Server and Host Management documentation for more information.

Performance Implications of CPU Virtualization

CPU virtualization adds varying amounts of overhead depending on the workload and the type of virtualization used.

An application is CPU-bound if it spends most of its time executing instructions rather than waiting for external events such as user interaction, device input, or data retrieval. For such applications, the CPU virtualization overhead includes the additional instructions that must be executed. This overhead takes CPU processing time that the application itself can use. CPU virtualization overhead usually translates into a reduction in overall performance.

For applications that are not CPU-bound, CPU virtualization likely translates into an increase in CPU use. If spare CPU capacity is available to absorb the overhead, it can still deliver comparable performance in terms of overall throughput.

ESXi supports up to 128 virtual processors (CPUs) for each virtual machine.

Not Deploy single-threaded applications on uniprocessor virtual machines, instead of on SMP virtual machines that have multiple CPUs, for the best performance and resource use.

Single-threaded applications can take advantage only of a single CPU. Deploying such applications in dualprocessor virtual machines does not speed up the application. Instead, it causes the second virtual CPU to use physical resources that other virtual machines could otherwise use.

18 |

VMware, Inc. |

Administering CPU Resources |

4 |

You can configure virtual machines with one or more virtual processors, each with its own set of registers and control structures.

When a virtual machine is scheduled, its virtual processors are scheduled to run on physical processors. The VMkernel Resource Manager schedules the virtual CPUs on physical CPUs, thereby managing the virtual machine’s access to physical CPU resources. ESXi supports virtual machines with up to 128 virtual CPUs.

This chapter includes the following topics:

n“View Processor Information,” on page 19

n“Specifying CPU Configuration ” on page 19

n“Multicore Processors,” on page 20

n“Hyperthreading,” on page 20

n“Using CPU Affinity ” on page 22

n“Host Power Management Policies,” on page 23

View Processor Information

You can access information about current CPU configuration in the vSphere Web Client.

Procedure

1Browse to the host in the vSphere Web Client navigator.

2 Click on ure and expand Hardware.

3Select Processors to view the information about the number and type of physical processors and the number of logical processors.

Not In hyperthreaded systems, each hardware thread is a logical processor. For example, a dual-core processor with hyperthreading enabled has two cores and four logical processors.

Specifying CPU Configuration

You can specify CPU configuration to improve resource management. However, if you do not customize CPU configuration the ESXi host uses defaults that work well in most situations.

You can specify CPU configuration in the following ways:

nUse the attributes and special features available through the vSphere Web Client. The vSphere Web Client allows you to connect to the ESXi host or a vCenter Server system.

VMware, Inc. |

19 |

vSphere Resource Management

nUse advanced settings under certain circumstances.

nUse the vSphere SDK for scripted CPU allocation.

nUse hyperthreading.

Multicore Processors

Multicore processors provide many advantages for a host performing multitasking of virtual machines.

Intel and AMD have developed processors which combine two or more processor cores into a single integrated circuit (often called a package or socket). VMware uses the term socket to describe a single package which can have one or more processor cores with one or more logical processors in each core.

A dual-core processor, for example, provides almost double the performance of a single-core processor, by allowing two virtual CPUs to run at the same time. Cores within the same processor are typically configured with a shared last-level cache used by all cores, potentially reducing the need to access slower main memory. A shared memory bus that connects a physical processor to main memory can limit performance of its logical processors when the virtual machines running on them are running memory-intensive workloads which compete for the same memory bus resources.

Each logical processor of each processor core is used independently by the ESXi CPU scheduler to run virtual machines, providing capabilities similar to SMP systems. For example, a two-way virtual machine can have its virtual processors running on logical processors that belong to the same core, or on logical processors on different physical cores.

The ESXi CPU scheduler can detect the processor topology and the relationships between processor cores and the logical processors on them. It uses this information to schedule virtual machines and optimize performance.

The ESXi CPU scheduler can interpret processor topology, including the relationship between sockets, cores, and logical processors. The scheduler uses topology information to optimize the placement of virtual CPUs onto different sockets. This optimization can maximize overall cache usage, and to improve cache affinity by minimizing virtual CPU migrations.

Hyperthreading

Hyperthreading technology allows a single physical processor core to behave like two logical processors. The processor can run two independent applications at the same time. To avoid confusion between logical and physical processors, Intel refers to a physical processor as a socket, and the discussion in this chapter uses that terminology as well.

Intel Corporation developed hyperthreading technology to enhance the performance of its Pentium IV and Xeon processor lines. Hyperthreading technology allows a single processor core to execute two independent threads simultaneously.

While hyperthreading does not double the performance of a system, it can increase performance by better utilizing idle resources leading to greater throughput for certain important workload types. An application running on one logical processor of a busy core can expect slightly more than half of the throughput that it obtains while running alone on a non-hyperthreaded processor. Hyperthreading performance improvements are highly application-dependent, and some applications might see performance degradation with hyperthreading because many processor resources (such as the cache) are shared between logical processors.

Not On processors with Intel Hyper-Threading technology, each core can have two logical processors which share most of the core's resources, such as memory caches and functional units. Such logical processors are usually called threads.

20 |

VMware, Inc. |

Chapter 4 Administering CPU Resources

Many processors do not support hyperthreading and as a result have only one thread per core. For such processors, the number of cores also matches the number of logical processors. The following processors support hyperthreading and have two threads per core.

nProcessors based on the Intel Xeon 5500 processor microarchitecture.

nIntel Pentium 4 (HT-enabled)

nIntel Pentium EE 840 (HT-enabled)

Hyperthreading and ESXi Hosts

A host that is enabled for hyperthreading should behave similarly to a host without hyperthreading. You might need to consider certain factors if you enable hyperthreading, however.

ESXi hosts manage processor time intelligently to guarantee that load is spread smoothly across processor cores in the system. Logical processors on the same core have consecutive CPU numbers, so that CPUs 0 and 1 are on the first core together, CPUs 2 and 3 are on the second core, and so on. Virtual machines are preferentially scheduled on two different cores rather than on two logical processors on the same core.

If there is no work for a logical processor, it is put into a halted state, which frees its execution resources and allows the virtual machine running on the other logical processor on the same core to use the full execution resources of the core. The VMware scheduler properly accounts for this halt time, and charges a virtual machine running with the full resources of a core more than a virtual machine running on a half core. This approach to processor management ensures that the server does not violate any of the standard ESXi resource allocation rules.

Consider your resource management needs before you enable CPU affinity on hosts using hyperthreading. For example, if you bind a high priority virtual machine to CPU 0 and another high priority virtual machine to CPU 1, the two virtual machines have to share the same physical core. In this case, it can be impossible to meet the resource demands of these virtual machines. Ensure that any custom affinity settings make sense for a hyperthreaded system.

Enable Hyperthreading

To enable hyperthreading, you must first enable it in your system's BIOS settings and then turn it on in the vSphere Web Client. Hyperthreading is enabled by default.

Consult your system documentation to determine whether your CPU supports hyperthreading.

Procedure

1Ensure that your system supports hyperthreading technology.

2Enable hyperthreading in the system BIOS.

Some manufacturers label this option Logical Processor, while others call it Enable Hyperthreading.

3Ensure that hyperthreading is enabled for the ESXi host.

a Browse to the host in the vSphere Web Client navigator.

b |

Click on ure. |

c |

Under System, click Advanced System e n s and select VMkernel.Boot.hyperthreading. |

|

You must restart the host for the setting to take effect Hyperthreading is enabled if the value is |

|

true. |

4 Under Hardware, click Processors to view the number of Logical processors. Hyperthreading is enabled.

VMware, Inc. |

21 |

vSphere Resource Management

Using CPU Affinity

By specifying a CPU affinity setting for each virtual machine, you can restrict the assignment of virtual machines to a subset of the available processors in multiprocessor systems. By using this feature, you can assign each virtual machine to processors in the specified affinity set.

CPU affinity specifies virtual machine-to-processor placement constraints and is different from the relationship created by a VM-VM or VM-Host affinity rule, which specifies virtual machine-to-virtual machine host placement constraints.

In this context, the term CPU refers to a logical processor on a hyperthreaded system and refers to a core on a non-hyperthreaded system.

The CPU affinity setting for a virtual machine applies to all of the virtual CPUs associated with the virtual machine and to all other threads (also known as worlds) associated with the virtual machine. Such virtual machine threads perform processing required for emulating mouse, keyboard, screen, CD-ROM, and miscellaneous legacy devices.

In some cases, such as display-intensive workloads, significant communication might occur between the virtual CPUs and these other virtual machine threads. Performance might degrade if the virtual machine's affinity setting prevents these additional threads from being scheduled concurrently with the virtual machine's virtual CPUs. Examples of this include a uniprocessor virtual machine with affinity to a single CPU or a two-way SMP virtual machine with affinity to only two CPUs.

For the best performance, when you use manual affinity settings VMware recommends that you include at least one additional physical CPU in the affinity setting to allow at least one of the virtual machine's threads to be scheduled at the same time as its virtual CPUs. Examples of this include a uniprocessor virtual machine with affinity to at least two CPUs or a two-way SMP virtual machine with affinity to at least three CPUs.

Assign a Virtual Machine to a Specific Processor

Using CPU affinity you can assign a virtual machine to a specific processor. This allows you to restrict the assignment of virtual machines to a specific available processor in multiprocessor systems.

Procedure

1Find the virtual machine in the vSphere Web Client inventory.

a To find a virtual machine, select a data center, folder, cluster, resource pool, or host. b Click the Related Objects tab and click Virtual Machines.

2 Right-click the virtual machine and click Edit e n s.

3Under Virtual Hardware, expand CPU.

4Under Scheduling Affinity select physical processor affinity for the virtual machine. Use '-' for ranges and ',' to separate values.

For example, "0, 2, 4-7" would indicate processors 0, 2, 4, 5, 6 and 7.

5Select the processors where you want the virtual machine to run and click OK.

22 |

VMware, Inc. |

Chapter 4 Administering CPU Resources

Potential Issues with CPU Affinity

Before you use CPU affinity you might need to consider certain issues.

Potential issues with CPU affinity include:

nFor multiprocessor systems, ESXi systems perform automatic load balancing. Avoid manual specification of virtual machine affinity to improve the scheduler’s ability to balance load across processors.

nAffinity can interfere with the ESXi host’s ability to meet the reservation and shares specified for a virtual machine.

nBecause CPU admission control does not consider affinity a virtual machine with manual affinity settings might not always receive its full reservation.

Virtual machines that do not have manual affinity settings are not adversely affected by virtual machines with manual affinity settings

nWhen you move a virtual machine from one host to another, affinity might no longer apply because the new host might have a different number of processors.

nThe NUMA scheduler might not be able to manage a virtual machine that is already assigned to certain processors using affinity

nAffinity can affect the host's ability to schedule virtual machines on multicore or hyperthreaded processors to take full advantage of resources shared on such processors.

Host Power Management Policies

You can apply several power management features in ESXi that the host hardware provides to adjust the balance between performance and power. You can control how ESXi uses these features by selecting a power management policy.

Selecting a high-performance policy provides more absolute performance, but at lower efficiency and performance per watt Low-power policies provide less absolute performance, but at higher efficiency

You can select a policy for the host that you manage by using the VMware Host Client. If you do not select a policy, ESXi uses Balanced by default.

Table 4 1. CPU Power Management Policies

Power Management Policy |

Description |

|

|

High Performance |

Do not use any power management features. |

|

|

Balanced (Default) |

Reduce energy consumption with minimal performance |

|

compromise |

|

|

Low Power |

Reduce energy consumption at the risk of lower |

|

performance |

|

|

Custom |

User defined power management policy. Advanced |

|

configuration becomes available. |

|

|

When a CPU runs at lower frequency, it can also run at lower voltage, which saves power. This type of power management is typically called Dynamic Voltage and Frequency Scaling (DVFS). ESXi attempts to adjust CPU frequencies so that virtual machine performance is not affected

When a CPU is idle, ESXi can apply deep halt states, also known as C-states. The deeper the C-state, the less power the CPU uses, but it also takes longer for the CPU to start running again. When a CPU becomes idle, ESXi applies an algorithm to predict the idle state duration and chooses an appropriate C-state to enter. In power management policies that do not use deep C-states, ESXi uses only the shallowest halt state for idle CPUs, C1.

VMware, Inc. |

23 |

vSphere Resource Management

Select a CPU Power Management Policy

You set the CPU power management policy for a host using the vSphere Web Client.

Prerequisites

Verify that the BIOS settings on the host system allow the operating system to control power management (for example, OS Controlled).

Not Some systems have Processor Clocking Control (PCC) technology, which allows ESXi to manage power on the host system even if the host BIOS settings do not specify OS Controlled mode. With this technology, ESXi does not manage P-states directly. Instead, the host cooperates with the BIOS to determine the processor clock rate. HP systems that support this technology have a BIOS setting called Cooperative Power Management that is enabled by default.

If the host hardware does not allow the operating system to manage power, only the Not Supported policy is available. (On some systems, only the High Performance policy is available.)

Procedure

1Browse to the host in the vSphere Web Client navigator.

2 Click on ure.

3Under Hardware, select Power Management and click the Edit button

4Select a power management policy for the host and click OK.

The policy selection is saved in the host configuration and can be used again at boot time. You can change it at any time, and it does not require a server reboot.

Configure Custom Policy Parameters for Host Power Management

When you use the Custom policy for host power management, ESXi bases its power management policy on the values of several advanced configuration parameters.

Prerequisites

Select Custom for the power management policy, as described in “Select a CPU Power Management Policy,” on page 24.

Procedure

1Browse to the host in the vSphere Web Client navigator.

2 Click on ure.

3 Under System, select Advanced System e n s.

4In the right pane, you can edit the power management parameters that affect the Custom policy.

Power management parameters that affect the Custom policy have descriptions that begin with In Custom policy. All other power parameters affect all power management policies.

5Select the parameter and click the Edit button

Not The default values of power management parameters match the Balanced policy.

Parameter |

Description |

Power.UsePStates |

Use ACPI P-states to save power when the processor is busy. |

|

|

Power.MaxCpuLoad |

Use P-states to save power on a CPU only when the CPU is busy for less |

|

than the given percentage of real time. |

|

|

24 |

VMware, Inc. |

|

Chapter 4 Administering CPU Resources |

|

|

Parameter |

Description |

|

|

Power.MinFreqPct |

Do not use any P-states slower than the given percentage of full CPU |

|

speed. |

|

|

Power.UseStallCtr |

Use a deeper P-state when the processor is frequently stalled waiting for |

|

events such as cache misses. |

|

|

Power.TimerHz |

Controls how many times per second ESXi reevaluates which P-state each |

|

CPU should be in. |

|

|

Power.UseCStates |

Use deep ACPI C-states (C2 or below) when the processor is idle. |

|

|

Power.CStateMaxLatency |

Do not use C-states whose latency is greater than this value. |

|

|

Power.CStateResidencyCoef |

When a CPU becomes idle, choose the deepest C-state whose latency |

|

multiplied by this value is less than the host's prediction of how long the |

|

CPU will remain idle. Larger values make ESXi more conservative about |

|

using deep C-states, while smaller values are more aggressive. |

|

|

Power.CStatePredictionCoef |

A parameter in the ESXi algorithm for predicting how long a CPU that |

|

becomes idle will remain idle. Changing this value is not recommended. |

|

|

Power.PerfBias |

Performance Energy Bias Hint (Intel-only). Sets an MSR on Intel processors |

|

to an Intel-recommended value. Intel recommends 0 for high performance, |

|

6 for balanced, and 15 for low power. Other values are undefined |

|

|

6Click OK.

VMware, Inc. |

25 |

vSphere Resource Management

26 |

VMware, Inc. |

Memory Virtualization Basics |

5 |

Before you manage memory resources, you should understand how they are being virtualized and used by ESXi.

The VMkernel manages all physical RAM on the host. The VMkernel dedicates part of this managed physical RAM for its own use. The rest is available for use by virtual machines.

The virtual and physical memory space is divided into blocks called pages. When physical memory is full, the data for virtual pages that are not present in physical memory are stored on disk. Depending on processor architecture, pages are typically 4 KB or 2 MB. See “Advanced Memory Attributes ” on page 116.

This chapter includes the following topics:

n“Virtual Machine Memory,” on page 27

n“Memory Overcommitment,” on page 28

n“Memory Sharing,” on page 28

n“Types of Memory Virtualization,” on page 29

Virtual Machine Memory

Each virtual machine consumes memory based on its configured size, plus additional overhead memory for virtualization.

The configured size is the amount of memory that is presented to the guest operating system. This is different from the amount of physical RAM that is allocated to the virtual machine. The latter depends on the resource settings (shares, reservation, limit) and the level of memory pressure on the host.

For example, consider a virtual machine with a configured size of 1GB. When the guest operating system boots, it detects that it is running on a dedicated machine with 1GB of physical memory. In some cases, the virtual machine might be allocated the full 1GB. In other cases, it might receive a smaller allocation. Regardless of the actual allocation, the guest operating system continues to behave as though it is running on a dedicated machine with 1GB of physical memory.

Shares |

Specify the relative priority for a virtual machine if more than the reservation |

|

is available. |

Reservation |

Is a guaranteed lower bound on the amount of physical RAM that the host |

|

reserves for the virtual machine, even when memory is overcommitted Set |

|

the reservation to a level that ensures the virtual machine has sufficient |

|

memory to run efficiently without excessive paging. |

VMware, Inc. |

27 |

vSphere Resource Management

Limit

After a virtual machine consumes all of the memory within its reservation, it is allowed to retain that amount of memory and this memory is not reclaimed, even if the virtual machine becomes idle. Some guest operating systems (for example, Linux) might not access all of the configured memory immediately after booting. Until the virtual machines consumes all of the memory within its reservation, VMkernel can allocate any unused portion of its reservation to other virtual machines. However, after the guest’s workload increases and the virtual machine consumes its full reservation, it is allowed to keep this memory.

Is an upper bound on the amount of physical RAM that the host can allocate to the virtual machine. The virtual machine’s memory allocation is also implicitly limited by its configured size.

Memory Overcommitment

For each running virtual machine, the system reserves physical RAM for the virtual machine’s reservation (if any) and for its virtualization overhead.

The total configured memory sizes of all virtual machines may exceed the amount of available physical memory on the host. However, it doesn't necessarily mean memory is overcommitted Memory is overcommitted when the combined working memory footprint of all virtual machines exceed that of the host memory sizes.

Because of the memory management techniques the ESXi host uses, your virtual machines can use more virtual RAM than there is physical RAM available on the host. For example, you can have a host with 2GB memory and run four virtual machines with 1GB memory each. In that case, the memory is overcommitted For instance, if all four virtual machines are idle, the combined consumed memory may be well below 2GB. However, if all 4GB virtual machines are actively consuming memory, then their memory footprint may exceed 2GB and the ESXi host will become overcommitted

Overcommitment makes sense because, typically, some virtual machines are lightly loaded while others are more heavily loaded, and relative activity levels vary over time.

To improve memory utilization, the ESXi host transfers memory from idle virtual machines to virtual machines that need more memory. Use the Reservation or Shares parameter to preferentially allocate memory to important virtual machines. This memory remains available to other virtual machines if it is not in use. ESXi implements various mechanisms such as ballooning, memory sharing, memory compression and swapping to provide reasonable performance even if the host is not heavily memory overcommitted

An ESXi host can run out of memory if virtual machines consume all reservable memory in a memory overcommitted environment. Although the powered on virtual machines are not affected a new virtual machine might fail to power on due to lack of memory.

Not All virtual machine memory overhead is also considered reserved.

In addition, memory compression is enabled by default on ESXi hosts to improve virtual machine performance when memory is overcommitted as described in “Memory Compression,” on page 41.

Memory Sharing

Memory sharing is a proprietary ESXi technique that can help achieve greater memory density on a host.

Memory sharing relies on the observation that several virtual machines might be running instances of the same guest operating system. These virtual machines might have the same applications or components loaded, or contain common data. In such cases, a host uses a proprietary Transparent Page Sharing (TPS) technique to eliminate redundant copies of memory pages. With memory sharing, a workload running on a

28 |

VMware, Inc. |

Chapter 5 Memory Virtualization Basics

virtual machine often consumes less memory than it might when running on physical machines. As a result, higher levels of overcommitment can be supported efficiently The amount of memory saved by memory sharing depends on whether the workload consists of nearly identical machines which might free up more memory. A more diverse workload might result in a lower percentage of memory savings.

Not Due to security concerns, inter-virtual machine transparent page sharing is disabled by default and page sharing is being restricted to intra-virtual machine memory sharing. Page sharing does not occur across virtual machines and only occurs inside a virtual machine. See “Sharing Memory Across Virtual Machines,” on page 40 for more information.

Types of Memory Virtualization

There are two types of memory virtualization: Software-based and hardware-assisted memory virtualization.

Because of the extra level of memory mapping introduced by virtualization, ESXi can effectively manage memory across all virtual machines. Some of the physical memory of a virtual machine might be mapped to shared pages or to pages that are unmapped, or swapped out.

A host performs virtual memory management without the knowledge of the guest operating system and without interfering with the guest operating system’s own memory management subsystem.

The VMM for each virtual machine maintains a mapping from the guest operating system's physical memory pages to the physical memory pages on the underlying machine. (VMware refers to the underlying host physical pages as “machine” pages and the guest operating system’s physical pages as “physical” pages.)

Each virtual machine sees a contiguous, zero-based, addressable physical memory space. The underlying machine memory on the server used by each virtual machine is not necessarily contiguous.

For both software-based and hardware-assisted memory virtualization, the guest virtual to guest physical addresses are managed by the guest operating system. The hypervisor is only responsible for translating the guest physical addresses to machine addresses. Software-based memory virtualization combines the guest's virtual to machine addresses in software and saves them in the shadow page tables managed by the hypervisor. Hardware-assisted memory virtualization utilizes the hardware facility to generate the combined mappings with the guest's page tables and the nested page tables maintained by the hypervisor.

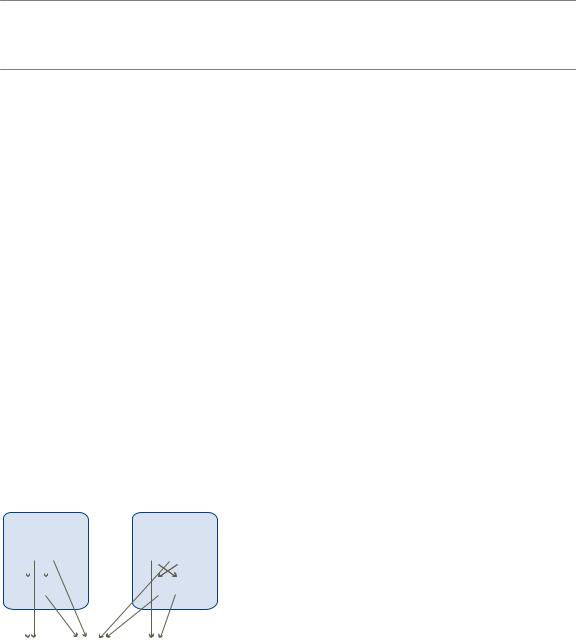

The diagram illustrates the ESXi implementation of memory virtualization.

Figure 5 1. ESXi Memory Mapping

virtual machine |

|

virtual machine |

|

|||||||||||

|

|

|

1 |

|

|

|

|

|

|

2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

guest virtual memory |

||

|

a |

b |

|

c |

b |

|

||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

a |

b |

|

|

|

b |

c |

|

guest physical memory |

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

machine memory |

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

a |

|

|

|

b |

b |

|

|

c |

|

|

|

||

nThe boxes represent pages, and the arrows show the different memory mappings.

nThe arrows from guest virtual memory to guest physical memory show the mapping maintained by the page tables in the guest operating system. (The mapping from virtual memory to linear memory for x86-architecture processors is not shown.)

nThe arrows from guest physical memory to machine memory show the mapping maintained by the VMM.

VMware, Inc. |

29 |

vSphere Resource Management

nThe dashed arrows show the mapping from guest virtual memory to machine memory in the shadow page tables also maintained by the VMM. The underlying processor running the virtual machine uses the shadow page table mappings.

Software-Based Memory Virtualization

ESXi virtualizes guest physical memory by adding an extra level of address translation.

nThe VMM maintains the combined virtual-to-machine page mappings in the shadow page tables. The shadow page tables are kept up to date with the guest operating system's virtual-to-physical mappings and physical-to-machine mappings maintained by the VMM.

nThe VMM intercepts virtual machine instructions that manipulate guest operating system memory management structures so that the actual memory management unit (MMU) on the processor is not updated directly by the virtual machine.

nThe shadow page tables are used directly by the processor's paging hardware.

nThere is non-trivial computation overhead for maintaining the coherency of the shadow page tables. The overhead is more pronounced when the number of virtual CPUs increases.

This approach to address translation allows normal memory accesses in the virtual machine to execute without adding address translation overhead, after the shadow page tables are set up. Because the translation look-aside buffer (TLB) on the processor caches direct virtual-to-machine mappings read from the shadow page tables, no additional overhead is added by the VMM to access the memory. Note that software MMU has a higher overhead memory requirement than hardware MMU. Hence, in order to support software MMU, the maximum overhead supported for virtual machines in the VMkernel needs to be increased. In some cases, software memory virtualization may have some performance benefit over hardware-assisted approach if the workload induces a huge amount of TLB misses.

Performance Considerations

The use of two sets of page tables has these performance implications.

nNo overhead is incurred for regular guest memory accesses.

nAdditional time is required to map memory within a virtual machine, which happens when:

n The virtual machine operating system is setting up or updating virtual address to physical address mappings.

n The virtual machine operating system is switching from one address space to another (context switch).

nLike CPU virtualization, memory virtualization overhead depends on workload.

Hardware-Assisted Memory Virtualization

Some CPUs, such as AMD SVM-V and the Intel Xeon 5500 series, provide hardware support for memory virtualization by using two layers of page tables.

The first layer of page tables stores guest virtual-to-physical translations, while the second layer of page tables stores guest physical-to-machine translation. The TLB (translation look-aside buffer) is a cache of translations maintained by the processor's memory management unit (MMU) hardware. A TLB miss is a miss in this cache and the hardware needs to go to memory (possibly many times) to find the required translation. For a TLB miss to a certain guest virtual address, the hardware looks at both page tables to translate guest virtual address to machine address. The first layer of page tables is maintained by the guest operating system. The VMM only maintains the second layer of page tables.

30 |

VMware, Inc. |

Loading...

Loading...