Page 1

CentricStor V3.1D

User Guide

Edition July 2007

Page 2

Comments… Suggestions… Corrections…

The User Documentation Department would like to know your

opinion on this manual. Your feedback helps us to optimize our

documentation to suit your individual needs.

Feel free to send us your comments by e-mail to:

manuals@fujitsu-siemens.com

Certified documentation

according to DIN EN ISO 9001:2000

To ensure a consistently high quality standard and

user-friendliness, this documentation was created to

meet the regulations of a quality management system which

complies with the requirements of the standard

DIN EN ISO 9001:2000.

cognitas. Gesellschaft für Technik-Dokumentation mbH

www.cognitas.de

Copyright and Trademarks

Copyright © Fujitsu Siemens Computers GmbH 2007.

All rights reserved.

Delivery subject to availability; right of technical modifications reserved.

All hardware and software names used are trademarks of their respective manufacturers.

This manual was produced by

cognitas. Gesellschaft für Technik-Dokumentation mbH

www.cognitas.de

This manual is printed

on paper treated with

chlorine-free bleach.

Page 3

Contents

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .19

1.1 Objective and target group for the manual . . . . . . . . . . . . . . . . . . . . . . 20

1.2 Concept of the manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.3 Notational conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.4 Note . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2 CentricStor - Virtual Tape Library . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1 The CentricStor principle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2 Hardware architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.2.1 ISP (Integrated Service Processor) . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.1.1 VLP (Virtual Library Processor) . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.1.2 ICP (Integrated Channel Processor) . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.2.1.3 IDP (Integrated Device Processor) . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.2.1.4 ICP_IDP or IUP (Integrated Universal Processor) . . . . . . . . . . . . . . . . . . 29

2.2.2 RAID systems for the Tape Volume Cache . . . . . . . . . . . . . . . . . . . . . . . 30

2.2.3 FibreChannel (FC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .31

2.2.4 FC switch (fibre channel switch) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.2.5 Host connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .32

2.3 Software architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.4 Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.5 Administering the tape cartridges . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.5.1 Writing the tape cartridges according to the stacked volume principle . . . . . . . . . 35

2.5.2 Repeated writing of a logical volume onto tape . . . . . . . . . . . . . . . . . . . . . 36

2.5.3 Creating a directory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .36

2.5.4 Reorganization of the tape cartridges . . . . . . . . . . . . . . . . . . . . . . . . . . 37

U41117-J-Z125-7-76

Page 4

Contents

2.6 Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .38

2.6.1 Creating the CentricStor data maintenance . . . . . . . . . . . . . . . . . . . . . . . 38

2.6.2 Issuing a mount job from the host . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.6.3 Scratch mount . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.7 New system functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.8 Standard system functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.8.1 Partitioning by volume groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.8.2 “Call Home” in the event of an error . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.8.3 SNMP support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .45

2.8.4 Exporting and importing tape cartridges . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.8.4.1 Vault attribute and vault status . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.8.4.2 Transfer PVG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .46

2.9 Optional system functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.9.1 Compression . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.9.2 Multiple library support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .49

2.9.3 Dual Save . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.9.4 Extending virtual drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52

2.9.5 System administrator’s edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.9.6 Fibre channel connection for load balancing and redundancy . . . . . . . . . . . . . . 52

2.9.7 Automatic VLP failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.9.8 Cache Mirroring Feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.9.8.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.9.8.2 Hardware requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.9.8.3 Software requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.9.8.4 Mirrored RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

2.9.8.5 Presentation of the mirror function in GXCC . . . . . . . . . . . . . . . . . . . . 58

2.9.9 Accounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3 Switching CentricStor on/off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.1 Switching CentricStor on . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.2 Switching CentricStor off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4 Selected system administrator activities . . . . . . . . . . . . . . . . . . . . . . . 63

4.1 Partitioning on the basis of volume groups . . . . . . . . . . . . . . . . . . . . . 63

4.1.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4.1.2 Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

U41117-J-Z125-7-76

Page 5

Contents

4.1.3 System administrator activities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

4.1.3.1 Adding a logical volume group . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.1.3.2 Adding a physical volume group . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

4.1.3.3 Adding logical volumes to a logical volume group . . . . . . . . . . . . . . . . . . 66

4.1.3.4 Adding physical volumes to a physical volume group . . . . . . . . . . . . . . . . 67

4.1.3.5 Assigning an LVG to a PVG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.1.3.6 Removing an assignment between an LVG and a PVG . . . . . . . . . . . . . . . 67

4.1.3.7 Changing logical volumes to another group . . . . . . . . . . . . . . . . . . . . . 68

4.1.3.8 Removing logical volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

4.1.3.9 Removing logical volume groups . . . . . . . . . . . . . . . . . . . . . . . . . . 68

4.1.3.10 Removing physical volumes from a physical volume group . . . . . . . . . . . . . 69

4.1.3.11 Removing physical volume groups . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.2 Cache management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.3 Dual Save . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.3.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.3.2 System administrator activities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

4.3.2.1 Assigning a logical volume group to two physical volume groups . . . . . . . . . . 72

4.3.2.2 Removing a Dual Save assignment . . . . . . . . . . . . . . . . . . . . . . . . . 72

4.4 Reorganization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.4.1 Why do we need reorganization? . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.4.2 How is a physical volume reorganized? . . . . . . . . . . . . . . . . . . . . . . . . . 74

4.4.3 When is a reorganization performed? . . . . . . . . . . . . . . . . . . . . . . . . . . 75

4.4.4 Which physical volume is selected for reorganization? . . . . . . . . . . . . . . . . . 76

4.4.5 Own physical volumes for reorganization backup . . . . . . . . . . . . . . . . . . . . 78

4.4.6 Starting the reorganization of a physical volume . . . . . . . . . . . . . . . . . . . . 78

4.4.7 Configuration parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

4.5 Cleaning physical drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

4.6 Synchronization of the system time using NTP . . . . . . . . . . . . . . . . . . . 82

5 Operating and monitoring CentricStor . . . . . . . . . . . . . . . . . . . . . . . . 83

5.1 Technical design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.1.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

5.1.2 Principles of operation of GXCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

5.1.3 Monitoring structure within a CentricStor ISP . . . . . . . . . . . . . . . . . . . . . . 87

5.1.4 Operating modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .90

5.2 Operator configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

5.2.1 Basic configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .91

5.2.2 Expansion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

U41117-J-Z125-7-76

Page 6

Contents

5.2.3 GXCC in other systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.2.4 Screen display requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.2.5 Managing CentricStor via SNMP . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.2.5.1 Connection to SNMP management systems . . . . . . . . . . . . . . . . . . . . 92

5.2.5.2 SNMP and GXCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.3 Starting GXCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

5.3.1 Differences to earlier CentricStor versions . . . . . . . . . . . . . . . . . . . . . . . 95

5.3.2 Command line . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .95

5.3.2.1 Explanation of the start parameter -aspect . . . . . . . . . . . . . . . . . . . . . 97

5.3.3 Environment variable XTCC_CLASS . . . . . . . . . . . . . . . . . . . . . . . . . . 98

5.3.4 Passwords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

5.3.4.1 Optional access control for Observe mode . . . . . . . . . . . . . . . . . . . . . 99

5.3.4.2 Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .99

5.3.4.3 Suppressing the password query . . . . . . . . . . . . . . . . . . . . . . . . . 100

5.3.4.4 Additional password query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

5.3.5 Starting the CentricStor console . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

5.3.6 Starting from an X11 server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

5.3.6.1 General notes on the X11 server architecture . . . . . . . . . . . . . . . . . . . 102

5.3.6.2 Using the direct XDMCP interface . . . . . . . . . . . . . . . . . . . . . . . . . 104

5.3.6.3 Starting from a UNIX system . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

5.3.6.4 Starting from a Windows system via Exceed . . . . . . . . . . . . . . . . . . . 105

5.3.6.5 Starting from a Windows/NT system via XVision . . . . . . . . . . . . . . . . . 108

5.3.7 GXCC welcome screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

5.3.8 Selecting the CentricStor system . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

5.3.9 Establishing a connection after clicking on OK . . . . . . . . . . . . . . . . . . . . 116

5.3.10 Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

5.3.11 Software updates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

6 GXCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

6.1 Main window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

6.1.1 Standard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

6.1.2 Loss of a connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

6.1.3 Elements of the GXCC main window . . . . . . . . . . . . . . . . . . . . . . . . . 121

6.1.3.1 Title bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

6.1.3.2 Footer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

6.1.3.3 Function buttons and displays in the button bar . . . . . . . . . . . . . . . . . . 123

6.1.3.4 System information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

6.1.3.5 Console messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

6.1.3.6 Function bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

6.1.4 Message window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

6.1.5 Asynchronous errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

U41117-J-Z125-7-76

Page 7

Contents

6.1.6 Block diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

6.1.6.1 Status information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

6.1.6.2 Object information and object-related functions . . . . . . . . . . . . . . . . . . 133

6.1.7 ICP object information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

6.1.8 IDP object information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

6.1.9 Functions of an ISP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

6.1.9.1 Show Details (XTCC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

6.1.10 Functions for all ISPs of a particular class . . . . . . . . . . . . . . . . . . . . . . . 135

6.1.11 Information about the RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . 136

6.1.12 RAID system functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

6.1.12.1 Show complete RAID status . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

6.1.13 Information on Fibre Channel fabric . . . . . . . . . . . . . . . . . . . . . . . . . . 138

6.1.14 Functions of the Fibre Channel fabric . . . . . . . . . . . . . . . . . . . . . . . . . 138

6.1.14.1 Controller Color Scheme . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

6.1.14.2 Show data fcswitch <Name of the switch> [(trap)] . . . . . . . . . . . . . . . . . 139

6.1.15 Information about the FC connections . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.1.16 Information on the archive systems . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.1.17 ISP system messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6.1.18 SNMP messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6.1.19 Configuration Changed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

6.2 Function bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

6.2.1 Overview of GXCC functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

6.2.2 File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

6.2.2.1 Save . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

6.2.2.2 Open . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.2.2.3 Show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.2.2.4 Print . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.2.2.5 Exit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6.2.3 Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6.2.3.1 Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6.2.4 Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.2.4.1 Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.2.4.2 Show Current Aspect . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

6.2.5 Autoscan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

6.2.5.1 Start Autoscan/Stop Autoscan . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

6.2.5.2 Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

6.2.6 Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

6.2.6.1 Global Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

6.2.6.2 Get Remote/Expand Local File . . . . . . . . . . . . . . . . . . . . . . . . . . 154

6.2.6.3 Show Remote File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

6.2.6.4 Show System Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

6.2.6.5 GXCC Update/Revert Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

U41117-J-Z125-7-76

Page 8

Contents

6.2.7 Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

6.2.7.1 RAID Filesystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

6.2.7.2 Logical Volume Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

6.2.7.3 Physical Volume Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

6.2.7.4 Distribute and Activate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

6.2.8 Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

6.2.8.1 Add/Select Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

6.2.9 Administration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

6.2.9.1 Show WWN’s . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

6.2.9.2 Show Optional Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

6.2.9.3 Show CS Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

6.2.9.4 Diagnostic Snapshots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

6.2.9.5 Logical Volume Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

6.2.9.6 Logical Volume Operations » Show Logical Volumes . . . . . . . . . . . . . . . 203

6.2.9.7 Logical Volume Operations » Show Logical Volumes (physical view) . . . . . . . 207

6.2.9.8 Logical Volume Operations » Change Volume Group . . . . . . . . . . . . . . . 209

6.2.9.9 Logical Volume Operations » Add Logical Volumes . . . . . . . . . . . . . . . . 211

6.2.9.10 Logical Volume Operations » Erase Logical Volumes . . . . . . . . . . . . . . . 213

6.2.9.11 Physical Volume Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

6.2.9.12 Physical Volume Operations » Show Physical Volumes . . . . . . . . . . . . . . 215

6.2.9.13 Physical Volume Operations » Link/Unlink Volume Groups . . . . . . . . . . . . 221

6.2.9.14 Physical Volume Operations » Add Physical Volumes . . . . . . . . . . . . . . . 223

6.2.9.15 Physical Volume Operations » Erase Physical Volumes . . . . . . . . . . . . . . 226

6.2.9.16 Physical Volume Operations » Reorganize Physical Volumes . . . . . . . . . . . 228

6.2.9.17 Setup for accounting mails . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

6.2.10 Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.2.10.1 Readme / LIESMICH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.2.10.2 Direct Help / Direkthilfe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.2.10.3 System Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.2.10.4 About GXCC... . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.2.10.5 Revision Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

6.2.10.6 Hardware Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

6.2.10.7 Online Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

7 Global Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

7.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

7.2 Operation of the Global Status Monitor . . . . . . . . . . . . . . . . . . . . . . . 239

7.3 Function bar of the Global Status Monitor . . . . . . . . . . . . . . . . . . . . . 239

7.3.1 File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

7.3.1.1 Print . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

U41117-J-Z125-7-76

Page 9

Contents

7.3.1.2 Exit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

7.3.2 Config . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

7.3.3 Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

7.3.3.1 Global eXtended Control Center . . . . . . . . . . . . . . . . . . . . . . . . . . 242

7.3.3.2 Show Balloon Help Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

7.3.4 Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

7.3.5 Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

7.4 Global Status button bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

7.5 Display of the Global Status Monitor . . . . . . . . . . . . . . . . . . . . . . . . 247

7.5.1 Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

7.5.2 Virtual Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

7.5.3 Physical Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

7.6 History data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

7.6.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258

7.6.1.1 Recording analog operating data . . . . . . . . . . . . . . . . . . . . . . . . . 258

7.6.1.2 Overview of the displays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258

7.6.1.3 Selecting the time period . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

7.6.1.4 Selecting the presentation mode . . . . . . . . . . . . . . . . . . . . . . . . . 263

7.6.2 Data which can be called via the function bar . . . . . . . . . . . . . . . . . . . . . 264

7.6.2.1 Statistics » History of . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

7.6.2.2 Statistics » History of » Cache Usage . . . . . . . . . . . . . . . . . . . . . . . 265

7.6.2.3 Statistics » History of » Channel/Device Performance . . . . . . . . . . . . . . 266

7.6.2.4 Statistics » Logical Components . . . . . . . . . . . . . . . . . . . . . . . . . . 267

7.6.2.5 Statistics » Logical Components » Logical Drives . . . . . . . . . . . . . . . . . 268

7.6.2.6 Statistics » Logical Components »Logical Volumes (physical view) . . . . . . . . 271

7.6.2.7 Statistics » Logical Components » Logical Volumes (logical view) . . . . . . . . 272

7.6.2.8 Statistics » Logical Components » Logical Volume Groups . . . . . . . . . . . . 273

7.6.2.9 Statistics » Logical Components » Jobs of Logical Volume Groups . . . . . . . . 275

7.6.2.10 Statistics » Physical Components . . . . . . . . . . . . . . . . . . . . . . . . . 276

7.6.2.11 Statistics » Physical Components » Physical Drives . . . . . . . . . . . . . . . 277

7.6.2.12 Statistics » Physical Components » Physical Volumes . . . . . . . . . . . . . . 279

7.6.2.13 Statistics » Physical Components » Physical Volume Groups . . . . . . . . . . . 283

7.6.2.14 Statistics » Physical Components » Jobs of Physical Vol. Groups . . . . . . . . 289

7.6.2.15 Statistics » Physical Components » Reorganization Status . . . . . . . . . . . . 291

7.6.2.16 Statistics » Usage (Accounting) . . . . . . . . . . . . . . . . . . . . . . . . . . 293

7.6.3 Data which can be called via objects of the Global Status . . . . . . . . . . . . . . 297

7.7 History diagrams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

7.7.1 Function/menu bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

7.7.1.1 File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

7.7.1.2 Date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300

7.7.1.3 Time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

7.7.1.4 Range . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

U41117-J-Z125-7-76

Page 10

Contents

7.7.1.5 Run . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

7.7.1.6 Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 301

7.7.1.7 Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

7.7.1.8 Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

7.7.2 Toolbar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

7.7.3 Status bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 303

7.7.4 Diagrams for the throughput (left-hand part of the screen) . . . . . . . . . . . . . . 303

7.7.5 Diagrams for virtual components (central part of the screen) . . . . . . . . . . . . . 305

7.7.5.1 ICP emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

7.7.5.2 Cache Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309

7.7.6 Diagrams of the physical components (right-hand part of the screen) . . . . . . . . 310

7.7.6.1 IDP statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 310

7.7.6.2 Tape pool values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313

7.7.7 Exporting history data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

7.7.8 Command line tool for generating the history data . . . . . . . . . . . . . . . . . . 316

8 XTCC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

8.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

8.2 Margins of the main XTCC window . . . . . . . . . . . . . . . . . . . . . . . . . 328

8.2.1 Title bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

8.2.2 Status bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

8.3 Function bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

8.3.1 File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

8.3.1.1 Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

8.3.1.2 Save . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

8.3.1.3 Show . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 331

8.3.1.4 Print . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 332

8.3.1.5 Exit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

8.3.2 Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

8.3.2.1 Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

8.3.3 Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 336

8.3.3.1 Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 336

8.3.3.2 Toggle Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

8.3.3.3 Toggle Aspect . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

8.3.3.4 Show Current Aspect . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

8.3.3.5 Apply Current Aspect . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 337

8.3.4 Autoscan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

8.3.4.1 Start . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

8.3.4.2 Stop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

8.3.4.3 Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339

U41117-J-Z125-7-76

Page 11

Contents

8.3.4.4 Scan Now . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 340

8.3.4.5 Interaction Timeout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 340

8.3.5 Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

8.3.5.1 XTCC Communications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 341

8.3.5.2 Get Remote/Expand Local File . . . . . . . . . . . . . . . . . . . . . . . . . . 342

8.3.5.3 Show Remote File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

8.3.5.4 Compare Local Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343

8.3.5.5 XTCC Update/Revert . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 343

8.3.6 Profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

8.3.6.1 Select . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 346

8.3.7 Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

8.3.7.1 README / LIESMICH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

8.3.7.2 Direct Help / Direkthilfe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 348

8.3.7.3 Mouse Functions / Maus-Funktionen . . . . . . . . . . . . . . . . . . . . . . . 348

8.3.7.4 About XTCC... . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

8.3.7.5 CentricStor User Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 350

8.3.7.6 CentricStor Service Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . 351

8.4 Elements of the XTCC window . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

8.4.1 Display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

8.4.2 Unexpected errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 354

8.4.3 Message window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 354

8.4.4 Object-related functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 355

8.4.5 Group display . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

8.5 File viewer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 360

8.5.1 Opening the file viewer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 360

8.5.2 Function bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 360

8.5.3 File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.3.1 Open (Text)/Open (Hex) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.3.2 Save As . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.3.3 Re-read . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.3.4 Print . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.3.5 Exit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 361

8.5.4 AutoUpdate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.4.1 Start . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.4.2 Stop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.5 AutoPopup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.5.1 Enable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.5.2 Disable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 362

8.5.6 Highlight . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 363

8.5.7 Search down/up . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 364

8.5.8 Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 365

8.5.8.1 1st Line -> Ruler/Selection -> Ruler . . . . . . . . . . . . . . . . . . . . . . . . 365

8.5.8.2 Text/Hex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 365

U41117-J-Z125-7-76

Page 12

Contents

8.5.8.3 Abort . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 365

8.5.8.4 Enlarge Font / Reduce Font . . . . . . . . . . . . . . . . . . . . . . . . . . . . 365

8.5.8.5 Tab Stop Interval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

8.5.9 Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 366

8.6 ISP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 367

8.6.1 Object information on the ISP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 367

8.6.2 ISP functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 368

8.6.2.1 Show Revision History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 369

8.6.2.2 Version Consistency Check . . . . . . . . . . . . . . . . . . . . . . . . . . . . 370

8.6.2.3 Show Diff. Curr./Prev. Version . . . . . . . . . . . . . . . . . . . . . . . . . . . 371

8.6.2.4 Show Node Element Descriptors . . . . . . . . . . . . . . . . . . . . . . . . . 372

8.6.2.5 Show Configuration Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 373

8.6.2.6 Show System Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

8.6.2.7 Show SNMP Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

8.6.2.8 Clean File System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375

8.7 Internal objects of the ISP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

8.7.1 Representation of internal objects . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

8.7.1.1 Hard disk drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 376

8.7.1.2 CD-ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

8.7.1.3 Streamer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 377

8.7.1.4 SCSI controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 378

8.7.1.5 RAID controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 378

8.7.2 Functions of the ISP-internal objects . . . . . . . . . . . . . . . . . . . . . . . . . 378

8.7.2.1 Hard disk, CD-ROM, streamer, all internal objects . . . . . . . . . . . . . . . . 378

8.7.2.2 SCSI controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 378

8.7.2.3 RAID controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 378

8.8 ESCON/FICON host adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 379

8.8.1 Object information for the ESCON/FICON host adapter . . . . . . . . . . . . . . . . 379

8.8.2 ESCON/FICON host adapter functions . . . . . . . . . . . . . . . . . . . . . . . . 381

8.8.2.1 Show Node ID Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 381

8.8.2.2 Show Node Element Descriptors . . . . . . . . . . . . . . . . . . . . . . . . . 382

8.8.2.3 Show Dump (prkdump) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 383

8.9 Emulations of drives connected to OS/390 host adapters . . . . . . . . . . . . 384

8.9.1 Information on emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 384

8.9.2 Functions for individual 3490 emulations . . . . . . . . . . . . . . . . . . . . . . . 385

8.9.2.1 Show Error/Transfer Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . 386

8.9.2.2 Show Short Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 387

8.9.2.3 Show Path Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 388

8.9.2.4 Show Error Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 389

8.9.2.5 Show Memory Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

8.9.3 Functions for all 3490 emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . 390

U41117-J-Z125-7-76

Page 13

Contents

8.10 Virtual 3490 drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 391

8.10.1 Object information and error messages for virtual 3490 drives . . . . . . . . . . . . 391

8.10.1.1 Error conditions indicated on the display . . . . . . . . . . . . . . . . . . . . . 392

8.10.1.2 Object information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 393

8.10.1.3 SIM/MIM error messages on virtual devices . . . . . . . . . . . . . . . . . . . . 393

8.10.2 Virtual drive functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 394

8.10.2.1 Show SCSI Sense . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 395

8.10.2.2 Show Medium Info (MIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 396

8.10.2.3 Show Service Info (SIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 397

8.10.2.4 Unload and Unmount . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 397

8.11 FC-SCSI host adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 398

8.11.1 Object information on FC-SCSI host adapters . . . . . . . . . . . . . . . . . . . . . 398

8.11.2 FC-SCSI host adapter functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 399

8.11.2.1 Perform Link Down/Up Sequence . . . . . . . . . . . . . . . . . . . . . . . . . 399

8.12 Emulations of SCSI drives (VTD) . . . . . . . . . . . . . . . . . . . . . . . . . . 399

8.12.1 Object information on emulations of SCSI devices . . . . . . . . . . . . . . . . . . 399

8.12.2 Functions for individual VTD emulations . . . . . . . . . . . . . . . . . . . . . . . . 401

8.12.2.1 Show Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

8.12.3 Functions for all VTD emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . 401

8.13 Virtual SCSI drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

8.13.1 Object information on virtual tape drives . . . . . . . . . . . . . . . . . . . . . . . 402

8.13.2 Virtual generic drive functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

8.13.2.1 Show SCSI Sense . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

8.13.2.2 Show Medium Info (MIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

8.13.2.3 Show Service Info (SIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

8.13.2.4 Unload and Unmount . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

8.14 VLS (Virtual Library Service) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

8.14.1 Object information on VLSs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 405

8.14.2 Functions for individual VLSs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

8.14.2.1 Show Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

8.14.3 Global functions for all VLSs of an ISP . . . . . . . . . . . . . . . . . . . . . . . . 406

8.15 VMD (Virtual Mount Daemon) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.15.1 Object information on the Virtual Mount Daemon (VMD) . . . . . . . . . . . . . . . 407

8.15.2 VMD functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

8.16 VLM (Virtual Library Manager) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

8.16.1 Object information for the VLM . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

8.16.2 VLM functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

8.16.2.1 Show Cache Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 408

8.16.2.2 Set HALT Mode/Set RUN Mode . . . . . . . . . . . . . . . . . . . . . . . . . . 410

U41117-J-Z125-7-76

Page 14

Contents

8.17 RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 411

8.17.1 Object information on RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . 411

8.17.2 Functions of RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 414

8.17.2.1 Show Complete RAID Status (all types) . . . . . . . . . . . . . . . . . . . . . . 414

8.17.2.2 Show Mode Pages (CX500/CX3-20 and FCS80) . . . . . . . . . . . . . . . . . 415

8.17.2.3 Show Mode Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

8.17.2.4 Show Log Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

8.17.2.5 Show Log Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 415

8.18 PLM (Physical Library Manager) . . . . . . . . . . . . . . . . . . . . . . . . . . 416

8.18.1 Object information on the PLM . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416

8.18.2 PLM functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 416

8.19 PLS (Physical Library Service) . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

8.19.1 Object information on the PLS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

8.19.2 Functions for individual PLSs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

8.19.3 Functions for all PLSs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 417

8.20 SCSI archive systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418

8.20.1 Object information on archive systems . . . . . . . . . . . . . . . . . . . . . . . . 418

8.20.2 SCSI Archive system functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

8.20.2.1 Show Mode Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

8.20.2.2 Show Mode Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

8.20.2.3 Show Log Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

8.20.2.4 Show Log Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 419

8.21 PDS (Physical Device Service) . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

8.21.1 Object information on PDS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

8.21.2 PDS functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 420

8.22 SCSI controllers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 421

8.22.1 Object information on SCSI controllers . . . . . . . . . . . . . . . . . . . . . . . . 421

8.22.2 SCSI controller functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 422

8.22.2.1 Rescan own Bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 422

8.22.2.2 Rescan all Busses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 422

8.23 Cartridge drives (real) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

8.23.1 Object information on tape drives . . . . . . . . . . . . . . . . . . . . . . . . . . . 423

8.23.2 Tape drive functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 425

8.23.2.1 Show SCSI Sense . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426

8.23.2.2 Show Log Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426

8.23.2.3 Show Log Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 427

8.23.2.4 Show Mode Pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 427

8.23.2.5 Show Mode Page Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428

8.23.2.6 Show Vital Product Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429

8.23.2.7 Show Medium Info (MIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 431

8.23.2.8 Show Service Info (SIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

U41117-J-Z125-7-76

Page 15

Contents

8.23.3 Global functions for tape drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

8.23.3.1 Remove Symbols of all Drives . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

8.24 MSGMGR (Message Manager) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

8.24.1 Object information on the Message Manager (MSGMGR) . . . . . . . . . . . . . . 433

8.24.2 MSGMGR functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

8.24.2.1 Show Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

8.24.2.2 Show Trap Trace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

8.25 PERFLOG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 435

8.25.1 Object information of PERFLOG . . . . . . . . . . . . . . . . . . . . . . . . . . . . 435

8.25.2 PERFLOG functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

8.25.2.1 Show Trace & Logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

8.26 ACCOUNTD (Account Daemon) . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

8.26.1 Object information of ACCOUNTD . . . . . . . . . . . . . . . . . . . . . . . . . . 437

8.26.2 Functions of the ACCOUNTD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

8.27 MIRRORD (mirror daemon) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

8.27.1 Object information of MIRRORD . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

8.27.2 Functions of MIRRORD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

8.28 S80D (S80 daemon) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

8.28.1 Object information of S80D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

8.28.2 Functions of S80D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

8.29 VLPWATCH (VLPwatch daemon) . . . . . . . . . . . . . . . . . . . . . . . . . . 440

8.29.1 Object information of VLPWATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

8.29.2 Functions of VLPWATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

9 Explanation of console messages . . . . . . . . . . . . . . . . . . . . . . . . . 441

9.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 441

9.2 Message lines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 445

9.2.1 SXCF... (CMF: Cache Mirroring Feature) . . . . . . . . . . . . . . . . . . . . . . . 445

9.2.2 SXCH... (Channel: pcib/pcea) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447

9.2.3 SXCM... (CHIM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 449

9.2.4 SXDN... (DNA: Distribute and Activate) . . . . . . . . . . . . . . . . . . . . . . . . 450

9.2.5 SXDT... (DTV File System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 450

9.2.6 SXFC... (FibreChannel Driver) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 452

9.2.7 SXFP... (FibreChannel Driver) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 454

9.2.8 SXFW... (Firmware) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 455

9.2.9 SXIB... (Info Broker) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 456

9.2.10 SXLA... (LANWATCH) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

9.2.11 SXLV... (Log Volume) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

U41117-J-Z125-7-76

Page 16

Contents

9.2.12 SXMM... (Message Manager) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 459

9.2.13 SXPL... (PLM: Physical Library Manager) . . . . . . . . . . . . . . . . . . . . . . . 465

9.2.14 SXPS... (PLS: Physical Library Server) . . . . . . . . . . . . . . . . . . . . . . . . 482

9.2.15 SXRD... (FibreCAT: RAID) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 485

9.2.15.1 Messages of the monitoring daemon for the internal RAID . . . . . . . . . . . . 485

9.2.15.2 FibreCAT S80 messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 488

9.2.15.3 FibreCAT CX500 and CX3-20 messages . . . . . . . . . . . . . . . . . . . . . 489

9.2.15.4 FibreCAT CX500 and CX3-20 messages . . . . . . . . . . . . . . . . . . . . . 490

9.2.16 SXRP... (RPLM: Recovery Physical Library Manager) . . . . . . . . . . . . . . . . . 491

9.2.17 SXSB... (Sadm Driver: SCSI bus error) . . . . . . . . . . . . . . . . . . . . . . . . 494

9.2.18 SXSC... (Savecore: organize coredump) . . . . . . . . . . . . . . . . . . . . . . . 495

9.2.19 SXSD... (SCSI Disks: driver shd) . . . . . . . . . . . . . . . . . . . . . . . . . . . 495

9.2.20 SXSE... (EXABYTE Tapes) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 496

9.2.21 SXSM... (Server Management) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 497

9.2.22 SXSW... (Software Mirror) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 510

9.2.23 SXTF... (Tape File System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 510

9.2.24 SXVD... (Distributed Tape Volume Driver) . . . . . . . . . . . . . . . . . . . . . . . 516

9.2.25 SXVL... (VLM: Virtual Library Manager) . . . . . . . . . . . . . . . . . . . . . . . . 517

9.2.26 SXVLS... (VT_LS: Virtual Tape and Library System) . . . . . . . . . . . . . . . . . 521

9.2.27 SXVS... (VLS: Virtual Library Server) . . . . . . . . . . . . . . . . . . . . . . . . . 522

9.2.28 SXVW... (VLPWATCH) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523

9.2.29 SXVX... (Veritas File System) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537

9.3 Message complexes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

9.3.1 Timeout on the RAID disk array . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

9.3.2 Timeout on the MTC drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539

9.3.3 Failure of RAID systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 540

9.3.4 Failover at the RAID system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

9.3.5 Bus Reset for SCSI Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

10 Waste disposal and recycling . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543

11 Contacting the Help Desk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

12 Appendix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 547

12.1 Integration of CentricStor V3.1 in SNMP . . . . . . . . . . . . . . . . . . . . . . 547

12.1.1 Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 547

12.1.2 Activating SNMP on CentricStor . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

12.1.2.1 Configuring SNMP under CentricStor . . . . . . . . . . . . . . . . . . . . . . . 548

U41117-J-Z125-7-76

Page 17

Contents

12.1.2.2 Activating the configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

12.1.2.3 Changes in central files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

12.1.3 Monitoring CentricStor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 549

12.1.3.1 GXCC as a monitoring tool without SNMP . . . . . . . . . . . . . . . . . . . . 549

12.1.3.2 Monitoring using any SNMP Management Station . . . . . . . . . . . . . . . . 550

12.1.3.3 CentricStor Global System State . . . . . . . . . . . . . . . . . . . . . . . . . 551

12.1.3.4 GXCC on the SNMP Management Station . . . . . . . . . . . . . . . . . . . . 551

12.1.3.5 Sending a trap to the Management Station . . . . . . . . . . . . . . . . . . . . 551

12.1.3.6 Monitoring of CentricStor V2/V3.0 and V3.1 . . . . . . . . . . . . . . . . . . . . 552

12.1.4 Installation on the Management Station CA Unicenter . . . . . . . . . . . . . . . . 552

12.1.4.1 Reading in the GUI CD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552

12.1.4.2 Installation of the CA Unicenter extensions for CentricStor . . . . . . . . . . . . 553

12.1.4.3 Identification and editing of the CentricStor traps . . . . . . . . . . . . . . . . . 553

12.1.5 Working with CA Unicenter and CentricStor . . . . . . . . . . . . . . . . . . . . . . 554

12.1.5.1 CentricStor icon under CA Unicenter . . . . . . . . . . . . . . . . . . . . . . . 554

12.1.5.2 Identifying a CentricStor and assigning the icon . . . . . . . . . . . . . . . . . . 555

12.1.5.3 Receipt and preparation of a CentricStor trap . . . . . . . . . . . . . . . . . . . 556

12.1.5.4 Monitoring CentricStor using ping and MIB-II . . . . . . . . . . . . . . . . . . . 557

12.1.5.5 Calling the GXCC from the pop-up menu of CA Unicenter . . . . . . . . . . . . 557

12.1.6 Monitoring of CentricStor V2/V3.0 and V3.1 with CA Unicenter . . . . . . . . . . . . 557

12.1.7 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 557

12.2 E-mail support in CentricStor . . . . . . . . . . . . . . . . . . . . . . . . . . . . 558

12.2.1 Sendmail configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 558

12.2.2 Setting up the DNS domain service . . . . . . . . . . . . . . . . . . . . . . . . . . 558

12.2.3 Configuring the e-mail template . . . . . . . . . . . . . . . . . . . . . . . . . . . . 560

12.2.4 Description of the e-mail formats . . . . . . . . . . . . . . . . . . . . . . . . . . . 561

12.3 Transferring volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 562

12.3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 562

12.3.2 Export procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 563

12.3.3 Import procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564

12.3.4 Special features of the PVG TR-PVG . . . . . . . . . . . . . . . . . . . . . . . . . 565

12.3.5 Additional command line interface (CLI) . . . . . . . . . . . . . . . . . . . . . . . . 566

12.3.5.1 Transfer-out . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 566

12.3.5.2 Removing PVs and LVs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 568

12.3.5.3 Adding a PV to the transfer-in . . . . . . . . . . . . . . . . . . . . . . . . . . . 568

12.3.5.4 Removing an LV from a transfer list . . . . . . . . . . . . . . . . . . . . . . . . 570

12.3.5.5 Skipping an LV / removing a PV . . . . . . . . . . . . . . . . . . . . . . . . . . 570

12.3.6 Special situations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 570

12.3.7 Library commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

12.3.7.1 ADIC library with DAS server . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

12.3.7.2 StorageTek Library with ACSLS server . . . . . . . . . . . . . . . . . . . . . . 571

12.3.7.3 Fujitsu Library with LMF server (PLP) . . . . . . . . . . . . . . . . . . . . . . . 571

U41117-J-Z125-7-76

Page 18

Contents

12.4 Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

12.4.1 Xpdf, gzip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

12.4.1.1 Preamble . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

12.4.1.2 GNU GENERAL PUBLIC LICENSE . . . . . . . . . . . . . . . . . . . . . . . . 573

12.4.1.3 Appendix: How to Apply These Terms to Your New Programs . . . . . . . . . . 577

12.4.2 Firebird . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 579

12.4.3 Sendmail . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589

12.4.4 XML . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 590

12.4.4.1 Licence for libxslt except libexslt . . . . . . . . . . . . . . . . . . . . . . . . . . 590

12.4.4.2 Licence for libexslt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 591

12.4.5 NTP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 592

12.4.6 tcpd . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 596

12.4.7 PRNGD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 596

12.4.8 openssh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 597

12.4.9 openssl . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 604

12.4.10 tcl . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

12.4.11 tk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 608

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 609

Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 619

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 627

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 629

U41117-J-Z125-7-76

Page 19

1Introduction

With CentricStor, a virtual tape robot system is placed in front of the real tape robot system

(with the real drives and cartridges). In this way the host and the real archive are fully

decoupled. The virtual tape robot system knows what are referred to as virtual (logical)

drives and virtual (logical) volumes. The core element here consists principally of a disk

system as data cache, guaranteeing not only extremely high-speed access to the data, but

also, thanks to the large number of virtual drives (up to 512) and logical volumes (up to

500 000) which can be generated, that the bottlenecks which occur in a real robot system

can be cleared.

U41117-J-Z125-7-76 19

Page 20

Objective and target group for the manual Introduction

The host is connected using the following connection technologies:

● ESCON channels

● FibreChannel

● FICON

Communication between the individual control units takes place via the LAN in CentricStor,

the transport of the user data to and from the RAID system via the FibreChannel.

The physical drives can be connected to the backend via both FibreChannel and SCSI

technology.

1.1 Objective and target group for the manual

This manual provides all the information you need to operate CentricStor. It is thus aimed

at operators and system administrators.

1.2 Concept of the manual

This manual describes how to use CentricStor in conjunction with a BS2000/MVS system

and Open Systems.

It supplies all the information you need to commission and administer CentricStor:

CentricStor - Virtual Tape Library

This chapter describes the CentricStor hardware and software architecture. It details the

operating procedures, so that you can gain an understanding of the way the system works.

It also contains information on the technical implementation, and a description of new and

optional components.

Switching CentricStor on/off

This chapter describes how to power up and shut down CentricStor.

Selected system administrator activities

This chapter contains information on selected system administrator activities in GXCC and

XTCC, the graphical user interface of CentricStor.

Operating and monitoring CentricStor

This chapter describes the technical concept for operating and monitoring CentricStor, and

explains how GXCC and XTCC are started.

GXCC

This chapter describes the GXCC program used to operate and monitor CentricStor.

20 U41117-J-Z125-7-76

Page 21

Introduction Notational conventions

Global Status

The Global Status Monitor provides a graphical display of all important operating data in a

window.

XTCC

The program XTCC is used mainly to monitor the individual CentricStor computers (ISPs)

including the peripheral devices connected to the computers.

Explanation of console messages

This chapter describes the most important of the console messages. And as far as possible

suggests a way of solving the problem.

Appendix

The Appendix contains additional information concerning CentricStor.

Glossary

This chapter describes the most important CentricStor specific terms.

1.3 Notational conventions

This manual uses the following symbols and notational conventions to draw your attention

to certain passages of text:

Ê This symbol indicates actions that must be performed by the user

(e.g. keyboard input).

This symbol indicates important information (e.g. warnings).

!

i

[ ... ] Square brackets are used to enclose cross-references to related publications,

Names, commands, and messages appear throughout the manual in typewriter font

(e.g. the SET-LOGON-PARAMETERS command).

1.4 Note

CentricStor is subject to constant development. The information contained in this manual is

subject to change without notice.

U41117-J-Z125-7-76 21

This symbol indicates information which is particularly important for the

functionality of the product.

and to indicate optional parameters in command descriptions.

Page 22

Eine Dokuschablone von Frank Flachenecker

by f.f. 1992

Page 23

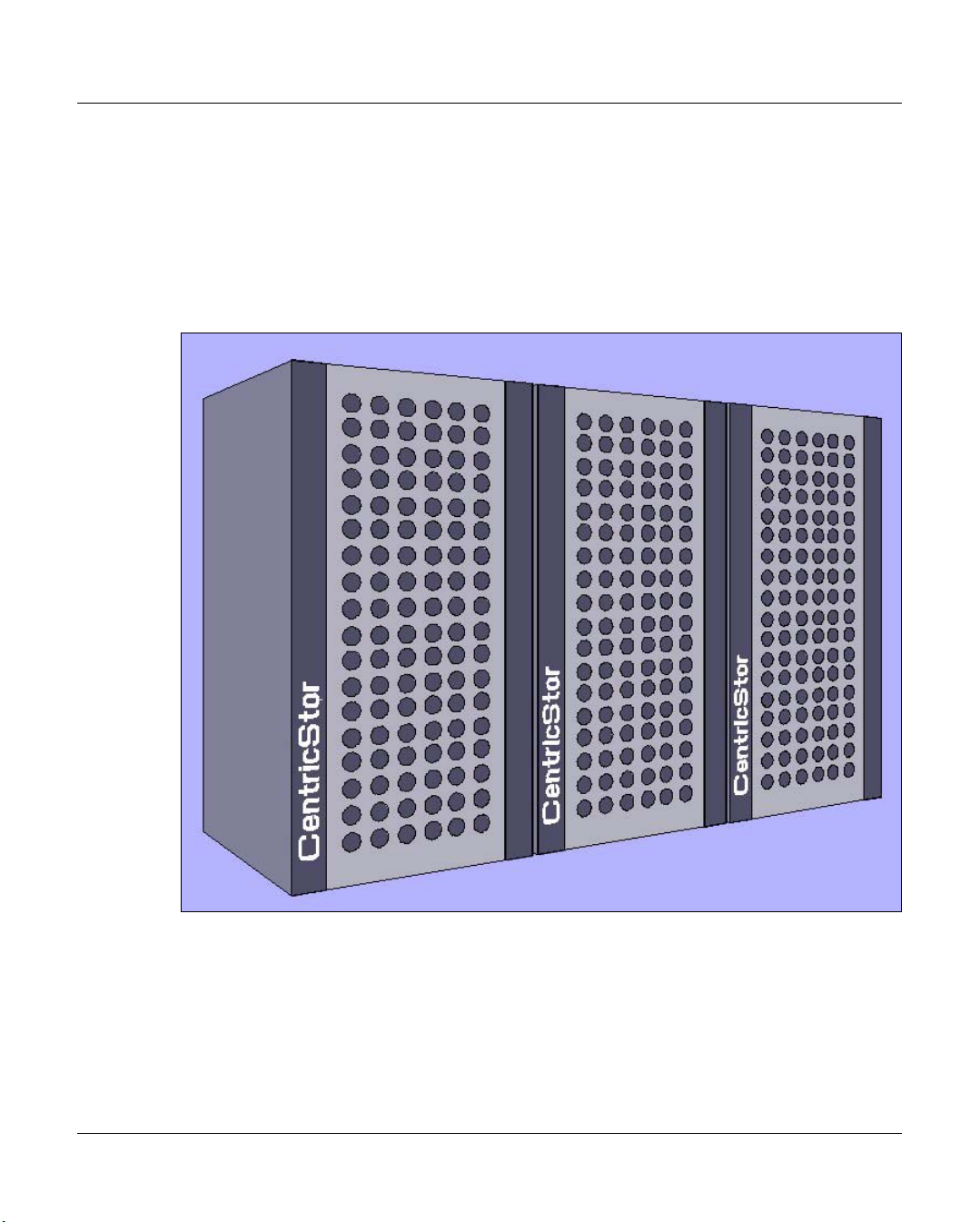

2 CentricStor - Virtual Tape Library

2.1 The CentricStor principle

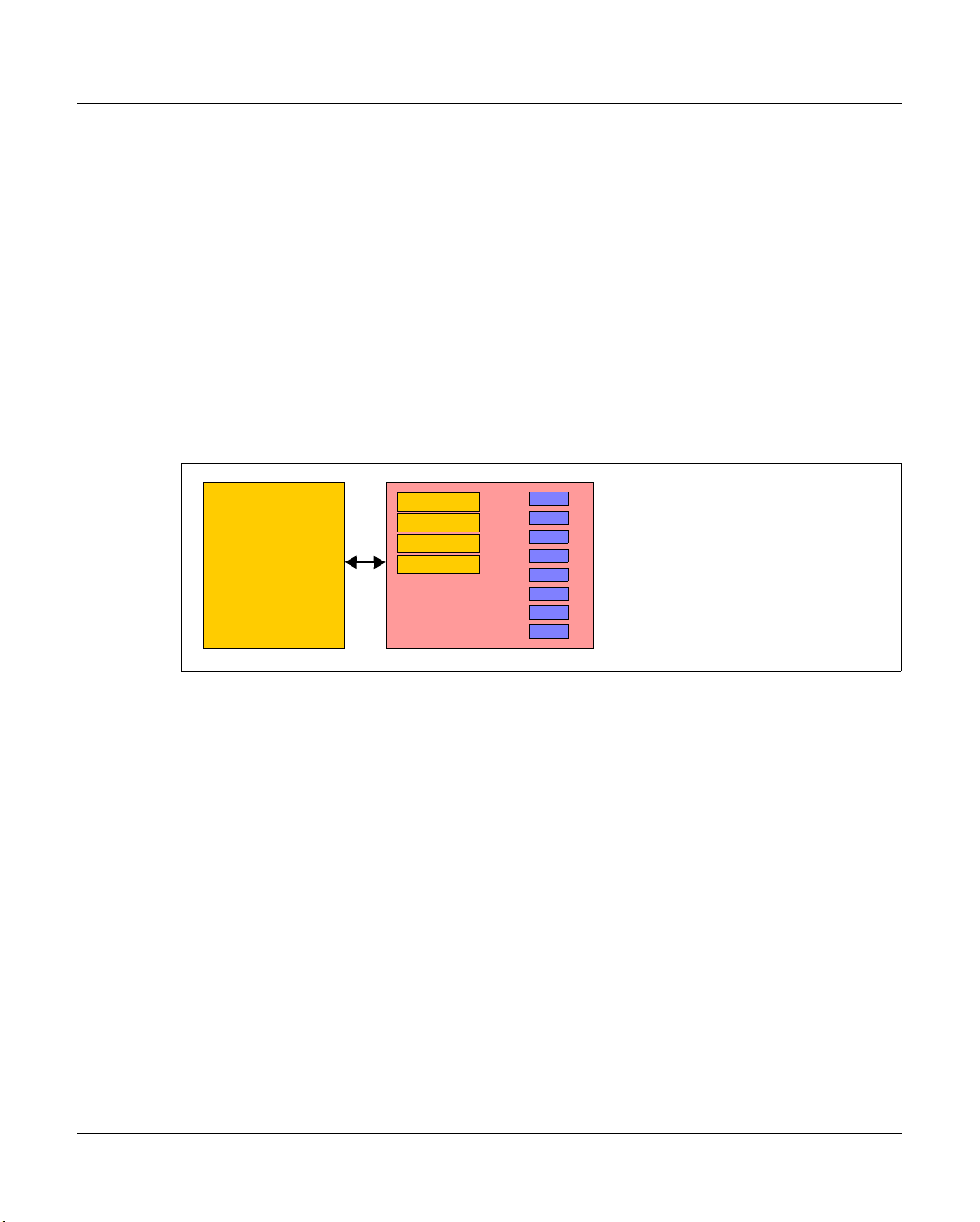

Conventional host robot system

Drive

Drive

Drive

Drive

Host

Figure 1: Conventional host robot system

In a conventional real host robot system, the host system requests certain data cartridges

to be mounted in a defined real tape drive. As soon as the storage peripherals (robots,

drives) report that this has been completed successfully, data transfer can begin. In this

case, the host has direct, exclusive access to the drive in the archive system. It is crucial

that a completely static association be defined between the application and the physical

drive.

U41117-J-Z125-7-76 23

Robots

Data cartridges

Page 24

The CentricStor principle CentricStor - Virtual Tape Library

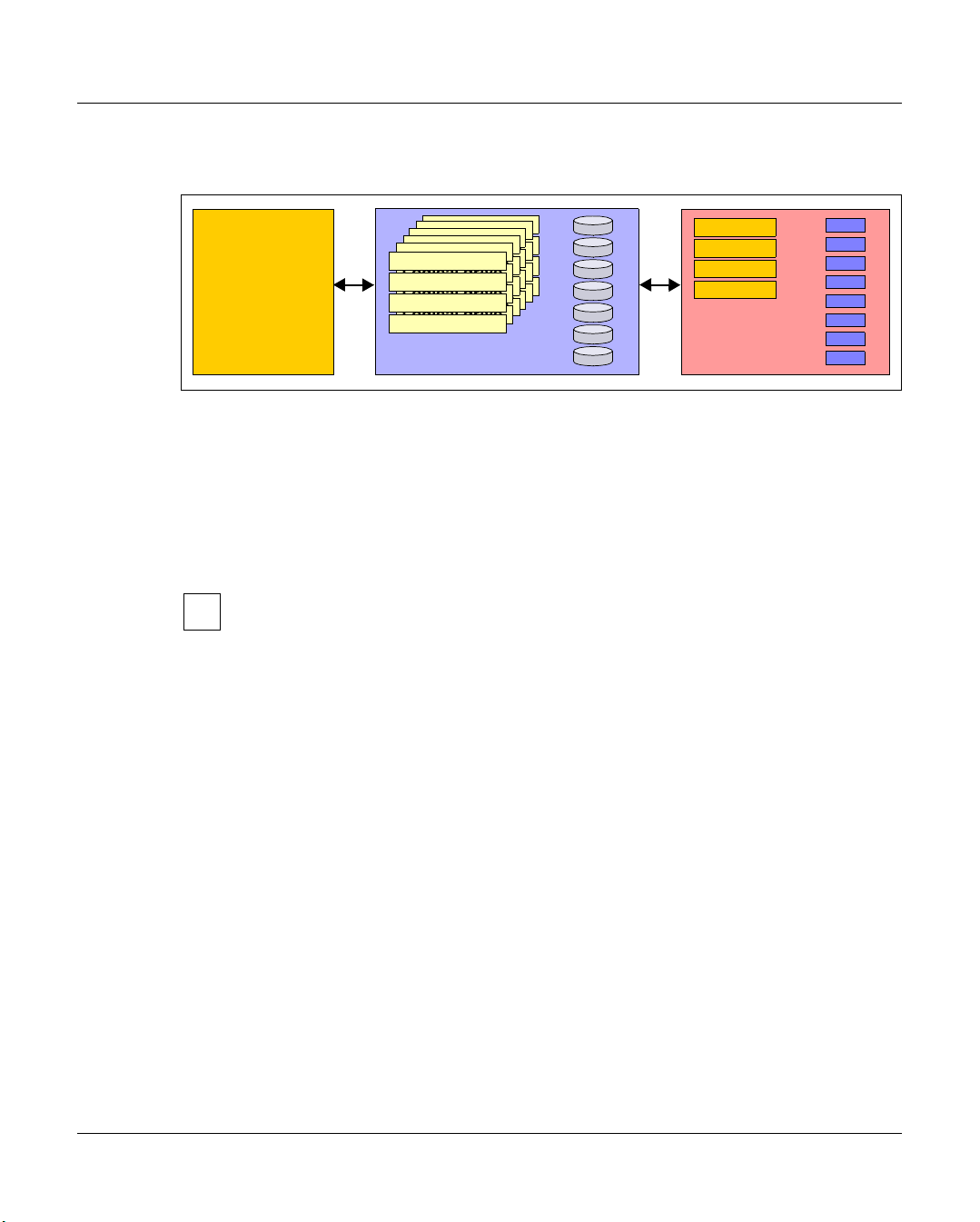

Host robot system with CentricStor

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Logical drive

Host

Figure 2: Host robot system with CentricStor

CentricStor

logical volumes

Disk cache

Drive

Drive

Drive

Drive

Robots

Physical volumes

Data cartridges

With CentricStor, a virtual archive system is installed upstream of the real archive system

with the physical drives and data cartridges. This enables the host to be completely isolated

from the real archive. The virtual archive system contains a series of logical drives and

volumes. At its heart is a data buffer, known as the disk cache, in which the logical volumes

are made available. This guarantees extremely fast access to the data, in most cases

allowing both read and write operations to be performed much more efficiently than in

conventional operation.

Instead of the term logical drives (or volumes), the term virtual drives (or volumes)

i

is sometimes also used. These terms should be regarded as synonyms. In this

manual the term logical is used consistently when drives and volumes in

CentricStor are meant, and physical when the real peripherals are meant.

The virtual archive system is particularly attractive, as it provides a large number of logical

drives compared to the number of physical drives. As a result, bottlenecks which exist in a

real archive can be eliminated or avoided.

From the host’s viewpoint, the logical drives and volumes act like real storage peripherals.

When a mount job is issued by a mainframe application or an open systems server, for

example, the requested logical volume is loaded into the disk cache. If the application then

writes data to the logical drive, the incoming data stream is written to the logical volume

created in the disk cache.

The Library Manager of the virtual archive system then issues a mount job to the real

archive system asynchronously and completely transparently to the host. The data is read

out directly from the disk cache and written to a physical tape cartridge. The physical

volume is thus updated with optimum resource utilization.

Logical volumes in the disk cache are not erased immediately. Instead, data is displaced in

accordance with the LRU principle (Least Recently Used). Sufficient space for this must be

allocated in the disk cache.

24 U41117-J-Z125-7-76

Page 25

CentricStor - Virtual Tape Library The CentricStor principle

As soon as a mount job is issued, the Library Manager checks whether the requested

volume is already in the disk cache. If so, the volume is immediately released for processing

by the application. If not, CentricStor requests the corresponding cartridge to be mounted

onto a physical drive, and reads the logical volume into the disk cache.

CentricStor thus operates as a very large, extremely powerful, highly intelligent data buffer

between the host level and the real archive system.

It offers the following advantages:

● removal of device bottlenecks through virtualization

● transparency to the host thanks to the retention of interfaces unchanged

● support for future technologies by isolating the host from the archive system

CentricStor thus provides a long-term, cost-effective basis for modern storage

management.

U41117-J-Z125-7-76 25

Page 26

Hardware architecture CentricStor - Virtual Tape Library

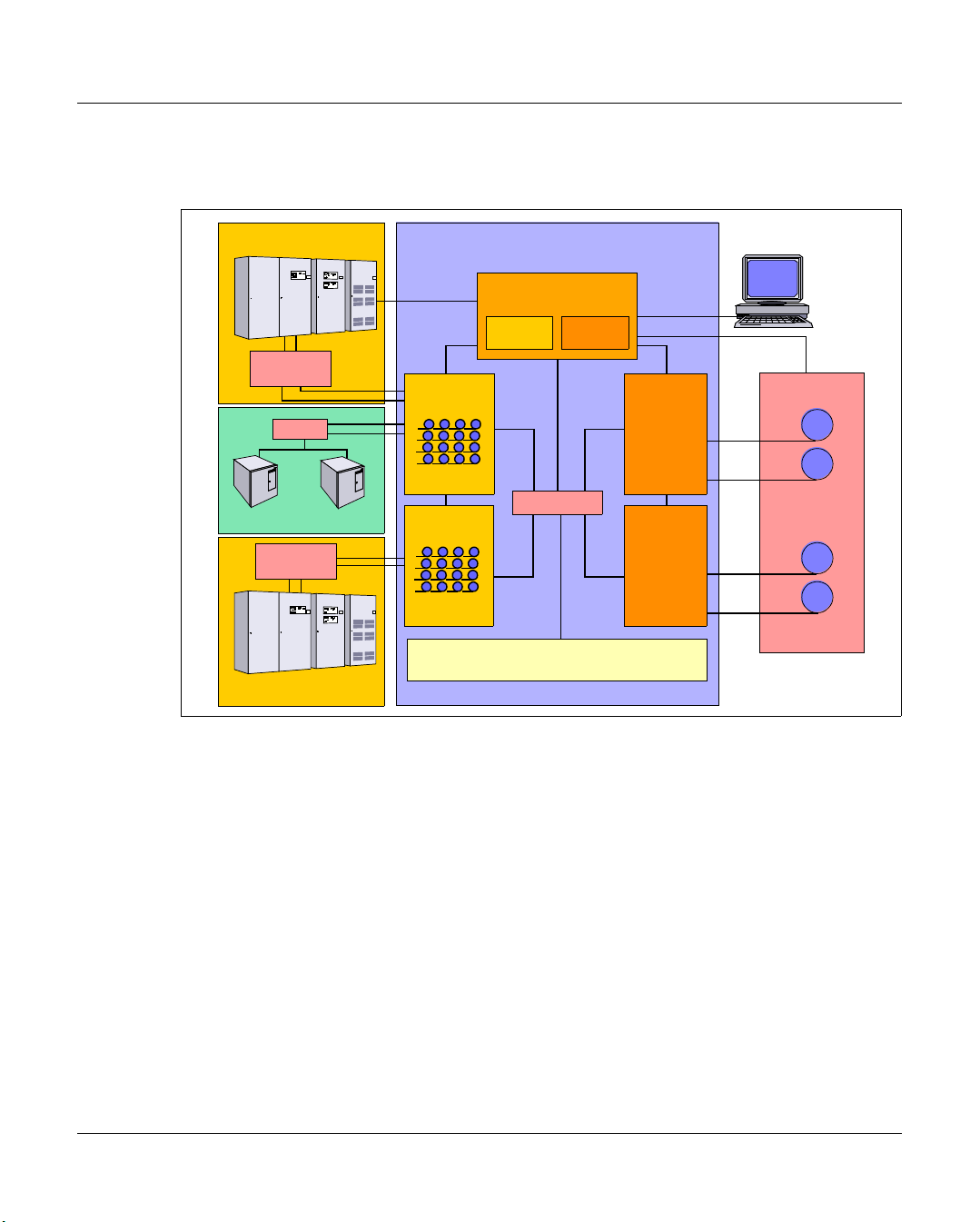

2.2 Hardware architecture

Mainframe

CentricStor

LAN

ESCON

Director

Switch

ESCON

FC

UNIX/Windows

FICON

Switch

FICON

Mainframe

Figure 3: Example of a CentricStor configuration

LAN

ICP

Virtual

tape drives

ICP

Virtual

tape drives

VLP

PLMVLM

FC Switch

TVC

LAN

IDP

IDP

CentricStor

Console

LAN

SCSI

SCSI

Real

tape drives

SCSI

SCSI

Real

tape drives

Robots

In this example, CentricStor comprises the following hardware components:

● a VLP (Virtual Library Processor), which monitors and controls the CentricStor

hardware and software components

● two ICPs (Integrated Channel Processors), which communicate with the hosts via

ESCON (via ESCON Director), FICON (via FICON switch) or FC (via FC switch)

● two IDPs (Integrated Device Processors), which communicate with the tape drives in

the robot system via SCSI or FC

● one or more RAID systems for the TVC (Tape Volume Cache) for buffering logical

volumes

● an FC switch, which is used by the ICP, IDP, and VLP to transfer data

● a CentricStor console for performing configuration and administration tasks

● a LAN connection between CentricStor and the robot system

● a LAN connection, which is used by the ICP, IDP, and VLP for communication

The PLM (Physical Library Manager) and VLM (Virtual Library Manager) are software

components which are particularly important for system operation (see page 34).

26 U41117-J-Z125-7-76

Page 27

CentricStor - Virtual Tape Library Hardware architecture

2.2.1 ISP (Integrated Service Processor)

CentricStor is a group of several processors, each running special software (UNIX

derivative) as the operating system. These processors are referred to collectively as the ISP

(Integrated Service Processor). Depending on the peripheral connection, the hardware

configuration, the software configuration, and the task in the CentricStor system, a

distinction is made between the following processor types:

– VLPs (optional: SVLP = standby VLP)

–ICPs

–IDPs

–ICP_IDP

To permit communication between the processors, they are interconnected by an internal

LAN. The distinguishing characteristics of these processors are described in the following

sections.

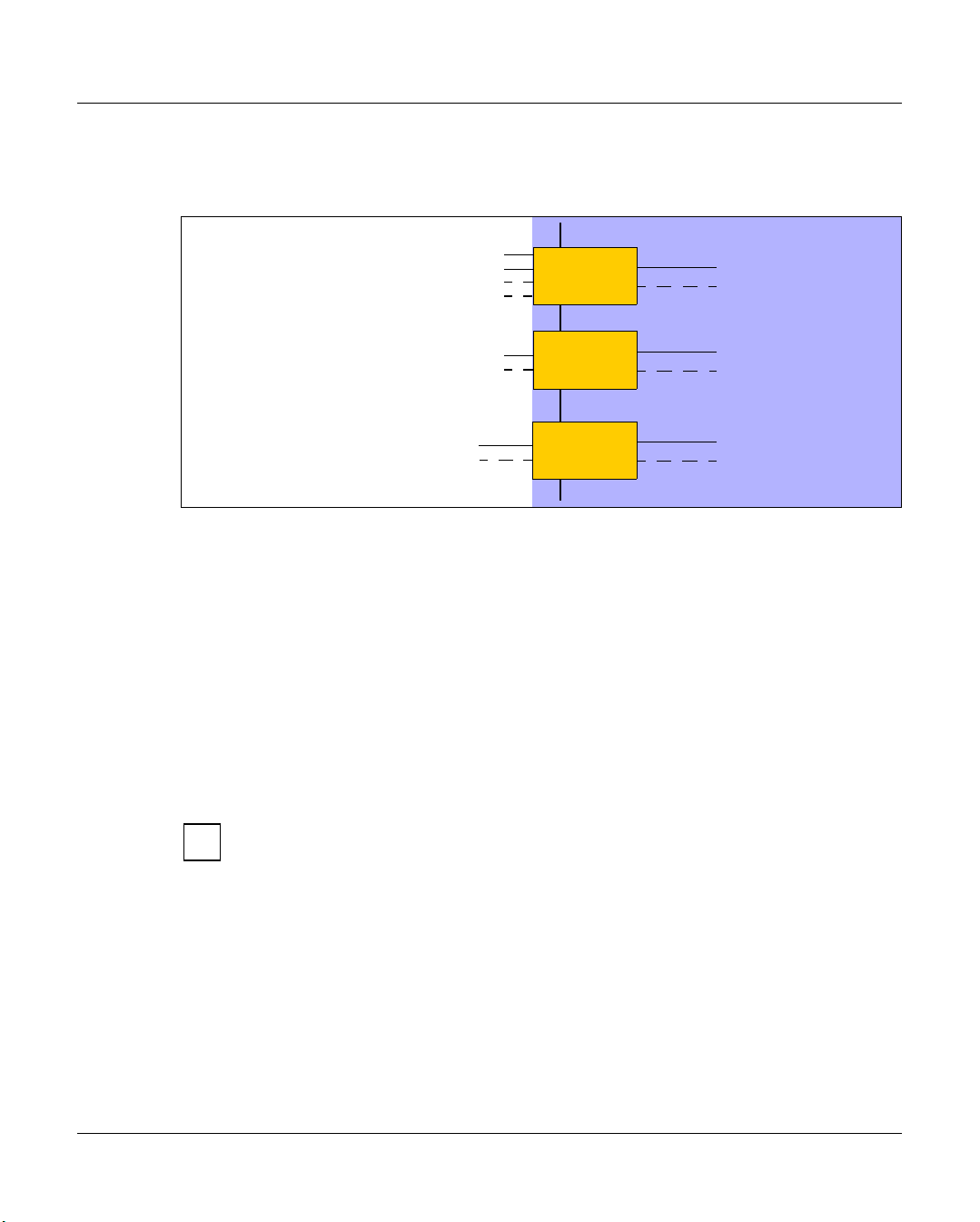

2.2.1.1 VLP (Virtual Library Processor)

The processor of the type VLP can be included twice to provide failsafe performance. Only

one of the two plays an active role at any given time: the VLP Master. The other, the Standby

VLP (SVLP), is ready to take over the role of the VLP Master should the VLP Master fail

(see section “Automatic VLP failover” on page 52). The two VLPs are connected to each

other and to the ICPs, IDPs and TVC via FC.

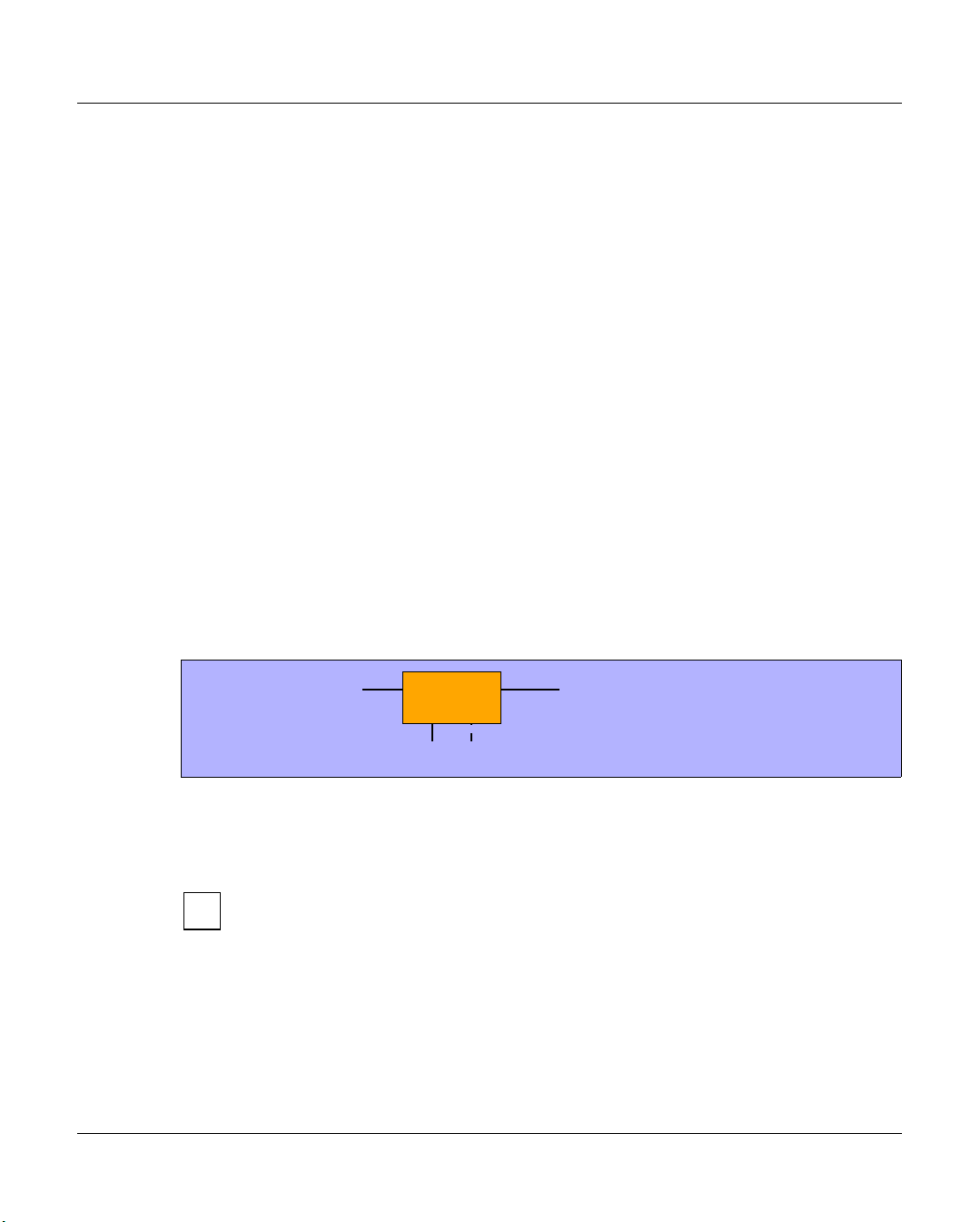

CentricStor

Figure 4: Internal VLP connections

The main task of the VLP Master is the supervision and control of the hardware and

software components, including the data maintenance of the VLM and the PLM. Communication takes place via the LAN connection

The software which controls CentricStor (in particular, the VLM and PLM) is

i

installed on all the processors (VLP, ICP, and IDP) but is only activated on one

processor (the VLP Master).

U41117-J-Z125-7-76 27

VLP

FC

FC

LAN

Page 28

Hardware architecture CentricStor - Virtual Tape Library

2.2.1.2 ICP (Integrated Channel Processor)

The ICP is the interface to the host systems connected in the overall system.

Hosts

BS2000/OSD,

ESCON

LAN

ICP

z/OS and OS/390

z/OS and OS/390

FICON

BS2000/OSD,

Open Systems

Figure 5: External and internal ICP connections

Depending on the type of host system used, it is possible to equip an ICP with a maximum

of 4 ESCON boards on the host side (connection with BS2000/OSD, z/OS or OS/390), with

one or two FICON ports (connection with z/OS or OS/390), or with one or two FC boards

(BS2000/OSD or open systems). A mixed configuration is also possible.The ICP also has

an internal FC board (or two in the case of redundancy) for connecting to the RAID disk

system.

The main task of the ICP is to emulate physical drives to the connected host systems.

The host application issues a logical mount job for a logical drive in an ICP connected to a

host system (see section “Issuing a mount job from the host” on page 39). The data trans-

ferred for the associated logical volume is then stored by the ICP directly in the RAID disk

system.

FCP

ICP

ICP

CentricStor

FC

FC

FC

FC

FC

FC

The virtual CentricStor drives support a maximum block size of 256 KB.

i

Communication with the other processors takes place over a LAN connection.

28 U41117-J-Z125-7-76

Page 29

CentricStor - Virtual Tape Library Hardware architecture

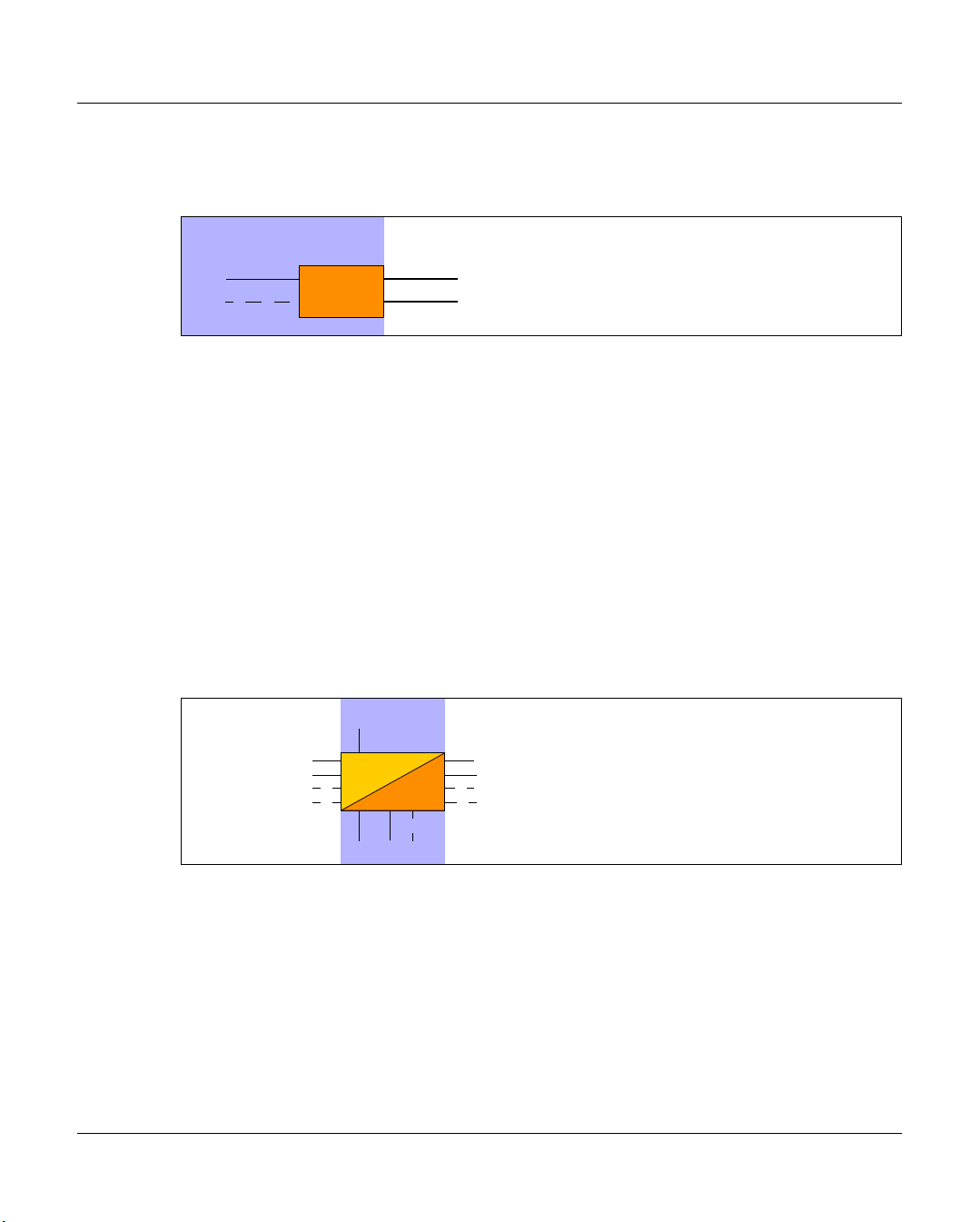

2.2.1.3 IDP (Integrated Device Processor)

The IDP is the interface to the connected tape drives.

CentricStor

FC

FC

Figure 6: Internal and external IDP connections

The IDP is responsible for communication with real tape drives. To optimize performance,

only two real tape drives should be configured per IDP.

Because of the relatively short length of a SCSI cable (approx. 25 m), the CentricStor IDPs

are typically installed directly in the vicinity of the robot archive if a SCSI connection is to be

used to connect the drives.

It is capable of updating tape cartridges onto which data has already been written by

appending a further logical volume after the last one.

A cartridge filled in this way with a number of logical volumes is also referred to as a stacked

volume (see section “Administering the tape cartridges” on page 35).

Communication with the other processors takes place over a LAN connection.

2.2.1.4 ICP_IDP or IUP (Integrated Universal Processor)

Hosts

IDP

SCSI or FC

SCSI or FC

RobotsCentricStor

Robots

Interfaces

to the host

An ICP_IDP provides the features of a VLP, an ICP and an IDP. This processor has interfaces to the hosts and to the tape drives.

However, the performance is a great deal lower than if its functions are distributed on its

own processors of the types VLP, ICP and IDP.

IUP (Integrated Universal Processor) is a synonym for ICP_IDP.

U41117-J-Z125-7-76 29

ICP_IDP

LAN

FC FC

Interfaces to

tape drives

Page 30

Hardware architecture CentricStor - Virtual Tape Library

2.2.2 RAID systems for the Tape Volume Cache

A TVC (Tape Volume Cache) is the heart of the entire virtual archive system. It represents

all of the Tape File Systems in which the logical volumes can be stored temporarily. One or

more RAID systems (up to 8) are used for this.

Each RAID system contains at least the basic configuration, which consists of FC disks and

2 RAID controllers. It can also be equipped with up to 7 extensions, which in turn constitute

a fully equipped shelf with FC or ATA disks. A RAID system consists of shelves which in

CentricStor are always fully equipped with disks. The TVC illustrated in the figure below