VMware ESXI - 6.7 Instruction Manual

Setup for Failover

Clustering and Microsoft

Cluster Service

17 APR 2018

VMware vSphere 6.7

VMware ESXi 6.7

vCenter Server 6.7

Setup for Failover Clustering and Microsoft Cluster Service

You can find the most up-to-date technical documentation on the VMware website at:

https://docs.vmware.com/

If you have comments about this documentation, submit your feedback to

docfeedback@vmware.com

VMware, Inc.

3401 Hillview Ave.

Palo Alto, CA 94304

www.vmware.com

Copyright © 2006–2018 VMware, Inc. All rights reserved. Copyright and trademark information.

VMware, Inc. 2

Contents

About Setup for Failover Clustering and Microsoft Cluster Service 5

Getting Started with MSCS 6

1

Clustering Configuration Overview 6

Hardware and Software Requirements for Clustering 10

Supported Shared Storage Configurations 10

PSP_RR Support for MSCS 11

iSCSI Support for MSCS 11

FCoE Support for MSCS 12

vMotion support for MSCS 12

VVol Support for MSCS 13

vSphere MSCS Setup Limitations 14

MSCS and Booting from a SAN 14

Setting up Clustered Continuous Replication or Database Availability Groups with Exchange 15

Setting up AlwaysOn Availability Groups with SQL Server 2012 15

Cluster Virtual Machines on One Physical Host 16

2

Create the First Node for Clusters on One Physical Host 16

Create Additional Nodes for Clusters on One Physical Host 17

Add Hard Disks to the First Node for Clusters on One Physical Host 18

Add Hard Disks to Additional Nodes for Clusters on One Physical Host 19

Cluster Virtual Machines Across Physical Hosts 21

3

Create the First Node for MSCS Clusters Across Physical Hosts 21

Create Additional Nodes for Clusters Across Physical Hosts 23

Add Hard Disks to the First Node for Clusters Across Physical Hosts 24

Add Hard Disks to the First Node for Clusters Across Physical Hosts with VVol 25

Add Hard Disks to Additional Nodes for Clusters Across Physical Hosts 26

Cluster Physical and Virtual Machines 28

4

Create the First Node for a Cluster of Physical and Virtual Machines 28

Create the Second Node for a Cluster of Physical and Virtual Machines 29

Add Hard Disks to the Second Node for a Cluster of Physical and Virtual Machines 30

Install Microsoft Cluster Service 31

Create Additional Physical-Virtual Pairs 31

Use MSCS in an vSphere HA and vSphere DRS Environment 32

5

Enable vSphere HA and vSphere DRS in a Cluster (MSCS) 32

VMware, Inc.

3

Setup for Failover Clustering and Microsoft Cluster Service

Create VM-VM Affinity Rules for MSCS Virtual Machines 33

Enable Strict Enforcement of Affinity Rules (MSCS) 33

Set DRS Automation Level for MSCS Virtual Machines 34

Using vSphere DRS Groups and VM-Host Affinity Rules with MSCS Virtual Machines 34

vSphere MSCS Setup Checklist 37

6

VMware, Inc. 4

About Setup for Failover Clustering and Microsoft Cluster Service

Setup for Failover Clustering and Microsoft Cluster Service describes the types of clusters you can

implement using virtual machines with Microsoft Cluster Service for Windows Server 2003 and Failover

Clustering for Windows Server 2008, Windows Server 2012 and above releases. You get step-by-step

instructions for each type of cluster and a checklist of clustering requirements and recommendations.

Unless stated otherwise, the term Microsoft Cluster Service (MSCS) applies to Microsoft Cluster Service

with Windows Server 2003 and Failover Clustering with Windows Server 2008 and above releases.

Setup for Failover Clustering and Microsoft Cluster Service covers ESXi and VMware® vCenter® Server.

Intended Audience

This information is for system administrators who are familiar with VMware technology and

Microsoft Cluster Service.

Note This is not a guide to using Microsoft Cluster Service or Failover Clustering. Use your Microsoft

documentation for information about installation and configuration of Microsoft Cluster Service or

Failover Clustering.

Note In this document, references to Microsoft Cluster Service (MSCS) also apply to Windows Server

Failover Clustering (WSFC) on corresponding Windows Server versions.

vSphere Client and vSphere Web Client

The instructions in this guide are specific primarily to the vSphere Client (an HTML5-based GUI). Most

instructions also apply to the vSphere Web Client (a Flex-based GUI).

For the workflows that significantly differ between the two clients, there are duplicate procedures. The

procedures indicate when they are intended exclusively for the vSphere Client or the vSphere Web Client.

Note In vSphere 6.7, most of the vSphere Web Client functionality is implemented in the vSphere Client.

For an up-to-date list of the unsupported functionality, see Functionality Updates for the vSphere Client.

VMware, Inc.

5

Getting Started with MSCS 1

VMware® vSphere® supports clustering using MSCS across virtual machines. Clustering virtual machines

can reduce the hardware costs of traditional high-availability clusters.

Note vSphere High Availability (vSphere HA) supports a clustering solution in conjunction with

vCenter Server clusters. vSphere Availability describes vSphere HA functionality.

This chapter includes the following topics:

n

Clustering Configuration Overview

n

Hardware and Software Requirements for Clustering

n

Supported Shared Storage Configurations

n

PSP_RR Support for MSCS

n

iSCSI Support for MSCS

n

FCoE Support for MSCS

n

vMotion support for MSCS

n

VVol Support for MSCS

n

vSphere MSCS Setup Limitations

n

MSCS and Booting from a SAN

n

Setting up Clustered Continuous Replication or Database Availability Groups with Exchange

n

Setting up AlwaysOn Availability Groups with SQL Server 2012

Clustering Configuration Overview

Several applications use clustering, including stateless applications such as Web servers, and

applications with built-in recovery features such as database servers. You can set up MSCS clusters in

several configurations, depending on your environment.

VMware, Inc.

6

physical machine

virtual machine

Node1

cluster

software

virtual machine

Node2

cluster

software

storage (local or SAN)

private

network

public

network

Setup for Failover Clustering and Microsoft Cluster Service

A typical clustering setup includes:

n

Disks that are shared between nodes. A shared disk is required as a quorum disk. In a cluster of

virtual machines across physical hosts, the shared disk must be on a Fibre Channel (FC) SAN, FCoE

or iSCSI. A quorum disk must have a homogenous set of disks. This means that if the configuration is

done with FC SAN, then all of the cluster disks should be FC SAN only. Mixed mode is not supported.

n

A private heartbeat network between nodes.

You can set up the shared disks and private heartbeat using one of several clustering configurations.

In ESXi 6.7, MSCS pass-through support for VVols (Virtual Volumes) permits the shared disk to be on a

VVol storage that supports SCSI Persistent Reservations for VVols.

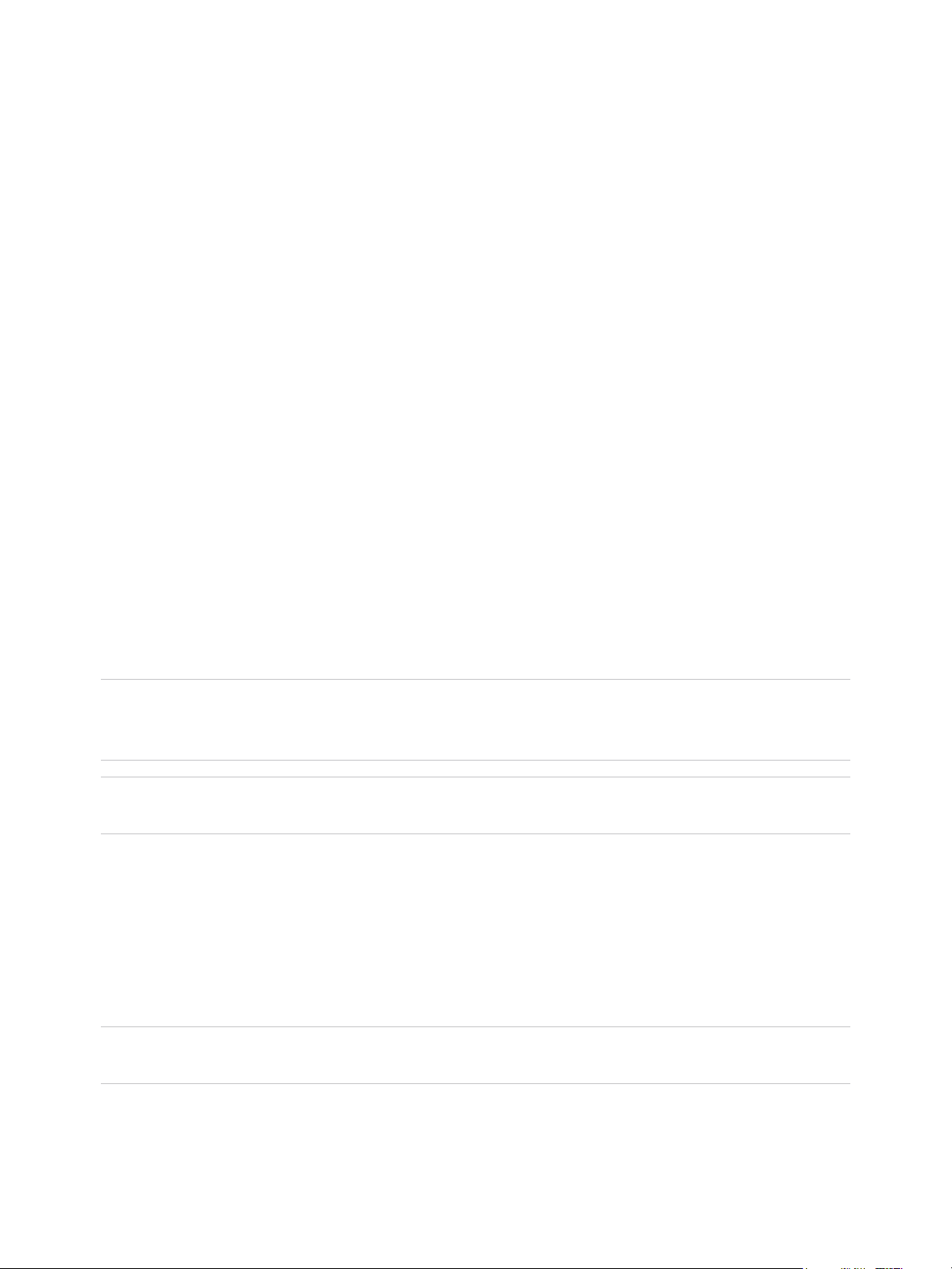

Clustering MSCS Virtual Machines on a Single Host

A cluster of MSCS virtual machines on a single host (also known as a cluster in a box) consists of

clustered virtual machines on the same ESXi host. The virtual machines are connected to the same

storage, either local or remote. This configuration protects against failures at the operating system and

application level, but it does not protect against hardware failures.

Note Windows Server 2008 R2 and above releases support up to five nodes (virtual machines).

Windows Server 2003 SP2 systems support two nodes.

The following figure shows a cluster in a box setup.

n

Two virtual machines on the same physical machine (ESXi host) run clustering software.

n

The virtual machines share a private network connection for the private heartbeat and a public

network connection.

n

Each virtual machine is connected to shared storage, which can be local or on a SAN.

Figure 1‑1. Virtual Machines Clustered on a Single Host

VMware, Inc. 7

physical machine physical machine

virtual machine

Node1

cluster

software

virtual machine

Node2

cluster

software

storage (SAN)

private

network

public

network

Setup for Failover Clustering and Microsoft Cluster Service

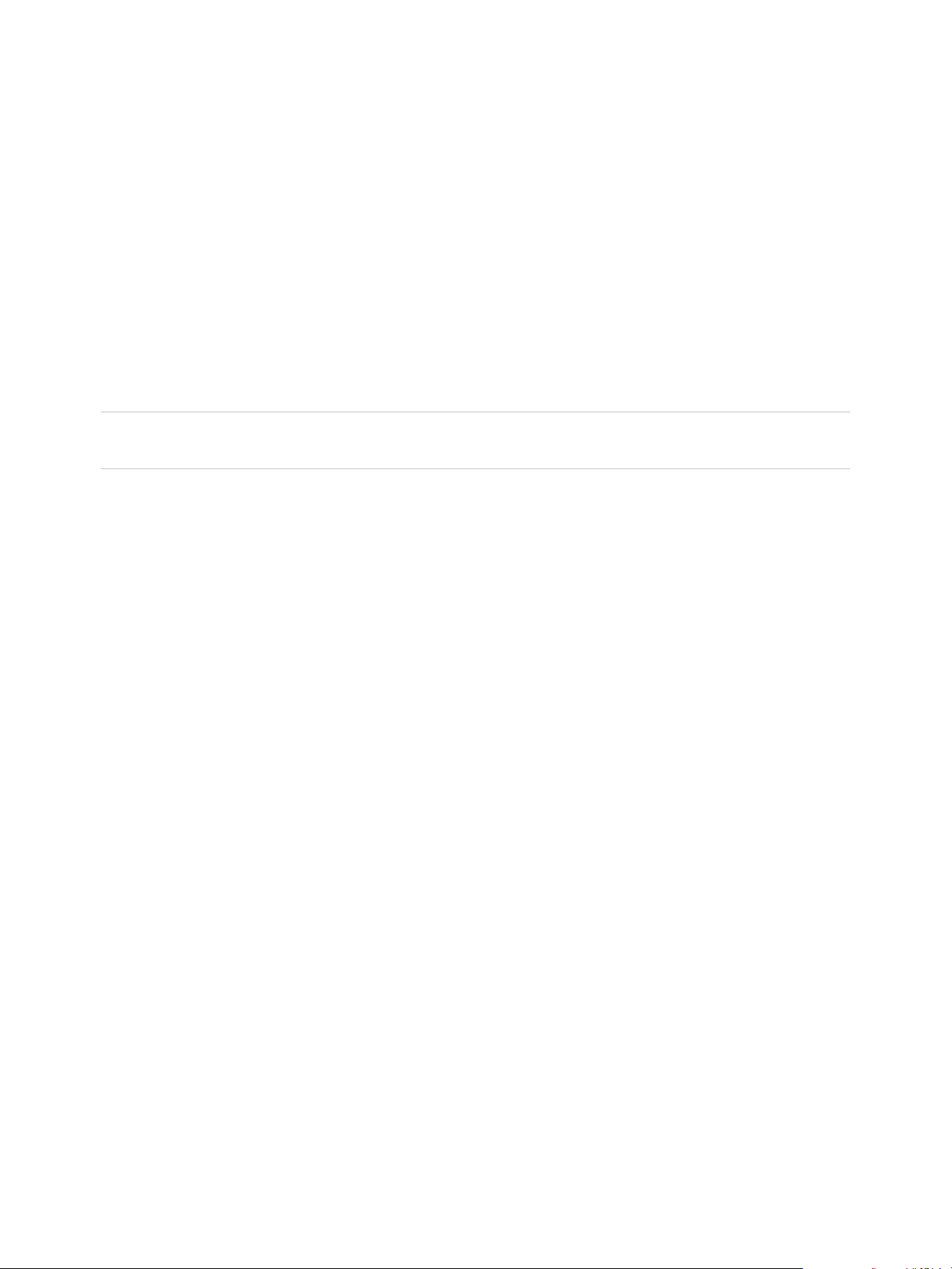

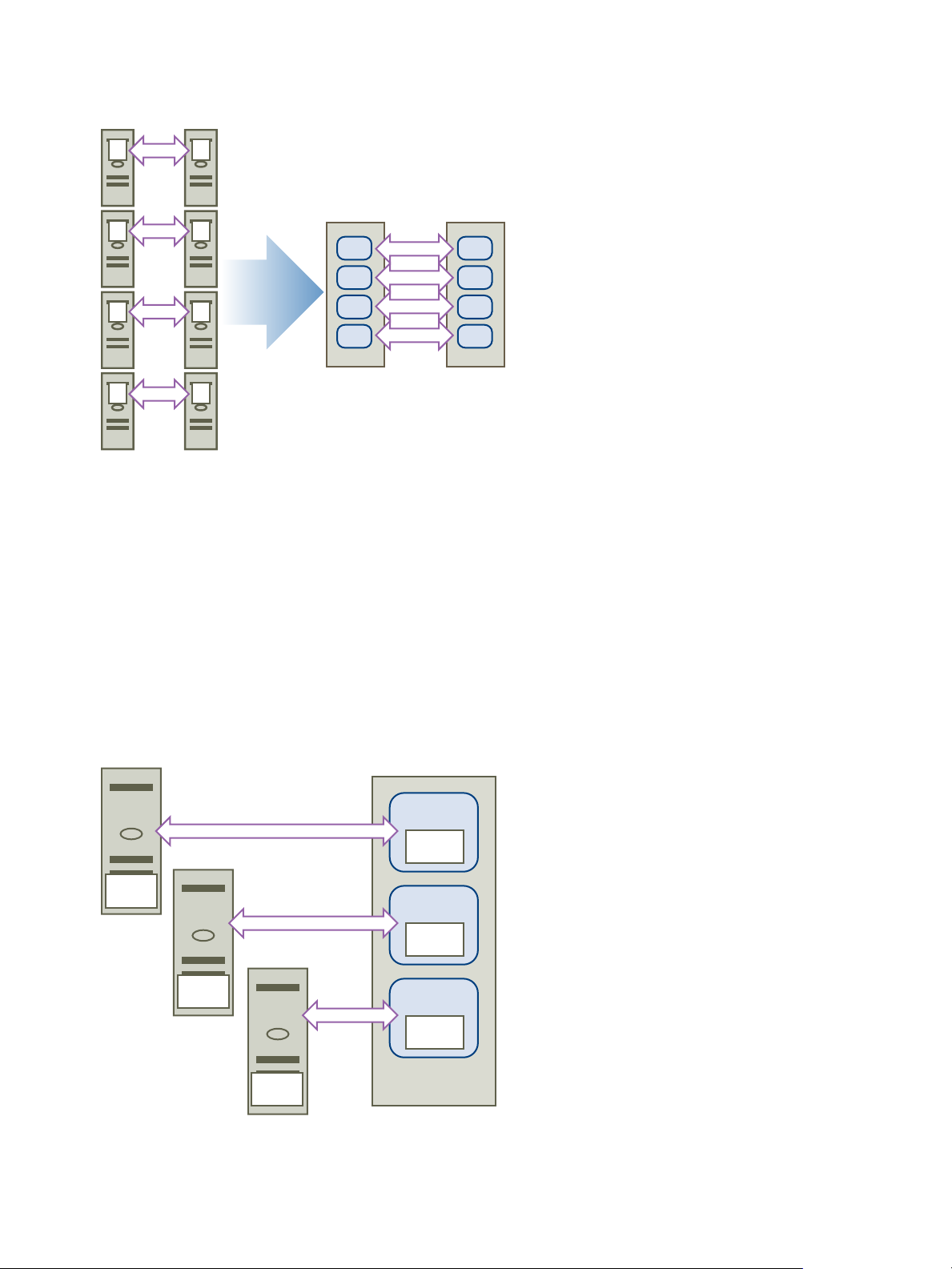

Clustering Virtual Machines Across Physical Hosts

A cluster of virtual machines across physical hosts (also known as a cluster across boxes) protects

against software failures and hardware failures on the physical machine by placing the cluster nodes on

separate ESXi hosts. This configuration requires shared storage on an Fibre Channel SAN for the

quorum disk.

The following figure shows a cluster-across-boxes setup.

n

Two virtual machines on two different physical machines (ESXi hosts) run clustering software.

n

The virtual machines share a private network connection for the private heartbeat and a public

network connection.

n

Each virtual machine is connected to shared storage, which must be on a SAN.

Note A quorum disk can be configured with iSCSI, FC SAN or FCoE. A quorum disk must have a

homogenous set of disks. This means that if the configuration is done with FC SAN, then all of the cluster

disks should be FC SAN only. Mixed mode is not supported.

Figure 1‑2. Virtual Machines Clustered Across Hosts

Note Windows Server 2008 SP2 and above systems support up to five nodes (virtual machines).

Windows Server 2003 SP1 and SP2 systems support two nodes (virtual machines). For supported guest

operating systems see Table 6‑2.

This setup provides significant hardware cost savings.

You can expand the cluster-across-boxes model and place multiple virtual machines on multiple physical

machines. For example, you can consolidate four clusters of two physical machines each to two physical

machines with four virtual machines each.

The following figure shows how you can move four two-node clusters from eight physical machines to

two.

VMware, Inc. 8

physical

machine

physical

machine

VM1

VM3

VM5

VM7

VM2

VM4

VM6

VM8

1

3

5

7

2

4

6

8

physical

machine

virtual

machine

cluster

software

virtual

machine

cluster

software

virtual

machine

cluster

software

cluster

software

cluster

software

cluster

software

Setup for Failover Clustering and Microsoft Cluster Service

Figure 1‑3. Clustering Multiple Virtual Machines Across Hosts

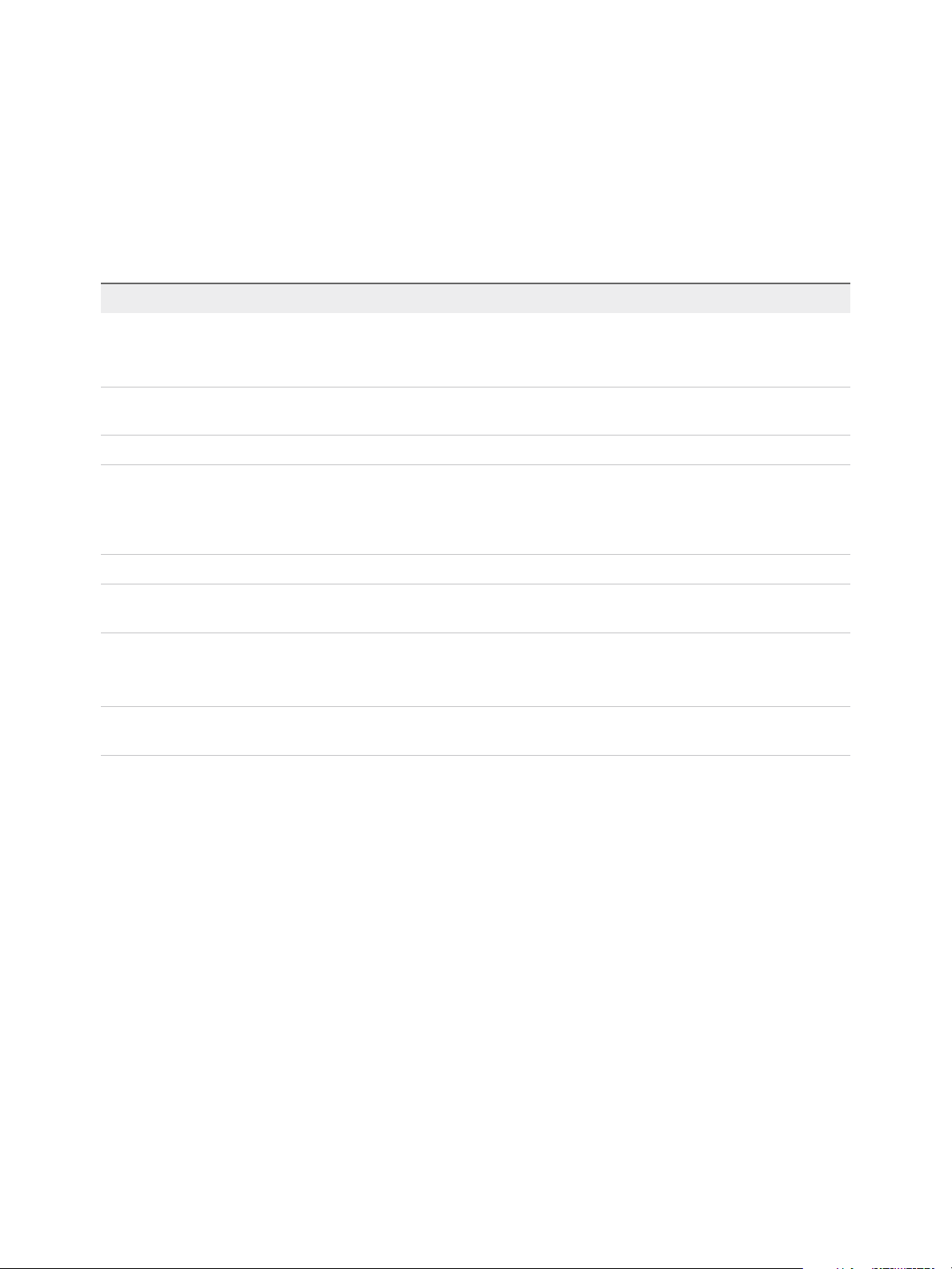

Clustering Physical Machines with Virtual Machines

For a simple MSCS clustering solution with low hardware requirements, you might choose to have one

standby host.

Set up your system to have a virtual machine corresponding to each physical machine on the standby

host, and create clusters, one each for each physical machine and its corresponding virtual machine. In

case of hardware failure in one of the physical machines, the virtual machine on the standby host can

take over for that physical host.

The following figure shows a standby host using three virtual machines on a single physical machine.

Each virtual machine is running clustering software.

Figure 1‑4. Clustering Physical and Virtual Machines

VMware, Inc. 9

Setup for Failover Clustering and Microsoft Cluster Service

Hardware and Software Requirements for Clustering

All vSphere MSCS configurations require certain hardware and software components.

The following table lists hardware and software requirements that apply to all vSphere MSCS

configurations.

Table 1‑1. Clustering Requirements

Component Requirement

Virtual SCSI adapter LSI Logic Parallel for Windows Server 2003.

LSI Logic SAS for Windows Server 2008 SP2 and above.

VMware Paravirtual for Windows Server 2008 SP2 and above.

Operating system Windows Server 2003 SP1 and SP2, Windows Server 2008 SP2 above releases. For supported guest

operating systems see Table 6‑2.

Virtual NIC Use the default type for all guest operating systems.

I/O timeout Set to 60 seconds or more. Modify

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services\Disk\TimeOutValue.

The system might reset this I/O timeout value if you re-create a cluster. You must reset the value in

that case.

Disk format Select Thick Provision to create disks in eagerzeroedthick format.

Disk and networking setup Add networking before disks. Refer to the VMware knowledge base article at

http://kb.vmware.com/kb/1513 if you encounter any errors.

Number of nodes Windows Server 2003 SP1 and SP2 : two-node clustering

Windows Server 2008 SP2 and above: up to five-node clustering

For supported guest operating systems see Table 6‑2.

NTP server Synchronize domain controllers and cluster nodes with a common NTP server, and disable host-based

time synchronization when using clustering in the guest.

Supported Shared Storage Configurations

Different MSCS cluster setups support different types of shared storage configurations. Some setups

support more than one type. Select the recommended type of shared storage for best results.

VMware, Inc. 10

Setup for Failover Clustering and Microsoft Cluster Service

Table 1‑2. Shared Storage Requirements

Clusters on One Physical

Machine

Storage Type

(Cluster in a Box)

Clusters Across

Physical Machines

(Cluster Across Boxes)

Clusters of Physical

and Virtual Machines

(Standby Host

Clustering)

Virtual disks Yes

(recommended)

Pass-through RDM

(physical compatibility mode)

Non-pass-through RDM

(virtual compatibility mode)

No Yes

Yes No No

No No

Yes

(recommended)

Use of software iSCSI initiators within guest operating systems configured with MSCS, in any

configuration supported by Microsoft, is transparent to ESXi hosts and there is no need for explicit

support statements from VMware.

PSP_RR Support for MSCS

ESXi 6.0 supports PSP_RR for MSCS.

n

ESXi 6.0 supports PSP_RR for Windows Server 2008 SP2 and above releases. Windows Server

2003 is not supported.

n

PSPs configured in mixed mode is supported. In a 2 node cluster one ESXi host can be configured to

use PSP_FIXED and the other ESXi host can use PSP_RR.

n

Shared disk quorum or data must be provisioned to guest in PassThrough RDM mode only.

n

All hosts must be running ESXi 6.0.

n

Mixed mode configurations of ESXi 6.0 with previous ESXi releases are not supported.

n

Rolling upgrades of cluster Hosts from previous versions of ESXi to ESXi 6.0 builds are not

supported.

iSCSI Support for MSCS

ESXi 6.0 supports iSCSI storage and up to 5 node MSCS cluster using Qlogic, Emulex and Broadcom

adapters.

n

ESXi 6.0 supports iSCSI for Windows Server 2008 SP2 and above releases. Windows Server 2003 is

not supported.

n

Cluster-across-box (CAB) and cluster-in-a-box (CIB) are supported. A mixture of CAB and CIB is not

supported.

n

No qualification is needed for SWiSCSI initiator in a guest operating system.

n

N+1 cluster configuration comprising of a cluster between "N" virtual machines on separate ESXi

hosts and one physical machine running Windows natively is supported.

VMware, Inc. 11

Setup for Failover Clustering and Microsoft Cluster Service

n

All hosts must be running ESXi 6.0.

n

Mixed cluster nodes running FC or FCOE and iSCSI are not supported.

n

Mixed mode of iSCSI config is supported. For example, Node A on ESXi with iSCSI software initiator

and Node B on ESXi with Qlogic, Emulex or Broadcom hardware adapter.

n

Mixed mode configurations of ESXi 6.0 with previous ESXi releases are not supported.

n

Rolling upgrades of cluster Hosts from previous versions of ESXi to ESXi 6.0 builds are not

supported.

FCoE Support for MSCS

ESXi 6.0 supports FCoE storage and up to 5 node MSCS clusters using Cisco FNIC and Emulex FCoE

adapters.

n

ESXi 6.0 supports FCoE for Windows Server 2008 SP2 and above releases. Windows Server 2003 is

not supported.

n

Cluster-across-box (CAB) and cluster-in-a-box (CIB) are supported. A mixture of CAB and CIB is not

supported.

n

CAB configurations are supported with some cluster nodes on physical hosts. In a CAB configuration,

a max of one virtual machine in a host can see a LUN.

n

In a CIB configuration all virtual machines must be on the same host.

n

No qualification is needed for SWiSCSI and FCoE initiators in a guest operating system.

n

N+1 cluster configuration, in which one ESXi host has virtual machines which are secondary nodes

and one primary node is a physical box are supported.

n

Standard affinity and anti-affinity rules apply for MSCS virtual machines.

n

All hosts must be running ESXi 6.0.

n

All hosts must be running FCoE initiators. Mixed cluster nodes running FC and FCoE are not

supported.

n

Mixed mode FCoE configuration is supported. For example, Node A on ESXi with an FCoE software

adapter intel based card and Node B on ESXi with an Emulex or Cisco FCoE hardware adapter.

n

Mixed mode configurations of ESXi 6.0 with previous ESXi releases are not supported.

n

Rolling upgrades of cluster hosts from previous versions of ESXi to ESXi 6.0 builds are not

supported.

vMotion support for MSCS

vSphere 6.0 adds support for vMotion of MSCS clustered virtual machines.

VMware, Inc. 12

Loading...

Loading...