VMware ESXI - 6.7 Instruction Manual

vSphere Storage

17 APR 2018

VMware vSphere 6.7

VMware ESXi 6.7

vCenter Server 6.7

vSphere Storage

You can find the most up-to-date technical documentation on the VMware website at:

https://docs.vmware.com/

If you have comments about this documentation, submit your feedback to

docfeedback@vmware.com

VMware, Inc.

3401 Hillview Ave.

Palo Alto, CA 94304

www.vmware.com

Copyright © 2009–2018 VMware, Inc. All rights reserved. Copyright and trademark information.

VMware, Inc. 2

Contents

About vSphere Storage 8

Introduction to Storage 9

1

Traditional Storage Virtualization Models 9

Software-Defined Storage Models 11

vSphere Storage APIs 11

Getting Started with a Traditional Storage Model 13

2

Types of Physical Storage 13

Supported Storage Adapters 24

Datastore Characteristics 25

Using Persistent Memory 28

Overview of Using ESXi with a SAN 32

3

ESXi and SAN Use Cases 33

Specifics of Using SAN Storage with ESXi 33

ESXi Hosts and Multiple Storage Arrays 34

Making LUN Decisions 34

Selecting Virtual Machine Locations 36

Third-Party Management Applications 36

SAN Storage Backup Considerations 37

Using ESXi with Fibre Channel SAN 39

4

Fibre Channel SAN Concepts 39

Using Zoning with Fibre Channel SANs 41

How Virtual Machines Access Data on a Fibre Channel SAN 41

Configuring Fibre Channel Storage 43

5

ESXi Fibre Channel SAN Requirements 43

Installation and Setup Steps 45

N-Port ID Virtualization 45

Configuring Fibre Channel over Ethernet 49

6

Fibre Channel over Ethernet Adapters 49

Configuration Guidelines for Software FCoE 50

Set Up Networking for Software FCoE 51

Add Software FCoE Adapters 52

VMware, Inc.

3

vSphere Storage

Booting ESXi from Fibre Channel SAN 53

7

Boot from SAN Benefits 53

Requirements and Considerations when Booting from Fibre Channel SAN 54

Getting Ready for Boot from SAN 54

Configure Emulex HBA to Boot from SAN 56

Configure QLogic HBA to Boot from SAN 57

Booting ESXi with Software FCoE 59

8

Requirements and Considerations for Software FCoE Boot 59

Set Up Software FCoE Boot 60

Troubleshooting Boot from Software FCoE for an ESXi Host 62

Best Practices for Fibre Channel Storage 63

9

Preventing Fibre Channel SAN Problems 63

Disable Automatic Host Registration 64

Optimizing Fibre Channel SAN Storage Performance 64

Using ESXi with iSCSI SAN 67

10

About iSCSI SAN 67

Configuring iSCSI Adapters and Storage 75

11

ESXi iSCSI SAN Recommendations and Restrictions 76

Configuring iSCSI Parameters for Adapters 76

Set Up Independent Hardware iSCSI Adapters 77

Configure Dependent Hardware iSCSI Adapters 80

Configure the Software iSCSI Adapter 82

Configure iSER Adapters 84

Modify General Properties for iSCSI or iSER Adapters 85

Setting Up Network for iSCSI and iSER 86

Using Jumbo Frames with iSCSI 98

Configuring Discovery Addresses for iSCSI Adapters 99

Configuring CHAP Parameters for iSCSI Adapters 101

Configuring Advanced Parameters for iSCSI 105

iSCSI Session Management 107

Booting from iSCSI SAN 110

12

General Recommendations for Boot from iSCSI SAN 110

Prepare the iSCSI SAN 111

Configure Independent Hardware iSCSI Adapter for SAN Boot 112

iBFT iSCSI Boot Overview 113

VMware, Inc. 4

vSphere Storage

Best Practices for iSCSI Storage 119

13

Preventing iSCSI SAN Problems 119

Optimizing iSCSI SAN Storage Performance 120

Checking Ethernet Switch Statistics 124

Managing Storage Devices 125

14

Storage Device Characteristics 125

Understanding Storage Device Naming 129

Storage Rescan Operations 131

Identifying Device Connectivity Problems 134

Enable or Disable the Locator LED on Storage Devices 139

Erase Storage Devices 140

Working with Flash Devices 141

15

Using Flash Devices with ESXi 141

Marking Storage Devices 142

Monitor Flash Devices 144

Best Practices for Flash Devices 144

About Virtual Flash Resource 145

Configuring Host Swap Cache 148

About VMware vSphere Flash Read Cache 150

16

DRS Support for Flash Read Cache 151

vSphere High Availability Support for Flash Read Cache 151

Configure Flash Read Cache for a Virtual Machine 151

Migrate Virtual Machines with Flash Read Cache 152

Working with Datastores 154

17

Types of Datastores 154

Understanding VMFS Datastores 155

Upgrading VMFS Datastores 164

Understanding Network File System Datastores 166

Creating Datastores 177

Managing Duplicate VMFS Datastores 181

Increasing VMFS Datastore Capacity 182

Administrative Operations for Datastores 184

Set Up Dynamic Disk Mirroring 192

Collecting Diagnostic Information for ESXi Hosts on a Storage Device 193

Checking Metadata Consistency with VOMA 197

Configuring VMFS Pointer Block Cache 199

VMware, Inc. 5

vSphere Storage

Understanding Multipathing and Failover 202

18

Failovers with Fibre Channel 202

Host-Based Failover with iSCSI 203

Array-Based Failover with iSCSI 205

Path Failover and Virtual Machines 206

Pluggable Storage Architecture and Path Management 207

Viewing and Managing Paths 217

Using Claim Rules 220

Scheduling Queues for Virtual Machine I/Os 230

Raw Device Mapping 232

19

About Raw Device Mapping 232

Raw Device Mapping Characteristics 236

Create Virtual Machines with RDMs 238

Manage Paths for a Mapped LUN 239

Storage Policy Based Management 240

20

Virtual Machine Storage Policies 241

Workflow for Virtual Machine Storage Policies 241

Populating the VM Storage Policies Interface 242

About Rules and Rule Sets 246

Creating and Managing VM Storage Policies 248

About Storage Policy Components 257

Storage Policies and Virtual Machines 260

Default Storage Policies 266

Using Storage Providers 268

21

About Storage Providers 268

Storage Providers and Data Representation 269

Storage Provider Requirements and Considerations 270

Register Storage Providers 270

View Storage Provider Information 271

Manage Storage Providers 272

Working with Virtual Volumes 273

22

About Virtual Volumes 273

Virtual Volumes Concepts 274

Virtual Volumes and Storage Protocols 280

Virtual Volumes Architecture 281

Virtual Volumes and VMware Certificate Authority 282

Snapshots and Virtual Volumes 283

Before You Enable Virtual Volumes 284

VMware, Inc. 6

vSphere Storage

Configure Virtual Volumes 285

Provision Virtual Machines on Virtual Volumes Datastores 289

Virtual Volumes and Replication 290

Best Practices for Working with vSphere Virtual Volumes 294

Troubleshooting Virtual Volumes 298

Filtering Virtual Machine I/O 302

23

About I/O Filters 302

Using Flash Storage Devices with Cache I/O Filters 305

System Requirements for I/O Filters 306

Configure I/O Filters in the vSphere Environment 307

Enable I/O Filter Data Services on Virtual Disks 309

Managing I/O Filters 310

I/O Filter Guidelines and Best Practices 312

Handling I/O Filter Installation Failures 313

Storage Hardware Acceleration 315

24

Hardware Acceleration Benefits 315

Hardware Acceleration Requirements 316

Hardware Acceleration Support Status 316

Hardware Acceleration for Block Storage Devices 316

Hardware Acceleration on NAS Devices 323

Hardware Acceleration Considerations 325

Thin Provisioning and Space Reclamation 327

25

Virtual Disk Thin Provisioning 327

ESXi and Array Thin Provisioning 332

Storage Space Reclamation 334

Using vmkfstools 342

26

vmkfstools Command Syntax 342

The vmkfstools Command Options 343

VMware, Inc. 7

About vSphere Storage

vSphere Storage describes virtualized and software-defined storage technologies that VMware ESXi™

and VMware vCenter Server® offer, and explains how to configure and use these technologies.

Intended Audience

This information is for experienced system administrators who are familiar with the virtual machine and

storage virtualization technologies, data center operations, and SAN storage concepts.

vSphere Client and vSphere Web Client

The instructions in this guide are specific primarily to the vSphere Client (an HTML5-based GUI). Most

instructions also apply to the vSphere Web Client (a Flex-based GUI).

For the workflows that significantly differ between the two clients, vSphere Storage provides duplicate

procedures. The procedures indicate when they are intended exclusively for the vSphere Client or the

vSphere Web Client.

Note In vSphere 6.7, most of the vSphere Web Client functionality is implemented in the vSphere Client.

For an up-to-date list of the unsupported functionality, see Functionality Updates for the vSphere Client.

VMware, Inc.

8

Introduction to Storage 1

vSphere supports various storage options and functionalities in traditional and software-defined storage

environments. A high-level overview of vSphere storage elements and aspects helps you plan a proper

storage strategy for your virtual data center.

This chapter includes the following topics:

n

Traditional Storage Virtualization Models

n

Software-Defined Storage Models

n

vSphere Storage APIs

Traditional Storage Virtualization Models

Generally, storage virtualization refers to a logical abstraction of physical storage resources and

capacities from virtual machines and their applications. ESXi provides host-level storage virtualization.

In vSphere environment, a traditional model is built around the following storage technologies and ESXi

and vCenter Server virtualization functionalities.

Local and Networked

Storage

Storage Area Networks A storage area network (SAN) is a specialized high-speed network that

Fibre Channel Fibre Channel (FC) is a storage protocol that the SAN uses to transfer data

In traditional storage environments, the ESXi storage management process

starts with storage space that your storage administrator preallocates on

different storage systems. ESXi supports local storage and networked

storage.

See Types of Physical Storage.

connects computer systems, or ESXi hosts, to high-performance storage

systems. ESXi can use Fibre Channel or iSCSI protocols to connect to

storage systems.

See Chapter 3 Overview of Using ESXi with a SAN.

traffic from ESXi host servers to shared storage. The protocol packages

SCSI commands into FC frames. To connect to the FC SAN, your host

uses Fibre Channel host bus adapters (HBAs).

See Chapter 4 Using ESXi with Fibre Channel SAN.

VMware, Inc. 9

vSphere Storage

Internet SCSI Internet iSCSI (iSCSI) is a SAN transport that can use Ethernet

connections between computer systems, or ESXi hosts, and high-

performance storage systems. To connect to the storage systems, your

hosts use hardware iSCSI adapters or software iSCSI initiators with

standard network adapters.

See Chapter 10 Using ESXi with iSCSI SAN.

Storage Device or LUN In the ESXi context, the terms device and LUN are used interchangeably.

Typically, both terms mean a storage volume that is presented to the host

from a block storage system and is available for formatting.

See Target and Device Representations and Chapter 14 Managing Storage

Devices.

Virtual Disks A virtual machine on an ESXi host uses a virtual disk to store its operating

system, application files, and other data associated with its activities. Virtual

disks are large physical files, or sets of files, that can be copied, moved,

archived, and backed up as any other files. You can configure virtual

machines with multiple virtual disks.

To access virtual disks, a virtual machine uses virtual SCSI controllers.

These virtual controllers include BusLogic Parallel, LSI Logic Parallel, LSI

Logic SAS, and VMware Paravirtual. These controllers are the only types of

SCSI controllers that a virtual machine can see and access.

Each virtual disk resides on a datastore that is deployed on physical

storage. From the standpoint of the virtual machine, each virtual disk

appears as if it were a SCSI drive connected to a SCSI controller. Whether

the physical storage is accessed through storage or network adapters on

the host is typically transparent to the VM guest operating system and

applications.

VMware vSphere

VMFS

®

The datastores that you deploy on block storage devices use the native

vSphere Virtual Machine File System (VMFS) format. It is a special high-

performance file system format that is optimized for storing virtual

machines.

See Understanding VMFS Datastores.

NFS An NFS client built into ESXi uses the Network File System (NFS) protocol

over TCP/IP to access an NFS volume that is located on a NAS server. The

ESXi host can mount the volume and use it as an NFS datastore.

See Understanding Network File System Datastores.

Raw Device Mapping In addition to virtual disks, vSphere offers a mechanism called raw device

mapping (RDM). RDM is useful when a guest operating system inside a

virtual machine requires direct access to a storage device. For information

about RDMs, see Chapter 19 Raw Device Mapping.

VMware, Inc. 10

vSphere Storage

Software-Defined Storage Models

In addition to abstracting underlying storage capacities from VMs, as traditional storage models do,

software-defined storage abstracts storage capabilities.

With the software-defined storage model, a virtual machine becomes a unit of storage provisioning and

can be managed through a flexible policy-based mechanism. The model involves the following vSphere

technologies.

Storage Policy Based

Management

VMware vSphere

Virtual Volumes™

VMware vSAN vSAN is a distributed layer of software that runs natively as a part of the

®

Storage Policy Based Management (SPBM) is a framework that provides a

single control panel across various data services and storage solutions,

including vSAN and Virtual Volumes. Using storage policies, the framework

aligns application demands of your virtual machines with capabilities

provided by storage entities.

See Chapter 20 Storage Policy Based Management.

The Virtual Volumes functionality changes the storage management

paradigm from managing space inside datastores to managing abstract

storage objects handled by storage arrays. With Virtual Volumes, an

individual virtual machine, not the datastore, becomes a unit of storage

management. And storage hardware gains complete control over virtual

disk content, layout, and management.

See Chapter 22 Working with Virtual Volumes.

hypervisor. vSAN aggregates local or direct-attached capacity devices of an

ESXi host cluster and creates a single storage pool shared across all hosts

in the vSAN cluster.

See Administering VMware vSAN.

I/O Filters I/O filters are software components that can be installed on ESXi hosts and

can offer additional data services to virtual machines. Depending on

implementation, the services might include replication, encryption, caching,

and so on.

See Chapter 23 Filtering Virtual Machine I/O.

vSphere Storage APIs

Storage APIs is a family of APIs used by third-party hardware, software, and storage providers to develop

components that enhance several vSphere features and solutions.

This Storage publication describes several Storage APIs that contribute to your storage environment. For

information about other APIs from this family, including vSphere APIs - Data Protection, see the VMware

website.

VMware, Inc. 11

vSphere Storage

vSphere APIs for Storage Awareness

Also known as VASA, these APIs, either supplied by third-party vendors or offered by VMware, enable

communications between vCenter Server and underlying storage. Through VASA, storage entities can

inform vCenter Server about their configurations, capabilities, and storage health and events. In return,

VASA can deliver VM storage requirements from vCenter Server to a storage entity and ensure that the

storage layer meets the requirements.

VASA becomes essential when you work with Virtual Volumes, vSAN, vSphere APIs for I/O Filtering

(VAIO), and storage VM policies. See Chapter 21 Using Storage Providers.

vSphere APIs for Array Integration

These APIs, also known as VAAI, include the following components:

n

Hardware Acceleration APIs. Help arrays to integrate with vSphere, so that vSphere can offload

certain storage operations to the array. This integration significantly reduces CPU overhead on the

host. See Chapter 24 Storage Hardware Acceleration.

n

Array Thin Provisioning APIs. Help to monitor space use on thin-provisioned storage arrays to

prevent out-of-space conditions, and to perform space reclamation. See ESXi and Array Thin

Provisioning.

vSphere APIs for Multipathing

Known as the Pluggable Storage Architecture (PSA), these APIs allow storage partners to create and

deliver multipathing and load-balancing plug-ins that are optimized for each array. Plug-ins communicate

with storage arrays and determine the best path selection strategy to increase I/O performance and

reliability from the ESXi host to the storage array. For more information, see Pluggable Storage

Architecture and Path Management.

VMware, Inc. 12

Getting Started with a

Traditional Storage Model 2

Setting up your ESXi storage in traditional environments, includes configuring your storage systems and

devices, enabling storage adapters, and creating datastores.

This chapter includes the following topics:

n

Types of Physical Storage

n

Supported Storage Adapters

n

Datastore Characteristics

n

Using Persistent Memory

Types of Physical Storage

In traditional storage environments, the ESXi storage management process starts with storage space that

your storage administrator preallocates on different storage systems. ESXi supports local storage and

networked storage.

Local Storage

Local storage can be internal hard disks located inside your ESXi host. It can also include external

storage systems located outside and connected to the host directly through protocols such as SAS or

SATA.

Local storage does not require a storage network to communicate with your host. You need a cable

connected to the storage unit and, when required, a compatible HBA in your host.

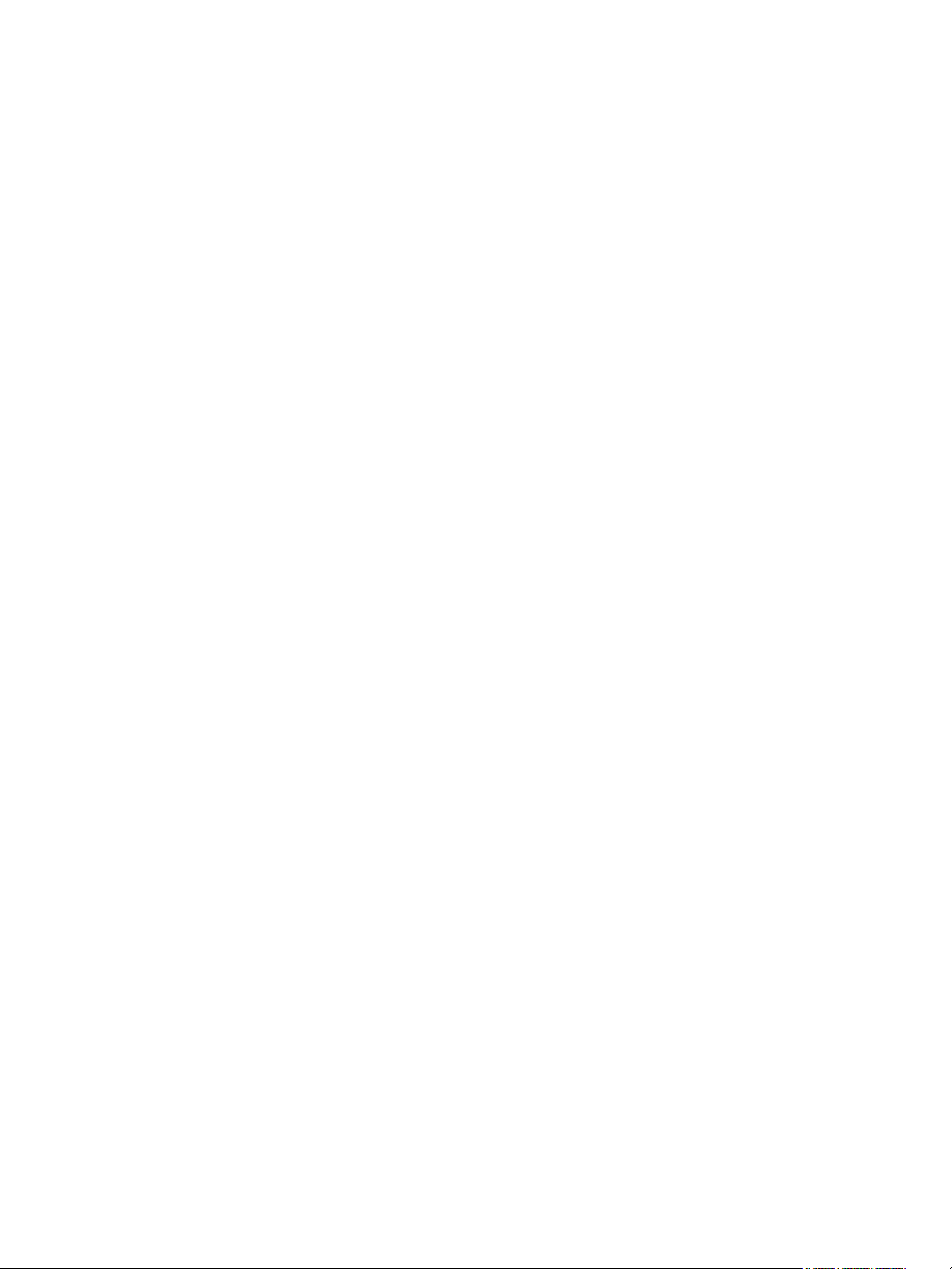

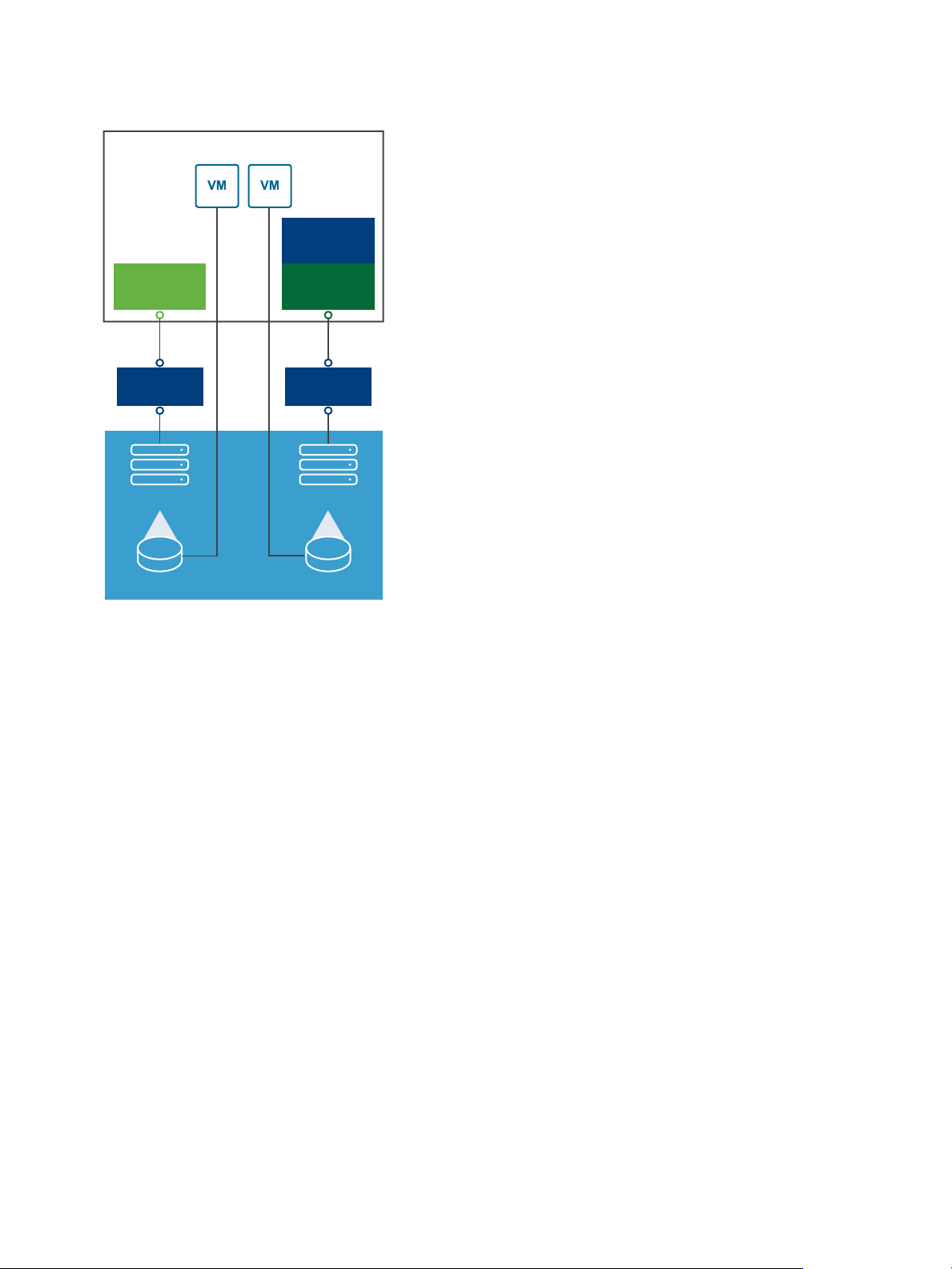

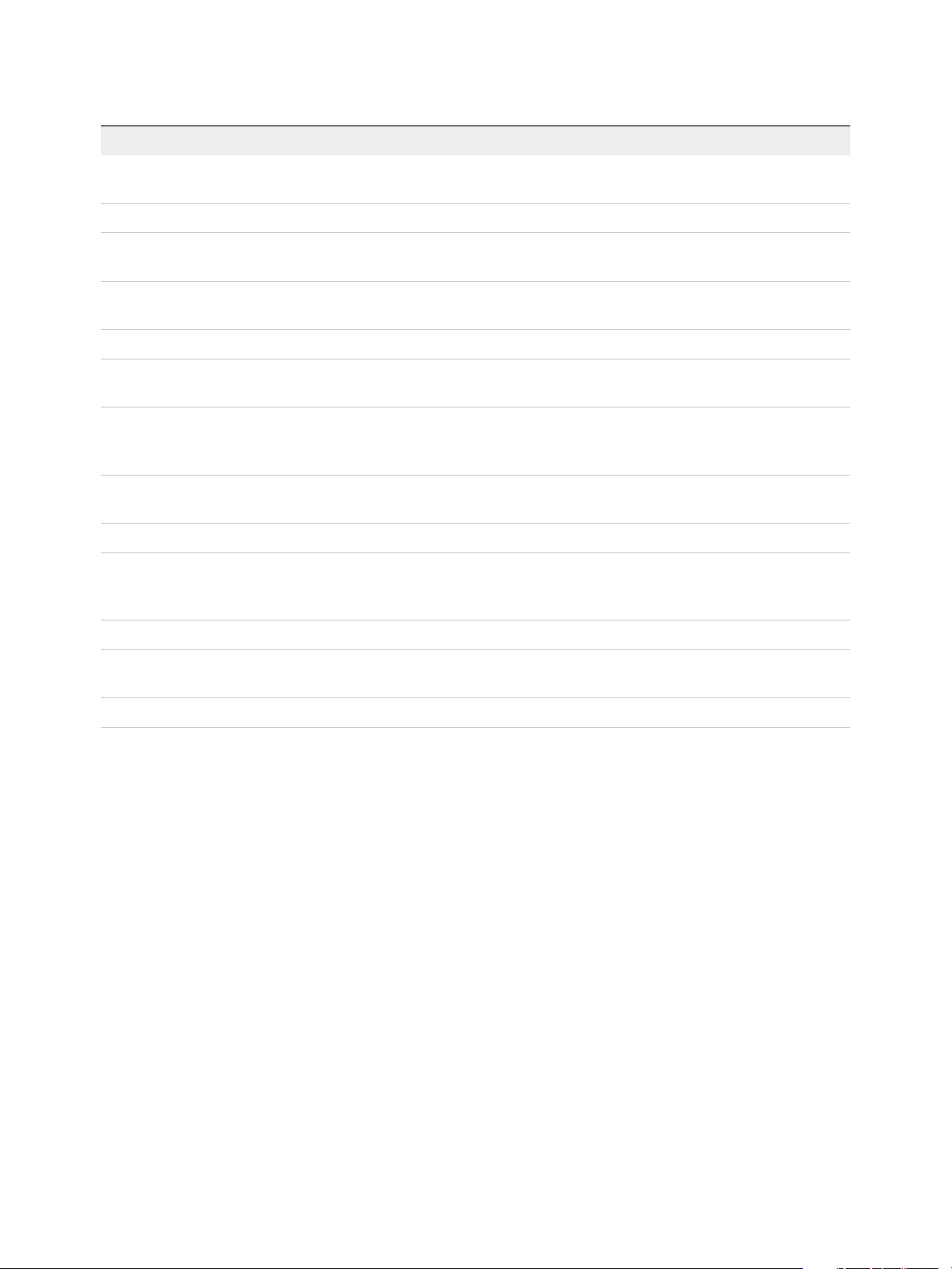

The following illustration depicts a virtual machine using local SCSI storage.

VMware, Inc.

13

ESXi Host

vmdk

SCSI Device

VMFS

vSphere Storage

Figure 2‑1. Local Storage

In this example of a local storage topology, the ESXi host uses a single connection to a storage device.

On that device, you can create a VMFS datastore, which you use to store virtual machine disk files.

Although this storage configuration is possible, it is not a best practice. Using single connections between

storage devices and hosts creates single points of failure (SPOF) that can cause interruptions when a

connection becomes unreliable or fails. However, because most of local storage devices do not support

multiple connections, you cannot use multiple paths to access local storage.

ESXi supports various local storage devices, including SCSI, IDE, SATA, USB, SAS, flash, and NVMe

devices.

Note You cannot use IDE/ATA or USB drives to store virtual machines.

Local storage does not support sharing across multiple hosts. Only one host has access to a datastore on

a local storage device. As a result, although you can use local storage to create VMs, you cannot use

VMware features that require shared storage, such as HA and vMotion.

However, if you use a cluster of hosts that have just local storage devices, you can implement vSAN.

vSAN transforms local storage resources into software-defined shared storage. With vSAN, you can use

features that require shared storage. For details, see the Administering VMware vSAN documentation.

Networked Storage

Networked storage consists of external storage systems that your ESXi host uses to store virtual machine

files remotely. Typically, the host accesses these systems over a high-speed storage network.

Networked storage devices are shared. Datastores on networked storage devices can be accessed by

multiple hosts concurrently. ESXi supports multiple networked storage technologies.

VMware, Inc. 14

ESXi Host

vmdk

Fibre

Channel Array

VMFS

SAN

Fibre

Channel

HBA

vSphere Storage

In addition to traditional networked storage that this topic covers, VMware supports virtualized shared

storage, such as vSAN. vSAN transforms internal storage resources of your ESXi hosts into shared

storage that provides such capabilities as High Availability and vMotion for virtual machines. For details,

see the Administering VMware vSAN documentation.

Note The same LUN cannot be presented to an ESXi host or multiple hosts through different storage

protocols. To access the LUN, hosts must always use a single protocol, for example, either Fibre Channel

only or iSCSI only.

Fibre Channel (FC)

Stores virtual machine files remotely on an FC storage area network (SAN). FC SAN is a specialized

high-speed network that connects your hosts to high-performance storage devices. The network uses

Fibre Channel protocol to transport SCSI traffic from virtual machines to the FC SAN devices.

To connect to the FC SAN, your host should be equipped with Fibre Channel host bus adapters (HBAs).

Unless you use Fibre Channel direct connect storage, you need Fibre Channel switches to route storage

traffic. If your host contains FCoE (Fibre Channel over Ethernet) adapters, you can connect to your

shared Fibre Channel devices by using an Ethernet network.

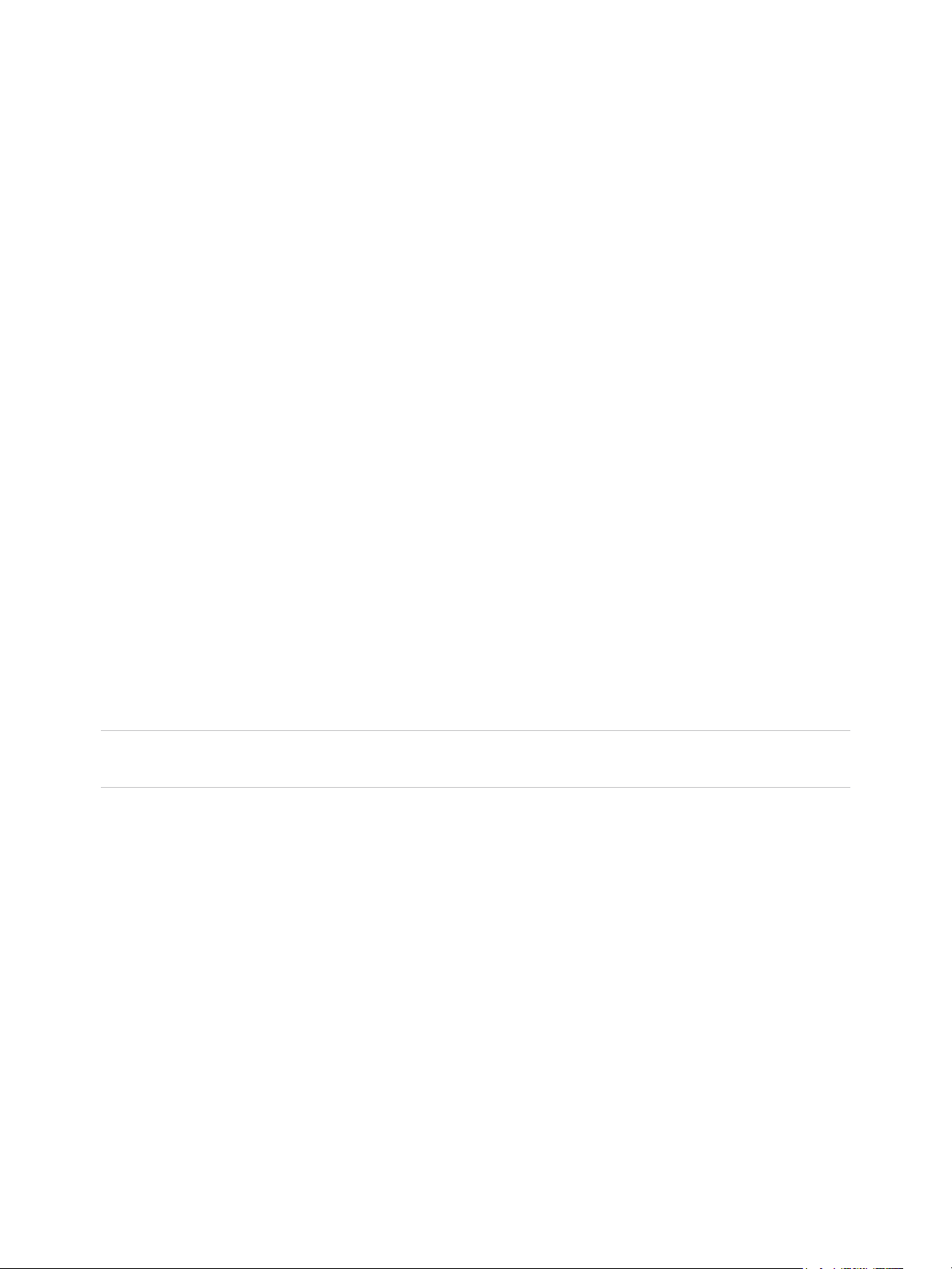

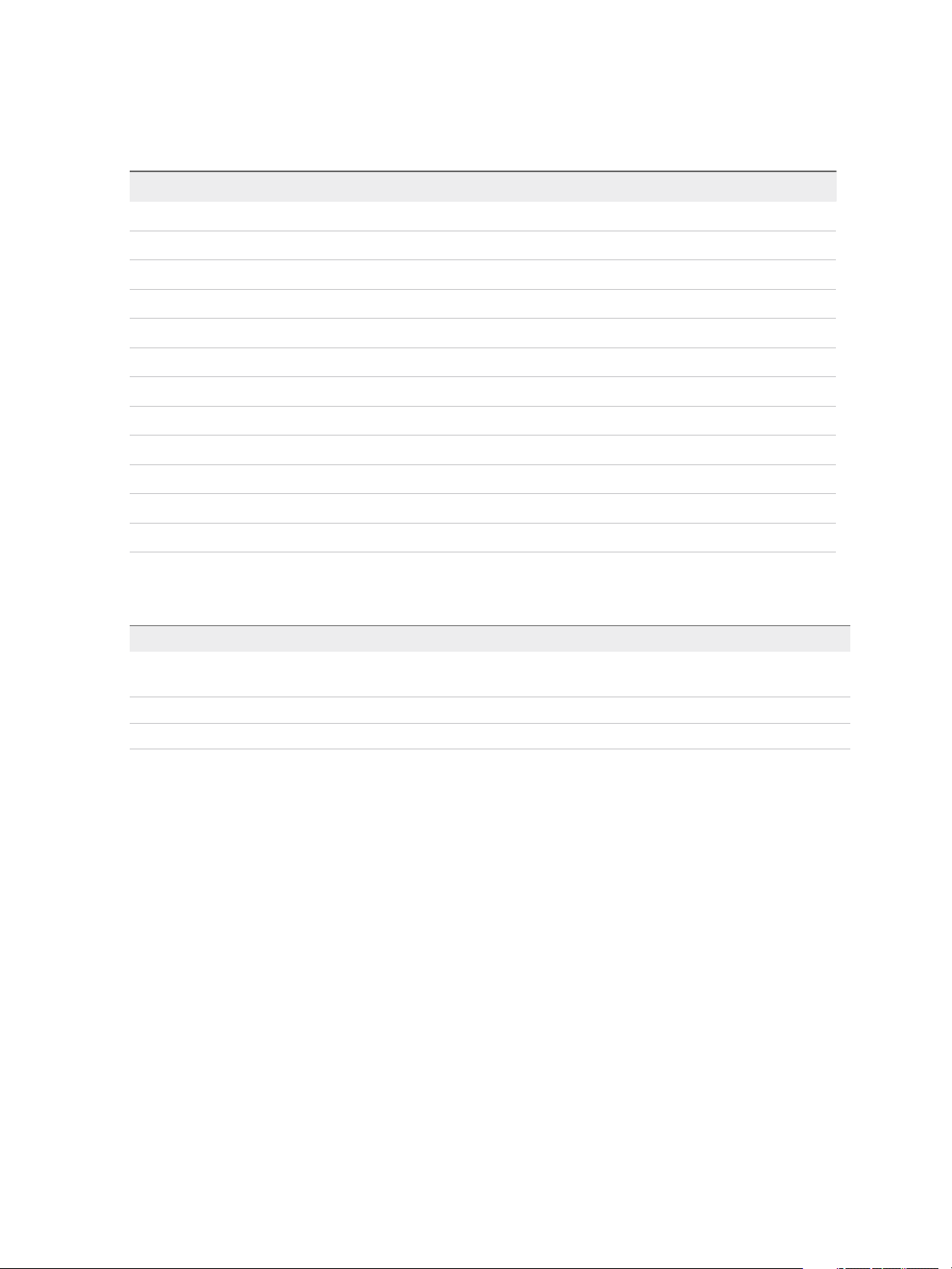

Fibre Channel Storage depicts virtual machines using Fibre Channel storage.

Figure 2‑2. Fibre Channel Storage

VMware, Inc. 15

vSphere Storage

In this configuration, a host connects to a SAN fabric, which consists of Fibre Channel switches and

storage arrays, using a Fibre Channel adapter. LUNs from a storage array become available to the host.

You can access the LUNs and create datastores for your storage needs. The datastores use the VMFS

format.

For specific information on setting up the Fibre Channel SAN, see Chapter 4 Using ESXi with Fibre

Channel SAN.

Internet SCSI (iSCSI)

Stores virtual machine files on remote iSCSI storage devices. iSCSI packages SCSI storage traffic into

the TCP/IP protocol, so that it can travel through standard TCP/IP networks instead of the specialized FC

network. With an iSCSI connection, your host serves as the initiator that communicates with a target,

located in remote iSCSI storage systems.

ESXi offers the following types of iSCSI connections:

Hardware iSCSI Your host connects to storage through a third-party adapter capable of

offloading the iSCSI and network processing. Hardware adapters can be

dependent and independent.

Software iSCSI Your host uses a software-based iSCSI initiator in the VMkernel to connect

to storage. With this type of iSCSI connection, your host needs only a

standard network adapter for network connectivity.

You must configure iSCSI initiators for the host to access and display iSCSI storage devices.

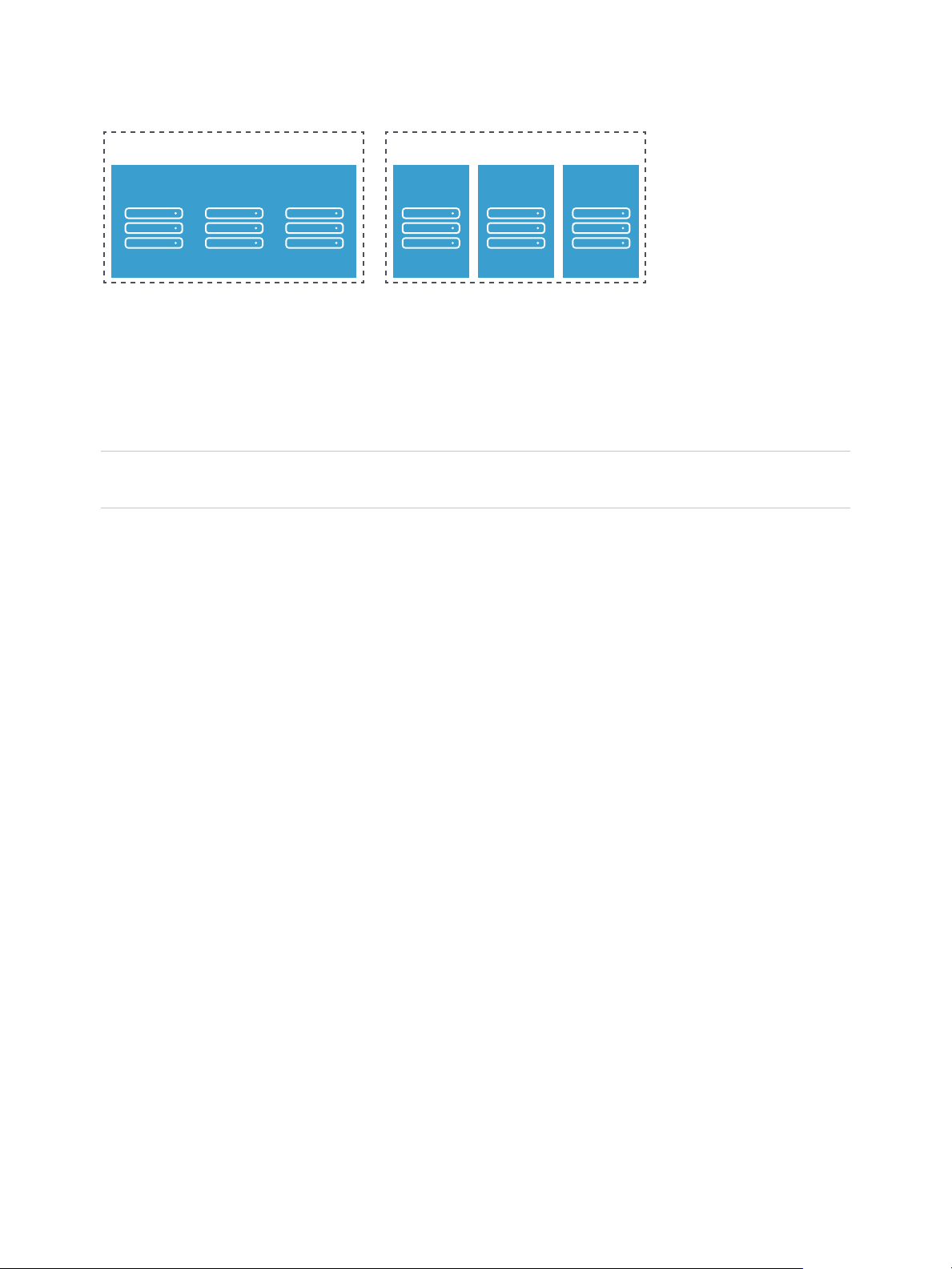

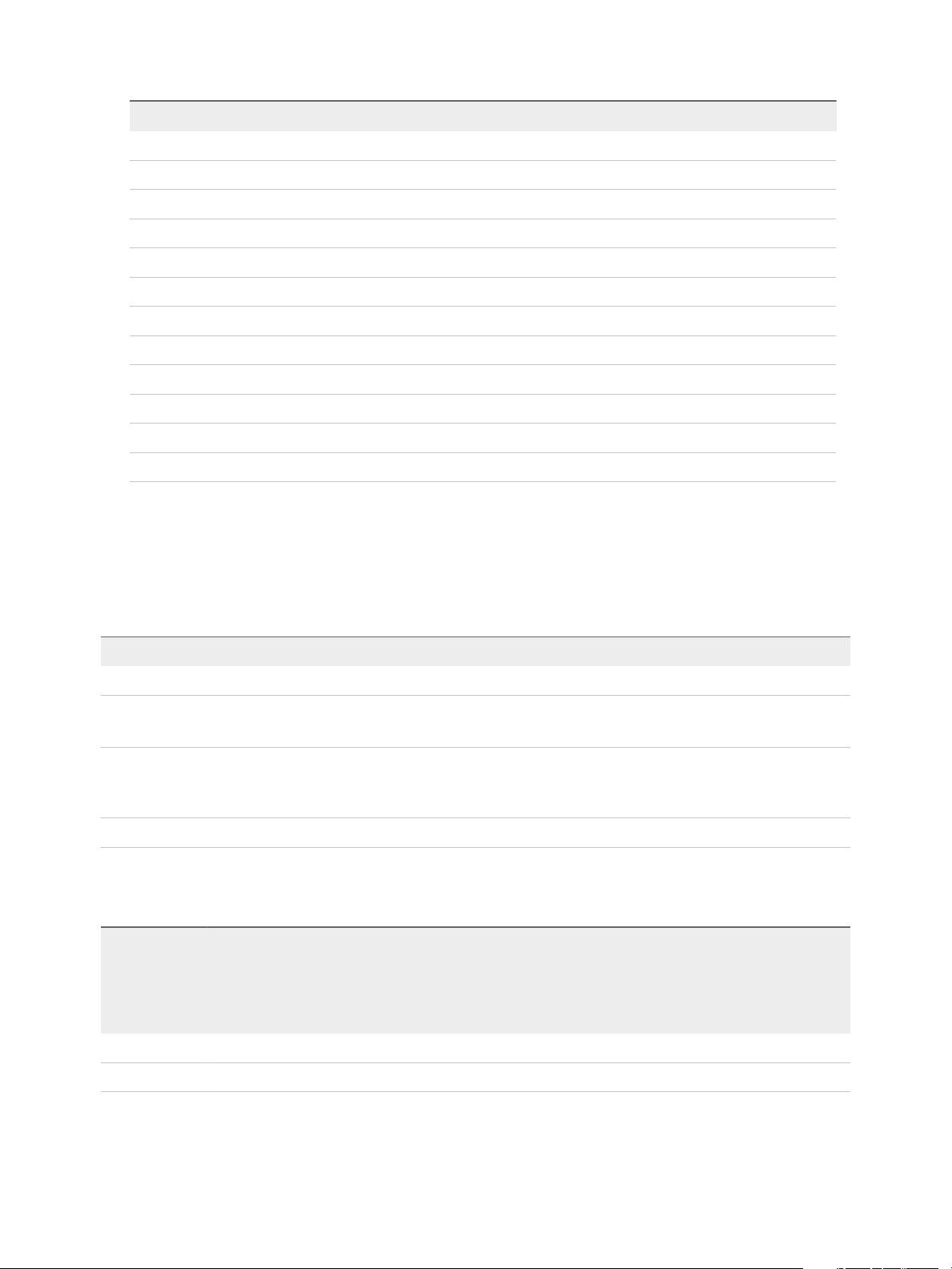

iSCSI Storage depicts different types of iSCSI initiators.

VMware, Inc. 16

iSCSI Array

VMFS VMFS

LAN LAN

iSCSI

HBA

Ethernet

NIC

ESXi Host

Software

Adapter

vmdk vmdk

vSphere Storage

Figure 2‑3. iSCSI Storage

In the left example, the host uses the hardware iSCSI adapter to connect to the iSCSI storage system.

In the right example, the host uses a software iSCSI adapter and an Ethernet NIC to connect to the iSCSI

storage.

iSCSI storage devices from the storage system become available to the host. You can access the storage

devices and create VMFS datastores for your storage needs.

For specific information on setting up the iSCSI SAN, see Chapter 10 Using ESXi with iSCSI SAN.

Network-attached Storage (NAS)

Stores virtual machine files on remote file servers accessed over a standard TCP/IP network. The NFS

client built into ESXi uses Network File System (NFS) protocol version 3 and 4.1 to communicate with the

NAS/NFS servers. For network connectivity, the host requires a standard network adapter.

You can mount an NFS volume directly on the ESXi host. You then use the NFS datastore to store and

manage virtual machines in the same way that you use the VMFS datastores.

NFS Storage depicts a virtual machine using the NFS datastore to store its files. In this configuration, the

host connects to the NAS server, which stores the virtual disk files, through a regular network adapter.

VMware, Inc. 17

ESXi Host

NAS

Appliance

vmdk

NFS

LAN

Ethernet

NIC

vSphere Storage

Figure 2‑4. NFS Storage

For specific information on setting up NFS storage, see Understanding Network File System Datastores.

Shared Serial Attached SCSI (SAS)

Stores virtual machines on direct-attached SAS storage systems that offer shared access to multiple

hosts. This type of access permits multiple hosts to access the same VMFS datastore on a LUN.

Target and Device Representations

In the ESXi context, the term target identifies a single storage unit that the host can access. The terms

storage device and LUN describe a logical volume that represents storage space on a target. In the ESXi

context, both terms also mean a storage volume that is presented to the host from a storage target and is

available for formatting. Storage device and LUN are often used interchangeably.

Different storage vendors present the storage systems to ESXi hosts in different ways. Some vendors

present a single target with multiple storage devices or LUNs on it, while others present multiple targets

with one LUN each.

VMware, Inc. 18

Storage Array

Target

LUN LUN LUN

Storage Array

Target TargetTarget

LUN LUN LUN

vSphere Storage

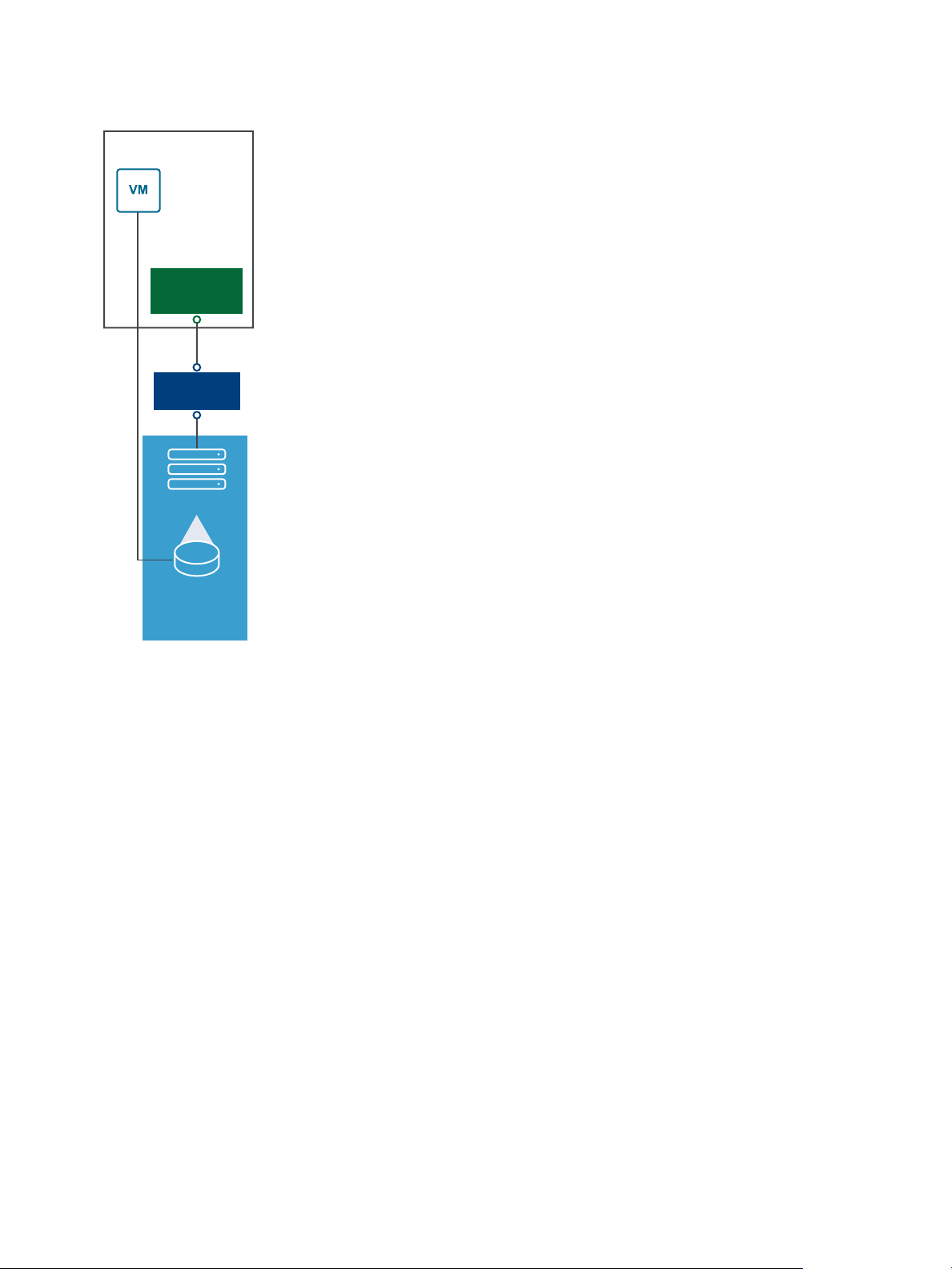

Figure 2‑5. Target and LUN Representations

In this illustration, three LUNs are available in each configuration. In one case, the host connects to one

target, but that target has three LUNs that can be used. Each LUN represents an individual storage

volume. In the other example, the host detects three different targets, each having one LUN.

Targets that are accessed through the network have unique names that are provided by the storage

systems. The iSCSI targets use iSCSI names. Fibre Channel targets use World Wide Names (WWNs).

Note ESXi does not support accessing the same LUN through different transport protocols, such as

iSCSI and Fibre Channel.

A device, or LUN, is identified by its UUID name. If a LUN is shared by multiple hosts, it must be

presented to all hosts with the same UUID.

How Virtual Machines Access Storage

When a virtual machine communicates with its virtual disk stored on a datastore, it issues SCSI

commands. Because datastores can exist on various types of physical storage, these commands are

encapsulated into other forms, depending on the protocol that the ESXi host uses to connect to a storage

device.

ESXi supports Fibre Channel (FC), Internet SCSI (iSCSI), Fibre Channel over Ethernet (FCoE), and NFS

protocols. Regardless of the type of storage device your host uses, the virtual disk always appears to the

virtual machine as a mounted SCSI device. The virtual disk hides a physical storage layer from the virtual

machine’s operating system. This allows you to run operating systems that are not certified for specific

storage equipment, such as SAN, inside the virtual machine.

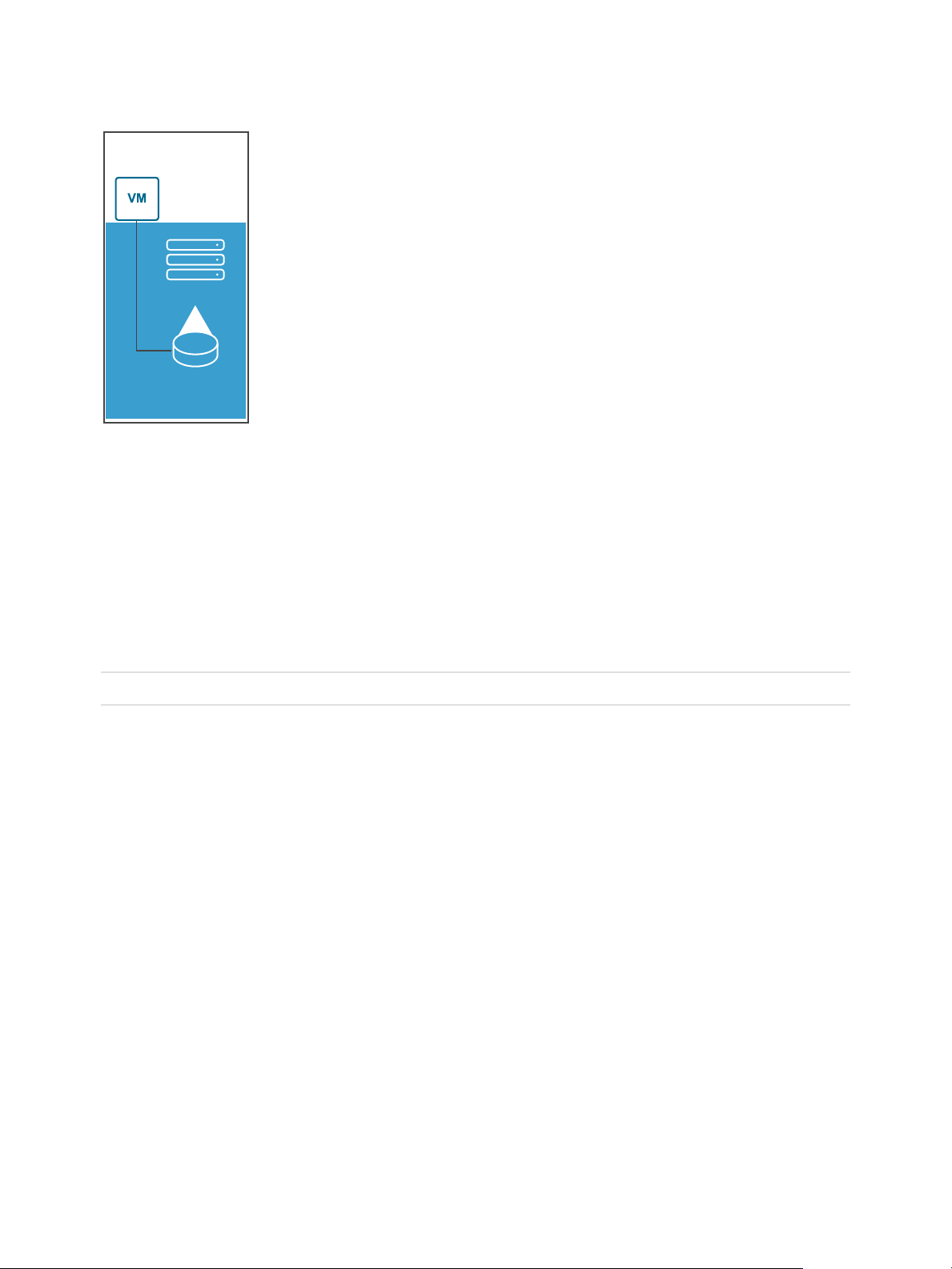

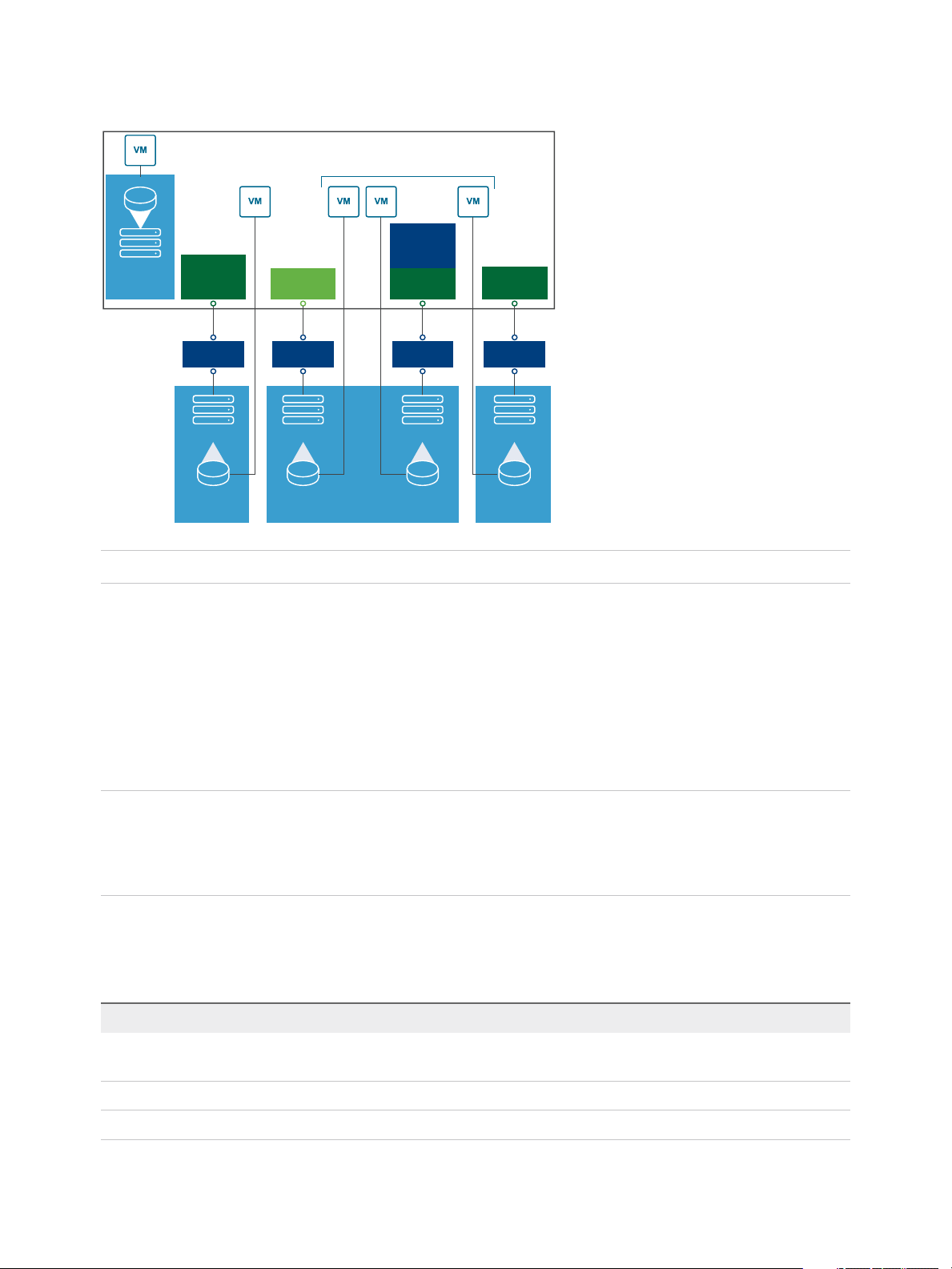

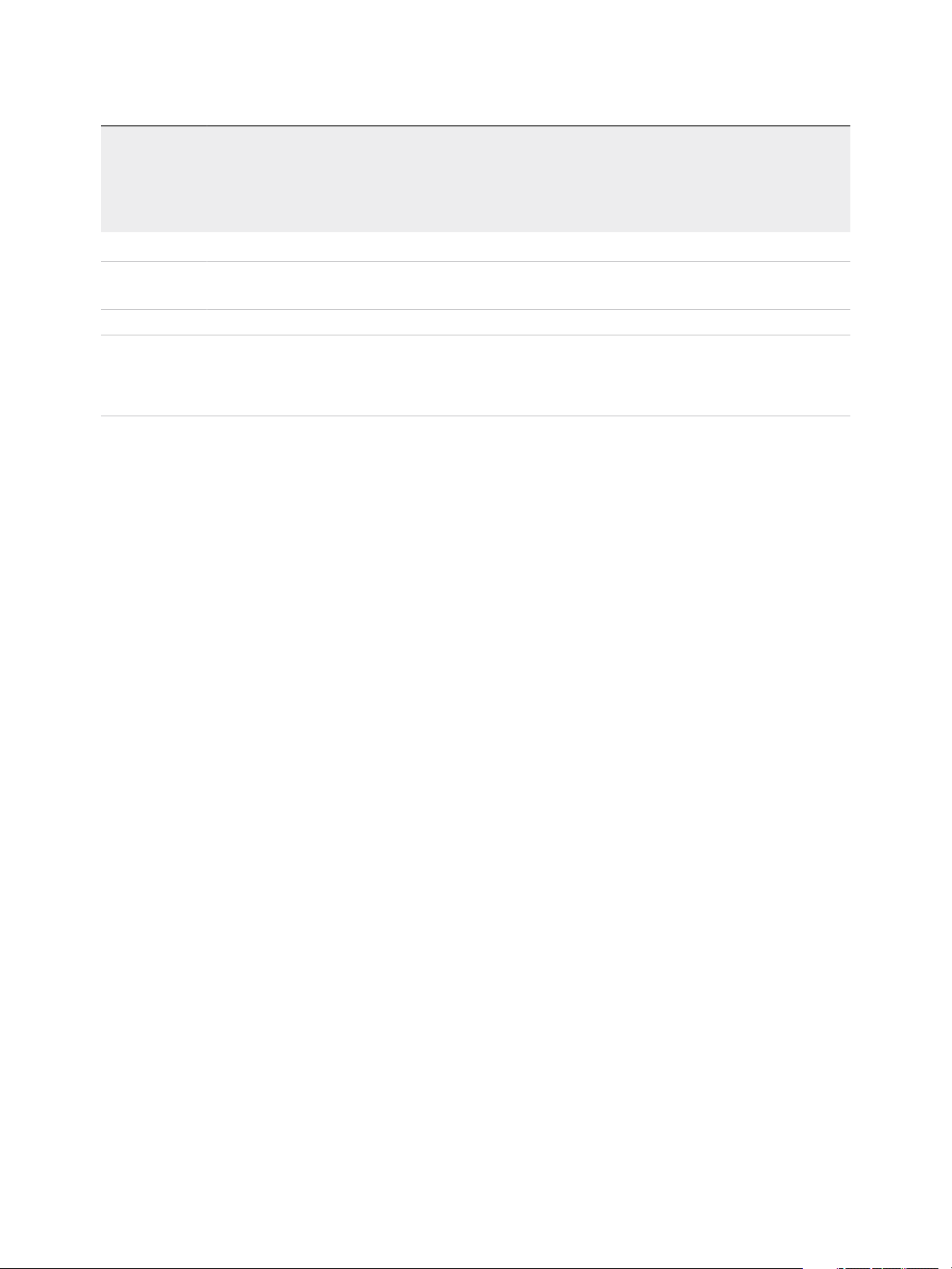

The following graphic depicts five virtual machines using different types of storage to illustrate the

differences between each type.

VMware, Inc. 19

iSCSI Array

VMFS VMFS

LAN LAN

iSCSI

HBA

Ethernet

NIC

ESXi Host

Requires TCP/IP Connectivity

Software

iSCSI

Adapter

NAS

Appliance

NFS

LAN

Ethernet

NIC

Fibre

Channel Array

VMFS

VMFS

vmdk

vmdk vmdk vmdk vmdk

SAN

Fibre

Channel

HBA

SCSI Device

vSphere Storage

Figure 2‑6. Virtual machines accessing dierent types of storage

Note This diagram is for conceptual purposes only. It is not a recommended configuration.

Storage Device Characteristics

When your ESXi host connects to block-based storage systems, LUNs or storage devices that support

ESXi become available to the host.

After the devices get registered with your host, you can display all available local and networked devices

and review their information. If you use third-party multipathing plug-ins, the storage devices available

through the plug-ins also appear on the list.

Note If an array supports implicit asymmetric logical unit access (ALUA) and has only standby paths, the

registration of the device fails. The device can register with the host after the target activates a standby

path and the host detects it as active. The advanced system /Disk/FailDiskRegistration parameter

controls this behavior of the host.

For each storage adapter, you can display a separate list of storage devices available for this adapter.

Generally, when you review storage devices, you see the following information.

Table 2‑1. Storage Device Information

Storage Device Information Description

Name Also called Display Name. It is a name that the ESXi host assigns to the device based on the

storage type and manufacturer. You can change this name to a name of your choice.

Identifier A universally unique identifier that is intrinsic to the device.

Operational State Indicates whether the device is attached or detached. For details, see Detach Storage Devices.

VMware, Inc. 20

vSphere Storage

Table 2‑1. Storage Device Information (Continued)

Storage Device Information Description

LUN Logical Unit Number (LUN) within the SCSI target. The LUN number is provided by the storage

system. If a target has only one LUN, the LUN number is always zero (0).

Type Type of device, for example, disk or CD-ROM.

Drive Type Information about whether the device is a flash drive or a regular HDD drive. For information

about flash drives and NVMe devices, see Chapter 15 Working with Flash Devices.

Transport Transportation protocol your host uses to access the device. The protocol depends on the type

of storage being used. See Types of Physical Storage.

Capacity Total capacity of the storage device.

Owner The plug-in, such as the NMP or a third-party plug-in, that the host uses to manage paths to the

storage device. For details, see Pluggable Storage Architecture and Path Management.

Hardware Acceleration Information about whether the storage device assists the host with virtual machine

management operations. The status can be Supported, Not Supported, or Unknown. For

details, see Chapter 24 Storage Hardware Acceleration.

Sector Format Indicates whether the device uses a traditional, 512n, or advanced sector format, such as 512e

or 4Kn. For more information, see Device Sector Formats.

Location A path to the storage device in the /vmfs/devices/ directory.

Partition Format A partition scheme used by the storage device. It can be of a master boot record (MBR) or

GUID partition table (GPT) format. The GPT devices can support datastores greater than 2 TB.

For more information, see Device Sector Formats.

Partitions Primary and logical partitions, including a VMFS datastore, if configured.

Multipathing Policies Path Selection Policy and Storage Array Type Policy the host uses to manage paths to storage.

For more information, see Chapter 18 Understanding Multipathing and Failover.

Paths Paths used to access storage and their status.

Display Storage Devices for a Host

Display all storage devices available to a host. If you use any third-party multipathing plug-ins, the storage

devices available through the plug-ins also appear on the list.

The Storage Devices view allows you to list the hosts' storage devices, analyze their information, and

modify properties.

Procedure

1 Navigate to the host.

2 Click the Configure tab.

3 Under Storage, click Storage Devices.

All storage devices available to the host are listed in the Storage Devices table.

4 To view details for a specific device, select the device from the list.

VMware, Inc. 21

vSphere Storage

5 Use the icons to perform basic storage management tasks.

Availability of specific icons depends on the device type and configuration.

Icon Description

Refresh Refresh information about storage adapters, topology, and file systems.

Rescan Rescan all storage adapters on the host to discover newly added storage devices or VMFS datastores.

Detach Detach the selected device from the host.

Attach Attach the selected device to the host.

Rename Change the display name of the selected device.

Turn On LED Turn on the locator LED for the selected devices.

Turn Off LED Turn off the locator LED for the selected devices.

Mark as Flash Disk Mark the selected devices as flash disks.

Mark as HDD Disk Mark the selected devices as HDD disks.

Mark as Local Mark the selected devices as local for the host.

Mark as Remote Mark the selected devices as remote for the host.

Erase Partitions Erase partitions on the selected devices.

6 Use tabs under Device Details to access additional information and modify properties for the selected

device.

Tab Description

Properties View device properties and characteristics. View and modify multipathing policies

for the device.

Paths Display paths available for the device. Disable or enable a selected path.

Partition Details Displays information about partitions and their formats.

Display Storage Devices for an Adapter

Display a list of storage devices accessible through a specific storage adapter on the host.

Procedure

1 Navigate to the host.

2 Click the Configure tab.

3 Under Storage, click Storage Adapters.

All storage adapters installed on the host are listed in the Storage Adapters table.

4 Select the adapter from the list and click the Devices tab.

Storage devices that the host can access through the adapter are displayed.

5 Use the icons to perform basic storage management tasks.

Availability of specific icons depends on the device type and configuration.

VMware, Inc. 22

vSphere Storage

Icon Description

Refresh Refresh information about storage adapters, topology, and file systems.

Rescan Rescan all storage adapters on the host to discover newly added storage devices or VMFS datastores.

Detach Detach the selected device from the host.

Attach Attach the selected device to the host.

Rename Change the display name of the selected device.

Turn On LED Turn on the locator LED for the selected devices.

Turn Off LED Turn off the locator LED for the selected devices.

Mark as Flash Disk Mark the selected devices as flash disks.

Mark as HDD Disk Mark the selected devices as HDD disks.

Mark as Local Mark the selected devices as local for the host.

Mark as Remote Mark the selected devices as remote for the host.

Erase Partitions Erase partitions on the selected devices.

Comparing Types of Storage

Whether certain vSphere functionality is supported might depend on the storage technology that you use.

The following table compares networked storage technologies that ESXi supports.

Table 2‑2. Networked Storage that ESXi Supports

Technology Protocols Transfers Interface

Fibre Channel FC/SCSI Block access of data/LUN FC HBA

Fibre Channel over

Ethernet

iSCSI IP/SCSI Block access of data/LUN

NAS IP/NFS File (no direct LUN access) Network adapter

FCoE/SCSI Block access of data/LUN

The following table compares the vSphere features that different types of storage support.

Table 2‑3. vSphere Features Supported by Storage

Storage Type Boot VM vMotion Datastore RDM VM Cluster

n

Converged Network Adapter (hardware FCoE)

n

NIC with FCoE support (software FCoE)

n

iSCSI HBA or iSCSI-enabled NIC (hardware

iSCSI)

n

Network adapter (software iSCSI)

VMware HA

and DRS

Storage

APIs -

Data

Protectio

n

Local Storage Yes No VMFS No Yes No Yes

Fibre Channel Yes Yes VMFS Yes Yes Yes Yes

VMware, Inc. 23

vSphere Storage

Table 2‑3. vSphere Features Supported by Storage (Continued)

Storage

APIs -

Data

VMware HA

Storage Type Boot VM vMotion Datastore RDM VM Cluster

iSCSI Yes Yes VMFS Yes Yes Yes Yes

and DRS

Protectio

n

NAS over NFS Yes Yes NFS 3 and NFS

4.1

No No Yes Yes

Note Local storage supports a cluster of virtual machines on a single host (also known as a cluster in a

box). A shared virtual disk is required. For more information about this configuration, see the vSphere

Resource Management documentation.

Supported Storage Adapters

Storage adapters provide connectivity for your ESXi host to a specific storage unit or network.

ESXi supports different classes of adapters, including SCSI, iSCSI, RAID, Fibre Channel, Fibre Channel

over Ethernet (FCoE), and Ethernet. ESXi accesses the adapters directly through device drivers in the

VMkernel.

Depending on the type of storage you use, you might need to enable and configure a storage adapter on

your host.

For information on setting up software FCoE adapters, see Chapter 6 Configuring Fibre Channel over

Ethernet.

For information on configuring different types of iSCSI adapters, see Chapter 11 Configuring iSCSI

Adapters and Storage.

View Storage Adapters Information

The host uses storage adapters to access different storage devices. You can display details for the

available storage adapters and review their information.

Prerequisites

You must enable certain adapters, for example software iSCSI or FCoE, before you can view their

information. To configure adapters, see the following:

n

Chapter 11 Configuring iSCSI Adapters and Storage

n

Chapter 6 Configuring Fibre Channel over Ethernet

Procedure

1 Navigate to the host.

2 Click the Configure tab.

VMware, Inc. 24

vSphere Storage

3 Under Storage, click Storage Adapters.

4 Use the icons to perform storage adapter tasks.

Availability of specific icons depends on the storage configuration.

Icon Description

Add Software Adapter Add a storage adapter. Applies to software iSCSI and software FCoE.

Refresh Refresh information about storage adapters, topology, and file systems on the host.

Rescan Storage Rescan all storage adapters on the host to discover newly added storage devices or VMFS

datastores.

Rescan Adapter Rescan the selected adapter to discover newly added storage devices.

5 To view details for a specific adapter, select the adapter from the list.

6 Use tabs under Adapter Details to access additional information and modify properties for the

selected adapter.

Tab Description

Properties Review general adapter properties that typically include a name and model of the adapter and

unique identifiers formed according to specific storage standards. For iSCSI and FCoE

adapters, use this tab to configure additional properties, for example, authentication.

Devices View storage devices the adapter can access. Use the tab to perform basic device

management tasks. See Display Storage Devices for an Adapter.

Paths List and manage all paths the adapter uses to access storage devices.

Targets (Fibre Channel and

iSCSI)

Network Port Binding (iSCSI

only)

Advanced Options (iSCSI

only)

Review and manage targets accessed through the adapter.

Configure port binding for software and dependent hardware iSCSI adapters.

Configure advanced parameters for iSCSI.

Datastore Characteristics

Datastores are logical containers, analogous to file systems, that hide specifics of each storage device

and provide a uniform model for storing virtual machine files. You can display all datastores available to

your hosts and analyze their properties.

Datastores are added to vCenter Server in the following ways:

n

You can create a VMFS datastore, an NFS version 3 or 4.1 datastore, or a Virtual Volumes datastore

using the New Datastore wizard. A vSAN datastore is automatically created when you enable vSAN.

n

When you add an ESXi host to vCenter Server, all datastores on the host are added to

vCenter Server.

The following table describes datastore details that you can see when you review datastores through the

vSphere Client. Certain characteristic might not be available or applicable to all types of datastores.

VMware, Inc. 25

vSphere Storage

Table 2‑4. Datastore Information

Datastore Information Applicable Datastore Type Description

Name VMFS

NFS

vSAN

Virtual Volumes

Type VMFS

NFS

vSAN

Virtual Volumes

Device Backing VMFS

NFS

vSAN

Protocol Endpoints Virtual Volumes Information about corresponding protocol endpoints. See

Extents VMFS Individual extents that the datastore spans and their capacity.

Drive Type VMFS Type of the underlying storage device, such as a flash drive

Editable name that you assign to a datastore. For information

on renaming a datastore, see Change Datastore Name.

File system that the datastore uses. For information about

VMFS and NFS datastores and how to manage them, see

Chapter 17 Working with Datastores.

For information about vSAN datastores, see the

Administering VMware vSAN documentation.

For information about Virtual Volumes, see Chapter 22

Working with Virtual Volumes.

Information about underlying storage, such as a storage

device on which the datastore is deployed (VMFS), server

and folder (NFS), or disk groups (vSAN).

Protocol Endpoints.

or a regular HHD drive. For details, see Chapter 15 Working

with Flash Devices.

Capacity VMFS

NFS

vSAN

Virtual Volumes

Mount Point VMFS

NFS

vSAN

Virtual Volumes

Capability Sets VMFS

Note A multi-extent VMFS datastore

assumes capabilities of only one of its

extents.

NFS

vSAN

Virtual Volumes

Storage I/O Control VMFS

NFS

Includes total capacity, provisioned space, and free space.

A path to the datastore in the /vmfs/volumes/ directory of

the host.

Information about storage data services that the underlying

storage entity provides. You cannot modify them.

Information on whether cluster-wide storage I/O prioritization

is enabled. See the vSphere Resource Management

documentation.

VMware, Inc. 26

vSphere Storage

Table 2‑4. Datastore Information (Continued)

Datastore Information Applicable Datastore Type Description

Hardware Acceleration VMFS

NFS

vSAN

Virtual Volumes

Tags VMFS

NFS

vSAN

Virtual Volumes

Connectivity with Hosts VMFS

NFS

Virtual Volumes

Multipathing VMFS

Virtual Volumes

Information on whether the underlying storage entity supports

hardware acceleration. The status can be Supported, Not

Supported, or Unknown. For details, see Chapter 24 Storage

Hardware Acceleration.

Note NFS 4.1 does not support Hardware Acceleration.

Datastore capabilities that you define and associate with

datastores in a form of tags. For information, see Assign

Tags to Datastores.

Hosts where the datastore is mounted.

Path selection policy the host uses to access storage. For

more information, see Chapter 18 Understanding

Multipathing and Failover.

Display Datastore Information

Access the Datastores view with the vSphere Client navigator.

Use the Datastores view to list all datastores available in the vSphere infrastructure inventory, analyze the

information, and modify properties.

Procedure

1 Navigate to any inventory object that is a valid parent object of a datastore, such as a host, a cluster,

or a data center, and click the Datastores tab.

Datastores that are available in the inventory appear in the center panel.

2 Use the icons from a datastore right-click menu to perform basic tasks for a selected datastore.

Availability of specific icons depends on the type of the datastore and its configuration.

Icon Description

Register an existing virtual machine in the inventory.

Increase datastore capacity.

Navigate to the datastore file browser.

Manage storage providers.

Mount a datastore to certain hosts.

Remove a datastore.

Unmount a datastore from certain hosts.

VMware, Inc. 27

vSphere Storage

3 To view specific datastore details, click a selected datastore.

4 Use tabs to access additional information and modify datastore properties.

Tab Description

Summary View statistics and configuration for the selected datastore.

Monitor View alarms, performance data, resource allocation, events, and other status information for the datastore.

Configure View and modify datastore properties. Menu items that you can see depend on the datastore type.

Permissions Assign or edit permissions for the selected datastore.

Files Navigate to the datastore file browser.

Hosts View hosts where the datastore is mounted.

VMs View virtual machines that reside on the datastore.

Using Persistent Memory

ESXi supports next generation persistent memory devices, also known as Non-Volatile Memory (NVM)

devices. These devices combine performance and speed of memory with the persistence of traditional

storage. They can retain stored data through reboots or power source failures.

Virtual machines that require high bandwidth, low latency, and persistence can benefit from this

technology. Examples include VMs with acceleration databases and analytics workload.

To use persistent memory with your ESXi host, you must be familiar with the following concepts.

PMem Datastore After you add persistent memory to your ESXi host, the host detects the

hardware, and then formats and mounts it as a local PMem datastore. ESXi

uses VMFS-L as a file system format. Only one local PMem datastore per

host is supported.

Note When you manage physical persistent memory, make sure to

evacuate all VMs from the host and place the host into maintenance mode.

To reduce administrative overhead, the PMem datastore offers a simplified

management model. Traditional datastore tasks do not generally apply to

the datastore because the host automatically performs all the required

operations on the background. As an administrator, you cannot display the

datastore in the Datastores view of the vSphere Client, or perform other

regular datastore actions. The only operation available to you is monitoring

statistics for the PMem datastore.

VMware, Inc. 28

vSphere Storage

The PMem datastore is used to store virtual NVDIMM devices and

traditional virtual disks of a VM. The VM home directory with the vmx and

vmware.log files cannot be placed on the PMem datastore.

PMem Access Modes ESXi exposes persistent memory to a VM in two different modes. PMem-

aware VMs can have direct access to persistent memory. Traditional VMs

can use fast virtual disks stored on the PMem datastore.

Direct-Access Mode In this mode, a PMem region can be presented to a VM as a virtual non-

volatile dual in-line memory module (NVDIMM) module. The VM uses the

NVDIMM module as a standard byte-addressable memory that can persist

across power cycles.

You can add one or several NVDIMM modules when provisioning the VM.

The VMs must be of the hardware version ESXi 6.7 and have a PMem-

aware guest OS. The NVDIMM device is compatible with latest guest OSes

that support persistent memory, for example, Windows 2016.

Each NVDIMM device is automatically stored on the PMem datastore.

Virtual Disk Mode This mode is available to any traditional VM and supports any hardware

version, including all legacy versions. VMs are not required to be PMem-

aware. When you use this mode, you create a regular SCSI virtual disk and

attach a PMem VM storage policy to the disk. The policy automatically

places the disk on the PMem datastore.

PMem Storage Policy To place the virtual disk on the PMem datastore, you must apply the host-

local PMem default storage policy to the disk. The policy is not editable.

The policy can be applied only to virtual disks. Because the VM home

directory does not reside on the PMem datastore, make sure to place it on

any standard datastore.

After you assign the PMem storage policy to the virtual disk, you cannot

change the policy through the VM Edit Setting dialog box. To change the

policy, migrate or clone the VM.

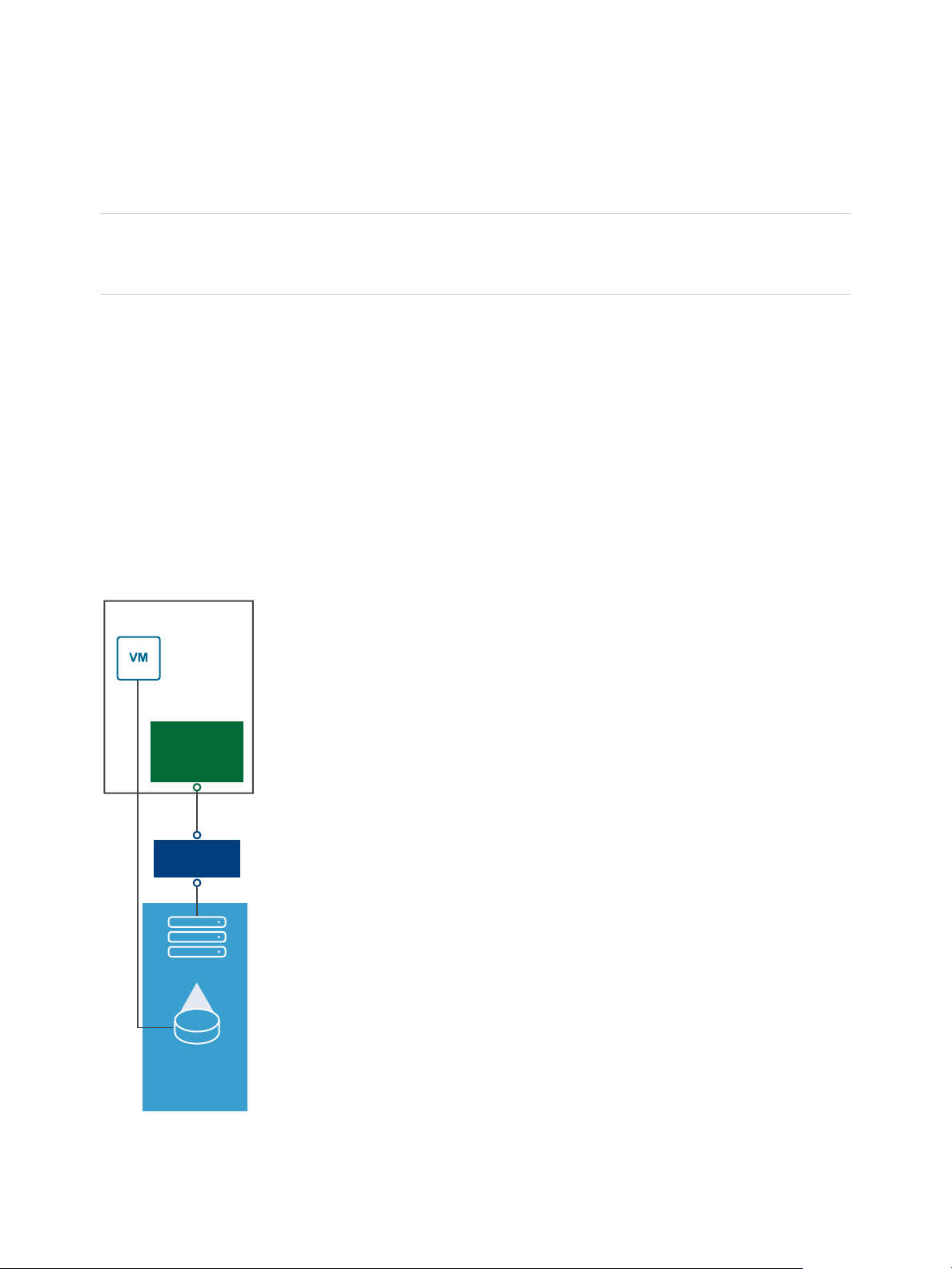

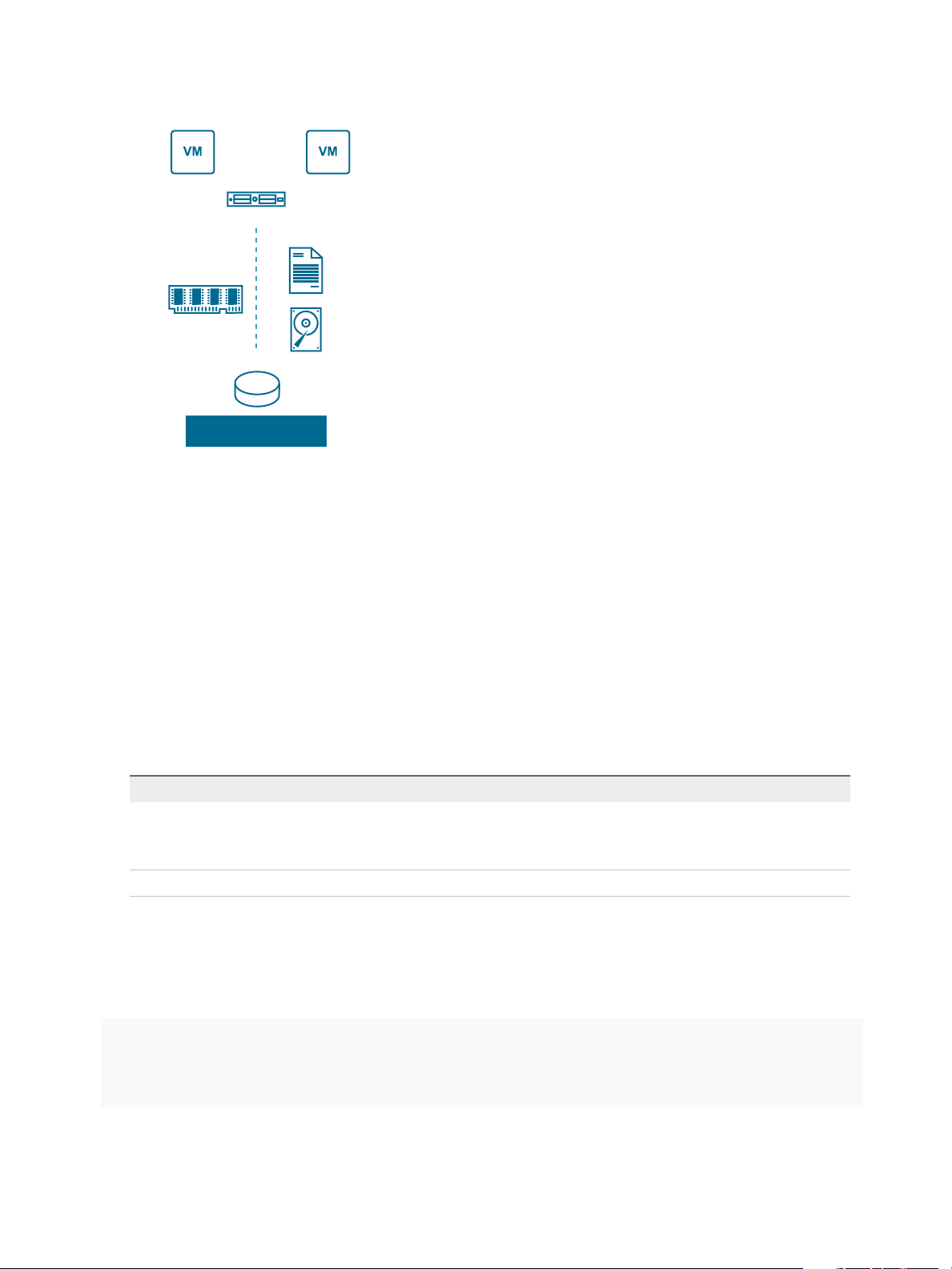

The following graphic illustrates how the persistent memory components interact.

VMware, Inc. 29

Direct-access

mode

Virtual disk

mode

NVDMM

device

PMem Storage

Policy

Virtual disk

PMem Datastore

Persistent Memory

PMem-aware VM Traditional VM

vSphere Storage

For information about how to configure and manage VMs with NVDIMMs or virtual persistent memory

disks, see the vSphere Resource Management documentation.

Monitor PMem Datastore Statistics

You can use the vSphere Client and the esxcli command to review the capacity of the PMem datastore

and some of its other attributes.

However, unlike regular datastores, such as VMFS or VVols, the PMem datastore does not appear in the

Datastores view of the vSphere Client. Regular datastore administrative tasks do not apply to it.

Procedure

u

Review PMem datastore information.

Option Description

vSphere Client a Navigate to the ESXi host and click Summary.

esxcli command Use the esxcli storage filesystem list to list the PMem datastore.

Example: Viewing the PMem Datastore

The following sample output appears when you use the esxcli storage filesystem list command

to list the datastore.

# esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

---------------------- ---------------- ------------- -------- ------- ------------ ------------

b In the Hardware panel, verify that Persistent Memory is displayed and review

its capacity.

VMware, Inc. 30

Loading...

Loading...