Page 1

Voice API

Programming Guide

June 2005

05-2377-002

Page 2

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY

ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN

INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS

ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES

RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER

INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

This Voice API Programming Guide as well as the software described in it is furnished under license and may only be used or copied in accordance

with the terms of the license. The information in this manual is furnished for informational use only, is subject to change without notice, and should not

be construed as a commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies that may

appear in this document or any software that may be provided in association with this document.

Except as permitted by such license, no part of this document may be reproduced, stored in a retrieval system, or transmitted in any form or by any

means without express written consent of Intel Corporation.

Copyright © 2004-2005, Intel Corporation

BunnyPeople, Celeron, Chips, Dialogic, EtherExpress, ETOX, FlashFile, i386, i486, i960, iCOMP, InstantIP, Intel, Intel Centrino, Intel Centrino logo,

Intel logo, Intel386, Intel486, Intel740, IntelDX2, IntelDX4, IntelSX2, Intel Inside, Intel Inside logo, Intel NetBurst, Intel NetMerge, Intel NetStructure,

Intel SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel Xeon, Intel XScale, IPLink, Itanium, MCS, MMX, MMX logo, Optimizer logo, OverDrive,

Paragon, PDCharm, Pentium, Pentium II Xeon, Pentium III Xeon, Perfor mance at Your Command, skoool, Sound Mark, The Computer Inside., The

Journey Inside, VTune, and Xircom are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other

countries.

* Other names and brands may be claimed as the property of others.

Publication Date: June 2005

Document Number: 05-2377-002

Intel Converged Communications, Inc.

1515 Route 10

Parsippany, NJ 07054

For Technical Support, visit the Intel Telecom Support Resources website at:

http://developer.intel.com/design/telecom/support

For Products and Services Information, visit the Intel Telecom Products website at:

http://www.intel.com/design/network/products/telecom

For Sales Offices and other contact information, visit the Where to Buy Intel Telecom Products page at:

http://www.intel.com/buy/networking/telecom.htm

Voice API Programming Guide – June 2005

Page 3

Contents

Revision History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

About This Publication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Purpose . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Applicability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Intended Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

How to Use This Publication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Related Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1 Product Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.2 R4 API . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.3 Call Progress Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.4 Tone Generation and Detection Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.4.1 Global Tone Detection (GTD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.4.2 Global Tone Generation (GTG) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.4.3 Cadenced Tone Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.5 Dial Pulse Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6 Play and Record Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6.1 Play and Record Functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.2 Speed and Volume Control. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.3 Transaction Record . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.4 Silence Compressed Record . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.5 Streaming to Board . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.6 Echo Cancellation Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.7 Send and Receive FSK Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.8 Caller ID. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.9 R2/MF Signaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.10 TDM Bus Routing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2 Programming Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1 Standard Runtime Library . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2 Asynchronous Programming Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.3 Synchronous Programming Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3 Device Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.1 Device Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.2 Voice Device Names . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

4 Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.1 Overview of Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.2 Event Management Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5 Error Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

6 Application Development Guidelines. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Voice API Programming Guide – June 2005 3

Page 4

Contents

6.1 General Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

6.1.1 Busy and Idle States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

6.1.2 Setting Termination Conditions for I/O Functions . . . . . . . . . . . . . . . . . . . . . . . . . 32

6.1.3 Setting Termination Conditions for Digits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

6.1.4 Clearing Structures Before Use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6.1.5 Working with User-Defined I/O Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6.2 Fixed and Flexible Routing Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

6.3 Fixed Routing Configuration Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

6.4 Additional DM3 Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

6.4.1 Call Control Through Global Call API Library . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

6.4.2 Multithreading and Multiprocessing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

6.4.3 DM3 Media Loads . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

6.4.4 Device Discovery for DM3 and Springware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

6.4.5 Device Initialization Hint. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

6.4.6 TDM Bus Time Slot Considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

6.4.7 Tone Detection Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

6.5 Using Wink Signaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41

6.5.1 Setting Delay Prior to Wink . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

6.5.2 Setting Wink Duration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

6.5.3 Receiving an Inbound Wink . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

7 Call Progress Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

7.1 Call Progress Analysis Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

7.2 Call Progress and Call Analysis Terminology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

7.3 Call Progress Analysis Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

7.4 Using Call Progress Analysis on DM3 Boards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

7.4.1 Call Progress Analysis Rules on DM3 Boards. . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

7.4.2 Overview of Steps to Initiate Call Progress Analysis . . . . . . . . . . . . . . . . . . . . . . . 47

7.4.3 Setting Up Call Progress Analysis Parameters in DX_CAP . . . . . . . . . . . . . . . . . 48

7.4.4 Executing a Dial Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

7.4.5 Determining the Outcome of a Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

7.4.6 Obtaining Additional Call Outcome Information. . . . . . . . . . . . . . . . . . . . . . . . . . . 50

7.5 Call Progress Analysis Tone Detection on DM3 Boards . . . . . . . . . . . . . . . . . . . . . . . . . . 51

7.5.1 Tone Detection Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

7.5.2 Types of Tones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

7.5.3 Ringback Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

7.5.4 Busy Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

7.5.5 Fax or Modem Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

7.5.6 SIT Frequency Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

7.6 Media Tone Detection on DM3 Boards. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

7.6.1 Positive Voice Detection (PVD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

7.6.2 Positive Answering Machine Detection (PAMD) . . . . . . . . . . . . . . . . . . . . . . . . . . 55

7.7 Default Call Progress Analysis Tone Definitions on DM3 Boards . . . . . . . . . . . . . . . . . . . 56

7.8 Modifying Default Call Progress Analysis Tone Definitions on DM3 Boards . . . . . . . . . . . 57

7.8.1 API Functions for Manipulating Tone Definitions. . . . . . . . . . . . . . . . . . . . . . . . . . 57

7.8.2 TONE_DATA Data Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

7.8.3 Rules for Modifying a Tone Definition on DM3 Boards . . . . . . . . . . . . . . . . . . . . . 59

7.8.4 Rules for Using a Single Tone Proxy for a Dual Tone . . . . . . . . . . . . . . . . . . . . . . 59

7.8.5 Steps to Modify a Tone Definition on DM3 Boards . . . . . . . . . . . . . . . . . . . . . . . . 60

7.9 Call Progress Analysis Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4 Voice API Programming Guide – June 2005

Page 5

Contents

7.10 Using Call Progress Analysis on Springware Boards . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

7.10.1 Overview of Steps to Initiate Call Progress Analysis . . . . . . . . . . . . . . . . . . . . . . 61

7.10.2 Setting Up Call Progress Analysis Features in DX_CAP . . . . . . . . . . . . . . . . . . . 61

7.10.3 Enabling Call Progress Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7.10.4 Executing a Dial Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7.10.5 Determining the Outcome of a Call. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

7.10.6 Obtaining Additional Call Outcome Information . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.11 Call Progress Analysis Tone Detection on Springware Boards . . . . . . . . . . . . . . . . . . . . . 65

7.11.1 Tone Detection Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

7.11.2 Types of Tones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

7.11.3 Dial Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

7.11.4 Ringback Detection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

7.11.5 Busy Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

7.11.6 Fax or Modem Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

7.11.7 Loop Current Detection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

7.12 Media Tone Detection on Springware Boards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

7.12.1 Positive Voice Detection (PVD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

7.12.2 Positive Answering Machine Detection (PAMD) . . . . . . . . . . . . . . . . . . . . . . . . . . 70

7.13 Default Call Progress Analysis Tone Definitions on Springware Boards . . . . . . . . . . . . . . 71

7.14 Modifying Default Call Progress Analysis Tone Definitions on Springware Boards . . . . . 71

7.15 SIT Frequency Detection (Springware Only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

7.15.1 Tri-Tone SIT Sequences. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

7.15.2 Setting Tri-Tone SIT Frequency Detection Parameters . . . . . . . . . . . . . . . . . . . . 73

7.15.3 Obtaining Tri-Tone SIT Frequency Information . . . . . . . . . . . . . . . . . . . . . . . . . . 75

7.15.4 Global Tone Detection Tone Memory Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

7.15.5 Frequency Detection Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

7.15.6 Setting Single Tone Frequency Detection Parameters. . . . . . . . . . . . . . . . . . . . . 77

7.15.7 Obtaining Single Tone Frequency Information . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

7.16 Cadence Detection in Basic Call Progress Analysis (Springware Only) . . . . . . . . . . . . . . 78

7.16.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

7.16.2 Typical Cadence Patterns. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

7.16.3 Elements of a Cadence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

7.16.4 Outcomes of Cadence Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

7.16.5 Setting Selected Cadence Detection Parameters. . . . . . . . . . . . . . . . . . . . . . . . . 82

7.16.6 Obtaining Cadence Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8 Recording and Playback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

8.1 Overview of Recording and Playback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

8.2 Digital Recording and Playback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

8.3 Play and Record Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

8.4 Play and Record Convenience Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

8.5 Voice Encoding Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

8.6 G.726 Voice Coder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

8.7 Transaction Record . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

8.8 Silence Compressed Record . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.8.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.8.2 Enabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

8.8.3 Encoding Methods Supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

8.9 Recording with the Voice Activity Detector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

8.9.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Voice API Programming Guide – June 2005 5

Page 6

Contents

8.9.2 Enabling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

8.9.3 Encoding Methods Supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

8.10 Streaming to Board. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.10.1 Streaming to Board Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.10.2 Streaming to Board Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

8.10.3 Implementing Streaming to Board . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.10.4 Streaming to Board Hints and Tips . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

8.11 Pause and Resume Play . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

8.11.1 Pause and Resume Play Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

8.11.2 Pause and Resume Play Functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

8.11.3 Implementing Pause and Resume Play. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

8.11.4 Pause and Resume Play Hints and Tips . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

8.12 Echo Cancellation Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

8.12.1 Overview of Echo Cancellation Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

8.12.2 Echo Cancellation Resource Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

8.12.3 Modes of Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

8.12.4 Echo Cancellation Resource Application Models . . . . . . . . . . . . . . . . . . . . . . . . 105

9 Speed and Volume Control. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

9.1 Speed and Volume Control Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

9.2 Speed and Volume Convenience Functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

9.3 Speed and Volume Adjustment Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

9.4 Speed and Volume Modification Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

9.5 Play Adjustment Digits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

9.6 Setting Play Adjustment Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

9.7 Explicitly Adjusting Speed and Volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

10 Send and Receive FSK Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

10.1 Overview of ADSI and Two-Way FSK Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

10.2 ADSI Protocol . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

10.3 ADSI Operation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

10.4 One-Way ADSI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .123

10.5 Two-Way ADSI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .124

10.5.1 Transmit to On-Hook CPE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

10.5.2 Two-Way FSK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

10.6 Fixed-Line Short Message Service (SMS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

10.7 ADSI and Two-Way FSK Voice Library Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

10.7.1 Library Support on DM3 Boards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

10.7.2 Library Support on Springware Boards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

10.8 Developing ADSI Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

10.8.1 Technical Overview of One-Way ADSI Data Transfer. . . . . . . . . . . . . . . . . . . . . 128

10.8.2 Implementing One-Way ADSI Using dx_TxIottData( ). . . . . . . . . . . . . . . . . . . . . 128

10.8.3 Technical Overview of Two-Way ADSI Data Transfer. . . . . . . . . . . . . . . . . . . . . 130

10.8.4 Implementing Two-Way ADSI Using dx_TxIottData( ). . . . . . . . . . . . . . . . . . . . . 131

10.8.5 Implementing Two-Way ADSI Using dx_TxRxIottData( ) . . . . . . . . . . . . . . . . . . 132

10.9 Modifying Older One-Way ADSI Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

11 Caller ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

11.1 Overview of Caller ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .135

11.2 Caller ID Formats . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

11.3 Accessing Caller ID Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

6 Voice API Programming Guide – June 2005

Page 7

Contents

11.4 Enabling Channels to Use the Caller ID Feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

11.5 Error Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

11.6 Caller ID Technical Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

12 Cached Prompt Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

12.1 Overview of Cached Prompt Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

12.2 Using Cached Prompt Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

12.2.1 Discovering Cached Prompt Capability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

12.2.2 Downloading Cached Prompts to a Board . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

12.2.3 Playing Cached Prompts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

12.2.4 Recovering from Errors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

12.2.5 Cached Prompt Management Hints and Tips . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

12.3 Cached Prompt Management Example Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

13 Global Tone Detection and Generation, and Cadenced Tone Generation . . . . . . . . . . . . . 147

13.1 Global Tone Detection (GTD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

13.1.1 Overview of Global Tone Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

13.1.2 Global Tone Detection on DM3 Boards versus Springware Boards . . . . . . . . . . 148

13.1.3 Defining Global Tone Detection Tones. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

13.1.4 Building Tone Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

13.1.5 Working with Tone Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

13.1.6 Retrieving Tone Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

13.1.7 Setting GTD Tones as Termination Conditions . . . . . . . . . . . . . . . . . . . . . . . . . 152

13.1.8 Maximum Amount of Memory for Tone Templates. . . . . . . . . . . . . . . . . . . . . . . 152

13.1.9 Estimating Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

13.1.10 Guidelines for Creating User-Defined Tones . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

13.1.11 Global Tone Detection Application . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

13.2 Global Tone Generation (GTG) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

13.2.1 Using GTG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

13.2.2 GTG Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

13.2.3 Building and Implementing a Tone Generation Template. . . . . . . . . . . . . . . . . . 156

13.3 Cadenced Tone Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

13.3.1 Using Cadenced Tone Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

13.3.2 How To Generate a Custom Cadenced Tone. . . . . . . . . . . . . . . . . . . . . . . . . . . 157

13.3.3 How To Generate a Non-Cadenced Tone . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

13.3.4 TN_GENCAD Data Structure - Cadenced Tone Generation . . . . . . . . . . . . . . . 160

13.3.5 How To Generate a Standard PBX Call Progress Signal . . . . . . . . . . . . . . . . . . 160

13.3.6 Predefined Set of Standard PBX Call Progress Signals . . . . . . . . . . . . . . . . . . . 161

13.3.7 Important Considerations for Using Predefined Call Progress Signals. . . . . . . . 166

14 Global Dial Pulse Detection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

14.1 Key Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

14.2 Global DPD Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

14.3 Enabling Global DPD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

14.4 Global DPD Programming Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

14.5 Retrieving Digits from the Digit Buffer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

14.6 Retrieving Digits as Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

14.7 Dial Pulse Detection Digit Type Reporting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

14.8 Defines for Digit Type Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

14.9 Global DPD Programming Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

14.10 Global DPD Example Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

Voice API Programming Guide – June 2005 7

Page 8

Contents

15 R2/MF Signaling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

15.1 R2/MF Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .175

15.2 Direct Dialing-In Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

15.3 R2/MF Multifrequency Combinations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

15.4 R2/MF Signal Meanings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

15.5 R2/MF Compelled Signaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

15.6 R2/MF Voice Library Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

15.7 R2/MF Tone Detection Template Memory Requirements . . . . . . . . . . . . . . . . . . . . . . . . 186

16 Syntellect License Automated Attendant . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

16.1 Overview of Automated Attendant Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

16.2 Syntellect License Automated Attendant Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

16.3 How to Use the Automated Attendant Function Call . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

17 Building Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

17.1 Voice and SRL Libraries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

17.2 Compiling and Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

17.2.1 Include Files. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

17.2.2 Required Libraries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

17.2.3 Run-time Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

17.2.4 Variables for Compiling and Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

8 Voice API Programming Guide – June 2005

Page 9

Contents

Figures

1 Cluster Configurations for Fixed and Flexible Routing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

2 Basic Call Progress Analysis Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3 PerfectCall Call Progress Analysis Components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4 Call Outcomes for Call Progress Analysis (DM3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

5 Call Outcomes for Call Progress Analysis (Springware) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

6 A Standard Busy Signal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

7 A Standard Single Ring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

8 A Type of Double Ring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

9 Cadence Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

10 Elements of Established Cadence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

11 No Ringback Due to Continuous No Signal. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

12 No Ringback Due to Continuous Nonsilence. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

13 Cadence Detection Salutation Processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

14 Silence Compressed Record Parameters Illustrated . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

15 Echo Canceller with Relevant Input and Output Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

16 Echo Canceller Operating over a TDM bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

17 ECR Bridge Example Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

18 An ECR Play Over the TDM bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

19 Example of Custom Cadenced Tone Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

20 Standard PBX Call Progress Signals (Part 1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

21 Standard PBX Call Progress Signals (Part 2) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

22 Forward and Backward Interregister Signals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

23 Multiple Meanings for R2/MF Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

24 R2/MF Compelled Signaling Cycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

25 Example of R2/MF Signals for 4-digit DDI Application . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

26 Voice and SRL Libraries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

Voice API Programming Guide – June 2005 9

Page 10

Contents

Tables

1 Voice Device Inputs for Event Management Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2 Voice Device Returns from Event Management Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3 API Function Restrictions in a Fixed Routing Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4 Call Progress Analysis Support with dx_dial( ). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5 Special Information Tone Sequences (DM3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6 Default Call Progress Analysis Tone Definitions (DM3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

7 Default Call Progress Analysis Tone Definitions (Springware). . . . . . . . . . . . . . . . . . . . . . . . . . 71

8 Special Information Tone Sequences (Springware) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

9 Voice Encoding Methods (DM3). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

10 Voice Encoding Methods (Springware) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

11 Default Speed Modification Table. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

12 Default Volume Modification Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

13 Supported CLASS Caller ID Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

14 Standard Bell System Network Call Progress Tones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

15 Asynchronous/Synchronous Tone Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

16 Maximum Memory Available for User-Defined Tone Templates (Springware). . . . . . . . . . . . . 152

17 Maximum Memory Available for Tone Templates for Tone-Creating Voice Features (Springware)

152

18 Maximum Tone Templates for Dual Tones (Springware) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

19 Standard PBX Call Progress Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

20 TN_GENCAD Definitions for Standard PBX Call Progress Signals . . . . . . . . . . . . . . . . . . . . . 165

21 Forward Signals, CCITT Signaling System R2/MF tones. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

22 Backward Signals, CCITT Signaling System R2/MF tones . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

23 Purpose of Signal Groups and Changeover in Meaning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

24 Meanings for R2/MF Group I Forward Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

25 Meanings for R2/MF Group II Forward Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

26 Meanings for R2/MF Group A Backward Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

27 Meanings for R2/MF Group B Backward Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

10 Voice API Programming Guide – June 2005

Page 11

Revision History

This revision history summarizes the changes made in each published version of this document.

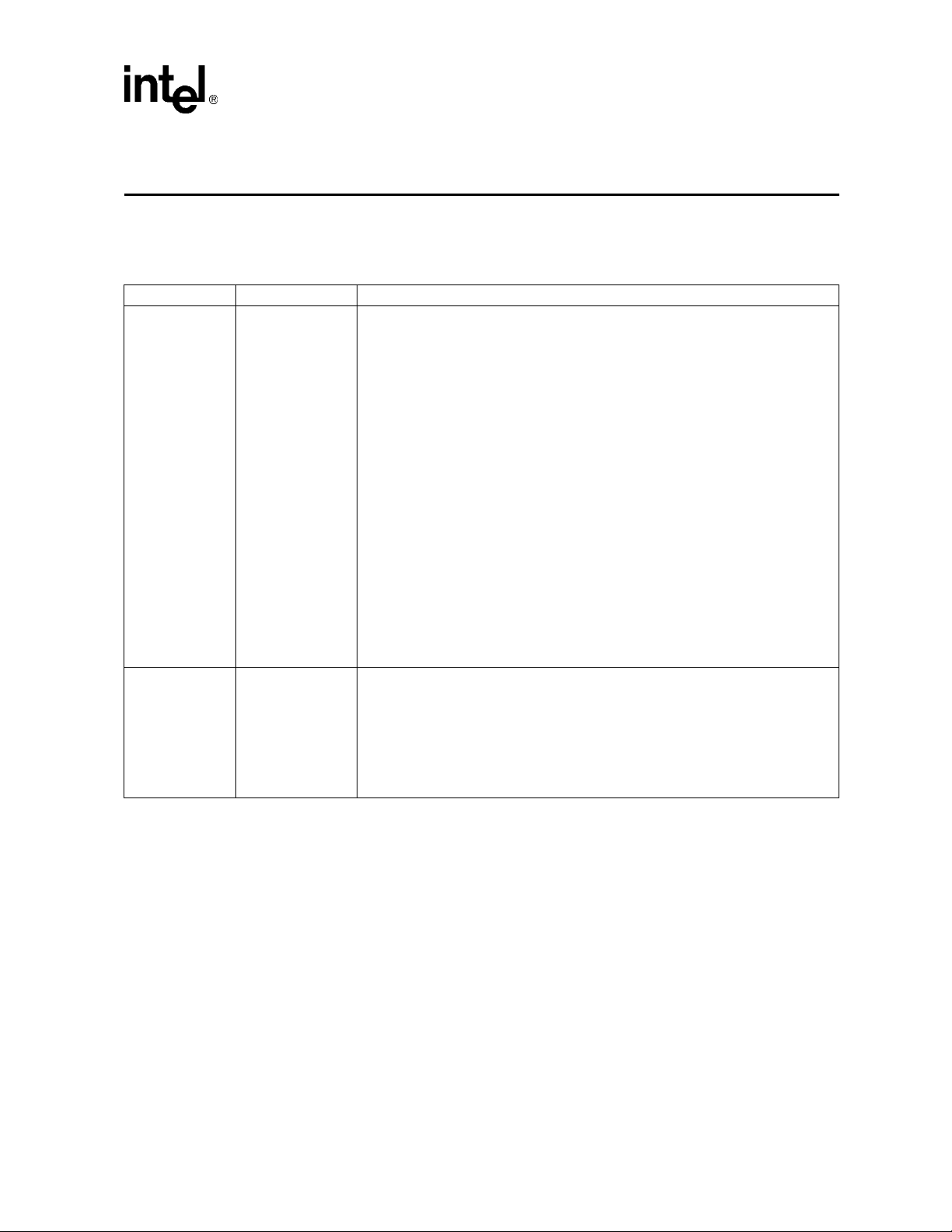

Document No. Publication Date Description of Revisions

05-2377-002 June 2005 Application Development Guidelines chapter : Added bullet about digits not always

being cleared by dx_clrdigbuf( ) in Tone Detection Considerations section [PTR

33806].

Call Progress Analysis chapter : Added eight new SIT sequences that can be

returned by ATDX_CRTNID( ) for DM3 boards in Types of Tones section.

Revised values of TID_SIT_NC (Freq of first segment changed from 950/1001 to

950/1020) and TID_SIT_VC (Freq of first segment changed from 950/1001 to

950/1020) in table of Special Information Tone Sequences (DM3); also added

four new SIT sequences to this table. Added note about SRL device mapper

functions in Steps to Modify a Tone Definition on DM3 Boards section.

Recording and Playback chapter : Added Recording with the Voice Activity Detector

section that describes new modes for dx_reciottdata( ).

Send and Receive FSK Data chapter: Updated Fixed-Line Short Message Service

(SMS) section to indicate that fixed-line short message service (SMS) is

supported on Springware boards. Updated Library Support on Springware

Boards section to indicate that Springware boards in Linux support ADSI two-

way FSK and SMS.

Cached Prompt Management chapter: Added sentence to second paragraph about

flushing cached prompts in Overview of Cached Prompt Management section.

Added second paragraph about flushing cached prompts in Cached Prompt

Management Hints and Tips section.

05-2377-001 November 2004 Initial version of document. Much of the information contained in this document was

previously published in the Voice API for Linux Operating System Programming

Guide (document number 05-1829-001) and the Voice API for Windows Operating

Systems Programming Guide (document number 05-1831-002).

This document now supports both Linux and Windows operating systems. When

information is specific to an operating system, it is noted.

Voice API Programming Guide — June 2005 11

Page 12

Revision History

12 Voice API Programming Guide — June 2005

Page 13

About This Publication

The following topics provide information about this publication:

• Purpose

• Applicability

• Intended Audience

• How to Use This Publication

• Related Information

Purpose

This publication provides guidelines for building computer telephony applications on Windows*

and Linux* operating systems using the Intel

limited to, call routing, voice messaging, interactive voice response, and call center applications.

This publication is a companion guide to the Voice API Library Reference, which provides details

on the functions and parameters in the voice library.

®

voice API. Such applications include, but are not

Applicability

This document version (05-2377-002) is published for Intel® Dialogic® System Release 6.1 for

Linux operating system.

This document may also be applicable to later Intel Dialogic system releases, including service

updates, on Linux or Windows. Check the Release Guide for your software release to determine

whether this document is supported.

This document is applicable to Intel Dialogic system releases only. It is not applicable to Intel

NetStructure

documentation specific to HMP is provided. Check the Release Guide for your software release to

determine what documents are provided with the release.

®

Host Media Processing (HMP) software releases. A separate set of voice API

Intended Audience

This information is intended for:

• Distributors

• System Integrators

• Toolkit Developers

Voice API Programming Guide — June 2005 13

Page 14

About This Publication

• Independent Software Vendors (ISVs)

• Value Added Resellers (VARs)

• Original Equipment Manufacturers (OEMs)

How to Use This Publication

This document assumes that you are familiar with and have prior experience with Windows or

Linux operating systems and the C programming language. Use this document together with the

following: the Voice API Library Reference, the Standard Runtime Library API Programming

Guide, and the Standard Runtime Library API Library Reference.

The information in this guide is organized as follows:

• Chapter 1, “Product Description” introduces the key features of the voice library and provides

a brief description of each feature.

• Chapter 2, “Programming Models” provides a brief overview of supported programming

models.

• Chapter 3, “Device Handling” discusses topics related to devices such as device naming

concepts, how to open and close devices, and how to discover whether a device is Springware

or DM3.

• Chapter 4, “Event Handling” provides information on functions used to handle events.

• Chapter 5, “Error Handling” provides information on handling errors in your application.

• Chapter 6, “Application Development Guidelines” provides programming guidelines and

techniques for developing an application using the voice library. This chapter also discusses

fixed and flexible routing configurations.

• Chapter 7, “Call Progress Analysis” describes the components of call progress analysis in

detail. This chapter also covers differences between Basic Call Progress Analysis and

PerfectCall Call Progress Analysis.

• Chapter 8, “Recording and Playback” discusses playback and recording features, such as

encoding algorithms, play and record API functions, transaction record, and silence

compressed record.

• Chapter 9, “Speed and Volume Control” explains how to control speed and volume of

playback recordings through API functions and data structures.

• Chapter 10, “Send and Receive FSK Data” describes the two-way frequency shift keying

(FSK) feature, the Analog Display Services Interface (ADSI), and API functions for use with

this feature.

• Chapter 11, “Caller ID” describes the caller ID feature, supported formats, and how to enable

it.

• Chapter 12, “Cached Prompt Management” provides information on cached prompts and how

to use cached prompt management in your application.

• Chapter 13, “Global Tone Detection and Generation, and Cadenced Tone Generation”

describes these tone detection and generation features in detail.

• Chapter 14, “Global Dial Pulse Detection” discusses the Global DPD feature, the API

functions for use with this feature, programming guidelines, and example code.

14 Voice API Programming Guide — June 2005

Page 15

About This Publication

• Chapter 15, “R2/MF Signaling” describes the R2/MF signaling protocol, the API functions for

use with this feature, and programming guidelines.

• Chapter 16, “Syntellect License Automated Attendant” describes Intel hardware and software

that include a license for the Syntellect Technology Corporation (STC) patent portfolio.

• Chapter 17, “Building Applications” discusses compiling and linking requirements such as

include files and library files.

Related Information

See the following for more information:

• For details on all voice functions, parameters and data structures in the voice library, see the

Voice API Library Reference.

• For details on the Standard Runtime Library (SRL), supported programming models, and

programming guidelines for building all applications, see the Standard Runtime Library API

Programming Guide. The SRL is a device-independent library that consists of event

management functions and standard attribute functions.

• For details on all functions and data structures in the Standard Runtime Library (SRL) library,

see the Standard Runtime Library API Library Reference.

• For information on the system release, system requirements, software and hardware features,

supported hardware, and release documentation, see the Release Guide for the system release

you are using.

• For details on compatibility issues, restrictions and limitations, known problems, and late-

breaking updates or corrections to the release documentation, see the Release Update.

Be sure to check the Release Update for the system release you are using for any updates or

corrections to this publication. Release Updates are available on the Telecom Support

Resources website at http://resource.intel.com/telecom/support/releases/.

• For details on installing the system software, see the System Release Installation Guide.

• For guidelines on building applications using Global Call software (a common signaling

interface for network-enabled applications, regardless of the signaling protocol needed to

connect to the local telephone network), see the Global Call API Programming Guide.

• For details on all functions and data structures in the Global Call library, see the Global Call

API Library Reference.

• For details on configuration files (including FCD/PCD files) and instructions for configuring

products, see the Configuration Guide for your product or product family.

Voice API Programming Guide — June 2005 15

Page 16

About This Publication

16 Voice API Programming Guide — June 2005

Page 17

1.Product Description

This chapter provides information on key voice library features and capability. The following

topics are covered:

• Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

• R4 API . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

• Call Progress Analysis. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

• Tone Generation and Detection Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

• Dial Pulse Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

• Play and Record Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

• Send and Receive FSK Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

• Caller ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

• R2/MF Signaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

• TDM Bus Routing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

1.1 Overview

1

The voice software provides a high-level interface to Intel telecom media processing boards and is

a building block for creating computer telephony applications. It offers a comprehensive set of

features such as dual-tone multifrequency (DTMF) detection, tone signaling, call progress analysis,

playing and recording that supports a number of encoding methods, and much more.

The voice software consists of a C language library of functions, device drivers, and firmware.

The voice library is well integrated with other technology libraries provided by Intel such as fax,

conferencing, and continuous speech processing. This architecture enables you to add new

capability to your voice application over time.

For a list of voice features by product, see the Release Guide for your system release.

1.2 R4 API

The term R4 API (“System Software Release 4 Application Programming Interface”) describes the

direct interface used for creating computer telephony application programs. The R4 API is a rich

set of proprietary APIs for building computer telephony applications on Intel telecom products.

These APIs encompass technologies that include voice, conferencing, fax, and speech. This

document describes the voice API.

Voice API Programming Guide — June 2005 17

Page 18

Product Description

In addition to original Springware products (also known as earlier-generation products), the R4

API supports a new generation of hardware products that are based on the DM3 mediastream

architecture. Feature differences between these two categories of products are noted.

DM3 boards is a collective name used in this document to refer to products that are based on the

DM3 mediastream architecture. DM3 board names typically are prefaced with “DM,” such as the

Intel NetStructure

architecture. Springware boards typically are prefaced with “D,” such as the Intel

D/240JCT-T1.

In this document, the term voice API is used to refer to the R4 voice API.

®

DM/V2400A. Springware boards refer to boards based on earlier-generation

1.3 Call Progress Analysis

Call progress analysis monitors the progress of an outbound call after it is dialed into the Public

Switched Telephone Network (PSTN).

There are two forms of call progress analysis: basic and PerfectCall. PerfectCall call progress

analysis uses an improved method of signal identification and can detect fax machines and

answering machines. Basic call progress analysis provides backward compatibility for older

applications written before PerfectCall call progress analysis became available.

®

Dialogic®

Note: PerfectCall call progress analysis was formerly called enhanced call analysis.

See Chapter 7, “Call Progress Analysis” for detailed information about this feature.

1.4 Tone Generation and Detection Features

In addition to DTMF and MF tone detection and generation, the following signaling features are

provided by the voice library:

• Global Tone Detection (GTD)

• Global Tone Generation (GTG)

• Cadenced Tone Generation

1.4.1 Global Tone Detection (GTD)

Global tone detection allows you to define single- or dual-frequency tones for detection on a

channel-by-channel basis. Global tone detection and GTD tones are also known as user-defined

tone detection and user-defined tones.

Use global tone detection to detect single- or dual-frequency tones outside the standard DTMF

range of 0-9, a-d, *, and #. The characteristics of a tone can be defined and tone detection can be

enabled using GTD functions and data structures provided in the voice library.

18 Voice API Programming Guide — June 2005

Page 19

See Chapter 13, “Global Tone Detection and Generation, and Cadenced Tone Generation” for

detailed information about global tone detection.

1.4.2 Global Tone Generation (GTG)

Global tone generation allows you to define a single- or dual-frequency tone in a tone generation

template and to play the tone on a specified channel.

See Chapter 13, “Global Tone Detection and Generation, and Cadenced Tone Generation” for

detailed information about global tone generation.

1.4.3 Cadenced Tone Generation

Cadenced tone generation is an enhancement to global tone generation. It allows you to generate a

tone with up to 4 single- or dual-tone elements, each with its own on/off duration, which creates the

signal pattern or cadence. You can define your own custom cadenced tone or take advantage of the

built-in set of standard PBX call progress signals, such as dial tone, ringback, and busy.

See Chapter 13, “Global Tone Detection and Generation, and Cadenced Tone Generation” for

detailed information about cadenced tone generation.

Product Description

1.5 Dial Pulse Detection

Dial pulse detection (DPD) allows applications to detect dial pulses from rotary or pulse phones by

detecting the audible clicks produced when a number is dialed, and to use these clicks as if they

were DTMF digits. Global dial pulse detection, called global DPD, is a software-based dial pulse

detection method that can use country-customized parameters for extremely accurate performance.

See Chapter 14, “Global Dial Pulse Detection” for more information about this feature.

1.6 Play and Record Features

The following play and record features are provided by the voice library:

• Play and Record Functions

• Speed and Volume Control

• Transaction Record

• Silence Compressed Record

• Streaming to Board

• Echo Cancellation Resource

Voice API Programming Guide — June 2005 19

Page 20

Product Description

1.6.1 Play and Record Functions

The voice library includes several functions and data structures for recording and playing audio

data. These allow you to digitize and store human voice; then retrieve, convert, and play this digital

information. In addition, you can pause a play currently in progress and resume that same play.

For more information about play and record features, see Chapter 8, “Recording and Playback”.

This chapter also includes information about voice encoding methods supported; see Section 8.5,

“Voice Encoding Methods”, on page 89. For detailed information about play and record functions,

see the Voice API Library Reference.

1.6.2 Speed and Volume Control

The speed and volume control feature allows you to control the speed and volume of a message

being played on a channel, for example, by entering a DTMF tone.

Se Chapter 9, “Speed and Volume Control” for more information about this feature.

1.6.3 Transaction Record

The transaction record feature allows voice activity on two channels to be summed and stored in a

single file, or in a combination of files, devices, and memory. This feature is useful in call center

applications where it is necessary to archive a verbal transaction or record a live conversation.

See Chapter 8, “Recording and Playback” for more information on the transaction record feature.

1.6.4 Silence Compressed Record

The silence compressed record (SCR) feature enables recording with silent pauses eliminated. This

results in smaller recorded files with no loss of intelligibility.

When the audio level is at or falls below the silence threshold for a minimum duration of time,

silence compressed record begins. If a short burst of noise (glitch) is detected, the compression

does not end unless the glitch is longer than a specified period of time.

See Chapter 8, “Recording and Playback” for more information.

1.6.5 Streaming to Board

The streaming to board feature allows you to stream data to a network interface in real time. Unlike

the standard voice play feature (store and forward), data can be streamed in real time with little

delay as the amount of initial data required to start the stream is configurable. The streaming to

board feature is essential for applications such as text-to-speech, distributed prompt servers, and IP

gateways.

For more information about this feature, see Chapter 8, “Recording and Playback”.

20 Voice API Programming Guide — June 2005

Page 21

1.6.6 Echo Cancellation Resource

The echo cancellation resource (ECR) feature enables a voice channel to dynamically perform echo

cancellation on any external TDM bus time slot signal.

Note: The ECR feature has been replaced with continuous speech processing (CSP). Although the CSP

API is related to the voice API, it is provided as a separate product. The continuous speech

processing software is a significant enhancement to ECR. The continuous speech processing

library provides many features such as high-performance echo cancellation, voice energy detection,

barge-in, voice event signaling, pre-speech buffering, full-duplex operation and more. For more

information on this API, see the Continuous Speech Processing documentation.

See Chapter 8, “Recording and Playback” for more information about the ECR feature.

1.7 Send and Receive FSK Data

The send and receive frequency shift keying (FSK) data interface is used for Analog Display

Services Interface (ADSI) and fixed-line short message service, also called small message service,

or SMS. Frequency shift keying is a frequency modulation technique to send digital data over

voiced band telephone lines. ADSI allows information to be transmitted for display on a displaybased telephone connected to an analog loop start line, and to store and forward SMS messages in

the Public Switched Telephone Network (PSTN). The telephone must be a true ADSI-compliant or

fixed line SMS-compliant device.

Product Description

See Chapter 10, “Send and Receive FSK Data” for more information on ADSI, FSK, and SMS.

1.8 Caller ID

An application can enable the caller ID feature on specific channels to process caller ID

information as it is received with an incoming call. Caller ID information can include the calling

party’s directory number (DN), the date and time of the call, and the calling party’s subscriber

name.

See Chapter 11, “Caller ID” for more information about this feature.

1.9 R2/MF Signaling

R2/MF signaling is an international signaling system that is used in Europe and Asia to permit the

transmission of numerical and other information relating to the called and calling subscribers’

lines.

R2/MF signaling is typically accomplished through the Global Call API. For more information, see

the Global Call documentation set. Chapter 15, “R2/MF Signaling” is provided for reference only.

Voice API Programming Guide — June 2005 21

Page 22

Product Description

1.10 TDM Bus Routing

A time division multiplexing (TDM) bus is a technique for transmitting a number of separate

digitized signals simultaneously over a communication medium. TDM bus includes the CT Bus

and SCbus.

The CT Bus is an implementation of the computer telephony bus standard developed by the

Enterprise Computer Telephony Forum (ECTF) and accepted industry-wide. The H.100 hardware

specification covers CT Bus implementation using the PCI form factor. The H.110 hardware

specification covers CT Bus implementation using the CompactPCI (cPCI) form factor. The CT

Bus has 4096 bi-directional time slots.

The SCbus or signal computing bus connects Signal Computing System Architecture (SCSA)

resources. The SCbus has 1024 bi-directional time slots.

A TDM bus connects voice, telephone network interface, fax, and other technology resource

boards together. TDM bus boards are treated as board devices with on-board voice and/or

telephone network interface devices that are identified by a board and channel (time slot for digital

network channels) designation, such as a voice channel, analog channel, or digital channel.

For information on TDM bus routing functions, see the Voice API Library Reference.

Note: When you see a reference to the SCbus or SCbus routing, the information also applies to the CT

Bus on DM3 products. That is, the physical interboard connection can be either SCbus or CT Bus.

The SCbus protocol is used and the TDM routing API (previously called the SCbus routing API)

applies to all the boards regardless of whether they use an SCbus or CT Bus physical interboard

connection.

22 Voice API Programming Guide — June 2005

Page 23

2.Programming Models

This chapter briefly discusses the Standard Runtime Library and supported programming models:

• Standard Runtime Library . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

• Asynchronous Programming Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

• Synchronous Programming Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1 Standard Runtime Library

2

The Standard Runtime Library (SRL) provides a set of common system functions that are device

independent and are applicable to all Intel

event management functions, device management functions (called standard attribute functions),

and device mapper functions. You can use the SRL to simplify application development, such as by

writing common event handlers to be used by all devices.

When developing voice processing applications, refer to the Standard Runtime Library

documentation in tandem with the voice library documentation. For more information on the

Standard Runtime Library, see the Standard Runtime Library API Library Reference and Standard

Runtime Library API Programming Guide.

®

telecom devices. The SRL consists of a data structure,

2.2 Asynchronous Programming Models

Asynchronous programming enables a single program to control multiple voice channels within a

single process. This allows the development of complex applications where multiple tasks must be

coordinated simultaneously.

The asynchronous programming model uses functions that do not block thread execution; that is,

the function continues processing under the hood. A Standard Runtime Library (SRL) event later

indicates function completion.

Generally, if you are building applications that use any significant density, you should use the

asynchronous programming model to develop field solutions.

For complete information on asynchronous programming models, see the Standard Runtime

Library API Programming Guide.

2.3 Synchronous Programming Model

The synchronous programming model uses functions that block application execution until the

function completes. This model requires that each channel be controlled from a separate process.

This allows you to assign distinct applications to different channels dynamically in real time.

Voice API Programming Guide — June 2005 23

Page 24

Programming Models

Synchronous programming models allow you to scale an application by simply instantiating more

threads or processes (one per channel). This programming model may be easy to encode and

manage but it relies on the system to manage scalability. Applying the synchronous programming

model can consume large amounts of system overhead, which reduces the achievable densities and

negatively impacts timely servicing of both hardware and software interrupts. Using this model, a

developer can only solve system performance issues by adding memory or increasing CPU speed

or both. The synchronous programming models may be useful for testing or very low-density

solutions.

For complete information on synchronous programming models, see the Standard Runtime Library

API Programming Guide.

24 Voice API Programming Guide — June 2005

Page 25

3.Device Handling

This chapter describes the concept of a voice device and how voice devices are named and used.

• Device Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

• Voice Device Names . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.1 Device Concepts

The following concepts are key to understanding devices and device handling:

device

A device is a computer component controlled through a software device driver. A resource

board, such as a voice resource, fax resource, and conferencing resource, and network

interface board, contains one or more logical board devices. Each channel or time slot on the

board is also considered a device.

device channel

A device channel refers to a data path that processes one incoming or outgoing call at a time

(equivalent to the terminal equipment terminating a phone line). The first two numbers in the

product naming scheme identify the number of device channels for a given product. For

example, there are 24 voice device channels on a D/240JCT-T1 board, 30 on a D/300JCT-E1.

3

device name

A device name is a literal reference to a device, used to gain access to the device via an

xx_open( ) function, where “xx” is the prefix defining the device to be opened. For example,

“dx” is the prefix for voice device and “fx” for fax device.

device handle

A device handle is a numerical reference to a device, obtained when a device is opened using

xx_open( ), where “xx” is the prefix defining the device to be opened. The device handle is

used for all operations on that device.

physical and virtual boards

The API functions distinguish between physical boards and virtual boards. The device driver

views a single physical voice board with more than four channels as multiple emulated D/4x

boards. These emulated boards are called virtual boards. For example, a D/120JCT-LS with 12

channels of voice processing contains three virtual boards. A DM/V480A-2T1 board with 48

channels of voice processing and two T1 trunk lines contains 12 virtual voice boards and two

virtual network interface boards.

3.2 Voice Device Names

The software assigns a device name to each device or each component on a board. A voice device is

named dxxxBn, where n is the device number assigned in sequential order down the list of sorted

voice boards. A device corresponds to a grouping of two or four voice channels.

Voice API Programming Guide — June 2005 25

Page 26

Device Handling

For example, a D/240JCT-T1 board employs 24 voice channels; the software therefore divides the

D/240JCT into six voice board devices, each device consisting of four channels. Examples of board

device names for voice boards are dxxxB1 and dxxxB2.

A device name can be appended with a channel or component identifier. A voice channel device is

named dxxxBnCy, where y corresponds to one of the voice channels. Examples of channel device

names for voice boards are dxxxB1C1 and dxxxB1C2.

A physical board device handle is a numerical reference to a physical board. A physical board

device handle is a concept introduced in System Release 6.0. Previously there was no way to

identify a physical board but only the virtual boards that make up the physical board. Having a

physical board device handle enables API functions to act on all devices on the physical board. The

physical board device handle is named brdBn, where n is the device number. As an example, the

physical board device handle is used in cached prompt management.

Use the Standard Runtime Library device mapper functions to retrieve information on all devices in

a system, including a list of physical boards, virtual boards on a physical board, and subdevices on

a virtual board.

For complete information on device handling, see the Standard Runtime Library API Programming

Guide.

26 Voice API Programming Guide — June 2005

Page 27

4.Event Handling

This chapter provides information on functions used to retrieve and handle events. Topics include:

• Overview of Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

• Event Management Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.1 Overview of Event Handling

An event indicates that a specific activity has occurred on a channel. The voice driver reports

channel activity to the application program in the form of events, which allows the program to

identify and respond to a specific occurrence on a channel. Events provide feedback on the

progress and completion of functions and indicate the occurrence of other channel activities. Voice

library events are defined in the dxxxlib.h header file.

For a list of events that may be returned by the voice software, see the Voice API Library Reference.

4.2 Event Management Functions

4

Event management functions are used to retrieve and handle events being sent to the application

from the firmware. These functions are contained in the Standard Runtime Library (SRL) and

defined in srllib.h. The SRL provides a set of common system functions that are device

independent and are applicable to all Intel

management and event handling, see the Standard Runtime Library API Programming Guide.

Event management functions include:

• sr_enbhdlr( )

• sr_dishdlr( )

• sr_getevtdev( )

• sr_getevttype( )

• sr_getevtlen( )

• sr_getevtdatap( )

For details on SRL functions, see the Standard Runtime Library API Library Reference.

The event management functions retrieve and handle voice device termination events for functions

that run in asynchronous mode, such as dx_dial( ) and dx_play( ). For complete function reference

information, see the Voice API Library Reference.

®

telecom devices. For more information on event

Voice API Programming Guide — June 2005 27

Page 28

Event Handling

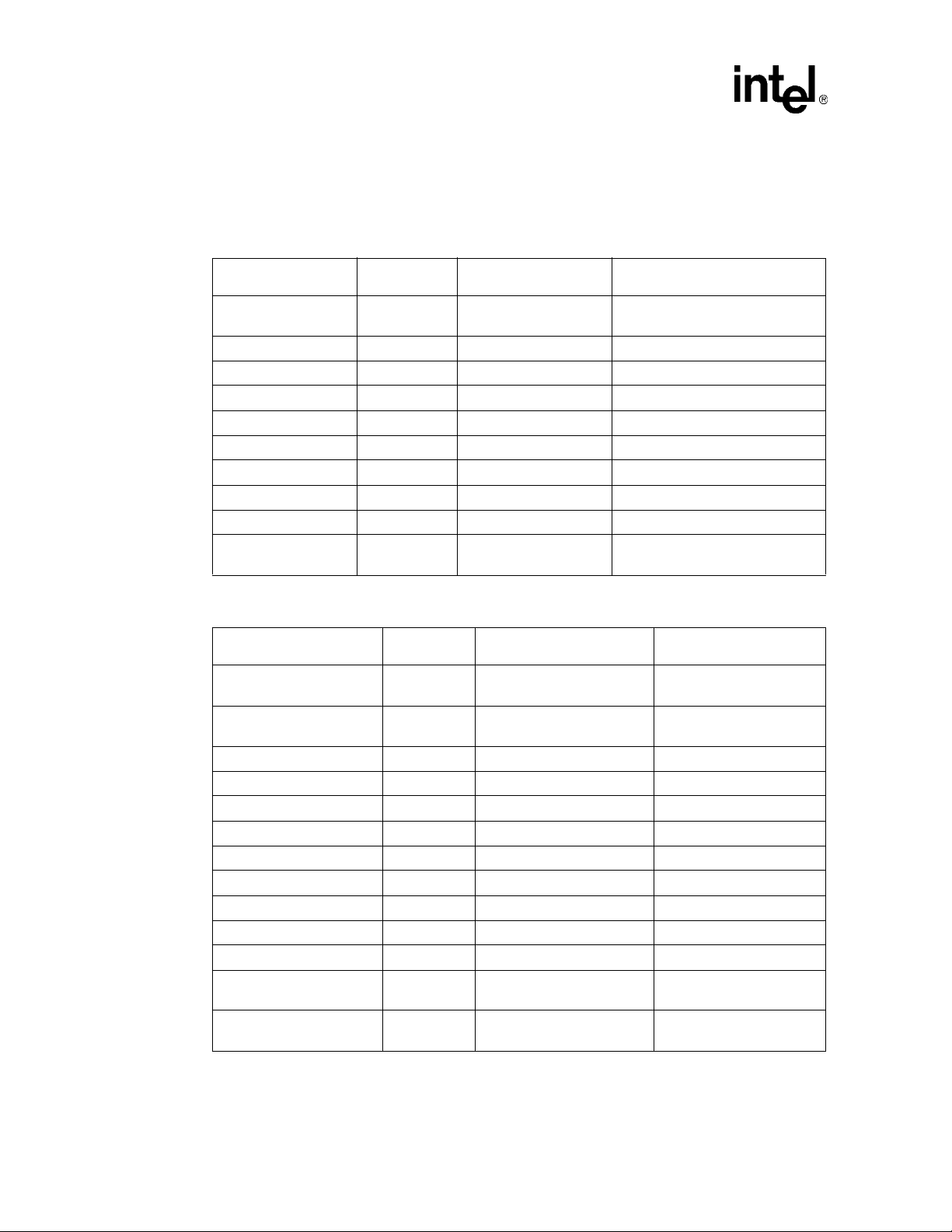

Each of the event management functions applicable to the voice boards are listed in the following

tables. Table 1 lists values that are required by event management functions. Table 2 list values that

are returned for event management functions that are used with voice devices.

Table 1. Voice Device Inputs for Event Management Functions

Event Management

Function

sr_enbhdlr( )

Enable event handler

sr_dishdlr( )

Disable event handler

Voice Device

Input

evt_type TDX_PLAY dx_play( )

evt_type As above As above

Valid Value Related Voice Functions

TDX_PLAYTONE dx_playtone( )

TDX_RECORD dx_rec( )

TDX_GETDIG dx_getdig( )

TDX_DIAL dx_dial( )

TDX_CALLP dx_dial( )

TDX_SETHOOK dx_sethook( )

TDX_WINK dx_wink( )

TDX_ERROR All asynchronous functions

Table 2. Voice Device Returns from Event Management Functions

Event Management

Function

sr_getevtdev( )

Get device handle

sr_getevttype( )

Get event type

sr_getevtlen( )

Get event data length

sr_getevtdatap( )

Get pointer to event data

Return

Description

device voice device handle

event type TDX_PLAY dx_play( )

event length sizeof (DX_CST)

event data pointer to DX_CST structure

Returned Value Related Voice Functions

TDX_PLAYTONE dx_playtone( )

TDX_RECORD dx_rec( )

TDX_GETDIG dx_getdig( )

TDX_DIAL dx_dial( )

TDX_CALLP dx_dial( )

TDX_CST dx_setevtmsk( )

TDX_SETHOOK dx_sethook( )

TDX_WINK dx_wink( )

TDX_ERROR All asynchronous functions

28 Voice API Programming Guide — June 2005

Page 29

5.Error Handling

This chapter discusses how to handle errors that can occur when running an application.

All voice library functions return a value to indicate success or failure of the function. A return

value of zero or a non-negative number indicates success. A return value of -1 indicates failure.

If a voice library function fails, call the standard attribute functions ATDV_LASTERR( ) and

ATDV_ERRMSGP( ) to determine the reason for failure. For more information on these

functions, see the Standard Runtime Library API Library Reference.

If an extended attribute function fails, two types of errors can be generated. An extended attribute

function that returns a pointer will produce a pointer to the ASCIIZ string “Unknown device” if it

fails. An extended attribute function that does not return a pointer will produce a value of

AT_FAILURE if it fails. Extended attribute functions for the voice library are prefaced with

“ATDX_”.