Page 1

HP X5000 G2 Network Storage System

Administrator Guide

Abstract

This document explains how to install, configure, and maintain all models of the HP X5000 G2 Network Storage System and

is intended for system administrators. For the latest version of this guide, go to www.hp.com/support/manuals. Select NAS

Systems in the storage group, and then select an X5000 G2 product.

HP Part Number: QW919-96035

Published: July 2013

Edition: 4

Page 2

© Copyright 2011, 2012 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgments

Microsoft®, Windows®, and Windows Server® are registered trademarks of Microsoft Corporation in the United States and other countries.

Revision History

DescriptionSoftware

Version

DateEdition

First release2.01.0aNovember 2011First

Corrected network addresses for iLO connections; clarified network connections

to both server blades are required during initial configuration; added details

2.02.0aMarch 2012Second

to the Known Issues section; corrected minimum supported Insight Remote

Support software version; added details about the 1210m Online Volume Tool;

revised content in the System recovery chapter; added SHOW CSR commands

for EMU CLI and corrected instructions for using Enclosure Manager physical

interface.

Added details to the Known issues section; documented new Storage Viewer

feature; documented Alerts and Email enhancements; revised instructions for

2.03.0aMay 2012Third

using the Enclosure Manager physical interface; corrected syntax for SHOW

SERVER BOOT and POWEROFF SERVER Enclosure Manager commands.

Page 3

Contents

1 HP X5000 G2 Network Storage System........................................................7

Features..................................................................................................................................7

Hardware components..............................................................................................................7

Software components................................................................................................................8

2 Installing the storage system.........................................................................9

Verify the kit contents................................................................................................................9

Locate and record the product number, serial number, and SAID number.........................................9

Unpack and rack the storage system hardware.............................................................................9

Cable disk enclosures.............................................................................................................10

Network connections..............................................................................................................12

Power on the storage system.....................................................................................................14

Configure the EMU and iLO management processors..................................................................15

3 Configuring the storage system...................................................................19

Accessing the storage system...................................................................................................19

Set up Windows and discover the second node..........................................................................19

Completing initial configuration................................................................................................20

Networking options................................................................................................................21

Network teaming...............................................................................................................21

Multi-home........................................................................................................................21

Dedicated networks...........................................................................................................21

10 GbE versus 1 GbE........................................................................................................21

4 Monitoring and troubleshooting the storage system.......................................22

Using notification alerts...........................................................................................................22

Configuring Alerts and Email...................................................................................................22

HP System Management Homepage.........................................................................................26

Starting the System Management Homepage application........................................................26

System Management Homepage main page.........................................................................26

Using the System Manager......................................................................................................30

Component LEDs....................................................................................................................34

EMU CLI SHOW commands....................................................................................................42

Known issues.........................................................................................................................43

Using Storage Viewer..............................................................................................................47

HP Support websites...............................................................................................................49

HP Insight Remote Support software..........................................................................................50

Microsoft Systems Center Operations Manager...........................................................................51

Windows Recovery Environment ..............................................................................................51

Startup Repair...................................................................................................................51

Memory Diagnostic............................................................................................................53

HP 1210m Volume Online Tool.................................................................................................53

Obtaining the Service Agreement ID.........................................................................................54

Locating the storage system warranty entitlement label.................................................................54

5 Upgrading the storage system....................................................................55

Maintaining your storage system...............................................................................................55

Determining the current storage system software version...............................................................55

Upgrading X5000 G2 software...............................................................................................56

Upgrading a component's firmware..........................................................................................56

Resolving errors after the HP 1210m controller upgrade...........................................................59

Resolving errors after a disk drive firmware upgrade...............................................................59

Resolving an EMU upgrade issue.........................................................................................60

Contents 3

Page 4

Upgrading hardware components.............................................................................................60

Powering the storage system off and on.....................................................................................60

6 Removing and replacing hardware components............................................61

Customer self repair................................................................................................................61

Best practices for replacing components....................................................................................61

During replacement of the failed component..........................................................................61

Accessing component replacement videos.............................................................................61

Identifying the spare part....................................................................................................62

Replaceable parts...................................................................................................................62

Hot, warm, and cold swap components.....................................................................................65

Preventing electrostatic discharge..............................................................................................65

Verifying component failure......................................................................................................66

Verifying proper operation.......................................................................................................66

Wait times for hard disks.........................................................................................................66

Removing the system enclosure from the rack..............................................................................67

Inserting the system enclosure into the rack.................................................................................68

Removing and replacing the server interposer board...................................................................68

Removing and replacing the midplane board.............................................................................70

Removing and replacing a SAS cable .......................................................................................73

Removing and replacing the SAS I/O module............................................................................73

Removing and replacing the fan module....................................................................................75

Removing and replacing the power UID button assembly.............................................................76

Removing and replacing the power supply.................................................................................77

Removing and replacing the HP Ethernet I/O module..................................................................78

Removing and replacing the PCIe module (with card)..................................................................79

Removing and replacing the EMU module.................................................................................81

Removing and replacing the server blade backplane...................................................................82

Removing and replacing the server airflow baffle........................................................................84

Removing and replacing the front bezel (standard)......................................................................85

Removing and replacing the front bezel (full)..............................................................................87

Removing and replacing the front LED display board in the rack (standard)....................................88

Removing and replacing the front LED display board (full)............................................................89

Removing and replacing a drive drawer....................................................................................91

Removing and replacing the drive drawer hard drive...................................................................96

Removing and replacing the drive drawer rails (side or bottom)....................................................98

Removing and replacing the enclosure rails..............................................................................103

Removing and replacing the rack rails.....................................................................................108

Removing and replacing server blades....................................................................................108

Removing and replacing the server blade hard drive.................................................................109

Removing and replacing the 1210m controller board components...............................................111

Removing and replacing the 1210m cache module...............................................................113

Removing and replacing the capacitor pack........................................................................116

Removing and replacing the Mezzanine NIC...........................................................................118

7 Storage system recovery..........................................................................120

System Recovery DVD...........................................................................................................120

Using a downloaded version of the System Recovery DVD.....................................................120

Drive letters are not assigned after a restore........................................................................121

Restoring the factory image with a DVD or USB flash device.......................................................121

Using a USB flash drive for storage system recovery..................................................................121

Recovering both servers.........................................................................................................122

Recovering a single server.....................................................................................................122

Restoring the system with Windows Recovery Environment..........................................................125

4 Contents

Page 5

8 Support and other resources....................................................................128

Contacting HP......................................................................................................................128

HP technical support........................................................................................................128

Subscription service..........................................................................................................128

Related information...............................................................................................................128

HP websites....................................................................................................................128

Rack stability........................................................................................................................129

9 Documentation feedback.........................................................................130

A Managing the EMU................................................................................131

CLI reference........................................................................................................................131

Command line conventions....................................................................................................131

Operational groups..............................................................................................................131

Authentication......................................................................................................................132

Time functions......................................................................................................................135

Inventory and status..............................................................................................................138

Internet control.....................................................................................................................143

Server management..............................................................................................................146

Enclosure control..................................................................................................................149

Forensic...............................................................................................................................153

Session...............................................................................................................................155

Using the Enclosure Manager physical interface.......................................................................157

Activate Button Menu............................................................................................................158

Reboot EM (bE)....................................................................................................................158

Restore Factory Defaults (Fd)..................................................................................................158

Recover Lost Password (Fp).....................................................................................................159

Set DHCP IP Address (dH).....................................................................................................159

Set Link Local IP Address (LL)..................................................................................................159

Display Current IP Address (IP)...............................................................................................159

Exit Button Menu..................................................................................................................160

B Regulatory compliance notices.................................................................161

Regulatory compliance identification numbers..........................................................................161

Federal Communications Commission notice............................................................................161

FCC rating label..............................................................................................................161

Class A equipment......................................................................................................161

Class B equipment......................................................................................................161

Modification...................................................................................................................162

Cables...........................................................................................................................162

Canadian notice (Avis Canadien)...........................................................................................162

Class A equipment...........................................................................................................162

Class B equipment...........................................................................................................162

European Union notice..........................................................................................................162

Japanese notices..................................................................................................................163

Japanese VCCI-A notice....................................................................................................163

Japanese VCCI-B notice....................................................................................................163

Japanese VCCI marking...................................................................................................163

Japanese power cord statement.........................................................................................163

Korean notices.....................................................................................................................163

Class A equipment...........................................................................................................163

Class B equipment...........................................................................................................163

Taiwanese notices.................................................................................................................164

BSMI Class A notice.........................................................................................................164

Taiwan battery recycle statement........................................................................................164

Vietnamese notice............................................................................................................164

Contents 5

Page 6

Laser compliance notices.......................................................................................................165

English laser notice..........................................................................................................165

Dutch laser notice............................................................................................................165

French laser notice...........................................................................................................165

German laser notice.........................................................................................................166

Italian laser notice............................................................................................................166

Japanese laser notice.......................................................................................................166

Spanish laser notice.........................................................................................................167

Recycling notices..................................................................................................................167

English recycling notice....................................................................................................167

Bulgarian recycling notice.................................................................................................168

Czech recycling notice......................................................................................................168

Danish recycling notice.....................................................................................................168

Dutch recycling notice.......................................................................................................168

Estonian recycling notice...................................................................................................169

Finnish recycling notice.....................................................................................................169

French recycling notice.....................................................................................................169

German recycling notice...................................................................................................169

Greek recycling notice......................................................................................................170

Hungarian recycling notice...............................................................................................170

Italian recycling notice......................................................................................................170

Latvian recycling notice.....................................................................................................170

Lithuanian recycling notice................................................................................................171

Polish recycling notice.......................................................................................................171

Portuguese recycling notice...............................................................................................171

Romanian recycling notice................................................................................................171

Slovak recycling notice.....................................................................................................172

Spanish recycling notice...................................................................................................172

Swedish recycling notice...................................................................................................172

Turkish recycling notice.....................................................................................................172

Battery replacement notices...................................................................................................173

Dutch battery notice.........................................................................................................173

French battery notice........................................................................................................173

German battery notice......................................................................................................174

Italian battery notice........................................................................................................174

Japanese battery notice....................................................................................................175

Spanish battery notice......................................................................................................175

Glossary..................................................................................................176

Index.......................................................................................................177

6 Contents

Page 7

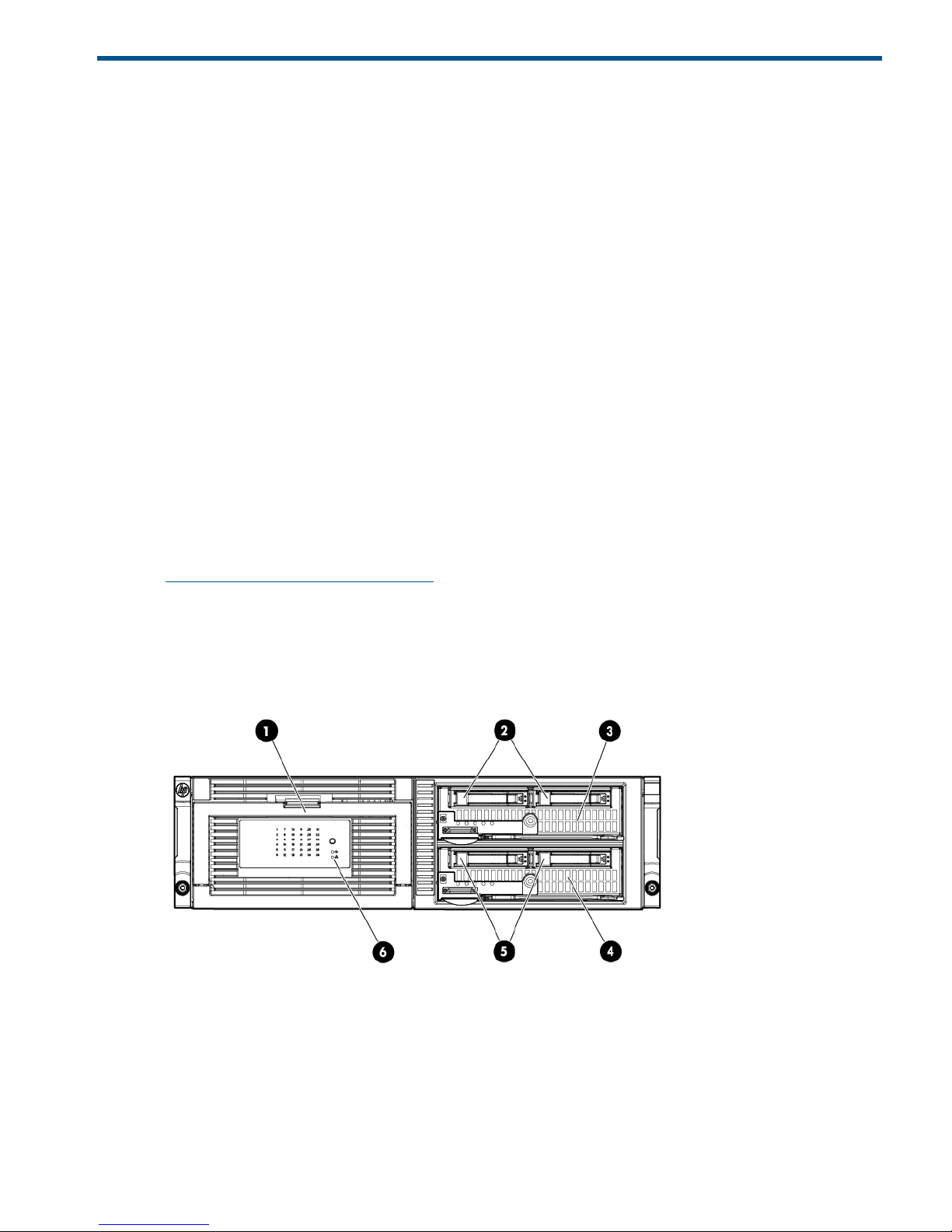

1 HP X5000 G2 Network Storage System

The HP X5000 G2 Network Storage System (“storage system”) is an integrated hardware-software

solution that provides highly available file and block storage on a Windows failover cluster. Each

storage system features HP server blades and dense disk storage in a single 3U enclosure (Figure 1

(page 7)).

Features

The HP X5000 G2 Network Storage System provides the following advantages:

• Each system ships from the factory with preintegrated hardware and preloaded software, to

significantly reduce the time and complexity of deploying clusters.

• Built on the HP converged application platform, which combines two server blades and dense

storage drawer into a single enclosure

• Lower overall TCO with reduced footprint and lower energy consumption

• Specially developed setup tools (setup wizards) provide guided setup assistance, performing

many of the complex and time-consuming tasks needed to configure and deploy a high

availability storage system. The setup tools make it easy to get both Windows and a two-node

cluster configured and running quickly.

• HP and Microsoft management integration, including Microsoft Server Manager and System

Center and HP System Insight Manager and Integrated Lights Out (iLO)

For more information about X5000 G2 Network Storage System features, go to:

http://www.hp.com/go/X5000-G2

Hardware components

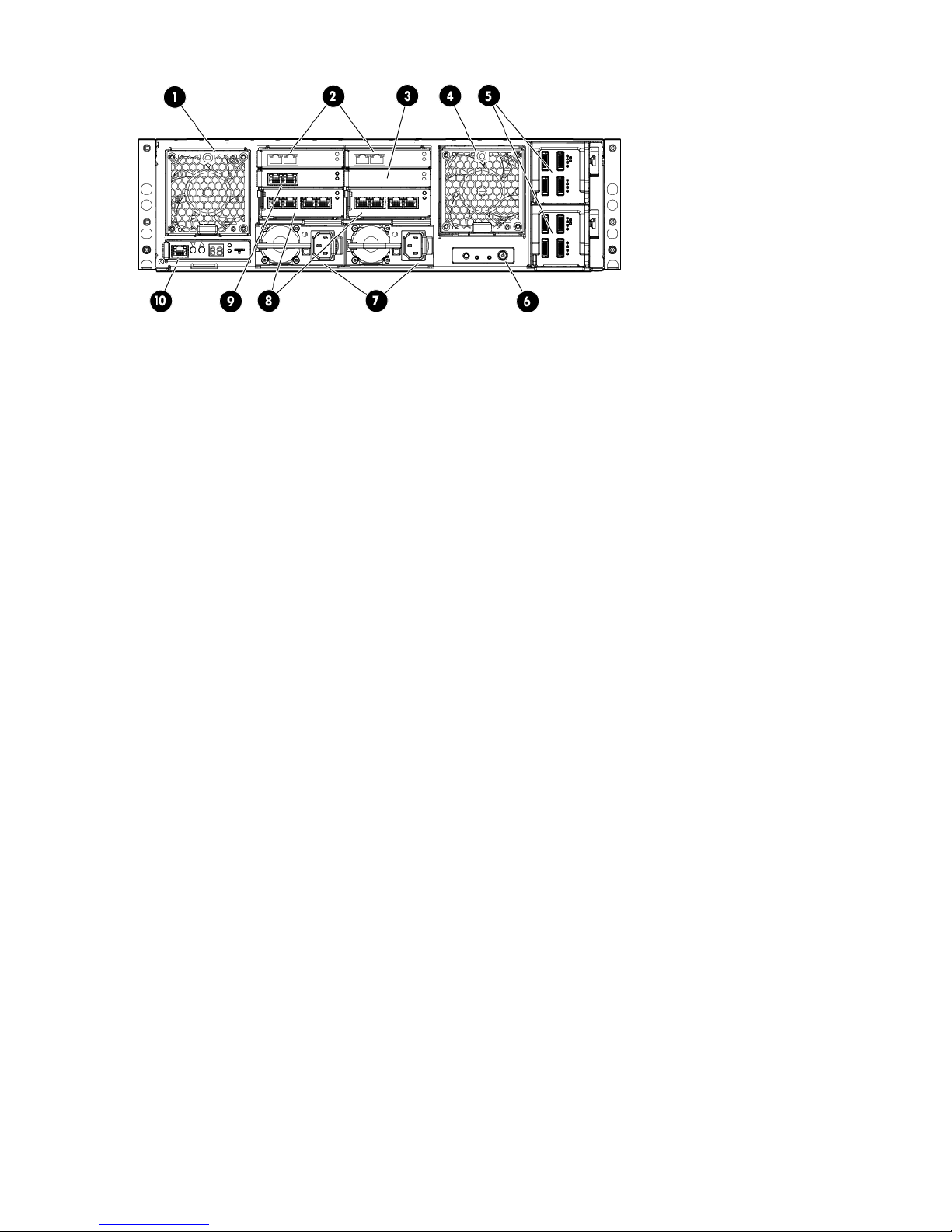

Figure 1 (page 7) and Figure 2 (page 8) show front and rear views of the storage system.

Figure 1 Front view

4. Server blade 2, Bay 21. Disk drawer

5. Server blade 2, OS drives2. Server blade 1, OS drives

6. Chassis fault LED3. Server blade 1, Bay 1

Features 7

Page 8

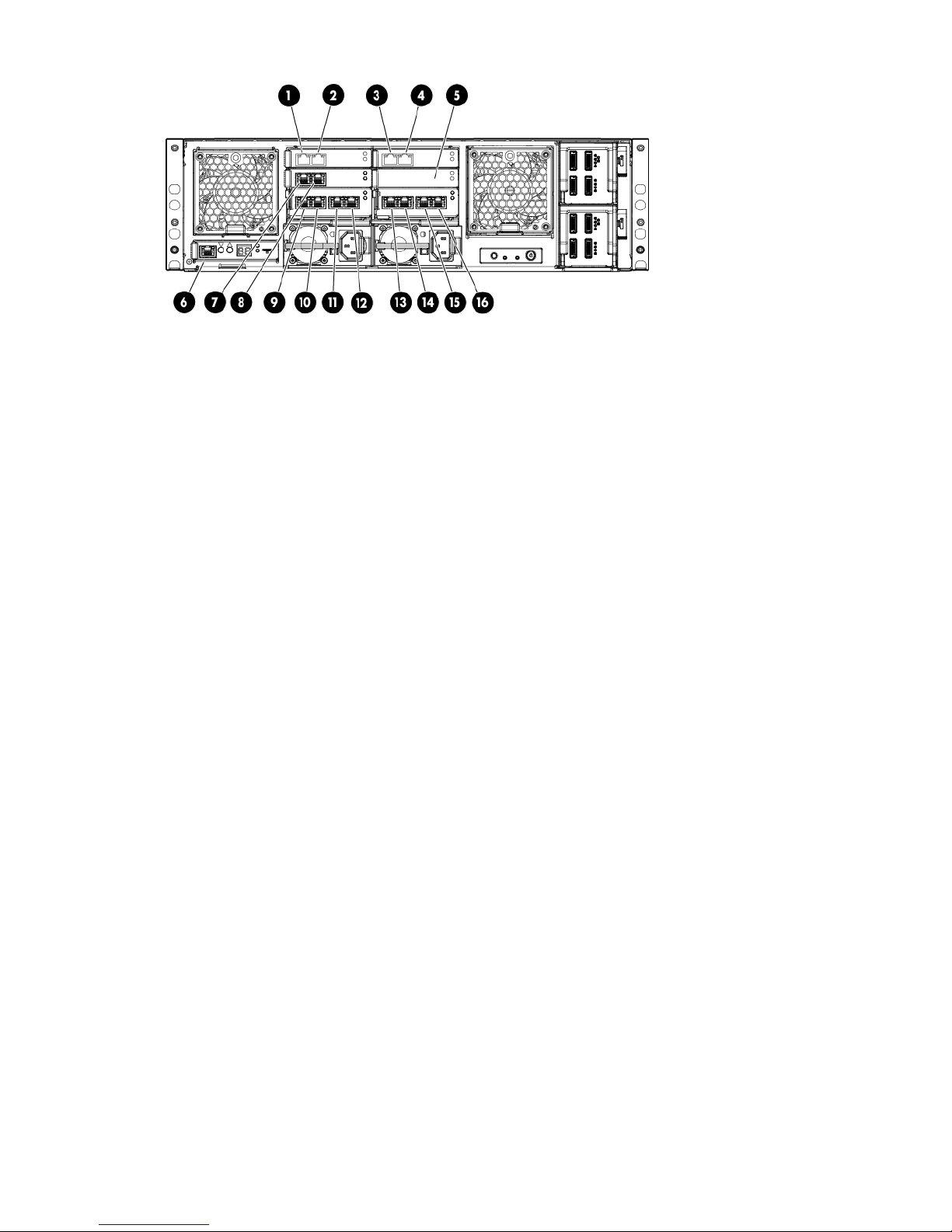

Figure 2 Rear view

1. System fan

2. HP 2-port 10 Gb I/O module (2). These modules connect to the NIC located on the server blade motherboard.

3. Intraconnect (internal switch connecting servers and EMU)

4. Drive fan

5. SAS I/O module (2)

6. Power button

7. Power supply (2)

8. HP 4-port, 1 Gb Ethernet I/O PCIe module (2)

9. HP 2-port, 1 Gb Ethernet I/O module (connects to the mezzanine NIC in each server blade)

10. Management port for iLO (servers 1 and 2), and Enclosure Manager Unit (EMU)

Software components

Windows Storage Server 2008 R2 SP1 comes preinstalled and activated on the HP X5000 G2

Network Storage System. The operating system software contains the Microsoft iSCSI Software

Target and a Microsoft Cluster Service license. The storage system configuration also includes the

HP Initial Configuration Tasks window and HP Server Manager, which are used to set up and

manage your storage system.

The Initial Configuration Tasks window assists during the initial out of box setup by configuring

the network, configuring two nodes from a single node, and deploying the cluster. Use HP Server

Manager to further customize the storage system, such as managing volumes and spare drives.

To provide ongoing monitoring and facilitate management, the storage system includes the System

Manager, which provides a snapshot view of the health and status of the storage system and tools

to manage firmware updates.

8 HP X5000 G2 Network Storage System

Page 9

2 Installing the storage system

This chapter explains how to install the storage system hardware.

Verify the kit contents

Remove the contents, ensuring that you have all of the following components. If components are

missing, contact HP technical support.

Hardware

• HP X5000 G2 Network Storage System

NOTE: External disk enclosures are not included with the storage system, but up to four

D2600 or D2700 disk enclosures may be connected to the storage system.

• Rail kit

• Power cords

Media and documentation

• HP X5000 G2 Network Storage System Quick Start Guide

• HP ProLiant Essentials Integrated Lights-Out Advanced Pack

• End User License Agreement

• HP X5000 G2 System Recovery DVD

• Certificate of Authenticity Card

• Safety and Disposal Documentation CD

Locate and record the product number, serial number, and SAID number

Before you begin installation, locate and record the product number of the storage system, serial

number, and support contract service agreement ID (SAID) number.

The product number of the storage system and serial number are located in three places:

• Top of the storage system

• Back of the storage system on a pull-out tab

• On the storage system shipping box

The SAID number is listed on your service contract agreement (see “Obtaining the Service Agreement

ID” (page 54)).

Unpack and rack the storage system hardware

WARNING! The storage system enclosure is heavy. Always use at least two people to move the

storage system into the rack.

Verify the kit contents 9

Page 10

1. If your storage system is delivered in a rack, proceed to Step 2. If you ordered the storage

system without the rack, install the rail kit and enclosure in the rack using the installation

instructions that are included with the rail kit.

IMPORTANT: Ensure that cabling in the back of the rack system does not interfere with

system operation or maintenance. Bind cables loosely with cable ties and route the excess

out of the way, along the side of the rack, to keep system components and indicators visible

and accessible.

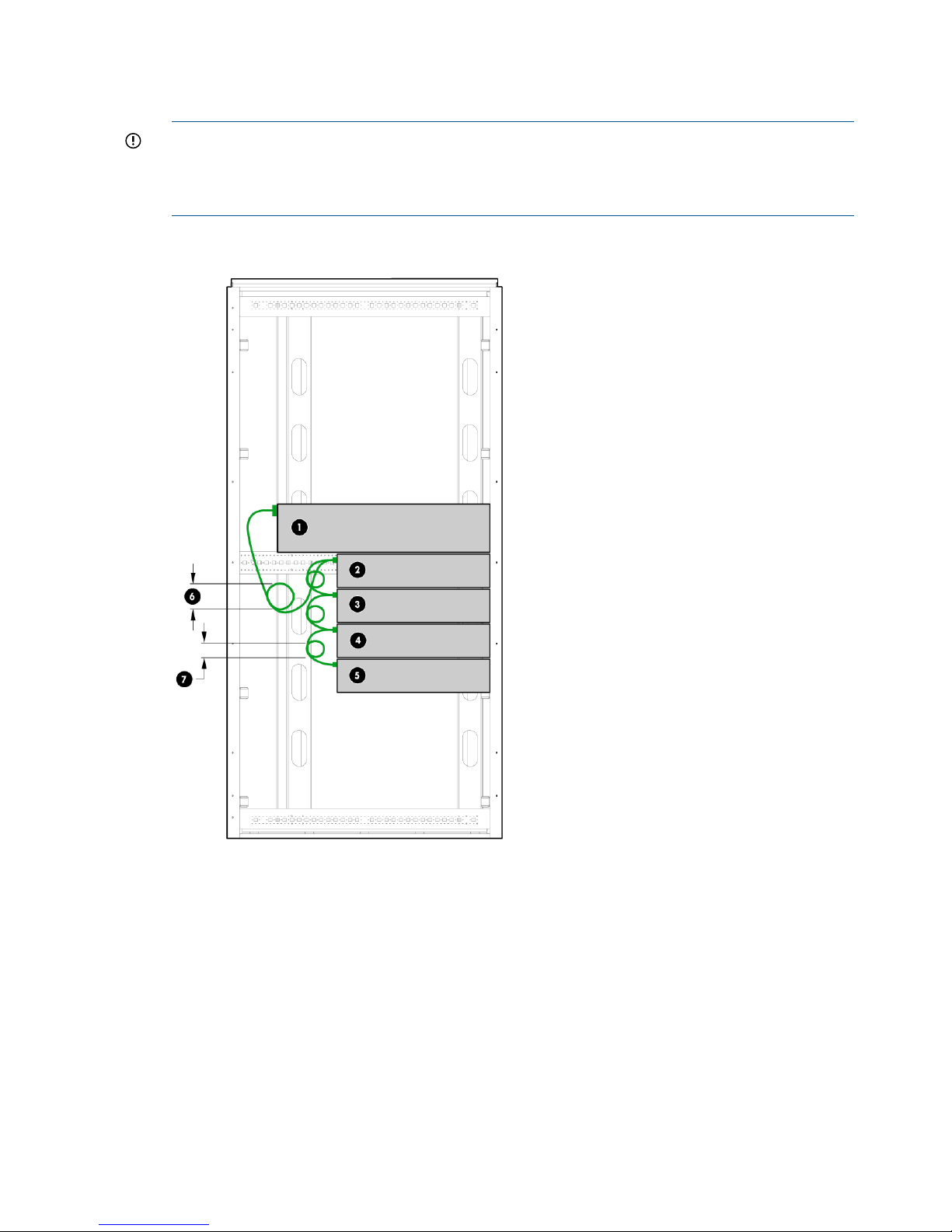

Figure 3 Storage system installed in a rack

1. Storage system enclosure

2-5. Disk enclosures (optional)

6-7. Cable connection, with no bend radius smaller than 5 cm

2. If you purchased disk enclosures, rack and cable the disk enclosures before moving to the

next step.

3. Cable the storage system to your network and attach the power cords. See “Rear view”

(page 8) for connecting the power cables.

Cable disk enclosures

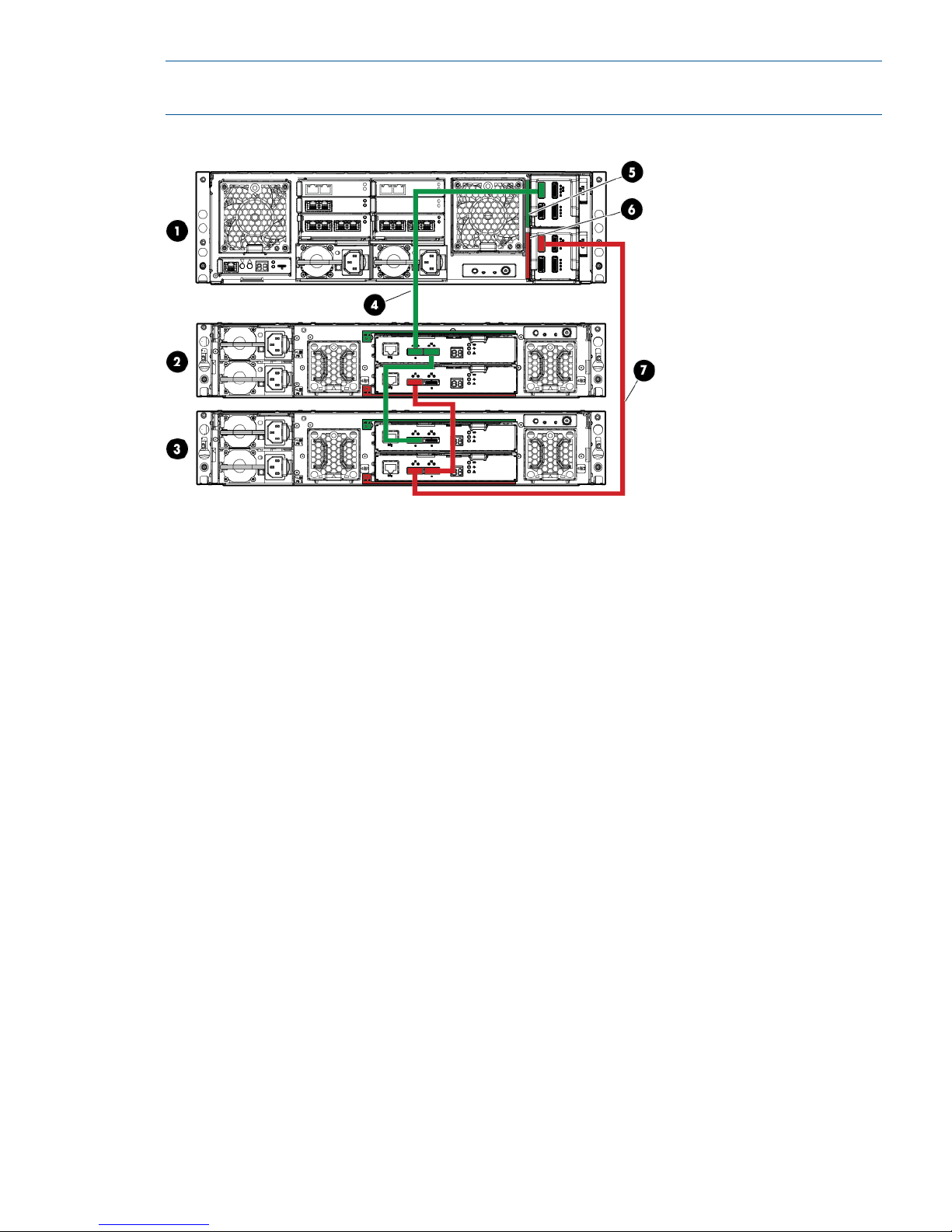

The following figures show the correct cabling of disk enclosures to the storage system chassis.

Numbers represent the order of attachment. Figure 4 (page 11) shows an HP X5000 G2 Network

Storage System with two disk enclosures.

10 Installing the storage system

Page 11

NOTE: Up to four HP D2600 or HP D2700 disk enclosures are supported. A mix of HP D2600

or HP D2700 disk enclosures is not supported.

Figure 4 X5000 G2 with two disk enclosures

1. X5000 G2

2–3. Disk enclosures

4. SAS cable connecting disk enclosure 1 (green cable)

5. Green color code for upper SAS I/O module

6. Red color code for lower SAS I/O module

7. SAS cable connecting disk enclosure 2 (red cable)

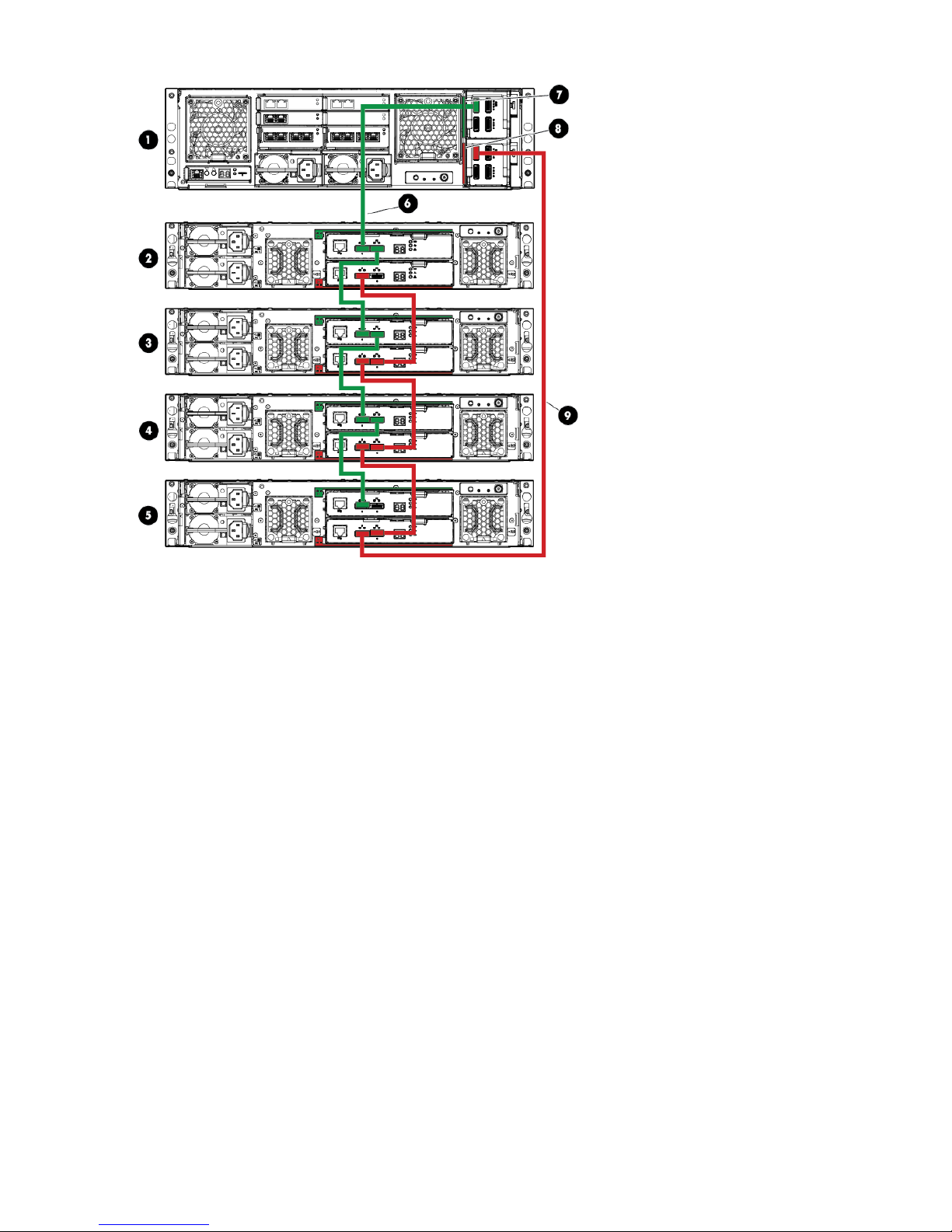

Figure 5 (page 12) shows an X5000 G2 Network Storage System with four disk enclosures.

Cable disk enclosures 11

Page 12

Figure 5 X5000 G2 with four disk enclosures

1. X5000 G2

2–5. Disk enclosures

6. SAS cable connecting disk enclosure 1 (green cable)

7. Green color code for upper SAS I/O module

8. Red color code for lower SAS I/O module

9. SAS cable connecting disk enclosure 2 (red cable)

Network connections

Each of the two servers has eight network adapters. One of the adapters, Cluster Internal, is already

connected to the corresponding adapter on the second node. This is done through an internal

switch located in the Mezz B slot in the rear of the enclosure (5, Figure 6).

12 Installing the storage system

Page 13

Figure 6 Network ports

9. 1 GbE Public 4 (Blade 1)1. 10 GbE Public 1 (Blade 1)

10. 1 GbE Public 3 (Blade 1)2. 10 GbE Public 1 (Blade 2)

11. 1 GbE Public 2 (Blade 1)3. 10 GbE Public 2 (Blade 1)

12. 1 GbE Public 1 (Blade 1)4. 10 GbE Public 2 (Blade 2)

13. 1 GbE Public 4 (Blade 2)5. Cluster Internal

14. 1 GbE Public 3 (Blade 2)6. Enclosure Manager, iLO (Blades 1 and 2)

15. 1 GbE Public 2 (Blade 2)7. Server Management (Blade 1)

16. 1 GbE Public 1 (Blade 2)8. Server Management (Blade 2)

Because the two Cluster Internal adapters are connected, they are automatically assigned an IPv4

link-local address from the address block 169.254.0.0/16. This network will be used in a later

step for configuration of the second node from the first node, and it also is used as a private cluster

heartbeat network when the cluster is deployed. HP recommends that you do not make changes

to the configuration of the Cluster Internal network adapter.

The remaining network adapters are intended for use in your network infrastructure. Each adapter

is labeled according to a suggested use (for example, 1 GbE Public 1), but you may rename the

adapters in later configuration steps and use them in a way best suited to your environment.

In the network infrastructure that connects the cluster nodes, avoid having single points of failure.

One way to do this is to have at least two distinct networks. The HP X5000 G2 already provides

one network between the nodes—the Cluster Internal network. You must add at least one more

network. As you connect the HP X5000 G2 to your network infrastructure, consider the following

requirements:

• Since deploying the cluster requires that both servers be joined to an Active Directory domain,

you must have a route to the domain controller from each server on the storage system.

• Servers in a cluster must use DNS for name resolution, so you must have a route to a DNS

server from each server on the storage system.

• If you are adding more than one adapter per server to your network infrastructure, each

adapter should be on a different subnet.

Figure 7 (page 14) shows two possibilities for adding network cables for an additional network.

Network connections 13

Page 14

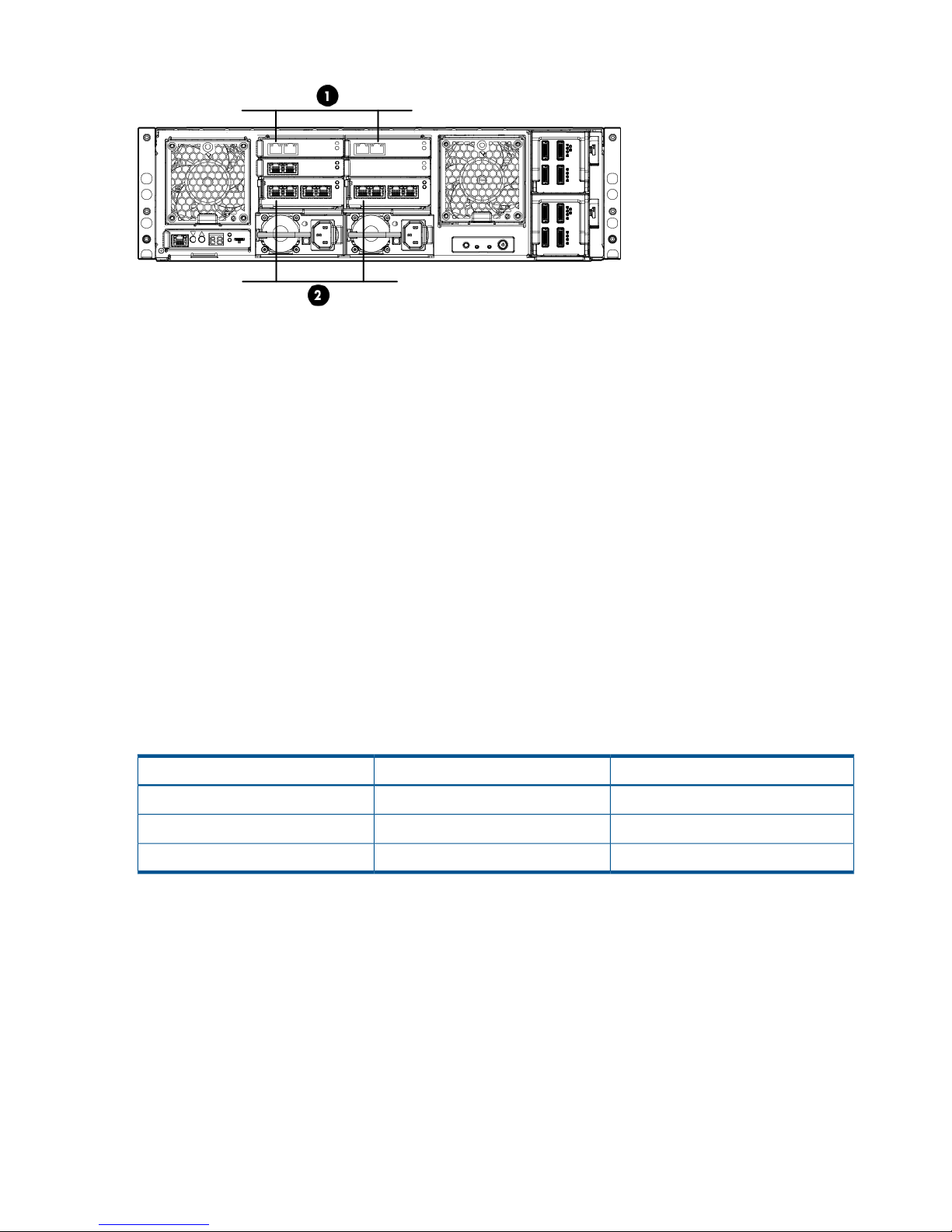

Figure 7 Cabling an additional network

1. Connect 10 GbE Public 1 (Blade 1) and 10 GbE Public 2 (Blade 2) to the same subnet in

your network infrastructure. Note that adapters were chosen on different pass-through modules.

This prevents the pass-through module from becoming a single point of failure for the connection

between the two nodes.

or

2. Connect 1 GbE Public 4 (Blade 1) and 1 GbE Public 4 (Blade 2) to the same subnet in your

network infrastructure.

In later configuration steps you can configure the adapters you have connected to your network.

If you have connected to a DHCP-enabled network, no further configuration is necessary. Otherwise,

you must assign static addresses to the adapters. You may also want to rename the adapters to

reflect their use in your environment. Also note that these are only two examples out of many

networking possibilities. NIC teaming may also be used. It is not necessary to make all these

decisions now, because you can always add more networks after the system has been deployed.

The Enclosure Manager and iLO port (6, Figure 6 (page 13)) provides for a connection from your

network infrastructure to the Enclosure Manager Unit (EMU) and to the iLO on each blade. For

ease of setup, the EMU and each iLO processor have been assigned static IP addresses in the

factory. You use these addresses to make an initial connection, and then configure each to connect

to your network. The factory configured addresses are as follows:

Table 1 Factory configured EMU and iLO addresses

Subnet maskIP addressComponent

255.255.255.010.0.0.10EMU

255.255.255.010.0.0.11Server 1 iLO

255.255.255.010.0.0.12Server 2 iLO

“Configure the EMU and iLO management processors” (page 15) describes how you can directly

connect a laptop or other local system to reconfigure these addresses.

Power on the storage system

1. Power on disk enclosures, if any.

2. Power on the storage system by pushing the power button on the back of the chassis.

Once the storage system power is on, power on the server blades if they do not automatically

power on.

14 Installing the storage system

Page 15

Configure the EMU and iLO management processors

Before configuring the management processors, verify the following:

• You have determined whether the network ports on the server are to use DHCP or static

addresses. If the network ports are to use static addresses, you must provide the addresses.

• For this step, the EMU port should not be connected to a switch. You can connect the EMU

port to a switch after the EMU and iLO NICs are configured.

Configure the EMU and iLO management processors for both servers as follows:

1. Connect a system (the configuration system) in the environment or a laptop to the EMU port

(Figure 8 (page 15)). You can use either a crossover or a regular Ethernet cable.

Figure 8 EMU NIC port connection

2. Configure the networking properties for the local system:

a. Open Control Panel, select Network Sharing Center or Network Connections, and navigate

to Local Area Connections.

b. Select Properties→Internet Protocol, and then select Properties.

c. If Use the following IP address: is selected, record values for the following items and

restore them after completing the EMU and iLO setup:

• IP address

• Subnet mask

• Default gateway

d. Enter the following values:

• IP address: 10.0.0.20

• Subnet mask: 255.255.255.0

e. Before continuing, ping the following IP addresses to test connectivity to the EMU and

the iLO located in each of the servers: 10.0.0.10, 10.0.0.11, and 10.0.0.12. The EMU

and iLO interfaces have been assigned IP addresses during factory setup. You must either

update the factory values with site-specific static IP addresses or configure the management

processors to use DHCP IP addressing.

Configure the EMU and iLO management processors 15

Page 16

3. Configure iLO on the server blades:

a. Open a web browser and log in to iLO using the address: http://10.0.0.11. You

are prompted to enter the user name and password. The password for the Administrator

account is located on a pull out tag on the front of the server blade.

After you have logged into iLO, HP recommends that you change the administrator

password. To do so, select User Administration under Administration in the iLO

management interface.

b. Configure the network as required for your environment. Select Network under

Administration in the iLO management interface. You can either enable DHCP or edit the

IP address details and enter site-specific network settings. Click Apply to save your settings.

c. Repeat the process on the other server blade. Open a web browser and log in to iLO

using the address: http://10.0.0.12.

16 Installing the storage system

Page 17

4. Configure the EMU:

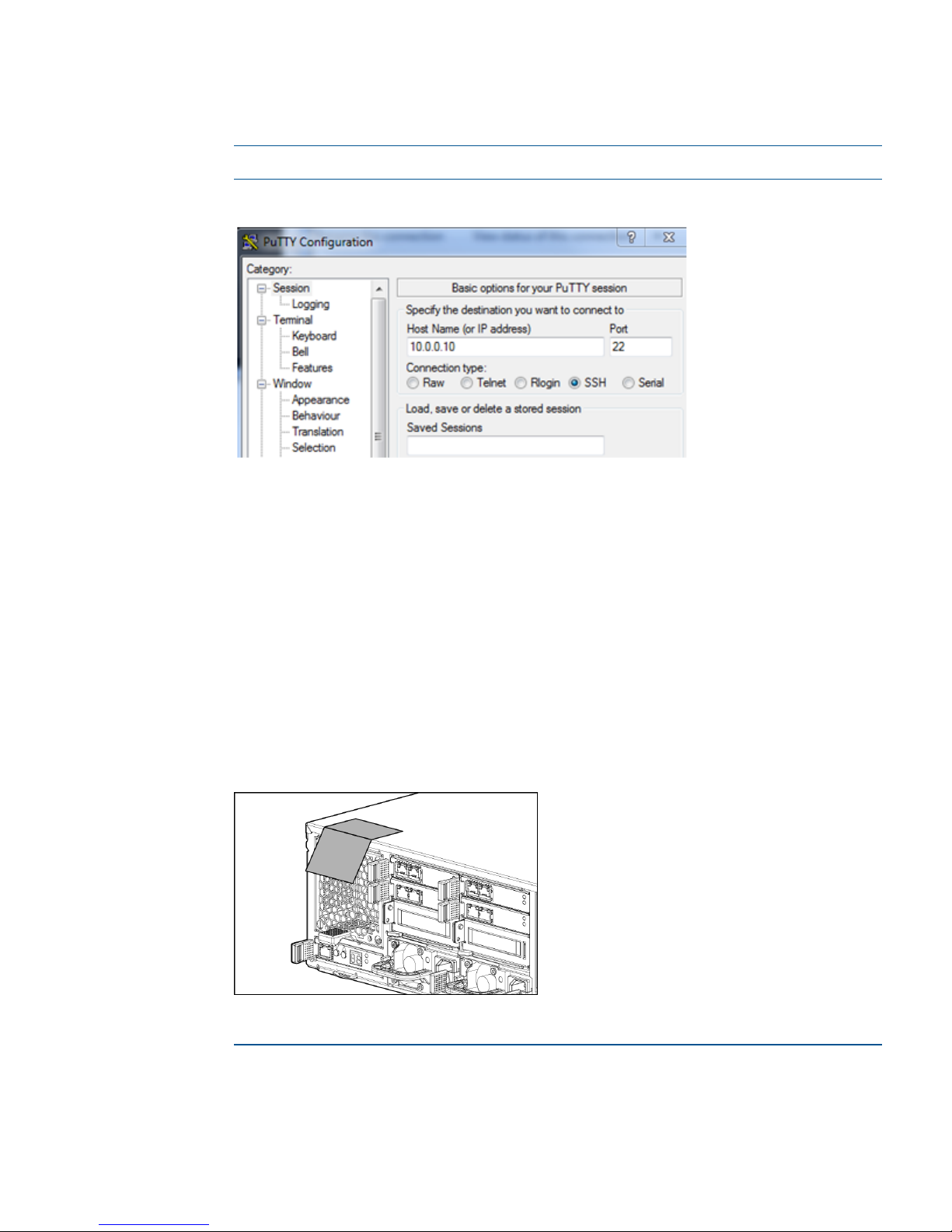

a. Connect to the Enclosure Manager software using an ssh compatible tool like PuTTY. In

the PuTTY session basic options, enter the EMU IP address (10.0.0.10) and port (22),

and select SSH for the connection type (Figure 9 (page 17)).

NOTE: See “Managing the EMU” (page 131) for information on using CLI commands.

Figure 9 Connecting to the Enclosure Manager software

b. After you have connected to the EMU port, set the following attributes:

• EMU (DNS) name

• Rack name

• EMU password (located on the tear-away label on the back of the server blade; see

Figure 10 (page 17))

• IP addressing method

To change the static IP address, type the command set ipconfig static

at the command line prompt and follow the instructions.

◦

◦ To change the EMU addressing to DHCP, type set ipconfig dhcp at the

command line prompt.

Figure 10 Tear-away label location

Example 1 Setting attributes

CustomerEMU-dnsName> set em name CustomerEMU-dnsName

CSP Enclosure Manager name changed to CustomerEMU-dnsName.

CustomerEMU-dnsName> set rack name CustomerRackName

Changed rack name to "CustomerRackName".

Configure the EMU and iLO management processors 17

Page 18

CustomerEMU-dnsName> set password

New Password: ********

Confirm : ********

Changed password for the "Administrator" user account.

CustomerEMU-dnsName>

NOTE: You will not be able to connect to iLO or the EMU from the configuration system until

you change the network settings on the configuration system.

5. Complete the configuration:

a. Connect the EMU port to the appropriate switch/VLAN/subnet.

b. Log in to the EMU using ssh and the newly assigned EMU name and validate connectivity.

It is assumed that the EMU name is in the DNS.

Example 2 Verifying connectivity

CustomerEMU-dnsName> show server list all

Bay iLO Name iLO IP Address Status Power UID

--- ----------------------------- --------------- -------- ------- -- 1 ILOMXQ0110FJ9 16.78.90.51 OK On Off

2 ILOMXQ0110FHU 16.78.90.113 OK On Off

Totals: 2 server blades installed, 2 powered on.

18 Installing the storage system

Page 19

3 Configuring the storage system

This chapter explains the out of box experience that occurs when you first power on the storage

system. This includes setup tasks, such as the selection of language and regional settings for the

OS, network configuration, time zone, provisioning storage required for the cluster, and deploying

the two-node cluster. All configuration may be done from a single server. There is no need to log

on to the second server.

Accessing the storage system

For initial configuration of the storage system, you must have console access to one of the server

blades. You can use either a local I/O diagnostic (SUV) cable or an iLO connection. The iLO

connection is the preferred method because it allows for remote access. If you are using the direct

connect method, connect the supplied SUV cable to the front of the storage system server blades

in the following sequence: keyboard, mouse, monitor cable, and monitor power cable. Regardless

of which access method you use, perform the configuration from only one of the server blades.

The server blade you choose for configuration will be designated the first node, and the other

server blade will be designated the second node.

Figure 11 Keyboard, mouse, and monitor

1. Storage system enclosure

2. Monitor

3. Keyboard (USB)

4. Mouse (USB)

NOTE: The keyboard, mouse, and monitor are not provided with the storage system.

For remote access, open a web browser and enter the iLO name or IP address for a server blade

located in either bay. Log in using the iLO administrator name and newly created password for

that blade.

For instructions on using iLO, see the Integrated Lights Out user guide available from http://

www.hp.com/go/ilo. On the iLO web page, select More iLO Documentation.

Set up Windows and discover the second node

When the storage system starts, the servers will begin a first time setup procedure that takes

approximately 10 to 15 minutes, including the Set Up Windows wizard. Use only one node to

complete the setup procedure.

Accessing the storage system 19

Page 20

In the Set Up Windows wizard, you are asked to choose a language, regional settings, and

keyboard layout. After you accept the EULA, the server you are connected to attempts to discover

the second server. This is done over the internal switch (5, Figure 6 (page 13)). If the second node

is not ready, you may see a message stating Cannot establish communication with

the second node. Click Retry to attempt discovery, and repeat the retry until the second node

is discovered. After the second node is discovered, there will be a few more installation steps that

occur automatically on each server, and then both servers will reboot.

NOTE: If you click Cancel instead of Retry, you must access the second node from iLO or a direct

(SUV) connection and manually perform the Set Up Windows wizard on the second node. Because

the discovery process has not completed, there will also be an extra step later to establish a

connection between the two nodes. You will find instructions for this, if needed, in the online help

of the Initial Configuration Tasks (ICT).

Completing initial configuration

After the servers reboot, continue the configuration using the first node. A default administrator

password (HPinvent!) has been set and this is used to log on automatically. Leave this

administrator password unchanged until you are prompted for a new password in a later

configuration step. After logon, the HP ICT window is launched automatically.

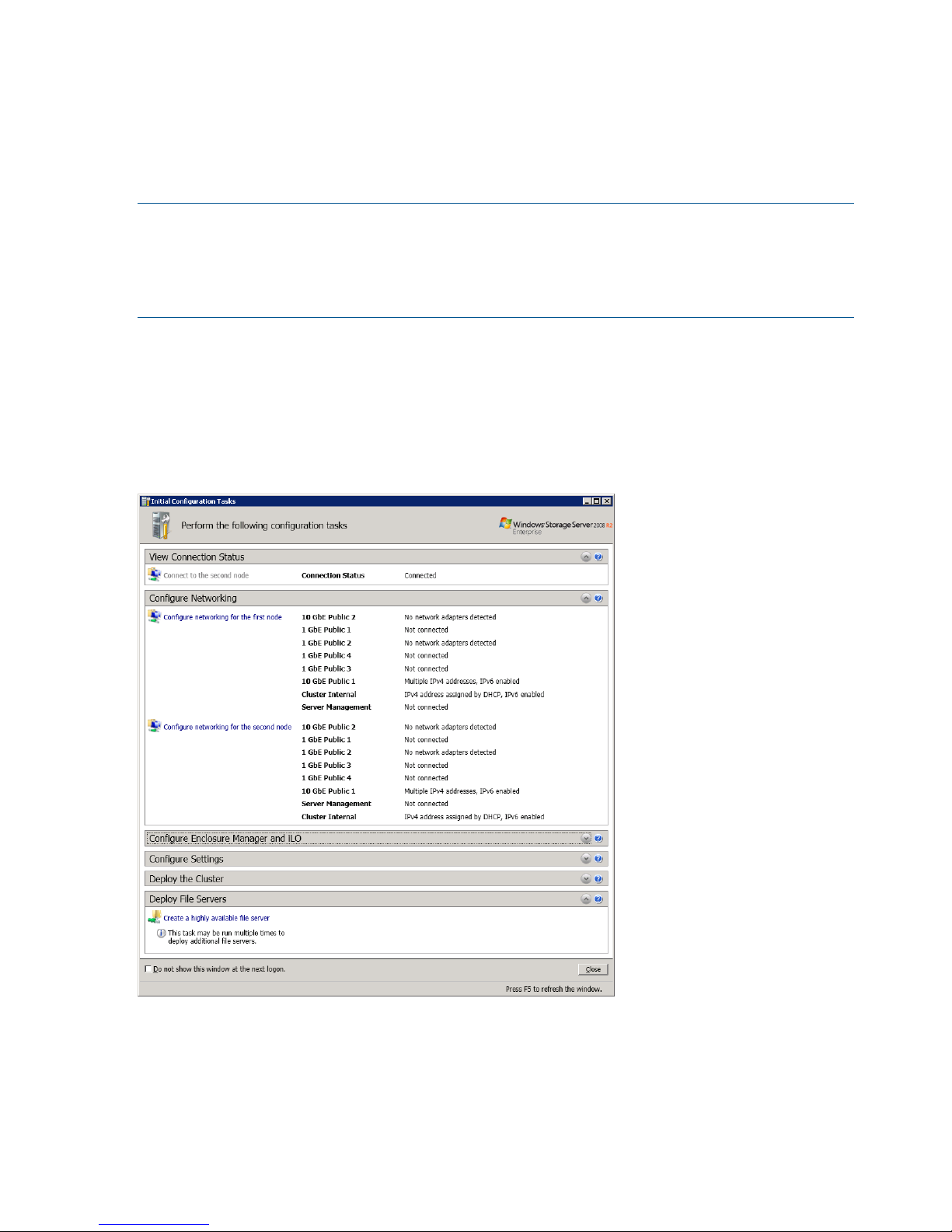

Figure 12 ICT window

Use the HP ICT to perform setup tasks in the order they appear. See the provided online help for

each group of tasks for more information about the task. After completing the “Provide cluster name

and domain” task, both nodes will reboot. After allowing time for a reboot, log on once again to

the first node. This time, rather than logging on as local Administrator, log on using the domain

account that was specified in the “Provide cluster name and domain” task. You may now complete

the remaining tasks, which includes creation of the two-node cluster. The final task is an optional

20 Configuring the storage system

Page 21

step to deploy one or more file servers on the cluster. You may also wait and create file servers

later using Server Manager.

The ICT is intended for initial setup, so once it is complete, you may select the Do not show this

window at the next logon box. If you do want to launch the ICT at a later time, you may do so

from Server Manager or by typing oobe from a Windows command prompt.

When the HP ICT window is closed, Server Manager is launched automatically. Use Server Manager

for further customization of the storage system, such as adding roles and features, and share and

storage management. See the Getting Started node in the navigation tree of Server Manager for

more help on using the storage system.

NOTE: Although BitLocker is supported by the Windows operating system, it is not supported on

the X5000 G2 Network Storage System because BitLocker is not supported on clustered volumes.

For more information, see the following Microsoft article:

http://support.microsoft.com/kb/947302

If encryption is required, the Encrypting File System (EFS) is supported on clustered volumes. For

more information on EFS, see the following Microsoft article:

http://support.microsoft.com/kb/223316

Networking options

The large number of network adapters on each server in the X5000 G2 provides a number of

different options for networking. The network adapter named "Cluster Internal" is pre-configured

as a private cluster heartbeat and should be left as is, but all other adapters are available for use.

Use the guidelines below as an aid in making configuration choices.

Network teaming

Network teaming is a common use of multiple network adapters. Teaming is used to increase

available network bandwidth and provide fault tolerance. Teaming can be across multiple ports

in the same network adapter or across network adapters.

Multi-home

Distributing network workload across multiple network adapters is also commonly used. Placing

each network interface on a different subnet allows the workload on each subnet to be serviced

in parallel rather than through a single interface.

Dedicated networks

Implementing a unified storage solution requires that different protocols be used to access a storage

system. In one instance, a block protocol like iSCSI is used to present storage to a virtual machine

host. At the same time, a file protocol like SMB is used for sharing files for department or user

home directories. A dedicated storage network for each protocol allows the network traffic to be

kept separate to maximize performance. Similarly, one network interface can be used for system

management and monitoring while another interface can be used for data traffic.

10 GbE versus 1 GbE

Other than the obvious difference in speed, 10 GbE provides an order of magnitude difference

in lower latency. Lower latency is ideal for transactional database applications and virtualization.

Combining a 10 GbE dedicated storage network for a virtual machine infrastructure, and a 1 GbE

network for shared folder, takes the most advantage of the network offerings. A classic example

is thin clients whose resources are hosted on virtual machines (for example, the Citrix model).

Networking options 21

Page 22

4 Monitoring and troubleshooting the storage system

The storage system provides several monitoring and troubleshooting options. You can access the

following troubleshooting alerts and solutions to maintain the system health:

• Notification alerts

• System Management Homepage (SMH)

• System Manager

• Hardware component LEDs

• EMU CLI SHOW commands

• HP and Microsoft support websites

• HP Insight Remote Support software

• Microsoft Systems Center Operations Manager (SCOM) and Microsoft websites

• HP SIM 6.3 or later, which is required for proper storage system/HP SIM integration.

NOTE: Integration with HP SIM is only supported using the WBEM/WMI interfaces. Do not

attempt to configure HP SIM to use the ProLiant SNMP agents, because the configuration is

untested and unsupported. The ProLiant SNMP agents are enabled on the storage system by

default and should not be disabled as they are used for internal management functions. If they

are enabled for external client consumption, HP SIM must be configured so it does not attempt

to communicate with these agents.

NOTE: WBEM events for storage are logged into Windows Application logs and WBEM events

for Server and Enclosure are logged into Windows System logs.

If you are unable to resolve a storage system operation issue after using the various options, contact

HP Support. You must provide your SAID and your warranty and entitlement labels. See “Obtaining

the Service Agreement ID” (page 54) and “Locating the storage system warranty entitlement label”

(page 54).

Using notification alerts

When you receive an alert, open the System Manager (described in “Using the System Manager”

(page 30)) to view a high-level description of the issue. You may then choose to open the System

Management Homepage or HP SIM to obtain more detailed information.

IMPORTANT: While the notification alerts report issues as they arise, it is still important to monitor

the storage system regularly to ensure optimal operation.

Configuring Alerts and Email

Configure Alerts and Email in the System Manager to send email notification of system events.

IMPORTANT: HP recommends that you configure Alerts and Email (and also install HP Insight

Remote Support) to ensure that you are proactively alerted to issues. Proactive notification enables

you to address issues before they become serious problems.

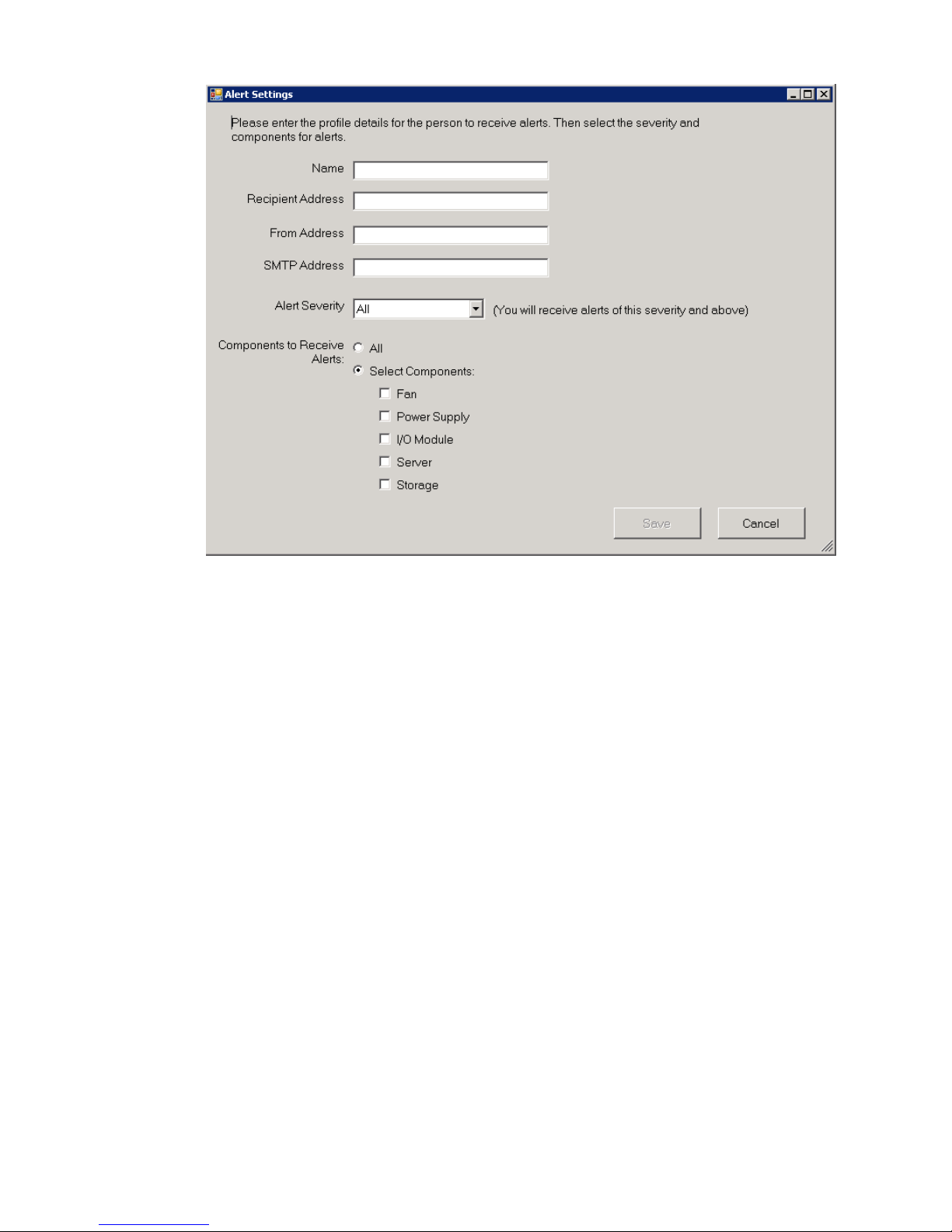

To create an alert for a recipient:

1. Open the Server Manager by clicking the icon located to the right of the Start button on the

Windows taskbar.

2. Expand the tree under System Manager.

3. In the tree, select Alerts and Email.

22 Monitoring and troubleshooting the storage system

Page 23

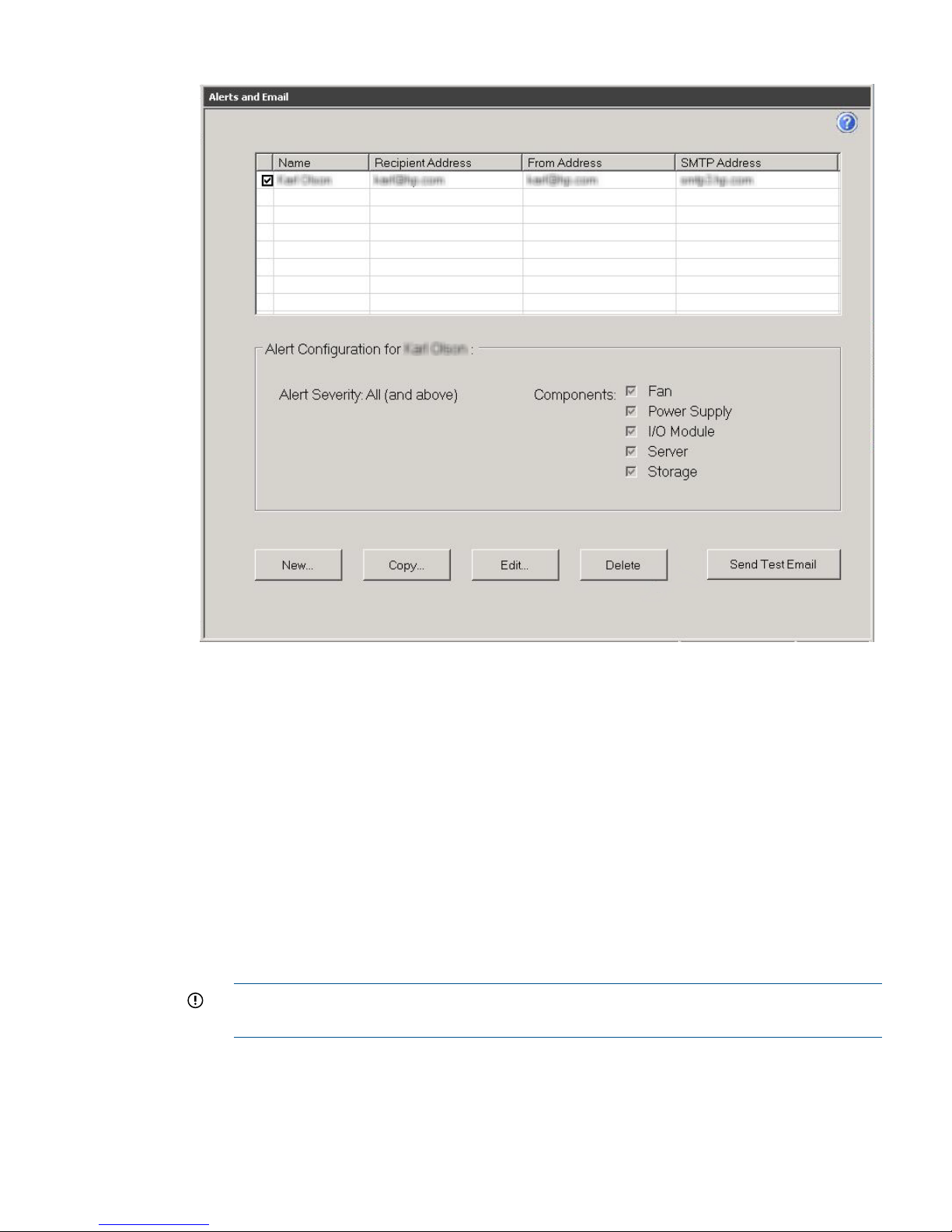

Figure 13 Configuring Alerts and Email

4. Do one of the following:

• Select New to create a profile.

• Select Copy or Edit to modify an existing profile.

The Alert Settings window appears.

Configuring Alerts and Email 23

Page 24

Figure 14 Alert and Email settings

5. Complete the following fields:

• Name—Enter the name of a recipient (for example, John Doe).

• Recipient address—Enter the email address of the recipient (for example,

John.Doe@company.com).

• From address—Enter an email address that will display to the recipient indicating where

the message originated. It can be the same as the recipient address, if desired.

• SMTP address—Enter a valid SMTP address (for example, SMTP.company.com).

• Alerts Severity—Select the severity for which you want to receive alerts. You will also

receive alerts for any severity higher than the one you select. Select All to receive alerts

for all severities.

• Components Alerts—Select the components for which you want to receive alerts, or select

All to receive alerts for all components.

6. To test the ability for the recipient to receive email alerts, click Send Test Email. If the recipient

receives the test email, no further action is required. If the test email is not received, check

that the information entered for the recipient is correct.

24 Monitoring and troubleshooting the storage system

Page 25

Figure 15 Send test email

7. Click Save. The name of the recipient is displayed on the main Alerts and Email window.

To configure the SNMP settings:

1. In the Server Manager navigation pane, select System and Network Settings.

2. Select SNMP Settings in the lower-right pane.

3. Provide the contact and location information for the System Administrator, and then click OK.

4. To make SNMP visible externally:

a. Select Start→Administrative Tools→Services.

b. Select SNMP Service.

c. Right-click and select Properties to display the SNMP Service properties.

d. Select the Security tab and specify the following items:

• The external hosts that may use the SNMP protocol.

• The SNMP Community string. HP recommends that you use something other than the

typical ‘Public’ string.

IMPORTANT: Configure HP SIM security to prevent the SIM management server from

gaining access to SNMP.

The SNMP trap function in the storage system is enabled by default. Any SNMP client (on

localhost) listening on default port number 171 can receive traps. You can configure the

destination IP address using the snmp.xml configuration file in the directory \Program Files\

HPWBEM\Tools\snmp.xml.

Configuring Alerts and Email 25

Page 26

HP System Management Homepage

The HP System Management Homepage (SMH) is a web-based interface that consolidates and

simplifies single system management for HP servers. The SMH is the primary tool for identifying

and troubleshooting hardware issues in the storage system. You may choose this option to diagnose

a suspected hardware problem. Go to the SMH main page and open the Overall System Health

Status and the Component Status Summary sections to review the status of the storage system

hardware.

By aggregating the data from HP web-based agents and management utilities, the SMH provides

a common, easy-to-use interface for displaying the following information:

• Hardware fault and status monitoring

• System thresholds

• Diagnostics

• Software and firmware version control for an individual server

• HP Storage 1210m firmware information

The SMH Help menu provides documentation for using, maintaining, and troubleshooting the

application. For more information about the SMH software, go to www.hp.com/support/manuals

and enter System Management Homepage in the Search box. Select HP System Management

Homepage Software. A list of documents and advisories is displayed. To view SMH user guides,

select User Guide.

Starting the System Management Homepage application

To start the application, double-click the HP System Management Homepage desktop shortcut or

enter https://hostname:2381/ in Internet Explorer. The hostname can be localhost or

the IP address of the server you want to monitor. To log into SMH, enter the same username and

password you use to log in to the server. Users who have administrative privileges on the server

have the same privileges in the SMH application.

To view the SMH of one server from another server, you must modify the Windows firewall settings

as follows:

1. Open the Control Panel and select System Security→Windows Firewall→Allowed Programs.

2. Select Allow another program and click Browse in the Add a Program dialog box.

3. Navigate to C:\hp\hpsmh\bin and select hpsmhd. Click Open and then click Add. HP

System Management Homepage displays in the Allowed Programs and Features window.

4. Select Home/work (Private) and Public and click OK.

5. To access the SMH on another server, enter the following URL:

https://<server IP address>:2381

System Management Homepage main page

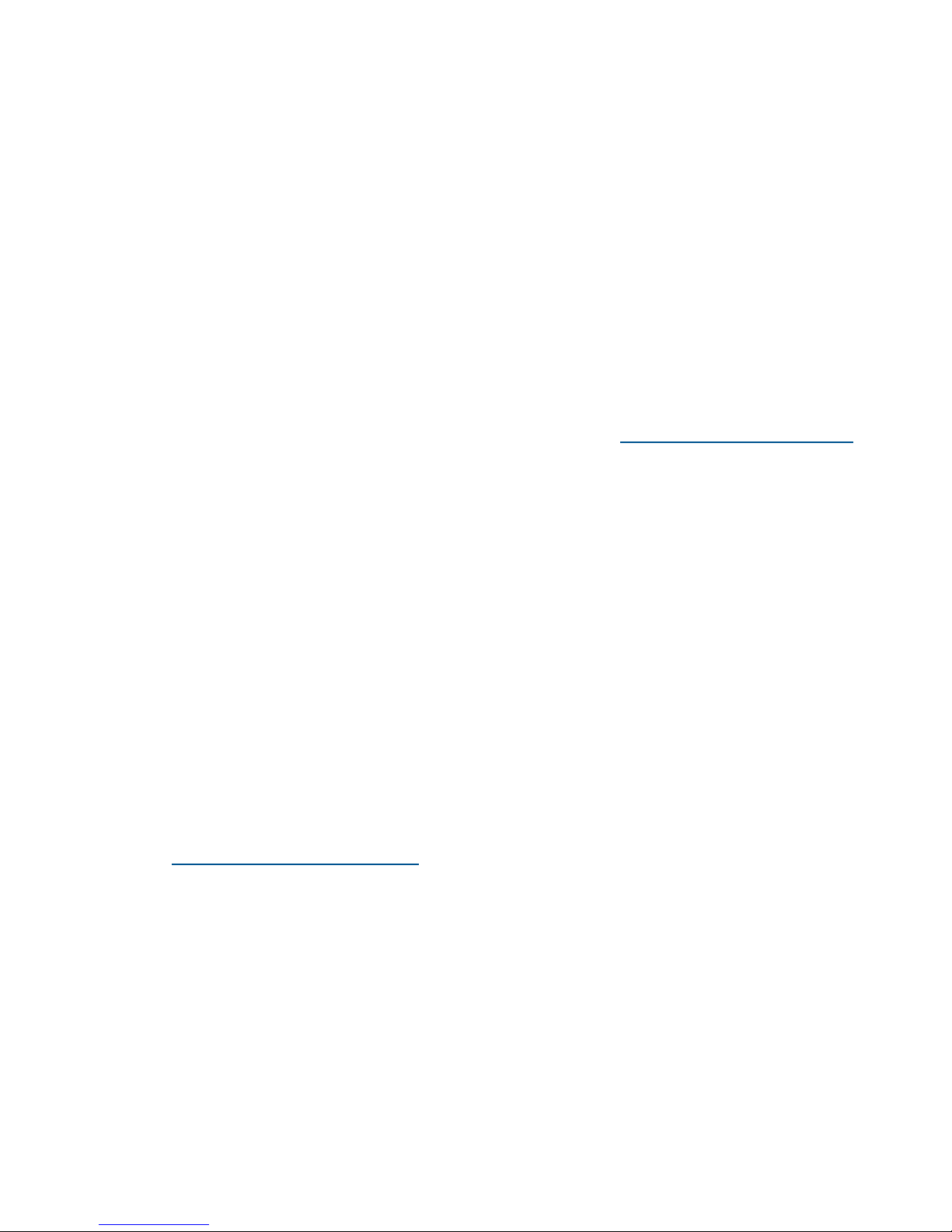

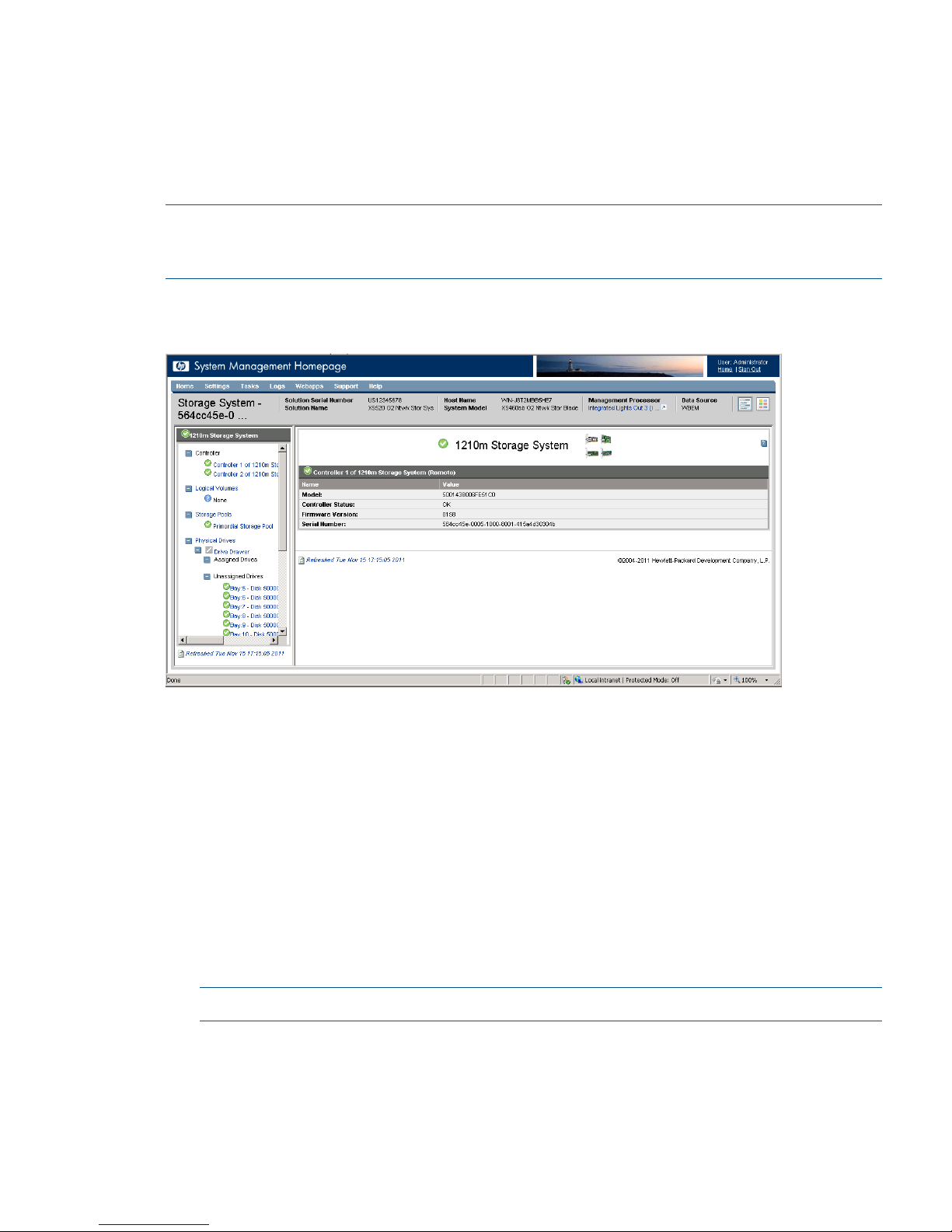

Figure 16 (page 27) shows the SMH main page.

26 Monitoring and troubleshooting the storage system

Page 27

Figure 16 System Management Homepage main page

The page provides system, subsystem, and status views of the server and displays groupings of

systems and their status.

NOTE:

• NICs will display with a failed status (red icon) if they are unplugged. To remove unused NICs

from the system status, you can disable them by selecting Control Panel→Hardware→Device

Manager, right-click on the specific NIC, and then select Disable.

• When you remove a disk or disconnect a cable, the SMH interface might not display alerts

when you click the Refresh button. You can force a hard refresh by clicking the Home button

or by navigating to the problem area. The default refresh interval is two minutes. To change

the interval in the Settings menu, select Autorefresh, and then Configure Page refresh settings.

The minimum interval is five seconds and the maximum is 30 minutes.

Overall System Health Status

A webapp sets the value of the Overall System Health Status icon by using a predefined heuristic.

If no webapp can determine the status, the worst possible status is displayed in the Component

Status Summary section.

Component Status summary

The Component Status Summary section displays links to all subsystems that have a critical, major,

minor, or warning status. If there are no critical, major, minor or warning items, the Component

Status Summary section displays no items.

Enclosure

This section provides information about the enclosure cooling, IDs, power, Unit Identification LED,

PCIe devices, and I/O modules.

NOTE: A large number of disk errors may indicate that an I/O module has failed. Inspect the

I/O module LEDs on the storage system and any disk enclosures, and replace any failed component.

• Because both a system and drive fan are required, the maximum and minimum number of

fans required is two. If either fan becomes degraded, the system could shut down quickly.

Because the fans are not mutually redundant, even if the status of a single fan has changed,

HP System Management Homepage 27

Page 28

the new status is reported immediately in the Components Status Summary section on the SMH

main page.

• When the Enclosure Manager IP address is set incorrectly, the enclosure status displayed is

Lost communication. Because the Enclosure Manager has lost communication with the external

network, none of the other items in the Enclosure Information section can be displayed.

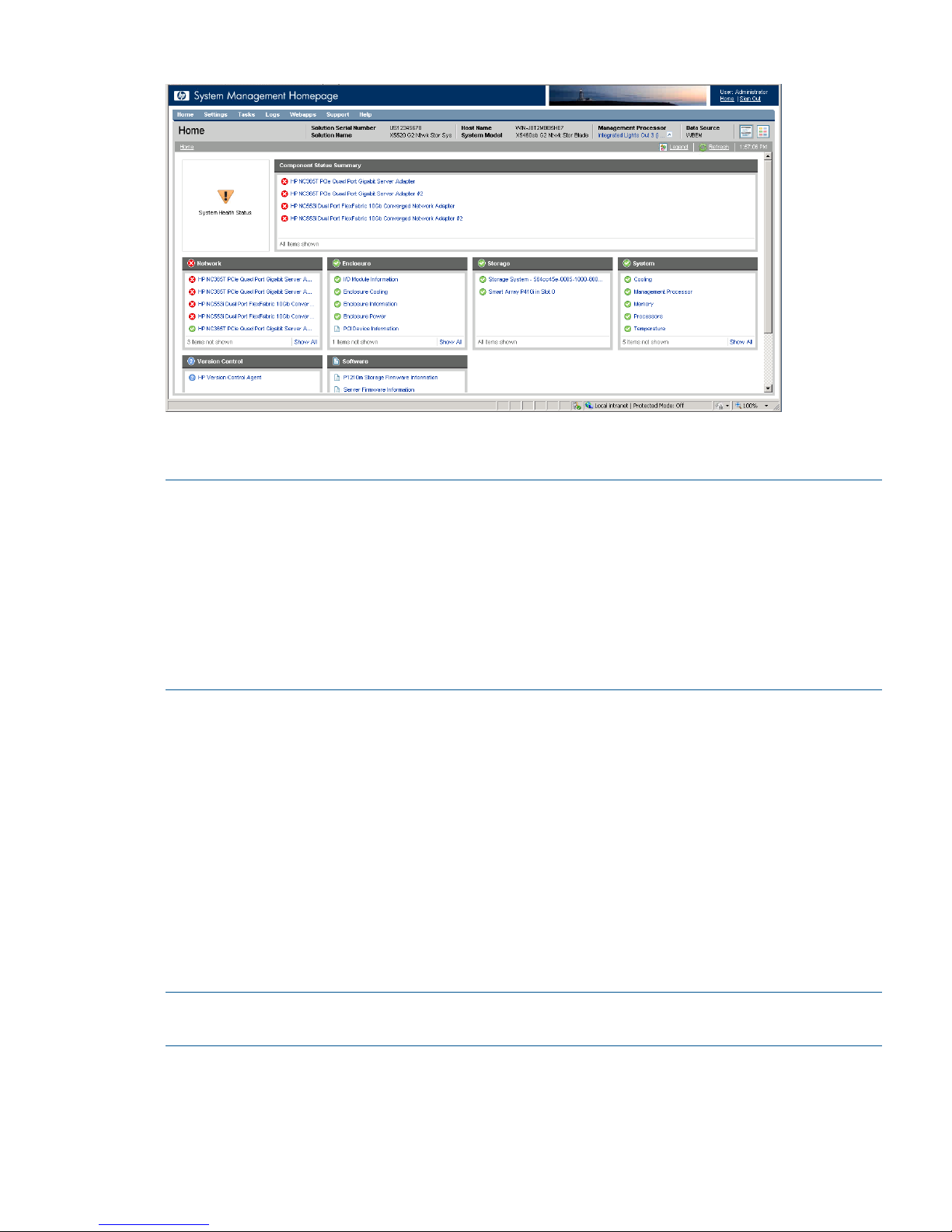

The enclosure I/O ports are numbered from 1 to 8 in the SMH.

Figure 17 I/O module

These numbers correspond to the I/O modules in the enclosure bays.

Figure 18 I/O module bays

5. PCIe module1. LOM module

6. PCIe module2. LOM module

7. SAS I/O module3. MEZZ module

8. SAS I/O module4. Intraconnect (internal switch)

Network

This section shows the status of the network connections.

28 Monitoring and troubleshooting the storage system

Page 29

Storage

This section displays information about the following components:

• Storage System—Links to the page that displays information about storage in the drive drawer

and any external disk enclosures. This storage is managed by the 1210m controller.

• Smart array subsystem—Links to the page that displays information about operating system

drives and smart array controllers.

NOTE: The SMH will display a total of four power supplies for each External Storage Enclosure.

If there is more than one External Storage Enclosure connected, the SMH may not show the correct

number of power supplies for each of these enclosures.

The Storage System page is organized as a left panel and a main page:

Figure 19 Storage system

The left panel provides links to information about the following items:

• Controller

Select a storage controller to view its type, status, firmware version, and serial number.

• Logical Drives

A list of logical drives associated with the controller appears in the left panel tree view. Select

one of the logical drive entries to display the status of the drive, fault tolerance (RAID level),

and capacity (volume size). A link to the logical drive storage pool is also displayed.

• Storage Pools

A list of storage pools associated with the controller displays in the left panel tree view. Select

one of the pool entries to display its status, capacity, communication status with the controller,

primordial state, and cache properties.

NOTE: If read or write cache is enabled the value displayed is 2; otherwise, the value is 3.

The Storage Pools page also displays a list of disk drives and storage volumes present in the

pool.

Under the Physical Drives tree, the list of disk enclosures is displayed. Under each enclosure,

the list of disk drives present in each disk enclosures is displayed. When there is no drive in

HP System Management Homepage 29

Page 30

the enclosure, the display shows Bay Bay number – Empty. Select one of the disk enclosures

or disk drives to see information for that enclosure or drive.

• Physical Drives

This section provides an overview of all disk drives attached to the controller. Drives are

identified and grouped as assigned, unassigned, and spare drives. Each physical drive is

listed as a separate entry in the Storage System submenu. Select any of the physical drives to

display more information about the drive.

NOTE: Spare drives are only used when a disk drive fails. Until a spare drive is used, it

remains offline and its LEDs will remain off.

System

This section displays status for various system components.

Version Control

This section provides information about the Version Control Agent.

Software

This section provides information about system firmware and software.

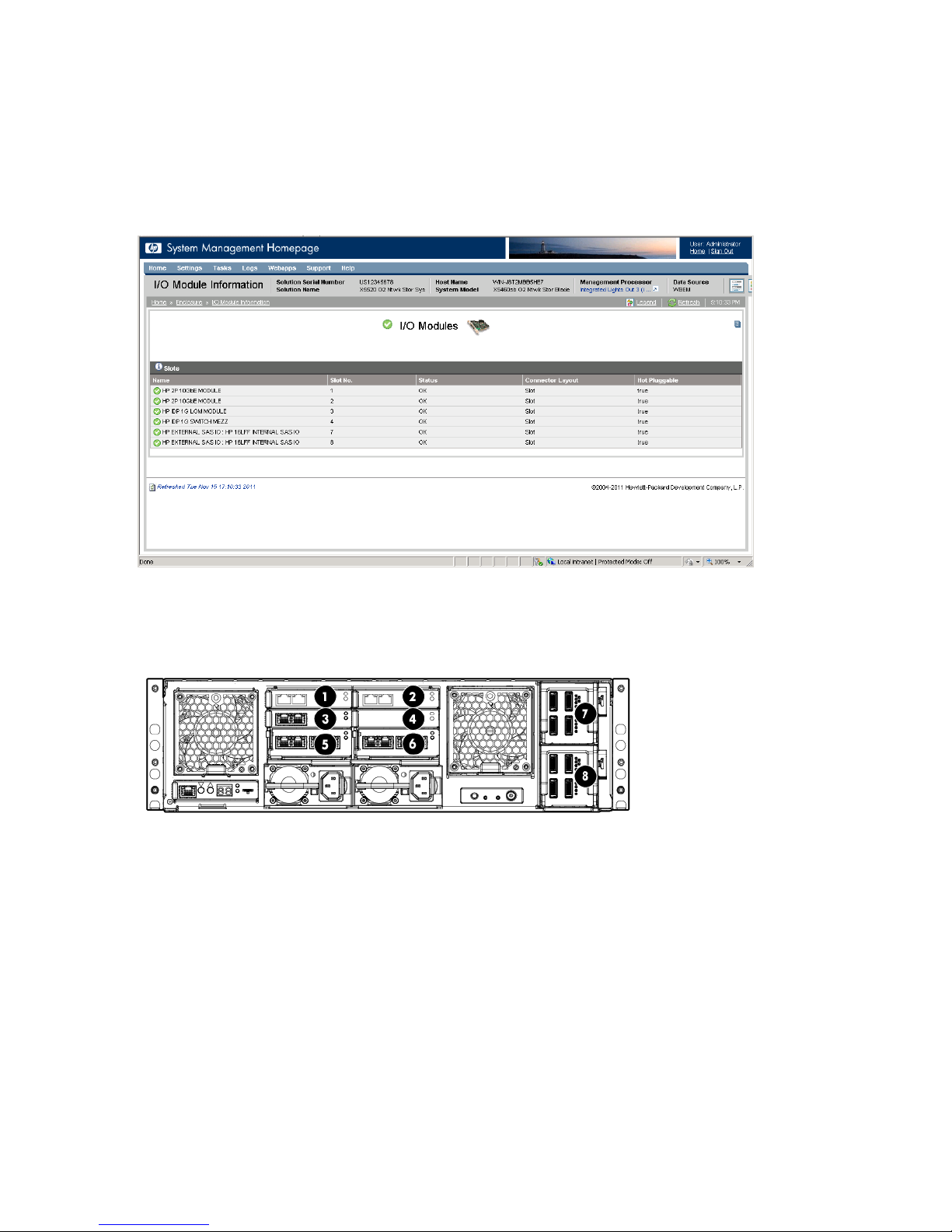

Using the System Manager

The System Manager provides the status of each server blade that is configured in the storage

system. Be sure to note the server blade that is being assessed when you open the System Manager.

Log in to each server blade to evaluate its status.

To use the System Manager, which has been preinstalled and configured, use Remote Desktop or

iLO to access the server blade. Click the Server Manager icon located in the taskbar to the

right of the Start button or select Start→Administrative Tools→Server Manager. When Server

Manager appears, select System Manger in the left navigation pane.

To troubleshoot using the System Manager:

1. Open the System Manager.

2. Open the System Summary tab to review the overall health of the storage system hardware,

and firmware.

If the status icon is green, the system is running properly. A yellow icon is a warning that there

are conditions that might cause a problem. If the icon is red, a problem exists in the storage

system.

3. Open each tab in the System Manager to assess the status of the storage system.

4. Follow the instructions provided on the System Manager tabs for any reported issue.

System Summary

The System Summary tab displays information such as the enclosure name, IP Address, firmware

revision, and serial number. The lower part of the System Summary also shows the status of

hardware, and whether your current firmware revision is up to date. If a green check mark does

not appear beside the configuration status, go to the related tab for information about the issue.

30 Monitoring and troubleshooting the storage system

Page 31

Figure 20 System summary

Hardware Status

The Hardware Status tab provides the health status for each storage system component. The System

section displays information for the server blade that you are logged in to. If a problem is reported

in the System section, you should check the Hardware Status tab on each server blade.

NOTE: If the System Manager shows that a LUN has an error, open the System Management

Homepage and determine whether the LUN is degraded due to a disk failure. If so, also use the

System Management Homepage to determine which disk needs to be replaced.

Using the System Manager 31

Page 32

Figure 21 Hardware status

Firmware

The Firmware tab indicates whether the firmware of a component is outdated. If the specific firmware

requires that you reboot after installing the update, a message instructing you to reboot the storage

system appears. Since the tool does not connect to the Internet to identify new firmware, you must

periodically check the HP support web page and download new firmware when available. Be

sure to check the Firmware tab on each server blade. Some firmware updates must be made on

both server blades.

IMPORTANT: If a firmware update requires a reboot, you must reboot your storage system

manually. For more information about firmware updates, see“Upgrading a component's firmware”

(page 56).

32 Monitoring and troubleshooting the storage system

Page 33

Figure 22 Firmware

Reports

The Reports tab gathers logs for the hardware, software, Microsoft Windows system configuration,

and the Microsoft Exchange diagnostics in one place. These logs are used by HP support engineers

to help diagnose your system, if needed; you do not need to view and interpret the logs yourself.

To generate reports:

1. Consult with HP support to determine what type of report is required.

- If complete reports are required, go to step 2.

- If an abbreviated report can be used, select the Run Quick Report Only option. A quick report

contains less information but is created in much less time. It may contain all the necessary

information HP support needs.

2. Click Generate Support File. A license agreement window is displayed.

3. Select Yes to accept the license.

The report generation process begins. It may take up to 45 minutes to create the reports.

When the process is complete, the lower portion of the screen indicates that HP System Reports

have been generated.

4. Click Open Reports Folder to access the .cab file containing the report results.

This file is ready to forward to the HP support engineers.

Using the System Manager 33

Page 34

Figure 23 Reports

Component LEDs

LEDs indicate the status of hardware components. This section provides images of the component

LED locations and describes the status of LED behaviors. To obtain additional information on some

status indicators, you can use the EMU CLI SHOW commands described in “Managing the EMU”

(page 131).

Figure 24 Server blade LEDs

Table 2 Server blade LEDs status

StatusDescriptionItem

Blue = Needs service checkUID LED1

Blue flashing = remote management (remote console in use via iLO)

OFF = No remote management

Green = NormalHealth LED2

Flashing = Booting

34 Monitoring and troubleshooting the storage system

Page 35

Table 2 Server blade LEDs status (continued)

StatusDescriptionItem

Amber = Degraded condition

Red = Critical condition

Green = Network linkedNIC 1 LED*3

Green flashing = Network activity

OFF = No link or activity

Green = Network linkedFlex-10 NIC 2 LED*4

Green flashing = Network activity

OFF = No link or activity

—Reserved5

Green = OnSystem power LED6

Amber = Standby (auxiliary power available)

OFF = OFF

*Actual NIC numbers depend on several factors, including the operating system installed on the server blade.

Figure 25 Front LED display board

Table 3 Front LED status

StatusDescriptionItem

Green = The drive is online, but is not currently active.Hard drive LEDs1

Flashing irregularly green = The drive is online and it is operating normally.Normal mode (UID LED is

solid)

Flashing green (1 Hz) = Do not remove the drive. Removing the drive may

terminate the current operation and cause data loss. The drive is rebuilding,

or it is part of an array that is undergoing expansion, logical drive extension,

a stripe size migration, or RAID migration.

Flashing amber/green = Drive is configured and indicating a predictive

failure. The drive may also be undergoing a rebuild, expansion, extension,

or migration.

Flashing amber (1 Hz) = A predictive failure alert has been received for

this drive. Replace the drive as soon as possible.

Amber = Drive failure, link failure, or mismatched configuration.

OFF = The drive is offline, a spare, or not configured as part of an array.

Component LEDs 35

Page 36

Table 3 Front LED status (continued)

StatusDescriptionItem

Green = The drive has been selected by a management application and it

is operating normally.

Hard drive LEDs1

Flashing amber (1 Hz) = The drive is not selected and is indicating a

predictive failure.

Drive locate mode (UID LED is

flashing)

Flashing amber/green = The drive has been selected by a management

application and is indicating a predictive failure.

Amber = The drive might or might not be selected and is indicating drive

failure, link failure, or mismatched configuration.

OFF = The drive is not selected.

Flashing amber if there is a failed component in the system.

NOTE: The amber chassis fault LED flashes if any component fault is

detected by the System Management Homepage. A fault can be as minor

as a cable unplugged from a NIC port, and therefore may not be cause

for concern.

Chassis fault LED2

OFF if the system is in good health.

Solid green if the system is in good health.Chassis health LED3

OFF if there is a failed component in the system.

This is either blue or off. When on it can be steady or blinking. Used only

for unit identification. To set the LED, use the following CLI command: SET

ENCLOSURE UID { ON | OFF | SLOW | FAST }

Chassis UID LED4

OFF = Enclosure is functioning normally.

NOTE: All these LEDs are off if the enclosure has power but is turned off (see Table 11 (page 40)).

Then only the equivalent Chassis LEDs (2,3,4) on the rear Power Pod show status.

Figure 26 Hard drive LEDs

1. Fault/UID LED (amber/blue)

2. Online LED (green)

Table 4 SAS hard drive LED combinations

StatusDescriptionItem

OFF = Override drive activity output. Drive is not a member of any RAID

volumes <or> Drive is configured but in a replacement or failed state for

Activity/Online LED1

at least one volume that is a member of a RAID volume <or> Drive is a

spare drive that is or has been activated but has not been

rebuilt.<and>Drive is not rebuilding<and>Drive is not a member of a

volume undergoing capacity expansion or RAID migration.

Solid green = Drive is a member of a RAID volume <and> Drive is not a

spare drive <and> Drive is not in a replacement or failed state for any

volume that is a member of a RAID volume <and> Drive is not currently

performing I/O activity.

36 Monitoring and troubleshooting the storage system

Page 37

Table 4 SAS hard drive LED combinations (continued)

StatusDescriptionItem

Blinking green (@ 4 Hz 50% of duty cycle) = Drive is currently performing

I/O activity <and> Drive is a member of a RAID volume <and> Drive is

not in a replacement or failed state for any volumes that is a member of

a RAID volume (drive is online) <and> Drive is not rebuilding <and>

Drive is not a member of a volume undergoing capacity expansion or

RAID migration.

Blinking green (@1 Hz 50% duty cycle — override drive activity output)

= Drive rebuilding <or> member of volume undergoing Capacity

Expansion/RAID Migration.

OFF = Drive is not failed <and> Drive is not selected (unit identification).Fault/Identification LED

– Bicolor amber/blue

2

Solid blue = Drive is not failed <and> Drive is selected (unit identification).

Solid amber = Drive is failed <and> Drive is not selected.

Blinking amber (@ 1Hz 50% duty cycle) = Drive is in a predictive failure

state <and> Drive is not failed <and> Drive is not selected.

Blinking alternate amber/blue (@ 1Hz 50% duty cycle) = Drive Failed

<or> Drive is in a predictive failure state<and>Drive is selected.

NOTE: Spare drives are only used when a disk drive fails. Until a spare drive is used, it remains

offline and its LEDs will remain off.

Figure 27 1210m Cache module controller LEDs

Table 5 1210m Cache module controller LED status

StatusDescriptionItem

Green off, amber on = A backup is in progress.Controller LEDsGreen LED upper left;

Amber LED lower right

Green flashing (1 Hz), amber on = A restore is in progress.

Green flashing (1 Hz), amber off = The capacitor pack is

charging.

Green on, amber off = The capacitor pack has completed

charging.

Green flashing (2 Hz) alternating with amber; amber flashing

(2 Hz) alternating with green LED = One of the following

condition exists:

– The charging process has timed out.

– The capacitor pack is not connected.

Green on, amber on = The flash code image failed to load.

Green off, amber off = The flash code is corrupt.

Component LEDs 37

Page 38

Figure 28 Enclosure Manager unit LEDs