Page 1

HP Insight Cluster Management Utility v7.2

User Guide

Abstract

This guide describes how to install, configure, and use HP Insight Cluster Management Utility (CMU) v7.2 on HP systems. HP

Insight CMU is software dedicated to the administration of HPC and large Linux clusters. This guide is intended primarily for

administrators who install and manage a large collection of systems. This document assumes you have access to the documentation

that comes with the hardware platform where the HP Insight CMU cluster will be installed, and you are familiar with installing

and administering Linux operating systems.

HP Part Number: 5900-3115

Published: November 2013

Edition: 1

Page 2

© Copyright 2013 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

Microsoft® and Windows® are U.S. registered trademarks of Microsoft Corporation. Linux™ is a trademark of Linus Torvalds in the U.S. Red Hat™

and RPM™ are trademarks of Red Hat, Inc.Java® is a registered trademark of Oracle and/or its affiliates.

Page 3

Contents

1 Overview................................................................................................11

1.1 Features...........................................................................................................................11

1.1.1 Compute node monitoring............................................................................................11

1.1.2 HP Insight CMU configuration......................................................................................11

1.1.3 Compute node administration......................................................................................12

1.1.4 System disk replication................................................................................................12

2 Installing and upgrading HP Insight CMU....................................................13

2.1 Installing HP Insight CMU...................................................................................................13

2.1.1 Management node hardware requirements....................................................................13

2.1.2 Disk space requirements..............................................................................................14

2.1.3 Support for non-HP servers..........................................................................................14

2.1.4 Planning for compute node installation..........................................................................14

2.1.5 Firmware upgrade requirements...................................................................................15

2.1.6 Configuring the local smart array card..........................................................................15

2.1.7 Configuring the management cards..............................................................................15

2.1.8 Configuring the BIOS..................................................................................................15

2.1.8.1 DL3xx, DL5xx, DL7xx, Blades...............................................................................16

2.1.8.1.1 OA IP address: Blades only...........................................................................16

2.1.8.1.2 Configuring iLO cards from the OA: Blades only..............................................16

2.1.8.1.3 Disabling server automatic power on: Blades only............................................16

2.1.8.2 DL160 G5, DL165c G5, DL165c G6, and DL180 G5 Servers...................................17

2.1.8.3 DL160 G6 Servers..............................................................................................18

2.1.8.4 SL2x170z G6 and DL170h G6 Servers BIOS setting................................................18

2.2 Preparing for installation....................................................................................................20

2.2.1 HP Insight CMU kit delivery.........................................................................................20

2.2.2 Preinstallation limitations............................................................................................20

2.2.3 Operating system support...........................................................................................21

2.2.3.1 RHEL 6 support..................................................................................................21

2.2.4 HP Insight CMU CD-ROM directory structure.................................................................21

2.2.5 HP Insight CMU installation checklist............................................................................22

2.2.6 Login privileges.........................................................................................................22

2.2.7 SELinux and HP Insight CMU......................................................................................22

2.3 Installation procedures.......................................................................................................22

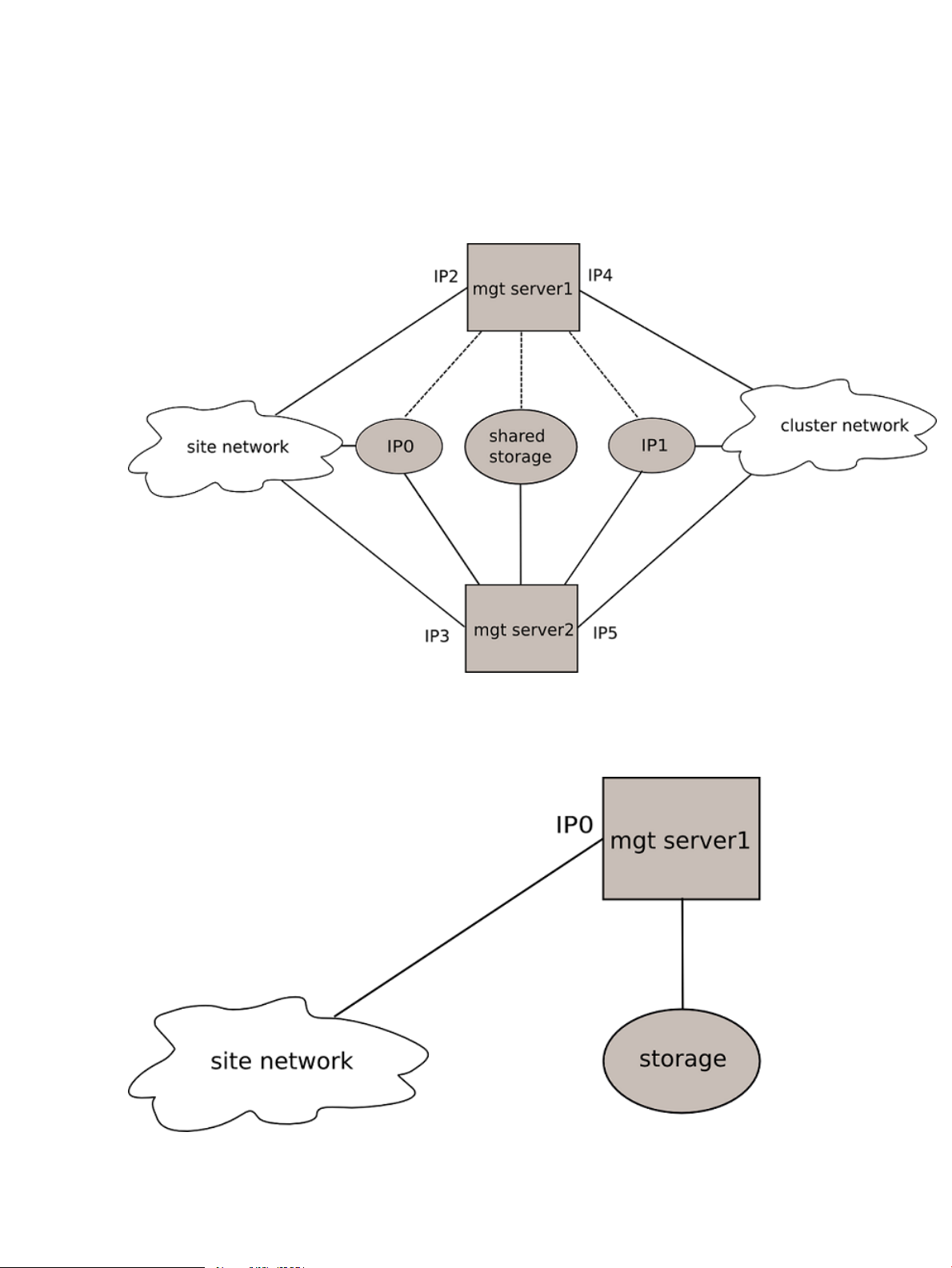

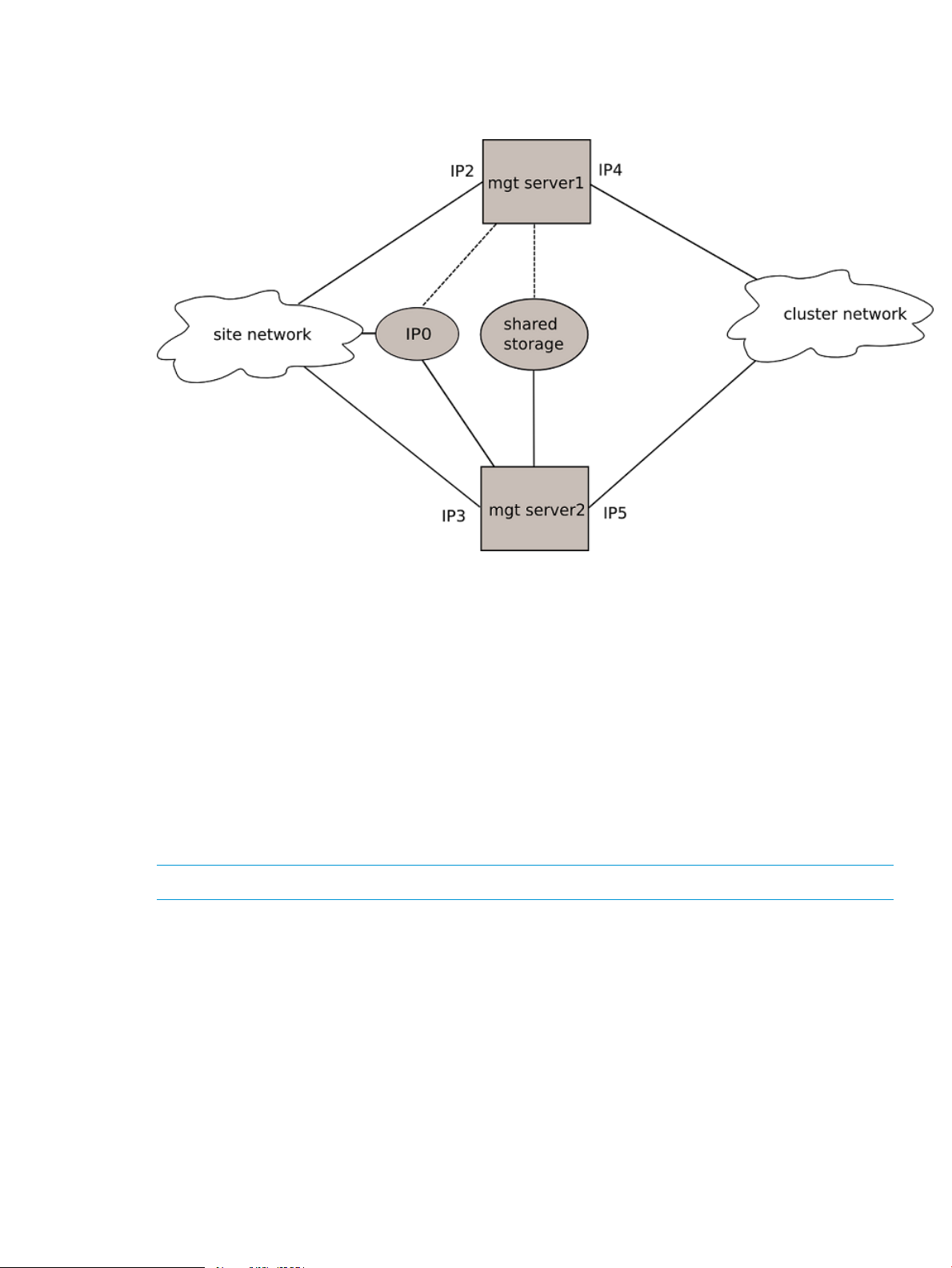

2.4 Installing HP Insight CMU with high availability....................................................................27

2.4.1 HA hardware requirements.........................................................................................29

2.4.2 Software prerequisites................................................................................................29

2.4.3 Installing HP Insight CMU under HA............................................................................29

2.4.3.1 Overview .........................................................................................................29

2.4.3.2 HP Insight CMU HA service requirements..............................................................30

2.4.3.3 Installing and testing..........................................................................................30

2.4.4 Configuring HA control of HP Insight CMU...................................................................30

2.4.5 HP Insight CMU configuration considerations................................................................33

2.4.6 Upgrading HP Insight CMU HA service........................................................................33

2.5 Upgrading HP Insight CMU................................................................................................34

2.5.1 Upgrading to v7.2 important information......................................................................34

2.5.2 Dependencies...........................................................................................................34

2.5.2.1 64-bit versions on management node...................................................................34

2.5.2.2 tftp client..........................................................................................................34

2.5.2.3 Java version dependency....................................................................................35

2.5.2.4 Monitoring clients..............................................................................................35

2.5.3 Stopping the HP Insight CMU service...........................................................................35

Contents 3

Page 4

2.5.4 Upgrading Java Runtime Environment...........................................................................35

2.5.5 Removing the previous HP Insight CMU package...........................................................35

2.5.6 Installing the HP Insight CMU v7.2 package..................................................................35

2.5.7 Installing your HP Insight CMU license.........................................................................36

2.5.8 Restoring the previous HP Insight CMU configuration......................................................36

2.5.9 Configuring the updated UP Insight CMU.....................................................................37

2.5.10 Starting HP Insight CMU...........................................................................................38

2.5.11 Deploying the monitoring client..................................................................................39

2.6 Saving the HP Insight CMU database..................................................................................39

2.7 Restoring the HP Insight CMU database...............................................................................39

3 Launching the HP Insight CMU GUI............................................................40

3.1 HP Insight CMU GUI.........................................................................................................40

3.2 HP Insight CMU main window............................................................................................40

3.3 Administrator mode...........................................................................................................41

3.4 Quitting administrator mode..............................................................................................41

3.5 Changing X display address..............................................................................................41

3.6 Launching the HP Insight CMU GUI.....................................................................................41

3.6.1 Launching the HP Insight CMU GUI using a web browser...............................................41

3.6.2 Launching the HP Insight CMU GUI from the Java file.....................................................42

3.6.3 Configuring the GUI client on Linux workstations............................................................42

3.6.4 Launching the HP Insight CMU Time View GUI..............................................................43

4 Defining a cluster with HP Insight CMU.......................................................44

4.1 HP Insight CMU service status.............................................................................................44

4.2 High-level checklist for building an HP Insight CMU cluster.....................................................44

4.3 Cluster administration........................................................................................................44

4.3.1 Node management....................................................................................................45

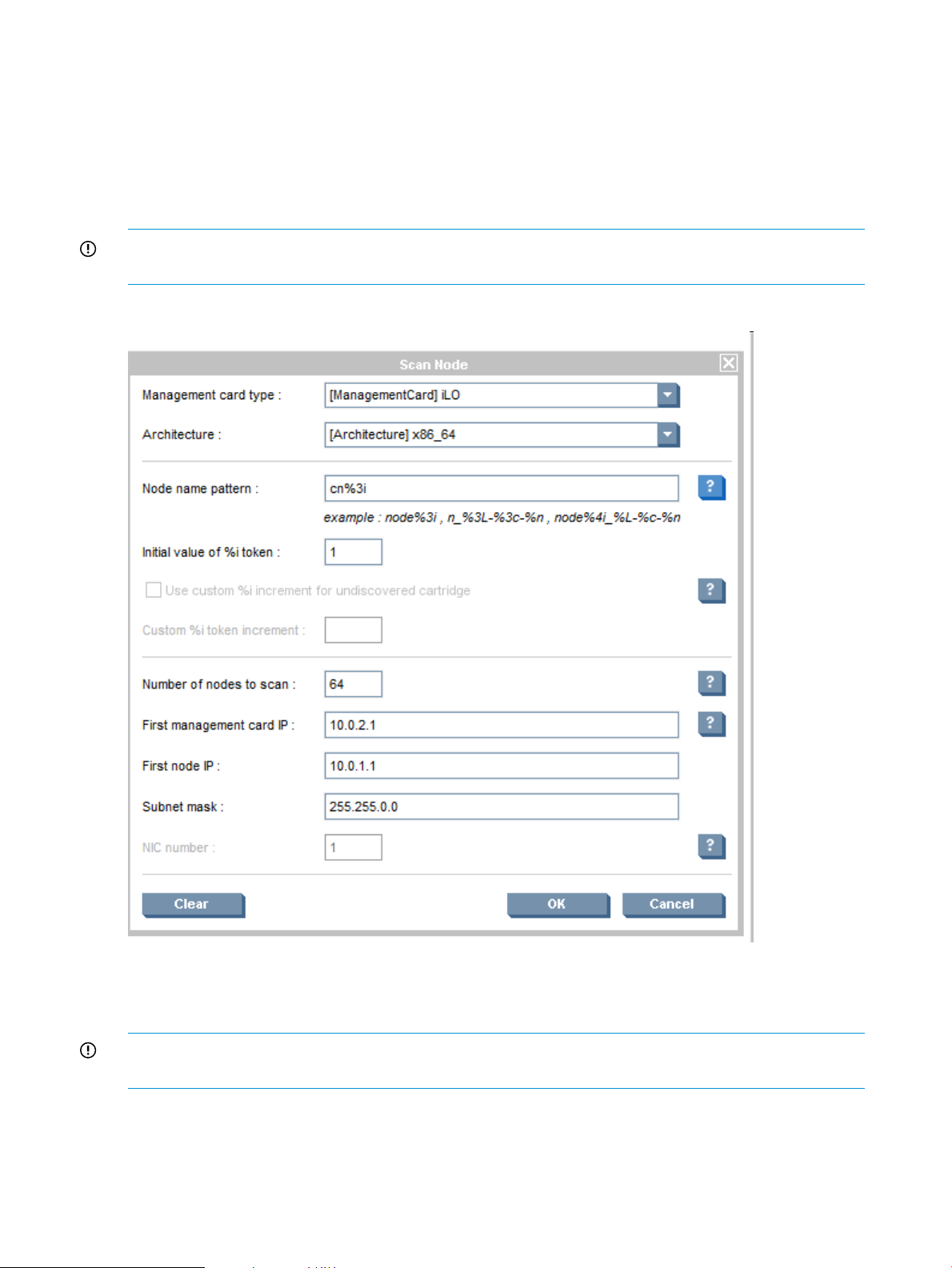

4.3.1.1 Scanning nodes..................................................................................................46

4.3.1.2 Adding nodes....................................................................................................47

4.3.1.3 Modifying nodes................................................................................................48

4.3.1.4 Importing nodes.................................................................................................49

4.3.1.5 Deleting nodes...................................................................................................49

4.3.1.6 Exporting nodes.................................................................................................49

4.3.1.7 Contextual menu................................................................................................49

4.3.2 Network entity management.......................................................................................49

4.3.2.1 Adding network entities......................................................................................50

4.3.2.2 Deleting network entities.....................................................................................50

5 Provisioning a cluster with HP Insight CMU..................................................51

5.1 Logical group management................................................................................................51

5.1.1 Modifying logical groups.............................................................................................52

5.1.2 Deleting logical groups...............................................................................................52

5.1.3 Renaming logical groups.............................................................................................52

5.2 Autoinstall........................................................................................................................53

5.2.1 Autoinstall requirements..............................................................................................53

5.2.2 Autoinstall templates..................................................................................................53

5.2.3 Autoinstall calling methods ........................................................................................54

5.2.4 Using autoinstall from GUI..........................................................................................54

5.2.4.1 Creating an autoinstall logical group....................................................................54

5.2.4.2 Registering compute nodes.................................................................................55

5.2.4.3 Autoinstall compute nodes..................................................................................55

5.2.5 Using autoinstall from CLI...........................................................................................56

5.2.5.1 Registering an autoinstall logical group.................................................................56

5.2.5.2 Adding nodes to autoinstall logical group.............................................................56

5.2.5.3 Autoinstall compute nodes..................................................................................57

4 Contents

Page 5

5.2.6 Customization...........................................................................................................57

5.2.6.1 RHEL autoinstall customization for nodes configured with Dynamic Smart Array RAID

(B120i, B320i RAID mode).............................................................................................58

5.2.7 Restrictions...............................................................................................................58

5.3 Backing up......................................................................................................................58

5.3.1 Backing up a disk from a compute node in a logical group.............................................58

5.4 Cloning...........................................................................................................................61

5.4.1 Preconfiguration........................................................................................................62

5.4.2 Reconfiguration.........................................................................................................63

5.4.3 Cloning Windows images..........................................................................................64

5.5 Node static info................................................................................................................64

5.6 Rescan MAC....................................................................................................................65

5.7 HP Insight CMU image editor.............................................................................................66

5.7.1 Expanding an image..................................................................................................66

5.7.2 Modifying an image..................................................................................................67

5.7.3 Saving a modified cloning image................................................................................67

5.8 HP Insight CMU diskless environments.................................................................................68

5.8.1 Overview.................................................................................................................68

5.8.1.1 Enabling diskless support in HP Insight CMU..........................................................69

5.8.2 The system-config-netboot diskless method....................................................................70

5.8.2.1 Operating systems supported...............................................................................70

5.8.2.2 Installing the operating system on the management node and the golden node.........70

5.8.2.3 Modifying the TFTP server configuration ...............................................................70

5.8.2.4 Populating the HP Insight CMU database.............................................................71

5.8.2.5 Creating a diskless image..................................................................................71

5.8.2.6 Creating a diskless logical group.........................................................................71

5.8.2.7 Adding nodes into the logical group....................................................................72

5.8.2.8 Booting the compute nodes.................................................................................73

5.8.2.9 Understanding the structure of a diskless image.....................................................74

5.8.2.10 Customizing your diskless image........................................................................74

5.8.2.10.1 files.custom...............................................................................................74

5.8.2.10.2 Using reconf-diskless-image.sh....................................................................74

5.8.2.10.3 Using reconf-diskless-snapshot.sh................................................................75

5.8.2.10.4 Templates and image file...........................................................................76

5.8.2.11 Best practices for diskless clusters........................................................................76

5.8.3 The HP Insight CMU oneSIS diskless method.................................................................76

5.8.3.1 Operating systems supported...............................................................................77

5.8.3.2 Enabling oneSIS support....................................................................................77

5.8.3.3 Preparing the HP Insight CMU management node.................................................77

5.8.3.4 Preparing the golden node.................................................................................77

5.8.3.5 Capturing and customizing a oneSIS diskless image..............................................78

5.8.3.5.1 Creating an HP Insight CMU oneSIS diskless image........................................78

5.8.3.5.2 Customizing an HP Insight CMU oneSIS diskless image..................................80

5.8.3.6 Manage the writeable memory usage by the oneSIS diskless clients.........................81

5.8.3.7 Adding nodes and booting the diskless compute nodes..........................................81

5.8.4 Scaling out an HP Insight CMU diskless solution with multiple NFS servers........................82

5.8.4.1 Comments on High Availability (HA)....................................................................84

6 Monitoring a cluster with HP Insight CMU....................................................85

6.1 Installing the HP Insight CMU monitoring client......................................................................85

6.2 Deploying the monitoring client..........................................................................................85

6.3 Monitoring the cluster........................................................................................................86

6.3.1 Node and group status...............................................................................................87

6.3.2 Selecting the central frame display..............................................................................87

6.3.3 Global cluster view in the central frame........................................................................88

Contents 5

Page 6

6.3.4 Resource view in the central frame...............................................................................89

6.3.4.1 Resource view overview......................................................................................89

6.3.4.2 Detail mode in resource view..............................................................................90

6.3.5 Gauge widget..........................................................................................................90

6.3.6 Node view in the central frame...................................................................................91

6.3.7 Using Time View.......................................................................................................92

6.3.7.1 Tagging nodes...................................................................................................92

6.3.7.2 Adaptive stacking..............................................................................................93

6.3.7.3 Bindings and options..........................................................................................93

6.3.7.3.1 Mouse control.............................................................................................93

6.3.7.3.2 Keyboard control........................................................................................94

6.3.7.3.3 Custom cameras.........................................................................................94

6.3.7.3.4 Options.....................................................................................................94

6.3.7.4 Technical dependencies......................................................................................94

6.3.7.5 Troubleshooting.................................................................................................95

6.3.8 Archiving user groups................................................................................................95

6.3.8.1 Visualizing history data......................................................................................96

6.3.8.2 Limitations........................................................................................................96

6.4 Stopping HP Insight CMU monitoring..................................................................................96

6.5 Customizing HP Insight CMU monitoring, alerting, and reactions............................................96

6.5.1 Action and alert files..................................................................................................96

6.5.2 Actions....................................................................................................................97

6.5.3 Alerts.......................................................................................................................98

6.5.4 Alert reactions..........................................................................................................99

6.5.5 Modifying the sensors, alerts, and alert reactions monitored by HP Insight CMU..............100

6.5.6 Using collectl for gathering monitoring data...............................................................100

6.5.6.1 Installing and starting collectl on compute nodes..................................................100

6.5.6.2 Modifying the ActionAndAlerts.txt file................................................................101

6.5.6.3 Installing and configuring colplot for plotting collectl data.....................................102

6.5.6.3.1 Plotting data............................................................................................103

6.5.7 Monitoring GPUs and coprocessors...........................................................................105

6.5.7.1 Monitoring NVIDIA GPUs..................................................................................105

6.5.7.2 Monitoring AMD GPUs.....................................................................................106

6.5.7.3 Monitoring Intel coprocessors............................................................................107

6.5.8 Monitoring HP Insight CMU alerts in HP Systems Insight Manager.................................108

6.5.9 Extended metric support...........................................................................................109

6.5.9.1 Configuring iLO4 AMS extended metric support...................................................112

6.5.9.1.1 Configuring the HP iLO SNMP port..............................................................113

6.5.9.1.2 Accessing and viewing the HP iLO data via SNMP........................................114

6.5.9.1.3 Configuring HP iLO SNMP metrics in HP Insight CMU....................................115

7 Managing a cluster with HP Insight CMU..................................................118

7.1 Unprivileged user menu....................................................................................................118

7.2 Administrator menu.........................................................................................................118

7.3 SSH connection..............................................................................................................118

7.4 Management card connection..........................................................................................119

7.5 Virtual serial port connection............................................................................................119

7.6 Shutdown.......................................................................................................................119

7.7 Power off.......................................................................................................................119

7.8 Boot..............................................................................................................................120

7.9 Reboot...........................................................................................................................120

7.10 Change UID LED status...................................................................................................120

7.11 Multiple windows broadcast............................................................................................121

7.12 Single window pdsh.......................................................................................................121

7.12.1 cmudiff examples....................................................................................................122

6 Contents

Page 7

7.13 Parallel distributed copy (pdcp)........................................................................................125

7.14 User group management.................................................................................................125

7.14.1 Adding user groups.................................................................................................125

7.14.2 Deleting user groups...............................................................................................126

7.14.3 Renaming user groups.............................................................................................126

7.15 HP Insight firmware management.....................................................................................126

7.15.1 Viewing and analyzing BIOS settings........................................................................126

7.15.2 Checking BIOS versions..........................................................................................127

7.15.3 Installing and upgrading firmware............................................................................127

7.16 Customizing the GUI menu..............................................................................................127

7.16.1 Saving user settings.................................................................................................128

7.17 HP Insight CMU CLI........................................................................................................128

7.17.1 Starting a CLI interactive session................................................................................128

7.17.2 Basic commands.....................................................................................................128

7.17.3 Specifying nodes....................................................................................................130

7.17.4 Administration and cloning commands......................................................................132

7.17.5 Administration utilities pdcp and pdsh.......................................................................138

7.17.6 HP Insight CMU Linux shell commands.......................................................................138

8 Advanced topics....................................................................................139

8.1 Accessing the GUI for non-root users..................................................................................139

8.1.1 Custom menu options for non-root users.......................................................................140

8.1.2 Configuring sudo support .........................................................................................140

8.1.3 Examples................................................................................................................141

8.2 HP Insight CMU diskless API............................................................................................141

8.2.1 Build diskless image.................................................................................................142

8.2.2 Delete diskless image..............................................................................................143

8.2.3 Configure diskless node...........................................................................................143

8.2.4 Post node configuration............................................................................................143

8.2.5 Unconfigure diskless node........................................................................................143

8.2.6 Boot diskless node..................................................................................................144

8.2.7 Diskless check.........................................................................................................144

8.3 HP Insight CMU remote hardware control API.....................................................................145

8.4 Customizing kernel arguments for the HP Insight CMU provisioning kernel..............................146

8.4.1 PXE-boot configuration file keywords..........................................................................147

8.5 Support for ScaleMP.......................................................................................................148

8.6 Cloning mechanisms.......................................................................................................148

8.7 Support for Intel Xeon Phi cards........................................................................................150

8.7.1 Intel Xeon Phi card IP address and host name assignment algorithm...............................151

8.7.2 Cloning an image with Intel Xeon Phi cards configured with independent IP addresses.....152

8.7.3 HP Insight CMU oneSIS diskless file system support for independent addressing of Intel

Xeon Phi cards................................................................................................................154

9 Support and other resources....................................................................159

9.1 Contacting HP.................................................................................................................159

9.1.1 Before you contact HP...............................................................................................159

9.1.2 HP contact information..............................................................................................159

9.1.3 Subscription service..................................................................................................159

9.1.4 Documentation feedback...........................................................................................159

9.2 Related information.........................................................................................................159

9.3 Typographic conventions..................................................................................................160

A Troubleshooting.....................................................................................162

A.1 HP Insight CMU logs.......................................................................................................162

A.1.1 cmuserver log files....................................................................................................162

A.1.2 Cloning log files......................................................................................................162

Contents 7

Page 8

A.1.3 Backup log files.......................................................................................................162

A.1.4 Monitoring log files..................................................................................................162

A.2 Network boot issues.......................................................................................................162

A.2.1 Troubleshooting network boot...................................................................................163

A.3 Backup issues................................................................................................................163

A.4 Cloning issues...............................................................................................................164

A.5 Administration command problems...................................................................................164

A.6 GUI problems................................................................................................................164

1 HP Insight CMU manpages......................................................................167

cmu_boot(8)........................................................................................................................168

cmu_show_nodes(8).............................................................................................................169

cmu_show_logical_groups(8).................................................................................................171

cmu_show_network_entities(8)................................................................................................172

cmu_show_user_groups(8).....................................................................................................173

cmu_show_archived_user_groups(8)........................................................................................174

cmu_add_node(8)................................................................................................................175

cmu_add_network_entity(8)...................................................................................................177

cmu_add_logical_group(8)....................................................................................................178

cmu_add_to_logical_group_candidates(8)...............................................................................179

cmu_add_user_group(8)........................................................................................................180

cmu_add_to_user_group(8)....................................................................................................181

cmu_change_active_logical_group(8)......................................................................................182

cmu_change_network_entity(8)...............................................................................................183

cmu_del_from_logical_group_candidates(8).............................................................................184

cmu_del_from_network_entity(8).............................................................................................185

cmu_del_archived_user_groups(8)...........................................................................................186

cmu_del_from_user_group(8).................................................................................................187

cmu_del_logical_group(8).....................................................................................................188

cmu_del_network_entity(8).....................................................................................................189

cmu_del_node(8)..................................................................................................................190

cmu_del_snapshots(8)...........................................................................................................191

cmu_del_user_group(8).........................................................................................................192

cmu_console(8)....................................................................................................................193

cmu_power(8)......................................................................................................................194

cmu_custom_run(8)...............................................................................................................196

cmu_clone(8).......................................................................................................................197

cmu_backup(8)....................................................................................................................198

cmu_scan_macs(8)...............................................................................................................199

cmu_rescan_mac(8)..............................................................................................................203

cmu_mod_node(8)................................................................................................................204

cmu_monstat(8)....................................................................................................................207

cmu_image_open(8).............................................................................................................209

cmu_image_commit(8)..........................................................................................................210

cmu_config_nvidia(8)............................................................................................................211

cmu_config_amd(8)..............................................................................................................212

cmu_config_intel(8)...............................................................................................................213

cmu_mgt_config(8)...............................................................................................................214

cmu_firmware_mgmt(8).........................................................................................................216

cmu_monitoring_dump(8)......................................................................................................217

cmu_rename_archived_user_group(8)......................................................................................218

Glossary..................................................................................................219

Index.......................................................................................................221

8 Contents

Page 9

Figures

1 Typical HPC cluster...........................................................................................................13

2 iLO server power controls..................................................................................................17

3 NIC2 on the SL2x170z G6 Server......................................................................................18

4 HP Insight CMU main window...........................................................................................40

5 Change X display address dialog box................................................................................41

6 Cluster administration menu...............................................................................................44

7 Node management window..............................................................................................45

8 Scan node dialog............................................................................................................46

9 Management card password window.................................................................................47

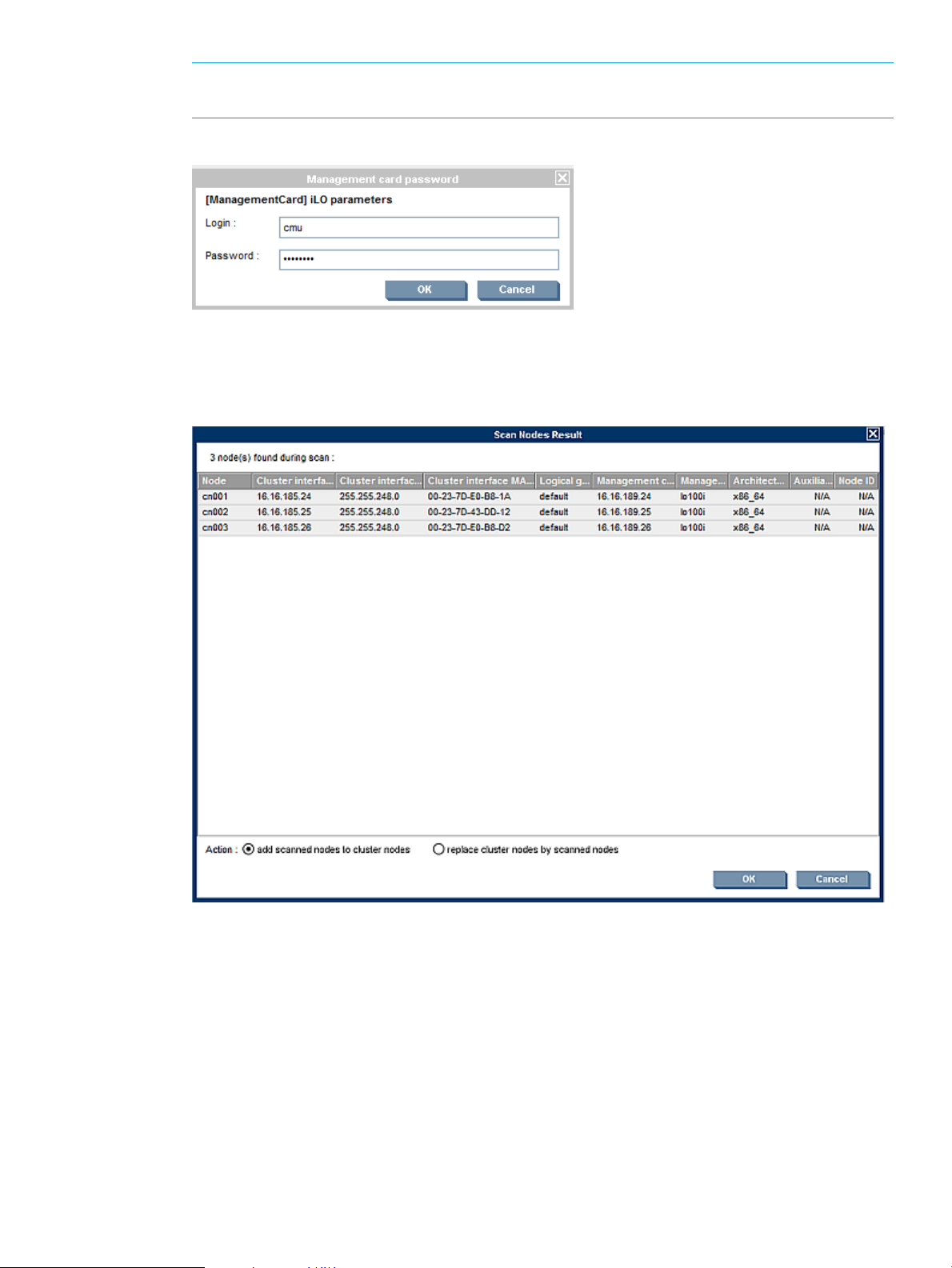

10 Scan node result..............................................................................................................47

11 Add node dialog.............................................................................................................48

12 Populated database node management window..................................................................48

13 Network entity management..............................................................................................50

14 Create a logical group ....................................................................................................51

15 Add logical group............................................................................................................51

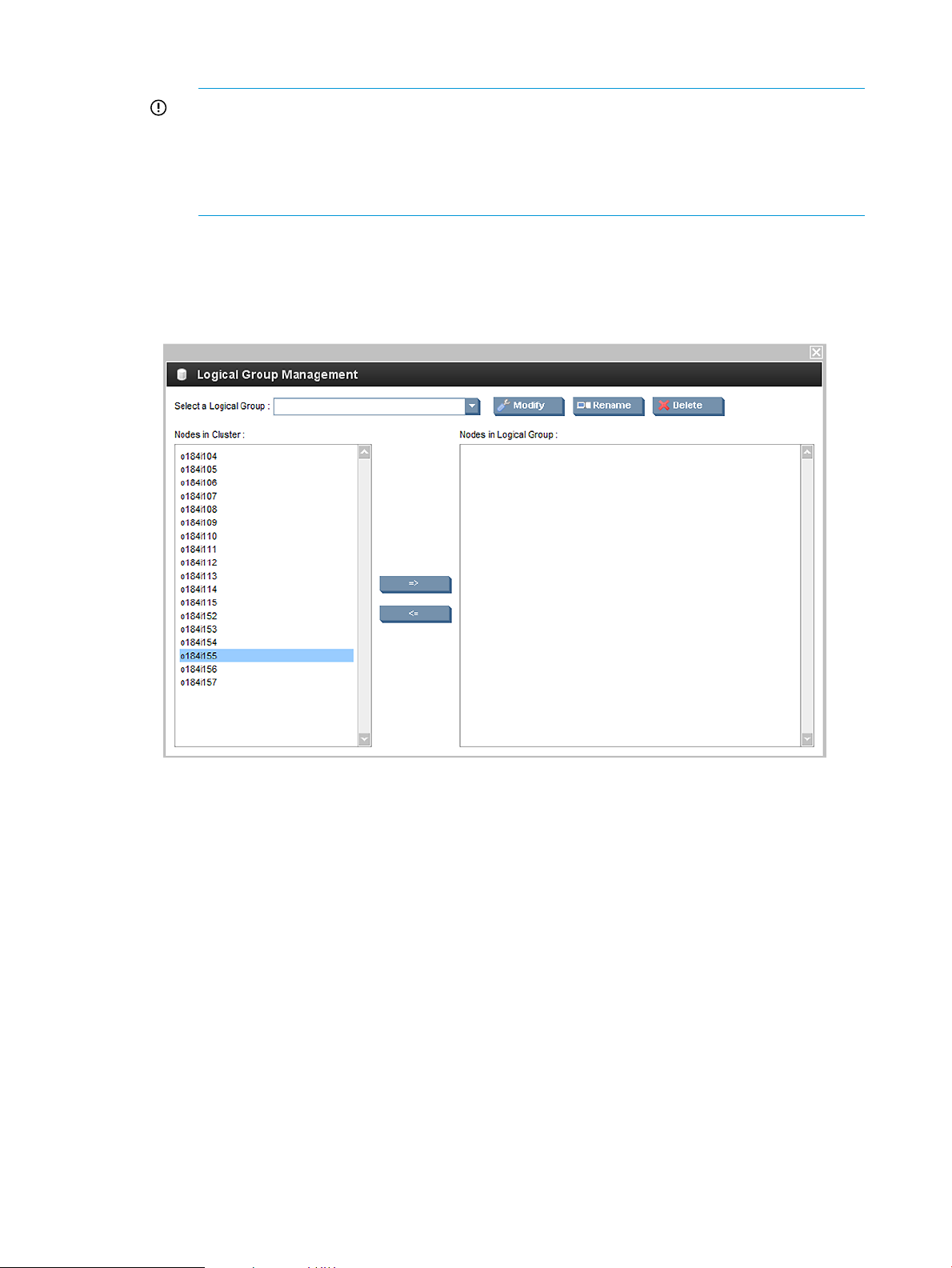

16 Logical group management...............................................................................................52

17 Logical group management autoinstall................................................................................54

18 New autoinstall logical group............................................................................................55

19 Autoinstall log.................................................................................................................56

20 Backup dialog box...........................................................................................................59

21 Backup status window......................................................................................................60

22 Cloning procedure...........................................................................................................61

23 Cloning status..................................................................................................................62

24 Node static info...............................................................................................................65

25 Rescan MAC...................................................................................................................66

26 Naming a logical group...................................................................................................72

27 Adding nodes to logical groups.........................................................................................73

28 Booting the compute nodes...............................................................................................73

29 Monitoring client installation..............................................................................................85

30 Main window..................................................................................................................86

31 Node status....................................................................................................................87

32 Monitoring window..........................................................................................................88

33 Resource view overview....................................................................................................89

34 Alert messages................................................................................................................90

35 Resource view details.......................................................................................................90

36 Memory used summary....................................................................................................91

37 Node details...................................................................................................................92

38 Time view.......................................................................................................................93

39 Archiving deleted user groups............................................................................................95

40 Archived user groups........................................................................................................96

41 ColPlot window.............................................................................................................104

42 ColPlot results................................................................................................................105

43 HP Insight CMU alert converted to SIM event.....................................................................109

44 cmu_config_ams command.........................................................................................112

45 Verify AMS submenu......................................................................................................113

46 Configure iLO SNMP port...............................................................................................113

47 Configure iLO finished....................................................................................................114

48 SNMP query.................................................................................................................114

49 Get/Refresh SNMP data.................................................................................................115

50 View/Compare SNMP data............................................................................................115

51 cmu_ams_metrics......................................................................................................116

Page 10

52 cmu_get_ams_metrics..............................................................................................116

53 Instant view display........................................................................................................117

54 Contextual menu for administrator....................................................................................118

55 Halt dialog ..................................................................................................................119

56 Power off dialog box......................................................................................................120

57 Boot dialog box.............................................................................................................120

58 Reboot dialog box.........................................................................................................120

59 Multiple windows broadcast command.............................................................................121

60 pdsh window................................................................................................................122

61 Parallel distributed copy window......................................................................................125

62 User group management.................................................................................................126

63 Certificate error.............................................................................................................165

64 Java control panel..........................................................................................................165

Tables

1 Directory structure............................................................................................................21

2 Valid archived user group parameters.................................................................................96

3 Extended metric fields.....................................................................................................111

4 Operational HP Insight CMU GUI features available by default for non-root users ..................139

5 HP Insight CMU GUI features and their corresponding commands .......................................141

Examples

1 date command.............................................................................................................122

2 dmidecode command...................................................................................................123

Page 11

1 Overview

HP Insight Cluster Management Utility (CMU) is a collection of tools that manage and monitor a

large group of computer nodes, specifically HPC and large Linux Clusters. You can use HP Insight

CMU to lower the total cost of ownership (TCO) of this architecture. HP Insight CMU helps manage,

install, and monitor the compute nodes of your cluster from a single interface. You can access this

utility through a GUI or a CLI.

1.1 Features

HP Insight CMU is scalable and can be used for any size cluster.

The HP Insight CMU GUI:

• Monitors all the nodes of your cluster at a glance.

• Configures HP Insight CMU according to your actual cluster.

• Manages your cluster by sending commands to any number of compute nodes.

• Replicates the disk of a compute node on any number of compute nodes.

The HP Insight CMU CLI:

• Manages your cluster by sending commands to any number of compute nodes.

• Replicates the disk of a compute node on any number of compute nodes.

• Saves and restores your HP Insight CMU database.

1.1.1 Compute node monitoring

You can monitor many nodes using a single window. HP Insight CMU provides the connectivity

status of each node as well as sensors. HP Insight CMU provides a default set of sensors such as

CPU load, memory usage, I/O performance, and network performance. You can customize this

list or create your own sensors. You can display sensor values for any number of nodes.

Information provided by HP Insight CMU is used to ensure optimum performance and for

troubleshooting. You can fix thresholds to trigger alerts. All information is transmitted across the

network at time intervals, using a scalable protocol for real-time monitoring.

1.1.2 HP Insight CMU configuration

HP Insight CMU requires a dedicated management server running RHEL or SLES. CentOS and

Scientific Linux are supported on the management node, but require active approval and verification

form HP. The management node can run a different OS from the compute nodes. However, HP

recommends running the same OS on the compute nodes and on the management node.

IMPORTANT: HP Insight CMU does not qualify management nodes. Any server with a supported

operating system can become an HP Insight CMU management node.

For details on specific operating systems supported, see the HP Insight CMU release notes for your

version of the product.

All cluster nodes must be connected to the management node through an Ethernet network. Each

compute node must have a management card. These management cards must be connected to an

Ethernet network. This network must be accessible by the management node.

HP Insight CMU is configured and customized using the HP Insight CMU GUI. Tasks include:

• Manually adding, removing, or modifying nodes in the HP Insight CMU database

• Invoking the scan node procedure to automatically add several nodes

• Adding, deleting, or customizing HP Insight CMU groups

1.1 Features 11

Page 12

• Managing the system images stored by HP Insight CMU

• Configuring actions performed when a node status changes such as display a warning, execute

a command, or send an email

• Exporting the HP Insight CMU node list in a simple text file for reuse by other applications

• Importing nodes from a simple text file into the HP Insight CMU database

1.1.3 Compute node administration

The HP Insight CMU GUI and CLI enable you to perform actions on any number of selected compute

nodes. Tasks include:

• Halting

• Rebooting

• Booting and powering off, using the compute node management card

• Broadcasting a command to selected compute nodes, using a secure shell connection or a

management card connection

• Direct node connection by clicking a node to open a secure shell connection or a management

card connection

1.1.4 System disk replication

The HP Insight CMU GUI and CLI enable you to replicate a system disk image on any number of

selected compute nodes. Tasks include:

• Creating a new image (While backing up a compute node system disk, you can dynamically

choose which partitions to backup.)

• Replicating available images on any number of compute nodes in the cluster

• Managing as many different images as needed for different software stacks, different operating

systems, or different hardware

• Cloning from one to many nodes at a time with a scalable algorithm which is reliable and

does not stop the entire cloning process if any nodes are broken

• Customizing reconfiguration scripts associated with each image to execute specific tasks on

compute nodes after cloning

12 Overview

Page 13

2 Installing and upgrading HP Insight CMU

2.1 Installing HP Insight CMU

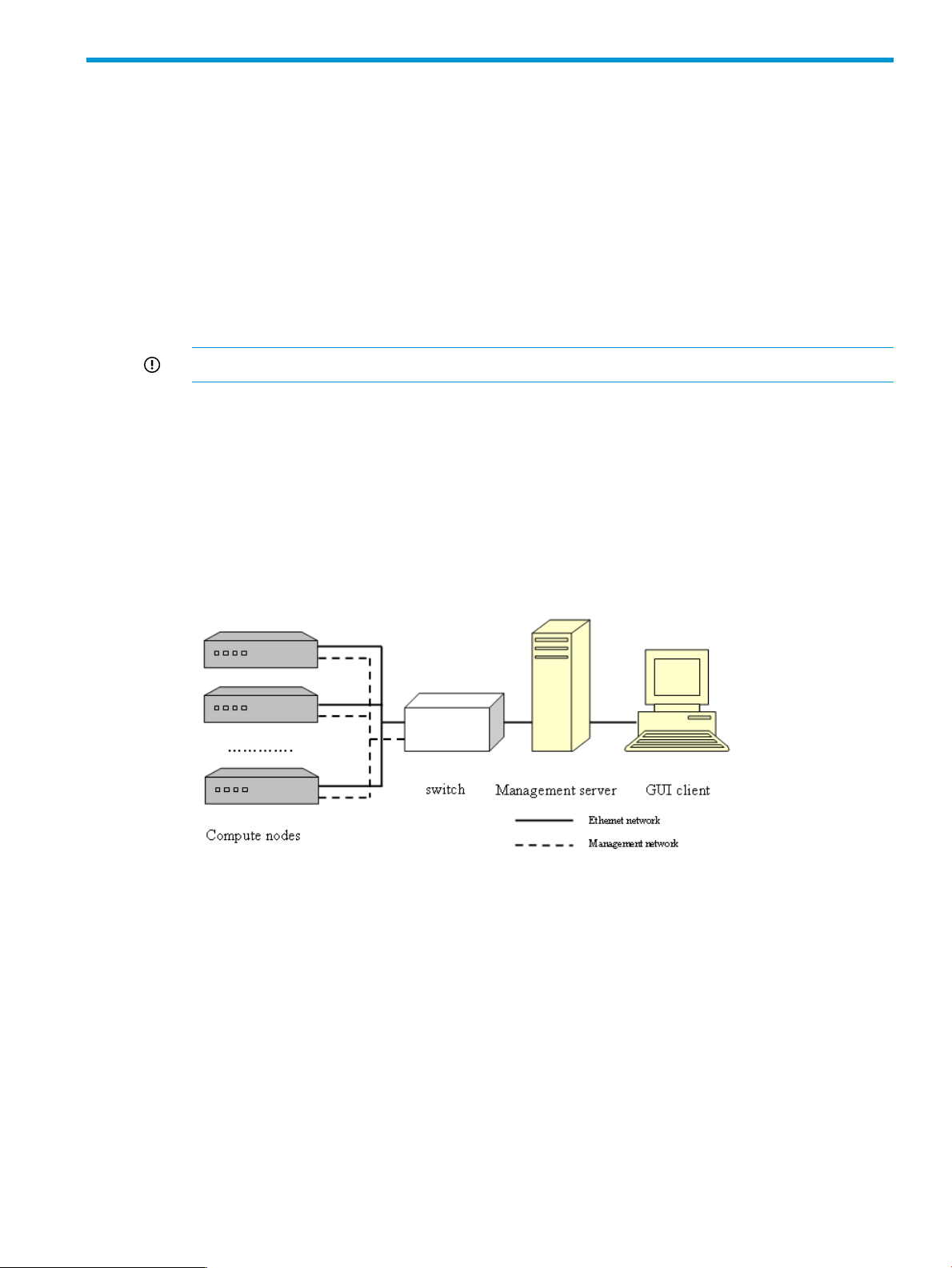

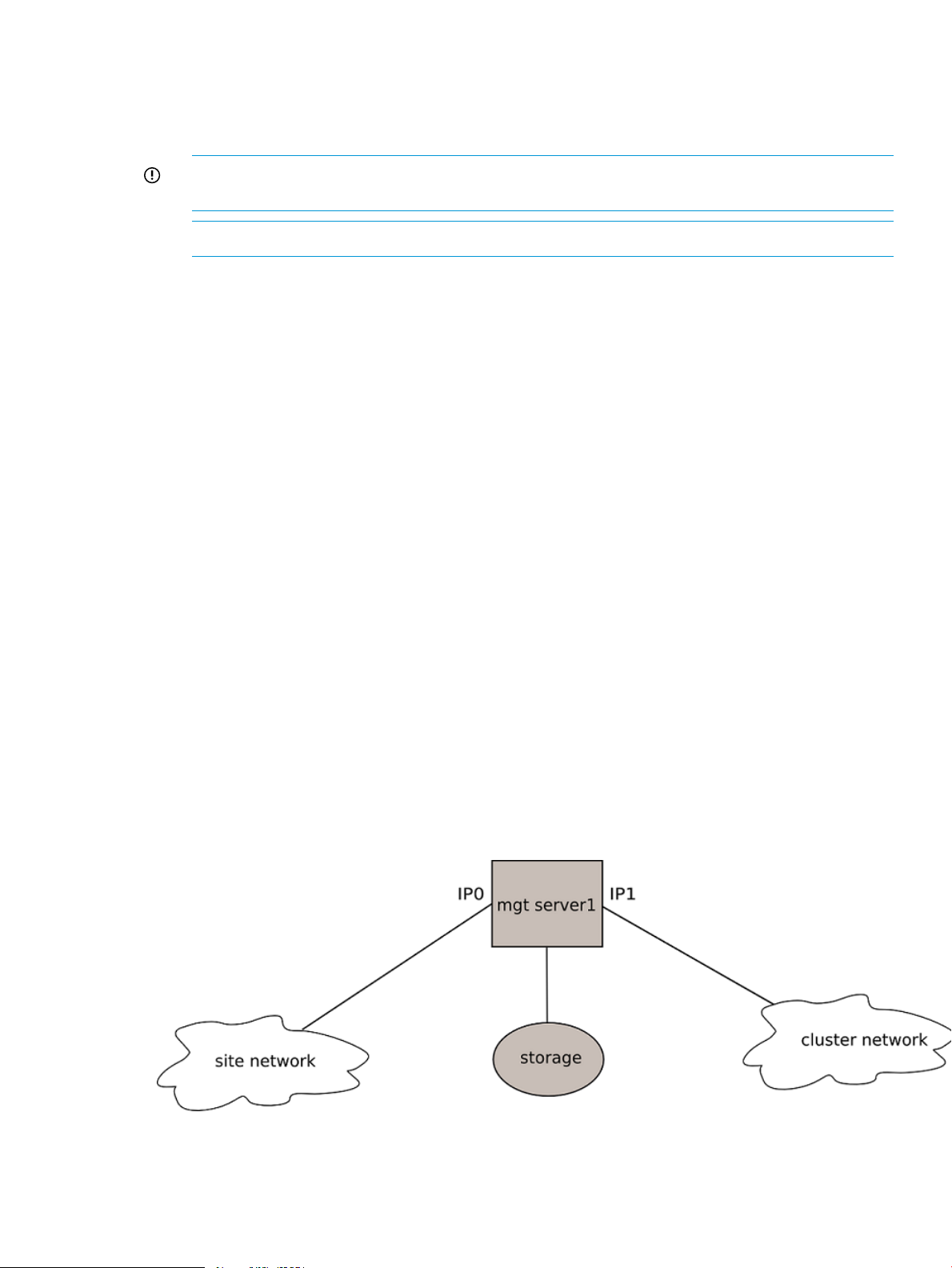

A typical HP Insight CMU cluster contains three kinds of nodes. Figure 1 (page 13) shows a typical

HPC cluster.

• The management node is the central point that connects all the compute nodes and the GUI

clients. Installation, management, and monitoring are performed from the management node.

The package cmu-v7.2-1.x86_64.rpm must be installed on the management node. All

HP Insight CMU files are installed under the /opt/cmu directory.

• The compute nodes are dedicated to user applications. A small software application that

provides a monitoring report is installed on the compute nodes.

IMPORTANT: All compute nodes must be connected to an Ethernet network.

• The client workstations are any PC systems running Linux or Windows operating systems that

display the GUI. The administrator can install, manage, and monitor the entire cluster from a

client workstation. Users can monitor the cluster and access compute nodes from their

workstations.

A management card is required on each node to manage the cluster. These management cards

must be connected to an Ethernet network. The management node must have access to this network.

Figure 1 Typical HPC cluster

2.1.1 Management node hardware requirements

The HP Insight CMU management node needs access to the compute nodes, the compute node

management cards (iLOs), and the HP Insight CMU GUI clients. Each of these components is

typically on a separate network, though that is not strictly required. Using independent networks

ensures good network performance and isolates problems if network failures occur. A recommended

NIC/network configuration for the management node is:

• Connect one NIC to a network established for compute node administration.

• Connect a second NIC to the network connecting the HP Insight CMU management node to

the HP Insight CMU GUI clients.

• A third NIC is typically used to provide access to the network connecting all the compute node

management cards (iLOs).

2.1 Installing HP Insight CMU 13

Page 14

NOTE: The IP address of the NIC connected to the compute node administration network is

needed during configuration of the HP Insight CMU management node.

2.1.2 Disk space requirements

A total of 400 MB of free disk space is necessary to install all the subsets or packages required

for HP Insight CMU. Up to 4 Gb of additional space is needed to store each master disk image.

2.1.3 Support for non-HP servers

IMPORTANT: You must obtain a valid license to run HP Insight CMU on non-HP hardware.

The following section describes how HP Insight CMU functions with non-HP servers.

Provisioning

• autoinstall works (assumes PXE-boot support).

• Diskless works (assumes PXE-boot support).

• Backup and cloning must be tested. These processes rely on the HP Insight CMU netboot kernel

which needs the network and disk drivers for non-HP hardware. If these drivers for non-HP

hardware exist in the kernel.org source tree, then backup and cloning should work. If

backup and cloning does not work on your specific hardware, contact HP services.

Monitoring

• All monitoring works, including the GUI.

• If provisioning is not used, monitoring requires password-less ssh to be configured for the root

account on all nodes.

NOTE: Backup and cloning configures this automatically.

Remote management

• All xterm-based features work. For example:

single|multi xterm◦

◦ pdsh with cmudiff

◦ pdcp

• Power control and console access depend on non-HP hardware. HP Insight CMU supports

IPMI. Otherwise, a new power interface can be configured. HP Insight CMU has an API for

power control.

• BIOS and firmware management are HP-specific.

• Custom menu support works.

2.1.4 Planning for compute node installation

Two IP addresses are required for each compute node.

• Determine the IP address for the management card (iLO) on the management network.

• Determine the IP address for the NIC on the administration network.

HP recommends assigning contiguous ranges of static addresses for nodes located in the same

rack. This method eases the discovery of the nodes and makes the cluster management more

convenient.

14 Installing and upgrading HP Insight CMU

Page 15

The management cards must be configured with a static IP address. All the compute node

management cards must have a single login and password.

NOTE: HP Insight CMU uses DHCP and PXE. Do not run other DHCP or PXE servers on the HP

Insight CMU management network in the range of ProLiant MAC addresses belonging to the HP

Insight CMU cluster.

NOTE: The settings described in this document are based on the assumption that the administration

network on each compute node is connected to, and will PXE boot from, NIC1. While this

configuration enables all supported hardware to be imaged by HP Insight CMU, some operating

systems might not configure eth0 as NIC1. For example, RHEL5.4 on the ProLiant DL385 G6 Server

defaults eth0 to NIC3. To simplify the installation process for your operating system, HP recommends

wiring your administration network to the NIC that defaults to eth0 and set that NIC to PXE boot

rather than NIC1.

2.1.5 Firmware upgrade requirements

Depending on the type of compute nodes in the cluster, you might have to upgrade the firmware

on each compute node.

IMPORTANT: All compute nodes must have the same firmware version.

2.1.6 Configuring the local smart array card

HP Insight CMU does not configure local disks. If you have a hardware RAID controller, configure

a logical drive to be used later by the operating system. The same logical drive must be created

on each compute node.

The Compaq Array must be configured on each node of the cluster. You can choose any RAID

level. If you have only one physical drive, before performing the initial operating system installation,

configure it in a logical RAID0 drive. Otherwise, the disk is not detected during the Linux installation

procedure and during the cloning procedure.

2.1.7 Configuring the management cards

To configure the management cards such as iLO:

1. Power on the server.

2. Access the management card.

3. Assign the same username and password to all management cards.

4. Assign a fixed IP address to each management card.

NOTE: On Blade servers, to configure the IP addresses on the iLO cards, you can use the

EBIPA on the OA. For instructions, see “Configuring iLO cards from the OA: Blades only”

(page 16).

NOTE: Blade servers do not use the Single Sign-On capability. You must configure each

Blade individually and create the same username and password. For instructions, see “Disabling

server automatic power on: Blades only” (page 16).

2.1.8 Configuring the BIOS

Generally, BIOS parameters that affect HP Insight CMU are:

• Boot order parameters. (Network boot must have the highest priority.)

• Parameters that enable the BIOS boot process and the Linux boot process to be visualized at

the iLO serial console. (Those parameters must be set for BIOS startup and Linux boot to be

monitored from a remote connection through the iLO port.)

2.1 Installing HP Insight CMU 15

Page 16

• Parameters that affect the behavior of the local disk controller. Parameter names can differ

from one server to another and cannot be documented exhaustively.

IMPORTANT: If the boot order is not correctly set, then cloning and backup fail on the cluster.

Examples are provided in the following sections.

2.1.8.1 DL3xx, DL5xx, DL7xx, Blades

Parameters:

• Virtual serial port COM1

• Embedded NIC

NIC 1 PXE boot or PXE enabled◦

◦ NIC 2 Disabled

◦ NIC 3 Disabled (not always present)

◦ NIC 4 Disabled (not always present)

• Boot order

PXE1.

2. CD

3. DISK

• BIOS Serial console

BIOS Serial Console Auto◦

◦ Speed 9600 Bd

◦ EMS Console COM1

◦ Interface Mode Auto

2.1.8.1.1 OA IP address: Blades only

Assign the OA IP address in the same subnet as the administration network.

2.1.8.1.2 Configuring iLO cards from the OA: Blades only

Use the EBIPA to assign consecutive addresses to the iLO:

• 16 addresses on the c7000 Enclosure

• 8 addresses on the c3000 Enclosure

To configure the iLO cards:

1. Open a browser to the OA.

2. In the right window, select Device Bays.

3. Select Bay 1.

4. In the left window, select the Enclosure Setting tab and then Enclosure Bay IP Addressing.

5. Enter the IP address of the first iLO card.

6. Click Auto Fill or the red arrow.

Each iLO is reset and assigned an IP address by the OA.

7. From the HP Insight CMU management node, ping each iLO.

2.1.8.1.3 Disabling server automatic power on: Blades only

On each Blade server:

16 Installing and upgrading HP Insight CMU

Page 17

1. Access the iLO card.

2. Create the username and password. Each server must have the same username and password.

3. Select the Power Management tab.

4. For Automatically Power On Server, select No.

5. Select Submit.

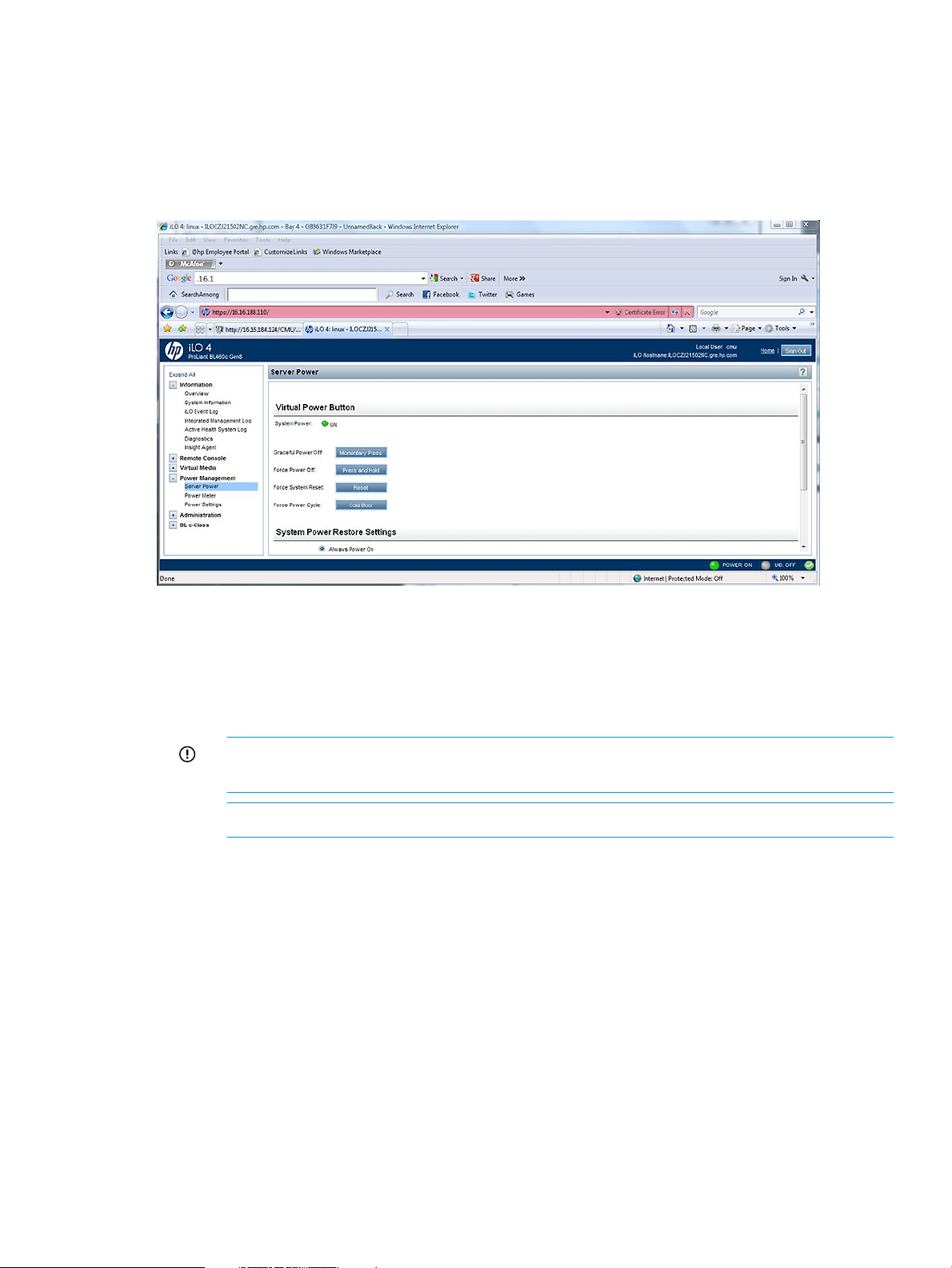

Figure 2 iLO server power controls

2.1.8.2 DL160 G5, DL165c G5, DL165c G6, and DL180 G5 Servers

• IDE

ATA/IDE Enhanced◦

◦ Configure SATA as IDE

IMPORTANT: The embedded SATA Raid Controller option is not supported. Do not

select this option.

NOTE: These IDE settings only apply to the DL160 G5 Server.

• IPMI

Serial Port assigned to System◦

◦ Serial Port Switching Disabled

◦ Serial Port Connection Mode Direct

• LAN

Share NIC mode Disabled◦

◦ DHCP Disabled

• Remote Access

Remote access Enabled◦

◦ Redirection Always

2.1 Installing HP Insight CMU 17

Page 18

◦ Terminal VT100

• Boot Configuration

Boot Order◦

1. Embedded NIC

2. Disk or smart array

◦ Embedded NIC1 Enabled

2.1.8.3 DL160 G6 Servers

• IPMI

Serial Port assigned to System◦

◦ Serial Port Connection Mode Direct

• PCI

NIC1 control Enabled◦

◦ NIC1 PXE Enabled

• SATA

SATA#1 Controller Mode AHCI◦

• Boot Configuration

Boot Order◦

1. NIC

2. CD

3. Disk

2.1.8.4 SL2x170z G6 and DL170h G6 Servers BIOS setting

IMPORTANT: To enable BIOS updates, you must restart the server. You can restart the server

with Ctrl+Alt+Delete immediately after leaving the BIOS, or you can physically restart the server

by using the power switch on the server.

Figure 3 NIC2 on the SL2x170z G6 Server

• BIOS settings

Post speedup Enabled◦

18 Installing and upgrading HP Insight CMU

Page 19

◦ Numlock Enabled

◦ Restore after AC loss Last state

◦ Post F1 prompt Delayed

• CPU setup

Proc hyper threading Disabled◦

• IDE configuration

SATA controller mode AHCI◦

◦ Drive cache Enabled

◦ IDE timeout 35

• Chipset ACPI configuration

High Performance Event timer Enabled◦

• IPMI serial port configuration

Serial port assignment BMC◦

◦ Serial port switching Enabled

◦ Serial port connection mode Direct

• LAN configuration

If your node is wired with the LO100i management port shared with NIC2:

◦ BMC NIC Allocation Shared

◦ LAN protocol: HTTP, telnet, ping Enabled

Otherwise, if your node is wired with a dedicated management port for LO100i:

◦ BMC NIC Allocation Dedicated

◦ LAN protocol: HTTP, telnet, ping Enabled

• Remote Access

BIOS Serial console Enabled◦

◦ EMS console support Enabled

◦ Flow control Node

◦ Redirection after BIOS POST Enabled

◦ Serial port 9600 8,n,1

• Boot device priority

Network ( 0500 )◦

◦ Removable device

◦ Hard Disk

• Enable PXE for the NIC that is connected to the administration network.

2.1 Installing HP Insight CMU 19

Page 20

2.2 Preparing for installation

2.2.1 HP Insight CMU kit delivery

The HP Insight CMU kit is delivered on CD-ROM and is provided in the appropriate format for

your operating system. These features enable HP Insight CMU files to be installed directly from the

CD-ROM to your disk. The Linux versions of HP Insight CMU are in the Red Hat Package Manager

(RPM) format.

2.2.2 Preinstallation limitations

• HP Insight CMU monitors only the compute nodes and not the infrastructure of the cluster.

• For cloning Linux images:

HP Insight CMU requires that each partition of the golden image node is at least 50%

◦

free. Alternatively, if this condition cannot be satisfied, then the largest partition of the

golden node must be less than or equal to the compute node memory size.

◦ HP Insight CMU does not support software RAID or LVM on compute nodes.

◦ HP Insight CMU only clones one disk or logical drive per compute node.

• Limitations for backup and cloning Windows images:

The Windows backup and cloning feature is supported only on specific Moonshot

◦

cartridges. No other platforms are supported.

◦ HP Insight CMU can backup and clone only one disk per compute node.

◦ The Windows golden node must be shutdown gracefully before attempting a backup

operation.

◦ The golden image size (the total size of compressed part-archi*.tar.bz2 files) must

be less than 85% of RAM size on the nodes to be cloned. For example, on nodes with

8GB RAM, the maximum image size available for cloning is approximately 7GB. A

simple Windows install image is usually approximately 3GB when compressed.

NOTE: Windows unattended autoinstall does not have this limitation.

◦ Windows dynamic disks are not supported. Only Windows basic disks are supported.

◦ When multiple (>1) primary and (>1) logical partitions are present in a Windows backup

image, drive letters (e.g. D:, E: ) assigned to the partitions on the cloned nodes are not

consistent with the golden node.

◦ The local “Administrator” account password and desktop are reset on cloned nodes. Any

content placed in the “Administrator” desktop directory is lost after cloning.

◦ Cloned nodes reboot twice after the first disk-boot for host specific customizations.

◦ GPT partition table is not supported.

IMPORTANT: HP Insight CMU does not support RAID arrays created by B110i RAID controllers

(e.g. SL4545 G7). Any attempts to backup or clone such RAID arrays will fail.

NOTE: You can partially overcome some of these limitations by using a reconfiguration script

after cloning. For more information about reconfiguration, see “Reconfiguration” (page 63).

20 Installing and upgrading HP Insight CMU

Page 21

2.2.3 Operating system support

HP Insight CMU software is generally supported on Red Hat Enterprise Linux (RHEL) 5 and 6; and

SUSE Linux Enterprise Server (SLES) 11.

The HP Insight CMU diskless environment is supported on RHEL5, RHEL6, and SLES11.

Ubuntu 12.x and 13.x are supported on the compute nodes only, on HP Ubuntu certified servers.

Debian is supported on the compute nodes only, but requires active approval and verification from

HP. Contact HP for support.

CentOS and Scientific Linux are supported on the compute nodes and the management nodes,

but require active approval and verification from HP. Contact HP for support.

For details on specific operating systems supported, see the HP Insight CMU release notes for your

version of the product.

Windows 7 SP1 is supported only on HP ProLiant m700 Server cartridges.

Windows Server 2012 and Windows Server 2012 R2 are supported only on HP ProLiant m300

Server cartridges.

2.2.3.1 RHEL 6 support

HP Insight CMU v7.2 supports RHEL6 on the management node and compute nodes. HP Insight

CMU continues to support a mix of operating systems. For example, RHEL6 is not required on the

management node if RHEL6 is installed on your compute nodes. However, you must use HP Insight

CMU v7.2 when RHEL6 is installed anywhere in your HP Insight CMU cluster. As with all HP Insight

CMU releases, all backup images from previous HP Insight CMU versions can be used with v7.2.

HP Smart Array warning with RHEL6 and future Linux releases

If your compute nodes have P212, P410, P410i, P411, P711, P712, P812, or all newer controllers

proposed by HP after April 1, 2011, then running RHEL6+ (or SLES 11SP1 with the optional driver)

will make them appear as standard /dev/sd* SCSI devices and not as /dev/cciss/c*d*.

Other controllers such as HP Smart Array P400, P800, and P700m will continue to appear as

/dev/cciss/c*d* with RHEL6+.

Having these particular nodes means that HP Insight CMU users might have to create new logical

groups and declare backup devices as /dev/sd* instead of /dev/cciss/c*d*. For example,

you can clone a RHEL5 image on a P410i-based compute node, then clone it with RHEL6 and HP

Insight CMU will switch from /dev/cciss/c*d* to /dev/sd*.

As a result of support for RHEL6, HP Insight CMU v7.2 now supports:

• The ext4 file system

• UUID support in fstabs (replaced at backup by HP Insight CMU)

• dhcpd.conf alternate path support

• SHA512 password support for RHEL6 management nodes

• hpsa/cciss support

2.2.4 HP Insight CMU CD-ROM directory structure

The directory structure of the HP Insight CMU CD-ROM is organized as described in Table 1.

Table 1 Directory structure

Linux

ContentsSubdirectory

HP Insight CMU kit for X86_64. CMU-<version>.x86_64.rpm (HP Insight

CMU v7.2 for X86_64)

Examples of configuration files required for the HP Insight CMU installationConfigFiles

2.2 Preparing for installation 21

Page 22

Table 1 Directory structure (continued)

ContentsSubdirectory

Useful tools that can be used in conjunction with HP Insight CMUTools

Documentation and release notesDocumentation

Licenses

Contains the following licenses: Apache_LICENSE-2_0.txt, gluegen_LICENSE.txt,

jogl_LICENSE.txt. Also contains system-config-netboot-legalnotice.html

2.2.5 HP Insight CMU installation checklist

The following list summarizes the steps needed to install HP Insight CMU on your HPC cluster:

Preparing the management node:

1. For hardware requirements, see “Management node hardware requirements” (page 13).

2. Perform a full installation of your base OS on your management node.

3. Install required rpm files. For details, see “Installation procedures” (page 22)

4. Install Oracle Java version 1.6u33 or later.

5. Install HP Insight CMU rpm.

6. Install HP Insight CMU license.

7. Configure HP Insight CMU.

8. Start HP Insight CMU.

9. Configure HP Insight CMU to start automatically.

Preparing the compute nodes:

For instructions on how to prepare the compute nodes for installation, see “Planning for compute

node installation” (page 14)

Preparing the GUI client workstation:

1. Install Java Runtime Environment version 1.6u33 or later.

2. Install cmugui.jar (optional).

3. Configure X Window server. (Optional)

2.2.6 Login privileges

To install HP Insight CMU, you must be logged in as root and have administrator privileges on the

installation node. If relevant for the cluster, you must know the password of the management cards

on the compute nodes.

2.2.7 SELinux and HP Insight CMU

HP recommends disabling SELinux on the management node and the compute node creating the

image. To disable SELinux in RHEL versions, set SELINUX=disabled in the /etc/sysconfig/

selinux file and restart the node. If you must use SELinux with HP Insight CMU, please contact

support.

2.3 Installation procedures

IMPORTANT: All steps in this section must be performed on the node designated as your HP

Insight CMU management node.

1. Install a base operating system on your HP Insight CMU management node and perform any

configuration steps that are necessary for the node to work within your local environment (e.g.

configure DNS, set up ntp time synchronization, etc). For details on which operating systems

22 Installing and upgrading HP Insight CMU

Page 23

are supported on the HP Insight CMU management node see “Operating system support”

(page 21)

The following rpms must be installed on the HP Insight CMU management node. Any missing

rpms are flagged as dependencies when the HP Insight CMU rpm is installed and must be

installed to continue the installation.

a. expect

b. dhcp

c. tftp client

d. tftp server

e. Oracle Java Runtime Environment, update 33 or newer

f. tcl-8

g. OpenSSL

h. NFS

i. xterm

j. libX11

k. libXau

l. libXdmcp

m. perl-IO-Socket-SSL.

n. perl-Net-SSLeay

o. Samba (Required only if you intend to use HP Insight CMU Windows support.)

If you are using firewalls on the HP Insight CMU management node or GUI client workstation,

configure them to enable the following ports:

• On the HP Insight CMU management node:

External network interface◦

– RMI registry traffic (tcp ports 1099, 49150)

– Webserver port (tcp 80)

– ssh server (tcp 22)

◦ Internal (Admin/Compute) network interface

– – Allow all incoming and outgoing traffic. Admin NIC should be a trusted

interface or “Internal Zone”.

• On the GUI client workstation:

◦ – X Window export (tcp ports 6000 to 6063)

2. Download and install a supported version of Oracle Java. HP Insight CMU depends on Oracle

Java version 1.6 update 33 or later. Only the Java Runtime Environment (JRE) is required. To

download a supported JRE, go to: http://www.oracle.com/technetwork/java/index.html.

3. Install HP Insight CMU.

a. Install the HP Insight CMU rpm key:

# rpm --import /mnt/cmuteam-rpm-key.asc

NOTE: If you do not import the cmuteam-rpm-key a warning message similar to the

following is displayed when you install the HP Insight CMU rmp: