Page 1

Arria V Hard IP for PCI Express User Guide

Arria V Hard IP for PCI Express

User Guide

101 Innovation Drive

San Jose, CA 95134

www.altera.com

UG-01105-1.5

Document last updated for Altera Complete Design Suite version:

Document publication date:

13.1

December 2013

Feedback Subscribe

Page 2

© 2013 Altera Corporation. All rights reserved. ALTERA, ARRIA, CYCLONE, HARDCOPY, MAX, MEGACORE, NIOS, QUARTUS and STRATIX words and logos

are trademarks of Altera Corporation and registered in the U.S. Patent and Trademark Office and in other countries. All other words and logos identified as

trademarks or service marks are the property of their respective holders as described at www.altera.com/common/legal.html. Altera warrants performance of its

semiconductor products to current specifications in accordance with Altera's standard warranty, but reserves the right to make changes to any products and

services at any time without notice. Altera assumes no responsibility or liability arising out of the application or use of any information, product, or service

described herein except as expressly agreed to in writing by Altera. Altera customers are advised to obtain the latest version of device specifications before relying

on any published information and before placing orders for products or services.

ISO

9001:2008

Registered

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 3

Contents

Chapter 1. Datasheet

Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–1

Release Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–4

Device Family Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–4

Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–4

Debug Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–5

IP Core Verification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–6

Performance and Resource Utilization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–6

Recommended Speed Grades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1–7

Chapter 2. Getting Started with the Arria Hard IP for PCI Express

Getting Started with the Arria Hard IP for PCI Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–1

MegaWizard Plug-In Manager Design Flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–3

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow . . . . . . . . . . . . . . . . . . 2–3

Understanding the Files Generated . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–6

Qsys Design Flow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–9

Generating the Testbench . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–10

Understanding the Files Generated . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–11

Simulating the Example Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–11

Understanding Channel Placement Guideline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–14

Compiling the Design in the MegaWizard Plug-In Manager Design Flow . . . . . . . . . . . . . . . . . . . 2–15

Compiling the Design in the Qsys Design Flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–15

Modifying the Example Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2–18

Chapter 3. Getting Started with the Avalon-MM Arria Hard IP for PCI Express

Running Qsys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–2

Customizing the Arria V Hard IP for PCI Express IP Core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–3

Adding the Remaining Components to the Qsys System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–5

Completing the Connections in Qsys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–8

Specifying Clocks and Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–9

Specifying Exported Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–9

Specifying Address Assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–10

Simulating the Example Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–10

Simulating the Single DWord Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–15

Understanding Channel Placement Guidelines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–15

Adding Synopsis Design Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–16

Creating a Quartus II Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–16

Compiling the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–17

Programming a Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3–17

Chapter 4. Parameter Settings for the Arria V Hard IP for PCI Express

System Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–1

Port Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–3

Parameters Shared Across All Port Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–3

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–4

Error Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–5

Link . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–6

Slot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–6

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 4

1–iv

Power Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–7

Parameters Defined Separately for All Port Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–7

Base Address Registers for Function <n> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–8

Base and Limit Registers for Root Port Func <n> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–8

Device ID Registers for Function <n> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–9

PCI Express/PCI Capabilities for Func <n> . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4–10

Chapter 5. Parameter Settings for the Avalon-MM Arria V Hard IP for PCI Express

System Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–1

Base Address Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–2

Device Identification Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–3

PCI Express/PCI Capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–3

Device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–4

Error Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–5

Link . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–5

Power Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–8

Avalon Memory-Mapped System Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–9

Avalon to PCIe Address Translation Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5–10

Chapter 6. IP Core Architecture

Key Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–3

Avalon-ST Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–3

RX Datapath . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–3

TX Datapath . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–3

Clocks and Reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–4

Local Management Interface (LMI Interface) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–4

Transceiver Reconfiguration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–4

Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–5

PIPE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–5

Protocol Layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–5

Transaction Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–5

Configuration Space . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–7

Data Link Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–7

Physical Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–9

Multi-Function Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–12

PCI Express Avalon-MM Bridge . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–12

Avalon-MM Bridge TLPs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–14

Avalon-MM-to-PCI Express Write Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–14

Avalon-MM-to-PCI Express Upstream Read Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–15

PCI Express-to-Avalon-MM Read Completions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–15

PCI Express-to-Avalon-MM Downstream Write Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–15

PCI Express-to-Avalon-MM Downstream Read Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–16

Avalon-MM-to-PCI Express Read Completions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–16

PCI Express-to-Avalon-MM Address Translation for Endpoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–16

Minimizing BAR Sizes and the PCIe Address Space . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–18

Avalon-MM-to-PCI Express Address Translation Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–20

Single DWord Completer Endpoint . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–22

RX Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–23

Avalon-MM RX Master Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–23

TX Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–24

Interrupt Handler Block . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6–24

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 5

Chapter 7. IP Core Interfaces

Arria V Hard IP for PCI Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–3

Avalon-ST Packets to PCI Express TLPs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–4

Avalon-ST RX Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–5

Data Alignment and Timing for the 64-Bit Avalon-ST RX Interface . . . . . . . . . . . . . . . . . . . . . . . . 7–8

Data Alignment and Timing for the 128-Bit Avalon-ST RX Interface . . . . . . . . . . . . . . . . . . . . . . 7–11

Avalon-ST TX Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–15

Data Alignment and Timing for the 64-Bit Avalon-ST TX Interface . . . . . . . . . . . . . . . . . . . . . . . 7–18

Data Alignment and Timing for the 128-Bit Avalon-ST TX Interface . . . . . . . . . . . . . . . . . . . . . . 7–20

Root Port Mode Configuration Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–22

ECRC Forwarding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–23

Clock Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–23

Reset Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–24

ECC Error Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–27

Interrupts for Endpoints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–27

Interrupts for Root Ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–28

Completion Side Band Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–28

Transaction Layer Configuration Space Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–30

Configuration Space Register Access Timing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–33

Configuration Space Register Access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–34

LMI Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–38

LMI Read Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–39

LMI Write Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–39

Power Management Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–40

Avalon-MM Hard IP for PCI Express . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–42

32-Bit Non-Bursting Avalon-MM Control Register Access (CRA) Slave Signals . . . . . . . . . . . . . . . 7–44

RX Avalon-MM Master Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–45

64- or 128-Bit Bursting TX Avalon-MM Slave Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–45

Physical Layer Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–46

Transceiver Reconfiguration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–47

Serial Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–47

PIPE Interface Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–51

Test Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7–54

1–v

Chapter 8. Register Descriptions

Configuration Space Register Content . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–1

Altera-Defined Vendor Specific Extended Capability (VSEC) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–5

PCI Express Avalon-MM Bridge Control Register Access Content . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–10

Avalon-MM to PCI Express Interrupt Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–12

PCI Express Mailbox Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–13

Avalon-MM-to-PCI Express Address Translation Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–14

Root Port TLP Data Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–16

Programming Model for Avalon-MM Root Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–17

Sending a TLP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–19

Receiving a Completion TLP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–19

PCI Express to Avalon-MM Interrupt Status and Enable Registers for Root Ports . . . . . . . . . . . . . 8–19

PCI Express to Avalon-MM Interrupt Status and Enable Registers for Endpoints . . . . . . . . . . . . . 8–20

Avalon-MM Mailbox Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8–21

Correspondence between Configuration Space Registers and the PCIe Spec 2.1 . . . . . . . . . . . . . . . . . 8–22

Chapter 9. Reset and Clocks

Reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–1

Clocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–4

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 6

1–vi

pclk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–5

coreclkout_hip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–6

pld_clk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–6

Transceiver Clock Signals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9–7

Chapter 10. Transaction Layer Protocol (TLP) Details

Supported Message Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10–1

Transaction Layer Routing Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10–3

Receive Buffer Reordering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10–4

Chapter 11. Interrupts

Interrupts for Endpoints Using the Avalon-ST Application Interface . . . . . . . . . . . . . . . . . . . . . . . . . . 11–1

MSI Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11–1

MSI-X . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11–3

Legacy Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11–4

Interrupts for Root Ports Using the Avalon-ST Interface to the Application Layer . . . . . . . . . . . . . . . 11–4

Interrupts for Endpoints Using the Avalon-MM Interface to the Application Layer . . . . . . . . . . . . . . 11–5

Enabling MSI or Legacy Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11–7

Generation of Avalon-MM Interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11–7

Interrupts for End Points Using the Avalon-MM Interface with Multiple MSI/MSI-X Support . . . . 11–7

Chapter 12. Optional Features

Configuration via Protocol (CvP) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12–1

ECRC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12–2

ECRC on the RX Path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12–2

ECRC on the TX Path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12–3

Lane Initialization and Reversal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12–4

Chapter 13. Flow Control

Throughput of Posted Writes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13–1

Throughput of Non-Posted Reads . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13–3

Chapter 14. Error Handling

Physical Layer Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14–2

Data Link Layer Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14–2

Transaction Layer Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14–3

Error Reporting and Data Poisoning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14–5

Uncorrectable and Correctable Error Status Bits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14–6

Chapter 15. Transceiver PHY IP Reconfiguration

Chapter 16. SDC Timing Constraints

SDC Constraints for the Hard IP for PCIe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16–1

SDC Constraints for the Example Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16–2

Chapter 17. Testbench and Design Example

Endpoint Testbench . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–2

Root Port Testbench . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–4

Chaining DMA Design Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–4

Design Example BAR/Address Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–9

Chaining DMA Control and Status Registers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–10

Chaining DMA Descriptor Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–12

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 7

1–vii

Test Driver Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–14

DMA Write Cycles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–15

DMA Read Cycles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–17

Root Port Design Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–18

Root Port BFM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–20

BFM Memory Map . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–22

Configuration Space Bus and Device Numbering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–22

Configuration of Root Port and Endpoint . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–22

Issuing Read and Write Transactions to the Application Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–27

BFM Procedures and Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–28

BFM Read and Write Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–28

ebfm_barwr Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–28

ebfm_barwr_imm Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–29

ebfm_barrd_wait Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–30

ebfm_barrd_nowt Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–30

ebfm_cfgwr_imm_wait Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–31

ebfm_cfgwr_imm_nowt Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–32

ebfm_cfgrd_wait Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–33

ebfm_cfgrd_nowt Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–33

BFM Configuration Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–34

ebfm_cfg_rp_ep Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–34

ebfm_cfg_decode_bar Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–35

BFM Shared Memory Access Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–35

Shared Memory Constants . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–35

shmem_write . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–36

shmem_read Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–36

shmem_display Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–36

shmem_fill Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–37

shmem_chk_ok Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–37

BFM Log and Message Procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–37

ebfm_display Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–39

ebfm_log_stop_sim Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–39

ebfm_log_set_suppressed_msg_mask Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–39

ebfm_log_set_stop_on_msg_mask Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–40

ebfm_log_open Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–40

ebfm_log_close Verilog HDL Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–40

Verilog HDL Formatting Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–40

himage1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–41

himage2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–41

himage4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–41

himage8 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–41

himage16 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–42

dimage1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–42

dimage2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–42

dimage3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–43

dimage4 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–43

dimage5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–43

dimage6 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–43

dimage7 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–44

Procedures and Functions Specific to the Chaining DMA Design Example . . . . . . . . . . . . . . . . . . 17–44

chained_dma_test Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

dma_rd_test Procedure

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–45

. . . . . 17–44

dma_wr_test Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–45

dma_set_rd_desc_data Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–45

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 8

1–viii

dma_set_wr_desc_data Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–45

dma_set_header Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–46

rc_mempoll Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–46

msi_poll Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–47

dma_set_msi Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–47

find_mem_bar Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–48

dma_set_rclast Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–48

ebfm_display_verb Procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17–48

Chapter 18. Debugging

Hardware Bring-Up Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–1

Link Training . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–1

Link Hangs in L0 Due To Deassertion of tx_st_ready . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–4

Recommended Reset Sequence to Avoid Link Training Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–6

Setting Up Simulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–6

Use the PIPE Interface for Gen1 and Gen2 Variants . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–6

Reduce Counter Values for Serial Simulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–7

Disable the Scrambler for Gen3 Simulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–7

Change between the Hard and Soft Reset Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–7

Using the PIPE Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–7

Use Third-Party PCIe Analyzer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–10

BIOS Enumeration Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18–10

TLP Packet Format without Data Payload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A–i

TLP Packet Format with Data Payload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A–iii

Additional Information

Revision History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Info–i

How to Contact Altera . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Info–iii

Typographic Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Info–iv

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 9

December 2013

UG-01110-1.5

1. Datasheet

This document describes the Altera® Arria® V Hard IP for PCI Express®. PCI Express

is a high-performance interconnect protocol for use in a variety of applications

including network adapters, storage area networks, embedded controllers, graphic

accelerator boards, and audio-video products. The PCI Express protocol is software

backwards-compatible with the earlier PCI and PCI-X protocols, but is significantly

different from its predecessors. It is a packet-based, serial, point-to-point interconnect

between two devices. The performance is scalable based on the number of lanes and

the generation that is implemented. Altera offers a configurable hard IP block in Arria

V devices for both Endpoints and Root Ports that complies with the PCI Express Base

Specification 2.1. Using a configurable hard IP block, rather than programmable logic,

saves significant FPGA resources. The hard IP block is available in ×1, ×2, ×4, and ×8

configurations. Table 1–1 shows the aggregate bandwidth of a PCI Express link for the

available configurations. The protocol specifies 2.5 giga-transfers per second for Gen1

and 5 giga-transfers per second for Gen2. Table 1–1 provides bandwidths for a single

transmit (TX) or receive (RX) channel, so that the numbers double for duplex

operation. Because the PCI Express protocol uses 8B/10B encoding, there is a 20%

overhead which is included in the figures in Table 1–1.

Table 1–1. PCI Express Throughput

Link Width

×1 ×2 ×4 ×8

PCI Express Gen1 Gbps (2.5 Gbps) 2.5 5 10 20

PCI Express Gen2 Gbps (5.0 Gbps) 5 10 20 —

f Refer to the PCI Express High Performance Reference Design for more information about

calculating bandwidth for the hard IP implementation of PCI Express in many Altera

FPGAs.

Features

The Arria V Hard IP for PCI Express IP supports the following key features:

■ Complete protocol stack including the Transaction, Data Link, and Physical Layers

is hardened in the device.

■ Multi-function support for up to eight Endpoint functions.

■ Support for ×1, ×2, ×4, and ×8 Gen1 and Gen2 configurations for Root Ports and

Endpoints.

■ Dedicated 6 KByte receive buffer

■ Dedicated hard reset controller

■ MegaWizard Plug-In Manager and Qsys support using the Avalon

®

Streaming

(Avalon-ST) with a 64- or 128-bit interface to the Application Layer.

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 10

1–2 Chapter 1: Datasheet

■ Qsys support using the Avalon Memory-Mapped (Avalon-MM) with a 64- or

Features

128-bit interface to the Application Layer

■ Extended credit allocation settings to better optimize the RX buffer space based on

application type.

■ Qsys example designs demonstrating parameterization, design modules and

connectivity.

■ Optional end-to-end cyclic redundancy code (ECRC) generation and checking and

advanced error reporting (AER) for high reliability applications.

■ Easy to use:

■ Easy parameterization.

■ Substantial on-chip resource savings and guaranteed timing closure.

■ Easy adoption with no license requirement.

■ New features in the 13.1 release

■ Added support for Gen2 Configuration via Protocol (CvP) using an .ini file.

Contact your sales representative for more information.

.The Arria V Hard IP for PCI Express offers different features for the variants that use

the Avalon-ST interface to the Application Layer and the variants that use an

Avalon-MM interface to the Application Layer. Variants using the Avalon-ST interface

are available in both the MegaWizard Plug-In Manager and the Qsys design flows.

Variants using the Avalon-MM interface are only available in the Qsys design flow.

Variants using the Avalon-ST interfaces offer a richer feature set; however, if you are

not familiar with the PCI Express protocol, variants using the Avalon-MM interface

may be easier to understand. A PCI Express to Avalon-MM bridge translates the PCI

Express read, write and completion TLPs into standard Avalon-MM read and write

commands typically used by master and slave interfaces. Tab le 1– 1 outlines these

differences in features between variants with Avalon-ST and Avalon-MM interfaces to

the Application Layer.

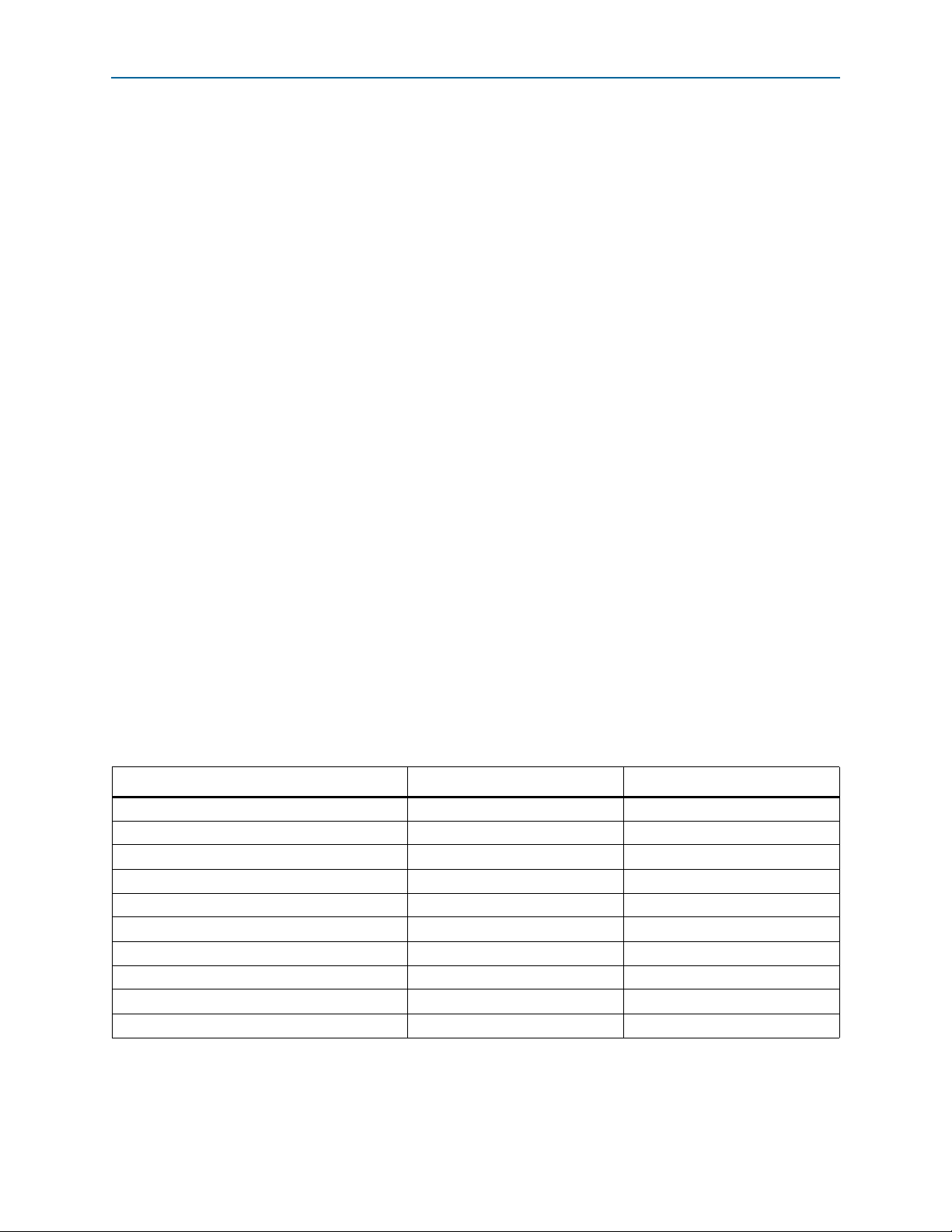

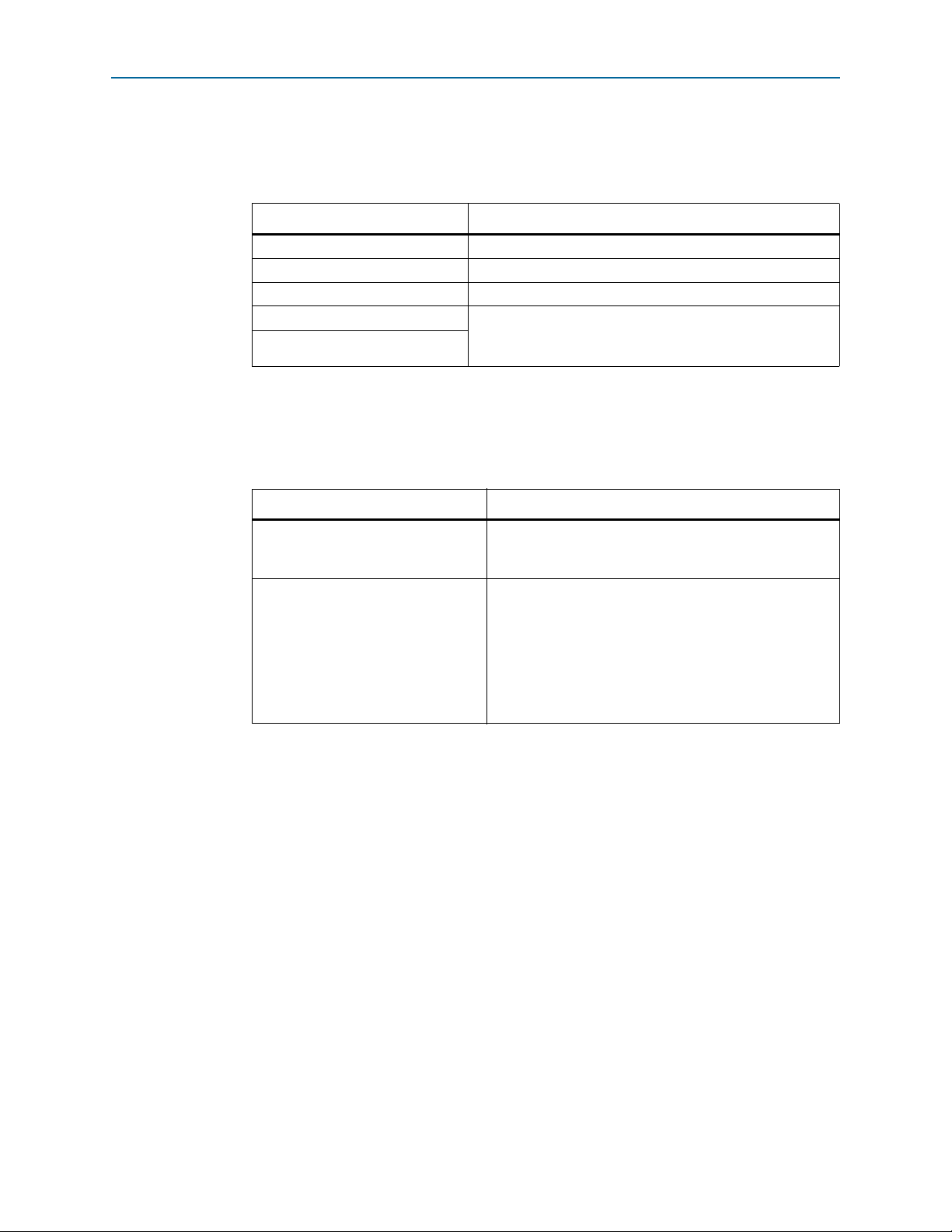

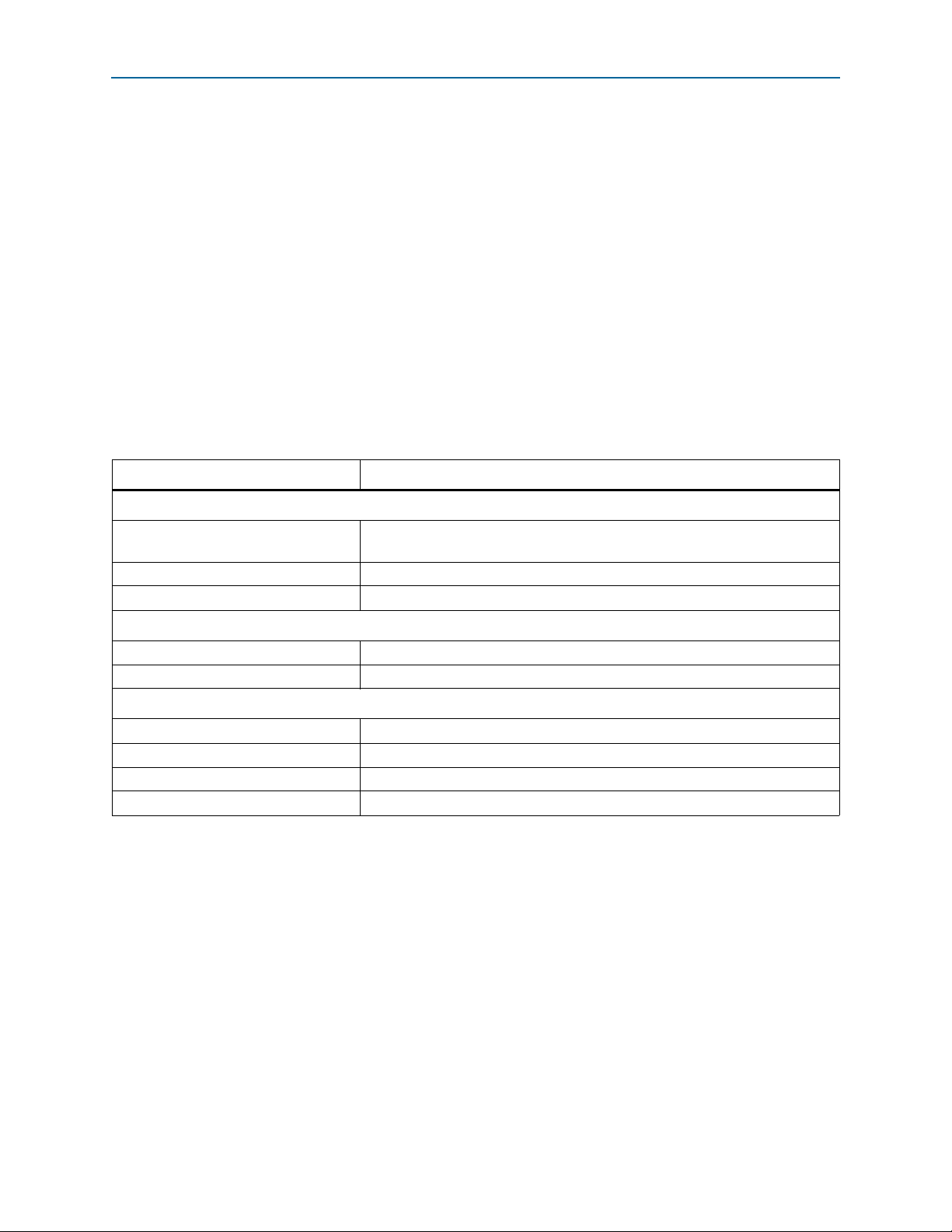

Table 1–2. Differences in Features Available Using the Avalon-MM and Avalon-ST Interfaces (Part 1 of 2)

Feature Avalon-ST Interface Avalon-MM Interface

MegaCore License Free Free

Native Endpoint Supported Supported

Legacy Endpoint (1) Supported Not Supported

Root port Supported Supported

Gen1 ×1, ×2, ×4, and ×8 ×1, ×4, and ×8 (2)

Gen2 ×1, ×2, ×4 ×1, ×4 (2)

MegaWizard Plug-In Manager design flow Supported Not supported

Qsys design flow Supported Supported

64-bit Application Layer interface Supported Supported

128-bit Application Layer interface Supported Supported

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 11

Chapter 1: Datasheet 1–3

Features

Table 1–2. Differences in Features Available Using the Avalon-MM and Avalon-ST Interfaces (Part 2 of 2)

Feature Avalon-ST Interface Avalon-MM Interface

Transaction Layer Packet Types (TLP) (3)

■ Memory Read Request

■ Memory Read Request-Locked

■ Memory Write Request

■ I/O Read Request

■ I/O Write Request

■ Configuration Read Request

(Root Port)

■ Configuration Write Request

(Root Port)

■ Message Request

■ Message Request with Data

Payload

■ Completion without Data

■ Completion with data

■ Completion for Locked Read

■ Memory Read Request

■ Memory Write Request

■ Configuration Read Request

(Root Port)

■ Configuration Write Request

(Root Port)

■ Message Request

■ Message Request with Data

Payload

■ Completion without Data

■ Completion with Data

■ Memory Read Request (single

dword)

■ Memory Write Request (single

dword)

without Data

Maximum payload size 128–512 bytes 128–256 bytes

Number of tags supported for non-posted

requests

32 or 64 8

62.5 MHz clock Supported Supported

Multi-function

Supports up to 8 functions Supports single function only

Polarity inversion of PIPE interface signals Supported Supported

ECRC forwarding on RX and TX Supported Not supported

Expansion ROM Supported Not supported

Number of MSI requests 16 1, 2, 4, 8, or 16

MSI-X Supported Supported

Multiple MSI, MSI-X, and INTx Not Supported Supported

Legacy interrupts Supported Supported

Notes to Table 1–1:

(1) Not recommended for new designs.

(2) ×2 is supported by down training from ×4 or ×8 lanes.

(3) Refer to Appendix A, Transaction Layer Packet (TLP) Header Formats for the layout of TLP headers.

f The purpose of the Arria V Hard IP for PCI Express User Guide is to explain how to use

the Arria V Hard IP for PCI Express and not to explain the PCI Express protocol.

Although there is inevitable overlap between these two purposes, this document

should be used in conjunction with an understanding of the following PCI Express

specifications: PHY Interface for the PCI Express Architecture PCI Express 2.0 and PCI

Express Base Specification 2.1.

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 12

1–4 Chapter 1: Datasheet

Release Information

Release Information

Tab le 1 –2 provides information about this release of the PCI Express Compiler.

Table 1–3. PCI Express Compiler Release Information

Item Description

Version 13.1

Release Date December 2013

Ordering Codes No ordering code is required

Product IDs There are no encrypted files for the Arria V Hard IP for PCI

Vendor ID

Express. The Product ID and Vendor ID are not required

because this IP core does not require a license.

Device Family Support

Tab le 1 –3 shows the level of support offered by the Arria V Hard IP for PCI Express.

Table 1–4. Device Family Support

Configurations

Device Family Support

Final. The IP core is verified with final timing models. The

Arria V

IP core meets all functional and timing requirements for

the device family and can be used in production designs.

Refer to the following user guides for other device families:

■ IP Compiler for PCI Express User Guide

Other device families

■ Arria V GZ Hard IP for PCI Express User Guide’

■ Cyclone V Hard IP for PCI Express User Guide

■ Stratix V Hard IP for PCI Express User Guide

■ Arria 10 Hard IP for PCI Express User Guide

The Arria V Hard IP for PCI Express includes a full hard IP implementation of the

PCI Express stack including the following layers:

■ Physical (PHY)

■ Physical Media Attachment (PMA)

■ Physical Coding Sublayer (PCS)

■ Media Access Control (MAC)

■ Data Link Layer (DL)

■ Transaction Layer (TL)

Optimized for Altera devices, the Arria V Hard IP for PCI Express supports all

memory, I/O, configuration, and message transactions. It has a highly optimized

Application Layer interface to achieve maximum effective throughput. You can

customize the Hard IP to meet your design requirements using either the

MegaWizard

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Plug-In Manager or the Qsys design flow.

Page 13

Chapter 1: Datasheet 1–5

Altera FPGA

User Application

Logic

PCIe

Hard IP

RP

PCIe

Hard IP

EP

User Application

Logic

PCI Express Link

Altera FPGA

Arria V or Cyclone V FPGA

PCIe Hard

IP Multi-

Function

EP

CAN GbE ATA PCI

Altera FPGA

PCIe

Hard IP

RP

Host

CPU

Memory

Controller

Peripheral

Controller

Peripheral

Controller

USB

SPI GPIO

I2C

PCI Express Link

Debug Features

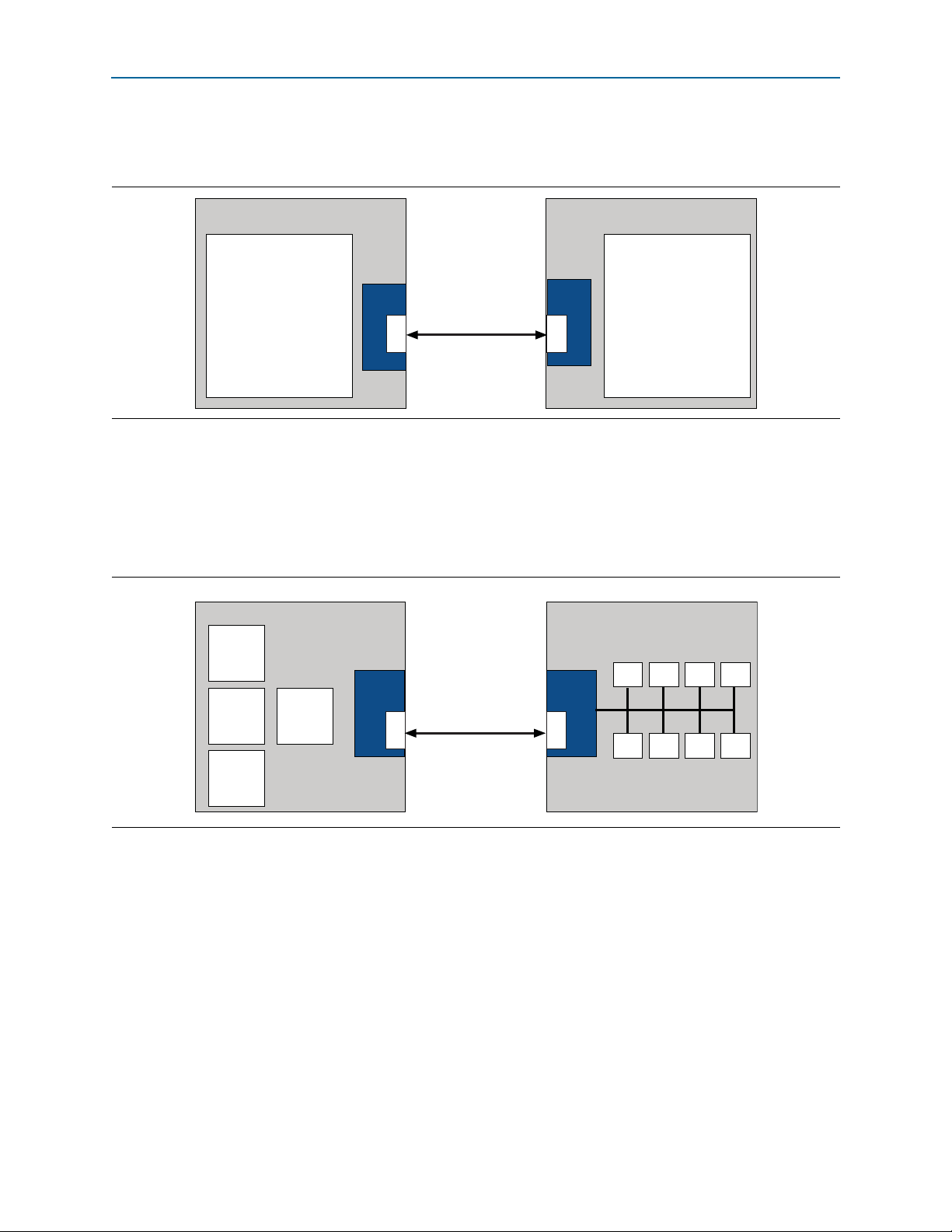

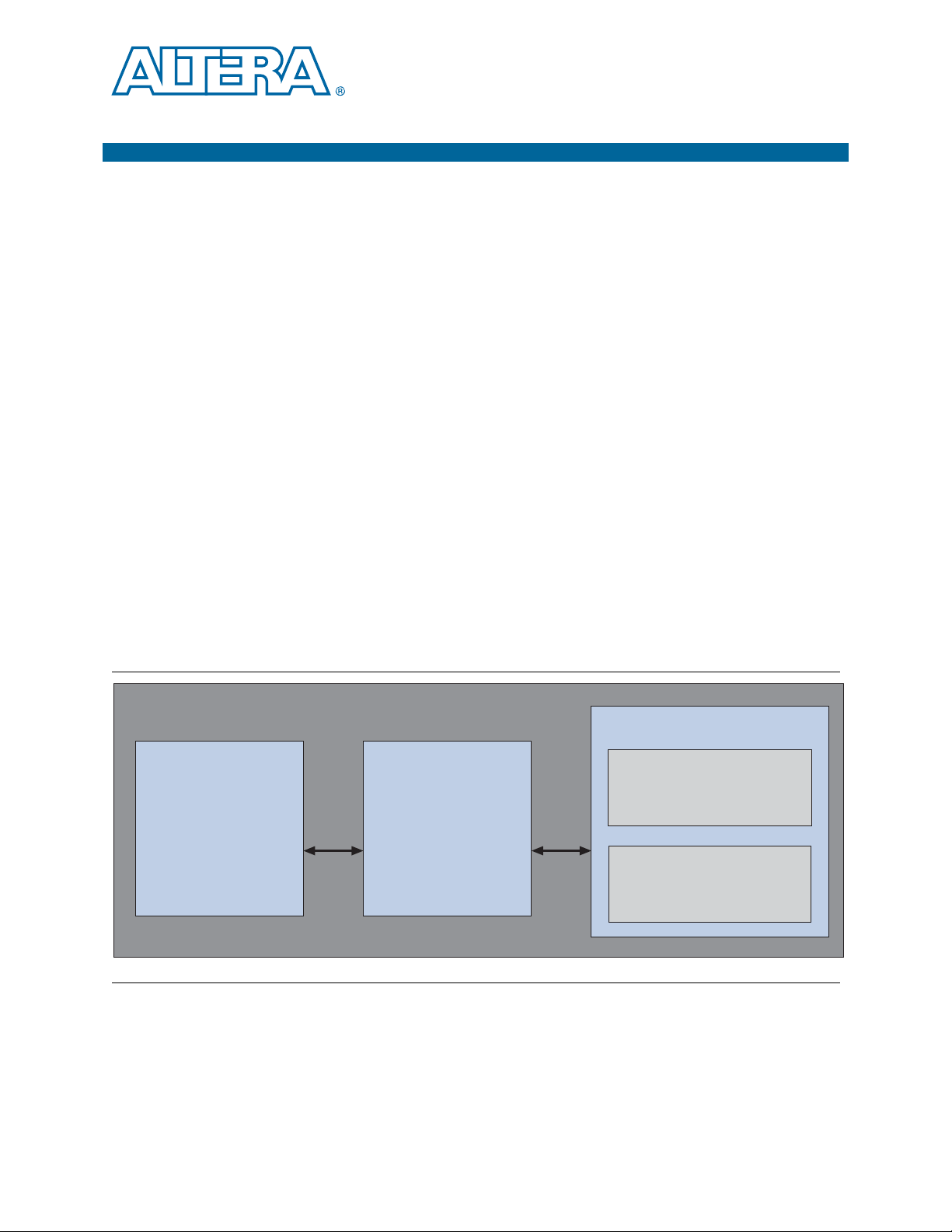

Figure 1–1 shows a PCI Express link between two Arria V FPGAs. One is configured

as a Root Port and the other as an Endpoint.

Figure 1–1. PCI Express Application with a Single Root Port and Endpoint

Figure 1–2 shows a PCI Express link between two Altera FPGAs. One is configured as

a Root Port and the other as a multi-function Endpoint. The FPGA serves as a custom

I/O hub for the host CPU. In the Arria V FPGA, each peripheral is treated as a

function with its own set of Configuration Space registers. Eight multiplexed

functions operate using a single PCI Express link.

Figure 1–2. PCI Express Application with an Endpoint Using the Multi-Function Capability

Debug Features

The Arria V Hard IP for PCI Express includes debug features that allow observation

and control of the Hard IP for faster debugging of system-level problems. For more

information about debugging refer to Chapter 19, C**Debugging.

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 14

1–6 Chapter 1: Datasheet

IP Core Verification

IP Core Verification

To ensure compliance with the PCI Express specification, Altera performs extensive

validation of the Arria V Hard IP Core for PCI Express. The Gen1 ×8 and Gen2 ×4

Endpoints were certified PCI Express compliant at PCI-SIG Compliance Workshop

#79 in February 2012.

The simulation environment uses multiple testbenches that consist of

industry-standard BFMs driving the PCI Express link interface. A custom BFM

connects to the application-side interface.

Altera performs the following tests in the simulation environment:

■ Directed and pseudo random stimuli areArria V applied to test the Application

Layer interface, Configuration Space, and all types and sizes of TLPs.

■ Error injection tests that inject errors in the link, TLPs, and Data Link Layer

Packets (DLLPs), and check for the proper responses

■ PCI-SIG

■ Random tests that test a wide range of traffic patterns

®

Compliance Checklist tests that specifically test the items in the checklist

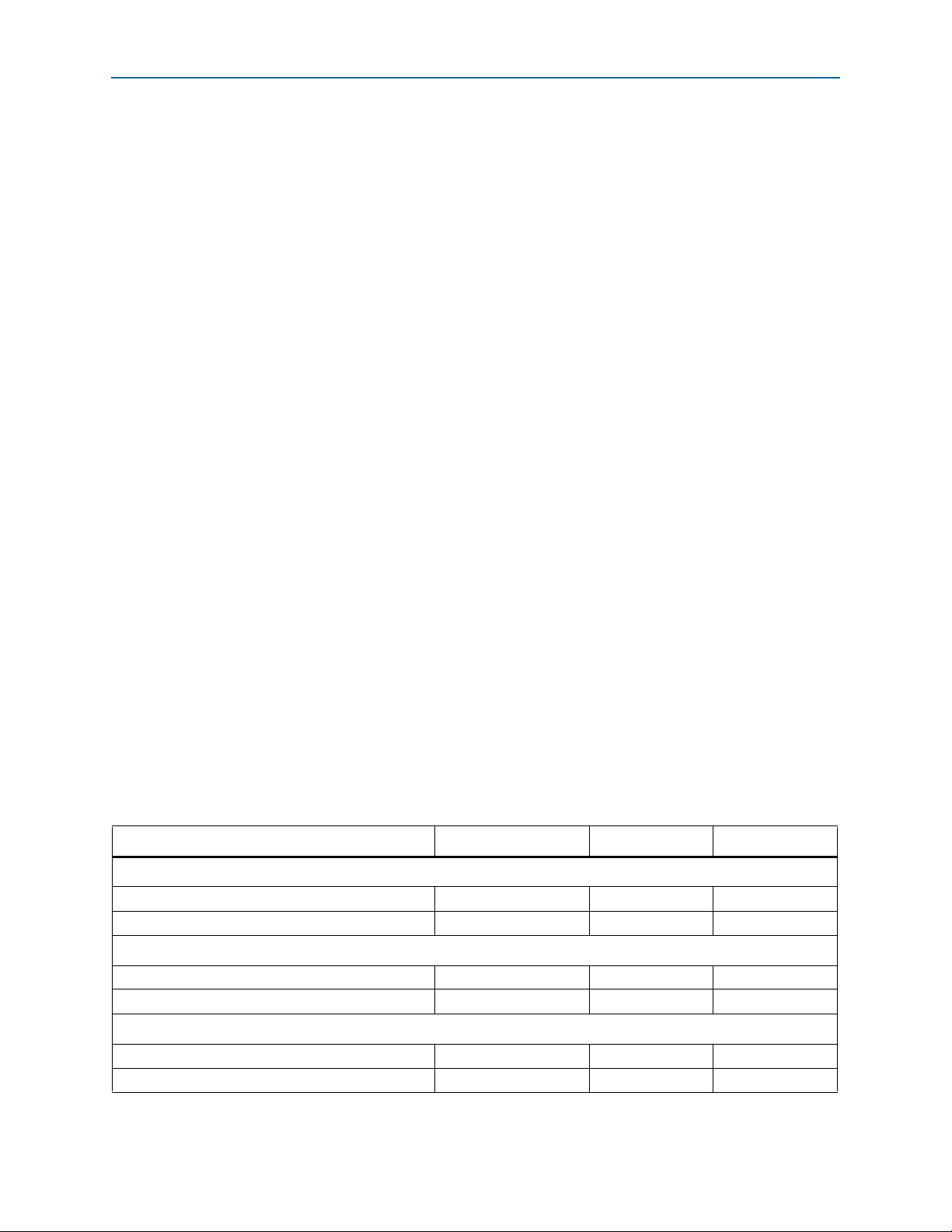

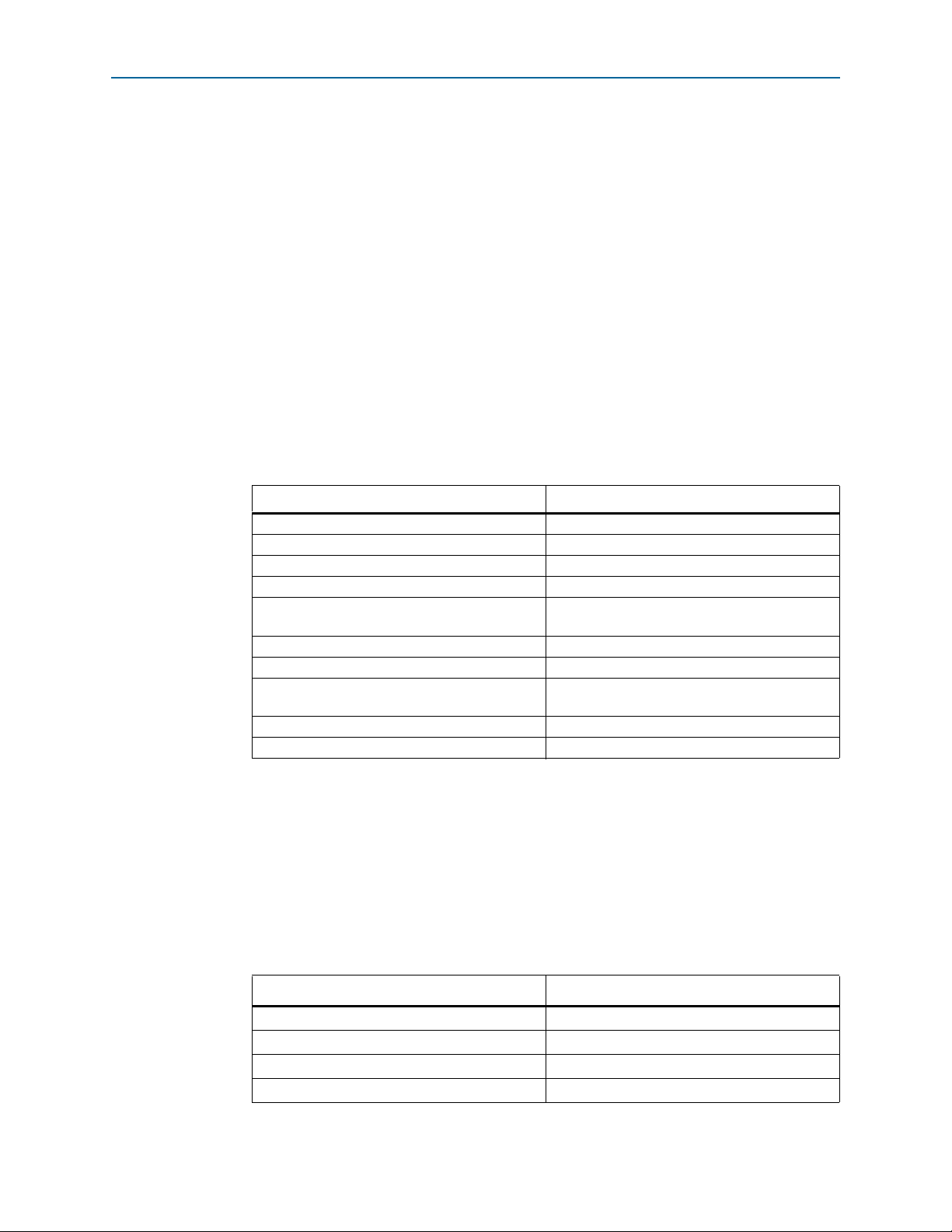

Performance and Resource Utilization

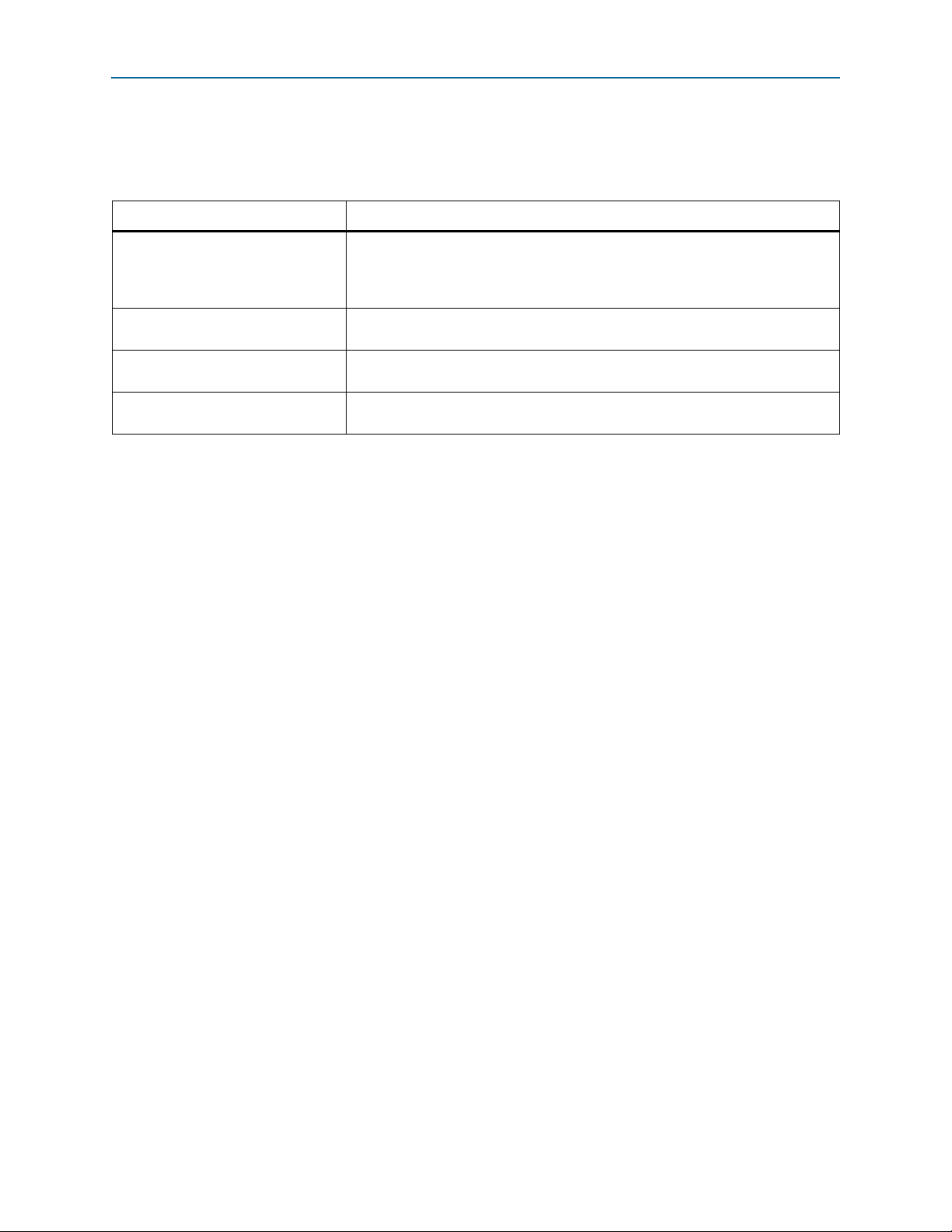

Because the Arria V Hard IP for PCI Express IP core is implemented in hardened

logic, it uses less than 1% of Arria V resources. The Avalon-MM Arria V Hard IP for

PCI Express includes a bridge implemented in soft logic. Tab le 1 –4 shows the typical

expected device resource utilization for selected configurations of the Avalon-MM

Arria V Hard IP for PCI Express using the current version of the Quartus II software

targeting a Arria V (5AGXFB3H6F35C6ES) device. With the exception of M10K

memory blocks, the numbers of ALMs and logic registers in Table 1–4 are rounded up

to the nearest 100. Resource utilization numbers reflect changes to the resource

utilization reporting starting in the Quartus II software v12.1 release 28 nm device

families and upcoming device families.

f For information about Quartus II resource utilization reporting, refer to Fitter

Resources Reports in the Quartus II Help.

Table 1–5. Performance and Resource Utilization (Part 1 of 2)

ALMs Memory M10K Logic Registers

Avalon-MM Bridge

Gen1 ×4 1250 27 1700

Gen2 ×8 2100 35 3050

Avalon-MM Interface–Burst Capable Requester/Single DWord Completer

64 1150 23 1700

128 1600 29 2550

Avalon-MM Interface-Burst Capable Completer Only

64 600 11 900

128 1350 22 2300

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 15

Chapter 1: Datasheet 1–7

Recommended Speed Grades

Table 1–5. Performance and Resource Utilization (Part 2 of 2)

ALMs Memory M10K Logic Registers

Avalon-MM Interface–Completer Only

64 160 0 230

Soft calibration of the transceiver module requires additional logic. The amount of

logic required depends upon the configuration.

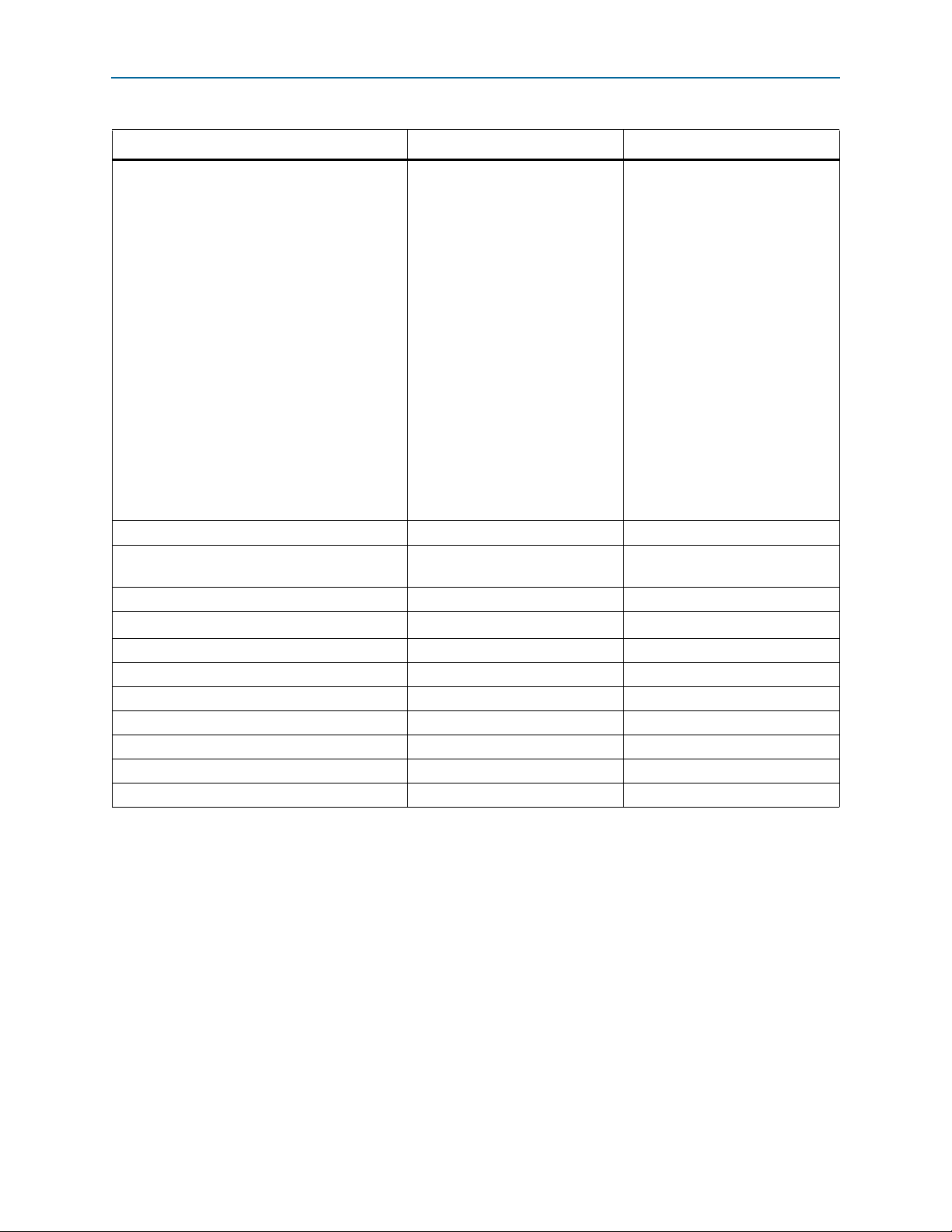

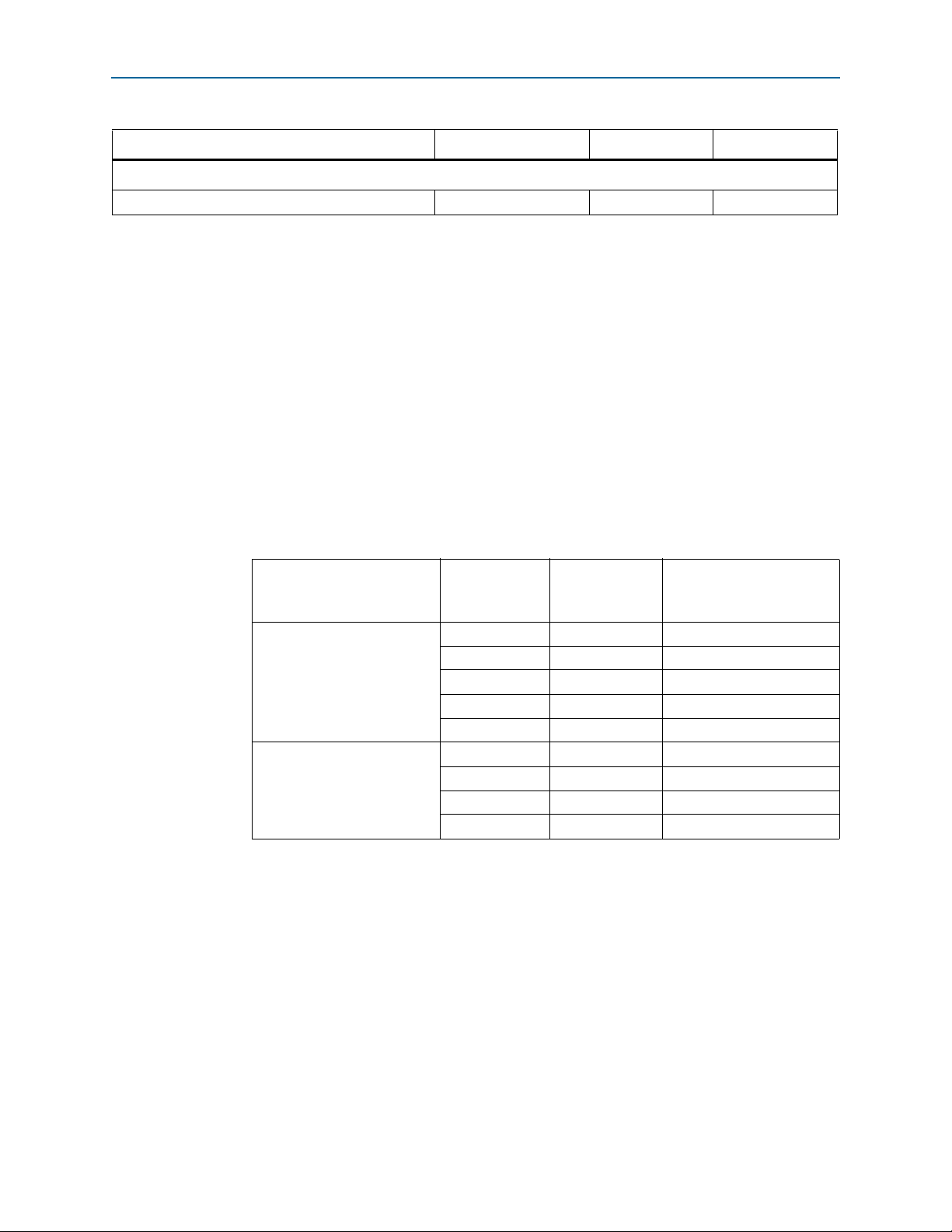

Recommended Speed Grades

Tab le 1 –5 lists the recommended speed grades for the supported link widths and

Application Layer clock frequencies. The speed grades listed are the only speed

grades that close timing. Altera recommends setting the Quartus II Analysis &

Synthesis Settings Optimization Technique to Speed.

h For information about optimizing synthesis, refer to “Setting Up and Running Analysis

and Synthesis in Quartus II Help.

For more information about how to effect the Optimization Technique settings, refer

to Area and Timing Optimization in volume 2 of the Quartus II Handbook.

Table 1–6. Device Family Link Width Application Frequency Recommended Speed Grades

Link Speed Link Width

×1 62.5

Application

Clock

Frequency (MHz)

(1)

–4, –5, –6

Recommended

Speed Grades

(2)

×1 125 –4, –5, –6

Gen1–2.5 Gbps

×2 125 –4, –5, –6

×4 125 –4, –5, –6

×8 125 –4, –5, –6

(1)

–4, –5,

Gen2–5.0 Gbps

×1 62.5

×1 125 –4, –5,,

×2 125 –4, –5,

×4 125 –4, –5,

Notes to Table 1–5:

(1) This is a power-saving mode of operation.

(2) Final results pending characterization by Altera. Refer to the fit.rpt file generated by the Quartus II software.

(2)

(2)

(2)

(2)

(2)

f For details on installation, refer to the Altera Software Installation and Licensing Manual.

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 16

1–8 Chapter 1: Datasheet

Recommended Speed Grades

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 17

2. Getting Started with the Arria Hard IP

APPS

altpcied_sv_hwtcl.v

Stratix V Hard IP for PCI Express Testbench for Endpoints

Avalon-ST TX

Avalon-ST RX

reset

status

Avalon-ST TX

Avalon-ST RX

reset

status

DUT

altpcie_sv_hip_ast_hwtcl.v

Root Port Model

altpcie_tbed_sv_hwtcl.v

PIPE or

Serial

Interface

Root Port BFM

altpcietb_bfm_rpvar_64b_x8_pipen1b

Root Port Driver and Monitor

altpcietb_bfm_vc_intf

December 2013

UG-01110-1.5

Getting Started with the Arria Hard IP for PCI Express

This section provides step-by-step instructions to help you quickly customize,

simulate, and compile the Arria Hard IP for PCI Express using either the

MegaWizard Plug-In Manager or Qsys design flow. When you install the Quartus II

software you also install the IP Library. This installation includes design examples for

Hard IP for PCI Express in <install_dir>/ip/altera/altera_pcie/

altera_pcie_hip_ast_ed/example_design/<device> directory.

1 If you have an existing Arria 12.1 or older design, you must regenerate it in 13.1

before compiling with the 13.1 version of the Quartus II software.

After you install the Quartus II software for 13.1, you can copy the design examples

from the <install_dir>/ip/altera/altera_pcie/altera_pcie_hip_ast_ed/

example_design/<device> directory. This walkthrough uses the Gen1 ×4 Endpoint.

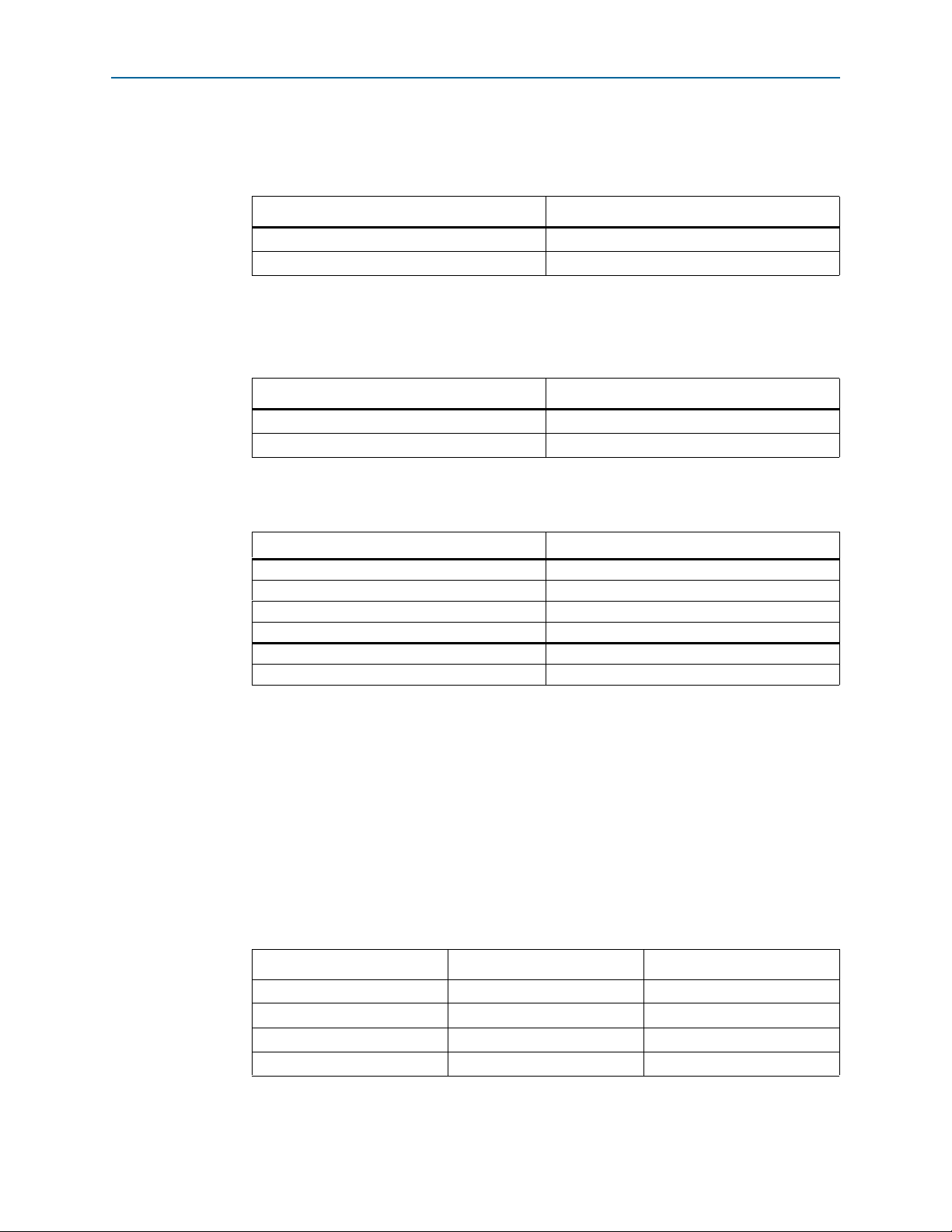

The following figure illustrates the top-level modules of the testbench in which the

DUT, a Gen1 ×4 Endpoint, connects to a chaining DMA engine, labeled APPS in the

following figure, and a Root Port model. The Transceiver Reconfiguration Controller

dynamically reconfigures analog settings to optimize signal quality of the serial

interface. The pcie_reconfig_driver drives the Transceiver Reconfiguration Controller.

The simulation can use the parallel PHY Interface for PCI Express (PIPE) or serial

interface.

for PCI Express

Figure 2–1. Testbench for an Endpoint

For a detailed explanation of this example design, refer to Chapter 18, Testbench and

December 2013 Altera Corporation Arria V Hard IP for PCI Express

Design Example. If you choose the parameters specified in this chapter, you can run

all of the tests included in Chapter 18.

L

User Guide

Page 18

2–2 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

Select Design Flow

Customize the

Hard IP for PCIe

Qsys Flow

MegaWizard Plug-In

Manager Flow

Complete Qsys System

Run Simulation

Create Quartus II Project

Add Quartus IP File (.qip)

Create Quartus II Project

Generate the Simulation

Model for ModelSim, NC-Sim

or VCS

Generate the Simulation

Model in Qsys

Compile the Design for the

Qsys Design Flow

Modify Example Design

to Meet Your Requirements

Compile the Design for the

MegaWizard Design Flow

Add Quartus IP File (.qip)

to Quartus II Project

Customize the

Hard IP for PCIe

Step 1

Step 2

Step 3

Step 4

Step 5

Step 6

Step 7

Ye s

No

Simulating?

Ye s

No

Simulating?

Getting Started with the Arria Hard IP for PCI Express

The Arria Hard IP for PCI Express offers exactly the same feature set in both the

MegaWizard and Qsys design flows. Consequently, your choice of design flow

depends on whether you want to integrate the Arria Hard IP for PCI Express using

RTL instantiation or using Qsys, which is a system integration tool available in the

Quartus II software.

f For more information about Qsys, refer to System Design with Qsys in the Quartus II

Handbook.

h For more information about the Qsys GUI, refer to About Qsys in Quartus II Help.

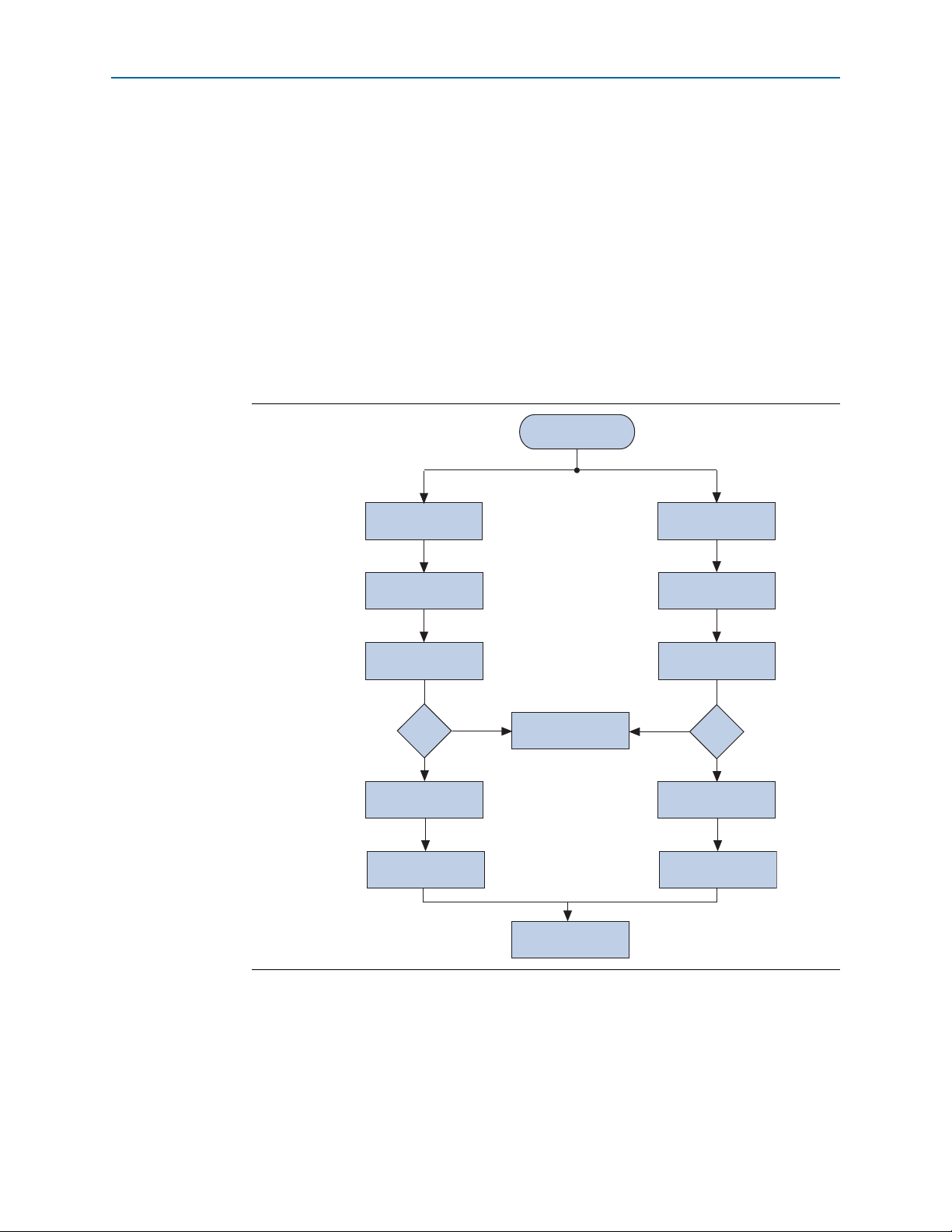

The following figure illustrates the steps necessary to customize the Arria Hard IP for

PCI Express and run the example design.

Figure 2–2. MegaWizard Plug-In Manager and Qsys Design Flows

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 19

Chapter 2: Getting Started with the Arria Hard IP for PCI Express 2–3

MegaWizard Plug-In Manager Design Flow

MegaWizard Plug-In Manager Design Flow

This section guides you through the steps necessary to customize the Arria Hard IP

for PCI Express and run the example testbench, starting with the creation of a

Quartus II project.

Follow these steps to copy the example design files and create a Quartus II project.

1. Choose Programs > Altera > Quartus II <version> (Windows Start menu) to run

the Quartus II software.

2. On the Quartus II File menu, click New, then New Quartus II Project, then OK.

3. Click Next in the New Project Wizard: Introduction (The introduction does not

display if you previously turned it off.)

4. On the Directory, Name, Top-Level Entity page, enter the following information:

a. The working directory for your project. This design example uses

<working_dir>/example_design

b. The name of the project. This design example uses pcie_de_gen1_x4_ast64.

1 The Quartus II software specifies a top-level design entity that has the same

name as the project automatically. Do not change this name.

5. Click Next to display the Add Files page.

6. Click Yes, if prompted, to create a new directory.

7. Click Next to display the Family & Device Settings page.

8. On the Family & Device Settings page, choose the following target device family

and options:

a. In the Family list, select Arria V (/GX/GT/ST/SX)

b. In the Devices list, select Arria V GX Extended Features GX PCIe

c. In the Available devices list, select 5AGXFB3H6F35C6ES.

9. Click Next to close this page and display the EDA Tool Settings page.

10. From the Simulation list, select ModelSim

language you intend to use for simulation.

11. Click Next to display the Summary page.

12. Check the Summary page to ensure that you have entered all the information

correctly.

13. Click Finish to create the Quartus II project.

®

. From the Format list, select the HDL

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

This section guides you through the process of customizing the Endpoint in the

MegaWizard Plug-In Manager design flow. It specifies the same options that are

chosen in Chapter 18, Testbench and Design Example.

Follow these steps to customize your variant in the MegaWizard Plug-In Manager:

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 20

2–4 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

1. On the Tools menu, click MegaWizard Plug-In Manager. The MegaWizard

Plug-In Manager appears.

2. Select Create a new custom megafunction variation and click Next.

3. In Which device family will you be using? Select the Arria device family.

4. Expand the Interfaces directory under Installed Plug-Ins by clicking the + icon

left of the directory name, expand PCI Express, then click Arria Hard IP for PCI

Express <version_number>

5. Select the output file type for your design. This walkthrough supports VHDL and

Verilog HDL. For this example, select Verilog HDL.

6. Specify a variation name for output files <working_dir>/example_design/

<variation name>. For this walkthrough, specify <working_dir>/example_design/

gen1_x4.

7. Click Next to open the parameter editor for the Arria Hard IP for PCI Express.

8. Specify the System Settings values listed in the following table.

Table 2–1. System Settings Parameters

Parameter Value

Number of Lanes x4

Lane Rate Gen 1 (2.5 Gbps)

Port type Native endpoint

Application Layer interface Avalon-ST 64-bit

RX buffer credit allocation - performance for

received requests

Reference clock frequency 100 MHz

Use 62.5 MHz Application Layer clock for ×1 Leave this option off

Use deprecated RX Avalon-ST data byte enable

port (rx_st_be)

Enable configuration via the PCIe link Leave this option off

Number of functions 1

Low

Leave this option off

1 Each function shares the parameter settings on the Device, Error Reporting, Link,

Slot, and Power Management tabs. Each function has separate parameter settings for

the Base Address Registers, Base and Limit Registers for Root Ports, Device

Identification Registers, and the PCI Express/PCI Capabilities parameters. When

you click on a Func<n> tab under the Port Functions heading, the tabs automatically

reflect the Func<n> tab selected.

9. Specify the Device parameters listed in Ta bl e 2– 2.

Table 2–2. Device

Parameter Value

Maximum payload size 128 bytes

Number of tags supported 32

Completion timeout range ABCD

Implement completion timeout disable On

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 21

Chapter 2: Getting Started with the Arria Hard IP for PCI Express 2–5

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

10. On the Error Reporting tab, leave all options off.

11. Specify the Link settings listed in Tab le 2 –7 .

Table 2–3. Link Tab

Parameter Value

Link port number 1

Slot clock configuration On

12. On the Slot Capabilities tab, leave the Slot register turned off.

13. Specify the Power Management parameters listed in Ta bl e 2–4 .

Table 2–4. Power Management Parameters

Parameter Value

Endpoint L0s acceptable exit latency Maximum of 64 ns

Endpoint L1 acceptable latency Maximum of 1 µs

14. Specify the BAR settings for Func0 listed in Ta bl e 2– 5.

Table 2–5. Base Address Registers for Func0

Parameter Value

BAR0 Type 64-bit prefetchable memory

BAR0 Size 256 MBytes - 28 bits

BAR1 Type Disabled

BAR1 Size N/A

BAR2 Type 32-bit non-prefetchable memory

BAR2 Size 1 KByte - 10 bits

15. You can leave Func0 BAR3 through Func

16. 0 BAR5 and the Func0 Expansion ROM Disabled.

17. Under the Base and Limit Registers heading, disable both the Input/Output and

Prefetchable memory options. (These options are for Root Ports.)

18. For the Device ID Registers for Func0, specify the values listed in the center

column of Table 2–6. The right-hand column of this table lists the value assigned to

Altera devices. You must use the Altera values to run the reference design

described in AN 456 PCI Express High Performance Reference Design. Be sure to use

your company’s values for your final product.

Table 2–6. Device ID Registers for Func0

Register Name Value Altera Value

Vendor ID

Device ID

Revision ID

Class Code

0x00000000

0x00000001

0x00000001

0x00000000

0x00001172

0x0000E001

0x00000001

0x00FF0000

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 22

2–6 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

Table 2–6. Device ID Registers for Func0

Subsystem Vendor ID

Subsystem Device ID

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

0x00000000

0x00000000

0x00001172

0x0000E001

19. On the Func 0 Device tab, under PCI Express/PCI Capabilities for Func 0 turn

Function Level Reset (FLR) Off.

20. Ta bl e 2 –7 lists settings for the Func0 Link tab.

Table 2–7. Link Capabilities

Parameter Value

Data link layer active reporting Off

Surprise down reporting Off

21. On the Func0 MSI tab, for Number of MSI messages requested, select 4.

22. On the Func0 MSI-X tab, turn Implement MSI-X off.

23. On the Func0 Legacy Interrupt tab, select INTA.

24. the following tablethe following tablethe following tablethe following tablethe

following tablethe following tableClick Finish. The Generation dialog box

appears.

25. Turn on Generate Example Design to generate the Endpoint, testbench, and

supporting files.

26. Click Exit.

27. Click Yes if you are prompted to add the Quartus II IP File (.qip) to the project.

The .qip is a file generated by the parameter editor contains all of the necessary

assignments and information required to process the IP core in the Quartus II

compiler. Generally, a single .qip file is generated for each IP core.

Understanding the Files Generated

The following table provides an overview of directories and files generated.

Table 2–8. Qsys Generation Output Files

Directory Description

<working_dir>/<variant_name>/ Includes the files for synthesis

<working_dir>/<variant_name>_sim/

altera_pcie_<device>_hip_ast

<working_dir>/<variant_name>_example_design/

altera_pcie_<device>_hip_ast

Includes the simulation files.

Includes a Qsys testbench that connects the Endpoint to a chaining

DMA engine, Transceiver Reconfiguration Controller, and driver for the

Transceiver Reconfiguration Controller.

Follow these steps to generate the chaining DMA testbench from the Qsys system

design example.

1. On the Quartus II File menu, click Open.

2. Navigate to the Qsys system in the altera_pcie_<device>_hip_ast subdirectory.

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 23

Chapter 2: Getting Started with the Arria Hard IP for PCI Express 2–7

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

3. Click pcie_de_gen1_x4_ast64.qsys to bring up the Qsys design. The following

figure illustrates this Qsys system.

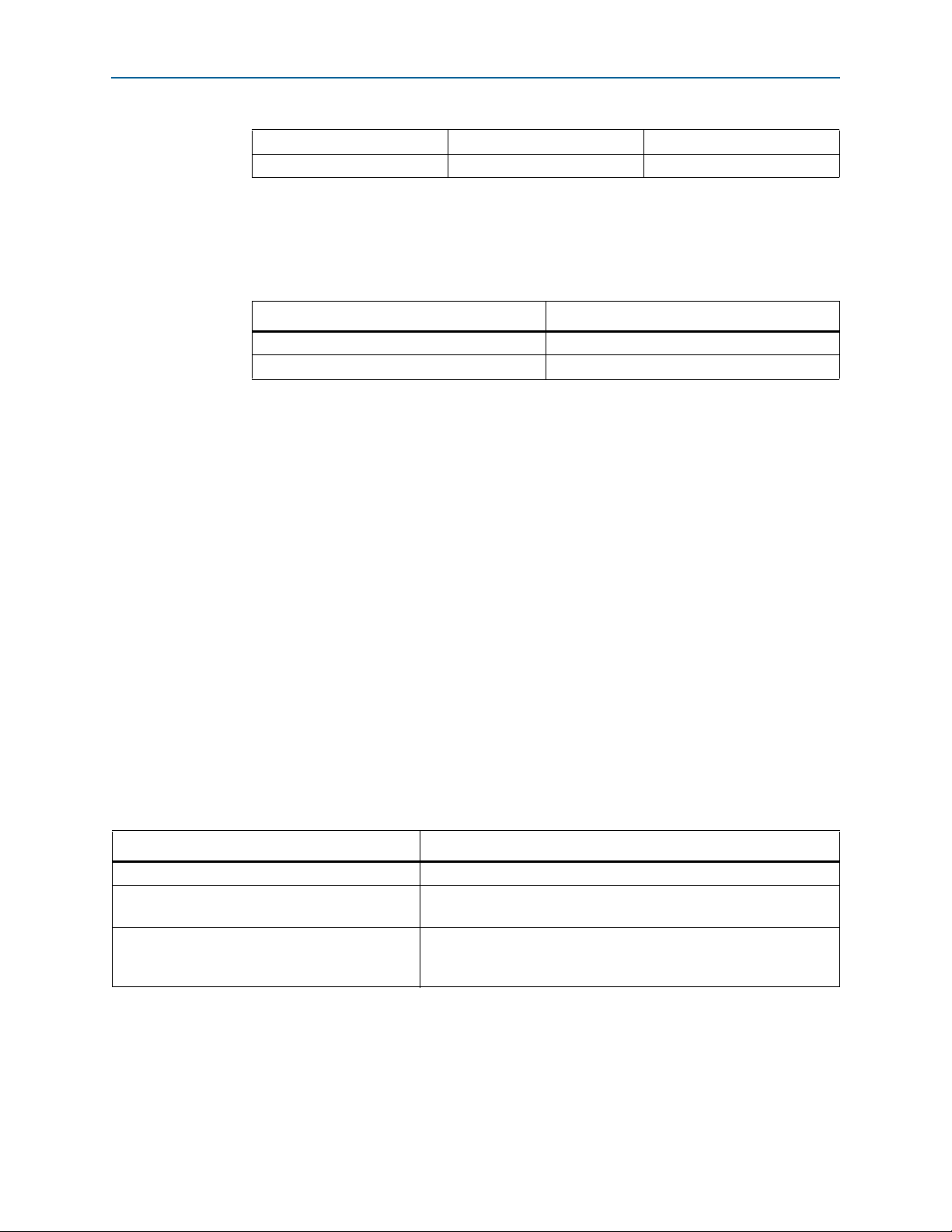

Figure 2–3. Qsys System Connecting the Endpoint Variant and Chaining DMA Testbench

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 24

2–8 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

Customizing the Endpoint in the MegaWizard Plug-In Manager Design Flow

4. To display the parameters of the APPS component shown in the previous figure,

click on it and then select Edit from the right-mouse menuFigure 2–4. illustrates

this component. Note that the values for the following parameters match those set

in the DUT component:

■ Targeted Device Family

■ Lanes

■ Lane Rate

■ Application Clock Rate

■ Port

■ Application interface

■ Tags supported

■ Maximum payload size

■ Number of Functions

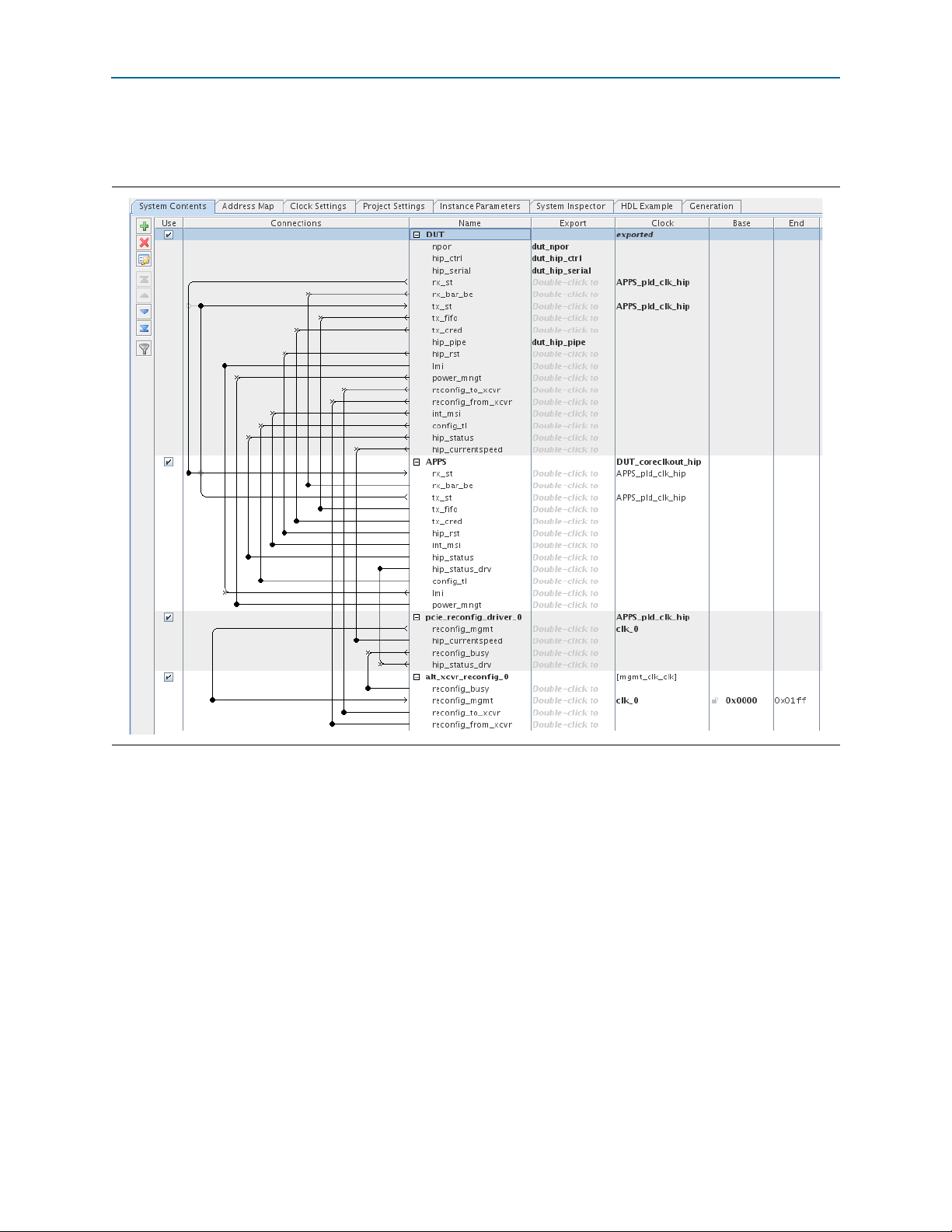

Figure 2–4. Qsys Component Representing the Chaining DMA Design Example

1 You can use this Qsys APPS component to test any Endpoint variant with

compatible values for these parameters.

5. To close the APPS component, click the X in the upper right-hand corner of the

parameter editor.

Go to “Simulating the Example Design ###avst_sim###” on page 2–11 for instructions

on system simulation.

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 25

Chapter 2: Getting Started with the Arria Hard IP for PCI Express 2–9

Qsys Design Flow

Qsys Design Flow

This section guides you through the steps necessary to customize the Arria Hard IP

for PCI Express and run the example testbench in Qsys. Reviewing the Qsys Example

Design for PCIe

For this example, copy the Gen1 x4 Endpoint example design from installation

directory: <install_dir>/ip/altera/altera_pcie/altera_pcie_hip_ast_ed/example_design

/<device> directory to a working directory.

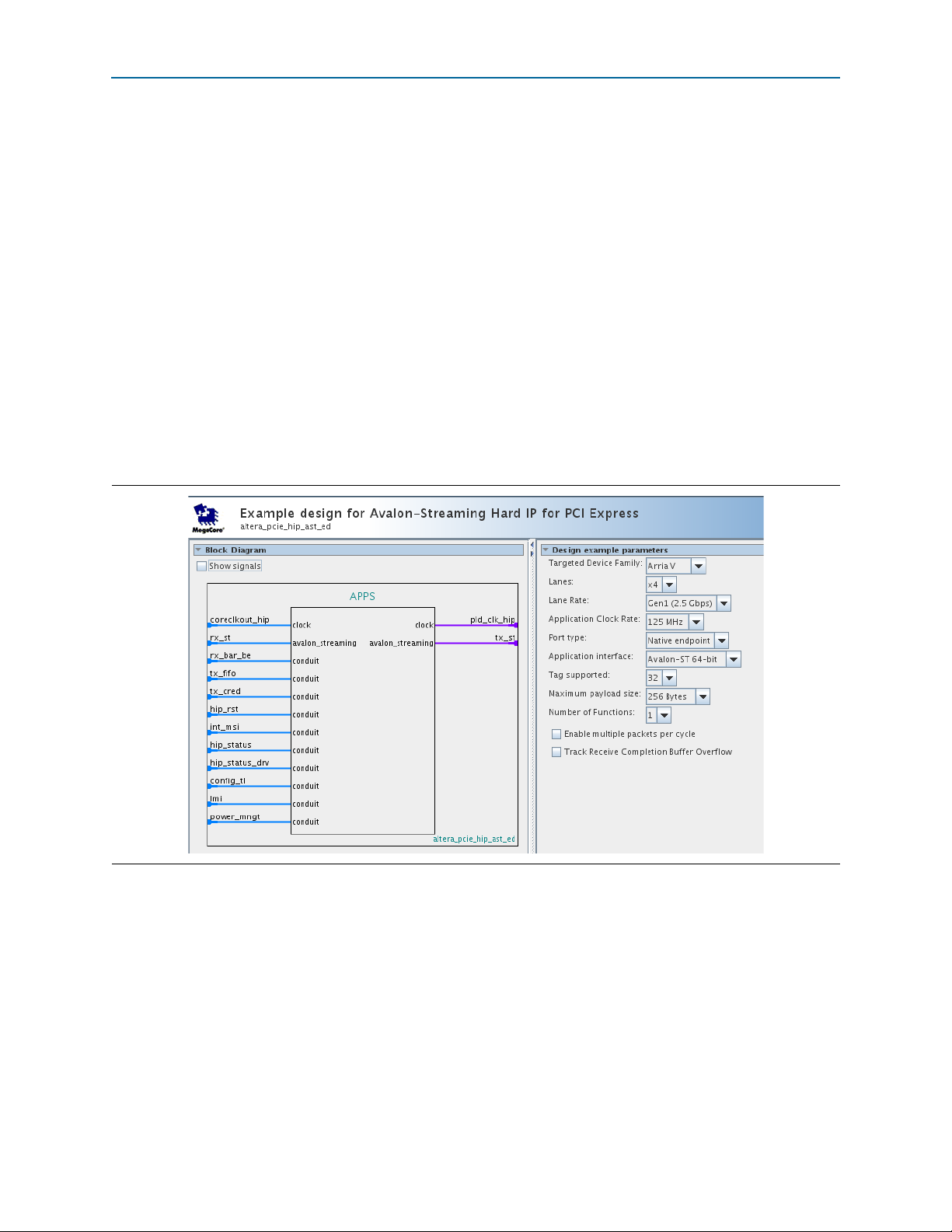

The following figure illustrates this Qsys system.

Figure 2–5. Complete Gen1 ×4 Endpoint (DUT) Connected to Example Design (APPS)

The example design includes the following four components:

■ DUT—This is Gen1 x4 Endpoint. For your own design, you can select the data

rate, number of lanes, and either Endpoint or Root Port mode.

■ APPS—This Root Port BFM configures the DUT and drives read and write TLPs to

test DUT functionality. An Endpoint BFM is available if your PCI Express design

implements a Root Port.

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 26

2–10 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

■ pcie_reconfig_driver_0—This Avalon-MM master drives the Transceiver

Qsys Design Flow

Reconfiguration Controller. The pcie_reconfig_driver_0 is implemented in clear

text that you can modify if your design requires different reconfiguration

functions. After you generate your Qsys system, the Verilog HDL for this

component is available as: <working_dir>/<variant_name>/testbench/

<variant_name>_tb/simulation/submodules/altpcie_reconfig_driver.sv.

■ Transceiver Reconfiguration Controller—The Transceiver Reconfiguration

Controller dynamically reconfigures analog settings to improve signal quality. For

Gen1 and Gen2 data rates, the Transceiver Reconfiguration Controller must

perform offset cancellation and PLL calibration.

Generating the Testbench

Follow these steps to generate the chaining DMA testbench:

1. On the Qsys Generation tab, specify the parameters listed in the following table.

Table 2–9. Parameters to Specify on the Generation Tab in Qsys

Parameter Value

Simulation

Create simulation model

None. (This option generates a simulation model you can include in your own

custom testbench.)

Create testbench Qsys system Standard, BFMs for standard Avalon interfaces

Create testbench simulation model Verilog

Synthesis

Create HDL design files for synthesis Turn this option on

Create block symbol file (.bsf) Turn this option on

Output Directory

Path pcie_qsys/gen1_x4_example_design

Simulation Leave this option blank

Testbench

Synthesis

Note to Table 2–9:

(1) Qsys automatically creates this path by appending testbench to the output directory/.

(2) Qsys automatically creates this path by appending synthesis to the output directory/.

(1)

(2)

pcie_qsys/gen1_x4_example_design/testbench

pcie_qsys/gen1_x4_example_design/synthesis

2. Click the Generate button at the bottom of the Generation tab to create the

chaining DMA testbench.

Arria V Hard IP for PCI Express December 2013 Altera Corporation

User Guide

Page 27

Chapter 2: Getting Started with the Arria Hard IP for PCI Express 2–11

Qsys Design Flow

Understanding the Files Generated

The following table provides an overview of the files and directories Qsys generates.

Table 2–10. Qsys Generation Output Files

Directory Description

includes the top-level HDL file for the Hard I for PCI Express and the .qip file that

<testbench_dir>/<variant_name>/

synthesis

<testbench_dir>/<variant_name>/

synthesis/submodules

<testbench_dir>/<variant_name>/

testbench/

<testbench_dir>/<variant_name>/

testbench/<cad_vendor>

lists all of the necessary assignments and information required to process the IP

core in the Quartus II compiler. Generally, a single .qip file is generated for each IP

core.

Includes the HDL files necessary for Quartus II synthesis.

Includes testbench subdirectories for the Aldec, Cadence and Mentor simulation

tools with the required libraries and simulation scripts.

Includes the HDL source files and scripts for the simulation testbench.

Simulating the Example Design

Follow these steps to compile the testbench for simulation and run the chaining DMA

testbench.

1. Start your simulation tool. This example uses the ModelSim

®

software.

2. From the ModelSim transcript window, in the testbench directory

(./example_design/altera_pcie_<device>_hip_ast/<variant>/testbench/mentor)

type the following commands:

a.

do msim_setup.tcl

r

b. h r (This is the ModelSim help command.)

c. ld_debug r (This command compiles all design files and elaborates the

top-level design without any optimization.)

d. run -all r

The following example shows a partial transcript from a successful simulation. As this

transcript illustrates, the simulation includes the following stages:

■ Link training

■ Configuration

■ DMA reads and writes

December 2013 Altera Corporation Arria V Hard IP for PCI Express

User Guide

Page 28

2–12 Chapter 2: Getting Started with the Arria Hard IP for PCI Express

■ Root Port to Endpoint memory reads and writes

Qsys Design Flow

Example 2–1. Excerpts from Transcript of Successful Simulation Run

Time: 56000 Instance: top_chaining_testbench.ep.epmap.pll_250mhz_to_500mhz.

# Time: 0 Instance:

pcie_de_gen1_x8_ast128_tb.dut_pcie_tb.genblk1.genblk1.altpcietb_bfm_top_rp.rp.rp.nl00O

0i.Arria ii_pll.pll1