Page 1

vSphere Resource Management

Update 1

VMware vSphere 6.5

VMware ESXi 6.5

vCenter Server 6.5

This document supports the version of each product listed and

supports all subsequent versions until the document is

replaced by a new edition. To check for more recent editions of

this document, see http://www.vmware.com/support/pubs.

EN-002644-00

Page 2

vSphere Resource Management

You can find the most up-to-date technical documentation on the VMware Web site at:

hp://www.vmware.com/support/

The VMware Web site also provides the latest product updates.

If you have comments about this documentation, submit your feedback to:

docfeedback@vmware.com

Copyright © 2006–2017 VMware, Inc. All rights reserved. Copyright and trademark information.

VMware, Inc.

3401 Hillview Ave.

Palo Alto, CA 94304

www.vmware.com

2 VMware, Inc.

Page 3

Contents

About vSphere Resource Management 7

Geing Started with Resource Management 9

1

Resource Types 9

Resource Providers 9

Resource Consumers 10

Goals of Resource Management 10

Conguring Resource Allocation

2

Seings 11

Resource Allocation Shares 11

Resource Allocation Reservation 12

Resource Allocation Limit 12

Resource Allocation Seings Suggestions 13

Edit Resource Seings 13

Changing Resource Allocation Seings—Example 14

Admission Control 15

CPU Virtualization Basics 17

3

Software-Based CPU Virtualization 17

Hardware-Assisted CPU Virtualization 18

Virtualization and Processor-Specic Behavior 18

Performance Implications of CPU Virtualization 18

VMware, Inc.

Administering CPU Resources 19

4

View Processor Information 19

Specifying CPU Conguration 19

Multicore Processors 20

Hyperthreading 20

Using CPU Anity 22

Host Power Management Policies 23

Memory Virtualization Basics 27

5

Virtual Machine Memory 27

Memory Overcommitment 28

Memory Sharing 28

Types of Memory Virtualization 29

Administering Memory Resources 33

6

Understanding Memory Overhead 33

How ESXi Hosts Allocate Memory 34

3

Page 4

vSphere Resource Management

Memory Reclamation 35

Using Swap Files 36

Sharing Memory Across Virtual Machines 40

Memory Compression 41

Measuring and Dierentiating Types of Memory Usage 42

Memory Reliability 43

About System Swap 43

Conguring Virtual Graphics 45

7

View GPU Statistics 45

Add an NVIDIA GRID vGPU to a Virtual Machine 45

Conguring Host Graphics 46

Conguring Graphics Devices 47

Managing Storage I/O Resources 49

8

About Virtual Machine Storage Policies 50

About I/O Filters 50

Storage I/O Control Requirements 50

Storage I/O Control Resource Shares and Limits 51

Set Storage I/O Control Resource Shares and Limits 52

Enable Storage I/O Control 52

Set Storage I/O Control Threshold Value 53

Storage DRS Integration with Storage Proles 54

Managing Resource Pools 55

9

Why Use Resource Pools? 56

Create a Resource Pool 57

Edit a Resource Pool 58

Add a Virtual Machine to a Resource Pool 58

Remove a Virtual Machine from a Resource Pool 59

Remove a Resource Pool 60

Resource Pool Admission Control 60

Creating a DRS Cluster 63

10

Admission Control and Initial Placement 63

Virtual Machine Migration 65

DRS Cluster Requirements 67

Conguring DRS with Virtual Flash 68

Create a Cluster 68

Edit Cluster Seings 69

Set a Custom Automation Level for a Virtual Machine 71

Disable DRS 72

Restore a Resource Pool Tree 72

Using DRS Clusters to Manage Resources 73

11

Adding Hosts to a Cluster 73

Adding Virtual Machines to a Cluster 75

Removing Virtual Machines from a Cluster 75

4 VMware, Inc.

Page 5

Removing a Host from a Cluster 76

DRS Cluster Validity 77

Managing Power Resources 82

Using DRS Anity Rules 86

Contents

Creating a Datastore Cluster 91

12

Initial Placement and Ongoing Balancing 92

Storage Migration Recommendations 92

Create a Datastore Cluster 92

Enable and Disable Storage DRS 93

Set the Automation Level for Datastore Clusters 93

Seing the Aggressiveness Level for Storage DRS 94

Datastore Cluster Requirements 95

Adding and Removing Datastores from a Datastore Cluster 96

Using Datastore Clusters to Manage Storage Resources 97

13

Using Storage DRS Maintenance Mode 97

Applying Storage DRS Recommendations 99

Change Storage DRS Automation Level for a Virtual Machine 100

Set Up O-Hours Scheduling for Storage DRS 100

Storage DRS Anti-Anity Rules 101

Clear Storage DRS Statistics 104

Storage vMotion Compatibility with Datastore Clusters 105

Using NUMA Systems with ESXi 107

14

What is NUMA? 107

How ESXi NUMA Scheduling Works 108

VMware NUMA Optimization Algorithms and Seings 109

Resource Management in NUMA Architectures 110

Using Virtual NUMA 110

Specifying NUMA Controls 111

Advanced

15

Aributes 115

Set Advanced Host Aributes 115

Set Advanced Virtual Machine Aributes 118

Latency Sensitivity 120

About Reliable Memory 120

Fault Denitions 123

16

Virtual Machine is Pinned 124

Virtual Machine not Compatible with any Host 124

VM/VM DRS Rule Violated when Moving to another Host 124

Host Incompatible with Virtual Machine 124

Host Has Virtual Machine That Violates VM/VM DRS Rules 124

Host has Insucient Capacity for Virtual Machine 124

Host in Incorrect State 124

Host Has Insucient Number of Physical CPUs for Virtual Machine 125

VMware, Inc. 5

Page 6

vSphere Resource Management

Host has Insucient Capacity for Each Virtual Machine CPU 125

The Virtual Machine Is in vMotion 125

No Active Host in Cluster 125

Insucient Resources 125

Insucient Resources to Satisfy Congured Failover Level for HA 125

No Compatible Hard Anity Host 125

No Compatible Soft Anity Host 125

Soft Rule Violation Correction Disallowed 125

Soft Rule Violation Correction Impact 126

DRS Troubleshooting Information 127

17

Cluster Problems 127

Host Problems 130

Virtual Machine Problems 133

Index 137

6 VMware, Inc.

Page 7

About vSphere Resource Management

vSphere Resource Management describes resource management for VMware® ESXi and vCenter® Server

environments.

This documentation focuses on the following topics.

Resource allocation and resource management concepts

n

Virtual machine aributes and admission control

n

Resource pools and how to manage them

n

Clusters, vSphere® Distributed Resource Scheduler (DRS), vSphere Distributed Power Management

n

(DPM), and how to work with them

Datastore clusters, Storage DRS, Storage I/O Control, and how to work with them

n

Advanced resource management options

n

Performance considerations

n

Intended Audience

This information is for system administrators who want to understand how the system manages resources

and how they can customize the default behavior. It’s also essential for anyone who wants to understand

and use resource pools, clusters, DRS, datastore clusters, Storage DRS, Storage I/O Control, or vSphere

DPM.

VMware, Inc.

This documentation assumes you have a working knowledge of VMware ESXi and of vCenter Server.

Task instructions in this guide are based on the vSphere Web Client. You can also perform most of the tasks

in this guide by using the new vSphere Client. The new vSphere Client user interface terminology, topology,

and workow are closely aligned with the same aspects and elements of the vSphere Web Client user

interface. You can apply the vSphere Web Client instructions to the new vSphere Client unless otherwise

instructed.

N Not all functionality in the vSphere Web Client has been implemented for the vSphere Client in the

vSphere 6.5 release. For an up-to-date list of unsupported functionality, see Functionality Updates for the

vSphere Client Guide at hp://www.vmware.com/info?id=1413.

7

Page 8

vSphere Resource Management

8 VMware, Inc.

Page 9

Getting Started with Resource

Management 1

To understand resource management, you must be aware of its components, its goals, and how best to

implement it in a cluster seing.

Resource allocation seings for a virtual machine (shares, reservation, and limit) are discussed, including

how to set them and how to view them. Also, admission control, the process whereby resource allocation

seings are validated against existing resources is explained.

Resource management is the allocation of resources from resource providers to resource consumers.

The need for resource management arises from the overcommitment of resources—that is, more demand

than capacity and from the fact that demand and capacity vary over time. Resource management allows you

to dynamically reallocate resources, so that you can more eciently use available capacity.

This chapter includes the following topics:

“Resource Types,” on page 9

n

“Resource Providers,” on page 9

n

“Resource Consumers,” on page 10

n

“Goals of Resource Management,” on page 10

n

Resource Types

Resources include CPU, memory, power, storage, and network resources.

N ESXi manages network bandwidth and disk resources on a per-host basis, using network trac

shaping and a proportional share mechanism, respectively.

Resource Providers

Hosts and clusters, including datastore clusters, are providers of physical resources.

For hosts, available resources are the host’s hardware specication, minus the resources used by the

virtualization software.

A cluster is a group of hosts. You can create a cluster using vSphere Web Client, and add multiple hosts to

the cluster. vCenter Server manages these hosts’ resources jointly: the cluster owns all of the CPU and

memory of all hosts. You can enable the cluster for joint load balancing or failover. See Chapter 10, “Creating

a DRS Cluster,” on page 63 for more information.

A datastore cluster is a group of datastores. Like DRS clusters, you can create a datastore cluster using the

vSphere Web Client, and add multiple datstores to the cluster. vCenter Server manages the datastore

resources jointly. You can enable Storage DRS to balance I/O load and space utilization. See Chapter 12,

“Creating a Datastore Cluster,” on page 91.

VMware, Inc.

9

Page 10

vSphere Resource Management

Resource Consumers

Virtual machines are resource consumers.

The default resource seings assigned during creation work well for most machines. You can later edit the

virtual machine seings to allocate a share-based percentage of the total CPU, memory, and storage I/O of

the resource provider or a guaranteed reservation of CPU and memory. When you power on that virtual

machine, the server checks whether enough unreserved resources are available and allows power on only if

there are enough resources. This process is called admission control.

A resource pool is a logical abstraction for exible management of resources. Resource pools can be grouped

into hierarchies and used to hierarchically partition available CPU and memory resources. Accordingly,

resource pools can be considered both resource providers and consumers. They provide resources to child

resource pools and virtual machines, but are also resource consumers because they consume their parents’

resources. See Chapter 9, “Managing Resource Pools,” on page 55.

ESXi hosts allocate each virtual machine a portion of the underlying hardware resources based on a number

of factors:

Resource limits dened by the user.

n

Total available resources for the ESXi host (or the cluster).

n

Number of virtual machines powered on and resource usage by those virtual machines.

n

Overhead required to manage the virtualization.

n

Goals of Resource Management

When managing your resources, you must be aware of what your goals are.

In addition to resolving resource overcommitment, resource management can help you accomplish the

following:

Performance Isolation: Prevent virtual machines from monopolizing resources and guarantee

n

predictable service rates.

Ecient Usage: Exploit undercommied resources and overcommit with graceful degradation.

n

Easy Administration: Control the relative importance of virtual machines, provide exible dynamic

n

partitioning, and meet absolute service-level agreements.

10 VMware, Inc.

Page 11

Configuring Resource Allocation

Settings 2

When available resource capacity does not meet the demands of the resource consumers (and virtualization

overhead), administrators might need to customize the amount of resources that are allocated to virtual

machines or to the resource pools in which they reside.

Use the resource allocation seings (shares, reservation, and limit) to determine the amount of CPU,

memory, and storage resources provided for a virtual machine. In particular, administrators have several

options for allocating resources.

Reserve the physical resources of the host or cluster.

n

Set an upper bound on the resources that can be allocated to a virtual machine.

n

Guarantee that a particular virtual machine is always allocated a higher percentage of the physical

n

resources than other virtual machines.

This chapter includes the following topics:

“Resource Allocation Shares,” on page 11

n

“Resource Allocation Reservation,” on page 12

n

“Resource Allocation Limit,” on page 12

n

“Resource Allocation Seings Suggestions,” on page 13

n

“Edit Resource Seings,” on page 13

n

“Changing Resource Allocation Seings—Example,” on page 14

n

“Admission Control,” on page 15

n

Resource Allocation Shares

Shares specify the relative importance of a virtual machine (or resource pool). If a virtual machine has twice

as many shares of a resource as another virtual machine, it is entitled to consume twice as much of that

resource when these two virtual machines are competing for resources.

Shares are typically specied as High, Normal, or Low and these values specify share values with a 4:2:1

ratio, respectively. You can also select Custom to assign a specic number of shares (which expresses a

proportional weight) to each virtual machine.

Specifying shares makes sense only with regard to sibling virtual machines or resource pools, that is, virtual

machines or resource pools with the same parent in the resource pool hierarchy. Siblings share resources

according to their relative share values, bounded by the reservation and limit. When you assign shares to a

virtual machine, you always specify the priority for that virtual machine relative to other powered-on

virtual machines.

VMware, Inc.

11

Page 12

vSphere Resource Management

The following table shows the default CPU and memory share values for a virtual machine. For resource

pools, the default CPU and memory share values are the same, but must be multiplied as if the resource

pool were a virtual machine with four virtual CPUs and 16 GB of memory.

Table 2‑1. Share Values

Setting CPU share values Memory share values

High 2000 shares per virtual CPU 20 shares per megabyte of congured virtual

Normal 1000 shares per virtual CPU 10 shares per megabyte of congured virtual

Low 500 shares per virtual CPU 5 shares per megabyte of congured virtual machine

For example, an SMP virtual machine with two virtual CPUs and 1GB RAM with CPU and memory shares

set to Normal has 2x1000=2000 shares of CPU and 10x1024=10240 shares of memory.

N Virtual machines with more than one virtual CPU are called SMP (symmetric multiprocessing)

virtual machines. ESXi supports up to 128 virtual CPUs per virtual machine.

The relative priority represented by each share changes when a new virtual machine is powered on. This

aects all virtual machines in the same resource pool. All of the virtual machines have the same number of

virtual CPUs. Consider the following examples.

machine memory.

machine memory.

memory.

Two CPU-bound virtual machines run on a host with 8GHz of aggregate CPU capacity. Their CPU

n

shares are set to Normal and get 4GHz each.

A third CPU-bound virtual machine is powered on. Its CPU shares value is set to High, which means it

n

should have twice as many shares as the machines set to Normal. The new virtual machine receives

4GHz and the two other machines get only 2GHz each. The same result occurs if the user species a

custom share value of 2000 for the third virtual machine.

Resource Allocation Reservation

A reservation species the guaranteed minimum allocation for a virtual machine.

vCenter Server or ESXi allows you to power on a virtual machine only if there are enough unreserved

resources to satisfy the reservation of the virtual machine. The server guarantees that amount even when the

physical server is heavily loaded. The reservation is expressed in concrete units (megaher or megabytes).

For example, assume you have 2GHz available and specify a reservation of 1GHz for VM1 and 1GHz for

VM2. Now each virtual machine is guaranteed to get 1GHz if it needs it. However, if VM1 is using only

500MHz, VM2 can use 1.5GHz.

Reservation defaults to 0. You can specify a reservation if you need to guarantee that the minimum required

amounts of CPU or memory are always available for the virtual machine.

Resource Allocation Limit

Limit species an upper bound for CPU, memory, or storage I/O resources that can be allocated to a virtual

machine.

A server can allocate more than the reservation to a virtual machine, but never allocates more than the limit,

even if there are unused resources on the system. The limit is expressed in concrete units (megaher,

megabytes, or I/O operations per second).

CPU, memory, and storage I/O resource limits default to unlimited. When the memory limit is unlimited,

the amount of memory congured for the virtual machine when it was created becomes its eective limit.

12 VMware, Inc.

Page 13

In most cases, it is not necessary to specify a limit. There are benets and drawbacks:

Benets — Assigning a limit is useful if you start with a small number of virtual machines and want to

n

manage user expectations. Performance deteriorates as you add more virtual machines. You can

simulate having fewer resources available by specifying a limit.

Drawbacks — You might waste idle resources if you specify a limit. The system does not allow virtual

n

machines to use more resources than the limit, even when the system is underutilized and idle

resources are available. Specify the limit only if you have good reasons for doing so.

Resource Allocation Settings Suggestions

Select resource allocation seings (reservation, limit and shares) that are appropriate for your ESXi

environment.

The following guidelines can help you achieve beer performance for your virtual machines.

Use Reservation to specify the minimum acceptable amount of CPU or memory, not the amount you

n

want to have available. The amount of concrete resources represented by a reservation does not change

when you change the environment, such as by adding or removing virtual machines. The host assigns

additional resources as available based on the limit for your virtual machine, the number of shares and

estimated demand.

When specifying the reservations for virtual machines, do not commit all resources (plan to leave at

n

least 10% unreserved). As you move closer to fully reserving all capacity in the system, it becomes

increasingly dicult to make changes to reservations and to the resource pool hierarchy without

violating admission control. In a DRS-enabled cluster, reservations that fully commit the capacity of the

cluster or of individual hosts in the cluster can prevent DRS from migrating virtual machines between

hosts.

Chapter 2 Configuring Resource Allocation Settings

If you expect frequent changes to the total available resources, use Shares to allocate resources fairly

n

across virtual machines. If you use Shares, and you upgrade the host, for example, each virtual machine

stays at the same priority (keeps the same number of shares) even though each share represents a larger

amount of memory, CPU, or storage I/O resources.

Edit Resource Settings

Use the Edit Resource Seings dialog box to change allocations for memory and CPU resources.

Procedure

1 Browse to the virtual machine in the vSphere Web Client navigator.

2 Right-click and select Edit Resource .

3 Edit the CPU Resources.

Option Description

Shares

Reservation

Limit

CPU shares for this resource pool with respect to the parent’s total. Sibling

resource pools share resources according to their relative share values

bounded by the reservation and limit. Select Low, Normal, or High, which

specify share values respectively in a 1:2:4 ratio. Select Custom to give each

virtual machine a specic number of shares, which expresses a

proportional weight.

Guaranteed CPU allocation for this resource pool.

Upper limit for this resource pool’s CPU allocation. Select Unlimited to

specify no upper limit.

VMware, Inc. 13

Page 14

VM-QA

host

VM-Marketing

vSphere Resource Management

4 Edit the Memory Resources.

Option Description

Shares

Reservation

Limit

Memory shares for this resource pool with respect to the parent’s total.

Sibling resource pools share resources according to their relative share

values bounded by the reservation and limit. Select Low, Normal, or High,

which specify share values respectively in a 1:2:4 ratio. Select Custom to

give each virtual machine a specic number of shares, which expresses a

proportional weight.

Guaranteed memory allocation for this resource pool.

Upper limit for this resource pool’s memory allocation. Select Unlimited to

specify no upper limit.

5 Click OK.

Changing Resource Allocation Settings—Example

The following example illustrates how you can change resource allocation seings to improve virtual

machine performance.

Assume that on an ESXi host, you have created two new virtual machines—one each for your QA (VM-QA)

and Marketing (VM-Marketing) departments.

Figure 2‑1. Single Host with Two Virtual Machines

In the following example, assume that VM-QA is memory intensive and accordingly you want to change the

resource allocation seings for the two virtual machines to:

Specify that, when system memory is overcommied, VM-QA can use twice as much CPU and memory

n

resources as the Marketing virtual machine. Set the CPU shares and memory shares for VM-QA to

High and for VM-Marketing set them to Normal.

Ensure that the Marketing virtual machine has a certain amount of guaranteed CPU resources. You can

n

do so using a reservation seing.

Procedure

1 Browse to the virtual machines in the vSphere Web Client navigator.

2 Right-click VM-QA, the virtual machine for which you want to change shares, and select Edit .

3 Under Virtual Hardware, expand CPU and select High from the Shares drop-down menu.

4 Under Virtual Hardware, expand Memory and select High from the Shares drop-down menu.

5 Click OK.

6 Right-click the marketing virtual machine (VM-Marketing) and select Edit .

7 Under Virtual Hardware, expand CPU and change the Reservation value to the desired number.

8 Click OK.

14 VMware, Inc.

Page 15

If you select the cluster’s Resource Reservation tab and click CPU, you should see that shares for VM-QA

are twice that of the other virtual machine. Also, because the virtual machines have not been powered on,

the Reservation Used elds have not changed.

Admission Control

When you power on a virtual machine, the system checks the amount of CPU and memory resources that

have not yet been reserved. Based on the available unreserved resources, the system determines whether it

can guarantee the reservation for which the virtual machine is congured (if any). This process is called

admission control.

If enough unreserved CPU and memory are available, or if there is no reservation, the virtual machine is

powered on. Otherwise, an Insufficient Resources warning appears.

N In addition to the user-specied memory reservation, for each virtual machine there is also an

amount of overhead memory. This extra memory commitment is included in the admission control

calculation.

When the vSphere DPM feature is enabled, hosts might be placed in standby mode (that is, powered o) to

reduce power consumption. The unreserved resources provided by these hosts are considered available for

admission control. If a virtual machine cannot be powered on without these resources, a recommendation to

power on sucient standby hosts is made.

Chapter 2 Configuring Resource Allocation Settings

VMware, Inc. 15

Page 16

vSphere Resource Management

16 VMware, Inc.

Page 17

CPU Virtualization Basics 3

CPU virtualization emphasizes performance and runs directly on the processor whenever possible. The

underlying physical resources are used whenever possible and the virtualization layer runs instructions

only as needed to make virtual machines operate as if they were running directly on a physical machine.

CPU virtualization is not the same thing as emulation. ESXi does not use emulation to run virtual CPUs.

With emulation, all operations are run in software by an emulator. A software emulator allows programs to

run on a computer system other than the one for which they were originally wrien. The emulator does this

by emulating, or reproducing, the original computer’s behavior by accepting the same data or inputs and

achieving the same results. Emulation provides portability and runs software designed for one platform

across several platforms.

When CPU resources are overcommied, the ESXi host time-slices the physical processors across all virtual

machines so each virtual machine runs as if it has its specied number of virtual processors. When an ESXi

host runs multiple virtual machines, it allocates to each virtual machine a share of the physical resources.

With the default resource allocation seings, all virtual machines associated with the same host receive an

equal share of CPU per virtual CPU. This means that a single-processor virtual machines is assigned only

half of the resources of a dual-processor virtual machine.

This chapter includes the following topics:

“Software-Based CPU Virtualization,” on page 17

n

“Hardware-Assisted CPU Virtualization,” on page 18

n

“Virtualization and Processor-Specic Behavior,” on page 18

n

“Performance Implications of CPU Virtualization,” on page 18

n

Software-Based CPU Virtualization

With software-based CPU virtualization, the guest application code runs directly on the processor, while the

guest privileged code is translated and the translated code runs on the processor.

The translated code is slightly larger and usually runs more slowly than the native version. As a result,

guest applications, which have a small privileged code component, run with speeds very close to native.

Applications with a signicant privileged code component, such as system calls, traps, or page table updates

can run slower in the virtualized environment.

VMware, Inc.

17

Page 18

vSphere Resource Management

Hardware-Assisted CPU Virtualization

Certain processors provide hardware assistance for CPU virtualization.

When using this assistance, the guest can use a separate mode of execution called guest mode. The guest

code, whether application code or privileged code, runs in the guest mode. On certain events, the processor

exits out of guest mode and enters root mode. The hypervisor executes in the root mode, determines the

reason for the exit, takes any required actions, and restarts the guest in guest mode.

When you use hardware assistance for virtualization, there is no need to translate the code. As a result,

system calls or trap-intensive workloads run very close to native speed. Some workloads, such as those

involving updates to page tables, lead to a large number of exits from guest mode to root mode. Depending

on the number of such exits and total time spent in exits, hardware-assisted CPU virtualization can speed up

execution signicantly.

Virtualization and Processor-Specific Behavior

Although VMware software virtualizes the CPU, the virtual machine detects the specic model of the

processor on which it is running.

Processor models might dier in the CPU features they oer, and applications running in the virtual

machine can make use of these features. Therefore, it is not possible to use vMotion® to migrate virtual

machines between systems running on processors with dierent feature sets. You can avoid this restriction,

in some cases, by using Enhanced vMotion Compatibility (EVC) with processors that support this feature.

See the vCenter Server and Host Management documentation for more information.

Performance Implications of CPU Virtualization

CPU virtualization adds varying amounts of overhead depending on the workload and the type of

virtualization used.

An application is CPU-bound if it spends most of its time executing instructions rather than waiting for

external events such as user interaction, device input, or data retrieval. For such applications, the CPU

virtualization overhead includes the additional instructions that must be executed. This overhead takes CPU

processing time that the application itself can use. CPU virtualization overhead usually translates into a

reduction in overall performance.

For applications that are not CPU-bound, CPU virtualization likely translates into an increase in CPU use. If

spare CPU capacity is available to absorb the overhead, it can still deliver comparable performance in terms

of overall throughput.

ESXi supports up to 128 virtual processors (CPUs) for each virtual machine.

N Deploy single-threaded applications on uniprocessor virtual machines, instead of on SMP virtual

machines that have multiple CPUs, for the best performance and resource use.

Single-threaded applications can take advantage only of a single CPU. Deploying such applications in dualprocessor virtual machines does not speed up the application. Instead, it causes the second virtual CPU to

use physical resources that other virtual machines could otherwise use.

18 VMware, Inc.

Page 19

Administering CPU Resources 4

You can congure virtual machines with one or more virtual processors, each with its own set of registers

and control structures.

When a virtual machine is scheduled, its virtual processors are scheduled to run on physical processors. The

VMkernel Resource Manager schedules the virtual CPUs on physical CPUs, thereby managing the virtual

machine’s access to physical CPU resources. ESXi supports virtual machines with up to 128 virtual CPUs.

This chapter includes the following topics:

“View Processor Information,” on page 19

n

“Specifying CPU Conguration,” on page 19

n

“Multicore Processors,” on page 20

n

“Hyperthreading,” on page 20

n

“Using CPU Anity,” on page 22

n

“Host Power Management Policies,” on page 23

n

View Processor Information

You can access information about current CPU conguration in the vSphere Web Client.

Procedure

1 Browse to the host in the vSphere Web Client navigator.

2 Click and expand Hardware.

3 Select Processors to view the information about the number and type of physical processors and the

number of logical processors.

N In hyperthreaded systems, each hardware thread is a logical processor. For example, a dual-core

processor with hyperthreading enabled has two cores and four logical processors.

Specifying CPU Configuration

You can specify CPU conguration to improve resource management. However, if you do not customize

CPU conguration, the ESXi host uses defaults that work well in most situations.

You can specify CPU conguration in the following ways:

Use the aributes and special features available through the vSphere Web Client. The

n

vSphere Web Client allows you to connect to the ESXi host or a vCenter Server system.

VMware, Inc.

19

Page 20

vSphere Resource Management

Use advanced seings under certain circumstances.

n

Use the vSphere SDK for scripted CPU allocation.

n

Use hyperthreading.

n

Multicore Processors

Multicore processors provide many advantages for a host performing multitasking of virtual machines.

Intel and AMD have developed processors which combine two or more processor cores into a single

integrated circuit (often called a package or socket). VMware uses the term socket to describe a single

package which can have one or more processor cores with one or more logical processors in each core.

A dual-core processor, for example, provides almost double the performance of a single-core processor, by

allowing two virtual CPUs to run at the same time. Cores within the same processor are typically congured

with a shared last-level cache used by all cores, potentially reducing the need to access slower main memory.

A shared memory bus that connects a physical processor to main memory can limit performance of its

logical processors when the virtual machines running on them are running memory-intensive workloads

which compete for the same memory bus resources.

Each logical processor of each processor core is used independently by the ESXi CPU scheduler to run

virtual machines, providing capabilities similar to SMP systems. For example, a two-way virtual machine

can have its virtual processors running on logical processors that belong to the same core, or on logical

processors on dierent physical cores.

The ESXi CPU scheduler can detect the processor topology and the relationships between processor cores

and the logical processors on them. It uses this information to schedule virtual machines and optimize

performance.

The ESXi CPU scheduler can interpret processor topology, including the relationship between sockets, cores,

and logical processors. The scheduler uses topology information to optimize the placement of virtual CPUs

onto dierent sockets. This optimization can maximize overall cache usage, and to improve cache anity by

minimizing virtual CPU migrations.

Hyperthreading

Hyperthreading technology allows a single physical processor core to behave like two logical processors.

The processor can run two independent applications at the same time. To avoid confusion between logical

and physical processors, Intel refers to a physical processor as a socket, and the discussion in this chapter

uses that terminology as well.

Intel Corporation developed hyperthreading technology to enhance the performance of its Pentium IV and

Xeon processor lines. Hyperthreading technology allows a single processor core to execute two independent

threads simultaneously.

While hyperthreading does not double the performance of a system, it can increase performance by beer

utilizing idle resources leading to greater throughput for certain important workload types. An application

running on one logical processor of a busy core can expect slightly more than half of the throughput that it

obtains while running alone on a non-hyperthreaded processor. Hyperthreading performance

improvements are highly application-dependent, and some applications might see performance degradation

with hyperthreading because many processor resources (such as the cache) are shared between logical

processors.

N On processors with Intel Hyper-Threading technology, each core can have two logical processors

which share most of the core's resources, such as memory caches and functional units. Such logical

processors are usually called threads.

20 VMware, Inc.

Page 21

Chapter 4 Administering CPU Resources

Many processors do not support hyperthreading and as a result have only one thread per core. For such

processors, the number of cores also matches the number of logical processors. The following processors

support hyperthreading and have two threads per core.

Processors based on the Intel Xeon 5500 processor microarchitecture.

n

Intel Pentium 4 (HT-enabled)

n

Intel Pentium EE 840 (HT-enabled)

n

Hyperthreading and ESXi Hosts

A host that is enabled for hyperthreading should behave similarly to a host without hyperthreading. You

might need to consider certain factors if you enable hyperthreading, however.

ESXi hosts manage processor time intelligently to guarantee that load is spread smoothly across processor

cores in the system. Logical processors on the same core have consecutive CPU numbers, so that CPUs 0 and

1 are on the rst core together, CPUs 2 and 3 are on the second core, and so on. Virtual machines are

preferentially scheduled on two dierent cores rather than on two logical processors on the same core.

If there is no work for a logical processor, it is put into a halted state, which frees its execution resources and

allows the virtual machine running on the other logical processor on the same core to use the full execution

resources of the core. The VMware scheduler properly accounts for this halt time, and charges a virtual

machine running with the full resources of a core more than a virtual machine running on a half core. This

approach to processor management ensures that the server does not violate any of the standard ESXi

resource allocation rules.

Consider your resource management needs before you enable CPU anity on hosts using hyperthreading.

For example, if you bind a high priority virtual machine to CPU 0 and another high priority virtual machine

to CPU 1, the two virtual machines have to share the same physical core. In this case, it can be impossible to

meet the resource demands of these virtual machines. Ensure that any custom anity seings make sense

for a hyperthreaded system.

Enable Hyperthreading

To enable hyperthreading, you must rst enable it in your system's BIOS seings and then turn it on in the

vSphere Web Client. Hyperthreading is enabled by default.

Consult your system documentation to determine whether your CPU supports hyperthreading.

Procedure

1 Ensure that your system supports hyperthreading technology.

2 Enable hyperthreading in the system BIOS.

Some manufacturers label this option Logical Processor, while others call it Enable Hyperthreading.

3 Ensure that hyperthreading is enabled for the ESXi host.

a Browse to the host in the vSphere Web Client navigator.

b Click .

c Under System, click Advanced System and select VMkernel.Boot.hyperthreading.

You must restart the host for the seing to take eect. Hyperthreading is enabled if the value is

true.

4 Under Hardware, click Processors to view the number of Logical processors.

Hyperthreading is enabled.

VMware, Inc. 21

Page 22

vSphere Resource Management

Using CPU Affinity

By specifying a CPU anity seing for each virtual machine, you can restrict the assignment of virtual

machines to a subset of the available processors in multiprocessor systems. By using this feature, you can

assign each virtual machine to processors in the specied anity set.

CPU anity species virtual machine-to-processor placement constraints and is dierent from the

relationship created by a VM-VM or VM-Host anity rule, which species virtual machine-to-virtual

machine host placement constraints.

In this context, the term CPU refers to a logical processor on a hyperthreaded system and refers to a core on

a non-hyperthreaded system.

The CPU anity seing for a virtual machine applies to all of the virtual CPUs associated with the virtual

machine and to all other threads (also known as worlds) associated with the virtual machine. Such virtual

machine threads perform processing required for emulating mouse, keyboard, screen, CD-ROM, and

miscellaneous legacy devices.

In some cases, such as display-intensive workloads, signicant communication might occur between the

virtual CPUs and these other virtual machine threads. Performance might degrade if the virtual machine's

anity seing prevents these additional threads from being scheduled concurrently with the virtual

machine's virtual CPUs. Examples of this include a uniprocessor virtual machine with anity to a single

CPU or a two-way SMP virtual machine with anity to only two CPUs.

For the best performance, when you use manual anity seings, VMware recommends that you include at

least one additional physical CPU in the anity seing to allow at least one of the virtual machine's threads

to be scheduled at the same time as its virtual CPUs. Examples of this include a uniprocessor virtual

machine with anity to at least two CPUs or a two-way SMP virtual machine with anity to at least three

CPUs.

Assign a Virtual Machine to a Specific Processor

Using CPU anity, you can assign a virtual machine to a specic processor. This allows you to restrict the

assignment of virtual machines to a specic available processor in multiprocessor systems.

Procedure

1 Find the virtual machine in the vSphere Web Client inventory.

a To nd a virtual machine, select a data center, folder, cluster, resource pool, or host.

b Click the Related Objects tab and click Virtual Machines.

2 Right-click the virtual machine and click Edit .

3 Under Virtual Hardware, expand CPU.

4 Under Scheduling Anity, select physical processor anity for the virtual machine.

Use '-' for ranges and ',' to separate values.

For example, "0, 2, 4-7" would indicate processors 0, 2, 4, 5, 6 and 7.

5 Select the processors where you want the virtual machine to run and click OK.

22 VMware, Inc.

Page 23

Potential Issues with CPU Affinity

Before you use CPU anity, you might need to consider certain issues.

Potential issues with CPU anity include:

For multiprocessor systems, ESXi systems perform automatic load balancing. Avoid manual

n

specication of virtual machine anity to improve the scheduler’s ability to balance load across

processors.

Anity can interfere with the ESXi host’s ability to meet the reservation and shares specied for a

n

virtual machine.

Because CPU admission control does not consider anity, a virtual machine with manual anity

n

seings might not always receive its full reservation.

Virtual machines that do not have manual anity seings are not adversely aected by virtual

machines with manual anity seings.

When you move a virtual machine from one host to another, anity might no longer apply because the

n

new host might have a dierent number of processors.

The NUMA scheduler might not be able to manage a virtual machine that is already assigned to certain

n

processors using anity.

Chapter 4 Administering CPU Resources

Anity can aect the host's ability to schedule virtual machines on multicore or hyperthreaded

n

processors to take full advantage of resources shared on such processors.

Host Power Management Policies

You can apply several power management features in ESXi that the host hardware provides to adjust the

balance between performance and power. You can control how ESXi uses these features by selecting a power

management policy.

Selecting a high-performance policy provides more absolute performance, but at lower eciency and

performance per wa. Low-power policies provide less absolute performance, but at higher eciency.

You can select a policy for the host that you manage by using the VMware Host Client. If you do not select a

policy, ESXi uses Balanced by default.

Table 4‑1. CPU Power Management Policies

Power Management Policy Description

High Performance Do not use any power management features.

Balanced (Default) Reduce energy consumption with minimal performance

Low Power Reduce energy consumption at the risk of lower

Custom User-dened power management policy. Advanced

compromise

performance

conguration becomes available.

When a CPU runs at lower frequency, it can also run at lower voltage, which saves power. This type of

power management is typically called Dynamic Voltage and Frequency Scaling (DVFS). ESXi aempts to

adjust CPU frequencies so that virtual machine performance is not aected.

When a CPU is idle, ESXi can apply deep halt states, also known as C-states. The deeper the C-state, the less

power the CPU uses, but it also takes longer for the CPU to start running again. When a CPU becomes idle,

ESXi applies an algorithm to predict the idle state duration and chooses an appropriate C-state to enter. In

power management policies that do not use deep C-states, ESXi uses only the shallowest halt state for idle

CPUs, C1.

VMware, Inc. 23

Page 24

vSphere Resource Management

Select a CPU Power Management Policy

You set the CPU power management policy for a host using the vSphere Web Client.

Prerequisites

Verify that the BIOS seings on the host system allow the operating system to control power management

(for example, OS Controlled).

N Some systems have Processor Clocking Control (PCC) technology, which allows ESXi to manage

power on the host system even if the host BIOS seings do not specify OS Controlled mode. With this

technology, ESXi does not manage P-states directly. Instead, the host cooperates with the BIOS to determine

the processor clock rate. HP systems that support this technology have a BIOS seing called Cooperative

Power Management that is enabled by default.

If the host hardware does not allow the operating system to manage power, only the Not Supported policy

is available. (On some systems, only the High Performance policy is available.)

Procedure

1 Browse to the host in the vSphere Web Client navigator.

2 Click .

3 Under Hardware, select Power Management and click the Edit buon.

4 Select a power management policy for the host and click OK.

The policy selection is saved in the host conguration and can be used again at boot time. You can

change it at any time, and it does not require a server reboot.

Configure Custom Policy Parameters for Host Power Management

When you use the Custom policy for host power management, ESXi bases its power management policy on

the values of several advanced conguration parameters.

Prerequisites

Select Custom for the power management policy, as described in “Select a CPU Power Management Policy,”

on page 24.

Procedure

1 Browse to the host in the vSphere Web Client navigator.

2 Click .

3 Under System, select Advanced System .

4 In the right pane, you can edit the power management parameters that aect the Custom policy.

Power management parameters that aect the Custom policy have descriptions that begin with In

Custom policy. All other power parameters aect all power management policies.

5 Select the parameter and click the Edit buon.

N The default values of power management parameters match the Balanced policy.

Parameter Description

Power.UsePStates

Power.MaxCpuLoad

24 VMware, Inc.

Use ACPI P-states to save power when the processor is busy.

Use P-states to save power on a CPU only when the CPU is busy for less

than the given percentage of real time.

Page 25

Parameter Description

Power.MinFreqPct

Power.UseStallCtr

Power.TimerHz

Power.UseCStates

Power.CStateMaxLatency

Power.CStateResidencyCoef

Power.CStatePredictionCoef

Power.PerfBias

Do not use any P-states slower than the given percentage of full CPU

speed.

Use a deeper P-state when the processor is frequently stalled waiting for

events such as cache misses.

Controls how many times per second ESXi reevaluates which P-state each

CPU should be in.

Use deep ACPI C-states (C2 or below) when the processor is idle.

Do not use C-states whose latency is greater than this value.

When a CPU becomes idle, choose the deepest C-state whose latency

multiplied by this value is less than the host's prediction of how long the

CPU will remain idle. Larger values make ESXi more conservative about

using deep C-states, while smaller values are more aggressive.

A parameter in the ESXi algorithm for predicting how long a CPU that

becomes idle will remain idle. Changing this value is not recommended.

Performance Energy Bias Hint (Intel-only). Sets an MSR on Intel processors

to an Intel-recommended value. Intel recommends 0 for high performance,

6 for balanced, and 15 for low power. Other values are undened.

6 Click OK.

Chapter 4 Administering CPU Resources

VMware, Inc. 25

Page 26

vSphere Resource Management

26 VMware, Inc.

Page 27

Memory Virtualization Basics 5

Before you manage memory resources, you should understand how they are being virtualized and used by

ESXi.

The VMkernel manages all physical RAM on the host. The VMkernel dedicates part of this managed

physical RAM for its own use. The rest is available for use by virtual machines.

The virtual and physical memory space is divided into blocks called pages. When physical memory is full,

the data for virtual pages that are not present in physical memory are stored on disk. Depending on

processor architecture, pages are typically 4 KB or 2 MB. See “Advanced Memory Aributes,” on page 116.

This chapter includes the following topics:

“Virtual Machine Memory,” on page 27

n

“Memory Overcommitment,” on page 28

n

“Memory Sharing,” on page 28

n

“Types of Memory Virtualization,” on page 29

n

Virtual Machine Memory

Each virtual machine consumes memory based on its congured size, plus additional overhead memory for

virtualization.

The congured size is the amount of memory that is presented to the guest operating system. This is

dierent from the amount of physical RAM that is allocated to the virtual machine. The laer depends on

the resource seings (shares, reservation, limit) and the level of memory pressure on the host.

For example, consider a virtual machine with a congured size of 1GB. When the guest operating system

boots, it detects that it is running on a dedicated machine with 1GB of physical memory. In some cases, the

virtual machine might be allocated the full 1GB. In other cases, it might receive a smaller allocation.

Regardless of the actual allocation, the guest operating system continues to behave as though it is running

on a dedicated machine with 1GB of physical memory.

Shares

Reservation

VMware, Inc. 27

Specify the relative priority for a virtual machine if more than the reservation

is available.

Is a guaranteed lower bound on the amount of physical RAM that the host

reserves for the virtual machine, even when memory is overcommied. Set

the reservation to a level that ensures the virtual machine has sucient

memory to run eciently, without excessive paging.

Page 28

vSphere Resource Management

After a virtual machine consumes all of the memory within its reservation, it

is allowed to retain that amount of memory and this memory is not

reclaimed, even if the virtual machine becomes idle. Some guest operating

systems (for example, Linux) might not access all of the congured memory

immediately after booting. Until the virtual machines consumes all of the

memory within its reservation, VMkernel can allocate any unused portion of

its reservation to other virtual machines. However, after the guest’s workload

increases and the virtual machine consumes its full reservation, it is allowed

to keep this memory.

Limit

Is an upper bound on the amount of physical RAM that the host can allocate

to the virtual machine. The virtual machine’s memory allocation is also

implicitly limited by its congured size.

Memory Overcommitment

For each running virtual machine, the system reserves physical RAM for the virtual machine’s reservation (if

any) and for its virtualization overhead.

The total congured memory sizes of all virtual machines may exceed the amount of available physical

memory on the host. However, it doesn't necessarily mean memory is overcommied. Memory is

overcommied when the combined working memory footprint of all virtual machines exceed that of the

host memory sizes.

Because of the memory management techniques the ESXi host uses, your virtual machines can use more

virtual RAM than there is physical RAM available on the host. For example, you can have a host with 2GB

memory and run four virtual machines with 1GB memory each. In that case, the memory is overcommied.

For instance, if all four virtual machines are idle, the combined consumed memory may be well below 2GB.

However, if all 4GB virtual machines are actively consuming memory, then their memory footprint may

exceed 2GB and the ESXi host will become overcommied.

Overcommitment makes sense because, typically, some virtual machines are lightly loaded while others are

more heavily loaded, and relative activity levels vary over time.

To improve memory utilization, the ESXi host transfers memory from idle virtual machines to virtual

machines that need more memory. Use the Reservation or Shares parameter to preferentially allocate

memory to important virtual machines. This memory remains available to other virtual machines if it is not

in use. ESXi implements various mechanisms such as ballooning, memory sharing, memory compression

and swapping to provide reasonable performance even if the host is not heavily memory overcommied.

An ESXi host can run out of memory if virtual machines consume all reservable memory in a memory

overcommied environment. Although the powered on virtual machines are not aected, a new virtual

machine might fail to power on due to lack of memory.

N All virtual machine memory overhead is also considered reserved.

In addition, memory compression is enabled by default on ESXi hosts to improve virtual machine

performance when memory is overcommied as described in “Memory Compression,” on page 41.

Memory Sharing

Memory sharing is a proprietary ESXi technique that can help achieve greater memory density on a host.

Memory sharing relies on the observation that several virtual machines might be running instances of the

same guest operating system. These virtual machines might have the same applications or components

loaded, or contain common data. In such cases, a host uses a proprietary Transparent Page Sharing (TPS)

technique to eliminate redundant copies of memory pages. With memory sharing, a workload running on a

28 VMware, Inc.

Page 29

virtual machine often consumes less memory than it might when running on physical machines. As a result,

virtual machine

1

guest virtual memory

guest physical memory

machine memory

a b

a

a b b c

b

c b

b c

virtual machine

2

higher levels of overcommitment can be supported eciently. The amount of memory saved by memory

sharing depends on whether the workload consists of nearly identical machines which might free up more

memory. A more diverse workload might result in a lower percentage of memory savings.

N Due to security concerns, inter-virtual machine transparent page sharing is disabled by default and

page sharing is being restricted to intra-virtual machine memory sharing. Page sharing does not occur

across virtual machines and only occurs inside a virtual machine. See “Sharing Memory Across Virtual

Machines,” on page 40 for more information.

Types of Memory Virtualization

There are two types of memory virtualization: Software-based and hardware-assisted memory

virtualization.

Because of the extra level of memory mapping introduced by virtualization, ESXi can eectively manage

memory across all virtual machines. Some of the physical memory of a virtual machine might be mapped to

shared pages or to pages that are unmapped, or swapped out.

A host performs virtual memory management without the knowledge of the guest operating system and

without interfering with the guest operating system’s own memory management subsystem.

The VMM for each virtual machine maintains a mapping from the guest operating system's physical

memory pages to the physical memory pages on the underlying machine. (VMware refers to the underlying

host physical pages as “machine” pages and the guest operating system’s physical pages as “physical”

pages.)

Chapter 5 Memory Virtualization Basics

Each virtual machine sees a contiguous, zero-based, addressable physical memory space. The underlying

machine memory on the server used by each virtual machine is not necessarily contiguous.

For both software-based and hardware-assisted memory virtualization, the guest virtual to guest physical

addresses are managed by the guest operating system. The hypervisor is only responsible for translating the

guest physical addresses to machine addresses. Software-based memory virtualization combines the guest's

virtual to machine addresses in software and saves them in the shadow page tables managed by the

hypervisor. Hardware-assisted memory virtualization utilizes the hardware facility to generate the

combined mappings with the guest's page tables and the nested page tables maintained by the hypervisor.

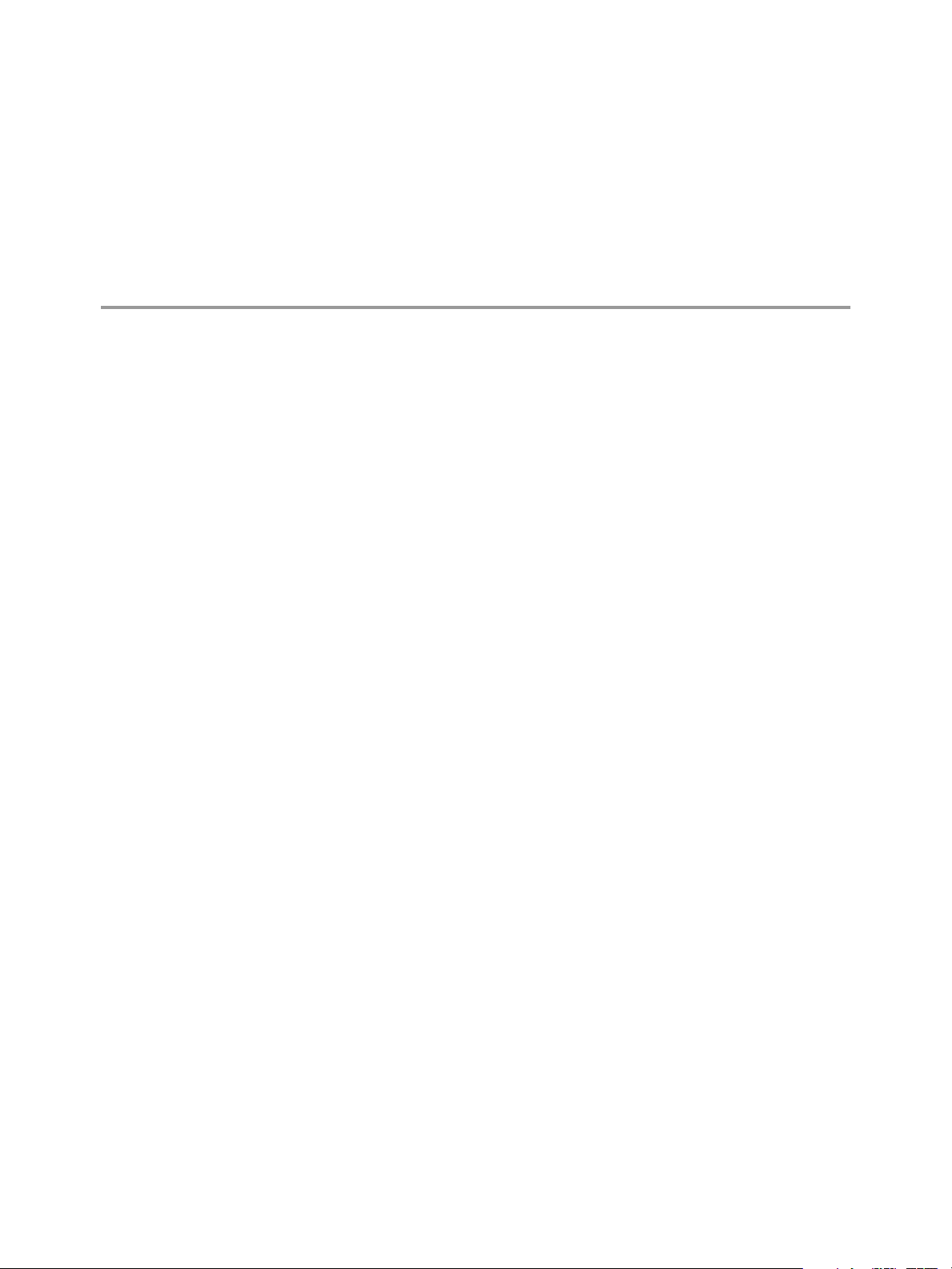

The diagram illustrates the ESXi implementation of memory virtualization.

Figure 5‑1. ESXi Memory Mapping

The boxes represent pages, and the arrows show the dierent memory mappings.

n

The arrows from guest virtual memory to guest physical memory show the mapping maintained by the

n

page tables in the guest operating system. (The mapping from virtual memory to linear memory for

x86-architecture processors is not shown.)

VMware, Inc. 29

The arrows from guest physical memory to machine memory show the mapping maintained by the

n

VMM.

Page 30

vSphere Resource Management

The dashed arrows show the mapping from guest virtual memory to machine memory in the shadow

n

page tables also maintained by the VMM. The underlying processor running the virtual machine uses

the shadow page table mappings.

Software-Based Memory Virtualization

ESXi virtualizes guest physical memory by adding an extra level of address translation.

The VMM maintains the combined virtual-to-machine page mappings in the shadow page tables. The

n

shadow page tables are kept up to date with the guest operating system's virtual-to-physical mappings

and physical-to-machine mappings maintained by the VMM.

The VMM intercepts virtual machine instructions that manipulate guest operating system memory

n

management structures so that the actual memory management unit (MMU) on the processor is not

updated directly by the virtual machine.

The shadow page tables are used directly by the processor's paging hardware.

n

There is non-trivial computation overhead for maintaining the coherency of the shadow page tables.

n

The overhead is more pronounced when the number of virtual CPUs increases.

This approach to address translation allows normal memory accesses in the virtual machine to execute

without adding address translation overhead, after the shadow page tables are set up. Because the

translation look-aside buer (TLB) on the processor caches direct virtual-to-machine mappings read from

the shadow page tables, no additional overhead is added by the VMM to access the memory. Note that

software MMU has a higher overhead memory requirement than hardware MMU. Hence, in order to

support software MMU, the maximum overhead supported for virtual machines in the VMkernel needs to

be increased. In some cases, software memory virtualization may have some performance benet over

hardware-assisted approach if the workload induces a huge amount of TLB misses.

Performance Considerations

The use of two sets of page tables has these performance implications.

No overhead is incurred for regular guest memory accesses.

n

Additional time is required to map memory within a virtual machine, which happens when:

n

The virtual machine operating system is seing up or updating virtual address to physical address

n

mappings.

The virtual machine operating system is switching from one address space to another (context

n

switch).

Like CPU virtualization, memory virtualization overhead depends on workload.

n

Hardware-Assisted Memory Virtualization

Some CPUs, such as AMD SVM-V and the Intel Xeon 5500 series, provide hardware support for memory

virtualization by using two layers of page tables.

The rst layer of page tables stores guest virtual-to-physical translations, while the second layer of page

tables stores guest physical-to-machine translation. The TLB (translation look-aside buer) is a cache of

translations maintained by the processor's memory management unit (MMU) hardware. A TLB miss is a

miss in this cache and the hardware needs to go to memory (possibly many times) to nd the required

translation. For a TLB miss to a certain guest virtual address, the hardware looks at both page tables to

translate guest virtual address to machine address. The rst layer of page tables is maintained by the guest

operating system. The VMM only maintains the second layer of page tables.

30 VMware, Inc.

Page 31

Chapter 5 Memory Virtualization Basics

Performance Considerations

When you use hardware assistance, you eliminate the overhead for software memory virtualization. In

particular, hardware assistance eliminates the overhead required to keep shadow page tables in

synchronization with guest page tables. However, the TLB miss latency when using hardware assistance is

signicantly higher. By default the hypervisor uses large pages in hardware assisted modes to reduce the

cost of TLB misses. As a result, whether or not a workload benets by using hardware assistance primarily

depends on the overhead the memory virtualization causes when using software memory virtualization. If a

workload involves a small amount of page table activity (such as process creation, mapping the memory, or

context switches), software virtualization does not cause signicant overhead. Conversely, workloads with a

large amount of page table activity are likely to benet from hardware assistance.

The performance of hardware MMU has improved since it was rst introduced with extensive caching

implemented in hardware. Using software memory virtualization techniques, the frequency of context

switches in a typical guest may happen from 100 to 1000 times per second. Each context switch will trap the

VMM in software MMU. Hardware MMU approaches avoid this issue.

By default the hypervisor uses large pages in hardware assisted modes to reduce the cost of TLB misses. The

best performance is achieved by using large pages in both guest virtual to guest physical and guest physical

to machine address translations.

The option LPage.LPageAlwaysTryForNPT can change the policy for using large pages in guest physical to

machine address translations. For more information, see “Advanced Memory Aributes,” on page 116.

N Binary translation only works with software-based memory virtualization.

VMware, Inc. 31

Page 32

vSphere Resource Management

32 VMware, Inc.

Page 33

Administering Memory Resources 6

Using the vSphere Web Client you can view information about and make changes to memory allocation

seings. To administer your memory resources eectively, you must also be familiar with memory

overhead, idle memory tax, and how ESXi hosts reclaim memory.

When administering memory resources, you can specify memory allocation. If you do not customize

memory allocation, the ESXi host uses defaults that work well in most situations.

You can specify memory allocation in several ways.

Use the aributes and special features available through the vSphere Web Client. The

n

vSphere Web Client allows you to connect to the ESXi host or vCenter Server system.

Use advanced seings.

n

Use the vSphere SDK for scripted memory allocation.

n

This chapter includes the following topics:

“Understanding Memory Overhead,” on page 33

n

“How ESXi Hosts Allocate Memory,” on page 34

n

“Memory Reclamation,” on page 35

n

“Using Swap Files,” on page 36

n

“Sharing Memory Across Virtual Machines,” on page 40

n

“Memory Compression,” on page 41

n

“Measuring and Dierentiating Types of Memory Usage,” on page 42

n

“Memory Reliability,” on page 43

n

“About System Swap,” on page 43

n

Understanding Memory Overhead

Virtualization of memory resources has some associated overhead.

ESXi virtual machines can incur two kinds of memory overhead.

The additional time to access memory within a virtual machine.

n

The extra space needed by the ESXi host for its own code and data structures, beyond the memory

n

allocated to each virtual machine.

VMware, Inc.

33

Page 34

vSphere Resource Management

ESXi memory virtualization adds lile time overhead to memory accesses. Because the processor's paging

hardware uses page tables (shadow page tables for software-based approach or two level page tables for

hardware-assisted approach) directly, most memory accesses in the virtual machine can execute without

address translation overhead.

The memory space overhead has two components.

A xed, system-wide overhead for the VMkernel.

n

Additional overhead for each virtual machine.

n

Overhead memory includes space reserved for the virtual machine frame buer and various virtualization

data structures, such as shadow page tables. Overhead memory depends on the number of virtual CPUs

and the congured memory for the guest operating system.

Overhead Memory on Virtual Machines

Virtual machines require a certain amount of available overhead memory to power on. You should be aware

of the amount of this overhead.

The following table lists the amount of overhead memory a virtual machine requires to power on. After a

virtual machine is running, the amount of overhead memory it uses might dier from the amount listed in

the table. The sample values were collected with VMX swap enabled and hardware MMU enabled for the

virtual machine. (VMX swap is enabled by default.)

N The table provides a sample of overhead memory values and does not aempt to provide

information about all possible congurations. You can congure a virtual machine to have up to 64 virtual

CPUs, depending on the number of licensed CPUs on the host and the number of CPUs that the guest

operating system supports.

Table 6‑1. Sample Overhead Memory on Virtual Machines

Memory (MB) 1 VCPU 2 VCPUs 4 VCPUs 8 VCPUs

256 20.29 24.28 32.23 48.16

1024 25.90 29.91 37.86 53.82

4096 48.64 52.72 60.67 76.78

16384 139.62 143.98 151.93 168.60

How ESXi Hosts Allocate Memory

A host allocates the memory specied by the Limit parameter to each virtual machine, unless memory is

overcommied. ESXi never allocates more memory to a virtual machine than its specied physical memory

size.

For example, a 1GB virtual machine might have the default limit (unlimited) or a user-specied limit (for

example 2GB). In both cases, the ESXi host never allocates more than 1GB, the physical memory size that

was specied for it.

When memory is overcommied, each virtual machine is allocated an amount of memory somewhere

between what is specied by Reservation and what is specied by Limit. The amount of memory granted to

a virtual machine above its reservation usually varies with the current memory load.

A host determines allocations for each virtual machine based on the number of shares allocated to it and an

estimate of its recent working set size.

Shares — ESXi hosts use a modied proportional-share memory allocation policy. Memory shares

n

entitle a virtual machine to a fraction of available physical memory.

34 VMware, Inc.

Page 35

Chapter 6 Administering Memory Resources

Working set size — ESXi hosts estimate the working set for a virtual machine by monitoring memory

n

activity over successive periods of virtual machine execution time. Estimates are smoothed over several

time periods using techniques that respond rapidly to increases in working set size and more slowly to

decreases in working set size.

This approach ensures that a virtual machine from which idle memory is reclaimed can ramp up

quickly to its full share-based allocation when it starts using its memory more actively.

Memory activity is monitored to estimate the working set sizes for a default period of 60 seconds. To

modify this default , adjust the Mem.SamplePeriod advanced seing. See “Set Advanced Host

Aributes,” on page 115.

Memory Tax for Idle Virtual Machines

If a virtual machine is not actively using all of its currently allocated memory, ESXi charges more for idle

memory than for memory that is in use. This is done to help prevent virtual machines from hoarding idle

memory.

The idle memory tax is applied in a progressive fashion. The eective tax rate increases as the ratio of idle

memory to active memory for the virtual machine rises. (In earlier versions of ESXi that did not support

hierarchical resource pools, all idle memory for a virtual machine was taxed equally.)

You can modify the idle memory tax rate with the Mem.IdleTax option. Use this option, together with the

Mem.SamplePeriod advanced aribute, to control how the system determines target memory allocations for

virtual machines. See “Set Advanced Host Aributes,” on page 115.

N In most cases, changes to Mem.IdleTax are not necessary nor appropriate.

VMX Swap Files

Virtual machine executable (VMX) swap les allow the host to greatly reduce the amount of overhead

memory reserved for the VMX process.

N VMX swap les are not related to the swap to host swap cache feature or to regular host-level swap

les.

ESXi reserves memory per virtual machine for a variety of purposes. Memory for the needs of certain

components, such as the virtual machine monitor (VMM) and virtual devices, is fully reserved when a

virtual machine is powered on. However, some of the overhead memory that is reserved for the VMX

process can be swapped. The VMX swap feature reduces the VMX memory reservation signicantly (for

example, from about 50MB or more per virtual machine to about 10MB per virtual machine). This allows the

remaining memory to be swapped out when host memory is overcommied, reducing overhead memory

reservation for each virtual machine.

The host creates VMX swap les automatically, provided there is sucient free disk space at the time a

virtual machine is powered on.

Memory Reclamation

ESXi hosts can reclaim memory from virtual machines.

A host allocates the amount of memory specied by a reservation directly to a virtual machine. Anything

beyond the reservation is allocated using the host’s physical resources or, when physical resources are not

available, handled using special techniques such as ballooning or swapping. Hosts can use two techniques

for dynamically expanding or contracting the amount of memory allocated to virtual machines.

ESXi systems use a memory balloon driver (vmmemctl), loaded into the guest operating system running

n

in a virtual machine. See “Memory Balloon Driver,” on page 36.

VMware, Inc. 35

Page 36

1

2

3

memory

memory

memory

swap space

swap space

vSphere Resource Management

ESXi system swaps out a page from a virtual machine to a server swap le without any involvement by

n

the guest operating system. Each virtual machine has its own swap le.

Memory Balloon Driver

The memory balloon driver (vmmemctl) collaborates with the server to reclaim pages that are considered least

valuable by the guest operating system.

The driver uses a proprietary ballooning technique that provides predictable performance that closely

matches the behavior of a native system under similar memory constraints. This technique increases or

decreases memory pressure on the guest operating system, causing the guest to use its own native memory

management algorithms. When memory is tight, the guest operating system determines which pages to

reclaim and, if necessary, swaps them to its own virtual disk.

Figure 6‑1. Memory Ballooning in the Guest Operating System

N You must congure the guest operating system with sucient swap space. Some guest operating

systems have additional limitations.

If necessary, you can limit the amount of memory vmmemctl reclaims by seing the sched.mem.maxmemctl

parameter for a specic virtual machine. This option species the maximum amount of memory that can be

reclaimed from a virtual machine in megabytes (MB). See “Set Advanced Virtual Machine Aributes,” on

page 118.

Using Swap Files

You can specify the location of your guest swap le, reserve swap space when memory is overcommied,

and delete a swap le.

ESXi hosts use swapping to forcibly reclaim memory from a virtual machine when the vmmemctl driver is not

available or is not responsive.

It was never installed.

n

It is explicitly disabled.

n

It is not running (for example, while the guest operating system is booting).

n

It is temporarily unable to reclaim memory quickly enough to satisfy current system demands.

n

36 VMware, Inc.

Page 37

Chapter 6 Administering Memory Resources

It is functioning properly, but maximum balloon size is reached.

n

Standard demand-paging techniques swap pages back in when the virtual machine needs them.

Swap File Location

By default, the swap le is created in the same location as the virtual machine's conguration le, which

may either be on a VMFS datastore, a vSAN datastore or a VVol datastore. On a vSAN datastore or a VVol

datastore, the swap le is created as a separate vSAN or VVol object.

The ESXi host creates a swap le when a virtual machine is powered on. If this le cannot be created, the

virtual machine cannot power on. Instead of accepting the default, you can also:

Use per-virtual machine conguration options to change the datastore to another shared storage

n

location.

Use host-local swap, which allows you to specify a datastore stored locally on the host. This allows you

n

to swap at a per-host level, saving space on the SAN. However, it can lead to a slight degradation in