Page 1

HP IBRIX X9720/StoreAll 9730 Storage

Administrator Guide

Abstract

This guide describes tasks related to cluster configuration and monitoring, system upgrade and recovery, hardware component

replacement, and troubleshooting. It does not document StoreAll file system features or standard Linux administrative tools and

commands. For information about configuring and using StoreAll file system features, see the

nl

HP StoreAll Storage File System User Guide.

This guide is intended for system administrators and technicians who are experienced with installing and administering networks,

and with performing Linux operating and administrative tasks. For the latest StoreAll guides, browse to

nl

http://www.hp.com/support/StoreAllManuals.

HP Part Number: AW549-96073

Published: July 2013

Edition: 14

Page 2

© Copyright 2009, 2013 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgments

Microsoft® and Windows® are U.S. registered trademarks of Microsoft Corporation.

UNIX® is a registered trademark of The Open Group.

Warranty

WARRANTY STATEMENT: To obtain a copy of the warranty for this product, see the warranty information website:

http://www.hp.com/go/storagewarranty

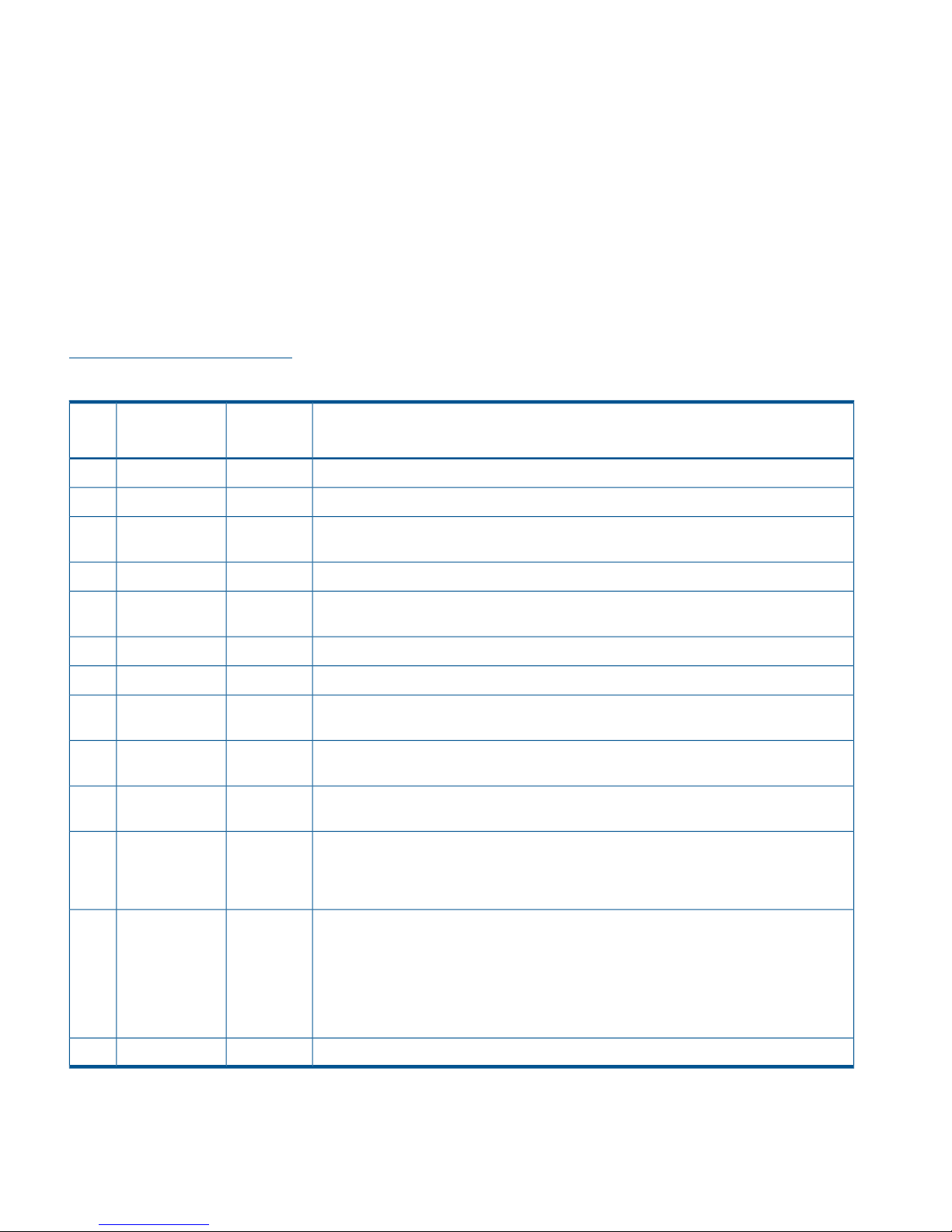

Revision History

DescriptionSoftware

Version

DateEdition

Initial release of the IBRIX X9720 Storage.5.3.1December 20091

Added network management and Support ticket.5.4April 20102

Added Fusion Manager backup, migration to an agile Fusion Manager configuration,

software upgrade procedures, and system recovery procedures.

5.4.1August 20103

Revised upgrade procedure.5.4.1August 20104

Added information about NDMP backups and configuring virtual interfaces, and updated

cluster procedures.

5.5December 20105

Updated segment evacuation information.5.5March 20116

Revised upgrade procedure.5.6April 20117

Added or updated information about the agile Fusion Manager, Statistics tool, Ibrix

Collect, event notification, capacity block installation, NTP servers, upgrades.

6.0September 20118

Added or updated information about 9730 systems, hardware monitoring, segment

evacuation, HP Insight Remote Support, software upgrades, events, Statistics tool.

6.1June 20129

Added or updated information about High Availability, failover, server tuning, VLAN

tagging, segment migration and evacuation, upgrades, SNMP.

6.2December 201210

Updated information on upgrades, remote support, collection logs, phone home and

troubleshooting. Now point users to website for the latest spare parts list instead of shipping

6.3March 201311

the list. Added before and after upgrade steps for Express Query when going from 6.2

to 6.3.

Removed post upgrade step that tells users to modify the /etc/hosts file on every

StoreAll node. Changed firmware version to 4.0.0-13 in “Upgrading IBRIX X9720 chassis

6.3April 201312

firmware.” In the “Cascading Upgrades” appendix, added a section that tells users to

ensure that the NFS exports option subtree_check is the default export option for every

NFS export when upgrading from a StoreAll 5.x release. Also changed ibrix_fm -m

nofmfailover -A to ibrix_fm -m maintenance -A in the “Cascading Upgrades”

appendix. Updated information about SMB share creation.

Updated the example in the section “Enabling collection and synchronization.”6.3June 201313

Page 3

Contents

1 Upgrading the StoreAll software to the 6.3 release.......................................10

Upgrading 9720 chassis firmware............................................................................................12

Online upgrades for StoreAll software.......................................................................................12

Preparing for the upgrade...................................................................................................13

Performing the upgrade......................................................................................................13

After the upgrade..............................................................................................................14

Automated offline upgrades for StoreAll software 6.x to 6.3.........................................................14

Preparing for the upgrade...................................................................................................14

Performing the upgrade......................................................................................................14

After the upgrade..............................................................................................................15

Manual offline upgrades for StoreAll software 6.x to 6.3.............................................................15

Preparing for the upgrade...................................................................................................15

Performing the upgrade manually.........................................................................................17

After the upgrade..............................................................................................................17

Upgrading Linux StoreAll clients................................................................................................18

Installing a minor kernel update on Linux clients.....................................................................18

Upgrading Windows StoreAll clients.........................................................................................19

Upgrading pre-6.3 Express Query enabled file systems...............................................................19

Required steps before the StoreAll Upgrade for pre-6.3 Express Query enabled file systems.........19

Required steps after the StoreAll Upgrade for pre-6.3 Express Query enabled file systems...........20

Troubleshooting upgrade issues................................................................................................21

Automatic upgrade............................................................................................................21

Manual upgrade...............................................................................................................22

Offline upgrade fails because iLO firmware is out of date........................................................22

Node is not registered with the cluster network .....................................................................22

File system unmount issues...................................................................................................23

File system in MIF state after StoreAll software 6.3 upgrade.....................................................23

2 Product description...................................................................................25

System features.......................................................................................................................25

System components.................................................................................................................25

HP StoreAll software features....................................................................................................25

High availability and redundancy.............................................................................................26

3 Getting started.........................................................................................27

Setting up the X9720/9730 Storage.........................................................................................27

Installation steps................................................................................................................27

Additional configuration steps.............................................................................................27

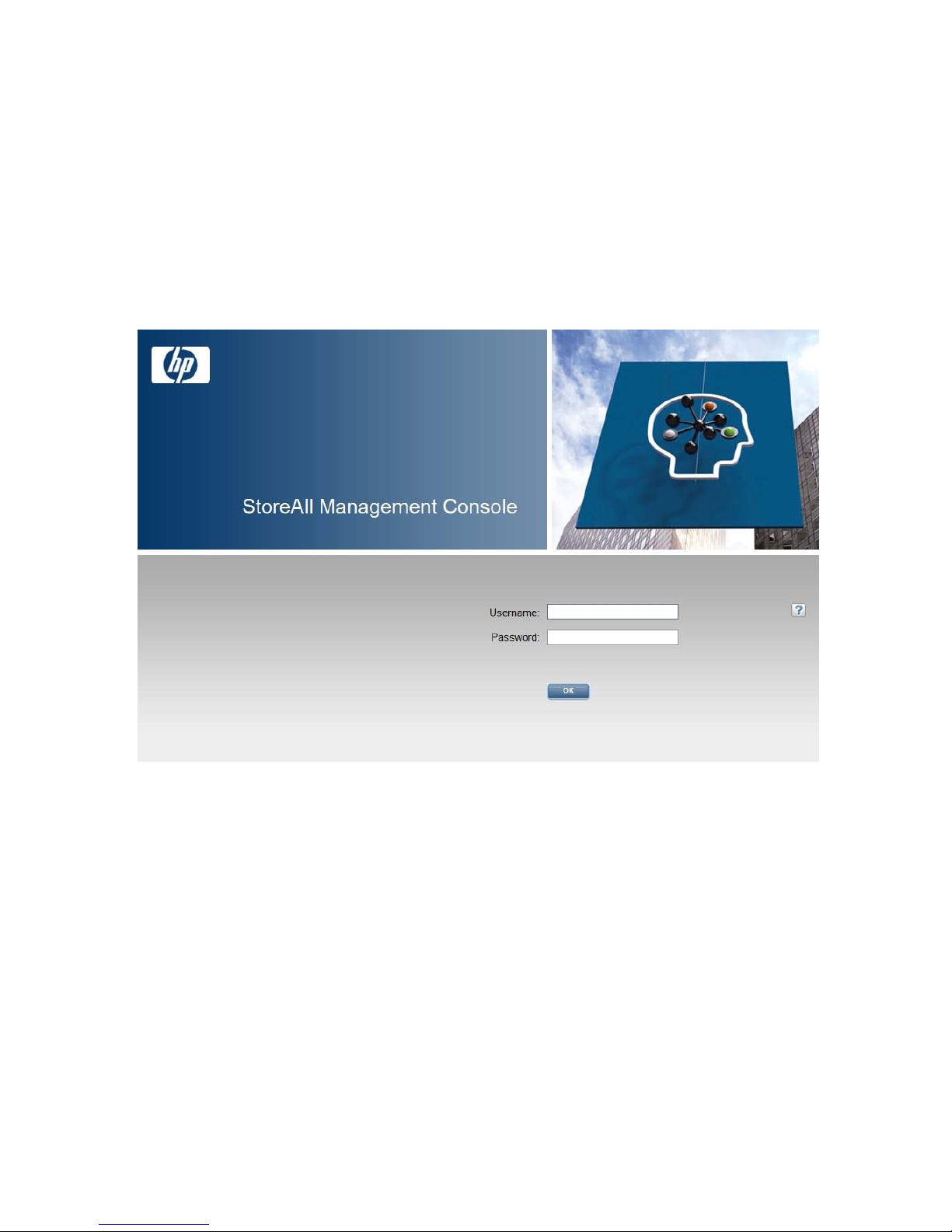

Logging in to the system..........................................................................................................28

Using the network..............................................................................................................28

Using the TFT keyboard/monitor..........................................................................................28

Using the serial link on the Onboard Administrator.................................................................29

Booting the system and individual server blades.........................................................................29

Management interfaces...........................................................................................................29

Using the StoreAll Management Console..............................................................................29

Customizing the GUI..........................................................................................................32

Adding user accounts for Management Console access..........................................................33

Using the CLI.....................................................................................................................33

Starting the array management software...............................................................................33

StoreAll client interfaces......................................................................................................34

StoreAll software manpages.....................................................................................................34

Changing passwords..............................................................................................................34

Contents 3

Page 4

Configuring ports for a firewall.................................................................................................35

Configuring NTP servers..........................................................................................................36

Configuring HP Insight Remote Support on StoreAll systems..........................................................36

Configuring the StoreAll cluster for Insight Remote Support......................................................38

Configuring Insight Remote Support for HP SIM 7.1 and IRS 5.7...............................................41

Configuring Insight Remote Support for HP SIM 6.3 and IRS 5.6..............................................44

Testing the Insight Remote Support configuration....................................................................47

Updating the Phone Home configuration...............................................................................47

Disabling Phone Home.......................................................................................................47

Troubleshooting Insight Remote Support................................................................................48

4 Configuring virtual interfaces for client access..............................................49

Network and VIF guidelines.....................................................................................................49

Creating a bonded VIF............................................................................................................50

Configuring backup servers......................................................................................................50

Configuring NIC failover.........................................................................................................50

Configuring automated failover................................................................................................51

Example configuration.............................................................................................................51

Specifying VIFs in the client configuration...................................................................................51

Configuring VLAN tagging......................................................................................................52

Support for link state monitoring...............................................................................................52

5 Configuring failover..................................................................................53

Agile management consoles....................................................................................................53

Agile Fusion Manager modes..............................................................................................53

Agile Fusion Manager and failover......................................................................................53

Viewing information about Fusion Managers.........................................................................54

Configuring High Availability on the cluster................................................................................54

What happens during a failover..........................................................................................55

Configuring automated failover with the HA Wizard...............................................................55

Configuring automated failover manually..............................................................................62

Changing the HA configuration manually.........................................................................63

Failing a server over manually.............................................................................................64

Failing back a server .........................................................................................................64

Setting up HBA monitoring..................................................................................................65

Checking the High Availability configuration.........................................................................66

Capturing a core dump from a failed node................................................................................68

Prerequisites for setting up the crash capture..........................................................................68

Setting up nodes for crash capture.......................................................................................69

6 Configuring cluster event notification...........................................................70

Cluster events.........................................................................................................................70

Setting up email notification of cluster events..............................................................................70

Associating events and email addresses................................................................................70

Configuring email notification settings..................................................................................71

Dissociating events and email addresses...............................................................................71

Testing email addresses......................................................................................................71

Viewing email notification settings........................................................................................72

Setting up SNMP notifications..................................................................................................72

Configuring the SNMP agent...............................................................................................73

Configuring trapsink settings................................................................................................73

Associating events and trapsinks..........................................................................................74

Defining views...................................................................................................................74

Configuring groups and users..............................................................................................75

Deleting elements of the SNMP configuration........................................................................75

Listing SNMP configuration information.................................................................................75

4 Contents

Page 5

7 Configuring system backups.......................................................................76

Backing up the Fusion Manager configuration............................................................................76

Using NDMP backup applications............................................................................................76

Configuring NDMP parameters on the cluster........................................................................77

NDMP process management...............................................................................................78

Viewing or canceling NDMP sessions..............................................................................78

Starting, stopping, or restarting an NDMP Server..............................................................78

Viewing or rescanning tape and media changer devices.........................................................79

NDMP events....................................................................................................................79

8 Creating host groups for StoreAll clients.......................................................80

How host groups work.............................................................................................................80

Creating a host group tree.......................................................................................................80

Adding a StoreAll client to a host group....................................................................................81

Adding a domain rule to a host group.......................................................................................81

Viewing host groups................................................................................................................82

Deleting host groups...............................................................................................................82

Other host group operations....................................................................................................82

9 Monitoring cluster operations.....................................................................83

Monitoring X9720/9730 hardware..........................................................................................83

Monitoring servers.............................................................................................................83

Monitoring hardware components........................................................................................87

Monitoring blade enclosures...........................................................................................88

Obtaining server details.................................................................................................91

Monitoring storage and storage components.........................................................................94

Monitoring storage clusters.............................................................................................96

Monitoring drive enclosures for a storage cluster...........................................................96

Monitoring pools for a storage cluster.........................................................................99

Monitoring storage controllers for a storage cluster.....................................................100

Monitoring storage switches in a storage cluster..............................................................101

Managing LUNs in a storage cluster..............................................................................101

Monitoring the status of file serving nodes................................................................................102

Monitoring cluster events.......................................................................................................103

Viewing events................................................................................................................103

Removing events from the events database table..................................................................104

Monitoring cluster health.......................................................................................................104

Health checks..................................................................................................................104

Health check reports........................................................................................................104

Viewing logs........................................................................................................................106

Viewing operating statistics for file serving nodes......................................................................106

10 Using the Statistics tool..........................................................................108

Installing and configuring the Statistics tool..............................................................................108

Installing the Statistics tool.................................................................................................108

Enabling collection and synchronization..............................................................................108

Upgrading the Statistics tool from StoreAll software 6.0.............................................................109

Using the Historical Reports GUI.............................................................................................109

Generating reports...........................................................................................................111

Deleting reports...............................................................................................................111

Maintaining the Statistics tool.................................................................................................112

Space requirements..........................................................................................................112

Updating the Statistics tool configuration.............................................................................112

Changing the Statistics tool configuration............................................................................112

Fusion Manager failover and the Statistics tool configuration.................................................112

Checking the status of Statistics tool processes.....................................................................113

Contents 5

Page 6

Controlling Statistics tool processes.....................................................................................113

Troubleshooting the Statistics tool............................................................................................114

Log files...............................................................................................................................114

Uninstalling the Statistics tool.................................................................................................114

11 Maintaining the system..........................................................................115

Shutting down the system.......................................................................................................115

Shutting down the StoreAll software....................................................................................115

Powering off the system hardware......................................................................................116

Starting up the system...........................................................................................................117

Powering on the system hardware......................................................................................117

Powering on after a power failure......................................................................................117

Starting the StoreAll software.............................................................................................117

Powering file serving nodes on or off.......................................................................................117

Performing a rolling reboot....................................................................................................118

Starting and stopping processes.............................................................................................118

Tuning file serving nodes and StoreAll clients............................................................................118

Managing segments.............................................................................................................122

Migrating segments..........................................................................................................123

Evacuating segments and removing storage from the cluster ..................................................125

Removing a node from a cluster..............................................................................................128

Maintaining networks............................................................................................................129

Cluster and user network interfaces....................................................................................129

Adding user network interfaces..........................................................................................129

Setting network interface options in the configuration database..............................................131

Preferring network interfaces..............................................................................................131

Unpreferring network interfaces.........................................................................................132

Making network changes..................................................................................................132

Changing the IP address for a Linux StoreAll client...........................................................132

Changing the cluster interface.......................................................................................133

Managing routing table entries.....................................................................................133

Adding a routing table entry....................................................................................133

Deleting a routing table entry...................................................................................133

Deleting a network interface.........................................................................................133

Viewing network interface information................................................................................134

12 Licensing.............................................................................................135

Viewing license terms............................................................................................................135

Retrieving a license key.........................................................................................................135

Using AutoPass to retrieve and install permanent license keys......................................................135

13 Upgrading firmware..............................................................................136

Components for firmware upgrades.........................................................................................136

Steps for upgrading the firmware............................................................................................137

Finding additional information on FMT...............................................................................140

Adding performance modules on 9730 systems...................................................................140

Adding new server blades on 9720 systems........................................................................141

14 Troubleshooting....................................................................................143

Collecting information for HP Support with the IbrixCollect.........................................................143

Collecting logs................................................................................................................143

Downloading the archive file.............................................................................................144

Deleting the archive file....................................................................................................144

Configuring Ibrix Collect...................................................................................................145

Obtaining custom logging from ibrix_collect add-on scripts....................................................146

Creating an add-on script.............................................................................................146

Running an add-on script.............................................................................................147

6 Contents

Page 7

Viewing the output from an add-on script........................................................................147

Viewing data collection information....................................................................................149

Adding/deleting commands or logs in the XML file..............................................................149

Viewing software version numbers..........................................................................................149

Troubleshooting specific issues................................................................................................150

Software services.............................................................................................................150

Failover..........................................................................................................................150

Windows StoreAll clients...................................................................................................151

Synchronizing information on file serving nodes and the configuration database...........................151

Troubleshooting an Express Query Manual Intervention Failure (MIF)...........................................152

15 Recovering the X9720/9730 Storage......................................................154

Obtaining the latest StoreAll software release...........................................................................154

Preparing for the recovery......................................................................................................154

Restoring an X9720 node with StoreAll 6.1 or later...............................................................155

Recovering an X9720 or 9730 file serving node.......................................................................155

Completing the restore .........................................................................................................162

Troubleshooting....................................................................................................................165

Manually recovering bond1 as the cluster...........................................................................165

iLO remote console does not respond to keystrokes...............................................................169

The ibrix_auth command fails after a restore........................................................................169

16 Support and other resources...................................................................170

Contacting HP......................................................................................................................170

Related information...............................................................................................................170

Obtaining spare parts...........................................................................................................171

HP websites.........................................................................................................................171

Rack stability........................................................................................................................171

Product warranties................................................................................................................171

Subscription service..............................................................................................................171

17 Documentation feedback.......................................................................173

A Cascading Upgrades.............................................................................174

Upgrading the StoreAll software to the 6.1 release....................................................................174

Upgrading 9720 chassis firmware.....................................................................................175

Online upgrades for StoreAll software 6.x to 6.1..................................................................175

Preparing for the upgrade............................................................................................175

Performing the upgrade................................................................................................176

After the upgrade........................................................................................................176

Offline upgrades for StoreAll software 5.6.x or 6.0.x to 6.1...................................................176

Preparing for the upgrade............................................................................................176

Performing the upgrade................................................................................................178

After the upgrade........................................................................................................178

Upgrading Linux StoreAll clients.........................................................................................179

Installing a minor kernel update on Linux clients..............................................................179

Upgrading Windows StoreAll clients..................................................................................180

Upgrading pre-6.0 file systems for software snapshots..........................................................180

Upgrading pre-6.1.1 file systems for data retention features....................................................181

Troubleshooting upgrade issues.........................................................................................182

Automatic upgrade......................................................................................................182

Manual upgrade.........................................................................................................182

Offline upgrade fails because iLO firmware is out of date.................................................182

Node is not registered with the cluster network ...............................................................183

File system unmount issues............................................................................................183

Moving the Fusion Manager VIF to bond1......................................................................184

Upgrading the StoreAll software to the 5.6 release...................................................................185

Contents 7

Page 8

Automatic upgrades.........................................................................................................185

Manual upgrades............................................................................................................186

Preparing for the upgrade............................................................................................186

Saving the node configuration......................................................................................186

Performing the upgrade................................................................................................186

Restoring the node configuration...................................................................................187

Completing the upgrade..............................................................................................187

Troubleshooting upgrade issues.........................................................................................188

Automatic upgrade......................................................................................................188

Manual upgrade.........................................................................................................188

Upgrading the StoreAll software to the 5.5 release....................................................................188

Automatic upgrades.........................................................................................................189

Manual upgrades............................................................................................................190

Standard upgrade for clusters with a dedicated Management Server machine or blade........190

Standard online upgrade.........................................................................................190

Standard offline upgrade.........................................................................................192

Agile upgrade for clusters with an agile management console configuration.......................194

Agile online upgrade..............................................................................................194

Agile offline upgrade..............................................................................................198

Troubleshooting upgrade issues.........................................................................................200

B StoreAll 9730 component and cabling diagrams........................................201

Back view of the main rack....................................................................................................201

Back view of the expansion rack.............................................................................................202

StoreAll 9730 CX I/O modules and SAS port connectors...........................................................202

StoreAll 9730 CX 1 connections to the SAS switches.................................................................203

StoreAll 9730 CX 2 connections to the SAS switches.................................................................204

StoreAll 9730 CX 3 connections to the SAS switches.................................................................205

StoreAll 9730 CX 7 connections to the SAS switches in the expansion rack..................................206

C The IBRIX X9720 component and cabling diagrams....................................207

Base and expansion cabinets.................................................................................................207

Front view of a base cabinet..............................................................................................207

Back view of a base cabinet with one capacity block...........................................................208

Front view of a full base cabinet.........................................................................................209

Back view of a full base cabinet.........................................................................................210

Front view of an expansion cabinet ...................................................................................211

Back view of an expansion cabinet with four capacity blocks.................................................212

Performance blocks (c-Class Blade enclosure)............................................................................212

Front view of a c-Class Blade enclosure...............................................................................212

Rear view of a c-Class Blade enclosure...............................................................................213

Flex-10 networks...............................................................................................................213

Capacity blocks...................................................................................................................214

X9700c (array controller with 12 disk drives).......................................................................215

Front view of an X9700c..............................................................................................215

Rear view of an X9700c..............................................................................................215

X9700cx (dense JBOD with 70 disk drives)..........................................................................215

Front view of an X9700cx............................................................................................216

Rear view of an X9700cx.............................................................................................216

Cabling diagrams................................................................................................................216

Capacity block cabling—Base and expansion cabinets........................................................216

Virtual Connect Flex-10 Ethernet module cabling—Base cabinet.............................................217

SAS switch cabling—Base cabinet.....................................................................................218

SAS switch cabling—Expansion cabinet..............................................................................218

8 Contents

Page 9

D Warnings and precautions......................................................................220

Electrostatic discharge information..........................................................................................220

Preventing electrostatic discharge.......................................................................................220

Grounding methods.....................................................................................................220

Grounding methods.........................................................................................................220

Equipment symbols...............................................................................................................221

Weight warning...................................................................................................................221

Rack warnings and precautions..............................................................................................221

Device warnings and precautions...........................................................................................222

E Regulatory information............................................................................224

Belarus Kazakhstan Russia marking.........................................................................................224

Turkey RoHS material content declaration.................................................................................224

Ukraine RoHS material content declaration..............................................................................224

Warranty information............................................................................................................224

Glossary..................................................................................................225

Index.......................................................................................................227

Contents 9

Page 10

1 Upgrading the StoreAll software to the 6.3 release

This chapter describes how to upgrade to the 6.3 StoreAll software release. You can also use this

procedure for any subsequent 6.3.x patches.

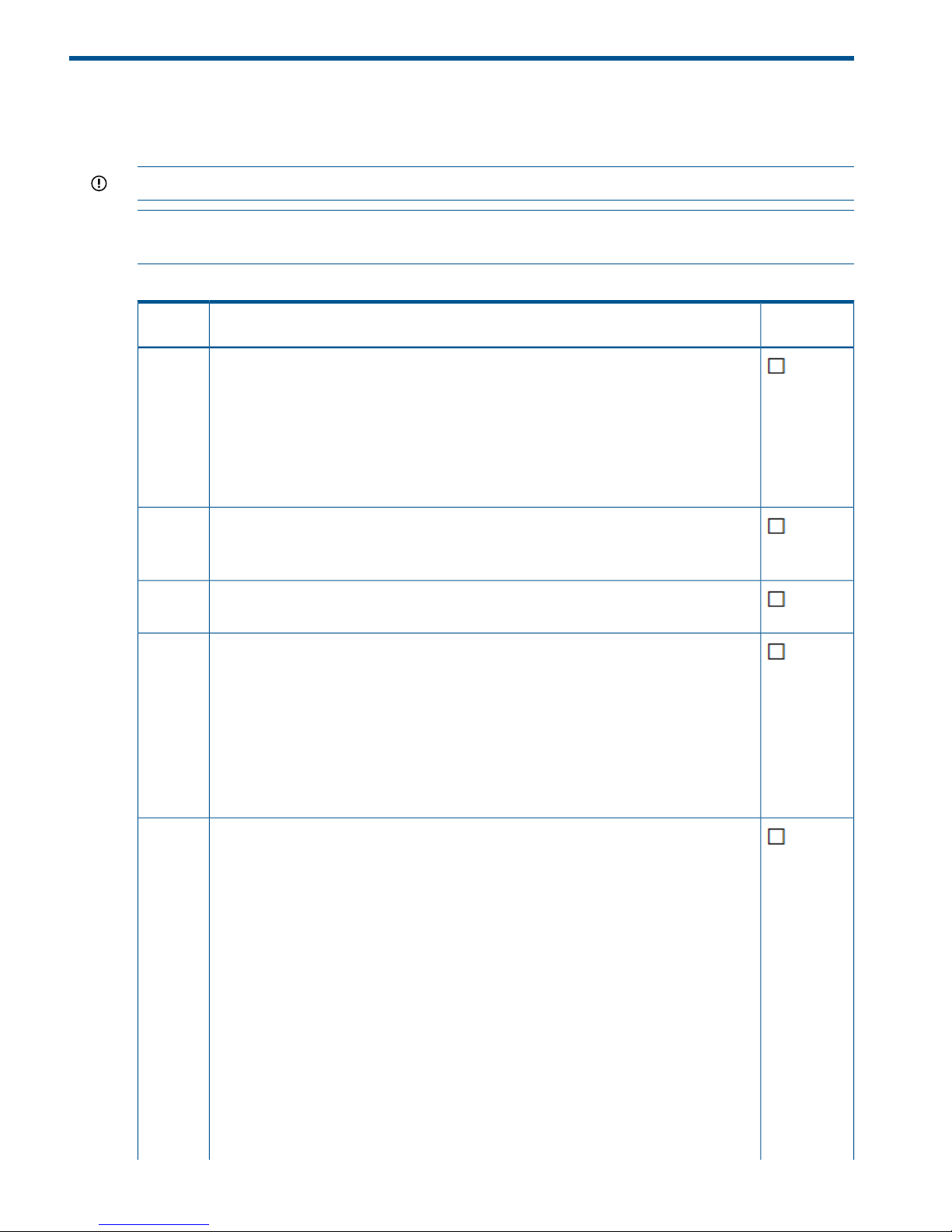

IMPORTANT: Print the following table and check off each step as you complete it.

NOTE: (Upgrades from version 6.0.x) CIFS share permissions are granted on a global basis in

v6.0.X. When upgrading from v6.0.X, confirm that the correct share permissions are in place.

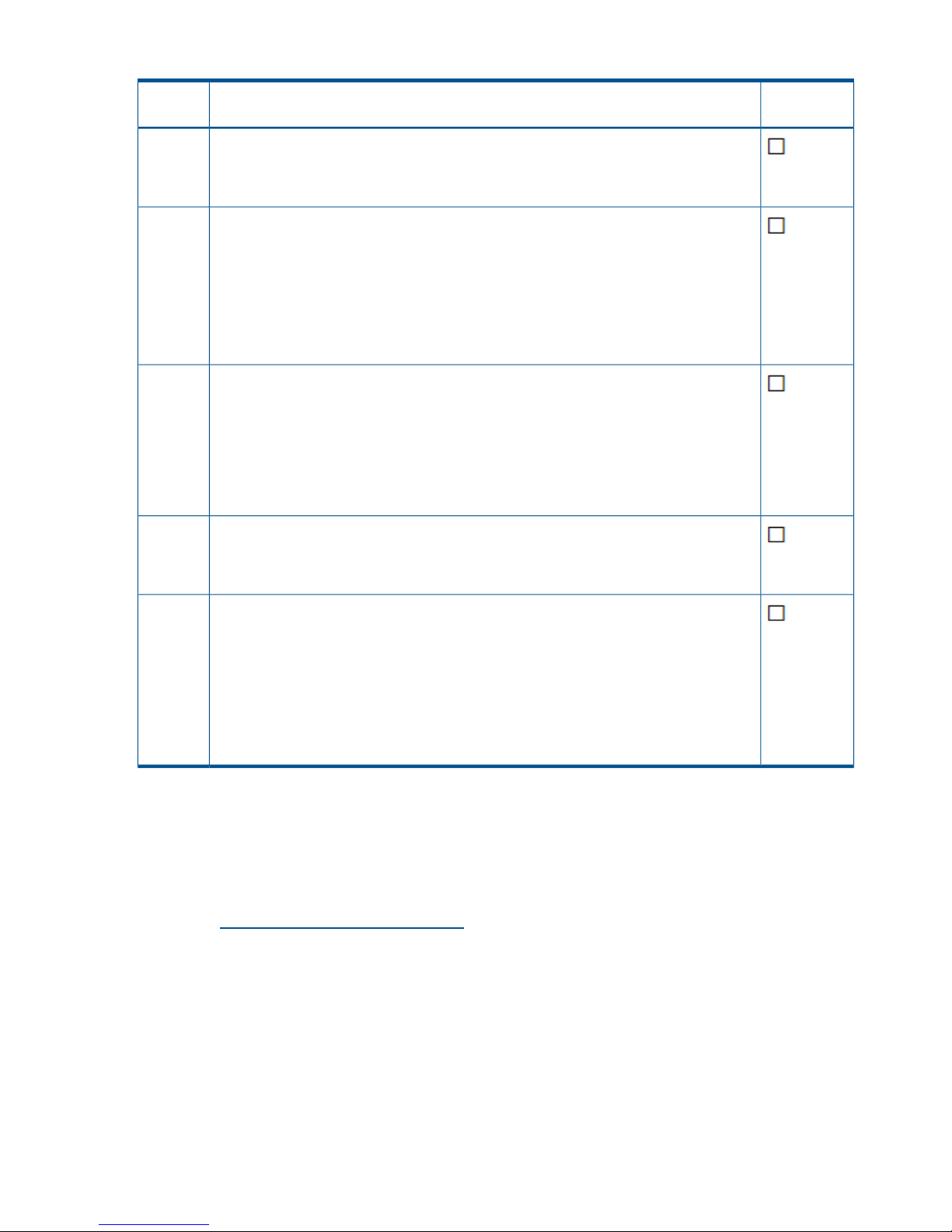

Table 1 Prerequisites checklist for all upgrades

Step

completed?DescriptionStep

Verify that the entire cluster is currently running StoreAll 6.0 or later by entering the following

command:

ibrix_version -l

1

IMPORTANT: All the StoreAll nodes must be at the same release.

• If you are running a version of StoreAll earlier than 6.0, upgrade the product as

described in “Cascading Upgrades” (page 174).

• If you are running StoreAll 6.0 or later, proceed with the upgrade steps in this section.

Verify that the /local partition contains at least 4 GB for the upgrade by using the

following command:

df -kh /local

2

For 9720 systems, enable password-less access among the cluster nodes before starting

the upgrade.

3

The 6.3 release requires that nodes hosting the agile Fusion Manager be registered on the

cluster network. Run the following command to verify that nodes hosting the agile Fusion

Manager have IP addresses on the cluster network:

4

ibrix_fm -l

If a node is configured on the user network, see “Node is not registered with the cluster

network ” (page 22) for a workaround.

NOTE: The Fusion Manager and all file serving nodes must be upgraded to the new

release at the same time. Do not change the active/passive Fusion Manager configuration

during the upgrade.

Verify that the crash kernel parameter on all nodes has been set to 256M by viewing the

default boot entry in the /etc/grub.conf file, as shown in the following example:

kernel /vmlinuz-2.6.18-194.el5 ro root=/dev/vg1/lv1

crashkernel=256M@16M

5

The /etc/grub.conf file might contain multiple instances of the crash kernel parameter.

Make sure you modify each instance that appears in the file.

If you must modify the /etc/grub.conf file, follow the steps in this section:

1. Use SSH to access the active Fusion Manager (FM).

2. Do one of the following:

• (Versions 6.2 and later) Place all passive FMs into nofmfailover mode:

ibrix_fm -m nofmfailover -A

• (Versions earlier than 6.2) Place all passive FMs into maintenance mode:

ibrix_fm -m maintenance -A

3. Disable Segment Server Failover on each node in the cluster:

ibrix_server -m -U -h <node>

10 Upgrading the StoreAll software to the 6.3 release

Page 11

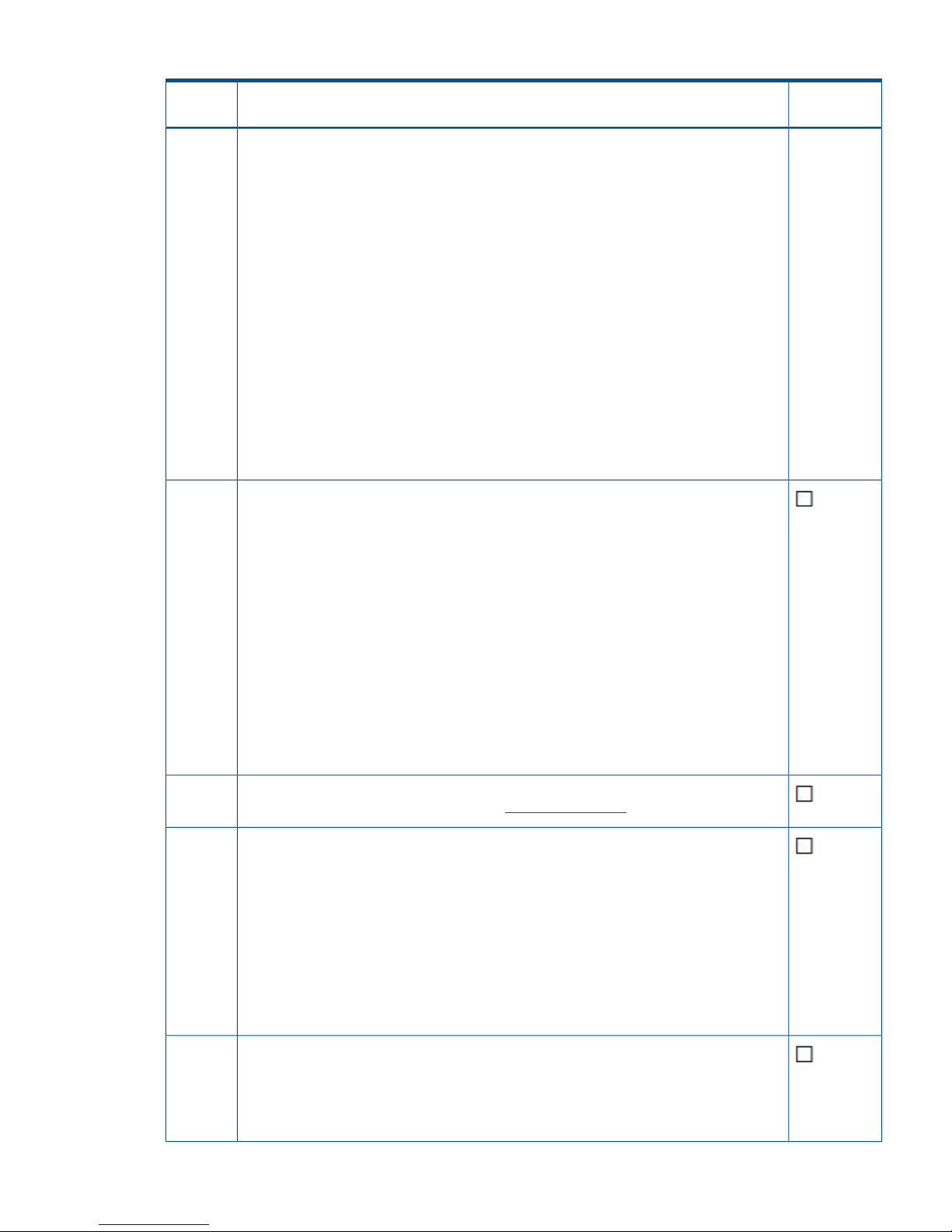

Table 1 Prerequisites checklist for all upgrades (continued)

Step

completed?DescriptionStep

4. Set the crash kernel to 256M in the /etc/grub.conf file. The /etc/grub.conf

file might contain multiple instances of the crash kernel parameter. Make sure you modify

each instance that appears in the file.

NOTE: Save a copy of the /etc/grub.conf file before you modify it.

The following example shows the crash kernel set to 256M:

kernel /vmlinuz-2.6.18-194.el5 ro root=/dev/vg1/lv1

crashkernel=256M@16M

5. Reboot the active FM.

6. Use SSH to access each passive FM and do the following:

a. Modify the /etc/grub.conf file as described in the previous steps.

b. Reboot the node.

7. After all nodes in the cluster are back up, use SSH to access the active FM.

8. Place all disabled FMs back into passive mode:

ibrix_fm -m passive -A

9. Re-enable Segment Server Failover on each node:

ibrix_server -m -h <node>

If your cluster includes G6 servers, check the iLO2 firmware version. This issue does not

affect G7 servers. The firmware must be at version 2.05 for HA to function properly. If your

servers have an earlier version of the iLO2 firmware, run the CP014256.scexe script as

described in the following steps:

6

1. Ensure that the /local/ibrix/ folder is empty prior to copying the contents of pkgfull.

When you upgrade the StoreAll software later in this chapter, this folder must contain

only .rpm packages listed in the build manifest for the upgrade or the upgrade will

fail.

2. Mount the pkg-full ISO image and copy the entire directory structure to the /local/

ibrix/ directory, as shown in the following example:

mount -o loop

/local/pkg/ibrix-pkgfull-FS_6.3.72+IAS_6.3.72-x86_64.signed.iso

/mnt/

3. Execute the firmware binary at the following location:

/local/ibrix/distrib/firmware/CP014256.scexe

Make sure StoreAll is running the latest firmware. For information on how to find the version

of firmware that StoreAll is running, see the Administrator Guide for your release.

7

Verify that all file system nodes can “see” and “access” every segment logical volume that

the file system node is configured for as either the owner or the backup by entering the

following commands:

8

1. To view all segments, logical volume name, and owner, enter the following command

on one line:

ibrix_fs -i | egrep -e OWNER -e MIXED|awk '{ print $1, $3, $6,

$2, $14, $5}' | tr " " "\t"

2. To verify the visibility of the correct segments on the current file system node enter the

following command on each file system node:

lvm lvs | awk '{print $1}'

Ensure that no active tasks are running. Stop any active remote replication, data tiering,

or rebalancer tasks running on the cluster. (Use ibrix_task -l to list active tasks.) When

the upgrade is complete, you can start the tasks again.

9

For additional information on how to stop a task, enter the ibrix_task command for

the help.

11

Page 12

Table 1 Prerequisites checklist for all upgrades (continued)

Step

completed?DescriptionStep

For 9720 systems, delete the existing vendor storage by entering the following command:

ibrix_vs -d -n EXDS

10

The vendor storage is registered automatically after the upgrade.

Record all host tunings, FS tunings and FS mounting options by using the following

commands:

11

1. To display file system tunings, enter: ibrix_fs_tune -l

>/local/ibrix_fs_tune-l.txt

2. To display default StoreAll tunings and settings, enter: ibrix_host_tune -L

>/local/ibrix_host_tune-L.txt

3. To display all non-default configuration tunings and settings, enter: ibrix_host_tune

-q >/local/ibrix_host_tune-q.txt

Ensure that the "ibrix" local user account exists and it has the same UID number on all the

servers in the cluster. If they do not have the same UID number, create the account and

change the UIDs as needed to make them the same on all the servers. Similarly, ensure

that the "ibrix-user" local user group exists and has the same GID number on all servers.

12

Enter the following commands on each node:

grep ibrix /etc/passwd

grep ibrix-user /etc/group

Ensure that all nodes are up and running. To determine the status of your cluster nodes,

check the health of each server by either using the dashboard on the Management Console

or entering the ibrix_health -S -i -h <hostname> command for each node in

the cluster. At the top of the output look for “PASSED.”

13

If you are running StoreAll 6.2.x or earlier and you have one or more Express Query

enabled file system, each one needs to be manually upgraded as described in “Upgrading

pre-6.3 Express Query enabled file systems” (page 19).

14

IMPORTANT: Run the steps in “Required steps before the StoreAll Upgrade for pre-6.3

Express Query enabled file systems” (page 19) before the upgrade. This section provides

steps for saving your custom metadata and audit log. After you upgrade the StoreAll

software, run the steps in “Required steps after the StoreAll Upgrade for pre-6.3 Express

Query enabled file systems” (page 20). These post-upgrade steps are required for you to

preserve your custom metadata and audit log data.

Upgrading 9720 chassis firmware

Before upgrading 9720 systems to StoreAll software 6.3, the 9720 chassis firmware must be at

version 4.0.0-13. If the firmware is not at this level, upgrade it before proceeding with the StoreAll

upgrade.

To upgrade the firmware, complete the following steps:

1. Go to http://www.hp.com/go/StoreAll.

2. On the HP StoreAll Storage page, select HP Support & Drivers from the Support section.

3. On the Business Support Center, select Download Drivers and Software and then select HP

9720 Base Rack > Red Hat Enterprise Linux 5 Server (x86-64).

4. Click HP 9720 Storage Chassis Firmware version 4.0.0-13.

5. Download the firmware and install it as described in the HP 9720 Network Storage System

4.0.0-13 Release Notes.

Online upgrades for StoreAll software

Online upgrades are supported only from the StoreAll 6.x release. Upgrades from earlier StoreAll

releases must use the appropriate offline upgrade procedure.

12 Upgrading the StoreAll software to the 6.3 release

Page 13

When performing an online upgrade, note the following:

• File systems remain mounted and client I/O continues during the upgrade.

• The upgrade process takes approximately 45 minutes, regardless of the number of nodes.

• The total I/O interruption per node IP is four minutes, allowing for a failover time of two minutes

and a failback time of two additional minutes.

• Client I/O having a timeout of more than two minutes is supported.

Preparing for the upgrade

To prepare for the upgrade, complete the following steps, ensure that high availability is enabled

on each node in the cluster by running the following command:

ibrix_haconfig -l

If the command displays an Overall HA Configuration Checker Results - PASSED

status, high availability is enabled on each node in the cluster. If the command returns Overall

HA Configuration Checker Results - FAILED, complete the following list items based

on the result returned for each component:

1. Make sure you have completed all steps in the upgrade checklist (Table 1 (page 10)).

2. If Failed was displayed for the HA Configuration or Auto Failover columns or both, perform

the steps described in the section “Configuring High Availability on the cluster” in the

administrator guide for your current release.

3. If Failed was displayed for the NIC or HBA Monitored columns, see the sections for

ibrix_nic -m -h <host> -A node_2/node_interface and ibrix_hba -m -h

<host> -p <World_Wide_Name> in the CLI guide for your current release.

Performing the upgrade

The online upgrade is supported only from the StoreAll 6.x releases.

IMPORTANT: Complete all steps provided in the Table 1 (page 10).

Complete the following steps:

1. This release is only available through the registered release process. To obtain the ISO image,

contact HP Support to register for the release and obtain access to the software dropbox.

2. Ensure that the /local/ibrix/ folder is empty prior to copying the contents of pkgfull.

The upgrade will fail if the /local/ibrix/ folder contains leftover .rpm packages not listed

in the build manifest.

3. Mount the pkg-full ISO image and copy the entire directory structure to the /local/ibrix/

directory, as shown in the following example:

mount -o loop

/local/pkg/ibrix-pkgfull-FS_6.3.72+IAS_6.3.72-x86_64.signed.iso

/mnt/

4. Change the permissions of all components in the /local/ibrix/ directory structure by

entering the following command:

chmod -R 777 /local/ibrix/

5. Change to the /local/ibrix/ directory.

cd /local/ibrix/

6. Run the upgrade script and follow the on-screen directions:

./auto_online_ibrixupgrade

7. Upgrade Linux StoreAll clients. See “Upgrading Linux StoreAll clients” (page 18).

8. If you received a new license from HP, install it as described in “Licensing” (page 135).

Online upgrades for StoreAll software 13

Page 14

After the upgrade

Complete these steps:

1. If your cluster nodes contain any 10Gb NICs, reboot these nodes to load the new driver. You

must do this step before you upgrade the server firmware, as requested later in this procedure.

2. Upgrade your firmware as described in “Upgrading firmware” (page 136).

3. Start any remote replication, rebalancer, or data tiering tasks that were stopped before the

upgrade.

4. If you have a file system version prior to version 6, you might have to make changes for

snapshots and data retention, as mentioned in the following list:

• Snapshots. Files used for snapshots must either be created on StoreAll software 6.0 or

later, or the pre-6.0 file system containing the files must be upgraded for snapshots. To

upgrade a file system, use the upgrade60.sh utility. For more information, see

“Upgrading pre-6.0 file systems for software snapshots” (page 180).

• Data retention. Files used for data retention (including WORM and auto-commit) must be

created on StoreAll software 6.1.1 or later, or the pre-6.1.1 file system containing the

files must be upgraded for retention features. To upgrade a file system, use the

ibrix_reten_adm -u -f FSNAME command. Additional steps are required before

and after you run the ibrix_reten_adm -u -f FSNAME command. For more

information, see “Upgrading pre-6.1.1 file systems for data retention features” (page 181).

5. If you have an Express Query enabled file system prior to version 6.3, manually complete

each file system upgrade as described in “Required steps after the StoreAll Upgrade for pre-6.3

Express Query enabled file systems” (page 20).

Automated offline upgrades for StoreAll software 6.x to 6.3

Preparing for the upgrade

To prepare for the upgrade, complete the following steps:

1. Make sure you have completed all steps in the upgrade checklist (Table 1 (page 10)).

2. Stop all client I/O to the cluster or file systems. On the Linux client, use lsof </mountpoint>

to show open files belonging to active processes.

3. Verify that all StoreAll file systems can be successfully unmounted from all FSN servers:

ibrix_umount -f fsname

Performing the upgrade

This upgrade method is supported only for upgrades from StoreAll software 6.x to the 6.3 release.

Complete the following steps:

1. This release is only available through the registered release process. To obtain the ISO image,

contact HP Support to register for the release and obtain access to the software dropbox.

2. Ensure that the /local/ibrix/ folder is empty prior to copying the contents of pkgfull.

The upgrade will fail if the /local/ibrix/ folder contains leftover .rpm packages not listed

in the build manifest.

3. Mount the pkg-full ISO image and copy the entire directory structure to the /local/ibrix/

directory, as shown in the following example:

mount -o loop

/local/pkg/ibrix-pkgfull-FS_6.3.72+IAS_6.3.72-x86_64.signed.iso

/mnt/

4. Change the permissions of all components in the /local/ibrix/ directory structure by

entering the following command:

chmod -R 777 /local/ibrix/

14 Upgrading the StoreAll software to the 6.3 release

Page 15

5. Change to the /local/ibrix/ directory.

cd /local/ibrix/

6. Run the following upgrade script:

./auto_ibrixupgrade

The upgrade script automatically stops the necessary services and restarts them when the

upgrade is complete. The upgrade script installs the Fusion Manager on all file serving nodes.

The Fusion Manager is in active mode on the node where the upgrade was run, and is in

passive mode on the other file serving nodes. If the cluster includes a dedicated Management

Server, the Fusion Manager is installed in passive mode on that server.

7. Upgrade Linux StoreAll clients. See “Upgrading Linux StoreAll clients” (page 18).

8. If you received a new license from HP, install it as described in “Licensing” (page 135).

After the upgrade

Complete the following steps:

1. If your cluster nodes contain any 10Gb NICs, reboot these nodes to load the new driver. You

must do this step before you upgrade the server firmware, as requested later in this procedure.

2. Upgrade your firmware as described in “Upgrading firmware” (page 136).

3. Mount file systems on Linux StoreAll clients.

4. If you have a file system version prior to version 6, you might have to make changes for

snapshots and data retention, as mentioned in the following list:

• Snapshots. Files used for snapshots must either be created on StoreAll software 6.0 or

later, or the pre-6.0 file system containing the files must be upgraded for snapshots. To

upgrade a file system, use the upgrade60.sh utility. For more information, see

“Upgrading pre-6.0 file systems for software snapshots” (page 180).

• Data retention. Files used for data retention (including WORM and auto-commit) must be

created on StoreAll software 6.1.1 or later, or the pre-6.1.1 file system containing the

files must be upgraded for retention features. To upgrade a file system, use the

ibrix_reten_adm -u -f FSNAME command. Additional steps are required before

and after you run the ibrix_reten_adm -u -f FSNAME command. For more

information, see “Upgrading pre-6.1.1 file systems for data retention features” (page 181).

5. If you have an Express Query enabled file system prior to version 6.3, manually complete

each file system upgrade as described in “Required steps after the StoreAll Upgrade for pre-6.3

Express Query enabled file systems” (page 20).

Manual offline upgrades for StoreAll software 6.x to 6.3

Preparing for the upgrade

To prepare for the upgrade, complete the following steps:

1. Make sure you have completed all steps in the upgrade checklist (Table 1 (page 10)).

2. Verify that ssh shared keys have been set up. To do this, run the following command on the

node hosting the active instance of the agile Fusion Manager:

ssh <server_name>

Repeat this command for each node in the cluster.

3. Verify that all file system node servers have separate file systems mounted on the following

partitions by using the df command:

• /

• /local

Manual offline upgrades for StoreAll software 6.x to 6.3 15

Page 16

• /stage

• /alt

4. Verify that all FSN servers have a minimum of 4 GB of free/available storage on the /local

partition by using the df command .

5. Verify that all FSN servers are not reporting any partition as 100% full (at least 5% free space)

by using the df command .

6. Note any custom tuning parameters, such as file system mount options. When the upgrade is

complete, you can reapply the parameters.

7. Stop all client I/O to the cluster or file systems. On the Linux client, use lsof </mountpoint>

to show open files belonging to active processes.

8. On the active Fusion Manager, enter the following command to place the Fusion Manager

into maintenance mode:

<ibrixhome>/bin/ibrix_fm -m nofmfailover -P -A

9. On the active Fusion Manager node, disable automated failover on all file serving nodes:

<ibrixhome>/bin/ibrix_server -m -U

10. Run the following command to verify that automated failover is off. In the output, the HA column

should display off.

<ibrixhome>/bin/ibrix_server -l

11. Unmount file systems on Linux StoreAll clients:

ibrix_umount -f MOUNTPOINT

12. Stop the SMB, NFS and NDMP services on all nodes. Run the following commands on the

node hosting the active Fusion Manager:

ibrix_server -s -t cifs -c stop

nl

ibrix_server -s -t nfs -c stop

nl

ibrix_server -s -t ndmp -c stop

If you are using SMB, verify that all likewise services are down on all file serving nodes:

ps -ef | grep likewise

Use kill -9 to stop any likewise services that are still running.

If you are using NFS, verify that all NFS processes are stopped:

ps -ef | grep nfs

If necessary, use the following command to stop NFS services:

/etc/init.d/nfs stop

Use kill -9 to stop any NFS processes that are still running.

If necessary, run the following command on all nodes to find any open file handles for the

mounted file systems:

lsof </mountpoint>

Use kill -9 to stop any processes that still have open file handles on the file systems.

13. Unmount each file system manually:

ibrix_umount -f FSNAME

Wait up to 15 minutes for the file systems to unmount.

Troubleshoot any issues with unmounting file systems before proceeding with the upgrade.

See “File system unmount issues” (page 23).

16 Upgrading the StoreAll software to the 6.3 release

Page 17

Performing the upgrade manually

This upgrade method is supported only for upgrades from StoreAll software 6.x to the 6.3 release.

Complete the following steps first for the server running the active Fusion Manager and then for

the servers running the passive Fusion Managers:

1. This release is only available through the registered release process. To obtain the ISO image,

contact HP Support to register for the release and obtain access to the software dropbox.

2. Ensure that the /local/ibrix/ folder is empty prior to copying the contents of pkgfull.

The upgrade will fail if the /local/ibrix/ folder contains leftover .rpm packages not listed

in the build manifest.

3. Mount the pkg-full ISO image and copy the entire directory structure to the /local/ibrix/

directory, as shown in the following example:

mount -o loop

/local/pkg/ibrix-pkgfull-FS_6.3.72+IAS_6.3.72-x86_64.signed.iso

/mnt/

4. Change the permissions of all components in the /local/ibrix/ directory structure by

entering the following command:

chmod -R 777 /local/ibrix/

5. Change to the /local/ibrix/ directory, and then run the upgrade script:

cd /local/ibrix/

./ibrixupgrade —f

The upgrade script automatically stops the necessary services and restarts them when the

upgrade is complete. The upgrade script installs the Fusion Manager on the server.

6. After completing the previous steps for the server running the active Fusion Manager, repeat

the steps for each of the servers running the passive Fusion Manager.

7. Upgrade Linux StoreAll clients. See “Upgrading Linux StoreAll clients” (page 18).

8. If you received a new license from HP, install it as described in “Licensing” (page 135).

After the upgrade

Complete the following steps:

1. If your cluster nodes contain any 10Gb NICs, reboot these nodes to load the new driver. You

must do this step before you upgrade the server firmware, as requested later in this procedure.

2. Upgrade your firmware as described in “Upgrading firmware” (page 136).

3. Run the following command to rediscover physical volumes:

ibrix_pv -a

4. Apply any custom tuning parameters, such as mount options.

5. Remount all file systems:

ibrix_mount -f <fsname> -m </mountpoint>

6. Re-enable High Availability if used:

ibrix_server -m

7. Start any remote replication, rebalancer, or data tiering tasks that were stopped before the

upgrade.

8. If you are using SMB, set the following parameters to synchronize the SMB software and the

Fusion Manager database:

• smb signing enabled

• smb signing required

• ignore_writethru

Manual offline upgrades for StoreAll software 6.x to 6.3 17

Page 18

Use ibrix_cifsconfig to set the parameters, specifying the value appropriate for your

cluster (1=enabled, 0=disabled). The following examples set the parameters to the default

values for the 6.3 release:

ibrix_cifsconfig -t -S "smb_signing_enabled=0,

smb_signing_required=0"

ibrix_cifsconfig -t -S "ignore_writethru=1"

The SMB signing feature specifies whether clients must support SMB signing to access SMB

shares. See the HP StoreAll Storage File System User Guide for more information about this

feature. When ignore_writethru is enabled, StoreAll software ignores writethru buffering

to improve SMB write performance on some user applications that request it.

9. Mount file systems on Linux StoreAll clients.

10. If you have a file system version prior to version 6, you might have to make changes for

snapshots and data retention, as mentioned in the following list:

• Snapshots. Files used for snapshots must either be created on StoreAll software 6.0 or

later, or the pre-6.0 file system containing the files must be upgraded for snapshots. To

upgrade a file system, use the upgrade60.sh utility. For more information, see

“Upgrading pre-6.0 file systems for software snapshots” (page 180).

• Data retention. Files used for data retention (including WORM and auto-commit) must be

created on StoreAll software 6.1.1 or later, or the pre-6.1.1 file system containing the

files must be upgraded for retention features. To upgrade a file system, use the

ibrix_reten_adm -u -f FSNAME command. Additional steps are required before

and after you run the ibrix_reten_adm -u -f FSNAME command. For more

information, see “Upgrading pre-6.1.1 file systems for data retention features” (page 181).

11. If you have an Express Query enabled file system prior to version 6.3, manually complete

each file system upgrade as described in “Required steps after the StoreAll Upgrade for pre-6.3

Express Query enabled file systems” (page 20).

Upgrading Linux StoreAll clients

Be sure to upgrade the cluster nodes before upgrading Linux StoreAll clients. Complete the following

steps on each client:

1. Download the latest HP StoreAll client 6.3 package.

2. Expand the tar file.

3. Run the upgrade script:

./ibrixupgrade -tc -f

The upgrade software automatically stops the necessary services and restarts them when the

upgrade is complete.

4. Execute the following command to verify the client is running StoreAll software:

/etc/init.d/ibrix_client status

IBRIX Filesystem Drivers loaded

IBRIX IAD Server (pid 3208) running...

The IAD service should be running, as shown in the previous sample output. If it is not, contact HP

Support.

Installing a minor kernel update on Linux clients

The StoreAll client software is upgraded automatically when you install a compatible Linux minor

kernel update.

If you are planning to install a minor kernel update, first run the following command to verify that

the update is compatible with the StoreAll client software:

/usr/local/ibrix/bin/verify_client_update <kernel_update_version>

18 Upgrading the StoreAll software to the 6.3 release

Page 19

The following example is for a RHEL 4.8 client with kernel version 2.6.9-89.ELsmp:

# /usr/local/ibrix/bin/verify_client_update 2.6.9-89.35.1.ELsmp

nl

Kernel update 2.6.9-89.35.1.ELsmp is compatible.

If the minor kernel update is compatible, install the update with the vendor RPM and reboot the

system. The StoreAll client software is then automatically updated with the new kernel, and StoreAll

client services start automatically. Use the ibrix_version -l -C command to verify the kernel

version on the client.

NOTE: To use the verify_client command, the StoreAll client software must be installed.

Upgrading Windows StoreAll clients

Complete the following steps on each client:

1. Remove the old Windows StoreAll client software using the Add or Remove Programs utility

in the Control Panel.

2. Copy the Windows StoreAll client MSI file for the upgrade to the machine.

3. Launch the Windows Installer and follow the instructions to complete the upgrade.

4. Register the Windows StoreAll client again with the cluster and check the option to Start Service

after Registration.

5. Check Administrative Tools | Services to verify that the StoreAll client service is started.

6. Launch the Windows StoreAll client. On the Active Directory Settings tab, click Update to

retrieve the current Active Directory settings.

7. Mount file systems using the StoreAll Windows client GUI.

NOTE: If you are using Remote Desktop to perform an upgrade, you must log out and log back

in to see the drive mounted.

Upgrading pre-6.3 Express Query enabled file systems

The internal database schema format of Express Query enabled file systems changed between

releases 6.2.x and 6.3. Each file system with Express Query enabled must be manually upgraded

to 6.3. This section has instructions to be run before and after the StoreAll upgrade, on each of

those file systems.

Required steps before the StoreAll Upgrade for pre-6.3 Express Query enabled file

systems

These steps are required before the StoreAll Upgrade:

1. Mount all Express Query file systems on the cluster to be upgraded if they are not mounted

yet.

2. Save your custom metadata by entering the following command:

/usr/local/ibrix/bin/MDExport.pl --dbconfig

/usr/local/Metabox/scripts/startup.xml --database <FSNAME>

--outputfile /tmp/custAttributes.csv --user ibrix

3. Save your audit log data by entering the following commands:

ibrix_audit_reports -t time -f <FSNAME>

cp <path to report file printed from previous command>

/tmp/auditData.csv

4. Disable auditing by entering the following command:

ibrix_fs -A -f <FSNAME> -oa audit_mode=off

In this instance <FSNAME> is the file system.

Upgrading Windows StoreAll clients 19

Page 20

5. If any archive API shares exist for the file system, delete them.

NOTE: To list all HTTP shares, enter the following command:

ibrix_httpshare -l

To list only REST API (Object API) shares, enter the following command:

ibrix_httpshare -l -f <FSNAME> -v 1 | grep "objectapi: true" | awk

'{ print $2 }'

In this instance <FSNAME> is the file system.

• Delete all HTTP shares, regular or REST API (Object API) by entering the following

command:

ibrix_httpshare -d -f <FSNAME>

In this instance <FSNAME> is the file system.

• Delete a specific REST API (Object API) share by entering the following command:

ibrix_httpshare -d <SHARENAME> -c <PROFILENAME> -t <VHOSTNAME>

In this instance

◦ <SHARENAME> is the share name.

◦ <PROFILENAME> is the profile name.

◦ <VHOSTNAME> is the virtual host name

6. Disable Express Query by entering the following command:

ibrix_fs -T -D -f <FSNAME>

7. Shut down Archiving daemons for Express Query by entering the following command:

ibrix_archiving -S -F

8. delete the internal database files for this file system by entering the following command:

rm -rf <FS_MOUNTPOINT>/.archiving/database

In this instance <FS_MOUNTPOINT> is the file system mount point.

Required steps after the StoreAll Upgrade for pre-6.3 Express Query enabled file

systems

These steps are required after the StoreAll Upgrade:

1. Restart the Archiving daemons for Express Query:

2. Re-enable Express Query on the file systems you disabled it from before by entering the

following command:

ibrix_fs -T -E -f <FSNAME>

In this instance <FSNAME> is the file system.

Express Query will begin resynchronizing (repopulating) a new database for this filesystem.

3. Re-enable auditing if you had it running before (the default) by entering the following command:

ibrix_fs -A -f <FSNAME> -oa audit_mode=on

In this instance <FSNAME> is the file system.