Page 1

HPE Integrity NonStop NS2100 Planning Guide

Part Number: 697513-004R

Published: November 2015

Edition: J06.14 and subsequent J-series RVUs

Page 2

© Copyright 2013, 2015 Hewlett Packard Enterprise Development LP

The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services

are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting

an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use, or copying. Consistent with FAR

12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed

to the U.S. Government under vendor's standard commercial license.

Export of the information contained in this publication may require authorization from the U.S. Department of Commerce.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard Enterprise has no control over and is not

responsible for information outside the Hewlett Packard Enterprise website.

Acknowledgments

Intel®, Pentium®, and Celeron® are trademarks of Intel Corporation in the United States and other countries.

Microsoft®, Windows®, and Windows® NT are trademarks of the Microsoft group of companies.

Page 3

Contents

About This Document.............................................................................................8

Supported Release Version Updates (RVUs).......................................................................................8

Intended Audience................................................................................................................................8

New and Changed Information in 697513-004R..................................................................................8

New and Changed Information in 697513-004.....................................................................................8

New and Changed Information in 697513-003.....................................................................................9

New and Changed Information in 697513-002.....................................................................................9

Document Organization........................................................................................................................9

Notation Conventions.........................................................................................................................10

General Syntax Notation...............................................................................................................10

Publishing History...............................................................................................................................12

1 NS2100 System Overview.................................................................................13

NS2100 Hardware .............................................................................................................................14

Blade Element (rx2800 i2) ............................................................................................................15

Versatile I/O (VIO) Enclosure .......................................................................................................15

CLuster I/O Modules (CLIMs)........................................................................................................16

Storage CLuster I/O Module (CLIM)........................................................................................17

IP CLuster I/O Module (CLIM) (Optional).................................................................................19

DL380 G6 IP CLIM Option 1 — Five Ethernet Copper Ports..............................................19

DL380 G6 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical

Ports....................................................................................................................................20

DL380p Gen8 IP CLIM Option 1 — Five Ethernet Copper Ports........................................20

DL380p Gen8 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical

Ports....................................................................................................................................21

Telco CLuster I/O Module (CLIM) (Optional)............................................................................21

DL380 G6 Telco CLIM — Five Ethernet Copper Ports.......................................................22

DL380p Gen8 Telco CLIM — Option 1 Five Ethernet Copper Ports...................................22

DL380p Gen8 Telco CLIM — Option 2 Three Ethernet Copper and Two Ethernet Optical

Ports....................................................................................................................................23

CLIM Cable Management Ethernet Patch Panel.....................................................................23

SAS Disk Enclosure......................................................................................................................24

Maintenance Switch .....................................................................................................................24

System Console ...........................................................................................................................24

UPS (Optional)..............................................................................................................................25

UPS for a Single-Phase Power Configuration (Optional).........................................................25

UPS for a Three-Phase Power Configuration (Optional).........................................................25

ERM (Optional with UPS)..............................................................................................................26

Enterprise Storage System — ESS (Optional)..............................................................................26

Tape Drive and Interface Hardware (Optional)..............................................................................27

Preparation for Other Hardware.........................................................................................................27

Component Location and Identification in an NS2100 System..........................................................27

Terminology...................................................................................................................................28

Rack and Offset Physical Location ...............................................................................................29

Blade Element Group-Module-Slot Numbering ............................................................................29

VIO Enclosure Group-Module-Slot Numbering ............................................................................31

CLIM Connection Group-Module-Slot-Port Numbering ................................................................33

System Installation Document Packet ...............................................................................................36

Tech Memo for the Factory-Installed Hardware Configuration .....................................................36

Configuration Forms for the CLIMs and ServerNet Adapters .......................................................37

2 Site Preparation Guidelines for NS2100 Systems.............................................38

Modular Cabinet Power and I/O Cable Entry......................................................................................38

Contents 3

Page 4

Emergency Power-Off (EPO).............................................................................................................38

EPO Switches................................................................................................................................38

EPO Requirement for NS2100 Systems.......................................................................................38

EPO Requirement for HPE R5000 UPS........................................................................................38

EPO Requirement for HPE R12000/3 UPS...................................................................................38

Electrical Power and Grounding Quality.............................................................................................39

Power Quality................................................................................................................................39

Grounding Systems.......................................................................................................................39

Power Consumption......................................................................................................................39

Uninterruptible Power Supply (UPS)..................................................................................................40

Cooling and Humidity Control.............................................................................................................41

Weight.................................................................................................................................................41

Flooring...............................................................................................................................................41

Dust and Pollution Control..................................................................................................................42

Zinc Particulates.................................................................................................................................42

Space for Receiving and Unpacking the System...............................................................................42

Operational Space..............................................................................................................................42

3 System Installation Specifications for NS2100 Systems...................................44

Modular Cabinets................................................................................................................................44

Power Distribution for NS2100 Systems.............................................................................................44

Power Distribution Units (PDUs)...................................................................................................44

AC Power Feeds...........................................................................................................................50

PDU Strapping Configurations......................................................................................................57

Uninterruptible Power Supply (UPS).............................................................................................58

AC Input Power for Modular Cabinets................................................................................................58

Enclosure AC Input........................................................................................................................60

Enclosure Power Loads.................................................................................................................60

AC Power Monitoring..........................................................................................................................62

How OSM Power Failure Support Works......................................................................................62

Considerations for Ride-Through Time Configuration...................................................................63

Considerations for Site UPS Configurations.................................................................................64

AC Power-Fail States.........................................................................................................................64

Dimensions and Weights....................................................................................................................65

Plan View of the Modular Cabinets...............................................................................................65

Service Clearances for the Modular Cabinets...............................................................................65

Unit Sizes......................................................................................................................................65

36U Intelligent Rack Modular Cabinet Physical Specifications.....................................................66

42U Modular Cabinet Physical Specifications...............................................................................66

Enclosure Dimensions ..................................................................................................................66

Modular Cabinet and Enclosure Weights With Worksheet............................................................67

Modular Cabinet Stability....................................................................................................................69

Environmental Specifications..............................................................................................................69

Heat Dissipation Specifications and Worksheet............................................................................69

Operating Temperature, Humidity, and Altitude.............................................................................70

Nonoperating Temperature, Humidity, and Altitude.......................................................................71

Cooling Airflow Direction...............................................................................................................71

Typical Acoustic Noise Emissions.................................................................................................71

Tested Electrostatic Immunity........................................................................................................71

Calculating Specifications for Enclosure Combinations.....................................................................71

4 System Configuration Guidelines for NS2100 Systems....................................73

Internal ServerNet Interconnect Cabling.............................................................................................73

Dedicated Service LAN Cables.....................................................................................................73

Length Restrictions for Cables......................................................................................................73

Cable Product IDs.........................................................................................................................73

4 Contents

Page 5

Blade Element to VIO Enclosure...................................................................................................73

Processor ID Assignment for the Blade Element..........................................................................74

SAS Ports to SAS Disk Enclosures...............................................................................................74

SAS Ports to SAS Tape Devices...................................................................................................74

Fibre Channel Ports to ESS..........................................................................................................74

Fibre Channel Ports to Tape Devices............................................................................................74

Storage CLIM Devices........................................................................................................................74

Factory-Default Disk Volume Locations for SAS Disk Devices.....................................................76

Configuration Restrictions for Storage CLIMs...............................................................................76

Configurations for Storage CLIMs and SAS Disk Enclosures.......................................................76

DL380 G6 Storage CLIM and SAS Disk Enclosure Configurations.........................................77

Two DL380 G6 Storage CLIMs, Two M8381-25 SAS Disk Enclosures..............................77

Two DL380 G6 Storage CLIMs, Four M8381-25 SAS Disk Enclosures.............................77

Four DL380 G6 Storage CLIMs, Four M8381-25 SAS Disk Enclosures............................78

DL380p Gen8 Storage CLIM and SAS Disk Enclosure Configurations...................................79

Two DL380p Gen8 Storage CLIMs, Two M8381-25 SAS Disk Enclosures........................80

Two DL380p Gen8 Storage CLIMs, Four M8381-25 SAS Disk Enclosures.......................81

Four DL380p Gen8 Storage CLIMs, Four M8381-25 SAS Disk Enclosures......................81

Four DL380p Gen8 Storage CLIMs, Eight M8381-25 SAS Disk Enclosures......................82

VIO Enclosure and Disk Storage Considerations...............................................................................84

Ethernet to Networks..........................................................................................................................84

IP CLIM Ethernet Interfaces...............................................................................................................84

Telco CLIM Ethernet Interfaces..........................................................................................................86

VIO Enclosure Ethernet Ports.............................................................................................................87

5 Hardware Configuration in NS2100 Cabinets...................................................88

Maximum Number of Modular Components, NS2100 System...........................................................88

IP and Telco CLIM Coexistence Limits ..............................................................................................88

Typical NS2100 Configurations..........................................................................................................89

6 Support and other resources.............................................................................93

Accessing Hewlett Packard Enterprise Support.................................................................................93

Accessing updates..............................................................................................................................93

Websites.............................................................................................................................................94

Customer self repair...........................................................................................................................94

Remote support..................................................................................................................................94

Documentation feedback....................................................................................................................94

A Maintenance and Support Connectivity............................................................95

Dedicated Service LAN.......................................................................................................................95

Fault-Tolerant LAN Configuration..................................................................................................96

DHCP, TFTP, and DNS Window-Based Services..........................................................................97

IP Addresses.................................................................................................................................98

Ethernet Cables.............................................................................................................................99

SWAN Concentrator Restrictions..................................................................................................99

Dedicated Service LAN Links........................................................................................................99

Dedicated Service LAN Links With Two VIO Enclosures.........................................................99

Dedicated Service LAN Links With IP CLIMs.........................................................................100

Dedicated Service LAN Links With Telco CLIMs....................................................................100

Initial Configuration for a Dedicated Service LAN.......................................................................101

Additional Configuration for OSM................................................................................................101

System Consoles for NS2100 Systems ...........................................................................................101

System Console Configurations..................................................................................................101

Primary and Backup System Console Managing One System..............................................101

Primary and Backup System Consoles Managing Multiple Systems.....................................102

Contents 5

Page 6

B Cables.............................................................................................................103

Cable Types, Connectors, Lengths, and Product IDs.......................................................................103

Cable Length Restrictions.................................................................................................................104

C Operations and Management Using OSM Applications.................................106

System-Down OSM Low-Level Link.................................................................................................106

D Default Startup Characteristics and Naming Conventions..............................108

Default Naming Conventions............................................................................................................109

E UPS and Data Center Power Configurations..................................................111

Supported UPS Configurations.........................................................................................................111

NonStop System With a Fault-Tolerant Data Center...................................................................112

NonStop System With a Rack-Mounted UPS.............................................................................113

SAS Disk Enclosures With a Rack-Mounted UPS......................................................................114

Non-Supported UPS Configurations.................................................................................................115

NonStop System With a Data Center UPS, Single Power Rail...................................................116

NonStop System With Data Center UPS, Both Power Rails.......................................................117

NonStop System With Rack-Mounted UPS and Data Center UPS in Parallel............................119

NonStop System With Two Rack-Mounted UPS in Parallel........................................................121

NonStop System with Cascading Rack-Mounted UPS and Data Center UPS...........................122

F Warranty and regulatory information...............................................................124

Warranty information.........................................................................................................................124

Regulatory information......................................................................................................................124

Belarus Kazakhstan Russia marking...........................................................................................124

Turkey RoHS material content declaration..................................................................................125

Ukraine RoHS material content declaration................................................................................125

Index...................................................................................................................126

6 Contents

Page 7

Figures

1 Example of an NS2100 System, 42U..........................................................................................14

2 Connections Between Storage CLIMs and ESS.........................................................................27

3 VIO Enclosure Slot Locations, NS2100 System..........................................................................32

4 DL380 G6 Storage CLIM Connections to VIO Enclosures .........................................................33

5 DL380 G6 IP or Telco CLIM Connections to VIO Enclosures ....................................................34

6 DL380p Gen8 Storage CLIM Connections to VIO Enclosures....................................................35

7 DL380p Gen8 IP or Telco CLIM Connections to VIO Enclosures...............................................36

8 Intelligent PDU Connections (Without UPS)...............................................................................45

9 Intelligent PDU Connections (With Single-Phase UPS)..............................................................46

10 Intelligent PDU Connections (With Three-Phase UPS)..............................................................47

11 Modular PDU Connections (Without UPS)..................................................................................48

12 Modular PDU Connections (With Single-Phase UPS)................................................................49

13 Modular PDU Connections (With Three-Phase UPS).................................................................50

14 Example of Bottom AC Power Feed Without UPS......................................................................52

15 Example of Top AC Power Feed Without UPS............................................................................53

16 Example of Top AC Power Feed With Single-Phase UPS..........................................................54

17 Example of Bottom AC Power Feed With Single-Phase UPS ...................................................55

18 Example of Top AC Power Feed with Three-Phase UPS ..........................................................56

19 Example of Bottom AC Power Feed With Three-Phase UPS.....................................................57

20 HPE M8381-25 SAS Disk Enclosure, Front and Rear View........................................................76

21 Two DL380 G6 Storage CLIMs, Two M8381-25 SAS Disk Enclosure Configuration..................77

22 Two DL380 G6 Storage CLIMs, Four M8381-25 SAS Disk Enclosure Configuration.................78

23 Four DL380 G6 Storage CLIMs, Four M8381-25 SAS Disk Enclosure Configuration................79

24 Two DL380p Gen8 Storage CLIMs, Two M8381-25 SAS Disk Enclosure Configuration............80

25 Two DL380p Gen8 Storage CLIMs, Four M8381-25 SAS Disk Enclosure Configuration...........81

26 Four DL380p Gen8 Storage CLIMs, Four M8381-25 SAS Disk Enclosure Configuration..........82

27 Four DL380p Gen8 Storage CLIMs, Eight M8381-25 SAS Disk Enclosure Configuration.........83

28 Example 42U Configuration Without UPS and ERM...................................................................89

29 Example 42U Configurations With Possible UPS/ERM Combinations.......................................90

30 Example 36U Configuration Without UPS and ERM...................................................................91

31 Example 36U Configurations With Possible UPS/ERM Combinations.......................................92

32 Example of a Fault-Tolerant LAN Configuration..........................................................................97

33 NonStop System With a Fault-Tolerant Data Center.................................................................112

34 NonStop System With a Rack-Mounted UPS...........................................................................113

35 SAS Disk Enclosures With a Rack-Mounted UPS....................................................................114

36 NonStop System With a Data Center UPS, Single Power Rail.................................................116

37 NonStop System With Data Center UPS, Both Power Rails.....................................................117

38 NonStop System With Rack-Mounted UPS and Data Center UPS in Parallel..........................119

39 NonStop System With Two Rack-Mounted UPS in Parallel......................................................121

40 NonStop System With Cascading UPS.....................................................................................122

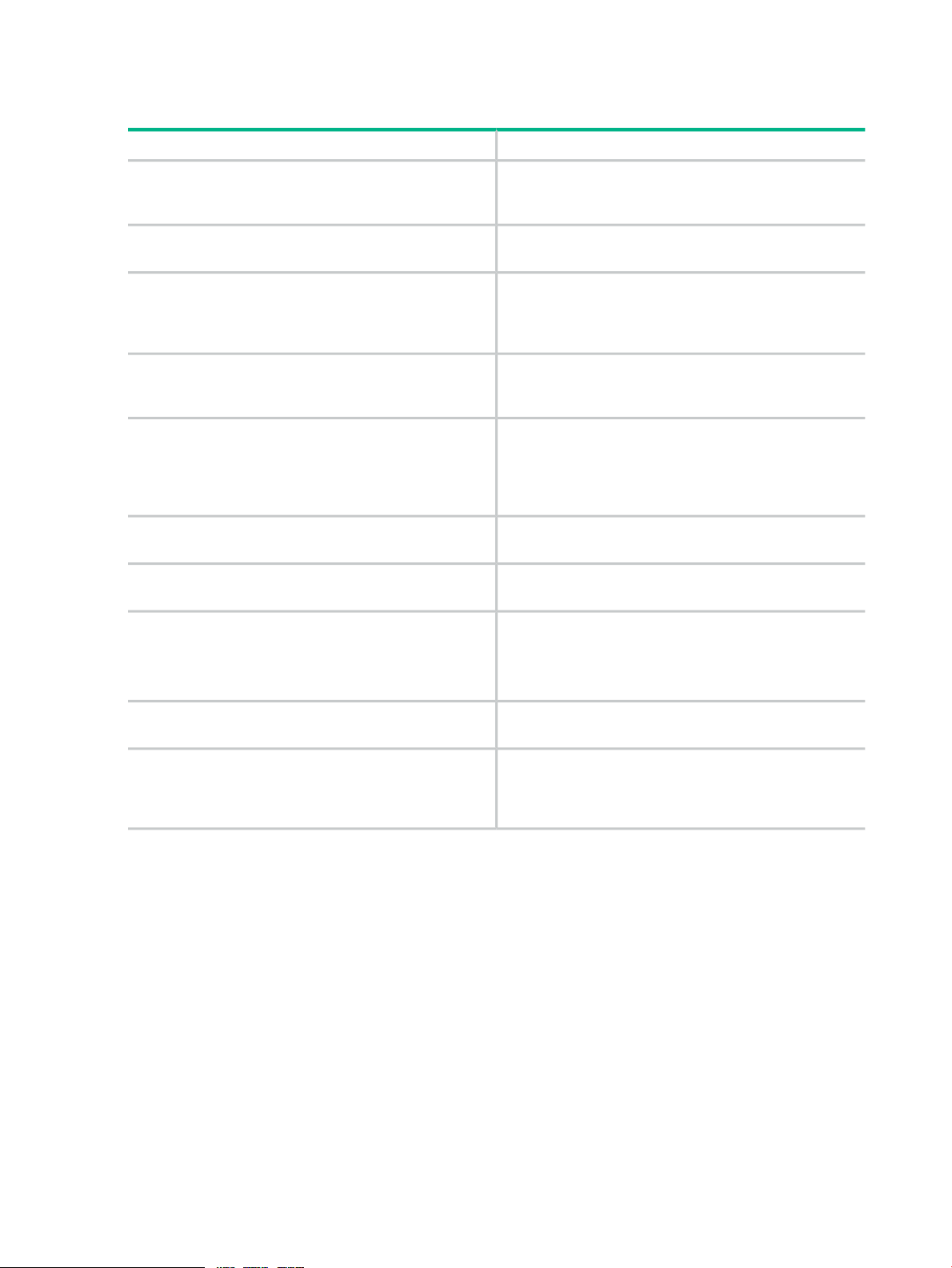

Tables

1 CLIM Models and RVU Requirements........................................................................................16

2 North America/Japan Single-Phase Power Specifications..........................................................58

3 North America/Japan Three-Phase Power Specifications..........................................................59

4 International Single-Phase Power Specifications........................................................................59

5 International Three-Phase Power Specifications........................................................................59

6 Example of Cabinet Load Calculations.......................................................................................71

Page 8

About This Document

This guide describes the HPE Integrity NonStop NS2100 system and provides examples of

system configurations to assist you in planning for installation of a new system.

Supported Release Version Updates (RVUs)

This publication supports J06.14 and all subsequent J-series RVUs until otherwise indicated by

its replacement publication.

Intended Audience

This guide is written for those responsible for planning the installation, configuration, and

maintenance of the server and the software environment at a particular site. Appropriate personnel

must have completed Hewlett Packard Enterprise training courses on system support for Integrity

NS2100 systems.

NOTE: NS2100 systems refers to hardware systems. J-series refers to release version updates

(RVUs).

New and Changed Information in 697513-004R

Updated Hewlett Packard Enterprise references.

New and Changed Information in 697513-004

The DL380p Gen8 IP, Telco, and Storage CLIMs are now supported for NS2100 systems. The

following topics have been added or modified:

• “NS2100 System Overview” (page 13)

• “CLuster I/O Modules (CLIMs)” (page 16)

• “CLIM Connection Group-Module-Slot-Port Numbering ” (page 33)

• “Uninterruptible Power Supply (UPS)” (page 40)

• “Enclosure Power Loads” (page 60)

• “Enclosure Dimensions ” (page 66)

• “Modular Cabinet and Enclosure Weights With Worksheet” (page 67)

• “Heat Dissipation Specifications and Worksheet” (page 69)

• “SAS Ports to SAS Disk Enclosures” (page 74)

• “Storage CLIM Devices” (page 74)

• “Ethernet to Networks” (page 84)

• “IP CLIM Ethernet Interfaces” (page 84)

• “Telco CLIM Ethernet Interfaces” (page 86)

• “Cable Length Restrictions” (page 104)

Added information for ride-through time for a Hewlett Packard Enterprise-supported UPS:

• “Uninterruptible Power Supply (UPS)” (page 40)

• “AC Power Monitoring” (page 62)

• “UPS and Data Center Power Configurations” (page 111)

8

Page 9

New and Changed Information in 697513-003

“UPS and Data Center Power Configurations” (page 111) appendix has been added.

New and Changed Information in 697513-002

The following changes have been made to this revision of the manual:

• The 36U Intelligent rack is now supported for NS2100 systems. The following topics have

been added or modified:

◦ “36U Intelligent Rack Modular Cabinet Physical Specifications” (page 66).

◦ “Modular Cabinet and Enclosure Weights With Worksheet” (page 67).

◦ Figure 30 (page 91).

◦ Figure 31 (page 92).

• The PDUs are always located at the lowest location in the rack even if there is a UPS or

UPS/ERM combination present. The following changes have been made to reflect the

changed PDU location:

◦ Figure 1 (page 14) has been modified.

◦ The text and illustrations in“Power Distribution Units (PDUs)” (page 44) have been

modified.

◦ Illustrations in “AC Power Feeds” (page 50) have been modified.

◦ Illustrations in “Typical NS2100 Configurations” (page 89) have been modified.

• The descriptions of the two different versions of the R12000/3 UPS have been corrected in

“UPS for a Three-Phase Power Configuration (Optional)” (page 25).

Document Organization

Chapter 1: NS2100 System Overview (page 13)

Chapter 2: Site Preparation Guidelines for NS2100

Systems (page 38)

Chapter 3: System Installation Specifications for NS2100

Systems (page 44)

Chapter 4: System Configuration Guidelines for NS2100

Systems (page 73)

Chapter 5: Hardware Configuration in NS2100 Cabinets

(page 88)

Appendices

ContentsChapters

This chapter provides an overview of the NS2100

commercial system.

This chapter outlines topics to consider when planning or

upgrading the installation site for the NS2100 system.

This chapter provides the installation specifications for a

fully populated NS2100 enclosure.

This chapter describes the guidelines for implementing

the NS2100 modular hardware.

This chapter shows the required locations for hardware

enclosures in the NS2100 cabinets.

Appendix A: Maintenance and Support Connectivity

(page 95)

Appendix B: Cables (page 103)

Appendix C: Operations and Management Using OSM

Applications (page 106)

This appendix describes the connectivity options, including

Instant Support Enterprise Edition (ISEE) for maintenance

and support of all the NS2100 systems.

This appendix identifies the cables used with the NS2100

hardware.

This appendix describes how to use the OSM applications

to manage NS2100 systems.

New and Changed Information in 697513-003 9

Page 10

ContentsChapters

Appendix D: Default Startup Characteristics and Naming

Conventions (page 108)

Appendix E: UPS and Data Center Power Configurations

(page 111)

Notation Conventions

General Syntax Notation

This list summarizes the notation conventions for syntax presentation in this manual.

UPPERCASE LETTERS

Uppercase letters indicate keywords and reserved words. Type these items exactly as shown.

Items not enclosed in brackets are required. For example:

MAXATTACH

Italic Letters

Italic letters, regardless of font, indicate variable items that you supply. Items not enclosed

in brackets are required. For example:

file-name

Computer Type

Computer type letters indicate:

• C and Open System Services ($OSS) keywords, commands, and reserved words. Type

these items exactly as shown. Items not enclosed in brackets are required. For example:

Use the cextdecs.h header file.

This appendix describes the default startup characteristics

and naming conventions for NS2100 systems.

This appendix provides examples of UPS and data center

power configurations.

• Text displayed by the computer. For example:

Last Logon: 14 May 2006, 08:02:23

• A listing of computer code. For example

if (listen(sock, 1) < 0)

{

perror("Listen Error");

exit(-1);

}

Bold Text

Bold text in an example indicates user input typed at the terminal. For example:

ENTER RUN CODE

?123

CODE RECEIVED: 123.00

The user must press the Return key after typing the input.

[ ] Brackets

Brackets enclose optional syntax items. For example:

TERM [\system-name.]$terminal-name

INT[ERRUPTS]

A group of items enclosed in brackets is a list from which you can choose one item or none.

The items in the list can be arranged either vertically, with aligned brackets on each side of

the list, or horizontally, enclosed in a pair of brackets and separated by vertical lines. For

example:

10

Page 11

FC [ num ]

[ -num ]

[ text ]

K [ X | D ] address

{ } Braces

A group of items enclosed in braces is a list from which you are required to choose one item.

The items in the list can be arranged either vertically, with aligned braces on each side of the

list, or horizontally, enclosed in a pair of braces and separated by vertical lines. For example:

LISTOPENS PROCESS { $appl-mgr-name }

{ $process-name }

ALLOWSU { ON | OFF }

| Vertical Line

A vertical line separates alternatives in a horizontal list that is enclosed in brackets or braces.

For example:

INSPECT { OFF | ON | SAVEABEND }

… Ellipsis

An ellipsis immediately following a pair of brackets or braces indicates that you can repeat

the enclosed sequence of syntax items any number of times. For example:

M address [ , new-value ]…

- ] {0|1|2|3|4|5|6|7|8|9}…

An ellipsis immediately following a single syntax item indicates that you can repeat that syntax

item any number of times. For example:

"s-char…"

Punctuation

Parentheses, commas, semicolons, and other symbols not previously described must be

typed as shown. For example:

error := NEXTFILENAME ( file-name ) ;

LISTOPENS SU $process-name.#su-name

Quotation marks around a symbol such as a bracket or brace indicate the symbol is a required

character that you must type as shown. For example:

"[" repetition-constant-list "]"

Item Spacing

Spaces shown between items are required unless one of the items is a punctuation symbol

such as a parenthesis or a comma. For example:

CALL STEPMOM ( process-id ) ;

If there is no space between two items, spaces are not permitted. In this example, no spaces

are permitted between the period and any other items:

$process-name.#su-name

Notation Conventions 11

Page 12

Line Spacing

If the syntax of a command is too long to fit on a single line, each continuation line is indented

three spaces and is separated from the preceding line by a blank line. This spacing

distinguishes items in a continuation line from items in a vertical list of selections. For example:

ALTER [ / OUT file-spec / ] LINE

[ , attribute-spec ]…

Publishing History

Publication DateProduct VersionPart Number

August 2012N.A.697513-001

November 2012N.A.697513-002

December 2012N.A.697513-003

February 2013N.A.697513-004

November 2015N.A.697513-004R

12

Page 13

1 NS2100 System Overview

The characteristics of an NS2100 system are:

Intel ItaniumProcessor

Input Power

Supported CLuster I/O Modules (CLIMs)

1

Minimum CLIMs

Maximum SAS disk enclosures per Storage CLIM pair

AC-powered with single-phase and three-phase power

configurations

42U and 36U, 19 inch rackCabinet

8 GB, 16 GB, or 32 GBMain memory

2 or 4Supported processor configurations

4Maximum processors

• Storage CLIMs

• IP CLIMs for Ethernet

• Telco CLIMs for Ethernet (M3UA protocol)

Up to 6 CLIMs using these possible combinations:Maximum CLIMs

• Up to 4 Storage CLIMs (two pairs)

• Up to 2 IP CLIMs if there are 0 Telco CLIMs

• Up to 2 Telco CLIMs if there are 0 IP CLIMs

• 0 IP CLIMs

• 0 Telco CLIMs

• 2 Storage CLIMs

A Storage CLIM pair supports a maximum of 4 SAS disk

enclosures. This maximum applies to G6 and Gen8

Storage CLIM types.

100Maximum SAS disk drives per Storage CLIM pair

2 required (one each for X and Y fabrics)Maximum VIO enclosures

4 embedded Ethernet ports (one port is reserved for OSM)Maximum embedded Ethernet connectivity per VIO

enclosure

4 optional expanded Ethernet ports (copper/optical)Maximum expanded Ethernet connectivity per VIO

enclosure (optional)

SupportedEnterprise Storage System (ESS) support available

through Storage CLIM

Not supportedM8201R Fibre Channel to SCSI router support

Not supportedI/O Adapter Module (IOAM) enclosures

Not supportedFibre Channel disk modules (FCDMs)

Not supportedConnection to NonStop ServerNet Clusters

Not supportedConnection to NonStop S-series I/O

1

For information about coexistence limits for IP and Telco CLIMs, see IP and Telco CLIM Coexistence Limits (page 88).

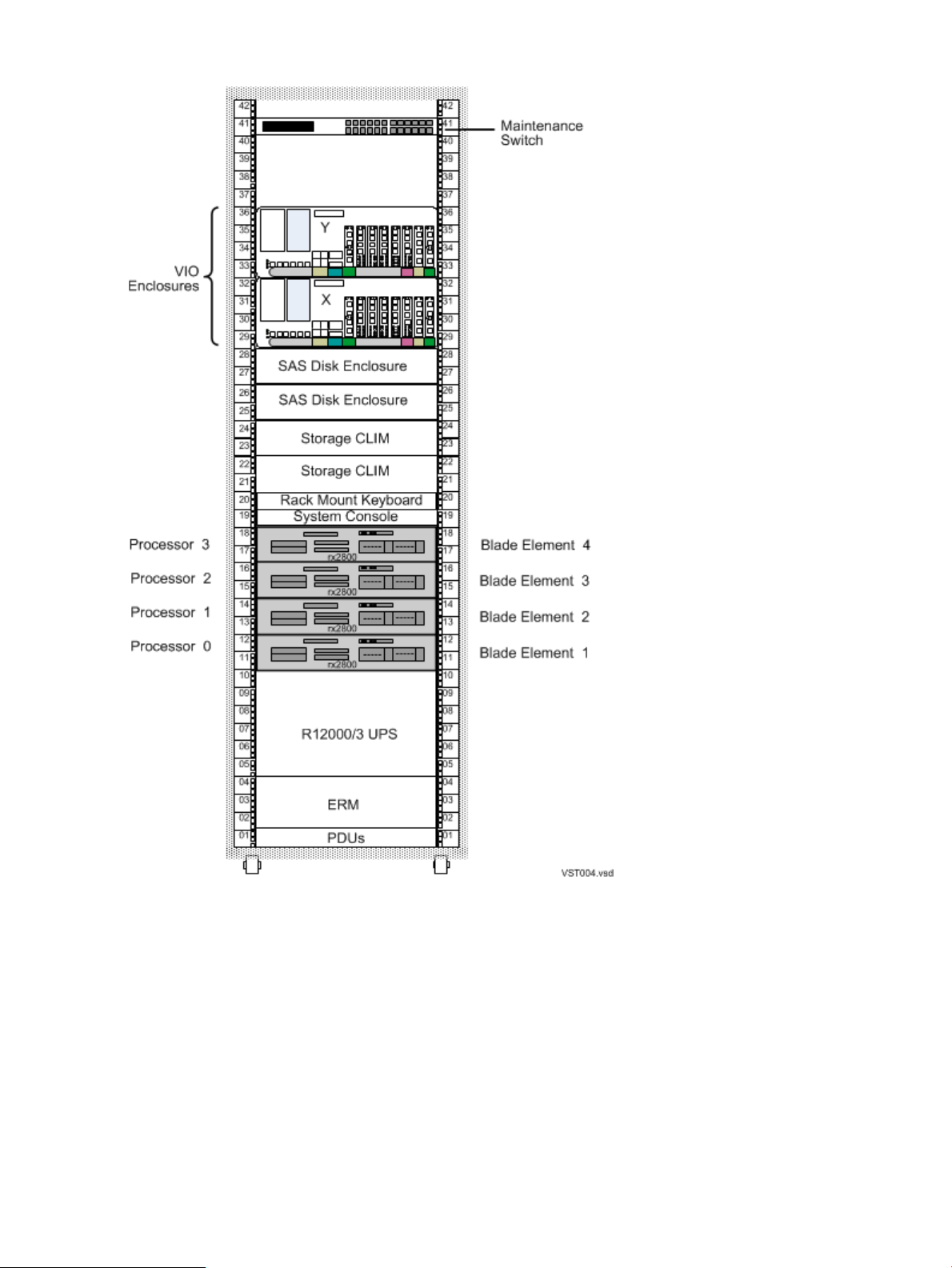

Figure 1 (page 14) shows the rear view of an NS2100 system with four blade elements in a 42U

modular cabinet with the optional extended runtime module (ERM) and UPS for the three-phase

power configuration.

13

Page 14

Figure 1 Example of an NS2100 System, 42U

NS2100 Hardware

Some variation of enclosure combinations is possible within the modular cabinets of an NS2100

system. The applications and purpose of any NS2100 system determine the number and

combinations of hardware within a modular cabinet.

Standard Hardware for an NS2100 system includes:

• “Blade Element (rx2800 i2) ” (page 15)

• “Versatile I/O (VIO) Enclosure ” (page 15)

• “Storage CLuster I/O Module (CLIM)” (page 17)

• “SAS Disk Enclosure” (page 24)

14 NS2100 System Overview

Page 15

• “Maintenance Switch ” (page 24)

• “System Console ” (page 24)

Optional Hardware for an NS2100 system includes:

• “IP CLuster I/O Module (CLIM) (Optional)” (page 19)

• “CLIM Cable Management Ethernet Patch Panel” (page 23)

• “UPS (Optional)” (page 25)

• “Enterprise Storage System — ESS (Optional)” (page 26)

• “Tape Drive and Interface Hardware (Optional)” (page 27)

All NS2100 system components are field-replaceable units that can only be serviced by service

providers trained by Hewlett Packard Enterprise.

Because of the number of possible configurations, you can calculate the total power consumption,

heat dissipation, and weight of each modular cabinet based on the hardware configuration that

you order from Hewlett Packard Enterprise. For site preparation specifications for the modular

cabinets and the individual enclosures, see Chapter 3 (page 44).

Blade Element (rx2800 i2)

The HPE Integrity rx2800 i2 server is adapted for use as an AC-powered blade element in the

NS2100 system. Each blade element contains an Intel® Itanium® processor with one core

enabled and a ServerNet PCI adapter card to provide connectivity to the ServerNet fabrics.

NOTE: NS2100 blade elements cannot be mixed with NS2200 blade elements in the same

system.

For details about the rx2800 i2 server, see the HPE Integrity rx2800 i2 Server User Service Guide

at:

http://www.hpe.com/support/Integrity_NonStop_rx2800_i2Server_Manuals

An NS2100 system supports up to four blade elements configured in pairs. Because modular

hardware provides flexibility in how hardware is distributed in a rack, up to four blade elements

can be installed in a single footprint.

To reduce ambiguity in identifying proper cable connections to the blade element, an identification

convention uses numbers to refer to each connection. A number such as 1, 2, 3, and 4 identifies

each blade element. These IDs reference the appropriate blade element for proper connection

of cables.

Versatile I/O (VIO) Enclosure

A VIO enclosure, which is 4U, provides Gigabit Ethernet networking and connectivity to the

processors. Two VIO enclosures, one required for each ServerNet fabric, are installed in a 19-inch

rack.

For a description and illustration of the VIO enclosure slot locations, see Figure 3 (page 32).

Each VIO enclosure contains:

• Connectivity for up to four processors, configured in pairs.

• Connectivity for up to four Storage CLuster I/O Modules (CLIMs) configured in pairs and

used to communicate with storage devices such as Serial Attached SCSI (SAS) disk

enclosures or Enterprise Storage System (ESS) disks.

• Up to eight copper/optical Ethernet ports used for Ethernet connectivity. Additional Ethernet

connectivity is available through IP or Telco CLIM connections with up to two IP or Telco

CLIMs.

NS2100 Hardware 15

Page 16

NOTE: For details about how to connect to the embedded or optional expanded Ethernet

ports on the VIO enclosure, ask your Hewlett Packard Enterprise service provider to refer

to the Versatile I/O Manual.

• Two fans to provide the cooling for components inside a VIO enclosure.

• Two power supplies with universal AC input to provide power to the components in a VIO

enclosure.

CLuster I/O Modules (CLIMs)

CLIMs are rack-mounted servers that can function a ServerNet Ethernet or I/O adapters.

The CLIM complies with Internet Protocol version 6 (IPv6), an Internet Layer protocol for

packet-switched networks, and has passed official certification of IPv6 readiness.

NOTE: All CLIMs use the Cluster I/O Protocols (CIP) subsystem. For more information about

the CIP subsystem, see the Cluster I/O Protocols (CIP) Configuration and Management Manual.

Two models of base servers are used for CLIMs. You can determine a CLIM's model by looking

at the label on the back of the unit (behind the cable arm). This label refers to the number as a

“PID,” but it is not the PID of the CLIM. It is the “Number on Label” in Table 1 (page 16) below.

The same number is listed as the part number in OSM. Below is the mapping for CLIM models

and earliest supported RVUs:

Table 1 CLIM Models and RVU Requirements

Earliest Supported RVUBase ServerNumber on LabelModel

J06.14 and later RVUsDL380494329-B21G6

J06.14 and later RVUsDL380p692764-001Gen8

These are the front views of each CLIM model. For an illustration of the back views, refer to each

supported CLIM configuration.

16 NS2100 System Overview

Page 17

These CLIM configurations are supported:

• “Storage CLuster I/O Module (CLIM)” (page 17)

• “IP CLuster I/O Module (CLIM) (Optional)” (page 19)

• “Telco CLuster I/O Module (CLIM) (Optional)” (page 21)

The optional “CLIM Cable Management Ethernet Patch Panel” (page 23) cable management

product is a convenient way to configure Ethernet cables in a NonStop cabinet for IP and Telco

CLIMs.

Storage CLuster I/O Module (CLIM)

The Storage CLuster I/O Module (CLIM) is part of all NS2100 system configurations. For RVU

requirements for DL380 G6 and DL380p Gen8 Storage CLIMs, see Table 1 (page 16). The

Storage CLIM is a rack-mounted server that connects to the VIO enclosure and functions as a

ServerNet I/O adapter providing:

• ServerNet fabric connections.

• A Serial Attached SCSI (SAS) interface for the storage subsystem via a SAS Host Bus

Adapter (HBA) supporting SAS disk drives, solid state drives, and SAS tape devices.

• A Fibre Channel (FC) interface for ESS and FC tape devices via a customer-ordered FC

HBA. A Storage CLIM can have 0, 2, or 4 FC interfaces in an NS2100 system.

Connections to FCDMs are not supported.

DL380 G6 and DL380p Gen8 CLIMs can coexist in the same NS2100 series system; and you

can have G6 and Gen8 CLIM pairs in the same system only if these criteria are met:

• The CLIM pair must be comprised of the same CLIM type (for example, a pair of Gen8

CLIMs). G6 and Gen8 CLIMs cannot coexist within the same CLIM pair.

• Coexisting CLIMs in a system must control different SAS disk enclosures.

NOTE: All CLIMs use the Cluster I/O Protocols (CIP) subsystem. For more information about

the CIP subsystem, see the Cluster I/O Protocols (CIP) Configuration and Management Manual.

Two storage CLIM configurations are available:

• “DL380 G6 Storage CLIM ” (page 18)

• “DL380p Gen8 Storage CLIM ” (page 18)

NS2100 Hardware 17

Page 18

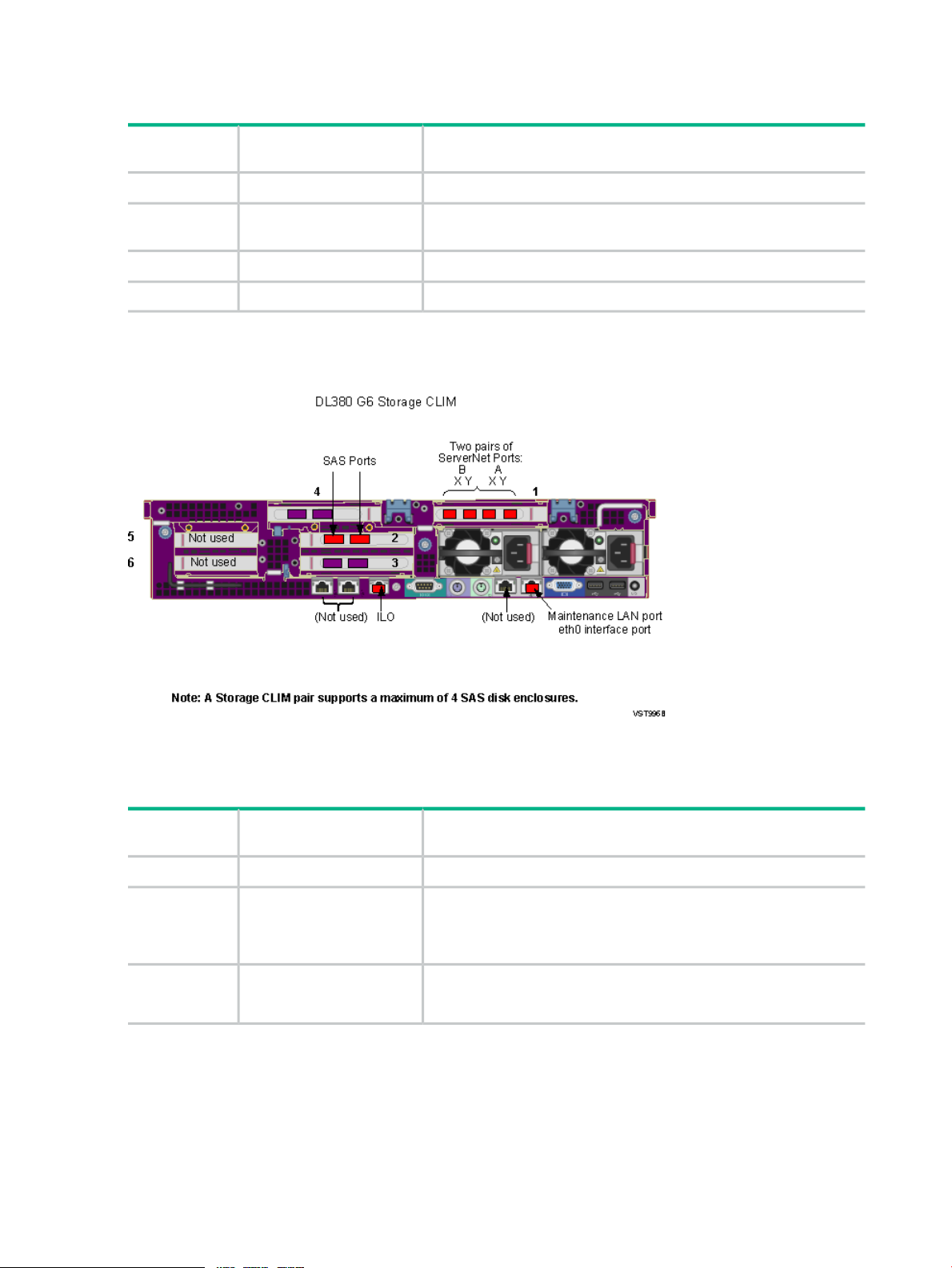

DL380 G6 Storage CLIM

The DL380 G6 Storage CLIM contains 4 PCIe HBA slots with these characteristics:

Storage CLIM

HBA Slot

ProvidesConfiguration

ServerNet fabric connections via a PCIe 4x adapter.Part of base configuration1

Part of base configuration2

One SAS external connector with two SAS links per connector and

6 Gbps per link is provided by the PCIe 8x slot

SAS or Fibre ChannelOptional customer order3

Fibre ChannelOptional customer order4

The illustration below shows the Storage CLIM HBA slots. For more information about Storage

CLIMs, see “Storage CLIM Devices” (page 74).

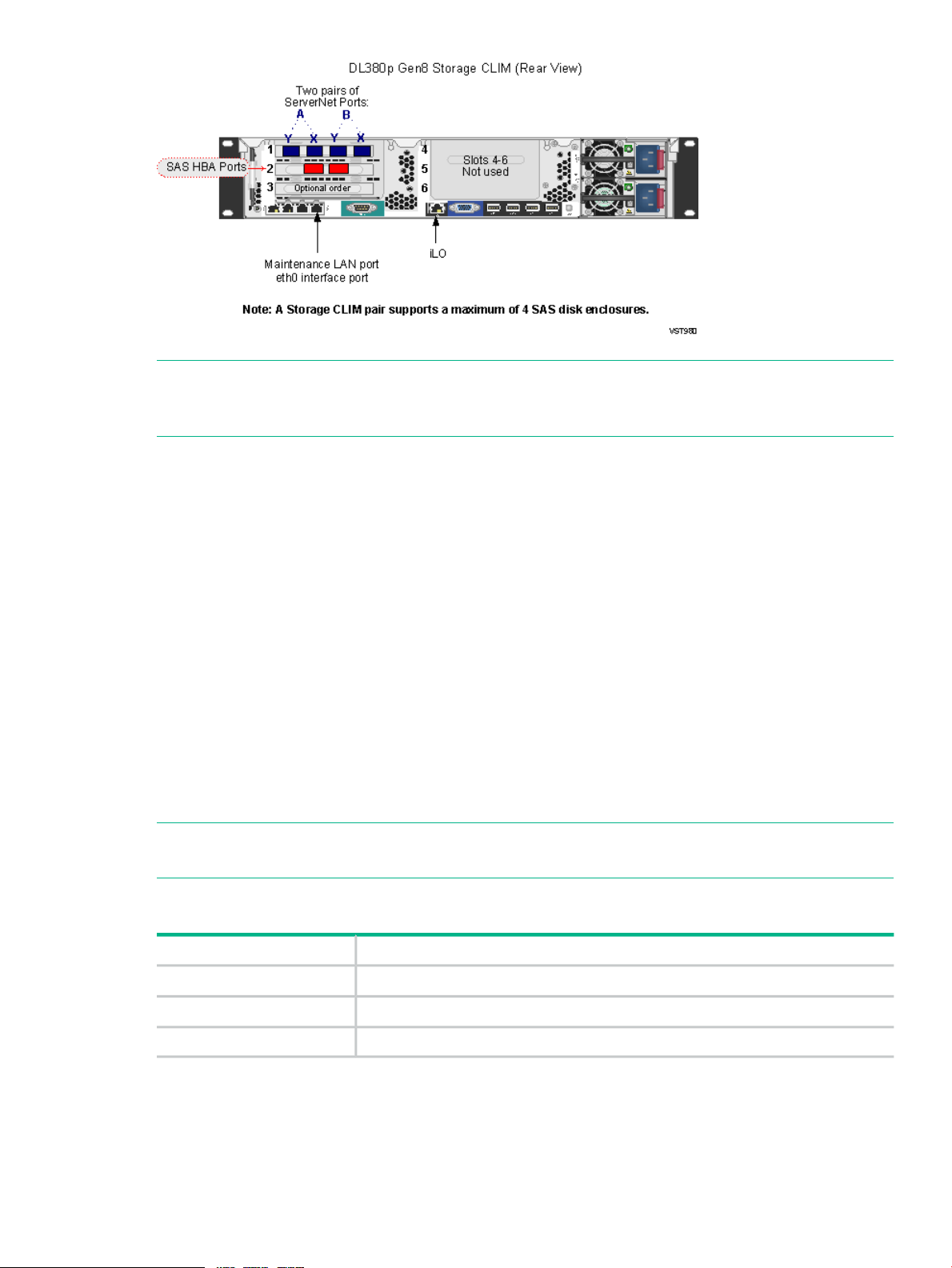

DL380p Gen8 Storage CLIM

The DL380p Gen8 Storage CLIM contains 3 PCIe HBA slots with these characteristics:

Storage CLIM

HBA Slot

2

SAS HBA is part of base

configuration

Optional customer order3

ProvidesConfiguration

ServerNet fabric connections via a PCIe 4x adapter.Part of base configuration1

Two 6 Gbps SAS ports.

NOTE: Fibre Channel HBA is not part of base configuration. An FC

HBA in slot 2 is an optional customer order.

SAS HBA with two 6 Gbps ports or FC HBA with two 8 Gbps ports.

NOTE: Not part of base configuration. Optional customer order.

18 NS2100 System Overview

Page 19

NOTE: The Storage CLIM uses the Cluster I/O Protocols (CIP) subsystem. For more information

about the CIP subsystem, refer to the Cluster I/O Protocols (CIP) Configuration and Management

Manual.

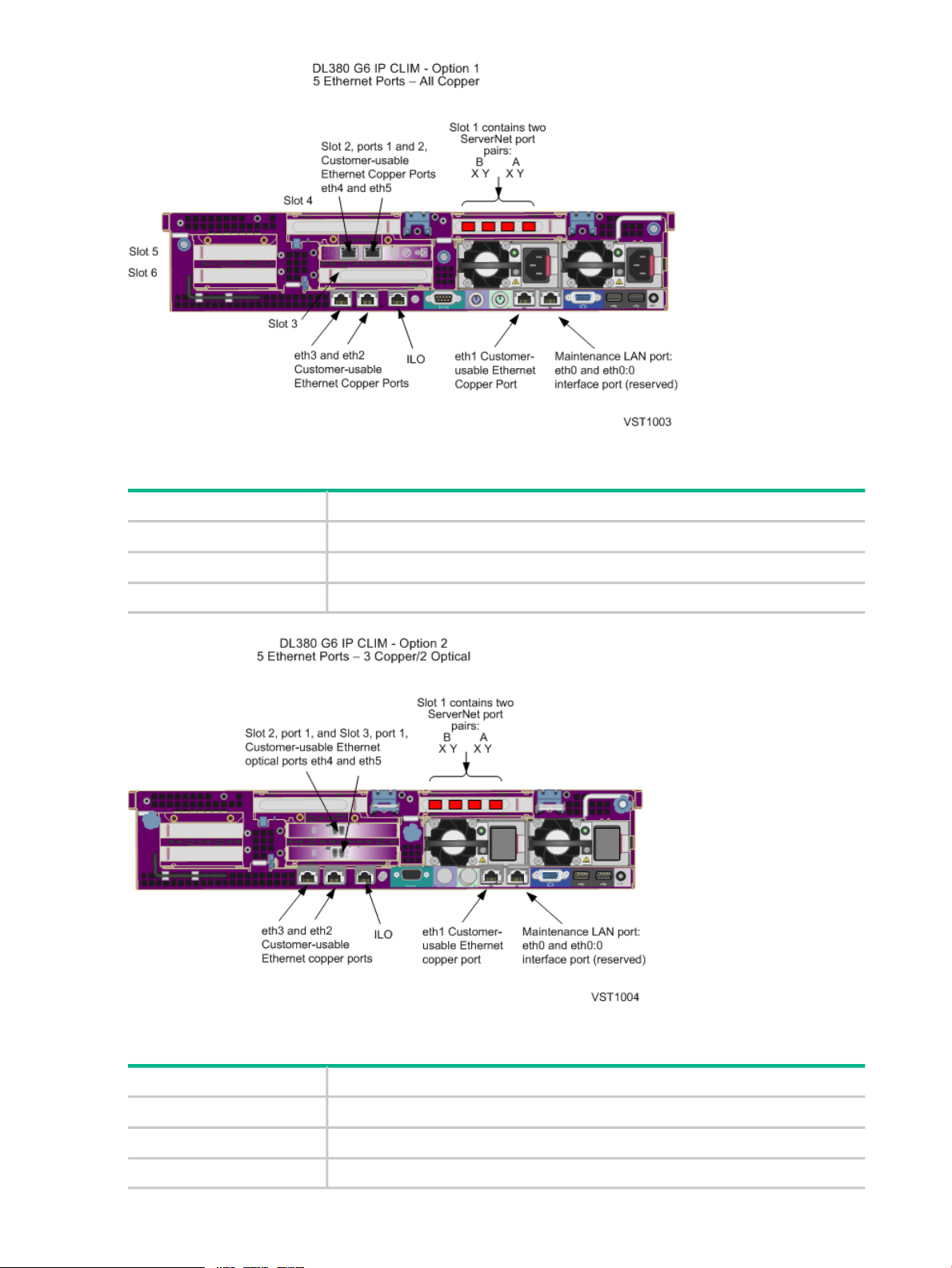

IP CLuster I/O Module (CLIM) (Optional)

The IP CLIM is a rack-mounted server that is part of some NS2100 system configurations. For

RVU requirements for DL380 G6 and DL380p Gen8 IP CLIMs, see Table 1 (page 16). An NS2100

system can have 0, 1, or 2 IP CLIMs. The IP CLIM connects to the VIO enclosure and functions

as a ServerNet Ethernet adapter providing HPE standard Gigabit Ethernet Network Interface

Cards (NICs) to implement one of these IP CLIM configurations:

• “DL380 G6 IP CLIM Option 1 — Five Ethernet Copper Ports” (page 19)

• “DL380 G6 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical Ports”

(page 20)

• “DL380p Gen8 IP CLIM Option 1 — Five Ethernet Copper Ports” (page 20)

• “DL380p Gen8 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical Ports”

(page 21)

These illustrations show the Ethernet interfaces and ServerNet fabric connections on the DL380

G6 and DL380p Gen8 IP CLIM with the IP CLIM option 1 and option 2 configurations. For

illustrations of the fronts of these CLIMs, see “CLuster I/O Modules (CLIMs)” (page 16).

NOTE: All CLIMs use the Cluster I/O Protocols (CIP) subsystem. For more information about

the CIP subsystem, see the Cluster I/O Protocols (CIP) Configuration and Management Manual.

DL380 G6 IP CLIM Option 1 — Five Ethernet Copper Ports

ProvidesIP CLIM Slot

ServerNet fabric connections via a PCIe 4x adapterSlot 1

2-port 1GbE copper NICSlot 2

Not usedSlots 3, 4, 5

NS2100 Hardware 19

Page 20

DL380 G6 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical Ports

ProvidesIP CLIM Slot

ServerNet fabric connections via a PCIe 4x adapterSlot 1

1-port 1GbE optical NICSlot 2 and Slot 3

Not usedSlot 4 and Slot 5

DL380p Gen8 IP CLIM Option 1 — Five Ethernet Copper Ports

20 NS2100 System Overview

ProvidesIP CLIM Slot

One ServerNet PCIe interface card, which provides the ServerNet fabric connectionsSlot 1

2-port 1GbE copper NICSlot 2

Not usedSlots 3, 4, 5, 6

Page 21

DL380p Gen8 IP CLIM Option 2 — Three Ethernet Copper and Two Ethernet Optical Ports

ProvidesIP CLIM Slot

One ServerNet PCIe interface card, which provides the ServerNet fabric connectionsSlot 1

2-port 1GbE optical NICSlot 2

Not usedSlots 3, 4, 5, 6

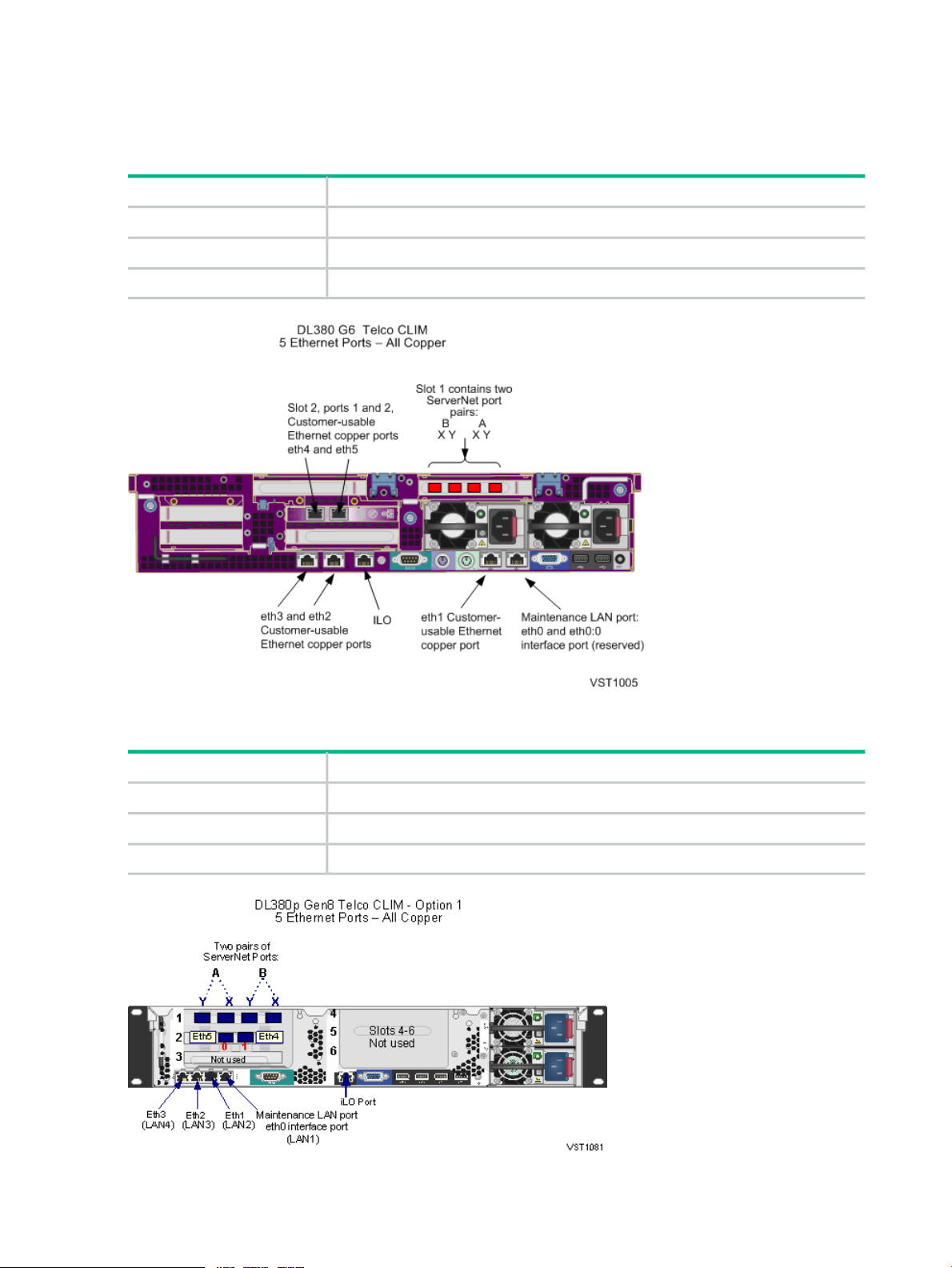

Telco CLuster I/O Module (CLIM) (Optional)

The Telco CLIM is a rack-mounted server that is part of some NS2100 series system

configurations. For RVU requirements for DL380 G6 and DL380p Gen8 Telco CLIMs, see Table 1

(page 16). An NS2100 series system can have 0, 1, or 2 Telco CLIMs. For information about

coexistence limits for IP and Telco CLIMs, see IP and Telco CLIM Coexistence Limits (page 88).

The Telco CLIM connects to the VIO enclosure and utilizes the Message Transfer Part Level 3

User Adaptation layer (M3UA) protocol and functions as a ServerNet Ethernet adapter with one

of these Telco CLIM configurations:

• “DL380 G6 Telco CLIM — Five Ethernet Copper Ports” (page 22)

• “DL380p Gen8 Telco CLIM — Option 1 Five Ethernet Copper Ports” (page 22)

• “DL380p Gen8 Telco CLIM — Option 2 Three Ethernet Copper and Two Ethernet Optical

Ports” (page 23)

NOTE: All CLIMs use the Cluster I/O Protocols (CIP) subsystem. For more information about

the CIP subsystem, see the Cluster I/O Protocols (CIP) Configuration and Management Manual.

NS2100 Hardware 21

Page 22

This illustration shows the Ethernet interfaces and ServerNet fabric connections on a DL380 G6

and DL380p Gen8 Telco CLIM. For illustrations of the front of this CLIM, see “CLuster I/O Modules

(CLIMs)” (page 16).

DL380 G6 Telco CLIM — Five Ethernet Copper Ports

ProvidesTelco CLIM Slot

ServerNet fabric connections via a PCIe 4x adapterSlot 1

2-port 1GbE copper NICSlot 2

Not usedSlots 3, 4, 5

DL380p Gen8 Telco CLIM — Option 1 Five Ethernet Copper Ports

ProvidesTelco CLIM Slot

One ServerNet PCIe interface card, which provides the ServerNet fabric connectionsSlot 1

2-port 1GbE copper NICSlot 2

Not usedSlots 3, 4, 5, 6

22 NS2100 System Overview

Page 23

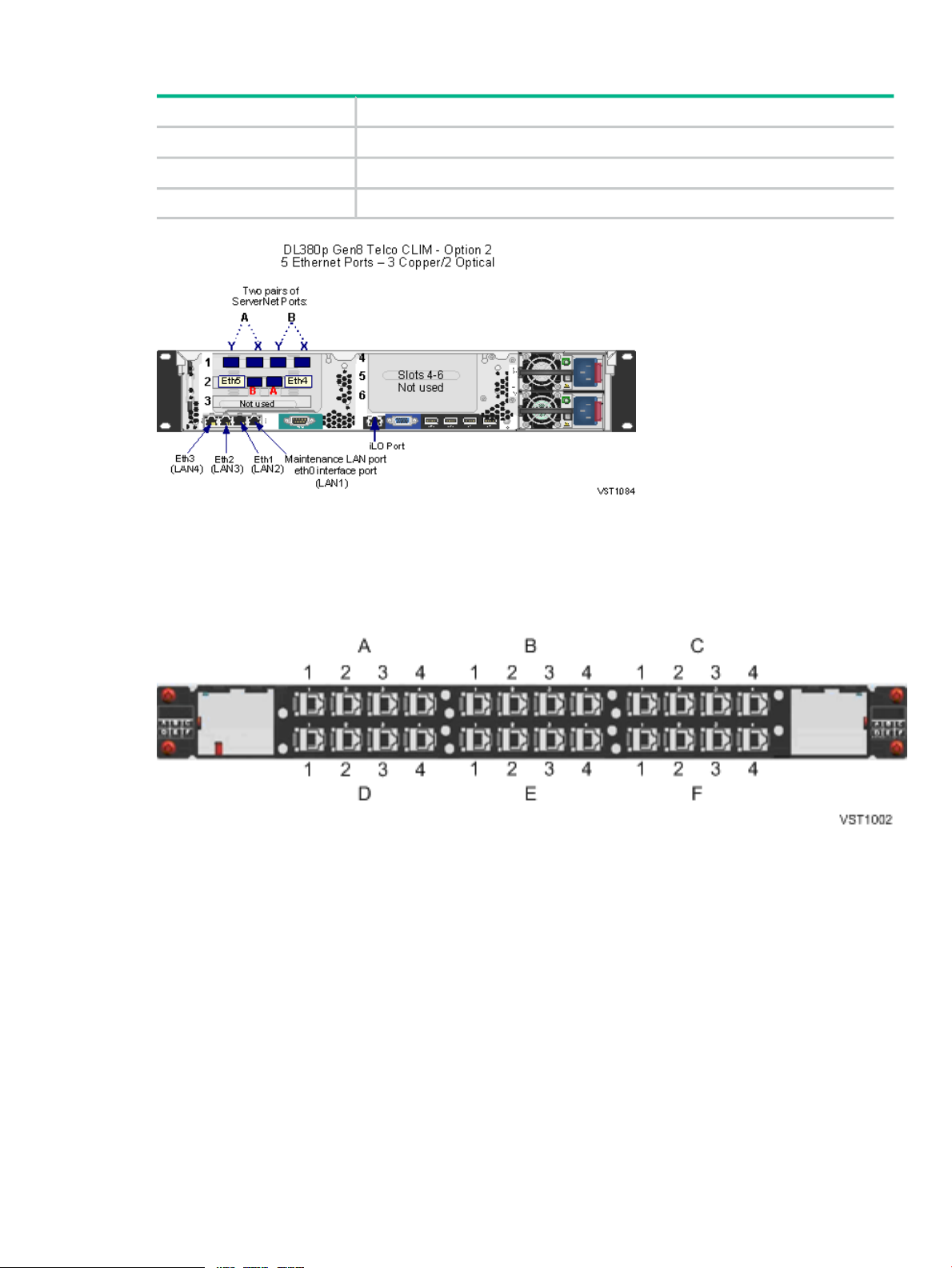

DL380p Gen8 Telco CLIM — Option 2 Three Ethernet Copper and Two Ethernet Optical Ports

ProvidesTelco CLIM Slot

One ServerNet PCIe interface card, which provides the ServerNet fabric connectionsSlot 1

2-port 1GbE optical NICSlot 2

Not usedSlot 3, 4, 5, 6

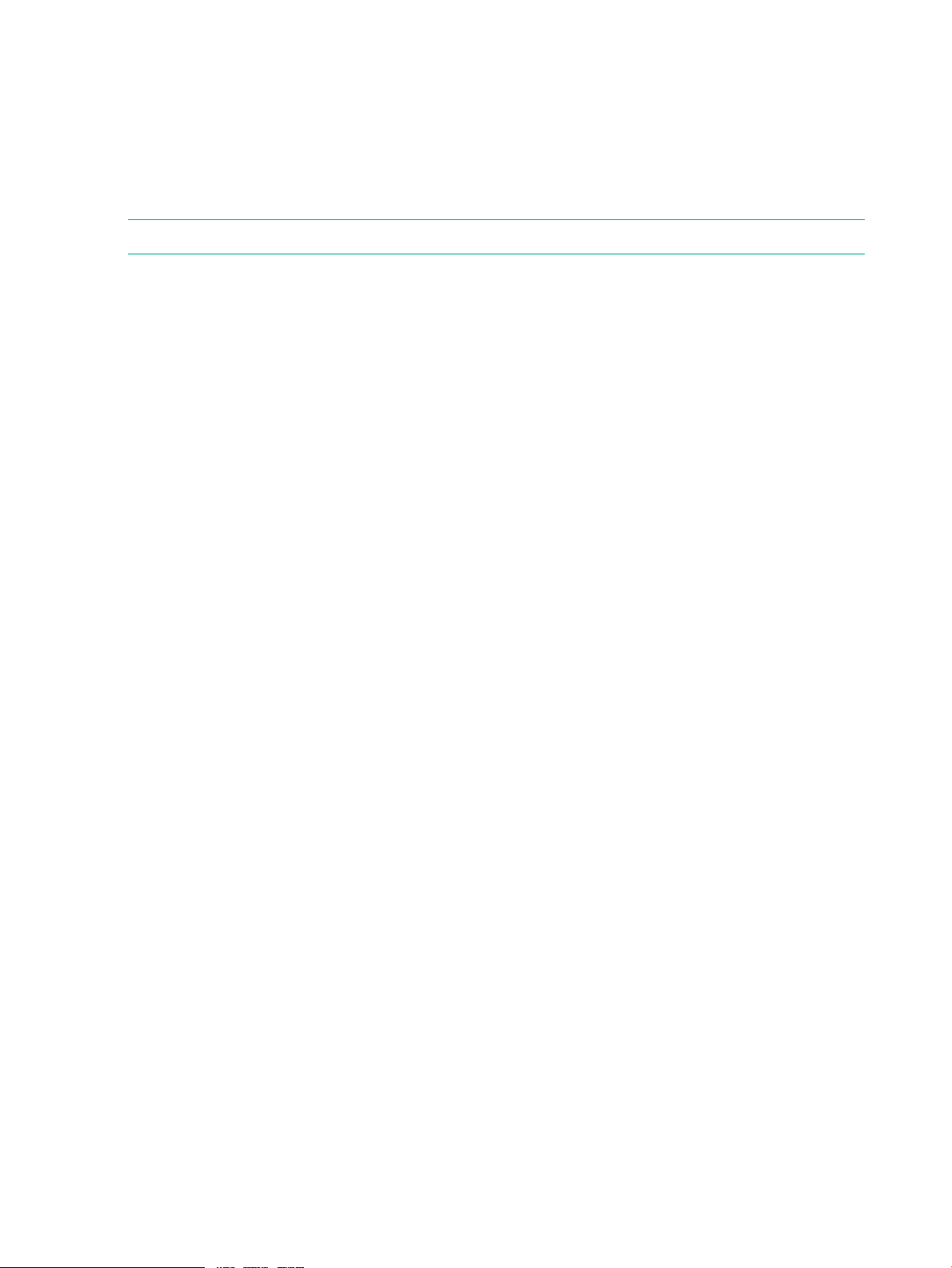

CLIM Cable Management Ethernet Patch Panel

The HPE Ethernet patch panel cable management product is used for cabling the IP and Telco

CLIM connections. The patch panel simplifies and organizes the cable connections to allow easy

access to the CLIM's customer-usable interfaces.

IP CLIMs each have five customer-usable interfaces. The patch panel connects these interfaces

and brings the usable interface ports to the patch panel. Each Ethernet patch panel has 24 slots,

is 1U high, and should be the topmost unit to the rear of the rack. Each Ethernet patch panel can

handle cables for up to five CLIMs. It has no power connection.

Each patch panel has 6 panes labeled A, B, C, D, E, and F. Each pane has 4 RJ-45 ports, and

each port is labeled 1, 2, 3, or 4. The RJ-45 ports in Panel A have port names: A1, A2, A3, and

A4.

The factory default configuration depends on how many IP or Telco CLIMs and patch panels are

configured in the system. For a new system, QMS Tech Doc generates a cable table with the

CLIM interface name. This table identifies how the connections between the CLIM physical ports

and patch panel ports were configured at the factory.

If you are adding a patch panel to an existing system, ask your Hewlett Packard Enterprise

service provider to refer to the CLuster I/O (CLIM) Installation and Configuration Guide.

NS2100 Hardware 23

Page 24

SAS Disk Enclosure

The M8381-25 SAS disk enclosure is a rack-mounted disk enclosure that connects to the Storage

CLIM and supports up to 25 SAS disk drives or solid state drives (in any combination), and a

dual SAS domain from the Storage CLIMs to dual port SAS drives. The SAS disk enclosure

supports connections to SAS disk drives. Connections to FCDMs are not supported. You can

find more information about the M8381-25 SAS disk enclosure in the HPE StorageWorks

D2600/D2700 Disk Enclosure User Guide.

NOTE: Solid state drives are supported as of J06.13.

An M8381-25 SAS disk enclosure supports 6Gbps SAS protocol. It contains:

• Twenty-five 2.5” dual-ported disk drive slots

• Two power supplies

• Two fans

• Two independent I/O modules:

SAS Domain A◦

◦ SAS Domain B

For illustrations of the SAS disk enclosure, see Figure 20 (page 76).

Maintenance Switch

The HPE ProCurve maintenance switch provides the communication between the NS2100 system

through the VIO enclosures, CLIMs, the optional UPS, and the system console running HPE

NonStop Open System Management (OSM).

The NS2100 system requires multiple connections to the maintenance switch:

• One connection from the ME ENET port on each of the two VIO enclosures

• One connection from slot 6B, port A on each of the two VIO enclosures for the OSM Service

Connection and OSM Notification Director (optional if a dedicated service LAN is implemented

using CLIMs)

• One connection to the iLO port on a CLIM

• One connection to the iLO port on a blade element

• One connection to an eth0 port on a CLIM

• One connection to the optional UPS module

• One connection to the system console running OSM

System Console

A system console is a Windows Server purchased from Hewlett Packard Enterprise that runs

maintenance and diagnostic software for NS2100 systems. When supplied with a new NS2100

system, system consoles have factory-installed Hewlett Packard Enterprise and third-party

software for managing the system. You can install software upgrades from the HPE NonStop

System Console Installer DVD.

Some system console hardware, including the Windows Server system unit, monitor, and

keyboard, can be mounted in the NS2100 system 19-inch rack. Other Windows Servers are

installed outside the rack and require separate provisions or furniture to hold the server hardware.

For more information on the system console, see “System Consoles for NS2100 Systems ”

(page 101).

24 NS2100 System Overview

Page 25

UPS (Optional)

An uninterruptible power supply (UPS) is optional but recommended where a site UPS is not

available.

A UPS can be combined with an extended runtime module (ERM) to extend battery run time.

See “ERM (Optional with UPS)” (page 26).

Depending on your power configuration, Hewlett Packard Enterprise supports these options:

• “UPS for a Single-Phase Power Configuration (Optional)” (page 25)

• “UPS for a Three-Phase Power Configuration (Optional)” (page 25)

WARNING! UPSs and ERMs must be mounted in the lowest portion of the system to avoid

tipping and stability issues. For more information, see the UPS user guide.

UPS for a Single-Phase Power Configuration (Optional)

Hewlett Packard Enterprise supports the HPE model R5000 UPS for the single-phase power

configuration because it utilizes the power fail support provided by OSM for this configuration.

For information about the requirements for installing a UPS, see “Uninterruptible Power Supply

(UPS)” (page 40).

There are two different versions of the R5000 single phase UPS:

• For North America and Japan, the HPE AF460A is utilized and uses a NEMA L6-30P (30A)

input connector with 200 to 208V single-phase.

• For International, the HPE AF461A is utilized and uses an IEC-60309 (32A) input connector

with 220V to 240V AC single-phase power.

NOTE: The AC input power cord for the single-phase UPS is routed to exit the modular cabinet

at either the top or bottom rear corners of the cabinet, depending on what is ordered for the site

power feed (the large output receptacle is unused).

For the UPS power and environmental requirements, see “System Installation Specifications for

NS2100 Systems” (page 44). For planning, installation, and emergency power-off (EPO)

instructions, see the HPE UPS R5000 User Guide and the HPE UPS Network Module User Guide

at http://www.hpe.com/support/UPS_3_Phase_Manuals

For other UPSs, see the documentation shipped with the UPS.

UPS for a Three-Phase Power Configuration (Optional)

Hewlett Packard Enterprise supports the HPE model R12000/3 UPS for the three-phase power

configuration because it utilizes the power fail support provided by OSM for this configuration.

For information about the requirements for installing a UPS, see “Uninterruptible Power Supply

(UPS)” (page 40).

There are two different versions of the R12000/3 UPS:

• For North America and Japan, the HPE AF429A is utilized and uses an IEC309 560P9 (60A)

input connector with 208V three-phase Wye.

• For International, the HPE AF430A is utilized and uses an IEC309 532P6 (32A) input

connector with 400V three-phase Wye.

NOTE: The R12000/3 UPS has two output connectors.

For the R12000/3 UPS power and environmental requirements, see Chapter 3 (page 44). For

planning, installation, and emergency power-off (EPO) instructions, see the HPE 3 Phase UPS

User Guide. This guide is on the Hewlett Packard Enterprise website at:

http://www.hpe.com/support/UPS_3_Phase_Manuals

NS2100 Hardware 25

Page 26

For other UPSs, see the documentation shipped with the UPS.

ERM (Optional with UPS)

Cabinet configurations that include the HPE UPS can also include extended runtime modules

(ERMs). An ERM is a battery module that extends the overall battery-supported system run time.

Up to two ERMs can be used for even longer battery-supported system run time.

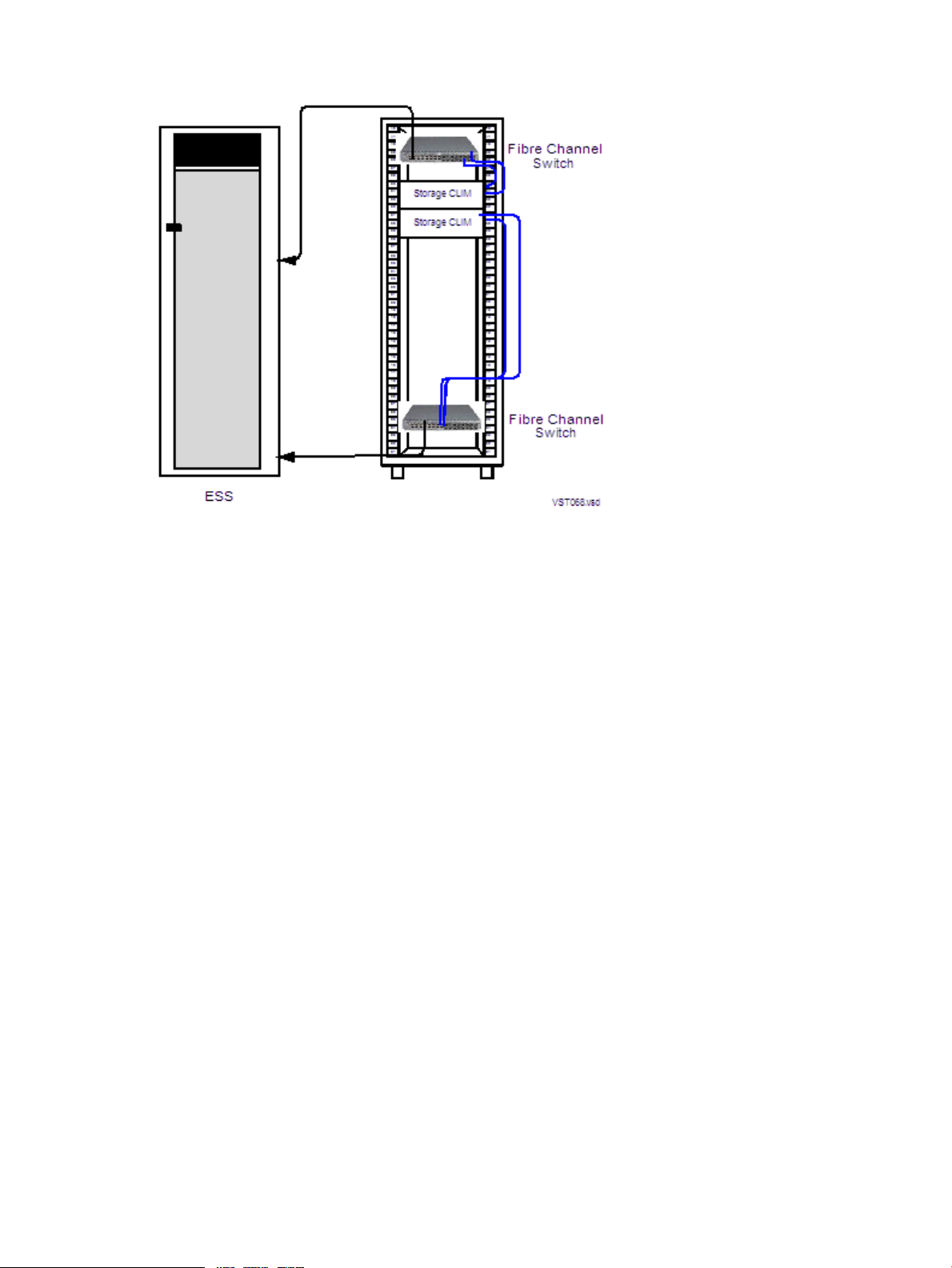

Enterprise Storage System — ESS (Optional)

An Enterprise Storage System (ESS) is a collection of magnetic disks, their controllers, and a

disk cache in one or more standalone cabinets. ESS connects to the Integrity NonStop NS-series

systems either directly via Fibre Channel ports on the Storage CLIM (direct connect) or through

a separate storage area network (SAN) using a Fibre Channel SAN switch (switched connect).

For more information about these connection types, see your Hewlett Packard Enterprise service

provider.

NOTE: The Fibre Channel SAN switch power cords might not be compatible with the modular

cabinet PDU. Contact your Hewlett Packard Enterprise service provider to order replacement

power cords for the SAN switch that are compatible with the modular cabinet PDU.

Cables and switches vary, depending on whether the connection is direct, switched, or a

combination:

Fibre Channel SwitchesCablesConnection

Direct connect

on Storage CLIM (LC-MMF)

Switched

CLIM (LC-MMF)

Combination of direct and switched

CLIM for each direct connection

CLIM for each switched connection

1

Customer must order FC HBA interfaces for a pair of Storage CLIMs.

1

02 Fibre Channel (FC) HBA interfaces

1 or more4 FC HBA interfaces on Storage

12 FC HBA interfaces on Storage

14 FC HBA interfaces on Storage

Figure 2 shows an example of connections between two Storage CLIMs and an ESS via separate

Fibre Channel switches:

26 NS2100 System Overview

Page 27

Figure 2 Connections Between Storage CLIMs and ESS

For fault tolerance, the primary and backup paths to an ESS logical device (LDEV) must go

through different Fibre Channel switches.

Some storage area procedures, such as reconfiguration, can cause the affected switches to

pause. If the pause is long enough, I/O failure occurs on all paths connected to that switch. If

both the primary and the backup paths are connected to the same switch, the LDEV goes down.

For more information, see the documentation that accompanies the ESS.

Tape Drive and Interface Hardware (Optional)

For an overview of tape drives and the interface hardware, see “Fibre Channel Ports to Tape

Devices” (page 74) and “SAS Ports to SAS Tape Devices” (page 74).

For a list of supported tape devices, ask your Hewlett Packard Enterprise service provider to

refer to the NonStop Storage Overview.

Preparation for Other Hardware

This guide provides the specifications only for the NS2100 system modular cabinets and

enclosures identified earlier in this section. For site preparation specifications for other Hewlett

Packard Enterprise hardware that will be installed at the site with the NS2100 systems, consult

with your Hewlett Packard Enterprise account team. For site preparation specifications relating

to hardware from other manufacturers, refer to the documentation for those devices.

Component Location and Identification in an NS2100 System

This subsection includes these topics:

• “Terminology” (page 28)

• “Rack and Offset Physical Location ” (page 29)

• “Blade Element Group-Module-Slot Numbering ” (page 29)

• “VIO Enclosure Group-Module-Slot Numbering ” (page 31)

• “CLIM Connection Group-Module-Slot-Port Numbering ” (page 33)

Preparation for Other Hardware 27

Page 28

Terminology

These are terms used in locating and describing components in an NS2100 commercial system:

DefinitionTerm

Cabinet

Rack

Rack Offset

Group

Module

Slot (or Bay or Position)

Port

• Group-Module-Slot (GMS)

• Group-Module-Slot-Bay (GMSB)

• Group-Module-Slot-Port (GMSP)

Computer system housing that includes a structure of

external panels, front and rear doors, internal racking,

and dual PDUs.

Structure integrated into the cabinet into which

rack-mountable components are assembled.

The physical location of components installed in a modular

cabinet, measured in U values numbered 1 to 42, with 1U

at the bottom of the cabinet. A U is 1.75 inches (44

millimeters).

A subset of a system that contains one or more modules.

A group does not necessarily correspond to a single

physical object, such as an enclosure.

A subset of a group that is usually contained in an

enclosure. A module contains one or more slots (or bays).

A module can consist of components sharing a common

interconnect, such as a backplane, or it can be a logical

grouping of components performing a particular function.

A subset of a module that is the logical or physical location

of a component within that module.

A connector to which a cable can be attached and which

transmits and receives data.

A notation method used by hardware and software in

NonStop systems for organizing and identifying the

location of certain hardware components.

Blade complex

rx2800 i2 blade element

In an NS2100 system, OSM uses this term to

hierarchically differentiate between each blade element.

An HPE Integrity rx2800 i2 server that contains the

processor element, power supplies, fan assemblies, and

firmware. An NS2100 system includes up to four blade

elements.

On NS2100 systems, locations of the modular components are identified by:

• Physical location:

Rack number◦

◦ Rack offset

• Logical location:

◦ Group, module, and slot (GMS) notation as defined by their position on the ServerNet

rather than the physical location

OSM uses GMS notation in many places, including the Tree view and Attributes window, and it

uses rack and offset information to create displays of the server and its components. For example,

in the Tree view, OSM displays the location of a power supply in a VIO enclosure in group 100,

module 2, slot 15 in this form:

Power Supply (100.2.15)

28 NS2100 System Overview

Page 29

Rack and Offset Physical Location

Rack name and rack offset identify the physical location of components in an NS2100 system.

The rack name is located on an external label affixed to the rack, which includes the system

name plus a 2-digit rack number.

Rack offset is labeled on the rails in each side of the rack. These rails are measured vertically

in units called U, with one U measuring 1.75 inches (44 millimeters). The rack is 36U with 1U

located at the bottom and 36U at the top or 42U with 1U located at the bottom and 42U at the

top. The rack offset is the lowest number on the rack that the component occupies.

Blade Element Group-Module-Slot Numbering

• Group:

In OSM Service Connection displays, 400 through 403 relates to blade complexes 0

◦

through 4. Each blade complex includes a blade element and its associated processor.

Example: group 403 = blade complex 3

◦ In the OSM Low-Level Link, 400 relates to all blade complexes.

Example: group 400 = any blade complex

• Module:

In OSM Service Connection displays, a module represents either the blade element or

◦

the processor:

– In an NS2100 system, all blade elements are module 1.

Example: module 1 = any blade element

– 100-103 relates to processors 0-3

Example: module 102 = processor 2

◦ In the OSM Low-Level Link, 100 through 103 relates to processors 0 through 3.

Example: module 103 = processor 3

• Slot:

In OSM Service Connection displays:◦

– 1 represents the ServerNet PCI adapter card.

The ServerNet PCI card is installed in the third PCI slot in the rx2800 i2.

– 3 and 4 represent the power supplies on the blade element.

OSM slot 3 represents power supply 1; OSM slot 4 represents power supply 2.

– 32 through 37 represent the fans on the blade element.

OSM slot 32 represents fan 1, OSM slot 33 represents fan 2, and so on.

◦ In the OSM Low-Level Link, 1 relates to the location of the processor. Because each

blade element contains only one processor, it is always located in slot 1.

Example: slot 1 = any processor in any blade element.

• Port: X and Y relate to the two ServerNet fabric ports in slot 1.

These tables show the default numbering for the blade elements of an NS2100 system when

blade elements are powered on and functioning:

Component Location and Identification in an NS2100 System 29

Page 30

NOTE: In OSM, if a blade element is not present or is powered off, processors might be

renumbered. For example, if processor 2 has been removed, processor 3 becomes processor

2 in OSM displays.

GMS Numbering Displayed in the OSM Service Connection:

Port (Slot 2 only)SlotModuleGroup*Processor

ID

ProcessorBlade

Element

10014000

10114011

10214022

10314033

*In OSM, the term Blade Complex is used for the group.

GMS Numbering Displayed in the OSM Low-Level Link:

*In OSM, the term Blade Complex is used for the group.

(physical PCI slot 1)

3-4, power supplies.

(physical power supplies 1

and 2)

32—37, fans (physical fans

1 through 6)

X1, PCI adapter card.

Y

SlotModuleGroup*Processor ID

11004000

11014001

11024002

11034003

The form of the GMS numbering for a blade element displayed in the OSM Service Connection

is:

This illustration shows the physical GMS numbering for the rear view of a blade element:

30 NS2100 System Overview

Page 31

The X fabric connects to ports BX and AX. The Y fabric connects to ports BY and AY.

VIO Enclosure Group-Module-Slot Numbering

An NS2100 system supports a single pair of VIO enclosures, identified as group 100. For an

illustration of the VIO enclosure slots, see Figure 3 (page 32).

SlotModuleGroup

PortsItemVIO Enclosure (AC-Powered)

X

Fabric

Y

Fabric

Displayed by

OSM

6

Displayed on

Chassis

6a6

1

6b

7a7

7b7

Storage CLIM33

Ethernet ports

(optical)

(copper)

(optical)

(copper)

-Not supported1132100

-Not supported22

1 and 3 for 2 Storage

CLIMs; 1 - 4 for 4 Storage

CLIMs

1 - 2IP CLIM44

1 - 2Telco CLIM

-Not supported55

C and D (10/100/1000

Mbps)

A , B (10/100 Mbps)Ethernet ports

C, D (10/100/1000 Mbps)

C, D (10/100/1000 Mbps)Ethernet ports

A , B (10/100 Mbps)Ethernet ports

C, D (10/100/1000 Mbps)

-Not supported7c7

14.1 - 14.414

(processors 0-3)

Component Location and Identification in an NS2100 System 31

1 - 4Processor ports

-Power supplies15, 1815, 18

-Fans16, 1716, 17

Page 32

1

Port A in slot 6b is reserved for OSM.

Figure 3 VIO Enclosure Slot Locations, NS2100 System

32 NS2100 System Overview

Page 33

CLIM Connection Group-Module-Slot-Port Numbering

This table lists the default numbering for VIO connections to a CLIM:

PIC Port NumbersVIO SlotModuleCLIM Group

3 (Storage CLIM)2100

4 (Telco CLIM)

3 (Storage CLIM)3100

4 (Telco CLIM)

1 and 3 for 2 Storage CLIMs,

1 - 4 for 4 Storage CLIMs

1-24 (IP CLIM)

1 and 3 for 2 Storage CLIMs,

1 - 4 for 4 Storage CLIMs

1-24 (IP CLIM)

The illustration below shows the slot and connector locations from the VIO modules to two DL380

G6 Storage CLIMs.

Figure 4 DL380 G6 Storage CLIM Connections to VIO Enclosures

Component Location and Identification in an NS2100 System 33

Page 34

The illustration below shows the slot and connector locations from the VIO modules to two DL380

G6 IP or Telco CLIMs.

NOTE: The Telco CLIM connections are the same as the IP CLIM connections.

Figure 5 DL380 G6 IP or Telco CLIM Connections to VIO Enclosures

34 NS2100 System Overview

Page 35

The illustration below shows the slot and connector locations from the VIO modules to two DL380p

Gen8 Storage CLIMs.

Figure 6 DL380p Gen8 Storage CLIM Connections to VIO Enclosures

Component Location and Identification in an NS2100 System 35

Page 36

The illustration below shows the slot and connector locations from the VIO modules to two DL380p

Gen8 IP or Telco CLIMs.

NOTE: The Telco CLIM connections are the same as the IP CLIM connections.

Figure 7 DL380p Gen8 IP or Telco CLIM Connections to VIO Enclosures

System Installation Document Packet

To keep track of the hardware configuration, internal and external communications cabling, IP

addresses, and connect networks, assemble and retain as the systems records an Installation

Document Packet. This packet can include:

• “Tech Memo for the Factory-Installed Hardware Configuration ” (page 36)

• “Configuration Forms for the CLIMs and ServerNet Adapters ” (page 37)

Tech Memo for the Factory-Installed Hardware Configuration

Each new NS2100 system includes a document that describes:

• The cabinet included with the system

• Each hardware enclosure installed in the cabinet

• Cabinet U location of the bottom edge of each enclosure

• Each ServerNet cable with:

Source and destination enclosure, component, and connector◦

◦ Cable part number

◦ Source and destination connection labels

36 NS2100 System Overview

Page 37

This document is called a tech memo and serves as the physical location and connection map

for the system.

Configuration Forms for the CLIMs and ServerNet Adapters

Ethernet ports on a VIO enclosure or connections to an IP or Telco CLIM provide Gigabit Ethernet

functionality in an NS2100 system. Connections to the Fibre Channel HBA interfaces on a Storage

CLIM provide the Fibre Channel functionality in the system.

To add Fibre Channel and Ethernet configuration forms to your Installation Document Packet,

ask your Hewlett Packard Enterprise service provider to provide the necessary forms from the

Versatile I/O Manual or the Cluster I/O Installation and Configuration Manual (for IP or Telco

CLIM-related configurations) and follow any associated planning instructions.

System Installation Document Packet 37

Page 38

2 Site Preparation Guidelines for NS2100 Systems

This section describes power, environmental, and space considerations for an NS2100 system

at your site.

Modular Cabinet Power and I/O Cable Entry

Power and I/O cables can enter the NS2100 system from either the top or the bottom rear of the

modular cabinets, depending on how the cabinets are ordered from Hewlett Packard Enterprise