Page 1

HPE Integrity BL860c i4, BL870c i4 & BL890c i4 Server Blade User Service Guide

Abstract

This document contains specific information that is intended for users of this Hewlett Packard

Enterprise product.

Part Number: 5200-2523

Published: September 2017

Edition: 6

Page 2

©

Copyright 2012, 2017 Hewlett Packard Enterprise Development LP

Notices

The information contained herein is subject to change without notice. The only warranties for Hewlett

Packard Enterprise products and services are set forth in the express warranty statements accompanying

such products and services. Nothing herein should be construed as constituting an additional warranty.

Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained

herein.

Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession,

use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer

Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government

under vendor's standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard

Enterprise has no control over and is not responsible for information outside the Hewlett Packard

Enterprise website.

Acknowledgments

Intel®, Itanium®, Pentium®, Intel Inside®, and the Intel Inside logo are trademarks of Intel Corporation in

the United States and other countries.

Microsoft® and Windows® are either registered trademarks or trademarks of Microsoft Corporation in the

United States and/or other countries.

UNIX® is a registered trademark of The Open Group.

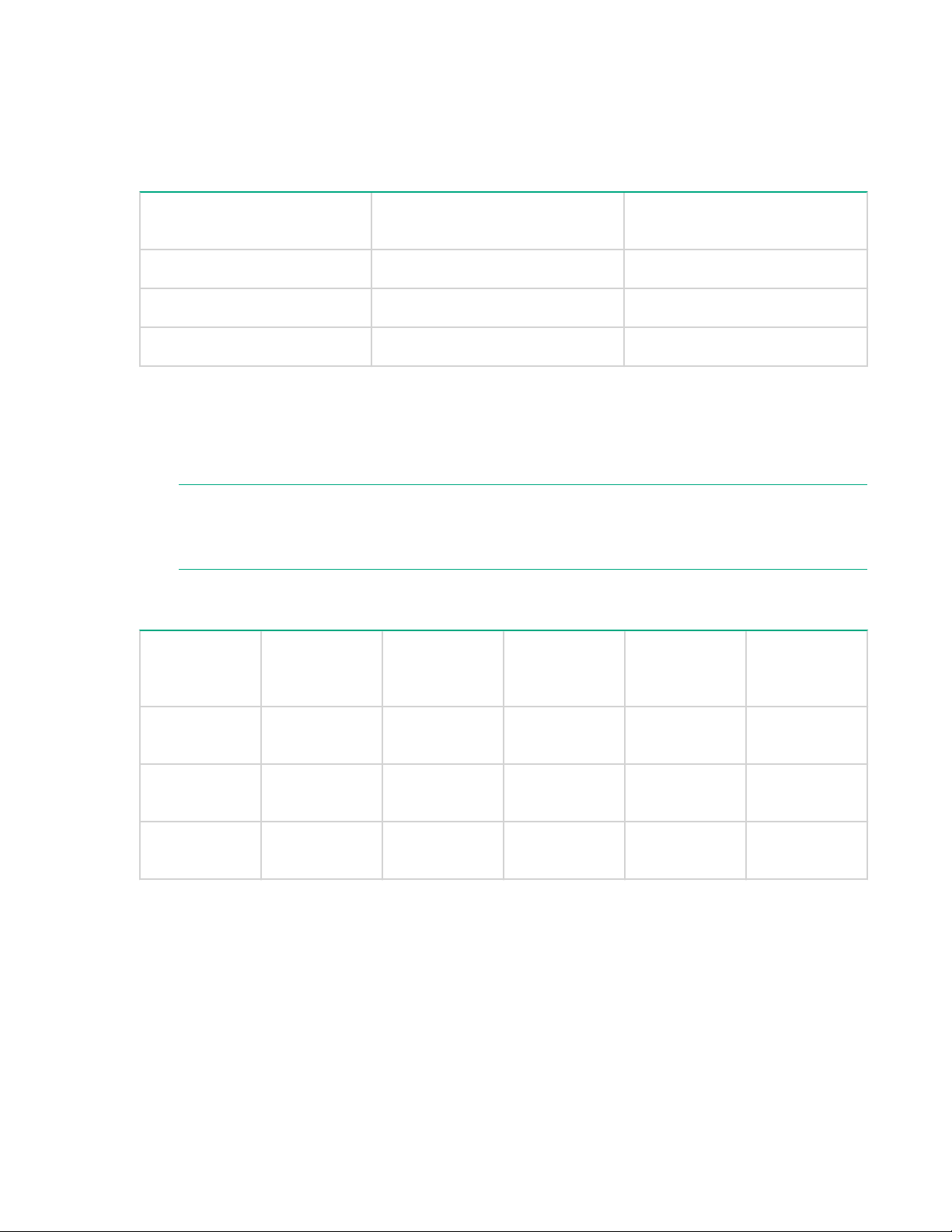

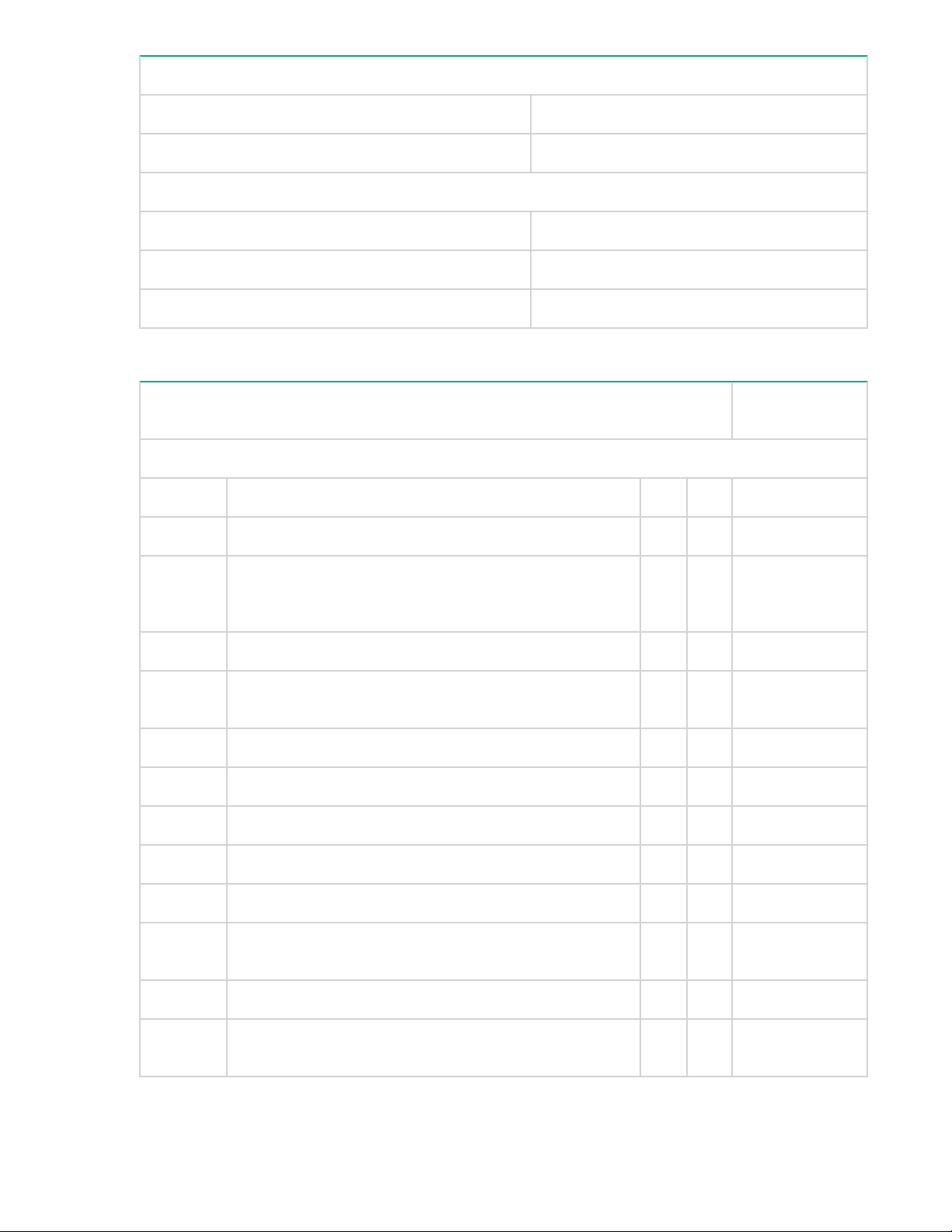

Revision history

Changes in this edition:

Added new part numbers for Adding SAS disk drive LEDs : HBA mode table under Troubleshooting"

chapter

Document

manufacturing

part number

5992–1083 HP-UX BL860c i4, BL870c

5900-2663 HP-UX BL860c i4, BL870c

5900-2673 HP-UX BL860c i4, BL870c

5900-2673R HP-UX BL860c i4, BL870c

5200-2461 HP-UX BL860c i4, BL870c

Operating

systems

supported

Supported

product versions

i4 & BL890c i4

i4 & BL890c i4

i4 & BL890c i4

i4 & BL890c i4

i4 & BL890c i4

Edition number Publication Date

First December 2012

Second May 2013

Third August 2013

Fourth November 2015

Fifth January 2017

5200-2523 HP-UX BL860c i4, BL870c

i4 & BL890c i4

Sixth September 2017

Page 3

Contents

Overview.................................................................................................. 8

Site preparation.....................................................................................10

Server blade overview.................................................................................................................. 8

Server blade components............................................................................................................. 9

Server blade dimensions and weight.......................................................................................... 10

Enclosure information................................................................................................................. 10

Enclosure environmental specifications...................................................................................... 11

Sample Site Inspection Checklist................................................................................................11

Power subsystem........................................................................................................................14

ESD handling information........................................................................................................... 15

Unpacking and inspecting the server blade................................................................................ 15

Verifying site preparation..................................................................................................15

Inspect the shipping containers for damage.................................................................... 15

Unpacking the server blade............................................................................................. 16

Verifying the inventory......................................................................................................16

Returning damaged equipment........................................................................................16

Installing the server blade into the enclosure....................................17

Installation sequence and checklist............................................................................................ 17

Installing and powering on the server blade............................................................................... 17

Preparing the enclosure................................................................................................... 17

Removing a c7000 device bay divider...................................................................18

Removing a c3000 device bay mini-divider or device bay divider.........................19

Installing interconnect modules............................................................................. 21

Installing the server blade into the enclosure...................................................................22

Server blade power states............................................................................................... 24

Powering on the server blade................................................................................24

Powering off the server blade................................................................................25

Installing the Blade Link for BL860c i4, BL870c i4 or BL890c i4 configurations......................... 25

Using iLO 3................................................................................................................................. 29

Accessing UEFI or the OS from iLO 3 MP..................................................................................29

UEFI Front Page.............................................................................................................. 30

Saving UEFI configuration settings....................................................................... 33

Booting and installing the operating system.....................................................................33

Operating system is loaded onto the server blade...........................................................33

Operating system is not loaded onto the server blade.....................................................33

OS login prompt............................................................................................................... 33

Installing the latest firmware using HP Smart Update Manager................................................. 34

Operating system procedures............................................................. 35

Operating systems supported on the server blade..................................................................... 35

Installing the operating system onto the server blade.................................................................35

Installing the OS from an external USB DVD device or tape device................................35

Installing the OS using Ignite-UX..................................................................................... 36

Installing the OS using vMedia.........................................................................................37

Contents 3

Page 4

Configuring system boot options.................................................................................................37

Booting and shutting down HP-UX............................................................................................. 37

Adding HP-UX to the boot options list..............................................................................38

HP-UX standard boot....................................................................................................... 38

Booting HP-UX from the UEFI Boot Manager....................................................... 39

Booting HP-UX from the UEFI Shell......................................................................39

Booting HP-UX in single-user mode................................................................................ 40

Booting HP-UX in LVM-maintenance mode..................................................................... 40

Shutting down HP-UX...................................................................................................... 40

Optional components........................................................................... 41

Partner blades.............................................................................................................................41

Hot-plug SAS disk drives............................................................................................................ 41

Installing internal components.................................................................................................... 43

Removing the access panel............................................................................................. 43

Processor and heatsink module.......................................................................................43

DIMMs..............................................................................................................................48

Mezzanine cards.............................................................................................................. 54

HPE Smart Array P711m Controller................................................................................. 55

Supercap pack mounting kit.................................................................................. 56

Installing the Supercap mounting bracket............................................................. 56

Installing the P711m controller board.................................................................... 57

Installing the Supercap Pack.................................................................................58

Replacing the access panel............................................................................................. 59

Upgrading a conjoined configuration................................................................................59

Procedure summary.............................................................................................. 60

Upgrade kit contents............................................................................................. 60

Before getting started............................................................................................ 61

Upgrading the original server................................................................................ 67

Support..................................................................................................................75

Blade link and system information parameters..................................................... 75

Operating System Licenses.................................................................................. 76

The Quick Boot option...........................................................................................77

Possible changes due to VC profile mapping on the upgraded blade server........77

Preserving VC-assigned MAC addresses in HP-UX by enabling Portable

Image.....................................................................................................................82

4 Contents

Troubleshooting.................................................................................... 84

Methodology............................................................................................................................... 84

General troubleshooting methodology............................................................................. 84

Executing recommended troubleshooting methodology ................................................. 86

Basic and advanced troubleshooting tables.....................................................................87

Troubleshooting tools..................................................................................................................92

Controls and ports............................................................................................................92

Front panel view.................................................................................................... 93

Rear panel view.....................................................................................................93

Server blade LEDs........................................................................................................... 94

Front panel LEDs...................................................................................................94

SAS disk drive LEDs............................................................................................. 95

Blade Link LEDs....................................................................................................98

Virtual Front Panel LEDs in the iLO 3 TUI........................................................................98

SUV Cable and Ports..................................................................................................... 100

Connecting to the serial port............................................................................... 101

Diagnostics.....................................................................................................................102

Page 5

General diagnostic tools.................................................................................................102

Fault management overview.......................................................................................... 102

HP-UX Fault management............................................................................................. 102

Errors and error logs................................................................................................................. 103

Event log definitions....................................................................................................... 103

Event log usage............................................................................................................. 103

iLO 3 MP event logs.......................................................................................................104

SEL review..................................................................................................................... 105

Troubleshooting processors......................................................................................................106

Processor installation order............................................................................................106

Processor module behaviors..........................................................................................106

Enclosure information............................................................................................................... 106

Cooling subsystem....................................................................................................................106

Firmware................................................................................................................................... 107

Identifying and troubleshooting firmware issues............................................................ 107

Verify and install the latest firmware...............................................................................107

Troubleshooting the server interface (system console)............................................................ 107

Troubleshooting the environment..............................................................................................108

Removing and replacing components.............................................. 109

Server blade components list....................................................................................................109

Preparing the server blade for servicing....................................................................................112

Powering off the server blade.........................................................................................113

Blade Link for BL870c i4 or BL890c i4 configurations....................................................113

Removing the Blade Link for BL870c i4 or BL890c i4 configurations.................. 113

Replacing the Blade Link for BL870c i4 or BL890c i4 configurations.................. 114

Blade Link for BL860c i4 configurations......................................................................... 115

Server blade..............................................................................................................................116

Access panel.............................................................................................................................117

Disk drive blanks....................................................................................................................... 118

Removing a disk drive blank...........................................................................................118

Disk drives.................................................................................................................................118

DIMM baffle...............................................................................................................................120

DIMMs.......................................................................................................................................121

CPU baffle.................................................................................................................................122

CPU and heatsink module........................................................................................................ 123

SAS backplane......................................................................................................................... 126

Server battery............................................................................................................................126

Mezzanine cards.......................................................................................................................127

ICH mezzanine board............................................................................................................... 128

System board............................................................................................................................129

Blade Link................................................................................................................................. 130

Support and other resources.............................................................131

Accessing Hewlett Packard Enterprise Support....................................................................... 131

Accessing updates....................................................................................................................131

Websites................................................................................................................................... 132

Customer self repair..................................................................................................................132

Remote support........................................................................................................................ 132

Documentation feedback.......................................................................................................... 132

RAID configuration and other utilities.............................................. 133

Configuring a Smart Array Controller........................................................................................133

Contents 5

Page 6

Using the saupdate command .................................................................................... 133

Get mode.............................................................................................................133

Set mode............................................................................................................. 134

Updating the firmware using saupdate ............................................................ 135

Determining the Driver ID and CTRL ID.........................................................................135

Configuring RAID volumes using the ORCA menu-driven interface.........................................136

Creating a logical drive...................................................................................................138

Deleting a logical drive................................................................................................... 139

Useful UEFI command checks.......................................................................................141

UEFI..........................................................................................................................................141

UEFI Shell and POSSE commands............................................................................... 142

Drive paths in UEFI...................................................................................................................145

Using the Boot Maintenance Manager......................................................................................146

Boot Options.................................................................................................................. 147

Add Boot Option.................................................................................................. 147

Delete Boot Option.............................................................................................. 148

Change Boot Order............................................................................................. 149

Driver Options................................................................................................................ 150

Add Driver Option................................................................................................151

Delete Driver Option............................................................................................151

Change Driver Order........................................................................................... 152

Console Configuration....................................................................................................152

Boot From File................................................................................................................153

Set Boot Next Value....................................................................................................... 154

Set Time Out Value........................................................................................................ 155

Reset System.................................................................................................................155

iLO 3 MP................................................................................................................................... 156

Warranty and regulatory information................................................157

Warranty information.................................................................................................................157

Regulatory information..............................................................................................................157

Belarus Kazakhstan Russia marking............................................................................. 157

Turkey RoHS material content declaration.....................................................................158

Ukraine RoHS material content declaration................................................................... 158

Standard terms, abbreviations, and acronyms................................ 159

A................................................................................................................................................159

B................................................................................................................................................159

C................................................................................................................................................159

D................................................................................................................................................159

E................................................................................................................................................160

F................................................................................................................................................160

H................................................................................................................................................160

I................................................................................................................................................. 160

L................................................................................................................................................ 160

M............................................................................................................................................... 161

N................................................................................................................................................161

O............................................................................................................................................... 161

P................................................................................................................................................161

Q............................................................................................................................................... 162

S................................................................................................................................................162

T................................................................................................................................................162

U................................................................................................................................................163

V................................................................................................................................................163

6 Contents

Page 7

W...............................................................................................................................................163

Contents 7

Page 8

Overview

The HPE Integrity BL860c i4 Server Blade is a dense, low-cost, Intel Itanium processor server blade.

Using a Blade Link hardware assembly, multiple BL860c i4 Server Blades can be conjoined to create

dual-blade, four socket and quad-blade, eight socket variants.

Name Number of Conjoined Server

BL860c i4 1 2

BL870c i4 2 4

BL890c i4 4 8

The three blade configurations support the HP-UX operating system and are designed for deployment in

HPE c-Class enclosures, specifically the 10U c7000 and the 6U c3000 Enclosures. The nPartition

configuration feature is enabled from the factory, enabling the BL870c i4 and BL890c i4 to be partitioned

into one more electrically isolated partitions. See the nPartitions Administrator Users Guide for more

information.

NOTE:

For purposes of this guide, make sure that the c-Class server blade enclosure is powered on and

running properly and that the OA and iLO 3 is operational.

Server blade overview

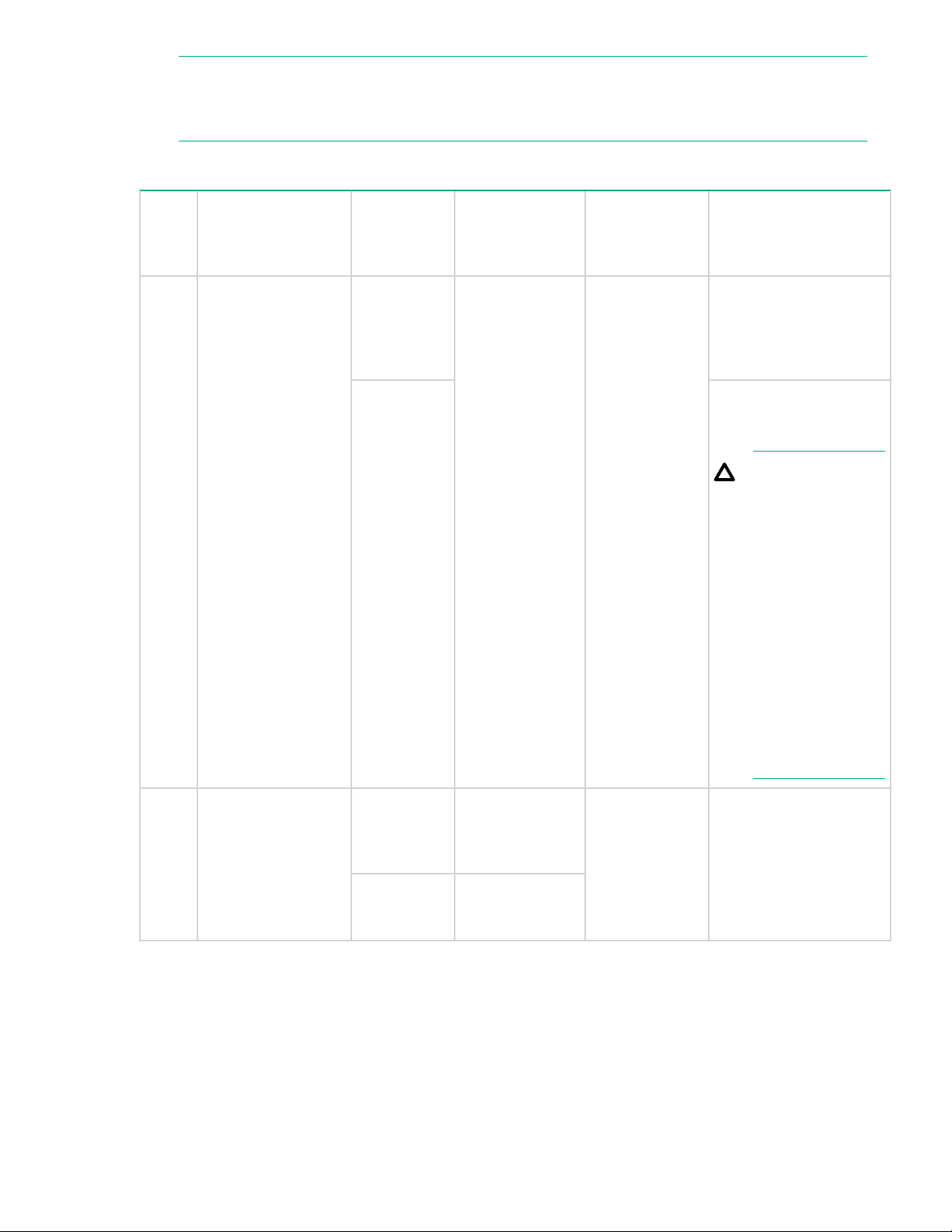

Product CPU cores

(octo)

Number of Processor Sockets

Blades

DIMM slots max memory PCIe I/O

Mezzanine

card capacity

SAS Hard Disk

Drives

BL860c i4 16 24 364GB with

BL870c i4 32 48 768GB with

BL890c i4 64 96 1.5TB with

8 Overview

3 2

16GB DIMMs

6 4

16GB DIMMs

12 8

16GB DIMMs

Page 9

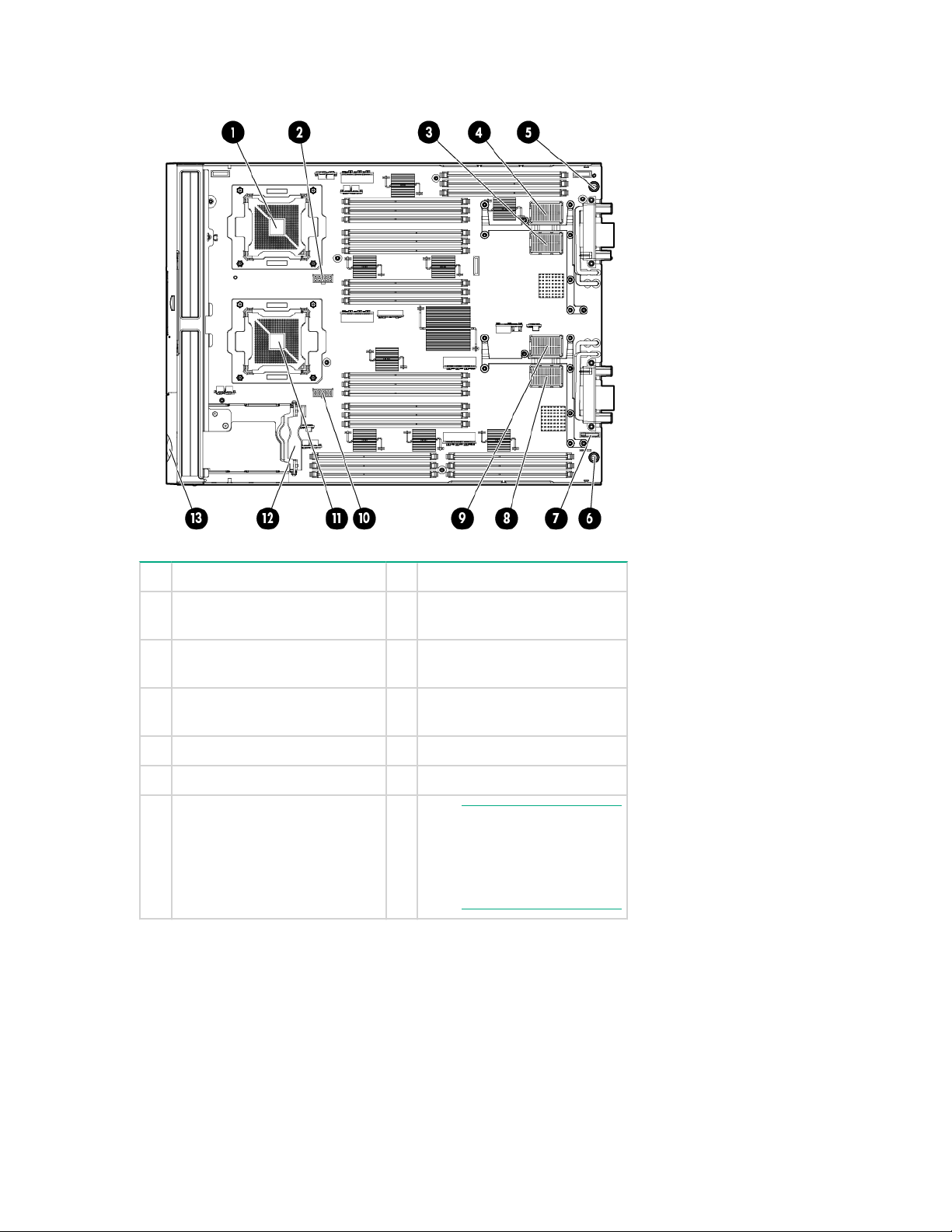

Server blade components

1 CPU0 8 ICH mezzanine connector

2 CPU0 power connector 9 Mezzanine connector 3

(type 1 or 2)

3 Mezzanine connector 1 (type1)10 CPU1 power connector

4 Mezzanine connector 2 (type

1 or 2)

5 System board thumbscrew 12 SAS backplane

6 System board thumbscrew 13 Pull tab

7 Battery (CR2032)

11 CPU1

NOTE:

The iLO 3 password is

located on the pull

tab.

Server blade components 9

Page 10

Site preparation

The BL860c i4 does not have cooling or power systems. Cooling and power is provided by the c-Class

enclosure.

IMPORTANT:

To avoid hardware damage, allow the thermal mass of the product to equalize to the temperature

and humidity of the installation facility after removing the shipping materials. A minimum of one hour

per 10°C (50°F) of temperature difference between the shipping facility and installation facility is

required.

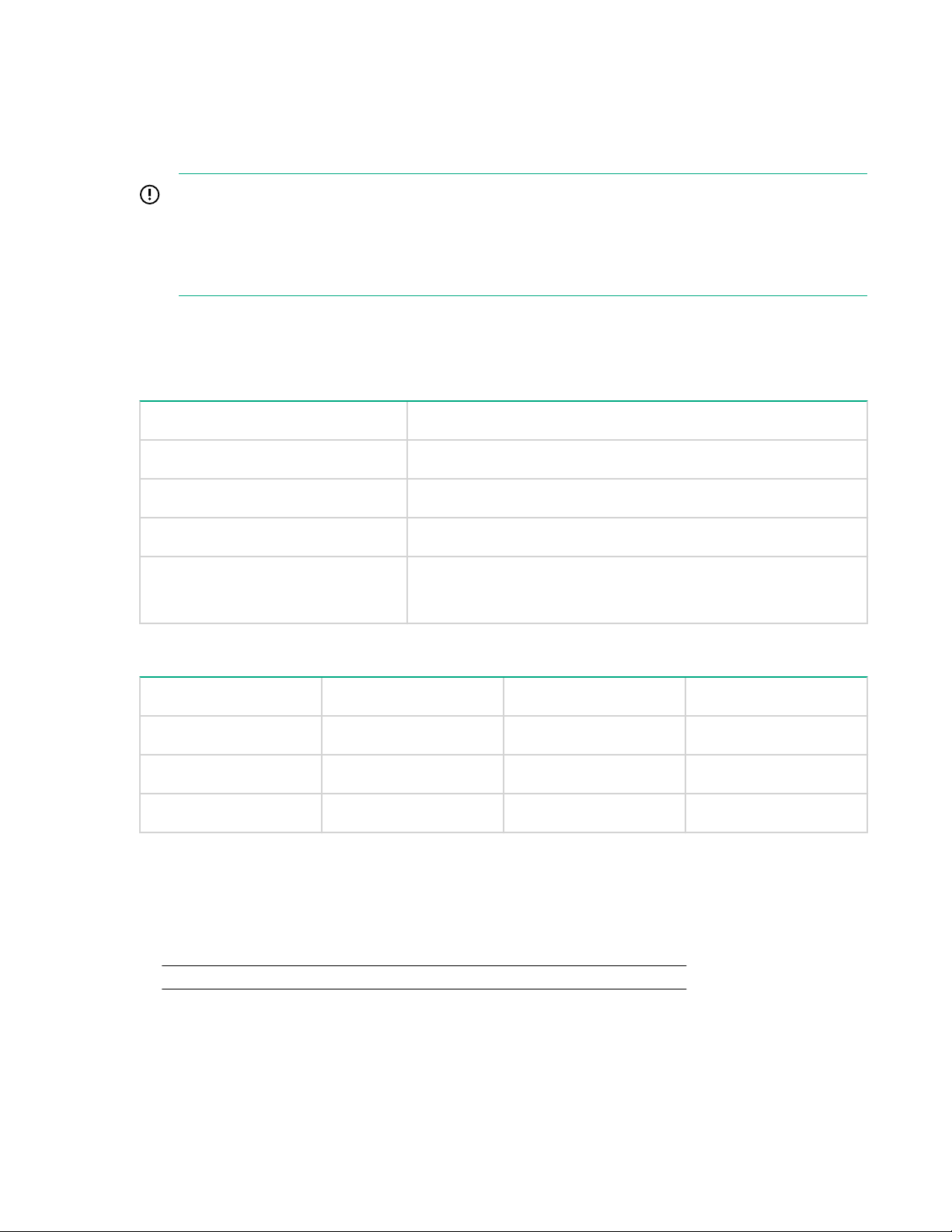

Server blade dimensions and weight

Table 1: Server blade dimensions and weight for the BL860c i4

Dimensions value

Height 36.63 cm (14.42 in.)

Width 5.14 cm (2.025 in.)

Depth 48.51 cm (19.1 in.)

Weight Unloaded: 8.6 kg (19 lb)

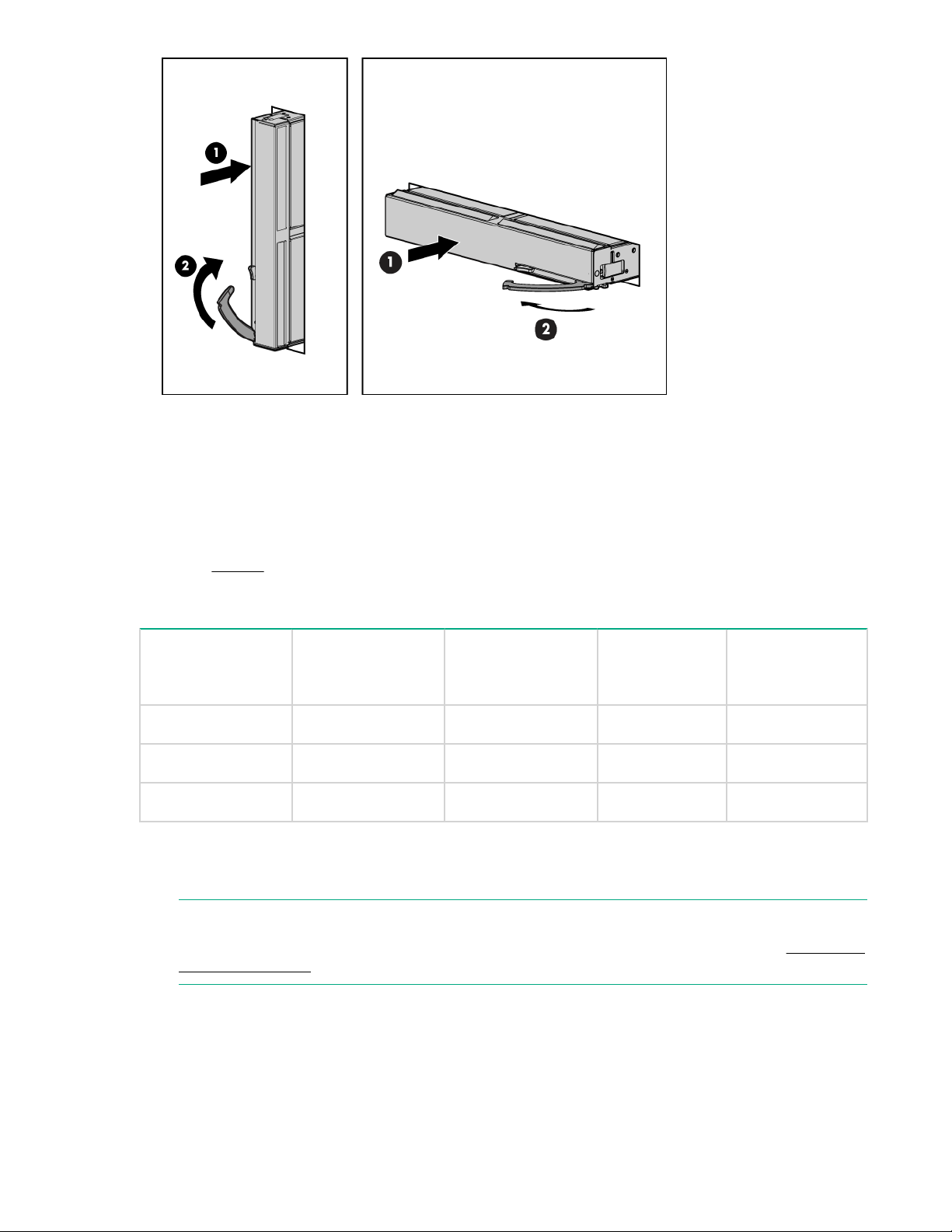

Table 2: Blade Link dimensions and weight

Blade Link type Height Width Weight

BL1 (BL860 i4) 44 mm (1.73 in) 51 mm (2 in) .5 lb (.22 kg)

BL2 (BL870 i4) 44 mm (1.73 in) 106 mm (4.17 in) 1 lb (.45 kg)

BL4 (BL890 i4) 44 mm (1.73 in) 212 mm (8.34 in) 2 lb (.90 kg)

Enclosure information

All three blade configurations are supported in HPE c7000 and c3000 Enclosures.

For more enclosure information see:

• http://www.hpe.com/support/Bladesystem_c3000_Enclosures_Manuals

• http://www.hpe.com/support/Bladesystem_c7000_Enclosures_Manuals

Fully loaded: 11.3 kg (25 lb)

10 Site preparation

Page 11

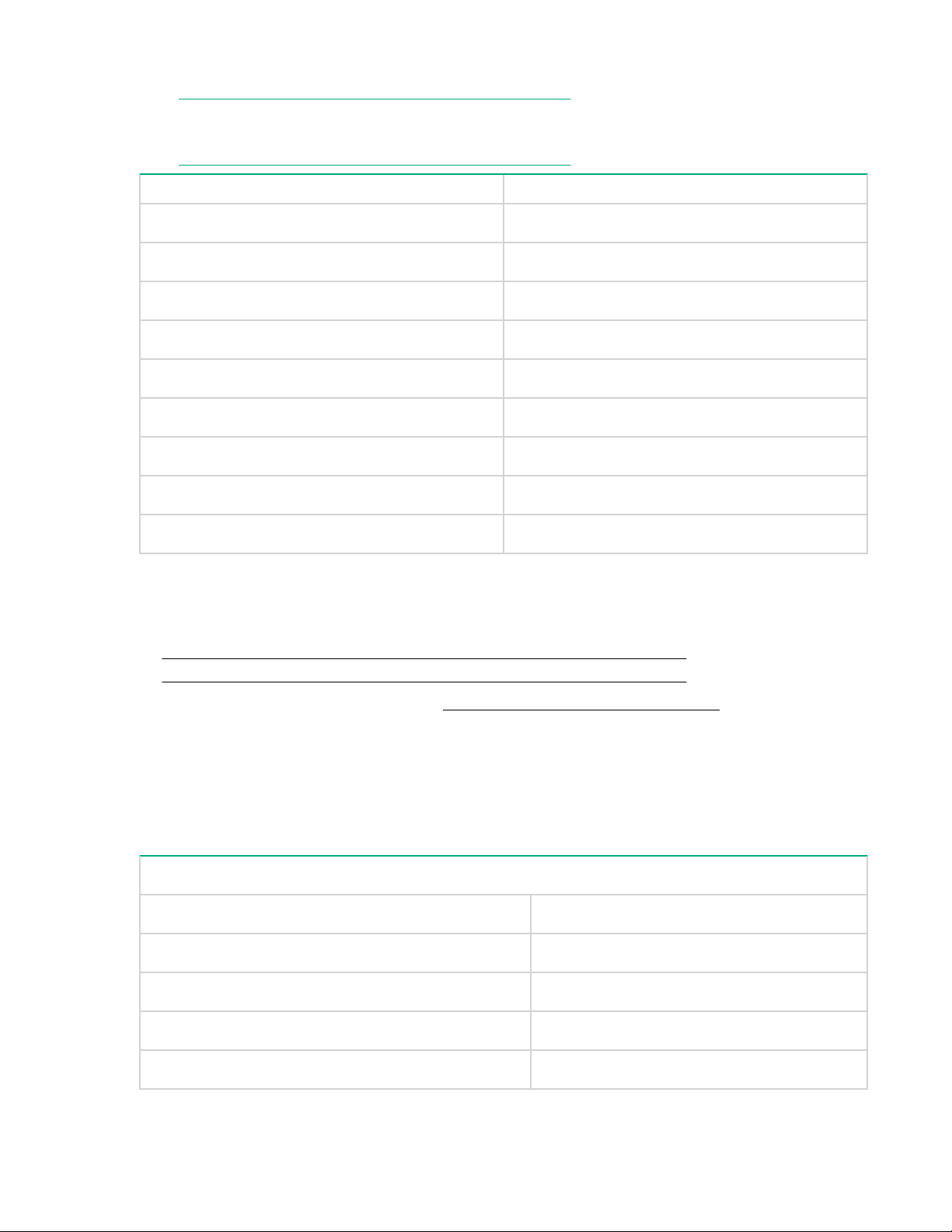

Enclosure environmental specifications

NOTE:

This information is for both c3000 and c7000 Enclosures.

Specification Value

Temperature range

Operating 10°C to 35°C (50°F to 95°F)

Non-operating -30°C to 60°C (-22°F to 140°F)

Wet bulb temperature

Operating 28ºC (82.4ºF)

Non-operating 38.7ºC (101.7ºF)

Relative humidity (noncondensing)

Operating 20% to 80%

Non-operating 5% to 95%

1

Storage maximum humidity of 95% is based on a maximum temperature of 45°C (113°F). Altitude maximum for

storage corresponds to a pressure minimum of 70 KPa.

For more information on the c-Class enclosures see:

• http://www.hpe.com/support/Bladesystem_c3000_Enclosures_Manuals

• http://www.hpe.com/support/Bladesystem_c7000_Enclosures_Manuals

For more site preparation information, go to http://www.hpe.com/info/Blades-docs, select HPE

Integrity BL860c i4 Server Blade in the list of servers, and then select the Generalized Site

Preparation Guidelines.

1

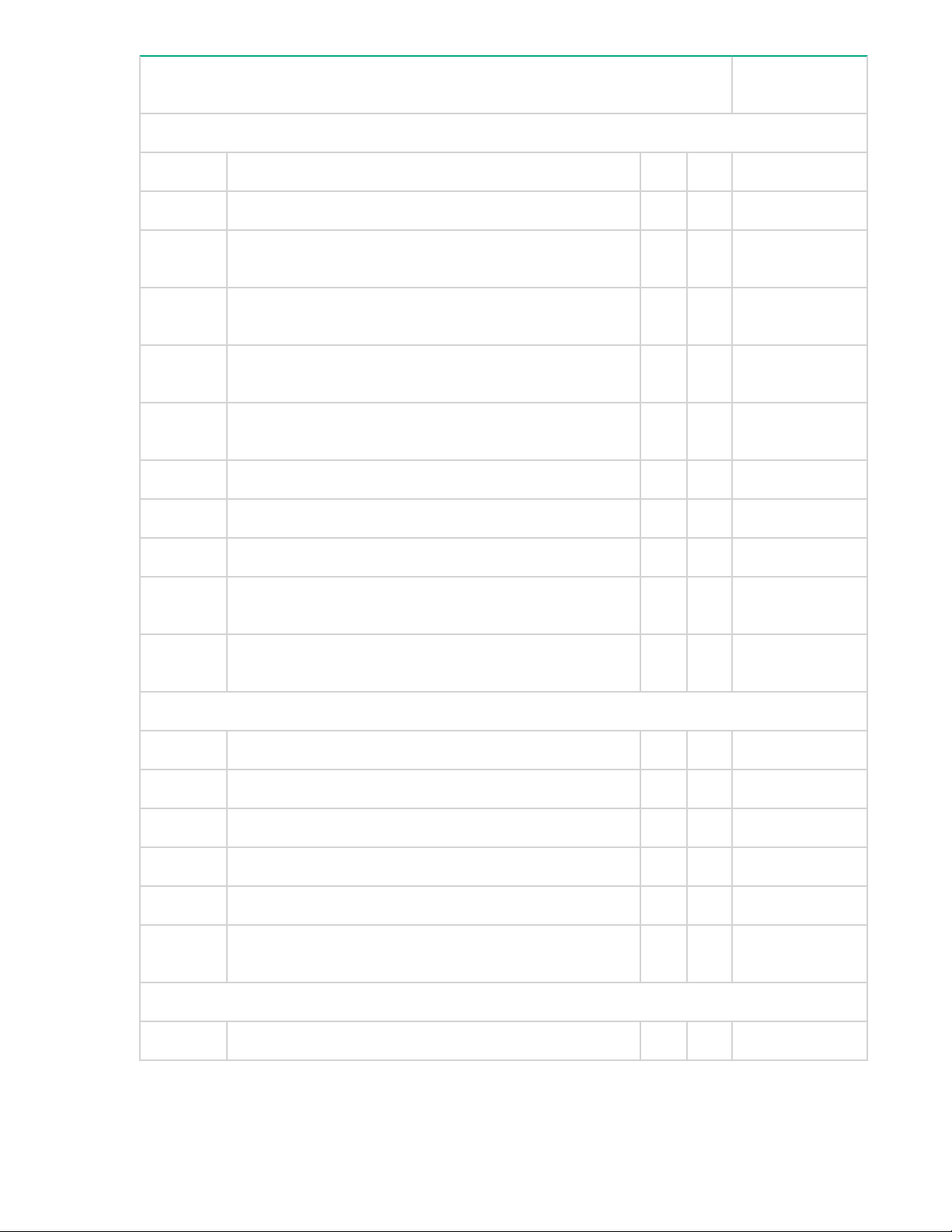

Sample Site Inspection Checklist

Table 3: Customer and Hewlett Packard Enterprise Information

Customer Information

Name: Phone number:

Street address: City or Town:

State or province: Country

Zip or postal code:

Primary customer contact: Phone number:

Enclosure environmental specifications 11

Table Continued

Page 12

Customer Information

Secondary customer contact: Phone number:

Traffic coordinator: Phone number:

Hewlett Packard Enterprise information

Sales representative Order number:

Representative making survey Date:

Scheduled delivery date

Table 4: Site Inspection Checklist

Check either Yes or No. If No, include comment number or date. Comment or

Date

Computer Room

Number Area or condition Yes No

1. Is there a completed floor plan?

2. Is adequate space available for maintenance needs?

Front 36 inches (91.4 cm) minimum and rear 36 inches

(91.4 cm) minimum are recommended clearances.

3. Is access to the site or computer room restricted?

4. Is the computer room structurally complete? Expected

date of completion?

5. Is a raised floor installed and in good condition?

6. Is the raised floor adequate for equipment loading?

7. Are channels or cutouts available for cable routing?

8. Is a network line available?

9. Is a telephone line available?

10. Are customer-supplied peripheral cables and LAN cables

available and of the proper type?

11. Are floor tiles in good condition and properly braced?

12. Is floor tile underside shiny or painted? If painted, judge

12 Site preparation

the need for particulate test.

Table Continued

Page 13

Check either Yes or No. If No, include comment number or date. Comment or

Date

Power and Lighting

Number Area or Condition Yes No

13. Are lighting levels adequate for maintenance?

14. Are AC outlets available for servicing needs (for example,

laptop)?

15. Does the input voltage correspond to equipment

specifications?

15a. Is dual source power used? If so, identify types and

evaluate grounding.

16. Does the input frequency correspond to equipment

specifications?

17. Are lightning arrestors installed inside the building?

18. Is power conditioning equipment installed?

19. Is a dedicated branch circuit available for equipment?

20. Is the dedicated branch circuit less than 75 feet (22.86

m)?

21. Are the input circuit breakers adequate for equipment

loads?

Safety

Number Area or Condition Yes No

22. Is an emergency power shutoff switch available?

23. Is a telephone available for emergency purposes?

24. Does the computer room have a fire protection system?

25. Does the computer room have antistatic flooring installed?

26. Do any equipment servicing hazards exist (loose ground

wires, poor lighting, and so on)?

Cooling

Number Area or Condition Yes No

Table Continued

Site preparation 13

Page 14

Check either Yes or No. If No, include comment number or date. Comment or

Date

27. Can cooling be maintained between 5°C (41 °F) and 35°C

(95 °F) (up to 1,525 m/5,000 ft)? Derate 1°C/305 m (34 °F/

1,000 ft) above 1,525 m/5,000 ft and up to 3,048 m/10,000

ft.

28. Can temperature changes be held to 5°C (9 °F) per hour

with tape media? Can temperature changes be held to

20°C (36 °F) per hour without tape media?

29. Can humidity level be maintained at 40% to 55% at 35°C

(95 °F) noncondensing?

30. Are air-conditioning filters installed and clean?

Storage

Number Area or Condition Yes No

31. Are cabinets available for tape and disc media?

32. Is shelving available for documentation?

Training

Number Area or Condition

33. Are personnel enrolled in the System Administrator’s

Course?

34. Is on-site training required?

Power subsystem

The power subsystem is located on the system board. The BL860c i4 Server Blade receives 12 Volts

directly from the enclosure. The voltage is immediately passed through an E-fuse circuit, which will

immediately cut power to the blade if a short circuit fault or over current condition is detected. The E-fuse

can also be intentionally power cycled through the manageability subsystem. The 12V is distributed to

various points on the blade and is converted to lower voltages through power converters for use by

integrated circuits and loads on the blade.

14 Power subsystem

Page 15

ESD handling information

CAUTION:

Wear an ESD wrist strap when handling internal server components. Acceptable ESD wrist straps

include:

• The wrist strap that is included in the ESD kit with circuit checker (part number 9300-1609).

• The wrist strap that is included in the ESD kit without circuit checker (part number 9300-1608).

If the above options are unavailable, the throw away (one use only) strap that ships with some

Hewlett Packard Enterprise memory products can also be used, with increased risk of electrostatic

damage.

When removing and replacing server components, use care to prevent injury and equipment damage.

Many assemblies are sensitive to damage by electrostatic discharge.

Follow the safety precautions listed to ensure safe handling of components, to prevent injury, and to

prevent damage to the server blade:

• When removing or installing a server blade or server blade component, review the instructions

provided in this guide.

• Do not wear loose clothing that might snag or catch on the server or on other items.

• Do not wear clothing subject to static charge build-up, such as wool or synthetic materials.

• If installing an internal assembly, wear an antistatic wrist strap, and use a grounding mat such as those

included in the Electrically Conductive Field Service Grounding Kit.

• Handle components by the edges only. Do not touch any metal-edge connectors or electrical

components on accessory boards.

Unpacking and inspecting the server blade

Be sure that you have adequately prepared your environment for your new server blade, received the

components that you ordered, and verified that the server and the containers are in good condition after

shipment.

Verifying site preparation

Verifying site preparation is an essential factor of a successful server blade installation, and includes the

following tasks:

• Gather LAN information. Determine the two IP addresses for the iLO 3 MP LAN and the server blade

LAN.

• Establish a method to connect to the server blade console. For more information on console

connection methods, see Using iLO 3 on page 29 for more information.

• Verify electrical requirements. Be sure that grounding specifications and power requirements are met.

• Confirm environmental requirements.

Inspect the shipping containers for damage

Hewlett Packard Enterprise shipping containers protect their contents under normal shipping conditions.

After the equipment arrives, carefully inspect each carton for signs of shipping damage. Shipping damage

constitutes moderate to severe damage such as punctures in the corrugated carton, crushed boxes, or

large dents. Normal wear or slight damage to the carton is not considered shipping damage. If you find

shipping damage to the carton, contact your Hewlett Packard Enterprise customer service representative

immediately.

ESD handling information 15

Page 16

Unpacking the server blade

Procedure

1. Use the instructions printed on the outside top flap of the carton.

2. Remove inner accessory cartons and the top foam cushions.

IMPORTANT:

Inspect each carton for shipping damage as you unpack the server blade.

3. Place the server blade on an antistatic pad.

Verifying the inventory

The sales order packing slip lists the equipment shipped from Hewlett Packard Enterprise. Use this

packing slip to verify that the equipment has arrived.

NOTE:

To identify each item by part number, see the sales order packing slip.

Returning damaged equipment

If the equipment is damaged, immediately contact your Hewlett Packard Enterprise customer service

representative. The service representative initiates appropriate action through the transport carrier or the

factory and assists you in returning the equipment.

16 Unpacking the server blade

Page 17

Installing the server blade into the enclosure

Installation sequence and checklist

Step Description Completed

1 Perform site preparation (see Site preparation on page 10 for more

information).

2 Unpack and inspect the server shipping container and then inventory the

contents using the packing slip.

3 Install additional components shipped with the server. For these procedures, see

the documentation that with the component or the user service guide.

4 Install and power on the server blade.

5 Configure iLO 3 MP access.

6 Access iLO 3 MP.

7 Access UEFI from iLO 3 MP.

8 Download latest firmware and update using HP Smart Update Manager.

9 Install and boot the OS.

NOTE:

For more information regarding HPE Integrity Server Blade upgrades, see Upgrading a conjoined

configuration on page 59 for more information.

Installing and powering on the server blade

Preparing the enclosure

HPE BladeSystem enclosures ship with device bay dividers to support half-height devices. To install a full

height device, remove the blanks and the corresponding device bay divider.

CAUTION:

To prevent improper cooling and thermal damage, do not operate the server blade or the enclosure

unless all hard drive and device bays are populated with either a component or a blank.

Procedure

1. Remove the device bay blank.

Installing the server blade into the enclosure 17

Page 18

2. Remove the three adjacent blanks.

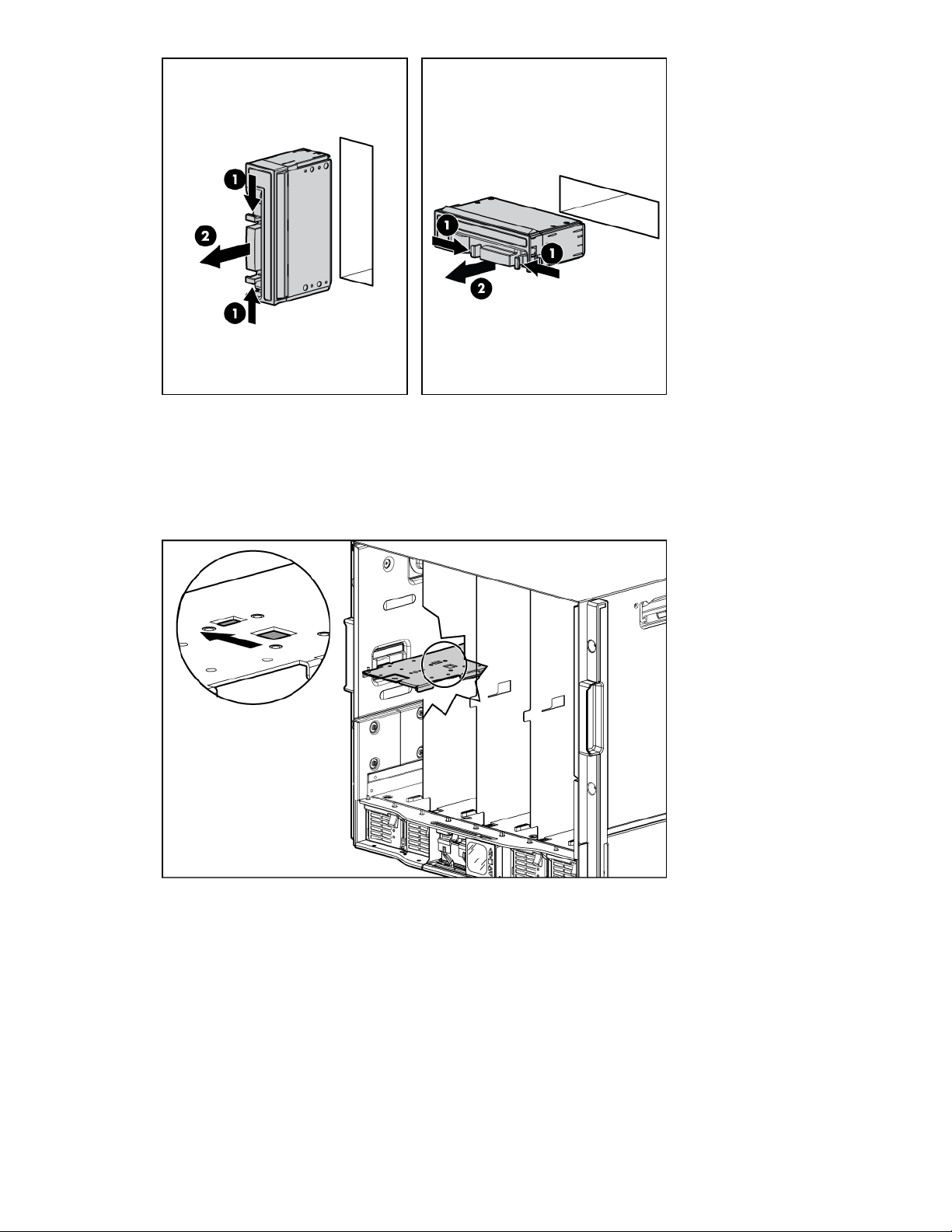

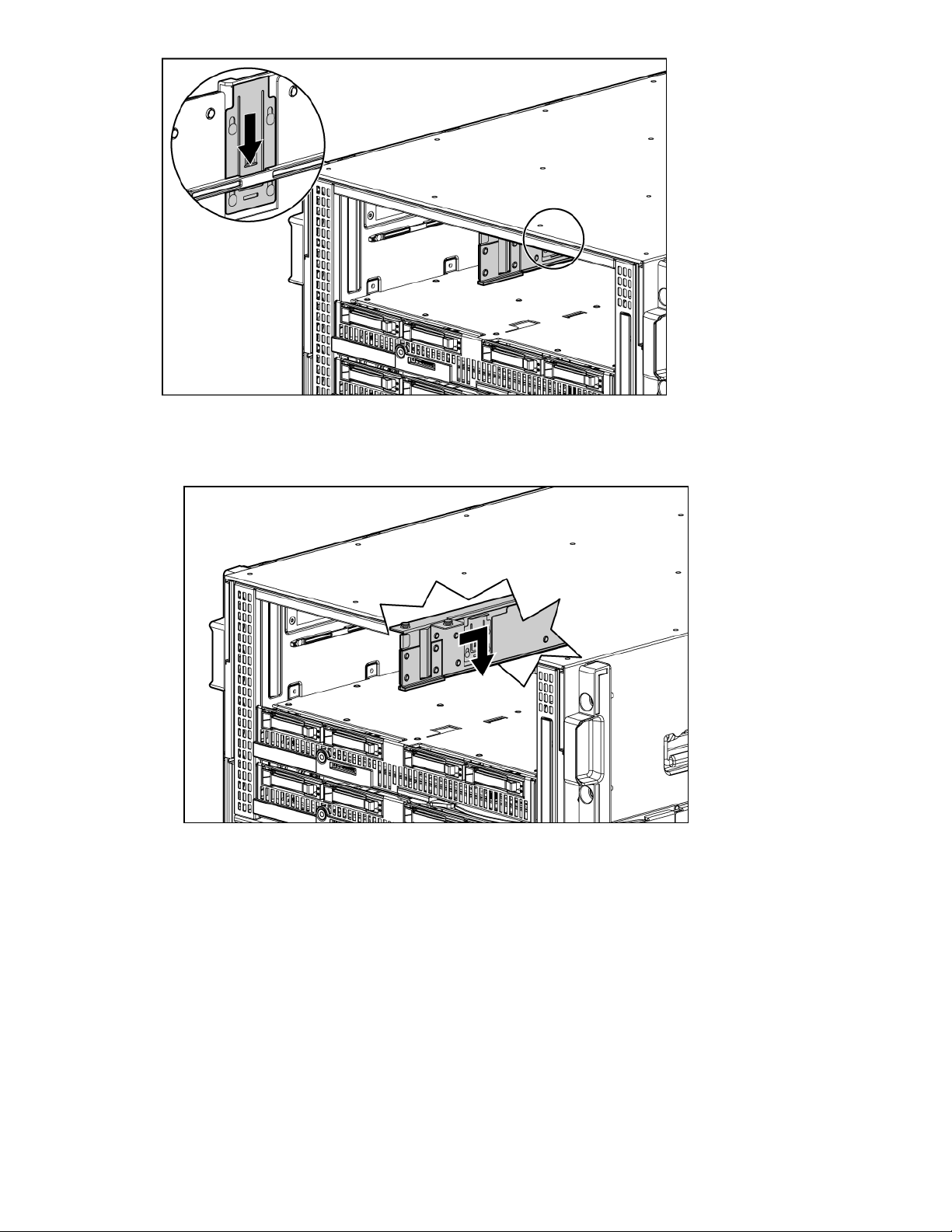

Removing a c7000 device bay divider

Procedure

1. Slide the device bay shelf locking tab to the left to open it.

2. Push the device bay shelf back until it stops, lift the right side slightly to disengage the two tabs from

the divider wall, and then rotate the right edge downward (clockwise).

18 Removing a c7000 device bay divider

Page 19

3. Lift the left side of the device bay shelf to disengage the three tabs from the divider wall, and then

remove it from the enclosure.

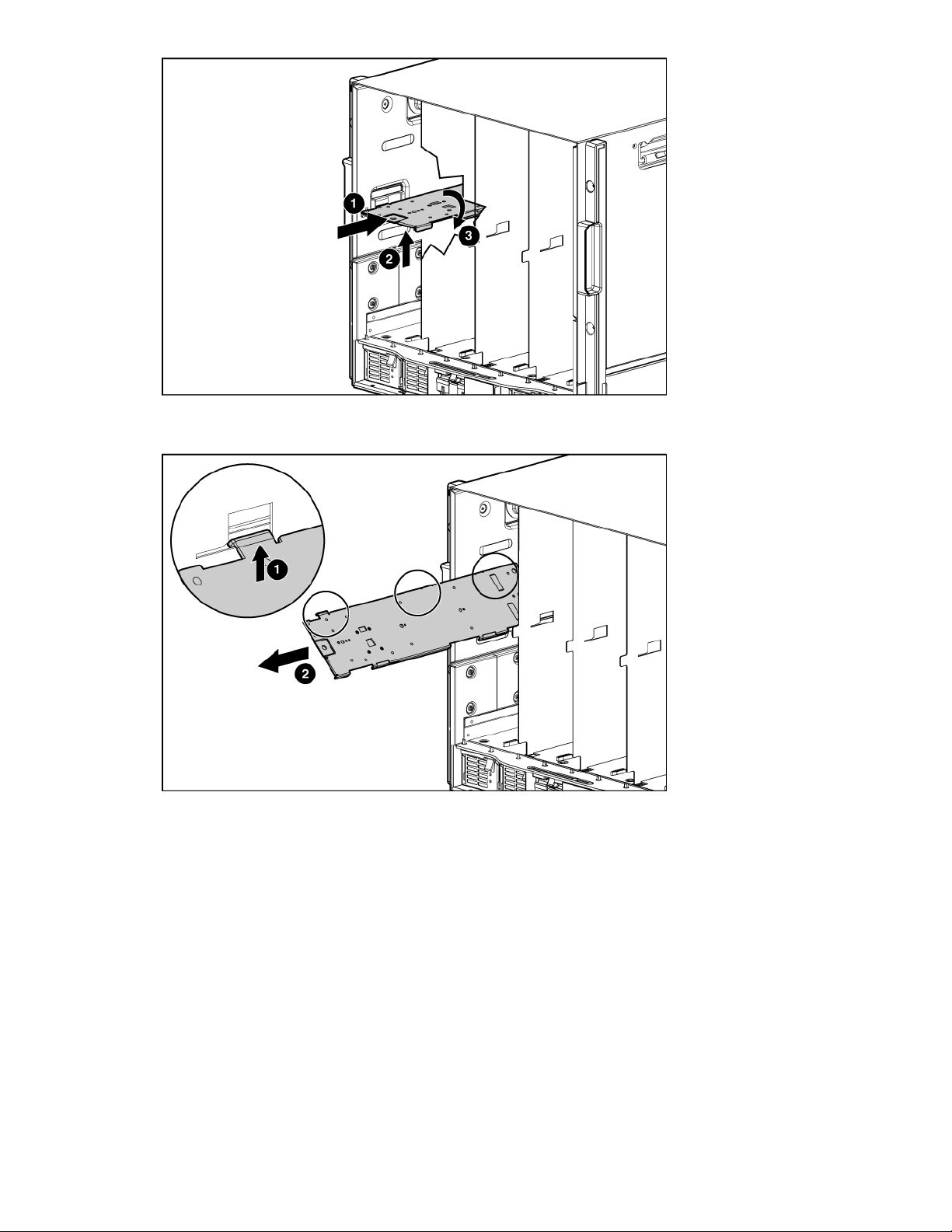

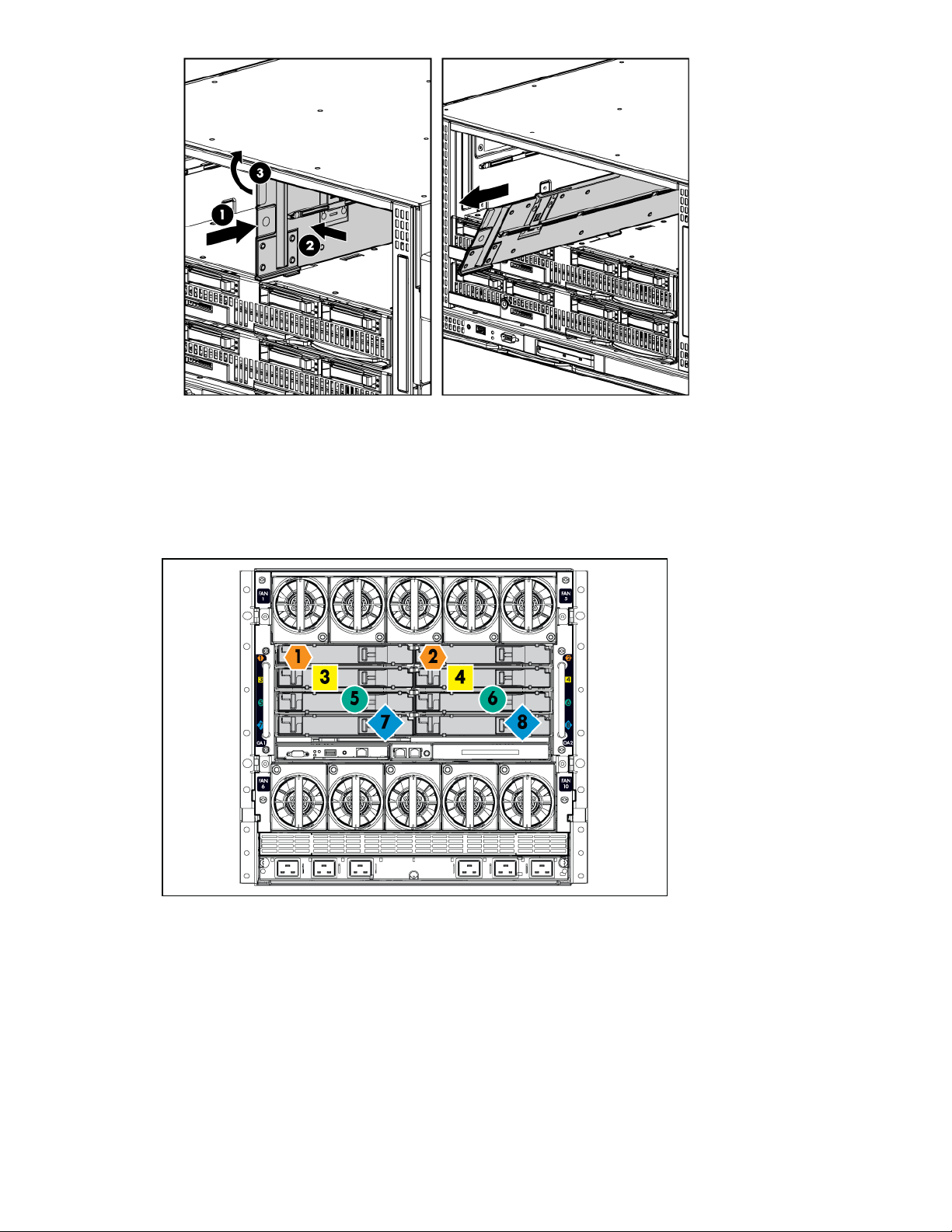

Removing a c3000 device bay mini-divider or device bay divider

Procedure

1. Slide the locking tab down.

Removing a c3000 device bay mini-divider or device bay divider 19

Page 20

2. Remove the mini-divider or divider:

a. c3000 mini-divider:

Push the divider toward the back of the enclosure until the divider drops out of the enclosure.

b. c3000 divider

I. Push the divider toward the back of the enclosure until it stops.

II. Slide the divider to the left to disengage the tabs from the wall.

III. Rotate the divider clockwise.

IV. Remove the divider from the enclosure.

20 Installing the server blade into the enclosure

Page 21

Installing interconnect modules

For specific steps to install interconnect modules, see the documentation that ships with the interconnect

module.

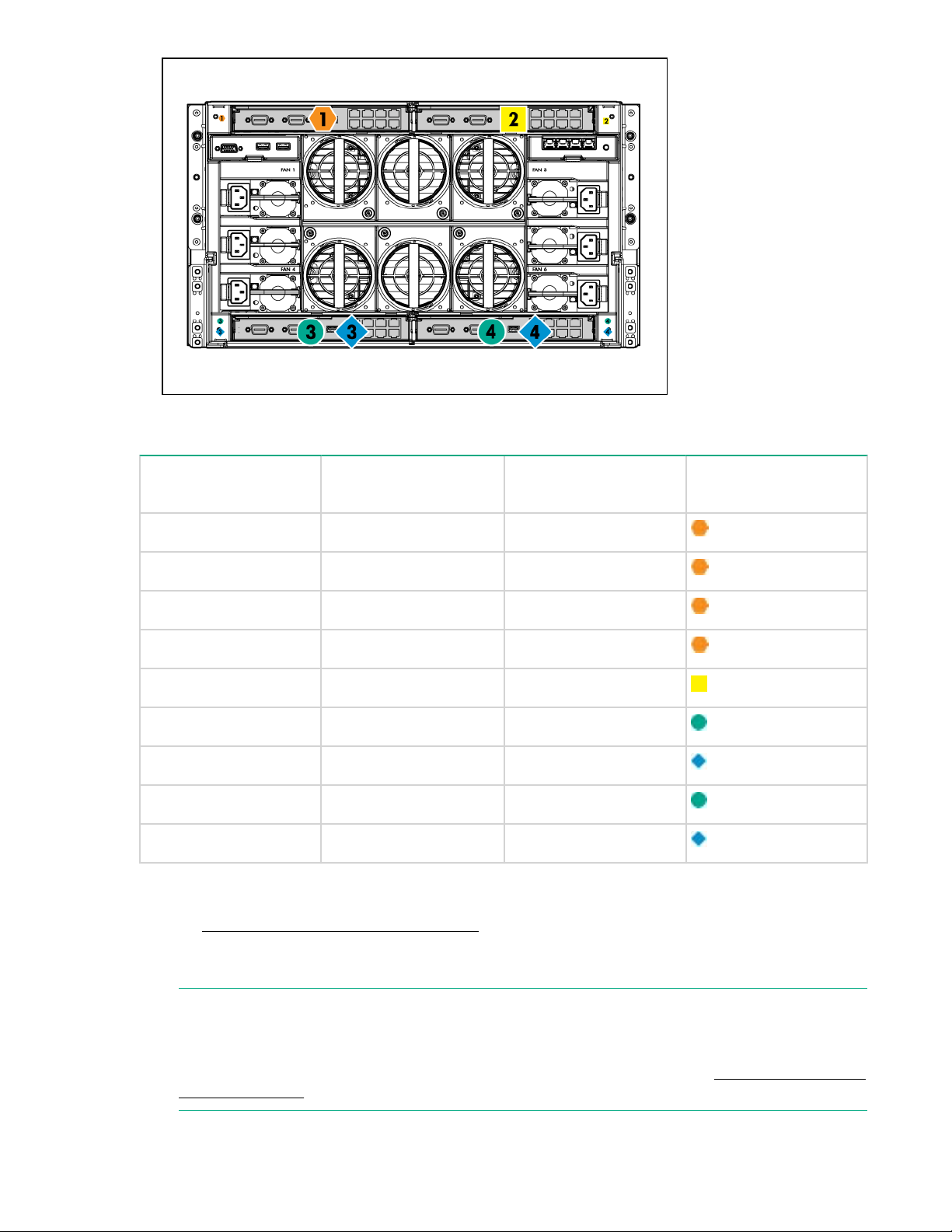

Interconnect bay numbering and device mapping

• HPE BladeSystem c7000 Enclosure

• HPE BladeSystem c3000 Enclosure

Installing interconnect modules 21

Page 22

To support network connections for specific signals, install an interconnect module in the bay

corresponding to the embedded NIC or mezzanine signals.

Server blade signal c7000 interconnect bay c3000 interconnect

bay

NIC 1 (Embedded) 1 1

NIC 2 (Embedded) 2 1

NIC 3 (Embedded) 1 1

NIC 4 (Embedded) 2 1

Mezzanine 1 3 and 4 2

Mezzanine 2 5 and 6 3 and 4

7 and 8 3 and 4

Mezzanine 3 5 and 6 3 and 4

7 and 8 3 and 4

For detailed port mapping information, see the BladeSystem enclosure installation poster or the

BladeSystem enclosure setup and installation guide for your product on the Hewlett Packard Enterprise

website (http://www.hpe.com/info/Blades-docs).

Interconnect bay

labels

Installing the server blade into the enclosure

NOTE:

When installing additional blades into an enclosure, additional power supplies might also be needed

to meet power requirements. For more information, see the BladeSystem enclosure setup and

installation guide for your product on the Hewlett Packard Enterprise website (http://www.hpe.com/

info/Blades-docs).

22 Installing the server blade into the enclosure

Page 23

Procedure

1. Remove the connector covers if they are present.

NOTE:

Before installing and initializing the server blade, install any server blade options, such as an

additional processor, hard drive, or mezzanine card.

2. Prepare the server blade for installation.

3. Install the server blade.

Installing the server blade into the enclosure 23

Page 24

The server blade should come up to standby power. The server blade is at standby power if the blade

power LED is amber.

Server blade power states

The server blade has three power states: standby power, full power, and off. Install the server blade into

the enclosure to achieve the standby power state. Server blades are set to power on to standby power

when installed in a server blade enclosure. Verify the power state by viewing the LEDs on the front panel,

and using Table 5.

Table 5: Power States

Power States Server Blade

Installed in

Enclosure?

Standby power Yes No Yes No

Full power Yes Yes Yes Yes

Off No No No No

Powering on the server blade

Use one of the following methods to power on the server blade:

NOTE:

To power on blades in a conjoined configuration, only power on the Monarch blade. See Blade Link

bay location rules for rules on the definition of the Monarch blade.

• Use a virtual power button selection through iLO 3.

• Press and release the Monarch power button.

When the server blade goes from the standby mode to the full power mode, the blade power LED

changes from amber to green.

Front Panel Power

Button Activated?

Standby Power

Applied?

DC Power

Applied?

24 Server blade power states

Page 25

For more information about iLO 3, see Using iLO 3 on page 29.

Powering off the server blade

Before powering down the server blade for any upgrade or maintenance procedures, perform a backup of

critical server data and programs.

Use one of the following methods to power off the server blade:

NOTE:

To power off blades in a conjoined configuration, only power off the Monarch blade.

• Use a virtual power button selection through the iLO 3 GUI (Power Management, Power & Reset) or

the iLO 3 TUI commands.

This method initiates a controlled remote shutdown of applications and the OS before the server blade

enter standby mode.

• Press and release the Monarch power button.

This method initiates a controlled shutdown of applications and the OS before the server blade enter

standby mode.

• Press and hold the Monarch power button for more than 4 seconds to force the server blade to enter

standby mode.

This method forces the server blade to enter standby mode without properly exiting applications and

the OS. It provides an emergency shutdown method in the event of a hung application.

Installing the Blade Link for BL860c i4, BL870c i4 or BL890c i4 configurations

IMPORTANT:

Without an attached Blade Link, the server blades will not power on.

NOTE:

Before installing the Blade Link for BL870c i4 or BL890c i4, make sure the following statements are

true:

• All blades have the same CPU SKUs

• All blades have the same hardware revision (only use BL860c i4, BL870c i4, or BL890c i4 Server

Blades)

• All blades have CPU0 installed

• All blades have the same firmware revision set

• All blades follow the memory loading rules for your configuration, see DIMMs on page 48

• The enclosure OA firmware is compatible with the blade firmware

• The Monarch blade has an ICH mezzanine card installed

• The proper Blade Link is being used for your configuration

To check on the blade hardware revisions and CPU SKUs, go to the Command Menu in the iLO 3

TUI and enter the DF command. This dumps the FRU content of the blades.

Powering off the server blade 25

Page 26

NOTE:

If you will be upgrading an initial installation, see the user service guide for more information on

server blade upgrades.

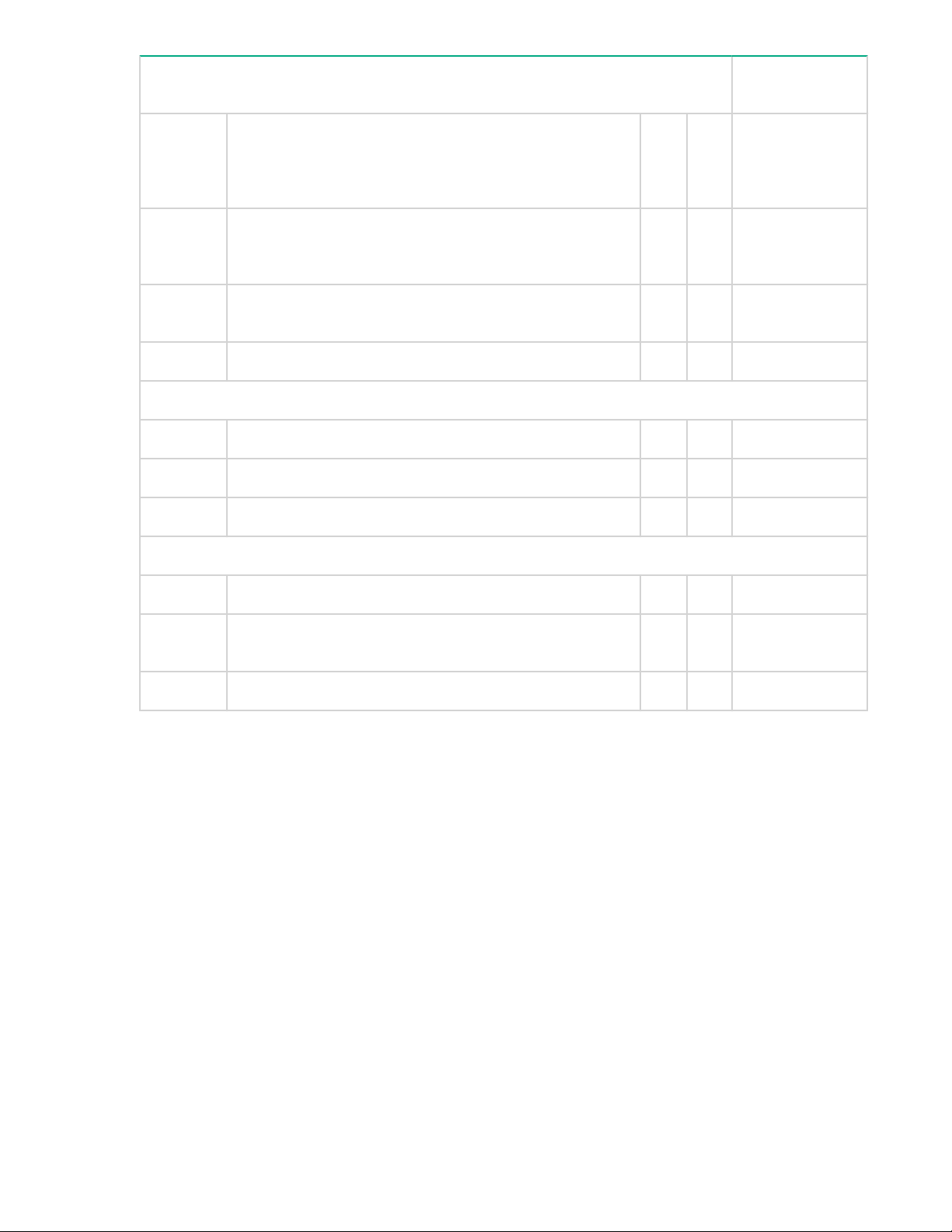

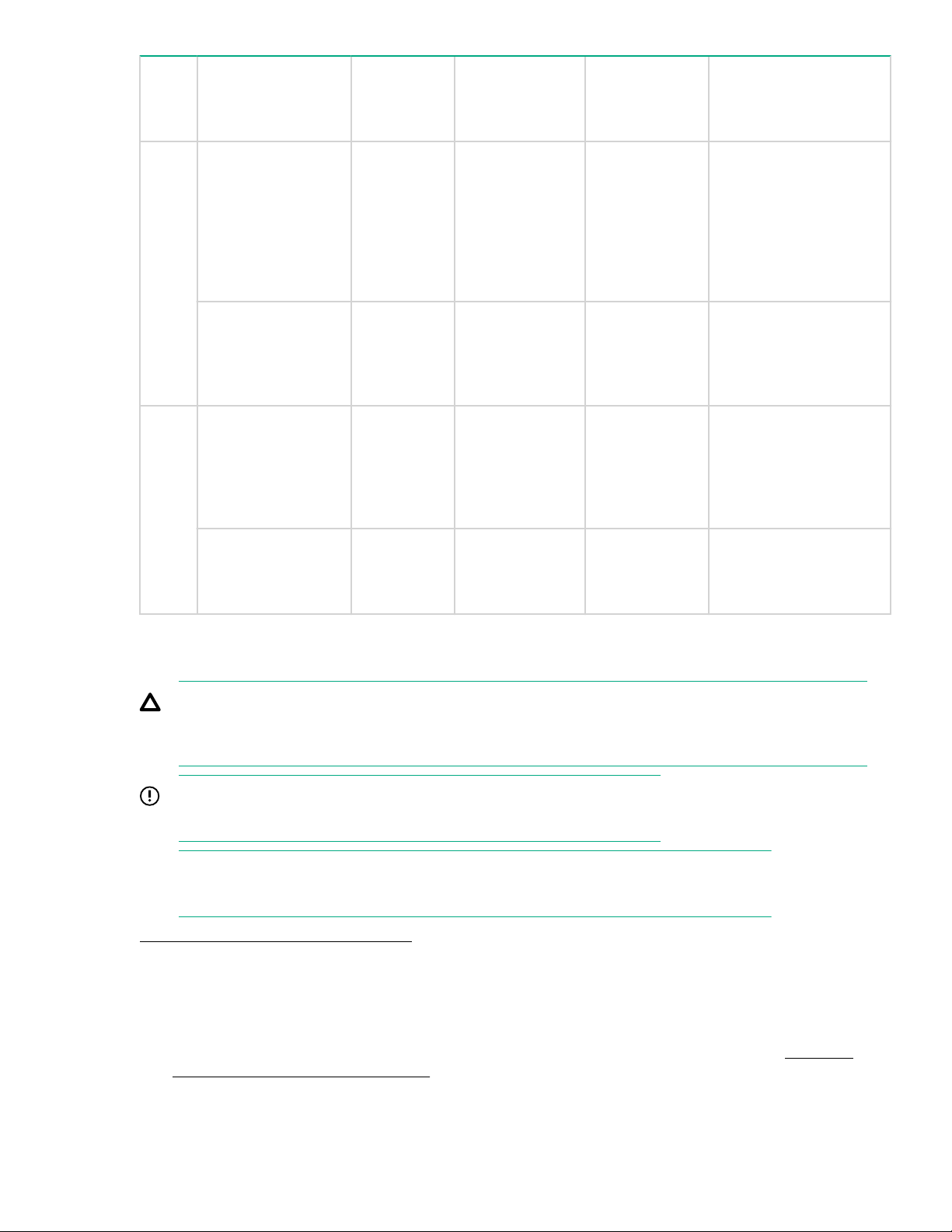

Table 6: Blade Link bay location rules

Class Number of

conjoined blades

BL1 1 (standard for

BL860c i4)

Supported

enclosures

Blade location

rules

Partner blade

support?

Partner blade halfheight bay number /

Server blade fullheight bay number

c7000 No specific bay

location rules for

blades

Yes Bottom half-height

adjacent bay, paired with

the server blade in fullheight bays 1&2, 3&4,

5&6, or 7&8

c3000 Half-height bay 8, paired

with the server blade in

full-height bay 3.

CAUTION:

The bay minidivider must be

installed in the

c3000 enclosure

to ensure the

partner blade is

inserted correctly.

Failure to install

the bay minidivider might

result in damage

to the blade or

enclosure when

installing the

partner blade.

1

BL2 2 (BL870c i4) c7000 Bays 1&2, 3&4,

c3000 Bays 1&2, 3&4

26 Installing the server blade into the enclosure

No N/A

5&6, or 7&8 with

Monarch blade in

odd bay

with Monarch

blade in odd bay

Table Continued

Page 27

Class Number of

conjoined blades

Supported

enclosures

Blade location

rules

Partner blade

support?

Partner blade halfheight bay number /

Server blade fullheight bay number

BL2E 2 (BL870c i4) c7000 only Bays 2&3, 4&5

or 6&7 with

Monarch blade in

even bay using

full-height

numbering

2 (BL870c i4) c3000 only Bays 2&3 with

Monarch blade in

even bay using

full-height

numbering.

BL4 4 (BL890c i4) c7000 only Bays 1&2&3&4

or 5&6&7&8,

with Monarch

blade defaulting

to slot 1 or slot 5,

respectively

4 (BL890c i4) c3000 only Bays 1&2&3&4

with Monarch

blade defaulting

to slot 1

Yes Bottom half-height bay 9

paired with full-height

bays 2&3, bottom halfheight bay 11 paired

with full-height bays

4&5, bottom half-height

bay 13 paired with fullheight bays 6&7

No N/A

No N/A

No N/A

1

Upgrading a conjoined configuration on page 59

To install the Blade Link:

Procedure

1. Log on to the OA.

2. Install the first blade into the lowest bay number, this blade becomes the Monarch blade (Installing

3. Wait 10 seconds. The IP address of the installed blade appears in the OA.

For information on installing the c3000 bay mini-divider, see the HPE BladeSystem c3000 Enclosure Setup and

Installation Guide.

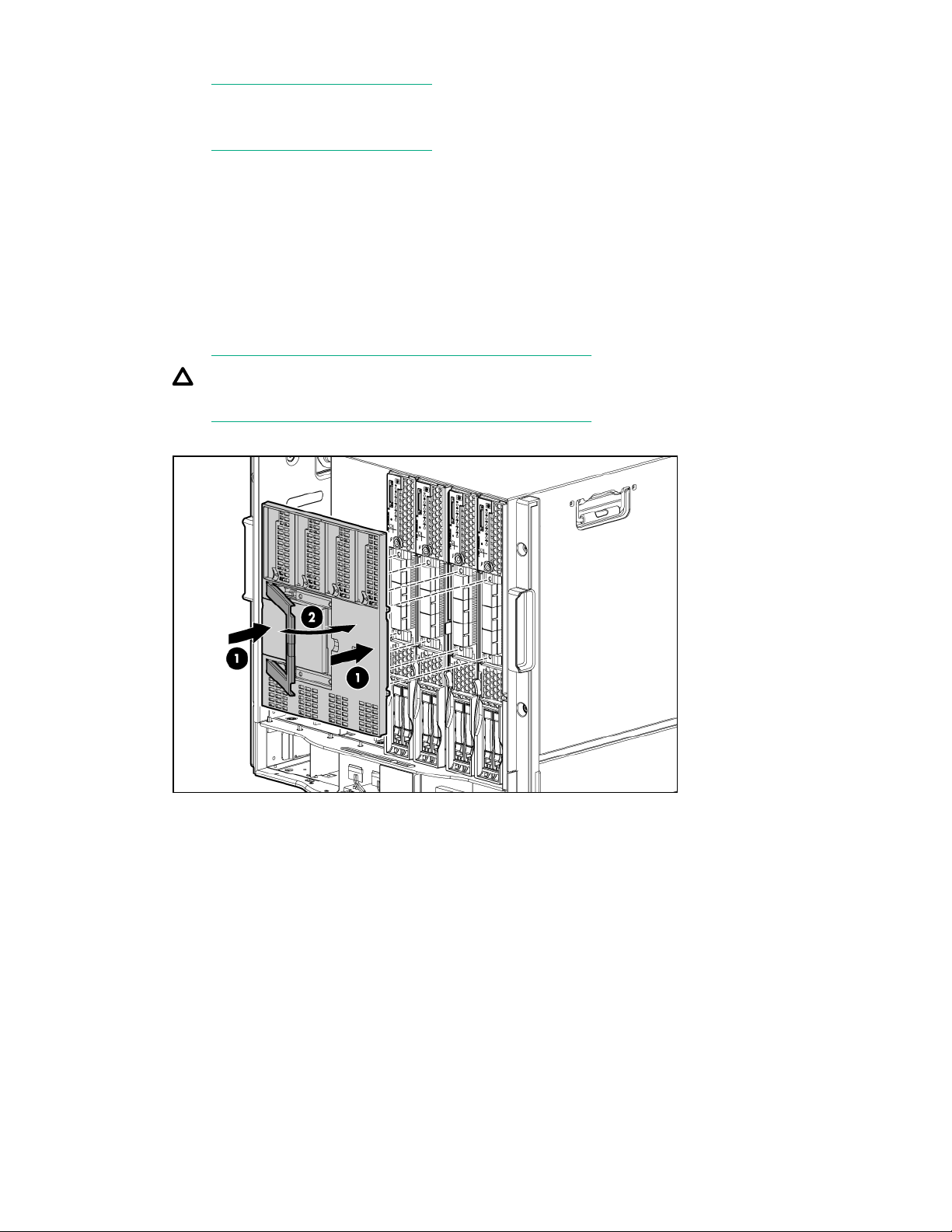

CAUTION:

Using the incorrect Blade Link can cause damage to the Blade Link and to the connectors on both

the Blade Link and the server blades.

IMPORTANT:

Failure to follow bay location rules can prevent server blade power on.

NOTE:

The manufacturing part numbers for the Blade Link is located on a sticker on the PCA.

the server blade into the enclosure on page 22).

Installing the server blade into the enclosure 27

Page 28

4. Insert each adjacent blade, waiting 10 seconds between blades.

NOTE:

The blades will go into stand-by.

5. Using the OA, verify that the rest of the blades that will be conjoined have an IP address and are

powered off.

6. Remove the plastic protectors from the connectors on the back of the Blade Link.

7. Push in the blue release latch on the handle to release the handle.

8. Pull the handle all the way out.

9. Align the guide pins on the back of the Blade Link to the holes on the front of the server blades. As

you insert the pins into the holes, ensure the face on the Blade Link is evenly aligned parallel to the

face of the server blades.

10. Press firmly on the left and right sides of the Blade Link face until the handle naturally starts to close.

CAUTION:

If not properly aligned, you can damage the Blade Link.

11. Close the handle when it has engaged.

12. Log into iLO 3 on the Monarch blade. For more information, see the HPE Integrity iLO3 Operations

Guide.

13. In iLO 3, go to the Command Menu and execute xd -r to reboot all of the iLO 3s in the conjoined

set.

14. Run the conjoin checks

Integrity BL870c i4 and BL890c i4 systems go through a process called “conjoining” when the Blade

Link is attached. The system cannot boot until that process is completed properly.

a. Execute the following CM commands in the iLO 3 TUI to show data from all blades. This

information can be used to determine if the blades are successfully conjoined:

I. DF — Lists the FRUs on all of the blades (2 or 4).

II. SR — Shows a table of each blades firmware revisions.

III. Blade — Shows information about the OA and the bays used.

b. Check to see if the OA shows a properly conjoined system from its GUI.

28 Installing the server blade into the enclosure

Page 29

IMPORTANT:

The secondary UUID and other system variables are stored on the Monarch blade. If you do

not put the Monarch blade in the leftmost slot, your system variables will not match. If you ever

change your iLO 3 configuration (such as adding users) that data is also stored on the Monarch

blade.

NOTE:

Auxiliary blades are not slot dependent after being installed and configured, however when the

conjoined systems ship, they come with A, B, C, D stickers located under the Blade Links.

While auxiliary blades are not slot dependent after being installed and configured, Hewlett

Packard Enterprise recommends using the shipped order to ensure proper auxiliary blade

function.

15. Still in the iLO 3 Command Menu, power on the Monarch blade with the PC -on -nc command.

Powering on the Monarch blade will power the entire conjoined system on.

16. Boot the Monarch blade. Booting the Monarch blade boots the entire conjoined system.

Using iLO 3

The iLO 3 subsystem is a standard component of selected server blades that monitors blade health and

provides remote server manageability. The iLO 3 subsystem includes an intelligent microprocessor,

secure memory, and a dedicated network interface. This design makes iLO 3 independent of the host

server and operating system. The iLO 3 subsystem provides remote access to any authorized network

client, sends alerts, and provides other server management functions.

Using iLO 3, you can:

• Remotely power on, power off, or reboot the host server.

• Subscribe to we-man alerts from iLO 3 regardless of the state of the host server.

• Access advanced troubleshooting features through the iLO 3 interface.

• Access Remote Console and vMedia functionality.

For more information about iLO 3 basic features, see the iLO 3 documentation on the Hewlett Packard

Enterprise website (

http://www.hpe.com/support/Integrated_Lights-Out3_Manuals).

Accessing UEFI or the OS from iLO 3 MP

UEFI is an architecture that provides an interface between the server blade OS and the server blade

firmware. UEFI provides a standard environment for booting an OS and running preboot applications.

Use this procedure to access UEFI or the OS from the iLO 3 MP. Your security parameters were set

regarding remote access.

Procedure

1. Retrieve the factory iLO 3 password from the iLO 3 Network pull tag located on the right side of the

Monarch blade.

2. From the MP Main Menu, enter the co command to access the Console.

NOTE:

Terminal windows should be set to a window size of 80 columns x 25 rows for optimal viewing of

the console at UEFI.

3. After memory test and CPU late self test the following message appears:

Using iLO 3 29

Page 30

Press Ctrl-C now to bypass loading option ROM UEFI drivers.

4. The prompt will timeout if Ctrl-C is not pressed within a few seconds. If Ctrl-C is pressed, you will be

presented with two options: After selecting an option, boot will proceed.

NOTE:

If no option is selected, normal boot will proceed after ten seconds.

• Bypass loading from I/O slots.

• Bypass loading from I/O slots and core I/O.

The Bypass loading from I/O slots and core I/O option may be useful if a bad core I/O UEFI driver

is preventing system boot. USB drives can still be used at the UEFI shell to update core I/O drivers.

CAUTION:

Hitting Ctrl-C before the prompt will not work and may even disable this feature, be sure wait

for the prompt before hitting Ctrl-C.

NOTE:

It can take several minutes for this prompt to appear, and the window of time when Ctrl-C can

be pressed is very short. For typical boots, Hewlett Packard Enterprise recommends that you

let the prompt time out.

5. Depending on how the server blade was configured from the factory, and if the OS is installed at the

time of purchase, you are taken to:

a. UEFI shell prompt

b. OS login prompt

6. If the server blade has a factory-installed OS, you can interrupt the boot process to configure your

specific UEFI parameters.

7. If you are at the UEFI shell prompt, go to UEFI Front Page on page 30.

8. If you are at the OS login prompt, go to OS login prompt on page 33.

UEFI Front Page

If you are at the UEFI shell prompt, enter exit to get to the UEFI Front Page.

30 UEFI Front Page

Page 31

To view boot options, or launch a specific boot option, press B or b to launch the Boot Manager.

Installing the server blade into the enclosure 31

Page 32

To configure specific devices, press D or d to launch the Device Manager. This is an advanced feature

and should only be performed when directed.

To perform maintenance on the system such as adding, deleting, or reordering boot options, press M or

m to launch the Boot Maintenance Manager.

32 Installing the server blade into the enclosure

Page 33

To perform more advanced operations, press S or s to launch the UEFI Shell.

To view the iLO 3 LAN configuration, press I or i to launch the iLO 3 Setup Tool.

Saving UEFI configuration settings

There are other UEFI settings you can configure at this time. For more UEFI configuration options, see

RAID configuration and other utilities on page 133.

Booting and installing the operating system

From the UEFI Front Page prompt, you can boot and install in either of two manners:

• If your OS is loaded onto your server blade, see Operating system is loaded onto the server blade

on page 33.

• If the OS is not installed onto your server blade, see Operating system is not loaded onto the

server blade on page 33.

Operating system is loaded onto the server blade

If the OS is loaded on your server blade, normally UEFI will automatically boot to the OS. If the UEFI

Front Page is loaded, press ENTER to start auto boot, or B or b to select a specific boot option for your

OS.

Use your standard OS logon procedures, or see your OS documentation to log on to your OS.

Operating system is not loaded onto the server blade

There are two options on how to load the OS if it is not loaded onto your server blade.

• To load the OS from a DVD, see Installing the OS from an external USB DVD device or tape

device on page 35.

• To load the OS using HP Ignite-UX, see Installing the OS using Ignite-UX on page 36.

OS login prompt

If your server blade is at the OS login prompt after you establish a connection to the server blade, use

your standard OS log in procedures, or see your OS documentation for the next steps.

Saving UEFI configuration settings 33

Page 34

Installing the latest firmware using HP Smart Update Manager

The HP Smart Update Manager (HP SUM) utility enables you to deploy firmware components from either

an easy-to-use interface or a command line. It has an integrated hardware discovery engine that

discovers the installed hardware and the current versions of firmware in use on target servers. This

prevents extraneous network traffic by only sending the required components to the target. HP SUM also

has logic to install updates in the correct order and ensure all dependencies are met before deployment

of a firmware update. It also contains logic to prevent version-based dependencies from destroying an

installation and ensures updates are handled in a manner that reduces any downtime required for the

update process. HP SUM does not require an agent for remote installations.

Key features of HP SUM are:

• GUI and CLI–command line interface

• Dependency checking, which ensures appropriate installation order and dependency checking

between components

• Intelligent deployment deploys only required updates

• Support for updating firmware on network-based targets, such as the OA, iLO (through the Network

Management Port), and VC Ethernet modules

• Improved deployment performance

• Remote command-line deployment

• Windows X86 or Linux X86 support

HP SUM is included in the firmware bundles download from

the BL860c i4, BL870c i4, and BL890c i4.

For more information about HP SUM, see the HP Smart Update Manager User Guide (http://

www.hpe.com/info/hpsum/documentation).

http://www.hpe.com, and is supported on

34 Installing the latest firmware using HP Smart Update Manager

Page 35

Operating system procedures

Operating systems supported on the server blade

HP-UX 11i v3 HWE 1209

Installing the operating system onto the server blade

The following procedures describe generalized operating system installation. For more details, see the

operating system documentation.

Installing the OS from an external USB DVD device or tape device

NOTE:

Tapeboot requires BL8x0c i4 system firmware bundle 42.06 or later and a partner tape blade, or an

additional 51378-B21 Integrity Smart Array P711m HBA running 6.22 firmware or later to boot from

an Ultrium 6250 tape drive.

Procedure

1. If using an external USB DVD device:

a. Connect the Integrity SUV cable to the front of the Monarch server blade.

b. Connect the USB DVD cable to one of the USB ports on the SUV cable.

NOTE:

Some DVD drives might also require a separate power connection.

c. Turn on the external USB DVD device.

2. Insert the OS media into the USB DVD device or tape device.

3. Power on the server blade and boot to UEFI. If the server blade is already powered on, then reboot to

UEFI using the reset command at the UEFI prompt.

4. From the UEFI Front Page, press S or s to launch the UEFI Shell.

NOTE:

If the device is already selected or you already know the device name, then skip the following

step.

If you are using a tape device, when the UEFI shell comes up, you should see a message similar to

the following on the console:

HP Smart Array P212 Controller (version 6.22)

Tape Drive(s) Detected:

Port: 1I, box:0, bay: 3 (SAS)

The message may also be similar to the following.

HP Smart Array P711m Controller (version 6.22) 0 Logical Drives

Tape Drive(s) Detected:

Port: 2E, box:1, bay: 9 (SAS)

Operating system procedures 35

Page 36

NOTE:

If you do not see a line starting with Port and ending with (SAS) , the tape is not connected

correctly or it is not responding.

5. Locate the device you want to boot from.

a. For USB DVD, locate the device:

I. Use the map command to list all device names from the UEFI Shell prompt. The map

command displays the following:

fs2:\> map

Device mapping table

fs6 :Removable CDRom - Alias cd66d0a blk6

PcieRoot(0x30304352)/Pci(0x1D,0x7)/USB(0x3,0x0)/CDROM(0x0)

From the list generated by the map command, locate the device name (in this example, fs6)

NOTE:

Your DVD drive might not be named fs6. Make sure you verify the ID appropriate to your

DVD device.

II. At the UEFI shell prompt, specify the device name for the DVD-ROM and then enter the UEFI

install command, as in the following example:

Shell> fs6:

fs6:\> install

b. For tape, locate the device:

I. To boot from tape once you are at the UEFI shell:

Shell> tapeboot select

01 PcieRoot(0x30304352)/Pci(0x8,0x0)/Pci(0x0,0x0)/

SAS(0x50060B00007F6FFC,0x0,0x1,NoTopology,0,0,0,0x0)

Select Desired Tape: 01 <<input 01

• If the correct media is installed, it will boot from tape when you enter the index number.

• If there is no media in the SAS tape drive and you select 1, the following message appears:

tapeboot: Could not load tapeboot image

6. The OS now starts loading onto the server blade. Follow the on-screen instructions to install the OS

fully.

7. Continue with

Configuring system boot options on page 37

Installing the OS using Ignite-UX

Ignite-UX is an HP-UX administration toolset that enables:

• Simultaneous installation of HP-UX on multiple clients

• The creation and use of custom installations

• The creation of recovery media

• The remote recovery of clients

To install the OS onto the server blade using Ignite-UX, go to http://www.hpe.com/info/Blades-docs.

36 Installing the OS using Ignite-UX

Page 37

Installing the OS using vMedia

NOTE:

Installing the OS using vMedia might be significantly slower than installing using other methods.

vMedia enables connections of a DVD physical device or image file from the local client system to the

remote server. The virtual device or image file can be used to boot the server with an operating system

that supports USB devices.

vMedia depends on a reliable network with good bandwidth. This is especially important when you are

performing tasks such as large file transfers or OS installations.

For more information regarding loading the OS with vMedia, see the vMedia Chapter of the HPE Integrity

Integrated Lights-Out Management Processor Operations Guide.

NOTE:

After the OS is loaded, make sure to save your nonvolatile memory settings to preserve boot entries

in case of blade failure.

Configuring system boot options

• Boot Manager

Contains the list of boot options available. Ordinarily the boot options list includes the UEFI Internal

Shell and one or more operating system loaders.

To manage the boot options list for each server, use the UEFI Shell, the Boot Maintenance Manager,

or operating system utilities.

• Autoboot setting

The autoboot setting determines whether a server automatically loads the first item in the boot

options list or remains at the UEFI Front Page menu. With autoboot enabled, UEFI loads the first item

in the boot options list after a designated timeout period.

Configure the autoboot setting for an Integrity server using either the autoboot UEFI Shell command

or the Set Time Out Value menu item from the Boot Maintenance Manager.

Examples of autoboot commands for HP-UX:

◦ Disable autoboot from the UEFI Shell by issuing autoboot off

◦ Enable autoboot with the default timeout value by issuing autoboot on

◦ Enable autoboot with a timeout of 60 seconds by issuing the autoboot 60

◦ Set autoboot from HP-UX using setboot

◦ Enable autoboot from HP-UX using setboot -b on

◦ Disable autoboot from HP-UX using setboot -b off

For more information on the autoboot command, enter help autoboot.

Booting and shutting down HP-UX

To boot HP-UX, use one of the following procedures:

• To boot HP-UX normally, see HP-UX standard boot on page 38. HP-UX boots in multi-user mode.

• To boot HP-UX in single-user mode, see Booting HP-UX in single-user mode on page 40.

• To boot HP-UX in LVM-maintenance mode, seeBooting HP-UX in LVM-maintenance mode on page

40.

Installing the OS using vMedia 37

Page 38

Adding HP-UX to the boot options list

You can add the \EFI\HPUX\HPUX.EFI loader to the boot options list from the UEFI Shell or the Boot

Maintenance Manager.

NOTE:

On Integrity server blades, the operating system installer automatically adds an entry to the boot

options list.

NOTE:

To add an HP-UX boot option when logged in to HP-UX, use the setboot command. For more

information, see the setbootvolume(1M) manpage.

To add HP-UX to the list:

Procedure

1. Access the UEFI Shell environment.

a. Log in to iLO 3 for Integrity and enter the CO command to access the system console.

When accessing the console, confirm that you are at the UEFI Front Page.

If you are at another UEFI menu, then choose the Exit option or press X or x to exit the menu. Exit

until you return to the screen that lists the keys that can be pressed to launch various Managers.

b. Press S or s to launch the UEFI shell.

2. Access the UEFI System Partition (fsX: where X is the file system number) for the device from which

you want to boot HP-UX.

For example, enter fs2: to access the UEFI System Partition for the bootable file system number2.

The UEFI Shell prompt changes to reflect the file system currently accessed.

The full path for the HP-UX loader is \EFI\HPUX\HPUX.EFI and it should be on the device you are

accessing.

3. At the UEFI Shell environment, use the bcfg command to manage the boot options list.

The bcfg command includes the following options for managing the boot options list:

a. bcfg boot dump – Display all items in the boot options list for the server.

b. bcfg boot rm # – Remove the item number specified by # from the boot options list.

c. bcfg boot mv #a #b – Move the item number specified by #a to the position specified by #b in

the boot options list.

d. bcfg boot add # file.efi "Description" – Add a new boot option to the position in the

boot options list specified by #. The new boot option references file.efi and is listed with the title

specified by Description.

For example, bcfg boot add 1 \EFI\HPUX\HPUX.EFI "HP-UX 11i v3" adds an HP-UX

11i v3 item as the first.

For more information, see the help bcfg command.

4. Exit the console and iLO 3 MP interfaces.

Press Ctrl–B to exit the system console and return to the iLO 3 MP Main Menu. To exit the MP, press

X or x at the Main Menu.

HP-UX standard boot

Use either of the following procedures to boot HP-UX:

38 Adding HP-UX to the boot options list

Page 39

• Booting HP-UX from the UEFI Boot Manager on page 39

• Booting HP-UX from the UEFI Shell on page 39

Booting HP-UX from the UEFI Boot Manager

Procedure

1. From the UEFI Boot Manager menu, choose an item from the boot options list to boot HP-UX.

2. Access the UEFI Boot Manager menu for the server on which you want to boot HP-UX.

3. Log in to iLO 3 MP and enter the CO command to choose the system console.

4. Confirm you are at the UEFI Front Page. If you are at another UEFI menu, then choose the Exit

option or press X or x to exit the menu. Exit until you return to the screen that lists the keys that can be

pressed to launch various Managers. Press B or b to launch the Boot Manager.

5. At the UEFI Boot Manager menu, choose an item from the boot options list.

Each item in the boot options list references a specific boot device and provides a specific set of boot

options or arguments you use when booting the device.

6. Press Enter to initiate booting using your chosen boot option.

7. Exit the console and iLO 3 MP interfaces.

8. Press Ctrl–B to exit the system console and return to the MP Main Menu. To exit the MP Main Menu,

press X or x.

Booting HP-UX from the UEFI Shell

Procedure

1. Access the UEFI Shell.

2. From the UEFI Front Page, press S or s to launch the UEFI shell.

3. Use the map command to list the file systems (fs0, fs1, and so on) that are known and have been

4. To select a file system to use, enter its mapped name followed by a colon (:). For example, to operate

5. Enter HPUX at the UEFI Shell command prompt to launch the HPUX.EFI loader from the currently

6. Allow the HPUX.EFI loader to proceed with the boot command specified in the AUTO file, or manually

mapped.

with the boot device that is mapped as fs0, enter fs0: at the UEFI Shell prompt.

selected boot device.

If needed, specify the full path of loader by entering \EFI\HPUX\HPUX at the UEFI Shell command

prompt.

specify the boot command.

By default, the HPUX.EFI loader boots using the loader commands found in the \EFI\HPUX\AUTO

file on the UEFI System Partition of the selected boot device. The AUTO file typically contains the boot

vmunix command.

To interact with the HPUX.EFI loader, interrupt the boot process (for example, type a space) within the

time-out period provided by the loader. To exit the loader, use the exit command, which returns you to

UEFI.

Booting HP-UX from the UEFI Boot Manager 39

Page 40

Booting HP-UX in single-user mode

Procedure

1. Use steps 1–5 from Booting HP-UX from the UEFI Shell on page 39 to access the UEFI shell and

launch the HPUX.EFI loader.

2. Access the HP-UX Boot Loader prompt (HPUX>) by pressing any key within the 10 seconds given for

interrupting the HP-UX boot process. Use the HPUX.EFI loader to boot HP-UX in single-user mode in

step 3.

After you press a key, the HPUX.EFI interface (the HP-UX Boot Loader prompt, HPUX>) launches. For

help using the HPUX.EFI loader, enter the help command. To return to the UEFI Shell, enter exit.

3. At the HPUX.EFI interface (the HP-UX Boot loader prompt, HPUX>) enter the boot -is vmunix

command to boot HP-UX (the /stand/vmunix kernel) in single-user (-is) mode.

Booting HP-UX in LVM-maintenance mode

The procedure for booting HP-UX into LVM Maintenance Mode is the same as for booting into single user

mode (Booting HP-UX in single-user mode on page 40), except use the -lm boot option instead of the

-is boot option:

HPUX> boot -lm vmunix

Shutting down HP-UX

For more information, see the shutdownvolume(1M) manpage.

Procedure

1. Log in to HP-UX running on the server that you want to shut down or log in to iLO 3 MP for the server

2. Issue the shutdown command with the appropriate command-line options.

and use the Console menu to access the system console. Accessing the console through iLO 3 MP

enables you to maintain console access to the server after HP-UX has shut down.

The command-line options you specify determines the way in which HP-UX shuts down and whether

the server is rebooted.

Use the following list to choose an HP-UX shutdown option for your server:

a. Shut down HP-UX and halt (power off) the server using the shutdown -h command.

Reboot a halted server by powering on the server using the PC command at the iLO 3 MP

Command menu.

b. Shut down HP-UX and reboot the server by issuing the shutdown -r command.

40 Booting HP-UX in single-user mode

Page 41

Optional components

If your server blade has no additional components to install, go to Installing and powering on the

server blade on page 17.

Partner blades

The following partner blades are supported:

• Ultrium 448c Tape Blade

• SB920c Tape Blade

• SB1760c Tape Blade

• SB3000c Tape Blade

• SB40c Storage Blade

• D2200sb Storage Blade

IMPORTANT:

In c7000 enclosures, partner blades are supported with BL860c i2 servers and BL870c i2 servers

with BL2E blade links.

In c3000 enclosures, partner blades are supported with BL860c i2 servers.

Partner blades are not supported with BL890c i2 servers.

Partner blade slotting rules are dependent on the conjoined blade configuration. For more

information on partner bay blade locations, see Installing the Blade Link for BL860c i4, BL870c

i4 or BL890c i4 configurations on page 25.

NOTE:

SAS tape boot is now supported with tape blades on HPE Integrity BL860c i4, BL870c i4, and