Page 1

Dell™ PowerEdge™ Expandable

RAID Controller (PERC) 6/i,

PERC 6/E and CERC 6/i

User’s Guide

Page 2

Notes, Cautions, and Warnings

NOTE: A NOTE indicates important information that helps you make better use of

your system.

CAUTION: A CAUTION indicates potential damage to hardware or loss of data if

instructions are not followed.

WARNING: A WARNING indicates a potential for property damage,

personal injury, or death.

____________________

Information in this document is subject to change without notice.

© 2007–2009 Dell Inc. All rights reserved.

Reproduction of these materials in any manner whatsoever without the written permission of Dell Inc.

is strictly forbidden.

Trademarks used in this text: Dell, the DELL logo, PowerEdge, PowerVault, Dell Precision, and

OpenManage are trademarks of Dell Inc.; MegaRAID is a registered trademark of LSI Corporation;

Microsoft, MS-DOS, Windows Server , W indows, and W indows V ista are either trademarks or registered

trademarks of Microsoft Corporation in the United States and/or other countries; Citrix XenServer is

a trademark of Citrix Systems Inc. and/or one or more of its subsidiaries, and may be registered in the

U.S. Patent and Trademark Off ice and in other countries; VMware is a registered trademark of VMware,

Inc. in the United States and/or other jurisdictions; Solaris is a trademark of Sun Microsystems, Inc.;

Intel is a registered trademark of Intel Corporation or its subsidiaries in the United States or other

countries; Novell and NetWar e are registered trademarks, and SUSE is a registered trademark of Novell,

Inc. in the United States and other countries; Red Hat and Red Hat Enterprise Linux are registered

trademarks of Red Hat, Inc.

Other trademarks and trade names may be used in this document to refer to either the entities claiming

the marks and names or their products. Dell Inc. disclaims any proprietary interest in trademarks and

trade names other than its own.

Models UCC-60, UCP-60, UCPM-60, and UCP-61

June 2009 Rev. A01

Page 3

Contents

1 WARNING: Safety Instructions . . . . . . . . 11

SAFETY: General . . . . . . . . . . . . . . . . . . 11

SAFETY: When Working Inside Your System

Protecting Against Electrostatic Discharge

SAFETY: Battery Disposal

. . . . . . . . . . . . . . . . 14

. . . . . . 12

. . . . . . 13

2Overview . . . . . . . . . . . . . . . . . . . . . . . . . 15

PERC 6 and CERC 6/i Controller Descriptions . . . . . . 15

PCI Architecture

Operating System Support

RAID Description

Summary of RAID Levels

RAID Terminology

. . . . . . . . . . . . . . . . . . 15

. . . . . . . . . . . . . . . . 16

. . . . . . . . . . . . . . . . . . . . 16

. . . . . . . . . . . . . . 17

. . . . . . . . . . . . . . . . . . 17

3 About PERC 6 and CERC

6/i Controllers

PERC 6 and CERC 6 Controller Features . . . . . . . . . 21

. . . . . . . . . . . . . . . . . . . . . 21

Using the SMART Feature

. . . . . . . . . . . . . . . . 24

Contents 3

Page 4

Initializing Virtual Disks . . . . . . . . . . . . . . . . . 25

Background Initialization

Full Initialization of Virtual Disks

Fast Initialization of Virtual Disks

. . . . . . . . . . . . . . 25

. . . . . . . . . . 26

. . . . . . . . . . 26

Consistency Checks

Disk Roaming

Disk Migration

. . . . . . . . . . . . . . . . . . . 26

. . . . . . . . . . . . . . . . . . . . . . . 27

. . . . . . . . . . . . . . . . . . . . . . 27

Compatibility With Virtual Disks Created

on PERC 5 Controllers

. . . . . . . . . . . . . . . . 28

Compatibility With Virtual Disks Created

on SAS 6/iR Controllers

. . . . . . . . . . . . . . . 28

Migrating Virtual Disks from SAS 6/iR

to PERC 6 and CERC 6/i

Battery Management

Battery Warranty Information

Battery Learn Cycle

Virtual Disk Write Cache Policies

Write-Back and Write-Through

. . . . . . . . . . . . . . . 29

. . . . . . . . . . . . . . . . . . . 30

. . . . . . . . . . . 30

. . . . . . . . . . . . . . . . . 31

. . . . . . . . . . . . 32

. . . . . . . . . . . 32

Conditions Under Which Write-Back

is Employed

. . . . . . . . . . . . . . . . . . . . . 32

Conditions Under Which Write-Through

is Employed

. . . . . . . . . . . . . . . . . . . . . 33

Conditions Under Which Forced Write-Back

With No Battery is Employed

. . . . . . . . . . . . 33

4 Contents

Virtual Disk Read Policies

Reconfiguring Virtual Disks

Fault Tolerance Features

. . . . . . . . . . . . . . . . . 36

Physical Disk Hot Swapping

Failed Physical Disk Detection

. . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . 33

. . . . . . . . . . . . 36

. . . . . . . . . . . 37

Page 5

Redundant Path With Static Load

Balancing Support

. . . . . . . . . . . . . . . . . 37

Using Replace Member and Revertible

Hot Spares

. . . . . . . . . . . . . . . . . . . . . 37

Patrol Read

. . . . . . . . . . . . . . . . . . . . . . . . 38

Patrol Read Feature

Patrol Read Modes

. . . . . . . . . . . . . . . . 38

. . . . . . . . . . . . . . . . . 39

4 Installing and Configuring Hardware . . . 41

Installing the PERC 6/E and PERC 6/i Adapters . . . . . 41

Installing the Transportable Battery Backup

Unit (TBBU) on PERC 6/E

Installing the DIMM on a PERC 6/E Adapter

Transferring a TBBU Between Controllers

Removing the PERC 6/E and PERC 6/i Adapters

Removing the DIMM and Battery from a

PERC 6/E Adapter

Disconnecting the BBU from a PERC 6/i Adapter

or a PERC 6/i Integrated Controller

Setting up Redundant Path Support on the

PERC 6/E Adapter

. . . . . . . . . . . . . . . . . 46

. . . . . . 47

. . . . . . . 48

. . . . . 49

. . . . . . . . . . . . . . . . . . . . 52

. . . . . . . . . . . 54

. . . . . . . . . . . . . . . . . . . . 55

Reverting From Redundant Path Support to Single

Path Support on the PERC 6/E Adapter

. . . . . . . . . 58

Removing and Installing the PERC 6/i and CERC 6/i

Integrated Storage Controller Cards in Dell

Modular Blade Systems (Service-Only Procedure)

Installing the Storage Controller Card

. . . . . . . 60

Contents 5

. . . 59

Page 6

5 Driver Installation . . . . . . . . . . . . . . . . . 63

Installing Windows Drivers . . . . . . . . . . . . . . . 64

Creating the Driver Media

Pre-Installation Requirements

Installing the Driver During a Windows

Server 2003 or Windows XP

Operating System Installation

Installing the Driver During a Windows Server

2008 or Windows Vista Installation

Installing a Windows Server 2003, Windows

Server 2008, Windows Vista, or Windows XP

Driver for a New RAID Controller

Updating an Existing Windows Server 2003,

Windows Server 2008, Windows XP,

or Windows Vista Driver

Installing Linux Driver . . . . . . . . . . . . . . . . . . 69

Installing Red Hat Enterprise Linux Operating

System Using the Driver Update Diskette

Installing SUSE Linux Enterprise Server

Using the Driver Update Diskette

Installing the RPM Package With

DKMS Support

. . . . . . . . . . . . . . . . . . . 72

. . . . . . . . . . . . . 64

. . . . . . . . . . . 64

. . . . . . . . . . . . 65

. . . . . . . . . 66

. . . . . . . . . . 67

. . . . . . . . . . . . . . 68

. . . . . 71

. . . . . . . . . . 71

6 Contents

Installing Solaris Driver

. . . . . . . . . . . . . . . . . 74

Installing Solaris 10 on a PowerEdge System

Booting From a PERC 6 or CERC 6/i Controller

Adding/Updating the Driver to an

Existing System

Installing NetWare Drivers

. . . . . . . . . . . . . . . . . . . 75

. . . . . . . . . . . . . . . 75

Installing the NetWare Driver in a New

NetWare System

. . . . . . . . . . . . . . . . . . 75

Installing or Updating the NetWare Driver in

an Existing NetWare System

. . . . . . . . . . . . 76

. . . 74

Page 7

6 Configuring and Managing RAID. . . . . . . 77

Dell OpenManage Storage Management . . . . . . . . 77

Dell SAS RAID Storage Manager

. . . . . . . . . . . . 77

RAID Configuration Functions . . . . . . . . . . . . . . 78

BIOS Configuration Utility

Entering the BIOS Configuration Utility

Exiting the Configuration Utility

Menu Navigation Controls

Setting Up Virtual Disks

Virtual Disk Management

Creating Virtual Disks

Initializing Virtual Disks

Checking Data Consistency

. . . . . . . . . . . . . . . . 79

. . . . . . . 79

. . . . . . . . . . . 80

. . . . . . . . . . . . . 80

. . . . . . . . . . . . . . . 83

. . . . . . . . . . . . . . . . 85

. . . . . . . . . . . . . . . . 85

. . . . . . . . . . . . . . . 88

. . . . . . . . . . . . 88

Importing or Clearing Foreign Configurations

Using the VD Mgmt Menu

. . . . . . . . . . . . . 89

Importing or Clearing Foreign

Configurations Using the Foreign

Configuration View Screen

Managing Preserved Cache

Managing Dedicated Hot Spares

Deleting Virtual Disks

Deleting Disk Groups

Resetting the Configuration

BIOS Configuration Utility Menu Options

. . . . . . . . . . . . . 90

. . . . . . . . . . . . 93

. . . . . . . . . . 94

. . . . . . . . . . . . . . . . 95

. . . . . . . . . . . . . . . . 95

. . . . . . . . . . . . 96

. . . . . . 96

Physical Disk Management

Setting LED Blinking

Creating Global Hot Spares

. . . . . . . . . . . . . . . 105

. . . . . . . . . . . . . . . . 105

. . . . . . . . . . . . . 105

Removing Global or Dedicated Hot Spares

. . . . 106

Contents 7

Page 8

Replacing an Online Physical Disk . . . . . . . . 107

Stopping Background Initialization

. . . . . . . . 108

Performing a Manual Rebuild of an

Individual Physical Disk

. . . . . . . . . . . . . . 108

Controller Management

Enabling Boot Support

Enabling BIOS Stop on Error

Restoring Factory Default Settings

. . . . . . . . . . . . . . . . 109

. . . . . . . . . . . . . . 109

. . . . . . . . . . . 110

. . . . . . . . 111

7 Troubleshooting . . . . . . . . . . . . . . . . . . . 113

Post Error Messages . . . . . . . . . . . . . . . . . . 113

Virtual Disks Degraded

Memory Errors . . . . . . . . . . . . . . . . . . . . . 120

Pinned Cache State

General Problems

Physical Disk Related Issues

Physical Disk Failures and Rebuilds

SMART Errors

. . . . . . . . . . . . . . . . . . . . . 125

Replace Member Errors

. . . . . . . . . . . . . . . . 119

. . . . . . . . . . . . . . . . . . 120

. . . . . . . . . . . . . . . . . . . 121

. . . . . . . . . . . . . 122

. . . . . . . . . 123

. . . . . . . . . . . . . . . . 126

8 Contents

Linux Operating System Errors

Controller LED Indicators

. . . . . . . . . . . . . . . 129

Drive Carrier LED Indicators

. . . . . . . . . . . . . 127

. . . . . . . . . . . . . . 130

Page 9

A Regulatory Notices . . . . . . . . . . . . . . . . 133

B Corporate Contact Details (Taiwan Only)

Glossary

Index

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

135

Contents 9

Page 10

10 Contents

Page 11

WARNING: Safety Instructions

Use the following safety guidelines to help ensure your own personal safety

and to help protect your system and working environment from potential

damage.

WARNING: There is a danger of a new battery exploding if it is incorrectly

installed. Replace the battery only with the same or equivalent type recommended

by the manufacturer. See "SAFETY: Battery Disposal" on page 14.

NOTE: For complete information about U.S. Terms and Conditions of Sale, Limited

Warranties, and Returns, Export Regulations, Software License Agreement, Safety,

Environmental and Ergonomic Instructions, Regulatory Notices, and Recycling

Information, see the documentation that was shipped with your system.

SAFETY: General

• Observe and follow service markings. Do not service any product except as

explained in your user documentation. Opening or removing covers that

are marked with the triangular symbol with a lightning bolt may expose

you to electrical shock. Components inside these compartments must be

serviced only by a trained service technician.

• If any of the following conditions occur, unplug the product from the

electrical outlet, and replace the part or contact your trained service

provider:

– The power cable, extension cable, or plug is damaged.

– An object has fallen in the product.

– The product has been exposed to water.

– The product has been dropped or damaged.

– The product does not operate correctly when you follow the operating

instructions.

• Use the product only with approved equipment.

WARNING: Safety Instructions 11

Page 12

• Operate the product only from the type of external power source indicated

on the electrical ratings label. If you are not sure of the type of power

source required, consult your service provider or local power company.

• Handle batteries carefully. Do not disassemble, crush, puncture, short

external contacts, dispose of in fire or water, or expose batteries to

temperatures higher than 60° Celsius (140° Fahrenheit). Do not attempt

to open or service batteries; replace batteries only with batteries designated

for the product.

SAFETY: When Working Inside Your System

Before you remove the system covers, perform the following steps in the

sequence indicated.

WARNING: Except as expressly otherwise instructed in Dell documentation, only

trained service technicians are authorized to remove the system cover and access

any of the components inside the system.

WARNING: To help avoid possible damage to the system board, wait 5 seconds

after turning off the system before removing a component from the system board or

disconnecting a peripheral device.

1

Turn off the system and any connected devices.

2

Disconnect your system and devices from their power sources. To reduce

the potential of personal injury or shock, disconnect any

telecommunication lines from the system.

3

Ground yourself by touching an unpainted metal surface on the chassis

before touching anything inside the system.

4

While you work, periodically touch an unpainted metal surface on the

chassis to dissipate any static electricity that might harm internal

components.

12 WARNING: Safety Instructions

Page 13

In addition, note these safety guidelines when appropriate:

• When you disconnect a cable, pull on its connector or on its strain-relief

loop, not on the cable itself. Some cables have a connector with locking

tabs. If you are disconnecting this type of cable, press in on the locking

tabs before disconnecting the cable. As you pull connectors apart, keep

them evenly aligned to avoid bending any connector pins. Also, when you

connect a cable, make sure both connectors are correctly oriented and

aligned.

• Handle components and cards with care. Do not touch the components or

contacts on a card. Hold a card by its edges or by its metal mounting

bracket. Hold a component such as a microprocessor chip by its edges, not

by its pins.

Protecting Against Electrostatic Discharge

Electrostatic discharge (ESD) events can harm electronic components inside

your system. Under certain conditions, ESD may build up on your body or an

object, such as a peripheral, and then discharge insto another object, such as

your system. To prevent ESD damage, you must discharge static electricity from

your body before you interact with any of your system’s internal electronic

components, such as a memory module. You can protect against ESD by

touching a metal grounded object (such as an unpainted metal surface on

your system’s I/O panel) before you interact with anything electronic. When

connecting a peripheral (including handheld digital assistants) to your system,

you should always ground both yourself and the peripheral before connecting

it to the system. Additionally, as you work inside the system, periodically

touch an I/O connector to remove any static charge your body may have

accumulated.

WARNING: Safety Instructions 13

Page 14

You can also take the following steps to prevent damage from electrostatic

discharge:

• When unpacking a static-sensitive component from its shipping carton, do

not remove the component from the antistatic packing material until you

are ready to install the component. Just before unwrapping the antistatic

package, be sure to discharge static electricity from your body.

• When transporting a sensitive component, first place it in an antistatic

container or packaging.

• Handle all electrostatic sensitive components in a static-safe area. If

possible, use antistatic floor pads and work bench pads.

SAFETY: Battery Disposal

Your system may use a nickel-metal hydride (NiMH), lithium

coin-cell, and/or a lithium-ion battery. The NiMH, lithium coincell, and lithium-ion batteries are long-life batteries, and it is

possible that you will never need to replace them. However, should

you need to replace them, see the instructions included in the

section "Configuring and Managing RAID" on page 77.

NOTE: Do not dispose of the battery along with household waste. Contact your

local waste disposal agency for the address of the nearest battery deposit site.

NOTE: Your system may also include circuit cards or other components that

contain batteries. These batteries too must be disposed of in a battery deposit site.

For information about such batteries, see the documentation for the specific card or

component.

Taiwan Battery Recycling Mark

14 WARNING: Safety Instructions

Page 15

Overview

The Dell™ PowerEdge™ Expandable RAID Controller (PERC) 6 family of

controllers and the Dell Cost-Effective RAID Controller (CERC) 6/i offer

redundant array of independent disks (RAID) control capabilities. The PERC 6

and CERC 6/i Serial Attached SCSI (SAS) RAID controllers only support

Dell-qualified SAS and SATA hard disk drives (HDD) and solid-state

drives (SSD). The controllers are designed to provide reliability, high

performance, and fault-tolerant disk subsystem management.

PERC 6 and CERC 6/i Controller Descriptions

The following list describes each type of controller:

• The PERC 6/E Adapter with two external x4 SAS ports and a transportable

battery backup unit (TBBU)

• The PERC 6/i Adapter with two internal x4 SAS ports, with or without a

battery backup unit, depending on the system

• The PERC 6/i Integrated controller with two internal x4 SAS ports and a

battery backup unit

• The CERC 6/i Integrated controller with one internal x4 SAS port and no

battery backup unit

Each controller supports up to 64 virtual disks.

NOTE: The number of virtual disks supported by the PERC 6/i and the CERC 6/i

cards is limited by the configuration supported by the system.

PCI Architecture

• PERC 6 controllers support a Peripheral Component Interconnect

Express (PCI-E) x8 host interface.

• CERC 6/i Modular controllers support a PCI-E x4 host interface.

NOTE: PCI-E is a high-performance input/output (I/O) bus architecture designed to

increase data transfers without slowing down the Central Processing Unit (CPU).

Overview 15

Page 16

Operating System Support

The PERC 6 and CERC 6/i controllers support the following operating systems:

• Citrix® XenServer® Dell Edition

• Microsoft

• Microsoft Windows

• Microsoft Windows Vista

• Microsoft Windows Server 2008 (including Hyper-V™ virtualization)

•Novell

•Red Hat

Linux Version 5

• Solaris™ 10 (64-bit)

•SUSE

and Version 11 (64-bit)

•VMware

NOTE: Windows XP and Windows Vista operating systems are supported with a

PERC 6 controller only when the controller is installed in a Dell Precision™

workstation.

NOTE: For the latest list of supported operating systems and driver installation

instructions, see the system documentation on the Dell Support website

at support.dell.com. For specific operating system service pack requirements,

see the Drivers and Downloads section on the Dell Support site at support.dell.com.

®

Windows Server® 2003

®

XP

®

®

NetWare® 6.5

®

Enterprise Linux® Version 4 and Red Hat Enterprise

®

Linux Enterprise Server Version 9 (64-bit), Version 10 (64-bit),

®

ESX 3.5 and 3.5i

RAID Description

RAID is a group of independent physical disks that provides high performance by

increasing the number of drives used for saving and accessing data. A RAID disk

subsystem improves I/O performance and data availability. The physical disk

group appears to the host system either as a single storage unit or multiple

logical units. Data throughput improves because several disks are accessed

simultaneously. RAID systems also improve data storage availability and fault

tolerance. Data loss caused by a physical disk failure can be recovered by rebuilding

missing data from the remaining physical disks containing data or parity.

CAUTION: In the event of a physical disk failure, a RAID 0 virtual disk fails,

resulting in data loss.

16 Overview

Page 17

Summary of RAID Levels

• RAID 0 uses disk striping to provide high data throughput, especially for

large files in an environment that requires no data redundancy.

• RAID 1 uses disk mirroring so that data written to one physical disk is

simultaneously written to another physical disk. RAID 1 is good for small

databases or other applications that require small capacity, but also require

complete data redundancy.

• RAID 5 uses disk striping and parity data across all physical disks

(distributed parity) to provide high data throughput and data redundancy,

especially for small random access.

• RAID 6 is an extension of RAID 5 and uses an additional parity block.

RAID 6 uses block-level striping with two parity blocks distributed across

all member disks. RAID 6 provides protection against double disk failures,

and failures while a single disk is rebuilding. If you are using only one array,

deploying RAID 6 is more effective than deploying a hot spare disk.

• RAID 10, a combination of RAID 0 and RAID 1, uses disk striping across

mirrored disks. It provides high data throughput and complete data

redundancy. RAID 10 can support up to eight spans, and up to 32 physical

disks per span.

• RAID 50, a combination of RAID 0 and RAID 5, uses distributed data

parity and disk striping and works best with data that requires high system

availability, high request rates, high data transfers, and medium to large

capacity.

• RAID 60 is a combination of RAID 6 and RAID 0, a RAID 0 array is striped

across RAID 6 elements. RAID 60 requires at least 8 disks.

RAID Terminology

Disk Striping

Disk striping allows you to write data across multiple physical disks instead of

just one physical disk. Disk striping involves partitioning each physical disk

storage space in stripes of the following sizes: 8 KB, 16 KB, 32 KB, 64 KB,

128 KB, 256 KB, 512 KB, and 1024 KB. These stripes are interleaved in a

repeated sequential manner. The part of the stripe on a single physical disk is

called a stripe element.

Overview 17

Page 18

For example, in a four-disk system using only disk striping (used in RAID 0),

Stripe element 1

Stripe element 5

Stripe element 9

Stripe element 2

Stripe element 6

Stripe element 10

Stripe element 3

Stripe element 7

Stripe element 11

Stripe element 4

Stripe element 8

Stripe element 12

segment 1 is written to disk 1, segment 2 is written to disk 2, and so on. Disk

striping enhances performance because multiple physical disks are accessed

simultaneously, but disk striping does not provide data redundancy.

Figure 2-1 shows an example of disk striping.

Figure 2-1. Example of Disk Striping (RAID 0)

Disk Mirroring

With mirroring (used in RAID 1), data written to one disk is simultaneously

written to another disk. If one disk fails, the contents of the other disk can be

used to run the system and rebuild the failed physical disk. The primary

advantage of disk mirroring is that it provides complete data redundancy.

Because the contents of the disk are completely written to a second disk, it

does not matter if one of the disks fails. Both disks contain the same data at

all times. Either of the physical disks can act as the operational physical disk.

Disk mirroring provides complete redundancy, but is expensive because each

physical disk in the system must be duplicated.

18 Overview

NOTE: Mirrored physical disks improve read performance by read load balance.

Page 19

Figure 2-2 shows an example of disk mirroring.

Stripe element 1

Stripe element 2

Stripe element 3

Stripe element 1 Duplicated

Stripe element 2 Duplicated

Stripe element 3 Duplicated

Stripe element 4 Stripe element 4 Duplicated

Figure 2-2. Example of Disk Mirroring (RAID 1)

Spanned RAID Levels

Spanning is a term used to describe the way in which RAID levels 10, 50,

and 60 are constructed from multiple sets of basic, or simple RAID levels.

For example, a RAID 10 has multiple sets of RAID 1 arrays where each RAID 1

set is considered a span. Data is then striped (RAID 0) across the RAID 1

spans to create a RAID 10 virtual disk. If you are using RAID 50 or RAID 60,

you can combine multiple sets of RAID 5 and RAID 6 together with striping.

Parity Data

Parity data is redundant data that is generated to provide fault tolerance

within certain RAID levels. In the event of a drive failure the parity data can

be used by the controller to regenerate user data. Parity data is present for

RAID 5, 6, 50, and 60.

The parity data is distributed across all the physical disks in the system. If a

single physical disk fails, it can be rebuilt from the parity and the data on the

remaining physical disks. RAID level 5 combines distributed parity with disk

striping, as shown in Figure 2-3. Parity provides redundancy for one physical

disk failure without duplicating the contents of entire physical disks.

RAID 6 combines dual distributed parity with disk striping. This level of

parity allows for two disk failures without duplicating the contents of entire

physical disks.

Overview 19

Page 20

Figure 2-3. Example of Distributed Parity (RAID 5)

Stripe element 1

Stripe element 7

Stripe element 2

Stripe element 8

Stripe element 3

Stripe element 9

Stripe element 4

Stripe element 10

Stripe element 5

Parity (6–10)

Parity (11–15)

Parity (1–5)

Stripe element 6

Stripe element 12

Stripe element 15

Stripe element 11Stripe element 14

Stripe element 13

Stripe element 19

Stripe element 25

Stripe element 20

Stripe element 23

Stripe element 18

Stripe element 21

Stripe element 16

Stripe element 22

Stripe element 17

Parity (21–25)

Parity (26–30)

Parity (16–20)

Stripe element 24

Stripe element 30

Stripe element 27 Stripe element 29

Stripe element 26

Stripe element 28

Stripe element 1

Stripe element 5

Stripe element 2

Stripe element 6

Stripe element 3

Parity (5–8)

Stripe element 4

Parity (5–8)

Parity (1–4)

Stripe element 7

Stripe element 10

Parity (1–4)

Stripe element 8

Stripe element 12

Stripe element 9

Stripe element 11

Parity (9–12)

Parity (9–12)

Stripe element 13 Stripe element 14 Stripe element 16Parity (13–16)

Stripe element 15

Parity (13–16)

NOTE: Parity is distributed across multiple physical disks in the disk group.

Figure 2-4. Example of Dual Distributed Parity (RAID 6)

NOTE: Parity is distributed across all drives in the array.

20 Overview

Page 21

About PERC 6 and CERC 6/i Controllers

This section describes the features of the Dell™ PowerEdge™ Expandable

RAID Controller (PERC) 6 and the Dell Cost-Effective RAID Controller

(CERC) 6/i such as the configuration options, disk array performance,

RAID management utilities, and operating system software drivers.

PERC 6 and CERC 6 Controller Features

The PERC 6 and CERC 6 family of controllers support only Dell-qualified

Serial-attached SCSI (SAS) hard disk drives(HDDs), SATA HDDs, and

solid-state disks (SSD). Mixing SAS and SATA drives within a virtual disk is

not supported. Also, mixing HDDs and SSDs within a virtual disk is not

supported.

Table 3-1 compares the hardware configurations for the PERC 6 and CERC 6/i

controllers.

Table 3-1. PERC 6 and CERC 6/i Controller Comparisons

Specification PERC 6/E

Adapter

RAID Levels 0, 1, 5, 6, 10,

50, 60

Enclosures

per Port

Ports 2 x4 external

Processor LSI adapter

Up to 3

enclosures

wide port

SAS RAID-onChip, 8-port

with 1078

PERC 6/i Adapter PERC 6/i

Integrated

0, 1, 5, 6, 10,

50, 60

N/A N/A N/A

2 x4 internal

wide port

LSI adapter SAS

RAID-on-Chip,

8-port with 1078

About PERC 6 and CERC 6/i Controllers 21

0, 1, 5, 6, 10,

50, 60

2 x4 internal

wide port

LSI adapter SAS

RAID-on-Chip,

8-port with 1078

CERC 6/i

Integrated

0,1,5,6,

and 10

1 x4

internal

wide port

LSI adapter

SAS RAIDon-Chip,

4-port with

1078

a

Page 22

Table 3-1. PERC 6 and CERC 6/i Controller Comparisons (continued)

Specification PERC 6/E

Adapter

Battery

Backup Unit

Cache

Memory

Ye s,

Transportable

256-MB

DDRII cache

memory size.

Optional

512-MB

DIMM

Cache

Functi on

Write-Back,

Write-Through,

Adaptive

Read Ahead,

No-Read

Ahead,

Read Ahead

Maximum

Up to 8 arrays Up to 8 arrays Up to 8 arrays Up to 2

Number of

Spans per

Disk Group

Maximum

Number of

Virtual Disks

per Disk

Group

Up to 16

virtual disks

per disk group

for non-

spanned RAID

levels: 0, 1, 5,

and 6.

One virtual

disk per disk

group for

spanned RAID

levels: 10, 50,

and 60.

PERC 6/i Adapter PERC 6/i

Integrated

b

Ye s

256-MB DDRII

cache memory

size

Wri te -Back ,

Write-Through,

Adaptive

Read Ahead,

No-Read Ahead,

Read Ahead

Up to 16 virtual

disks per disk

group for nonspanned RAID

levels: 0, 1, 5,

and 6.

One virtual disk

per disk group

for spanned

RAID levels: 10,

50, and 60.

Ye s N o

256-MB DDRII

cache memory

size

Write-Back,

Write-Through,

Adaptive

Read Ahead,

No-Read Ahead,

Read Ahead

Up to 16 virtual

disks per disk

group for nonspanned RAID

levels: 0, 1, 5,

and 6.

One virtual disk

per disk group

for spanned

RAID levels: 10,

50, and 60.

CERC 6/i

Integrated

128-MB

DDRII

cache

memory

size

Write-Back,

Writ eThrough,

Adaptive

Read

Ahead,

No-Read

Ahead,

Read Ahead

arrays

Up to 16

virtual disks

per disk

group for

nonspanned

RAID

levels: 0, 1,

5, and 6.

One virtual

disk per

disk group

for spanned

RAID

level 10.

22 About PERC 6 and CERC 6/i Controllers

Page 23

Table 3-1. PERC 6 and CERC 6/i Controller Comparisons (continued)

Specification PERC 6/E

Adapter

Multiple

Virtual Disks

per

Up to 64

virtual disks

per controller

Controller

Support for

Yes Yes Yes x4 PCIe

x8 PCIe Host

Interface

Online

Ye s Ye s Ye s Ye s

Capacity

Expansion

Dedicated

Ye s Ye s Ye s Ye s

and Global

Hot Spares

Hot Swap

Ye s Ye s Ye s Ye s

Devices

Supported

Enclosure

Hot-Add

Mixed

Yes N/A N/A N/A

c

Ye s Ye s Ye s Ye s

Capacity

Physical

Disks

Supported

Hardware

Ye s Ye s Ye s Ye s

Exclusive-OR

(XOR)

Assistance

PERC 6/i Adapter PERC 6/i

Integrated

Up to 64 virtual

disks per

controller

Up to 64 virtual

disks per

controller

CERC 6/i

Integrated

Up to 64

virtual disks

per

controller

About PERC 6 and CERC 6/i Controllers 23

Page 24

Table 3-1. PERC 6 and CERC 6/i Controller Comparisons (continued)

Specification PERC 6/E

Adapter

Revertible

Hot Spares

Supported

Redundant

Path Support

a. These RAID configurations are only supported on select Dell modular systems.

b. The PERC 6/i adapter supports a battery backup unit (BBU) on selected systems only.

For additional information, see the documentation that shipped with the system.

c. Using the enclosure Hot-Add feature, you can hot plug enclosures to the PERC 6/E adapter

without rebooting the system.

NOTE: The maximum array size is limited by the maximum number of drives per

disk group (32), the maximum number of spans per disk group (8), and the size of the

physical drives.

NOTE: The number of physical disks on a controller is limited by the number of slots

in the backplane on which the card is attached.

Ye s Ye s Ye s Ye s

Yes N/A N/A N/A

PERC 6/i Adapter PERC 6/i

Integrated

CERC 6/i

Integrated

Using the SMART Feature

The Self-Monitoring Analysis and Reporting Technology (SMART) feature

monitors the internal performance of all motors, heads, and physical disk

electronics to detect predictable physical disk failures. The SMART feature

helps monitor physical disk performance and reliability.

SMART-compliant physical disks have attributes for which data can be

monitored to identify changes in values and determine whether the values are

within threshold limits. Many mechanical and electrical failures display some

degradation in performance before failure.

24 About PERC 6 and CERC 6/i Controllers

Page 25

A SMART failure is also referred to as a predicted failure. There are numerous

factors that relate to predicted physical disk failures, such as a bearing failure,

a broken read/write head, and changes in spin-up rate. In addition, there are

factors related to read/write surface failure, such as seek error rate

and excessive bad sectors.

For information on physical disk status, see "Disk Roaming" on page 27.

NOTE: For detailed information on Small Computer System Interface (SCSI)

interface specifications, see www.t10.org and for detailed information on for Serial

Attached ATA (SATA) interface specifications, see www.t13.org.

Initializing Virtual Disks

You can initialize the virtual disks as described in the following sections.

Background Initialization

Background Initialization (BGI) is an automated process that writes the

parity or mirror data on newly created virtual disks. BGI assumes that the data

is correct on all new drives. BGI does not run on RAID 0 virtual disks.

NOTE: You cannot permanently disable BGI. If you cancel BGI, it automatically

restarts within five minutes. For information on stopping BGI, see "Stopping

Background Initialization" on page 108.

You can control the BGI rate in the Dell™ OpenManage™ storage

management application. Any change in the BGI rate does not take effect

until the next BGI run.

NOTE: Unlike full or fast initialization of virtual disks, Background Initialization does

not clear data from the physical disks.

Consistency Check (CC) and BGI perform similar functions in that they

both correct parity errors. However, CC reports data inconsistencies through

an event notification, but BGI does not (BGI assumes the data is correct, as it

is run only on a newly created disk). You can start CC manually, but not BGI.

About PERC 6 and CERC 6/i Controllers 25

Page 26

Full Initialization of Virtual Disks

Performing a Full Initialization on a virtual disk overwrites all blocks and

destroys any data that previously existed on the virtual disk. Full Initialization

of a virtual disk eliminates the need for that virtual disk to undergo a

Background Initialization and can be performed directly after the creation of

a virtual disk.

During Full Initialization, the host is not able to access the virtual disk.

You can start a Full Initialization on a virtual disk by using the Slow Initialize

option in the Dell OpenManage storage management application. To use the

BIOS Configuration Utility to perform a Full Initialization, see "Initializing

Virtual Disks" on page 88.

NOTE: If the system reboots during a Full Initialization, the operation aborts and a

BGI begins on the virtual disk.

Fast Initialization of Virtual Disks

A fast initialization on a virtual disk overwrites the first and last 8 MB of the

virtual disk, clearing any boot records or partition information. This operation

takes only 2–3 seconds to complete and is recommended when recreating

virtual disks. To perform a fast initialization using the BIOS Configuration

Utility, see "Initializing Virtual Disks" on page 88.

Consistency Checks

CC is a background operation that verifies and corrects the mirror or parity

data for fault tolerant virtual disks. It is recommended that you periodically

run a consistency check on virtual disks.

You can manually start a consistency check using the BIOS Configuration

Utility or a OpenManage storage management application. To start a CC

using the BIOS Configuration Utility, see "Checking Data Consistency" on

page 88. CCs can be scheduled to run on virtual disks using a OpenManage

storage management application.

By default, CC automatically corrects mirror or parity inconsistencies.

However, you can enable the Abort Consistency Check on Error feature on

the controller using Dell OpenManage storage management application.

With the Abort Consistency Check on Error setting enabled, consistency

check notifies if any inconsistency is found and aborts instead of

automatically correcting the error.

26 About PERC 6 and CERC 6/i Controllers

Page 27

Disk Roaming

The PERC 6 and CERC 6/i controllers support moving physical disks from

one cable connection or backplane slot to another on the same controller.

The controller automatically recognizes the relocated physical disks and

logically places them in the proper virtual disks that are part of the disk group.

You can perform disk roaming only when the system is turned off.

CAUTION: Do not attempt disk roaming during RAID level migration (RLM) or

online capacity expansion (OCE). This causes loss of the virtual disk.

Perform the following steps to use disk roaming:

1

Turn off the power to the system, physical disks, enclosures, and system

components. Disconnect power cords from the system.

2

Move the physical disks to desired positions on the backplane or

the enclosure.

3

Perform a safety check. Make sure the physical disks are inserted properly.

4

Turn on the system.

The controller detects the RAID configuration from the configuration

data on the physical disks.

Disk Migration

The PERC 6 and CERC 6/i controllers support migration of virtual disks from

one controller to another without taking the target controller offline.

However, the source controller must be offline prior to performing the

disk migration. The controller can import RAID virtual disks in optimal,

degraded, or partially degraded states. You cannot import a virtual disk that is in

an offline state.

NOTE: Disks cannot be migrated back to previous Dell PERC RAID controllers.

When a controller detects a physical disk with an existing configuration, it

flags the physical disk as foreign, and it generates an alert indicating that a

foreign disk was detected.

CAUTION: Do not attempt disk roaming during RAID level migration (RLM) or

online capacity expansion (OCE). This causes loss of the virtual disk.

About PERC 6 and CERC 6/i Controllers 27

Page 28

Perform the following steps to use disk migration:

1

Turn off the system that contains the source controller.

2

Move the appropriate physical disks from the source controller to the

target controller.

The system with the target controller can be running while inserting the

physical disks.

The controller flags the inserted disks as foreign disks.

3

Use the OpenManage storage management application to import the

detected foreign configuration.

NOTE: Ensure that all physical disks that are part of the virtual disk are migrated.

NOTE: You can also use the controller BIOS configuration utility to migrate disks.

Compatibility With Virtual Disks Created on PERC 5 Controllers

Virtual disks that were created on the PERC 5 family of controllers can be

migrated to the PERC 6 and CERC 6/i controllers without risking data or

configuration loss. Migrating virtual disks from PERC 6 and CERC 6/i controllers

to PERC 5 is not supported.

Virtual disks created on the CERC 6/i controller or the PERC 5 family of

controllers can be migrated to PERC 6.

NOTE: For more information about compatibility, contact your Dell technical

support representative.

Compatibility With Virtual Disks Created on SAS 6/iR Controllers

Virtual disks created on the SAS 6/iR family of controllers can be migrated to

PERC 6 and CERC 6/i. However, only virtual disks with boot volumes of the

following Linux operating systems successfully boot after migration:

•Red Hat® Enterprise Linux® 4

• Red Hat Enterprise Linux 5

•SUSE

28 About PERC 6 and CERC 6/i Controllers

®

Linux Enterprise Server 10 (64-bit)

NOTE: The migration of virtual disks with Microsoft® Windows® operating systems

is not supported.

CAUTION: Before migrating virtual disks, back up your data and ensure the

firmware of both controllers is the latest revision. Also ensure you use the SAS 6

firmware version 00.25.47.00.06.22.03.00 or newer.

Page 29

Migrating Virtual Disks from SAS 6/iR to PERC 6 and CERC 6/i

NOTE: The supported operating systems listed in "Compatibility With Virtual Disks

Created on SAS 6/iR Controllers" on page 28 contain the driver for the PERC 6

and CERC 6/i controller family. No additional drivers are needed during the

migration process.

1

If virtual disks with one of the supported Linux operating systems listed in

"Compatibility With Virtual Disks Created on SAS 6/iR Controllers" on

page 28 are being migrated, open a command prompt and type the

following commands:

modprobe megaraid_sas

mkinitrd -f --preload megaraid_sas /boot/initrd-

`uname -r`.img `uname -r`

Turn off the system.

2

3

Move the appropriate physical disks from the SAS 6/iR controller to the

PERC 6 and CERC 6/i. If you are replacing your SAS 6/iR controller with a

PERC 6 controller, see the

system or on the Dell Support website at

CAUTION: After you have imported the foreign configuration on the PERC 6 or

CERC 6/i storage controllers, migrating the storage disks back to the SAS 6/iR

controller may result in the loss of data.

4

Boot the system and import the foreign configuration that is detected.

Hardware Owner’s Manual

support.dell.com

You can do this in two ways:

• Press <F> to automatically import the foreign configuration

• Enter the

BIOS Configuration Utility

and navigate to the

Configuration View

shipped with your

.

Foreign

NOTE: For more information on accessing the BIOS Configuration Utility,

see "Entering the BIOS Configuration Utility" on page 79

NOTE: For more information on Foreign Configuration View, see "Foreign

Configuration View" on page 104

5

If the migrated virtual disk is the boot volume, ensure that the virtual disk

.

is selected as the bootable volume for the target PERC 6 and CERC 6/i

controller. See "Controller Management Actions" on page 104.

About PERC 6 and CERC 6/i Controllers 29

Page 30

6

Exit the

7

Ensure all the latest drivers for PERC 6 or CERC 6/i controller

(available on the Dell support website at

For more information, see "Driver Installation" on page 63.

NOTE: For more information about compatibility, contact your Dell technical

support representative.

BIOS Configuration Utility

and reboot the system.

support.dell.com

) are installed.

Battery Management

NOTE: Battery management is only applicable to PERC 6 family of controllers.

The Transportable Battery Backup Unit (TBBU) is a cache memory module

with an integrated battery pack that enables you to transport the cache

module with the battery in a new controller. The TBBU protects the integrity

of the cached data on the PERC 6/E adapter by providing backup power

during a power outage.

The Battery Backup Unit (BBU) is a battery pack that protects the integrity of

the cached data on the PERC 6/i adapter and PERC 6/i Integrated controllers

by providing backup power during a power outage.

The battery, when new, provides up to 24 hours of backup power for the

cache memory.

Battery Warranty Information

The BBU offers an inexpensive way to protect the data in cache memory.

The lithium-ion battery provides a way to store more power in a smaller

form factor than previous batteries.

Your PERC 6 battery, when new, provides up to 24 hours of controller cache

memory backup power. Under the 1–year limited warranty, we warrant that

the battery will provide at least 24 hours of backup coverage during the 1-year

limited warranty period. To prolong battery life, do not store or operate the

BBU in temperatures exceeding 60°C.

30 About PERC 6 and CERC 6/i Controllers

Page 31

Battery Learn Cycle

Learn cycle is a battery calibration operation performed by the controller

periodically to determine the condition of the battery. This operation cannot

be disabled.

You can start battery learn cycles manually or automatically. In addition,

you can enable or disable automatic learn cycles in the software utility. If you

enable automatic learn cycles, you can delay the start of the learn cycles for

up to 168 hours (7 days). If you disable automatic learn cycles, you can start

the learn cycles manually, and you can choose to receive a reminder to start

a manual learn cycle.

You can put the learn cycle in Wa r ni ng On l y mode. In the Warn i ng On ly

mode, a warning event is generated to prompt you to start the learn cycle

manually when it is time to perform the learn cycle operation. You can select

the schedule for initiating the learn cycle. When in Warning Only mode,

the controller continues to prompt you to start the learn cycle every seven

days until it is performed.

NOTE: Virtual disks automatically switch to Write-Through mode when the battery

charge is low because of a learn cycle.

Learn Cycle Completion Time Frame

The time frame for completion of a learn cycle is a function of the battery

charge capacity and the discharge/charge currents used. For PERC 6,

the expected time frame for completion ofa learn cycle is approximately

seven hours and consists of the following parts:

• Learn cycle discharge cycle: approximately three hours

• Learn cycle charge cycle: approximately four hours

Learn cycles shorten as the battery capacity deteriorates over time.

NOTE: For additional information, see the OpenManage storage

management application.

During the discharge phase of a learn cycle, the PERC 6 battery charger

is disabled and remains disabled until the battery is discharged. After the

battery is discharged, the charger is re-enabled.

About PERC 6 and CERC 6/i Controllers 31

Page 32

Virtual Disk Write Cache Policies

The write cache policy of a virtual disk determines how the controller handles

writes to that virtual disk. Write-Back and Write-Through are the two write

cache policies and can be set on virtual disks individually.

All RAID volumes will be presented as Write-Through (WT) to the operating

system (Windows and linux) independent of the actual write cache policy of

the virtual disk. The PERC/CERC controllers manage the data in cache

independently of the OS or any applications. Use OpenManage or the BIOS

configuration utility to view and manage virtual disk cache settings.

Write-Back and Write-Through

In Write-Through caching, the controller sends a data transfer completion

signal to the host system when the disk subsystem has received all the data

in a transaction.

In Write-Back caching, the controller sends a data transfer completion signal

to the host when the controller cache has received all the data in a

transaction. The controller then writes the cached data to the storage device

in the background.

The risk of using Write-Back cache is that the cached data can be lost if there

is a power failure before it is written to the storage device. This risk is

mitigated by using a BBU on selected PERC 6 controllers. For information

on which controllers support a BBU, see Table 3-1.

Write-Back caching has a performance advantage over Write-Through

caching.

NOTE: The default cache setting for virtual disks is Write-Back caching.

NOTE: Certain data patterns and configurations perform better with a

Write-Through cache policy.

Conditions Under Which Write-Back is Employed

Write-Back caching is used under all conditions in which the battery is

present and in good condition.

32 About PERC 6 and CERC 6/i Controllers

Page 33

Conditions Under Which Write-Through is Employed

Write-Through caching is used under all conditions in which the battery is

missing or in a low-charge state. Low-charge state is when the battery is not

capable of maintaining data for at least 24 hours in the case of a power loss.

Conditions Under Which Forced Write-Back With No Battery is Employed

Write-Back mode is available when the user selects Force WB with no

battery. When Forced Write-Back mode is selected, the virtual disk is in

Write-Back mode even if the battery is not present.

CAUTION: It is recommended that you use a power backup system when forcing

Write-Back to ensure there is no loss of data if the system suddenly loses power.

Virtual Disk Read Policies

The read policy of a virtual disk determines how the controller handles reads

to that virtual disk. The read policies are:

•

Always Read Ahead — Read-Ahead

sequentially ahead of requested data and to store the additional data in

cache memory, anticipating that the data is required soon. This speeds up

reads for sequential data, but there is little improvement when accessing

random data.

•

No Read Ahead

Adaptive Read Ahead

•

Read-Ahead

sectors. If the read requests are random, the controller reverts to

No Read Ahead

— Disables the

— When selected, the controller begins using

if the two most recent disk accesses occurred in sequential

mode.

capability allows the controller to read

Read-Ahead

capability.

Reconfiguring Virtual Disks

There are two methods to reconfigure RAID virtual disks — RAID Level

Migration and Online Capacity Expansion.

RAID Level Migrations (RLM) involve the conversion of a virtual disk to a

different RAID level. Online Capacity Expansions (OCE) refer to increasing

the capacity of a virtual disk by adding drives and/or migrating to a different

About PERC 6 and CERC 6/i Controllers 33

Page 34

RAID level. When a RLM/OCE operation is complete, a reboot is not

necessary. For a list of RAID level migrations and capacity expansion

possibilities, see Table 3-2.

The source RAID level column indicates the virtual disk level before the

RAID level migration and the target RAID level column indicates the RAID

level after the operation is complete.

NOTE: If you configure 64 virtual disks on a controller, you cannot perform a

RAID level migration or capacity expansion on any of the virtual disks.

NOTE: The controller changes the write cache policy of all virtual disks undergoing

a RLM/OCE to Write-Through until the RLM/OCE is complete.

Table 3-2. RAID Level Migration

Source

RAID

Level

RAID 0 RAID 1 1 2 No Converting non-redundant

RAID 0 RAID 5 1 or more 3 or more Yes At least one drive needs to be

RAID 0 RAID 6 1 or more 4 or more Yes At least two drives need to be

RAID 1 RAID 0 2 1 or more Yes Removes redundancy while

RAID 1 RAID 5 2 3 or more Yes Maintains redundancy while

RAID 1 RAID 6 2 4 or more Yes Two drives are required to be

Target

RAID

Level

Required

Number of

Physical

Disks

(Beginning)

Number of

Physical

Disks

(End)

Capacity

Expansion

Possible

Description

virtual disk into a mirrored

virtual disk by adding one drive.

added for distributed parity

data.

added for dual distributed

parity data.

increasing capacity.

doubling capacity.

added for distributed parity

data.

34 About PERC 6 and CERC 6/i Controllers

Page 35

Table 3-2. RAID Level Migration (continued)

Source

RAID

Level

RAID 5 RAID 0 3 or more 2 or more Yes Converting to a non-redundant

RAID 5 RAID 6 3 or more 4 or more Yes At least one drive needs to be

RAID 6 RAID 0 4 or more 2 or more Yes Converting to a non-redundant

RAID 6 RAID 5 4 or more 3 or more Yes Removing one set of parity data

Tar g et

RAID

Level

NOTE: The total number of physical disks in a disk group cannot exceed 32.

NOTE: You cannot perform RAID level migration and expansion on RAID

levels 10, 50, and 60.

Required

Number of

Physical

Disks

(Beginning)

Number of

Physical

Disks

(End)

Capacity

Expansion

Possible

Description

virtual disk and reclaiming disk

space used for distributed

parity data.

added for dual distributed

parity data.

virtual disk and reclaiming disk

space used for distributed

parity data.

and reclaiming disk space used

for it.

About PERC 6 and CERC 6/i Controllers 35

Page 36

Fault Tolerance Features

Table 3-3 lists the features that provide fault tolerance to prevent data loss in

case of a failed physical disk.

Table 3-3. Fault Tolerance Features

Specification PERC CERC

Support for SMART Yes Yes

Support for Patrol Read Yes Yes

Redundant path support Yes

Physical disk failure detection Automatic Automatic

Physical disk rebuild using hot spares Automatic Automatic

Parity generation and checking (RAID 5, 50, 6, and 60 only) Yes Yes

Battery backup of controller cache to protect data Yes

Manual learn cycle mode for battery backup Yes N/A

Detection of batteries with low charge after boot up Yes N/A

a. Supported only on PERC 6/E adapters.

b. The PERC 6/i adapter supports a BBU on selected systems only. For additional information, see

the documentation that was shipped with the system

a

b

Physical Disk Hot Swapping

Hot swapping is the manual replacement of a defective unit in a disk

subsystem while the subsystem is performing its normal functions.

N/A

N/A

NOTE: The system backplane or enclosure must support hot swapping for the

PERC 6 and CERC 6/i controllers to support hot swapping.

NOTE: Replace a physical disk with a new one of the same protocol and

drive technology. For example, only a SAS HDD can replace a SAS HDD;

only a SATA SSD can replace a SATA SSD.

NOTE: The replacement drive must be of equal or greater capacity than the one it

is replacing.

36 About PERC 6 and CERC 6/i Controllers

Page 37

Failed Physical Disk Detection

The controller automatically detects and rebuilds failed physical disks when you

place a new drive in the slot where the failed drive resided or when an

applicable hot spare is present. Automatic rebuilds can be performed

transparently with hot spares. If you have configured hot spares, the

controllers automatically try to use them to rebuild failed physical disks.

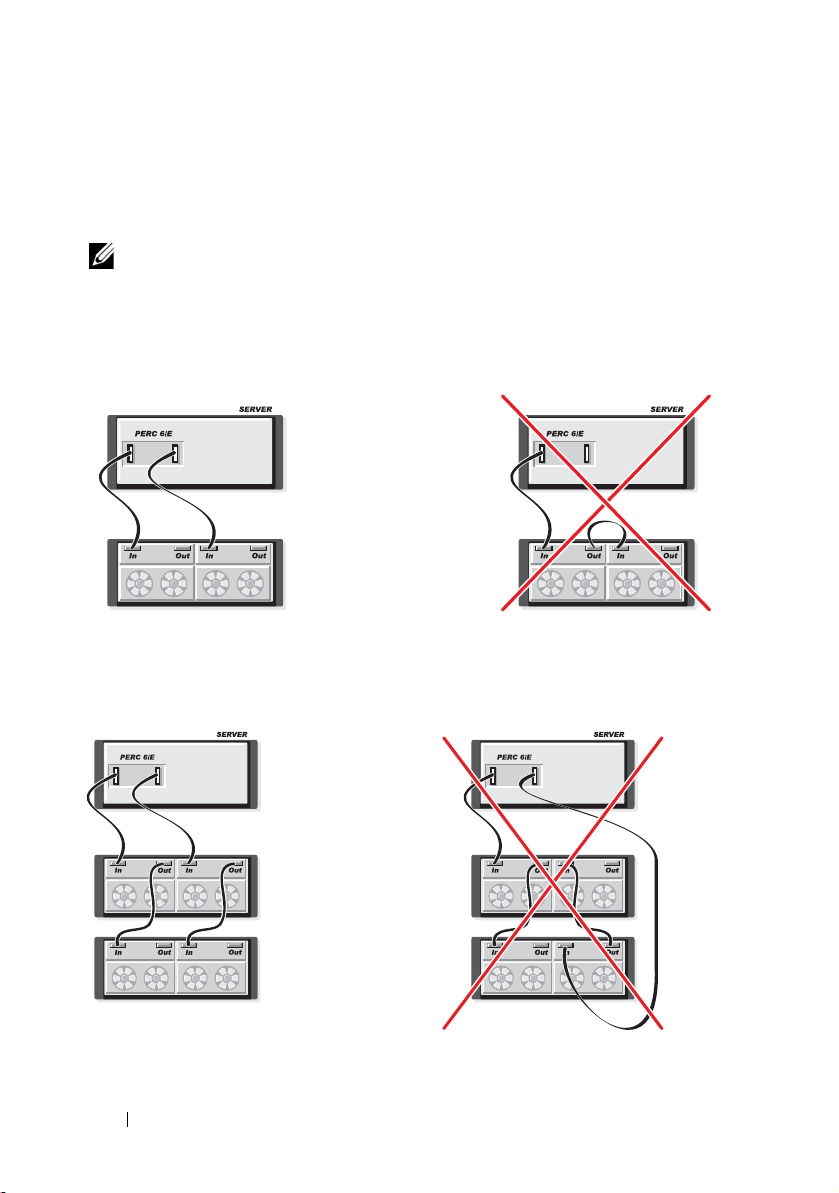

Redundant Path With Static Load Balancing Support

The PERC 6/E adapter can detect and use redundant paths to drives

contained in enclosures. This provides the ability to connect two SAS cables

between a controller and an enclosure for path redundancy. The controller is

able to tolerate the failure of a cable or Enclosure Management

Module (EMM) by utilizing the remaining path.

When redundant paths exist, the controller automatically balances I/O load

through both paths to each disk drive. This load balancing feature increases

throughput to each drive and is automatically turned on when redundant

paths are detected. To set up your hardware to support redundant paths, see

"Setting up Redundant Path Support on the PERC 6/E Adapter" on page 55.

NOTE: This support for redundant paths refers to path-redundancy only and not to

controller-redundancy.

Using Replace Member and Revertible Hot Spares

The Replace Member functionality allows a previously commissioned hot

spare to be reverted back to a usable hot spare. When a drive failure occurs

within a virtual disk, an assigned hot spare (dedicated or global) is

commissioned and begins rebuilding until the virtual disk is optimal.

After the failed drive is replaced (in the same slot) and the rebuild to the hot

spare is complete, the controller automatically starts to copy data from the

commissioned hot spare to the newly-inserted drive. After the data is copied,

the new drive is part of the virtual disk and the hot spare is reverted back to

being a ready hot spare. This allows hot spares to remain in specific enclosure

slots. While the controller is reverting the hot spare, the virtual disk

remains optimal.

NOTE: The controller automatically reverts a hot spare only if the failed drive is

replaced with a new drive in the same slot. If the new drive is not placed in the

same slot, a manual Replace Member operation can be used to revert a previously

commissioned hot spare.

About PERC 6 and CERC 6/i Controllers 37

Page 38

Automatic Replace Member with Predicted Failure

A Replace Member operation can occur when there is a SMART predictive

failure reporting on a drive in a virtual disk. The automatic Replace Member

is initiated when the first SMART error occurs on a physical disk that is part

of a virtual disk. The target drive needs to be a hot spare that qualifies as

a rebuild drive. The physical disk with the SMART error is marked as failed

only after the successful completion of the Replace Member. This avoids

putting the array in degraded status.

If an automatic Replace Member occurs using a source drive that was

originally a hot spare (that was used in a rebuild), and a new drive added for

the Replace Member operation as the target drive, the hot spare reverts to the

hot spare state after a successful Replace Member operation.

NOTE: To enable the automatic Replace Member, use the Dell OpenManage

storage management application. For more information on automatic Replace

Member, see "Dell OpenManage Storage Management" on page 77.

NOTE: For information on manual Replace Member, see "Replacing an Online

Physical Disk" on page 107.

Patrol Read

The Patrol Read feature is designed as a preventative measure to ensure

physical disk health and data integrity. Patrol Read scans for and resolves

potential problems on configured physical disks. The OpenManage storage

management application can be used to start Patrol Read and change its

behavior.

Patrol Read Feature

The following is an overview of Patrol Read behavior:

Patrol Read

1

of a virtual disk, including hot spares.

2

Patrol Read

or are in

38 About PERC 6 and CERC 6/i Controllers

runs on all disks on the controller that are configured as part

does not run on physical disks that are not part of a virtual disk

Ready

state.

Page 39

3

Patrol Read

Patrol Read

system is busy processing I/O operation, then

resources to allow the I/O to take a higher priority.

4

Patrol Read

operations:

• Rebuild

• Replace Member

• Full or Background Initialization

• Consistency Check

• RAID Level Migration or Online Capacity Expansion

adjusts the amount of controller resources dedicated to

operations based on outstanding disk I/O. For example, if the

Patrol Read

does not run on any disks involved in any of the following

uses fewer

Patrol Read Modes

The following describes each of the modes Patrol Read can be set to:

•

Auto

(default)

mode,

Patrol Read

days on SAS and SATA HDDs.

disabled by default. You can start and stop

•

Manual — Patrol Read

manually.

•

Disabled — Patrol Read

— Patrol Read

is enabled to run automatically and start every seven

is set to the

Patrol Read

does not run automatically and you must start it

is not allowed to start on the controller.

Auto

mode by default. In this

is not necessary on SSD and is

Patrol Read

as well.

About PERC 6 and CERC 6/i Controllers 39

Page 40

40 About PERC 6 and CERC 6/i Controllers

Page 41

Installing and Configuring Hardware

WARNING: Only trained service technicians are authorized to remove the system

cover and access any of the components inside the system. Before performing any

procedure, see the safety and warranty information that shipped with your system

for complete information about safety precautions, working inside the system, and

protecting against electrostatic discharge.

CAUTION: Electrostatic discharge can damage sensitive components.

Always use proper antistatic protection when handling components.

Touching components without using a proper ground can damage the equipment.

NOTE: For a list of compatible controllers, see the documentation that shipped with

the system.

Installing the PERC 6/E and PERC 6/i Adapters

1 Unpack the PERC 6/E adapter and check for damage.

NOTE: Contact Dell technical support if the controller is damaged.

2

Turn off the system and attached peripherals, and disconnect the system

from the electrical outlet. For more information on power supplies, see the

Hardware Owner’s Manual

Support website at

3

Disconnect the system from the network and remove the system cover. For

more information on opening the system, see your system’s

Hardware Owner’s Manual

4

Select an empty PCI Express (PCI-E) slot. Remove the blank filler bracket

on the back of the system aligned with the PCI-E slot you have selected.

shipped with your system or on the Dell

support.dell.com

.

.

Installing and Configuring Hardware 41

Page 42

5

4

3

1

2

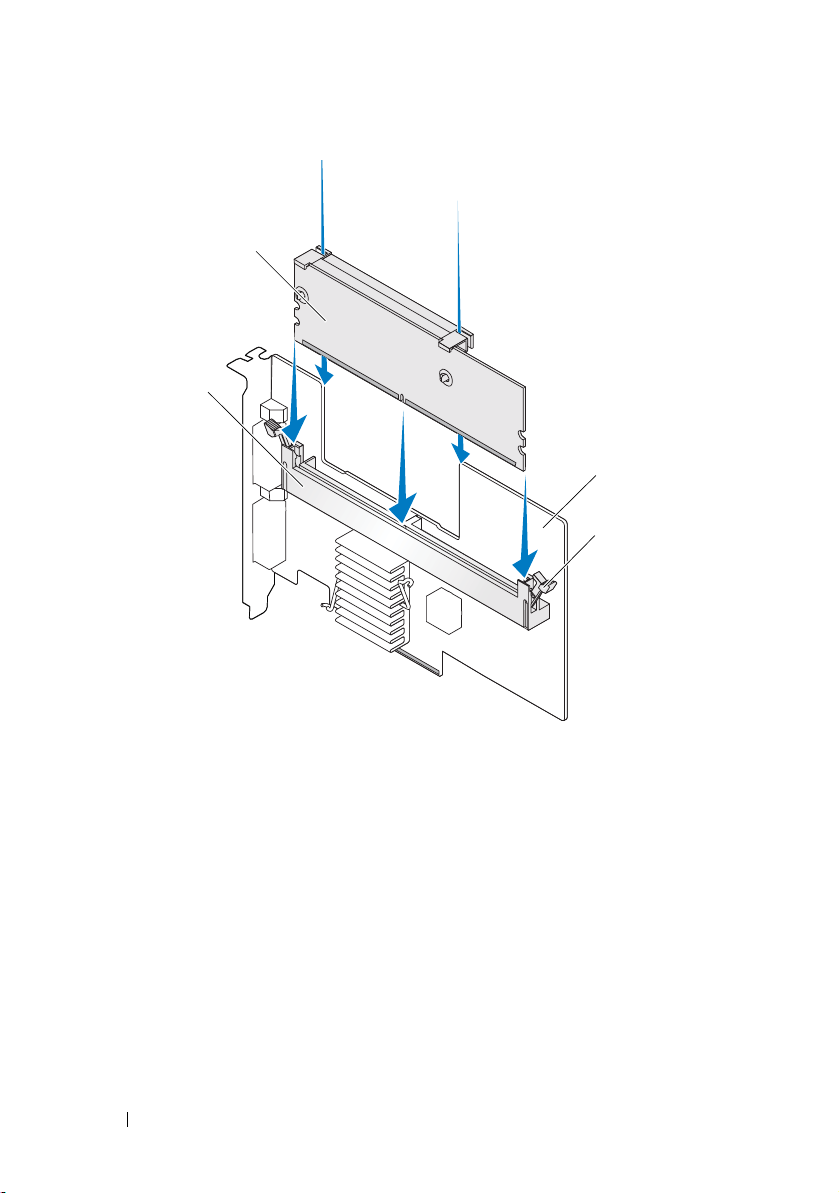

Align the PERC 6/E adapter to the PCI-E slot you have selected.

CAUTION: Never apply pressure to the adapter module while inserting it in the

PCI-E slot. Applying pressure could break the adapter module.

6

Insert the controller gently, but firmly, until the controller is firmly seated

in the PCI-E slot. For more information on installing the PERC 6 adapter,

see Figure 4-1. For more information on installing the PERC 6/i adapter,

see Figure 4-2.

Figure 4-1. Installing a PERC 6/E Adapter

1 PCI-e slot 3 filler bracket

2 PERC 6/i adapter 4 bracket screw

42 Installing and Configuring Hardware

Page 43

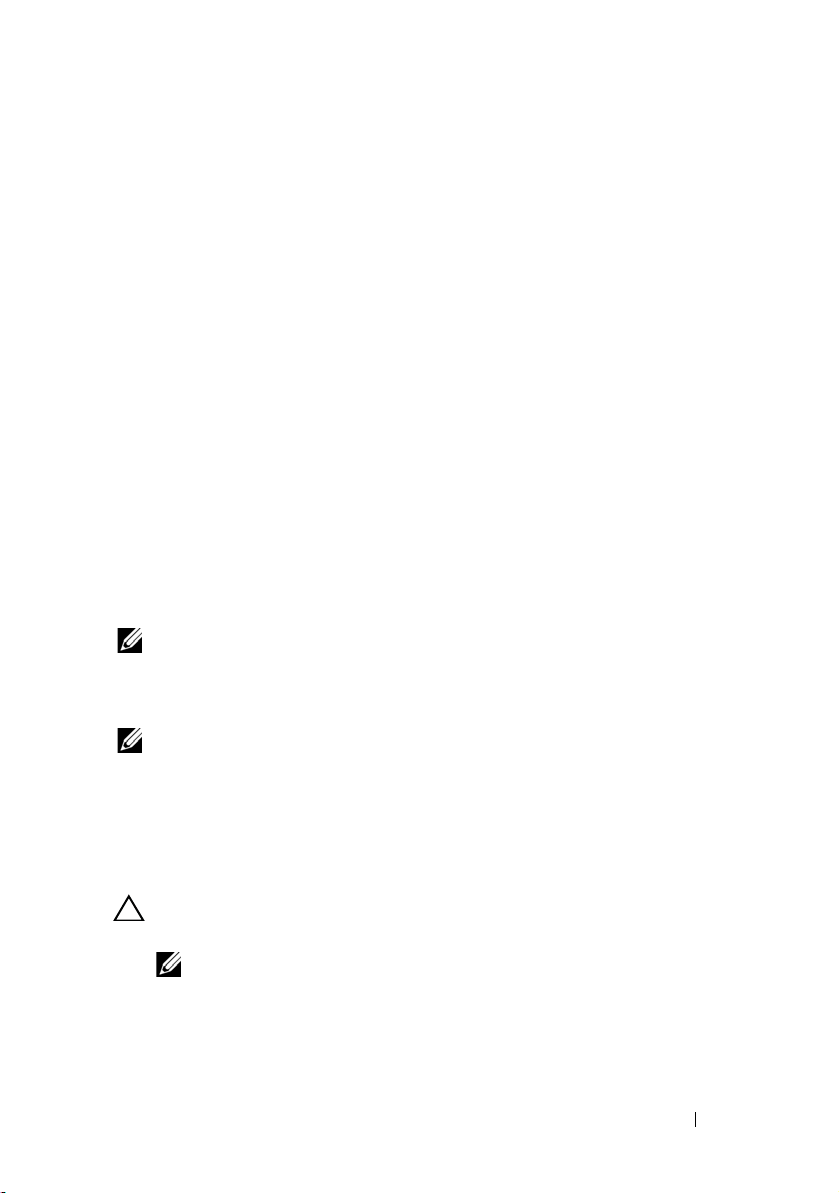

Figure 4-2. Installing a PERC 6/i Adapter

2

3

1

4

1 PCI-e slot 3 filler brackets

2 PERC 6/i adapter 4 bracket screw

7

Tighten the bracket screw, if any, or use the system’s retention clips to

secure the controller to the system’s chassis.

8

For PERC 6/E adapter, replace the cover of the system. For more

information on closing the system, see the

Hardware Owner’s Manual

shipped with your system or on the Dell Support website at

support.dell.com

.

Installing and Configuring Hardware 43

Page 44

9

3

2

1

Connect the cable from the external enclosure to the controller. For more

information, see Figure 4-3.

Figure 4-3. Connecting the Cable From the External Enclosure

1 connector on the controller 3 cable from the external enclosure

2 system

10

For PERC 6/i adapter, connect the cables from the backplane of the system

to the controller. The primary SAS connector is white and the secondary

SAS connector is black. For more information, see Figure 4-4.

44 Installing and Configuring Hardware

Page 45

Figure 4-4. Connecting Cables to the Controller

3

1

2

1 cable 3 PERC 6/i adapter

2 connector

11

Replace the cover of the system. For more information on closing the

system, see the

the Dell Support website at

12

Reconnect the power and network cables, and turn on the system.

Hardware Owner’s Manual

support.dell.com

shipped with your system or on

.

Installing and Configuring Hardware 45

Page 46

Installing the Transportable Battery Backup

4

3

1

5

2

Unit (TBBU) on PERC 6/E

CAUTION: The following procedure must be performed at an

Electrostatic Discharge (ESD)-safe workstation to meet the requirements of

EIA-625 – "Requirements For Handling Electrostatic Discharge Sensitive Devices."

The following procedure must be performed following the IPC-A-610 latest

revision ESD recommended practices.

CAUTION: When transporting a sensitive component, first place it in an antistatic

container or packaging.

CAUTION: Handle all sensitive components in a static-safe area. If possible, use

antistatic floor pads and work bench pads.

1

Unpack the TBBU and follow all antistatic procedures.

2

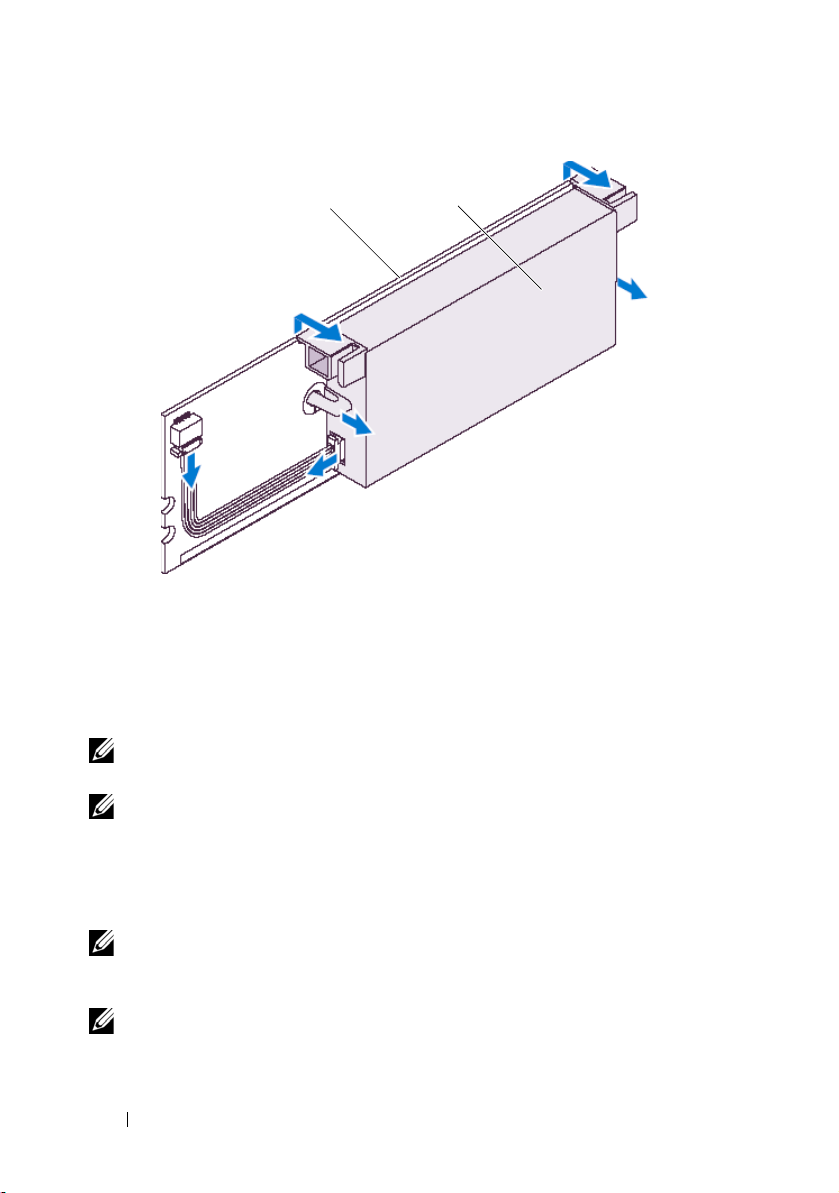

Remove the DIMM from the controller. Insert one end of the battery pack

harness (the red, white, yellow, and green wires) in the connector on the

memory module and the other end in the connector on the battery.

3

Place the top edge of the battery over the top edge of the memory module

so that the arms on the side of the battery fit in their sockets on the

memory module. For more information, see Figure 4-5.

Figure 4-5. Installing a TBBU

1 battery 4 connector on the memory module

2 connector on the battery 5 memory module

3 battery pack harness

46 Installing and Configuring Hardware

Page 47

4

Place the PERC 6/E adapter on a flat, clean, and static–free surface.

5

Mount the memory module in the controller memory socket like a

standard DIMM. For more information, see "Installing the DIMM on a

PERC 6/E Adapter" on page 47.

The memory module is mounted flush with the controller board so that

the memory module is parallel to the board when installed.

6

Press the memory module firmly in the memory socket. As you press the

memory module in the socket, the TBBU clicks in place, indicating that

the controller is firmly seated in the socket. The arms on the socket fit in

the notches on the memory module to hold it securely.

Installing the DIMM on a PERC 6/E Adapter

CAUTION: When unpacking a static sensitive component from its shipping

carton, do not remove the component from the antistatic packing material until you

are ready to install the component. Before unwrapping the antistatic package,

ensure to discharge static electricity from your body. Handle all sensitive

components in a static-safe area. If possible, use antistatic floor pads and work

bench pads.

CAUTION: PERC 6 cards support Dell-qualified 512 MB and 256 MB

DDRII 667 MHz ECC-registered DIMMs with x16 DRAM components.

Installing unsupported memory causes the system to hang at POST.

CAUTION: Do not touch the gold leads and do not bend the memory module.

1

Remove the memory module from its packaging.

2 Align the keyed edge of the memory module to the physical divider on

the memory socket

3

Insert the memory module in the memory socket. Apply a constant,

downward pressure on both ends or the middle of the memory module

until the retention clips fall in the allotted slots on either side of the

memory module. For more information, see Figure 4-6.

Figure 4-6 displays the installation of a memory module on a PERC 6/E

adapter.

to avoid damage to the module.

Installing and Configuring Hardware 47

Page 48

Figure 4-6. Installing a DIMM

3

2

1

4

1 PERC 6/E adapter 3 memory socket

2 retention clip 4 memory module

Transferring a TBBU Between Controllers

The TBBU provides uninterrupted power supply for up to 24 hours to the

cache memory module. If the controller fails as a result of a power failure, you

can move the TBBU to a new controller and recover the data. The controller

that replaces the failed controller should not have any prior configuration.

48 Installing and Configuring Hardware

Page 49

Perform the following steps to replace a failed controller with data in the TBBU:

1

Perform a controlled shutdown on the system in which the PERC 6/E is

installed, as well as any attached storage enclosures.

2

Remove the controller that has the TBBU currently installed from the

system.

3

Remove the TBBU from the controller.

4

Insert the TBBU in the new controller.

For more information on installing the TBBU, see "Installing the

Transportable Battery Backup Unit (TBBU) on PERC 6/E" on page 46.

5

Insert the replacement controller in the system.

See the relevant sections on installing controllers under "Installing the

PERC 6/E and PERC 6/i Adapters" on page 41.

6

Turn on the system.

The controller flushes the cache data to the virtual disks.

Removing the PERC 6/E and PERC 6/i Adapters

NOTE: In the event that the SAS cable is accidentally pulled out when the system is

operational, reconnect the cable and see the online help of your

Dell™ OpenManage™ storage management application for the required recovery

steps.

NOTE: Some PERC 6/i adapters installed on a Dell workstation or

Dell PowerEdge™ SC systems do not have a BBU.

1

Perform a controlled shutdown on the system in which the PERC 6/E

is installed, as well as any attached storage enclosures.

2

Disconnect the system from the electrical outlet and remove the system

cover.

CAUTION: Running a system without the system cover installed may cause

damage due to improper cooling.

NOTE: For more information on removing peripherals installed in the system’s

PCI-E slots, see the Hardware Owner’s Manual shipped with your system or

on the Dell Support website at support.dell.com.

For instructions on removing a PERC 6/E adapter, go to step 3.

For instructions on removing a PERC 6/i adapter, go to step 5.

Installing and Configuring Hardware 49

Page 50

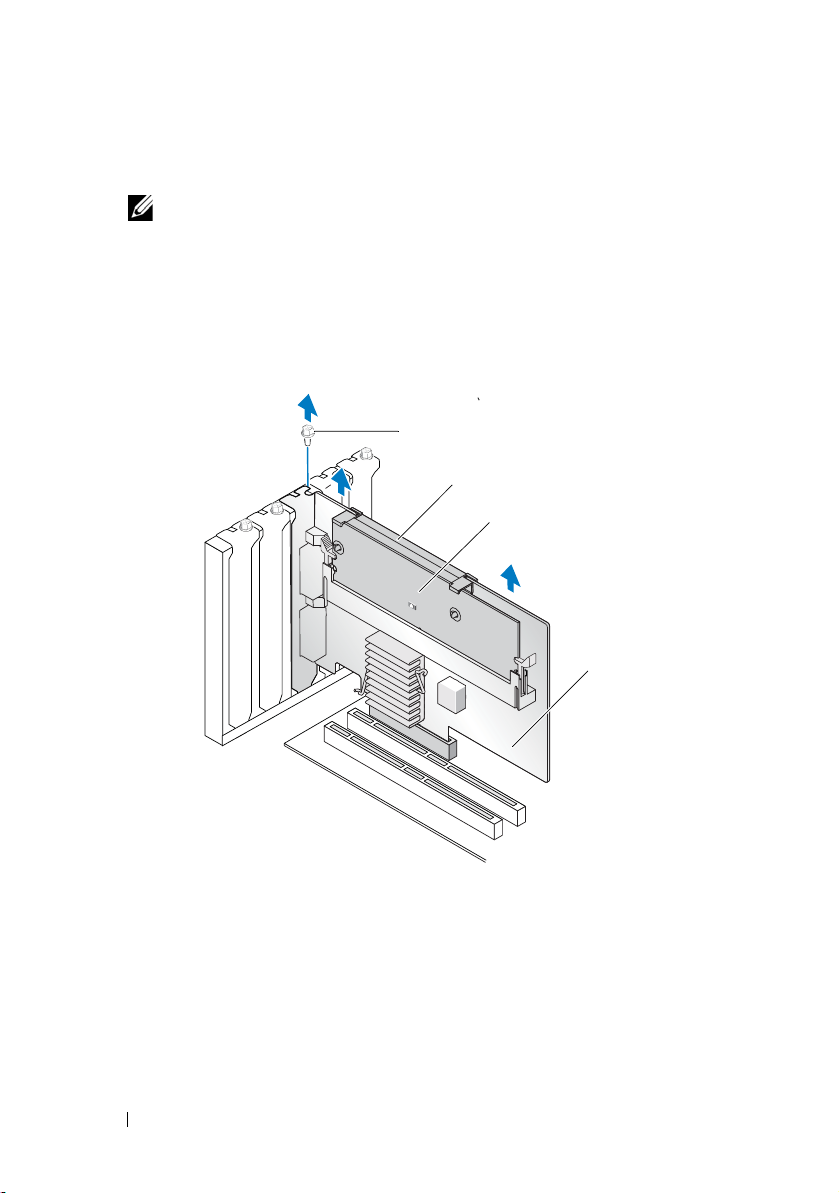

3

4

2

1

3

Locate the PERC 6/E adapter in the system and disconnect the external

cables from the adapter.

NOTE: The location of the PERC 6/i varies from system to system. For

information on PERC 6/i card location, see the Hardware Owner’s Manual

shipped with your system or on the Dell Support website at support.dell.com.

4

Remove any retention mechanism, such as a bracket screw, that may be

holding the PERC 6/E in the system and gently lift the controller from the

system’s PCI-E slot. For more information, see Figure 4-7.

Figure 4-7. Removing the PERC 6/E Adapter

1 bracket screw 3 memory module

2 battery 4 PERC 6/E adapter

50 Installing and Configuring Hardware

Page 51

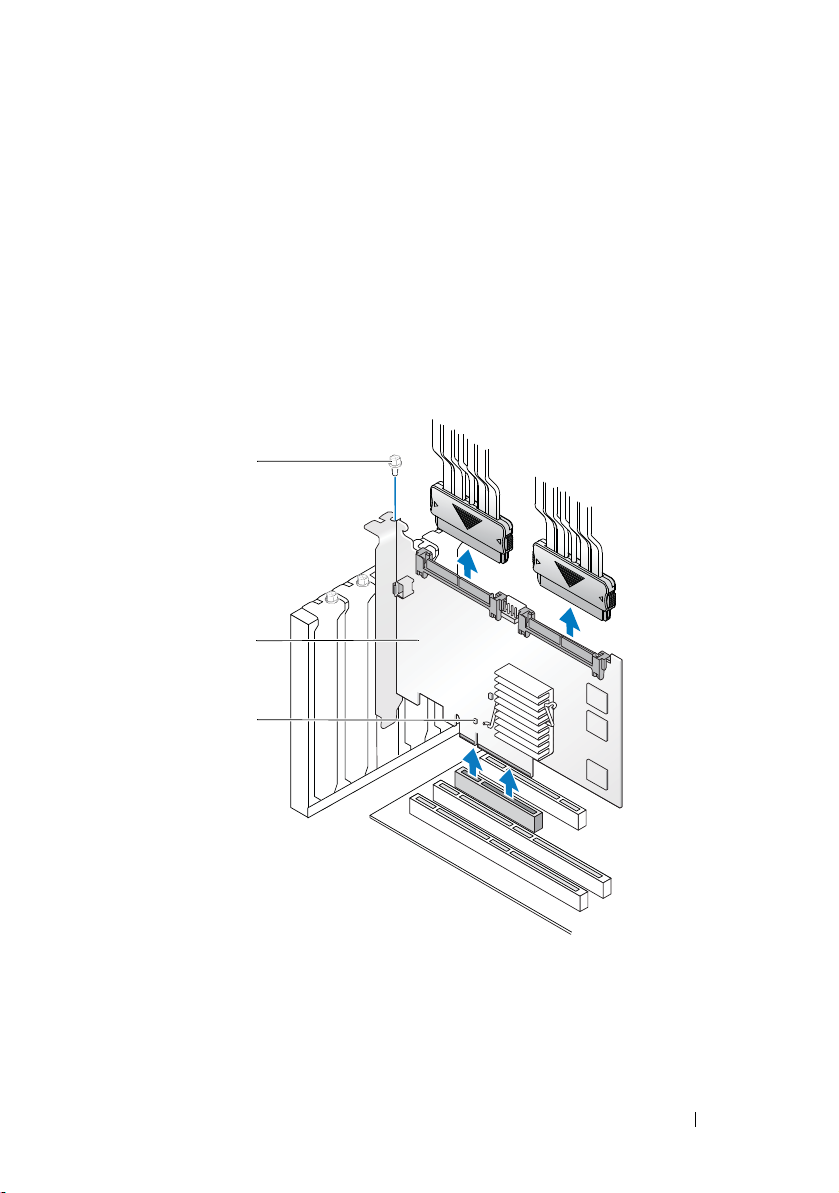

5

2

3

1

Determine whether the Dirty Cache LED on the controller is illuminated.

For location of the LED, see Figure 4-8

• If the LED is illuminated, replace the system cover, reconnect the

system to power, turn on the system, and repeat step 1 and step 2.

If the LED is not illuminated, continue with the next step.

6

Disconnect the data cables and battery cable from the PERC 6/i.

Remove any retention mechanism, such as a bracket screw, that might be

holding the PERC 6/i in the system, and gently lift the controller from the

system’s PCI-E slot.

Figure 4-8. Removing the PERC 6/i Adapter

1 bracket screw 3 dirty cache LED

2 PERC 6/i controller

Installing and Configuring Hardware 51

Page 52

Removing the DIMM and Battery from a PERC 6/E Adapter

NOTE: The TBBU on the PERC 6/E adapter consists of a DIMM and battery

backup unit.

1 Perform a controlled shutdown on the system in which the PERC 6/E

adapter is installed,

2

Disconnect the system from the electrical outlet and open the

system cover.

CAUTION: Running a system without the system cover installed can cause

damage due to improper cooling.

3

Remove the PERC 6/E adapter from the system. For instruction on

removing the PERC 6/E adapter, see "Removing the PERC 6/E and PERC

6/i Adapters" on page 49.

4

Visually inspect the controller and determine whether the dirty cache LED