Page 1

White Paper on GA620/GA620T Gigabit Ethernet Card

Performance

Gigabit Ethernet adapters represent the next step in the evolution of

network performance. The widespread adoption of Fast Ethernet at the desktop

has created a bottleneck at the server, specifically at the Server adapter. Some

companies have created Server adapters with multiple 100 Mbps connections,

while others have populated several PCI slots with 100 Mbps adapters to boost

Server bandwidth beyond Fast Ethernet. Gigabit Ethernet delivers performance

beyond these aggregation band-aids, by delivering speeds approaching 1 Gbps.

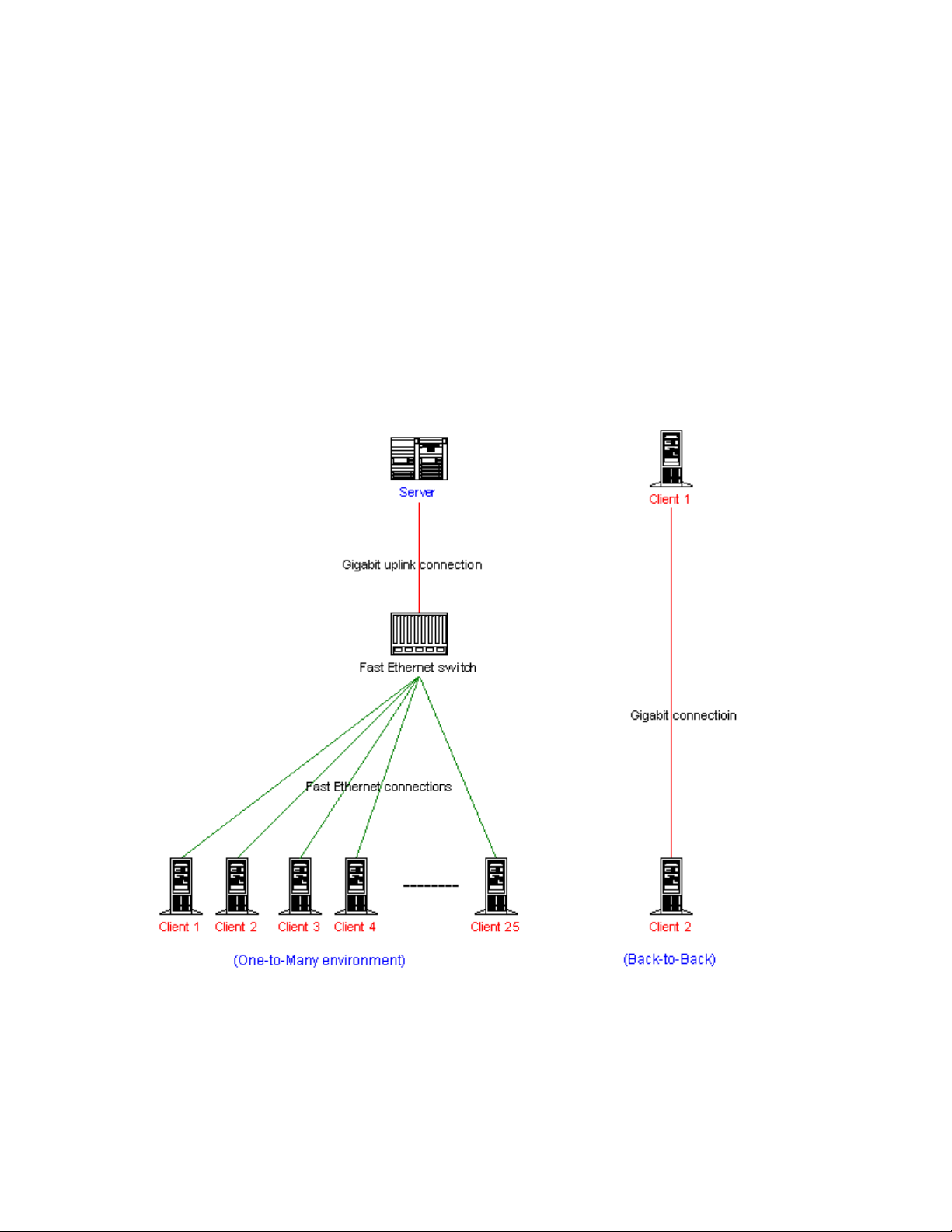

Network Configurations

Due to the fact that Servers benefit most from Gigabit speeds, the adapter

card drivers are optimized for that environment. In most networks, the server

distributes information to multiple clients. For this reason, the driver has been

optimized to perform best in a one-to-many environment. Using the test program

Page 2

Chariot running against twenty -five 100 Mbps clients, Gigabit speeds are

consistently reproduced in lab.

Test results will vary depending on a number of factors including the type

of test, the speed and performance of the computers being used, and the

network topology. To measure the capabilities of the adapter, the test should

stress the network and not the other components of your system. Programs

such as Ganymede’s Chariot, and Microsoft’s NTTTCP accomplish this goal

quite well. These tools provide a multi-threaded, asynchronous performance

benchmark for measuring achiev able data transfer rate.

Back-to-Back Test

One test that does not accurately measure adapter performance is a backto-back test. The driver is optimized for one-to-many scenarios, so testing in a

back-to-back environment will yield slower results. More than 500 Mbps

throughput is still possible using a multi-threaded testing program for testing

network performance, such as Microsoft’s NTTTCP program. While one is

unlikely to see the full capabilities of a Gigabit Ethernet card when tested backto-back, it is still possible to get a feel for the capabilities of the adapter.

File Transfer

Another test that does not accurately measure adapter capability is a file

transfer. Copying a file from one computer to another tests a hard drive’s

performance rather than network performance. In a test like this, the limiting

factor is the performance of the computers, rather than the performance of the

network. The copying process adds the overhead of the disc drives, as well as

the operating system handling the copy. The test results indicate the computers’

ability to process these additional requirements rather than the abilities of the

connection between them. In a test like this it is possible to see results less than

20 Mbps on any card, which is slow for both a 100 Mbps card and the 1000 Mbps

card. Surprisingly, the Gigabit adapter will probably be the slower of the two

cards in this test.

The reason a 1000 Mbps adapter would perform below a 100 Mbps

adapter is quite simple in this test. In order to achieve Gigabit performance,

Gigabit adapter drivers contain additional intelligence beyond Fast Ethernet

drivers. This intelligence creates more overhead than a standard 10/100 card.

When stressing the network at higher speeds, this overhead is negligible, and

improves to the performance of the network. When the cards are run at minimal

performance, such the file copy test, the Gigabit card will have slightly more

overhead than a standard 10/100 card, causing slower performance. However,

as more stress is placed on the network, such as video or multiple system file

transfers, the gigabit card will start to outperform a 10/100 card. Ultimately, the

Gigabit adapter can deliver 10 times the network throughput of a Fast Ethernet

adapter.

Page 3

Testing Results

1. Using Ganymede’s (or netIQ) Chariot

One-to-Many Traffic Flow

OS environments Throughput (Mbps)

Windows NT4.0 server to 25 pair clients

Windows 2000 server to 25 pair clients

2. Using Microsoft NTTTCP

Back-to-Back Traffic Flow (Windows 2000 to Windows 2000)

Sessions / Threads Throughput (Mbps)

30

16

8

Back-to-Back Traffic Flow (Windows NT4.0 to Windows NT4.0)

Sessions / Threads Throughput (Mbps)

30

16

8

915.775

529.452

594.843

576.568

509.942

562.813

552.356

555.565

Test Configuration (10/25/2000) :

1. One-to- Many:

This configuration was comprised of one chassis switch with Fast Ethernet

connections to 25 clients (18 Windows NT Workstation, 4 Windows 2000

Professional and 3 Windows 98) and 1-gigabit uplink to the server (Dell

PowerEdge 4400/600 server running either Microsoft Windows NT 4.0 Server or

Windows 2000 Advanced Server).

2. Back-to-Back:

The back-to-back test bed configuration was comprised of one Dell PowerEdge

4400/600 server and one IBM Netfinity 6000, both running either Microsoft

Windows NT 4.0 Server or Windows 2000 Server.

Page 4

The latest network interface cards and drivers were used in all tests. Throughout

the testing used two different server machines:

1. IBM Netfinity 6000 Server (600MHz, 2GB RAM, BIOS version: 1.02) with

dual boot between Microsoft Windows 2000 Server and Microsoft

Windows NT 4.0, SP6;

2. DELL PowerEdge 4400/600 Server (600MHz, 512MB RAM, BIOS version:

A04) with dual boot between Microsoft Windows 2000 Server and

Microsoft Windows NT 4.0, SP6.

Loading...

Loading...