Page 1

Model 2400SourceMeter

®

Service Manual

A GREATER MEASURE OF CONFIDENCE

Page 2

W ARRANTY

Keithley Instruments, Inc. warrants this product to be free from defects in material and workmanship for a

period of 1 year from date of shipment.

Keithley Instruments, Inc. warrants the following items for 90 days from the date of shipment: probes, cables,

rechargeable batteries, diskettes, and documentation.

During the warranty period, we will, at our option, either repair or replace any product that proves to be defective.

To exercise this warranty, write or call your local Keithley representative, or contact Keithley headquarters in

Cleveland, Ohio. You will be given prompt assistance and return instructions. Send the product, transportation

prepaid, to the indicated service facility . Repairs will be made and the product returned, transportation prepaid.

Repaired or replaced products are warranted for the balance of the original warranty period, or at least 90 days.

LIMIT A TION OF W ARRANTY

This warranty does not apply to defects resulting from product modification without Keithley’s express written

consent, or misuse of any product or part. This warranty also does not apply to fuses, software, non-rechargeable batteries, damage from battery leakage, or problems arising from normal wear or failure to follow instructions.

THIS WARRANTY IS IN LIEU OF ALL OTHER WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING ANY IMPLIED WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR USE.

THE REMEDIES PROVIDED HEREIN ARE BUYER’S SOLE AND EXCLUSIVE REMEDIES.

NEITHER KEITHLEY INSTRUMENTS, INC. NOR ANY OF ITS EMPLOYEES SHALL BE LIABLE FOR

ANY DIRECT , INDIRECT, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF

THE USE OF ITS INSTRUMENTS AND SOFTWARE EVEN IF KEITHLEY INSTRUMENTS, INC., HAS

BEEN ADVISED IN ADVANCE OF THE POSSIBILITY OF SUCH DAMAGES. SUCH EXCLUDED DAMAGES SHALL INCLUDE, BUT ARE NOT LIMITED TO: COSTS OF REMOVAL AND INSTALLATION,

LOSSES SUSTAINED AS THE RESULT OF INJURY T O ANY PERSON, OR DAMAGE T O PROPER TY.

Keithley Instruments, Inc. • 28775 Aurora Road • Cleveland, OH 44139 • 440-248-0400 • Fax: 440-248-6168 • http://www.k eithley.com

BELGIUM: Keithley Instruments B.V.

CHINA: Keithley Instruments China

FRANCE: Keithley Instruments Sarl 3, allée des Garays • 91127 Palaiseau Cedex • 01-64 53 20 20 • Fax: 01-60 11 77 26

GERMANY: Keithley Instruments GmbH Landsberger Strasse 65 • 82110 Germering • 089/84 93 07-40 • Fax: 089/84 93 07-34

GREAT BRITAIN: Keithley Instruments Ltd

INDIA: Keithley Instruments GmbH Flat 2B, WILOCRISSA • 14, Rest House Crescent • Bangalore 560 001 • 91-80-509-1320/21 • Fax: 91-80-509-1322

ITALY: Keithley Instruments s.r.l. Viale S. Gimignano, 38 • 20146 Milano • 02-48 39 16 01 • Fax: 02-48 30 22 74

NETHERLANDS: Keithley Instruments B.V. Postbus 559 • 4200 AN Gorinchem • 0183-635333 • Fax: 0183-630821

SWITZERLAND: Keithley Instruments SA Kriesbachstrasse 4 • 8600 Dübendorf • 01-821 94 44 • Fax: 01-820 30 81

TAIWAN: Keithley Instruments Taiwan 1 Fl. 85 Po Ai Street • Hsinchu, Taiwan, R.O.C. • 886-3572-9077• Fax: 886-3572-903

Bergensesteenweg 709 • B-1600 Sint-Pieters-Leeuw • 02/363 00 40 • Fax: 02/363 00 64

Y uan Chen Xin Building, Room 705 • 12 Y umin Road, De wai, Madian • Beijing 100029 • 8610-62022886 • Fax: 8610-62022892

The Minster • 58 Portman Road • Reading, Berkshire RG30 1EA • 0118-9 57 56 66 • Fax: 0118-9 59 64 69

9/00

Page 3

Model 2400 SourceMeter

Service Manual

®

©1996, Keithley Instruments, Inc.

All rights reserved.

Cleveland, Ohio, U.S.A.

Fourth Printing, November 2000

Document Number: 2400-902-01 Rev. D

Page 4

Manual Print History

The print history shown below lists the printing dates of all Revisions and Addenda created

for this manual. The Revision Le vel letter increases alphabetically as the manual under goes subsequent updates. Addenda, which are released between Revisions, contain important change information that the user should incorporate immediately into the manual. Addenda are numbered

sequentially . When a new Re vision is created, all Addenda associated with the previous Re vision

of the manual are incorporated into the new Revision of the manual. Each ne w Revision includes

a revised copy of this print history page.

Revision A (Document Number 2400-902-01)............................................................January 1996

Revision B (Document Number 2400-902-01).......................................................... February 1996

Addendum B (Document Number 2400-902-02).................................................... September 1996

Revision C (Document Number 2400-902-01)..................................................................July 2000

Revision D (Document Number 2400-902-01)........................................................November 2000

All Keithley product names are trademarks or registered trademarks of Keithley Instruments, Inc.

Other brand names are trademarks or registered trademarks of their respective holders.

Page 5

Safety Precautions

The following safety precautions should be observed before using this product and any associated instrumentation. Although some instruments and accessories would normally be used with non-hazardous

voltages, there are situations where hazardous conditions may be present.

This product is intended for use by qualified personnel who recognize shock hazards and are familiar

with the safety precautions required to avoid possible injury. Read the operating information carefully

before using the product.

The types of product users are:

Responsible body is the individual or group responsible for the use and maintenance of equipment, for

ensuring that the equipment is operated within its specifications and operating limits, and for ensuring

that operators are adequately trained.

Operators use the product for its intended function. They must be trained in electrical safety procedures

and proper use of the instrument. They must be protected from electric shock and contact with hazardous

live circuits.

Maintenance personnel perform routine procedures on the product to keep it operating, for example,

setting the line voltage or replacing consumable materials. Maintenance procedures are described in the

manual. The procedures explicitly state if the operator may perform them. Otherwise, they should be

performed only by service personnel.

Service personnel are trained to work on live circuits, and perform safe installations and repairs of prod-

ucts. Only properly trained service personnel may perform installation and service procedures.

Exercise extreme caution when a shock hazard is present. Lethal voltage may be present on cable con-

nector jacks or test fixtures. The American National Standards Institute (ANSI) states that a shock hazard exists when voltage lev els greater than 30V RMS, 42.4V peak, or 60VDC are present.

practice is to expect that hazardous voltage is present in any unknown circuit before measuring.

Users of this product must be protected from electric shock at all times. The responsible body must ensure that users are prevented access and/or insulated from every connection point. In some cases, connections must be exposed to potential human contact. Product users in these circumstances must be

trained to protect themselves from the risk of electric shock. If the circuit is capable of operating at or

above 1000 volts,

As described in the International Electrotechnical Commission (IEC) Standard IEC 664, digital multimeter measuring circuits (e.g., Keithley Models 175A, 199, 2000, 2001, 2002, and 2010) are Installation

Category II. All other instruments’ signal terminals are Installation Category I and must not be connected to mains.

Do not connect switching cards directly to unlimited power circuits. They are intended to be used with

impedance limited sources. NEVER connect switching cards directly to AC mains. When connecting

sources to switching cards, install protective devices to limit fault current and voltage to the card.

Before operating an instrument, make sure the line cord is connected to a properly grounded power receptacle. Inspect the connecting cables, test leads, and jumpers for possible wear, cracks, or breaks before each use.

For maximum safety, do not touch the product, test cables, or any other instruments while power is applied to the circuit under test. ALWAYS remove power from the entire test system and discharge any

capacitors before: connecting or disconnecting cables or jumpers, installing or removing switching

cards, or making internal changes, such as installing or removing jumpers.

no conductive part of the circuit may be exposed.

A good safety

Page 6

Do not touch any object that could provide a current path to the common side of the circuit under test or power

line (earth) ground. Alw ays make measurements with dry hands while standing on a dry, insulated surface capable of withstanding the voltage being measured.

The instrument and accessories must be used in accordance with its specifications and operating instructions

or the safety of the equipment may be impaired.

Do not exceed the maximum signal levels of the instruments and accessories, as defined in the specifications

and operating information, and as shown on the instrument or test fixture panels, or switching card.

When fuses are used in a product, replace with same type and rating for continued protection against fire hazard.

Chassis connections must only be used as shield connections for measuring circuits, NOT as safety earth

ground connections.

If you are using a test fixture, keep the lid closed while power is applied to the device under test. Safe operation

requires the use of a lid interlock.

If a scre w is present, connect it to safety earth ground using the wire recommended in the user documentation.

!

The symbol on an instrument indicates that the user should refer to the operating instructions located in

the manual.

The symbol on an instrument shows that it can source or measure 1000 volts or more, including the combined effect of normal and common mode voltages. Use standard safety precautions to av oid personal contact

with these voltages.

The

WARNING heading in a manual explains dangers that might result in personal injury or death. Always

read the associated information very carefully before performing the indicated procedure.

The

CAUTION heading in a manual explains hazards that could damage the instrument. Such damage may

invalidate the warranty.

Instrumentation and accessories shall not be connected to humans.

Before performing any maintenance, disconnect the line cord and all test cables.

To maintain protection from electric shock and fire, replacement components in mains circuits, including the

power transformer, test leads, and input jacks, must be purchased from Keithley Instruments. Standard fuses,

with applicable national safety approvals, may be used if the rating and type are the same. Other components

that are not safety related may be purchased from other suppliers as long as they are equivalent to the original

component. (Note that selected parts should be purchased only through Keithley Instruments to maintain accuracy and functionality of the product.) If you are unsure about the applicability of a replacement component,

call a Keithley Instruments office for information.

To clean an instrument, use a damp cloth or mild, water based cleaner. Clean the exterior of the instrument

only. Do not apply cleaner directly to the instrument or allow liquids to enter or spill on the instrument. Products that consist of a circuit board with no case or chassis (e.g., data acquisition board for installation into a

computer) should never require cleaning if handled according to instructions. If the board becomes contaminated and operation is affected, the board should be returned to the factory for proper cleaning/servicing.

Rev. 10/99

Page 7

T able of Contents

1 Performance V erification

Introduction ................................................................................ 1-2

Verification test requirements ..................................................... 1-2

Environmental conditions ................................................... 1-2

Warm-up period .................................................................. 1-2

Line power .......................................................................... 1-3

Recommended test equipment ............................................ 1-3

Verification limits ................................................................ 1-3

Restoring factory defaults .......................................................... 1-4

Performing the verification test procedures ............................... 1-5

Test summary ...................................................................... 1-5

Test considerations .............................................................. 1-5

Setting the source range and output value .......................... 1-6

Setting the measurement range ........................................... 1-6

Compliance considerations ........................................................ 1-6

Compliance limits ............................................................... 1-6

Types of compliance ........................................................... 1-6

Maximum compliance values ............................................. 1-7

Determining compliance limit ............................................ 1-7

Taking the SourceMeter out of compliance ........................ 1-8

Output voltage accuracy ............................................................. 1-8

Voltage measurement accuracy ................................................ 1-10

Output current accuracy ........................................................... 1-11

Current measurement accuracy ................................................ 1-12

Resistance measurement accuracy ........................................... 1-13

2 Calibration

Introduction ................................................................................ 2-2

Environmental conditions .......................................................... 2-2

Temperature and relative humidity ..................................... 2-2

Warm-up period .................................................................. 2-2

Line power .......................................................................... 2-2

Calibration considerations .......................................................... 2-3

Calibration cycle ................................................................. 2-3

Recommended calibration equipment ................................. 2-4

Unlocking calibration .......................................................... 2-4

Changing the password ....................................................... 2-6

Resetting the calibration password ..................................... 2-6

Viewing calibration dates and calibration count ................. 2-7

Calibration errors ................................................................ 2-7

Page 8

Front panel calibration ................................................................ 2-8

Remote calibration .................................................................... 2-16

Remote calibration commands .......................................... 2-16

Remote calibration procedure ........................................... 2-18

Single-range calibration ............................................................ 2-22

3 Routine Maintenance

Introduction ................................................................................ 3-2

Line fuse replacement ................................................................. 3-2

4 T roubleshooting

Introduction ................................................................................ 4-2

Repair considerations ................................................................. 4-2

Power-on self-test ....................................................................... 4-2

Front panel tests .......................................................................... 4-3

KEYS test ............................................................................ 4-3

DISPLAY PATTERNS test ................................................. 4-3

CHAR SET test ................................................................... 4-4

Principles of operation ................................................................ 4-4

Analog circuits .................................................................... 4-4

Power supply ....................................................................... 4-6

Output stage ......................................................................... 4-7

A/D converter ...................................................................... 4-8

Active guard ........................................................................ 4-8

Digital circuitry ................................................................... 4-8

Troubleshooting ........................................................................ 4-10

Display board checks ........................................................ 4-10

Power supply checks ......................................................... 4-10

Digital circuitry checks ..................................................... 4-11

Analog circuitry checks ..................................................... 4-11

Battery replacement .................................................................. 4-12

No comm link error .................................................................. 4-13

5 Disassembly

Introduction ................................................................................ 5-2

Handling and cleaning ................................................................ 5-2

Handling PC boards ............................................................ 5-2

Solder repairs ....................................................................... 5-2

Static sensitive devices ............................................................... 5-3

Assembly drawings ..................................................................... 5-3

Case cover removal ..................................................................... 5-4

Analog board removal ................................................................ 5-4

Digital board removal ................................................................. 5-6

Page 9

Front panel disassembly ............................................................. 5-6

Removing power components .................................................... 5-7

Power supply removal ......................................................... 5-7

Power module removal ....................................................... 5-7

Instrument reassembly ............................................................... 5-7

6 Replaceable Parts

Introduction ................................................................................ 6-2

Parts lists .................................................................................... 6-2

Ordering information ................................................................. 6-2

Factory service ........................................................................... 6-3

Component layouts .................................................................... 6-3

A Specifications

Accuracy calculations ............................................................. A-10

Measure accuracy ............................................................. A-10

Source accuracy ............................................................... A-10

B Command Reference

Introduction ............................................................................... B-2

Command summary .................................................................. B-2

Miscellaneous commands ......................................................... B-3

Detecting calibration errors ....................................................... B-9

Reading the error queue ..................................................... B-9

Error summary ................................................................... B-9

Status byte EAV (Error Available) bit .............................. B-10

Generating an SRQ on error ............................................ B-10

Detecting calibration step completion ..................................... B-11

Using the *OPC? query ................................................... B-11

Using the *OPC command ............................................... B-11

Generating an SRQ on calibration complete ................... B-12

C Calibration Programs

Introduction ............................................................................... C-2

Computer hardware requirements ............................................. C-2

Software requirements .............................................................. C-2

Calibration equipment ............................................................... C-2

General program instructions .................................................... C-3

Program C-1. Model 2400 calibration program ................ C-3

Requesting calibration constants ............................................... C-6

Program C-2. Requesting calibration constants ................ C-6

Page 10

Page 11

List of Illustrations

1 Performance V erification

Figure 1-1 Voltage verification front panel connections .......................... 1-9

Figure 1-2 Current verification connections .......................................... 1-11

Figure 1-3 Resistance verification connections ..................................... 1-15

2 Calibration

Figure 2-1 Voltage calibration connections ............................................. 2-9

Figure 2-2 Current calibration connections ........................................... 2-12

3 Routine Maintenance

Figure 3-1 Rear panel .............................................................................. 3-3

4 T roubleshooting

Figure 4-1 Analog circuit block diagram ................................................ 4-5

Figure 4-2 Power supply block diagram .................................................. 4-6

Figure 4-3 Output stage simplified schematic ......................................... 4-7

Figure 4-4 Digital board block diagram .................................................. 4-9

Page 12

Page 13

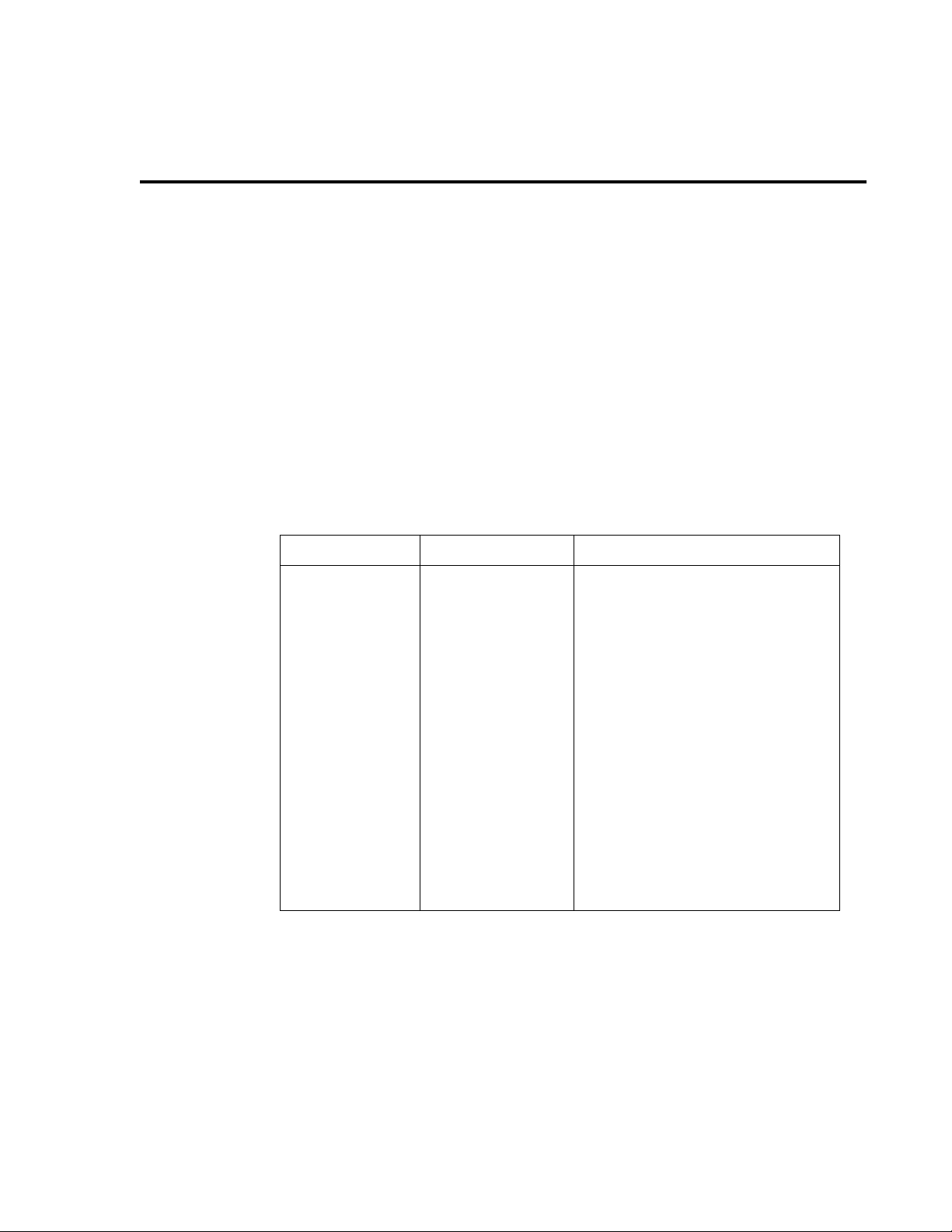

List of T ables

1 Performance V erification

Table 1-1 Recommended verification equipment .................................. 1-3

Table 1-2 Output voltage accuracy limits .............................................. 1-9

Table 1-3 Voltage measurement accuracy limits .................................. 1-10

Table 1-4 Output current accuracy limits ............................................. 1-12

Table 1-5 Current measurement accuracy limits .................................. 1-13

Table 1-6 Ohms measurement accuracy limits .................................... 1-14

2 Calibration

Table 2-1 Recommended calibration equipment ................................... 2-4

Table 2-2 Calibration unlocked states .................................................... 2-5

Table 2-3 Front panel voltage calibration ............................................ 2-11

Table 2-4 Front panel current calibration ............................................. 2-14

Table 2-5 Remote calibration command summary .............................. 2-16

Table 2-6 :CALibration:PROTected:SENSe parameter ranges ............ 2-17

Table 2-7 :CALibration:PROTected:SOURce parameter ranges ......... 2-17

Table 2-8 Voltage calibration initialization commands ........................ 2-18

Table 2-9 Voltage range calibration commands ................................... 2-19

Table 2-10 Current calibration initialization commands ........................ 2-20

Table 2-11 Current range calibration commands ................................... 2-21

3 Routine Maintenance

Table 3-1 Power line fuse ....................................................................... 3-2

4 T roubleshooting

Table 4-1 Display board checks ........................................................... 4-10

Table 4-2 Power supply checks ............................................................ 4-10

Table 4-3 Digital circuitry checks ........................................................ 4-11

Table 4-4 Analog circuitry checks ....................................................... 4-11

6 Replaceable Parts

Table 6-1 Analog board parts list ........................................................... 6-4

Table 6-2 Digital board parts list .......................................................... 6-10

Table 6-3 Display board parts list ........................................................ 6-13

Table 6-4 Mechanical parts list ............................................................ 6-14

Page 14

B Command Reference

Table B-1 Remote calibration command summary ................................ B-2

Table B-2 :CALibration:PROTected:SENSe parameter ranges ............. B-6

Table B-3 :CALibration:PROTected:SOURce parameter ranges .......... B-8

Table B-4 Calibration errors ................................................................ B-10

Page 15

1

Performance V erification

Page 16

1-2 Performance Verification

Introduction

Use the procedures in this section to verify that Model 2400 accuracy is within the limits stated

in the instrument’s one-year accuracy specifications. You can perform these verification

procedures:

• When you first receive the instrument to make sure that it was not damaged during

shipment.

• To verify that the unit meets factory specifications.

• To determine if calibration is required.

• Following calibration to make sure it was performed properly.

WARNING The information in this section is intended for qualified service personnel

only. Do not attempt these procedures unless you are qualified to do so.

Some of these procedures may expose you to hazardous voltages, which

could cause personal injury or death if contacted. Use standard safety precautions when working with hazardous voltages.

NOTE If the instrument is still under warranty and its performance is outside specified

limits, contact your Keithley representative or the factory to determine the correct

course of action.

V erification test requirements

Be sure that you perform the verification tests:

• Under the proper environmental conditions.

• After the specified warm-up period.

• Using the correct line voltage.

• Using the proper test equipment.

• Using the specified output signal and reading limits.

Environmental conditions

Conduct your performance verification procedures in a test environment with:

• An ambient temperature of 18-28°C (65-82°F).

• A relative humidity of less than 70% unless otherwise noted.

W arm-up period

Allow the Model 2400 to warm up for at least one hour before conducting the verification

procedures. If the instrument has been subjected to temperature extremes (those outside the

ranges stated above), allow additional time for the instrument’s internal temperature to

stabilize. Typically, allow one extra hour to stabilize a unit that is 10°C (18°F) outside the

specified temperature range.

Also, allow the test equipment to warm up for the minimum time specified by the

manufacturer.

Page 17

Performance Verification 1-3

Line power

The Model 2400 requires a line voltage of 88 to 264V and a line frequency of 50 or 60Hz.

Verification tests should be performed within this range.

Recommended test equipment

Table 1-1 summarizes recommended verification equipment. You can use alternate equipment

as long as that equipment has specifications at least as good as those listed in Table 1-1. Keep

in mind, however, that test equipment uncertainty will add to the uncertainty of each measurement. Generally, test equipment uncertainty should be at least four times better than corresponding Model 2400 specifications. Table 1-1 lists the uncertainties of the recommended test

equipment.

Table 1-1

Recommended verifi cation equipment

Description Manufacturer/Model Accuracy*

Digital Multimeter

Resistance calibrator

**90-day specifications show accuracy at specified measurement point.

**Nominal resistance values shown.

Hewlett Packard

HP3458A

Fluke 5450A

DC Voltage

DC current

Resistance**

200mV:

2V:

20V:

200V:

1µA:

10µA:

100µA:

1mA:

10mA:

100mA:

1A:

19

Ω :

190

Ω :

1.9k

Ω :

19k

Ω

190k Ω :

1.9M

Ω :

19M

Ω :

100M

Ω :

±15ppm

±6ppm

±9ppm

±7ppm

±55ppm

±25ppm

±23ppm

±20ppm

±20ppm

±35ppm

±110ppm

±23ppm

±10.5ppm

±8ppm

±7.5ppm

±8.5ppm

±11.5ppm

±30ppm

±120ppm

V erification limits

The verification limits stated in this section have been calculated using only the Model 2400

one-year accuracy specifications, and they do not include test equipment uncertainty. If a

particular measurement falls outside the allowable range, recalculate new limits based both on

Model 2400 specifications and corresponding test equipment specifications.

Page 18

1-4 Performance Verification

Example limits calculation

As an example of how verification limits are calculated, assume you are testing the 20V DC

output range using a 20V output value. Using the Model 2400 one-year accuracy specification

for 20V DC output of ±(0.02% of output + 2.4mV offset), the calculated output limits are:

Output limits = 20V ± [(20V

Output limits = 20V ± (0.004 + 0.0024)

Output limits = 20V ± 0.0064V

Output limits = 19.9936V to 20.0064V

× 0.02%) + 2.4mV]

Resistance limits calculation

When verifying the ohms function, you may find it necessary to recalculate resistance limits

based on the actual calibrator resistance values. You can calculate resistance reading limits in

the same manner described above, but be sure to use the actual calibrator resistance values and

the Model 2400 normal accuracy specifications for your calculations.

As an example, assume that you are testing the 20k

19k

Ω calibrator resistor is 19.025k Ω . Using the Model 2400 one-year normal accuracy

specifications of ±(0.063% of reading + 3

Reading limits = 19.025k

Reading limits = 19.025k

Reading limits = 19.0100k Ω to 19.0400k Ω

Ω ±[(19.025k Ω × 0.063%) + 3 Ω ]

Ω ± 15 Ω

Restoring factory defaults

Before performing the verification procedures, restore the instrument to its factory front panel

(bench) defaults as follows:

1. Press MENU key. The instrument will display the following prompt:

MAIN MENU

SAVESETUP COMMUNICATION CAL

2. Select SAVESETUP, and then press ENTER. The unit then displays:

SETUP MENU

SAVE RESTORE POWERON RESET

3. Select RESET, and then press ENTER. The unit displays:

RESET ORIGINAL DFLTS

BENCH GPIB

4. Select BENCH, and then press ENTER. The unit then displays:

RESETTING INSTRUMENT

ENTER to confirm; EXIT to abort

5. Press ENTER to restore bench defaults, and note the unit displays the following:

RESET COMPLETE

BENCH defaults are now restored

Press ENTER to continue

6. Press ENTER and then EXIT to return to normal display.

Ω range, and the actual value of the nominal

Ω ), the recalculated reading limits are:

Page 19

Performance Verification 1-5

Performing the verification test procedures

T est summary

• DC voltage output accuracy

• DC voltage measurement accuracy

• DC current output accuracy

• DC current measurement accuracy

• Resistance measurement accuracy

If the Model 2400 is not within specifications and not under warranty, see the calibration

procedures in Section 2 for information on calibrating the unit.

T est considerations

When performing the verification procedures:

• Be sure to restore factory front panel defaults as outlined above.

• Make sure that the test equipment is properly warmed up and connected to the

Model 2400 INPUT/OUTPUT jacks. Also ensure that the front panel jacks are selected

with the TERMINALS key.

• Make sure the Model 2400 is set to the correct source range.

• Be sure the Model 2400 output is turned on before making measurements.

• Be sure the test equipment is set up for the proper function and range.

• Allow the Model 2400 output signal to settle before making a measurement.

• Do not connect test equipment to the Model 2400 through a scanner, multiplexer, or

other switching equipment.

WARNING The maximum common-mode voltage (voltage between LO and chassis

ground) is 250V peak. Exceeding this value may cause a breakdown in

insulation, creating a shock hazard.

CAUTION The maximum voltage between INPUT/OUTPUT HI and LO or 4-WIRE

SENSE HI and LO is 250V peak. The maximum voltage between INPUT/

OUTPUT HI and 4-WIRE SENSE HI or between INPUT/OUTPUT LO

and 4-WIRE SENSE LO is 5V. Exceeding these voltages may result in

instrument damage.

Page 20

1-6 Performance Verification

Setting the source range and output value

Before testing each verification point, you must properly set the source range and output value

as outlined below.

1. Press either the SOURCE V or SOURCE I key to select the appropriate source

function.

2. Press the EDIT key as required to select the source display field. Note that the cursor

will flash in the source field while its value is being edited.

3. With the cursor in the source display field flashing, set the source range to the lowest

possible range for the value to be sourced using the up or do wn RANGE k ey. For example, you should use the 20V source range to output a 19V or 20V source value. With a

20V source value and the 20V range selected, the source field display will appear as

follows:

Vsrc:+20.0000 V

4. With the source field cursor flashing, set the source output to the required value using

either:

• The SOURCE adjustment and left and right arrow keys.

• The numeric keys.

5. Note that the source output value will be updated immediately; you need not press

ENTER when setting the source value.

Setting the measurement range

When simultaneously sourcing and measuring either voltage or current, the measure range is

coupled to the source range, and you cannot independently control the measure range. Thus, it

is not necessary for you to set the range when testing voltage or current measurement accurac y.

Compliance considerations

Compliance limits

When sourcing voltage, you can set the SourceMeter to limit current from 1nA to 1.05A.

Conversely, when sourcing current, you can set the SourceMeter to limit voltage from 200µV

to 210V. The SourceMeter output will not exceed the programmed compliance limit.

T ypes of compliance

There are two types of compliance that can occur: “real” and “range.” Depending upon which

value is lower , the output will clamp at either the displayed compliance setting (“real”) or at the

maximum measurement range reading (“range”).

Page 21

The “real” compliance condition can occur when the compliance setting is less than the highest

possible reading of the measurement range. When in compliance, the source output clamps at

the displayed compliance value. For example, if the compliance voltage is set to 1V and the

measurement range is 2V, the output voltage will clamp (limit) at 1V.

“Range” compliance can occur when the compliance setting is higher than the possible reading

of the selected measurement range. When in compliance, the source output clamps at the

maximum measurement range reading (not the compliance value). For example, if the

compliance voltage is set to 1V and the measurement range is 200mV, the output voltage will

clamp (limit) at 210mV.

Maximum compliance values

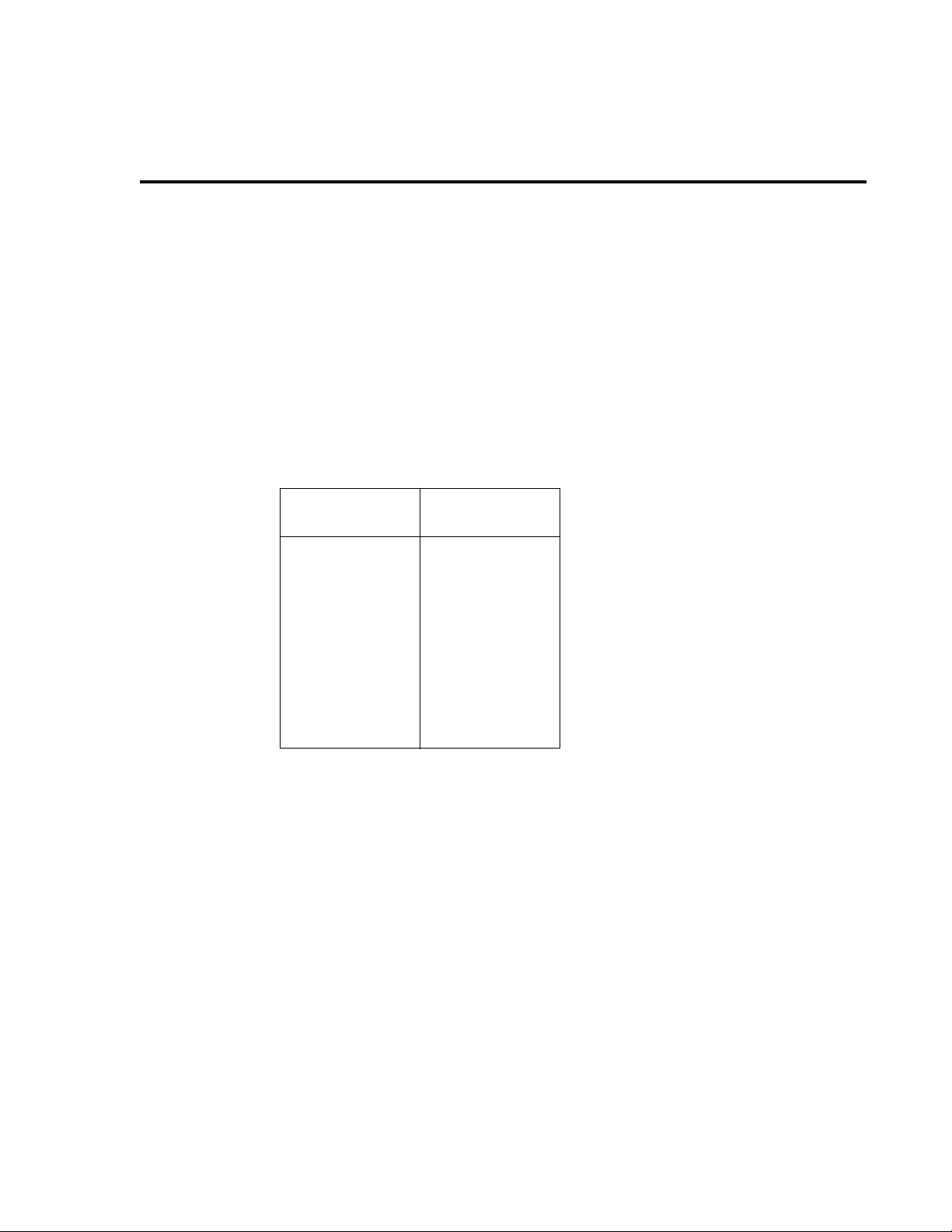

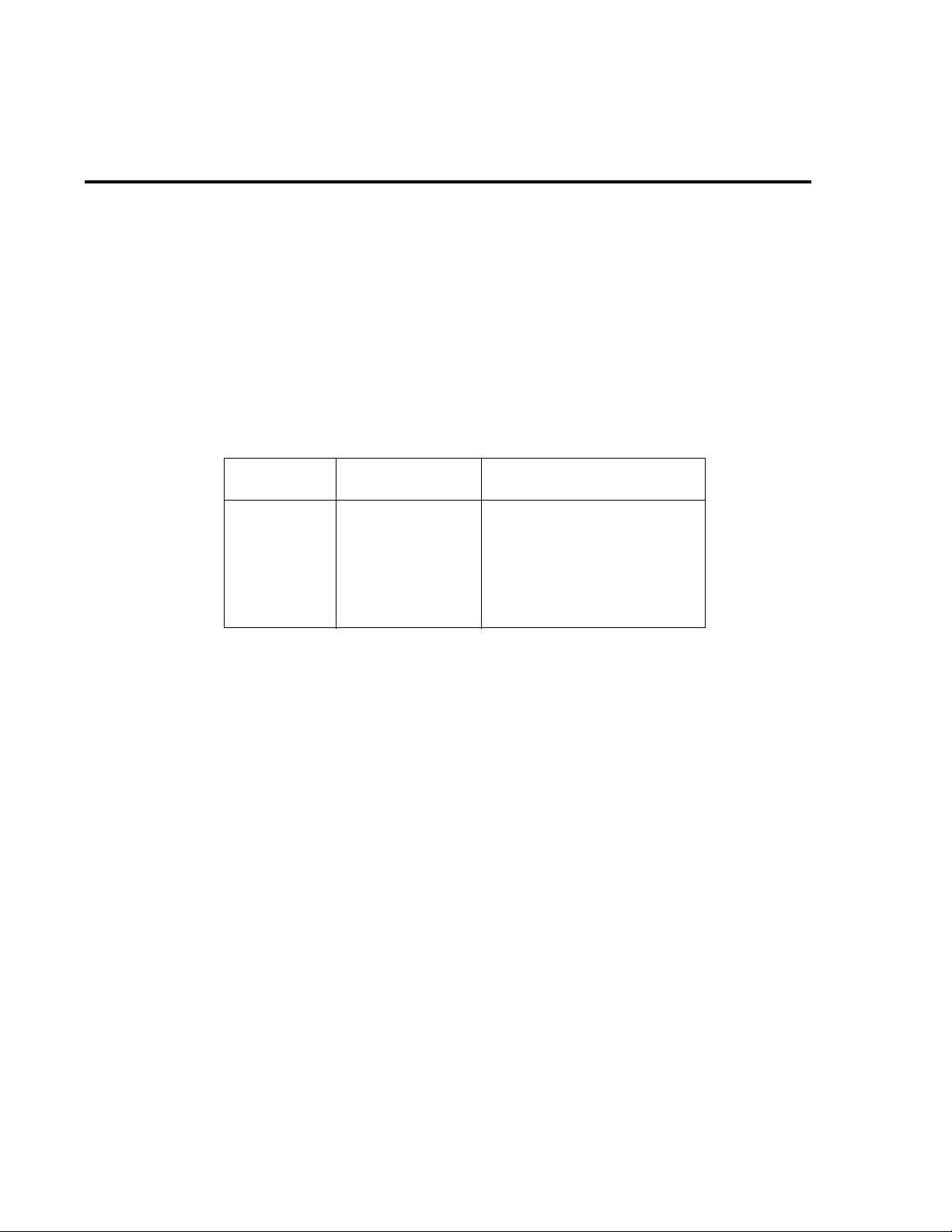

The maximum compliance values for the measurement ranges are summarized as follows:

Performance Verification 1-7

Measurement

range

200mV

2V

20V

200V

1µA

10µA

100µA

1mA

10mA

100mA

1A

When the SourceMeter goes into compliance, the “Cmpl” label or the units label (i.e., “mA”)

for the compliance display will flash.

Maximum

compliance value

210mV

1.05µA

10.5µA

1.05mA

10.5mA

105mA

Determining compliance limit

The relationships to determine which compliance is in effect are summarized as follows. They

assume the measurement function is the same as the compliance function.

• Compliance Setting < Measurement Range = Real Compliance

• Measurement Range < Compliance Setting = Range Compliance

2.1V

21V

210V

105µA

1.05A

You can determine the compliance that is in effect by comparing the displayed compliance

setting to the present measurement range. If the compliance setting is lower than the maximum

possible reading on the present measurement range, the compliance setting is the compliance

limit. If the compliance setting is higher than the measurement range, the maximum reading on

that measurement range is the compliance limit.

Page 22

1-8 Performance Verification

T aking the SourceMeter out of compliance

Verification measurements should not be made when the SourceMeter is in compliance. For

purposes of the verification tests, the SourceMeter can be taken out of compliance by going

into the edit mode and increasing the compliance limit.

NOTE Do not take the unit out of compliance by decreasing the source value or changing

the range. Always use the recommended range and source settings when performing

the verification tests.

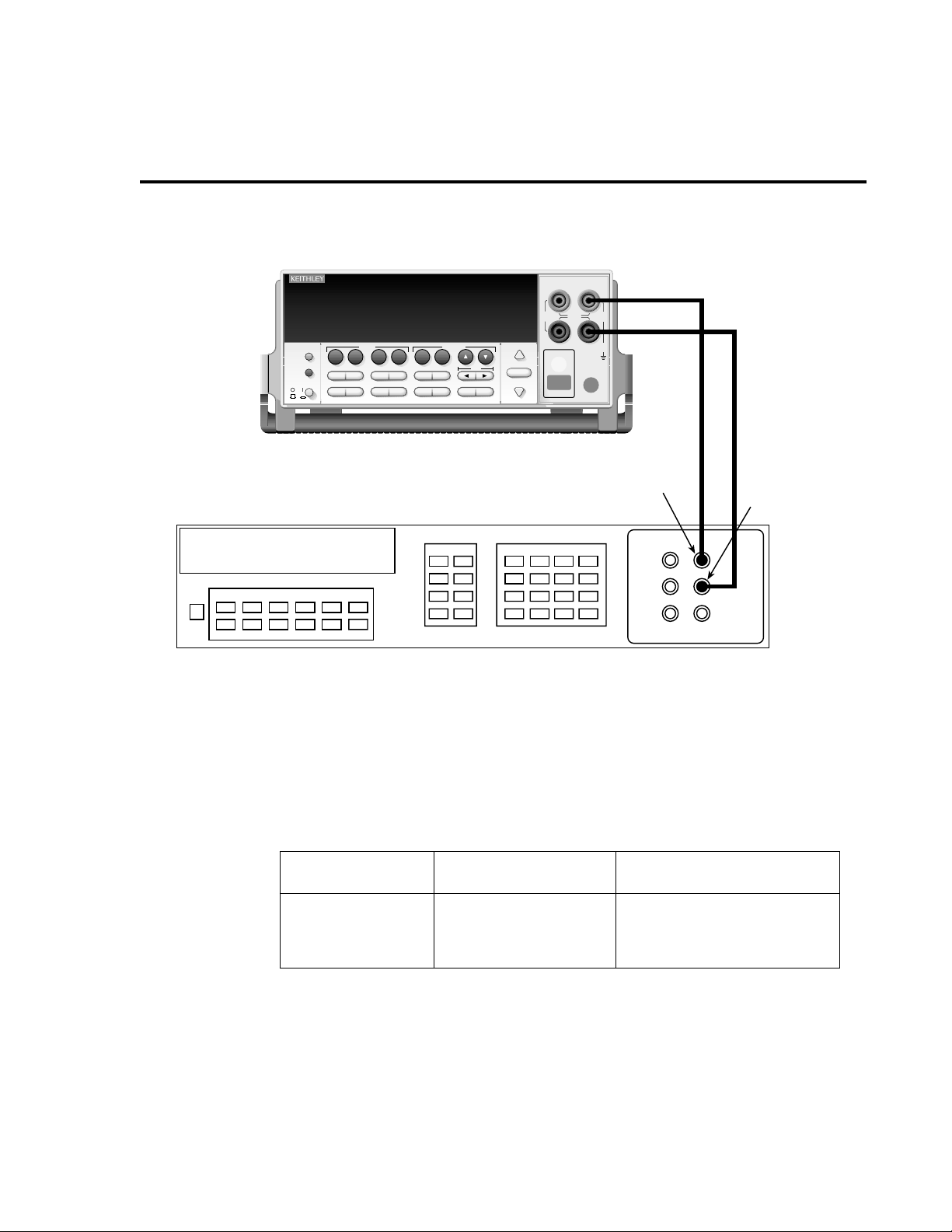

Output voltage accuracy

Follow the steps below to verify that Model 2400 output voltage accuracy is within specified

limits. This test involves setting the output voltage to each full-range value and measuring the

voltages with a precision digital multimeter.

1. With the power of f, connect the digital multimeter to the Model 2400 INPUT/OUTPUT

jacks, as shown in Figure 1-1.

2. Select the multimeter DC volts measuring function.

NOTE The default voltage source protection value is 40V . Befor e testing the 200V r ange, set

the voltage source protection value to >200V. To do so, press CONFIG then

SOURCE V to access the CONFIGURE V-SOURCE menu, then select PROTECTION and set the limit value to >200V.

3. Press the Model 2400 SOURCE V k ey to source voltage, and make sure the source output is turned on.

4. Verify output voltage accuracy for each of the voltages listed in Table 1-2. For each test

point:

• Select the correct source range.

• Set the Model 2400 output voltage to the indicated value.

• Verify that the multimeter reading is within the limits given in the table.

Page 23

Fi

gure 1-

1

Voltage verifi cation front panel connections

EDIT

DISPLAY

TOGGLE

POWER

V

LOCAL

67

DIGITS SPEED

MEAS

FCTN

I

Ω

1

230

REL

FILTER

LIMIT

89

STORE

RECALL

V

4

TRIG

SWEEP

+/-

CONFIG MENU

I

5

SOURCE

Model 2400

EDIT

EXIT ENTER

2400 SourceMeter

RANGE

AUTO

RANGE

Performance Verification 1-9

4-WIRE

INPUT/

SENSE

OUTPUT

HI

250V

PEAK

ON/OFF

OUTPUT

250V

5V

PEAK

PEAK

LO

250V

PEAK

TERMINALS

FRONT/

REAR

Input HI

Input LO

Digital Multimeter

5. Repeat the procedure for negative output voltages with the same magnitudes as those

listed in Table 1-2.

6. Repeat the entire procedure using the rear panel INPUT/OUTPUT jacks. Be sure to

select the rear panel jacks with the front panel TERMINALS key.

Table 1-2

Output voltage accuracy limits

Model 2400

source range

200mV

2V

20V

200V

Model 2400

output voltage setting

200.000mV

2.00000V

20.0000V

200.000V

Output voltage limits

(1 year, 18°C–28°C)

199.360 to 200.640mV

1.99900 to 2.00100V

19.9936 to 20.0064V

199.936 to 200.064V

Page 24

1-10 Performance Verification

V oltage measurement accuracy

Follow the steps below to verify that Model 2400 voltage measurement accuracy is within

specified limits. The test involves setting the source voltage to 95% of full-range values, as

measured by a precision digital multimeter, and then verifying that the Model 2400 voltage

readings are within required limits.

1. With the power of f, connect the digital multimeter to the Model 2400 INPUT/OUTPUT

jacks, as shown in Figure 1-1.

2. Select the multimeter DC volts function.

NOTE The default voltage source protection value is 40V . Befor e testing the 200V r ange, set

the voltage source protection value to >200V. To do so, press CONFIG then

SOURCE V to access the CONFIGURE V-SOURCE menu, then select PROTECTION and set the limit value to >200V.

3. Set the Model 2400 to both source and measure voltage by pressing the SOURCE V

and MEAS V keys, and make sure the source output is turned on.

4. Verify output voltage accuracy for each of the voltages listed in Table 1-3. For each test

point:

• Select the correct source range.

• Set the Model 2400 output voltage to the indicated value as measured by the digital

multimeter.

• Verify that the Model 2400 voltage reading is within the limits given in the table.

NOTE It may not be possible to set the voltage sour ce to the specified value . Use the closest

possible setting, and modify reading limits accordingly.

5. Repeat the procedure for negative source voltages with the same magnitudes as those

listed in Table 1-3.

6. Repeat the entire procedure using the rear panel INPUT/OUTPUT jacks. Be sure to

select the rear panel jacks with the front panel TERMINALS key.

Table 1-3

Voltage measurement accuracy limits

Model 2400 source and

measure range* Source voltage**

0200mv 190.000mV 189.677 to 190.323mV

002V 1.90000V 1.89947 to 1.90053V

020V 19.0000V 18.9962 to 19.0038V

200V 190.000V 189.962 to 190.038V

**Measure range coupled to source range when simultaneously sourcing and measuring voltage.

**As measured by precision digital multimeter. Use closest possible value, and modify reading limits

accordingly if necessary.

Model 2400 voltage reading limits

(1 year, 18°C–28°C)

Page 25

Output current accuracy

Fi

2

Follow the steps below to verify that Model 2400 output current accuracy is within specified

limits. The test involves setting the output current to each full-range value and measuring the

currents with a precision digital multimeter.

1. With the power of f, connect the digital multimeter to the Model 2400 INPUT/OUTPUT

jacks, as shown in Figure 1-2.

2. Select the multimeter DC current measuring function.

3. Press the Model 2400 SOURCE I key to source current, and make sure the source output is turned on.

gure 1-

Current verifi cation connections

Performance Verification 1-11

4-WIRE

INPUT/

SENSE

OUTPUT

HI

EDIT

DISPLAY

TOGGLE

POWER

V

REL

LOCAL

67

DIGITS SPEED

250V

PEAK

MEAS

FCTN

I

Ω

230

LIMIT

89

RECALL

V

4

TRIG

SWEEP

+/-

CONFIG MENU

5

1

FILTER

STORE

SOURCE

I

EDIT

EXIT ENTER

2400 SourceMeter

RANGE

AUTO

RANGE

OUTPUT

ON/OFF

250V

5V

PEAK

PEAK

LO

250V

PEAK

TERMINALS

FRONT/

REAR

Model 2400

Input LO

Amps

Digital Multimeter

Page 26

1-12 Performance Verification

4. Verify output current accuracy for each of the currents listed in Table 1-4. For each test

point:

• Select the correct source range.

• Set the Model 2400 output current to the correct value.

• Verify that the multimeter reading is within the limits given in the table.

5. Repeat the procedure for negative output currents with the same magnitudes as those

listed in Table 1-4.

6. Repeat the entire procedure using the rear panel INPUT/OUTPUT jacks. Be sure to

select the rear panel jacks with the front panel TERMINALS key.

Table 1-4

Output current accuracy limits

Model 2400

source range

1µA

10µA

100µA

1mA

10mA

100mA

1A

Model 2400 output

current setting

1.00000µA

10.0000µA

100.000µA

1.00000mA

10.0000mA

100.000mA

1.00000A

Current measurement accuracy

Follow the steps below to verify that Model 2400 current measurement accuracy is within

specified limits. The procedure involves applying accurate currents from the Model 2400

current source and then verifying that Model 2400 current measurements are within required

limits.

1. With the power of f, connect the digital multimeter to the Model 2400 INPUT/OUTPUT

jacks as shown in Figure 1-2.

2. Select the multimeter DC current function.

3. Set the Model 2400 to both source and measure current by pressing the SOURCE I and

MEAS I keys, and make sure the source output is turned on.

4. Verify measure current accuracy for each of the currents listed in Table 1-5. For each

measurement:

• Select the correct source range.

• Set the Model 2400 source output to the correct value as measured by the digital

multimeter.

• Verify that the Model 2400 current reading is within the limits given in the table.

Output current limits

(1 year, 18°C–28°C)

0.99905 to 1.00095µA

9.9947 to 10.0053µA

99.949 to 100.051µA

0.99946 to 1.00054mA

9.9935 to 10.0065mA

99.914 to 100.086mA

0.99640 to 1.00360A

Page 27

Performance Verification 1-13

NOTE It may not be possible to set the current source to the specified value. Use the closest

possible setting, and modify reading limits accordingly.

5. Repeat the procedure for negativ e calibrator currents with the same magnitudes as those

listed in Table 1-5.

6. Repeat the entire procedure using the rear panel INPUT/OUTPUT jacks. Be sure to

select the rear panel jacks with the front panel TERMINALS key.

Table 1-5

Current measurement accuracy limits

Model 2400 source and

measure range* Source current**

1µA

10µA

100µA

1mA

10mA

100mA

1A

**Measure range coupled to source range when simultaneously sourcing and measuring current.

**As measured by precision digital multimeter. Use closest possible value, and modify reading limits

accordingly if necessary.

0.95000µA

9.5000µA

95.000µA

0.95000mA

9.5000mA

95.000mA

0.95000A

Resistance measurement accuracy

Follow the steps below to verify that Model 2400 resistance measurement accuracy is within

specified limits. This procedure involves applying accurate resistances from a resistance

calibrator and then verifying that Model 2400 resistance measurements are within required

limits.

1. With the power off, connect the resistance calibrator to the Model 2400 INPUT/OUT-

PUT and 4-WIRE SENSE jacks as shown in Figure 1-3. Be sure to use the four-wire

connections as shown

2. Select the resistance calibrator external sense mode.

3. Configure the Model 2400 ohms function for the 4-wire sense mode as follows:

• Press CONFIG then MEAS

CONFIG OHMS

SOURCE SENSE-MODE GUARD

• Select SENSE-MODE, and then press ENTER. The following will be displayed:

SENSE-MODE

2-WIRE 4-WIRE

• Select 4-WIRE, and then press ENTER.

• Press EXIT to return to normal display.

Ω . The instrument will display the following:

Model 2400 current reading limits

(1 year, 18°C–28°C)

0.94942 to 0.95058µA

9.4967 to 9.5033µA

94.970 to 95.030µA

0.94968 to 0.95032mA

9.4961 to 9.5039mA

94.942 to 95.058mA

0.94734 to 0.95266A

Page 28

1-14 Performance Verification

4. Press MEAS Ω to select the ohms measurement function, and make sure the source output is turned on.

5. Verify ohms measurement accuracy for each of the resistance values listed in T able 1-6.

For each measurement:

• Set the resistance calibrator output to the nominal resistance or closest available

value.

NOTE It may not be possible to set the resistance calibrator to the specified value. Use the

closest possible setting, and modify reading limits accordingly.

• Select the appropriate ohms measurement range with the RANGE keys.

• Verify that the Model 2400 resistance reading is within the limits given in the

table.

6. Repeat the entire procedure using the rear panel INPUT/OUTPUT and 4-WIRE

SENSE jacks. Be sure to select the rear panel jacks with the front panel TERMINALS

key.

Table 1-6

Ohms measurement accuracy limits

Calibrator

Model 2400 range

20Ω

200Ω

2kΩ

20kΩ

200kΩ

2MΩ

20MΩ

200MΩ

** Nominal resistance value.

** Reading limits based on Model 2400 normal accuracy specifications and nominal resistance values. If

actual resistance values differ from nominal values shown, recalculate reading limits using actual calibrator

resistance values and Model 2400 normal accuracy specifications. See “Verification limits” earlier in this

section for details.

resistance*

19Ω

190Ω

1.9kΩ

19kΩ

190kΩ

1.9MΩ

19MΩ

100MΩ

Model 2400 resistance reading limits**

(1 year, 18°C-28°C)

18.9784 to 19.0216Ω

189.824 to 190.176Ω

1.89845 to 1.90155kΩ

18.9850 to 19.0150kΩ

189.847 to 190.153kΩ

1.89761 to 1.90239MΩ

18.9781 to 19.0219MΩ

99.020 to 100.980MΩ

Page 29

Fi

gure 1-

3

Resistance verifi cation connections

V

LOCAL

67

DIGITS SPEED

MEAS

I

Ω

1

REL

FILTER

STORE

EDIT

DISPLAY

TOGGLE

POWER

FCTN

230

LIMIT

89

RECALL

CONFIG MENU

Model 2400

Performance Verification 1-15

4-WIRE

INPUT/

SENSE

OUTPUT

HI

250V

PEAK

2400 SourceMeter

SOURCE

I

V

4

5

TRIG

SWEEP

+/-

EXIT ENTER

RANGE

EDIT

AUTO

ON/OFF

RANGE

OUTPUT

250V

5V

PEAK

PEAK

LO

250V

PEAK

TERMINALS

FRONT/

REAR

Output HI

Sense HI

Resistance Calibrator

Output LO

Sense LO

Page 30

1-16 Performance Verification

Page 31

2

Calibration

Page 32

2-2 Calibration

Introduction

Use the procedures in this section to calibrate the Model 2400. These procedures require

accurate test equipment to measure precise DC voltages and currents. Calibration can be

performed either from the front panel or by sending SCPI calibration commands over the

IEEE-488 bus or RS-232 port with the aid of a computer.

WARNING The information in this section is intended for qualified service personnel

only. Do not attempt these procedures unless you are qualified to do so.

Some of these procedures may expose you to hazardous voltages.

Environmental conditions

T emperature and relative humidity

Conduct the calibration procedures at an ambient temperature of 18-28°C (65-82°F) with

relative humidity of less than 70% unless otherwise noted.

W arm-up period

Allow the Model 2400 to warm up for at least one hour before performing calibration.

If the instrument has been subjected to temperature extremes (those outside the ranges stated

above), allow additional time for the instrument’s internal temperature to stabilize. Typically,

allow one extra hour to stabilize a unit that is 10°C (18°F) outside the specified temperature

range.

Also, allow the test equipment to warm up for the minimum time specified by the

manufacturer.

Line power

The Model 2400 requires a line voltage of 88 to 264V at line frequency of 50 or 60Hz. The

instrument must be calibrated within this range.

Page 33

Calibration 2-3

Calibration considerations

When performing the calibration procedures:

• Make sure that the test equipment is properly warmed up and connected to the

Model 2400 front panel INPUT/ OUTPUT jacks. Also be certain that the front panel

jacks are selected with the TERMINALS switch.

• Always allow the source signal to settle before calibrating each point.

• Do not connect test equipment to the Model 2400 through a scanner or other switching

equipment.

• If an error occurs during calibration, the Model 2400 will generate an appropriate error

message. See Appendix B for more information.

WARNING The maximum common-mode voltage (voltage between LO and chassis

ground) is 250V peak. Exceeding this value may cause a breakdown in

insulation, creating a shock hazard.

CAUTION The maximum voltage between INPUT/OUTPUT HI and LO or 4-WIRE

Calibration cycle

Perform calibration at least once a year to ensure the unit meets or exceeds its specifications.

SENSE HI and LO is 250V peak. The maximum voltage between INPUT/

OUTPUT HI and 4-WIRE SENSE HI or between INPUT/OUTPUT LO

and 4-WIRE SENSE LO is 5V. Exceeding these voltage values may result

in instrument damage.

Page 34

2-4 Calibration

Recommended calibration equipment

Table 2-1 lists the recommended equipment for the calibration procedures. You can use

alternate equipment as long as that equipment has specifications at least as good as those listed

in the table. When possible, test equipment specifications should be at least four times better

than corresponding Model 2400 specifications.

Table 2-1

Recommended calibration equipment

Description Manufacturer/Model Accuracy*

Digital Multimeter Hewlett Packard

*90-day specifications show accuracy at specified measurement point.

Unlocking calibration

Before performing calibration, you must first unlock calibration by entering or sending the

calibration password as follows:

Front panel calibration password

1. Press the MENU key , then choose CAL, and press ENTER. The instrument will display

the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD

2. Select UNLOCK, and then press ENTER. The instrument will display the following:

PASSWORD:

Use

,

,

3. Use the up and down RANGE keys to select the letter or number, and use the left and

right arrow keys to choose the position. (Press down RANGE for letters; up RANGE

for numbers.) Enter the current password on the display. (Front panel default: 002400.)

HP3458A

,

, ENTER, or EXIT.

DC voltage 200mV:

2V

20V:

200V:

DC current 1µA:

10µA:

100µA:

1mA:

10mA:

100mA:

1A:

±15ppm

±6ppm

±9ppm

±7ppm

±55ppm

±25ppm

±23ppm

±20ppm

±20ppm

±35ppm

±110ppm

Page 35

Calibration 2-5

4. Once the correct password is displayed, press the ENTER key. If the password was correctly entered, the following message will be displayed.

CALIBRATION UNLOCKED

Calibration can now be executed

5. Press EXIT to return to normal display. Calibration will be unlocked and assume the

states summarized in Table 2-2. Attempts to change any of the settings listed below

with calibration unlocked will result in an error +510, “Not permitted with cal unlocked.”

NOTE With calibration unlocked, the sense function and range track the source function

and range. That is, when :SOUR:FUNC is set to VOLT, the :SENS:FUNC setting

will be ‘VOLT:DC’. When :SOUR:FUNC is set to CURR, the :SENS:FUNC setting

will be ‘CURR:DC’. A similar command coupling exists for :SOUR:VOLT:RANG/

:SENS:VOLT:RANG and SOUR:CURR:RANG/:SENS:CURR:RANG.

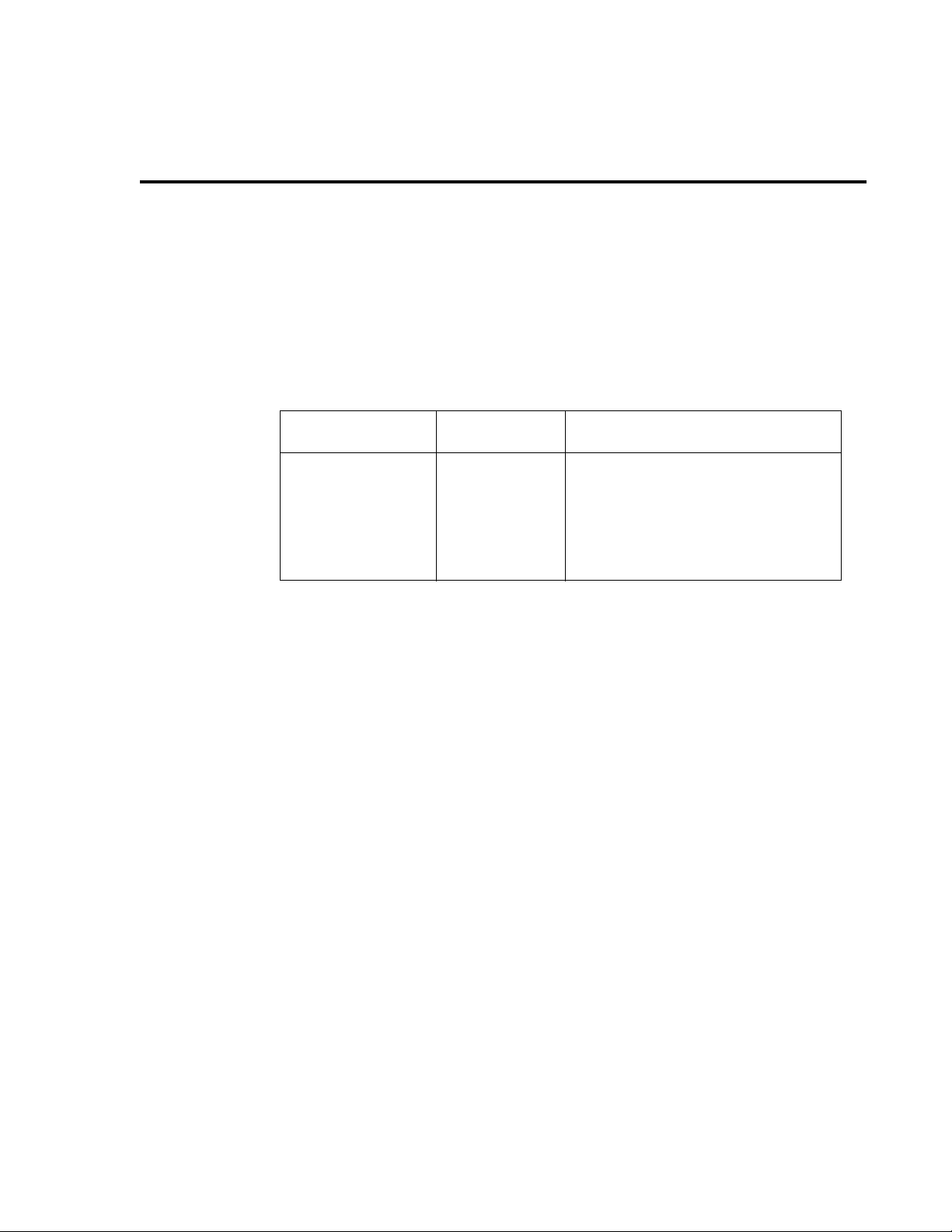

Table 2-2

Calibration unlocked states

Mode State Equivalent remote command

Concurrent Functions

Sense Function

Sense Volts NPLC

Sense Volts Range

Sense Current NPLC

Sense Current Range

Filter Count

Filter Control

Filter A veraging

Source V Mode

Volts Autorange

Source I Mode

Current Autorange

Autozero

Trigger Arm Count

Trigger Arm Source

Trigger Count

Trigger Source

OFF

Source

1.0

Source V

1.0

Source I

10

REPEAT

ON

FIXED

OFF:SOUR

FIXED

OFF

ON

1

Immediate

1

Immediate

:SENS:FUNC:CONC OFF

:SENS:FUNC <source_function>

:SENS:VOLT:NPLC 1.0

:SENS:VOLT:RANG <source_V_range>

:SENS:CURR:NPLC 1.0

:SENS:CURR:RANG <source_I_range>

:SENS:AVER:COUN 10

:SENS:AVER:TCON REPeat

:SENS:AVER:STAT ON

:SOUR:VOLT:MODE FIXED

:VOLT:RANGE:AUTO OFF

:SOUR:CURR:MODE FIXED

:SOUR:CURR:RANGE:AUTO OFF

:SYST:AZERO ON

:ARM:COUNT 1

:ARM:SOUR IMMediate

:TRIG:COUNT 1

:TRIG:SOUR IMMediate

Remote calibration password

To unlock calibration via remote, send the following command:

:CAL:PROT:CODE '<password>'

For example, the following command uses the default password:

:CAL:PROT:CODE 'KI002400'

Page 36

2-6 Calibration

Changing the password

The default password may be changed from the front panel or via remote as discussed in the

following paragraphs.

Front panel password

Follow the steps below to change the password from the front panel:

1. Press the MENU key , then choose CAL, and press ENTER. The instrument will display

the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD

2. Select CHANGE-PASSWORD, and then press ENTER. The instrument will display

the following:

NEW PWD: 002400

Use

,

,

,

, ENTER, or EXIT.

3. Using the range keys, and the left and right arrow keys, enter the new password on the

display.

4. Once the desired password is displayed, press the ENTER key to store the new

password.

Remote password

To change the calibration password via remote, first send the present password, and then send

the new password. For example, the following command sequence changes the password from

the 'KI002400' remote default to 'KI_CAL':

:CAL:PROT:CODE 'KI002400'

:CAL:PROT:CODE 'KI_CAL'

You can use any combination of letters and numbers up to a maximum of eight characters.

NOTE If you change the first two char acters of the password to something other than “KI”,

you will not be able to unlock calibration from the front panel.

Resetting the calibration password

If you lose the calibration password, you can unlock calibration by shorting together the CAL

pads, which are located on the display board. Doing so will also reset the password to the

factory default (KI002400).

See Section 5 for details on disassembling the unit to access the CAL pads. Refer to the

display board component layout drawing at the end of Section 6 for the location of the CAL

pads.

Page 37

Viewing calibration dates and calibration count

When calibration is locked, only the UNLOCK and VIEW-DATES selections will be

accessible in the calibration menu. To view calibration dates and calibration count at any time:

1. From normal display, press MENU, select CAL, and then press ENTER. The unit will

display the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

2. Select VIEW-DATES, and then press ENTER. The Model 2400 will display the next

and last calibration dates and the calibration count as in the following example:

NEXT CAL: 12/15/96

Last calibration: 12/15/95 Count: 00001

Calibration errors

Calibration 2-7

The Model 2400 checks for errors after each calibration step, minimizing the possibility that

improper calibration may occur due to operator error.

Front panel error reporting

If an error is detected during comprehensive calibration, the instrument will display an

appropriate error message (see Appendix B). The unit will then prompt you to repeat the

calibration step that caused the error.

Remote error reporting

You can detect errors while in remote by testing the state of EAV (Error Available) bit (bit 2) in

the status byte. (Use the *STB? query to request the status byte.) Query the instrument for the

type of error by using the appropriate :SYST:ERR? query. The Model 2400 will respond with

the error number and a text message describing the nature of the error. See Appendix B for

details.

Page 38

2-8 Calibration

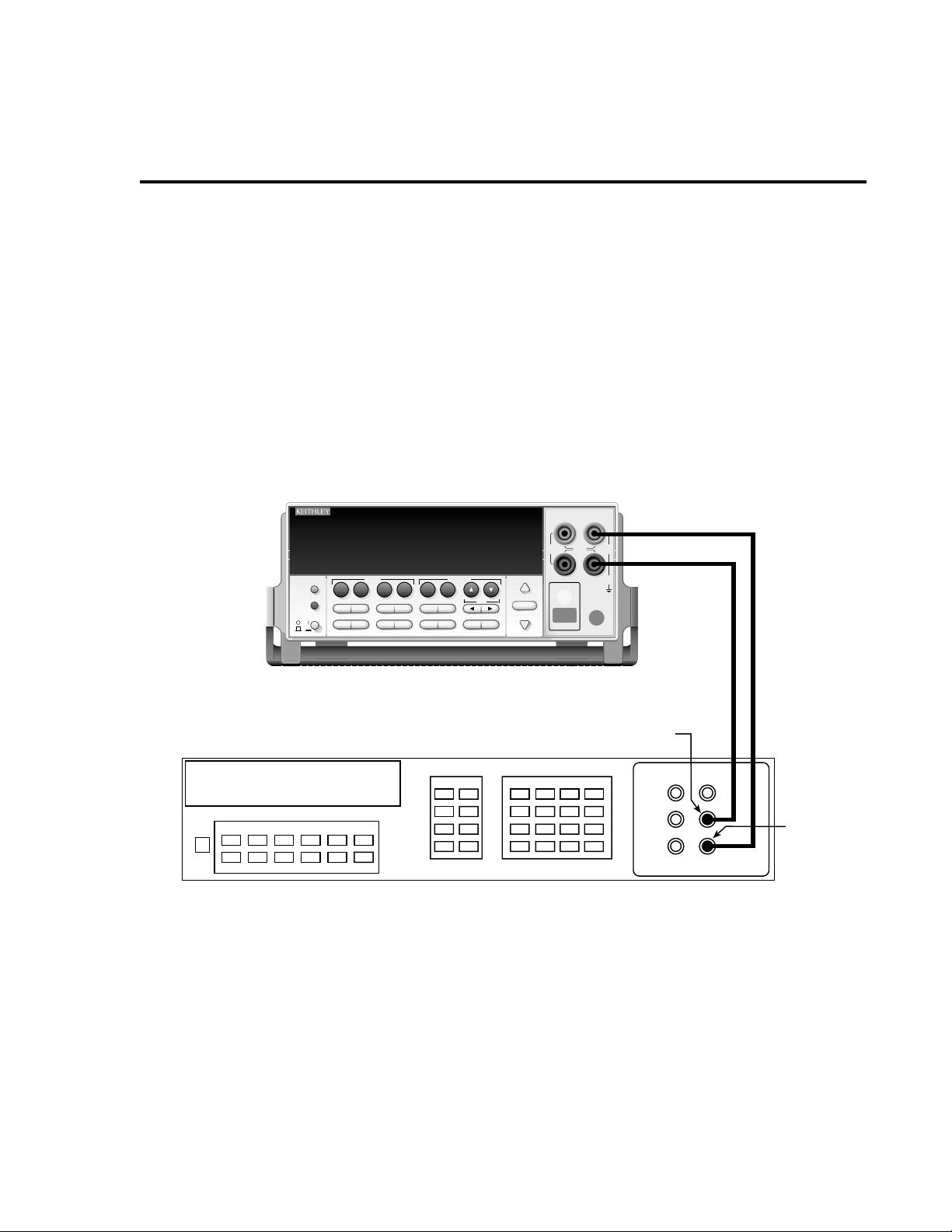

Front panel calibration

The front panel calibration procedure described in the following paragraphs calibrates all

ranges of both the current and voltage source and measure functions. Note that each function is

separately calibrated by repeating the entire procedure for each range.

Step 1. Prepare the Model 2400 for calibration

1. Turn on the Model 2400 and the digital multimeter, and allow them to warm up for at

least one hour before performing calibration.

2. Press the MENU key, then choose CAL, and press ENTER. Select UNLOCK, and then

press ENTER. The instrument will display the following:

PASSWORD:

Use

,

,

,

, ENTER, or EXIT.

3. Use the up and down keys to select the letter or number, and use the left and right arrow

keys to choose the position. Enter the present password on the display. (Front panel

default: 002400.) Press ENTER to complete the process.

4. Press EXIT to return to normal display. Instrument operating states will be set as summarized in Table 2-2.

Step 2. V oltage calibration

Perform the steps below for each voltage range, using Table 2-3 as a guide.

1. Connect the Model 2400 to the digital multimeter, as shown in Figure 2-1. Select the

multimeter DC volts measurement function.

NOTE The 2-wire connections shown assume that remote sensing is not used. Remote sens-

ing may be used, if desired, but it is not essential when using recommended digital

multimeter.

2. From normal display, press the SOURCE V key.

3. Press the EDIT key to select the source field (cursor flashing in source display field),

and then use the down RANGE key to select the 200mV source range.

4. From normal display, press MENU.

5. Select CAL, and then press ENTER. The unit will display the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD

6. Select EXECUTE, and then press ENTER. The instrument will display the following

message:

V-CAL

Press ENTER to Output +200.00mV

7. Press ENTER. The Model 2400 will source +200mV and simultaneously display the

following:

DMM RDG: +200.0000mV

Use

,

,

,

, ENTER, or EXIT.

Page 39

Fi

gure 2-

1

Voltage calibration connections

EDIT

V

DISPLAY

TOGGLE

POWER

REL

LOCAL

67

DIGITS SPEED

1

Calibration 2-9

4-WIRE

INPUT/

SENSE

OUTPUT

HI

250V

PEAK

MEAS

FCTN

I

Ω

V

230

4

5

TRIG

LIMIT

RECALL

SWEEP

+/-

CONFIG MENU

FILTER

89

STORE

SOURCE

I

EDIT

EXIT ENTER

2400 SourceMeter

RANGE

AUTO

RANGE

ON/OFF

OUTPUT

250V

5V

PEAK

PEAK

LO

250V

PEAK

TERMINALS

FRONT/

REAR

Model 2400

Input HI

Input LO

Digital Multimeter

8. Note and record the DMM reading, and then adjust the Model 2400 display to agree

exactly with the actual DMM reading. (Use the up and down arrow keys to select the

digit value, and use the left and right arrow k e ys to choose the digit position.) Note that

the display adjustment range is within ±10% of the present range.

9. After adjusting the display to agree with the DMM reading, press ENTER. The instrument will then display the following:

V-CAL

Press ENTER to Output +000.00mV

10. Press ENTER. The Model 2400 will source 0mV and at the same time display the

following:

DMM RDG: +000.0000mV

Use

,

,

,

, ENTER, or EXIT.

11. Note and record the DMM reading, and then adjust the Model 2400 display to agree

with the actual DMM reading. Note that the display value adjustment limits are within

±1% of the present range.

12. After adjusting the display value to agree with the DMM reading, press ENTER. The

unit will then display the following:

V-CAL

Press ENTER to Output -200.00mV

Page 40

2-10 Calibration

13. Press ENTER. The Model 2400 will source -200mV and display the following:

DMM RDG: -200.0000mV

Use

,

,

,

, ENTER, or EXIT.

14. Note and record the DMM reading, and then adjust the Model 2400 display to agree

with the DMM reading. Ag ain, the maximum display adjustment is within ±10% of the

present range.

15. After adjusting the display value to agree with the DMM reading, press ENTER, and

note that the instrument displays:

V-CAL

Press ENTER to Output -000.00mv

16. Press ENTER. The Model 2400 will source -0mV and simultaneously display the

following:

DMM RDG: -000.0000mV

Use

,

,

,

, ENTER, or EXIT.

17. Note and record the DMM reading, and then adjust the display to agree with the DMM

reading. Once again, the maximum adjustment is within ±1% of the present range.

18. After adjusting the display to agree with the DMM reading, press ENTER to complete

calibration of the present range.

19. Press EXIT to return to normal display, and then select the 2V source range. Repeat

steps 2 through 18 for the 2V range.

20. After calibrating the 2V range, repeat the entire procedure for the 20V and 200V ranges

using T able 2-3 as a guide. Be sure to select the appropriate source range with the EDIT

and RANGE keys before calibrating each range.

21. Press EXIT as necessary to return to normal display.

Page 41

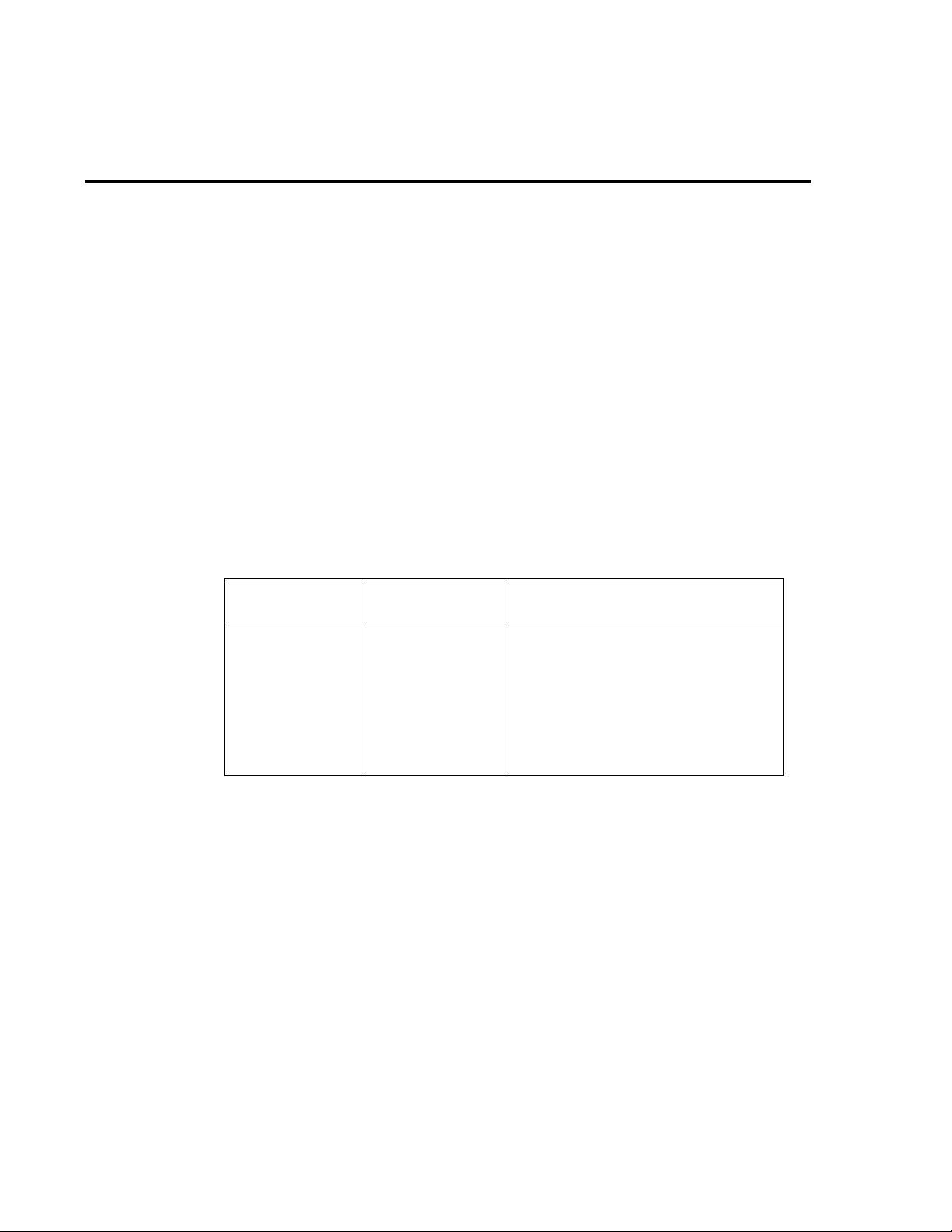

Table 2-3

Front panel voltage calibration

Source range* Source voltage Multimeter voltage reading**

0.2V

+200.00mV

+000.00mV

-200.00mV

-000.00mV

___________mV

___________mV

___________mV

___________mV

Calibration 2-11

2V

+2.0000V

+0.0000V

-2.0000V

-0.0000V

20V

+20.000V

+00.000V

-20.000V

-00.000V

200V

+200.00V

+000.00V

-200.00V

-000.00V

**Use EDIT and RANGE keys to select source range.

**Multimeter reading used in corresponding calibration step. See procedure.

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

____________Vm

Page 42

2-12 Calibration

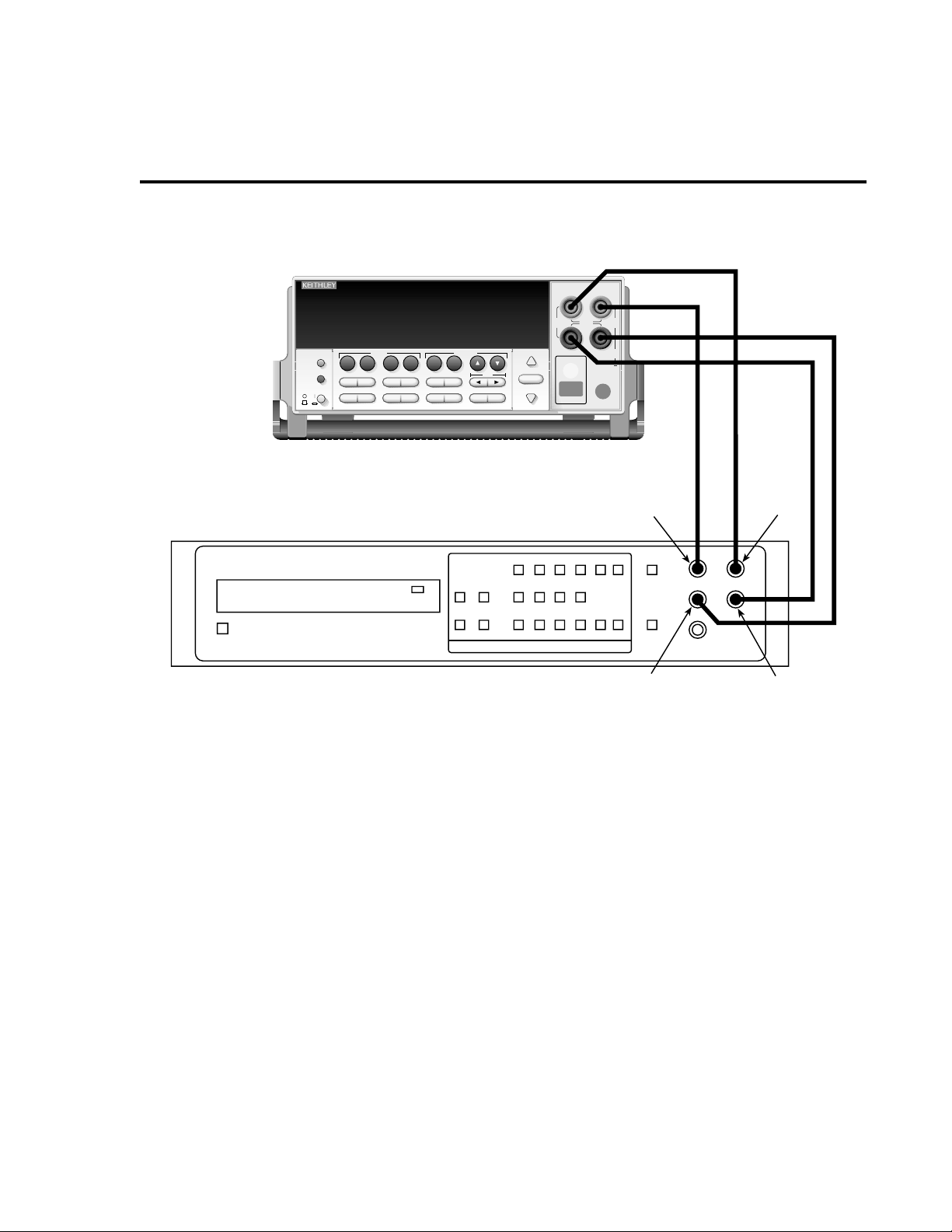

Fi

2

Step 3. Current calibration

Perform the following steps for each current range using Table 2-4 as a guide.

1. Connect the Model 2400 to the digital multimeter as shown in Figure 2-2. Select the

multimeter DC current measurement function.

2. From normal display, press the SOURCE I key.

3. Press the EDIT key to select the source display field, and then use the down RANGE

key to select the 1µA source range.

4. From normal display, press MENU.

5. Select CAL, and then press ENTER. The unit will display the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD

6. Select EXECUTE, and then press ENTER. The instrument will display the following

message:

I-CAL

Press ENTER to Output +1.0000µA

gure 2-

Current calibration connections

4-WIRE

INPUT/

SENSE

OUTPUT

HI

250V

PEAK

V

LOCAL

67

DIGITS SPEED

MEAS

FCTN

I

Ω

230

LIMIT

89

RECALL

V

4

TRIG

SWEEP

+/-

CONFIG MENU

5

1

REL

FILTER

STORE

Model 2400

EDIT

DISPLAY

TOGGLE

POWER

SOURCE

I

EDIT

EXIT ENTER

2400 SourceMeter

RANGE

AUTO

RANGE

ON/OFF

OUTPUT

250V

5V

PEAK

PEAK

LO

250V

PEAK

TERMINALS

FRONT/

REAR

Input LO

Amps

Digital Multimeter

Page 43

Calibration 2-13

7. Press ENTER. The Model 2400 will source +1µA and simultaneously display the

following:

DMM RDG: +1.000000µA

Use , , , , ENTER, or EXIT.

8. Note and record the DMM reading, and then adjust the Model 2400 display to agree

exactly with the actual DMM reading. (Use the up and down arrow keys to select the

digit value; use the left and right arrow keys to choose the digit position.) Note that the

display adjustment range is within ±10% of the present range.

9. After adjusting the display to agree with the DMM reading, press ENTER. The instrument will then display the following:

I-CAL

Press ENTER to Output +0.0000µA

10. Press ENTER. The Model 2400 will source 0µA and at the same time display the

following:

DMM RDG: +0.000000µA

Use , , , , ENTER, or EXIT.

11. Note and record the DMM reading, and then adjust the Model 2400 display to agree

with the actual DMM reading. Note that the display value adjustment limits are within

±1% of the present range.

12. After adjusting the display value to agree with the DMM reading, press ENTER. The

unit will then display the following:

I-CAL

Press ENTER to Output -1.0000µA

13. Press ENTER. The Model 2400 will source -1µA and display the following:

DMM RDG: -1.000000µA

Use , , , , ENTER, or EXIT.

14. Note and record the DMM reading, then adjust the Model 2400 display to agree with

the DMM reading. Again, the maximum display adjustment is within ±10% of the

present range.

15. After adjusting the display value to agree with the DMM reading, press ENTER. and

note that the instrument displays:

I-CAL

Press ENTER to Output -0.0000µA

16. Press ENTER. The Model 2400 will source -0µA and simultaneously display the

following:

DMM RDG: -0.000000µA

Use , , , , ENTER, or EXIT.

17. Note and record the DMM reading, and then adjust the display to agree with the DMM

reading. Once again, the maximum adjustment is within ±1% of the present range.

18. After adjusting the display to agree with the DMM reading, press ENTER to complete

calibration of the present range.

19. Press EXIT to return to normal display, then select the 10µA source range using the

EDIT and up RANGE keys. Repeat steps 2 through 18 for the 10µA range.

Page 44

2-14 Calibration

20. After calibrating the 10µA range, repeat the entire procedure for the 100µA through 1A

ranges using Table 2-4 as a guide. Be sure to select the appropriate source range with

the EDIT and up RANGE keys before calibrating each range.

Table 2-4

Front panel current calibration

Source range* Source current Multimeter current reading**

001µA

010µA

100µA

001mA

010mA

100mA

+1.0000µA

+0.0000µA

-1.0000µA

-0.0000µA

+10.000µA

+00.000µA

-10.000µA

-00.000µA

+100.00µA

+000.00µA

-100.00µA

-000.00µA

+1.0000mA

+0.0000mA

-1.0000mA

-0.0000mA

+10.000mA

+00.000mA

-10.000mA

-00.000mA

+100.00mA

+000.00mA

-100.00mA

-000.00mA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________µA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

____________mA

01A0

+1.0000A

+0.0000A

-1.0000A

-0.0000A

**Use EDIT and RANGE keys to select source range.

**Multimeter reading used in corresponding calibration step. See procedure.

_____________A

_____________A

_____________A

_____________A

Page 45

Calibration 2-15

Step 4. Enter calibration dates and save calibration

NOTE For temporary calibration without saving new calibration constants, proceed to

Step 5: Lock out calibration.

1. From normal display, press MENU.

2. Select CAL, and then press ENTER. The Model 2400 will display the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD

3. Select SAVE, and then press ENTER. The instrument will display the following:

SAVE CAL

Press ENTER to continue; EXIT to abort calibration sequence.

4. Press ENTER. The unit will prompt you for the calibration date:

CAL DATE: 12/15/95

Use , , , , ENTER, or EXIT.

5. Change the displayed date to today’s date, and then press the ENTER key. Press

ENTER again to confirm the date.

6. The unit will then prompt for the calibration due date:

NEXT CAL: 12/15/96

Use , , , , ENTER, or EXIT.

7. Set the calibration due date to the desired value, and then press ENTER. Press ENTER

again to confirm the date.

8. Once the calibration dates are entered, calibration is complete. The following message

will be displayed.

CALIBRATION COMPLETE

Press ENTER to confirm; EXIT to abort

9. Press ENTER to save the calibration data (or press EXIT to abort without saving calibration data). The following message will be displayed:

CALIBRATION SUCCESS

Press ENTER or EXIT to continue.

10. Press ENTER or EXIT to complete process.

Step 5. Lock out calibration

1. From normal display, press MENU.

2. Select CAL, and then press ENTER. The Model 2400 will display the following:

CALIBRATION

UNLOCK EXECUTE VIEW-DATES

SAVE LOCK CHANGE-PASSWORD