Page 1

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Developer’s Manual

September 2006

Order Number: 252480-006US

Page 2

Legal Lines and Disclaimers

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR

OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS

OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING

TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE,

MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for

use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the

presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by estoppel

or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights.

The Intel® IXP42X Product Line of Network Processors and IXC1100 Con trol Plane Processor may contain design defects or errors known as errata which

may cause the product to deviate from published specifications. Current characterized errata are available on request.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an order number and are referenced in this document, or other Intel literature may be obtained by calling

1-800-548-4725 or by visiting Intel's website at http://www.intel.com.

BunnyPeople, Celeron, Chips, Dialogic, EtherExpress, ETOX, FlashFile, i386, i486, i960, iCOMP, InstantIP, Intel, Intel Centrino, Intel Centrino logo, Intel

logo, Intel386, Intel486, Intel740, IntelDX2, IntelDX4, IntelSX2, Intel Inside, Intel Inside logo, Intel NetBurst, Intel NetMerge, Intel NetStructure, Intel

SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel Xeon, Intel XScale, IPLink, Itanium, MCS, MMX, MMX logo, Optimizer logo, OverDrive, Par agon,

PDCharm, Pentium, Pentium II Xeon, Pentium III Xeon, Performance at Your Command, Sound Mark, The Computer Inside., The Journey Inside, VTune,

and Xircom are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other c o untries.

*Other names and brands may be claimed as the property of others.

Copyright © 2006, Intel Corporation. All Rights Reserved.

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

DM September 2006

2 Order Number: 252480-006US

Page 3

®

—Intel

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Contents

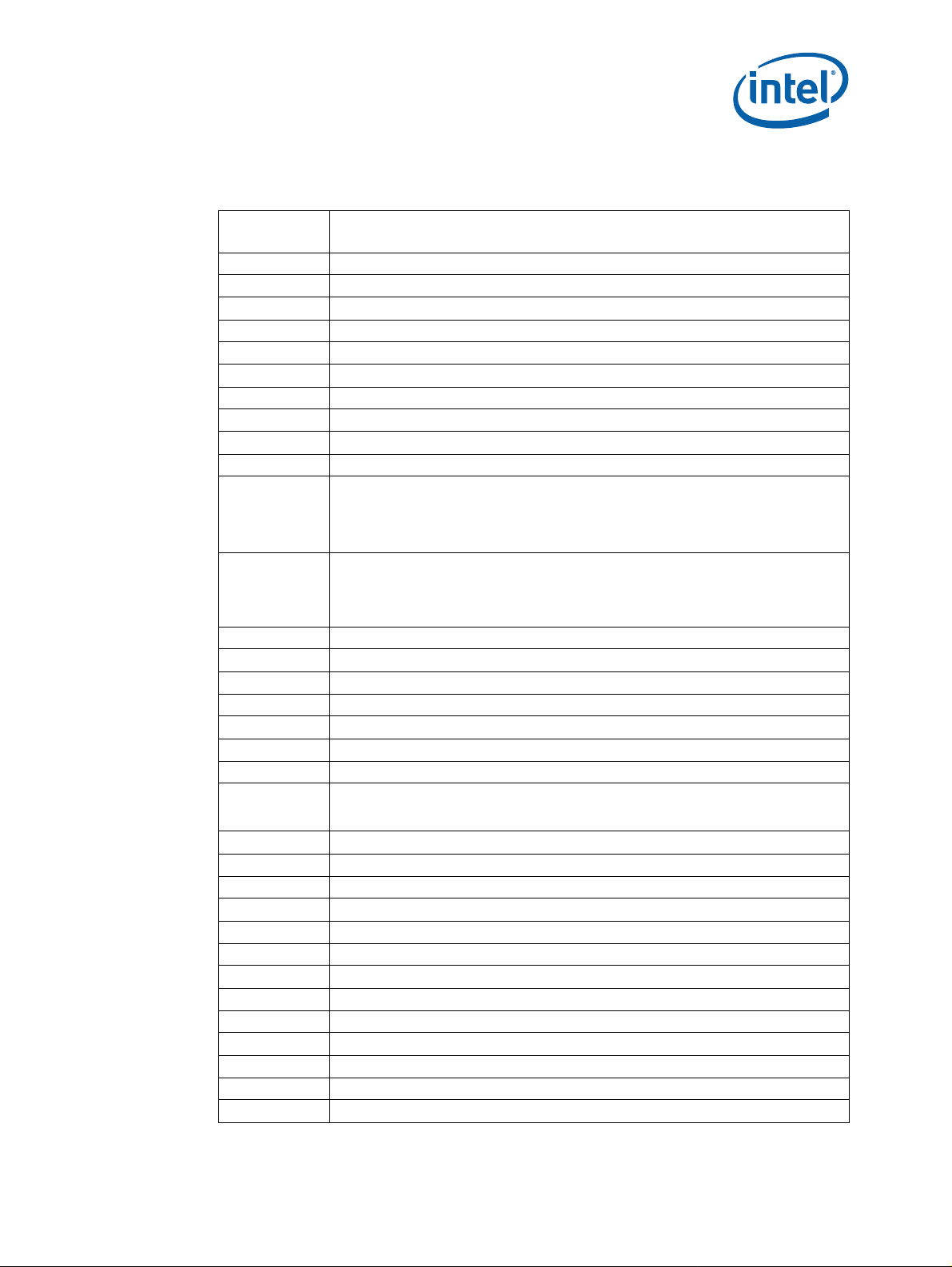

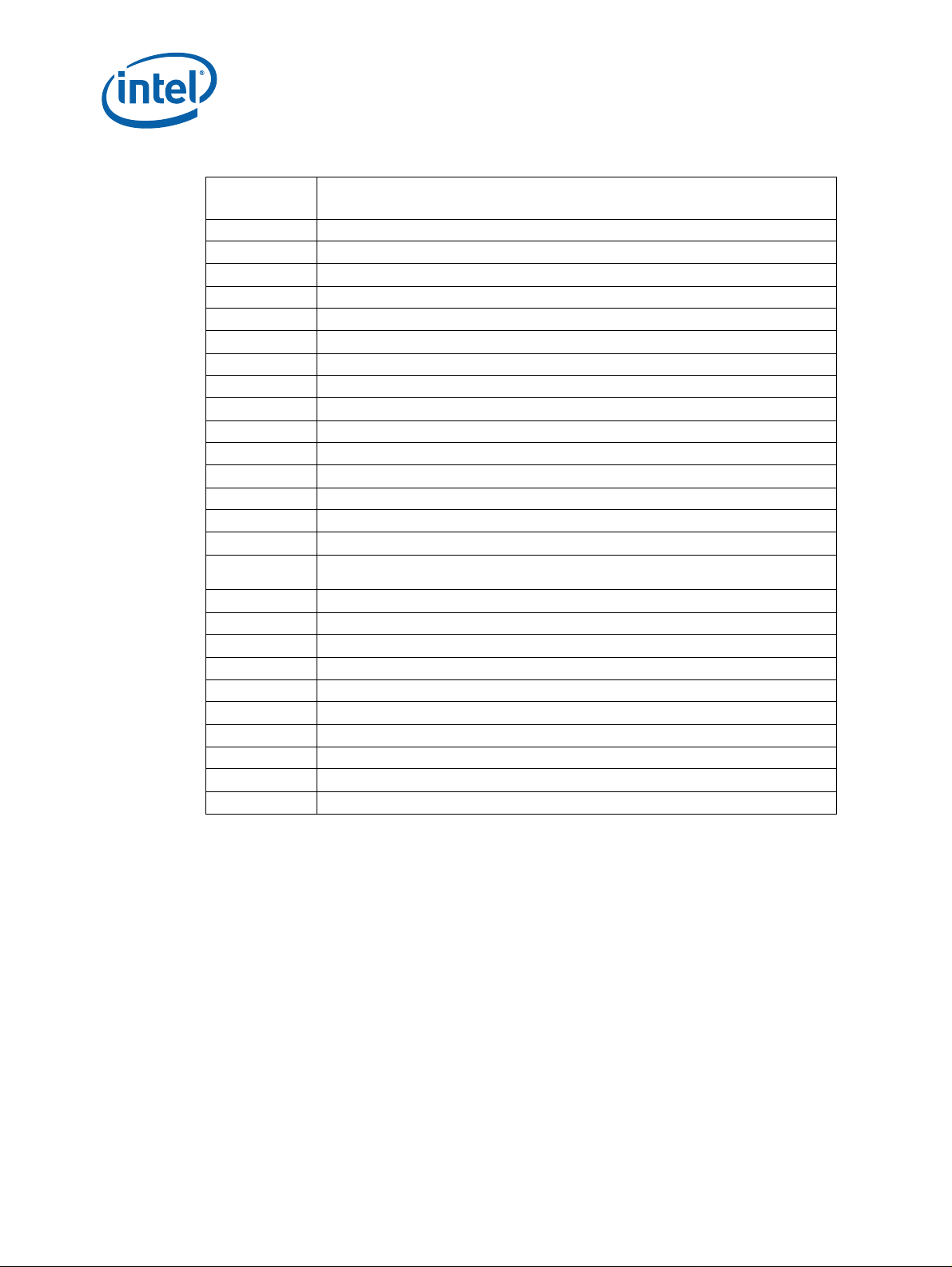

1.0 Introduction............................................................................................................ 26

1.1 About This Document.........................................................................................26

1.1.1 How to Read This Document....................................................................26

1.2 Other Relevant Documents................................................................................. 26

1.3 Terminology and Conventions.............................................................................26

1.3.1 Number Representation...........................................................................26

1.3.2 Acronyms and Terminology......................................................................27

2.0 Overview of Product Line........................................................................................30

2.1 Intel XScale

2.1.1 Intel XScale

2.2 Network Processor Engines (NPE)........................................................................38

2.3 Internal Bus .....................................................................................................39

2.4 MII Interfaces...................................................................................................39

2.5 AHB Queue Manager................................................ .. .............................. ..........39

2.6 UTOPIA 2............................. ............................................................................40

2.7 USB v1.1 ..................................................... .. .. ................................................40

2.8 PCI..................................................................................................................40

2.9 Memory Controller........................................... ............................. .. .. ... ..............40

2.10 Expansion Bus ..................................................................................................41

2.11 High-Speed Serial Interfaces...............................................................................41

2.12 Universal Asynchronous Receiver Transceiver........................................................42

2.13 GPIO ...............................................................................................................42

2.14 Interrupt Controller ........................................................................................... 42

2.15 Timers.............................................................................................................42

2.16 JTAG ...............................................................................................................43

3.0 Intel XScale

3.1 Memory Management Unit.................................. ............................. .. ... .. ............44

3.1.1 Memory Attributes....................... .. .. .............................. .. .. .. ...................45

3.1.2 Interaction of the MMU, Instruction Cache, and Data Cache ......................... 47

3.1.3 MMU Control..........................................................................................48

3.2 Instruction Cache..............................................................................................52

3.2.1 Operation When Instruction Cache is Enabled.............................................52

3.3 Branch Target Buffer .........................................................................................58

3.3.1 Branch Target Buffer (BTB) Operation.......................................................58

®

Microarchitecture Processor ............................................................35

®

Processor Overview ............................................................36

2.1.1.1 ARM

*

Compatibility ...................................................................36

2.1.1.2 Multiply/Accumulate (MAC) ........................................................36

2.1.1.3 Memory Management................................................................37

2.1.1.4 Instruction Cache......................................................................37

2.1.1.5 Branch Target Buffer.................................................................37

2.1.1.6 Data Cache..............................................................................37

2.1.1.7 Intel XScale

®

Processor...........................................................................................44

®

Processor Performance Monitoring...........................38

3.1.1.1 Page (P) Attribute Bit ................................................................45

3.1.1.2 Cacheable (C), Bufferable (B), and eXtension (X) Bits.................... 45

3.1.3.1 Invalidate (Flush) Operation.......................................................48

3.1.3.2 Enabling/Disabling ....................................................................48

3.1.3.3 Locking Entries................................................................... .. .. ..49

3.1.3.4 Round-Robin Replacement Algorithm...........................................51

3.2.1.1 Instruction-Cache ‘Miss’.............................................................53

3.2.1.2 Instruction-Cache Line-Replacement Algorithm .............................54

3.2.1.3 Instruction-Cache Coherence.................................................... ..55

3.3.1.1 Reset ......................................................................................59

September 2006 DM

Order Number: 252480-006US 3

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 4

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

3.4 Data Cache.......................................................................................................60

3.4.1 Data Cache Overview..............................................................................60

3.4.2 Cacheability ...........................................................................................63

3.4.3 Reconfiguring the Data Cache as Data RAM................................................68

3.5 Configuration ....................................................................................................73

3.5.1 CP15 Registers.......................................................................................75

3.5.1.1 Register 0: ID and Cache Type Registers......................................76

3.5.1.2 Register 1: Control and Auxiliary Control Registers ........................77

3.5.1.3 Register 2: Translation Table Base Register ..................................79

3.5.1.4 Register 3: Domain Access Control Register..................................80

3.5.1.5 Register 4: Reserved .................................................................80

3.5.1.6 Register 5: Fault Status Register.................................................80

3.5.1.7 Register 6: Fault Address Register...............................................81

3.5.1.8 Register 7: Cache Functions........................................................81

3.5.1.9 Register 8: TLB Operations.........................................................82

3.5.1.10 Register 9: Cache Lock Down......................................................82

3.5.1.11 Register 10: TLB Lock Down.......................................................83

3.5.1.12 Register 11-12: Reserved...........................................................84

3.5.1.13 Register 13: Process ID..............................................................84

3.5.1.14 The PID Register Affect On Addresses..........................................84

3.5.1.15 Register 14: Breakpoint Registers................................................85

3.5.1.16 Register 15: Coprocessor Access Register.....................................85

3.5.2 CP14 Registers.......................................................................................86

3.5.2.1 Performance Monitoring Registers................................................87

3.5.2.2 Clock and Power Management Registers.......................................87

3.5.2.3 Software Debug Registers ..........................................................88

3.6 Software Debug.................................................................................................88

3.6.1 Definitions .............................................................................................89

3.6.2 Debug Registers..................................... ........................... .. .. .................89

3.6.3 Debug Modes ....................................... .. .. ..............................................89

3.6.3.1 Halt Mode ................................................................................90

3.6.3.2 Monitor Mode............................................................................90

3.6.4 Debug Control and Status Register (DCSR) ................................................90

3.6.4.1 Global Enable Bit (GE) ...............................................................91

3.6.4.2 Halt Mode Bit (H) ......................................................................91

3.6.4.3 Vector Trap Bits (TF,TI,TD,TA,TS,TU,TR) ......................................92

3.6.4.4 Sticky Abort Bit (SA)..................................................................92

3.6.4.5 Method of Entry Bits (MOE) ........................................................92

3.6.4.6 Trace Buffer Mode Bit (M)...........................................................92

3.6.4.7 Trace Buffer Enable Bit (E) .........................................................92

3.6.5 Debug Exceptions................................. .. .. ............................. .. ...............92

3.6.5.1 Halt Mode ................................................................................93

3.6.5.2 Monitor Mode............................................................................94

3.6.6 HW Breakpoint Resources........................................................................95

3.6.6.1 Instruction Breakpoints..............................................................95

3.6.6.2 Data Breakpoints ......................................................................96

3.6.7 Software Breakpoints ..............................................................................98

3.6.8 Transmit/Receive Control Register ............................................................98

3.6.8.1 RX Register Ready Bit (RR)..................................................... .. ..99

3.6.8.2 Overflow Flag (OV)..................................................................100

3.6.8.3 Download Flag (D)...................................................................100

3.6.8.4 TX Register Ready Bit (TR) ................................................... .. ..100

3.6.8.5 Conditional Execution Using TXRXCTRL.......................................101

3.6.9 Transmit Register .................................................................................101

3.6.10 Receive Register...................................................................................102

3.6.11 Debug JTAG Access...............................................................................102

3.6.11.1 SELDCSR JTAG Command ........................................................102

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

4 Order Number: 252480-006US

Page 5

®

—Intel

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

3.6.11.2 SELDCSR JTAG Register........................................................... 103

3.6.11.3 DBGTX JTAG Command ........................................................... 105

3.6.11.4 DBGTX JTAG Register.............................................................. 105

3.6.11.5 DBGRX JTAG Command........................................................... 106

3.6.11.6 DBGRX JTAG Register.............................................................. 106

3.6.11.7 Debug JTAG Data Register Reset Values..................................... 109

3.6.12 Trace Buffer ........................................................................................ 109

3.6.12.1 Trace Buffer CP Registers......................................................... 109

3.6.13 Trace Buffer Entries.............................................................................. 111

3.6.13.1 Message Byte................................... .............................. .. ...... 111

3.6.13.2 Trace Buffer Usage.................................................................. 114

3.6.14 Downloading Code in ICache.................................................................. 116

3.6.14.1 LDIC JTAG Command .............................................................. 116

3.6.14.2 LDIC JTAG Data Register ......................................................... 117

3.6.14.3 LDIC Cache Functions.............................................................. 118

3.6.14.4 Loading IC During Reset.......................................................... 119

3.6.14.5 Dynamically Loading IC After Reset........................................... 123

3.6.14.6 Mini-Instruction Cache Overview............................................... 126

3.6.15 Halt Mode Software Protocol.................................................................. 126

3.6.15.1 Starting a Debug Session............................ .. .. ......................... 126

3.6.15.2 Implementing a Debug Handler ...................................... .. .. ...... 128

3.6.15.3 Ending a Debug Session .......................................................... 131

3.6.16 Software Debug Notes and Errata........................................................... 132

3.7 Performance Monitoring ................................................................................... 133

3.7.1 Overview ............................................................................................ 133

3.7.2 Register Description.............................................................................. 134

3.7.2.1 Clock Counter (CCNT) ............................................................. 134

3.7.2.2 Performance Count Registers.................................................... 134

3.7.2.3 Performance Monitor Control Register........................................ 135

3.7.2.4 Interrupt Enable Register......................................................... 136

3.7.2.5 Overflow Flag Status Register.................................. ................. 136

3.7.2.6 Event Select Register ................................... ........................... 137

3.7.3 Managing the Performance Monitor......................................................... 138

3.7.4 Performance Monitoring Events.............................................................. 139

3.7.4.1 Instruction Cache Efficiency Mode ............................................. 140

3.7.4.2 Data Cache Efficiency Mode...................................................... 140

3.7.4.3 Instruction Fetch Latency Mode ................................................ 140

3.7.4.4 Data/Bus Request Buffer Full Mode............................................ 141

3.7.4.5 Stall/Write-Back Statistics........................................................ 141

3.7.4.6 Instruction TLB Efficiency Mode ................................................ 142

3.7.4.7 Data TLB Efficiency Mode......................................................... 142

3.7.5 Multiple Performance Monitoring Run Statistics......................................... 142

3.7.6 Examples ............................................................................................ 142

3.8 Programming Model......................................................................................... 144

3.8.1 ARM

3.8.2 ARM

*

Architecture Compatibility............................................................. 144

*

Architecture Implementation Options.............................................. 144

3.8.2.1 Big Endian versus Little Endian................................................. 144

3.8.2.2 26-Bit Architecture.................................................................. 145

3.8.2.3 Thumb .................................................................................. 145

3.8.2.4 ARM

3.8.2.5 Base Register Update.............................................................. 145

3.8.3 Extensions to ARM

*

DSP-Enhanced Instruction Set.......................................... 145

*

Architecture.............................. .. .. ........................... 146

3.8.3.1 DSP Coprocessor 0 (CP0)......................................................... 146

3.8.3.2 New Page Attributes................................................................ 152

3.8.3.3 Additions to CP15 Functionality................................................. 153

3.8.3.4 Event Architecture ................................... .. .. .. ......................... 154

3.9 Performance Considerations.............................................................................. 159

September 2006 DM

Order Number: 252480-006US 5

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 6

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

3.9.1 Interrupt Latency..................................................................................159

3.9.2 Branch Prediction..................................................................................160

3.9.3 Addressing Modes.................................................................................160

3.9.4 Instruction Latencies.............................................................................160

3.9.4.1 Performance Terms .................................................................160

3.9.4.2 Branch Instruction Timings.......................................................162

3.9.4.3 Data Processing Instruction Timings...........................................162

3.9.4.4 Multiply Instruction Timings......................................................163

3.9.4.5 Saturated Arithmetic Instructions ..............................................165

3.9.4.6 Status Register Access Instructions............................................165

3.9.4.7 Load/Store Instructions............................................................165

3.9.4.8 Semaphore Instructions.................................... .. .....................166

3.9.4.9 Coprocessor Instructions..........................................................166

3.9.4.10 Miscellaneous Instruction Timing ...............................................167

3.9.4.11 Thumb Instructions .................................................................167

3.10 Optimization Guide ..........................................................................................167

3.10.1 Introduction.........................................................................................167

3.10.1.1 About This Section ..................................................................168

3.10.2 Processors’ Pipeline...............................................................................168

3.10.2.1 General Pipeline Characteristics.................................................168

3.10.2.2 Instruction Flow Through the Pipeline.........................................170

3.10.2.3 Main Execution Pipeline............................................................171

3.10.2.4 Memory Pipeline....................................................... ...............172

3.10.2.5 Multiply/Multiply Accumulate (MAC) Pipeline ...................... .........173

3.10.3 Basic Optimizations...............................................................................173

3.10.3.1 Conditional Instructions ...........................................................173

3.10.3.2 Bit Field Manipulation........................................ .. .. ...................178

3.10.3.3 Optimizing the Use of Immediate Values ....................................178

3.10.3.4 Optimizing Integer Multiply and Divide.......................................178

3.10.3.5 Effective Use of Addressing Modes.............................................179

3.10.4 Cache and Prefetch Optimizations ...........................................................180

3.10.4.1 Instruction Cache....................................................................180

3.10.4.2 Data and Mini Cache................................................................181

3.10.4.3 Cache Considerations........................... .. .. ... .............................184

3.10.4.4 Prefetch Considerations............................................................185

3.10.5 Instruction Scheduling...........................................................................191

3.10.5.1 Scheduling Loads ....................................................................191

3.10.5.2 Scheduling Data Processing Instructions.....................................195

3.10.5.3 Scheduling Multiply Instructions................................................196

3.10.5.4 Scheduling SWP and SWPB Instructions .....................................197

3.10.5.5 Scheduling the MRA and MAR Instructions (MRRC/MCRR) .............197

3.10.5.6 Scheduling the MIA and MIAPH Instructions................................198

3.10.5.7 Scheduling MRS and MSR Instructions........................................198

3.10.5.8 Scheduling CP15 Coprocessor Instructions..................................199

3.10.6 Optimizing C Libraries ...........................................................................199

3.10.7 Optimizations for Size ...........................................................................199

3.10.7.1 Space/Performance Trade Off ...................................................199

4.0 Network Processor Engines (NPE) .........................................................................202

5.0 Internal Bus...........................................................................................................204

5.1 Internal Bus Arbiters.............................................. .. .. .............................. .. ......204

5.1.1 Priority Mechanism................................................................................205

5.2 Memory Map...................................................................................................205

6.0 PCI Controller ........................................................................................................208

6.1 PCI Controller Configured as Host......................................................................213

6.1.1 Example: Generating a PCI Configuration Write and Read ..........................216

6.2 PCI Controller Configured as Option ...................................................................218

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

6 Order Number: 252480-006US

Page 7

®

—Intel

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

6.3 Initializing PCI Controller Configuration and Status Registers for Data Transactions.. 219

6.3.1 Example: AHB Memory Base Address Register, AHB I/O

Base Address Register, and PCI Memory Base Address

Register.............................................................................................. 220

6.3.2 Example: PCI Memory Base Address Register and South-AHB Translation .... 222

6.4 Initializing the PCI Controller Configuration Registers........................................... 222

6.5 PCI Controller South AHB Transactions ............................................................... 225

6.6 PCI Controller Functioning as Bus Initiator...................... .............................. .. .... 226

6.6.1 PCI Byte Enables.................................................................................. 226

6.6.2 Initiated Type-0 Read Transaction ................................... .. .. ................... 227

6.6.3 Initiated Type-0 Write Transaction.......................................................... 227

6.6.4 Initiated Type-1 Read Transaction ................................... .. .. ................... 228

6.6.5 Initiated Type-1 Write Transaction.......................................................... 229

6.6.6 Initiated Memory Read Transaction.......................................... ... .. .. .. ...... 229

6.6.7 Initiated Memory Write Transaction ........................................................ 230

6.6.8 Initiated I/O Read Transaction ............................................................... 231

6.6.9 Initiated I/O Write Transaction............................................................... 231

6.6.10 Initiated Burst Memory Read Transaction................................................. 232

6.6.11 Initiated Burst Memory Write Transaction ................................................ 233

6.7 PCI Controller Functioning as Bus Target ............................................................ 234

6.8 PCI Controller DMA Controller ........................................................................... 234

6.8.1 AHB to PCI DMA Channel Operation........................................................ 238

6.8.2 PCI to AHB DMA Channel Operation........................................................ 238

6.9 PCI Controller Door Bell Register....................................................................... 239

6.10 PCI Controller Interrupts.................................................................................. 240

6.10.1 PCI Interrupt Generation....................................................................... 240

6.10.2 Internal Interrupt Generation................................................................. 240

6.11 PCI Controller Endian Control............................................................................ 241

6.12 PCI Controller Clock and Reset Generation.......................................................... 248

6.13 PCI RCOMP Circuitry........................................................................................ 249

6.14 Register Descriptions....................................................................................... 249

6.14.1 PCI Configuration Registers ................................................................... 249

6.14.1.1 Device ID/Vendor ID Register ................................................... 250

6.14.1.2 Status Register/Control Register............................................. .. 250

6.14.1.3 Class Code/Revision ID Register ............................................... 252

6.14.1.4 BIST/Header Type/Latency Timer/Cache Line Register................. 252

6.14.1.5 Base Address 0 Register ........................... ............................. .. 253

6.14.1.6 Base Address 1 Register ........................... ............................. .. 254

6.14.1.7 Base Address 2 Register ........................... ............................. .. 254

6.14.1.8 Base Address 3 Register ........................... ............................. .. 255

6.14.1.9 Base Address 4 Register ........................... ............................. .. 255

6.14.1.10Base Address 5 Register .......................................................... 256

6.14.1.11Subsystem ID/Subsystem Vendor ID Register............................. 256

6.14.1.12Max_Lat, Min_Gnt, Interrupt Pin, and Interrupt Line Register........ 257

6.14.1.13Retry Timeout/TRDY Timeout Register....................................... 257

6.14.2 PCI Controller Configuration and Status Registers..................................... 258

6.14.2.1 PCI Controller Non-pre-fetch Address Register............................ 259

6.14.2.2 PCI Controller Non-pre-fetch Command/Byte Enables Register ...... 259

6.14.2.3 PCI Controller Non-Pre-fetch Write Data Register ........................ 260

6.14.2.4 PCI Controller Non-Pre-fetch Read Data Register......................... 260

6.14.2.5 PCI Controller Configuration Port Address/Command/

Byte Enables Register.............................................................. 260

6.14.2.6 PCI Controller Configuration Port Write Data Register .................. 261

6.14.2.7 PCI Controller Configuration Port Read Data Register................... 262

6.14.2.8 PCI Controller Control and Status Register ................................. 262

6.14.2.9 PCI Controller Interrupt Status Register..................................... 263

6.14.2.10PCI Controller Interrupt Enable Register..................................... 264

September 2006 DM

Order Number: 252480-006US 7

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 8

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

6.14.2.11DMA Control Register...............................................................265

6.14.2.12AHB Memory Base Address Register...........................................266

6.14.2.13AHB I/O Base Address Register.................................................266

6.14.2.14PCI Memory Base Address Register............................................267

6.14.2.15AHB Doorbell Register..............................................................267

6.14.2.16PCI Doorbell Register............................................ .. .................268

6.14.2.17AHB to PCI DMA AHB Address Register 0....................................268

6.14.2.18AHB to PCI DMA PCI Address Register 0.....................................269

6.14.2.19AHB to PCI DMA Length Register 0 ............................................269

6.14.2.20AHB to PCI DMA AHB Address Register 1....................................270

6.14.2.21AHB to PCI DMA PCI Address Register 1.....................................270

6.14.2.22AHB to PCI DMA Length Register 1 ............................................270

6.14.2.23PCI to AHB DMA AHB Address Register 0....................................271

6.14.2.24PCI to AHB DMA PCI Address Register 0.....................................271

6.14.2.25PCI to AHB DMA Length Register 0 ............................................272

6.14.2.26PCI to AHB DMA AHB Address Register 1....................................272

6.14.2.27PCI to AHB DMA PCI Address Register 1.....................................273

6.14.2.28PCI to AHB DMA Length Register 1 ............................................273

7.0 SDRAM Controller .................................................................................................. 276

7.1 SDRAM Memory Space .....................................................................................279

7.2 Initializing the SDRAM Controller .......................................................................279

7.2.1 Initializing the SDRAM........................................................................... 283

7.3 SDRAM Memory Accesses .................................................................................285

7.3.1 Read Transfer ......................................................................................285

7.3.1.1 Read Cycle Timing (CAS Latency of Two Cycles)..........................285

7.3.1.2 Read Burst Transfer (Interleaved AHB Reads) .............................286

7.3.2 Write Transfer......................................................................................286

7.3.2.1 Write Transfer.........................................................................286

7.4 Register Description........................................... ............................. .. .. ... ..........287

7.4.1 Configuration Register.............................................. .............................287

7.4.2 Refresh Register...................... ......................................................... ....288

7.4.3 Instruction Register ..............................................................................288

8.0 Expansion Bus Controller .......................................................................................292

8.1 Expansion Bus Address Space ...........................................................................293

8.2 Chip Select Address Allocation...........................................................................294

8.3 Address and Data Byte Steering ........................................................................295

8.4 Expansion Bus Connections...............................................................................297

8.5 Expansion Bus Interface Configuration................................................................298

8.6 Using I/O Wait ................................................................................................301

8.7 Special Design Knowledge for Using HPI mode.....................................................303

8.8 Expansion Bus Interface Access Timing Diagrams.................................................305

8.8.1 Intel

8.8.2 Intel

8.8.3 Intel

8.8.4 Intel

®

Multiplexed-Mode Write Access .....................................................305

®

Multiplexed-Mode Read Access........................... .. .. .......................306

®

Simplex-Mode Write Access..........................................................307

®

Simplex-Mode Read Access................... .. ......................................308

8.8.5 Motorola* Multiplexed-Mode Write Access ................................................309

8.8.6 Motorola* Multiplexed-Mode Read Access.................................................310

8.8.7 Motorola* Simplex-Mode Write Access.....................................................311

8.8.8 Motorola* Simplex-Mode Read Access .....................................................312

8.8.9 TI* HPI-8 Write Access..........................................................................313

8.8.10 TI* HPI-8 Read Access ..........................................................................314

8.8.11 TI* HPI-16, Multiplexed-Mode Write Access..............................................315

8.8.12 TI* HPI-16, Multiplexed-Mode Read Access..............................................316

8.8.13 TI* HPI-16 Simplex-Mode Write Access ...................................................317

8.8.14 TI* HPI-16 Simplex-Mode Read Access....................................................318

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

8 Order Number: 252480-006US

Page 9

®

—Intel

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

8.9 Register Descriptions....................................................................................... 319

8.9.1 Timing and Control Registers for Chip Select 0 ......................................... 319

8.9.2 Timing and Control Registers for Chip Select 1 ......................................... 319

8.9.3 Timing and Control Registers for Chip Select 2 ......................................... 320

8.9.4 Timing and Control Registers for Chip Select 3 ......................................... 320

8.9.5 Timing and Control Registers for Chip Select 4 ......................................... 320

8.9.6 Timing and Control Registers for Chip Select 5 ......................................... 321

8.9.7 Timing and Control Registers for Chip Select 6 ......................................... 321

8.9.8 Timing and Control Registers for Chip Select 7 ......................................... 321

8.9.9 Configuration Register 0........................................................................ 322

8.9.9.1 User-Configurable Field............................................................ 324

8.9.10 Configuration Register 1........................................................................ 324

8.10 Expansion Bus Controller Performance ............................................................... 326

9.0 AHB/APB Bridge.................................................................................................... 328

10.0 Universal Asynchronous Receiver Transceiver (UART)........................................... 332

10.1 High Speed UART........................... .. ............................. .................................. 333

10.2 Configuring the UART....................................................................................... 335

10.2.1 Setting the Baud Rate........................................................................... 335

10.2.2 Setting Data Bits/Stop Bits/Parity........................................................... 336

10.2.3 Using the Modem Control Signals ............................................ ............... 338

10.2.4 UART Interrupts................................................................................... 339

10.3 Transmitting and Receiving UART Data............................................................... 342

10.4 Register Descriptions....................................................................................... 344

10.4.1 Receive Buffer Register....................... .. ............................. .. ................. 345

10.4.2 Transmit Holding Register ..................................................................... 345

10.4.3 Divisor Latch Low Register..................................................................... 346

10.4.4 Divisor Latch High Register.................................................................... 346

10.4.5 Interrupt Enable Register ...................................................................... 346

10.4.6 Interrupt Identification Register ............................................................. 347

10.4.7 FIFO Control Register............................................................................ 349

10.4.8 Line Control Register ............................................................................ 350

10.4.9 Modem Control Register........................................................................ 352

10.4.10 Line Status Register.............................................................................. 353

10.4.11 Modem Status Register ......................................................................... 354

10.4.12Scratch-Pad Register ............................................................................ 355

10.4.13Infrared Selection Register .................................................................... 356

10.5 Console UART................................................................................................. 357

10.5.1 Register Description.............................................................................. 357

10.5.1.1 Receive Buffer Register............................................................ 358

10.5.1.2 Transmit Holding Register........................................................ 358

10.5.1.3 Divisor Latch Low Register ....................................................... 359

10.5.1.4 Divisor Latch High Register ...................................................... 359

10.5.1.5 Interrupt Enable Register......................................................... 360

10.5.1.6 Interrupt Identification Register................................................ 360

10.5.1.7 FIFO Control Register.............................................................. 362

10.5.1.8 Line Control Register............................................................... 363

10.5.1.9 Modem Control Register........................................................... 365

10.5.1.10Line Status Register............................................... .. ... .. .......... 366

10.5.1.11Modem Status Register.......................... ... .. ............................. 367

10.5.1.12Scratch-Pad Register............................................................... 368

10.5.1.13Infrared Selection Register....................................................... 369

11.0 Internal Bus Performance Monitoring Unit (IBPMU) .............................................. 372

11.1 Initializing the IBPMU....................................................................................... 372

11.2 Using the IBPMU ............................ ............................. .................................... 373

September 2006 DM

Order Number: 252480-006US 9

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 10

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

11.2.1 Monitored Events South AHB and North AHB ............................................375

11.2.2 Monitored SDRAM Events.......................................................................377

11.2.3 Cycle Count .........................................................................................377

11.3 Register Descriptions................... .. .. .. ............................. .. ... .............................378

11.3.1 Event Select Register ............................................................................378

11.3.2 PMU Status Register (PSR).....................................................................381

11.3.3 Programmable Event Counters (PEC1).....................................................381

11.3.4 Programmable Event Counters (PEC2).....................................................382

11.3.5 Programmable Event Counters (PEC3).....................................................382

11.3.6 Programmable Event Counters (PEC4).....................................................382

11.3.7 Programmable Event Counters (PEC5).....................................................383

11.3.8 Programmable Event Counters (PEC6).....................................................383

11.3.9 Programmable Event Counters (PEC7).....................................................384

11.3.10Previous Master/Slave Register (PSMR) ...................................................384

12.0 General Purpose Input/Output (GPIO) ..................................................................386

12.1 Using GPIO as Inputs/Outputs...........................................................................386

12.2 Using GPIO as Interrupt Inputs..........................................................................387

12.3 Using GPIO 14 and GPIO 15 as Clocks ................................................................389

12.4 Register Description........................................... .. ............................. .. ... .. ........391

12.4.1 GPIO Output Register............................................................................391

12.4.2 GPIO Output Enable Register..................................................................392

12.4.3 GPIO Input Register..............................................................................392

12.4.4 GPIO Interrupt Status Register...............................................................393

12.4.5 GP Interrupt Type Register 1..................................................................393

12.4.6 GPIO Interrupt Type Register 2...............................................................394

12.4.7 GPIO Clock Register..............................................................................395

13.0 Interrupt Controller ...............................................................................................398

13.1 Interrupt Priority .............................................................................................398

13.2 Assigning FIQ or IRQ Interrupts.........................................................................399

13.3 Enabling and Disabling Interrupts ......................................................................399

13.4 Reading Interrupt Status ..................................................................................400

13.5 Interrupt Controller Register Description.............................................................401

13.5.1 Interrupt Status Register.......................................................................402

13.5.2 Interrupt-Enable Register ......................................................................404

13.5.3 Interrupt Select Register........................................................................404

13.5.4 IRQ Status Register .............................................. ... .............................404

13.5.5 FIQ Status Register......................................................................... .. ....404

13.5.6 Interrupt Priority Register......................................................................405

13.5.7 IRQ Highest-Priority Register..................................................................405

13.5.8 FIQ Highest-Priority Register ..................................................................406

14.0 Timers ...................................................................................................................408

14.1 Watch-Dog Timer.............................................................................................408

14.2 Time-Stamp Timer...........................................................................................409

14.3 General-Purpose Timers ...................................................................................409

14.4 Timer Register Definition ..................................................................................411

14.4.1 Time-Stamp Timer................................................................................ 411

14.4.2 General-Purpose Timer 0 .......................................................................411

14.4.3 General-Purpose Timer 0 Reload............................. ... .. ...........................412

14.4.4 General-Purpose Timer 1 .......................................................................412

14.4.5 General-Purpose Timer 1 Reload............................. ... .. ...........................413

14.4.6 Watch-Dog Timer .................................................................................413

14.4.7 Watch-Dog Enable Register....................................................................414

14.4.8 Watch-Dog Key Register........................................................................414

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

10 Order Number: 252480-006US

Page 11

®

—Intel

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

14.4.9 Timer Status........................................................................................ 415

15.0 Ethernet MAC A ..................................................................................................... 416

15.1 Ethernet Coprocessor.......................... .. .. .............................. .. ......................... 417

15.1.1 Ethernet Coprocessor APB Interface.............................................. .......... 418

15.1.2 Ethernet Coprocessor NPE Interface........................................................ 418

15.1.3 Ethernet Coprocessor MDIO Interface ..................................................... 418

15.1.4 Transmitting Ethernet Frames with MII Interfaces..................................... 420

15.1.5 Receiving Ethernet Frames with MII Interfaces......................................... 423

15.1.6 General Ethernet Coprocessor Configuration ............................................ 425

15.2 Register Descriptions....................................................................................... 427

15.2.1 Transmit Control 1 ............................................................................... 428

15.2.2 Transmit Control 2 ............................................................................... 429

15.2.3 Receive Control 1...................... .. .. .. .............................. .. ..................... 429

15.2.4 Receive Control 2...................... .. .. .. .............................. .. ..................... 430

15.2.5 Random Seed...................................................................................... 430

15.2.6 Threshold For Partially Empty................................................................. 431

15.2.7 Threshold For Partially Full..................................................................... 431

15.2.8 Buffer Size For Transmit........................................................................ 431

15.2.9 Transmit Deferral Parameters ................................................................ 432

15.2.10Receive Deferral Parameters................................ ... .. ............................. 432

15.2.11Transmit Two Part Deferral Parameters 1 ................................................ 433

15.2.12Transmit Two Part Deferral Parameters 2 ................................................ 433

15.2.13 Slot Time ............................................................................................ 433

15.2.14MDIO Commands Registers .......................................... .. ....................... 434

15.2.15MDIO Command 1................................ .. .. ............................. ............... 434

15.2.16MDIO Command 2................................ .. .. ............................. ............... 434

15.2.17MDIO Command 3................................ .. .. ............................. ............... 435

15.2.18MDIO Command 4................................ .. .. ............................. ............... 435

15.2.19MDIO Status Registers............................................... .. ......................... 435

15.2.20MDIO Status 1.......................... .............................. .. ........................... 436

15.2.21MDIO Status 2.......................... .............................. .. ........................... 436

15.2.22MDIO Status 3.......................... .............................. .. ........................... 436

15.2.23MDIO Status 4.......................... .............................. .. ........................... 436

15.2.24Address Mask Registers......................................................................... 437

15.2.25 Address Mask 1.................................................................................... 437

15.2.26 Address Mask 2.................................................................................... 438

15.2.27 Address Mask 3.................................................................................... 438

15.2.28 Address Mask 4.................................................................................... 438

15.2.29 Address Mask 5.................................................................................... 438

15.2.30 Address Mask 6.................................................................................... 439

15.2.31 Address Registers................................................................................. 439

15.2.32 Address 1............................................................................................ 440

15.2.33 Address 2............................................................................................ 440

15.2.34 Address 3............................................................................................ 440

15.2.35 Address 4............................................................................................ 440

15.2.36 Address 5............................................................................................ 441

15.2.37 Address 6............................................................................................ 441

15.2.38Threshold for Internal Clock................................................................... 442

15.2.39Unicast Address Registers........... .. .............................. .. ......................... 442

15.2.40Unicast Address 1.................................................. .. ............................. 443

15.2.41Unicast Address 2.................................................. .. ............................. 443

15.2.42Unicast Address 3.................................................. .. ............................. 443

15.2.43Unicast Address 4.................................................. .. ............................. 443

15.2.44Unicast Address 5.................................................. .. ............................. 444

September 2006 DM

Order Number: 252480-006US 11

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 12

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

15.2.45Unicast Address 6 .................................................................................444

15.2.46Core Control ........................................................................................444

16.0 Ethernet MAC B ......................................................................................................446

17.0 High-Speed Serial Interfaces .................................................................................448

17.1 High-Speed Serial Interface Receive Operation ....................................................448

17.2 High-Speed Serial Interface Transmit Operation...................................................449

17.3 Configuration of the High-Speed Serial Interface..................................................450

17.4 Obtaining High-Speed, Serial Synchronization......................................................453

17.5 HSS Registers and Clock Configuration ...............................................................454

17.5.1 HSS Clock and Jitter..............................................................................455

17.5.2 Overview of HSS Clock Configuration.......................................................455

17.6 HSS Supported Framing Protocols......................................................................457

17.6.1 T1 ......................................................................................................457

17.6.2 E1 ......................................................................................................459

17.6.3 MVIP...................................................................................................460

17.6.3.1 MVIP using 2.048Mbps Backplane..............................................461

17.6.3.2 MVIP Using 4.096-Mbps Backplane ............................................463

17.6.3.3 MVIP Using 8.192-Mbps Backplane ............................................464

18.0 Universal Serial Bus (USB) v1.1 Device Controller..................................................468

18.1 USB Overview.................................................................................................468

18.2 Device Configuration........................................................................................469

18.3 USB Operation ................................................................................................470

18.3.1 Signalling Levels...................................................................................470

18.3.2 Bit Encoding.........................................................................................471

18.3.3 Field Formats.......................................................................................472

18.3.4 Packet Formats .................................................................................... 473

18.3.4.1 Token Packet Type ..................................................................474

18.3.4.2 Start-of-Frame Packet Type......................................................474

18.3.4.3 Data Packet Type....................................................................474

18.3.4.4 Handshake Packet Type ...........................................................475

18.3.5 Transaction Formats........................... ...................................................475

18.3.5.1 Bulk Transaction Type..............................................................475

18.3.5.2 Isochronous Transaction Type...................................................476

18.3.5.3 Control Transaction Type..........................................................476

18.3.5.4 Interrupt Transaction Type .......................................................477

18.3.6 UDC Device Requests............................................................................477

18.3.7 UDC Configuration ................................................................................478

18.4 UDC Hardware Connections...............................................................................479

18.4.1 Self-Powered Device ................................................................ ... ..........479

18.4.2 Bus-Powered Devices............................................................................479

18.5 Register Descriptions................... .. .. .. ............................. .. ... .............................479

18.5.1 UDC Control Register (UDCCR)...............................................................481

18.5.1.1 UDC Enable............................................................. .. .............481

18.5.1.2 UDC Active................................................................ .............481

18.5.1.3 UDC Resume (RSM).......................................... .. .. ...................481

18.5.1.4 Resume Interrupt Request (RESIR)............................................481

18.5.1.5 Suspend Interrupt Request (SUSIR)...........................................482

18.5.1.6 Suspend/Resume Interrupt Mask (SRM).....................................482

18.5.1.7 Reset Interrupt Request (RSTIR)...............................................482

18.5.1.8 Reset Interrupt Mask (REM)............................................ .. ........482

18.5.2 UDC Endpoint 0 Control/Status Register (UDCCS0) ...................................483

18.5.2.1 OUT Packet Ready (OPR)..........................................................483

18.5.2.2 IN Packet Ready (IPR) .............................................................483

18.5.2.3 Flush Tx FIFO (FTF).................................................................484

18.5.2.4 Device Remote Wake-Up Feature (DRWF)...................................484

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

12 Order Number: 252480-006US

Page 13

—Intel

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

18.5.2.5 Sent Stall (SST)...................................................................... 484

18.5.2.6 Force Stall (FST)................................................ .. .. .. ............... 484

18.5.2.7 Receive FIFO Not Empty (RNE)................................................. 484

18.5.2.8 Setup Active (SA) ................................................................... 484

18.5.3 UDC Endpoint 1 Control/Status Register (UDCCS1)................................... 485

18.5.3.1 Transmit FIFO Service (TFS)..................................................... 485

18.5.3.2 Transmit Packet Complete (TPC)............................................... 486

18.5.3.3 Flush Tx FIFO (FTF)................................................................. 486

18.5.3.4 Transmit Underrun (TUR)......................................................... 486

18.5.3.5 Sent STALL (SST)................................................................... 486

18.5.3.6 Force STALL (FST) .............................. .............................. ...... 486

18.5.3.7 Bit 6 Reserved........................................................................ 487

18.5.3.8 Transmit Short Packet (TSP) .................................................... 487

18.5.4 UDC Endpoint 2 Control/Status Register (UDCCS2)................................... 487

18.5.4.1 Receive FIFO Service (RFS)...................................................... 488

18.5.4.2 Receive Packet Complete (RPC)................................................ 488

18.5.4.3 Bit 2 Reserved........................................................................ 488

18.5.4.4 Bit 2 Reserved........................................................................ 488

18.5.4.5 Sent Stall (SST)...................................................................... 488

18.5.4.6 Force Stall (FST)................................................ .. .. .. ............... 488

18.5.4.7 Receive FIFO Not Empty (RNE)................................................. 488

18.5.4.8 Receive Short Packet (RSP)...................................................... 489

18.5.5 UDC Endpoint 3 Control/Status Register (UDCCS3)................................... 490

18.5.5.1 Transmit FIFO Service (TFS)..................................................... 490

18.5.5.2 Transmit Packet Complete (TPC)............................................... 490

18.5.5.3 Flush Tx FIFO (FTF)................................................................. 490

18.5.5.4 Transmit Underrun (TUR)......................................................... 490

18.5.5.5 Bit 4 Reserved........................................................................ 490

18.5.5.6 Bit 5 Reserved........................................................................ 490

18.5.5.7 Bit 6 Reserved........................................................................ 490

18.5.5.8 Transmit Short Packet (TSP) .................................................... 491

18.5.6 UDC Endpoint 4 Control/Status Register (UDCCS4)................................... 491

18.5.6.1 Receive FIFO Service (RFS)...................................................... 491

18.5.6.2 Receive Packet Complete (RPC)................................................ 492

18.5.6.3 Receive Overflow (ROF)........................................................... 492

18.5.6.4 Bit 3 Reserved........................................................................ 492

18.5.6.5 Bit 4 Reserved........................................................................ 492

18.5.6.6 Bit 5 Reserved........................................................................ 492

18.5.6.7 Receive FIFO Not Empty (RNE)................................................. 492

18.5.6.8 Receive Short Packet (RSP)...................................................... 492

18.5.7 UDC Endpoint 5 Control/Status Register (UDCCS5)................................... 493

18.5.7.1 Transmit FIFO Service (TFS)..................................................... 493

18.5.7.2 Transmit Packet Complete (TPC)............................................... 493

18.5.7.3 Flush Tx FIFO (FTF)................................................................. 494

18.5.7.4 Transmit Underrun (TUR)......................................................... 494

18.5.7.5 Sent STALL (SST)................................................................... 494

18.5.7.6 Force STALL (FST) .............................. .............................. ...... 494

18.5.7.7 Bit 6 Reserved........................................................................ 494

18.5.7.8 Transmit Short Packet (TSP) .................................................... 495

18.5.8 UDC Endpoint 6 Control/Status Register .................................................. 495

18.5.8.1 Transmit FIFO Service (TFS)..................................................... 496

18.5.8.2 Transmit Packet Complete (TPC)............................................... 496

18.5.8.3 Flush Tx FIFO (FTF)................................................................. 496

18.5.8.4 Transmit Underrun (TUR)......................................................... 496

18.5.8.5 Sent STALL (SST)................................................................... 496

18.5.8.6 Force STALL (FST) .............................. .............................. ...... 496

18.5.8.7 Bit 6 Reserved........................................................................ 497

18.5.8.8 Transmit Short Packet (TSP) .................................................... 497

18.5.9 UDC Endpoint 7 Control/Status Register (UDCCS7)................................... 498

September 2006 DM

Order Number: 252480-006US 13

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Page 14

Intel® IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor—

18.5.9.1 Receive FIFO Service (RFS) ................................. .....................498

18.5.9.2 Receive Packet Complete (RPC)............................ .. ...................498

18.5.9.3 Bit 2 Reserved ........................................ .............................. ..498

18.5.9.4 Bit 3 Reserved ........................................ .............................. ..498

18.5.9.5 Sent Stall (SST)...................................................................... 498

18.5.9.6 Force Stall (FST).....................................................................498

18.5.9.7 Receive FIFO Not Empty (RNE)....................... .. .. .. .....................499

18.5.9.8 Receive Short Packet (RSP) .............................................. .. ......499

18.5.10 UDC Endpoint 8 Control/Status Register (UDCCS8)................................ ..500

18.5.10.1 Transmit FIFO Service (TFS)...................................................500

18.5.10.2 Transmit Packet Complete (TPC) ............................................500

18.5.10.3 Flush Tx FIFO (FTF)..............................................................500

18.5.10.4 Transmit Underrun (TUR)......................................................500

18.5.10.5 Bit 4 Reserved.....................................................................500

18.5.10.6 Bit 5 Reserved.....................................................................501

18.5.10.7 Bit 6 Reserved.....................................................................501

18.5.10.8 Transmit Short Packet (TSP)...................................................501

18.5.11 UDC Endpoint 9 Control/Status Register (UDCCS9).................................502

18.5.11.1 Receive FIFO Service (RFS) ...................................................502

18.5.11.2 Receive Packet Complete (RPC)..............................................502

18.5.11.3 Receive Overflow (ROF) ........................................................502

18.5.11.4 Bit 3 Reserved.....................................................................502

18.5.11.5 Bit 4 Reserved.....................................................................502

18.5.11.6 Bit 5 Reserved......................................................................502

18.5.11.7 Receive FIFO Not Empty (RNE)...............................................502

18.5.11.8 Receive Short Packet (RSP)....................................................502

18.5.12 UDC Endpoint 10 Control/Status Register (UDCCS10)..............................503

18.5.12.1 Transmit FIFO Service (TFS)...................................................503

18.5.12.2 Transmit Packet Complete (TPC) .............................................503

18.5.12.3 Flush Tx FIFO (FTF)........................... .. .. .............................. ..504

18.5.12.4 Transmit Underrun (TUR) .......................................................504

18.5.12.5 Sent STALL (SST).................... ............................. .................504

18.5.12.6 Force STALL (FST)............................................ .. .. .................504

18.5.12.7 Bit 6 Reserved......................................................................504

18.5.12.8 Transmit Short Packet (TSP)...................................................505

18.5.13 UDC End point 11 Control/Status Register (UDCCS11) .................... .........505

18.5.13.1 Transmit FIFO Service (TFS)...................................................506

18.5.13.2 Transmit Packet Complete (TPC) .............................................506

18.5.13.3 Flush Tx FIFO (FTF)........................... .. .. .............................. ..506

18.5.13.4 Transmit Underrun (TUR) .......................................................506

18.5.13.5 Sent STALL (SST).................... ............................. .................506

18.5.13.6 Force STALL (FST)............................................ .. .. .................506

18.5.13.7 Bit 6 Reserved......................................................................507

18.5.13.8 Transmit Short Packet (TSP)...................................................507

18.5.14 UDC Endpoint 12 Control/Status Register (UDCCS12)..............................508

18.5.14.1 Receive FIFO Service (RFS) ....................................................508

18.5.14.2 Receive Packet Complete (RPC)...............................................508

18.5.14.3 Bit 2 Reserved......................................................................508

18.5.14.4 Bit 3 Reserved......................................................................508

18.5.14.5 Sent Stall (SST)............................................... .....................508

18.5.14.6 Force Stall (FST) ............................................ .. .....................509

18.5.14.7 Receive FIFO Not Empty (RNE)................................................509

18.5.14.8 Receive Short Packet (RSP)....................................................509

18.5.15 UDC Endpoint 13 Control/Status Register (UDCCS13)..............................510

18.5.15.1 Transmit FIFO Service (TFS)...................................................510

18.5.15.2 Transmit Packet Complete (TPC) .............................................510

18.5.15.3 Flush Tx FIFO (FTF)........................... .. .. .............................. ..510

18.5.15.4 Transmit Underrun (TUR) .......................................................511

18.5.15.5 Bit 4 Reserved......................................................................511

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

Intel

DM September 2006

14 Order Number: 252480-006US

Page 15

—Intel

®

IXP42X Product Line of Network Processors and IXC1100 Control Plane Processor

18.5.15.6 Bit 5 Reserved...................................................................... 511

18.5.15.7 Bit 6 Reserved...................................................................... 511

18.5.15.8 Transmit Short Packet (TSP) .................................................. 511

18.5.16 UDC Endpoint 14 Control/Status Register (UDCCS14).............................. 512

18.5.16.1 Receive FIFO Service (RFS).................................................... 512

18.5.16.2 Receive Packet Complete (RPC).............................................. 512

18.5.16.3 Receive Overflow (ROF)......................................................... 512

18.5.16.4 Bit 3 Reserved...................................................................... 512

18.5.16.5 Bit 4 Reserved...................................................................... 512

18.5.16.6 Bit 5 Reserved...................................................................... 512

18.5.16.7 Receive FIFO Not Empty (RNE)............................................... 513

18.5.16.8 Receive Short Packet (RSP).................................................... 513

18.5.17 UDC Endpoint 15 Control/Status Register (UDCCS15).............................. 514

18.5.17.1 Transmit FIFO Service (TFS)................................................... 514

18.5.17.2 Transmit Packet Complete (TPC)............................................. 514

18.5.17.3 Flush Tx FIFO (FTF)............................................................... 514