Intel Itanium 2 9052 (NE80549KE025LK) Dual-Core Itanium 2 Reference Manual For Software Developement and Optimization (0.9)

Dual-Core Update

to the Intel® Itanium®2 Processor

Reference Manual

For Software Development and Optimization

Revision 0.9

January 2006

Document Number: 308065-001

Notice: This document contains information on products in the design phase of development. The information here is subject to change without

notice. Do not finalize a design with this information.

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY

ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN

INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS

ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES

RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER

INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

The Itanium 2 processor may contain design defects or errors known as errata which may cause the product to deviate from published specifications.

Current characterized errata are available on request.

The code name “Montecito” presented in this document is only for use by Intel to identify a product, technology, or service in development, that has not

been made commercially available to the public, i.e., announced, launched or shipped. It is not a “commercial” name for products or services and is

not intended to function as a trademark.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1-800-

548-4725, or by visiting Intel's web site at http://www.intel.com.

Intel, Itanium, Pentium, VTune and the Intel logo are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States

and other countries.

Copyright © 2006, Intel Corporation. All rights reserved.

*Other names and brands may be claimed as the property of others.

2 Reference Manual for Software Development and Optimization

Contents

1 Introduction.........................................................................................................................9

1.1 Terminology...........................................................................................................9

1.2 Related Documentation.........................................................................................9

2 The Dual-Core Itanium 2 Processor.................................................................................11

2.1 Overview .............................................................................................................11

2.1.1 Identifying the Dual-Core Itanium 2 Processor.......................................11

2.1.2 Introducing Montecito.............................................................................12

2.2 New Instructions..................................................................................................14

2.3 Core.....................................................................................................................15

2.3.1 Instruction Slot to Functional Unit Mapping............................................15

2.3.2 Instruction Latencies and Bypasses.......................................................17

2.3.3 Caches and Cache Management Changes ...........................................18

2.4 Threading ............................................................................................................20

2.4.1 Sharing Core Resources........................................................................21

2.4.2 Tailoring Thread Switch Behavior ..........................................................23

2.4.3 Sharing Cache and Memory Resources ................................................24

2.5 Dual Cores ..........................................................................................................25

2.5.1 Fairness and Arbitration .........................................................................27

2.6 Intel® Virtualization Technology ..........................................................................27

2.7 Tips and Tricks....................................................................................................27

2.7.1 Cross Modifying Code............................................................................27

2.7.2 ld.bias and lfetch.excl.............................................................................27

2.7.3 L2D Victimization Optimization...............................................................27

2.7.4 Instruction Cache Coherence Optimization............................................28

2.8 IA-32 Execution...................................................................................................28

2.9 Brand Information................................................................................................28

3 Performance Monitoring...................................................................................................31

3.1 Introduction to Performance Monitoring ..............................................................31

3.2 Performance Monitor Programming Models........................................................31

3.2.1 Workload Characterization.....................................................................32

3.2.2 Profiling ..................................................................................................35

3.2.3 Event Qualification .................................................................................37

3.2.4 References.............................................................................................43

3.3 Performance Monitor State .................................................................................43

3.3.1 Performance Monitor Control and Accessibility......................................46

3.3.2 Performance Counter Registers.............................................................46

3.3.3 Performance Monitor Event Counting Restrictions Overview ................49

3.3.4 Performance Monitor Overflow Status Registers (PMC0,1,2,3).............49

3.3.5 Instruction Address Range Matching .....................................................50

3.3.6 Opcode Match Check (PMC32,33,34,35,36) .........................................53

3.3.7 Data Address Range Matching (PMC41)...............................................56

3.3.8 Instruction EAR (PMC37/PMD32,33,36)................................................57

3.3.9 Data EAR (PMC40, PMD32,33,36)........................................................60

Reference Manual for Software Development and Optimization 3

3.3.10 Execution Trace Buffer (PMC

3.3.11 Interrupts................................................................................................72

3.3.12 Processor Reset, PAL Calls, and Low Power State...............................73

4 Performance Monitor Events............................................................................................75

4.1 Introduction .........................................................................................................75

4.2 Categorization of Events.....................................................................................75

4.2.1 Hyper-Threading and Event Types ........................................................76

4.3 Basic Events .......................................................................................................77

4.4 Instruction Dispersal Events................................................................................77

4.5 Instruction Execution Events...............................................................................78

4.6 Stall Events .........................................................................................................79

4.7 Branch Events.....................................................................................................80

4.8 Memory Hierarchy...............................................................................................81

4.8.1 L1 Instruction Cache and Prefetch Events.............................................83

4.8.2 L1 Data Cache Events ...........................................................................84

4.8.3 L2 Instruction Cache Events ..................................................................86

4.8.4 L2 Data Cache Events ...........................................................................87

4.8.5 L3 Cache Events....................................................................................91

4.9 System Events ....................................................................................................92

4.10 TLB Events..........................................................................................................93

4.11 System Bus Events.............................................................................................95

4.11.1 System Bus Conventions .......................................................................98

4.11.2 Extracting Memory Latency from Montecito Performance Counters......98

4.12 RSE Events.......................................................................................................100

4.13 Hyper-Threading Events ...................................................................................101

4.14 Performance Monitors Ordered by Event Code................................................102

4.15 Performance Monitor Event List........................................................................108

39,42

,PMD

48-63,38,39

)................................65

Figures

2-1 The Montecito Processor ....................................................................................14

2-2 Urgency and Thread Switching...........................................................................23

2-3 The Arbiter and Queues......................................................................................26

3-1 Time-Based Sampling.........................................................................................32

3-2 Itanium® Processor Family Cycle Accounting.....................................................34

3-3 Event Histogram by Program Counter ................................................................36

3-4 Montecito Processor Event Qualification ............................................................ 38

3-5 Instruction Tagging Mechanism in the Montecito Processor...............................39

3-6 Single Process Monitor .......................................................................................42

3-7 Multiple Process Monitor.....................................................................................42

3-8 System Wide Monitor..........................................................................................43

3-9 Montecito Processor Performance Monitor Register Mode ................................45

3-10 Processor Status Register (PSR) Fields for Performance Monitoring ................46

3-11 Montecito Processor Generic PMC Registers (PMC4-15) ..................................47

3-12 Montecito Processor Generic PMD Registers (PMD4-15) ..................................48

4 Reference Manual for Software Development and Optimization

3-13 Montecito Processor Performance Monitor Overflow Status

Registers (PMC0,1,2,3).......................................................................................49

3-14 Instruction Address Range Configuration Register (PMC38)..............................51

3-15 Opcode Match Registers (PMC32,34) ................................................................54

3-16 Opcode Match Registers (PMC33,35) ................................................................54

3-17 Opcode Match Configuration Register (PMC36).................................................55

3-18 Memory Pipeline Event Constraints Configuration Register (PMC41)................57

3-19 Instruction Event Address Configuration Register (PMC37) ...............................58

3-20 Instruction Event Address Register Format (PMD34,35) ....................................58

3-21 Data Event Address Configuration Register (PMC40) ........................................60

3-22 Data Event Address Register Format (PMD32,d3,36) ........................................61

3-23 Execution Trace Buffer Configuration Register (PMC39)....................................65

3-24 Execution Trace Buffer Register Format (PMD48-63, where

PMC39.ds == 0)...................................................................................................67

3-25 Execution Trace Buffer Index Register Format (PMD38)....................................68

3-26 Execution Trace Buffer Extension Register Format (PMD39)

(PMC42.mode=‘1xx) ...........................................................................................68

3-27 IP-EAR Configuration Register (PMC42) ............................................................69

3-28 IP-EAR data format (PMD48-63, where PMC42.mode == 100 and

PMD48-63.ef =0).................................................................................................70

3-29 IP-EAR data format (PMD48-63, where PMC42.mode == 100 and

PMD48-63.ef =1).................................................................................................70

3-30 IP Trace Buffer Index Register Format (PMD38)(PMC42.mode=‘1xx) ...............71

3-31 IP Trace Buffer Extension Register Format (PMD39)

(PMC42.mode=‘1xx) ...........................................................................................71

4-1 Event Monitors in the Itanium® 2 Processor Memory Hierarchy.........................82

4-2 Extracting Memory Latency from PMUs............................................................100

Tables

2-1 Itanium® Processor Family and Model Values....................................................11

2-2 Definition Table ...................................................................................................12

2-3 New Instructions Available in Montecito..............................................................14

2-4 A-Type Instruction Port Mapping.........................................................................15

2-5 B-Type Instruction Port Mapping.........................................................................16

2-6 I-Type Instruction Port Mapping ..........................................................................16

2-7 M-Type Instruction Port Mapping ........................................................................16

2-8 Execution with Bypass Latency Summary ..........................................................18

2-9 Montecito Cache Hierarchy Summary.................................................................19

2-10 PAL_BRAND_INFO Implementation-Specific Return Values .............................28

2-11 Montecito Processor Feature Set Return Values................................................29

3-1 Average Latency per Request and Requests per Cycle

Calculation Example33

3-2 Montecito Processor EARs and Branch Trace Buffer.........................................37

3-3 Montecito Processor Event Qualification Modes.................................................40

3-4 Montecito Processor Performance Monitor Register Set ....................................44

3-5 Performance Monitor PMC Register Control Fields (PMC4-15)..........................46

3-6 Montecito Processor Generic PMC Register Fields (PMC4-15) .........................47

Reference Manual for Software Development and Optimization 5

3-7 Montecito Processor Generic PMD Register Fields............................................48

3-8 Montecito Processor Performance Monitor Overflow Register

Fields (PMC0,1,2,3) ............................................................................................49

3-9 Montecito Processor Instruction Address Range Check by

Instruction Set.....................................................................................................51

3-10 Instruction Address Range Configuration Register Fields (PMC38)...................51

3-11 Opcode Match Registers(PMC32,34) .................................................................54

3-12 Opcode Match Registers(PMC33,35) .................................................................55

3-13 Opcode Match Configuration Register Fields (PMC36)......................................55

3-14 Memory Pipeline Event Constraints Fields (PMC41) ..........................................56

3-15 Instruction Event Address Configuration Register Fields (PMC37) ....................58

3-16 Instruction EAR (PMC37) umask Field in Cache Mode

(PMC37.ct=’1x) ...................................................................................................59

3-17 Instruction EAR (PMD34,35) in Cache Mode (PMC37.ct=’1x)............................59

3-18 Instruction EAR (PMC37) umask Field in TLB Mode (PMC37.ct=00).................59

3-19 Instruction EAR (PMD34,35) in TLB Mode (PMC37.ct=‘00) ...............................60

3-20 Data Event Address Configuration Register Fields (PMC40) .............................60

3-21 Data EAR (PMC40) Umask Fields in Data Cache Mode

(PMC40.mode=00)..............................................................................................61

3-22 PMD32,33,36 Fields in Data Cache Load Miss Mode

(PMC40.mode=00)..............................................................................................62

3-23 Data EAR (PMC40) Umask Field in TLB Mode (PMC40.ct=01).........................63

3-24 PMD32,33,36 Fields in TLB Miss Mode (PMC40.mode=‘01)..............................63

3-25 PMD32,33,36 Fields in ALAT Miss Mode (PMC11.mode=‘1x) ...........................64

3-26 Execution Trace Buffer Configuration Register Fields (PMC39).........................66

3-27 Execution Trace Buffer Register Fields (PMD48-63)

(PMC42.mode=‘000)...........................................................................................67

3-28 Execution Trace Buffer Index Register Fields (PMD38) .....................................68

3-29 Execution Trace Buffer Extension Register Fields (PMD39)

(PMC42.mode=‘1xx) ...........................................................................................69

3-30 IP-EAR Configuration Register Fields (PMC42) .................................................70

3-31 IP-EAR Data Register Fields (PMD48-63) (PMC42.mode=‘1xx) ........................70

3-32 IP Trace Buffer Index Register Fields (PMD38) (PMC42.mode=‘1xx)................71

3-33 IP Trace Buffer Extension Register Fields (PMD39)

(PMC42.mode=‘1xx) ...........................................................................................72

3-34 Information Returned by PAL_PERF_MON_INFO for the

Montecito Processor ...........................................................................................73

4-1 Performance Monitors for Basic Events..............................................................77

4-2 Derived Monitors for Basic Events......................................................................77

4-3 Performance Monitors for Instruction Dispersal Events......................................78

4-4 Performance Monitors for Instruction Execution Events .....................................78

4-5 Derived Monitors for Instruction Execution Events .............................................79

4-6 Performance Monitors for Stall Events................................................................80

4-7 Performance Monitors for Branch Events ...........................................................81

4-8 Performance Monitors for L1/L2 Instruction Cache and

Prefetch Events...................................................................................................83

4-9 Derived Monitors for L1 Instruction Cache and Prefetch Events ........................84

4-10 Performance Monitors for L1 Data Cache Events...............................................84

6 Reference Manual for Software Development and Optimization

4-11 Performance Monitors for L1D Cache Set 0 .......................................................85

4-12 Performance Monitors for L1D Cache Set 1 .......................................................85

4-13 Performance Monitors for L1D Cache Set 2 .......................................................85

4-14 Performance Monitors for L1D Cache Set 3 .......................................................85

4-15 Performance Monitors for L1D Cache Set 4 .......................................................86

4-16 Performance Monitors for L1D Cache Set 6 .......................................................86

4-19 Performance Monitors for L2 Data Cache Events...............................................87

4-20 Derived Monitors for L2 Data Cache Events.......................................................88

4-21 Performance Monitors for L2 Data Cache Set 0 .................................................89

4-22 Performance Monitors for L2 Data Cache Set 1 .................................................89

4-23 Performance Monitors for L2 Data Cache Set 2 .................................................89

4-24 Performance Monitors for L2 Data Cache Set 3 .................................................89

4-25 Performance Monitors for L2 Data Cache Set 4 .................................................90

4-26 Performance Monitors for L2 Data Cache Set 5 .................................................90

4-27 Performance Monitors for L2 Data Cache Set 6 .................................................90

4-28 Performance Monitors for L2 Data Cache Set 7 .................................................90

4-29 Performance Monitors for L2 Data Cache Set 8 .................................................91

4-30 Performance Monitors for L2D Cache - Not Set Restricted ................................91

4-31 Performance Monitors for L3 Unified Cache Events ...........................................91

4-32 Derived Monitors for L3 Unified Cache Events ...................................................92

4-33 Performance Monitors for System Events...........................................................93

4-34 Derived Monitors for System Events...................................................................93

4-35 Performance Monitors for TLB Events ................................................................93

4-36 Derived Monitors for TLB Events ........................................................................94

4-37 Performance Monitors for System Bus Events....................................................95

4-38 Derived Monitors for System Bus Events............................................................97

4-39 Performance Monitors for RSE Events .............................................................100

4-40 Derived Monitors for RSE Events......................................................................101

4-41 Performance Monitors for Multi-thread Events..................................................101

4-42 All Performance Monitors Ordered by Code .....................................................102

Reference Manual for Software Development and Optimization 7

Revision History

Document

Number

308065-001 0.9 • Initial release of the document. January 2006

Revision

Number

Description Date

8 Reference Manual for Software Development and Optimization

1 Introduction

This document is an update to the Intel® Itanium® 2 Processor Reference Manual for Software

Development and Optimization. This update is meant to give guidance on the changes that the dual-

core Intel®Itanium® 2 processor, code named Montecito, brings to the existing Itanium 2

processor family.

1.1 Terminology

The following definitions are for terms that will be used throughout this document:

Term Definition

Dispersal The process of mapping instructions within bundles to functional units

Bundle rotation The process of bringing new bundles into the two-bundle issue

Split issue Instruction execution when an instruction does not issue at the same

Advanced load address table (ALAT) The ALAT holds the state necessary for advanced load and check

Translation lookaside buffer (TLB) The TLB holds virtual to physical address mappings

Virtual hash page table (VHPT) The VHPT is an extension of the TLB hierarchy, which resides in the

Hardware page walker (HPW) The HPW is the third level of address translation. It is an engine that

Register stack engine (RSE) The RSE moves registers between the register stack and the backing

Event address registers (EARs) The EARs record the instruction and data addresses of data cache

window

time as the instruction immediately before it.

operations.

virtual memory space, is designed to enhance virtual address

translation performance.

performs page look-ups from the VHPT and seeks opportunities to

insert translations into the processor TLBs.

store in memory.

misses.

1.2 Related Documentation

The reader of this document should also be familiar with the material and concepts presented in the

following documents:

®

• Intel

• Intel

• Intel

Reference Manual for Software Development and Optimization 9

Itanium®Architecture Software Developer’s Manual, Volume 1: Application

Architecture

®

Itanium®Architecture Software Developer’s Manual, Volume 2: System Architecture

®

Itanium®Architecture Software Developer’s Manual, Volume 3: Instruction Set

Reference

§

Reference Manual for Software Development and Optimization 10

2 The Dual-Core Itanium 2

Processor

2.1 Overview

The first dual-core Itanium 2 processor, code named Montecito, is the fourth generation of the

Itanium 2 processor. Montecito builds on the strength of the previous Itanium 2 processors while

bringing many new key technologies for performance and management to the Itanium processor

family. Key improvements include multiple-cores, multiple-threads, cache hierarchy, and

speculation with the addition of new instructions.

This document describes key Montecito features and how Montecito differs in its implementation

of the Itanium architecture from previous Itanium 2 processors. Some of this information may not

be directly applicable to performance tuning, but is certainly needed to better understand and

interpret changes in application behavior on Montecito versus other Itanium architecture-based

processors. Unless otherwise stated, all of the restrictions, rules, sizes, and capacities described in

this document apply specifically to Montecito and may not apply to other Itanium architecturebased processors. This document assumes a familiarity with the previous Itanium 2 processors and

some of the unique properties and behaviors of those. Furthermore, only differences as they relate

to performance will be included here. Information about Montecito features such as error

protection, Virtualization technology, Hyper-Threading technology, and lockstep support may be

obtained in separate documents.

General understanding of processor components and explicit familiarity with Itanium processor

instructions are assumed. This document is not intended to be used as an architectural reference for

the Itanium architecture. For more information on the Itanium architecture, consult the Intel

Itanium®Architecture Software Developer’s Manual.

2.1.1 Identifying the Dual-Core Itanium 2 Processor

There have now been four generations of the Itanium 2 processor, which can be identified by their

unique CPUID values. For simplicity of documentation, throughout this document we will group

all processors of like model together. Table 2-1details out the CPUID values of all of the Itanium

processor family generations. Table 2-2 lists out all of the varieties of the Itanium processor family

that are available along with their grouping.

Note that the Montecito CPUID family value changes to 0x20.

Table 2-1. Itanium® Processor Family and Model Values

Family Model Description

0x07 0x00 Itanium® Processor

0x1f 0x00 Itanium 2 Processor (up to 3 MB L3 cache)

0x1f 0x01 Itanium 2 Processor (up to 6 MB L3 cache)

0x1f 0x02 Itanium 2 Processor (up to 9 MB L3 cache)

0x20 0x00 Dual-Core Itanium 2 Processor (Montecito)

®

Reference Manual for Software Development and Optimization 11

The Dual-Core Itanium 2 Processor

Table 2-2. Definition Table

Intel® Itanium® 2 Processor 900 MHz with 1.5 MB L3 Cache

Intel® Itanium® 2 Processor 1.0 GHz with 3 MB L3 Cache

Low Voltage Intel® Itanium® 2 Processor 1.0 GHz with 1.5 MB

L3 Cache

Intel® Itanium® 2 Processor 1.40 GHz with 1.5 MB L3 Cache

Intel® Itanium® 2 Processor 1.40 GHz with 3 MB L3 Cache

®

Itanium® 2 Processor 1.60 GHz with 3 MB L3 Cache

Intel

Intel® Itanium® 2 Processor 1.30 GHz with 3 MB L3 Cache

Intel® Itanium® 2 Processor 1.40 GHz with 4 MB L3 Cache

®

Itanium® 2 Processor 1.50 GHz with 6 MB L3 Cache

Intel

Low Voltage Intel® Itanium® 2 Processor 1.30 GHz with 3 MB

L3 Cache

Intel® Itanium® 2 Processor 1.60 GHz with 3 MB L3 Cache at

400 and 533 MHz System Bus (DP Optimized)

Intel® Itanium® 2 Processor 1.50 GHz with 4 MB L3 Cache

Intel® Itanium® 2 Processor 1.60 GHz with 6 MB L3 Cache

Intel® Itanium® 2 Processor 1.60 GHz with 9 MB L3 Cache

®

Itanium® 2 Processor 1.66 GHz with 6 MB L3 Cache

Intel

Intel® Itanium® 2 Processor 1.66 GHz with 9 MB L3 Cache

Individual SKUs TBD Dual-Core Itanium 2 Processor (Montecito)

Processor Abbreviation

Itanium 2 Processor (up to 3 MB L3 cache)

Itanium 2 Processor (up to 6 MB L3 cache)

Itanium 2 Processor (up to 9 MB L3 cache)

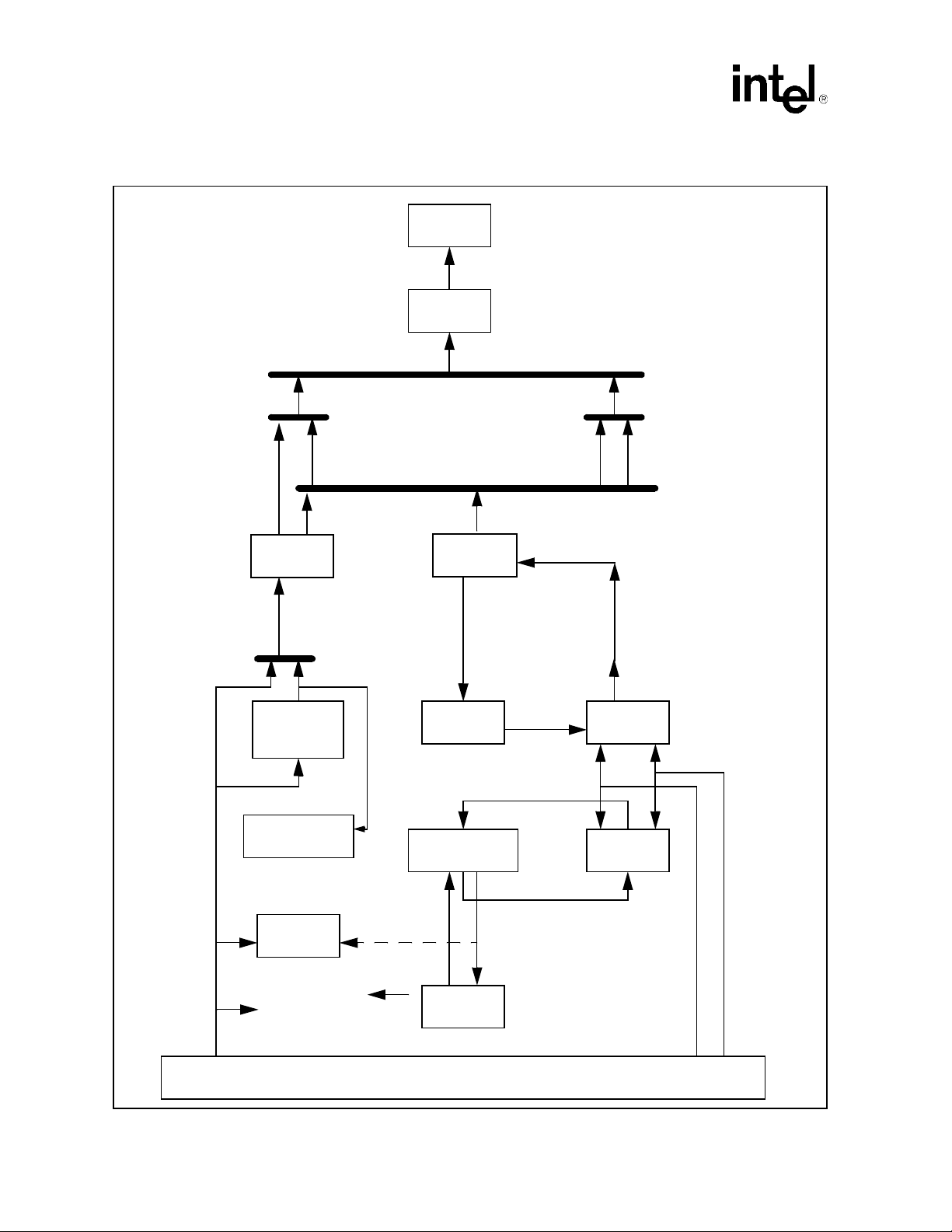

2.1.2 Introducing Montecito

Montecito takes the latest Itanium 2 processor core, improves the memory hierarchy and adds an

enhanced form of temporal multi-threading. A full introduction to the Itanium 2 processor is

available elsewhere but a brief review is provided below.

The front-end, with two levels of branch prediction, two TLBs, and a 0 cycle branch predictor,

feeds two bundles of three instructions each into the instruction buffer every cycle. This 8 entry

queue decouples the front-end from the back-end and delivers up to two bundles, of any alignment,

to the remaining 6 stages of the pipeline. The dispersal logic determines issue groups and allocates

up to 6 instructions to nearly every combination of the 11 available functional units (2 integer, 4

memory, 2 floating point, and 3 branch). The renaming logic maps virtual registers into physical

registers. Actual register (up to 12 integer and 4 floating point) reads are performed just before the

instructions execute or requests are issued to the cache hierarchy. The full bypass network allows

nearly immediate access to previous instruction results while final results are written into the

register file (up to 6 integer and 4 floating point).

Montecito preserves application and operating system investments while providing greater

opportunity for code generators to continue their steady performance push without any destructive

disturbance. This is important since even today, three years after the introduction of the first

Itanium 2 processor, compilers are providing significant performance improvements. The block

diagram of the Montecito processor can be found in Figure 2-1.

Montecito provides a second integer shifter and popcounter to help reduce port asymmetries. The

front-end provides better branching behavior for single cycle branches and cache allocation/

reclamation. Finally, Montecito decreases the time to reach recovery code when speculation fails

12 Reference Manual for Software Development and Optimization

The Dual-Core Itanium 2 Processor

thereby providing a lower cost for speculation. All told, nearly every core block and piece of

control logic includes some optimization to improve small deficiencies.

Exposing additional performance in an already capable cache hierarchy is also challenging and

includes additional capacity, improved coherence architecture, and more efficient cache

organization and queuing. Montecito supports three levels of on-chip cache. The first level (L1)

caches are each 4-way set associative caches and hold 16 KB of instruction or data. These caches

are in-order, like the rest of the pipeline, but are non-blocking allowing high request concurrency.

These L1 caches are accessed in a single cycle using pre-validated tags. The data cache is writethrough and dual-ported to support two integer loads and two stores, while the instruction cache

has dual-ported tags and a single data port to support simultaneous demand and prefetch accesses.

While previous generations of the Itanium 2 processor share the second level (L2) cache with both

data and instructions, Montecito provides a dedicated 1 MB L2 cache for instructions. This cache is

8-way set associative with a 128 byte line size and provides the same 7 cycle instruction access

latency as the previous smaller Itanium 2 processor unified cache. A single tag and data port

supports out-of-order and pipelined accesses to provide a high utilization. The separate instruction

and data L2 caches provide more efficient access to the caches compared to Itanium 2 processors

where instruction requests would contend against data accesses for L2 bandwidth against data

accesses and potentially impact core execution as well as L2 throughput.

This previously shared 256 KB L2 cache is now dedicated to data on Montecito with several

micro-architectural improvements to increase throughput. The instruction and data separation

effectively increase the data hit rate. The L2D hit latency remains at 5 cycles for integer and 6

cycles for floating-point accesses. The tag is true 4-ported and the data is pseudo 4-ported with 16byte banks. Montecito removes some of the code generator challenges found in the Itanium 2

processor L2 cache. Specifically, any accesses beyond the first access to miss the L2 in previous

Itanium 2 processors would access the L2 tags periodically until a hit is detected. The repeated tag

accesses consume bandwidth from the core and increase the miss latency. On Montecito, such

misses are suspended until the L2 fill occurs. The fill awakens and immediately satisfies the

request which greatly reduces bandwidth contention and final latency. The Montecito L2D, like

previous generations of the Itanium 2 processor L2, is out-of-order and pipelined with the ability to

track up to 32 requests in addition to 16 misses and their associated victims. However, Montecito

optimizes allocation of the 32 queue entries providing a higher concurrency level than previously

possible.

The third level (L3) cache remains unified as in previous Itanium 2 processors, but is now 12 MB

in size while maintaining the same 14 cycle integer access latency found on the 6 MB and 9 MB

Itanium 2 processors. The L3 uses an asynchronous interface with the data array to achieve this low

latency; there is no clock, only a read or write valid indication. The read signal is coincident with

index and way values that initiate L3 data array accesses. Four cycles later, the entire 128-byte line

is available and latched. This data is then delivered in 4 cycles to either the L2D or L2I cache in

critical byte order.

The L3 receives requests from both the L2I and L2D but gives priority to the L2I request in the rare

case of a conflict. Moving the arbitration point from the L1-L2 in the Itanium 2 processor to the

L2-L3 cache greatly reduces conflicts thanks to the high hit rates of the L2.

The cache hierarchy is replicated in each core to total more than 13.3 MB for each core and nearly

27 MB for the entire processor.

Reference Manual for Software Development and Optimization 13

The Dual-Core Itanium 2 Processor

Figure 2-1. The Montecito Processor

2.2 New Instructions

Montecito is compliant with the latest revisions of the Itanium architecture in addition to the Intel

Itanium Architecture Virtualization Specification Update. As such, Montecito introduces several

new instructions as summarized below:

Table 2-3. New Instructions Available in Montecito

New Instruction Comment

1

fc.i

2

ld16

2

st16

cmp8xchg16

hint@pause

vmsw.0, vmsw.1 On promote pages, these instructions allow cooperative operating systems to obtain and

NOTES:

1. This instruction behaves as the instruction on Montecito

2. This instruction will fault if issued to UC, UCE, or WC memory

3. This instruction will not initiate a thread switch if it is a B type instruction.

Insures that instruction caches are coherent with data caches

AR.csd and the register specified are the targets for this load.

AR.csd and the value in the register specified are written for this store

2

AR.csd and the value in the register specified are written for this exchange if the 8 byte

compare is true.

3

The current thread is yielding resources to the other thread.

give up VMM privilege

14 Reference Manual for Software Development and Optimization

The Dual-Core Itanium 2 Processor

2.3 Core

The Montecito core is very similar to previous generations of the Itanium 2 processor core from a

code generation point of view. The core has new resources; specifically, an additional integer

shifter and popcounter. The core also removes the rarely needed MMU to Memory Address bypass

path. The core also includes many optimizations, from the front-end to the cache hierarchy, that are

transparent to the code generator and legacy code can see improvements without any code change.

2.3.1 Instruction Slot to Functional Unit Mapping

This information is very similar to previous Itanium 2 processors. Changes between Itanium 2

processors and Montecito will be noted with footnotes.

Each fetched instruction is assigned to a functional unit through an issue port. The numerous

functional units share a smaller number of issue ports. There are 11 functional units: eight for nonbranch instructions and three for branch instructions. They are labeled M0, M1, M2, M3, I0, I1, F0,

F1, B0, B1, and B2. The process of mapping instructions within bundles to functional units is

called dispersal.

An instruction’s type and position within the issue group determine which functional unit the

instruction is assigned. An instruction is mapped to a subset of the functional units based upon the

instruction type (i.e. ALU, Memory, Integer, etc.). Then, based on the position of the instruction

within the instruction group presented for dispersal, the instruction is mapped to a particular

functional unit within that subset.

Table 2-4, Table 2-5, Table 2-6 and Table 2-7 show the mappings of instruction types to ports and

functional units.

Note: Shading in the following tables indicates the instruction type can be issued on the port(s).

A-type instructions can be issued on all M and I ports (M0-M3 and I0 and I1). I-type instructions

can only issue to I0 or I1. The I ports are asymmetric so some I-type instructions can only issue on

port I0. M ports have many asymmetries: some M-type instructions can issue on all ports; some can

only issue on M0 and M1; some can only issue on M2 and M3; some can only issue on M0; some

can only issue on M2.

Table 2-4. A-Type Instruction Port Mapping

Instruction

Type

A1-A5 ALU add, shladd M0-M3, I0, I1

A4, A5 Add Immediate addp4, addl M0-M3, I0, I1

A6,A7,A8 Compare cmp, cmp4 M0-M3, I0, I1

A9 MM ALU pcmp[1 | 2 | 4] M0-M3, I0, I1

A10 MM Shift and Add pshladd2 M0-M3, I0, I1

Description Examples Ports

Reference Manual for Software Development and Optimization 15

The Dual-Core Itanium 2 Processor

Table 2-5. B-Type Instruction Port Mapping

Instruction

Type

Description Examples Ports

B1-B5 Branch br B0-B2

B6-8 Branch Predict brp B0-B2

1

B9

Break, nop, thread switch hint hint B0-B2

NOTES:

1. hint.b is treated as a nop.b -- it does not have any impact on multi-thread control in Montecito.

Table 2-6. I-Type Instruction Port Mapping

Instruction

Type

I1 MM Multiply/Shift pmpy2.[l | r],

I2 MM Mix/Pack mix[1 | 2 | 4].[l | r

I3, I4 MM Mux mux1, mux2

I5 Variable Right Shift shr{.u] =ar,ar

I6 MM Right Shift Fixed pshr[2 | 4] =ar,c

I7 Variable Left Shift shl{.u] =ar,ar

I8 MM Left Shift Fixed pshl[2 | 4] =ar,c

1

I9

1

I10

I11-I17

1

MM Popcount popcnt

Shift Right Pair shrp

Extr, Dep

Test Nat

I18 Hint hint.i

I19 Break, Nop break.i, nop.i

I20 Integer Speculation Check chk.s.i

I21-28 Move to/from BR/PR/IP/AR mov =[br | pr | ip | ar]

I29 Sxt/Zxt/Czx sxt, zxt, czx

NOTES:

1. The I1 issue capability is new to Montecito

Description Examples

I Port

I0 I1

pmpyshr2{.u}

pmin, pmax

pshr[2 | 4] =ar,ar

pshl[2 | 4] =ar,ar

extr{.u}, dep{.z}

tnat

mov [br | pr | ip | ar]=

Table 2-7. M-Type Instruction Port Mapping (Sheet 1 of 2)

Instruction

Type

Description Examples

M1, 2, 3 Integer Load ldsz, ld8.fill

M4, 5 Integer Store stsz, st8.spill

16 Reference Manual for Software Development and Optimization

Memory Port

M0 M1 M2 M3

Table 2-7. M-Type Instruction Port Mapping (Sheet 2 of 2)

The Dual-Core Itanium 2 Processor

Instruction

Type

M6, 7, 8 Floating-point Load ldffsz, ldffsz.s, ldf.fill

Floating-point Advanced Load ldffsz.a, ldffsz.c.[clr | nc]

M9, 10 Floating-point Store stffsz, stf.spill

M11, 12 Floating-point Load Pair ldfpfsz

M13, 14, 15 Line Prefetch lfetch

M16 Compare and Exchange cmpxchgsz.[acq | rel]

M17 Fetch and Add fetchaddsz.[acq | rel]

M18 Set Floating-point Reg setf.[s | d | exp | sig}

M19 Get Floating-point Reg getf.[s | d | exp | sig}

M20, 21 Speculation Check chk.s{.m}

M22, 23 Advanced Load Check chk.a[clr | nc]

M24 Invalidate ALAT invala

Mem Fence, Sync, Serialize fwb, mf{.a}, srlz.[d | i],

M25 RSE Control flushrs, loadrs

M26, 27 Invalidate ALAT invala.e

M28 Flush Cache, Purge TC Entry fc, ptc.e

M29, 30, 31 Move to/from App Reg mov{.m} ar=

M32, 33 Move to/from Control Reg mov cr=, mov =cr

M34 Allocate Register Stack Frame alloc

M35, 36 Move to/from Proc. Status Reg mov psr.[l | um]

M37 Break, Nop.m break.m, nop.m

M38, 39, 40 Probe Access probe.[r | w].{fault}

M41 Insert Translation Cache itc.[d | i]

M42, 43 Move Indirect Reg

Insert TR

M44 Set/Reset User/System Mask sum, rum, ssm, rsm

M45 Purge Translation Cache/Reg ptc.[d | i | g | ga]

M46 Virtual Address Translation tak, thash, tpa, ttag

M47 Purge Translation Cache ptc.e

M48 Thread switch hint hint

Description Examples

sync.li

mov{.m} =ar

mov =psr.[l | m]

mov ireg=, move =ireg,

itr.[d | i]

Memory Port

M0 M1 M2 M3

2.3.2 Instruction Latencies and Bypasses

Table 2-8 lists the Montecito processor operation latencies.

Reference Manual for Software Development and Optimization 17

The Dual-Core Itanium 2 Processor

Table 2-8. Execution with Bypass Latency Summary

Consumer (across)

Producer (down)

Adder: add, cmp, cmp4, shrp,

Qual.

Pred.

Branch

Pred.

ALU

n/a n/a 1 1 3 1 n/a n/a n/a 1

Load

Store

Addr

Multi-

media

Store

Data

Fmac Fmisc getf setf

extr, dep, tbit, addp4, shladd,

shladdp4, zxt, sxt, czx, sum,

logical ops, 64-bit immed.

moves, movl, post-inc ops

(includes post-inc stores,

loads, lfetches)

Multimedia n/a n/a 3 4 or 812 3 n/a n/a n/a 3

thash, ttag, tak, tpa, probe

2

getf

2

setf

2

Fmac: fma, fms, fnma, fpma,

n/a n/a 5 6 6 5 n/a n/a n/a 5

n/a n/a n/a n/a n/a 6 6 6 6 n/a

n/a n/a n/a n/a n/a 4 4 4 4 n/a

5 6 6 5

fpms, fpnma, fadd, fnmpy,

fsub, fpmpy, fpnmpy, fmpy,

fnorm, xma, frcpa, fprcpa,

frsqrta, fpsqrta, fcvt, fpcvt

Fmisc: fselect, fcmp, fclass,

n/a n/a n/a n/a n/a 4 4 4 4 n/a

fmin, fmax, famin, famax,

fpmin, fpmax, fpamin, fpcmp,

fmerge, fmix, fsxt, fpack,

fswap, fand, fandcm, for, fxor,

fpmerge, fneg, fnegabs, fpabs,

fpneg, fpnegabs

Integer side predicate write:

1 0 n/a n/a n/a n/a n/a n/a n/a n/a

cmp, tbit, tnat

FP side predicate write: fcmp 2 1 n/a n/a n/a n/a n/a n/a n/a n/a

FP side predicate write: frcpa,

2 2 n/a n/a n/a n/a n/a n/a n/a n/a

fprcpa, frsqrta, fpsqrta

Integer Load

FP Load

3

4

n/a n/a N N+1 N+2 N N N N N

n/a n/a M+1 M+2 M+3 M+1 M+1 M+1 M+1 M+1

IEU2: move_from_br, alloc n/a n/a 2 2 3 2 n/a n/a n/a 2

Move to/from CR or AR

5

Move to pr 1 0 2 2 3 2

Move indirect

6

n/a n/a C C C C n/a n/a n/a C

n/a n/a n/a n/a

n/a n/a D D D D n/a n/a n/a D

NOTES:

1. The MMU to memory address bypass in Montecito does not exist. If code does not account for the missing bypass, the processor will detect the case

and cause a pipeflush to ensure proper separation between the producer and the consumer.

2. Since these operations are performed by the L2D, they interact with the L2D pipeline. These are the minimum latencies but they could be much larger

because of this interaction.

3. N depends upon which level of cache is hit: N=1 for L1D, N=5 for L2D, N=14-15 for L3, N=~180-225 for main memory. These are minimum latencies

and are likely to be larger for higher levels of cache.

4. M depends upon which level of cache is hit: M=5 for L2D, M=14-15 for L3, M=~180-225 for main memory. These are minimum latencies and are

likely to be larger for higher levels of cache. The +1 in all table entries denotes one cycle needed for format conversion.

5. Best case values of C range from 2 to 35 cycles depending upon the registers accessed. EC and LC accesses are 2 cycles, FPSR and CR accesses

are 10-12 cycles.

6. Best case values of D range from 6 to 35 cycles depending upon the indirect registers accessed. LREGS, PKR, and RR are on the faster side being

6 cycle accesses.

2.3.3 Caches and Cache Management Changes

Montecito, like the previous Itanium 2 processors, supports three levels of on-chip cache. Each

core contains a complete cache hierarchy, with nearly 13.3 Mbytes per core, for a total of nearly 27

Mbytes of processor cache.

18 Reference Manual for Software Development and Optimization

Table 2-9. Montecito Cache Hierarchy Summary

The Dual-Core Itanium 2 Processor

Cache

Data Types

Supported

L1D Integer WT 16 KB 64 Bytes 4 VA[11:6] 8 Fills 1/1

L1I Instruction NA 16 KB 64 Bytes 4 VA[11:6] 1 Demand

L2D Integer,

Floating

Point

L2I Instruction NA 1 MByte 128 Bytes 8 PA[16:7] 8 7/10

L3 Integer,

Floating

Point,

Instruction

2.3.3.1 L1 Caches

The L1I and L1D caches are essentially unchanged from previous generations of the Itanium 2

processor.

2.3.3.2 L2 Caches

Level 2 caches are both different and similar to the Itanium 2 processor L2 cache. The previous

Itanium 2 processor L2 shares both data and instructions, while the Montecito has dedicated

instruction (L2I) and data (L2D) caches. This separation of instruction and data caches makes it

possible to have dedicated access paths to the caches and thus eliminates contention and eases

capacity pressures on the L2 caches.

Write

Through/

Write Back

WB 256 KB 128 Bytes 8 PA[14:7] 32 OzQ/

WB 12 MByte 128 Bytes 12 PA[19:7] 8 14/21

Data

Array

Size

Line Size Ways Index Queuing

+ 7

Prefetch

Fills

16 Fills

Minimum

/Typical

Latency

1/1

5/11

The L2I cache holds 1 Mbyte; is eight-way set associative; and has a 128-byte line size-yet has the

same seven-cycle instruction-access latency as the smaller previous Itanium 2 processor unified

cache. The tag and data arrays are single ported, but the control logic supports out-of-order and

pipelined accesses. This large cache greatly reduces the number of instruction accesses seen at the

L3 cache. Any coherence request to identify whether a cache line is in the processor will invalidate

that line from the L2I cache.

The L2D cache has the same structure and organization as the Itanium 2 processor shared 256 KB

L2 cache but with several microarchitectural improvements to increase throughput. The L2D hit

latency remains at five cycles for integer and six cycles for floating-point accesses. The tag array is

true four-ported-four fully independent accesses in the same cycle-and the data array is pseudo

four-ported with 16-byte banks.

Montecito optimizes several aspects of the L2D. In the Itanium 2 processor, any accesses to the

same cache line beyond the first access that misses L2 will access the L2 tags periodically until the

tags detect a hit. The repeated tag accesses consume bandwidth from the core and increase the L2

miss latency. Montecito suspends such secondary misses until the L2D fill occurs. At that point, the

fill immediately satisfies the suspended request. This approach greatly reduces bandwidth

contention and final latency. The L2D, like the Itanium 2 processor L2, is out of order, pipelined,

and tracks 32 requests (L2D hits or L2D misses not yet passed to the L3 cache) in addition to 16

misses and their associated victims. The difference is that Montecito allocates the 32 queue entries

more efficiently, which provides a higher concurrency level than with the Itanium 2 processor.

Reference Manual for Software Development and Optimization 19

The Dual-Core Itanium 2 Processor

Specifically, the queue allocation policy now supports recovery of empty entries. This allows for

greater availability of the L2 OzQ in light of accesses completed out of order.

The L2D also considers the thread identifier when performing ordering such that an ordered

request from one thread is not needlessly ordered against another thread’s accesses.

2.3.3.3 L3 Cache

Montecito's L3 cache remains unified as in previous Itanium 2 processors, but is now 12 MB. Even

so, it maintains the same 14-cycle integer-access best case latency in the 6M and 9M Itanium 2

processors. Montecito's L3 cache uses an asynchronous interface with the data array to achieve this

low latency; there is no clock, only a read or write valid indication. Four cycles after a read signal,

index, and way, the entire 128-byte line is available and latched. The array then delivers this data in

four cycles to either the L2D or L2I in critical-byte order.

Montecito's L3 receives requests from both the L2I and L2D but gives priority to the L2I request in

the rare case of a conflict. Conflicts are rare because Montecito moves the arbitration point from

the Itanium 2 processor L1-L2 to L2-L3. This greatly reduces conflicts because of L2I and L2D's

high hit rates. The I and D arbitration point also reduces conflict and access pressure within the

core; L1I misses go directly to the L2I and not through the core. L2I misses contend against L2D

request for L3 access.

2.3.3.4 Request Tracking

All L2I and L2D requests are allocated to one of 16 request buffers. Requests are sent to the to the

L3 cache and system from these buffers by the tracking logic. A modified L2D victim or partial

write may be allocated to one of 8 write buffers. This is an increase of 2 over the Itanium 2

processor. The lifetime of the L2D victim buffers is also significantly decreased to further reduce

pressure on them. Lastly, the L3 dirty victim resources has grown by 2 entries to 8 in Montecito.

In terms of write coalescing buffers (WCB), Montecito has 4 128B line WCBs in each core. These

are fully shared between threads.

2.4 Threading

The multiple thread concept starts with the idea that the processor has some resources that cannot

be effectively utilized by a single thread. Therefore, sharing under-utilized resources between

multiple threads will increase utilization and performance. The Montecito processor HyperThreading Technology implementation duplicates and shares resources to create two logical

processors. All architectural state and some micro-architectural state is duplicated.

The duplicated architectural state (general, floating point, predicate, branch, application,

translation, performance monitoring, bank, and interrupt registers) allows each thread to appear as

a complete processor to the operating system thus minimizing the changes needed at the OS level.

The duplicated micro-architectural state of the return stack buffer and the advanced load address

table (ALAT) prevent cross-thread pollution that would occur if these resources were shared

between the two logical processors.

The two logical processors share the parallel execution resources (core) and the memory hierarchy

(caches and TLBs). There are many approaches to sharing resources that vary from fixed time

intervals, temporal multi-threading or TMT, to sharing resources concurrently, simultaneous multithreading or SMT. The Montecito Hyper-Threading Technology approach blends both approaches

such that the cores share threads using a TMT approach while the memory hierarchy shares

resources using a SMT approach. The core TMT approach is further augmented with control

20 Reference Manual for Software Development and Optimization

hardware that monitors the dynamic behavior of the threads and allocates core resources to the

most appropriate thread - an event experienced by the workload may cause a switch before the

thread quantum of TMT would cause a switch. This modification of TMT may be termed switchon-event multi-threading.

2.4.1 Sharing Core Resources

Many processors implementing multi-threading share resources using the SMT paradigm. In SMT,

instructions from different threads compete for and share execution resources such that each

functional resource is dynamically allocated to an available thread. This approach allocates

resources originally meant for instruction level parallelism (ILP), but under-utilized in the single

thread case, to exploit thread level parallelism (TLP). This is common in many out-of-order

execution designs where increased utilization of functional units can be attained for little cost.

Processor resources may also be shared temporally rather than symmetrically. In TMT, a thread is

given exclusive ownership of resources for a small time period. Complexity may be reduced by

expanding the time quantum to at least the pipeline depth and thus ensure that only a single thread

owns any execution or pipeline resources at any moment. Using this approach to multi-threading,

nearly all structures and control logic can be thread agnostic allowing the natural behaviors of the

pipeline, bypass, and stall control logic for execution to be leveraged while orthogonal logic

controls and completes a thread switch is added. However, this approach also means that a pipeline

flush is required at thread switch points.

The Dual-Core Itanium 2 Processor

In the core, one thread has exclusive access to the execution resources (foreground thread) for a

period of time while the other thread is suspended (background thread). Control logic monitors the

workload's behavior and dynamically decreases the time quantum for a thread that is not likely to

make progress. Thus, if the control logic determines that a thread is not making progress, the

pipeline is flushed and the execution resources are given to the background thread. This ensures

better overall utilization of the core resources over strict TMT and effectively hides the cost of long

latency operations such as memory accesses.

A thread switch on Montecito requires 15 cycles from initiation until the background thread retires

an instruction. Given the low latency of the memory hierarchy (1 cycle L1D, 5 cycle L2D, and 14

cycle L3) memory accesses are the only potentially stalling condition that greatly exceeds the

thread switch time and thus is the primary switch event.

A thread switch also has other side effects such as invalidating the Prefetch Virtual Address Buffer

(PVAB) and canceling any prefetch requests in the prefetch pipeline.

2.4.1.1 The Switch Events

There are several events that can lead to a thread switch event. Given that hiding memory latency is

the primary motivation for multi-threading, the most common switch event is based on L3 cache

misses and data returns. Other events, such as the time-out and forward progress event, provide

fairness, while the hint events provide paths for the software to influence thread switches. These

events have an impact on a thread's urgency which indicates a thread's ability to effectively use

core resources. Each event is described below:

• L3 Cache Miss - An L3 miss by the foreground thread is likely to cause that thread to stall

waiting for the return from the system interface. Hence, L3 misses can trigger thread switches

subject to thread urgency comparisons. This event decreases the thread’s urgency. Since there

is some latency between when a thread makes a request and when it is determined to be an L3

miss, it is possible to have multiple requests from a thread miss the L3 cache before a thread

switch occurs.

Reference Manual for Software Development and Optimization 21

The Dual-Core Itanium 2 Processor

• L3 Cache Return - An L3 miss data return for the background thread is likely to resolve data

dependences and is an early indication of execution readiness, hence an L3 miss data return

can trigger thread switch events subject to thread urgency comparisons. This event increases

the thread’s urgency

• Time-out - Thread-quantum counters ensure fairness in access to the pipeline execution

resources for each thread. If the thread-quantum expiration occurs when the thread was not

stalled, its urgency is set to a high value to indicate execution readiness prior to the switch

event.

• Switch Hint - The Itanium architecture provides the instruction which can

trigger a thread switch to yield execution to the background thread. This allows software to

indicate when the current thread has no need of the core resources.

• Low-power Mode - When the active thread has entered into a quiesced low-power mode, a

thread switch is triggered to the background thread so that it may continue execution.

Similarly, if both threads are in a quiesced low-power state, and the background thread is

awakened, a thread switch is triggered.

The L3 miss and data return event can occur for several types of accesses: data or instruction,

prefetch or demand, cacheable or uncacheable, or hardware page walker (HPW). A data demand

access includes loads, stores, and semaphores.

The switch events are intended to enable the control logic to decide the appropriate time to switch

threads without software intervention. Thus, Montecito Hyper-Threading Technology is mostly

transparent to the application and the operating system

2.4.1.2 Software Control of Thread Switching

The instruction is used by software to initiate a thread switch. The intent is to allow

code to indicate that it does not have any useful work to do and that its execution resources should

be given to the other thread. Some later event, such as an interrupt, may change the work for the

thread and should awaken the thread.

The instruction forces a switch from the foreground thread to the background

thread. This instruction can be predicated to conditionally initiate a thread switch. Since the current

issue group retires before the switch is initiated, the following code sequences are equivalent:

2.4.1.3 Urgency

Each thread has an urgency which can take on values from 0 to 7. A value of 0 denotes that a thread

has no useful work to perform. A value of 7 signifies that a thread is actively making forward

progress. The nominal urgency is 5 and indicates that a thread is actively progressing. The urgency

of one thread is compared against the other at every L3 event. If the urgency of the currently

22 Reference Manual for Software Development and Optimization

executing thread is lower than the background thread then the L3 event will initiate a thread switch.

Every L3 miss event decrements the urgency by 1, eventually saturating at 0. Similarly, every L3

return event increments the urgency by 1 as long as the urgency is below 5. Figure 2-2 shows a

typical urgency based switch scenario. The urgency can be set to 7 for a thread that is switched out

due to time-out event. An external interrupt directed at the background thread will set the urgency

for that thread to 6 which increases the probability of a thread switch and provide a reasonable

response time for interrupt servicing.

Figure 2-2. Urgency and Thread Switching

The Dual-Core Itanium 2 Processor

2.4.2 Tailoring Thread Switch Behavior

Montecito allows the behavior of the thread switch control logic to be tailored to meet specific

software requirements. Specifically, thread switch control may emphasize overall performance,

thread fairness or elevate the priority of one thread over the other. These different behaviors are

available through a low latency PAL call, PAL_SET_HW_POLICY. This will allow software to

exert some level of control over how the processor determines the best time to switch. Details on

this call and the parameters can be found in the latest Intel®Itanium®Architecture Software

Developer’s Manual and Intel®Itanium®Architecture Software Developer’s Manual Specification

Update.

Reference Manual for Software Development and Optimization 23

The Dual-Core Itanium 2 Processor

2.4.3 Sharing Cache and Memory Resources

The Montecito memory resources that are concurrently or simultaneously shared between the two

threads include the first and second level TLBs, the first, second, and third level caches, and system

interface resources. Each of these structures are impacted in different ways as a result of their

sharing.

2.4.3.1 Hyper-Threading Technology and the TLBs

The instruction in previous Itanium 2 processors would invalidate the entire Translation

Cache (TC) section of the TLB with one instruction. This same behavior is retained for Montecito

with the caveat that a issued on one thread will invalidate the TC of the other thread at the

same time.

The L2I and L2D TLB on the Itanium 2 processor supported 64 Translation Registers (TR).

Montecito supports 32 TRs for each logical processor.

2.4.3.1.1 Instruction TLBs

The replacement algorithms for the L1I and L2I TLB do not consider thread for replacement vector

updating. However, the L2I TLB will reserve one TLB entry for each thread to meet the

architectural requirements for TCs available to a logical processor.

The TLBs support SMT-based sharing by assigning a thread identifier to the virtual address. Thus,

two threads cannot share the same TLB entry at the same time even if the virtual address is the

same between the two threads.

Since the L1I TLB is key in providing a pseudo-virtual access to the L1I cache, using

prevalidation, when a L1I TLB entry is invalidated, the L1I cache entries associated with that page

(up to 4 K) are invalidated. However, the invalidation of a page (and hence cache contents) can be

suppressed when two threads access the same virtual and physical addresses. This allows the two

threads to share much of the L1I TLB and cache contents. For example, T0 inserts a L1I TLB entry

with VA=0 and PA=0x1001000. T0 then accesses VAs 0x000 to 0xFFF which are allocated to the

L1I cache. A thread switch occurs. Now, T1 initiates an access with VA=0. It will miss in the L1I

TLB because the entry with VA=0 belongs to T0. T1 will insert a L1I TLB entry with VA=0 and

PA=0x1001000. The T1 L1I TLB entry replaces the T0 L1I TLB entry without causing an

invalidation. Thus, the accesses performed by T0 become available to T1 with the exception of the

initial T1 access that inserted the L1I TLB page. Since the L1I cache contents can be shared

between two threads and the L1I cache includes branch prediction information, this optimization

allows one thread to impact the branch information contained in the L1I cache and hence branch

predictions generated for each thread.

2.4.3.1.2 Data TLBs

The replacement algorithms for the L1D and L2D TLB do not consider threads for replacement

vector updating. However, the L2D TLB will reserves 16 TLB entries for each thread to meet the

architectural requirements for TCs available to a logical processor.

The TLBs support SMT based sharing by assigning a thread identifier to the virtual address. Thus,

two threads cannot share the same TLB entry at the same time even if the virtual address is the

same between the two threads.

Despite the fact that both the instruction and data L1 TLBs support prevalidation, the L1I TLB

optimization regarding cache contents is not supported in the L1D TLB.

24 Reference Manual for Software Development and Optimization

The Dual-Core Itanium 2 Processor

2.4.3.2 Hyper-Threading Technology and the Caches

The L2I, L2D, and L3 caches are all physically addressed. Thus, the threads can fully share the

cache contents (i.e. an access allocated by T0 can be accessed by both T0 and T1). The queueing

resources for these cache levels are equally available to each thread. The replacement logic also

ignores threads such that T0 can cause an eviction of T1 allocated data and a hit will cause a cache

line to be considered recently used regardless of the thread that allocated or accessed the line.

A thread identifier is provided with each instruction or data cache request to ensure proper ordering

of requests between threads at the L2D cache in addition to performance monitoring and switch

event calculations at all levels. The thread identifier allows ordered and unordered transactions

from T0 pass ordered transactions from T1.

2.4.3.3 Hyper-Threading Technology and the System Interface

The system interface logic also ignores the thread identifier in allocating queue entries and in

prioritizing system interface requests. The system interface logic tracks L3 miss and fills and as

such, uses the thread identifier to correctly signal to the core which thread missed or filled the

cache for L3 miss/return events. The thread identifier is also used in performance monitor event

collection and counting.

The thread identifier can be made visible on the system interface as part of the agent identifier

through a PAL call. This is for informational purposes only as the bit would appear in a reserved

portion of the agent identifier and Montecito does not require the memory controller to ensure

forward progress and fairness based on the thread identifier -- the L2D cache ensures forward

progress between threads.

2.5 Dual Cores

Montecito is the first dual core Itanium 2 processor. The two cores attach to the system interface

through the arbiter, which provides a low-latency path for each core to initiate and respond to

system events.

Figure 2-3 is a block diagram of the arbiter, which organizes and optimizes each core's request to

the system interface, ensures fairness and forward progress, and collects responses from each core

to provide a unified response. The arbiter maintains each core's unique identity to the system

interface and operates at a fixed ratio to the system interface frequency. The cores are responsible

for thread ordering and fairness so the thread identifier to uniquely identify transactions on the

system interface is not necessary. However, the processor can be configured to provide the thread

identifier for informational purposes only.

Reference Manual for Software Development and Optimization 25

The Dual-Core Itanium 2 Processor

Figure 2-3. The Arbiter and Queues

As the figure shows, the arbiter consists of a set of address queues, data queues, and synchronizers,

as well as logic for core and system interface arbitration. Error-Correction Code (ECC) encoders/

decoders and parity generators exist but are not shown.

The core initiates one of three types of accesses, which the arbiter allocates to the following queues

and buffers:

• Request queue. This is the primary address queue that supports most request types. Each core

has four request queues.

• Write address queue. This queue holds addresses only and handles explicit writebacks and

partial line writes. Each core has two write address queues.

• Clean castout queue. This queue holds the address for the clean castout (directory and snoop

filter update) transactions. The arbiter holds pending transactions until it issues them on the

system interface. Each core has four clean castout queues.

• Write data buffer. This buffer holds outbound data and has a one-to-one correspondence with

addresses in the write address queue. Each core has four write data buffers, with the additional

two buffers holding implicit writeback data.

The number of entries in these buffers are small because they are deallocated once the transaction

is issued on the system interface. System interface responses to the transaction are sent directly to

the core where the overall tracking of a system interface request occurs.

Note that there are no core to core bypasses present. Thus, a cache line that is requested by core 0

but exists modified on core 1 will be issued to the system interface, snoop core 1 which provides

the data and a modified snoop result - all of which is seen on the system interface.

The Snoop queue issues snoop requests to the cores and coalesces the snoop response from each

core into a unified snoop response for the socket. If any core is delayed in delivering its snoop

response, the arbiter will delay the snoop response on the system interface.

The arbiter delivers all data returns directly to the appropriate core using a unique identifier

provided with the initial request. It delivers broadcast transactions, such as interrupts and TLB

purges, to both cores in the same way that delivery would occur if each core were connected

directly to the system interface.

26 Reference Manual for Software Development and Optimization

The Dual-Core Itanium 2 Processor

2.5.1 Fairness and Arbitration

The arbiter interleaves core requests on a one-to-one basis when both cores have transactions to

issue. When only one core has requests, its can issue its requests without the other core having to

issue a transaction. Because read latency is the greatest concern, the read requests are typically the

highest priority, followed by writes, and finally clean castouts. Each core tracks the occupancy of

the arbiter's queues using a credit system for flow control. As requests complete, the arbiter

informs the appropriate core of the type and number of deallocated queue entries. The cores use

this information to determine which, if any, transaction to issue to the arbiter.

2.6 Intel® Virtualization Technology

The Montecito processor is the first Itanium 2 processor to implement Intel® Virtualization

Technology. The full specification as well as further information on Intel Virtualization Technology

can be found at:

http://www.intel.com/technology/computing/vptech/.

2.7 Tips and Tricks

2.7.1 Cross Modifying Code

Section 2.5 in Part 2 of Volume 2 of the Intel®Itanium®Architecture Software Developer’s Manual

specifies specific sequences that must be followed when any instruction code may exist in the data

cache. Many violations of this code may have worked in previous Itanium 2 processors, but such

violations are likely to be exposed by the cache hierarchy found in Montecito. Code in violation of

the architecture should be modified to adhere to the architectural requirements.

The large L2I and the separation of the instruction and data at the L2 level also requires additional

time to ensure coherence if using the PAL_CACHE_FLUSH procedure with the I/D coherence

option. Care should be taken to ensure that previously lower cost uses of the

PAL_CACHE_FLUSH call should be replaced with the architecture required code sequence for

ensuring instruction and data consistency.

2.7.2 ld.bias and lfetch.excl

The and instructions have been enhanced on the Montecito processor.

These instructions can now bring in lines into the cache in a state that is ready to be modified if

supported by the memory controller. This feature allows a single or to

prefetch both the source and destination streams. This feature is enabled by default, but may be

disabled by PAL_SET_PROC_FEATURES bit 7 of the Montecito feature_set (18).

2.7.3 L2D Victimization Optimization

Montecito also improves on the behaviors associated with internal cache line coherence tracking.

The number of false L2D victims will drastically reduce on Montecito over previous Itanium 2

processors. This optimization is enabled by default, but may be disabled by

PAL_SET_PROC_FEATURES.

Reference Manual for Software Development and Optimization 27

The Dual-Core Itanium 2 Processor

2.7.4 Instruction Cache Coherence Optimization

Coherence requests of the L1I and L2I caches will invalidate the line if it is in the cache. Montecito

allows instruction requests on the system interface to be filtered such that they will not initiate

coherence requests of the L1I and L2I caches. This will allow instructions to be cached at the L1I

and L2I levels across multiple processors in a coherent domain. This optimization is enabled by

default, but may be disabled by PAL_SET_PROC_FEATURES bit 5 of the Montecito

feature_set (18).

2.8 IA-32 Execution

IA-32 execution on the Montecito processor is enabled with the IA-32 Execution Layer (IA-32 EL)

and PAL-based IA-32 execution. IA-32 EL is OS-based and is only available after an OS has

booted. PAL-based IA-32 execution is available after PAL_COPY_PAL is called and provides IA32 execution support before the OS has booted. All OSes running on Montecito have a requirement

to have IA-32 EL installed. There is no support for PAL-based IA-32 execution in an OS

environment.

IA-32 EL is a software layer that is currently shipping with Itanium architecture-based operating

systems and will convert IA-32 instructions into Itanium processor instructions via dynamic