Page 1

nPartition Administrator's Guide

HP Part Number: 5991-1247B_ed2

Published: February 2009

Edition: Second Edition

Page 2

© Copyright 2007–2009 Hewlett-Packard Development Company, L.P

Legal Notices

© Copyright 2007–2009 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP requiredforpossession,useor copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP

shall not be liable for technical or editorial errors or omissions contained herein.

Intel and Itanium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a U.S. registered trademark of Linus Torvalds. Microsoft, Windows, and Windows NT are U.S. registered trademarks of Microsoft

Corporation.

Restricted Rights Legend Use, duplication or disclosure by the U.S. Government is subject to restrictions as set forth in subparagraph (c) (1) (ii)

of the Rights in Technical Data and Computer Software clause at DFARS 252.227-7013 for DOD agencies, and subparagraphs (c) (1) and (c) (2)

of the Commercial Computer Software Restricted Rights clause at FAR 52.227-19 for other agencies.

HEWLETT-PACKARD COMPANY

3000 Hanover Street

Palo Alto, California 94304 U.S.A.

Page 3

Table of Contents

About This Document.......................................................................................................11

New and Changed Information in This Edition...................................................................................11

Document Organization.......................................................................................................................11

Typographic Conventions.....................................................................................................................12

Related Information..............................................................................................................................13

Publishing History................................................................................................................................13

HP Encourages Your Comments..........................................................................................................13

1 Getting Started with nPartitions..................................................................................15

Introduction to nPartitions....................................................................................................................15

Operating Systems Supported on nPartitions.................................................................................15

HP Server Support for nPartitions...................................................................................................16

HP Superdome Hybrid Servers: Intel® Itanium® 2 and PA-RISC nPartition Mixing..............17

Hardware Components of nPartition-Capable Servers...................................................................18

Administration Tools for nPartitions....................................................................................................18

Commands for Configuring nPartitions.........................................................................................19

Availability of nPartition Commands........................................................................................21

Enhanced nPartitions Commands for Windows.......................................................................21

Enhanced nPartition Commands for Linux...............................................................................22

Partition Manager............................................................................................................................22

Partition Manager Version 2.0 for Windows..............................................................................23

nPartition Properties.............................................................................................................................23

Partition Numbers...........................................................................................................................24

Assigned and Unassigned Cells......................................................................................................24

Base Cells.........................................................................................................................................24

Core Cells.........................................................................................................................................24

Active and Inactive Cells.................................................................................................................25

Cell Local Memory..........................................................................................................................25

Cell Property Details.......................................................................................................................25

Active and Inactive nPartition Boot States......................................................................................27

Overview of Managing nPartitions......................................................................................................27

Basics of Listing nPartition and Complex Status.............................................................................27

Basics of nPartition Creation...........................................................................................................29

Genesis Partition.........................................................................................................................30

Basics of nPartition Modification....................................................................................................30

nPartition Modification Tasks....................................................................................................31

Basics of nPartition Booting and Resetting......................................................................................32

Boot Process for Cells and nPartitions.......................................................................................32

Common nPartition Boot Commands and Tasks.......................................................................33

Complex Profile....................................................................................................................................36

Changing the Server Complex Profile.............................................................................................37

How the Complex Profile is Updated........................................................................................37

Complex Profile Entry Locking and Unlocking..............................................................................38

Complex Profile Group Details.......................................................................................................39

Remote and Local Management of nPartitions....................................................................................41

Intelligent Platform Management Interface (IPMI).........................................................................41

IPMI Block Transfer (IPMI BT)...................................................................................................42

nPartition Configuration Privilege.......................................................................................42

IPMI over LAN...........................................................................................................................42

Web-Based Enterprise Management (WBEM)................................................................................43

Table of Contents 3

Page 4

Local Management..........................................................................................................................43

Remote Management Using WBEM................................................................................................44

WBEM Remote Management Files............................................................................................44

nPartition Commands Support for Remote Management Using WBEM..................................45

Partition Manager Support for Remote Management Using WBEM........................................45

Remote Management Using IPMI over LAN..................................................................................46

nPartition Commands Support for Remote Management Using IPMI over LAN....................46

Partition Manager Support for Remote Management Using IPMI over LAN...........................47

Licensing Information: Getting Server Product Details.......................................................................47

nPartition and Virtual Partition Unique Identifiers........................................................................47

2 nPartition Server Hardware Overview.......................................................................49

sx1000 Chipset for HP Servers..............................................................................................................49

sx2000 Chipset for HP Servers..............................................................................................................49

Model Identifiers for Machine Hardware.............................................................................................49

Server Hardware Details: Cell-Based HP Servers.................................................................................51

Two-Cell nPartition Server Model...................................................................................................55

Four-Cell nPartition Server Model..................................................................................................56

Superdome Server Models..............................................................................................................57

HP Superdome 16-/32-Way Servers: SD16000, SD16A, and SD16B...........................................58

HP Superdome 32-/64-Way Servers: SD32000, SD32A, and SD32B...........................................58

HP Superdome 64-/128-Way Servers: SD64000, SD64A, and SD64B.........................................59

HP Superdome I/O Expansion Cabinet.....................................................................................59

3 Planning nPartitions......................................................................................................61

nPartition Hardware Requirements for Operating Systems................................................................61

Configuration Requirements for nPartitions........................................................................................62

Recommended nPartition Configurations............................................................................................63

Recommended HP Superdome nPartition Configurations.............................................................64

4 Using Management Interfaces and Tools..................................................................67

SMS (Support Management Station) for HP Superdome Servers........................................................67

Overview of nPartition Service Processor (MP or GSP) Interfaces......................................................67

Service Processor (MP or GSP) Features...............................................................................................68

Service Processor Accounts and Access Levels...............................................................................69

nPartition Console Features..................................................................................................................70

nPartition Console Access versus Direct OS Login.........................................................................71

Boot Console Handler System Boot Environment................................................................................71

Extensible Firmware Interface System Boot Environment...................................................................72

Windows Special Administration Console (SAC)................................................................................72

Accessing and Using the Service Processor..........................................................................................74

Using Service Processor Menus.......................................................................................................76

Navigating through Service Processor Menus...........................................................................76

Network Configuration for a Service Processor..............................................................................77

Viewing Console Logs..........................................................................................................................77

Viewing Chassis Codes or Event Logs.................................................................................................78

Virtual Front Panel (VFP) nPartition Views..........................................................................................79

Command Reference for Service Processor Commands......................................................................80

Command Reference for EFI Shell Commands....................................................................................81

Command Reference for BCH Menu Commands................................................................................84

4 Table of Contents

Page 5

5 Booting and Resetting nPartitions...............................................................................87

Overview of nPartition System Booting...............................................................................................87

Boot Process Differences for nPartitions on HP 9000 servers and HP Integrity servers.................88

Types of Booting and Resetting for nPartitions...............................................................................89

System Boot Configuration Options................................................................................................91

HP 9000 Boot Configuration Options........................................................................................91

HP Integrity Boot Configuration Options..................................................................................91

Tools for Booting nPartitions................................................................................................................95

Task Summaries for nPartition Boot and Reset....................................................................................96

Troubleshooting Boot Problems..........................................................................................................100

Accessing nPartition Console and System Boot Interfaces.................................................................101

Monitoring nPartition Boot Activity...................................................................................................104

Finding Bootable Devices....................................................................................................................106

Performing a Transfer of Control Reset..............................................................................................107

Booting and Shutting Down HP-UX...................................................................................................108

HP-UX Support for Cell Local Memory........................................................................................108

Adding HP-UX to the Boot Options List.......................................................................................109

Booting HP-UX..............................................................................................................................110

HP-UX Booting.........................................................................................................................110

Single-User Mode HP-UX Booting...........................................................................................114

LVM-Maintenance Mode HP-UX Booting...............................................................................116

Shutting Down HP-UX..................................................................................................................117

Booting and Shutting Down HP OpenVMS I64.................................................................................119

HP OpenVMS I64 Support for Cell Local Memory.......................................................................120

Adding HP OpenVMS to the Boot Options List............................................................................120

Booting HP OpenVMS...................................................................................................................122

Shutting Down HP OpenVMS.......................................................................................................123

Booting and Shutting Down Microsoft Windows..............................................................................124

Microsoft Windows Support for Cell Local Memory....................................................................124

Adding Microsoft Windows to the Boot Options List...................................................................125

Booting Microsoft Windows..........................................................................................................126

Shutting Down Microsoft Windows..............................................................................................128

Booting and Shutting Down Linux.....................................................................................................129

Linux Support for Cell Local Memory..........................................................................................129

Adding Linux to the Boot Options List.........................................................................................130

Booting Red Hat Enterprise Linux................................................................................................131

Booting SuSE Linux Enterprise Server..........................................................................................132

Shutting Down Linux....................................................................................................................134

Rebooting and Resetting nPartitions..................................................................................................135

Performing a Reboot for Reconfig.......................................................................................................139

Shutting Down to a Shutdown for Reconfig (Inactive) State..............................................................141

Booting an Inactive nPartition.............................................................................................................146

Booting over a Network......................................................................................................................147

Booting to the HP-UX Initial System Loader (ISL).............................................................................149

Booting to the HP-UX Loader (HPUX.EFI).........................................................................................150

Using HP-UX Loader Commands......................................................................................................151

HPUX.EFI Boot Loader Commands..............................................................................................151

HPUX Boot Loader Commands Issued from ISL..........................................................................151

Booting to the Linux Loader (ELILO.EFI)...........................................................................................152

Linux Boot Option Management...................................................................................................153

Linux Loader Configuration File (elilo.conf)...........................................................................153

Using Linux Loader (ELILO) Commands..........................................................................................154

Configuring Boot Paths and Options..................................................................................................155

Configuring Autoboot Options...........................................................................................................158

Table of Contents 5

Page 6

Configuring Boot-Time System Tests..................................................................................................161

6 Creating and Configuring nPartitions......................................................................165

Tools for Configuring nPartitions.......................................................................................................165

Task Summaries for Creating and Configuring nPartitions...............................................................165

Creating a Genesis Partition................................................................................................................170

Creating a New nPartition..................................................................................................................172

Removing (Deleting) an nPartition.....................................................................................................176

Assigning (Adding) Cells to an nPartition..........................................................................................179

Unassigning (Removing) Cells from an nPartition.............................................................................182

Renaming an nPartition......................................................................................................................185

Renaming a Server Complex...............................................................................................................187

Setting Cell Attributes.........................................................................................................................189

Setting nPartition Core Cell Choices...................................................................................................194

Unlocking Complex Profile Entries....................................................................................................198

Canceling Pending Changes to the Complex Profile..........................................................................199

7 Managing Hardware Resources..............................................................................201

Tools for Managing Hardware............................................................................................................201

Task Summaries for Hardware Resource Management.....................................................................201

Powering Server Cabinets On and Off................................................................................................205

Powering Cells and I/O Chassis On and Off.......................................................................................206

Turning Attention Indicators (LEDs) On and Off...............................................................................209

Configuring and Deconfiguring Cells................................................................................................213

Configuring and Deconfiguring Processors.......................................................................................217

Enabling and Disabling Hyper-Threading on Dual-Core Intel® Itanium® 2 Processors..................218

Configuring and Deconfiguring Memory (DIMMs)...........................................................................220

Complex Health Analysis of a Server.................................................................................................222

8 Listing nPartition and Hardware Status....................................................................223

Tools for Listing Status........................................................................................................................223

Task Summaries for nPartition and Hardware Status........................................................................223

Listing Cell Configurations.................................................................................................................226

Listing Processor Configurations........................................................................................................227

Listing Memory Configurations.........................................................................................................229

Listing Input/Output (I/O) Configurations.........................................................................................231

Listing Cabinets in a Server Complex.................................................................................................234

Listing Product and Serial Numbers..................................................................................................235

Listing nPartition Configurations.......................................................................................................236

Listing the Local nPartition Number..................................................................................................237

Listing Power Status and Power Supplies..........................................................................................238

Listing Fan and Blower Status............................................................................................................240

A nPartition Commands................................................................................................243

Specifying Cells and I/O Chassis to Commands.................................................................................243

Cell Specification Formats.............................................................................................................243

I/O Specification Format................................................................................................................244

Specifying Remote Management Options to Commands..................................................................247

parcreate Command............................................................................................................................249

parmodify Command.........................................................................................................................252

parremove Command.........................................................................................................................256

parstatus Command............................................................................................................................258

6 Table of Contents

Page 7

parunlock Command..........................................................................................................................260

fruled Command.................................................................................................................................262

frupower Command...........................................................................................................................264

cplxmodify Command........................................................................................................................266

Table of Contents 7

Page 8

List of Figures

1-1 Partition Manager Version 2.0 Switch Complexes Dialog............................................................46

2-1 Two-Cell HP Server Cabinet..........................................................................................................55

2-2 Four-Cell HP Server Cabinet.........................................................................................................56

2-3 HP Superdome Server Cabinet......................................................................................................57

8 List of Figures

Page 9

List of Tables

1-1 nPartition Operating System Support...........................................................................................15

1-2 HP Servers Supporting nPartitions...............................................................................................17

1-3 nPartition Commands Releases.....................................................................................................20

1-4 nPartition Commands Descriptions..............................................................................................20

1-5 Complex Profile Group Details.....................................................................................................40

2-1 Models of Cell-Based HP Servers..................................................................................................51

3-1 Operating System Hardware Requirements.................................................................................61

4-1 Windows SAC Commands............................................................................................................73

4-2 Service Processor (MP or GSP) Command Reference...................................................................80

4-3 EFI Shell Command Reference......................................................................................................81

4-4 Boot Console Handler (BCH) Command Reference.....................................................................84

5-1 nPartition Boot and Reset Task Summaries...................................................................................96

6-1 nPartition Configuration Task Summaries..................................................................................166

7-1 Hardware Management Task Summaries...................................................................................202

7-2 Attention Indicator (LED) States and Meanings.........................................................................209

8-1 Hardware and nPartition Status Task Summaries......................................................................224

A-1 Cell IDs in Global Cell Number Format......................................................................................243

A-2 Cell IDs in Hardware Location Format.......................................................................................244

9

Page 10

List of Examples

1-1 Unique IDs for an nPartition and Complex..................................................................................48

1-2 Unique IDs for Virtual Partitions (vPars)......................................................................................48

4-1 Overview of a Service Processor Login Session............................................................................75

5-1 Single-User HP-UX Boot..............................................................................................................115

7-1 Turning Attention Indicators On and Off...................................................................................212

7-2 Checking the Hyper-Threading Status for an nPartition............................................................219

7-3 Enabling Hyper-Threading for an nPartition..............................................................................220

A-1 I/O Specification Formats for Cabinets, Bays, and Chassis.........................................................247

10 List of Examples

Page 11

About This Document

This book describes nPartition system administration procedures, concepts, and principles for

the HP servers that support nPartitions.

New and Changed Information in This Edition

This edition includes changes and additions related to the Superdome SX1000 PA and SX2000

PA.

Document Organization

This book contains the following chapters and appendix.

Chapter 1. “Getting Started with nPartitions” (page 15)

This chapter introduces HP nPartition system features, server models,

supported operating systems, and administration tools, and outlines the basic

information needed for managing nPartitions.

Chapter 2. “nPartition Server Hardware Overview” (page 49)

This chapter describes HP nPartition server models and features.

Chapter 3. “Planning nPartitions” (page 61)

This chapter describes how you can plan nPartition configurations. Details

include the nPartition configuration requirements and recommendations.

Chapter 4. “Using Management Interfaces and Tools” (page 67)

This chapter presents the system management interfaces and tools available

on HP nPartition servers. Also described here are the nPartition boot

environments, management access procedures, and detailed command

references.

Chapter 5. “Booting and Resetting nPartitions” (page 87)

This chapter introduces nPartition system boot and reset concepts,

configuration options, and procedures for booting and resetting nPartitions.

Chapter 6. “Creating and Configuring nPartitions” (page 165)

This chapter presents the procedures for creating, configuring, and managing

nPartitions on HP servers that support them.

Chapter 7. “Managing Hardware Resources” (page 201)

This chapter explains the procedures for managing the hardware resources

in nPartitions and their server complexes. It describes power and LED

(attention indicator) management, hardware configuration and

deconfiguration, and analysis of the current status of the server complex.

Chapter 8. “Listing nPartition and Hardware Status” (page 223)

This chapter describes procedures for listing the current status of nPartitions

and server hardware components.

Appendix A. “nPartition Commands” (page 243)

This appendix contains details and command-line syntax for the HP nPartition

configuration commands.

New and Changed Information in This Edition 11

Page 12

Typographic Conventions

This document uses the following typographical conventions:

audit(5) A manpage. The manpage name is audit, and it is located in

Section 5.

Command

A command name or qualified command phrase.

Computer output

Text displayed by the computer.

Ctrl+x A key sequence. A sequence such as Ctrl+x indicates that you

must hold down the key labeled Ctrl while you press another

key or mouse button.

ENVIRONMENT VARIABLE The name of an environment variable, for example, PATH.

[ERROR NAME]

The name of an error, usually returned in the errno variable.

Key The name of a keyboard key. Return and Enter both refer to the

same key.

User input

Commands and other text that you type.

Variable

The name of a placeholder in a command, function, or other

syntax display that you replace with an actual value.

[] The contents are optional in syntax. If the contents are a list

separated by |, you must choose one of the items.

{} The contents are required in syntax. If the contents are a list

separated by |, you must choose one of the items.

... The preceding element can be repeated an arbitrary number of

times.

| Separates items in a list of choices.

WARNING A warning calls attention to important information that if not

understood or followed will result in personal injury or

nonrecoverable system problems.

CAUTION A caution calls attention to important information that if not

understood or followed will result in data loss, data corruption,

or damage to hardware or software.

IMPORTANT This alert provides essential information to explain a concept or

to complete a task

NOTE A note contains additional information to emphasize or

supplement important points of the main text.

12

Page 13

Related Information

You can find information on nPartition server hardware management, operating system

administration, and diagnostic support tools in the following publications and Web sites.

Web Site for HP Technical Documentation: http://docs.hp.com The HP Technical Documentation

Web site is at http://docs.hp.com and has complete information available for free.

Server Hardware Information: http://docs.hp.com/hpux/hw/ The systems hardware portion

of the docs.hp.com Web site is at http://docs.hp.com/hpux/hw/. It provides server hardware

management information, including site preparation and installation.

Diagnostics and Event Monitoring: Hardware Support Tools Complete information about HP

hardware support tools, including online and offline diagnostics and event monitoring tools, is

at the http://docs.hp.com/hpux/diag/ Web site. This site has manuals, tutorials, FAQs, and other

reference material.

Web Site for HP Technical Support: http://us-support2.external.hp.com The HP IT resource

center Web site at http://us-support2.external.hp.com/ provides comprehensive support

information for IT professionals on a wide variety of topics, including software, hardware, and

networking.

Publishing History

This is the second edition of the nPartition Administrator's Guide.

This book replaces the nPartition Administrator's Guide (5991–1247B) and HP System Partitions

Guide (5991–1247).

HP Encourages Your Comments

HP welcomes your feedback on this publication. Address your comments to

edit@presskit.rsn.hp.com and note that you will not receive an immediate reply. All

comments are appreciated.

Related Information 13

Page 14

14

Page 15

1 Getting Started with nPartitions

This chapter introduces cell-based HP server features, server models, supported operating

systems, and administration tools, and outlines the basic information needed for managing

nPartitions.

Introduction to nPartitions

Cell-based HP servers enable you to configure a single server complex as one large system or as

multiple smaller systems by configuring nPartitions.

Each nPartition defines a subset of server hardware resources to be used as an independent

system environment. An nPartition includes one or more cells assigned to it (with processors

and memory) and all I/O chassis connected to those cells.

All processors, memory, and I/O in an nPartition are used exclusively by software running in

the nPartition. Thus, each nPartition has its own system boot interface, and each nPartition boots

and reboots independently.

Each nPartition provides both hardware and software isolation, so that hardware or software

faults in one nPartition do not affect other nPartitions within the same server complex.

You can reconfigure nPartition definitions for a server without physically modifying the server

hardware configuration by using the HP software-based nPartition management tools.

For procedures for creating and reconfiguring nPartitions, see Chapter 6 (page 165).

Operating Systems Supported on nPartitions

Table 1-1 lists the operating systems that can run on nPartitions.

For an overview of the server models that support nPartitions, see “HP Server Support for

nPartitions” (page 16).

For details on operating system boot and reset procedures, see Chapter 5 (page 87).

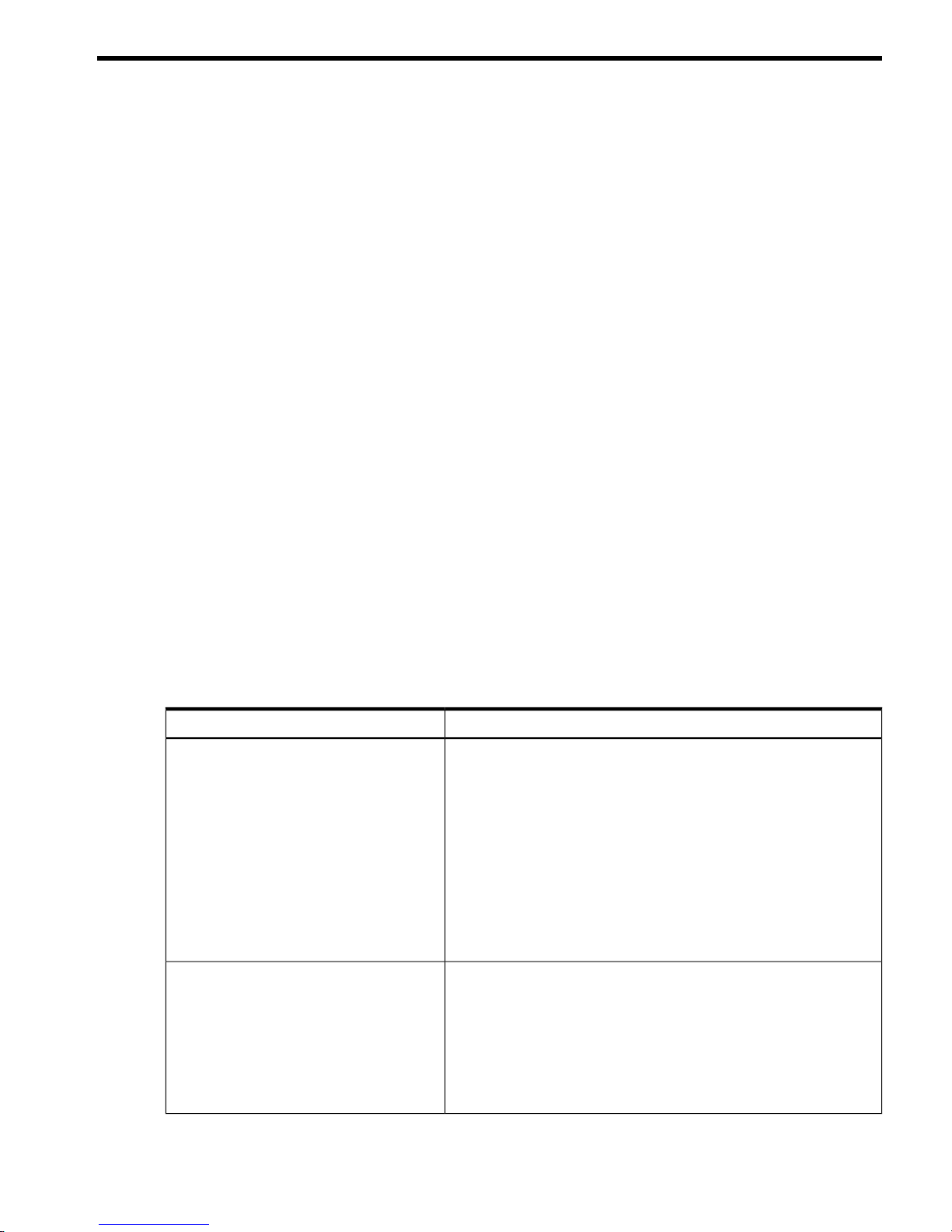

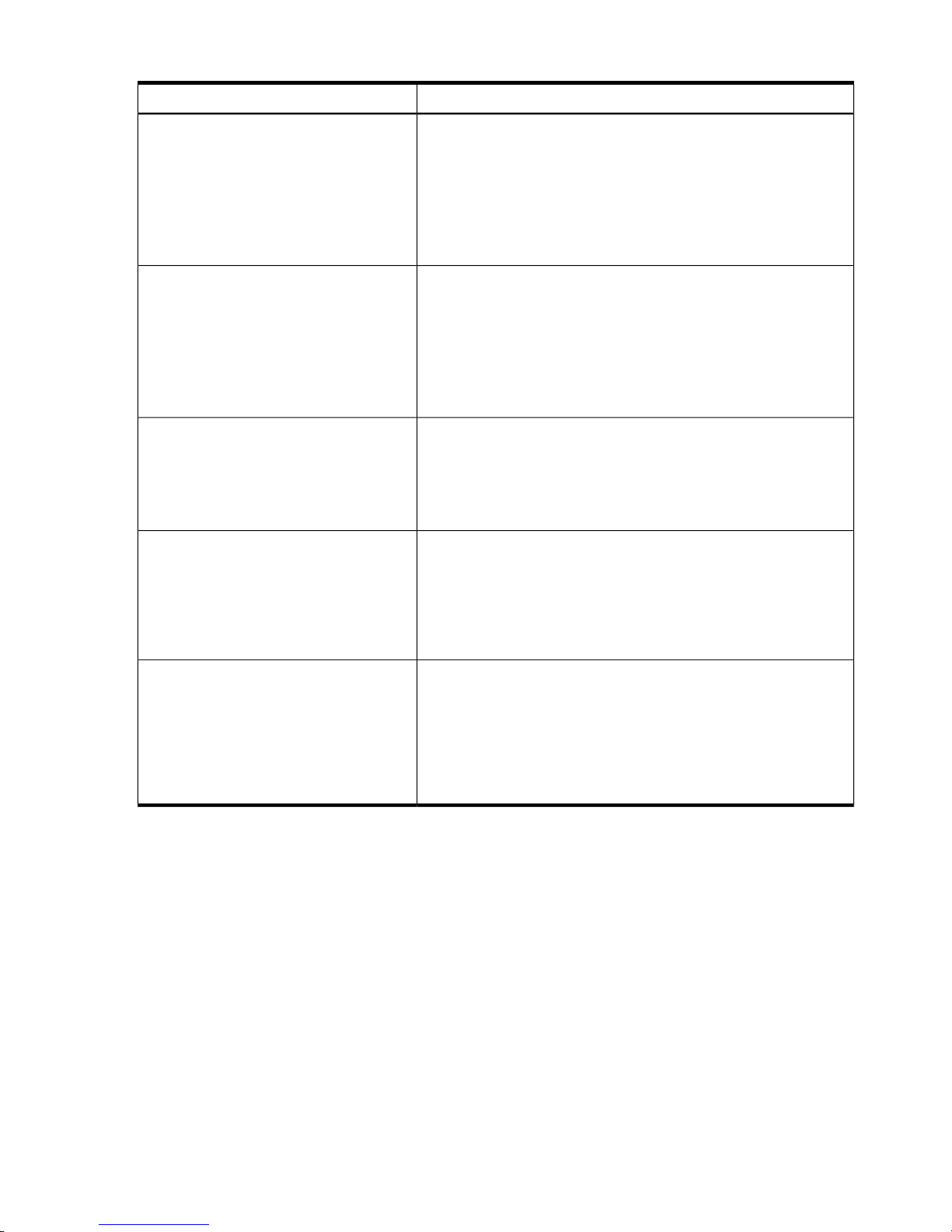

Table 1-1 nPartition Operating System Support

Supported Cell-Based ServersOperating System

HP-UX 11i v1 (B.11.11) is supported on HP 9000 servers, including the

cell-based HP 9000 servers.

The HP-UX 11i v1 (B.11.11) December 2003 release and later supports

rp7420, rp8420, and HP 9000 Superdome (SD16A, SD32A, SD64A

models), based on the HP sx1000 chipset.

The HP-UX 11i v1 (B.11.11) December 2006 release and later supports

rp7440, rp8440, and HP 9000 Superdome (SD16B, SD32B, SD64B

models), based on the HP sx2000 chipset.

HP-UX 11i v1 does not support cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

HP-UX 11i v1 (B.11.11)

HP-UX 11i v2 (B.11.23) is supported on HP Integrity servers, including

the cell-based HP Integrity servers.

The HP-UX 11i v2 (B.11.23) September 2004 release and later also

supports cell-based HP 9000 servers based on the HP sx1000 chipset.

HP-UX 11i v2 supports cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

HP-UX 11i v2 (B.11.23)

Introduction to nPartitions 15

Page 16

Table 1-1 nPartition Operating System Support (continued)

Supported Cell-Based ServersOperating System

HP-UX 11i v3 (B.11.31) is supported on HP Integrity servers and HP

9000 servers.

HP-UX 11i v3 is supported on all servers based on the HP sx1000

chipset, and on HP Integrity servers based on the HP sx2000 chipset.

HP-UX 11i v3 supports cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

HP-UX 11i v3 (B.11.31)

OpenVMS I64 8.2-1 is supported on cell-based HP Integrity servers

based on the HP sx1000 chipset.

OpenVMS I64 8.3 is supported on HP Integrity servers based on the

HP sx1000 and sx2000 chipsets.

OpenVMS I64 does not support cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

HP OpenVMS I64 8.2-1 and 8.3

Windows Server 2003 is supported on HP Integrity servers, including

the cell-based HP Integrity servers.

Windows Server 2003 supports cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

Microsoft® Windows® Server 2003

Red Hat Enterprise Linux 3 and Red Hat Enterprise Linux 4 are

supported on HP Integrity servers, including the cell-based HP

Integrity servers.

Red Hat Enterprise Linux does not support cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

Red Hat Enterprise Linux 3 Update 2

Red Hat Enterprise Linux 3 Update 3

Red Hat Enterprise Linux 4

SuSE Linux Enterprise Server 9 and SuSE Linux Enterprise Server 10

are supported on HP Integrity servers, including the cell-based HP

Integrity servers.

SuSE Linux Enterprise Server 9 and SuSE Linux Enterprise Server 10

support cell local memory.

Also see “nPartition Hardware Requirements for Operating Systems”

(page 61).

SuSE Linux Enterprise Server 9

SuSE Linux Enterprise Server 10

HP Server Support for nPartitions

HP supports nPartition capabilities on cell-based servers, listed in Table 1-2.

On HP Superdome servers based on the HP sx1000 chipset, you can mix both PA-RISC nPartitions

and Intel® Itanium® 2 nPartitions in the same server complex under specific system

configurations. For details, refer to “HP Superdome Hybrid Servers: Intel® Itanium® 2 and

PA-RISC nPartition Mixing” (page 17).

The same basic nPartition features are supported for cell-based HP 9000 servers and cell-based

HP Integrity servers, though some differences exist in the sets of supported tools and management

capabilities. Where such differences exist, this document notes them.

16 Getting Started with nPartitions

Page 17

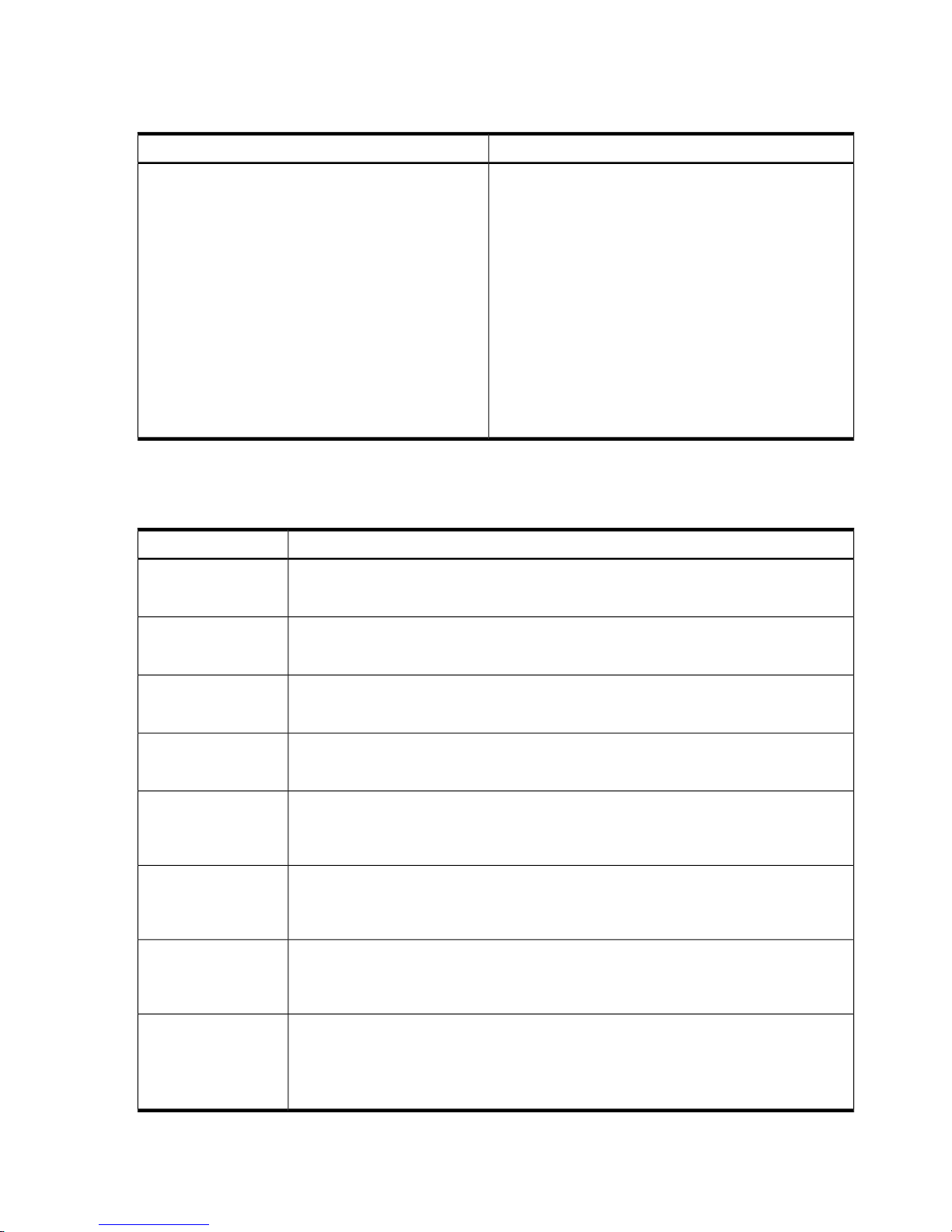

Table 1-2 HP Servers Supporting nPartitions

The first-generation cell-based HP 9000 servers include the following models:

• HP 9000 Superdome servers, including the SD16000, SD32000, and SD64000 models.

These models support up to 16 cells in a server complex.

• HP 9000 rp8400 model, which supports up to four cells in a server complex.

• HP 9000 rp7405/rp7410, which supports up to two cells in a server complex.

For details see “Server Hardware Details: Cell-Based HP Servers” (page 51).

HP 9000 Servers HP

9000 servers have

PA-RISC processors.

The following second-generation cell-based HP 9000 servers use the HP sx1000 chipset. For

details see “sx1000 Chipset for HP Servers” (page 49).

• HP 9000 Superdome servers, including the SD16A, SD32A, and SD64A models. These

models support up to 16 cells in a server complex.

• HP 9000 rp8420 model, which supports up to four cells in a server complex.

• HP 9000 rp7420 model, which supports up to two cells in a server complex.

The following third-generation cell-based HP 9000 servers use the HP sx2000 chipset. For

details see “sx2000 Chipset for HP Servers” (page 49).

• HP 9000 Superdome servers, including the SD16B, SD32B, and SD64B models. These

models support up to 16 cells in a server complex.

• HP 9000 rp8440 model, which supports up to four cells in a server complex.

• HP 9000 rp7440 model, which supports up to two cells in a server complex.

For details see “Server Hardware Details: Cell-Based HP Servers” (page 51).

Cell-based HP Integrity servers use either the HP sx1000 chipset or the HP sx2000 chipset.

The following cell-based HP Integrity servers use the HP sx1000 chipset; for details see

“sx1000 Chipset for HP Servers” (page 49).

• HP Integrity Superdome servers include the SD16A, SD32A, and SD64A models. These

models support up to 16 cells in a server complex.

• The HP Integrity rx8620 model supports up to four cells in a server complex.

• The HP Integrity rx7620 model supports up to two cells in a server complex.

The following cell-based HP Integrity servers use the HP sx2000 chipset; for details see

“sx2000 Chipset for HP Servers” (page 49).

• HP Integrity Superdome servers include the SD16B, SD32B, and SD64B models. These

models support up to 16 cells in a server complex.

• The HP Integrity rx8640 model supports up to four cells in a server complex.

• The HP Integrity rx7640 model supports up to two cells in a server complex.

For details see “Server Hardware Details: Cell-Based HP Servers” (page 51).

HP Integrity

Servers HP Integrity

servers have Intel®

Itanium® 2 processors.

The Intel® Itanium®

processor family

architecture was

co-developed by

Hewlett-Packard and

Intel.

HP Superdome Hybrid Servers: Intel® Itanium® 2 and PA-RISC nPartition Mixing

HP Superdome servers based on the HP sx1000 chipset can support hybrid configurations with

both PA-RISC nPartitions and Intel® Itanium® 2 nPartitions in the same server complex.

NOTE: For details and restrictions on mixing PA-RISC nPartitions and Intel® Itanium® 2

nPartitions on HP Superdome servers based on the HP sx2000 chipset, see the HP Integrity

Superdome/sx2000 Service Guide.

On Superdome hybrid servers based on the HP sx1000 chipset, each nPartition must have only

PA-RISC processor or Intel® Itanium® 2 processors. However, both types of nPartitions can

reside in the same server complex. Within each PA-RISC nPartition, all cells must have the same

processor revision level. Within each Intel® Itanium® 2 nPartition, all cells must have the same

cell compatibility value.

Introduction to nPartitions 17

Page 18

NOTE: Specific firmware, operating systems, and management tools are required to supported

mixing PA-RISC nPartitions and Intel® Itanium® 2 nPartitions on Superdome hybrid servers.

For details, refer to HP Superdome Hybrid Servers, which is available from the http://docs.hp.com/

en/hw.html Web site under the HP 9000 Superdome Server and HP Integrity Superdome

Server links.

Hardware Components of nPartition-Capable Servers

All hardware within a cell-based server—including all cells, I/O chassis, cables, cabinet hardware,

fans, and power and utility components—is considered to be a server complex.

Within each cell-based server cabinet are one or more cells, each of which contains processors

and memory.

Each cell-based server cabinet can have multiple I/O chassis that provide PCI slots for I/O cards.

I/O resources also include any I/O devices attached to I/O cards within the I/O chassis.

Core I/O is required for each nPartition to provide console services and other boot and

management abilities. On first-generation cell-based servers and HP sx1000 chipset-based servers,

core I/O is provided by a PCI card residing in an I/O chassis. On HP sx2000 chipset-based servers,

core I/O is provided on each cell. On all cell-based servers, each nPartition has only one core I/O

active at a time.

Each I/O chassis connects to only one cell in the server. Some cell-based servers also support

optional I/O expansion cabinets to provide additional I/O chassis. An HP Superdome complex

can consist of one cabinet or two server cabinets, and can also include one or two I/O expansion

cabinets (to provide additional I/O chassis). The two-cell HP servers consists of a single server

cabinet only. The four-cell servers consists of a single server cabinet and can optionally include

one I/O expansion cabinet to provide two additional I/O chassis.

For details on listing and managing nPartition hardware components, see Chapter 7 (page 201).

Administration Tools for nPartitions

The main administration tools for nPartitions are Partition Manager, which provides a graphical

interface, and the nPartition Commands, which provide a command-line interface.

Some nPartition configuration and management also can be accomplished using the service

processor interface to a cell-based server or by using the boot interface available through an

nPartition console.

Slightly different toolsets and capabilities are available the different server models. However,

the same basic administration tasks are supported on all cell-based servers.

The following tools can perform nPartition administration tasks:

• Service Processor (MP or GSP) Menus

Service processor menus provide a service interface for the entire complex. It allows access

to all hardware and nPartitions defined within the complex. The service processor is always

available, regardless of whether any nPartitions are configured or booted in the complex.

The service processor includes the Command menu, nPartition consoles, nPartition Virtual

Front Panels, nPartition console logs and the Chassis Log Viewer or Event Log Viewer (HP

9000 servers with HP PA-8700 processors have chassis logs, and servers based on the HP

sx1000 or sx2000 chipset have event logs).

See Chapter 4 (page 67).

18 Getting Started with nPartitions

Page 19

For service processor commands, see “Command Reference for Service Processor Commands”

(page 80).

• EFI Boot Manager and EFI Shell Commands

On cell-based HP Integrity servers, the Extensible Firmware Interface (EFI) supports

nPartition management. The EFI is accessible from an nPartition console when the nPartition

is in an active state but has not booted an operating system.

See “Command Reference for EFI Shell Commands” (page 81) for details.

• BCH Menu Commands

On cell-based PA-RISC servers, the Boot Console Handler (BCH) interface supports

management from an nPartition console when the nPartition is in an active state but has not

booted an operating system. See “Command Reference for BCH Menu Commands” (page 84)

for details.

• nPartition Commands

You can configure, manage, and monitor nPartitions and hardware using the nPartition

commands such as parstatus, parcreate, parmodify, and others.

Two versions of the nPartition commands are available: the Original nPartition Commands

and Enhanced nPartition Commands. The Original nPartition Commands are used only on

HP-UX 11i v1 (B.11.11) releases prior to the December 2004 release. The Enhanced nPartition

Commands are supported for HP-UX, Windows, and Linux.

The same base set of features is available in both nPartition commands versions. However,

the Enhanced nPartition Commands include new options, such as remote administration

abilities, and include the cplxmodify command.

See “Commands for Configuring nPartitions” (page 19) for details.

• Partition Manager (/opt/parmgr/bin/parmgr)

Partition Manager provides a graphical interface for configuring, modifying, and managing

nPartitions and hardware within a server complex.

Two versions of Partition Manager are available: Version 1.0 and Version 2.0. Partition

Manager Version 1.0 is used only on HP-UX 11i v1 (B.11.11) releases prior to the December

2004 release and relies in part on the Original nPartition Commands. Partition Manager

Version 2.0 is supported for HP-UX and Windows and relies in part on the Enhanced

nPartition Commands.

Although both Partition Manager versions support a similar set of tasks, the Partition

Manager Version 2.0 release provides a significantly improved graphical interface, a new

Web-based management interface, and remote administration abilities.

See “Partition Manager” (page 22) for details.

Commands for Configuring nPartitions

You can use the nPartition commands to create, modify, monitor, and remove nPartitions; get

detailed server hardware information; manipulate attention indicators (LEDs) and power; and

modify server complex attributes such as the complex name.

Table 1-3 describes the two nPartition commands releases, the Original nPartition Commands

and the Enhanced nPartition Commands.

The nPartition commands include: parcreate, parmodify, parremove, parstatus,

parunlock, fruled, frupower, and cplxmodify. Table 1-4 “nPartition Commands

Descriptions” briefly describes each of the commands.

When using these commands, specify cells and I/O chassis using the notations in “Specifying

Cells and I/O Chassis to Commands” (page 243).

Administration Tools for nPartitions 19

Page 20

Remote management using the commands is supported as described in “Specifying Remote

Management Options to Commands” (page 247).

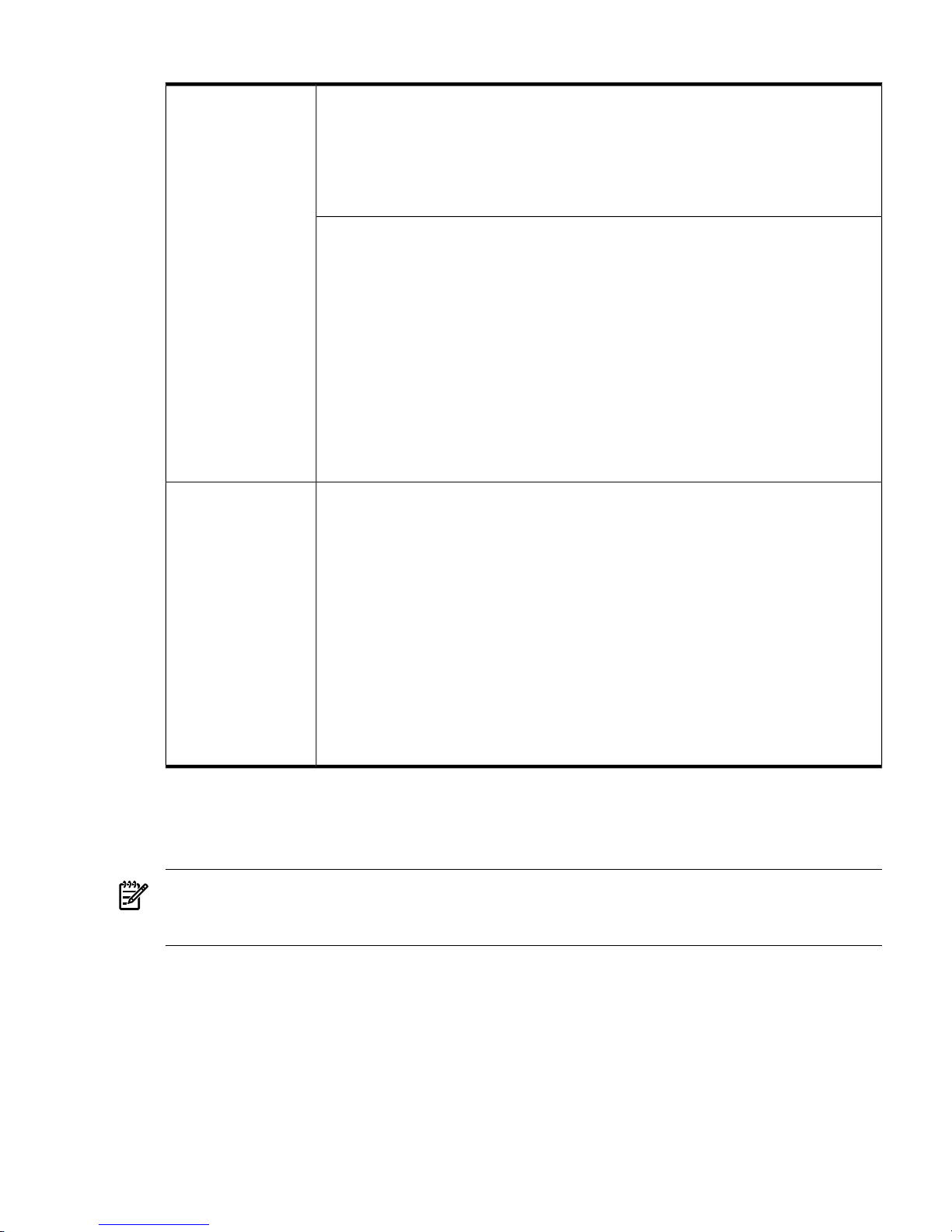

Table 1-3 nPartition Commands Releases

Enhanced nPartition CommandsOriginal nPartition Commands

• Support both local management and remote

management of nPartitions and complexes.

• Distributed with the HP-UX 11i v3 (B.11.31) release.

Installed and supported for use on all systems that run

HP-UX 11i Version 3.

• Distributed with the HP-UX 11i v2 (B.11.23) release.

Installed and supported for use on all systems that run

HP-UX 11i Version 2.

• Distributed with the HP-UX 11i v1 (B.11.11) December

2004 release and later.

• Available for Windows (32-bit) and Windows (64-bit).

Distributed with the Smart Setup CD.

• Available for Red Hat Enterprise Linux and SuSE Linux

Enterprise Server. Distributed with the HP Integrity

Essentials Foundation Pack for Linux.

• Support only local management of nPartitions and

complexes.

• Were distributed with HP-UX 11i v1 (B.11.11) releases

prior to the December 2004 release.

• Supported by HP-UX kernels built with nPartition

support enabled (the hd_fabric driver) and use the

libfab.1 library.

• Installed as part of the HP-UX 11i Version 1 operating

system installation prior to the December 2004 release.

Table 1-4 describes the nPartition configuration commands and lists sections where you can find

each command's syntax and details.

Table 1-4 nPartition Commands Descriptions

DescriptionCommand

Create a new nPartition; root or IPMI LAN access is required.

See “parcreate Command” (page 249).

parcreate

Modify an existing nPartition; root or IPMI LAN access is required.

See “parmodify Command” (page 252).

parmodify

Remove an existing nPartition; root or IPMI LAN access is required.

See “parremove Command” (page 256).

parremove

Display nPartition information and hardware details for a server complex.

See “parstatus Command” (page 258).

parstatus

Unlock Complex Profile data (use this command with caution); root or IPMI LAN access

is required.

See “parunlock Command” (page 260).

parunlock

Blink the attention indicators (LEDs) or turn them off. This command can control these

indicators for cells, I/O chassis, and cabinet numbers.

See “fruled Command” (page 262).

fruled

Display status or turn power on or off for cells and I/O chassis; root or IPMI LAN access

is required.

See “frupower Command” (page 264).

frupower

Only distributed with the Enhanced nPartition Commands.

Modify server complex attributes. Supports changing the name of a complex; root or IPMI

LAN access is required

See “cplxmodify Command” (page 266).

cplxmodify

20 Getting Started with nPartitions

Page 21

Availability of nPartition Commands

The Original nPartition Commands were distributed as part of HP-UX 11i v1 (B.11.11) releases

prior to the December 2004 release.

The Enhanced nPartition Commands are distributed with current HP-UX releases, including the

HP-UX 11i v3 (B.11.31) release, all releases of HP-UX 11i v2 (B.11.23), and releases of HP-UX 11i

v1 (B.11.11) beginning with the December 2004 release.

The Enhanced nPartition Commands also are distributed as bundles on the HP Smart Setup CD

for Windows, and as bundles on the HP Integrity Essentials Foundation Pack for Linux.

You can download the nPartition Commands for Windows and Linux from the http://

www.hp.com/ Web site. See “Downloading Enhanced nPartition Commands for Windows” and

“Downloading the HP Integrity Essentials Foundation Pack for Linux”.

Enhanced nPartitions Commands for Windows

The Enhanced nPartition Commands for Windows are available in both 32-bit and 64-bit versions.

• The Windows (32-bit) Enhanced nPartition Commands are designed for any 32-bit system

running Windows XP, Windows 2000 with Service Pack 3, or Windows Server 2003 (32-bit).

The 32-bit nPartition Commands enable you to use a 32-bit system as a remote management

station for nPartition administration.

• The Windows (64-bit) Enhanced nPartition Commands are designed for HP Integrity servers

running Windows Server 2003, 64-bit, Enterprise Edition or Datacenter Edition.

The 64-bit nPartition Commands enable you to perform local and remote management of

nPartitions when running on a cell-based server with Windows Server 2003, and enable you

to perform remote management when running on other HP Integrity servers with Windows

Server 2003.

The Smart Setup CD has both both the 32-bit and 64-bit versions of the nPartition Commands.

You also can download the nPartition Commands bundles for Windows from the http://

www.hp.com/ Web site.

Procedure 1-1 Downloading Enhanced nPartition Commands for Windows

You can download the Enhanced nPartition Commands for Windows from the http://

www.hp.com/ Web site.

1. Go to the http://www.hp.com/ Web site and choose Software & Driver Downloads.

2. At the Software & Driver Downloads page, in the for product box, enter the name of a

cell-based HP Integrity server, such as:

• Integrity Superdome

• Integrity rx8620

• Integrity rx7620

3. At the Downloads for HP Business Support Center Web page, choose Microsoft Windows

Server 2003 64-Bit from the select operating system list.

4. At the next Downloads for HP Business Support Center Web page, choose HP nPartition

Commands Bundle (Windows Server 2003 64-Bit) or choose HP nPartition Commands

Bundle (Windows Server 2003 32-Bit) from the Utility Partition Management heading.

After you choose the nPartition commands bundle, the HP nPartition Commands Bundle

Web page displays information about the software bundle and provides options for

downloading the software and for viewing the release notes.

5. To view the release notes, choose the Release Notes tab.

Read the Installation instructions section of the release notes and the features summary before

downloading and installing the software.

Administration Tools for nPartitions 21

Page 22

6. To download the Enhanced nPartition Commands for Windows, choose download from

the Web page.

Enhanced nPartition Commands for Linux

The HP Integrity Essentials Foundation Pack for Linux is a CD that includes Enhanced nPartition

Commands for use with Red Hat Enterprise Linux or SuSE Linux Enterprise Server.

Procedure 1-2 Downloading the HP Integrity Essentials Foundation Pack for Linux

You can download the HP Integrity Essentials Foundation Pack for Linux from the http://

www.hp.com/go/softwaredepot Web site.

The downloadable CD image is an .iso file that you can use to record a usable CD.

1. Go to the http://www.hp.com/go/softwaredepot Web site.

2. At the Software Depot home page, enter Foundation Pack for Linux in the Search

field to search the Software Depot.

3. At the product catalog page that displays the search results, choose the HP Integrity

Essentials Foundation Pack for Linux on Itanium (R) 2-based Servers entry from the

list of products.

4. At the HP Integrity Essentials Foundation Pack for Linux product details page, in the to

order section of the page (where it states "Click here to download"), choose the word here.

5. To download the HP Integrity Essentials Foundation Pack for Linux CD image file, choose

download from the Web page.

You will use the .iso file that you downloaded to create a usable CD.

6. Go to the http://docs.hp.com/linux/ Web site to view documentation for the HP Integrity

Essentials Foundation Pack for Linux.

Under the Linux for Itanium 2-based Servers and Workstations heading of the Linux

documentation Web site, view the HP Integrity Essentials Foundation Pack for Linux

documentation.

7. Record a CD using the HP Integrity Essentials Foundation Pack for Linux CD image file.

The CD image (.iso file) is a complete CD image in one file. Copying the file to a CD does

not create a usable CD. Instead, use a software application that supports recording a CD

from a CD image.

Partition Manager

Partition Manager provides a graphical interface for managing cell-based servers.

Partition Manager Version 1.0 was distributed with HP-UX 11i v1 (B.11.11) releases prior to the

December 2004 release. Starting with the HP-UX 11i v1 December 2004 release, Partition Manager

Version 2.0 replaces Partition Manager Version 1.0 for HP-UX 11i v1 systems.

Partition Manager Version 2.0 is distributed and installed with the HP-UX 11i v3 (B.11.31) release

and all HP-UX 11i v2 (B.11.23) releases. It also is available for Windows (32-bit) and Windows

(64-bit) as part of the the Smart Setup CD, and is available for Linux on the HP Integrity Essentials

Foundation Pack for Linux.

Partition Manager Version 2.0 for HP-UX provides the /opt/parmgr/bin/parmgr command

to run, stop, and restart Partition Manager. Refer to the parmgr -h command or the parmgr(1M)

manpage for command-line options.

Also see the Partition Manager Version 2.0 online help available at the following Web site:

http://docs.hp.com/en/PARMGR2/

To view the online help without running Partition Manager, you can open the help files using

a Web browser either on a system where Partition Manager is installed, or on a system that has

a downloaded copy of the help files.

22 Getting Started with nPartitions

Page 23

Partition Manager Version 2.0 for Windows

The Partition Manager Version 2.0 for Windows can be installed and run on either 32-bit or 64-bit

Windows systems. (A single Partition Manager bundle is provided for both 32-bit and 64-bit

systems.)

• Using PartitionManager for Windows on any 32-bit system running Windows XP, Windows

2000 with Service Pack 3, or Windows 2003, 32-bit Edition enables you to use a 32-bit system

as a remote management station for nPartition administration.

• Using Partition Manager for Windows on an HP Integrity servers running Windows Server

2003, 64-bit, Enterprise Edition or Datacenter Edition enables you to perform local and

remote management of nPartitions when running on a cell-based server with Windows

Server 2003, and enables you to perform remote management when running on other HP

Integrity servers with Windows Server 2003.

NOTE: Before installing the Partition Manager bundle for Windows you must download and

install the nPartition Commands bundle (either the 32-bit or 64-bit version, depending on the

platform where the installation occurs).

You also must download and install the Java 2 SE SDK v1.42 from http://java.sun.com/downloads.

For details refer to the release notes.

The Smart Setup CD includes Partition Manager Version 2.0 for Windows. You also can download

the Partition Manager bundles for Windows from the http://www.hp.com/ Web site.

Procedure 1-3 Downloading Partition Manager Version 2.0 for Windows

You can download Partition Manager Version 2.0 for Windows from the http://www.hp.com/

Web site.

1. Go to the http://www.hp.com/ Web site and choose Software & Driver Downloads.

2. At the Software & Driver Downloads page, in the for product box, enter the name of a

cell-based HP Integrity server, such as:

• Integrity Superdome

• Integrity rx8620

• Integrity rx7620

3. At the Downloads for HP Business Support Center Web page, choose Microsoft Windows

Server 2003 64-Bit from the select operating system list.

4. At the next Downloads for HP Business Support Center Web page, choose HP Partition

Manager Bundle from the Utility Partition Management heading.

After you choose the Partition Manager bundle, the HP Partition Manager Bundle Web page

displays information about the software bundle and provides options for downloading the

software and for viewing the release notes.

5. To view the release notes, choose the Release Notes tab.

Read the Installation instructions section of the release notes and the features summary before

downloading and installing the software.

6. To download Partition Manager for Windows, choose download from the Web page.

nPartition Properties

This section describes the nPartition properties you work with when performing nPartition

administration tasks.

The following nPartitions details are covered here:

• “Partition Numbers”

• “Assigned and Unassigned Cells”

nPartition Properties 23

Page 24

• “Base Cells”

• “Core Cells”

• “Active and Inactive Cells”

• “Cell Local Memory”

• “Cell Property Details”

• “Active and Inactive nPartition Boot States”

Partition Numbers

Each nPartition has its own unique partition number that the nPartition administration tools use

for identifying the nPartition.

When you create an nPartition, the tool you use assigns the nPartition the lowest available

partition number. For example, the first nPartition always is partition number 0, and the second

nPartition to be created is partition number 1.

After you remove an nPartition, no cells are assigned to the nPartition. As a result, the nPartition

tools can reuse the partition number when creating a new nPartition.

For example, after you remove partition number 2, the next time you create a new nPartition the

parcreate command or Partition Manager will assign cells to partition number 2 when creating

a new nPartition, if all lower-numbered nPartitions (partition numbers 0 and 1) already are

defined.

Assigned and Unassigned Cells

Each cell in a server complex either is assigned to one of the nPartitions in the complex, or it is

unassigned and thus is not used by any of the nPartitions. If an I/O chassis is attached to an

unassigned cell, then the chassis likewise is not assigned to an nPartition.

Cells that are unassigned are considered to be available resources; they are free to be assigned

to any of the existing nPartitions, or can be used to create new nPartitions.

Base Cells

On both HP 9000 servers and HP Integrity servers, all cells within an nPartition are base cells.

The nPartitions administration tools automatically set the cell type to base cell, if you do not

specify the cell type.

Core Cells

One cell in each nPartition must serve as the active core cell. The core cell controls the nPartition

until an operating system has booted, and it provides console services and other boot and

management abilities for the nPartition. The monarch processor on the core cell runs the Boot

Console Handler (BCH) or Extensible Firmware Interface (EFI) code while all other processors

are idle until an operating system is booted.

On first-generation cell-based servers and HP sx1000 chipset-based servers, core I/O is provided

by a PCI card residing in an I/O chassis. On these servers, to be eligible as a core cell, a cell must

be assigned to the nPartition, it must be active, and it must be attached to an I/O chassis containing

functional core I/O.

On HP sx2000 chipset-based servers, core I/O is provided on each cell, so any cell assigned to an

nPartition can be a core cell.

Although an nPartition can have multiple core-capable cells, only one core I/O is actively used

in an nPartition: the core I/O belonging to the active core cell.

For details about setting and using the core cell choices (or "alternates") for an nPartition see

“Setting nPartition Core Cell Choices” (page 194). When none of the core cell choices can serve

24 Getting Started with nPartitions

Page 25

as the active core cell, or if no core cell choices are specified, the nPartition attempts to select an

eligible cell using a default process.

Active and Inactive Cells

Cells that are assigned to an nPartition and have booted to form an nPartition are active cells

whose resources (processors, memory, and any attached I/O) can be actively used by software

running in the nPartition.

Cells that are inactive either are not assigned to an nPartition, or they have not participated in

partition rendezvous to form an nPartition with any other cells assigned to the nPartition.

(Partition rendezvous is the point during the nPartition boot process when all available cells in

an nPartition join together to establish which cells are active for the current boot of the nPartition.)

For example, a cell is inactive when it is powered off, has booted with a "n" use-on-next-boot

value, or is assigned to an nPartition that has been reset to the shutdown for reconfig state.

The resources belonging to inactive cells are not actively used by an nPartition. For a cell and its

resources to be actively used the cell must boot and participate in partition rendezvous.

Cell Local Memory

On cell-based servers that are based on the HP sx1000 or sx2000 chipset, a portion of the memory

in each cell can be configured as cell local memory (CLM), which is non-interleaved memory

that can be quickly accessed by processors residing on the same cell as the memory.

CAUTION: Memory configured as cell local memory only can be used by operating systems

that support it.

Any memory configured as cell local memory is unusable when an nPartition runs an operating

system that does not support it.

The nPartition management tools enable you to configure CLM for each cell either as a percentage

of the total memory in the cell, or as an absolute number of gigabytes of memory.

For details about configuring CLM see Chapter 3 (page 61).

Cell Property Details

Each cell has various properties that determine how the cell can be used and managed.

To list the properties of cells in a server complex, you can use the parstatus -C command,

parstatus -V -c# command, or Partition Manager.

The parstatus -C command output includes cell property summaries such as the current

assignments, usage, and I/O details for all cells in the complex.

# parstatus -C

[Cell]

CPU Memory Use

OK/ (GB) Core On

Hardware Actual Deconf/ OK/ Cell Next Par

Location Usage Max Deconf Connected To Capable Boot Num

========== ============ ======= ========= =================== ======= ==== ===

cab0,cell0 active core 4/0/4 8.0/ 0.0 cab 0,bay0,chassis1 yes yes 0

cab0,cell1 active base 4/0/4 8.0/ 0.0 - no yes 0

cab0,cell2 active base 4/0/4 8.0/ 0.0 cab 0,bay1,chassis3 yes yes 0

cab0,cell3 absent - - - - - cab0,cell4 active core 2/0/4 4.0/ 0.0 cab 0,bay0,chassis3 yes yes 1

cab0,cell5 active base 2/0/4 4.0/ 0.0 - no yes 1

cab0,cell6 active base 2/0/4 4.0/ 0.0 cab 0,bay1,chassis1 yes yes 1

cab0,cell7 absent - - - - - -

#

nPartition Properties 25

Page 26

The parstatus -V -c# command gives detailed information about the properties and status

for the cell (-c#) that you specify.

# parstatus -V -c0

[Cell]

Hardware Location : cab0,cell0

Global Cell Number : 0

Actual Usage : active core

Normal Usage : base

Connected To : cab0,bay0,chassis0

Core Cell Capable : yes

Firmware Revision : 20.1

Failure Usage : activate

Use On Next Boot : yes

Partition Number : 0

Partition Name : Partition 0

[CPU Details]

Type : 8820

Speed : 900 MHz

CPU Status

=== ======

0 ok

1 ok

2 ok

3 ok

4 ok

5 ok

6 ok

7 ok

CPUs

===========

OK : 8

Deconf : 0

Max : 8

[Memory Details]

DIMM Size (MB) Status

==== ========= =========

0A 2048 ok

4A 2048 ok

0B 2048 ok

4B 2048 ok

1A 2048 ok

5A 2048 ok

1B 2048 ok

5B 2048 ok

2A 2048 ok

6A 2048 ok

2B 2048 ok

6B 2048 ok

3A 2048 ok

7A 2048 ok

3B 2048 ok

7B 2048 ok

Memory

=========================

DIMM OK : 16

DIMM Deconf : 0

Max DIMMs : 16

Memory OK : 32.00 GB

Memory Deconf : 0.00 GB

#

26 Getting Started with nPartitions

Page 27

Active and Inactive nPartition Boot States

Each nPartition has a boot state of either active or inactive.

The boot state indicates whether the nPartition has booted so that it may be interactively accessed

through its console (active nPartitions) or if it cannot be used interactively (inactive nPartitions)

You can use the parstatus -P command or Partition Manager to list all nPartitions and their

boot states (active or inactive status).

# parstatus -P

[Partition]

Par # of # of I/O

Num Status Cells Chassis Core cell Partition Name (first 30 chars)

=== ============ ===== ======== ========== ===============================

0 inactive 2 1 ? feshd5a

1 active 2 1 cab1,cell2 feshd5b

#

Likewise, you can view nPartition boot states using the Virtual Front Panel, which is available

from the service processor Main menu for the server complex.

Active nPartition An nPartition that is active has at least one core-capable cell that is active (not

in a boot-is-blocked state). When an nPartition is active, one or more of the cells assigned to the

nPartition have completed partition rendezvous and the system boot interface (the BCH or EFI

environment) has loaded and been displayed through the nPartition console. An operating system

can be loaded and run from the system boot interface on an active nPartition.

Inactive nPartition An inactive nPartition is considered to be in the shutdown for reconfig state,

because all cells assigned to the nPartition either remain at a boot-is-blocked state or are powered

off.

To make an inactive nPartition active, use the BO command at the service processor (MP or GSP)

Command menu. The BO command clears the boot-is-blocked flag for all cells assigned to the