Page 1

nPartition Guide

HP Integrity Servers with Microsoft® Windows® Server 2003

Manufacturing Part Number: 5991-3684

September 2006

© Copyright 2006

Hewlett-Packard Development Company, L.P.

All rights reserved.

Page 2

Legal Notices

© 2006 Hewlett-Packard Development Company, L.P.

Microsoft and Windows are trademarks of Microsoft Corporation in the U.S. and other

countries.

Hewlett-Packard Company shall not be liable for technical or editorial errors or

omissions contained herein. The information in this document is provided “as is” without

warranty of any kind and is subject to change without notice. The warranties for HP

products are set forth in the express limited warranty statements accompanying such

products. Nothing herein should be construed as constituting an additional warranty.

ii

Page 3

1. Introduction

Quick Start . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Getting to know nPartitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Partitioning continuum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Cell structure of nPartitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Properties of nPartitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Cell and nPartition boot process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Cell boot phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

nPartition boot phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Choosing a management tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Management interface options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Intelligent Platform Management Interface (IPMI). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

IPMI Block Transfer (IPMI BT) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

IPMI over LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Managing nPartitions using IPMI over LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Running Partition Manager using IPMI over LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Running nPartition commands using IPMI over LAN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Web-Based Enterprise Management (WBEM) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Securing the WBEM connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Managing nPartitions using WBEM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Running Partition Manager using WBEM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Running nPartition commands using WBEM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Choosing a management mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Local management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Remote management via IPMI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Remote management via WBEM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Authentication and authorization issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Local management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Remote management via IPMI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Remote management via WBEM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Setting up the management station . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Controlling the management station remotely . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Remote Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Terminal Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Remote Desktop. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Telnet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Performing nPartition management tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Listing the status of an nPartition or complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Using the Partition Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Using the nPartition commands. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Using the Management Processor Command menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Using the EFI Shell. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Creating nPartitions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Creating the first nPartition in a server complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Creating a Genesis Partition for a server complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Creating additional nPartitions in a server complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Contents

iii

Page 4

Contents

Modifying nPartitions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Booting and resetting nPartitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Using the Management Processor Command menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Using the EFI Shell. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Using Microsoft Windows commands. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2. Installing nPartition Management Utilities

nPartition Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Install nPartition Commands bundle from the Smart Setup media . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Partition Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Install Partition Manager Bundle from the Smart Setup media . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Verifying the installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Verifying nPartition Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Verifying Partition Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Miscellaneous installation issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Configuring for secure WBEM communications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Upgrading service packs later (after installation) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3. Using Partition Manager

4. Using Other Tools for Managing nPartitions

Complex-level tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Rename a server complex. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Unlock complex profile entries. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Cancel pending changes to the complex profile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

List product and serial numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

nPartition-level tasks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Boot configuration options for nPartition systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Find bootable devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

List nPartition configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

List the local (current) nPartition number . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

List memory configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Configure boot paths and options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Configure autoboot options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Configure boot-time system tests. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Boot Windows Server 2003. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Boot over a network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Shut down Windows Server 2003 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Reboot and reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Reboot for reconfiguration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Shut down to a shutdown for reconfig (inactive) state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Boot an inactive nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Perform a transfer of control (TOC) reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Create a genesis nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Create a new nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Remove (delete) an nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

iv

Page 5

Contents

Add cells to an nPartition. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Set core cell choices. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Rename an nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Cell-level tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

List processor configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

List cell configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Unassign (remove) cells from an nPartition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Set cell attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Power-, status-, and hardware-level tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

List input/output (I/O) configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

List cabinets in a server complex. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

List power status and power supplies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

List fan and blower status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Turn attention indicators (LEDs) on and off. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Power server cabinets on and off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Power cells and I/O chassis on and off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Configure and deconfigure cells . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Configure and deconfigure processors (CPUs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

Configure and deconfigure memory (DIMMs). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

5. nPartition Commands Reference

parcreate. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

parmodify . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

parremove. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

parstatus. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

parunlock . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

fruled. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

frupower . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

cplxmodify. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

6. Troubleshooting

Installation problems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Services (WMI Mapper + nPartition Provider) fail to start during installation . . . . . . . . . . . . . . . . 139

nPartition Provider fails to start during installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Checking component installation and operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Verify WMI Mapper installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Verify WMI Mapper service with HTTP connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Verify WMI Mapper service with HTTPS connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

Verify WMI nPartition Provider registration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Verify WMI nPartition Provider operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Operational problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

All commands: ordinal not found . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

All commands: required data unavailable or locked. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Fruled: LED error messages (mid-range servers only). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Frupower: cannot power off error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Parcreate and parmodify: cell local memory warnings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

v

Page 6

Contents

Parcreate, parmodify, and parremove: failure to update stable complex configuration data (SCCD) . .

148

Parremove: shutdown and reset instructions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Parstatus -c -V: apparent incorrect output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Parstatus -p -V: apparent incorrect output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Parstatus: local partition error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Parstatus: unable to get read lock error . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Using WMIOP.EXE to pinpoint problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Error messages and corrective actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

nPartition commands messages. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Application Event Log messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

vi

Page 7

1Introduction

A cell-based HP Integrity server (such as the rx7620 or rx7640, the rx8620 or rx8640, or

Superdome) can be configured either as a single system or partitioned into several

independent systems. Partitioning provides hardware and software isolation, where each

partition is assigned memory, processors, I/O resources for its exclusive use, and

Chapter 1

7

Page 8

Introduction

executes its own operating system image. This chapter introduces node partitions

(nPartitions) on cell-based HP Integrity servers running Microsoft® Windows® Server

2003 and the tools to manage them.

8

Chapter 1

Page 9

Quick Start

This section provides an overview of the installation and configuration steps needed to

get you up and running as quickly as possible.

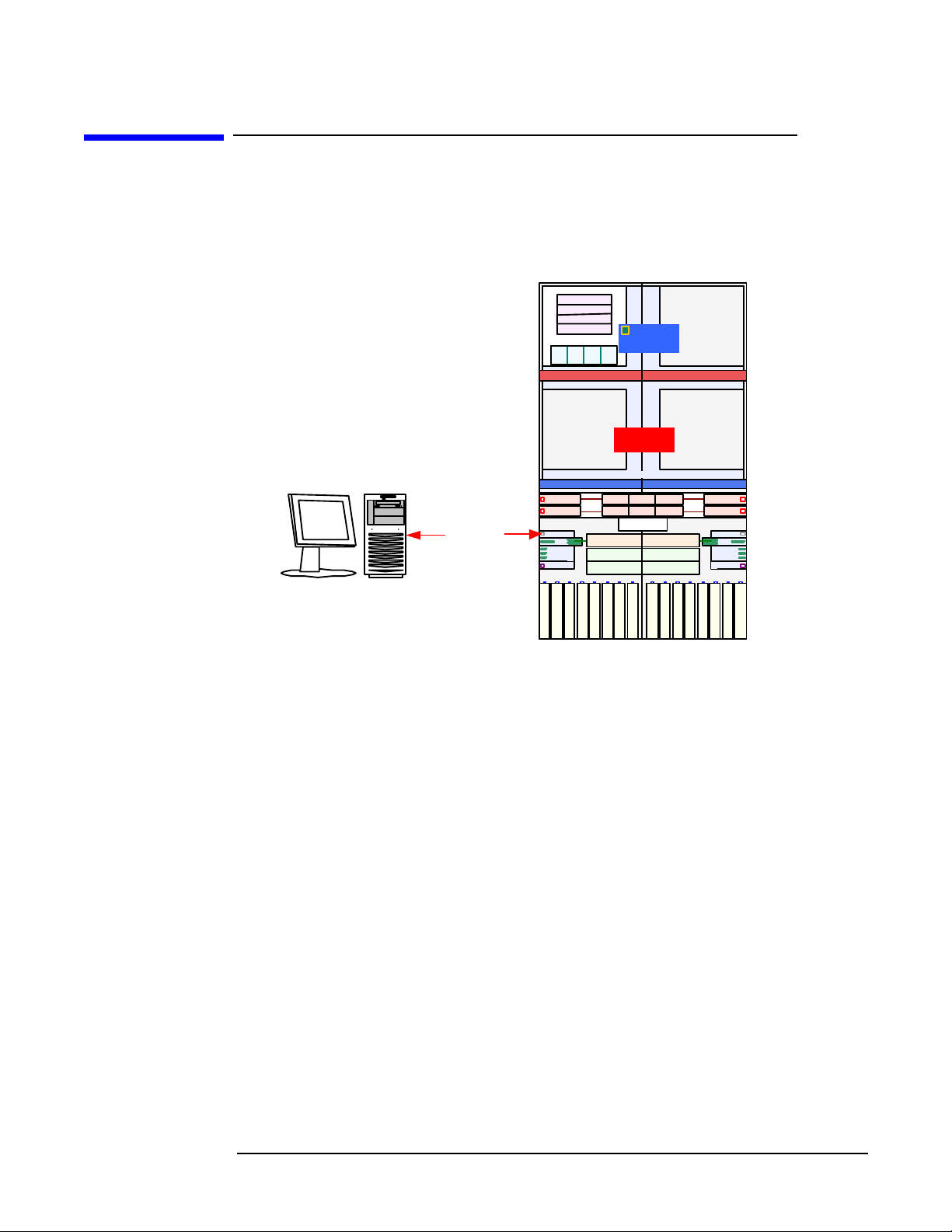

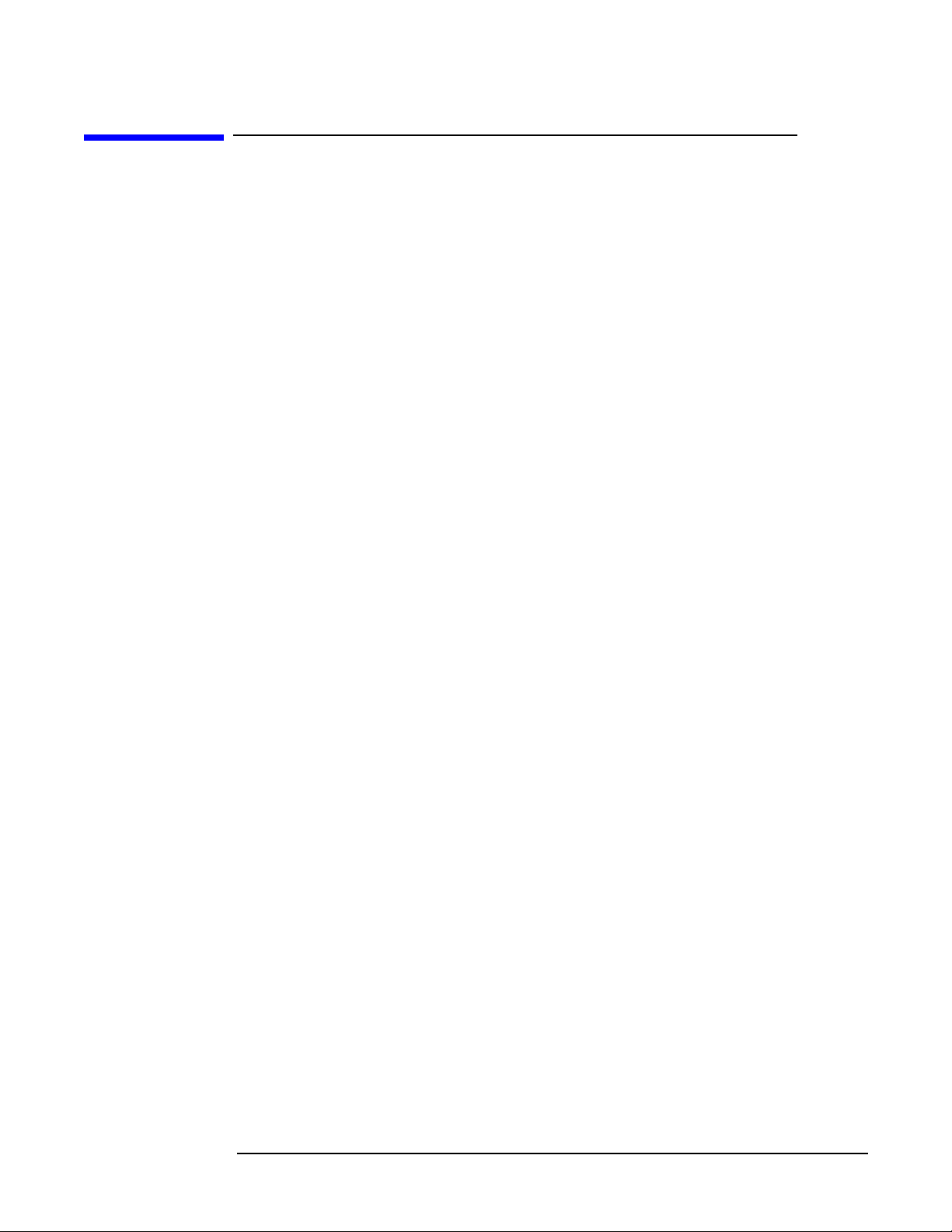

Figure 1-1 System overview

Partit ion Manager

nPartition Commands

M P Com m ands

managem ent station

IPMI / LAN

CPU

CPU 0

CPU 1

CPU 2

CPU 3

Memory

Cell 0

HP-UX

Cell 2

Optional Cell

Board

Partition 0Partition 0

Cell 3

Cell 1

Optional

Ce ll Board

Partition 1 Partiti on 1

PDCA B1

PDCA B0

Cell 0 Core I/O Cell 1 Core I/O

Console

LAN

LVD

SCSI

Serial

Modem

Console

UPS

1000t LAN

1 2 3 4 5 6 7

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Windows

Chipset HP SX1000

BPS3 BPS5

BPS 1

BPS 0

BPS2 BPS4

rx8620

DVD / DDS DVD / DDS

u320 Disk Sl ot u320 Disk Slot

u320 Disk Sl ot u320 Disk Slot

8

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Turbo PCI-X

Optional

Cell Bo ar d

PDCA A1

PDCA A0

Console LAN

LVD

SCSI

Serial

Modem

Console

UPS

1 2 3 4 5 6 7 8

Twi nTurb o PCI-X

Twi nTurb o PCI-X

1000t LAN

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Twi nTurb o PCI-X

Introduction

Quick Start

Turbo PCI-X

HP I ntegrit y rx8620 s erv er

Figure 1-1 shows the hardware, software, and most commonly used connection method.

All components are described in greater detail later in this chapter.

The most common setup for nPartition management of HP Integrity servers running

Windows involves a remote management station. This dedicated management station

hosts the management tools and issues commands over a network connection to the

server’s management processor, allowing the complex to be managed even if no

nPartitions exist on the complex yet.

To set up and configure the management station:

Step 1. Establish a dedicated management station for your HP Integrity server.

See “Setting up the management station” on page 25.

Step 2. Install the nPartition tools.

See “Install nPartition Commands bundle from the Smart Setup media” on page 35 and

“Install Partition Manager Bundle from the Smart Setup media” on page 36.

Step 3. Verify installation of the management software.

See “Verifying the installation” on page 38.

Chapter 1

9

Page 10

Introduction

Getting to know nPartitions

Getting to know nPartitions

Partitions are physical or logical mechanisms for demarcating operational environments

within a single system or across multiple systems. Partitioning lets you consolidate

systems, allocate computing resources in response to fluctuations in demand, maximize

resource utilization, and protect operating environments from disruptive events.

Partitioning continuum

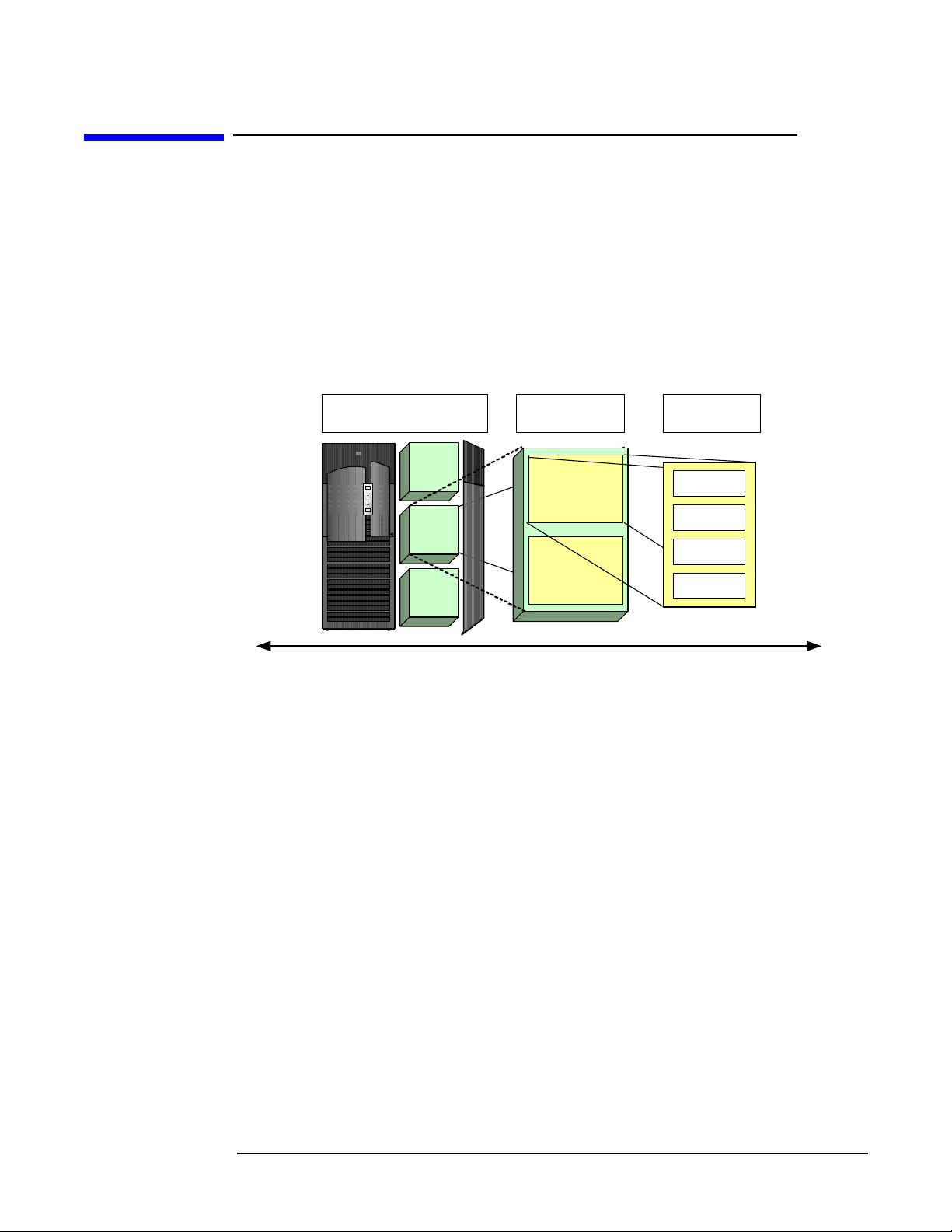

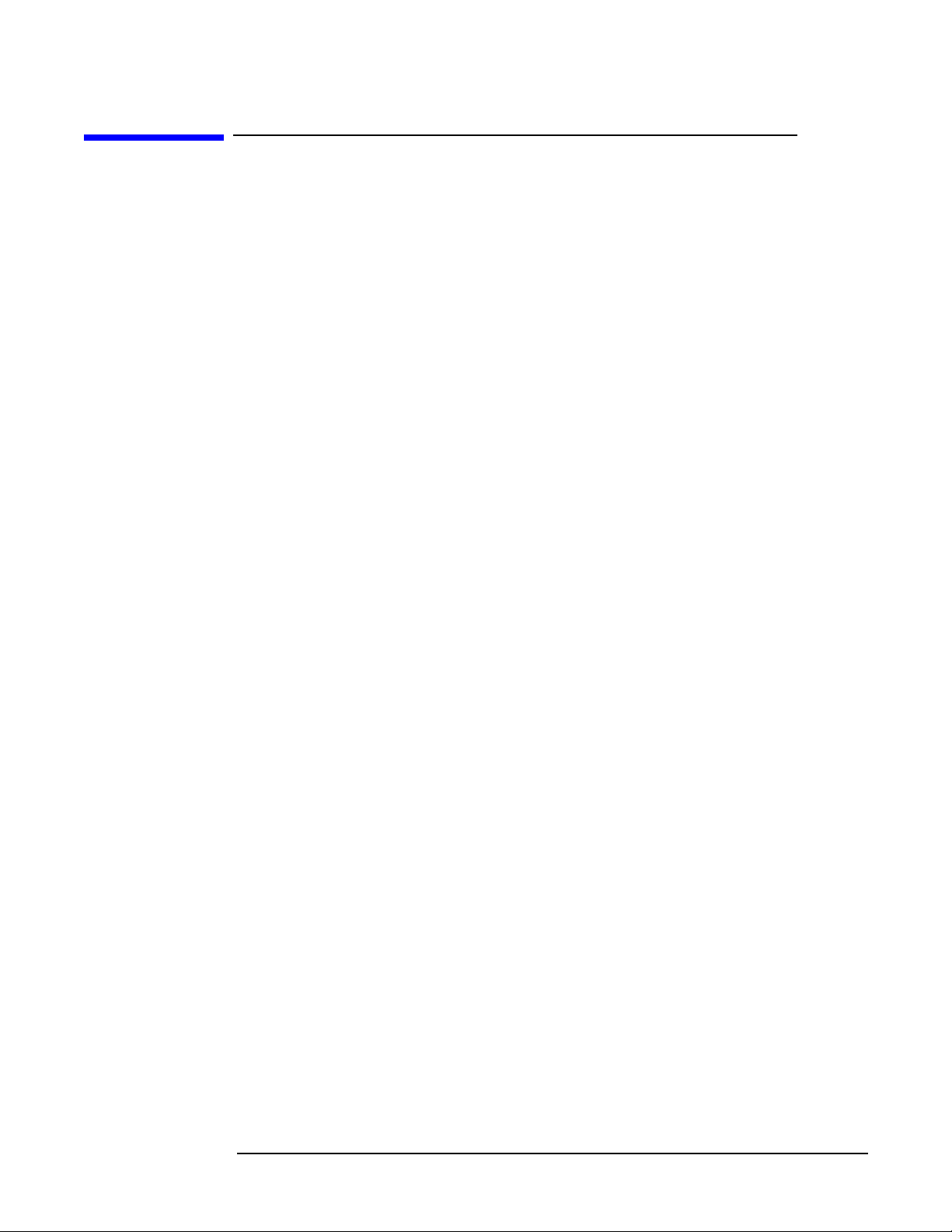

HP offers a continuum of partitioning technologies, as shown in Figure 1-2.

Figure 1-2 Partitioning continuum

Hard

Partitions

withi n a node

nPartition

nPartition

nPartition

Virtual Partitions

withi n a hard

partition

virtual

partition

virtual

partition

Resource

Partitions

withi n an OS

application

application

application

application

Isolation

Partitioning

Continuum

Flexibility

node Partition An node partition (nPartition) is a hard partition within a cell-based

server. Cell-based servers can be configured either as a single large

symmetric multiprocessor or as several independent systems. An

nPartition has exclusive use of the memory, processor, and I/O

resources belonging to the cells that are assigned to that partition, and

can execute its own operating system image.

virtual Partition A virtual partition is a soft partition within a node or an nPartition. A

virtual partition uses a subset of the system processor, memory, and

I/O resources, and includes OS and application-related software.

Windows Server 2003 is not supported in a virtual partition.

resource Partition A resource partition is a soft partition within either hard partitions or

virtual partitions and is controlled by workload management services

within the operating system. Resource partitions allow an OS instance

to allocate resources between competing applications. Windows Server

2003 does not support resource partitions.

This document focuses on nPartitions running Microsoft Windows Server 2003.

10

Chapter 1

Page 11

Introduction

Getting to know nPartitions

Cell structure of nPartitions

HP Integrity mid-range and high-end servers (rx7620/rx7640, rx8620/rx8640, and

Superdome) are composed of basic building blocks known as cells or cell boards. A cell

consists of up to four processor modules, contains memory modules (up to 32 DIMMs for

Superdome cells, up to 16 DIMMs for rx7260 and rx8620 cells), and may connect to an

I/O chassis that has I/O cards. A server cabinet may have several I/O chassis. Some

cell-based servers support I/O expansion cabinets to provide additional I/O chassis.

The hardware of a cell-based server—including all cells, I/O expansion cabinets, cables,

cabinet hardware, fans, and power and utilities components—is known as a server

complex.

• A Superdome server complex can consist of one or two server cabinets, and can also

include one or two I/O expansion cabinets (which provide additional I/O chassis).

• An rx8620 or rx8640 server complex consists of a single server cabinet. It may include

one I/O expansion cabinet (which provides two additional I/O chassis).

• An rx7620 or rx7640 server complex consists of a single server cabinet only.

An nPartition is a partition of the server complex that you can reconfigure without

physically modifying the server hardware. An nPartition contains one or more cells

communicating coherently over a high-bandwidth, low-latency, crossbar fabric. Special

firmware in each cell defines the boundaries of an nPartition to ensure isolation from

other nPartitions.

Each nPartition has exclusive use of the memory, processor, and I/O resources belonging

to the cells that are assigned to that nPartition. An nPartition must have at least one

core I/O card to support console services, booting, and management operations. Each

nPartition has its own system boot interface, boots independently, and executes its own

operating system image.

Properties of nPartitions

An nPartition has the following properties:

Partition Numbers

Each nPartition is identified by a unique partition number. When you

create an nPartition, it is assigned the lowest available number. That

is, the first nPartition always is partition number 0 and the second

nPartition is partition number 1.

When you remove an nPartition, its partition number is released and

can be reused while creating a new nPartition. Consider a server with

partitions numbered 0, 1, 2, and 3. When you remove partition 2, for

example, and then create a new nPartition, the new nPartition is

identified as partition 2.

Assigned and Unassigned Cells

If a cell in a server complex is not assigned to an nPartition, the cell is

considered unassigned. If an I/O chassis is attached to an assigned cell,

then the chassis is likewise assigned to that nPartition. Cells that are

unassigned are considered to be available resources; they are free to be

assigned to any existing nPartition or used to create a new nPartition.

Chapter 1

11

Page 12

Introduction

Getting to know nPartitions

Base Cells All cells within an nPartition are base cells. If you do not specify the

Active and Inactive Cells

Core Cell The core cell is an active cell that is attached to an I/O chassis with a

cell type, the nPartition administration tools automatically set the cell

type to base cell.

Active cells are assigned cells that boot to form an nPartition whose

resources (processors, memory, and any attached I/O) can be actively

used by software running in that nPartition.

Cells that are inactive are either not assigned to an nPartition or have

not participated in partition rendezvous to form an nPartition with

other cells in the nPartition. (Partition rendezvous is the event during

the nPartition boot process when all available cells in an nPartition

join to determine which cells are active for the current boot of the

nPartition.) The resources belonging to inactive cells are not actively

used by an nPartition.

functional core I/O. Each nPartition must have one core cell. Although

an nPartition can have multiple core-capable cells (any assigned cell

that has an I/O chassis with core I/O), only one core I/O is actively used

in an nPartition.

The core cell is selected by system firmware in the early stages of the

nPartition boot process. When none of the core cell choices can serve as

the active core cell, the nPartition attempts to select an eligible cell.

The core I/O in the I/O chassis connected to the core cell provides

console access for the nPartition through the management processor.

The monarch processor in the core cell runs the Extensible Firmware

Interface (EFI) while all other processors are idle until an operating

system is booted.

Cell Local Memory (CLM)

CLM is a portion of the memory in a cell that can be accessed quickly

by processors residing on the same cell. You can configure CLM for

each cell either as a percentage of the total memory in the cell or as an

absolute number of gigabytes.

For nPartitions running Microsoft Windows, HP recommends

assigning 100% CLM for each cell in the nPartition. However, when

running other operating systems the appropriate CLM assignment will

likely differ, depending on the nPartition configuration and workloads

running.

Cell Property Details

Cells in an nPartition have various properties that determine how the

cells can be used and managed.

Active and Inactive nPartition Boot States

Each nPartition has a boot state of either active or inactive. The boot

state indicates whether the nPartition has booted so that it may be

interactively accessed through its console (active nPartitions).

12

An nPartition that is active has at least one core-capable cell that is

active (not in a boot-is-blocked state). When an nPartition is active, one

or more of the cells assigned to the nPartition have completed partition

Chapter 1

Page 13

Introduction

Getting to know nPartitions

rendezvous, and the system boot interface (EFI) has loaded and been

displayed through the nPartition console. An operating system may be

loaded and run from the system boot interface on an active nPartition.

An inactive nPartition is considered to be in the shutdown for reconfig

state because all cells assigned to the nPartition either remain at a

boot-is-blocked state or are powered off.

Cell and nPartition boot process

The nPartition boot process on HP Integrity servers involves two phases:

Cell boot phase

The cell boot phase occurs when cells are powered on or reset. The main activities that

occur during the cell boot phase are power-on-self-test activities. During this phase, each

cell operates independent of other cells in the complex. Cells do not necessarily proceed

through this phase at the same pace, because each cell may have a different amount of

hardware to test and discover, or cells might be reset or powered on at different times.

The main steps that occur during the cell boot phase are as follows:

1. A cell is powered on or reset, and the cell boot-is-blocked (BIB) flag is set. BIB is a

hardware flag on the cell board. When BIB is set, the cell is considered to be inactive.

2. Firmware on the cell performs self-tests and discovery operations on the hardware

components of the cell. Operations at this point include processor self-tests, memory

tests, I/O discovery, and discovery of interconnecting fabric (connections between the

cell and other cells, I/O, and system crossbars).

3. The firmware completes self-tests and discovery, reports the hardware configuration

of the cell to the management processor, informs the management processor the cell

is “waiting at BIB”, and then waits for the cell BIB flag to be cleared.

nPartition boot phase

After its cells have completed their self-tests, the nPartition is booted. The “nPartition

rendezvous” occurs during this phase. Not all cells assigned to the nPartition need to

participate in the rendezvous. Only one core-capable cell that has completed its cell boot

phase is needed for the nPartition boot phase to begin. By default, all cells assigned to

the nPartition that have a “y” use-on-next-boot value are expected to participate in

rendezvous. The management processor waits for up to ten minutes for such cells to

reach the “waiting at BIB” state. Cells that have a “n” use-on-next-boot value do not

participate in rendezvous and remain waiting at BIB. The main steps that occur during

the nPartition boot phase are as follows:

1. The management processor provides a copy of the relevant Complex Profile data to

the cells assigned to the nPartition. This data includes a copy of the Stable Complex

Configuration Data and a copy of the Partition Configuration Data for the nPartition.

(The “Complex Profile” represents the configurable aspects of a server complex. The

Stable Complex Configuration Data contains complex-wide configuration details and

the Partition Configuration Data contains details specific to the nPartition. See the

HP System Partitions Guide for more information.)

Chapter 1

13

Page 14

Introduction

Getting to know nPartitions

2. The management processor releases BIB for all cells assigned to the nPartition that

have a “y” use-on-next-boot value and complete the cell boot phase in time. The

management processor does not release BIB for any cell with a “n” use-on-next-boot

value, or for any cell that did not complete the cell boot phase within ten minutes of

the first cell to do so. Once BIB is release for a cell, the cell is considered to be active.

3. nPartition rendezvous begins, with the system firmware on each active cell using its

copy of complex profile data to contact other active cells in the nPartition.

4. The active cells in the nPartition negotiate to select a core cell.

5. The chosen core cell manages the rest of the nPartition boot process. A processor on

the core cell runs the nPartition system boot environment (EFI). The core cell hands

off control to an operating system loader when the OS boot process is initiated.

14

Chapter 1

Page 15

Choosing a management tool

You can manage nPartitions using the following tools:

Partition Manager

Partition Manager provides a graphical interface for managing

nPartitions. You can run Partition Manager on the complex itself, or

on management stations used to remotely manage the complex.

nPartition commands

You can manage nPartitions using commands such as parcreate,

parmodify, parremove, parstatus, parunlock, fruled, frupower,

and cplxmodify. As with Partition Manager, nPar commands can be

run directly on the complex nPartitions, or from a management station

used to remotely manage the complex. Also, setting the admin IP

address for individual nPartitions must be done using the nPar

commands. It cannot be done with any other tool.

EFI Boot Manager and EFI Shell commands

Extensible Firmware Interface (EFI) provides support for nPartition

management. The EFI interfaces are accessible from an nPartition

console when the nPartition is in an active state but has not booted an

operating system.

Introduction

Choosing a management tool

Management Processor (MP) menus

Management processor menus provide a service interface that allows

access to all hardware and nPartitions in the complex. The MP is

always available, whether or not any nPartitions are configured or

booted in the server complex.

Chapter 1

15

Page 16

Introduction

Management interface options

Management interface options

You can manage nPartitions remotely using one of two management interfaces:

Intelligent Platform Management Interface (IPMI) and Web-Based Enterprise

Management infrastructure (WBEM). This section provides a brief overview of these

interfaces.

Intelligent Platform Management Interface (IPMI)

On cell-based HP Integrity servers, the management processor supports the Intelligent

Platform Management Interface (IPMI). IPMI is an industry-standard interface that

defines common, message-based interfaces to platform management hardware and

common records for describing devices and their characteristics. The IPMI specification

supports extensions, such as the nPartition and server complex management features

introduced by HP. On cell-based servers, the management processor supports two of the

communication paths defined by the IPMI specification: Block Transfer and IPMI

over LAN.

IPMI Block Transfer (IPMI BT)

IPMI Block Transfer (IPMI BT) provides a communication path between the MP and the

operating system running on an nPartition. IPMI BT uses the MP device driver and a

hardware buffer on each cell to establish a private path (using the block transfer

hardware on the core cell) from each nPartition to the MP. On Windows, the device

driver that provides the IPMI BT interface is called the “HP Health Driver”.

The nPartition Configuration Privilege setting allows you to control the access of a

user managing an nPartition on a complex. To set the nPartition Configuration

Privilege, run the PARPERM command at the MP. The nPartition Configuration Privilege

has two settings:

Unrestricted The default, which allows the user to manage other nPartitions in the

complex.

Restricted Restricts use of the IPMI BT interface to the following capabilities:

• Retrieving information about the server, including everything

displayed by Partition Manager and the parstatus command.

• Changing the local nPartition’s Partition Configuration Data.

• Manipulating any of the attention indicators (LEDs).

• Powering cells and I/O chassis belonging to the local nPartition.

The nPartition Configuration Privilege does not restrict deallocation of processors across

nPartition boundaries. When the nPartition Configuration Privilege is restricted, some

management tasks require IPMI over LAN instead.

IPMI over LAN

IPMI over LAN enables the management tool to communicate directly (without going

through an nPartition) with the MP via its LAN port. To enable IPMI LAN access to the

MP, use the SA command at the MP Command menu. The MP accepts IPMI requests at

its LAN port only if the request is accompanied by the correct password. To set the

IPMI password use the SO command at the MP Command menu.

16

Chapter 1

Page 17

Introduction

Management interface options

To secure communication between the client and the server, the IPMI specification uses

the MD5 algorithm (RFC1321) to encrypt the IPMI password and to authenticate both

the server and the client. Other security mechanisms protect against replay attacks.

When the management tool sends a request, the nPartition Provider first downloads a

large data structure containing static configuration information about the server from

the MP. This allows the Provider to request dynamic information about the server.

When an nPartition command accesses a particular complex for the first time using

IPMI over LAN, it can take a long time (two minutes or more) to complete, depending on

network latency between the PC and the server MP.

The nPartition Provider caches the static data and reuses it for subsequent requests so

that future command usage will not incur that initial overhead. If the PC is rebooted or

the nPartition Provider is restarted, the cached data is lost. The first request to the MP

after the restart requires the same initial overhead.

Also, IPMI specifies that data be sent as UDP datagrams over the LAN. Note that UDP

does not guarantee delivery of individual datagrams. If datagrams fail to arrive in a

reasonable amount of time, the nPartition Provider retries a number of times. But if the

network connection between the PC and MP is too unreliable or too slow, the provider

eventually times out and returns an error. The error is often seen as the message: [X]

data is not available, where [X] is a cell, I/O chassis, cabinet, or other element in

the complex.

The speed and reliability of the network between the management station and the MP

has a large effect on the reliability of command execution. When managing remotely via

IPMI over a LAN, the management station should be on the same subnet as and

physically close to the target complex. If you cannot access the management station

directly, you can use a Remote Desktop application or equivalent tool to connect to it

from a remote terminal.

Managing nPartitions using IPMI over LAN

Using IPMI over LAN, you can manage remote nPartitions via the MP. The

management tool on the management station communicates directly with the local

nPartition Provider. The nPartition Provider then communicates with the MP on the

remote complex using IPMI over LAN.

NOTE You must use IPMI over LAN to manage nPartitions remotely if none of the nPartitions

on the target server have been booted or configured yet.

To use IPMI over LAN, you must enable IPMI LAN Access at the MP, set the MP IPMI

password, and install the nPar tools on your management station. You can then use any

of the nPartition management tools to manage your remote nPartitions.

Running Partition Manager using IPMI over LAN You can run Partition Manager

using IPMI over LAN in one of two ways:

• If running Partition Manager on an nPartition:

From the

Too ls menu, select the Switch Complexes task. In the Switch Complexes

dialog, enter the hostname or IP address of the MP in the target complex, and type

that MP’s IPMI password.

Chapter 1

17

Page 18

Introduction

Management interface options

• If running Partition Manager on another system (not an nPartition):

Running nPartition commands using IPMI over LAN To run nPartitions

commands remotely using IPMI over LAN, you must include two command-line options

with each command:

• -g [password]

• -h hostname | IPaddress

When you use the -g... -h... set of options, the command sends appropriate WBEM

requests to the local nPartition Provider, which in turn uses IPMI over LAN to

communicate with the management processor in the target complex.

Web-Based Enterprise Management (WBEM)

Partition Manager automatically displays the

hostname or IP address of the MP in the target complex, and type that MP’s IPMI

password.

The password is the management processor’s IPMI password.

The -h option specifies the hostname or IP address of the management processor in

the target complex.

Switch Complexes dialog. Enter the

Web-Based Enterprise Management (WBEM) is an industry initiative that establishes

management infrastructure standards and provides a way to combine data from various

hardware and software management systems. WBEM specifies standards that enable

access to data from various technologies and platforms, and the presentation of that data

in a consistent fashion. Client applications can then use this information to manage an

enterprise computing environment.

Because WBEM supports a distributed management architecture, client applications

(nPartition management tools, for example) can run on a remote system and use the

WBEM infrastructure to send requests to the managed servers.

Partition Manager is one such WBEM client application. Partition Manager uses WBEM

when retrieving information about a server complex. Partition Manager uses the

nPartition commands for all other operations. The nPartition commands are also WBEM

client applications.

The nPartition commands for Windows are supported by several software components.

The Windows operating system provides the Windows Management Instrumentation

(WMI) software, which is an implementation of the WBEM standards. HP provides a

WMI-based nPartition Provider and WMI Mapper for converting CIM/XML WBEM

requests from clients (like the nPar Commands and Partition Manager) into WMI

requests.

The nPartition commands and Partition Manager send management messages to the

nPartition Provider. The nPartition Provider handles all communication with the MP

using the IPMI protocol, either locally via an IPMI/BT device driver, or remotely using

the MP's IPMI/LAN interface.

Securing the WBEM connection

WBEM secures the management connection using an SSL authentication process, which

involves the following files:

18

Chapter 1

Page 19

Introduction

Management interface options

• WBEM SSL Certificate (cert.pem file)

The WBEM SSL Certificate file resides on the system that is being managed and

contains the local WBEM server’s certificate.

On a Windows system, the WBEM SSL Certificate file is in the location specified by

the sslCertificateFilePath entry in the

%PEGASUS_HOME%\cimserver_current.conf file, and is usually

%SystemDrive%\hp\sslshare\cert.pem.

• WBEM Trusted Certificate Store (known_hosts.pem file)

The Trusted Certificate Store file resides on the system from which WBEM remote

management commands are issued. On a Windows system, the WBEM Trusted

Certificate Store file resides in the %SystemDrive%\hp\sslshare directory.

• Partition Manager Trusted Certificate Store (parmgr.keystore file)

The Partition Manager Certificate Store file resides on the system from which

Partition Manager is run. It is used by Partition Manager to validate server

certificates. On a Windows system, the Partition Manager Trusted Certificate Store

file resides in the %SystemDrive%\hp\sslshare directory.

In order for remote WBEM SSL connections to succeed, the WBEM SSL server

certificate from the remote system you are connecting to (the WBEM server) must first

be imported into the trusted certificate store(s) on the system where the remote WBEM

commands are issued from (the client system). For a description of how to do this, see

“Configuring for secure WBEM communications” on page 40.

Managing nPartitions using WBEM

Using WBEM, you can manage remote nPartitions indirectly, via an existing nPartition

on the server.

NOTE You cannot use WBEM to manage nPartitions remotely if none of the nPartitions on the

target server have been booted or configured yet, or if the nPar Provider or MP device

driver components have not been installed yet.

To use WBEM, install the WMI Mapper and the nPartition commands software on your

management station. After you install the tools, enable secure WBEM communications

by referring to “Configuring for secure WBEM communications” on page 40. You can

then use Partition Manager or the nPartition commands to manage your remote

nPartitions.

Running Partition Manager using WBEM You can run Partition Manager with

WBEM in one of two ways:

• If running Partition Manager on an nPartition:

Select the

Switch Complexes task from the To ols menu. In the resulting dialog enter

the hostname or IP address of the remote nPartition, and supply a username and

that user’s password.

To merely display information about the target complex, specify any user defined on

the remote nPartition. To make changes to the target complex, specify a user with

superuser privileges on the remote nPartition.

Chapter 1

19

Page 20

Introduction

Management interface options

• If running Partition Manager on another system (not an nPartition):

Running nPartition commands using WBEM To manage nPartitions remotely

using WBEM, you must include two command-line options with each nPartition

command:

• -u username:[password]

• -h hostname | IPaddress

Partition Manager automatically displays the

Switch Complexes dialog.

The -u option specifies a valid username on the remote nPartition.

For the parstatus and fruled commands, you can specify any username on the

remote nPartition, whereas for the other commands you must select a user with

superuser privileges on the remote nPartition. If the password is not specified, you

are prompted to enter a password after executing the command. This is more secure,

since the password is not displayed on the command line.

The -h option specifies either the hostname or IP address of the remote nPartition.

20

Chapter 1

Page 21

Introduction

Choosing a management mode

Choosing a management mode

As stated previously, the most common method for managing nPartitions involves a

remote management station. This dedicated management station hosts the management

tools and issues commands over a network connection to the server’s management

processor, allowing the complex to be managed even if no nPartitions exist on the

complex yet. This is called the “remote management” mode.

The other option is to install the nPar tools onto an existing nPartition on the server,

and then use them to manage the other nPartitions in the system. This is called the

“local management” mode.

When the nPar tools run on an nPartition in the local mode, they default to managing

the local complex using the IPMI/BT interface to the MP. This may be the simplest mode

to use. Since the tools run on the system being managed, there is no need to provide

additional authentication/remote connection information to make them work.

For example, when running the nPartition Commands in the local mode, no -g, -u, or -h

options are required to manage the local complex. And when running Partition Manager

in the local mode, the connection to the local complex is made automatically (no

additional connection/authentication information is required).

Interestingly, it is even possible to manage another complex remotely, from an

nPartition configured to run in the local mode, by using the -g or -u options with the

nPartition commands, or by using a

Manager.

A list of the advantages and disadvantages of the different management modes and

connection methods follows. Also included are a listing of the authentication and

authorization issues associated with each mode.

Tools > Switch Complexes menu path in Partition

Local management

Some of the advantages of local management are:

• Simple installation: install all components on an nPartition (also, fewer components

need to be installed manually when reinstall media is used).

• Simple connection: login to SMH and run Partition Manager; or, run nPar

Commands with no -g/-u options. No additional authentication/login steps are

required to manage the local complex.

• Allows configuration of specific nPartitions to be controlled by use of the nPartition

Config Privilege option (users can be restricted to configuration of certain

nPartitions).

Some of the disadvantages of local management are:

• Requires at least one nPar to be configured already and booted to Windows (or other

OS supporting the nPar tools).

• Requires nPar tools to be installed on at least one nPar in each partitionable complex.

• Requires login to an nPar in each complex to be managed, either via the SMH web (in

the case of Partition Manager) or telnet or Remote Desktop (in the case of the

nPartition Commands).

Chapter 1

21

Page 22

Introduction

Choosing a management mode

Remote management via IPMI

Some of the advantages of remote management via IPMI are:

• Simple installation: install all components onto a remote management station and

you are ready to manage all complexes in the data center.

• Allows a complex to be managed even if no nPars are currently configured in the

complex.

• Allows all complexes in the data center to be managed from a single management

station.

Some of the disadvantages of remote management via IPMI are:

•Does not allow configuration of specific nPartitions to be controlled by use of the

nPartition Config Privilege option (all users logging in via remote IPMI will have

configuration privileges for all nPartitions in the complex).

• Requires the additional login step of providing the MP hostname/address and IPMI

password in order to connect to the MP remotely.

• IPMI/LAN connections are slightly less secure than remote WBEM connections

(using SSL). For this reason, HP recommends that the network connection between

the management station and the MP be on a private network.

Remote management via WBEM

Some of the advantages of remote management via WBEM are:

• Secure HTTPS/SSL connection between the remote management station and the

nPartition.

• Allows configuration of specific nPartitions to be controlled by use of the nPartition

Config Privilege option (users can be restricted to configuration of certain

nPartitions).

• Allows all complexes in the data center to be managed from a single management

station.

Some of the disadvantages of remote management via WBEM are:

• Requires at least one nPar to be configured already and booted to Windows (or other

OS) and running the WBEM/WMI nPartition Provider.

• Requires copying SSL certificates from the nPartition to the management station’s

trusted certificate store (currently not automated, but a series of manual steps).

• Requires the additional login step of providing a username and password for the

remote nPartition being connected to.

Authentication and authorization issues

A list of the authentication and authorization issues associated with each of the

management modes and connection methods follows.

Local management

Authentication issues associated with the local management mode include:

22

Chapter 1

Page 23

Introduction

Choosing a management mode

• Authentication implemented solely by the OS login to the nPartition (the account

used to login to Windows on the nPartition where the tools are run).

• In the case of Partition Manager (a web application), you are asked for login

credentials when first accessing an SMH or Partition Manager web page (either

locally or from a remote browser).

• The credentials you provide are the same as the OS credentials used to login to the

nPartition directly (for example, in Windows, you would use your local or domain

Windows account to login to SMH).

Authorization issues associated with the local management mode include:

• Authorization is based on the OS authorization “group” that the account used to login

belongs to.

• In Windows, if the login account is a member of the local or domain “Administrators”

group, you are given full permission to view and modify nPartition configurations.

Accounts that do not belong to the local or domain Administrators group are given

read-only access to the nPar tools.

• If the nPartition Config Privilege option is enabled for the complex, users logging into

a given nPartition with full privileges are only able to modify the configuration of

that nPartition. All other nPartition configurations are read-only for them.

• HP SMH lets you grant read-only and read/write permissions to any OS group. Only

Windows Administrators are automatically given full permissions. Other groups

must be configured in SMH on the local nPartition, in order to give those groups

read-only or read/write permissions (applies to Partition Manager only – when using

the nPartition Commands, the rule is this: Administrators have full access, and all

other authenticated accounts have read-only access).

Remote management via IPMI

Authentication issues associated with the remote via IPMI management mode include:

• You must first login to the local OS in order to access the tools (however local OS

permissions do not apply when using the remote modes of the nPar tools).

• Next, you must specify a remote MP hostname/IP address and IPMI password in

order to make the remote IPMI connection to the MP.

Authorization issues associated with the remote via IPMI management mode include:

• Since there is only one remote IPMI account (which is fixed as “Admin”, and therefore

not specified in the tools) and password, this account always has full (read/write)

access to all nPartition configurations in the complex.

• The nPartition Config Privilege setting has no affect when using remote IPMI to

manage the complex (users logged in via remote IPMI have full access to all

nPartitions, regardless of the current nPartition Config Privilege setting.

Remote management via WBEM

Authentication issues associated with the remote via WBEM management mode include:

• You must first login to the local OS in order to access the tools (however local OS

permissions do not apply when using the remote modes of the nPar tools).

Chapter 1

23

Page 24

Introduction

Choosing a management mode

• Next, you must specify a remote nPartition’s hostname/IP address and an OS account

and password that are used to login to that remote nPartition.

Authorization issues associated with the remote via WBEM management mode include:

• Authorization is based on the OS authorization “group” that the account used for the

remote login belongs to.

• In Windows, if the login account is a member of the local or domain “Administrators”

group of the remote nPartition, you are given full permission to view and modify

nPartition configurations. Accounts that do not belong to the local or domain

Administrators group are given read-only access to the nPar tools.

• If the nPartition Config Privilege option is enabled for the complex, users logging into

a given nPartition with full privileges are only able to modify the configuration of

that nPartition. All other nPartition configurations are read-only for them.

24

Chapter 1

Page 25

Introduction

Setting up the management station

Setting up the management station

A management station is often used to configure and administer nPartitions on an HP

Integrity server.

NOTE The nPar tools can also be installed on, and run from, an nPartition on the server being

managed.

If your management station was provided by HP (for example, the PC-SMS management

system supplied with Superdome servers), then all of the nPartition tools and support

components have already been installed on that system.

However, if you are providing your own PC as a management station (for example, you

have an HP Integrity mid-range server) then you must install the tools and components

on that system, using the Smart Setup media provided with your server. Refer to

Chapter 2, “Installing nPartition Management Utilities,” on page 33, for more

information on how to do this.

nPartition tools and components are supported on management stations running one of

the following operating systems:

• Windows 2000, SP3 or later

•Windows XP

• Windows Server 2003 (both 32- and 64-bit editions)

Controlling the management station remotely

The management station running the nPartition tools should be located close to the

server being managed in order to minimize the likelihood of UDP datagrams being lost

in transmission. If, as a result, you cannot access the management station directly, you

can use a remote desktop control or telnet application to access the management station

remotely. To connect to the management station remotely, you can use any of the

following applications.

Remote Control

To view the desktop of the management station from a remote terminal running an OS

other than Windows Server 2003, Windows XP, or Windows Server 2000, use third-party

remote control software such as the free VNC (www.realvnc.com) or Symantec's

pcAnywhere™.

Terminal Services

Windows Server 2000 and Windows Server 2003 include a Terminal Services feature

that allows you to create a login session different from the console, leaving the console

available for other administration tasks.

To enable Terminal Services on the management station, click its checkbox in Add

Windows Components. Windows 2000 Professional and some Windows Server

editions do not include a client for Terminal Services. Download the free client from

www.microsoft.com.

Chapter 1

25

Page 26

Introduction

Setting up the management station

Remote Desktop

If you are running Windows Server 2000 with Terminal Services, Windows Server 2003,

or Windows XP on the management station and Windows Server 2003 or Windows XP on

the remote terminal, you can take advantage of the remote desktop display feature

provided by these operating systems.

The Remote Desktop Connection feature is not enabled by default in Windows XP or

Windows Server 2003. To enable Remote Desktop Connections on the management

station:

Step 1. Click

My Computer > System Properties > Remote > Remote Desktop.

Step 2. Select Allow users to connect remotely to this computer.

To connect to the management station from the remote terminal:

• On Windows XP, click

Desktop Connection

• On Windows Server 2003, click

Start > All Programs > Accessories > Communications > Remote

.

Start > Administrative > Tools > Remote Desktops.

To connect to a Windows 2003 console, use the /console switch with the Remote

Desktop Connection application. This is present on the latest version available at

www.microsoft.com and on Windows Server 2003 (This version is a 32-bit program

available on both the 32-bit and 64-bit Windows Server 2003 operating systems).

Telnet

Because the nPartition commands are executed from a command prompt, you can use a

telnet application (either the telnet command in Windows or a third-party application

such as Reflection® 1) to open a command prompt on the management station. The

management station must have the Telnet service installed and started.

To execute nPartition commands via a telnet connection to the management station:

Step 1. Invoke telnet on the remote terminal, providing the hostname or IP address of the

management station.

Step 2. Login to the telnet server with a valid username and password.

Step 3. From the command prompt, execute nPartition commands as usual.

NOTE There may be limitations on the number of telnet connections permitted into the

management station by its host operating system. Also, you cannot use nPartition

management tools with a GUI such as Partition Manager using this method.

26

Chapter 1

Page 27

Introduction

Performing nPartition management tasks

Performing nPartition management tasks

This section provides an overview of common nPartition management tasks.

Listing the status of an nPartition or complex

To list server complex hardware details and nPartition configuration details:

Using the Partition Manager

Nearly every aspect of the complex — the status of its nPartitions, cells, and other

hardware — can be viewed on a single page from within Partition Manager. To access

this information:

Step 1. Open a web browser and enter the URL for the web server supporting the target system.

Step 2. At the login page, enter a username and password and click Login to access the System

Management Homepage (SMH). This may take several minutes as information about

the complex is loaded.

Step 3. Go to the SMH Tools tab and click the Partition Manager link in the “nPartition

Management” box.

Step 4. In the Partition Manager’s Home tab, use a menu path of

Details

to produce a summary of the status and configuration of the complex, its

nPartitions, cells, and hardware.

Using the nPartition commands

nPartition Commands for listing hardware and nPartition status are as follows:

parstatus -C

List cell configurations.

parstatus -V -c#

List detailed cell information.

parstatus -I

List I/O chassis and card slot details.

parstatus -B

List server cabinet summaries for the complex.

parstatus -V -b#

List detailed server cabinet status.

parstatus -X

List product and serial numbers.

Complex > Show Complex

parstatus -P

List a configuration summary for all nPartitions.

parstatus -V -p#

List detailed nPartition configuration information.

parstatus -w

List the local nPartition number.

Chapter 1

27

Page 28

Introduction

Performing nPartition management tasks

frupower -d -C or frupower -d -I

Using the Management Processor Command menu

You can list hardware and nPartition status with the following commands, which are

available from the management processor Command menu.

CP List nPartition configurations, including all assigned cells.

PS List cabinet, power, cell, processor, memory, I/O, and other details.

IO List connections from cells to I/O chassis on HP Superdome servers.

ID List product and serial numbers.

Using the EFI Shell

EFI Shell methods for listing hardware and nPartition status include the following

commands. Hardware and nPartition information displayed by the EFI Shell is limited

to the local nPartition.

info sys List the local nPartition number and active cell details.

info io List the I/O configuration.

List power status for all cells (-C) or all I/O chassis (-I).

info mem List memory details.

info cpu List processor details.

Creating nPartitions

Creating an nPartition involves using an nPartition administration tool to assign one or

more cells in a complex to the new nPartition. At the time an nPartition is created you

also can optionally specify various configuration options for the nPartition, such as its

name, cell use-on-next-boot values, and other details.

NOTE When creating an nPartition, follow the HP nPartition requirements and guidelines. HP

recommends only specific sets of nPartition configurations. For nPartition configuration

requirements and recommendations, see the chapter Planning nPartitions in the HP

System Partitions Guide.

The method you choose for creating an nPartition depends on whether you are creating

the first nPartition in a complex, creating a “Genesis Partition” for a complex, or creating

an additional nPartition in a complex that already has one or more nPartitions defined.

Creating the first nPartition in a server complex

To create the first nPartition in a complex you can do one of the following tasks:

• From a remote management station, run Partition Manager (connected to the remote

management processor) and use the

nPartition > Create nPartition task.

• From a remote management station, use the parcreate command (with the

-g and -h options to connect to the remote management processor).

• Create a Genesis partition (as described below) from the management processor

console.

28

Chapter 1

Page 29

Introduction

Performing nPartition management tasks

Creating a Genesis Partition for a server complex

To create a Genesis Partition, use the management processor CC command to specify

that an initial, one-cell nPartition be created within the server complex. The complex

must not have any nPartitions defined or all nPartitions must be shutdown for reconfig

(inactive).

Creating additional nPartitions in a server complex

You can use one of two methods to create nPartitions in a complex where one or more

nPartitions already are defined:

Create a new nPartition locally

Login to an nPartition in the same complex where the new nPartition

will be created and use Partition Manager’s Create Partition task, or

issue the parcreate command.

Create a new nPartition remotely

Login to an nPartition in the same complex where the new nPartition

will be created and use Partition Manager’s Create Partition task.

You can also execute the parcreate command using either the WBEM

or with IPMI-over-LAN method. For remote administration using

WBEM, the tool accesses the nPar Provider running on an nPartition

in the target complex (for example, with the -u... -h... options). For

remote administration using IPMI over LAN, the tool accesses the

management processor (for example, with the -g... -h... options).

Modifying nPartitions

Modifying an nPartition involves using an nPartition administration tool to revise one or

more parts of the server Complex Profile data, which determines how hardware is

assigned to and used by nPartitions.

•Use parmodify or Partition Manager from an nPartition running in the same

complex as the nPartition. Some nPartition details can also be modified locally from

an nPartition console by using EFI Shell commands.

•Use parmodify or Partition Manager running on a remote management station or

Windows system.

— Using WBEM, the tool accesses the nPar Provider running on an nPartition in the

target complex. Use the -u... -h... set of parmodify options (or equivalent

Partition Manager login options).

— Using IPMI over LAN, the tool accesses the management processor of the target

complex. Use the -g... -h... set of parmodify options (or equivalent Partition

Manager login options).

Modifying nPartitions includes the following tasks:

Assign (add) or unassign (remove) cells from an nPartition:

In the Partition Manager Hardware tab, select the desired nPartition

and cell and use a menu path of

cell(s)

or nPartition > Unassign cell(s) / Cell > Unassign cell(s).

nPartition > Assign cell(s) / Cell > Assign

Chapter 1

Or, use the parmodify -p# -a#... command to add a cell or the

parmodify -p# -d#... command to remove a cell from the specified

nPartition (-p#, where # is the partition number).

29

Page 30

Introduction

Performing nPartition management tasks

Remove (delete) an nPartition:

Renaming an nPartition:

Setting cell attributes:

Shutdown all open applications on the target nPartition. Shutdown

Windows on the target nPartition. Then, in the Partition Manager

Hardware tab, select the desired nPartition and use a menu path of

nPartition > Delete nPartition.

Or, use the parremove -p# command to remove a specified nPartition

(-p#, where # is the partition number).

In the Partition Manager Hardware tab, select the desired nPartition

and use a menu path of

nPartition > Modify nPartition. Then, in the

General tab, enter a new name in the nPartition Name text box and

click OK.

Or, use the parmodify -p# -P name command to set the name for a

specified nPartition (-p#, where # is the partition number).

In the Partition Manager Hardware tab, select the nPartition that the

target cell belongs to. Use a menu path of

nPartition > Modify nPartition.

Click on the Set Cell Options tab, change the Use On Next Boot

attribute as desired, and click OK.

Or, use the parmodify -p# -m#... command to modify cell attributes

for a specified nPartition (-p#, where # is the partition number). Or,

use the EFI Shell cellconfig command to set use-on-next-boot values.

Setting core cell choices:

In the Partition Manager Hardware tab, select the nPartition that the

target cell belongs to. Use a menu path of

Click on the Set Cell Options tab, change the Core Cell Choice

attribute as desired, and click OK.

Or, use the parmodify -p# -r# -r#... command to specify up to four

core cell choices in priority order for a specified nPartition (-p#, where

# is the partition number). Or, use the EFI Shell rootcell command to

set core cell choices.

Setting cell local memory (vs. interleaved memory) values:

In the Partition Manager Hardware tab, select the nPartition that the

target cell belongs to. Use a menu path of

Click on the Configure Memory tab, change the Requested CLM

attribute as desired (either as a fraction of total available memory, or

as a fixed amount), and click OK.

Or, use the parmodify -p# -m#::::% command (-p#, where # is the

partition number, and -m#, where # is the cell number and % is the

desired cell local memory value, expressed as a percentage).

Setting nPartition boot paths:

You must use the EFI Shell bcfg command to configure boot paths.

nPartition > Modify nPartition.

nPartition > Modify nPartition.

Booting and resetting nPartitions

This section gives a brief overview of the boot process for cells and nPartitions and lists

the main nPartition boot commands and tasks.

30

Chapter 1

Page 31

Introduction

Performing nPartition management tasks

Using the Management Processor Command menu

The management processor provides the following commands, available from the

Command menu, to support boot and reset operations:

RS Reset an nPartition. Reset an nPartition only after all self tests and

partition rendezvous have completed.

RR Perform a shutdown for reconfig of an nPartition. Reset an nPartition

only after all self tests and partition rendezvous have completed.

BO Boot the cells assigned to an nPartition past the “waiting at BIB” state

and thus begin the nPartition boot phase.

TC Perform a transfer of control reset of an nPartition.

PE Power on or power off a cabinet, cell, or I/O chassis. On HP Integrity

mid-range servers, nPartition power on and power off also is

supported, to manage power of all cells and I/O chassis assigned to the

nPartition using a single command.

Using the EFI Shell