Dell PowerEdge Cluster FE100, PowerEdge Cluster FL100 User Manual

Dell™ PowerEdge™ Cluster FE100/FL100 Datacenter Server

USER’S GUIDE

www.dell.com

support.dell.com

Notes, Notices, Cautions, and Warnings

Throughout this guide, blocks of text may be accompanied by an icon and printed in

bold type or in italic type. These blocks are notes, notices, cautions, and warnings,

and they are used as follows:

NOTE: A NOTE indicates important information that helps you make better use of

your computer system.

NOTICE: A NOTICE indicates either potential damage to hardware or loss

of data and tells you how to avoid the problem.

CAUTION: A CAUTION indicates a potentially hazardous situation which, if

not avoided, may result in minor or moderate injury.

WARNING: A WARNING indicates a potentially hazardous situation which,

if not avoided, could result in death or serious bodily injury.

____________________

Information in this document is subject to change without notice.

© 2001 Dell Computer Corporation. All rights reserved.

Reproduction in any manner whatsoever without the written permission of Dell Computer Corporation is strictly forbidden.

Trademarks used in this text: Dell, the DELL logo, PowerEdge, PowerVault, and Dell OpenManage

are trademarks of Dell Computer Corporation; ClusterX is a registered trademark and VERITAS is

a trademark of VERITAS Corporation; Microsoft, Windows NT, and Windows are registered trade-

marks of Microsoft Corporation; Intel and Pentium are registered trademarks of Intel Corporation;

Computer Associates and ARCserve are registered trademarks of Computer Associates International, Inc.

Other trademarks and trade names may be used in this document to refer to either the entities

claiming the marks and names or their products. Dell Computer Corporation disclaims any proprietary interest in trademarks and trade names other than its own.

April 2001 P/N 9E926 Rev. A00

Preface

This guide provides information about the Dell PowerEdge Cluster FE100/FL100

Datacenter Server solution. This information includes procedures for installing, configuring, and troubleshooting the hardware and software components of PowerEdge

Cluster FE100/FL100 Datacenter Server configurations.

The chapters and appendixes in this guide are summarized as follows:

• Chapter 1, “Getting Started,” provides an overview of PowerEdge Cluster

FE100/FL100 Datacenter Server.

• Chapter 2, “Installation Overview,” provides an overview of the installation pro-

cess for PowerEdge Cluster FE100/FL100 Datacenter Server configurations.

Procedures are located throughout this guide.

• Chapter 3, “Preparing PowerEdge and PowerVault Systems for Clustering“pro-

vides information on adding peripherals and Fibre Channel host bus adapters.

• Chapter 4, “Cabling the Cluster Hardware,“provides instructions for properly

cabling the system hardware components.

• Chapter 5, “Configuring Storage Systems (Low-Level Configuration),“provides

information for configuring software and external shared storage.

• Chapter 6, “Configuring the System Software“provides the software configura-

tion options necessary for setting up the cluster.

• Chapter 7, “Installing Cluster Management and Systems Management Soft-

ware,“provides information on software tools for configuring and administering a

cluster including how to run Cluster Administrator locally on a cluster node and

how to install it on a remote console.

• Chapter 8, “Upgrading Your PowerEdge System to a Cluster Configuration,“pro-

vides procedures for upgrading your noncluster system to a PowerEdge Cluster

FE100/FL100 Datacenter Server configuration if the components of the cluster

hardware are already present.

• Chapter 9, “Maintaining the Cluster“provides information on maintaining

PowerEdge Cluster FE100/FL100 Datacenter Server configurations.

• Chapter 10, “SAN Components,” provides detailed information on storage area

network (SAN)-attached cluster configurations for the Dell PowerEdge Cluster

FE100/FL100 Datacenter Server configuration.

v

• Appendix A, “Troubleshooting,” provides information to help you troubleshoot

problems with installing and configuring clusters.

• Appendix B, “Cluster Data Sheets,” provides worksheets on which to record

your specific configurations.

Warranty and Return Policy Information

Dell Computer Corporation (“Dell”) manufactures its hardware products from parts and

components that are new or equivalent to new in accordance with industry-standard

practices. See your Dell PowerEdge System Information document for complete war-

ranty information for your system.

Other Documents You May Need

You may need to refer to the following documentation when performing the procedures in this guide:

• The Dell PowerVault SAN documentation for more information on storage area

networks.

• The User's Guide for your system, which describes system features and technical

specifications, small computer system interface (SCSI) device drivers, the

System Setup program, software support, and the system configuration utility.

• The Installation and Service Guide for your PowerVault storage system, which

provides installation and operation instructions for the PowerVault 65xF storage

system.

• The Dell OpenManage Data Agent Installation and Operation Guide, the Dell

OpenManage Data Supervisor Installation and Operation Guide, the Dell

OpenManage Data Administrator Installation and Operation Guide, and the Dell

OpenManage ATF Installation and Operation Guide, which provide installation

instructions for the Dell OpenManage Data Agent, Dell OpenManage Data Supervisor, Dell OpenManage Data Administrator, and the Dell OpenManage ATF.

You may also have one or more of the following documents:

• The Rack Installation Guide for your PowerVault storage system and the rack

installation documentation for your Dell PowerEdge servers provide detailed

instructions for installing the cluster components in a rack.

• Dell OpenManage Cluster Assistant With ClusterX Getting Started Guide

provides installation and configuration instructions for the ClusterX software.

• The Hardware Installation Guide for the QLA2x00 and the Software Installation

Guide for the QLA2x00 provide installation instructions for the QLogic host bus

adapter.

• The Dell PowerVault Storage Area Network (SAN) Administrator’s Guide for

information on installing the QLogic software.

vi

• Dell PowerEdge Expandable RAID Controller Battery Backup Module User's

Guide.

• The Microsoft Cluster Server Administrator's Guide for the Windows 2000

Cluster Service documentation describes the clustering software used on

PowerEdge Cluster FE100/FL100 Datacenter.

• The Microsoft Windows 2000 Datacenter Server documentation describes how

to install (if necessary), configure, and use the Windows 2000 Datacenter Server

operating system.

Documentation is included with any options you purchase separately from the system. This documentation includes information that you need to configure and install

these options in the Dell computer.

Technical information files-sometimes called “readme” files-may be installed on the

hard-disk drive to provide last-minute updates about technical changes to the system

or advanced technical reference material intended for experienced users or

technicians.

NOTE: Documentation updates are sometimes included with your system to describe

changes to your system or software. Always read these updates before consulting

any other documentation because the updates often contain the latest information.

Also consult Dell's Web site at http://www.support.com for updates.

Typographical Conventions

The following list defines (where appropriate) and illustrates typographical conventions used as visual cues for specific elements of text throughout this document:

• Interface components are window titles, button and icon names, menu names

and selections, and other options that appear on the monitor screen or display.

They are presented in bold.

Example: Click OK.

• Keycaps are labels that appear on the keys on a keyboard. They are enclosed in

angle brackets.

Example: <Enter>

• Key combinations are series of keys to be pressed simultaneously (unless other-

wise indicated) to perform a single function.

Example: <Ctrl ><Alt><Enter>

• Commands presented in lowercase bold are for reference purposes only and are

not intended to be typed when referenced.

Example: “Use the format command to . . . .“

• In contrast, commands presented in the Courier New font are part of an instruc-

tion and intended to be typed.

Example: “Type format a: to format the diskette in drive A.”

vii

• Filenames and directory names are presented in lowercase bold.

Examples: autoexec.bat and c:\windows

• Syntax lines consist of a command and all its possible parameters. Commands

are presented in lowercase bold; variable parameters (those for which you substitute a value) are presented in lowercase italics; constant parameters are

presented in lowercase bold. The brackets indicate items that are optional.

Example: del [drive:] [path] filename [/p]

• Command lines consist of a command and may include one or more of the com-

mand's possible parameters. Command lines are presented in the Courier New

font.

Example: del c:\myfile.doc

• Screen text is a message or text that you are instructed to type as part of a com-

mand (referred to as a command line). Screen text is presented in the Courier

New font.

Example: The following message appears on your screen:

• No boot device available

Example: “Type md c:\Programs and press <Enter>.”

• Variables are placeholders for which you substitute a value. They are presented in

italics.

Example: DIMM_x (where x represents the DIMM socket designation).

viii

Contents

Chapter 1 Getting Started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

Overview of Microsoft Windows 2000 Datacenter Server . . . . . . . . . . . . . . . . . . . 1-1

Overview of a Dell PowerEdge Cluster FE100/FL100

Datacenter Server Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

SAN-Attached Cluster Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

PowerEdge Cluster FE100/FL100 Identification . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-4

Activen/Active and Activen/Passive Configurations . . . . . . . . . . . . . . . . . . . . . 1-5

Failover and Failback Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

PowerEdge Cluster FE100/FL100

Datacenter Server Failover Options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

N+1 Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

Multiway Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-7

Cascading Failover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-8

N-Way Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-9

PowerEdge Cluster FE100/FL100

Datacenter Server Minimum System Requirements . . . . . . . . . . . . . . . . . . . . . . 1-11

Cluster Nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-11

Cluster Storage. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-11

Cluster Interconnect Connections

(Private Network) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-11

Cluster Client Network Connections

(Public Network). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-12

Operating System and System Management Software . . . . . . . . . . . . . . . . 1-12

PowerEdge Cluster FE100/FL100

Datacenter Server Support Configuration Requirements . . . . . . . . . . . . . . . . . . . 1-13

Required Configuration Requirements for the PowerEdge Cluster

FE100/FL100 Datacenter Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-13

Shared Storage Requirements for the PowerEdge Cluster

FE100/FL100 Datacenter Server Configuration . . . . . . . . . . . . . . . . . . . . . . . 1-14

SAN-Attached Cluster Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-15

ix

Chapter 2 Installation Overview . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

Chapter 3 Preparing PowerEdge and

PowerVault Systems for Clustering . . . . . . . . . . . . . . . 3-1

Adding Peripherals to Your Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

Configuring Fibre Channel HBAs on Separate PCI Buses . . . . . . . . . . . . . . . . 3-2

Configuring PowerVault DPE and DAE Enclosure Addresses . . . . . . . . . . . . . 3-2

Chapter 4 Cabling the Cluster Hardware . . . . . . . . . . . . . . . . . . 4-1

Cluster Cabling Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-1

Fibre Channel Copper Connectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Cabling Your Public Network. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Using NICs in Your Public Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Cabling Your Private Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Using Broadcom NetExtreme Gigabit Ethernet Controllers in

Your Private Network. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Using Giganet cLAN for the Private Network . . . . . . . . . . . . . . . . . . . . . . . . . 4-4

Protecting Your Cluster From Power Failure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-5

Connecting Standby Power Supplies in the PowerVault System . . . . . . . . . . 4-5

Cabling Your Mouse, Keyboard, and

Monitor in a Dell Rack. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-6

Chapter 5 Configuring Storage Systems

(Low-Level Configuration). . . . . . . . . . . . . . . . . . . . . . 5-1

Configuring PowerVault Shared Storage Hard-Disk Drives . . . . . . . . . . . . . . . . . . . 5-1

Configuring the LUNs and RAID Level for the Shared Storage Subsystem . . 5-2

Configuring Internal SCSI Hard-Disk Drives. . . . . . . . . . . . . . . . . . . . . . . . . . . 5-2

RAID Level for the Internal SCSI Hard-Disk Drives . . . . . . . . . . . . . . . . . . . . . 5-2

Chapter 6 Configuring the System Software . . . . . . . . . . . . . . . . 6-1

Preparing for Microsoft Windows 2000 Datacenter Server Installation . . . . . . . . . 6-1

Configuring the Cluster Nodes in a

Windows 2000 Domain. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-2

Configuring the Windows 2000 Cluster Service . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-3

Configuring the Public and Private Networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-4

Static IP Address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-4

Configuring the IP Addresses for the Private Network . . . . . . . . . . . . . . . . . . 6-5

x

Installing and Configuring Your Windows 2000 Datacenter Server Network . . . . . 6-6

Overview of a Windows 2000 Datacenter Server Network Installation. . . . . . 6-6

Updating the Host Bus Adapter Driver. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-8

Installing the Dell OpenManage Storage Management Software

for the PowerVault Storage System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-9

Configuring Shared Drives Using the Windows 2000

Disk Management Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-11

Assigning Drive Letters and Formatting Drives

on the Shared Storage System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-11

Using the Windows 2000 Dynamic Disks and Volumes. . . . . . . . . . . . . 6-12

Verifying Cluster Readiness. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-13

Installing and Configuring the Windows 2000 Cluster Service . . . . . . . . . . . 6-13

Cluster Quorum Resource . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-13

Cluster Resource Group . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-14

Adding Additional Applications and Data to the Quorum Disk . . . . . . . . 6-14

Verifying Cluster Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-15

Verifying Cluster Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-15

Verifying Cluster Resource Availability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-15

Configuring the Dell OpenManage

Manage Node (Data Agent) for a Cluster Environment . . . . . . . . . . . . . . . . . . . . . 6-16

Configuring the Data Agent for Cluster Failover . . . . . . . . . . . . . . . . . . . . . . . . . . 6-16

Configuring Failover and Failback Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-18

Failover Support Through Four-Node Clustering . . . . . . . . . . . . . . . . . . . . . . 6-18

Modifying the Failover Sequence of the Disk Groups. . . . . . . . . . . . . . . 6-19

Chapter 7 Installing Cluster Management

and Systems Management Software . . . . . . . . . . . . . 7-1

Microsoft Cluster Administrator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-1

Running Cluster Administrator on a Cluster Node . . . . . . . . . . . . . . . . . . . . . . 7-1

Installing Cluster Administrator on a Remote Console Running

Windows 2000 Professional. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-1

Installing the Cluster Administrator on a Remote Console Running

Windows 2000 Advanced Server or Server Editions . . . . . . . . . . . . . . . . . . . . 7-2

Installing Cluster Administrator for Windows 2000 on a Remote Console

Running Windows NT 4.0 Server, Enterprise Edition. . . . . . . . . . . . . . . . . . . . 7-2

Installing Dell OpenManage Cluster Assistant With ClusterX (Optional). . . . . . . . . 7-3

Chapter 8 Upgrading Your PowerEdge

System to a Cluster Configuration . . . . . . . . . . . . . . . 8-1

Upgrading Your PowerEdge System to a PowerEdge Cluster FE100/FL100

Datacenter Server Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-1

Installing the Appropriate Version of

Windows 2000 on Your PowerEdge System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-2

xi

Chapter 9 Maintaining the Cluster. . . . . . . . . . . . . . . . . . . . . . . . 9-1

Connecting to Your PowerVault

Storage Systems Using Dell OpenManage Storage Management Software . . . . . 9-1

Connecting to the PowerVault Shared Storage Systems

Using Data Agent. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-1

Connecting to Data Agent Using Data Administrator . . . . . . . . . . . . . . . . . . . 9-2

Identifying the Cluster Name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

Connecting to Data Agent Using Data Supervisor. . . . . . . . . . . . . . . . . . . . . . 9-3

Identifying the Device Name of the Storage Processor . . . . . . . . . . . . . . 9-3

Restoring Communications to a Failed Storage Device Using

Dell OpenManage ATF. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-3

Using the QLogic Fibre Channel Configuration Software for PowerVault

65xF Storage Processor Replacement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-4

Determining the RAID Levels of the Shared Disk Volumes . . . . . . . . . . . . . . . . . . 9-4

Configuring Your Cluster Nodes Using

Windows 2000 Datacenter Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

Adding a Third NIC to a Cluster Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

Changing the IP Address of a Cluster Node . . . . . . . . . . . . . . . . . . . . . . . . . . 9-7

Uninstalling Cluster Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-8

Running chkdsk /f on a Quorum Disk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-8

Replacing a Cluster Node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-8

Chapter 10 SAN Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-1

Overview of a PowerVault SAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-1

PowerVault SAN Components for PowerEdge Cluster

FE100/FL100 Datacenter Server Configurations . . . . . . . . . . . . . . . . . . . . . . 10-1

SAN-Attached Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-2

Fibre Channel Fabrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-3

Attaching a SAN-Attached Cluster Configuration to a Network . . . . . . . 10-4

Using Dell PowerVault Fibre Channel Switches . . . . . . . . . . . . . . . . . . . . . . . . . . 10-6

Attaching a PowerVault Storage System to a SAN-Attached Cluster . . . . . . . . . . 10-6

Connecting the PowerEdge Cluster to a Single PowerVault

Storage System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-6

Connecting a PowerVault 130T DLT Library and PowerVault 35F

Fibre Channel Bridge to a Cluster-Attached PowerVault SAN . . . . . . . . . . . . 10-7

Configuring Hard-Disk Drive Letters Using Multiple

Shared Storage Units. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-7

Additional PowerEdge Cluster Maintenance Procedures . . . . . . . . . . . . . . . . . . . 10-8

Using the QLogic Fibre Channel Configuration Utility

for Storage Processor Failure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-8

Appendix A Troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-1

Troubleshooting Windows 2000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-2

xii

Appendix B Cluster Data Sheets. . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

PowerEdge Cluster FE100/FL100

Datacenter Server Configuration Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

Cluster Data Sheets. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

Index

Figures Figure 1-1. SAN-Attached Cluster Configuration . . . . . . . . . . . . . . . . . . . . . . . . . 1-4

Figure 1-2. N+1 Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-7

Figure 1-3. Example of a 4-Node Multiway Failover . . . . . . . . . . . . . . . . . . . . . . 1-8

Figure 1-4. Example of a 4-Node Cascading Failover. . . . . . . . . . . . . . . . . . . . . . 1-9

Figure 1-5. Example of a 4-Node N-Way Migration Solution . . . . . . . . . . . . . . . 1-10

Figure 4-1. DB-9 Connector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Figure 4-2. HSSDC Connector . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Figure 4-3. Configuration Using a Broadcom NetExtreme Gigabit NICs

for the Private Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-4

Figure 4-4. Configuration Using a GigaNet cLAN NIC

for the Private Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-5

Figure 4-5. Cable Configuration of PowerVault 65xF Power Supplies . . . . . . . . . 4-6

Figure 10-1. SAN-Attached Cluster Configuration . . . . . . . . . . . . . . . . . . . . . . . . 10-3

Figure 10-2. SAN-attached Clusters Using a Public, Private,

and SAN Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-5

Tables Table 2-1. System-Setup Navigation Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-2

Table 2-2. Device-List Screen Navigation Keys . . . . . . . . . . . . . . . . . . . . . . . . . 2-7

Table 2-3. Power Time-Out Periods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-10

Table 3-1. ICU Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-4

Table 5-1. System-Board Jumper Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-7

Table 5-2. System Board and Riser Board Connectors and Sockets . . . . . . . . . 5-8

Table 6-1. Sample DIMM Configuration Options . . . . . . . . . . . . . . . . . . . . . . . . 6-4

Table A-1. Technical Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-1

Table B-1. Configuration Utility Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

Table B-2. Configuration Manager Messages. . . . . . . . . . . . . . . . . . . . . . . . . . . B-5

xiii

xiv

CHAPTER 1

Getting Started

This chapter provides an overview of the following information for the

™

PowerEdge™ Cluster FE100/FL100 Datacenter Server configuration:

Dell

• Microsoft

®

Windows® 2000 Datacenter Server operating system

• Configuration and operation

• Cluster identification

• Failover options

• Minimum system requirements

• Support configuration requirements

Overview of Microsoft Windows 2000

Datacenter Server

Windows 2000 Datacenter Server is geared specifically for organizations implementing and managing enterprise applications that require high availability and high

performance. Typical application usages include large data warehouses, server consolidation, online transaction processing (OLTP), and modeling/simulation.

Windows 2000 Datacenter Server provides all of the features included with Microsoft

Windows 2000 Advanced Server, along with the following additional features:

• Two-to-four-node clustering—provides high availability of applications through

failover to another node in the cluster

• Support for up to 32-way symmetrical multiprocessing (SMP)—the operating sys-

tem distributes the processing workload with up to 32 central processing units

(CPUs)

• Support for up to 32 gigabyte (GB) random access memory (RAM) on the physi-

cal address extension (PAE)-capable PowerEdge 8450 system—improves

multiple application performance

• Winsock Direct—optimizes Internet protocol (IP) performance

• Process Control management tool—improves server resource management

support.dell.com Getting Started 1-1

NOTE: Since Datacenter Server is one of four operating systems in the

Windows 2000 platform, some of the core services incorporated within Datacenter

Server are common to all Windows 2000 platforms. In the following sections, “Windows 2000” is used to identify the services common to all Windows 2000 platforms

and “Windows 2000 Datacenter Server” is used to identify services and components

specific to the Datacenter Server platform.

Overview of a Dell PowerEdge Cluster

FE100/FL100 Datacenter Server

Configuration

The PowerEdge Cluster FE100/FL100 Datacenter Server is a cluster solution that

implements 2-node to 4-node clustering technology based on the Microsoft

Windows 2000 Cluster Service (MSCS) software incorporated within the Windows

2000 Datacenter Server operating system.

NOTE: In this guide and in other cluster documentation, MSCS is also referred to as

Cluster Service.

PowerEdge Cluster FE100/FL100 Datacenter Server solutions provide the following

benefits in meeting the needs of mission-critical network application programs:

• High availability of system services and resources to network clients

• Redundant storage for application program data

• Failure recovery for cluster application programs

• Flexible maintenance capabilities, allowing you to repair, maintain, or upgrade a

cluster node without taking the entire cluster offline

• Load balancing between the cluster nodes

A PowerEdge cluster consists of two or more PowerEdge 8450 server systems

(referred to as cluster nodes) that are interconnected with hardware and software

components, providing a single point of continuous access to network services

(including file systems, databases, enterprise resource planning [ERP], and other

application programs, and services) for network clients. Each cluster node is configured with software, storage, and network resources that enable it to monitor and

interact with the other nodes to provide mutually redundant operation. Because the

cluster nodes interact in this way, they appear as a single system to the network

clients.

In a standard client/server environment, a user accesses a network resource by connecting to a physical server with a unique Internet Protocol (IP) address and network

name. If the server fails for any reason, the user will no longer be able to access the

resource. In a cluster environment, a user does not access a physical server, but a vir-

tual server—a network resource managed by the Cluster Service that is not

associated with a physical server, and can failover to another cluster node. Virtual

servers are designed to dynamically reconfigure user resources during a connection

or hardware failure, providing a higher availability of network resources as compared

to a nonclustered PowerEdge system.

1-2 User’s Guide

NOTE: For more information on failover, failback, and groups, see “Configuring

Failover and Failback Support” in Chapter 6, “Configuring the System Software.”

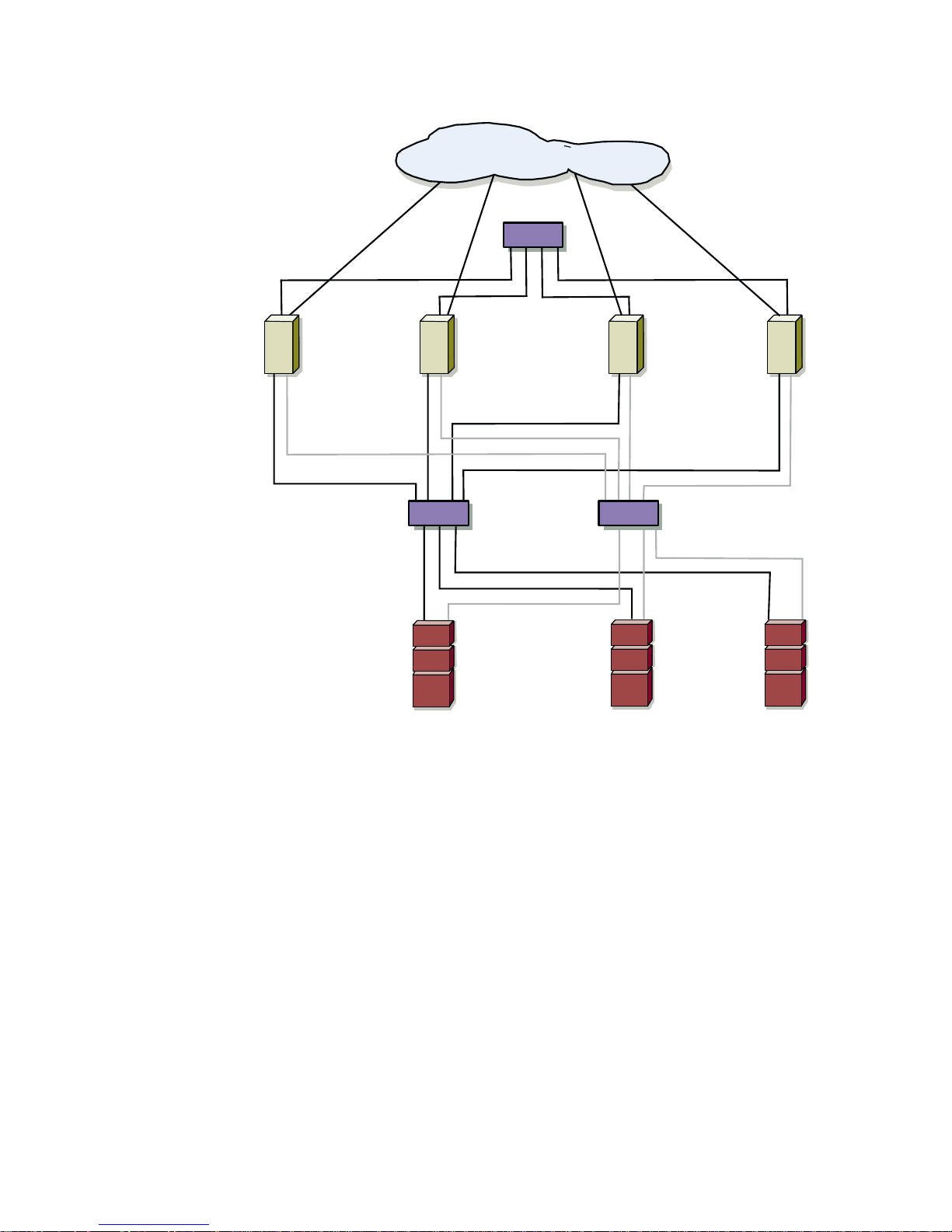

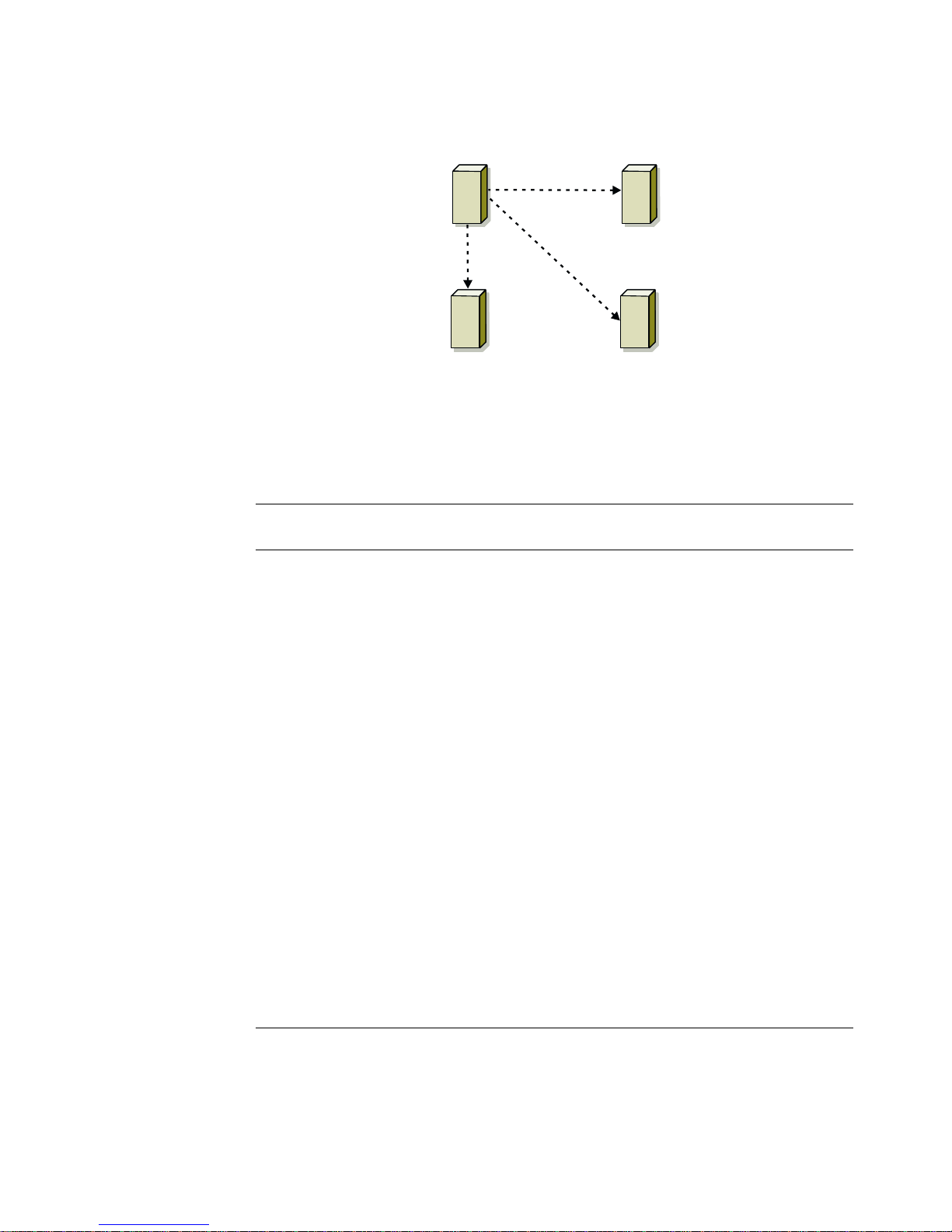

SAN-Attached Cluster Configuration

A PowerEdge Cluster FE100/FL100 Datacenter Server configuration is a SAN-attached

cluster configuration where all four cluster nodes are attached to a single

PowerVault™ storage system or to multiple PowerVault storage systems through a

Dell PowerVault SAN using a redundant Fibre Channel switch fabric.

NOTE: A PowerEdge Cluster FE100/FL100 Datacenter Server configuration cannot

coexist on the Fibre Channel switch fabric with other clusters or stand-alone servers.

A Fibre Channel switch fabric is an active, intelligent, and private connection of one or

more fibre channel switches that provide high-speed, point-to-point connections

between servers and storage devices. Using instructions that are programmed into

the switch, the switches in a Fibre Channel fabric provide point-to-point connection

through inbound and outbound points from one device (sender) to another device or

switch (receiver) on the network. If the data is sent to another switch, the process

repeats itself until a connection is established between the sender and the receiver.

One or more PowerVault Fibre Channel switches make up a Fibre Channel fabric.

Figure 1-1 shows an advanced SAN-attached cluster configuration.

support.dell.com Getting Started 1-3

LAN/WAN

private network switch

PowerEdge

server

PowerEdge

server

Fibre Channel

Switch

PowerVa u lt

storage

system

PowerEdge

server

PowerVa u lt

storage

system

PowerEdge

server

Fibre Channel

Switch

PowerVa u lt

storage

system

Figure 1-1. SAN-Attached Cluster Configuration

PowerEdge Cluster FE100/FL100

Identification

The Dell PowerEdge Fibre Channel clusters are configured and identified by the

private network connection (cluster interconnect) that connects the cluster nodes

together— FE (Fibre Channel Ethernet) and FL (Fibre Channel Low Latency)—and the

type of storage devices in the cluster configuration.

1-4 User’s Guide

Table 1-1 provides an overview of the differences between PowerEdge Cluster FE100

and FL100 Datacenter Server configurations.

Table 1-1. PowerEdge Cluster FE100/FL100 Configurations

Cluster Solution Cluster Interconnect

Typ e

PowerEdge

Fast Ethernet Broadcom NetExtreme

Cluster FE100

PowerEdge

Giganet Giganet cLAN 1000

Cluster Interconnect

Network Interface

Controller (NIC)

Gigabit Ethernet NIC

Cluster FL100

Activen/Active and Activen/Passive Configurations

MSCS and PowerEdge Clusters support multiple variations of Activen/Active and

n

Active

Table 1-2 provides a description of the configuration types and their definitions.

Table 1-2. Activen/Active and Activen/Passive Configuration Types

/Passive configurations (where n = the number of active cluster nodes).

Configuration Type Number

of Active

Cluster

Nodes

Active/Active

2

Definition

The active node(s) process requests

and provide failover for each other,

Active/Active/Active

3

depending on cluster node resources

and your configuration.

Active/Active/Active/Active

4

Active/Passive

1

The active node(s) processes

requests while the passive node

Active/Active/Passive

Active/Active/Active/Passive

n

An active

/active configuration refers to a cluster with virtual servers running on each

2

waits for the active node to fail.

3

node. When an application is running on node 1, the remaining cluster nodes do not

have to wait for node 1 to fail. The remaining cluster nodes can run their own clusteraware applications (or another instance of the same application) while providing

n

failover capabilities for the resources on node 1. However, an active

/active cluster

node must be configured appropriately to handle the workload of other cluster nodes

in case one cluster node fails.

n

Active

/passive refers to failover cluster configurations in which one cluster node is

actively processing requests for a clustered application while another cluster node

n

simply waits for the active node to fail. An active

/passive configuration is more costly

in terms of price and performance because one cluster node sits idle all of the time.

support.dell.com Getting Started 1-5

However, this configuration is appropriate for business-critical systems since the

application can use the full power of another cluster node in case one cluster node

fails.

NOTE: For clarity, future references of activen/active and activen/passive configurations will use “n” to equal the number of active cluster nodes. For example, an

active/active/active/active configuration consisting of four active cluster nodes will be

referred to as an active

4

configuration.

Failover and Failback Support

One of the key features of Cluster Service is failover and failback support. Failover is

the process of automatically moving resources from a failed cluster node to other

healthy node(s) in the cluster. Failback is the process of returning the resources back

to the original cluster node. Both failover and failback can be an automatic or manual

process, depending on how you configure the Cluster Service.

NOTE: For more information on failover, failback, and cluster groups, see “Configuring

Failover and Failback Support” in Chapter 6, “Configuring the System Software.”

PowerEdge Cluster FE100/FL100

Datacenter Server Failover Options

The PowerEdge FE100/FL100 Datacenter Server configuration provides the following

failover options:

• N + 1

• Multiway

• Cascading

• N-Way migration solution

The following subsections describe each of these failover options.

N+1 Failover

N+1 failover is an activen/passive failover solution where one cluster node provides

backup for multiple cluster nodes. This solution provides the following features:

Advantage:

• One cluster node is a dedicated backup node for all cluster nodes, providing the

best solution for critical applications.

Disadvantages

• High expense, as one node is not used to provide network resources.

• Backup cluster node may lack the resources to support multiple cluster node

failures.

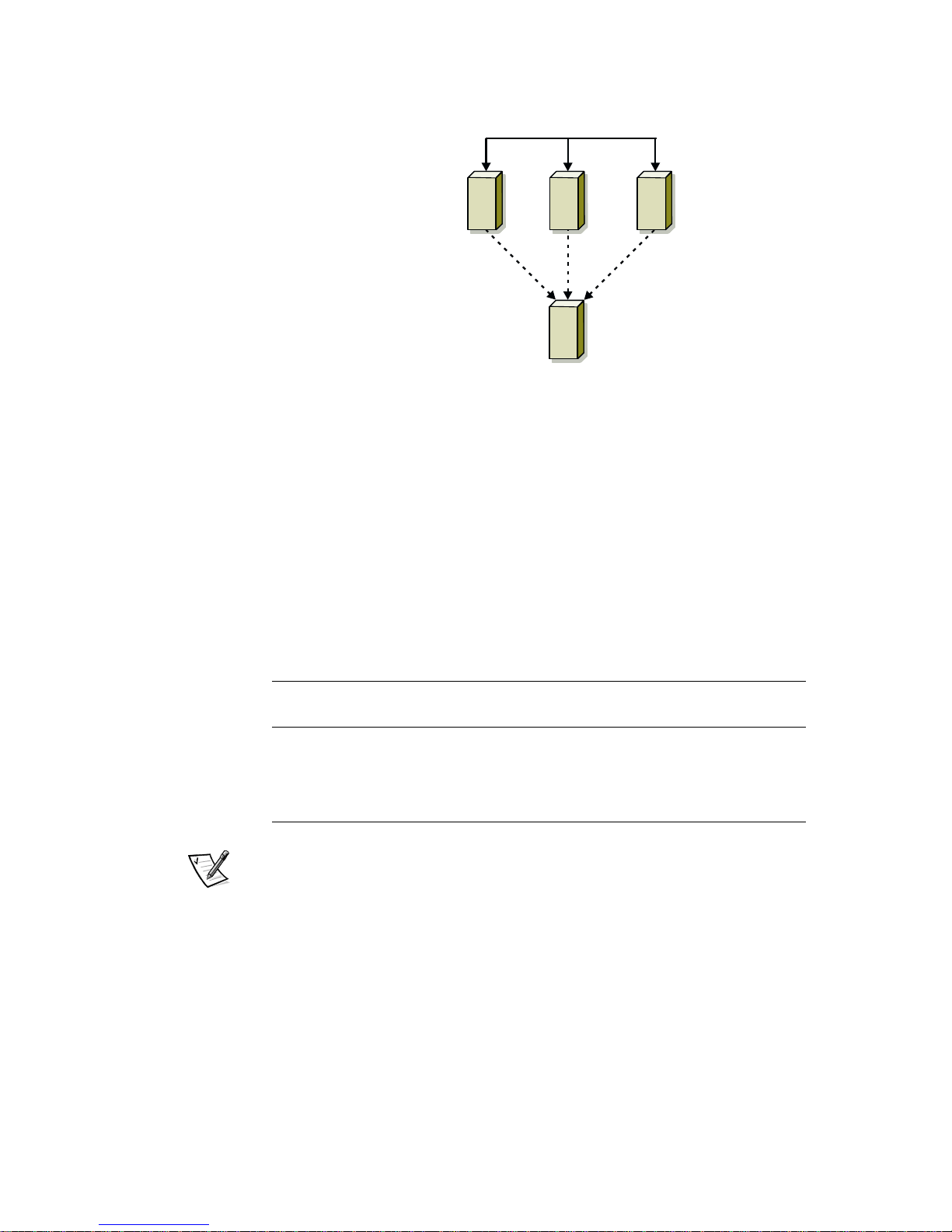

Figure 1-2 shows an example of N + 1 failover configuration.

1-6 User’s Guide

cluster

node 1

cluster

node 2

cluster

node 3

cluster

node 4

(backup)

Figure 1-2. N+1 Failover

Table 1-3 provides a N+1 failover configuration for the cluster shown in Figure 1-2. For

each cluster resource group, the failover order in the Preferred Owners list provides

the order that you want that resource group to failover. If that resource group or its

cluster node fails, the cluster will try to fail that resource group to the first available

node in the list. In this example, node 1 owns cluster resource group A, node 2 owns

cluster resource group B, and node 3 owns cluster resource group C. Cluster

resource groups A, B, or C would failover to cluster node 4, if cluster node 1, 2, or 3

fails, respectively.

Table 1-3. N+1 Failover Configuration for a 4-Node Cluster

Cluster Resource Group Failover Order in the Preferred

Owners List

A1, 4

B2, 4

C3, 4

NOTE: When implementing this type of failover solution, failback should be configured if the cluster node lacks the resources (such as memory or processing power) to

support one or more cluster node failures.

Multiway Failover

Multiway failover is an activen/active failover solution where running applications from

a failed node migrate to multiple nodes in the cluster. This active

failover provides the following features:

Advantage:

n

/active type of

• Automatic failover and load-balancing between the cluster nodes.

support.dell.com Getting Started 1-7

Disadvantage:

• Must ensure that the failover cluster nodes have ample resources available to

handle the additional workload.

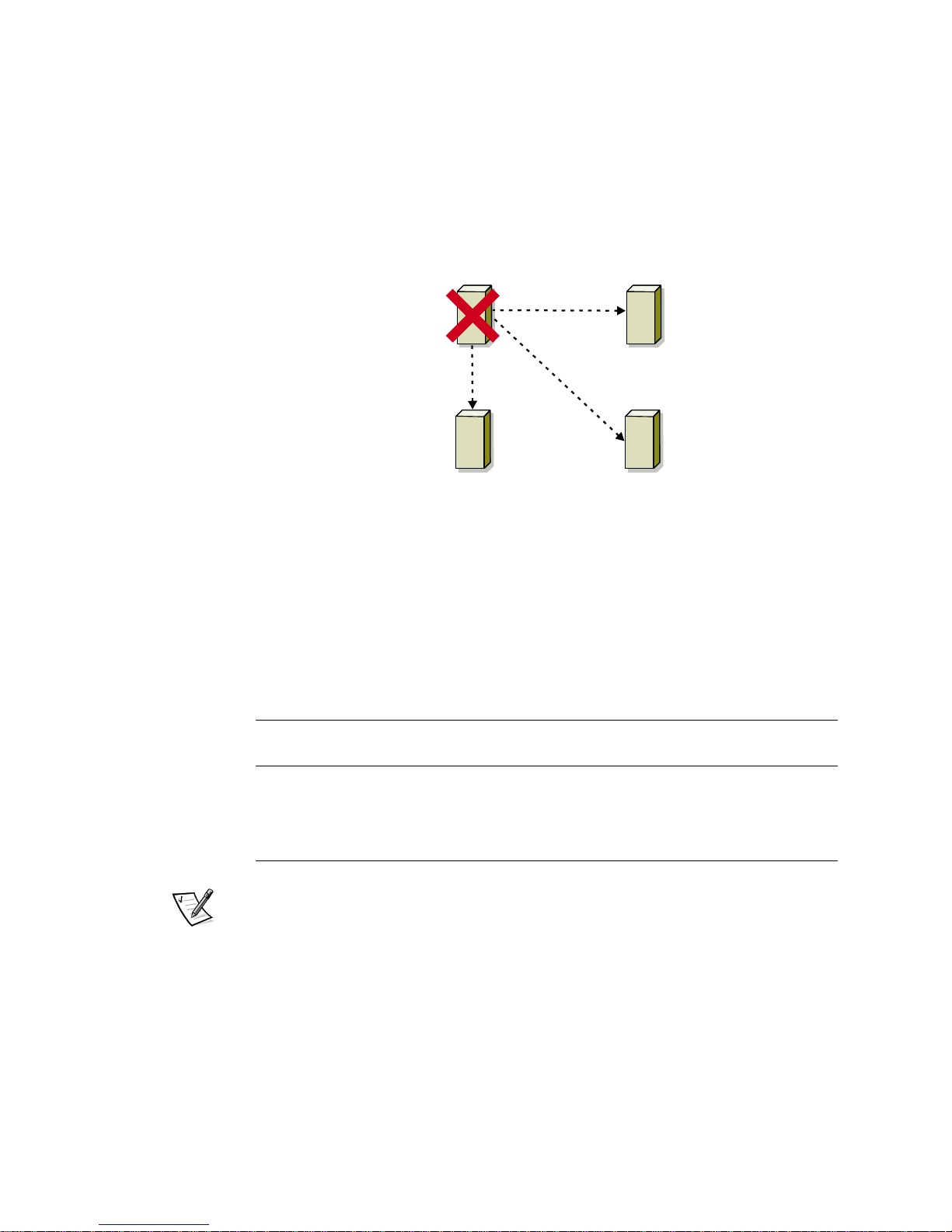

Figure 1-3 shows an example of multiway failover configuration.

cluster

node 1

Application A

Application C

cluster node 3 cluster node 4

Application B

cluster

node 2

Figure 1-3. Example of a 4-Node Multiway Failover

Table 1-4 provides an example of a multiway failover configuration for the cluster

shown in Figure 1-3. For each cluster resource group, the failover order in the

Preferred Owners list outlines the order that you want that resource group to failover.

In this example, node 1 owns cluster resource groups A, B, and C. If node 1 fails, the

cluster resource groups A, B, and C will failover to cluster nodes 2, 4, and 3, respectively. The cluster resource groups on cluster nodes 2, 3, and 4 need to be configured

similarly.

Table 1-4. Example of a 4-Node Multiway Failover Configuration

1-8 User’s Guide

Cluster Resource Group Failover Order in the

Preferred Owners List

A 1, 2, 3, 4

B 1, 4, 2, 3

C 1, 3, 4, 2

NOTE: When implementing this type of failover solution, failback should be configured to avoid performance degradation.

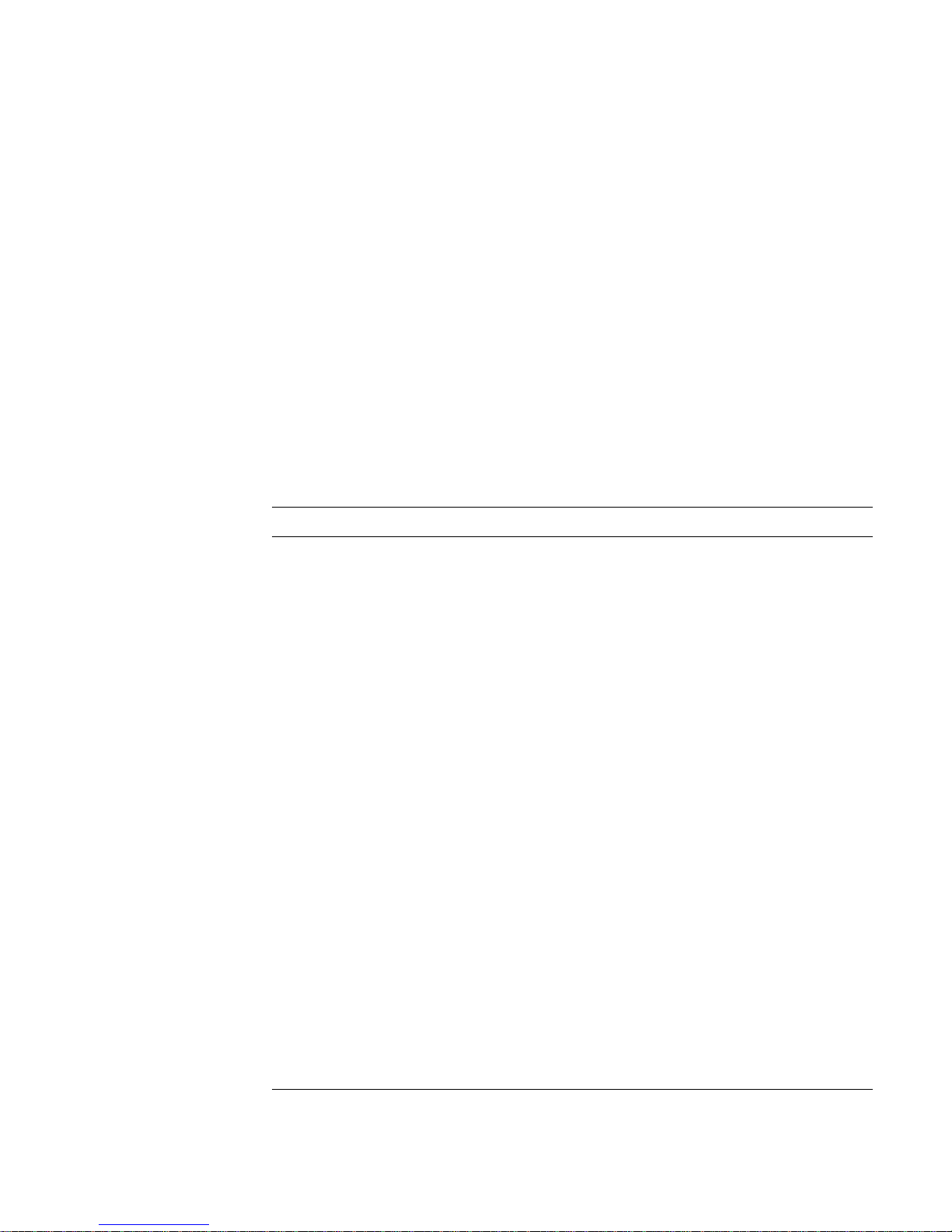

Cascading Failover

With Cascading failover, all running applications migrate from the failed node to the

next preassigned cluster node. If you do not make a failover selection, cascading

failover will be the default failover type. This type of failover provides the following

features:

Advantage:

• High resource availability to users.

Disadvantage:

• The cluster node next in line for failover may not have ample resources available

to handle the additional workload of the failed node.

Figure 1-4 shows an example of cascading failover configuration.

applications

failed cluster

node 1

cluster

node 2

cluster

node 3

cluster

node 4

Figure 1-4. Example of a 4-Node Cascading Failover

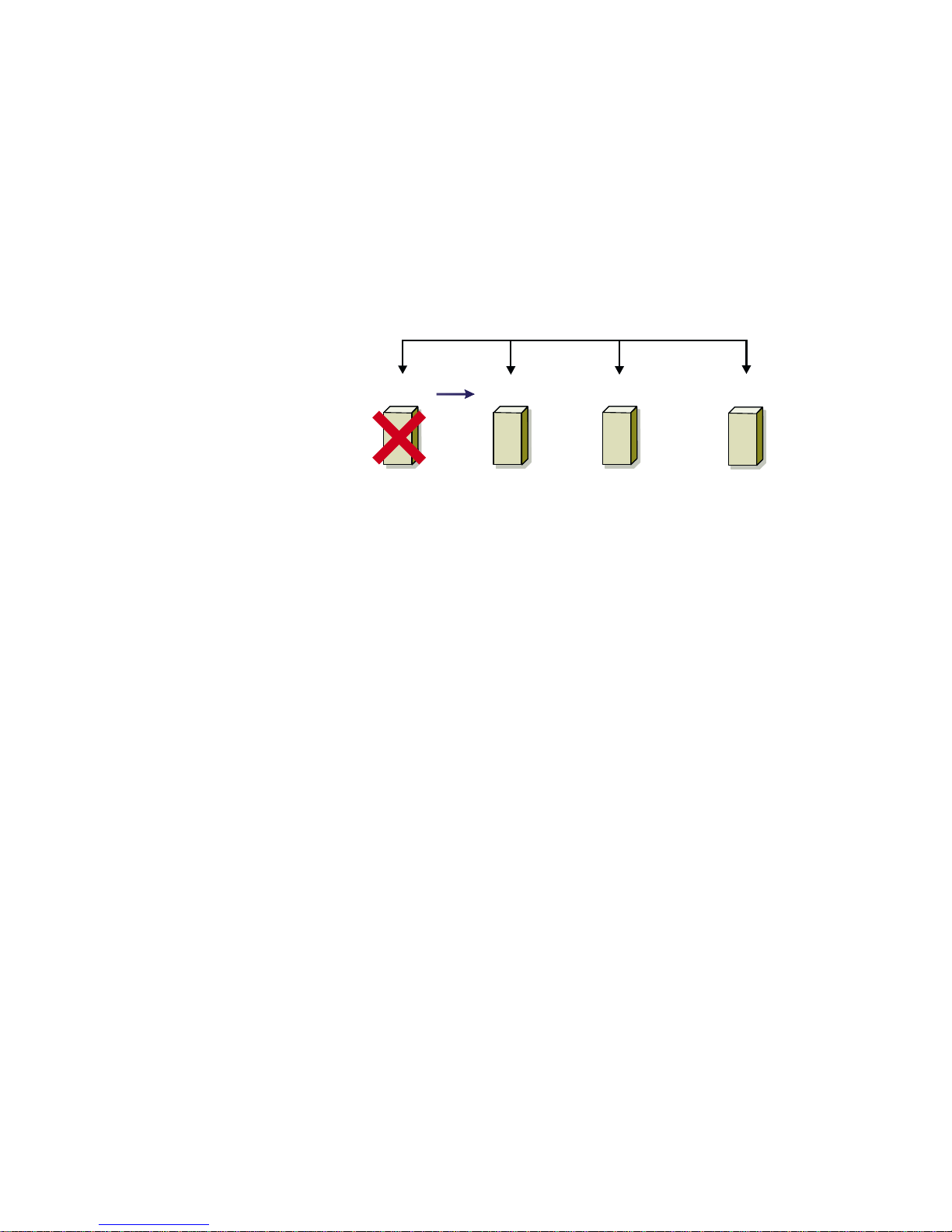

N-Way Migration

N-Way migration is the ability to manually migrate an application from one node to any

node in the cluster (based on cluster node resource availability). This type of solution

provides the following features:

Advantages:

• Adjustable resource allocation.

• Added flexibility.

Disadvantage:

• Solution is not automatic.

Figure 1-5 shows an example of an N- Way migration solution.

support.dell.com Getting Started 1-9

cluster

node 1

cluster

node 2

Application A

cluster

node 3

cluster

node 4

Figure 1-5. Example of a 4-Node N-Way Migration Solution

Table 1-5 provides an overview of the failover types implemented with Datacenter

Server.

Table 1-5. Failover Configurations

Failover

Type

N + 1 One server provides

Multiway Running applications

Description Advantage Disadvantage

backup for multiple

servers in the cluster

High resource availability

Backup cluster

node may lack the

resources to

support multiple

cluster node

failures

migrate to multiple

nodes in the cluster

Application load

balancing

Must ensure that

the failover cluster

nodes have ample

resources available

to handle the additional workload

Cascading Running applications

N-Way

migration

1-10 User’s Guide

migrate to the next

pre-assigned cluster

node

Any running application(s) are manually

migrated to any node

in the cluster

Higher availability The cluster node

next in line for

failover may not

have ample

resources available

to handle the additional workload of

the failed node

Added flexibility

and adjustable

Solution is not

automatic

resource

allocation

PowerEdge Cluster FE100/FL100

Datacenter Server Minimum System

Requirements

Dell PowerEdge Cluster FE100/FL100 Datacenter Server configurations require the

following hardware and software components:

• Cluster nodes

• Cluster storage

• Cluster interconnects

• Operating system and system management software

Cluster Nodes

Cluster nodes require the following hardware resources:

• Two to four supported Dell PowerEdge systems, each with at least two

microprocessors.

• For each server, a minimum of 2 GB random access memory (RAM) and two

HBAs.

• For each server, at least one network interface controller (NIC) is required for

client local area network (LAN) connections (public network).

• One NIC required for the cluster interconnect (private network).

Cluster Storage

Cluster storage requires the following:

• For each cluster, at least one supported PowerVault 65xF/630F Fibre Channel

storage system with dual standby power supplies (SPSs).

• For each PowerVault 65xF disk processor enclosure (DPE), at least ten hard-disk

drives are required;

• Each PowerVault 630F disk array enclosure (DAE) attached to the cluster can con-

tain five or ten hard-disk drives in each DAE.

Cluster Interconnect Connections

(Private Network)

The cluster connections for the cluster interconnect (private network that connects

the cluster nodes together) requires the following hardware components:

• For each server, either a Broadcom NetExtreme Gigabit Ethernet NIC or

Giganet cLAN 1000 adapter.

• For each server, one Category 5 (CAT 5) Ethernet cable or Giganet cLAN cable to

connect the nodes.

support.dell.com Getting Started 1-11

• For each cluster, a network switch or Giganet cLAN cluster switch to connect the

cluster nodes.

NOTE: If you have a two-node PowerEdge Cluster FE100/FL100 Datacenter Server

configuration that will not expand the configuration to a three or four node cluster, a

crossover cable or cLAN cable can be used to connect the nodes rather than using a

private network switch.

Cluster Client Network Connections

(Public Network)

The cluster connections to the client network (public network for client access of

cluster resources) requires the following hardware components:

• For each server, a Broadcom NetExtreme Gigabit Ethernet NIC or

®

PRO/1000 Gigabit Server Adapter.

Intel

Operating System and System Management Software

Dell PowerEdge Cluster FE100 and FL100 Datacenter Server systems require the

following operating system and system management software:

• Microsoft Windows 2000 Datacenter Server

NOTE: One licensed copy of Windows 2000 Datacenter Server is required for

each cluster node.

• QLogic Fibre Channel Configuration Utility

• Dell OpenManage Application Transparent Failover

• Dell OpenManage Managed Node (Data Agent)

• Dell OpenManage Data Administrator or Dell OpenManage Data Supervisor

• Dell OpenManage Cluster Assistant With ClusterX 3.0.1 with Service Pack 2 or

later (optional)

1-12 User’s Guide

PowerEdge Cluster FE100/FL100

Datacenter Server Support Configuration

Requirements

The following tables provide configuration information for the following cluster components and configurations:

• Cluster nodes

• Shared storage systems

• SAN-attached clusters

Required Configuration Requirements for the PowerEdge

Cluster FE100/FL100 Datacenter Server

Table 1-6 provides the cluster component requirements for a PowerEdge Cluster

FE100/FL100 Datacenter Server configuration.

Table 1-6. Cluster Node Requirements

Rule/Guideline Description

Platform Microsoft Windows 2000 Datacenter Server with

two to four homogeneous PowerEdge 8450 systems with basic input/output system (BIOS) version

A04 per cluster

Processors

Memory (RAM) Minimum of 2 GB up to 32 GB random access

Host bus adapters (HBA) Two identical QLogic 2200/66 HBAs per cluster

Internal hard-disk drives Minimum of two logical drives with a minimum of

Cluster interconnect

(private network)

Two to eight Pentium

central processing units (CPU) with 2 megabytes

(MB) of cache per system

memory (RAM) per system

node.

Both copper and Fibre Channel HBAs are supported.

9 GB storage on each logical volume

• Giganet cLAN with card level A01 and driver

version 4.1.1

®

III 700 megahertz (MHz)

• Broadcom NetExtreme Gigabit Ethernet NIC

with driver version 1.29.0.0

Public network

• Intel PRO/1000 Gigabit Server Adapter with

driver version 2.19.219.0

• Broadcom NetExtreme Gigabit Ethernet NIC

with driver version 1.29.0.0

support.dell.com Getting Started 1-13

Table 1-6. Cluster Node Requirements (continued)

Rule/Guideline Description

RAID controller One PowerEdge Expandable RAID controller 2/DC

(PERC 2/DC) with firmware version 1.01 and driver

version 2.62

Cluster management

(optional)

Remote ser ver

management (optional)

Operating systems Microsoft Windows 2000 Datacenter Server with

Dell OpenManage™ Cluster Assistant With

ClusterX

Dell OpenManage Remote Assistant Card (DRAC)

with firmware version 2.3 and driver version 2.3.0.4

the latest Service Pack and hotfixes

(one licensed copy per cluster node)

®

, version 3.0.1 with Service Pack 2 or later

Shared Storage Requirements for the PowerEdge Cluster

FE100/FL100 Datacenter Server Configuration

Table 1-7 provides the clustering requirements for the PowerEdge Cluster FE100/

FL100 Datacenter Server.

Table 1-7. PowerEdge Cluster FE100/FL100 Shared Storage

Requirements

Rule/Guideline Description

Disk array PowerVault 650F with firmware version 5.11.09

Storage processors Two identical PowerVault storage processors, each

with a minimum of 2 GB RAM

1-14 User’s Guide

Standby power supplies Dual standby power supplies required

Supported RAID levels RAID 0, RAID 1, RAID 3, RAID 5, and RAID 1/0

Tape library (optional) PowerVault 130T digital linear tape (DLT) library with

driver version 1.0

NOTICE: Dell recommends that you use a redundant array of independent

disks (RAID) level other than RAID 0 for your PowerVault shared storage

system. RAID 0 does not provide the level of availability required for the

quorum resource. See the Installation and Troubleshooting Guide for your

PowerVault storage system for more information about setting up RAID levels for the system.

SAN-Attached Cluster Requirements

Table 1-8 provides the requirements for a SAN-attached cluster configuration.

Table 1-8. SAN-Attached Cluster Requirements

Rule/Guideline Description

SAN version SAN 3.0

HBA QLogic QLA2200/66 with firmware version 1.45 and

driver version 7.04.08.02

HBA failover driver Dell OpenManage ATF version 2.3.2.5

Fibre Channel switch PowerVault 51F Fibre Channel switch with firmware

version 2.1.7

PowerVault 56F Fibre Channel switch with firmware

version 2.1.7

Fibre Channel-to-SCSI

bridge

Up to four PowerVault 35F Fibre Channel-to-SCSI

bridges with firmware version d99908e

SAN tape backup Up to two PowerVault 130T tape libraries for each

PowerVault 35F bridge

PowerSuite

Computer Associates

®

ARCserve® 2000

VERITAS™ BackupExec 8.0 Build 3316

SAN configuration

Follow SAN 3.0 rules

guidelines

Redundant switch fabric is required

support.dell.com Getting Started 1-15

1-16 User’s Guide

CHAPTER 2

Installation Overview

This chapter provides an overview for installing and implementing a Dell

PowerEdge Cluster FE100/FL100 Datacenter Server configurations. More detailed

instructions are provided later in this document.

NOTICE: Before installing the cluster, ensure that your site can handle the

power requirements of the cluster equipment. Contact your Dell sales representative for information about your region's power requirements.

WARNING: Hardware installation should be performed only by trained

service technicians. Before working inside the system, see the safety

instructions in your Dell PowerEdge System Information document to avoid

a situation that could cause serious injury or death.

The following chapter provides an overview for installing Microsoft Windows 2000

Datacenter Server on the PowerEdge Cluster FE100/FL100 Datacenter Server.

To install Windows 2000 Datacenter Server on the PowerEdge Cluster FE100/FL100

Datacenter Server cluster, perform the following steps:

1. Add network interface controllers (NIC), host bus adapters (HBA), redundant

array of independent disks (RAID) controllers, small computer system interface

(SCSI) hard-disk drives, Fibre Channel hard-disk drives, and other components to

the existing system hardware to meet the requirements for a Dell PowerEdge

Cluster FE100/FL100 Datacenter Server configuration.

2. Cable the system hardware for clustering.

3. Configure RAID for the internal SCSI hard-disk drives using the RAID controller’s

basic input/output system (BIOS) utility.

4. Create the low-level configurations and configure the hardware settings for the

host bus adapter (HBA), and ensure that the sum of the execution throttle values

does not exceed 240 and that each value does not exceed 90.

NOTE: See the Dell PowerVault SAN Administrator’s Guide for critical information

on performing low-level configurations and setting the execution throttle settings

on your HBAs.

5. Install and configure the Windows 2000 Datacenter Server operating system

with the latest Service Pack and hotfixes (if applicable) on each node.

support.dell.com Installation Overview 2-1

6. During the installation, check the appropriate box to install the Cluster Service

files when prompted.

NOTICE: Do not configure the Cluster Service in this step.

7. Configure the public and private networks in each node, and place each network

on separate subnets with static Internet protocol (IP) addresses.

NOTE: The public network refers to the NIC used for client connections. The private network refers to the cluster interconnect that connects the cluster nodes

together.

8. Update the miniport driver for the Fibre Channel HBAs in each node.

9. Install the QLogic Fibre Channel configuration software on each node and reboot.

10. Inst a ll Dell OpenManage Application Transparent Failover (ATF) on each node

and reboot.

11. I ns t a ll Dell OpenManage Managed Node (Data Agent) on each node.

12. Insta ll Dell OpenManage Data Supervisor or Dell OpenManage Data Admin-

istrator on node 1.

13. Reboot node 1 and shutdown all other nodes.

14. From node 1, configure the RAID level on the storage system and then reboot

node 1.

15. Run Microsoft Windows 2000 Disk Management and format and assign drive

letters to the Fibre Channel hard-disk drives in the shared storage system.

16. Verify disk access and functionality to all new hard-disk drives in the shared storage system.

17. Power off the node.

18. Repeat steps 15 through 17 on all other nodes for each shared volume.

NOTE: You do not have to reformat the disks.

19. Power on node 1 and configure the Cluster Service.

20. After the Cluster Service has started on node 1, power on all other cluster nodes

and then install Cluster Service on these nodes.

21. Verify the functionality of the cluster.

22. Configure the failover for each cluster node.

23. Install and setup your application programs.

24. Record the configuration of the cluster using the data sheets in Appendix B,

“Cluster Data Sheets.”

2-2 User’s Guide

Loading...

Loading...