Page 1

REVISION 1.37

APRIL 2017

INTEL® SERVER PRODUCTS AND SOLUTIONS

Intel® Server Board S2600KP Product

Family and Intel® Compute Module

HNS2600KP Product Family

Technical Product Specification

A document providing an overview of product features, functions,

architecture, and support specifications

Page 2

Revision History Technical Product Specification

Date

Revision

Number

Modifications

September, 2014

1.00

1st External Public Release

January, 2015

1.10

Added 12G SAS bridge boards

Updated BMC Sensor Table

Removed Appendix Node Manager 2.0 IPMI Integrated Sensors

Added POST Error Beep Codes

March, 2015

1.11

Added maximum RPM for BMC fan control

August, 2015

1.20

Corrected the InfiniBand* Connect-IB* name

Removed the 6G SAS bridge board (option1) from the support list

November, 2015

1.21

Updated the product code

Corrected pin-out tables references

March, 2016

1.30

Added support for Intel® Xeon® processor E5-2600 v4 product family

Added AHWKPTP12GBGBIT

April, 2016

1.31

Added FXX2130PCRPS Power Supply

April, 2016

1.32

Added J6B4 jumper

May, 2016

1.33

Added BBS2600KPTR TPM references

June, 2016

1.34

Added H2312XXLR2 and H2216XXLR2 references

August, 2016

1.35

Updated embedded RAID description

Updated 6Gbps Bridge Board description

October, 2016

1.36

Updated the E5-2600 v4 memory speed supported reference

Added AXXKPTPM2IOM and M.2 device references

Intel® ESRT2 SATA DOM support for RAID-0 and RAID-1

Typographical corrections

April, 2017

1.37

Added S2600KPFR Mellanox IB card has no driver support for Windows OS

Errata: Removed “ED2 – 4: CATERR due to CPU 3-strike timeout” from

CATERR Sensor section

Revision History

ii Revision 1.37

Page 3

Technical Product Specification Disclaimers

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express or implied, by

estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel's

Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any

express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to

fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual

property right. Intel products are not intended for use in medical, lifesaving, or life sustaining applications. Intel

may make changes to specifications and product descriptions at any time, without notice.

A "Mission Critical Application" is any application in which failure of the Intel Product could result, directly or

indirectly, in personal injury or death. SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH

MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND ITS SUBSIDIARIES,

SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS

AGAINST ALL CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF,

DIRECTLY OR INDIRECTLY, ANY CLAIM OF PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY

WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR ITS SUBCONTRACTOR WAS

NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS.

Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or

"undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or

incompatibilities arising from future changes to them.

The Intel® Server Board S2600KP Product Family and Intel® Compute Module HNS2600KP Product Familyproduct

may contain design defects or errors known as errata which may cause the product to deviate from published

specifications. Current characterized errata are available on request.

This document and the software described in it are furnished under license and may only be used or copied in

accordance with the terms of the license. The information in this manual is furnished for informational use only, is

subject to change without notice, and should not be construed as a commitment by Intel Corporation. Intel

Corporation assumes no responsibility or liability for any errors or inaccuracies that may appear in this document

or any software that may be provided in association with this document.

Except as permitted by such license, no part of this document may be reproduced, stored in a retrieval system, or

transmitted in any form or by any means without the express written consent of Intel Corporation.

Copies of documents which have an order number and are referenced in this document, or other Intel® Literature,

may be obtained by calling 1-800-548-4725, or go to: http://www.intel.com/design/Literature.htm.

Intel and Xeon are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © 2016 Intel Corporation. All rights reserved.

Revision 1.37 iii

Page 4

Table of Contents Technical Product Specification

Table of Contents

1 Introduction ........................................................................................................................................ 1

1.1 Chapter Outline .................................................................................................................................... 1

1.2 Server Board Use Disclaimer .......................................................................................................... 2

2 Product Features Overview ............................................................................................................. 3

2.1 Components and Features Identification ................................................................................. 6

2.2 Rear Connectors and Back Panel Feature Identification .................................................... 7

2.3 Intel® Light Guided Diagnostic LED .............................................................................................. 8

2.4 Jumper Identification ........................................................................................................................ 8

2.5 Mechanical Dimension and Weight ............................................................................................. 9

2.6 Product Architecture Overview .................................................................................................. 10

2.7 Power Docking Board ..................................................................................................................... 13

2.8 Bridge Board ....................................................................................................................................... 14

2.8.1 6G SATA Bridge Board ................................................................................................................... 14

2.8.2 12G SAS Bridge Board with IT mode ....................................................................................... 15

2.8.3 12G SAS Bridge Board with RAID 0, 1 and 10 ...................................................................... 15

2.8.4 12G SAS Bridge Board with RAID 0, 1, 5 and 10 ................................................................. 16

2.9 Riser Card ............................................................................................................................................ 16

2.9.1 Riser Slot 1 Riser Card .................................................................................................................... 16

2.9.2 Riser Slot 2 Riser Card .................................................................................................................... 17

2.10 I/O Module Carrier ........................................................................................................................... 17

2.11 Compute Module Fans ................................................................................................................... 20

2.12 Air Duct ................................................................................................................................................. 20

2.13 Intel® RAID C600 Upgrade Key .................................................................................................... 21

2.14 Intel® Remote Management Module 4 (Intel® RMM4) Lite ................................................ 21

2.15 Breakout Board ................................................................................................................................. 22

2.16 System Software Overview .......................................................................................................... 23

2.16.1 System BIOS ....................................................................................................................................... 24

2.16.2 Field Replaceable Unit (FRU) and Sensor Data Record (SDR) Data ............................. 28

2.16.3 Baseboard Management Controller (BMC) Firmware ....................................................... 29

3 Processor Support.......................................................................................................................... 30

3.1 Processor Socket Assembly ........................................................................................................ 30

3.2 Processor Thermal Design Power (TDP) Support .............................................................. 31

3.3 Processor Population Rules ......................................................................................................... 31

3.4 Processor Initialization Error Summary .................................................................................. 32

3.5 Processor Function Overview ..................................................................................................... 35

iv Revision 1.37

Page 5

Technical Product Specification Table of Contents

3.5.1 Processor Core Features ............................................................................................................... 35

3.5.2 Supported Technologies .............................................................................................................. 35

3.6 Processor Heat Sink ........................................................................................................................ 38

4 Memory Support ............................................................................................................................. 39

4.1 Memory Subsystem Architecture .............................................................................................. 39

4.1.1 IMC Modes of Operation ............................................................................................................... 40

4.1.2 Memory RASM Features ................................................................................................................ 41

4.2 Supported DDR4-2400 memory for Intel® Xeon processor v4 Product Family .... 42

4.3 Supported DDR4-2133 memory for Intel® Xeon processor v4 Product Family .... 43

4.4 Memory Slot Identification and Population Rules ............................................................. 43

4.5 System Memory Sizing and Publishing ................................................................................... 45

4.5.1 Effects of Memory Configuration on Memory Sizing ........................................................ 45

4.5.2 Publishing System Memory ......................................................................................................... 46

4.6 Memory Initialization ...................................................................................................................... 47

5 Server Board I/O ............................................................................................................................. 51

5.1 PCI Express* Support...................................................................................................................... 51

5.1.1 PCIe Enumeration and Allocation ............................................................................................. 51

5.1.2 PCIe Non-Transparent Bridge (NTB) ........................................................................................ 52

5.2 Add-in Card Support ...................................................................................................................... 53

5.2.1 Riser Card Support .......................................................................................................................... 53

5.3 Serial ATA (SATA) Support ........................................................................................................... 55

5.3.1 Staggered Disk Spin-Up ................................................................................................................ 57

5.4 Embedded SATA RAID Support ................................................................................................. 57

5.4.1 Intel® Rapid Storage Technology (RSTe) 4.0 ......................................................................... 57

5.4.2 Intel® Embedded Server RAID Technology 2 (ESRT2) ....................................................... 59

5.5 Network Interface ............................................................................................................................. 60

5.5.1 MAC Address Definition ................................................................................................................ 61

5.5.2 LAN Manageability ........................................................................................................................... 61

5.6 Video Support ................................................................................................................................... 61

5.7 Universal Serial Bus (USB) Ports ................................................................................................ 62

5.8 Serial Port ............................................................................................................................................ 63

5.9 InfiniBand* Adapter ......................................................................................................................... 63

5.9.1 Device Interfaces .............................................................................................................................. 64

5.9.2 Quad Small Form-factor Pluggable (QSFP+) Connector ................................................. 65

6 Connector and Header .................................................................................................................. 66

6.1 Power Connectors ........................................................................................................................... 66

6.1.1 Main Power Connector .................................................................................................................. 66

Revision 1.37 v

Page 6

Table of Contents Technical Product Specification

6.1.2 Backup Power Connector ............................................................................................................. 66

6.2 System Management Headers .................................................................................................... 67

6.2.1 Intel® Remote Management Module 4 (Intel® RMM4) Lite Connector .......................... 67

6.2.2 IPMB Header ....................................................................................................................................... 67

6.2.3 Control Panel Connector .............................................................................................................. 67

6.3 Bridge Board Connector ................................................................................................................ 68

6.3.1 Power Button ..................................................................................................................................... 69

6.4 I/O Connectors .................................................................................................................................. 70

6.4.1 PCI Express* Connectors ............................................................................................................... 70

6.4.2 VGA Connector .................................................................................................................................. 78

6.4.3 NIC Connectors ................................................................................................................................. 79

6.4.4 SATA Connectors ............................................................................................................................. 79

6.4.5 SATA SGPIO Connectors ............................................................................................................... 80

6.4.6 Hard Drive Activity LED Header .................................................................................................. 80

6.4.7 Intel® RAID C600 Upgrade Key Connector ............................................................................. 80

6.4.8 Serial Port Connectors ................................................................................................................... 81

6.4.9 USB Connectors ................................................................................................................................ 81

6.4.10 QSFP+ for InfiniBand* .................................................................................................................... 82

6.4.11 UART Header ...................................................................................................................................... 82

6.5 Fan Headers ........................................................................................................................................ 83

6.5.1 FAN Control Cable Connector .................................................................................................... 83

6.5.2 Discrete System FAN Connector ................................................................................................ 83

6.6 Power Docking Board Connectors ............................................................................................ 84

6.7 Breakout Board Connector .......................................................................................................... 85

7 Configuration Jumpers ................................................................................................................. 86

7.1 PS All Node off (J6B4) .................................................................................................................... 87

7.2 BMC Force Update (J6B6)............................................................................................................. 87

7.3 ME Force Update (J5D2) ................................................................................................................ 88

7.4 Password Clear (J6B8) ................................................................................................................... 89

7.5 BIOS Recovery Mode (J6B9) ........................................................................................................ 90

7.6 BIOS Default (J6B7) ......................................................................................................................... 92

8 Intel® Light-Guided Diagnostics................................................................................................... 94

8.1 Status LED ........................................................................................................................................... 94

8.2 ID LED .................................................................................................................................................... 97

8.3 BMC Boot/Reset Status LED Indicators .................................................................................. 97

8.4 InfiniBand* Link/Activity LED ...................................................................................................... 98

8.5 POST Code Diagnostic LEDs ....................................................................................................... 98

vi Revision 1.37

Page 7

Technical Product Specification Table of Contents

9 Platform Management ................................................................................................................. 100

9.1 Management Feature Set Overview ...................................................................................... 100

9.1.1 IPMI 2.0 Features Overview ...................................................................................................... 100

9.1.2 Non IPMI Features Overview .................................................................................................... 101

9.2 Platform Management Features and Functions ............................................................... 103

9.2.1 Power Subsystem ......................................................................................................................... 103

9.2.2 Advanced Configuration and Power Interface (ACPI) .................................................... 103

9.2.3 System Initialization ..................................................................................................................... 104

9.2.4 System Event Log (SEL) .............................................................................................................. 105

9.3 Sensor Monitoring ........................................................................................................................ 105

9.3.1 Sensor Scanning ............................................................................................................................ 105

9.3.2 Sensor Rearm Behavior .............................................................................................................. 105

9.3.3 BIOS Event-Only Sensors .......................................................................................................... 106

9.3.4 Margin Sensors ............................................................................................................................... 107

9.3.5 IPMI Watchdog Sensor ............................................................................................................... 107

9.3.6 BMC Watchdog Sensor ............................................................................................................... 107

9.3.7 BMC System Management Health Monitoring .................................................................. 107

9.3.8 VR Watchdog Timer ..................................................................................................................... 107

9.3.9 System Airflow Monitoring ........................................................................................................ 107

9.3.10 Thermal Monitoring ..................................................................................................................... 108

9.3.11 Processor Sensors ........................................................................................................................ 111

9.3.12 Voltage Monitoring ....................................................................................................................... 114

9.3.13 Fan Monitoring ............................................................................................................................... 114

9.3.14 Standard Fan Management ....................................................................................................... 116

9.3.15 Power Management Bus (PMBus*) ......................................................................................... 122

9.3.16 Power Supply Dynamic Redundancy Sensor .................................................................... 122

9.3.17 Component Fault LED Control ................................................................................................ 123

9.3.18 CMOS Battery Monitoring .......................................................................................................... 124

9.4 Intel® Intelligent Power Node Manager (NM)...................................................................... 124

9.4.1 Hardware Requirements ............................................................................................................ 124

9.4.2 Features ............................................................................................................................................. 125

9.4.3 ME System Management Bus (SMBus*) Interface............................................................ 125

9.4.4 PECI 3.0 ............................................................................................................................................. 125

9.4.5 NM “Discovery” OEM SDR .......................................................................................................... 125

9.4.6 SmaRT/CLST ................................................................................................................................... 125

9.5 Basic and Advanced Server Management Features ....................................................... 126

9.5.1 Dedicated Management Port ................................................................................................... 127

Revision 1.37 vii

Page 8

Table of Contents Technical Product Specification

9.5.2 Embedded Web Server ............................................................................................................... 127

9.5.3 Advanced Management Feature Support (RMM4 Lite) ................................................. 129

10 Thermal Management ................................................................................................................. 134

11 System Security ............................................................................................................................ 136

11.1 Password Setup ............................................................................................................................. 136

11.1.1 System Administrator Password Rights .............................................................................. 137

11.1.2 Authorized System User Password Rights and Restrictions ....................................... 137

11.2 Front Panel Lockout ..................................................................................................................... 138

11.3 Trusted Platform Module (TPM) support ............................................................................ 138

11.3.1 TPM security BIOS ........................................................................................................................ 139

11.3.2 Physical presence ......................................................................................................................... 139

11.3.3 TPM security setup options ...................................................................................................... 139

12 Environmental Limits Specification ......................................................................................... 141

13 Power Supply Specification Guidelines .................................................................................. 142

13.1 Power Supply DC Output Connector .................................................................................... 142

13.2 Power Supply DC Output Specification ............................................................................... 142

13.2.1 Output Power/Currents .............................................................................................................. 142

13.2.2 Standby Output ............................................................................................................................. 143

13.2.3 Voltage Regulation ....................................................................................................................... 143

13.2.4 Dynamic Loading ........................................................................................................................... 143

13.2.5 Capacitive Loading ....................................................................................................................... 143

13.2.6 Grounding......................................................................................................................................... 144

13.2.7 Closed-loop Stability ................................................................................................................... 144

13.2.8 Residual Voltage Immunity in Standby Mode ................................................................... 144

13.2.9 Common Mode Noise .................................................................................................................. 144

13.2.10 Soft Starting .................................................................................................................................... 144

13.2.11 Zero Load Stability Requirements ......................................................................................... 145

13.2.12 Hot Swap Requirements ............................................................................................................. 145

13.2.13 Forced Load Sharing .................................................................................................................... 145

13.2.14 Ripple/Noise .................................................................................................................................... 145

13.2.15 Timing Requirement .................................................................................................................... 145

Appendix A: Integration and Usage Tips ........................................................................................ 149

Appendix B: Integrated BMC Sensor Tables .................................................................................. 150

Appendix C: BIOS Sensors and SEL Data ........................................................................................ 167

Appendix D: POST Code Diagnostic LED Decoder ....................................................................... 174

Appendix E: POST Code Errors ......................................................................................................... 181

POST Error Beep Codes .................................................................................................................................... 183

viii Revision 1.37

Page 9

Technical Product Specification Table of Contents

Appendix F: Statement of Volatility ................................................................................................ 185

Glossary ................................................................................................................................................. 187

Reference Documents ......................................................................................................................... 189

Revision 1.37 ix

Page 10

List of Figures Technical Product Specification

List of Figures

Figure 1. Intel® Server Board S2600KPFR (demo picture) ............................................................................. 3

Figure 2. Intel® Compute Module HNS2600KPR (demo picture) ................................................................ 3

Figure 3. Server Board Components (S2600KPFR).......................................................................................... 6

Figure 4. Compute Module Components ............................................................................................................. 6

Figure 5. Server Board Rear Connectors .............................................................................................................. 7

Figure 6. Compute Module Back Panel ................................................................................................................. 7

Figure 7. Intel® Light Guided Diagnostic LED ...................................................................................................... 8

Figure 8. Jumper Identification ................................................................................................................................ 8

Figure 9. Server Board Dimension .......................................................................................................................... 9

Figure 10. Compute Module Dimension ............................................................................................................... 9

Figure 11. Intel® Server Board S2600KPR Block Diagram .......................................................................... 11

Figure 12. Intel® Server Board S2600KPFR Block Diagram ........................................................................ 12

Figure 13. Intel® Server Board S2600KPTR Block Diagram ......................................................................... 13

Figure 14. Power Docking Board Overview ...................................................................................................... 14

Figure 15. 6G SATA Bridge Board Overview .................................................................................................... 15

Figure 16. 12G SAS Bridge Board with IT mode Overview ........................................................................ 15

Figure 17. 12G SAS Bridge Board with RAID 0, 1 and 10 Overview ....................................................... 16

Figure 18. 12G SAS Bridge Board with RAID 0, 1, 5 and 10 Overview .................................................. 16

Figure 19. Riser Card for Riser Slot #1 ............................................................................................................... 16

Figure 20. Riser Card for Riser Slot #2 ............................................................................................................... 17

Figure 21. I/O Module Carrier Installation ........................................................................................................ 17

Figure 22. Installing the M.2 Device .................................................................................................................... 18

Figure 23. AXXKPTPM2IOM I/O Module Carrier Connectors ................................................................... 18

Figure 24. Connecting the M.2 SATA cable ...................................................................................................... 19

Figure 25. Compute Module Fans ........................................................................................................................ 20

Figure 26. Air Duct ...................................................................................................................................................... 21

Figure 27. Intel® RAID C600 Upgrade Key ......................................................................................................... 21

Figure 28. Intel® RMM4 Lite ..................................................................................................................................... 22

Figure 29. Breakout Board Front and Rear View ............................................................................................ 23

Figure 30. Breakout Board Mechanical Drawing (Unit: mm)...................................................................... 23

Figure 31. Processor Socket Assembly .............................................................................................................. 30

Figure 32. Processor Socket ILM .......................................................................................................................... 30

Figure 33. Processor Heat Sink Overview ......................................................................................................... 38

Figure 34. Integrated Memory Controller Functional Block Diagram ................................................... 39

Figure 35. DIMM Slot Identification ..................................................................................................................... 44

x Revision 1.37

Page 11

Technical Product Specification List of Figures

Figure 36. Add-in Card Support Block Diagram (S2600KPR) ................................................................... 53

Figure 37. Server Board Riser Slots (S2600KPFR) ......................................................................................... 53

Figure 38. SATA Support ......................................................................................................................................... 55

Figure 39. SATA RAID 5 Upgrade Key................................................................................................................. 59

Figure 40. Network Interface Connectors ......................................................................................................... 60

Figure 41. RJ45 NIC Port LED................................................................................................................................. 61

Figure 42. USB Ports Block Diagram ................................................................................................................... 63

Figure 43. Serial Port A Location .......................................................................................................................... 63

Figure 44. Jumper Location .................................................................................................................................... 86

Figure 45. Status LED (E) and ID LED (D) ........................................................................................................... 94

Figure 46. InfiniBand* Link LED (K) and InfiniBand* Activity LED (J) ..................................................... 98

Figure 47. Rear Panel Diagnostic LEDs .............................................................................................................. 99

Figure 48. High-level Fan Speed Control Process ...................................................................................... 119

Figure 49. Air Flow and Fan Identification ..................................................................................................... 134

Figure 50. Turn On/Off Timing (Power Supply Signals – 5VSB) ........................................................... 148

Figure 51. Turn On/Off Timing (Power Supply Signals – 12VSB) ........................................................ 148

Figure 52. Diagnostic LED Placement Diagram ........................................................................................... 174

Revision 1.37 xi

Page 12

List of Tables Technical Product Specification

List of Tables

Table 1. Intel® Server Board S2600KP Product Family Feature Set .......................................................... 4

Table 2. Intel® Compute Module HNS2600KP Product Family Feature Set .......................................... 5

Table 3. Product Weight and Packaging ........................................................................................................... 10

Table 4. POST Hot-Keys ........................................................................................................................................... 26

Table 5. Mixed Processor Configurations Error Summary ......................................................................... 33

Table 6. DDR4-2400 DIMM Support Guidelines for Intel® Xeon processor v4 Product Family . 42

Table 8. DIMM Nomenclature ................................................................................................................................ 44

Table 9. Supported DIMM Populations .............................................................................................................. 44

Table 10. PCIe* Port Routing – CPU 1 ................................................................................................................ 54

Table 11. PCIe* Port Routing – CPU 2 ................................................................................................................ 54

Table 12. SATA and sSATA Controller BIOS Utility Setup Options ....................................................... 55

Table 13. SATA and sSATA Controller Feature Support ............................................................................ 56

Table 14. Onboard Video Resolution and Refresh Rate (Hz) .................................................................... 62

Table 15. Network Port Configuration ............................................................................................................... 64

Table 16. Main Power Supply Connector 6-pin 2x3 Connector .............................................................. 66

Table 17. Backup Power Connector .................................................................................................................... 66

Table 18. Intel® RMM4 Lite Connector ................................................................................................................ 67

Table 19. IPMB Header ............................................................................................................................................. 67

Table 20. Control Panel Connector ..................................................................................................................... 67

Table 21. Bridge Board Connector ...................................................................................................................... 68

Table 22. SATA DOM Connector Pin-out .......................................................................................................... 69

Table 23. USB 2.0 Type-A Connector Pin-out ................................................................................................ 70

Table 24. 5V_AUX Power Connector Pin-out.................................................................................................. 70

Table 25. CPU1 and CPU2 PCIe Bus Connectivity ......................................................................................... 70

Table 26. PCI Express* x16 Riser Slot 1 Connector ...................................................................................... 71

Table 27. PCI Express* x24 Riser Slot 2 Connector ...................................................................................... 73

Table 28. PCI Express* x24 Riser Slot 3 Connector ...................................................................................... 76

Table 29. PCI Express* Riser ID Assignment .................................................................................................... 78

Table 30. PCI Express* Clock Source by Slot .................................................................................................. 78

Table 31. VGA External Video Connector ......................................................................................................... 78

Table 32. RJ-45 10/100/1000 NIC Connector ............................................................................................... 79

Table 33. SATA Connector ...................................................................................................................................... 80

Table 34. SATA SGPIO Connector ....................................................................................................................... 80

Table 35. SATA HDD Activity LED Header ........................................................................................................ 80

Table 36. Storage Upgrade Key Connector ..................................................................................................... 81

xii Revision 1.37

Page 13

Technical Product Specification List of Tables

Table 37. Internal 9-pin Serial A ........................................................................................................................... 81

Table 38. External USB port Connector............................................................................................................. 81

Table 39. Internal USB Connector ....................................................................................................................... 81

Table 40. QSFP+ Connector ................................................................................................................................... 82

Table 41. UART Header ............................................................................................................................................ 82

Table 42. Baseboard Fan Connector................................................................................................................... 83

Table 43. Baseboard Fan Connector................................................................................................................... 83

Table 44. Main Power Input Connector ............................................................................................................. 84

Table 45. Fan Control Signal Connector ........................................................................................................... 84

Table 46. Compute Module Fan Connector ..................................................................................................... 84

Table 47. Main Power Output Connector ......................................................................................................... 84

Table 48. Miscellaneous Signal Connector ...................................................................................................... 85

Table 49. Jumper Modes Selection ..................................................................................................................... 86

Table 50. PS All Node off (J6B4) .......................................................................................................................... 87

Table 51. Force Integrated BMC Update Jumper (J6B6) ............................................................................ 87

Table 52. Force ME Update Jumper (J5D2) ..................................................................................................... 88

Table 53. Password Clear Jumper (J6B8) ......................................................................................................... 90

Table 54. BIOS Recovery Mode Jumper (J6B9) ............................................................................................. 91

Table 55. BIOS Default Jumper ............................................................................................................................. 92

Table 56. Status LED State Definitions .............................................................................................................. 95

Table 57. ID LED .......................................................................................................................................................... 97

Table 58. BMC Boot/Reset Status LED Indicators ......................................................................................... 97

Table 59. InfiniBand* Link/Activity LED ............................................................................................................. 98

Table 60. ACPI Power States ............................................................................................................................... 103

Table 61. Processor Sensors ............................................................................................................................... 111

Table 62. Processor Status Sensor Implementation ................................................................................. 112

Table 63. Component Fault LEDs ...................................................................................................................... 123

Table 64. Intel® Remote Management Module 4 (RMM4) Options ...................................................... 126

Table 65. Basic and Advanced Server Management Features Overview .......................................... 127

Table 66. Air Flow .................................................................................................................................................... 134

Table 67. TPM Setup Utility – Security Configuration Screen Fields ................................................. 140

Table 68. Server Board Design Specifications ............................................................................................. 141

Table 69. Power Supply DC Power Input Connector Pin-out ................................................................ 142

Table 70. Minimum 1200W/1600W Load Ratings..................................................................................... 142

Table 71. Minimum 2130W Load Ratings ...................................................................................................... 142

Table 72. Voltage Regulation Limits ................................................................................................................ 143

Table 73. Transient Load Requirements ........................................................................................................ 143

Revision 1.37 xiii

Page 14

List of Tables Technical Product Specification

Table 74. Capacitive Loading Conditions ....................................................................................................... 144

Table 75. Ripples and Noise ................................................................................................................................ 145

Table 76. Timing Requirements ......................................................................................................................... 146

Table 77. Timing Requirements (12VSB) ....................................................................................................... 147

Table 78. BMC Sensor Table ............................................................................................................................... 152

Table 79. BIOS Sensor and SEL Data ............................................................................................................... 167

Table 80. POST Code LED Example ................................................................................................................. 175

Table 81. MRC Fatal Error Codes ....................................................................................................................... 175

Table 82. MRC Progress Codes .......................................................................................................................... 176

Table 83. POST Progress Codes ........................................................................................................................ 177

Table 84. POST Error Codes and Messages.................................................................................................. 181

Table 85. POST Error Beep Codes .................................................................................................................... 183

Table 86. Glossary ................................................................................................................................................... 187

xiv Revision 1.37

Page 15

Technical Product Specification List of Tables

<This page is intentionally left blank.>

Revision 1.37 xv

Page 16

Page 17

Technical Product Specification Introduction

1 Introduction

This Technical Product Specification (TPS) provides specific information detailing the features,

functionality, and high-level architecture of the Intel® Server Board S2600KP product family

and the Intel® Compute Module HNS2600KP product family.

Design-level information related to specific server board components and subsystems can be

obtained by ordering External Product Specifications (EPS) or External Design Specifications

(EDS) related to this server generation. EPS and EDS documents are made available under

NDA with Intel and must be ordered through your local Intel representative. See the Reference

Documents section for a list of available documents.

1.1 Chapter Outline

This document is divided into the following chapters:

Chapter 1 – Introduction

Chapter 2 – Product Features Overview

Chapter 3 – Processor Support

Chapter 4 – Memory Support

Chapter 5 – Server Board I/O

Chapter 6 – Connector and Header

Chapter 7 – Configuration Jumpers

Chapter 8 – Intel

Chapter 9 – Platform Management

Chapter 10 – Thermal Management

Chapter 11 – System Security

Chapter 12 – Environmental Limits Specification

Chapter 13 – Power Supply Specification Guidelines

Appendix A – Integration and Usage Tips

Appendix B – Integrated BMC Sensor Tables

Appendix C – BIOS Sensors and SEL Data

Appendix D – POST Code Diagnostic LED Decoder

Appendix E – POST Code Errors

®

Light-Guided Diagnostics

Appendix F – Statement of Volatility

Glossary

Reference Documents

Revision 1.37 1

Page 18

Introduction Technical Product Specification

2

1.2 Server Board Use Disclaimer

Intel Corporation server boards contain a number of high-density VLSI (Very Large Scale

Integration) and power delivery components that need adequate airflow to cool. Intel ensures

through its own chassis development and testing that when Intel server building blocks are

used together, the fully integrated system will meet the intended thermal requirements of

these components. It is the responsibility of the system integrator who chooses not to use

Intel developed server building blocks to consult vendor datasheets and operating

parameters to determine the amount of air flow required for their specific application and

environmental conditions. Intel Corporation cannot be held responsible if components fail or

the server board does not operate correctly when used outside any of their published

operating or non-operating limits.

Revision 1.37

Page 19

Technical Product Specification Product Features Overview

2 Product Features Overview

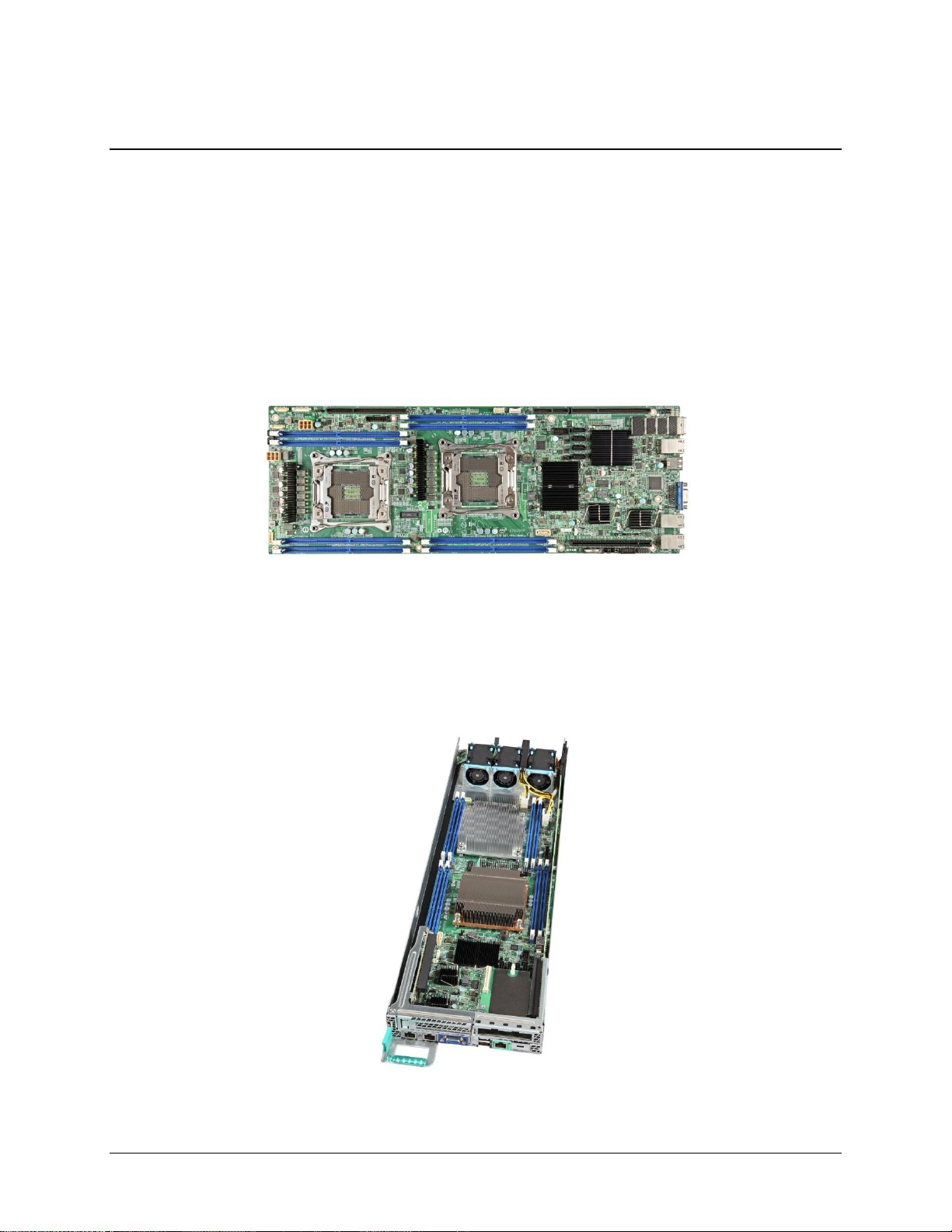

The Intel® Server Board S2600KP product family is a monolithic printed circuit board (PCB)

assembly with features designed to support the high performance and high density

computing markets. This server board is designed to support the Intel® Xeon® processor E52600 v3/v4 product family. Previous generation Intel® Xeon® processors are not supported.

The Intel® Server Board S2600KP product family contains two server board options. Many of

the features and functions of the server board family are common. A board will be identified

by its name which has described features or functions unique to it.

S2600KPFR – With onboard InfiniBand* controller providing one external rear QSFP+

port

Figure 1. Intel® Server Board S2600KPFR (demo picture)

S2600KPR – Without onboard InfiniBand* controller, no external rear QSFP+ port

The Intel® Compute Module HNS2600KP product family provides two compute module

options, each integrated with either of the server board from the Intel® Server Board S2600KP

product family.

Figure 2. Intel® Compute Module HNS2600KPR (demo picture)

Revision 1.37 3

Page 20

Product Features Overview Technical Product Specification

4

Feature

Description

Processor Support

Two LGA2011-3 (Socket R3) processor sockets

Support for one or two Intel

®

Xeon® processors E5-2600 v3/v4

product family

Maximum supported Thermal Design Power (TDP) of up to 160 W

Memory Support

Eight DIMM slots in total across eight memory channels

Registered DDR4 (RDIMM), Load Reduced DDR4 (LRDIMM)

Memory DDR4 data transfer rates of 1600/1866/2133/2400 MT/s

Chipset

Intel® C612 chipset

External I/O Connections

DB-15 video connector

Two RJ-45 1GbE Network Interface Controller (NIC) ports

One dedicated RJ-45 port for remote server management

One stacked two port USB 2.0 (port 0/1) connector

One InfiniBand* FDR QSFP+ port (S2600KPFR only)

Internal I/O

connectors/headers

Bridge slot to extend board I/O

o Four SATA 6Gb/s signals to backplane

o Front control panel signals

o One SATA 6Gb/s port for SATA DOM

o One USB 2.0 connector (port 10)

One internal USB 2.0 connector (port 6/7)

One 2x7 pin header for system fan module

One 1x12 pin control panel header

One DH-10 serial Port A connector

One SATA 6Gb/s port for SATA DOM

Four SATA 6Gb/s connectors (port 0/1/2/3)

One 2x4 pin header for Intel

®

RMM4 Lite

One 1x4 pin header for Storage Upgrade Key

One 1x8 pin backup power connector

PCIe Support

PCIe* 3.0 (2.5, 5, 8 GT/s)

Power Connections

Two sets of 2x3 pin connectors (main power 1/2)

System Fan Support

One 2x7 pin fan control connector for Intel compute module and

chassis

Three 1x8 pin fan connectors for third-party chassis

Video

Integrated 2D video graphics controller

16MB DDR3 memory

Riser Support

Three riser slots

o Riser slot 1 provides x16 PCIe* 3.0 lanes

o Riser slot 2 provides

x24 PCIe* 3.0 lanes for S2600KPR

x16 PCIe* 3.0 lanes for S2600KPFR

o Riser slot 3 provides x24 PCIe* 3.0 lanes

One bridge board slot for board I/O expansion

The following table provides a high-level product feature list.

Table 1. Intel® Server Board S2600KP Product Family Feature Set

Revision 1.37

Page 21

Technical Product Specification Product Features Overview

Feature

Description

On-board storage

controllers and options

5 on-board SATA 6Gb/s ports, one of them is SATA DOM compatible.

5 SATA 6Gb/s signals to backplane via bridge slot.

RAID Support

Intel

®

Rapid Storage RAID Technology (RSTe) 4.0

Intel

®

Embedded Server RAID Technology 2 (ESRT2) with optional

Intel® RAID C600 Upgrade Key to enable SATA RAID 5

Server Management

Onboard Emulex* Pilot III* Controller

Support for Intel

®

Remote Management Module 4 Lite solutions

Intel

®

Light-Guided Diagnostics on field replaceable units

Support for Intel

®

System Management Software

Support for Intel

®

Intelligent Power Node Manager (Need

PMBus*-compliant power supply)

Security

Intel® Trusted Platform Module (TPM) v1.2 for BBS2600KPTR only

Feature1

Description

Server Board

Intel® Server Board S2600KP product family

HNS2600KPR – include Intel

®

Server Board S2600KPR

HNS2600KPFR – include Intel

®

Server Board S2600KPFR

Processor Support

Maximum supported Thermal Design Power (TDP) of up to 145 W

Heat Sink

One Cu/Al 91.5x91.5mm heat sink for CPU 1

One Ex-Al 91.5x91.5mm heat sink for CPU 2

Fan

Three sets of 40x56mm dual rotor system fans

Riser Support

One riser card with bracket on riser slot 1 to support one PCIe* 3.0

x16 low profile card (default)2

One I/O module riser and carrier kit on riser slot 2 to support an

Intel® I/O Expansion Module (optional)

Note: Riser slot 3 cannot be used with the bridge board installed.

Compute Module Board

Three types of bridge boards:

o 6G SATA Bridge Board (Default)

o 12G SAS Bridge Board (Optional)

o 12G SAS Bridge Board with RAID 5 (Optional)

One compute module power docking board

Air Duct

One transparent air duct

Warning! The riser slot 1 on the server board is designed for plugging in ONLY the riser card.

Plugging in any PCIe* card may cause permanent server board and PCIe* card damage.

Table 2. Intel® Compute Module HNS2600KP Product Family Feature Set

Notes:

1. The table only lists features that are unique to the compute module or different from the

server board.

Revision 1.37 5

Page 22

Product Features Overview Technical Product Specification

6

2. ONLY low profile PCIe* card can be installed on riser slot 1 riser card of the compute

module.

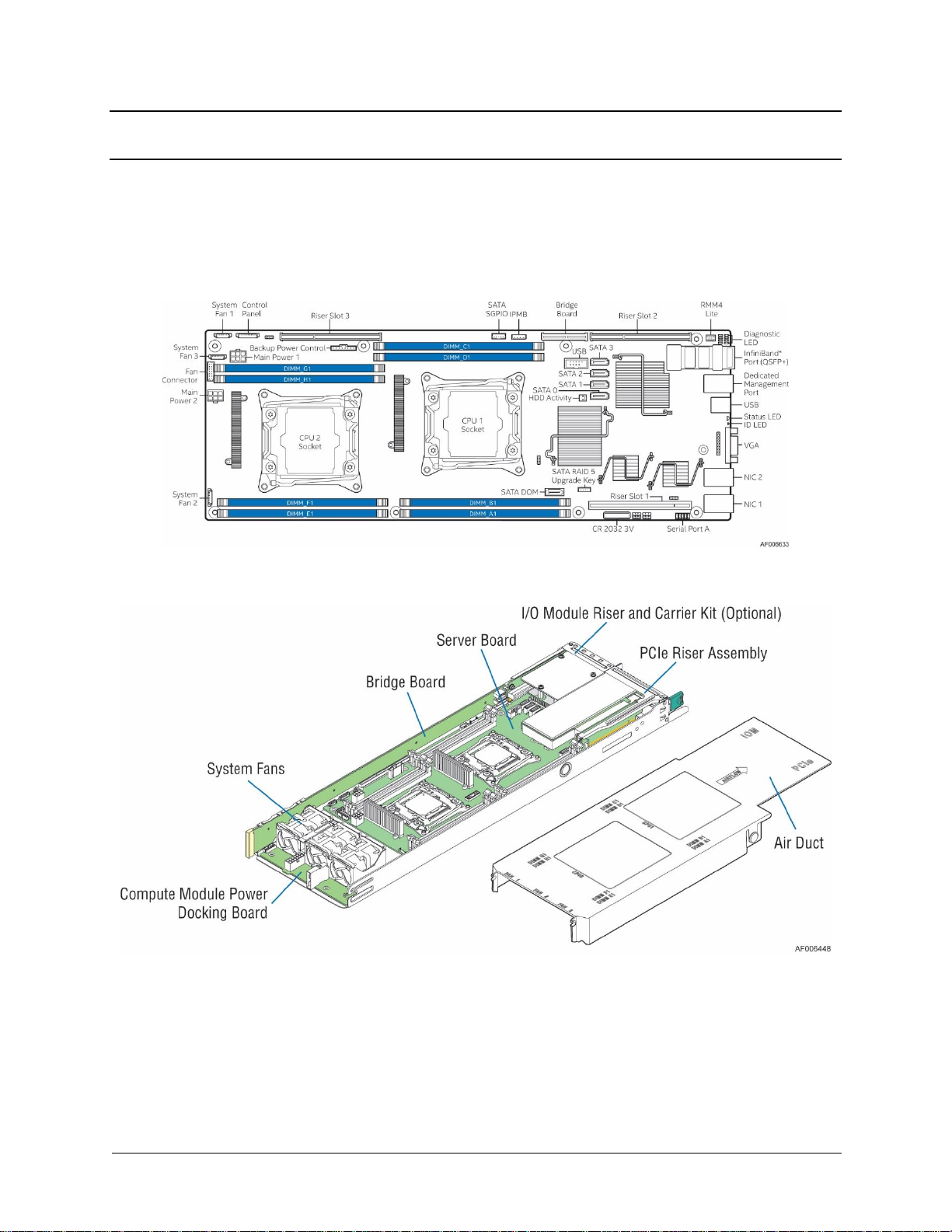

2.1 Components and Features Identification

This section provides a general overview of the server board and compute module, identifying

key features and component locations. The majority of the items identified are common in the

product family.

Figure 3. Server Board Components (S2600KPFR)

Figure 4. Compute Module Components

Revision 1.37

Page 23

Technical Product Specification Product Features Overview

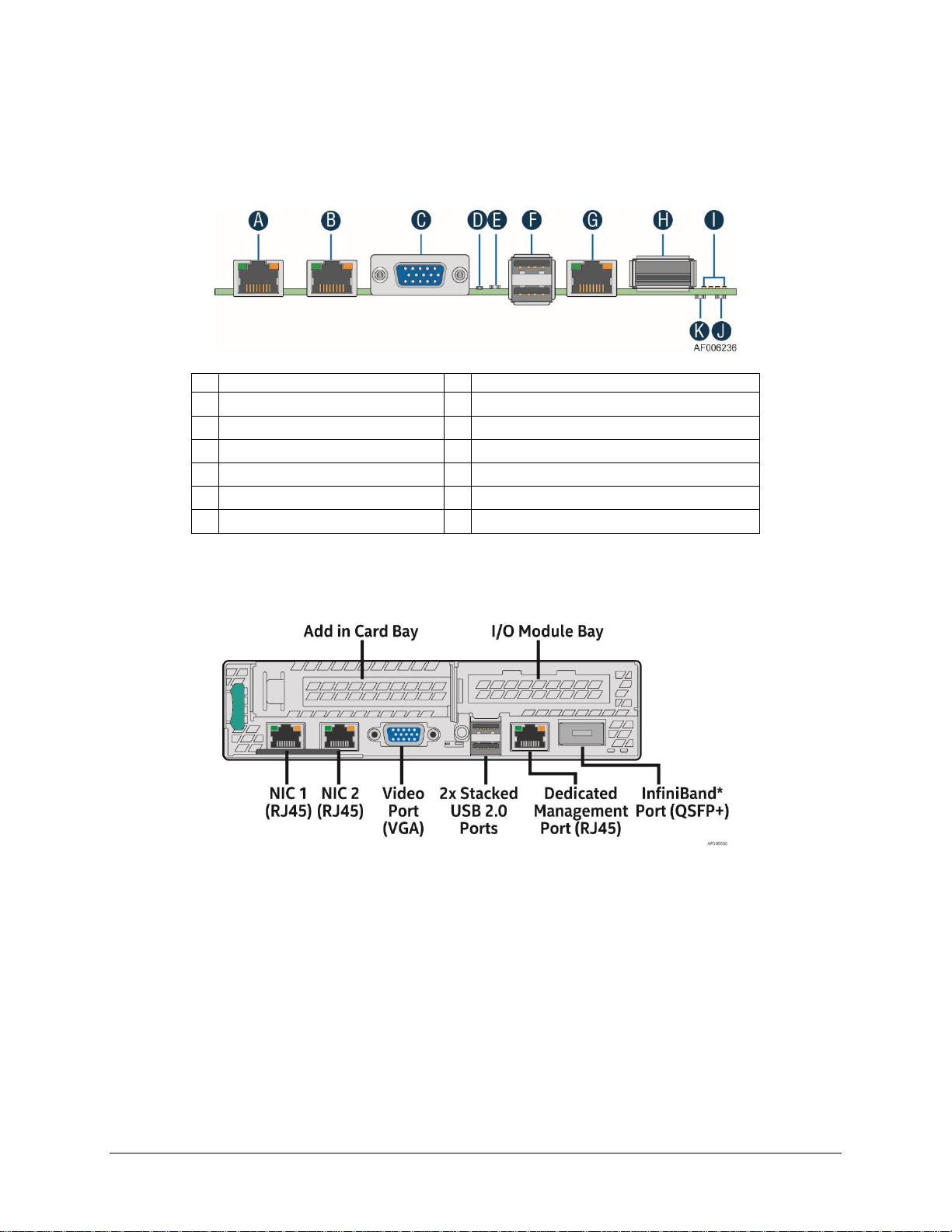

Description

Description

A

NIC port 1 (RJ45)

G

Dedicated Management Port (RJ45)

B

NIC port 2 (RJ45)

H

InfiniBand* Port (QSFP+, S2600KPFR only)

C

Video out (DB-15)

I

POST Code LEDs (8 LEDs)

D

ID LED

J

InfiniBand* Activity LED (S2600KPFR only)

E

Status LED

K

InfiniBand* Link LED (S2600KPFR only)

F

Dual port USB

2.2 Rear Connectors and Back Panel Feature Identification

The Intel® Server Board S2600KP product family has the following board rear connector

placement.

Figure 5. Server Board Rear Connectors

The Intel® Compute Module HNS2600KP product family has the following back panel features.

Figure 6. Compute Module Back Panel

Revision 1.37 7

Page 24

Product Features Overview Technical Product Specification

8

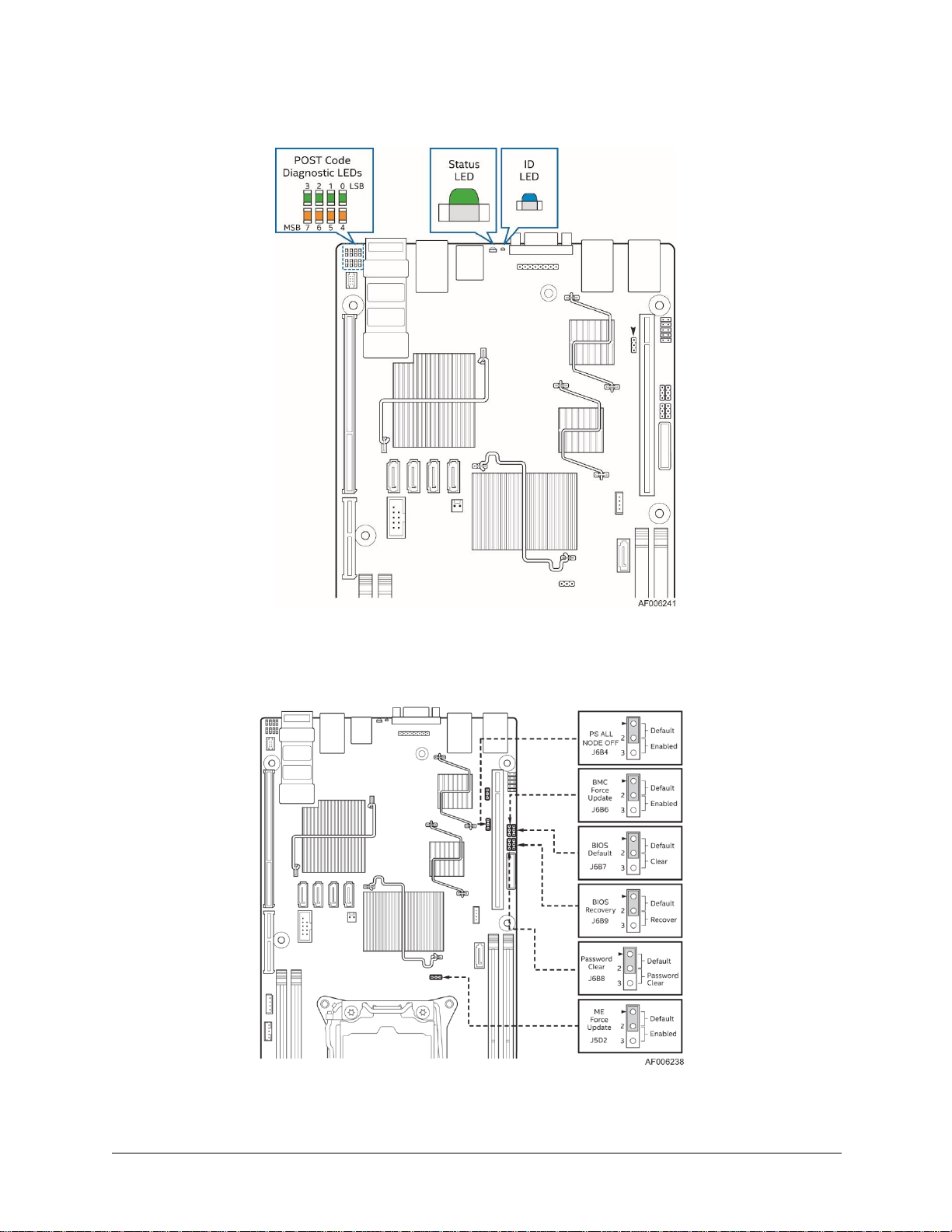

2.3 Intel

®

Light Guided Diagnostic LED

Figure 7. Intel® Light Guided Diagnostic LED

2.4 Jumper Identification

Figure 8. Jumper Identification

Revision 1.37

Page 25

Technical Product Specification Product Features Overview

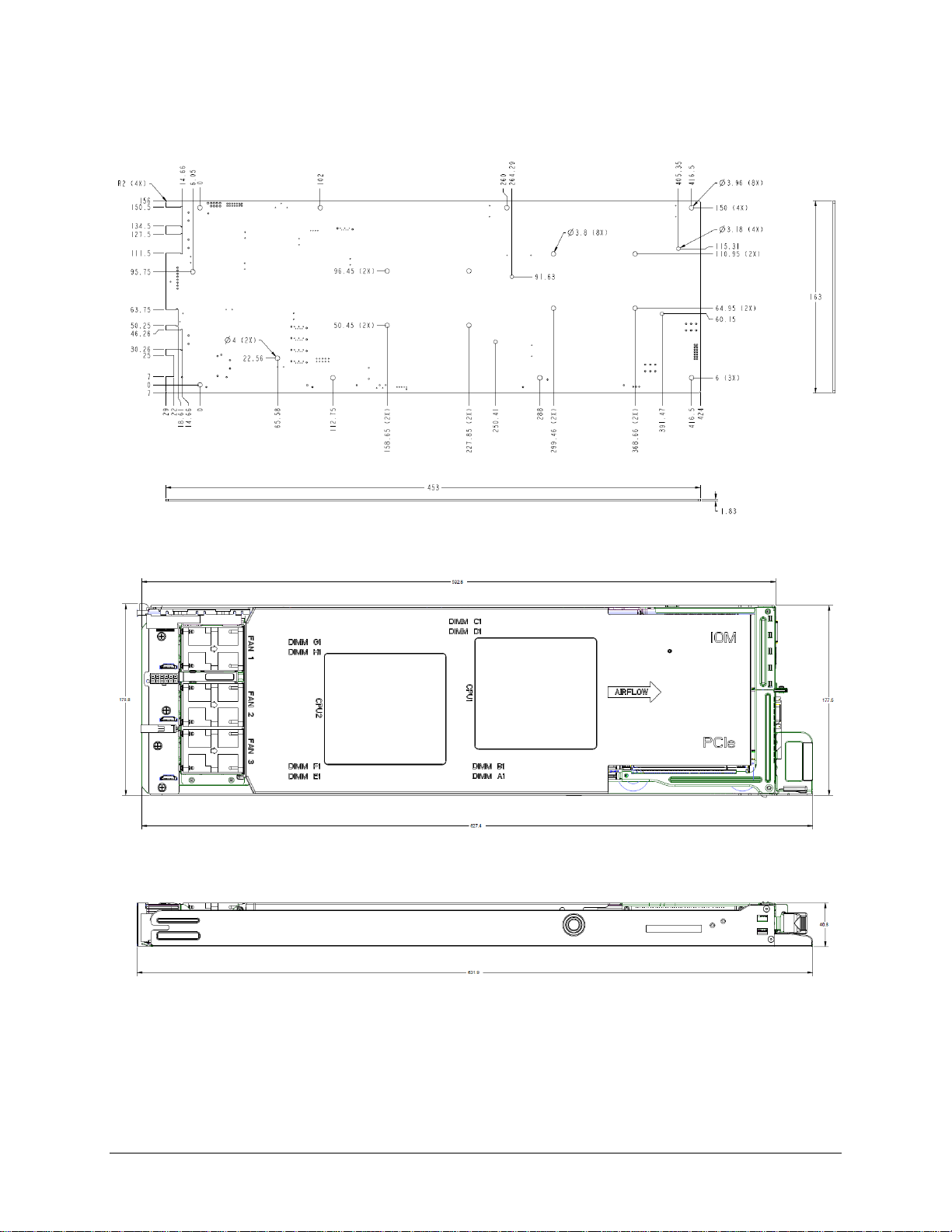

2.5 Mechanical Dimension and Weight

Figure 9. Server Board Dimension

Figure 10. Compute Module Dimension

Revision 1.37 9

Page 26

Product Features Overview Technical Product Specification

10

Product Code

Quantity per Box

Box Dimension (mm)

Net Weight

Package Weight

BBS2600KPR

10

553X242X463

10.0 kg

12.8 kg

BBS2600KPFR

10

553X242X463

10.4 kg

13.2 kg

BBS2600KPTR

10

553X242X463

0.98 kg

12.6 kg

HNS2600KPR

1

716X269X158

3.4 kg

4.6 kg

HNS2600KPFR

1

716X269X158

3.44 kg

4.64 kg

Approximate product weight is listed in the following table for reference. Variations are

expected with real shipping products.

Table 3. Product Weight and Packaging

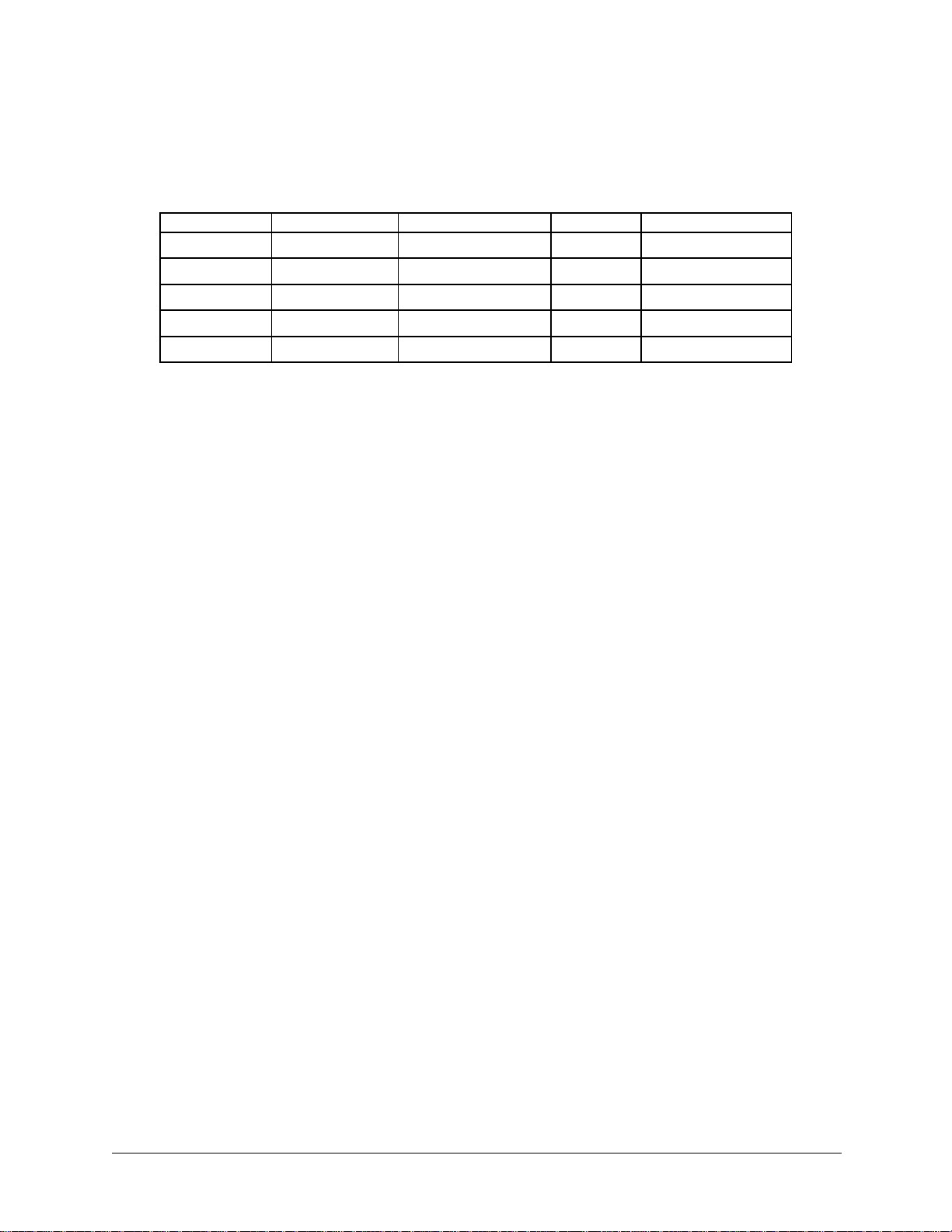

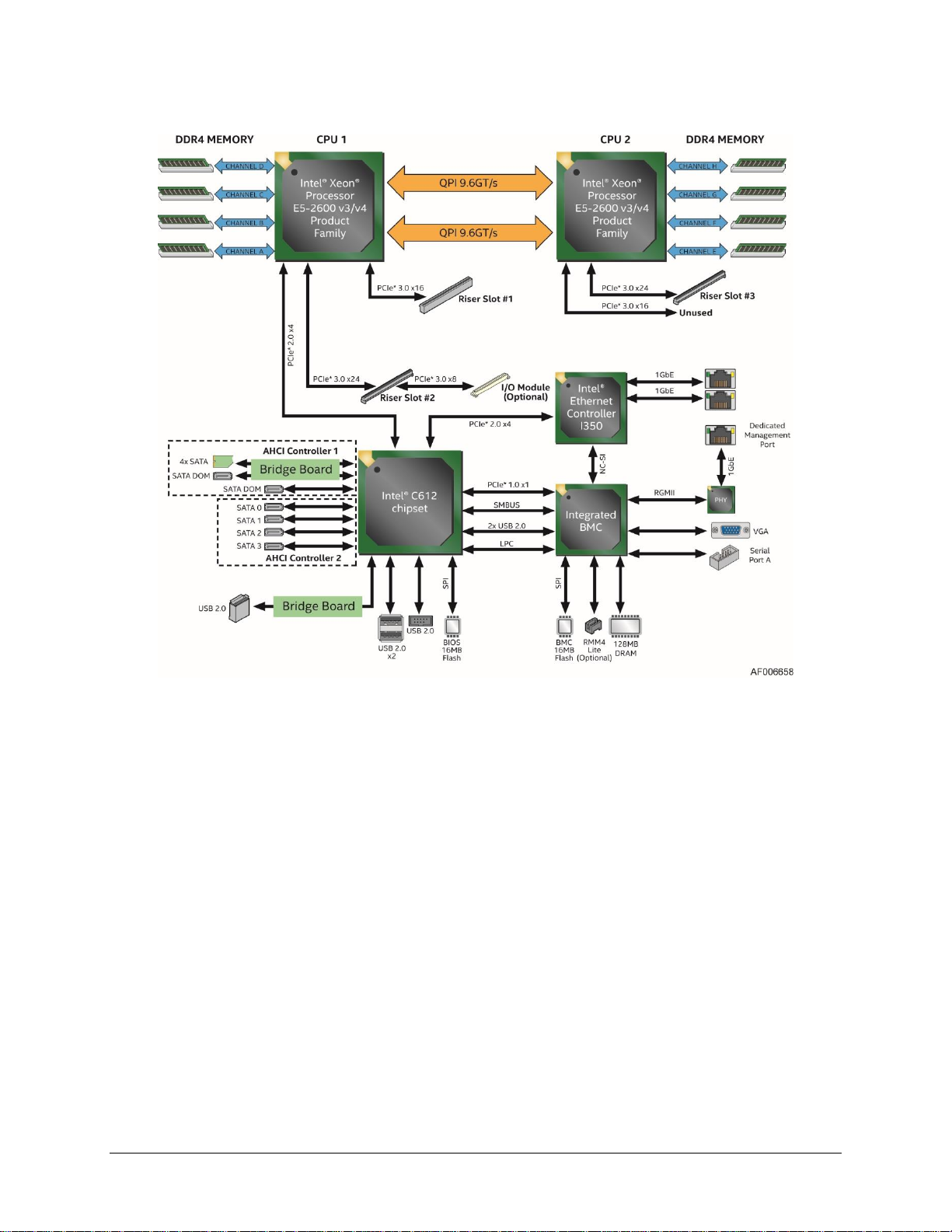

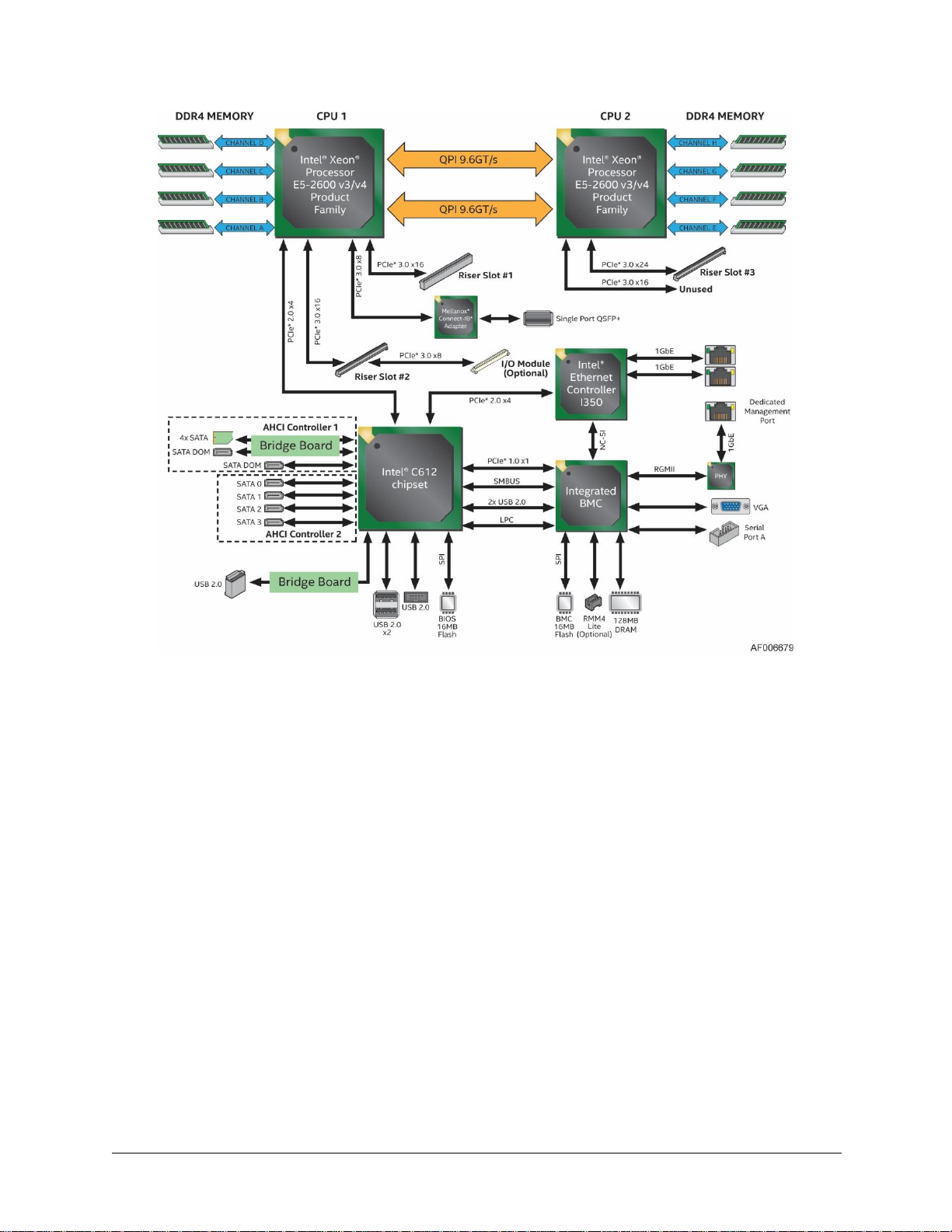

2.6 Product Architecture Overview

The Intel® Server Board S2600KP product family is a purpose built, rack-optimized, liquid

cooling friendly server board used in a high-density rack system. It is designed around the

integrated features and functions of the Intel® Xeon® processor E5-2600 V3/V4 product family,

the Intel® C612 chipset, and other supporting components including the Integrated BMC, the

Intel® I350 network interface controller, and the Mellanox* Connect-IB* adapter (S2600KPFR

only).

The half-width board size allows four boards to reside in a standard multi-compute module

2U Intel® Server Chassis H2000G product family, for high-performance and high-density

computing platforms.

The following diagram provides an overview of the server board architecture, showing the

features and interconnects of each of the major subsystem components.

Revision 1.37

Page 27

Technical Product Specification Product Features Overview

Figure 11. Intel® Server Board S2600KPR Block Diagram

Revision 1.37 11

Page 28

Product Features Overview Technical Product Specification

12

Figure 12. Intel® Server Board S2600KPFR Block Diagram

Revision 1.37

Page 29

Technical Product Specification Product Features Overview

Label

Description

A

2x7-pin fan control connector

Figure 13. Intel® Server Board S2600KPTR Block Diagram

The Intel® Compute Module HNS2600KP product family provides a series of features including

the power docking board, bridge boards, riser cards, fans, and the air duct.

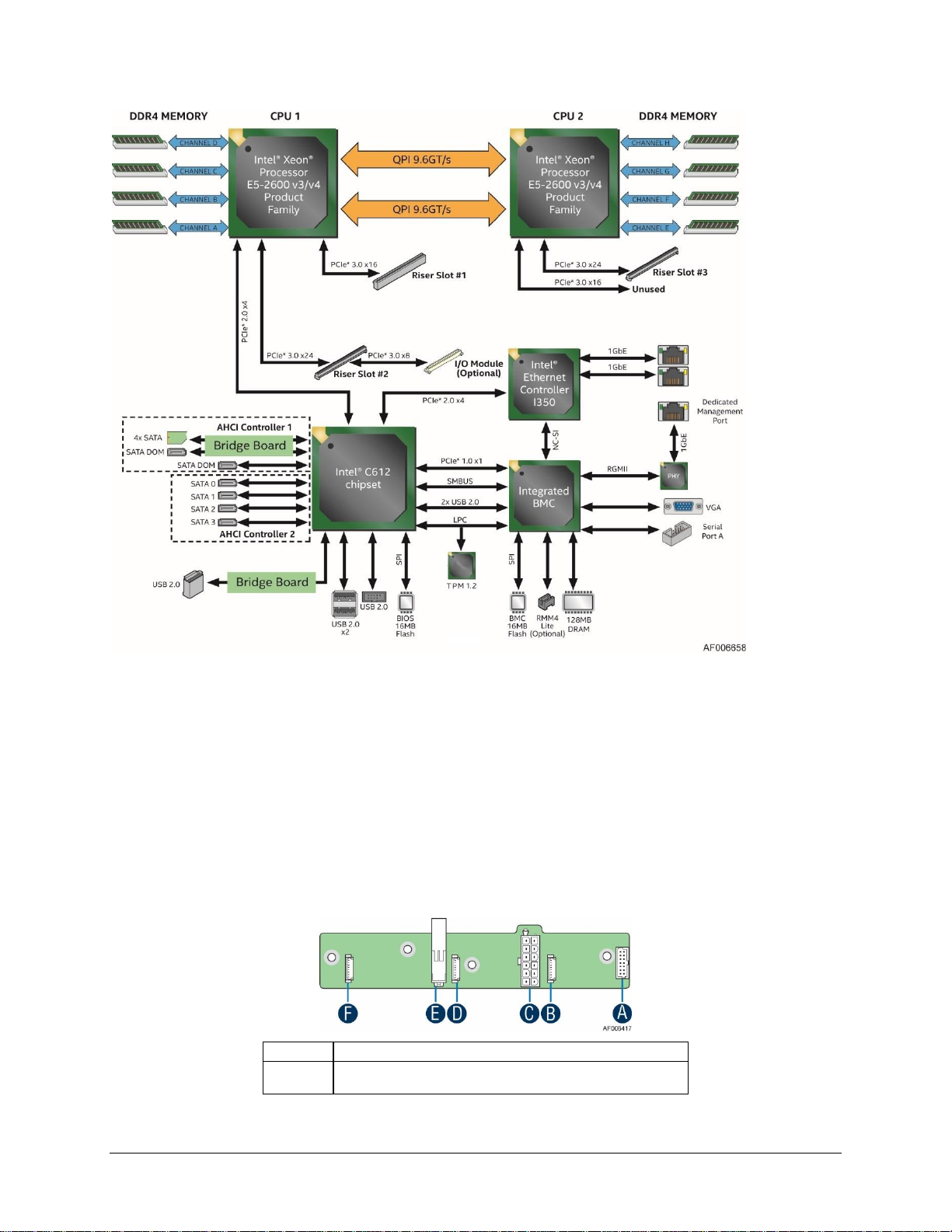

2.7 Power Docking Board

The power docking board provides hot swap docking of 12V main power between the

compute module and the server. It supports three dual rotor fan connections, 12V main power

hot swap controller, and current sensing. The power docking board is intended to support the

usage of the compute module with the Intel® Server Board S2600KP product family.

Revision 1.37 13

Page 30

Product Features Overview Technical Product Specification

14

B

8-pin connector for fan 1

C

2x6-pin main power output connector

D

8-pin connector for fan 2

E

12-pin connector for main power input

F

8-pin connector for fan 3

Label

Description

A

2x40-pin card edge connector (to the backplane)

B

USB 2.0 Type-A connector

C

2-pin 5V power

D

SATA DOM port connector

Figure 14. Power Docking Board Overview

2.8 Bridge Board

There are four types of bridge boards that implement different features and functions.

6G SATA bridge board (Default)

12G SAS bridge board with IT mode (Optional)

12G SAS bridge board with RAID 0, 1 and 10 (Optional)

12G SAS bridge board with RAID 0, 1, 5 and 10 (Optional)

Note: All 12G SAS bridge boards require two processors installed to be functional.

2.8.1 6G SATA Bridge Board

The 6G SATA bridge board provides hot swap interconnect of all electrical signals to the

backplane of the server chassis (except for main 12V power). It supports up to 4x lanes of

SATA, a 7-pin SATA connector for SATA DOM devices, and a type-A USB connector for USB

flash device. One bridge board is used per one compute module. The bridge board is secured

with screws to the compute module. The bridge board support embedded SATA RAID

Support.

Revision 1.37

Page 31

Technical Product Specification Product Features Overview

Label

Description

E

2x40-pin card edge connector (to the bridge board

connector on the server board)

Label

Description

A

2x40-pin card edge connector (to the backplane)

B

UART header

C

2-pin 5V power

D

SATA DOM port connector

E

2x40-pin card edge connector (to the bridge board

connector on the server board)

F

200-pin connector (to Riser Slot 3 on the server board)

Label

Description

A

2x40-pin card edge connector (to the backplane)

B

UART header

C

2-pin 5V power

D

SATA DOM port connector

Figure 15. 6G SATA Bridge Board Overview

2.8.2 12G SAS Bridge Board with IT mode

The optional 12G SAS bridge board with IT mode has one embedded LSI* SAS 3008 controller

to support up to four SAS/SATA, a 7-pin SATA connector for SATA DOM devices, and a UART

(Universal Asynchronous Receiver/Transmitter) header. One bridge board is used per one

compute module, connecting to the bridge board slot and Riser Slot 3.

Figure 16. 12G SAS Bridge Board with IT mode Overview

2.8.3 12G SAS Bridge Board with RAID 0, 1 and 10

The optional 12G SAS bridge board has one embedded LSI* SAS 3008 controller to support

up to four SAS/SATA ports with RAID 0, 1, and 10 support, a 7-pin SATA connector for SATA

DOM devices, and a type-A USB connector for USB flash device. One bridge board is used per

one compute module, connecting to the bridge board slot and Riser Slot 3.

Revision 1.37 15

Page 32

Product Features Overview Technical Product Specification

16

E

2x40-pin card edge connector (to the bridge board

connector on the server board)

F

200-pin connector (to Riser Slot 3 on the server board)

Label

Description

A

2x40-pin card edge connector (to the backplane)

B

UART header

C

2-pin 5V power

D

SATA DOM port connector

E

2x40-pin card edge connector (to the bridge board

connector on the server board)

F

200-pin connector (to Riser Slot 3 on the server board)

Figure 17. 12G SAS Bridge Board with RAID 0, 1 and 10 Overview

2.8.4 12G SAS Bridge Board with RAID 0, 1, 5 and 10

The optional 12G SAS bridge board with RAID 5 has one embedded LSI* SAS 3008 controller

to support up to four SAS/SATA ports with RAID 0, 1, 10, and RAID 5 support, a 7-pin SATA

connector for SATA DOM devices, and a UART (Universal Asynchronous Receiver/Transmitter)

header. One bridge board is used per one compute module, connecting to the bridge board

slot and Riser Slot 3.

Figure 18. 12G SAS Bridge Board with RAID 0, 1, 5 and 10 Overview

2.9 Riser Card

There are two types of riser cards:

Riser slot 1 riser card (for Riser slot 1 only)

Riser slot 2 riser card (for Riser slot 2 only)

2.9.1 Riser Slot 1 Riser Card

The riser card for riser slot 1 has one PCIe* 3.0 x16 slot.

Figure 19. Riser Card for Riser Slot #1

Revision 1.37

Page 33

Technical Product Specification Product Features Overview

I/O Module Carrier

M.2 Support

Supported Computer Modules

AXXKPTPM2IOM

Yes

HNS2600KPR

AXXKPTPIOM

No

HNS2600KPR

HNS2600KPFR

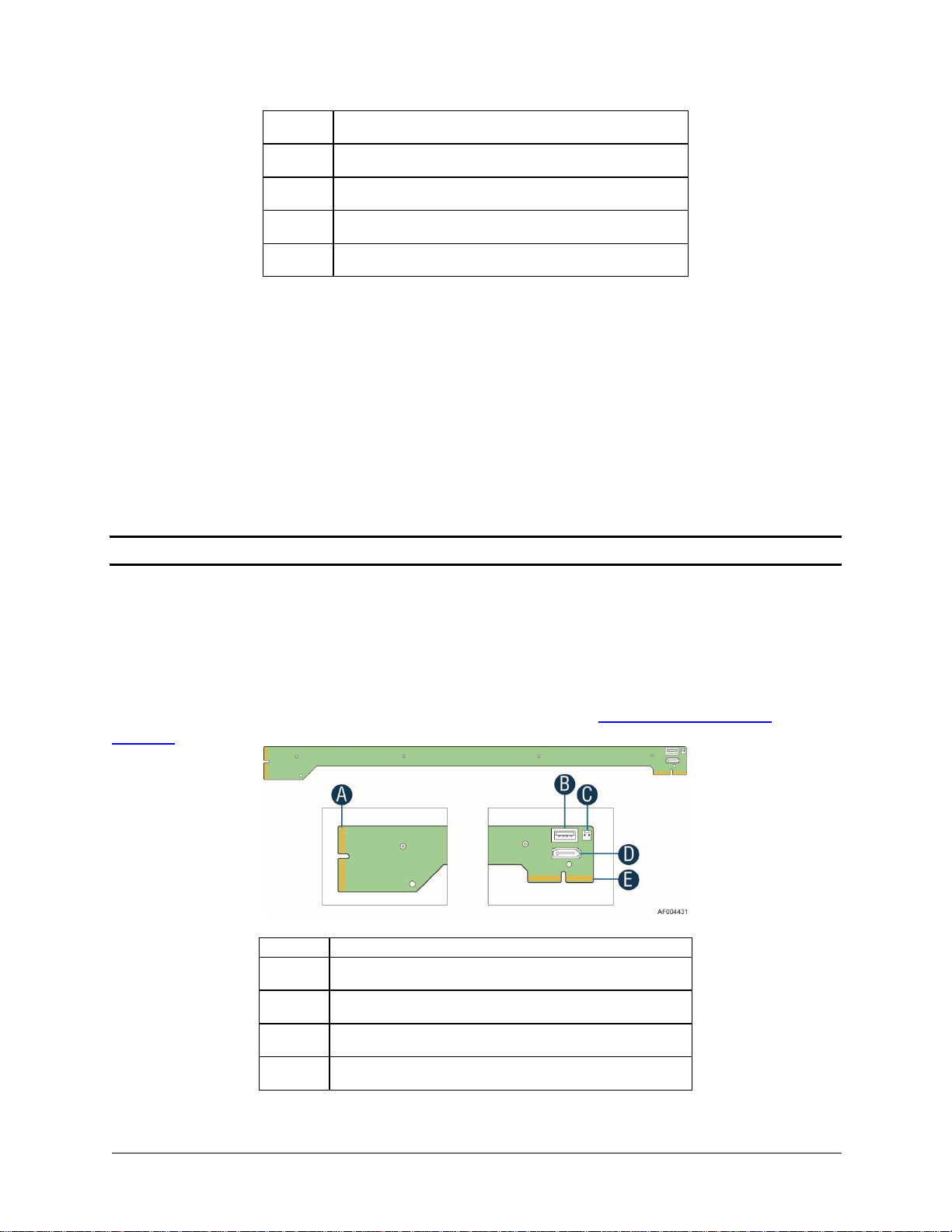

2.9.2 Riser Slot 2 Riser Card

The riser card for riser slot 2 has one PCIe* 3.0 x16 slot (x8 lanes are for I/O module carrier)

which can only support Intel® I/O Module carrier.

Figure 20. Riser Card for Riser Slot #2

2.10 I/O Module Carrier

To broaden the standard on-board feature set, the server board supports the option of adding

a single I/O module providing external ports for a variety of networking interfaces. The I/O

module attaches to a high density 80-pin connector of the I/O module carrier on the riser slot

2 riser card.

Figure 21. I/O Module Carrier Installation

The I/O module carrier board is included in the optional accessory kit. It is

horizontallyinstalled to the riser slot 2 riser card. The board provides electrical connectivity for

installing an Intel® I/O Expansion Module and a SATA based M.2 form factor (NGFF, Next

Generation Form Factor) storage device. It supports up to x8 lanes of PCIe* 3.0 for the I/O

module, and a 7 pin SATA header for the M.2 device. The I/O module carrier has two types

AXXKPTPM2IOM and AXXKPTPIOM, only AXXKPTPM2IOM can support SATA based M.2. But

due to mechanical limitation, the AXXKPTPM2IOM cannot support the computer module with

onboard IB* module.

Revision 1.37 17

Page 34

Product Features Overview Technical Product Specification

18

A

2x40 pin Messanine connector (for I/O module)

B

7 pin SATA connector (to server board, for M.2 device)

The M.2 slot is on the backside of the AXXKPTPM2IOM, it can support M.2 2280 SSD which

size is 80.0 mm X 22.0 mm X 3.8 mm. User can install the M.2 device to the M.2 slot (See the

letter A on Figure 22) and fix it with the screw (See the letter B on the Figure 22).

Figure 22. Installing the M.2 Device

User still needs to connect the SATA connector (see B on Figure 23) on the AXXKPTPM2IOM

to the STAT connector on the server with SATA cable.

Figure 23. AXXKPTPM2IOM I/O Module Carrier Connectors

Revision 1.37

Page 35

Technical Product Specification Product Features Overview

Figure 24. Connecting the M.2 SATA cable

Revision 1.37 19

Page 36

Product Features Overview Technical Product Specification

20

2.11 Compute Module Fans

The cooling subsystem for the compute module consists of three 40 x 40 x 56 dual rotor fans.

These components provide the necessary cooling and airflow.

Figure 25. Compute Module Fans

Note: The Intel® Compute Module HNS2600KP product family does not support redundant

cooling. If one of the compute module fans fails, it is recommended to replace the failed fan as

soon as possible.

Each fan within the compute module can support multiple speeds. Fan speed may change

automatically when any temperature sensor reading changes. The fan speed control algorithm

is programmed into the server board’s BMC.

Each fan connector within the module supplies a tachometer signal that allows the BMC to

monitor the status of each fan. If one of the fans fails, the status LED on the server board will

light up.

The fan control signal is from the BMC on the mother board to the power docking board and

then is distributed to three sets of dual rotor fans. The expected maximum RPM is 25,000.

2.12 Air Duct

Each compute module requires the use of a transparent plastic air duct to direct airflow over

critical areas within the compute module. To maintain the necessary airflow, the air duct must

be properly installed. Before sliding the compute module into the chassis, make sure the air

duct is installed properly.

Revision 1.37

Page 37

Technical Product Specification Product Features Overview

Figure 26. Air Duct

2.13 Intel

The Intel® RAID C600 Upgrade Key RKSATA4R5 is supported. With the optional key installed

on the server board, Intel® ESRT2 SATA RAID 5 is enabled.

2.14 Intel

®

RAID C600 Upgrade Key

Figure 27. Intel® RAID C600 Upgrade Key

®

Remote Management Module 4 (Intel® RMM4) Lite

The optional Intel® RMM4 Lite is a small board that unlocks the advanced management

features when installed on the server board.

Revision 1.37 21

Page 38

Product Features Overview Technical Product Specification

22

Label

Description

A

SATA DOM port connector

B

Mini-SAS connector

Figure 28. Intel® RMM4 Lite

2.15 Breakout Board

Intel provides a breakout board which is designed for the server board only I/O peripherals in

a third-party chassis. It is not a standard accessory of the Intel® Compute Module HNS2600KP

product family or Intel® Server Chassis H2000G product family.

The breakout board provides:

One 7 pin SATA connector for 6Gb/s SATA DOM

One mini-SAS HD SFF-8643 connector for 4x lanes of 6Gb/s SATA

One 7 pin connector for miscellaneous signals:

o Status LED

o NMI switch

o SMBus

o 3.3V auxiliary power (maximum current 50mA)

Revision 1.37

Page 39

Technical Product Specification Product Features Overview

Label

Description

C

7 pin miscellaneous signals connector

Figure 29. Breakout Board Front and Rear View

The breakout board has reserved holes for users to design their own bracket to fix the board

into the server system. See the following mechanical drawing for details.

2.16 System Software Overview

The server board includes an embedded software stack to enable, configure, and support

various system functions. This software stack includes the System BIOS, Baseboard

Management Controller (BMC) Firmware, Management Engine (ME) Firmware, and

management support data including Field Replaceable Unit (FRU) data and Sensor Data

Record (SDR) data.

The system software is pre-programmed on the server board during factory assembly, making

the server board functional at first power-on after system integration. Typically, as part of the

initial system integration process, FRU and SDR data will have to be installed onto the server