Page 1

Intel® NetStructure™

MPCMM0001 Chassis

Management Module

Software Technical Product Specification

April 2005

Order Number: 273888-007

Page 2

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY

ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PRO PERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN

INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEV ER, AND INTEL DISCLAIMS

ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES

RELATING T O FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PA TENT, COPYRIGHT OR OTHER

INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, life sustaining applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “un defined.” Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

®

The Intel

product to deviate from published specifications. Current characterized errata are available on request.

This Software Technical Product Specification as well as the software described in it is furnished under license and may only be used or copied in

accordance with the terms of the license. The information in this manual is furnished for informational use only, is subject to change without notice,

and should not be construed as a commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or

inaccuracies that may appear in this document or any software that may be provided in associat ion with this document.

Except as permitted by such license, no part of this document may be reproduced, stored in a retrieval system, or transmitted in any form or by any

means without the express written consent of Intel Corporation.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an ordering number and are referenced in this document, or other Intel literature may be obtained by calling

1-800-548-4725 or by visiting Intel's website at http://www.intel.com.

AnyPoint, AppChoice, BoardWatch, BunnyPeople, CablePort, Celeron, Chips, CT Media, Dialogic, DM3, EtherExpress, ETOX, FlashFile, i386, i486,

i960, iCOMP, InstantIP , I ntel, Inte l Centrino, I ntel logo, Intel386, I ntel486, I ntel740, Int elDX2, Inte lDX4, IntelSX2, Intel Creat e & Share, Intel GigaBla de,

Intel InBusiness, Intel Inside, Intel Inside logo, Intel NetBurst, Intel NetMerge, Intel NetStructure, Intel Play, Intel Play logo, Intel SingleDriver, Intel

SpeedStep, Intel StrataFlash, Intel TeamStation, Intel Xeon, Intel XScale, IPLink, Itanium, MCS, MMX, MMX logo, Optimizer logo, OverDrive,

Paragon, PC Dads, PC Parents, PDCharm, Pentium, Pentium II Xeon, Pe ntium III Xeon, Pe rformance at Your Command, RemoteExpress, SmartDie,

Solutions960, Sound Mark, StorageExpress, The Computer Inside., The Journey Inside, TokenExpress, VoiceBrick, VTune, and Xircom are

trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright © 2005, Intel Corporation. All rights reserved.

NetStructureTM MPCMM0001 Chassis Management Module may contain design defects or errors known as errata which may cause the

2 MPCMM0001 Chassis Management Module Software Technical Product Spe cification

Page 3

Contents

Contents

1 Introduction....................................................................................................................................16

1.1 Overview.............................................................................................................................16

1.2 Terms Used in this Document ............................................................................................16

2 Software Specifications .................................................................................................................18

2.1 Red Hat* Embedded Debug and Bootstrap (Redboot).......................................................18

2.2 Operating System........................ ... ... .... ... ... ... ....................................... ... .... ... ... ... ... ..........18

2.3 Command Line Interface (CLI) ...........................................................................................18

2.4 SNMP/UDP.........................................................................................................................18

2.5 Remote Procedural Call (RPC) Interface............................................................................19

2.6 RMCP .................................................................................................................................19

2.7 Ethernet Interfaces .............................................................................................................19

2.8 Sensor Event Logs (SEL) ...................................................................................................19

2.8.1 CMM SEL Architecture ..........................................................................................19

2.8.2 Retrieving a SEL....................................................................................................19

2.8.3 Clearing the SEL....................................................................................................20

2.8.4 Retrieving the Raw SEL.........................................................................................20

2.9 Blade OverTemp Shutdown Script .....................................................................................20

3 Redundancy, Synchronization, and Failover.................................................................................21

3.1 Overview.............................................................................................................................21

3.2 Synchronization ..................................................................................................................21

3.3 Heterogeneous Synchronization.........................................................................................23

3.3.1 SDR/SIF Synchronization......................................................................................23

3.3.2 User Scripts Synchronization and Configuration ...................... ... .... ......................23

3.3.3 Synchronization Requirements..............................................................................24

3.4 Initial Data Synchronization................................................................................................24

3.4.1 Initial Data Sync Failure.........................................................................................24

3.5 Datasync Status Sensor .....................................................................................................25

3.5.1 Sensor bitmap........................................................................................................25

3.5.2 Event IDs ...............................................................................................................25

3.5.3 Querying the Datasync Status...............................................................................25

3.5.4 SEL Event..............................................................................................................27

3.5.5 SNMP Trap............................................................................................................27

3.5.6 System Health .......................................... ... ... .... ...................................... ... .... ... ...28

3.6 CMM Failover .....................................................................................................................28

3.6.1 Scenarios That Prevent Failover . ... ... .... ... ... ... .... ... ... ... ... .......................................28

3.6.2 Scenarios That Failover to a Healthier Standby CMM...........................................28

3.6.3 Manual Failover .....................................................................................................29

3.6.4 Scenarios That Force a Failover..... ... .......................................... .... ... ...................29

3.7 CMM Ready Event..............................................................................................................30

4 Built-In Self Test (BIST).................................................................................................................31

4.1 BIST Test Flow ...................................................................................................................31

4.2 Boot-BIST ...........................................................................................................................33

4.3 Early-BIST ..........................................................................................................................33

4.4 Mid-BIST.............................................................................................................................33

MPCMM0001 Chassis Management Module Software Technical Product Specification 3

Page 4

Contents

4.5 Late-BIST............................................................................................................................33

4.6 QuickBoot Feature..............................................................................................................34

4.6.1 Configuring QuickBoot.................................... ... .... ... .......................................... ...34

4.7 Event Log Area and Event Management............................................................................35

4.8 OS Flash Corruption Detection and Recovery Design .......................................................35

4.8.1 Monitoring the Static Images.................................................................................35

4.8.2 Monitoring the Dynamic Images............................................................................36

4.8.3 CMM Failover .................... ... ... .... ... ... ... .... ... ... .......................................................36

4.9 BIST Test Descriptions.......................................................................................................36

4.9.1 Flash Checksum Test............................................................................................36

4.9.2 Base Memory Test.................................................................................................36

4.9.3 Extended Memory Tests . ... ... ... .... ... ... .......................................... ... .......................36

4.9.4 FPGA Version Check.............................................................................................37

4.9.5 DS1307 RTC (Real-Time Clock) Test ...................................................................37

4.9.6 NIC Presence/Local PCI Bus Test.........................................................................37

4.9.7 OS Image Checksum Test........................... ... ... .... ... ... ..........................................37

4.9.8 CRC32 Checksum.................................................................................................37

4.9.9 IPMB Bus Busy/Not Ready Test............................................................................38

5 Re-enumeration.............................................................................................................................39

5.1 Overview.............................................................................................................................39

5.2 Re-enumeration on Failover...............................................................................................39

5.3 Re-enumeration of M5 FRU................................................................................................40

5.4 Resolution of EKeys .......................... ... .... ... ... ... .... ... ..........................................................40

5.5 Events Regeneration..........................................................................................................40

6 Process Monitoring and Integrity...................................................................................................41

6.1 Overview.............................................................................................................................41

6.1.1 Process Existence Monitoring ........................ ... .... ... ... ... ... .... ... ... ... .... ... ... ... ... .... ...41

6.1.2 Thread Watchdog Monitoring ............................ .... ......................................... .... ...41

6.1.3 Process Integrity Monitoring.... .... ... ... ... .... ... ... ... .... ... ... ... .......................................42

6.2 Processes Monitored..........................................................................................................42

6.3 Process Monitoring Targets...... ... ... ... .......................................... ... .... ... ... ... .... ...................42

6.4 Process Monitoring Dataitems.................................. ... ... .... ... ... ... ... .... ... .............................43

6.4.1 Examples...............................................................................................................43

6.5 SNMP MIB Commands.......................................................................................................44

6.6 Process Monitoring CMM Events .......................... ... ... ... .......................................... ... .... ...44

6.7 Failure Scenarios and Eventing..........................................................................................45

6.7.1 No Action Recovery........ ... ... ... .... .......................................... ... ... ..........................45

6.7.2 Successful Restart Recovery.................................................................................46

6.7.3 Successful Failover/Restart Recovery...................................................................47

6.7.4 Successful Failover/Reboot Recovery...................................................................48

6.7.5 Failed Failover/Reboot Recovery, Non-Critical......................................................48

6.7.6 Failed Failover/Reboot Recovery, Critical .............................................................49

6.7.7 Excessive Restarts, Escalate No Action......................... ... .... ... .............................50

6.7.8 Excessive Restarts, Successful Escalate Failover/Reboot....................................51

6.7.9 Excessive Restarts, Failed Escalate Failover/Reboot, Non-Critical ......................52

6.7.10 Excessive Restarts, Failed Escalate Failover/Reboot, Critical ..............................52

6.7.11 Process Administrative Action..... ... ... ... .... .......................................... ... ... ... ... .... ...53

6.7.12 Excessive Failover/Reboots, Administrative Action..................... ... .......................54

4 MPCMM0001 Chassis Management Module Software Technical Product Spe cification

Page 5

Contents

6.8 Process Integrity Executable (PIE).....................................................................................54

6.9 Configuring pms.ini.............................................................................................................55

6.9.1 Global Data........... .... ... ... ... ... ....................................... ... .... ... ................................55

6.9.2 Process Specific Data............................................................................................56

6.9.3 Process Definition Section of pms.ini.....................................................................58

6.10 Process Integrity Executable (PIE) Specific Data Config ...................................................64

6.10.1 PIE Section Name ....................................... ... .... ... ... ... ... .... ... ... .............................64

6.10.2 Process Integrity Executable .................................................................................65

6.10.3 Unique ID...............................................................................................................65

6.10.4 Administrative State...............................................................................................65

6.10.5 Process Integrity Interval .......................... ... ... .... ... ... ... ..........................................66

6.10.6 Chassis Applicability.......... ....................................... ... ... .... ... ... ... .... ... ... ... .............66

6.10.7 PmsPieSnmp Command Line................................................................................66

6.10.8 SNMP PIE Section of pms.ini ................................................................................66

6.11 WP/BPM PIE ......................................... ... ... ... .... ... ....................................... ... ... ... ... .... ......67

6.11.1 WP/BPM Section of pms.ini...................................................................................67

7 Power and Hot Swap Management...............................................................................................68

7.1 Hot Swap States.................................................................................................................68

7.2 FRU Insertion......................................................................................................................68

7.3 Graceful FRU Extraction........ ... ... ... ... .... ...................................... .... ... ... ... .... ... ...................68

7.4 Surprise FRU Extraction/IPMI Failure.................................................................................69

7.5 Forced Power State Changes.............................................................................................69

7.6 Power Management on the Standby CMM............ ... ... .... ... ... .............................................69

7.7 Power Feed Targets ...........................................................................................................69

7.8 Pinging IPMI Controllers........................... ... ... ....................................... ... .... ... ... ... ... .... ... ...70

8 The Command Line Interface (CLI)...............................................................................................71

8.1 CLI Overview ......................................................................................................................71

8.2 Connecting to the CLI.........................................................................................................71

8.2.1 Connecting through a Serial Port Console ............................................................71

8.3 Initial Setup— Logging in for the First Time. ... .... ... ....................................... ... ... ... ... .... ... ...72

8.3.1 Setting IP Address Properties...... ... ... .... ... ... ... .... ... .......................................... ... ...72

8.3.2 Setting a Hostname ............................... ... ... ... .... ... ... ... ... .......................................75

8.3.3 Setting the Amount of Time for Auto-Logout .........................................................75

8.3.4 Setting the Date and Time..................... ... ... ... .... ... ... ... ....................................... ...76

8.3.5 Telnet into the CMM ..............................................................................................76

8.3.6 Connect Through SSH (Secure Shell)...................................................................76

8.3.7 FTP into the CMM. ....................................... ... .... ... ... ....................................... ... ...76

8.3.8 Rebooting the CMM...............................................................................................76

8.4 CLI Command Line Syntax and Arguments .......................................................................77

8.4.1 Cmmget and Cmmset Syntax......... ... .... ... ... ... .... ......................................... .... ......77

8.4.2 Help Parameter: -h ............................................. ... ... ... ... .... ... ................................77

8.4.3 Location Parameter: -l ...........................................................................................77

8.4.4 Target Parameter: -t .............................................................................. ... ... .... ... ...78

8.4.5 Dataitem Parameter: -d ............................................... ....................................... ...80

8.4.6 Value Parameter: -v...............................................................................................97

8.4.7 Sample CLI Operations .........................................................................................97

8.5 Generating a System Status Report...................................................................................97

MPCMM0001 Chassis Management Module Software Technical Product Specification 5

Page 6

Contents

9 Resetting the Password.................................................................................................................99

9.1 Resetting the Password in a Dual CMM System............................ .... ... ... ... .... ... ... ... ..........99

9.2 Resetting the Password in a Single CMM System ...........................................................100

10 Sensor Types ......................... ... ... ... .... ...................................... .... ... ... ... ... .... ... ...........................101

10.1 CMM Sensor Types........ ... ... .... ... ... ... ... .... ... .....................................................................101

10.2 Threshold-Based Sensors................................................................................................101

10.2.1 Threshold-Based Sensor Events.........................................................................101

10.3 CMM Voltage/Temp Sensor Thresholds...........................................................................102

10.4 Discrete Sensors ............................ ... ... .... ... ... ... ...............................................................102

10.4.1 Discrete Sensor Events..... ....................................... ... ... ... .... ... ... ... .... ... ... ...........103

11 Health Events..............................................................................................................................104

11.1 Syntax of Health Event Strings.........................................................................................104

11.1.1 Healthevents Query Event Syntax.......................................................................104

11.1.2 SEL Event Syntax................................. .... ... ... ... .... ... ... ... ... .... ... ... ... .... ... ... ...........104

11.1.3 SEL Sensor Types................................ .... ... ... ... ..................................................105

11.1.4 SNMP Trap Event Syntax....................................................................................105

11.2 Sensor Targets..................... .... ... ... ... ... .... ... .....................................................................106

11.3 Healthevents Queries.......................................................................................................107

11.3.1 HealthEvents Queries for Individual Sensors. ... .... ..............................................107

11.3.2 HealthEvents Queries for All Sensors on a Location...........................................108

11.3.3 No Active Events ............... ... ... .... ... ... ... .... ...........................................................108

11.3.4 Not Present or Non-IPMI Locations............................. ... ... .... ... ... ... .....................108

11.4 List of Possible Health Event Strings................................................................................108

11.4.1 All Locations ........................................................................................................109

11.4.2 CMM Location......................................................................................................115

11.4.3 Chassis Location .................................................................................................120

11.5 IPMI Error Completion Codes...........................................................................................120

11.5.1 Configuring IPMI Error Completion Codes ........................ .... ... ... ... .... ... ... ... ... .... .121

11.5.2 IPMI/IMB Error Message Format.........................................................................121

12 Front Panel LEDs...................... ..................................................................................................123

12.1 LED Types and States......................................................................................................123

12.1.1 Alarm LEDs..................... ... ... ... ....................................... ... .... ... ... ... .... .................123

12.1.2 Health LED ..........................................................................................................124

12.1.3 Hot Swap LED.....................................................................................................124

12.1.4 User Definable LEDs...........................................................................................124

12.2 Retrieving a Location’s LED properties ............................................................................124

12.3 Retrieving Color Properties of LEDs.................................................................................124

12.4 Retrieving the State of LEDs .......................... ....................................... ... ... .... ... ... ... ... .... .125

12.5 Setting the State of the User LEDs . ... ... ............................................................................125

12.6 LED Boot Sequence.........................................................................................................126

13 Node Power Control....................................................................................................................127

13.1 Node Operational State Management..............................................................................127

13.2 Obtaining the Power State of a Board............................ .... ... ... ... ... ..................................127

13.3 Controlling the Power State of a Board ............................................................................127

13.3.1 Powering Off a Board .............. .... ... ... ... .... ... ... ... .... ... ...........................................1 27

13.3.2 Powering On a Board .................. ... .....................................................................127

6 MPCMM0001 Chassis Management Module Software Technical Product Spe cification

Page 7

Contents

13.3.3 Resetting a Board................................................................................................128

14 Electronic Keying Manager..........................................................................................................129

14.1 Point-to-Point EKeying......................................................................................................129

14.2 Bused EKeying .................................................................................................................129

14.3 EKeying CLI Commands .......... ... ... ... .... ... .......................................... ... ... ........................1 29

15 CDMs and FRU Information .......................................... ... .......................................... ... ... ... .... ....130

15.1 Chassis Data Module......... .... ...................................... .... ... ... ... ... .... ... ... ... .... ....................130

15.2 FRU/CDM Election Process .............................................................................................130

15.3 FRU Information ...............................................................................................................130

15.4 FRU Query Syntax............................................................................................................131

16 Fan Control and Monitoring.........................................................................................................132

16.1 Automatic Fan Control......................................................................................................132

16.2 Querying Fan Tray Sensors - FantrayN location ..............................................................132

16.3 Fantray Cooling Levels.....................................................................................................132

16.4 CMM Cooling Manager Temperature Status....................................................................132

16.5 CMM Cooling Table..........................................................................................................133

16.5.1 Setting Values in the Cooling Table.....................................................................133

16.6 Control Modes for Fan Trays............................................................................................134

16.6.1 CMM Control Mode..............................................................................................134

16.6.2 Fantray Control Mode..........................................................................................134

16.6.3 Emergency Shutdown Control Mode...................................................................134

16.6.4 User Initiated Mode Change................................................................................135

16.6.5 Automatic Mode Change .....................................................................................135

16.7 Getting Temperature Statuses..........................................................................................135

16.8 Fantray Properties ............................................................................................................136

16.9 Retrieving the Current Cooling Level................................................................................136

16.10 Fantray Insertion...............................................................................................................136

16.11 Default Cooling Values .....................................................................................................137

16.11.1 Vendor Defaults ...................................................................................................137

16.11.2 Structure of /etc/cmm/fantray.cfg.........................................................................138

16.11.3 Code Defaults......................................................................................................138

16.11.4 Restoring Defaults ...............................................................................................138

16.12 Firmware Upgrade/Downgrade.........................................................................................138

16.13 Chassis vs. Fantray .................................. ... ... .... ... ... ........................................................139

16.14 Legacy Method of Querying/Setting Fan Speed...............................................................139

17 SNMP..........................................................................................................................................140

17.1 CMM MIB..........................................................................................................................141

17.2 MIB Design .......................................................................................................................141

17.2.1 MIB Tree..............................................................................................................141

17.2.2 CMM MIB Objects................................................................................................142

17.3 SNMP Agent.....................................................................................................................158

17.3.1 Configuring the SNMP Agent Port.......................................................................158

17.3.2 Configuring the Agent to Respond to SNMP v3 Requests ................................. .158

17.3.3 Configuring the Agent Back to SNMP v1.............................................................159

17.3.4 Setting up an SNMP v1 MIB Browser..................................................................159

17.3.5 Setting up an SNMP v3 MIB Browser..................................................................159

17.3.6 Changing the SNMP MD5 and DES Passwords..................................................159

7 MPCMM0001 Chassis Management Module Software Technical Product Spe cification

Page 8

Contents

17.4 SNMP Trap Utility.............................................................................................................160

17.4.1 Configuring the SNMP Trap Port.........................................................................160

17.4.2 Configuring the CMM to Send SNMP v3 Traps...................................................160

17.4.3 Configuring the CMM to Send SNMP v1 Traps...................................................160

17.5 Configuring and Enabling SNMP Trap Addresses................................. ... ... .... ... ... ... ... .... .160

17.5.1 Configuring an SNMP Trap Address ...................................................................161

17.5.2 Enabling and Disabling SNMP Traps ..................................................................161

17.5.3 Alerts Using SNMP v3....... ... ... .... ........................................................................161

17.5.4 Alert Using UDP Alert..........................................................................................161

17.6 SNMP Security .................................................................................................................162

17.6.1 SNMP v1 Security................................................................................................162

17.6.2 SNMP v3 Security - Authentication Protocol and Privacy Protocol .....................162

17.7 SNMP Trap Descriptions..................................................................................................162

17.8 Snmpd.conf File. ... ... .... ... ... ....................................... ... ... .... ... ... ... ... .... ... ... ........................163

18 CMM Scripting.... ... .... ... ... ... .... ... ....................................... ... ... ... .... ... ... ... .....................................164

18.1 CLI Scripting.....................................................................................................................164

18.1.1 Script Synchronization................................. ... ... .... ... ... ... ... .... ... ... ... .....................164

18.2 Event Scripting................................ ... ... .... ... ... ... .... ... ....................................... ... ... ... ........164

18.2.1 Listing Scripts Associated With Events................................................................165

18.2.2 Removing Scripts From an Associated Event .......... ...................................... .... .165

18.3 Setting Scripts for Specific Individual Events....................................................................165

18.3.1 Event Codes............ ....................................... ... .... ... ... ... ... .... ... ... ... .....................165

18.3.2 Setting Event Action Scripts ................................................................................166

18.4 Running CMM Event Scripts on CMM State Transitions

(Active/Standby/Ready/Not Ready).......................................... ... ... .... ... ... ... .....................166

18.4.1 Sensor Data Bits............................. ... ... .... ... ... ... .... ... ...........................................1 66

18.4.2 Retrieving the Value of the Data Sensor Bits ......................................................167

18.4.3 CMMReadyTimeout Value...................................................................................168

18.4.4 CMM State Transition Model....... ... ... ... .... ... ... ... .... ... ... ... ... .... ... ...........................168

18.5 FRU Control Script............................................................................................................169

18.5.1 Command line arguments....................................................................................170

18.5.2 Sample frucontrol file......................................... .... ... .......................................... .170

19 Remote Procedure Calls (RPC) ..................................................................................................174

19.1 Setting Up the RPC Interface ...........................................................................................174

19.2 Using the RPC Interface...................................................................................................174

19.2.1 GetAuthCapability() .............................................................................................175

19.2.2 ChassisManagementApi() ...................................................................................175

19.2.3 ChassisManagementApi() Threshold Response Format.....................................181

19.2.4 ChassisManagementApi() String Response Format ...... ... ..................................181

19.2.5 ChassisManagementApi() Integer Response Format..........................................185

19.2.6 FRU String Response Format .............................................................................186

19.3 RPC Sample Code ...........................................................................................................187

19.4 RPC Usage Examples......................................................................................................187

20 RMCP..........................................................................................................................................190

20.1 RMCP References............................................................................................................190

20.2 RMCP Modes ...................................................................................................................190

20.3 RMCP User Privilege Levels ............................................................................................191

20.4 RMCP Discovery ..............................................................................................................191

8 MPCMM0001 Chassis Management Module Software Technical Product Spe cification

Page 9

Contents

20.5 RMCP Session Activation.................. .... .......................................... ... ... ...........................191

20.6 RMCP Port Numbers........................................................................................................192

20.7 IPMB Slave Addresses.....................................................................................................193

20.8 CMM RMCP Configuration ...............................................................................................193

20.9 IPMI Commands Supported by CMM RMCP ........ ... ... .....................................................194

20.10 Configuring IPMI Command Privileges.............................................................................196

20.10.1 Sample cmdPrivillege.ini file................................................................................197

20.11 Completion Codes for the RMCP Messages....................................................................197

21 Command and Error Logging......................................................................................................199

21.1 Command Logging ...........................................................................................................199

21.2 Error Logging....................................................................................................................199

21.2.1 Error.log File ........................................................................................................199

21.2.2 Debug.log File......................................................................................................199

21.3 Cmmdump Utility ......... ... ... .... ...................................... .... ... ... ... ... .... ... ... ... .... ....................200

22 Application Hosting......................................................................................................................201

22.1 System Details................ ... .... ...................................... .... ... ... ... ... .... .................................201

22.2 Startup and Shutdown Scripts ..........................................................................................201

22.3 System Resources Available to User Applications...........................................................201

22.3.1 File System Storage Constraints .........................................................................201

22.3.2 RAM Constraints..................................................................................................202

22.3.3 Interrupt Constraints ............................................................................................203

22.4 RAM Disk Directory Structure...........................................................................................203

23 Updating CMM Software .............. .... ... .......................................... ... ... ... .... .................................204

23.1 Key Features of the Firmware Update Process................................................................204

23.2 Update Process Architecture............................................................................................204

23.3 Critical Software Update Files and Directories .................................................................205

23.4 Update Package...............................................................................................................205

23.4.1 Update Package File Validation................................... ... .....................................206

23.4.2 Update Firmware Package Version.....................................................................207

23.4.3 Component Versioning ........................................................................................207

23.5 saveList and Data Preservation........................................................................................207

23.6 Update Mode....................................................................................................................208

23.7 Update_Metadata File ......................................................................................................209

23.8 Firmware Update Synchronization/Failover Support ....................................... ... ... ... .... ... .209

23.9 Automatic/Manual Failover Configuration.........................................................................209

23.9.1 Setting Failover Configuration Flag .....................................................................210

23.9.2 Retrieving the Failover Configuration Flag...........................................................210

23.10 Single CMM System .........................................................................................................210

23.11 Redundant CMM Systems................................................................................................210

23.12 CLI Software Update Procedure.......................................................................................210

23.13 Hooks for User Scripts......................................................................................................211

23.13.1 Update Mode User Scripts...................................................................................211

23.13.2 Data Restore User Scripts...................................................................................212

23.13.3 Example Task—Replace /home/scripts/myScript................................................212

23.14 Update Process ................................................................................................................213

23.15 Update Process Status and Logging ................................................................................215

23.16 Update Process Sensor and SEL Events.........................................................................215

23.17 Redboot* Update Process................................................................................................215

MPCMM0001 Chassis Management Module Software Technical Product Specification 9

Page 10

Contents

23.17.1 Required Set up..................................... .... ... ... ... .... ...................................... ... .... .215

23.17.2 Update Procedure................................................................................................215

24 Updating Shelf Components........................................................................................................217

25 IPMI Pass-Through......................................................................................................................218

25.1 Overview...........................................................................................................................218

25.2 Command Syntax and Interface.......................................................................................218

25.2.1 Command Request String Format.......................................................................218

25.2.2 Response String...... .... ... ... ... ... ....................................... ... .... ... ... ... .... ... ... ... ... .....2 19

25.2.3 Usage Examples..................................................................................................219

25.3 SNMP ...............................................................................................................................219

25.3.1 Usage Example ...................................................................................................219

26 FRU Update Utility.......................................................................................................................221

26.1 Overview...........................................................................................................................221

26.2 FRU Update Architecture..................................................................................................221

26.3 FRU Update Process........................................................................................................222

26.4 FRU Recovery Process....................................................................................................222

26.5 FRU Verification................................................................................................................223

26.6 FRU Display......................................................................................................................223

26.7 Setting the Library Path And Invoking the Utility...............................................................223

26.8 FRU Update Command Line Interface .............................................................................223

26.9 Using the Location Switch ................................................................................................224

26.10 Updating the FRU.............................................................................................................225

26.11 Getting the Inventory ........................................................................................................225

26.12 Viewing the Contents of the FRU .....................................................................................225

26.13 Getting the Contents of the FRU ......................................................................................225

26.14 Dumping the Contents of the FRU....................................................................................225

27 FRU Update Configuration File ...................................................................................................227

27.1 Configuration File Format.................................................................................................227

27.2 File Format........................................................................................................................227

27.3 String Constraints.............................................................................................................227

27.4 Numeric Constraints.........................................................................................................228

27.5 Tags..................................................................................................................................228

27.6 Control Commands...... ... ... ... .... ... ... .......................................... ... .....................................228

27.6.1 IFSET...................................................................................................................228

27.6.2 ELSE....................................................................................................................229

27.6.3 ENDIF..................................................................................................................229

27.6.4 SET......................................................................................................................229

27.6.5 CLEAR.................................................................................................................230

27.6.6 CFGNAME...........................................................................................................230

27.6.7 ERRORLEVEL.....................................................................................................230

27.7 Probing Commands..........................................................................................................230

27.7.1 PROBE................................................................................................................230

27.7.2 SYSTEM..............................................................................................................231

27.7.3 FRUVER..............................................................................................................231

27.7.4 BMCVER .............................................................................................................232

27.7.5 FOUND................................................................................................................232

27.8 Update Commands ..........................................................................................................233

10 MPCMM0001 Chassis Management Module Software Technical Product Specification

Page 11

Contents

27.8.1 FRUNAME...........................................................................................................233

27.8.2 FRUADDRESS....................................................................................................234

27.8.3 FRUAREA............................................................................................................234

27.8.4 MULTIREC ..........................................................................................................235

27.8.5 FRUFIELD ...........................................................................................................236

27.8.6 Input of Data ........................................................................................................240

27.9 Display Commands...........................................................................................................240

27.9.1 DISPLAY..............................................................................................................241

27.9.2 CONFIGURATION...............................................................................................241

27.9.3 Input Commands .. ...............................................................................................241

27.9.4 MENU ..................................................................................................................241

27.9.5 MENUTITLE ........................................................................................................242

27.9.6 MENUPROMPT...................................................................................................242

27.9.7 PROMPT .............................................................................................................242

27.9.8 YES......................................................................................................................243

27.9.9 NO .......................................................................................................................243

27.10 Command Quick Reference .............................................................................................243

27.11 Example Configuration File...............................................................................................246

27.11.1 Chassis Update Version 0 ...................................................................................246

27.11.2 Chassis Update Version 1 ...................................................................................249

28 Unrecognized Sensor Types.......................................................................................................253

28.1 System Events Overview................ ... .......................................... .... .................................253

28.2 System Events— SNMP Trap Support........................ .... ... ... ... ... .... ... ... ... .... ... ... ... ... ........254

28.2.1 SNMP Trap Header Format.................................................................................254

28.2.2 SNMP Trap ATCA Trap Text Translation Format................................................254

28.3 SNMP Trap Raw Format ..................................................................................................255

28.3.1 SNMP Trap Control .............................................................................................256

28.3.2 System Events— SEL Support............................................................................256

28.3.3 Configuring SEL Format ......................................................................................257

29 Warranty Information...................................................................................................................259

29.1 Intel

®

NetStructure™ Compute Boards and Platform Products Limited Warranty ...........259

29.2 Returning a Defective Product (RMA) ..............................................................................259

29.3 For the Americas ..............................................................................................................260

29.3.1 For Europe, Middle East, and Africa (EMEA) .............................. .... ... ... ... ... .... ... .260

29.3.2 For Asia and Pacific (APAC)................................................................................260

30 Customer Support .......................................................................................................................262

30.1 Customer Support.............................................................................................................262

30.2 Technical Support and Return for Service Assistance .....................................................262

30.3 Sales Assistance ...... ... ... ... .... ...........................................................................................262

31 Certifications................................................................................................................................263

32 Agency Information................. .....................................................................................................264

32.1 North America (FCC Class A)...... ... ... .... ...................................... .... ... ... ... .... ... ... ... ... .... ....264

32.2 Canada – Industry Canada (ICES-003 Class A) (English and French-translated below).264

32.3 Safety Instructions (English and French-translated below) ..............................................265

32.3.1 English.................................................................................................................265

32.3.2 French..................................................................................................................265

MPCMM0001 Chassis Management Module Software Technical Product Specification 11

Page 12

Contents

32.4 Taiwan Class A Warning Statement.................................................................................266

32.5 Japan VCCI Class A....................................................... .... ... .......................................... .266

32.6 Korean Class A.................................................................................................................266

32.7 Australia, New Zealand.....................................................................................................266

33 Safety Warnings..........................................................................................................................267

33.1 Mesures de Sécurité.........................................................................................................268

33.2 Sicherheitshinweise..........................................................................................................270

33.3 Norme di Sicurezza .............................. ............................................................................272

33.4 Instrucciones de Seguridad..............................................................................................274

33.5 Chinese Safety Warning...................................................................................................276

Figures

1 BIST Flow Chart .........................................................................................................................32

2 Timing of BIST Stages................................................................................................................34

3 High Level SNMP/MIB Layout..................................................................................................140

4 CMM Custom MIB Tree............................................................................................................142

5 CMM Status State Diagram................. ... ... ... .... ... ... ... .... ... ... ... .... ... .......................................... .169

6 SNMPTrapFormat = 1 ..............................................................................................................255

7 SNMPTrapFormat = 2 ..............................................................................................................255

8 SNMPTrapFormat = 3 ..............................................................................................................255

Tables

1 Glossary .....................................................................................................................................16

2 CMM Synchronization ................................................................................................................22

3 CMM Status Event Strings (CMM Status) ..................................................................................30

4 BIST Implementation..................................................................................................................32

5 Processes Monitored.................... .... ... ... ... ... ..............................................................................42

6 No Action Recovery....................................................................................................................46

7 Successful Restart Recovery . ... ... .... ... ... .......................................... ... .......................................46

8 Successful Failover/Restart Recovery.... ... ... .... ..........................................................................47

9 Successful Failover/Reboot Recovery. ... ... ... .... ... ... .......................................... ... .... ... ... ... ... .......48

10 Failed Failover/Reboot Recovery, Non-Critical ..........................................................................49

11 Failed Failover/Reboot Recovery, Critical ..................................................................................50

12 Existence Fault, Excessive Restarts, Escalate No Action..........................................................50

13 Excessive Restarts, Successful Escalate Failover/Reboot ........................................................51

14 Excessive Restarts, Failed Escalate Failover/Reboot, Non-Critical .............................. ... ... .... ...52

15 Excessive Restarts, Failed Escalate Failover/Reboot, Critical................... ... ... ... .... ... ... ... ... .... ...53

16 Administrative Action..................................................................................................................53

17 Excessive Failover/Reboots, Administrative Action....................................................................54

18 Time to Delay and Number of Attempts .....................................................................................70

19 SETIP Interface Assignments when BOOTPROTO=”static” ......................................................74

20 SETIP Interface Assignments when BOOTPROTO=”dhcp”.......................................................75

21 Location (-l) Keywords................................................................................................................77

22 CMM Targets..............................................................................................................................79

23 Dataitem Keywords for All Locations..........................................................................................80

12 MPCMM0001 Chassis Management Module Software Technical Product Specification

Page 13

Contents

24 Dataitem Keywords for All Locations Except System.................................................................80

25 Dataitem Keywords for All Locations Except Chassis and System ............................................81

26 Dataitem Keywords for Chassis Location...................................................................................85

27 Dataitem Keywords for Cmm Location.......................................................................................86

28 Dataitem Keywords for System Location....................................................................................92

29 Dataitem Keywords for FantrayN Location.................................... ... ... .......................................93

30 Dataitem Keywords Used with the Target Parameter.................................................................94

31 CMM Voltage and Temp Sensor Thresholds................................. ... ... .... ... ... ... .... ... ... ... ... .... ... .102

32 CMM SEL Sensor Information..................................................................................................105

33 Sensor Targets.........................................................................................................................106

34 Threshold-Based Sensors: Voltage, Temp, Current, Fan.........................................................109

35 Hot Swap Sensor: Filter Tray HS, FRU Hot Swap....................................................................110

36 IPMB Link State Sensor: IPMB-0 Snsr [1-16]...........................................................................110

37 System Firmware Progress Event Strings (System Firmware Progress) .................................111

38 Watchdog 2 Sensor Event Strings............................................................................................113

39 CMM Redundancy....................................................................................................................115

40 CMM Trap Connectivity (CMM [1-2] Trap Conn)......................................................................115

41 CMM Failover ...........................................................................................................................115

42 CMM Synchronization...............................................................................................................116

43 BIST Event Strings .................... .... ... ... ... ... .... ... ... ... .... ... ... ... .......................................... ...........117

44 Chassis Data Module (CDM [1,2])............................................................................................118

45 Datasync Status............................. ... ... .......................................... ... ........................................118

46 CMM Status Event Strings (CMM Status) ................................................................................118

47 Process Monitoring Service Fault Event Strings (PMS Fault) ..................................................119

48 Process Monitoring Service Info Event Strings (PMS Info) ......................................................120

49 Chassis Events.........................................................................................................................120

50 IPMI Error Completion Codes and Enumerations.....................................................................121

51 System Health LED States.......................................................................................................123

52 CMM Health LED States...........................................................................................................124

53 CMM Hot Swap LED States ...................... .... ... ... ... .... ... ... ... .... ... ... ... ... .... ... ... ... ........................124

54 Ledstate Functions and Function Options................................................................................125

55 LED Event Sequence ...............................................................................................................126

56 Dataitems Used With FRU Target (-t) to Obtain FRU Information............................................131

57 CMM Cooling Table..................................................................................................................133

58 MIB II Objects - System Group.. .......................................... .... ... ... ... ... .... .................................141

59 MIB II - Interface Group................. ... ... ... ... .... ... ... .......................................... ... .... ... ... ... ... ........141

60 System Location (1.3.6.1.4.1.343.2.14.2.10.1).........................................................................143

61 Shelf Location (Equivalent to Chassis) (1.3.6.1.4.1.343.2.14.2.10.2).......................................144

62 ShelfTable/shelfEntry (1.3.6.1.4.1.343.2.14.2.10.2.50.1).........................................................144

63 Cmm Location (1.3.6.1.4.1.343.2.14.2.10.3)............................................................................146

64 CmmTable/cmmEntry (1.3.6.1.4.1.343.2.14.2.10.3.51.1).........................................................149

65 CmmFruTable/cmmFruEntry (1.3.6.1.4.1.343.2.14.2.10.3.52.1)..............................................151

66 CmmFruTargetTable (1.3.6.1.4.1.343.2.14.2.10.3.53.1)..........................................................151

67 CmmPmsTable/cmmPmsEntry (1.3.6.1.4.1.343.2.14.2.10.3.54.1)..........................................151

68 Blade# Location (1.3.6.1.4.1.343.2.14.2.10.4.[1-16]) ...............................................................152

69 Blade#TargetTable/blade#TargetEntry (1.3.6.1.4.1.343.2.14.2.10.4.[1-16].51.1)....................153

70 Blade#FruTable/blade#FruEntry (1.3.6.1.4.1.343.2.14.2.10.4.[1-16].52.1)..............................154

71 Blade#FruTargetTable/blade#FruTargetEntry (1.3.6.1.4.1.343.2.14.2.10.4.[1-16].53.1).........155

72 [FanTray/pem]Table/[fanTray/pem]Entry (1.3.6.1.4.1.343.2.14.2.10.[5/6].51.1) ......................155

73 [FanTray/pem]TargetTable/[fanTray/pem]TargetEntry (1.3.6.1.4.1.343.2.14.2.10.[5/6].52.1)..156

MPCMM0001 Chassis Management Module Software Technical Product Specification 13

Page 14

Contents

74 [FanTray/pem]FruTable/[fanTray/pem]FruEntry (1.3.6.1.4.1.343.2.14.2.10.[5/6].53.1) ...........157

75 [FanTray/pem]FruTargetTable/[fanTray/pem]FruTargetEntry

(1.3.6.1.4.1.343.2.14.2.10.[5/6].54.1).......................................................................................158

76 SNMP v3 Security Fields For Traps .........................................................................................162

77 SNMP v3 Security Fields For Queries......................................................................................162

78 CMM State Transition Events and Event IDs ...........................................................................166

79 CMM Status Sensor Data Bits................... ... .... ... ... ... .... ... ... ... .... ... ... ... .....................................167

80 Error and Return Codes for the RPC Interface.........................................................................177

81 Threshold Response Formats ....................................... ... ... ... .... ......................................... .... .181

82 String Response Formats.........................................................................................................181

83 Integer Response Formats.......................................................................................................185

84 FRU Data Items String Response Format................................................................................186

85 RPC Usage Examples...................... ... ... ... ... .... ... ... ... .... .......................................... ... ..............187

86 RMCP Modes ...................................... ... ... ... .... ... ....................................... ... ... ... .... ... ... ...........190

87 RMCP Session Timers ............................................................................... ... ...........................192

88 RMCP Slave Addresses......................................................... .... ... ... ... ... .... ..............................193

89 IPMI Commands Supported by CMM RMCP...........................................................................194

90 RMCP Message Completion Codes.........................................................................................198

91 Flash #1....................................................................................................................................202

92 Flash #2....................................................................................................................................202

93 Flash #3....................................................................................................................................202

94 Flash #4....................................................................................................................................202

95 List of Critical Software Update Files and Directories ..............................................................205

96 Contents of the Update Package..............................................................................................206

97 SaveList Items and Their Priorities............... ............................................................................208

98 CMM Update Directions ...........................................................................................................209

99 Platform FRU Accessibility of the FRU Update Utility ..............................................................221

100 FruUpdate Utility Command Line Options................................................................................224

101 Probe Command Parameters....................... ............................................................................231

102 FRU Area String Specifications................................................................................................235

103 Multi-Record Selection Parameters..........................................................................................236

104 FRU Field First String Specifications........................................................................................237

105 FRU Field Maximum Allowed Lengths .....................................................................................237

106 FRU Field Second String Specification ....................................................................................238

107 Type Code Specification...........................................................................................................239

108 Command Quick Reference.....................................................................................................243

109 Probe Arguments Quick Reference..........................................................................................246

110 Results of Variable Settings ......... .... ... ... ... ... .... ... ... .......................................... ... .... ... ... ...........256

111 Example CLI Commands..........................................................................................................277

14 MPCMM0001 Chassis Management Module Software Technical Product Specification

Page 15

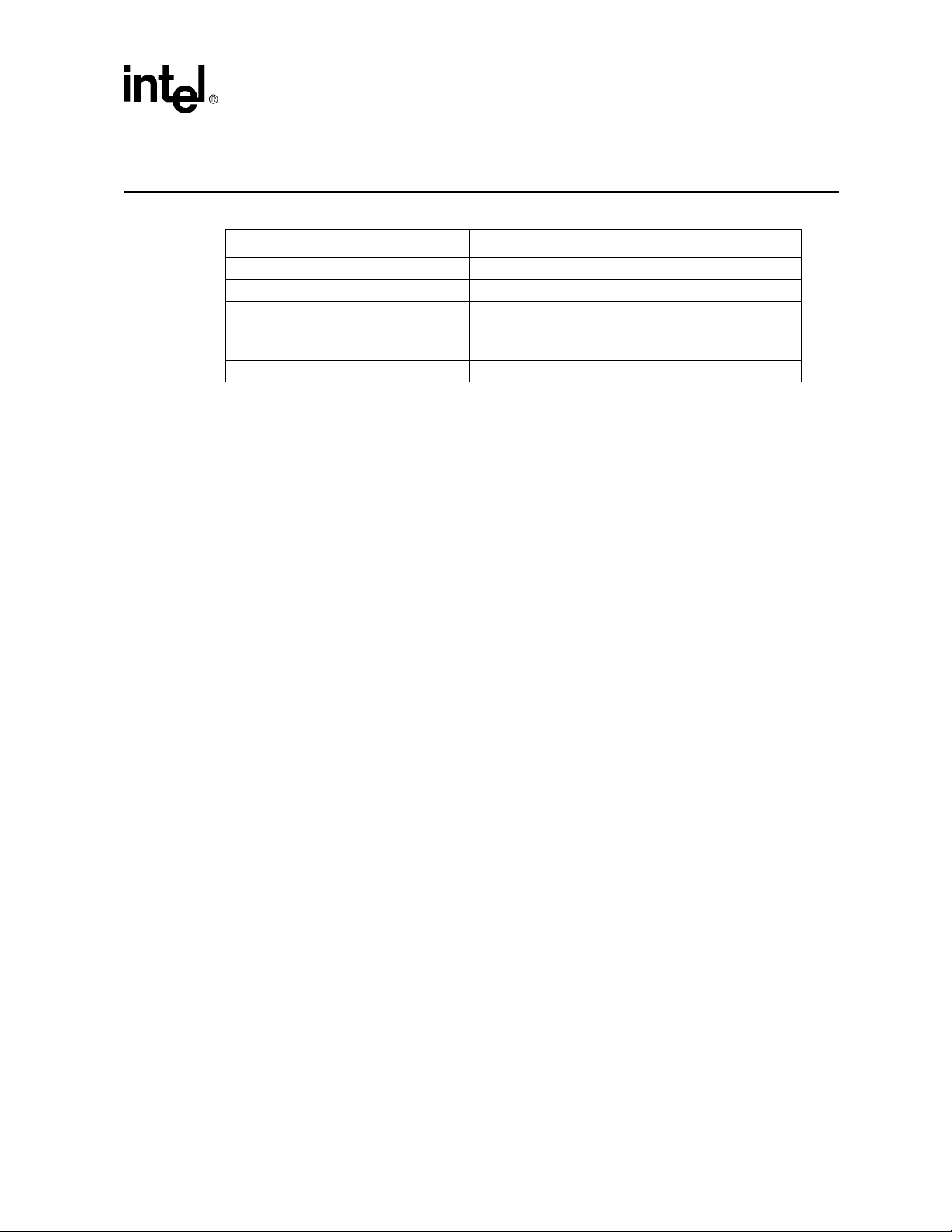

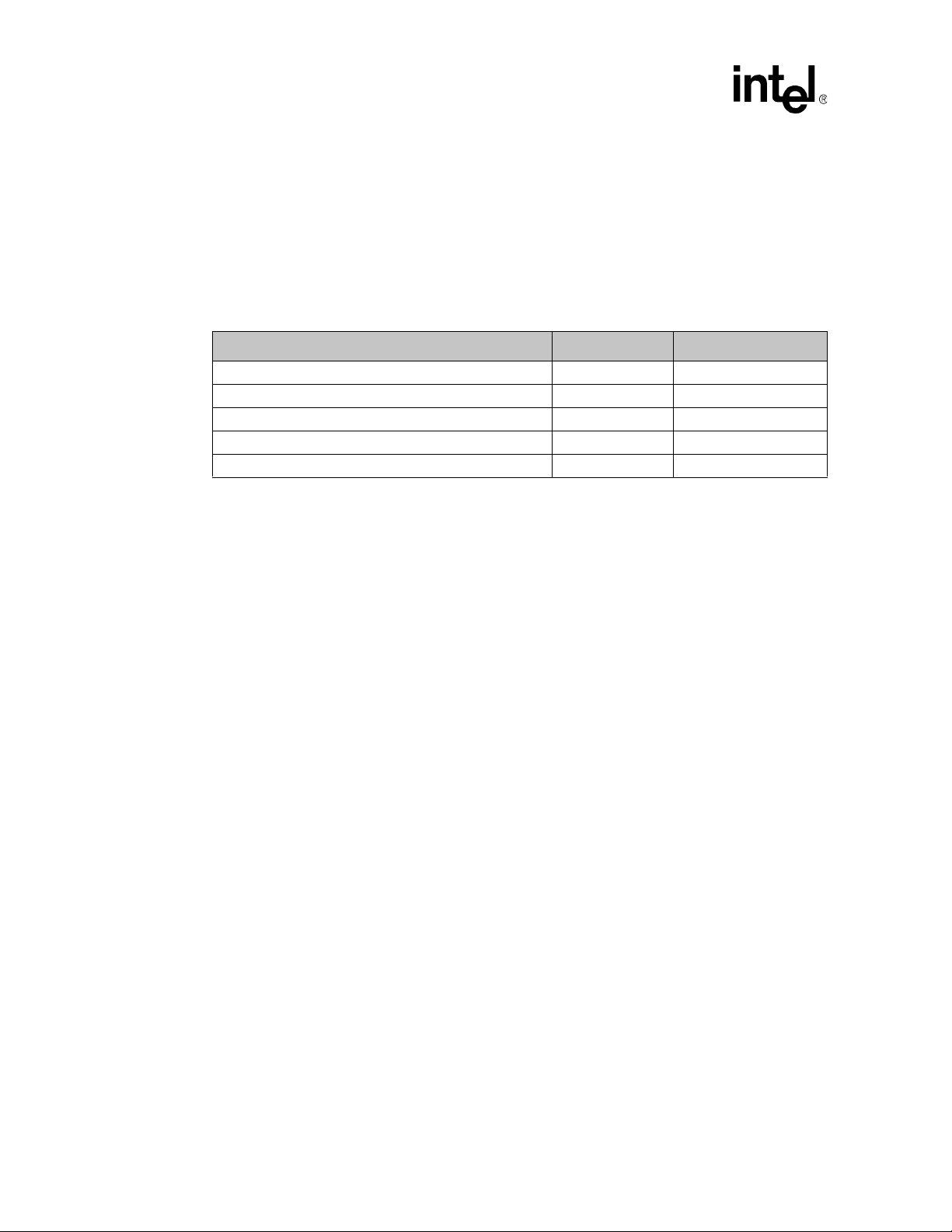

Revision History

Date Revision Description

April 2005 007 Firmware version 5.2

August 2004 006 Firmware version 5.1.0.757

April 2004 005

January 2004 004.1 Version 4.1 TPS

Contents

Version 5.1 TPS

Added Re-Enumeration Section

Added Process Monitoring Section

MPCMM0001 Chassis Management Module Software Technical Product Specification 15

Page 16

Introduction

Introduction 1

1.1 Overview

The Intel® NetStructureTM MPCMM0001 Chassis Management Module is a 4U, single-slot CMM

intended for use with AdvancedTCA* PICMG* 3.0 platforms. This document details the software

features and specifications of the CMM. For information on hardware features for the CMM refer

to the Intel

specifications and other material can be found in Appendix B , “Data Sheet Reference.”

The CMM plugs into a dedicated slot in compatible systems. It provides centralized management

and alarming for up to 16 node and/or fabric slots as well as for system power supplies, fans and