Page 1

Intel® IXP400 Software

Programmer’s Guide

April 2005

Document Number: 252539, Revision: 007

Page 2

®

IXP400 Software

Intel

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. EXCEPT AS PROVIDED IN INTEL'S TERMS

AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS

OR IMPLIED WARRANTY RELATING TO SALE AND/OR USE OF INTEL PRODUCTS, INCLUDING LIABILITY OR WARRANTIES RELATING TO

FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT, OR OTHER

INTELLECTUAL PROPERTY RIGHT.

Intel Corporation may have patents or pending patent applicat ions, trademarks, copyrights, or other intellectual property rights that relate to the

presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by

estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights.

Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “un defined.” Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

MPEG is an international standard for video compression/decompression promoted by ISO. Implementations of MPEG CODECs, or MPEG enabled

platforms may require licenses from various entities, including Intel Corporation.

This document and the software described in it are furnished under license and may only be used or copied in accordance with the terms of the

license. The information in this document is furnished for informati onal use only, is subject to change without notice, and sh ould not be construe d as a

commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies that may appear in this

document or any software that may b e provide d in association with this document. Except as permitted by such license, no part of this document may

be reproduced, stored in a retrieval system, or transmitted in any form or by any means without the express written consent of Intel Corporation.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling

1-800-548-4725, or by visiting Intel's website at http://www.intel.com.

BunnyPeople, Celeron, Chips, Dialogic, EtherExpress, ETOX, FlashFile, i386, i486, i960, iCOMP, InstantIP, Intel, Intel Centrino, Intel Centrino logo,

Intel logo, Intel386, Intel486, Intel740, IntelDX2, IntelDX4, IntelSX2, Intel Inside, Intel Inside logo, Intel NetBurst, Intel NetMerge, Intel NetStructure,

Intel SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel Xeon, Intel XScale, IPLink, Itanium, MCS, MMX, MMX logo, Optimizer logo, OverDrive,

Paragon, PDCharm, Pentium, Pentium II Xeon, Pentium III Xeon, Performance at Your Command, Sound Mark, The Computer Inside, The Journey

Inside, VTune, and Xircom are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright © Intel Corporation 2005. All Rights Reserved.

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

2 Document Number: 252539, Revision: 007

Page 3

Intel® IXP400 Software

Contents

Contents

1 Introduction..................................................................................................................................19

1.1 Versions Supported by this Document ...............................................................................19

1.2 Hardware Supported by this Release.................................................................................19

1.3 Intended Audience..............................................................................................................19

1.4 How to Use this Document .................................................................................................20

1.5 About the Processors .........................................................................................................20

1.6 Related Documents ............................................................................................................21

1.7 Acronyms............................................................................................................................22

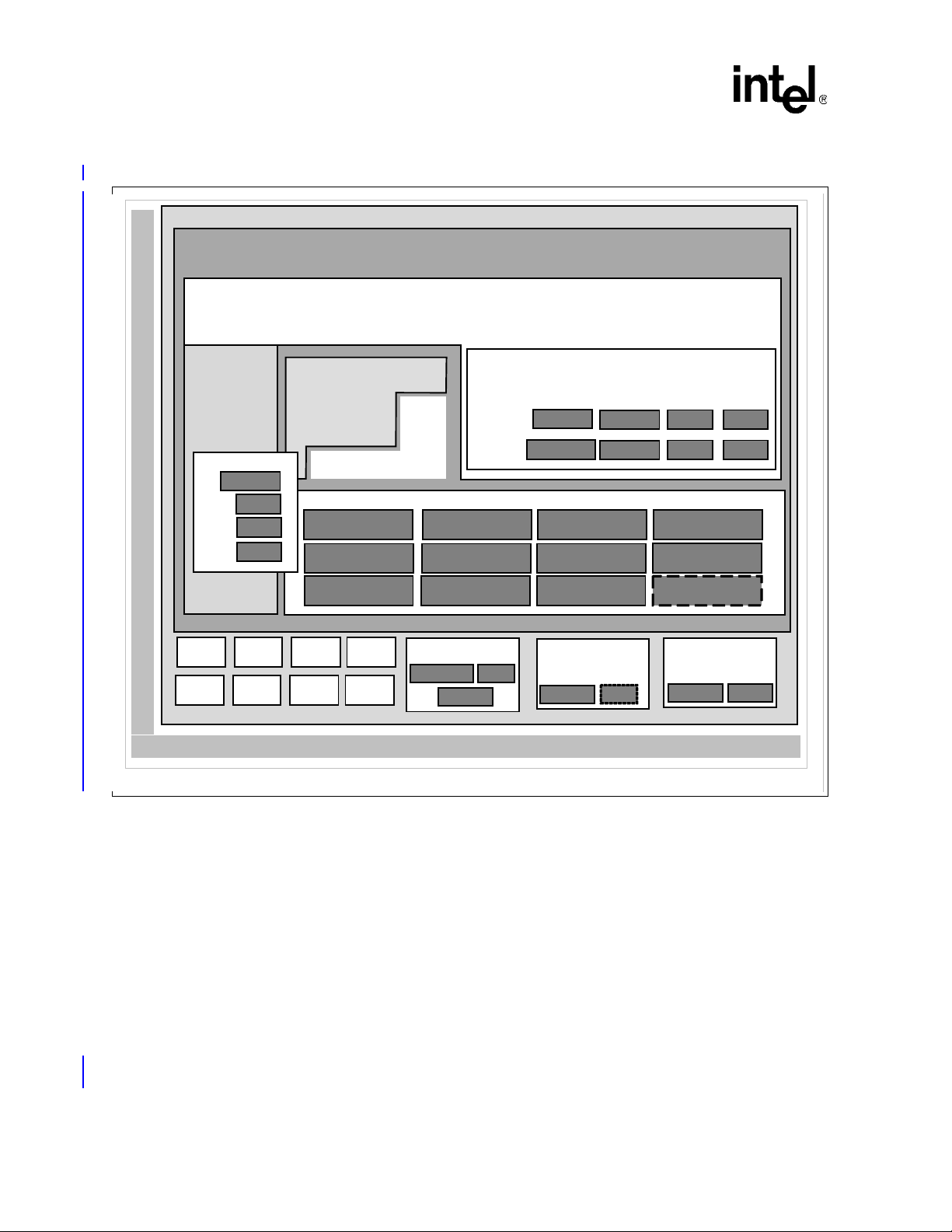

2 Software Architecture Overview ................................................................................................27

2.1 High-Level Overview...........................................................................................................27

2.2 Deliverable Model...............................................................................................................28

2.3 Operating System Support .............................................. ... ... .............................................29

2.4 Development Tools.............................................................................................................29

2.5 Access Library Source Code Documentation.....................................................................29

2.6 Release Directory Structure................................................................................................30

2.7 Threading and Locking Policy.............................................................................................32

2.8 Polled and Interrupt Operation............................................................................................32

2.9 Statistics and MIBs ......... ... .... ... ... ... ... .... ... ... ... .... ... ... ... .... ...................................................32

2.10 Global Dependency Chart ............................................... ... ... .............................................33

3 Buffer Management.....................................................................................................................35

3.1 What’s New.........................................................................................................................35

3.2 Overview.............................................................................................................................35

3.3 IXP_BUF Structure .............................................................................................................38

3.3.1 IXP_BUF Structure and Macros ............................................................................38

3.4 Mapping of IX_MBUF to Shared Structure .........................................................................43

3.5 IX_MBUF Structure.............................................................................................................44

3.6 Mapping to OS Native Buffer Types ...................................................................................46

3.6.1 VxWorks* M_BLK Buffer........................................................................................46

3.6.2 Linux* skbuff Buffer................................................................................................47

3.7 Caching Strategy ................................................................................................................49

3.7.1 Tx Path ..................................................................................................................49

3.7.2 Rx Path.............................. ... .... ... ... ... ....................................... ... .... ......................50

3.7.3 Caching Strategy Summary...................................................................................50

4 Access-Layer Components:

ATM Driver Access (IxAtmdAcc) API.........................................................................................53

4.1 What’s New.........................................................................................................................53

4.2 Overview.............................................................................................................................53

4.3 IxAtmdAcc Component Features........................................................................................53

4.4 Configuration Services........................................................................................................55

4.4.1 UTOPIA Port-Configuration Service ......................................................................55

4.4.2 ATM Traffic-Shaping Services...............................................................................55

4.4.3 VC-Configuration Services ................................. ... ... ... ....................................... ...56

4.5 Transmission Services........................................................................................................57

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 4

Intel® IXP400 Software

Contents

4.5.1 Scheduled Transmission .......................................................................................58

4.5.1.1 Schedule Table Description............................ ... .... ... ... ... .... ... ... ... ... .... ...59

4.5.2 Transmission Triggers (Tx-Low Notification).........................................................60

4.5.2.1 Transmit-Done Processing .......... ... ... .... ... ... ... ... .... ... ... ... .... ... ... .............60

4.5.2.2 Transmit Disconnect..............................................................................62

4.5.3 Receive Services...................................................................................... ... ... .... ...63

4.5.3.1 Receive Triggers (Rx-Free-Low Notification).........................................64

4.5.3.2 Receive Processing...............................................................................64

4.5.3.3 Receive Disconnect...............................................................................66

4.5.4 Buffer Management...............................................................................................67

4.5.4.1 Buffer Allocation.............. ... ... .... ...................................... .... ... ... ... ... .......67

4.5.4.2 Buffer Contents............................... .......................................................67

4.5.4.3 Buffer-Size Constraints..........................................................................69

4.5.4.4 Buffer-Chaining Constraints...................................................................69

4.5.5 Error Handling........................................................................................................69

4.5.5.1 API-Usage Errors......................... ... .......................................... ... ... .......69

4.5.5.2 Real-Time Errors....................................................................................70

5 Access-Layer Components:

ATM Manager (IxAtmm) API .......................................................................................................71

5.1 What’s New.........................................................................................................................71

5.2 IxAtmm Overview................................................................................................................71

5.3 IxAtmm Component Features.............................................................................................71

5.4 UTOPIA Level-2 Port Initialization......................................................................................72

5.5 ATM-Port Management Service Model...............................................................................73

5.6 Tx/Rx Control Configuration ...............................................................................................75

5.7 Dependencies.....................................................................................................................77

5.8 Error Handling.....................................................................................................................77

5.9 Management Interfaces......................................................................................................77

5.10 Memory Requirements .......................................................................................................77

5.11 Performance.......................................................................................................................78

6 Access-Layer Components:

ATM Transmit Scheduler (IxAtmSch) API.................................................................................79

6.1 What’s New.........................................................................................................................79

6.2 Overview.............................................................................................................................79

6.3 IxAtmSch Component Features............................. ... ... ... .... ... ... ... ... .... ... ... ... .... ... ... ... ..........79

6.4 Connection Admission Control (CAC) Function..................................................................81

6.5 Scheduling and Traffic Shaping..........................................................................................82

6.5.1 Schedule Table......................................................................................................82

6.5.1.1 Minimum Cells Value (minCellsToSchedule).........................................83

6.5.1.2 Maximum Cells Value (maxCells)..........................................................83

6.5.2 Schedule Service Model........................................................................................83

6.5.3 Timing and Idle Cells......................... ... ....................................... ... .... ... ... ... ... .... ...84

6.6 Dependencies.....................................................................................................................84

6.7 Error Handling.....................................................................................................................85

6.8 Memory Requirements .......................................................................................................85

6.8.1 Code Size..............................................................................................................85

6.8.2 Data Memory..................... ... ... .... ... ....................................... ... ... ... .... ... ................85

6.9 Performance.......................................................................................................................85

6.9.1 Latency..................................................................................................................86

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

4 Document Number: 252539, Revision: 007

Page 5

Intel® IXP400 Software

7 Access-Layer Components:

Security (IxCryptoAcc) API.........................................................................................................87

7.1 What’s New.........................................................................................................................87

7.2 Overview.............................................................................................................................87

7.3 IxCryptoAcc API Architecture .............................................................................................88

7.3.1 IxCryptoAcc Interfaces...........................................................................................88

7.3.2 Basic API Flow.......................................................................................................89

7.3.3 Context Registration and the Cryptographic Context Database............................90

7.3.4 Buffer and Queue Management ............................................................................93

7.3.5 Memory Requirements ..........................................................................................93

7.3.6 Dependencies........................................................................................................94

7.3.7 Other API Functionality......... .... ... ... ....................................... ... ... .... ... ... ... ... .... ......95

7.3.8 Error Handling........................................................................................................96

7.3.9 Endianness............................................................................................................96

7.3.10 Import and Export of Cryptographic Technology ...................................................96

7.4 IPSec Services ............................................ ... .... ... ... ... .... ... ... ....................................... ......96

7.4.1 IPSec Background and Implementation ...................................... .... ... ... ... ... .... ... ...96

7.4.2 IPSec Packet Formats.............................. ... ... .... ... ... ... ... .... ... ... .............................98

7.4.2.1 Reference ESP Dataflow.......................................................................99

7.4.2.2 Reference AH Dataflow .......................................................................100

7.4.3 Hardware Acceleration for IPSec Services..........................................................101

7.4.4 IPSec API Call Flow..... ... ... ... .... ... ... ....................................... ... ... .... ... ... ... ... .... ....101

7.4.5 Special API Use Cases........................................................................................103

7.4.5.1 HMAC with Key Size Greater Than 64 Bytes ......................................103

7.4.5.2 Performing CCM (AES CTR-Mode Encryption and AES

CBC-MAC Authentication) for IPSec ...................................................103

7.4.6 IPSec Assumptions, Dependencies, and Limitations...........................................106

7.5 WEP Services........................................................ ... ... .... ... ... ... ... .... .................................106

7.5.1 WEP Background and Implementation................................................................106

7.5.2 Hardware Acceleration for WEP Services ...........................................................107

7.5.3 WEP API Call Flow................................ ... ... ... .... ... ...................................... .... ... .108

7.6 SSL and TLS Protocol Usage Models ..............................................................................110

7.7 Supported Encryption and Authentication Algorithms ......................................................111

7.7.1 Encryption Algorithms..........................................................................................111

7.7.2 Cipher Modes .................................... ....................................... ... .... ... ... ... ...........112

7.7.2.1 Electronic Code Book (ECB)................................................................112

7.7.2.2 Cipher Block Chaining (CBC) ..............................................................112

7.7.2.3 Counter Mode (CTR) ...........................................................................112

7.7.2.4 Counter-Mode Encryption with CBC-MAC Authentication (CCM)

for CCMP in 802.11i........... .... ... ... ... .... ... ..............................................112

7.7.3 Authentication Algorithms............................... .... ... ... ... ... .... ... ... ... .... ... ... ..............113

Contents

8 Access-Layer Components:

DMA Access Driver (IxDmaAcc) API........................................................................................115

8.1 What’s New.......................................................................................................................115

8.2 Overview...........................................................................................................................115

8.3 Features............................................................................................................................115

8.4 Assumptions .....................................................................................................................115

8.5 Dependencies...................................................................................................................116

8.6 DMA Access-Layer API ....................................................................................................116

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 6

Intel® IXP400 Software

Contents

8.6.1 IxDmaAccDescriptorManager..............................................................................118

8.7 Parameters Description............................ ... ... ..................................................................118

8.7.1 Source Address.................... ... ....................................... ... .... ... ... ... .....................119

8.7.2 Destination Address.............................................................................................119

8.7.3 Transfer Mode .....................................................................................................119

8.7.4 Transfer Width.....................................................................................................119

8.7.5 Addressing Modes...............................................................................................120

8.7.6 Transfer Length ...................................................................................................120

8.7.7 Supported Modes ................................................................................................121

8.8 Data Flow.............................. .... ... ... ... ... .... ...................................... .... ... ... ... .....................123

8.9 Control Flow................................. ... ... ... .... ... ... ... .... ... ....................................... ... ... ... ........123

8.9.1 DMA Initialization.................................................................................................124

8.9.2 DMA Configuration and Data Transfer ................................................................125

8.10 Restrictions of the DMA Transfer......................................................................................127

8.11 Error Handling...................................................................................................................128

8.12 Little Endian............. .... ... ... ... .... ... ... ... ... .... ... ... ....................................... ... ... .... ... ... ...........128

9 Access-Layer Components:

Ethernet Access (IxEthAcc) API...............................................................................................129

9.1 What’s New.......................................................................................................................129

9.2 IxEthAcc Overview............................................................................................................129

9.3 Ethernet Access Layers: Architectural Overview..............................................................130

9.3.1 Role of the Ethernet NPE Microcode...................................................................130

9.3.2 Queue Manager...................................................................................................131

9.3.3 Learning/Filtering Database.................................................................................131

9.3.4 MAC/PHY Configuration................................................. ... .... ... ... ... .... ... ... ... ... .... .131

9.4 Ethernet Access Layers: Component Features................................................................132

9.5 Data Plane......... ... ... ....................................... ... .... ... ... ... ....................................... ... ........133

9.5.1 Port Initialization ..................................................................................................134

9.5.2 Ethernet Frame Transmission .............................................................................134

9.5.2.1 Transmission Flow....................................................... ... .... ... ... ... ... .... .134

9.5.2.2 Transmit Buffer Management and Priority...........................................135

9.5.2.3 Using Chained IX_OSAL_MBUFs for Transmission / Buffer Sizing ....137

9.5.3 Ethernet Frame Reception...................................................................................137

9.5.3.1 Receive Flow.......................................................................................138

9.5.3.2 Receive Buffer Management and Priority............................................139

9.5.3.3 Additional Receive Path Information............................................... .... .142

9.5.4 Data-Plane Endianness.......................................................................................143

9.5.5 Maximum Ethernet Frame Size......................... .... ... ... ... ... .... ... ... ... .... ... ... ... ... .... .143

9.6 Control Path .... ... ... ... .... ... ... ... ....................................... ... .... ... ... ... .....................................143

9.6.1 Ethernet MAC Control..........................................................................................145

9.6.1.1 MAC Duplex Settings...........................................................................145

9.6.1.2 MII I/O................................................ ....................................... ... ... .... .145

9.6.1.3 Frame Check Sequence......................................................................145

9.6.1.4 Frame Padding....................................................................................145

9.6.1.5 MAC Filtering.......................................................................................146

9.6.1.6 802.3x Flow Control.............................................................................146

9.6.1.7 NPE Loopback.......................... ... ... ... .... ... ... ... ... .... ..............................147

9.6.1.8 Emergency Security Port Shutdown............................................... .... .147

9.7 Initialization.......................................................................................................................147

9.8 Shared Data Structures....................................................................................................147

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

6 Document Number: 252539, Revision: 007

Page 7

Intel® IXP400 Software

9.9 Management Information..................................................................................................152

10 Access-Layer Components:

Ethernet Database (IxEthDB) API.............................................................................................155

10.1 Overview...........................................................................................................................155

10.2 What’s New.......................................................................................................................155

10.3 IxEthDB Functional Behavior............................................................................................155

10.3.1 MAC Address Learning and Filtering...................................................................156

10.3.1.1 Learning and Filtering..........................................................................156

10.3.1.2 Other MAC Learning/Filtering Usage Models......................................158

10.3.1.3 Learning/Filtering General Characteristics...........................................158

10.3.2 Frame Size Filtering.............................................................................................160

10.3.2.1 Filtering Example Based Upon Maximum Frame Size ........................161

10.3.3 Source MAC Address Firewall.............................................................................161

10.3.4 802.1Q VLAN............ ... ... ... ....................................... ... ... .... ... ... ... .... ... .................162

10.3.4.1 Background – VLAN Data in Ethernet Frames....................................163

10.3.4.2 Database Records Associated With VLAN IDs....................................164

10.3.4.3 Acceptable Frame Type Filtering.........................................................164

10.3.4.4 Ingress Tagging and Tag Removal......................................................165

10.3.4.5 Port-Based VLAN Membership Filtering..............................................165

10.3.4.6 Port and VLAN-Based Egress Tagging and Tag Removal..................166

10.3.4.7 Port ID Extraction.................................................................................169

10.3.5 802.1Q User Priority / QoS Support ................................................ ... ... ... ... .... ... .169

10.3.5.1 Priority Aware Transmission........................................ .... ... ... ... ... .... ... .169

10.3.5.2 Receive Priority Queuing.....................................................................170

10.3.5.3 Priority to Traffic Class Mapping..........................................................171

10.3.6 802.3 / 802.11 Frame Conversion .......................................................................172

10.3.6.1 Background — 802.3 and 802.11 Frame Formats...............................172

10.3.6.2 How the 802.3 / 802.11 Frame Conversion Feature Works.................174

10.3.6.3 802.3 / 802.11 API Details.................................................................176

10.3.7 Spanning Tree Protocol Port Settings .................................................................177

10.4 IxEthDB API......................................................................................................................177

10.4.1 Initialization..........................................................................................................177

10.4.2 Dependencies......................................................................................................177

10.4.3 Feature Set..........................................................................................................178

10.4.4 Additional Database Features .................................................. ... .... ... ... ... ... .... ... .178

10.4.4.1 User-Defined Field...............................................................................178

10.4.4.2 Database Clear......... ... ... ... .... ..............................................................1 79

10.4.5 Dependencies on IxEthAcc Configuration ...........................................................179

10.4.5.1 Promiscuous-Mode Requirement ........................................................179

10.4.5.2 FCS Appending......................................... ... ... .... ... ... ... .... ... ... ... ... ........179

Contents

11 Access-Layer Components:

Ethernet PHY (IxEthMii) API .....................................................................................................181

11.1 What’s New.......................................................................................................................181

11.2 Overview...........................................................................................................................181

11.3 Features............................................................................................................................181

11.4 Supported PHYs...............................................................................................................181

11.5 Dependencies...................................................................................................................182

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 8

Intel® IXP400 Software

Contents

12 Access-Layer Components:

Feature Control (IxFeatureCtrl) API.........................................................................................183

12.1 What’s New.......................................................................................................................183

12.2 Overview...........................................................................................................................183

12.3 Hardware Feature Control..................................... ... ... ... .... ... ... ... .....................................183

12.3.1 Using the Product ID-Related Functions .............................................................184

12.3.2 Using the Feature Control Register Functions.....................................................185

12.4 Component Check by Other APIs.....................................................................................186

12.5 Software Configuration.....................................................................................................186

12.6 Dependencies...................................................................................................................187

13 Access-Layer Components:

HSS-Access (IxHssAcc) API.....................................................................................................189

13.1 What’s New.......................................................................................................................189

13.2 Overview...........................................................................................................................189

13.3 IxHssAcc API Overview....................................................................................................190

13.3.1 IxHssAcc Interfaces.............................................................................................190

13.3.2 Basic API Flow.....................................................................................................191

13.3.3 HSS and HDLC Theory and Coprocessor Operation............ ... ... ... .... ... ..............192

13.3.4 High-Level API Call Flow.....................................................................................195

13.3.5 Dependencies......................................................................................................196

13.3.6 Key Assumptions.................................................................................................196

13.3.7 Error Handling......................................................................................................197

13.4 HSS Port Initialization Details...........................................................................................197

13.5 HSS Channelized Operation.............................................................................................199

13.5.1 Channelized Connect and Enable.......................................................................199

13.5.2 Channelized Tx/Rx Methods........................... ... .... ... .......................................... .201

13.5.2.1 CallBack...............................................................................................202

13.5.2.2 Polled...................................................................................................202

13.5.3 Channelized Disconnect.... ... ... .......................................... .... ... ... ........................204

13.6 HSS Packetized Operation...............................................................................................204

13.6.1 Packetized Connect and Enable..........................................................................204

13.6.2 Packetized Tx................. ... ... ....................................... ... ... .... ... ...........................206

13.6.3 Packetized Rx.......................... .... ... ... ... .... ...................................... .... ... ... ... ... .....2 08

13.6.4 Packetized Disconnect ...................................................... .... ... ... ... .... ... ... ... ... .....211

13.6.5 56-Kbps, Packetized Raw Mode..........................................................................211

13.7 Buffer Allocation Data-Flow Overview..............................................................................211

13.7.1 Data Flow in Packetized Service................. ... ... .... ..............................................211

13.7.2 Data Flow in Channelized Service.................................. ... ..................................214

14 Access-Layer Components:

NPE-Downloader (IxNpeDl) API................................................................................................219

14.1 What’s New.......................................................................................................................219

14.2 Overview...........................................................................................................................219

14.3 Microcode Images ............................................................................................................219

14.4 Standard Usage Example......... ........................................................................................220

14.5 Custom Usage Example........... ... ............................................................................. ... .... .223

14.6 IxNpeDl Uninitialization.....................................................................................................223

14.7 Deprecated APIs...............................................................................................................224

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

8 Document Number: 252539, Revision: 007

Page 9

Intel® IXP400 Software

15 Access-Layer Components:

NPE Message Handler (IxNpeMh) API.....................................................................................225

15.1 What’s New.......................................................................................................................225

15.2 Overview...........................................................................................................................225

15.3 Initializing the IxNpeMh.....................................................................................................226

15.3.1 Interrupt-Driven Operation...................................................................................226

15.3.2 Polled Operation..................................................................................................226

15.4 Uninitializing IxNpeMh ......................................................................................................227

15.5 Sending Messages from an Intel XScale

®

Core Software Client to an NPE....................227

15.5.1 Sending an NPE Message...................................................................................227

15.5.2 Sending an NPE Message with Response..........................................................228

15.6 Receiving Unsolicited Messages from an NPE to Client Software...................................229

15.7 Dependencies...................................................................................................................231

15.8 Error Handling...................................................................................................................231

16 Access-Layer Components:

Parity Error Notifier (IxParityENAcc) API ................................................................................233

16.1 What’s New.......................................................................................................................233

16.2 Introduction.......................................................................................................................233

16.2.1 Background..........................................................................................................233

16.2.2 Parity and ECC Capabilities in the

®

Intel

IXP45X and Intel® IXP46X Product Line ...................................................234

16.2.2.1 Network Processing Engines...............................................................234

16.2.2.2 Switching Coprocessor in NPE B (SWCP) ..........................................235

16.2.2.3 AHB Queue Manager (AQM)...............................................................235

16.2.2.4 DDR SDRAM Memory Controller Unit (MCU)......................................235

16.2.2.5 Expansion Bus Controller ....................................................................235

16.2.2.6 PCI Controller ......................................................................................235

16.2.2.7 Secondary Effects of Parity Interrupts .................................................236

16.2.3 Interrupt Prioritization...........................................................................................236

16.3 IxParityENAcc API Details.................................. ... ... ... .... ... ... ... ... .... .................................237

16.3.1 Features...............................................................................................................237

16.3.2 Dependencies......................................................................................................237

16.4 IxParityENAcc API Usage Scenarios................................................................................238

16.4.1 Summary Parity Error Notification Scenario ....................................................... .239

16.4.2 Summary Parity Error Recovery Scenario...........................................................241

16.4.3 Summary Parity Error Prevention Scenario.........................................................242

16.4.4 Parity Error Notification Detailed Scenarios. ... .... ... ... ... ... .....................................242

Contents

17 Access-Layer Components:

Performance Profiling (IxPerfProfAcc) API.............................................................................247

17.1 What’s New.......................................................................................................................247

17.2 Overview...........................................................................................................................247

17.3 Intel XScale

®

Core PMU...................................................................................................248

17.3.1 Counter Buffer Overflow ......................................................................................249

17.4 Internal Bus PMU..............................................................................................................249

17.5 Idle-Cycle Counter Utilities (‘Xcycle’)................................................................................250

17.6 Dependencies...................................................................................................................250

17.7 Error Handling...................................................................................................................251

17.8 Interrupt Handling.............................................................................................................251

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 10

Intel® IXP400 Software

Contents

17.9 Threading..........................................................................................................................252

17.10 Using the API.. ... ... ... .... ...................................... .... ... ... ... .... ... ... ... .....................................252

17.10.1 API Usage for Intel XScale

®

Core PMU ..............................................................253

17.10.1.1 Event and Clock Counting ...................................................................253

17.10.1.2 Time-Based Sampling..........................................................................255

17.10.1.3 Event-Based Sampling........................................................................257

17.10.1.4 Using Intel XScale

®

Core PMU to Determine Cache Efficiency ..........260

17.10.2 Internal Bus PMU.................................................................................................261

17.10.2.1 Using the Internal Bus PMU Utility to Monitor

Read/Write Activity on the North Bus...................................................262

17.10.3 Xcycle (Idlecycle Counter)...................................................................................263

18 Access-Layer Components:

Queue Manager (IxQMgr) API...................................................................................................265

18.1 What’s New.......................................................................................................................265

18.2 Overview...........................................................................................................................265

18.3 Features and Hardware Interface.....................................................................................266

18.4 IxQMgr Initialization and Uninitialization...........................................................................267

18.5 Queue Configuration.........................................................................................................267

18.6 Queue Identifiers ..............................................................................................................267

18.7 Configuration Values ........................................................................................................268

18.8 Dispatcher.........................................................................................................................268

18.9 Dispatcher Modes.............................................................................................................269

18.10 Livelock Prevention............................ ... .... ... ... ... .... ... ... ... ..................................................272

18.11 Threading..........................................................................................................................274

18.12 Dependencies...................................................................................................................274

19 Access-Layer Components:

Synchronous Serial Port (IxSspAcc) API................................................................................275

19.1 What’s New.......................................................................................................................275

19.2 Introduction.......................................................................................................................275

19.3 IxSspAcc API Details........................................................................................................275

19.3.1 Features...............................................................................................................275

19.3.2 Dependencies......................................................................................................276

19.4 IxSspAcc API Usage Models............................................................................................277

19.4.1 Initialization and General Data Model..................................................................277

19.4.2 Interrupt Mode .....................................................................................................277

19.4.3 Polling Mode......... ....................................... ... ... .... ... ... ... ... .... ..............................280

20 Access-Layer Components:

Time Sync (IxTimeSyncAcc) API..............................................................................................283

20.1 What’s New.......................................................................................................................283

20.2 Introduction.......................................................................................................................283

20.2.1 IEEE 1588 PTP Protocol Overview .....................................................................284

20.2.2 IEEE 1588 Hardware Assist Block.......................................................................285

20.2.3 IxTimeSyncAcc....................................................................................................288

20.2.4 IEEE 1588 PTP Client Application.......................................................................288

20.3 IxTimeSyncAcc API Details......................... ... ... .... ... ... ... .... ... ...........................................288

20.3.1 Features...............................................................................................................288

20.3.2 Dependencies......................................................................................................289

20.3.3 Error Handling......................................................................................................289

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

10 Document Number: 252539, Revision: 007

Page 11

Intel® IXP400 Software

20.4 IxTimeSyncAcc API Usage Scenarios..............................................................................290

20.4.1 Polling for Transmit and Receive Timestamps ....................................................290

20.4.2 Interrupt Mode Operations...................................................................................290

20.4.3 Polled Mode Operations ......................................................................................291

21 Access-Layer Components:

UART-Access (IxUARTAcc) API...............................................................................................293

21.1 What’s New.......................................................................................................................293

21.2 Overview...........................................................................................................................293

21.3 Interface Description.........................................................................................................293

21.4 UART / OS Dependencies................................................................................................294

21.4.1 FIFO Versus Polled Mode .................................................. ... ... ... .... ... ... ... ... .... ... .294

21.5 Dependencies...................................................................................................................295

22 Access-Layer Components:

USB Access (ixUSB) API ..........................................................................................................297

22.1 What’s New.......................................................................................................................297

22.2 Overview...........................................................................................................................297

22.3 USB Controller Background...................... ... ... ..................................................................297

22.3.1 Packet Formats....................................................................................................298

22.3.2 Transaction Formats............................................................................................299

22.4 ixUSB API Interfaces ........................................................................................................302

22.4.1 ixUSB Setup Requests ........................................................................................302

22.4.1.1 Configuration........................................................................................304

22.4.1.2 Frame Synchronization...... .... ... .......................................... ... ... ... ........305

22.4.2 ixUSB Send and Receive Requests ....................................................................305

22.4.3 ixUSB Endpoint Stall Feature ..............................................................................305

22.4.4 ixUSB Error Handling...........................................................................................306

22.5 USB Data Flow................................................... ... ... ... .... ... ... ... ... .....................................308

22.6 USB Dependencies ............................................ ... ... ... .... ... ...................................... .... ... .308

Contents

23 Codelets .....................................................................................................................................309

23.1 What’s New.......................................................................................................................309

23.2 Overview...........................................................................................................................309

23.3 ATM Codelet (IxAtmCodelet)............................................................................................309

23.4 Crypto Access Codelet (IxCryptoAccCodelet)..................................................................310

23.5 DMA Access Codelet (IxDmaAccCodelet)........................................................................310

23.6 Ethernet AAL-5 Codelet (IxEthAal5App)...........................................................................310

23.7 Ethernet Access Codelet (IxEthAccCodelet) ....................................................................310

23.8 HSS Access Codelet (IxHssAccCodelet)..........................................................................311

23.9 Parity Error Notifier Codelet (IxParityENAccCodelet)............... ... .... ... ... ... .... ... ... ... ... .... ... .311

23.10 Performance Profiling Codelet (IxPerfProfAccCodelet)....................................................312

23.11 Time Sync Codelet (IxTimeSyncAccCodelet)...................................................................312

23.12 USB RNDIS Codelet (IxUSBRNDIS)........................... .... ... ... ... ... .... .................................312

24 Operating System

Abstraction Layer (OSAL).........................................................................................................313

24.1 What’s New.......................................................................................................................313

24.2 Overview...........................................................................................................................313

24.3 OS-Independent Core Module..................... ... .... ... ... ... .... ... ... ... ... .... ... ... ... ........................3 15

24.4 OS-Dependent Module.....................................................................................................315

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 12

Intel® IXP400 Software

Contents

24.4.1 Backward Compatibility Module...........................................................................316

24.4.2 Buffer Translation Module....................................................................................316

24.5 OSAL Library Structure.....................................................................................................316

24.6 OSAL Modules and Related Interfaces ............................................................................319

24.6.1 Core Module........................................................................................................319

24.6.2 Buffer Management Module ................................................................................322

24.6.3 I/O Memory and Endianness Support Module.....................................................322

24.7 Supporting a New OS.......................................................................................................324

24.8 Supporting New Platforms................................................................................................325

25 ADSL Driver ...............................................................................................................................327

25.1 What’s New.......................................................................................................................327

25.2 Device Support.................................................................................................................327

25.3 ADSL Driver Overview......................................................................................................327

25.3.1 Controlling STMicroelectronics* ADSL Modem Chipset Through CTRL-E..........328

25.4 ADSL API..........................................................................................................................328

25.5 ADSL Line Open/Close Overview.....................................................................................328

25.6 Limitations and Constraints ..............................................................................................330

26 I

2

C Driver (IxI2cDrv)...................................................................................................................331

26.1 What’s New.......................................................................................................................331

26.2 Introduction.......................................................................................................................331

26.3 I

2

C Driver API Details.......................................................................................................331

26.3.1 Features...............................................................................................................331

26.3.2 Dependencies......................................................................................................332

26.3.3 Error Handling......................................................................................................333

26.3.3.1 Arbitration Loss Error...........................................................................333

26.3.3.2 Bus Error..............................................................................................334

C Driver API Usage Models...........................................................................................334

26.4 I

2

26.4.1 Initialization and General Data Model..................................................................334

26.4.2 Example Sequence Flows for Slave Mode..........................................................336

26.4.3 I

27 Endianness in Intel

2

C Using GPIO Versus Dedicated I2C Hardware............. .... ... ... ... .... ... ... ... ... .....339

®

IXP400 Software.....................................................................................341

27.1 Overview...........................................................................................................................341

27.2 The Basics of Endianness................................................................................................341

27.2.1 The Nature of Endianness: Hardware or Software?............................................342

27.2.2 Endianness When Memory is Shared .................................................................342

27.3 Software Considerations and Implications........................................................................343

27.3.1 Coding Pitfalls — Little-Endian/Big-Endian..........................................................343

27.3.1.1 Casting a Pointer Between Types of Different Sizes...........................343

27.3.1.2 Network Stacks and Protocols.............................................................344

27.3.1.3 Shared Data Example: LE Re-Ordering Data for BE Network Traffic..344

27.3.2 Best Practices in Coding of Endian-Independence .............................................345

27.3.3 Macro Examples: Endian Conversion..................................................................345

27.3.3.1 Macro Source Code.............................................................................345

27.4 Endianness Features of the Intel

®

IXP4XX

Product Line of Network Processors

and IXC1100 Control Plane Processor.............................................................................346

27.4.1 Supporting Little-Endian Mode ....................................................... .... ... ..............348

27.4.2 Reasons for Choosing a Particular LE Coherency Mode............ ... .... ... ... ... ........348

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

12 Document Number: 252539, Revision: 007

Page 13

Intel® IXP400 Software

27.4.3 Silicon Endianness Controls........................................ ... .... ... ... ... .... ... ... ... ... .... ... .349

27.4.3.1 Hardware Switches............ .......................................... .... ....................349

27.4.3.2 Intel XScale

®

Core Endianness Mode................................... ... ... .... ... .350

27.4.3.3 Little-Endian Data Coherence Enable/Disable.....................................351

27.4.3.4 MMU P-Attribute Bit.............................................................................351

27.4.3.5 PCI Bus Swap......................................................................................352

27.4.3.6 Summary of Silicon Controls................................................................352

27.4.4 Silicon Versions ........ ... ... ... ... .... ...................................... .... ... ... ... .... ... ... ... ...........352

27.5 Little-Endian Strategy in Intel

®

IXP400 Software and Associated BSPs..........................353

27.5.1 APB Peripherals ..................................................................................................354

27.5.2 AHB Memory-Mapped Registers........................................ ... ... ... .... ... ... ... ... .... ... .355

27.5.3 Intel

®

IXP400 Software Core Components..........................................................355

27.5.3.1 Queue Manager — IxQMgr..................................................................355

27.5.3.2 NPE Downloader — IxNpeDl...............................................................356

27.5.3.3 NPE Message Handler — IxNpeMh ....................................................356

27.5.3.4 Ethernet Access Component — IxEthAcc ...........................................356

27.5.3.5 ATM and HSS......................................................................................361

27.5.4 PCI.......................................................................................................................361

27.5.5 Intel

®

IXP400 Software OS Abstraction...............................................................361

27.5.6 VxWorks* Considerations....................................................................................362

27.5.7 Software Versions........... .....................................................................................364

Contents

Figures

1 Intel® IXP400 Software v2.0 Architecture Block Diagram ..........................................................28

2 Global Dependencies .................................................................................................................33

3 Intel

4 IXP_BUF User Interface............ .... ... ... ....................................... ... ... ... .... ... ... ... .... ......................37

5 IXP_BUF Structure........... ...................................... .... ... ... ... .... ...................................... ... ..........38

6 OSAL IXP_BUF structure and macros .......................................................................................39

7 API User Interface to IXP_BUF ..................................................................................................40

8 Access-Layer Component Interface to IXP_BUF .......................................................................40

9 Pool Management Fields.. ... ... ... .... ... ... ............................................................................. .... ......41

10 IXP_BUF: IX_MBUF Structure...................................................................................................41

11 IXP_BUF: ix_ctrl Structure..........................................................................................................42

12 IXP_BUF: NPE Shared Structure ...............................................................................................43

13 Internal Mapping of IX_MBUF to the Shared NPE Structure......................................................44

14 Buffer Transmission for a Scheduled Port..................................................................................58

15 IxAtmdAccScheduleTable Structure and Order Of ATM Cell .....................................................60

16 Tx Done Recycling — Using a Threshold Level .........................................................................61

17 Tx Done Recycling — Using a Polling Mechanism.....................................................................62

18 Tx Disconnect.. .... ... ... ... .... ... ... ....................................... ... ... .... ... ... ... ... .... ... ... ... .... ... ...................63

19 Rx Using a Threshold Level........................................................................................................65

20 RX Using a Polling Mechanism ..................................................................................................66

21 Rx Disconnect.............................................................................................................................67

22 Services Provided by Ixatmm .....................................................................................................74

23 Configuration of Traffic Control Mechanism ...............................................................................76

24 Component Dependencies of IxAtmm..................................................... ... ... ... .... ... ... ... ... .... ... ...77

25 Multiple VCs for Each Port, Multiplexed onto Single Line by the ATM Scheduler......................82

26 Translation of IxAtmScheduleTable Structure to ATM Tx Cell Ordering ....................................83

®

IXP400 Software Buffer Flow............................................................................................36

Programmer’s Guide IXP400 Software Version 2.0 April 2005

Document Number: 252539, Revision: 007

Page 14

Intel® IXP400 Software

Contents

27 Basic IxCryptoAcc API Flow.......................................................................................................90

28 IxCryptoAcc API Call Process Flow for CCD Updates ...............................................................92

29 IxCryptoAcc Component Dependencies.....................................................................................94

30 IxCryptoAcc, NPE and IPSec Stack Scope................................................................................97

31 Relationship Between IPSec Protocol and Algorithms...............................................................98

32 ESP Packet Structure.................................................................................................................98

33 Authentication Header............................................ ....................................... ... ... .... ... ... ... ..........99

34 ESP Data Flow ............................................. ............................................................................100

35 AH Data Flow ...................................... ... ... ... .... ... ....................................... ... ... ... .... ... ..............101

36 IPSec API Call Flow ......................................... ... ... ... .... ... ... ... ....................................... ... ........102

37 CCM Operation Flow................................................................................................................104

38 CCM Operation on Data Packet...............................................................................................104

39 AES CBC Encryption For MIC..................................................................................................1 05

40 AES CTR Encryption For Payload and MIC.............................................................................105

41 WEP Frame with Request Parameters.....................................................................................107

42 WEP Perform API Call Flow.....................................................................................................109

43 ixDmaAcc Dependencies .................................... ... ... .... ... ....................................... ... ... ... ... .... .116

44 IxDmaAcc Component Overview..............................................................................................117

45 IxDmaAcc Control Flow............................................................................................................124

46 IxDMAcc Initialization ...............................................................................................................125

47 DMA Transfer Operation ..........................................................................................................126

48 Ethernet Access Layers Block Diagram ...................................................................................133

49 Ethernet Transmit Frame API Overview...................................................................................134

50 Ethernet Transmit Frame Data Buffer Flow..............................................................................136

51 Ethernet Receive Frame API Overview....................................................................................138

52 Ethernet Receive Plane Data Buffer Flow................................................................................142

53 IxEthAcc and Secondary Components.....................................................................................144

54 Example Network Diagram for MAC Address Learning and Filtering with Two Ports..............157

55 Egress VLAN Control Path for Untagged Frames....................................................................168

56 QoS on Receive for 802.1Q Tagged Frames...........................................................................170

57 QoS on Receive for Untagged Frames ....................................................................................171

58 AP-STA and AP-AP Modes......................................................................................................173

59 HSS/HDLC Access Overview...................................................................................................192

60 T1 Tx Signal Format.................................................................................................................194

61 IxHssAcc Component Dependencies.......................................................................................196

62 Channelized Connect ...............................................................................................................201

63 Channelized Transmit and Receive..........................................................................................203

64 Packetized Connect..................................................................................................................206

65 Packetized Transmit.................................................................................................................208

66 Packetized Receive..................................................................................................................210

67 HSS Packetized Receive Buffering ............................................................... ... ... .... ... ... ... ... .... .213

68 HSS Packetized Transmit Buffering .... ... ... ... .... ........................................................................214

69 HSS Channelized Receive Operation ......................................................................................216

70 HSS Channelized Transmit Operation ....................................... ... .......................................... .217

71 Message from Intel XScale

72 Message with Response from Intel XScale

®

Core Software Client to an NPE.................................................228

®

Core Software Client to an NPE ........................229

73 Receiving Unsolicited Messages from NPE to Software Client................................................230

74 ixNpeMh Component Dependencies........................................................................................231

75 IxParityENAcc Dependency Diagram........ ... .... ... ... ... .... ... ... ... .......................................... ... .... .238

76 Parity Error Notification Sequence ...........................................................................................239

April 2005 IXP400 Software Version 2.0 Programmer’s Guide

14 Document Number: 252539, Revision: 007

Page 15

Intel® IXP400 Software

Contents

77 Data Abort with No Parity Error ................................................................................................243

78 Parity Error with No Data Abort ................................................................................................243

79 Data Abort followed by Unrelated Parity Error Notification.......................................................244

80 Unrelated Parity Error Followed by Data Abort.........................................................................244

81 Data Abort Caused by Parity Error ...........................................................................................245

82 Parity Error Notification Followed by Related Data Abort.........................................................245

83 Data Abort with both Related and Unrelated Parity Errors......................... ... ... .... ... ... ... ... .... ... .246

84 IxPerfProfAcc Dependencies..................... .... ... ... ... .... ..............................................................251

85 IxPerfProfAcc Component API ...................................................... ... ... .... ... ... ... .... ... ... ... ... .... ... .253

86 Display Performance Counters............................ ... .... ... ... ... .... ... ... ... ... .... ... ... ... .... ... ... ..............255

87 Display Clock Counter............ ... .... ... ... ... ....................................... ... ... .... ... ... ... .... ... .................256

88 Display Xcycle Measurement ...................................................................................................264