Page 1

Intel® IXP2800 Network

Processor

Hardware Reference Manual

August 2004

Order Number: 278882-010

Page 2

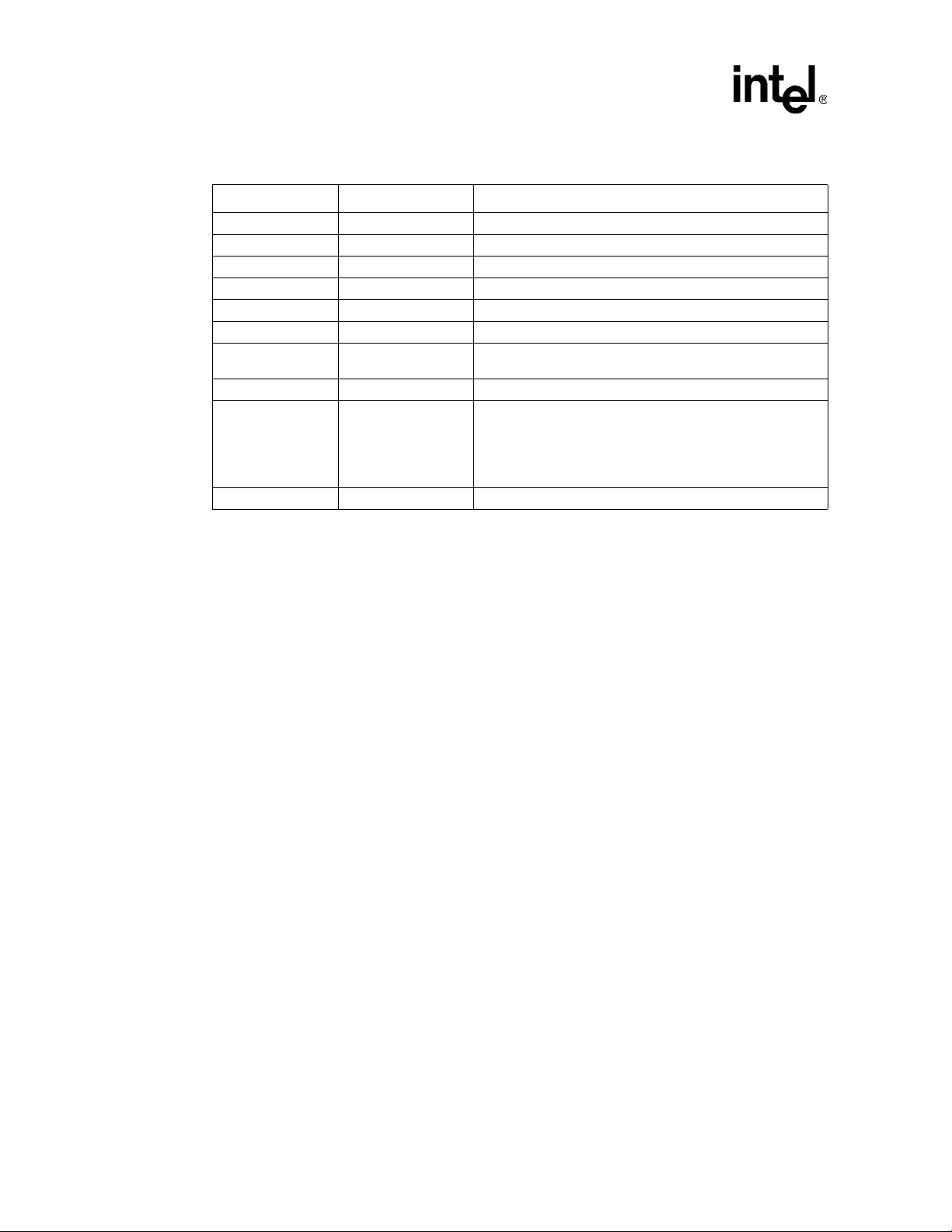

Revision History

Date Revision Description

March 2002 001 First release for IXP2800 Customer Information Book V 0.4

May 2002 002 Update for the IXA SDK 3.0 release.

August 2002 003 Update for the IXA SDK 3.0 Pre-Release 4.

November 2002 004 Update for the IXA SDK 3.0 Pre-Release 5.

May 2003 005 Update for the IXA SDK 3.1 Alpha Release

September 2003 006 Update for the IXA SDK 3.5 Pre-Release 1

October 2003 007

January 2004 008 Updated for new trademark usage: Intel XScale

May 2004 009

August 2004 010 Preparation for web posting.

Added information about Receiver and Transmitter

Interoperation with Framers and Switch Fabrics.

®

technology.

Updated Sections 6.5.2, 8.5.2.2, 9.2.2.1, 9.3.1, 9.3.3.2,

9.5.1.4, 9.5.3.4, and 10.3.1.

Updated Figure 123 and Timing Diagrams in Figures 43, 44,

46, 47, 50, 51, 54, and 55.

Added Chapter 11, “Performance Monitor Unit”.

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. EXCEPT AS PROVIDED IN INTEL'S TERMS

AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS

OR IMPLIED WARRANTY RELATING TO SALE AND/OR USE OF INTEL PRODUCTS, INCLUDING LIABILITY OR WARRANTIES RELATING TO

FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT, OR OTHER

INTELLECTUAL PROPERTY RIGHT.

Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the

presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by

estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights.

Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

The IXP2800 Network Processor may contain design defects or errors known as errata which may cause the product to deviate from published

specifications. Current characterized errata are available on request.

Except as permitted by such license, no part of this document may be reproduced, stored in a retrieval system, or transmitted in any form or by any

means without the express written consent of Intel Corporation.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an ordering number and are referenced in this document, or other Intel literature may be obtained by calling

1-800-548-4725 or by visiting Intel's website at http://www.intel.com.

Intel and XScale are registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright © 2004, Intel Corporation.

2 Hardware Reference Manual

Page 3

Contents

Contents

1 Introduction.................................................................................................................................. 25

1.1 About This Document ......................................................................................................... 25

1.2 Related Documentation ...................................................................................................... 25

1.3 Terminology ........................................................................................................................26

2 Technical Description ................................................................................................................. 27

2.1 Overview............................................................................................................................. 27

2.2 Intel XScale

2.2.1 ARM* Compatibility................................................................................................30

2.2.2 Features................................................................................................................. 30

2.3 Microengines ......................................................................................................................33

2.3.1 Microengine Bus Arrangement ..............................................................................35

2.3.2 Control Store.......................................................................................................... 35

2.3.3 Contexts.................................................................................................................35

2.3.4 Datapath Registers ................................................................................................ 37

2.3.5 Addressing Modes ................................................................................................. 41

2.3.6 Local CSRs............................................................................................................ 43

2.3.7 Execution Datapath ............................................................................................... 43

2.3.8 CRC Unit................................................................................................................ 48

2.3.9 Event Signals......................................................................................................... 49

2.4 DRAM .................................................................................................................................50

2.4.1 Size Configuration ................................................................................................. 50

2.4.2 Read and Write Access ......................................................................................... 51

2.5 SRAM .................................................................................................................................51

2.5.1 QDR Clocking Scheme ..........................................................................................52

2.5.2 SRAM Controller Configurations............................................................................52

2.5.3 SRAM Atomic Operations ......................................................................................53

2.5.4 Queue Data Structure Commands ........................................................................54

2.5.5 Reference Ordering ............................................................................................... 54

®

Core Microarchitecture ................................................................................. 30

2.2.2.1 Multiply/Accumulate (MAC).................................................................... 30

2.2.2.2 Memory Management ............................................................................ 30

2.2.2.3 Instruction Cache ................................................................................... 30

2.2.2.4 Branch Target Buffer..............................................................................31

2.2.2.5 Data Cache ............................................................................................31

2.2.2.6 Interrupt Controller ................................................................................. 31

2.2.2.7 Address Map.......................................................................................... 32

2.3.4.1 General-Purpose Registers (GPRs) ......................................................37

2.3.4.2 Transfer Registers ................................................................................. 37

2.3.4.3 Next Neighbor Registers........................................................................38

2.3.4.4 Local Memory ....................................................................................... 39

2.3.5.1 Context-Relative Addressing Mode .......................................................41

2.3.5.2 Absolute Addressing Mode .................................................................... 42

2.3.5.3 Indexed Addressing Mode ..................................................................... 42

2.3.7.1 Byte Align............................................................................................... 43

2.3.7.2 CAM ....................................................................................................... 45

2.5.5.1 Reference Order Tables ........................................................................ 54

2.5.5.2 Microengine Software Restrictions to Maintain Ordering.......................56

Hardware Reference Manual 3

Page 4

Contents

2.6 Scratchpad Memory............................................................................................................56

2.6.1 Scratchpad Atomic Operations .............................................................................. 57

2.6.2 Ring Commands .................................................................................................... 57

2.7 Media and Switch Fabric Interface ..................................................................................... 59

2.7.1 SPI-4......................................................................................................................60

2.7.2 CSIX ...................................................................................................................... 61

2.7.3 Receive.................................................................................................................. 61

2.7.3.1 RBUF ..................................................................................................... 62

2.7.3.1.1 SPI-4 and the RBUF .............................................................. 62

2.7.3.1.2 CSIX and RBUF..................................................................... 63

2.7.3.2 Full Element List .................................................................................... 63

2.7.3.3 RX_THREAD_FREELIST...................................................................... 63

2.7.3.4 Receive Operation Summary................................................................. 64

2.7.4 Transmit................................................................................................................. 65

2.7.4.1 TBUF...................................................................................................... 65

2.7.4.1.1 SPI-4 and TBUF..................................................................... 66

2.7.4.1.2 CSIX and TBUF ..................................................................... 67

2.7.4.2 Transmit Operation Summary................................................................ 67

2.7.5 The Flow Control Interface .................................................................................... 68

2.7.5.1 SPI-4...................................................................................................... 68

2.7.5.2 CSIX....................................................................................................... 68

2.8 Hash Unit............................................................................................................................ 69

2.9 PCI Controller ..................................................................................................................... 71

2.9.1 Target Access........................................................................................................ 71

2.9.2 Master Access ....................................................................................................... 71

2.9.3 DMA Channels....................................................................................................... 71

2.9.3.1 DMA Descriptor...................................................................................... 72

2.9.3.2 DMA Channel Operation........................................................................ 73

2.9.3.3 DMA Channel End Operation ................................................................ 74

2.9.3.4 Adding Descriptors to an Unterminated Chain....................................... 74

2.9.4 Mailbox and Message Registers............................................................................ 74

2.9.5 PCI Arbiter ............................................................................................................. 75

2.10 Control and Status Register Access Proxy......................................................................... 76

2.11 Intel XScale

®

Core Peripherals .......................................................................................... 76

2.11.1 Interrupt Controller................................................................................................. 76

2.11.2 Timers....................................................................................................................77

2.11.3 General Purpose I/O.............................................................................................. 77

2.11.4 Universal Asynchronous Receiver/Transmitter...................................................... 77

2.11.5 Slowport................................................................................................................. 77

2.12 I/O Latency ......................................................................................................................... 78

2.13 Performance Monitor .......................................................................................................... 78

3 Intel XScale

®

Core ....................................................................................................................... 79

3.1 Introduction ......................................................................................................................... 79

3.2 Features.............................................................................................................................. 80

3.2.1 Multiply/ACcumulate (MAC)................................................................................... 80

3.2.2 Memory Management............................................................................................ 80

3.2.3 Instruction Cache................................................................................................... 81

3.2.4 Branch Target Buffer (BTB) ................................................................................... 81

3.2.5 Data Cache............................................................................................................ 81

3.2.6 Performance Monitoring ........................................................................................ 81

4 Hardware Reference Manual

Page 5

Contents

3.2.7 Power Management...............................................................................................81

3.2.8 Debugging ............................................................................................................. 81

3.2.9 JTAG...................................................................................................................... 81

3.3 Memory Management.........................................................................................................82

3.3.1 Architecture Model ................................................................................................. 82

3.3.1.1 Version 4 versus Version 5 .................................................................... 82

3.3.1.2 Memory Attributes.................................................................................. 82

3.3.1.2.1 Page (P) Attribute Bit ............................................................. 82

3.3.1.2.2 Instruction Cache ................................................................... 83

3.3.1.2.3 Data Cache and Write Buffer .................................................83

3.3.1.2.4 Details on Data Cache and Write Buffer Behavior................. 83

3.3.1.2.5 Memory Operation Ordering ..................................................84

3.3.2 Exceptions .............................................................................................................84

3.3.3 Interaction of the MMU, Instruction Cache, and Data Cache.................................85

3.3.4 Control ...................................................................................................................85

3.3.4.1 Invalidate (Flush) Operation...................................................................85

3.3.4.2 Enabling/Disabling ................................................................................. 85

3.3.4.3 Locking Entries ...................................................................................... 86

3.3.4.4 Round-Robin Replacement Algorithm ...................................................87

3.4 Instruction Cache................................................................................................................ 88

3.4.1 Instruction Cache Operation .................................................................................. 89

3.4.1.1 Operation when Instruction Cache is Enabled.......................................89

3.4.1.2 Operation when Instruction Cache is Disabled ...................................... 90

3.4.1.3 Fetch Policy ........................................................................................... 90

3.4.1.4 Round-Robin Replacement Algorithm ...................................................90

3.4.1.5 Parity Protection..................................................................................... 91

3.4.1.6 Instruction Cache Coherency.................................................................91

3.4.2 Instruction Cache Control ...................................................................................... 92

3.4.2.1 Instruction Cache State at Reset ...........................................................92

3.4.2.2 Enabling/Disabling ................................................................................. 92

3.4.2.3 Invalidating the Instruction Cache.......................................................... 92

3.4.2.4 Locking Instructions in the Instruction Cache ........................................ 92

3.4.2.5 Unlocking Instructions in the Instruction Cache .....................................94

3.5 Branch Target Buffer (BTB) ................................................................................................ 94

3.5.1 Branch Target Buffer Operation.............................................................................94

3.5.1.1 Reset......................................................................................................95

3.5.2 Update Policy......................................................................................................... 96

3.5.3 BTB Control ...........................................................................................................96

3.5.3.1 Disabling/Enabling ................................................................................. 96

3.5.3.2 Invalidation............................................................................................. 96

3.6 Data Cache.........................................................................................................................96

3.6.1 Overviews ..............................................................................................................97

3.6.1.1 Data Cache Overview ............................................................................ 97

3.6.1.2 Mini-Data Cache Overview ....................................................................98

3.6.1.3 Write Buffer and Fill Buffer Overview..................................................... 99

3.6.2 Data Cache and Mini-Data Cache Operation ........................................................ 99

3.6.2.1 Operation when Caching is Enabled...................................................... 99

3.6.2.2 Operation when Data Caching is Disabled ............................................99

3.6.2.3 Cache Policies ..................................................................................... 100

3.6.2.3.1 Cacheability ......................................................................... 100

3.6.2.3.2 Read Miss Policy .................................................................100

3.6.2.3.3 Write Miss Policy..................................................................101

Hardware Reference Manual 5

Page 6

Contents

3.6.2.3.4 Write-Back versus Write-Through........................................ 101

3.6.2.4 Round-Robin Replacement Algorithm ................................................. 102

3.6.2.5 Parity Protection................................................................................... 102

3.6.2.6 Atomic Accesses.................................................................................. 102

3.6.3 Data Cache and Mini-Data Cache Control .......................................................... 103

3.6.3.1 Data Memory State After Reset........................................................... 103

3.6.3.2 Enabling/Disabling ............................................................................... 103

3.6.3.3 Invalidate and Clean Operations.......................................................... 103

3.6.3.3.1 Global Clean and Invalidate Operation ................................ 104

3.6.4 Reconfiguring the Data Cache as Data RAM ...................................................... 105

3.6.5 Write Buffer/Fill Buffer Operation and Control ..................................................... 106

3.7 Configuration .................................................................................................................... 106

3.8 Performance Monitoring ................................................................................................... 107

3.8.1 Performance Monitoring Events .......................................................................... 107

3.8.1.1 Instruction Cache Efficiency Mode....................................................... 108

3.8.1.2 Data Cache Efficiency Mode................................................................ 109

3.8.1.3 Instruction Fetch Latency Mode........................................................... 109

3.8.1.4 Data/Bus Request Buffer Full Mode .................................................... 109

3.8.1.5 Stall/Writeback Statistics...................................................................... 110

3.8.1.6 Instruction TLB Efficiency Mode .......................................................... 111

3.8.1.7 Data TLB Efficiency Mode ................................................................... 111

3.8.2 Multiple Performance Monitoring Run Statistics .................................................. 111

3.9 Performance Considerations ............................................................................................ 111

3.9.1 Interrupt Latency.................................................................................................. 112

3.9.2 Branch Prediction ................................................................................................ 112

3.9.3 Addressing Modes ............................................................................................... 113

3.9.4 Instruction Latencies............................................................................................ 113

3.9.4.1 Performance Terms ............................................................................. 113

3.9.4.2 Branch Instruction Timings .................................................................. 115

3.9.4.3 Data Processing Instruction Timings ................................................... 115

3.9.4.4 Multiply Instruction Timings.................................................................. 116

3.9.4.5 Saturated Arithmetic Instructions......................................................... 117

3.9.4.6 Status Register Access Instructions .................................................... 118

3.9.4.7 Load/Store Instructions........................................................................ 118

3.9.4.8 Semaphore Instructions....................................................................... 118

3.9.4.9 Coprocessor Instructions ..................................................................... 119

3.9.4.10 Miscellaneous Instruction Timing......................................................... 119

3.9.4.11 Thumb Instructions .............................................................................. 119

3.10 Test Features....................................................................................................................119

3.10.1 IXP2800 Network Processor Endianness............................................................ 120

3.10.1.1 Read and Write Transactions Initiated by the Intel XScale

3.10.1.1.1 Reads Initiated by the Intel XScale® Core ........................ 121

3.10.1.1.2 The Intel XScale

®

Core Writing to the IXP2800

®

Network Processor .................................................................. 123

3.11 Intel XScale® Core Gasket Unit ....................................................................................... 125

3.11.1 Overview.............................................................................................................. 125

3.11.2 Intel XScale® Core Gasket Functional Description ............................................. 127

3.11.2.1 Command Memory Bus to Command Push/Pull Conversion .............. 127

3.11.3 CAM Operation .................................................................................................... 127

3.11.4 Atomic Operations ............................................................................................... 128

3.11.4.1 Summary of Rules for the Atomic Command Regarding I/O ............... 129

3.11.4.2 Intel XScale® Core Access to SRAM Q-Array..................................... 129

Core ...... 121

6 Hardware Reference Manual

Page 7

Contents

3.11.5 I/O Transaction ....................................................................................................130

3.11.6 Hash Access ........................................................................................................ 130

3.11.7 Gasket Local CSR ...............................................................................................131

3.11.8 Interrupt ...............................................................................................................132

3.12 Intel XScale® Core Peripheral Interface........................................................................... 134

3.12.1 XPI Overview .......................................................................................................134

3.12.1.1 Data Transfers ..................................................................................... 135

3.12.1.2 Data Alignment .................................................................................... 135

3.12.1.3 Address Spaces for XPI Internal Devices ............................................ 136

3.12.2 UART Overview ...................................................................................................137

3.12.3 UART Operation .................................................................................................. 138

3.12.3.1 UART FIFO OPERATION....................................................................138

3.12.3.1.1 UART FIFO Interrupt Mode Operation –

Receiver Interrupt .................................................................... 138

3.12.3.1.2 FIFO Polled Mode Operation ............................................. 139

3.12.4 Baud Rate Generator...........................................................................................139

3.12.5 General Purpose I/O (GPIO) ............................................................................... 140

3.12.6 Timers.................................................................................................................. 141

3.12.6.1 Timer Operation ...................................................................................141

3.12.7 Slowport Unit ....................................................................................................... 142

3.12.7.1 PROM Device Support.........................................................................143

3.12.7.2 Microprocessor Interface Support for the Framer ................................ 143

3.12.7.3 Slowport Unit Interfaces.......................................................................144

3.12.7.4 Address Space.....................................................................................145

3.12.7.5 Slowport Interfacing Topology ............................................................. 145

3.12.7.6 Slowport 8-Bit Device Bus Protocols ...................................................146

3.12.7.6.1 Mode 0 Single Write Transfer for Fixed-Timed Device ......147

3.12.7.6.2 Mode 0 Single Write Transfer for Self-Timing Device........ 148

3.12.7.6.3 Mode 0 Single Read Transfer for Fixed-Timed Device...... 149

3.12.7.6.4 Single Read Transfer for a Self-Timing Device..................150

3.12.7.7 SONET/SDH Microprocessor Access Support ....................................150

3.12.7.7.1 Mode 1: 16-Bit Microprocessor Interface Support with

16-Bit Address Lines................................................................151

3.12.7.7.2 Mode 2: Interface with 8 Data Bits and 11 Address Bits ....155

3.12.7.7.3 Mode 3: Support for the Intel and AMCC* 2488 Mbps

SONET/SDH Microprocessor Interface ...................................157

4Microengines............................................................................................................................. 167

4.1 Overview........................................................................................................................... 167

4.1.1 Control Store........................................................................................................ 169

4.1.2 Contexts...............................................................................................................169

4.1.3 Datapath Registers .............................................................................................. 171

4.1.3.1 General-Purpose Registers (GPRs) ....................................................171

4.1.3.2 Transfer Registers ............................................................................... 171

4.1.3.3 Next Neighbor Registers......................................................................172

4.1.3.4 Local Memory ...................................................................................... 172

4.1.4 Addressing Modes ............................................................................................... 173

4.1.4.1 Context-Relative Addressing Mode .....................................................173

4.1.4.2 Absolute Addressing Mode .................................................................. 174

4.1.4.3 Indexed Addressing Mode ...................................................................174

4.2 Local CSRs....................................................................................................................... 174

4.3 Execution Datapath .......................................................................................................... 174

Hardware Reference Manual 7

Page 8

Contents

4.3.1 Byte Align............................................................................................................. 174

4.3.2 CAM..................................................................................................................... 176

4.4 CRC Unit........................................................................................................................... 179

4.5 Event Signals.................................................................................................................... 180

4.5.1 Microengine Endianness ..................................................................................... 181

4.5.1.1 Read from RBUF (64 Bits)................................................................... 181

4.5.1.2 Write to TBUF ...................................................................................... 182

4.5.1.3 Read/Write from/to SRAM ................................................................... 182

4.5.1.4 Read/Write from/to DRAM ................................................................... 182

4.5.1.5 Read/Write from/to SHaC and Other CSRs......................................... 182

4.5.1.6 Write to Hash Unit................................................................................ 183

4.5.2 Media Access ...................................................................................................... 183

4.5.2.1 Read from RBUF ................................................................................. 184

4.5.2.2 Write to TBUF ...................................................................................... 185

4.5.2.3 TBUF to SPI-4 Transfer ....................................................................... 186

5 DRAM.......................................................................................................................................... 187

5.1 Overview........................................................................................................................... 187

5.2 Size Configuration ............................................................................................................188

5.3 DRAM Clocking ................................................................................................................189

5.4 Bank Policy ....................................................................................................................... 190

5.5 Interleaving ....................................................................................................................... 191

5.5.1 Three Channels Active (3-Way Interleave).......................................................... 191

5.5.2 Two Channels Active (2-Way Interleave) ............................................................ 193

5.5.3 One Channel Active (No Interleave) .................................................................... 193

5.5.4 Interleaving Across RDRAMs and Banks ............................................................ 194

5.6 Parity and ECC................................................................................................................. 194

5.6.1 Parity and ECC Disabled ..................................................................................... 194

5.6.2 Parity Enabled ..................................................................................................... 195

5.6.3 ECC Enabled ....................................................................................................... 195

5.6.4 ECC Calculation and Syndrome .......................................................................... 196

5.7 Timing Configuration.........................................................................................................196

5.8 Microengine Signals .........................................................................................................197

5.9 Serial Port......................................................................................................................... 197

5.10 RDRAM Controller Block Diagram.................................................................................... 198

5.10.1 Commands .......................................................................................................... 199

5.10.2 DRAM Write......................................................................................................... 199

5.10.2.1 Masked Write....................................................................................... 199

5.10.3 DRAM Read......................................................................................................... 200

5.10.4 CSR Write............................................................................................................ 200

5.10.5 CSR Read............................................................................................................ 200

5.10.6 Arbitration ............................................................................................................ 201

5.10.7 Reference Ordering ............................................................................................. 201

5.11 DRAM Push/Pull Arbiter ................................................................................................... 201

5.11.1 Arbiter Push/Pull Operation ................................................................................. 202

5.11.2 DRAM Push Arbiter Description .......................................................................... 203

5.12 DRAM Pull Arbiter Description.......................................................................................... 204

6 SRAM Interface.......................................................................................................................... 207

6.1 Overview........................................................................................................................... 207

6.2 SRAM Interface Configurations ........................................................................................ 208

8 Hardware Reference Manual

Page 9

Contents

6.2.1 Internal Interface..................................................................................................209

6.2.2 Number of Channels............................................................................................209

6.2.3 Coprocessor and/or SRAMs Attached to a Channel............................................ 209

6.3 SRAM Controller Configurations.......................................................................................209

6.4 Command Overview .........................................................................................................211

6.4.1 Basic Read/Write Commands.............................................................................. 211

6.4.2 Atomic Operations ............................................................................................... 211

6.4.3 Queue Data Structure Commands ......................................................................213

6.4.3.1 Read_Q_Descriptor Commands.......................................................... 216

6.4.3.2 Write_Q_Descriptor Commands ..........................................................216

6.4.3.3 ENQ and DEQ Commands .................................................................. 217

6.4.4 Ring Data Structure Commands.......................................................................... 217

6.4.5 Journaling Commands......................................................................................... 217

6.4.6 CSR Accesses ..................................................................................................... 217

6.5 Parity................................................................................................................................. 217

6.6 Address Map..................................................................................................................... 218

6.7 Reference Ordering .......................................................................................................... 219

6.7.1 Reference Order Tables ...................................................................................... 219

6.7.2 Microcode Restrictions to Maintain Ordering ....................................................... 220

6.8 Coprocessor Mode ........................................................................................................... 221

7 SHaC — Unit Expansion ...........................................................................................................225

7.1 Overview........................................................................................................................... 225

7.1.1 SHaC Unit Block Diagram.................................................................................... 225

7.1.2 Scratchpad........................................................................................................... 227

7.1.2.1 Scratchpad Description........................................................................227

7.1.2.2 Scratchpad Interface............................................................................229

7.1.2.2.1 Command Interface .............................................................229

7.1.2.2.2 Push/Pull Interface...............................................................229

7.1.2.2.3 CSR Bus Interface ............................................................... 229

7.1.2.2.4 Advanced Peripherals Bus Interface (APB) ......................... 229

7.1.2.3 Scratchpad Block Level Diagram......................................................... 229

7.1.2.3.1 Scratchpad Commands .......................................................230

7.1.2.3.2 Ring Commands ..................................................................231

7.1.2.3.3 Clocks and Reset.................................................................235

7.1.2.3.4 Reset Registers ...................................................................235

7.1.3 Hash Unit .............................................................................................................236

7.1.3.1 Hashing Operation ............................................................................... 237

7.1.3.2 Hash Algorithm .................................................................................... 239

8 Media and Switch Fabric Interface...........................................................................................241

8.1 Overview........................................................................................................................... 241

8.1.1 SPI-4.................................................................................................................... 243

8.1.2 CSIX ....................................................................................................................246

8.1.3 CSIX/SPI-4 Interleave Mode................................................................................246

8.2 Receive............................................................................................................................. 247

8.2.1 Receive Pins........................................................................................................248

8.2.2 RBUF ...................................................................................................................248

8.2.2.1 SPI-4 .................................................................................................... 250

8.2.2.2 CSIX.....................................................................................................253

8.2.3 Full Element List .................................................................................................. 255

8.2.4 Rx_Thread_Freelist_# ......................................................................................... 255

Hardware Reference Manual 9

Page 10

Contents

8.2.5 Rx_Thread_Freelist_Timeout_# .......................................................................... 256

8.2.6 Receive Operation Summary............................................................................... 256

8.2.7 Receive Flow Control Status ............................................................................... 258

8.2.7.1 SPI-4.................................................................................................... 258

8.2.7.2 CSIX..................................................................................................... 259

8.2.7.2.1 Link-Level............................................................................. 259

8.2.7.2.2 Virtual Output Queue ........................................................... 260

8.2.8 Parity.................................................................................................................... 260

8.2.8.1 SPI-4.................................................................................................... 260

8.2.8.2 CSIX..................................................................................................... 261

8.2.8.2.1 Horizontal Parity................................................................... 261

8.2.8.2.2 Vertical Parity....................................................................... 261

8.2.9 Error Cases.......................................................................................................... 261

8.3 Transmit............................................................................................................................ 262

8.3.1 Transmit Pins....................................................................................................... 262

8.3.2 TBUF ................................................................................................................... 263

8.3.2.1 SPI-4.................................................................................................... 266

8.3.2.2 CSIX..................................................................................................... 267

8.3.3 Transmit Operation Summary.............................................................................. 268

8.3.3.1 SPI-4.................................................................................................... 268

8.3.3.2 CSIX..................................................................................................... 269

8.3.3.3 Transmit Summary............................................................................... 270

8.3.4 Transmit Flow Control Status .............................................................................. 270

8.3.4.1 SPI-4.................................................................................................... 271

8.3.4.2 CSIX..................................................................................................... 273

8.3.4.2.1 Link-Level............................................................................. 273

8.3.4.2.2 Virtual Output Queue ........................................................... 273

8.3.5 Parity.................................................................................................................... 273

8.3.5.1 SPI-4.................................................................................................... 273

8.3.5.2 CSIX..................................................................................................... 274

8.3.5.2.1 Horizontal Parity................................................................... 274

8.3.5.2.2 Vertical Parity....................................................................... 274

8.4 RBUF and TBUF Summary .............................................................................................. 274

8.5 CSIX Flow Control Interface ............................................................................................. 275

8.5.1 TXCSRB and RXCSRB Signals .......................................................................... 275

8.5.2 FCIFIFO and FCEFIFO Buffers ........................................................................... 276

8.5.2.1 Full Duplex CSIX.................................................................................. 277

8.5.2.2 Simplex CSIX....................................................................................... 278

8.5.3 TXCDAT/RXCDAT, TXCSOF/RXCSOF, TXCPAR/RXCPAR,

and TXCFC/RXCFC Signals................................................................................ 280

8.6 Deskew and Training ........................................................................................................ 280

8.6.1 Data Training Pattern........................................................................................... 282

8.6.2 Flow Control Training Pattern .............................................................................. 282

8.6.3 Use of Dynamic Training ..................................................................................... 283

8.7 CSIX Startup Sequence.................................................................................................... 287

8.7.1 CSIX Full Duplex ................................................................................................. 287

8.7.1.1 Ingress IXP2800 Network Processor................................................... 287

8.7.1.2 Egress IXP2800 Network Processor.................................................... 287

8.7.1.3 Single IXP2800 Network Processor..................................................... 288

8.7.2 CSIX Simplex....................................................................................................... 288

8.7.2.1 Ingress IXP2800 Network Processor................................................... 288

8.7.2.2 Egress IXP2800 Network Processor.................................................... 289

10 Hardware Reference Manual

Page 11

Contents

8.7.2.3 Single IXP2800 Network Processor.....................................................289

8.8 Interface to Command and Push and Pull Buses ............................................................. 290

8.8.1 RBUF or MSF CSR to Microengine S_TRANSFER_IN Register for Instruction:.291

8.8.2 Microengine S_TRANSFER_OUT Register to TBUF or

MSF CSR for Instruction:..................................................................................... 291

8.8.3 Microengine to MSF CSR for Instruction: ............................................................ 291

8.8.4 From RBUF to DRAM for Instruction: .................................................................. 291

8.8.5 From DRAM to TBUF for Instruction:................................................................... 292

8.9 Receiver and Transmitter Interoperation with Framers and Switch Fabrics .....................292

8.9.1 Receiver and Transmitter Configurations ............................................................293

8.9.1.1 Simplex Configuration..........................................................................293

8.9.1.2 Hybrid Simplex Configuration ..............................................................294

8.9.1.3 Dual Network Processor Full Duplex Configuration ............................. 295

8.9.1.4 Single Network Processor Full Duplex Configuration (SPI-4.2)........... 296

8.9.1.5 Single Network Processor, Full Duplex Configuration

(SPI-4.2 and CSIX-L1) .........................................................................297

8.9.2 System Configurations.........................................................................................297

8.9.2.1 Framer, Single Network Processor Ingress and Egress, and

Fabric Interface Chip............................................................................ 298

8.9.2.2 Framer, Dual Network Processor Ingress, Single

Network Processor Egress, and Fabric Interface Chip ........................298

8.9.2.3 Framer, Single Network Processor Ingress and Egress, and

CSIX-L1 Chips for Translation and Fabric Interface ............................299

8.9.2.4 CPU Complex, Network Processor, and Fabric Interface Chip ...........299

8.9.2.5 Framer, Single Network Processor, Co-Processor, and

Fabric Interface Chip............................................................................ 300

8.9.3 SPI-4.2 Support ................................................................................................... 301

8.9.3.1 SPI-4.2 Receiver..................................................................................301

8.9.3.2 SPI-4.2 Transmitter..............................................................................302

8.9.4 CSIX-L1 Protocol Support ...................................................................................303

8.9.4.1 CSIX-L1 Interface Reference Model: Traffic Manager and Fabric

Interface Chip.......................................................................................303

8.9.4.2 Intel® IXP2800 Support of the CSIX-L1 Protocol ................................304

8.9.4.2.1 Mapping to 16-Bit Wide DDR LVDS .................................... 304

8.9.4.2.2 Support for Dual Chip, Full-Duplex Operation ..................... 305

8.9.4.2.3 Support for Simplex Operation............................................. 306

8.9.4.2.4 Support for Hybrid Simplex Operation .................................307

8.9.4.2.5 Support for Dynamic De-Skew Training...............................308

8.9.4.3 CSIX-L1 Protocol Receiver Support ....................................................309

8.9.4.4 CSIX-L1 Protocol Transmitter Support ................................................310

8.9.4.5 Implementation of a Bridge Chip to CSIX-L1 .......................................311

8.9.5 Dual Protocol (SPI and CSIX-L1) Support ...........................................................312

8.9.5.1 Dual Protocol Receiver Support...........................................................312

8.9.5.2 Dual Protocol Transmitter Support....................................................... 312

8.9.5.3 Implementation of a Bridge Chip to CSIX-L1 and SPI-4.2 ................... 313

8.9.6 Transmit State Machine .......................................................................................314

8.9.6.1 SPI-4.2 Transmitter State Machine...................................................... 314

8.9.6.2 Training Transmitter State Machine..................................................... 315

8.9.6.3 CSIX-L1 Transmitter State Machine .................................................... 315

8.9.7 Dynamic De-Skew ...............................................................................................316

8.9.8 Summary of Receiver and Transmitter Signals ...................................................317

Hardware Reference Manual 11

Page 12

Contents

9 PCI Unit....................................................................................................................................... 319

9.1 Overview........................................................................................................................... 319

9.2 PCI Pin Protocol Interface Block....................................................................................... 321

9.2.1 PCI Commands ................................................................................................... 322

9.2.2 IXP2800 Network Processor Initialization............................................................ 323

9.2.2.1 Initialization by the Intel XScale® Core................................................ 324

9.2.2.2 Initialization by a PCI Host................................................................... 324

9.2.3 PCI Type 0 Configuration Cycles......................................................................... 325

9.2.3.1 Configuration Write .............................................................................. 325

9.2.3.2 Configuration Read.............................................................................. 325

9.2.4 PCI 64-Bit Bus Extension .................................................................................... 325

9.2.5 PCI Target Cycles................................................................................................ 326

9.2.5.1 PCI Accesses to CSR.......................................................................... 326

9.2.5.2 PCI Accesses to DRAM....................................................................... 326

9.2.5.3 PCI Accesses to SRAM ....................................................................... 326

9.2.5.4 Target Write Accesses from the PCI Bus ............................................ 326

9.2.5.5 Target Read Accesses from the PCI Bus ............................................ 327

9.2.6 PCI Initiator Transactions .................................................................................... 327

9.2.6.1 PCI Request Operation........................................................................ 327

9.2.6.2 PCI Commands.................................................................................... 328

9.2.6.3 Initiator Write Transactions .................................................................. 328

9.2.6.4 Initiator Read Transactions.................................................................. 328

9.2.6.5 Initiator Latency Timer ......................................................................... 328

9.2.6.6 Special Cycle ....................................................................................... 329

9.2.7 PCI Fast Back-to-Back Cycles............................................................................. 329

9.2.8 PCI Retry ............................................................................................................. 329

9.2.9 PCI Disconnect .................................................................................................... 329

9.2.10 PCI Built-In System Test...................................................................................... 329

9.2.11 PCI Central Functions......................................................................................... 330

9.2.11.1 PCI Interrupt Inputs.............................................................................. 330

9.2.11.2 PCI Reset Output................................................................................. 330

9.2.11.3 PCI Internal Arbiter .............................................................................. 331

9.3 Slave Interface Block ........................................................................................................332

9.3.1 CSR Interface ...................................................................................................... 332

9.3.2 SRAM Interface ................................................................................................... 333

9.3.2.1 SRAM Slave Writes ............................................................................. 333

9.3.2.2 SRAM Slave Reads ............................................................................. 334

9.3.3 DRAM Interface ................................................................................................... 334

9.3.3.1 DRAM Slave Writes ............................................................................. 334

9.3.3.2 DRAM Slave Reads............................................................................. 335

9.3.4 Mailbox and Doorbell Registers........................................................................... 336

9.3.5 PCI Interrupt Pin .................................................................................................. 339

9.4 Master Interface Block ...................................................................................................... 340

9.4.1 DMA Interface...................................................................................................... 340

9.4.1.1 Allocation of the DMA Channels .......................................................... 341

9.4.1.2 Special Registers for Microengine Channels....................................... 341

9.4.1.3 DMA Descriptor.................................................................................... 342

9.4.1.4 DMA Channel Operation...................................................................... 343

9.4.1.5 DMA Channel End Operation .............................................................. 344

9.4.1.6 Adding Descriptor to an Unterminated Chain ...................................... 344

9.4.1.7 DRAM to PCI Transfer......................................................................... 344

9.4.1.8 PCI to DRAM Transfer......................................................................... 345

12 Hardware Reference Manual

Page 13

Contents

9.4.2 Push/Pull Command Bus Target Interface........................................................... 345

9.4.2.1 Command Bus Master Access to Local Configuration Registers ........345

9.4.2.2 Command Bus Master Access to Local Control and

Status Registers...................................................................................346

9.4.2.3 Command Bus Master Direct Access to PCI Bus ................................ 346

9.4.2.3.1 PCI Address Generation for IO and MEM Cycles................346

9.4.2.3.2 PCI Address Generation for Configuration Cycles...............347

9.4.2.3.3 PCI Address Generation for Special and IACK Cycles........ 347

9.4.2.3.4 PCI Enables ......................................................................... 347

9.4.2.3.5 PCI Command ..................................................................... 347

9.5 PCI Unit Error Behavior .................................................................................................... 348

9.5.1 PCI Target Error Behavior ................................................................................... 348

9.5.1.1 Target Access Has an Address Parity Error ........................................348

9.5.1.2 Initiator Asserts PCI_PERR_L in Response to One of Our Data

Phases ................................................................................................. 348

9.5.1.3 Discard Timer Expires on a Target Read.............................................348

9.5.1.4 Target Access to the PCI_CSR_BAR Space Has Illegal

Byte Enables........................................................................................ 348

9.5.1.5 Target Write Access Receives Bad Parity PCI_PAR with the Data .....349

9.5.1.6 SRAM Responds with a Memory Error on One or More Data Phases

on a Target Read .................................................................................349

9.5.1.7 DRAM Responds with a Memory Error on One or More Data Phases

on a Target Read .................................................................................349

9.5.2 As a PCI Initiator During a DMA Transfer ............................................................349

9.5.2.1 DMA Read from DRAM (Memory-to-PCI Transaction) Gets a

Memory Error .......................................................................................349

9.5.2.2 DMA Read from SRAM (Descriptor Read) Gets a Memory Error........ 350

9.5.2.3 DMA from DRAM Transfer (Write to PCI) Receives PCI_PERR_L on

PCI Bus................................................................................................350

9.5.2.4 DMA To DRAM (Read from PCI) Has Bad Data Parity ....................... 350

9.5.2.5 DMA Transfer Experiences a Master Abort (Time-Out) on PCI ...........351

9.5.2.6 DMA Transfer Receives a Target Abort Response During a

Data Phase .......................................................................................... 351

9.5.2.7 DMA Descriptor Has a 0x0 Word Count (Not an Error) .......................351

9.5.3 As a PCI Initiator During a Direct Access from the Intel

XScale® Core or Microengine .............................................................................351

9.5.3.1 Master Transfer Experiences a Master Abort (Time-Out) on PCI ........351

9.5.3.2 Master Transfer Receives a Target Abort Response During

a Data Phase .......................................................................................351

9.5.3.3 Master from the Intel XScale® Core or Microengine Transfer

(Write to PCI) Receives PCI_PERR_L on PCI Bus .............................352

9.5.3.4 Master Read from PCI (Read from PCI) Has Bad Data Parity ............352

9.5.3.5 Master Transfer Receives PCI_SERR_L from the PCI Bus ................352

9.5.3.6 Intel XScale® Core Microengine Requests Direct Transfer when

the PCI Bus is in Reset ........................................................................352

9.6 PCI Data Byte Lane Alignment ......................................................................................... 352

9.6.1 Endian for Byte Enable ........................................................................................ 355

10 Clocks and Reset....................................................................................................................... 359

10.1 Clocks ............................................................................................................................... 359

10.2 Synchronization Between Frequency Domains ................................................................363

10.3 Reset ................................................................................................................................364

10.3.1 Hardware Reset Using nRESET or PCI_RST_L .................................................364

Hardware Reference Manual 13

Page 14

Contents

10.3.2 PCI-Initiated Reset............................................................................................... 366

10.3.3 Watchdog Timer-Initiated Reset .......................................................................... 366

10.3.3.1 Slave Network Processor (Non-Central Function)............................... 367

10.3.3.2 Master Network Processor (PCI Host, Central Function) .................... 367

10.3.3.3 Master Network Processor (Central Function)..................................... 367

10.3.4 Software-Initiated Reset ...................................................................................... 367

10.3.5 Reset Removal Operation Based on CFG_PROM_BOOT.................................. 368

10.3.5.1 When CFG_PROM_BOOT is 1 (BOOT_PROM is Present) ................ 368

10.3.5.2 When CFG_PROM_BOOT is 0 (BOOT_PROM is Not Present) .........368

10.3.6 Strap Pins ............................................................................................................ 368

10.3.7 Powerup Reset Sequence ................................................................................... 370

10.4 Boot Mode ........................................................................................................................ 370

10.4.1 Flash ROM........................................................................................................... 372

10.4.2 PCI Host Download ............................................................................................. 372

10.5 Initialization ....................................................................................................................... 373

11 Performance Monitor Unit ........................................................................................................ 375

11.1 Introduction ....................................................................................................................... 375

11.1.1 Motivation for Performance Monitors................................................................... 375

11.1.2 Motivation for Choosing CHAP Counters ............................................................ 376

11.1.3 Functional Overview of CHAP Counters.............................................................. 377

11.1.4 Basic Operation of the Performance Monitor Unit ............................................... 378

11.1.5 Definition of CHAP Terminology .......................................................................... 379

11.1.6 Definition of Clock Domains................................................................................. 380

11.2 Interface and CSR Description ......................................................................................... 380

11.2.1 APB Peripheral .................................................................................................... 381

11.2.2 CAP Description .................................................................................................. 381

11.2.2.1 Selecting the Access Mode.................................................................. 381

11.2.2.2 PMU CSR ............................................................................................ 381

11.2.2.3 CAP Writes .......................................................................................... 381

11.2.2.4 CAP Reads .......................................................................................... 381

11.2.3 Configuration Registers ....................................................................................... 382

11.3 Performance Measurements ............................................................................................ 382

11.4 Events Monitored in Hardware ......................................................................................... 385

11.4.1 Queue Statistics Events....................................................................................... 385

11.4.1.1 Queue Latency..................................................................................... 385

11.4.1.2 Queue Utilization.................................................................................. 385

11.4.2 Count Events ....................................................................................................... 385

11.4.2.1 Hardware Block Execution Count ........................................................ 385

11.4.3 Design Block Select Definitions ........................................................................... 386

11.4.4 Null Event ............................................................................................................ 387

11.4.5 Threshold Events................................................................................................. 388

11.4.6 External Input Events........................................................................................... 389

11.4.6.1 XPI Events Target ID(000001) / Design Block #(0100) ....................... 389

11.4.6.2 SHaC Events Target ID(000010) / Design Block #(0101).................... 393

11.4.6.3 IXP2800 Network Processor MSF Events Target ID(000011) /

Design Block #(0110)........................................................................... 396

11.4.6.4 Intel XScale® Core Events Target ID(000100) /

Design Block #(0111)........................................................................... 402

11.4.6.5 PCI Events Target ID(000101) / Design Block #(1000) ....................... 405

11.4.6.6 ME00 Events Target ID(100000) / Design Block #(1001).................... 409

14 Hardware Reference Manual

Page 15

Contents

11.4.6.7 ME01 Events Target ID(100001) / Design Block #(1001).................... 410

11.4.6.8 ME02 Events Target ID(100010) / Design Block #(1001).................... 411

11.4.6.9 ME03 Events Target ID(100011) / Design Block #(1001).................... 411

11.4.6.10 ME04 Events Target ID(100100) / Design Block #(1001).................... 412

11.4.6.11 ME05 Events Target ID(100101) / Design Block #(1001).................... 412

11.4.6.12 ME06 Events Target ID(100110) / Design Block #(1001).................... 413

11.4.6.13 ME07 Events Target ID(100111) / Design Block #(1001).................... 413

11.4.6.14 ME10 Events Target ID(110000) / Design Block #(1010).................... 414

11.4.6.15 ME11 Events Target ID(110001) / Design Block #(1010).................... 414

11.4.6.16 ME12 Events Target ID(110010) / Design Block #(1010).................... 415

11.4.6.17 ME13 Events Target ID(110011) / Design Block #(1010).................... 415

11.4.6.18 ME14 Events Target ID(110100) / Design Block #(1010).................... 416

11.4.6.19 ME15 Events Target ID(110101) / Design Block #(1010).................... 416

11.4.6.20 ME16 Events Target ID(100110) / Design Block #(1010).................... 417

11.4.6.21 ME17 Events Target ID(110111) / Design Block #(1010).................... 417

11.4.6.22 SRAM DP1 Events Target ID(001001) / Design Block #(0010)...........418

11.4.6.23 SRAM DP0 Events Target ID(001010) / Design Block #(0010)...........418

11.4.6.24 SRAM CH3 Events Target ID(001011) / Design Block #(0010)...........420

11.4.6.25 SRAM CH2 Events Target ID(001100) / Design Block #(0010)...........421

11.4.6.26 SRAM CH1 Events Target ID(001101) / Design Block #(0010)...........421

11.4.6.27 SRAM CH0 Events Target ID(001110) / Design Block #(0010)...........422

11.4.6.28 DRAM DPLA Events Target ID(010010) / Design Block #(0011) ........423

11.4.6.29 DRAM DPSA Events Target ID(010011) / Design Block #(0011)........ 424

11.4.6.30 IXP2800 Network Processor DRAM CH2 Events Target ID(010100) /

Design Block #(0011)........................................................................... 425

11.4.6.31 IXP2800 Network Processor DRAM CH1 Events Target ID(010101) /

Design Block #(0011)........................................................................... 429

11.4.6.32 IXP2800 Network Processor DRAM CH0 Events Target ID(010110) /

Design Block #(0011)........................................................................... 429

Hardware Reference Manual 15

Page 16

Contents

Figures

1 IXP2800 Network Processor Functional Block Diagram ............................................................ 28

2 IXP2800 Network Processor Detailed Diagram.......................................................................... 29

3 Intel XScale® Core 4-GB (32-Bit) Address Space ..................................................................... 32

4 Microengine Block Diagram........................................................................................................ 34

5 Context State Transition Diagram .............................................................................................. 36

6 Byte-Align Block Diagram........................................................................................................... 44

7 CAM Block Diagram ................................................................................................................... 46

8 Echo Clock Configuration ........................................................................................................... 52

9 Logical View of Rings ................................................................................................................. 57

10 Example System Block Diagram ................................................................................................ 59

11 Full-Duplex Block Diagram......................................................................................................... 60

12 Simplified MSF Receive Section Block Diagram........................................................................ 61

13 Simplified Transmit Section Block Diagram................................................................................ 65