Page 1

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 1

IBM® System StorageTM DR550 V3.0

Installation, Setup, Operations, and

Problem Determination Guide

(P) IBM part number: 23R5892

*P23R5892*

(2P) EC Level: H81819

*2PH81819*

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 2

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 2

Table of Contents

INTRODUCTION............................................................................................................. 6

HARDWARE OVERVIEW............................................................................................... 7

IBM System P5 520...................................................................................................................................................7

Management Console ..............................................................................................................................................9

IBM TotalStorage DS4100 Midrange Disk System and IBM TotalStorage DS4000 EXP100...................9

IBM TotalStorage SAN Switch .............................................................................................................................10

Accessing the Switch(es).................................................................................................................................10

Dual Node Zoning – without Enhanced Remote Volume Mirroring ......................................................11

Single Node Zoning – without Enhanced Remote Volume Mirroring...................................................12

SOFTWARE OVERVIEW ............................................................................................. 14

High Availability Cluster Multi-Processing (HACMP) for AIX ......................................................................14

System Storage Archive Manager ......................................................................................................................14

System Storage Archive Manager API Client..............................................................................................15

DS4000 Storage Manager Version 9.12.65........................................................................................................15

Content Management Applications....................................................................................................................16

IBM DB2 Content Manager ..............................................................................................................................16

Other Management Applications....................................................................................................................16

DR550 OFFERINGS ..................................................................................................... 18

DR550 Single Node Components .......................................................................................................................18

DR550 Dual Node Components...........................................................................................................................21

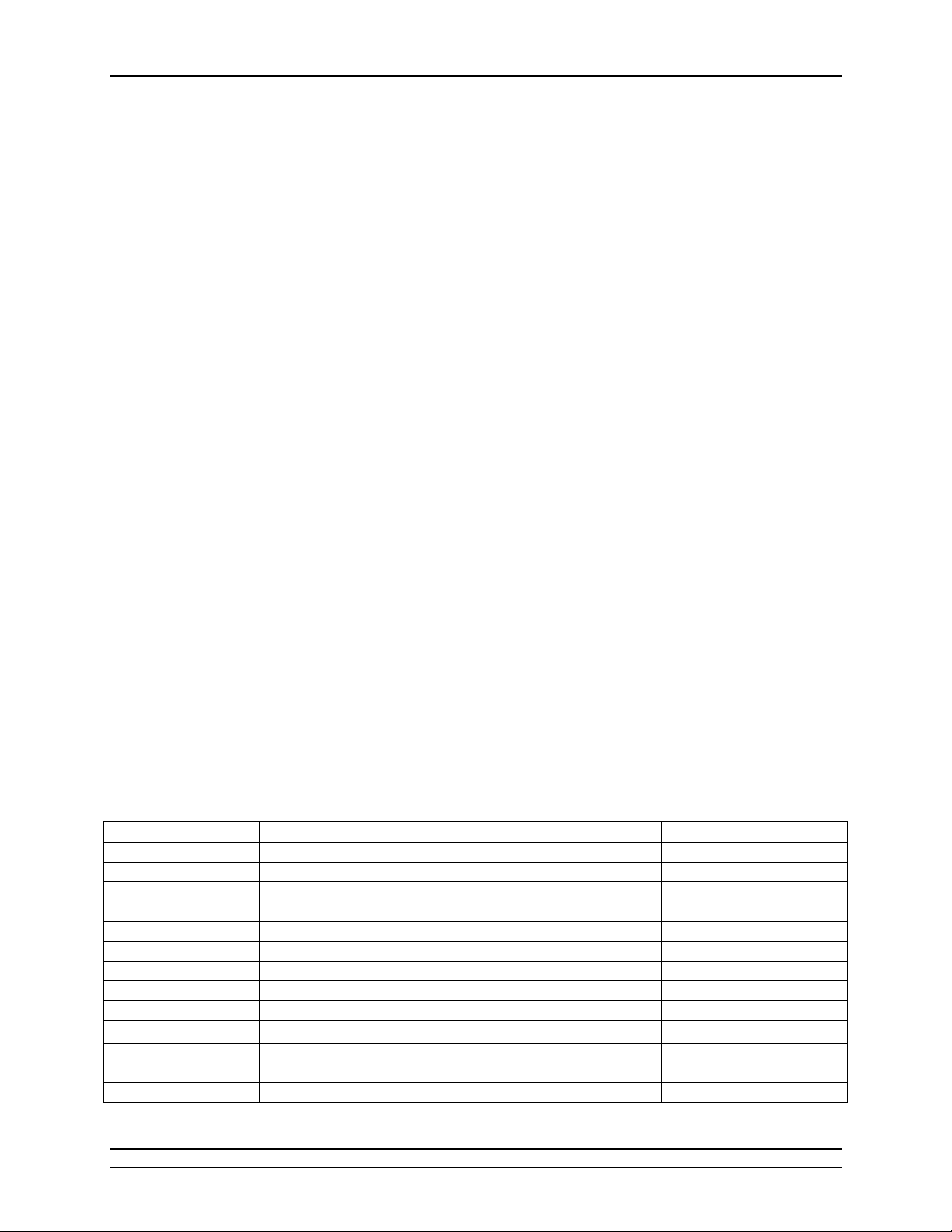

DS4100 Logical Volume Configurations ...........................................................................................................25

AIX settings ..............................................................................................................................................................27

System Storage Archive Server Configuration ...............................................................................................28

System Storage Archive Manager Database and Logs ............................................................................28

System Storage Archive Manager Disk Storage Pool...............................................................................28

System Storage Archive Manager Storage Management Policy............................................................28

Automated System Storage Archive Manager Operations......................................................................28

Routine Operations for System Storage Archive Manager..........................................................................29

Monitoring the Database and Recovery Log Status .................................................................................29

Monitoring Disk Volumes.................................................................................................................................29

Monitoring the Results of Scheduled Operations .....................................................................................29

Installing and Setting Up System Storage Archive Manager API Clients.................................................29

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 3

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 3

INSTALLATION AND ACTIVATION............................................................................. 30

Safety Notices..........................................................................................................................................................30

Site Preparation and Planning.............................................................................................................................31

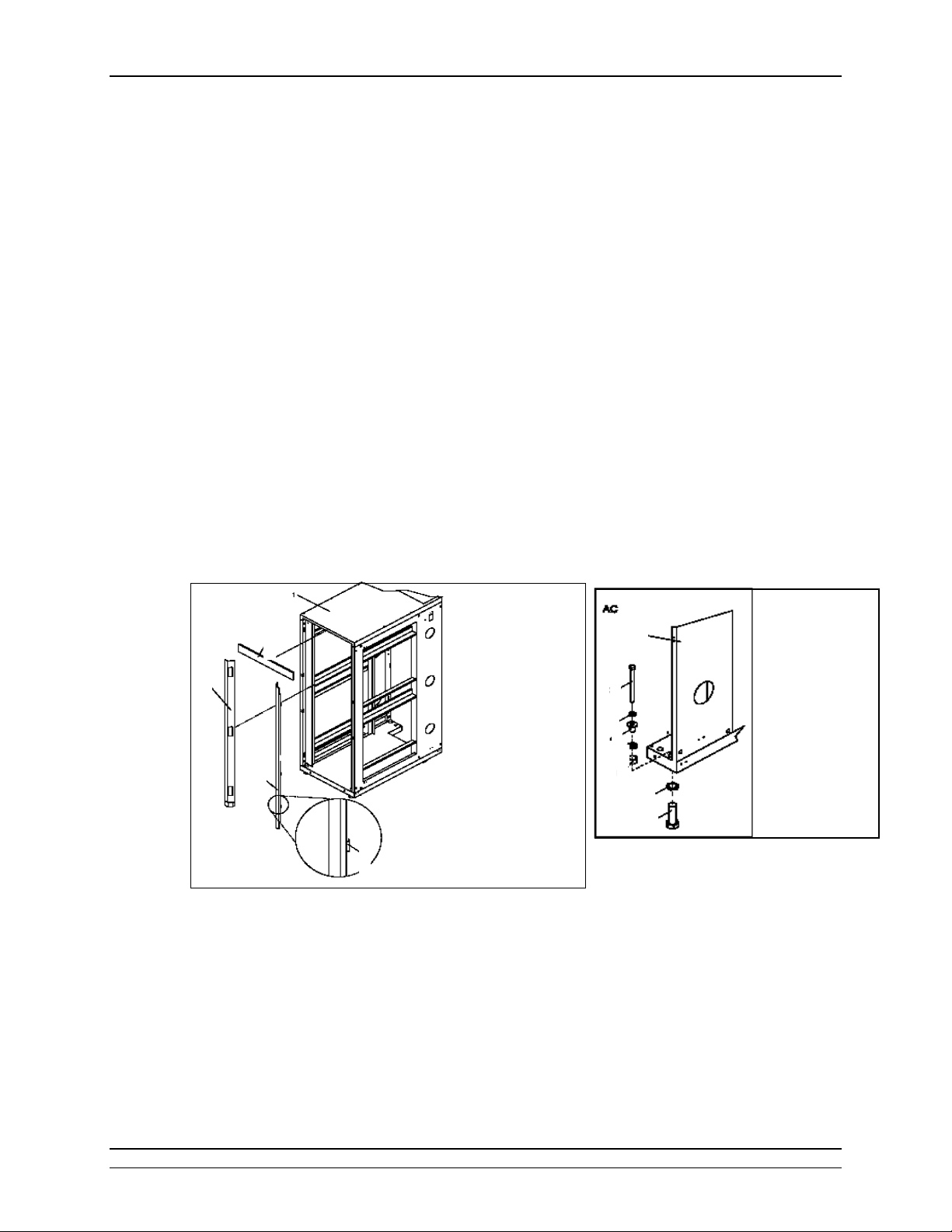

Unpacking and Installing the Rack(s)................................................................................................................32

Positioning the Rack .........................................................................................................................................32

Leveling the Rack...............................................................................................................................................32

Attaching the Rack to a Concrete Floor .......................................................................................................33

Attach the Rack to a Concrete Floor Beneath a Raised Floor................................................................36

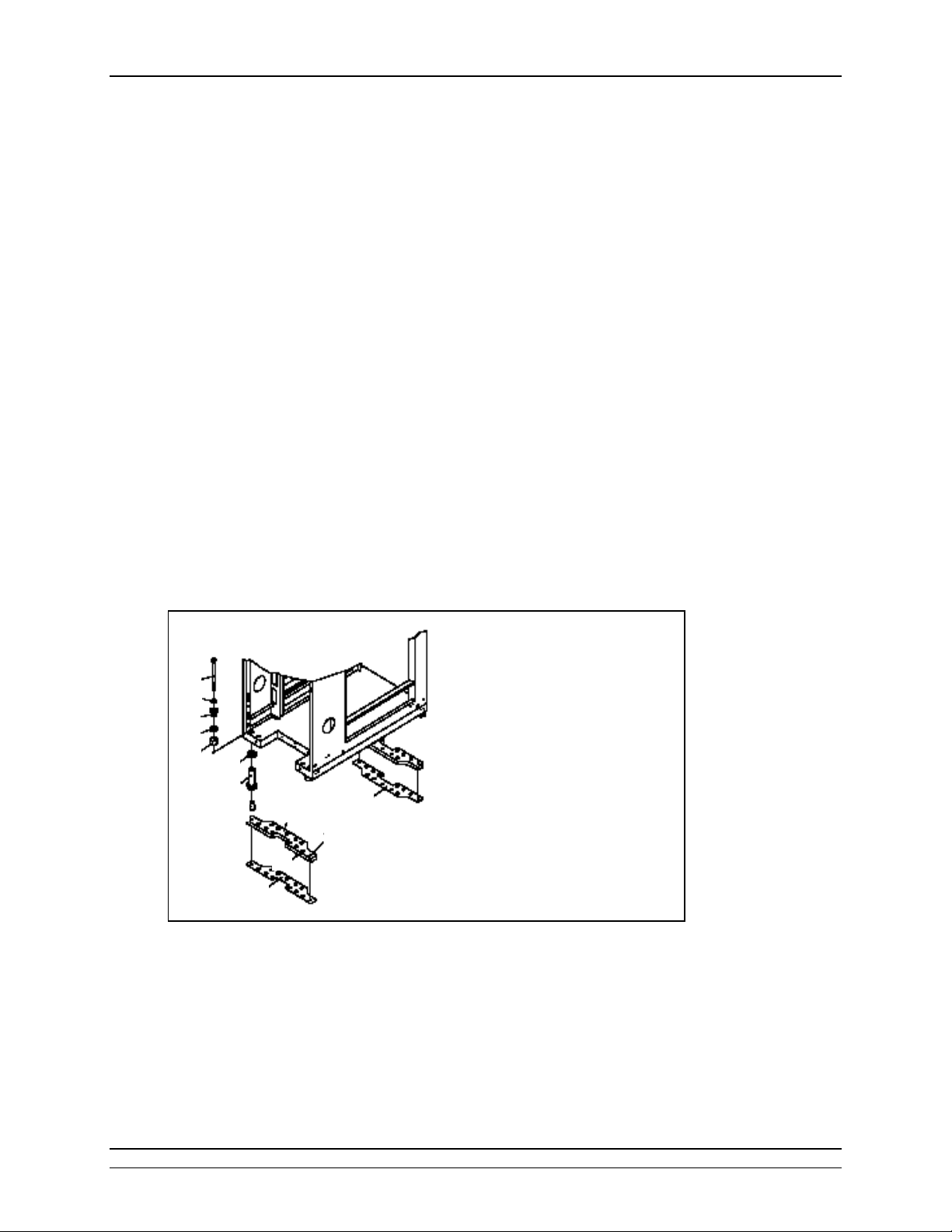

Connecting the Racks in a Suite (applies only to dual node 89.6 TB Configuration) ......................38

Cabling between the Racks .............................................................................................................................40

Cabling to the customer’s Ethernet / IP Network ...........................................................................................41

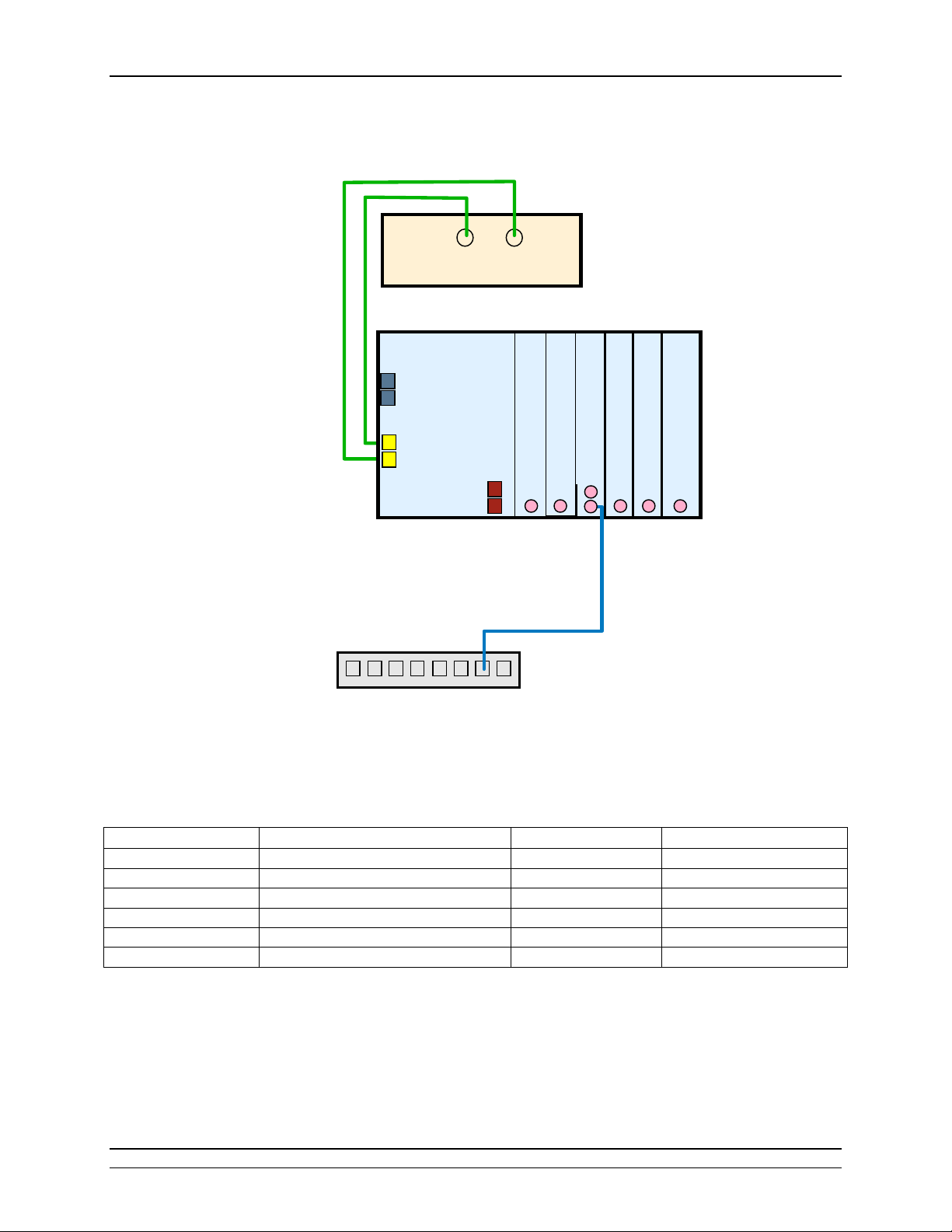

Single Node Configurations.................................................................................................................................41

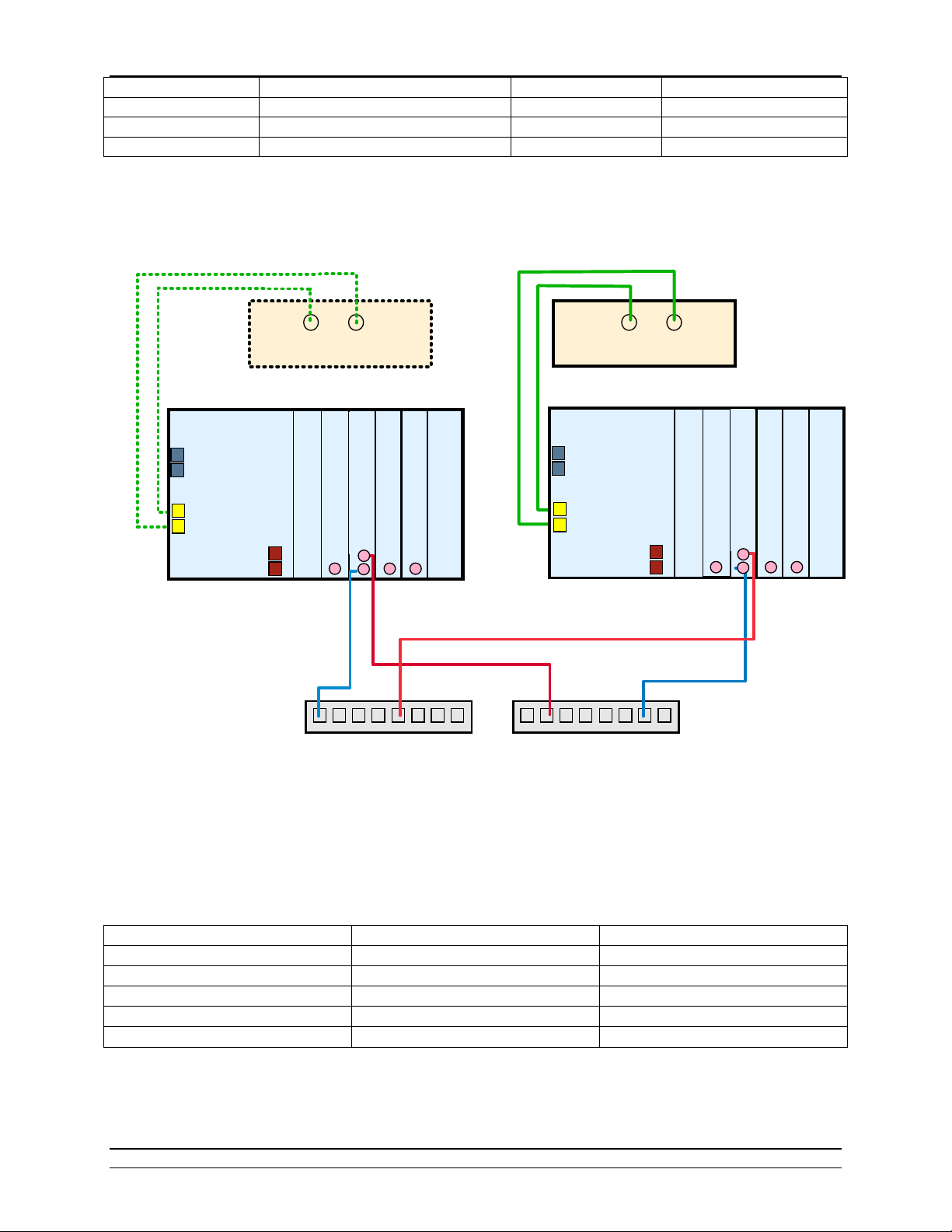

Dual Node Configurations ....................................................................................................................................43

Setting up the Management Console ................................................................................................................44

Userid and Password........................................................................................................................................44

Changing Server Names ..................................................................................................................................44

Connecting AC Power ...........................................................................................................................................47

Configuring the P5 520 Servers ..........................................................................................................................49

User Accounts ....................................................................................................................................................49

Connecting to Gigabit Ethernet (Fibre) Network .......................................................................................50

Connecting to a 10/100/1000 Mbps Ethernet (copper) Network.............................................................51

Procedure for Changing IP Address .............................................................................................................53

Configuring the Dual Node System (P5 520 Servers)....................................................................................55

Connecting to Gigabit Ethernet (Fibre) Network .......................................................................................56

Connecting to 10/100/1000 Ethernet (Copper) Network ...........................................................................58

HACMP Configuration ...........................................................................................................................................59

Procedures for Changing IP Addresses.......................................................................................................59

Cluster Snapshot........................................................................................................................................................72

REMOTE MIRRORING ................................................................................................. 74

Zoning diagrams (single and dual node)..................................................................................................................74

Setting up DR550 for remote mirroring.............................................................................................................77

Recovery from the primary failure......................................................................................................................78

Setting up DR550 for mirroring back to original site.....................................................................................80

OTHER INSTALLATION TOPICS ................................................................................ 81

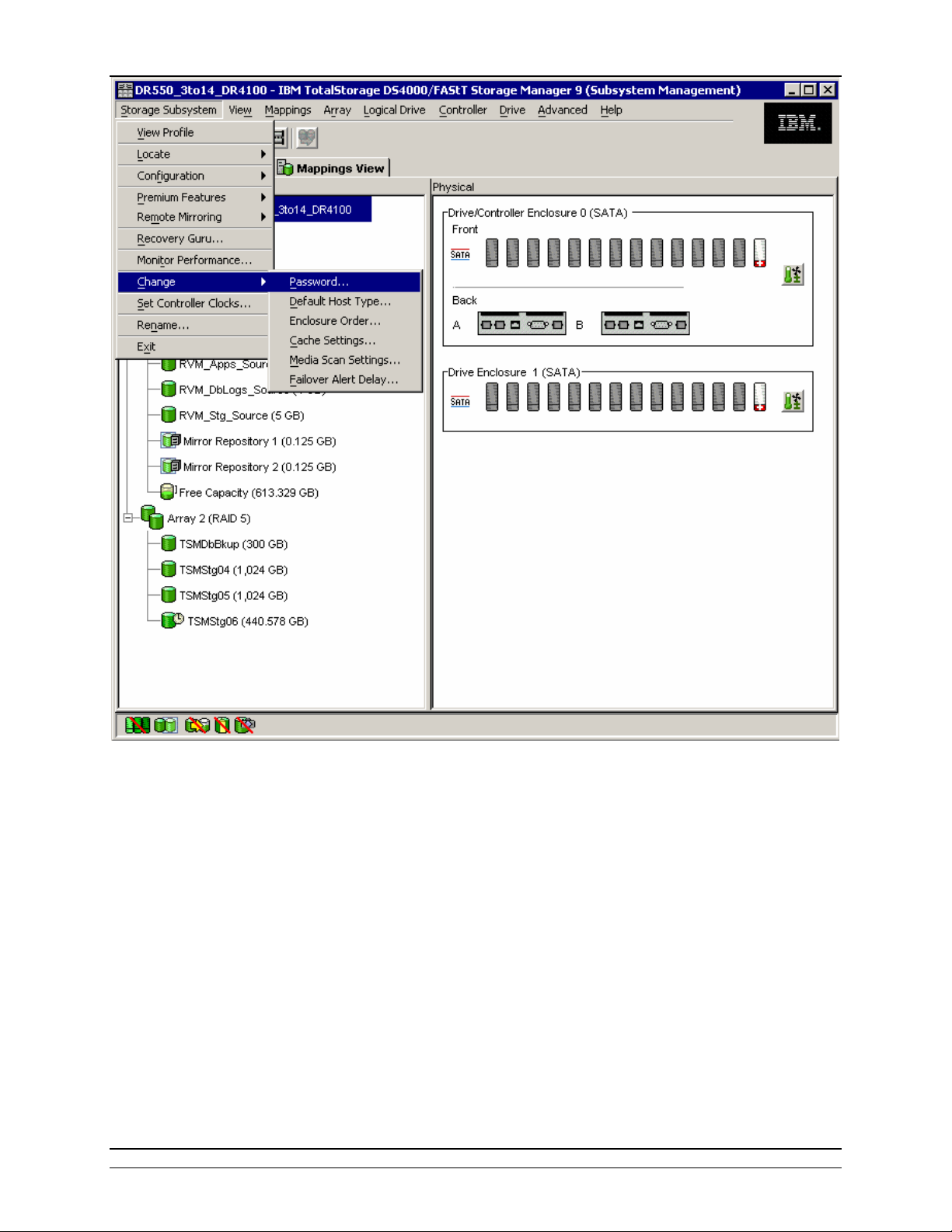

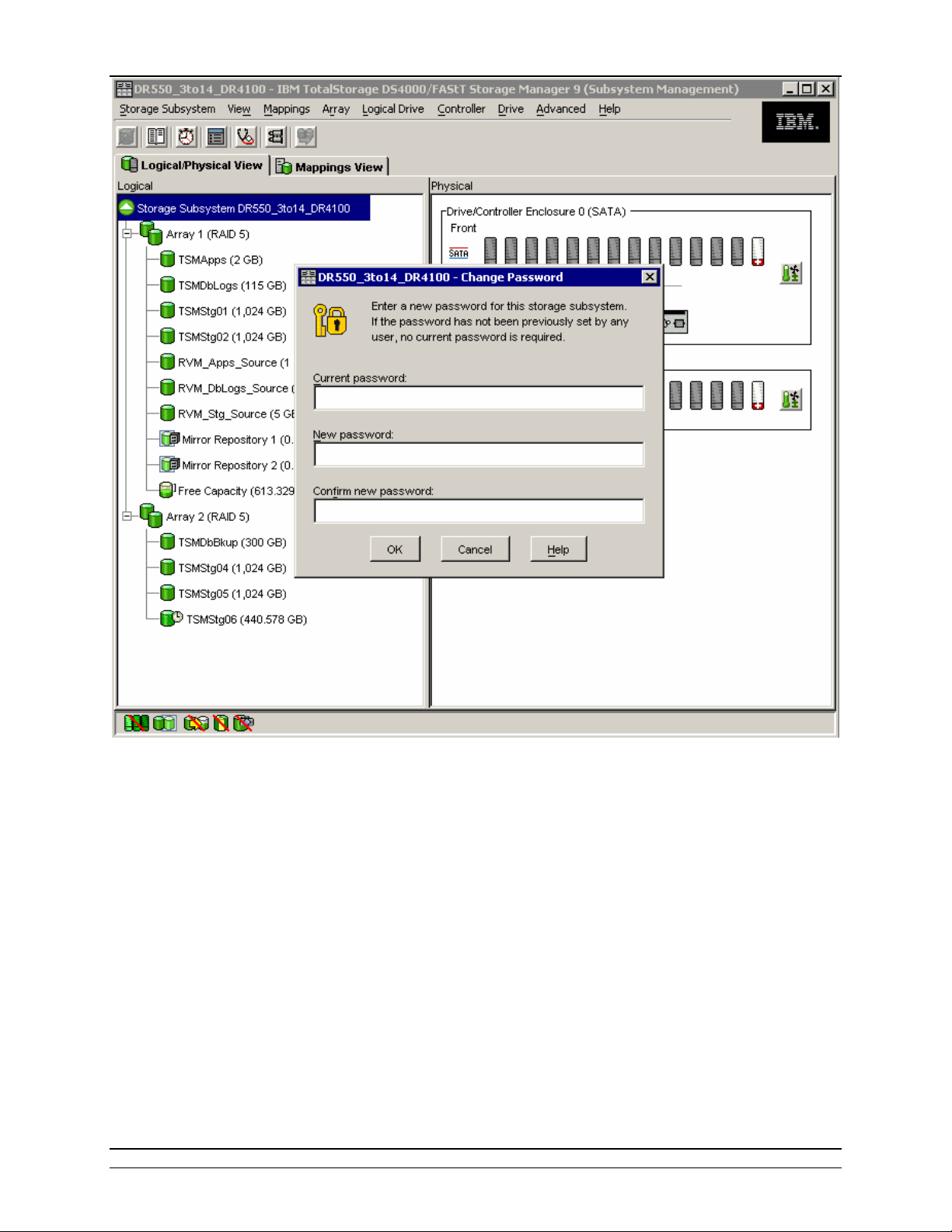

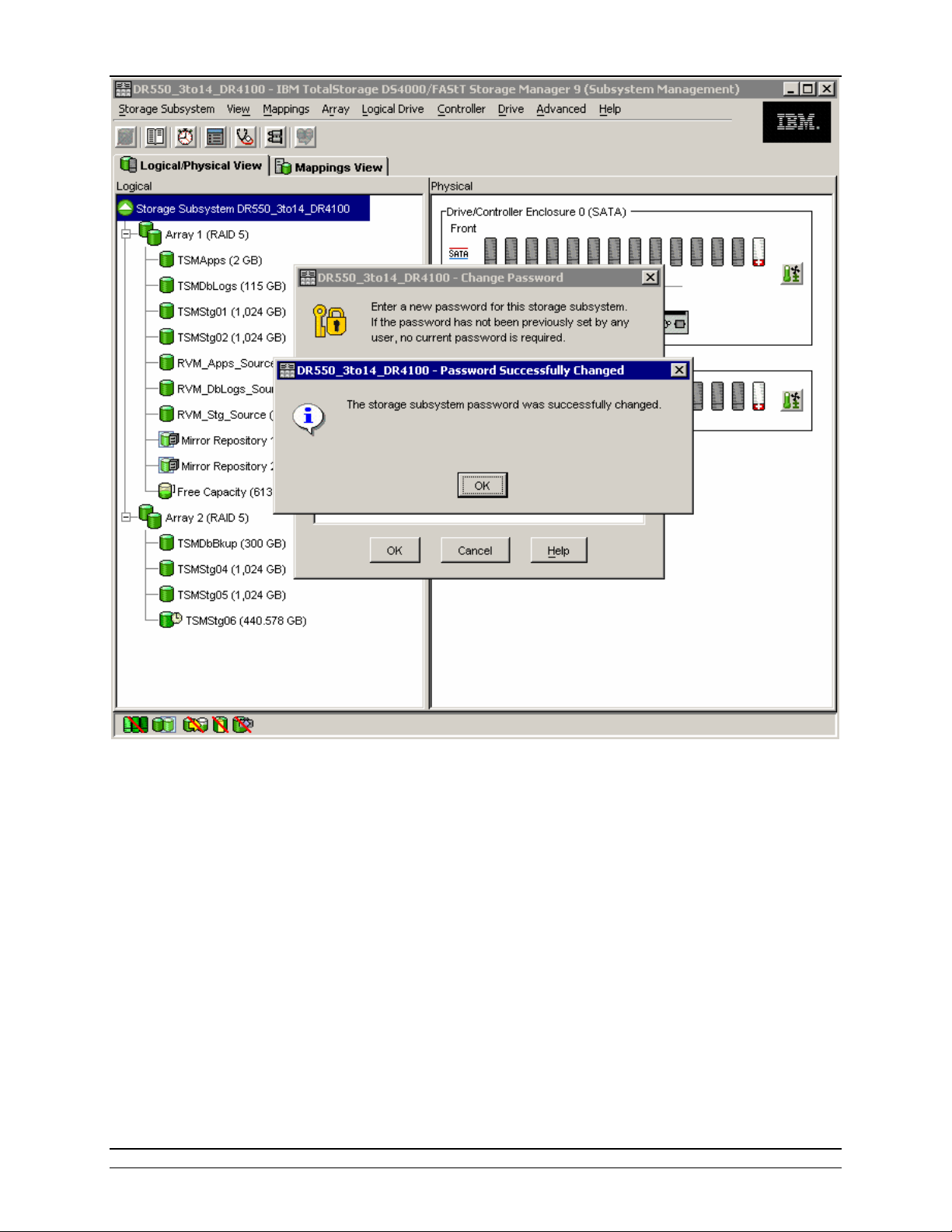

Changing passwords.............................................................................................................................................81

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 4

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 4

HACMP Network Considerations........................................................................................................................85

HACMP Configuration in Switched Networks.............................................................................................85

Monitoring the HACMP Cluster ...........................................................................................................................86

Error Notification and Monitoring.......................................................................................................................86

HMC electronic problem reporting ................................................................................................................86

DS4000 SNMP and e-mail setup for error notification ..............................................................................94

DS4000 Service Alert.........................................................................................................................................99

Changing TSM HACMPADM password ...........................................................................................................103

Creating an AIX Recovery DVD .........................................................................................................................104

Creating a Root Volume Group Backup on DVD-RAM with Universal Disk Format .......................104

Recovering from a problem using the Recovery DVD ................................................................................106

Boot from the Recovery DVD........................................................................................................................106

Set and Verify Installation Settings .............................................................................................................107

Backing Up the DR550 Data ...............................................................................................................................108

OTHER INFORMATION.............................................................................................. 110

Environmental Specifications............................................................................................................................110

P5 520..................................................................................................................................................................110

DS4100................................................................................................................................................................110

DS4000 EXP100 ................................................................................................................................................111

SAN Switches – 2005-B16..............................................................................................................................111

7310-CR3 ............................................................................................................................................................111

7316-TF3 .............................................................................................................................................................112

7014-T00 Rack...................................................................................................................................................112

Lost Key Replacement.........................................................................................................................................113

COMPLEMENTARY PRODUCTS .............................................................................. 114

IBM Tape Solutions ..............................................................................................................................................114

Connecting Tape to the DR550.....................................................................................................................114

Single node tape attachment .............................................................................................................................114

Dual node tape attachment ................................................................................................................................115

Data Migration .......................................................................................................................................................116

PROBLEM DETERMINATION.................................................................................... 118

Gathering problem information from the user ..............................................................................................118

Additional sources of information ..........................................................................................................................121

Viewing the AIX runtime error log ....................................................................................................................122

Support Web Site ..................................................................................................................................................122

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 5

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 5

Placing a Service Call ..........................................................................................................................................122

DS4000 Service Alert Notification.....................................................................................................................124

REFERENCES............................................................................................................ 126

UPDATES TO THIS GUIDE........................................................................................ 128

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 6

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 6

Introduction

IBM® System StorageTM DR550, one of IBM’s Data Retention offerings, is an integrated offering for

clients that need to retain and preserve electronic business records. The DR550 packages storage,

server and software retention components into a lockable cabinet.

Integrating IBM System P5 servers (using POWER5™ processors) with IBM System Storage and

TotalStorage products and IBM System Storage Archive Manager software, this system is designed

to provide a central point of control to help manage growing compliance and data retention needs.

The powerful system, which fits into a lockable cabinet, supports the ability to retain data and helps

prevent tampering alteration. The system’s compact design can help with fast and easy deployment,

and incorporates an open and flexible architecture.

To help clients better respond to changing business environments as they transform their

infrastructure, the DR550 can be shipped with as few as 5.6 terabytes of physical capacity and can

expand up to 89.6 terabytes, of physical capacity (equal to 89.6 million full-length novels) to help

address massive data requirements.

The DR550 is available to be sold by IBM Business Partners certified on all of the solution

components as well as by IBM Direct.

Innovative Technology

At the heart of the offering is IBM System Storage Archive Manager. This new industry changing

software is designed to help customers protect the integrity of data as well as automatically enforce

data retention policies. Using policy-based management, data can be stored indefinitely, can be

expired based on a retention event, or have a predetermined expiration date. In addition, the

retention enforcement feature may be applied to data using deletion hold and release interfaces

which hold data for an indefinite period of time, regardless of the expiration date or defined event.

The policy software is also designed to prevent modifications or deletions after the data is stored.

With support for open standards, the new technology is designed to provide customers flexibility to

use a variety of content management or archive applications.

The System Storage Archive Manager is embedded on an IBM System P5 520 using POWER5+

processors. This entry-level server has many of the attributes of IBM’s high-end servers,

representing outstanding technology advancements.

Tape storage can be critical for long-term data archiving, and IBM provides customers with a

comprehensive range of tape solutions. The IBM System Storage DR550 supports IBM's

TotalStorage Enterprise Tape Drive 3592, IBM System Storage TS1120 drive, and the IBM Linear

Tape Open family of tape products. Write Once Read Many (WORM) cartridges are recommended

due to the permanent nature of data stored with the DR550, it is strongly recommended that the

3592 with WORM cartridges be used to take advantage of tape media encoded to enforce nonrewrite and non-erase capability. This complementary capability will be of particular interest to

customers that need to store large quantities of electronic records to meet regulatory and internal

audit requirements.

The DR550 is available in two basic configurations: single node (one POWER5+ server) and dual

node (two clustered POWER5+ servers).

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 7

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 7

Hardware Overview

The DR550 includes one or two IBM System P5 520 servers running AIX® 5.3. When configured

with two 520 servers, the servers are setup in an HACMP™ 5.3 configuration. Both P5 520s have

the same hardware configuration. When configured with one 520 server, no HACMP software is

included.

IBM System P5 520

The IBM System P5 520 (referred to hereafter as the P5 520 when discussing the DR550) is a costeffective, high performance, space-efficient server that uses advanced IBM technology. The P5 520

uses the POWER5+ microprocessor, and is designed for use in LAN clustered environments.

The P5 520 is a member of the symmetric multiprocessing (SMP) UNIX servers from IBM. The P5

520 (product number 9131-52A) is a 4-EIA (4U), 19-inch rack-mounted server. The P5 520 is

configured as a 2-core system with 1.9 GHz processors. The total system memory installed is 1024

MB.

The P5 520 includes six hot-plug PCI-X slots, an integrated dual channel Ultra320 SCSI controller,

two 10/100/1000 Mbps integrated Ethernet controllers, and eight front-accessible disk bays

supporting hot-swappable disks (two are populated with 36.4 GB Ultra3 10K RPM disk drives).

These disk bays are designed to provide high system availability and growth by allowing the

removal or addition of disk drives without disrupting service. The internal disk storage is configured

as mirrored disk for high availability.

Figure 1: Front view of P5 520 server

In addition to the disk drives, there are also 3 media bays available.

• Media - dev0 – not used for DR550

• Media – dev1 – Slimline DVD-RAM (FC 1993)

• SCSI tape drive (not included)

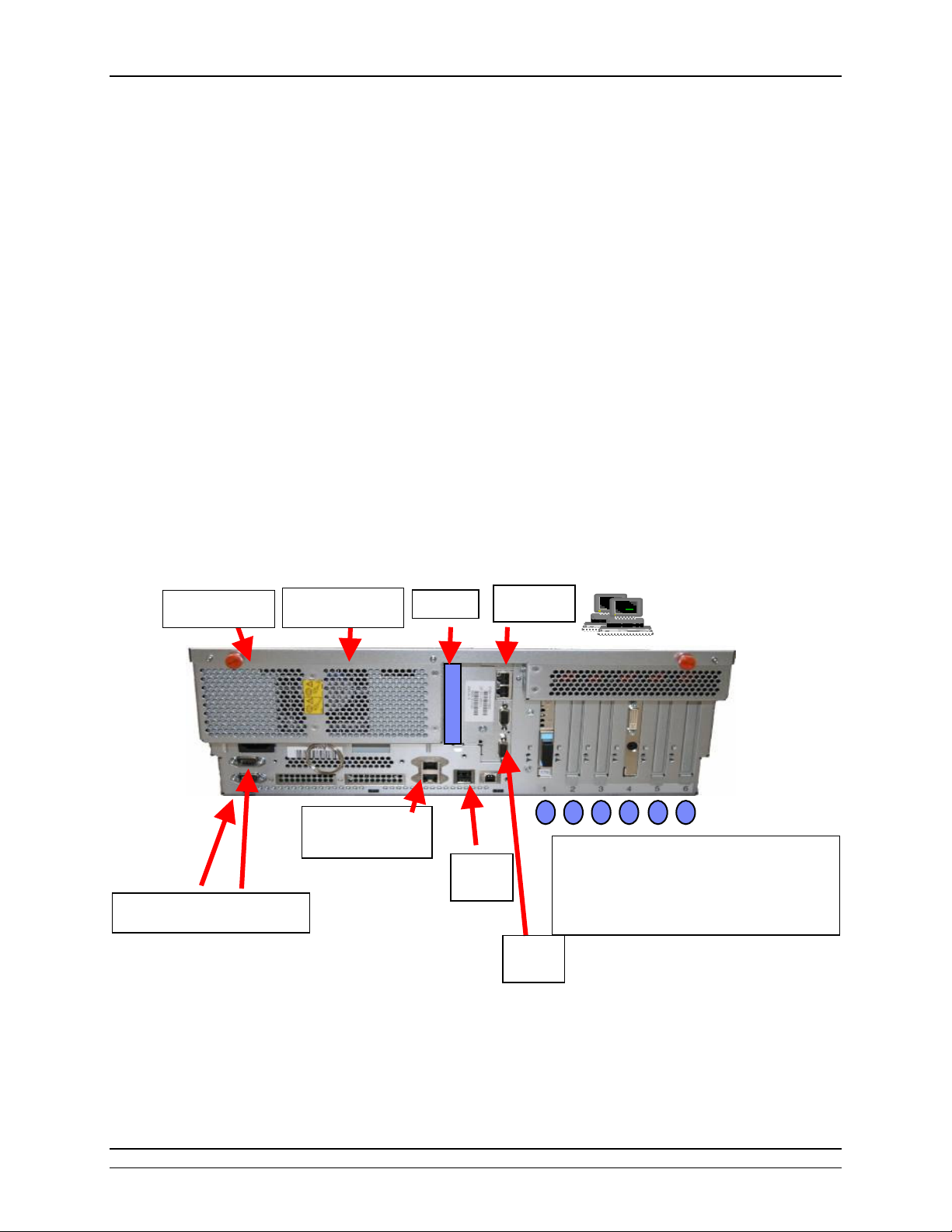

From the back of the server, the following ports and slots are included.

PCI-X slots: The P5 520 provides multiple hot-plug PCI-X slots. The number and type of adapters

installed is dependent on the configuration selected.

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 8

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 8

ports

One short 64

-

bit 133 MHz (slot

1)

Supply (Optional)

ports

The following adapters are installed.

• 3 – 2 Gigabit Fibre Channel PCI-X adapters (two for connections to the internal SAN for disk

attachment and one for connection to the internal SAN for tape attachment) (FC 5716) –

located in slots 1, 4, 5

• 1 – 10/100/1000 Mbps dual port Ethernet PCI adapter II (FC 1983 – TX version or FC 1984

– SX version) – located in slot 3

o Used for connection to the client network

• 1 – POWER GXT135P Graphics Accelerator with Digital support adapter (FC 1980) –

located in slot 2

I/O ports: The P5 520 includes several native I/O ports as part of the basic configuration:

• 2 10/100/1000 Ethernet ports (for copper based connections)

o Both are used for connections to the DS4100 and used for management purposes

only (no changes should be made in these connections).

• 2 serial ports (RS232).

o These are not used with DR550.

• 2 USB ports

o One of these is used to connect to the keyboard and mouse – the other port is not

used

• 2 RIO ports

o These are not used by DR550

• 2 HMC (Hardware Management Console) ports

o One is used for connection to the HMC server in the rack

• 2 SPCN ports

o These are not used by DR550

Hot-plug

Hot-plug Power

GX Bus

GX Bus

Two HMC

ports*

Two

10/100/1000 Mbps

Ethernet ports

Two USB

2.0

Two System ports*

Six hot-plug PCI-X slots:

Two long 64-bit 133 MHz (slots 5, 6)

One short 64-bit 266 MHz (slot 4)

Two short 32-bit 66 MHz (slots 2,3)

Two

SPCN

Figure 2: Back view of P5 520 server

The Converged Service Processor2 (CSP) is on a dedicated card plugged into the main system

planar, which is designed to continuously monitor system operations, taking preventive or corrective

actions to promote quick problem resolution and high system availability.

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 9

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 9

Additional features are designed into pSeries servers to provide an extensive set of reliability,

availability, and serviceability (RAS) features such as improved fault isolation, recovery from errors

without stopping the system, avoidance of recurring failures, and predictive failure analysis.

Additional information on the P5 520 server is available at www.ibm.com/redbooks.

Management Console

Included in the DR550 is a set of integrated management components. This includes the Hardware

Management Console (HMC) as well as a flat panel monitor, keyboard and mouse. The HMC

(7310-CR3) is a dedicated rack-mounted workstation that allows the user to configure and manage

call home support. The HMC has other capabilities (partitioning, Capacity on Demand) that are not

used in the DR550. The HMC includes the management application used to setup call home. To

help ensure console functionality, the HMC is not available as a general purpose computing

resource. The HMC offers a service focal point for the 520 server(s) that are attached. It is

connected to a dedicated port on the service processor of the POWER5 system via an Ethernet

connection. Tools are included for problem determination and service support, such as call-home

and error log notification, through the internet or via modem. The customer will need to supply the

connection to the network or phone system. The HMC is connected to the keyboard, mouse and

monitor installed in the rack.

The IBM 7316-TF3 is a rack-mounted flat panel console kit consisting of a 17 inch (337.9 mm x

270.3 mm) flat panel color monitor, rack keyboard tray, IBM travel keyboard (English only), and the

Netbay LCM switch. This is packaged as a 1U kit and is mounted in the rack along with the other

DR550 components. The Netbay LCM Switch is mounted in the same rack space, located behind

the flat panel monitor. The IBM Travel Keyboard is configured for English. An integrated “mouse” is

included in the keyboard. The HMC and the P5 520 servers are connected to the Netbay LCM

switch so that the monitor and keyboard can access all three servers.

IBM TotalStorage DS4100 Midrange Disk System and IBM TotalStorage

DS4000 EXP100

The DR550 includes one IBM TotalStorage DS4100 Midrange Disk System (hereafter referred to as

the DS4100) in DR550 configurations of 44.8 TBs or less. With 89.6 TB configurations, two

DS4100s are included. The disk capacity used by the DS4100(s) is provided by the IBM

TotalStorage EXP100 (hereafter referred to as the EXP100). Each EXP100 enclosure packaged

with the DR550 includes fourteen 400 GB Serial ATA (SATA) disk drive modules, offering 5.6

Terabytes (TB) of raw physical capacity.

The DS4100 is an affordable, scalable storage server for clustering applications such as the Data

Retention application. Its modular architecture —which includes Dynamic Capacity Expansion and

Dynamic Volume Expansion—is designed to support e-business on demand™ environments by

helping to enable storage to grow as demands increase. Autonomic features such as online

firmware upgrades also help enhance the system’s usability.

One DS4100 supports up to 44.8TB of Serial ATA physical disk storage capacity (seven EXP100

enclosures). Thus for 89.6 TB configurations of the DR550, dual DS4100s are provided, each

capable of supporting up to 44.8 TBs of raw disk capacity. Note, the first rack holds the first 44.8 TB

and the second rack holds the second 44.8 TB.

The DS4100 is designed to allow upgrades while keeping data intact, helping to minimize

disruptions during upgrades. The DS4100 also supports online controller firmware upgrades, to help

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 10

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 10

provide high performance and functionality. Events such as upgrades to support the latest version of

DS4000 Storage Manager can also often be executed without stopping operations.

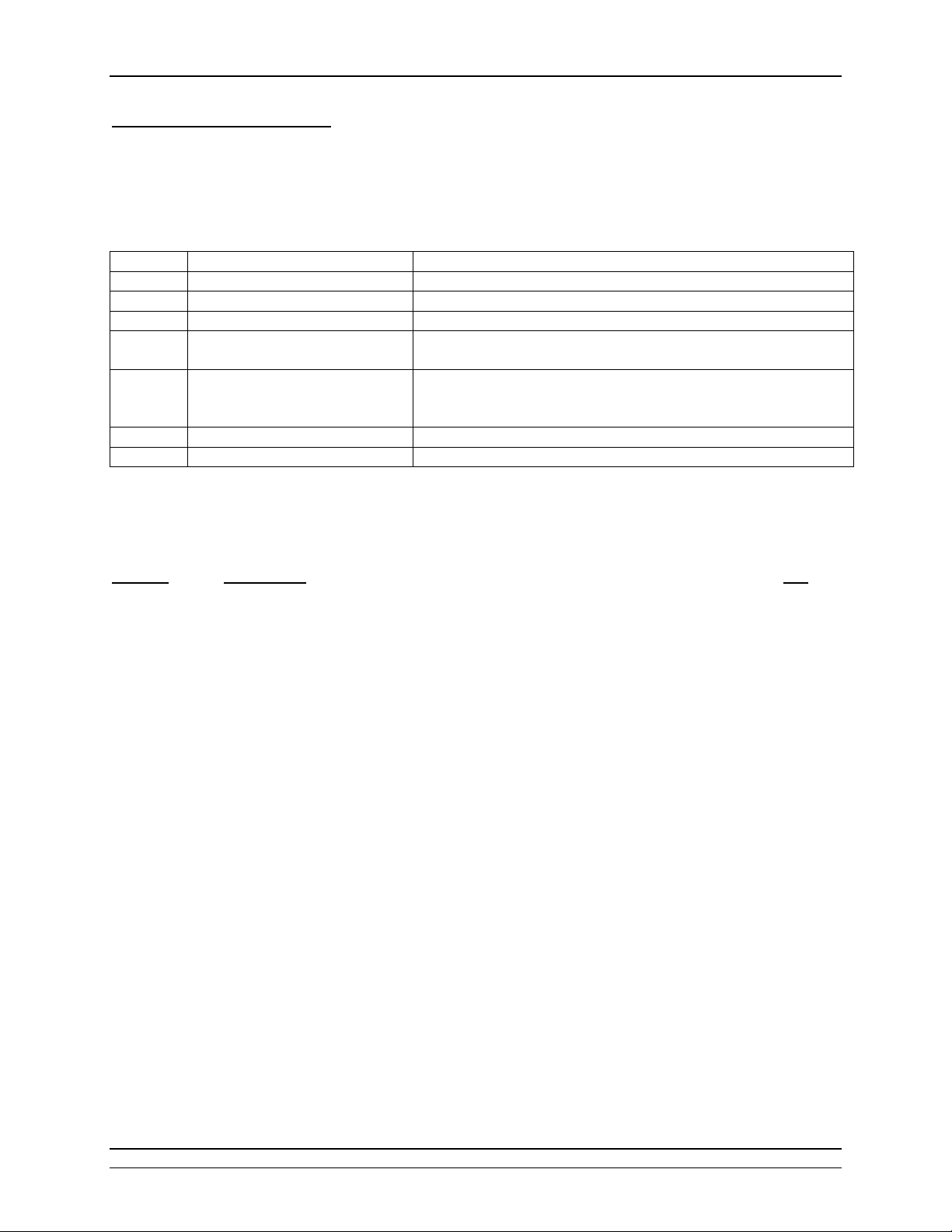

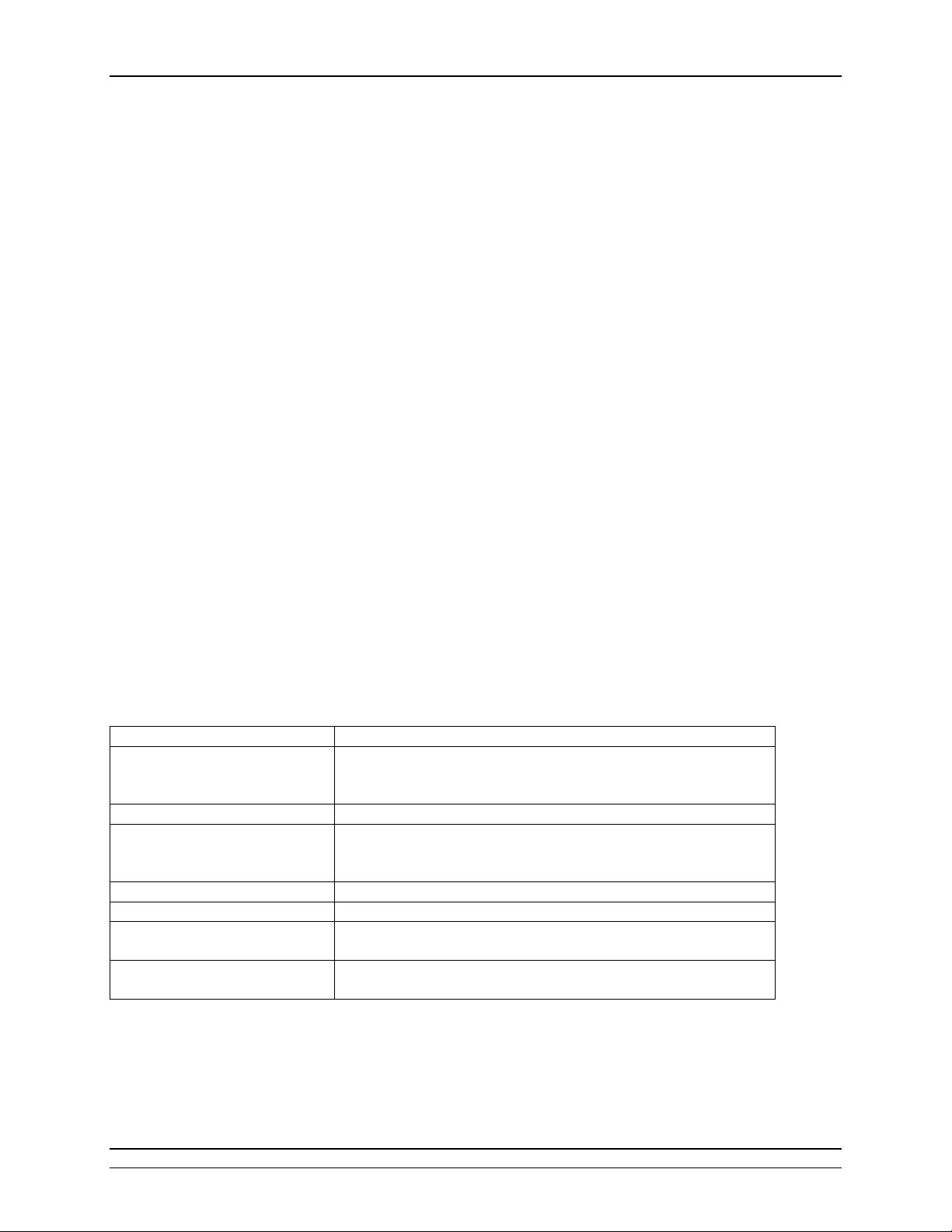

IBM DS4100 Storage Server in the DR550 at a glance

Characteristics

Model 1724-100

RAID controller Dual active 2 GB RAID controllers

Cache 512 MB total, battery-backed

Host interface 4 - Fibre Channel (FC) Switched and FC Arbitrated Loop (FC-

AL) standard

Drive interface Redundant 2 Gbps FC-AL connections

EXP100 drives 400 GB 7200 RPM SATA disk drives

EXP100 enclosures 14 400 GB SATA disk drive modules, offering up to 5.6

Terabytes (TB) of physical capacity per enclosure

RAID Level 5 configured. RAID-10 can be configured at the

customer’s site using an optional IBM Services consultant

Maximum drives supported 112 Serial ATA drives (using 8 EXP100 Expansion Units) per

DS4100

Fans Dual redundant, hot-swappable

Management software IBM DS4000 Storage Manager version 9.12.65 (Special

version for exclusive use with DR550)

IBM TotalStorage SAN Switch

Two IBM TotalStorage SAN Fibre Channel Switches are used to interconnect both P5 520 servers

with the DS4100s to create a SAN (dual node configurations). Tape attachment such as the 3592,

TS1120 or LTO can be done using the additional ports on the switches. The switches (2005-B16)

build two independent SANs, which are designed to be fully redundant for high availability. This

implementation in the DR550 is designed to provide high performance, scalability, and high fault

tolerance.

For the single node configurations, only one switch (2005-B16) included. This creates a single

independent SAN and can be used for both disk and tape access.

The 2005-B16 is a 16-port, dual speed, auto-sensing fibre channel switch. Eight ports are

populated with 2 gigabit shortwave transceivers when the DR550 is configured for single copy

mode. Twelve ports are populated with 2 gigabit short wave tranceivers when the DR550 is

configured for enhanced remote volume mirroring. This dual implementation is designed to provide

a fault tolerant fabric topology, to help avoid single points of failure.

IBM SAN Fibre Channel Switch 2005-B16

Each switch in the DR550 comes pre-zoned by ports. Both switches are configured with distinct

zones as illustrated below:

Accessing the Switch(es)

If you need to access the switch(es) to review the zoning information, error messages, or other

information, you will need to connect Ethernet cables (provided by the customer) to the Ethernet port

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 11

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 11

on the switch. These cables would also need to be connected to the customer network. You can

then access the switch using the IP address. The Userid is ADMIN and the password is

PASSWORD. You should change this password to confirm with site security guidelines.

If you should need to review the configuration or zoning within the switches, the IP address for

switch 1 is 192.168.1.31 and switch 2 (only installed in dual node configurations) is 192.168.1.32.

These addresses should not be changed. To gain access to the switches via the IP network, you

will need to provide Ethernet cables and ports on your existing Ethernet network. Once the

connections have been made, then you can connect to the IP address and use the management

tools provided by the switch.

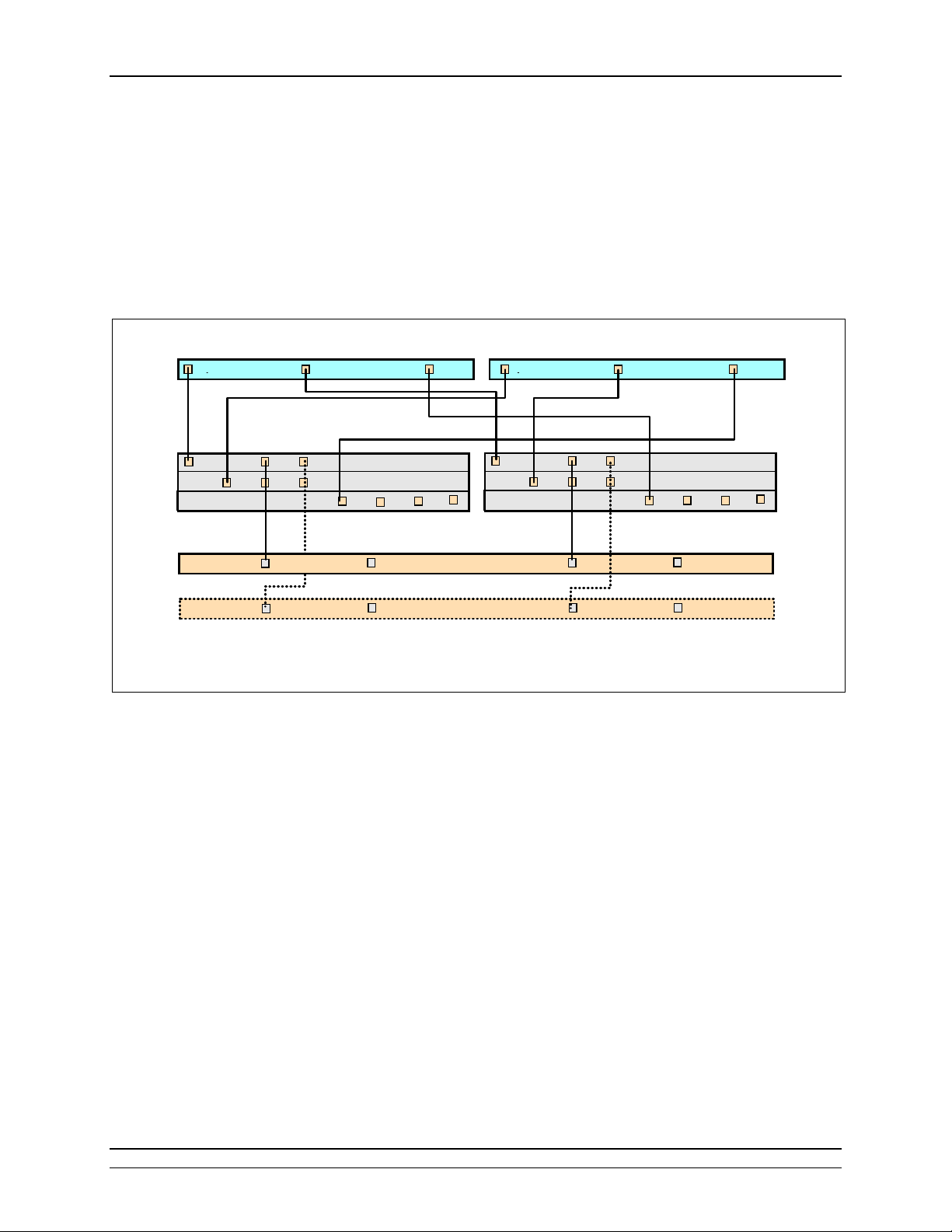

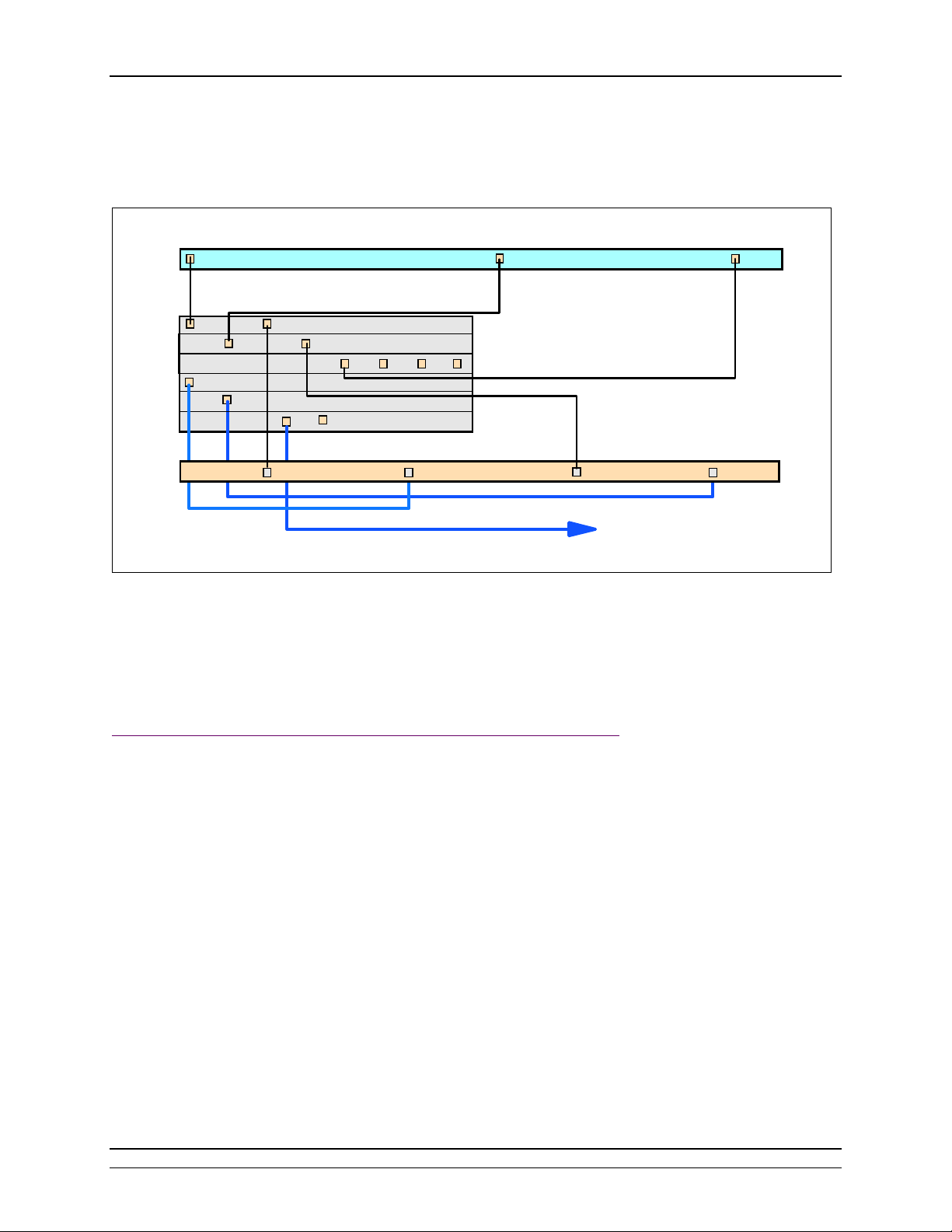

Dual Node Zoning – without Enhanced Remote Volume Mirroring

Slot 5

0 2 3

1

2005-B16_2

2 3

Controller B1

Controller B1

Zone 1

Zone 2

Zone 3

DS4100_1

DS4100_2

0 2 3

1

2 3

2005-B16_1

Slot 4

Controller A1

Controller A1

4

5

Controller A2

Controller A2

Slot 1

7

6

Note: Zone 3 is configured for use with the tape (TS1120, TS3310, 358x, 3592 with WORM

cartridges are recommended) - ports 5-7 are connected to the tape drives

Dual Node Zoning – with Enhanced Remote Volume Mirroring

Slot 4

4

5

Controller B2

Controller B2

6

Slot 1

7

Engine2Engine1 Slot 5

Zone 1

Zone 2

Zone 3

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 12

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 12

Engine1

Slot 5

Slot 4

Slot 1

Slot 5

2005-B16_1

Zone 1

Zone 2

Zone 3

Zone 4

Zone 5

Not

Zoned

DS4100_1

DS4100_2

0 2

1

8

9

3

10

Controller A1

Controller A1

4 5 6 7

11

Controller A2

Controller A2

0 2

1

8

9

3

10

Controller B1

Controller B1

Connects to Switch in

secondary DR550

Note: Zone 3 is configured for use with tape (TS1120, LTO Gen 3, or 3592 drives with WORM cartridges are

recommended - ports 5-7 are available to connect to tape drives)

Zones 4 & 5 are configured for use with data replication (DS4100 enhanced remote volume mirroring)

Per IBM recommendations, the ports used to connect the switches are not included in the zones for remote mirroring

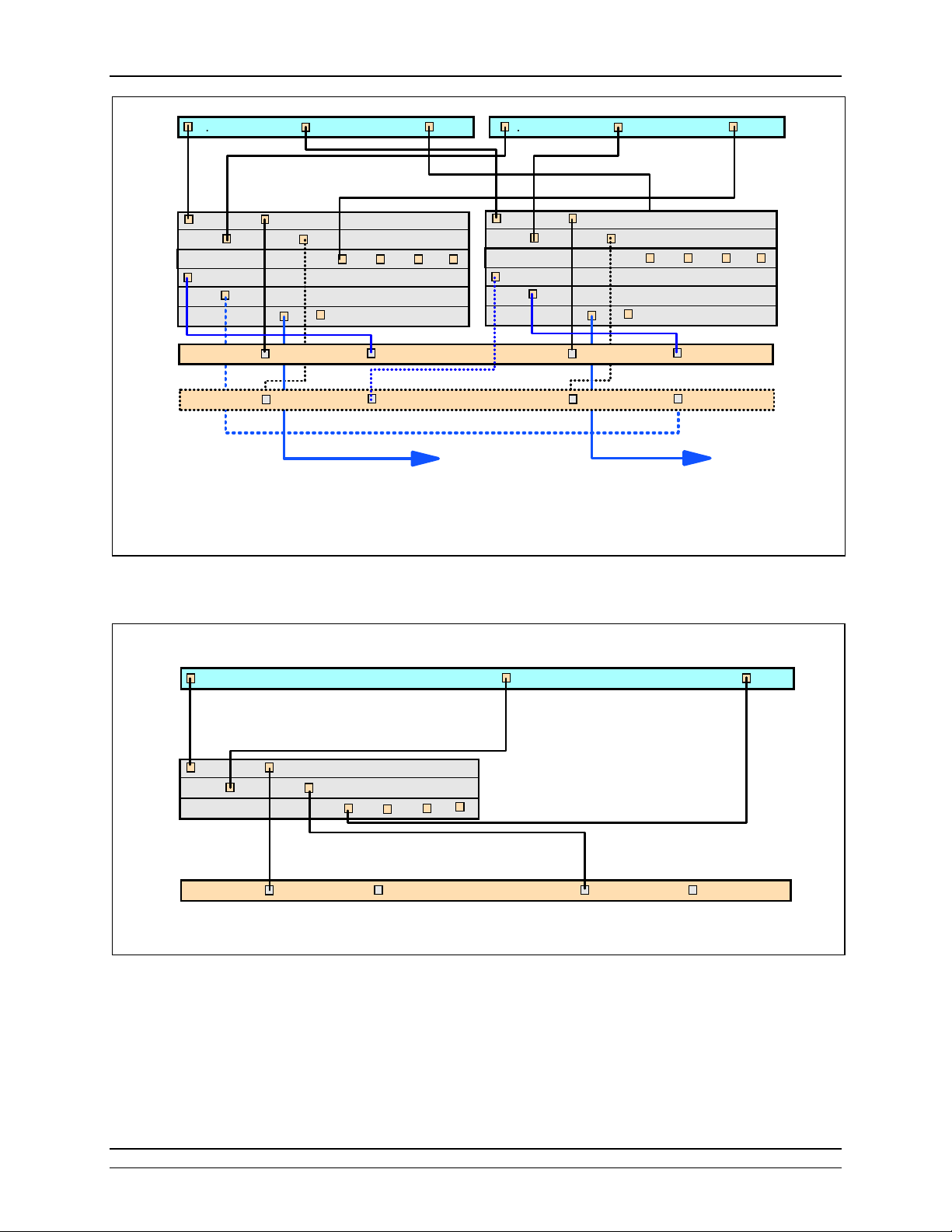

Single Node Zoning – without Enhanced Remote Volume Mirroring

Slot 4

4 5 6 7

11

Controller B2

Controller B2

Slot 1

Engine2

2005-B16_2

Zone 1

Zone 2

Zone 3

Zone 4

Zone 5

Not

Zoned

Connects to Switch in

secondary DR550

9131-52A

Slot 5

Slot 4

2005-B16

Zone 1

Zone 2

Zone 3

DS4100

0 2

1

3

Controller A1

4

6

5

Controller A2

7

Controller B1

Note: Zone 3 is configured for use with the tape (TS1120, TS3310, 358x, 3592 with WORM

cartridges are recommended) - ports 5-7 are connected to the tape drives

Single Node Zoning – with Enhanced Remote Volume Mirroring

Slot 1

Controller B2

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 13

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 13

Switch Zoning when Remote Mirroring

is installed (Factory Settings)

9131-52A

Zone 1

Zone 2

Zone 3

Zone 4

Zone 5

Not

Zoned

DS4100

Slot 5

0 2

1

8

9

Controller A-1

Note: Zone 3 is configured for use with tape (TS1120, LTO Gen 3, or 3592 drives with WORM cartridges are

recommended - ports 5-7 are available to connect to tape drives)

Zones 4 & 5 are configured for use with data replication (DS4100 enhanced remote volume mirroring)

Per IBM recommendations, the ports used to connect the switches are not included in the zones for remote mirroring

10

3

4 5 6 7

11

Controller A-2

Slot 4

2005-B16

Controller B-1

Slot 1

Controller B-2

Connects to Switch in

secondary DR550

These zoning configurations may be needed if you ever need to rezone the 2005-B16.

For more information, 2005-B16 data sheets can be downloaded from:

http://www-03.ibm.com/servers/storage/san/b_type/san16b-2/express/

Should one of the switches fail (dual node configurations only), the logical volumes within the

DS4100 systems are available through the other controller and switch.

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 14

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 14

Software Overview

High Availability Cluster Multi-Processing (HACMP) for AIX

The data retention application can be a business critical application. The DR550 can provide a

high availability environment by leveraging the capabilities of AIX and High Availability Cluster

Multi-Processing (HACMP) with dual P5 servers and redundant networks. This is referred to as

the dual node configuration. IBM also offers a single node configuration that does not include

HACMP.

HACMP is designed to maintain as operational applications such as System Storage Archive

Manager if a component in a cluster node fails. In case of a component failure, HACMP is

designed to move the application along with the resources from the active node to the standby

(passive) node in the DR550.

Cluster Nodes

The two P5 520 servers running AIX with HACMP daemons are Server nodes that share

resources—disks, volume groups, file systems, networks and network IP addresses.

In this HACMP cluster, the two cluster nodes communicate with each other over a private

Ethernet IP network. If one of the network interface cards fails, HACMP is designed to preserve

communication by transferring the traffic to another physical network interface card on the

same node. If a “connection” to the node fails, HACMP is designed to transfer resources to

backup node to which it has access.

In addition, heartbeats are sent between the nodes over the cluster networks to check on the

health of the other cluster node. If the passive standby node detects no heartbeats from the

active node, the active node is considered as failed and HACMP is designed to automatically

transfer resources to the passive standby node.

Within the DR550 (dual node configuration only), HACMP is configured as follows:

• The clusters are setup in Hot Standby (active/passive) mode.

• The resource groups are setup in cascading mode.

• The volume group is setup in enhanced concurrent mode.

System Storage Archive Manager

IBM System Storage Archive Manager (this is the new name for Tivoli Storage Manager for Data

Retention) is designed provide archive services and to prevent critical data from being erased or

rewritten. This software can help address requirements defined by many regulatory agencies for

retention and disposition of data. Key features include the following:

• Data retention protection This feature is designed to prevent deliberate or accidental

deletion of data until its specified retention criterion is met. See “Data Retention Protection”

for more information.

• Event-based retention policy - In some cases, retention must be based on an external

event such as closing a brokerage account. System Storage Archive Manager supports

event-based retention policy to allow data retention to be based on an event other than the

storage of the data. See “Defining and Updating an Archive Copy Group” for more

information. This feature must be enabled via the commands sent by the content

management application.

• Deletion hold In order to ensure that records are not deleted when a regulatory retention

period has lapsed but other requirements mandate that the records continue to be

maintained, System Storage Archive Manager includes deletion hold. Using this feature will

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 15

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 15

help prevent stored data from being deleted until the hold is released. See “Deletion Hold”

for more information. This feature must be enabled via the commands sent by the content

management application.

• Data encryption - 144.8-bit Advanced Encryption Standard (AES) is now available for the

Archive API Client. Data can now be encrypted before transmitting to the DR550 and would

then be stored on the disk/tape in an encrypted format.

While the software has been renamed, many of the supporting documents have not been renamed

yet. You will see references to SSAM and TSM throughout this document. Overtime, the

documentation will be updated to reflect the new naming conventions.

For more information on System Storage Archive Manager and, refer to the Tivoli Storage Manager

for AIX Version 5.3 Administrator’s Guide, Administrator’s Reference and Quick Start manuals

which can be found on the web at:

http://publib.boulder.ibm.com/infocenter/tivihelp/index.jsp?toc=/com.ibm.itstorage.doc/toc.xml

Select Storage Manager for Data Retention, and then Storage Manager for AIX Server and then the

Administrator’s Guide

System Storage Archive Manager API Client

The System Storage Archive Manager API Client is used, in conjunction with System Storage

Archive Manager server code, as the link to applications that produce or manage information to be

stored, retrieved and retained. Content management applications, such as The IBM DB2® Content

Manager, identify information to be retained. The content management application calls the System

Storage Archive Manager (SSAM) archive API Client to store, retrieve and communicate retention

criteria to the SSAM server. The SSAM API Client must be installed on the application or

middleware server that is used to initiate requests to DR550. The application or middleware server

must call the SSAM API to initiate a task within the DR550.

Some applications and middleware include the API client as part of their code. Others require it to

be installed separately.

DS4000 Storage Manager Version 9.12.65

The DS4000 Storage Manager Version 9.12.65 software (hereafter referred to as Storage Manager)

is only available as part of the DR550 and is not available for download from the web. This version

has been enhanced to provide additional protection.

Storage Manager is designed to support centralized management of the DS4100s in the DR550.

Storage Manager is designed to allow administrators to quickly configure and monitor storage from

a Java™-based GUI interface. It is also designed to allow them to customize and change settings as

well as configure new volumes, define mappings, handle routine maintenance and dynamically add

new enclosures and capacity to existing volumes—without interrupting user access to data. Failover

drivers, performance-tuning routines and cluster support are also standard features of Storage

Manager.

Using the DS4000 Storage Manager, the DS4100 is partitioned into a single partition at the factory.

As shown in later diagrams, the P5 520 servers are connected to the DS4100s via Ethernet cables.

This connection is used to manage the DS4000. For the single node configuration, DS4000

Storage Manager runs in the P5 520 server. For the dual node configuration, DS4000 Storage

Manager runs in both servers. Server #2 is used to manage DS4100 #1 and Server #1 is used to

DS4100 #2 (if present in the configuration).

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 16

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 16

Note: only this special version of DS4000 Storage Manager should be used with the DR550.

You should not use this version with other DS4000 or FAStT disk systems and should not

replace this version with a standard version of DS4000 Storage Manager (even if a newer

version is available).

Content Management Applications

For the DR550 to function within a customer IT environment, information appropriate to be retained

must be identified and supplied to the DR550. This can be accomplished with a content

management application. The content management application identifies information appropriate to

be retained, and provides this information to the DR550 via the SSAM API Client.

IBM DB2 Content Manager

To assist customers in addressing needs for data retention, IBM delivers DB2 Content Manager

along with business consulting services (as needed).

IBM® DB2® Content Manager provides a foundation for managing, accessing and integrating

critical business information on demand. It lets you integrate all forms of content - document,

web, image, rich media - across diverse business processes and applications, including Siebel,

PeopleSoft and SAP. Content Manager integrates with existing hardware and software

investments, both IBM and non-IBM, enabling customers to leverage common infrastructure,

achieve a lower cost of ownership, and deliver new, powerful information and services to

customers, partners and employees where and when needed. It is comprised of two core

repository products that are integrated with System Storage Archive Manager for storage of

documents into the DR550:

• DB2 Content Manager is optimized for large collections of large objects. It provides

imaging, digital asset management, and web content management. When combined w/

DB2 Records Manager, it also provides a robust records retention repository for

managing the retention of all enterprise documents.

• DB2 Content Manager OnDemand is optimized to manage very large collections of

smaller objects such as statements and checks. It provides output and report

management.

More information on the DB2 Content Manager portfolio of products can be found at the following

web site: http://www-306.ibm.com/software/data/cm/

There are a number of applications that work with IBM Content Manager to deliver specific

solutions. These applications are designed to use Content Manager functions and can send data to

be stored in DR550.

IBM offers additional applications that are designed to work with the DR550 API. These include:

IBM CommonStore for Exchange Server

IBM CommonStore for Lotus Domino

IBM CommonStore for SAP

IBM Content Manager for Message Monitoring and Retention (CM MMR) (with iLumin)

BRMS (iSeries) (also via IFS to BRMS)

Other Management Applications

You should consult with your application software vendor to determine if your applications support

the DR550 API. A number of application providers have enhanced their software to include this

support. The current list includes:

AXS-One

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 17

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 17

BrainTribe (formerly Comprendium)

Caminosoft

Ceyoniq

Easy Software

FIleNet

Hummingbird

Hyland Software (OnBase)

Hyperwave

IRIS Software (Documentum Connector)

MBS Technologies (iSeries Connector for IBM CM V5)

OpenText (formerly IXOS)

Princeton Softech Active Archive Solution for PeopleSoft; for Siebel; for Oracle

Saperion

SER Solutions

Symantec Enterprise Vault (formerly KVS)

Waters (Creon Labs, NuGenesis)

Windream

Zantaz

Only applications or middleware using the API can send data to DR550. Information regarding the

System Storage Archive Manager API Client may be found at

xml.toc/doc.itstorage.ibm.com=/jsp?toc.index/tivihelp/infocenter/com.ibm.boulder.publib

Additional information on qualified ISVs may be found at http://www.ibm.com/storage/dr550

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 18

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 18

DR550 Offerings

DR550 is available in both single and dual node offerings. Each offering can also be customized to

include support for tape, and the appropriate Ethernet connections.

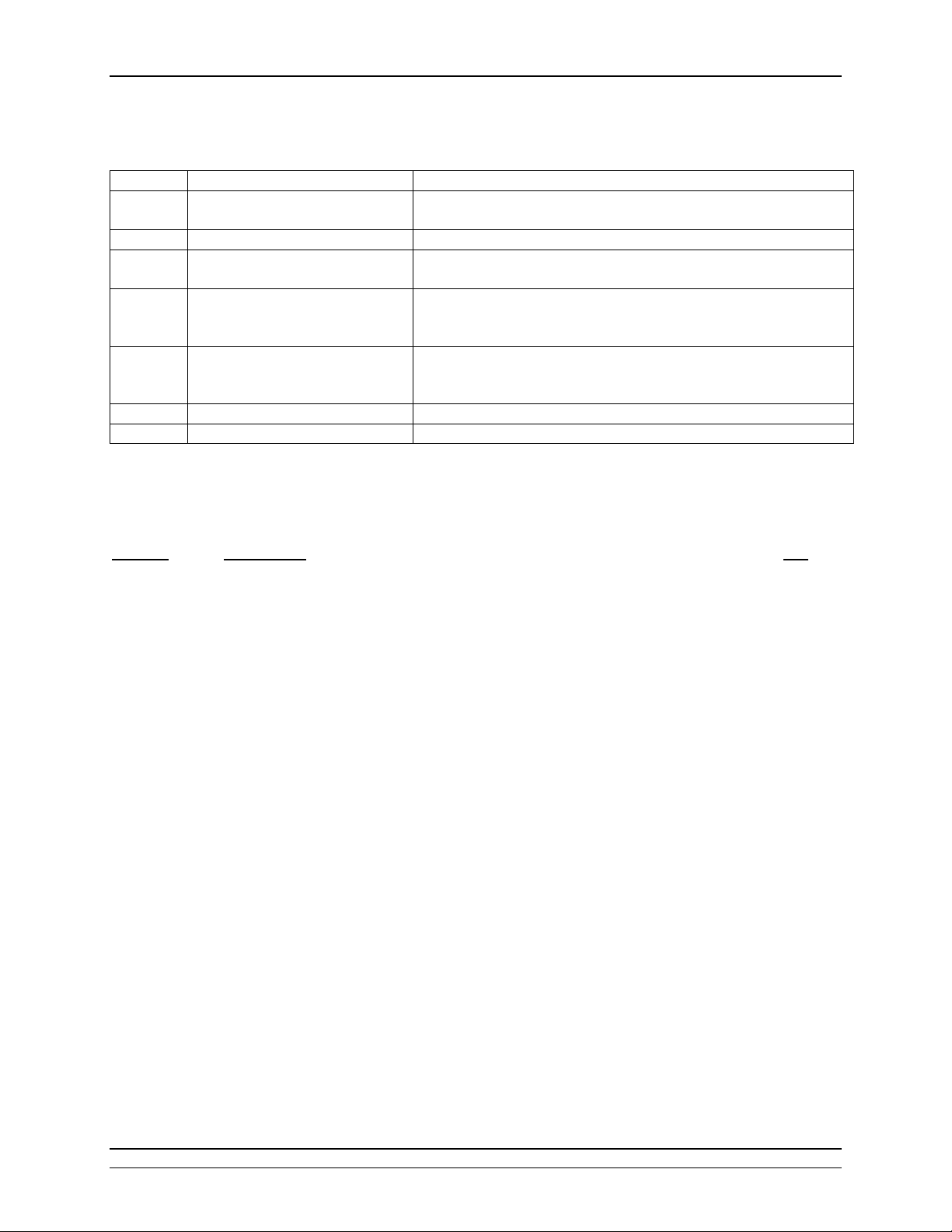

DR550 Single Node Components

The single node DR550 offerings are built with the following components:

Quantity Key Hardware Components

1 7014 Model T00 RS/6000 System Rack

1 9131 Model 52A P5 520

1 1724-100 DS4100 Storage Server (includes 5.6 TB of disk capacity)

0-1 1710-10U DS4000 EXP100 Storage Expansion Unit (only installed in

11.2 TB configuration)

1 2005-B16 TotalStorage SAN Switch - with 8 shortwave transceivers

(there are 12 transceivers when enhanced RVM is included

in the order)

1 7310-CR3 eServer Hardware Management Console

1 7316-TF3 Flat Panel Monitor, Keyboard, and Mouse

The following list is a list of the actual features that are ordered for DR550 (11.2 TB). This list

should not be modified in any way as part of the initial order. IBM’s eConfig tool should be used to

review any changes in the official configurations. Other configurations are also available.

Product Description Qty

Management Console

7310-CR3 7310-CR3 Rack-mounted Hardware Management Console 1

0706 Integrate with IBM TotalStorage Storage DR550 1

0961 Hardware Management Console for POWER5 Licensed Machine Code 1

4651 Rack Indicator, Rack #1 1

6458 Power Cord (4M – 14Ft) 400V/14A, IEC320/C13, IEC320/C14 1

7801 Ethernet Cable, 6M, Hardware Management Console to System Unit 1

9300 Language Group, US English 1

7316-TF3 IBM 7316-TF3 Rack-Mounted Flat Panel Console Kit 1

0706 Integrate with IBM System Storage DR550 1

4202 Keyboard/Video/Mouse (LCM) Displays 1

4242 6 Foot Extender Cable for Displays 1

4269 USB Conversion Option 1

6350 Travel Keyboard, US English 1

9300 Language Group Specify – US English 1

9911 Power Cord (4M) All (Standard Cord) 1

5771-RS1 Initial Software Support 1 Year 1

0612 Per Processor Software Support 1 Year 1

7000 Agreement for MCRSA 1

DR550 Engine #1

9131-52A 9131 Model 52A

0706 Integrate with DR500 -- IBM TotalStorage 1

Retention Solution single node

1930 1024 MB (2X512MB) DIMMS, 276-PIN, 533 MHZ, 1

DDR-2 SDRAM

Description

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 19

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 19

1

1

1968 73.4 GB 10,000 RPM Ultra320 SCSI Disk Drive 2

Assembly

1977 2 Gigabit Fibre Channel PCI-X Adapter 3

1980 POWER GXT135P Graphics Accelerator With 1

Digital Support

1983 IBM 2-Port 10/100/1000 Base-TX Ethernet 1

PCI-X Adapter

1993 IBM 4.7 GB IDE Slimline DVD-RAM Drive 1

5005 Software Preinstall 1

5159 AC Power Supply, 850 W 1

6458 Power Cable -- Drawer to IBM PDU, 14-foot, 1

250V/10A

6574 Ultra320 SCSI 4-Pack 1

7160 IBM Rack-mount Drawer Rail Kit 1

7190 IBM Rack-mount Drawer Bezel and Hardware 1

7320 One Processor Entitlement for Processor 2

Feature # 8330

7877 Media Backplane Card 1

8330 2-way 1.9 GHz POWER5 Processor Card, 36MB 1

L3 Cache

9300 Language Group Specify - US English 1

5608-ARM IBM System Storage Archive Manager 1

3868 Per Terabytes with 1 Year SW Maintenance 6

5608-AR2 TS - ARM, 1 Yr Maint

3908 Per Terabytes SW Maintenance No Charge Registration 6

5692-A5L System Software 1

0967 MEDIA 5765-G03 AIX V5.3 1

0968 Expansion pack 1

0970 AIX 5.3 Update CD 1

0975 Microcode Upd Files and Disc Tool v1.1 CD 1

1004 CD-ROM Process Charge 1

1403 Preinstall 64 bit Kernel 1

2924 English Language 1

3410 CD-ROM 1

5005 Preinstall 1

5924 English U/L SBCS Secondary Language 1

5765-G03 AIX V5 1

0034 Value Pak per Processor D5 AIX V5.3

5771-SWM Software Maintenance for AIX, 1 Year 1

0484 D5 1 Yr SWMA for AIX per Processor Reg/Ren

DS4100#1

1724-100 DS4100 Midrange Disk System 1

0707 Integrate with IBM System Storage DR550 1

2210 Short Wave SFP GBIC (LC) 2

4603 SATA 400GB/7200 Disk Drive Module 14

5605 LC-LC 5M Fibre Optic Cable 2

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 20

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 20

7711 DS4100 AIX Host Kit 1

EXP100#1

1710-10U DS4000 EXP100 Storage Expan 1

0707 Integrate with IBM System Storage DR550 1

2210 2 Gb Fibre Channel Short Wave GBIC 2

4603 SATA 400 GB/7200 Drive Module 14

5601 1M 50u Fiber Optic Cable (LC-LC) 2

9001 Attach to DS4100 1

Switch#1

2005-B16 IBM TotalStorage SAN Switch – 16 Port 1

0706 DR550 Integration 1

2414 4 Gb SW SFP Transceiver - 4 Pack 2

5605 Fibre Cable LC/LC 5m multimode 1

Rack

7014-T00 7014-T00 IBM RS/6000 Rack Model T00 1

0233 Rack Content Specify: 7316-TF3 - 1 EIA 1

0234 Rack Content Specify: 1710/10U - 3 EIA 1

0247 Rack Content Specify: 7310/CR2 - 1 EIA 1

0248 Rack Content Specify: 1 EIA 1

0254 Rack Content Specify: 1724/100 - 3 EIA 1

0257 Rack Content Specify: 9131-52A - 4 EIA 1

0706 DR550 Integration (single node) 1

6068 Front Door for 1.8 Meter Rack (Flat Black) 1

6098 Side Panel for 1.8 or 2.0 Meter Rack (Black) 2

6580 Optional Rack Security Kit 1

7188 Power Dist Unit-Side Mount, Universal UTG0247 Connector 1

9188 Power Dist Unit Specify - Base/Side Mount, Universal UTG0247 Connector 1

9300 US English Nomenclature 1

9800 United States/Canada Power Cord 1

The following table highlights the versions used in DR550.

Components Software or Firmware Levels

DS4100 NVSRAM: N1724F100R912V05

Appware: 06.12.16.00

Bootware: 06.12.16.00

DS4000 EXP100 ESM Firmware 9563

DS4000 Storage Manager Version 9.12.6500.00

Note: (this is a special version only available with DR550. It

is not specifically ordered, but included with the hardware)

2005-B16 Firmware 4.4.0e (or later)

AIX 5.3 TL 04, with Atape driver 9.6.0.0 and Atldd 6.3.1.0

System Storage Archive

5.3.2.0

Manager

System Storage Archive

Manager Client

5.3 0.0

An integrated management console is part of the DR550 offering. An as option, limited function is

available via a TTY type terminal (customer provided) such as a VT100 or an IBM 3151. The TTY

terminal is connected to the P5 520 server(s) via the serial port on the front of the server. It is

recommended that the integrated management console be used for all management activity.

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 21

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 21

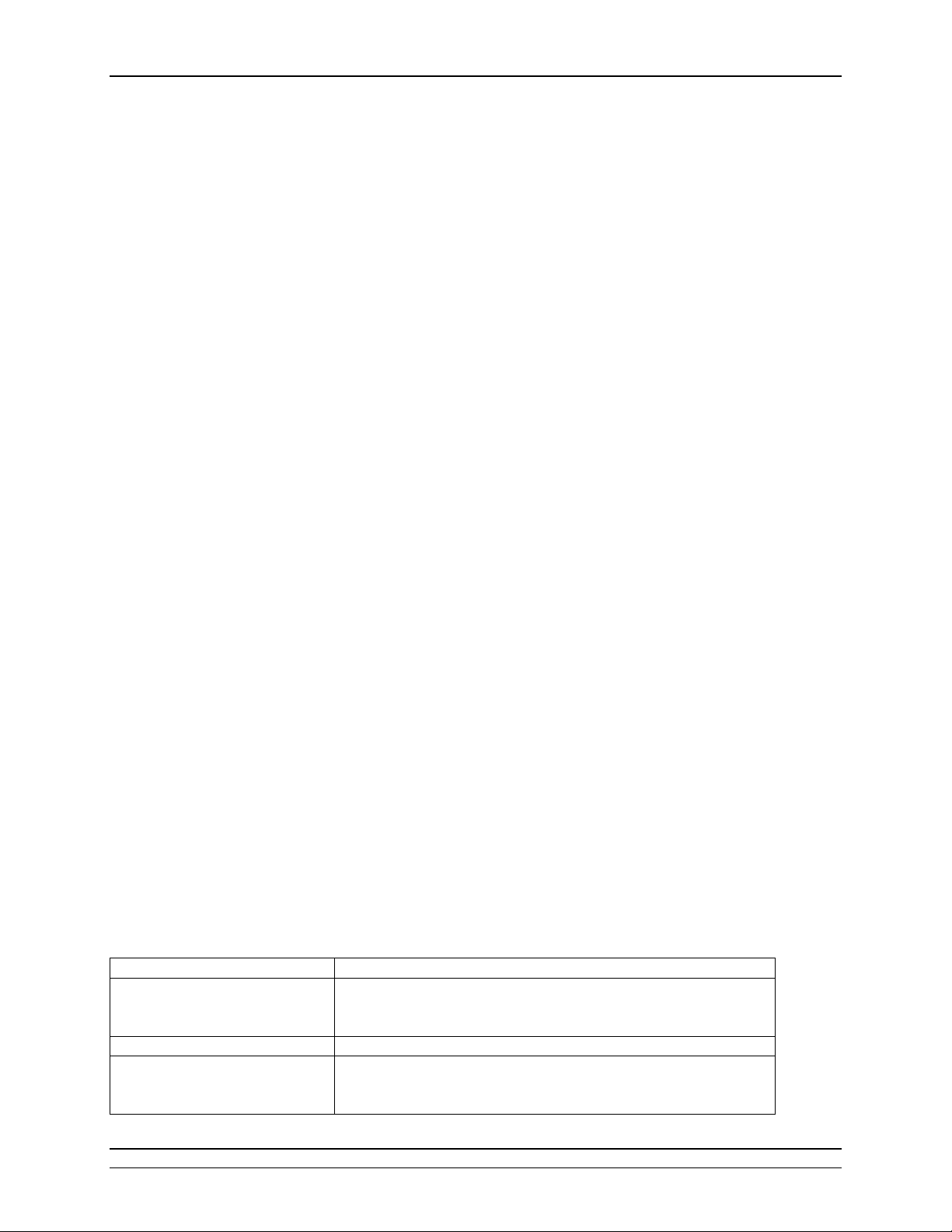

DR550 Dual Node Components

The dual node DR550 offerings are built with the following components:

Quantity Key Hardware Components

1 or 2 7014 Model T00 RS/6000 System Rack (2nd rack only included in 89.6 TB

configuration

2 9131 Model 52A P5 520

1 or 2 1724-100 DS4100 Storage Server (2nd DS4100 included in 89.6 TB

configuration)

0-14 1710-10U DS4000 EXP100 Storage Expansion Unit (1 included for

11.2 TB, 3 included for 14 TB, 7 included for 44.8 TB and

14 included for 89.6 TB configuration)

2 2005-B16 TotalStorage SAN Switch - with 8 shortwave transceivers

(there are 12 transceivers when configured for remote

mirroring)

1 7310-CR3 eServer Hardware Management Console

1 7316-TF3 Monitor, Keyboard, and Mouse

The following list is a list of the actual features that are ordered for DR550 (11.2 TB). This list

should not be modified in any way as part of the initial order. IBM’s eConfig tool should be used to

review the official configurations and any changes that have been implemented.

Product Description Qty

Management Console

7310-CR3 7310-CR3 Rack-mounted Hardware Management Console 1

0707 Integrate with IBM TotalStorage Storage DR550 1

0961 Hardware Management Console for POWER5 Licensed Machine Code 1

4651 Rack Indicator, Rack #1 1

6458 Power Cord (4M – 14Ft) 400V/14A, IEC320/C13, IEC320/C14 1

7801 Ethernet Cable, 6M, Hardware Management Console to System Unit 1

9300 Language Group, US English 1

7316-TF3 IBM 7316-TF3 Rack-Mounted Flat Panel Console Kit 1

0707 Integrate with IBM System Storage DR550 1

4202 Keyboard/Video/Mouse (LCM) Displays 1

4242 6 Foot Extender Cable for Displays 1

4269 USB Conversion Option 1

6350 Travel Keyboard, US English 1

9300 Language Group Specify – US English 1

9911 Power Cord (4M) All (Standard Cord) 1

5771-RS1 Initial Software Support 1 Year 1

0612 Per Processor Software Support 1 Year 1

7000 Agreement for MCRSA 1

DR550 Engine #1

9131-52A 9131 Model 52A

0706 Integrate with DR500 -- IBM TotalStorage 1

Retention Solution single node

1930 1024 MB (2X512MB) DIMMS, 276-PIN, 533 MHZ, 1

DDR-2 SDRAM

1968 73.4 GB 10,000 RPM Ultra320 SCSI Disk Drive 2

Description

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 22

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 22

1

1

Assembly

1977 2 Gigabit Fibre Channel PCI-X Adapter 3

1980 POWER GXT135P Graphics Accelerator With 1

Digital Support

1983 IBM 2-Port 10/100/1000 Base-TX Ethernet 1

PCI-X Adapter

1993 IBM 4.7 GB IDE Slimline DVD-RAM Drive 1

5005 Software Preinstall 1

5159 AC Power Supply, 850 W 1

6458 Power Cable -- Drawer to IBM PDU, 14-foot, 1

250V/10A

6574 Ultra320 SCSI 4-Pack 1

7160 IBM Rack-mount Drawer Rail Kit 1

7190 IBM Rack-mount Drawer Bezel and Hardware 1

7320 One Processor Entitlement for Processor 2

Feature # 8330

7877 Media Backplane Card 1

8330 2-way 1.9 GHz POWER5 Processor Card, 36MB 1

L3 Cache

9300 Language Group Specify - US English 1

5608-ARM IBM System Storage Archive Manager 1

3868 Per Terabytes with 1 Year SW Maintenance 1

5608-AR2 TS - ARM, 1 Yr Maint

3908 Per Terabytes SW Maintenance No Charge Registration 1

5692-A5L System Software 1

0967 MEDIA 5765-G03 AIX V5.3 1

0968 Expansion pack 1

0970 AIX 5.3 Update CD 1

0975 Microcode Upd Files and Disc Tool v1.1 CD 1

1004 CD-ROM Process Charge 1

1403 Preinstall 64 bit Kernel 1

2924 English Language 1

3410 CD-ROM 1

5005 Preinstall 1

5924 English U/L SBCS Secondary Language 1

5765-F62 HACMP V5 1

0001 Per Processor with 1 Year maintenance 1

5660-HMP HACMP Reg/Ren: 1Yr 1

0719 HACMP Base SW MAINT per proc 1Y Reg 1

5765-G03 AIX V5 1

0034 Value Pak per Processor D5 AIX V5.3

5771-SWM Software Maintenance for AIX, 1 Year 1

0484 D5 1 Yr SWMA for AIX per Processor Reg/Ren

DR550 Engine #2

9131-52A 9131 Model 52A

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 23

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 23

1

1

0706 Integrate with DR500 -- IBM TotalStorage 1

Retention Solution single node

1930 1024 MB (2X512MB) DIMMS, 276-PIN, 533 MHZ, 1

DDR-2 SDRAM

1968 73.4 GB 10,000 RPM Ultra320 SCSI Disk Drive 2

Assembly

1977 2 Gigabit Fibre Channel PCI-X Adapter 3

1980 POWER GXT135P Graphics Accelerator With 1

Digital Support

1983 IBM 2-Port 10/100/1000 Base-TX Ethernet 1

PCI-X Adapter

1993 IBM 4.7 GB IDE Slimline DVD-RAM Drive 1

5005 Software Preinstall 1

5159 AC Power Supply, 850 W 1

6458 Power Cable -- Drawer to IBM PDU, 14-foot, 1

250V/10A

6574 Ultra320 SCSI 4-Pack 1

7160 IBM Rack-mount Drawer Rail Kit 1

7190 IBM Rack-mount Drawer Bezel and Hardware 1

7320 One Processor Entitlement for Processor 2

Feature # 8330

7877 Media Backplane Card 1

8330 2-way 1.9 GHz POWER5 Processor Card,36MB 1

L3 Cache

9300 Language Group Specify - US English 1

5692-A5L System Software 1

0967 MEDIA 5765-G03 AIX V5.3 1

0968 Expansion pack 1

0970 AIX 5.3 Update CD 1

0975 Microcode Upd Files and Disc Tool v1.1 CD 1

1004 CD-ROM Process Charge 1

1403 Preinstall 64 bit Kernel 1

2924 English Language 1

3410 CD-ROM 1

5005 Preinstall 1

5924 English U/L SBCS Secondary Language 1

5765-G03 AIX V5 1

0034 Value Pak per Processor D5 AIX V5.3

5771-SWM Software Maintenance for AIX, 1 Year 1

0484 D5 1 Yr SWMA for AIX per Processor Reg/Ren

5765-F62 HACMP V5 1

0001 Per Processor with 1 Year maintenance 1

5660-HMP HACMP Reg/Ren: 1Yr 1

0719 HACMP Base SW MAINT per proc 1Y Reg 1

DS4100#1

1724-100 DS4100 Midrange Disk System 1

0707 Integrate with IBM System Storage DR550 1

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 24

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 24

2210 Short Wave SFP GBIC (LC) 2

4603 SATA 400GB/7200 Disk Drive Module 14

5605 LC-LC 5M Fibre Optic Cable 2

7711 DS4100 AIX Host Kit 1

EXP100#1

1710-10U DS4000 EXP100 Storage Expan 1

0707 Integrate with IBM System Storage DR550 1

2210 2 Gb Fibre Channel Short Wave GBIC 2

4603 SATA 400 GB/7200 Drive Module 14

5601 1M 50u Fiber Optic Cable (LC-LC) 2

9001 Attach to DS4100 1

Switch#1

2005-B16 IBM TotalStorage SAN Switch – 16 Port 1

0707 DR550 Integration 1

2414 4 Gb SW SFP Transceiver - 4 Pack 2

5605 Fibre Cable LC/LC 5m multimode 3

Switch#2

2005-B16 IBM TotalStorage SAN Switch – 16 Port 1

0707 DR550 Integration 1

2414 4 Gb SW SFP Transceiver - 4 Pack 2

5605 Fibre Cable LC/LC 5m multimode 3

Rack

7014-T00 7014-T00 IBM RS/6000 Rack Model T00 1

0233 Rack Content Specify: 7316-TF3 - 1 EIA 1

0234 Rack Content Specify: 1710/10U - 3 EIA 1

0247 Rack Content Specify: 7310/CR2 - 1 EIA 1

0248 Rack Content Specify: 1 EIA 2

0254 Rack Content Specify: 1724/100 - 3 EIA 1

0257 Rack Content Specify: 9131-52A - 4 EIA 2

0707 DR550 Integration (dual node) 1

6068 Front Door for 1.8 Meter Rack (Flat Black) 1

6098 Side Panel for 1.8 or 2.0 Meter Rack (Black) 2

6580 Optional Rack Security Kit 1

7188 Power Dist Unit-Side Mount, Universal UTG0247 Connector 1

9188 Power Dist Unit Specify - Base/Side Mount, Universal UTG0247 Connector 1

9300 US English Nomenclature 1

9800 United States/Canada Power Cord 1

Other configurations are available, using different amounts of DS4000 EXP100 and DS4100.

The following table highlights the versions used in DR550.

Components Software or Firmware Levels

DS4100 NVSRAM: N1724F100R912V05

Appware: 06.12.16.00

Bootware: 06.12.16.00

DS4000 EXP100 ESM Firmware 9563

DS4000 Storage Manager Version 9.12.6500.00

Note: (this is a special version only available with DR550. It

is not specifically ordered, but included with the hardware)

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 25

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 25

2005-B16 Firmware 4.4.0e (or later)

AIX 5.3 TL 04, with Atape driver 9.6.0.0 and Atldd 6.3.1.0

HACMP 5.3 + PTFs

System Storage Archive

5.3.2.0

Manager

System Storage Archive

5.3.0.0

Client

An integrated console is part of the DR550 offering. As an option, limited function is available via a

TTY type terminal (customer provided) such as a VT100 or an IBM 3151. If this option is used, the

TTY terminal is connected to the serial port on the front of the P5 520 server. It is recommended

that the integrated management console be used for all management activity.

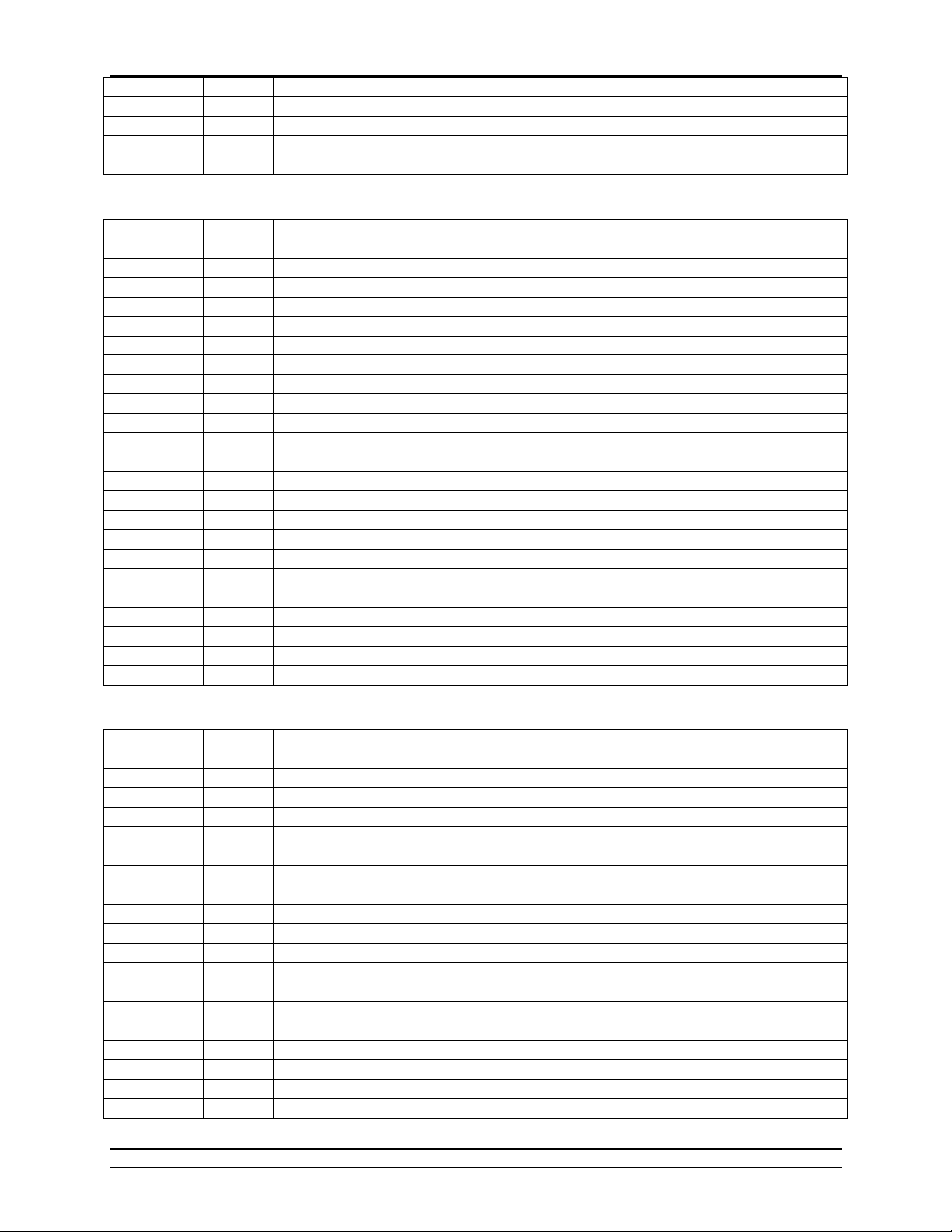

DS4100 Logical Volume Configurations

On the DR550, LUNs will be configured based on the total storage capacity ordered. All disk

capacity is allocated for use by TSM. No disk capacity is set aside for other applications or servers.

Below are the approximate LUN configurations set at the factory. The LUN id column shows the

LUN id as seen from DS4000 Storage Manager. If you view the LUN id from AIX, it will be displayed

as a hexadecimal address.

For 5.6 TB System (single or dual node configurations)

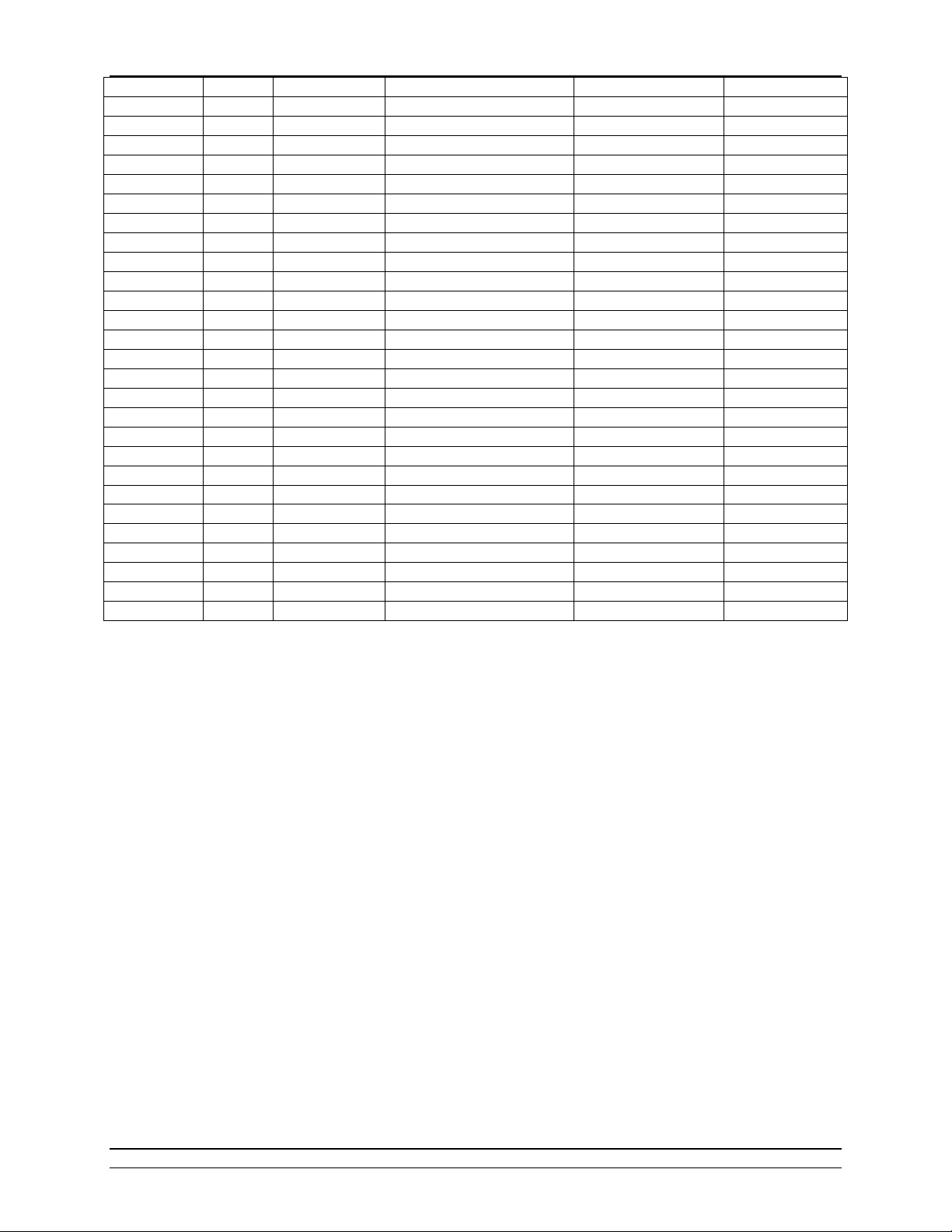

LUN size LUN ID Hdisk on AIX Logical Volume File System VG Name

2 GB 0 hdisk2 tsmappslv /tsm TSMApps

115 GB

300 GB

2035 GB

2035 GB

380* GB

1

2

3

4

5

For 11.2 TB System (single or dual node configurations)

LUN size LUN ID Hdisk on AIX Logical Volume File System VG Name

2 GB 0 hdisk2 tsmappslv /tsm TSMApps

115 GB

300 GB

2035 GB

2035 GB

380* GB

2035 GB

2035 GB

600* GB

1

2

3

4

5

6

7

8

For 22.4 TB System (dual node configurations only)

LUN size LUN ID Hdisk on AIX Logical Volume File System VG Name

2 GB 0 hdisk2 tsmappslv /tsm TSMApps

315 GB

900 GB

2035 GB

2035 GB

380* GB

2035 GB

1900* GB

2035 GB

1

2

3

4

5

6

7

9

hdisk3

hdisk4

hdisk5 tsmstglv1

hdisk6 tsmstglv2

hdisk7 tsmstglv3

hdisk3

hdisk4

hdisk5 tsmstglv1

hdisk6 tsmstglv2

hdisk7 tsmstglv3

hdisk8 tsmstglv4

hdisk9 tsmstglv5

hdisk10 tsmstglv6

hdisk3

hdisk4

hdisk5 tsmstglv1

hdisk6 tsmstglv2

hdisk7 tsmstglv3

hdisk8 tsmstglv4

hdisk9 tsmstglv5

hdisk10 tsmstglv6

tsmdblv tsmloglv /tsmDb /tsmLog TSMDbLogs

tsmdbbkuplv /tsmdbbkup TSMDbBkup

tsmdblv tsmloglv /tsmDb /tsmLog TSMDbLogs

tsmdbbkuplv /tsmdbbkup TSMDbBkup

tsmdblv tsmloglv /tsmDb /tsmLog TSMDbLogs

tsmdbbkuplv /tsmdbbkup TSMDbBkup

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 26

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 26

2035 GB

600* GB

2035 GB

2035 GB

600* GB

10

11

11

12

13

hdisk11 tsmstglv7

hdisk12 tsmstglv8

hdisk13 tsmstglv9

hdisk14 tsmstglv10

hdisk15 tsmstglv11

For 44.8TB System (dual node configurations only)

LUN size LUN ID Hdisk on AIX Logical Volume File System VG Name

2 GB 0 hdisk2 tsmappslv /tsm TSMApps

415 GB

1200 GB

2035 GB

2035 GB

280* GB

2035 GB

1600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

hdisk3

hdisk4

hdisk5 tsmstglv1

hdisk6 tsmstglv2

hdisk7 tsmstglv2

hdisk8 tsmstglv3

hdisk9 tsmstglv4

hdisk10 tsmstglv6

hdisk11 tsmstglv7

hdisk12 tsmstglv8

hdisk13 tsmstglv9

hdisk14 tsmstglv10

hdisk15 tsmstglv11

hdisk16 tsmstglv12

hdisk17 tsmstglv13

hdisk18 tsmstglv14

hdisk19 tsmstglv15

hdisk20 tsmstglv16

hdisk21 tsmstglv17

hdisk22 tsmstglv18

hdisk23 tsmstglv19

hdisk24 tsmstglv20

tsmdblv tsmloglv /tsmDb /tsmLog TSMDbLogs

tsmdbbkuplv /tsmdbbkup TSMDbBkup

For 89.6 TB System (dual node configurations only)

LUN size LUN ID Hdisk on AIX Logical Volume File System VG Name

2 GB 0 hdisk2 tsmappslv /tsm TSMApps

415 GB

1200 GB

2035 GB

2035 GB

280* GB

2035 GB

1600 GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

1

2

3

4

4

5

6

8

9

10

11

12

13

14

15

16

17

18

hdisk3

hdisk4

hdisk5 tsmstglv1

hdisk6 tsmstglv2

hdisk7 tsmstglv3

hdisk8 tsmstglv4

hdisk9 tsmstglv5

hdisk10 tsmstglv6

hdisk11 tsmstglv7

hdisk12 tsmstglv8

hdisk13 tsmstglv9

hdisk14 tsmstglv10

hdisk15 tsmstglv11

hdisk16 tsmstglv12

hdisk17 tsmstglv13

hdisk18 tsmstglv14

hdisk19 tsmstglv15

hdisk20 tsmstglv16

tsmdblv tsmloglv /tsmDb /tsmLog TSMDbLogs

tsmdbbkuplv /tsmdbbkup TSMDbBkup

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 27

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 27

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

2035 GB

2035 GB

600* GB

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

hdisk21 tsmstglv17

hdisk22 tsmstglv18

hdisk23 tsmstglv19

hdisk24 tsmstglv20

hdisk25 tsmstglv21

hdisk26 tsmstglv22

hdisk27 tsmstglv23

hdisk28 tsmstglv24

hdisk29 tsmstglv25

hdisk30 tsmstglv26

hdisk31 tsmstglv27

hdisk32 tsmstglv44.8

hdisk33 tsmstglv29

hdisk34 tsmstglv30

hdisk35 tsmstglv31

hdisk36 tsmstglv32

hdisk37 tsmstglv33

hdisk38 tsmstglv34

hdisk39 tsmstglv35

hdisk40 tsmstglv36

hdisk41 tsmstglv37

hdisk42 tsmstglv38

hdisk43 tsmstglv39

hdisk44 tsmstglv40

hdisk45 tsmstglv41

hdisk46 tsmstglv42

hdisk47 tsmstglv43

hdisk48 tsmstglv44

Note* the actual size of the last LUN in each disk drawer is an approximate value. The actual size

may be slightly different from what is published.

Backup times for the SSAM data base will vary, depending on the used portion of the allocated

space. As the data base fills up (could take years to do this, you should expect longer backup

times.

AIX settings

The following has been setup for the AIX server(s)

• Paging space has been increased by 1536 MB. Now DR550 has a paging space of 1536 +

512 = 2048 MB paging space.

• Changed TCP parameter in AIX rfc1323 = 1 <==== the default value was 0.

In addition, FTP and Telnet services have been shut down. All other ports/sockets are blocked with

the exception of those needed by AIX, HACMP and System Storage Archive Manager

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 28

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 28

System Storage Archive Server Configuration

The System Storage Archive Manager Server settings on the DR550 are (these settings are

different than the default settings. Other parameters use the default settings.

ARCHIVERETENTIONPROTECTION ON

TCPWINDOWSIZE 63

TCPNODELAY YES

BUFFERPOOL 512000

SELFTUNEBUFPOOLSIZE NO

TXNGRPMAX 512

COMMTIMEOUT 300

DBPAGE SHADOW YES

MAX SESSIONS 100

The following files have been set at the factory:

1. Defined /tsm/files/devconfig (keeps System Storage Archive Manager device configuration

to help in rebuilding the System Storage Archive Manager server within DR550 in case of

System Storage Archive Manager server loss).

2. Defined /tsm/files/volhist (keeps track of volumes used by System Storage Archive

Manager).

See the Tivoli Storage Manager for AIX Administrator’s Guide and Reference manuals for

information on customizing these settings for your needs.

System Storage Archive Manager Database and Logs

For the DR550 configurations (5.6 TB and 11.2 TB), a 100 GB logical volume for the SSAM

database and a 15 GB logical volume for the SSAM log have been preconfigured. For the 22.4 TB

configuration, a 300 GB logical volume for the SSAM database and a 15 GB logical volume for the

SSAM log has been preconfigured. For the 44.8 and 89.6 TB configurations, a 400 GB logical

volume for the SSAM database and 15 GB logical volume for the SSAM log have been

preconfigured. The System Storage Archive Manager database and log volumes are configured

using DS4000 RAID5 storage. System Storage Archive Manager mirroring for the database and log

volumes is not configured.

System Storage Archive Manager Disk Storage Pool

The DR550 has been preconfigured with a single disk storage pool (ARCHIVEPOOL). The raw

logical volumes in the table above have been defined into the ARCHIVEPOOL.

System Storage Archive Manager Storage Management Policy

The DR550 default policy is defined (all are named STANDARD) and the Archive Copy Group in the

STANDARD Management Class specifies the following:

Data is stored into ARCHIVEPOOL

A chronological retention policy to retain the data for 365 days

See the Tivoli Storage Manager for AIX Administrator’s Reference, Guide, and Quick Start manual

for more information on customizing the System Storage Archive Manager policy for your data

environment.

Automated System Storage Archive Manager Operations

DR550 includes schedules used to automate System Storage Archive Manager server

administrative commands. The schedules specify that the operations start and stop during defined

time periods. You may want to change these start and stop windows to suit your situation. See the

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 29

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 29

TSM for AIX Administrator’s Guide and Reference manuals for help in modifying the administrative

schedules.

Examples of schedules that are setup in the DR550 are:

Backup of the System Storage Archive Manager database is scheduled to run daily at

8am.

Maintain 3 copies of backup and delete older versions (scheduled a job to run daily at

23:59).

Note: It is the responsibility of the customer to set up and verify schedules and database backups.

You should verify that you have appropriate schedules in place to back up your database.

Routine Operations for System Storage Archive Manager

Monitoring the Database and Recovery Log Status

Monitor the System Storage Archive Manager database and recovery usage log to ensure that you

have enough space available for efficient operation. You should pay particular attention to the

maximum utilization percentage (%Util) for both. If more room is needed in that database or

recovery log, shift resources by using the administrator functions outlined in the Tivoli Storage

Manager for AIX Administrator’s Guide and Reference manuals.

Monitoring Disk Volumes

Monitor the status of the volumes for the Tivoli Storage database, recovery log, and the

ARCHIVEPOOL storage pool. If any volumes have a status of Offline or Stale, you must determine

why and correct the situation.

Monitoring the Results of Scheduled Operations

Monitor scheduled administrative to ensure that the schedules are running successfully. This is

particularly relevant if you have set up scheduled backups of the database.

Installing and Setting Up System Storage Archive Manager API

Clients

The DR550 storage interface is the System Storage Archive Manager API Client. You may need to

install the System Storage Archive Manager API Client V5.2.2 or later on the server(s) that are

sending data to the DR550. Your software vendor may offer specific recommendations for defining

System Storage Archive Manager storage management policies, the System Storage Archive

Manager client node names, and System Storage Archive Manager client Include-Exclude lists. You

will also need to point to the network address of the DR550 in the System Storage Archive Manager

Client Communications Options definition.

The following web based education is available from Tivoli and may be beneficial in providing more

information on System Storage Archive Manager. The site for web based training for externals is

the Virtual Tivoli Skill Center- cgse1.cgselearning.com/tivoliskills

IBM Storage Systems Copyright © 2006 by International Business Machines Corporation

Page 30

IBM System Storage DR550 Version 3.0 ------17 March 2006 Page 30

Installation and Activation

Safety Notices

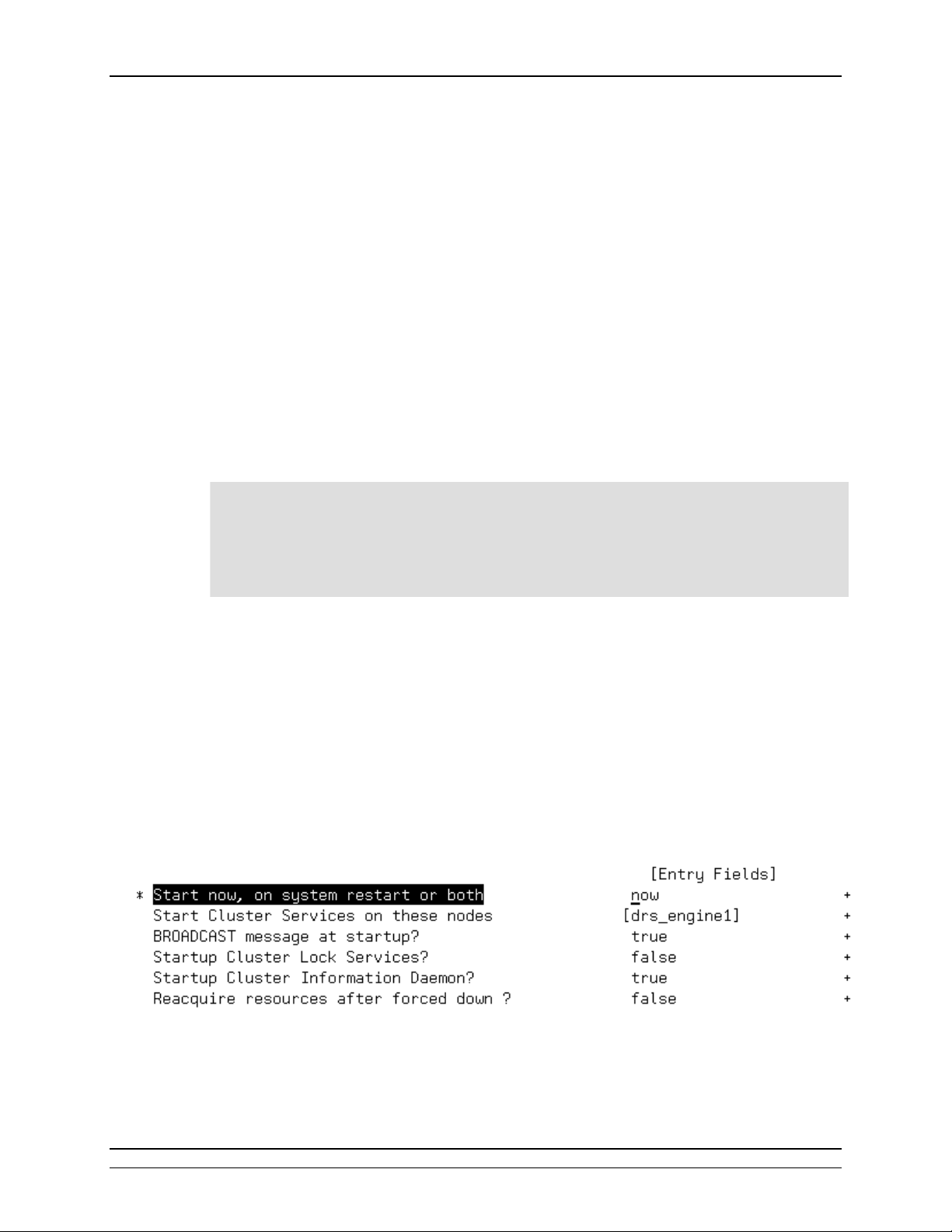

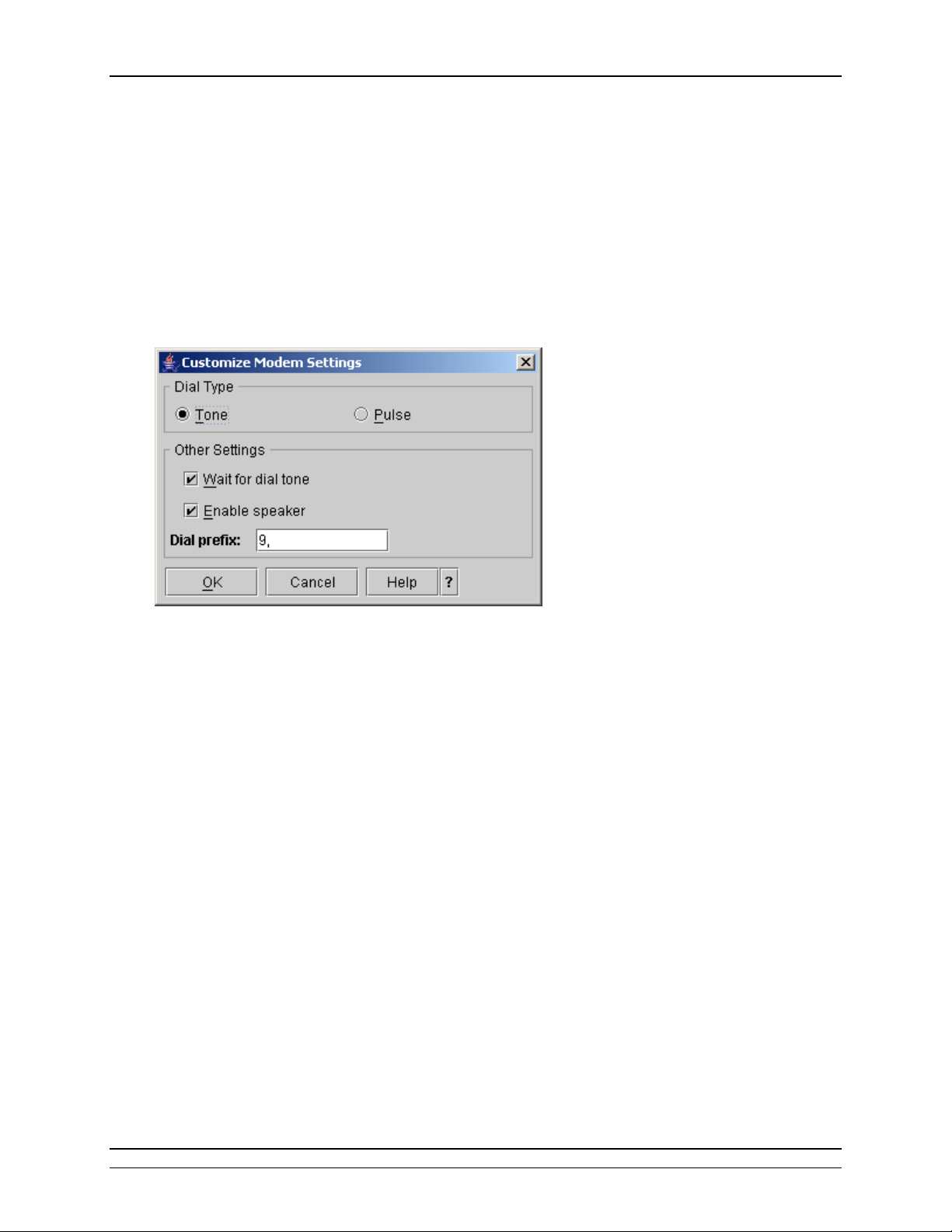

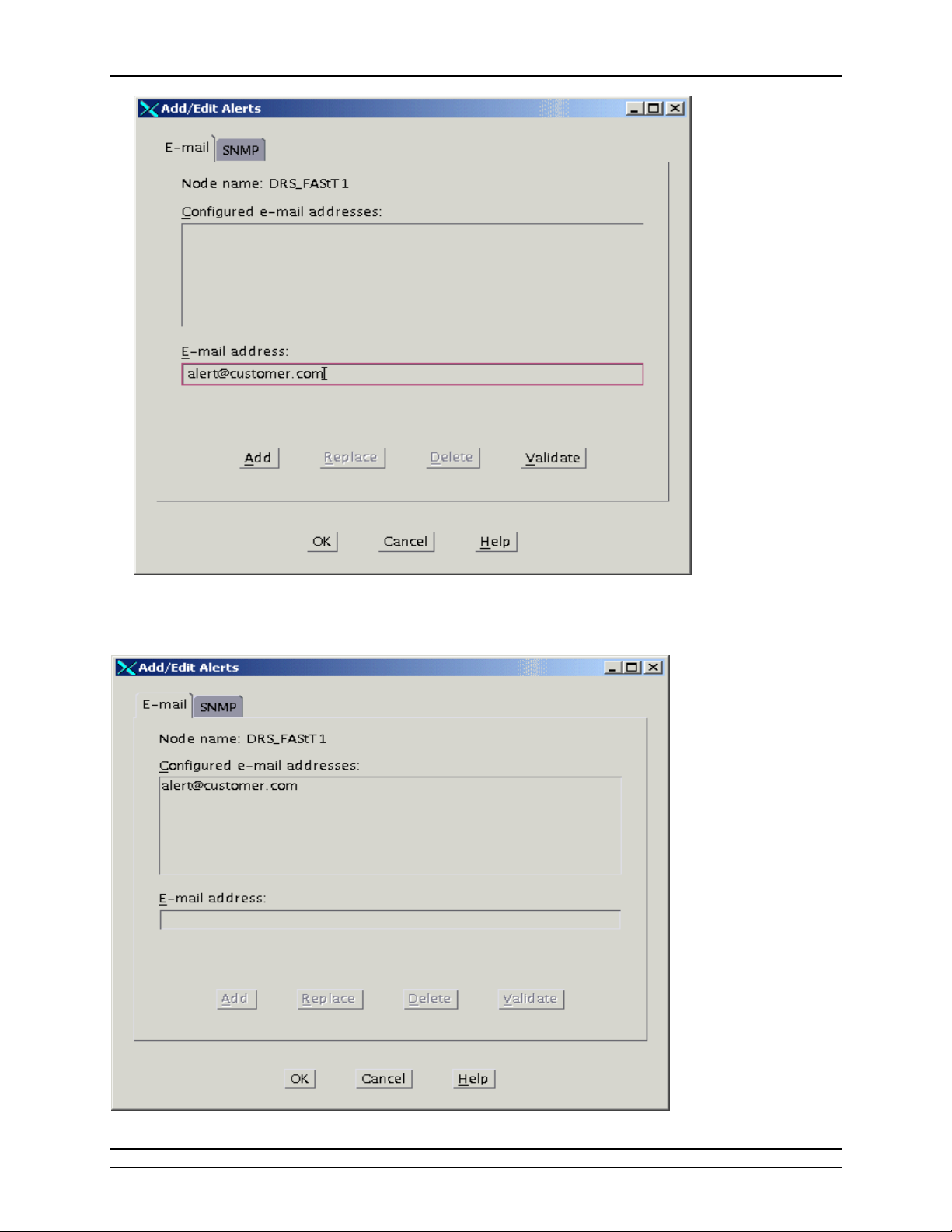

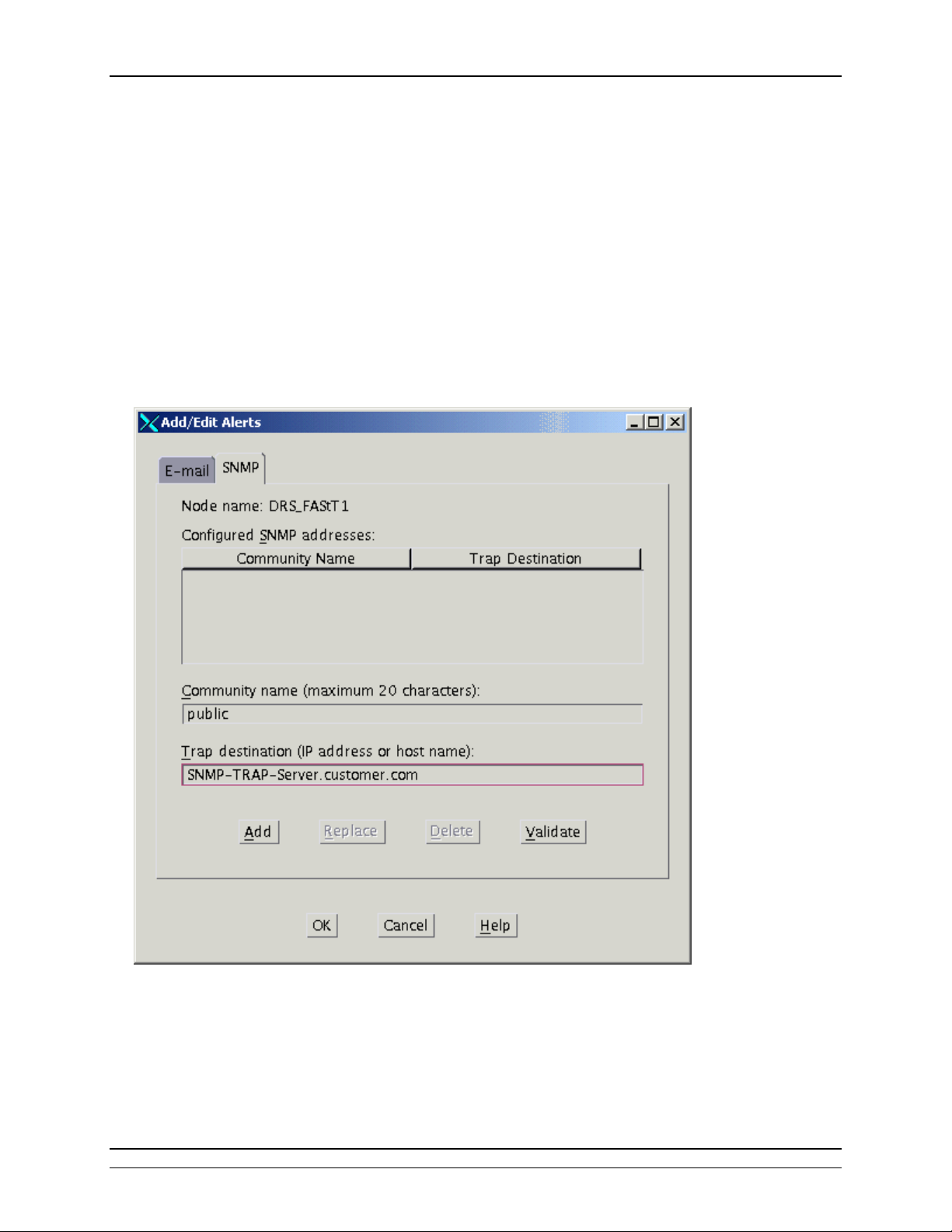

A danger notice indicates the presence of a hazard that has the potential of causing death or