Page 1

Using EMS HA Monitors

B5735-90001

August 1997

© Copyright 1997 Hewlett-Packard Company

Page 2

Legal Notices

The information contained in this document is subject to change without notice.

Hewlett-Packard makes no warranty of any kind with regard to this manual,

including, but not limited to, the implied warranties of merchantability and fitness

for a particular purpose. Hewlett-Packard shall not be liable for errors contained

herein or direct, indirect, special, incidental or consequential damages in connection

with the furnishing, performance, or use of this material.

Copyright © 1997 Hewlett-Packard Company.

This document contains information which is protected by copyright. All rights are

reserved. Reproduction, adaptation, or translation without prior written permission

is prohibited, except as allowed under the copyright laws.

Corporate Offices:

Hewlett-Packard Co.

3000 Hanover St.

Palo Alto, CA 94304

Use, duplication or disclosure by the U.S. Government Department of Defense is

subject to restrictions as set forth in paragraph (b)(3)(ii) of the Rights in Technical

Data and Software clause in FAR 52.227-7013.

Rights for non-DOD U.S. Government Departments and Agencies are as set forth in

FAR 52.227-19(c)(1,2).

Use of this manual and flexible disc(s), compact disc(s), or tape cartridge(s)

supplied for this pack is restricted to this product only. Additional copies of the

programs may be made for security and back-up purposes only. Resale of the

programs in their present form or with alterations, is expressly prohibited.

A copy of the specific warranty terms applicable to your Hewlett-Packard product

and replacement parts can be obtained from your local Sales and Service Office.

2

Page 3

Contents

1. Installing and Using EMS

What are EMS HA Monitors? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .12

The Role of EMS HA Monitors in a

High Availability Environment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .14

Installing and Removing EMS HA Monitors . . . . . . . . . . . . . . . . . . . . . .15

Installing EMS HA Monitors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .15

Removing EMS HA Monitors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .16

Using EMS HA Monitors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .17

Configuring EMS Monitoring Requests

Outside of MC/ServiceGuard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18

Selecting a Resource to Monitor. . . . . . . . . . . . . . . . . . . . . . . . . . . . .19

Using Wildcards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

Creating a Monitoring Request . . . . . . . . . . . . . . . . . . . . . . . . . . . . .21

How Do I Tell EMS When to Send Events?. . . . . . . . . . . . . . . . . . . .22

What is a Polling Interval?. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .23

Which Protocols Can I Use to Send Events? . . . . . . . . . . . . . . . . . . .23

What is a Notification Comment? . . . . . . . . . . . . . . . . . . . . . . . . . . .24

Copying Monitoring Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

Modifying Monitoring Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

Removing Monitoring Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

Configuring MC/ServiceGuard Package Dependencies . . . . . . . . . . . .26

2. Monitoring Disk Resources

Disk Monitor Reference. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .33

Physical Volume Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .34

Physical Volume and Physical Volume Link Status. . . . . . . . . . . . . . .36

Logical Volume Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .37

Logical Volume Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .38

Logical Volume Number of Copies. . . . . . . . . . . . . . . . . . . . . . . . . . . . .39

3

Page 4

Contents

Rules for Using the EMS Disk Monitor

with MC/ServiceGuard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Rules for RAID Arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Adding PVGs to Existing Volume Groups . . . . . . . . . . . . . . . . . . . . 43

Creating Volume Groups on Disk Arrays Using PV Links . . . . . . . 44

Creating Logical Volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Rules for Mirrored Individual Disks . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Creating Disk Monitoring Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Disk Monitoring Request Suggestions. . . . . . . . . . . . . . . . . . . . . . . . . 48

Resources to Monitor for RAID Arrays . . . . . . . . . . . . . . . . . . . . . . . . 51

Resources to Monitor for Mirrored Disks . . . . . . . . . . . . . . . . . . . . . . 53

Resources to Monitor for Lock Disks . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Resources to Monitor for Root Volumes. . . . . . . . . . . . . . . . . . . . . . . . 56

3. Monitoring Cluster Resources

Cluster Monitor Reference. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Cluster Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Node Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Package Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Creating Cluster Monitoring Requests. . . . . . . . . . . . . . . . . . . . . . . . . . 62

4. Monitoring Network Interfaces

Network Monitor Reference. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Configuring Network Monitoring Requests . . . . . . . . . . . . . . . . . . . . . . 66

4

Page 5

Contents

5. Monitoring System Resources

System Monitor Reference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .68

Number of Users. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .69

Job Queues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .70

Filesystem Available Space . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .71

Creating System Resource Monitoring Requests. . . . . . . . . . . . . . . . . . .72

6. Troubleshooting

EMS Directories and Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .74

Logging and tracing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .76

EMS Logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .76

EMS Tracing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .77

Performance Considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .78

System Performance Issues. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .78

Network Performance Issues. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .78

Testing Monitor Requests. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .79

Testing Disk Monitor Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .79

Testing Cluster Monitor Requests. . . . . . . . . . . . . . . . . . . . . . . . . . . . .79

Testing Network Monitor Requests. . . . . . . . . . . . . . . . . . . . . . . . . . . .79

Testing System Resource Monitor Requests. . . . . . . . . . . . . . . . . . . . .79

Making Sure Monitors are Running . . . . . . . . . . . . . . . . . . . . . . . . . . .80

Glossary

5

Page 6

Contents

6

Page 7

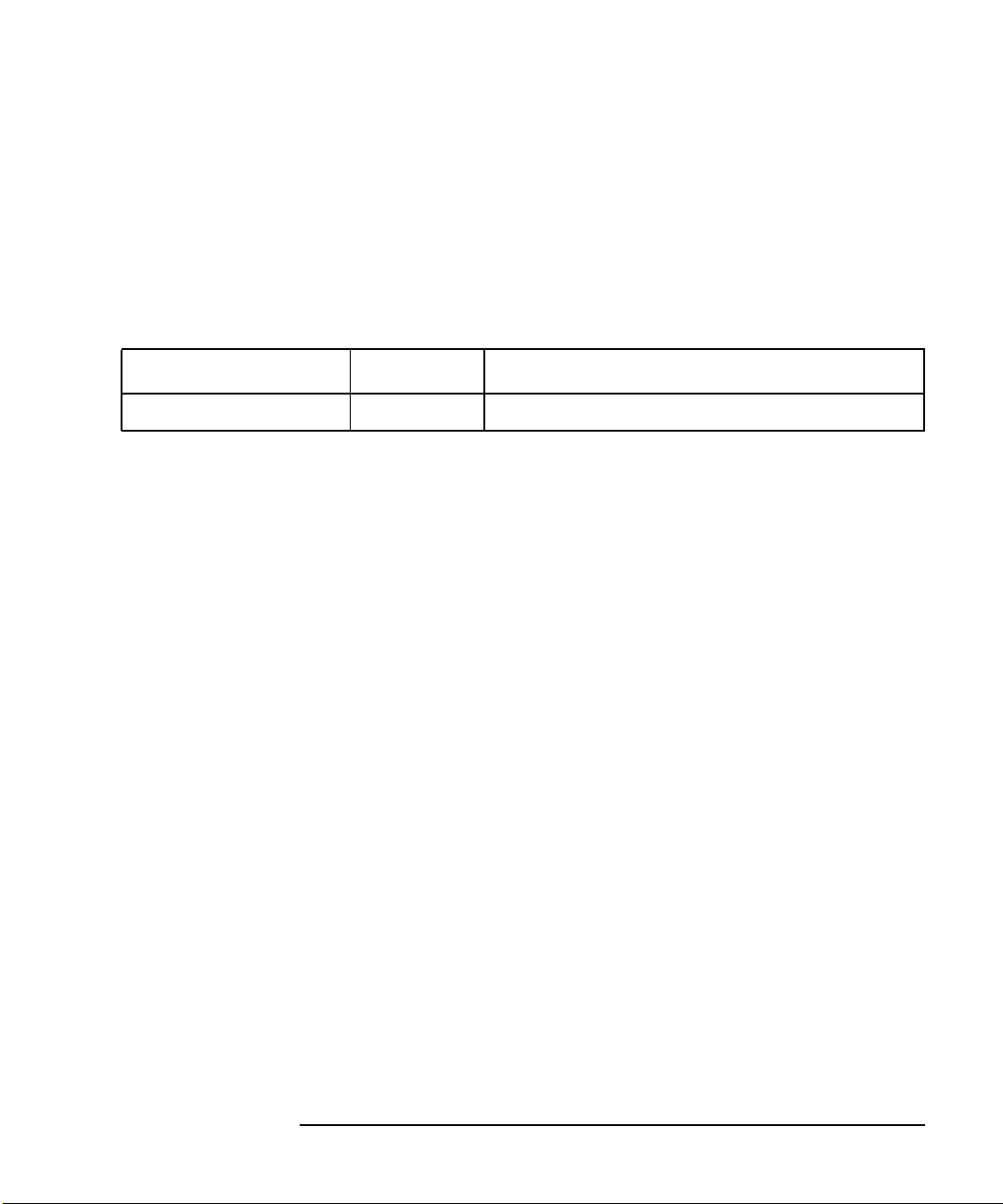

Table 1

Printing History

Printing Date Part Number Edition

August 1997 B5735-90001 Edition 1.

This edition documents material related to installing and configuring the Event

Monitoring Service (EMS).

This printing date and part number indicate the current edition. The printing date

changes when a new edition is printed. (Minor corrections and updates which are

incorporated at reprint do not cause the date to change.) The part number changes

when extensive technical changes are incorporated.

New editions of this manual will incorporate all material updated since the previous

edition.

HP Printing Division:

Enterprise Systems Division

Hewlett-Packard Co.

19111 Pruneridge Ave.

Cupertino, CA 95014

7

Page 8

8

Page 9

Preface

This guide describes how to install and configure the Event Monitoring Service to

monitor system health, and how to use EMS in conjunction with availability

software such as MC/ServiceGuard and IT/O:

• Chapter 1, “Installing and Using EMS” presents the exact steps required to

install and use the software on your system or cluster.

• Chapter 2, “Monitoring Disk Resources”, gives guidelines on using the disk

monitor, including using it with MC/ServiceGuard.

• Chapter 3, “Monitoring Cluster Resources”, gives guidelines on using the

cluster monitor.

• Chapter 4, “Monitoring Network Interfaces”, gives guidelines on using the

network interface monitor.

• Chapter 5, “Monitoring System Resources”, gives guidelines on using the

system resource monitor for monitoring users, job queues and available

filesystem space.

• Chapter 6, “Troubleshooting”, gives guidelines on reading log files, and testing

monitor requests.

Related Publications

The following documents contain additional related information:

• Clusters for High Availability: A Primer of HP-UX Solutions (ISBN

0-13-494758-4). HP Press: Prentice Hall, Inc., 1996.

• Disk and File Manag ement Tasks on HP-UX (ISBN 0-13-518861-X). HP Press;

Prentice Hall, Inc., 1997.

• Managing MC/ServiceGuard (HP Part Number B3936-90019).

• HP OpenView IT/Operations Administrators Task Guide (P/N B4249-90003)

• Configuring OPS Clusters with MC/LockManager (HP Part Number

B5158-90001).

• Managing Highly Available NFS (HP Part Number B5125-90001)

• http://www.hp.com/go/ha external web site for information about

Hewlett-Packard’s high-availability technologies where you can documents

such as Writing Monitors for the Event Monitoring Service (EMS)

9

Page 10

Problem Reporting If you have any problems with the software or documentation, please contact your

local Hewlett-Packard Sales Office or Customer Service Center.

10

Page 11

1 Installing and Using EMS

EMS HA Monitors (Event Monitoring Service High Availability Monitors) aids in

providing high availability in an HP-UX environment by monitoring particular

system resources and then informing target applications (e.g. MC/ServiceGuard)

when the resources they monitor are at critical user-defined values.

11

Page 12

Installing and Using EMS

What are EMS HA Monitors?

What are EMS HA Monitors?

EMS HA Monitors (Event Monitoring Service High Availability Monitors) are a set

of monitors and a monitoring service that polls a local system or application

resource and sends messages when events occur. An event can simply be defined as

something you want to know about. For example, you may want to be alerted when

a disk fails or when available filesystem space falls below a certain level. EMS

allows you to configure what you consider an event for any monitored system

resource.

The advantage EMS has over built-in monitors is that requests can be made to send

events to a wide variety of softw are using multiple protocols (opcmsg, SNMP, TCP,

UDP). For example, you can configure EMS so that when a disk fails a message is

sent to MC/ServiceGuard and IT/Operations. These applications can then use that

message to trigger package failover and to send a message to an administrator to fix

the disk.

EMS HA Monitors consist of a framework, a collection of monitors, and a

configuration interface that runs under SAM (System Administration Manager). The

framework starts and stops the monitors, stores information used by the monitors,

and directs monitors where to send events. A standard API provides a way to add

new monitors as they become available, or to write your own monitors; see the

document Writing Monitors for the Event Monitoring Service (EMS) available from

the high availability web site: http://www.hp.com/go/ha

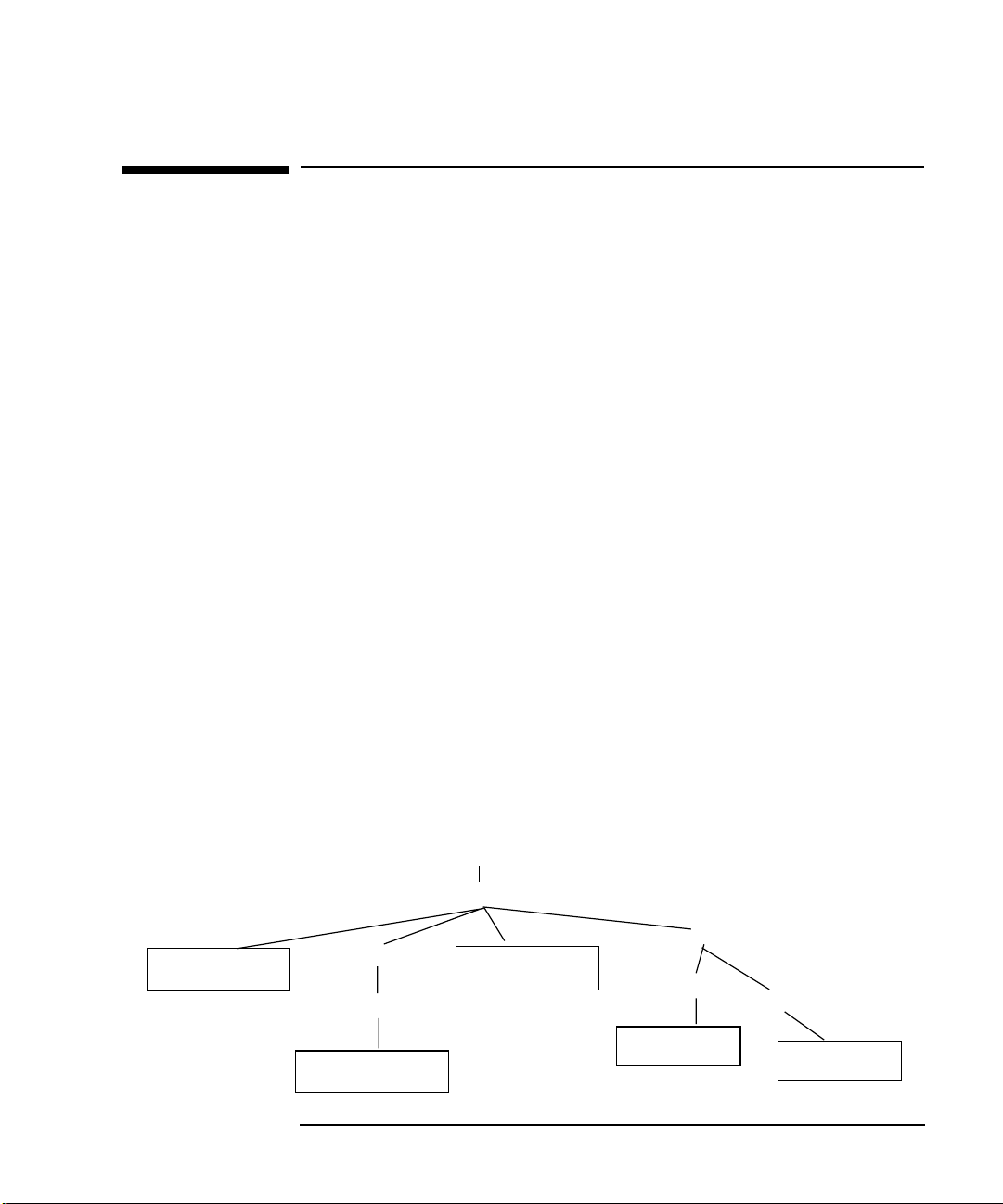

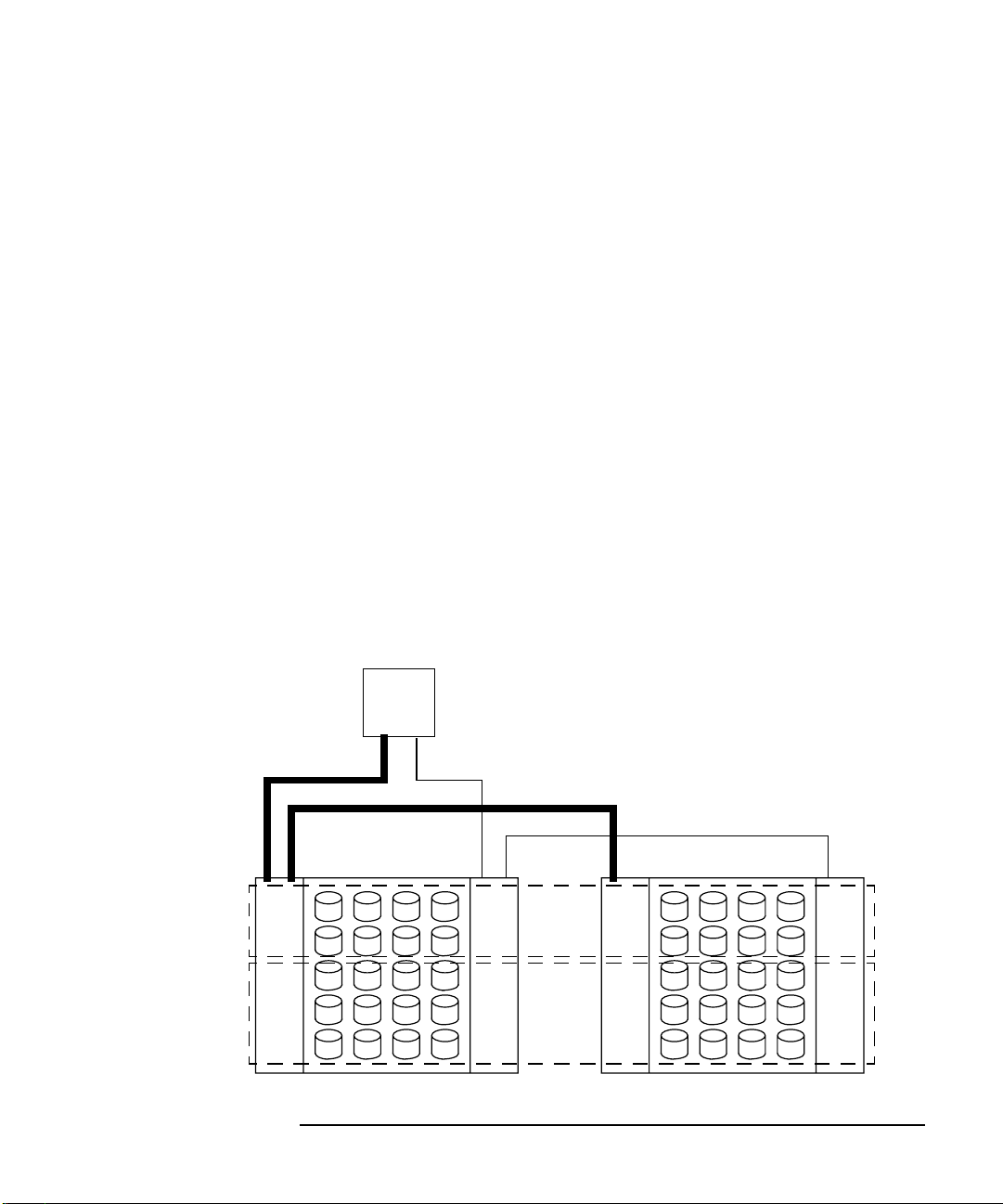

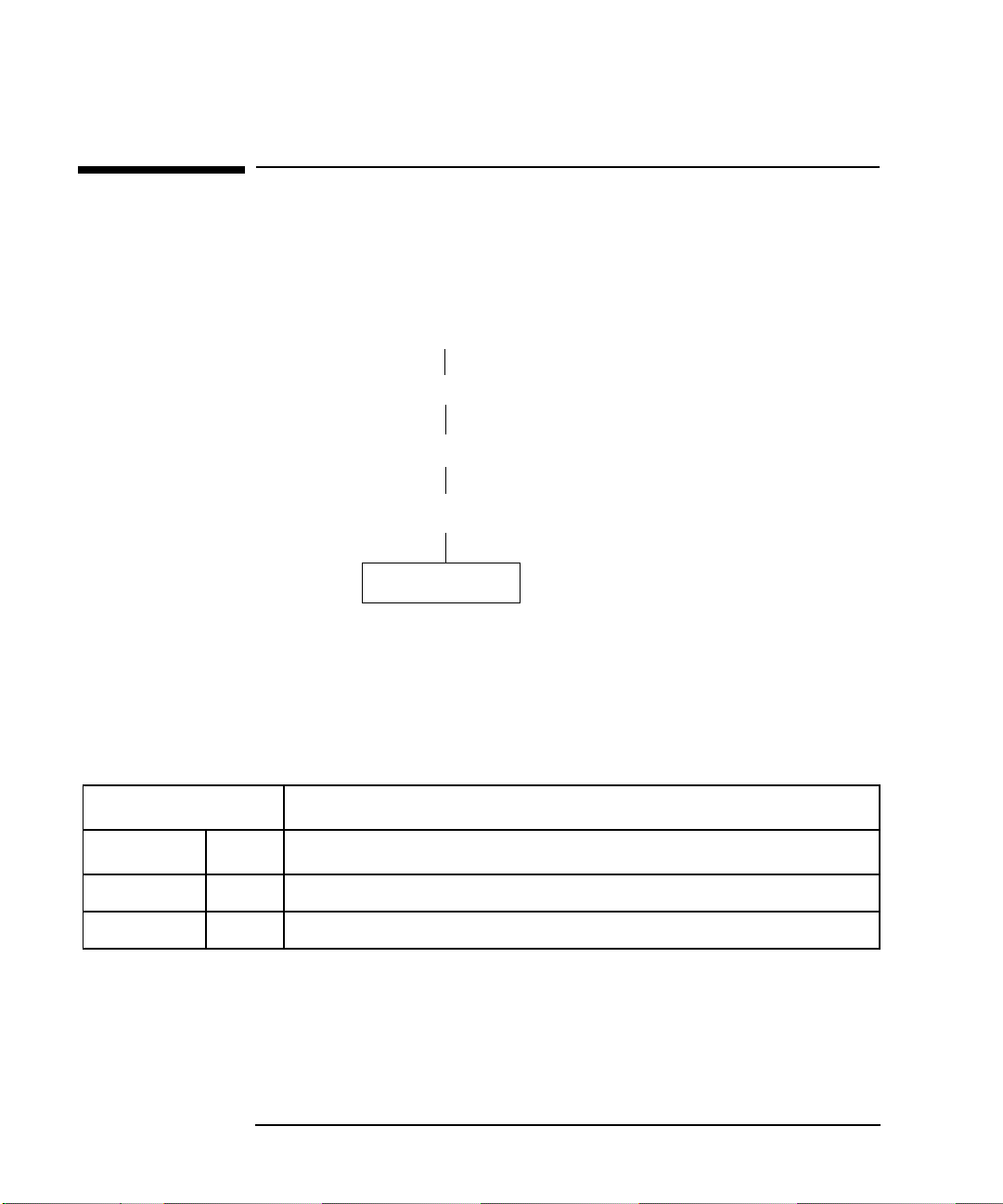

Figure 1-1 Event Monitoring Services High Availability Monitors

Client, such as

SAM interface to EMS,

MC/ServiceGuard package configuration

Target, such as

IT/Operations,

MC/ServiceGuard

12 Chapter 1

Framework

Registrar

API

MONITORS

Resource

dictionary

System and

application

resources

Page 13

Installing and Using EMS

What are EMS HA Monitors?

Monitors are applications written to gather and report information about specific

resources on the system. They use system information stored in places like

/etc/lvmtab and the MIB database. When you make a request to a monitor, it

polls the system information and sends a message to the framework, which then

interprets the data to determine if an event has occurred and sends messages in the

appropriate format.

EMS HA Monitors work best in a high availability environment; it aids in quickly

detecting and eliminating single points of failure, and monitors resources that can be

used as MC/ServiceGuard package dependencies. However, EMS HA Monitors can

also be used outside a high availability environment for monitoring the status of

system resources.

A set of monitors is shipped with EMS: disk, cluster, network interface, and system

resource monitors. Other Hewlett-Packard products are bundled with monitors that

fit into the EMS framework, such as ATM and HP OSI Transport Service 9000. You

can also write your own monitor; see Writing Monitors for the Event Monitoring

Service (EMS).

Chapter 1 13

Page 14

Installing and Using EMS

The Role of EMS HA Monitors in a High Availability Environment

The Role of EMS HA Monitors in a

High Availability Environment

The weakest link in a high availability system is the single point of f ailure. EMS HA

Monitors can be used to report information that helps you detect loss of redundant

resources, thus exposing single points of failure, a threat to data and application

availability.

Because EMS is a monitoring system, and does not do anything to modify the

system, it is best used with additional software that can take action based on the

events sent by EMS. Some examples are:

• EMS HA Monitors and MC/ServiceGuard

MC/ServiceGuard uses the EMS monitors to determine the health of resources,

such as disks, and may fail over packages based on that information.

Configuration of EMS monitoring requests for use with MC/ServiceGuard

packages is done from the Cluster area for Package Configuration in SAM, or by

editing the ASCII package configuration file.

However, if you also want to be alerted to what caused a package to fail over, or

you want to monitor events that affect high availability you need to create

requests from the SAM interface in the Resource Management area as described

in “Using EMS HA Monitors” on page 17, and in subsequent chapters.

MC/ServiceGuard may already be configured to monitor the health of nodes,

services, and subnets, and to make failover decisions based on resources status.

Configuring EMS monitors provides additional MC/ServiceGuard failover

criteria for certain network links and other resources.

• EMS HA Monitors with IT/Operations or Network Node Manager

EM HA Monitors S can be configured to send events to IT/Operations and

Network Node Manager.

• EMS HA Monitors with your choice of system management software

Because EMS can send events in a number of protocols, it can be used with any

system management software that supports either SNMP traps, or TCP, or UDP

messages.

14 Chapter 1

Page 15

Installing and Using EMS

Installing and Removing EMS HA Monitors

Installing and Removing EMS HA Monitors

NOTE T o make best use of EMS HA Monitors, install and configure them on all systems in

your environment. Because EMS monitors resources for the local system only, you

need to install EMS on every system to monitor all systems.

EMS HA Monitors run on HP 9000 Series 800 systems running HP-UX version

10.20 or later.

Hardware, such as disks and LAN cards, should be configured and tested before

installing EMS HA Monitors.

Installing EMS HA Monitors

The EMS HA Monitor bundle (P/N B5735AA-APZ) and license

(P/NB5736AA-APZ) version A.01.00 contains these products:

EMS-Core the EMS framework

EMS-Config the SAM interface to EMS

EMS-Disk Monitor the disk monitor, associated dictionary and files

EMS-MIB Monitor the cluster, network, and system resource monitors,

associated dictionary and files

To install EMS product, use swinstall, or the Software Management area in

SAM.

If you have many systems, it may be easier to install o ver the netw ork from a central

location. Create a network depot according to the instructions in Managing HP-UX

Software with SD-UX, rlogin or telnet to the remote host, and install over the

network from the depot.

When monitors are updated, or you install a monitor on top of an existing monitor,

your requests are retained. This is part of the functionality provided by the

persistence client; see “Making Sure Monitors are Running” in Chapter 6.

Note that updated monitors may have new status values that change the meaning of

your monitoring requests.

Chapter 1 15

Page 16

Installing and Using EMS

Installing and Removing EMS HA Monitors

Removing EMS HA Monitors

Use swremove or the Software Management tools under SAM to remove EMS.

Note that because the monitors are persistent, that is, they are always automatically

started if they are stopped, it is likely you will have warnings in your removal log

file that say, “Could not shut down process” or errors that say “File

/etc/opt/resmon/lbin/p_client could not be removed.” Even if you see these

warnings, monitors are removed and any dirty files are cleaned up on reboot.

16 Chapter 1

Page 17

Installing and Using EMS

Using EMS HA Monitors

Using EMS HA Monitors

There are two ways to use EMS HA Monitors:

• Configure monitoring requests from the EMS interface in the Resource

Management area of SAM.

• Configure package dependencies in MC/ServiceGuard by using the Package

Configuration interface in the High Availability Clusters subarea of SAM or by

editing the package ASCII configuration file.

The following are prerequisites to using EMS:

• Disks need to be configured using the LVM (Logical Volume Manager).

• Network cards need to be configured.

• Filesystems need to have been created and mounted.

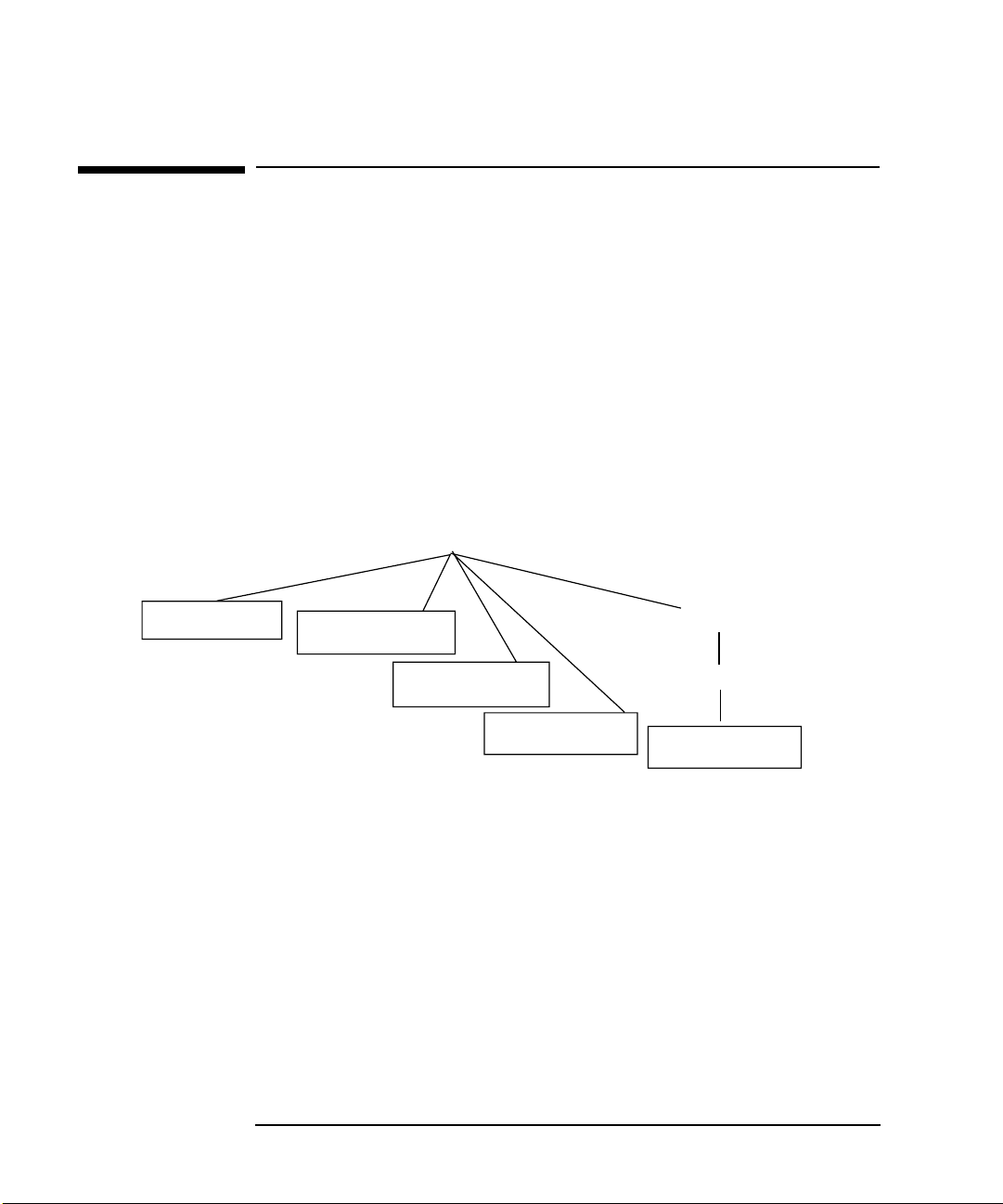

Resource classes are structured hierarchically, similar to a filesystem structure,

although they are not actually files and directories. The classes supplied with this

version of EMS are listed in Figure 1-2. Resource instances are listed in bold, and

instances that are replaced with an actual name are in bold italics.

Figure 1-2 Event Monitoring Service Resource Class Hierarchy

/vg

contains all logical volume, disk

and PVlink, and volume group

summary status

/vgName

/lv

/copies

/status

/lvName

/lvName

/pv_summary

/lv_summary

/pv_pvlink

/status

/deviceName

The full path of a resource includes the class, subclasses, and instance. An example

of a full resource path for the physical volume status of the device

/dev/dsk/c0t1d2 belonging to volume group vgDataBase, would be

/vg/vgDataBase/pv_pvlink/status/c0t1d2.

Chapter 1 17

contains all package,

node, and cluster

status

/package

/localNode

/status

/packageName

/clusterName

/status

/clusterName

/status

/net/cluster

contains network

interface status

/interfaces

/lan

/status

/LANname

/jobQueue1Min

/jobQueue5Min

/jobQueue15Min

/system

contains all job

queue, user, and

filesystem status

/filesystem

/availMB

/numUsers

/fsName

Page 18

Installing and Using EMS

Using EMS HA Monitors

Configuring EMS Monitoring Requests

Outside of MC/ServiceGuard

This section describes the steps from the SAM interface to EMS to create

monitoring requests that notify non-MC/ServiceGuard management applications

such as IT/Operations.This information for creating requests is also valid for

monitors sold with other products (ATM or OTS, for example) and for user-written

monitors written according to developer specifications in Writing Monitors for the

Event Monitoring Service (EMS).

To start the EMS configuration, double-click on the Event Monitoring Service icon

in the Resource Management area in SAM. The main screen, shown in Figure 1-3,

shows all requests configured on that system; if you haven’t created requests, the

screen will be empty.

Figure 1-3 Event Monitoring Service Screen

18 Chapter 1

Page 19

Installing and Using EMS

Using EMS HA Monitors

Selecting a Resource to Monitor

All resources are divided into classes. When you double-click on Add Monitoring

Request in the Actions menu, the top-level classes for all installed monitors are

dynamically discovered and then listed.

Figure 1-4 The Top Level of the Resource Hierarchy in the Add a Monitoring Request

Screen

Chapter 1 19

Page 20

Installing and Using EMS

Using EMS HA Monitors

Some Hewlett-Packard products, such as ATM or HP OTS 9000, provide EMS

monitors. If those products are installed on the system, then their top-level classes

will also appear here. Similarly, top-level classes belonging to user-written

monitors, created using the EMS Developer’s Kit, will be discovered and displayed

here.

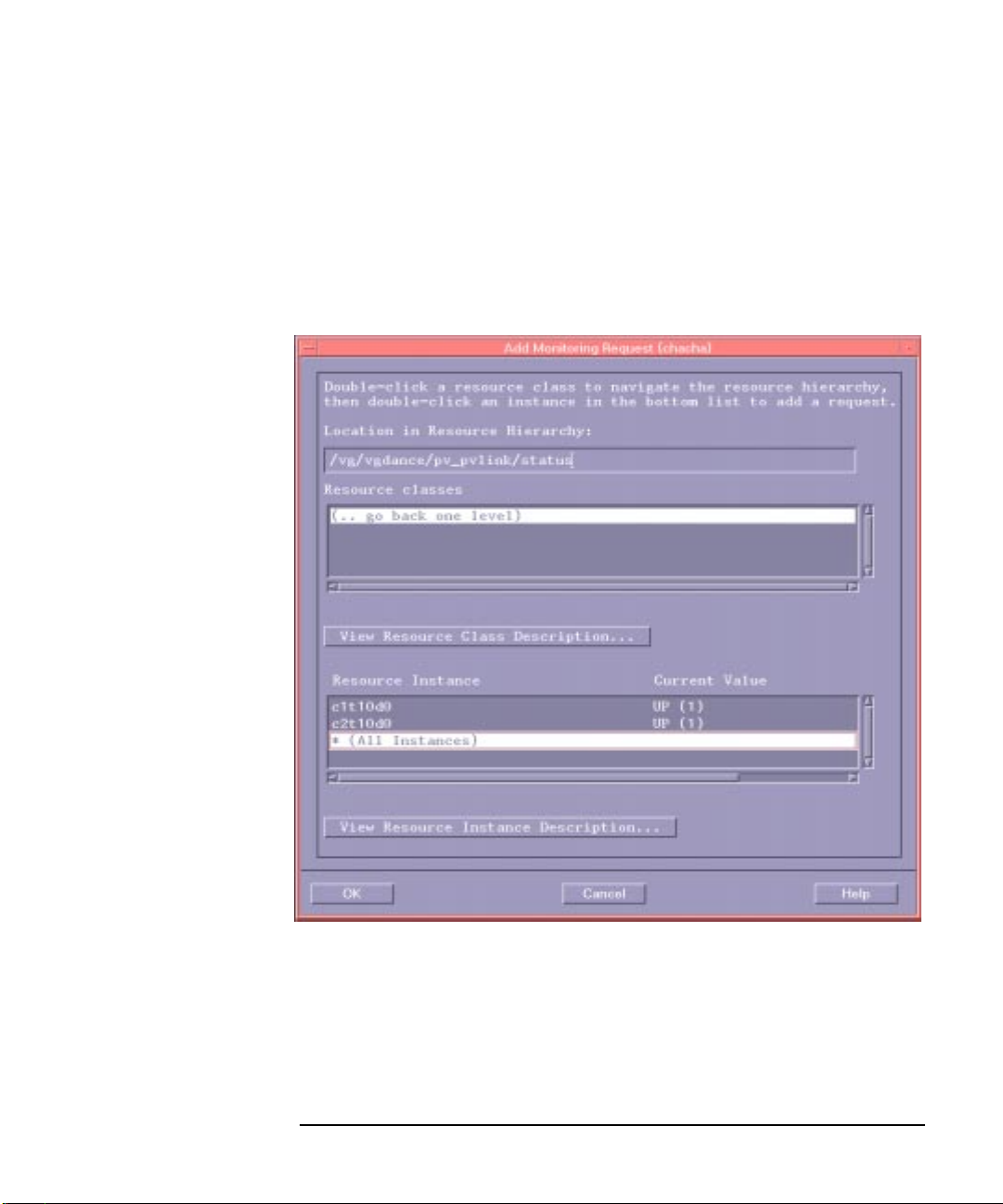

Traverse the hierarchy in the upper part of the screen in Figure 1-4 and select a

resource instance to monitor in the lower part of the screen as in Figure 1-5.

Figure 1-5 Choosing a Resource Instance in the Add a Monitoring Request Screen

Using Wildcards

The * wildcard is a convenient way to create many requests at once. Most systems

have more than one disk or network card, and many have several disks. To avoid

having to create a monitor request for each disk, select * (All Instances) in the

Resource Instance box. See Figure 1-5.

20 Chapter 1

Page 21

Wildcards are available only when all instances of a subclass are the same resource

type.

Wildcards are not available for resource classes. So, for example, a wildcard is

available for the status instances in the /vg/vgName/pv_pvlink/status subclass, but

no wildcard appears for the volume group subclasses under the /vg resource class.

Creating a Monitoring Request

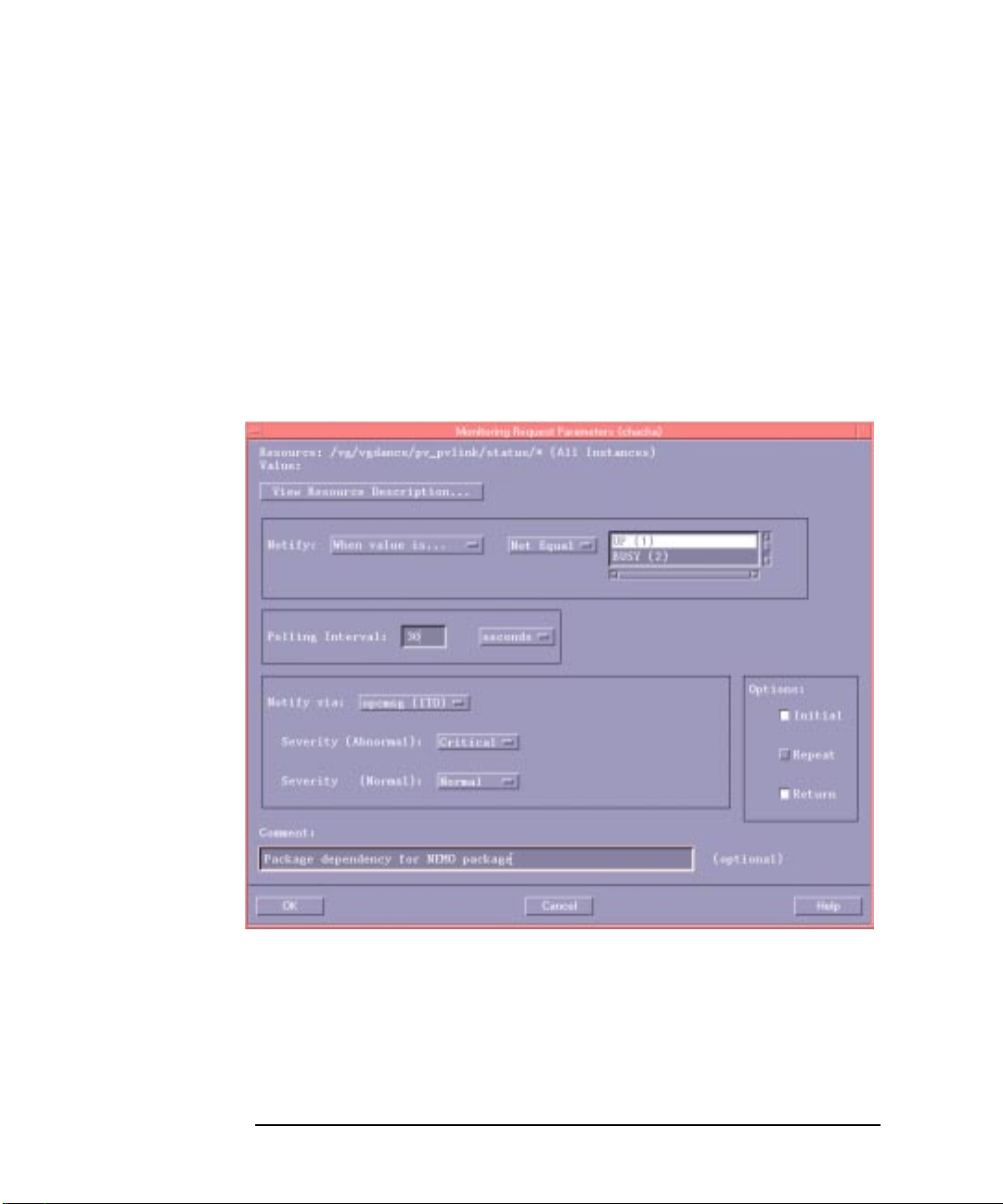

The screen in Figure 1-6 shows where you specify when and how to send events.

The following sections describe the monitoring parameters and some common

applications of them.

Figure 1-6 Monitoring Request Parameters

Installing and Using EMS

Using EMS HA Monitors

Chapter 1 21

Page 22

Installing and Using EMS

Using EMS HA Monitors

How Do I Tell EMS When to Send Events?

While the monitor may be polling disks every 5 minutes, for example, you may only

want to be alerted when something happens that requires your attention. When you

create a request, you specify the conditions under which you receive an alert. Here

are the terms under which you can be notified:

When value is... You define the conditions under which you wish to be

notified for a particular resource using an operator (e.g. =,

not equal, >, >=, <, <=) and a value returned by the

monitor (e.g. UP, DOWN, INACTIVE). Text values are

mapped to numerical values. Specific values are in the

chapters describing the individual monitors.

When value changes This notification might be used for a resource that does

not change frequently, but you need to know each time it

does. For example, you would want notification each time

the number of mirrored copies of data changes from 2 to 1

and back to 2.

At each interval This sends notification at each polling interval. It would

most commonly be used for reminders or gathering data

for system analysis. Use this for only a small number of

resources at a time, and with long polling intervals of

several minutes or hours; there is a risk of affecting

system performance.

If you select conditional notification, you may select one or more of these options:

Initial Use this option as a baseline when monitoring resources such as

available filesystem space or system load. It can also be used to

test that events are being sent for a new request.

Repeat Use this option for urgent alerts. The Repeat option sends an

alert at each polling interval as long as the notify condition is

met. Use this option with caution; there is a risk of high CPU

use or filling log files and alert windows.

Return Use this option to track when emergency situations return to

normal.

22 Chapter 1

Page 23

Installing and Using EMS

Using EMS HA Monitors

NOTE Updated monitors may have new status values that change the meaning of your

monitoring requests, or generate new alerts.

For example, assume you have a request for notification if status > 3 for a resource

with a values range of 1-7. You would get alerts each time the value equaled 4, 5, 6,

or 7. If the updated version of the monitor has a new status value of 8, you w ould see

new alerts when the resource equalled 8.

What is a Polling Interval?

The polling interval determines the maximum amount of elapsed time before a

monitor knows about a change in status for a particular resource. The shorter the

polling interval, the more likely you are to hav e recent data. Ho we v er, depending on

the monitor, a short polling interval may use more CPU and system resources. You

need to weigh the advantages and disadvantages between being able to quickly

respond to events and maintaining good system performance.

The minimum polling interval depends on the monitor’s ability to process quickly.

For most resource monitors the minimum is 30 seconds. Disk monitor requests can

be as short as 1 second.

MC/ServiceGuard monitors resources every few seconds. You may want to use a

short polling interval (30 seconds or less) when it is critical that you make a quick

failover decision.

You may want a polling interval of 5 minutes or so for monitoring less critical

resources.

You may want to set a very long polling interval (4 hours) to monitor failed disks

that are not essential to the system, but which should be replaced in the next few

days.

Which Protocols Can I Use to Send Events?

You specify the protocol the EMS framework uses to send events in the Notify via:

section of the screen in Figure 1-6. The options are:

• opcmsg ITO sends messages to ITO applications via the opcmsg daemon. EMS

defines normal and abnormal differently for each notification type:

• Conditional notification defines all events that meet the condition as

abnormal, and all others as normal.

• Change notification defines all events as abnormal.

• Notification at each polling interval defines all events as normal.

Chapter 1 23

Page 24

Installing and Using EMS

Using EMS HA Monitors

You may specify the ITO message severity for both normal and abnormal events:

• Normal

• W arning

• Critical

• Minor

• Major

The ITO application group is EMS(HP), the message group, HA, and the object

is the full path of the resource being monitored.

See HP OpenView IT/Operations Administrators Task Guide (P/N

B4249-90003) for more information on configuring notification severity.

• SNMP traps

This sends messages to applications using SNMP traps, such as Network Node

Manager. See HP OpenView Using Network Node Manager (P/N J1169-90002)

for more information on configuring SNMP traps. The following traps are used

by EMS:

EMS_NORMAL_OID “1.3.6.1.4.1.11.2.3.1.7.0.1” - Normal notification

EMS_ABNORMAL_OID “1.3.6.1.4.1.11.2.3.1.7.0.2” - Abnormal notification

EMS_RESTART_OID “1.3.6.1.4.1.11.2.3.1.7.0.4” - Restart notification

• TCP and UDP

This sends TCP or UDP encoded events to the target host name and port

indicated for that request. Thus the message can be directed to a user-written

socket program.

Templates for configuring IT/Operations and Network Node Manager to display

EMS events can be found on the Hewlett-P ackard High Availability public web page

at http://www.hp.com/go/ha.

What is a Notification Comment?

The notification comment is useful for sending task reminders to the recipients of an

event. For example, if you have a disk monitor request that reports an alert that an

entire mirror has failed, when that event shows up in IT/Operations, for example,

you may want it to have the name of the person to contact if disks fail. If you have

configured MC/ServiceGuard package dependencies, you may want to enter the

package name as a comment in the corresponding pv_summary request.

24 Chapter 1

Page 25

Installing and Using EMS

Using EMS HA Monitors

Copying Monitoring Requests

There are two ways to use the copy function:

• To create requests for many resources using the same monitoring parameters,

select the monitoring request in the main screen and choose Actions: Copy

Monitoring Request. You need to have configured at least one similar request for

a similar instance. Choose a different resource instance in the Add a Monitoring

Request screen, and click <OK> in the Monitoring Request Parameters screen.

• To create many different requests for the same resource, select the monitoring

request in the main screen and choose Actions: Copy Monitoring Request. You

need to have configured at last one request for that resource. Click <OK> in the

Add a Monitoring Request screen, and modify the parameters in the Monitoring

Request Parameters screen. You may want to do this to create requests that send

events using multiple protocols.

Modifying Monitoring Requests

To change the monitoring parameters of a request, select the monitoring request

from the main screen and select Actions: Modify Monitoring Request.

Removing Monitoring Requests

Select one or more monitoring requests from the main screen and choose Actions:

Remove Monitoring Request. To start monitoring the resource again you must

recreate the request, either by copying a similar request for a similar resource or by

re-entering the data.

Chapter 1 25

Page 26

Installing and Using EMS

Using EMS HA Monitors

Configuring MC/ServiceGuard Package

Dependencies

This section describes how to use SAM to create package dependencies on EMS

resources. This creates an EMS request to monitor that resource and to notify

MC/ServiceGuard when that resource reaches a critical user-defined level.

MC/ServiceGuard will then failover the package. Here are some examples of how

EMS might be used:

• In a cluster where one copy of data is shared between all nodes in a cluster, you

may want to fail over a package if the host adapter has failed on the node

running the package. Because busses, controllers, and disks are shared, package

fail over to another node because of bus, controller, or disk failure would not

successfully run the package. To make sure you have proper failov er in a shared

data environment, you must create identical package dependencies on all nodes

in the cluster. MC/ServiceGuard can then compare the resource “UP” values on

all nodes and fail over to the node that has the correct resources available.

• In a cluster where each node has its own copy of data, you may want to fail o ver

a package to another node for any number of reasons:

• host adapter, bus, controller, or disk failure

• unprotected data (the number of copies is reduced to one)

• performance has degraded because one of the PV links has failed

In this sort of cluster of web servers, where each node has a copy of the data and

users are distributed for load balancing, you can fail over a package to another

node with the correct resources available. Again, the package resource

dependencies should be configured the same on all nodes.

This information for creating requests is also valid for EMS monitors sold with

other products (ATM or OTS, for example) and for user-written monitors written

according to developer specifications in Writing Monitors for the Event Monitoring

Service (EMS).

NOTE You should create the same requests on all nodes in an MC/ServiceGuard cluster.

A package can depend on any resource monitored by an EMS monitor. To create

package dependencies, choose create or modify a package from the Package

Configuration interface under the High Availability Clusters subarea of SAM,

Figure 1-7. You see a new option called “Specify Package Resource Dependencies.”

26 Chapter 1

Page 27

Figure 1-7 Package Configuration Screen

Installing and Using EMS

Using EMS HA Monitors

Click on “Specify Package Resource Dependencies...” to add EMS resources as

package dependencies; you see a screen similar to Figure 1-8. If you click “Add

Resource”, you get a screen similar to Figure 1-7 on page 27.

Chapter 1 27

Page 28

Installing and Using EMS

Using EMS HA Monitors

Figure 1-8 Package Resource Dependencies Screen

When you select a resource, either from the “Add a Resource” screen, or from the

“Package Resource Dependencies” screen by selecting a resource and clicking

“Modify Resource Dependencies...” you get a screen similar to Figure 1-9.

To make a package dependent on an EMS resource, select a Resource Up Value

from the list of A v ailable Resource Values, then click “ Add.” The example in Figure

1-9 shows the possible values for pv_summary. Different resources show different

available “Up” values.

NOTE Make sure you always select UP as one of the UP values. MC/ServiceGuard creates

an EMS request that sends an event if the resource value is not equal to the UP

value.

If you select UP, the package fails over if the value is anything but UP. If you select

UP and PVG-UP, the package fails over if the pv_summary value is not equal to UP

or PVG_UP; in other words, if pv_summary were SUSPECT or DOWN.

The polling interval determines the maximum amount of elapsed time before the

monitor knows about a change in resource status. For critical resources, you may

want to set a short polling interval, for example 30 seconds, which could adversely

affect system performance. With longer polling intervals you gain system

performance, but risk not detecting problems soon enough.

28 Chapter 1

Page 29

Figure 1-9 Resource Parameters Screen

Installing and Using EMS

Using EMS HA Monitors

You can also add resources as package dependencies by modifying the package

configuration file in /etc/cmcluster/pkg.ascii. See Managing

MC/ServiceGuard for details on how to modify this file. A example of the syntax is:

RESOURCE_NAME /vg/vg01/pv_summary

RESOURCE_POLLING_INTERVAL 60

RESOURCE_UP_VALUE = UP

RESOURCE_UP_VALUE = PVG_UP

Chapter 1 29

Page 30

Installing and Using EMS

Using EMS HA Monitors

30 Chapter 1

Page 31

2 Monitoring Disk Resources

This section recommends ways to configure requests to the disk monitor for most

high availability configurations.

31

Page 32

Monitoring Disk Resources

You can monitor the following SE (single-ended) or F/W (fast/wide) SCSI disks:

• Hewlett-Packard High Availability Disk Array, Models 10, and 20

• Hewlett-Packard Disk Array with AutoRAID, Models 12 and 12H

• EMC Symmetrix arrays

• High Availability Storage System

• Single-spindle SCSI disks

HP-IB and HP-FL disks are not supported by the disk monitor. FiberChannel disks

are not yet supported.

You should be familiar with how the physical and logical volumes are configured on

all the nodes in your system and whether disks are configured with redundant PV

links, mirroring, or both, or whether they are standalone disks. See HP-UX System

Administration Tasks. For more information on configuring disks in a high

availability environment, see the technical whitepaper Choosing the Right Disk

Technology in a High A vailability Environment by Bob Sauers. Other information is

available from the high availability web site at http://www.hp.com/go/ha.

32 Chapter 2

Page 33

Monitoring Disk Resources

Disk Monitor Reference

Disk Monitor Reference

The EMS disk monitor reports information on the physical and logical volumes

configured by LVM (Logical Volume Manager). Anything not configured through

LVM is not monitored from the disk monitor. Monitored disk resources are:

• Physical volume summary (/vg/vgName/pv_summary), a summary status of all

physical volumes in a volume group.

• Physical volume and physical volume link status

(/vg/vgname/pv_pvlink/status/deviceName), the status of a given physical

volume or PV links in a volume group.

• Logical volume summary (/vg/vgName/lv_summary), a summary status of all

logical volumes in a volume group.

• Logical volume status (/vg/vgName/lv/status/lvName), the status of a given

logical volume in a volume group.

• Logical volume copies (/vg/vgName/lv/copies/lvName), the number of copies of

data available in a volume group.

Monitoring both the physical and logical volumes allows you to detect failures in

both active and inactive volume groups and logical volumes and correct hardware

problems that put node, application, or data availability at risk.

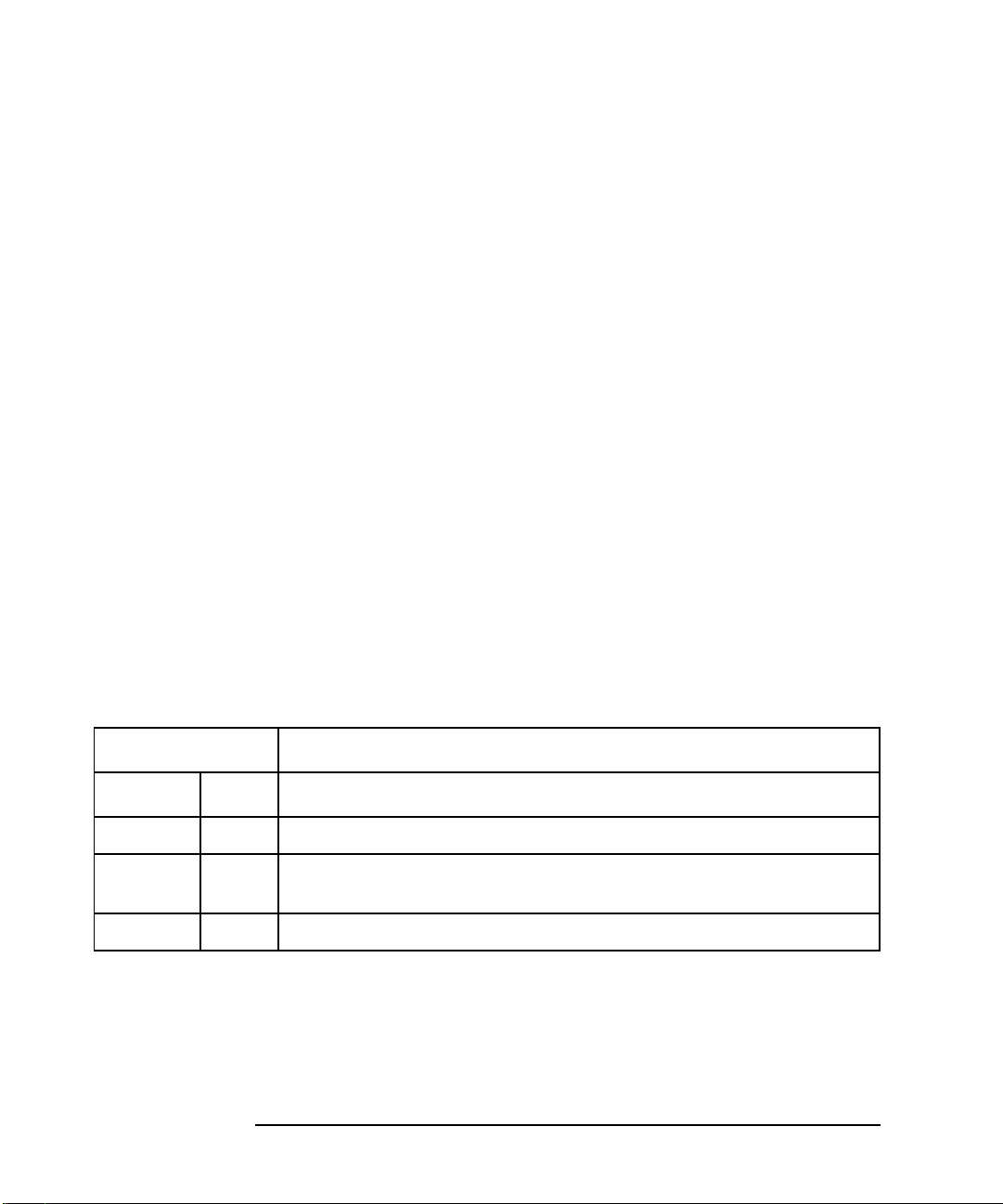

Figure 2-1 shows the class hierarchy for the disk monitor.

Items in boxes are resource instances that can be monitored.

Items in italics change depending on the names of volume groups, devices, and

logical volumes on the system.

Figure 2-1 Disk Monitor Resource Class Hierarchy

/vg

/vgName

/pv_summary

/pv_pvlinks

/status

/deviceName

Chapter 2 33

/lv_summary

/status

/lvName

/lv/

/copies

/lvName

Page 34

Monitoring Disk Resources

Disk Monitor Reference

Physical Volume Summary

The pv_summary is a summary status of all physical volumes in a volume group.

This status is based on the compiled results of SCSI inquiries to all physical

volumes in a volume group; see “Physical Volume and Physical Volume Link

Status” on page 36.

If you have configured package dependencies in MC/ServiceGuard, this resource is

used to determine package failover based on access to physical disks. (See Chapter 1

for information on configuring MC/ServiceGuard package dependencies.) If you are

using the disk monitor with MC/ServiceGuard, it is important that you configure

physical volume groups (PVGs) to give you the most accurate pv_summary for

MC/ServiceGuard package failover. See “Rules for Using the EMS Disk Monitor

with MC/ServiceGuard” on page 40.

The value in Table 2-1 is used by the disk monitor to determine how conditions

compare in logical operations. For example, you may create a request that alerts you

when the condition is greater than or equal to SUSPECT. The numeric value allows

you to tell which conditions qualify.

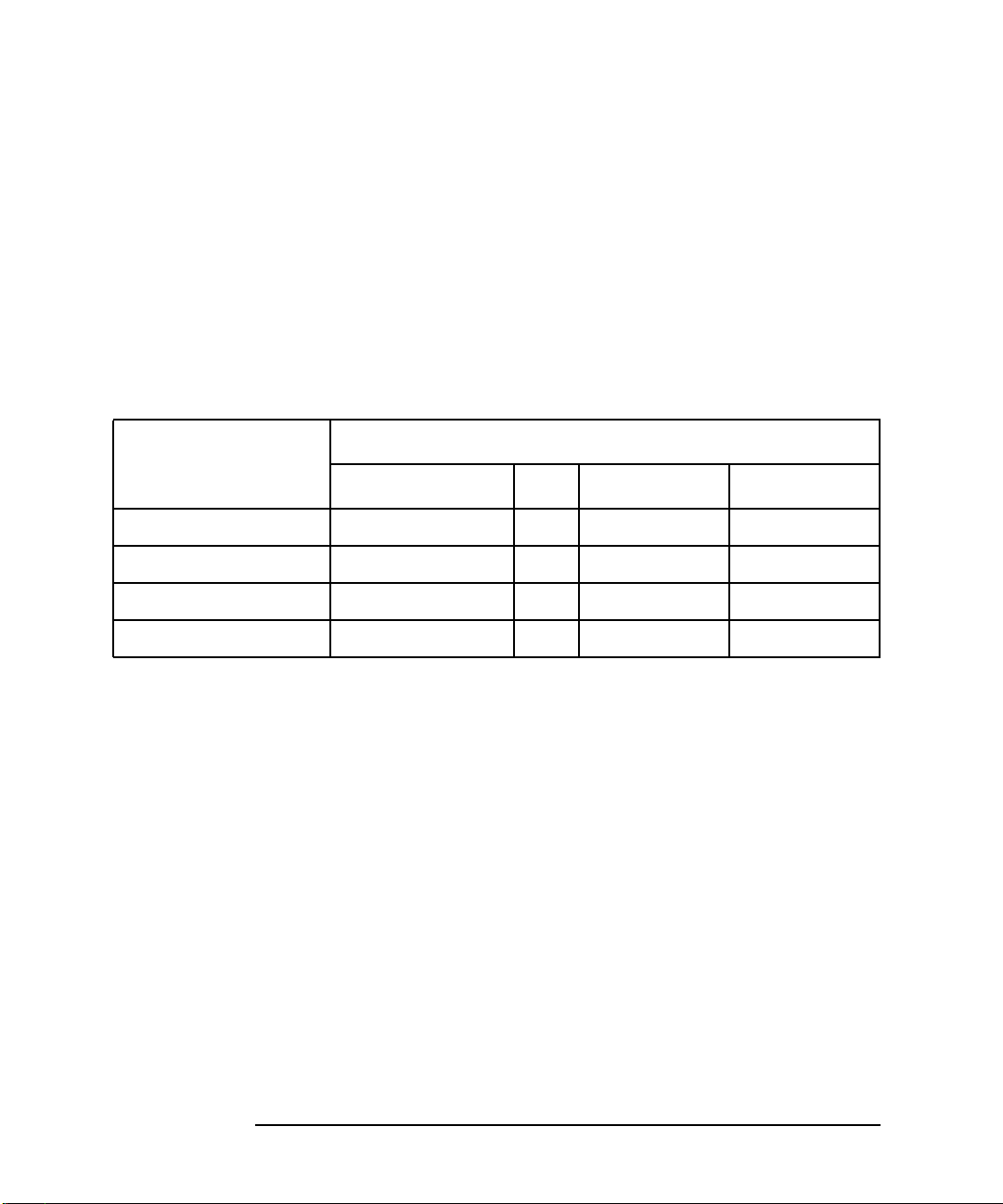

Table 2-1 Interpreting Physical Volume Summary

Resource Name /vg/vgName/pv_summary

Condition Value Interpretation

UP 1 All physical volumes containing data are accessible.

PVG_UP 2 At least 1 PV has f ailed; all data is accessible. If more than 1 is down and the

failed PVs are from the same PVG, all data is still accessible.

This condition can only occur in mirrored set or if PV links in PVGs.

SUSPECT 3 Two or more physical volumes from different PVGs are unav ailable; the disk

monitor cannot conclude that all data is available.

For example, on a 2-way mirrored system, if a physical volume fails on each

side of the mirror, data may be available if the failed volumes are holding

different data. But data may be unavailable if the failed volumes hold the

same data. Because the disk monitor only knows disks have failed, and not

what data is on the disks, it marks the volume group SUSPECT.

DOWN 4 Some data missing or no data accessible.

34 Chapter 2

Page 35

Monitoring Disk Resources

Disk Monitor Reference

The pv_summary resource may not be available for a given volume group in the

following cases:

• Devices are on an unsupported bus (such as HP-IB or HP-FL) or an

unrecognized bus, in the case of a new bus technology. The /etc/syslog

entry would say:

diskmond[5699]: pv_summary will be unavailable for /dev/vg00 because there

are physical volumes in this volume group which are on an unrecognized bus.

(DRM-502)

• PVGs (physical volume groups) exist in a volume group, but not all physical

volumes are assigned to a PVG. The /etc/syslog entry would say:

diskmond[18323]: pv_summary will be unavailable for /dev/vgtest because the

physical volume groups (PVGs) in this volume group do not have an equal

number of PVs or there are PVs not in a PVG. (DRM-503)

• Unequal numbers of physical volumes exist in each PVG in the volume group.

The /etc/syslog entry would say:

diskmond[18323]: pv_summary will be unavailable for /dev/vgtest because the

physical volume groups (PVGs) in this volume group do not have an equal

number of PVs or there are PVs not in a PVG. (DRM-503)

Two cases where this would occur are:.

• There are both 2-way and 3-way mirroring in the same volume group.

• Mirrored disks are a different number of physical disks with the same total

disk mi4Gb drive in one PVG and 2 2G drives in the redundant PVG.

All checks for the validity of pv_summary are logged to both /etc/syslog and

/etc/opt/resmon/log/api.log with the name of the local node and the

identifier diskmond.

Chapter 2 35

Page 36

Monitoring Disk Resources

Disk Monitor Reference

Physical Volume and Physical Volume Link Status

Requests to monitor physical volumes and physical volume links give you status on

the individual physical volumes and PV links in a volume group. In the case of most

RAID arrays, this means the monitor can talk to the physical link to a logical unit

number (LUN) in the array. In the case of stand-alone disks, it means the monitor

can talk to the disk itself.

The pv_pvlink status is used to calculate pv_summary. Although it is somewhat

redundant to use both, you might want to have more specific status sent by

pv_summary, and only have status sent on pv_pvlinks if a device is DOWN.

Pv_pvlinks and pv_summary supplement lv_summary by giving status on the

accessibility of both active and inactive volume groups and logical volumes.

To pinpoint a failure to a particular disk, bus, or I/O card, you need to use the disk

monitor alerts in conjunction with standard troubleshooting methods: reading log

files, inspecting the actual devices. The disk monitor uses the data in

/etc/lvmtab to see what is available for monitoring, and /etc/lvmtab does

not distinguish between physical volumes and physical volume links, so you need to

do additional investigation to detect whether a disk, bus, or I/O card has failed.

The value in Table 2-2 is used by the disk monitor to determine how conditions

compare in logical operations. For example, you may create a request that alerts you

when the condition is greater than or equal to BUSY. The numeric value allows you

to tell which conditions qualify.

Table 2-2 Interpreting Physical Volume and Physical Volume Link Status

Resource Name /vg/vgName/pv_pvlink/status/deviceName

Condition Value Interpretation

UP 1 SCSI inquiry was successful.

BUSY 2 SCSI inquiry returned with DEVICE BUSY; the disk monitor will try 3 times

to see if it gets either an UP or DOWN result before marking a device BUSY.

DOWN 3 SCSI inquiry failed; either the bus or disk are not accessible.

When configuring requests from the SAM interface, a wildcard (*) may be used in

place of deviceName to monitor all physical volumes and physical volume links in a

volume group.

36 Chapter 2

Page 37

Monitoring Disk Resources

Disk Monitor Reference

Logical Volume Summary

The logical volume summary tells you how accessible the data is in all logical

volumes in an active volume group. Sometimes the physical connection may be

working, but the application cannot read or write data on the disk. The disk monitor

determines I/O activity by querying LVM, and marks a logical volume as DOWN if

a portion of its data is unavailable.

NOTE The disk monitor cannot determine data accessibility to logical volumes in an

inactive volume group.

The values in Table 2-3 are used by the disk monitor to determine how conditions

compare in logical operations. For example, you may create a request that alerts you

when the condition is greater than or equal to INACTIVE_DOWN.

Table 2-3 Interpreting Logical Volume Summary

Resource Name /vg/vgName/lv_summary

Condition Value Interpretation

UP 1 All logical volumes are accessible, all data is accessible.

INACTIVE 2 The volume group is inactive. This could be because:

• The volume group is active in e xclusive mode on another node

in an MC/ServiceGuard cluster. (This is not valid for clusters

running MC/LockManager, because it can support a volume

group being active on more than one node.) Note that

MC/ServiceGuard does allow a volume group to be active in

read-only mode, if it is already active on another node.

• The volume group was made inactiv e usingvgchange -a n

for maintenance or other reasons.

• There was not a quorum of active physical volumes at system

boot, i.e. not enough disks in the volume group were working.

INACTIVE_DOWN 3 The last time the inactive volume was activated, it was DOWN; at

least one logical volume in the volume was inaccessible

DOWN 4 At least one logical volume in the volume group reports a status of

either INA CTIVE or DOWN. Note that an inactive logical volume

in an active volume group is rare, but possible. See “Logical

Volume Status” on page 38.

Chapter 2 37

Page 38

Monitoring Disk Resources

Disk Monitor Reference

Logical Volume Status

Logical volume status gives you status on each logical volume in a volume group.

While the lv_summary tells whether data in a volume group is available, the

lv/status/lvName will tell you whether specific logical volumes have failed.

The value in Table 2-4 is used by the disk monitor to determine how conditions

compare in logical operations. For example, you may create a request that alerts you

when the condition is greater than or equal to INA CTIVE. The numeric value allo ws

you to tell which conditions qualify.

Table 2-4 Interpreting Logical Volume Status

Resource Name /vg/vgName/lv/status/lvName

Condition Value Interpretation

UP 1 All logical volumes are accessible, all data is accessible.

INACTIVE 2 The logical volume is inactive.

DOWN 3 The logical volume is DOWN, a complete copy of the data is not

available for this logical volume.

When configuring requests from the SAM interface, a wildcard (*) may be used in

place of lvName to monitor all logical volumes in a volume group.

If you split off mirrors from your mirrored configuration, you will see new logical

volume resource instances when the split mirror is created.

38 Chapter 2

Page 39

Logical Volume Number of Copies

The logical volume number of copies is most useful to monitor in a mirrored disk

configuration. It tells you how many copies of the data are available.

MirrorDisk/UX supports up to 3-way mirroring, so there can be from 0 to 3 copies

(see Table 2-5.) In a RAID configuration that is not mirrored using LVM, the only

possible number is 0 or 1; either the data is accessible or it isn’t.

Note that when you configure mirroring in L VM, it lists 0 mirrors to mean you have

one copy of the data. Likewise, 2 mirrors mean you have 3 copies of the data (one

original plus 2 mirrors). The disk monitor is monitoring all copies of data, and

therefore counts the “original” as part of the total number of copies.

Table 2-5 Interpreting Logical Volume Copies

Resource Name vg/vgName/lv/copies/lvName

Condition Interpretation

0 No copies, either physical parts of the disk array have problems,

the lv is inactive, or a physical extent is stale or unavailable.

Monitoring Disk Resources

Disk Monitor Reference

1 One complete copy of data available; if the data is not mirrored,

then all physical extents are fine, if data is mirrored, all other

copies have problems.

2 Two complete copies of data are available; if the data is two-way

mirrored, then all physical disks are up and data is available, if

3-way mirrored, at least one logical extent has a missing or stale

physical extent .

3 All copies of a 3-way mirror are available.

When configuring requests from the SAM interface, a wildcard (*) may be used in

place of lvName to request status for all logical volumes in a volume group.

If you split off mirrors from your mirrored configuration, you will see the number of

copies reduced by 1 when the split mirror is created.

Chapter 2 39

Page 40

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

Rules for Using the EMS Disk Monitor

with MC/ServiceGuard

The disk monitor is designed especially for use with MC/ServiceGuard to provide

package failover if host adapters, busses, controllers, or disks fail. Here are some

examples:

• In a cluster where one copy of data is shared between all nodes in a cluster, you

may want to fail over a package if the host adapter has failed on the node

running the package. Because busses, controllers, and disks are shared, package

fail over to another node because of bus, controller, or disk failure would not

successfully run the package. To make sure you have proper failov er in a shared

data environment, you must create identical package dependencies on all nodes

in the cluster. MC/ServiceGuard can then compare the resource “UP” values on

all nodes and fail over to the node that has the correct resources available.

• In a cluster where each node has its own copy of data, you may want to fail o ver

a package to another node for any number of reasons:

• host adapter, bus, controller, or disk failure

• unprotected data (the number of copies is reduced to one)

• performance has degraded because one of the PV links has failed

For example, in a cluster of web servers where each node has a copy of the data

and users are distributed for load balancing, you can fail over a package to

another node with the correct resources available. Again, the package resource

dependencies should be configured the same on all nodes.

Disk availability is based on pv_summary. See “Configuring MC/ServiceGuard

Package Dependencies” in Chapter 1 for information on configuring package

dependencies.

In addition to configuring disks as MC/ServiceGuard package dependencies, you

may also want to have alerts sent to a system management tool such as HP

OpenView IT/Operations or Network Node Manager. Although MC/ServiceGuard

and EMS work together to provide package failover, they do not send events or log

the source of the failure. Also, failures may not cause a package to fail over, but may

expose a single point of failure that you want to know about. Therefore, it is

recommended you also configure requests from the SAM interface to EMS.

40 Chapter 2

Page 41

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

The pv_summary is calculated based on the compiled results of SCSI inquiries to all

physical volumes in a volume group. To help you determine the best way to

configure your disks for monitoring, here are the assumptions made when

calculating pv_summary:

• PVGs (physical volume groups) are set up to be bus-specific sides of a mirror or

redundant links and have an equal number of physical volumes.

• All logical volumes within a volume group are mirrored in the same way: all

2-way or all 3-way mirroring.

• A package depends on all logical volumes in the volume group.

• The SCSI inquiry will retry on devices that are BUSY. BUSY devices are not

considered UP when calculating pv_summary.

These rules apply when creating PVGs, if they are not followed, pv_summary will

not be available for monitoring:

• If PVGs are used, all physical volumes in a volume group must be in a PVG.

• All PVGs in a volume group must have the same number of physical volumes.

Chapter 2 41

Page 42

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

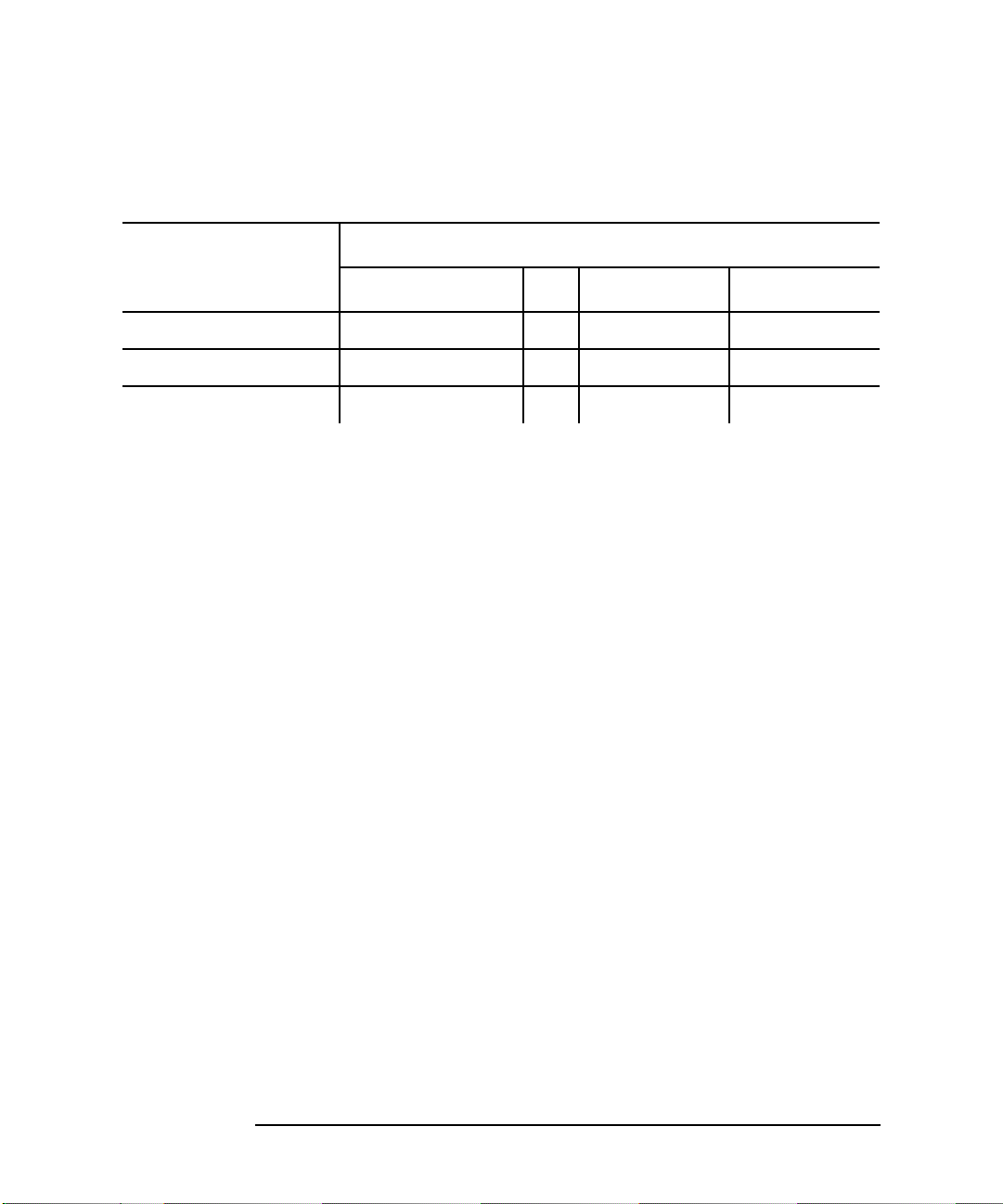

Table 2-6 is a summary of how pv_summary is calculated where

• n is the number of paths for the volume group in /etc/lvmtab, (physical

volumes, paths, or LUNs).

• p is the number of PVGs physical volume groups in the volume group.

• x is the number of paths currently available from a SCSI inquiry.

To give pv_summary the most accurate picture of data availability, you need to use

PVGs to define your physical volumes as separate access points to data: mirroring

should be PVG strict and arrays should have PV links, with redundant links in a

separate PVG. Note that if you do not configure PV links into separate PVGs, p in

T able 2-6 will al w ays be equal to 1. Therefore any SCSI inquiry that does not return

a value of UP for every path will result in a calculation of DOWN for pv_summary.

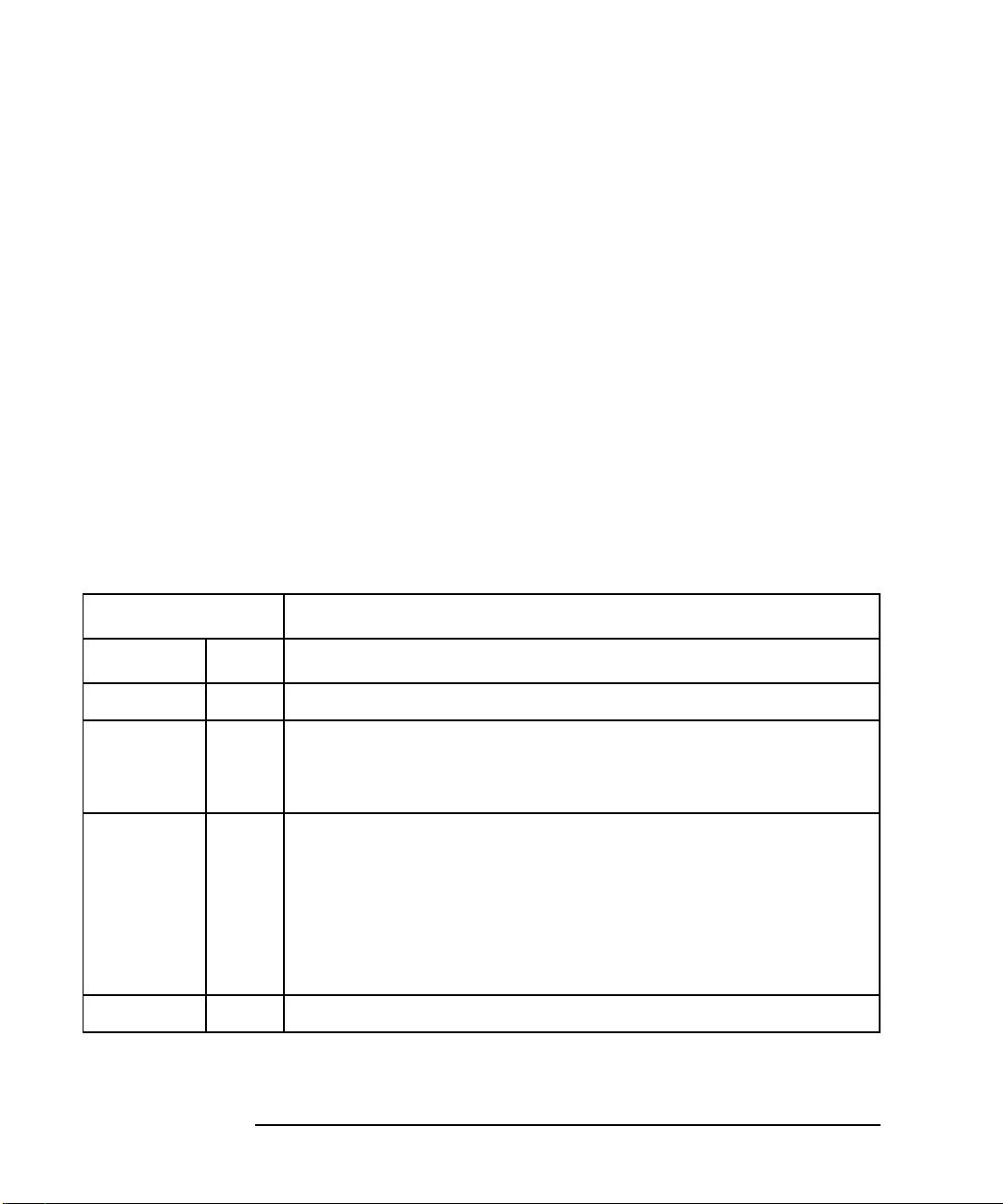

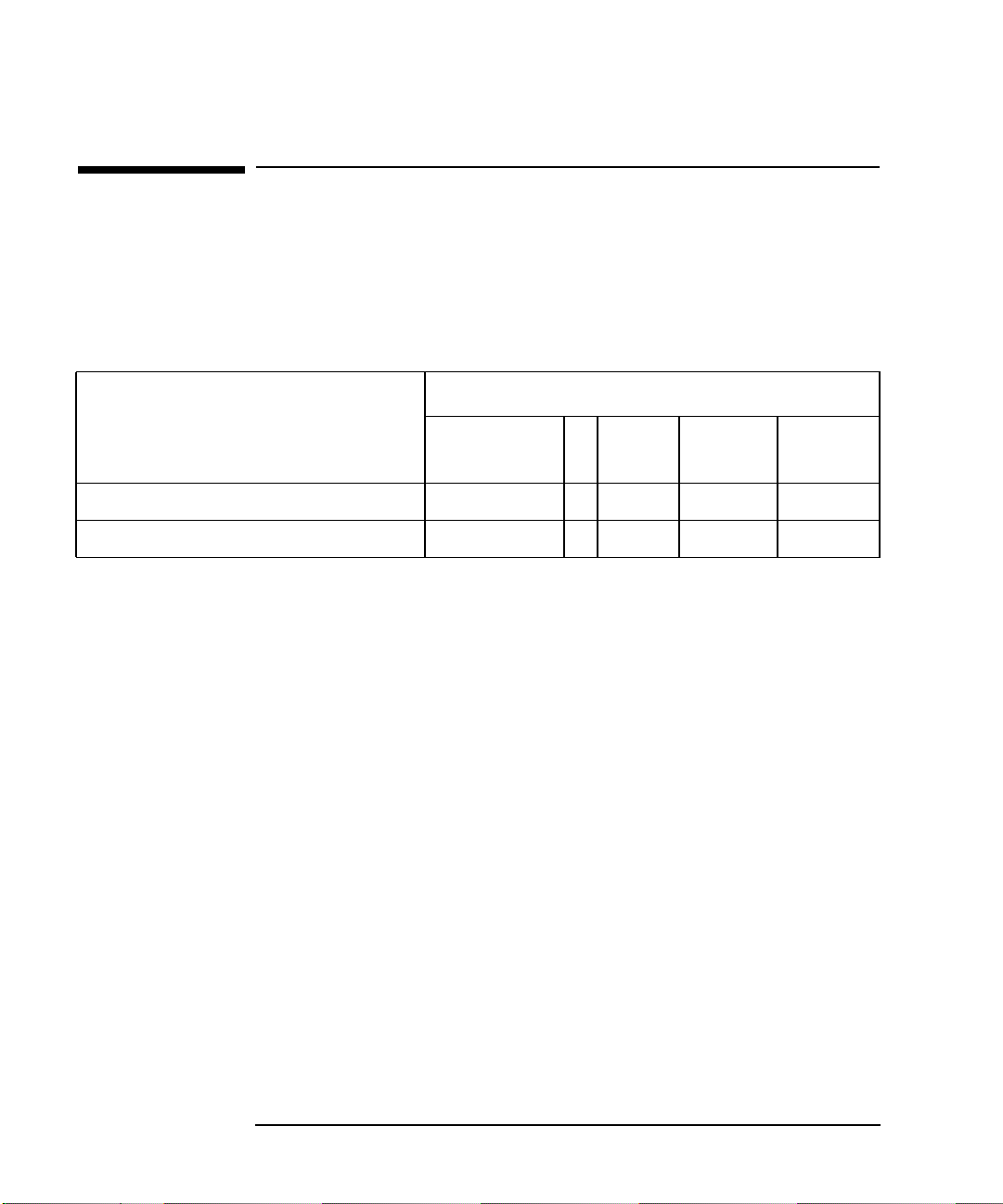

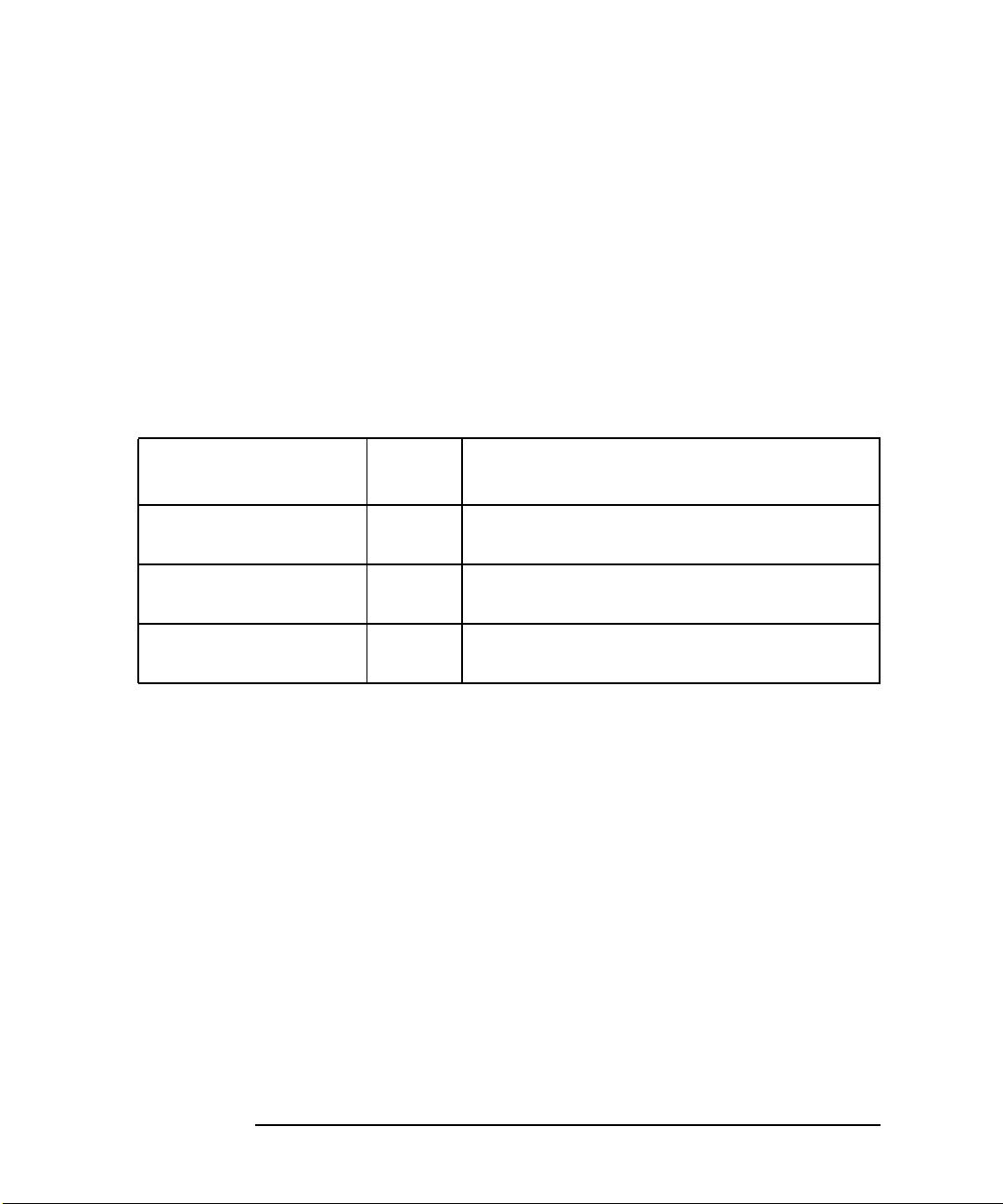

Table 2-6 pv_summary Calculations

Case Conclusion State

x = n All physical volumes and all data are available. UP

x=n-(p-1) All data is available. PVG_UP

If there are PVGs, and one PVG has all paths,

n/p <= x <= n-(p-1)

x < n/p Missing some data.

x=0 No data or physical volumes are available.

then all data is available.

If there are PVGs, and none of the PVGs has all

paths, then the disk monitor cannot determine if

all data is available.

Rules for RAID Arrays

RAID configurations must be configured with PV links. PV links are redundant

links attached to separate controllers on the array. If PV links are configured, LVM

automatically switches to the alternate controller when one fails.

To use the EMS disk monitor with MC/ServiceGuard, PV links must be configured

in a separate PVGS (physical volume groups). This new requirement allows

pv_summary to accurately calculate data availability based on physical volume

availability, thus including both ACTIVE and INACTIVE volume groups. If PV

42 Chapter 2

PVG_UP

SUSPECT

DOWN

Page 43

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

links are not configured in separate PVGs, the disk monitor sees all links to the array

as one physical volume, so if one link fails, pv_summary will register DOWN, and

your package will fail over, even if the other link is still up and data is available.

The following sections describe how to make sure your PV links are in physical

volume groups.

Adding PVGs to Existing Volume Groups

If you have already created volume groups, you can create PVGs and put PV links

into them:

1. Create a file called /etc/lvmpvg with permissions 600. See the lvmpvg man

page and HP-UX System Administration Tasks

2. Create an entry for each volume group and assign a different PVG name to each

PV link. The PVG names can be any arbitrary name of your choosing, but must

be unique on the system For example, an array containing 2 volume groups,

vgdance and vgsing, each containing a single LUN and each with 2 PV links

(see Figure 2-4 on page 51) should have the following /etc/lvmpvg file:

VG /dev/vgdance

PVG busA

/dev/dsk/c1t0d0

/dev/dsk/c1t2d0

PVG busB

/dev/dsk/c2t1d0

/dev/dsk/c2t3d0

VG /dev/vgsing

PVG busA

/dev/dsk/c1t0d1

/dev/dsk/c1t2d1

PVG busB

/dev/dsk/c2t1d1

/dev/dsk/c2t3d1

3. Carefully copy the /etc/lvmpvg to each system connected to the disk array.

NOTE Make sure you edit lvmpvg to contain the correct link names in

/dev/dsk/device for that system.

Creating Volume Groups on Disk Arrays Using PV

Links

If you will be monitoring volume groups that use mass storage on disk arrays, you

should use redundant I/O channels from each node, connecting them to separate

controllers on the array. Then you can define alternate links to the LUNs or logical

disks you have defined on the array. Alternate links (known as PV links) to the same

disk should be assigned to different physical volume groups. In SAM, choose the

Chapter 2 43

Page 44

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

type of disk array you wish to configure, and follow the menus to define alternate

links. Be sure to specify a different physical volume group for each link to the same

disk.

The following example shows how to configure alternate links using LVM

commands. In the example, the following disk configuration is assumed:

8/0.15.0 /dev/dsk/c0t15d0 /* I/O Channel 0 (8/0) SCSI address 15 LUN 0 */

8/0.15.1 /dev/dsk/c0t15d1 /* I/O Channel 0 (8/0) SCSI address 15 LUN 1 */

8/0.15.2 /dev/dsk/c0t15d2 /* I/O Channel 0 (8/0) SCSI address 15 LUN 2 */

8/0.15.3 /dev/dsk/c0t15d3 /* I/O Channel 0 (8/0) SCSI address 15 LUN 3 */

8/0.15.4 /dev/dsk/c0t15d4 /* I/O Channel 0 (8/0) SCSI address 15 LUN 4 */

8/0.15.5 /dev/dsk/c0t15d5 /* I/O Channel 0 (8/0) SCSI address 15 LUN 5 */

10/0.3.0 /dev/dsk/c1t3d0 /* I/O Channel 1 (10/0) SCSI address 3 LUN 0 */

10/0.3.1 /dev/dsk/c1t3d1 /* I/O Channel 1 (10/0) SCSI address 3 LUN 1 */

10/0.3.2 /dev/dsk/c1t3d2 /* I/O Channel 1 (10/0) SCSI address 3 LUN 2 */

10/0.3.3 /dev/dsk/c1t3d3 /* I/O Channel 1 (10/0) SCSI address 3 LUN 3 */

10/0.3.4 /dev/dsk/c1t3d4 /* I/O Channel 1 (10/0) SCSI address 3 LUN 4 */

10/0.3.5 /dev/dsk/c1t3d5 /* I/O Channel 1 (10/0) SCSI address 3 LUN 5 */

Assume that the disk array has been configured, and that both the following device

files appear for the same LUN (logical disk) when you run the ioscan command:

/dev/dsk/c0t15d0

/dev/dsk/c1t3d0

Use the following steps to configure a volume group for this logical disk:

1. First, set up the group directory for vgdatabase:

# mkdir /dev/vgdatabase

2. Next, create a control file named group in the directory /dev/vgdatabase, as

follows:

# mknod /dev/vgdatabase/group c 64 0xhh0000

The major number is always 64, and the hexadecimal minor number has the

form

0xhh0000

where hh must be unique to the volume group you are creating. Use an

appropriate hexadecimal number that is available on your system, after the

volume groups that are already configured. On a single system, this might be the

next hexadecimal number, on a cluster, these number must be assigned

cluster-wide, so it should be one of the hexadecimal numbers used in the cluster .

Use the following command to display a list of existing volume groups:

# ls -l /dev/*/group

3. Use the pvcreate command on one of the device files associated with the

LUN to define the LUN to LVM as a physical volume.

# pvcreate /dev/dsk/c0t15d0

It is only necessary to do this with one of the device file names for the LUN.

44 Chapter 2

Page 45

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

4. Use the following commands to create the volume group itself with the first link

assigned to a physical volume group called bus1and the second link assigned to

a physical volume group called bus2:

# vgcreate -g bus1 /dev/vgdatabase /dev/dsk/c0t15d0

# vgextend -g bus2 /dev/vgdatabase /dev/dsk/c0t3d0

LVM will now recognize the I/O channel represented by /dev/dsk/c0t15d0 as the

primary link to the disk; if the primary link fails, LVM will automatically switch to

the alternate I/O channel represented by /dev/dsk/c1t3d0.

Creating Logical Volumes

Use the following command to create logical volumes (the example is for

/dev/vgdatabase):

# lvcreate -L 120 -m 1 -s g /dev/vgdatabase

This command creates a 120 MB mirrored volume named lvol1. The name is

supplied by default, since no name is specified in the command. The -s g option

means that mirroring is PVG-strict, that is, the mirror copies of data will be in

different physical volume groups.

NOTE If you are using disk arrays in RAID 1 or RAID 5 mode, omit the -m 1 option.

Chapter 2 45

Page 46

Monitoring Disk Resources

Rules for Using the EMS Disk Monitor with MC/ServiceGuard

Rules for Mirrored Individual Disks

The following rules apply to configuring mirrored disks for use with

MC/ServiceGuard and EMS monitoring:

• Mirroring must be PVG-strict.

Mirrored volumes must reside on a different bus from the original volume to

avoid a single point of failure and to obtain the best pv_summary value for that

mirror. This is done automatically by LVM if you created the PVGs while

setting up mirroring. See the lvextend man page and Managing

MC/ServiceGuard for more information.

• Logical volumes that are 2-way mirrored should be in separate volume groups

from those that are 3-way mirrored.

Putting differently mirrored volumes in the same volume group mak es it difficult

to accurately interpret the pv_summary data. Take the example of a volume

group containing both 2- and 3-way mirroring. If 2 host adapters fail on that

volume group, it could mean no data available for the 2-way mirrored logical

volume, but one copy still available for the 3-way mirrored volume. The

pv_summary would be wrong for one of those mirrored disk configurations.

• Volume groups representing the same hardware for failover must be created with

exactly the same name on all nodes.

For example a bus connecting 3 nodes to a disk array must be defined as part of

vg01 on all 3 nodes. We also recommend using the same names for PVGs

containing the same actual disks.

Mirrors that have been split off are treated the same by the disk monitor as they are

by LVM. When you split off a mirror you may see a change in the following

resources:

• /vg/vgName/lv/copies/lvName will be reduced by one when the mirror is split

off. If you created a monitoring request for that resource that alerts you when the

number of copies changes or is reduced, you may see an event.

• /vg/vgName/lv/status will have a new /lvName resource instance that represents

the split off mirror.

• /vg/vgName/lv_summary many change depending on the state of the new logical

volume created by the split mirror.

If you restore the split mirror normally using supported LVM commands, the disk

monitor will detect the merged mirror and reports

46 Chapter 2

Page 47

Monitoring Disk Resources

Creating Disk Monitoring Requests

Creating Disk Monitoring Requests

There are two ways to create disk monitor requests from:

• the SAM interface to EMS to send alerts to HP OpenView ITO, ClusterView, or

Network Node Manager.

• MC/ServiceGuard to configure any disk monitor resource as a package

dependency.

These requests are not exclusive: you can configure the disk monitor from both

MC/ServiceGuard and the SAM interface to EMS. In fact, if you are using EMS to

monitor disks for MC/ServiceGuard package dependencies, it is recommended you

also configure EMS to send events to your system monitoring software, e.g. HP

OpenView IT/Operations, so you are alerted when something threatens data or

application availability.

The following sections take some common disk configurations in a high av ailability

environment and give examples of the types of monitor requests you might want to

create.

Chapter 2 47

Page 48

Monitoring Disk Resources

Creating Disk Monitoring Requests

Disk Monitoring Request Suggestions

The examples listed in Table 2-7 are valid for both RAID and mirrored

configurations. For examples on configuring MC/ServiceGuard dependencies, see

Chapter 1, “Configuring MC/ServiceGuard Package Dependencies”.

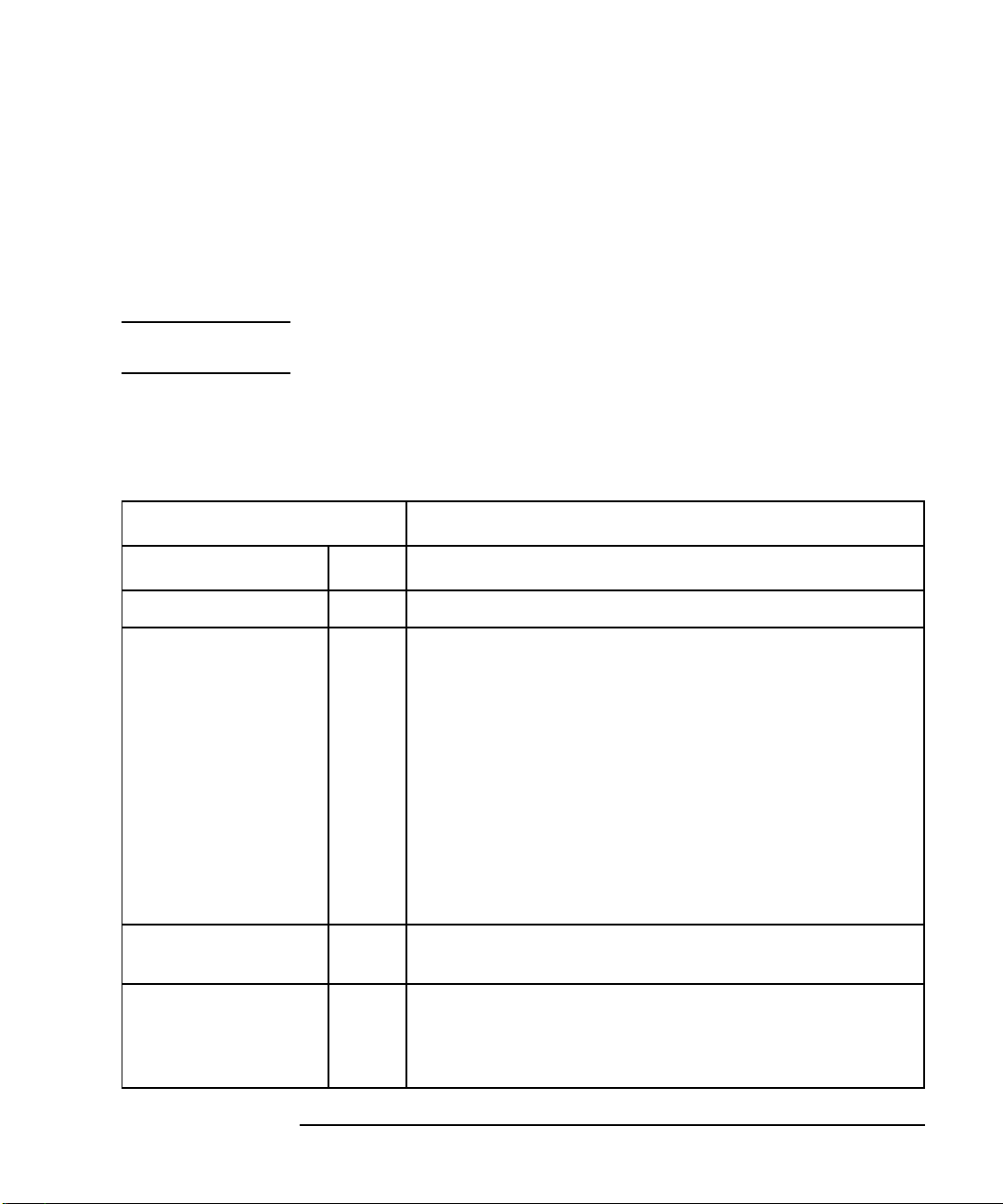

Table 2-7 Suggestions for Creating Disk Monitor Requests

Monitoring Parameters

To be alerted when...

you are at risk for data

Resources to

monitor

Notify Value Option

pv_summary when value is >=

loss (most common for

use with

lv_summary when value is >=

MC/ServiceGuard)

any disks fail pv_pvlink/status/* when value is not

equal

any disks fail, and you

want to know when

pv_pvlink/status/* when value is not

equal

they are back up

you want regular

reminders to fix

inoperative disks,

controllers, busses,

and host adapters, and

pv_pvlink/status/* at each interval

(use a long

polling

interval, 1 hour

or more)

= DOWN

you want notification

when they are fixed

any logical volume

becomes unavailable

lv/status/* when value is... not

equal

SUSPECT

INACTIVE_

DOWN

UP

UP

UP

RETURN

REPEAT

RETURN

you have lost a mirror

in your 2-way

mirroring environment

lv/copies/* when value is... < 2

48 Chapter 2

Page 49

Monitoring Disk Resources

Creating Disk Monitoring Requests

The following screens step you through creating a disk monitor request. Assume

you want to be alerted when any disks fail and when they are back up. Figure 2-2

shows you can select all instances of pv_pvlink, so you only have to enter the

parameters once for each volume group.You still need to create multiple pv_pvlink

requests, one for each volume group on your system. Click OK to set monitoring

parameters.

Figure 2-2 Example: Selecting All Instances of /vg/vgName/pv_pvlink/status

Chapter 2 49

Page 50

Monitoring Disk Resources

Creating Disk Monitoring Requests

Assume you have a great need to know the status of your system at all times. You

would need a short polling interval, perhaps between 30 and 120 seconds. (If you

notice the disk monitor consumes too much CPU, you may want to set a longer

polling interval.) Assume also that you want an Initial event sent to make sure the

request is configured properly. You would want to set the Return option to send an

event when disks come back up. You would configure the events to use opcmsg

(ITO) protocol because you use HP OpenView IT/Operations as your system

management tool. The parameters for your monitoring request would look like

Figure 2-3.

Figure 2-3 Example: Configuring /vg/vgName/pv_pvlink/status Parameters to Notify

When Disks Fail

All requests are created in a similar way. You need to make sure you perform these

steps for all instances in all volume groups you want to monitor.

50 Chapter 2

Page 51

Monitoring Disk Resources

Creating Disk Monitoring Requests

Resources to Monitor for RAID Arrays

These considerations are relevant to all supported RAID configurations listed at the

beginning of this chapter. To adequately monitor a RAID system, create requests to

monitor at least the following resources for all volume groups on a node:

/vg/vgName/pv_summary This gives you an overview of the status of the entire physical volume

group and is recommended when using EMS in conjunction with

MC/ServiceGuard; see “Rules for Using the EMS Disk Monitor with

MC/ServiceGuard” on page 40.

vg/vgName/pv_pvlink/status/* This gives you the status of each PV link in the array and is redundant

to pv_summary. It is recommended when using EMS outside of the

MC/ServiceGuard environment, or if you require specific status on

each physical device.

vg/vgName/lv_summary This gives you the status of data availability on the array.

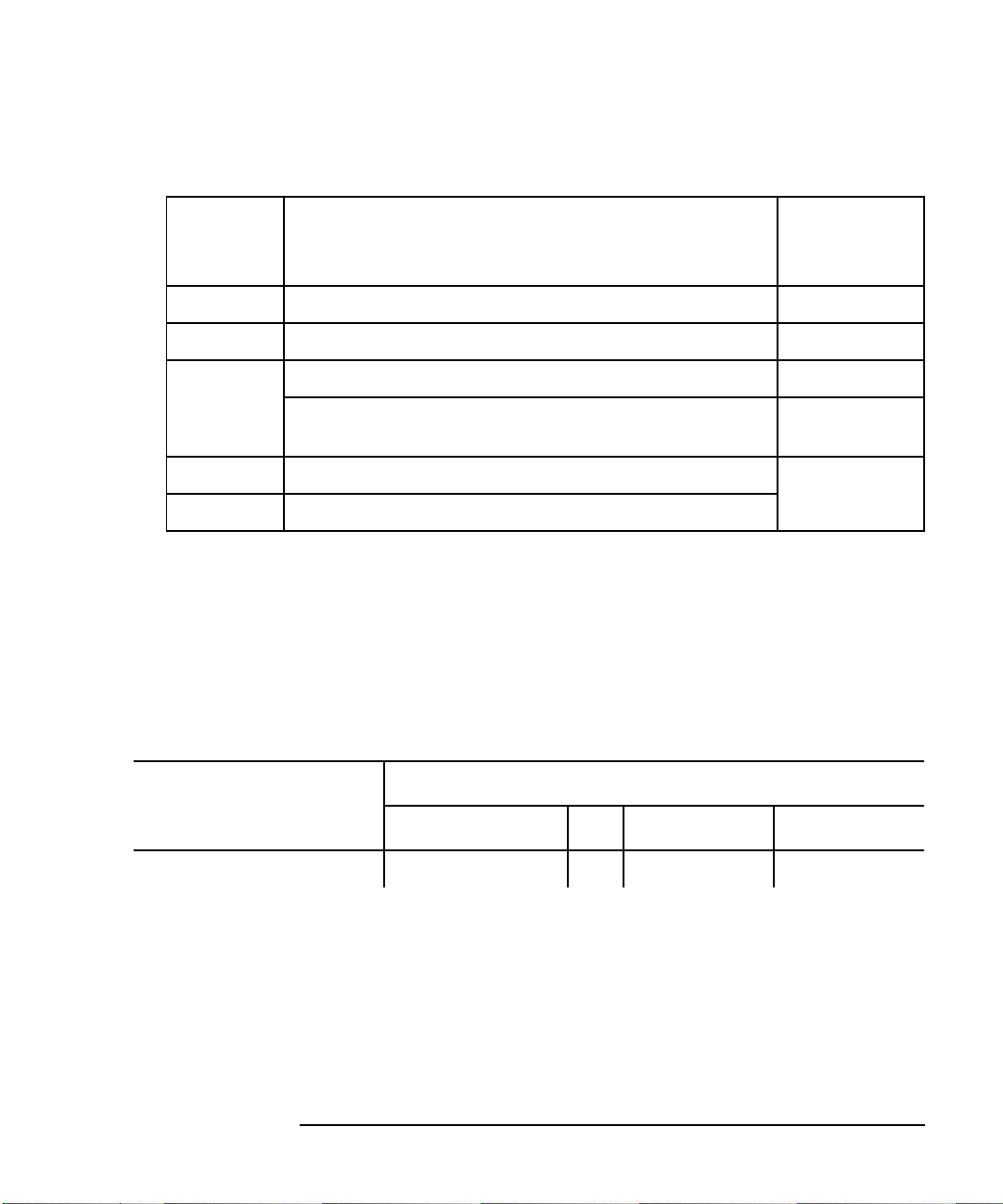

Figure 2-4 represents a node with two RAID arrays and two PV links to each are

Figure 2-4 RAID Array Example

Bus A for vgdance:

/dev/dsk/c1t0d0

/dev/dsk/c1t2d0

Bus A for vgdsing:

/dev/dsk/c1t0d1

/dev/dsk/c1t2d1

t0

vgdance

vgsing

Bus A (c1)

lun 0

lun1

Chapter 2 51

node1

Bus B (c2)

t1

lun 0

lun1

Bus B for vgdance:

/dev/dsk/c2t1d0

/dev/dsk/c2t3d0

Bus B for vgsing:

/dev/dsk/c2t1d1

/dev/dsk/c2t3d1

t2

lun 0

lun1

t3

lun 0

lun1

Page 52

Monitoring Disk Resources

Creating Disk Monitoring Requests

Each LUN on the RAID array is in it own volume group: vgdance and vgsing.

Assume this is one node in a 2-node cluster and you want to be notified when there

is a failover, when any physical device fails, and when any logical volume becomes

unavailable.

T o be notified when a package f ails o v er, you must configure an EMS request that is

the same as the package dependency you configured in MC/ServiceGuard. See

Chapter 1, “Configuring MC/ServiceGuard Package Dependencies”. For this

example, assume the package UP values were set as “UP” and “PVG_UP”.

To configure the EMS alerts, create the following requests:

Table 2-8 Sample Disk Monitoring Requests

Monitoring Parameters

Resource

Notify Condition Option

/vg/vgdance/pv_summary when value is... > PVG_UP RETURN

/vg/vgsing/pv_summary when value is... > PVG_UP RETURN

/vg/dance/lv_summary when value is... >= INACTIVE RETURN

/vg/vgsing/lv_summary when value is... >= INACTIVE RETURN

If pv_summary is SUSPECT, you know a physical device fails. If pv_summary

status is SUSPECT , you may want to look at your lv_summary to see if you can still

access all data. If lv_summary is DOWN or IN ACTIVE_DOWN, you do not hav e a

complete copy of data.

52 Chapter 2

Page 53

Monitoring Disk Resources

Creating Disk Monitoring Requests

Resources to Monitor for Mirrored Disks

This section is valid for mirrored disks created with MirrorDisk/UX. Mirroring is

required to be PVG-strict if you are using the disk monitor. Mirrored configurations

that are not PVG-strict will not give you a correct pv_summary.

To adequately monitor mirrored disks, create requests for the following resources

for all volume groups on a node:

/vg/vgName/pv_summary This gives you summary status of all physical volumes in a volume

group. A high availability system must be configured PVG strict. If

not, pv_summary cannot accurately determine disk availability.

vg/vgName/pv_pvlink/status/* This gives you the status of each physical disk and links.

vg/vgName/lv_summary This gives you the status of data availability for logical volumes.

vg/vgName/lv/copies/* This gives you the total number of copies of data currently available.

Figure 2-5 represents two nodes with 2-way mirrored configuration with 10 disks on

2 busses. Both copies are in a single volume group. Assume you want to be notified

when any physical device fails, and when you only have one copy of data, or when

there is an MC/ServiceGuard failover. To configure this last request, you must

duplicate your MC/ServiceGuard package dependency. See Chapter 1 “Configuring

MC/ServiceGuard Package Dependencies”.

Figure 2-5 Mirrored Disks Example

node1

/dev/dsk/c0t7d0

/dev/vg01

/dev/dsk/c0t8d0

/dev/dsk/c0t9d0

/dev/dsk/c0t10d0

/dev/dsk/c0t11d0

Chapter 2 53

node2

/dev/dsk/c1t7d0

/dev/dsk/c1t8d0

/dev/dsk/c1t9d0

/dev/dsk/c1t10d0

/dev/dsk/c1t11d0

Page 54

Resource

Monitoring Disk Resources

Creating Disk Monitoring Requests

To configure the EMS alerts, create the following requests on each node:

Monitoring Parameters

Notify Condition Option

/vg/vg01/pv_summary when value is... >= PVG_UP

/vg/vg01/lv_summary when value is... >=

INACTIVE RETURN

/vg/vg01/lv/copies/* when value is... <= 1

Alerts need to be interpreted in relation to each other. In the table above, you would

get an alert when PVG_UP is true. Although all data is available, the condition

PVG_UP implies there are physical volumes that are not functioning and need to be

fixed.You may want to examine lv/copies to see how many copies of data are

accessible and determine how urgently you need to repair the failures. If you have

3-way mirroring and only 1 copy of data is available, for example, you may want to

correct the failure immediately to eliminate the single point of failure. Table 2-9 is

an example of how the disk monitor determines whether data is available in a

mirrored configuration with 5 disks on each bus.

RETURN

RETURN

54 Chapter 2

Page 55

Monitoring Disk Resources

Creating Disk Monitoring Requests

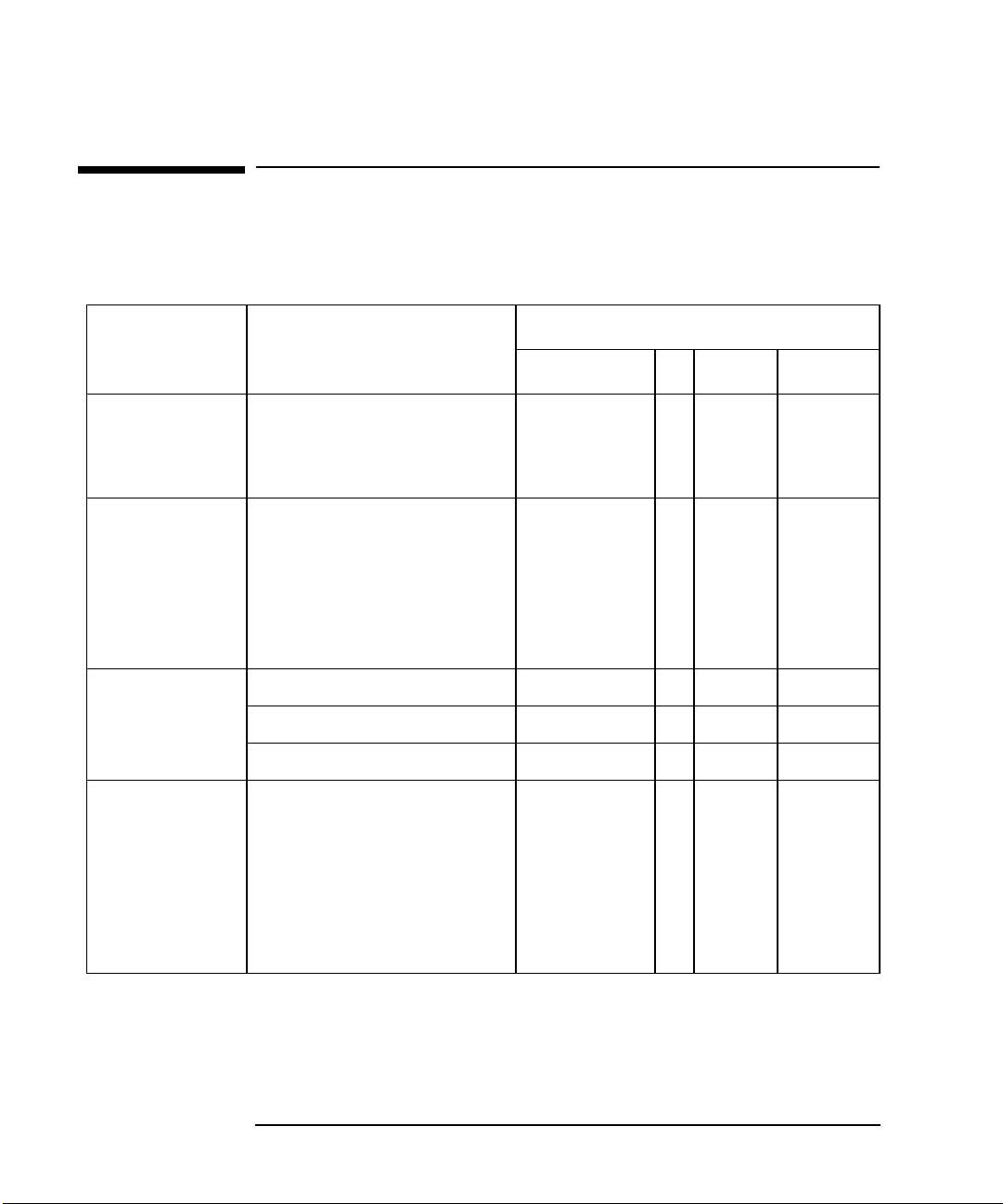

Table 2-9 Example for Interpreting the pv_summary for Mirrored Disks

number of

valid

devices

10 all PVs and data accessible UP

9 1 PV down, all data accessible PVG_UP

8-5 if 5 PVs are from the same PVG, then all data is available PVG_UP

if 2 or more physical volumes from different PVGs are DO WN,

the disk monitor cannot conclude that all data is available

4-1 some data missing

0 no data available

meaning

pv_summary

value

SUSPECT

DOWN

Resources to Monitor for Lock Disks

Lock disks are used as a tie-breaker in a forming or reforming cluster, so if you are

using a lock disk with your cluster, you should request a monitor for that disk and

send an alert to your system management software if the lock disk is unavailable. If

the lock disk is unavailable during cluster formation, the cluster may fail to reform.

Requests to monitor the lock disk might look like this:

Monitoring Parameters

Resource

Notify Condition Option

/vg/vg02/pv_pvlink/c0t0d1 when value is >=

The Repeat value in the Options will send an alert until the lock disk is available.

You need to create a request on each node in the cluster. Because the bus name and