Page 1

HP Integrity rx7640 and HP 9000 rp7440

Servers

User Service Guide

HP Part Number: AB312-9010A

Published: November 2007

Edition: Fourth Edition

Page 2

© Copyright 2007

Legal Notices

© Copyright 2007 Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice.

The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services.

Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions

contained herein.

Microsoft and Windows are U.S. registered trademarks of Microsoft Corporation. Linux is a U.S. registered trademark of Linus Torvalds. Intel

is a trademark or registered trademark of Intel Corporation or its susidaries in the United States and other countries.

Page 3

Table of Contents

About this Document.......................................................................................................15

Book Layout..........................................................................................................................................15

Intended Audience................................................................................................................................15

Publishing History................................................................................................................................15

Related Information..............................................................................................................................16

Typographic Conventions.....................................................................................................................17

HP Encourages Your Comments..........................................................................................................18

1 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview.....................19

Detailed Server Description..................................................................................................................19

Dimensions and Components.........................................................................................................20

Front Panel.......................................................................................................................................23

Front Panel Indicators and Controls..........................................................................................23

Enclosure Status LEDs...............................................................................................................23

Cell Board........................................................................................................................................24

PDH Riser Board........................................................................................................................25

Central Processor Units..............................................................................................................25

Memory Subsystem....................................................................................................................26

DIMMs........................................................................................................................................27

Cells and nPartitions........................................................................................................................27

Internal Disk Devices for the Server................................................................................................28

System Backplane............................................................................................................................29

System Bacplane to PCI-X Backplane Connectivity...................................................................29

Clocks and Reset........................................................................................................................29

I/O Subsystem..................................................................................................................................29

PCI-X/PCIe Backplane................................................................................................................32

PCI-X/PCIe Slot Boot Paths...................................................................................................33

MP/SCSI Board...........................................................................................................................34

LAN/SCSI Board........................................................................................................................34

Mass Storage (Disk) Backplane..................................................................................................34

2 Server Site Preparation................................................................................................35

Dimensions and Weights......................................................................................................................35

Electrical Specifications.........................................................................................................................36

Grounding.......................................................................................................................................36

Circuit Breaker.................................................................................................................................36

System AC Power Specifications.....................................................................................................36

Power Cords...............................................................................................................................36

System Power Specifications......................................................................................................37

Environmental Specifications...............................................................................................................38

Temperature and Humidity............................................................................................................38

Operating Environment.............................................................................................................38

Environmental Temperature Sensor..........................................................................................39

Non-Operating Environment.....................................................................................................39

Cooling.............................................................................................................................................39

Internal Chassis Cooling............................................................................................................39

Bulk Power Supply Cooling.......................................................................................................39

PCI/Mass Storage Section Cooling.............................................................................................39

Standby Cooling.........................................................................................................................39

Table of Contents 3

Page 4

Typical Power Dissipation and Cooling..........................................................................................39

Acoustic Noise Specification...........................................................................................................40

Airflow.............................................................................................................................................40

System Requirements Summary...........................................................................................................41

Power Consumption and Air Conditioning....................................................................................41

3 Installing the Server......................................................................................................43

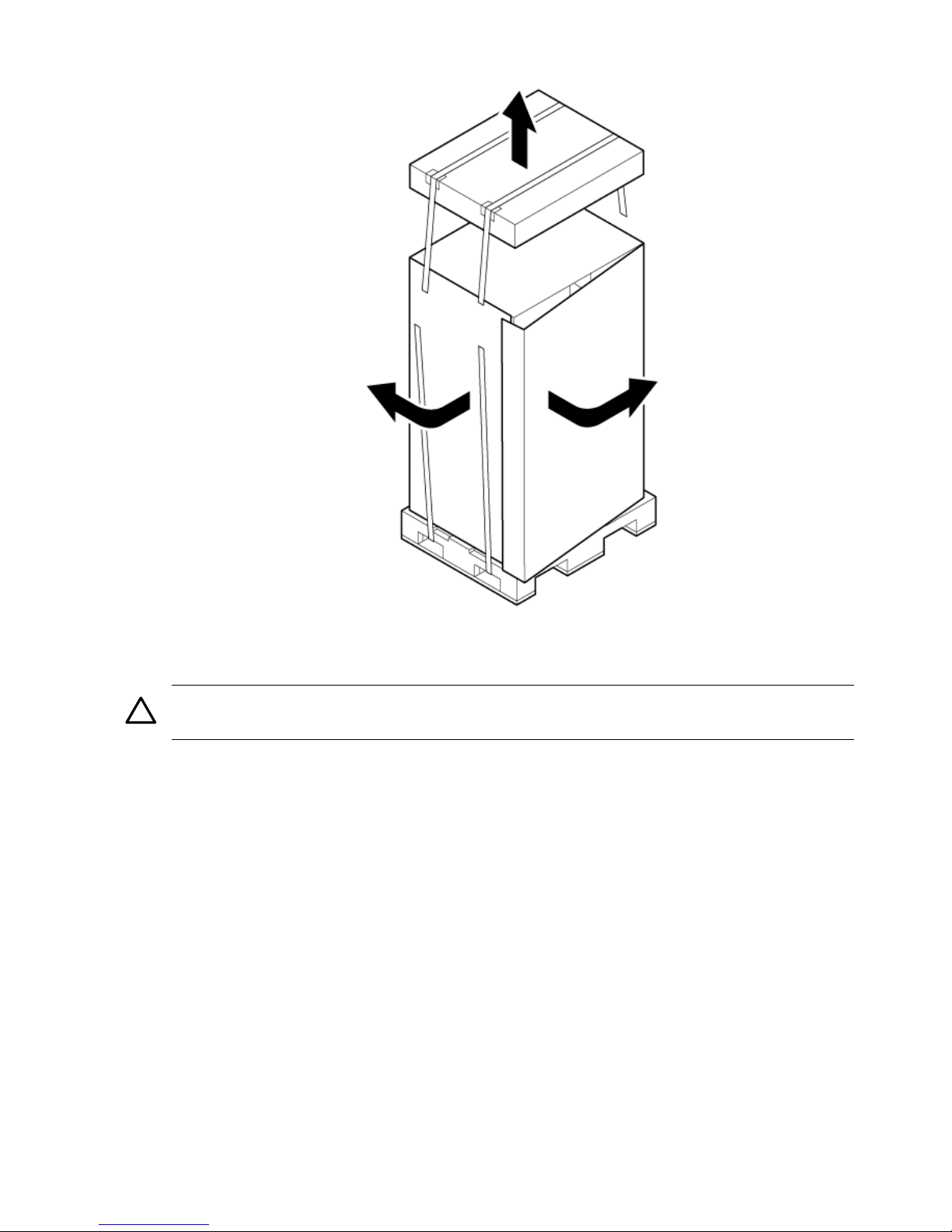

Receiving and Inspecting the Server Cabinet.......................................................................................43

Unpacking the Server Cabinet.........................................................................................................43

Securing the Cabinet........................................................................................................................46

Standalone and To-Be-Racked Systems................................................................................................47

Rack-Mount System Installation.....................................................................................................47

Lifting the Server Cabinet Manually....................................................................................................47

Using the RonI Model 17000 SP 400 Lifting Device.............................................................................49

Wheel Kit Installation...........................................................................................................................52

Installing the Power Distribution Unit.................................................................................................57

Installing Additional Cards and Storage..............................................................................................58

Installing Additional Hard Disk Drives..........................................................................................58

Removable Media Drive Installation...............................................................................................59

PCI-X Card Cage Assembly I/O Cards............................................................................................60

Installing an Additional PCI-X Card..........................................................................................63

Installing an A6869B VGA/USB PCI Card in a Server....................................................................65

Troubleshooting the A6869B VGA/USB PCI Card..........................................................................66

No Console Display...................................................................................................................67

Reference URL............................................................................................................................67

Cabling and Power Up..........................................................................................................................67

Checking the Voltage.......................................................................................................................67

Preface........................................................................................................................................67

Voltage Range Verification of Receptacle...................................................................................67

Verifying the Safety Ground (Single Power Source)..................................................................68

Verifying the Safety Ground (Dual Power Source)....................................................................69

Voltage Check (Additional Procedure)...........................................................................................71

Connecting AC Input Power...........................................................................................................72

Installing The Line Cord Anchor (for rack mounted servers).........................................................73

Two Cell Server Installation (rp7410, rp7420, rp7440, rx7620, rx7640)......................................73

Core I/O Connections......................................................................................................................74

MP/SCSI I/O Connections .........................................................................................................74

LAN/SCSI Connections..............................................................................................................75

Management Processor Access..................................................................................................75

Setting Up the Customer Engineer Tool (PC) .................................................................................75

Setting CE Tool Parameters........................................................................................................75

Connecting the CE Tool to the Local RS232 Port on the MP .....................................................76

Turning on Housekeeping Power and Logging in to the MP.........................................................76

Configuring LAN Information for the MP......................................................................................77

Accessing the Management Processor via a Web Browser.............................................................79

Verifying the Presence of the Cell Boards.......................................................................................80

System Console Selection................................................................................................................81

VGA Consoles............................................................................................................................82

Interface Differences Between Itanium-based Systems.............................................................82

Other Console Types..................................................................................................................82

Additional Notes on Console Selection.....................................................................................82

Configuring the Server for HP-UX Installation...............................................................................83

Booting the Server ...........................................................................................................................83

Selecting a Boot Partition Using the MP ...................................................................................84

4 Table of Contents

Page 5

Verifying the System Configuration Using the EFI Shell...........................................................84

Booting HP-UX Using the EFI Shell...........................................................................................84

Adding Processors with Instant Capacity.......................................................................................84

Installation Checklist.......................................................................................................................85

4 Booting and Shutting Down the Operating System..................................................89

Operating Systems Supported on Cell-based HP Servers....................................................................89

System Boot Configuration Options.....................................................................................................90

HP 9000 Boot Configuration Options..............................................................................................90

HP Integrity Boot Configuration Options.......................................................................................90

Booting and Shutting Down HP-UX.....................................................................................................94

HP-UX Support for Cell Local Memory..........................................................................................94

Adding HP-UX to the Boot Options List.........................................................................................95

Booting HP-UX................................................................................................................................96

Standard HP-UX Booting...........................................................................................................96

Single-User Mode HP-UX Booting...........................................................................................100

LVM-Maintenance Mode HP-UX Booting...............................................................................102

Shutting Down HP-UX..................................................................................................................103

Booting and Shutting Down HP OpenVMS I64.................................................................................105

HP OpenVMS I64 Support for Cell Local Memory.......................................................................105

Adding HP OpenVMS to the Boot Options List............................................................................105

Booting HP OpenVMS...................................................................................................................107

Shutting Down HP OpenVMS.......................................................................................................108

Booting and Shutting Down Microsoft Windows..............................................................................109

Microsoft Windows Support for Cell Local Memory....................................................................109

Adding Microsoft Windows to the Boot Options List...................................................................110

Booting Microsoft Windows..........................................................................................................111

Shutting Down Microsoft Windows..............................................................................................113

Booting and Shutting Down Linux.....................................................................................................114

Linux Support for Cell Local Memory..........................................................................................114

Adding Linux to the Boot Options List.........................................................................................115

Booting Red Hat Enterprise Linux................................................................................................116

Booting SuSE Linux Enterprise Server .........................................................................................117

Shutting Down Linux....................................................................................................................119

5 Server Troubleshooting..............................................................................................121

Common Installation Problems..........................................................................................................121

The Server Does Not Power On.....................................................................................................121

The Server Powers On But Fails Power-On Self Test.....................................................................122

Server LED Indicators.........................................................................................................................122

Front Panel LEDs...........................................................................................................................122

Bulk Power Supply LEDs..............................................................................................................123

PCI-X Power Supply LEDs............................................................................................................124

System and PCI I/O Fan LEDs.......................................................................................................125

OL* LEDs.......................................................................................................................................126

PCI-X OL* Card Divider LEDs......................................................................................................127

Core I/O LEDs................................................................................................................................128

Core I/O Buttons............................................................................................................................129

PCI-X Hot-Plug LED OL* LEDs....................................................................................................131

Disk Drive LEDs............................................................................................................................131

Interlock Switches..........................................................................................................................132

Server Management Subsystem Hardware Overview.......................................................................132

Server Management Overview...........................................................................................................133

Table of Contents 5

Page 6

Server Management Behavior.............................................................................................................133

Thermal Monitoring......................................................................................................................134

Fan Control....................................................................................................................................134

Power Control................................................................................................................................135

Updating Firmware.............................................................................................................................135

Firmware Manager .......................................................................................................................135

Using FTP to Update Firmware.....................................................................................................135

Possible Error Messages.................................................................................................................136

PDC Code CRU Reporting..................................................................................................................136

Verifying Cell Board Insertion............................................................................................................138

Cell Board Extraction Levers.........................................................................................................138

6 Removing and Replacing Components...................................................................141

Customer Replaceable Units (CRUs)..................................................................................................141

Hot-plug CRUs..............................................................................................................................141

Hot-Swap CRUs.............................................................................................................................141

Other CRUs....................................................................................................................................141

Safety and Environmental Considerations ........................................................................................142

Communications Interference ......................................................................................................142

Electrostatic Discharge ..................................................................................................................142

Powering Off Hardware Components and Powering On the Server.................................................142

Powering Off Hardware Components...........................................................................................142

Powering On the System...............................................................................................................143

Removing and Replacing the Top Cover............................................................................................144

Removing the Top Cover...............................................................................................................144

Replacing the Top Cover................................................................................................................145

Removing and Replacing a Side Cover...............................................................................................145

Removing a Side Cover.................................................................................................................146

Replacing a Side Cover..................................................................................................................146

Removing and Replacing the Front Bezel...........................................................................................147

Removing the Front Bezel..............................................................................................................147

Replacing the Front Bezel..............................................................................................................147

Removing and Replacing PCA Front Panel Board.............................................................................147

Removing the PCA Front Panel Board..........................................................................................148

Replacing the Front Panel Board...................................................................................................149

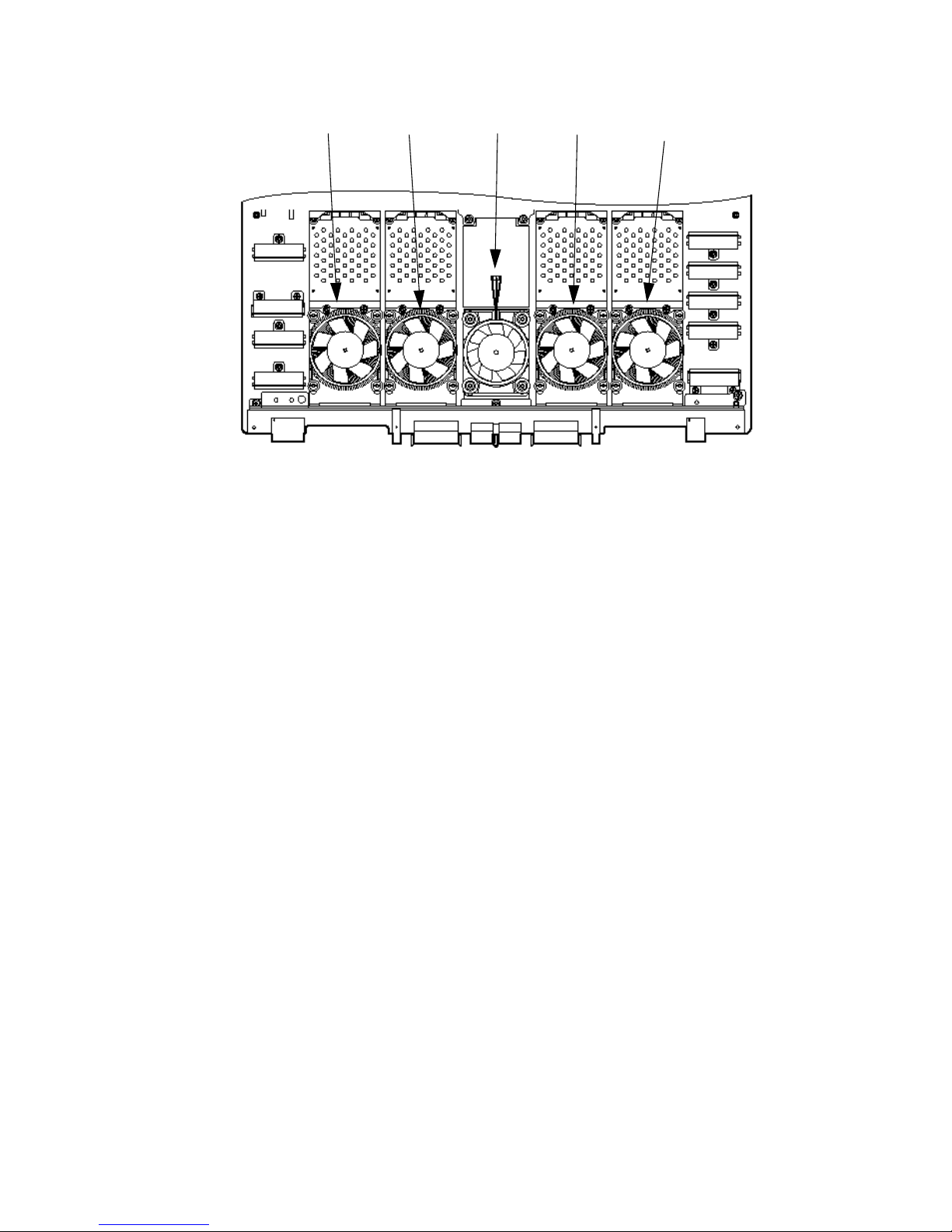

Removing and Replacing a Front Smart Fan Assembly.....................................................................150

Removing a Front Smart Fan Assembly........................................................................................152

Replacing a Front Smart Fan Assembly........................................................................................152

Removing and Replacing a Rear Smart Fan Assembly......................................................................152

Removing a Rear Smart Fan Assembly.........................................................................................154

Replacing a Rear Smart Fan Assembly..........................................................................................154

Removing and Replacing a Disk Drive...............................................................................................154

Removing a Disk Drive..................................................................................................................155

Replacing a Disk Drive..................................................................................................................156

Removing and Replacing a Half-Height DVD/DAT Drive.................................................................156

Removing a DVD/DAT Drive........................................................................................................157

Installing a Half-Height DVD or DAT Drive......................................................................................158

Internal DVD and DAT Devices That Are Not Supported In HP Integrity rx7640.......................158

Removable Media Cable Configuration for a Half-height DVD or DAT Drive............................158

Installing the Half-Height DVD or DAT drive..............................................................................160

Removing and Replacing a Slimline DVD Drive................................................................................161

Removing a Slimline DVD Drive...................................................................................................162

Replacing a Slimline DVD Drive...................................................................................................162

Removing and Replacing a Dual Slimline DVD Carrier....................................................................162

6 Table of Contents

Page 7

Removing a Slimline DVD Carrier................................................................................................162

Installation of Two Slimline DVD+RW Drives..............................................................................163

Removable Media Cable Configuration for the Slimline DVD+RW Drives............................163

Installing the Slimline DVD+RW Drives..................................................................................165

Removing and Replacing a PCI/PCI-X Card......................................................................................165

Installing the New LAN/SCSI Core I/O PCI-X Card(s).................................................................166

PCI/PCI-X Card Replacement Preliminary Procedures................................................................167

Removing a PCI/PCI-X Card.........................................................................................................167

Replacing the PCI/PCI-X Card.......................................................................................................167

Option ROM..................................................................................................................................168

Removing and Replacing a PCI Smart Fan Assembly........................................................................168

Removing a PCI Smart Fan Assembly...........................................................................................169

Replacing a PCI Smart Fan Assembly...........................................................................................170

Removing and Replacing a PCI-X Power Supply...............................................................................170

Preliminary Procedures ................................................................................................................170

Removing a PCI-X Power Supply .................................................................................................171

Replacing the PCI Power Supply...................................................................................................171

Removing and Replacing a Bulk Power Supply.................................................................................171

Removing a BPS.............................................................................................................................172

Replacing a BPS.............................................................................................................................174

Configuring Management Processor (MP) Network Settings............................................................174

7 HP Integrity rp7440 Server .....................................................................................177

Electrical and Cooling Specifications .................................................................................................177

Boot Console Handler (BCH) for the HP Integrity rx7640 and HP 9000 rp7440 Servers...................178

Booting an HP 9000 sx2000 Server to BCH....................................................................................178

HP-UX for the HP Integrity rx7640 and HP 9000 rp7440 Servers......................................................178

HP 9000 Boot Configuration Options............................................................................................179

Booting and Shutting Down HP-UX.............................................................................................179

Standard HP-UX Booting..............................................................................................................179

Single-User Mode HP-UX Booting................................................................................................180

LVM-Maintenance Mode HP-UX Booting.....................................................................................181

Shutting Down HP-UX..................................................................................................................182

System Verification.............................................................................................................................183

A Replaceable Parts......................................................................................................185

Replaceable Parts................................................................................................................................185

B MP Commands...........................................................................................................187

Server Management Commands.........................................................................................................187

C Templates...................................................................................................................189

Equipment Footprint Templates.........................................................................................................189

Computer Room Layout Plan.............................................................................................................189

Index...............................................................................................................................193

Table of Contents 7

Page 8

8

Page 9

List of Figures

1-1 8-Socket Server Block Diagram.....................................................................................................20

1-2 Server (Front View With Bezel) ....................................................................................................21

1-3 Server (Front View Without Bezel)................................................................................................21

1-4 Right-Front View...........................................................................................................................22

1-5 Left-Rear View ..............................................................................................................................23

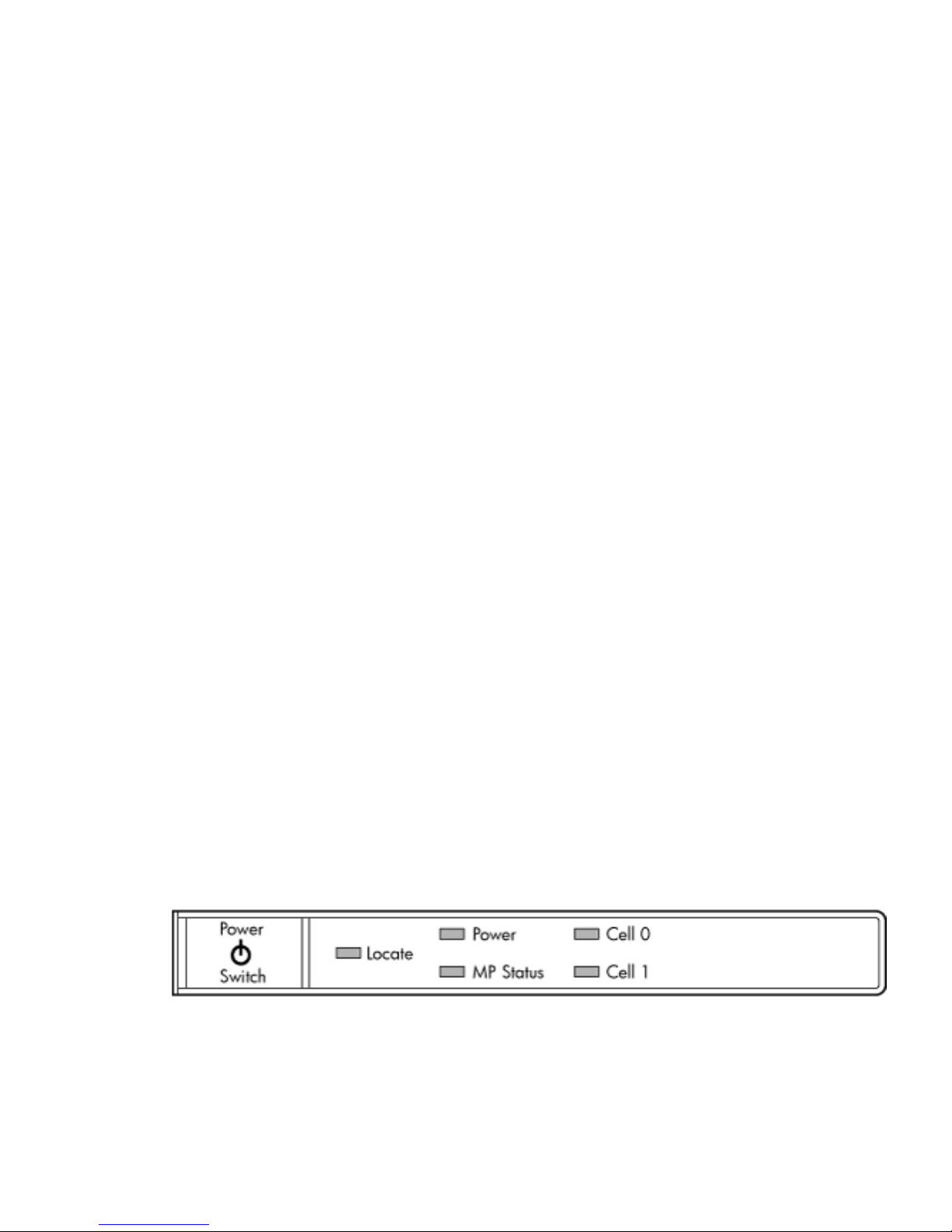

1-6 Front Panel LEDs and Power Switch.............................................................................................24

1-7 Cell Board......................................................................................................................................24

1-8 CPU Locations on Cell Board........................................................................................................26

1-9 Memory Subsystem.......................................................................................................................27

1-10 Disk Drive and DVD Drive Location............................................................................................28

1-11 System Backplane Block Diagram.................................................................................................29

1-12 PCI-X Board to Cell Board Block Diagram....................................................................................30

2-1 Airflow Diagram ..........................................................................................................................41

3-1 Removing the Polystraps and Cardboard.....................................................................................44

3-2 Removing the Shipping Bolts and Plastic Cover...........................................................................45

3-3 Preparing to Roll Off the Pallet.....................................................................................................46

3-4 Securing the Cabinet......................................................................................................................47

3-5 Inserting Rear Handle Tabs into Chassis......................................................................................48

3-6 Attaching the Front of Handle to Chassis.....................................................................................49

3-7 RonI Lifter......................................................................................................................................50

3-8 Positioning the Lifter to the Pallet.................................................................................................51

3-9 Raising the Server Off the Pallet Cushions....................................................................................52

3-10 Component Locations ...................................................................................................................53

3-11 Left Foam Block Position...............................................................................................................54

3-12 Right Foam Block Position............................................................................................................54

3-13 Foam Block Removal.....................................................................................................................55

3-14 Attaching a Caster to the Server....................................................................................................56

3-15 Securing Each Caster Cover to the Server.....................................................................................57

3-16 Completed Server..........................................................................................................................57

3-17 Disk Drive and DVD Drive Location............................................................................................59

3-18 Removable Media Location...........................................................................................................60

3-19 PCI I/O Slot Details........................................................................................................................65

3-20 PCI/PCI-X Card Location..............................................................................................................66

3-21 Voltage Reference Points for IEC 320 C19 Plug.............................................................................68

3-22 Safety Ground Reference Check....................................................................................................69

3-23 Safety Ground Reference Check....................................................................................................70

3-24 Wall Receptacle Pinouts................................................................................................................71

3-25 AC Power Input Labeling..............................................................................................................72

3-26 Distribution of Input Power for Each Bulk Power Supply............................................................73

3-27 Two Cell Line Cord Anchor (rp7410, rp7420, rp7440, rx7620, rx7640).........................................74

3-28 Line Cord Anchor Attach Straps...................................................................................................74

3-29 Front Panel Display ......................................................................................................................76

3-30 MP Main Menu..............................................................................................................................77

3-31 The lc Command Screen................................................................................................................78

3-32 The ls Command Screen................................................................................................................79

3-33 Example sa Command...................................................................................................................80

3-34 Browser Window...........................................................................................................................80

3-35 The du Command Screen..............................................................................................................81

3-36 Console Output Device menu.......................................................................................................82

5-1 Front Panel with LED Indicators.................................................................................................122

5-2 BPS LED Locations......................................................................................................................124

5-3 PCI-X Power Supply LED Locations...........................................................................................125

9

Page 10

5-4 Front, Rear and PCI I/O Fan LEDs..............................................................................................126

5-5 Cell Board LED Locations...........................................................................................................127

5-6 PCI-X OL* LED Locations...........................................................................................................128

5-7 Core I/O Card Bulkhead LEDs....................................................................................................129

5-8 Core I/O Button Locations...........................................................................................................130

5-9 Disk Drive LED Location.............................................................................................................132

5-10 Temperature States......................................................................................................................134

5-11 Firmware Update Command Sample..........................................................................................136

5-12 Server Cabinet CRUs (Front View)..............................................................................................137

5-13 Server Cabinet CRUs (Rear View)...............................................................................................138

5-14 de Command Output..................................................................................................................139

6-1 Top Cover....................................................................................................................................144

6-2 Top Cover Retaining Screws........................................................................................................144

6-3 Side Cover Locations ..................................................................................................................145

6-4 Side Cover Retaining Screws.......................................................................................................146

6-5 Side Cover Removal Detail..........................................................................................................146

6-6 Bezel hand slots...........................................................................................................................147

6-7 Front Panel Assembly Location...................................................................................................148

6-8 Front Panel Board Detail.............................................................................................................149

6-9 Front Panel Board Cable Location on Backplane........................................................................150

6-10 Front Smart Fan Assembly Locations .........................................................................................151

6-11 Front Fan Detail...........................................................................................................................152

6-12 Rear Smart Fan Assembly Locations ..........................................................................................153

6-13 Rear Fan Detail............................................................................................................................154

6-14 Disk Drive Location ....................................................................................................................155

6-15 Disk Drive Detail ........................................................................................................................155

6-16 DVD/DAT Location ....................................................................................................................157

6-17 DVD/DAT Detail..........................................................................................................................158

6-18 Single SCSI and Power Cable in Drive Bay.................................................................................159

6-19 SCSI and Power Cable Lengths...................................................................................................159

6-20 SCSI and Power Cable Lengths...................................................................................................160

6-21 SCSI and Power Cable Lengths...................................................................................................160

6-22 Power Cable Connection and Routing........................................................................................161

6-23 DVD Drive Location ...................................................................................................................161

6-24 Slimline DVD Carrier Location ..................................................................................................162

6-25 Data and Power Cable Configuration for Slimline DVD Installation.........................................163

6-26 Top DVD/DAT and Bottom DVD Cables Nested Together.........................................................164

6-27 SCSI and Power Cables for Slimline DVD+RW Installation........................................................164

6-28 SCSI and Power Cables for Slimline DVD Installation...............................................................165

6-29 PCI/PCI-X Card Location............................................................................................................166

6-30 PCI Smart Fan Assembly Location .............................................................................................169

6-31 PCI Smart Fan Assembly Detail..................................................................................................169

6-32 PCI-X Power Supply Location ....................................................................................................170

6-33 PCI Power Supply Detail.............................................................................................................171

6-34 BPS Location ...............................................................................................................................172

6-35 Extraction Levers.........................................................................................................................173

6-36 BPS Detail ...................................................................................................................................173

C-1 Server Space Requirements.........................................................................................................189

C-2 Server Cabinet Template..............................................................................................................190

C-3 Planning Grid..............................................................................................................................191

C-4 Planning Grid..............................................................................................................................192

10 List of Figures

Page 11

List of Tables

1-1 Cell Board CPU Module Load Order............................................................................................25

1-2 Server DIMMs...............................................................................................................................27

1-3 PCI-X paths for Cell 0....................................................................................................................30

1-4 PCI-X Paths Cell 1..........................................................................................................................31

1-5 PCI-X Slot Types............................................................................................................................32

1-6 PCI-X/PCIe Slot Types...................................................................................................................33

2-1 Server Dimensions and Weights...................................................................................................35

2-2 Server Component Weights...........................................................................................................35

2-3 Example Weight Summary............................................................................................................35

2-4 Weight Summary...........................................................................................................................36

2-5 Power Cords..................................................................................................................................37

2-6 AC Power Requirements...............................................................................................................37

2-7 System Power Requirements for the HP 9000 rp7440 Server........................................................37

2-8 Example ASHRAE Thermal Report..............................................................................................38

2-9 Typical Server Configurations for the HP Integrity rx7640 Server...............................................40

3-1 Wheel Kit Packing List..................................................................................................................52

3-2 Caster Part Numbers.....................................................................................................................55

3-3 HP Integrity rx7640 PCI-X and PCIe I/O Cards............................................................................60

3-4 Single Phase Voltage Examples.....................................................................................................68

3-5 Factory-Integrated Installation Checklist......................................................................................85

5-1 Front Panel LEDs.........................................................................................................................122

5-2 BPS LEDs.....................................................................................................................................124

5-3 PCI Power Supply LEDs..............................................................................................................125

5-4 System and PCI I/O Fan LEDs.....................................................................................................126

5-5 Cell Board OL* LED Indicators...................................................................................................127

5-6 Core I/O LEDs..............................................................................................................................129

5-7 Core I/O Buttons..........................................................................................................................131

5-8 OL* LED States............................................................................................................................131

5-9 Disk Drive LEDs..........................................................................................................................132

5-10 Ready Bit States...........................................................................................................................139

6-1 Front Smart Fan Assembly LED Indications...............................................................................151

6-2 Rear Smart Fan Assembly LED Indications................................................................................153

6-3 Unsupported Removable Media Devices....................................................................................158

6-4 Smart Fan Assembly LED Indications.........................................................................................169

6-5 PCI-X Power Supply LEDs..........................................................................................................171

6-6 Default Configuration for Management Processor LAN...........................................................174

7-1 System Power Requirements for the HP Integrity rx7640 and HP 9000 rp7440 Servers............177

7-2 Typical Server Configurations for the HP 9000 rp7440 Server....................................................177

A-1 Server CRU Descriptions and Part Numbers..............................................................................185

B-1 Service Commands......................................................................................................................187

B-2 Status Commands........................................................................................................................187

B-3 System and Access Config Commands.......................................................................................187

11

Page 12

12

Page 13

List of Examples

4-1 Single-User HP-UX Boot..............................................................................................................101

7-1 Single-User HP-UX Boot..............................................................................................................181

13

Page 14

14

Page 15

About this Document

This document covers the HP Integrity rx7640 and HP 9000 rp7440 Servers.

This document does not describe system software or partition configuration in any detail. For

detailed information concerning those topics, refer to the HP System Partitions Guide:

Administration for nPartitions.

Book Layout

This document contains the following chapters and appendices:

• Chapter 1 - Overview

• Chapter 2 - Site Preparation

• Chapter 3 - Installing the Server

• Chapter 4 - Operating System Boot and Shutdown

• Chapter 5 - Server Troubleshooting

• Chapter 6 - Removal and Replacement

• Chapter 7 - HP 9000 rp7440 Server

• Appendix A - Replaceable Parts

• Appendix B - MP Commands

• Appendix C - DIMM Slot Mapping

• Appendix D - Templates

• Index

Intended Audience

This document is intended to be used by customer engineers assigned to support the HP Integrity

rx7640 and HP 9000 rp7440 Servers.

Publishing History

The Printing History below identifies the edition dates of this document. Updates are made to

this publication on an unscheduled, as needed, basis. The updates will consist of a complete

replacement document and pertinent on-line or CD-ROM documentation.

March 2006. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .First Edition

September 2006. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .Second Edition

January 2007Minor edits throughout. Added Chapter 7 for PA release.Third Edition

November 2007Minor edits.Fourth Edition

Book Layout 15

Page 16

Related Information

You can access other information on HP server hardware management, Microsoft® Windows®

administration, and diagnostic support tools at the following Web sites:

http://docs.hp.com The main Web site for HP technical documentation is http://docs.hp.com.

Server Hardware Information: http://docs.hp.com/hpux/hw/ The

http://docs.hp.com/hpux/hw/ Web site is the systems hardware portion of docs.hp.com.

16 About this Document

Page 17

It provides HP nPartition server hardware management information, including site preparation,

installation, and more.

Windows Operating System Information You can find information about administration of the

Microsoft® Windows® operating system at the following Web sites, among others:

• http://docs.hp.com/windows_nt/

• http://www.microsoft.com/technet/

Diagnostics and Event Monitoring: Hardware Support Tools Complete information about HP

hardware support tools, including online and offline diagnostics and event monitoring tools, is

at the http://docs.hp.com/hpux/diag/ Web site. This site hasdocuments, tutorials, FAQs,

and other reference material.

Web Site for HP Technical Support: http://us-support2.external.hp.com HP IT resource center

Web site at http://us-support2.external.hp.com/ provides comprehensive support

information for IT professionals on a wide variety of topics, including software, hardware, and

networking.

Books about HP-UX Published by Prentice Hall The http://www.hp.com/hpbooks/ Web

site lists the HP books that Prentice Hall currently publishes, such as HP-UX books including:

• HP-UX 11i System Administration Handbook and

Toolkithttp://www.hp.com/hpbooks/prentice/ptr_0130600814.html

• HP-UX Virtual

Partitionshttp://www.hp.com/hpbooks/prentice/ptr_0130352128.html

HP books are available worldwide through bookstores, online booksellers, and office and

computer stores.

Typographic Conventions

The following notational conventions are used in this publication.

WARNING! A warning lists requirements that you must meet to avoid personal injury.

CAUTION: A caution provides information required to avoid losing data or avoid losing system

functionality.

NOTE: A note highlights useful information such as restrictions, recommendations, or important

details about HP product features.

• Commands and options are represented using this font.

• Text that you type exactly as shown is represented using this font.

• Text to be replaced with text that you supply is represented using this font.

Example: “Enter the ls -l filename command” means you must replace filename with your

own text.

• Keyboard keys and graphical interface items (such as buttons, tabs, and menu items)

are represented using this font.

Examples: The Control key, the OK button, the General tab, the Options menu.

• Menu —> Submenu represents a menu selection you can perform.

Example: “Select the Partition —> Create Partition action” means you must select the

Create Partition menu item from the Partition menu.

• Example screen output is represented using this font.

Typographic Conventions 17

Page 18

HP Encourages Your Comments

Hewlett-Packard welcomes your feedback on this publication. Please address your comments

to edit@presskit.rsn.hp.com and note that you will not receive an immediate reply. All

comments are appreciated.

18 About this Document

Page 19

1 HP Integrity rx7640 Server and HP 9000 rp7440 Server

Overview

The HP Integrity rx7640 and HP 9000 rp7440 Servers are members of HP’s business-critical

computing platform family in the mid-range product line.

The information in chapters one through six of this guide applies to the HP Integrity rx7640 and

HP 9000 rp7440 Servers, except for a few items specifically denoted as applying only to the HP

Integrity rx7640 Server. Chapter seven covers any information specific to the HP 9000 rp7440

Server only.

IMPORTANT: Ensure a valid UUID is either in place or available prior to maintenance of these

servers. This step is vital when performing upgrades and is recommended for existing hardware

service restoration. Specific information for upgrades is found in the Upgrade Guide, Mid-Range

Two-Cell HP Servers to HP Integrity rx7640 Server, located at the following

URL:http://docs.fc.hp.com.

The server is a 10U1high, 8-socket symmetric multiprocessor (SMP) rack-mount or standalone

server. Features of the server include:

• Up to 256 GB of physical memory provided by dual inline memory modules (DIMMs).

• Dual-core processors.

• Up to 16 processors with a maximum of 4 processor modules per cell board and a maximum

of 2 cell boards.

• One cell controller (CC) per cell board.

• Turbo fans to cool CPUs and CCs on the cell boards.

• Up to four embedded hard disk drives.

• One half-height DVD drive, two slimline DVDs or one DAT drive.

• Two front chassis mounted N+1 fans.

• Two rear chassis mounted N+1 fans.

• Six N+1 PCI-X card cage fans.

• Two N+1 bulk power supplies.

• N+1 hot-swappable system oscillators.

• Sixteen PCI slots divided into two IO Chassis each. Each IO Chassis accommodates eight

slots supporting PCI/PCI-X/PCI-X 2.0 device adapters or four PCI/PCI-X/PCI-X 2.0 and four

PCIe device adapters.

• Up to two core I/O card sets.

• One manageability processor per core I/O card with failover capability when two or more

core I/O cards are installed and properly configured.

• Four 220 V AC power plugs. Two are required and the other two provide power source

redundancy.

Detailed Server Description

The following section provides detailed information about the server components.

1. The U is a unit of measurement specifying product height. One U is equal to 1.75 inches.

Detailed Server Description 19

Page 20

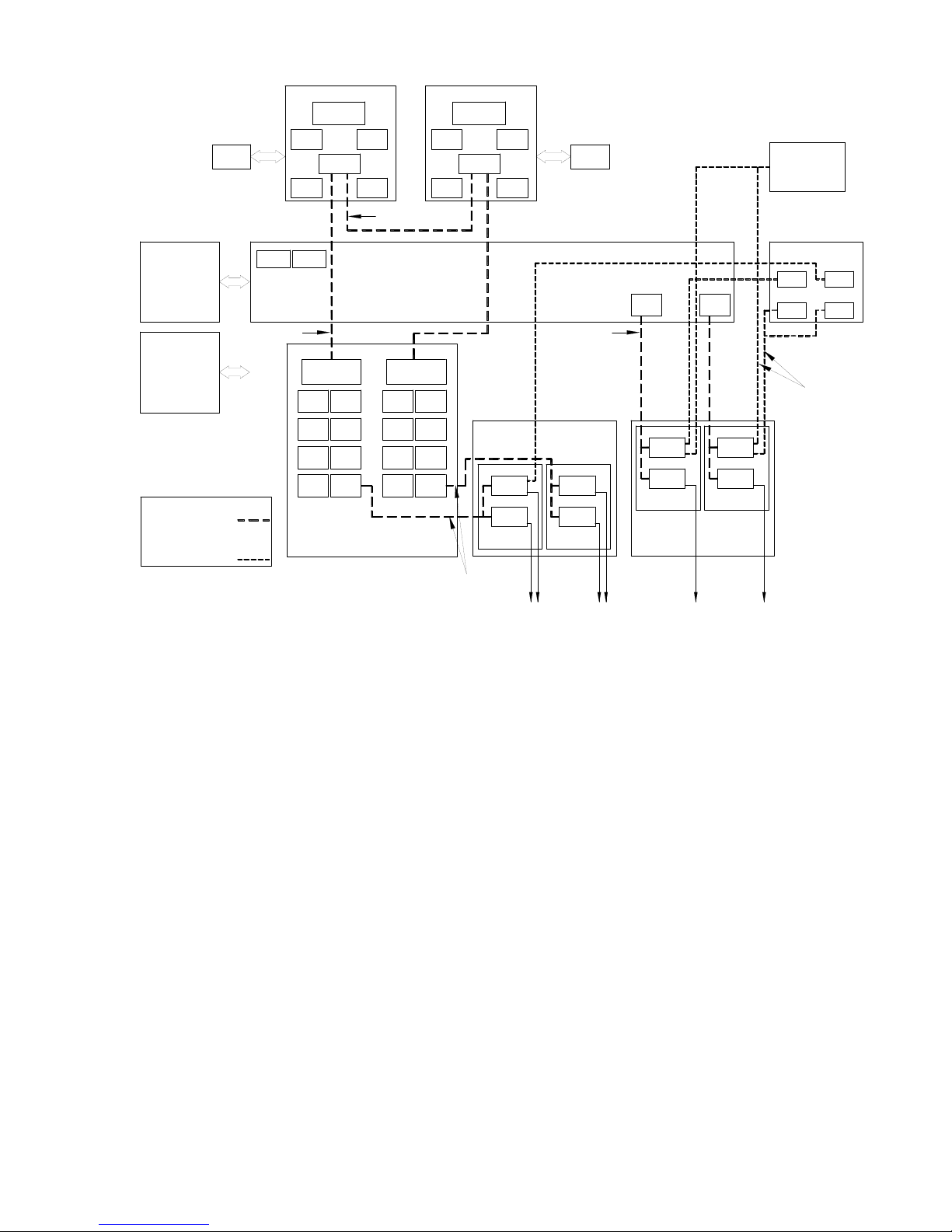

Figure 1-1 8-Socket Server Block Diagram

Memor

y

Cell Board 0

CPU

CPU

CPU

CPU

C

C

PDH

SBA

LBALB

A

LBALB

A

LBALB

A

LBALB

A

LBA

LBA

LBALB

A

LBA

LBA

LBA

LBA

SBA

Backplane

System

Clo

cks

Bulk

Power

Supply

(x2)

PCI-X

(x2)

Power

Rese

t

Memor

y

C

C

CPU

PDH

CPU

CPU

CPU

Cell Board 0

CC Link

SCS

I

LAN

LBA

SCS

I

LAN

SBA Link

LAN/SCSI

Board

s

PCI-X

M

P

SCS

I

Board

s

LAN/SCSI

M

P

SCS

I

LBA

Disk Backplan

e

D

isk

D

isk

D

isk

D

isk

DVD

/

PCI

SCS

I

Tap

e

Indicates ho

t

pluggable

link

or bus

Indicates

cable

Dimensions and Components

The following section describes server dimensions and components.

20 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview

Page 21

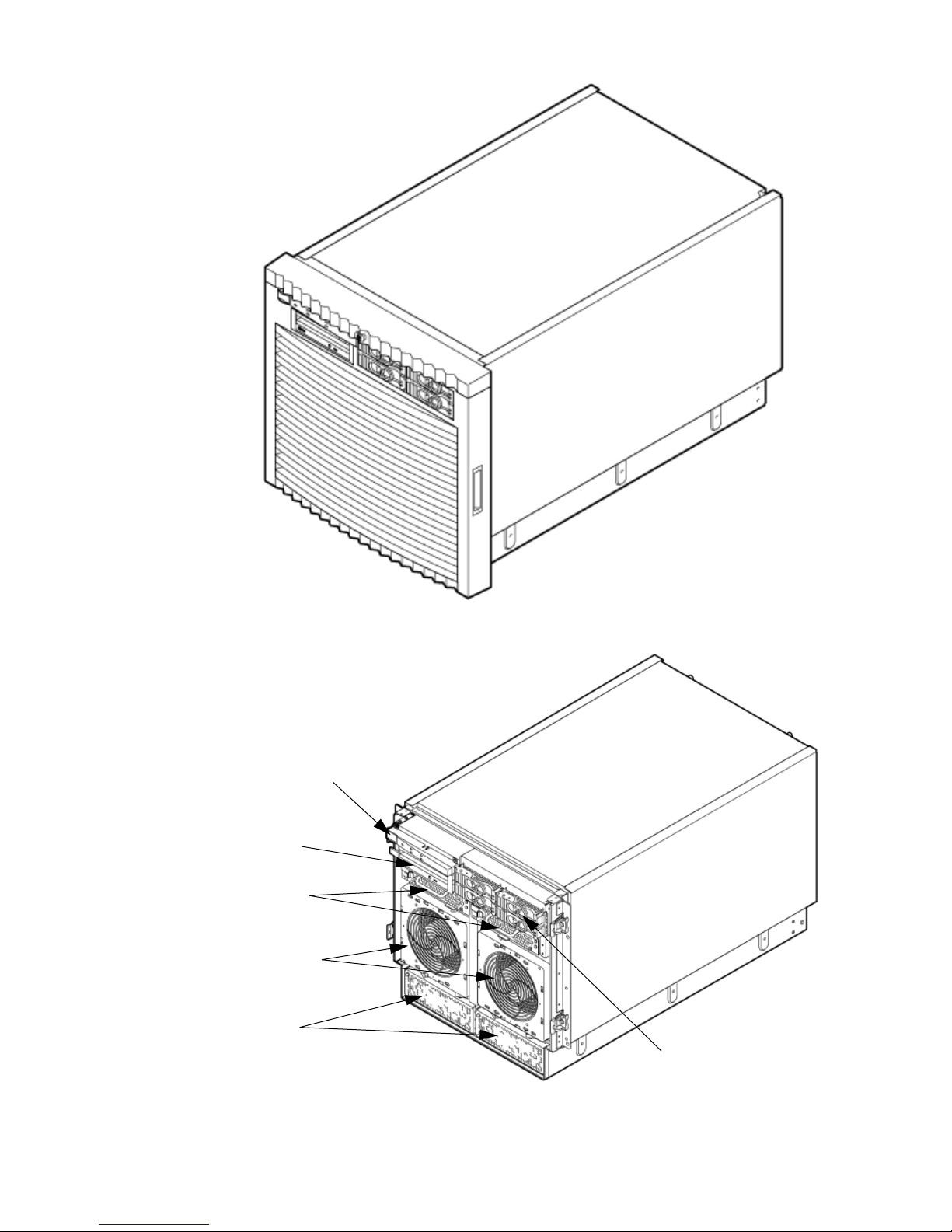

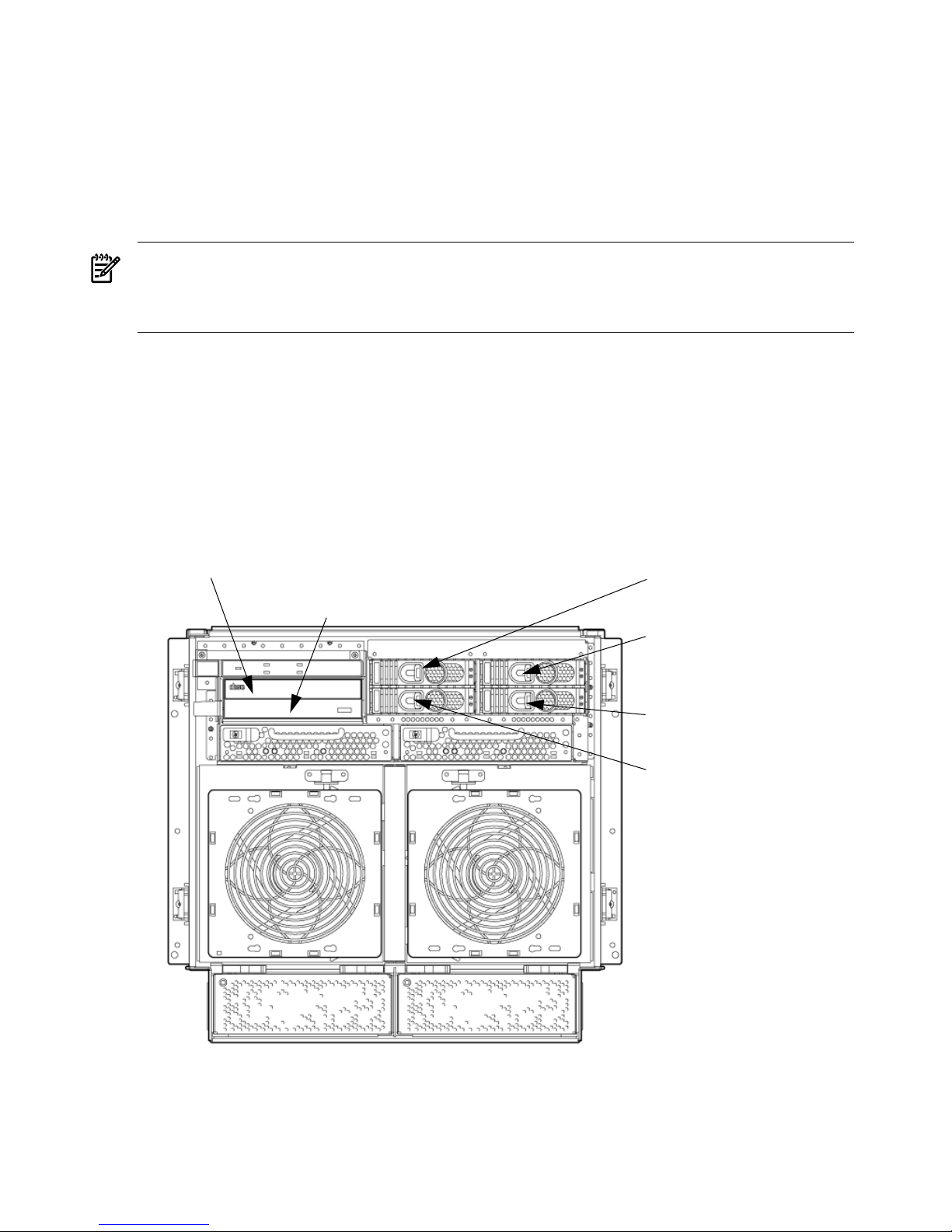

Figure 1-2 Server (Front View With Bezel)

Figure 1-3 Server (Front View Without Bezel)

Power Switch

Removable Media

Drive

PCI Power

Supplies

Front OLR Fans

Bulk Power

Supplies

Hard Disk Drives

Detailed Server Description 21

Page 22

The server has the following dimensions:

• Depth: Defined by cable management constraints to fit into standard 36-inch deep rack:

25.5 inches from front rack column to PCI connector surface

26.7 inches from front rack column to MP Core I/O connector surface

30 inches overall package dimension, including 2.7 inches protruding in front of the front

rack columns.

• Width: 44.45 cm (17.5 inches), constrained by EIA standard 19 inch racks.

• Height: 10U – 0.54 cm = 43.91 cm (17.287 inches). This is the appropriate height for a product

that consumes 10U of rack height while allowing adequate clearance between products

directly above and below this product. Fitting four server units per 2 m rack and upgrade

of current 10U height products in the future are the main height constraints.

The mass storage section located in the front enables access to the 3.5-inch hard drives without

removal of the bezel. This is especially helpful when the system is mounted in the lowest position

in a rack. The mass storage bay also accommodates one 5.25-inch removable media device. The

front panel display board, containing LEDs and the system power switch, is located directly

above the 5.25-inch removable media bay.

Below the mass storage section and behind the removable front bezel are two, N+1 PCI-X power

supplies.

The bulk power supply section ispartitioned by a sealed metallic enclosure located in the bottom

of the package. This enclosure housesthe N+1 fully redundant BPSs. Install these power supplies

from the front of the server after removing the front bezel.

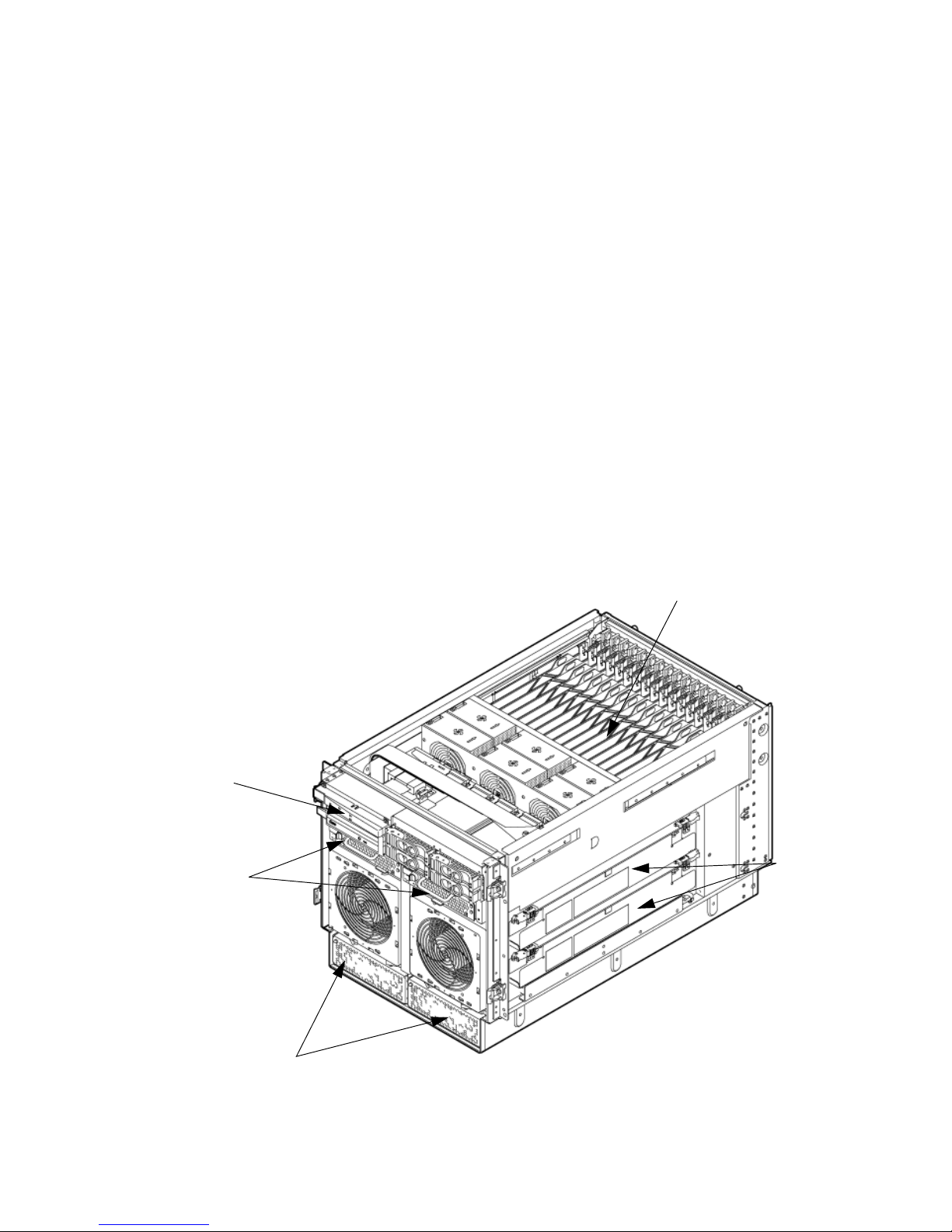

Figure 1-4 Right-Front View

B

PCI Power

Supplies

PCI-X cards

Cell Boards

Bulk Power

Supplies

Front Panel

Display Board

Access the PCI-X card section, located toward the rear, by removing the top cover.

The PCI card bulkhead connectors are located at the rear top.

22 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview

Page 23

The PCI OLR fan modules are located in front of the PCI-X cards. These six 9.2-cm fans are housed

in plastic carriers. They are configured in two rows of three fans.

Four OLR system fan modules, externally attached to the chassis, are 15-cm (6.5-inch) fans. Two

fans are mounted on the front surface of the chassis and two are mounted on the rear surface.

The cell boards are accessed from the right side of the chassis behind a removable side cover.

The two MP/SCSI boards are positioned vertically at the rear of the chassis.

The two hot-pluggable N+1 redundant bulk power supplies provide a wide input voltage range.

They are installed in the front of the chassis, directly under the front fans.

A cable harness that connects from the rear of the BPSs to the system backplane provides DC

power distribution.

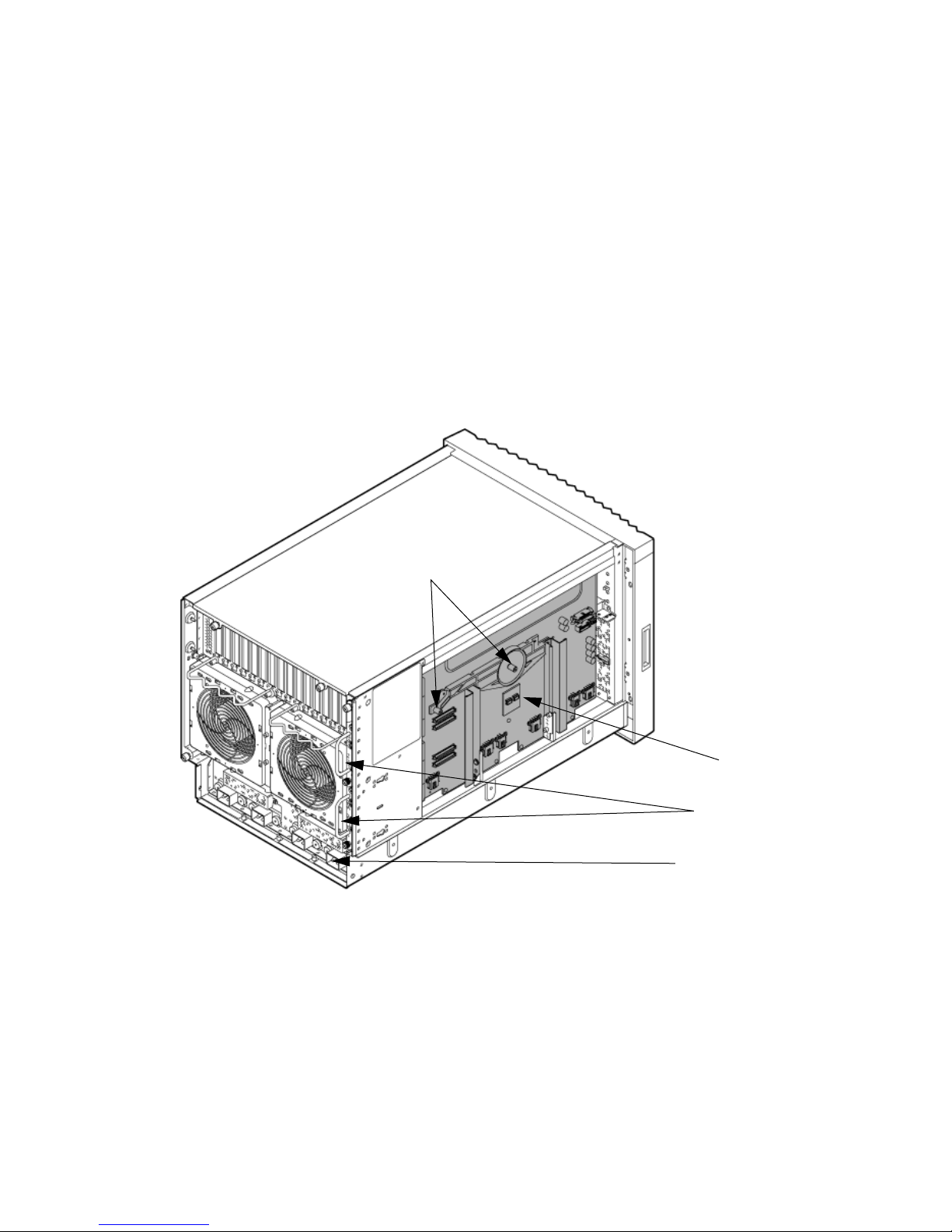

Access the system backplane by removing the left side cover. The system backplane hinges from

the lower edge and is anchored at the top with two jack screws.

The SCSI ribbon-cable assembly routes from the mass storage area to the backside of the system

backplane for connection to the MP/SCSI card, and to the AB290A LAN/SCSI PCI-X cards.

Figure 1-5 Left-Rear View

System backplane

MP/SCSI Core I/O

AC Power Receptacles

Jack Screws

Front Panel

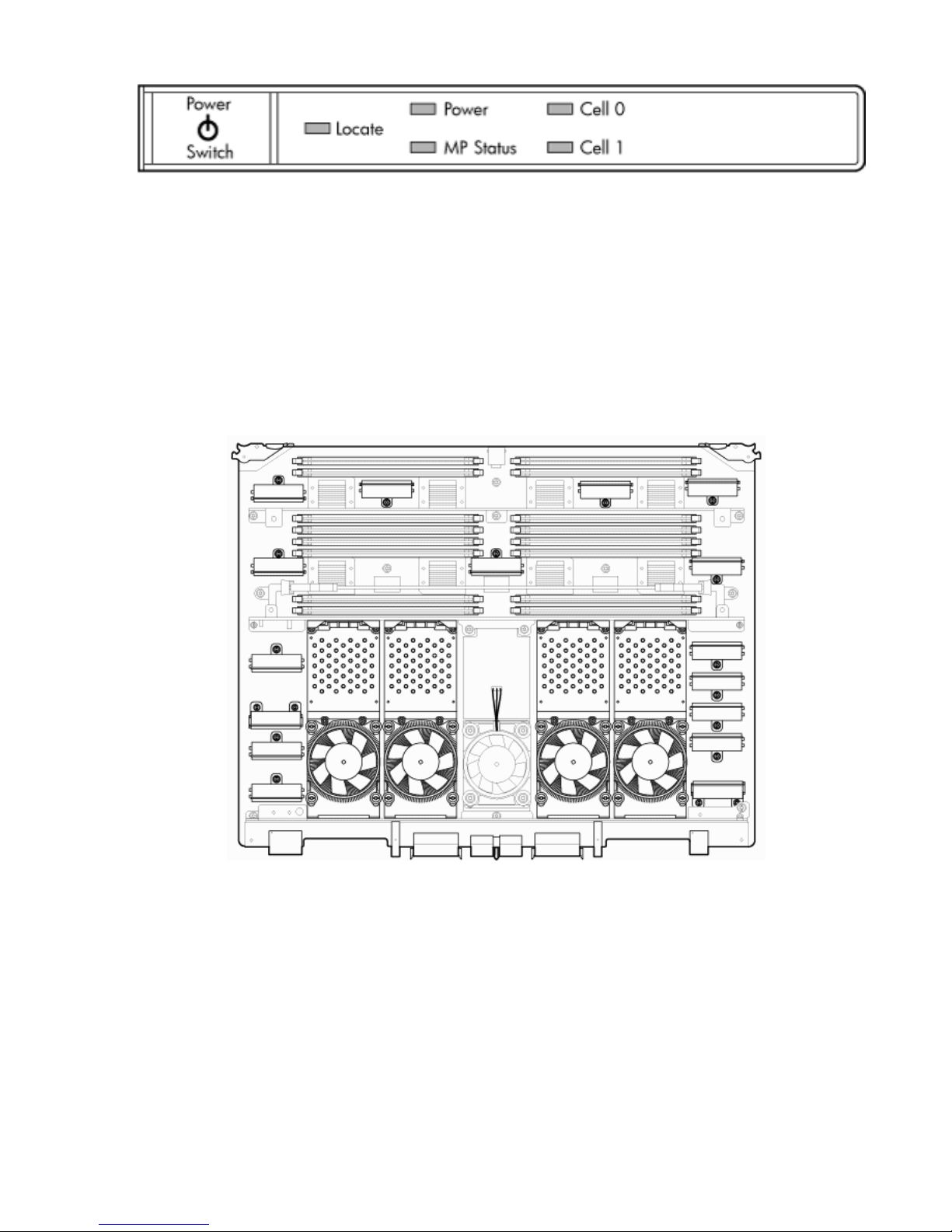

Front Panel Indicators and Controls

The front panel, located on the front of the server, includes the power switch. See Figure 1-6

Enclosure Status LEDs

The following status LEDs are on the front panel:

• Locate LED (blue)

• Power LED (tri-color)

• Management processor (MP) status LED (tri-color)

• Cell 0, 1 status (tri-color) LEDs

Detailed Server Description 23

Page 24

Figure 1-6 Front Panel LEDs and Power Switch

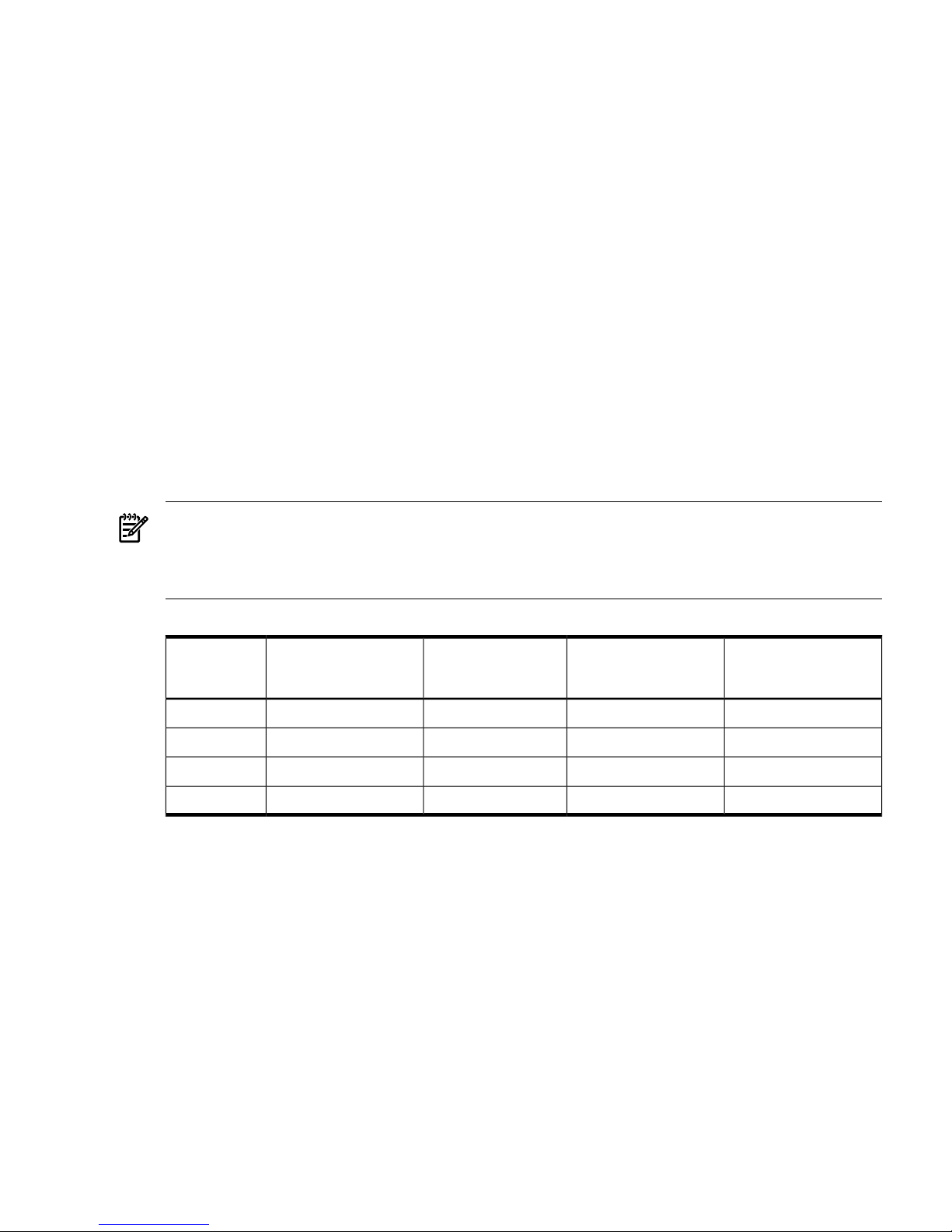

Cell Board

The cell board, illustrated in Figure 1-7, contains the processors, main memory, and the CC

application specific integrated circuit (ASIC) which interfaces the processors and memory with

the I/O, and to the other cell board in the server. The CC is the heart of the cell board, enabling

communication with the other cell board in the system. It connects to the processor dependent

hardware (PDH) and micro controller hardware. Each cell board holds up to two processor

modules and 16 memory DIMMs. One or two cell boards can be installed in the server. A cell

board can be selectively powered off for adding processors, memory, or for maintenance of the

cell board, without affecting the other cell board in a configured partition.

Figure 1-7 Cell Board

The server has a 48 V distributed power system and receives the 48 V power from the system

backplane board. The cell board contains DC-to-DC converters to generate the required voltage

rails. The DC-to-DC converters on the cell board do not provide N+1 redundancy.

The cell board contains the following major buses:

• Two front side buses (FSB), each with up to two processors

• Four memory buses (one going to each memory quad)

• Incoming and outgoing I/O bus that goes off board to an SBA chip

• Incoming and outgoing crossbar bus that goes off board to the other cell board

• PDH bus that goes to the PDH and microcontroller circuitry

All of these buses come together at the CC chip.

24 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview

Page 25

Because of space limitations on the cell board, the PDH and microcontroller circuitry resides on

a riser board that plugs into the cell board at a right angle. The cell board also includes clock

circuits, test circuits, and de-coupling capacitors.

PDH Riser Board

The PDH riser board is a small card that plugs into the cell board at a right angle. The PDH riser

interface contains the following components:

• Microprocessor memory interface microcircuit

• Hardware including the processor dependant code (PDH) flash memory

• Manageability microcontroller with associated circuitry

The PDH obtains cell board configuration information from cell board signals and from the cell

board local power module (LPM).

Central Processor Units

The cell board can hold up to four CPU modules. Each CPU module can contain up to two CPU

cores on a single socket. Modules are populated in increments of one. On a cell board, the

processor modules must be the same family, type, and clock frequencies. Mixing of different

processors on a cell board or partition is not supported. Refer to Table 1-1 for the load order that

must be maintained when adding processor modules to the cell board. Refer to Figure 1-8 for

the locations on the cell board for installing processor modules.

NOTE: Unlike previous HP cell based systems, the HP Integrity rx7640 server cell board does

not require that a termination module be installed at the end of an unused FSB. System firmware

is allowed to disable an unused FSB in the CC. This enables both sockets of the unused bus to

remain unpopulated.

Table 1-1 Cell Board CPU Module Load Order

Socket 0Socket 1Socket 3Socket 2Number of

CPU Modules

Installed

CPU installedEmpty slotEmpty slotEmpty slot1

CPU installedEmpty slotEmpty slotCPU installed2

CPU installedCPU installedEmpty slotCPU installed3

CPU installedCPU installedCPU installedCPU installed4

Detailed Server Description 25

Page 26

Figure 1-8 CPU Locations on Cell Board

Socket 2

Socket 3

Socket 1 Socket 0

Cell

Controller

Memory Subsystem

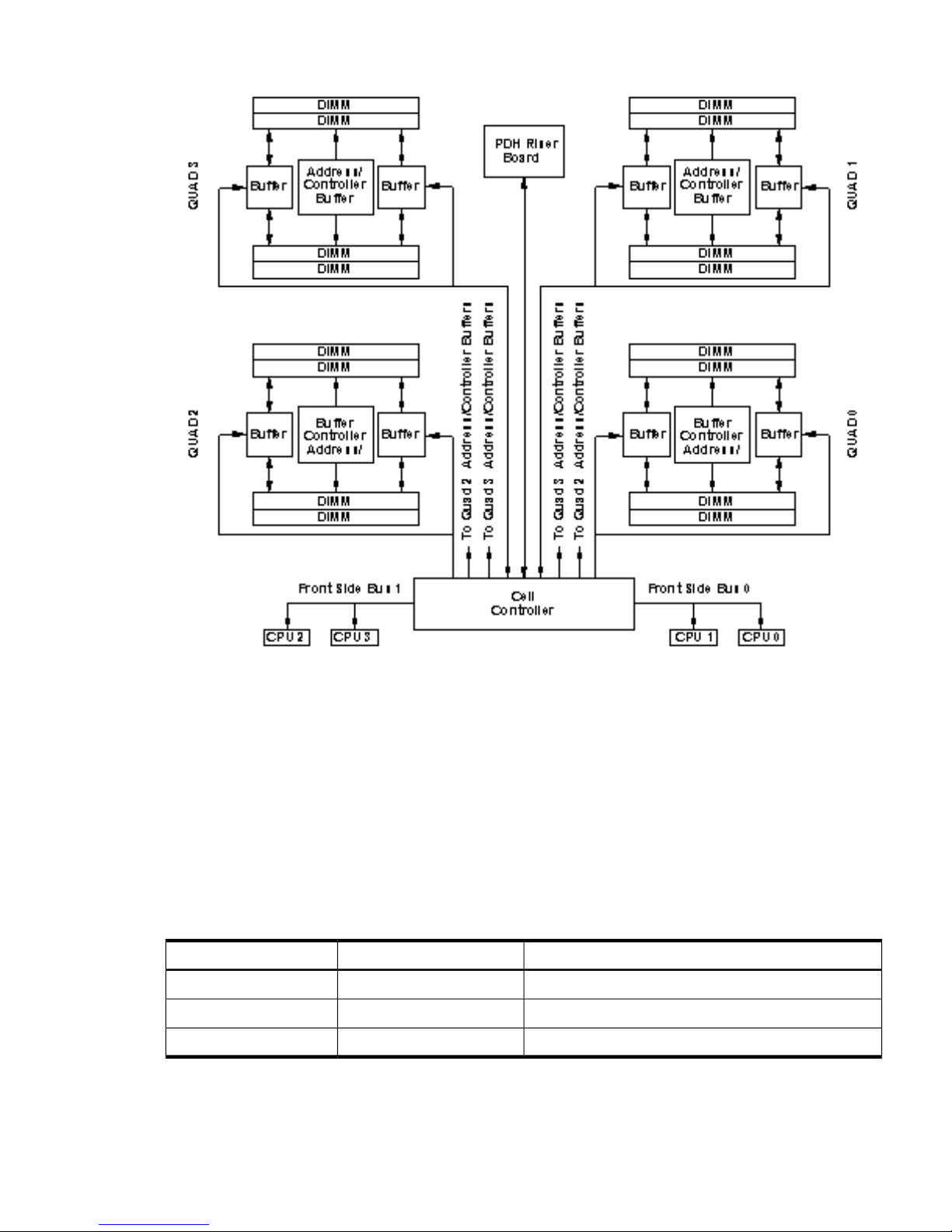

Figure 1-9 shows a simplified view of the memory subsystem. It consists of two independent

access paths, each path having its own address bus, control bus, data bus, and DIMMs . Address

and control signals are fanned out through register ports to the synchronous dynamic random

access memory (SDRAM) on the DIMMs.

The memory subsystem comprises four independent quadrants. Each quadrant has its own

memory data bus connected from the cell controller to the two buffers for the memory quadrant.

Each quadrant also has two memory control buses; one for each buffer.

26 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview

Page 27

Figure 1-9 Memory Subsystem

DIMMs

The memory DIMMs used by the server are custom designed by HP. Each DIMM contains DDR-II

SDRAM memory that operates at 533 MT/s. Industry standard DIMM modules do not support

the high availability and shared memory features of the server. Therefore, industry standard

DIMM modules are not supported.

The server supports DIMMs with densities of 1, 2, and 4 Gb. Table 1-2 (page 27) lists each

supported DIMM size, the resulting total system capacity, and the memory component density.

Each DIMM is connected to two buffer chips on the cell board.

See Appendix C for more information on DIMM slotmapping and valid memory configurations.

Table 1-2 Server DIMMs

Memory Component DensityTotal CapacityDIMM Size

128 Mb32 Gb1 Gb

256 Mb64 Gb2 Gb

512 Mb128 Gb4 Gb

Cells and nPartitions

An nPartition comprises one or more cells working as a single system. Any I/O chassis that is

attached to a cell belonging to an nPartition is also assigned to the nPartition. Each I/O chassis

has PCI card slots, I/O cards, attached devices, and a core I/O card assigned to the I/O chassis.

Detailed Server Description 27

Page 28

On the server, each nPartition has its own dedicated portion of the server hardware which can

run a single instance of the operating system. Each nPartition can boot, reboot, and operate

independently of any other nPartitions and hardware within the same server complex.

The server complex includes all hardware within an nPartition server: all cabinets, cells, I/O

chassis, I/O devices and racks, management and interconnecting hardware, power supplies, and

fans.

A server complex can contain one or two nPartitions, enabling the hardware to function as a

single system or as multiple systems.

NOTE: Partition configuration information is available on the Web at:

http://docs.hp.com

Refer to HP System Partitions Guide: Administration for nPartitions for details.

Internal Disk Devices for the Server

As Figure 1-10 shows, in a server cabinet, the top internal disk drives connect to cell 1 through

the core I/O for cell 1. Both of the bottom disk drives connect to cell 0 through the core I/O for

cell 0.

The DVD/DAT drive connects to cell 1 through the core I/O card for cell 1.

Figure 1-10 Disk Drive and DVD Drive Location

Drive 1-1

Path: 1/0/0/3/0.6.0

Drive 1-2

Path: 1/0/1/1/0/4/1.6.0

Drive 0-2

Path: 0/0/1/1/0/4/1.5.0

Drive 0-1

Path: 0/0/0/3/0.6.0

DVD/DAT/

Slimline DVD Drive

Path: 1/0/0/3/1.2.0

Slimline DVD Drive

Path: 0/0/0/3/1.2.0

28 HP Integrity rx7640 Server and HP 9000 rp7440 Server Overview

Page 29

System Backplane

The system backplane contains the following components:

• The system clock generation logic

• The system reset generation logic

• DC-to-DC converters

• Power monitor logic

• Two local bus adapter (LBA) chips that create internal PCI buses for communicating with

the core I/O card

The backplane also contains connectors for attaching the cell boards, the PCI-X backplane, the

core I/O board set, SCSI cables, bulk power, chassis fans, the front panel display, intrusion

switches, and the system scan card. Unlike Superdome or the HP Integrity rx8640, there are no

Crossbar Chips (XBC) on the system backplane. The “crossbar-less” back-to-back CC connection

increases performance.

Only half of the core I/O board set connects to the system backplane. The MP/SCSI boards plug

into the backplane, while the LAN/SCSI boards plug into the PCI-X backplane.

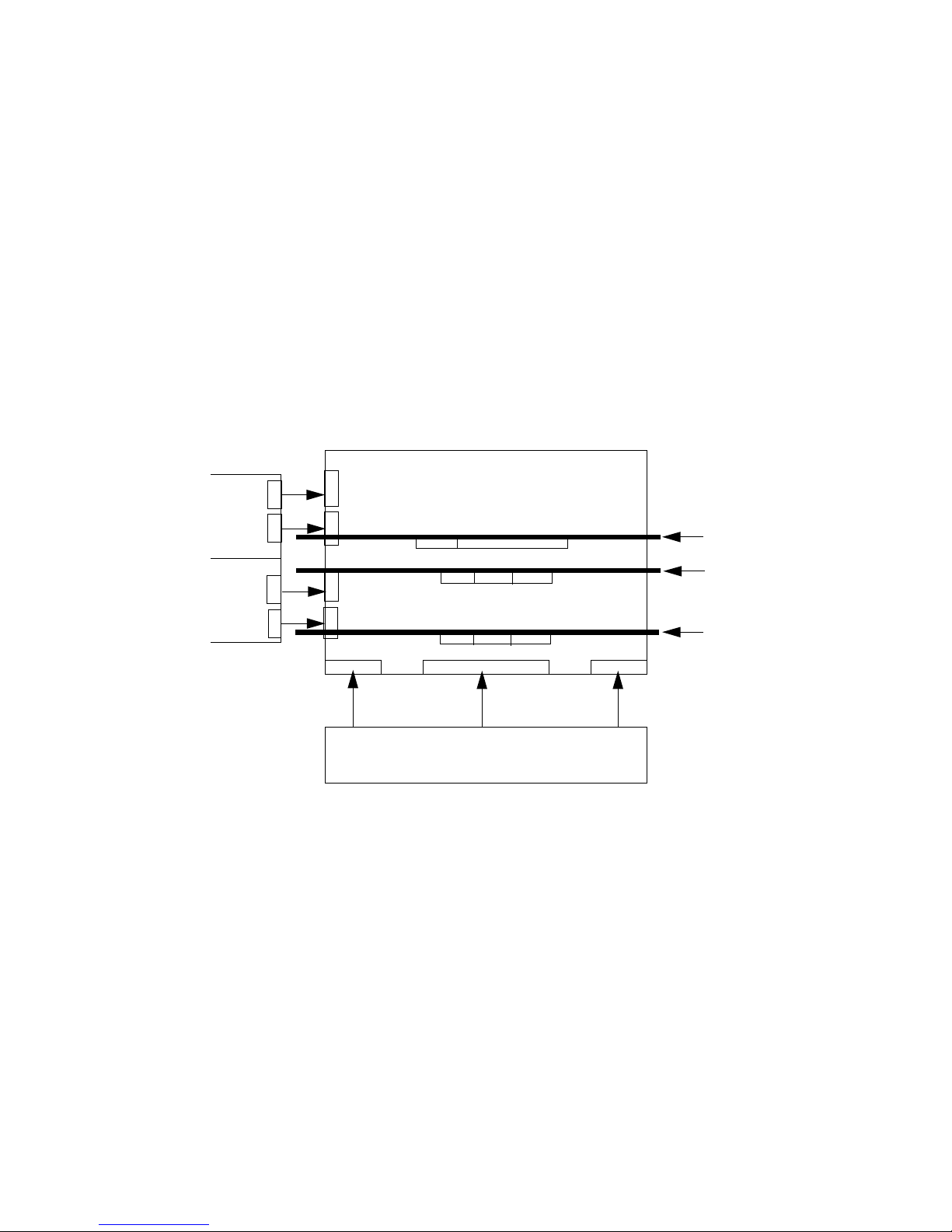

Figure 1-11 System Backplane Block Diagram

PCI-X backplane

Cell board 1

Cell board 0

System backplane

Bulk power supply

MP Core I/O

MP/SCSI

MP Core I/O

MP/SCSI

Cell boards are perpendicular

to the system backplane.

System Bacplane to PCI-X Backplane Connectivity

The PCI-X backplane uses two connectors for the SBA link bus and two connectors for the high

speed data signals and the manageability signals.

SBA link bus signals are routed through the system backplane to the cell controller on each

corresponding cell board.

The high speed data signals are routed from the SBA chips on the PCI-X backplane to the two

LBA PCI bus controllers on the system backplane.

Clocks and Reset

The system backplane contains reset and clock circuitry that propagates through the whole

system. The system backplane central clocks drive all major chip set clocks. The system central

clock circuitry features redundant, hot-swappable oscillators.

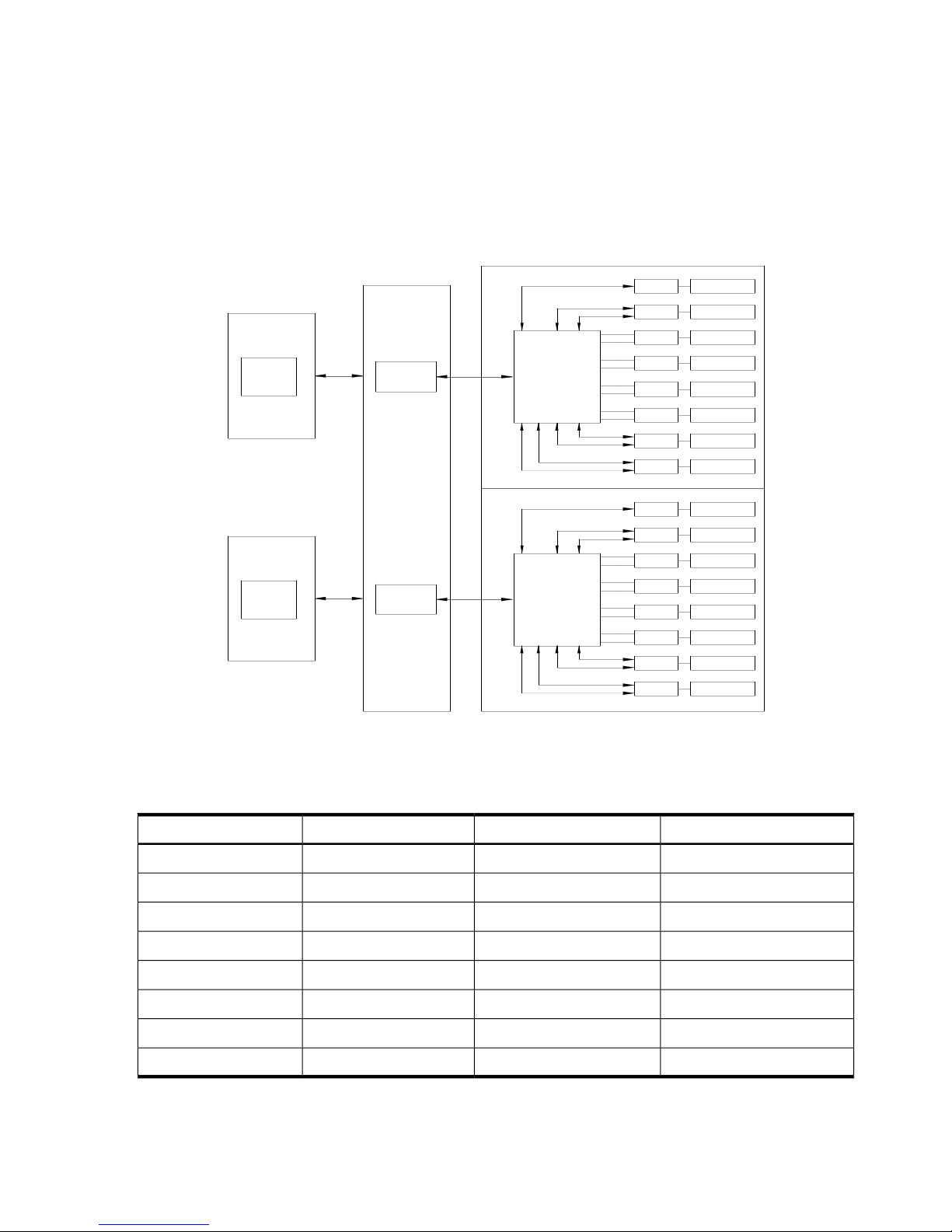

I/O Subsystem

The cell board to the PCI-X board path runs from the CC to the SBA, from the SBA to the ropes,

from the ropes to the LBA, and from the LBA to the PCI slots seen in Figure 1-12. The CC on cell

Detailed Server Description 29

Page 30

board 0 and cell board 1 communicates through an SBA over the SBA link. The SBA link consists

of both an inbound and an outbound link with an effective bandwidth of approximately 11.5

GB/sec. The SBA converts the SBA link protocol into “ropes.” A rope is defined as a high-speed,