Page 1

Using HP-UX Workload Manager: A quick reference

Executive summary..........................................................................................................................3

HP-UX Workload Manager in action..................................................................................................4

Where is HP-UX Workload Manager installed?....................................................................................9

Can I see how HP-UX Workload Manager will perform without actually affecting my system?......................9

How do I start HP-UX Workload Manager?.......................................................................................10

How do I stop HP-UX Workload Manager?.......................................................................................10

How do I create a configuration file?............................................................................................... 11

What is the easiest way to configure HP-UX Workload Manager?.........................................................11

Where can I find example HP-UX Workload Manager configurations?...................................................12

How does HP-UX Workload Manager control applications? ................................................................. 12

How do I put an application under HP-UX Workload Manager control?.................................................13

Application records: Workload separation by binary name.............................................................. 14

User records: Workload separation by process owner..................................................................... 14

Unix group records: Workload separation by Unix group ID ............................................................15

Secure compartments: Workload separation by Secure Resource Partitions ......................................... 15

Process maps: Workload separation using your own criteria ............................................................15

prmrun: Starting a process in a workload group ...........................................................................16

prmmove: Moving an existing process to a workload group.............................................................16

Default: Inheriting workload group of parent process.......................................................................16

How do I determine a goal for my workload?.................................................................................... 17

What are some common HP-UX Workload Manager tasks?................................................................. 17

Migrating Cores across partitions ................................................................................................17

Providing a fixed amount of CPU resources ...................................................................................20

Portions of processors (FSS groups)........................................................................................... 21

Whole processors (pSets)........................................................................................................21

Providing CPU resources for a given time period ............................................................................22

Providing CPU resources as needed .............................................................................................24

Page 2

What else can HP-UX Workload Manager do?..................................................................................27

Run in passive mode to verify operation........................................................................................27

Generate audit data..................................................................................................................27

Optimize the use of HP Temporary Instant Capacity........................................................................27

Integrate with various third-party products .....................................................................................27

What status information does HP-UX Workload Manager provide?....................................................... 28

How do I monitor HP-UX Workload Manager? ..................................................................................29

ps [-P] [-R workload_group].........................................................................................................29

wlminfo...................................................................................................................................29

wlmgui....................................................................................................................................30

prmmonitor.............................................................................................................................. 30

prmlist.....................................................................................................................................31

HP Glanceplus.......................................................................................................................... 31

wlm_watch.cgi CGI script........................................................................................................... 31

Status and message logs............................................................................................................ 32

Event Monitoring Service............................................................................................................32

For more information..................................................................................................................... 33

Page 3

Executive summary

Traditional IT environments are often silos in which both technology and human resources are aligned

around an application or business function. Capacity is fixed, resources are over-provisioned to meet

peak demand, and systems are complex and difficult to change. Costs are based on owning and

operating the entire vertical infrastructure—even when it is being underutilized.

Resource optimization is one of the goals of the HP Adaptive Enterprise strategy—a strategy for

helping customers synchronize business and IT to adapt to and capitalize on change. To help you

realize the promise of becoming an Adaptive Enterprise, HP provides virtualization technologies that

pool and share resources to optimize utilization and meet demands automatically.

HP-UX Workload Manager (WLM) is a virtualization solution that helps you achieve a true Adaptive

Enterprise. As a goal-based policy engine in the HP Virtual Server Environment, WLM integrates

virtualization techniques—including partitioning, resource management, utility pricing resources, and

clustering—and links them to your service level objectives (SLOs) and business priorities. WLM

enables a virtual HP-UX server to grow and shrink automatically, based on the demands and SLOs for

each application it hosts. You can consolidate multiple applications onto a single server to receive

greater return on your IT investment while ensuring that end-users receive the service and performance

they expect.

WLM automates many of the features of the Process Resource Manager (PRM) and HP-UX Virtual

Partitions (vPars). WLM manages CPU resources within a single HP-UX instance as well as within and

across hard partitions and virtual partitions. It automatically adapts system or partition CPU resources

(cores) to the demands, SLOs, and priorities of the running applications. (A core is the actual data

processing engine within a processor, where a single processor can have multiple cores.) On systems

with HP Instant Capacity, WLM automatically moves cores among partitions based on the SLOs in the

partitions. Given the physical nature of hard partitions, the “movement” of cores among partitions is

achieved by deactivating a core on one nPartition and then activating a core on another.

This paper presents an overview of the techniques and tools available for using WLM A.03.02 and

WLM A.03.02.02. WLM A.03.02 is available with the following operating system and hardware

combinations:

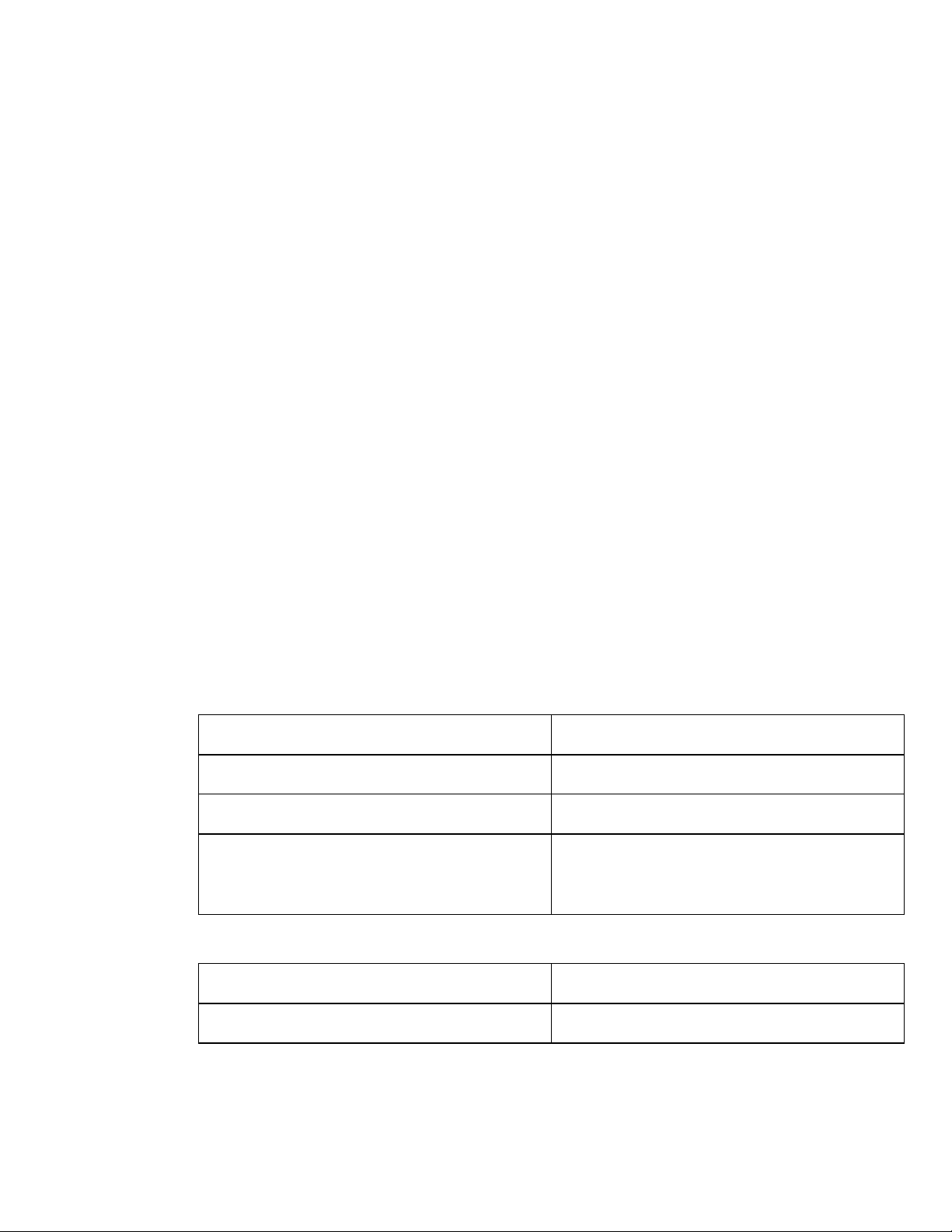

Operating Systems Hardware

HP-UX 11i v1 (B.11.11) HP 9000 servers

HP-UX 11i v2 (B.11.23) HP Integrity servers and HP 9000 servers

HP-UX 11i v1 (B.11.11) and

HP-UX 11i v2 (B.11.23)

WLM A.03.02.02 is available with the following operating system and hardware combinations:

Operating Systems Hardware

HP-UX 11i v3 (B.11.31) HP 9000 servers and HP Integrity servers

(Some of the functionality presented in this paper was available starting with WLM A.02.00.)

This paper assumes you have a basic understanding of WLM terminology and concepts, as well as

WLM configuration file syntax. The paper first gives an overview of a WLM session. Then, it provides

Servers combining HP 9000 partitions and HP

Integrity partitions (in such environments, HP-UX

11i v1 supports HP 9000 partitions only)

3

Page 4

background information on various ways to use WLM, including how to complete several common

WLM tasks. Lastly, it discusses how to monitor WLM and its effects on your workloads.

If you prefer to configure WLM using a graphical wizard, see the white paper, “Getting started with

HP-UX Workload Manager,” available from the information library at:

http://www.hp.com/go/wlm

HP-UX Workload Manager in action

This section provides a quick overview of various commands associated with using WLM. It takes

advantage of some of the configuration files and scripts that are used in the chapter “Learning WLM

by example” in the HP-UX Workload Manager User’s Guide. These files are in the directory

/opt/wlm/examples/userguide/ and at:

http://www.hp.com/go/wlm

To become familiar with WLM, how it works, and some related commands:

1. Log in as root.

2. Start WLM with an example configuration file:

# /opt/wlm/bin/wlmd –a \

/opt/wlm/examples/userguide/multiple_groups.wlm

The file multiple_groups.wlm is shown in the following. This configuration:

– Defines two workload groups: g2 and g3.

– Assigns applications (in this case, perl programs) to the groups. (With shell/perl programs, give

the full path of the shell or perl followed by the name of the program.) The two programs loop2.pl

and loop3.pl are copies of loop.pl. The loop.pl script (available in

/opt/wlm/examples/userguide) runs an infinite outer loop, maintains a counter in the inner loop,

and shows the time spent counting.

– Sets bounds on usage of CPU resources . The number of CPU shares for the workload groups can

never go below the gmincpu or above the gmaxcpu values. These values take precedence over

the minimum and maximum values that you can optionally set in the slo structures.

– Defines an SLO for g2. The SLO is priority 1 and requests 15 CPU shares for g2.

– Defines a priority 1 SLO for g3 that requests 20 CPU shares.

4

Page 5

# Name:

# multiple_groups.wlm

#

# Version information:

#

# $Revision: 1.10 $

#

# Dependencies:

# This example was designed to run with HP-UX WLM version A.01.02

# or later. It uses the cpushares keyword introduced in A.01.02

# and is, consequently, incompatible with earlier versions of

# HP-UX WLM.

#

# Requirements:

# To ensure WLM places the perl scripts below in their assigned

# workload groups, add "/opt/perl/bin/perl" (without the quotes) to

# the file /opt/prm/shells.

prm {

groups = g2 : 2,

g3 : 3;

apps = g2 : /opt/perl/bin/perl loop2.pl,

g3 : /opt/perl/bin/perl loop3.pl;

gmincpu = g2 : 5, g3 : 5;

gmaxcpu = g2 : 30, g3 : 60;

}

slo test2 {

pri = 1;

cpushares = 15 total;

entity = PRM group g2;

}

slo test3 {

pri = 1;

cpushares = 20 total;

entity = PRM group g3;

}

5

Page 6

3. Note what messages a WLM startup produces. Start another session to view the WLM message

log:

# tail -f /var/opt/wlm/msglog

08/29/06 08:35:23 [I] (p6128) wlmd initial command line:

08/29/06 08:35:23 [I] (p6128) argv[0]=/opt/wlm/bin/wlmd

08/29/06 08:35:23 [I] (p6128) argv[1]=-a

08/29/06 08:35:23 [I] (p6128) argv[2]=/opt/wlm/examples/userguide/multiple_gro

ups.wlm

08/29/06 08:35:23 [I] (p6128) what: @(#)HP-UX WLM A.03.02 (2006_08_21_17_04_11)

hpux_11.00

08/29/06 08:35:23 [I] (p6128) dir: @(#) /opt/wlm

08/29/06 08:35:23 [I] (p6128) SLO file path: /opt/wlm/examples/userguide/multipl

e_groups.wlm

08/29/06 08:35:26 [I] (p6128) wlmd 6128 starting

The text in the log shows when the WLM daemon wlmd started, as well as what arguments it was

started with—including the configuration file used.

4. Check that the workload groups are in effect.

The prmlist command shows current configuration information. This HP Process Resource

Manager (PRM) command is available because WLM uses PRM to provide some of the WLM

functionality. For more information on the prmlist command, see the “prmlist” section on page 31.

# /opt/prm/bin/prmlist

PRM configured from file: /var/opt/wlm/tmp/wmprmBAAa06335

File last modified: Thu Aug 29 08:35:23 2006

PRM Group PRMID CPU Upper LCPU

Entitlement Bound Attr

---------------------------------------------------------------OTHERS 1 65.00%

g2 2 15.00%

g3 3 20.00%

PRM User Initial Group Alternate Group(s)

-------------------------------------------------------------------------------root (PRM_SYS)

PRM Application Assigned Group Alternate Name(s)

-------------------------------------------------------------------------------/opt/perl/bin/perl g2 loop2.pl

/opt/perl/bin/perl g3 loop3.pl

A Web interface to the prmlist command is available. For information, see the wlm_watch(1M)

manpage.

6

Page 7

In addition, you can use the wlminfo command, which shows CPU Shares and utilization (CPU

Util) for each workload group, and beginning with WLM A.03.02, the command also shows

memory utilization (because memory records are not being managed for any of the groups in this

example, a “-“ is displayed in the Mem Shares and Mem Util columns):

# /opt/wlm/bin/wlminfo group

Thu Aug 29 08:36:38 2006

Workload Group PRMID CPU Shares CPU Util Mem Shares Mem Util State

OTHERS 1 65.00 0.00 - - ON

g2 2 15.00 0.00 - - ON

g3 3 20.00 0.00 - - ON

5. Start the scripts referenced in the configuration file, as explained in the following:

a. WLM checks the files /etc/shells and /opt/prm/shells to ensure one of them lists each shell

or interpreter, including perl, used in a script. If the shell or interpreter is not in either of those

files, WLM ignores its application record (the workload group assignment in an apps

statement).

Add the following line to the file /opt/prm/shells so that the application manager can

correctly assign the perl programs to workload groups:

/opt/perl/bin/perl

b. Start the two scripts loop2.pl and loop3.pl. The following scripts produce output, so you

might want to start them in a new window.

# /opt/wlm/examples/userguide/loop2.pl &

# /opt/wlm/examples/userguide/loop3.pl &

These scripts start in the PRM_SYS group because you started them as the root user.

However, the application manager soon moves them (within 30 seconds) to their assigned

groups, g2 and g3. After waiting 30 seconds, run the following ps command to see that the

processes have been moved to their assigned workload groups:

# ps -efP | grep loop

The output will include the following items (column headings are included for convenience):

PRMID PID TTY TIME COMMAND

g3 6463 ttyp1 1:42 loop3.pl

g2 6462 ttyp1 1:21 loop2.pl

6. Manage workload group assignments:

a. Start a process in the group g2:

# /opt/prm/bin/prmrun -g g2 \

/opt/wlm/examples/userguide/loop.pl

b. Verify that loop.pl is in g2 with ps:

# ps -efP | grep loop

The output will confirm the group assignment:

g2 6793 ttyp1 0:02 loop.pl

7

Page 8

c. Move the process to another group.

Use the process ID (PID) for loop.pl from the last step to move loop.pl to the group g3:

# /opt/prm/bin/prmmove g3 -p loop.pl_PID

In this case, loop.pl_PID is 6793.

7. Verify workload group assignments:

The ps command has two options related to WLM:

-P shows PRM IDs (workload group IDs) for each process.

-R workload_group shows ps listing for only the processes in workload_group.

Looking at all the processes, the output includes the following items (column headings are

included for convenience):

# ps -efP | grep loop

PRMID PID TTY TIME COMMAND

g3 6793 ttyp1 1:52 loop.pl

g3 6463 ttyp1 7:02 loop3.pl

g2 6462 ttyp1 4:34 loop2.pl

Focusing on the group g3, the output is:

# ps -R g3

PID TTY TIME COMMAND

6793 ttyp1 1:29 loop.pl

6463 ttyp1 6:41 loop3.pl

8. Check usage of CPU resources:

The wlminfo command shows usage of CPU resources (CPU utilization) by workload group.

The command output, which might be slightly different on your system, follows:

# /opt/wlm/bin/wlminfo group

Workload Group PRMID CPU Shares CPU Util Mem Shares Mem Util State

OTHERS 1 65.00 0.00 - - ON

g2 2 15.00 14.26 - - ON

g3 3 20.00 19.00 - - ON

This output shows that both groups are using CPU resources up to their allocations. If the

allocations were increased, the groups’ usage would probably increase to match the new

allocations.

9. Stop WLM:

# /opt/wlm/bin/wlmd -k

8

Page 9

10. Note what messages a WLM shutdown produces:

Run the tail command again:

# tail -f /var/opt/wlm/msglog

You will see messages similar to the following:

08/29/06 09:06:55 [I] (p6128) wlmd 6128 shutting down

08/29/06 09:06:55 [I] (p7235) wlmd terminated (by request)

11. Stop the loop.pl, loop2.pl, and loop3.pl perl programs.

Where is HP-UX Workload Manager installed?

The following table shows where WLM and some of its components are installed.

Item Installation path

WLM /opt/wlm/

WLM Toolkits /opt/wlm/toolkits/

Manpages for WLM and its toolkits /opt/wlm/share/man/

If you are using WLM configurations that are based on the Process Resource Manager (PRM) product,

you must install PRM.

Can I see how HP-UX Workload Manager will perform

without actually affecting my system?

WLM provides a passive mode that enables you to see approximately how WLM will respond to a

given configuration—without putting WLM in charge of your system resources. Using this mode,

enabled with the -p option to wlmd, you can gain a better understanding of how various WLM

features work. In addition, you can verify that your configuration behaves as expected—with minimal

effect on the system. For example, with passive mode, you can answer the following questions:

• How does a cpushares statement work?

• How do goals work? Is my goal set up correctly?

• How might a particular cntl_convergence_rate value or the values of other tunables affect

allocation change?

• How does a usage goal work?

• Is my global configuration file set up as I wanted? If I used global arbitration on my production

system, what might happen to the CPU layouts?

• Is a user’s default workload set up as I expected?

• Can a user access a particular workload?

• When an application is run, which workload does it run in?

• Can I run an application in a particular workload?

• Are the alternate names for an application set up correctly?

For more information on how to use the passive mode of WLM, as well as explanations of how

passive mode does not always represent actual WLM operations, see the “PASSIVE MODE VERSUS

ACTUAL WLM MANAGEMENT” section in the wlm(5) manpage.

9

Page 10

Activate a configuration in passive mode by logging in as root and running the command:

# /opt/wlm/bin/wlmd -p -a config.wlm

where config.wlm is the name of your configuration file.

The WLM global arbiter, wlmpard, which is used in managing SLOs across virtual partitions and

nPartitions, also provides a passive mode.

How do I start HP-UX Workload Manager?

Before starting WLM (activating a configuration), you might want to try the configuration in passive

mode, discussed in the previous section. Otherwise, you can activate your configuration by logging in

as root and running the following command:

# /opt/wlm/bin/wlmd -a config.wlm

where config.wlm is the name of your configuration file.

When you run the wlmd -a command, WLM starts the data collectors you specify in the WLM

configuration.

Although data collectors are not necessary in every case, be sure to monitor any data collectors you

do have. Because data collection is a critical link for effectively maintaining your configured SLOs,

you must be aware when a collector exits unexpectedly. One method for monitoring collectors is to

use wlminfo slo.

For information on creating your WLM configuration, see the “How do I create a configuration file?“

section on page 11.

WLM automatically logs informational messages to the file /var/opt/wlm/msglog. In addition, WLM

can log data that enables you to verify WLM management and fine-tune your WLM configuration file.

To log this data, use the -l option. This option causes WLM to log data to /var/opt/wlm/wlmdstats.

The following command line starts WLM, logging data for SLOs every third WLM interval:

# /opt/wlm/bin/wlmd -a config.wlm -l slo=3

For more information on the -l option, see the wlmd(1M) manpage.

How do I stop HP-UX Workload Manager?

With WLM running, stop it by logging in as root and running the command:

# /opt/wlm/bin/wlmd -k

10

Page 11

How do I create a configuration file?

The WLM configuration file is simply a text file.

To create your own WLM configuration file, use one or more of the following techniques:

• Determine which example configurations can be useful in your environment, and modify them

appropriately. For information on example configurations, see the “Where can I find example

HP-UX Workload Manager configurations?” section on page 12.

• Create a new configuration using any of the following:

– vi or any other text editor

– The WLM configuration wizard, which is discussed in the “What is the easiest way to configure

HP-UX Workload Manager?” section on page 11

– The WLM graphical user interface, wlmgui

Using the wizard, the configuration syntax is almost entirely hidden. With wlmgui, you must be

familiar with the syntax.

• Enhance your configuration created from any of the previous methods by modifying it based on

ideas from the following sections:

– “How do I put an application under HP-UX Workload Manager control?” on page 13

– “What are some common HP-UX Workload Manager tasks?” on page 17

For configuration file syntax, see the wlmconf(4) manpage.

What is the easiest way to configure HP-UX Workload

Manager?

The easiest and quickest method to configure WLM is to use the WLM configuration wizard.

NOTE

Set your DISPLAY environment variable before starting the wizard.

Usage of the WLM wizard requires Java™ Runtime Environment version

1.4.2 or later. (Starting with WLM A.03.04, Java 1.5 or later is required.)

For PRM-based configurations, PRM C.03.00 or later is required (to take

advantage of the latest updates to WLM, use the latest version of PRM

available).

To start the wizard, run the following command:

# /opt/wlm/bin/wlmcw

The wizard provides an easy way to create initial WLM configurations. To maintain simplicity, it does

not provide all the functionality available in WLM. Also, the wizard does not allow you to edit

existing configurations.

11

Page 12

Where can I find example HP-UX Workload Manager

configurations?

WLM and its toolkits come with example WLM configuration files. These files are located in the

directories indicated in the table below.

For See example WLM configurations in the directory

Examples showing a range of WLM functionality /opt/wlm/examples/wlmconf/

Examples used in this paper /opt/wlm/examples/userguide/

Using WLM with Apache Web servers /opt/wlm/toolkits/apache/config/

Using WLM to manage job duration /opt/wlm/toolkits/duration/config/

Using WLM with Oracle® databases /opt/wlm/toolkits/oracle/config/

Using WLM with SAP® software /opt/wlm/toolkits/sap/config

Using WLM with SAS software /opt/wlm/toolkits/sas/config/

Using WLM with SNMP agents /opt/wlm/toolkits/snmp/config/

Using WLM with BEA WebLogic Server /opt/wlm/toolkits/weblogic/config/

These configurations are also available at the following location (select “Example configurations”):

http://www.hp.com/go/wlm

How does HP-UX Workload Manager control applications?

WLM controls your applications after you isolate your applications in workloads based on:

• nPartitions that use Instant Capacity

• HP-UX virtual partitions

• HP Integrity Virtual Machines (Integrity VM) hosts

• Resource partitions (also known as workload groups), which can be:

– Whole-core: HP-UX processor sets (pSets)

– Sub-core: Fair Share Scheduler (FSS) groups

You create one or more SLOs for each workload to migrate resources among the workloads as

needed. (In the case of nPartitions, which represent hardware, the movement of cores is simulated

using Instant Capacity to deactivate one or more cores in one nPartition and then activate cores in

another nPartition). In defining an SLO, you specify the SLO’s priority. You can also specify a usage

goal to attain a targeted resource usage. Or, if a performance measure (metric) is available, you can

specify a metric goal. As the applications run, WLM compares the application usage or metrics

against the goals. To achieve the goals, WLM then automatically adjusts allocations of cores for the

workloads.

For workload groups (which share the resources of a single HP-UX instance), WLM can manage each

group’s real memory and disk bandwidth resources in addition to its CPU allocation.

12

Page 13

NOTE

WLM adjusts only a workload group’s CPU allocation in response to SLO

performance. Thus, WLM SLO management is most effective for workloads

that are CPU-bound.

How do I put an application under HP-UX Workload

Manager control?

WLM can treat an nPartition or virtual partition as a workload. The workload consists of all the

processes running in the operating system instance on the partition. WLM also enables you to divide

the resources of a single operating system into pSets or FSS groups. When dividing the resources of a

single operating system, the workloads are known as “workload groups.”

If you will not be using WLM to manage resources within a single HP-UX image, you can omit the rest

of this section and go to the “How do I determine a goal for my workload?” section on page 17.

When a system is divided into workload groups, each application must go into a workload group. By

default, processes run by root users go into the PRM_SYS group, and processes run by non-root users

go into the OTHERS group. However, you can change the workload group in which a particular

user’s processes run by adding user records to the WLM configuration file. You can add Unix group

records to the configuration file so that the processes running in a specified Unix group are placed in

a specific workload group. Furthermore, you can specify the workload groups in which processes run

by adding application records to your WLM configuration, or defining secure compartments that

isolate the processes in specified workload groups. You can even define process maps that include

your own criteria for placing processes in workload groups.

When determining the workload groups where particular processes should be placed, WLM gives

application records precedence over user records, and user records precedence over Unix group

records. For example, if the same process is identified in both application records and user records,

the process is placed in the workload group assigned to it by the application record. If you define

secure compartments, they take precedence over application, user, and Unix grouip records. If you

define process maps, they take precedence over all the WLM records. In addition, you can alter the

workload group of an application using the prmmove and prmrun utilities, which are discussed in

the following sections.

As you can see, WLM provides several methods for placing processes in workload groups. It is

important to understand these methods because they form the basis of the workload separation.

First, define the workload groups for the workloads. The following snippet from a WLM configuration

file creates three workload groups: servers_grp, apache_grp, and OTHERS. (The OTHERS group

is a reserved workload group and must have ID 1. If you do not explicitly create this group, WLM will

create it for you.)

prm {

groups = OTHERS : 1,

servers_grp : 2,

apache_grp : 3;

}

Each workload group is given a name and a number. Later sections of the WLM configuration file

assign resources to the groups. Processes within the groups then share the resources allocated to that

group.

13

Page 14

With the workload groups defined, the remainder of this section explores how processes can be

placed in the workload groups.

Application records: Workload separation by binary name

One mechanism for separating workloads is the apps statement. This statement names a particular

application binary and the group in which it should be placed. You can specify multiple

binary-workload group combinations, separated by commas, in a single apps statement.

In the following prm structure, the apps statement causes the PRM application manager to place any

newly running /opt/hpws/apache/bin/httpd executables in the group apache_grp.

prm {

groups = OTHERS : 1,

servers_grp : 2,

apache_grp : 3;

apps = apache_grp : /opt/hpws/apache/bin/httpd;

}

NOTE

For polling, understand that the process is not placed in the workload

group immediately after starting. Rather, the PRM application manager

periodically polls for newly started processes that match records in the

apps statement. Each matched process is placed in its designated

workload group at that time.

User records: Workload separation by process owner

You can place processes in workload groups according to the user IDs (UIDs) of the process owners.

Specify your user-workload group mapping in the users statement. For example:

prm {

groups = OTHERS : 1,

testers : 2,

coders : 3,

surfers : 4;

users = moe : coders surfers,

curly : coders surfers,

larry : testers surfers;

}

Besides the default OTHERS group, this example has three groups of users: testers, coders, and

surfers. The user records cause processes started by users moe and curly to be run in group

coders by default and user larry’s processes to be run in group testers by default. Each user is

also given permission to run jobs in group surfers if they want, using the prmrun or prmmove

commands discussed in subsequent sections. Users not belonging to either group are placed in

OTHERS by default.

As previously noted, application records take precedence over user records.

For more information on users’ access to workload groups, see the HP-UX Workload Manager User’s

Guide.

14

Page 15

Unix group records: Workload separation by Unix group ID

You can place processes in workload groups according to the Unix groups the processes run in.

Specify your Unix group-workload group mapping in the uxgrp statement as in the following

example:

prm {

groups = OTHERS : 1,

testers : 2,

coders : 3,

surfers : 4;

uxgrp = sports : surfers,

shoes : coders,

appliances : testers;

}

Besides the default OTHERS group, this example has the three workload groups testers, coders,

and surfers. The Unix group records cause processes running in Unix group sports to be placed

in workload group surfers, while processes running in Unix group shoes are placed in workload

group coders, and processes in Unix group appliances are placed in workload group testers.

Processes not running in any of the specified Unix groups are placed in OTHERS by default.

To support Unix group records, WLM must be A.03.02 or later, and PRM C.03.02 or later must be

running on the system.

Secure compartments: Workload separation by Secure Resource

Partitions

You can place processes in workload groups according to the secure compartments the processes run

in. The HP-UX feature Security Containment, available starting with HP-UX 11i v2, enables you to

create secure compartments. Specify the mapping between secure compartments and workload

groups in the scomp statement as in the following example:

prm {

groups = OTHERS : 1,

database : 2,

webapp : 3;

scomp = db_comp : database,

wa_comp : webapp;

}

Besides the default OTHERS group, two workload groups are defined in this example: database and

webapp. Processes running in the secure compartment db_comp are placed in workload group

database, while processes running in secure compartment wa_comp are placed in workload group

webapp. Processes not running in any of the two secure compartments defined in this example are

placed in OTHERS by default.

Process maps: Workload separation using your own criteria

Using the procmap statement, you define your own criteria for placing processes in workload

groups. You establish your criteria by specifying a particular workload group plus a script or

command and its arguments that gathers and outputs PIDs of processes to be placed in that group.

WLM spawns the command or script periodically at 30-second intervals. At each interval, WLM

places the identified processes in the specified group. You can use process maps to automatically

15

Page 16

place processes that you might otherwise have to move manually by using the prmmove or prmrun

commands.

In the prm structure that follows, the procmap statement causes the PRM application manager to

place in the sales group any processes gathered by the ps command that have PIDs matching the

application pid_app. The application manager places in the mrktg group any processes gathered

by the external script pidsbyapp that have PIDs matching the application mrketpid_app.

prm {

groups = OTHERS : 1,

sales : 2,

mrktg : 3;

procmap = sales :

}

As noted previously, if application, user, Unix group, or compartment records are also specified in the

prm structure, the process placement specified by a process map has precedence over the placement

specified by the other records. In other words, if a PID gathered by the process map matches an

application, user, Unix group, or compartment record, the process map determines the placement of

the identified process.

/bin/env /UNIX95= /bin/ps -C pid_app -o pid=,

mrktg : /scratch/pidsbyapp mrketpid_app;

prmrun: Starting a process in a workload group

You can explicitly start processes in particular workload groups using the prmrun command. Given

the groups and users statements shownin User records: Workload separation by process owner,

user larry running the following command would cause his job to be run in group testers:

# my_really_big_job &

However, user larry also has permission to run processes in the group surfers. Thus, larry can use

the following prmrun command to run his proces in the group surfers:

# /opt/prm/bin/prmrun -g surfers my_really_big_job &

prmmove: Moving an existing process to a workload group

Use the prmmove command to move existing processes to a different workload group. If larry from

the previous example has a job running with PID 4065 in the group testers, he could move that

process to group surfers by running the command:

# /opt/prm/bin/prmmove surfers -p 4065

Default: Inheriting workload group of parent process

If a process is not named in an apps statement, a users statement, a uxgrp statement, or an

scomp statement, or if it has not been identified by a procmap statement, or if it has not been started

with prmrun or moved with prmmove, it starts and runs in the same group as its parent process. So

for a setup like the following, if user jdoe has an interactive shell running in group mygrp, any

process spawned by that shell process would also run in mygrp because its parent process was there:

prm {

groups = OTHERS : 1,

mygrp : 2;

}

Simple inheritance is the mechanism that determines where most processes run, especially for shortlived processes.

16

Page 17

How do I determine a goal for my workload?

NOTE

Be aware of the resource interaction for each of your workloads. Limiting a

workload’s memory allocation can also limit its use of CPU resources. For

example, if a workload uses memory and CPU resources (cores) in the ratio

of 1:2, limiting the workload to 5% of the memory implies that it cannot use

more than 10% of the CPU resources—even if it has a 20% CPU allocation.

To characterize the behavior of a workload, use the following example WLM configuration:

/opt/wlm/examples/wlmconf/manual_entitlement.wlm

Using this configuration, you can directly set an entitlement (allocation) for a workload using the

wlmsend command. By gradually increasing the workload’s allocation with a series of wlmsend

calls, you can determine how various amounts of CPU resources affect the workload and its

performance with respect to some metric that you might want to use in an SLO for the workload.

NOTE

This configuration file is only for PRM-based configurations. PRM must be

installed on your system. For a similar configuration file that demonstrates

WLM’s ability to migrate CPU resources across partitions, see the

par_manual_allocation.wlm and par_manual_allocation.wlmpar

configuration files in /opt/wlm/examples/wlmconf.

In addition, you can compare this research with similar data for the other workloads that will run on

the system. This comparison gives you insight into which workloads you can combine (based on their

needs for CPU resources) on a single system and still achieve the desired SLOs. Alternatively, if you

cannot give a workload its optimal amount of CPU resources, you will know what kind of

performance to expect with a smaller allocation.

Once you know how the workload behaves, you can decide more easily the type of goal (either

metric or usage) you want for it. You might even decide to just allocate the workload a fixed amount

of CPU resources or an amount of CPU resources that varies directly in relation to some metric. For

information on the different methods for getting CPU resources for your workload, see the “SLO

TYPES” section in the wlm(5) manpage.

Like the manual_entitlement.wlm configuration, the following configuration enables you to adjust a

workload group’s CPU allocation with a series of wlmsend calls:

/opt/wlm/toolkits/weblogic/config/manual_cpucount.wlm

However, manual_cpucount.wlm uses a pSet as the basis for a workload group and changes the

group’s CPU allocation by one whole core at a time.

What are some common HP-UX Workload Manager tasks?

WLM is a powerful tool that enables you to manage your systems in numerous ways. The following

sections explain some of the common tasks that WLM can do for you.

Migrating Cores across partitions

WLM can manage SLOs across virtual partitions and nPartitions.

17

Page 18

You must use Instant Capacity cores (formerly known as iCOD CPUs) on the nPartitions for WLM

management. (A core is the actual data processing engine within a processor; a processor can have

multiple cores.) WLM provides a global arbiter, wlmpard, that can take input from the WLM

instances on the individual partitions. The global arbiter then moves cores between partitions, if

needed, to better achieve the SLOs specified in the WLM configuration files that are active in the

partitions. These partitions can be nested—and even contain FSS and PSET-based workload groups.

(wlmpard can be running in one of the managed partitions or on a supported platform with network

connectivity to the managed partitions.)

HP-UX Virtual Partitions and Integrity VM hosts are software-based virtual systems, each running its

own instance of the HP-UX operating system. WLM can move processors among virtual partitions to

better achieve the SLOs you define.

nPartitions (nPars) are hardware-based partitions, each running its own instance of the HP-UX

operating system. Using Instant Capacity (formerly known as iCOD) software, WLM can manage your

SLOs across nPartitions by simulating the movement of processors among nPartitions. The processor

movement is simulated by deactivating a core on one nPartition and activating a core on another

nPartition. WLM can move processors among nPartitions on which VMs are running.

Configuring WLM to manage SLOs across virtual partitions and nPartitions requires the following

steps. For more details, see the wlmpard(1M) and wlmparconf(4) manpages and the HP-UX Workload

Manager User’s Guide.

1. (Optional) Set up secure WLM communications.

Follow the procedure HOW TO SECURE COMMUNICATIONS in the wlmcert(1M) manpage—

skipping the step about starting/restarting the WLM daemons. You will do that later in this

procedure.

2. Create a WLM configuration file for each partition.

Each partition on the system must have the WLM daemon wlmd running. Create a WLM

configuration file for each partition, ensuring each configuration uses the primary_host

keyword to reference the partition where the global arbiter is running. For information on the

primary_host syntax, see the wlmconf(4) manpage.

3. (Optional) Activate each partition’s WLM configuration in passive mode.

WLM operates in “passive mode” when you include the -p option in your command to activate a

configuration. With passive mode, you can see approximately how a particular configuration is

going to affect your system—without the configuration actually taking control of your system.

Activate each partition’s WLM configuration file configfile in passive mode as follows:

# wlmd -p -a configfile

For information on passive mode, including its limitations, see PASSIVE MODE VERSUS ACTUAL

WLM MANAGEMENT in the wlm(5) manpage.

4. Activate each partition’s WLM configuration

After verifying and fine-tuning each partition’s WLM configuration file configfile, activate it as

follows:

# wlmd -a configfile

To use secure communications, activate the file using the -s option:

# wlmd -s -a configfile

18

Page 19

The wlmd daemon runs in secure mode by default when you use the /sbin/init.d/wlm script to

start WLM. (If you upgraded WLM, secure mode might not be the default. Ensure that the

appropriate secure mode variables in /etc/rc.config.d/wlm are set correctly. For more

information on these variables, see the wlmcert(1M) manpage.

5. Create a configuration file for the global arbiter.

On the system running the global arbiter, create a configuration file for the global arbiter. (If this

system is being managed by WLM, it will have both a WLM configuration file and a WLM global

arbiter configuration file. You can set up and run the global arbiter configuration on a system that

is not managed by WLM if needed for the creation of fault-tolerant environments or Serviceguard

environments.)

This global arbiter configuration file is required. In the file specify the:

• Global arbiter interval

• Port used for communications between the partitions

• Size at which to truncate wlmpardstats log file

• Lowest priority at which Temporary Instant Capacity (v6 or later) or Pay per use (v4, v7, or

later) resources are used

NOTE

Specifying this priority ensures WLM maintains compliance with your

usage rights for Temporary Instant Capacity. When your prepaid

amount of temporary capacity expires, WLM no longer attempts to

use the temporary resources.

For information on the syntax of this file, see the wlmparconf(4) manpage.

6. (Optional) Activate the global arbiter configuration file in passive mode.

Similar to the WLM passive mode, the WLM global arbiter has a passive mode (also enabled

with the -p option) that allows you to verify wlmpard settings before letting it control your system.

Activate the global arbiter configuration file configfile in passive mode as follows:

# wlmpard -p -a configfile

Again, to see approximately how the configuration would affect your system, use the WLM utility

wlminfo.

7. Activate the global arbiter.

Activate the global arbiter configuration file as follows:

# wlmpard -a configfile

To use secure communications, activate the file using the -s option:

# wlmpard -s -a configfile

The global arbiter runs in secure mode by default when you use the /sbin/init.d/wlm script to

19

Page 20

start WLM. (If you upgraded WLM, secure mode might not be the default. Ensure that the

appropriate secure mode variables in /etc/rc.config.d/wlm are set correctly. For more

information on these variables, see the wlmcert(1M) manpage.

Providing a fixed amount of CPU resources

WLM enables you to give a workload group a fixed amount of CPU resources. The following figure

shows a group with a fixed allocation of 15 CPU shares.

Figure 1. Fixed allocation

20

Page 21

Within a single HP-UX instance, WLM enables you to allocate a fixed amount of CPU resources using:

• Portions of processors (FSS groups)

• Whole processors (pSets)

You can also allocate a fixed amount of CPU resources to virtual partitions and nPartitions. HP

recommends omitting from WLM management any partitions that should have a constant size. In such

cases, WLM’s capability of migrating CPU resources is not needed.

Portions of processors (FSS groups)

One method for providing a fixed amount of CPU resources is to set up an SLO to give a workload

group a portion of each available processor. To set up this type of SLO:

1. Define the workload group and assign a workload to it.

In your WLM configuration file, define your workload group in a prm structure using the groups

keyword. Assign a workload to the group using the apps keyword.

The following example defines a group named sales.

prm {

groups = sales : 2;

apps = sales : /opt/sales/bin/sales_monitor;

}

The sales_monitor application is placed in the sales workload group using the apps

statement. For other methods of placing a workload in a certain group, see the “How do I put an

application under HP-UX Workload Manager control?” section on page 13.

2. Define a fixed-allocation SLO for the workload group.

In the slo structure, use the cpushares keyword with total to request CPU resources for the

workload group. The following SLO requests 15 CPU shares for the workload group sales.

Based on SLO priorities and available CPU resources, WLM attempts to meet the request.

slo fixed_allocation_example {

pri = 2;

entity = PRM group sales;

cpushares = 15 total;

}

3. Activate the configuration:

# /opt/wlm/bin/wlmd -a config.wlm

where config.wlm is the name of your configuration file.

Whole processors (pSets)

Another method for providing a workload group with a fixed amount of CPU resources is to define the

group based on a pSet. If your system is running HP-UX 11i v1 (B.11.11), you can download

software (to support processor sets) from the following location:

http://www.hp.com/go/wlm

Select the “Patches/support” link and then search for “processor sets”.

Processor sets are included with HP-UX 11i v2 (B.11.23), 11i v3 (B.11.31), and later.

As in the previous case, the workload requires a constant amount of CPU resources. Placing the

workload in a workload group based on a pSet, the group has sole access to the processors in the

pSet.

21

Page 22

NOTE

PRM must be installed on your system for WLM to be able to

manage workgroups based on PSETs.

To place an application in a workload group based on a pSet:

1. Define the workload group based on a pSet, and assign a workload to it.

In your WLM configuration file, define your workload group in a prm structure using the groups

keyword. Assign a workload (application) to the group using the apps keyword. Use the

gmincpu statement to set the minimum CPU usage.

The following example shows the sales group as a pSet that is assigned two cores through a

gmincpu statement. (When using gmincpu for a group based on a pSet, 100 represents a

single core; thus, 200 represents two cores.) The application sales_monitor is assigned to the

group:

prm {

groups = sales : PSET;

apps = sales : /opt/sales/bin/sales_monitor;

gmincpu = sales : 200;

}

NOTE

When WLM is managing pSets, do not change pSet settings by

using the psrset command. Only use WLM to control pSets.

2. Activate the configuration:

# /opt/wlm/bin/wlmd -a config.wlm

where config.wlm. is the name of your configuration file

Providing CPU resources for a given time period

For workloads that are only needed for a certain time, whether it is once a day, week, or any other

period, WLM provides the condition keyword. This keyword is placed in an slo structure and

enables the SLO when the condition statement is true.

By default, when the SLO is not enabled, its workload group gets its gmincpu value. If this value is

not set, the workload group gets the minimum allocation possible. For FSS groups, this default is 1%

of the total CPU resources in the default pSet. (The default pSet, also referred to as pSet 0, is created

at system initialization, at which time it consists of all of your system’s processors. After initialization,

the default pSet consists of all the processors that were not assigned to other pSet groups.) If the

extended_shares tunable is set to 1, the minimum allocation is 0.2% (with incremental allocations

of 0.1%). For more information on the extended_shares tunable, see the wlmconf(4) manpage.

Setting the transient_groups keyword to 1 also changes the minimum allocation behavior.

The following figure shows the allocation for a workload group that is disabled initiallly, at which time

it is allocated 5 shares. When the SLO is enabled, the allocation increases to 50 shares.

22

Page 23

Figure 2. Time-based resource allocation

SLO is enabled

SLO is disabled; workload

gets its gmincpu

To provide a workload group CPU resources based on a schedule:

NOTE

This procedure applies only to PRM-based configurations (confined within a

single instance of HP-UX). PRM must be installed on your system for WLM

to be able to manage PRM-based workloads.

You can achieve similar functionality for configurations that manage

partitions by using a condition keyword similar to the one shown in step

2. For information on configuring WLM for migrating CPU resources

across partitions, see the wlmpard(1M) and wlmparconf(4) manpages.

1. Define the workload group and assign a workload to it.

In your WLM configuration file, define your workload group in a prm structure using the groups

keyword. Assign a workload to the group using the apps keyword.

In this example, the sales group is the group of interest again.

prm {

groups = sales : 2;

apps = sales : /opt/sales/bin/sales_monitor;

}

23

Page 24

2. Define the SLO.

The SLO in your WLM configuration file must specify a priority (pri) for the SLO, the workload

group to which the SLO applies (entity), and either a cpushares statement or a goal

statement so that WLM grants the SLO’s workload group some CPU resources. The condition

keyword determines when the SLO is enabled or disabled.

The following slo structure shows a fixed-allocation SLO for the sales group. When enabled,

this SLO requests 25 CPU shares for the sales group. Based on the condition statement, the

SLO is enabled between 8:00 p.m. and 11:00 p.m. every day.

slo condition_example {

pri = 1;

entity = PRM group sales;

cpushares = 25 total;

condition = 20:00 - 22:59;

}

Whenever the condition statement is false, the SLO is disabled and does not make any CPU

requests for the sales group. When a group has no enabled SLOs, it gets its gmincpu value (if

set); if this value is not set, a group gets the minimum allocation possible. For FSS groups, this

default is 1% of the total CPU resources in the default pSet. If the extended_shares tunable is

set to 1, the minimum allocation is 0.2% (with incremental allocations of 0.1%). For more

information on the extended_shares tunable, see the wlmconf(4) manpage.

You can use times, dates, or metrics to enable or disable an SLO.

For information on the syntax for the condition keyword, see the wlmconf(4) manpage.

3. Activate the configuration:

# /opt/wlm/bin/wlmd -a config.wlm

where config.wlm is the name of your configuration file.

Providing CPU resources as needed

To ensure a workload gets the CPU resources it needs—without preventing other workloads access to

unused CPU resources—WLM enables you to define usage goals. A major advantage with usage

goals is that you do not need to track and feed a metric to WLM.

The following figure shows a workload that initially uses 20 CPU shares. The usage goal for the

workload is to use between 60% and 90% of its allocation (entitlement). With the workload’s initial

allocation of 32 shares, the workload is using 20/32 or 62.5% of its allocation. Later, the workload

settles to using around 8 or 9 shares. WLM responds by reducing its allocation to 10 shares, still

meeting the usage goal while usage stays between 6 and 9 shares.

24

Page 25

Figure 3. CPU usage goal

Here, WLM adjusts the allocation so

that the workload always uses at least

60% but never more than 90% of it

With a usage goal, you indicate for a workload how much of its CPU allocation it should use. The

workload’s CPU allocation is then reduced if not enough of its current allocation is being consumed,

allowing other workloads to consume more CPU resources if needed. Similarly, if the workload is

using a high percentage of its allocation, it is granted more CPU resources.

To define a usage goal for a workload group:

NOTE

This procedure applies only to PRM-based configurations (confined within a

single instance of HP-UX). PRM must be installed on your system for WLM to

be able to manage PRM-based workloads.

For information on configuring WLM for migrating CPU resources across

partitions, see the wlmpard(1M) and wlmparconf(4) manpages.

1. Define the workload group and assign a workload to it.

In your WLM configuration file, define your workload group in a prm structure using the groups

keyword. Assign a workload to the group using the apps keyword.

The following example shows the prm structure for the sales group.

prm {

groups = sales : 2;

apps = sales : /opt/sales/bin/sales_monitor;

}

25

Page 26

2. Define the SLO.

The SLO in your WLM configuration file must specify a priority (pri) for the SLO, the workload

group to which the SLO applies (entity), and a usage goal statement.

The following slo structure for the sales group shows a usage goal statement.

slo usage_example {

pri = 1;

mincpu = 20;

maxcpu = 60;

entity = PRM group sales;

goal = usage _CPU 80 90;

}

With usage goals, WLM adjusts the amount of CPU resources it grants a workload group so that

the group uses between 50% and 75% of its allocated share of CPU resources. However, in the

previous example, with the values of 80 and 90 in the goal statement, WLM would try to

increase or decrease the workload group’s CPU allocation until the group is using between 80%

and 90% of the allocated share of CPU resources. In attempting to meet the usage goal, the new

WLM CPU allocations to the workload group will typically be within the mincpu/maxcpu range.

3. Set your interval to 5.

With a usage goal, you should typically set wlm_interval to 5.

tune {

wlm_interval = 5;

}

4. Activate the configuration:

# /opt/wlm/bin/wlmd -a config.wlm

where config.wlm is the name of your configuration file.

26

Page 27

What else can HP-UX Workload Manager do?

Run in passive mode to verify operation

WLM provides a passive mode that enables you to see how WLM will approximately respond to a

given configuration without putting WLM in charge of your system resources. Using this mode, you

can verify that your configuration behaves as expected—with minimal effect on the system. Besides

being useful in understanding and experimenting with WLM, passive mode can be helpful in

capacity-planning activities.

Generate audit data

When you activate a WLM configuration or a WLM global arbiter configuration, you have the option

of generating audit data. You can use the audit data files directly or view them using the wlmaudit

command. For more information, see the wlmaudit(1M), wlmd(1M), and wlmpard(1M) manpages.

Optimize the use of HP Temporary Instant Capacity

HP Temporary Instant Capacity (TiCAP) activates capacity in a temporary “calling-card fashion” such

as in 20-day or 30-day increments, where a day equals 24 hours for one core. With this option, you

can activate and deactivate cores as needed. You purchase a TiCAP codeword to obtain usage rights

for a preset amount of days. The TiCAP codeword is applied to a system so that you can turn on and

off any number of Instant Capacity cores on the system as long as your prepaid amount of temporary

capacity has not been fully consumed.

If you have WLM on a TiCAP system (using version 6 or later), you can configure WLM to minimize

the costs of using these resources. You do so by optimizing the amount of time the resources are used

to meet the needs of your workloads.

To take advantage of this optimization, use the utilitypri keyword in your global arbiter

configuration as explained in the wlmparconf(4) and wlmpard(1M) manpages.

Integrate with various third-party products

HP has developed several toolkits to assist you in quickly and effectively deploying WLM for use with

your key applications. The freely available HP-UX WLM Toolkits product includes several toolkits, each

with its own example configuration files. The product currently includes Toolkits for:

• Oracle® databases

• HP-UX Apache-based Web Server software

• BEA WebLogic Server

• SAP software

• SNMP agents

WLM Toolkits are included with WLM. They are also freely available at:

http://www.hp.com/go/softwaredepot

For more information on the toolkits, see the HP-UX Workload Manager Toolkits User’s Guide at the

following location or in the wlmtk(5) manpage:

http://docs.hp.com/hpux/netsys/

27

Page 28

What status information does HP-UX Workload Manager

provide?

WLM has three log files to keep you informed:

• /var/opt/wlm/msglog

WLM prints errors and warnings about configuration file syntax on stderr. For messages

about on-going WLM operations, WLM logs error and informational messages to

/var/opt/wlm/msglog, as shown in the following example:

05/07/06 14:12:44 [I] (p13931) Ready to forward data for metric

"m_apache_access_2min"

05/07/06 14:12:44 [I] (p13931) Using "/door00/wlm_cfg/apache_access.pl"

05/07/06 14:12:44 [I] (p13932) Ready to forward data for metric

"m_list.cgi_procs"

05/07/06 14:12:44 [I] (p13932) Using "/door00/wlm_cfg/proc_count.sh"

05/07/06 14:12:44 [I] (p13930) Ready to forward data for metric

"m_apache_access_10min"

05/07/06 14:12:44 [I] (p13930) Using "/door00/wlm_cfg/apache_access.pl"

05/07/06 14:12:44 [I] (p13929) Ready to forward data for metric

"m_nightly_procs"

05/07/06 14:12:44 [I] (p13929) Using "/opt/wlm/lbin/coll/glance_prm"

05/07/06 14:12:44 [I] (p13921) wlmd 13921 starting

05/31/06 03:42:10 [I] (p13921) /var/opt/wlm/wlmdstats has been renamed to

/var/opt/wlm/wlmdstats.old.

• /var/opt/wlm/wlmdstats

WLM can optionally produce a log file that includes data useful for verifying WLM management or

for fine-tuning your WLM configuration. This log file, /var/opt/wlm/wlmdstats, is created when you

start the WLM daemon with the -l option:

# /opt/wlm/bin/wlmd -a config.wlm -l slo

For information on the -l option, see the wlmd(1M) manpage.

The following example is a line from a wlmdstats file:

1119864350 SLO=mySLO sloactive=1 goaltype=nogoal goal=nan goalsatis=1 met=nan

metfresh=0 mementitl=0 cpuentitl=4 clipped=0 pri=101 ask=30.000000 got=30.000000

grp=4 coll=0

• /var/opt/wlm/wlmpardstats

The WLM global arbiter, used in managing SLOs across virtual partitions and nPartitions and in

optimizing Temporary Instant Capacity (version 6 or later) and HP Pay per use (v4, v7, or later),

can optionally produce a statistics log file. This log file, /var/opt/wlm/wlmpardstats, is created

when you start the WLM global arbiter daemon with the -l option:

# /opt/wlm/bin/wlmpard -a config.wlm -l vpar

For information on the -l option, see the wlmpard(1M) manpage.

28

Page 29

How do I monitor HP-UX Workload Manager?

There are several methods for monitoring WLM, as described in the following sections.

ps [-P] [-R workload_group]

The ps command has options that are specific to PRM, which WLM uses to define workload groups

when dividing resources within a single HP-UX instance:

•-P

This option adds the column PRMID, showing the workload group for each process.

# ps -P

PRMID PID TTY TIME COMMAND

g3 6793 ttyp1 1:52 loop.pl

g3 6463 ttyp1 7:02 loop3.pl

g2 6462 ttyp1 4:34 loop2.pl

•-R workload_group

This option lists only the processes in the group named by workload_group. The following output

shows processes in the workload group g3:

# ps -R g3

PID TTY TIME COMMAND

6793 ttyp1 1:29 loop.pl

6463 ttyp1 6:41 loop3.pl

wlminfo

The wlminfo command, available in /opt/wlm/bin/, displays information about SLOs, metrics,

workload groups, virtual partitions or nPartitions, and the current host. The following example displays

group information:

# wlminfo group

Workload Group PRMID CPU Shares CPU Util Mem Shares Mem Util State

OTHERS 1 65.00 0.00 6.00 2.10 ON

g2 2 15.00 0.00 64.00 32.43 ON

g3 3 20.00 0.00 30.00 9.17 ON

The following example shows partition information:

% /opt/wlm/bin/wlminfo par

Hostname Intended Cores Cores Cores Used Interval

north 2 2 1.3 6

south 3 3 2.1 6

east 1 1 0.4 6

west 2 2 1.7 6

northwest 3 3 2.3 6

northeast 2 2 1.4 6

29

Page 30

The following example shows host information:

Hostname Cores Cores Used Interval

localhost 7 0.03 6

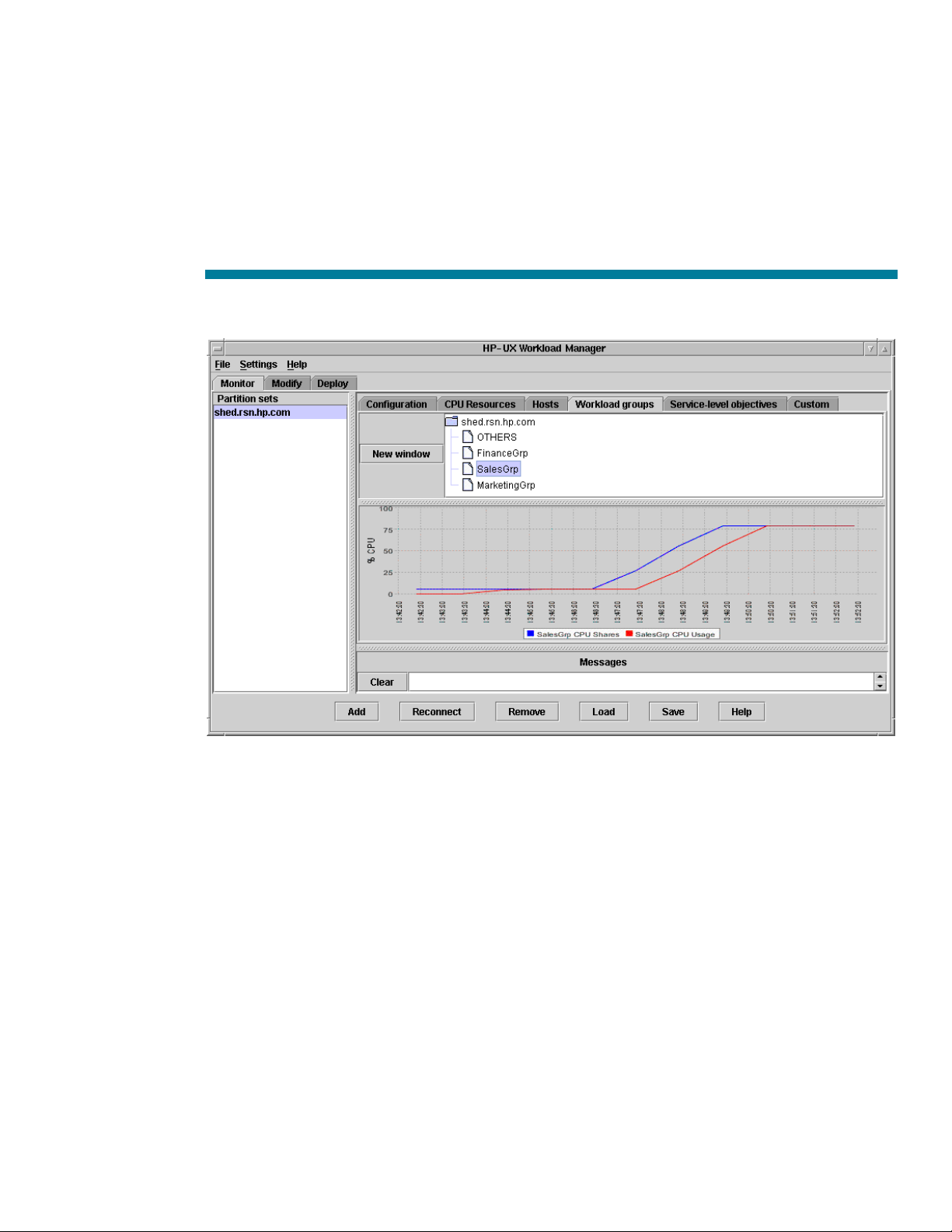

wlmgui

The wlmgui command, available in /opt/wlm/bin/, graphically displays information about SLOs,

metrics, workload groups, partitions, and the current host. The following figure shows graphical

information about the CPU shares allocation and CPU usage for the workload SalesGrp.

Figure 4. WLM graphical user interface

prmmonitor

The prmmonitor command, available in /opt/prm/bin/, displays current configuration and

resource usage information. The CPU Entitle column indicates the CPU entitlement for the group. The

Upper Bound column indicates the per-group consumption cap; the column is blank for each group

because the CPU consumption cap is not available with WLM. The LCPU State column indicates the

Hyper-Threading state (ON or OFF) for a pSet-based group. Starting with HP-UX 11i v3 (B.11.31),

PRM and WLM support the Hyper-Threading feature on systems that support it. Hyper-Threading

provides logical CPUs that are execution threads contained within a core. Each core with HyperThreading enabled can contain multiple logical CPUs. In this prmmonitor example, the LCPU State

column is blank for each group because the system does not support Hyper-Threading. The column

would also be blank for FSS groups.

30

Page 31

PRM configured from file: /var/opt/wlm/tmp/wmprmBAAa06335

File last modified: Thu Aug 29 08:35:23 2006

HP-UX shed B.11.23 U 9000/800 08/29/06

Thu Aug 29 08:43:16 2006 Sample: 1 second

CPU scheduler state: Enabled, CPU cap ON

CPU Upper CPU LCPU

PRM Group PRMID Entitle Bound Used State

____________________________________________________________________

OTHERS 1 65.00% 0.00%

g2 2 15.00% 0.00%

g3 3 20.00% 0.00%

PRM application manager state: Enabled (polling interval: 30 seconds)

prmlist

The prmlist command, available in /opt/prm/bin, displays current CPU allocations plus user and

application configuration information. The LCPU Attr column indicates the setting for a pSet group’s

LCPU attribute, which determines the Hyper-Threading state for cores assigned to the group.

PRM configured from file: /var/opt/wlm/tmp/wmprmBAAa06335

File last modified: Thu Aug 29 08:35:23 2006

PRM Group PRMID CPU Upper LCPU

Entitlement Bound Attr

---------------------------------------------------------------OTHERS 1 65.00%

g2 2 15.00%

g3 3 20.00%

PRM User Initial Group Alternate Group(s)

-------------------------------------------------------------------------------root (PRM_SYS)

PRM Application Assigned Group Alternate Name(s)

-------------------------------------------------------------------------------/opt/perl/bin/perl g2 loop2.pl

/opt/perl/bin/perl g3 loop3.pl

HP Glanceplus

The optional product HP Glanceplus enables you to display resource allocations for the workload

groups and list processes for individual workload groups.

wlm_watch.cgi CGI script

This CGI script, available in /opt/wlm/toolkits/apache/bin/, enables you to monitor WLM using a

Web browser interface to prmmonitor, prmlist, and other monitoring tools.

For information on setting up this script, see the wlm_watch(1M) manpage.

31

Page 32

Status and message logs

WLM provides the following logs:

• /var/opt/wlm/msglog

• /var/opt/wlm/wlmdstats

• /var/opt/wlm/wlmpardstats

For information on these logs, including sample output, see the “What status information does HP-UX

Workload Manager provide?” section on page 28.

Event Monitoring Service

Event Monitoring Service (EMS) polls various system resources and sends messages when events

occur. The WLM wlmemsmon command provides numerous resources for event monitoring. For

information on these resources, see the wlmemsmon(1M) manpage.

32

Page 33

For more information

© 2008Hewlett

-

Packard Development Company, L.P. The information

For more information on HP-UX Workload Manager, contact any HP worldwide sales offices or see

the HP website at:

http://www.hp.com/go/wlm

To learn more about the Adaptive Enterprise and virtualization, see:

http://www.hp.com/go/virtualization

The following references provide useful background information on related products and topics:

• HP-UX Workload Manager (HP-UX WLM)—http://www.hp.com/go/wlm

• HP-UX Workload Manager User’s Guide—http://www.docs.hp.com/hpux/netsys/

• HP-UX Workload Manager Toolkits—http://www.hp.com/go/wlm

– HP-UX WLM Oracle Database Toolkit (ODBTK)

– HP-UX WLM Toolkit for Apache (ApacheTK)

– HP-UX WLM BEA WebLogic Server Toolkit (WebLogicTK)

– HP-UX WLM Toolkit for SAP (SAPTK)

– HP-UX WLM SNMP Toolkit (SNMPTK)

• HP-UX Workload Manager Toolkits User’s Guide—http://www.docs.hp.com/hpux/netsys/

• HP Process Resource Manager (PRM)—http://www.hp.com/go/prm

• Process Resource Manager User’s Guide—http://www.docs.hp.com/hpux/ha/

• Application Response Measurement (ARM) and Application Response Measurement (ARM) API—

http://www.opengroup.org/management/arm.htm

• Event Monitoring Service (EMS)—http://www.unix.hp.com/highavailability

• HP Systems Insight Manager—http://www.hp.com/go/hpsim

• Instant Capacity—http://www.hp.com/go/utility

contained herein is subject to change without notice. The only warranties for

HP products and services are set forth in the express warranty statements

accompanying such products and services. Nothing herein should be construed

as constituting an additional warranty. HP shall not be liable for technical or

editorial errors or omissions contained herein.

Oracle is a registered U.S. trademark of Oracle Corporation, Redwood City,

California. UNIX is a registered trademark of The Open Group.

Loading...

Loading...