D-Link DSN-4200, DSN-2100-10, DSN-4100, DSN-1100-10, DSN-3400-10 User Manual

...

D-Link System, Inc.™

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

Rev. A

D-Link Systems, Inc. Page 1

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

© 2009 D-Link Systems, Inc. All Rights Reserved

DISCLAIMER OF LIABILITY: With respect to documents available from this server, neither DLink Systems, Inc. nor any of their employees, makes any warranty, express or implied,

including the warranties of merchantability and fitness for a particular purpose, or assumes any

legal liability or responsibility for the accuracy, completeness, or usefulness of any

information, apparatus, product, or process disclosed, or represents that its use would not

infringe privately owned rights.

DISCLAIMER OF ENDORSEMENT: Reference herein to any specific commercial products,

process, or service by trade name, trademark, manufacturer, or otherwise, does not

necessarily constitute or imply its endorsement, recommendation, or favoring by D-Link

Systems, Inc.. The views and opinions of authors expressed herein do not necessarily state or

reflect those of D-Link Systems, Inc., and shall not be used for advertising or product

endorsement purposes.

COPYRIGHT: VMware, ESX Server and Virtual Infrastructure are copyrights of EMC. XStack

Storage is a trademark of D-Link Systems, Inc.. Permission to reproduce may be required.

All other brand or product names are or may be trademarks or service marks, and are used

to identify products or services, of their respective owners.

Copyright © 2009 D-Link Systems, Inc.™

D-Link Systems, Inc.

17595 Mount Herrmann Street

Fountain Valley, CA 92708

www.DLink.com

D-Link Systems, Inc. Page 2

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

Executive Summary

The xStack Storage Series iSCSI storage arrays from D-Link provide cost-effective, easy-todeploy shared storage solutions for applications like the VMware Infrastructure 3 server

virtualization software. In this document the features and performance of the D-Link xStack

Storage™ Series iSCSI storage arrays along with typical server systems are configured,

instructions for use with VMware are given, and recommendations are made.

Overview

Server virtualization programs such as VMware run best when the datacenter or enterprise is

organized into “farms” of servers that are connected to shared storage. By placing the virtual

machines’ virtual disks on storage area networks accessible to all the virtualized servers, the

virtual machines can be migrated from one server to another within the farm for purposes of load

balancing or failover. VMware Infrastructure 3 uses the VMotion live migration facility in its

Distributed Resource Scheduling feature and provides a High Availability component which

takes advantage of shared storage to quickly boot up a virtual machine on a different ESX server

after the original ESX server fails. Shared storage is key to enabling VMotion because, when a

virtual machine is migrated from one physical server to another, the virtual machine’s virtual disk

doesn’t actually move. Only the virtual disk’s ownership is changed while it continues to reside in

the same place.

The xStack Storage iSCSI storage arrays provide excellent performance, reliability and

functionality and do not require specialized hardware and skills to set up and maintain. The Fibre

Channel storage network starts with the fabric, which involves the use of FC host bus adapters

(HBAs) in each server, connected by fiber cables to one or more FC switches, which in turn can

network multiple storage arrays, each supporting a scalable number of high speed disk

enclosures. An application’s request for an input or output (IO) to storage originates as a SCSI

(Small Computer System Interface) request, is packed into an FC packet by the FC HBA, and

sent down the fiber cable to the FC switch for dispatch to the storage array that contains the

requested data, similar to the way Internet Protocol (IP) packets are sent over Ethernet. For

smaller IT shops or for those that are just starting out in the virtualization arena, an alternate

shared storage paradigm is emerging that employs iSCSI (Internet SCSI) to connect the servers to

the storage. In this case the communication between the server and the data storage uses standard

Ethernet network interface cards (NICs), switches and cables. SCSI IO requests are packed into

standard Internet protocol packets and routed to the iSCSI storage through Ethernet switches and

routers. With iSCSI customers can leverage existing networking expertise and equipment to

simplify their implementation of a storage network. Like Fibre Channel, iSCSI supports blocklevel data transmission for all applications, not just VMware. For security iSCSI provides CHAP

authentication as well as IPSEC encryption.

Servers can communicate with iSCSI storage using two methods. The first involves the use of an

add-in card called an iSCSI hardware initiator or host bus adapter, analogous to the Fibre Channel

HBA, which connects directly to the datacenter’s Ethernet infrastructure. The second does the

iSCSI conversion in software and sends the Ethernet packets through the standard Ethernet NIC.

D-Link Systems, Inc. Page 3

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

The xStack Storage Series iSCSI SAN solution is designed for different sized customers and

varying environments and is easy to configure to provide storage to VMware hosts.

This document will describe the xStack Storage Series, document how to configure with

VMware, and then, using a database workload running on two virtual machines to demonstrate

usage.

The configuration shown in this paper represent a single, high-level view of the usage of the

xStack Storage array with a simple virtualization workload running on only 5 disks in each array.

Typical customer use scenarios will employ larger disk arrays supporting a variety of

applications, which may result in varying performance results.

xStack Storage Family

D-Link’s virtualized storage technology is revolutionizing the way small and medium enterprises

will manage their storage. Our virtualized storage pool eliminates the complexities associated

with managing traditional RAID. Best of all, D-Link’s iSCSI storage arrays are affordable.

Provision your storage from a single pool of available storage. There is no need to dedicate drive

groups or entire arrays to RAID types or manage individual LUNS. Wizard driven provisioning is

as simple as selecting a volume size and the appropriate application type from a menu. Seasoned

storage administrators have the option of defining volumes with more granularity if desired. The

advanced intelligence of the system allows for multiple virtual volumes with mixed RAID types

to coexist on the same drives. Each application can have a Quality of Service tailored to the needs

of the specific application. Application aware cache algorithms dynamically adjust to each

application, further optimizing performance. By eliminating RAID groups, volumes can span

across the total quantity of disks in the system, thereby further increasing performance. The

benefits of these features become increasingly critical in mixed workload and virtualized server

environments where multiple application types concurrently access the system resources.

All xStack Storage family models are comprised of these features. And, all targets work with

either VMWare ESX 3.0.2 & 3.5 Server.

D-Link Systems, Inc. Page 4

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

The D-Link DSN-1100-10 iSCSI SAN

The D-Link DSN-2100-10 iSCSI SAN

The D-Link DSN-3200-10 & 3400-10 iSCSI SAN

The D-Link DSN-5110-10, & 5210-10 & 5410-10 iSCSI SAN

D-Link Systems, Inc. Page 5

Figure 1: The xStack Storage family

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

VMware and iSCSI

This section will describe the steps needed to attach iSCSI storage to a VMware ESX server host.

Once attached and formatted, the storage can be provided to guest virtual machines as virtual

disks that appear as local storage to the guest. An alternate method, in which the guest is attached

directly to iSCSI storage through a software iSCSI initiator supplied with the guest’s operating

system, is not covered in this document.

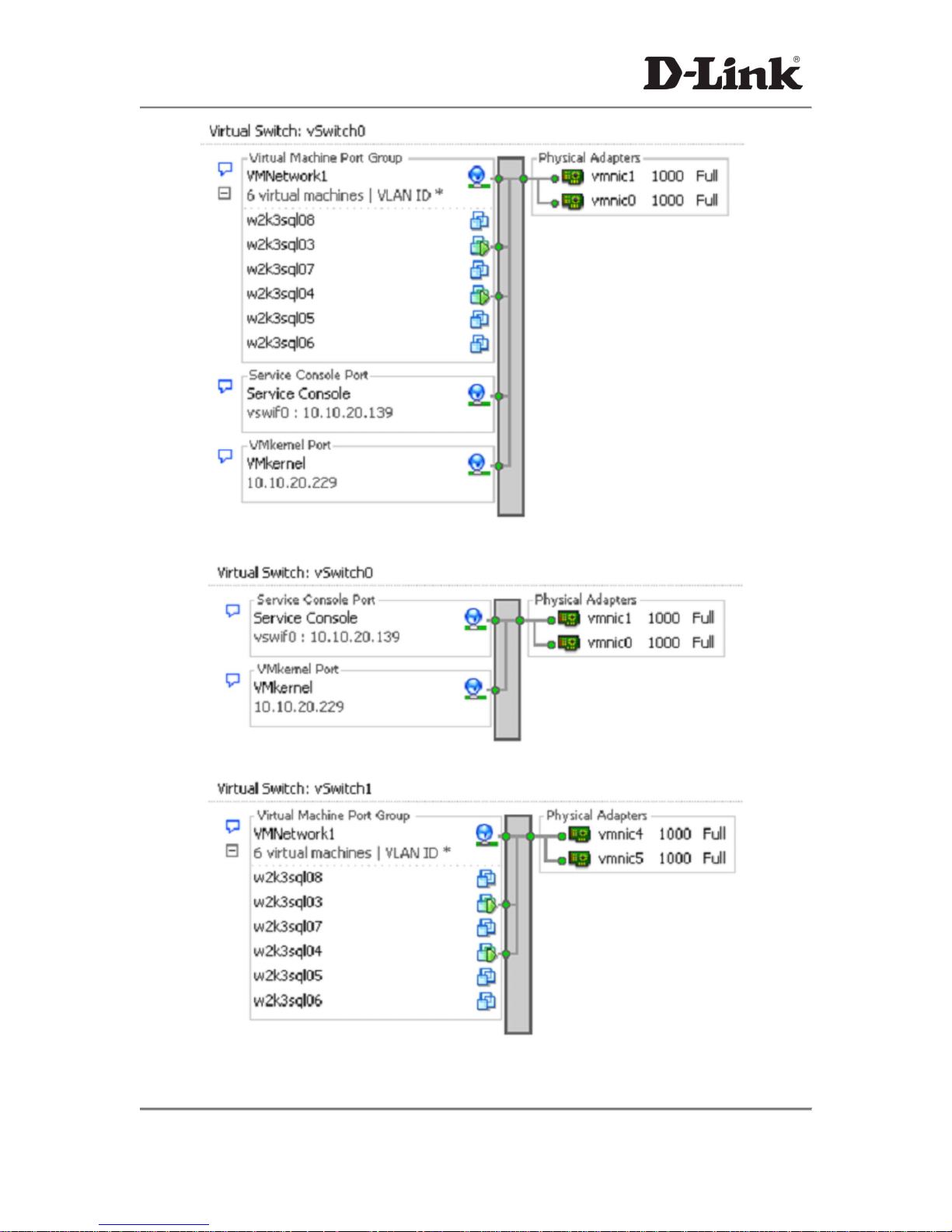

ESX 3.0.2 Configuration

Connectivity from a host running VMware’s ESX server to iSCSI storage is provided through a

built-in software initiator (support for hardware iSCSI initiators will be provided at a later date).

The physical network interface cards (NICs) that connect to the Ethernet network in which the

iSCSI storage is located must be included within a VMware virtual switch that also includes the

ESX Service Console and the VMkernel (which supports VMotion traffic as well as iSCSI

packets). For a two-NIC system it is recommended that the NICs be teamed as shown in Figure 2.

This provides NIC/cable failover and iSCSI traffic load balancing across the NICs to multiple

iSCSI targets with different IP addresses.

Since the VM and iSCSI traffic are mixed in this configuration, CHAP authentication and IPSEC

encryption should be employed in the iSCSI connection. (Alternately, in a two- NIC

configuration the VM and iSCSI traffic can be each placed on their own non-teamed NICs for

total isolation). The network configuration is created in Virtual Center (VC) using the Virtual

Infrastructure Client. The ESX server to be connected is highlighted, then the Configuration tab is

selected, then Networking.

If there are more than two NICs available in the ESX server host, it is recommended that two

virtual switches be created, one which hosts the Service Console and VMkernel (including iSCSI

and VMotion traffic), and one which is dedicated to virtual machine (VM) traffic. The two NICs

carrying iSCSI traffic should be cabled to redundant Ethernet switches. An example for four

NICs showing two 2-NIC teams is shown in Figure 3.

D-Link Systems, Inc. Page 6

December 2, 2009

xStack Storage DSN-Series SAN Arrays

VMware ESX 3.0.2 & 3.5

Figure 2: ESX Two NIC Configuration for iSCSI

Figure 3: ESX Four NIC Configuration for iSCSI

D-Link Systems, Inc. Page 7

December 2, 2009

Loading...

Loading...