Page 1

1 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

STORAGE TIERING WITH DELL EMC

POWERSCALE SMARTPOOLS

Abstract

This white paper provides a technical overview of Dell EMC PowerScale

SmartPools software and how it provides a native, policy-based tiering

capability, which enables enterprises to reduce storage costs and optimizing

their storage investment by automatically moving data to the most appropriate

storage tier within a OneFS cluster.

February 2021

WHITE PAPER

Page 2

2 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

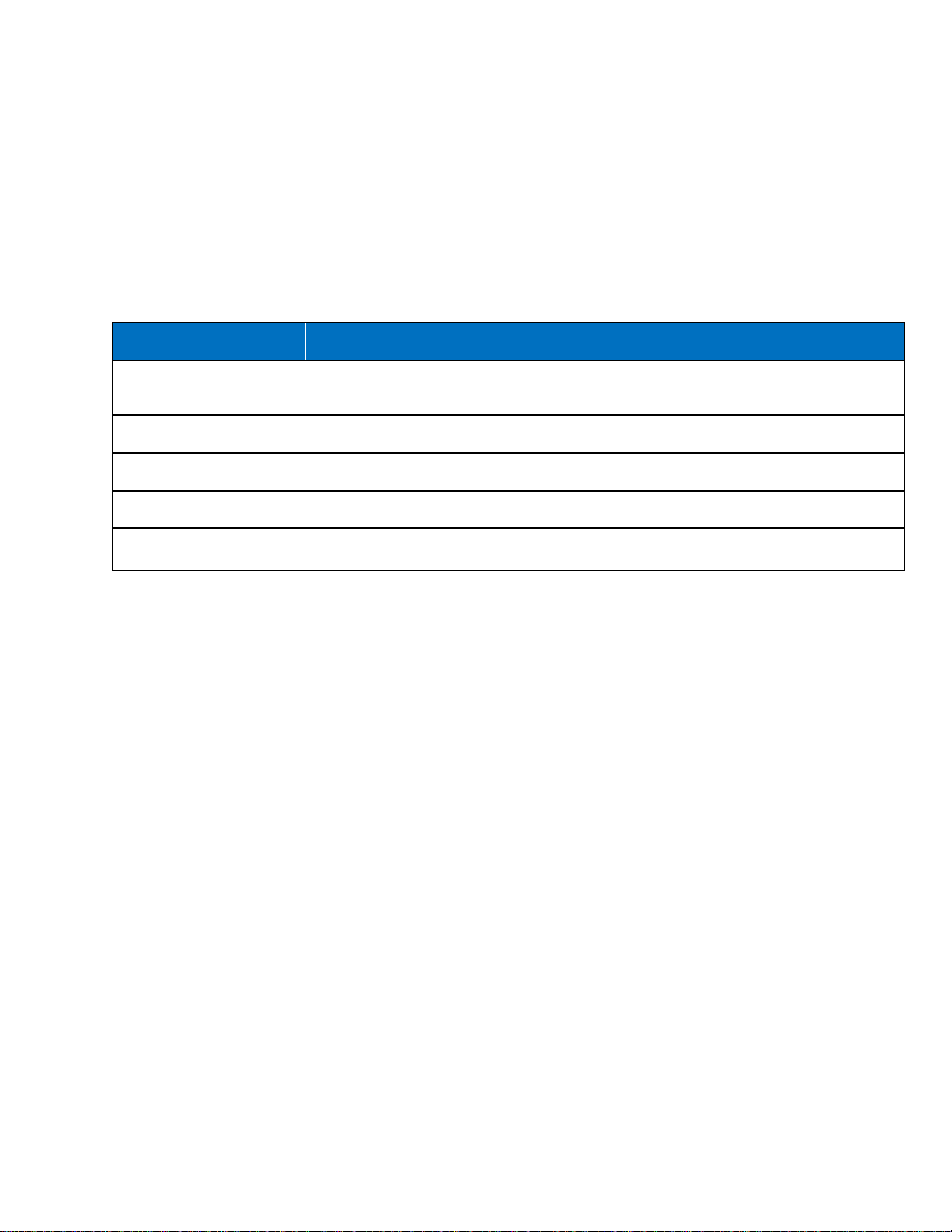

Revisions

Version

Date

Comment

1.0

November 2013

Initial release for OneFS 7.1

2.0

June 2014

Updated for OneFS 7.1.1

3.0

November 2014

Updated for OneFS 7.2

4.0

June 2015

Updated for OneFS 7.2.1

5.0

November 2015

Updated for OneFS 8.0

6.0

September 2016

Updated for OneFS 8.0.1

7.0

April 2017

Updated for OneFS 8.1

8.0

November 2017

Updated for OneFS 8.1.1

9.0

February 2019

Updated for OneFS 8.1.3

10.0

April 2019

Updated for OneFS 8.2

11.0

August 2019

Updated for OneFS 8.2.1

12.0

December 2019

Updated for OneFS 8.2.2

10.0

June 2020

Updated for OneFS 9.0

11.0

September 2020

Updated for OneFS 9.1

Acknowledgements

This paper was produced by the following:

Author: Nick Trimbee

The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this

publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose.

Use, copying, and distribution of any software described in this publication requires an applicable software license.

Copyright © Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries.

Other trademarks may be trademarks of their respective owners.

Page 3

3 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

TABLE OF CONTENTS

Executive Summary ............................................................................................................................................................... 5

Scale-out NAS Architecture ................................................................................................................................................... 5

Single File System ............................................................................................................................................................. 6

Data Layout and Protection ................................................................................................................................................ 6

Job Engine ......................................................................................................................................................................... 7

Data Rebalancing ............................................................................................................................................................... 8

Caching .............................................................................................................................................................................. 8

Smart Pools ............................................................................................................................................................................ 9

Overview ............................................................................................................................................................................ 9

Hardware Tiers ................................................................................................................................................................. 10

Storage Pools ................................................................................................................................................................... 11

Disk Pools ........................................................................................................................................................................ 11

Node Pools ....................................................................................................................................................................... 12

Tiers ................................................................................................................................................................................. 14

Node Compatibility and Equivalence ............................................................................................................................... 15

SSD Compatibility ............................................................................................................................................................ 15

Data Spill Over ................................................................................................................................................................. 15

Automatic Provisioning ..................................................................................................................................................... 16

Manually Managed Node Pools ....................................................................................................................................... 16

Global Namespace Acceleration ...................................................................................................................................... 16

Virtual Hot Spare .............................................................................................................................................................. 17

SmartDedupe and Tiering ................................................................................................................................................ 18

SmartPools Licensing ...................................................................................................................................................... 18

File Pools .......................................................................................................................................................................... 19

File Pool Policies .............................................................................................................................................................. 20

Custom File Attributes ...................................................................................................................................................... 22

Anatomy of a SmartPools Job.......................................................................................................................................... 22

File Pool Policy Engine .................................................................................................................................................... 23

FilePolicy Job ................................................................................................................................................................... 23

Data Location ................................................................................................................................................................... 24

Node Pool Affinity ............................................................................................................................................................. 27

Performance with SmartPools.......................................................................................................................................... 27

Using SmartPools to Improve Performance ..................................................................................................................... 27

Data Access Settings ....................................................................................................................................................... 28

Leveraging SSDs for Metadata & Data Performance ...................................................................................................... 29

Enabling L3 Cache (SmartFlash) ..................................................................................................................................... 30

Page 4

4 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Minimizing the Performance Impact of Tiered Data ......................................................................................................... 31

SmartPools Best Practices ............................................................................................................................................... 31

SmartPools Use Cases .................................................................................................................................................... 32

SmartPools Workflow Examples .......................................................................................................................................... 32

Example A: Storage Cost Efficiency in Media Post Production ....................................................................................... 32

Example B: Data Availability & Protection in Semiconductor Design .............................................................................. 33

Example C: Investment Protection for Financial Market Data ......................................................................................... 34

Example D: Metadata Performance for Seismic Interpretation ........................................................................................ 35

Conclusion ............................................................................................................................................................................ 36

TAKE THE NEXT STEP ....................................................................................................................................................... 37

Page 5

5 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Executive Summary

Dell EMC PowerScale SmartPools software enables multiple levels of performance, protection, and storage density to co-exist within

the same file system and unlocks the ability to aggregate and consolidate a wide range of applications within a single extensible,

ubiquitous storage resource pool. This helps provide granular performance optimization, workflow isolation, higher utilization, and

independent scalability – all with a single point of management.

SmartPools allows you to define the value of the data within your workflows based on policies, and automatically aligns data to the

appropriate price/performance tier over time. Data movement is seamless, and with file-level granularity and control via automated

policies, manual control, or API interface, you can tune performance and layout, storage tier alignment, and protection settings – all

with minimal impact to your end-users.

Storage tiering has a very convincing value proposition, namely separating data according to its business value, and aligning it with the

appropriate class of storage and levels of performance and protection. Information Lifecycle Management techniques have been

around for a number of years, but have typically suffered from the following inefficiencies: complex to install and manage, involves

changes to the file system, requires the use of stub files, etc.

SmartPools is a next generation approach to tiering that facilitates the management of heterogeneous clusters. The SmartPools

capability is native to the OneFS scale-out file system, which allows for unprecedented flexibility, granularity, and ease of

management. In order to achieve this, SmartPools leverages many of the components and attributes of OneFS, including data layout

and mobility, protection, performance, scheduling, and impact management.

Intended Audience

This paper presents information for deploying and managing a heterogeneous Dell EMC OneFS cluster. This paper does not intend to

provide a comprehensive background to the OneFS architecture.

Please refer to the OneFS Technical Overview white paper for further details on the OneFS architecture.

The target audience for this white paper is anyone configuring and managing data tiering in a OneFS clustered storage environment. It

is assumed that the reader has a basic understanding of storage, networking, operating systems, and data management.

More information on OneFS commands and feature configuration is available in the OneFS Administration Guide.

Scale-Out NAS Architecture

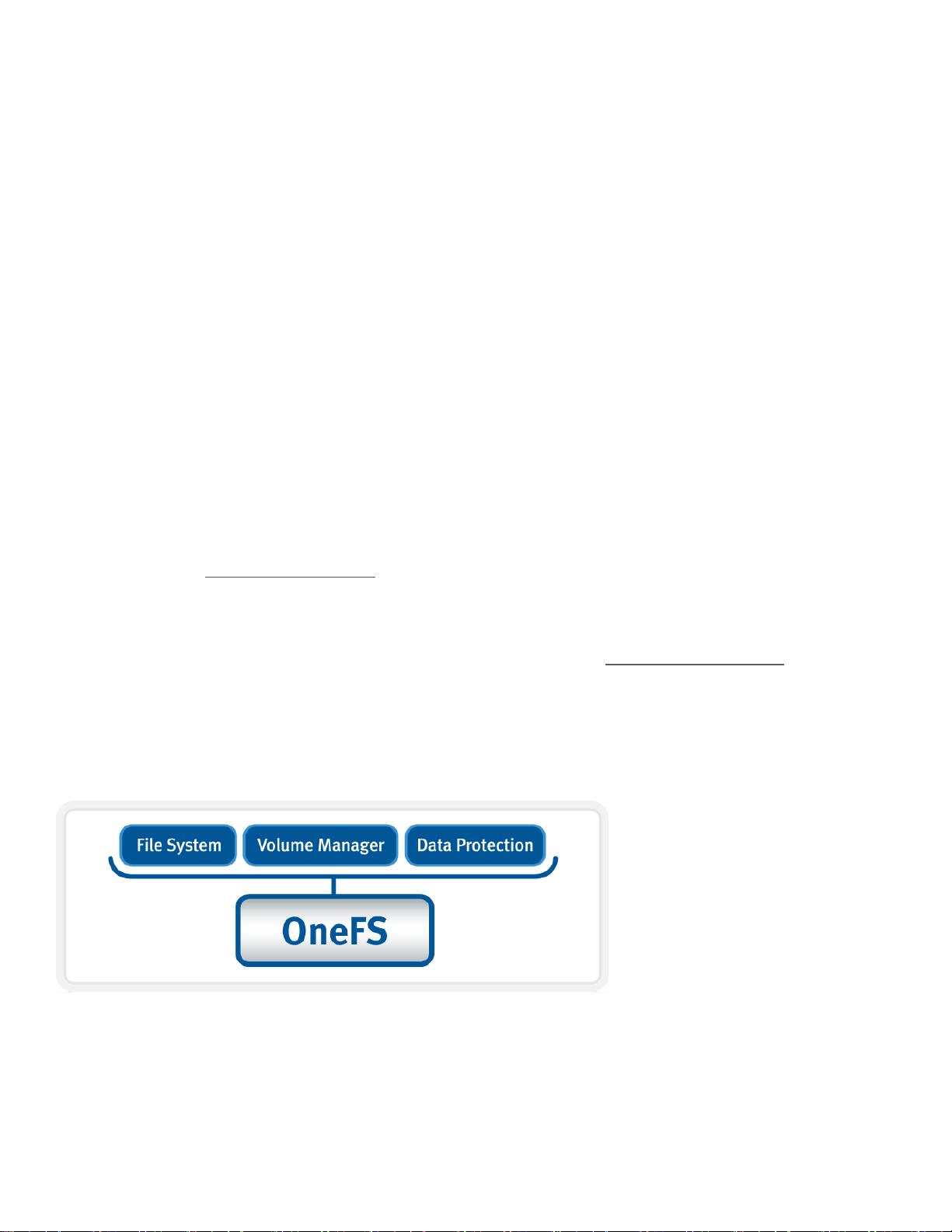

OneFS combines the three layers of traditional storage architectures—file system, volume manager, and data protection—into one

unified software layer, creating a single intelligent distributed file system that runs on a Dell EMC PowerScale storage cluster.

Figure 1. OneFS combines file system, volume manager and data protection into one single intelligent, distributed system.

Page 6

6 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

This is the core innovation that directly enables enterprises to successfully utilize scale-out NAS in their environments today. It adheres

to the key principles of scale-out: intelligent software, commodity hardware, and distributed architecture. OneFS is not only the

operating system, but also the underlying file system that stores and manages data in a cluster.

Single File System

OneFS provides a single file system that operates outside the constraints of traditional scale up constructs like RAID groups, allowing

data to be placed anywhere on the cluster, thereby accommodating a variety of levels of performance and protection.

Each cluster operates within a single volume and namespace with the file system distributed across all nodes. As such, there is no

partitioning, and clients are provided a coherent view of their data from any node in the cluster.

Because all information is shared among nodes across the internal network, data can be written to or read from any node, thus

optimizing performance when multiple users are concurrently reading and writing to the same set of data.

From an application or user perspective, all capacity is available - the storage has been completely virtualized for the users and

administrator. The file system can grow organically without requiring too much planning or oversight. No special thought has to be

applied by the administrator about tiering files to the appropriate disk, because SmartPools will handle that automatically. Additionally,

no special consideration needs to be given to how one might replicate such a large file system, because the OneFS SyncIQ service

automatically parallelizes the transfer of the data to one or more alternate clusters.

Please refer to the OneFS Technical Overview white paper for further details on the OneFS architecture.

Data Layout and Protection

OneFS is designed to withstand multiple simultaneous component failures (currently four per node pool) while still affording unfettered

access to the entire file system and dataset. Data protection is implemented at the file level using Reed Solomon erasure coding and,

as such, is not dependent on any hardware RAID controllers. This provides many benefits, including the ability to add new data

protection schemes as market conditions or hardware attributes and characteristics evolve. Since protection is applied at the file-level,

a OneFS software upgrade is all that’s required in order to make new protection and performance schemes available.

OneFS employs the popular Reed-Solomon erasure coding algorithm for its protection calculations. Protection is applied at the filelevel, enabling the cluster to recover data quickly and efficiently. Inodes, directories, and other metadata are protected at the same or

higher level as the data blocks they reference. Since all data, metadata, and forward error correction (FEC) blocks are striped across

multiple nodes, there is no requirement for dedicated parity drives. This guards against single points of failure and bottlenecks, allows

file reconstruction to be highly parallelized, and ensures that all hardware components in a cluster are always in play doing useful

work.

OneFS supports several protection schemes. These include the ubiquitous +2d:1n, which protects against two drive failures or one

node failure.

The best practice is to use the recommended protection level for a particular cluster configuration. This recommended level of

protection is clearly marked as ‘suggested’ in the OneFS WebUI storage pools configuration pages and is typically configured by

default.

The hybrid protection schemes are particularly useful for high-density node configurations, such as the Isilon A2000 chassis, where the

probability of multiple drives failing surpasses that of an entire node failure. In the unlikely event that multiple devices have

simultaneously failed, such that the file is “beyond its protection level”, OneFS will re-protect everything possible and report errors on

the individual files affected to the cluster’s logs.

OneFS also provides a variety of mirroring options ranging from 2x to 8x, allowing from two to eight mirrors of the specified content.

This is the method used for protecting OneFS metadata. Metadata, for example, is mirrored at one level above FEC by default. For

example, if a file is protected at +1n, its associated metadata object will be 3x mirrored.

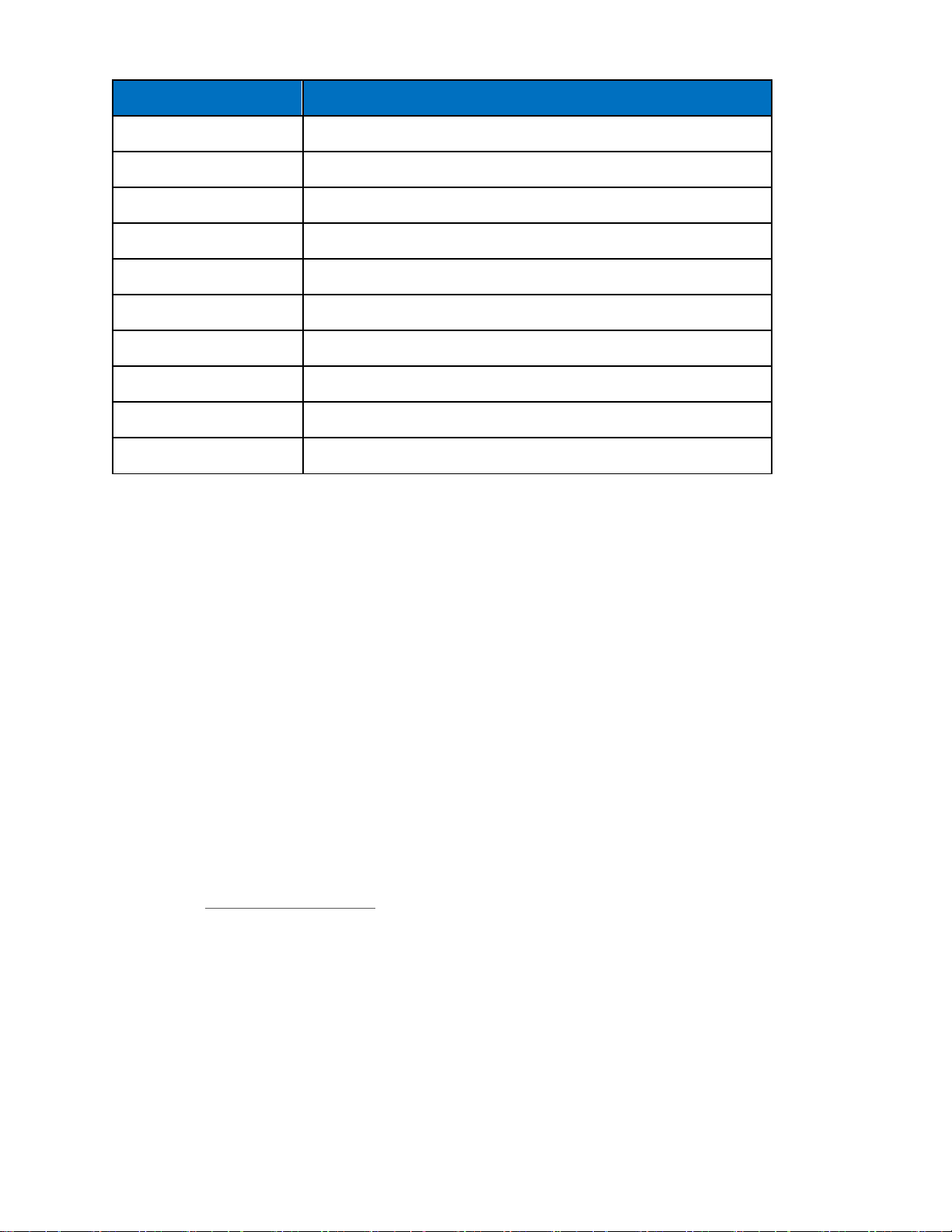

The full range of OneFS protection levels are summarized in the following table:

Page 7

7 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Protection Level

Description

+1n

Tolerate failure of 1 drive OR 1 node

+2d:1n

Tolerate failure of 2 drives OR 1 node

+2n

Tolerate failure of 2 drives OR 2 nodes

+3d:1n

Tolerate failure of 3 drives OR 1 node

+3d:1n1d

Tolerate failure of 3 drives OR 1 node AND 1 drive

+3n

Tolerate failure of 3 drives or 3 nodes

+4d:1n

Tolerate failure of 4 drives or 1 node

+4d:2n

Tolerate failure of 4 drives or 2 nodes

+4n

Tolerate failure of 4 nodes

2x to 8x

Mirrored over 2 to 8 nodes, depending on configuration

Table 1: OneFS FEC Protection Levels

Additionally, a logical separation of data and metadata structures within the single OneFS file system allows SmartPools to manage

data and metadata objects separately, as we will see.

OneFS stores file and directory metadata in inodes and B-trees, allowing the file system to scale to billions of objects and still provide

very fast lookups of data or metadata. OneFS is a completely symmetric and fully distributed file system with data and metadata

spread across multiple hardware devices. Data are generally protected using erasure coding for space efficiency reasons, enabling

utilization levels of up to 80% and above on clusters of five nodes or more. Metadata (which generally makes up around 2% of the

system) is mirrored for performance and availability. Protection levels are dynamically configurable at a per-file or per-file system

granularity, or anything in between. Data and metadata access and locking are coherent across the cluster, and this symmetry is

fundamental to the simplicity and resiliency of OneFS’ shared nothing architecture.

When a client connects to a OneFS-managed node and performs a write operation, files are broken into smaller logical chunks, or

stripes units, before being written to disk. These chunks are then striped across the cluster’s nodes and protected either via erasurecoding or mirroring. OneFS primarily uses the Reed-Solomon erasure coding system for data protection, and mirroring for metadata.

OneFS’ file level protection typically provides industry leading levels of utilization. And, for nine node and larger clusters, OneFS is able

to sustain up to four full node failures while still providing full access to data.

OneFS uses multiple data layout methods to optimize for maximum efficiency and performance according to the data’s access pattern

– for example, streaming, concurrency, random, etc. And, like protection, these performance attributes can also be applied per file or

per filesystem.

Please refer to the OneFS Technical Overview white paper for further details on OneFS data protection levels.

Job Engine

The Job Engine is OneFS’ parallel task scheduling framework, and is responsible for the distribution, execution, and impact

management of critical jobs and operations across the entire cluster.

Page 8

8 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

The OneFS Job Engine schedules and manages all the data protection and background cluster tasks: creating jobs for each task,

prioritizing them and ensuring that inter-node communication and cluster wide capacity utilization and performance are balanced and

optimized. Job Engine ensures that core cluster functions have priority over less important work and gives applications integrated with

OneFS – add-on software or applications integrating to OneFS via the OneFS API – the ability to control the priority of their various

functions to ensure the best resource utilization.

Each job, for example the SmartPools job, has an “Impact Profile” comprising a configurable policy and a schedule which characterizes

how much of the system’s resources the job will take, plus an Impact Policy and an Impact Schedule. The amount of work a job has to

do is fixed, but the resources dedicated to that work can be tuned to minimize the impact to other cluster functions, like serving client

data.

The specific jobs that the SmartPools feature comprises include:

Job

Description

SmartPools

Job that runs and moves data between the tiers of nodes within the same cluster. Also

executes the CloudPools functionality if licensed and configured.

SmartPoolsTree

Enforces SmartPools file policies on a subtree.

FilePolicy

Efficient changelist-based SmartPools file pool policy job.

IndexUpdate

Creates and updates an efficient file system index for FilePolicy job.

SetProtectPlus

Applies the default file policy. This job is disabled if SmartPools is activated on the cluster.

When a cluster running the FSAnalyze job is upgraded to OneFS 8.2 or later, the legacy FSAnalyze index and snapshots are

removed and replaced by new snapshots the first time that IndexUpdate is run. The new index stores considerably more file and

snapshot attributes than the old FSA index. Until the IndexUpdate job effects this change, FSA keeps running on the old index and

snapshots.

Data Rebalancing

Another key Job Engine task is AutoBalance. This enables OneFS to reallocate and rebalance, or restripe, data across the nodes in a

cluster, making storage space utilization more uniform and efficient.

OneFS manages protection of file data directly, and when a drive or entire node failure occurs, it rebuilds data in a parallel fashion.

OneFS avoids the requirement for dedicated hot spare drives and serial drive rebuilds, and simply borrows from the available free

space in the file system in order to recover from failures; this technique is called Virtual Hot Spare. This approach allows the cluster to

be self-healing, without human intervention, and with the advantages of fast, parallel data reconstruction. The administrator can create

a virtual hot spare reserve, which prevents users from consuming capacity that is reserved for the virtual hot spare.

The process of choosing a new layout is called restriping, and this mechanism is identical for repair, rebalance, and tiering. Data is

moved in the background with the file available at all times, a process that’s completely transparent to end users and applications.

Further information is available in the OneFS Job Engine white paper.

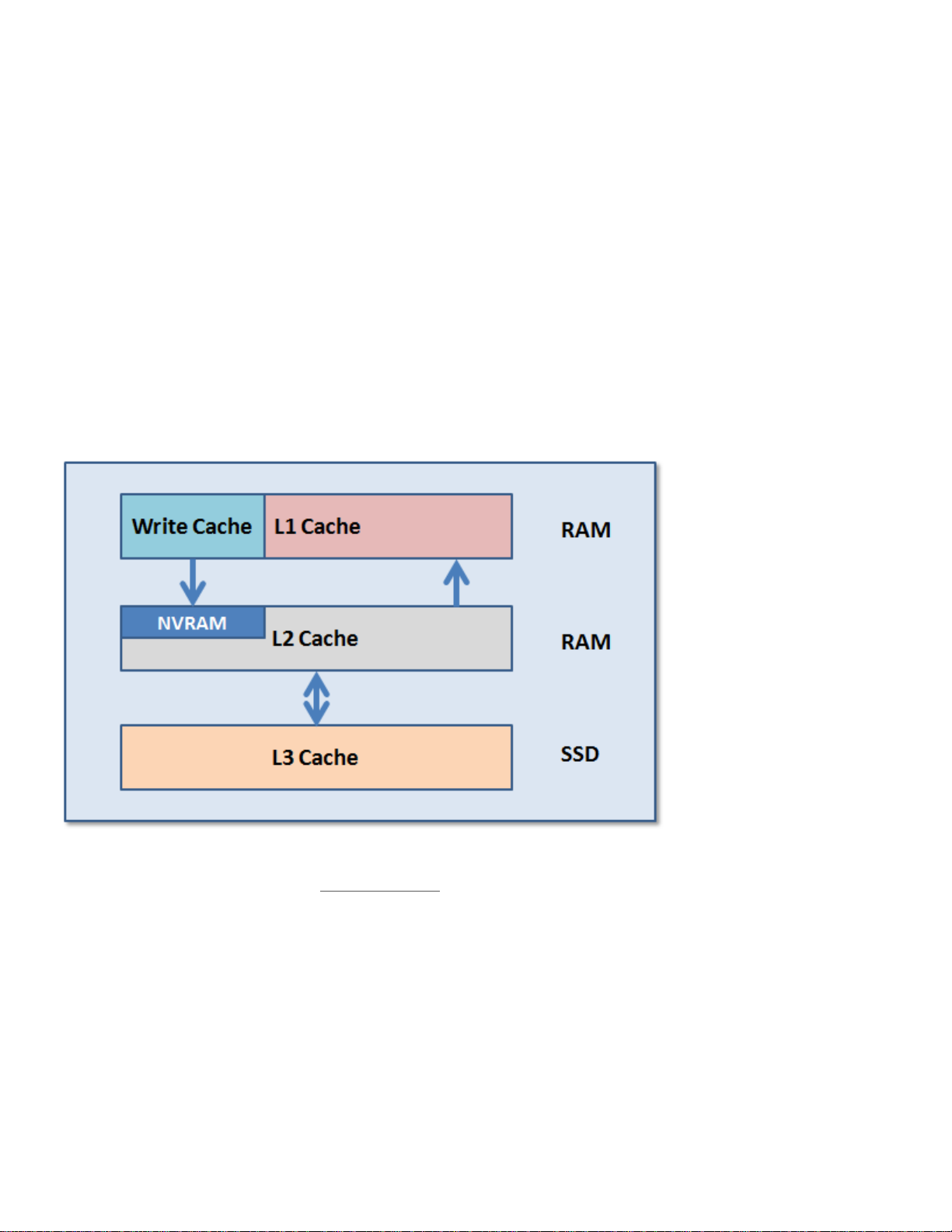

Caching

SmartCache is a globally coherent read and write caching infrastructure that provides low latency access to content. Like other

resources in the cluster, as more nodes are added, the total cluster cache grows in size, enabling OneFS to deliver predictable,

scalable performance within a single filesystem.

Page 9

9 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

A OneFS cluster provides a high cache to disk ratio (multiple GB per node), which is dynamically allocated for read operations as

needed. This cache is unified and coherent across all nodes in the cluster, allowing a user on one node to benefit from I/O already

transacted on another node. OneFS stores only

distinct

data on each node. The node’s RAM is used as a “level 2” (L2) cache of such

data. These distinct, cached blocks can be accessed across the backplane very quickly and, as the cluster grows, the cache benefit

increases. For this reason, the amount of I/O to disk on a OneFS cluster is generally substantially lower than it is on traditional

platforms, allowing for reduced latencies and a better user experience. For sequentially accessed data, OneFS SmartRead

aggressively pre-fetches data, greatly improving read performance across all protocols.

An optional third tier of read cache, called SmartFlash or Level 3 cache (L3), is also configurable on nodes that contain solid state

drives (SSDs). SmartFlash is an eviction cache that is populated by L2 cache blocks as they are aged out from memory. There are

several benefits to using SSDs for caching rather than as traditional file system storage devices. For example, when reserved for

caching, the entire SSD will be used, and writes will occur in a very linear and predictable way. This provides far better utilization and

also results in considerably reduced wear and increased durability over regular file system usage, particularly with random write

workloads. Using SSD for cache also makes sizing SSD capacity a much more straightforward and less error prone prospect

compared to using use SSDs as a storage tier.

OneFS write caching uses write buffering to aggregate, or coalesce, multiple write operations to the NVRAM file systems journals so

that they can be written to disk safely and more efficiently. This form of buffering reduces the disk write penalty which could require

multiple reads and writes for each write operation.

Figure 2: OneFS Caching Hierarchy

Further information is available in the OneFS SmartFlash white paper.

SmartPools

Overview

SmartPools is a next-generation data tiering product that builds directly on the core OneFS attributes described above. These include

the single file system, extensible data layout and protection framework, parallel job execution, and intelligent caching architecture.

SmartPools was originally envisioned as a combination of two fundamental notions:

•

The ability to define sub-sets of hardware within a single, heterogeneous cluster.

Page 10

10 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

•

A method to associate logical groupings of files with these hardware sub-sets via simple, logical definitions or rules.

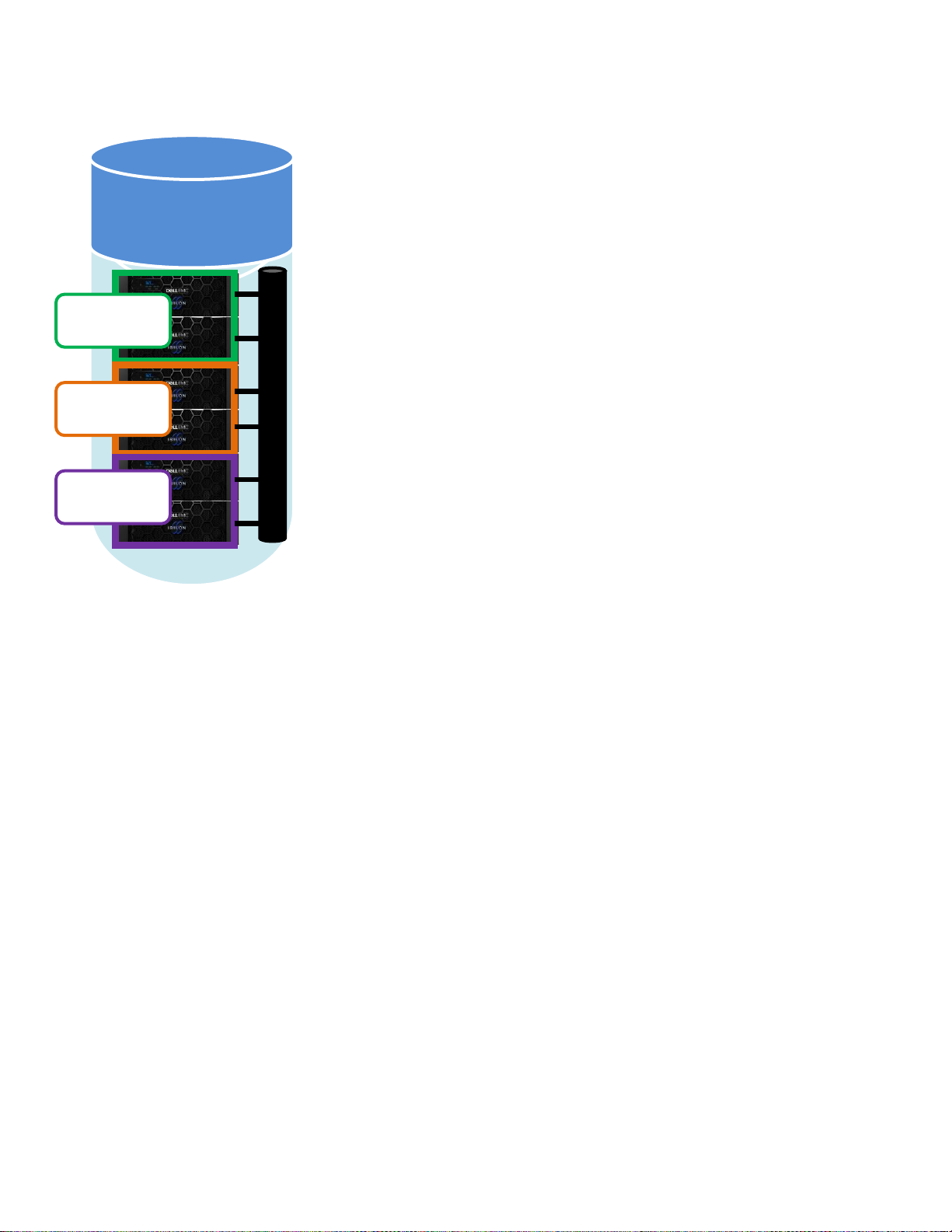

Figure 3. SmartPools Tiering Model

The current SmartPools implementation expands on these two core concepts in several additional ways, while maintaining simplicity

and ease of use as primary goals.

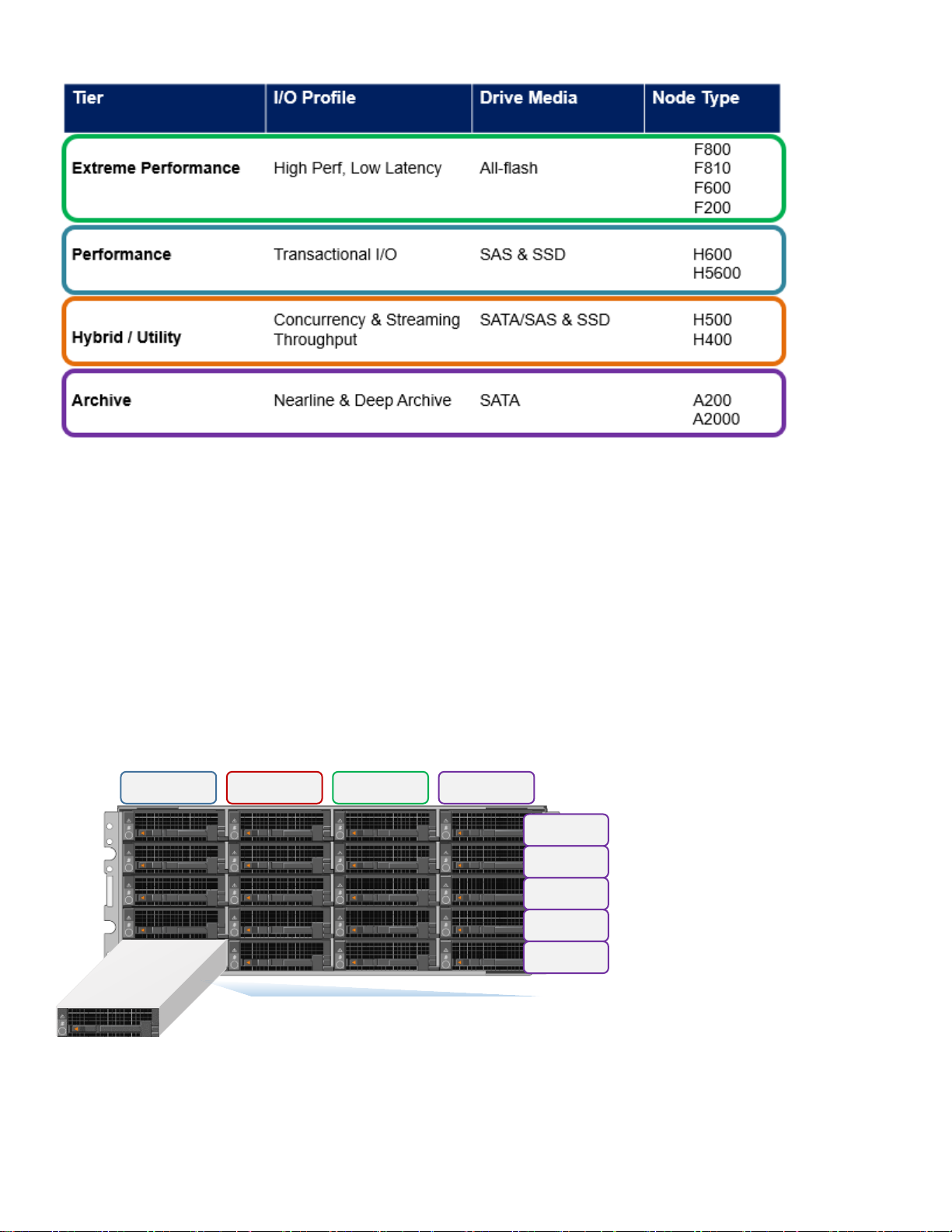

Hardware Tiers

Heterogeneous OneFS clusters can be architected with a wide variety of node styles and capacities, in order to meet the needs of a

varied data set and wide spectrum of workloads. These node styles encompass Isilon Gen6 and PowerScale hardware generations

and fall loosely into four main categories or tiers. The following table illustrates these tiers, and the associated hardware models:

OneFS with

Ethernet or Infiniband Network

Flash

I/Ops

Hybrid

Throughput

Archive

Capacity

Page 11

11 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Table 1: Hardware Tiers and Node Types

Storage Pools

Storage pools provide the ability to define subsets of hardware within a single cluster, allowing file layout to be aligned with specific

sets of nodes through the configuration of storage pool policies. The notion of Storage pools is an abstraction that encompasses disk

pools, node pools, and tiers, all described below.

Disk Pools

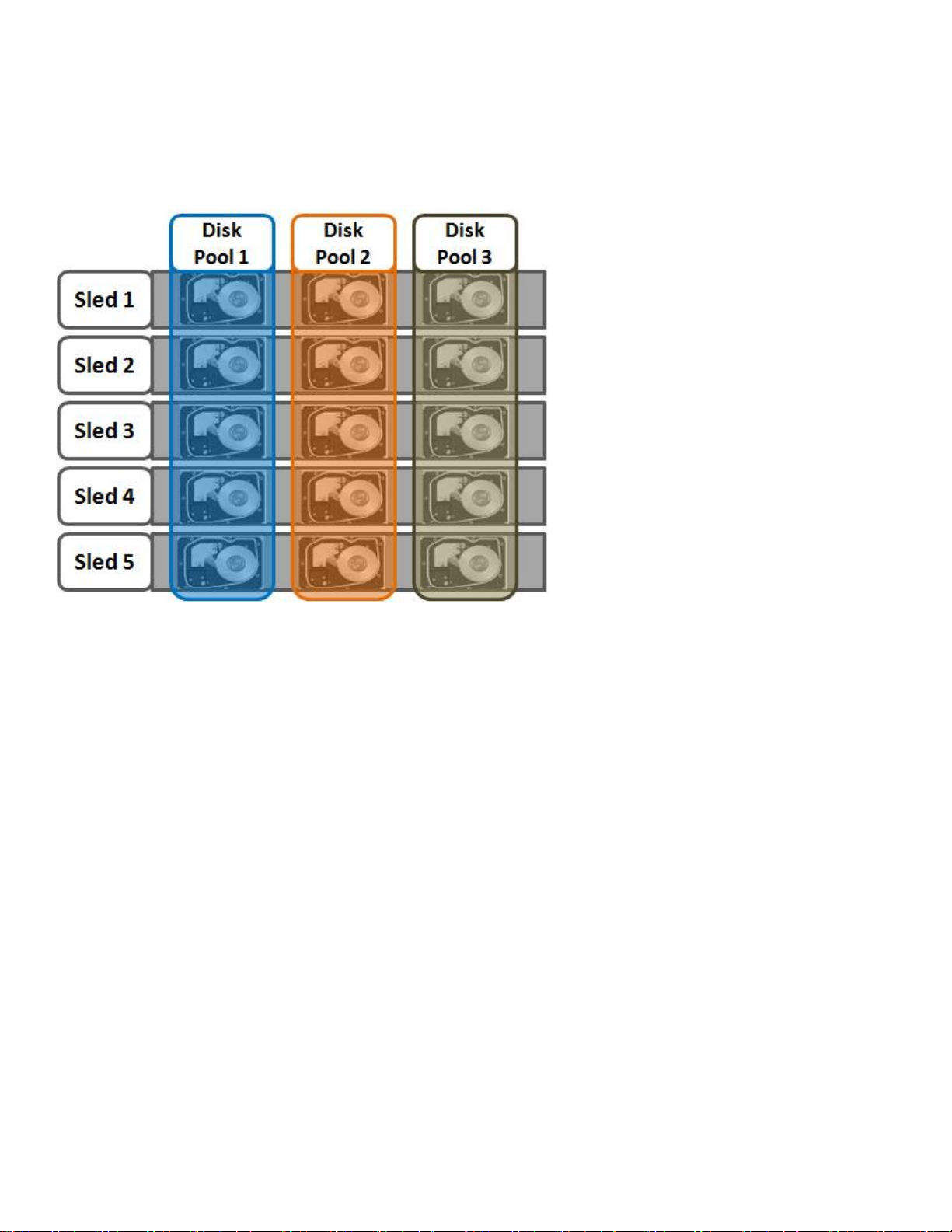

Disk pools are the smallest unit within the storage pools hierarchy, as illustrated in figure 4 below. OneFS provisioning works on the

premise of dividing similar nodes’ drives into sets, or disk pools, with each pool representing a separate failure domain.

These disk pools are protected by default at +2d:1n (or the ability to withstand two disk or one entire node failure) and span from four

to twenty nodes with modular, chassis-based platforms. Each chassis contains four compute modules (one per node), and five drive

containers, or ‘sleds’, per node.

Figure 4. Modular Chassis Front View Showing Drive Sleds.

Node 1

Node 2

Node 3

Node 4

Sled 1

Sled 2

Sled 3

Sled 4

Sled 5

Page 12

12 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Each ‘sled’ is a tray which slides into the front of the chassis and contains between three and six drives, depending on the

configuration of a particular node chassis. Disk pools are laid out across all five sleds in each node. For example, a chassis-based

node with three drives per sled will have the following disk pool configuration:

Figure 5. Modular Chassis-based Node Disk Pools.

Earlier generations of Isilon hardware and the new PowerScale nodes utilize six-drive node pools that span from three to forty

nodes.

Each drive may only belong to one disk pool and data protection stripes or mirrors don’t extend across disk pools (the exception being

a Global Namespace Acceleration extra mirror, described below). Disk pools are managed by OneFS and are not user configurable.

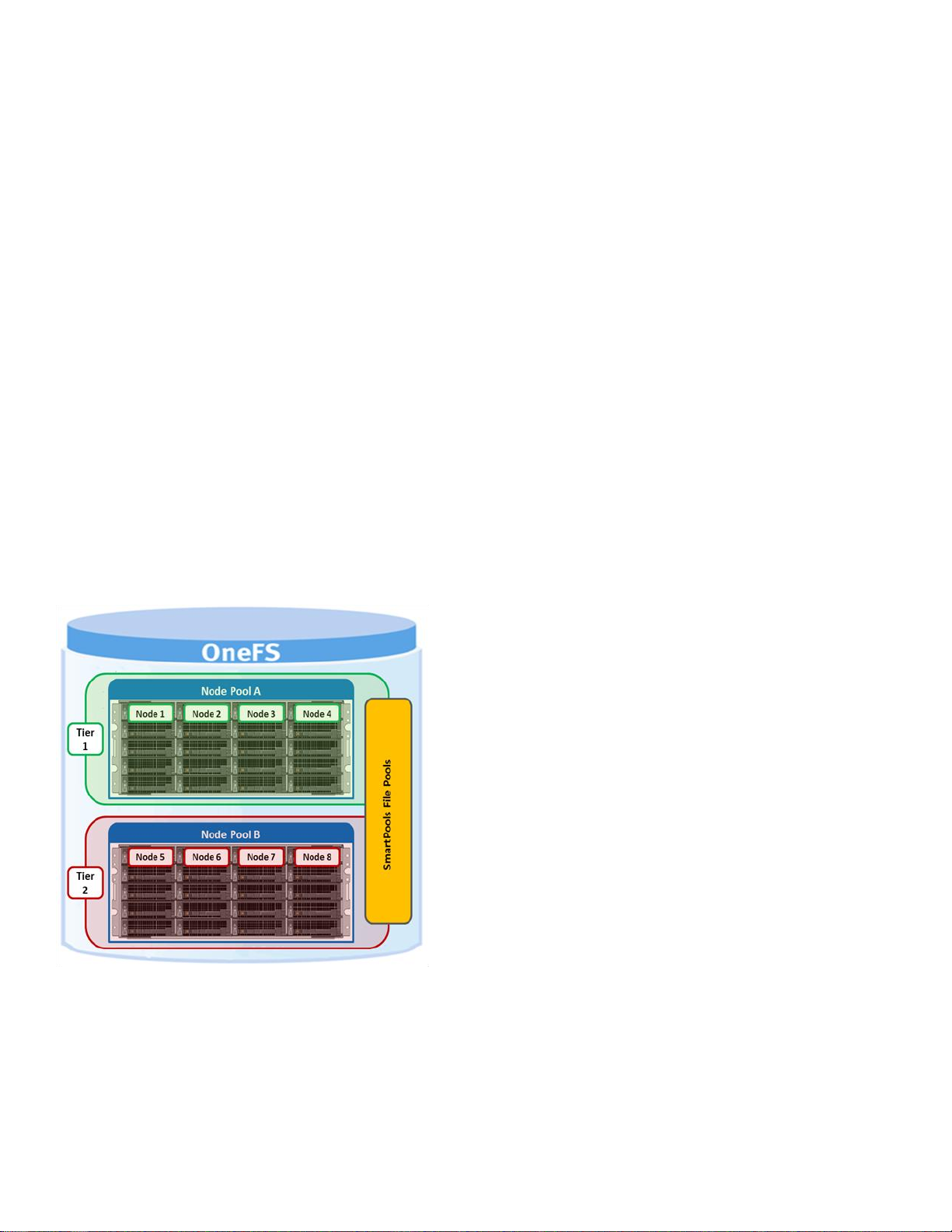

Node Pools

Node pools are groups of disk pools, spread across similar storage nodes (equivalence classes). This is illustrated in figure 5, below.

Multiple groups of different node types can work together in a single, heterogeneous cluster. For example: one node pool of all-flash FSeries nodes for I/Ops-intensive applications, one node pool of H-Series nodes, primarily used for high-concurrent and sequential

workloads, and one node pool of A-series nodes, primarily used for nearline and/or deep archive workloads.

This allows OneFS to present a single storage resource pool comprising multiple drive media types – NVMe, SSD, high speed SAS,

large capacity SATA - providing a range of different performance, protection and capacity characteristics. This heterogeneous storage

pool in turn can support a diverse range of applications and workload requirements with a single, unified point of management. It also

facilitates the mixing of older and newer hardware, allowing for simple investment protection even across product generations, and

seamless hardware refreshes.

Each node pool only contains disk pools from the same type of storage nodes and a disk pool may belong to exactly one node pool.

For example, all-flash F-series nodes would be in one node pool, whereas A-series nodes with high capacity SATA drives would be in

another. Today, a minimum of 4 nodes, or one chassis, are required per node pool for Isilon Gen6 modular chassis-based hardware,

or three previous generation or PowerScale nodes per node pool.

Page 13

13 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Nodes are not provisioned (not associated with each other and not writable) until at least three nodes from the same compatibility class

are assigned in a node pool. If nodes are removed from a node pool, that pool becomes under-provisioned. In this situation, if two like

nodes remain, they are still writable. If only one remains, it is automatically set to read-only.

Once node pools are created, they can be easily modified to adapt to changing requirements. Individual nodes can be reassigned

from one node pool to another. Node pool associations can also be discarded, releasing member nodes so they can be added to new

or existing pools. Node pools can also be renamed at any time without changing any other settings in the node pool configuration.

Any new node added to a cluster is automatically allocated to a node pool and then subdivided into disk pools without any additional

configuration steps, inheriting the SmartPools configuration properties of that node pool. This means the configuration of disk pool data

protection, layout and cache settings only needs to be completed once per node pool and can be done at the time the node pool is first

created. Automatic allocation is determined by the shared attributes of the new nodes with the closest matching node pool. If the new

node is not a close match to the nodes of any existing node pool, it remains un-provisioned until the minimum node pool node

membership for like nodes is met (three nodes of same or similar storage and memory configuration).

When a new node pool is created, and nodes are added, SmartPools associates those nodes with an ID. This ID is also used in file

pool policies and file attributes to dictate file placement within a specific disk pool.

By default, a file which is not covered by a specific file pool policy will go to the default node pool or pools identified during set up. If no

default is specified, SmartPools will write that data to the pool with the most available capacity.

Figure 6. Automatic Provisioning into Node Pools

Page 14

14 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Tiers

Tiers are groups of node pools combined into a logical superset to optimize data storage, according to OneFS platform type. This is

illustrated in figure 6, below. For example, similar ‘archive’ node pools are often consolidated into a single tier. This tier could

incorporate different styles of archive node pools (i.e. Isilon A200 with 8TB SATA drives and Isilon A2000 with 12TB SATA drives) into

a single, logical container. This is a significant benefit because it allows customers who consistently purchase the highest capacity

nodes available to consolidate a variety of node styles within a single group, or tier, and manage them as one logical group.

Figure 7. SmartPools Tier Configuration

SmartPools users typically deploy 2 to 4 tiers, with the fastest tier often containing all-flash nodes for the most performance demanding

portions of a workflow, and the lowest, capacity-biased tier comprising high capacity SATA drive nodes.

Figure 8. SmartPools WebUI View – Tiers & Node Pools

Page 15

15 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Node Compatibility and Equivalence

SmartPools allows nodes of any type supported by the particular OneFS version to be combined within the same cluster. The likenodes will be provisioned into different node pools according to their physical attributes and these node equivalence classes are fairly

stringent. This is in order to avoid disproportionate amounts of work being directed towards a subset of cluster resources. This is often

referred to as “node bullying” and can manifest as severe over-utilization of resources like CPU, network or disks.

However, administrators can safely target specific data to broader classes of storage by creating tiers. For example, if a cluster

includes two different varieties of X nodes, these will automatically be provisioned into two different node pools. These two node pools

can be logically combined into a tier, and file placement targeted to it, resulting in automatic balancing across the node pools.

Criteria governing the creation of a node pool include:

1. A minimum of four nodes for chassis-based nodes, and three nodes for self-contained, of a particular type must be added to the

cluster before a node pool is provisioned.

2. If three or more nodes have been added, but fewer than three nodes are accessible, the cluster will be in a degraded state. The

cluster may or may not be usable, depending on whether there is still cluster quorum (greater than half the total nodes available).

3. All nodes in the cluster must have a current, valid support contract.

SmartPools separates hardware by node type and creates a separate node pool for each distinct hardware variant. To reside in the

same node pool, nodes must have a set of core attributes in common:

1. Family

2. Chassis size

3. Generation

4. RAM capacity

5. SSD/HDD Drive capacity & quantity

Node compatibilities can be defined to allow nodes with the same drive types, quantities and capacities and compatible RAM

configurations to be provisioned into the same pools.

Due to significant architectural differences, there are no node compatibilities between the 4-node modular chassis such as the

Isilon H400 platform and self-contained nodes like the PowerScale F600.

SSD Compatibility

OneFS also contains an SSD compatibility option, which allows nodes with dissimilar SSD capacity and count to be provisioned to a

single node pool. The SSD compatibility is created and described in the OneFS WebUI SmartPools Compatibilities list and is also

displayed in the Tiers & Node Pools list.

When creating this SSD compatibility, OneFS automatically checks that the two pools to be merged have the same number of

SSDs (if desired), tier, requested protection, and L3 cache settings. If these settings differ, the OneFS WebUI will prompt for

consolidation and alignment of these settings

Data Spill Over

If a node pool fills up, writes to that pool will automatically spill over to the next pool. This default behavior ensures that work can

continue even if one type of capacity is full. There are some circumstances in which spillover is undesirable, for example when

different business units within an organization purchase separate pools, or data location has security or protection implications. In

these circumstances, spillover can simply be disabled. Disabling spillover ensures a file exists in one pool and will not move to

another. Keep in mind that reservations for virtual hot sparing will affect spillover – if, for example, VHS is configured to reserve 10%

of a pool’s capacity, spillover will occur at 90% full.

Protection settings can be configured outside SmartPools and managed at the cluster level, or within SmartPools at either the node

pool or file pool level. Wherever protection levels exist, they are fully configurable and the default protection setting for a node pool is

+2d:1n.

Page 16

16 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Automatic Provisioning

Data tiering and management in OneFS is handled by the SmartPools framework. From a data protection and layout efficiency point of

view, SmartPools facilitates the subdivision of large numbers of high-capacity, homogeneous nodes into smaller, more efficiently

protected disk pools. For example, a forty node nearline cluster with 3TB SATA disks would typically run at a +4n protection level.

However, partitioning it into two, twenty node disk pools would allow each pool to run at +2n protection, thereby lowering the protection

overhead and improving space utilization without any net increase in management overhead.

In keeping with the goal of storage management simplicity, OneFS will automatically calculate and divide the cluster into pools of disks,

which are optimized for both Mean Time to Data Loss (MTTDL) and efficient space utilization. This means that protection level

decisions, such as the forty-node cluster example above, are not left to the customer.

With Automatic Provisioning, every set of equivalent node hardware is automatically divided into disk pools comprising up to forty

nodes and six drives per node. These disk pools are protected against up to two drive failures per disk pool. Multiple similar disk pools

are automatically combined into node pools, which can then be further aggregated into logical tiers and managed with SmartPools file

pool policies. By subdividing a node’s disks into multiple, separately protected disk pools, nodes are significantly more resilient to

multiple disk failures than previously possible.

When initially configuring a cluster, OneFS automatically assigns nodes to node pools. Nodes are not pooled—not associated with

each other—until at least three nodes are assigned to the node pool. A node pool with fewer than three nodes is considered an underprovisioned pool.

Manually Managed Node Pools

If the automatically provisioned node pools that OneFS creates are not appropriate for an environment, they can be manually

reconfigured. This is done by creating a manual node pool and moving nodes from an existing node pool to the newly created one.

The recommendation is to use the default, automatically provisioned node pools. Manually assigned pools may not provide the

same level of performance and storage efficiency as automatically assigned pools, particularly if your changes result in fewer than 20

nodes in the manual node pool.

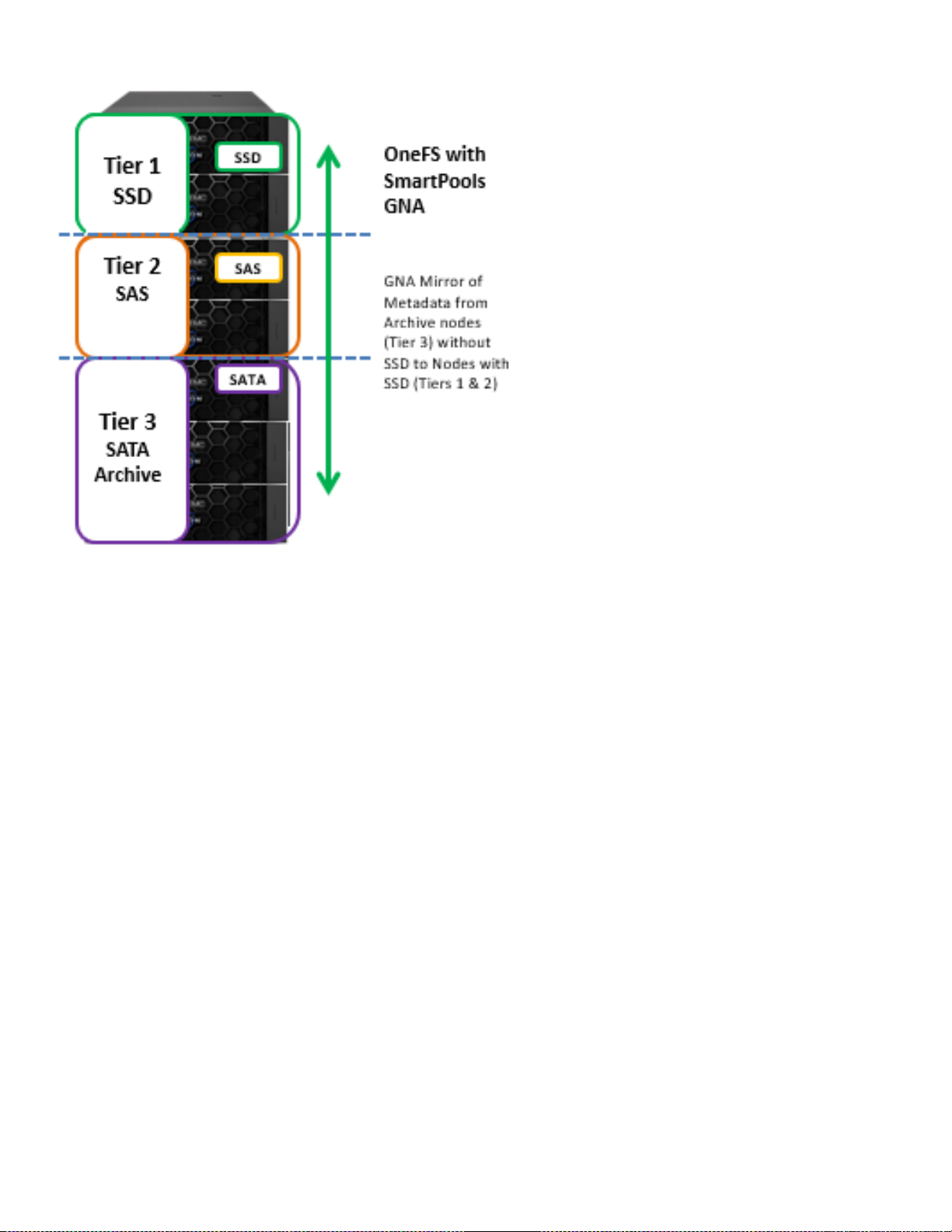

Global Namespace Acceleration

Global Namespace Acceleration, or GNA, is an unlicensed, configurable component of SmartPools. GNA’s principal goal is to help

accelerate metadata read operations (e.g. filename lookups) by keeping a copy of the cluster’s metadata on high performance, low

latency SSD media. This allows customers to increase the performance of certain workloads across the whole file system without

having to upgrade/purchase SSDs for every node in the cluster. This is illustrated in figure 9, below. To achieve this, an extra mirror of

metadata from storage pools that do not contain SSDs is stored on SSDs available anywhere else in the cluster, regardless of node

pool boundaries. As such, metadata read operations are accelerated even for data on node pools that have no SSDs.

Page 17

17 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Figure 9. Global Name Space Acceleration

The purpose of GNA is to accelerate the performance of metadata-intensive applications and workloads such as home directories,

workflows with heavy enumeration and activities requiring a large number of comparisons. Examples of metadata-read-heavy

workflows exist across the majority of Dell EMC established and emerging scale-out NAS markets. In some, like EDA for example,

such workloads are dominant and the use of SSDs to provide the performance they require is ubiquitous.

Virtual Hot Spare

SmartPools Virtual Hot Spare (VHS) helps ensure that node pools maintain enough free space to successfully re-protect data in the

event of drive failure. Though configured globally, VHS actually operates at the node pool level so that nodes with different size drives

reserve the appropriate VHS space. This helps ensures that, while data may move from one disk pool to another during repair, it

remains on the same class of storage. VHS reservations are cluster wide and configurable as either a percentage of total storage (020%) or as a number of virtual drives (1-4). The mechanism of this reservation is to allocate a fraction of the node pool’s VHS space in

each of the node pool’s constituent disk pools.

No space is reserved for VHS on SSDs unless the entire node pool consists of SSDs. This means that a failed SSD may have data

moved to HDDs during repair, but without adding additional configuration settings, the alternative is reserving an unreasonable

percentage of the SSD space in a node pool.

The default for new clusters is for Virtual Hot Spare to have both "reduce amount of available space" and "deny new data writes"

enabled with one virtual drive. On upgrade, existing settings are maintained. All customers are encouraged to enable Virtual Hot

Spare.

Page 18

18 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

SmartDedupe and Tiering

OneFS SmartDedupe maximizes the storage efficiency of a cluster by decreasing the amount of physical storage required to house an

organization’s data. Efficiency is achieved by scanning the on-disk data for identical blocks and then eliminating the duplicates. This

approach is commonly referred to as post-process, or asynchronous, deduplication. After duplicate blocks are discovered,

SmartDedupe moves a single copy of those blocks to a special set of files known as shadow stores. During this process, duplicate

blocks are removed from the actual files and replaced with pointers to the shadow stores.

OneFS SmartDedupe does not deduplicate files that span SmartPools node pools or tiers, or that have different protection levels set.

This is to avoid potential performance or protection asymmetry which could occur if portions of a file live on different classes of storage.

However, a deduped file that is moved to a pool with a different disk pool policy ID will retain the shadow references to the shadow

store on the original pool. This breaks the rule for deduping across different disk pool policies, but it's preferred to do this than

rehydrate files that are moved.

Further dedupe activity on that file will no longer be allowed to reference any blocks in the original shadow store. The file will need to

be deduped against other files in the same disk pool policy. If the file had not yet been deduped, the dedupe index may have

knowledge about the file and will still think it is on the original pool. This will be discovered and corrected when a match is made

against blocks in the file. Because the moved file has already been deduped, the dedupe index will have knowledge of the shadow

store only. Since the shadow store has not moved, it will not cause problems for further matching. However, if the shadow store is

moved as well (but not both files), then a similar situation occurs and the SmartDedupe job will discover this and purge knowledge of

the shadow store from the dedupe index.

Further information is available in the OneFS SmartDedupe white paper.

SmartPools Licensing

The base SmartPools license enables three things:

1. The ability to manually set individual files to a specific storage pool.

2. All aspects of file pool policy configuration.

3. Running the SmartPools job.

The SmartPools job runs on a schedule and applies changes that have occurred through file pool policy configurations, file system

activity or just the passage of time. The schedule is configurable via standard Job Engine means and defaults to daily at 10pm.

The storage pool configuration of SmartPools requires no license. Drives will automatically be provisioned into disk pools and node

pools. Tiers can be created but have little utility since files will be evenly allocated among the disk pools. The "global SmartPools

settings" are all still available, with the caveat that spillover is forcibly enabled to "any" in the unlicensed case. Spillover has little

meaning when any file can be stored anywhere.

The default file pool policy will apply to all files. This can be used to set the protection policy and I/O optimization settings, but the disk

pool policy cannot be changed. The SetProtectPlus job will run to enforce these settings when changes are made.

In the case that a SmartPools license lapses, the disk pool policy set on files' inodes will be ignored and it will be treated as the "ANY"

disk pool policy. However, since the disk pool policy is only evaluated when selecting new disk pool targets, writes to files with valid

targets will continue to be directed to those targets based on the old disk pool policy until a ‘reprotect’ or higher level restripe causes

the disk pool targets to be reevaluated. New files will inherit the disk pool policy of their parents (possibly through the New File

Attributes mechanism), but that disk pool policy will be ignored, and their disk pool targets will be selected according to the "ANY"

policy.

When SmartPools is not licensed, any disk pool policy is ignored. Instead, the AutoBalance job will spread data evenly across node

pools.

Page 19

19 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

File Pools

This is the SmartPools logic layer, where user configurable file pool policies govern where data is placed, protected, accessed, and

how it moves among the node pools and tiers. This is conceptually similar to storage ILM (information lifecycle management) but does

not involve file stubbing or other file system modifications. File pools allow data to be automatically moved from one type of storage to

another within a single cluster to meet performance, space, cost or other requirements, while retaining its data protection settings. For

example, a file pool policy may dictate anything written to path /ifs/foo goes to the H-Series nodes in node pool 1, then moves to the ASeries nodes in node pool 3 when older than 30 days.

To simplify management, there are defaults in place for node pool and file pool settings which handle basic data placement,

movement, protection and performance. All of these can also be configured via the simple and intuitive UI, delivering deep granularity

of control. Also provided are customizable template policies which are optimized for archiving, extra protection, performance and

VMware files.

When a SmartPools job runs, the data may be moved, undergo a protection or layout change, etc. There are no stubs. The file

system itself is doing the work so no transparency or data access risks apply.

Data movement is parallelized with the resources of multiple nodes being leveraged for speedy job completion. While a job is in

progress all data is completely available to users and applications.

The performance of different nodes can also be augmented with the addition of system cache or solid-state drives (SSDs). A OneFS

cluster can leverage up to 37 TB of globally coherent cache. Most nodes can be augmented with up to six SSD drives. Within a file

pool, SSD ‘Strategies’ can be configured to place a copy of that pool’s metadata, or even some of its data, on SSDs in that pool.

Overall system performance impact can be configured to suit the peaks and lulls of an environment’s workload. Change the time or

frequency of any SmartPools job and the amount of resources allocated to SmartPools. For extremely high-utilization environments, a

sample file pool policy can be used to match SmartPools run times to non-peak computing hours. While resources required to execute

SmartPools jobs are low and the defaults work for the vast majority of environments, that extra control can be beneficial when system

resources are heavily utilized.

Figure 10. SmartPools File Pool Policy Engine

Page 20

20 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

File Pool Policies

SmartPools file pool policies can be used to broadly control the three principal attributes of a file. Namely:

1. Where a file resides.

2. Tier

3. Node pool

The file performance profile (I/O optimization setting).

1. Sequential

2. Concurrent

3. Random

4. SmartCache write caching

The protection level of a file.

1. Parity protected (+1n to +4n, +2d:1n, etc.)

2. Mirrored (2x – 8x)

A file pool policy is built on a file attribute the policy can match on. The attributes a file pool policy can use are any of: File Name, Path,

File Type, File Size, Modified Time, Create Time, Metadata Change Time, Access Time or User Attributes.

Once the file attribute is set to select the appropriate files, the action to be taken on those files can be added – for example: if the

attribute is File Size, additional settings are available to dictate thresholds (all files bigger than… smaller than…). Next, actions are

applied: move to node pool x, set to y protection level and lay out for z access setting.

File Attribute

Description

File Name

Specifies file criteria based on the file name

Path

Specifies file criteria based on where the file is stored

File Type

Specifies file criteria based on the file-system object type

File Size

Specifies file criteria based on the file size

Modified Time

Specifies file criteria based on when the file was last modified

Create Time

Specifies file criteria based on when the file was created

Metadata Change Time

Specifies file criteria based on when the file metadata was last modified

Access Time

Specifies file criteria based on when the file was last accessed

User Attributes

Specifies file criteria based on custom attributes – see below

Table 3. OneFS File Pool File Attributes

‘And’ and ‘Or’ operators allow for the combination of criteria within a single policy for extremely granular data manipulation.

Page 21

21 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

File pool policies that dictate placement of data based on its path force data to the correct disk on write directly to that node pool

without a SmartPools job running. File pool policies that dictate placement of data on other attributes besides path name get written to

disk pool with the highest available capacity and then moved, if necessary, to match a file pool policy, when the next SmartPools job

runs. This ensures that write performance is not sacrificed for initial data placement.

As mentioned above in the node pools section, any data not covered by a file pool policy is moved to a tier that can be selected as a

default for exactly this purpose. If no disk pool has been selected for this purpose, SmartPools will default to the node pool with the

most available capacity.

In practice, default file pool policies are almost always used because they can be very powerful. Most administrators do not want to

set rules to govern all their data. They are generally concerned about some or most of their data in terms of where it sits and how

accessible it is, but there is always data for which location in the cluster is going to be less important. For this data, there is the default

policy, which is used for files for which none of the other policies in the list have applied. Typically, it is set to optimize cost and to

avoid using storage pools that are specifically needed for other data. For example, most default policies are at a lower protection level,

and use only the least expensive tier of storage.

When a file pool policy is created, SmartPools stores it in the OneFS configuration database with any other SmartPools policies.

When a SmartPools job runs, it runs all the policies in order. If a file matches multiple policies, SmartPools will apply only the first rule

it fits. So, for example if there is a rule that moves all jpg files to an A-series archive node pool, and another that moves all files under

2 MB to a performance tier, if the jpg rule appears first in the list, then jpg files under 2 MB will go to nearline, NOT the performance

tier. As mentioned above, criteria can be combined within a single policy using ‘And’ or ‘Or’ so that data can be classified very

granularly. Using this example, if the desired behavior is to have all jpg files over 2 MB to be moved to the Archive node pool, the file

pool policy can be simply constructed with an ‘And’ operator to cover precisely that condition.

Policy order, and policies themselves, can be easily changed at any time. Specifically, policies can be added, deleted, edited, copied

and re-ordered.

Figure 11. SmartPools File Pool Policy governing data movement

Page 22

22 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Figure 12. File Pool Policy Example

Figures 9 and 10 illustrate a common file pools use case: An organization and wants the active data on their performance nodes in Tier

1 (SAS + SSD) and to move any data not accessed for 6 months to the cost optimized archive Tier 2.

As the list of file pool policies grows (SmartPools currently supports up to 128 policies), it becomes less practical to manually walk

through all of them to see how a file will behave when policies are applied. SmartPools also has some advanced options which are

available on the command line for this kind of scenario testing and trouble shooting.

File pool policies can be created, copied, modified, prioritized or removed at any time. Sample policies are also provided that can be

used as is or as templates for customization.

Custom File Attributes

Custom File Attributes, or user attributes, can be used when more granular control is needed than can be achieved using the standard

file attributes options (File Name, Path, File Type, File Size, Modified Time, Create Time, Metadata Change Time, Access Time). User

Attributes use key value pairs to tag files with additional identifying criteria which SmartPools can then use to apply file pool policies.

While SmartPools has no utility to set file attributes this can be done easily by using the “setextattr” command.

Custom File Attributes are generally used to designate ownership or create project affinities. For example, a biosciences user

expecting to access a large number of genomic sequencing files as soon as personnel arrive at the lab in the morning might use

Custom File Attributes to ensure these are migrated to the fastest available storage.

Once set, Custom File Attributes are leveraged by SmartPools just as File Name, File Type or any other file attribute to specify

location, protection and performance access for a matching group of files. Unlike other SmartPools file pool policies, Custom File

Attributes can be set from the command line or platform API.

Anatomy of a SmartPools Job

When a SmartPools job runs, SmartPools examines all file attributes and checks them against the list of SmartPools policies. To

maximize efficiency, wherever possible, the SmartPools’ job utilizes an efficient, parallel metadata scan (logical inode, or LIN, tree

scan) instead of a more expensive directory and file tree-walk. This is even more efficient when one the SmartPools SSD metadata

acceleration strategy is deployed.

A SmartPools LIN tree scan breaks up the metadata into ranges for each node to work on in parallel. Each node can then dedicate a

single, or multiple, threads to execute the scan on their assigned range. A LIN tree walk also ensures each file is opened only once,

which is much more efficient when compared to a directory walk where hard links and other constructs can result in single threading,

multiple opens, etc.

When the SmartPools file pool policy engine finds a match between a file and a policy, it stops processing policies for that file, since

the first policy match determines what will happen to that file. Next, SmartPools checks the file’s current settings against those the

policy would assign to identify those which do not match. Once SmartPools has the complete list of settings that need to apply to that

file, it sets them all simultaneously, and moves to restripe that file to reflect any and all changes to node pool, protection, SmartCache

use, layout, etc.

Page 23

23 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

File Pool Policy Engine

The SmartPools file pool policy engine falls under the control and management of the Job Engine. The default schedule for this

process is every day at 10pm, and with a low impact policy. The schedule, priority and impact policy can be manually configured and

tailored to a particular environment and workload.

The engine can also be run on-demand using a separate invocation to apply the appropriate file-pool membership settings to an

individual file or subdirectory without having to wait for the background scan to do it.

To test how a new policy will affect file dispositions, a SmartPools job can be run on a subset of the data. This can be either a single

file or directory or group of files or directories. The job can either be run live, to actually make the policy changes, or in a ‘dry-run’

mode to estimate the scope and effect of a policy. This means the end state can be simulated, showing how each file would be

affected by the set of file pool policies in place.

Running a SmartPools job against a directory or group of directories is available as a command line option. The following CLI syntax

will run the engine on-demand for specific files or subtrees:

isi filepool apply [-r] <filename>

For a dry-run assessment that calculates and reports without making changes use:

isi filepool apply –nv [PATH]

For a particular file pool policy, the following information will be returned:

1. Policy Number

2. Files matched

3. Directories matched

4. ADS containers matched

5. ADS streams matched

6. Access changes skipped

7. Protection changes skipped

8. File creation templates matched

9. File data placed on HDDs

10. File data placed on SSDs

When using the CLI utility above, SmartPools will traverse the specified directory tree, as opposed to performing a LIN scan, since

the operation has been specified at the directory level. Also, the command is synchronous and, as such, will wait for completion before

returning the command prompt.

Page 24

24 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

FilePolicy Job

Traditionally, OneFS has used the SmartPools jobs to apply its file pool policies. To accomplish this, the SmartPools job visits every

file, and the SmartPoolsTree job visits a tree of files. However, the scanning portion of these jobs can result in significant random

impact to the cluster and lengthy execution times, particularly in the case of SmartPools job.

To address this, the FilePolicy job, introduced in OneFS 8.2, provides a faster, lower impact method for applying file pool policies than

the full-blown SmartPools job. In conjunction with the IndexUpdate job, FilePolicy improves job scan performance by using a ‘file

system index’, or changelist, to find files needing policy changes, rather than a full tree scan.

Figure 13. File System Index, Jobs, and Consumers.

Avoiding a full treewalk dramatically decreases the amount of locking and metadata scanning work the job is required to perform,

reducing impact on CPU and disk - albeit at the expense of not doing everything that SmartPools does. The FilePolicy job enforces just

the SmartPools file pool policies, as opposed to the storage pool settings. For example, FilePolicy does not deal with changes to

storage pools or storage pool settings, such as:

• Restriping activity due to adding, removing, or reorganizing node pools.

• Changes to storage pool settings or defaults, including protection.

However, most of the time SmartPools and FilePolicy perform the same work. The FilePolicy job can be run in one of four modes:

FilePolicy Mode

Description

Default

Enacts full policy changes on file and directories.

Dry Run

Calculates and reports on the policy changes that would be made.

Directory Only

Process policies for directories but skips the actual files.

Policy Only

Applies the matching FilePool policies but skips the actual data restriping.

Table 4: FilePolicy job execution modes..

Page 25

25 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

These modes can be easily selected from the FilePolicy job configuration WebUI, by navigating to Cluster Management > Job

Operations > File Policy > View/Edit:

Figure 14. FilePolicy job mode selection.

Disabled by default, FilePolicy supports the full range of file pool policy features, reports the same information, and provides the same

configuration options as the SmartPools job. Since FilePolicy is a changelist-based job, it performs best when run frequently - once or

multiple times a day, depending on the configured file pool policies, data size and rate of change.

Job schedules can easily be configured from the OneFS WebUI by navigating to Cluster Management > Job Operations, highlighting

the desired job and selecting ‘View\Edit’. The following example illustrates configuring the IndexUpdate job to run every six hours at a

LOW impact level with a priority value of 5:

Figure 15. IndexUpdate job schedule configuration via the OneFS WebUI.

Page 26

26 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

When enabling and using the FilePolicy and IndexUpdate jobs, the recommendation is to continue running the SmartPools job as well,

but at a reduced frequency (monthly).

In addition to running on a configured schedule, the FilePolicy job can also be executed manually.

FilePolicy requires access to a current index. In the event that the IndexUpdate job has not yet been run, attempting to start the

FilePolicy job will fail with the error below. Instructions in the error message will be displayed, prompting to run the IndexUpdate job

first. Once the index has been created, the FilePolicy job will run successfully. The IndexUpdate job can be run several times daily (ie.

every six hours) to keep the index current and prevent the snapshots from getting large.

Figure 16. FilePolicy job error message prompting to run IndexUpdate first.

Consider using the FilePolicy job with the job schedules below for workflows and datasets with the following characteristics:

• Data with long retention times

• Large number of small files

• Path-based file pool filters configured

• Where FSAnalyze job is already running on the cluster (InsightIQ monitored clusters)

• There is already a SnapshotIQ schedule configured

• When the SmartPools job typically takes a day or more to run to completion at LOW impact

On clusters without the characteristics described above, the recommendation is to continue running the SmartPools job as usual

and to not activate the FilePolicy job.

The following table provides a suggested job schedule when deploying FilePolicy:

Job

Schedule

Impact

Priority

FilePolicy

Every day at 22:00

LOW

6

IndexUpdate

Every six hours, every day

LOW

5

SmartPools

Monthly – Sunday at 23:00

LOW

6

Table 4: Suggested job schedule, priority and impact configuration when activating the FilePolicy job.

Bear in mind that no two clusters are the same, so this suggested job schedule may require additional tuning to meet the needs of

a specific environment.

Further information on job configuration is available in the OneFS Job Engine white paper.

Page 27

27 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Data Location

The file system explorer provides a detailed view of where SmartPools-managed data is at any time by both the actual node pool

location and the file pool policy-dictated location (i.e. where that file will move after the next successful completion of the SmartPools

job).

When data is written to the cluster, SmartPools writes it to a single node pool only. This means that, in almost all cases, a file exists in

its entirety within a node pool, and not across node pools. SmartPools determines which pool to write to based on one of two

scenarios: If a file matches a file pool policy based on directory path, that file will be written into the node pool dictated by the file pool

policy immediately. If that file matches a file pool policy which is based on any other criteria besides path name, SmartPools will write

that file to the node pool with the most available capacity. If the file matches a file pool policy that places it on a different node pool

than the highest capacity node pool, it will be moved when the next scheduled SmartPools job runs.

Figure 16. File Pools and Node Pools

For performance, charge back, ownership or security purposes it is sometimes important to know exactly where a specific file or group

of files is on disk at any given time. While any file in a SmartPools environment typically exists entirely in one Storage Pool, there are

exceptions when a single file may be split (usually only on a temporary basis) across two or more node pools at one time.

Node Pool Affinity

SmartPools generally only allows a file to reside in one node pool. A file may temporarily span several node pools in some situations.

When a file pool policy dictates a file move from one node pool to another, that file will exist partially on the source node pool and

partially on the destination node pool until the move is complete. If the node pool configuration is changed (for example, when splitting

a node pool into two node pools) a file may be split across those two new pools until the next scheduled SmartPools job runs. If a

node pool fills up and data spills over to another node pool so the cluster can continue accepting writes, a file may be split over the

intended node pool and the default spillover node pool. The last circumstance under which a file may span more than one node pool is

for typical restriping activities like cross-node pool rebalances or rebuilds.

Performance with SmartPools

One of the principle goals of storage tiering is to reduce data storage costs without compromising data protection or access. There are

several areas in which tiering can have a positive or negative performance impact on performance. It is important to consider each,

and how SmartPools behaves in each situation.

Using SmartPools to Improve Performance

SmartPools can be used to improve performance in several ways:

•

Location-based Performance

•

Performance Settings

•

SSD Strategies

•

Performance Isolation

Page 28

28 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Location-based performance leverages SmartPools file pool policies to classify and direct data to the most appropriate media (SSD,

SAS, or SATA) for its performance requirements.

In addition, SmartPools file pool rules also allow data to be optimized for both performance and protection.

As we have seen, SSDs can also be employed in a variety of ways, accelerating combinations of data, metadata read and write, and

metadata read performance on other tiers.

Another application of location-based performance is for performance isolation goals. Using SmartPools, a specific node pool can be

isolated from all but the highest performance data, and file pool policies can be used to direct all but the most critical data away from

this node pool. This approach is sometimes used to isolate just a few nodes of a certain type out of the cluster for intense work.

Because node pools are easily configurable, a larger node pool can be split, and one of the resulting node pools isolated and used to

meet a temporary requirement. The split pools can then be reconfigured back into a larger node pool.

For example, the administrators of this cluster are meeting a temporary need to provide higher performance support for a specific

application for a limited period of time. They have split their highest performance tier and set policies to migrate all data not related to

their project to other node pools. The servers running their critical application have direct access to the isolated node pool. A default

policy has been set to ensure all other data in the environment is not placed on the isolated node pool. In this way, the newly-created

node pool is completely available to the critical application.

Figure 17. Performance Isolation Example

Data Access Settings

At the file pool (or even the single file) level, Data Access Settings can be configured to optimize data access for the type of application

accessing it. Data can be optimized for Concurrent, Streaming or Random access. Each one of these settings changes how data is

laid out on disk and how it is cached.

Page 29

29 |

Next Generation Storage Tiering with Dell EMC PowerScale SmartPools

© 2020 Dell Inc. or its subsidiaries.

Data Access Setting

Description

On Disk Layout

Caching

Concurrency

Optimizes for current load on the cluster,

featuring many simultaneous clients.

This setting provides the best behavior

for mixed workloads.

Stripes data across the minimum

number of drives required to achieve

the data protection setting configured

for the file.

Moderate prefetching

Streaming

Optimizes for high-speed streaming of a

single file, for example to enable very

fast reading with a single client.

Stripes data across a larger number

of drives.

Aggressive

prefetching

Random

Optimizes for unpredictable access to

the file by performing almost no cache

prefetching.

Stripes data across the minimum

number of drives required to achieve

the data protection setting configured

for the file.

Little to no

prefetching

Table 5. SmartPools Data Access Settings

As the settings indicate, the ‘random’ data access setting performs little to no read-cache prefetching to avoid wasted disk access. This

works best for small files (< 128KB) and large files with random small block accesses. Streaming access works best for sequentially

read medium to large files. This access pattern uses aggressive prefetching to improve overall read throughput, and on disk layout

spreads the file across a large number of disks to optimize access. Concurrency (the default setting for all file data) access is the

middle ground with moderate prefetching. Use this for file sets with a mix of both random and sequential access.

Leveraging SSDs for Metadata & Data Performance

Adding SSDs within a node pool can boost performance significantly for many workloads. In the OneFS architecture, SSDs can be

used to accelerate performance across the entire cluster using SSD Strategies for data or metadata acceleration.

There are several SSD Strategies to choose from: Metadata Acceleration, Avoid SSDs, Data on SSDs, Global Namespace

Acceleration, and L3 cache (SmartFlash). The default setting, for pools where SSDs are available, is ‘Metadata Acceleration’.

•

Metadata read acceleration: Creates a preferred mirror of file metadata on SSD and writes the rest of the metadata, plus all the

actual file data, to HDDs.

•

Metadata read & write acceleration: Creates all the mirrors of a file’s metadata on SSD.

•