Page 1

Page 2

Table of Contents

Cisco Switching Black Book...............................................................................................................................1

Introduction.........................................................................................................................................................4

Overview..................................................................................................................................................4

Is This Book for You?..............................................................................................................................4

How to Use This Book.............................................................................................................................4

The Black Book Philosophy....................................................................................................................5

Chapter 1: Network Switching Fundamentals.................................................................................................6

In Depth...................................................................................................................................................6

Physical Media and Switching Types......................................................................................................6

A Bit of History.......................................................................................................................................7

Networking Architectures.................................................................................................................7

The Pieces of Technology........................................................................................................................9

Repeaters.........................................................................................................................................10

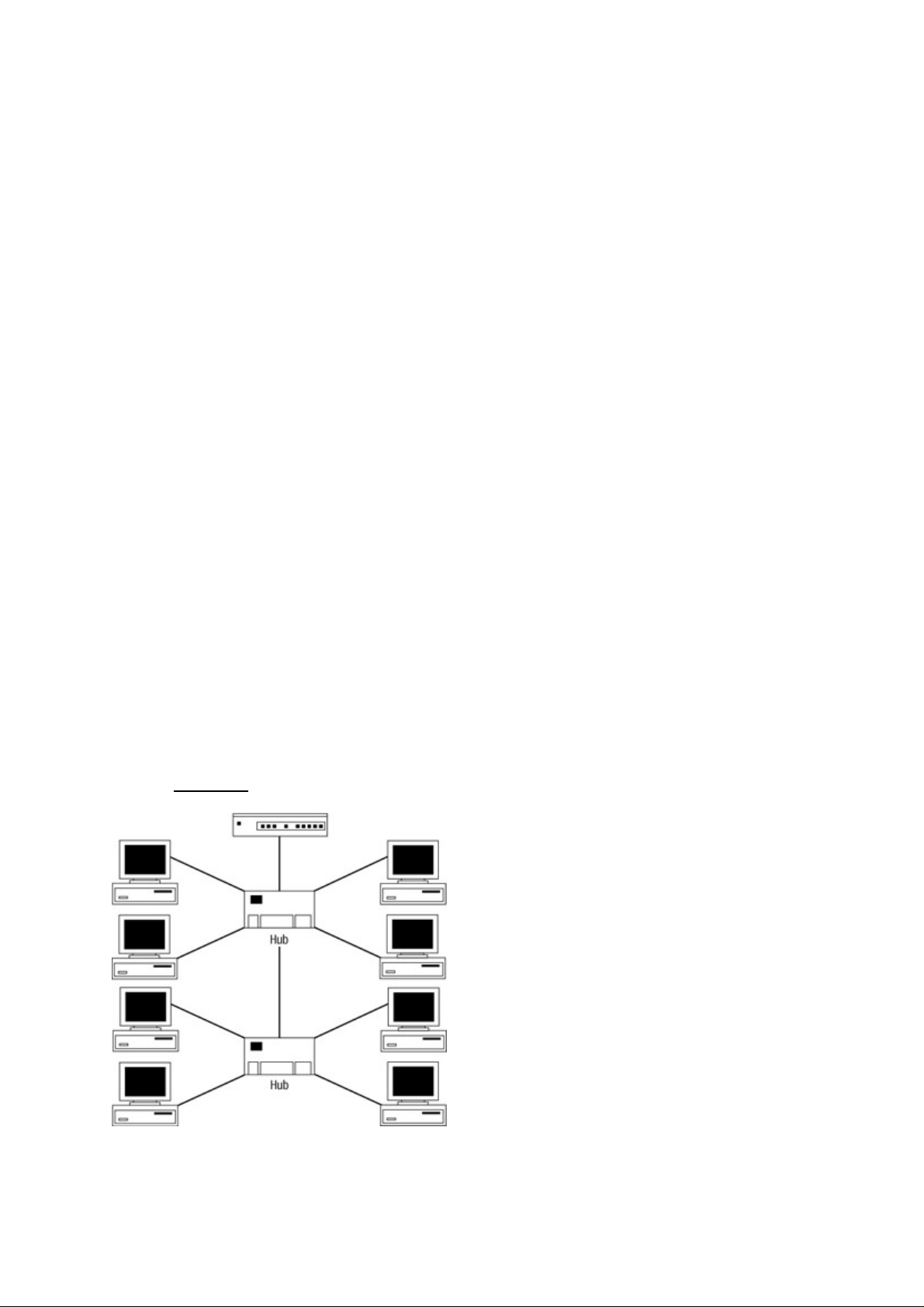

Hubs.................................................................................................................................................10

Bridges.............................................................................................................................................11

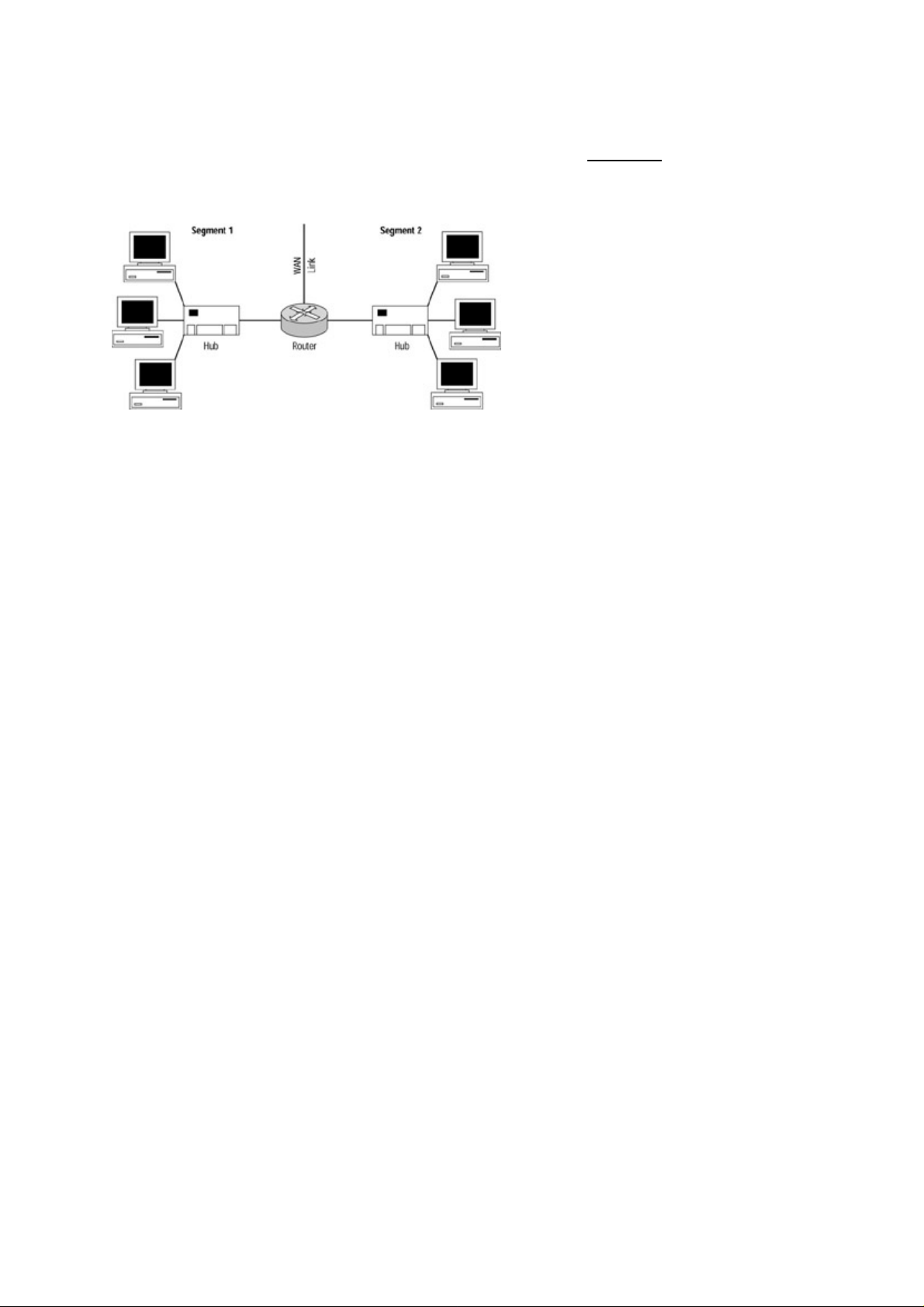

Routers.............................................................................................................................................13

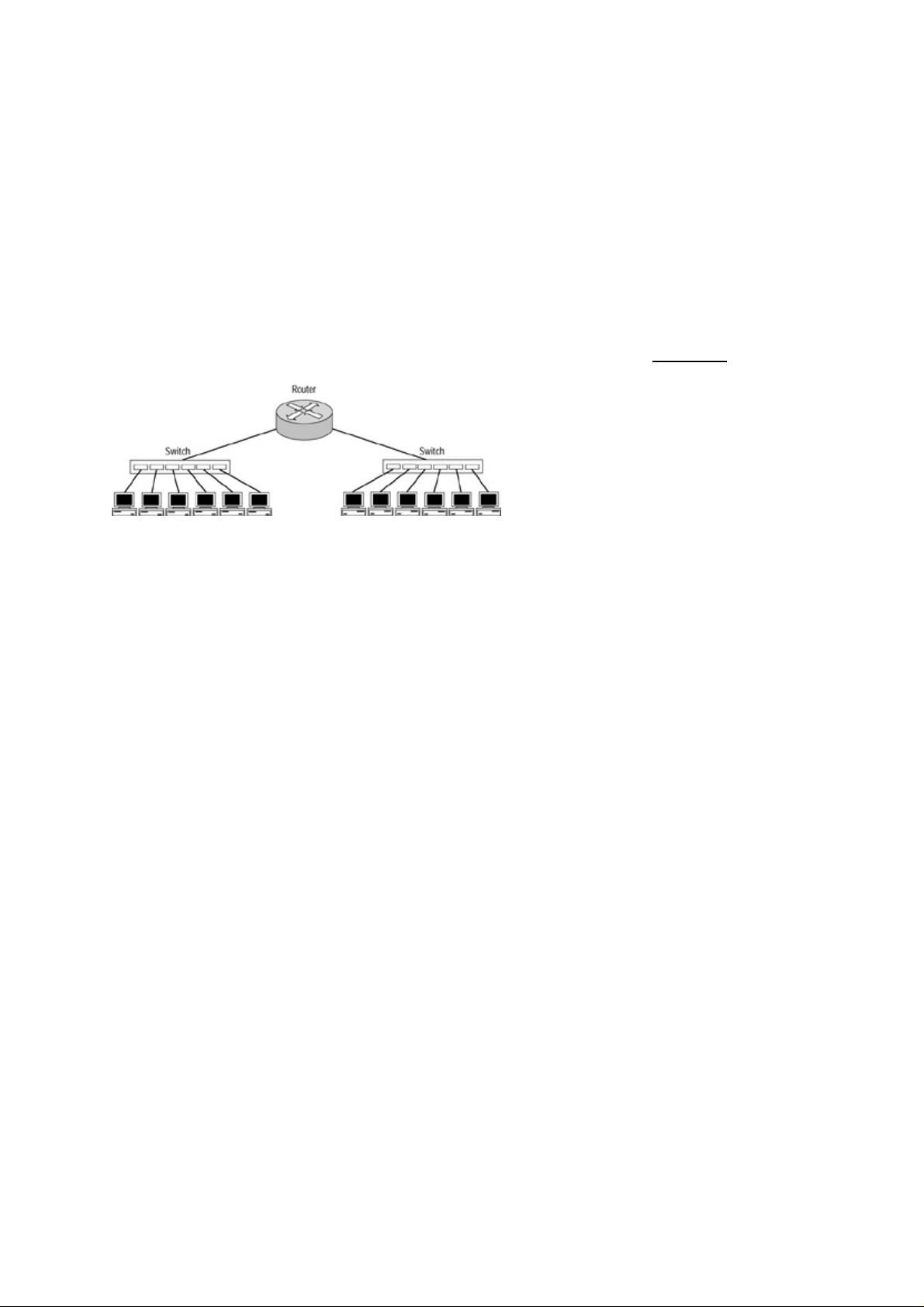

Switches...........................................................................................................................................13

Network Design.....................................................................................................................................14

Collision Domains...........................................................................................................................15

Broadcast Domains..........................................................................................................................16

Why Upgrade to Switches?.............................................................................................................16

Switched Forwarding......................................................................................................................19

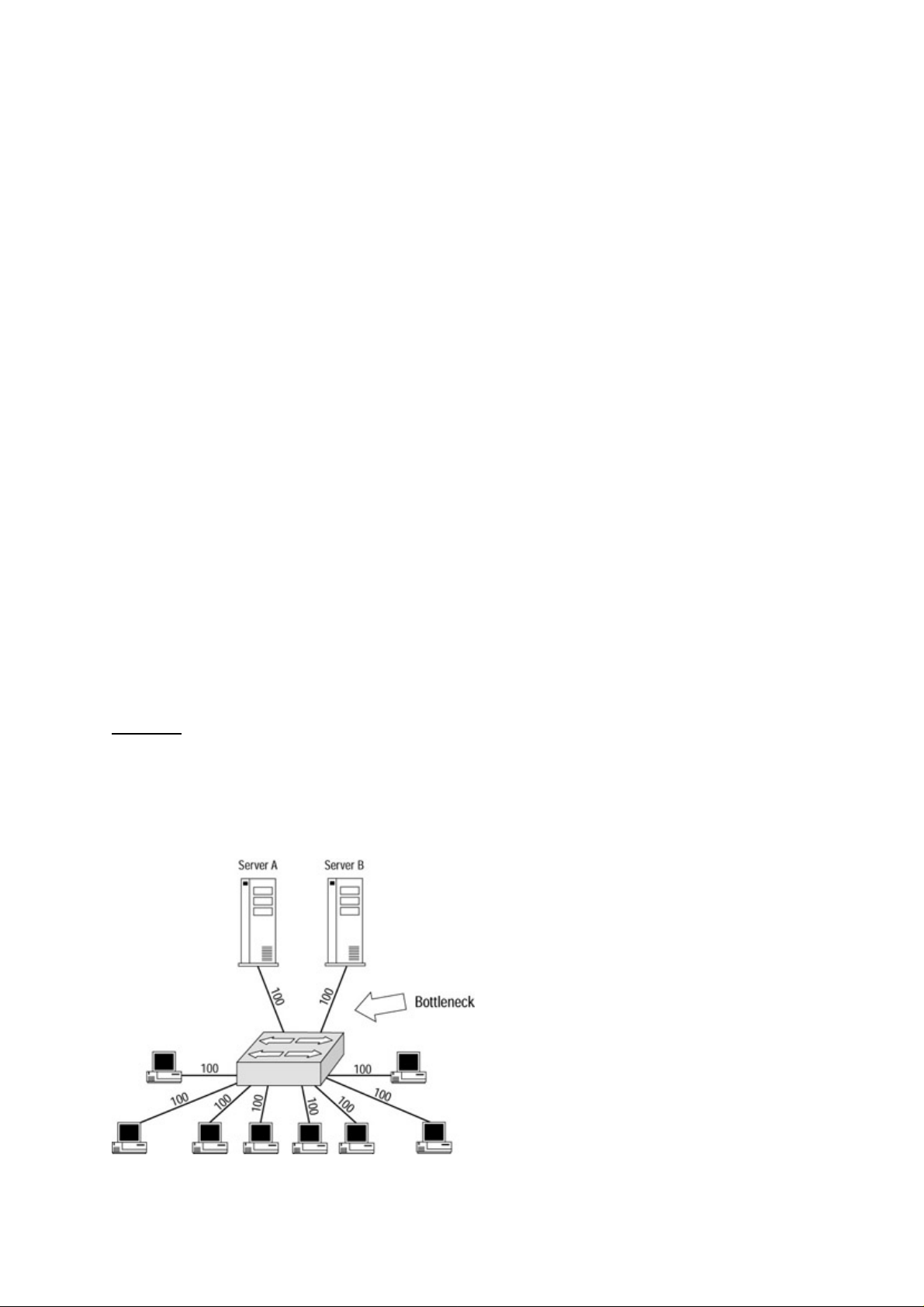

Switched Network Bottlenecks.......................................................................................................20

The Rule of the Network Road........................................................................................................22

Switched Ethernet Innovations..............................................................................................................23

Full−Duplex Ethernet......................................................................................................................23

Fast Ethernet....................................................................................................................................23

Gigabit Ethernet..............................................................................................................................24

The Cisco IOS........................................................................................................................................24

Connecting to the Switch................................................................................................................25

Powering Up the Switch..................................................................................................................25

The Challenges.......................................................................................................................................27

Today’s Trend........................................................................................................................................27

Entering and Exiting Privileged EXEC Mode.......................................................................................28

Entering and Exiting Global Configuration Mode.................................................................................28

Entering and Exiting Interface Configuration Mode.............................................................................28

Entering and Exiting Subinterface Configuration Mode.......................................................................28

Saving Configuration Changes..............................................................................................................29

Chapter 2: Basic Switch Configuration..........................................................................................................30

In Depth.................................................................................................................................................30

Command−Line Interfaces....................................................................................................................30

Campus Hierarchical Switching Model.................................................................................................31

Access Layer...................................................................................................................................32

Distribution Layer...........................................................................................................................32

Core Layer.......................................................................................................................................33

Remote Network Monitoring.................................................................................................................33

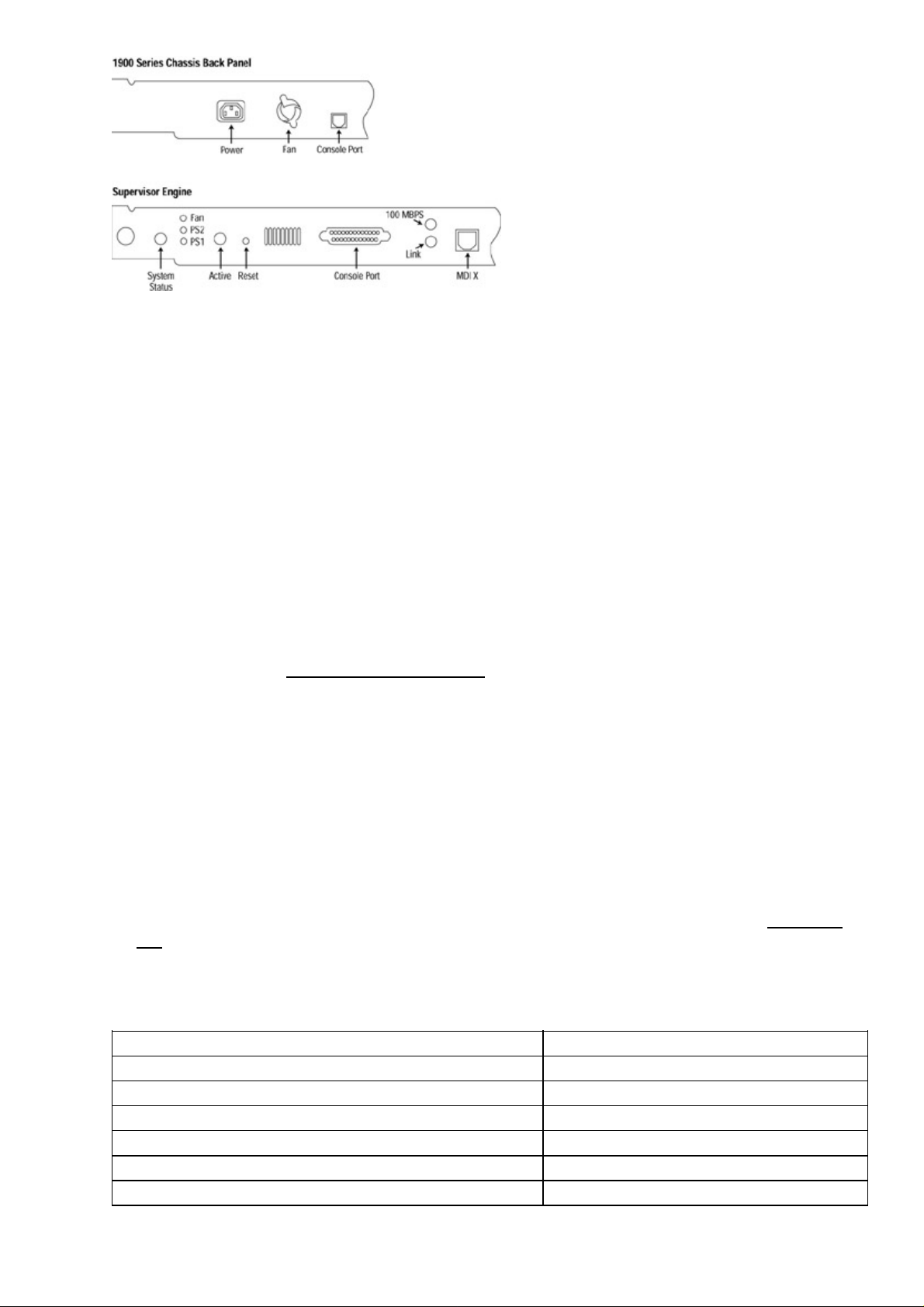

Connecting to the Console Port.............................................................................................................34

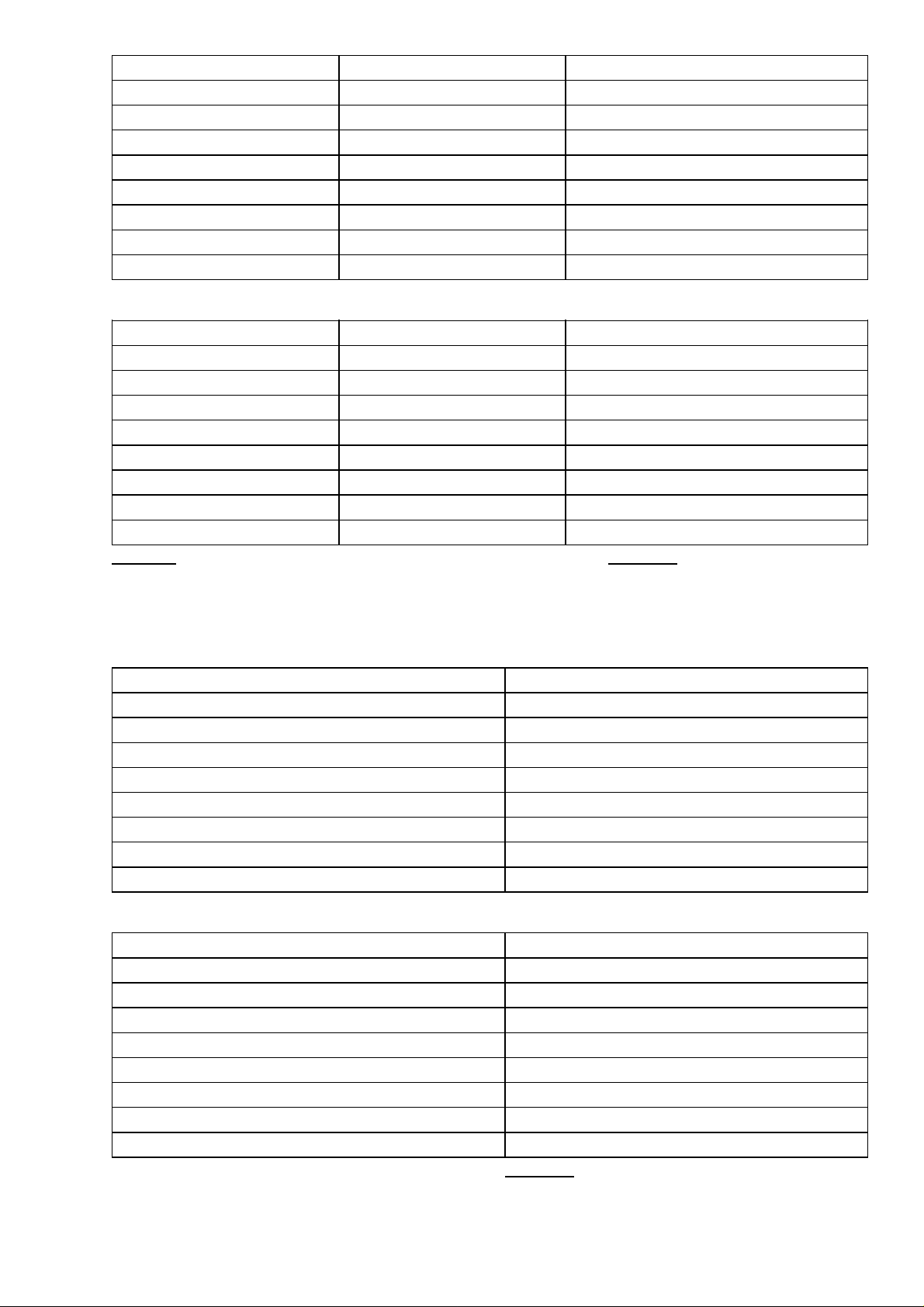

Console Cable Pinouts.....................................................................................................................35

Console Connectors.........................................................................................................................36

i

Page 3

Table of Contents

Chapter 2: Basic Switch Configuration

The RJ−45−to−AUX Port Console Connector Pinouts...................................................................36

Switch IOSs...........................................................................................................................................38

The IOS Configuration Modes........................................................................................................38

Limiting Telnet Access..........................................................................................................................39

Implementing Privilege Levels..............................................................................................................39

Configuring an IOS−Based CLI Switch................................................................................................39

Setting the Login Passwords...........................................................................................................40

Setting Privilege Levels...................................................................................................................40

Assigning Allowable Commands....................................................................................................40

Setting the Console Port Time−out Value.......................................................................................40

Configuring the Telnet Time−out Value.........................................................................................41

Configuring the Hostname..............................................................................................................41

Configuring the Date and Time.......................................................................................................41

Configuring an IP Address and Netmask........................................................................................41

Configuring a Default Route and Gateway.....................................................................................41

Configuring Port Speed and Duplex...............................................................................................42

Enabling SNMP Contact.................................................................................................................42

Configuring a Set/Clear−Based CLI Switch..........................................................................................42

Logging On to a Switch...................................................................................................................42

Setting the Login and Enable Passwords........................................................................................43

Changing the Console Prompt.........................................................................................................43

Entering a Contact Name and Location Information......................................................................44

Configuring System and Time Information....................................................................................44

Configuring an IP Address and Netmask........................................................................................44

Configuring a Default Route and Gateway.....................................................................................45

Viewing the Default Routes............................................................................................................45

Configuring Port Speed and Duplex...............................................................................................45

Enabling SNMP...............................................................................................................................46

Configuring Trap Message Targets.................................................................................................46

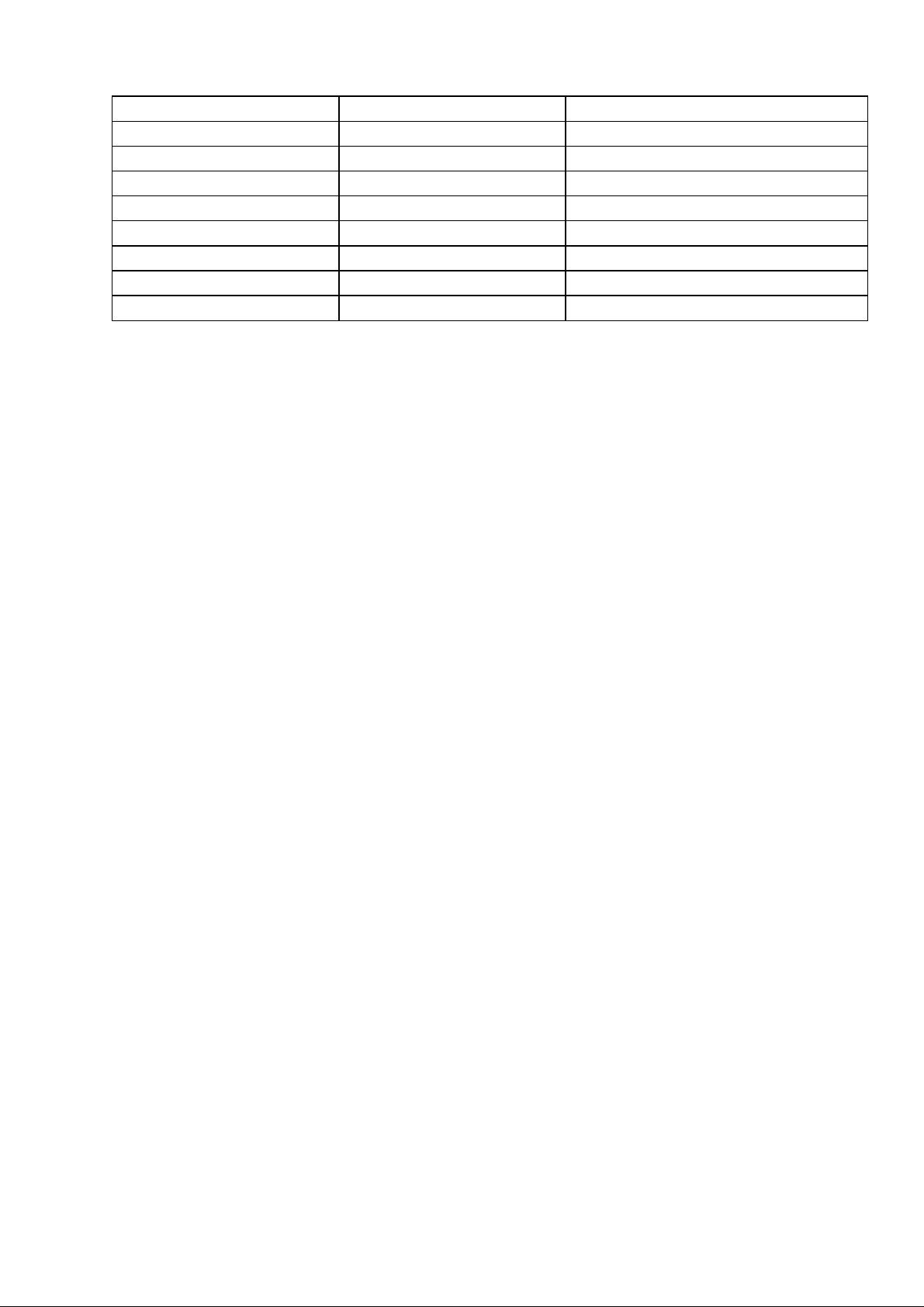

Configuring a Menu−Driven IOS..........................................................................................................47

Configuring the Console Port..........................................................................................................48

Configuring Telnet..........................................................................................................................48

Configuring the Password...............................................................................................................48

Configuring an IP Address and Default Gateway...........................................................................48

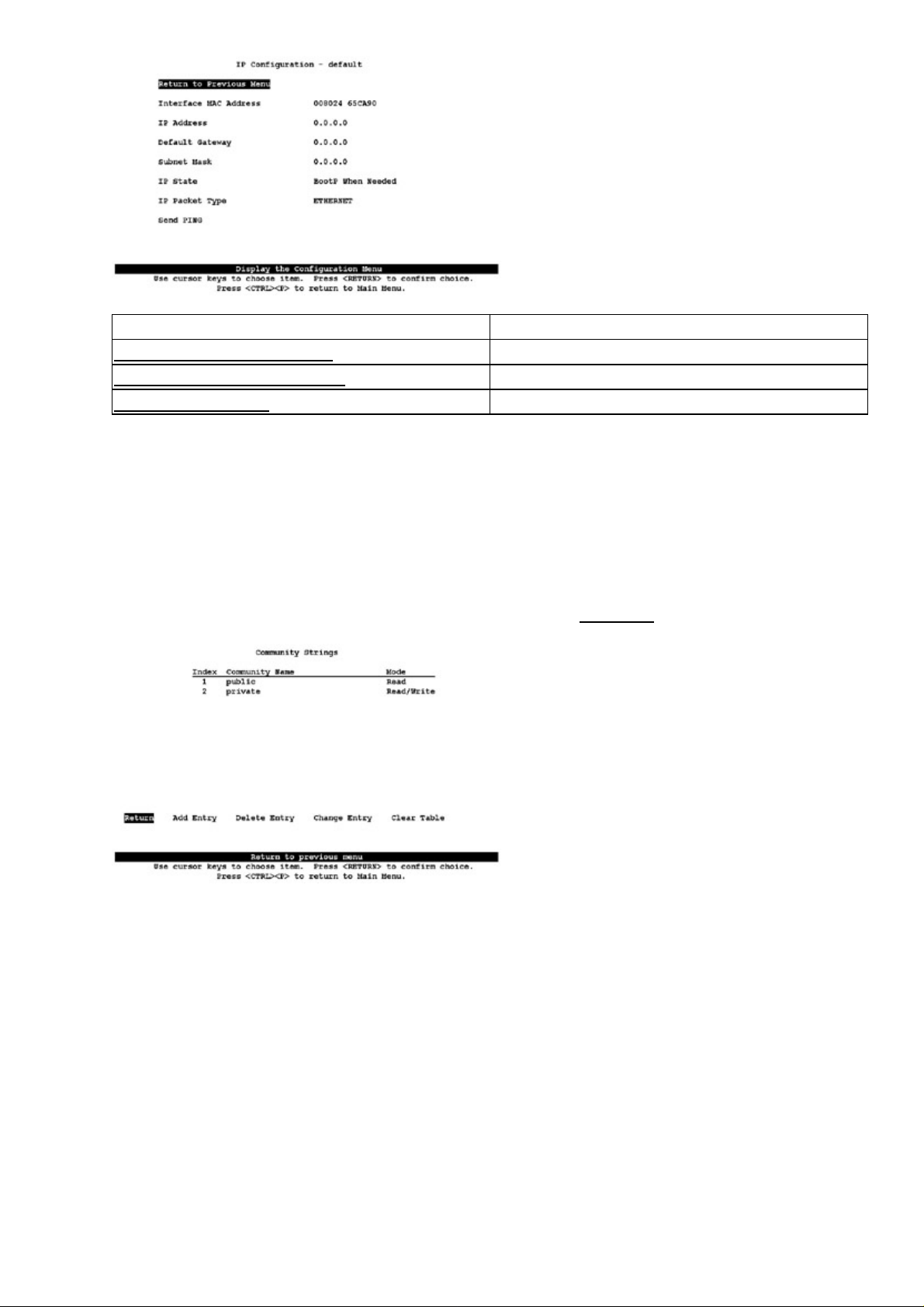

Configuring SNMP..........................................................................................................................49

Configuring ROM..................................................................................................................................50

Entering ROM Configuration Mode...............................................................................................50

Booting ROM Mode from a Flash Device......................................................................................50

Configuring SNMP..........................................................................................................................51

Configuring RMON........................................................................................................................51

Configuring RMON on a Set/Clear−Based Interface.....................................................................51

Using Set/Clear Command Set Recall Key Sequences..........................................................................52

Using IOS−Based Command Editing Keys and Functions...................................................................52

Chapter 3: WAN Switching.............................................................................................................................54

In Depth.................................................................................................................................................54

WAN Transmission Media....................................................................................................................55

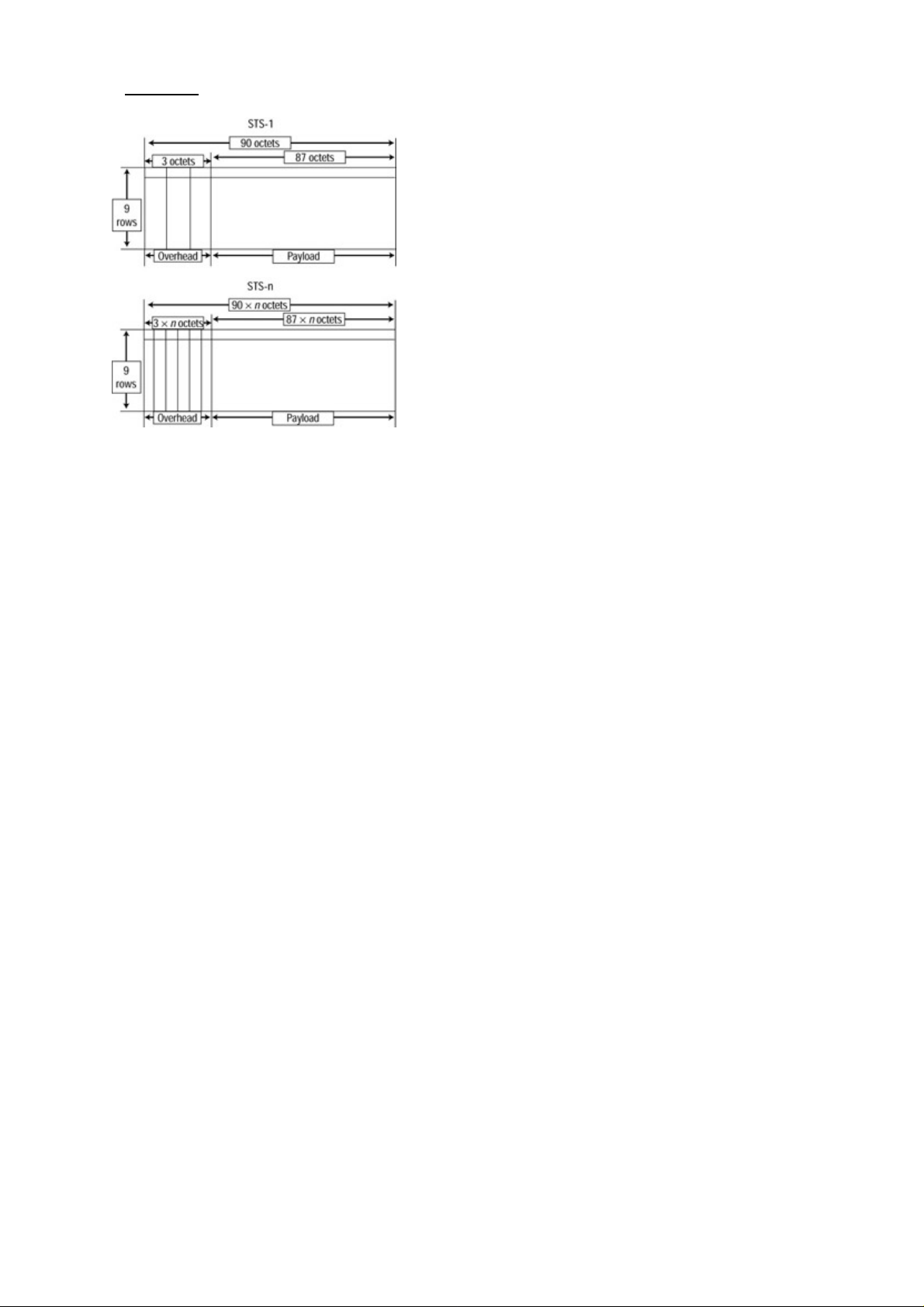

Synchronous Transport Signal (STS)..............................................................................................56

Cisco WAN Switches............................................................................................................................57

MGX 8200 Series............................................................................................................................57

IGX 8400 Series..............................................................................................................................58

ii

Page 4

Chapter 3: WAN Switching

BPX 8600 Series Wide−Area Switches..........................................................................................58

MGX 8800 Series Wide−Area Edge Switches...............................................................................59

WAN Switch Hardware Overview........................................................................................................59

Cisco WAN Switch Network Topologies..............................................................................................60

Network Management............................................................................................................................61

The CLI...........................................................................................................................................61

WAN Manager................................................................................................................................61

Accessing and Setting Up IGX and BPX Switches...............................................................................62

Adding New Users..........................................................................................................................62

Displaying a User’s Password.........................................................................................................62

Changing a User’s Password...........................................................................................................62

Using the History Command...........................................................................................................63

Displaying a Summary of All Card Modules..................................................................................63

Displaying Detailed Information for a Card Module......................................................................63

Displaying the Power and Temperature of a Switch.......................................................................63

Displaying the ASM Statistics for BPX..........................................................................................63

Configuring the ASM Setting for BPX...........................................................................................63

Logging Out....................................................................................................................................63

Resetting the Switch........................................................................................................................63

Displaying Other Switches..............................................................................................................64

Setting the Switch Name.................................................................................................................64

Setting the Time Zone.....................................................................................................................64

Configuring the Time and Date.......................................................................................................64

Configuring the Control and Auxiliary Ports..................................................................................64

Modifying the Functions of the Control and Auxiliary Ports.........................................................64

Configuring the Printing Function..................................................................................................64

Configuring the LAN Interface.......................................................................................................64

Accessing the MGX 8850 and 8220......................................................................................................65

Adding New Users..........................................................................................................................65

Changing Passwords........................................................................................................................65

Assigning a Switch Hostname.........................................................................................................65

Displaying a Summary of All Modules...........................................................................................66

Displaying Detailed Information for the Current Card...................................................................66

Changing the Time and Date...........................................................................................................66

Displaying the Configuration of the Maintenance and Control Ports.............................................66

Displaying the IP Address...............................................................................................................66

Configuring the IP Interface............................................................................................................67

Displaying the Alarm Level of the Switch......................................................................................67

Table of Contents

Chapter 4: LAN Switch Architectures............................................................................................................68

In Depth.................................................................................................................................................68

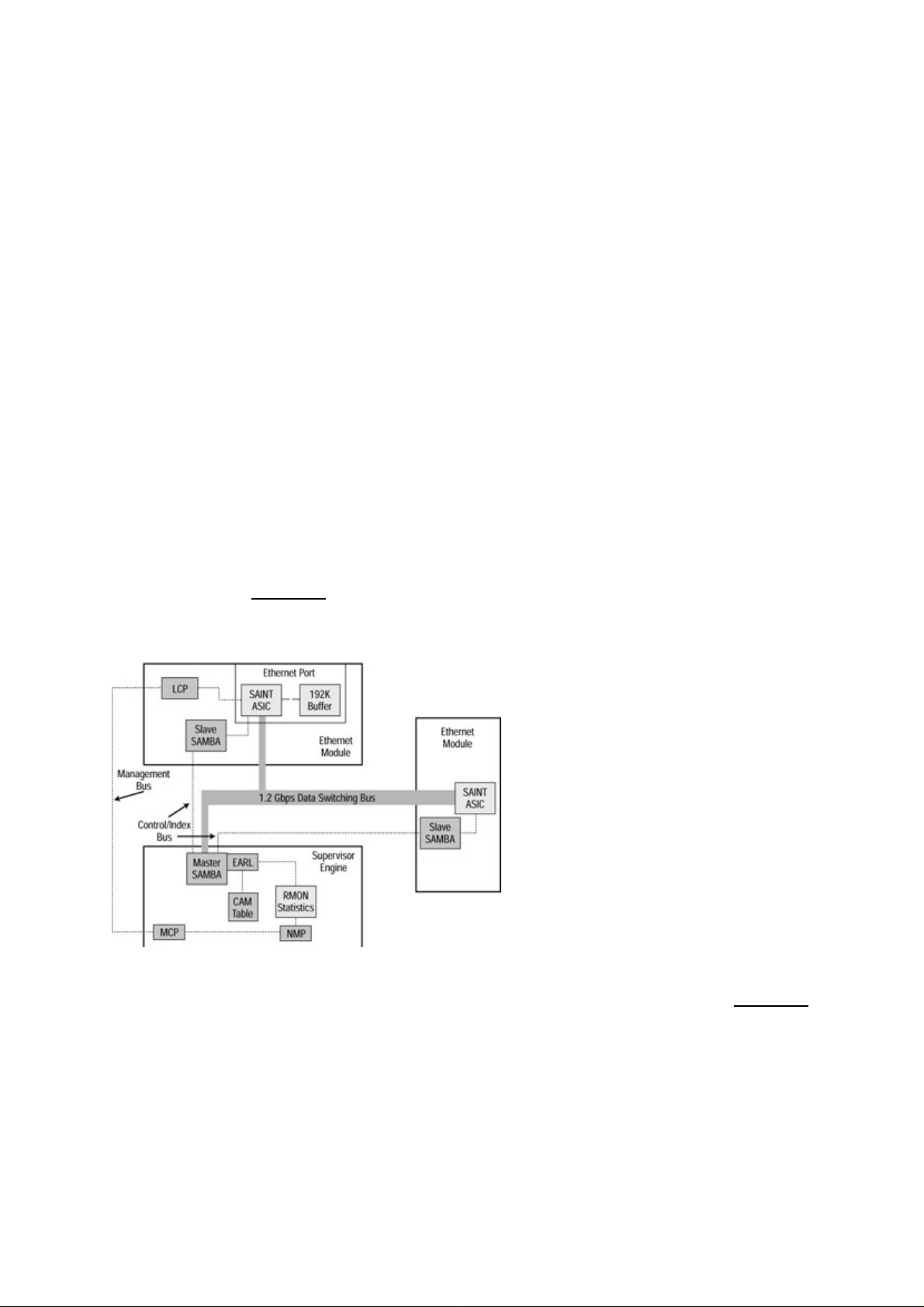

The Catalyst Crescendo Architecture....................................................................................................68

BUS.................................................................................................................................................68

ASICs..............................................................................................................................................69

The Crescendo Processors...............................................................................................................71

Crescendo Logic Units....................................................................................................................71

Other Cisco Switch Processors, Buses, ASICs, and Logic Units..........................................................72

CAM................................................................................................................................................72

AXIS Bus........................................................................................................................................72

CEF ASIC........................................................................................................................................73

Phoenix ASIC..................................................................................................................................75

iii

Page 5

Table of Contents

Chapter 4: LAN Switch Architectures

LCP..................................................................................................................................................75

SAGE ASIC....................................................................................................................................75

QTP ASIC.......................................................................................................................................75

QMAC.............................................................................................................................................76

Bridging Types......................................................................................................................................76

Source Route Bridging....................................................................................................................76

Source Route Transparent Bridging................................................................................................77

Source Route Translational Bridging..............................................................................................77

Transparent Bridging.......................................................................................................................77

Source Route Switching..................................................................................................................77

Switching Paths......................................................................................................................................78

Process Switching............................................................................................................................78

Fast Switching.................................................................................................................................78

Autonomous Switching...................................................................................................................79

Silicon Switching............................................................................................................................79

Optimum Switching........................................................................................................................79

Distributed Switching......................................................................................................................79

NetFlow Switching..........................................................................................................................79

System Message Logging......................................................................................................................80

Loading an Image on the Supervisor Engine III....................................................................................80

Booting the Supervisor Engine III from Flash.......................................................................................81

Setting the Boot Configuration Register................................................................................................81

Configuring Cisco Express Forwarding.................................................................................................81

Enabling CEF..................................................................................................................................81

Disabling CEF.................................................................................................................................81

Enabling dCEF................................................................................................................................82

Disabling dCEF...............................................................................................................................82

Disabling CEF on an Individual Interface.......................................................................................82

Configuring CEF Load Balancing...................................................................................................82

Disabling CEF Load Balancing.......................................................................................................82

Enabling Network Accounting for CEF..........................................................................................82

Setting Network Accounting for CEF to Collect Packet Numbers.................................................82

Viewing Network Accounting for CEF Statistics...........................................................................82

Viewing CEF Packet−Dropped Statistics.......................................................................................83

Viewing Non−CEF Path Packets....................................................................................................83

Disabling Per−Destination Load Sharing..............................................................................................83

Viewing the Adjacency Table on the 8500 GSR...................................................................................83

Clearing the Adjacency Table on the 8500 GSR...................................................................................83

Enabling Console Session Logging on a Set/Clear Command−Based IOS..........................................83

Enabling Telnet Session Logging on a Set/Clear Command−Based IOS.............................................84

Disabling Console Session Logging on a Set/Clear Command−Based IOS.........................................84

Disabling Telnet Session Logging on a Set/Clear Command−Based IOS............................................84

Setting the System Message Severity Levels on a Set/Clear Command−Based IOS............................84

Enabling the Logging Time Stamp on a Set/Clear Command−Based Switch......................................84

Disabling the Logging Time Stamp on a Set/Clear Command−Based Switch.....................................85

Configuring the Logging Buffer Size on a Set/Clear Command−Based Switch...................................85

Clearing the Server Logging Table........................................................................................................85

Disabling Server Logging......................................................................................................................85

Displaying the Logging Configuration..................................................................................................86

Displaying System Logging Messages..................................................................................................86

iv

Page 6

Table of Contents

Chapter 5: Virtual Local Area Networks.......................................................................................................88

In Depth.................................................................................................................................................88

The Flat Network of Yesterday..............................................................................................................88

Why Use VLANs?.................................................................................................................................89

VLAN Basics..................................................................................................................................90

A Properly Switched Network........................................................................................................90

Switched Internetwork Security......................................................................................................91

Scaling with VLANs..............................................................................................................................92

VLAN Boundaries...........................................................................................................................92

VLAN Membership Types..............................................................................................................93

Traffic Patterns Flowing through the Network...............................................................................93

Cisco’s VLAN Recommendations..................................................................................................93

VLAN Trunking.....................................................................................................................................94

Trunk Types....................................................................................................................................94

LAN Emulation (LANE).................................................................................................................97

VLAN Trunking Protocol (VTP)...........................................................................................................97

VTP Versions..................................................................................................................................98

VTP Advertisements.......................................................................................................................98

VTP Switch Modes.......................................................................................................................100

Methods for VLAN Identification.................................................................................................101

Dynamic Trunking Protocol..........................................................................................................101

InterVLAN Routing.............................................................................................................................101

Internal Route Processors..............................................................................................................102

How InterVLAN Routing Works..................................................................................................102

Configuring a Static VLAN on a Catalyst 5000 Series Switch...........................................................103

Configuring Multiple VLANs on a Catalyst 5000 Series Switch........................................................103

Creating VLANs on a Catalyst 1900EN Series...................................................................................103

Assigning a Static VLAN to an Interface on a 1900EN Series...........................................................104

Viewing the VLAN Configuration on a 1900 Series...........................................................................105

Viewing an Individual VLAN Configuration on a 1900 Series..........................................................105

Configuring a Trunk Port on a Cisco 5000 Series...............................................................................105

Mapping VLANs to a Trunk Port........................................................................................................107

Configuring a Trunk Port on a Cisco 1900EN Series..........................................................................107

Clearing VLANs from Trunk Links on a Cisco 5000 Series...............................................................107

Clearing VLANs from Trunk Links on a Cisco 1900EN Series.........................................................107

Verifying a Trunk Link Configuration on a 5000 Series.....................................................................108

Verifying a Trunk Link Configuration on a 1900EN Series................................................................108

Configuring the VTP Version on a Catalyst 5000 Switch...................................................................108

Configuring a VTP Domain on a Catalyst 1900 Switch......................................................................109

Setting a VTP Domain Password on a Catalyst Switch.......................................................................109

Configuring a Catalyst 1900 Switch as a VTP Server.........................................................................109

Configuring a Catalyst 1900 Switch as a VTP Client.........................................................................109

Configuring a Catalyst 1900 Switch for Transparent Mode................................................................109

Configuring VTP Pruning on a Catalyst 1900 Switch.........................................................................110

Configuring VTP on a Set/Clear CLI Switch......................................................................................110

Configuring VTP on a 1900 Cisco IOS CLI Switch...........................................................................110

Verifying the VTP Configuration on a Set/Clear CLI.........................................................................111

Displaying VTP Statistics....................................................................................................................111

Configuring VTP Pruning on a Set/Clear CLI Switch........................................................................112

Disabling Pruning for Unwanted VLANs............................................................................................112

Configuring IP InterVLAN Routing on an External Cisco Router......................................................112

Configuring IPX InterVLAN Routing on an External Router.............................................................113

v

Page 7

Table of Contents

Chapter 6: InterVLAN and Basic Module Configuration..........................................................................114

In Depth...............................................................................................................................................114

Internal Route Processors....................................................................................................................114

Available Route Processors...........................................................................................................116

Routing Protocol Assignment.......................................................................................................120

Supervisor Engine Modules.................................................................................................................120

Supervisor Engines I and II...........................................................................................................120

Supervisor Engine III....................................................................................................................121

Using the Supervisor Engine.........................................................................................................122

Etherport Modules...............................................................................................................................122

Port Security..................................................................................................................................123

Manually Configured MAC Addresses.........................................................................................123

Determining the Slot Number in Which a Module Resides................................................................123

Accessing the Internal Route Processor from the Switch....................................................................124

Configuring a Hostname on the RSM..................................................................................................124

Assigning an IP Address and Encapsulation Type to an Ethernet Interface........................................125

Setting the Port Speed and Port Name on an Ethernet Interface.........................................................125

Configuring a Default Gateway on a Catalyst 5000............................................................................126

Verifying the IP Configuration on a Catalyst 5000.............................................................................126

Enabling RIP on an RSM.....................................................................................................................126

Viewing the RSM’s Running Configuration.......................................................................................127

Configuring InterVLAN Routing on an RSM.....................................................................................127

Configuring IPX InterVLAN Routing on the RSM.............................................................................128

Configuring AppleTalk InterVLAN Routing on an RSM...................................................................128

Viewing the RSM Configuration.........................................................................................................129

Assigning a MAC Address to a VLAN...............................................................................................129

Viewing the MAC Addresses..............................................................................................................129

Configuring Filtering on an Ethernet Interface....................................................................................130

Configuring Port Security on an Ethernet Module..............................................................................130

Clearing MAC Addresses....................................................................................................................131

Configuring the Catalyst 5000 Supervisor Engine Module.................................................................131

Setting the boot config−register on the Supervisor Engine Module....................................................132

Changing the Management VLAN on a Supervisor Engine................................................................133

Viewing the Supervisor Engine Configuration....................................................................................133

Configuring the Cisco 2621 External Router for ISL Trunking..........................................................134

Configuring Redundancy Using HSRP...............................................................................................135

Chapter 7: IP Multicast..................................................................................................................................137

In Depth...............................................................................................................................................137

IP Multicasting Overview....................................................................................................................137

Broadcast.......................................................................................................................................138

Unicast...........................................................................................................................................138

Multicast........................................................................................................................................139

IP Multicasting Addresses...................................................................................................................140

The Multicast IP Structure............................................................................................................140

Delivery of Multicast Datagrams..................................................................................................142

Multicast Distribution Tree...........................................................................................................142

Multicast Forwarding....................................................................................................................143

IGMP Protocols.............................................................................................................................143

Internet Group Management Protocol (IGMP)....................................................................................145

IGMPv1.........................................................................................................................................145

IGMPv2.........................................................................................................................................146

vi

Page 8

Chapter 7: IP Multicast

Time to Live..................................................................................................................................147

Multicast at Layer 2.............................................................................................................................147

IGMP Snooping.............................................................................................................................147

Cisco Group Management Protocol..............................................................................................148

Router Group Management Protocol............................................................................................148

GARP Multicast Registration Protocol.........................................................................................149

Configuring IP Multicast Routing.......................................................................................................149

Disabling IP Multicast Routing.....................................................................................................149

Enabling PIM on an Interface.......................................................................................................149

Disabling PIM on an Interface......................................................................................................149

Configuring the Rendezvous Point................................................................................................150

Adding a Router to a Multicast Group.................................................................................................150

Configuring a Router to Be a Static Multicast Group Member....................................................150

Restricting Access to a Multicast Group.......................................................................................150

Changing the IGMP Version...............................................................................................................150

Changing the IGMP Host−Query Message Interval............................................................................151

Configuring Multicast Groups.............................................................................................................151

Removing Multicast Groups................................................................................................................151

Configuring Multicast Router Ports.....................................................................................................151

Displaying Multicast Routers........................................................................................................151

Removing the Multicast Router....................................................................................................152

Configuring IGMP Snooping...............................................................................................................152

Disabling IGMP Snooping............................................................................................................152

Configuring IGMP Fast−Leave Processing.........................................................................................152

Disabling IGMP Fast−Leave Processing......................................................................................152

Displaying IGMP Statistics.................................................................................................................153

Displaying Multicast Routers Learned from IGMP.............................................................................153

Displaying IGMP Multicast Groups....................................................................................................153

Configuring CGMP..............................................................................................................................154

Disabling CGMP...........................................................................................................................154

Enabling CGMP Fast−Leave Processing......................................................................................154

Disabling CGMP Fast−Leave Processing.....................................................................................154

Displaying CGMP Statistics..........................................................................................................154

Configuring RGMP on the Switch.......................................................................................................155

Disabling RGMP on the Switch....................................................................................................155

Configuring RGMP on the Router.......................................................................................................155

Disabling RGMP on the Router....................................................................................................155

Displaying RGMP Groups...................................................................................................................155

Displaying RGMP−Capable Router Ports...........................................................................................156

Displaying RGMP VLAN Statistics....................................................................................................156

Configuring GMRP..............................................................................................................................156

Disabling GMRP...........................................................................................................................157

Enabling GMRP on Individual Ports.............................................................................................157

Disabling GMRP on Individual Ports...........................................................................................157

Enabling GMRP Forward−All......................................................................................................157

Disabling GMRP Forward−All.....................................................................................................157

Configuring GMRP Registration...................................................................................................157

Displaying the GMRP Configuration............................................................................................158

Setting GMRP Timers...................................................................................................................158

Displaying GMRP Timers.............................................................................................................158

Configuring Bandwidth−Based Suppression.......................................................................................159

Table of Contents

vii

Page 9

Table of Contents

Chapter 7: IP Multicast

Configuring Packet−Based Suppression..............................................................................................159

Disabling Multicast Suppression.........................................................................................................159

Chapter 8: WAN Cell Switching...................................................................................................................160

In Depth...............................................................................................................................................160

ATM Overview....................................................................................................................................160

LANE............................................................................................................................................161

ATM Protocols..............................................................................................................................162

ATM Circuit Switching.................................................................................................................162

ATM Cells.....................................................................................................................................162

The ATM Switch and ATM Endpoints.........................................................................................164

The ATM Reference Model..........................................................................................................164

Specifying ATM Connections.......................................................................................................166

ATM Addressing...........................................................................................................................167

Local Area Network Emulation (LANE).............................................................................................167

LANE Components.......................................................................................................................168

Integrated Local Management Interface (ILMI)...........................................................................172

LANE Communication..................................................................................................................172

LANE Configuration Guidelines...................................................................................................174

How LANE Works........................................................................................................................174

Implementing LANE.....................................................................................................................175

Configuring ATM on the 5000 Switch..........................................................................................175

Connecting in an ATM Network...................................................................................................177

Monitoring and Maintaining LANE....................................................................................................178

Accessing the ATM LANE Module....................................................................................................178

Displaying the Selector Field...............................................................................................................178

Configuring the LES/BUS...................................................................................................................179

Verifying the LES/BUS Configuration.........................................................................................179

Configuring a LEC for an ELAN.........................................................................................................179

Verifying a LEC Configuration on an ELAN...............................................................................180

Configuring the LECS...................................................................................................................181

Viewing the LANE Database........................................................................................................181

Binding the LECS Address to an Interface...................................................................................181

Verifying the LECS Configuration...............................................................................................182

Chapter 9: LightStream Switches.................................................................................................................183

In Depth...............................................................................................................................................183

LightStream 100..................................................................................................................................183

LightStream 1010................................................................................................................................184

LightStream 2020................................................................................................................................185

Neighborhood Discovery Function...............................................................................................186

Virtual Path Connections.....................................................................................................................186

LightStream Troubleshooting Tools....................................................................................................187

LightStream Boot Process.............................................................................................................187

Supported Troubleshooting Protocols...........................................................................................188

Snooping Mechanisms..................................................................................................................188

Multiprotocol Over ATM..............................................................................................................188

Configuring the Hostname...................................................................................................................189

Configuring an Enable Password.........................................................................................................189

Configuring the Processor Card Ethernet Interface.............................................................................189

Configuring Virtual Private Tunnels...................................................................................................190

viii

Page 10

Table of Contents

Chapter 9: LightStream Switches

Verifying an ATM Interface Connection Status..................................................................................190

Viewing the Configured Virtual Connections.....................................................................................191

Configuring the LECS ATM Address on a LightStream 1010 Switch...............................................191

Configuring the Advertised LECS Address.........................................................................................191

Viewing the LANE Configuration.......................................................................................................191

Viewing the Installed Modules............................................................................................................192

Configuring the MPC...........................................................................................................................193

Configuring the MPS...........................................................................................................................193

Changing the MPS Variables........................................................................................................193

Monitoring the MPS......................................................................................................................194

Enabling ILMI Autoconfiguration.......................................................................................................194

Configuring LANE on a LightStream 1010.........................................................................................194

Powering on the LightStream 100 ATM Switch.................................................................................195

Configuring the LS100 Switch............................................................................................................195

Recovering a Lost Password................................................................................................................196

Chapter 10: Layer 2 Redundant Links.........................................................................................................199

In Depth...............................................................................................................................................199

Layer 2 Switching Overview...............................................................................................................199

Frames..................................................................................................................................................199

Broadcast and Multicast Frames...................................................................................................200

Unknown Unicasts........................................................................................................................200

Layer 2 Network Loops.......................................................................................................................200

Danger! Data Loops!.....................................................................................................................201

Edsger Dijkstra’s Graph Theory....................................................................................................201

STP Root Bridges..........................................................................................................................202

Bridge Protocol Data Units...........................................................................................................203

Root Bridge Selection...................................................................................................................205

Spanning Tree Convergence Time................................................................................................207

STP Port States..............................................................................................................................208

Per−VLAN Spanning Tree............................................................................................................209

EtherChannel........................................................................................................................................209

Link Failure...................................................................................................................................210

Port Aggregation Protocol.............................................................................................................210

Fast Convergence Components of STP...............................................................................................211

PortFast..........................................................................................................................................211

UplinkFast.....................................................................................................................................211

BackboneFast................................................................................................................................212

Enabling STP on a Set/Clear Command−Based Switch......................................................................212

Enabling STP on a Set/Clear Command−Based Switch for All VLANs............................................213

Disabling STP on a Set/Clear Command−Based Switch.....................................................................213

Disabling STP on a Set/Clear Command−Based Switch by VLAN...................................................213

Viewing the STP Configuration on a Set/Clear Command−Based Switch.........................................213

Configuring STP on an IOS Command−Based Switch.......................................................................214

Disabling STP on an IOS Command−Based Switch...........................................................................214

Viewing the STP Configuration on a Command Line Switch.............................................................215

Configuring the STP Root Switch.......................................................................................................215

Configuring the STP Secondary Root Switch.....................................................................................215

Setting the Root Bridge for More than One VLAN on a Set/Clear Command−Based Switch...........216

Assigning a Port Cost to a Port Using the Set/Clear Command−Based IOS......................................216

Assigning a Port Cost to a Port Using a CLI−Based Switch...............................................................216

ix

Page 11

Table of Contents

Chapter 10: Layer 2 Redundant Links

Verifying the Port Cost Configuration on Both a Set/Clear Command− and CLI−Based Interface...217

Configuring the Port Priority on a Set/Clear Command−Based IOS..................................................217

Configuring the Port Priority on a CLI−Based IOS............................................................................217

Verifying the STP Port Priority on a Set/Clear Command−Based Switch..........................................218

Verifying the VLAN Priority Settings.................................................................................................218

Adjusting the FwdDelay Timer on a Set/Clear Command−Based IOS...............................................218

Adjusting the Hello Timer on a Set/Clear Command−Based IOS......................................................218

Adjusting the MaxAge Timer on a Set/Clear Command−Based IOS.................................................219

Preparing to Enable EtherChannel.......................................................................................................219

Viewing the Port Setting for EtherChannel on a Set/Clear Command−Based Switch........................219

Creating an EtherChannel on a Set/Clear Command−Based Switch..................................................220

Verifying the EtherChannel Configuration..........................................................................................221

Defining an EtherChannel Administrative Group...............................................................................221

Viewing an EtherChannel Administrative Group................................................................................221

Configuring EtherChannel on an IOS−Based Switch..........................................................................222

Identifying the Template Port..............................................................................................................222

Verifying the EtherChannel Configuration on a Command Line Interface IOS.................................222

Enabling PortFast on a Set/Clear Command−Based Switch...............................................................223

Disabling PortFast on a Set/Clear Command−Based Switch..............................................................223

Enabling PortFast on a CLI−Based IOS Switch..................................................................................223

Disabling PortFast on a CLI−Based IOS Switch.................................................................................224

Verifying the PortFast Configuration..................................................................................................224

Enabling UplinkFast on a Set/Clear Command−Based Switch...........................................................224

Disabling UplinkFast on a Set/Clear Command−Based Switch..........................................................224

Verifying the UplinkFast Configuration..............................................................................................225

Enabling UplinkFast on a Cisco IOS Command−Based Switch.........................................................225

Disabling UplinkFast on a Cisco IOS Command−Based Switch........................................................225

Viewing the UplinkFast Configuration on an IOS−Based Switch......................................................226

Viewing UplinkFast Statistics on an IOS−Based Switch....................................................................226

Enabling BackboneFast on a Set/Clear Command−Based Switch......................................................226

Disabling BackboneFast on a Set/Clear Command−Based Switch.....................................................226

Viewing the BackboneFast Configuration...........................................................................................226

Chapter 11: Multilayer Switching.................................................................................................................227

In Depth...............................................................................................................................................227

How MLS Works.................................................................................................................................227

MLS Components..........................................................................................................................228

MLS Flows....................................................................................................................................230

Access List Flow Masks................................................................................................................231

MLS Troubleshooting Notes...............................................................................................................232

Configuring MLS.................................................................................................................................233

MLS Cache....................................................................................................................................234

Aging Timers.................................................................................................................................234

VLAN ID.......................................................................................................................................235

VTP Domain..................................................................................................................................235

Management Interfaces.................................................................................................................235

Configuring an External MLS Route Processor..................................................................................235

Enabling MLSP on an MLS−RP for IP.........................................................................................236

Disabling MLSP on an MLS−RP for IP........................................................................................236

Enabling MLSP on an MLS−RP for IPX......................................................................................236

Disabling MLSP on an MLS−RP for IPX.....................................................................................236

x

Page 12

Chapter 11: Multilayer Switching

Assigning a VLAN ID...................................................................................................................236

Adding an MLS Interface to a VTP Domain................................................................................236

Enabling MLS on an Individual Interface.....................................................................................237

Disabling MLS on an External Router Interface...........................................................................237

Configuring the MLS Switch Engine..................................................................................................237

Re−enabling MLS on a Catalyst 6000..........................................................................................237

Re−enabling MLS on a Catalyst 5000..........................................................................................238

Disabling MLS on a Catalyst 6000...............................................................................................238

Disabling MLS on a Catalyst 5000...............................................................................................238

Configuring the MLS Cache on the Catalyst 5000.......................................................................238

Configuring Fast Aging on a Catalyst 5000..................................................................................238

Configuring Fast Aging on a Catalyst 6000..................................................................................238

Disabling Fast Aging on a Catalyst 6000......................................................................................238

Configuring Long Aging on the Catalyst 6000.............................................................................239

Disabling Long Aging on the Catalyst 6000.................................................................................239

Configuring Normal Aging on the Catalyst 6000.........................................................................239

Disabling Normal Aging on the Catalyst 6000.............................................................................239

Assigning MLS Management to an Interface on the Catalyst 5000..............................................239

Disabling MLS Management on an Interface on the Catalyst 5000.............................................239

Monitoring and Viewing the MLS Configuration...............................................................................240

Viewing the MLS Aging Configuration on a Catalyst 6000.........................................................240

Displaying the IP MLS Configuration..........................................................................................240

Viewing MLS−RPs.......................................................................................................................240

Viewing MLS−RP Specifics.........................................................................................................240

Displaying MLS VTP Domain Information..................................................................................241

Viewing the MLS VLAN Interface Information...........................................................................241

Viewing MLS Statistics on the Catalyst 5000...............................................................................241

Viewing MLS Statistics on the Catalyst 6000...............................................................................242

Viewing MLS Entries....................................................................................................................242

Table of Contents

Chapter 12: Hot Standby Routing Protocol.................................................................................................243

In Depth...............................................................................................................................................243

Routing Problems................................................................................................................................243

Routing Information Protocol.......................................................................................................244

Proxy ARP.....................................................................................................................................244

ICMP Router Discovery Protocol.................................................................................................244

The Solution.........................................................................................................................................245

HSRP Message Format.................................................................................................................247

The HSRP States...........................................................................................................................247

HSRP Configuration............................................................................................................................248

HSRP Interface Tracking.....................................................................................................................248

Opening a Session on an Internal Route Processor.............................................................................249

Entering Configuration Mode on an RSM...........................................................................................249

Enabling HSRP and Assigning an IP Address to a Standby Group....................................................249

Assigning an HSRP Interface Priority.................................................................................................250

Assigning a Preempt Delay to a Standby Group.................................................................................250

Removing a Preempt Delay from a Standby Group............................................................................250

Setting the HSRP Hello and Hold Timers...........................................................................................250

Removing the HSRP Hello and Hold Timers......................................................................................251

Configuring a Clear−Text Password for HSRP Authentication..........................................................251

Configuring Two RSFC Interfaces as One HSRP Group....................................................................251

xi

Page 13

Table of Contents

Chapter 12: Hot Standby Routing Protocol

Enabling Interface Tracking................................................................................................................252

Using the show standby Command.....................................................................................................252

Using the debug Command..................................................................................................................253

Chapter 13: Policy Networking.....................................................................................................................254

In Depth...............................................................................................................................................254

Access Security Policies......................................................................................................................254

Core Layer Policies.......................................................................................................................255

Distribution Layer Policies............................................................................................................255

Security at the Access Layer................................................................................................................261

Configuring Passwords..................................................................................................................261