Page 1

Contents

StorNext 3.1.4 Release Notes

Product StorNext® 3.1.4

Date September 2009

Purpose of This Release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

New Features and Enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Discontinued Support on Some Platforms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Changes From Previous Releases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

StorNext Upgrade Recommendations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Configuration Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Operating System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Supported Libraries and Tape Drives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Minimum Firmware Levels for StorNext Drives . . . . . . . . . . . . . . . . . . . . . . . . 19

Supported System Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Resolved Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Known Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Operating Guidelines and Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Contacting Quantum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

© September 2009 Quantum Corporation. All rights reserved. Document 6-00431-25 Rev A

Quantum, DLT, DLTtape, the Quantum logo, and the DLTtape logo are all registered trademarks of Quantum Corporation.

SDLT and Super DLTtape are trademarks of Quantum Corporation. Other trademarks may

be mentioned herein which belong to other companies.

Page 2

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Purpose of This Release

StorNext 3.1.4 includes enhancements that extend the capabilities of StorNext

Storage Manager (SNSM) and StorNext File System (SNFS). This document

describes these enhancements, as well as supported platforms and system

components. This document also lists currently known issues, issues that were

resolved for this release, and known limitations.

Visit www.quantum.com/ServiceandSupport

updates for StorNext.

New Features and Enhancements

StorNext 3.1.4 adds new support for operating systems, libraries and tape

drives.

Added Operating System Support

Support has been added for the following operating systems:

• Windows Vista Service Pack 2 (SP2) for x86 32-bit and x86 64-bit systems

• Windows Server 2008 Service Pack 2 (SP2) for x86 32-bit and x86 64-bit

systems

• Red Hat Enterprise Linux 4 Update 8 (2.6.9-89 EL) for x86 32-bit and

x86 64-bit systems

• Red Hat Enterprise Linux 5 Update 3 (2.6.18-128 EL) for x86 64-bit systems

for additional information and

Added Library and Drive Support

New Media Limiting Feature

2 Purpose of This Release

Support has been added for the following libraries and drives:

• Quantum DXi 7500 library

• Sun/StorageTek T10000 Rev B tape drives for Sun/StorageTek SCSI and Fibre

Channel L700 libraries

• Sun/StorageTek T10000 Rev B tape drives for Sun/StorageTek ACSLS 7.3

SL3000 libraries

StorNext users can now minimize the number of new tape media that can be

used for stores by a single policy class. In most cases one new media will be

used per policy class at a time. A new piece of media must be filled before

another new media can be allocated for the policy class.

It is necessary to understand how Storage Manager allocates media to

understand when only one piece of media will be used and in what

circumstances several media might be used.

Page 3

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Consider the following example. In standard StorNext Storage Manager without

the new feature, suppose we have a group of 1200 files that need to be stored

for policy class pc1. Assume there are four tape drives available, and they are

currently idle. Assume also that there is a supply of blank unassigned media,

and that there are no partially used media currently assigned to policy class pc1.

Storage Manager splits its files to be stored into groups of 300 (the default

value), so in this example the list of 1200 files to be stored will be split into four

groups of 300 files each. Four

fs_fmover processes can be copying the files to

four different newly allocated media. This is good because it achieves

parallelization for the copies for class pc1, but a side effect is that four different

media are now assigned to class pc1, and each of the four media might be only

partially used.

The new media limiting feature allows the StorNext administrator to select a

different media allocation strategy. The alternate allocation strategy will allocate

one new media for the policy class, and files will be written only to that media

until it fills up. Then a new media will be allocated and stores are written only to

that media. And so on. This feature maximizes storage media usage per policy

class by eliminating the possible parallelization of copy operations (stores) for

the policy class.

When preparing to store a set of files, Storage Manager looks to see if there are

any media already assigned to the policy class that can hold at least one of the

files in the list. If so, the store operation proceeds, using that piece of media. If

there are no such available media, a blank tape is requested. That blank tape will

be used for the store operation and will then be owned by the policy class.

To limit the media used per policy class, insert this line:

LIMIT_MEDIA_PER_CLASS=y;

in the file /usr/adic/TSM/config/fs_sysparm_override

You must stop and restart Storage manager for this change to take effect. If you

wish to disable the feature, remove that line from /usr/adic/TSM/config/

fs_sysparm_override and then stop and restart Storage Manager.

Enabling the feature prevents Storage Manager from requesting a new blank

tape if there is a piece of media owned by the policy class containing enough

space to hold at least one of the files in the current store list. However, if there

are several media already assigned to the policy class they can still be used to

store files at the same time.

You must be careful if no media are currently assigned to the policy class,

because a new piece of media is not really assigned to a policy class until a copy

operation to that media has successfully completed.

Consider the example above in which we wanted to store 1200 files. Even with

the limiting feature enabled, four media would be used because files have been

written to each of the four media. Four store operations (300 files each) would

be launched almost simultaneously. Each of these store operations will query

the media database and conclude that there are no writable media assigned to

the policy class. Each of the four store operations will request a new blank tape.

When the operations have completed, four media are now assigned to the

policy class.

This behavior can be avoided by seeding the process with one file. If we stored

one file initially (with the

fsstore command, for example) then there would be

one piece of media assigned to the policy class. Then, if we stored 1200 files,

New Features and Enhancements 3

Page 4

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Other Changes

each of the stores of 300 files would wait for that piece of media. If that piece

of media fills, a new piece will be assigned and stores will be able to continue.

This section describes additional changes in the StorNext 3.1.4 release.

New VTOC Label Format

In StorNext 3.1.3 an alternate VTOC label format was introduced. This new label

format allows for better label compatibility between architectures in future

StorNext releases. The default label format in 3.1.3 was the original VTOC

format. Beginning with release 3.1.4, the default is the newer VTOC format.

In 3.1.x there are compatibility considerations that must be taken into account

when using VTOC labels:

1) The old VTOC format is not compatible with Solaris systems.

2) The new VTOC format is not compatible with IRIX systems on StorNext

releases prior to 3.5.0

See the cvlabel documentation for more on VTOC label formats. The '-i' and '-I'

flags indicate which VTOC format to use.

Quantum recommends against using the old VTOC format. If IRIX platforms are

included in a StorNext environment, contact Quantum regarding upgrading to

StorNext 3.5.0 or higher.

For more information about this VTOC label change, see StorNext Product

Bulletin 31 on Quantum.com

.

Updated Database StorNext 3.1.4 incorporates a new version of the database, revision 104, which

corrects an issue in earlier database versions.

This issue caused truncation problems and issues in which files on several tapes

were erroneously reported as having no path. Running a fsmedinfo command

for these files reported PATH UNKNOWN despite the directories existing. This

problem occurred because running fsclean -r did not properly clean out the

oldmedia table. Because of the entries in the oldmedia table, database

queries did not return the correct number of entries.

Storage Manager Parameter Deprecated

4 Other Changes

This release includes an optimization for the Storage Manager rebuild policy. As

part of this optimization, the MAPPER_MAX_THREADS system parameter has

been deprecated.

When upgrading, if this parameter exists in /usr/adic/TSM/config/

fs_sysparm or /usr/adic/TSM/config/fs_sysparm_override, it will

automatically be removed. If the parameter is added to these files, it will have

no effect.

Page 5

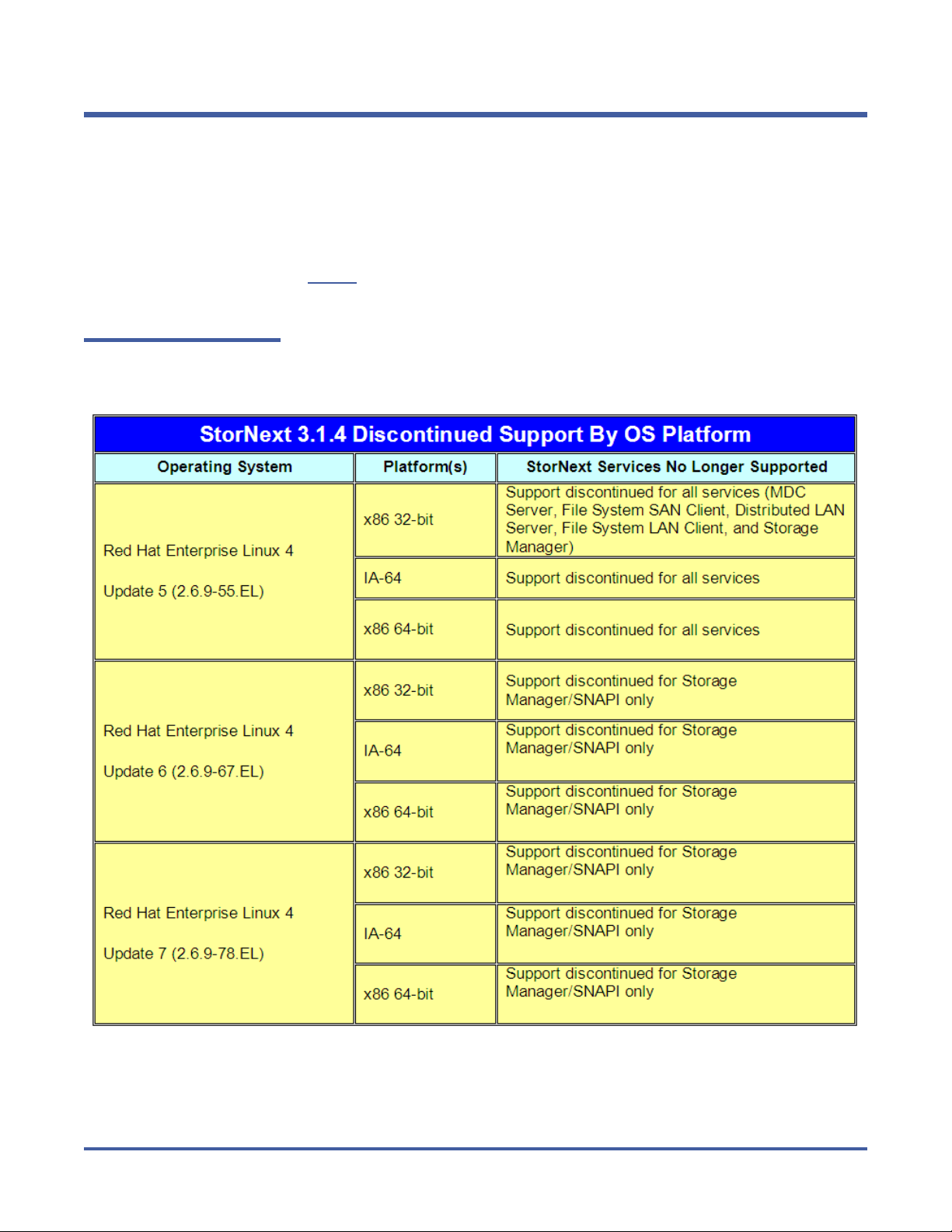

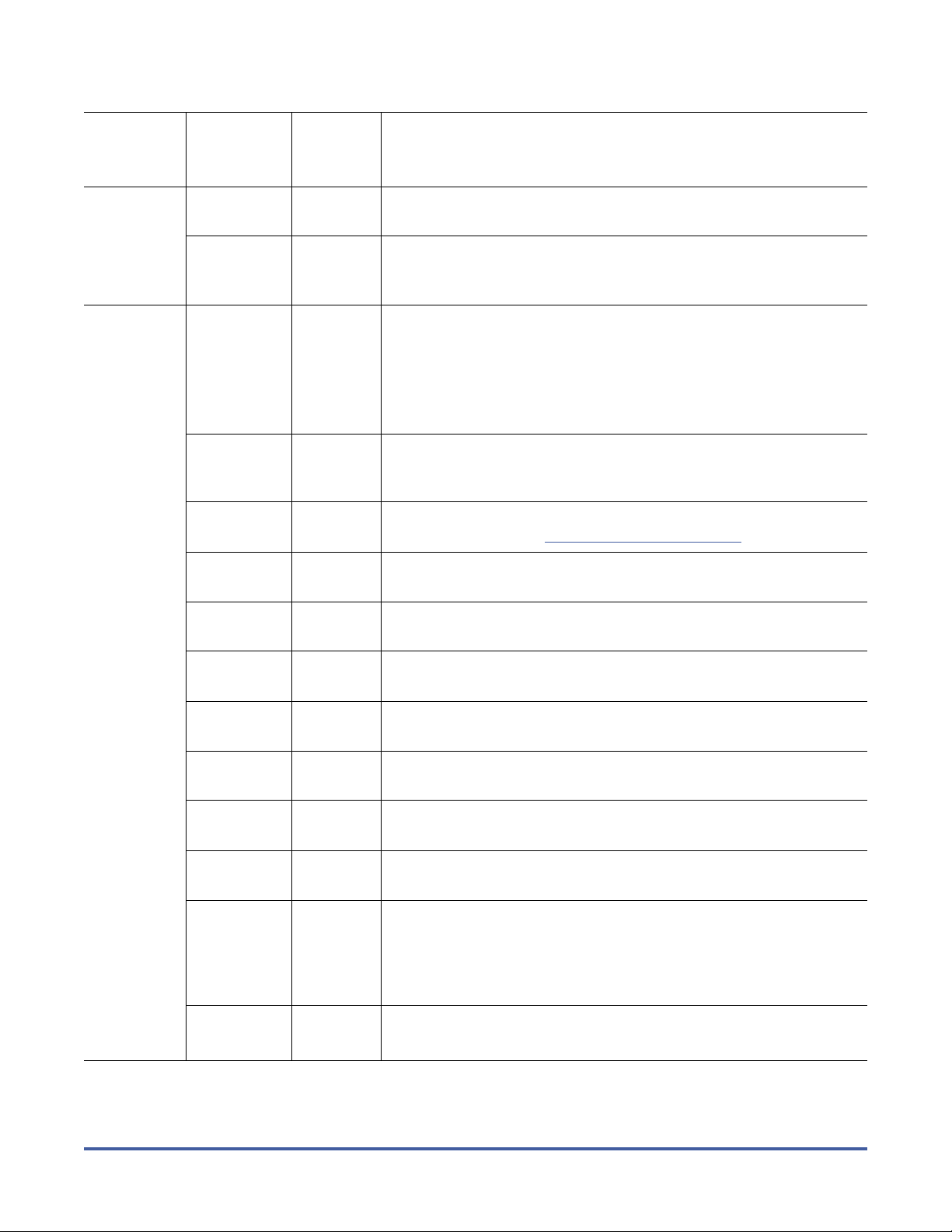

Discontinued Support on Some Platforms

Some StorNext services that were supported on various platforms in StorNext

3.1.3 and other previous releases are no longer supported in StorNext 3.1.4.

These services will continue to be supported for previous StorNext releases, but

going forward beginning with release 3.1.4 will not be supported.

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

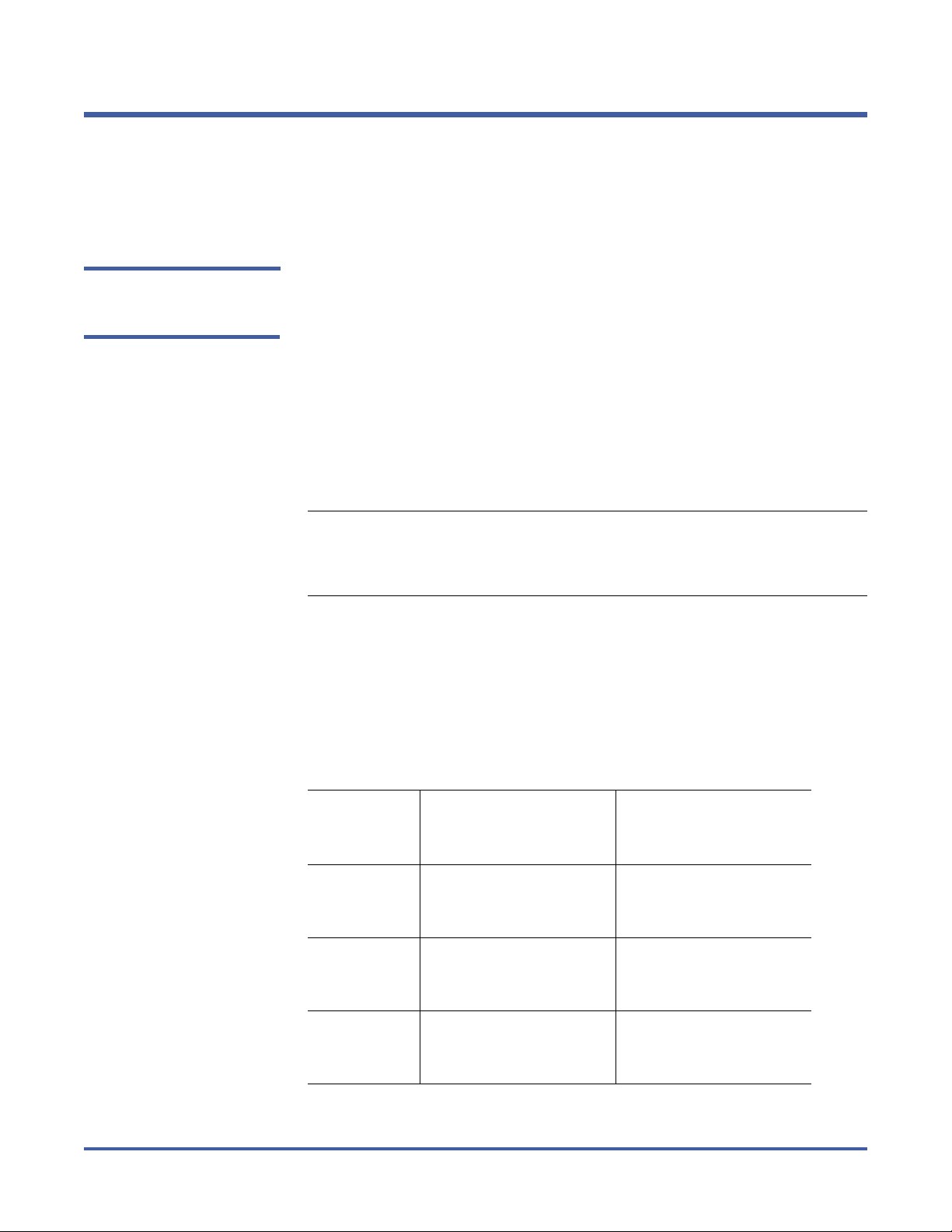

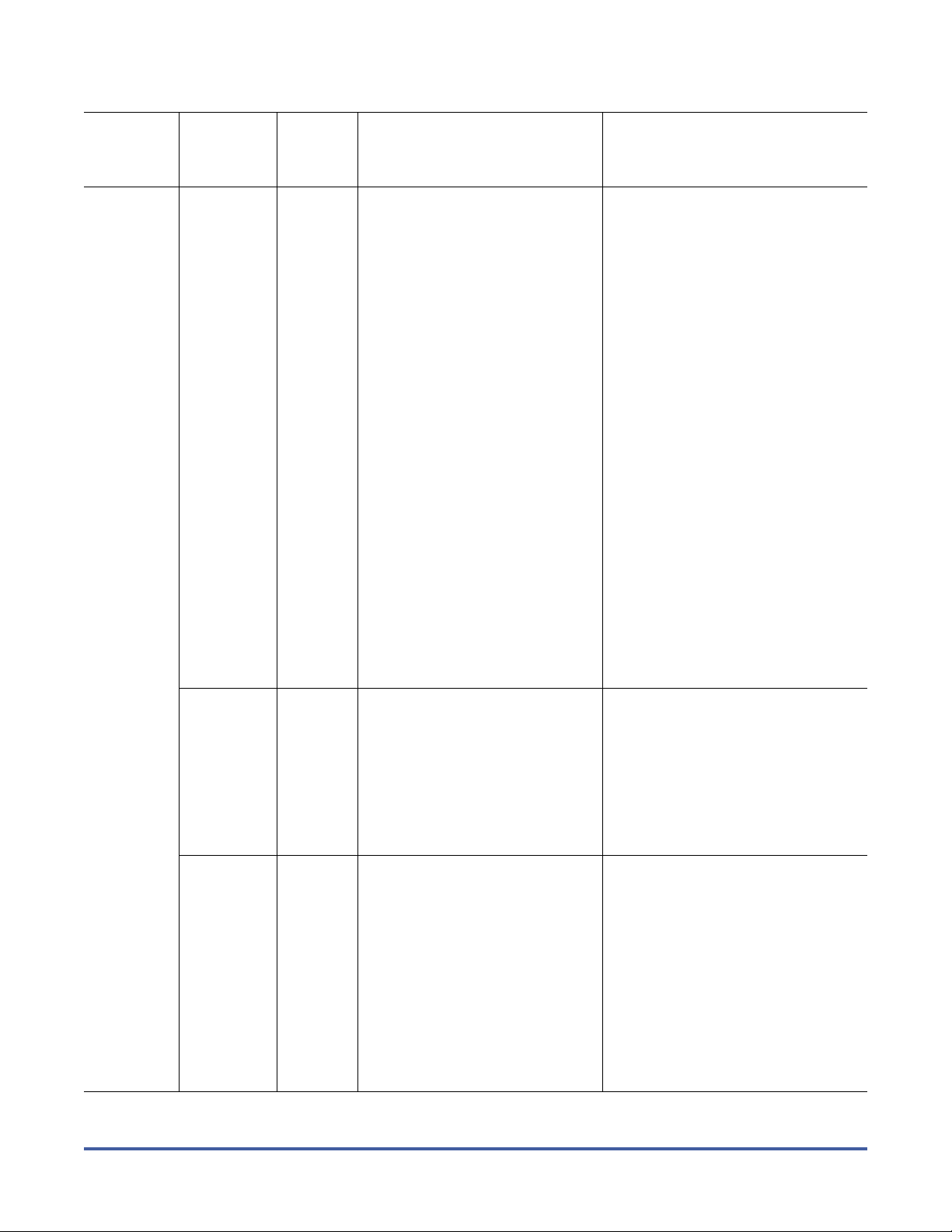

Table 1 Discontinued

Platforms

Tab l e 1

StorNext 3.1.4.

shows the StorNext services for which support is discontinued as of

Discontinued Support on Some Platforms 5

Page 6

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Changes From Previous Releases

The following change was instituted in a previous StorNext release and is listed

here as a reminder that important settings have been changed.

Revised FSBlockSize, Metadata Disk Size, and JournalSize Settings

The FsBlockSize (FSB), metadata disk size, and JournalSize settings all

work together. For example, the FsBlockSize must be set correctly in order for

the metadata sizing to be correct. JournalSize is also dependent on the

FsBlockSize.

For FsBlockSize the optimal settings for both performance and space

utilization are in the range of 16K or 64K.

Settings greater than 64K are not recommended because performance will be

adversely impacted due to inefficient metadata I/O operations. Values less than

16K are not recommended in most scenarios because startup and failover time

may be adversely impacted. Setting FsBlockSize (FSB) to higher values is

important for multi-terabyte file systems for optimal startup and failover time.

Note: This is particularly true for slow CPU clock speed metadata servers such

as Sparc. However, values greater than 16K can severely consume

metadata space in cases where the file-to-directory ratio is low (e.g.,

less than 100 to 1).

For metadata disk size, all new installations must have a

with more space allocated depending on the number of files per directory and

the size of your file system.

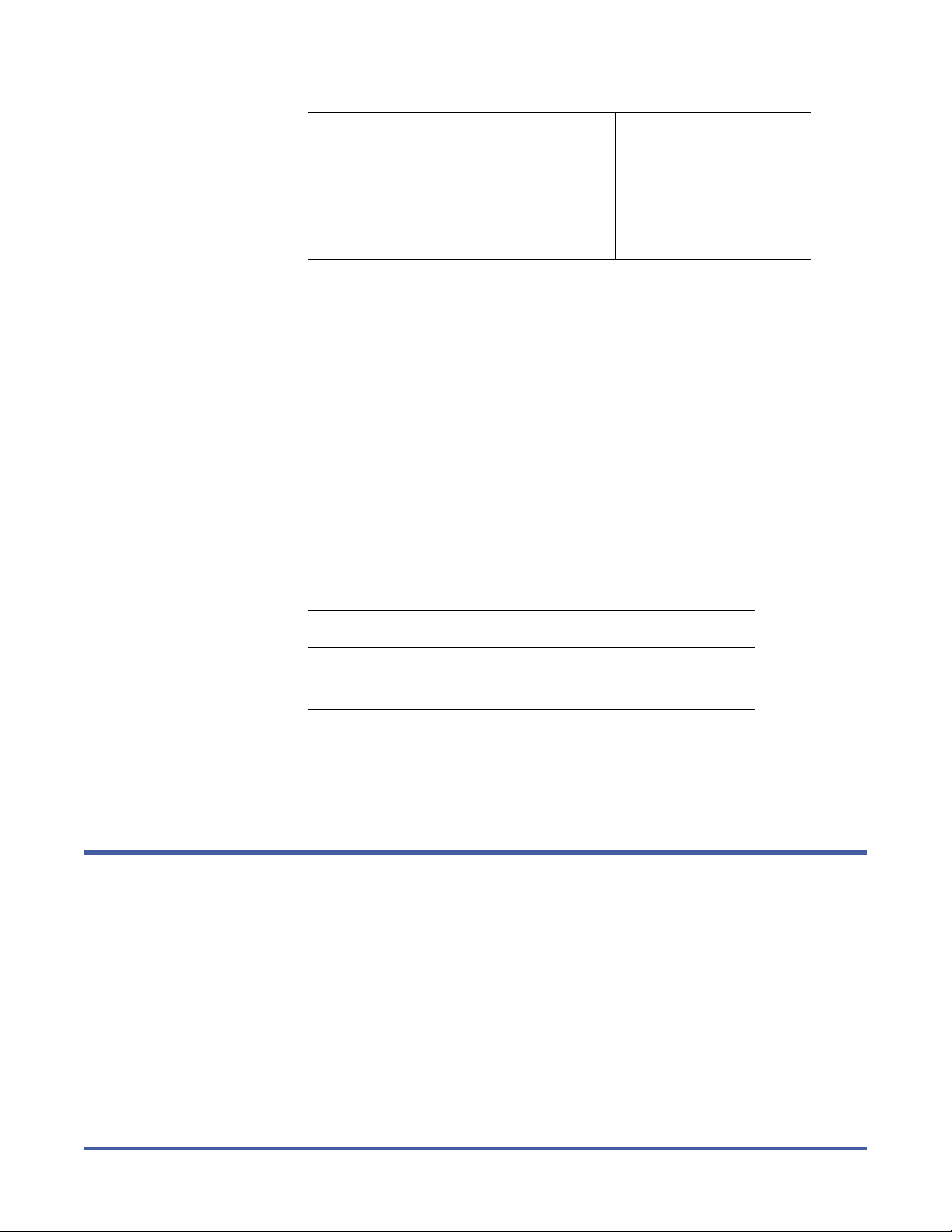

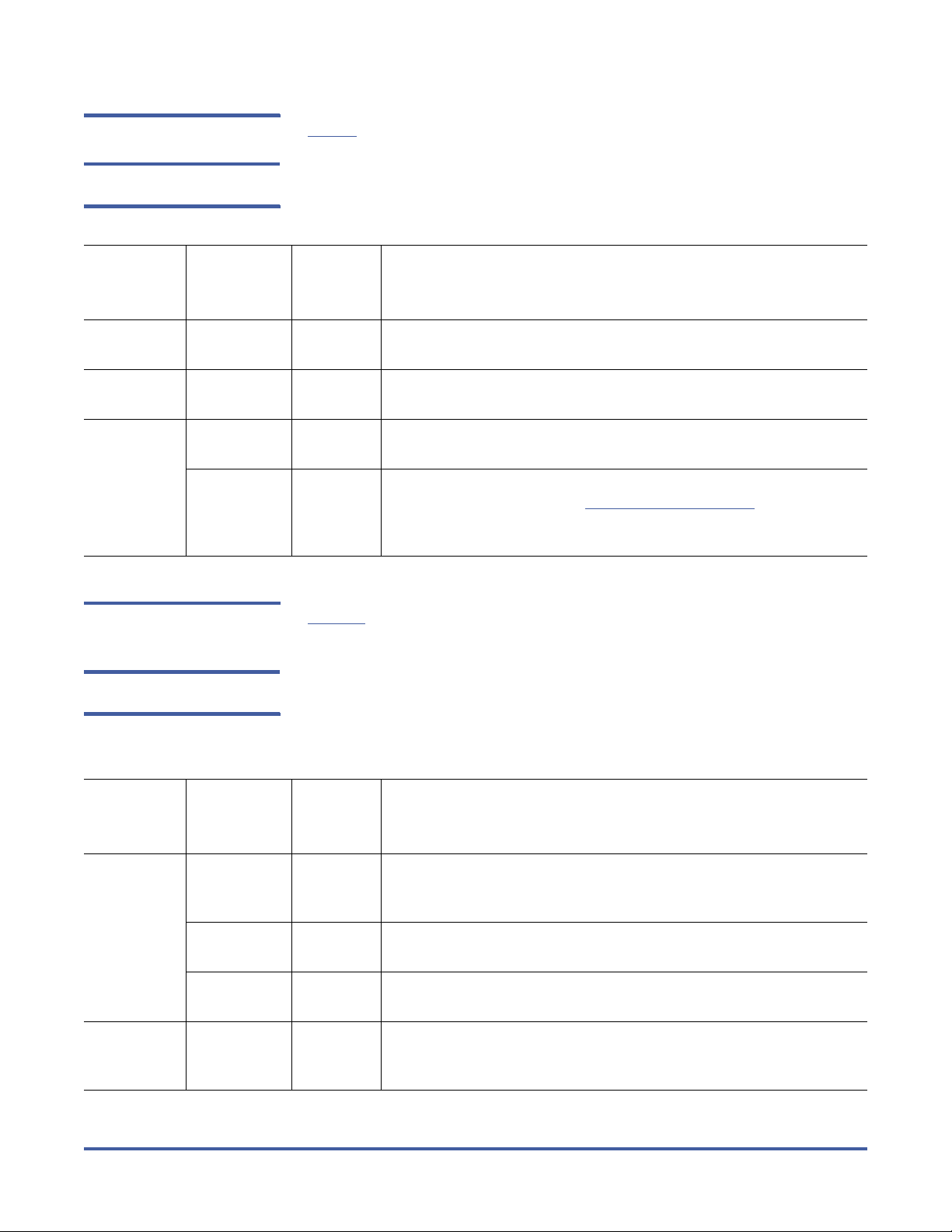

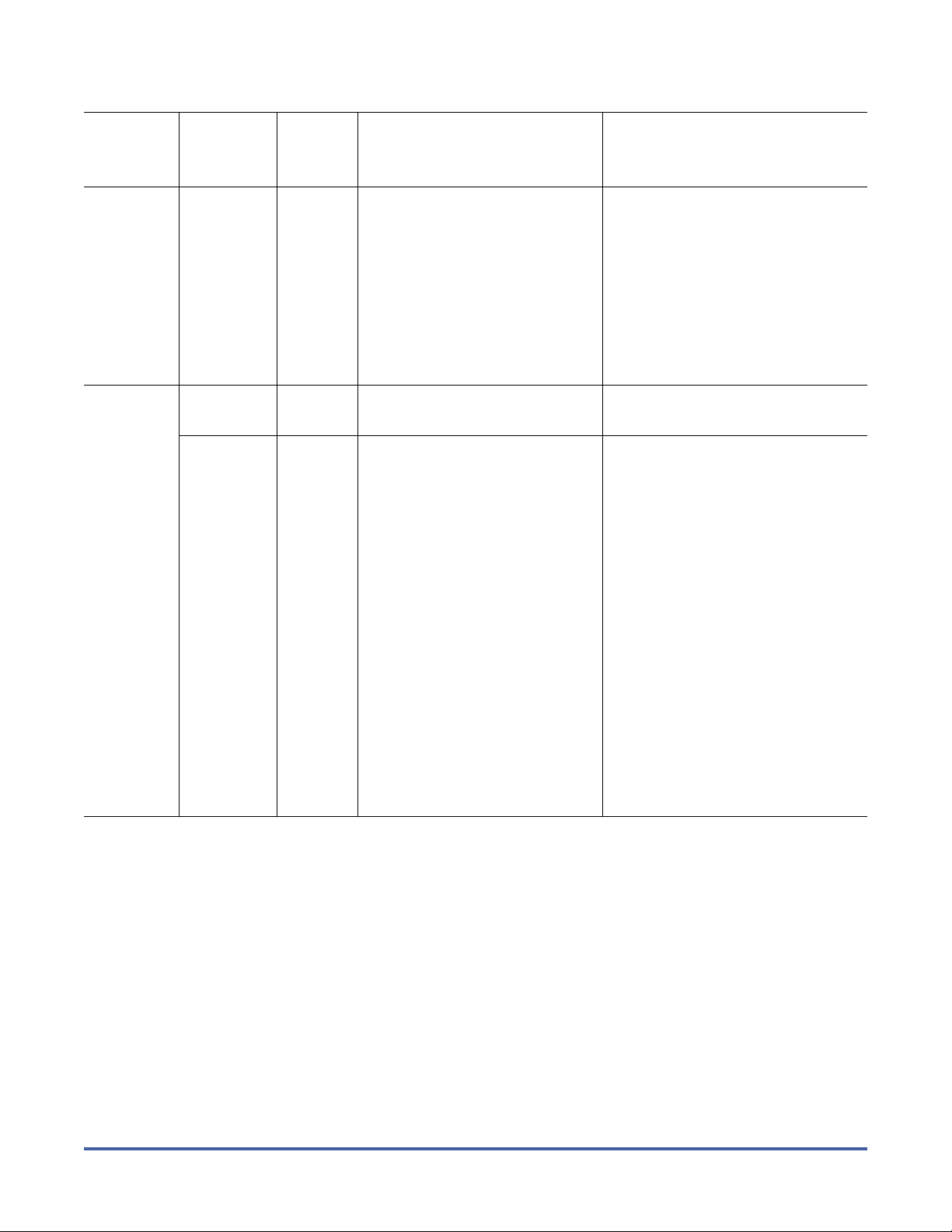

The following table shows suggested FsBlockSize (FSB) settings and

metadata disk space based on the average number of files per directory and file

system size. The amount of disk space listed for metadata is

25 GB minimum amount. Use this table to determine the setting for your

configuration.

minimum

in addition

of 25 GB,

to the

Average No.

of Files Per

Directory

Less than 10 FSB: 16KB

10-100 FSB: 16KB

100-1000 FSB: 64KB

6 Changes From Previous Releases

File System SIze: Less

Than 10TB

Metadata: 32 GB per 1M

files

Metadata: 8 GB per 1M

files

Metadata: 8 GB per 1M

files

File System Size: 10TB

or Larger

FSB: 64KB

Metadata: 128 GB per

1M files

FSB: 64KB

Metadata: 32 GB per 1M

files

FSB: 64KB

Metadata: 8 GB per 1M

files

Page 7

Average No.

of Files Per

Directory

File System SIze: Less

Than 10TB

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

File System Size: 10TB

or Larger

1000 + FSB: 64KB

Metadata: 4 GB per 1M

files

The best rule of thumb is to use a 16K FsBlockSize unless other requirements

such as directory ratio dictate otherwise.

This setting is not adjustable after initial file system creation, so it is very

important to give it careful consideration during initial configuration.

Example: FsBlockSize 16K

FSB: 64KB

Metadata: 4 GB per 1M

files

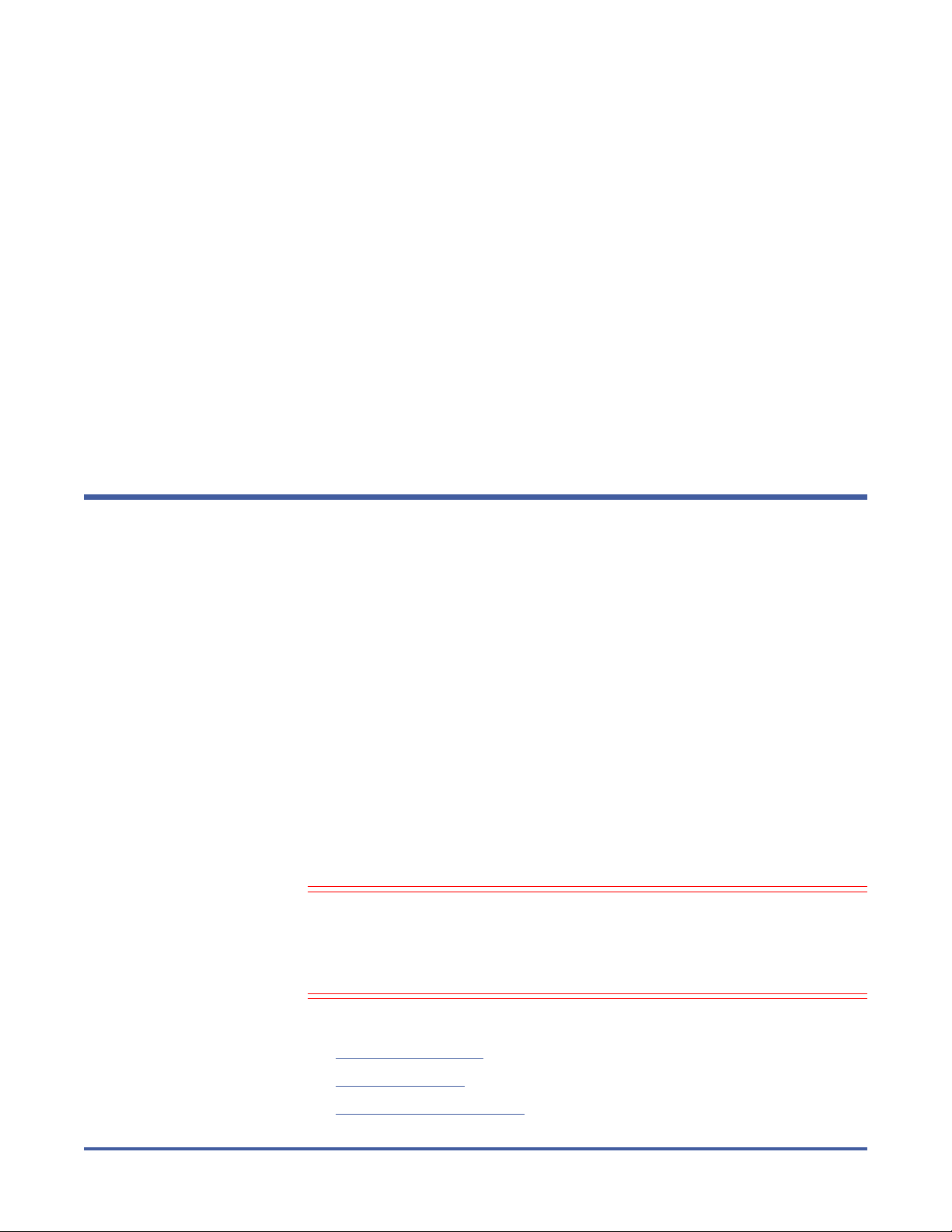

JournalSize Setting

The optimal settings for JournalSize are in the range between 16M and 64M,

depending on the FsBlockSize. Avoid values greater than 64M due to

potentially severe impacts on startup and failover times. Values at the higher

end of the 16M-64M range may improve performance of metadata operations

in some cases, although at the cost of slower startup and failover time.

The following table shows recommended settings. Choose the setting that

corresponds to your configuration.

FsBlockSize JournalSize

16KB 16MB

64KB 64MB

This setting is adjustable using the cvupdatefs utility. For more information,

see the cvupdatefs man page.

Example: JournalSize 16M

StorNext Upgrade Recommendations

Customers migrating to 3.1.4 should observe the following best practices:

• Whenever possible, StorNext systems should run the latest StorNextsupported operating system service pack or update level.

• When upgrading from StorNext 2.8 to StorNext 3.1.4 on RHEL4, you should

first upgrade the operating system to update 7. For example, if SNMS 2.8 is

installed on RHEL4U3, the upgrade procedure is:

1. Upgrade the operating system from RHEL4U3 to RHEL4U7

2. Upgrade the StorNext version from 2.8 to 3.1.4

StorNext Upgrade Recommendations 7

Page 8

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Since StorNext 3.1.4 requires Update 5, 6 or 7 of RHEL4, these steps should

be performed one after the other to avoid running StorNext with an

unsupported Red Hat update level longer than necessary.

• StorNext 3.1.4 does not support RHEL5 GA (“update 0”). When upgrading a

system running RHEL5 GA to StorNext 3.1.4, you must first upgrade the

operating system to RHEL5U2.

• StorNext 3.1.4 does not support AIX 5.2. When upgrading clients running

AIX 5.2 to StorNext 3.1.4, perform the following steps:

1 Make a backup copy of /etc/filesystems

2 Uninstall StorNext

3 Upgrade the operating system to AIX 5.3

4 Install StorNext 3.1.4

5 Update /etc/filesystems with previously saved StorNext mount

information

Configuration Requirements

Before installing StorNext 3.1.4, note the following configuration requirements:

• In cases where gigabit networking hardware is used and maximum StorNext

performance is required, a separate, dedicated switched Ethernet LAN is

recommended for the StorNext metadata network. If maximum StorNext

performance is not required, shared gigabit networking is acceptable.

• A separate, dedicated switched Ethernet LAN is mandatory for the metadata

network if 100 Mbit/s or slower networking hardware is used.

• StorNext does not support file system metadata on the same network as

iSCSI, NFS, CIFS, or VLAN data when 100 Mbit/s or slower networking

hardware is used.

• The operating system on the metadata controller must always be run in U.S.

English.

• For Windows systems (server and client), the operating system must always

be run in U.S. English.

Caution: If a Library used by StorNext Storage Manager is connected via a

fibre switch, zone the switch to allow only the system(s) running

SNSM to have access to the library. This is necessary to ensure that

a “rogue” system does not impact the library and cause data loss or

corruption. For more information, see StorNext Product Alert 16.

This section contains the following configuration requirement topics:

• Library Requirements

• Disk Requirements

• Disk Naming Requirements

8 Configuration Requirements

Page 9

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

• SAN Disks on Windows Server 2008

Library Requirements The following libraries require special configurations to run StorNext.

DAS and Scalar DLC Network-Attached Libraries

Prior to launching the StorNext Configuration Wizard, DAS, and Scalar DLC

network-attached libraries must have the DAS client already installed on the

appropriate host control computer.

DAS Attached Libraries

For DAS attached libraries, refer to “Installation and Configuration” and “DAS

Configuration File Description” in the

Guide

. The client name is either the default StorNext server host name or the

name selected by the administrator.

StorNext can support LTO-3 WORM media in DAS connected libraries, but

WORM media cannot be mixed with other LTO media types in one logical library.

DAS Installation and Administration

To use LTO-3 WORM media in a logical library, before configuring the library in

StorNext, set the environmental variable XDI_DAS_MAP_LTO_TO_LTOW in the

/usr/adic/MSM/config/envvar.config file to the name of the library. The

library name must match the name given to the library when configuring it with

StorNext. If defining multiple libraries with this environmental variable, separate

them with a space. After setting the environmental variable, restart StorNext

Storage Manager (SNSM).

Note: SDLC software may not correctly recognize LTO-3 WORM media in the

library and instead set it to “unknown media type.” In this case you

must manually change the media type to “LTO3” using the SDLC GUI.

Scalar DLC Attached Libraries

For Scalar 10K and Scalar 1000 DLC attached libraries, refer to “Installation and

Configuration” and “Client Component Installation” in the

Library Controller Reference Manual

The DAS client should be installed during the installation of the Scalar DLC

attached libraries. Use this procedure to install the DAS client.

1 Select Clients > Create DAS Client.

The client name is either the default StorNext server host name or the name

selected by the administrator.

(6-00658-04).

Scalar Distributed

2 When the DAS client is configured in Scalar DLC, select Aliasing.

3 Select sony_ait as the Media aliasing.

4 The default value is 8mm.

5 Verify that Element Type has AIT drive selected.

6 Click Change to execute the changes.

Configuration Requirements 9

Page 10

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

ACSLS Attached Libraries

Due to limitations in the STK ACSLS interface, StorNext supports only single ACS

configurations (ACS 0 only).

Scalar i500 (Firmware Requirements)

For Scalar i500 libraries that do not have a blade installed, the library and drives

must meet the following minimum firmware requirements. (These requirements

apply

only

to Scalar i500 libraries that do not have a blade installed.)

• Scalar i500 minimum firmware level: 420GS.GS00600

• HP LTO-4 Fibre/SAS tape device minimum firmware level: H35Z

Caution: If you do not meet the minimum firmware requirements, you

might be unable to add a library to the Scalar i500 using the

StorNext Configuration Wizard.

Disk Requirements Disk devices must support, at minimum, the mandatory SCSI commands for

block devices as defined by the SCSI Primary Commands-3 standard (SPC-3) and

the SCSI Block Commands-2 (SBC-2) standard.

To ensure disk reliability, Quantum recommends that disk devices meet the

requirements specified by Windows Hardware Quality Labs (WHQL) testing.

However, there is no need to replace non-WHQL certified devices that have been

used successfully with StorNext.

Disk devices must be configured with 512-byte or 4096-byte sectors, and the

underlying operating system must support the device at the given sector size.

StorNext customers that have arrays configured with 4096-byte sectors can use

only Windows, Linux and IRIX clients. Customers with 512-byte arrays can use

clients for any valid StorNext operating system (i.e., Windows, Linux, or UNIX).

In some cases, non-conforming disk devices can be identified by examining the

output of cvlabel –vvvl. For example:

/dev/rdsk/c1d0p0: Cannot get the disk physical info.

If you receive this message, contact your disk vendors to determine whether the

disk has the proper level of SCSI support.

Disk Naming Requirements

When naming disks, names should be unique across all SANs. If a client

connects to more that one SAN, a conflict will arise if the client sees two disks

with the same name.

SAN Disks on Windows Server 2008

10 Configuration Requirements

SAN policy has been introduced in Windows Server 2008 to protect shared disks

accessed by multiple servers. The first time the server sees the disk it will be

offline, so StorNext is prevented from using or labeling the disk.

Page 11

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

To bring the disks online, use the POLICY=OnlineAll setting. If this doesn’t

set the disks online after a reboot, you may need to go to Windows Disk

Management and set each disk online.

Follow these steps to set all disks online:

1 From the command prompt, type DISKPART

2 Type SAN to view the current SAN policy of the disks.

3 To set all the disks online, type SAN POLICY=onlineall.

4 After being brought online once, the disks should stay online after

rebooting.

5 If the disks appear as “Not Initialized” in Windows Disk Management after a

reboot, this indicates the disks are ready for use.

If the disks still appear as offline in Disk Management after rebooting, you

must set each disk online by right-clicking the disk and selecting Online.

This should always leave the SAN disks online after reboot.

Note: NOTE: If the disks are shared among servers, above steps may lead to

data corruption. Users are encouraged to use the proper SAN policy to

protect data

EXAMPLE:

C:\ >Diskpart

Microsoft DiskPart version 6.0.6001

Copyright (C) 1999-2007 Microsoft Corporation.

On computer: CALIFORNIA

DISKPART> SAN

SAN Policy : Offline All

DISKPART> san policy=onlineall

DiskPart successfully changed the SAN policy for the current

operating system.

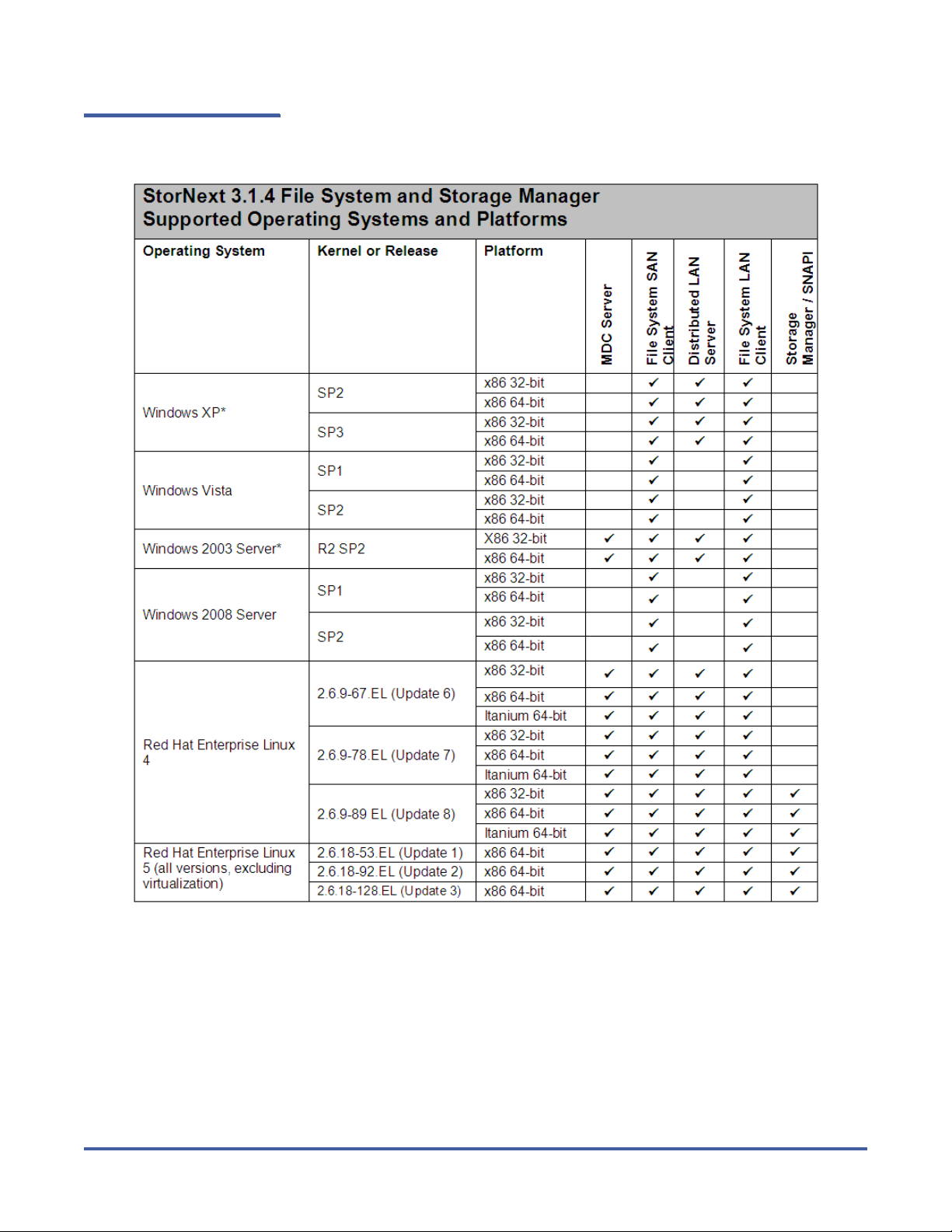

Operating System Requirements

Tab l e 2 shows the operating systems, kernel versions, and hardware platforms

that support StorNext File System, StorNext Storage Manager, and the StorNext

client software.

This table also indicates the platforms that support the following:

•MDC Servers

• Distributed LAN Servers

• File System LAN Clients

Operating System Requirements 11

Page 12

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Table 2 StorNext Supported

OSes and Platforms

Notes: When adding StorNext Storage Manager to a StorNext File System environment,

the metadata controller (MDC) must be moved to a supported platform. If you attempt

to install and run a StorNext 3.1.4 server that is not supported, you do so at your own

risk. Quantum strongly recommends against installing non-supported servers.

*64-bit versions of Windows support up to 128 distributed LAN clients. 32-bit versions of

Windows is not recommended for MDC server or Distributed LAN due to memory

limitations.

12 Operating System Requirements

Page 13

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Notes: When adding StorNext Storage Manager to a StorNext File System environment,

the metadata controller (MDC) must be moved to a supported platform. If you attempt

to install and run a StorNext 3.1.4 server that is not supported, you do so at your own

risk. Quantum strongly recommends against installing non-supported servers.

†StorNext support will transition from HP-UX 11i v2 to 11i v3, and from IBM AIX

5.3 to 6.1 on a future date.

Note: For systems running Red Hat Enterprise Linux version 4 or 5, before

installing StorNext you must first install the kernel header files (shipped

as the kernel-devel-smp or kernel-devel RPM).

For systems running SUSE Linux Enterprise Server, you must first install

the kernel source code (typically shipped as the kernel-source RPM).

Caution: Red Hat 5 ships with secure Linux kernel enabled by default. To

ensure proper StorNext operation, you must not install Red Hat 5

with secure Linux enabled. The secure Linux kernel must be off, or

the file system could fail to start.

Operating System Requirements 13

Page 14

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Note: GNU tar is required on Solaris systems. In addition, for systems running

Solaris 10, install the Recommended Patch Cluster (dated March 10,

2006 or later) before installing StorNext.

To e n ab l e s u p p or t fo r L U N s g r e a ter than 2TB on Solaris 10, the

following patches are required:

• 118822-23 (or greater) Kernel Patch

• 118996-03 (or greater) Format Patch

• 119374-07 (or greater) SD and SSD Patch

• 120998-01 (or greater) SD Headers Patch

14 Operating System Requirements

Page 15

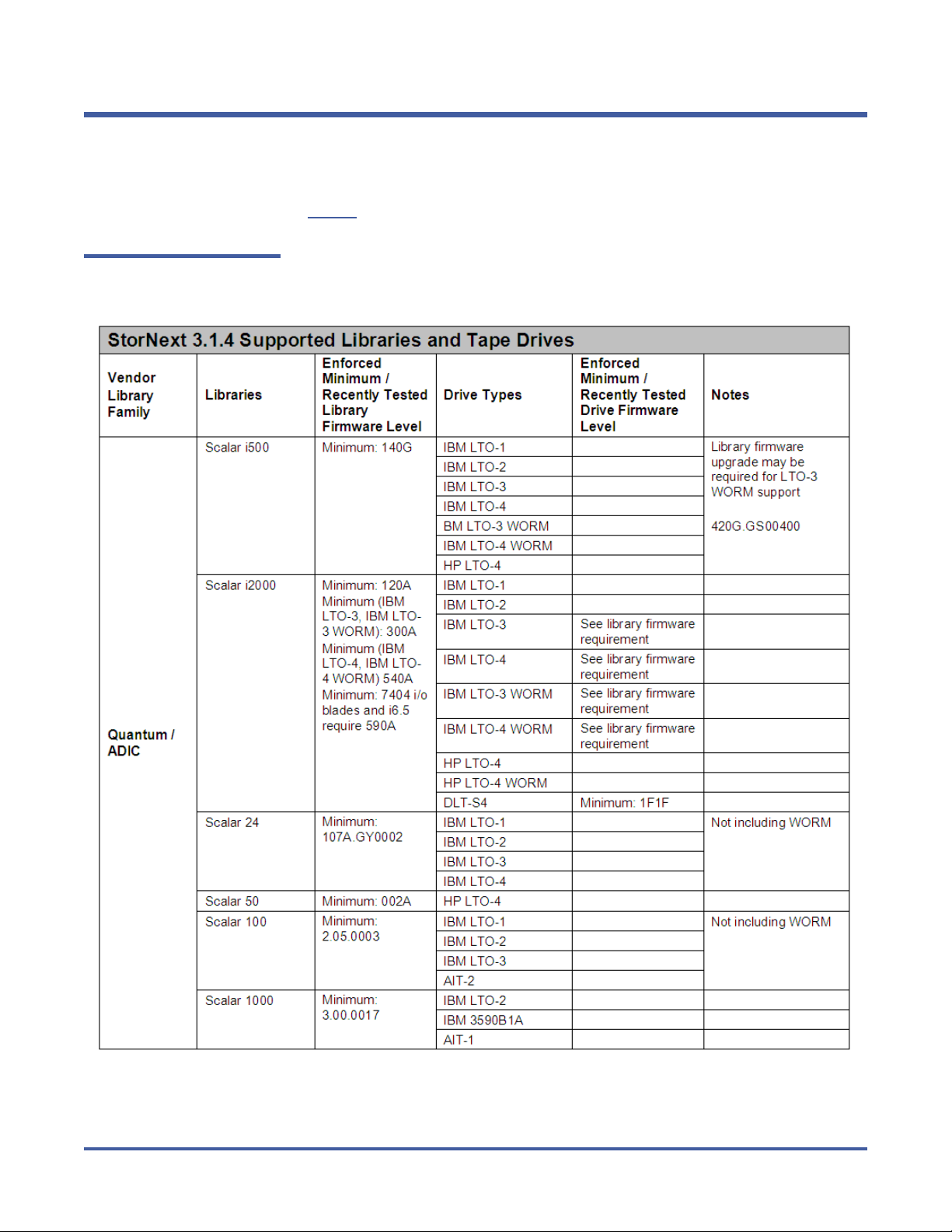

Supported Libraries and Tape Drives

Libraries and tape drives supported for use with StorNext 3.1.4 are presented in

Tab l e 3

Table 3 StorNext Supported

Libraries and Tape Drives

. Where applicable, minimum firmware levels for libraries are provided.

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Supported Libraries and Tape Drives 15

Page 16

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

16 Supported Libraries and Tape Drives

Page 17

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Notes:

Sun/Storage Tek no longer supports ACSLS version 6.x. Version 7.0 or higher is

required.

Before using DLT cleaning with DLT-S4 or SDLT 600 drives, configure the library

(Scalar i2000 or PX720) to disable reporting of the media ID. If media ID

reporting is not disabled, StorNext will not recognize the cleaning media (SDLT

type 1).

* When using T10000 drives, the STK library parameter Fastload must be set to

OFF.

Supported Libraries and Tape Drives 17

Page 18

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Notes:

Sun/Storage Tek no longer supports ACSLS version 6.x. Version 7.0 or higher is

required.

Before using DLT cleaning with DLT-S4 or SDLT 600 drives, configure the library

(Scalar i2000 or PX720) to disable reporting of the media ID. If media ID

reporting is not disabled, StorNext will not recognize the cleaning media (SDLT

type 1).

* When using T10000 drives, the STK library parameter Fastload must be set to

OFF.

18 Supported Libraries and Tape Drives

Page 19

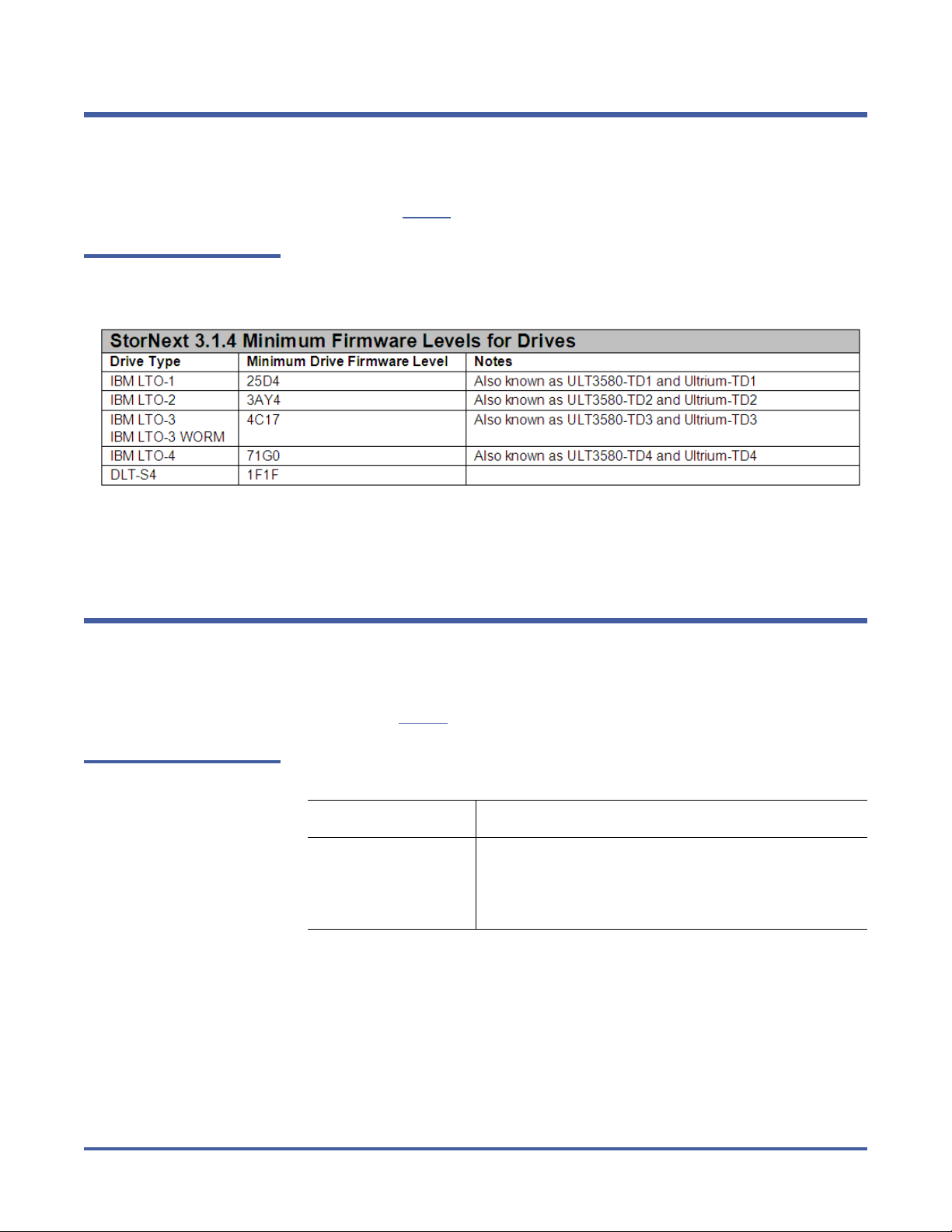

Minimum Firmware Levels for StorNext Drives

Where applicable, the minimum firmware levels for StorNext-supported drives

are shown in Ta b l e 4

Table 4 Minimum Firmware

Levels for Drives

.

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Note: When using IBM Ultrium-TD3 drives with SUSE Linux Enterprise Server 10,

you must upgrade the drive firmware to version 64D0 or later.

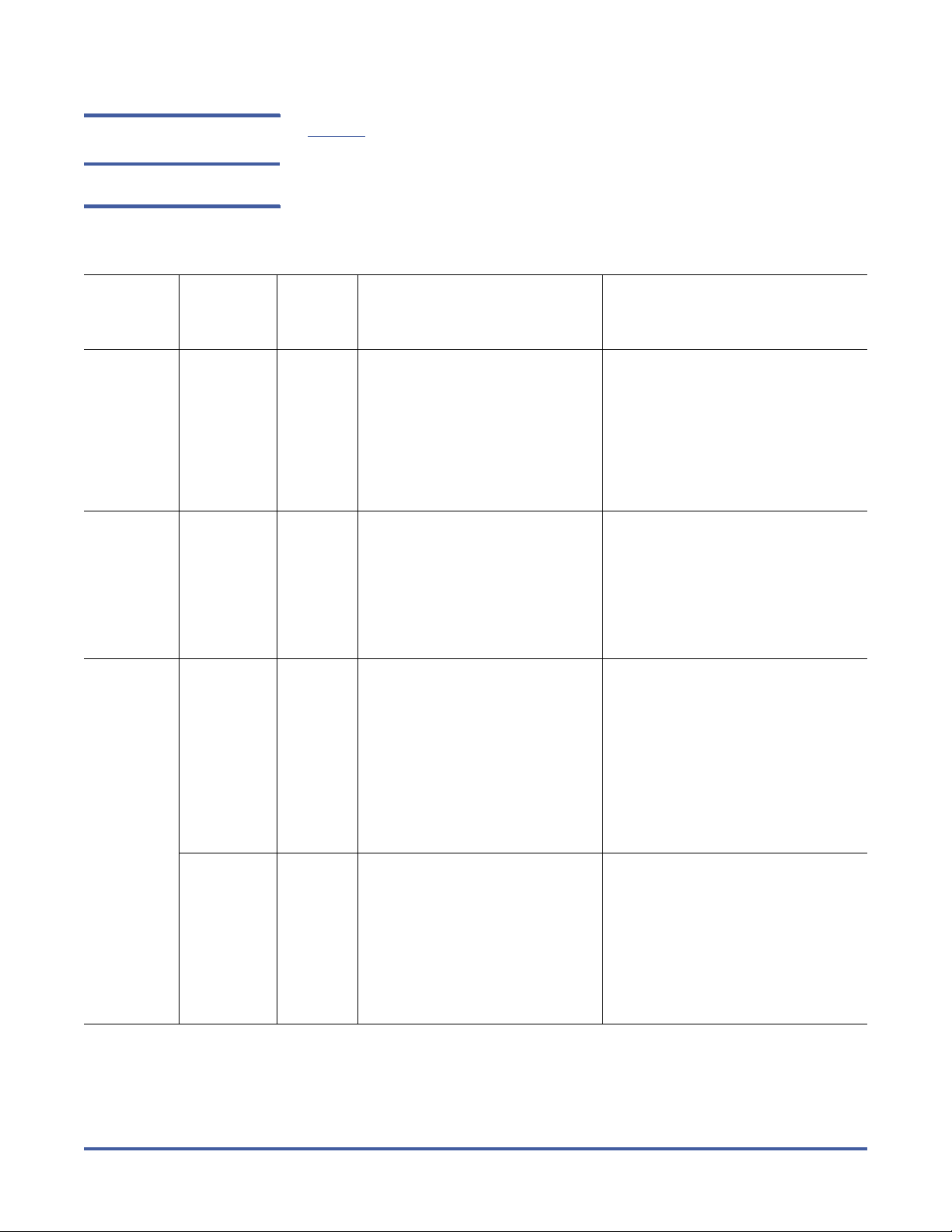

Supported System Components

System components that are supported for use with StorNext 3.1.4 are

presented in Tab l e 5

Table 5 StorNext Supported

System Components

Component Description

Browsers Internet Explorer 6.0 or later (including 7.0)

.

Mozilla Firefox 1.5 or later (including 2.0 or later)

(Minimum browser resolution: 800x600)

NOTE: Disable pop-up blockers.

Minimum Firmware Levels for StorNext Drives 19

Page 20

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Component Description

LTO-1 M e dia to

LTO-3 o r LTO-4 Dr i v e

Compatibility

NFS Version 3

LTO-1 media in an LTO-3 or LTO-4 library (LTO-3 or

LTO-4 drives) are considered for store requests unless

they are logically marked as write protected. When

LTO-1 m e dia is mou n t ed in an LT O -3 or LTO -4 drive,

StorNext marks the media as write protected.

Quantum recommends circumventing LTO-1 media

for store requests by following this procedure:

1 From the SNSM home page, choose Attributes

from the Media menu.

2 On the Change Media Attributes window, select

the LTO- 1 media from the list.

3 Click the Write Protect option.

4 Click Apply to make the change.

5 Repeat the process for each piece of LTO-1 media.

NOTE: An NFS server that exports a StorNext file

system with the default export options may not flush

data to disk immediately when an NFS client requests

it. This could result in loss of data if the NFS server

crashes after the client has written data, but before

the data has reached the disk.

This issue will be addressed in a future StorNext

release. As a workaround, add the no_wdelay option

to each line in the /etc/exports file that references a

StorNext file system. For example, typical export

options would be (rw,sync,no_wdelay).

Addressable Power

Switch

LDAP LDAP (Lightweight Directory Access Protocol) support

Hardware Requirements

To successfully install StorNext 3.1.4, the following hardware requirements must

be met:

• StorNext File System and Storage Manager Requirements

• StorNext Client Software Requirements

WTI RPS-10m

WTI IPS-800

The RPS-10m (master) is supported.

The RPS-10s (slave) is not supported.

requires Windows Active Directory.

20 Hardware Requirements

Page 21

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Note: The following requirements are for running StorNext only. Running

additional software (including the StorNext client software) requires

additional RAM and disk space.

StorNext File System and Storage Manager Requirements

Table 6 File System and

Storage Manager Hardware

Requirements

The hardware requirements for StorNext File System and Storage Manager are

presented in Tab l e 6

No. of File

Systems

.

RAM File System Disk Space

Storage Manager Disk

Space

1–4* 2 GB 2 GB • For application

5–8** 4 GB 4 GB

binaries, log files, and

documentation: up to

30GB (depending on

system activity)

• For support

directories: 3 GB per

million files stored

* Two or more CPU cores are recommended for best performance.

** Two or more CPU cores are required for best performance.

Note: If a file system uses deduplicated storage disks (DDisks), note the

following additional requirements:

• Requires 2 GB RAM per DDisk in addition to the base RAM noted in

Tab l e 6 .

• Requires an additional 5GB of disk space for application binaries

and log files.

• Deduplication is supported only for file systems running on a Linux

operating system (x86 32-bit or x86 64-bit).

• An Intel Pentium 4 or later processor (or an equivalent AMD

processor) is required. For best performance, Quantum recommends

an extra CPU per DDisk.

StorNext Client Software Requirements

To install and run the StorNext client software, the client system must meet the

following minimum hardware requirements.

For SAN (FC-attached) clients or for Distributed LAN Clients:

•1 GB RAM

Hardware Requirements 21

Page 22

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Resolved Issues

• 500 MB available hard disk space

For SAN clients acting as a Distributed LAN Server:

•2 GB RAM

• 500 MB available hard disk space

Note: Distributed LAN servers may require additional RAM depending on the

number of file systems, Distributed LAN Clients, and NICs used. See

Distributed LAN Server Memory Tuning in the StorNext User’s Guide for

Distributed LAN Server memory tuning guidelines.

The following sections list resolved issues in this release of StorNext:

• StorNext File System Resolved Issues on page 22

• StorNext Storage Manager Resolved Issues on page 25

• StorNext GUI Resolved Issues on page 27

• StorNext Installation and Database Resolved Issues on page 27

Note: There is no change to cryptographic functionality in StorNext release

StorNext File System

Tab l e 7 lists resolved issues that are specific to StorNext File System.

Resolved Issues

Table 7 StorNext File System

Resolved Issues

Change

Operating

System

Solaris 27302 901210,

Request

Number

Service

Request

Number

997028,

993390,

984914

3.1.4.

Description

Changed the default value for cvlabel, which wrote VTOC labels

that were incompatible with recent releases of Solaris 10.

HP-UX 28848 1019994 Corrected a condition which caused performance issues when

using shared memory.

22 Resolved Issues

Page 23

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number Description

Linux 25770 n/a Resolved a condition in which GUI redirection did not work

properly after HA failover. (cvfail did not always run when an

MDC became the active MDC due to a lock file that was not

cleaned up.)

26614 942318 Corrected a condition that caused an fsm panic. (PANIC: /usr/

cvfs/bin/fsm "Trans_commit: t irp left locked and

not DIRTY " file transaction.c, line 961)

26634 n/a Corrected the following condition: A failure to stop an HA fsm

could occur if the fsm has multiple IP addresses and is configured

in the fsmlist to use an IP address that differs from the IP address

to which the fsm's hostname resolves.

26655 931830 Corrected a condition that could cause backup failures or hangs

due to the way extended attributes were processed.

26685 950226 Resolved a condition in which running the snmetadump -a

command failed periodically with the following:

ASSERT failed "length >= sizeof(section_header_t)"

file process_metadata.c

process_metadata.c, line 589

26890 n/a Corrected a condition in which a process involved with database

journals sometimes remained running after the database was

stopped.

27926 n/a Corrected an snmetadump failure that generated a “Transaction

out of order” error.

Windows 27166 832794 Corrected a condition in which CVFSPM disconnected on Windows

systems.

27395 970490

973766,

999134,

956410,

971086

Corrected a condition on Windows Vista or Windows Server 2008

systems which caused a blue screen error when a remote SNFS

client opened a file already open on the affected system.

(OplockOpenChange appeared on the blue screen’s stack trace as

a distinctive indicator.)

27881 999134 Resolved a condition in which a blue screen error resulted from

“BAD_POOL_CALLER” on Windows Server 2008.”

27907 n/a Corrected a condition in which unlabeling a VTOC-labeled disk and

then relabeling failed on a Windows system.

27909 712214 Improved cvfsck processing so that the allocation bit map is

updated correctly and more efficiently.

27910 712214 The number of free inodes is now updated properly.

28853 971688 Corrected a condition in which Microsoft IIS 7 Web Hosting

software did not operate correctly with StorNext File System.

Resolved Issues 23

Page 24

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number Description

All 26408 919864,

992906

26835 955194,

997876

27004 956004 Support for the Quality of Service feature was added for

27014 955980 Corrected cvfsck to correctly zero only corrupt extended

27049 958296 Added the ability to place orphaned inodes into the specified

27319 962028 Corrected a condition that caused data corruption in some

27816 871834,

877874

Resolved an issue that caused fsm panics in

alloc_space_debug.

Corrected a condition that caused an FSM panic -

trans_write: space reservation too small for type

6

distributed LAN clients and servers.

attributes. (In the process of removing corruption and the

associated extended attribute, cvfsck also incorrectly zeroed a

different structure, requiring cvfsck to be run again to fix that

corruption.)

directory inode.

instances when bringing UP a DOWN stripe group.

Corrected a condition in which ExtApiGetSgInfo returned static

stripe group status instead of dynamic stripe group status.

27875 968024,

985224

27928 892710,

1010482,

Addressed snmetadump failures on systems having multiple

metadata stripe groups.

Corrected a condition in which a zero-length restore journal lead

to backup failure.

1003672,

1015538,

1028578

27931 996254 Resolved a condition in which snmetadump incorrectly processed a

directory in a multiple metadata stripe group setup.

27949 n/a Corrected a condition in which running out of disk space for

restore journals lead to FSM PANIC: /usr/cvfs/bin/fsm

ASSERT failed

"pthread_mutex_unlock(&restore_flusher_lock) == 0"

file restorejournal.c, line 2627

27965 n/a Addressed a bad ASSERT in snmetadump restore on systems

having multiple metadata stripe groups.

27992 1003288 Corrected an snmetadump failure that occurred during the initial

optimization phase.

27993 n/a Resolved a missing buffer flush in cvfsck which prevented

repairing an arb block.

24 Resolved Issues

Page 25

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number Description

All 28257 n/a The Show Inode cvfsdb command has been changed to display

inode times as real dates.

28259,

28261

997926 Corrected a condition in which running cvfsck on a file system

showed dot entries that didn’t have the generation

portion of the inode.

28455 1005712

1000514

Corrected a condition in which some status queries failed when a

LUN had more than four paths.

972122,

922532,

1019354

28554 914634,8

75158

Corrected a condition in which directories containing scattered

inodes contributed to slow rebuild performance. (See also

Storage

Manager Parameter Deprecated on page 4.)

28774 1014826 Corrected a problem in the in the externals/acsapi/src/

common_lib/ml_api.c module which caused a daemon core

dump.

StorNext Storage

Tab l e 8 lists resolved issues that are specific to StorNext Storage Manager.

Manager Resolved

Issues

Table 8 StorNext Storage

Manager Resolved Issues

Change

Operating

System

Request

Number

Linux 26421 887578 Corrected a condition in which Storage manager filled tapes with

26604 875712,

27053 896404 Resolved an issue in which conflicting CONF manifest numbers

Service

Request

Number

933796,

963346,

937860,

935286

Description

wasted space if the class in the inode was incorrect.

Resolved a condition in which store candidates could not be stored

by fsrmcopy until fsclean ran.

prevented snbackup from running.

27807 951158,

969348

Corrected a condition in which moving files from a managed

directory to an unmanaged directory caused issues with

fspolicy.

Resolved Issues 25

Page 26

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number Description

Linux 27911 n/a Corrected a condition in which fsclean -r ran slowly for files

that were removed or renamed to a different directory

26608 927346,

912890

Addressed an issue where LibManager processes could become

defunct if SL_TAC_MASK was not set to the default value in /usr/

adic/MSM/logs/log_params.

All 25824 871834,

877874,

1000030

Corrected a condition in which a file system containing several

offline stripe groups prevented truncation from occurring.

Note: If you applied the workaround for this issue before installing

StorNext 3.1.4, you must “undo” the workaround by using the

StorNext GUI to reset the truncation values to either their original

or default values.

26628 845714,

Corrected a condition that caused fsclean -r to hang.

881622,

895620

26647 914640 A parameter was created to limit the number of drives used by a

policy for stores. (See

New Media Limiting Feature on page 2.)

26792 941980 All /usr/adic/database/db log files are now included in the

snapshots.

27119 961840 Corrected a condition in which files with % in their name could not

be recovered.

27834 n/a Addressed tape drive reservation conflicts that occurred on

failover.

27835 985494,

983646

Resolved an issue in which fsretrieve never completed running on a

RHEL5 system due to hung fs_fmover processes.

27887 728589 Corrected a condition that caused TSM-Fs_fmover and fsstore

to core while storing a file with special characters.

28200 991802 Corrected the StorNext API feature so that the correct percentage

used calculation is used.

28205 837806 Resolved a condition in which an inefficient SQL query hindered

database performance.

28555 n/a Corrected an issue with the rebuild policy that occurred when the

number of relation points exceeded the number of threads

available, causing the primary rebuild policy to pick up the

remaining relation points and miss any files that needed to be

stored, relocated, or truncated.

28669 n/a Corrected a condition in which Storage Manager TSM stored a

bad copy of a file on archive media.

26 Resolved Issues

Page 27

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

StorNext GUI Resolved

Tab l e 9 lists resolved issues that are specific to the StorNext GUI.

Issues

Table 9 StorNext GUI Resolved

Issues

Change

Operating

System

Request

Number

Linux 28369 n/a Corrected a condition which caused a file system configuration file

Windows 27520 978244 Corrected a condition in which Stornext menu selection windows

All 25289 844668,

27543 901210,

Service

Request

Number

938488

997028,

993390,

984914

Description

to become corrupted when adding a 5GB LUN.

did not work correctly using Microsoft Internet Explorer 8.

Resolved a condition in which file system expansion via the

StorNext GUI failed.

The default VTOC label format has changed from previous

StorNext releases. (See also

New VTOC Label Format on page 4.)

StorNext Installation

and Database Resolved

Tab l e 1 0 lists resolved issues that are specific to StorNext installation and the

database.

Issues

Table 10 StorNext Installation

and Database Resolved Issues

Change

Operating

System

Request

Number

Linux 24027 766930 Corrected a condition on an HA system in which an upgrade from

26645 n/a The man pages for fsrminfo and fsclean have been updated to

26612 933846 Corrected a condition that caused fs_fmover to hang due to a

Solaris 28338 n/a Corrected a condition in the Stornext installation code for Solaris,

Service

Request

Number

Description

StorNext version 310B10 to 311B12 failed without manual

intervention.

reflect changed functionality.

database issue.

which did not have the correct default directory path for steps 13

and 14.

Resolved Issues 27

Page 28

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Change

Operating

System

All 26406 917570 Updated the man pages for cvfs_failover.

Request

Number

26802 948026,

27223 949248 Corrected a condition in which an ljb process timeout caused out-

28289 n/a The database StorNext uses has been updated to version 104 to

28694 912136 Corrected errors in the HA upgrade process.

Service

Request

Number Description

Addressed a condition that caused corruption in the

946116,

958332,

955446,

961146

sl_event_details and sl_notification_email tables.

of-order journal files and backup/restore problems.

address underlying performance issues. (See also

Database on page 4.)

Known Issues

Updated

The following sections list known issues in this release of StorNext, as well as

associated workarounds, where applicable:

• StorNext File System Known Issues on page 29

• StorNext Storage Manager Known Issues on page 34

• StorNext Installation Known Issues on page 36

28 Known Issues

Page 29

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

StorNext File System

Tab l e 1 1 lists known issues that are specific to StorNext File System.

Known Issues

Table 11 StorNext File System

Known Issues

Change

Operating

System

Request

Number

HP-UX 24309 n/a If the cvpaths file contains an

Solaris 24331 755956 Running an anonymous FTP

Service

Request

Number

Description Workaround

invalid wild-card specification

(that is, a wild-card pattern that

does not include a leading '/'

character), the fsmpm process

could panic and the cvlabel

command might fail with a core

dump.

server inside of a StorNext file

system could cause a system to

crash with the following error:

CVFS ASSERTION FAILED:

f_cvp->cv_opencnt == 0

This issue will be addressed in a

future StorNext release.

This issue will be addressed in a

future StorNext release.

To work around this issue, install

Very Secure FTP Daemon (vsftpd)

and use it instead of the FTP

daemon (in.ftpd) that is shipped

with Solaris.

Linux 23661 958244 StorNext File System does not

support the Linux sendfile()

system call.

This issue causes Apache web

servers to deliver blank pages

when content resides on

StorNext file systems.

This issue also affects Samba

servers running on Linux.

24890 836284 DMA I/Os may hang when the

file offset is not aligned and

disks configured with 4096-byte

sectors are used. For example:

dd if=/stornext/

sr83628a/file2g of=/dev/

null bs=1880000 count=1

skip=40

The workaround is to disable

sendfile usage by adding the

following entry into the Apache

configuration file

httpd.conf:

EnableSendfile off

The workaround for Samba servers

is to add the following line into the

configuration file:

sendfile=no

This issue will be addressed in a

future StorNext release.

As a workaround, use the

“memalign=4k” mount option.

Note: this mount option should

NOT be used with file systems

containing LUNs configured with

512-byte sectors.

Known Issues 29

Page 30

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number

Description Workaround

Linux 25124 833282 A “no space” error appears after

you attempt to add a file to a

directory known to contain

sufficient space.

There has been only one known

instance of this problem, which

is a corrupted B-tree for one

directory. Please report any

additional instances to

Quantum Support.

Identify this problem by looking

for these indicators:

1. Attempts to add a file to an

affected directory produce a

“no space” error.

2. Files can be added to other

directories in the same file

system without error.

3. Running the command

cvadmin -F

<FileSystemName> -e show

reports free space in the

metadata stripe groups for the

file system of the affected

directory.

After collecting debugging

information, the following

workaround can be applied:

1) Rename the corrupt directory

<dir> to <dir>.corrupt

2) Create a new directory named

<dir>

3) Move all files from the corrupt

directory <dir>.corrupt to the

newly created directory <dir>

4) Delete the empty corrupt

directory <dir>.corrupt

26321 n/a Due to the way Linux handles

errors, the appearance of SCSI

“No Sense” messages in system

logs can indicate possible data

corruption on disk devices.

This affects StorNext users on

Red Hat 4, Red Hat 5, SuSe 9,

and SuSe 10.

25102 n/a 32-bit StorNext Distributed

Gateways may lock up under

load. This problem is due to

“highmem” kernel memory

depletion and occurs under load

when several distributed LAN

clients perform I/O concurrently.

Using a larger than default

value for the

transfer_buffer_size_kb

parameter in the dpserver file

will exacerbate the problem.

This issue is not caused by

StorNext, and is described in detail

in StorNext Product Alert 20.

For additional information, see Red

Hat 5 CR 468088 and SuSE 10 CR

10440734121.

The workaround is to use 64-bit

Distributed Gateways.

30 Known Issues

Page 31

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number

Description Workaround

Linux 25864 n/a An NFS server that exports a

StorNext file system with the

default export options may not

flush data to disk immediately

when an NFS client requests it.

This could result in loss of data

if the NFS server crashes after

the client has written data, but

before the data has reached the

disk.

Windows 26115 n/a Failover did not execute

properly after an upgrade.

24034 n/a Windows has different

limitations regarding maximum

allowable path names. The

limitations depend upon

whether you are using the NTFS

API or the WIN32 API.

The WIN32 API has a maximum

path name length of 260

characters, including all path

characters (for example, c:\,)

and the path’s NUL termination

character. The NTFS API allows

32,000 characters.

This issue will be addressed in a

future StorNext release.

As a workaround, add the

no_wdelay option to each line in

the /etc/exports file that

references a StorNext file system.

For example, typical export options

would be

(rw,sync,no_wdelay).

This issue will be addressed in a

future StorNext release.

There isn't an easy work-around for

this problem.

The path name in the command

del \\?\<drive

letter>:\<directory>\...\

<filename> must not be longer

than 260 characters.

However, applications can use this

path-naming syntax to access files

with path names longer than 260

characters. That is, an application

can use the syntax \\?\<drive

letter>:\ <directory>

\...\<filename> on Windows

APIs to access paths longer than

260 characters.

Windows has additional

restrictions on file names. For more

information, contact Microsoft.

Known Issues 31

Page 32

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number

Description Workaround

Windows 25032 n/a If you are using Promise RAID

controllers on a Windows Server

2008 64-bit system, you must

install Promise’s PerfectPath

software. (PerfectPath also helps

recognize multiple-path

failovers.)

If you do not install this

software, you will be unable to

use your Windows Server 2008

system.

Promise is working on a solution to

this problem, but in the meantime

they have provided the following

workaround:

1. Install the PerfectPath software

on your Windows Server 2008 64bit system.

2. Restart your system. The login

prompt will

not

appear after you

restart. Instead, the Windows

Boot Manager screen appears

showing an error message:

“Windows cannot verify the digital

signature for this file”

(\Windows\system32\DRIVERS

\ perfectpathdsm.sys)

3. From the Windows Boot

Manager screen, press Enter to

continue. A second Windows Boot

Manager screen appears, asking

you to choose an operating system

or specify an advanced option.

4. On the second Windows Boot

Manager screen, press F8 to

specify advanced options. The

Advanced Boot Options screen

appears.

5. On the Advanced Boot Options

screen, use the arrow keys to

choose the option Disable Driver

Signature Enforcement. Choosing

this option will cause the system to

display the login prompt normally

after you reboot.

6. Restart your system.

32 Known Issues

Page 33

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number

Description Workaround

Windows 24174 n/a Issues may arise due to the way

Windows handles Posixcompliant file names.

NTFS and Posix subsystems

allow trailing periods and

spaces in file names, whereas

WIN32 subsystem does not.

(MS-DOS support allows only

8.3 format.)

Even though StorNext supplies

the unicode file names with a

space on the end, when

StorNext is called by Windows

to delete the file, the file name

supplied does not have the

space on the end. For this

reason file names with spaces or

periods as the last character

cannot be deleted using

Windows Explorer.

A partial workaround for this

problem is to use the MS-DOS shell

instead of Windows Explorer as

follows:

del \\?\<drive

letter>:\<directory>\...\<

filename>

This format directs the del

command to use the NTFS

interface instead of the WIN32

interface.

This will not work on file names

that contain the following

Windows reserved characters:

* ? \ < > " : |

In addition, you will not be able to

delete a directory with a name that

ends with a period or a space.

All 24859

813172,

1001612,

994798

Setting the buffer cache above

256MB can result in

fragmentation. The mount

option for buffercachecap

should not exceed 256MB.

This issue will be addressed in a

future StorNext release.

In the meantime, make sure the

mount option for

buffercachecap does not

exceed 256MB.

Known Issues 33

Page 34

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

StorNext Storage

Tab l e 1 2 lists known issues that are specific to StorNext Storage Manager.

Manager Known Issues

Table 12 StorNext Storage

Manager Known Issues

Change

Operating

System

Request

Number

Linux 24649 n/a StorNext Storage Manager in a

Service

Request

Number

Description Workaround

High Availability configuration

could encounter a problem

reserving tape drives following a

failover. A “target reset” is used

by the newly activated

metadata controller to release

“scsi reserve” device

reservations made by the former

metadata controller. The target

reset operation might fail due to

device driver problems on

systems running SUSE Linux

Enterprise Server 10 with tape

drives attached via an LSI fibre

host bus adapter. Any such

reserved drives will not be

accessible by the new metadata

controller.

There are two possible

workarounds, which also apply to

versions of StorNext prior to 3.1.2.

1) Following a failover, release any

tape drive reservations held by the

former metadata controller. This

must be done for each tape drive

still reserved by the former

metadata controller by running

/usr/adic/TSM/util/fs_scsi on

the metadata controller which

o

wns the reservation:

# /usr/adic/TSM/util/fs_scsi

Choice==> 10 (list drives)

Choice==> 1 (select drive,

e.g. /dev/sg0)

Choice==> 3 (select Test

Ready)

Choice==> 24 (select

Release)

Choice==> 0 (select Quit to

exit fs_scsi)

OR

2) Add the following setting to the

/usr/adic/TSM/config/

fs_sysparm_override file:

FS_SCSI_RESERVE=none;.

(“none”

means don't try to reserve tape

drives.) StorNext must be restarted

for this change to take effect.

WARNING: This workaround could

leave tape drives exposed to

unexpected access by other

systems, which could lead to data

loss.

34 Known Issues

Page 35

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating

System

Change

Request

Number

Service

Request

Number

Description Workaround

All 12321 n/a Removing affinities does not

unconfigure them from

managed file systems.

25501 n/a End of Tape/Medium not

detected

25506 n/a End of tape or medium not

correctly detected.

25766 870012,

886856

MSM failed to start with “glibc

double free or corruption”

25837 836242 Running fsmedcopy fails if the

output medium does not have

sufficient space to hold the

copies from the input.

However, the copies successfully

transferred to the new media

can be identified with the

fsfileinfo command.

This issue will be addressed in a

future StorNext release.

This issue will be addressed in a

future StorNext release.

This issue will be addressed in a

future StorNext release.

This issue will be addressed in a

future StorNext release.

The workaround is to remove

outstanding MSM requests stored

in the Request table.

This issue will be addressed in a

future StorNext release.

As a workaround, a few

fsmedcopy runs will get all the

files from the larger input media to

the new (smaller) media.

26279 945058 Running the following SQL

query does not product the

desired results:

SELECT * FROM FILECOMP%s

WHERE file_key=? AND

version=? AND

(mediandx,medgen) NOT IN

(SELECT DISTINCT

mediandx,mediagen FROM

OLDMEDIA)

This issue will be addressed in a

future StorNext release.

Known Issues 35

Page 36

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

StorNext Installation

Tab l e 1 3 lists known issues that are specific to the StorNext installation process.

Known Issues

Table 13 StorNext Installation

Known Issues

Change

Operating

System

Linux

Request

Number

24692 n/a When you mount a CD in a Red

(RHEL4

and RHEL5

only)

Windows 27129 n/a An incompatibility between

Service

Request

Number

Description Workaround

Hat 4 or 5 system, CDs are

mounted by default with a

noexec (non-executable) option

which prevents you from

proceeding with the StorNext

installation.

StorNext 3.1.2, 3.1.3 and 3.5

installers and Altiris Agent

running as a service

(C:\Program Files\

Altiris\Altiris Agent\

AeXNSAgent.exe) could cause

the StorNext installation to fail.

Remount the CD by typing mount

-o remount, exec ...

Alternatively, mount the CD to a

different directory by typing the

following:

# mkdir /mnt/MOUNT_PATH

# mount /dev/cdrom /mnt/

MOUNT_PATH # cd /mnt/

MOUNT_PATH

This issue will be addressed in a

future StorNext release.

To avoid encountering this issue,

disable the Altiris Agent service

before installing StorNext.

36 Known Issues

Page 37

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating Guidelines and Limitations

Tab l e 1 4 lists updated information and guidelines for running StorNext, as well

as known limitations in this release.

Table 14 StorNext Operating

Guidelines and Limitations

Operating System / Affected

Component

Windows When a StorNext file system is mounted to a drive letter or a

Description

directory, configure the Windows backup utility to NOT include the

StorNext file system.

If you are using the StorNext client software with Windows Server

2003, Windows Server 2008, Windows XP, or Windows Vista, turn off

the Recycle Bin in the StorNext file systems mapped on the Windows

machine.

You must disable the Recycle Bin for the drive on which a StorNext file

system is mounted. Also, each occurrence of file system remapping

(unmounting/mounting) will require disabling the Recycle Bin. For

example, if you mount a file system on E: (and disable the Recycle Bin

for that drive) and then remap the file system to F:, you must then

disable the Recycle Bin on the F: drive.

As of release 3.5, StorNext supports mounting file systems to a

directory. For Windows Server 2003 and Windows XP you must

disable the Recycle Bin for the root drive letter of the directorymounted file system. (For example: For C:\MOUNT\File_System

you would disable the Recycle Bin for the C: drive.) For Windows

Server 2008 and Windows Vista you must disable each directorymounted file system.

For Windows Server 2003 or Windows XP:

1 On the Windows client machine, right-click the Recycle Bin icon

on the desktop and then click Properties.

2 Click Global.

3 Click Configure drives independently.

4 Click the Local Disk tab that corresponds to the mapped or

directory-mounted file system.

5 Click the checkbox Do not move files to the Recycle Bin. Remove files

immediately when deleted

6 Click Apply, and then click OK.

.

Operating Guidelines and Limitations 37

Page 38

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

Operating System / Affected

Component Description

Windows For Windows Server 2008 and Windows Vista:

1 On the Windows client machine, right-click the Recycle Bin icon

on the desktop and then click Properties.

2 Click the General tab.

3 Select the mapped drive that corresponds to the StorNext mapped

file system. For directory-mounted file systems, select the file

system from the list.

4 Choose the option Do not move files to the Recycle Bin. Remove files

immediately when deleted

5 Click Apply.

6 Repeat steps 3-5 for each remaining directory-mounted file system.

7 When finished, click OK.

All Be aware of the following limitations regarding file systems and

stripe groups:

• The maximum number of disks per file system is 512

• The maximum number of disks per data stripe group is 128

• The maximum number of stripe groups per file system is 256

• The maximum number of tape drives is 256

.

Documentation

For managed file systems only, the maximum directory capacity is

50,000 files per single directory. (This limitation does not apply to

unmanaged file systems.)

If you use an SDisk or DDisk, you should save at least one other copy

of the data to tape or to another SDisk or Ddisk. Without the second

copy, your stored data will be vulnerable to data loss if you ever have

hardware failure.

The following documents are currently available for StorNext products:

Document Number Document Title

6-01658-08 StorNext User’s Guide

6-00360-17 StorNext Installation Guide

6-01376-12 StorNext File System Tuning Guide

6-01620-11 StorNext Upgrade Guide

6-01688-08 StorNext CLI Reference Guide

6-00361-33 StorNext File System Quick Reference Guide

38 Documentation

Page 39

Document Number Document Title

6-00361-34 StorNext Storage Manager Quick Reference Guide

Contacting Quantum

More information about this product is available on the Service and Support

website at www.quantum.com/support

contains a collection of information, including answers to frequently asked

questions (FAQs). You can also access software, firmware, and drivers through

this site.

For further assistance, or if training is desired, contact Quantum:

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

. The Service and Support Website

Quantum Technical Assistance

Center in the USA:

For additional contact information:

To open a Service Request:

For the most updated information on Quantum Global Services, please visit:

www.quantum.com/support

+1 800-284-5101

www.quantum.com/support

www.quantum.com/esupport

Contacting Quantum 39

Page 40

StorNext 3.1.4 Release Notes

6-00431-25 Rev A

September 2009

40 Contacting Quantum

Loading...

Loading...