Page 1

OFED+ Host Software

Release 1.5.4

User Guide

IB0054606-02 A

Page 2

OFED+ Host Software Release 1.5.4

User Guide

Information furnished in this manual is believed to be accurate and reliable. However, QLogic Corporation assumes no

responsibility for its use, nor for any infringements of patents or other rights of third parties which may result from its

use. QLogic Corporation reserves the right to change product specifications at any time without notice. Applications

described in this document for any of these products are for illustrative purposes only. QLogic Corporation makes no

representation nor warranty that such applications are suitable for the specified use without further testing or

modification. QLogic Corporation assumes no responsibility for any errors that may appear in this document.

Document Revision History

Revision A, April 2012

Changes Sections Affected

ii IB0054606-02 A

Page 3

Table of Contents

Preface

Intended Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Related Materials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Documentation Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

License Agreements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Technical Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Training . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Contact Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Knowledge Database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

1 Introduction

How this Guide is Organized . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

Interoperability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

2 Step-by-Step Cluster Setup and MPI Usage Checklists

Cluster Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

Using MPI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-2

3InfiniBand

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

Installed Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-2

IB and OpenFabrics Driver Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-3

IPoIB Network Interface Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-3

IPoIB Administration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-5

Administering IPoIB. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-5

Configuring IPoIB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-5

IB Bonding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-6

Interface Configuration Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-6

Verify IB Bonding is Configured. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-9

®

Cluster Setup and Administration

Stopping, Starting and Restarting the IPoIB Driver. . . . . . . . . . . 3-5

Editing the IPoIB Configuration File . . . . . . . . . . . . . . . . . . . . . . 3-5

Red Hat EL5 and EL6. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-7

SuSE Linux Enterprise Server (SLES) 10 and 11. . . . . . . . . . . . 3-8

IB0054606-02 A iii

Page 4

OFED+ Host Software Release 1.5.4

User Guide

Subnet Manager Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-10

QLogic Distributed Subnet Administration . . . . . . . . . . . . . . . . . . . . . . . . . . 3-12

Applications that use Distributed SA . . . . . . . . . . . . . . . . . . . . . . . . . . 3-12

Virtual Fabrics and the Distributed SA. . . . . . . . . . . . . . . . . . . . . . . . . 3-13

Configuring the Distributed SA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-13

Default Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-13

Multiple Virtual Fabrics Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-14

Virtual Fabrics with Overlapping Definitions . . . . . . . . . . . . . . . . . . . . 3-15

Distributed SA Configuration File . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-17

SID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

ScanFrequency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

LogFile . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

Dbg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-19

Other Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-19

Changing the MTU Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-20

Managing the ib_qib Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-21

Configure the ib_qib Driver State. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-22

Start, Stop, or Restart ib_qib Driver. . . . . . . . . . . . . . . . . . . . . . . . . . . 3-22

Unload the Driver/Modules Manually. . . . . . . . . . . . . . . . . . . . . . . . . . 3-23

ib_qib Driver Filesystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-23

More Information on Configuring and Loading Drivers. . . . . . . . . . . . . . . . . 3-24

Performance Settings and Management Tips . . . . . . . . . . . . . . . . . . . . . . . 3-24

Performance Tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-25

Systems in General (With Either Intel or AMD CPUs) . . . . . . . . 3-25

AMD CPU Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-28

AMD Interlagos CPU Systems . . . . . . . . . . . . . . . . . . . . . . . . . . 3-28

Intel CPU Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-28

High Risk Tuning for Intel Harpertown CPUs . . . . . . . . . . . . . . . 3-30

Additional Driver Module Parameter Tunings Available . . . . . . . 3-31

Performance Tuning using ipath_perf_tuning Tool . . . . . . . . . . . 3-34

OPTIONS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-35

AUTOMATIC vs. INTERACTIVE MODE. . . . . . . . . . . . . . . . . . . 3-36

Affected Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-37

Homogeneous Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-37

Adapter and Other Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-38

Remove Unneeded Services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-39

Host Environment Setup for MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-40

iv IB0054606-02 A

Page 5

Configuring for ssh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-40

Configuring ssh and sshd Using shosts.equiv . . . . . . . . . . 3-40

Configuring for ssh Using ssh-agent . . . . . . . . . . . . . . . . . . . 3-43

Process Limitation with ssh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-44

Checking Cluster and Software Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-44

ipath_control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-44

iba_opp_query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-45

ibstatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-46

ibv_devinfo. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-47

ipath_checkout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-47

4 Running MPI on QLogic Adapters

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-1

MPIs Packaged with QLogic OFED+ . . . . . . . . . . . . . . . . . . . . . . . . . 4-1

Open MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-1

Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Compiling Open MPI Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

Create the mpihosts File. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Running Open MPI Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

Further Information on Open MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-4

Configuring MPI Programs for Open MPI . . . . . . . . . . . . . . . . . . . . . . 4-5

To Use Another Compiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-5

Compiler and Linker Variables . . . . . . . . . . . . . . . . . . . . . . . . . . 4-7

Process Allocation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-7

IB Hardware Contexts on the QDR IB Adapters. . . . . . . . . . . . . 4-8

Enabling and Disabling Software Context Sharing. . . . . . . . . . . 4-9

Restricting IB Hardware Contexts in a Batch Environment . . . . 4-10

Context Sharing Error Messages . . . . . . . . . . . . . . . . . . . . . . . . 4-11

Running in Shared Memory Mode . . . . . . . . . . . . . . . . . . . . . . . 4-11

mpihosts File Details. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-12

Using Open MPI’s mpirun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-13

Console I/O in Open MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . 4-14

Environment for Node Programs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-15

Remote Execution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-15

Exported Environment Variables . . . . . . . . . . . . . . . . . . . . . . . . 4-16

Setting MCA Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-17

Environment Variables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-18

Job Blocking in Case of Temporary IB Link Failures . . . . . . . . . . . . . . 4-20

Open MPI and Hybrid MPI/OpenMP Applications . . . . . . . . . . . . . . . . . . . . 4-21

OFED+ Host Software Release 1.5.4

User Guide

IB0054606-02 A v

Page 6

OFED+ Host Software Release 1.5.4

User Guide

Debugging MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-22

MPI Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-22

Using Debuggers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-22

5 Using Other MPIs

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-1

Installed Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-2

Open MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

MVAPICH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

Compiling MVAPICH Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

Running MVAPICH Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

Further Information on MVAPICH . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-4

MVAPICH2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-4

Compiling MVAPICH2 Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . 5-4

Running MVAPICH2 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-5

Further Information on MVAPICH2 . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-5

Managing MVAPICH, and MVAPICH2

with the mpi-selector Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-5

Platform MPI 8 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-6

Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-6

Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-6

Compiling Platform MPI 8 Applications . . . . . . . . . . . . . . . . . . . . . . . . 5-7

Running Platform MPI 8 Applications . . . . . . . . . . . . . . . . . . . . . . . . . 5-7

More Information on Platform MPI 8 . . . . . . . . . . . . . . . . . . . . . . . . . . 5-7

Intel MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-7

Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-8

Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-8

Compiling Intel MPI Applications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-10

Running Intel MPI Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-10

Further Information on Intel MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-11

Improving Performance of Other MPIs Over IB Verbs . . . . . . . . . . . . . . . . . 5-12

6 SHMEM Description and Configuration

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-1

Interoperability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-1

Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-1

SHMEM Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-3

Basic SHMEM Program. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-3

Compiling SHMEM Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-4

vi IB0054606-02 A

Page 7

OFED+ Host Software Release 1.5.4

User Guide

Running SHMEM Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-5

Using shmemrun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-5

Running programs without using shmemrun . . . . . . . . . . . . . . . 6-6

QLogic SHMEM Relationship with MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-7

Slurm Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-8

Full Integration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-8

Two-step Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-8

No Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-9

Sizing Global Shared Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-9

Progress Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-11

Active Progress . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-12

Passive Progress. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-12

Active versus Passive Progress . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-13

Environment Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-13

Implementation Behavior . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-15

Application Programming Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-17

SHMEM Benchmark Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-27

7 Virtual Fabric support in PSM

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-1

Virtual Fabric Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-2

Using SL and PKeys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-2

Using Service ID. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-3

SL2VL mapping from the Fabric Manager . . . . . . . . . . . . . . . . . . . . . . . . . . 7-3

Verifying SL2VL tables on QLogic 7300 Series Adapters . . . . . . . . . . . . . . 7-4

8 Dispersive Routing

9gPXE

gPXE Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-1

Required Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

Preparing the DHCP Server in Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

Installing DHCP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-3

Configuring DHCP. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-4

Netbooting Over IB. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

Prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

Boot Server Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

Steps on the gPXE Client . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-14

HTTP Boot Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-14

IB0054606-02 A vii

Page 8

OFED+ Host Software Release 1.5.4

User Guide

A Benchmark Programs

Benchmark 1: Measuring MPI Latency Between Two Nodes . . . . . . . . . . . A-1

Benchmark 2: Measuring MPI Bandwidth Between Two Nodes . . . . . . . . . A-4

Benchmark 3: Messaging Rate Microbenchmarks. . . . . . . . . . . . . . . . . . . . A-6

OSU Multiple Bandwidth / Message Rate test (osu_mbw_mr)

A-6

An Enhanced Multiple Bandwidth / Message Rate test

(mpi_multibw) . . . . . . . . . . . . . . . . . A-7

B SRP Configuration

SRP Configuration Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

Important Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

QLogic SRP Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

Stopping, Starting and Restarting the SRP Driver . . . . . . . . . . . . . . . . B-3

Specifying a Session . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

Determining the values to use for the configuration . . . . . . . . . . B-6

Specifying an SRP Initiator Port of a Session by Card and

Port Indexes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-8

Specifying an SRP Initiator Port of Session by Port GUID . . . . . B-8

Specifying a SRP Target Port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-9

Specifying a SRP Target Port of a Session by IOCGUID . . . . . . B-10

Specifying a SRP Target Port of a Session by Profile String . . . B-10

Specifying an Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-10

Restarting the SRP Module. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-11

Configuring an Adapter with Multiple Sessions . . . . . . . . . . . . . . . . . . B-11

Configuring Fibre Channel Failover. . . . . . . . . . . . . . . . . . . . . . . . . . . B-13

Failover Configuration File 1: Failing over from one

SRP Initiator port to another. . . . . . . . . . . . . . . . . . . . . . . . . . . B-14

Failover Configuration File 2: Failing over from a port on the

VIO hardware card to another port on the VIO hardware card. B-15

Failover Configuration File 3: Failing over from a port on a

VIO hardware card to a port on a different VIO hardware card

within the same Virtual I/O chassis . . . . . . . . . . . . . . . . . . . . . B-16

Failover Configuration File 4: Failing over from a port on a

VIO hardware card to a port on a different VIO hardware

card in a different Virtual I/O chassis . . . . . . . . . . . . . . . . . . . . B-17

Configuring Fibre Channel Load Balancing. . . . . . . . . . . . . . . . . . . . . B-18

1 Adapter Port and 2 Ports on a Single VIO. . . . . . . . . . . . . . . . B-18

2 Adapter Ports and 2 Ports on a Single VIO Module . . . . . . . . B-19

Using the roundrobinmode Parameter . . . . . . . . . . . . . . . . . . . . B-20

viii IB0054606-02 A

Page 9

Configuring SRP for Native IB Storage . . . . . . . . . . . . . . . . . . . . . . . . B-21

Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-23

Additional Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-24

Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-24

OFED SRP Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-24

C Integration with a Batch Queuing System

Clean Termination of MPI Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-1

Clean-up PSM Shared Memory Files. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-2

D Troubleshooting

Using LEDs to Check the State of the Adapter . . . . . . . . . . . . . . . . . . . . . . D-1

BIOS Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-2

Kernel and Initialization Issues. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-2

Driver Load Fails Due to Unsupported Kernel. . . . . . . . . . . . . . . . . . . D-3

Rebuild or Reinstall Drivers if Different Kernel Installed . . . . . . . . . . . D-3

InfiniPath Interrupts Not Working. . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-3

OpenFabrics Load Errors if ib_qib Driver Load Fails . . . . . . . . . . . . D-4

InfiniPath ib_qib Initialization Failure. . . . . . . . . . . . . . . . . . . . . . . . D-5

MPI Job Failures Due to Initialization Problems . . . . . . . . . . . . . . . . . D-6

OpenFabrics and InfiniPath Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-6

Stop Infinipath Services Before Stopping/Restarting InfiniPath. . . . . . D-6

Manual Shutdown or Restart May Hang if NFS in Use . . . . . . . . . . . . D-7

Load and Configure IPoIB Before Loading SDP . . . . . . . . . . . . . . . . . D-7

Set $IBPATH for OpenFabrics Scripts . . . . . . . . . . . . . . . . . . . . . . . . D-7

SDP Module Not Loading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-7

ibsrpdm Command Hangs when Two Host Channel

Adapters are Installed but Only Unit 1 is Connected

to the Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-8

Outdated ipath_ether Configuration Setup Generates Error . . . . . . . . D-8

System Administration Troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . D-8

Broken Intermediate Link. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-9

Performance Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-9

Large Message Receive Side Bandwidth Varies with

Socket Affinity on Opteron Systems . . . . . . . . . . . . . . . . . . . . . . . . . D-9

Erratic Performance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-10

Method 1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-10

Method 2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-10

Immediately change the processor affinity of an IRQ. . . . . . . . . D-11

Performance Warning if ib_qib Shares Interrupts with eth0 . . . . . D-12

OFED+ Host Software Release 1.5.4

User Guide

IB0054606-02 A ix

Page 10

OFED+ Host Software Release 1.5.4

User Guide

Open MPI Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-12

Invalid Configuration Warning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-12

E ULP Troubleshooting

Troubleshooting VirtualNIC and VIO Hardware Issues . . . . . . . . . . . . . . . . E-1

Checking the logical connection between the

IB Host and the VIO hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-1

Verify that the proper VirtualNIC driver is running . . . . . . . . . . . E-2

Verifying that the qlgc_vnic.cfg file contains the correct

information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-2

Verifying that the host can communicate with the I/O

Controllers (IOCs) of the VIO hardware . . . . . . . . . . . . . . . . . . E-3

Checking the interface definitions on the host. . . . . . . . . . . . . . . . . . . E-6

Interface does not show up in output of 'ifconfig' . . . . . . . . . . . . E-6

Verify the physical connection between the VIO hardware and

the Ethernet network. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-7

Troubleshooting SRP Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-9

ib_qlgc_srp_stats showing session in disconnected state . . . . . E-9

Session in 'Connection Rejected' state . . . . . . . . . . . . . . . . . . . . . . . . E-11

Attempts to read or write to disk are unsuccessful . . . . . . . . . . . . . . . E-14

Four sessions in a round-robin configuration are active . . . . . . . . . . . E-15

Which port does a port GUID refer to? . . . . . . . . . . . . . . . . . . . . . . . . E-16

How does the user find a HCA port GUID?. . . . . . . . . . . . . . . . . . . . . E-17

Need to determine the SRP driver version.. . . . . . . . . . . . . . . . . . . . . E-19

F Write Combining

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . F-1

PAT and Write Combining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . F-1

MTRR Mapping and Write Combining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . F-2

Edit BIOS Settings to Fix MTRR Issues . . . . . . . . . . . . . . . . . . . . . . . F-2

Use the ipath_mtrr Script to Fix MTRR Issues. . . . . . . . . . . . . . . . F-2

Verify Write Combining is Working . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . F-3

G Commands and Files

Check Cluster Homogeneity with ipath_checkout . . . . . . . G-1

Restarting InfiniPath. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-2

Summary and Descriptions of Commands. . . . . . . . . . . . . . . . . . . . . . . . . . G-2

dmesg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-4

iba_opp_query. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-4

iba_hca_rev. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-9

iba_manage_switch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-19

x IB0054606-02 A

Page 11

OFED+ Host Software Release 1.5.4

User Guide

iba_packet_capture. . . . . . . . . . . . . . . . G-21

ibhosts . . . . . . . . . . . . . . . . . . . . . G-22

ibstatus. . . . . . . . . . . . . . . . . . . . . G-22

ibtracert . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-23

ibv_devinfo. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-24

ident . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-24

ipath_checkout. . . . . . . . . . . . . . . . . . G-25

ipath_control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-27

ipath_mtrr. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-28

ipath_pkt_test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-29

ipathstats. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-30

lsmod . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-30

modprobe . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-30

mpirun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-31

mpi_stress. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-31

rpm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-32

strings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-32

Common Tasks and Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-32

Summary and Descriptions of Useful Files . . . . . . . . . . . . . . . . . . . . . . . . . G-34

boardversion. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-34

status_str. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-35

version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-36

Summary of Configuration Files. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-36

H Recommended Reading

References for MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-1

Books for Learning MPI Programming . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-1

Reference and Source for SLURM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-1

InfiniBand

OpenFabrics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-2

Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-2

Networking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-2

Rocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-2

Other Software Packages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . H-2

IB0054606-02 A xi

® . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

H-1

Page 12

OFED+ Host Software Release 1.5.4

User Guide

List of Figures

3-1 QLogic OFED+ Software Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

3-2 Distributed SA Default Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-13

3-3 Distributed SA Multiple Virtual Fabrics Example . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-14

3-4 Distributed SA Multiple Virtual Fabrics Configured Example . . . . . . . . . . . . . . . . . . 3-15

3-5 Virtual Fabrics with Overlapping Definitions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-15

3-6 Virtual Fabrics with PSM_MPI Virtual Fabric Enabled . . . . . . . . . . . . . . . . . . . . . . . 3-16

3-7 Virtual Fabrics with all SIDs assigned to PSM_MPI Virtual Fabric. . . . . . . . . . . . . . 3-16

3-8 Virtual Fabrics with Unique Numeric Indexes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-17

xii IB0054606-02 A

Page 13

OFED+ Host Software Release 1.5.4

User Guide

List of Tables

3-1 ibmtu Values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-20

3-2 krcvqs Parameter Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-27

3-3 Checks Preformed by ipath_perf_tuning Tool . . . . . . . . . . . . . . . . . . . . . . . . . 3-34

3-4 ipath_perf_tuning Tool Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-35

3-5 Test Execution Modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-36

4-1 Open MPI Wrapper Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

4-2 Command Line Options for Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

4-3 Intel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-6

4-4 Portland Group (PGI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-6

4-5 Available Hardware and Software Contexts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-8

4-6 Environment Variables Relevant for any PSM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-18

4-7 Environment Variables Relevant for Open MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-20

5-1 Other Supported MPI Implementations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-1

5-2 MVAPICH Wrapper Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

5-3 MVAPICH Wrapper Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-4

5-4 Platform MPI 8 Wrapper Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-7

5-5 Intel MPI Wrapper Scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-10

6-1 SHMEM Run Time Library Environment Variables . . . . . . . . . . . . . . . . . . . . . . . . . 6-13

6-2 shmemrun Environment Variables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-15

6-3 SHMEM Application Programming Interface Calls. . . . . . . . . . . . . . . . . . . . . . . . . . 6-18

6-4 QLogic SHMEM micro-benchmarks options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-27

6-5 QLogic SHMEM random access benchmark options. . . . . . . . . . . . . . . . . . . . . . . . 6-28

6-6 QLogic SHMEM all-to-all benchmark options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-29

6-7 QLogic SHMEM barrier benchmark options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-30

6-8 QLogic SHMEM reduce benchmark options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-31

D-1 LED Link and Data Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

G-1 Useful Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-2

G-2 ipath_checkout Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-26

G-3 Common Tasks and Commands Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-33

G-4 Useful Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-34

G-5 status_str File Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-35

G-6 Status—Other Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-36

G-7 Configuration Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . G-37

IB0054606-02 A xiii

Page 14

OFED+ Host Software Release 1.5.4

User Guide

xiv IB0054606-02 A

Page 15

Preface

The QLogic OFED+ Host Software User Guide shows end users how to use the

installed software to setup the fabric. End users include both the cluster

administrator and the Message-Passing Interface (MPI) application programmers,

who have different but overlapping interests in the details of the technology.

For specific instructions about installing the QLogic QLE7340, QLE7342,

QMH7342, and QME7342 PCI Express

InfiniBand

Fabric Software, see the QLogic InfiniBand

®

Adapter Hardware Installation Guide, and the initial installation of the

®

Intended Audience

This guide is intended for end users responsible for administration of a cluster

network as well as for end users who want to use that cluster.

This guide assumes that all users are familiar with cluster computing, that the

cluster administrator is familiar with Linux

programmer is familiar with MPI, vFabrics, SRP, and Distributed SA.

Related Materials

QLogic InfiniBand® Adapter Hardware Installation Guide

QLogic InfiniBand

Release Notes

®

Fabric Software Installation Guide

Documentation Conventions

(PCIe®) adapters see the QLogic

®

Fabric Software Installation Guide.

®

administration, and that the application

This guide uses the following documentation conventions:

NOTE: provides additional information.

CAUTION!

causing damage to data or equipment.

WARNING!!

causing personal injury.

IB0054606-02 A xv

indicates the presence of a hazard that has the potential of

indicates the presence of a hazard that has the potential of

Page 16

Preface

License Agreements

Tex t i n blue font indicates a hyperlink (jump) to a figure, table, or section in

this guide, and links to Web sites are shown in underlined blue

example:

Table 9-2 lists problems related to the user interface and remote agent.

See “Installation Checklist” on page 3-6.

. For

For more information, visit www.qlogic.com

Tex t i n bold font indicates user interface elements such as a menu items,

buttons, check boxes, or column headings. For example:

Click the Start button, point to Programs, point to Accessories, and

then click Command Prompt.

Under Notification Options, select the Warning Alarms check box.

Tex t i n Courier font indicates a file name, directory path, or command line

text. For example:

To return to the root directory from anywhere in the file structure:

Type

cd /root and press ENTER.

Enter the following command: sh ./install.bin

Key names and key strokes are indicated with UPPERCASE:

Press CTRL+P.

Press the UP ARROW key.

Tex t i n italics indicates terms, emphasis, variables, or document titles. For

example:

For a complete listing of license agreements, refer to the QLogic

Software End User License Agreement.

.

What are shortcut keys?

To enter the date type mm/dd/yyyy (where mm is the month, dd is the

day, and yyyy is the year).

Topic titles between quotation marks identify related topics either within this

manual or in the online help, which is also referred to as the help system

throughout this document.

License Agreements

Refer to the QLogic Software End User License Agreement for a complete listing

of all license agreements affecting this product.

xvi IB0054606-02 A

Page 17

Technical Support

Customers should contact their authorized maintenance provider for technical

support of their QLogic products. QLogic-direct customers may contact QLogic

Technical Support; others will be redirected to their authorized maintenance

provider. Visit the QLogic support Web site listed in Contact Information for the

latest firmware and software updates.

For details about available service plans, or for information about renewing and

extending your service, visit the Service Program web page at

http://www.qlogic.com/services

Training

QLogic offers training for technical professionals for all iSCSI, InfiniBand® (IB),

and Fibre Channel products. From the main QLogic web page at www.qlogic.com

click the Support tab at the top, and then click Training and Certification on the

left. The QLogic Global Training portal offers online courses, certification exams,

and scheduling of in-person training.

Preface

Technical Support

.

,

Technical Certification courses include installation, maintenance and

troubleshooting QLogic products. Upon demonstrating knowledge using live

equipment, QLogic awards a certificate identifying the student as a certified

professional. You can reach the training professionals at QLogic by e-mail at

training@qlogic.com

Contact Information

QLogic Technical Support for products under warranty is available during local

standard working hours excluding QLogic Observed Holidays. For customers with

extended service, consult your plan for available hours.For Support phone

numbers, see the Contact Support link at support@qlogic.com

Support Headquarters

QLogic Web Site

Technical Support Web Site

Technical Support E-mail

Technical Training E-mail

.

.

QLogic Corporation

4601 Dean Lakes Blvd.

Shakopee, MN 55379 USA

www.qlogic.com

http://support.qlogic.com

support@qlogic.com

training@qlogic.com

IB0054606-02 A xvii

Page 18

Preface

Technical Support

Knowledge Database

The QLogic knowledge database is an extensive collection of QLogic product

information that you can search for specific solutions. We are constantly adding to

the collection of information in our database to provide answers to your most

urgent questions. Access the database from the QLogic Support Center:

http://support.qlogic.com.

xviii IB0054606-02 A

Page 19

1 Introduction

How this Guide is Organized

The QLogic OFED+ Host Software User Guide is organized into these sections:

Section 1, provides an overview and describes interoperability.

Section 2, describes how to setup your cluster to run high-performance MPI

jobs.

Section 3, describes the lower levels of the supplied QLogic OFED+ Host

software. This section is of interest to a InfiniBand

Section 4, helps the

best use of the Open MPI implementation. Examples are provided for

compiling and running MPI programs.

Section 5, gives examples for compiling and running MPI programs with

other MPI implementations.

Section 7, describes QLogic Performance Scaled Messaging (PSM) that

provides support for full Virtual Fabric (vFabric) integration, allowing users to

specify InfiniBand

provide a configured Service ID (SID) to target a vFabric.

Section 8, describes dispersive routing in the InfiniBand

congestion hotspots by “sraying” messages across the multiple potential

paths.

Section 9, describes open-source Preboot Execution Environment

boot including installation and setup.

Appendix A, describes how to run QLogic’s performance measurement

programs.

Message Passing Interface (MPI) programmer make the

®

Service Level (SL) and Partition Key (PKey), or to

®

cluster administrator.

®

fabric to avoid

(gPXE)

Appendix B, describes SCSI RDMA Protocol (SRP) configuration that allows

the SCSI protocol to run over InfiniBand

usage.

IB0054606-02 A 1-1

®

for Storage Area Network (SAN)

Page 20

1–Introduction

NOTE

Overview

Appendix C, describes two methods the administrator can use to allow users

to submit MPI jobs through batch queuing systems.

Appendix D, provides information for troubleshooting installation, cluster

administration, and MPI.

Appendix E, provides information for troubleshooting the upper layer

protocol utilities in the fabric.

Appendix F, provides instructions for checking write combining and for using

the Page Attribute Table (PAT) and Memory Type Range Registers (MTRR).

Appendix G, contains useful programs and files for debugging, as well as

commands for common tasks.

Appendix H, contains a list of useful web sites and documents for a further

In addition, the QLogic InfiniBand

information on QLogic hardware installation and the QLogic InfiniBand

Software Installation Guide contains information on QLogic software installation.

Overview

The material in this documentation pertains to a QLogic OFED+ cluster. A cluster

is defined as a collection of nodes, each attached to an InfiniBand

through the QLogic interconnect.

The QLogic IB Host Channel Adapters (HCA) are InfiniBand

quad data rate (QDR) adapters (QLE7340, QLE7342, QMH7342, and QME7342)

have a raw data rate of 40Gbps (data rate of 32Gbps). The QLE7340, QLE7342,

QMH7342, and QME7342 adapters can also run in DDR or SDR mode.

The QLogic IB HCA utilize standard, off-the-shelf InfiniBand

cabling. The QLogic interconnect is designed to work with all

InfiniBand

understanding of the InfiniBand

®

Adapter Hardware Installation Guide contains

®

-compliant switches.

®

fabric, and related information.

®

4X adapters. The

®

4X switches and

®

Fabric

®

-based fabric

If you are using the QLE7300 series adapters in QDR mode, a QDR switch

must be used.

QLogic OFED+ software is interoperable with other vendors’ IBTA compliant

InfiniBand

®

adapters running compatible OFED releases. There are several

options for subnet management in your cluster:

1-2 IB0054606-02 A

Page 21

1–Introduction

NOTE

Interoperability

An embedded subnet manager can be used in one or more managed

switches. QLogic offers the QLogic Embedded Fabric Manager (FM) for

both DDR and QDR switch product lines supplied by your IB switch vendor.

A host-based subnet manager can be used. QLogic provides the QLogic

Fabric Manager (FM), as a part of the QLogic InfiniBand

Interoperability

QLogic OFED+ participates in the standard IB subnet management protocols for

configuration and monitoring. Note that:

QLogic OFED+ (including Internet Protocol over InfiniBand

interoperable with other vendors’ InfiniBand

OFED releases.

In addition to supporting running MPI over verbs, QLogic provides a

high-performance InfiniBand

PSM. MPIs run over PSM will not interoperate with other adapters.

See the OpenFabrics web site at www.openfabrics.org for more information

on the OpenFabrics Alliance.

®

Fabric Suite (IFS).

®

®

adapters running compatible

®

-Compliant vendor-specific protocol, known as

(IPoIB)) is

IB0054606-02 A 1-3

Page 22

1–Introduction

Interoperability

1-4 IB0054606-02 A

Page 23

2 Step-by-Step Cluster Setup

and MPI Usage Checklists

This section describes how to set up your cluster to run high-performance

Message Passing Interface (MPI) jobs.

Cluster Setup

Perform the following tasks when setting up the cluster. These include BIOS,

adapter, and system settings.

1. Make sure that hardware installation has been completed according to the

instructions in the QLogic InfiniBand

and software installation and driver configuration has been completed

according to the instructions in the QLogic InfiniBand

Installation Guide. To minimize management problems, the compute nodes

of the cluster must have very similar hardware configurations and identical

software installations. See “Homogeneous Nodes” on page 3-37 for more

information.

2. Check that the BIOS is set properly according to the instructions in the

QLogic InfiniBand

3. Set up the Distributed

your virtual fabrics. See “QLogic Distributed Subnet Administration” on

page 3-12

4. Adjust settings, including setting the appropriate MTU size. See “Adapter

and Other Settings” on page 3-38.

5. Remove unneeded services. See “Remove Unneeded Services” on

page 3-39.

6. Disable powersaving features. See “Host Environment Setup for MPI” on

page 3-40.

®

Adapter Hardware Installation Guide.

Subnet Administration (SA) to correctly synchronize

®

Adapter Hardware Installation Guide

®

Fabric Software

7. Check other performance tuning settings. See “Performance Settings and

Management Tips” on page 3-24.

IB0054606-02 A 2-1

Page 24

2–Step-by-Step Cluster Setup and MPI Usage Checklists

Using MPI

8. Set up the host environment to use ssh. Two methods are discussed in

“Host Environment Setup for MPI” on page 3-40.

9. Verify the cluster setup. See “Checking Cluster and Software Status” on

page 3-44.

Using MPI

1. Verify that the QLogic hardware and software has been installed on all the

nodes you will be using, and that ssh is set up on your cluster (see all the

steps in the Cluster Setup checklist).

2. Setup Open MPI. See “Setup” on page 4-2.

3. Compile Open MPI applications. See “Compiling Open MPI Applications” on

page 4-2

4. Create an mpihosts file that lists the nodes where your programs will run.

See “Create the mpihosts File” on page 4-3.

5. Run Open MPI applications. See “Running Open MPI Applications” on

page 4-3.

6. Configure MPI programs for Open MPI. See “Configuring MPI Programs for

Open MPI” on page 4-5

7. To test using other MPIs that run over PSM, such as MVAPICH, MVAPICH2,

Platform MPI, and Intel MPI, see Section 5 Using Other MPIs.

8. To switch between multiple versions of MVAPICH, use the mpi-selector.

See “Managing MVAPICH, and MVAPICH2 with the mpi-selector Utility” on

page 5-5.

9. Refer to “Performance Tuning” on page 3-25 to read more about runtime

performance tuning.

10. Refer to Section 5 Using Other MPIs to learn about using other MPI

implementations.

2-2 IB0054606-02 A

Page 25

3 InfiniBand

InfiniBand®/OpenFabrics

User Verbs

MPI Applications

QLogic OFED+ Driver ib_qib

Kernel Space

uMAD API

User Space

QLogic OFED+

Communication

Library (PSM)

QLogic OFED+

Hardware

TCP/IP

IPoIB

QLogic IB adapter

Platform MPI

MVAPICH

Open MPI

MVAPICH

Open MPI

Intel MPI

QLogic FM

MVAPICH2

Common

Intel MPI

uDAPL

Platform MPI

SRP

MVAPICH2

and Administration

This section describes what the cluster administrator needs to know about the

QLogic OFED+ software and system administration.

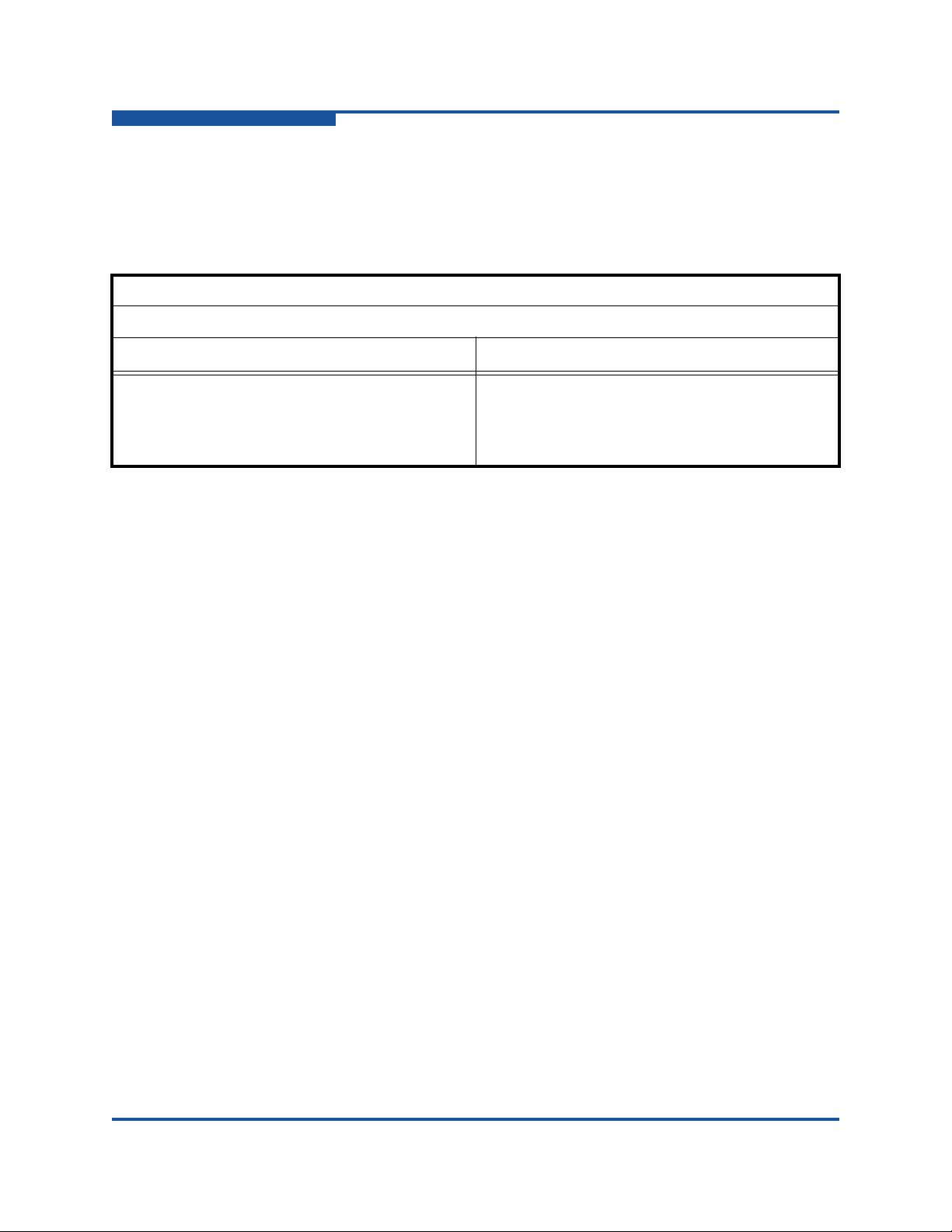

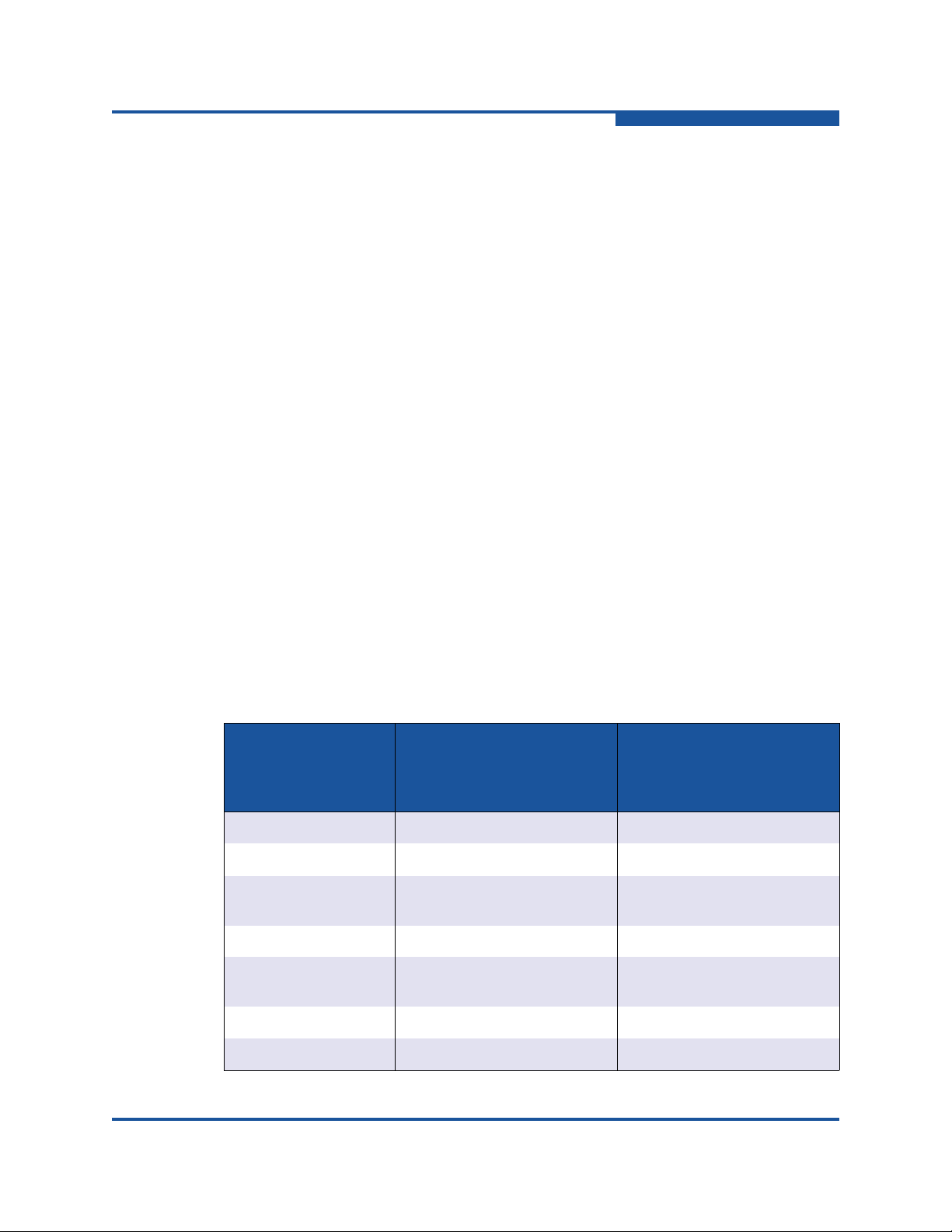

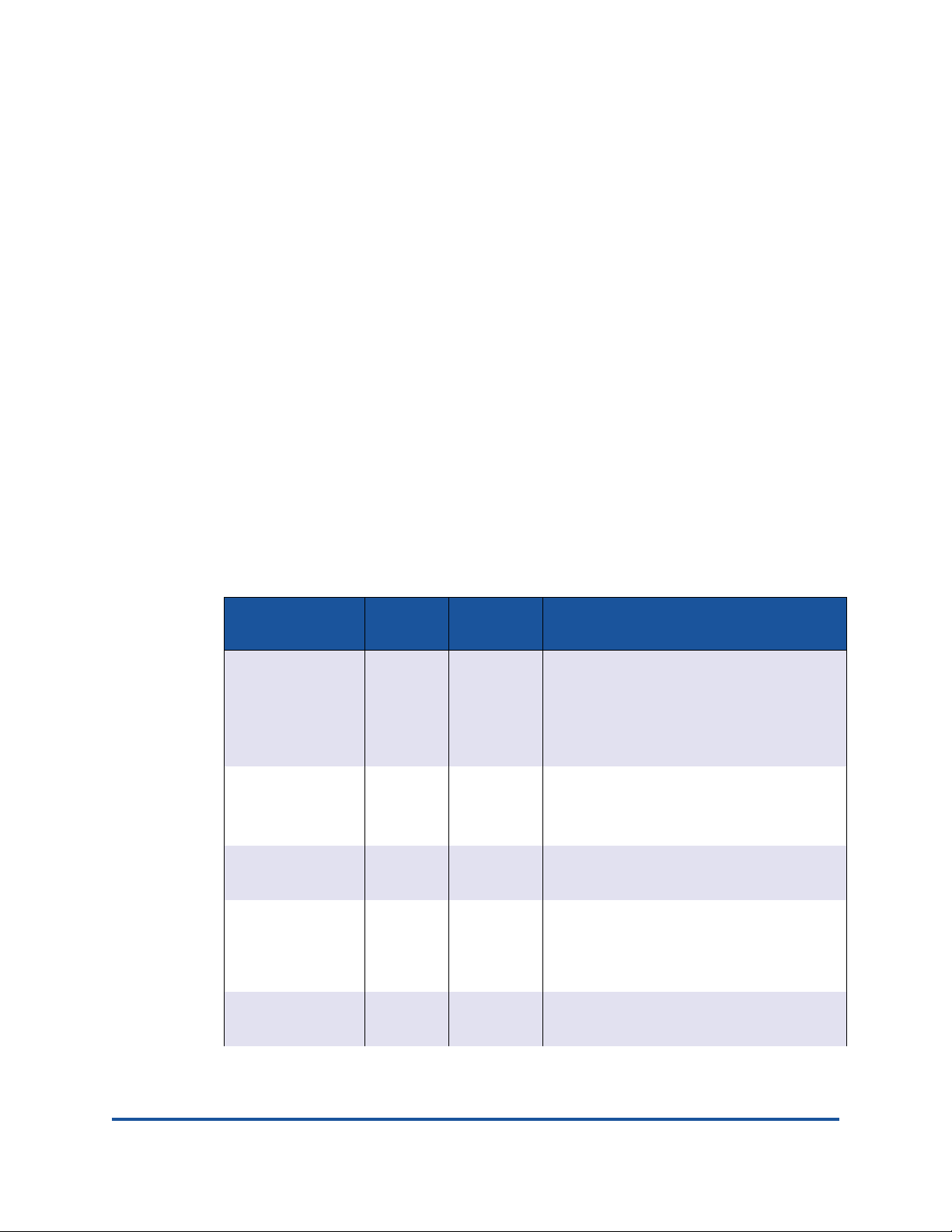

Introduction

The IB driver ib_qib, QLogic Performance Scaled Messaging (PSM), accelerated

Message-Passing Interface (MPI) stack, the protocol and MPI support libraries,

and other modules are components of the QLogic OFED+ software. This software

provides the foundation that supports the MPI implementation.

Figure 3-1 illustrates these relationships. Note that HP-MPI, Platform MPI, Intel

MPI, MVAPICH, MVAPICH2, and Open MPI can run either over PSM or

OpenFabrics

®

User Verbs.

®

Cluster Setup

Figure 3-1. QLogic OFED+ Software Structure

IB0054606-02 A 3-1

Page 26

3–InfiniBand® Cluster Setup and Administration

Installed Layout

Installed Layout

This section describes the default installed layout for the QLogic OFED+ software

and QLogic-supplied MPIs.

QLogic-supplied Open MPI, MVAPICH, and MVAPICH2 RPMs with PSM support

and compiled with GCC, PGI, and the Intel compilers are installed in directories

using the following format:

/usr/mpi/<compiler>/<mpi>-<mpi_version>-qlc

For example:

/usr/mpi/gcc/openmpi-1.4-qlc

QLogic OFED+ utility programs, are installed in:

/usr/bin

/sbin

/opt/iba/*

Documentation is found in:

/usr/share/man

/usr/share/doc/infinipath

License information is found only in usr/share/doc/infinipath. QLogic

OFED+ Host Software user documentation can be found on the QLogic web site

on the software download page for your distribution.

Configuration files are found in:

/etc/sysconfig

Init scripts are found in:

/etc/init.d

The IB driver modules in this release are installed in:

/lib/modules/$(uname -r)/

updates/kernel/drivers/infiniband/hw/qib

Most of the other OFED modules are installed under the infiniband

subdirectory. Other modules are installed under:

/lib/modules/$(uname -r)/updates/kernel/drivers/net

The RDS modules are installed under:

/lib/modules/$(uname -r)/updates/kernel/net/rds

3-2 IB0054606-02 A

Page 27

3–InfiniBand® Cluster Setup and Administration

IB and OpenFabrics Driver Overview

IB and OpenFabrics Driver Overview

The ib_qib module provides low-level QLogic hardware support, and is the base

driver for both MPI/PSM programs and general OpenFabrics protocols such as

IPoIB and sockets direct protocol (SDP). The driver also supplies the Subnet

Management Agent (SMA) component.

The following is a list of the optional configurable OpenFabrics components and

their default settings:

IPoIB network interface. This component is required for TCP/IP networking

for running IP traffic over the IB link. It is not running until it is configured.

OpenSM. This component is disabled at startup. QLogic recommends using

the QLogic Fabric Manager (FM), which is included with the IFS or optionally

available within the QLogic switches. QLogic FM or OpenSM can be

installed on one or more nodes with only one node being the master SM.

SRP (OFED and QLogic modules). SRP is not running until the module is

loaded and the SRP devices on the fabric have been discovered.

MPI over uDAPL (can be used by Intel MPI). IPoIB must be configured

before MPI over uDAPL can be set up.

Other optional drivers can now be configured and enabled, as described in “IPoIB

Network Interface Configuration” on page 3-3.

Complete information about starting, stopping, and restarting the QLogic OFED+

services are in “Managing the ib_qib Driver” on page 3-21.

IPoIB Network Interface Configuration

The following instructions show you how to manually configure your OpenFabrics

IPoIB network interface. QLogic recommends using the QLogic OFED+ Host

Software Installation package or the iba_config tool. For larger clusters,

FastFabric can be used to automate installation and configuration of many nodes.

These tools automate the configuration of the IPoIB network interface. This

example assumes that you are using

QLogic OFED+ and OpenFabric’s RPMs are installed, and your startup scripts

have been run (either manually or at system boot).

sh or bash as your shell, all required

For this example, the IPoIB network is 10.1.17.0 (one of the networks reserved for

private use, and thus not routable on the Internet), with a /8 host portion. In this

case, the netmask must be specified.

IB0054606-02 A 3-3

Page 28

3–InfiniBand® Cluster Setup and Administration

NOTE

IPoIB Network Interface Configuration

This example assumes that no hosts files exist, the host being configured has the

IP address 10.1.17.3, and DHCP is not used.

Instructions are only for this static IP address case. Configuration methods

for using DHCP will be supplied in a later release.

1. Type the following command (as a root user):

ifconfig ib0 10.1.17.3 netmask 0xffffff00

2. To verify the configuration, type:

ifconfig ib0

ifconfig ib1

The output from this command will be similar to:

ib0 Link encap:InfiniBand HWaddr

00:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:

00

inet addr:10.1.17.3 Bcast:10.1.17.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:4096 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:128

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

3. Type:

ping -c 2 -b 10.1.17.255

The output of the ping command will be similar to the following, with a line

for each host already configured and connected:

WARNING: pinging broadcast address

PING 10.1.17.255 (10.1.17.255) 517(84) bytes of data.

174 bytes from 10.1.17.3: icmp_seq=0 ttl=174 time=0.022

ms

64 bytes from 10.1.17.1: icmp_seq=0 ttl=64 time=0.070 ms

(DUP!)

64 bytes from 10.1.17.7: icmp_seq=0 ttl=64 time=0.073 ms

(DUP!)

The IPoIB network interface is now configured.

4. Restart (as a root user) by typing:

/etc/init.d/openibd restart

3-4 IB0054606-02 A

Page 29

3–InfiniBand® Cluster Setup and Administration

NOTE

The configuration must be repeated each time the system is rebooted.

IPoIB-CM (Connected Mode) is enabled by default. The setting in

/etc/infiniband/openib.conf is SET_IPOIB_CM=yes. To use

datagram mode, change the setting to

also be changed when asked during initial installation (./INSTALL).

IPoIB Administration

Administering IPoIB

Stopping, Starting and Restarting the IPoIB Driver

IPoIB Administration

SET_IPOIB_CM=no. Setting can

QLogic recommends using the QLogic IFS Installer TUI or iba_config command to

enable autostart for the IPoIB driver. Refer to the QLogic InfiniBand

Software Installation Guide for more information. For using the command line to

stop, start, and restart the IPoIB driver use the following commands.

To stop the IPoIB driver, use the following command:

/etc/init.d/openibd stop

To start the IPoIB driver, use the following command:

/etc/init.d/openibd start

To restart the IPoIB driver, use the following command:

/etc/init.d/openibd restart

Configuring IPoIB

QLogic recommends using the QLogic IFS Installer TUI, FastFabric, or

iba_config command to configure the boot time and autostart of the IPoIB

driver. Refer to the QLogic InfiniBand

information on using the QLogic IFS Installer TUI. Refer to the QLogic FastFabric

User Guide for more information on using FastFabric. For using the command line

to configure the IPoIB driver use the following commands.

®

Fabric

®

Fabric Software Installation Guide for more

Editing the IPoIB Configuration File

1. For each IP Link Layer interface, create an interface configuration file,

/etc/sysconfig/network/ifcfg-NAME, where NAME is the value of the

IB0054606-02 A 3-5

Page 30

3–InfiniBand® Cluster Setup and Administration

NOTE

NOTE

IB Bonding

NAME field specified in the CREATE block. The following is an example of the

ifcfg-NAME file:

DEVICE=ib1

BOOTPROTO=static

BROADCAST=192.168.18.255

IPADDR=192.168.18.120

NETMASK=255.255.255.0

ONBOOT=yes

NM_CONTROLLED=no

For IPoIB, the INSTALL script for the adapter now helps the user

create the

2. After modifying the /etc/sysconfig/ipoib.cfg file, restart the IPoIB driver

with the following:

/etc/init.d/openibd restart

ifcfg files.

IB Bonding

IB bonding is a high availability solution for IPoIB interfaces. It is based on the

Linux Ethernet Bonding Driver and was adopted to work with IPoIB. The support

for IPoIB interfaces is only for the active-backup mode, other modes should not be

used. QLogic supports bonding across HCA ports and bonding port 1 and port 2

on the same HCA.

Interface Configuration Scripts

Create interface configuration scripts for the ibX and bondX interfaces. Once the

configurations are in place, perform a server reboot, or a service network restart.

For SLES operating systems (OS), a server reboot is required. Refer to the

following standard syntax for bonding configuration by the OS.

For all of the following OS configuration script examples that set MTU,

MTU=65520 is valid only if all IPoIB slaves operate in connected mode and

are configured with the same value. For IPoIB slaves that work in datagram

mode, use MTU=2044. If the MTU is not set correctly or the MTU is not set

at all (set to the default value), performance of the interface may be lower.

3-6 IB0054606-02 A

Page 31

Red Hat EL5 and EL6

The following is an example for bond0 (master). The file is named

/etc/sysconfig/network-scripts/ifcfg-bond0:

DEVICE=bond0

IPADDR=192.168.1.1

NETMASK=255.255.255.0

NETWORK=192.168.1.0

BROADCAST=192.168.1.255

ONBOOT=yes

BOOTPROTO=none

USERCTL=no

MTU=65520

BONDING_OPTS="primary=ib0 updelay=0 downdelay=0"

The following is an example for ib0 (slave). The file is named

/etc/sysconfig/network-scripts/ifcfg-ib0:

DEVICE=ib0

USERCTL=no

ONBOOT=yes

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

TYPE=InfiniBand

PRIMARY=yes

3–InfiniBand® Cluster Setup and Administration

IB Bonding

The following is an example for ib1 (slave 2). The file is named

/etc/sysconfig/network-scripts/ifcfg-ib1:

DEVICE=ib1

USERCTL=no

ONBOOT=yes

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

TYPE=InfiniBand

Add the following lines to the RHEL 5.x file /etc/modprobe.conf, or the

RHEL 6.x file /etc/modprobe.d/ib_qib.conf:

alias bond0 bonding

options bond0 miimon=100 mode=1 max_bonds=1

IB0054606-02 A 3-7

Page 32

3–InfiniBand® Cluster Setup and Administration

IB Bonding

SuSE Linux Enterprise Server (SLES) 10 and 11

The following is an example for bond0 (master). The file is named

/etc/sysconfig/network-scripts/ifcfg-bond0:

DEVICE="bond0"

TYPE="Bonding"

IPADDR="192.168.1.1"

NETMASK="255.255.255.0"

NETWORK="192.168.1.0"

BROADCAST="192.168.1.255"

BOOTPROTO="static"

USERCTL="no"

STARTMODE="onboot"

BONDING_MASTER="yes"

BONDING_MODULE_OPTS="mode=active-backup miimon=100

primary=ib0 updelay=0 downdelay=0"

BONDING_SLAVE0=ib0

BONDING_SLAVE1=ib1

MTU=65520

The following is an example for ib0 (slave). The file is named

/etc/sysconfig/network-scripts/ifcfg-ib0:

DEVICE='ib0'

BOOTPROTO='none'

STARTMODE='off'

WIRELESS='no'

ETHTOOL_OPTIONS=''

NAME=''

USERCONTROL='no'

IPOIB_MODE='connected'

The following is an example for ib1 (slave 2). The file is named

/etc/sysconfig/network-scripts/ifcfg-ib1:

DEVICE='ib1'

BOOTPROTO='none'

STARTMODE='off'

WIRELESS='no'

ETHTOOL_OPTIONS=''

NAME=''

USERCONTROL='no'

IPOIB_MODE='connected'

3-8 IB0054606-02 A

Page 33

Verify the following line is set to the value of yes in /etc/sysconfig/boot:

RUN_PARALLEL="yes"

Verify IB Bonding is Configured

After the configuration scripts are updated, and the service network is restarted or

a server reboot is accomplished, use the following CLI commands to verify that IB

bonding is configured.

cat /proc/net/bonding/bond0

# ifconfig

Example of cat /proc/net/bonding/bond0 output:

# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.2.3 (December 6, 2007)

Bonding Mode: fault-tolerance (active-backup) (fail_over_mac)

Primary Slave: ib0

Currently Active Slave: ib0

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

3–InfiniBand® Cluster Setup and Administration

IB Bonding

Slave Interface: ib0

MII Status: up

Link Failure Count: 0

Permanent HW addr: 80:00:04:04:fe:80

Slave Interface: ib1

MII Status: up

Link Failure Count: 0

Permanent HW addr: 80:00:04:05:fe:80

IB0054606-02 A 3-9

Page 34

3–InfiniBand® Cluster Setup and Administration

Subnet Manager Configuration

Example of ifconfig output:

st2169:/etc/sysconfig # ifconfig

bond0 Link encap:InfiniBand HWaddr

80:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00

inet addr:192.168.1.1 Bcast:192.168.1.255

Mask:255.255.255.0

inet6 addr: fe80::211:7500:ff:909b/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:65520 Metric:1

RX packets:120619276 errors:0 dropped:0 overruns:0 frame:0

TX packets:120619277 errors:0 dropped:137 overruns:0

carrier:0

collisions:0 txqueuelen:0

RX bytes:10132014352 (9662.6 Mb) TX bytes:10614493096

(10122.7 Mb)

ib0 Link encap:InfiniBand HWaddr

80:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00

UP BROADCAST RUNNING SLAVE MULTICAST MTU:65520 Metric:1

RX packets:118938033 errors:0 dropped:0 overruns:0 frame:0

TX packets:118938027 errors:0 dropped:41 overruns:0

carrier:0

collisions:0 txqueuelen:256

RX bytes:9990790704 (9527.9 Mb) TX bytes:10466543096

(9981.6 Mb)

ib1 Link encap:InfiniBand HWaddr

80:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00

UP BROADCAST RUNNING SLAVE MULTICAST MTU:65520 Metric:1

RX packets:1681243 errors:0 dropped:0 overruns:0 frame:0

TX packets:1681250 errors:0 dropped:96 overruns:0 carrier:0

collisions:0 txqueuelen:256

RX bytes:141223648 (134.6 Mb) TX bytes:147950000 (141.0 Mb)

Subnet Manager Configuration

QLogic recommends using the QLogic Fabric Manager to manage your fabric.

Refer to the QLogic Fabric Manager User Guide for information on configuring the

QLogic Fabric Manager.

3-10 IB0054606-02 A

Page 35

3–InfiniBand® Cluster Setup and Administration

!

WARNING

Subnet Manager Configuration

OpenSM is a component of the OpenFabrics project that provides a Subnet

Manager (SM) for IB networks. This package can optionally be installed on any

machine, but only needs to be enabled on the machine in the cluster that will act

as a subnet manager. You cannot use OpenSM if any of your IB switches provide

a subnet manager, or if you are running a host-based SM, for example the QLogic

Fabric Manager.

Don’t run OpenSM with QLogic FM in the same fabric.

If you are using the Installer tool, you can set the OpenSM default behavior at the

time of installation.

OpenSM only needs to be enabled on the node that acts as the subnet

manager.Toenable OpenSM the iba_config command can be used or the

chkconfig command (as a root user) can be used on the node where it will be

run. The chkconfig command to enable the OpenSM is:

chkconfig opensmd on

The chkconfig command to disable it on reboot is:

chkconfig opensmd off

You can start opensmd without rebooting your machine by typing:

/etc/init.d/opensmd start

You can stop opensmd by typing:

/etc/init.d/opensmd stop

If you want to pass any arguments to the OpenSM program, modify the following

file, and add the arguments to the

/etc/init.d/opensmd

OPTIONS variable:

For example:

Use the UPDN algorithm instead of the Min Hop algorithm.

OPTIONS="-R updn"

For more information on OpenSM, see the OpenSM man pages, or look on the

OpenFabrics web site.

IB0054606-02 A 3-11

Page 36

3–InfiniBand® Cluster Setup and Administration

QLogic Distributed Subnet Administration

QLogic Distributed Subnet Administration

As InfiniBand® clusters are scaled into the Petaflop range and beyond, a more

efficient method for handling queries to the Fabric Manager is required. One of the

issues is that while the Fabric Manager can configure and operate that many

nodes, under certain conditions it can become overloaded with queries from those

same nodes.

For example, consider an IB fabric consisting of 1,000 nodes, each with 4

processors. When a large MPI job is started across the entire fabric, each process

needs to collect IB path records for every other node in the fabric - and every

single process is going to be querying the subnet manager for these path records

at roughly the same time. This amounts to a total of 3.9 million path queries just to

start the job.

In the past, MPI implementations have side-stepped this problem by hand crafting

path records themselves, but this solution cannot be used if advanced fabric

management techniques such as virtual fabrics and mesh/torus configurations are

being used. In such cases, only the subnet manager itself has enough information

to correctly build a path record between two nodes.

The Distributed Subnet Administration (SA) solves this problem by allowing each

node to locally replicate the path records needed to reach the other nodes on the

fabric. At boot time, each Distributed SA queries the subnet manager for

information about the relevant parts of the fabric, backing off whenever the subnet

manager indicates that it is busy. Once this information is in the Distributed SA's

database, it is ready to answer local path queries from MPI or other IB

applications. If the fabric changes (due to a switch failure or a node being added

or removed from the fabric) the Distributed SA updates the affected portions of the

database. The Distributed SA can be installed and run on any node in the fabric. It

is only needed on nodes running MPI applications.

Applications that use Distributed SA

The QLogic PSM Library has been extended to take advantage of the Distributed

SA. Therefore, all MPIs that use the QLogic PSM library can take advantage of

the Distributed SA. Other applications must be modified specifically to take

advantage of it. For developers writing applications that use the Distributed SA,

refer to the header file /usr/include/Infiniband/ofedplus_path.h for

information on using Distributed SA APIs. This file can be found on any node

where the Distributed SA is installed. For further assistance please contact

QLogic Support.

3-12 IB0054606-02 A

Page 37

3–InfiniBand® Cluster Setup and Administration

9LUWXDO)DEULF³'HIDXOW´

3NH\[IIII

6,'5DQJH[[IIIIIIIIIIIIIIII

,QILQLEDQG)DEULF

9LUWXDO)DEULF³'HIDXOW´

3NH\[IIII

6,'5DQJH[[I

6,'5DQJH[[I

'LVWULEXWHG6$

Virtual Fabrics and the Distributed SA

The IBTA standard states that applications can be identified by a Service ID (SID).

The QLogic Fabric Manager uses SIDs to identify applications. One or more

applications can be associated with a Virtual Fabric using the SID. The Distributed

SA is designed to be aware of Virtual Fabrics, but to only store records for those

Virtual Fabrics that match the SIDs in the Distributed SA's configuration file. The

Distributed SA recognizes when multiple SIDs match the same Virtual Fabric and

will only store one copy of each path record within a Virtual Fabric. SIDs that

match more than one Virtual Fabric will be associated with a single Virtual Fabric.

The Virtual Fabrics that do not match SIDs in the Distributed SA's database will be

ignored.

Configuring the Distributed SA

In order to absolutely minimize the number of queries made by the Distributed SA,

it is important to configure it correctly, both to match the configuration of the Fabric

Manager and to exclude those portions of the fabric that will not be used by

applications using the Distributed SA. The configuration file for the Distributed SA

is named /etc/sysconfig/iba/qlogic_sa.conf.

QLogic Distributed Subnet Administration

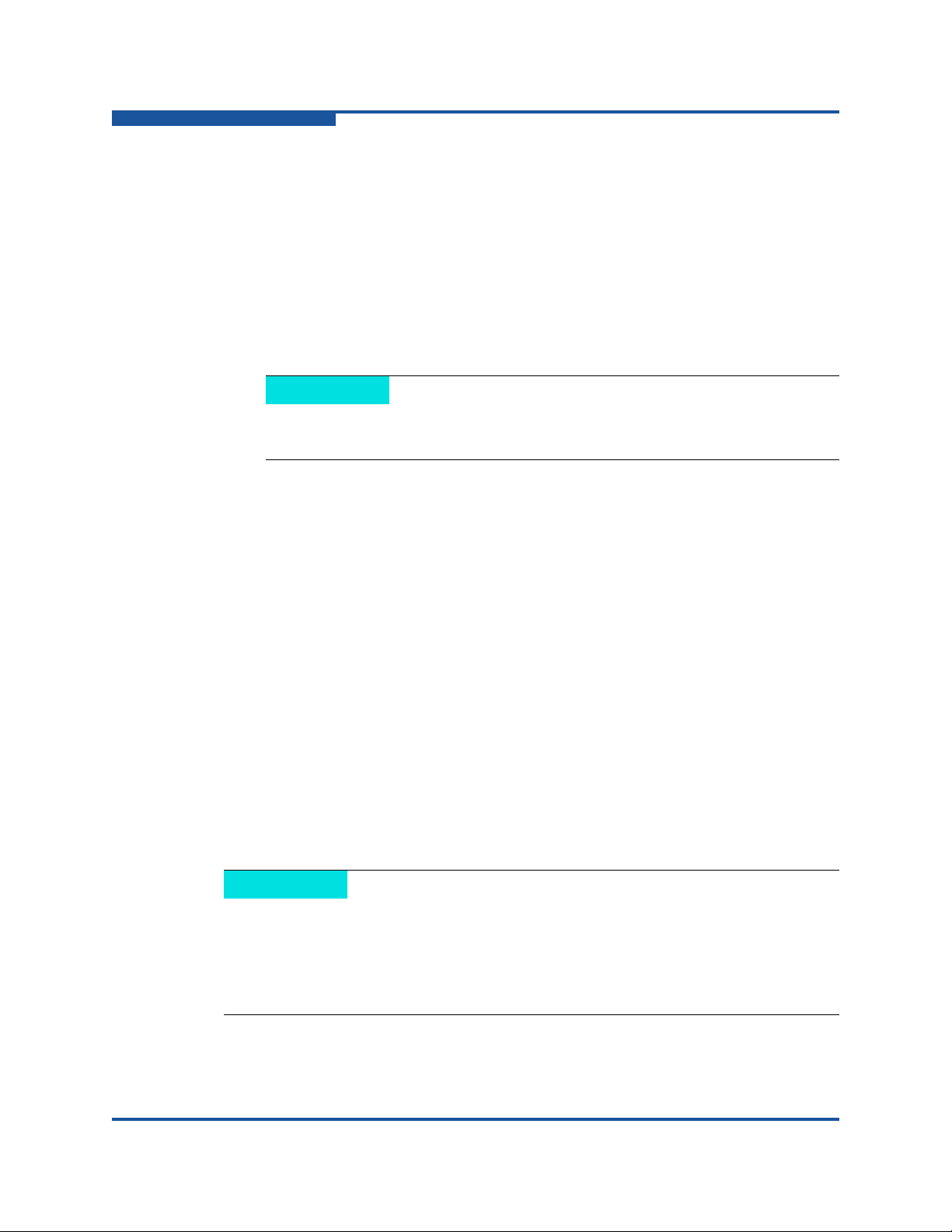

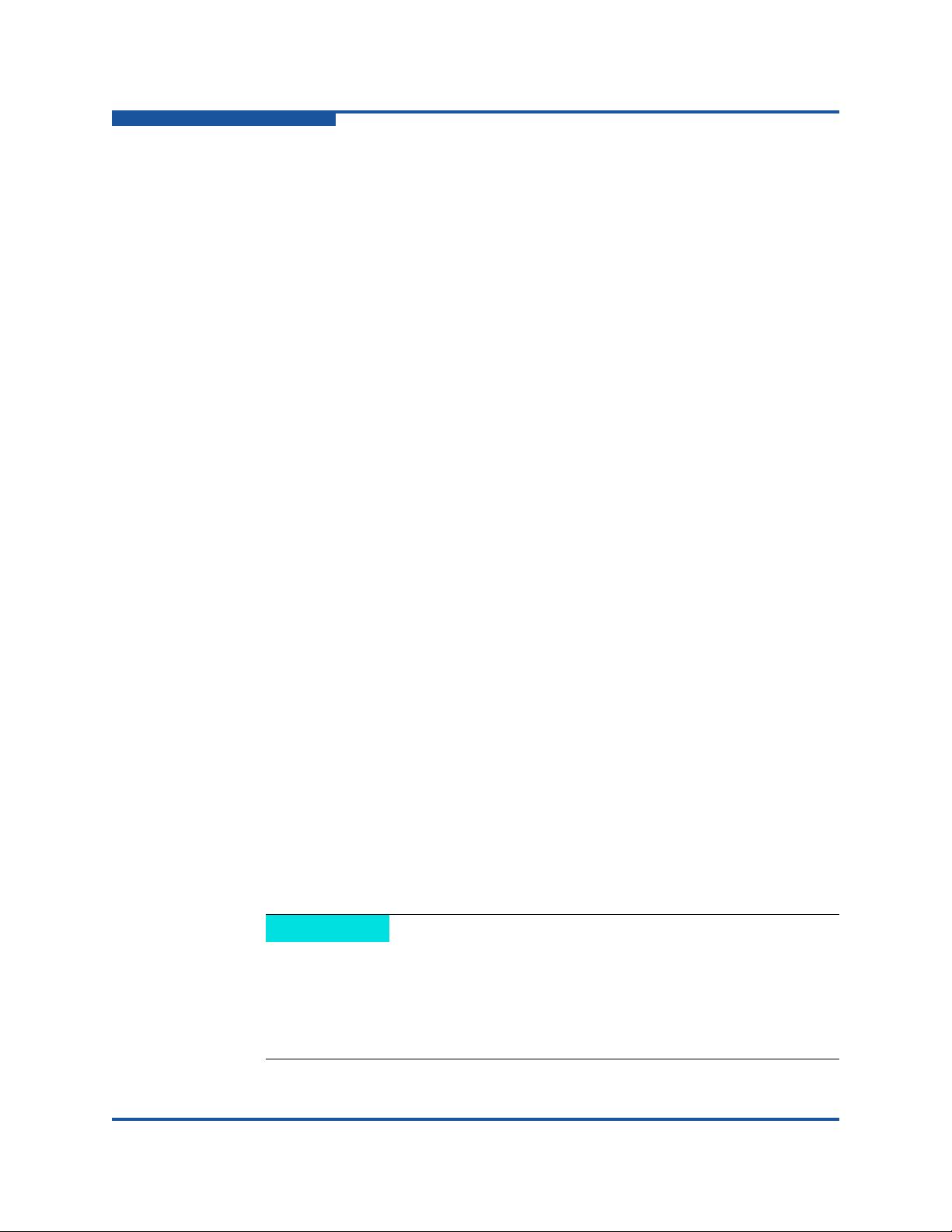

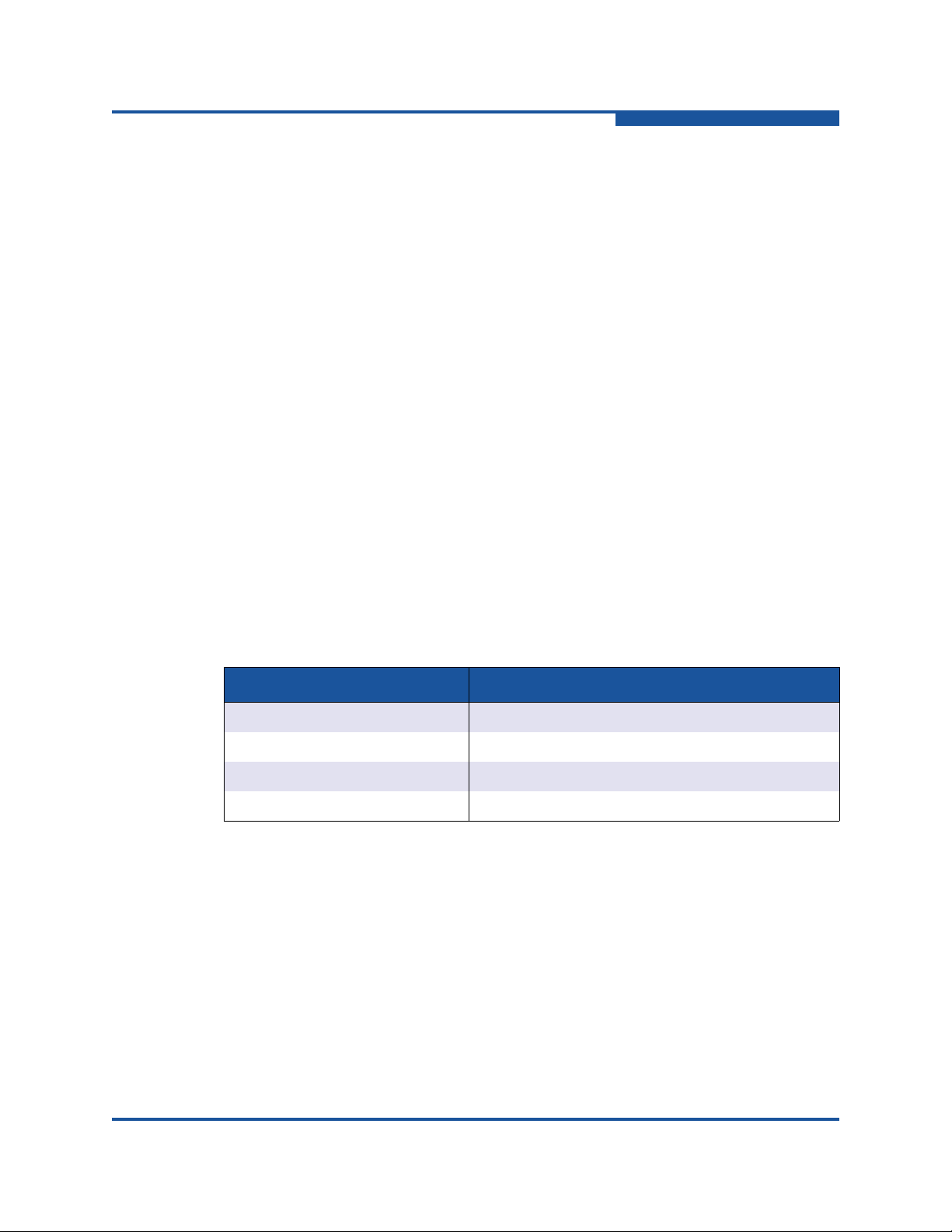

Default Configuration

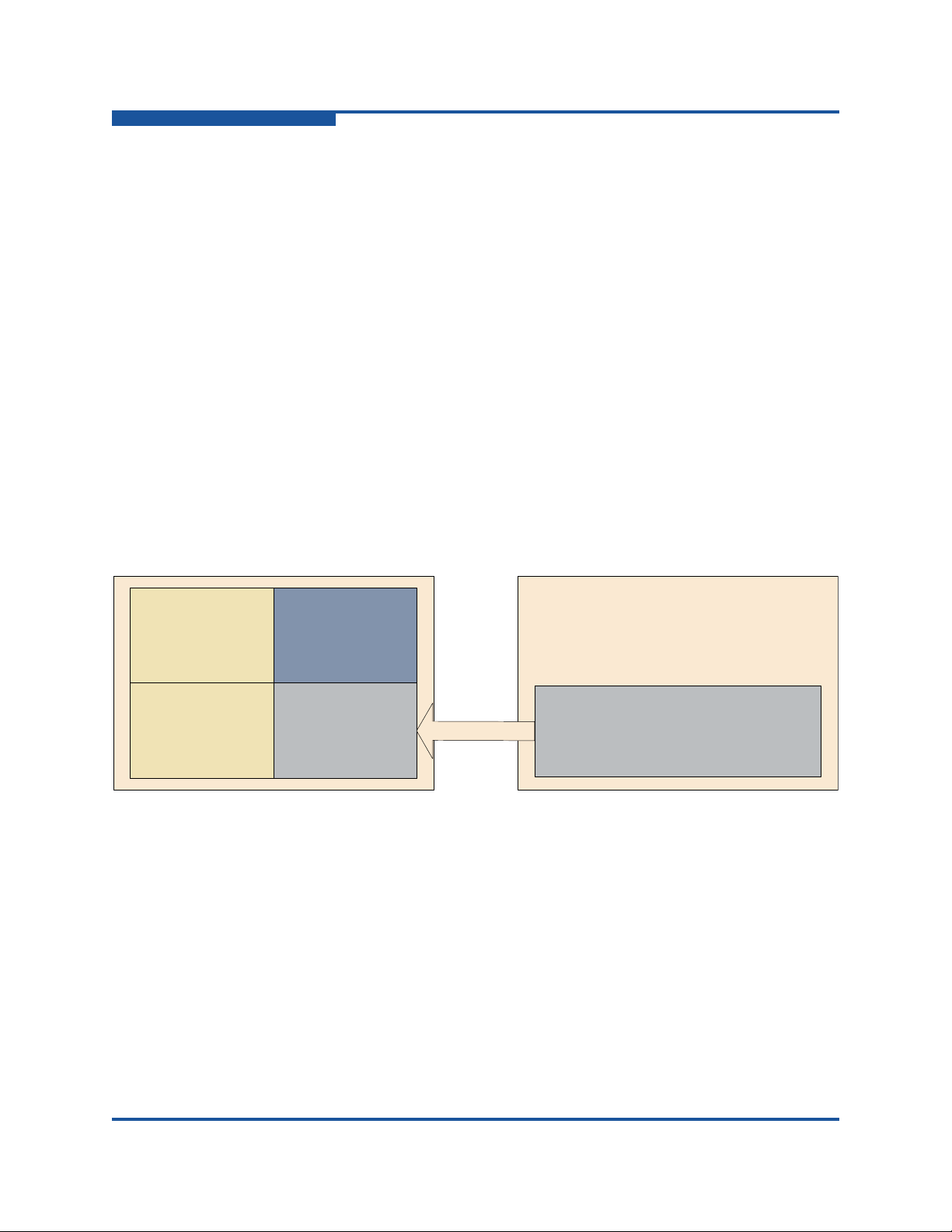

As shipped, the QLogic Fabric Manager creates a single virtual fabric, called

“Default” and maps all nodes and Service IDs to it, and the Distributed SA ships

with a configuration that lists a set of thirty-one SIDs, 0x1000117500000000

through 0x100011750000000f and 0x1 through 0xf. This results in an

arrangement like the one shown in Figure 3-2

Figure 3-2. Distributed SA Default Configuration

IB0054606-02 A 3-13

Page 38

3–InfiniBand® Cluster Setup and Administration

9LUWXDO)DEULF³$GPLQ´

3NH\[III

,QILQLEDQG)DEULF

9LUWXDO)DEULF

³360B03,´

3NH\[

6,'5DQJH[[I

6,'5DQJH

[

[I

9LUWXDO)DEULF³6WRUDJH´

3NH\[

6,'

[

9LUWXDO)DEULF

³5HVHUYHG´

3NH\[

6,'5DQJH[[I

'LVWULEXWHG6$

9LUWXDO)DEULF³360B03,´

3NH\[

6,'5DQJH[[I

6,'5DQJH[

[I

QLogic Distributed Subnet Administration

If you are using the QLogic Fabric Manager in its default configuration, and you

are using the standard QLogic PSM SIDs, this arrangement will work fine and you

will not need to modify the Distributed SA's configuration file - but notice that the

Distributed SA has restricted the range of SIDs it cares about to those that were

defined in its configuration file. Attempts to get path records using other SIDs will

not work, even if those other SIDs are valid for the fabric. When using this default

configuration it is necessary that MPI applications only be run using one of these

32 SIDs.

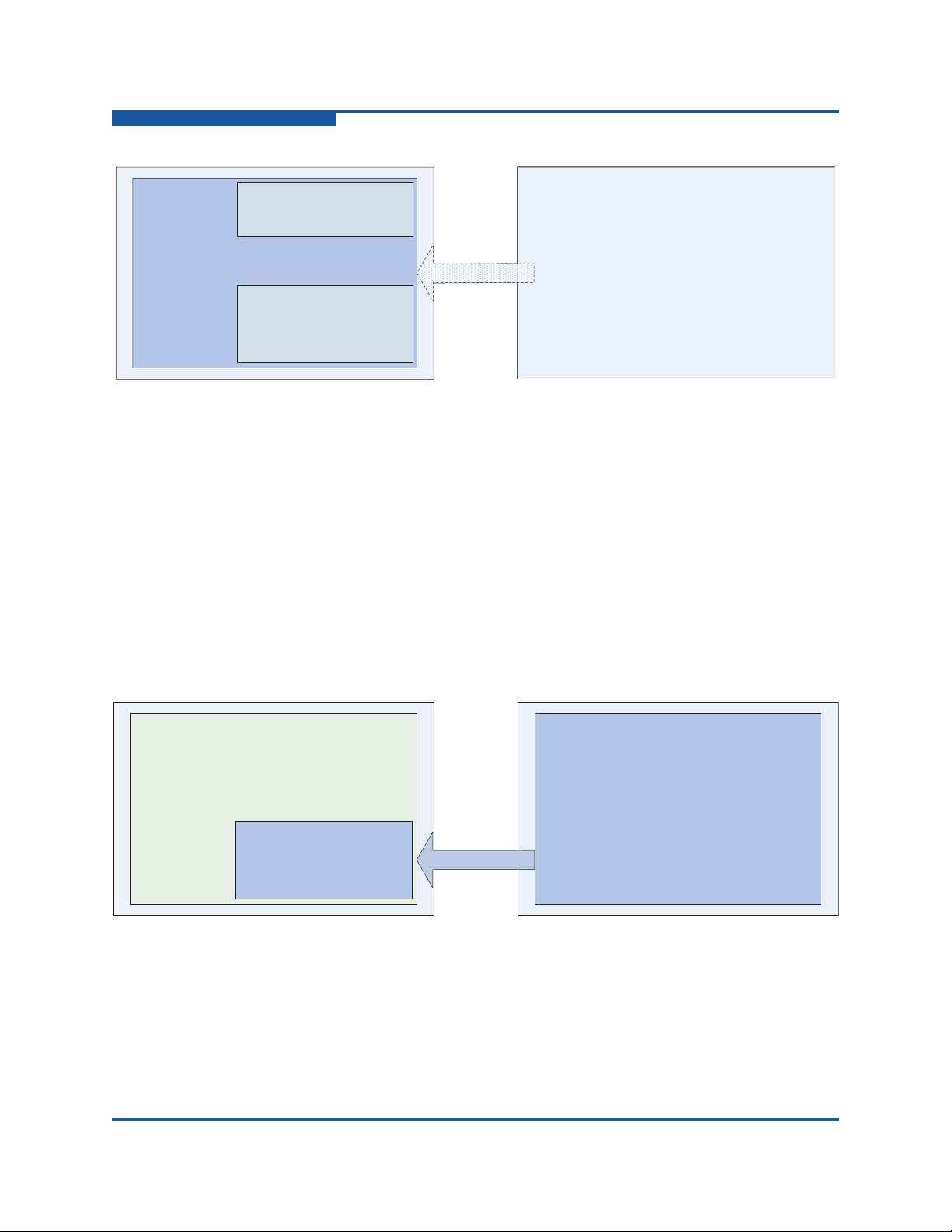

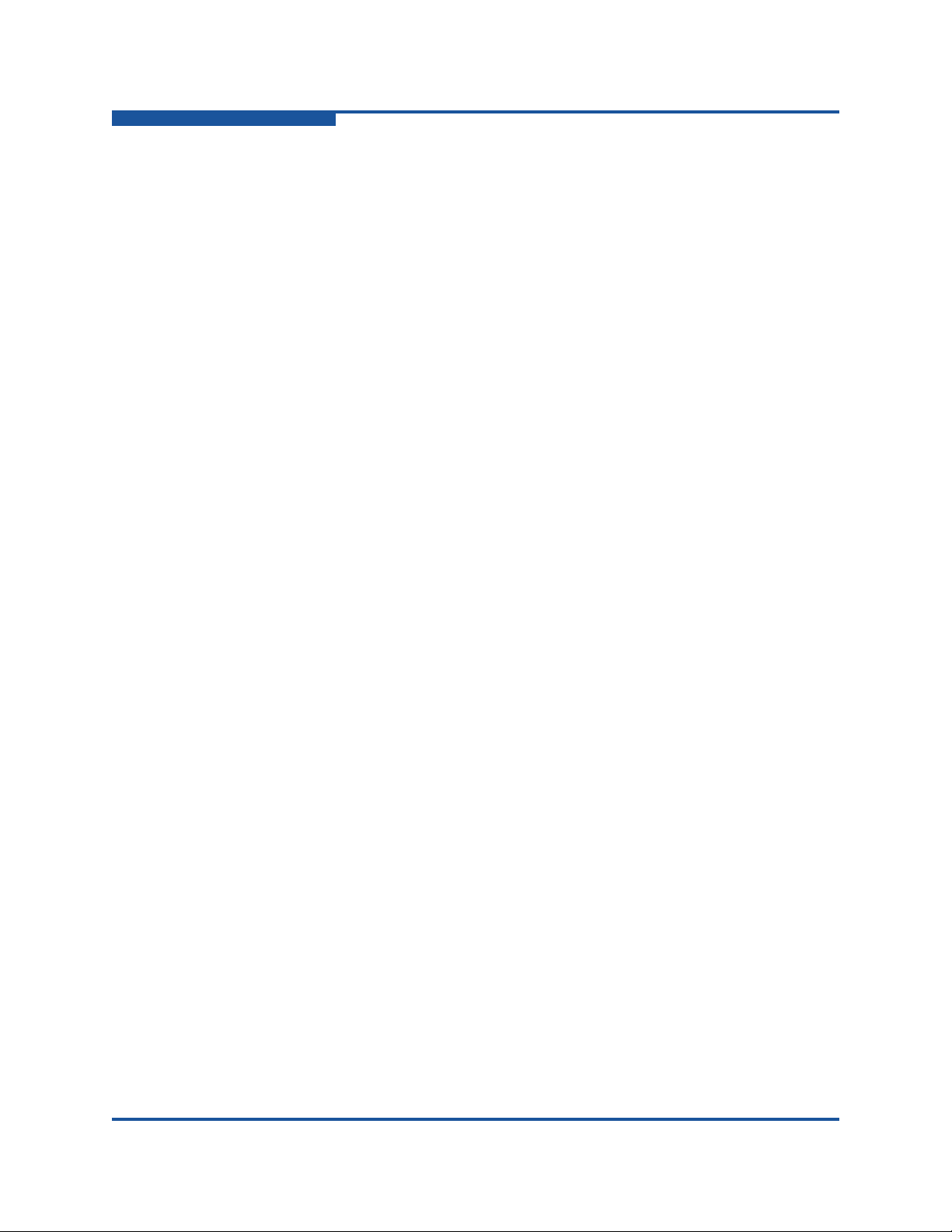

Multiple Virtual Fabrics Example

A person configuring the physical IB fabric may want to limit how much IB

bandwidth MPI applications are permitted to consume. In that case, they may

re-configure the QLogic Fabric Manager, turning off the “Default” Virtual Fabric

and replacing it with several other Virtual Fabrics.

In Figure 3-3, the administrator has divided the physical fabric into four virtual

fabrics: “Admin” (used to communicate with the Fabric Manager), “Storage” (used

by SRP), “PSM_MPI” (used by regular MPI jobs) and a special “Reserved” fabric

for special high-priority jobs.

3-14 IB0054606-02 A

Figure 3-3. Distributed SA Multiple Virtual Fabrics Example

Due to the fact that the Distributed SA was not configured to include the SID

Range 0x10 through 0x1f, it has simply ignored the “Reserved” VF. Adding those

SIDs to the qlogic_sa.conf file solves the problem as shown in Figure 3-4.

Page 39

3–InfiniBand® Cluster Setup and Administration

9LUWXDO)DEULF³$GPLQ´

3NH\[III

,QILQLEDQG)DEULF

9LUWXDO)DEULF

³360B03,´

3NH\[

6,'5DQJH[[I

6,'5DQJH

[

[I

9LUWXDO)DEULF³6WRUDJH´

3NH\[

6,'

[

9LUWXDO)DEULF

³5HVHUYHG´

3NH\[

6,'5DQJH[[I

'LVWULEXWHG6$

9LUWXDO)DEULF³5HVHUYHG´

3NH\[

6,'5DQJH[[I

9LUWXDO)DEULF³360B03,´

3NH\[

6,'5DQJH[[I

6,'5DQJH[

[I

9LUWXDO)DEULF³'HIDXOW´

3NH\[IIII

6,'5DQJH[[IIIIIIIIIIIIIIII

,QILQLEDQG)DEULF

/RRNLQJIRU6,'5DQJH[[I

DQG[

[I

'LVWULEXWHG6$

9LUWXDO)DEULF³'HIDXOW´

3NH\[IIII

6,'5DQJH[[IIIIIIIIIIIIIIII

/RRNLQJIRU6,'5DQJHV[[IDQG

[

[I

"

9LUWXDO)DEULF³360B03,´

3NH\[

6,'5DQJH[[I

6,'5DQJH

[

[I

QLogic Distributed Subnet Administration

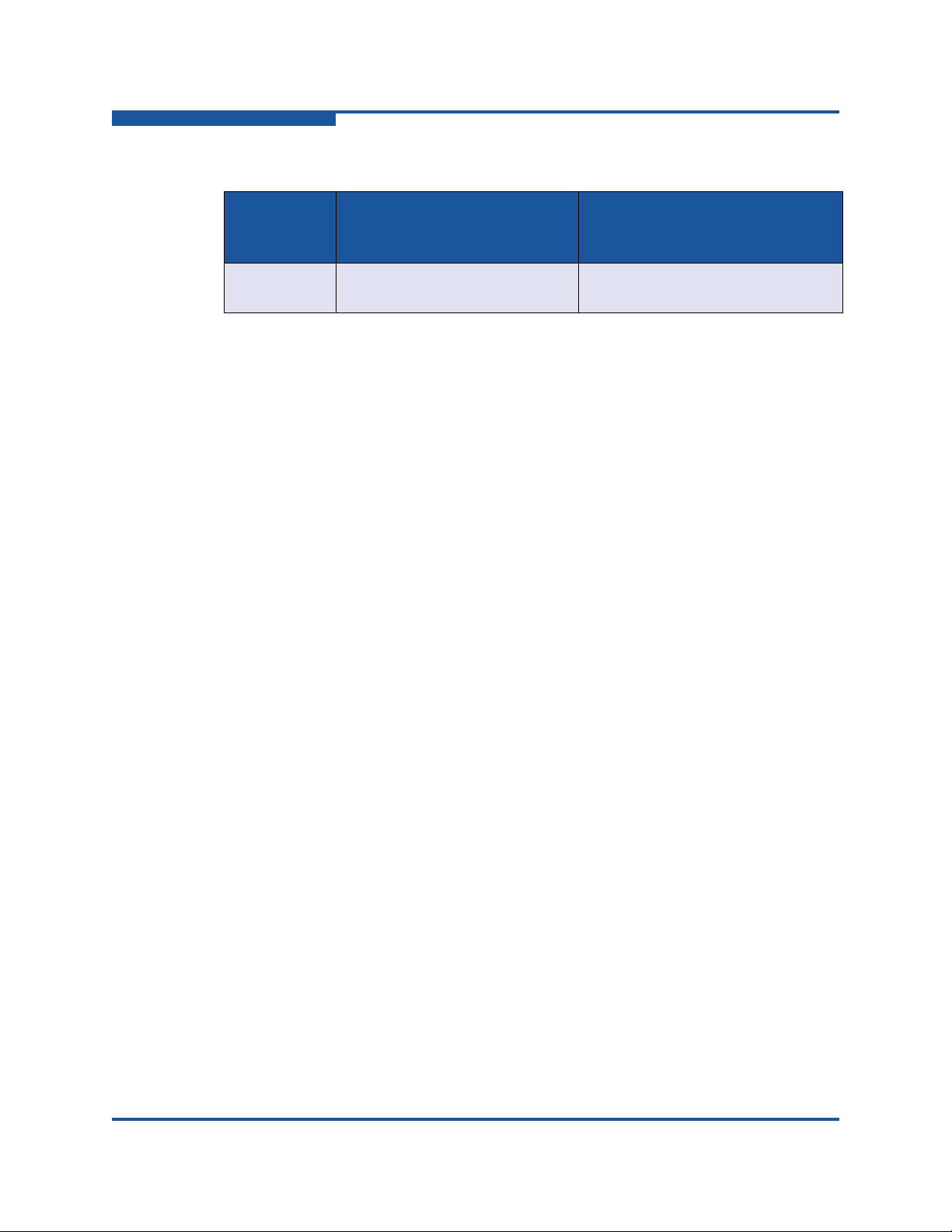

Figure 3-4. Distributed SA Multiple Virtual Fabrics Configured Example

Virtual Fabrics with Overlapping Definitions

As defined, SIDs should never be shared between Virtual Fabrics. Unfortunately,