Page 1

Q

Simplify

InfiniPath User Guide

Version 2.0

IB6054601-00 D Page i

Page 2

InfiniPath User Guide

Version 2.0

Information furnished in this manual is believed to be accurate and reliable. However, QLogic Corporation assumes no

responsibility for its use, nor for any infringements of patents or other rights of third parties which may result from its use.

QLogic Corporation reserves the right to change product specifications at any time without notice. Applications described

in this document for any of these products are for illustrative purposes only. QLogic Corporation makes no representation

nor warranty that such applications are suitable for the specified use without further testing or modification. QLogic

Corporation assumes no responsibility for any errors that may appear in this document.

No part of this document may be copied nor reproduced by any means, nor translated nor transmitted to any magnetic

medium without the express written consent of QLogic Corporation. In accordance with the terms of their valid PathScale

agreements, customers are permitted to make electronic and paper copies of this document for their own exclusive use.

Linux is a registered trademark of Linus Torvalds.

QLA, QLogic, SANsurfer, the QLogic logo, PathScale, the PathScale logo, and InfiniPath are registered trademarks

of QLogic Corporation.

Red Hat and all Red Hat-based trademarks are trademarks or registered trademarks of Red Hat, Inc.

SuSE is a registered trademark of SuSE Linux AG.

All other brand and product names are trademarks or registered trademarks of their respective owners.

Document Revision History

Rev. 1.0, 8/20/2005

Rev. 1.1, 11/15/05

Rev. 1.2,02/15/06

Rev. 1.3 Beta 1, 4/15/06

Rev. 1.3, 6/15/06

Rev. 2.0 Beta, 9/25/06,

QLogic Rev IB6054601 A

Rev. 2.0 Beta 2, 10/15/06,

QLogic Rev IB6054601 B

Rev. 2.0, 11/30/06,

QLogic Rev IB6054601 C

Rev. 2.0, 3/23/07,

QLogic Rev IB6054601 D

Rev. D Change Document Sections Affected

Added metadata to pdf document only PDF metadata

Rev. C Changes Document Sections Affected

Updated Preface and Overview by combining into single section, now

called Introduction. Same as introduction in Install Guide.

Added SLES 9 as new supported distribution 1.7

Revised info about MTRR mapping in BIOS. Some BIOS’ don’t have it,

or call it something else.

Corrected usage of ipath_core, replacing with ib_ipath 2

Added more options to mpirun man page description 3.5.10

Added new section on Environment for Multiple Versions of InfiniPath

or MPI

Added info on support for multiple MPIs 3.6

C.1, C.2.1, C.2.2, C.2.3

1

3.5.8.1

Q

Page ii IB6054601-00 D

Page 3

Q

InfiniPath User Guide

Version 2.0

Added info about using MPI over uDAPL. Need to load modules

rdma_cm and rdma_ucm.

Added section: Error messages generated by mpirun. This explains

more about the types of errors found in the sub-sections. Also added

error messages related to failed connections between nodes

Added mpirun error message about stray processes to error message

section

Added driver and link error messages reported by MPI programs C.8.12.3

Added section about errors occurring when different runtime/compile

time MPI versions are used

2.0 mpirun incompatible with 1.3 libraries C.8.1

Added glossary entry for MTRR E

Added new index entries for MPI error messages format, corrected

index formatting

3.7

C.8.12

C.8.12.2

C.8.7

Index

IB6054601-00 D Page iii

Page 4

InfiniPath User Guide

Version 2.0

Q

© 2006, 2007 QLogic Corporation. All rights reserved worldwide.

© PathScale 2004, 2005, 2006. All rights reserved.

First Published: August 2005

Printed in U.S.A.

Page iv IB6054601-00 D

Page 5

Table of Contents

Section 1 Introduction

1.1 Who Should Read this Guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.2 How this Guide is Organized . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.3 Overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

1.4 Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

1.5 Interoperability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

1.6 What’s New in this Release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

1.7 Supported Distributions and Kernels . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-4

1.8 Software Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-5

1.9 Conventions Used in this Document. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

1.10 Documentation and Technical Support. . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

Section 2 InfiniPath Cluster Administration

2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.2 Installed Layout. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.3 Memory Footprint . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-2

2.4 Configuration and Startup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

2.4.1 BIOS Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

2.4.2 InfiniPath Driver Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-5

2.4.3 InfiniPath Driver Software Configuration . . . . . . . . . . . . . . . . . . . . . . . 2-5

2.4.4 InfiniPath Driver Filesystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-5

2.4.5 Subnet Management Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-6

2.4.6 Layered Ethernet Driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-6

2.4.6.1 ipath_ether Configuration on Fedora and RHEL4 . . . . . . . . . . . 2-7

2.4.6.2 ipath_ether Configuration on SUSE 9.3, SLES 9, and SLES 10 2-8

2.4.7 OpenFabrics Configuration and Startup . . . . . . . . . . . . . . . . . . . . . . . 2-11

2.4.7.1 Configuring the IPoIB Network Interface . . . . . . . . . . . . . . . . . . . . . 2-11

2.4.8 OpenSM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-12

2.5 SRP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-13

2.6 Further Information on Configuring and Loading Drivers . . . . . . . . . . . . . 2-13

2.7 Starting and Stopping the InfiniPath Software . . . . . . . . . . . . . . . . . . . . . 2-13

2.8 Software Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-15

2.9 Configuring ssh and sshd Using shosts.equiv . . . . . . . . . . . . . . . . . 2-15

2.9.1 Process Limitation with ssh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

IB6054601-00 D Page v

Page 6

InfiniPath User Guide

Version 2.0

2.10 Performance and Management Tips . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

2.10.1 Remove Unneeded Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-17

2.10.2 Disable Powersaving Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-18

2.10.3 Balanced Processor Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-19

2.10.4 SDP Module Parameters for Best Performance . . . . . . . . . . . . . . . . . 2-19

2.10.5 CPU Affinity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-19

2.10.6 Hyper-Threading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-20

2.10.7 Homogeneous Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-20

2.11 Customer Acceptance Utility. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-22

Q

Section 3 Using InfiniPath MPI

3.1 InfiniPath MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

3.2 Other MPI Implementations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

3.3 Getting Started with MPI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

3.3.1 An Example C Program . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-2

3.3.2 Examples Using Other Languages . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-3

3.4 Configuring MPI Programs for InfiniPath MPI. . . . . . . . . . . . . . . . . . . . . . 3-4

3.5 InfiniPath MPI Details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-5

3.5.1 Configuring for ssh Using ssh-agent . . . . . . . . . . . . . . . . . . . . . . . . 3-5

3.5.2 Compiling and Linking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-7

3.5.3 To Use Another Compiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-8

3.5.3.1 Compiler and Linker Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-9

3.5.4 Cross-compilation Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-9

3.5.5 Running MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-10

3.5.6 The mpihosts File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-11

3.5.7 Console I/O in MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-12

3.5.8 Environment for Node Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-12

3.5.8.1 Environment for Multiple Versions of InfiniPath or MPI . . . . . . . . . . 3-13

3.5.9 Multiprocessor Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-14

3.5.10 mpirun Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-14

3.6 Using Other MPI Implementations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-17

3.7 MPI Over uDAPL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-17

3.8 MPD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-17

3.8.1 MPD Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

3.8.2 Using MPD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

3.9 File I/O in MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

3.9.1 Linux File I/O in MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-18

3.9.2 MPI-IO with ROMIO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-19

3.10 InfiniPath MPI and Hybrid MPI/OpenMP Applications . . . . . . . . . . . . . . . 3-19

Page vi IB6054601-00 D

Page 7

InfiniPath User Guide

Q

InfiniPath User Guide

3.11 Debugging MPI Programs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-20

3.11.1 MPI Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-20

3.11.2 Using Debuggers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-20

3.12 InfiniPath MPI Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-21

Version 2.0

Appendix A Benchmark Programs

A.1 Benchmark 1: Measuring MPI Latency Between Two Nodes . . . . . . . . . A-1

A.2 Benchmark 2: Measuring MPI Bandwidth Between Two Nodes . . . . . . . A-2

A.3 Benchmark 3: Messaging Rate Microbenchmarks . . . . . . . . . . . . . . . . . A-3

A.4 Benchmark 4: Measuring MPI Latency in Host Rings . . . . . . . . . . . . . . . A-5

Appendix B Integration with a Batch Queuing System

B.1 A Batch Queuing Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

B.1.1 Allocating Resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

B.1.2 Generating the mpihosts File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

B.1.3 Simple Process Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.1.4 Clean Termination of MPI Processes . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.2 Lock Enough Memory on Nodes When Using SLURM . . . . . . . . . . . . . . B-4

Appendix C Troubleshooting

C.1 Troubleshooting InfiniPath Adapter Installation . . . . . . . . . . . . . . . . . . . . C-1

C.1.1 Mechanical and Electrical Considerations . . . . . . . . . . . . . . . . . . . . . . C-1

C.1.2 Some HTX Motherboards May Need 2 or More CPUs in Use . . . . . . . C-2

C.2 BIOS Settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-2

C.2.1 MTRR Mapping and Write Combining . . . . . . . . . . . . . . . . . . . . . . . . . C-3

C.2.2 Incorrect MTRR Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-3

C.2.3 Incorrect MTRR Mapping Causes Unexpected Low Bandwidth . . . . . C-4

C.2.4 Change Setting for Mapping Memory . . . . . . . . . . . . . . . . . . . . . . . . . C-4

C.2.5 Issue with SuperMicro H8DCE-HTe and QHT7040 . . . . . . . . . . . . . . . C-4

C.3 Software Installation Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-5

C.3.1 OpenFabrics Dependencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-5

C.3.2 Install Warning with RHEL4U2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-5

C.3.3 mpirun Installation Requires 32-bit Support . . . . . . . . . . . . . . . . . . . . C-5

C.3.4 Installing Newer Drivers from Other Distributions . . . . . . . . . . . . . . . . C-6

C.3.5 Installing for Your Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-7

C.4 Kernel and Initialization Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-7

C.4.1 Kernel Needs CONFIG_PCI_MSI=y . . . . . . . . . . . . . . . . . . . . . . . . . . C-8

C.4.2 pci_msi_quirk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-8

C.4.3 Driver Load Fails Due to Unsupported Kernel . . . . . . . . . . . . . . . . . . . C-9

C.4.4 InfiniPath Interrupts Not Working . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-9

IB6054601-00 D Page vii

Page 8

InfiniPath User Guide

Version 2.0

C.4.5 OpenFabrics Load Errors If ib_ipath Driver Load Fails . . . . . . . . . . C-10

C.4.6 InfiniPath ib_ipath Initialization Failure . . . . . . . . . . . . . . . . . . . . . . C-11

C.4.7 MPI Job Failures Due to Initialization Problems . . . . . . . . . . . . . . . . . C-11

C.5 OpenFabrics Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-12

C.5.1 Stop OpenSM Before Stopping/Restarting InfiniPath . . . . . . . . . . . . . C-12

C.5.2 Load and Configure IPoIB Before Loading SDP . . . . . . . . . . . . . . . . . C-12

C.5.3 Set $IBPATH for OpenFabrics Scripts . . . . . . . . . . . . . . . . . . . . . . . . . C-12

C.6 System Administration Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . C-12

C.6.1 Broken Intermediate Link . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

C.7 Performance Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

C.7.1 MVAPICH Performance Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

C.8 InfiniPath MPI Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

C.8.1 Mixed Releases of MPI RPMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

C.8.2 Cross-compilation Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-14

C.8.3 Compiler/Linker Mismatch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-15

C.8.4 Compiler Can’t Find Include, Module or Library Files . . . . . . . . . . . . . C-15

C.8.5 Compiling on Development Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . C-16

C.8.6 Specifying the Run-time Library Path . . . . . . . . . . . . . . . . . . . . . . . . . C-16

C.8.7 Run Time Errors With Different MPI Implementations . . . . . . . . . . . . . C-17

C.8.8 Process Limitation with ssh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-19

C.8.9 Using MPI.mod Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-19

C.8.10 Extending MPI Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-20

C.8.11 Lock Enough Memory on Nodes When Using a Batch Queuing

System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-21

C.8.12 Error Messages Generated by mpirun . . . . . . . . . . . . . . . . . . . . . . . C-22

C.8.12.1 Messages from the InfiniPath Library . . . . . . . . . . . . . . . . . . . . . . . . C-22

C.8.12.2 MPI Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-24

C.8.12.3 Driver and Link Error Messages Reported by MPI Programs. . . . . . C-27

C.8.13 MPI Stats . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-28

C.9 Useful Programs and Files for Debugging . . . . . . . . . . . . . . . . . . . . . . . . C-29

C.9.1 Check Cluster Homogeneity with ipath_checkout . . . . . . . . . . . . . C-29

C.9.2 Restarting InfiniPath . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-29

C.9.3 Summary of Useful Programs and Files . . . . . . . . . . . . . . . . . . . . . . . C-30

C.9.4 boardversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-31

C.9.5 ibstatus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-32

C.9.6 ibv_devinfo . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-32

C.9.7 ident . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-32

C.9.8 ipath_checkout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-33

C.9.9 ipath_control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-34

C.9.10 ipathbug-helper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-35

Q

Page viii IB6054601-00 D

Page 9

InfiniPath User Guide

Q

InfiniPath User Guide

C.9.11 ipath_pkt_test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-35

C.9.12 ipathstats . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-35

C.9.13 lsmod . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-36

C.9.14 mpirun . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-36

C.9.15 rpm . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-36

C.9.16 status_str . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-36

C.9.17 strings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-38

C.9.18 version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-38

Version 2.0

Appendix D Recommended Reading

D.1 References for MPI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

D.2 Books for Learning MPI Programming . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

D.3 Reference and Source for SLURM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

D.4 InfiniBand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

D.5 OpenFabrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

D.6 Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-2

D.7 Rocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-2

Appendix E Glossary

Index

Figures

Figure Page

2-1 InfiniPath Software Structure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

Tables

Table Page

1-1 PathScale-QLogic Adapter Model Numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

1-2 InfiniPath/OpenFabrics Supported Distributions and Kernels . . . . . . . . . . . . . . . . . . 1-4

1-3 Typographical Conventions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

2-1 Memory Footprint of the InfiniPath Adapter on Linux x86_64 Systems . . . . . . . . . . 2-3

2-2 Memory Footprint, 331 MB per Node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

C-1 LED Link and Data Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-2

C-2 Useful Programs and Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-30

C-3 status_str File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-37

C-4 Other Files Related to Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-37

IB6054601-00 D Page ix

Page 10

InfiniPath User Guide

Version 2.0

Notes

Q

Page x IB6054601-00 D

Page 11

This chapter describes the objectives, intended audience, and organization of the

InfiniPath User Guide.

The InfiniPath User Guide is intended to give the end users of an InifiniPath cluster

what they need to know to use it. In this case, end users are understood to include

both the cluster administrator and the MPI application programmers, who have

different but overlapping interests in the details of the technology.

For specific instructions about installing the InfiniPath QLE7140 PCI Express™

adapter, the QMI7140 adapter, or the QHT7140 /QHT7040 HTX™ adapters, and

the initial installation of the InifiniPath Software, see the InfiniPath Install Guide.

1.1

Who Should Read this Guide

This guide is intended both for readers responsible for administration of an InfiniPath

cluster network and for readers wanting to use that cluster.

This guide assumes that all readers are familiar with cluster computing, that the

cluster administrator reader is familiar with Linux administration and that the

application programmer reader is familiar with MPI.

Section 1

Introduction

1.2

How this Guide is Organized

The InfiniPath User Guide is organized into these sections:

■ Section 1"Introduction". This section.

■ Section 2 “InfiniPath Cluster Administration” describes the lower levels of the

supplied InfiniPath software. This would be of interest mainly to an InfiniPath

cluster administrator.

■ Section 3 “Using InfiniPath MPI” helps the MPI programmer make best use of

the InfiniPath MPI implementation.

■ Appendix A “Benchmark Programs”

■ Appendix B “Integration with a Batch Queuing System”

■ Appendix C “Troubleshooting”. The Troubleshooting section provides

information for troubleshooting installation, cluster administration, and MPI.

■ Appendix D “Recommended Reading”

IB6054601-00 D 1-1

Page 12

1 – Introduction

Interoperability

1.3

Overview

Q

■ Appendix E Glossary of technical terms

■ Index

In addition, the InfiniPath Install Guide contains information on InfiniPath hardware

and software installation.

The material in this documentation pertains to an InfiniPath cluster. This is defined

as a collection of nodes, each attached to an InfiniBand™-based fabric through the

InfiniPath Interconnect. The nodes are Linux-based computers, each having up to

eight processors.

The InfiniPath interconnect is InfiniBand 4X, with a raw data rate of 10 Gb/s (data

rate of 8Gb/s).

InfiniPath utilizes standard, off-the-shelf InfiniBand 4X switches and cabling.

InfiniPath OpenFabrics software is interoperable with other vendors’ InfiniBand

HCAs running compatible OpenFabrics releases. There are two options for Subnet

Management in your cluster:

■ Use the Subnet Manager on one or more managed switches supplied with your

Infiniband switches.

■ Use the OpenSM component of OpenFabrics.

1.4

Switches

The InfiniPath interconnect is designed to work with all InfiniBand-compliant

switches. Use of OpenSM as a subnet manager is now supported. OpenSM is part

of the OpenFabrics component of this release.

1.5

Interoperability

InfiniPath participates in the standard InfiniBand Subnet Management protocols for

configuration and monitoring. InfiniPath OpenFabrics (including IPoIB) is

interoperable with other vendors’ InfiniBand HCAs running compatible OpenFabrics

releases. The InfiniPath MPI and Ethernet emulation stacks (

interoperable with other InfiniBand Host Channel Adapters (HCA) and Target

Channel Adapters (TCA). Instead, InfiniPath uses an InfiniBand-compliant

vendor-specific protocol that is highly optimized for MPI and TCP between

InfiniPath-equipped hosts.

ipath_ether) are not

1-2 IB6054601-00 D

Page 13

Q

NOTE: OpenFabrics was known as OpenIB until March 2006. All relevant

references to OpenIB in this documentation have been updated to reflect

this change. See the OpenFabrics website at http://www.openfabrics.org

for more information on the OpenFabrics Alliance.

1.6

What’s New in this Release

QLogic Corp. acquired PathScale in April 2006. In this 2.0 release, product names,

internal program and output message names now refer to QLogic rather than

PathScale.

The new QLogic and former PathScale adapter model numbers are shown in the

table below.

Table 1-1. PathScale-QLogic Adapter Model Numbers

Former

PathScale

Model Number

HT-400 IBA6110 Single Port 10GBS InfiniBand to HTX ASIC

PE-800 IBA6120 Single Port 10GBS InfiniBand to x8 PCI Express

HT-460 QHT7040 Single Port 10GBS InfiniBand to HTX Adapter

HT-465 QHT7140 Single Port 10GBS InfiniBand to HTX Adapter

PE-880 QLE7140 Single Port 10GBS InfiniBand to x8 PCI Express

PE-850 QMI7140 Single Port 10GBS InfiniBand IBM Blade Center

New QLogic Model

Number

1 – Introduction

What’s New in this Release

Description

ROHS

ASIC ROHS

Adapter

Adapter

This version of InfiniPath provides support for all QLogic’s HCAs, including:

■ InfiniPath QLE7140, which is supported on systems with PCIe x8 or x16 slots

■ InfiniPath QMI7140, which runs on Power PC systems, particularly on the IBM®

BladeCenter H processor blades

■ InfiniPath QHT7040 and QHT7140, which leverage HTX™. The InfiniPath

QHT7040 and QHT7140 are exclusively for motherboards that support

HTXcards. The QHT7140 has a smaller form factor than the QHT7040, but is

otherwise the same. Unless otherwise stated, QHT7140 will refer to both the

QHT7040 and QHT7140 in this documentation.

Expanded MPI scalability enhancements for PCI Express have been added. The

QHT7040 and QHT7140 can support 2 processes per context for a total of 16. The

QLE7140 and QMI7140 also support 2 processes per context, for a total of 8.

IB6054601-00 D 1-3

Page 14

1 – Introduction

Supported Distributions and Kernels

Support for multiple versions of MPI has been added. You can use a different version

of MPI and achieve the high-bandwidth and low-latency performance that is

standard with InfiniPath MPI.

Also included is expanded operating system support, and support for the latest

OpenFabrics software stack.

Multiple InfiniPath cards per node are supported. A single software installation works

for all the cards.

Additional up-to-date information can be found on the QLogic web site:

http://www.qlogic.com

1.7

Supported Distributions and Kernels

The InfiniPath interconnect runs on AMD Opteron, Intel EM64T, and IBM Power

Blade Center H) systems running Linux. The currently supported distributions and

associated Linux kernel versions for InfiniPath and OpenFabrics are listed in the

following table. The kernels are the ones that shipped with the distributions, unless

otherwise noted.

Q

Table 1-2. InfiniPath/OpenFabrics Supported Distributions and Kernels

InfiniPath/OpenFabrics supported

Distribution

Fedora Core 3 (FC3) 2.6.12 (x86_64)

Fedora Core 4 (FC4) 2.6.16, 2.6.17 (x86_64)

Red Hat Enterprise Linux 4 (RHEL4) 2.6.9-22, 2.6.9-34, 2.6.9-42(U2/U3/U4)

(x86_64)

CentOS 4.2-4.4 (Rocks 4.2-4.4) 2.6.9 (x86_64)

SUSE Linux 9.3 (SUSE 9.3) 2.6.11 (x86_64)

SUSE LInux Enterprise Server (SLES 9) 2.6.5 (x86_64)

SUSE LInux Enterprise Server (SLES 10) 2.6.16 (x86_64 and ppc64)

NOTE: IBM Power systems run only with the SLES 10 distribution.

The SUSE10 release series is no longer supported as of this InfiniPath 2.0 release.

Fedora Core 4 kernels prior to 2.6.16 are also no longer supported.

kernels

1-4 IB6054601-00 D

Page 15

Q

1.8

Software Components

The software provided with the InfiniPath Interconnect product consists of:

■ InfiniPath driver (including OpenFabrics)

■ InfiniPath ethernet emulation

■ InfiniPath libraries

■ InfiniPath utilities, configuration, and support tools

■ InfiniPath MPI

■ InfiniPath MPI benchmarks

■ OpenFabrics protocols, including Subnet Management Agent

■ OpenFabrics libraries and utilities

1 – Introduction

Software Components

OpenFabrics kernel module support is now built and installed as part of the InfiniPath

RPM install. The InfiniPath release 2.0 runs on the same code base as OpenFabrics

Enterprise Distribution (OFED) version 1.1. It also includes the OpenFabrics

1.1-based library and utility RPMs. InfiniBand protocols are interoperable between

InfiniPath 2.0 and OFED 1.1.

This release provides support for the following protocols:

■ IPoIB (TCP/IP networking)

■ SDP (Sockets Direct Protocol)

■ OpenSM

■ UD (Unreliable Datagram)

■ RC (Reliable Connection)

■ UC (Unreliable Connection)

■ SRQ (Shared Receive Queue)

■ uDAPL (user Direct Access Provider Library)

This release includes a technology preview of:

■ SRP (SCSI RDMA Protocol)

Future releases will provide support for:

■ iSER (iSCSI Extensions for RDMA)

No support is provided for RD.

IB6054601-00 D 1-5

Page 16

1 – Introduction

Documentation and Technical Support

NOTE: 32 bit OpenFabrics programs using the verb interfaces are not supported

in this InfiniPath release, but will be supported in a future release.

1.9

Conventions Used in this Document

This Guide uses these typographical conventions:

Table 1-3. Typographical Conventions

Convention Meaning

command Fixed-space font is used for literal items such as commands,

functions, programs, files and pathnames, and program

output;

variable Italic fixed-space font is used for variable names in programs

and command lines.

concept Italic font is used for emphasis, concepts.

user input Bold fixed-space font is used for literal items in commands or

constructs that you type in.

$ Indicates a command line prompt.

# Indicates a command line prompt as root when using bash or

sh.

[ ] Brackets enclose optional elements of a command or

program construct.

... Ellipses indicate that a preceding element can be repeated.

> Right caret identifies the cascading path of menu commands

used in a procedure.

2.0 The current version number of the software is included in the

RPM names and within this documentation.

NOTE: Indicates important information.

Q

1.10

Documentation and Technical Support

The InfiniPath product documentation includes:

■ The InfiniPath Install Guide

■ The InfiniPath User Guide

■ Release Notes

■ Quick Start Guide

1-6 IB6054601-00 D

Page 17

Q

1 – Introduction

Documentation and Technical Support

■ Readme file

The Troubleshooting Appendix for installation, InfiniPath and OpenFabrics

administration, and MPI issues is located in the InfiniPath User Guide.

Visit the QLogic support Web site for documentation and the latest software updates.

http://www.qlogic.com

IB6054601-00 D 1-7

Page 18

1 – Introduction

Documentation and Technical Support

Notes

Q

1-8 IB6054601-00 D

Page 19

This chapter describes what the cluster administrator needs to know about the

InfiniPath software and system administration.

2.1

Introduction

The InfiniPath driver ib_ipath, layered Ethernet driver ipath_ether, OpenSM,

and other modules and the protocol and MPI support libraries are the components

of the InfiniPath software providing the foundation that supports the MPI

implementation.

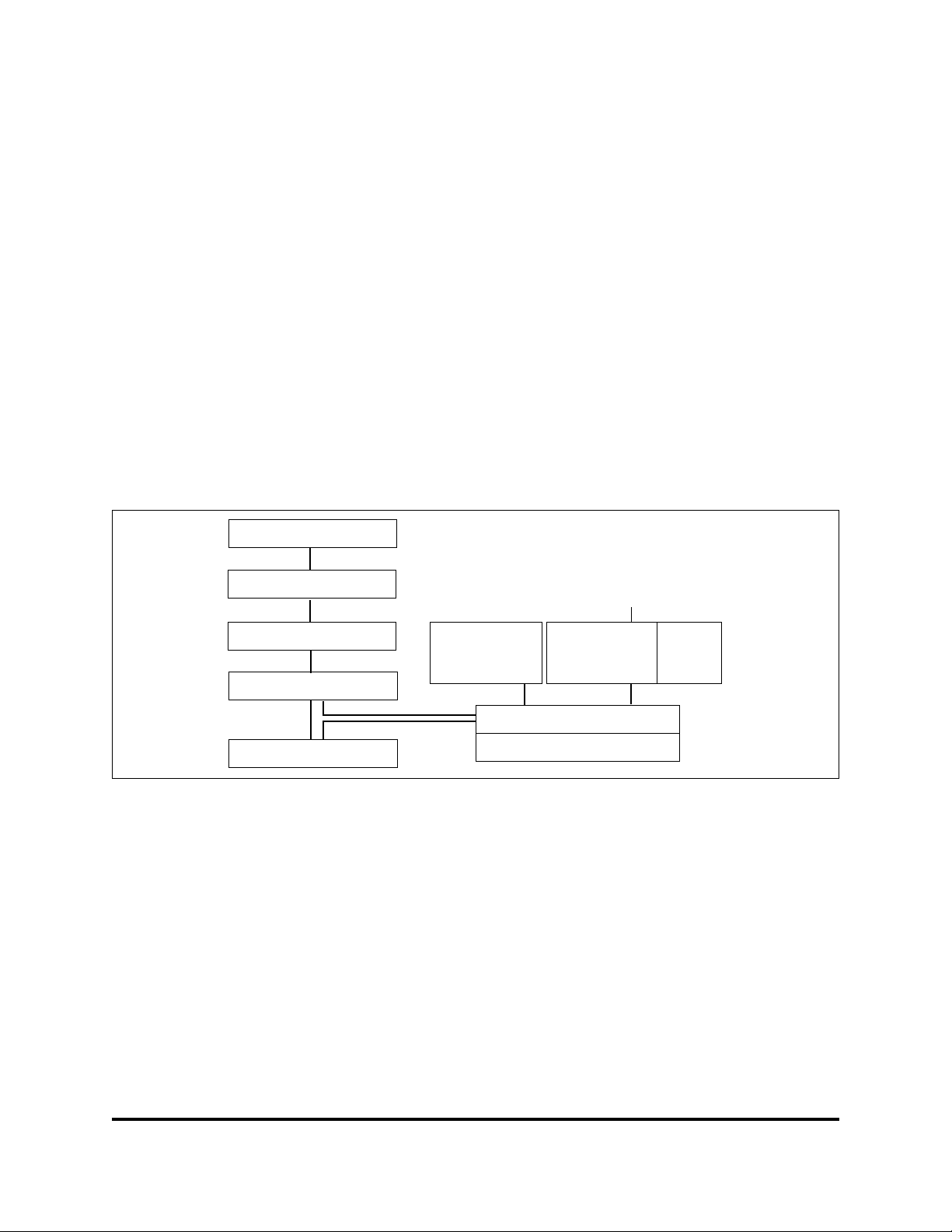

Figure 2-1, below, shows these relationships.

Section 2

InfiniPath Cluster Administration

MPI Application

InfiniPath Channel (ADI Layer)

InfiniPath Protocol Library

InfiniPath Hardw are

2.2

Installed Layout

The InfiniPath software is supplied as a set of RPM files, described in detail in the

InfiniPath Install Guide. This section describes the directory structure that the

installation leaves on each node’s file system.

The InfiniPath shared libraries are installed in:

/usr/lib for 32-bit applications

/usr/lib64 for 64-bit applications

InfiniPath MPI

TC P/IP

ipath_ether

InfiniPath driver ib_ipath

Linux Kernel

Figure 2-1. InfiniPath Software Structure

OpenFabrics com ponents

IPoIB

OpenSM

IB6054601-00 D 2-1

Page 20

2 – InfiniPath Cluster Administration

Memory Footprint

MPI include files are in:

/usr/include

MPI programming examples and source for several MPI benchmarks are in:

/usr/share/mpich/examples

InfiniPath utility programs, as well as MPI utilities and benchmarks are installed in:

/usr/bin

The InfiniPath kernel modules are installed in the standard module locations in:

/lib/modules (version dependent)

They are compiled and installed when the infinipath-kernel RPM is installed.

They must be rebuilt and re-installed when the kernel is upgraded. This can be done

by running the script:

/usr/src/infinipath/drivers/make-install.sh

Documentation can be found in:

/usr/share/man

/usr/share/doc/infinipath

/usr/share/doc/mpich-infinipath

Q

2.3

Memory Footprint

The following is a preliminary guideline for estimating the memory footprint of the

InfiniPath adapter on Linux x86_64systems. Memory consumption is linear based

2-2 IB6054601-00 D

Page 21

Q

2 – InfiniPath Cluster Administration

Memory Footprint

on system configuration. OpenFabrics support is under development and has not

been fully characterized. This table summarizes the guidelines.

Table 2-1. Memory Footprint of the InfiniPath Adapter on Linux x86_64 Systems

Adapter

component

InfiniPath Driver Required 9 MB Includes accelerated IP

MPI Optional 71 MB per process with

OpenFabrics Optional 1~6 MB

Required/

optional

Memory Footprint Comment

support. Includes tables

space to support up to

1000 node systems.

Clusters larger than 1000

nodes can also be

configured.

Several of these

default parameters: 60 MB

+ 512

*2172 (sendbufs) +

4096*2176 (recvbufs) +

1024*1K (misc. allocations)

+ 32 MB per node when

multiple processes

communicate via shared

memory

+ 264 Bytes per MPI node

on the subnet

+ ~500 bytes per QP

+ TBD bytes per MR

+ ~500 bytes per EE

Context

+ OpenFabrics stack from

openfabrics.org (size not

included in these

guidelines)

parameters (sendbufs,

recvbufs and size of the

shared memory region)

are tunable if reduced

memory footprint is

desired.

This not been fully

characterized as of this

writing.

Here is an example for a 1024 processor system:

■ 1024 cores over 256 nodes (each node has 2 sockets with dual-core processors)

■ 1 adapter per node

■ Each core runs an MPI process, with the 4 processes per node communicating

via shared memory.

■ Each core uses OpenFabrics to connect with storage and file system targets

using 50 QPs and 50 EECs per core.

IB6054601-00 D 2-3

Page 22

2 – InfiniPath Cluster Administration

Configuration and Startup

This breaks down to a memory footprint of 331MB per node, as follows:

Table 2-2. Memory Footprint, 331 MB per Node

Component Footprint (in MB) Breakdown

Driver 9 Per node

MPI 316 4*71 MB (MPI per process)

OpenFabrics 6 6 MB + 200 KB per node

2.4

Configuration and Startup

2.4.1

BIOS Settings

A properly configured BIOS is required. The BIOS settings, which are stored in

non-volatile memory, contain certain parameters characterizing the system,. These

parameters may include date and time, configuration settings, and information about

the installed hardware.

Q

+ 32 MB (shared memory

per node)

There are currently two issues concerning BIOS settings that you need to be aware

of:

■ ACPI needs to be enabled

■ MTRR mapping needs to be set to “Discrete”

MTRR (Memory Type Range Registers) is used by the InfiniPath driver to enable

write combining to the InfiniPath on-chip transmit buffers. This improves write

bandwidth to the InfiniPath chip by writing multiple words in a single bus transaction

(typically 64). This applies only to x86_64 systems.

However, some BIOSes don’t have the MTRR mapping option. It may be referred

to in a different way, dependent upon chipset, vendor, BIOS, or other factors. For

example, it is sometimes referred to as "32 bit memory hole", which should be

enabled.

If there is no setting for MTRR mapping or 32 bit memory hole, please contact your

system or motherboard vendor and inquire as to how write combining may be

enabled.

ACPI and MTRR mapping issues are discussed in greater detail in the

Troubleshooting section of the InfiniPath User Guide.

NOTE: BIOS settings on IBM Blade Center H (Power) systems do not need

adjustment.

2-4 IB6054601-00 D

Page 23

Q

You can check and adjust these BIOS settings using the BIOS Setup Utility. For

specific instructions on how to do this, follow the hardware documentation that came

with your system.

2.4.2

InfiniPath Driver Startup

The ib_ipath module provides low level InfiniPath hardware support. It does

hardware initialization, handles infinipath-specific memory management, and

provides services to other InfiniPath and OpenFabrics modules. It provides the

management functions for InfiniPath MPI programs, the ipath_ether ethernet

emulation, and general OpenFabrics protocols such as IPoIB, and SDP. It also

contains a Subnet Management Agent.

The InfiniPath driver software is generally started at system startup under control

of these scripts:

/etc/init.d/infinipath

/etc/sysconfig/infinipath

2 – InfiniPath Cluster Administration

Configuration and Startup

These scripts are configured by the installation. Debug messages are printed with

the function name preceding the message.

The cluster administrator does not normally need to be concerned with the

configuration parameters. Assuming that all the InfiniPath and OpenFabrics

software has been installed, the default settings upon startup will be:

■ InfiniPath ib_ipath is enabled

■ InfiniPath ipath_ether is not running until configured

■ OpenFabrics IPoIB is not running until configured

■ OpenSM is enabled on startup. Disable it on all nodes except where it will be

used as subnet manager.

2.4.3

InfiniPath Driver Software Configuration

The ib_ipath driver has several configuration variables which provide for setting

reserved buffers for the software, defining events to create trace records, and setting

debug level. See the

2.4.4

ib_ipath man page for details.

InfiniPath Driver Filesystem

The InfiniPath driver supplies a filesystem for exporting certain binary statistics to

user applications. By default, this filesystem is mounted in the

when the infinipath script is invoked with the "start" option (e.g. at system startup)

IB6054601-00 D 2-5

/ipathfs directory

Page 24

2 – InfiniPath Cluster Administration

Configuration and Startup

and unmounted when the infinipath script is invoked with the "stop" option (e.g. at

system shutdown).

The layout of the filesystem is as follows:

atomic_stats

00/

01/

...

The atomic_stats file contains general driver statistics. There is one numbered

directory per InfiniPath device on the system. Each numbered directory contains

the following files of per-device statistics:

atomic_counters

node_info

port_info

The atomic_counters file contains counters for the device: examples would be

interrupts received, bytes and packets in and out, and so on. The

contains information such as the device’s GUID. The

information for each port on the device. An example would be the port LID.

Q

node_info file

port_info file contains

2.4.5

Subnet Management Agent

Each node in an InfiniPath cluster runs a Subnet Management Agent (SMA), which

carries out two-way communication with the Subnet Manager (SM) running on one

or more managed switches. The Subnet Manager is responsible for network

initialization (topology discovery), configuration, and maintenance. The Subnet

Manager also assigns and manages InfiniBand multicast groups, such as the group

used for broadcast purposes by the

the SMA are to keep the SM informed whether a node is alive and to get the node’s

assigned identifier (LID) from the SM.

2.4.6

Layered Ethernet Driver

The layered Ethernet component ipath_ether provides almost complete Ethernet

software functionality over the InfiniPath fabric. At startup this is bound to some

Ethernet device

transparent way, except that Ethernet multicasting is not supported. Broadcasting

is supported. You can use all the usual command line and GUI-based configuration

tools on this Ethernet. Configuration of

These instructions are for enabling TCP-IP networking over the InfiniPath link. To

enable IPoIB networking, see section 2.4.7.1.

ethx. All Ethernet functions are available through this device in a

ipath_ether driver. The primary functions of

ipath_ether is optional.

2-6 IB6054601-00 D

Page 25

2 – InfiniPath Cluster Administration

Q

You must create a network device configuration file for the layered Ethernet device

on the InfiniPath adapter. This configuration file will resemble the configuration files

for the other Ethernet devices on the nodes. Typically on servers there are two

Ethernet devices present, numbered as 0 (eth0) and 1 (eth1). This examples

assumes we create a third device, eth2.

NOTE: When multiple InfiniPath chips are present, the configuration for eth3,

eth4, and so on follow the same format as for adding eth2 in the examples

below.

Two slightly different procedures are given below for the ipath configuration; one

for Fedora and one for SUSE, SLES9, or SLES 10.

Many of the entries that are used in the configuration directions below are explained

in the file sysconfig.txt. To familiarize yourself with these, please see:

/usr/share/doc/initscripts-*/sysconfig.txt

2.4.6.1

ipath_ether Configuration on Fedora and RHEL4

Configuration and Startup

These configuration steps will cause the ipath_ether network interfaces to be

automatically configured when you next reboot the system. These instructions are

for the

Typically on servers there are two Ethernet devices present, numbered as 0 (eth0)

and 1 (eth1). This example assumes we create a third device, eth2.

NOTE: When multiple InfiniPath chips are present, the configuration for eth3,

Fedora Core 3, Fedora Core 4 and Red Hat Enterprise Linux 4 distributions.

eth4, and so on follow the same format as for adding eth2 in the

examples below.

1. Check for the number of Ethernet drivers you currently have by either one of

the two following commands :

$ ifconfig -a

$ ls /sys/class/net

As mentioned above we assume that two Ethernet devices (numbered 0 and

1) are already present.

2. Edit the file

alias eth2 ipath_ether

3. Create or edit the following file (as root).

/etc/sysconfig/network-scripts/ifcfg-eth2

/etc/modprobe.conf (as root) by adding the following line:

IB6054601-00 D 2-7

Page 26

2 – InfiniPath Cluster Administration

Configuration and Startup

If you are using DHCP (dynamic host configuration protocol), add the following

lines to ifcfg-eth2:

# QLogic Interconnect Ethernet

DEVICE=eth2

ONBOOT=yes

BOOTPROTO=dhcp

If you are using static IP addresses, use the following lines instead, substituting

your own IP address for the sample one given here

netmask is shown.

# QLogic Interconnect Ethernet

DEVICE=eth2

BOOTPROTO=static

ONBOOT=YES

IPADDR=192.168.5.101 #Substitute your IP address here

NETMASK="255.255.255.0"#Normal matching netmask

TYPE=Ethernet

This will cause the ipath_ether Ethernet driver to be loaded and configured during

system startup. To check your configuration, and make the

driver available immediately, use the command (as root):

Q

.The normal matching

ipath_ether Ethernet

# /sbin/ifup eth2

4. Check whether the Ethernet driver has been loaded with:

$ lsmod | grep ipath_ether

5. Verify that the driver is up with:

$ ifconfig -a

2.4.6.2

ipath_ether Configuration on SUSE 9.3, SLES 9, and SLES 10

These configuration steps will cause the ipath_ether network interfaces to be

automatically configured when you next reboot the system. These instructions are

for the

Typically on servers there are two Ethernet devices present, numbered as 0 (eth0)

and 1 (eth1). This example assumes we create a third device, eth2.

NOTE: When multiple InfiniPath chips are present, the configuration for eth3,

SUSE 9.3, SLES 9 and SLES 10 distributions.

eth4, and so on follow the same format as for adding eth2 in the

examples below. Similarly , in step 2, add one to the unit number, so

replace

and so on.

.../00/guid with /01/guid for the second InfiniPath interface,

2-8 IB6054601-00 D

Page 27

Q

2 – InfiniPath Cluster Administration

Configuration and Startup

Step 3 is applicable only to SLES 10; it is required because SLES 10 uses a newer

version of the

NOTE: The MAC address (media access control address) is a unique identifier

The following steps must all be executed as the root user.

1. Be sure that the ipath_ether module is loaded:

# lsmod | grep -q ipath_ether || modprobe ipath_ether

2. Determine the MAC address that will be used:

# sed ’s/^\(..:..:..\):..:../\1/’ \

/sys/bus/pci/drivers/ib_ipath/00/guid

NOTE: Care should be taken when cutting and pasting commands such as

udev subsystem.

attached to most forms of networking equipment. Step 2 below determines

the MAC address to use, and will be referred to as $MAC in the

subsequent steps. $MAC must be replaced in each case with the string

printed in step 2.

the above from PDF documents, as quotes are special characters

and may not be translated correctly.

The output should appear similar to this (6 hex digit pairs, separated by colons):

00:11:75:04:e0:11

The GUID can also be returned by running:

# ipath_control -i

$Id: QLogic Release2.0 $ $Date: 2006-10-15-04:16 $

00: Version: Driver 2.0, InfiniPath_QHT7140, InfiniPath1 3.2,

PCI 2, SW Compat 2

00: Status: 0xe1 Initted Present IB_link_up IB_configured

00: LID=0x30 MLID=0x0 GUID=00:11:75:00:00:04:e0:11 Serial:

1236070407

Note that removing the middle two 00:00 octets from the GUID in the above

output will form the MAC address

If either step 1 or step 2 fails in some fashion, the problem must be found and

corrected before continuing. Verify that the RPMs are installed correctly, and

that infinipath has correctly been started. If problems continue, run

ipathbug-helper and report the results to your reseller or InfiniPath support

organization.

3. Skip to Step 4 if you are using SUSE 9.3 or SLES 9. This step is only done on

SLES 10 systems. Edit the file:

/etc/udev/rules.d/30-net_persistent_names.rules

If this file does not exist, skip to Step 4.

IB6054601-00 D 2-9

Page 28

2 – InfiniPath Cluster Administration

Configuration and Startup

Check each of the lines starting with SUBSYSTEM=, to find the highest numbered

interface. (For standard motherboards, the highest numbered interface will

typically be 1.)

Add a new line at the end of the file, incrementing the interface number by one.

In this example, it becomes eth2. The new line will look like this:

SUBSYSTEM=="net", ACTION=="add", SYSFS{address}=="$MAC",

IMPORT="/sbin/ rename_netiface %k eth2"

This will appear as a single line in the file. $MAC is replaced by the string from

step 2 above.

4. Create the network module file:

/etc/sysconfig/hardware/hwcfg-eth-id-$MAC

Add the following lines to the file:

MODULE=ipath_ether

STARTMODE=auto

This will cause the ipath_ether Ethernet driver to be loaded and configured

during system startup.

Q

5. Create the network configuration file:

/etc/sysconfig/network/ifcfg-eth2

If you are using DHCP (dynamically assigned IP addresses), add these lines

to the file:

STARTMODE=onboot

BOOTPROTO=dhcp

NAME=’InfiniPath Network Card’

_nm_name=eth-id-$MAC

Proceed to Step 6.

If you are you are using static IP addresses (not DHCP), add these lines to the

file:

STARTMODE=onboot

BOOTPROTO=static

NAME=’InfiniPath Network Card’

NETWORK=192.168.5.0

NETMASK=255.255.255.0

BROADCAST=192.168.5.255

IPADDR=192.168.5.211

_nm_name=eth-id-$MAC

Make sure that you substitute your own IP address for the sample IPADDR

shown here. The BROADCAST, NETMASK, and NETWORK lines need to

match for your network.

2-10 IB6054601-00 D

Page 29

Q

6. To verify that the configuration files are correct, you will normally now be able

to run the commands:

# ifup eth2

# ifconfig eth2

Note that it may be necessary to reboot the system before the configuration

changes will work.

2.4.7

OpenFabrics Configuration and Startup

In the prior InfiniPath 1.3 release the InfiniPath (ipath_core) and OpenFabrics

(ib_ipath) modules were separate. In this release there is now one module,

ib_ipath, which provides both low level InfiniPath support and management

functions for OpenFabrics protocols. The startup script for ib_ipath is installed

automatically as part of the software installation, and normally does not need to be

changed.

2 – InfiniPath Cluster Administration

Configuration and Startup

However, the IPoIB network interface and OpenSM components of OpenFabrics

can be configured to be on or off. IPoIB is off by default; OpenSM is on by default.

IPoIB and OpenSM configuration is explained in greater detail in the following

sections.

NOTE: The following instructions work for FC4, SUSE9.3, SLES 9, and SLES 10.

2.4.7.1

Configuring the IPoIB Network Interface

Instructions are given here to manually configure your OpenFabrics IPoIB network

interface. This example assumes that you are using sh or bash as your shell, and

that all required InfiniPath and OpenFabrics RPMs are installed, and your startup

scripts have been run, either manually or at system boot.

For this example, we assume that your IPoIB network is 10.1.17.0 (one of the

networks reserved for private use, and thus not routable on the internet), with a /8

host portion, and therefore requires that the netmask be specified.

This example assumes that no hosts files exist, and that the host being configured

has the IP address 10.1.17.3, and that DHCP is not being used.

NOTE: We supply instructions only for this static IP address case. Configuration

methods for using DHCP will be supplied in a later release.

Type the following commands (as root):

# ifconfig ib0 10.1.17.3 netmask 0xffffff00

IB6054601-00 D 2-11

Page 30

2 – InfiniPath Cluster Administration

Configuration and Startup

To verify the configuration, type:

# ifconfig ib0

The output from this command should be similar to this:

ib0 Link encap:InfiniBand HWaddr

00:00:00:02:FE:80:00:00:00:00:00:00:00:00:00:00:00:00:00:00

inet addr:10.1.17.3 Bcast:10.1.17.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:2044 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:128

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

Next, type:

# ping -c 2 -b 10.1.17.255

The output of the ping command should be similar to that below, with a line for

each host already configured and connected:

WARNING: pinging broadcast address

PING 10.1.17.255 (10.1.17.255) 517(84) bytes of data.

174 bytes from 10.1.17.3: icmp_seq=0 ttl=174 time=0.022 ms

64 bytes from 10.1.17.1: icmp_seq=0 ttl=64 time=0.070 ms (DUP!)

64 bytes from 10.1.17.7: icmp_seq=0 ttl=64 time=0.073 ms (DUP!)

Q

2.4.8

OpenSM

The IPoIB network interface is now configured.

NOTE: The configuration must be repeated each time the system is rebooted.

OpenSM is an optional component of the OpenFabrics project that provides a

subnet manager for InfiniBand networks. This package can be installed on all

machines, but only needs to be enabled on the machine in your cluster that is going

to act as a subnet manager. You do not need to use OpenSM if any of your InfiniBand

switches provide a subnet manager.

After installing the opensm package, OpenSM is configured to be on on the next

machine reboot. It only needs to be enabled on the node which acts as the subnet

manager, so use the chkconfig command (as root) to disable it on the other nodes:

# chkconfig opensmd off

The command to enable it on reboot is:

# chkconfig opensmd on

You can start opensmd without rebooting your machine as follows:

# /etc/init.d/opensmd start

2-12 IB6054601-00 D

Page 31

Q

2.5

SRP

2 – InfiniPath Cluster Administration

Starting and Stopping the InfiniPath Software

and you can stop it again like this:

# /etc/init.d/opensmd stop

If you wish to pass any arguments to the OpenSM program, modify the file:

/etc/init.d/opensmd

and add the arguments to the "OPTIONS" variable. Here is an example:

# Use the UPDN algorithm instead of the Min Hop algorithm.

OPTIONS="-u"

SRP stands for SCSI RDMA Protocol. It was originally intended to allow the SCSI

protocol to run over InfiniBand for SAN usage. SRP interfaces directly to the Linux

file system through the SRP Upper Layer Protocol. SRP storage can be treated as

just another device.

In this release SRP is provided as a technology preview. Add ib_srp to the module

list in /etc/sysconfig/infinipath to have it automatically loaded.

NOTE: SRP does not yet work with IBM Power Systems.This will be fixed in a

future release.

2.6

Further Information on Configuring and Loading Drivers

See the modprobe(8), modprobe.conf(5), lsmod(8), man pages for more

information. Also see the file

for more general information on configuration files. Section 2.7, below, may also be

useful.

2.7

/usr/share/doc/initscripts-*/sysconfig.txt

Starting and Stopping the InfiniPath Software

The InfiniPath driver software runs as a system service, normally started at system

startup. Normally you will not need to restart the software, but you may wish to do

so after installing a new InfiniPath release, or after changing driver options, or if

doing manual testing.

The following commands can be used to check or configure state. These methods

will not reboot the system.

To check the configuration state, use the command:

$ chkconfig --list infinipath

To enable the driver, use the command (as root):

# chkconfig infinipath on 2345

IB6054601-00 D 2-13

Page 32

2 – InfiniPath Cluster Administration

Starting and Stopping the InfiniPath Software

To disable the driver on the next system boot, use the command (as root):

# chkconfig infinipath off

NOTE: This does not stop and unload the driver, if it is already loaded.

You can start, stop, or restart (as root) the InfiniPath support with:

# /etc/init.d/infinipath [start | stop | restart]

This method will not reboot the system. The following set of commands shows how

this script can be used. Please take note of the following:

■ You should omit the commands to start/stop opensmd if you are not running it

on that node.

■ You should omit the ifdown and ifup step if you are not using ipath_ether

on that node.

The sequence of commands to restart infinipath are given below. Note that this

next example assumes that ipath_ether is configured as eth2.

# /etc/init.d/opensmd stop

# ifdown eth2

# /etc/init.d/infinipath stop

...

Q

# /etc/init.d/infinipath start

# ifup eth2

# /etc/init.d/opensmd start

The ... represents whatever activity you are engaged in after InfiniPath is stopped.

An equivalent way to specify this is to use same sequence as above, except use

the restart command instead of start and stop:

# /etc/init.d/opensmd stop

# ifdown eth2

# /etc/init.d/infinipath restart

# ifup eth2

# /etc/init.d/opensmd start

NOTE: Restarting InfiniPath will terminate any InfiniPath MPI processes, as well

as any OpenFabrics processes that are running at the time. Processes

using networking over ipath_ether will return errors.

You can check to see if opensmd is running by using the following command; if

there is no output, opensmd is not configured to run:

# /sbin/chkconfig --list opensmd | grep -w on

You can check to see if ipath_ether is running by using the following command.

If it prints no output, it is not running.

$/sbin/lsmod | grep ipath_ether

2-14 IB6054601-00 D

Page 33

Q

If there is output, you should look at the output from this command to determine if

it is configured:

$ /sbin/ifconfig -a

Finally, if you need to find which InfiniPath and OpenFabrics modules are running,

try the following command:

$ lsmod | egrep ’ipath_|ib_|rdma_|findex’

2.8

Software Status

InfiniBand status can be checked by running the program ipath_control. Here is

sample usage and output:

$ ipath_control -i

2 – InfiniPath Cluster Administration

Configuring ssh and sshd Using shosts.equiv

$Id: QLogic Release2.0 $ $Date: 2006-09-15-04:16 $

00: Version: Driver 2.0, InfiniPath_QHT7140, InfiniPath1 3.2,

PCI 2, SW Compat 2

00: Status: 0xe1 Initted Present IB_link_up IB_configured

00: LID=0x30 MLID=0x0 GUID=00:11:75:00:00:07:11:97 Serial:

1236070407

Another useful program is ibstatus. Sample usage and output is as follows:

$ ibstatus

Infiniband device ’ipath0’ port 1 status:

default gid: fe80:0000:0000:0000:0011:7500:0005:602f

base lid: 0x35

sm lid: 0x2

state: 4: ACTIVE

phys state: 5: LinkUp

rate: 10 Gb/sec (4X)

For more information on these programs, See appendix C.9.9 and appendix C.9.5.

2.9

Configuring ssh and sshd Using shosts.equiv

Running MPI programs on an InfiniPath cluster depends, by default, on secure shell

ssh to launch node programs on the nodes. Jobs must be able to start up without

the need for interactive password entry on every node. Here we see how the cluster

administrator can lift this burden from the user through the use of the

mechanism. This method is recommended, provided that your cluster is behind a

firewall and accessible only to trusted users.

Later, in section 3.5.1, we show how an individual user can accomplish this end

through the use of

ssh-agent.

shosts.equiv

IB6054601-00 D 2-15

Page 34

2 – InfiniPath Cluster Administration

Configuring ssh and sshd Using shosts.equiv

This next example assumes the following:

■ Both the cluster nodes and the front end system are running the openssh

package as distributed in current Linux systems.

■ All cluster users have accounts with the same account name on the front end

and on each node, either by using NIS or some other means of distributing the

password file.

■ The front end is called ip-fe.

■ Root or superuser access is required on ip-fe and on each node in order to

configure

■ ssh, including the host’s key, has already been configured on the system ip-fe.

See the

ssh.

sshd and ssh-keygen man pages for more information.

The example proceeds as follows:

Q

1. On the system

ip-fe, the front end node, change /etc/ssh/ssh_config to

allow host-based authentication. Specifically, this file must contain the following

four lines, set to ‘yes’. If they are already present but commented out with an

initial #, remove the #.

RhostsAuthentication yes

RhostsRSAAuthentication yes

HostbasedAuthentication yes

EnableSSHKeysign yes

2. On each of the InfiniPath node systems, create or edit the file

/etc/ssh/shosts.equiv, adding the name of the front end system. You’ll need

to add the line:

ip-fe

Change the file to mode 600 when finished editing.

3. On each of the InfiniPath node systems, create or edit the file

/etc/ssh/ssh_known_hosts. You’ll need to copy the contents of the file

/etc/ssh/ssh_host_dsa_key.pub from ip-fe to this file (as a single line),

and then edit that line to insert

is very similar to the standard

ip-fe ssh-dss at the beginning of the line. This

known_hosts file for ssh. An example line might

look like this (displayed as multiple lines, but a single line in the file):

ip-fe ssh-dss

AAzAB3NzaC1kc3MAAACBAPoyES6+Akk+z3RfCkEHCkmYuYzqL2+1nwo4LeTVWp

CD1QsvrYRmpsfwpzYLXiSJdZSA8hfePWmMfrkvAAk4ueN8L3ZT4QfCTwqvHVvS

ctpibf8n

aUmzloovBndOX9TIHyP/Ljfzzep4wL17+5hr1AHXldzrmgeEKp6ect1wxAAAAF

QDR56dAKFA4WgAiRmUJailtLFp8swAAAIBB1yrhF5P0jO+vpSnZrvrHa0Ok+Y9

apeJp3sessee30NlqKbJqWj5DOoRejr2VfTxZROf8LKuOY8tD6I59I0vlcQ812

E5iw1GCZfNefBmWbegWVKFwGlNbqBnZK7kDRLSOKQtuhYbGPcrVlSjuVpsfWEj

u64FTqKEetA8l8QEgAAAIBNtPDDwdmXRvDyc0gvAm6lPOIsRLmgmdgKXTGOZUZ

2-16 IB6054601-00 D

Page 35

Q

2 – InfiniPath Cluster Administration

Performance and Management Tips

0zwxSL7GP1nEyFk9wAxCrXv3xPKxQaezQKs+KL95FouJvJ4qrSxxHdd1NYNR0D

avEBVQgCaspgWvWQ8cL

0aUQmTbggLrtD9zETVU5PCgRlQL6I3Y5sCCHuO7/UvTH9nneCg==

Change the file to mode 600 when finished editing.

4. On each node, the system file

the following four lines uncommented (no

yes. Each of these lines is normally present, but commented out and set to

to

no by default.

RhostsAuthentication yes

RhostsRSAAuthentication yes

HostbasedAuthentication yes

PAMAuthenticationViaKbdInt yes

5. After creating or editing these three files in steps 2, 3 and 4, sshd must be

restarted on each system. If you are already logged in via

user is logged in via

this only on idle nodes. Tell

(as root):

# killall -HUP sshd

NOTE: This will terminate all ssh sessions into that system. Run from the

console, or have a way to log into the console in case of any problem.

At this point, any user should be able to login to the

then use

or pass phrase.

2.9.1

ssh to login to any InfiniPath node without being prompted for a password

Process Limitation with ssh

/etc/ssh/sshd_config must be edited, so that

# at the start of the line) and are set

ssh (or any other

ssh), their sessions or programs will be terminated, so do

sshd to use the new configuration files by typing

ip-fe front end system, and

MPI jobs that use more than 8 processes per node may encounter an SSH throttling

mechanism that limits the amount of concurrent per-node connections to 10. If you

need to use more processes, you or your system administrator should increase the

value of ’MaxStartups’ in your sshd configurations. See appendix C.8.8 for an

example of an error message associated with this limitation.

2.10

Performance and Management Tips

The following section gives some suggestions for improving performance and

simplifying management of the cluster.

2.10.1

Remove Unneeded Services

An important step that the cluster administrator can take to enhance application

performance is to minimize the set of system services running on the compute

IB6054601-00 D 2-17

Page 36

2 – InfiniPath Cluster Administration

Performance and Management Tips

nodes. Since these are presumed to be specialized computing appliances, they

do not need many of the service daemons normally running on a general Linux

computer.

Following are several groups constituting a minimal necessary set of services.

These are all services controlled by

enabled, use the command:

$ /sbin/chkconfig --list | grep -w on

Basic network services:

network

ntpd

syslog

xinetd

sshd

For system housekeeping:

anacron

atd

crond

Q

chkconfig. To see the list of services that are

If you are using NFS or yp passwords:

rpcidmapd

ypbind

portmap

nfs

nfslock

autofs

To watch for disk problems:

smartd

readahead

The service comprising the InfiniPath driver and SMA:

infinipath

Other services may be required by your batch queuing system or user community.

2.10.2

Disable Powersaving Features

If you are running benchmarks or large numbers of short jobs, it is beneficial to

disable the powersaving features of the Opteron. The reason is that these features

may be slow to respond to changes in system load.

For rhel4, fc3 and fc4, run this command as root:

# /sbin/chkconfig --level 12345 cpuspeed off

2-18 IB6054601-00 D

Page 37

Q

For SUSE 9.3 and 10.0 run this command as root:

# /sbin/chkconfig --level 12345 powersaved off

After running either of these commands, the system will need to be rebooted for

these changes to take effect.

2.10.3

Balanced Processor Power

Higher processor speed is good. However, adding more processors is good only if

processor speed is balanced. Adding processors with different speeds can result

in load imbalance.

2.10.4

SDP Module Parameters for Best Performance

To get the best performance from SDP, especially for bandwidth tests, edit one of

these files:

/etc/modprobe.conf (on Fedora and RHEL)

/etc/modprobe.conf.local (on SUSE and SLES)

2 – InfiniPath Cluster Administration

Performance and Management Tips

Add the line:

options ib_sdp sdp_debug_level=4

sdp_zcopy_thrsh_src_default=10000000

This should be a single line in the file. This sets both the debug level and the zero

copy threshold.

2.10.5

CPU Affinity

InfiniPath will attempt to run each node program with CPU affinity set to a separate

logical processor, up to the number of available logical processors. If CPU affinity

is already set (with

InfiniPath will not change the setting.

The

processes to logical processors. This is useful, for example, to make best use of

available memory bandwidth or cache locality when running on dual-core SMP

cluster nodes.

In the following example we use the NAS Parallel Benchmark’s MG (multi-grid)

benchmark and the

$ mpirun -np 4 -ppn 2 -m $hosts taskset -c 0,2 bin/mg.B.4

$ mpirun -np 4 -ppn 2 -m $hosts taskset -c 1,3 bin/mg.B.4

sched_setaffinity(), or with the taskset utility), then

taskset utility can be used with mpirun to specify the mapping of MPI

-c option to taskset.

The first command forces the programs to run on CPUs (or cores) 0 and 2. The

second forces the programs to run on CPUs 1 and 3. Please see the

taskset for more information on usage.

IB6054601-00 D 2-19

man page for

Page 38

2 – InfiniPath Cluster Administration

Performance and Management Tips

2.10.6

Hyper-Threading

If using Intel processors that support Hyper-Threading, it is recommended that

HyperThreading is turned off in the BIOS. This will provide more consistent

performance. You can check and adjust this setting using the BIOS Setup Utility.

For specific instructions on how to do this, follow the hardware documentation that

came with your system.

2.10.7

Homogeneous Nodes

To minimize management problems, the compute nodes of the cluster should have

very similar hardware configurations and identical software installations. A

mismatch between the InfiniPath software versions may also cause problems. Old

and new libraries should not be run within the same job. It may also be useful to

distinguish between the InfiniPath-specific drivers and those that are associated

with kernel.org, OpenFabrics, or are distribution-built. The most useful tools are:

ipathbug-helper

ipath_control

rpm

mpirun

ident

strings

ipath_checkout

Q

NOTE: Run these tools to gather information before reporting problems and

requesting support.

ipathbug_helper

The InfiniPath software includes a shell script ipathbug-helper, which can gather

status and history information for use in analyzing InfiniPath problems. This tool is

also useful for verifying homogeneity. It is best to run

privilege, since some of the queries require it. There is also a

which greatly increases the amount of gathered information. Simply run it on several

nodes and examine the output for differences.

ipath_control

Run the shell script ipath_control as follows:

% ipath_control -i

$Id: QLogic Release2.0 $ $Date: 2006-09-15-04:16 $

00: Version: Driver 2.0, InfiniPath_QHT7140, InfiniPath1 3.2, PCI

2, SW Compat 2

00: Status: 0xe1 Initted Present IB_link_up IB_configured

ipathbug-helper with root

--verbose option

2-20 IB6054601-00 D

Page 39

Q

2 – InfiniPath Cluster Administration

Performance and Management Tips

00: LID=0x30 MLID=0x0 GUID=00:11:75:00:00:07:11:97 Serial:

1236070407

Note that ipath_control will report whether the installed adapter is the QHT7040,

QHT7140, or the QLE7140. It will also report whether the driver is InfiniPath-specific

or not with the output associated with $Id.

rpm

To check the contents of an RPM, commands of these types may be useful:

$ rpm -qa infinipath\* mpi-\*

$ rpm -q --info infinipath # (etc)

The option -q will query and -qa will query all.

mpirun

mpirun can give information on whether the program is being run against a QLogic

or non-QLogic driver. Sample commands and results are given below.

QLogic-built:

$ mpirun -np 2 -m /tmp/id1 -d0x101 mpi_latency 1 0

asus-01:0.ipath_setaffinity: Set CPU affinity to 1, port 0:2:0 (1

active chips)

asus-01:0.ipath_userinit: Driver is QLogic-built

Non-QLogic built:

$ mpirun -np 2 -m /tmp/id1 -d0x101 mpi_latency 1 0

asus-01:0.ipath_setaffinity: Set CPU affinity to 1, port 0:2:0 (1

active chips)

asus-01:0.ipath_userinit: Driver is not QLogic-built

ident

ident

strings are available in ib_ipath.ko. Running ident (as root) will yield

information similar to the following. For QLogic RPMs, it will look like:

# ident /lib/modules/$(uname -r)/updates/*ipath.ko

/lib/modules/2.6.16.21-0.8-smp/updates/ib_ipath.ko:

$Id: QLogic Release2.0 $

$Date: 2006-09-15-04:16 $

$Id: QLogic Release2.0 $

$Date: 2006-09-15-04:16 $

For non-QLogic RPMs, it will look like:

# ident /lib/modules/$(uname -r)/updates/*ipath_ether.ko

/lib/modules/2.6.16.21-0.8-smp/updates/infinipath.ko:

IB6054601-00 D 2-21

Page 40

2 – InfiniPath Cluster Administration

Customer Acceptance Utility

$Id: kernel.org InfiniPath Release 2.0 $

$Date: 2006-09-15-04:16 $

/lib/modules/2.6.16.21-0.8-smp/updates/ipath.ko:

$Id: kernel.org InfiniPath Release2.0 $

$Date: 2006-09-15-04:20 $

NOTE: ident is in the optional rcs RPM, and is not always installed.

strings

The command strings can also be used. Here is a sample:

$ strings /usr/lib/libinfinipath.so.4.0 | grep Date:

will produce output like this:

Date: 2006-09-15 04:07 Release2.0 InfiniPath $

NOTE: strings is part of binutils (a development RPM), and may not be

available on all machines.

ipath_checkout

ipath_checkout

that all the nodes are functioning. It is run on a front end node and requires a hosts

file:

Q

is a bash script used to verify that the installation is correct, and

$ ipath_checkout [options] hostsfile

More complete information on ipath_checkout is given below in section 2.11 and

in section C.9.8.

2.11

Customer Acceptance Utility

ipath_checkout is a bash script used to verify that the installation is correct and

that all the nodes of the network are functioning and mutually connected by the

InfiniPath fabric. It is to be run on a front end node, and requires specification of a

hosts file:

$ ipath_checkout [options] hostsfile

where hostsfile designates a file listing the hostnames of the nodes of the cluster,

one hostname per line. The format of hostsfile is as follows:

hostname1

hostname2

...

ipath_checkout performs the following seven tests on the cluster:

1. ping all nodes to verify all are reachable from the frontend.

2. ssh to each node to verify correct configuration of ssh.

2-22 IB6054601-00 D

Page 41

Q

2 – InfiniPath Cluster Administration