Page 1

Software Manual

TMvision

Original Instructions

I627-E-06

Page 2

This Manual contains information of the Techman Robot product series (hereinafter referred to as the TM

Robot). The information contained herein is the property of Techman Robot Inc. (hereinafter referred to as

the Corporation). No part of this publication may be reproduced or copied in any way, shape or form without

prior authorization from the Corporation. No information contained herein shall be considered an offer or

commitment. It may be subject to change without notice. This Manual will be reviewed periodically. The

Corporation will not be liable for any error or omission.

logo is registered trademark of TECHMAN ROBOT INC. in Taiwan and other countries and the

company reserves the ownership of this manual and its copy and its copyrights.

Software Manual TMvision Software version:1.82 2

Page 3

Revision History Table .................................................................................................................................. 9

1. General................................................................................................................................................... 10

Overview ....................................................................................................................................... 10

Warning and Caution Symbols ....................................................................................................... 10

Safety Precautions ........................................................................................................................ 11

Validation and Liability ................................................................................................................... 12

Limitation of Liability ...................................................................................................................... 12

Functional Note Symbols ............................................................................................................... 12

2. Eye-in-Hand ........................................................................................................................................... 13

Overview ....................................................................................................................................... 13

Vision Base System Positioning Mode ........................................................................................... 13

Landmark ................................................................................................................................ 13

Fixed Positioning ..................................................................................................................... 15

Servoing .................................................................................................................................. 16

Object-based Calibration ......................................................................................................... 16

Camera List ................................................................................................................................... 16

Controller ....................................................................................................................................... 16

Camera Kit .................................................................................................................................... 16

Calibrate Workspace ..................................................................................................................... 17

Automatic Calibration .............................................................................................................. 17

Manual Calibration .................................................................................................................. 18

Live Video ...................................................................................................................................... 19

Task Designer ................................................................................................................................ 20

Hard Drive Setting ......................................................................................................................... 20

3. Task Designer ......................................................................................................................................... 22

Overview ....................................................................................................................................... 22

Select Application .......................................................................................................................... 23

Visual Servoing ....................................................................................................................... 24

Fixed Point .............................................................................................................................. 26

AOI-only .................................................................................................................................. 27

Vision IO ................................................................................................................................. 28

Landmark Alignment ............................................................................................................... 29

Object-based Calibration ......................................................................................................... 31

Smart-Pick .............................................................................................................................. 32

Function list ................................................................................................................................... 33

Enhance .................................................................................................................................. 34

Software Manual TMvision Software version:1.82 3

Page 4

Contrast Enhancement ..................................................................................................... 34

Color Plane Extraction ...................................................................................................... 35

Smoothing ........................................................................................................................ 36

Thresholding ..................................................................................................................... 36

Morphology ....................................................................................................................... 37

Flip ................................................................................................................................... 37

Find ......................................................................................................................................... 38

Flow .................................................................................................................................. 38

Pattern Matching(Shape) .................................................................................................. 38

Pattern Matching (Image) ................................................................................................. 40

Blob Finder ....................................................................................................................... 41

Anchor .............................................................................................................................. 41

Fiducial Mark Matching ..................................................................................................... 42

One Shot Get All ............................................................................................................... 43

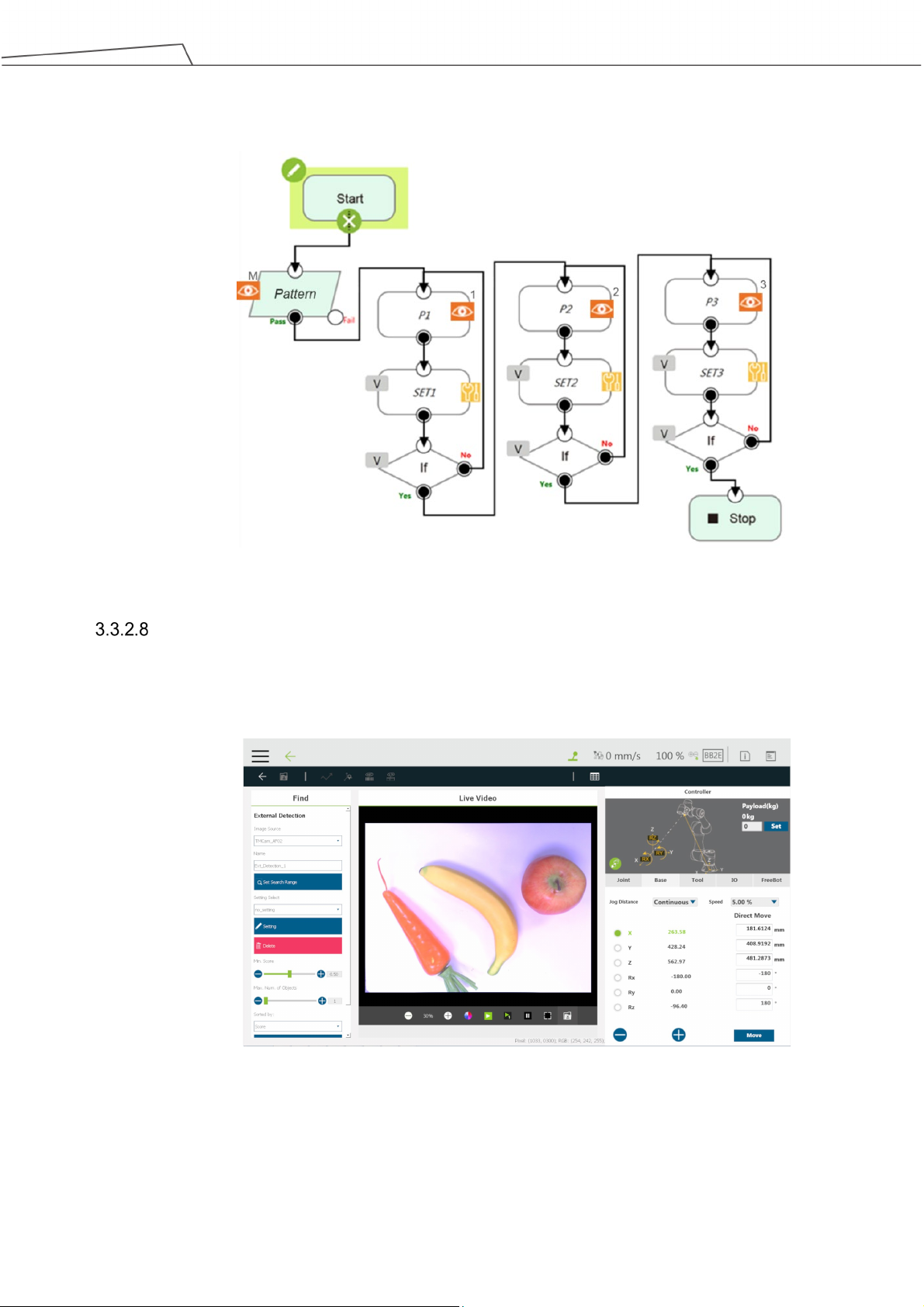

External Detection ............................................................................................................ 45

Identify .................................................................................................................................... 48

Barcode / QR Code .......................................................................................................... 48

Color Classifier ................................................................................................................. 49

String Match ..................................................................................................................... 50

External Classification ...................................................................................................... 50

4. TM External Camera ............................................................................................................................... 53

Overview ....................................................................................................................................... 53

Types of Camera Supported .......................................................................................................... 53

External Camera Installation Procedure......................................................................................... 53

Calibrating the External Camera .................................................................................................... 54

ETH Camera Calibration ......................................................................................................... 54

Upward-looking Camera Calibration ........................................................................................ 55

Lens Setting .................................................................................................................................. 56

Focus / Aperture ...................................................................................................................... 56

Eye-to-Hand .................................................................................................................................. 56

Pick'n Place ............................................................................................................................ 57

AOI-only / Vision IO ................................................................................................................. 57

Upward-Looking ............................................................................................................................ 57

Alignment Compensation ........................................................................................................ 58

AOI-only / Vision IO ................................................................................................................. 58

5. TM OCR ................................................................................................................................................. 59

Software Manual TMvision Software version:1.82 4

Page 5

Overview ....................................................................................................................................... 59

OCR .............................................................................................................................................. 59

Support Content ...................................................................................................................... 59

Parameter Setting Interface .................................................................................................... 60

Set OCR Region ............................................................................................................... 60

Segmentation ................................................................................................................... 60

Character Selection .......................................................................................................... 61

Candidate Characters Menu ............................................................................................. 61

Number OCR ................................................................................................................................. 61

Support Content ...................................................................................................................... 61

Parameter Setting Interface .................................................................................................... 62

Setting Identification Region ............................................................................................. 62

Segmentation ................................................................................................................... 62

Font Selection ................................................................................................................... 63

6. TM Identify & Measure ............................................................................................................................ 64

Identify ........................................................................................................................................... 64

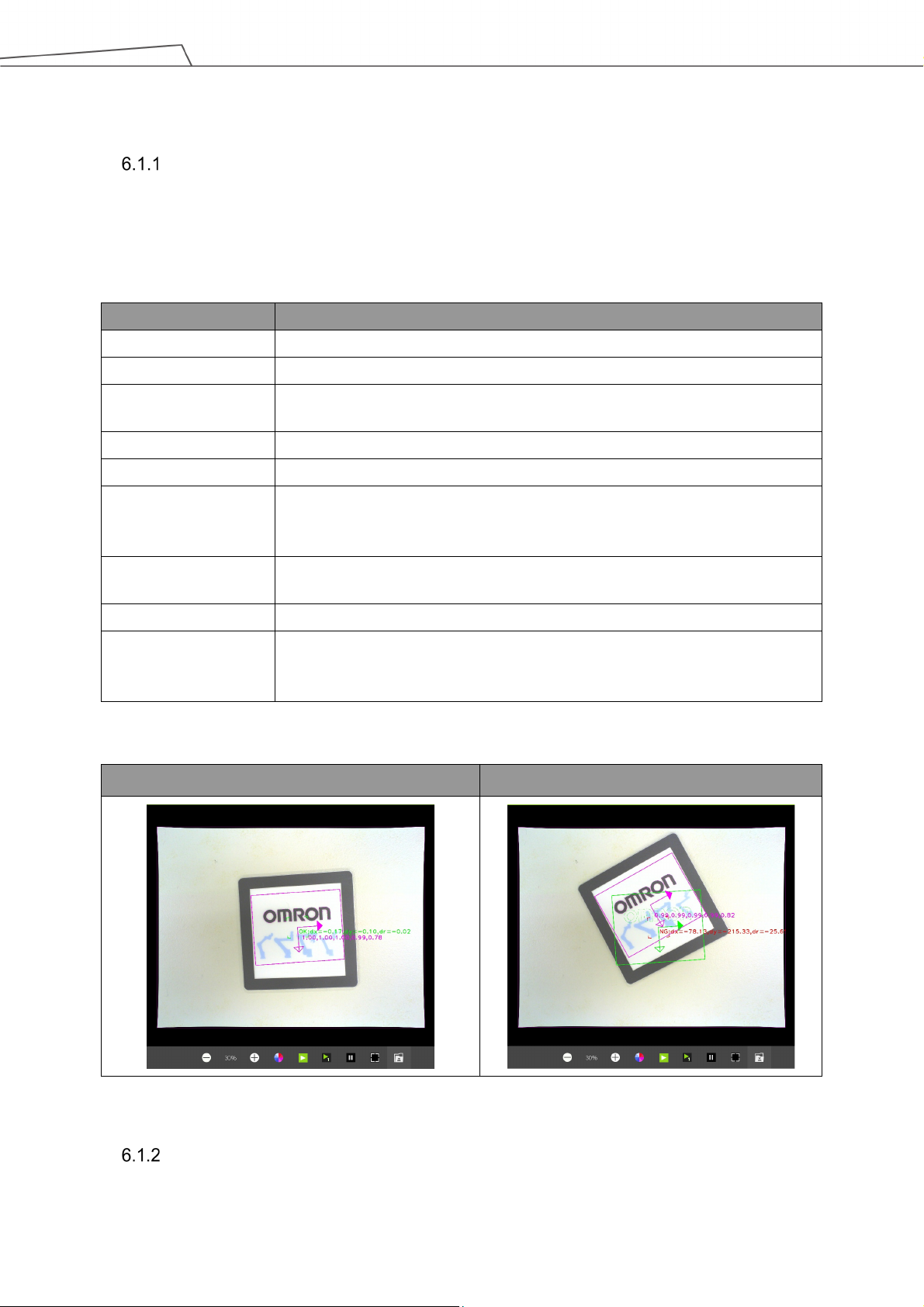

Pose Variation (Shape) ........................................................................................................... 65

Pose Variation (Image) ............................................................................................................ 65

Specific Color Area Size .......................................................................................................... 66

Subtract Reference Image ...................................................................................................... 67

Line Burr ................................................................................................................................. 68

Circle Burr ............................................................................................................................... 69

Measuring ...................................................................................................................................... 70

Counting (Shape) .................................................................................................................... 71

Counting (Image) .................................................................................................................... 72

Counting (Blobs) ..................................................................................................................... 72

Counting (Edges) .................................................................................................................... 73

Gauge ..................................................................................................................................... 74

Anchor .............................................................................................................................. 74

Line .................................................................................................................................. 75

Circle ................................................................................................................................ 76

Shape-based Pattern ........................................................................................................ 77

Image-based Pattern ........................................................................................................ 77

Calipers ................................................................................................................................... 78

Peak-to-Peak Width .......................................................................................................... 78

Software Manual TMvision Software version:1.82 5

Page 6

Tables

Table 1: Danger, Warning, and Caution Symbols ......................................................................... 11

Table 2: Function Note Symbols ................................................................................................... 12

Table 3: Camera Kit Functions ..................................................................................................... 17

Table 4: Live Video Functions ...................................................................................................... 20

Table 5: Select Applications ......................................................................................................... 23

Table 6: Visual Servoing Settings ................................................................................................. 24

Table 7: Parameters of the Teaching ............................................................................................ 26

Table 8: Fixed Point Settings ........................................................................................................ 27

Table 9: AOI-only Settings ............................................................................................................ 28

Table 10: Vision IO Settings ......................................................................................................... 29

Table 11: The Fixed Settings ........................................................................................................ 31

Table 12: Object-Based Calibration Settings ................................................................................ 32

Table 13: Function List – Enhance ............................................................................................... 34

Table 14: Function List – Enhance (Contrast Enhancement) ........................................................ 35

Table 15: Function List – Enhance (Color Plane Extraction) ......................................................... 35

Table 16: Function List – Enhance (Color Plane Extraction – Color Plane) ................................... 36

Table 17: Function List – Enhance (Smoothing) ........................................................................... 36

Table 18: Function List – Enhance (Thresholding) ........................................................................ 37

Table 19: Function List – Enhance (Morphology) .......................................................................... 37

Table 20: Function List – Enhance (Flip) ...................................................................................... 37

Table 21: Function List – Find ...................................................................................................... 38

Table 22: Function List – Find (Patten Matching (Shape)) ............................................................ 39

Table 23: Function List –Find (Patten Matching (Shape)) ............................................................. 40

Table 24: Function List – Find (Patten Matching (Image)) ............................................................ 40

Table 25: Function List – Find (Blob Finder) ................................................................................. 41

Table 26: Function List – Find (Anchor) ........................................................................................ 42

Table 27: Function List – Find (Fiducial Mark Matching) ............................................................... 42

Table 28: Function List – Identify .................................................................................................. 48

Table 29: Function List – Identify (Supported Barcodes) .............................................................. 49

Table 30: Function List – Identify (Supported QR codes) .............................................................. 49

Table 31: Types of Camera Supported ......................................................................................... 53

Table 32: OCR Supported Fonts .................................................................................................. 59

Table 33: OCR Parameter Settings .............................................................................................. 60

Table 34: OCR Parameter Settings – Segmentation..................................................................... 60

Table 35: Number OCR Supported Fonts ..................................................................................... 61

Software Manual TMvision Software version:1.82 6

Page 7

Table 36: Number OCR Parameter Settings ................................................................................. 62

Table 37: OCR Parameter Settings – Segmentation..................................................................... 63

Table 38: Identification Functions ................................................................................................. 65

Table 39: Pose Variation (Shape) Functions ................................................................................ 65

Table 40: Pose Variation (Shape) Examples ................................................................................ 65

Table 41: Pose Variation (Image) Functions ................................................................................. 66

Table 42: Pose Variation (Image) Example .................................................................................. 66

Table 43: Specific Color Area Functions ....................................................................................... 67

Table 44: Specific Color Area Size Example ................................................................................ 67

Table 45: Subtract Reference Image Functions ............................................................................ 68

Table 46: Subtract Reference Image Example ............................................................................. 68

Table 47: Line Burr Functions ....................................................................................................... 69

Table 48: Line Burr Example ........................................................................................................ 69

Table 49: Circle Burr Functions .................................................................................................... 69

Figures

Table 50: Measuring Functions .................................................................................................... 71

Table 51: Counting (Shape) Functions ......................................................................................... 71

Table 52: Counting (Shape) Example ........................................................................................... 71

Table 53: Counting (Blobs) Functions ........................................................................................... 72

Table 54: Counting (Blobs) Example ............................................................................................ 73

Table 55: Counting (Edges) Functions ......................................................................................... 73

Table 56: Counting (Edges) Examples ......................................................................................... 73

Table 57: Gauge Functions .......................................................................................................... 74

Table 58: Anchor Functions .......................................................................................................... 75

Table 59: Line Functions .............................................................................................................. 75

Table 60: Circle Functions ............................................................................................................ 77

Table 61: Caliper Functions .......................................................................................................... 78

Table 62: Peak-to-Peak Width Functions ...................................................................................... 78

Figure 1: Landmark ...................................................................................................................... 15

Figure 2: Fixed Positioning ........................................................................................................... 15

Figure 3: Live Video...................................................................................................................... 19

Figure 4: Hard Drive Setting ......................................................................................................... 20

Figure 5: The Flow of Pick and Place ........................................................................................... 22

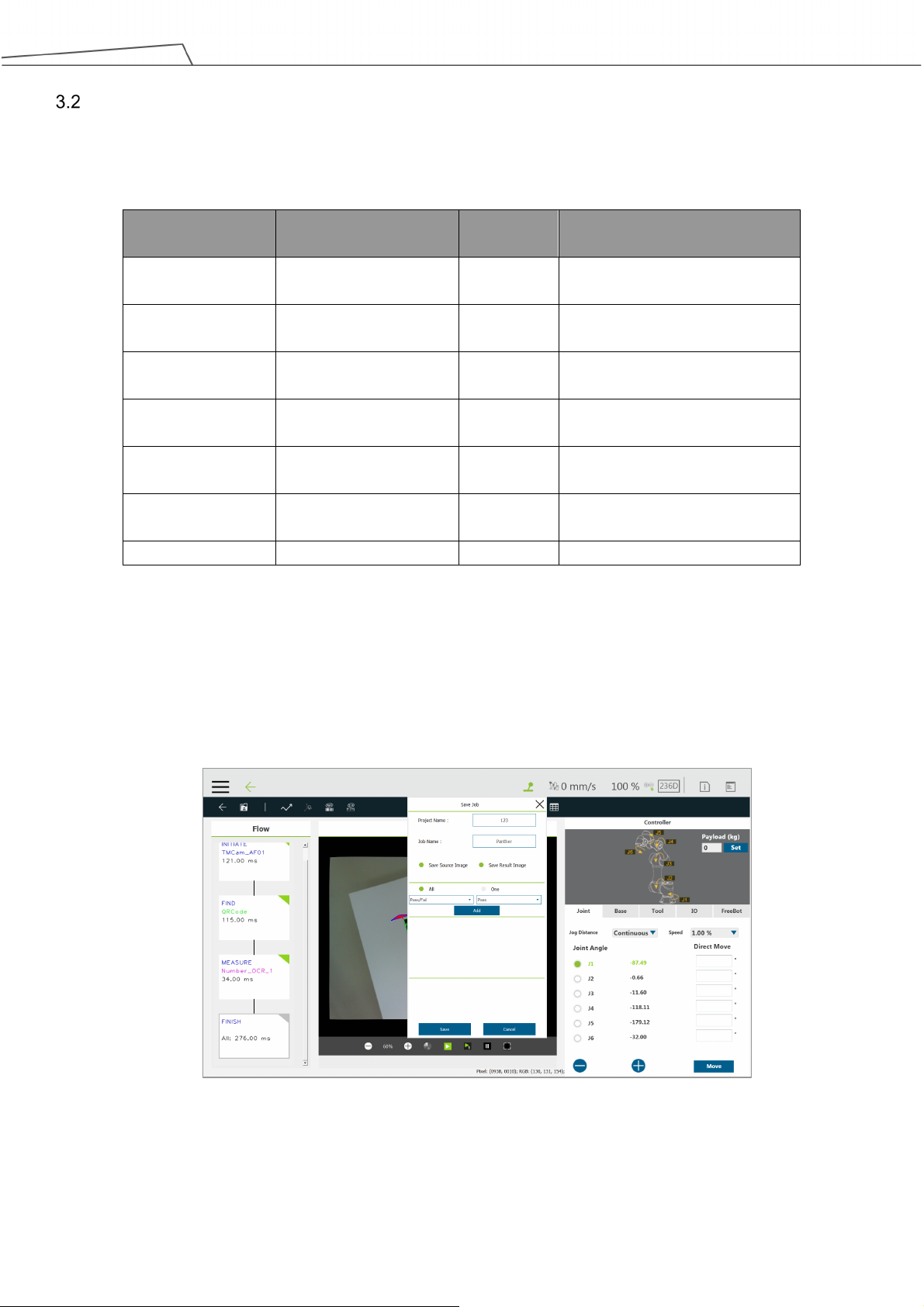

Figure 6: Save Vision Images Based on Results .......................................................................... 23

Figure 7: Visual Servoing ............................................................................................................. 25

Software Manual TMvision Software version:1.82 7

Page 8

Figure 8: Fixed Point .................................................................................................................... 27

Figure 9: Vision IO ........................................................................................................................ 29

Figure 10: Landmark Alignment (1/2) ........................................................................................... 30

Figure 11: Landmark Alignment (2/2) ........................................................................................... 30

Figure 12: Object-Based Calibration ............................................................................................. 32

Figure 13: Anchor ......................................................................................................................... 42

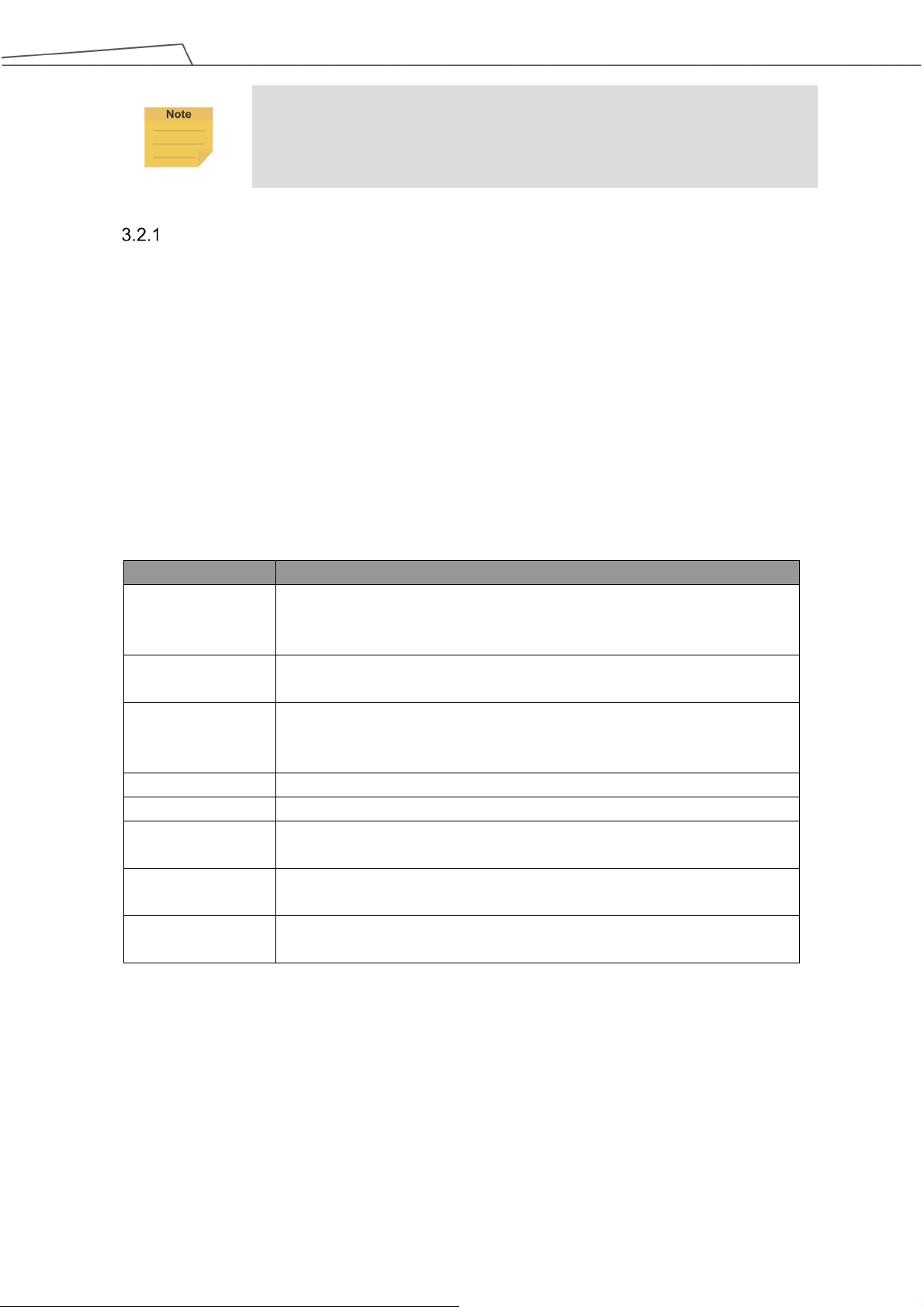

Figure 14: One Shot Get All (1/4) ................................................................................................. 43

Figure 15: One Shot Get All (2/4) ................................................................................................. 44

Figure 16: One Shot Get All (3/4) ................................................................................................. 44

Figure 17: One Shot Get All (4/4) ................................................................................................. 45

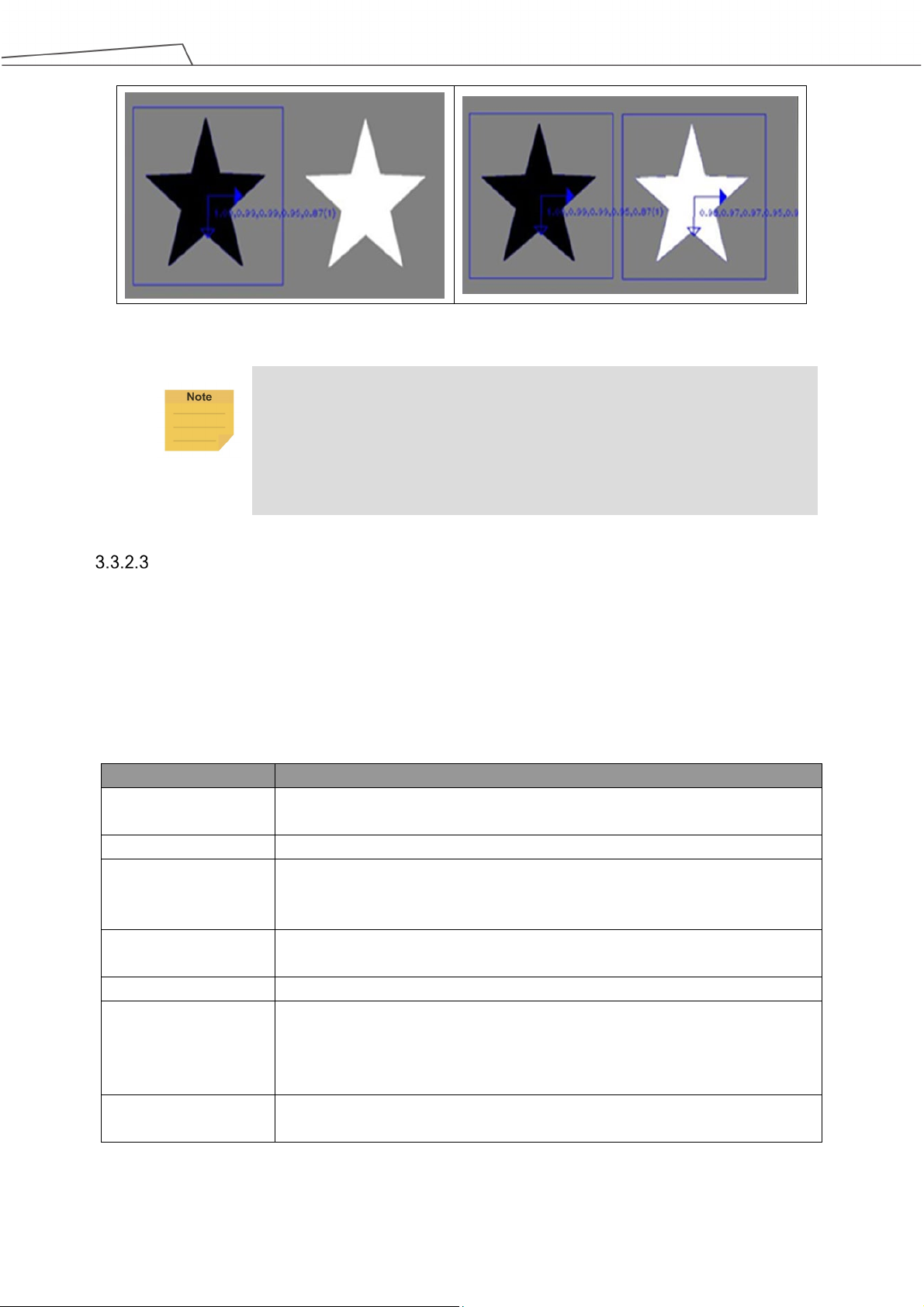

Figure 18: External Detection (1/2) ............................................................................................... 45

Figure 19: External Detection (2/2) ............................................................................................... 46

Figure 20: Color Classifier ............................................................................................................ 50

Figure 21: String Match ................................................................................................................ 50

Figure 22: External Classification (1/2) ......................................................................................... 51

Figure 23: External Classification (1/2) ......................................................................................... 51

Figure 24: Focus/Aperture ............................................................................................................ 56

Figure 25: Eye-to-Hand ................................................................................................................ 57

Figure 26: Upward-Looking .......................................................................................................... 58

Figure 27: OCR ............................................................................................................................ 59

Figure 28: Number OCR ............................................................................................................... 61

Figure 29: Circle Burr Example ..................................................................................................... 70

Figure 30: Counting (Image) Example .......................................................................................... 72

Figure 31: Gauge Example ........................................................................................................... 74

Figure 32: Anchor Example .......................................................................................................... 75

Figure 33: Line Example (1/2) ...................................................................................................... 76

Figure 34: Line Example (2/2) ...................................................................................................... 76

Figure 35: Circle Example (External) ............................................................................................ 77

Figure 36: Image-based Pattern Example .................................................................................... 78

Figure 37: Caliper (Peak-to-Peak Width) Example ....................................................................... 79

Software Manual TMvision Software version:1.82 8

Page 9

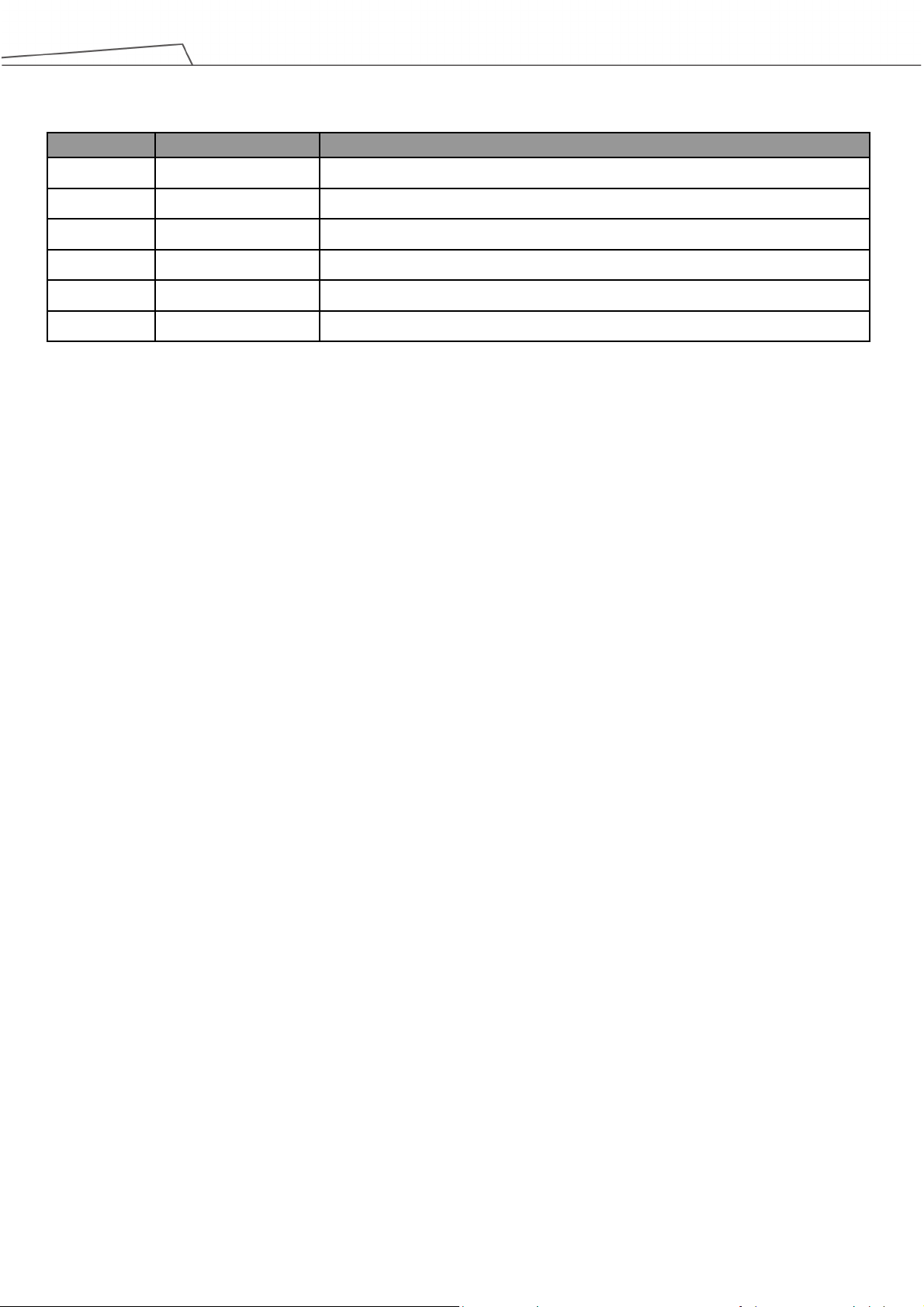

Revision

Date

Revised Content

Revision History Table

01 October 2018 Original release

02 July 2019 Added 1.72.3500 features

03 October 2019 Minor texts fixed and added Light Intensity. Added 1.76.3300 features

04 April 2020 Added 1.76.6300 features

05 August 2020 Added 1.80 features

06 Feburay 2021 Added 1.82 features

Software Manual TMvision Software version:1.82 9

Page 10

software version, click at the top right of the screen for the information.

1. General

Overview

TMvision is a combined hardware and software built-in feature of TM Robot. Regarding the hardware:

There is a visual camera module at the end of the TM Robot for users to experience complete visual

software functionalities. The software comes in two functions: Standard and Licensed. The Standard

function supports most robot applications, while the Licensed function consists of separate modules that

may be purchased as needed.

With approvals from a variety of robot vision manufacturers, TMvision comes with functions such as

feature identification, object location, enhance mode, barcode identification as well as color classifier

integrated into TMflow for users to design the robot task step by step.

TM Robot's built-in Vision Designer supports Eye-in-Hand (EIH), Eye-to-Hand (ETH), and

Upward-Looking cameras with balanced high-level integration and multiple supports. The hardware and

software integrated internal Vision Designer does away with the complex vision components of

conventional systems, and saves the time in getting familiar with robots that users may know little about.

For users familiar with robot and machine vision, TMvision comes with a wide range of assistance and

integration tools for users to generate diversified visual robot integration platforms.

This manual begins with the built-in EIH camera to outline the TM exclusive Task Designer system with

the built-in camera. It then describes the external camera's software and hardware integration, and ends

with an introduction of advanced licensed functions.

This manual applies to TMflow Version 1.82. There will be differences between the functions and interfaces of

different software versions. Confirm the software version before using and reading this manual. To confirm the

NOTE:

In this software, the naming rules for custom names and paths are restricted to use: letters

(both uppercase and lowercase letters), digits, and underscore.

Warning and Caution Symbols

The Table below shows the definitions of the warning and caution levels used in our manuals. Pay close

attention to them when reading each paragraph, and observe them to avoid personal injuries or

equipment damage.

Software Manual TMvision Software version:1.82 10

Page 11

Read Manual Label; Impact Warning Label

Safety Precautions

DANGER:

Identifies an imminently hazardous situation which, if not avoided, is likely to result in serious

injury, and might result in death or severe property damage.

WARNING:

Identifies a potentially hazardous situation which, if not avoided, will result in minor or

moderate injury, and might result in serious injury, death, or significant property damage.

CAUTION:

Identifies a potentially hazardous situation which, if not avoided, might result in minor injury,

moderate injury, or property damage.

Table 1: Danger, Warning, and Caution Symbols

DANGER:

This product can cause serious injury or death, or damage to itself and other equipment, if the

following safety precautions are not observed:

All personnel who install, operate, teach, program, or maintain the system must read the Hardware

installation Manual, Software Manual, and Safety Manual according to the software and hardware

version of this product, and complete a training course for their responsibilities in regard to the

robot.

All personnel who design the robot system must read the Hardware installation Manual, Software

Manual, and Safety Manual according to the software and hardware version of this product, and

must comply with all local and national safety regulations for the location in which the robot is

installed.

The TM Robot must be used for its intended use.

Results of the risk assessment may require the use of additional risk reduction measures.

Power to the robot and its power supply must be locked out and tagged out or have means to

control hazardous energy or implement energy isolation before any maintenance is performed.

Dispose of the product in accordance with the relevant rules and regulations of the country or

area where the product is used.

Software Manual TMvision Software version:1.82 11

Page 12

system

and prevent major hazards from occurring in the complete system.

Validation and Liability

The information contained herein neither includes how to design, install, and operate a complete robotic

arm system, nor involves the peripherals which may affect the safety of the complete system. The

integrators of the robot should understand the safety laws and regulations in their countries and prevent

hazards from occurring in the complete system.

This includes but is not limited to:

Risk assessment of the whole system

Adding other machines and additional risk reduction measures based on the results of the risk

assessment

Using appropriate software safety features

Ensuring the user will not modify any safety measures

Ensuring all systems are correctly designed and installed

Clearly labeling user instructions

Clearly marked symbols for installation of the robot arm and the integrator contact details

Making accessible relevant documents, including the risk assessment and this Manual

CAUTION:

This product is a partly complete machine. The design and installation of the complete

must comply with the safety standards and regulations in the country of use. The user and

integrators of the robot should understand the safety laws and regulations in their countries

Limitation of Liability

No safety-related information shall be considered a guarantee by the Corporation that a TM Robot will

not cause personnel injury or property damage.

Functional Note Symbols

The following table defines the functional note symbols used in this manual. Read the paragraphs

carefully.

IMPORTANT:

This symbol indicates the relevant functional details to assist programming and use.

NOTE:

This symbol indicates the relevant functional use tips to assist programming efficiency.

Table 2: Function Note Symbols

Software Manual TMvision Software version:1.82 12

Page 13

Software Manual TMflow.

2. Eye-in-Hand

Overview

The TM Robot's built-in Vision Designer system integrates hands, eyes and brains of conventional

robots into one. This not only enables users to execute high precision jobs but also provides flexibility for

fast line changes. Regarding hardware operation, users can move the robot to right above the object

and press the Vision button on the camera to generate a Vision node in TMflow for subsequent visual

job programming. Refer to the relevant Hardware Installation Manual for the position of the buttons.

TMvision is designed for coordinate adjustment and vision job administration, and users can set

parameters of visual features on lighting and imaging in the Vision node to enhance the speed and

quality of identification. Refer to the following chapters for details and instructions.

NOTE:

Users should check if the connection of User Connected External Safeguard Input for

Human-Machine Safety Setting on the control box is closed before proceeding a conclusive

calibration. For details of User Connected External Safeguard Input for Human-Machine

Safety Setting, refer to Safety Manual, the relevant Hardware Installation Manual, and

Vision Base System Positioning Mode

TM Robot comes with a 2D camera as the built-in vision system that supports the positioning model on

the object-oriented base or the robot alignment-oriented base. For the object-oriented base positioning

model, users must create a workspace and make sure the workspace is parallel to the object. Failure to

do so may result in distorted imaging and visual identification job failures. TMvision offers four

positioning methods: Landmark, fixed-point, visual servoing, and object-based calibration as described

below.

Landmark

Landmark provides a fast, simple and flexible base system positioning method as a reference to

the environment. Capturing Landmark with TM Robot will generate the position information of six

degrees of freedom (including X, Y, Z, RX, RY, RZ) once to build a base system accordingly for

users to record following points and motions. When the robot is repurposed or relocated, when

the relative position of the robot and landmark changed, it's simple - use the robot to take a photo

of Landmark again, to regain 6 DoF of the new location and renew the landmark base system.

The recorded points and motions on the Landmark base system will be converted to the base

system automatically to make the robot move to the same positions as before.

Software Manual TMvision Software version:1.82 13

Page 14

Landmark is a 0.2 cm thick and 5x5 cm square plastic plate as shown in the figure below. By

capturing and recognizing Landmark's black and white borders and central graphic features

through TM Robot's EIH camera, the robot can create the base system in the center of the

Landmark's black and white border. Note that the accuracy of landmark positioning is not

sufficient for identification and alignment purpose. In principle, Landmark is not designed for

users to have the robot directly go to individual points or execute motions after creating a base

system. Instead, it is an alignment tool to lead the robot toward a valid visual point. Users should

use the TM Robot visual positioning function to identify and locate the object in the last step to get

the best results.

Landmark generates a base system with six degrees of freedom, and the data in the RX, RY, and

Z directions are not easy to obtain accurately with EIH 2D vision (i.e. whether the camera plane is

parallel to the object and how long is the distance between the camera plane and the object).

Landmark can enhance the positioning ability of the 2D vision along these axes. Despite the fact

that Landmark is able to get the data of the X, Y, and RZ direction, chances are users may fail to

place or attach Landmark precisely in the operating environment, it is not recommended to use

the data directly for positioning. Due to the fact that these three degrees of freedom compensate

the positioning of the base data in EIH 2D vision, users should use both methods. As a regular

approach, users should use Landmark to have the robot guide its relative relationship between

the peripherals or the RX, RY, and the Z axes. That is to say, using the positioning of Landmark

on the three axes to ensure the visual points recorded in the Landmark base system after

updating with the landmark base system of the visual point camera posture, are able to return

back to the state of parallel with workpiece features (RX, RY) and to the correct distance to

workpiece features (Z). Users can then use this positioning as the basis for a subsequent 2D

vision job, and use each of the TMvision 2D functions to align the remaining axial directions of X,

Y and RZ. Even if the relative position between base of robot and the Landmark changes, users

can reuse the points and the motions recorded in the landmark base system from the former

project by having the robot shoot the Landmark again.

When planning a project, users may place Landmark in the target task environment to create a

TM Robot vision job and perform subsequent motions with the base system. Shooting the

Landmark again in later operations will have the robot reset to the original base system

automatically, i.e. to change alignment of robot according to site conditions without being confined

to a fixed alignment.

Software Manual TMvision Software version:1.82 14

Page 15

Landmark base system.

Figure 1: Landmark

NOTE:

The farther away the Landmark is from the camera the less accurate the

alignment will be. The tradeoff is that a bigger field of view tends to capture

changes of relative alignment between the robot and the Landmark. A shorter

distance between the camera and Landmark has the advantage of better

alignment accuracy but at the cost of a smaller field of view and Landmark's

easily falling outside the file of view. Users are advised to edit two vision jobs:

one nearer and the other farther, when using Landmark. The farther one is

aimed to quickly detect the Landmark in a workspace to create the first base

system. Then, pull the robot close while orienting the RX, RY, and RZ angles of

the second visual points (set these axes in the original base system orthogonal)

to zero and keep them as close as possible, e.g. camera and Landmark 10cm

apart from each other. Shoot the same Landmark to get a more accurate

Fixed Positioning

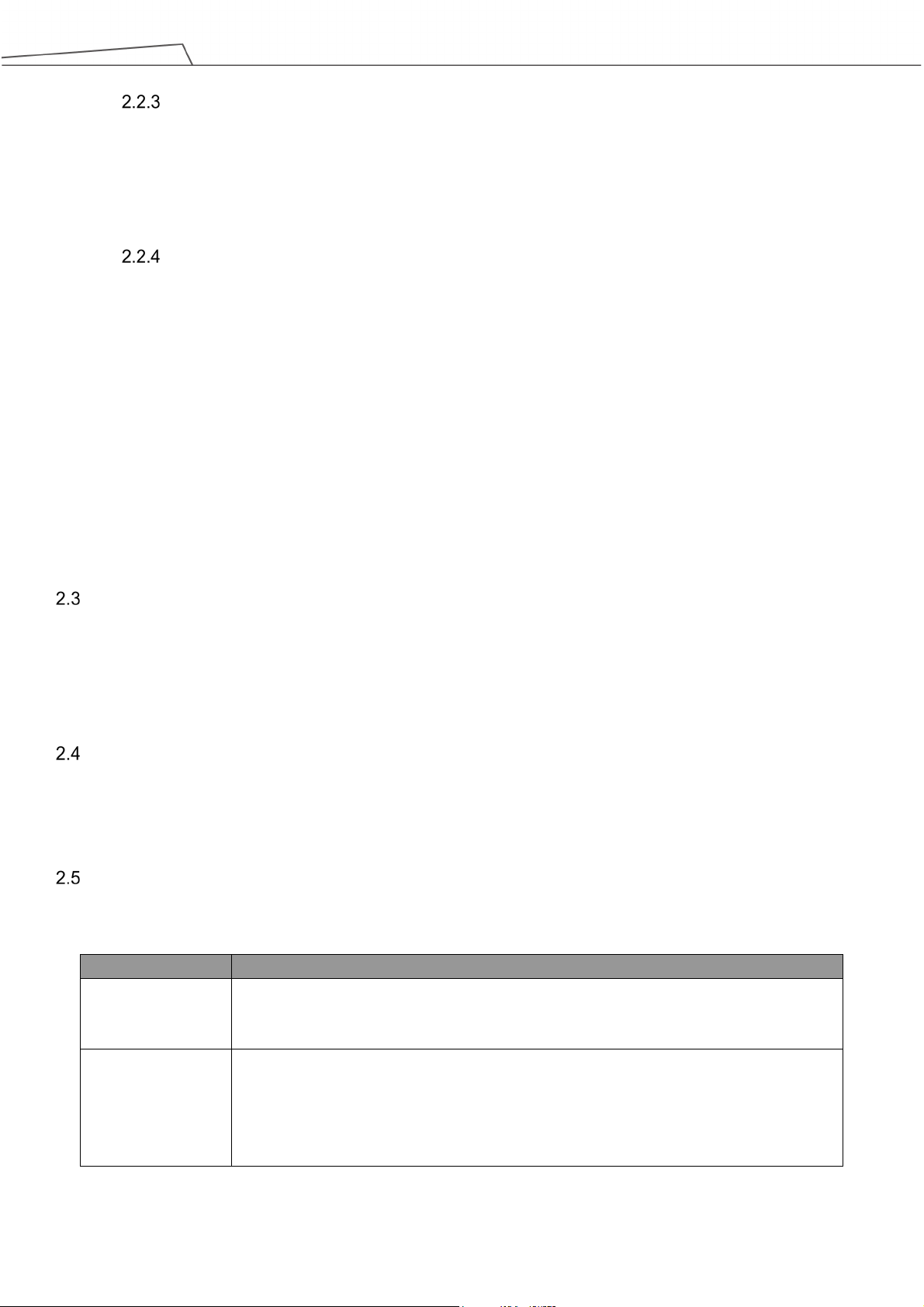

The fixed positioning function is designed with a pre-set object placement area and pre-set height

for vision jobs. Users can create a workspace with the TM calibration plate. When using the TM

calibration plate for fixed-point alignment, the relative height of the camera and the work plane is

also defined. When using fixed-point alignment to establish a workspace, users must ensure that

the absolute height of camera and object is equal to the workspace created by the TM calibration

plate.

Software Manual TMvision Software version:1.82 15

Figure 2: Fixed Positioning

Page 16

Name

Function

change made after adjustment jobs ended.

adjustment.

Servoing

The servoing function is for users to define the object features. In each servoing process,

TMvision automatically sets the robot position based on the defined object to return the relative

position of the camera and object.

Object-based Calibration

The principle of object-based calibration is basically teaching as servoing and ending as

fixed-point positioning. First, run the tilt correction with the calibration plate to define the visual

servoing workspace with the actual workpiece and convert to the fixed point positioning with

calculations. Since the servo calibration is used only when defining the workspace for the first

time, the robot will place the workpiece at the four corners of the camera’s field of view to create

the workspace with four movements and make the fixed-point positioning calculation with the

workspace accordingly. This takes advantage of the fixed positioning's speed for positioning and

the servoing without the calibration plate. For the object calibration, the features of the object

should not be too big to fit in the field of view during the servo calibration.

Camera List

The list of cameras on the left side of TMvision shows the cameras in use and their status. Right-click

any listed camera to pop up a window that lets users refresh the list of cameras or detect an external

camera.

Controller

To help users control the robot movements, TMvision provides the controller interface for users to move

the robot to the appropriate positions and edit vision jobs.

Camera Kit

The camera kit is used to adjust camera imaging, including the following settings:

Camera Parameter

Setting

Focus / Aperture To assist adjusting focus and aperture of an external camera. It provides visual tools for

Software Manual TMvision Software version:1.82 16

Includes shutter and focus for the built-in camera and contrast and white balance for

extracted images. All modules feature auto once function. Click Save to validate

easy regulation. Users may read the scores of the current focus and aperture on the

left, which vary with change in focus and aperture with the external camera. The

calibration ends when the scores hit the Max line and stop rising even after more

Page 17

lower slides being farthest away from each other).

its current position.

3. Previous vision jobs built with 1.2M pixels will retain previous settings.

geometry does not allow for a calibration plate, users may replace the

Brightness Setting Includes illuminance visualization tool to enable users adjusting lighting tools for

optimized illumination distribution. The left side controls sensitivity of the visualization

tool. The two track bars in the settings indicate the upper and lower limits of the

visualization display. The brightness over the upper and lower limits are defaulted to

their limits for display. If the illuminance in the field of view is uniform, colors shown by

visualization tools may be close to each other in case of high sensitivity (upper and

Tilt-Correction Secure Landmark or calibration plate to the target plane as a calibration tool to enable

the robot's automatic adjustment to the tilt angle and vertical alignment of the camera

to target plane. Adjust camera parameter settings to ensure Landmark or the

calibration plate is detectable before running tilt-correction. Keep adequate clearance

around the robot, as in an automatic tilt-correction process the robot will move around

Table 3: Camera Kit Functions

NOTE:

1. The default resolution of the camera is 5M pixels, and so is the production

calibration. 5M pixels positioning is supported in Fixed Point and

Landmark.

2. If the robot came with TMflow 1.68 out of the box, once upgraded to

TMflow 1.72 or later, the default 5MP camera setting won't take effect.

Please contact service team to conduct 5MP calibration procedures to

enable this functionality.

Calibrate Workspace

Workspace calibration includes automatic and manual calibration to help users create workspaces for

fixed-point vision jobs. Workspace calibration will generate the information of the workspace as well as

the VPoint. Refer to Expression Editor and Listen Node for details of VPoint.

Automatic Calibration

The automatic workspace calibration goes with four steps:

1. Tilt-Correction

2. Confirm Workspace

3. Calibrate Workspace

4. Save Results

NOTE:

Before starting calibration: Position the identification target in the center of

the field of view using the controller or manual handle. Place the camera

10 to 30 cm above the target. Determine the plane where the feature is

located before placing the calibration plate on the plane. If the workpiece

Software Manual TMvision Software version:1.82 17

Page 18

tilt-correction while calibrating a workspace with eye-in-hand.

Step 1.

Tilt-Correction:

perpendicular to the camera parallel to the camera’s focal plane.

Step 2.

Confirm Workspace:

VPoint.

Step 3.

Calibrate Workspace:

the robot.

Step 4.

Save Results:

file to access it in fixed vision jobs.

plane where the feature is located before placing the calibration plate on

workspace with an object of the proper height to place the calibration plate

at the same height as the identification feature.

Click Yes when the message to skip tilt-correction prompts to bypass

IMPORTANT:

Keep adequate clearance around the robot as in an automatic calibration

process the robot will move around the initial position.

Once set up, do not touch the calibration plate before starting the calibration process.

Correct tilt before workspace calibration to ensure the calibration plate is

Visually check tilt-correction. Click the icon in the flow chart to calibrate tilt again if

necessary. The position of the robot, at the start of the calibration process, is called

the initial position of the robot in this workspace. This process also defines the

Click Start to have the robot take pictures of the calibration plate with multiple angles

to calculate the relative position of the workspace created by the calibration plate to

Once the accuracy has been validated, save the calibration results in a workspace

Manual Calibration

The manual workspace calibration goes with four steps:

1. Confirm Workspace

2. Set TCP Setting

3. Calibrate Workspace

4. Save Results

NOTE:

Before starting calibration: Mount the required calibration tool on the robot

tool flange. Techman Robot recommends using the calibration pin set

Software Manual TMvision Software version:1.82 18

provided by Techman Robot as the calibration tool. Using TMflow (TCP

Setting), set the Z height of the calibration tool. Position the identification

target in the center of the field of view using the controller or manual

handle. Place the camera 10 to 30 cm above the target; determine the

Page 19

bypass tilt-correction while calibrating workspace with eye-in-hand.

Step 1.

The robot must be positioned at the initial position of the robot in this workspace.

Step 2.

Set the Z height, using TMflow (TCP Offset), for the calibration tool being used.

Step 3.

controller to manipulate the robot when performing this calibration.

Step 4.

workspace file to access it in fixed vision jobs.

Functions

Suitable for hand-eye relationship

changing the scope of extraction by the camera.

Text tool

Set the color, the offset, the size, the style, the prefix and the suffix of

the plane. If the workpiece geometry does not allow for a calibration plate,

users can replace the workspace with an object of the proper height to

place the calibration plate at the same height as the identification feature.

Simply click Yes when the message to skip tilt-correction prompts to

IMPORTANT:

Once set up, do not move the calibration plate until the completion of the

calibration process.

Confirm Workspace:

Set TCP Setting:

Calibrate workspace: Point the calibration tool to the calibration plate grid shown on

the screen. When being prompted. Click Next. Repeat this step five times. Use the

Save Results: Once the accuracy has been validated, save the calibration results in a

Live Video

Live Video provides a live camera image with functions at the bottom (from left to right): zoom out,

display ratio, zoom in, text tool, play, play once, pause, and grid

Figure 3: Live Video

Zoom out

Zoom in

Software Manual TMvision Software version:1.82 19

The Eye-in-hand / eye-to-hand function is designed to change display

ratio of the camera. This zooms in and out image displayed without

Page 20

the text and the objects on the screen.

when pressing the extract button.

Grid

Turn on grid at the center of the live video to help composition.

Play

Play Once

Pause

Set up extract mode (default = continuous extract) for users

convenience to capture current image shown on camera; pause extract:

to freeze image and stop capturing; extract once: to get current image

Table 4: Live Video Functions

NOTE:

Users can move the mouse cursor anywhere on the screen to view the

coordinates and the RGB values of the pixel in the live video.

Task Designer

TMvision provides users with a means of editing visual work, see Chapter 3 Task Designer for details.

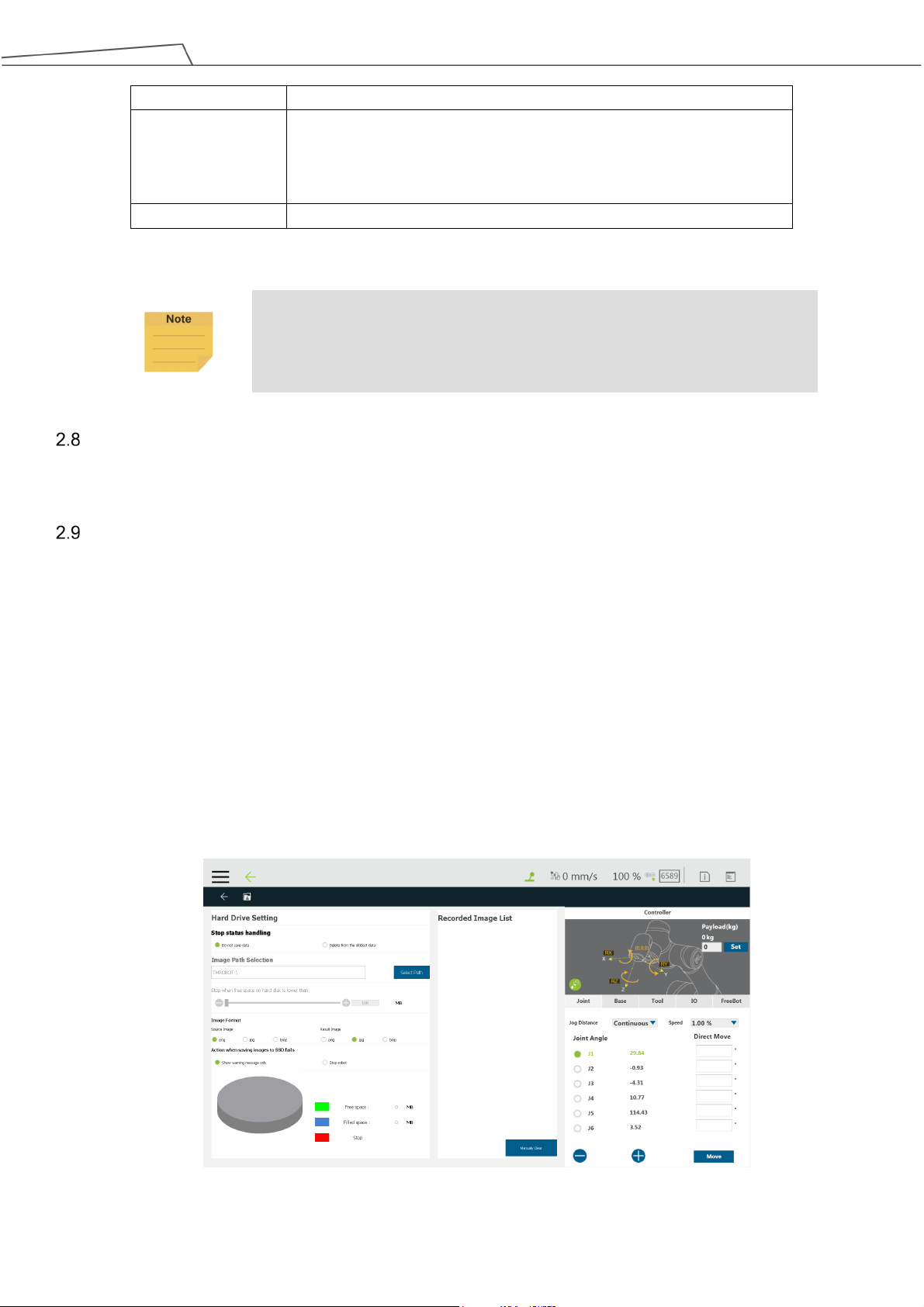

Hard Drive Setting

Hard Drive setting provides users with the ability to manage photo storage space and requires the

TM SSD (sold separately) to save source images or result images for analysis. Images can be saved in

png, jpg, or bmp. The Source Image is saved as png by default, the Result Image as jpg. The pie

chart in the bottom left displays used space, available space, and reserved space. Users may check

from Do not save data or Delete from the oldest data in Stop status handling. Click Select Path to

assign the path to store files, and drag the slider to configure the size reserved for the free space. Also,

users may check Show warning message only or Stop robot for the Action when saving images to

SSD fails. Show warning message only will display the warning message in the log of TMflow while

Stop robot makes the robot stops for the saving error.

Figure 4: Hard Drive Setting

Software Manual TMvision Software version:1.82 20

Page 21

NOTE:

It is favored to set the SSD reserved free space to 30% of the SSD total storage

space.

Software Manual TMvision Software version:1.82 21

Page 22

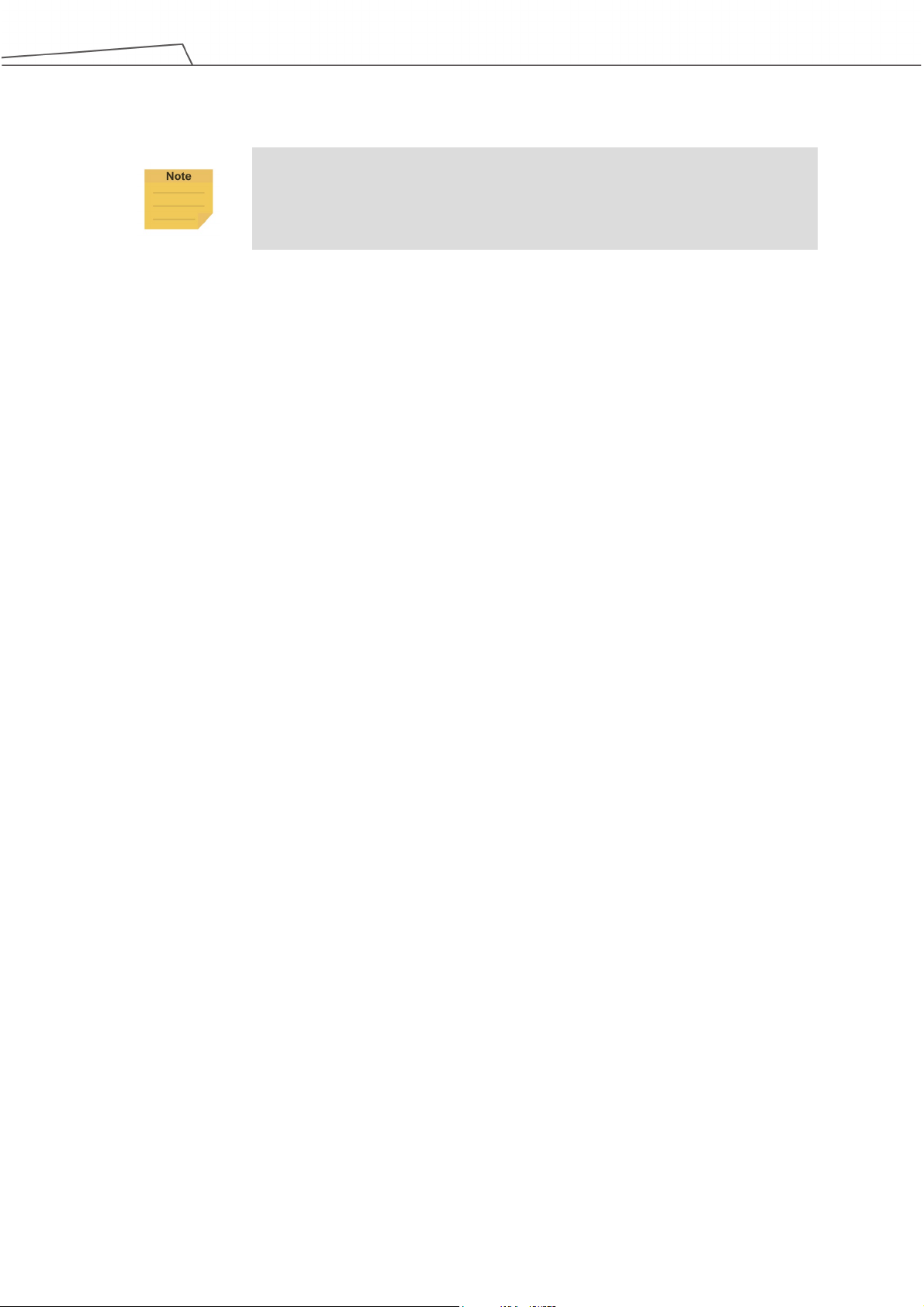

3. Task Designer

Overview

TMvision contains the following task designer functions: Visual Servoing, Fixed Point, AOI-only, Vision

IO, Landmark Alignment, Object-based Calibration, and Smart-Pick. Users can select the required

applications according to their needs and execute jobs with diversified visual algorithm.

In addition to Vision IO and AOI-only identification, other applications can use the Find function to

position the base system to establish the relationship between the robot motion and the visual

components. As shown in the figure below, record point P1 on vision base system 2 and create relative

relationship with the object to access object visually.

Figure 5: The Flow of Pick and Place

IMPORTANT:

When using a vision base system, select the current base system shown at the

top right of TMflow as the vision base system.

NOTE:

In case of invalid selection, re-record the base system with the "Re-record on

another base “ in the Point Manager.

Software Manual TMvision Software version:1.82 22

Page 23

relationship

Eye-to-Hand

✓

object position

the robot position

Eye-to-Hand

Eye-to-Hand

Alignment

Landmark position

Calibration

object position

Smart-Pick

Eye-in-Hand

Select Application

Select the TMvision Task Designer in the work list and choose appropriate application according to

intended use. Basic categories are as follows:

Applications

Fixed Eye-in-Hand /

Servoing

AOI-only Eye-in-Hand /

Vision IO Eye-in-Hand /

Landmark

Object-based

Suitable for hand-eye

Eye-in-Hand

Eye-in-Hand

Eye-in-Hand × Create base system based on

Workspace Base system output

Create base system based on

× Create base system based on

× ×

× ×

×

Create base system based on

Table 5: Select Applications

Users can save vision images by setting criteria based on the results of object detections, recognitions,

and measurements. Images available to save include the original image (source image) and the last

image taken (result image).

Figure 6: Save Vision Images Based on Results

Software Manual TMvision Software version:1.82 23

Page 24

Name

Function

Click Save to validate changes made.

image

at the current position.

Lighting

Control light source switch at end of the robot.

Light Intensity*

Use the slider to set the brightness level

position

position

Stabilization

self-adjust before taking pictures.

NOTE:

The name of the selected application will be put above the flow at the left as a

label.

Visual Servoing

Enter the TMvision Task Designer window and select Visual Servo to use this function. Visual

servoing is only suitable for eye-in-hand. Alignment is achieved by getting continuously closer to

the object's target coordinate on the image. The workspace does not need to be established. If

the target angle has wide variations, use a calibration board to conduct level calibration during the

initial alignment. The servoing time is determined by region of convergence and the robot

movement path. This can be applied to situations where the relationship between the camera,

workspace, and the robot can easily change due to changes in human action and the

environment. After the level is calibrated, select INITIATE on the left side of the Flow to make

basic parameter settings. Setting parameters are as follows:

Adjust camera

parameters

Switch to record

Start at initial

position

Move to the initial

Reset initial

Idle for Robot

*Available for HW 3.0 models or newer.

Includes shutter and focus for the built-in camera and contrast and white

balance for extracted images. All modules feature an auto once function.

Use the internal TM SSD images for identification.

Check this to return the robot to its initial position before visual

identification. Uncheck this and the robot will execute visual identification

Move the robot to the initial position

Reset initial position of the robot

Set the length of time manually or automatically to have the robot

After the basic parameters have been set, confirm that the image is clear and can be seen. Select

the Find function at the top and use the pattern matching function to match the pattern's shape

feature in the selected frame.

Once the matching patterns have been determined, TMvision will compare the image in the

Software Manual TMvision Software version:1.82 24

Table 6: Visual Servoing Settings

Page 25

pattern.

Name

Function

position

to be a match.

set value of the angle, it is judged to be a match.

compensation

value of the found object.

plane

exceeds this value.

this value.

current field of view against the one in storage to compute shape features and identify differences

between them as well as give scores for similarity determination. Users may set up appropriate

thresholds to determine whether the two images are of the same object.

NOTE:

TMvision provides an easy feature editing function. If patterns selected contain

unnecessary features users can click Edit pattern icon to modify features of the

Exit and return to the flow chart once completed. Users may set servoing target when there is at

least one Find function in the in visual flow chart.

Figure 7: Visual Servoing

Parameters of the teaching page are described below:

Move to the initial

Distance (pixels) When features distances between the current object and the target

Angle When features angles between current and target object fall below the

Depth

Radius in X-Y

Distance in ± depth Stop the robot movement when the vertical movement distance exceeds

Move the robot to the initial position

model are less than or fall below the set value of the distance, it is judged

Whether or not to perform depth compensation based on the Scaling

Stop the robot movement when the horizontal movement distance

Software Manual TMvision Software version:1.82 25

Page 26

(2) Locate target at image center

successful servoing.

the Depth, and the length of Timeout.

the project goes to the flow where the condition is fail.

node.

Name

Function

Save to validate changes made.

image

position.

position

Reset workspace

Reset the robot's workspace.

Lighting

Toggle camera light on or off.

Light Intensity*

Use the slider to set the brightness level.

Stabilization

before taking pictures.

Set servoing target Determine servo target position by clicking the button and options below.

(1) Use current position

Start Servoing Click and hold to run the servoing process. Only save the results after

Stop Criterion Use the sliders to configure the stop criteria of the Distance, the Angle,

Timeout (second) Defaults to 45 seconds. Available from 10 ~ 45 seconds. Once triggered,

Moving Range Use the sliders to configure the ranges of the limitations in the Radius,

the Distance, and the Rotation angle of the camera. If the camera goes

beyond the range, the system will take the fail route and leave the Vision

Table 7: Parameters of the Teaching

After configuring the servoing target setting, click Start Servoing and press the (+) button on the

robot stick to have TM Robot begin servoing the visual screen. Save the results once TMvision

prompts servoing completed successfully.

Fixed Point

Enter the TMvision Task Designer window and select Fixed Point to use this function. The fixed

point function is designed for EIH and ETH for the robot to calculate and position objects with

absolute coordinates by creating workspaces. Accuracy varies with that of workspace calibration.

Refer to 2.2 Vision Base System Positioning Mode for details on creating workspaces. After

choosing the workspace, use INITIATE in Flow on the left side to set basic parameters. Setting

parameters are shown below:

Adjust camera

parameters

Switch to record

Start at initial

position

Includes shutter and focus for the built-in camera and contrast and white

balance for extracted images. All modules feature an auto once function. Click

Use the internal TM SSD images for identification.

Check this to return the robot to its initial position before visual identification.

Uncheck this and the robot will execute visual identification at the current

Move to the initial

Idle for Robot

Software Manual TMvision Software version:1.82 26

Move the robot to the initial position.

Set the length of time manually or automatically to have the robot self-adjust

Page 27

node.

Snap-n-go Improve efficiency by concurrently taking snaps and keeping the flow going to

save time for non-vision tasks that follow. After the image has been captured,

the system will go to the next node and keep the image processing in the

background from the flow. Note that when the processes after the Vision node

require the result from the Vision node and the background image processing is

still running, there will be conditions and returns as follows:

If the next node requires the parameters of the result, such as the

Boolean variables Done and Pass generated by the Vision job, users will

have to edit an If node for the system to determine how to proceed.

If the next node is also a Vision node which includes a Vision base point

or a Vision job, the flow will not continue until it is done with the last Vision

*Available for HW 3.0 models or newer.

Table 8: Fixed Point Settings

After configuring the basic camera parameters, select the Find function at the top and select the

pattern matching function as shown below. TMvision will use the framed shaped feature to find its

alignment on the image and build the visual base on the object.

Figure 8: Fixed Point

Once the matching patterns have been determined, TMvision will compare the image in the

current field of view against the one in storage to compute shape features and identify differences

as well as give scores for matching. Users may set up thresholds to determine whether the two

images are the same object.

AOI-only

Enter the TMvision Task Designer and select AOI-only to use this function. The AOI-only

identification is applicable to EIH or ETH to read Barcode and QR code, Color Classifier, and

Software Manual TMvision Software version:1.82 27

Page 28

Name

Function

Save to validate changes.

image

position.

position

Reset workspace

Reset the robot's workspace.

Lighting

Control the light source switch at end of the robot.

Light Intensity*

Use the slider to set the brightness level.

Stabilization

before taking pictures.

node.

String Match without workspace and base system output. To identify a barcode, make sure there

is only one clear and readable barcode in the framed region and use INITIATE on the left side of

Flow to set basic parameters. The parameters are shown as below:

Adjust camera

parameters

Switch to record

Start at initial

position

Move to the initial

Idle for Robot

Snap-n-go Improve efficiency by concurrently taking snaps and keeping the flow going to

Includes shutter and focus for the built-in camera and contrast and white

balance for extracted images. All modules feature an auto once function. Click

Use the internal TM SSD images for identification.

Check this to return the robot to its initial position before visual identification.

Uncheck this and the robot will execute visual identification at the current

Move the robot to the initial position.

Set the length of time manually or automatically to have the robot self-adjust

save time for non-vision tasks that follow. After the image has been captured,

the system will go to the next node and keep the image processing in the

background from the flow. Note that when the tasks after the Vision node

require the result from the Vision node and the background image processing is

still running, there will be conditions and returns as follows:

If the next node requires the parameters of the result, such as the

Boolean variables Done and Pass generated by the Vision job, users will

have to edit an If node for the system to determine how to proceed.

If the next node is also a Vision node which includes a Vision base point

or a Vision job, the flow will not continue until it is done with the last Vision

*Available for HW 3.0 models or newer.

After setting the basic parameters, choose the pattern matching function in the Find function at

the top to proceed with matching. The identification is for a specific spot o n ly, not for the entire

field of view. Users can use the Find function to adjust the search range to find the object feature.

Once the object feature is found, the object's barcode can be accurately identified. The barcode

identification will output the identification result. Use the Display node to confirm the accuracy of

the barcode.

Vision IO

Software Manual TMvision Software version:1.82 28

Table 9: AOI-only Settings

Page 29

Name

Function

Position

Rest Initial Position

Rese the initial position of the robot.

limit, the process exits through the Fail path.

triggered event occurs.

Threshold

Trigger event sensitivity: The lower the threshold, the more sensitive.

Enter the TMvision Task Designer window and select Vision IO to use this function. When an

obvious change occurs in the picture, the difference before and after the change can be used to

determine whether a change has occurred to the Sensing Window. The Vision IO module views

the camera as an IO module, and continuously monitors a specific area in the screen. When the

area shows significant change in content, a trigger signal is sent to TMflow.

Startup method:

Task Designer → Vision IO

In comparison to the previous vision tasks in the flow, when selecting Vision IO at startup, users

can set up in the prompt as shown in the left of the figure below.

Figure 9: Vision IO

Move to Initial

TimeOut Set the time waiting for Vision IO. If the IO is not activated within the time

Set sensing

window

Move the robot to the initial position

Set a region in the live video as an area to monitor. After the setting is

completed, if the level of variations goes over the threshold, it means that

Table 10: Vision IO Settings

Landmark Alignment

Enter the TMvision Task Designer window to select and use the Landmark Alignment function.

Users may run this function with the official Landmark. This is meant to build subsequent teaching

points on the base system added by the Landmark.

Software Manual TMvision Software version:1.82 29

Page 30

Name

Function

validate changes.

image

position.

position

Figure 10: Landmark Alignment (1/2)

For points that were recorded on the robot base, users must teach all points again if the relative

relationship between the robot and the object has changed. If the vision base system was created

through Landmark and the aligning point is based on the previous vision base system, if the

relative relationship between the robot and the object has changed, it only takes the vision node

execution to update the Landmark vision base system.

Figure 11: Landmark Alignment (2/2)

The Landmark Alignment parameter settings are as follows.

Adjust camera

parameters

Switch to record

Start at initial

position

Move to the initial

Software Manual TMvision Software version:1.82 30

Includes shutter and focus for the built-in camera and contrast and white balance

for extracted images. All modules feature an Auto once function. Click Save to

Use the internal TM SSD images for identification.

Check this to return the robot to its initial position before visual identification.

Uncheck this and the robot will execute visual identification at the current

Move the robot to the initial position

Page 31

Reset workspace

Reset the robot's workspace

Lighting

Toggle camera light on or off.

Light Intensity

Use the slider to set the brightness level

Stabilization

before taking pictures.

node.

Idle for Robot

Snap-n-go Improve efficiency by concurrently taking snaps and keeping the flow going to

*Available for HW 3.0 models or newer.

Set the length of time manually or automatically to have the robot self-adjust

save time for non-vision tasks that will follow. After the image has been captured,

the system will go to the next node and keep the image processing in the

background from the flow. Note that when the processes after the Vision node

require the result from the Vision node and the background image processing is

still running there will be conditions and returns as below.

If the next node requires the parameters of the result such as the Boolean

variables Done and Pass generated by the Vision job, users will have to

edit an If node for the system to determine how to proceed.

If the next node is also a Vision node which includes a Vision base point or

a Vision job, the flow will not continue until it is done with the last Vision

Table 11: The Fixed Settings

NOTE:

Users can add Enhance, Identify, and Measure modules to the Landmark

Alignment flows for the use of flexibility.

Object-based Calibration

Object-based calibration is applicable to EIH only, which employs the difference in the robot

servoing movement to calculate relative relationship between the object and the robot without

workspace creation. If the positioning target angle has large variations, users must run the

horizontal calibration with the calibration plate before determining the initial position. This function

delivers high precision for objects with simpler shapes by building the fixed-point base system

directly on the object to reduce the errors on the height measurements made with the calibration

plate. When the horizontal calibration is completed, click Find function to select Pattern

Matching(Shape) apart from Pattern Matching(Image), Blob Finder, Anchor, and Fiducial Mark

Matching for TMvision to frame the shape.

Once the matching patterns have been determined, TMvision will compare the image in the

current field of view against the one in storage to compute shape features and identify differences

between them as well as give scores for similarity determination. Users can set thresholds to

determine if the two images are the same object. Exit and return to the flow chart once completed.

Once edited and there is at least one Find module in the visual flow chart, click Calibration to

Software Manual TMvision Software version:1.82 31

Page 32

Name

Function

position

robot movement.

robot movement.

after the robot successfully completes these actions.

perform object-based calibration.

Figure 12: Object-Based Calibration

Move to the initial

Radius in X-Y plane When the horizontal moving distance exceeds this value, stop the

Distance in ± depth When the vertical moving distance exceeds this value, stop the

Start calibration Click and hold to the + button on the robot stick to servo the object.

Move the robot to the initial position.

The robot will move four times to place object at each of the four

corners of image field to complete the action. Only save the file

Table 12: Object-Based Calibration Settings

Smart-Pick

Smart-Pick lowers the threshold of using TMvision by adopting the Vision button to perform a step

by step and simple-to-use vision job teaching process, and users can use Landmark to achieve a

fixed point vision job without the calibration plate. Smart-Pick applies to the stack of boxes, pick

and place with trays (low precision requirements), and applications with extra compensations

(force sensor, gripper, or object restricted position.) Using Smart-Pick for applications with 1~2 ㎜

accuracy is recommended.

Software Manual TMvision Software version:1.82 32

Page 33

in the last saved setting will be cleared.

NOTE:

To switch the Vision button at the end of the robot to Smart-Pick, go to TMflow >

☰ > Setting > End Button > Vision Button and check Smart-Pick.

Users can start using Smart-Pick by navigating to Task Designer > Please select an

application to start and click the Smart-Pick icon or press the Vision button at the of the robot if

switched to Smart-Pick.

Steps to use Smart-Pick

1.

Put Landmark in the vision of the robot. Move the robot if necessary. Click NEXT to

automatically adjust shutter, white balance, and focus based on the current location.

2.

If the automatic adjustment does not fit, click Change Settings to adjust manually.

3.

Push the + button on the robot stick to perform tilt-correction. Click NEXT when done and

setting the landmark base as the work platform of the object.

4.

Click Camera Settings if necessary and capture the image of the background without the

object. Click NEXT.

5. Capture the image of the object with the background.

6.

Adjust Region of Interest parameters for the best outcome. Click Select ROI to scale

ROI down.

7.

Adjust the matching parameters or use Edit Pattern to edit the feature of the object. Set

Search Range of the object location, rotation, and scale in the image.

8.

Click Save to save the job. The default job name goes by SmartPick_ with a sequence

number. Users can use the Vision button as Done, Save, and Yes in this step.

To apply extra functions such as enhance, users can lick Transform Into a General

Vision Job to save the job without the Smart-Pick feature. Once transformed, there is no