Page 1

NEC D3-10i SAN Storage

Quick Start Guide

For the latest information, please see http://support.necam.com/Storage/D-Series/

Please feel free to contact your NEC Corporation of America approved reseller or NEC Corporation of America

Technical Support at any phase of the integration for assistance. NEC Corporation of America Technical Support

can be reached by the following methods:

Web: http://support.necam.com

Email: support@necam.com

Phone: +1-877-632-0064 (toll-free)

Support contact information for other regions of the world is available at http://www.nec.com/global/support/contact/index.html

Page 2

Contents

Introduction ........................................................................................................................................................... 1

Understanding D3-10i Array Management ....................................................................................................... 2

Requirements ................................................................................................................................................... 2

STEP 1: Planning and Preparation ....................................................................................................................... 3

Preparing For Installation.................................................................................................................................. 3

Unpacking the Array ......................................................................................................................................... 3

Hardware Component Features ....................................................................................................................... 4

Array Front View ............................................................................................................................................ 4

Array Rear View ............................................................................................................................................. 5

STEP 2: Array Installation ................................................................................................ ..................................... 7

Hardware Installation ........................................................................................................................................ 7

Mounting the Array ........................................................................................................................................ 7

Installing Disk Drives ..................................................................................................................................... 8

Cable Connections ........................................................................................................................................... 8

SAS Cable Connections ................................................................................................................................ 8

Array Connection Configurations ................................................................................................................... 9

iSCSI Connections ......................................................................................................................................... 9

Management Connections ........................................................................................................................... 10

Power Cable Connections ........................................................................................................................... 11

Power-On/Power-Off Procedures ................................................................................................................... 11

Power-On Procedure When Auto Power On Mode Is Not Enabled ............................................................. 11

Power-Off Procedure ................................................................................................................................... 12

STEP 3: Software Installation ............................................................................................................................. 13

Management Server Installation ..................................................................................................................... 13

Installing Storage Manager .......................................................................................................................... 13

Application Server Installation ........................................................................................................................ 16

Control Command Installation...................................................................................................................... 17

Preparing the Software iSCSI Initiator in a Windows Environment .............................................................. 20

Preparing the Software iSCSI Initiator in a VMware ESX Environment ....................................................... 26

Storage Manager Client Installation ................................................................................................................ 32

Storage Manager Client Installation and Startup ......................................................................................... 32

STEP 4: Array Initialization and Configuration .................................................................................................... 34

Array Initialization ........................................................................................................................................... 34

Configuring iSCSI Connections ...................................................................................................................... 42

Configuring the iSCSI Initiator in Windows .................................................................................................. 42

Configuring the iSCSI Initiator in VMware ESX............................................................................................ 46

Configuring Logical Disks and Pools ........................................................................................................... 49

Appendix: Configuring Multipathing .................................................................................................................... 60

Installing PathManager ................................................................................................................................... 60

Page 3

Introduction

Thank you for your purchase of the NEC D3-10i SAN Storage array. This document provides instructions that

are designed to help experienced IT professionals to install and configure the array as quickly as possible.

Since this document is a Quick Start Guide, the following assumptions have been made:

Readers have experience with network and storage terminology and configurations.

Readers have experience with Microsoft® Windows® or VMware® ESX administration.

The array will be deployed in a Microsoft Windows or VMware ESX environment.

Expansion options, like an external battery backup unit (BBU), are not initially being installed with this

array.

Throughout this document, the term “base unit” refers to the enclosure containing the array controller modules,

and the term “array” refers to the base unit and any attached disk enclosures collectively. To read about

information not covered in this documentation, please refer to the additional documentation contained on the

CD media that comes with the array.

To review documentation on the Storage Manager CD when Autorun is disabled, navigate to the root directory

of the CD drive and launch Index.HTML. From the browser window, you can navigate to all the documentation

and installation software available for use with the array.

Figure 1. Storage Manager CD Navigation Windows

Page 4

Maximum Wattage

BTUs per Hour

Model

SAS

SATA

SAS

SATA

D3-10i Base Unit

610 W

540 W

2,081

1,843

Disk Enclosure

430 W

370 W

1,462

1,258

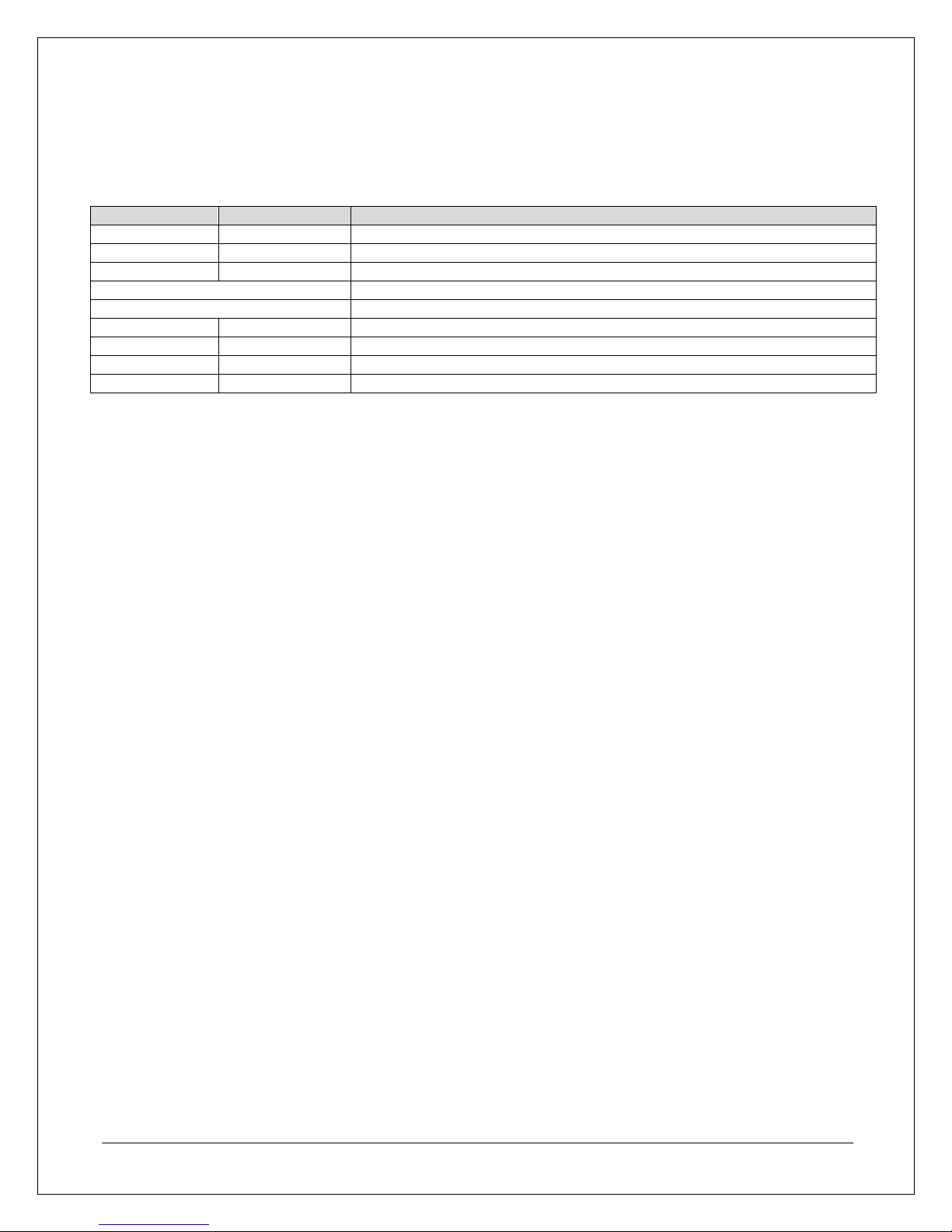

LAN

Ethernet/iSCSI

Management Server

Management Clients

Application Servers

D-Series Array

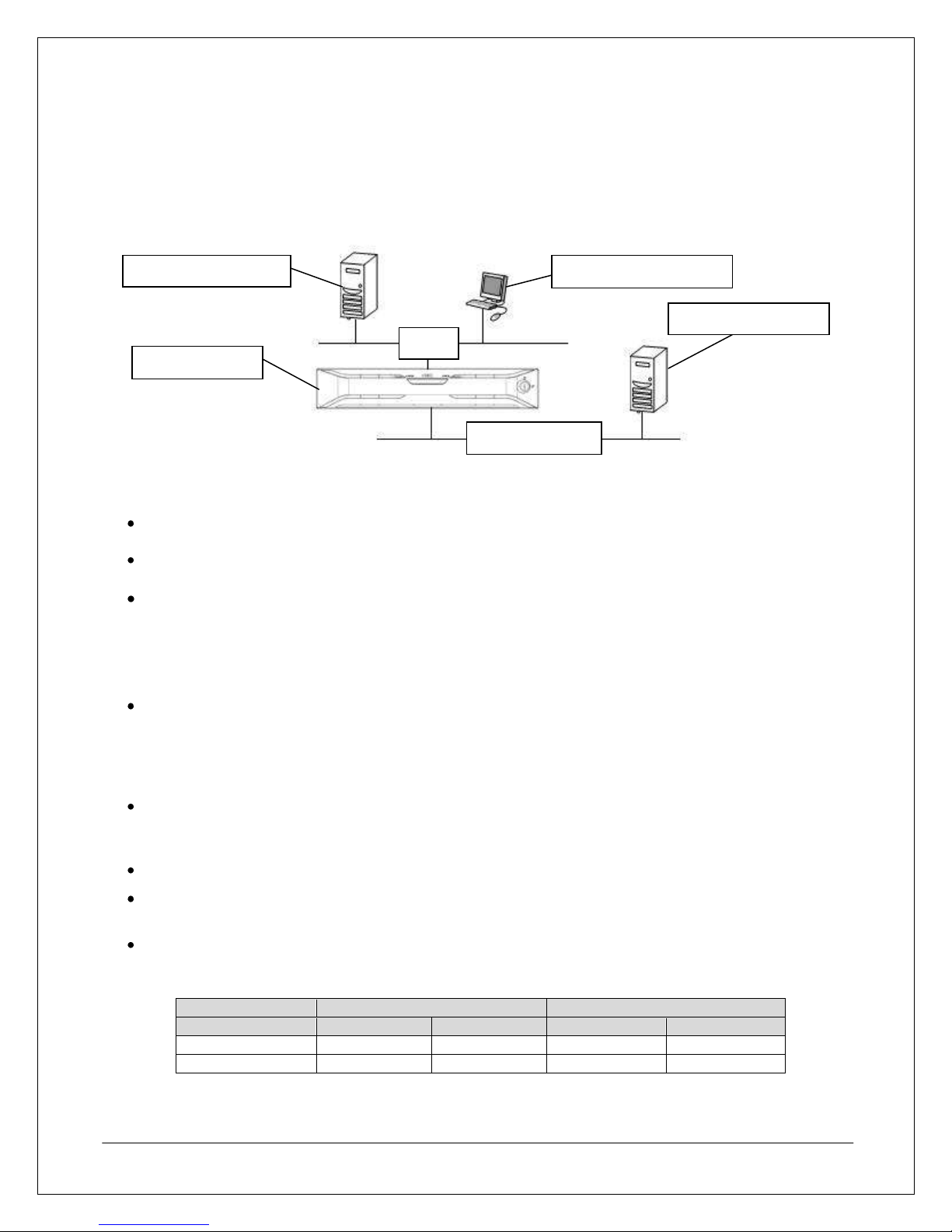

Understanding D3-10i Array Management

The D3-10i array is flexible enough to be implemented in a number of ways, from a single-server storage

solution up to a multi-server SAN infrastructure. No matter which approach is used, the array requires an

Ethernet connection to a server and client that will perform management functions and an Ethernet connection

to servers that will access the array’s storage.

Figure 2. Sample D3-10i Infrastructure Design

Even though these roles can be implemented on a single server, this document describes these as distinct

server roles as follows:

Management Server – The management server role is performed by a server with the Storage

Manager software installed. This server is attached to the array via an Ethernet connection.

Management Client – The management client role is performed by any Windows workstation or server

that connects to the management server via the Web-based management interface.

Application Server – The application server role is performed by any server that uses the array

storage via an iSCSI connection.

Requirements

The following equipment, tools, and resources are required to complete this installation.

Network Cables – At least two Ethernet cables will be required.

o 1 Ethernet cable is required for connection to the management port.

o 1 Ethernet cable is required for each iSCSI port that will be used for connections to application

servers.

IP Addresses – There is one management port and two iSCSI ports on each controller. Each port that

will be used requires a unique IP address. Those IP addresses should be reserved and all associated

network settings should be confirmed before installation.

Tools – You will need the installation CD (shipped with unit) and a #1 Phillips-head screwdriver.

Rack space – If rack-mounting, 2U of rack space is required for each base unit and an additional 2U of

space is required for each additional disk enclosure.

Power Requirements – Two available outlets providing AC 100-240V single phase 50/60Hz for a

NEMA 5-15P plug will be required for each base unit and each disk enclosure. Power and cooling

requirements are listed in the table below.

Table 1. Storage unit power requirements

Page 5

STEP 1: Planning and Preparation

This section describes the planning and preparation steps that need to occur before you install the array.

Preparing For Installation

Before installing the array, the following steps should be performed on the application servers that will be

connected to the array:

1. Install any additional gigabit Ethernet NICs on all application servers as needed for iSCSI connectivity.

2. Install and apply the latest driver updates for all application server NICs that will be used for iSCSI

connectivity.

3. Ensure that the array and application servers will reside on the same network subnet and all necessary

IP addresses have been reserved for each iSCSI port that will be connected to that network.

4. Optional: Install and configure at least one iSCSI Naming Services (iSNS) Server on the same network

segment as the array and the application servers that will connect to the array.

5. If a specialized iSCSI HBA will be used instead of a software iSCSI initiator, ensure that all iSCSI

adapters and iSCSI switches, if used, are installed and configured on all application servers as

specified by the hardware vendor.

Note: Check with your D3-10i sales representative to get the latest information about iSCSI HBAs that

have been certified for use with the D3-10i array.

Unpacking the Array

While unpacking instructions are illustrated on the array packaging materials, the following tips should be kept

in mind when unpacking the base unit.

To prevent component damage caused by condensation due to rapid temperature and humidity

changes, please allow the base unit to sufficiently acclimate to the ambient room temperature before

unpacking.

To prevent injury, the array should be unpacked, lifted out of the packing materials, and moved by at

least two people.

To prevent damage to the base unit, the unit should be held by the bottom of the unit when removed

from the packing material or moved. It is not recommended to lift or carry the unit by holding on to

projecting surfaces such as the power supply units or plastic bezel mounts.

Please save all packing materials in case the unit needs to be returned for repairs or shipped to other

locations.

Record the array serial number and model number located on the back of the array or inside the

cabinet door of the array, then visit http://www.necam.com/registration to fill in your self-support

registration information in order to receive an additional 30 days of warranty coverage.

Page 6

Power LED

Service LED

Description

ON

OFF

Normal operating condition

ON

ON

Indicates that a maintenance request or maintenance task is in process

ON

Blinking

Depending on controller LED status (which is located at the rear of the unit),

can indicate that a power-on sequence, online download sequence, or

automatic download sequence is in progress

ON

ON 5 Seconds

OFF 7 Seconds

Indicates that a condition may exist that requires immediate technical

support from NEC

Blinking

OFF

Cache flushed, okay to power down if desired

OFF

OFF

Powered off

Active/Fault LED

Status

Green

Steady ON

Normal status (Ready)

Green

Blinking

Normal status (Accessing)

Green/Orange

Alternating

Performing RAID rebuild

Orange

Blinking

HDD low power condition

Orange

Steady ON

Abnormal status

Service LED

Power LED

Activity/Fault LED

Disk Drive or Tray

00

02

03

04

08

05

01

06

0A

09

07

0B

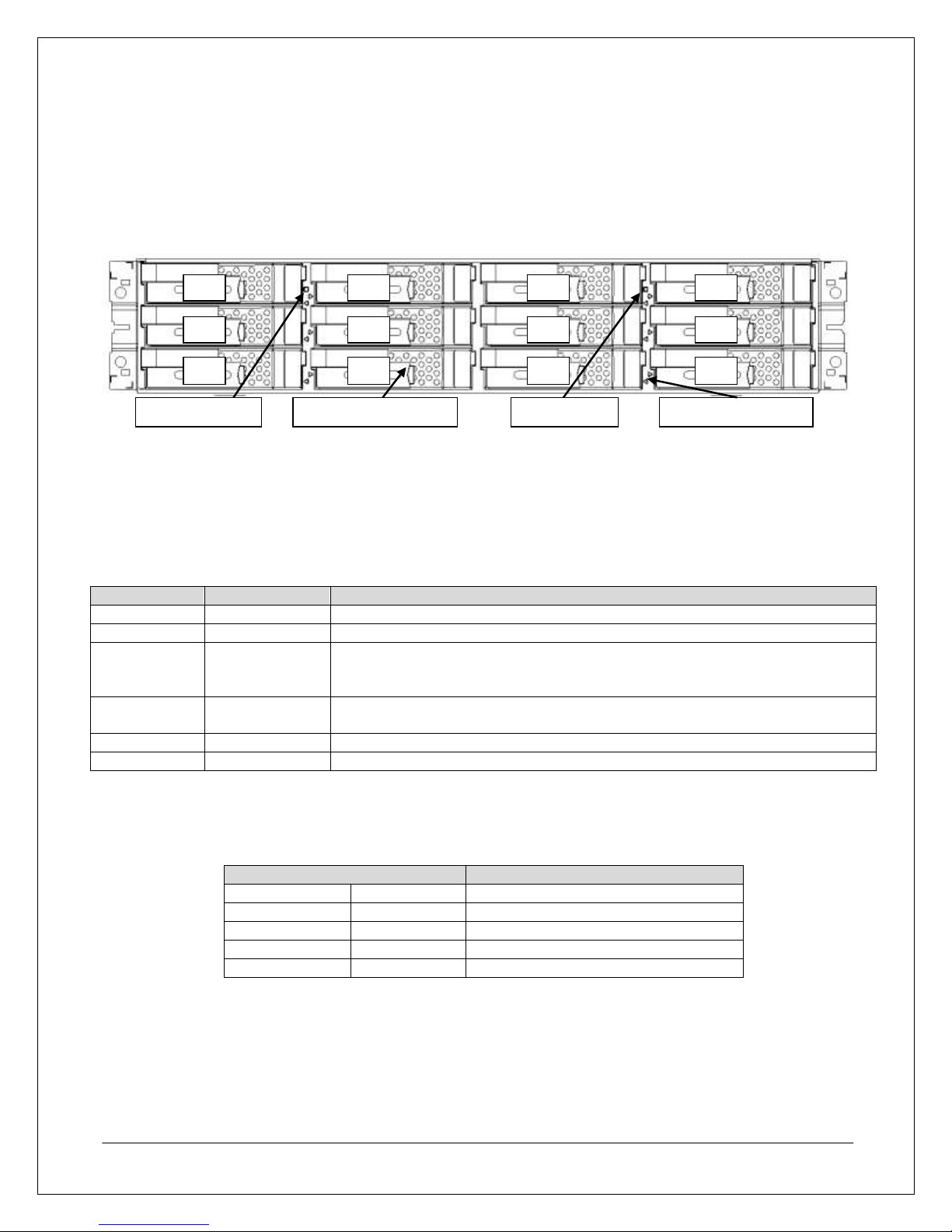

Hardware Component Features

This section describes and illustrates a few of important components that are accessible from the front and rear

of the base unit.

Array Front View

This section describes the array components accessible from the front.

Figure 3. Array Front View (with bezel removed)

Disk Drives and Dummy Trays

Base units and disk enclosures have 12 disk drive slots. To maintain proper airflow and thermal conditions,

each slot is filled with either a disk drive or dummy tray.

Figure 3, above, shows the drive numbering sequence for the slots.

Service LED and Power LED

The Service and Power indicators illuminate to indicate different operating conditions as follows.

Table 2. Power LED and Service LED Status Conditions

Active/Fault LEDs

There is a single Active/Fault LED for each disk drive slot. These indicators use different color/blinking

indicators to signal different operating conditions for each respective drive as follows.

Table 3. Active/Fault LED Status Conditions

Page 7

LED

Indication

Description

Power On LED

Solid Green

When lit, indicates that the power supply is on

Power Fault

LED

Solid Orange

Indicates that a fault condition exists in the power supply itself

Blinking Orange

Indicates that there is an abnormal thermal condition in the power supply

or that the other power supply bay is empty

Fan Fault LED

Solid Orange

When lit, indicates that a fault condition exists in the power supply’s fan

Power Supply Cable Clamp

Power Supply Status LEDs

PS 0

PS 1

Controller 1

Controller 0

SAS Ports and LEDs

Power Button

Management LAN Port

Maintenance Port

iSCSI Host Ports

Controller Status LEDs

Array Rear View

The rear of the base unit provides access to the base unit’s two power supply modules (PS 0 and PS 1) and

two controller modules (Controller 0 and Controller 1), as seen in Figure 4 below.

Figure 4. Base Unit Rear View

Power Supply Status LEDs

There are three status LEDs on each power supply that include, from left to right, a power-on LED, a power

fault LED, and a fan fault LED, which indicate the status for each power supply module as follows.

Table 4. Power Supply LED Status Conditions

Controller SAS Port

The SAS ports are used to connect the base unit to additional disk enclosures and have two Status LEDs, a

green Link LED on the left and an Orange Fault LED on the right.

iSCSI Host Ports

The ports labeled iHP on the base unit controllers are iSCSI Host Ports that are used to connect the array

to the application servers. There are two iSCSI Ports on each controller and each array unit can have either

one or two controllers for a maximum of four iSCSI ports per array.

Management and Maintenance Ports

The management port provides the network connection for the management server. The maintenance port

is only for use by NEC-authorized service professionals.

Page 8

Ready LED

Fault LED

Status

Blinking

OFF

Normal operating condition

ON

OFF

Startup sequence in progress

Rapid Blinking

OFF

Shutdown sequence in progress

Asynchronous Blinking

Online/automatic download sequence in progress

Synchronized Blinking

In download mode

ON

ON

Fault condition exists

ON

Blinking

Waiting for disk enclosure power-on

Blinking

ON

Disk interface is disabled

OFF

OFF

Powered off

Controller Status LEDs

There are three status LEDs on each controller that include, from left to right, a Backup LED, a Fault LED,

and a Ready LED. The Backup LED will only blink to indicate that the battery backup is currently powering

the unit. The Ready and Fault LEDs use the following signal combinations to indicate controller status.

Table 5. Controller Ready LED and Fault LED Status Conditions

Page 9

Warning: Bezel

mounts are fragile

and should not be

used as handles to lift

the base unit or be

exposed to any other

strong forces.

STEP 2: Array Installation

This section describes the steps necessary to complete the physical installation of the array. These steps

should occur before you connect the array to a server.

Hardware Installation

This section describes the steps you need to perform to physically install an array into a rack and prepare for

connection to any servers.

Mounting the Array

Before mounting the array in a rack enclosure, please observe the following:

When installing both disk enclosures and base units in the same rack, the disk enclosures should be

mounted above the base unit.

If an optional external battery backup unit (BBU) will be installed in the same rack, at least 1U of space

should be reserved underneath the base unit for the external BBU.

If the array is being installed into a rack containing other equipment, the heaviest rack units should be

mounted as close to the bottom as possible, while lighter units can be placed near the top.

For the purpose of determining the proper positioning in the rack, a fully loaded base unit may weigh in

excess of 68 lbs and a fully configured disk enclosure may weigh in excess of 63 lbs.

The rack mount rail mounting points are illustrated in Figure 5 above and show M5 screw placements for the

two center screw holes in the front of each rail. Screws are positioned above and below the inner rail tabs at the

rear of each rail as well. The array then slides into the rails from the front and is secured by two screws on each

side of the plastic bezel mount, above and below the front rail mounting screws.

Figure 5. Mounting the Array

Page 10

Installing Disk Drives

To remove drives or trays, simply push in the tab on the drive carrier to unlock the drive latch, pull the latch out

to the open position as seen in Figure 6 below, and then slide the drive or tray out of the disk drive bay.

Figure 6. Array Disk Drive Latch Open Position

To install a drive or tray in an empty drive bay, simply slide the drive or tray into the empty bay with the drive

latch in the open position until the drive latch catches on the inside edge of the drive bay, then move the latch to

the closed position until it locks into place with a click.

While the drive removal and insertion process is simple, there are some general guidelines to keep in mind

when installing and removing disk drives from the array.

Note: All array disk drives should be reseated before the initial startup anytime after the array is transported or

shipped with the drives installed.

The disk drives in positions 00, 01, and 02 function as a system drive stripe set and must remain

mounted and remain in their original positions for proper array operation.

If disk drives in positions 00, 01, or 02 must be replaced, only remove one drive at any given time.

When installing more than one drive while the system is powered on, only install one drive at a time.

Wait until each drive reaches a ready state before installing the next drive.

When replacing failed disk drives, replace each drive with the same drive model.

Use dummy trays in any vacant slots to maintain proper unit air flow.

Cable Connections

This section describes the various connection points used for network and storage connectivity.

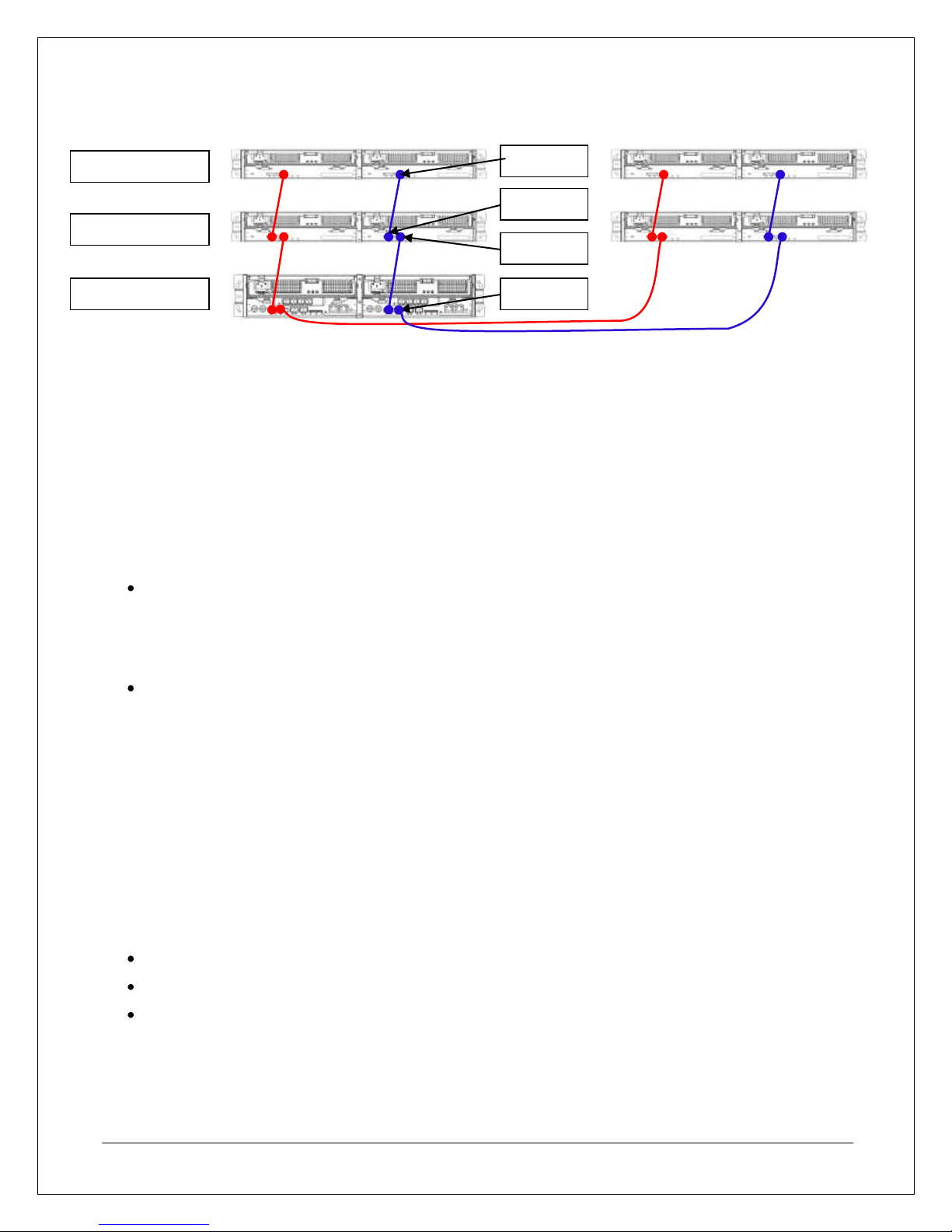

SAS Cable Connections

The array SAS ports are used to connect the base unit with additional disk enclosures to expand storage

capacity. When connecting a disk enclosure to the base unit, use an SAS wide-link cable to connect each DPIN port on the disk enclosure to the DP0 or DP1 port on the base unit. To connect more than one disk

enclosure, connect the DP-IN on the next disk enclosure to DP-OUT on a previously connected disk enclosure

as shown in Figure 7 below.

Page 11

DP-IN

DP0

Base Unit

DP-OUT

DP-IN

Disk Enclosure

Disk Enclosure

Figure 7. SAS Cable Connections

The D3-10i can support up to 11 additional disk enclosures. For more information about connecting multiple

disk enclosures to an array, Disk Enclosure (DE) and Physical Disk (PD) numbering recommendations, and

other considerations, please refer to the documentation contained in the CD shipped with your base unit.

Array Connection Configurations

The D3-10i SAN Storage array can be used in a number of configurations, ranging from a Direct Attached

Storage (DAS) configuration to being part of a SAN configuration with the array attached to an Ethernet switch

or specialized iSCSI switch.

Additionally, the array can be used in a LUN masking, multipathing, or zoning configuration. While zoning is

configured on specialized iSCSI switches and not covered here*, LUN masking and multipathing are dependent

on software installed in the array and application server.

LUN Masking – This is the simplest method for configuring an array to connect with multiple

application servers through an Ethernet switch; it’s managed on the array through the use of Access

Control in the Storage Manager software on the management server. While it allows multiple

application servers to access array resources, it does not offer any fault tolerance unless used with

multipathing.

Multipathing – This configuration requires use of NEC’s PathManager software and some additional

configuration, but offers redundant connection paths, support for clustered servers, and other traffic

management features that aren’t available when using the array in a LUN Masking configuration by

itself. The installation and configuration of PathManager is covered in the Appendix of this guide.*

*Note: For more information about zoning configurations, please refer to the manufacturer’s documentation for

your specific model iSCSI or iSCSI/FC switch.

*Note: For information about how to configure multipathing using Microsoft’s iSCSI software initiator instead of

NEC’s PathManager, refer to the Microsoft documentation for the software initiator.

iSCSI Connections

As described in the previous section, there are a number of ways in which you can connect the array to an

application server or multiple application servers. Generally, though, there are some common basic guidelines

and suggestions that should be followed when connecting the array to an application server via iSCSI.

The array uses Cat5e or better Ethernet cables, purchased separately.

The array should be connected to application servers via a gigabit Ethernet connection.

There should not be any routers or firewalls between the array and application servers.

Page 12

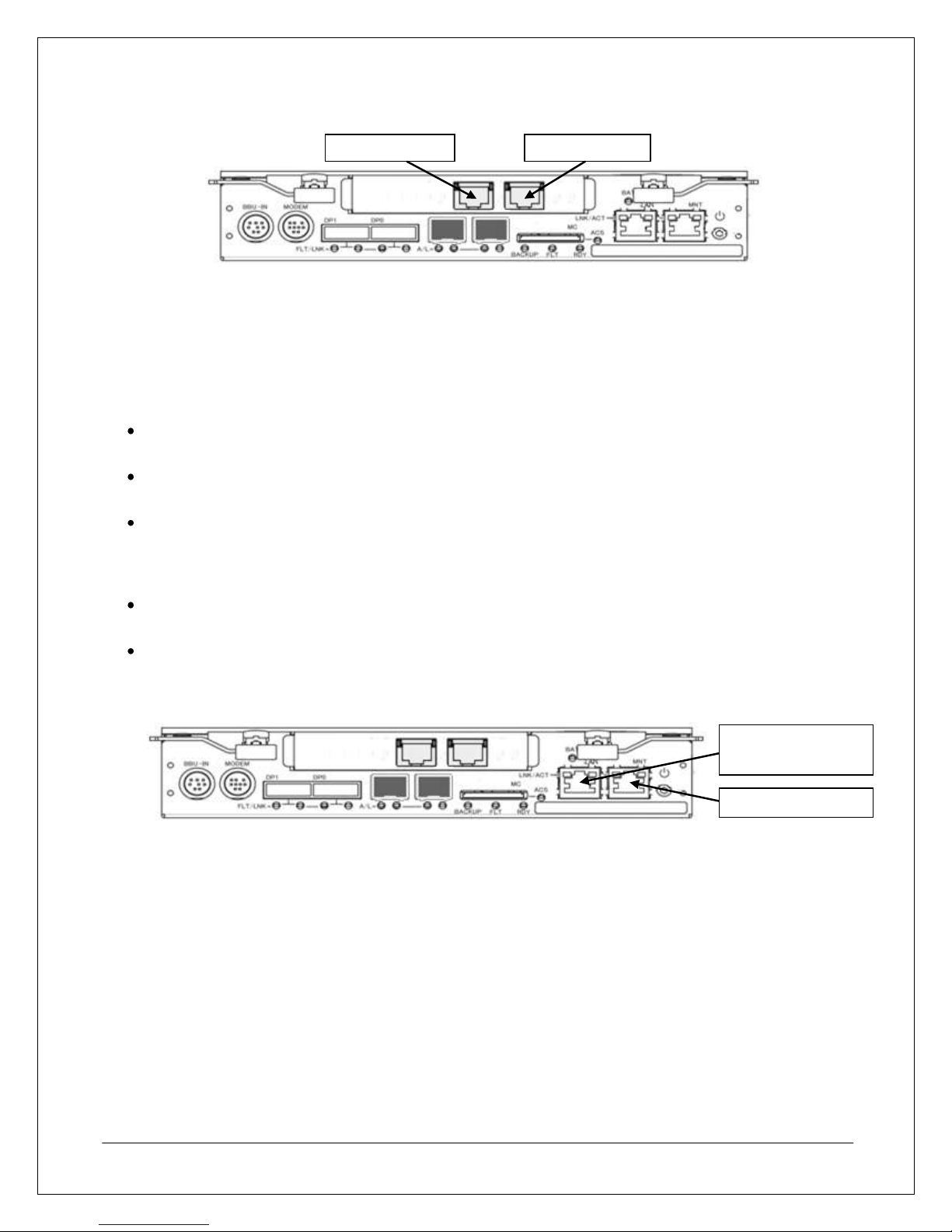

Ethernet

Management Port

Maintenance Port

iSCSI Port 0

iSCSI Port 1

Figure 8. Controller iSCSI Ports

As Figure 8 above shows, each array controller has two iSCSI Host Ports built in and can support a maximum

of four iSCSI ports utilizing two controller modules in an array. iSCSI ports are numbered from right to left

starting at port 0 on the right and port 1 on the left.

Management Connections

The following guidelines should be followed when connecting an array to the network.

The array must be connected to a management server where the Storage Manager software will be

installed to configure the array.

Use the management port on Controller 0 (the controller on the right when viewed from the back of the

unit) if only one Ethernet connection will be used for the array.

Each management port (one on controller 0 and one on controller 1) should have its own IP address

reserved on any DHCP servers. The IP address for each controller is configured manually on the array

during the initialization process outlined in the Array Initialization and Configuration section of this

guide.

The array, Management Server, and management client must be connected to the network before you

use the initialization wizard in Storage Manager to configure an array’s initial settings.

The Management ports on both controllers should be connected to a network switch to provide the

management server with a redundant connection to the array. However, the array can be managed by

connecting just one controller to the network or directly to the management server with a crossover

cable if necessary.

Figure 9. Array Controller Management Port

Page 13

Power Button

Power Cable Connections

The base unit is shipped in “Auto Power On” mode, so the base unit will turn on when the power cables are

plugged into the unit and an outlet. Therefore, the following steps should be performed before connecting any

power cables to the array:

Ensure that all disk drives are properly seated.

Ensure that all Ethernet cables have been properly connected.

Ensure that any SAS cable connections to additional disk enclosures are properly connected.

Plug in the power cables for any additional disk enclosures attached to the base unit before connecting

the base unit power cables.

To plug an AC power cable into a base unit or disk enclosure:

1. Spread the AC cable clamp.

2. Connect the power cable to the unit.

3. Loosely lock the clamp around the power cord, but do not tighten.

4. Move the clamp in the direction indicated in Figure 10 below until the clamp is fixed securely to the

base of the AC cable plug as shown, and then tighten.

Figure 10. Using Power Cable Management Straps

Power-On/Power-Off Procedures

The array power buttons are located on each controller module at the back of the base unit, as shown in Figure

11 below.

Figure 11. Power Button Location on Array Controller

Power-On Procedure When Auto Power On Mode Is Not Enabled

If the array has been shut down using the power button or if Auto Power On mode is not enabled, the array can

be turned on by depressing the power button and holding it in for approximately one second. If there are two

controllers on the base unit, only one power button needs to be depressed to turn an array on or off.

When the array is first powered on, it will perform a self-test, which is indicated by a flashing Service LED. After

the array has successfully finished the self-test process, the Power LED should remain lit and the Service LED

should turn off. Application servers can be connected to the array after the self-test is completed.

*Note: If the Power LED does not remain lit or if the Service LED does not turn off after the self-test, a controller

fault may have occurred. For information about identifying and troubleshooting fault conditions, please refer to

the D3-10i Disk Array Unit User Guide available on the Storage Manager CD or contact NEC technical support.

Page 14

Power-Off Procedure

To prevent any data loss or corruption, the following process should be followed when an array is to be shut

down.

1. Turn off or disconnect application servers using the prescribed shut-down process for that server’s

applications and operating system.

2. Turn off the array by depressing the power button located on the array controller at the rear of the base

unit.

3. Turn off the external BBU if used.

4. If the array needs to remain powered off for more than a few hours, partially eject both controllers from

the base unit at the same time and then reseat them, to prevent the internal battery backup from

draining.

Page 15

STEP 3: Software Installation

The D3-10i SAN Storage array comes standard with Storage Manager software that is required to use basic

array features. To extend an array’s feature sets, additional software can be installed depending on which

features would be best suited for any given environment.

This guide covers the installation and configuration tasks that would be necessary to enable the common

functionality required in an average enterprise environment using Storage Manager software. For more

information about other software suites and the features they enable for the D-Series array, please refer to the

relevant documentation on the CD included with the base unit.

Management Server Installation

The Storage Manager setup CD contains installation software for systems performing the management server,

application server, and management client roles.

The Storage Manager software generates a large number of files and constantly updates files during normal

operation. Therefore, it is recommended that the installation path for the Storage Manager software be

excluded from any real-time virus scanning routine. Also, while a single management server can monitor

several arrays simultaneously, an array should not be managed by more than one management server.

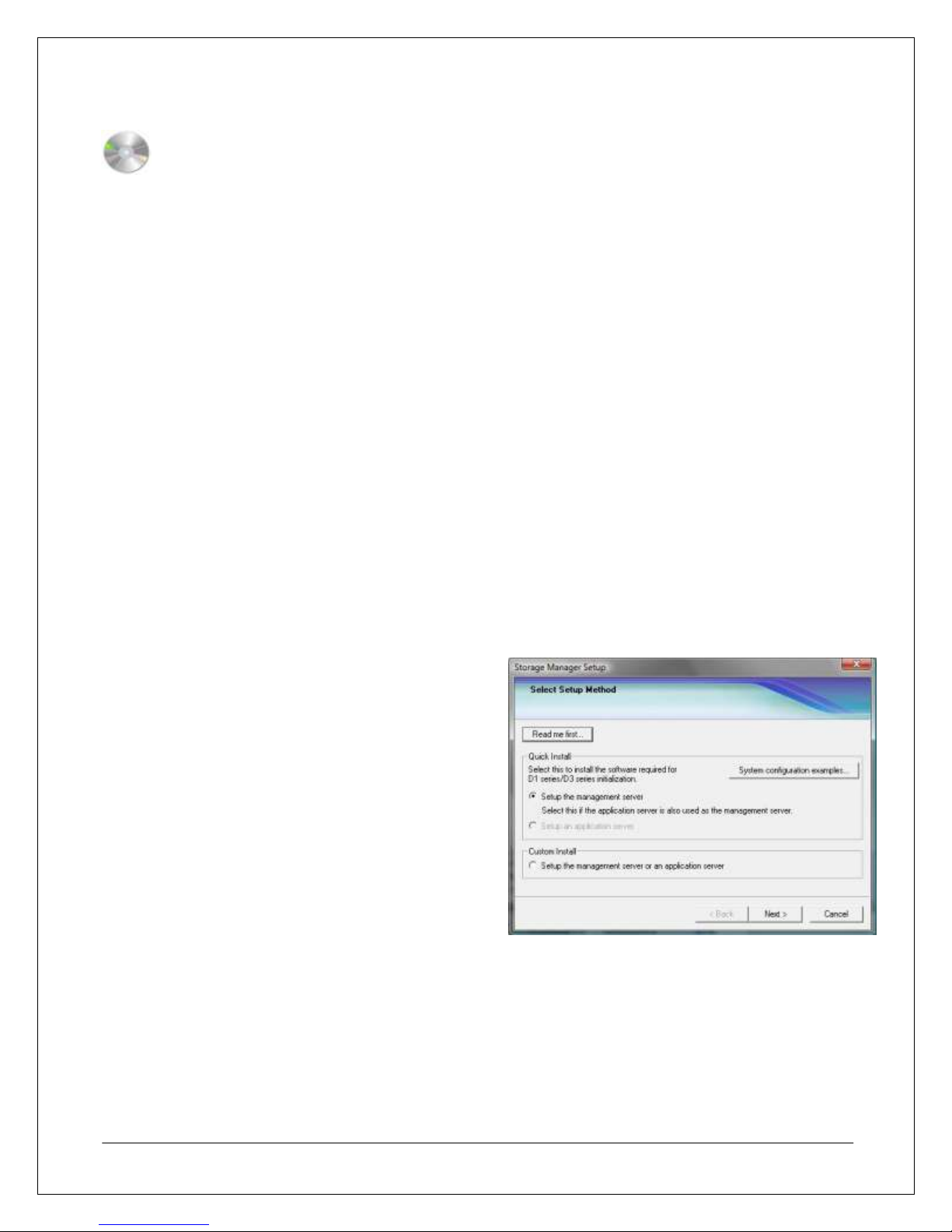

Installing Storage Manager

The following steps take place on the system designated as the management server.

1. Log on to the management server using an account with administrator privileges.

2. Insert the Storage Manager CD.

3. Start the Storage Manager setup. If the Storage Manager setup does not start automatically after

inserting the CD, manually run <CD Drive>:\StorageManager\Install\Windows\ISMSETUP.EXE

4. At the Select Setup Method window, select

Setup the management server from the Quick

Install section, and then click Next.

*Note: Windows Server 2008 in UAC mode may

require an additional step before this step to

grant permission to run the setup.

Page 16

5. At the License Agreement window, select

Accept, and then click Next.

6. At the Choose Destination Location window,

either leave the default installation path in place

or click Browse to select a new installation path,

and then click Next.

7. At this point, the Storage Manager installation

will attempt to install Java. Click Accept to begin

installing Java.

Note: Storage Manager requires the use of a

specific version of Java that comes with the

installation software. Ensure that Java is

installed during this step even if another version

of Java has already been installed on this server,

to avoid any compatibility issues.

Page 17

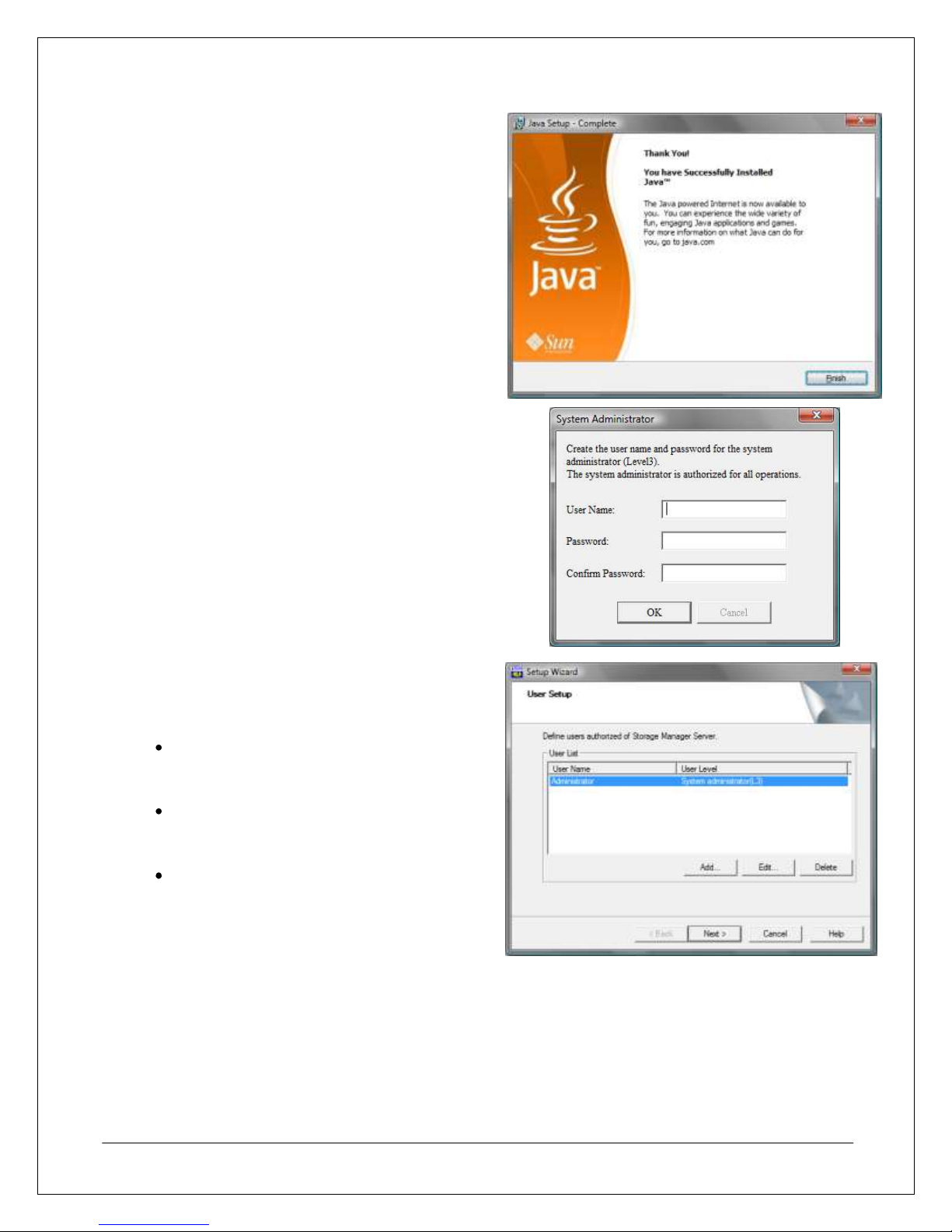

8. After the Java installation process has

completed, click Finish to continue with the

Storage Manager installation process.

9. Allow the setup process to run until setup

prompts you to create a System Administrator

account and password. This account will have

full access to all configuration tasks and user

management roles within Storage Manager.

Select a User Name and Password, and then

click OK.

10. At this point, you can create more user accounts

and grant each account the appropriate access

rights to Storage Manager settings as follows:

Standard (L1) – Authorized for disk

monitoring, state display, and other readonly tasks.

Operator (L2) – Authorized for changing

optimization settings and starting snapshot

or replication tasks.

Administrator (L3) – Authorized for any

management tasks.

To add users, click Add, create the username and

password, select the access level, and then click

OK. After all users have been created, click Next to

continue.

Page 18

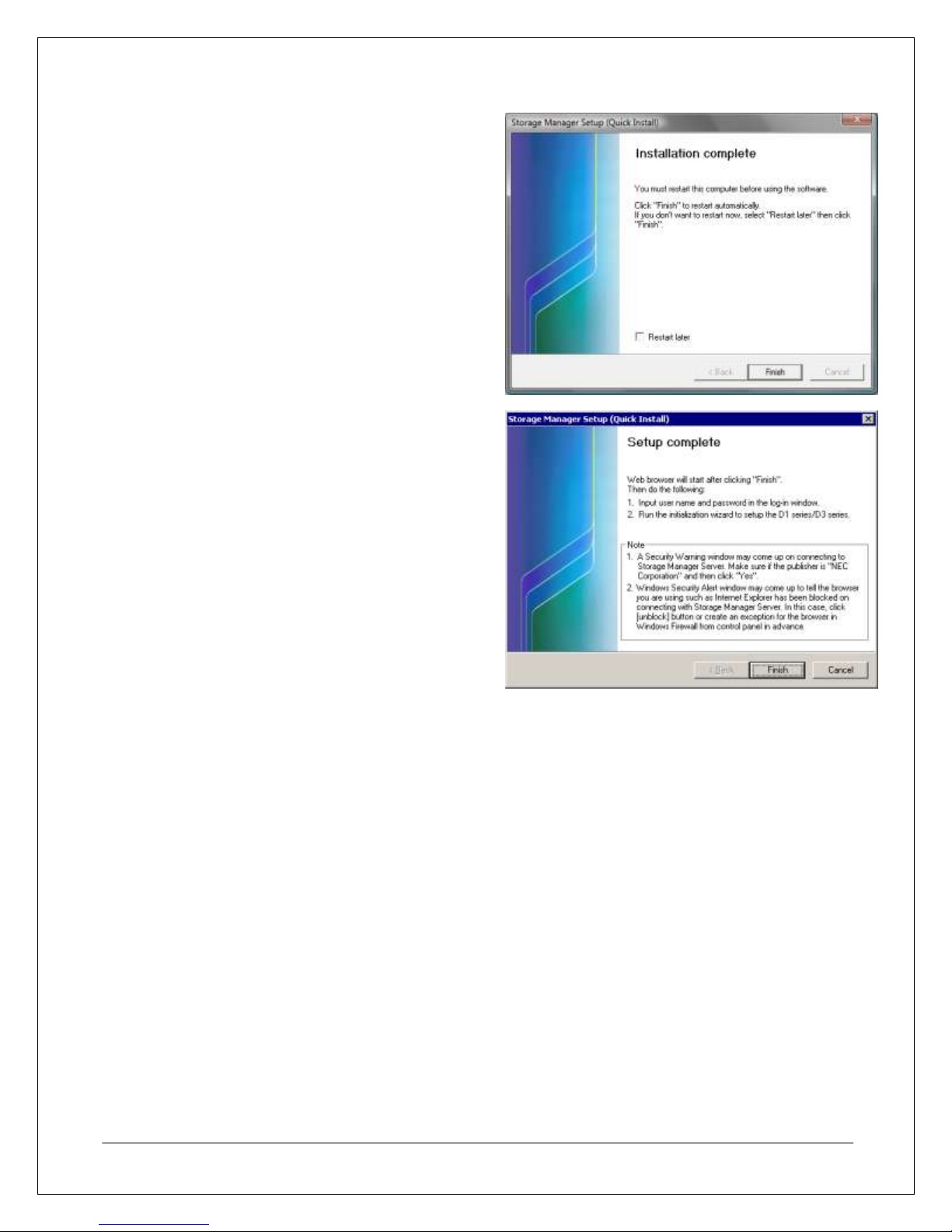

11. At this point, the installation process has been

completed and a system restart will be required

before starting Storage Manager for the first

time. Either click Finish to close the Storage

Manager Setup and restart the server, or if you

do not want to restart the server at this time,

select Restart later, and then click Finish.

12. After the management server has restarted, the

Setup Complete window will appear. Clicking

Finish will launch a Web browser window that

will prompt for a login and then launch the

initialization wizard for the array.

Clicking Cancel will still finish the installation but

will not open a browser window and initialize the

array.

Application Server Installation

After installing software on the management server, the application servers need to be prepared to connect to

the array by installing an auto-discovery host agent on the application servers.

The auto-discovery host agent allows you to perform an auto-discovery of hosts during the array initialization

process when binding logical drives. This host agent can either be installed as part of iSM Volume List

Command or Control Command.

The iSM Volume List installation provides basic host server functionality and the Control Command installation

provides advanced features that are necessary when using the advanced software functions of the array.

The following steps outline the process to install the Control Command host agent and configure the application

servers to connect with the array through an iSCSI connection with a software iSCSI initiator. For information

about how to install iSM Volume List, please refer to the NEC Storage Manager Manuals section on the Storage

Manager CD.

Page 19

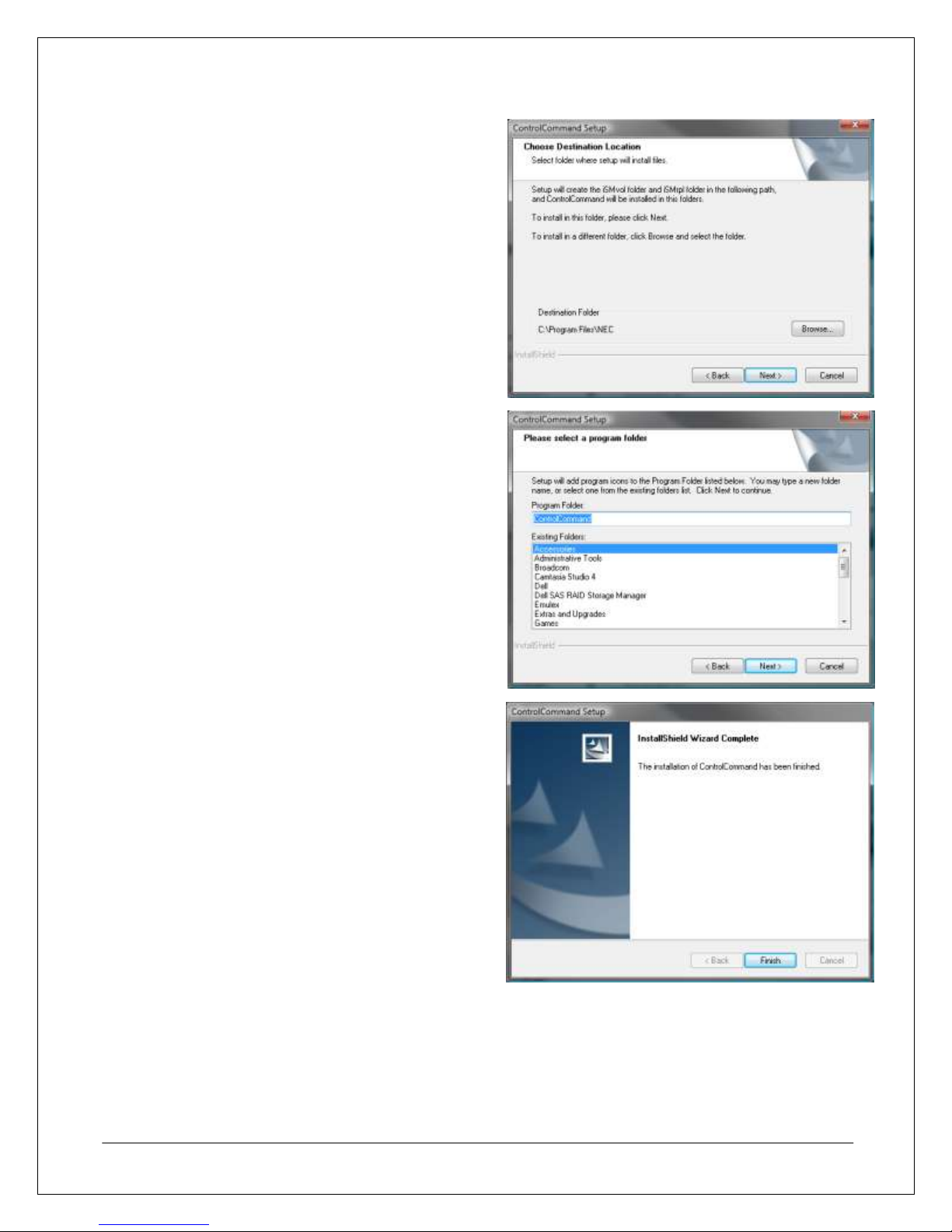

Control Command Installation

This section describes the installation process for the Control Command software from the Storage Manager

installation CD.

1. Log on to the application server with an account that has administrative privileges.

2. Insert the Storage Manager setup CD and run ISMSETUP.EXE from the Autorun prompt or from the

<CD Drive>:\ControlCommand\Install\Windows\ directory.

3. From the ControlCommand Setup Welcome

window, click Next.

4. From the License Agreement window, select

Yes to continue.

5. Review the information for the ControlCommand

setup and click Next to continue.

Page 20

6. From the Choose Destination Location window,

either leave the default installation path in place

or choose a new destination directory by clicking

Browse and then selecting the appropriate path.

When finished, click Next to continue.

7. At this point, choose a program folder for the

ControlCommand program icons, and then click

Next to continue.

8. Allow the installation process to continue,

and after the installation has finished, the

Installation Complete window will appear.

Click Finish to close the wizard and

complete the installation process.

Page 21

9. At this point, the server will need to be

restarted for the installation to be completed.

Either click Finish to allow the server to restart

automatically, or check the box next to

Restart later to restart the server manually at

a more convenient time.

Page 22

Preparing the Software iSCSI Initiator in a Windows Environment

If you are not using a hardware-based iSCSI initiator then you will need to install and configure a software

iSCSI initiator so that the application server can connect with and be configured for use with the D3-10i array.

Microsoft Windows Server 2008 servers already have a built-in iSCSI initiator that only needs to be activated to

accomplish this step. However, a software iSCSI initiator must be downloaded and installed for any Microsoft

Windows Server 2003 (or earlier) server that will be connected to the array prior to initializing the array.

Installing iSCSI Initiator on Windows Server 2008

As noted above, Microsoft Windows Server 2008 and later already have iSCSI capabilities. Even though a

software iSCSI initiator doesn’t need to be downloaded and installed, the initiator still needs to be started in

order to operate.

1. Log on to the server with an account that has administrative privileges.

2. Launch the iSCSI service by clicking Start, then Administrative Tools, then iSCSI Initiator.

3. When the iSCSI initiator service first starts, it will

inform you that the service is currently not

running. Click Yes to configure the service to

start automatically at each startup.

4. After configuring the iSCSI service to start

automatically, you will also need to allow the

service to communicate past the Windows

Firewall.

Click Yes to enable Windows Firewall to allow

Microsoft iSCSI service to communicate with the

network.

At this point, the Microsoft iSCSI initiator service

has been installed and is ready for use.

Installing iSCSI Initiators on Windows Server 2003

In versions of Windows Server prior to Windows Server 2008, an iSCSI initiator needs to be downloaded and

installed on each application server that will connect to the array via iSCSI. To download Microsoft’s iSCSI

initiator, visit http://www.microsoft.com/downloads.

Ensure that a copy of the latest version of the Microsoft iSCSI initiator is copied to each application server, and

then perform the following steps on each server to install the initiator.

1. Log on to the application server with an account that has administrative privileges.

2. Start the Microsoft iSCSI Initiator installation package from the location where it was copied onto the

server.

Page 23

3. Follow the instructions on the Microsoft iSCSI

Initiator welcome screen, and then click Next to

continue.

4. Select the Initiator Service and the Software

Initiator. Microsoft MPIO Multipathing Support for

iSCSI should not be used if PathManager will be

installed.

Once all needed options have been selected,

click Next to continue.

5. At the License Agreement window,

select I Agree, and then click Next to

continue.

Page 24

6. After the installation process has completed,

click Finish to close the installation wizard on

the application server.

7. Click Start and iSCSI Initiator from the Start

Menu to bring up the iSCSI Initiator properties

menu.

8. From the General tab of the iSCSI Initiator

Properties menu, you can change the initiator

name, configure the CHAP authentication

settings, and set up IPsec tunneling mode.

(IPsec tunneling is not supported by the NEC D310i array)

To configure CHAP authentication settings, click

the Secret button.

9. Type in the desired CHAP secret passphrase in

the CHAP secret field, and then click OK to save.

Page 25

10. To configure the discovery settings, click the

Discovery tab, and then click Add Portal.

11. In the Add Target Portal window, enter the IP

address of the iSCSI array port that this

application server will connect with, and then

click OK to save.

Page 26

12. After returning to the iSCSI Initiator Properties

window, click ADD under the iSNS servers

window to configure any iSNS servers that will

be used on the network.

13. Enter the IP address or DNS name of the iSNS

server(s) that will be used for this application

server and array, then click OK to continue.

Page 27

14. Review the iSCSI settings in the iSCSI Initiator

Properties window, and then click OK when all

needed configuration settings have been

entered correctly.

Page 28

Preparing the Software iSCSI Initiator in a VMware ESX Environment

VMware ESX comes with a built-in iSCSI initiator, so no additional download and installation process is

required. However, only the VMware host can access iSCSI arrays, not guest operating systems.

To activate the iSCSI initiator for use by VMware, there are some configuration steps that should be performed

prior to array initialization in order to be able to assign logical drives to the VMware host during the array

initialization process.

Creating a VMkernel Port for iSCSI

The first step in configuring the iSCSI initiator in VMware ESX is to create a VMKernel network configuration for

iSCSI communications.

1. Log on to the VI Client and select the application

host server from the inventory.

2. Click the Configuration tab, and then click

Networking.

3. In the Networking window, click Add Network.

4. In the Add Network Wizard Connection Type

window, select the VMkernel radio button, and

then click Next.

5. At the Network Access window, select the

network adapter(s) that the VMkernel vSwitch

will use, and then click Next to continue.

Note: Ensure that the adapters selected for iSCSI

use are at least 1Gb Full or better.

Page 29

6. In the Connection Settings window, you can

select a network label and optional VLAN ID for

the port group in addition to configuring the IP

settings for the new VMKernel connection.

After configuring all necessary settings, click

Next to continue.

7. In the Summary window, review the settings,

and then click Finish to finalize the new network

configuration.

Configuring a Service Console Connection

This step is only necessary for VMware ESX Server Version 3. For other versions of ESX Server, skip to

Enabling the iSCSI Initiator for VMware.

1. Log on to the VI Client and select the application

host server from the inventory.

2. Click the Configuration tab, and then click

Networking.

3. On the right side of the window, click Properties

for the VMkernel vSwitch you just created for

iSCSI.

Page 30

4. In the Ports tab, click Add to bring up the Add

Network Wizard.

5. In the Add Network Wizard Connection Type

window, select Service Console, and then click

Next to continue.

6. In the Service Console window, type a name for

the connection in the Network Label field and

configure the IP address settings for the

connection. You can also configure an optional

VLAN ID if necessary.

When all needed settings have been configured,

click Next to continue.

7. In the Summary window, review the

configuration settings for the service console

connection, and then click Finish to save.

Page 31

Enabling the iSCSI Initiator for VMware

After configuring a new VMkernal port for iSCSI you will need to enable the iSCSI initiator and configure any

additional settings, such as CHAP, as needed.

1. From the VI Client, select the Configuration tab

and then select Storage Adapters.

2. In the Storage Adapters window, highlight the

iSCSI Software Adapter and then click

Properties in the Details window.

3. In the iSCSI Initiator Properties window, you can

configure CHAP Authentication settings, Static

Discovery, and Dynamic Discovery, and enable

the software iSCSI initiator.

To enable the software iSCSI initiator, click the

General tab and then click the Configure button.

4. In the Software Initiator General Properties

window, select Enabled and ensure that a

properly formatted iSCSI Name is entered into

the iSCSI Name field. Then click OK to enable

the iSCSI initiator.

Page 32

5. If you are planning to use CHAP authentication,

click the CHAP Authentication tab after

enabling the iSCSI initiator, and then click

Configure.

6. In the CHAP Authentication window,

select the Use the following CHAP

credentials radio button and then enter

the appropriate CHAP name and CHAP

secret that will be used to connect the

VMware host server to the iSCSI array.

After the appropriate settings have been

configured, click OK to save and

continue.

7. Prior to starting the array initialization

process, it may also be necessary to

configure a static discovery entry for the

disk array so that the VMware host

server can find the array during the

configuration process.

To configure a static discovery setting,

click the Static Discovery tab, and then

click Add.

Page 33

8. In the Add Static Targets Server

window, enter the IP Address of the disk

array port that the VMware ESX host

server will connect to, set the

appropriate port setting (default is

recommended), and assign an iSCSI

Target Name for this static discovery

entry.

After the configuration settings have

been entered, click OK to save, and

then exit the iSCSI Initiator Properties

window by clicking Close.

Page 34

Storage Manager Client Installation

The Storage Manager client component is a Web browser-based GUI that can be used on the management

server or any other system that has network access to the management server. The Storage Manager client is

only supported on Microsoft Internet Explorer® 6 and above, and it requires some configuration before use, as

shown below.

Storage Manager Client Installation and Startup

Perform the following steps on the system that will be used to configure Internet Explorer for the Storage

Manager client:

1. Open the Start Menu, select Control Panel,

Internet Options, and then select the Security

Tab.

2. Highlight Trusted sites, and then click the Sites

button.

3. In the Trusted Sites window, type the URL or IP

Address of the management server, and then

click the Add button.

Note: If the management client is running on the

same machine as the storage manager server,

type the loopback address as shown on the

right, and then click Add.

4. Uncheck the Require server verification

(https:) for all sites in this zone checkbox, and

then click Close to continue, but do not close the

original Internet Options window.

Page 35

5. From the Security Settings window under the

Security tab, highlight Trusted sites, and then

click Custom level.

6. In the Security Settings window, scroll down to

the Run ActiveX controls and plug-ins entry

and select Enable if it is not already selected,

and then click OK.

7. Start Internet Explorer and navigate to the

management server’s network address and port

(default port 8070). If the server has an

associated DNS entry, navigate to that address

in the browser window. If the server is only

identified by an IP address, navigate to that

address and follow the prompts to install the

client software.

At this point, the software installation process

has been completed and you can manage the

array with the iSM client by navigating to the

management server address within the Web

browser.

Everything should be ready for the array to be

initialized and configured at this point. However,

if the Storage Manager fails to launch and

prompt for a user name and password, go back

through the configuration steps to ensure that

everything is correct.

Page 36

STEP 4: Array Initialization and Configuration

This section describes the process used to initialize and configure the array for use in a Microsoft Windows

environment.

Array Initialization

This section will describe the step-by-step process of using the Management Client Initialization Wizard to

initialize the array. Array initialization is only required for newly deployed arrays. Previously deployed arrays

moved to a new network or location should be reconfigured as if an array initialization process has already been

performed, by configuring logical disks and pools as described on page 49 of this guide.

If the array is being used with an iSCSI switch, the switch should be configured before starting this step, so that

the array can connect with any application servers for the host information gathering process. Ensure that all

application servers are operational and connected to the switch and/or array before beginning the initialization

process.

1. Start Internet Explorer and navigate to the

management server’s network address and port

(default port 8070). If the server has an

associated DNS entry, navigate to that address

in the browser window. If the server is only

identified by an IP address, navigate to that

address.

Note: If the client browser cannot connect to the

Storage Manager host over the network, make

sure that the management server is attached to

the network, the Storage Manager service is

running, and that any intervening network

firewalls or client firewalls have a rule that

permits UDP port 2730 traffic to pass through.

2. Log on to the Storage Manager client

with a previously created user name

that has administrative privileges.

Page 37

3. In the Storage Manager Client browser

window, select File from the top

toolbar, then click Initialization Wizard

from the drop-down menu.

4. At the Initialization Wizard start screen, ensure

that the prerequisite steps have been performed

as listed, and then click Next when everything is

ready for initialization.

5. At the Disk Array Selection window, select the

array to be initialized, and then click Next.

Note: To be initialized, an array must be in the

Not Initialized state. Arrays in a Suspended or

Finished state have already been initialized and

should be configured by using the steps outlined

in the Configuring Logical Disks and Pools

section on page 35.

Note: The wizard will only initialize one array at

a time. If multiple arrays are selected, the wizard

will initialize the first array and then restart the

initialization process for subsequent arrays until

all selected arrays have been initialized.

Page 38

6. After selecting the array, a confirmation window

will appear. Confirm that the correct array was

selected and click Yes to continue. Otherwise,

click No to go back and then select the correct

array.

7. The Disk Array Connection State Confirmation

window displays an example of how an array

should be cabled. Verify that the array is cabled

correctly and click Next, or click Cancel if there

is a problem with your array’s connections.

Note: This screen shows a fully configured array

only. It does not show your specific

configuration.

8. After completing an initialization, an initialization

file can be generated and used to configure

other arrays or reconfigure the existing array.

This step allows you to choose between using

the initialization wizard or a previously generated

installation file from another array to configure

this array.

During an initial setup of an array, choose Use

this wizard. Otherwise, select Use an

initialization file and type the location of that file

in the field below.

After the appropriate option has been selected,

click Next to continue.

9. At the Host Information Collection window, you

can select Automatic collection as long as the

application servers to be configured have not

been configured for use with an array

previously, and as long as the Volume List

Command or ControlCommand software has

been installed on the desired application

server(s).

If these conditions have not been met, the host

information will have to be gathered and typed in

manually during the initialization process.

After selecting or clearing the Automatic

collection field as appropriate, click Next to

continue.

Page 39

10. If automatic collection was selected, an

initialization warning will appear to notify you

that the operating system on the application

server that the array automatically connects to

can affect how the array is configured during the

initialization process. If the array is being

installed in an environment other than Windows,

please refer to the array user manual before

continuing. Otherwise, click OK to continue.

11. At the Set Network Parameters window, type in

the network addressing information for each

controller that will be used on the array. The IP

Address for each controller should be unique

and should be reserved in any DHCP server

before configuring. After typing in the network

information for the array, click Next to continue.

12. After you type in the network information for the

array, a confirmation window will appear. Verify

that the information entered was correct, and

then click Set to continue.

If the information is not correct, click Back and

type the information again before continuing.

13. At the Set Host Connection Port Parameters

window, review the port parameters for the

array. If any parameters need to be changed,

highlight the port to be changed and click Edit

on the right of the port list. If an iSCSI Naming

Service server is to be used, configure the iSNS

Server settings by clicking the Set iSNS Server

button at the left side of the window. After all

ports are configured correctly, click Next to

continue.

Page 40

14. If any ports needed to be edited in the previous

step, this port editing window will appear. Here

you can modify the host port MTU, IP Address,

Subnet Mask, and Gateway address.

When you are finished editing port settings,

click OK to return to the previous Set Host

Connection Port Parameters window.

15. If Set iSNS Server is selected in Step

13, the Set iSNS Server settings

window will appear. To register an

iSNS Server, click Add and enter the

IP Address and Port settings for each

iSNS Server the array can connect to.

Up to four iSNS Servers can be

registered on each array.

When all iSNS Servers have been

added, click OK to continue.

16. After you set the host connection port

parameters, a confirmation window will appear.

Review the port settings and click Set to

continue if they are correct. Otherwise, click

Back to return to the previous step and modify

the port settings as needed.

Page 41

17. At the Enter License Keys window, type in the

Base Product License key from the NEC Storage

software license code provided on the Program

Product License Sheet that was shipped with the

array. Click Enter after the code has been typed

in the License Key field. When the code has

been typed, click Next to continue.

Note: At this time, do not enter in other license

codes. More specifically, if you enter the Access

Control license key, you will need to set up

Access Control or servers will not be able

access the Logical Disks. Setting up Access

Control is not included in the Quick Start Guide.

18. In the Select Logical Disk Binding Method

window, you can choose between binding logical

disks within the initialization wizard and creating

logical disks after completing the initialization

process.

Choosing the Bind logical disks using the

wizard option allows you to use the wizard to

create and bind logical disks to application

servers using RAID1, RAID6 (4+PQ), or RAID6

(8+PQ) while performing the array initialization.

If another type of logical disk is required, this

step can be skipped by choosing the Bind

logical disks later option.

After choosing the appropriate option, click Next

to continue.

19. If the Bind logical disks using the wizard

option was selected in the previous step, the Set

the LD Set window will be shown. Here you can

specify which operating system platform the

logical disk set will be used with, and name the

logical disk set.

From this window, you can also configure iSCSI

CHAP Authentication settings and set access

restrictions on a per-portal basis by clicking

either the Authentication Settings or Access

Restriction per Portal button.

After you have finished modifying the settings for

the logical disk set, click Next to continue.

Page 42

20. After setting the logical disk name and platform

type, you can configure the initiator node that

the logical disk will be bound to. Select the

initiator from the Assignable Initiators section,

and then click Add to assign an iSCSI Initiator to

the specified Destination LD Set or select the

Type In/Edit button to manually enter an

Initiator’s information.

Once an initiator has been selected for the

destination logical disk set, click Next to

continue.

21. The Select LD Binding Parameters window

allows you to create and bind logical disks to

application servers.

The following options are available when binding

logical disks from the wizard:

Bind/Select – This takes you to the Bind

Pool and Bind Select window which allows

you to configure and select a pool to be

bound to logical disks.

LD Capacity – Set the capacity of the logical

disks.

Number of LDs – Set the number of logical

disks to create.

LD Name Prefix – Add a prefix name to the

logical disk.

Quick Format – Perform a quick format of

any logical disks created.

GPT Disk Type – Enable GPT type if a

volume is larger than 2 TB.

Create Hot Spare – Assign a physical disk

as a hot spare drive for the logical disk.

(Recommended)

When all settings are correct, click Next to

continue.

Note: If you change the Number of LDs, the LD Capacity needs to be manually changed.

Page 43

22. If you click Bind/Select in Step 21, the

Bind/Select window will allow you to create a

pool and assign physical drives to that pool in a

specified RAID configuration.

When the needed logical drives and pools have

been configured, click OK to continue.

23. After setting the logical disk binding parameters,

a confirmation window will appear. Review the

settings and license information listed, and if

everything is correct, click Set. Otherwise, click

Back and configure the proper settings before

continuing.

24. After configuring logical disks and confirming that

all settings are correct, the array will begin

setting the configuration and building any logical

disks that may have been configured. When the

initialization process is complete, an Initialization

Complete window will appear.

At this point, click Finish to complete the

initialization, and then confirm that all configured

logical drives and application servers are

connected through the Storage Manager client.

Page 44

Configuring iSCSI Connections

After the array has been initialized and all needed logical drives have been configured, the iSCSI initiators on

the application servers will need to be configured in order to mount any logical drives that are configured for the

application server. Once configured, the logical drives created during the initialization process can be mounted

by the application server.

If logical drives were not created during the initialization process or additional logical drives and pools are

needed, then refer to the Configuring Logical Disks and Pools section that appears later in this document

before continuing with these steps.

Configuring the iSCSI Initiator in Windows

To configure the iSCSI initiator on a Windows server, ensure that the port IP address that the application server

will connect with has been recorded and that the CHAP secret, if used, has also been recorded.

1. Launch the iSCSI Initiator properties menu by

clicking Start and then iSCSI Initiator.

2. In the iSCSI Initiator Properties window, select

the Targets tab. In the Targets window, select

the appropriate array, and then click Log On.

3. At the Log On to Target window, select the

Automatically restore this connection when

the computer starts option, and then click

Advanced. If you will be using NEC

PathManager for Multipathing, do not select

“Enable multi-path”. Otherwise, refer to

Microsoft’s documentation for information about

enabling mutlipathing through the Microsoft

iSCSI software initiator.

Page 45

4. In the Advanced Settings window, ensure that all

settings are correct, and then click OK once all

settings have been properly configured.

Then click OK in the Log On to Target window in

order to return to the iSCSI Initiator Properties

window.

5. At the iSCSI Initiator Properties window, ensure

that the configured target’s status is Connected

and then click OK to close the iSCSI Initiator

Properties window and save the settings.

Page 46

Verifying Windows iSCSI Connectivity

After confirming that the array and application server are connected from the Storage Manager client, the next

step is to confirm that any logical disks that were created during the initialization process are available to the

application server.

1. From the application server, log on to the server with an account that has administrative privileges.

2. From the Start Menu, open the Computer

Management MMC by clicking Administrative

Tools and then Computer Management, or by

right-clicking Computer and then clicking

Manage.

3. At the Computer Management window, select

Device Manager, and then click Disk Drives on

the right to ensure that any logical disks are

visible from the application server.

4. In the Computer Management window, select

Disk Management. If the logical drives that

were bound to this application server were not

initialized, a Windows Disk Initialization prompt

will appear.

If the Initialize Disk prompt does appear, select

the disks to initialize, choose the partition style to

be used for the new logical drives, and then click

OK.

Page 47

5. After any necessary disk initialization process

has been completed, the new logical drives

should be visible from within the Disk

Management window. The logical disks are now

ready to be configured for use on the application

server and formatted as needed.

If the logical drives do not appear on the

application server, check all physical

connections and restart the application server.

If they still do not appear or were not created

during the array initialization process, attempt to

create and bind logical drives through the

Storage Manager client as shown in the

Configuring Logical Disks and Pools section that

appears later in this guide.

Page 48

Configuring the iSCSI Initiator in VMware ESX

After the array has been initialized and any logical drives that will be used by the VMware ESX host server have

been configured, the iSCSI storage settings can be configured within the VMware host server to enable the

drives for use on guest operating systems.

Note: This section only details the basic configuration steps needed to configure VMware ESX to work with the

NEC D3-10i storage array. For more information about advanced configuration items for VMware ESX or to

configure other versions of VMware to use an iSCSI array, please refer to the VMware ESX iSCSI SAN

documentation at http://www.vmware.com/support/pubs/.

1. Log on to the VI Client and select the host server from the listed inventory.

2. Click the Configuration tab, and then click

Storage.

3. Click Add Storage from the Storage window.

4. At the Select Storage Type window, select the

Disk/LUN option, and then click Next.

Page 49

5. In the Device Location window, highlight the

logical disk that you wish to configure, and then

click Next.

6. At the Current Disk Layout window, click Next to

continue.

7. At the Disk/LUN Properties screen, assign a

Datastore Name to the logical disk, and then

click Next to continue.

Page 50

8. In the Disk/LUN Formatting page, you can adjust

the file system values for the logical disk. By

default, the entire storage space of the logical

disk should be available for use.

Click Next to continue after the desired

configuration has been entered.

9. At the Ready to Complete window, review the

configuration information for the logical disk and

then click Finish to complete the logical disk

assignment.

At this point, the logical disk is ready for use by

the VMware ESX server.

Page 51

Configuring Logical Disks and Pools

If the step to bind logical disks was skipped during the array initialization, if the logical disks that were created

are not visible from an application server, or if a new application server is being added to the array, logical disks

or pools may need to be created and bound to that specific server or cluster through the Configuration Settings

wizards available in the Storage Manager client.

Creating and Configuring Pools

The first step in creating a set of logical drives is to create a pool for those logical drives to be assigned to. The

following process illustrates how to configure an individual pool. For more information about the pool

configuration process, please refer to the documentation that accompanied your array.

1. Navigate to the Storage Manager network

address in an Internet Explorer window, and

then log on to the client with an account that has

administrative privileges.

2. Select the array where logical disks and pools

need to be created, click File, highlight

Configuration Settings/References, and then

click Configuration Setting.

3. A window will appear warning you that array

monitoring will be stopped while configuration

settings are being adjusted. Click Yes to

continue.

4. At the Configuration Setting menu, you can view

basic array information and configure basic

array functionality.

To create an individual pool from the

Configuration Setting menu, click Storage

Configuration Setting.

Note: For more information about the other

configuration options available through the

Configuration Settings menu, please refer to the

documentation on the Storage Manager

installation CD.

Page 52

5. At the LD Individual Bind/Unbind window, select

Pool Binding under the Binding Wizard

section at the bottom.

6. At the Welcome window for the Pool Binding

Wizard, click Next.

7. At the Select Physical Disk Type window, select

the desired physical disk type to be used in this

pool, and then click Next.

Page 53

8. At the Select Pool Type window, select either a

dynamic or basic pool type, and then click Next.

9. At the Select RAID Type window, select the type

of RAID that will be used for the logical disk set

in this pool. The RAID options available will

depend on whether the pool type is dynamic or

basic as chosen in the previous screen.

After the appropriate RAID type has been

selected, click Next to continue.

10. At the Select Physical Drive window, select each

physical drive to be added to the logical disk

pool, and then click Add for each one

highlighted until the requisite number of drives

for that pool type have been added.

Page 54

11. At the Set Detailed Parameters for Pool Binding

window, set the pool name and rebuild time

desired for that pool, then click Next.

12. At the Confirm Pool Binding Parameters window,

review the settings listed, and if they are correct,

click Next. If changes need to be made, click

Back to change the settings made in previous

steps, or click Cancel to exit the pool binding

wizard.

13. At the Pool Binding Wizard confirmation

pop-up, click Yes to continue or No to

quit.

Page 55

14. After the Pool Binding Complete window

appears, click Finish to complete the new pool

binding process.

The pool is now ready for logical drives to be

configured and bound to application servers.

Page 56

Creating and Configuring Logical Drives

With a pool created and physical drives configured for use in that pool, logical drives can be configured for use

within the pool and bound to application servers. The following steps will use the Logical Drive Binding wizard to

create logical drives and bind them to a host application server.

1. Navigate to the Storage Manager server address in an Internet Explorer window, and then log on to the

Storage Manager client with a user account that has administrative privileges.

2. Navigate to the Storage Manager network

address in an Internet Explorer window, and

then log on to the client with an account that has

administrative privileges.

3. Select the array where logical disks and pools

need to be created, click File, highlight

Configuration Settings/References, Quick

Configuration, and then click LD Binding

(iSCSI).

Note: For more information about the

other configuration options available

through the Configuration Settings

menu, please refer to the documentation

on the Storage Manager installation CD.

4. A window will appear warning you that array

monitoring will be stopped while configuration

settings are being adjusted. Click Yes to

continue.

5. At the iSCSI Setting menu, you can edit or

delete existing logical disk sets or you can

create new logical disks.

To create an individual pool and logical disk set

from the Configuration Setting menu, click

Create.

Page 57

6. At the iSCSI Setup Wizard Welcome screen,

click Next to begin the logical disk binding

wizard.

7. At the Set the LD Set window, you can specify

which platform the logical disk set will be used

with, establish a name for the set, configure

CHAP authentication settings for the set, and

configure access restrictions for each portal.

When all required settings have been configured,

click Next to continue.

8. The Set initiators window allows you to assign

host initiators to the logical disk set. Select the

initiator(s) that this logical disk set will be

assigned to from the lower Assignable

Initiators window, and then click Add to assign

that initiator to the logical disk set. Or, if the

initiator is not automatically detected, you can

manually enter the initiator details by using the

Type In/Edit button.

When all initiators have been assigned to the

logical disk set, click Next to continue.

Page 58

9. At the Select logical disk binding method

window, you can select whether you want to

create logical disks now using the wizard, assign

existing logical disks, or bind logical disks to the

pool later.

To create new logical disks and assign them to

the set, select the Bind logical disks using the

wizard radio button, and then click Next.

10. At the Bind/Select window, you can select pools

and bind them to the logical disk set, as well as

specifying what type of RAID configuration to

use, which types of physical drives to use, and

what number of physical drives will be assigned

to that logical disk set.

Once all pool and logical disk settings have been

selected, click Next to continue.

Page 59

25. The Select LD Binding Parameters window

allows you to create and bind logical disks to

application servers.

The following options are available when binding

logical disks from the wizard:

Bind/Select – Configure and select a pool to

be bound to logical disks in the the Bind Pool

and Bind Select window.

LD Capacity – Set the capacity of the logical

disks.

Number of LDs – Set the number of logical

disks to create.

LD Name Prefix – Add a prefix name to the

logical disk.

Quick Format – Perform a quick format of

any logical disks created.

GPT Disk Type – Enable GPT type if a

volume is larger than 2 TB.

Create Hot Spare – Assign a physical disk

as a hot spare drive for the logical disk.

(Recommended)

When all settings are correct, click Next

to continue.

Note: If you change the Number of LDs, the LD Capacity needs to be manually changed.

11. At the Setup Details window, review and confirm

that the configuration settings are correct, and

then click Next to continue.

Page 60

12. Once the setup has completed successfully, click

Finish to close the wizard and configure the

application server to connect with the

established logical drive(s).

Page 61

Your storage array is now ready for use.

Thank you for selecting NEC.

Page 62

Appendix: Configuring Multipathing

NEC Storage PathManager enables multi-path connection capabilities with the array that allow the configuration

of path failover for redundancy, load balancing between paths for performance improvements, and clustering

support.

PathManager consists of the following components:

PathManager Driver – A driver that manages and controls basic PathManager functionality, such as

redundant paths and load balancing.

Operation Command (spscmd) – A command-line utility that allows you to check the status of paths

and configure PathManager settings.

Path Patrol Service – A service that automatically monitors path status and collects information from

the PathManager driver for log collection activities.

Log Service – A service that writes events related to PathManager activity to the Event Log.

PathManager can be installed in a single-server direct connection configuration, a multi-server loop or fabric

configuration, and a clustered server configuration to eliminate the need for failover to be configured on the

cluster nodes.

Installing PathManager