Page 1

Intel® Server Board

SE7520JR2

Technical Product Specification

Revision 1.0

October 2004

Enterprise Platforms and Services Marketing

Page 2

Revision History Intel® Server Board SE7520JR2

Revision History

Date Revision

Number

December 2003 0.5 Preliminary Release

June 2004 0.9 Memory Sub-system rewrite, BIOS Chapter Updated, Management Chapter

re-write, Error Handling chapter re-write, several changes made to better

reflect final design

October 2004 1.0 First non-NDA release; Updated IRQ routing diagrams, Updated mBMC

Sensor tables, Updates to Regulatory Information, Updated Sensor data

tables

Modifications

Revision 1.0

ii

C78844-002

Page 3

Intel® Server Board SE7520JR2 Disclaimers

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express or

implied, by estoppel or otherwise, to any intellectual property rights is granted by this document. Except

as provided in Intel's Terms and Conditions of Sale for such products, Intel assumes no liability

whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel

products including liability or warranties relating to fitness for a particular purpose, merchantability, or

infringement of any patent, copyright or other intellectual property right. Intel products are not intended for

use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and

product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked

"reserved" or "undefined." Intel reserves these for future definition and shall have no responsibility

whatsoever for conflicts or incompatibilities arising from future changes to them.

This document contains information on products in the design phase of development. Do not finalize a

design with this information. Revised information will be published when the product is available. Verify

with your local sales office that you have the latest datasheet before finalizing a design.

The Intel® Server Board SE7520JR2 may contain design defects or errors known as errata which may

cause the product to deviate from published specifications. Current characterized errata are available on

request.

This document and the software described in it are furnished under license and may only be used or

copied in accordance with the terms of the license. The information in this manual is furnished for

informational use only, is subject to change without notice, and should not be construed as a commitment

by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies

that may appear in this document or any software that may be provided in association with this document.

Except as permitted by such license, no part of this document may be reproduced, stored in a retrieval

system, or transmitted in any form or by any means without the express written consent of Intel

Corporation.

Intel Corporation server boards contain a number of high-density VLSI and power delivery components

that need adequate airflow to cool. Intel’s own chassis are designed and tested to meet the intended

thermal requirements of these components when the fully integrated system is used together. It is the

responsibility of the system integrator that chooses not to use Intel developed server building blocks to

consult vendor datasheets and operating parameters to determine the amount of air flow required for their

specific application and environmental conditions. Intel Corporation cannot be held responsible if

components fail or the server board does not operate correctly when used outside any of their published

operating or non-operating limits.

Intel, Pentium, Itanium, and Xeon are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © Intel Corporation 2004.

Revision 1.0

C78844-002

iii

Page 4

Table of Contents Intel® Server Board SE7520JR2

Table of Contents

1. Introduction ........................................................................................................................19

1.1 Chapter Outline...................................................................................................... 19

1.2 Server Board Use Disclaimer ................................................................................20

2. Server Board Overview...................................................................................................... 21

2.1 Server Board SE7520JR2 SKU Availability ........................................................... 21

2.2 Server Board SE7520JR2 Feature Set.................................................................. 21

3. Functional Architecture ..................................................................................................... 26

3.1 Processor Sub-system........................................................................................... 27

3.1.1 Processor Voltage Regulators ............................................................................... 27

3.1.2 Reset Configuration Logic .....................................................................................27

3.1.3 Processor Module Presence Detection .................................................................27

3.1.4 GTL2006................................................................................................................ 27

3.1.5 Common Enabling Kit (CEK) Design Support........................................................ 28

3.1.6 Processor Support ................................................................................................. 28

3.1.6.1 Processor Mis-population Detection ..................................................................29

3.1.6.2 Mixed Processor Steppings ...............................................................................29

3.1.6.3 Mixed Processor Models.................................................................................... 29

3.1.6.4 Mixed Processor Families .................................................................................. 29

3.1.6.5 Mixed Processor Cache Sizes ........................................................................... 29

3.1.6.6 Jumperless Processor Speed Settings .............................................................. 29

3.1.6.7 Microcode........................................................................................................... 30

3.1.6.8 Processor Cache................................................................................................ 30

3.1.6.9 Hyper-Threading Technology............................................................................. 30

3.1.6.10 Intel® SpeedStep® Technology....................................................................... 30

3.1.6.11 EM64T Technology Support ............................................................................30

3.1.7 Multiple Processor Initialization .............................................................................30

3.1.8 CPU Thermal Sensors........................................................................................... 31

3.1.9 Processor Thermal Control Sensor .......................................................................31

3.1.10 Processor Thermal Trip Shutdown ........................................................................ 31

3.1.11 Processor IERR ..................................................................................................... 31

3.2 Intel® E7520 Chipset............................................................................................. 31

3.2.1 Memory Controller Hub (MCH) .............................................................................. 32

3.2.1.1 Front Side Bus (FSB) ......................................................................................... 32

3.2.1.2 MCH Memory Sub-System Overview................................................................. 32

3.2.1.3 PCI Express ....................................................................................................... 32

3.2.1.4 Hub Interface...................................................................................................... 33

Revision 1.0

iv

C78844-002

Page 5

Intel® Server Board SE7520JR2 Table of Contents

3.2.2 PCI-X Hub (PXH)................................................................................................... 33

3.2.2.1 Full-height Riser Slot.......................................................................................... 33

3.2.2.2 Low Profile Riser Slot......................................................................................... 33

3.2.2.3 I/OxAPIC Controller............................................................................................ 34

3.2.2.4 SMBus Interface................................................................................................. 34

3.2.3 I/O Controller Hub (ICH5-R) .................................................................................. 34

3.2.3.1 PCI Interface ...................................................................................................... 34

3.2.3.2 IDE Interface (Bus Master Capability and Synchronous DMA Mode) ................ 34

3.2.3.3 SATA Controller ................................................................................................. 35

3.2.3.4 Low Pin Count (LPC) Interface ..........................................................................35

3.2.3.5 Compatibility Modules (DMA Controller, Timer/Counters, Interrupt Controller) .35

3.2.3.6 Advanced Programmable Interrupt Controller (APIC)........................................ 36

3.2.3.7 Universal Serial Bus (USB) Controller ............................................................... 36

3.2.3.8 RTC.................................................................................................................... 36

3.2.3.9 General Purpose I/O (GPIO).............................................................................. 36

3.2.3.10 Enhanced Power Management........................................................................ 36

3.2.3.11 System Management Bus (SMBus 2.0) ........................................................... 36

3.3 Memory Sub-System ............................................................................................. 37

3.3.1 Memory Sizing ....................................................................................................... 37

3.3.2 Memory Population................................................................................................ 38

3.3.3 ECC Memory Initialization ..................................................................................... 40

3.3.4 Memory Test.......................................................................................................... 40

3.3.5 Memory Monitoring ................................................................................................ 41

3.3.6 Memory RASUM Features.....................................................................................42

3.3.6.1 DRAM ECC – Intel® x4 Single Device Data Correction (x4 SDDC) .................42

3.3.6.2 Integrated Memory Scrub Engine ...................................................................... 42

3.3.6.3 Retry on Uncorrectable Error ............................................................................. 43

3.3.6.4 Integrated Memory Initialization Engine ............................................................. 43

3.3.6.5 DIMM Sparing Function .....................................................................................44

3.3.6.6 Memory Mirroring ............................................................................................... 45

3.3.6.7 Logging Memory RAS Information to the SEL ...................................................47

3.4 I/O Sub-System .....................................................................................................47

3.4.1 PCI Subsystem ...................................................................................................... 47

3.4.1.1 P32-A: 32-bit, 33-MHz PCI Subsystem.............................................................. 48

3.4.1.2 P64-A and P64-B: 64-bit, 100MHz PCI Subsystem ........................................... 48

3.4.1.3 P64-Express: Dual x4 PCI Bus Segment........................................................... 48

3.4.1.4 PCI Riser Slots................................................................................................... 48

3.4.1.5 PCI Scan Order.................................................................................................. 49

3.4.1.6 PCI Bus Numbering ...........................................................................................49

3.4.1.7 Device Number and IDSEL Mapping .................................................................50

3.4.1.8 Resource Assignment ........................................................................................ 52

3.4.1.9 Automatic IRQ Assignment ................................................................................ 52

3.4.1.10 Option ROM Support........................................................................................ 52

3.4.1.11 PCI APIs........................................................................................................... 52

3.4.2 Split Option ROM................................................................................................... 52

Revision 1.0

C78844-002

v

Page 6

Table of Contents Intel® Server Board SE7520JR2

3.4.3 Interrupt Routing .................................................................................................... 52

3.4.3.1 Legacy Interrupt Routing.................................................................................... 52

3.4.3.2 APIC Interrupt Routing ....................................................................................... 53

3.4.3.3 Legacy Interrupt Sources ................................................................................... 54

3.4.3.4 Serialized IRQ Support ......................................................................................54

3.4.3.5 IRQ Scan for PCIIRQ ......................................................................................... 55

3.4.4 SCSI Support......................................................................................................... 58

3.4.4.1 LSI* 53C1030 Dual Channel Ultra320 SCSI Controller ..................................... 58

3.4.4.2 Zero Channel RAID............................................................................................ 60

3.4.5 IDE Support ........................................................................................................... 60

3.4.5.1 Ultra ATA/100..................................................................................................... 61

3.4.5.2 IDE Initialization .................................................................................................61

3.4.6 SATA Support........................................................................................................ 61

3.4.6.1 SATA RAID ........................................................................................................ 62

3.4.6.2 Intel® RAID Technology Option ROM................................................................ 62

3.4.7 Video Support ........................................................................................................ 62

3.4.7.1 Video Modes ...................................................................................................... 62

3.4.7.2 Video Memory Interface ..................................................................................... 63

3.4.7.3 Dual video .......................................................................................................... 64

3.4.8 Network Interface Controller (NIC) ........................................................................ 64

3.4.8.1 NIC Connector and Status LEDs .......................................................................65

3.4.9 USB 2.0 Support.................................................................................................... 65

3.4.10 Super I/O Chip ....................................................................................................... 65

3.4.10.1 GPIOs ..............................................................................................................65

3.4.10.2 Serial Ports....................................................................................................... 67

3.4.10.3 Removable Media Drives ................................................................................. 69

3.4.10.4 Floppy Disk Support......................................................................................... 69

3.4.10.5 Keyboard and Mouse Support .........................................................................69

3.4.10.6 Wake-up Control .............................................................................................. 69

3.4.11 BIOS Flash ............................................................................................................69

3.5 Configuration and Initialization............................................................................... 70

3.5.1 Memory Space....................................................................................................... 70

3.5.1.1 DOS Compatibility Region ................................................................................. 71

3.5.1.2 Extended Memory .............................................................................................. 73

3.5.1.3 Memory Shadowing ........................................................................................... 74

3.5.1.4 System Management Mode Handling ................................................................ 75

3.5.2 I/O Map .................................................................................................................. 76

3.5.3 Accessing Configuration Space............................................................................. 78

3.5.3.1 CONFIG_ADDRESS Register............................................................................ 79

3.6 Clock Generation and Distribution ......................................................................... 79

4. System BIOS....................................................................................................................... 80

4.1 BIOS Identification String....................................................................................... 80

4.2 Flash Architecture and Flash Update Utility........................................................... 81

Revision 1.0

vi

C78844-002

Page 7

Intel® Server Board SE7520JR2 Table of Contents

4.3 BIOS Power On Self Test (POST)......................................................................... 81

4.3.1 User Interface ........................................................................................................ 81

4.3.1.1 System Activity Window ..................................................................................... 82

4.3.1.2 Splash Screen/Diagnostic Window .................................................................... 82

4.3.1.3 POST Activity Window ....................................................................................... 83

4.3.2 BIOS Boot Popup Menu ........................................................................................ 83

4.4 BIOS Setup Utility .................................................................................................. 84

4.4.1 Localization............................................................................................................ 84

4.4.2 Entering BIOS Setup .............................................................................................85

4.4.2.1 Main Menu .........................................................................................................85

4.4.2.2 Advanced Menu ................................................................................................. 86

4.4.2.3 Boot Menu.......................................................................................................... 95

4.4.2.4 Security Menu .................................................................................................... 98

4.4.2.5 Server Menu....................................................................................................... 99

4.4.2.6 Exit Menu ......................................................................................................... 102

4.5 Rolling BIOS and On-line Updates ...................................................................... 102

4.5.1 Flash Update Utility.............................................................................................. 103

4.5.1.1 Flash BIOS....................................................................................................... 103

4.5.1.2 User Binary Area.............................................................................................. 103

4.5.1.3 Recovery Mode ................................................................................................ 103

4.5.1.4 BIOS Recovery ................................................................................................104

4.5.2 .Configuration Reset ............................................................................................ 104

4.6 OEM Binary .........................................................................................................105

4.7 Security................................................................................................................105

4.7.1 Operating Model .................................................................................................. 106

4.7.2 Password Clear Jumper ......................................................................................108

4.8 Extensible Firmware Interface (EFI) .................................................................... 108

4.8.1 EFI Shell .............................................................................................................. 108

4.9 Operating System Boot, Sleep, and Wake .......................................................... 108

4.9.1 Microsoft* Windows* Compatibility ...................................................................... 108

4.9.2 Advanced Configuration and Power Interface (ACPI) ......................................... 109

4.9.2.1 Sleep and Wake Functionality.......................................................................... 109

4.9.2.2 Power Switch Off to On.................................................................................... 110

4.9.2.3 On to Off (OS absent) ...................................................................................... 110

4.9.2.4 On to Off (OS present) ..................................................................................... 110

4.9.2.5 On to Sleep (ACPI) ..........................................................................................110

4.9.2.6 Sleep to On (ACPI) ..........................................................................................111

4.9.2.7 System Sleep States........................................................................................ 111

4.10 PXE BIOS Support .............................................................................................. 112

4.11 Console Redirection ............................................................................................112

5. Platform Management...................................................................................................... 113

Revision 1.0

C78844-002

vii

Page 8

Table of Contents Intel® Server Board SE7520JR2

5.1 Platform Management Architecture Overview ..................................................... 115

5.1.1 5V Standby .......................................................................................................... 116

5.1.2 IPMI Messaging, Commands, and Abstractions xxx............................................ 116

5.1.3 IPMI ‘Sensor Model’............................................................................................. 117

5.1.4 Private Management Busses............................................................................... 118

5.1.5 Management Controllers .....................................................................................118

5.2 On-Board Platform Management Features and Functionality..............................121

5.2.1 Server Management I2C Buses ...........................................................................122

5.2.2 Power Control Interfaces ..................................................................................... 122

5.2.3 External Interface to the mBMC........................................................................... 122

5.3 mBMC Hardware Architecture ............................................................................. 123

5.3.1 Power Supply Interface Signals........................................................................... 124

5.3.2 Power Control Sources........................................................................................ 126

5.3.3 Power-up Sequence ............................................................................................ 126

5.3.4 Power-down Sequence........................................................................................ 126

5.3.5 System Reset Control.......................................................................................... 126

5.3.5.1 Reset Signal Output ......................................................................................... 126

5.3.5.2 Reset Control Sources ..................................................................................... 127

5.3.5.3 Control Panel System Reset ............................................................................ 127

5.3.5.4 Control Panel Indicators................................................................................... 128

5.3.5.5 Control Panel Inputs......................................................................................... 129

5.3.6 Secure Mode Operation....................................................................................... 131

5.3.7 Baseboard Fan Control........................................................................................ 131

5.3.8 mBMC Peripheral SMBus.................................................................................... 131

5.3.9 Watchdog Timer ..................................................................................................131

5.3.10 System Event Log (SEL) ..................................................................................... 131

5.3.10.1 SEL Erasure................................................................................................... 132

5.3.10.2 Timestamp Clock ...........................................................................................132

5.3.11 Sensor Data Record (SDR) Repository ............................................................... 132

5.3.11.1 Initialization Agent .......................................................................................... 132

5.3.12 Field Replaceable Unit (FRU) Inventory Devices ................................................133

5.3.12.1 mBMC FRU Inventory Area Format ............................................................... 133

5.3.13 NMI Generation ...................................................................................................133

5.3.14 SMI Generation.................................................................................................... 133

5.3.15 Event Message Reception................................................................................... 133

5.3.16 mBMC Self Test................................................................................................... 134

5.3.17 Messaging Interfaces........................................................................................... 134

5.3.17.1 Channel Management.................................................................................... 134

Revision 1.0

viii

C78844-002

Page 9

Intel® Server Board SE7520JR2 Table of Contents

5.3.17.2 User Model..................................................................................................... 134

5.3.17.3 Request/Response Protocol ..........................................................................134

5.3.17.4 Host to mBMC Communication Interface....................................................... 134

5.3.17.5 LAN Interface ................................................................................................. 135

5.3.18 Event Filtering and Alerting.................................................................................. 136

5.3.18.1 Platform Event Filtering (PEF) ....................................................................... 136

5.3.18.2 Alert over LAN................................................................................................ 137

5.3.19 mBMC Sensor Support........................................................................................ 137

5.3.20 IMM BMC Sensor Support................................................................................... 142

5.4 Wired For Management (WFM) ........................................................................... 148

5.5 Vital Product Data (VPD) ..................................................................................... 148

5.6 System Management BIOS (SMBIOS)................................................................ 148

6. Error Reporting and Handling......................................................................................... 149

6.1 Fault Resilient Booting (FRB) .............................................................................. 149

6.1.1 FRB1 – BSP Self-Test Failures ........................................................................... 149

6.1.2 FRB2 – BSP POST Failures................................................................................ 149

6.1.3 FRB3 – BSP Reset Failures ................................................................................ 150

6.1.4 AP Failures .......................................................................................................... 151

6.1.5 Treatment of Failed Processors........................................................................... 151

6.1.6 Memory Error Handling in RAS Mode.................................................................. 152

6.1.7 Memory Error Handling in non-RAS Mode ..........................................................153

6.1.8 DIMM Enabling .................................................................................................... 154

6.1.9 Single-bit ECC Error Throttling Prevention .......................................................... 154

6.2 Error Logging ....................................................................................................... 155

6.2.1.1 PCI Bus Error ................................................................................................... 155

6.2.1.2 Processor Bus Error......................................................................................... 155

6.2.1.3 Memory Bus Error ............................................................................................ 156

6.2.1.4 System Limit Error............................................................................................ 156

6.2.1.5 Processor Failure ............................................................................................. 156

6.2.1.6 Boot Event........................................................................................................ 156

6.3 Error Messages and Error Codes ........................................................................ 156

6.3.1 POST Error Messages......................................................................................... 156

6.3.2 POST Error Codes............................................................................................... 162

6.3.3 BIOS Generated POST Error Beep Codes..........................................................165

6.3.4 Boot Block Error Beep Codes.............................................................................. 166

6.3.5 BMC Generated Beep Codes (Professional/Advanced only) ..............................166

6.4 Checkpoints ......................................................................................................... 167

6.4.1 System ROM BIOS POST Task Test Point (Port 80h Code)............................... 167

Revision 1.0

C78844-002

ix

Page 10

Table of Contents Intel® Server Board SE7520JR2

6.4.2 Diagnostic LEDs .................................................................................................. 167

6.4.3 POST Code Checkpoints..................................................................................... 168

6.4.4 Bootblock Initialization Code Checkpoints........................................................... 170

6.4.5 Bootblock Recovery Code Checkpoint ................................................................ 171

6.4.6 DIM Code Checkpoints........................................................................................ 172

6.4.7 ACPI Runtime Checkpoints ................................................................................. 173

6.4.8 POST Progress FIFO (Professional / Advanced only)......................................... 173

6.4.9 Memory Error Codes ........................................................................................... 173

6.5 Light Guided Diagnostics.....................................................................................174

7. Connectors and Jumper Blocks ..................................................................................... 175

7.1 Power Connectors ............................................................................................... 175

7.2 Riser Slots ...........................................................................................................176

7.2.1 Low Profile PCI-X Riser Slot................................................................................ 176

7.2.2 Full Height PCI-X Riser Slot ................................................................................179

7.3 System Management Headers ............................................................................ 184

7.3.1 Intel® Management Module Connector ............................................................... 184

7.3.2 ICMB Header ....................................................................................................... 187

7.3.3 IPMB Header ....................................................................................................... 187

7.3.4 OEM RMC Connector (J3B2) ..............................................................................189

7.4 Control Panel Connectors.................................................................................... 189

7.5 I/O Connectors..................................................................................................... 192

7.5.1 VGA Connector.................................................................................................... 192

7.5.2 NIC Connectors ................................................................................................... 193

7.5.3 SCSI Connectors ................................................................................................. 193

7.5.4 ATA-100 Connector ............................................................................................. 194

7.5.5 SATA Connectors ................................................................................................ 195

7.5.6 Floppy Controller Connector................................................................................ 195

7.5.7 Serial Port Connectors......................................................................................... 196

7.5.8 Keyboard and Mouse Connector ......................................................................... 197

7.5.9 USB Connector.................................................................................................... 197

7.6 Fan Headers ........................................................................................................ 198

7.7 Misc. Headers and Connectors ...........................................................................200

7.7.1 Chassis Intrusion Header ....................................................................................200

7.7.2 Hard Drive Activity LED Header........................................................................... 200

7.8 Jumper Blocks ..................................................................................................... 201

Revision 1.0

x

C78844-002

Page 11

Intel® Server Board SE7520JR2 Table of Contents

8. Design and Environmental Specifications..................................................................... 202

8.1 Server Board SE7520JR2 Design Specification.................................................. 202

8.2 Power Supply Requirements ............................................................................... 202

8.2.1 Output Connectors............................................................................................... 202

8.2.2 Grounding ............................................................................................................ 205

8.2.3 Remote Sense ..................................................................................................... 206

8.2.4 Standby Outputs .................................................................................................. 206

8.2.5 Voltage Regulation ..............................................................................................207

8.2.6 Dynamic Loading ................................................................................................. 207

8.2.7 Capacitive Loading .............................................................................................. 208

8.2.8 Closed Loop Stability ........................................................................................... 208

8.2.9 Common Mode Noise .......................................................................................... 208

8.2.10 Ripple / Noise ......................................................................................................208

8.2.11 Soft Starting ......................................................................................................... 209

8.2.12 Zero Load Stability Requirements .......................................................................209

8.2.13 Timing Requirements........................................................................................... 209

8.2.14 Residual Voltage Immunity in Standby Mode ...................................................... 211

8.3 Product Regulatory Compliance .......................................................................... 212

8.3.1 Product Safety Compliance ................................................................................. 212

8.3.2 Product EMC Compliance – Class A Compliance ............................................... 212

8.3.3 Certifications / Registrations / Declarations ......................................................... 213

8.3.4 Product Regulatory Compliance Markings .......................................................... 213

8.4 Electromagnetic Compatibility Notices ................................................................214

8.4.1 FCC (USA)........................................................................................................... 214

8.4.2 Industry Canada (ICES-003) ............................................................................... 214

8.4.3 Europe (CE Declaration of Conformity) ............................................................... 215

8.4.4 Taiwan Declaration of Conformity (BSMI)............................................................ 215

8.4.5 Korean Compliance (RRL)................................................................................... 215

9. Miscellaneous Board Information................................................................................... 216

9.1 Updating the System Software ............................................................................ 216

9.2 Programming FRU and SDR Data....................................................................... 216

9.3 Clearing CMOS.................................................................................................... 217

9.3.1 CMOS Clear Using J1H2 Jumper Block .............................................................. 217

9.3.2 CMOS Clear using Control Panel ........................................................................ 217

9.4 BIOS Recovery Operation ................................................................................... 218

Revision 1.0

C78844-002

xi

Page 12

Table of Contents Intel® Server Board SE7520JR2

Appendix A: Integration and Usage Tips.............................................................................. 221

Glossary................................................................................................................................... 222

Reference Documents ............................................................................................................225

Revision 1.0

xii

C78844-002

Page 13

Intel® Server Board SE7520JR2 List of Figures

List of Figures

Figure 1. SE7520JR2 Board Layout ........................................................................................... 23

Figure 2. Server Board Dimensions............................................................................................ 25

Figure 3. Server Board SE7520JR2 Block Diagram ................................................................... 26

Figure 4. CEK Processor Mounting ............................................................................................28

Figure 5. Identifying Banks of Memory .......................................................................................38

Figure 6. Four DIMM Memory Mirror Configuration .................................................................... 45

Figure 7. Six DIMM Memory Mirror Configuration (DDR2 Only) ................................................. 46

Figure 8. Interrupt Routing Diagram (ICH5-R Internal) ............................................................... 55

Figure 9. Interrupt Routing Diagram ...........................................................................................56

Figure 10. PCI Interrupt Mapping Diagram ................................................................................. 57

Figure 11. PCI Interrupt Mapping Diagram for 2U Active Riser Card .........................................57

Figure 12. Serial Port Mux Logic................................................................................................. 68

Figure 13. RJ45 Serial B Port Jumper Block Location and Setting............................................. 68

Figure 14. Intel® Xeon™ Processor Memory Address Space..................................................... 70

Figure 15. DOS Compatibility Region ......................................................................................... 71

Figure 16. Extended Memory Map.............................................................................................. 73

Figure 17. BIOS Identification String...........................................................................................80

Figure 18. POST Console Interface............................................................................................ 82

Figure 19. On-Board Platform Management Architecture......................................................... 115

Figure 20. mBMC in a Server Management System................................................................. 121

Figure 21. External Interfaces to mBMC................................................................................... 123

Figure 22. mBMC Block Diagram .............................................................................................124

Figure 23. Power Supply Control Signals ................................................................................. 125

Figure 24. Location of Diagnostic LEDs on Baseboard ............................................................ 168

Figure 25. 34-Pin SSI Compliant Control Panel Header........................................................... 192

Figure 26. System Configuration (J1H2) Jumper Block Settings.............................................. 201

Figure 27. Power Harness Specification Drawing..................................................................... 203

Figure 28. Output Voltage Timing ............................................................................................. 210

Figure 29. Turn On/Off Timing (Power Supply Signals)............................................................ 211

Revision 1.0

C78844-002

xiii

Page 14

List of Tables Intel® Server Board SE7520JR2

List of Tables

Table 1: Baseboard Layout Reference ....................................................................................... 24

Table 2: Processor Support Matrix .............................................................................................28

Table 3: Supported DDR-266 DIMM Populations ....................................................................... 39

Table 4: Supported DDR-333 DIMM Populations ....................................................................... 39

Table 5: Supported DDR2-400 DIMM Populations ..................................................................... 40

Table 6: Memory Monitoring Support by Server Management Level.......................................... 41

Table 7: PCI Bus Segment Characteristics................................................................................. 48

Table 8: PCI Configuration IDs and Device Numbers................................................................. 51

Table 9: PCI Interrupt Routing/Sharing....................................................................................... 53

Table 10: Interrupt Definitions.....................................................................................................54

Table 11: Video Modes ............................................................................................................... 63

Table 12: Video Memory Interface.............................................................................................. 63

Table 13: Super I/O GPIO Usage Table ..................................................................................... 65

Table 14: Serial A Header Pin-out .............................................................................................. 67

Table 15: SMM Space Table ......................................................................................................75

Table 16: I/O Map ....................................................................................................................... 76

Table 17: Sample BIOS Popup Menu........................................................................................ 84

Table 18: BIOS Setup Keyboard Command Bar Options .......................................................... 84

Table 19: BIOS Setup, Main Menu Options............................................................................... 85

Table 20: BIOS Setup, Advanced Menu Options....................................................................... 86

Table 21: BIOS Setup, Processor Configuration Sub-menu Options ........................................ 87

Table 22: BIOS Setup IDE Configuration Menu Options ........................................................... 88

Table 23: Mixed P-ATA-S-ATA Configuration with only Primary P-ATA.................................... 89

Table 24: BIOS Setup, IDE Device Configuration Sub-menu Selections .................................. 90

Table 25: BIOS Setup, Floppy Configuration Sub-menu Selections.......................................... 91

Table 26: BIOS Setup, Super I/O Configuration Sub-menu....................................................... 91

Table 27: BIOS Setup, USB Configuration Sub-menu Selections ............................................. 92

Table 28: BIOS Setup, USB Mass Storage Device Configuration Sub-menu Selections.......... 92

Table 29: BIOS Setup, PCI Configuration Sub-menu Selections .............................................. 93

Table 30: BIOS Setup, Memory Configuration Sub-menu Selections........................................ 94

Table 31: BIOS Setup, Boot Menu Selections ........................................................................... 95

Table 32: BIOS Setup, Boot Settings Configuration Sub-menu Selections ............................... 96

Revision 1.0

xiv

C78844-002

Page 15

Intel® Server Board SE7520JR2 List of Tables

Table 33: BIOS Setup, Boot Device Priority Sub-menu Selections ...........................................97

Table 34: BIOS Setup, Hard Disk Drive Sub-Menu Selections.................................................. 97

Table 35: BIOS Setup, Removable Drives Sub-menu Selections.............................................. 97

Table 36: BIOS Setup, CD/DVD Drives Sub-menu Selections.................................................. 97

Table 37: BIOS Setup, Security Menu Options.......................................................................... 98

Table 38: BIOS Setup, Server Menu Selections........................................................................ 99

Table 39: BIOS Setup, System Management Sub-menu Selections....................................... 100

Table 40: BIOS Setup, Serial Console Features Sub-menu Selections .................................. 101

Table 41: BIOS Setup, Event Log Configuration Sub-menu Selections .................................. 101

Table 42: BIOS Setup, Exit Menu Selections ..........................................................................102

Table 43: Security Features Operating Model ......................................................................... 106

Table 44: Supported Wake Events .......................................................................................... 111

Table 45: Suppoted Management Features by Tier .................................................................113

Table 46: Server Management I2C Bus ID Assignments .......................................................... 122

Table 47: Power Control Initiators............................................................................................. 126

Table 48: System Reset Sources and Actions.......................................................................... 127

Table 49: SSI Power LED Operation ........................................................................................ 128

Table 50: Fault / Status LED.....................................................................................................129

Table 51: Chassis ID LED......................................................................................................... 129

Table 52: Suported Channel Assignments ...............................................................................134

Table 53: LAN Channel Capacity.............................................................................................. 135

Table 54: PEF Action Priorities ................................................................................................. 137

Table 55: Platform Sensors for On-Board Platform Instrumentation ........................................ 138

Table 56. Platform Sensors for Intel Management Modules - Professional and Advanced...... 142

Table 57: Memory Error Handling mBMC vs Sahalee .............................................................. 153

Table 58: Memory Error Handling in non-RAS mode................................................................ 154

Table 59: Memory BIOS Messages ......................................................................................... 156

Table 60: Boot BIOS Messages............................................................................................... 157

Table 61: Storage Device BIOS Messages .............................................................................157

Table 62: Virus Related BIOS Messages ................................................................................160

Table 63: System Configuration BIOS Messages.................................................................... 160

Table 64: CMOS BIOS Messages ........................................................................................... 161

Table 65: Miscellaneous BIOS Messages ............................................................................... 161

Table 66: USB BIOS Error Messages...................................................................................... 161

Table 67: SMBIOS BIOS Error Messages ............................................................................... 162

Revision 1.0

C78844-002

xv

Page 16

List of Tables Intel® Server Board SE7520JR2

Table 68: Error Codes and Messages ...................................................................................... 162

Table 69: Error Codes Sent to the Management Module ......................................................... 164

Table 70: BIOS Generated Beep Codes................................................................................... 165

Table 71: Troubleshooting BIOS Beep Codes.......................................................................... 166

Table 72: Boot Block Error Beep Codes .................................................................................. 166

Table 73: BMC Beep Code ....................................................................................................... 167

Table 74: POST Progress Code LED Example ........................................................................167

Table 75: POST Code Checkpoints.......................................................................................... 168

Table 76: Bootblock Initialization Code Checkpoints ................................................................ 170

Table 77: Bootblock Recovery Code Checkpoint .....................................................................171

Table 78: DIM Code Checkpoints ............................................................................................. 172

Table 79: ACPI Runtime Checkpoints ......................................................................................173

Table 80: Memory Error Codes................................................................................................. 173

Table 81: Power Connector Pin-out.......................................................................................... 175

Table 82: 12V Power Connector (J4J1).................................................................................... 175

Table 83: Power Supply Signal Connector (J1G1) ................................................................... 176

Table 84: IDE Power Connector Pinout (U2E1)........................................................................ 176

Table 85: Low Profile Riser Slot Pinout ....................................................................................176

Table 86: Full-height Riser Slot Pinout .....................................................................................180

Table 87: IMM Connector Pinout (J1C1) ..................................................................................184

Table 88: ICMB Header Pin-out (J1D1) .................................................................................... 187

Table 89: IPMB Connector Pin-out (J3F1)................................................................................ 188

Table 90: OEM RMC Connector Pinout (J3B2) ........................................................................ 189

Table 91: 100-Pin Flex Cable Connector Pin-out (For Intel Chassis w/Backplane) (J2J1)....... 189

Table 92: 50-Pin Control Panel Connector (Intel Chassis w/No Backplane) (J1J2) ................. 190

Table 93: Control Panel SSI Standard 34-Pin Header Pin-out ................................................. 191

Table 94: VGA Connector Pin-out ............................................................................................192

Table 95: RJ-45 10/100/1000 NIC Connector Pin-out .............................................................. 193

Table 96: Internal/External 68-pin VHDCI SCSI Connector Pin-out .........................................193

Table 97: ATA-100 40-pin Connector Pin-out (J3K1) ............................................................... 194

Table 98: SATA Connector Pin-out (J1H1 and J1H5) ..............................................................195

Table 99: Legacy 34-pin Floppy Drive Connector Pin-out (J3K2)............................................. 196

Table 100: External RJ-45 Serial B Port Pin-out....................................................................... 196

Table 101: Internal 9-pin Serial A Header Pin-out (J1A3)......................................................... 196

Table 102: Stacked PS/2 Keyboard and Mouse Port Pin-out ................................................... 197

Revision 1.0

xvi

C78844-002

Page 17

Intel® Server Board SE7520JR2 List of Tables

Table 103: External USB Connector Pin-out ............................................................................197

Table 104: Internal 1x10 USB Connector Pin-out (J1F1) .........................................................198

Table 105: Internal 2x5 USB Connector (J1G1) ....................................................................... 198

Table 106: CPU1/CPU2 Fan Connector Pin-out (J5F2, J7F1) ................................................. 199

Table 107: Intel Server Chassis Fan Header Pin-out (J3K6).................................................... 199

Table 108: 3-Pin Fan Speed Controlled Fan Header (J3K3) .................................................... 200

Table 109: Chassis Intrusion Header (J1A1) ............................................................................ 200

Table 110: Hard Drive Activity LED Header(J1A2) ................................................................... 200

Table 111: Jumper Block Definitions ........................................................................................201

Table 112: Board Design Specifications ................................................................................... 202

Table 113: P1 Main Power Connector ...................................................................................... 204

Table 114: P2 Processor Power Connector.............................................................................. 204

Table 115: P3 Baseboard Signal Connector............................................................................. 205

Table 116: Peripheral Power Connectors ................................................................................. 205

Table 117: P7 Hard Drive Power Connector............................................................................. 205

Table 118: Voltage Regulation Limits ....................................................................................... 207

Table 119: Transient Load Requirements................................................................................. 207

Table 120: Capacitve Loading Conditions ................................................................................ 208

Table 121: Ripple and Noise..................................................................................................... 208

Table 122: Output Voltage Timing ............................................................................................ 209

Table 123: Turn On/Off Timing ................................................................................................. 210

Table 124: Product Certification Markings ............................................................................. 213

Revision 1.0

C78844-002

xvii

Page 18

List of Tables Intel® Server Board SE7520JR2

< This page intentionally left blank. >

Revision 1.0

xviii

C78844-002

Page 19

Intel® Server Board SE7520JR2 Introduction

1. Introduction

This Technical Product Specification (TPS) provides detail to the architecture and feature set of

the Intel

®

Server Board SE7520JR2.

The target audience for this document is anyone wishing to obtain more in depth detail of the

server board than what is generally made available in the board’s Users Guide. It is a technical

document meant to assist people with understanding and learning more about the specific

features of the board.

This is one of several technical documents available for this server board. All of the functional

sub-systems that make up the board are described in this document. However, some low-level

detail of specific sub-systems is not included. Design level information for specific sub-systems

can be obtained by ordering the External Product Specification (EPS) for a given sub-system.

The EPS documents available for this server board include the following:

®

• Intel

• Intel

• mini Baseboard Management Controller (mBMC) Core EPS for IPMI-based Systems

• Sahalee Core BMC EPS for IPMI v1.5

Server Board SE7520JR2 BIOS EPS

®

Server Board SE7520JR2 Baseboard Management Controller EPS

These documents are not publicly available and must be ordered by your local Intel

representative.

1.1 Chapter Outline

This document is divided into the following chapters

• Chapter 1 – Introduction

• Chapter 2 – Product Overview

• Chapter 3 – Board Architecture

• Chapter 4 – System BIOS

• Chapter 5 – Platform Management Architecture

• Chapter 6 – Error Reporting and Handling

• Chapter 7 – Connector Pin-out and Jumper Blocks

• Chapter 8 – Environmental Specifications

• Chapter 9 – Miscellaneous Board Information

• Appendix A – Integration and Usage Tips

Revision 1.0

C78844-002

19

Page 20

Introduction Intel® Server Board SE7520JR2

1.2 Server Board Use Disclaimer

Intel Corporation server boards contain a number of high-density VLSI and power delivery

components that need adequate airflow to cool. Intel ensures through its own chassis

development and testing that when Intel server building blocks are used together, the fully

integrated system will meet the intended thermal requirements of these components. It is the

responsibility of the system integrator who chooses not to use Intel developed server building

blocks to consult vendor datasheets and operating parameters to determine the amount of air

flow required for their specific application and environmental conditions. Intel Corporation

cannot be held responsible, if components fail or the server board does not operate correctly

when used outside any of their published operating or non-operating limits.

Revision 1.0

20

C78844-002

Page 21

Intel® Server Board SE7520JR2 Server Board Overview

2. Server Board Overview

The Intel® Server Board SE7520JR2 is a monolithic printed circuit board with features that were

designed to support the high density 1U and 2U server markets.

2.1 Server Board SE7520JR2 SKU Availability

In this document, the name SE7520JR2 is used to describe the family of boards that are made

available under a common product name. The core features for each board will be common;

however each board will have the following distinctions:

Product Code Feature Distinctions

SE7520JR2SCSID2 Onboard SCSI + Onboard SATA (RAID) + DDR2 – 400 MHz

SE7520JR2SCSID1 Onboard SCSI + Onboard SATA (RAID) + DDR – 266/333 MHz

SE7520JR2ATAD2 Onboard SATA (RAID) + DDR2 – 400 MHz

SE7520JR2ATAD1 Onboard SATA (RAID) + DDR – 266/333 MHz

Throughout this document, all references to the Server Board SE7520JR2 will refer to all four

board SKUs unless specifically noted otherwise. The board you select to use, may or may not

have all the features described based on the listed board differences.

2.2 Server Board SE7520JR2 Feature Set

• Dual processor slots supporting 800MHz Front Side Bus (FSB) Intel

• Intel E7520 Chipset (MCH, PXH, ICH5-R)

• Two PCI riser slots

o Riser Slot 1: Supports low profile PCI-X 66/100MHz PCI-X cards

o Riser Slot 2: Using Intel® adaptive slot technology and different riser cards, this

slot is capable of supporting full height PCI-X 66/100/133 or PCI-Express cards.

• Six DIMM slots supporting DDR2 – 400MHz DIMMs or DDR – 266/333 MHz

• Dual channel LSI* 53C1030 Ultra320 SCSI Controller with integrated RAID 0/1 support

(SCSI SKU only)

• Dual Intel

• On board ATI* Rage XL video controller with 8MB SDRAM

• On-board platform instrumentation using a National* PC87431M mini-BMC

• External IO connectors

1

The use of DDR2 - 400 MHz or DDR - 266/333 MHz DIMMs is dependant on which board SKU is used. DDR-2

DIMMs cannot be used on a board designed to support DDR. DDR DIMMs cannot be used on boards designed to

support DDR-2.

®

82546GB 10/100/1000 Network Interface Controllers (NICs)

o Stacked PS2 ports for keyboard and mouse

®

Xeon™ processors

1

DIMMs

Revision 1.0

C78844-002

21

Page 22

Server Board Overview Intel® Server Board SE7520JR2

o RJ45 Serial B Port

o Two RJ45 NIC connectors

o 15-pin video connector

o Two USB 2.0 ports

o U320 High density SCSI connector (Channel B) (SCSI SKU only)

• Internal IO Connectors / Headers

o Two onboard USB port headers. Each header is capable of supporting two USB

2.0 ports.

o One 10-pin DH10 Serial A Header

o One Ultra320 68-pin SCSI Connector (Channel A) (SCSI SKU only)

o Two SATA connectors with integrated chipset RAID 0/1 support

o One ATA100 connector

o One floppy connector

o SSI-compliant (34-pin) and custom control panel headers (50-pin and 100-pin)

o SSI-compliant 24-pin main power connector. This supports ATX-12V standard in

the first 20 pins

o Intel® Management Module (IMM) connector supporting both Professonal Edition

and Advanced Edition management modules

• Intel

• Port-80 Diagnostic LEDs displaying POST codes

®

Light-Guided Diagnostics on all FRU devices (processors, memory, power)

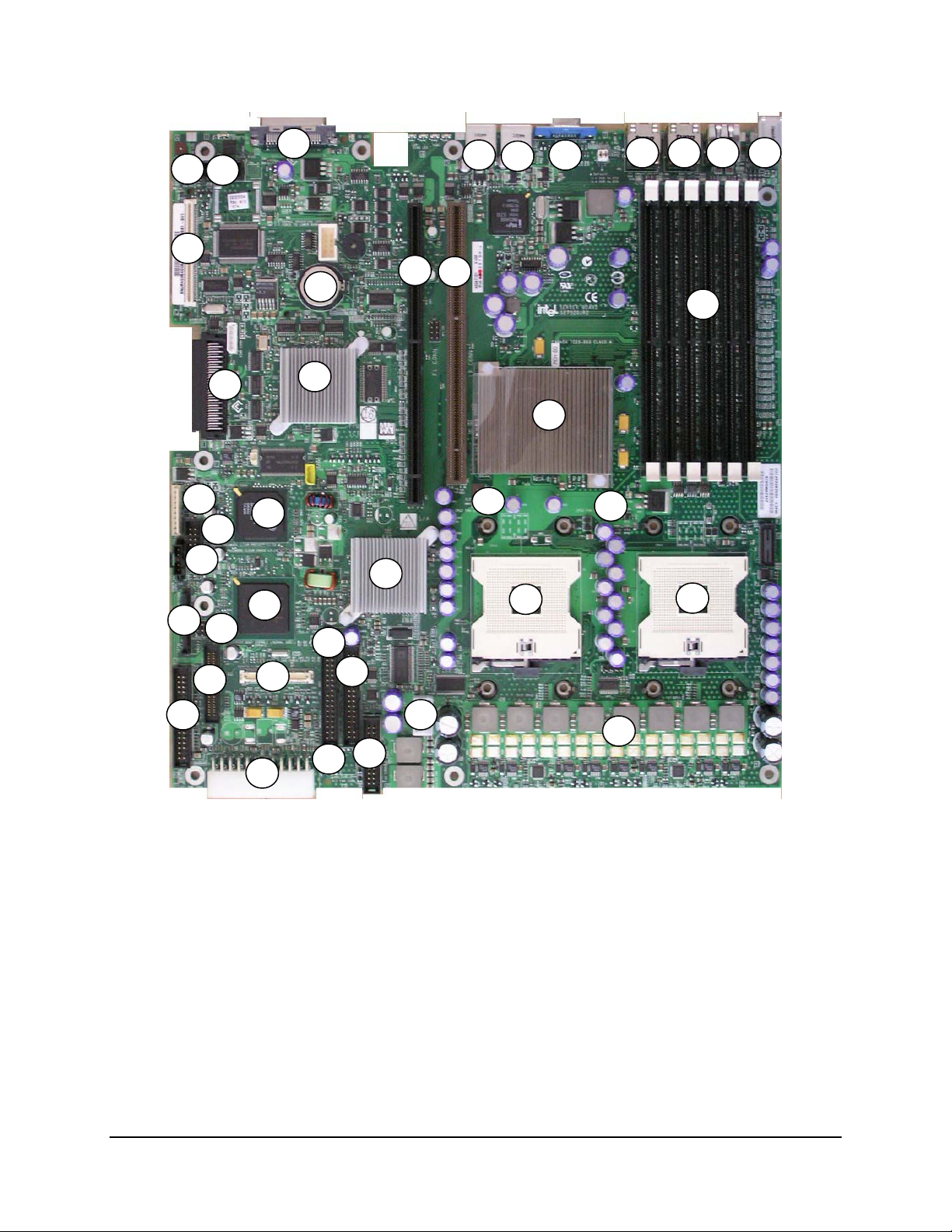

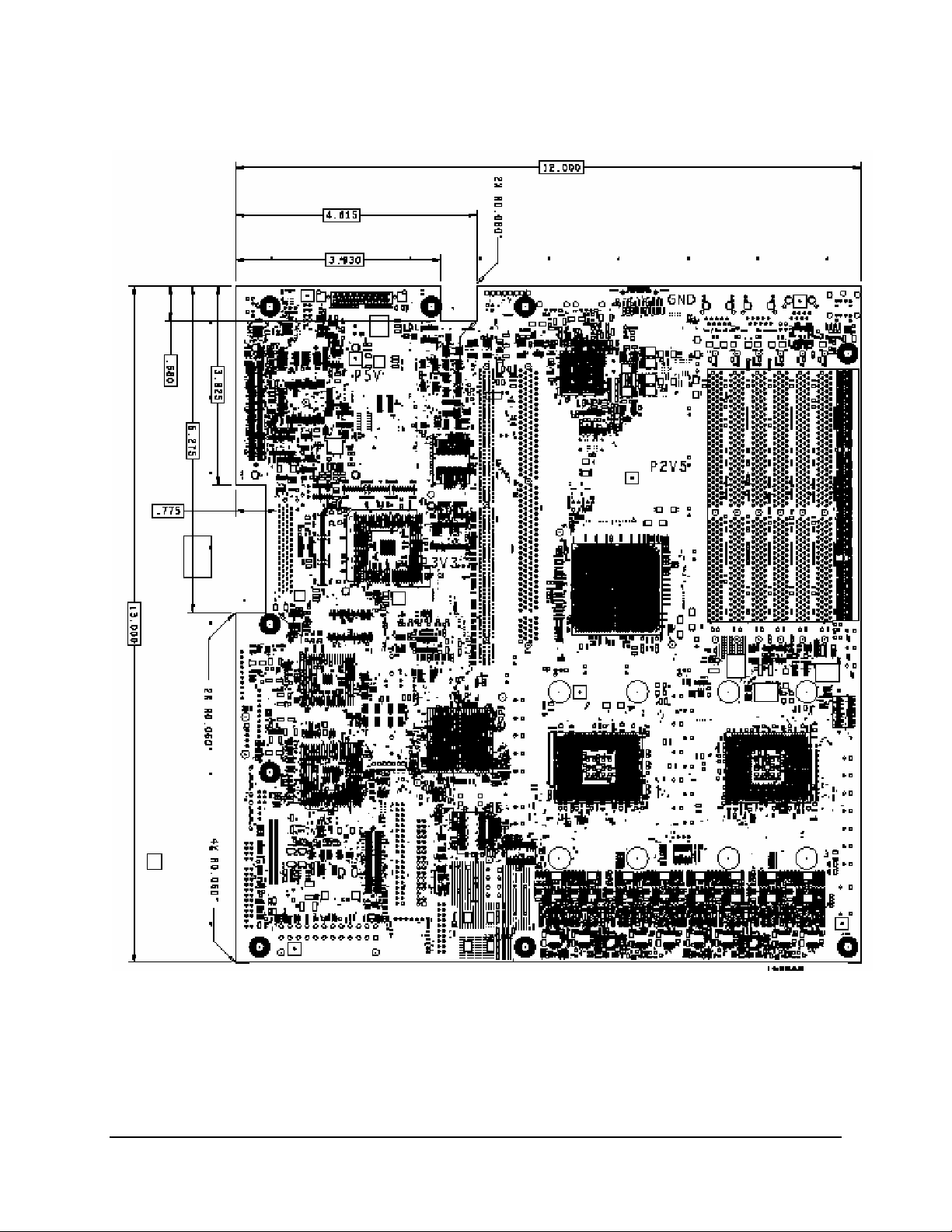

The following figure shows the board layout of the Server Board SE7520JR2. Each connector

and major component is identified by number and is identified in Table 1.

Revision 1.0

22

C78844-002

Page 23

Intel® Server Board SE7520JR2 Server Board Overview

1

11

29

19

24

16

20

30

2

21

28

3

12

17

31

25

13

14

4

22

5

26

18

9 8 7

6

15

23

27

10

38

34

36

40

39

35

32

33

37

Figure 1. SE7520JR2 Board Layout

Revision 1.0

C78844-002

23

Page 24

Server Board Overview Intel® Server Board SE7520JR2

Table 1: Baseboard Layout Reference

Ref # Description Ref # Description

(J1A1) 2-Pin Chassis Intrusion Header

1

2 10-Pin DH10 Serial A Header 23 CPU #1 Fan Header

3 Ext SCSI Channel B Connector 24 5-pin Power Sense Header

4 USB Port 2 25 PXH – Chipset Component

5 USB Port 1 26 CPU #2 Socket

6 Video Connector 27 CPU #1 Socket

7 NIC #2 28 ICH5-R – Chipset Component

8 NIC #1 29 SATA Ports

9 RJ-45 Serial B Port 30

10 Stacked PS/2 Keyboard and Mouse Ports 31 Legacy ATA-100 connector

11 Intel Management Module Connector 32 50-pin Control Panel Header

12 CMOS Battery 33 100-pin Control Panel, Floppy, IDE Connector

13 Full Height Riser Card Slot 34 Legacy Floppy Connector

14 Low Profile Riser Card Slot 35 SSI 34-pin Control Panel Header

15 DIMM Slots 36 8-Pin AUX Power Connector

16 68-pin SCSI Channel A Connector 37 24-Pin Main Power Connector

17 LSI 53C1030 SCSI Controller 38 SSI System Fan Header

18 MCH – Chipset Component 39 SR1400/SR2400 System Fan Header

19 1x10 USB Header 40 Processor Voltage Regulator Circuitry

20 2x5 USB Header

21 ATI RageXL Video Controller

(J1A2) 2-Pin Hard Drive Act LED Header

(J1A4) Rolling BIOS Jumper

22 CPU #2 Fan Header

(J1H2) Recovery Boot Jumper

(J1H3) Password Clear Jumper

(J1H4) CMOS Clear Jumper

Revision 1.0

24

C78844-002

Page 25

Intel® Server Board SE7520JR2 Server Board Overview

The following mechanical drawing shows the physical dimensions of the baseboard.

Figure 2. Server Board Dimensions

Revision 1.0

C78844-002

25

Page 26

Functional Architecture Intel® Server Board SE7520JR2

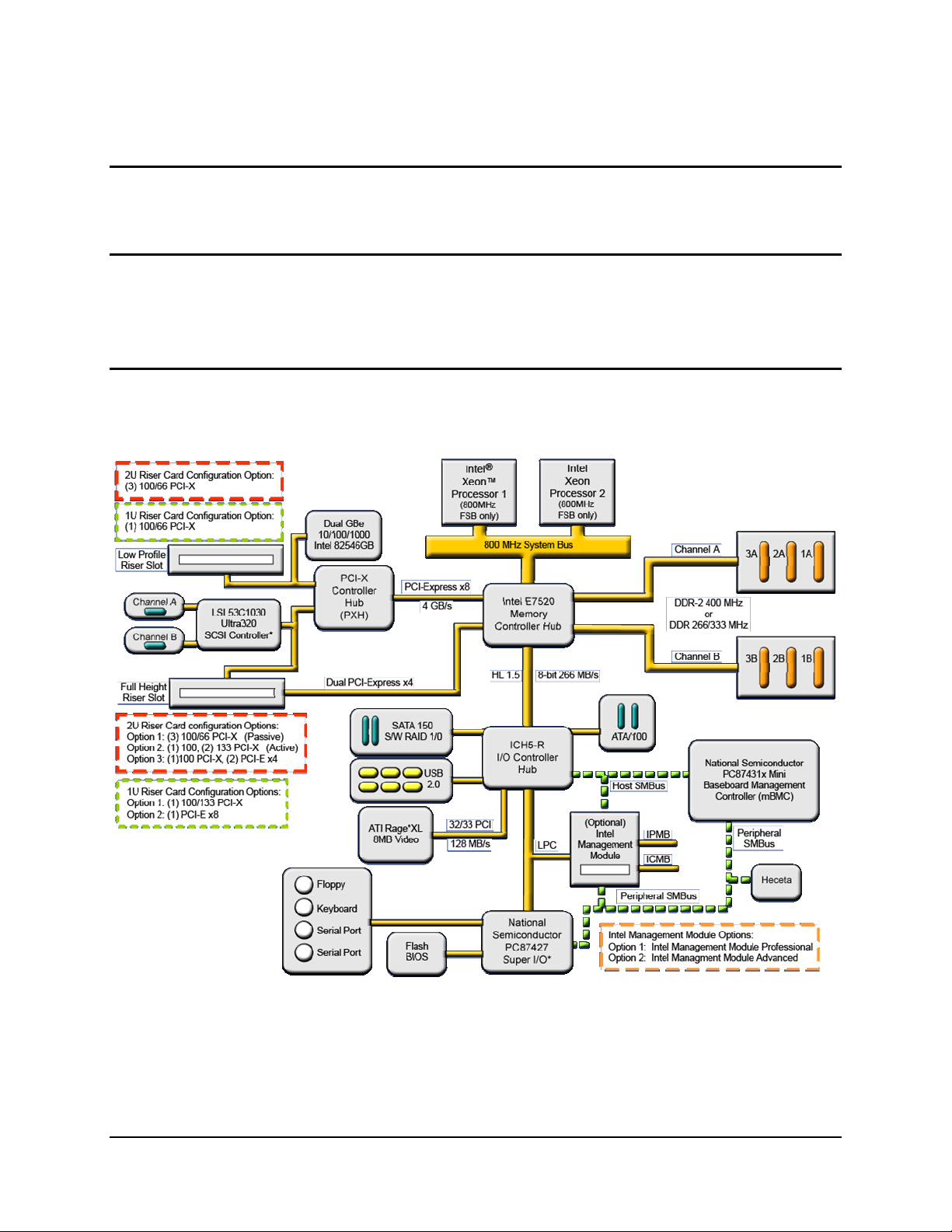

3. Functional Architecture

This chapter provides a high-level description of the functionality associated with the

architectural blocks that make up the Intel Server Board SE7520JR2.

Note: This document describes the features and functionality of the Server Board SE7520JR2

when using standard on-board platform instrumentation. Some functionality and feature

descriptions change when using either the Professional Edition or Advanced Edition Intel

Management Modules. Functional changes when either of these two options are used are

described in a separate document.

Figure 3. Server Board SE7520JR2 Block Diagram

Revision 1.0

26

C78844-002

Page 27

Intel® Server Board SE7520JR2 Functional Architecture

3.1 Processor Sub-system

The support circuitry for the processor sub-system consists of the following:

• Dual 604-pin zero insertion force (ZIF) processor sockets

• Processor host bus AGTL+ support circuitry

• Reset configuration logic

• Processor module presence detection logic

• BSEL detection capabilities

• CPU signal level translation

• Common Enabling Kit (CEK) CPU retention support

3.1.1 Processor Voltage Regulators

The baseboard has two VRDs (Voltage Regulator Devices) providing the appropriate voltages

to the installed processors. Each VRD is compliant with the VRD 10.1 specification and is

designed to support Intel

AMPs and peak support of 120A.

The baseboard supports the current requirements and processor speed requirements defined in

the Flexible Mother Board (FMB) specification for all 800 MHz FSB Intel Xeon processors. FMB

is an estimation of the maximum values the 800 MHz FSB versions of the Intel Xeon processors

will have over their lifetime. The value is only an estimate and actual specifications for future

processors may differ. At present, the current demand per FMB is a sustained maximum of a

105 Amps and peak support of 120 Amps.

®

Xeon™ processors that require up to a sustained maximum of 105

3.1.2 Reset Configuration Logic

The BIOS determines the processor stepping, cache size, etc through the CPUID instruction. All

processors in the system must operate at the same frequency; have the same cache sizes; and

same VID. No mixing of product families is supported. Processors run at a fixed speed and

cannot be programmed to operate at a lower or higher speed.

3.1.3 Processor Module Presence Detection

Logic is provided on the baseboard to detect the presence and identity of installed processors.

In dual-processor configurations, the on-board mini Baseboard Management Controller (mBMC)

must read the processor voltage identification (VID) bits for each processor before turning on

the VRD. If the VIDs of the two processors are not identical, then the mBMC will not turn on the

VRD. Prior to enabling the embedded VRD, circuitry on the baseboard ensures that the

following criteria are met:

• In a uni-processor configuration, CPU 1 is installed

• Only supported processors are installed in the system to prevent damage to the MCH

• In dual processor configurations, both processors support the same FSB frequency

3.1.4 GTL2006

The GTL2006 is a 13-bit translator designed for 3.3V to GTL/GTL+ translations to the system

bus. The translator incorporates all the level shifting and logic functions required to interface

between the processor subsystem and the rest of the system.

Revision 1.0

C78844-002

27

Page 28

Functional Architecture Intel® Server Board SE7520JR2

g

3.1.5 Common Enabling Kit (CEK) Design Support

The baseboard has been designed to comply with Intel’s Common Enabling Kit (CEK)

processor mounting and heat sink retention solution. The baseboard will ship with a CEK spring

snapped onto the bottom side of the board beneath each processor socket. The CEK spring is

removable, allowing for the use of non-Intel heat sink retention solutions.

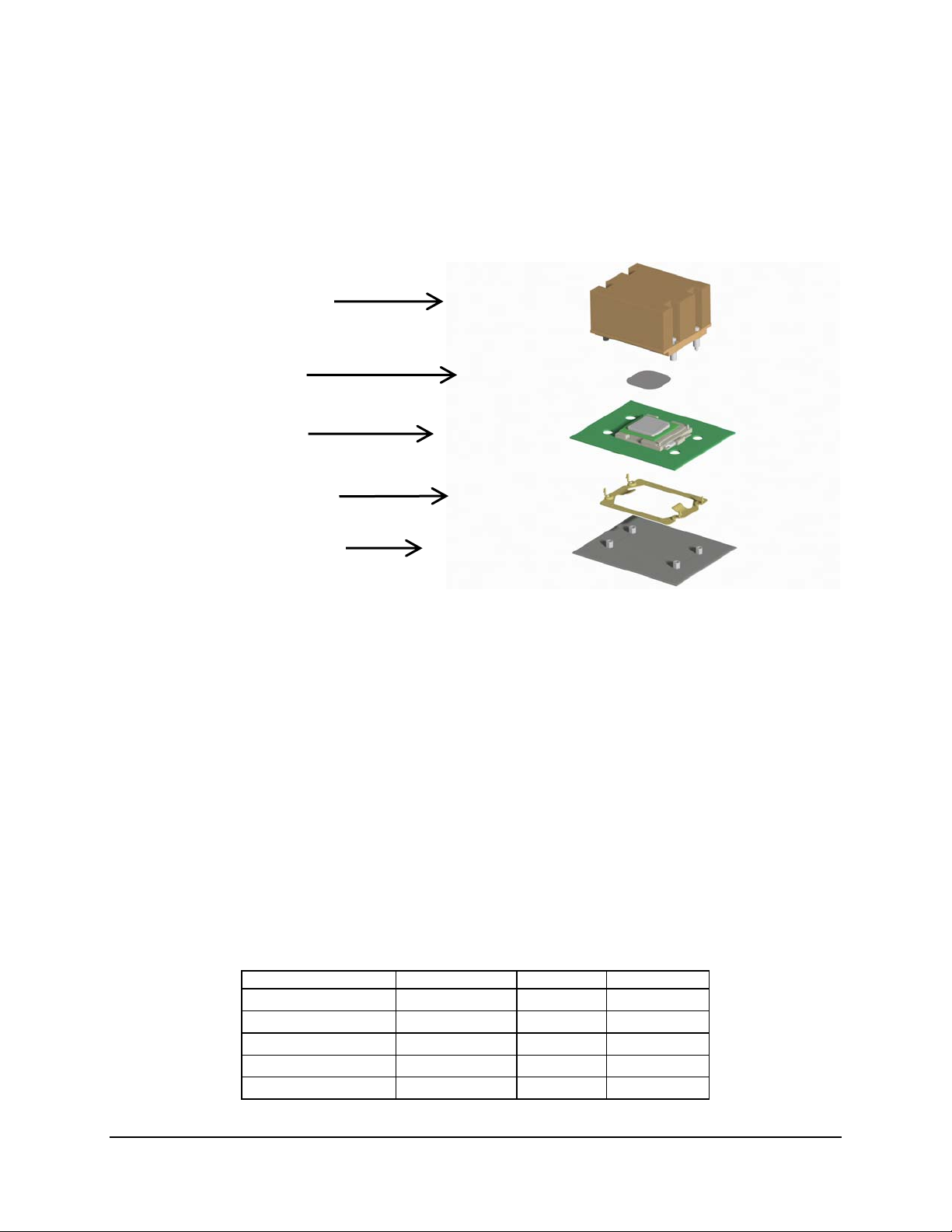

Heatsink assembly with

inte

rated hardware

Thermal Interface

Material (TIM)

Baseboard

CEK Spring

Chassis

Figure 4. CEK Processor Mounting

3.1.6 Processor Support

The Server Board SE7520JR2 is designed to support one or two Intel® Xeon™ processors

utilizing an 800 MHz front side bus with frequencies starting at 2.8 GHz. Previous generations of

Intel Xeon processor are not supported on the Server Board SE7520JR2.

The server board is designed to provide up to 120A peak per processors. Processors with

higher current requirements are not supported.

Note: Only Intel

the Server Board SE7520JR2. See the following table for a list of supported processors and

their operating frequencies.

®

Xeon™ processors that support an 800MHz Front Side Bus are supported on

Table 2: Processor Support Matrix

Processor Family FSB Frequency Frequency Support

Intel® Xeon™ 533 MHz 2.8 GHz No

Intel® Xeon™ 533 MHz 3.06 GHz No

Intel® Xeon™ 533 MHz 3.2 GHz No

Intel® Xeon™ 800 MHz 2.8 GHz Yes

Intel® Xeon™ 800 MHz 3.0 GHz Yes

Revision 1.0

28

C78844-002

Page 29

Intel® Server Board SE7520JR2 Functional Architecture

Processor Family FSB Frequency Frequency Support

Intel® Xeon™ 800 MHz 3.2 GHz Yes

Intel® Xeon™ 800 MHz 3.4 GHz Yes

Intel® Xeon™ 800 MHz 3.6 GHz Yes

3.1.6.1 Processor Mis-population Detection

The processors must be populated in the correct order for the processor front-side bus to be

correctly terminated. CPU socket 1 must be populated before CPU socket 2. Baseboard logic

will prevent the system from powering up if a single processor is present but it is not in the

correct socket. This protects the logic against voltage swings or unreliable operation that could

occur on an incorrectly terminated front-side bus.