Intel® Server Board Set

SE8500HW4

Technical Product Specification

Intel order number D22893-001

Enterprise Platforms and Services – Marketing

Revision 1.0

May, 2005

Revision History Intel® Server Board Set SE8500HW4

Revision History

Date Revision

Number

May 2005 1.0 Initial release.

Modifications

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express

or implied, by estoppel or otherwise, to any intellectual property rights is granted by this

document. Except as provided in Intel's Terms and Conditions of Sale for such products, Intel

assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to

sale and/or use of Intel products including liability or warranties relating to fitness for a particular

purpose, merchantability, or infringement of any patent, copyright or other intellectual property

right. Intel products are not intended for use in medical, life saving, or life sustaining

applications. Intel may make changes to specifications and product descriptions at any time,

without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked

"reserved" or "undefined." Intel reserves these for future definition and shall have no

responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

This document contains information on products in the design phase of development. Do not

finalize a design with this information. Revised information will be published when the product is

available. Verify with your local sales office that you have the latest datasheet before finalizing a

design.

The Intel® Server Board Set SE8500HW4 may contain design defects or errors known as errata

which may cause the product to deviate from published specifications. Current characterized

errata are available on request.

Intel Corporation server baseboards contain a number of high-density VLSI and power delivery

components that need adequate airflow to cool. Intel’s own chassis are designed and tested to

meet the intended thermal requirements of these components when the fully integrated system

is used together. It is the responsibility of the system integrator that chooses not to use Intel

developed server building blocks to consult vendor datasheets and operating parameters to

determine the amount of air flow required for their specific application and environmental

conditions. Intel Corporation can not be held responsible if components fail or the Server Board

does not operate correctly when used outside any of their published operating or non-operating

limits.

Intel, Xeon and XScale are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © Intel Corporation 2005.

Revision 1.0

ii

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

Table of Contents

1. Product Overview.................................................................................................................1

1.1 Board Set Features..................................................................................................2

2. Processor and Chipset ........................................................................................................5

2.1 Processors Supported.............................................................................................5

2.1.1 Heat Sink.................................................................................................................6

2.1.2 Installation Order .....................................................................................................7

2.2 Intel® E8500 Chipset................................................................................................8

2.2.1 North Bridge (NB)....................................................................................................8

2.2.2 eXtended Memory Bridge (XMB).............................................................................8

2.2.3 Intel® IOP332 Storage I/O Processor.......................................................................9

2.2.4 Intel® 82801EB I/O Controller Hub 5 (ICH5)............................................................9

2.2.5 Intel® 6700 PXH 64-bit Hub (PXH)..........................................................................9

3. I/O Subsystems ..................................................................................................................11

3.1 PCI Subsystem......................................................................................................11

3.1.1 PCI Interrupts.........................................................................................................11

3.1.2 PCI IDSEL Signal ..................................................................................................12

3.1.3 Bus Arbitration Signals ..........................................................................................13

3.1.4 Wake On LAN........................................................................................................13

3.1.5 PCI Hot Plug* Support...........................................................................................14

3.2 Ultra320 SCSI Subsystem.....................................................................................16

3.3 Intel® RAID On Motherboard (ROMB) ..................................................................17

3.3.1 Intel® RAID Activation Key (RAK)..........................................................................17

3.3.2 DDR2 RAID DIMM.................................................................................................17

3.3.3 Intel® RAID Smart Battery (RSB)...........................................................................17

3.4 Gigabit Ethernet.....................................................................................................18

3.5 Serial ATA (SATA).................................................................................................18

3.6 Fibre Channel........................................................................................................18

3.7 Firmware Hubs ......................................................................................................18

3.8 Video......................................................................................................................19

3.9 USB 2.0 .................................................................................................................19

3.10 Serial......................................................................................................................19

4. Intel® Server Board Set SE8500HW4 Memory Board ......................................................21

Revision 1.0

Intel order number D22893-001

iii

Product Overview Intel® Server Board Set SE8500HW4

4.1 DDR2 DIMM Support.............................................................................................22

4.2 Installation Order ...................................................................................................22

4.3 Memory Initialization..............................................................................................22

4.4 Data Correction and Scrubbing .............................................................................23

4.5 Memory Board Components..................................................................................23

4.5.1 Button, Retention Latch and LEDs ........................................................................24

4.5.2 Temperature Sensors and FRU.............................................................................25

4.5.3 I2C..........................................................................................................................25

4.5.4 Independent Memory Interface (IMI) .....................................................................25

4.5.5 Serial Presence Detect (SPD)...............................................................................25

4.5.6 Power.....................................................................................................................26

4.6 Memory Hot Plug...................................................................................................26

4.6.1 Prerequisite for Memory Hot Plug..........................................................................26

4.6.2 Memory Board Hot Remove ..................................................................................26

4.6.3 Memory Board Hot Add .........................................................................................26

5. Server Management ...........................................................................................................27

5.1 Sahalee Baseboard Management Controller (BMC) .............................................29

5.1.1 Sensor Data Record SDR (SDR) Repository.........................................................30

5.1.2 Field Replaceable Unit (FRU) Inventory Devices ..................................................30

5.1.3 System Event Log (SEL) .......................................................................................31

5.1.4 Rolling BIOS..........................................................................................................31

5.1.5 First Boot with a New IMM.....................................................................................31

5.2 Fan Control and Temperature Monitoring..............................................................32

5.2.1 Memory Throttling..................................................................................................33

5.2.2 Processor Throttling...............................................................................................33

5.3 ACPI Power Control...............................................................................................34

5.3.1 S1 Sleep State Support.........................................................................................34

5.3.2 S5 Sleep State Support.........................................................................................34

5.3.3 Secure Mode Operation.........................................................................................34

5.4 Fault Resilient Booting (FRB)................................................................................35

5.4.1 FRB3......................................................................................................................35

5.4.2 FRB2......................................................................................................................36

5.5 Reset Control.........................................................................................................36

5.5.1 Front Panel Reset..................................................................................................36

5.5.2 Warm Reset...........................................................................................................36

Revision 1.0

iv

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

5.6

Remote Management and External Interfaces to the BMC ...................................37

5.6.1 Intelligent Platform Management Buses (IPMB)....................................................38

5.6.2 Keyboard Controller Style (KCS)/Low Pin Count (LPC) Bus.................................38

5.6.3 Inter-Chassis Management Bus (ICMB)................................................................38

5.6.4 Serial Over LAN (SOL) ..........................................................................................39

5.6.5 Emergency Management Port (EMP) Interface.....................................................39

5.7 Event Filtering and Alerting....................................................................................40

5.7.1 Platform Event Filtering (PEF)...............................................................................40

5.7.2 Dial Page Alerting..................................................................................................41

5.7.3 Alert over LAN .......................................................................................................41

5.7.4 Alert over Serial/PPP.............................................................................................42

6. Jumpers ..............................................................................................................................43

6.1 Mainboard..............................................................................................................43

6.1.1 Circuit Breaker Type Jumper.................................................................................44

6.1.2 Intel® Management Module....................................................................................45

7. Connectors .........................................................................................................................47

7.1 SCSI ......................................................................................................................47

7.2 100-pin Front Panel...............................................................................................48

7.3 COM2 Serial Port...................................................................................................49

7.4 USB .......................................................................................................................50

7.5 SATA .....................................................................................................................50

7.6 Power.....................................................................................................................51

7.7 Rear Panel Connectors .........................................................................................52

7.7.1 Video......................................................................................................................52

7.7.2 Network..................................................................................................................52

7.7.3 COM1 Serial Port...................................................................................................54

7.7.4 USB .......................................................................................................................55

7.8 Server Management and Diagnostics....................................................................55

7.8.1 5-pin ICMB Header................................................................................................55

7.8.2 3-pin IPMB Header................................................................................................55

7.8.3 3-pin Chassis Intrusion..........................................................................................56

7.8.4 I2C POST Code Headers.......................................................................................56

8. Electrical Specifications....................................................................................................57

8.1 Power Generartion.................................................................................................57

8.2 Power Timing.........................................................................................................58

Revision 1.0

Intel order number D22893-001

v

Product Overview Intel® Server Board Set SE8500HW4

8.2.1 Power-Up Sequence..............................................................................................58

8.2.2 Power-Down Sequence.........................................................................................59

8.3 Reset .....................................................................................................................60

8.4 Interrupts................................................................................................................61

8.5 Clocks....................................................................................................................62

8.6 Programmable Logic Devices................................................................................63

9. Mechanical and Thermal Specifications ..........................................................................65

9.1 Mechanical Specifications .....................................................................................65

9.1.1 Mainboard..............................................................................................................65

9.1.2 Memory Board .......................................................................................................67

9.2 Thermal Specifications ..........................................................................................68

10. System BIOS.......................................................................................................................69

10.1 Advanced Memory Modes.....................................................................................69

10.1.1 Sparing ..................................................................................................................70

10.1.2 Maximum Compatibility..........................................................................................70

10.1.3 Maximum Performance..........................................................................................71

10.1.4 Memory Mirroring...................................................................................................71

10.1.5 Memory RAID ........................................................................................................71

10.2 Rolling BIOS..........................................................................................................73

10.3 Initialization............................................................................................................73

10.3.1 Processors.............................................................................................................73

10.3.2 Memory..................................................................................................................75

10.3.3 I/O Devices............................................................................................................77

10.3.4 Operating System..................................................................................................78

10.4 Remote Management ............................................................................................78

10.4.1 Serial Configuration Settings.................................................................................78

10.4.2 Keystroke Mappings..............................................................................................79

10.4.3 Limitations..............................................................................................................79

10.4.4 Interface to Server Management ...........................................................................79

10.5 IPMI Serial/Modem Interface .................................................................................80

10.5.1 Channel Access Modes.........................................................................................80

10.5.2 Interaction with BIOS Console Redirection............................................................80

10.5.3 Serial Over LAN.....................................................................................................80

10.6 Wired For Management.........................................................................................81

10.6.1 PXE BIOS Support ................................................................................................81

Revision 1.0

vi

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

10.7

System Management BIOS ...................................................................................81

10.8 Security..................................................................................................................81

11. BIOS User Interface............................................................................................................83

11.1 Overview................................................................................................................83

11.1.1 System State Window............................................................................................83

11.1.2 Logo/Diagnostic Window.......................................................................................83

11.1.3 Current Activity Window.........................................................................................84

11.2 System Diagnostic Screen.....................................................................................84

11.3 Systems Options Menu Screen .............................................................................85

11.4 Error Manager........................................................................................................85

11.5 Boot Maintenance Manager...................................................................................86

11.6 BIOS Setup Utility..................................................................................................94

11.6.1 Setup Utility Layout................................................................................................94

11.6.2 Keyboard Commands............................................................................................95

11.6.3 Server Platform Formset........................................................................................96

12. Error Handling ..................................................................................................................111

12.1 LEDs....................................................................................................................111

12.1.1 POST Progress LEDs..........................................................................................111

12.1.2 CPU Diagnostic LEDs..........................................................................................113

12.2 Beeps...................................................................................................................114

12.2.1 BIOS Recovery Beep Codes ...............................................................................114

12.3 POST Messages..................................................................................................115

Reference Documents............................................................................................................120

Revision 1.0

Intel order number D22893-001

vii

Product Overview Intel® Server Board Set SE8500HW4

List of Figures

Figure 1. Intel® Server Board Set SE8500HW4, Populated.........................................................2

Figure 2. Intel® Server Board Set SE8500HW4 Interconnect Diagram........................................3

Figure 3. 64-bit Intel® Xeon™ Processors MP............................................................................5

Figure 4. Memory Board Outline Diagram .................................................................................21

Figure 5. Memory Board Component Diagram..........................................................................22

Figure 6. Memory Board Block Diagram....................................................................................23

Figure 7. Server Management Block Diagram...........................................................................28

Figure 8. I2C Block Diagram.......................................................................................................29

Figure 9. External Interfaces to the BMC...................................................................................37

Figure 10. Mainboard Jumper Locations ...................................................................................43

Figure 11. 68-Pin SCSI Connector ............................................................................................47

Figure 12. Stacked Ethernet Connector.....................................................................................52

Figure 13. Power Distribution Block Diagram............................................................................57

Figure 14. Typical Power-Up Sequence....................................................................................58

Figure 15. Typical Power-Down Sequence................................................................................59

Figure 16. Reset Block Diagram................................................................................................60

Figure 17. Interrupt Block Diagram............................................................................................61

Figure 18. PLD Connections......................................................................................................63

Figure 19. Mainboard Outline and Hole Location Drawing........................................................65

Figure 20. Mainboard Pin 1 Location Drawing...........................................................................66

Figure 21. Memory Board Mechanical Outline Drawing ............................................................67

Figure 22. Memory Board Pin 1 Location Drawing ....................................................................67

Figure 23. BIOS Display ............................................................................................................83

Figure 24. System Diagnostics Display ....................................................................................84

Revision 1.0

viii

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

List of Tables

Table 1. Processor Feature Overview .........................................................................................5

Table 2. Processor Installation Order ..........................................................................................7

Table 3. PCI Expansion Slot Features.......................................................................................11

Table 4. PCI Interrupt Mapping..................................................................................................11

Table 5. IDSEL Mapping............................................................................................................12

Table 6. Arbitration Connections................................................................................................13

Table 7. PCI Hot Plug LEDs ......................................................................................................14

Table 8. Memory Board LEDs....................................................................................................25

Table 9. FRU Device Location and Size....................................................................................30

Table 10. Example Rolling BIOS Behavior with a New IMM......................................................31

Table 11. Fan States..................................................................................................................32

Table 12. Secure Mode Affect on ACPI States..........................................................................34

Table 13. System Reset Sources and Actions...........................................................................36

Table 14. Platform I2C Buses.....................................................................................................38

Table 15. Default Event Filters...................................................................................................40

Table 16. Mainboard Jumpers...................................................................................................44

Table 17. 68-Pin SCSI Connector Pinout ..................................................................................47

Table 18. 100-pin Front Panel Connector Pinout.......................................................................48

Table 19. COM2 Serial Header Pinout ......................................................................................49

Table 20. 4-pin Internal USB Header.........................................................................................50

Table 21. SATA Connector Pinout.............................................................................................50

Table 22. 12-pin Power Connector Pinout.................................................................................51

Table 23. 30-pin Power Signal Header Pinout...........................................................................51

Table 24. Video Connector Pinout.............................................................................................52

Table 25. Stacked Ethernet Connector Pinout...........................................................................53

Table 26. Server Management Ethernet Connector Pinout.......................................................54

Table 27. COM1 Serial Port Pinout............................................................................................54

Table 28. Stacked USB Connector Pinout.................................................................................55

Table 29. 5-pin ICMB Header Pinout.........................................................................................55

Table 30. 3-pin IPMB Header Pinout.........................................................................................55

Table 31. 3-pin Chassis Intrusion Pinout...................................................................................56

Table 32. 5-pin I2C POST Code Header Pinout.........................................................................56

Revision 1.0

Intel order number D22893-001

ix

Product Overview Intel® Server Board Set SE8500HW4

Table 33. Power Budget ............................................................................................................58

Table 34. Typical Power-Up Timings.........................................................................................59

Table 35. Typical Power-Down Timings ....................................................................................60

Table 36. Reset Types...............................................................................................................61

Table 37. PLD Functions ...........................................................................................................63

Table 38. Thermal Specifications...............................................................................................68

Table 39. Memory Hot Plug Support Under Different Memory Modes.......................................70

Table 40. Memory Modes Supporting Sparing ..........................................................................70

Table 41. System Options Menu................................................................................................85

Table 42. Error Manager Menu...................................................................................................85

Table 43. Boot Maintenance Manager Menu.............................................................................86

Table 44. Boot Options Menu....................................................................................................86

Table 45. Change Boot Order Menu...........................................................................................87

Table 46. Add Boot Option Menu...............................................................................................87

Table 47. Delete Boot Option Menu...........................................................................................88

Table 48. Select Legacy Floppy Order Menu ............................................................................88

Table 49. Select Legacy Hard Drive Order Menu......................................................................89

Table 50. Select Legacy CD-ROM Order Menu.........................................................................89

Table 51. Set Embedded NIC Order Menu................................................................................90

Table 52. Select Legacy BEV Order Menu................................................................................90

Table 53. Driver Options Menu..................................................................................................90

Table 54. Add Driver Option Menu .............................................................................................91

Table 55. Add Driver Option Using File Menu ...........................................................................91

Table 56. Add Driver Option Using Handle Menu......................................................................92

Table 57. Delete Driver Option Menu ........................................................................................93

Table 58. Change Driver Order Menu........................................................................................93

Table 59. Set Time Out Value Menu..........................................................................................94

Table 60. BIOS Setup Utility Layout ..........................................................................................94

Table 61. BIOS Setup: Keyboard Command Bar.......................................................................95

Table 62. Main Menu.................................................................................................................96

Table 63. Time and Date Menu .................................................................................................97

Table 64. Processor Menu.........................................................................................................97

Table 65. Processor #n Information Menu.................................................................................98

Table 66. Memory Menu............................................................................................................98

Table 67. Configure System RAS and Performance Menu........................................................99

Revision 1.0

x

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

Table 68. View Memory Configure Details Menu.....................................................................100

Table 69. Memory Board #n Menu ..........................................................................................101

Table 70. DIMM Labels Menu...................................................................................................102

Table 71. Devices Menu..........................................................................................................102

Table 72. IDE Controller Menu ................................................................................................102

Table 73. Mass Storage Menu.................................................................................................103

Table 74. LAN Menu................................................................................................................104

Table 75. Video Menu..............................................................................................................104

Table 76. USB Menu................................................................................................................104

Table 77. Serial Ports Menu .....................................................................................................105

Table 78. PCI Menu.................................................................................................................105

Table 79. Server Management Menu ......................................................................................106

Table 80. Console Redirection Menu.......................................................................................106

Table 81. COM1 Console Redirection Menu...........................................................................107

Table 82. FRU Information Menu..............................................................................................107

Table 83. LAN Management Menu..........................................................................................108

Table 84. SEL Menu................................................................................................................108

Table 85. FRB Information Menu.............................................................................................109

Table 86. Security Menu..........................................................................................................109

Table 87. Save, Restore and Exit Menu..................................................................................110

Table 88. POST Progress LED Location and Example ...........................................................111

Table 89. POST Progress LED Codes ....................................................................................111

Table 90. Processor Diagnostic LED Locations.......................................................................113

Table 91. Beep Codes.............................................................................................................114

Table 92. BIOS Recovery Beep Codes ...................................................................................114

Table 93. POST Messages......................................................................................................115

Revision 1.0

Intel order number D22893-001

xi

Product Overview Intel® Server Board Set SE8500HW4

< This page intentionally left blank. >

Revision 1.0

xii

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

1. Product Overview

The Intel® Server Board Set SE8500HW4 is the fourth generation of four-way Intel® IA32 Server

Boards. The board set uses the Intel

processor technologies. This product diverges from other Intel

®

E8500 Chipset, and the next generation of memory and

®

server boards and platforms in

the following ways:

Addition of PCI Express* technology

Addition of Double Data Rate Two (DDR2) memory

Memory implemented across up to four Memory Boards, with enhanced performance

and reliability features

Optional mass storage expansion for Fibre Channel and RAID

Removal of IDE, floppy, and PS/2* ports

The Intel

MP with up to 8MB L3 cache and incorporates features that clearly differentiate it as a high

availability server. Building on previous server platforms, the Intel

®

Server Board Set SE8500HW4 supports up to four 64-bit Intel® Xeon™ Processors

®

Server Board Set

SE8500HW4 introduces redundant memory, networking, and the BIOS flash in addition to the

enterprise features of hot-swap PCI slots, standards-based server management and serveroriented embedded I/O. Remote monitoring and management features are also included,

providing a new level of user tools for server administration.

The Intel

to four Memory Boards plug vertically into the Mainboard. The board set was designed to work

with the Intel

SR6850HW4, a 6U chassis. The board set may also be used in a non-Intel chassis that meets

the power and cooling requirements found in this specification. Please refer to the Intel

Platform SR4850HW4 Technical Product Specification and Intel

®

Server Board Set SE8500HW4 consists of two primary boards: Main and Memory. Up

®

Server Platform SR4850HW4, a 4U chassis, and the Intel® Server Platform

®

®

Server Platform SR6850HW4

Server

Technical Product Specification for more information on these products.

Revision 1.0

Intel order number D22893-001

1

Product Overview Intel® Server Board Set SE8500HW4

This document describes the Mainboard and Memory Board components of the Intel® Server

Board Set SE8500HW4.

Figure 1. Intel® Server Board Set SE8500HW4, Populated

1.1 Board Set Features

This chapter discusses the features for the Intel® Server Board Set SE8500HW4, which

includes:

Up to four 64-bit Intel

Xeon™ Processors MP with up to 8MB L3 cache

Intel

®

E8500 Chipset:

- Intel

®

E8500 Chipset North Bridge (NB): provides two processor buses and

connection to I/O and memory subsystems

- Intel

®

E8500 eXtended Memory Bridge (XMB): provides hot-plug support for up to

64GB of DDR2 memory

- Intel

- Intel

®

6700 PXH 64-bit PCI Hub: provides support for PCI-X* I/O

®

IOP332 Storage I/O Processor : provides support for PCI-X adapters and

contains Intel

(ROMB)

- Intel

®

81801EB I/O Controller Hub 5 (ICH5): provides support for the system BIOS,

video, USB 2.0, and Serial ATA (SATA).

Advanced I/O slots including PCI Express* and PCI-X and support circuits:

- One hot-plug PCI Express x8 slot

- Three hot-plug PCI Express x4 slots

- One hot-plug 64-bit PCI-X 133MHz, 1.0 slot

- Two 64-bit PCI-X 100MHz, 1.0 slots (not hot-plug)

®

Xeon™ Processors MP with 1MB L2 cache or 64-bit Intel®

®

XScale™ technology to support optional RAID On Motherboard

Revision 1.0

2

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Product Overview

Server management with either the Intel

®

Management Module Professional or Intel®

Management Module Advanced

ATI* Radeon* 7000 video controller, with 16MB SDRAM

Broadcom* BCM5704 NetXtreme* Gigabit Ethernet controller: provides two ports on the

rear of the Mainboard

LSI Logic* 53C1030 Ultra320* SCSI Controller: provides two independent Ultra320 SCSI

interfaces

Optional ROMB support: provides two channels of RAID 0, 1, 5, 10 or 50

Optional custom Intel

®

Fibre Channel Module: provides two 2Gbps optical connectors

Intel® Server Board SE8500HW4 Main Board

CPU

1

CPU2CPU

FSB 1

E8500

North Bridge

(NB)

4

FSB 0

CPU

3

IMI D

IMI C

IMI B

IMI A

Memory Board

Memory Board

Memory Board

SE8500HW4 Memory Board

XMB

XMB

XMB

XMB

DIMM

DIMM

DIMM

DIMM

DDR2 DIMM

DIMM

DIMM

DDR2 DIMM

DIMM

DIMM

DIMM

DIMM

DDR2 DIMM

DIMM

DIMM

DDR2 DIMM

PCI-X 100 MHz

SCSI

Connector

DDR2 RAID DIMM

LSI*

53C1030

Ultra320*

SCSI

Controller

SCSI Channel A

Fibre Channel Module

BRCM5704*

Ethernet

Controller

SCSI Channel B

USB

Front Panel

Connector

PCIe x8

PCIe x4

PCIe x4

Act Key

RAID

PCIe x4

PCIe x4

PCIe x4

IOP332

Processor

6700

PXH

PCI-X* 100 MHz

PCI Express x4 (hot-plug)

PCI Express x4 (hot-plug)

PCI-X 133 MHz (hot-plug)

PCI Express* x8 (hot-plug)

PCI Express x4 (hot-plug)

IMM

RAID Smart

Battery

82801EB

ICH5

SATA

LPC

SATA

Connector

PCI-33

FWH

ATI*

Radeon*

7000

Video

Controller

SIO

10/100

Ethernet Port

Ethernet Port

External SCSI

Connector

(optional)

USB Port

USB Port

Video Port

COM1/EMP

GCM

(optional)

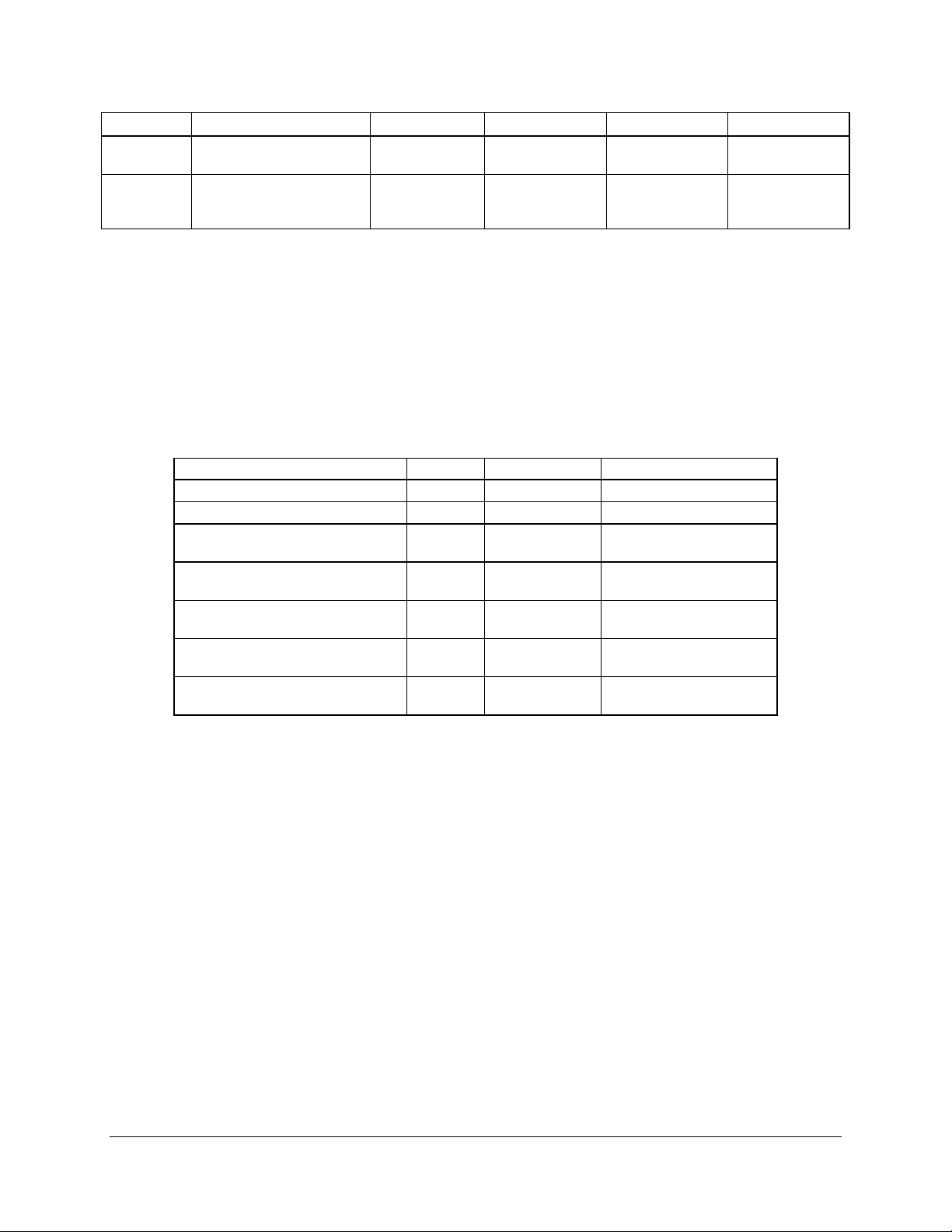

Figure 2. Intel® Server Board Set SE8500HW4 Interconnect Diagram

Revision 1.0

3

Intel order number D22893-001

Product Overview Intel® Server Board Set SE8500HW4

< This page intentionally left blank. >

Revision 1.0

4

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Processor and Chipset

2. Processor and Chipset

2.1 Processors Supported

The Intel® Server Board Set SE8500HW4 supports 64-bit Intel® Xeon™ Processors MP which

are based on the Intel

®

NetBurst™ microarchitecture. Several architectural and

microarchitectural enhancements have been added to this processor, including an increased L2

cache size and, for some models, an integrated L3 cache. Table 1 provides a feature set

overview of the 64-bit Intel

®

Xeon™ Processors MP.

Figure 3. 64-bit Intel® Xeon™ Processors MP

Table 1. Processor Feature Overview

Feature 64-bit Intel® Xeon™

Processors MP with

1MB L2 cache

Package FC-mPGA4

L2 cache size 1MB

L3 cache size N/A 4MB or 8MB

Core operating voltage 1.0975 to 1.4V 1.171 to 1.3250V

Cache operating voltage N/A 1.1 to 1.25V

Front side bus 667MHz with data-bus Error Correcting Code (ECC),

bandwidth up to 5.33GB/s

64-bit Intel® Xeon™

Processors MP with up to

8MB L3 cache

Revision 1.0

Intel order number D22893-001

5

Processor and Chipset Intel® Server Board Set SE8500HW4

The 64-bit Intel® Xeon™ Processors MP includes the following advanced features:

Intel

®

Extended Memory 64 Technology (EM64T) for executing both 32-bit and 64-bit

applications simultaneously

Intel

Intel

Execute-Disable Bit for hardware support of security features

Quad-channel DDR2 400MHz memory support

PCI Express for faster serial interconnects

Streaming Single Instruction, Multiple Data (SIMD) Extensions 2 and 3 (SSE2, SSE3)

For more information, please refer to the 64-bit Intel

cache Datasheet, 64-bit Intel

bit Intel

®

Hyper-Threading (HT) technology providing two logical processors

®

Demand-Based Switching (DBS) for power savings

®

®

®

Xeon™ Processors MP with 1MB L2 cache Specification Update, and 64-bit Intel®

Xeon™ Processors MP with up to 8MB L3 cache Datasheet, 64-

Xeon™ Processors MP with 1MB L2

Xeon™ Processors MP with up to 8MB L3 cache Specification Update.

2.1.1 Heat Sink

The Intel® Server Board Set SE8500HW4 uses the reference design Common Enabling Kit

(CEK) heatsinks, which meet the 64-bit Intel

targets. Each CEK heatsink consists of the following components:

Passive heatsink (with captive standoff and screws)

Thermal Interface Material (TIM-2) – to cover the entire processor Integrated Heat

Spreader (IHS) and the heatsink base

Hat spring – mounted below the Intel

®

Xeon™ Processors MP thermal performance

®

Server Board Set SE8500HW4 Mainboard

Revision 1.0

6

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Processor and Chipset

2.1.2 Installation Order

Some processor signals do not have on-die termination and must be terminated at an end

agent. The Intel

Front Side Buses (FSBs). For each bus with a processor installed, the first socket on that bus

must be used to ensure proper signal termination. A processor must be installed in socket 1

before socket 2, and socket 3 before socket 4. Refer to Table 2 for processor installation order.

®

Server Board Set SE8500HW4 Mainboard was designed with two separate

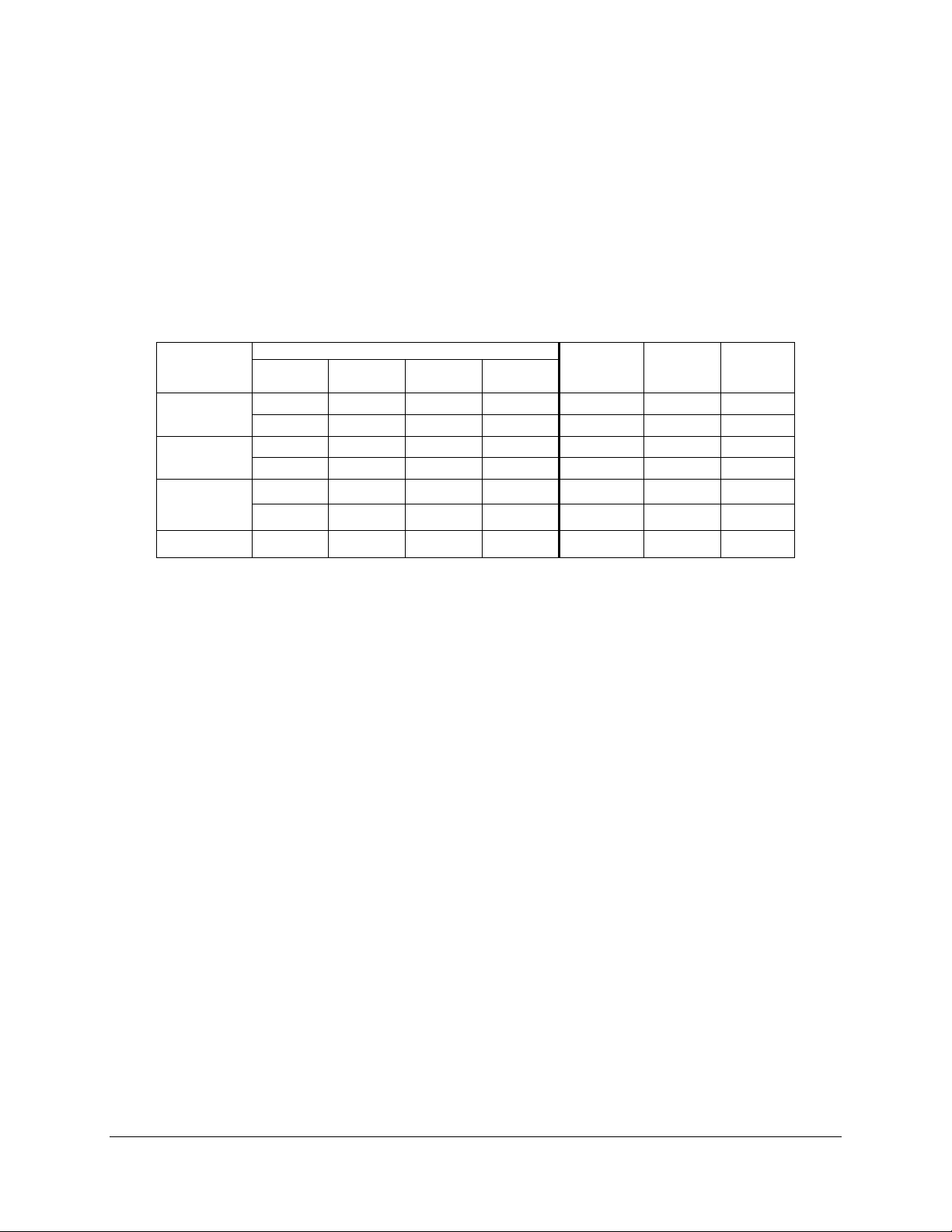

Table 2. Processor Installation Order

Sockets Number of

Processors

Four Installed Installed Installed Installed Installed Installed Installed

1 2 3 4

Installed One

Installed Installed Installed

Installed Installed Two1

Installed Installed Installed Installed

Installed Installed Installed Installed Installed Three1

Installed Installed Installed Installed Installed Installed

VRM 10.2

J1F1

VRM 9.1

J1H22

VRM

10.2

J3F1

1. There is no performance gained by splitting the processors across the FSBs. Intel has

validated sequential process installation, with a one-processor configuration using

socket 1; a two-processor configuration using sockets 1 and 2; and a three-processor

configuration using sockets 1, 2 and 3.

2. The 9.1 VRM is only required when installing 64-bit Intel

®

Xeon™ Processors MP with

up to 8MB of L3 cache.

Revision 1.0

7

Intel order number D22893-001

Processor and Chipset Intel® Server Board Set SE8500HW4

2.2 Intel® E8500 Chipset

The Intel® E8500 Chipset is the highest performance, most scalable platform offering in the 64bit Intel

®

Xeon™ Processor MP family. The chipset represents the sixth-generation Intel fourway multi-processor platform, is architected for multi-core processors and includes these

advanced features:

Support for up to four 64-bit Intel

Maintains coherency across both buses

Double-pumped 40-bit address buses with a total address bandwidth of 167 million

®

Xeon™ Processors MP FSB operating at 667 MHz

addresses/second

Quad-pumped, 64-bit data bus providing a bandwidth of 5.3 GB/s per bus

x8 Single Device Data Correction (x8 SDDC) technology for memory error correction

Hardware memory initialization

ECC protection on data signals and parity protection on address signals

Support for hot-plug memory and performance operations

This section provides an overview of the chipset components, for more detailed information

refer to the Intel

®

E8500 Chipset Datasheets referenced in the Appendix.

2.2.1 North Bridge (NB)

The Intel® E8500 Chipset North Bridge (NB) is the center of the system architecture and

provides interconnection to:

Up to four 64-bit Intel

®

Xeon™ Processors MP via two 667 MHz FSBs optimized for

server applications

Up to 64GB memory via four Independent Memory Interfaces (IMI)

I/O subsystem components via one PCI Express and the Intel

®

82801EB I/O Controller

Hub 5 (ICH5)

2.2.2 eXtended Memory Bridge (XMB)

The Intel® E8500 Chipset eXtended Memory Bridge (XMB) provides interface between the NB

and DDR2 400MHz DIMMs. The Intel

Memory Boards, each with an XMB and four DDR2 400MHz DIMM locations.

®

Server Board Set SE8500HW4 includes up to four

Revision 1.0

8

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Processor and Chipset

2.2.3 Intel® IOP332 Storage I/O Processor

The Intel® IOP332 Storage I/O Processor contains a PCI Express-to-PCI-X bridge and performs

bridging functions between the PCI Express interface of the NB and PCI-X devices. The Intel

Server Board Set SE8500HW4 contains one Intel

®

IOP332 Storage I/O Processor that has two

PCI bus interfaces which provide:

Slots 6 and 7 (PCI-X 100MHz, non-Hot Plug)

LSI Logic 53C1030 Ultra320 SCSI controller

Intel

®

Fibre Channel Module connector

®

2.2.4 Intel® 82801EB I/O Controller Hub 5 (ICH5)

The Intel® 82801EB I/O Controller Hub 5 (ICH5) provides a hub interface-to-PCI bridge, PCI-toLPC bridge and legacy I/O controllers. Some of the features of the ICH5 are not used in this

board set. The Intel

Integrated Serial ATA (SATA) controller

High-speed USB 2.0 host controller

ATI Radeon 7000 video controller

Support for System Management Bus (SMBus) specification, version 2.0 and I

ACPI power management logic support

Firmware Hub (FWH) interface support

®

Server Board Set SE8500HW4 contains one ICH5 which provides:

2

C

2.2.5 Intel® 6700 PXH 64-bit Hub (PXH)

The Intel® 6700 PXH 64-bit Hub performs bridging functions between the PCI Express interface

of the NB and PCI-X devices. The Intel

has two PCI bus interfaces which provide:

Slot 2 (PCI-X 133Mhz Hot Plug)

Broadcom BCM5704C dual channel Gigabit Ethernet controller

®

Server Board Set SE8500HW4 contains one PXH that

Revision 1.0

9

Intel order number D22893-001

Processor and Chipset Intel® Server Board Set SE8500HW4

< This page intentionally left blank. >

Revision 1.0

10

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 I/O Subsystems

3. I/O Subsystems

3.1 PCI Subsystem

The PCI subsystem consists of eight slots, seven available to standard PCI adapters and one

for the Intel

®

Server Board Set SE8500HW4-specific Intel® Fibre Channel Module.

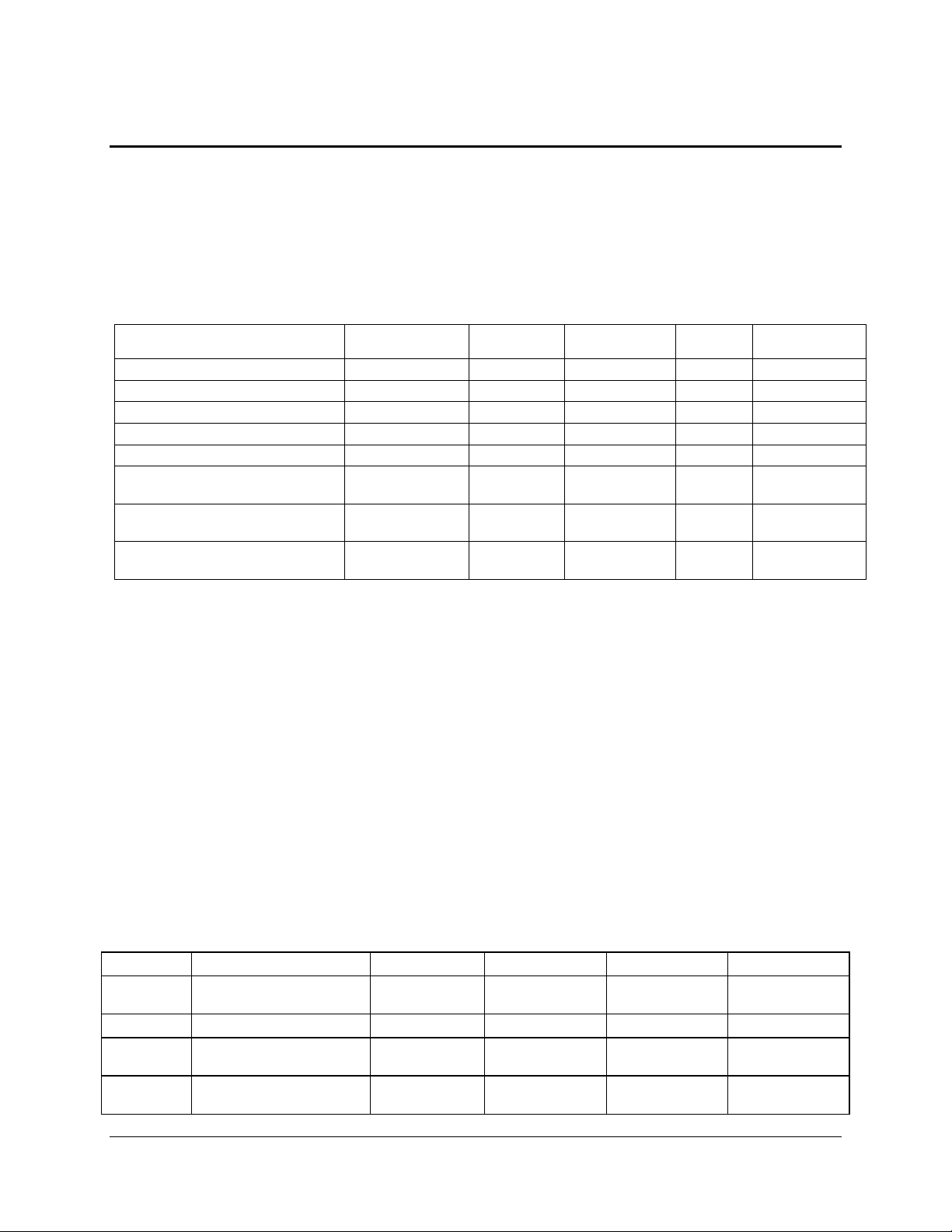

Table 3. PCI Expansion Slot Features

Segment Slot Hot Plug Technology Width Bandwidth

(GB/s)

North Bridge (C) 1 Yes PCI Express* x8 4

PXH (A) 2 Yes PCI-X* 133 64-bit 1

North Bridge (D) 3 Yes PCI Express x4 2

North Bridge (B) 4 Yes PCI Express x4 2

North Bridge (B) 5 Yes PCI Express x4 2

Intel® IOP332 Storage I/O

Processor (B)

Intel IOP332 Storage I/O

Processor (B)

Intel IOP332 Storage I/O

Processor (A)

6 No PCI-X 100 64-bit 0.8

7 No PCI-X 100 64-bit 0.8

Fibre Channel No PCI-X 100 64-bit 0.8

3.1.1 PCI Interrupts

PCI Express interrupts are delivered in-band over the PCI Express bus via the Message Signal

Interrupt (MSI) mechanism.

PCI and PCI-X devices can deliver interrupts either by asserting IRQ signals that are routed to

the PXH or Intel

®

IOP332 Storage I/O Processor IOxAPIC, or over the PCI-X bus via MSI. In

either case, the PXH and/or Intel® IOP332 Storage I/O Processor forward the interrupt to the

NB as an Inbound Write for the processor to handle the event.

Table 4 describes how the interrupts for each of the PCI devices are mapped to the PXH and

®

Intel

IOP332 Storage I/O Processor.

Table 4. PCI Interrupt Mapping

Device APIC INTA# INTB# INTC# INTD#

Broadcom*

BCM5704

Slot 2 PXH (A) PX2A_IRQ0_N PX2A_IRQ1_N PX2A_IRQ2_N PX2A_IRQ3_N

Slot 6 Intel® IOP332 Storage I/O

Slot 7 Intel IOP332 Storage I/O

Revision 1.0

PXH (B) PX2B_IRQ0_N PX2B_IRQ1_N

PX1B_XINT4_N PX1B_XINT5_N PX1B_XINT6_N PX1B_XINT7_N

Processor (B)

PX1B_XINT6_N PX1B_XINT7_N PX1B_XINT4_N PX1B_XINT5_N

Processor (B)

11

Intel order number D22893-001

I/O Subsystems Intel® Server Board Set SE8500HW4

Device APIC INTA# INTB# INTC# INTD#

LSI Logic*

53C1030

Intel® Fibre

Channel

Module

Intel IOP332 Storage I/O

Processor (A)

Intel IOP332 Storage I/O

Processor (A)

PX1A_XINT0_N PX1A_XINT1_N

PX1A_XINT2_N PX1A_XINT3_N

- -

- -

3.1.2 PCI IDSEL Signal

The IDSEL signal is used as a chip-select for devices during read and write transactions. The

PXH and Intel® IOP332 Storage I/O Processor assert a specific address bit on a given PCI bus

to toggle the IDSEL signal to the PCI device. For the Intel

Mainboard the address bit to IDSEL mapping is shown in Table 5.

Table 5. IDSEL Mapping

Device Device # IDSEL Host Bridge

Broadcom* BCM5704 2 PX2B_AD<18> PXH (B)

Slot 2 2 PX2A_AD<18> PXH (A)

Slot 6 6 PX1B_AD<22> Intel® IOP332 Storage I/O

Slot 7 7 PX1B_AD<23> Intel IOP332 Storage I/O

LSI Logic* 53C1030 5 PX1A_AD<21> Intel IOP332 Storage I/O

Intel® Fibre Channel Module 15 PX1A_AD<31> Intel IOP332 Storage I/O

ROMB enabled on Intel IOP332

Storage I/O Processor

14 n/a Internal to Intel IOP332

®

Server Board Set SE8500HW4

Processor (B)

Processor (B)

Processor (A)

Processor (A)

Storage I/O Processor

Note: When the ROMB solution is enabled, the IDSEL to the LSI Logic 53C1030 is inhibited by

the Intel

system and the Intel

Since the Intel

Intel

®

IOP332 Storage I/O Processor. This effectively hides the SCSI controller from the

®

Fibre Channel Module is set to device 15 so that it is not affected by the device hiding

®

®

IOP332 Storage I/O Processor acts as the SCSI (or RAID) controller.

Fibre Channel Module is attached to the same bus as the SCSI controller, the

operation required for the ROMB solution.

Revision 1.0

12

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 I/O Subsystems

3.1.3 Bus Arbitration Signals

Request (REQ#) signals indicate to the bus arbiter that an agent/device desires use of the bus.

The Grant (GNT#) signal indicates to the agent/device that access to the bus has been granted.

Every master has its own REQ#, which must be tri-stated while RST# is asserted. These are

point-to-point signals which are assigned to every bus master.

In the Intel

and Intel

BCM5704 and the Intel

Logic 53C1030, and the Intel

®

Server Board Set SE8500HW4 there is one arbiter for each PCI bus on the PXH

®

IOP332 Storage I/O Processor. The PXH contains an arbiter for slot 2 and the

®

IOP332 Storage I/O Processor contains an arbiter for slots 6 and 7, LSI

®

Fibre Channel Module.

Table 6. Arbitration Connections

Device REQ# GNT# Host Bridge

Broadcom* BCM5704 PX2B_REQ0_N PX2B_GNT0_N PXH (B)

Slot 2 PX2A_REQ0_N PX2A_GNT0_N PXH (A)

Slot 6 PX1B_REQ1_N PX1B_GNT1_N Intel® IOP332 Storage I/O

Processor (B)

Slot 7 PX1B_REQ0_N PX1B_GNT0_N Intel IOP332 Storage I/O

Processor (B)

LSI Logic* 53C1030 PX1A_REQ0_N PX1A_GNT0_N Intel IOP332 Storage I/O

Processor (A)

Intel® Fibre Channel Module PX1A_REQ1_N PX1A_GNT1_N Intel IOP332 Storage I/O

Processor (A)

3.1.4 Wake On LAN

Wake On LAN (WOL) is supported on the Intel® Server Board Set SE8500HW4 either from PCI

devices through the PME# signal, or PCI Express via the WAKE# signal.

Any PCI Express adapter can generate a wake event by asserting the WAKE# signal. This

signal is OR’d to all other PCI Express WAKE# signals and routed to the ICH5 after being

qualified with intrusion and a prior graceful shutdown. The assertion of a WAKE# signal will

cause the system to return to the ACPI S0 sleep state. Once system power is up and the PCI

Express devices are configured, a PME message is sent to the NB identifying the device that

woke the system.

For all the PCI devices or the Ethernet controller, PME# is handled similarly to the PCI Express

WAKE# signal. All PME# signals are OR’d together and routed to the ICH5 after being qualified

with intrusion and a prior graceful shutdown. The PME assertion wakes the system but does not

generate an interrupt from the ICH5. Once the system is powered up, the PXH or Intel

Storage I/O Processor generate a PME interrupt message to the operating system. The

operating system determines which slot is the PME source by polling the PXH and Intel

®

IOP332

®

IOP332 Storage I/O Processor.

Revision 1.0

Intel order number D22893-001

13

I/O Subsystems Intel® Server Board Set SE8500HW4

3.1.5 PCI Hot Plug* Support

PCI Hot Plug* is the concept of removing a standard PCI adapter card from a system without

stopping the software or powering down the system as a whole.

In the Intel

®

Server Board Set SE8500HW4, PCI Slot 2 supports the PCI Hot-Plug Specification,

Revision 1.1 and is configured so that the PXH isolates the slot from the PCI bus when no

adapter is present. The four PCI Express slots support the PCI Express Base Specification,

Revision 1.0a.

3.1.5.1 Hardware Components

The Intel

®

Server Board Set SE8500HW4 contains buttons and LEDs to assist a user for hot

plug operations. Buttons provide isolation circuitry to physically disconnect the hot plug adapter

from the PCI buses while LEDs provide slot power and status. The LEDs have enough luminous

intensity to pass through system-level light pipes and be visible at the top of a system. An

attention button can be used to invoke a hot-plug sequence to remove or add an adapter

without the use of an operating system/software interface.

Table 7. PCI Hot Plug LEDs

LED State Meaning

Power

(Green)

Attention

(Amber)

Off Power off: All main rails have been removed from slot. Card can be inserted or removed.

On Power on: Slot is powered on. Card cannot be inserted or removed.

Blinking Power transition: Slot is in the process of changing state. Card cannot be inserted or

removed.

Off Normal: Normal operation.

On Attention: Power fault or operational problem at this slot.

Blinking Locate: Slot is being identified at the user’s request.

3.1.5.2 Software Components

PCI hot plug operations are supported by the system BIOS, an operating system driver and an

optional operating system administrative interface. The Intel

®

Server Board Set SE8500HW4

BIOS provides initialization of the hot plug hardware components, logging of hot plug events

through server management and ACPI table generation. Microsoft* Windows* Server 2003,

Enterprise Edition includes support for PCI hot plug through the taskbar “Unplug or Eject

Hardware” interface but may require an updated adapter device driver. Refer to other operating

systems’ manuals for more information on how to perform hot-plug operations. Reference the

PCI adapter release notes for specific information on support and driver requirements.

Revision 1.0

14

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 I/O Subsystems

3.1.5.3 Hot Removal Example

3.1.5.3.1 Under Microsoft Windows Server 2003, Enterprise Edition:

1. Open the cover of the system to access the adapters and status LEDs.

2. Double-click “Unplug/Eject” in the taskbar to open the “Unplug or Eject Hardware” menu.

3. Select the device to be removed and click “Stop”.

4. Wait for the power LED to turn off.

5. Dis-engage rocker, retention, and/or safety devices.

6. Remove the adapter.

3.1.5.3.2 Under other operating systems:

1. Open the cover of the system to access the adapters and status LEDs.

2. Press the attention button for the slot. (press the attention button within five seconds to abort

the hot plug operation)

3. Wait for the power LED to turn off.

4. Dis-engage rocker, retention, and/or safety devices.

5. Remove the adapter.

3.1.5.4 Hot Addition Example

3.1.5.4.1 Under Microsoft Windows Server 2003, Enterprise Edition:

1. Open the cover of the system to access adapters and view the status LEDs.

2. Install the adapter into the slot.

3. Engage rocker, retention, and/or safety devices.

4. Wait for the software user interface to open. Confirm the device to be enabled.

5. Wait for the power LED to turn on.

Note: If the attention LED is blinking, a power fault has occurred. The user may need to remove

the adapter, wait for the LED to turn off, and re-start the hot add operation.

3.1.5.4.2 Under other operating systems:

1. Open the cover of the system to access adapters and view the status LEDs.

2. Install the adapter into the slot.

3. Engage rocker, retention, and/or safety devices.

4. Press the attention button for the slot. (press the attention within five seconds to abort the

hot plug operation)

5. Wait for the power LED to turn on.

6. Enable the device in your operating system.

Note: If the attention LED is blinking, a power fault has occurred. The user may need to remove

the adapter, wait for the LED to turn off, and re-start the hot add operation.

Revision 1.0

Intel order number D22893-001

15

I/O Subsystems Intel® Server Board Set SE8500HW4

3.2 Ultra320 SCSI Subsystem

A single LSI Logic* 53C1030 controller provides the on-board Ultra320 SCSI interface. The

controller resides on the PCI Bus Segment A (PX1A), off the Intel® IOP332 Storage I/O

Processor. For optimal performance, the controller is configured as a 64-bit PCI-X 100MHz

device.

The LSI Logic 53C1030 supports two Ultra320 SCSI channels, both validated for LVDS

operation. In the Intel

hot-swap hard disk drive bay and the second is optionally connected to an external connector.

In the Intel

®

Server Platform SR6850HW4 both channels are routed to the internal hot-swap

®

Server Platform SR4850HW4 the first channel is routed to the internal

hard disk drive bay. Intel has not validated Single Ended (SE) operation for this device.

The Mainboard provides active terminators, termination voltage, auto re-sealable fuse, and

protection diode for both SCSI channels. The SCSI ROM allows for the configuration of onboard termination.

PCI Express and PCI-X adapter cards based on a LSI Logic 53C1030 controller should have

the option ROM for the slot turned off in the system BIOS setup. This will allow the embedded

LSI Logic 53C1030 controller firmware to manage the add-in adapters. The Intel

®

Server Board

Set SE8500HW4 Mainboard does not have a physical flash device, so the system BIOS loads

the required RISC F/W into the embedded LSI Logic 53C1030 controller during POST. A

53C1030-based adapter cannot take control of the embedded SCSI controller since those cards

do not have the required RISC F/W to start the embedded SCSI device. Starting with the LSI

Logic Fusion-MPT* SCSI BIOS 5.10.02, the embedded LSI Logic 53C1030 SCSI controller can

control additional LSI Logic 53C1030-based adapter cards.

Revision 1.0

16

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 I/O Subsystems

3.3 Intel® RAID On Motherboard (ROMB)

The Intel® IOP332 Storage I/O Processor, in conjunction with the LSI Logic 53C1030, provides

an optional RAID On Motherboard (ROMB) solution which supports RAID levels 0, 1, 5, 10, and

50. A 2MB flash component and a non-volatile SRAM store the code and hardware

configuration information.

To activate the ROMB solution, a physical Intel

RAID DIMM must be installed on the Intel

contains a registration code required to unlock the LSI Mega RAID* solution. The DDR2

400MHz RAID DIMM serves as memory for the Intel

cache to store write data for the drives. In addition to these components an Intel

®

®

RAID Activation Key (RAK) and DDR2 400MHz

Server Board Set SE8500HW4 Mainboard. The RAK

®

IOP332 Storage I/O Processor and a disk

®

RAID Smart

Battery (RSB) may also be installed to refresh the RAID DIMM when system power drops below

specifications.

After installing a RAK and DDR2 400MHz RAID DIMM, and optional RSB, the system BIOS

setup allows the user to enable the ROMB solution. During option ROM scan, an option to

configure the RAID is displayed. The following three chapters provide an overview of the Intel

ROMB solution, however, for more information refer to the Intel

®

RAID Smart Battery Technical

Product Specification.

3.3.1 Intel® RAID Activation Key (RAK)

The RAK is a round one-wire serial EEPROM device programmed by Intel. This key has a

registration code required to enable the LSI Mega RAID* solution.

3.3.2 DDR2 RAID DIMM

The ROMB solution only supports 400MHz registered ECC, with a CAS latency of four clock

cycles. Please refer to the Intel

supported memory.

®

Server Board SE8500HW4 Memory Qualification List for

3.3.3 Intel® RAID Smart Battery (RSB)

The RSB keeps the contents of the DDR2 400MHz RAID DIMM preserved if power drops below

specifications. When the Intel

specifications, it initiates a power fail sequence that safely puts the RAID DIMM into self-refresh

state. The power subsystem generates enough of a delay to allow the Intel

Processor to complete its power fail sequence, even in the event of total system power loss.

After the power fail sequence is completed, additional logic keeps the RAID DIMM in selfrefresh mode. When power is restored, data from the RAID DIMM is safely written to the disk

array.

Revision 1.0

®

IOP332 Storage I/O Processor senses power has dropped below

®

IOP332 Storage I/O

Intel order number D22893-001

17

I/O Subsystems Intel® Server Board Set SE8500HW4

3.4 Gigabit Ethernet

A single Broadcom* BCM5704C controller provides the on-board Gigabit Ethernet interface.

This controller has two ports that can independently operate at 1000/100/10 Mbps and support

failover and teaming for greater reliability and performance. The two Media Access Controllers

support full-duplex and half-duplex modes at all speeds and have their own PCI configuration

space and on-chip memory for higher performance with load balancing and packet buffering.

For optimal performance, the controller is configured as a 64-bit PCI-X 133MHz device. The

ICH5 contains an Ethernet controller, but this device is not used by the Intel

®

Server Board Set

SE8500HW4.

3.5 Serial ATA (SATA)

The ICH5 provides a Serial ATA (SATA) interface with a transfer rate of up to 1.5GB/s. The

®

Intel

Server Board Set SE8500HW4 Mainboard has a standard 7-pin vertical connector for this

feature. SATA cables should be 1m (40 inches) or less in length.

3.6 Fibre Channel

The Intel® Fibre Channel Module seats into a custom-wired PCI Express x16 slot on the Intel®

Server Board Set SE8500HW4 Mainboard, which is attached to the Intel

®

IOP332 Storage I/O

Processor. The module uses a Qlogic* ISP2322 FC-PCI-X controller and has the following

features:

Two independent 2 Gbps Fibre Channel ports

Support for Fibre Channel virtual interface (VI) protocol

Automatically negotiates Fibre Channel bit rate (1 or 2 Gbps)

Supports up to 400 MBps sustained Fibre Channel data transfer rate

1 MB SRAM per port

Data and code parity protection

Host intervention not required to execute complete SCSI, IP, or VI operations

LC-style optical connectors

Works with the Qlogic SANsurfer* Management Suite and other Qlogic FC cards

For more information, please refer to the Intel

®

Fibre Channel Module User Guide.

3.7 Firmware Hubs

The Intel® Server Board Set SE8500HW4 Mainboard has a combined total of 4MB flash

memory that serves as the firmware hub (FWH) for the system BIOS. The system BIOS fits into

2MB of flash, but twice that is required to support the rolling BIOS feature. See Chapter 5 for

more information on the rolling BIOS.

Revision 1.0

18

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 I/O Subsystems

3.8 Video

A single ATI* Radeon* 7000 video controller provides the on-board video interface. The ATI

Radeon 7000 features the following technologies:

2D/3D video accelerator

Dual DAC for integrated, cost-effective multi-panel support

Resolutions from VGA up to UXGA (1600x1200)

16MB SDRAM video memory

32-bit/33MHz PCI host interface

Using the default operating system video driver options, the VGA signal is mirrored between the

rear panel and the front panel connector. This design consideration was made to facilitate user

debug of an operating system hard failure. When the system is in a failure state a portable

monitor can be attached to the front of the system to determine root cause. Since this is an

enterprise server, Intel has not validated the video driver configured with the front panel I/O

board VGA connector in a non-mirrored, extended desktop, state.

3.9 USB 2.0

The ICH5 provides four USB 2.0 interfaces with one internal connector on the Intel® Server

Board Set SE8500HW4 Mainboard, a dual-stack USB connector on the rear panel, and one

interface routed to the front panel connector.

3.10 Serial

The SIO provides two RS232 serial communication ports (COM1 and COM2). COM1 is

provided through DB9 connector on the rear panel of the Intel

Mainboard while COM2 is internal to the chassis and available as an unshielded 9-pin header (2

x 5, with pin 10 removed for keying). COM1 is available as an Emergency Management Port

(EMP) for remote server management, and when used in this mode, it is unavailable to the

BIOS/operating system. When server management is setup for Serial Over LAN (SOL) remote

server management, COM2 is unavailable to the BIOS/operating system.

®

Server Board Set SE8500HW4

Revision 1.0

Intel order number D22893-001

19

I/O Subsystems Intel® Server Board Set SE8500HW4

< This page intentionally left blank. >

Revision 1.0

20

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Intel® Server Board Set SE8500HW4 Memory Board

4. Intel® Server Board Set SE8500HW4 Memory

Board

One to four Intel® Server Board Set SE8500HW4 Memory Boards plug vertically into the Intel®

Server Board Set SE8500HW4 Mainboard. The Memory Board has the following features:

Intel

Two DDR2 400MT/s buses

Four 240-pin DDR2 400Mhz registered ECC DIMM sockets

Support for both single-rank and dual-rank DIMMs

Independent Memory Interface (IMI), a high-speed differential bus

PCI Express x16 card edge connector that plugs into the Intel

®

E8500 Chipset eXtended Memory Bridge (XMB)

®

Server Board Set

SE8500HW4 Mainboard

LED error indicators for each DIMM and an attention LED for hot plug events

LED indicator for both memory mirroring and RAID configurations

Memory hot plug at the card level, based on the PCI Hot Plug model

On board power converters for 0.9V, 1.5V, and 1.8V

Field Replaceable Unit (FRU) device

Two temperature sensors

Safety mechanism for instant power shut-down to the Memory Board

Figure 4. Memory Board Outline Diagram

Revision 1.0

Intel order number D22893-001

21

Intel® Server Board Set SE8500HW4 Memory Board Intel® Server Board Set SE8500HW4

Figure 5. Memory Board Component Diagram

4.1 DDR2 DIMM Support

DDR2 memory offers an effective doubling of the clock rate over DDR memory since data

transfers happen on both the rising and falling edge of the clock (double pumped). Due to the

lower clock frequency, and improved manufacturing technology, a significant power savings can

be achieved, especially when the data bus is not active.

The Intel

®

Server Board Set SE8500HW4 Memory Board supports DDR2 400MHz (also referred

to as PC2-3200) registered ECC SDRAM with On Die Termination (ODT). Both single-rank and

dual-rank technologies are supported, however unbuffered and non-ECC will not function in the

®

Intel

Server Board Set SE8500HW4. Within a single bank, both DIMMs must be identical. (The

DIMMs must be identical in size and in the number of devices on the DIMM.)

Speeds less than DDR2 400MHz may be used, but performance will be reduced. Intel has only

validated DDR2 400MHz SDRAM for specific memory parts; refer to the Intel

®

Server Board Set

SE8500HW4 Board Memory Qualification List

4.2 Installation Order

When only using two memory DIMMs, the first pair of sockets, DIMM_1A and DIMM_1B, must

be populated. When using a mixture of single-rank and dual-rank memory DIMMs on one

Memory Board, the dual-rank DIMMs must be installed in the first pair of sockets.

4.3 Memory Initialization

The XMB provides hardware memory initialization. The initialization engine performs two

passes. On the first pass, it writes the entire segment. On the second pass, it reads and tests

the entire segment. Any errors are logged with the failing DIMM being flagged for BIOS.

Revision 1.0

22

Intel order number D22893-001

Intel® Server Board Set SE8500HW4 Intel® Server Board Set SE8500HW4 Memory Board

4.4 Data Correction and Scrubbing

The XMB employs a Single Device Data Correction (x8 SDDC) algorithm for the memory