Page 1

Intel® Server Board S5500WB

Revision 1.9

February, 2012

Enterprise Platforms and Services Division

Technical Product Specification

Intel order number E53971-008

Page 2

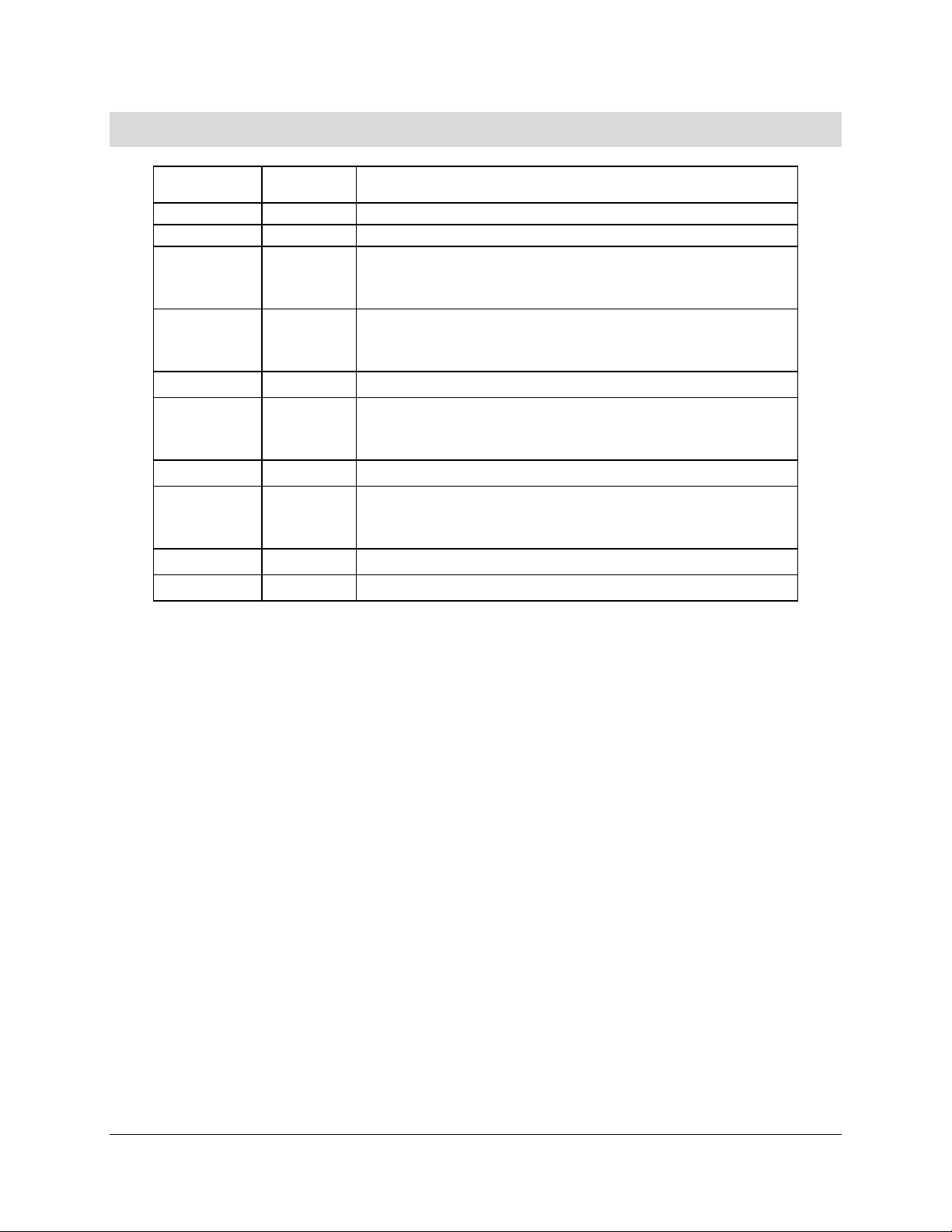

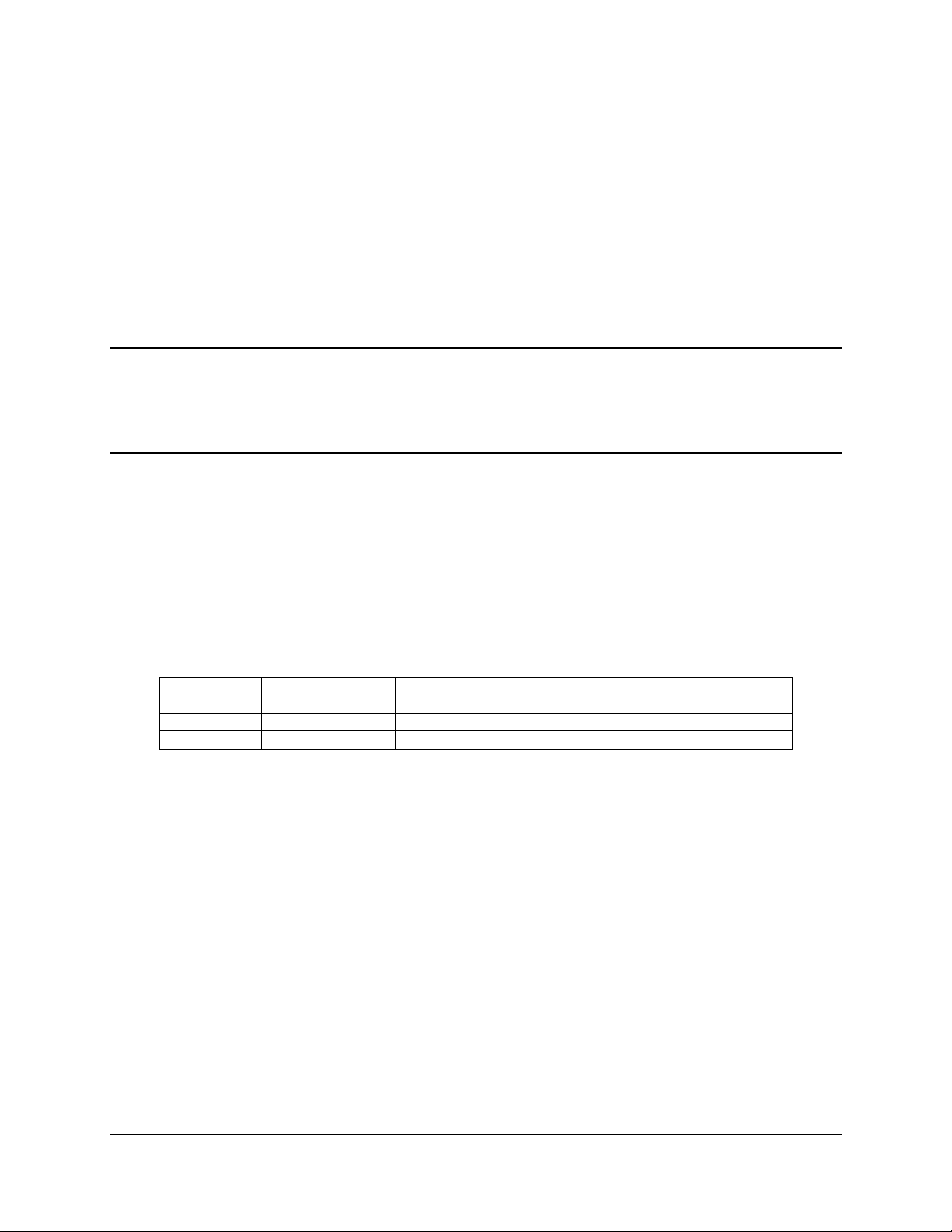

Revision History Intel® Server Board S5500WB TPS

ii

Date

Revision

Number

Modifications

03/30/2009

1.0

Initial Release.

04/29/2009

1.1

Formatting corrections.

05/20/2009

1.2

Updated heatsink installation steps.

Corrected processor fault table.

Added jumper location figure.

08/03/2009

1.3

Updated memory support.

Corrected PCIe slot speed.

Removed S4 support.

01/12/2010

1.4

Corrected USB header pin-out.

03/09/2010

1.5

Updated Power Supply communication bus requirements.

Increased maximum supported memory to 128GB.

Added support for 5600 series processors.

04/21/2010

1.6

Updated12V SKU board picture (Figure 1).

07/18/2010

1.7

Removed Rapid Boot Toolkit section.

Updated NIC LEDs.

Updated video resolution.

03/21/2010

1.8

Updated typo in board feature set.

02/16/2012

1.9

Updated typo in board feature set.

Revision History

Revision 1.9

Intel order number E53971-008

Page 3

Intel® Server Board S5500WB TPS Disclaimers

iii

Disclaimers

Information in this document is provided in connection with Intel® products. No license, express

or implied, by estoppel or otherwise, to any intellectual property rights is granted by this

document. Except as provided in Intel's Terms and Conditions of Sale for such products, Intel

assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to

sale and/or use of Intel products including liability or warranties relating to fitness for a particular

purpose, merchantability, or infringement of any patent, copyright or other intellectual property

right. Intel products are not intended for use in medical, life saving, or life sustaining

applications. Intel may make changes to specifications and product descriptions at any time,

without notice.

Designers must not rely on the absence or characteristics of any features or instructions

marked "reserved" or "undefined." Intel reserves these for future definition and shall have no

responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

This document contains information on products in the design phase of development. Do not

finalize a design with this information. Revised information will be published when the product is

available. Verify with your local sales office that you have the latest datasheet before finalizing

a design.

This document may contain design defects or errors known as errata which may cause the

product to deviate from published specifications. Current characterized errata are available

on request.

This document and the software described in it are furnished under license and may only be

used or copied in accordance with the terms of the license. The information in this manual is

furnished for informational use only, is subject to change without notice, and should not be

construed as a commitment by Intel Corporation. Intel Corporation assumes no responsibility or

liability for any errors or inaccuracies that may appear in this document or any software that

may be provided in association with this document.

Except as permitted by such license, no part of this document may be reproduced, stored in a

retrieval system, or transmitted in any form or by any means without the express written

consent of Intel Corporation.

Intel and Xeon are trademarks or registered trademarks of Intel Corporation.

*Other brands and names may be claimed as the property of others.

Copyright © Intel Corporation 2011

Revision 1.9

Intel order number E53971-008

Page 4

Table of Contents Intel® Server Board S5500WB TPS

iv

Table of Contents

1. Introduction ........................................................................................................................ 1

1.1 Section Outline ....................................................................................................... 1

1.2 Server Board Use Disclaimer ................................................................................. 1

2. Server Board Overview ...................................................................................................... 2

2.1 Intel® Server Board S5500WB Server Board .......................................................... 4

2.2 Server Board Connector and Component Layout ................................................... 6

2.2.1 Board Rear Connector Placement .......................................................................... 8

2.2.2 Server Board Mechanical Drawings ....................................................................... 8

3. Functional Architecture ................................................................................................... 13

3.1 High Level Product Features ................................................................................ 13

3.2 Functional Block Diagram .................................................................................... 14

3.3 Processor Subsystem .......................................................................................... 15

3.3.1 Processor Support ............................................................................................... 15

3.3.2 Processor Population Rules ................................................................................. 15

3.3.3 Installing or Replacing the Processor ................................................................... 17

3.3.4 Intel® QuickPath Interconnect (Intel® QPI) ............................................................ 20

3.4 Intel® QuickPath Memory Controller ..................................................................... 21

3.4.1 Supported Memory ............................................................................................... 21

3.4.2 Memory Subsystem Nomenclature....................................................................... 21

3.4.3 ECC Support ........................................................................................................ 22

3.4.4 Memory Reservation for Memory-mapped Functions ........................................... 22

3.4.5 High-Memory Reclaim .......................................................................................... 22

3.4.6 Memory Population Rules .................................................................................... 23

3.4.7 Installing and Removing Memory ......................................................................... 23

3.4.8 Channel-Independent Mode ................................................................................. 25

3.4.9 Memory RAS ........................................................................................................ 25

3.4.10 Memory Error LED ............................................................................................... 26

3.5 Intel® 5500 Chipset IOH ........................................................................................ 26

3.5.1 IOH24D PCI Express* .......................................................................................... 26

3.6 Management Engine ............................................................................................ 27

3.7 Intel® 82801Jx I/O Controller Hub (ICH10R) ......................................................... 28

3.7.1 Serial ATA Support .............................................................................................. 28

Revision 1.9

Intel order number E53971-008

Page 5

Intel® Server Board S5500WB TPS Table of Contents

v

3.7.2 USB 2.0 Support .................................................................................................. 29

3.8 Network Interface Controller (NIC) ....................................................................... 30

3.8.1 MAC Address Definition ....................................................................................... 31

3.8.2 LAN Connector Ordering ...................................................................................... 31

3.9 Integrated Baseboard Management Controller ..................................................... 31

3.9.1 Integrated BMC Embedded LAN Channel ............................................................ 33

3.9.2 RMM3 Advanced Management Board: ................................................................. 34

3.10 Serial Ports .......................................................................................................... 34

3.11 Wake-up Control .................................................................................................. 34

3.12 Integrated Video Support ..................................................................................... 34

3.12.1 Video Modes ........................................................................................................ 35

3.12.2 Dual Video ........................................................................................................... 35

3.12.3 Front Panel Video ................................................................................................ 35

3.13 I/O Slots ............................................................................................................... 36

3.13.1 X16 Riser Slot Definition ...................................................................................... 36

3.13.2 PE WIDTH Strapping ........................................................................................... 36

3.13.3 Slot 1 PCI Express* x8 Connector ........................................................................ 36

3.13.4 I/O Module Connector .......................................................................................... 37

4. Intel® I/O Expansion Modules .......................................................................................... 38

5. Platform Management Features ...................................................................................... 40

5.1 BIOS Feature Overview ....................................................................................... 40

5.1.1 EFI Support .......................................................................................................... 40

5.1.2 BIOS Recovery .................................................................................................... 40

5.2 BMC Feature Overview ........................................................................................ 40

5.2.1 Server Engines Pilot II Controller ......................................................................... 40

5.2.2 BMC Firmware ..................................................................................................... 41

5.2.3 BMC Basic Features ............................................................................................ 41

5.2.4 BMC Advanced Features ..................................................................................... 42

5.3 Management Engine (ME) ................................................................................... 42

5.3.1 Overview .............................................................................................................. 42

5.3.2 BMC - Management Engine Interaction ................................................................ 43

5.4 Data Center Manageability Interface .................................................................... 43

5.5 Other Platform Management ................................................................................ 43

5.5.1 Wake On LAN (WOL) .......................................................................................... 43

Revision 1.9

Intel order number E53971-008

Page 6

Table of Contents Intel® Server Board S5500WB TPS

vi

5.5.2 PCI Express* Power management ....................................................................... 43

5.5.3 PMBus* ................................................................................................................ 43

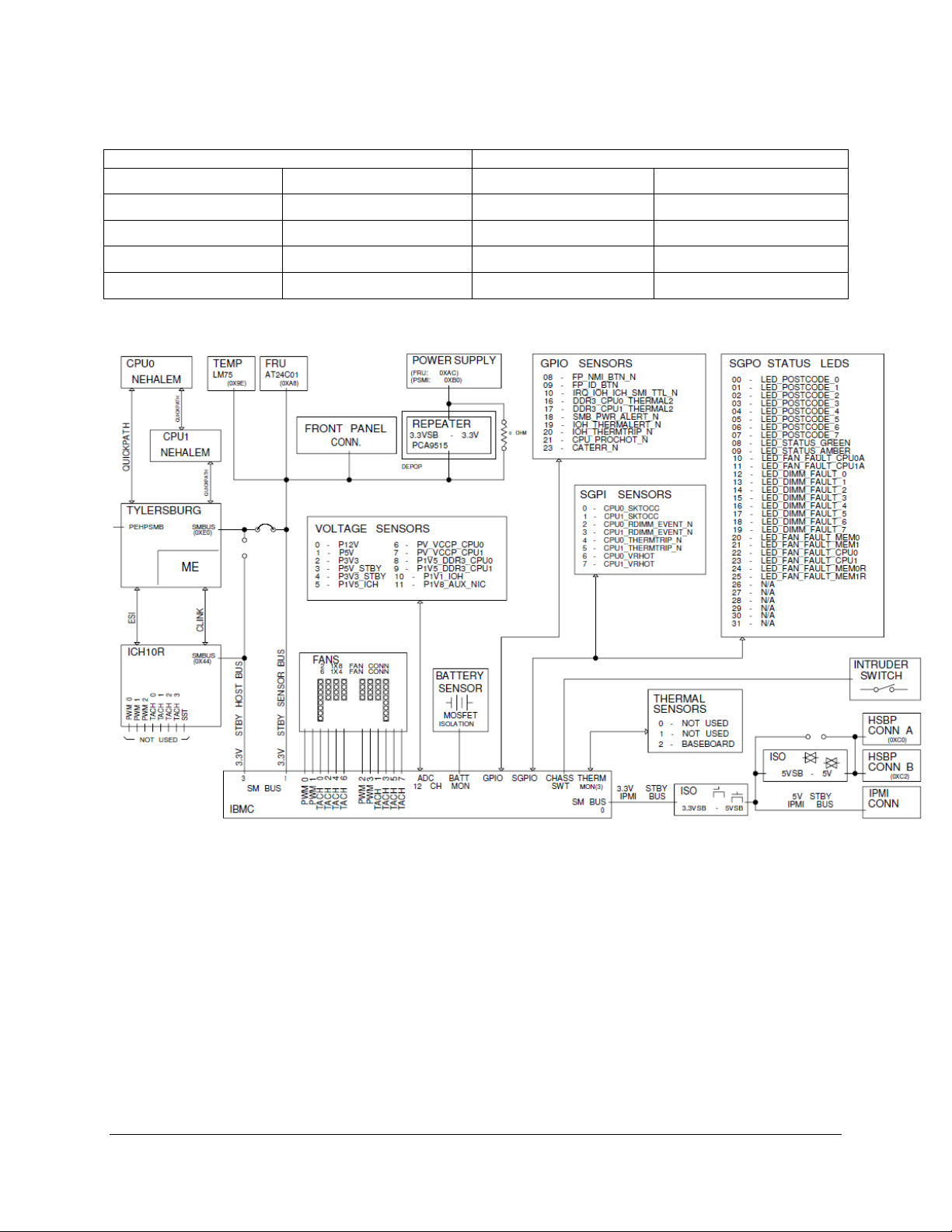

5.6 I2C\SMBUS Architecture Block ............................................................................ 44

5.6.1 I2C\SMBUS Device Addresse .............................................................................. 44

6. Configuration Jumpers .................................................................................................... 46

6.1.1 Force IBMC Update (J1B5) .................................................................................. 47

6.1.2 Password Clear (J1C2) ........................................................................................ 48

6.1.3 BIOS Recovery Mode (J1C3) ............................................................................... 49

6.1.4 Reset BIOS Configuration (J1B4) ........................................................................ 50

6.1.5 Video Master (J6A3) ............................................................................................ 50

6.1.6 ME Firmware Force Update (J7A2) ...................................................................... 51

6.1.7 Serial Interface (J6A2) ......................................................................................... 51

7. Connector/Header Locations and Pin-out ...................................................................... 52

7.1 Power Connectors ................................................................................................ 52

7.2 System Management Headers ............................................................................. 54

7.2.1 Intel® Remote Management Module 3 (Intel® RMM3) Connector .......................... 54

7.2.2 BMC Power Cycle Header (12V Only) .................................................................. 54

7.2.3 Hard Drive Activity (Input) LED Header ................................................................ 55

7.2.4 IPMB Header........................................................................................................ 55

7.2.5 SGPIO Header ..................................................................................................... 55

7.3 SSI Control Panel Connector ............................................................................... 55

7.3.1 Power Button ....................................................................................................... 56

7.3.2 Reset Button ........................................................................................................ 56

7.3.3 NMI Button ................................................................ ........................................... 56

7.3.4 Chassis Identify Button ........................................................................................ 56

7.3.5 Power LED ........................................................................................................... 57

7.3.6 System Status LED .............................................................................................. 57

7.3.7 Chassis ID LED .................................................................................................... 59

7.4 I/O Connectors ..................................................................................................... 59

7.4.1 PCI Express* Connectors ..................................................................................... 59

7.4.2 VGA Connectors .................................................................................................. 61

7.4.3 NIC Connectors.................................................................................................... 62

7.4.4 SATA Connectors ................................................................................................ 64

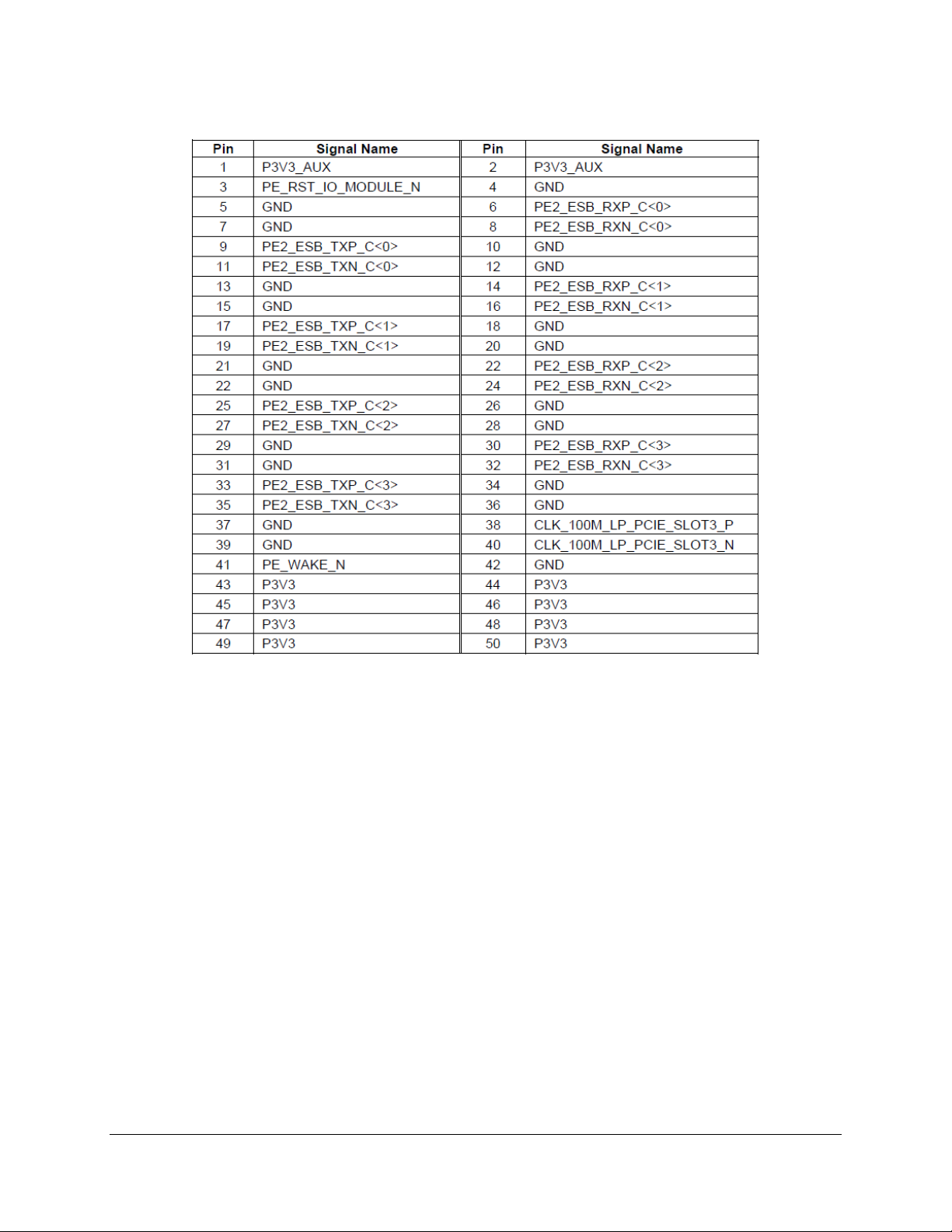

7.4.5 Intel® I/O Expansion Module Connector ............................................................... 64

Revision 1.9

Intel order number E53971-008

Page 7

Intel® Server Board S5500WB TPS Table of Contents

vii

7.4.6 Serial Port Connectors ......................................................................................... 66

7.4.7 USB Connectors .................................................................................................. 66

7.5 Fan Headers ........................................................................................................ 67

8. Intel® Light-Guided Diagnostics ...................................................................................... 68

8.1 5-V Standby LED ................................................................................................. 68

8.2 Fan Fault LEDs .................................................................................................... 69

8.3 System Status LED .............................................................................................. 69

8.4 DIMM Fault LEDs ................................................................................................. 73

8.5 POST Code Diagnostic LEDs .............................................................................. 74

8.6 Front Panel Support ............................................................................................. 75

9. Design and Environmental Specifications ..................................................................... 76

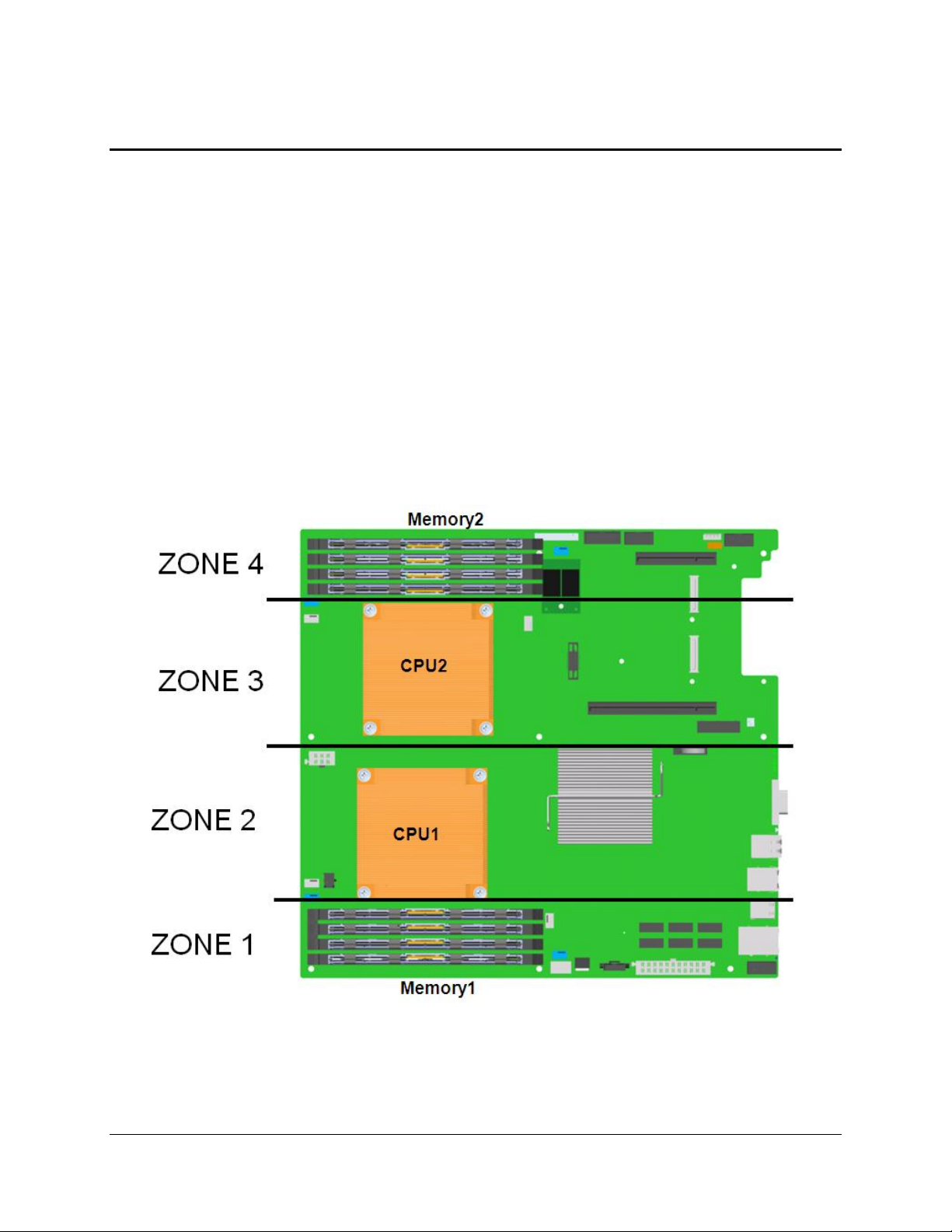

9.1 Fan Speed Control Thermal Management ........................................................... 76

9.2 Thermal Sensors .................................................................................................. 78

9.2.1 Processor PECI Temperature Sensor .................................................................. 78

9.2.2 Memory Temperature Sensor .............................................................................. 79

9.2.3 Board Temperature Sensor .................................................................................. 79

9.2.4 Thermals Sensor Placement ................................................................................ 79

9.3 Heatsinks ............................................................................................................. 80

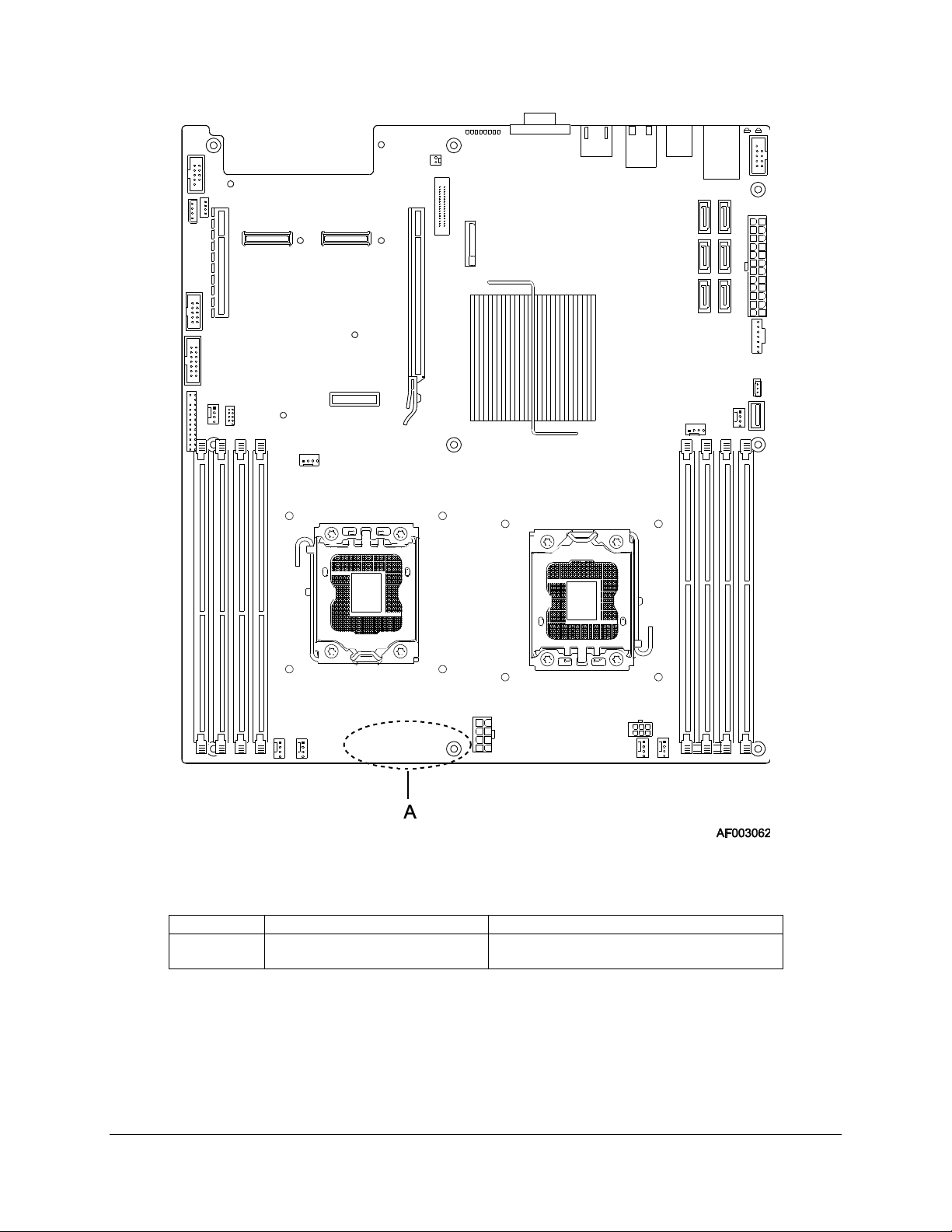

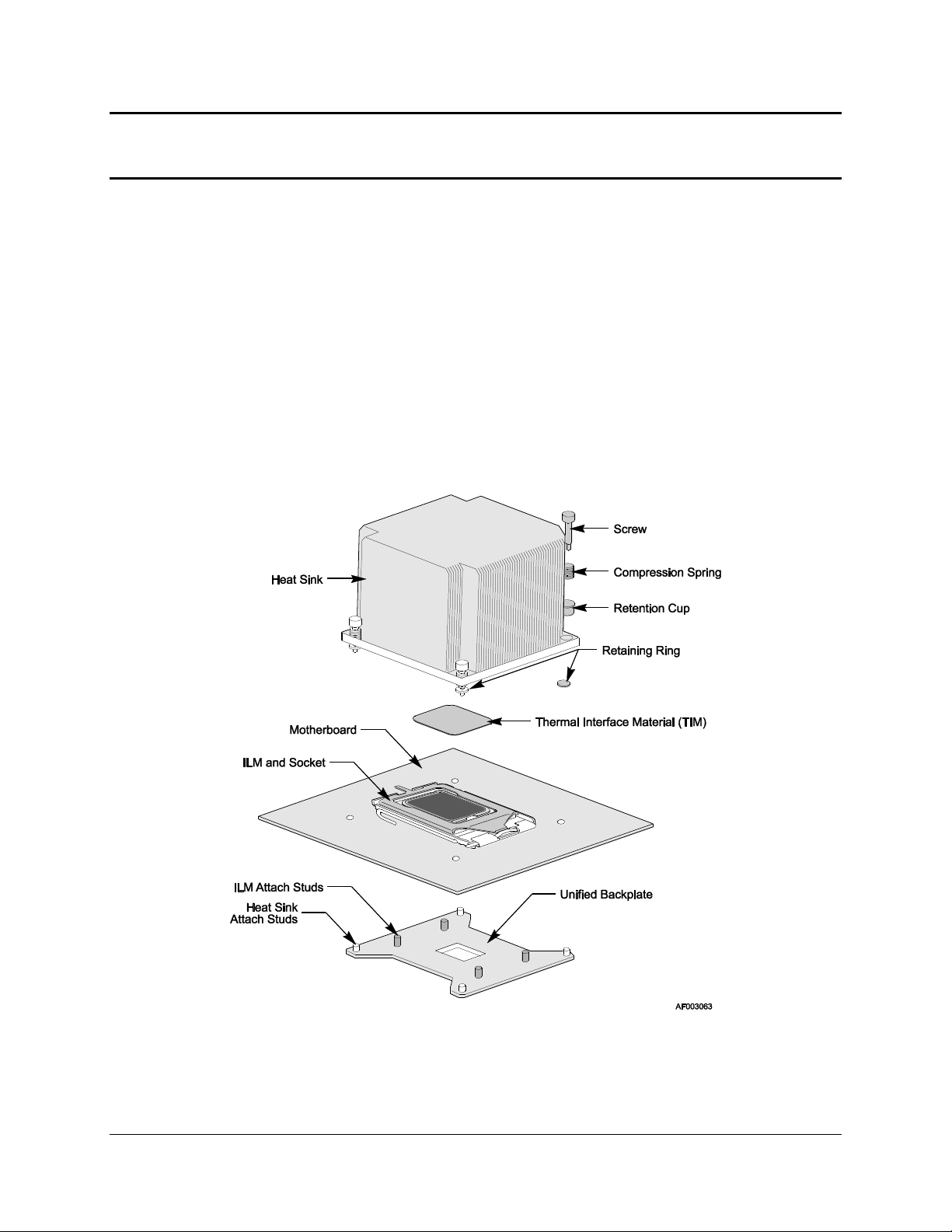

9.3.1 Unified Retention System Support ....................................................................... 81

9.4 Errors ................................................................................................................... 82

9.4.1 PROCHOT# ......................................................................................................... 82

9.4.2 THERMTRIP# ...................................................................................................... 82

9.4.3 CATERR# ............................................................................................................ 82

10. Power Subsystem............................................................................................................. 83

10.1 Server Board Power Distribution .......................................................................... 83

10.2 Power Supply Compatibility .................................................................................. 83

10.3 Power Sequencing and Reset Distribution ........................................................... 84

11. Regulatory and Certification Information ....................................................................... 85

11.1 Product Regulation Requirements ........................................................................ 85

11.1.1 Product Safety Compliance .................................................................................. 85

11.1.2 Product EMC Compliance – Class A Compliance ................................................ 85

11.1.3 Certifications / Registrations / Declarations .......................................................... 85

11.2 Product Regulatory Compliance Markings ........................................................... 86

11.3 Electromagnetic Compatibility Notices ................................................................. 86

Revision 1.9

Intel order number E53971-008

Page 8

Table of Contents Intel® Server Board S5500WB TPS

viii

11.3.1 FCC Verification Statement (USA) ....................................................................... 86

11.3.2 ICES-003 (Canada) .............................................................................................. 87

11.3.3 Europe (CE Declaration of Conformity) ................................................................ 88

11.3.4 BSMI (Taiwan) ..................................................................................................... 88

11.3.5 KCC (Korea) ........................................................................................................ 88

Appendix A: POST Code LED Decoder ................................................................................. 89

Appendix B: Video POST Code Errors .................................................................................. 96

Glossary ................................................................................................................................ 100

Reference Documents .......................................................................................................... 103

Revision 1.9

Intel order number E53971-008

Page 9

Intel® Server Board S5500WB TPS List of Figures

ix

List of Figures

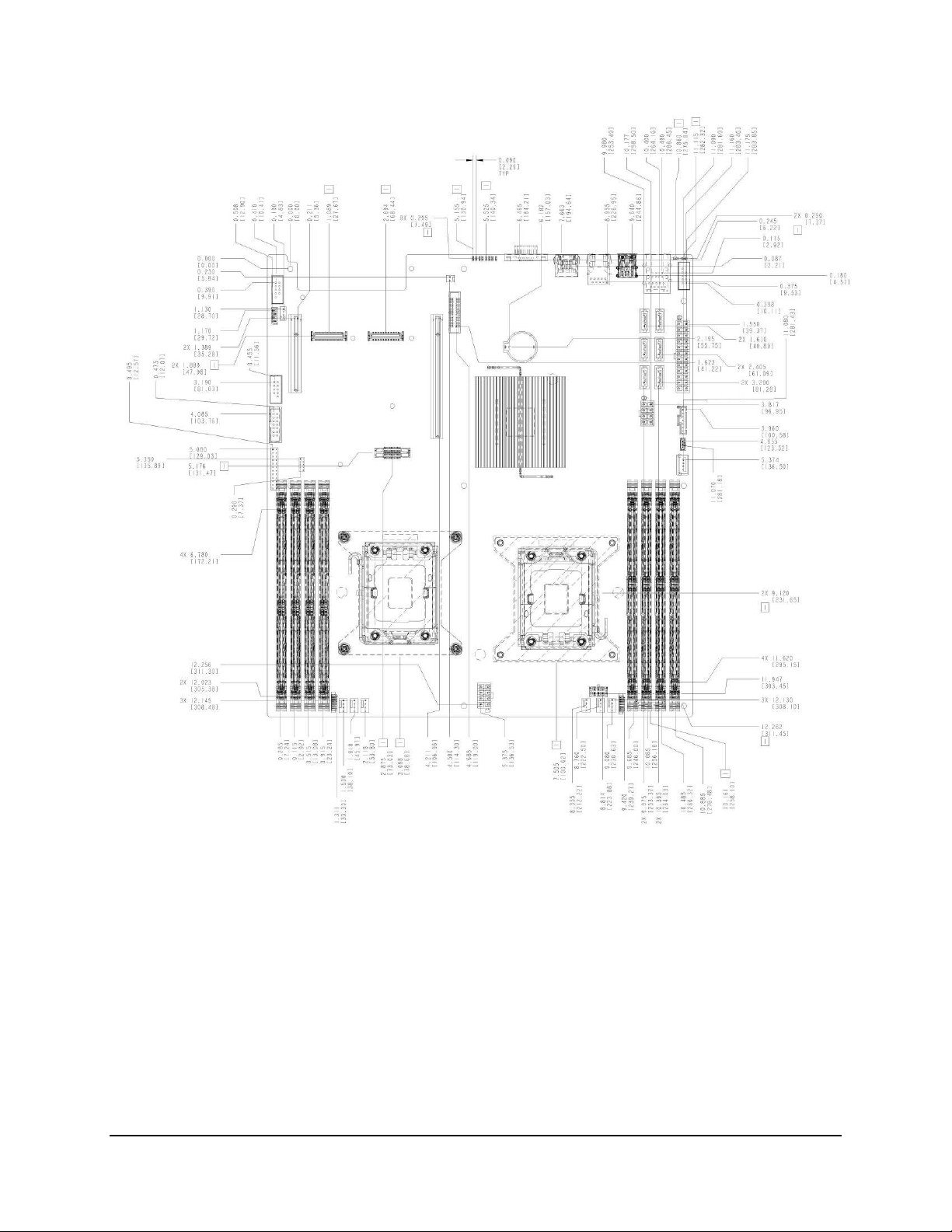

Figure 1. Intel® Server Board S5500WB 12V .............................................................................. 4

Figure 2. Intel Server Board S5500WB SSI ................................................................................ 5

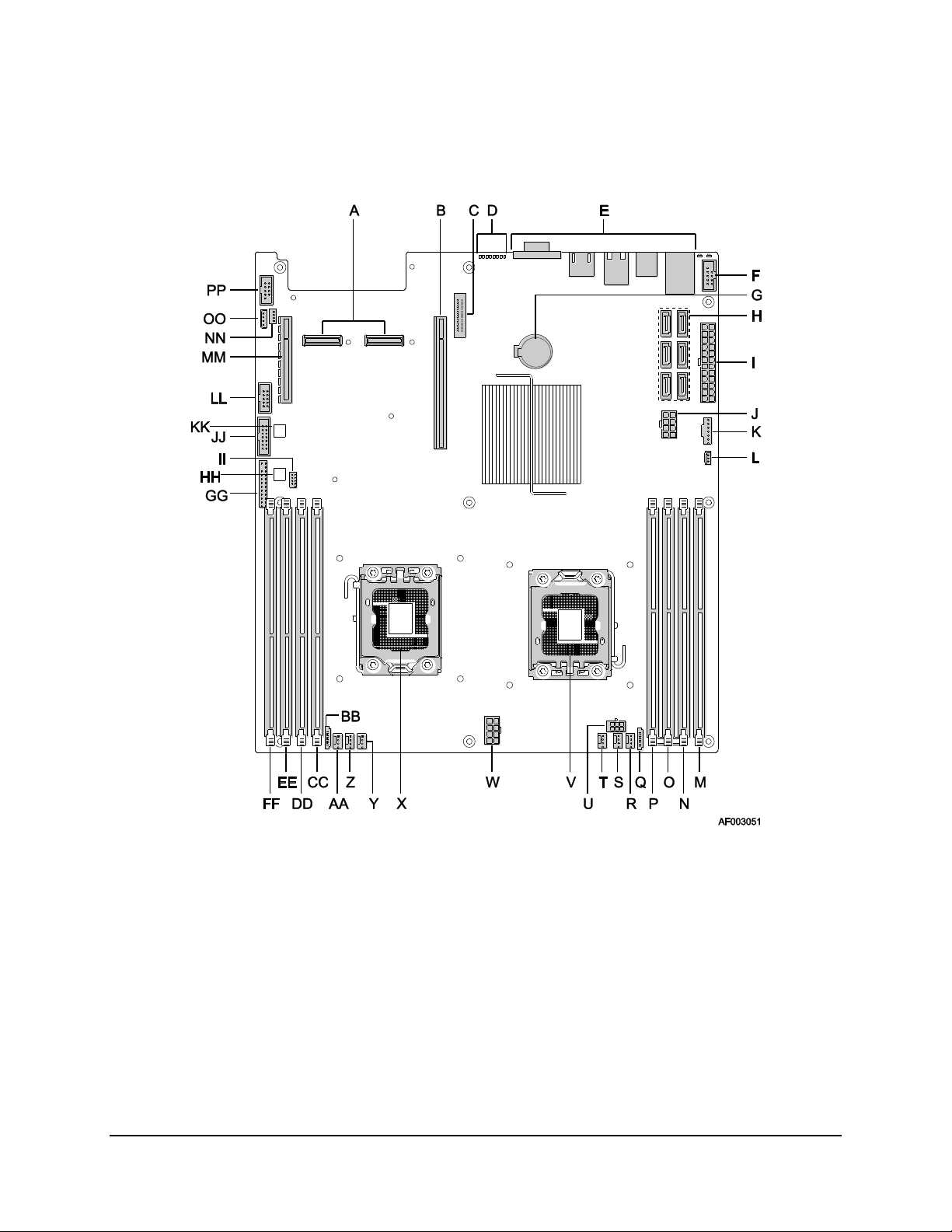

Figure 3. Intel® Server Board S5500WB Components (both SKUs are shown) ........................... 6

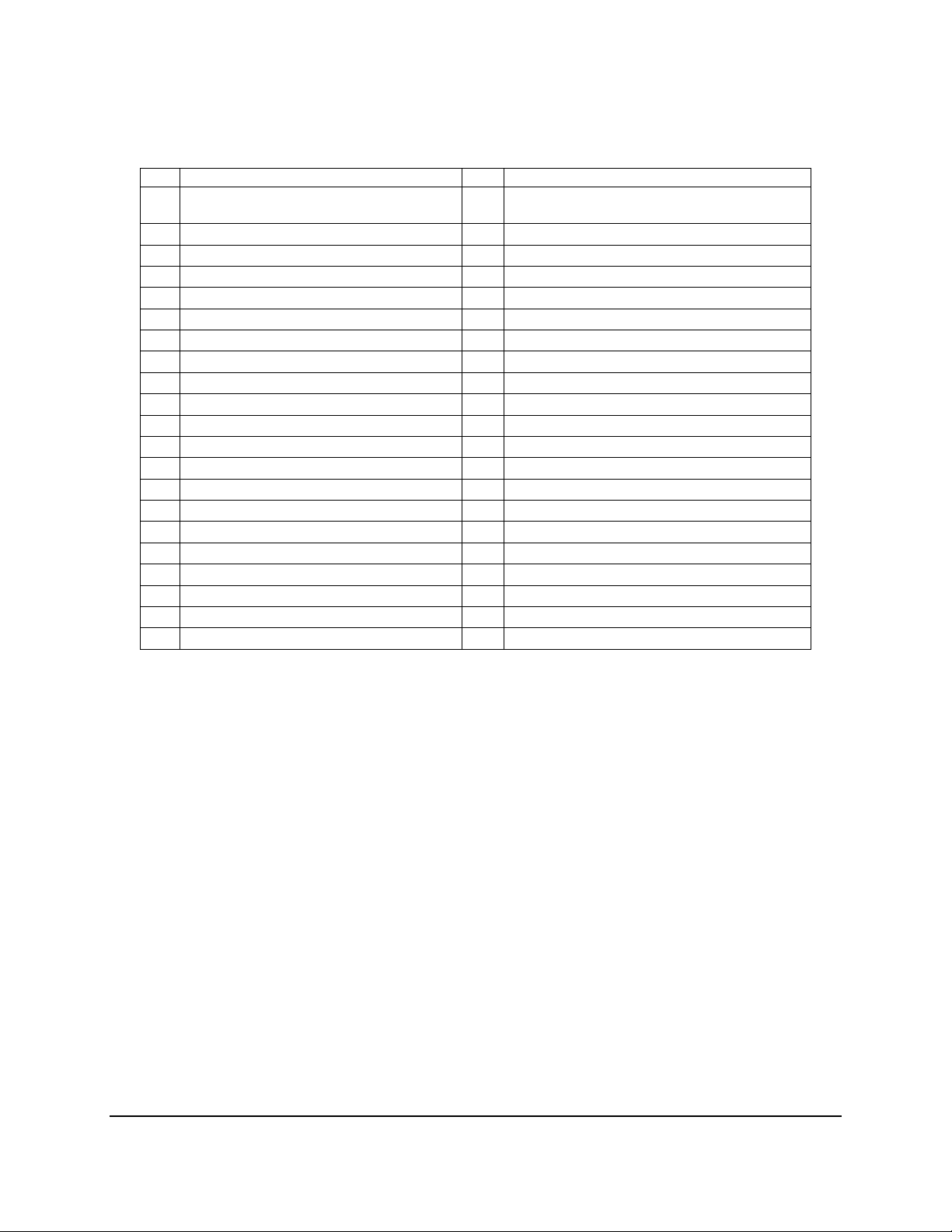

Figure 4. Rear Panel Connector Placement: ............................................................................... 8

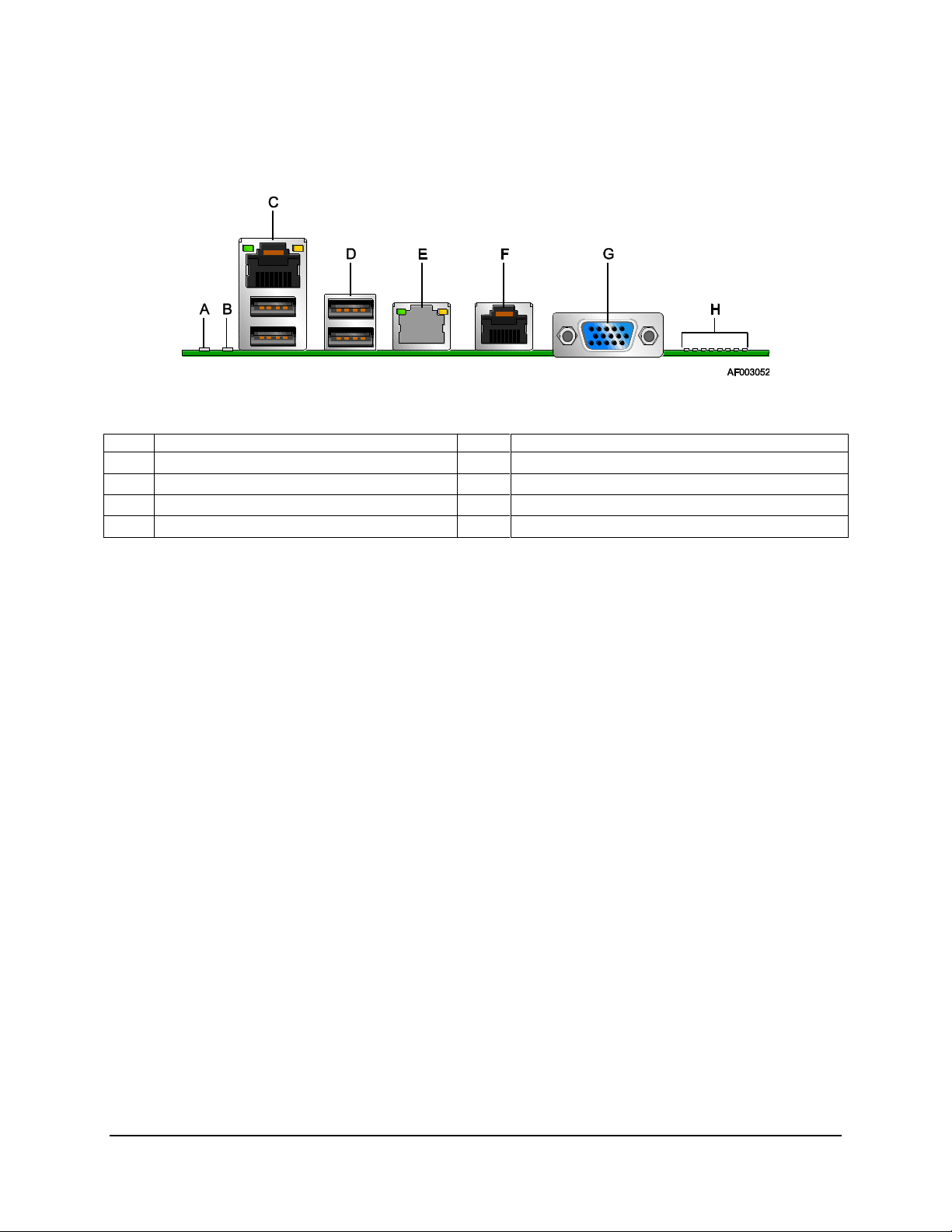

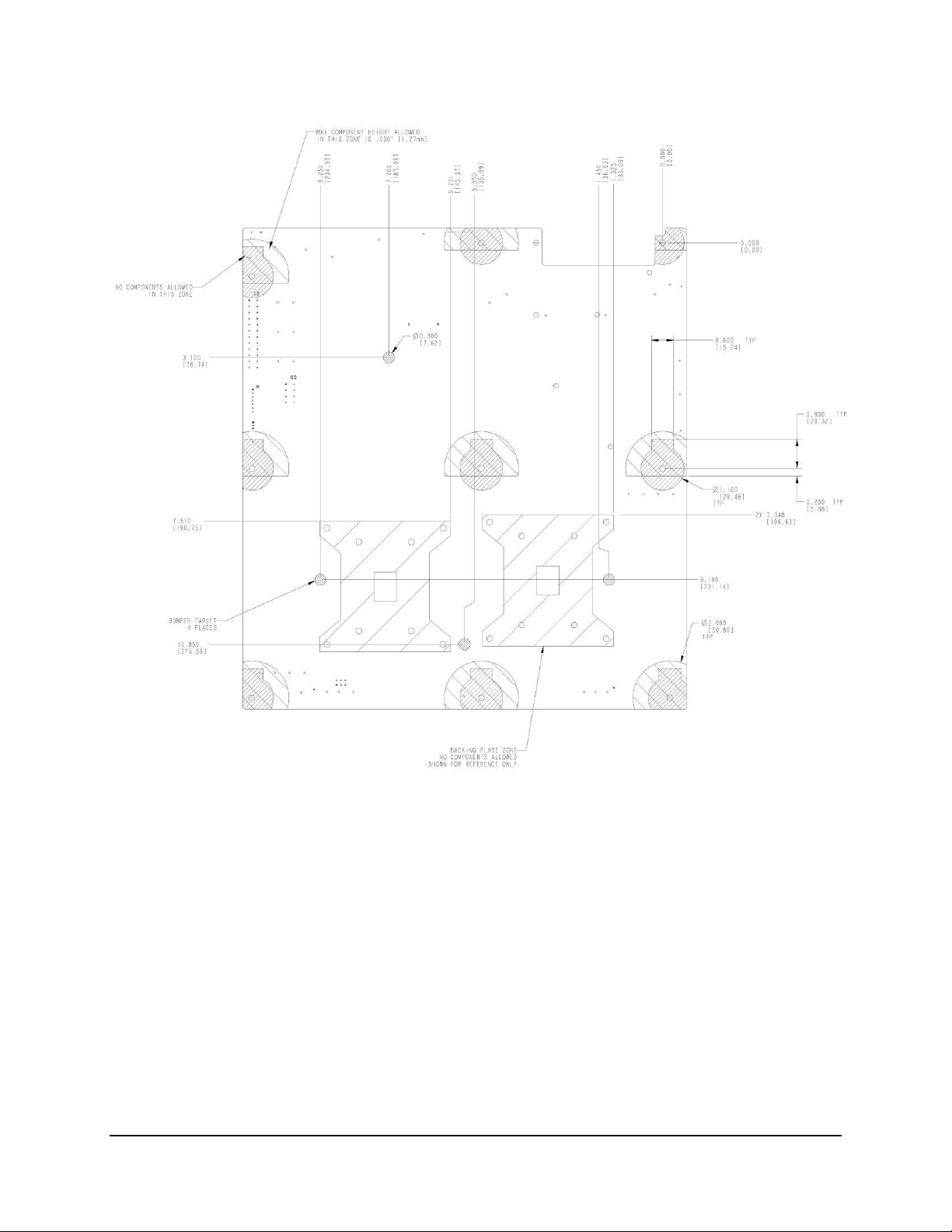

Figure 5. Baseboard and Mounting holes .................................................................................... 9

Figure 6. Connector Locations .................................................................................................. 10

Figure 7. Primary Side Height Restrictions ................................................................................ 11

Figure 8. Secondary Side Height Restrictions ........................................................................... 12

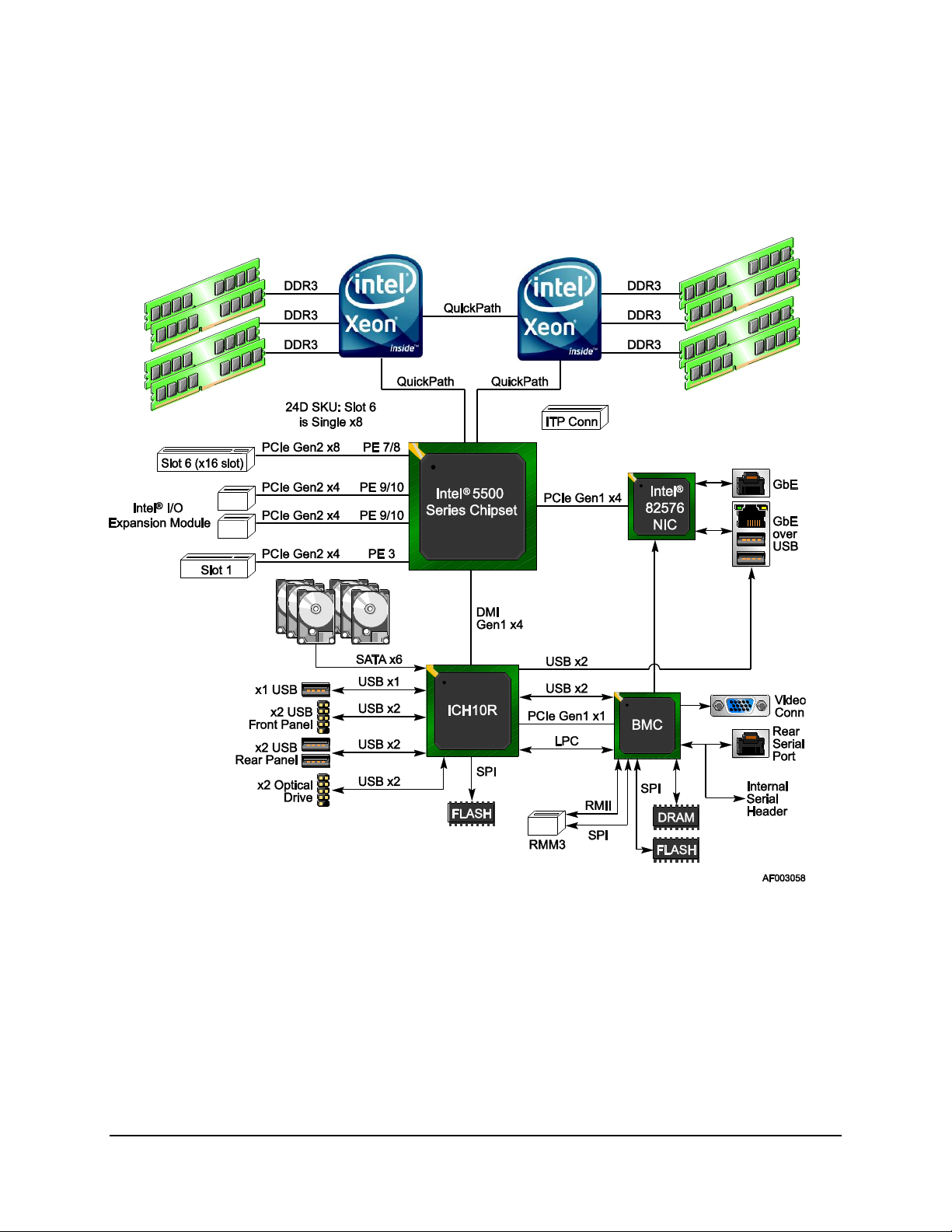

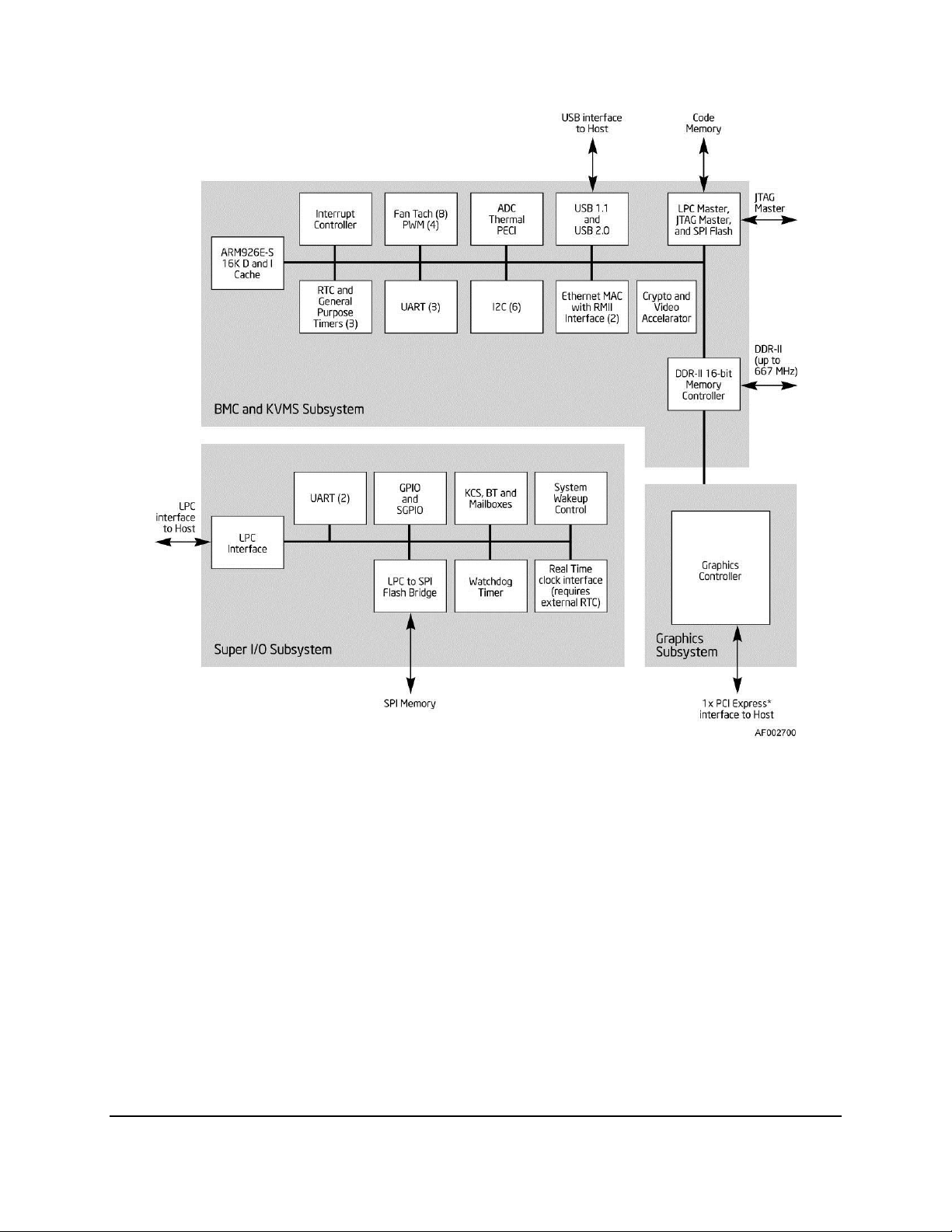

Figure 9. Intel® Server Board S5500WB Functional Block Diagram .......................................... 14

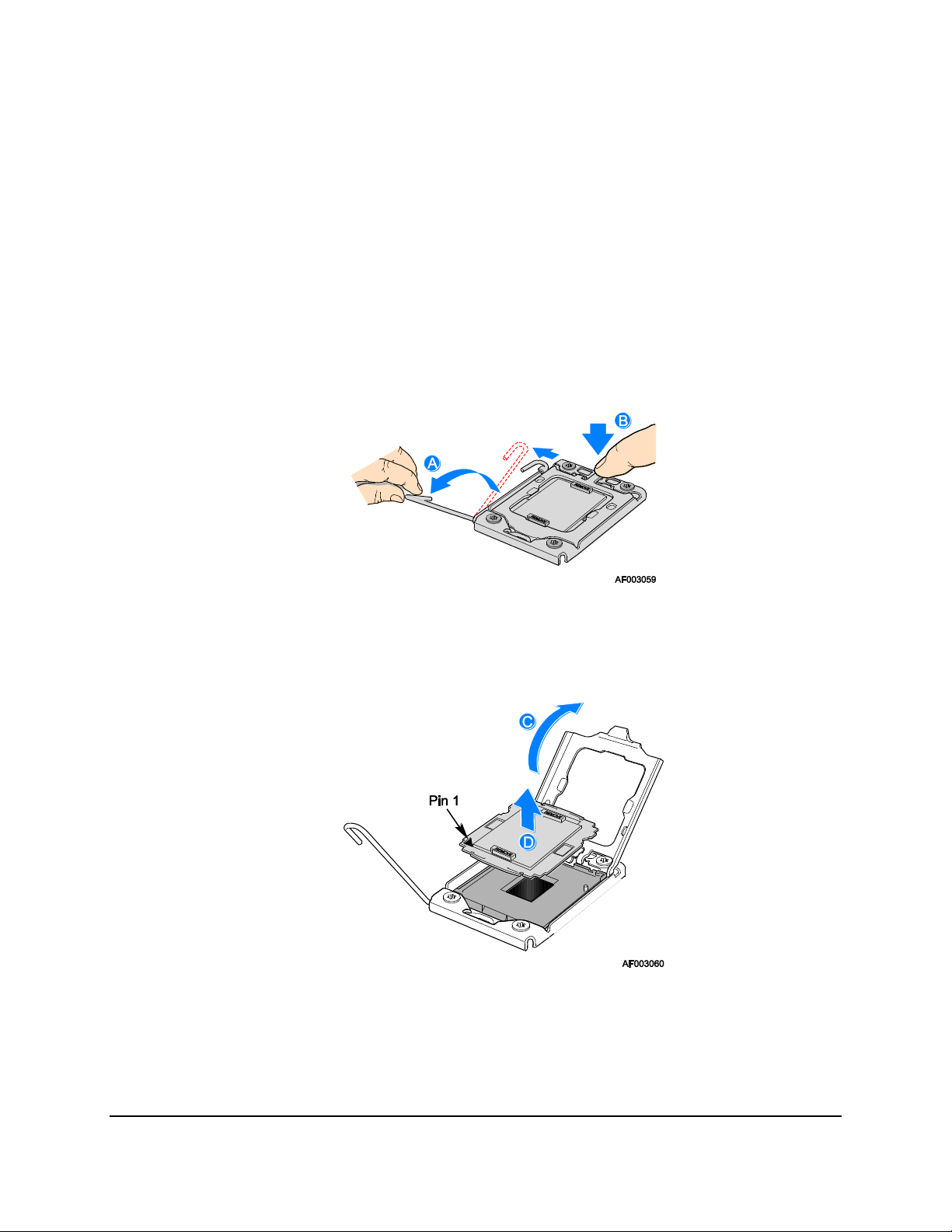

Figure 10. Lifting the load lever of ILM cover ............................................................................ 17

Figure 11. Removing the socket cover ...................................................................................... 17

Figure 12. Installing processor .................................................................................................. 18

Figure 13. Package Installation/Remove Feature ..................................................................... 19

Figure 14. Installing/Removing Heatsink ................................................................................... 20

Figure 15. Intel® QPI Link.......................................................................................................... 20

Figure 16. Memory Channel Population .................................................................................... 23

Figure 17. Installing Memory ..................................................................................................... 24

Figure 18. Mirroring Memory Configuration ............................................................................... 26

Figure 19. Integrated BMC Hardware ....................................................................................... 33

Figure 20. S5500WB I2C\SMBUS Block Diagram .................................................................... 44

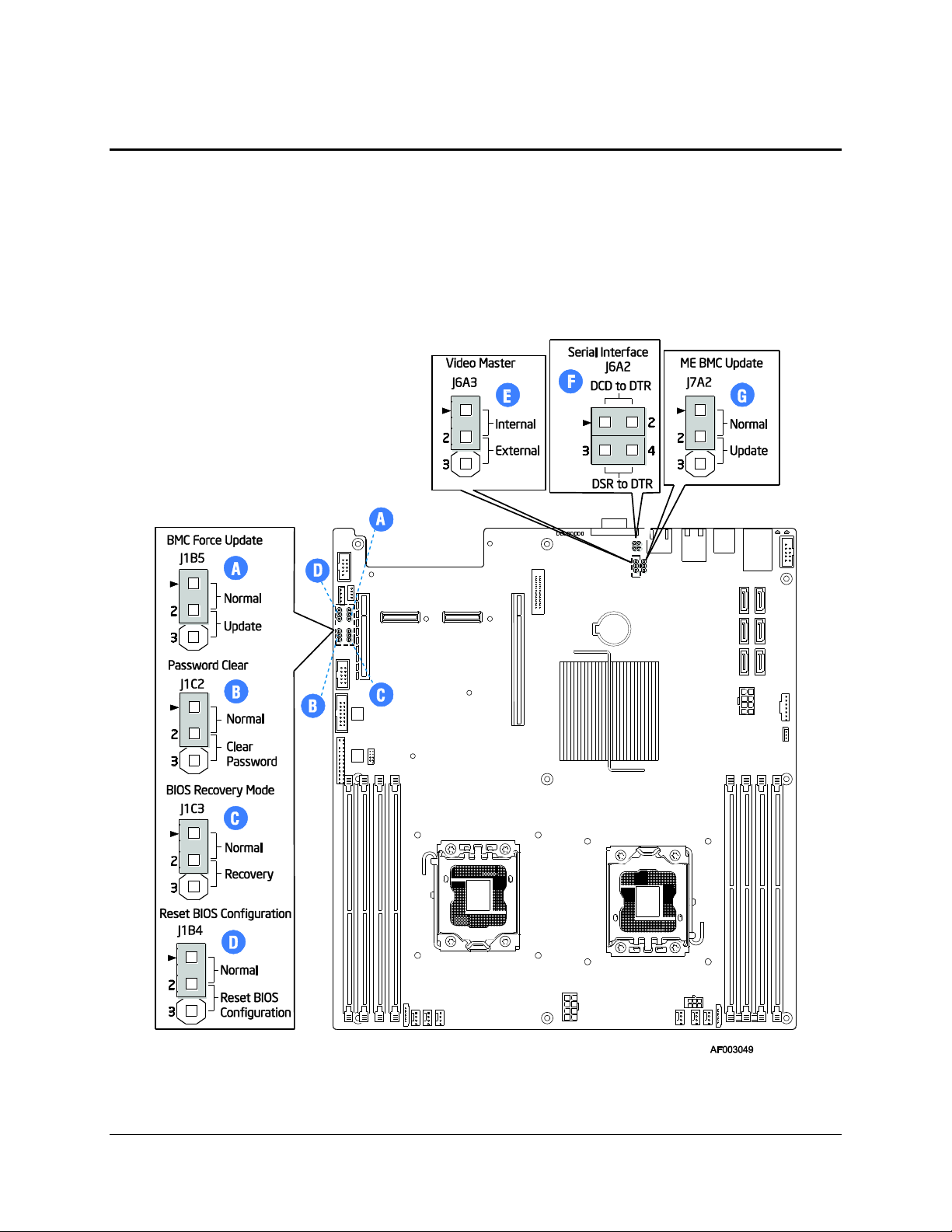

Figure 21: Jumper Blocks (J1B5, J1C2, J1C3, J1B4, J6A3, J6A2, J7A2) ................................. 46

Figure 22: 5-V Standby Status LED Location ............................................................................ 68

Figure 23. Fan Fault LED Locations .......................................................................................... 69

Figure 24. System Status LED Location ................................................................................... 70

Figure 25. DIMM Fault LEDs Locations ..................................................................................... 73

Figure 26. Rear Panel Diagnostic LEDs .................................................................................... 74

Figure 27: Thermal Zones ......................................................................................................... 76

Figure 28: Location of Fan Connectors ..................................................................................... 77

Figure 29. Fans and Sensors Block Diagram ............................................................................ 78

Figure 30: Temp Sensor Location ............................................................................................. 80

Figure 31. Unified Retention System and Unified Backplate Assembly ..................................... 81

Revision 1.9

Intel order number E53971-008

Page 10

List of Figures Intel® Server Board S5500WB TPS

x

Figure 32. Power Distribution Diagram ..................................................................................... 83

Figure 33. Diagnostic LED Placement Diagram ........................................................................ 89

Revision 1.9

Intel order number E53971-008

Page 11

Intel® Server Board S5500WB TPS List of Tables

xi

List of Tables

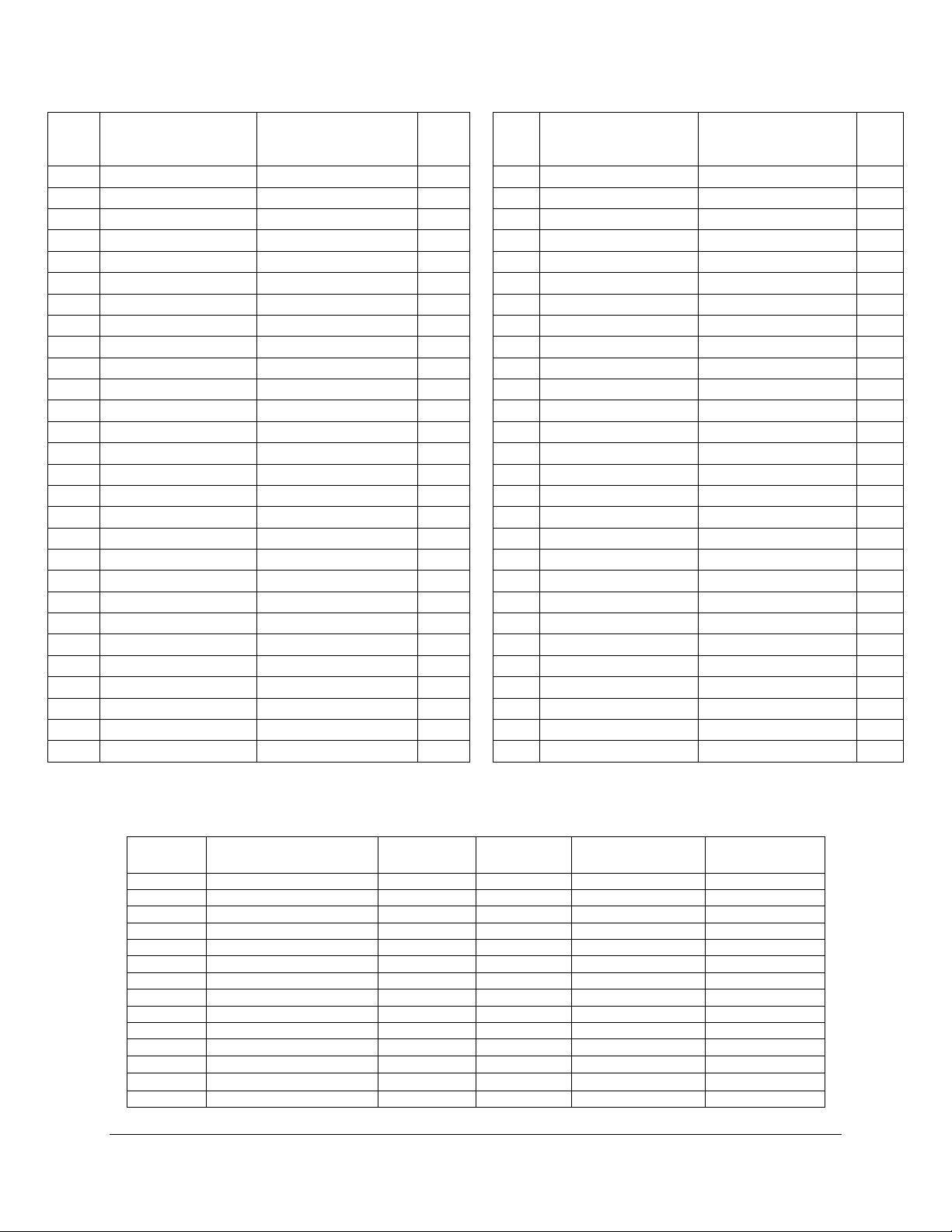

Table 1. Intel® Server Board S5500WB Feature Set ................................................................... 2

Table 2. Intel® Server Board S5500WB System Interconnects ................................................... 7

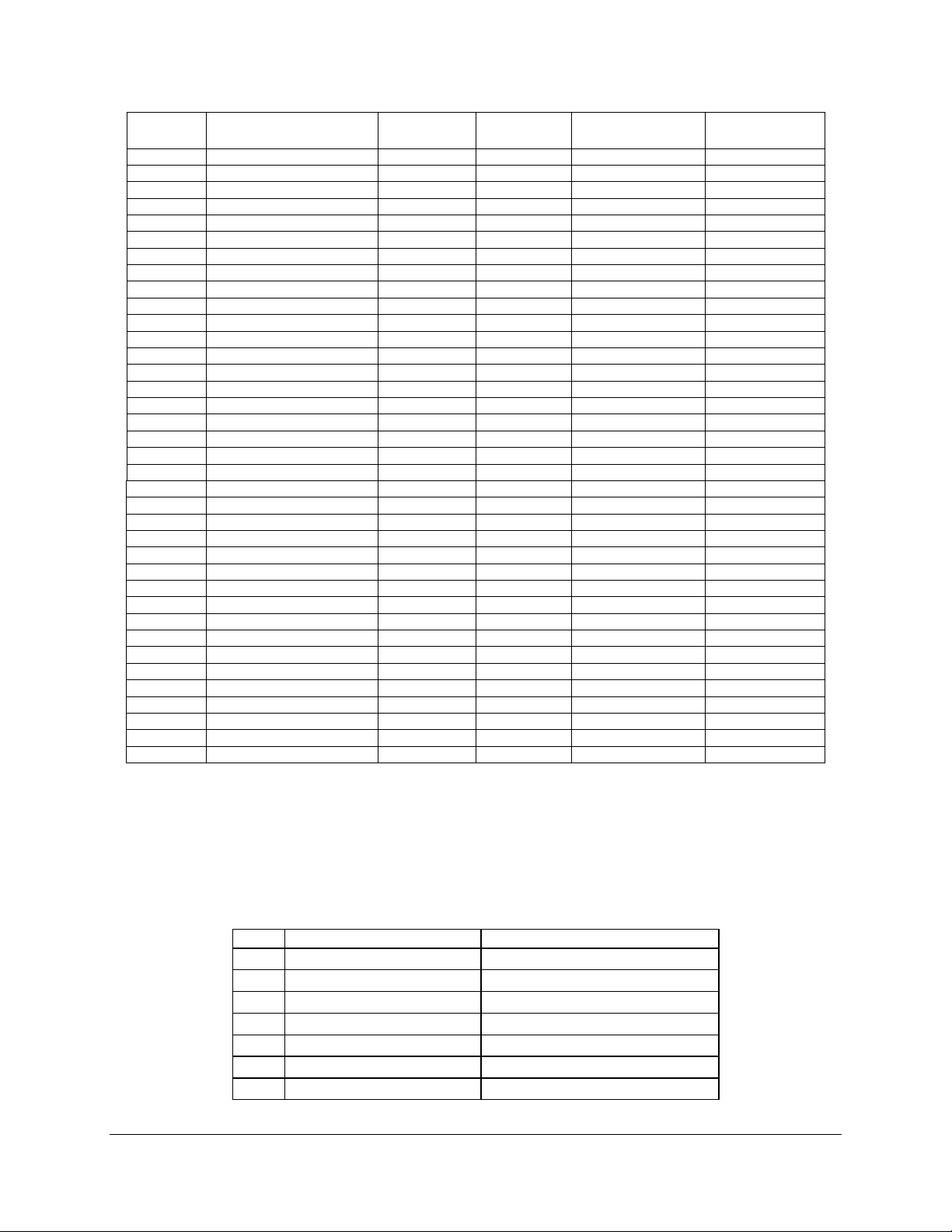

Table 3. Intel® Server Board S5500WB Features ..................................................................... 13

Table 4. Mixed Processor Configurations .................................................................................. 16

Table 5. DIMM Nomenclature ................................................................................................ ... 22

Table 6. IOH24D PCI Express* Bus Segments ......................................................................... 27

Table 7. NIC 1 Status LED ........................................................................................................ 30

Table 8. RMM3 Features .......................................................................................................... 34

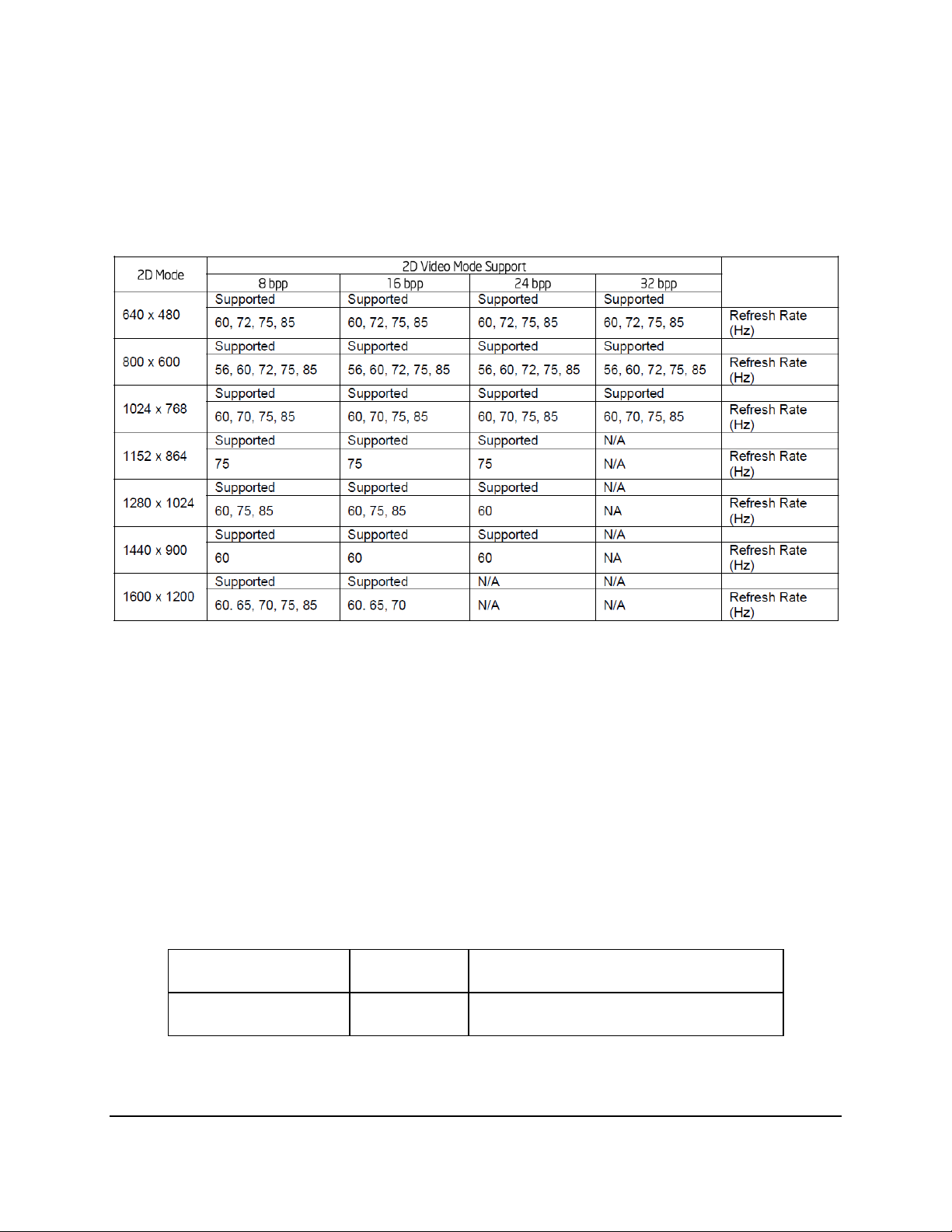

Table 9. Supported Video Modes .............................................................................................. 35

Table 10. Dual Video Options ................................................................ ................................... 35

Table 11. PEWIDTH Strapping Bits .......................................................................................... 36

Table 12. Intel® I/O Expansion Module Bus PEWIDTH Bits ...................................................... 37

Table 13. Intel® I/O Expansion Module Product Codes ............................................................. 38

Table 14: BMC Basic Features ................................................................................................. 41

Table 15. Advanced Features ................................................................................................... 42

Table 16. I2C/SMBus Device Address Assignment ................................................................... 44

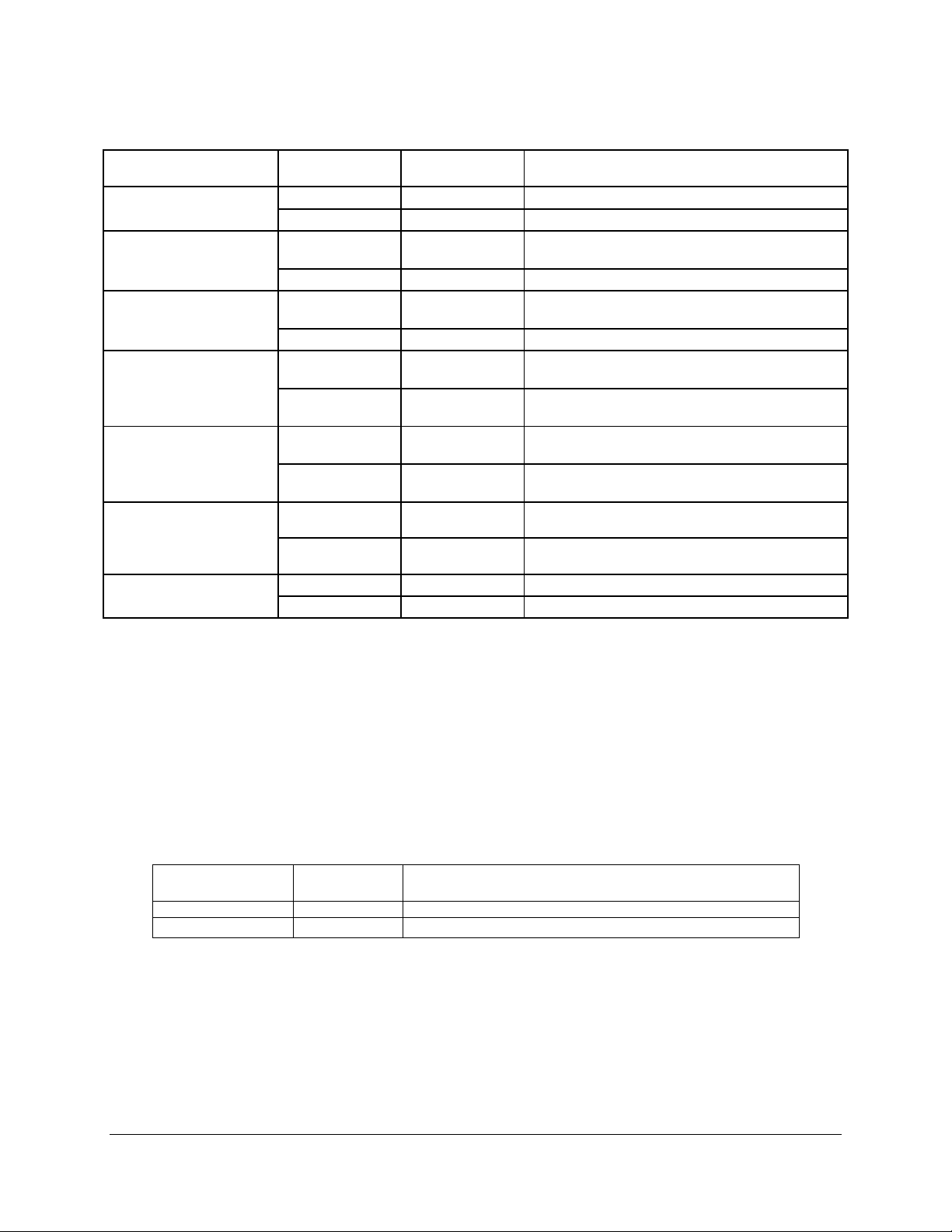

Table 17: Server Board Jumpers (J1B5, J1C2, J1C3, J1B4, J6A3, J6A2) ................................ 47

Table 18. Force IBMC Update Jumper ...................................................................................... 47

Table 19. Password Clear Jumper ................................................................ ............................ 48

Table 20. BIOS Recovery Mode Jumper ................................................................................... 49

Table 21. Reset BIOS Jumper .................................................................................................. 50

Table 22. Video Master Jumper ................................................................................................ 50

Table 23. SSI SKU 24-pin 2x12 Connector (J9B3) .................................................................... 52

Table 24. CPU 12V Power 2x4 Connector (J5K1) ..................................................................... 52

Table 25. SSI Power Control (J9D1) ......................................................................................... 52

Table 26. 12-V only 2x4 Connector (replaces EPSD12V 2x12 connector) (J9D2) .................... 53

Table 27. 12-V Only Power Control (replaces the 1x5 power control) (J9D1) (FOXCONN

ELECTRONICS INC HF1107V-P1 or TYCO ELECTRONICS CORPORATION 5-104809-6)53

Table 28. Peripheral Power (Only for 12-V only SKU) (J8K2) (iPN: C22293-003 MOLEX

CONNECTOR CORPORATION 43045-0627 ) ................................................................... 53

Table 29. Intel® RMM3 Connector Pin-out (J5B1) ..................................................................... 54

Table 30. BMC Power Cycle Header (J1D2) ............................................................................. 54

Table 31. IPMB Header 4-pin (J1B2) ........................................................................................ 55

Revision 1.9

Intel order number E53971-008

Page 12

List of Tables Intel® Server Board S5500WB TPS

xii

Table 32. SGPIO Header (J1B1) .............................................................................................. 55

Table 33. Front Panel SSI Standard 24-pin Connector Pin-out (J1E2) ...................................... 55

Table 34. Power LED Indicator States ...................................................................................... 57

Table 35. System Status LED ................................................................................................... 58

Table 36. Chassis ID LED Indicator States ............................................................................... 59

Table 37. Slot 6 Riser Connector (J4B1) ................................................................................... 59

Table 38. Slot 1 PCI Express* x8 Connector (J1B3) ................................................................. 60

Table 39. VGA External Video Connector (J6A1) ...................................................................... 61

Table 40. VGA Internal Video Connector (J1D1) ...................................................................... 62

Table 41. RJ-45 10/100/1000 NIC Connector Pin-out (J8A2, J9A1) .......................................... 63

Table 42. SATA Connectors ..................................................................................................... 64

Table 43. 50-pin Intel® I/O Expansion Module Connector Pin-out (J2B1, J3B1) ........................ 65

Table 44. External RJ-45 Serial Port A (COM1) (J7A1)............................................................. 66

Table 45. Internal 9-pin Serial B (COM2) (J1A2) ....................................................................... 66

Table 46. External USB Connector (J8A1, J9A1)) ..................................................................... 66

Table 47. Internal USB Connector (J1C1 and J9A2) ................................................................. 66

Table 48. Low-Profile Internal USB Connector (J1E3) .............................................................. 67

Table 49. SSI 4-pin Fan Connector (J2K2, J2K3, J3K1, J7K1, J8K4, J8K5) ............................. 67

Table 50. 8-pin Fan Connector (J2K1 & J8K3) (MOLEX CONNECTOR CORPORATION 53398-

0890 or 53398-0871 ) ......................................................................................................... 67

Table 51. System Status LED ................................................................................................... 71

Table 52. Standard Front Panel Functionality ................................................................ ........... 75

Table 53. Fan Connector Location & Detail ............................................................................... 77

Table 54. Fan Connector Location & Detail ............................................................................... 78

Table 55: Product Regulatory Compliance Markings ................................................................ 86

Table 56. POST Progress Code LED Example ......................................................................... 90

Table 57. Diagnostic LED POST Code Decoder ....................................................................... 91

Table 58. POST Error Messages and Handling ........................................................................ 96

Table 59: Glossary .................................................................................................................. 100

Revision 1.9

Intel order number E53971-008

Page 13

Intel® Server Board S5500WB TPS List of Tables

xiii

<This page is intentionally left blank.>

Revision 1.9

Intel order number E53971-008

Page 14

Page 15

Intel® Server Board S5500WB TPS Introduction

1

1. Introduction

The Intel® Server Board S5500WB is a dual socket server using the Intel® Xeon® Processor

5500 series and 5600 series processors, in combination with the IOH and ICH10R to provide a

balanced feature set between technology leadership and cost.

1.1 Section Outline

This document is divided into the following chapters:

Section 1 – Introduction

Section 2 – Server Board Overview

Section 3 – Functional Architecture

Section 4 – I/O Expansion Modules

Section 5 – Platform Management Features

Section 6 – Configuration Jumpers

Section 7 – Connector and Header Location and Pin-out

Section 8 – Intel® Light-Guided Diagnostics

Section 9 – Design and Environmental Specifications

Section 10 – Power Subsystem

Section 11 - Regulatory and Certification Information

Appendix A – POST Code LED Decoder

Appendix B – Video POST Code Errors

Glossary

Reference Documents

1.2 Server Board Use Disclaimer

Intel Corporation server boards contain a number of high-density VLSI and power delivery

components that need adequate airflow to cool. Intel ensures through its own chassis

development and testing that when Intel server building blocks are used together, the fully

integrated system will meet the intended thermal requirements of these components. It is the

responsibility of the system integrator who chooses not to use Intel developed server building

blocks to consult vendor datasheets and operating parameters to determine the amount of air

flow required for their specific application and environmental conditions. Intel Corporation

cannot be held responsible if components fail or the server board does not operate correctly

when used outside any of their published operating or non-operating limits.

Revision 1.9

Intel order number E53971-008

Page 16

Server Board Overview Intel® Server Board S5500WB TPS

Feature

Description

Processors

Support for one or two Intel® Xeon® Processor 5500 and 5600 series processors in

FC-LGA 1366 Socket B package with up to 95 W Thermal Design Power (TDP)

Supports future processor compatibility guidelines

4.8 GT/s, 5.86 GT/s, and 6.4 GT/s Intel® QuickPath Interconnect (Intel® QPI)

Meets EVRD11.1

Memory

Support for 800/1066/1333 MT/s ECC registered (RDIMM) or unbuffered (UDIMM)

DDR3 memory.

8 DIMMs total across six memory channels (three channels per processor in a

2:1:1 configuration)

VRD optimized to support QR x8 DIMMs

No support for QR x4 DIMMs

Chipset

Intel® 5500 Chipset IOH

Intel® 82801Jx I/O Controller Hub (ICH10R)

I/O Control

External connections:

DB-15 Video connectors

RJ-45 serial Port A connector

RJ-45 connector for 10/100/1000 LAN

One 2x USB 2.0 connectors

One RJ-45 over USB for 10/100/1000 LAN

Internal connections:

Two USB 2x5 pin header, supporting four USB 2.0 ports

One low-profile USB 2x5 pin

One DH-10 Serial Port B header

One 2x8 pin VGA header with presence detection to switch from rear I/O video

connector

Six SATA II connectors

Intel® I/O Expansion Module Dual Connectors

One RMM3 connector to support optional Intel® Remote Management Module 3

SATA SW RAID 5 Activation Key Connector

One SSI-EEB compliant front panel header

Power Connections

SSI SKU

One SSI-EEB compliant 24-pin main power connector (SSI only SKU)

One SSI compliant 8-pin CPU power connector

One SSI compliant 5-pin power control Connector (SSI only SKU)

12-V Only SKU

One 8-pin power connector

One 6-pin Aux power connector for 3.3 V and 5 V

One 7-pin power control connector

2. Server Board Overview

The Intel® Server Board S5500WB is a monolithic printed circuit board (PCB) with features

designed to support the Internet Portal Data Center markets. The following table provides a

high-level product feature list.

Table 1. Intel® Server Board S5500WB Feature Set

2 Revision 1.9

Intel order number E53971-008

Page 17

Intel® Server Board S5500WB TPS Server Board Overview

3

Feature

Description

System Fan Support

Two 8-pin fan headers for double rotor memory fans and six 4-pin fan headers

supporting two processor zones and two memory zones in a redundant fashion

Add-in Adapter Support

One riser slot supporting both full-height and low-profile 1U and 2U MD2 PCI

Express* x16 riser cards PCI gen2 Express* x8 w/ x16 connector.

One riser slot supporting PCI Express* x8 riser cards PCI gen2 Express* x4 w/ x8

connector.

Two Intel® I/O Expansion Module card connectors supporting double- and singlewide I/O modules.

Video

Onboard ServerEngines* LLC Pilot II Controller

Matrox* G200 2D Video Graphics controller

Uses 8 MB of the BMC 32 MB DDR2 Memory

Hard Drive

Support for six ICH10R SATA II ports

Optional support for SW RAID 5 with activation key

LAN

Two 10/100/1000 ports provided by Intel® 82576 PHYs with Intel® I/O Acceleration

Technology 2 support

Server Management

Onboard ServerEngines* LLC Pilot II Controller.

Integrated Baseboard Management Controller (Integrated BMC), IPMI 2.0 compliant

Basic

BMC Controller: ARM 926E-S microcontroller

Super IO: Serial Port logic, legacy interfaces, LPC interface, Port80

Hardware Monitoring: Fan speed control and voltage monitoring

Advanced

Video and USB compression and redirection

NC-SI port, a high-speed sideband management interface

Integrated Super I/O on LPC interface

Revision 1.9

Intel order number E53971-008

Page 18

Server Board Overview Intel® Server Board S5500WB TPS

2.1 Intel

®

Server Board S5500WB Server Board

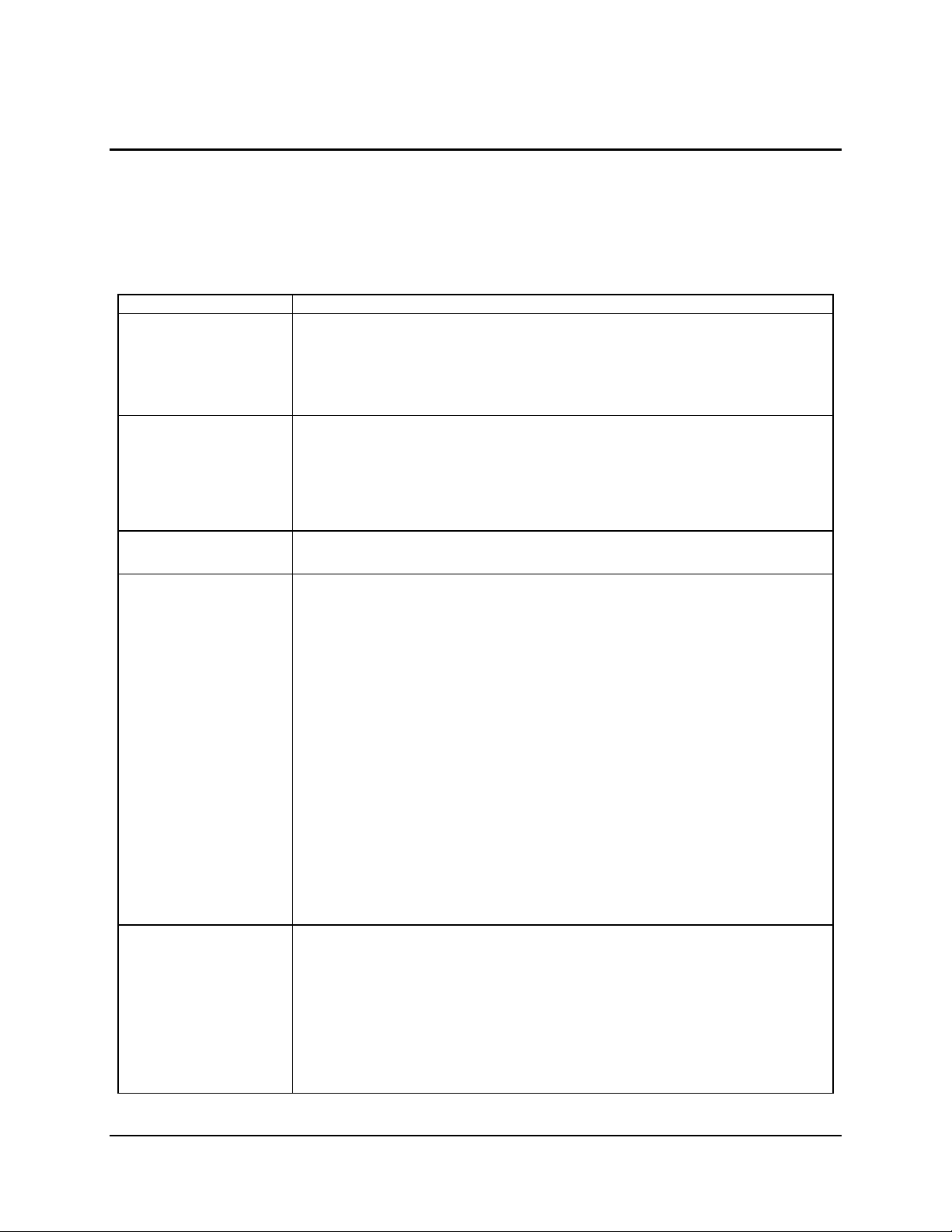

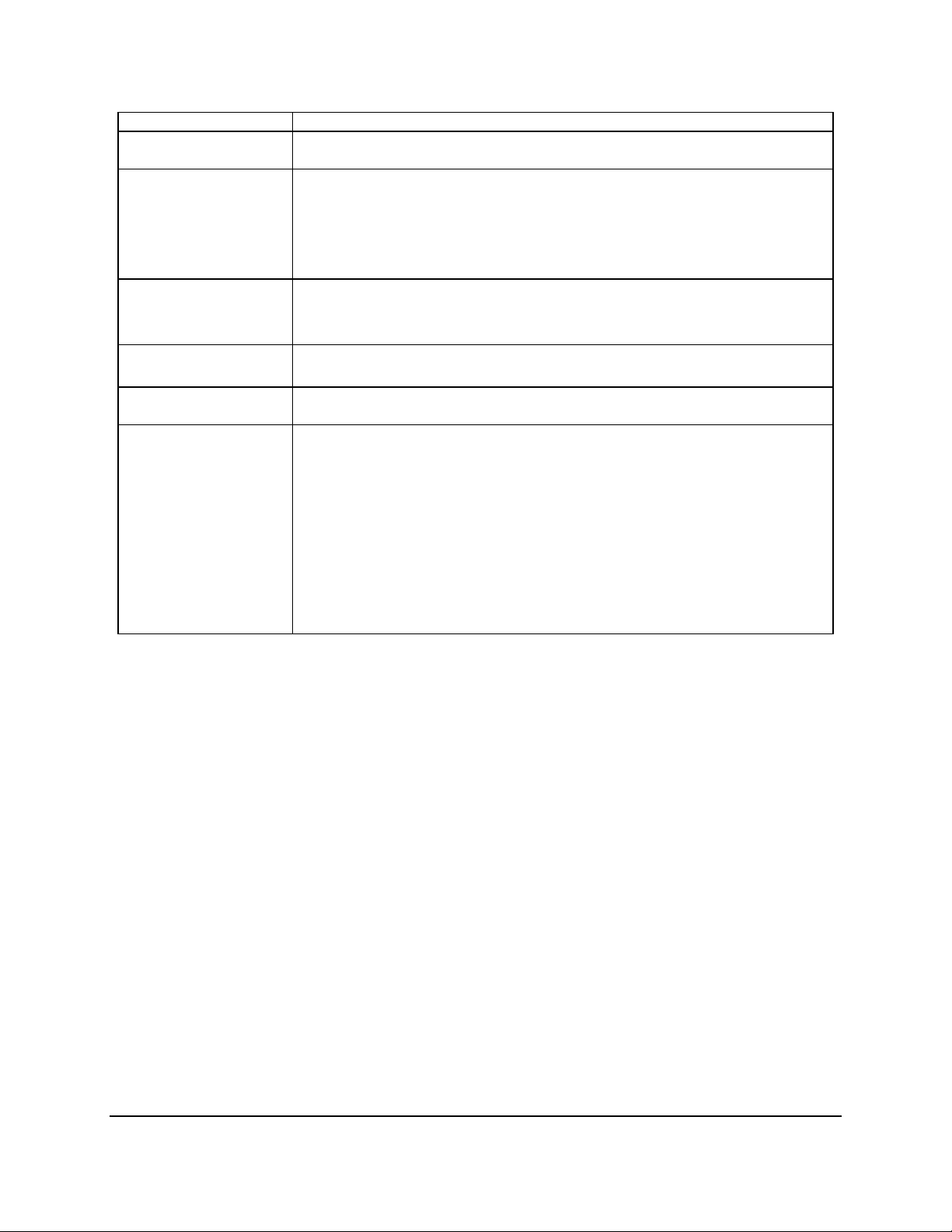

The Intel® Server Board S5500WB has two board SKUs, such as SSI-compliant and 12-V-onlySKU. The board layouts of the SKUs are shown.

Figure 1. Intel® Server Board S5500WB 12V

4 Revision 1.9

Intel order number E53971-008

Page 19

Intel® Server Board S5500WB TPS Server Board Overview

5

Figure 2. Intel Server Board S5500WB SSI

Revision 1.9

Intel order number E53971-008

Page 20

Server Board Overview Intel® Server Board S5500WB TPS

2.2 Server Board Connector and Component Layout

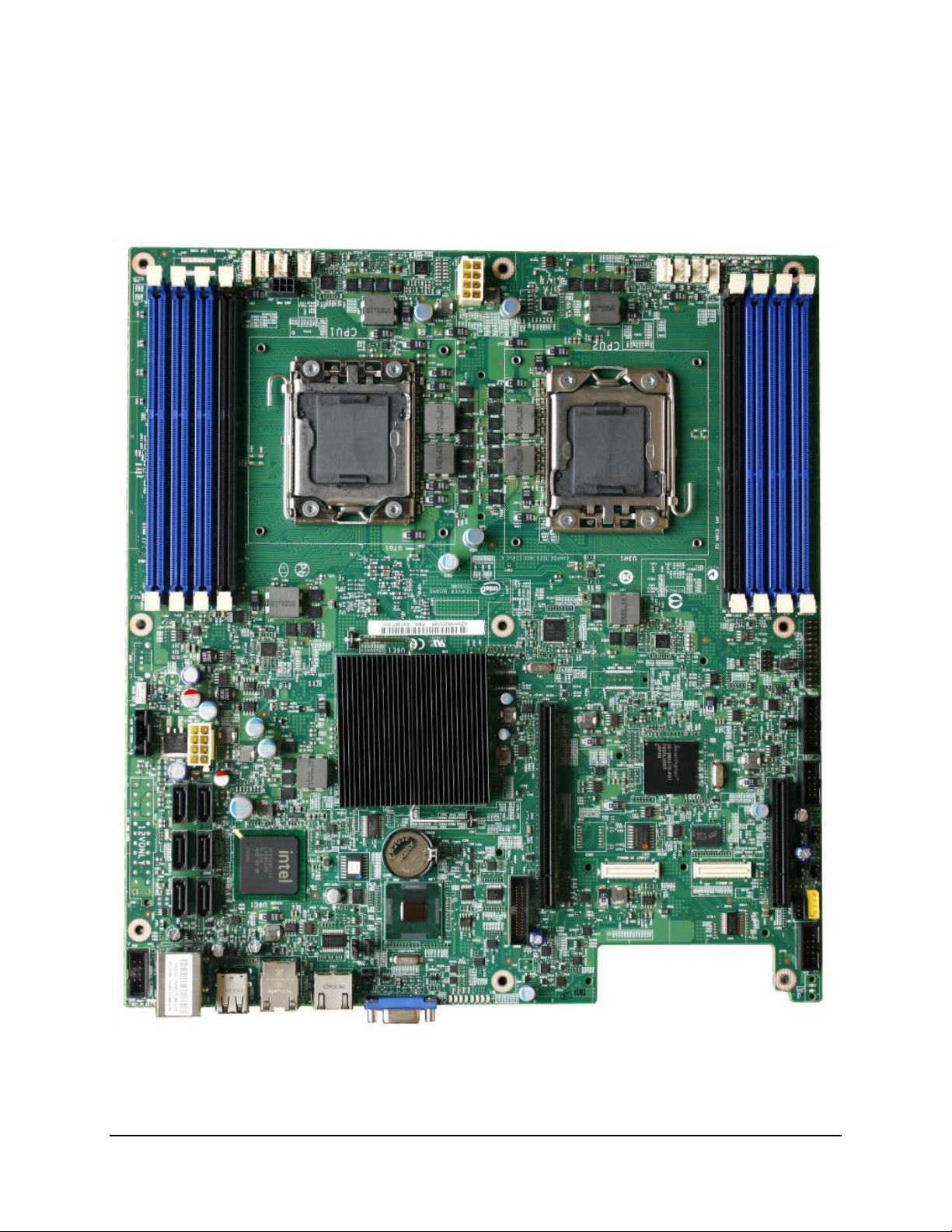

Figure 3. Intel® Server Board S5500WB Components (both SKUs are shown)

6 Revision 1.9

Intel order number E53971-008

Page 21

Intel® Server Board S5500WB TPS Server Board Overview

7

Description

Description

A

Dual Intel® I/O Expansion Module

Connectors

V

Processor Socket 1

B

PCI Express x16 Gen2

W

8 Pin CPU Connector

C

Remote Management Module 3

X

Processor Socket 2

D

POST Code LEDs

Y

4-pin Fan Connector (CPU2)

E

External I/O

Z

4-pin Fan Connector (CPU2A)

F

USB Connector

AA

4-pin Fan Connector (MEM2)

G

Battery

BB

8-pin Fan Connector (MEM2R)

H

SATA Connectors

CC

DIMM Slot D2

I

24 Pin Connector (SSI only)

DD

DIMM Slot D1

J

8 Pin Connector (12V only)

EE

DIMM Slot E1

K

Aux Power (5-pin or 7-pin)

FF

DIMM Slot F1

L

RAID Key

GG

Front Panel Connector

M

DIMM Slot C1

HH

HDD LED Header

N

DIMM Slot B1

II

Low-Profile USB Connector

O

DIMM Slot A1

JJ

Internal VGA Connector

P

DIMM Slot A2

KK

BMC Power Cycle Header (12V Only)

Q

8-pin Fan Connector (MEM1R)

LL

USB Connector

R

4-pin Fan Connector (MEM1)

MM

Slot 1 PCI Express x8 Gen2

S

4-pin Fan Connector (CPU1A)

NN

SGPIO Connector

T

4-pin Fan Connector (CPU1)

OO

IMPB Connector

U

HDD Power Connector (12V only)

PP

Serial Port B

Table 2. Intel® Server Board S5500WB System Interconnects

Revision 1.9

Intel order number E53971-008

Page 22

Server Board Overview Intel® Server Board S5500WB TPS

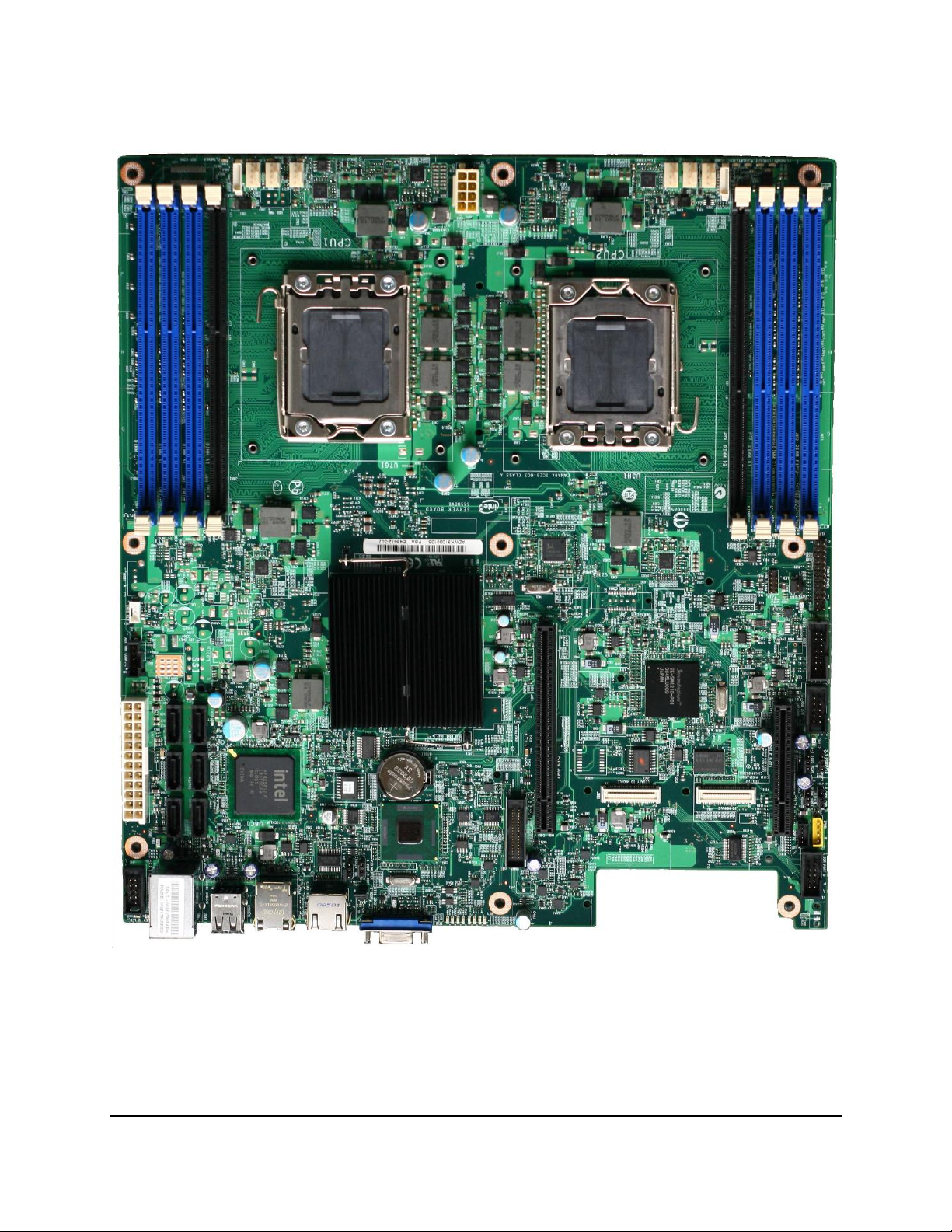

Description

Description

A

ID LED

E

RJ-45 GbE LAN connector

B

Status LED

F

RJ-45 Serial port connector

C

RJ-45 GbE/Dual USB connector

G

DB15 Video

D

Dual USB connector

H

Diagnostic LEDs

2.2.1 Board Rear Connector Placement

The Intel® Server Board S5500WB has the following board rear connector placement:

Figure 4. Rear Panel Connector Placement:

2.2.2 Server Board Mechanical Drawings

The following figures are mechanical drawings for the Intel® Server Board S5500WB.

8 Revision 1.9

Intel order number E53971-008

Page 23

Intel® Server Board S5500WB TPS Server Board Overview

9

Figure 5. Baseboard and Mounting holes

Revision 1.9

Intel order number E53971-008

Page 24

Server Board Overview Intel® Server Board S5500WB TPS

Figure 6. Connector Locations

10 Revision 1.9

Intel order number E53971-008

Page 25

Intel® Server Board S5500WB TPS Server Board Overview

11

Figure 7. Primary Side Height Restrictions

Revision 1.9

Intel order number E53971-008

Page 26

Server Board Overview Intel® Server Board S5500WB TPS

Figure 8. Secondary Side Height Restrictions

12 Revision 1.9

Intel order number E53971-008

Page 27

Intel® Server Board S5500WB TPS Functional Architecture

13

Board

S5500WB 12V

S5500WB SSI

Form Factor

EATX 12‖ x 13‖

EATX 12‖ x 13‖

CPU Socket

B

B

Chipset

Intel® 5500 Chipset IOH

Intel® 82801Jx I/O Controller Hub (ICH10R)

Intel® 5500 Chipset IOH

Intel® 82801Jx I/O Controller Hub

(ICH10R)

Memory

8 RDIMMs or 8 UDIMMs DDR3

8 RDIMMs or 8 UDIMMs DDR3

Slots

1 PCI Express* x8 w/ x16 connector

1 PCI Express* x4 w/ x8 connector

1 PCI Express* x8 w/ x16 connector

1 PCI Express* x4 w/ x8 connector

Ethernet

Dual GbE, Intel® 82576 Gigabit Ethernet

Dual GbE, Intel® 82576 Gigabit Ethernet

Storage

Six SATA II ports (3Gb/s)

Six SATA II ports (3Gb/s)

SAS

One (1) 4-port SAS module on IOM

connector (optional)

One (1) 4-port SAS module on IOM

connector (optional)

I/O Module

Yes, single- and double-wide

Yes, single- and double-wide

SW RAID

LSI SW RAID 0,1,5,10

LSI SW RAID 0,1,5,10

Processor Support

95 W, optimized for 80 W

95 W, optimized for 80 W

Video

Integrated in BMC

Integrated in BMC

ISM

iBMC w/ IPMI 2.0 support

iBMC w/ IPMI 2.0 support

Chassis*

Reference

Reference

Power Supply

12 V and 5 VS/B PMBus*

12 V, 5 V, 3.3 V, 5 VSB, PMBus*

3. Functional Architecture

The Intel® Server Board S5500WB is a purpose build, power-optimized server used in a 1U

rack. Memory and processor socket placement is made to minimize the amount of fan power

required to cool these components. Voltage Regulators (VRDs) are optimized for a particular

range of memory and CPU power that suits the target Internet Portal Datacenter (IPDC)

segment of the market. The VRDs are also designed to be highly power-efficient, balancing the

needs of being small in size and also cost-effective. There are two SKUs: a 12-V only SKU and

an SSI-compliant SKU.

3.1 High Level Product Features

Table 3. Intel® Server Board S5500WB Features

Note:

*Referenced Chassis: Chenbro RM13204 Chassis and Intel® Server System SR1690WB.

Revision 1.9

Intel order number E53971-008

Page 28

Functional Architecture Intel® Server Board S5500WB TPS

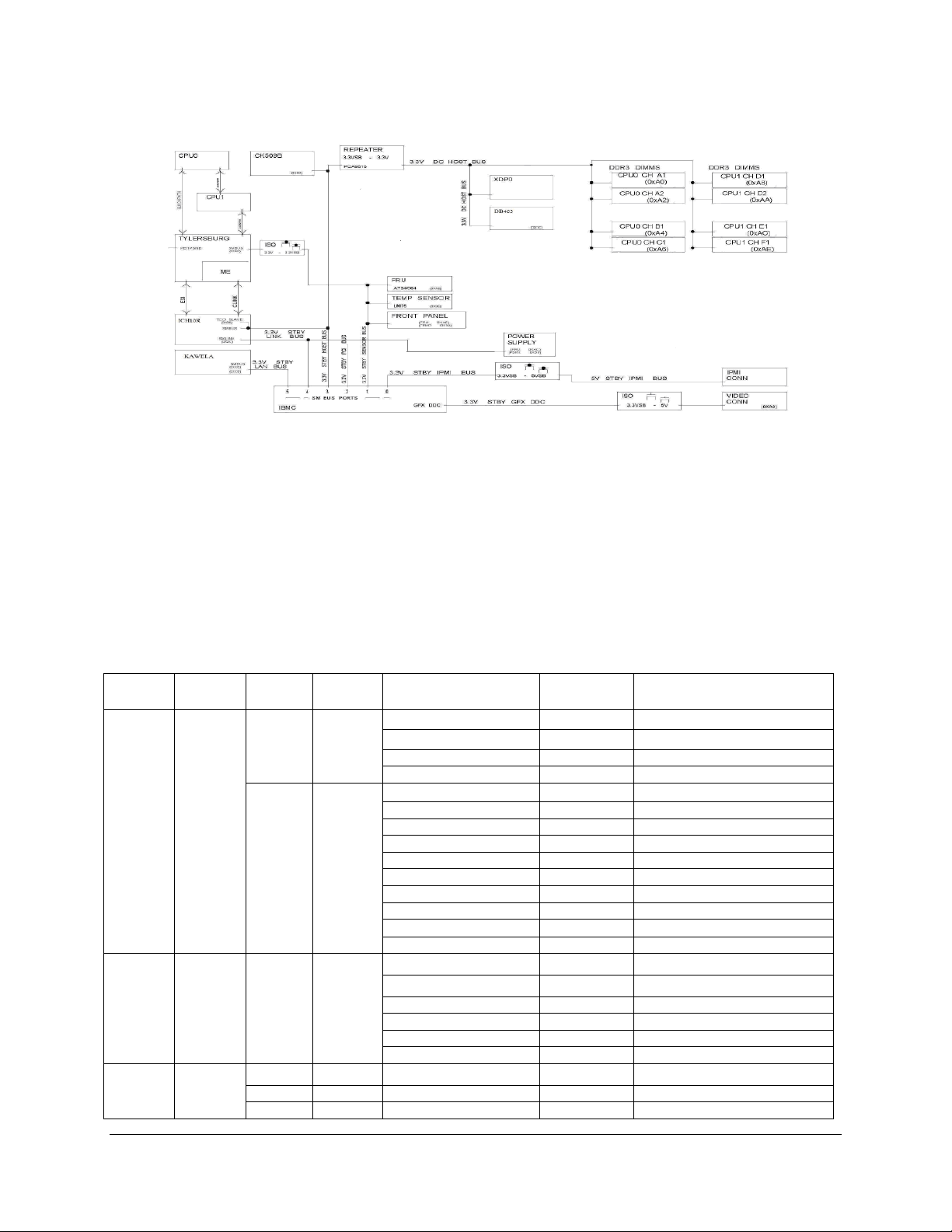

3.2 Functional Block Diagram

Figure 9. Intel® Server Board S5500WB Functional Block Diagram

14 Revision 1.9

Intel order number E53971-008

Page 29

Intel® Server Board S5500WB TPS Functional Architecture

15

3.3 Processor Subsystem

The Intel

®

5500 series and the next generation Intel

following key technologies:

Intel

®

Integrated Memory Controller

Point-to-point link interface based on the Intel

which was formerly known as the Common System Interface (CSI).

The Intel

®

5500 series processor is a multi-core processor based on the 45 nm process

technology. Processor features vary by SKU and include up to two Intel

capable of up to 6.4 GT/s, up to 8 MB of shared cache, and an integrated memory controller.

The Intel

®

5600 series processor is the next generation of multi-core processors based on the

32 nm process technology. Processor features vary by SKU and include up to 6 cores and up to

12 MB of shared cache.

3.3.1 Processor Support

The Intel® Server Board S5500WB supports the following processors:

®

5600 series processors support the

®

QuickPath Interconnect (Intel® QPI),

®

QPI point-to-point links

One or two Intel® 5500 series or 5600 series processor(s) in FC-LGA 1366 socket B

package with 4.8 GT/s, 5.86 GT/s, or 6.4 GT/s Intel® QPI.

Up to 95 W Thermal Design Power (TDP).

Supports Low Voltage (LV) processors.

3.3.2 Processor Population Rules

For optimum performance, when two processors are installed, both must be the identical

revision and have the same core voltage and Intel

is installed, it must be in the socket labeled CPU1. The other socket must be empty. You must

populate processors in sequential order. Therefore, you must populate processor socket 1

(CPU1) before processor socket 2 (CPU2).

When a single processor is installed, no terminator is required in the second processor socket.

3.3.2.1 Mixed Processor Configurations

The following table describes mixed processor conditions and recommended actions for all

Intel® server boards and systems that use the Intel® 5500 Chipset. The errors fall into one of the

following two categories:

Fatal: If the system can boot, it goes directly to the error manager, regardless of

whether the Post Error Pause setup option is enabled or disabled.

®

QPI/core speed. When only one processor

Major: If the Post Error Pause setup option is enabled, the system goes directly to

the error manager. Otherwise, the system continues to boot and no prompt is given

for the error. The error is logged to the error manager.

Revision 1.9

Intel order number E53971-008

Page 30

Functional Architecture Intel® Server Board S5500WB TPS

Error

Severity

System Action

Processor family not

identical

Fatal

The BIOS detects the error condition and responds as follows:

Logs the error into the system event log (SEL).

Alerts the Integrated BMC of the configuration error with an

IPMI command.

Does not disable the processor.

Displays ―0194: Processor family mismatch detected‖

message in the error manager.

Halts the system.

Processor cache not

identical

Fatal

The BIOS detects the error condition and responds as follows:

Logs the error into the SEL.

Alerts the Integrated BMC of the configuration error with an

IPMI command.

Does not disable the processor.

Displays ―0192: Cache size mismatch detected‖ message

in the error manager.

Halts the system.

Processor frequency (speed)

not identical

Major

The BIOS detects the error condition and responds as follows:

Adjusts all processor frequencies to the lowest common

denominator.

Continues to boot the system successfully.

If the frequencies for all processors cannot be adjusted to be the

same, then the BIOS:

Logs the error into the SEL.

Displays ―0197: Processor speeds mismatched‖ message

in the error manager.

Halts the system.

Processor microcode

missing

Minor

The BIOS detects the error condition and responds as follows:

Logs the error into the SEL.

Does not disable the processor.

Displays ―816x: Processor 0x unable to apply microcode

update‖ message in the error manager.

The system continues to boot in a degraded state,

regardless of the setting of POST Error Pause in the

Setup.

Processor Intel® QuickPath

Interconnect speeds not

identical

Halt

The BIOS detects the error condition and responds as follows:

Adjusts all processor interconnect frequencies to lowest

common denominator.

Logs the error into the SEL.

Alerts the Integrated BMC about the configuration error.

Does not disable the processor.

Displays ―0195: Processor 0x Intel(R) QPI speed

mismatch‖ message in the Error Manager.

If POST Error Pause is disabled in the Setup, continues to

boot in a degraded state.

If POST Error Pause is enabled in the Setup, pauses the

system, but can continue to boot if operator directs.

Table 4. Mixed Processor Configurations

16 Revision 1.9

Intel order number E53971-008

Page 31

Intel® Server Board S5500WB TPS Functional Architecture

17

3.3.3 Installing or Replacing the Processor

3.3.3.1 Installing the Processor

To install a processor, follow these instructions:

1. Turn off all peripheral devices connected to the server.

2. Turn off the server.

3. Disconnect the AC power cord from the server.

4. Remove the server’s cover. See the document that came with your server chassis for

instructions on removing the server’s cover.

5. Locate the processor socket and raise the raise the load lever of the ILM cover

completely. (see letter ―A‖ in the figure below).

Figure 10. Lifting the load lever of ILM cover

6. Open the load plate (see letter ―B‖ in Figure 10 and letter ―C‖ in Figure 11).

.

Figure 11. Removing the socket cover

7. Remove the protective socket cover. (See letter ―D‖ in Figure 11)

8. Align the pins of the processor with the socket and insert the processor into the

socket.

Revision 1.9

Intel order number E53971-008

Page 32

Functional Architecture Intel® Server Board S5500WB TPS

Figure 12. Installing processor

9. Lower the load plate and load lever of the ILM cover completely.

Note: Make sure the alignment triangle mark and the alignment triangle cutout align correctly.

To assist in package orientation and alignment with the socket:

A. The package Pin1 triangle and the socket Pin1 chamfer provide a visual reference for

proper orientation.

B. The package substrate has orientation notches along two opposing edges of the

package offset from the centerline. The socket has two corresponding orientation

posts to physically prevent mis-orientation of the package. These orientation features

also provide an initial rough alignment of the package to the socket.

C. The socket has alignment walls at the four corners to provide final alignment of the

package.

18 Revision 1.9

Intel order number E53971-008

Page 33

Intel® Server Board S5500WB TPS Functional Architecture

19

Figure 13. Package Installation/Remove Feature

3.3.3.2 Installing the Processor Heatsink(s)

CAUTION: The heatsink has Thermal Interface Material (TIM) located on the bottom of it. Use caution

when you unpack the heatsink so you do not damage the TIM

To install the heatsink, follow these steps:

1. Remove the protective film on the TIM if present.

2. Orient the heatsink over the processor as shown in Figure 14. The heatsink fins must

be positioned as shown to provide correct airflow through the system.

3. Set the heatsink over the processor, lining up the four captive screws with the four

posts surrounding the processor.

4. Loosely screw in the captive screws on the heatsink corners in a diagonal manner

according to the numbers shown in as follows:

a) Starting with the screw at location 1, engage the screw threads by giving it two

rotations in the clockwise direction and stop. (IMPORTANT: Do not fully

tighten.)

b) Proceed to the screw at location 2 and engage the screw threads by giving it two

rotations and stop.

c) Engage screws at locations 3 and 4 by giving each screw two rotations and then

stop.

d) Repeat steps 4a through 4c by giving each screw two rotations each time until all

screws are lightly tightened up to a maximum of 8 inch-lbs torque.

Revision 1.9

Intel order number E53971-008

Page 34

Functional Architecture Intel® Server Board S5500WB TPS

Figure 14. Installing/Removing Heatsink

3.3.3.3 Removing the Processor Heatsink

To remove the heatsink, follow these steps:

1. Loosen the four captive screws on the heatsink corners in a diagonal manner according

to the numbers shown in Figure 1 as follows:

a) Starting with the screw at location 1, loosen it by giving it two rotations in the

anticlockwise direction and stop. (IMPORTANT: Do not fully loosen.)

b) Proceed to the screw at location 2 and loosen it by giving it two rotations and

stop.

c) Loosen screws at locations 3 and 4 by giving each screw two rotations and then

stop.

d) Repeat steps 1a through 1c by giving each screw two rotations each time until all

screws are loosened.

2. Lift the heatsink from the board.

3.3.4 Intel

®

Intel

QPI is a cache-coherent, link-based interconnect specification for processor, chipset, and

I/O bridge components. You can use it in a wide variety of desktop, mobile, and server

platforms spanning IA-32 and Intel® Itanium

®

QuickPath Interconnect (Intel® QPI)

®

architectures. Intel® QPI also provides support for

high-performance I/O transfer between I/O nodes. It allows connection to standard I/O buses

such as PCI Express*, PCI-X*, PCI (including peer-to-peer communication support), AGP

(Accelerated Graphics Port), and so forth, through the appropriate bridges.

Each Intel

and receiver plus a differential forwarded clock. A full-width Intel

signals (20 differential pairs in each direction) plus a forwarded differential clock in each

direction. Each Intel

®

QPI link consists of 20 pairs of uni-directional differential lanes for the transmitter

®

5500 series and 5600 series processor supports two Intel® QPI links, one

®

QPI link pair consists of 84

going to the second processor and one going to the Intel® 5500 chipset IOH.

Figure 15. Intel® QPI Link

20 Revision 1.9

Intel order number E53971-008

Page 35

Intel® Server Board S5500WB TPS Functional Architecture

21

In the current implementation, Intel

6.4 GT/s. Intel

quarter - 5 lanes) independently in each direction between a pair of devices communicating via

the Intel

®

QPI ports operate at multiple lane widths (full - 20 lanes, half - 10 lanes, and

®

QPI. The server boards support full-width communication only.

®

QPI ports are capable of operating at transfer rates of up to

For more information see the Intel® QPI Overview Rev 1.04 (Document#: 380531)

3.4 Intel

The Intel

package. Each processor produces up to three channels of DDR3 memory. The Intel

®

QuickPath Memory Controller

®

5500 series and 5600 series processors have an integrated memory controller on its

®

QPI

Memory Controller supports DDR3 800, DDR3 1066, and DDR3 1333 memory technologies.

The memory controller supports both Registered DIMMs (RDIMMs) and Unbuffered DIMMs

(UDIMMs).

Mixing of RDIMMs and UDIMMs is not supported.

3.4.1 Supported Memory

The Intel® Server Board S5500WB supports six DDR3 memory channels (three per processor

socket) with two DIMMs on the first channel and one DIMM on the second and third channels of

each processor. Therefore, the server board supports up to 8 DIMMs with dual-processor

sockets with a maximum memory capacity of 128 GB.

The server board supports DDR3 800, DDR3 1067, and DDR3 1333 memory technologies.

Memory modules of mixed speed are supported by automatic selection of the highest common

frequency of all memory modules.

The following configurations are not supported, validated or recommended:

Mixing of RDIMMs and UDIMMs is not supported

Mixing of memory type, size, speed and/or rank has not been validated and is

not supported

Mixing memory vendors has not been validated and is not recommended

Non-ECC memory has not been validated and is not supported in a server environment

Note: Mixed memory is not tested or supported. Non-ECC memory is not tested and is not

recommended for use in a server environment

The Intel® Server Board S5500WB uses a 2:1:1 memory DIMM layout. A 2:1:1 layout was

chosen for its lowest power for a particular bandwidth and because it allows the maximum

possible bandwidth when a 1:1:1 memory population is used.

3.4.2 Memory Subsystem Nomenclature

DIMMs are organized into physical slots on DDR3 memory channels that belong to processor

sockets.

Revision 1.9

Intel order number E53971-008

Page 36

Functional Architecture Intel® Server Board S5500WB TPS

Processor Socket 1

Processor Socket 2

Channel A

Channel B

Channel C

Channel D

Channel E

Channel F

A1

A2

B1

C1

D1

D2

E1

F1

The memory channels from socket 1 are identified as Channels A, B, and C. The memory

channels from socket 2 are identified as Channels D, E, and F.

The DIMM identifiers on the silkscreen on the board provide information about the channel,

and, therefore the processor, to which they belong. For example, DIMM_A1 is the first slot on

Channel A on processor 1; DIMM_D1 is the first DIMM socket on Channel D on processor 2.

Table 5. DIMM Nomenclature

If the socket is not populated, the memory slots associated with a processor socket

are unavailable.

You can install a processor without populating the associated memory slots provided a second

processor is installed with associated memory. In this case, the memory is shared by the

processors. However, the platform suffers performance degradation and latency due to the

remote memory.

Sockets are self-contained and autonomous. However, all configurations in the BIOS setup

such as RAS, Error Management, and so forth, are applied commonly across sockets.

3.4.3 ECC Support

If at least one non-ECC DIMM is present in the system, the system reverts to non-ECC mode.

UDIMMs can be ECC or non-ECC; RDIMMs are always ECC enabled. Non-ECC DIMMs are not

validated and not recommended for server use.

3.4.4 Memory Reservation for Memory-mapped Functions

A region of size 40 MB of memory below 4 GB is always reserved for mapping chipset,

processor, and BIOS (flash) memory-mapped I/O regions. This region displays as a loss of

memory to the operating system. In addition to this loss, the BIOS creates another reserved

region for memory-mapped PCI Express* functions, including a standard 64 MB or 256 MB of

standard PCI Express* Memory Mapped I/O (MMIO) configuration space. This is based on the

setup selection using the MAX_BUS_NUMBER feature offered by Intel® Tylersburg IOH chipset

and a variably sized MMIO region for the PCI Express* functions.

All these reserved regions are reclaimed by the operating system if Physical Address Extension

(PAE) is turned on in the operating system.

3.4.5 High-Memory Reclaim

When 4 GB or more of physical memory is installed (physical memory is the memory installed

as DDR3 DIMMs), the reserved memory is lost. However, the Intel

provides a feature called high-memory reclaim, which allows the BIOS and operating system to

remap the lost physical memory into system memory above 4 GB (the system memory is the

memory that can be seen by the processor).

®

5500 Series Chipset

22 Revision 1.9

Intel order number E53971-008

Page 37

Intel® Server Board S5500WB TPS Functional Architecture

23

The BIOS will always enable high-memory reclaim if it discovers installed physical memory

equal to or greater than 4 GB. For the operating system, the reclaimed memory is recoverable

only when it supports and enables the PAE feature in the processor. Most operating systems

support this feature. For details, see the relevant operating system manuals.

3.4.6 Memory Population Rules

You should populate the memory slots of DDR3 channels furthest from the processor first.

Therefore, if A1 is empty, you cannot populate/use A2.

Figure 16. Memory Channel Population

3.4.7 Installing and Removing Memory

The silkscreen on the board next to CPU1 displays: DIMM_A2, DIMM_A1, DIMM_B1,

DIMM_C1, and next to CPU2 display: DIMM_D2, DIMM_D1, DIMM_E1, DIMM_F1 starting from

the inside of the board. DIMM_A1 is the blue socket closest to the CPU 1 socket. For memory

channel A, the server board requires DDR3 DIMMs within a channel to be populated starting

with the DIMM farthest from the processor. The DIMM farthest from the processor per channel

is blue on the board.

3.4.7.1 Installing DIMMs

To install DIMMs, follow these steps:

1. Turn off the server.

2. Disconnect the AC power cord from the server.

3. Remove the server’s cover and locate the DIMM sockets (see ― Installing Memory‖).

Revision 1.9

Intel order number E53971-008

Page 38

Functional Architecture Intel® Server Board S5500WB TPS

Figure 17. Installing Memory

4. Make sure the clips at either end of the DIMM socket(s) are pushed outward to the open

position (see letter ―A‖ in the figure above).

5. Holding the DIMM by the edges, remove it from its anti-static package.

6. Position the DIMM above the socket. Align the two small notches in the bottom edge of

the DIMM with the keys in the socket (letter ―B‖ in Figure 16).

7. Insert the bottom edge of the DIMM into the socket (letter ―C‖ in Figure 16).

8. When the DIMM is inserted, push down on the top edge of the DIMM until the retaining

clips snap into place (letter ―D‖ in Figure 16). Make sure the clips are firmly in place

(letter ―E‖ in Figure 16).

9. Replace the server’s cover and reconnect the AC power cord.

3.4.7.2 Removing DIMMs

To remove a DIMM, follow these steps:

1. Turn off all peripheral devices connected to the server.

2. Turn off the server.

3. Remove the AC power cord from the server.

4. Remove the server’s cover.

5. Gently spread the retaining clips at each end of the socket. The DIMM lifts from the

socket.

6. Holding the DIMM by the edges, lift it from the socket and store it in an anti-static

package.

7. Reinstall and reconnect any parts you removed or disconnected to reach the DIMM

sockets.

8. Replace the server’s cover and reconnect the AC power cord.

24 Revision 1.9

Intel order number E53971-008

Page 39

Intel® Server Board S5500WB TPS Functional Architecture

25

3.4.8 Channel-Independent Mode

In the Independent Channel mode, you can populate multiple channels in any order (for

example, you can populate channels B and C while channel A is empty). Also, DIMMs on

adjacent channels do not need to have identical parameters. Therefore, all DIMMs are enabled

and used in the Independent Channel mode.

Adjacent slots on channels A and D do not need matching size and organization. However, the

speed of the channel is configured to the maximum common speed of the DIMMs.

The single channel mode is established using the independent channel mode by populating

DIMM slots from channel A only.

3.4.9 Memory RAS

The memory RAS offered by the Intel® 5500 series and 5600 series processors is performed at

channel level (for example, during mirroring, channel B mirrors channel A). All DIMM matching

requirements are on a slot-to-slot basis on adjacent channels. For example, to enable mirroring,

corresponding slots on channels A and B must have DIMMS of identical parameters.

If one socket fails, the population requirements for RAS, the BIOS sets all six channels to the

Independent Channel mode. One exception to this rule is when all DIMM slots from a socket

are empty (for example, when only DIMM slots A1, B1, and C1 are populated, mirroring is

possible on the platform).

3.4.9.1 Memory Population for Channel Mirroring Mode

The mirrored configuration is a redundant image of the memory, and can continue to operate

despite the presence of sporadic uncorrectable errors.

Channel mirroring is a RAS feature in which two identical images of memory data are

maintained, thus providing maximum redundancy. On the Intel® 5500 series based Intel server

boards, mirroring is achieved across channels. Active channels hold the primary image and the

other channels hold the secondary image of the system memory. The integrated memory

controller in the processor alternates between both channels for read transactions. Under

normal circumstances, write transactions are issued to both channels.

Mirroring is only supported between Channels A & B and Channels D & E. The presence of a

DIMM on Channel C or F causes the BIOS to disable Mirroring and revert to the Independent

Channel mode.

Revision 1.9

Intel order number E53971-008

Page 40

Functional Architecture Intel® Server Board S5500WB TPS

Figure 18. Mirroring Memory Configuration

3.4.10 Memory Error LED

Each DIMM is allocated an LED that, when lit, indicates a memory DIMM failure. It is the

function of the BIOS to identify bad DIMMs during the boot process. The BIOS sends a

message to the BMC to indicate which DIMM LED needs turn on.

3.5 Intel

The Intel

®

5500 Chipset IOH

®

5500 Chipset component is an I/O Hub (IOH). The Intel® 5500 Chipset provides a

connection point between various I/O components and Intel processors using the Intel® QPI

interface.

The Intel

®

5500 Chipset IOH is capable of interfacing with up to 24 PCI Express* lanes, which

can be configured in various combinations of x4, x8, x16 and limited x2 and x1 devices.

The Intel

the Intel

bridge and interfaces with other devices through SMBus, Controller Link, and RMII (Reduced

Media Independent Interface) manageability interfaces. The Intel

®

5500 Chipset IOH is responsible for providing a path to the legacy bridge. In addition,

®

5500 Chipset supports a x4 DMI (Direct Media Interface) link interface for the legacy

®

5500 Chipset supports the

following features and technologies:

Intel® QuickPath Interconnect (Intel® QPI)

PCI Express* Gen2

Intel® Virtualization Technology (Intel® VT) for Directed I/O 2 (Intel® VT-D2)

Manageability Engine (ME) subsystem

3.5.1 IOH24D PCI Express*

PCI Express* Gen1 and Gen2 are dual-simplex, point-to point serial differential low-voltage

interconnects. The signaling bit rate is 2.5 Gb/s one direction per lane for Gen1 and 5.0 Gb/s

one direction per lane for Gen2. Each port consists of a transmitter and receiver pair. A link

between the ports of two devices is a collection of lanes (x1, x2, x4, x8, x16, and so forth). All

lanes within a port must transmit data using the same frequency. The following table lists the

usage of the IOH24D PCI Express* bus segments.

26 Revision 1.9

Intel order number E53971-008

Page 41

Intel® Server Board S5500WB TPS Functional Architecture

27

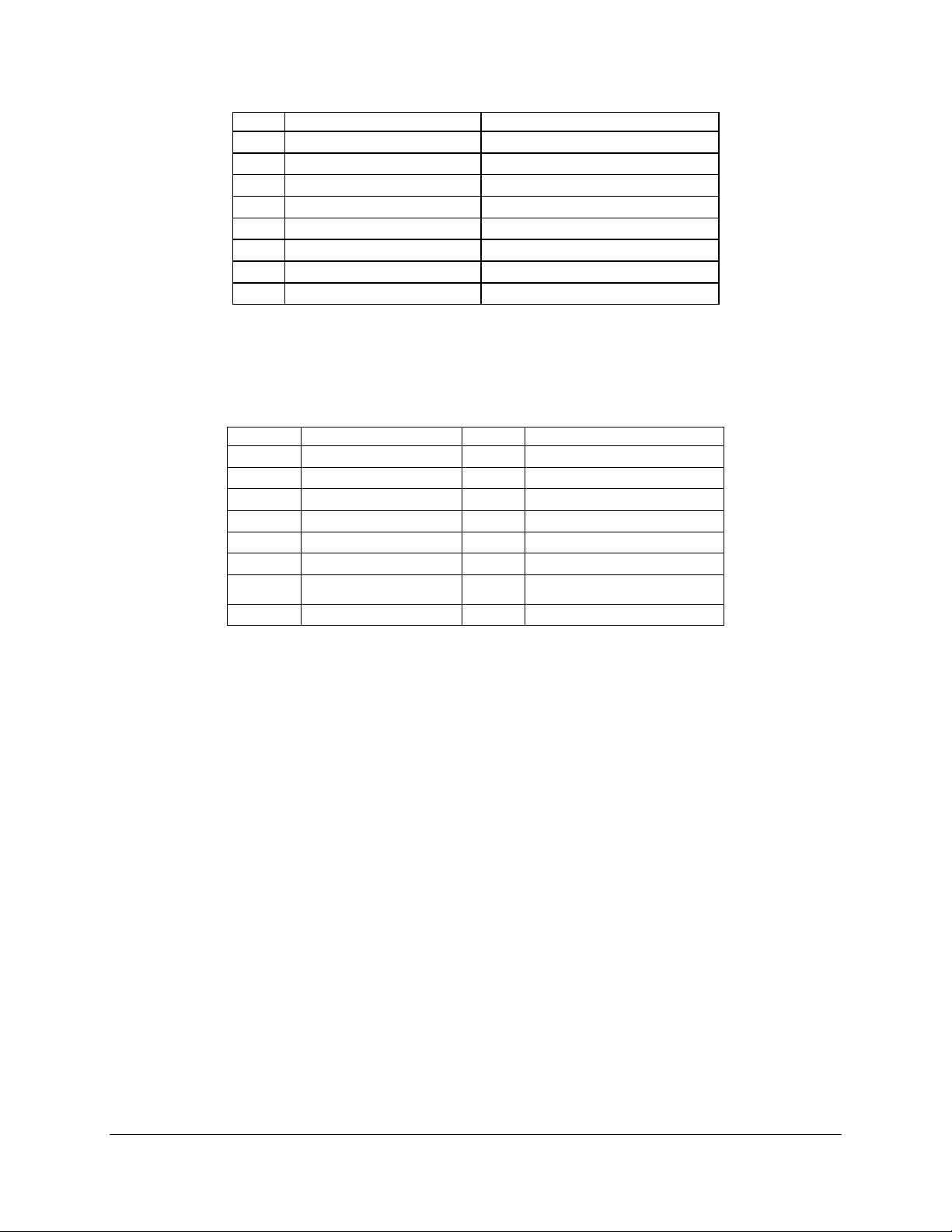

PCI Bus Segment

Width

Speed

Type

PCI I/O Card Slots

Port 0

ICH10R

x4

10 Gb/s

PCI Express*

Gen1

x4 PCI Express* Gen1 throughput to the ICH10R

southbridge

PE1, PE2

Intel® 5500 Chipset

IOH PCI Express*

x4

10 Gb/s

PCI Express*

Gen1

x4 PCI Express* Gen1 throughput to an onboard NIC.

PE3,

Intel® 5500 Chipset

IOH PCI Express*

X4

20 Gb/S

PCI Express*

Gen2

X4 PCI Express* Gen2 throughput to slot 1.

PE7, PE8

Intel® 5500 Chipset

IOH PCI Express*

x8

40 Gb/S

PCI Express*

Gen2

x8 PCI Express* Gen2 throughput to the slot 6 riser .

PE9, PE10

Intel® 5500 Chipset

IOH PCI Express*

x8

40 Gb/S

PCI Express*

Gen2

x4 PCI Express* Gen2 throughput to each of the two

Intel® I/O Expansion Module connectors.

Table 6. IOH24D PCI Express* Bus Segments

3.5.1.1 Direct Cache Access (DCA)

The DCA mechanism is a system-level protocol in a multi-processor system to improve I/O

network performance by providing higher system performance. It is designed to minimize cache

misses when a demand read is executed. This is accomplished by placing the data from the I/O

devices directly into the CPU cache through hints to the processor to perform a data pre-fetch

and install it in its local caches. The Intel® 5500 series and 5600 series processor supports

Direct Cache Access (DCA). You enable or disable DCA in the BIOS processor setup menu.

3.5.1.2 Intel

®

Virtualization Technology for Directed I/O (Intel® VT-d)

The Intel® Virtualization Technology is designed to support multiple software environments

sharing the same hardware resources. Each software environment may consist of an operating

system and applications. You can enable or disable the Intel® Virtualization Technology in the

BIOS setup. The default behavior is disabled.

Note: If the setup options are changed to enable or disable the Virtualization Technology

setting in the processor, the user must perform an AC power cycle for the changes to take

effect.

The Intel

®

5500 Chipset IOH supports DMA remapping from inbound PCI Express* memory

Guest Physical Address (GPA) to Host Physical Address (HPA). PCI Express* devices are

directly assigned to a virtual machine leading to a robust and efficient virtualization.

3.6 Management Engine

The Management Engine (ME) is an embedded ARC controller within the IOH. The IOH ME

performs manageability functions called Intel® Server Platform Services (SPS) for the discrete

Baseboard Management Controller (BMC).

Revision 1.9

Intel order number E53971-008

Page 42

Functional Architecture Intel® Server Board S5500WB TPS

The functionality provided by the SPS firmware is different from Intel® Active Management

Technology (Intel® AMT or AT) provided by the ME on client platforms.

Server Platform Services are value-added platform management options that enhance the

value of Intel platforms and their component ingredients (CPUs, chipsets, and I/O components).

Each service is designed to function independently wherever possible, or grouped together with

one or more features in flexible combinations to allow OEMs (Original Equipment

Manufacturers) to differentiate platforms. The following is a high-level view of the Intel® Server

Board S5500WB SPS functions.

Node Management Features:

o NPTM Policy Manager

o Power Supply Monitoring Service

o Inlet Temperature Monitoring Service

o CPU Power Limiting Service

Provide Access to ICH10R Devices: The ME has control of ICH10R platform

instrumentation. SPS provides a mechanism for the BMC to access this instrumentation

through IPMI OEM commands. Use of this capability on Intel servers is

platform-/SKU-specific.

o ICH10 temperature monitoring

PECI 2.0 Proxy: SPS offers a means for a BMC without a PECI 2.0 interface to use the ME

as a PECI proxy. The BMC on Intel servers already has a PECI 2.0 interface, so this SPS

capability is not used.

3.7 Intel

The Intel® 82801Jx I/O Controller Hub (ICH10R) provides extensive I/O support and supports

the following features and specifications:

®

82801Jx I/O Controller Hub (ICH10R)

PCI Express* Base Specification, Revision 1.1 support

ACPI Power Management Logic Support, Revision 3.0a

Enhanced DMA controller, interrupt controller, and timer functions

Integrated Serial ATA host controllers with independent DMA operation on up to six

ports and AHCI support

USB host interface with support for up to 12 USB ports; six UHCI host controllers;

and two EHCI high-speed USB 2.0 host controllers

System Management Bus (SMBus) Specification, Version 2.0 with additional support

for I2C devices

Low Pin Count (LPC) interface support

Serial Peripheral Interface (SPI) support

3.7.1 Serial ATA Support

The ICH10R has an integrated Serial ATA (SATA) controller that supports independent DMA

operation on six ports and data transfer rates of up to 3.0 Gb/s. The six SATA ports on the

28 Revision 1.9

Intel order number E53971-008

Page 43

Intel® Server Board S5500WB TPS Functional Architecture

29

server board are numbered SATA-1 through SATA-6. You can enable or disable the SATA

ports and/or configure them by accessing the BIOS setup utility during POST.

3.7.1.1 Intel

The onboard storage capability of these server boards includes support for Intel

Server RAID Technology II (Intel

®

Embedded Server RAID Technology II

®

ESRTII), which provides three standard software RAID levels:

®

Embedded

data stripping (RAID Level 0), data mirroring (RAID Level 1), and data stripping with mirroring

(RAID Level 10). For higher performance, you can use data stripping to alleviate disk

bottlenecks by taking advantage of the dual independent DMA engines that each SATA port

offers. Data mirroring is used for data security. If a disk fails, a mirrored copy of the failed disk

is brought online. There is no loss of either PCI resources (request/grant pair) or add-in card

slots.

With the addition of an optional Intel

®