Page 1

International Technical Support Organization

SG24-4690-00

A Technical Introduction to PCI-Based RS/6000 Servers

April 1996

Page 2

Page 3

IBM

International Technical Support Organization

A Technical Introduction to PCI-Based RS/6000 Servers

April 1996

SG24-4690-00

Page 4

Take Note!

Before using this information and the product it supports, be sure to read the general information under “Special Notices” on

page xv.

First Edition (April 1996)

This edition applies to the PCI-based RS/6000 servers, Model E20 and Model F30, for use with the AIX Version 4.1.4 Operating

System.

Order publications through your IBM representative or the IBM branch office serving your locality. Publications are not stocked at the

address given below.

An ITSO Technical Bulletin Evaluation Form for reader's feedback appears facing Chapter 1. If the form has been removed,

comments may be addressed to:

IBM Corporation, International Technical Support Organization

Dept. JN9B Building 045 Internal Zip 2834

11400 Burnet Road

Austin, Texas 78758-3493

When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any way it believes

appropriate without incurring any obligation to you.

Copyright International Business Machines Corporation 1996. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is subject to

restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 5

Abstract

After the successful introduction of the PCI-based RS/6000 workstations (40P/43P),

the RISC System/6000 family of products has been expanded to include a new line

of workgroup servers based on the PowerPC microprocessor, the Peripheral

Component Interconnect (PCI) and the PowerPC Reference Platform Specification

(PReP). These servers, which offer large memory and internal disk capacities, PC

I/O compatibility and flexible configurations, use “industry standard” components

and subsystems. The configuration flexibility and the enhanced Reliability,

Availability and Serviceability (RAS) features provided with the PCI-based RS/6000

servers constitute the substantial difference when these servers are compared to

the previously announced PCI-based RS/6000 workstations.

To support these new systems, new adapters and devices had to be provided, and

the AIX Version 4.1 operating system had to be enhanced dramatically.

All these enhancements present new environments and new configuration tasks to

system engineers, system administrators and customer engineers. This book is

intended to describe this new environment and to assist the support personnel in

accomplishing these new tasks.

(194 pages)

Copyright IBM Corp. 1996 iii

Page 6

iv Introduction to PCI-Based RS/6000 Servers

Page 7

Contents

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . iii

Special Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

How This Document is Organized .......................... xvii

Related Publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

International Technical Support Organization Publications ........... xviii

How Customers Can Get Redbooks and Other ITSO Deliverables ....... xix

How IBM Employees Can Get Redbooks and ITSO Deliverables ........ xx

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xxi

Chapter 1. Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Rationale for the PowerPC Reference Platform Specification ......... 2

1.2 Introducing the PowerPC Reference Platform Specification .......... 4

1.3 The PowerPC Microprocessor Common Hardware Reference Platform

(CHRP) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.1 PowerPC Platform - Introduction and History ............... 6

1.3.2 The PowerPC Platform Document ..................... 6

1.3.3 PowerPC Platform Goals ........................... 7

1.3.4 CHRP Certification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Chapter 2. PCI-Based RS/6000 Server Hardware ............... 11

2.1 The Hardware Design .............................. 11

2.1.1 The PCI Bus Architecture ......................... 12

2.1.2 The ISA BUS Architecture ......................... 14

2.2 The Hardware Main Components ........................ 15

2.2.1 The Processor Subsystem ......................... 15

2.2.2 The L2 Cache ................................ 16

2.2.3 The Memory Controller and PCI Bridge ................. 17

2.2.4 The System Memory ............................ 17

2.2.5 The Primary PCI Bus ............................ 17

2.2.6 The Secondary PCI Bus .......................... 18

2.2.7 The EISA Bus ................................ 18

2.2.8 The X-Bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.3 Electronics Partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.4 RS/6000 Model E20 Product Description ................... 20

2.4.1 Standard Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.4.2 Supported Optional Features ........................ 23

2.4.3 RS/6000 Model E20 Limitations ...................... 31

2.5 RS/6000 Model F30 Product Description ................... 31

2.5.1 Standard Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.5.2 Supported Optional Features ........................ 34

2.5.3 RS/6000 Model F30 Limitations ...................... 38

2.6 Hardware Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.7 Performance Positioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.7.1 SPEC95 Software Benchmark ....................... 40

Chapter 3. Hot-Swap Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.1 Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Copyright IBM Corp. 1996 v

Page 8

3.1.1 Backplanes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.1.2 Hot-Swap Bays and Banks ......................... 43

3.2 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.2.1 Accessing the Hot-Swap Banks ...................... 44

3.2.2 Installing Drives in Bank C ........................ 45

3.2.3 Installing Drives in Bank D ......................... 45

3.2.4 Installing Drives in Bank E ......................... 46

3.2.5 Disk Carriers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

3.3 Using the Hot-Swap Subsystem ........................ 47

3.3.1 Adding a New Drive to a Live System .................. 47

3.3.2 Removing a Drive from a Live System .................. 48

3.3.3 Replacing a Previously Defined Drive ................... 49

3.3.4 Mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Chapter 4. Boot Support and Firmware ..................... 53

4.1 Boot Record . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

4.2 Boot Components Specifically for AIX 4 on PCI-Based RS/6000 Systems 55

4.2.1 Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.2.2 Software ROS for AIX ............................ 56

4.2.3 Non-Volatile RAM (NVRAM) ........................ 57

4.3 Boot Image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

4.3.1 Platform-Specific Components . . . . . . . . . . . . . . . . . . . . . . . 59

4.3.2 Structure of the AIX Boot image on PCI-Based RS/6000 Systems .. 61

4.3.3 Boot Image Creation on PCI-Based RS/6000 Systems ......... 63

4.4 Understanding the Firmware .......................... 64

4.4.1 Firmware Boot Sequence .......................... 65

4.4.2 Firmware Passwords . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.4.3 Firmware Flash Update ........................... 68

4.4.4 Firmware Recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.4.5 Power-On Self Test (POST) ........................ 69

4.5 LCD Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.6 System Management Service (SMS) ...................... 72

4.6.1 SMS Graphical Main Menu ......................... 73

4.6.2 SMS ASCII Main Menu ........................... 73

4.6.3 Start-Up Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.6.4 Test Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

4.6.5 Tools Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Chapter 5. AIX Version 4.1.4 Support ...................... 75

5.1 Electronic Key-Switch Function ......................... 75

5.1.1 Boot-Device Order List ........................... 75

5.1.2 Function Keys - Key-Switches at Boot Time ............... 77

5.1.3 bootlist Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

5.2 Entering Debug Mode .............................. 78

5.3 System Dump Support .............................. 79

5.3.1 Defining a Directory for the System Dump ................ 79

5.3.2 Creating a Logical Volume for the System Dump ............ 80

5.3.3 Initiating a System Dump .......................... 80

5.3.4 Dump LCD Support ............................. 82

5.4 Managing System Backups ........................... 82

5.4.1 The bootinfo Command ........................... 83

5.4.2 Creating a System Backup ......................... 83

5.4.3 Testing Your System Backup ....................... 85

5.4.4 Restoring Your System Backup ...................... 86

vi Introduction to PCI-Based RS/6000 Servers

Page 9

5.4.5 Creating System Backups on Microchannel-Based RS/6000 Systems 87

5.4.6 Cloning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

5.5 Configuration Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

5.6 Graphics Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.6.1 Installing the S15 Graphics Adapter .................... 93

5.6.2 Resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

5.6.3 Configuring the Adapter ........................... 94

5.6.4 Multiple Adapter Support .......................... 96

5.6.5 Accessing the Second Display ....................... 97

5.6.6 Support for Other Adapters ......................... 98

Chapter 6. Adapter and Device Configuration on PCI-Based RS/6000

Servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

6.1 Device Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

6.1.1 Device Configuration Database ..................... 100

6.1.2 Device Location Codes .......................... 101

6.2 PCI Adapter Configuration ........................... 102

6.2.1 Configuring Non-Graphic PCI Adapters ................. 103

6.2.2 Configuring SCSI Devices ........................ 103

6.3 ISA Adapter Configuration ........................... 104

6.3.1 Recording Settings of ISA Adapters Already Configured ....... 104

6.3.2 Selecting ISA Adapter Hardware Parameters ............. 106

6.3.3 Installing Device Drivers ......................... 106

6.3.4 Defining ISA Adapters at AIX Level ................... 106

6.3.5 Setting the Adapter DIP Switches .................... 107

6.3.6 Making ISA Adapters Available ..................... 108

6.4 8-Port EIA-232 Asynchronous ISA Adapter (FC 2931) ........... 108

6.4.1 DIP-Switch Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

6.4.2 Configuring the Adapter .......................... 109

6.5 128-Port EIA-232 Asynchronous ISA Adapter (FC 2933) ......... 111

6.5.1 DIP-Switch Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

6.5.2 Configuring the Adapter .......................... 112

6.6 4-Port Multi-Protocol ISA Adapter (FC 2701) ................ 115

6.6.1 Cable Selections for 4-Port Multiprotocol Interface .......... 115

6.6.2 DIP-Switch Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

6.6.3 Configuring the Adapter .......................... 117

6.7 X.25 Interface Co-Processor ISA Adapter (FC 2961) ........... 120

6.7.1 DIP-Switch Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

6.7.2 Configuring the Adapter .......................... 122

6.8 Ethernet and Token-Ring ISA Adapters ................... 125

6.8.1 Configuring an ISA Ethernet Adapter .................. 126

6.8.2 Setting ISA Ethernet Adapter Parameters at Firmware Level .... 129

6.8.3 Configuring the Auto 16/4 Token-Ring ISA Adapter ......... 130

6.8.4 Setting Auto 16/4 Token-Ring ISA Adapter Parameters at Firmware

Level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Chapter 7. SCSI Cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

7.1 General SCSI Considerations ......................... 137

7.1.1 SCSI Bus Length ............................. 137

7.1.2 SCSI Terminators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

7.1.3 SCSI Device Addresses ......................... 138

7.1.4 SCSI Bus Width .............................. 138

7.2 Cabling the SCSI-2 Fast/Wide Single-Ended PCI Adapter ........ 139

7.2.1 Adapter-to-First Device Cables ..................... 139

Contents vii

Page 10

7.2.2 Device-to-Device Cables . . . . . . . . . . . . . . . . . . . . . . . . . 141

7.2.3 Terminators for Use with this Adapter ................. 141

7.3 Cabling the SCSI-2 Fast/Wide Differential PCI Adapter .......... 142

7.3.1 Adapter-to-First Device Cables ..................... 142

7.3.2 Device-to-Device Cables . . . . . . . . . . . . . . . . . . . . . . . . . 143

7.3.3 SCSI-2 F/W Differential PCI Adapter Terminators ........... 144

7.3.4 High-Availability Multi-Initiator SCSI-2 Fast/Wide Differential Cabling 144

Chapter 8. Diagnostics Support . . . . . . . . . . . . . . . . . . . . . . . . . 147

8.1 Diagnostics Operating Considerations .................... 147

8.2 Stand-Alone Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

8.2.1 Booting the Stand-Alone Diagnostics CD-ROM ............ 149

8.2.2 Running Stand-Alone Diagnostics in Concurrent Mode ........ 151

8.3 Online Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

8.3.1 Concurrent Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

8.3.2 Service Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

8.3.3 Maintenance Mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

8.4 Using NIM to Run Online Diagnostics over the Network .......... 154

Chapter 9. Network Installation Management (NIM) Support ....... 157

9.1 NIM Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

9.1.1 NIM Environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

9.1.2 NIM Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

9.2 Configuring a PCI-Based RS/6000 Server as NIM Master ........ 161

9.2.1 NIM Master Requirements ........................ 161

9.2.2 Filesystem Creation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

9.2.3 NIM Master Activation ........................... 162

9.2.4 Network Objects Definition ....................... 163

9.2.5 NIM Client Machine Definition ...................... 166

9.2.6 Resource Objects Definition ....................... 169

9.2.7 Allocating Resources for the Stand-Alone Installation ........ 172

9.2.8 Initiating the BOS Installation ...................... 173

9.3 Using a PCI-Based RS/6000 Server as a NIM Client ........... 174

9.4 Post-Installation Operations . . . . . . . . . . . . . . . . . . . . . . . . . . 177

9.4.1 PCI-Based RS/6000 Systems Network Installation Limitations ... 178

Chapter 10. Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . 179

10.1 Power-Up Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

10.1.1 Firmware Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

10.2 Booting Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

10.2.1 Booting From CD-ROM ......................... 180

10.2.2 System Hangs During AIX Boot Process ............... 180

10.2.3 Recovering a System with no Bootable Media ............ 181

10.3 Installation Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

10.3.1 AIX Boot Device Order List ....................... 182

10.3.2 NIM Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

10.4 Accessing Diagnostics With NIM ...................... 183

10.5 Miscellaneous . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

10.6 Hints and Tips ................................. 183

10.6.1 Accessing System Management Services with an ASCII Terminal 183

10.6.2 Power-On Passwords . . . . . . . . . . . . . . . . . . . . . . . . . . 183

10.6.3 ISA Adapter Configuration ....................... 184

10.6.4 Configuration Information . . . . . . . . . . . . . . . . . . . . . . . . 185

10.6.5 Using the Error Logging Facility .................... 185

viii Introduction to PCI-Based RS/6000 Servers

Page 11

10.6.6 Operator Panel F30 LED Status .................... 185

10.6.7 Dealing With Power Failures ...................... 186

Appendix A. Firmware Checkpoint Three-Digit Codes ........... 189

List of Abbreviations ................................ 195

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Contents ix

Page 12

x Introduction to PCI-Based RS/6000 Servers

Page 13

Figures

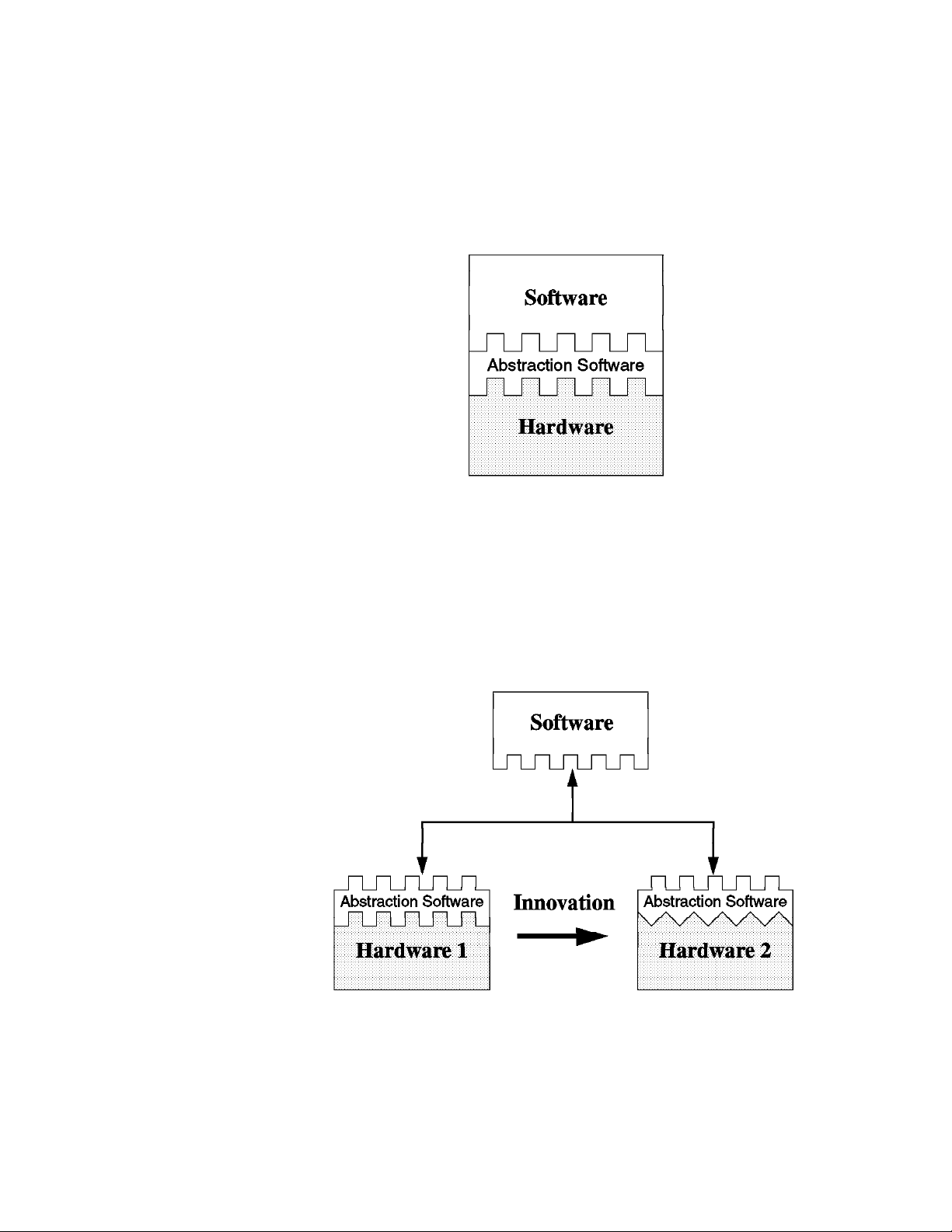

1. Old Compatibility Model .............................. 2

2. Innovation Within The Old Compatibility Model ................ 2

3. PReP Specification Compatibility Model .................... 3

4. PReP Specification Design Environment .................... 3

5. PCI RS/6000 Entry Server Logical Block Diagram ............. 12

6. PowerPC 604 Microprocessor Logical Block Diagram ........... 16

7. PCI-Based RS/6000 Server's Electronics Partitioning ............ 19

8. RISC System/6000 Model 7024-E20 ..................... 20

9. RS/6000 Model E20 Front View ........................ 22

10. RS/6000 Model F30 Bays ........................... 33

11. Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

12. Installing Hot-Swap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

13. Front Bays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

14. Removing the Front Panel ........................... 45

15. Disk Drive Light ................................. 46

16. Boot Structure on PCI-Based RS/6000 Systems and Microchannel-Based

RS/6000 Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

17. Hardfile Layout on Microchannel-Based RS/6000 Systems ........ 54

18. Hardfile Layout on PReP Specification .................... 54

19. Hardfile Layout on Microchannel-Based RS/6000 Systems ........ 55

20. NVRAM Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

21. Relation Map of Base Proto and Proto Extension Files ........... 60

22. Boot Image Layout on PCI-Based RS/6000 Systems ............ 62

23. Structure of the Boot Image on PCI-Based RS/6000 Systems ...... 62

24. AIX Version 4 Boot Image Creation ...................... 64

25. Firmware Boot Sequence ............................ 65

26. Boot Sequence Selection Submenu ...................... 76

27. Copying a System Dump on Reboot ..................... 79

28. Creating a mksysb ................................ 84

29. Editing the mksysb Script ............................ 88

30. Changing the Display Type ........................... 95

31. Devices' Location Codes ........................... 102

32. List of Configured ISA Adapters ....................... 105

33. Attributes Listed Using the lsresource Command ............. 105

34. Attributes Listed Using the lsattr Command ................ 105

35. SMIT ISA Menu ................................ 107

36. SMIT Configuration Menu for Adding an 8-Port Asynchronous ISA

Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

37. SMIT Configuration Menu for Adding an 128-Port Asynchronous ISA

Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

38. SMIT Configuration Menu for Adding an 4-Port Multi-Protocol ISA

Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

39. SMIT Configuration Menu for Adding an X.25 Interface Co-Processor

ISA Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

40. SMIT Configuration Menu for Adding an ISA Ethernet Adapter ..... 128

41. SMIT Configuration Menu for Adding an Auto 16/4 Token-Ring ISA

Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

42. Example of HACMP Cabling ......................... 145

43. ISA Adapter Service AID Diagnostic Menu ................. 150

44. ISA Adapter Attribute Selection Diagnostic Menu ............. 151

Copyright IBM Corp. 1996 xi

Page 14

45. Sample Network Topology .......................... 160

46. Network Topology Used in Our Lab ..................... 164

47. Defining the Third Network .......................... 165

48. Defining the Route Between First and Second Networks ......... 166

49. Machine Object Definition Menu ....................... 168

50. Resource Object Definition Menu (SPOT Resource) ........... 171

51. Initial Boot Screen ............................... 175

52. System Management Services Menu .................... 175

53. System Management Services Utilities Menu ............... 176

54. Adapter Parameters Submenu ........................ 176

55. Operator Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

xii Introduction to PCI-Based RS/6000 Servers

Page 15

Tables

1. PowerPC and Bus Specification ........................ 15

2. Optional Disk Drives on Model E20 ...................... 23

3. Optional Tape Drives on Model E20 ..................... 23

4. Optional CD-ROM drive on Model E20 .................... 24

5. Supported Monitors on Model E20 ...................... 25

6. SCSI Adapter Connections on Model E20 .................. 26

7. Optional Disk Drives on Model F30 ...................... 35

8. Optional Tape Drives on Model F30 ..................... 35

9. SCSI Adapter Connections on Model F30 .................. 36

10. PCI-based RS/6000 Comparison Performance Table ............ 39

11. Status Lights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

12. Platform Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

13. Checkpoint Codes on the LCD Panel ..................... 72

14. Dump Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

15. bootinfo -T . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

16. Accessing a Second Display .......................... 97

17. 8-Port Asynchronous ISA Adapter DIP Switches ............. 109

18. 128-Port Asynchronous ISA Adapter DIP Switches ............ 112

19. Physical Interfaces on 4-Port Multiprotocol Interface Cable ....... 116

20. Maximum Cable Length ............................ 116

21. DIP-Switches on 4-Port Multi-Protocol ISA Adapter ............ 116

22. 4-Port Multiprotocol Adapter: DIP-Switch Suggested Settings ...... 117

23. DIP Switches on the X.25 Interface Co-Processor ISA Adapter ..... 121

24. X.25 Adapter: DIP-Switch Suggested Settings ............... 121

25. Adapter Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

26. SCSI-2 Fast/Wide Single-Ended Adapter-to-First Device Cables .... 140

27. Device-to-Device Cables for Single-Ended Installations ......... 141

28. Terminators for Single-Ended Installations ................. 141

29. SCSI-2 Fast/Wide Differential Adapter-to-First Device Cables ...... 142

30. Device-to-Device Cables for Differential Installations ........... 143

31. Terminators for Differential Installations ................... 144

32. HACMP/6000 Cabling Features and Part Numbers ............ 145

33. NIM Client Configurations ........................... 158

34. NIM Object Classes .............................. 158

35. LED Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

36. Firmware Checkpoint Three-Digit Codes .................. 189

Copyright IBM Corp. 1996 xiii

Page 16

xiv Introduction to PCI-Based RS/6000 Servers

Page 17

Special Notices

This publication is intended to help system engineers, system administrators,

customer personnel and users to support, configure and manage the PCI-based

RS/6000 Servers, RS/6000 Model E20 and RS/6000 Model F30. The information

in this publication is not intended as the product specification for these systems.

See the PUBLICATIONS section of the IBM Hardware Announcement for the IBM

RS/6000 Model E20 and the IBM RS/6000 Model F30 for more information about

what publications are considered to be product documentation.

References in this publication to IBM products, programs or services do not imply

that IBM intends to make these available in all countries in which IBM operates.

Any reference to an IBM product, program, or service is not intended to state or

imply that only IBM's product, program, or service may be used. Any functionally

equivalent program that does not infringe any of IBM's intellectual property rights

may be used instead of the IBM product, program or service.

Information in this book was developed in conjunction with use of the equipment

specified, and is limited in application to those specific hardware and software

products and levels.

IBM may have patents or pending patent applications covering subject matter in

this document. The furnishing of this document does not give you any license to

these patents. You can send license inquiries, in writing, to the IBM Director of

Licensing, IBM Corporation, 500 Columbus Avenue, Thornwood, NY 10594 USA.

The information contained in this document has not been submitted to any formal

IBM test and is distributed AS IS. The information about non-IBM (VENDOR)

products in this manual has been supplied by the vendor and IBM assumes no

responsibility for its accuracy or completeness. The use of this information or the

implementation of any of these techniques is a customer responsibility and depends

on the customer's ability to evaluate and integrate them into the customer's

operational environment. While each item may have been reviewed by IBM for

accuracy in a specific situation, there is no guarantee that the same or similar

results will be obtained elsewhere. Customers attempting to adapt these

techniques to their own environments do so at their own risk.

Any performance data contained in this document was determined in a controlled

environment, and therefore, the results that may be obtained in other operating

environments may vary significantly. Users of this document should verify the

applicable data for their specific environment.

Reference to PTF numbers that have not been released through the normal

distribution process does not imply general availability. The purpose of including

these reference numbers is to alert IBM customers to specific information relative to

the implementation of the PTF when it becomes available to each customer

according to the normal IBM PTF distribution process.

The following terms are trademarks of the International Business Machines

Corporation in the United States and/or other countries:

AIX AIX/6000

AIXwindows AT

Copyright IBM Corp. 1996 xv

Page 18

IBM InfoExplorer

OS/2 Power Series

PowerPC PowerPC Reference Platform

PowerPC 604 PS/2

RISC System/6000 RS/6000

SP 400

The following terms are trademarks of other companies:

C-bus is a trademark of Corollary, Inc.

PC Direct is a trademark of Ziff Communications Company and is

used by IBM Corporation under license.

UNIX is a registered trademark in the United States and other

countries licensed exclusively through X/Open Company Limited.

Microsoft, Windows, and the Windows 95 logo are trademarks or registered

trademarks of Microsoft Corporation.

Apple, LocalTalk, Macintosh Apple Computer, Inc.

Compaq Compaq Computer Corporation

DEC, VAX, VMS, VT100 Digital Equipment Corporation

Intel Intel Corporation

Windows NT Microsoft Corporation

Motorola Motorola, Inc.

NFS, Solaris, SunSoft Sun Microsystems, Inc.

Novell Novell, Inc.

NuBus Texas Instruments, Inc.

Racal-Vadic Racal-Vadic Corporation

SCSI Security Control Systems, Inc.

Other trademarks are trademarks of their respective companies.

xvi Introduction to PCI-Based RS/6000 Servers

Page 19

Preface

This document is intended to assist system engineers, customer engineers, system

administration personnel, and customers in configuring, managing and using AIX

Version 4.1 on the PCI-based RS/6000 servers, RS/6000 Model E20 and RS/6000

Model F30.

It contains descriptions of processes which are unique to AIX Version 4.1 on

PCI-based RS/6000 servers. Practical configuration and environment examples are

provided as well as hints and tips to address "how-to" issues involving PCI-based

RS/6000 servers.

How This Document is Organized

This document is organized as follows:

Chapter 1, “Introduction”

This chapter includes an introduction to the PCI-based RS/6000 servers and to

the

PowerPC Reference Platform Specification (PReP)

section on the

Platform (CHRP)

PowerPC Microprocessor Common Hardware Reference

.

. It also includes a

Chapter 2, “PCI-Based RS/6000 Server Hardware”

This chapter introduces the PCI-based RS/6000 server's hardware design.

This includes a description of the main components around the Peripheral

Component Interconnect (PCI) bus and the electronics partitioning. The

RS/6000 Model E20 and RS/6000 Model F30 standard and optional features

are also described in this chapter.

Chapter 3, “Hot-Swap Subsystem”

This chapter includes an overview of the hot-swap subsystem provided with the

RS/6000 Model F30. Component descriptions and installation procedures are

explained along with the features of AIX which can take advantage of this

hot-swap capability.

Chapter 4, “Boot Support and Firmware”

The first part of this chapter explains the components involved in the boot

process. The boot process performed by the firmware is described. This

chapter also includes an introduction to the System Management Services

(SMS) programs.

Chapter 5, “AIX Version 4.1.4 Support”

AIX Version 4.1.4 is the first version of AIX supported on the PCI-based

RS/6000 servers, E20 and F30. This chapter explains the enhancements

included in AIX Version 4.1.4 that support these new machines. Important

topics such as system backup are described in detail, and hints and tips are

included.

Chapter 6, “Adapter and Device Configuration on PCI-Based RS/6000 Servers”

This chapter includes step-by-step procedures on how to configure devices and

adapters. It also includes cable types and cabling information that is necessary

on most adapters.

Copyright IBM Corp. 1996 xvii

Page 20

Chapter 7, “SCSI Cabling”

This chapter describes the main SCSI cabling features used with the

PCI-based RS/6000 servers. It addresses frequently asked questions, such as:

How many SCSI devices can be attached to a single SCSI adapter? What are

the right cable features to attach more SCSI devices on the same SCSI chain?

and others.

Chapter 8, “Diagnostics Support”

This chapter describes the diagnostics support provided by the PCI-based

RS/6000 servers. It explains how to run the diagnostics programs in both

stand-alone and online modes, locally and over the network.

Chapter 9, “Network Installation Management (NIM) Support”

This chapter describes the steps required to configure your PCI-based RS/6000

server as a Network Installation Management (NIM) Master and as a NIM

Client.

Chapter 10, “Troubleshooting”

There are some differences between the PCI-based RS/6000 servers and the

microchannel-based RS/6000 systems which require different approaches to

problem determination. This chapter addresses some of the problems which

can occur and how to prevent or solve them. This chapter also includes a hints

and tips section.

Related Publications

The publications listed in this section are considered particularly suitable for a more

detailed discussion of the topics covered in this redbook.

IBM RISC System/6000 7024 E Series User's Guide

IBM RISC System/6000 7024 E Series Service Guide

IBM RISC System/6000 7025 F Series User's Guide

IBM RISC System/6000 7025 F Series Service Guide

IBM RISC System/6000 7024 and 7025 Diagnostic Information

AIX Version 4.1 Network Installation Managament Guide and Reference

SC23-2627

, SA38-0501

, SA38-0502

, SA38-0504

, SA38-0505

International Technical Support Organization Publications

Managing AIX Version 4.1 on PCI-Based RS/6000 System Workstations

(40P/43P)

A complete list of International Technical Support Organization publications, known

as redbooks, with a brief description of each, may be found in:

International Technical Support Organization Bibliography of Redbooks,

GG24-3070.

, SG24-2581

, SA38-0509

,

xviii Introduction to PCI-Based RS/6000 Servers

Page 21

How Customers Can Get Redbooks and Other ITSO Deliverables

Customers may request ITSO deliverables (redbooks, BookManager BOOKs, and

CD-ROMs) and information about redbooks, workshops, and residencies in the

following ways:

IBMLINK

Registered customers have access to PUBORDER to order hardcopy, to

REDPRINT to obtain BookManager BOOKs

IBM Bookshop — send orders to:

usib6fpl@ibmmail.com (USA)

bookshop@dk.ibm.com (Outside USA)

Telephone orders

1-800-879-2755 (USA) 0256-478166 (UK)

354-9408 (Australia) 32-2-225-3738 (Belgium)

359-2-731076 (Bulgaria) 1-800-IBM-CALL (Canada)

42-2-67106-250 (Czech Republic) 45-934545 (Denmark)

593-2-5651-00 (Ecuador) 01805-5090 (Germany)

03-69-78901 (Israel) 0462-73-6669 (Japan)

905-627-1163 (Mexico) 31-20513-5100 (Netherlands)

064-4-57659-36 (New Zealand) 507-639977 (Panama)

027-011-320-9299 (South Africa)

Mail Orders — send orders to:

IBM Publications

P.O. Box 9046

Boulder, CO 80301-9191

USA

IBM Direct Services

Sortemosevej 21,

3450 Allerod

Denmark

Fax — send orders to:

1-800-445-9269 (USA) 0256-843173 (UK)

32-2-225-3478 (Belgium) 359-2-730235 (Bulgaria)

905-316-7210 (Canada) 42-2-67106-402 (Czech Republic)

593-2-5651-45 (Ecuador) 07032-15-3300 (Germany)

03-69-59985 (Israel) 0462-73-7313 (Japan)

31-20513-3296 (Netherlands) 064-4-57659-16 (New Zealand)

507-693604 (Panama) 027-011-320-9113 (South Africa)

1-800-IBM-4FAX (USA only) — ask for:

Index # 4421 Abstracts of new redbooks

Index # 4422 IBM redbooks

Index # 4420 Redbooks for last six months

Direct Services

Send note to softwareshop@vnet.ibm.com

Redbooks Home Page on the World Wide Web

http://www.redbooks.ibm.com/redbooks

E-mail (Internet)

Send note to redbook@vnet.ibm.com

Preface xix

Page 22

Internet Listserver

With an Internet E-mail address, anyone can subscribe to an IBM

Announcement Listserver. To initiate the service, send an E-mail note to

announce@webster.ibmlink.ibm.com with the keyword subscribe in the body of

the note (leave the subject line blank). A category form and detailed

instructions will be sent to you.

How IBM Employees Can Get Redbooks and ITSO Deliverables

Employees may request ITSO deliverables (redbooks, BookManager BOOKs, and

CD-ROMs) and information about redbooks, workshops, and residencies in the

following ways:

PUBORDER — to order hardcopies in USA

GOPHER link to the Internet

Type GOPHER

Select IBM GOPHER SERVERS

Select ITSO GOPHER SERVER for Redbooks

Tools disks

To get LIST3820s of redbooks, type one of the following commands:

TOOLS SENDTO EHONE4 TOOLS2 REDPRINT GET GG24xxxx PACKAGE

TOOLS SENDTO CANVM2 TOOLS REDPRINT GET GG24xxxx PACKAGE (Canadian users only)

To get lists of redbooks:

TOOLS SENDTO WTSCPOK TOOLS REDBOOKS GET REDBOOKS CATALOG

TOOLS SENDTO USDIST MKTTOOLS MKTTOOLS GET ITSOCAT TXT

TOOLS SENDTO USDIST MKTTOOLS MKTTOOLS GET LISTSERV PACKAGE

To register for information on workshops, residencies, and redbooks:

TOOLS SENDTO WTSCPOK TOOLS ZDISK GET ITSOREGI 1996

For a list of product area specialists in the ITSO:

TOOLS SENDTO WTSCPOK TOOLS ZDISK GET ORGCARD PACKAGE

Redbooks Home Page on the World Wide Web

http://w3.itso.ibm.com/redbooks/redbooks.html

ITSO4USA category on INEWS

IBM Bookshop — send orders to:

USIB6FPL at IBMMAIL or DKIBMBSH at IBMMAIL

Internet Listserver

With an Internet E-mail address, anyone can subscribe to an IBM

Announcement Listserver. To initiate the service, send an E-mail note to

announce@webster.ibmlink.ibm.com with the keyword subscribe in the body of

the note (leave the subject line blank). A category form and detailed

instructions will be sent to you.

xx Introduction to PCI-Based RS/6000 Servers

Page 23

Acknowledgments

This project was designed and managed by:

Miguel Crisanto

International Technical Support Organization, Austin Center

The authors of this document are:

Alexandre Bonfim de Azevedo

IBM Brazil

Giampiero Galli

IBM Italy

Simon M. Robertson

IBM UK

Miguel Crisanto

IBM Austin

Thanks also to our editor:

Marcus Brewer

Editor, ITSO Austin Center

This publication is the result of a residency conducted at the International Technical

Support Organization, Austin Center.

Preface xxi

Page 24

xxii Introduction to PCI-Based RS/6000 Servers

Page 25

Chapter 1. Introduction

IBM offers a family of powerful workgroup servers, the RS/6000 Model E20 and the

RS/6000 Model F30, that are ideal for running small business and departmental

applications. These servers use the PowerPC processor architecture and offer

large memory capacities, PC I/O compatibility and flexible configurations.

The RS/6000 Model E20 is the lowest-cost entry server and is intended to be the

RS/6000 family's competitive product for the price-conscience entry server market.

The RS/6000 Model F30 is a system targeted toward those customers looking for

investment protection, with a reliable, highly expandable system.

In order to keep development costs as low as possible, the PCI-based RS/6000

servers use components and subsystems developed in other areas of IBM as well

as the "PC Clone" industry at large. The design of this server family is intended to

have much in common with the PC Server line of IBM products produced in Boca

Raton and Raleigh. The power and mechanical packaging of the PCI-based

RS/6000 servers is the same as that used for packaging the IBM PC Server

products.

The electronics partitioning of the PCI-based RS/6000 servers (see 2.3, “Electronics

Partitioning” on page 19 for more information) has been chosen so as to allow a

fast and easy upgrade to more powerful processors, and even to multiprocessor

systems.

The PCI-based RS/6000 servers are based on the PowerPC Reference Platform

(PReP) system architecture; thus they have the capability to run several different

operating systems. Currently, only AIX and MicroSoft's Windows NT have been

announced for this platform; however, the PReP-based hardware design for

memory and I/O subsystems allows for the support of other operating systems that

may be announced in the future. See 1.2, “Introducing the PowerPC Reference

Platform Specification” on page 4 for more information about the PReP

specification.

Their orientation to the PReP specification makes the PCI-based RS/6000 servers

different from the "classical" microchannel-based RS/6000 systems. As yet,

microchannel is not available, but the PCI and ISA bus architectures have been

implemented on these types of machines. The AIX operating system was

enhanced in several areas in order to manage the new hardware architecture.

Those areas include support for stand-alone and online diagnostics on machines

without a physical mode key as well as configuration helpers for ISA adapters.

Although most changes made to AIX to enable support for the PCI-based RS/6000

servers are transparent to the end-user, in some areas the system administrator will

be confronted with platform-specific issues. This will be the case, for example,

when configuring a PCI-based RS/6000 server as a Network Installation

Management (NIM) Master. This book is intended to help end-users and system

administrators in understanding these platform-specific AIX issues and provide the

“how to” information required to handle these differences.

In this chapter, we include a brief introduction to the PReP specification. For a

more detailed overview of the specification, you may refer to the

on PCI-Based RS/6000 Workstations, SG24-2581

Copyright IBM Corp. 1996 1

, redbook.

Managing AIX V4

Page 26

The PowerPC Microprocessor Common Hardware Reference Platform, previously

known as the Common Hardware Reference Platform (CHRP), is a superset of the

PReP Specification. Although IBM has not yet announced any system based on

this new specification, it is becoming very popular, and many companies have

published their intentions to develop CHRP-compliant systems. For this reason, we

include a description of the CHRP specification in this chapter.

1.1 Rationale for the PowerPC Reference Platform Specification

Computer systems today span a wide range of environments, from hand-held

portables to room-size mainframes. The largest percentage of systems are based

on the IBM PC/AT, Apple Macintosh or a variety of workstation-level RISC

architectures.

These machines cover the needs of personal productivity, entry engineering design,

entry commercial data management, information analysis, and database, file, and

application servers. Today, despite their high levels of performance and

functionality, existing architectures limit the system designer's ability to add

innovative new features without jeopardizing operating systems and applications.

These limitations restrict the use of hardware and software enhancements which

promise improved user interfaces, faster system performance and broader

operating environments. Many times, system designers must carry obsolete

hardware structures to maintain compatibility.

Figure 1. Old Compatibility Model. Software communicates directly with hardware.

Figure 1 shows the old compatibility model, where the software communicates

directly with the hardware. Changes applied to the hardware require changes to

software and vice-versa, as shown in Figure 2.

Figure 2. Innovation Within The Old Compatibility Model

2 Introduction to PCI-Based RS/6000 Servers

Page 27

To be sustainable and to continue growing, the computer industry must define

computer architectures which allow system and application designs to utilize the

latest silicon, interface, storage, display, and software technologies. The key to

these new computer architectures is the ability of the software to abstract the

hardware from the operating system kernel and applications without sacrificing

compatibility or performance.

Figure 3. PReP Specification Compatibility Model. Abstraction layer separates hardware

and software.

Figure 3 shows the new model specified in the PowerPC Reference Platform

Specification. An abstraction layer separates the hardware from the software. The

advantage of this model is that hardware designers now have room to innovate

without jeopardizing the ability of their platform to run as many operating systems

as possible.

Figure 4. PReP Specification Design Environment

Figure 4 shows, that changing the hardware, for example from hardware level 1 to

hardware level 2, only requires a change in the abstraction layer. No changes are

required in the operating system or in the application itself.

Chapter 1. Introduction 3

Page 28

Independent software vendors (ISV) would like to develop for a large, installed base

of hardware systems and on as few operating system platforms as possible. For

this to happen, an industry standard computer architecture is required. The time

has come to define a new architecture in this area which has the following key

features:

The ability to allow hardware vendors to differentiate

The ability to use industry standard components and interfaces

The ability to support optimization of application performance

Compatible Operating Systems

This type of open system architecture allows hardware system vendors to

develop differentiated, yet compatible, systems. Each system is able to run any

of the compatible operating systems as well as the applications written for these

operating systems and system architecture.

1.2 Introducing the PowerPC Reference Platform Specification

The PowerPC Reference Platform Specification provides a description of the

devices, interfaces and data formats required to design and build a PowerPC-based

industry standard computer system. It is written to create a hardware, which when

coupled with the hardware abstraction software provided by the operating system or

hardware-system vendors, allows the computer industry to build PowerPC systems

which all run the same shrink-wrapped operating systems and the same

shrink-wrapped applications for those operating environments.

It gives system developers the freedom to choose the level of market differentiation

and enhanced features required in a given computer environment without carrying

obsolete interfaces or losing compatibility.

This specification defines the minimum functional requirements for a compliant

PReP implementation. It also provides a list of recommended hardware

subsystems, devices and interfaces.

Operating system vendors may use this specification as a reference to determine

the level of functionality required in a hardware abstraction layer. The specification

shows the hardware subsystems that are likely to change and therefore may need

hardware abstractions.

The PowerPC Reference Platform Specification is written primarily for system

developers. It contains operating-system-specific descriptions and references to

their hardware abstraction approach.

This specification also describes a reference implementation which is a fully

functional PReP system design supporting all operating systems and applications

that are being ported to this reference platform. This reference implementation

provides an example to which system developers can compare and gives them a

better understanding of their own design goals.

This specification supports all 32-bit PowerPC processors and is intended to cover

the following systems:

4 Introduction to PCI-Based RS/6000 Servers

Page 29

Portables

Medialess

Desktop

Workstations

Servers

Because PReP requires machine abstractions, the specification accommodates the

evolution of software and hardware technologies without losing system

compatibility.

The PReP specification covers:

Hardware Configuration

The hardware configuration defines the minimum and recommended

hardware standards and capacities required to be PowerPC Reference

Platform-compliant and compatible with targeted operating

environments.

Architecture

The system architecture defines the minimum and recommended system

attributes required to design a compatible computer system. This

section describes the key hardware and software architecture attributes

and restrictions defined for PReP compliance.

Machine Abstraction

To enable the same operating system to run on different

PReP-compliant platforms, the operating system must be designed to

use

abstraction

Abstraction software concentrates operating system

hardware-dependent code into a collection of code that has well-defined

interfaces with the operating system kernel and may be modified to

meet the hardware interface.

Boot Process

This section describes the boot process, the format and the contents of

boot information, and the state of the system at the end of the boot

process. It also mentions “Open Firmware,” the IEEE standard P1275

for Boot Firmware, as the goal for a PReP-compliant firmware

implementation.

Open Firmware is defined in one of the appendixes included in the

specification.

Reference Implementation

The PReP specification describes a reference implementation of a

PReP-compliant system. This description may be used as a high-level

design for vendors waiting to produce a compatible system, or it may be

used as an example for vendors who want to produce a different

system.

software to interface with the hardware.

Power Management

Power Management is used for saving electronic power. There are two

types of Power Management techniques:

Micro Power Management

This is hardware-managed power control.

Chapter 1. Introduction 5

Page 30

Macro Power Management

This uses system software to control the hardware. Macro Power

Management is, by far, the more powerful technique and thus is the

basis for the PReP Power Management model.

1.3 The PowerPC Microprocessor Common Hardware Reference

Platform (CHRP)

This section introduces the PowerPC Microprocessor Common Hardware

Reference Platform, previously referred to within the industry as Common Hardware

Reference Platform (CHRP), and describes the purposes and the goals of this

platform.

To avoid using the platform's long name, the industry has assigned a nickname to

this specification, PowerPC Platform.

Before talking about the platform itself, we want to give you a brief introduction

about the history, rationale and reasons why this platform had to be developed.

1.3.1 PowerPC Platform - Introduction and History

The PowerPC family of microprocessors, which is being jointly developed by Apple,

IBM and Motorola, is the foundation for an established and rapidly expanding

market for RISC-based systems. Apple Computer has shipped well over one

million Power Macintosh computers since March 1994. IBM has made major

announcements for a full line of PowerPC systems, thus completing its successful

PowerPC-based workstation and server products. Motorola has introduced a broad

range of desktop and server systems. Other companies, such as Bull, Canon and

FirePower, have announced or shipped PowerPC-based systems.

The PowerPC systems shipped by Apple retain many legacy characteristics of

Macintosh hardware and software. The existing PowerPC systems shipped by IBM

and Motorola retain many legacy characteristics of Intel-based PC design. The

operating systems on which the applications run are not compatible with the

different hardware platforms. This incompatibility causes hardware manufacturers

and software developers to have to choose platform families, and this limits the

options available to users.

To correct the problems facing customers and developers, Apple, IBM and Motorola

looked at various ways of combining the two hardware architectures into a common

system architecture. In November 1994, Apple, IBM and Motorola agreed to

develop a specification for a common hardware platform with the purpose of

defining a system which will become the pervasive open industry standard for

single users and on up through to server configurations. Finally, on November 13,

1995, the three companies announced the availability of the PowerPC Platform.

1.3.2 The PowerPC Platform Document

The PowerPC Platform is a set of specifications that defines a unified personal

computer architecture and brings the combined advantages of the Power Macintosh

platform and the standard PC environment to both system vendors and users.

These open specifications will make it easier for system vendors to design

computers capable of running multiple operating systems. Operating systems from

6 Introduction to PCI-Based RS/6000 Servers

Page 31

Apple, IBM, Microsoft, Novell, and SunSoft are planned to support the PowerPC

Platform.

The PowerPC Platform, combined with the superior performance of the PowerPC

RISC microprocessor, will support today's advanced applications and drive the next

generation of applications which address emerging customer requirements for

video, multimedia, virtual reality, speech recognition, 3D graphics, and enhanced

communications. PowerPC Platform-compliant systems will also benefit from the

availability of components designed to industry specifications which can help

reduce costs.

The PowerPC Platform specification is a blueprint for system vendors and

independent hardware vendors. It specifies the kinds of input/output interfaces, bus

standards and other system-level functional elements required to implement a

single, unified architecture around the PowerPC microprocessor. The PowerPC

alliance companies: Apple, IBM and Motorola, are publishing the PowerPC Platform

specifications as part of their initiative to create a superior, industry-wide

RISC-based alternative to the CISC-based X86 offerings. The PowerPC Platform

provides a standard architecture for the next generation of personal computing - an

architecture that is open, multi-OS capable, scalable from portables to

high-performance servers, and free from the limitations of CISC-based

microprocessor architectures, which have had to incorporate RISC-like features to

avoid reaching their performance peak.

The PowerPC Platform, an open, publicly available reference architecture for the

industry, leverages industry standard component designs. System vendors

choosing to implement the PowerPC Platform will benefit not only from the

specifications but also from the reference designs and infrastructure, including

chipsets, peripherals and firmware from leading vendors. Thus, the PowerPC

Platform provides a lower-cost, standard PC foundation for a broad range of

computing systems from multiple system vendors which can help to increase PC

volumes and enables system vendors to differentiate their PowerPC systems.

Additionally, users will benefit from broader access to software applications.

PowerPC Platform Document

The PowerPC Platform document is a superset of the PowerPC Reference

Platform Specification, Version 1.1, the Apple RISC Architecture (Power

Macintosh) and IBM RISC server systems.

1.3.3 PowerPC Platform Goals

The goals of this specification are as follows:

To create an open industry standard to be used for the implementation of

PowerPC-based systems. The architecture document is available to the

industry and can be used by any hardware or software vendor to develop

compliant products.

To allow compatible differentiation through the use of abstracted hardware

interfaces, defined minimum hardware, and extension mechanisms.

To leverage existing and future industry-standard buses and interfaces.

Existing bus architectures have a proven level of performance and functionality.

Established industry-standard interfaces (SCSI, IDE, LocalTalk, Ethernet, and

Chapter 1. Introduction 7

Page 32

so on) and newer bus architectures, interfaces and protocols (PCI, PC Card,

IrDA, and so forth) provide higher levels of performance or utility that are not

achievable by the older standards. The architecture allows platform and

system designers to determine which buses, interfaces and protocols best suit

their target environment.

To provide a flexible address map. Another key attribute of this specification is

the relocatability of devices and subsystems within the PowerPC address

space. Subsystem address information, which defines where I/O devices

reside, is detected by the Open Firmware and passed to the operating systems

in the device tree. The architecture accommodates the use of multiple identical

buses and adapters in the same platform without address conflicts.

To build upon the Open Firmware boot environment defined in IEEE 1275,

IEEE Standard for Boot (Initialization Configuration) Firmware, Core

Requirements and Practices. Currently, the abstraction approach for some

operating systems uses platform description information discovered by a legacy

boot process and passed to the operating system in data structures. With

these systems, operating systems and platforms will migrate to the Open

Firmware boot process and device tree.

To architect the control of power management by different operating systems.

It is important that the combination of hardware and software be allowed to

minimize power consumption through automatic or programmed power-saving

methods. Power management of systems will reduce the operational cost for

the user and reduce the impact of the system on the environment.

To provide an architecture which can evolve as technology changes. The

creators of the architecture invite industry participation in evolving future

versions of it.

To minimize the support cost for multiple operating systems through the

definition of common platform abstraction techniques. Common and compatible

approaches to the abstraction of hardware will reduce the burden on hardware

vendors who produce differentiated machines.

To architect a mechanism for error handling, error reporting and fault isolation.

The architecture provides for the implementation of more robust systems if

desired by the system developers.

PowerPC Platform on the Internet

A

Personal use Only

following Internet site:

http://www.austin.ibm.com/tech/chrp/chrp_book.html

Morgan Kaufmann Publishers provides hardcopies of the specification which

has the number ISBN 1-55860-394-8.

1.3.4 CHRP Certification

Currently, a team is in place to address the CHRP certification process. The

definition of this process is not yet finished; thus the information included below is

preliminary and subject to change.

copy of the PowerPC Platform can be found on the

Requirements for PowerPC Platform (CHRP) systems are established by the AIM

(Apple, IBM, Motorola) Alliance CHRP Specification Committee and defined in the

8 Introduction to PCI-Based RS/6000 Servers

Page 33

PowerPC Microprocessor Common Hardware Reference Platform: A System

Architecture

The AIM Alliance has established the CHRP certification process to accomplish the

following specific goals:

To establish concrete testing criteria for determining the compliance with the

requirements stated in the document titled,

Hardware Reference Platform: A System Architecture

To ensure multiple operating system interchangeability on certified CHRP

computer systems.

To establish a clearly defined set of criteria and procedures for the use of the

CHRP logo.

To make the testing criteria available to the computer industry in an open, fair

and easily accessible manner.

To encourage broad industry participation in the CHRP program through easy

and fair accessibility to testing requirements and procedures.

Any system being certified as a CHRP system will be certified under one of two

classes. These classes are:

Specification.

PowerPC Microprocessor Common

.

CHRP Certified

Intended for systems that will be general user, major market desktop or

Client systems. For this class, a system must be tested and achieve

four approvals from the CHRP Certification Authority. The approvals are

for CHRP hardware systems, the MacOS, Windows NT, and OS/2

operating systems.

CHRP Server Certified

Intended for those systems that will be sold and operated as servers.

For this class, three approvals must be received from the CHRP

Certification Authority. The approvals are for CHRP hardware systems

and for any two of the six CHRP-ported operating systems (AIX,

MacOS, Netware, OS/2, Solaris, and Windows NT).

Chapter 1. Introduction 9

Page 34

10 Introduction to PCI-Based RS/6000 Servers

Page 35

Chapter 2. PCI-Based RS/6000 Server Hardware

The PCI-based RS/6000 server's hardware design is driven by accepted “industry

standards” both formal and de-facto. This means that open, standard interfaces

are used whenever possible, and much of the expansion of the system will be

performed by the end user utilizing standard adapters and controllers.

The design of the PCI-based RS/6000 servers is intended to have much in

common with the PC Server line of products from Boca Raton/Raleigh. The power

and mechanical packaging is the same as that used in the PC Company. In

addition, the electronics partitioning explained in 2.3, “Electronics Partitioning” on

page 19 was chosen so as to allow “processor card agility,” the ability to use either

an Intel x86 or PowerPC architecture processor card.

While the PCI-based RS/6000 servers use a PowerPC Reference Platform (PReP)

compatible basic system architecture, they also build upon the PReP architecture in

order to provide features that are demanded by systems used as servers. These

servers give the customer a basic set of features that make these systems different

from Client systems. These features enhance the performance of the system and

provide a higher level of RAS (Reliability/Availability/Serviceability) than is

commonly found on Client workstations. They include support for future SMP

configurations, standard use of fast L2 caches, large capacity for memory

expansion, higher performance memory subsystems, ECC error- correcting

memory, additional I/O card expansion capability, and higher performance I/O

buses (PCI & ISA). Moreover, the PCI-based RS/6000 servers provide support for

a wide range of devices and features.

2.1 The Hardware Design

The PCI-based RS/6000 servers, packaged in an industry standard tower, are

based on the PowerPC 604 processor.

Figure 5 on page 12 shows the logical block diagram for these servers. The

processor bus runs at 66 MHz and the L2 cache and the Memory Controller are

attached to it. The Memory Controller chip also acts as a PCI Bridge to the primary

PCI bus. Notice that the peripheral units are separated from the PowerPC

processor, the L2 cache and memory through the

PCI Bridge

PCI bus runs at 33 MHz.

The primary PCI is a 32-bit bus. It drives two PCI expansion cards as well as the

secondary PCI bus bridge and the Extended Industry Standard Architecture (EISA)

bus bridge. The system flash EPROM (IPL ROS) is also connected to the primary

PCI bus.

The SCSI-2 Interface Controller and the other PCI slots are connected to the

secondary PCI bus, while the EISA bus allows the connection of on-board ISA

subsystems, such as standard I/O. ISA slots are provided on this bus for a

selection of ISA adapters.

chip. This allows the Processor Local Bus to run at 66 MHz, while the

Processor Memory Controller &

Copyright IBM Corp. 1996 11

Page 36

Figure 5. PCI RS/6000 Entry Server Logical Block Diagram

2.1.1 The PCI Bus Architecture

The Peripheral Component Interconnect (PCI) is a specification standard for

computer bus implementation developed by the PCI Special Interest Group

(PCI-SIG), led by a group of companies including Compaq, Digital, IBM, Intel and

NCR. There are now over 300 companies in the PCI-SIG supporting the

architecture and currently producing PCI products.

The goal was to provide a common system-board bus that could be used in

personal computers, from laptops to servers. It was envisioned as a local system

board bus that would serve as a common design point, supporting different system

processors as the various processors evolved over time. This is much like

operating systems which have defined Application Binary Interfaces (ABIs) so that

applications need not change with each generation of the operating system. The

PCI Local Bus would serve as a common hardware interface that would not change

with different versions of microprocessors.

The group defined PCI to support the high-performance basic system I/O devices,

such as the graphics adapter, hardfile controller and/or LAN adapter. In the original

definition, these would be mounted on the planar and would communicate through

the PCI bus. Current I/O buses (ISA, EISA and Micro Channel) would be used to

attach pluggable features to configure the system for the desired use. The first

release of PCI Specification was made available in June of 1992.

The PCI Special Interest Group (SIG) soon realized that the PCI bus needed the

capabilty to support connectors. For example, display controller evolution doesn't

necessarily match planar development; so providing for an upgrade of the display

controller became a requirement. The next release of the PCI Specification

12 Introduction to PCI-Based RS/6000 Servers

Page 37

(Version 2.0 in April of 1993) included upgrade capability through expansion

connectors.

According to PCI Specification Version 2.0, the PCI bus operates on 32- or 64-bits

of data at a clock speed of 33 MHz. This yields a local bus performance of 132

MB/sec for 32-bit transfers and 264 MB/sec for 64-bit transfers. The next PCI

Specification (Version 2.1) is expected to include a definition of 66 MHz PCI

capability, increasing local bus performance to 528 MB/sec for 64-bit transfers.

Though each PCI bus is restricted to a maximum of four slots, the addition of

multiple PCI-to-PCI bridges allows multiple PCI buses to be included in a system as

a means for providing additional slots when needed. Each PCI Bridge adds

another PCI bus, which in turn can handle up to four slots each.

2.1.1.1 PCI Features and Benefits

The PCI bus architecture has many advantages involving the following:

High data transfer speed

Processor independence

Cross-platform compatibility

Plug and Play

Investment protection

High Data Transfer Speed

The high-speed data transfer is implemented by the following functions:

Buffering and asynchronous data transfer

The PCI chip can support the processing and buffering of data and commands

sent from the processor or from the peripherals in case the peripheral or the

processor is not yet ready to receive the information.

Burst mode transfer

Variable length linear or toggle mode bursting for both reads and writes

improves write-dependant graphics performance.

Caching

To reduce the access time, the PCI bus architecture supports caching of data

which is frequently used.

DMA

The Direct Memory Access (DMA) function is used to enable peripheral units to

read from and write to memory without sending a memory request to the

processor. This function is very useful for peripherals that need to receive

large amounts of data, such as video adapters, hard disks and network

adapters.

Processor Independence

Processor independence allows manufacturers to implement PCI buses on any

computer. Any PCI-compliant peripheral will work on any PCI-compliant bus

implementation.

Cross-Platform Compatibility

Chapter 2. PCI-Based RS/6000 Server Hardware 13

Page 38

The key to cross-platform compatibility is processor independence. Until PCI,

different systems used different buses, such as ISA, EISA, NuBus, and so forth.

Now, different systems can use one bus.

Multi-bus Support

An important aspect to PCI-based system architecture is support for multiple PCI

buses, operating transparently to existing software.

Plug and Play

PCI peripherals, following the PCI standard, load the appropriate set of installation,

configuration and booting information to the host CPU without user intervention.

This provides a greater ease of use for the system integrator or end-user.

Investment protection

The PCI bus is designed for 64-bit addressing support.

2.1.2 The ISA BUS Architecture

The Industry Standard Architecture (ISA) is the most widely used system bus in the

PC industry. Originally, there were no official definition or standards for it. Later

on, its specifications were defined by the Institute of Electrical and Electronics

Engineers (IEEE) standards group.

The ISA bus, if implemented, allows a transfer rate of up to 8.3 MB/s. Transfers

over the ISA bus are synchronized around 8 MHz, and they usually take a

minimum of two cycles of the bus clock to perform a data transfer. Since the data

path of an ISA bus is 16 bits wide, up to two bytes may be transferred during each

transaction.

Since ISA is the most widely bus architecture used in the PC industry, it makes

sense to provide users with the possibility to use hardware peripherals they already

use with other systems, rather than having to expend additional money for these

peripherals.

Moreover, supporting the ISA bus architecture, the system-provider has access to a

wide spectrum of adapters and devices already available in the marketplace and

does not have to wait for adapters to be built for a specific system bus. The

provider has to ensure that the device driver for the specific operating system is

also available.

The problem when connecting the processor to the ISA bus directly is that the

processor's speed has to be reduced to match the slow ISA bus speed; thus the

systems cannot take advantage of a fast processor.

Table 1 shows the bus specification for different architectures and compares them

to the PowerPC processor's speed.

14 Introduction to PCI-Based RS/6000 Servers

Page 39

Table 1. PowerPC and Bus Specification

Specification PowerPC PCI ISA(8) ISA(16) EISA

Processor Speed

(601-604)

Databus 64 64 8 16 16/32

Address Bus 32 64 20 24 24/32

Bus Clock 66 MHz 33 MHz 4.7 MHz 8.3 MHz 8.3 MHZ

Interrupts - 4 6 11 11

DMA Channel - busmaster 3 7 7

The solution to the problem is to use the PCI local bus as the primary system bus

and the ISA bus as an expansion bus. This way, the system can take advantage

of the high-speed data transfer provided by the PCI bus when communicating with

the processor and memory. On the other side, through the PCI-ISA Bridge, the bus

clock can be reduced to match the ISA bus requirements.

66-132 - - - -

2.2 The Hardware Main Components

The PCI-based RS/6000 servers include the following main hardware components:

Processor Subsystem

L2 Cache

Memory Controller and PCI Bridge

System Memory

Primary PCI Bus

Secondary PCI Bus

EISA Bus

X-bus

No Power Management Controller

Note that the currently available PCI-based RS/6000 servers (E20 and F30) do

not include a power-management controller.

2.2.1 The Processor Subsystem

The PCI-based RS/6000 servers feature the PowerPC 604 microprocessor. The

superscalar multiprocessor-enabled chip issues up to four instructions in parallel

every clock cycle. Its three-stage, double-precision floating point unit provides

tremendous performance capabilities that were previously available only through

expensive add-on hardware.

Figure 6 on page 16 shows the PowerPC 604 microprocessor architecture which is

defined by the following specifications:

PowerPC 604 microprocessor running at:

– 100 MHz on RS/6000 Model E20

– 133 MHz on RS/6000 Model F30

Chapter 2. PCI-Based RS/6000 Server Hardware 15

Page 40

Up to 66 MHz bus clock

Superscalar design with integrated integer, floating-point and branch units

16 KB four-way set-associative instruction cache

16 KB four-way set-associative data cache

64-bit memory interface with 32-bit addressing

Virtual memory support for up to four petabytes (252)

Real memory support for up to four gigabytes

Support for Big-Endian and Little-Endian modes

Nap power management mode

Figure 6. PowerPC 604 Microprocessor Logical Block Diagram

2.2.2 The L2 Cache

The L2 cache subsystem is directly attached to the processor bus which runs at 66

MHz. It is managed by a Write-Through Look-Aside controller which interfaces to

two Cache Tag RAM modules and eight synchronous Strip Cylindrical Random

Access Memory (SCRAM) modules to form a 512 KB L2 cache assembly.

16 Introduction to PCI-Based RS/6000 Servers

Page 41

The cache controller supports disable, inhibit and invalidate functions in addition to

the expected L2 memory caching operations.

2.2.3 The Memory Controller and PCI Bridge

The Memory Controller chip is directly attached to the processor bus and acts as a

PCI Bridge to the primary PCI bus as well.

It issues two different bus interfaces:

The CPU bus interface that runs at 66 MHz

The PCI bus interface that runs at 33 MHz

The memory controller supports address and data-bus parity generation and

checking. It provides support for Big- and Little-Endian modes and for 604 the

MESI protocol which is used as cache synchronization logic in SMP systems.

The PCI Bridge provides a low-latency path through which the processor may

directly access PCI devices mapped anywhere in the memory or I/O address

spaces. It also provides a high-bandwidth path giving the PCI bus Masters direct

access to main memory.

2.2.4 The System Memory

The PCI-based RS/6000 servers use JEDEC-Standard 168 pin, 5 volt, 70 nSec,

single bank, eight-byte parity or ECC memory DIMMs (Dual Inline Memory

Modules). By using DIMMs, you get the benefit of a 64-bit wide memory without

having to use paired or quad memory modules. In this way, you can upgrade

system memory using one DIMM module at a time, while maintaining maximum