Page 1

HPSS

Management

Guide

High Performance Storage System

Release 7.3

November 2009 (Revision 1.0)

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 1

Page 2

© Copyright (C) 1992, 2009 International Business Machines Corporation, The Regents of the University of

California, Los Alamos National Security, LLC, Lawrence Livermore National Security, LLC, Sandia Corporation,

and UT-Battelle.

All rights reserved.

Portions of this work were produced by Lawrence Livermore National Security, LLC, Lawrence Livermore National

Laboratory (LLNL) under Contract No. DE-AC52-07NA27344 with the U.S. Department of Energy (DOE); by the

University of California, Lawrence Berkeley National Laboratory (LBNL) under Contract No. DE-AC0205CH11231 with DOE; by Los Alamos National Security, LLC, Los Alamos National Laboratory (LANL) under

Contract No. DE-AC52-06NA25396 with DOE; by Sandia Corporation, Sandia National Laboratories (SNL) under

Contract No. DE-AC04-94AL85000 with DOE; and by UT-Battelle, Oak Ridge National Laboratory (ORNL) under

Contract No. DE-AC05-00OR22725 with DOE. The U.S. Government has certain reserved rights under its prime

contracts with the Laboratories.

DISCLAIMER

Portions of this software were sponsored by an agency of the United States Government. Neither the United States,

DOE, The Regents of the University of California, Los Alamos National Security, LLC, Lawrence Livermore

National Security, LLC, Sandia Corporation, UT-Battelle, nor any of their employees, makes any warranty, express

or implied, or assumes any liability or responsibility for the accuracy, completeness, or usefulness of any

information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned

rights.

Printed in the United States of America.

HPSS Release 7.3

November 2009 (Revision 1.0)

High Performance Storage System is a trademark of International Business Machines Corporation.

IBM is a registered trademark of International Business Machines Corporation.

IBM, DB2, DB2 Universal Database, AIX, RISC/6000, pSeries, and xSeries are trademarks or registered trademarks

of International Business Machines Corporation.

UNIX is a registered trademark of the Open Group.

Linux is a registered trademark of Linus Torvalds in the United States and other countries.

Kerberos is a trademark of the Massachusetts Institute of Technology.

Java is a registered trademark of Sun Microsystems, Incorporated in the United States and other countries.

ACSLS is a trademark of Sun Microsystems, Incorporated.

Microsoft Windows is a registered trademark of Microsoft Corporation.

NFS, Network File System, and ACSLS are trademarks of Sun Microsystems, Inc.

DST is a trademark of Ampex Systems Corporation.

Other brands and product names appearing herein may be trademarks or registered trademarks of third parties.

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 2

Page 3

Table of Contents

Chapter 1. HPSS 7.1 Configuration Overview.....................................................................................15

1.1. Introduction...................................................................................................................................15

1.2. Starting the SSM GUI for the First Time.......................................................................................15

1.3. HPSS Configuration Roadmap (New HPSS Sites)........................................................................15

1.4. Initial HPSS Startup Roadmap (All Sites).....................................................................................16

1.5. Additional Configuration Roadmap (All Sites).............................................................................17

1.6. Verification Checklists (All Sites).................................................................................................17

1.6.1. Configuration Checklists.........................................................................................................................17

1.6.2. Operational Checklists.............................................................................................................................19

1.6.3. Performance Checklist.............................................................................................................................20

Chapter 2. Security and System Access................................................................................................21

2.1. Security Services...........................................................................................................................21

2.1.1. Security Services Configuration..............................................................................................................21

2.1.2. Security Mechanisms...............................................................................................................................22

2.1.2.1. UNIX...............................................................................................................................................22

2.1.2.2. Kerberos 5.......................................................................................................................................22

2.1.2.3. LDAP..............................................................................................................................................23

2.1.2.3.1. LDAP Administrative Tasks..................................................................................................23

2.2. HPSS Server Security ACLs..........................................................................................................25

2.3. SSM User Security........................................................................................................................26

2.4. Location Policy..............................................................................................................................26

2.4.1. Configuring/Updating a Location Policy.................................................................................................27

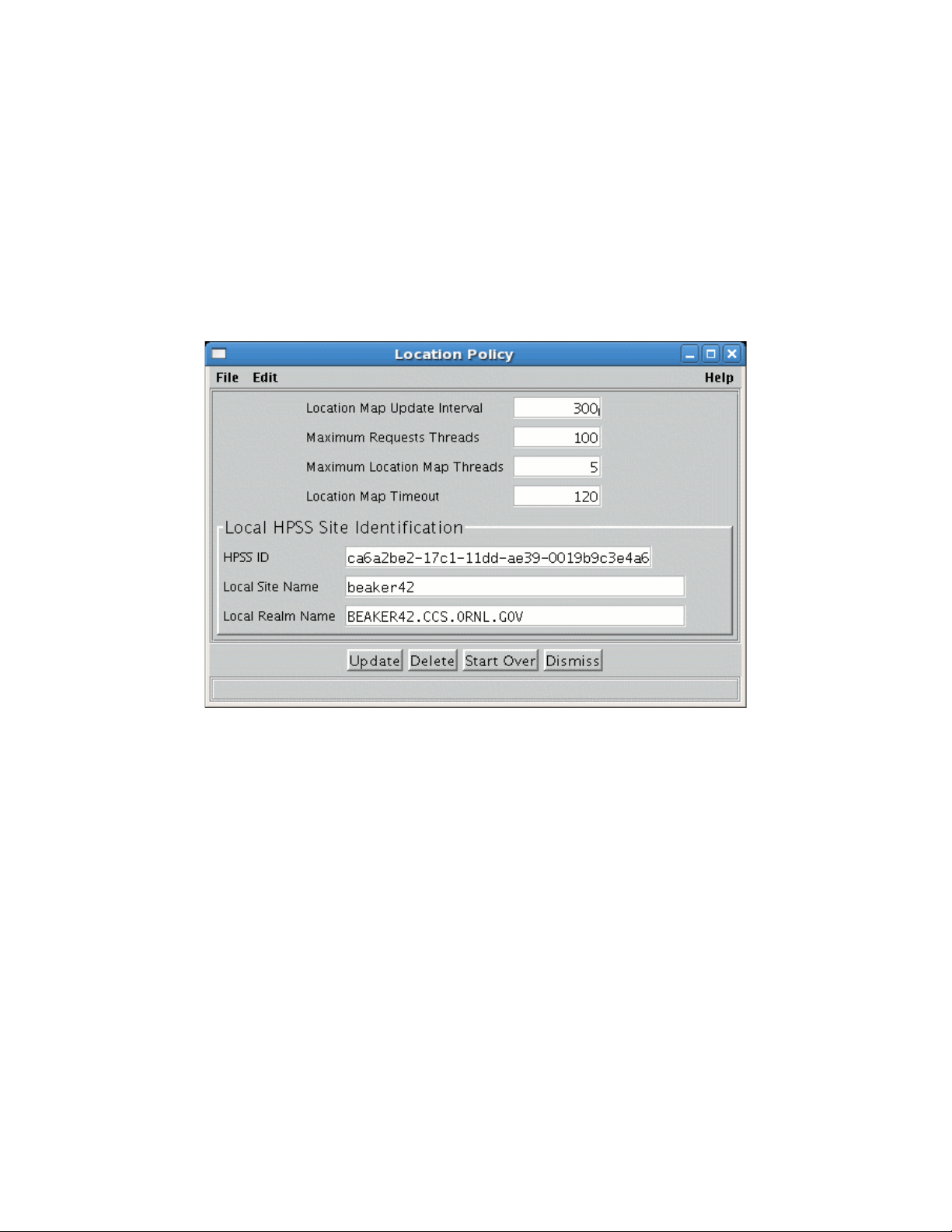

2.4.2. Location Policy Configuration Window..................................................................................................27

2.4.3. Deleting a Location Policy......................................................................................................................28

2.5. Restricting user access to HPSS. ..................................................................................................28

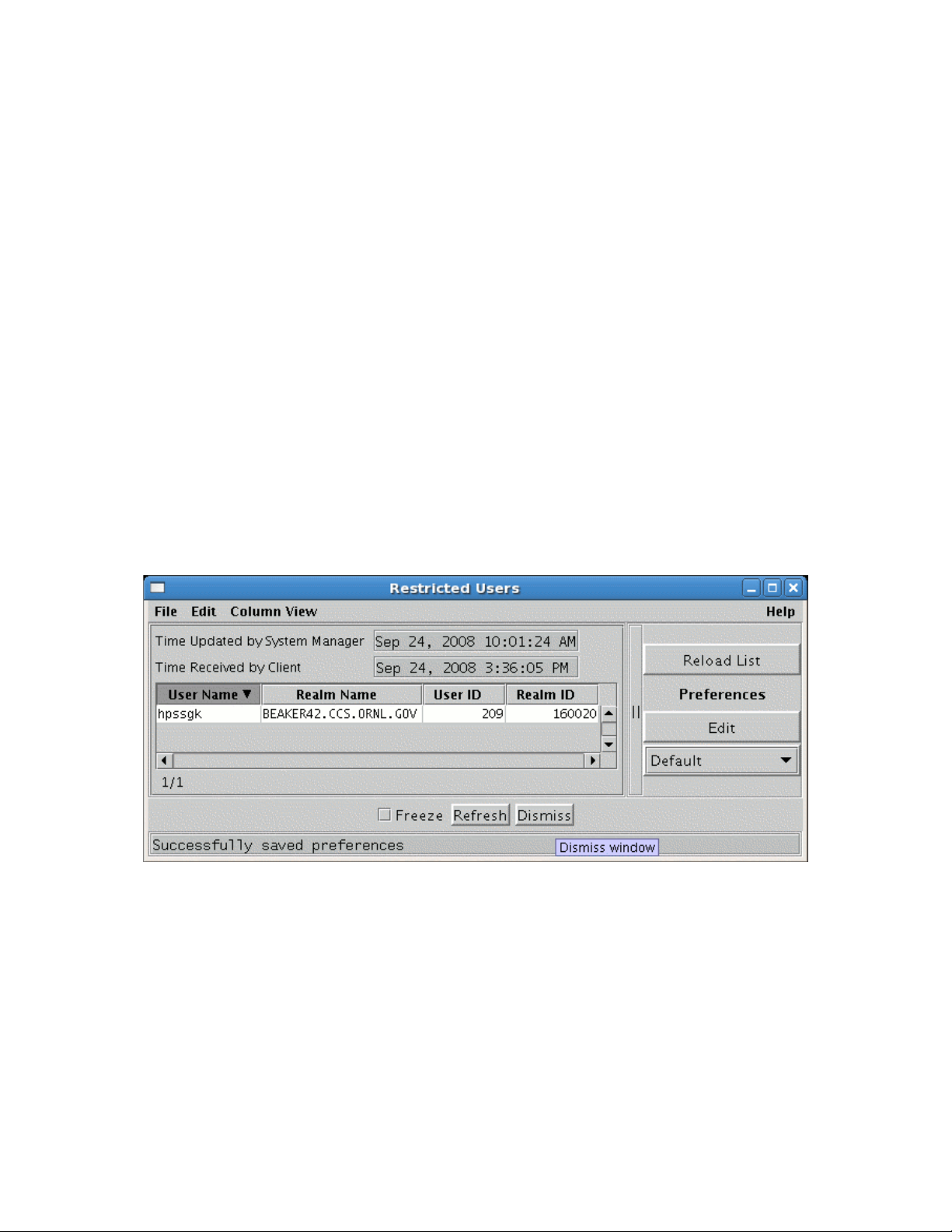

2.5.1. Restricted Users Window........................................................................................................................29

Chapter 3. Using SSM............................................................................................................................31

3.1. The SSM System Manager............................................................................................................31

3.1.1. Starting the SSM System Manager..........................................................................................................31

3.1.2. Tuning the System Manager RPC Thread Pool and Request Queue Sizes.............................................31

3.1.3. Labeling the System Manager RPC Program Number ...........................................................................32

3.2. Quick Startup of hpssgui...............................................................................................................33

3.3. Configuration and Startup of hpssgui and hpssadm.......................................................................34

3.3.1. Configuring the System Manager Authentication for SSM Clients.........................................................35

3.3.2. Creating the SSM User Accounts............................................................................................................35

3.3.2.1. The hpssuser Utility........................................................................................................................35

3.3.2.2. SSM User Authorization.................................................................................................................36

3.3.2.3. User Keytabs (For Use with hpssadm Only)...................................................................................37

3.3.2.3.1. Keytabs for Kerberos Authentication: hpss_krb5_keytab......................................................38

3.3.2.3.2. Keytabs for UNIX Authentication: hpss_unix_keytab...........................................................38

3.3.3. SSM Configuration File...........................................................................................................................39

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 3

Page 4

3.3.3.1. login.conf........................................................................................................................................41

3.3.3.2. krb5.conf (For Use with Kerberos Authentication Only)................................................................41

3.3.4. SSM Help Files (Optional)......................................................................................................................42

3.3.5. SSM Desktop Client Packaging...............................................................................................................42

3.3.5.1. Automatic SSM Client Packaging and Installation.........................................................................43

3.3.5.2. Manual SSM Client Packaging and Installation..............................................................................43

3.3.6. Using SSM Through a Firewall...............................................................................................................44

3.3.6.1. The Firewall Problem......................................................................................................................44

3.3.6.2. Solutions for Operating Through a Firewall..................................................................................45

3.3.6.3. Example: Using hpssgui Through a Firewall..................................................................................45

3.4. Multiple SSM Sessions..................................................................................................................47

3.5. SSM Window Conventions...........................................................................................................47

3.6. Common Window Elements..........................................................................................................50

3.7. Help Menu Overview....................................................................................................................52

3.8. Monitor, Operations and Configure Menus Overview...................................................................53

3.8.1. Monitor Menu..........................................................................................................................................53

3.8.2. Operations Menu.....................................................................................................................................54

3.8.3. Configure Menu.......................................................................................................................................55

3.9. SSM Specific Windows.................................................................................................................56

3.9.1. HPSS Login.............................................................................................................................................56

3.9.2. About HPSS.............................................................................................................................................58

3.9.3. HPSS Health and Status...........................................................................................................................58

3.9.3.1. SM Server Connection Status Indicator .........................................................................................59

3.9.3.2. HPSS Status....................................................................................................................................60

3.9.3.3. HPSS Statistics................................................................................................................................61

3.9.3.4. Menu Tree.......................................................................................................................................62

3.9.3.5. File Menu........................................................................................................................................62

3.9.3.6. View Menu......................................................................................................................................62

3.9.4. SSM Information Windows.....................................................................................................................63

3.9.4.1. System Manager Statistics Window................................................................................................63

3.9.4.2. User Session Information Window.................................................................................................67

3.10. SSM List Preferences..................................................................................................................69

Chapter 4. Global & Subsystem Configuration...................................................................................72

4.1. Global Configuration Window......................................................................................................72

4.2. Storage Subsystems ......................................................................................................................74

4.2.1. Subsystems List Window.........................................................................................................................74

4.2.2. Creating a New Storage Subsystem.........................................................................................................76

4.2.3. Storage Subsystem Configuration Window.............................................................................................76

4.2.3.1. Create Storage Subsystem Metadata...............................................................................................79

4.2.3.2. Create Storage Subsystem Configuration........................................................................................79

4.2.3.3. Create Storage Subsystem Servers..................................................................................................80

4.2.3.4. Assign a Gatekeeper if Required.....................................................................................................80

4.2.3.5. Assign Storage Resources to the Storage Subsystem......................................................................80

4.2.3.6. Create Storage Subsystem Fileset and Junction..............................................................................80

4.2.3.7. Migration and Purge Policy Overrides............................................................................................81

4.2.3.8. Storage Class Threshold Overrides.................................................................................................81

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 4

Page 5

4.2.4. Modifying a Storage Subsystem..............................................................................................................81

4.2.5. Deleting a Storage Subsystem..................................................................................................................81

Chapter 5. HPSS Servers.......................................................................................................................83

5.1. Server List.....................................................................................................................................83

5.1. Server Configuration.....................................................................................................................87

5.1.1. Common Server Configuration................................................................................................................89

5.1.1.1. Basic Controls.................................................................................................................................89

5.1.1.1. Execution Controls..........................................................................................................................90

5.1.1.2. Interface Controls............................................................................................................................92

5.1.1.1. Security Controls.............................................................................................................................92

5.1.1.1. Audit Policy....................................................................................................................................94

5.1.1.1. Log Policy.......................................................................................................................................95

5.1.1. Core Server Specific Configuration.........................................................................................................96

5.1.1.1. Additional Core Server Configuration............................................................................................97

5.1.2. Gatekeeper Specific Configuration..........................................................................................................98

5.1.3. Location Server Additional Configuration..............................................................................................99

5.1.4. Log Client Specific Configuration.........................................................................................................100

5.1.1. Log Daemon Specific Configuration.....................................................................................................101

5.1.2. Migration/Purge Server (MPS) Specific Configuration........................................................................101

5.1.3. Mover Specific Configuration...............................................................................................................102

5.1.3.1. Mover Specific Configuration Window........................................................................................102

5.1.3.1. Additional Mover Configuration...................................................................................................104

5.1.3.1.1. /etc/services, /etc/inetd.conf, and /etc/xinetd.d.....................................................................104

5.1.3.1.2. The Mover Encryption Key Files.........................................................................................105

5.1.3.1.3. /var/hpss/etc Files Required for Remote Mover...................................................................106

5.1.3.1.1. System Configuration Parameters on IRIX, Solaris, and Linux...........................................106

5.1.3.1.1. Setting Up Remote Movers with mkhpss.............................................................................108

5.1.3.1.2. Mover Configuration to Support Local File Transfer..........................................................108

5.1.1. Physical Volume Repository (PVR) Specific Configuration.................................................................109

5.1.1.1. Operator PVR Specific Configuration Window............................................................................110

5.1.1.1. 3494 PVR Specific Configuration................................................................................................111

5.1.1.1.1. 3494 PVR Specific Configuration Window.........................................................................111

5.1.1.1.1. 3494 PVR Additional Information.......................................................................................112

5.1.1.2. AML PVR Specific Configuration................................................................................................113

5.1.1.2.1. AML PVR Specific Configuration Window........................................................................113

5.1.1.2.1. AML PVR Additional Information......................................................................................115

5.1.1.1. LTO PVR Specific Configuration.................................................................................................116

5.1.1.1.1. LTO PVR Specific Configuration Window.........................................................................116

5.1.1.1.1. LTO PVR Additional Information.......................................................................................118

5.1.1.2. SCSI PVR Specific Configuration Window.................................................................................118

5.1.1.1. STK PVR Specific Configuration Window..................................................................................120

5.1.1.1.1. STK PVR Additional Information........................................................................................121

5.1.1. Deleting a Server Configuration............................................................................................................123

5.1. Monitoring Server Information....................................................................................................125

5.1.1. Basic Server Information.......................................................................................................................125

5.1.1. Specific Server Information...................................................................................................................127

5.1.1.1. Core Server Information Window.................................................................................................127

5.1.1.1. Gatekeeper Information Window.................................................................................................130

5.1.1.1. Location Server Information Window..........................................................................................132

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 5

Page 6

5.1.1.2. Migration/Purge Server Information Window..............................................................................133

5.1.1.3. Mover Information Window.........................................................................................................133

5.1.1.1. Physical Volume Library (PVL) Information Window.................................................................134

5.1.1.2. Physical Volume Repository (PVR) Information Windows.........................................................135

5.1.1.2.1. Operator PVR Information Window....................................................................................135

5.1.1.2.1. 3494 PVR Information Window..........................................................................................136

5.1.1.2.1. AML PVR Information Window..........................................................................................138

5.1.1.2.1. LTO PVR Information Window...........................................................................................139

5.1.1.2.1. SCSI PVR Information Window..........................................................................................141

5.1.1.2.1. STK PVR Information Window...........................................................................................143

5.1. Real-Time Monitoring (RTM).....................................................................................................144

5.1.1. RTM Summary List...............................................................................................................................145

5.1.2. RTM Detail............................................................................................................................................146

5.2. Starting HPSS..............................................................................................................................149

5.2.1. Starting HPSS Prerequisite Software.....................................................................................................149

5.2.2. Starting HPSS Servers...........................................................................................................................149

5.2.2.1. Starting the Startup Daemons .......................................................................................................149

5.2.2.2. Starting SSM.................................................................................................................................150

5.2.2.3. Starting Other HPSS Servers........................................................................................................150

5.2.2.4. Automatic Server Restart..............................................................................................................151

5.1. Stopping HPSS............................................................................................................................151

5.1.1. Shutting Down an HPSS Server............................................................................................................151

5.1.2. Shutting Down All HPSS Servers..........................................................................................................152

5.1.3. Halting an HPSS Server.........................................................................................................................152

5.1.4. Shutting Down the SSM Server.............................................................................................................152

5.1.5. Shutting Down the Startup Daemon......................................................................................................153

5.1.6. Stopping the Prerequisite Software........................................................................................................153

5.2. Server Repair and Reinitialization...............................................................................................153

5.2.1. Repairing an HPSS Server.....................................................................................................................153

5.2.2. Reinitializing a Server...........................................................................................................................154

5.1. Forcing an SSM Connection........................................................................................................156

Chapter 6. Storage Configuration.......................................................................................................157

6.1. Storage Classes ...........................................................................................................................157

6.1.1. Configured Storage Classes Window....................................................................................................157

6.1.2. Disk Storage Class Configuration..........................................................................................................158

6.1.3. Tape Storage Class Configuration.........................................................................................................162

6.1.4. Storage Class Subsystem Thresholds.....................................................................................................166

6.1.4.1. Disk Storage Subsystem-Specific Thresholds...............................................................................166

6.1.4.2. Tape Storage Subsystem-Specific Thresholds..............................................................................168

6.1.5. Changing a Storage Class Definition.....................................................................................................169

6.1.6. Deleting a Storage Class Definition.......................................................................................................170

6.2. Storage Hierarchies .....................................................................................................................170

6.2.1. Hierarchies Window..............................................................................................................................170

6.2.2. Storage Hierarchy Configuration Window............................................................................................172

6.2.3. Changing a Storage Hierarchy Definition..............................................................................................173

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 6

Page 7

6.2.4. Deleting a Storage Hierarchy Definition...............................................................................................173

6.3. Classes of Service........................................................................................................................174

6.3.1. Classes of Service Window...................................................................................................................174

6.3.2. Class of Service Configuration Window...............................................................................................175

6.3.3. Changing a Class of Service Definition.................................................................................................178

6.3.4. Deleting a Class of Service Definition...................................................................................................179

6.3.5. Changing a File's Class of Service.........................................................................................................180

6.3.6. Canceling a Class of Service Change Request.......................................................................................180

6.4. Migration Policies.......................................................................................................................180

6.4.1. Migration Policies Window...................................................................................................................180

6.4.2. Migration Policy Configuration.............................................................................................................182

6.4.2.1. Disk Migration Policy Configuration............................................................................................182

6.4.2.2. Tape Migration Policy Configuration...........................................................................................185

6.4.2.3. Changing a Migration Policy........................................................................................................188

6.4.2.4. Deleting a Migration Policy..........................................................................................................188

6.5. Purge Policies..............................................................................................................................189

6.5.1. Purge Policies Window..........................................................................................................................189

6.5.2. Purge Policy Configuration...................................................................................................................190

6.5.3. Changing a Purge Policy........................................................................................................................192

6.5.4. Deleting a Purge Policy.........................................................................................................................193

6.6. File Families ...............................................................................................................................193

6.6.1. File Family Configuration......................................................................................................................194

6.6.2. Changing a File Family..........................................................................................................................194

6.6.3. Deleting a File Family...........................................................................................................................194

Chapter 7. Device and Drive Management ........................................................................................196

7.1. Configure a New Device & Drive................................................................................................196

7.1.1. Devices and Drives Window.................................................................................................................202

7.1.2. Enable Variable Block Sizes for Tape Devices.....................................................................................207

7.1.3. Changing a Drive's Configuration..........................................................................................................207

7.1.4. Deleting a Drive's Configuration...........................................................................................................208

7.2. Monitoring Devices and Drives...................................................................................................209

7.2.1. Mover Device Information Window......................................................................................................209

7.2.2. PVL Drive Information Window...........................................................................................................214

7.3. Drive Pools..................................................................................................................................218

7.3.1. Tape Drive Configuration......................................................................................................................219

7.3.2. Client Application Tape Read Requests................................................................................................219

7.3.3. Drive Pool Considerations.....................................................................................................................219

7.4. Changing Device and Drive State................................................................................................220

7.4.1. Unlocking a Drive..................................................................................................................................220

7.4.2. Locking a Drive.....................................................................................................................................220

7.4.3. Repairing the State of a Device or Drive...............................................................................................221

7.4.4. Resetting Drive Statistics.......................................................................................................................221

Chapter 8. Volume and Storage Management...................................................................................223

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 7

Page 8

8.1. Adding Storage Space..................................................................................................................223

8.1.1. Importing Volumes into HPSS .............................................................................................................223

8.1.1.1. Import Tape Volumes Window.....................................................................................................225

8.1.1.2. Selecting Import Type for Tape Cartridges..................................................................................229

8.1.1.3. Import Disk Volumes Window.....................................................................................................230

8.1.1.4. Selecting Import Type for Disk Volumes.....................................................................................234

8.1.2. Creating Storage Resources...................................................................................................................234

8.1.2.1. Create Tape Resources Window...................................................................................................235

8.1.2.2. Create Disk Resources Window....................................................................................................237

8.2. Removing Storage Space.............................................................................................................240

8.2.1. Deleting Storage Resources...................................................................................................................240

8.2.1.1. Rules for Deleting Resources........................................................................................................241

8.2.1.2. Delete Resources Window............................................................................................................241

8.2.2. Exporting Volumes from HPSS.............................................................................................................243

8.2.2.1. Rules for Exporting Volumes........................................................................................................244

8.2.2.2. Export Volumes Window..............................................................................................................244

8.3. Monitoring Storage Space...........................................................................................................247

8.3.1. Active Storage Classes Window............................................................................................................248

8.3.2. MPS Disk Storage Class Information....................................................................................................251

8.3.3. MPS Tape Storage Class Information...................................................................................................256

8.4. Dealing with a Space Shortage....................................................................................................258

8.4.1. Forcing Migration..................................................................................................................................259

8.4.2. Forcing Purge.........................................................................................................................................259

8.4.3. Repacking and Reclaiming Volumes.....................................................................................................259

8.4.3.1. Repack Virtual Volumes Window................................................................................................260

8.4.3.2. Reclaim Virtual Volumes Window...............................................................................................262

8.5. Volume Management...................................................................................................................263

8.5.1. Lookup Cartridges & Volumes Window...............................................................................................263

8.5.2. PVL Volume Information Window.......................................................................................................264

8.5.3. PVR Cartridge Information Window.....................................................................................................265

8.5.4. Core Server Volume and Segment Windows........................................................................................269

8.5.4.1. Core Server Disk Volume Information Window..........................................................................269

8.5.4.2. Core Server Tape Volume Information Window..........................................................................273

8.5.5. Changing Core Server Volume Condition.............................................................................................278

8.5.6. Moving PVR Cartridges to Another PVR.............................................................................................280

8.5.6.1. Move Cartridges Window.............................................................................................................280

8.6. Monitoring and Managing Volume Mounts................................................................................283

8.6.1. PVL Job Queue Window ......................................................................................................................284

8.6.2. PVL Request Information Window.......................................................................................................286

8.6.3. Canceling Queued PVL Requests .........................................................................................................288

8.6.4. Tape Check-In Requests Window.........................................................................................................288

8.6.5. Tape Mount Requests Window..............................................................................................................289

8.6.6. Administrative Tape Dismounts............................................................................................................291

8.7. New Storage Technology Insertion..............................................................................................291

Chapter 9. Logging and Status...........................................................................................................294

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 8

Page 9

9.1. Logging Overview.......................................................................................................................294

9.2. Log Policies.................................................................................................................................295

9.2.1. Creating a Log Policy............................................................................................................................295

9.2.2. Logging Policies Window......................................................................................................................296

9.2.2.1. Logging Policy Configuration Window.......................................................................................297

9.2.3. Changing a Log Policy...........................................................................................................................298

9.2.4. Deleting a Log Policy............................................................................................................................299

9.3. Managing the Central Log...........................................................................................................299

9.3.1. Configuring Central Log Options..........................................................................................................299

9.3.2. Viewing the Central Log (Delogging)...................................................................................................300

9.4. Log Files Information..................................................................................................................300

9.5. Managing Local Logging.............................................................................................................301

9.5.1. Configuring Local Logging Options......................................................................................................302

9.5.2. Viewing the Local Log..........................................................................................................................302

9.6. Managing SSM Alarms and Events ............................................................................................302

9.6.1. Alarms and Events Window..................................................................................................................302

9.6.2. Alarm/Event Information.......................................................................................................................303

9.6.3. Diagnosing HPSS Problems with Alarms and Events...........................................................................305

9.6.4. Controlling SSM Log Message Handling.............................................................................................305

9.6.4.1. Controlling the System Manager Log Message Cache.................................................................305

9.6.4.2. Controlling Log Messages Displayed by hpssgui and hpssadm....................................................306

Chapter 10. Filesets and Junctions......................................................................................................308

10.1. Filesets & Junctions List............................................................................................................308

10.2. Creating an HPSS Fileset...........................................................................................................310

10.2.1. Create Fileset Window.........................................................................................................................310

10.3. Managing Existing Filesets........................................................................................................312

10.3.1. Core Server Fileset Information Window............................................................................................312

10.4. Deleting Filesets........................................................................................................................314

10.5. Creating a Junction....................................................................................................................315

10.5.1. Create Junction Window......................................................................................................................315

10.6. Deleting a Junction....................................................................................................................316

Chapter 11. Files, Directories and Objects by SOID.........................................................................317

11.1. Files & Directories Window......................................................................................................317

11.1.1. File/Directory Information Window....................................................................................................318

11.2. Objects by SOID Window.........................................................................................................322

Chapter 12. Tape Aggregation............................................................................................................323

12.1. Overview of Tape Aggregation..................................................................................................323

12.2. Tape Aggregation Performance Considerations........................................................................323

12.3. Configuring Tape Aggregation..................................................................................................323

Chapter 13. User Accounts and Accounting.......................................................................................325

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 9

Page 10

13.1. Managing HPSS Users...............................................................................................................325

13.1.1. Adding HPSS Users.............................................................................................................................325

13.1.1.1. Add All User ID Types...............................................................................................................325

13.1.1.2. Add a UNIX User ID..................................................................................................................326

13.1.1.3. Add a Kerberos User ID.............................................................................................................327

13.1.1.4. Add an LDAP User ID................................................................................................................327

13.1.1.5. Add an FTP User ID...................................................................................................................327

13.1.1.6. Add an SSM User ID..................................................................................................................328

13.1.2. Deleting HPSS Users...........................................................................................................................328

13.1.3. Listing HPSS Users.............................................................................................................................329

13.1.4. Create an SSM Client Package............................................................................................................329

13.2. Accounting................................................................................................................................330

13.2.1. Accounting Policy Window.................................................................................................................330

13.2.2. Accounting Reports and Status............................................................................................................332

13.2.2.1. Generating an Accounting Report...............................................................................................332

13.2.2.2. Accounting Status Window.........................................................................................................332

13.2.2.3. Interpreting the Accounting Report.............................................................................................333

13.2.3. Accounting Procedures........................................................................................................................335

13.2.3.1. Site Defined Accounting Configuration Files and Procedures...................................................336

13.2.3.1.1. Site Defined Account Maps...............................................................................................336

13.2.3.1.2. Site Defined Account Apportionment Table......................................................................337

13.2.3.1.3. Maintaining Site Defined Accounting Files.......................................................................337

13.2.3.2. Accounting Intervals and Charges..............................................................................................338

Chapter 14. User Interfaces.................................................................................................................339

14.1. Client API Configuration ..........................................................................................................339

14.2. FTP/PFTP Daemon Configuration............................................................................................341

14.3. HPSS VFS Interface Configuration ..........................................................................................345

14.3.1. HPSS VFS Interface Overview............................................................................................................345

14.3.2. Supported Linux Versions...................................................................................................................346

14.3.3. Installation and Configuration of VFS.................................................................................................346

14.3.3.1. Extracting from HPSS Source Tree............................................................................................346

14.3.3.2. Compiling/Building.....................................................................................................................347

14.3.3.3. Modifying the Kernel..................................................................................................................347

14.3.3.4. Client API – Pre-Requisite..........................................................................................................348

14.3.3.5. Other System Configuration Details...........................................................................................350

14.4. Mounting VFS Filesystems.......................................................................................................351

14.4.1. Mounting via the Command Line........................................................................................................351

14.4.2. Mounting via the ‘/etc/fstab’ File.........................................................................................................351

14.4.3. Mount Options.....................................................................................................................................352

14.4.4. Un-mounting an HPSS Filesystem.......................................................................................................354

14.4.5. Linux ‘proc’ Filesystem Statistics........................................................................................................354

14.5. Additional VFS Notes...............................................................................................................355

14.5.1. Building an RPM Package...................................................................................................................355

Chapter 15. Backup and Recovery......................................................................................................356

15.1. HPSS Metadata Backup and Recovery......................................................................................356

15.1.1. HPSS Administrator Responsibilities for DB2....................................................................................356

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 10

Page 11

15.1.2. Overview of the DB2 Backup Process................................................................................................357

15.1.2.1. Configuring DB2 for Online Backup..........................................................................................358

15.1.3. Overview of the DB2 Recovery Process.............................................................................................359

15.2. HPSS System Environmental Backup........................................................................................359

15.2.1. HPSS Filesystem Backup....................................................................................................................359

15.2.2. Operating System Backup....................................................................................................................360

15.2.3. Kerberos Backup ................................................................................................................................360

15.3. HPSS User Data Recovery.........................................................................................................360

15.3.1. Recovering HPSS Files from Damaged HPSS Volumes.....................................................................360

15.3.1.1. Recover Partially Damaged Disk or Tape Volume.....................................................................362

15.3.1.1.1. With Secondary Copies......................................................................................................362

15.3.1.1.2. Without Secondary Copies.................................................................................................363

15.3.1.2. Cleanup Totally Damaged Disk or Tape.....................................................................................364

15.4. DB2 Monitoring........................................................................................................................365

15.5. DB2 Space Shortage..................................................................................................................365

15.5.1. DMS Table Spaces..............................................................................................................................366

15.5.2. SMS Table Spaces...............................................................................................................................366

Chapter 16. Management Tools..........................................................................................................368

16.1. Utility Overview........................................................................................................................368

16.1.1. Fileset and Junction Management........................................................................................................368

16.1.2. Tape Management...............................................................................................................................368

16.1.3. System Info..........................................................................................................................................368

16.1.4. System Management............................................................................................................................369

16.1.5. User Interfaces.....................................................................................................................................370

16.1.6. Testing/Debugging...............................................................................................................................370

16.1.7. Unsupported Tools..............................................................................................................................370

Appendix A. Glossary of Terms and Acronyms.................................................................................373

Appendix B. References.......................................................................................................................385

Appendix C. Developer Acknowledgments.........................................................................................387

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 11

Page 12

List of Tables

Table 1. SSM General Options..............................................................................................................39

Table 2. HPSSGUI Specific Options.....................................................................................................40

Table 3. HPSSADM Specific Options...................................................................................................40

Table 4. Mover TCP Pathname Options.............................................................................................103

Table 1. IRIX System Parameters.......................................................................................................107

Table 2. Solaris System Parameters....................................................................................................107

Table 3. Linux System Parameters......................................................................................................108

Table 1. Auto Restart Count Values...................................................................................................151

Table 1. Server Reinitialization Behavior...........................................................................................154

Table 2. Recommended Settings for Tape Devices............................................................................201

Table 3. Tape Import Types.................................................................................................................229

Table 4. Disk Import Types.................................................................................................................234

Table 5. Banner Keywords..................................................................................................................344

Table 5. HPSS VFS Interface Mount Options....................................................................................352

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 12

Page 13

Preface

Who Should Read This Book

The HPSS Management Guide is intended as a resource for HPSS administrators. For those performing the initial

configuration for a new HPSS system, Chapter 1 provides a configuration roadmap. For both new systems and those

upgraded from a previous release, Chapter 1 provides a configuration, operational, and performance checklist which

should be consulted before bringing the system into production. The remaining chapters contain the details for

configuring, reconfiguring, monitoring, and managing an HPSS system.

Conventions Used in This Book

Example commands that should be typed at a command line will be proceeded by a percent sign (‘%’) and be

presented in a boldface courier font:

% sample command

Any text preceded by a pound sign (‘#’) should be considered comment lines:

# This is a comment

Angle brackets (‘<>’) denote a required argument for a command:

% sample command <argument>

Square brackets (‘[]’) denote an optional argument for a command:

% sample command [optional argument]

Vertical bars (‘|’) denote different choices within an argument:

% sample command <argument1 | argument2>

A byte is an eight bit data octet. A kilobyte, KB, is 1024 bytes (2

10

bytes). A megabyte, MB, is 1048576

bytes (220 bytes). A gigabyte, GB, is 1073741824 bytes (230 bytes), a terabyte, TB, is 1099511627776

bytes (240 bytes), and a petabyte, PB, is 1125899906842624 bytes (250 bytes).

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 13

Page 14

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 14

Page 15

Chapter 1. HPSS 7.1 Configuration Overview

1.1. Introduction

This chapter defines the high-level steps necessary to configure, start, and verify correct operation of a

new 7.1 HPSS system, whether that system is created from scratch or created by conversion from a 6.2

HPSS system.

To create or modify the HPSS configuration, we recommend that the administrator first be familiar with

the information described in the HPSS Installation Guide, Chapter 2: HPSS Basics and Chapter 3: HPSS

Planning.

Before performing the procedures described in this chapter, be certain that the appropriate system

preparation steps have been performed. See the HPSS Installation Guide, Chapter 4: System Preparation

for more information. For a system created from scratch, be certain that the HPSS installation and

infrastructure configuration have been completed. See the HPSS Installation Guide, Chapter 5: HPSS

Installation and Infrastructure Configuration for more information. To convert from a 6.2 system, see

the HPSS Conversion Guide for HPSS release 7.1.

1.2. Starting the SSM GUI for the First Time

The HPSS system is ready to be configured using SSM once the HPSS software is installed on the node

and the HPSS infrastructure components are configured. In order to start the SSM GUI you must first

start all infrastructure components and the SSM System Manager as follows:

% /opt/hpss/bin/rc.hpss -m start

Next you will need to add an SSM Admin user. To do this you will need to invoke the hpssuser utility as

follows:

% /opt/hpss/bin/hpssuser -add hpss -<unix|krb> -ssm

The above commands must be done as root!

Once the SSM Admin user has been created, you can invoke the SSM GUI as follows (for hpssgui.pl

options, see the hpssgui man page):

% /opt/hpss/bin/hpssgui.pl

Note: This command may be done as an HPSS user.

When the SSM GUI is running you can begin to configure the rest of HPSS (servers, devices, etc) as

described in the following sections. For more information on SSM, see Chapter 3: Using SSM on page

31.

1.3. HPSS Configuration Roadmap (New HPSS Sites)

The following steps summarize the configuration of an HPSS system when creating the 7.1system from

scratch (not upgrading from a previous release). It is important that the steps be performed in the order

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 15

Page 16

listed. Each step is required unless otherwise indicated. Each step is discussed in more detail in the

referenced section.

1. Configure storage subsystems (Section 4.2.2: Creating a New Storage Subsystem on page 76)

Subsystems can be configured only partially at this time. The Gatekeeper, Default COS, and

Allowed COS fields will be updated in a later step.

2. Configure HPSS storage policies

· Accounting Policy (Section 13.2.1: on page 330)

· Log Policies (Section 9.2: Log Policies on page 295)

· Location Policy (Section 2.4: Location Policy on page 26)

· Migration Policies (Section 6.4: Migration Policies on page 180)

· Purge Policies (Section 6.5: Purge Policies on page 189)

3. Configure HPSS storage characteristics

· Storage Classes (Section 6.1.1: Configured Storage Classes on page 157)

· Storage Hierarchies (Section 6.2: Storage Hierarchies on page 170)

· Classes of Service (Section 6.3: Classes of Service on page 174)

4. Configure HPSS servers (Section 5.1: Server Configuration on page 87)

5. Create global configuration (Section 4.1: Global Configuration Window on page 72)

6. Configure MVR devices and PVL drives (Section 7.1: Configure a New Device & Drive on page

196)

7. Configure file families, if used (Section 6.6: File Families on page 193)

8. Update storage subsystem configurations with Gatekeeper and COS information (Section 4.2.4:

Modifying a Storage Subsystem on page 81 and Section 4.2.3:Storage Subsystem Configuration

Window on page 76)

9. Create the endpoint map (Section 5.1.3: Location Server Additional Configuration on page 99).

1.4. Initial HPSS Startup Roadmap (All Sites)

This section provides instructions for starting the HPSS servers and performing post-startup

configuration. For sites which are converting from 6.2, only step 1 may be necessary. For sites

configuring a new 7.1 system from scratch, all steps are necessary:

1. Start the HPSS servers (Section 5.2.2: Starting HPSS Servers on page 149)

2. Unlock the PVL drives (Section 7.4.2: Locking a Drive on page 220)

3. Create HPSS storage space:

A. Import volumes into HPSS (Section 8.1.1: Importing Volumes into HPSS on page 223)

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 16

Page 17

B. Create storage resources (Section 8.1.2: Creating Storage Resources on page 234)

4. Create additional HPSS Users (Section 13.1.1: Adding HPSS Users on page 325)

5. Create Filesets and Junctions (Section 10.1: Filesets & Junctions List on page 308 and Section

10.5: Creating a Junction on page 315)

6. Create HPSS /log Directory

If log archiving is enabled, using an HPSS namespace tool such as scrub or ftp, create the /log

directory in HPSS. This directory must be owned by hpsslog and have permissions rwxr-xr-x.

The /log directory can be created by the root user using ftp as follows:

% ftp <node> <HPSS Port> # login as root user

ftp> mkdir /log

ftp> quote site chown hpsslog /log

ftp> quote site chmod 755 /log

1.5. Additional Configuration Roadmap (All Sites)

This section provides a high level roadmap for additional HPSS configuration.

1. Configure HPSS User Interfaces (Chapter 14: User Interfaces on page 339)

2. Set up Backup for DB2 and Other Infrastructure (Chapter 15: Backup and Recovery on page 356)

3. Set up High Availability, if desired (HPSS Installation Guide, Chapter 3: HPSS Planning)

4. Optionally configure support for both authentication mechanisms (HPSS Installation Guide,

Section 5.9: Supporting Both Unix and Kerberos Authentication for SSM)

1.6. Verification Checklists (All Sites)

This section provides a number of checklists regarding configuration, operational and performance

issues.

1.6.1. Configuration Checklists

After HPSS is running, the administrator should use the following checklists to verify that HPSS was

configured or converted correctly:

Global Configuration

• Verify that a Default Class of Service has been selected.

• Verify that a Root Core Server has been selected.

Storage Subsystem Configuration

• Verify that a Default Class of Service has been selected if desired.

• Verify that a Gatekeeper has been selected if gatekeeping or account validation is required.

• Verify that the COS Name list has been filled in correctly.

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 17

Page 18

• Verify that a Core Server and Migration Purge Server have been configured for each storage

subsystem.

• Verify that each storage subsystem is accessible by using lsjunctions and ensuring that there is at

least one junction to the Root fileset of each subsystem. (The root fileset for a given subsystem

can be found in the specific configuration for the subsystem’s Core Server)

Servers

• Verify that all required HPSS servers are configured and running.

• Verify that all servers are configured with the intended level of logging, whether using their

server specific policies or the default policy. Also, verify that all Core Server and Mover log

policies have the DEBUG flag turned on to aid in the diagnostics of any future problems.

Devices and Drives

• Verify that all devices/drives are configured and each is assigned to an appropriate PVR/Mover.

• For tape devices, verify that the “Locate Support” option is enabled if supported.

• For tape devices, verify that the “NO-DELAY” option is enabled if supported by the device.

• For disk devices, verify that the “Bytes on Device” and “Starting Offset” values are correct.

• Verify that all configured drives are unlocked.

Storage Classes

• Verify that all storage classes are defined and each has sufficient free storage space.

• Verify that each storage class that will be migrated and purged is configured with the appropriate

migration and purge policy.

• Verify that no storage class at the lowest level in a hierarchy is configured with a migration or

purge policy.

• To support repack and recover of tape volumes, verify that the stripe width of each tape storage

class is less than half of the number of available drives of the appropriate drive type.

Storage Hierarchies

• Verify that all storage hierarchies are defined.

Classes of Service (COS)

• Verify that all classes of service are defined.

• Verify that each COS is associated with the appropriate storage hierarchy.

• Verify that the COS is configured to use the characteristics of the hierarchy and the underlying

storage classes. In addition, verify that the classes of service have the correct Minimum File Size

and Maximum File Size values. If these sizes overlap, the file placement may be indeterminate

when the user creates a file using the size hints. For classes of services which are not to be used as

part of standard file placement, set their Force Selection flag to ON so that they will only be

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 18

Page 19

chosen if specified by their COS ID.

• Verify that classes of service with multiple copies have the Retry Stage Failures from

Secondary Copy flag enabled.

File Families, Filesets, and Junctions

• Verify that file families and filesets are created according to the site’s requirements.

• Verify that each fileset is associated with the appropriate file family and/or COS.

• Verify that each fileset has an associated junction.

User Interfaces

• Verify that the desired HPSS user interfaces (FTP, PFTP, Client API, etc.) are configured

(Chapter 14 : User Interfaces on page 339).

1.6.2. Operational Checklists

The administrator should follow these checklists to ensure that HPSS is operating properly.

Configured user interfaces

• Create files of various sizes on each defined COS.

• Verify that the files are created in the expected storage class with acceptable transfer rates.

• If necessary, redefine the associated storage class definition to enhance the throughput

performance.

• The characteristics fields (Transfer Rate, Latency, etc.) in the storage class and class of service

definition should be updated to reflect actual performance results.

• After the files are created on the correct storage class, verify that the files are created with correct

file ownerships and permissions.

• Verify that other file operations (delete, chmod, copy, etc.) work.

• If accounting is configured, verify that the files are created with the correct accounting indices.

• If file families, filesets and junctions are configured, verify that they work as intended.

Devices and Drives

• Ensure that each drive mounts, reads, and writes.

Storage Management

• Verify that migration and purge operations work as intended.

• Start Migration and Purge operations manually to verify that files are migrated and purged

correctly.

• Verify that files can be accessed after being migrated/purged.

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 19

Page 20

• Monitor free space from the top level storage class in each hierarchy to verify that the migration

and purge policy are maintaining adequate free space.

1.6.3. Performance Checklist

Measure data transfer rates in each COS for:

• Client writes to disk

• Migration from disk to tape

• Staging from tape to disk

• Client reads from disk

Transfer rates should be close to the speed of the underlying hardware. The actual hardware speeds can

be obtained from their specifications and by testing directly from the operating system (e.g., using dd to

read and write to each device). Keep in mind that transfer performance can be limited by factors external

to HPSS. For example, HPSS file read performance may be limited by the performance of the UNIX file

system writing the file rather than limits inside HPSS.

HPSS Management Guide November 2009

Release 7.3 (Revision 1.0) 20

Page 21

Chapter 2. Security and System Access

2.1. Security Services

As of release 6.2, HPSS no longer uses DCE security services. The new approach to security divides

services into two APIs, known as mechanisms, each of which has multiple implementations.

Configuration files control which implementation of each mechanism is used in the security realm

(analogous to a DCE cell) for an HPSS system. Security mechanisms are implemented in shared object

libraries and are described to HPSS by a configuration file. HPSS programs that need to use the

mechanism dynamically link the library to the program when the program starts.

The first type of mechanism is the authentication mechanism. This API is used to acquire credentials

and to verify the credentials of clients. Authentication verifies that a client really is who he claims to be.

The second type of mechanism is the authorization mechanism. Once a client's identity has been

verified, this API is used to obtain the authorization details associated with the client such as uid, gid,

group membership, etc., that are used to determine the privileges accorded to the client and the resources

to which it has access.

2.1.1. Security Services Configuration

Ordinarily, the configuration files that control HPSS's access to security services are set up either by the

installation tool, mkhpss, or by the metadata conversion tools. This section is provided purely for

reference. Each of the files below is stored by default in /var/hpss/etc.

• auth.conf, authz.conf

These files define which shared libraries provide implementations of the authentication and