Page 1

Front cover

Draft Document for Review May 4, 2007 11:35 am REDP-4285-00

Linux Performance and

Tuning Guidelines

Operating system tuning methods

Performance monitoring tools

Peformance analysis

ibm.com/redbooks

Eduardo Ciliendo

Takechika Kunimasa

Redpaper

Page 2

Page 3

Draft Document for Review May 4, 2007 11:35 am 4285edno.fm

International Technical Support Organization

Linux Performance and Tuning Guidelines

April 2007

REDP-4285-00

Page 4

4285edno.fm Draft Document for Review May 4, 2007 11:35 am

Note: Before using this information and the product it supports, read the information in “Notices” on

page vii.

First Edition (April 2007)

This edition applies to kernel 2.6 Linux distributions.

This document created or updated on May 4, 2007.

© Copyright International Business Machines Corporation 2007. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Draft Document for Review May 4, 2007 11:35 am 4285TOC.fm

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

How this Redpaper is structured . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

The team that wrote this Redpaper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Chapter 1. Understanding the Linux operating system. . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Linux process management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.1 What is a process? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.2 Lifecycle of a process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.3 Thread. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.4 Process priority and nice level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.1.5 Context switching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.1.6 Interrupt handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.1.7 Process state . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.1.8 Process memory segments. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.1.9 Linux CPU scheduler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.2 Linux memory architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.1 Physical and virtual memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.2 Virtual memory manager. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.3 Linux file systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3.1 Virtual file system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3.2 Journaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.3.3 Ext2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.3.4 Ext3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.3.5 ReiserFS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.3.6 Journal File System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.3.7 XFS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.4 Disk I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.4.1 I/O subsystem architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.4.2 Cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.4.3 Block layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.4.4 I/O device driver . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

1.4.5 RAID and Storage system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

1.5 Network subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.5.1 Networking implementation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.5.2 TCP/IP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.5.3 Offload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

1.5.4 Bonding module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.6 Understanding Linux performance metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.6.1 Processor metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.6.2 Memory metrics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

1.6.3 Network interface metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

1.6.4 Block device metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Chapter 2. Monitoring and benchmark tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

© Copyright IBM Corp. 2007. All rights reserved. iii

Page 6

4285TOC.fm Draft Document for Review May 4, 2007 11:35 am

2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

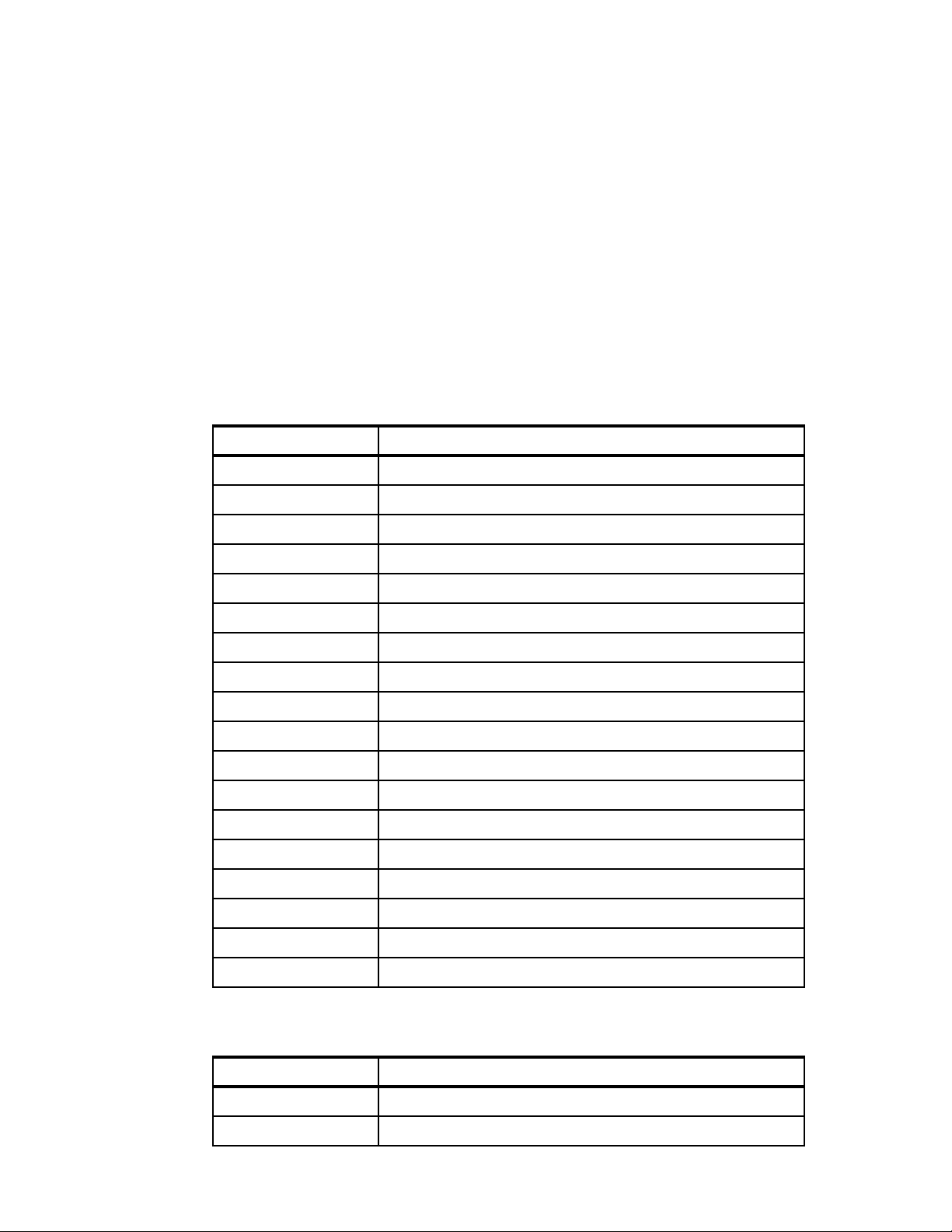

2.2 Overview of tool function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.3 Monitoring tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.3.1 top . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.3.2 vmstat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.3.3 uptime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.3.4 ps and pstree . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.3.5 free . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.3.6 iostat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.3.7 sar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

2.3.8 mpstat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

2.3.9 numastat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.3.10 pmap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.3.11 netstat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

2.3.12 iptraf . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.3.13 tcpdump / ethereal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.3.14 nmon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

2.3.15 strace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

2.3.16 Proc file system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

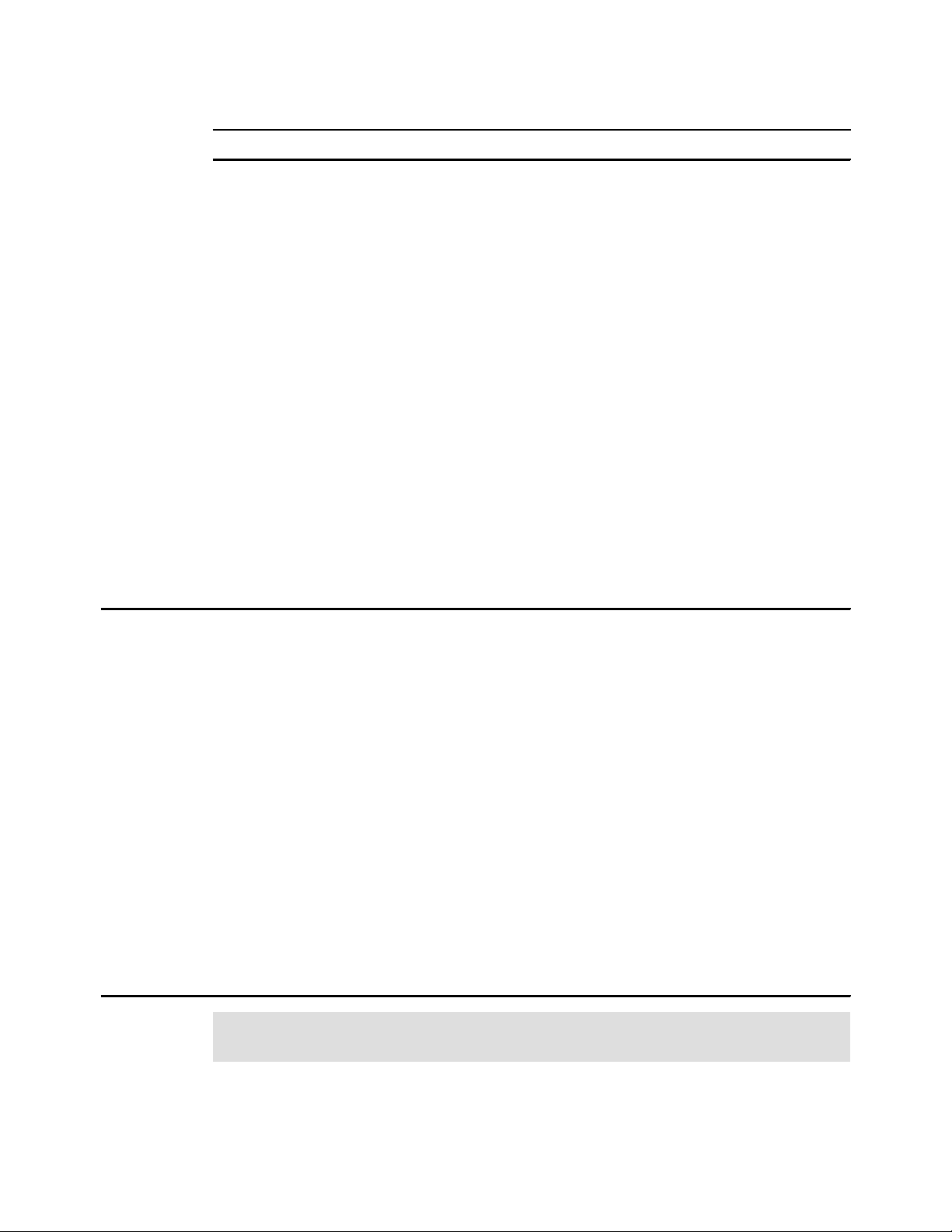

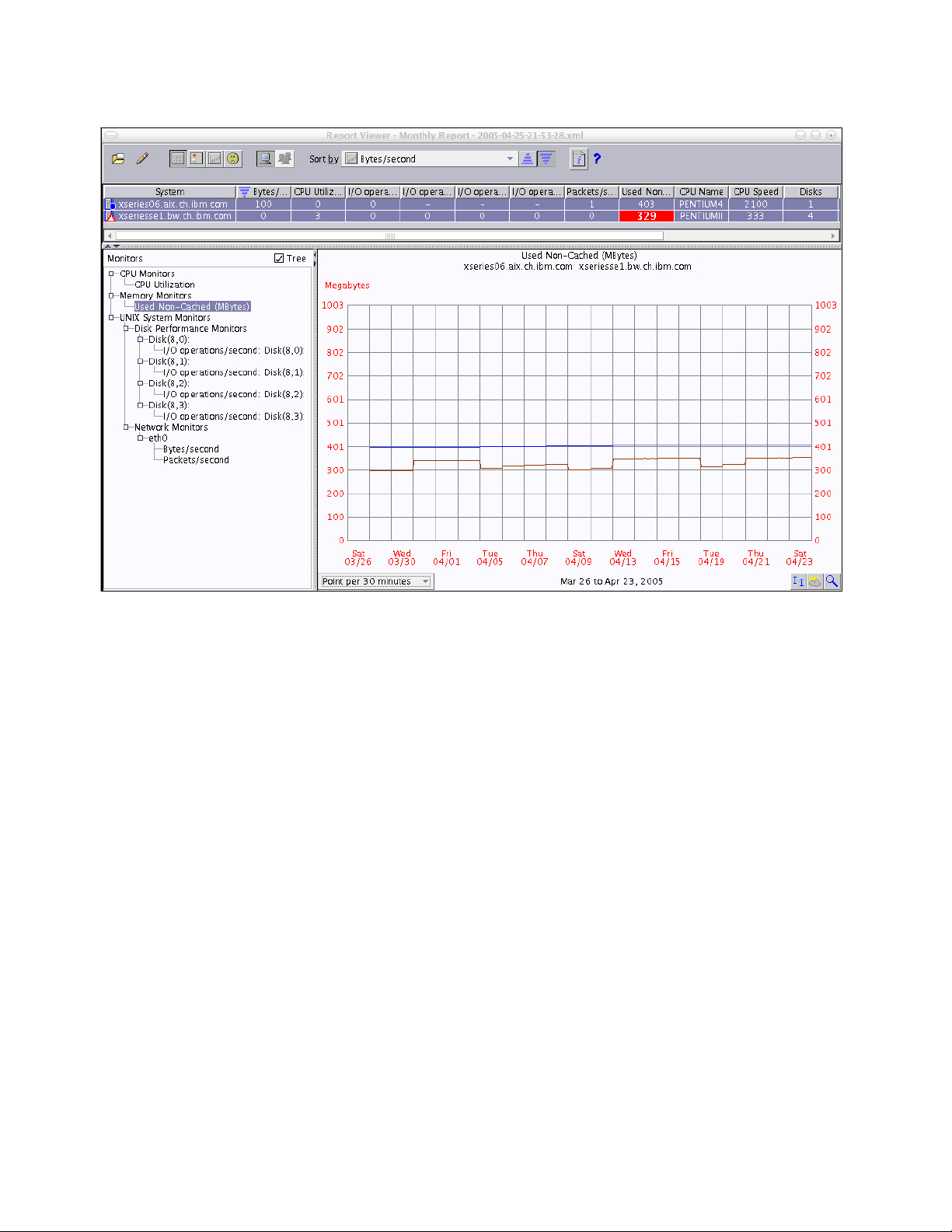

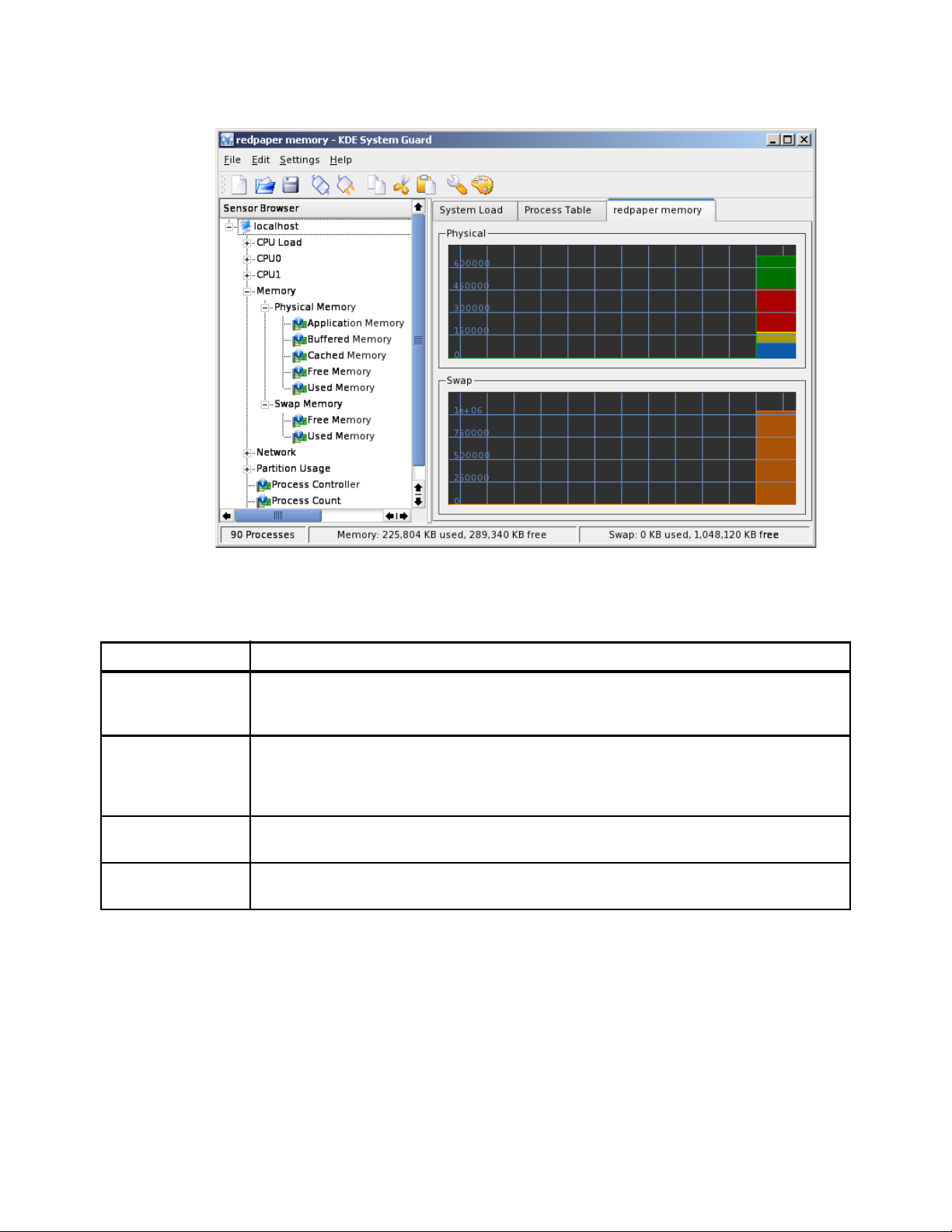

2.3.17 KDE System Guard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.3.18 Gnome System Monitor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

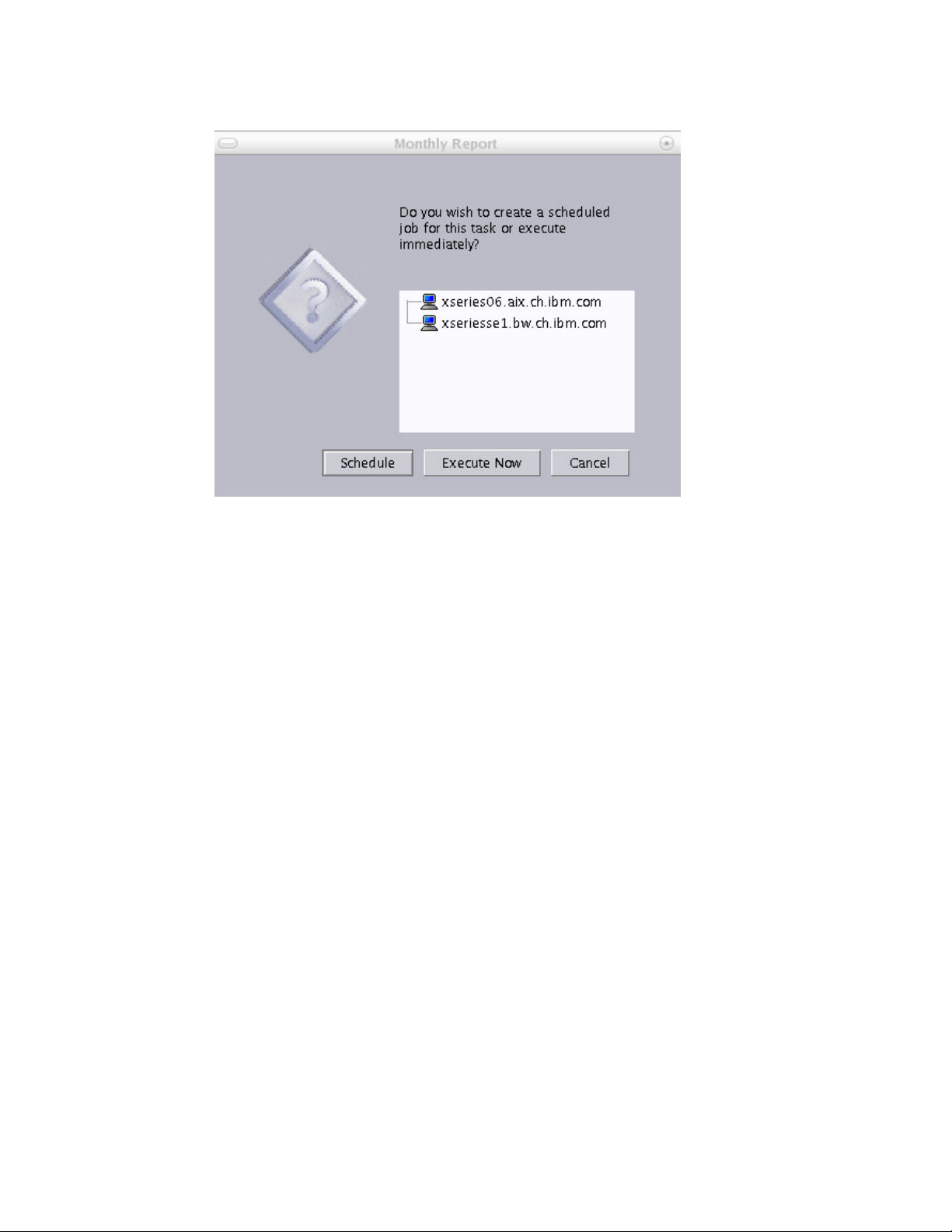

2.3.19 Capacity Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

2.4 Benchmark tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.4.1 LMbench . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

2.4.2 IOzone . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

2.4.3 netperf . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.4.4 Other useful tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Chapter 3. Analyzing performance bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

3.1 Identifying bottlenecks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

3.1.1 Gathering information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

3.1.2 Analyzing the server’s performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

3.2 CPU bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

3.2.1 Finding CPU bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

3.2.2 SMP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

3.2.3 Performance tuning options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

3.3 Memory bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

3.3.1 Finding memory bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

3.3.2 Performance tuning options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.4 Disk bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.4.1 Finding disk bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.4.2 Performance tuning options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.5 Network bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.5.1 Finding network bottlenecks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

3.5.2 Performance tuning options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Chapter 4. Tuning the operating system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

4.1 Tuning principals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.1.1 Change management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.2 Installation considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.2.1 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.2.2 Check the current configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

4.2.3 Minimize resource use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

iv Linux Performance and Tuning Guidelines

Page 7

Draft Document for Review May 4, 2007 11:35 am 4285TOC.fm

4.2.4 SELinux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

4.2.5 Compiling the kernel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

4.3 Changing kernel parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

4.3.1 Where the parameters are stored . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

4.3.2 Using the sysctl command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

4.4 Tuning the processor subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

4.4.1 Tuning process priority . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.4.2 CPU affinity for interrupt handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.4.3 Considerations for NUMA systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.5 Tuning the vm subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.5.1 Setting kernel swap and pdflush behavior . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.5.2 Swap partition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

4.5.3 HugeTLBfs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

4.6 Tuning the disk subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

4.6.1 Hardware considerations before installing Linux. . . . . . . . . . . . . . . . . . . . . . . . . 114

4.6.2 I/O elevator tuning and selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

4.6.3 File system selection and tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.7 Tuning the network subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

4.7.1 Considerations of traffic characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

4.7.2 Speed and duplexing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

4.7.3 MTU size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.7.4 Increasing network buffers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.7.5 Additional TCP/IP tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.7.6 Performance impact of Netfilter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

4.7.7 Offload configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

4.7.8 Increasing the packet queues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.7.9 Increasing the transmit queue length . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.7.10 Decreasing interrupts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Appendix A. Testing configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Hardware and software configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Linux installed on guest IBM z/VM systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Linux installed on IBM System x servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Contents v

Page 8

4285TOC.fm Draft Document for Review May 4, 2007 11:35 am

vi Linux Performance and Tuning Guidelines

Page 9

Draft Document for Review May 4, 2007 11:35 am 4285spec.fm

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2007. All rights reserved. vii

Page 10

4285spec.fm Draft Document for Review May 4, 2007 11:35 am

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Redbooks (logo) ®

eServer™

xSeries®

z/OS®

AIX®

DB2®

DS8000™

IBM®

POWER™

Redbooks®

ServeRAID™

System i™

System p™

System x™

System z™

System Storage™

TotalStorage®

The following terms are trademarks of other companies:

Java, JDBC, Solaris, and all Java-based trademarks are trademarks of Sun Microsystems, Inc. in the United

States, other countries, or both.

Excel, Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United

States, other countries, or both.

Intel, Itanium, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks of

Intel Corporation or its subsidiaries in the United States, other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

viii Linux Performance and Tuning Guidelines

Page 11

Draft Document for Review May 4, 2007 11:35 am 4285pref.fm

Preface

Linux® is an open source operating system developed by people all over the world. The

source code is freely available and can be used under the GNU General Public License. The

operating system is made available to users in the form of distributions from companies such

as Red Hat and Novell. Some desktop Linux distributions can be downloaded at no charge

from the Web, but the server versions typically must be purchased.

Over the past few years, Linux has made its way into the data centers of many corporations

all over the globe. The Linux operating system has become accepted by both the scientific

and enterprise user population. Today, Linux is by far the most versatile operating system.

You can find Linux on embedded devices such as firewalls and cell phones and mainframes.

Naturally, performance of the Linux operating system has become a hot topic for both

scientific and enterprise users. However, calculating a global weather forecast and hosting a

database impose different requirements on the operating system. Linux has to accommodate

all possible usage scenarios with the most optimal performance. The consequence of this

challenge is that most Linux distributions contain general tuning parameters to accommodate

all users.

IBM® has embraced Linux, and it is recognized as an operating system suitable for

enterprise-level applications running on IBM systems. Most enterprise applications are now

available on Linux, including file and print servers, database servers, Web servers, and

collaboration and mail servers.

With use of Linux in an enterprise-class server comes the need to monitor performance and,

when necessary, tune the server to remove bottlenecks that affect users. This IBM Redpaper

describes the methods you can use to tune Linux, tools that you can use to monitor and

analyze server performance, and key tuning parameters for specific server applications. The

purpose of this redpaper is to understand, analyze, and tune the Linux operating system to

yield superior performance for any type of application you plan to run on these systems.

The tuning parameters, benchmark results, and monitoring tools used in our test environment

were executed on Red Hat and Novell SUSE Linux kernel 2.6 systems running on IBM

System x servers and IBM System z servers. However, the information in this redpaper

should be helpful for all Linux hardware platforms.

How this Redpaper is structured

To help readers new to Linux or performance tuning get a fast start on the topic, we have

structured this book the following way:

Understanding the Linux operating system

This chapter introduces the factors that influence systems performance and the way the

Linux operating system manages system resources. The reader is introduced to several

important performance metrics that are needed to quantify system performance.

Monitoring Linux performance

The second chapter introduces the various utilities that are available for Linux to measure

and analyze systems performance.

Analyzing performance bottlenecks

This chapter introduces the process of identifying and analyzing bottlenecks in the system.

© Copyright IBM Corp. 2007. All rights reserved. ix

Page 12

4285pref.fm Draft Document for Review May 4, 2007 11:35 am

Tuning the operating system

With the basic knowledge of the operating systems way of working and the skills in a

variety of performance measurement utilities, the reader is now ready to go to work and

explore the various performance tweaks available in the Linux operating system.

The team that wrote this Redpaper

This Redpaper was produced by a team of specialists from around the world working at the

International Technical Support Organization, Raleigh Center.

The team: Byron, Eduardo, Takechika

Eduardo Ciliendo is an Advisory IT Specialist working as a performance specialist on

IBM Mainframe Systems in IBM Switzerland. He has over than 10 years of experience in

computer sciences. Eddy studied Computer and Business Sciences at the University of

Zurich and holds a post-diploma in Japanology. Eddy is a member of the zChampion team

and holds several IT certifications including the RHCE title. As a Systems Engineer for

IBM System z™, he works on capacity planning and systems performance for z/OS® and

Linux for System z. Eddy has made several publications on systems performance and

Linux.

Takechika Kunimasa is an Associate IT Architect in IBM Global Service in Japan. He studied

Electrical and Electronics engineering at Chiba University. He has more than 10 years of

experience in IT industry. He worked as network engineer for 5 years and he has been

working for Linux technical support. His areas of expertise include Linux on System x™,

System p™ and System z, high availability system, networking and infrastructure architecture

design. He is Cisco Certified Network Professional and Red Hat Certified Engineer.

Byron Braswell is a Networking Professional at the International Technical Support

Organization, Raleigh Center. He received a B.S. degree in Physics and an M.S. degree in

x Linux Performance and Tuning Guidelines

Page 13

Draft Document for Review May 4, 2007 11:35 am 4285pref.fm

Computer Sciences from Texas A&M University. He writes extensively in the areas of

networking, application integration middleware, and personal computer software. Before

joining the ITSO, Byron worked in IBM Learning Services Development in networking

education development.

Thanks to the following people for their contributions to this project:

Margaret Ticknor

Carolyn Briscoe

International Technical Support Organization, Raleigh Center

Roy Costa

Michael B Schwartz

Frieder Hamm

International Technical Support Organization, Poughkeepsie Center

Christian Ehrhardt

Martin Kammerer

IBM Böblingen, Germany

Erwan Auffret

IBM France

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook dealing with

specific products or solutions, while getting hands-on experience with leading-edge

technologies. You will have the opportunity to team with IBM technical professionals,

Business Partners, and Clients.

Your efforts will help increase product acceptance and customer satisfaction. As a bonus,

you'll develop a network of contacts in IBM development labs, and increase your productivity

and marketability.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our papers to be as helpful as possible. Send us your comments about this

Redpaper or other Redbooks® in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an e-mail to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

Preface xi

Page 14

4285pref.fm Draft Document for Review May 4, 2007 11:35 am

2455 South Road

Poughkeepsie, NY 12601-5400

xii Linux Performance and Tuning Guidelines

Page 15

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

1

Chapter 1. Understanding the Linux

operating system

We begin this Redpaper with a quick overview of how the Linux operating system handles its

tasks to complete interacting with its hardware resources. Performance tuning is a difficult

task that requires in-depth understanding of the hardware, operating system, and application.

If performance tuning were simple, the parameters we are about to explore would be

hard-coded into the firmware or the operating system and you would not be reading these

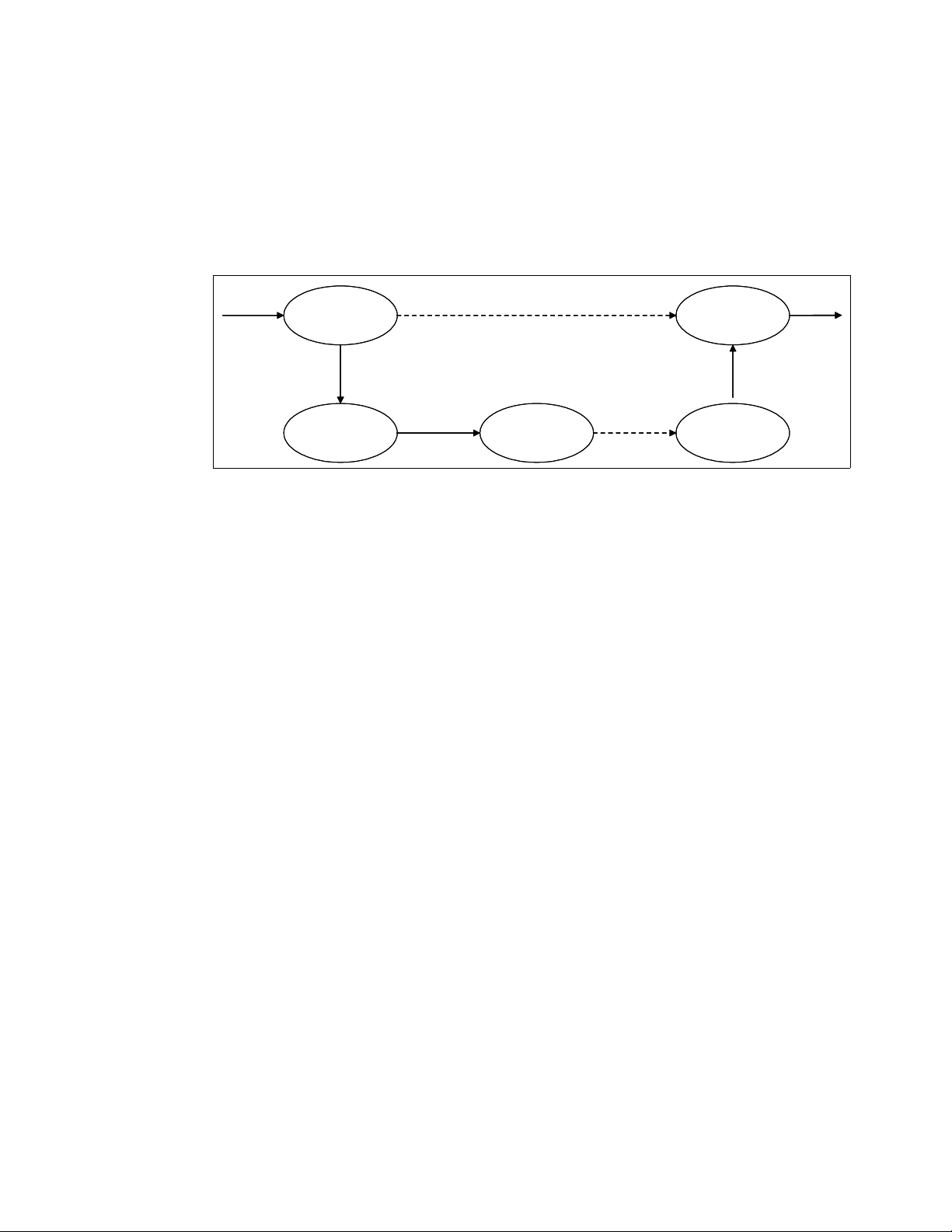

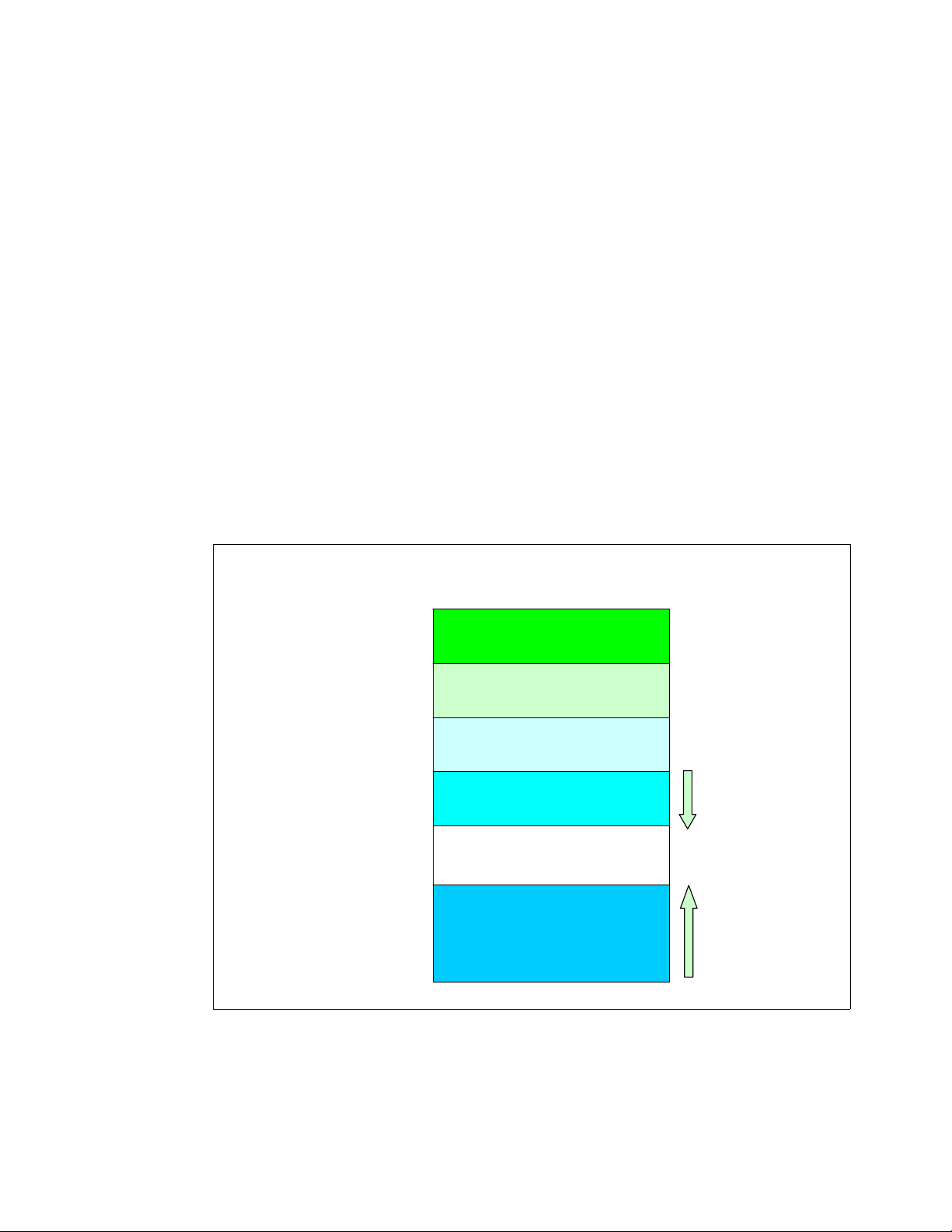

lines. However, as shown in the following figure, server performance is affected by multiple

factors.

Applications

Applications

Libraries

Libraries

Kernel

Kernel

Drivers

Drivers

Firmware

Firmware

Hardware

Hardware

Figure 1-1 Schematic interaction of different performance components

© Copyright IBM Corp. 2007. All rights reserved. 1

Page 16

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

We can tune the I/O subsystem for weeks in vain if the disk subsystem for a 20,000-user

database server consists of a single IDE drive. Often a new driver or an update to the

application will yield impressive performance gains. Even as we discuss specific details,

never forget the complete picture of systems performance. Understanding the way an

operating system manages the system resources aids us in understanding what subsystems

we need to tune, given a specific application scenario.

The following sections provide a short introduction to the architecture of the Linux operating

system. A complete analysis of the Linux kernel is beyond the scope of this Redpaper. The

interested reader is pointed to the kernel documentation for a complete reference of the Linux

kernel. Once you get a overall picture of the Linux kernel, you can go further depth into the

detail more easily.

Note: This Redpaper focuses on the performance of the Linux operating system.

In this chapter we cover:

1.1, “Linux process management” on page 3

1.2, “Linux memory architecture” on page 11

1.3, “Linux file systems” on page 15

1.4, “Disk I/O subsystem” on page 19

1.5, “Network subsystem” on page 26

1.6, “Understanding Linux performance metrics” on page 34

2 Linux Performance and Tuning Guidelines

Page 17

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

1.1 Linux process management

Process management is one of the most important roles of any operating system. Effective

process management enables an application to operate steadily and effectively.

Linux process management implementation is similar to UNIX® implementation. It includes

process scheduling, interrupt handling, signaling, process prioritization, process switching,

process state, process memory and so on.

In this section, we discuss the fundamentals of the Linux process management

implementation. It helps to understand how the Linux kernel deals with processes that will

have an effect on system performance.

1.1.1 What is a process?

A process is an instance of execution that runs on a processor. The process uses any

resources Linux kernel can handle to complete its task.

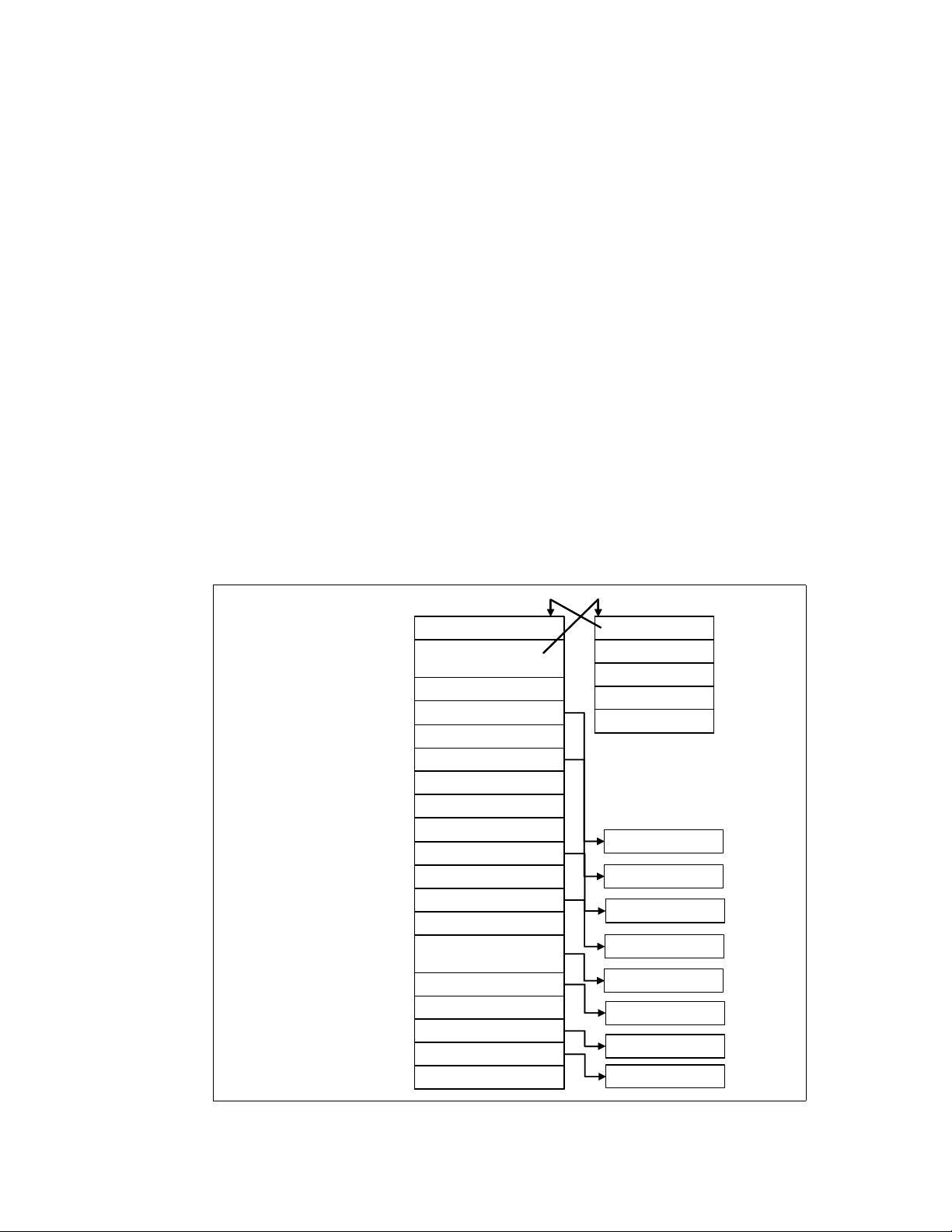

All processes running on Linux operating system are managed by the task_struct structure,

which is also called

necessary for a single process to run such as process identification, attributes of the process,

resources which construct the process. If you know the structure of the process, you can

understand what is important for process execution and performance. Figure 1-2 shows the

outline of structures related to process information.

process descriptor. A process descriptor contains all the information

task_struct structure

kernel stack

kernel stack

Root directory

Root directory

thread_info structure

task

stateProcess state

stateProcess state

thread_infoProcess information and

thread_infoProcess information and

:

:

run_list, arrayFor process scheduling

run_list, arrayFor process scheduling

:

:

mmProcess address space

mmProcess address space

:

:

pidProcess ID

pidProcess ID

:

:

group_infoGroup management

group_infoGroup management

:

:

userUser management

userUser management

:

:

fsWorking directory

fsWorking directory

fliesFile descripter

fliesFile descripter

:

:

signalSignal information

signalSignal information

sighandSignal handler

sighandSignal handler

:

:

task

exec_domain

exec_domain

flags

flags

status

status

Kernel stack

Kernel stack

the other structures

runqueue

mm_struct

group_info

user_struct

fs_struct

files_struct

signal_struct

sighand_struct

Figure 1-2 task_struct structure

Chapter 1. Understanding the Linux operating system 3

Page 18

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

1.1.2 Lifecycle of a process

Every process has its own lifecycle such as creation, execution, termination and removal.

These phases will be repeated literally millions of times as long as the system is up and

running. Therefore, the process lifecycle is a very important topic from the performance

perspective.

Figure 1-3 shows typical lifecycle of processes.

wait()

wait()

parent

parent

process

process

fork()

fork()

parent

parent

process

process

child

child

process

process

Figure 1-3 Lifecycle of typical processes

exec() exit()

exec() exit()

child

child

process

process

zombie

zombie

process

process

When a process creates new process, the creating process (parent process) issues a fork()

system call. When a fork() system call is issued, it gets a process descriptor for the newly

created process (child process) and sets a new process id. It then copies the values of the

parent process’s process descriptor to the child’s. At this time the entire address space of the

parent process is not copied; both processes share the same address space.

The exec() system call copies the new program to the address space of the child process.

Because both processes share the same address space, writing new program data causes a

page fault exception. At this point, the kernel assigns the new physical page to the child

process.

This deferred operation is called the

Copy On Write. The child process usually executes their

own program rather than the same execution as its parent does. This operation is a

reasonable choice to avoid unnecessary overhead because copying an entire address space

is a very slow and inefficient operation which uses much processor time and resources.

When program execution has completed, the child process terminates with an exit() system

call. The exit() system call releases most of the data structure of the process, and notifies

the parent process of the termination sending a certain signal. At this time, the process is

called a

zombie process (refer to “Zombie processes” on page 8).

The child process will not be completely removed until the parent process knows of the

termination of its child process by the wait() system call. As soon as the parent process is

notified of the child process termination, it removes all the data structure of the child process

and release the process descriptor.

1.1.3 Thread

A thread is an execution unit which is generated in a single process and runs in parallel with

other threads in the same process. They can share the same resources such as memory,

address space, open files and so on. They can access the same set of application data. A

thread is also called

should take care not to change their shared resources at the same time. The implementation

of mutual exclusion, locking and serialization etc. are the user application’s responsibility.

4 Linux Performance and Tuning Guidelines

Light Weight Process (LWP). Because they share resources, each thread

Page 19

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

resource

e

source

Threa

d

Threa

d

e

source

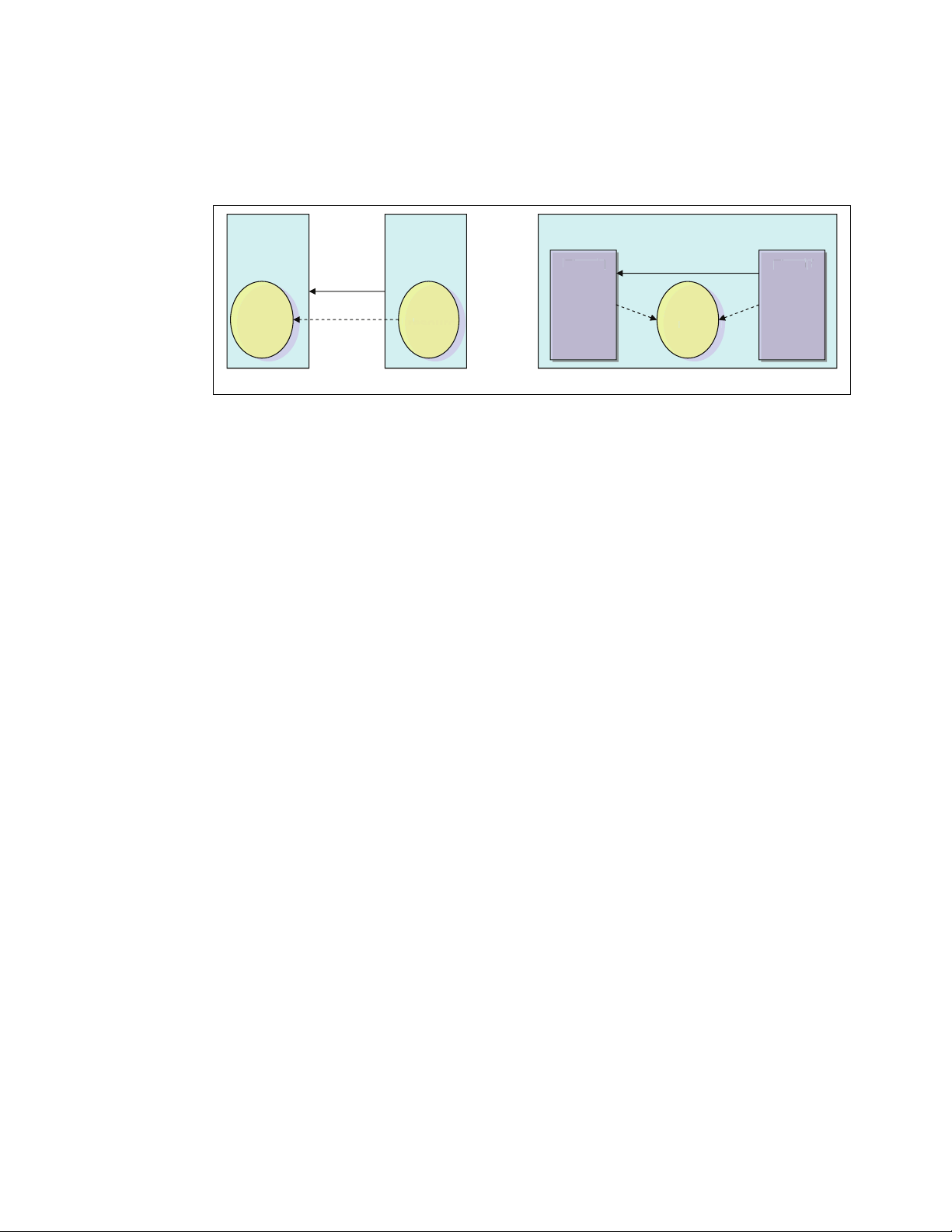

From the performance perspective, thread creation is less expensive than process creation

because a thread does not need to copy resources on creation. On the other hand, processes

and threads have similar characteristics in term of scheduling algorithm. The kernel deals

with both of them in the similar manner.

Process Process

resource

resource

r

copy

resource

resource

Thread

Thread

Process

resource

resource

share share

r

Thread

Thread

Process creation Thread creation

Figure 1-4 process and thread

In current Linux implementations, a thread is supported with the POSIX (Portable Operating

System Interface for UNIX) compliant library (

pthread). There are several thread

implementations available in the Linux operating system. The following are the widely used.

LinuxThreads

LinuxThreads have been the default thread implementation since Linux kernel 2.0 was

available. The LinuxThread has some noncompliant implementations with the POSIX

standard. NPTL is taking the place of LinuxThreads. The LinuxThreads will not be

supported in future release of Enterprise Linux distributions.

Native POSIX Thread Library (NPTL)

The NPTL was originally developed by Red Hat. NPTL is more compliant with POSIX

standards. Taking advantage of enhancements in kernel 2.6 such as the new clone()

system call, signal handling implementation etc., it has better performance and scalability

than LinuxThreads.

There is some incompatibility with LinuxThreads. An application which has a dependence

on LinuxThread may not work with the NPTL implementation.

Next Generation POSIX Thread (NGPT)

NGPT is an IBM developed version of POSIX thread library. It is currently under

maintenance operation and no further development is planned.

Using the LD_ASSUME_KERNEL environment variable, you can choose which threads library the

application should use.

1.1.4 Process priority and nice level

Process priority is a number that determines the order in which the process is handled by the

CPU and is determined by dynamic priority and static priority. A process which has higher

process priority has higher chances of getting permission to run on processor.

The kernel dynamically adjusts dynamic priority up and down as needed using a heuristic

algorithm based on process behaviors and characteristics. A user process can change the

static priority indirectly through the use of the

higher static priority will have longer time slice (how long the process can run on processor).

nice level of the process. A process which has

Chapter 1. Understanding the Linux operating system 5

Page 20

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

Linux supports nice levels from 19 (lowest priority) to -20 (highest priority). The default value

is 0. To change the nice level of a program to a negative number (which makes it higher

priority), it is necessary to log on or su to root.

1.1.5 Context switching

During process execution, information of the running process is stored in registers on

processor and its cache. The set of data that is loaded to the register for the executing

process is called the

stored and the context of the next running process is restored to the register. The process

descriptor and the area called kernel mode stack are used to store the context. This switching

process is called

because the processor has to flush its register and cache every time to make room for the

new process. It may cause performance problems.

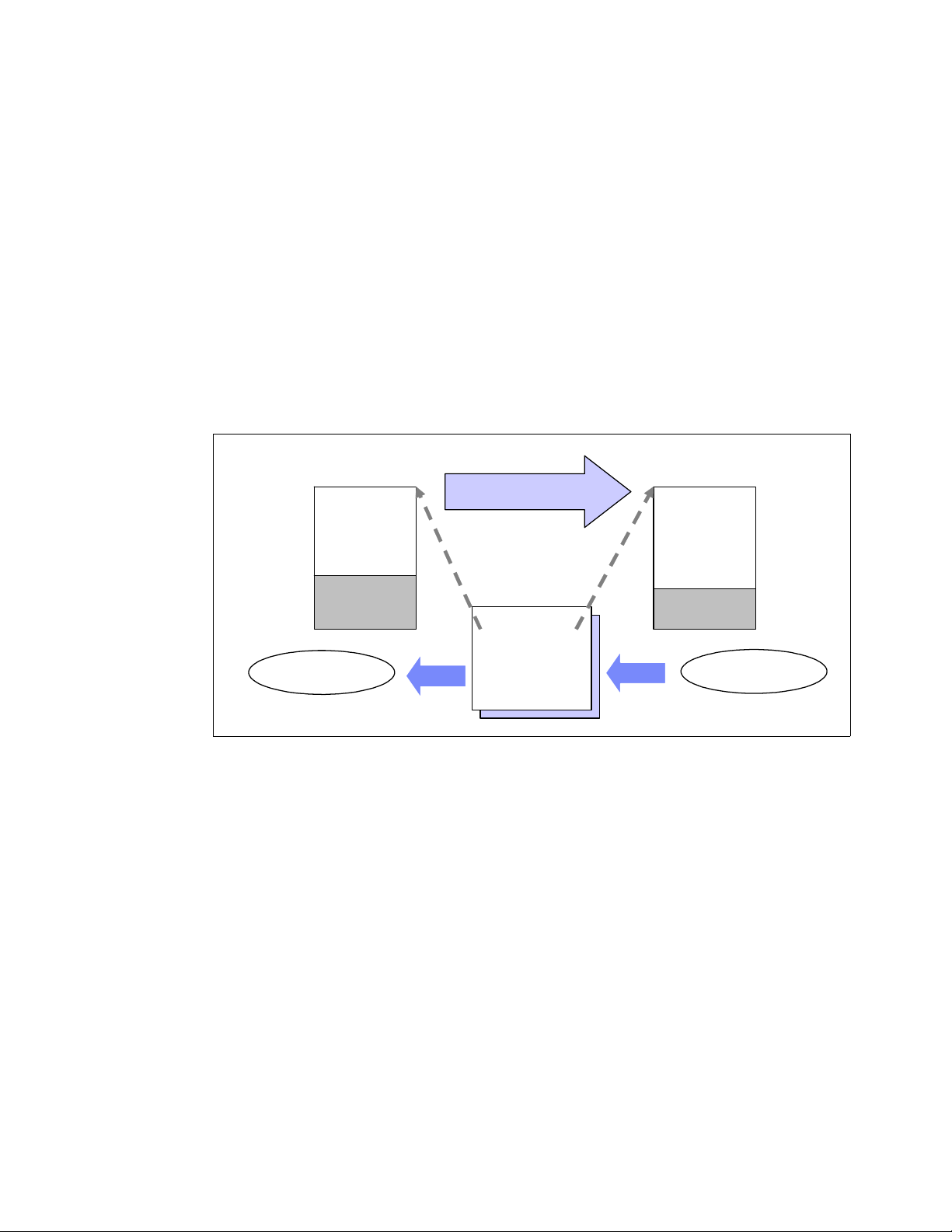

Figure 1-5 illustrates how the context switching works.

context. To switch processes, the context of the running process is

context switching. Having too much context switching is undesirable

task_struct

(Process A)

Figure 1-5 Context switching

1.1.6 Interrupt handling

Interrupt handling is one of the highest priority tasks. Interrupts are usually generated by I/O

devices such as a network interface card, keyboard, disk controller, serial adapter, and so on.

The interrupt handler notifies the Linux kernel of an event (such as keyboard input, ethernet

frame arrival, and so on). It tells the kernel to interrupt process execution and perform

interrupt handling as quickly as possible because some device requires quick

responsiveness. This is critical for system stability. When an interrupt signal arrives to the

kernel, the kernel must switch a currently execution process to new one to handle the

interrupt. This means interrupts cause context switching, and therefore a significant amount

of interrupts may cause performance degradation.

Address space

of process A

stack

Suspend

Context switch

CPU

stack pointer

other registers

EIP register

etc.

Address space

of process B

stack

task_struct

(Process B)

Resume

In Linux implementations, there are two types of interrupt. A

devices which require responsiveness (disk I/O interrupt, network adapter interrupt, keyboard

interrupt, mouse interrupt). A

deferred (TCP/IP operation, SCSI protocol operation etc.). You can see information related to

hard interrupts at /proc/interrupts.

6 Linux Performance and Tuning Guidelines

hard interrupt is generated for

soft interrupt is used for tasks which processing can be

Page 21

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

In a multi-processor environment, interrupts are handled by each processor. Binding

interrupts to a single physical processor may improve system performance. For further

details, refer to 4.4.2, “CPU affinity for interrupt handling”.

1.1.7 Process state

Every process has its own state to show what is currently happening in the process. Process

state changes during process execution. Some of the possible states are as follows:

TASK_RUNNING

In this state, a process is running on a CPU or waiting to run in the queue (run queue).

TASK_STOPPED

A process suspended by certain signals (ex. SIGINT, SIGSTOP) is in this state. The process

is waiting to be resumed by a signal such as SIGCONT.

TASK_INTERRUPTIBLE

In this state, the process is suspended and waits for a certain condition to be satisfied. If a

process is in TASK_INTERRUPTIBLE state and it receives a signal to stop, the process

state is changed and operation will be interrupted. A typical example of a

TASK_INTERRUPTIBLE process is a process waiting for keyboard interrupt.

TASK_UNINTERRUPTIBLE

Similar to TASK_INTERRUPTIBLE. While a process in TASK_INTERRUPTIBLE state can

be interrupted, sending a signal does nothing to the process in

TASK_UNINTERRUPTIBLE state. A typical example of TASK_UNINTERRUPTIBLE

process is a process waiting for disk I/O operation.

TASK_ZOMBIE

After a process exits with exit() system call, its parent should know of the termination. In

TASK_ZOMBIE state, a process is waiting for its parent to be notified to release all the

data structure.

TASK_ZOMBIE

fork()

TASK_RUNNING

TASK_RUNNING

(READY) TASK_RUNNING

(READY)

TASK_STOPPED

TASK_STOPPED

Scheduling

Preemption

TASK_ZOMBIE

exit()

TASK_RUNNING

Processor

TASK_UNINTERRUPTIBLE

TASK_UNINTERRUPTIBLE

Figure 1-6 Process state

TASK_INTERRUPTIBLE

TASK_INTERRUPTIBLE

Chapter 1. Understanding the Linux operating system 7

Page 22

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

Zombie processes

When a process has already terminated, having received a signal to do so, it normally takes

some time to finish all tasks (such as closing open files) before ending itself. In that normally

very short time frame, the process is a

After the process has completed all of these shutdown tasks, it reports to the parent process

that it is about to terminate. Sometimes, a zombie process is unable to terminate itself, in

which case it shows a status of Z (zombie).

It is not possible to kill such a process with the kill command, because it is already

considered “dead.” If you cannot get rid of a zombie, you can kill the parent process and then

the zombie disappears as well. However, if the parent process is the init process, you should

not kill it. The init process is a very important process and therefore a reboot may be needed

to get rid of the zombie process.

zombie.

1.1.8 Process memory segments

A process uses its own memory area to perform work. The work varies depending on the

situation and process usage. A process can have different workload characteristics and

different data size requirements. The process has to handle any of varying data sizes. To

satisfy this requirement, the Linux kernel uses a dynamic memory allocation mechanism for

each process. The process memory allocation structure is shown in Figure 1-7.

Text

segment

Data

segment

Heap

segment

Stack

segment

Figure 1-7 Process address space

Process address space

0x0000

Text

Executable instruction (Read-only

Data

Initialized data

BSS

Zero-ininitialized data

Heap

Dynamic memory allocation

by malloc()

Stack

Local variables

Function parameters,

Return address etc.

The process memory area consist of these segments

Text segment

The area where executable code is stored.

8 Linux Performance and Tuning Guidelines

Page 23

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

Data segment

The data segment consist of these three area.

– Data: The area where initialized data such as static variables are stored.

– BSS: The area where zero-initialized data is stored. The data is initialized to zero.

– Heap: The area where malloc() allocates dynamic memory based on the demand.

The heap grows toward higher addresses.

Stack segment

The area where local variables, function parameters, and the return address of a function

is stored. The stack grows toward lower addresses.

The memory allocation of a user process address space can be displayed with the pmap

command. You can display the total size of the segment with the ps command. Refer to

2.3.10, “pmap” on page 52 and 2.3.4, “ps and pstree” on page 44.

1.1.9 Linux CPU scheduler

The basic functionality of any computer is, quite simply, to compute. To be able to compute,

there must be a means to manage the computing resources, or processors, and the

computing tasks, also known as threads or processes. Thanks to the great work of Ingo

Molnar, Linux features a kernel using a O(1) algorithm as opposed to the O(n) algorithm used

to describe the former CPU scheduler. The term O(1) refers to a static algorithm, meaning

that the time taken to choose a process for placing into execution is constant, regardless of

the number of processes.

The new scheduler scales very well, regardless of process count or processor count, and

imposes a low overhead on the system. The algorithm uses two process priority arrays:

active

expired

As processes are allocated a timeslice by the scheduler, based on their priority and prior

blocking rate, they are placed in a list of processes for their priority in the active array. When

they expire their timeslice, they are allocated a new timeslice and placed on the expired array.

When all processes in the active array have expired their timeslice, the two arrays are

switched, restarting the algorithm. For general interactive processes (as opposed to real-time

processes) this results in high-priority processes, which typically have long timeslices, getting

more compute time than low-priority processes, but not to the point where they can starve the

low-priority processes completely. The advantage of such an algorithm is the vastly improved

scalability of the Linux kernel for enterprise workloads that often include vast amounts of

threads or processes and also a significant number of processors. The new O(1) CPU

scheduler was designed for kernel 2.6 but backported to the 2.4 kernel family. Figure 1-8

illustrates how the Linux CPU scheduler works.

Chapter 1. Understanding the Linux operating system 9

Page 24

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

active

active

expired

expired

priority0

priority0

priority 139

priority 139

priority0

priority0

priority 139

priority 139

:

:

:

:

array[0]

array[0]

array[1]

array[1]

P

P

:

:

P

P

P

P

P

P

P P

P P

P

P

:

:

P P

P P

Figure 1-8 Linux kernel 2.6 O(1) scheduler

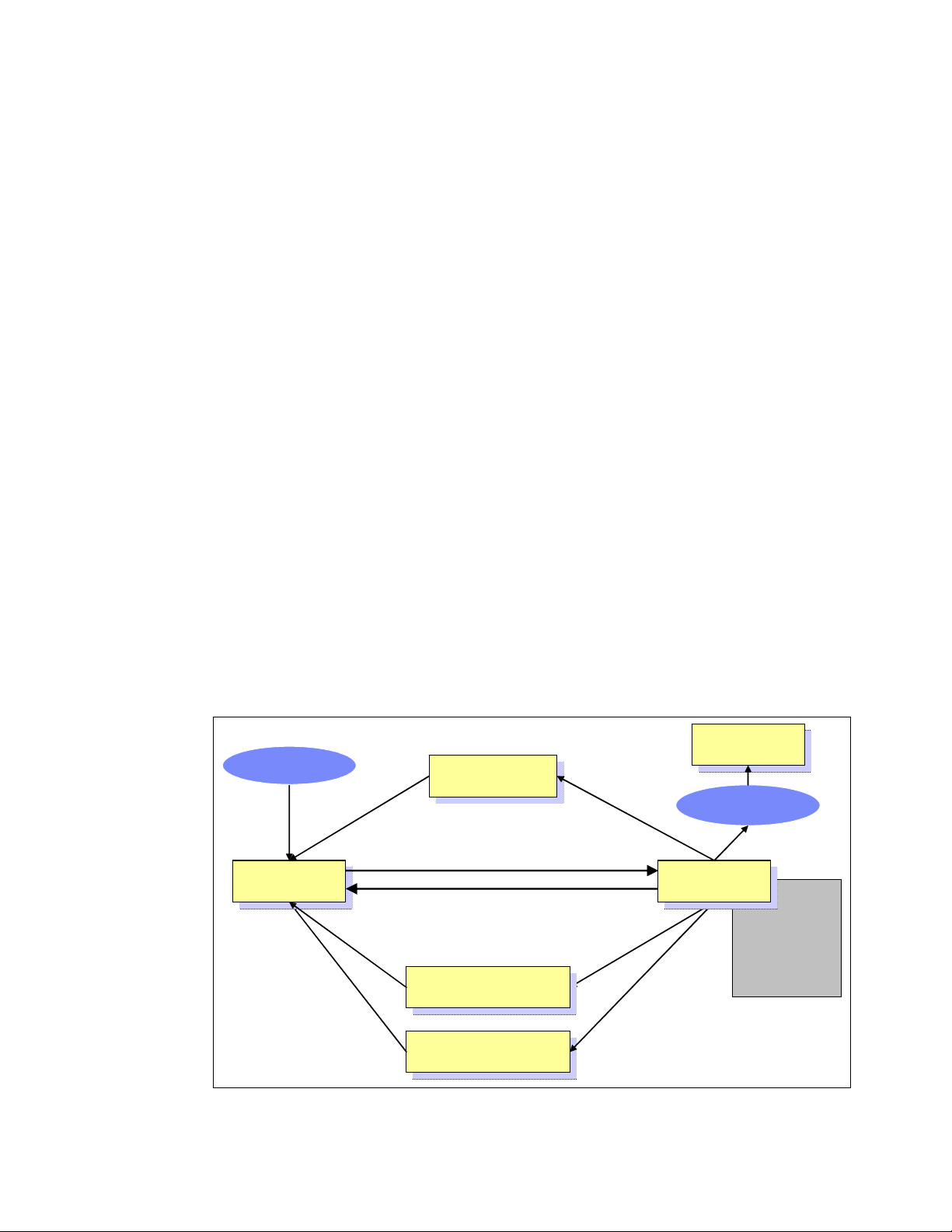

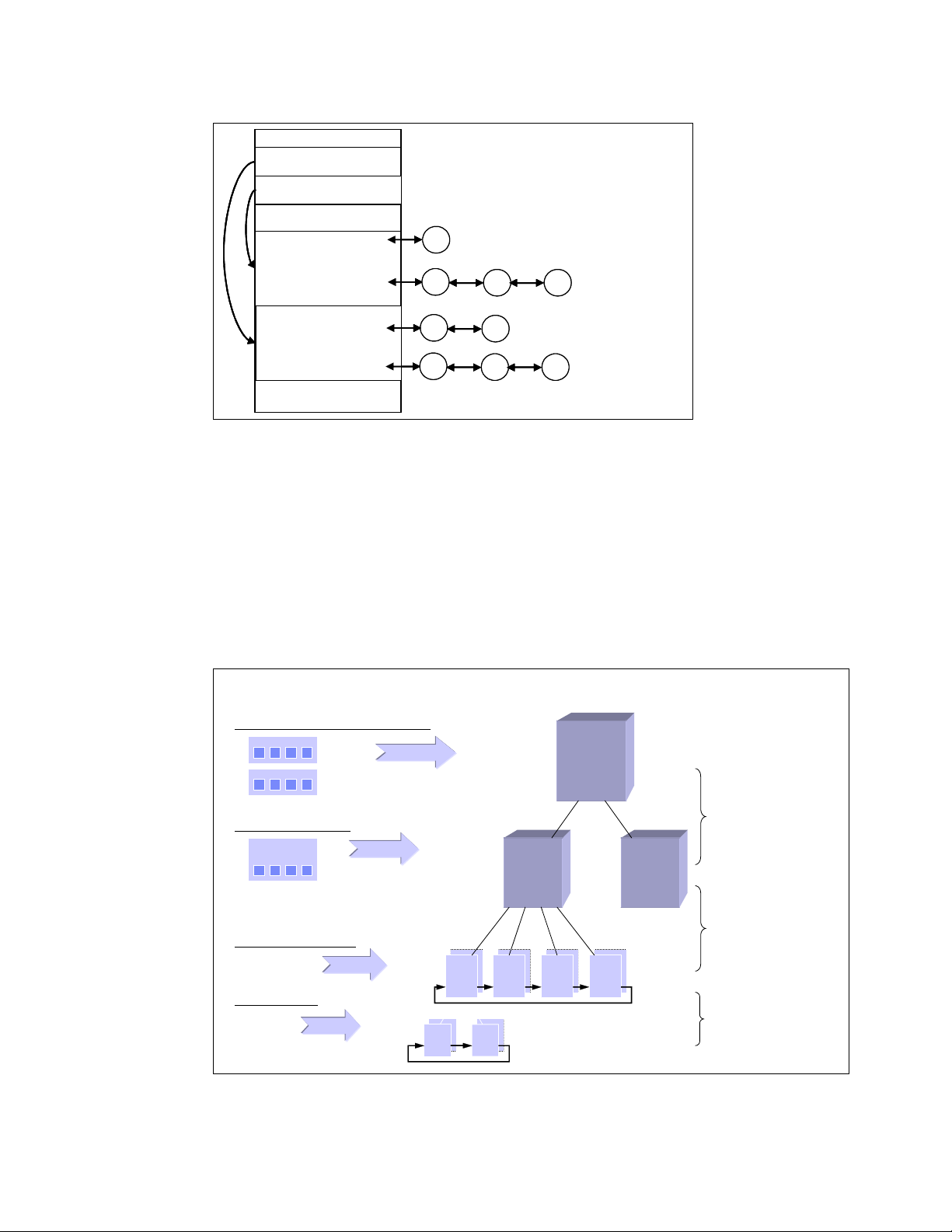

Another significant advantage of the new scheduler is the support for Non-Uniform Memory

Architecture (NUMA) and symmetric multithreading processors, such as Intel®

Hyper-Threading technology.

The improved NUMA support ensures that load balancing will not occur across NUMA nodes

unless a node gets overburdened. This mechanism ensures that traffic over the comparatively

slow scalability links in a NUMA system are minimized. Although load balancing across

processors in a scheduler domain group will be load balanced with every scheduler tick,

workload across scheduler domains will only occur if that node is overloaded and asks for

load balancing.

Parent

Scheduler

Domain

Two node xSeries 445 (8 CPU)

One CEC (4 CPU)

One Xeon MP (HT)

One HT CPU

Logical

CPU

Scheduler

Domain

Group

1

2

…

1

2

…

1

2

…

Child

Scheduler

Domain

1

2

3

…

1

2

…

1

2

3

…

Load balancing

only if a child

is overburdened

1

2

3

…

Load balancing

via scheduler_tick()

and time slice

1

2

…

1

2

…

Load balancing

via scheduler_tick()

Figure 1-9 Architecture of the O(1) CPU scheduler on an 8-way NUMA based system with

Hyper-Threading enabled

10 Linux Performance and Tuning Guidelines

Page 25

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

1.2 Linux memory architecture

To execute a process, the Linux kernel allocates a portion of the memory area to the

requesting process. The process uses the memory area as workspace and performs the

required work. It is similar to you having your own desk allocated and then using the desktop

to scatter papers, documents and memos to perform your work. The difference is that the

kernel has to allocate space in more dynamic manner. The number of running processes

sometimes comes to tens of thousands and amount of memory is usually limited. Therefore,

Linux kernel must handle the memory efficiently. In this section, we describe the Linux

memory architecture, address layout, and how the Linux manages memory space efficiently.

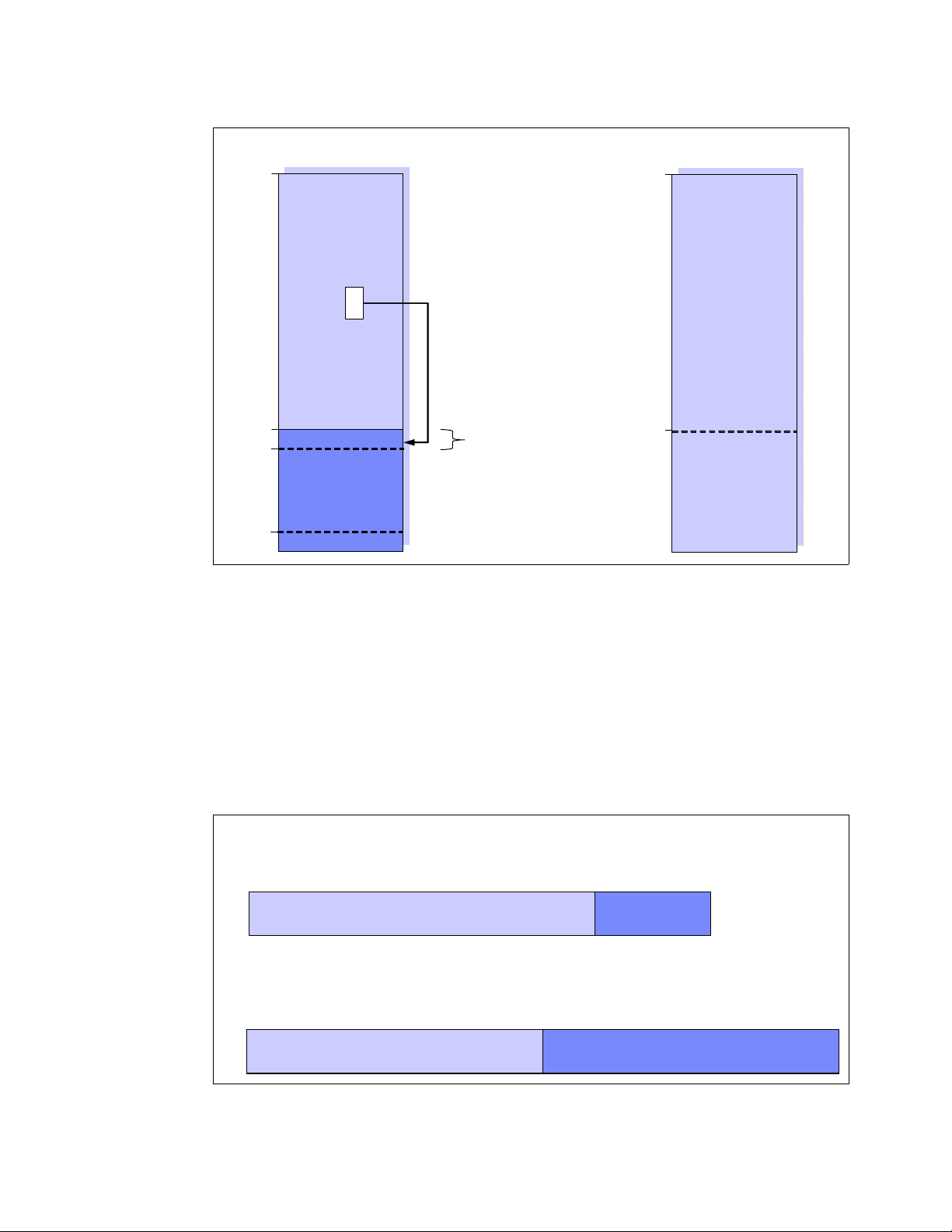

1.2.1 Physical and virtual memory

Today we are faced with the choice of 32-bit systems and 64-bit systems. One of the most

important differences for enterprise-class clients is the possibility of virtual memory

addressing above 4 GB. From a performance point of view, it is therefore interesting to

understand how the Linux kernel maps physical memory into virtual memory on both 32-bit

and 64-bit systems.

As you can see in Figure 1-10 on page 12, there are obvious differences in the way the Linux

kernel has to address memory in 32-bit and 64-bit systems. Exploring the physical-to-virtual

mapping in detail is beyond the scope of this paper, so we highlight some specifics in the

Linux memory architecture.

On 32-bit architectures such as the IA-32, the Linux kernel can directly address only the first

gigabyte of physical memory (896 MB when considering the reserved range). Memory above

the so-called ZONE_NORMAL must be mapped into the lower 1 GB. This mapping is

completely transparent to applications, but allocating a memory page in ZONE_HIGHMEM

causes a small performance degradation.

On the other hand, with 64-bit architectures such as x86-64 (also x64), ZONE_NORMAL

extends all the way to 64GB or to 128 GB in the case of IA-64 systems. As you can see, the

overhead of mapping memory pages from ZONE_HIGHMEM into ZONE_NORMAL can be

eliminated by using a 64-bit architecture.

Chapter 1. Understanding the Linux operating system 11

Page 26

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

32-bit Architecture 64-bit Architecture

64GB

1GB

896MB

16MB

ZONE_HIGHMEM

~~

~~

128MB

“Reserved”

ZONE_NORMAL

ZONE_DMA

Pages in ZONE_HIGHMEM

must be mapped into

ZONE_NORMAL

Reserved for Kernel

data structures

64GB

1GB

ZONE_NORMAL

ZONE_DMA

Figure 1-10 Linux kernel memory layout for 32-bit and 64-bit systems

Virtual memory addressing layout

Figure 1-11 shows the Linux virtual addressing layout for 32-bit and 64-bit architecture.

On 32-bit architectures, the maximum address space that single process can access is 4GB.

This is a restriction derived from 32-bit virtual addressing. In a standard implementation, the

virtual address space is divided into a 3GB user space and a 1GB kernel space. There is

some variants like 4G/4G addressing layout implementing.

On the other hand, on 64-bit architecture such as x86_64 and ia64, no such restriction exits.

Each single process can enjoy the vast and huge address space.

32-bit Architecture

3G/1G kernel

0GB

User space

3GB

Kernel space

4GB

64-bit Architecture

x86_64

0GB

User space

Figure 1-11 Virtual memory addressing layout for 32bit and 64-bit architecture

512GB or more

Kernel space

12 Linux Performance and Tuning Guidelines

Page 27

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

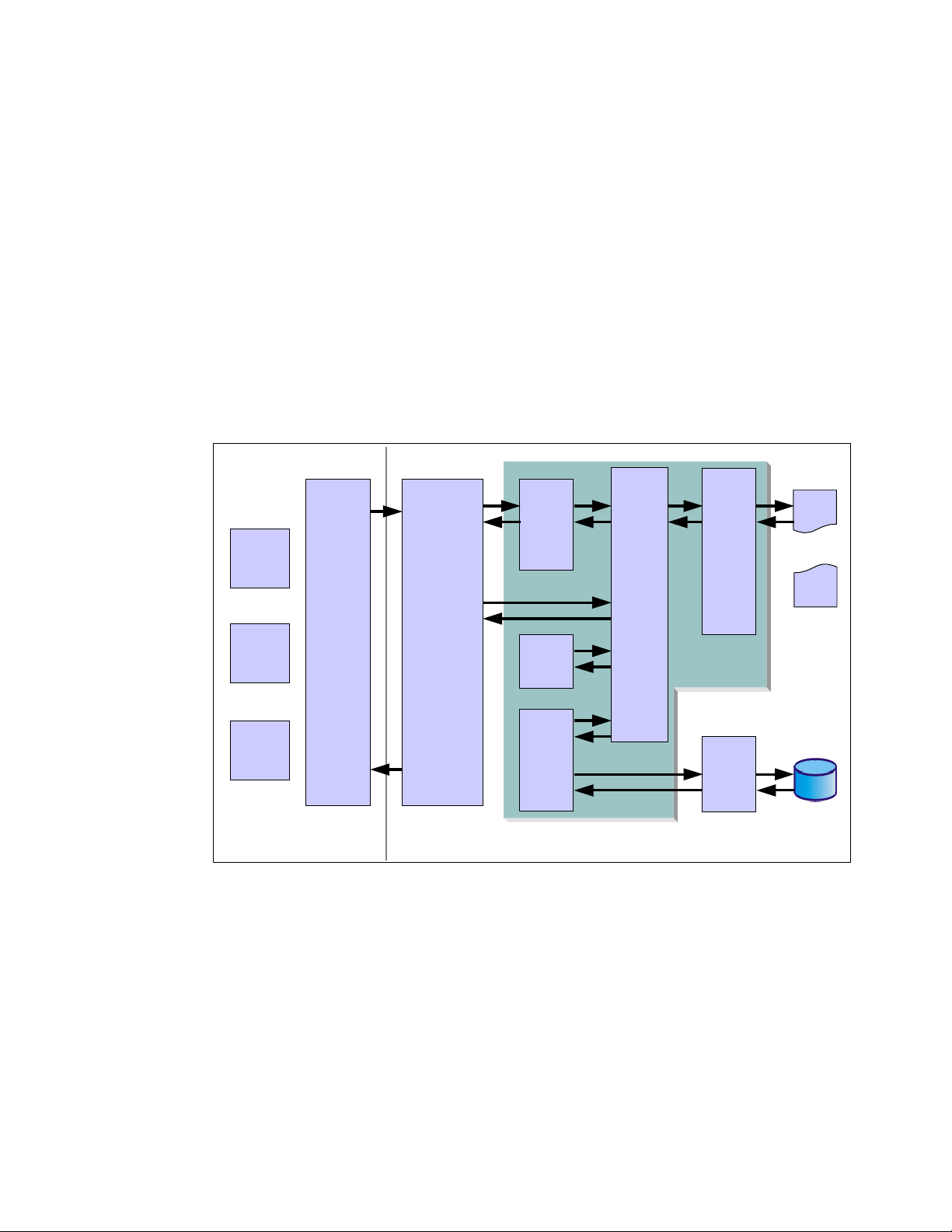

1.2.2 Virtual memory manager

The physical memory architecture of an operating system usually is hidden to the application

and the user because operating systems map any memory into virtual memory. If we want to

understand the tuning possibilities within the Linux operating system, we have to understand

how Linux handles virtual memory. As explained in 1.2.1, “Physical and virtual memory” on

page 11, applications do not allocate physical memory, but request a memory map of a

certain size at the Linux kernel and in exchange receive a map in virtual memory. As you can

see in Figure 1-12 on page 13, virtual memory does not necessarily have to be mapped into

physical memory. If your application allocates a large amount of memory, some of it might be

mapped to the swap file on the disk subsystem.

Another enlightening fact that can be taken from Figure 1-12 on page 13 is that applications

usually do not write directly to the disk subsystem, but into cache or buffers. The

kernel threads then flushes out data in cache/buffers to the disk whenever it has time to do so

(or, of course, if a file size exceeds the buffer cache). Refer to “Flushing dirty buffer” on

page 22

pdflush

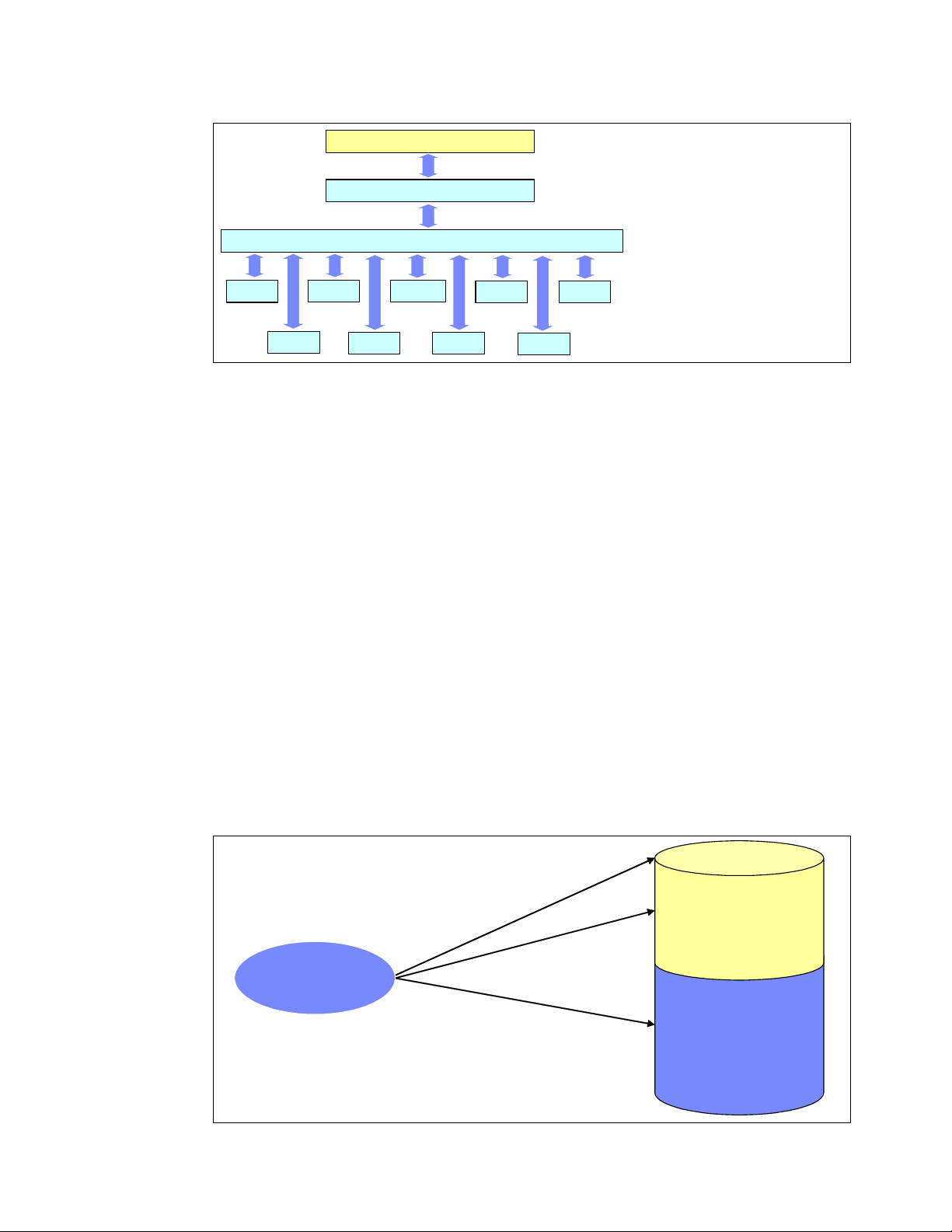

Physical

sh

Kernel

Standard

httpd

mozilla

User Space

Processes

Figure 1-12 The Linux virtual memory manager

C Library

(glibc)

Subsystems

Slab Allocator

kswapd

bdflush

VM Subsystem

MMU

zoned

buddy

allocator

Disk Driver

Memory

Disk

Closely connected to the way the Linux kernel handles writes to the physical disk subsystem

is the way the Linux kernel manages disk cache. While other operating systems allocate only

a certain portion of memory as disk cache, Linux handles the memory resource far more

efficiently. The default configuration of the virtual memory manager allocates all available free

memory space as disk cache. Hence it is not unusual to see productive Linux systems that

boast gigabytes of memory but only have 20 MB of that memory free.

In the same context, Linux also handles swap space very efficiently. The fact that swap space

is being used does not mean a memory bottleneck but rather proves how efficiently Linux

handles system resources. See “Page frame reclaiming” on page 14 for more detail.

Chapter 1. Understanding the Linux operating system 13

Page 28

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

Page frame allocation

A page is a group of contiguous linear addresses in physical memory (page frame) or virtual

memory. The Linux kernel handles memory with this page unit. A page is usually 4K bytes in

size. When a process requests a certain amount of pages, if there are available pages, the

Linux kernel can allocate them to the process immediately. Otherwise pages have to be taken

from some other process or page cache. The kernel knows how many memory pages are

available and where they are located.

Buddy system

The Linux kernel maintains its free pages by using the mechanism called buddy system. The

buddy system maintains free pages and tries to allocate pages for page allocation requests. It

tries to keep the memory area contiguous. If small pages are scattered without consideration,

it may cause memory fragmentation and it’s more difficult to allocate large portion of pages

into a contiguous area. It may lead to inefficient memory use and performance decline.

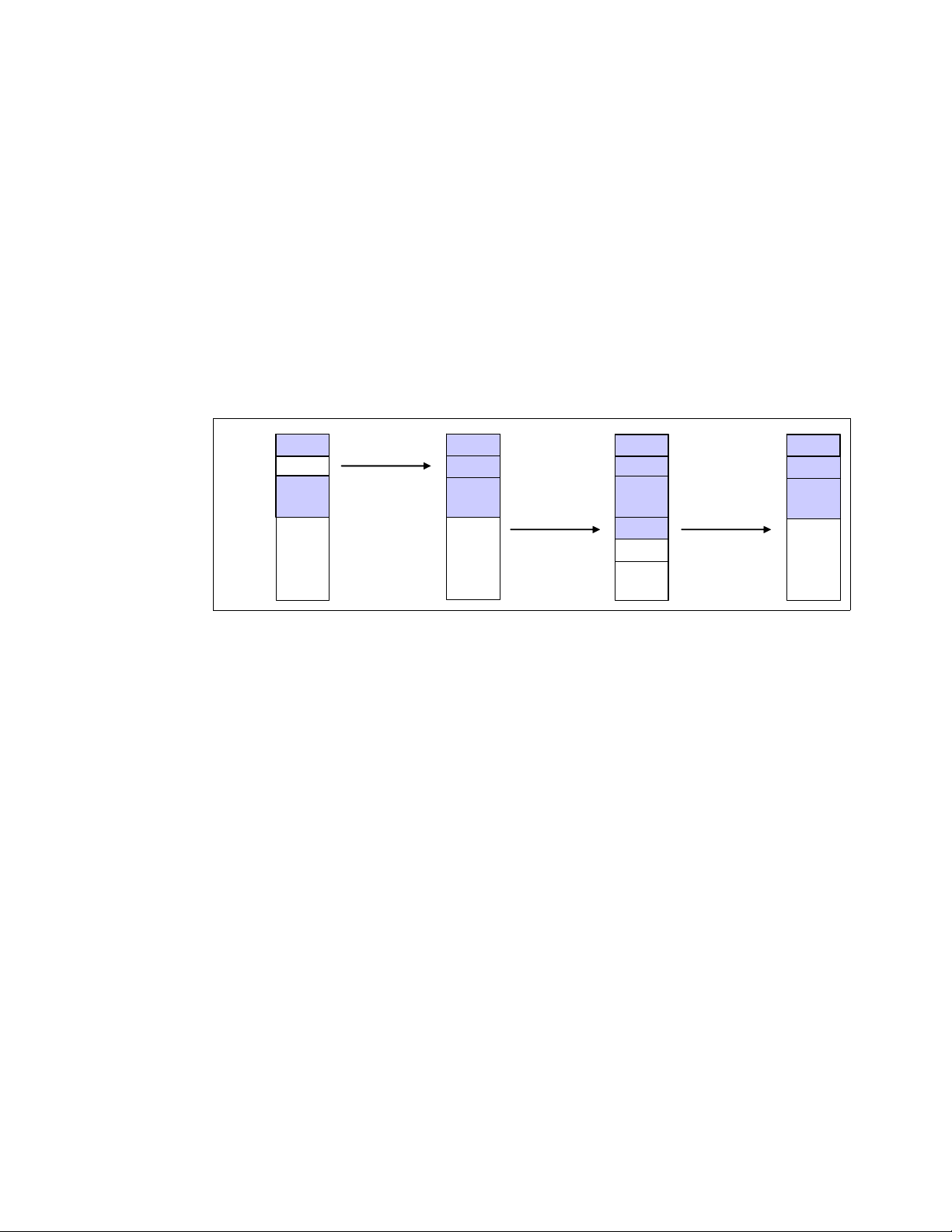

Figure 1-13 illustrates how the buddy system allocates pages.

2 pages

Used

chunk

Used

8 pages

chunk

Figure 1-13 Buddy System

Request

for 2pages

8 pages

chunk

Used

Used

Used

Request

for 2 pages

2 pages

chunk

4 pages

chunk

Used

Used

Used

Used

Release

2 pages

8 pages

chunk

Used

Used

Used

When the attempt of pages allocation failed, the page reclaiming will be activated. Refer to

“Page frame reclaiming” on page 14.

You can find information on the buddy system through /proc/buddyinfo. For detail, please

refer to “Memory used in a zone” on page 47.

Page frame reclaiming

If pages are not available when a process requests to map a certain amount of pages, the

Linux kernel tries to get pages for the new request by releasing certain pages which are used

before but not used anymore and still marked as active pages based on certain principals and

allocating the memory to new process. This process is called

thread and try_to_free_page() kernel function are responsible for page reclaiming.

page reclaiming. kswapd kernel

While kswapd is usually sleeping in task interruptible state, it is called by the buddy system

when free pages in a zone fall short of a certain threshold. It then tries to find the candidate

pages to be gotten out of active pages based on the Least Recently Used (

This is relatively simple. The pages least recently used should be released first. The active list

and the inactive list are used to maintain the candidate pages. kswapd scans part of the

active list and check how recently the pages were used then the pages not used recently is

put into inactive list. You can take a look at how much memory is considered as active and

inactive using vmstat -a command. For detail refer to 2.3.2, “vmstat”.

kswapd also follows another principal. The pages are used mainly for two purpose;

and process address space. The page cache is pages mapped to a file on disk. The

cache

pages belonging to a process address space is used for heap and stack (called anonymous

memory because it‘s not mapped to any files, and has no name) (refer to 1.1.8, “Process

14 Linux Performance and Tuning Guidelines

LRU) principal.

page

Page 29

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

memory segments” on page 8). When kswapd reclaims pages, it would rather shrink the page

cache than page out (or swap out) the pages owned by processes.

Note: The phrase “page out” and “swap out” is sometimes confusing. “page out” means

take some pages (a part of entire address space) into swap space while “swap out” means

taking entire address space into swap space. They are sometimes used interchangeably.

The good proportion of page cache reclaimed and process address space reclaimed may

depend on the usage scenario and will have certain effects on performance. You can take

some control of this behavior by using /proc/sys/vm/swappiness. Please refer to 4.5.1,

“Setting kernel swap and pdflush behavior” on page 110 for tuning detail.

swap

As we stated before, when page reclaiming occurs, the candidate pages in the inactive list

which belong to the process address space may be paged out. Having swap itself is not

problematic situation. While swap is nothing more than a guarantee in case of over allocation

of main memory in other operating systems, Linux utilizes swap space far more efficiently. As

you can see in Figure 1-12, virtual memory is composed of both physical memory and the

disk subsystem or the swap partition. If the virtual memory manager in Linux realizes that a

memory page has been allocated but not used for a significant amount of time, it moves this

memory page to swap space.

Often you will see daemons such as getty that will be launched when the system starts up but

will hardly ever be used. It appears that it would be more efficient to free the expensive main

memory of such a page and move the memory page to swap. This is exactly how Linux

handles swap, so there is no need to be alarmed if you find the swap partition filled to 50%.

The fact that swap space is being used does not mean a memory bottleneck but rather proves

how efficiently Linux handles system resources.

1.3 Linux file systems

One of the great advantages of Linux as an open source operating system is that it offers

users a variety of supported file systems. Modern Linux kernels can support nearly every file

system ever used by a computer system, from basic FAT support to high performance file

systems such as the journaling file system JFS. However, because Ext2, Ext3 and ReiserFS

are native Linux file systems and are supported by most Linux distributions (ReiserFS is

commercially supported only on Novell SUSE Linux), we will focus on their characteristics

and give only an overview of the other frequently used Linux file systems.

For more information on file systems and the disk subsystem, see 4.6, “Tuning the disk

subsystem” on page 113.

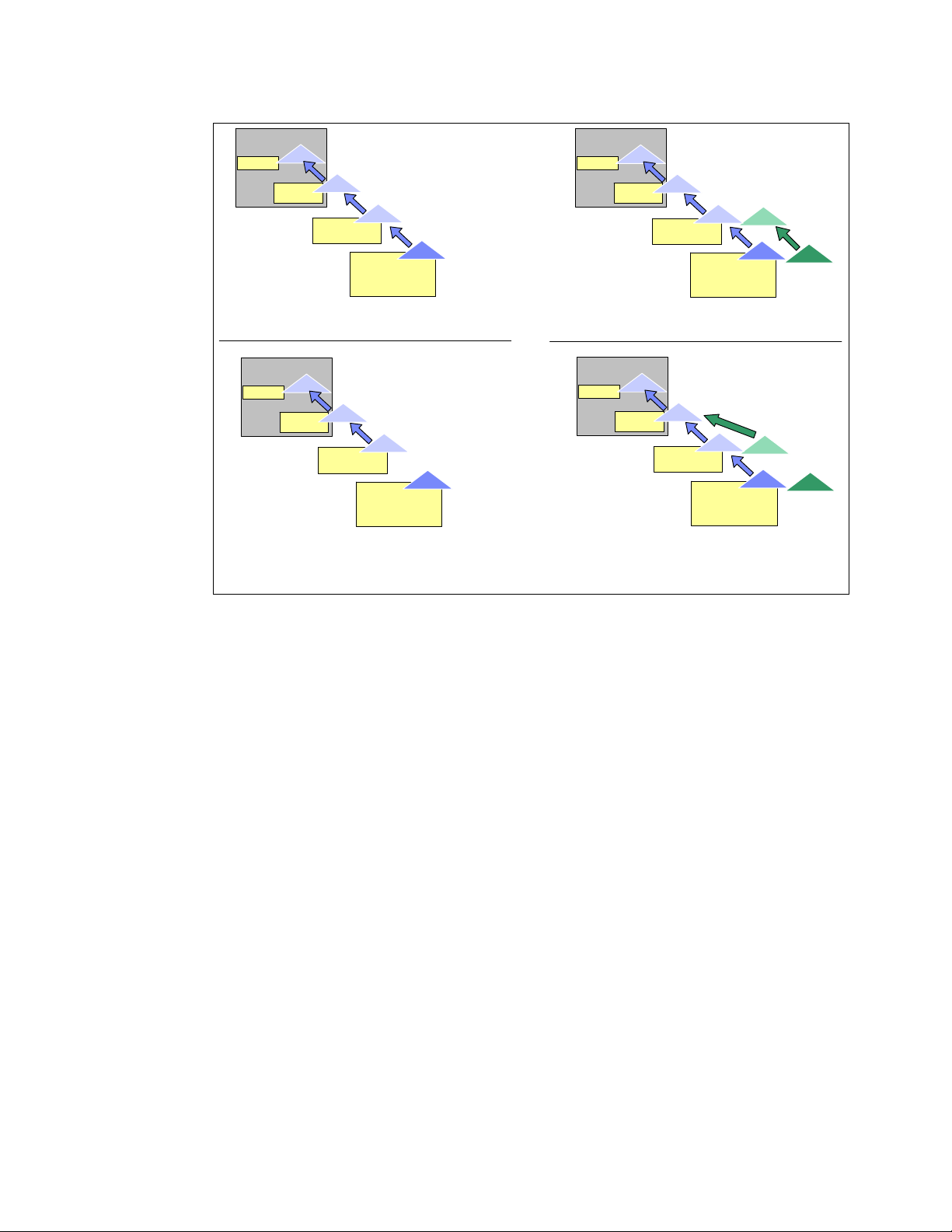

1.3.1 Virtual file system

Virtual Files System (VFS) is an abstraction interface layer that resides between the user

process and various types of Linux file system implementations. VFS provides common

object models (i.e. i-node, file object, page cache, directory entry etc.) and methods to access

file system objects. It hides the differences of each file system implementation from user

processes. Thanks to VFS, user processes do not need to know which file system to use, or

which system call should be issued for each file system. Figure 1-14 illustrates the concept of

VFS.

Chapter 1. Understanding the Linux operating system 15

Page 30

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

ext2

Figure 1-14 VFS concept

1.3.2 Journaling

In a non-journaling file system, when a write is performed to a file system the Linux kernel

makes changes to the file system metadata first and then writes actual user data next. This

operations sometimes causes higher chances of losing data integrity. If the system suddenly

crashes for some reason while the write operation to file system metadata is in process, the

file system consistency may be broken.

metadata and recover the consistency at the time of next reboot. But it takes way much time

to be completed when the system has large volume. The system is not operational during this

process.

NFS

ext3

AFS VFAT

User Process

System call

VFS

Reiserfs

cp

open(), read(), write()

translation for each file system

XFS

JFS

proc

fsck will fix the inconsistency by checking all the

A Journaling file system solves this problem by writing data to be changed to the area called

the journal area before writing the data to the actual file system. The journal area can be

placed both in the file system itself or out of the file system. The data written to the journal

area is called the journal log. It includes the changes to file system metadata and the actual

file data if supported.

As journaling write journal logs before writing actual user data to the file system, it may cause

performance overhead compared to no-journaling file system. How much performance

overhead is sacrificed to maintain higher data consistency depends on how much information

is written to disk before writing user data. We will discuss this topic in 1.3.4, “Ext3” on

page 18.

s

g

o

l

l

a

n

r

u

o

j

e

t

write

i

r

w

.

1

e

l

e

d

.

3

2

.

M

a

k

e

c

h

a

f

i

l

e

s

n

y

s

t

e

m

n

r

u

o

j

e

t

g

e

s

t

o

s

g

o

l

l

a

a

c

t

u

a

l

Journal area

File system

Figure 1-15 Journaling concept

16 Linux Performance and Tuning Guidelines

Page 31

Draft Document for Review May 4, 2007 11:35 am 4285ch01.fm

1.3.3 Ext2

The extended 2 file system is the predecessor of the extended 3 file system. A fast, simple file

system, it features no journaling capabilities, unlike most other current file systems.

Figure 1-16 shows the Ext2 file system data structure. The file system starts with boot sector

and followed by block groups. Splitting entire file system into several small block groups

contributes performance gain because i-node table and data blocks which hold user data can

resides closer on disk platter, then seek time can be reduced. A block group consist of:

Super block: Information on the file system is stored here. The exact copy of a

super block is placed in the top of every block group.

Block group descriptor: Information on the block group is stored.

Data block bitmaps: Used for free data block management.

i-node bitmaps: Used for free i-node management.

i-node tables: inode tables are stored here. Every file has a corresponding i-node

table which holds meta-data of the file such as file mode, uid, gid,

atime, ctime, mtime, dtime and pointer to the data block.

Data blocks: Where actual user data is stored.

boot sector

boot sector

BLOCK

BLOCK

Ext2

Figure 1-16 Ext2 file system data structure

GROUP 0

GROUP 0

BLOCK

BLOCK

GROUP 1

GROUP 1

BLOCK

BLOCK

GROUP 2

GROUP 2

:

:

:

:

BLOCK

BLOCK

GROUP N

GROUP N

super block

super block

block group

block group

descriptors

descriptors

data-block

data-block

bitmaps

bitmaps

inode

inode

bitmaps

bitmaps

inode-table

inode-table

Data-blocks

Data-blocks

To find data blocks which consist of a file, the kernel searches the i-node of the file first. When

a request to open /var/log/messages comes from a process, the kernel parses the file path

and searches a directory entry of / (root directory) which has the information about files and

directories under itself (root directory). Then the kernel can find the i-node of /var next and

takes a look at the directory entry of /var, and it also has the information of files and

directories under itself as well. The kernel gets down to the file in same manner until it finds

Chapter 1. Understanding the Linux operating system 17

Page 32

4285ch01.fm Draft Document for Review May 4, 2007 11:35 am

i-node of the file. The Linux kernel uses file object cache such as directory entry cache,

i-node cache to accelerate finding the corresponding i-node.

Now the Linux kernel knows i-node of the file then it tries to reach actual user data block. As

we described, i-node has the pointer to the data block. By referring to it, the kernel can get to

the data block. For large files, Ext2 implements direct/indirect reference to data block.

Figure 1-17 illustrates how it works.

ext2 disk inode

ext2 disk inode

i_size

direct

direct

indirect

indirect

double indirect

double indirect

trebly indirect

trebly indirect

i_size

:

:

i_blocks

i_blocks

i_blocks[0]

i_blocks[0]

i_blocks[1]

i_blocks[1]

i_blocks[2]

i_blocks[2]

i_blocks[3]

i_blocks[3]

i_blocks[4]

i_blocks[4]

i_blocks[5]

i_blocks[5]

i_blocks[6]

i_blocks[6]

i_blocks[7]

i_blocks[7]

i_blocks[8]

i_blocks[8]

i_blocks[9]

i_blocks[9]

i_blocks[10]

i_blocks[10]

i_blocks[11]

i_blocks[11]

i_blocks[12]

i_blocks[12]

i_blocks[13]

i_blocks[13]

i_blocks[14]

i_blocks[14]

Indirect

Indirect

Indirect

Indirect

block

block

block

block

Data

Data

block

block

Indirect

Indirect

Indirect

Indirect

block

block

block

block

Indirect

Indirect

Indirect

Indirect

block

block

block

block

Indirect

Indirect

Indirect

Indirect

block

block

block

block

Data

Data

block

block

Indirect

Indirect

Indirect

Indirect

block

block

block

block

Indirect

Indirect

Indirect

block

block

block

Data

block

Data

Data

block

block

1.3.4 Ext3

Figure 1-17 Ext2 file system direct / indirect reference to data block

The file system structure and file access operations differ by file system. This makes different

characteristics of each file system.

The current Enterprise Linux distributions support the extended 3 file system. This is an

updated version of the widely used extended 2 file system. Though the fundamental

structures are quite similar to Ext2 file system, the major difference is the support of

journaling capability. Highlights of this file system include:

Availability: Ext3 always writes data to the disks in a consistent way, so in case of an

unclean shutdown (unexpected power failure or system crash), the server does not have

to spend time checking the consistency of the data, thereby reducing system recovery

from hours to seconds.

Data integrity: By specifying the journaling mode data=journal on the mount command, all

data, both file data and metadata, is journaled.

Speed: By specifying the journaling mode data=writeback, you can decide on speed

versus integrity to meet the needs of your business requirements. This will be notable in

environments where there are heavy synchronous writes.