Page 1

Veritas 5.1 SP1 Installation Guide

HP-UX 11i v3

HP Part Number: 5900-1514

Published: September 2011

Edition: 2.0

Page 2

© Copyright 2009, 2011 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

UNIX is a registered trademark of The Open Group.

Veritas is a registered trademark of Symantec Corporation. Copyright © 2011 Symantec Corporation. All rights reserved. Symantec, the Symantec

Logo, Veritas, and Veritas Storage Foundation are trademarks or registered trademarks of Symantec Corporation or its affiliates in the U.S. and

other countries. Other names may be trademarks of their respective owners.

Java is a registered trademark of Sun Microsystems, Inc.

Intel and Itanium are registered trademarks of Intel Corporation or its subsidiaries in the United States or other countries.

Page 3

Contents

About this Document......................................................................................6

Intended Audience....................................................................................................................6

Document Organization............................................................................................................6

Typographic Conventions...........................................................................................................6

Related Information...................................................................................................................7

Technical Support.....................................................................................................................7

HP Welcomes Your Comments....................................................................................................8

1 Introduction...............................................................................................9

Overview................................................................................................................................9

Volume Managers Supported on HP-UX 11i v3.............................................................................9

Veritas Volume Manager (VxVM) ...............................................................................................9

Introduction.........................................................................................................................9

VxVM Features..................................................................................................................10

VxVM 5.1 SP1 on HP–UX 11i v3..........................................................................................14

Architecture of VxVM.........................................................................................................14

VxVM Daemons................................................................................................................15

VxVM Objects...................................................................................................................15

Volume Layouts in VxVM.....................................................................................................16

VxVM Storage Layouts........................................................................................................16

Concatenation and Spanning.........................................................................................16

Striping (RAID-0)...........................................................................................................16

Mirroring (RAID-1).........................................................................................................16

Striping Plus Mirroring (Mirrored-Stripe or RAID-0+1)..........................................................16

Mirroring Plus Striping (Striped-Mirror, RAID-1+0 or RAID-10)..............................................16

RAID-5 (Striping with Parity)............................................................................................17

VxVM Interfaces................................................................................................................17

Command-Line Interface.................................................................................................17

Menu-driven utility.........................................................................................................17

Veritas Enterprise Administrator.......................................................................................17

File Systems Supported on HP-UX 11i v3....................................................................................17

Veritas File System (VxFS) ........................................................................................................18

Introduction.......................................................................................................................18

VxFS Features....................................................................................................................18

VxFS 5.1 SP1 on HP-UX 11i v3.............................................................................................21

Architecture of VxFS...........................................................................................................21

Extent Based Allocation......................................................................................................22

2 System Requirements.................................................................................23

Software Dependency.............................................................................................................23

OS Version............................................................................................................................23

Patch Requirements ................................................................................................................23

Required Software..................................................................................................................24

Required Packages for Veritas Enterprise Administrator................................................................24

Software Depot Content..........................................................................................................24

License Bundles......................................................................................................................25

Disk Space Requirements.........................................................................................................26

Disk Space Requirements for VxFS 5.1 SP1............................................................................26

Disk Space Requirements for VxVM 5.1 SP1..........................................................................26

Disk Space Requirements for CVM.......................................................................................26

3 Installing the Veritas 5.1 SP1 Products..........................................................27

Mounting the HP Serviceguard Storage Management Suite Media................................................27

Contents 3

Page 4

Installing Veritas 5.1 SP1 Products.............................................................................................27

Installing VxFS 5.1 SP1............................................................................................................28

Installing Base-VxFS-51.......................................................................................................28

Installing Base-VxFS-51 in Non-Interactive Mode................................................................28

Installing Base-VxFS-51 in Interactive Mode.......................................................................28

Verifying Base-VxFS-51 Installation...................................................................................29

Installing HP OnlineJFS (B3929HB) ......................................................................................29

Installing HP OnlineJFS (B3929HB) in Non-Interactive Mode...............................................29

Installing HP OnlineJFS (B3929HB) in the Interactive Mode.................................................29

Verifying HP OnlineJFS (B3929HB) Installation..................................................................29

Installing VxVM 5.1 SP1..........................................................................................................29

Installing Base-VxVM-51......................................................................................................29

Installing Base-VxVM-51 in Non-Interactive Mode..............................................................30

Installing Base-VxVM-51 in the Interactive Mode................................................................30

Verifying the Base-VxVM-51 Installation............................................................................30

Installing Full VxVM (B9116EB) ............................................................................................30

Installing Full VxVM in Non-Interactive mode.....................................................................30

Installing Full VxVM in Interactive Mode...........................................................................31

Verifying the Full VxVM (B9116EB) Installation...................................................................31

Installing CVM [B9117EB] on HP-UX 11i v3.................................................................................31

Cold-Installing VxVM 5.1 SP1 and VxFS 5.1 SP1 with HP-UX 11i v3...............................................31

Preparing the Ignite-UX Server.............................................................................................31

Cold-Installing the Client.....................................................................................................32

Confirming the Client.........................................................................................................33

Updating HP-UX and Veritas Products Using the update-ux Command............................................33

4 Setting up the Veritas 5.1 SP1 Products........................................................36

Configuring Your System after the Installation.............................................................................36

Converting to a VxVM Root Disk...............................................................................................36

Starting and Enabling the Configuration Daemon.......................................................................37

Starting the Volume I/O Daemon.............................................................................................38

Enabling the Intelligent Storage Provisioning Feature...................................................................38

Enabling Cluster Support in VxVM............................................................................................38

Configuring New Shared Disks............................................................................................39

Verifying Existing Shared Disks............................................................................................39

Converting Existing VxVM Disk Groups to Shared Disk Groups................................................40

Upgrading in a Clustered Environment with FastResync...........................................................40

Setting Up VxVM 5.1 SP1........................................................................................................41

Initializing VxVM Using the vxinstall Utility............................................................................41

Moving Disks Under VxVM Control......................................................................................42

Setting Up a Veritas Enterprise Administrator Server...............................................................43

Setting Up a Veritas Enterprise Administrator Client................................................................44

Setting up and Managing VxFS 5.1 SP1 ...................................................................................44

Creating a VxFS File System................................................................................................44

Identifying the Type of File System........................................................................................44

Converting a File System to VxFS.........................................................................................44

Mounting a VxFS File System...............................................................................................45

Displaying Information on Mounted File System......................................................................45

Unmounting a VxFS File System...........................................................................................45

Setting Environment Variables...................................................................................................45

Cluster Environment Requirements.............................................................................................45

5 Upgrading from Previous Versions of VxFS to VxFS 5.1 SP1............................47

Upgrading from VxFS 3.3 or 3.5 on HP-UX 11i v1 to VxFS 5.1 SP1 on HP-UX 11i v3........................47

Upgrading from VxFS 3.5 on HP-UX 11i v2 to VxFS 5.1 SP1 on HP-UX 11i v3.................................48

Upgrading from VxFS 4.1 on HP-UX 11i v2 or HP-UX 11i v3 to VxFS 5.1 SP1 on HP-UX 11i v3...........49

4 Contents

Page 5

Upgrading from VxFS 5.0 on HP-UX 11i v2 or HP-UX 11i v3 to VxFS 5.1 SP1 on HP-UX 11i v3...........51

Upgrading from VxFS 5.0.1 on HP-UX 11i v3 to VxFS 5.1 SP1 on HP-UX 11i v3...............................52

6 Upgrading from Previous Versions of VxVM to VxVM 5.1 SP1........................54

Determining VxVM Disk Group Version.....................................................................................54

Native Multipathing with Veritas Volume Manager......................................................................55

Upgrading from VxVM 3.5 on HP-UX 11i v1 to VxVM 5.1 SP1 on HP-UX 11i v3..............................58

To Upgrade from VxVM 3.5 on HP-UX 11i v1 to VxVM 5.1 SP1 on HP-UX 11i v3........................58

Upgrading from VxVM 3.5 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3..............................59

To Upgrade from VxVM 3.5 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3........................59

Upgrading from VxVM 4.1 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3 .............................59

To Upgrade from VxVM 4.1 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3........................59

Upgrading from VxVM 4.1 on HP-UX 11i v3 to VxVM 5.1 SP1 on HP-UX 11i v3..............................60

To Upgrade from VxVM 4.1 on HP-UX 11i v3 to VxVM 5.1 SP1 on HP-UX 11i v3........................60

Upgrading from VxVM 5.0 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3..............................60

To Upgrade from VxVM 5.0 on HP-UX 11i v2 to VxVM 5.1 SP1 on HP-UX 11i v3........................61

To upgrade to VxVM 5.1 SP1 on HP-UX 11i v3 without removing VxVM 5.0 on HP-UX 11i

v2...............................................................................................................................61

Upgrading From VxVM 5.0 on HP-UX 11i v3 to VxVM 5.1 SP1 Using Integrated VxVM 5.1 SP1

Package for HPUX 11i v3.........................................................................................................62

Upgrading From VxVM 5.0.1 on HP-UX 11i v3 to VxVM 5.1 SP1 Using Integrated VxVM 5.1 SP1

Package for HPUX 11i v3.........................................................................................................62

I/O Robustness Recommendations............................................................................................62

7 Post Upgrade Tasks...................................................................................64

Optional Configuration Steps...................................................................................................64

Upgrading Disk Layout Versions................................................................................................64

Upgrading VxFS Disk Layout Versions...................................................................................64

Using the vxfsconvert Command..........................................................................................65

Using the vxupgrade Command...........................................................................................65

Requirements for Upgrading to Disk Layout Version 7..............................................................65

Upgrading the VxVM Cluster Protocol Version............................................................................65

Upgrading VxVM Disk Group Versions......................................................................................66

Updating Variables.................................................................................................................66

Setting the Default Disk Group..................................................................................................66

Upgrading the Array Support Library........................................................................................66

Converting from QuickLog to Multi-Volume Support.....................................................................67

8 Removing Veritas 5.1 SP1 Products..............................................................68

Removing VxVM.....................................................................................................................68

Moving VxVM Volumes to LVM Volumes................................................................................68

Removing Plexes................................................................................................................69

Shutting Down VxVM.........................................................................................................70

Removing Full VxVM (B9116EB)............................................................................................70

Removing Base-VxVM-51.....................................................................................................70

Removing VxFS......................................................................................................................70

Removing HP OnlineJFS (B3929HB).....................................................................................70

Removing Base-VxFS-51......................................................................................................70

Removing CVM......................................................................................................................71

Removing the Veritas Enterprise Administrator (VEA) Client...........................................................71

A Files Added and Modified After VxFS Installation.........................................72

Contents 5

Page 6

About this Document

This document provides information on Veritas 5.1 Service Pack1 (SP1) suite of products on systems

running HP-UX 11i v3. Veritas 5.1 SP1 suite of products include Base-VxFS, Base-VxVM, OnlineJFS,

Full VxVM, and Cluster Volume Manager (CVM). This document also includes a product overview,

system requirements, installation, basic configuration, and removal steps for Veritas 5.1 SP1 suite

of products on HP-UX 11i v3.

Intended Audience

This document is intended for system administrators responsible for installing and configuring HP-UX

systems with the Veritas suite of products. Readers are expected to have knowledge of the following:

• HP-UX operating system concepts

• System administration concepts

• Veritas Volume Manager concepts

• Veritas File System concepts

Document Organization

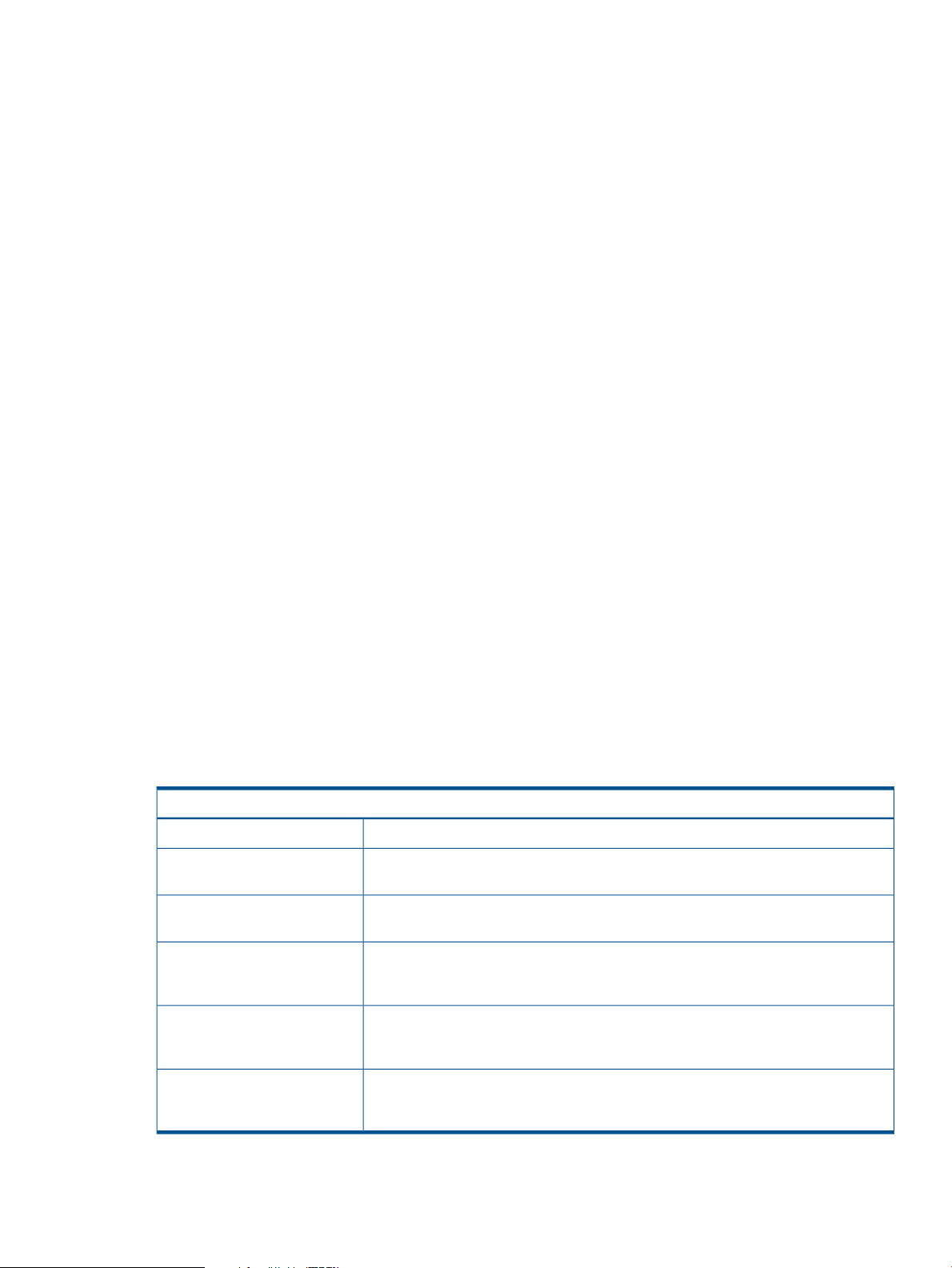

Table 1 Document Organization

Chapter 1: Introduction

Chapter 2: System Requirements

Chapter 3: Installing the Veritas 5.1 SP1 Products

Chapter 4: Setting up the Veritas 5.1 SP1 Products

Chapter 5: Upgrading from Previous Versions of VxFS to VxFS

5.1 SP1

Chapter 6: Upgrading from Previous Versions of VxVM to VxVM

5.1 SP1

Chapter 8: Removing Veritas 5.1 SP1 Products

DescriptionChapter Title

Describes the Veritas 5.1 SP1 suite of products for

systems running HP-UX 11i v3.

Describes the OS version, software depot contents,

license bundles, disk space requirements, and

supported upgrade paths for the Veritas 5.1 SP1 suite

of products.

Describes how to install the Veritas 5.1 SP1 suite of

products on systems running HP-UX 11i v3.

Describes how to set up the Veritas 5.1 SP1 suite of

products on HP-UX 11i v3.

Describes how to upgrade the VxFS disk layout

version.

Describes how to upgrade the VxVM disk group

version.

Discusses the post upgrade tasks for VxVM.“Post Upgrade Tasks” (page 64)

Describes how to remove the Veritas 5.1 SP1 suite

of products from an HP-UX 11i v3 system.

Typographic Conventions

This document uses the following typographic conventions:

monospace Computer output, files, directories, software elements such as command options,

function names, and parameters.

Read tunables from the /etc/vx/tunefstab file.

italic New terms, book titles, emphasis, variables replaced with a name or value

See “Veritas 5.1 SP1 Installation Guide” for more information.

% C shell prompt

$ Bourne/Korn shell prompt

# Superuser prompt (all shells)

6

Page 7

\ Continued input on the following line; you do not type this character

[ ] In command synopsis, brackets indicates an optional argument.

ls [ -a ]

| In command synopsis, a vertical bar separates mutually exclusive arguments.

mount [ suid | nosuid ]

blue text An active hypertext link

In PDF and HTML files, click on the links to move to the specified location.

Related Information

Additional information on the Veritas suite of products is available at:

HP Business Support Center.

This website contains the following documents:

• Veritas File System 5.1 SP1 Release Notes

• Veritas File System 5.1 SP1 Administrator's Guide

• Veritas Volume Manager 5.1 SP1 Release Notes

• Veritas Volume Manager 5.1 SP1 Administrator's Guide

• Veritas Volume Manager 5.1 SP1 Troubleshooting Guide

• Veritas Enterprise Administrator User's Guide

• Veritas Storage Foundation and High Availability Solutions 5.1 SP1 Getting Started Guide

• Veritas Storage Foundation 5.1 SP1 Advanced Features Administrator's Guide

• Veritas Storage Foundation 5.1 SP1: Storage and Availability Management for Oracle

Databases

• Veritas Storage Foundation 5.1 SP1 Cluster File System Release Notes

• Veritas Storage Foundation 5.1 SP1 Cluster File System Installation Guide

• Veritas Storage Foundation 5.1 SP1 for Oracle RAC Release Notes

• Veritas Storage Foundation 5.1 SP1 for Oracle RAC Administrator's Guide

To locate these documents, go to the HP-UX Core docs page at: www.hp.com/go/hpux-core-docs.

On this page, select HP-UX 11i v3.

Technical Support

For license information, contact:

• Software License Manager:

http://licensing.hp.com/welcome.slm

• HP Licensing Services:

http://licensing.hp.com/licenseAdmins.slm

◦ (Europe)

Phone: +353.(0)91.75.40.06

Email: codeword_europe@hp.com

◦ (U.S. and Canada)

Phone: +1 650.960.5111

Related Information 7

Page 8

Email: hplicense.na@hp.com

◦ (Asia Pacific)

Phone: 0120.42.1231 or 0426-48-9310 (Inside Japan)

+81.426.48.9312 (Outside Japan)

Email: sw_codeword@hp.com

• For latest information on the available patches, see:

http://itrc.hp.com

• For technical support, see:

http://welcome.hp.com/country/us/en/support.html

HP Welcomes Your Comments

HP welcomes your comments concerning this document. HP is committed to providing documentation

that meets your needs.

Please send comments to: docsfeedback@hp.com

Please include document title, manufacturing part number, and any comment, error found, or

suggestion for improvement you have concerning this document. Also, please include what we did

right so we can incorporate it into other documents.

8

Page 9

1 Introduction

This chapter introduces the Veritas 5.1 SP1 suite of products. It also describes the features of each

product that is included within the Veritas 5.1 SP1 suite of products.

This chapter addresses the following topics:

• “Overview” (page 9)

• “Volume Managers Supported on HP-UX 11i v3” (page 9)

• “Veritas Volume Manager (VxVM) ” (page 9)

• “File Systems Supported on HP-UX 11i v3” (page 17)

• “Veritas File System (VxFS) ” (page 18)

Overview

Veritas 5.1 SP1 suite of products include Base-VxFS, Base-VxVM, OnlineJFS, VxVM-Full, and CVM.

Veritas Volume Manager (VxVM) is a storage management subsystem that enables you to manage

physical disks as logical devices called volumes. A volume is a logical device that appears to a

data management system as a physical disk. Veritas File System (VxFS) is an extent based, intent

logging file system that is designed for use in UNIX environments, which require high performance

and availability and deal with large volumes of data.

The Cluster Volume Manager (CVM) allows up to 32 nodes in a cluster to simultaneously access

and manage a set of disks under VxVM control (VM disks). The same logical view of disk

configuration and any changes to this is available on all the nodes. For more information on CVM,

see the Managing Serviceguard manual, or the Veritas Volume Manager Administrator's Guide.

The HP Serviceguard Storage Management Suite integrates HP Serviceguard with Symantec’s

Veritas Storage Foundation. This combination provides powerful database and storage management

capabilities while maintaining the mission-critical reliability that HP Serviceguard customers have

come to expect. For more information about HP Serviceguard Storage Management Suite, see HP

Serviceguard Storage Management Suite Version A.04.00 Release Notes.

Volume Managers Supported on HP-UX 11i v3

HP-UX 11i v3 supports the following volume managers:

• HP Logical Volume Manager (HP LVM)

The HP LVM is a disk management subsystem that enables you to allocate disk space according

to the specific or projected size of your file system or raw data. For more information on HP

LVM, see HP-UX System Administrator's Guide: Logical Volume Management on HP Business

Support Center.

• Veritas Volume Manager (VxVM)

VxVM is a storage management subsystem that enables you to manage physical disks as

logical devices called volumes. A volume is a virtual device that appears to a data management

system as a physical disk.

Veritas Volume Manager (VxVM)

Introduction

VxVM is a storage management subsystem that removes the physical limitations of disk storage so

that you can configure, share, manage, and optimize storage I/O performance online without

interrupting data availability. VxVM also provides easy-to-use, online storage management tools

to reduce planned and unplanned system downtime, and online disk storage management for

Overview 9

Page 10

computing environments and Storage Area Network (SAN) environments. Through RAID support,

VxVM protects against disk and hardware failure. Additionally, VxVM provides features that offer

fault tolerance and fast recovery from disk failure.

VxVM overcomes physical restrictions imposed by hardware disk devices, by providing a logical

volume management layer. This enables volumes to span multiple disks. VxVM also dynamically

configures disk storage while the system is active.

VxVM Features

Veritas Volume Manager supports the following features:

• Veritas Enterprise Administrator (VEA)

A Java™-based graphical user interface for administering VxVM.

• Concatenation

Concatenation maps data in a linear manner onto one or more subdisks in a plex.

• Striping

Striping maps data, so that data is interleaved among two or more physical disks.

• Mirroring

Mirroring uses multiple mirrors to duplicate information contained in a volume.

• Mirrored Stripes

VxVM supports a combination of mirroring and striping.

• Striped Mirrors

VxVM supports a combination of striping and mirroring.

• RAID-5

RAID-5 provides data redundancy using parity.

• Online Resizing of Volumes

You can dynamically resize VxVM volumes while the data remains available to the user.

• Hot-relocation

The hot-relocation feature in VxVM automatically detects disk failures, and notifies the system

administrators of the failure, by email. Hot-relocation also attempts to use spare disks and

frees disk space to restore redundancy and to preserve access to mirrored and RAID-5 volumes.

• Volume Resynchronization

Volume resynchronization ensures that all copies of the data match, when mirroring redundant

copies of data.

• Online Relayout

Online relayout enables you to convert between storage layouts in VxVM, with uninterrupted

data access.

• Volume Snapshot

Volume Snapshots are point in time images of VxVM volumes.

10 Introduction

Page 11

VxVM 5.1 SP1 does not support snapshots of RAID 5 volumes.

• Dirty Region Logging

Dirty Region Logging (DRL) keeps track of the regions that have been changed because I/O

writes to a mirrored volume. The DRL uses this information to recover only those portions of

the volume that need to be recovered, thereby speeding up recovery after a system crash.

• SmartMove™ Feature

SmartMove reduces the time and I/O required to attach or reattach a plex to an existing

VxVM volume, in the specific case where a VxVM volume has a VxFS file system mounted on

it. The SmartMove feature uses the VxFS information to detect free extents and avoids copying

them.

• Enhancements to the Dynamic Multipathing Feature

This release provides a number of enhancements to the DMP features of VxVM. These

enhancements simplify administration and improve display of detailed information about the

connected storage. Following are the enhancements to the DMP feature:

◦ Dynamic multipathing attributes are now persistent

◦ Improved dynamic multipathing device naming

◦ Default behavior modified for I/O throttling

◦ Specifying a minimum number of active paths

◦ Enhanced listing of subpath

◦ Enhanced I/O statistics

◦ Making DMP restore options persistent

◦ New log file location for DMP events

◦ Extended device attributes displayed in the vxdisk list command

◦ Displaying the use_all_paths attribute for an enclosure

◦ Viewing information about the ASLs installed on the system

◦ Displaying the number of LUNs in an enclosure

◦ Displaying the LUN serial number

◦ Displaying HBA details

◦ New exclude and include Options for the vxdmpadm command

◦ New command for reporting DMP node information

◦ Setting attributes for all enclosures

◦ Support for ALUA JBOD devices

• VxVM Powerfail Timeout (PFTO) feature disabled in the HP-UX Native Multipathing Devices

By default, the use of PFTO is now disabled in the HP-UX native multipathing devices. As a

result, the native multipathing disk I/O can consume additional service time to complete an

I/O successfully. In case of DMP devices, the use of PFTO is enabled by default.

For information on recommendations to maintain high levels of I/O robustness, refer to “I/O

Robustness Recommendations” (page 62).

• Support for LVM version 2 Volume Groups

The LVM version 2 volume groups are now partially supported. VxVM now identifies and

protects the LVM version 2 volume groups. However, the LVM version 2 volume groups cannot

be initialized or converted.

Veritas Volume Manager (VxVM) 11

Page 12

• Distributed Volume Recovery

In a Cluster Volume Manager (CVM) cluster, upon a node failure, the mirror recovery is initiated

by the CVM master. Prior to this release, the CVM master performed all the recovery I/O

tasks. Starting from this release, the CVM master can distribute recovery tasks to other nodes

in the cluster. Distributing the recovery tasks is desirable in some situations so that the CVM

master can avoid an I/O or CPU bottleneck.

• Campus Cluster enhancements

The campus cluster feature provides the capability of mirroring volumes across sites, with hosts

connected to storage at all sites through a Fibre Channel network. In this release, the following

enhancements have been made to the campus cluster feature:

◦ Site Tagging of disks or Enclosures

◦ Automatic Site Tagging

◦ Site Renaming

• Estimated Required Time Displayed During Volume Conversion

During a volume conversion operation, before the conversion is committed, the vxvmconvert

command displays the estimated time required.

• The vxsited daemon Renamed to vxattachd

The vxsited daemon is renamed as the vxattachd daemon. The vxattachd daemon

now also handles automatic reattachment and resynchronization for plexes.

• Automatic Plex Attachment

When a mirror plex encounters irrecoverable errors, VxVM detaches the plex from the mirrored

volume. By default, VxVM automatically reattaches the affected mirror plexes when the

underlying failed disk or LUN becomes visible.

• Persisted Attributes

The vxassist command allows you to define a set of named volume allocation rules, which

can be referenced in volume allocation requests. The vxassist command also allows you

to record certain volume allocation attributes for a volume. These attributes are called persisted

attributes. You can record the persisted attributes and use them in later allocation operations

on the volume, such as increasing the volume.

• Automatic recovery of volumes during disk group import

VxVM allows automatic recovery of volumes during disk group import. After a disk group is

imported, disabled volumes can be enabled and started by default.

• Cross-platform data sharing support for disks greater than 1 TB

In releases prior to VxVM 5.1 SP1, the cdsdisk format was supported only on disks up to

1 TB in size. Therefore, cross-platform disk sharing (CDS) was limited to disks of size up to 1

TB. VxVM 5.1 SP1 removes this restriction. It introduces CDS support for disks of size greater

than 1 TB as well.

12 Introduction

Page 13

NOTE: The disk group version must be at least 160 to create and use the cdsdisk format

on disks of size greater than 1 TB.

IMPORTANT: VxVM uses the Global Partition Table (GPT) format to initialize disks of size

greater than 1TB in the cdsdisk format. HP Logical Volume Manager (LVM) and the

diskowner command do not recognize disks formatted with the GPT layout. So, LVM and

the diskowner command do not recognize disks of size greater than 1 TB.

For more information, refer to the “Known Problems and Workarounds” section of the Veritas

Volume Manager 5.1 SP1 Release Notes.

• Default format for auto-configured disks has changed

VxVM will initialize all auto-configured disks with the cdsdisk format, by default. To change

the default format, use the vxdiskadm command to update the /etc/default/vxdisk

file.

• Default naming scheme for devices is Enclosure Based Naming Scheme(ebn)

Starting with the VxVM 5.1 SP1 release, the default naming scheme for devices has changed

to the Enclosure Based Naming Scheme(ebn). The following example shows some

sample device names on a system running VxVM 5.1 SP1:

Example 1 Sample device names on a system using the Enclosure Based Naming

Scheme(ebn) (default in VxVM 5.1 SP1)

DEVICE TYPE DISK GROUP STATUS

disk_0 auto:cdsdisk c4t0d0 dg1 online

disk_1 auto:LVM - - LVM

disk_2 auto:LVM - - LVM

disk_3 auto:LVM - - LVM

disk_4 auto:hpdisk rootdisk01 rootdg online

To change the default naming scheme to the Legacy Device Naming Scheme, use the

following command:

# vxddladm set namingscheme=osn mode=legacy

The following example shows some sample device names on a system using the Legacy

Device Naming Scheme:

Example 2 Sample device names on a system using the Legacy Device Naming Scheme

DEVICE TYPE DISK GROUP STATUS

c0t6d0 auto:hpdisk rootdisk01 rootdg online

c3t6d0 auto:LVM - - LVM

c4t0d0 auto:cdsdisk c4t0d0 dg1 online

c4t3d0 auto:LVM - - LVM

c4t9d0 auto:LVM - - LVM

To change the default naming scheme to the Agile Device Naming Scheme, use the

following command:

# vxddladm set namingscheme=osn mode=new

Veritas Volume Manager (VxVM) 13

Page 14

The following example shows some sample device names on a system using the Agile

Device Naming Scheme:

Example 3 Sample device names on a system using the Agile Device Naming Scheme

DEVICE TYPE DISK GROUP STATUS

disk6 auto:hpdisk rootdisk01 rootdg online

disk7 auto:LVM - - LVM

disk11 auto:cdsdisk c4t0d0 dg1 online

disk10 auto:LVM - - LVM

disk9 auto:LVM - - LVM

Only in cases where customers upgrade from an earlier version to this version, Operating

System Native Naming Scheme(osn) or the setting from the earlier release will override

ebn. So, customers will continue to see the osn naming scheme.

• Issuing CVM commands from the slave node

In releases prior to VxVM 5.1 SP1, CVM required that you issue configuration commands for

shared disk groups from the master node of the cluster. Configuration commands change the

object configuration of a CVM shared disk group. Examples of configuration changes include

creating disk groups, importing disk groups, deporting disk groups, and creating volumes.

Starting with the VxVM 5.1 SP1 release, you can issue commands from any node, even when

the command changes the configuration of the shared disk group.

• Changing the CVM master online

CVM now supports changing the CVM master from one node in the cluster to another node,

while the cluster is online. CVM migrates the master node, and re-configures the cluster. After

the master change operation starts re-configuring the cluster, other commands that require

configuration changes will fail.

For more information on changing the CVM master while the cluster is online, refer to the

Veritas Volume Manager 5.1 SP1 Administrator's Guide. To locate this document, go to the

HP-UX Core docs page at: www.hp.com/go/hpux-core-docs. On this page, select HP-UX 11i

v3.

VxVM 5.1 SP1 on HP–UX 11i v3

For more information on features that VxVM 5.1 SP1 supports on HP-UX 11i v3, refer to the Veritas

Volume Manager 5.1 SP1 Release Notes. To locate this document, go to the HP-UX Core docs

page at: www.hp.com/go/hpux-core-docs. On this page, select HP-UX 11i v3.

Architecture of VxVM

VxVM operates as a subsystem between the HP-UX operating system and other data management

systems, such as file systems and database management systems. VxVM is layered on top of the

operating system and is dependent on it for the following:

• Physical access to disks

• Device handles

• VM disks

• Multipathing

14 Introduction

Page 15

VxVM Daemons

VxVM relies on the following daemons for its operation:

• vxconfigd – The VxVM configuration daemon maintains disk and disk group configuration

information, communicates configuration changes to the kernel, and modifies the configuration

information stored on the disks.

• vxiod – The VxVM I/O daemon provides extended I/O operations without blocking the

calling processes.

• vxrelocd – The hot-relocation daemon monitors VxVM for events that affect redundancy,

and performs hot-relocation to restore redundancy.

• vxattachd – The vxattachd daemon handles automatic reattachment and resynchronization

for plexes.

VxVM Objects

VxVM supports the following types of objects:

• Physical Objects

Physical disks or other hardware with block and raw operating system device interfaces that

are used to store data.

• Virtual Objects

The virtual objects in VxVM include the following:

◦ Disk Group

A group of disks that share a common configuration. A configuration consists of a set of

records describing objects (including disks, volumes, plexes, and subdisks) that are

associated with one particular disk group. Each disk group has an administrator-assigned

name, which can be used by the administrator to reference that disk group. Each disk

group also has an internally defined unique disk group ID, which is used to differentiate

two disk groups with the same administrator-assigned name.

◦ VM Disks

When you place a physical disk under VxVM control, a VM disk is assigned to the physical

disk. Each VM disk corresponds to one physical disk. A VM disk is under VxVM control

and is usually in a disk group.

◦ Subdisks

A VM disk can be divided into one or more subdisks. Each subdisk represents a specific

portion of a VM disk, which in turn is mapped to a specific region in a physical disk.

VxVM allocates a set of contiguous blocks for a subdisk.

◦ Plexes

VxVM uses subdisks to build virtual objects called plexes. A plex consists of one or more

subdisks located on one or more physical disks.

◦ Volumes

A volume is a virtual disk device that appears like a physical disk device to applications,

databases, and file systems. However, VxVM volumes do not have the physical limitations

of a physical disk device. A volume consists of one or more plexes, each holding a copy

of the selected data in the volume.

Veritas Volume Manager (VxVM) 15

Page 16

Volume Layouts in VxVM

A volume layout is defined by the association of a volume to one or more plexes, each of which

maps to a subdisk. VxVM supports two different types of volume layout:

• Non-Layered

• Layered

Non-Layered

In a non-layered volume layout, a subdisk maps directly to a VM disk. This enables the subdisk to

define a contiguous extent of storage space backed by the public region of a VM disk.

Layered Volumes

A layered volume is constructed by mapping its subdisks to the underlying volumes. The subdisks

in the underlying volumes must map to VM disks, and hence to the attached physical storage.

VxVM Storage Layouts

Data in virtual objects is organized to create volumes by using the following layouts:

• Concatenation and Spanning

• Striping (RAID-0)

• Mirroring (RAID-1)

• Striping Plus Mirroring (Mirrored-Stripe or RAID-0+1)

• Mirroring Plus Striping (Striped-Mirror, RAID-1+0 or RAID-10)

• RAID-5 (Striping with Parity)

Concatenation and Spanning

Concatenation maps data in a linear manner onto one or more subdisks in a plex. To access the

data in a concatenated plex sequentially, data is first accessed from the first subdisk from beginning

to end and then accessed in the remaining subdisks sequentially from beginning to end, until the

end of the last subdisk.

Striping (RAID-0)

Striping maps data so that the data is interleaved among two or more physical disks. A striped

plex contains two or more subdisks, spread out over two or more physical disks.

Mirroring (RAID-1)

Mirroring uses multiple mirrors (plexes) to duplicate the information contained in a volume. In the

event of a physical disk failure, the plex on the failed disk becomes unavailable.

When striping or spanning across a large number of disks, failure of any one of the disks can

make the entire plex unusable. As disks can fail, you must consider mirroring to improve the

reliability (and availability) of a striped or spanned volume.

Striping Plus Mirroring (Mirrored-Stripe or RAID-0+1)

VxVM supports combination of mirroring above striping. This combined layout is called a

mirrored-stripe layout. A mirrored-stripe layout offers the dual benefits of striping to spread data

across multiple disks, while mirroring provides redundancy of data.

Mirroring Plus Striping (Striped-Mirror, RAID-1+0 or RAID-10)

VxVM supports the combination of striping above mirroring. This combined layout is called a

striped-mirror layout. Putting mirroring below striping, mirrors each column of the stripe. If there

are multiple subdisks per column, each subdisk can be mirrored individually instead of each column.

16 Introduction

Page 17

RAID-5 (Striping with Parity)

Although both mirroring (RAID-1) and RAID-5 provide redundancy of data, they use different

methods. Mirroring provides data redundancy by maintaining multiple complete copies of the data

in a volume. Data being written to a mirrored volume is reflected in all copies. If a portion of the

mirrored volume fails, the system continues to use the other copies of the data. RAID-5 provides

data redundancy by using parity. Parity is a calculated value used to reconstruct data, after a

failure. If a portion of a RAID-5 volume fails, the data that was on that portion of the failed volume

can be recreated from the remaining data and parity information. It is also possible to mix

concatenation and striping in the layout.

VxVM Interfaces

VxVM provides the following interfaces:

• Command-Line Interface

• Menu-driven vxdiskadm utility

• Veritas Enterprise Administrator

Command-Line Interface

As a superuser, you can administer and configure volumes and other VxVM objects using the

supported vx* commands.

Menu-driven utility

The vxdiskadm utility provides an easy to use menu driven interface for common high-level

operations on disks and disk groups.

Veritas Enterprise Administrator

The Veritas™ Enterprise Administrator (VEA) is the graphical user interface for administering disks,

volumes, and file systems on local and remote machines.

File Systems Supported on HP-UX 11i v3

Table 2 discusses the file systems that are supported on HP-UX 11i v3.

Table 2 Supported File Systems on HP-UX 11i v 3

DescriptionFile System Type

HFS is derived from the UNIX File System, the original BSD file system.Hierarchical File System (HFS)

Veritas File System (VxFS)

(CDFS)

AutoFS

Network File System (NFS)

The Veritas File System is an extent-based, intent logging file system from Symantec

Corporation.

The CD file system enables you to read and write to compact disc media.Compact Disk File System

AutoFS/Automounter mounts directories automatically when users or processes request

access to them. AutoFS also unmounts the directories automatically if they remain

idle for a specified period of time.

Network File System (NFS) provides transparent access to files on the network. An

NFS server makes a directory available to other hosts on the network by “sharing”

the directory.

CacheFS

The Cache File System (CacheFS) is a general purpose file system caching mechanism

that improves server performance and scalability by reducing server and network

load.

File Systems Supported on HP-UX 11i v3 17

Page 18

Veritas File System (VxFS)

Introduction

The Veritas File System (VxFS) is a high availability, high performance, commercial grade file

system that provides features such as transaction based journaling, fast recovery, extent-based

allocation, and online administrative operations, such as backup, resizing, and defragmentation

of the file system. It provides high performance and easy manageability required by mission-critical

applications, where high availability is critical. It increases the I/O performance and provides

structural integrity.

The Veritas File System version (VxFS ) is the vxfs file system for HP-UX 11i v3 release.

VxFS Features

VxFS supports the following features:

• Extent-Based Allocation

An extent is defined as one or more adjacent blocks of data within the file system. VxFS

allocates storage in groups of extents rather than a block at a time, thereby resulting in faster

read-write operations.

• Extent Attributes

Extent attributes are the extent allocation policies associated with a file. VxFS allocates disk

space to files in groups of one or more extents. VxFS enables applications to control extent

allocation.

The setext and getext commands enable the administrator to set or view extent attributes

associated with a file, as well as to preallocate space for a file.

• Fast File System Recovery

Most file systems rely on full structural verification by the fsck utility as the only means to

recover from a system failure. For large disk configurations, this involves a time-consuming

process of checking the entire structure, verifying that the file system is intact, and correcting

any inconsistencies.

VxFS reduces system failure recovery time by tracking file system activity in the VxFS intent

log. This feature records pending changes to the file system structure in a circular intent log.

• Access Control Lists (ACLs)

An Access Control List (ACL) stores a series of entries that identify specific users or groups,

and their access privileges for a directory or file.

• Online Administration

A VxFS file system can be defragmented and resized while it remains online and available

to users. The online administrations operations supported are backup, resizing, and

defragmentation.

• File Snapshot

VxFS provides online data backup using the snapshot feature. An image of a mounted file

system instantly becomes an exact read-only copy of the file system at a specific point in time.

The original file system is called the snapped file system and the copy is called the snapshot.

• Expanded Application Interface

VxFS supports the following specific features for commercial applications:

18 Introduction

◦ Pre-allocates space for files

◦ Specifies fixed extent size for files

Page 19

◦ Bypasses the system buffer cache for file I/O

◦ Specifies the expected access pattern for a file

• Extended Mount Options

The extended mount options supported by VxFS include the following:

◦ Enhanced data integrity modes

◦ Enhanced performance modes

◦ Temporary file system modes

◦ Improved synchronous writes

◦ Large file sizes

• Large File and File System Sizes

File systems up to 40 TB and files up to 16 TB in size are supported on HP-UX 11i v3. For

more information on files and file system sizes supported by VxFS, see the Supported File and

File System Sizes white paper on HP Business Support Center.

• Enhanced I/O Performance

VxFS provides enhanced I/O performance by applying an aggressive I/O clustering policy,

integrating with VxVM, and allowing application specific parameters to be set on a per-file

system basis. However, clustering support is not available with the current release.

• Storage Checkpoints

To increase availability, recoverability, and performance, VxFS offers on-disk and online

backup and restore utilities that facilitate frequent and efficient backup of the file system.

Backup and restore applications can leverage the Storage Checkpoint, a disk and I/O-efficient

copying technology for creating periodic frozen images of a file system. Storage Checkpoints

present a view of a file system at a point in time, and subsequently identifies and maintains

copies of the original file system blocks. Instead of using a disk-based mirroring method,

Storage Checkpoints save disk space and significantly reduce I/O overhead by using the free

space pool available to a file system.

• Quotas

VxFS supports quotas, which allocate per-user quotas and limit the use of two principal

resources files and data blocks.

• Multi-Volume Support

The Multi-Volume support enables several volumes to be encapsulated into a single virtual

object called volume set. This volume set can then be used to create a file system, thereby

enabling advanced features such as Dynamic Storage Tiering.

• SmartMove™ Feature

SmartMove reduces the time and I/O required to attach or reattach a plex to an existing

VxVM volume, in the specific case where a VxVM volume has a VxFS file system mounted on

Veritas File System (VxFS) 19

Page 20

it. The SmartMove feature uses VxFS information to detect free extents and avoids copying

them.

• Dynamic Storage Tiering Enhancements

The Dynamic Storage Tiering (DST) feature provides the following enhancements:

◦ Enhanced DST APIs to provide a new interface for managing allocation policies of storage

checkpoints during creation and later, and for managing named data stream allocation

policies

◦ fsppadm support for user ID (UID), group ID (GID), and tagging (TAG) elements in the

placement policy XML file

◦ Improved scan performance in the fsppadm command

◦ Suppressed processing of the chosen RULE

◦ Parser support for UID, GID, and TAG elements in a DST policy

◦ What-if support for analyzing and enforcing without requiring the policy to be assigned

◦ Storage Checkpoint data placement support in a DST policy

◦ Shared DB thread handle support

◦ CPU and I/O throttling support for DST scans

◦ New command, fstag, for file tagging

◦ New command, fsppmk, for creating XML policies

• Availability of the mntlock and mntunlock Mount Options

You can specify the mntlock option with the mount command to prevent a file system from

being unmounted by an application.

• Autolog replay on mount

Starting with the VxFS 5.1 SP1 release, when the mount command detects a dirty log in the

file system, it will automatically run the VxFS command fsck to clean up the intent log. This

functionality is only supported on file systems mounted on a Veritas Volume Manager (VxVM)

volume.

• FileSnap

FileSnaps provide the ability to snapshot objects that are smaller in granularity than a file

system or a volume. This is an Enterprise level feature. It is supported only with DLV 8

filesystems.

• SmartTier sub-file movement

The Dynamic Storage Tiering (DST) feature is now rebranded as SmartTier. With the SmartTier

feature, you can now manage the placement of file objects as well as entire files on individual

volumes.

• Tuning performance optimization of inode allocation

Starting with the VxFS 5.1 SP1 release, you can optimize the way in which inodes are reused

in inode cache by setting the delicache_enable tunable parameter. It specifies whether

performance optimization of inode allocation and reuse during a new file creation is turned

on or not.

• Veritas File System is more thin-friendly tunable

20 Introduction

Thin Provisioning is a storage array feature that optimizes storage use by automating storage

provisioning. Administrators do not have to estimate how much storage an application requires.

Instead, Thin Provisioning lets administrators provision large thin or thin reclaim capable LUNs

Page 21

to a host. Physical storage capacity is allocated from a thin pool to the thin/thin reclaim

capable LUNS only after application I/O writes.

Starting with the VxFS 5.1 SP1 release, you can tune VxFS to enable or disable thin-friendly

allocations. This feature is only supported on file systems mounted on a VxVM volume.

• Partitioned Directories

VxFS 5.1 SP1 allows you to create partitioned directories. For every new create, delete, or

lookup thread that is created, VxFS searches for the thread's respective hash directory and

performs the operation in that directory. This allows uninterrupted access to the parent directory

inode and its other hash directories, which significantly improves the read/write performance

of cluster file systems.

This feature is supported only on file systems with DLV 8 or later.

For more information, refer to the Veritas File System 5.1 SP1 Administrator's Guide. To locate

this document, go to the HP-UX Core docs page at: www.hp.com/go/hpux-core-docs. On

this page, select HP-UX 11i v3.

VxFS 5.1 SP1 on HP-UX 11i v3

For more information on features supported with VxFS 5.1 SP1 on HP-UX 11i v3, refer to the Veritas

File System 5.1 SP1 Release Notes. To locate this document, go to the HP-UX Core docs page at:

www.hp.com/go/hpux-core-docs. On this page, select HP-UX 11i v3.

Architecture of VxFS

HP-UX supports various file systems. In order for the kernel to be able to access these different file

system types, there is a layer of indirection above them called Virtual File System (VFS).

Without the VFS layer, the kernel must know the specifics of each file system type and maintain

distinct code to handle each.

The VFS layer enables the kernel to possess a single set of routines that are common to all file

system types. Handling of the specifics of a file system type are passed down to the file system

specific modules. The following sections describe the VxFS file system specific structures.

The following are the VxFS on-disk structures:

• Superblock

A superblock (SB) resides ~8k from the beginning of the storage and tracks the status of the

file system. It supports maps of free space and other resources (inodes, allocation units, and

so on).

• Intent Log

VxFS reduces system failure recovery time by tracking file system activity in the VxFS intent

log. This feature records pending changes to the file system structure in a circular intent log.

The intent log recovery feature is not readily apparent to users or a system administrator,

except during a system failure. During system failure recovery, the VxFS fsck utility performs

an intent log replay, which scans the intent log, and nullifies or completes file system operations

that were active when the system failed. The file system can then be mounted without completing

a full structural check of the entire file system. Replaying the intent log may not completely

recover the damaged file system structure if there was a disk hardware failure. Hardware

problems may require a complete system check using the fsck utility provided with VxFS.

• Allocation Unit

Allocation units are made up of a series of data blocks. Each allocation unit typically consists

of 32k contiguous blocks. Several contiguous data blocks make up an extent. The extents are

used for file data storage.

Veritas File System (VxFS) 21

Page 22

Extent Based Allocation

An extent is defined as one or more adjacent blocks of data within the file system. Extent based

allocation offers the following advantages:

• Allows large I/Os for efficiency

• Supports dynamic resizing of disk space

22 Introduction

Page 23

2 System Requirements

This chapter discusses the various system requirements for the Veritas 5.1 SP1 suite of products.

This chapter addresses the following topics:

• Software Dependency

• OS Version

• Patch Requirements

• Required Packages for VEA

• Software Depot Content

• License Bundles

• Disk Space Requirements

Software Dependency

• VxFS 5.1 SP1 works with both HP LVM and VxVM 5.1 SP1 on HP-UX 11i v3

• VxVM 5.1 SP1 works only when VxFS 5.1 SP1 is installed

OS Version

This release can only be installed on a system running the HP-UX 11i v3 March 2011 Operating

Environment Upgrade Release (OEUR) or later on the PA-RISC or Itanium platforms.

To verify the operating system version, use the swlist command as follows:

# swlist | grep HPUX11i

HPUX11i-DC-OE B.11.31.1103 HP-UX Data Center Operating Environment

Patch Requirements

VxFS 5.1 SP1 and VxVM 5.1 SP1 for HP-UX 11i v3 require certain patches to function correctly.

In addition, certain patches are recommended for all installations.

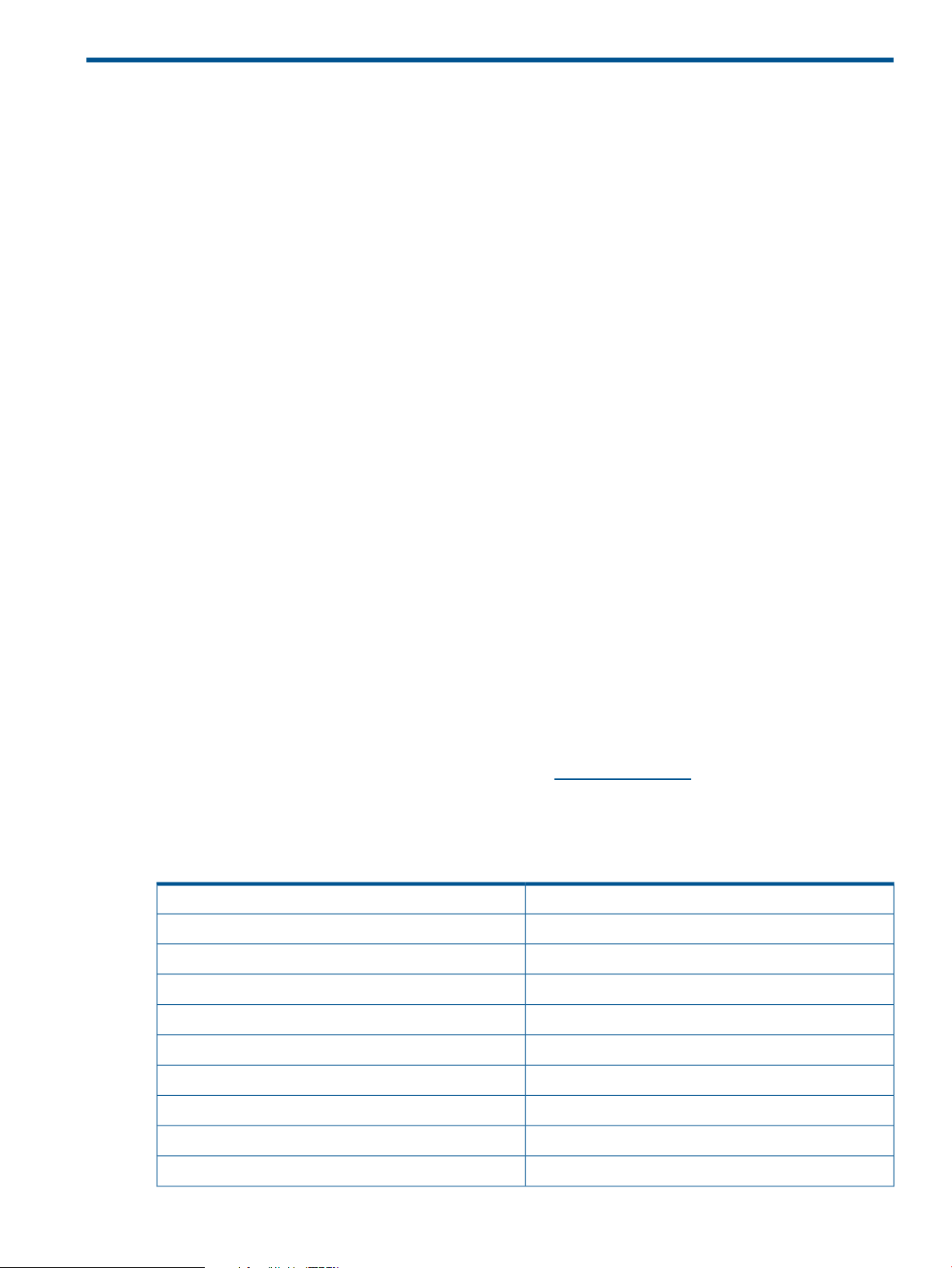

Table 3 lists all the required and recommended patches for VxFS 5.1 SP1 and VxVM 5.1 SP1 for

HP-UX 11i v3. You can obtain all these patches from http://itrc.hp.com. However, some of these

patches may already be available to you in the HP-UX11i v3 OEUR. Table 3 lists each patch and

mentions if the patch or a superseding patch is already included in a given OEUR. If the OEUR

does not contain a particular patch, you must download the patch accordingly.

Table 3 Required and Recommended Patches

Required Patches

Recommended Patches

Available in HP-UX 11i v3 March 2011 OEURPatch

YesPHKL_38651

YesPHKL_38952

YesPHKL_40944

YesPHKL_41086

YesPHSS_39898

NoPHCO_41903

NoPHKL_40130

Software Dependency 23

Page 24

Table 3 Required and Recommended Patches (continued)

Required Software

In addition to the recommended patches listed in Table 3, the software products listed in Table 4

are required for use with VxFS 5.1 SP1 and VxVM 5.1 SP1 installations. You can download these

products from http://www.software.hp.com.

Table 4 Required Software Products

Product Name: Revision

Ignite-UX C.7.12 or later

BaseLVM B.11.31.1104 or later

IMPORTANT: Ignite-UX version C.7.12 does not support archiving of disk groups with a disk

group version of 160. Archive creation (when disk groups with a disk group version of 160 are

present on the system) does not display any error/warning indicating restore operation will fail.

For more information, refer to the “Known Problems and Workarounds” section in the Veritas

Volume Manager 5.1 SP1 Release Notes.

NoPHKL_40377

NoPHKL_41005

YesPHKL_41083

NoPHKL_41087

YesPHKL_41442

Required Packages for Veritas Enterprise Administrator

To use the VEA with VxVM 5.1 SP1, the following software products are required:

• Veritas Enterprise Administrator Service (VRTSob)

• Veritas Enterprise Administrator (VRTSobgui)

The minimum memory requirement for the VEA client is 128 MB. The software products mentioned

in this section are installed as part of VxVM.

Software Depot Content

Table 5 and Table 6 lists the composition of the Base-VxFS-51 and Base-VxVM-51 bundles.

Table 5 Base-VxFS-51 Bundle Components

DescriptionPackage

Veritas File System Bundle 5.1 for HP-UXBase-VxFS-51

Symantec License UtilitiesBase-VxFS-51.VRTSvlic

VERITAS File SystemBase-VxFS-51.VRTSvxfs

Table 6 Base-VxVM-51 Bundle Components

DescriptionPackage

24 System Requirements

Base VERITAS Volume Manager Bundle 5.1 for HP-UXBase-VxVM-51

Array Support Libraries and Array Policy Modules for Veritas Volume ManagerBase-VxVM-51.VRTSaslapm

Page 25

Table 6 Base-VxVM-51 Bundle Components (continued)

DescriptionPackage

Veritas Volume Manager by SymantecBase-VxVM-51.VRTSvxvm

Symantec License UtilitiesBase-VxVM-51.VRTSvlic

Table 7 lists the Base-VxTools-51 bundle components.

Table 7 Base-VxTools-51 Bundle Components

DescriptionPackage

VERITAS Infrastructure Bundle 5.1 for HP-UXBase-VxTools-51

Symantec Product Authentication ServiceBase-VxTools-51.VRTSat

Symantec License UtilitiesBase-VxTools-51.VRTSvlic

Veritas Storage Foundation Managed Host by SymantecBase-VxTools-51.VRTSsfmh

Perl 5.10.0 for VeritasBase-VxTools-51.VRTSperl

Veritas Enterprise AdministratorBase-VxTools-51.VRTSobgui

VERITAS Enterprise Administrator ServiceBase-VxTools-51.VRTSob

Table 8 lists the VxFS-SDK-51 bundle components.

Table 8 VxFS-SDK-51 Bundle Components

Table 9 (page 25) lists the CVM bundle components.

Table 9 B9117EB Bundle Components

License Bundles

Following license bundles are available for the Veritas 5.1 SP1 suite of products on HP-UX 11i v3:

• OnlineJFS (B3929HB): OnlineJFS for Veritas File System 5.1 SP1 Bundle

DescriptionPackage

VERITAS SDK Bundle 5.1 for HP-UXVxFS-SDK-51

Veritas File System Software Developer KitVxFS-SDK-51.VRTSfssdk

DescriptionPackage

VERITAS Cluster Volume Manager 5.1 for HP-UXB9117EB

VERITAS Product EnablerB9117EB.VRTSwl

Veritas Fencing by SymantecB9117EB.VRTSvxfen

Veritas Low Latency Transport by SymantecB9117EB.VRTSllt

Veritas Group Membership and Atomic Broadcast by SymantecB9117EB.VRTSgab

• Full VxVM (B9116EB): Full VxVM License for Veritas Volume Manager 5.1 SP1

• CVM License (B9117EB): VERITAS Cluster Volume Manager 5.1 SP1 for HP-UX

• For more information on these licenses and the HP Serviceguard Storage Management Licenses,

see HP Serviceguard Storage Management Suite Version A.04.00 Release Notes.

License Bundles 25

Page 26

Disk Space Requirements

Table 10 and Table 11 list the disk space requirements for VxFS 5.1 SP1 and VxVM 5.1 SP1,

respectively.

Disk Space Requirements for VxFS 5.1 SP1

Table 10 lists the disk space required by VxFS 5.1 SP1.

Table 10 Minimum Space Required for Each Directory for VxFS 5.1 SP1

NOTE: Ensure that the /opt directory exists and has write permissions for root.

Disk Space Requirements for VxVM 5.1 SP1

Table 11 lists the disk space required by VxVM 5.1 SP1.

Table 11 Minimum Space Required for Each Directory for VxVM 5.1 SP1

Total/var/etc/opt/usr/sbin/standPackage

123839 KB4 KB—49791 KB74044 KB——VRTSvxfs

5753 KB——1979 KB3774 KB——VRTSvlic

Total/var/opt/usr/stand/Package

6102 KB——4699 KB—1403 KBBase-VxVM-51.VRTSaslapm

Disk Space Requirements for CVM

To install CVM, a minimum of 434000 KB of space is required.

855725 KB18 KB28030 KB363805 KB—463872 KBBase-VxVM-51.VRTSvxvm

10119 KB—1979 KB3774 KB—4366 KBBase-VxVM-51.VRTSvlic

208759 KB29 KB207359 KB——1371 KBBase-VxTools-51.VRTSat

142756 KB—142756 KB———Base-VxTools-51.VRTSsfmh

89245 KB—89245 KB———Base-VxTools-51.VRTSperl

126034 KB—126034 KB———Base-VxTools-51.VRTSobgui

126239 KB—126034 KB——205 KBBase-VxTools-51.VRTSob

26 System Requirements

Page 27

3 Installing the Veritas 5.1 SP1 Products

This chapter describes how to install VxFS 5.1 SP1 and VxVM 5.1 SP1 with the swinstall (1M)

command. This chapter addresses the following topics:

• “Mounting the HP Serviceguard Storage Management Suite Media” (page 27)

• “Installing Veritas 5.1 SP1 Products” (page 27)

• “Installing VxFS 5.1 SP1” (page 28)

• “Installing VxVM 5.1 SP1” (page 29)

• “Installing CVM [B9117EB] on HP-UX 11i v3” (page 31)

• “Cold-Installing VxVM 5.1 SP1 and VxFS 5.1 SP1 with HP-UX 11i v3” (page 31)

• “Updating HP-UX and Veritas Products Using the update-ux Command” (page 33)

Prior to installing Veritas 5.1 SP1 products, ensure that you have met the following conditions:

• Verify that all the required patches are installed.

• OnlineJFS 4.1(B3929EA), OnlineJFS 5.0(B3929FB) and OnlineJFS 5.0.1(B3929GB) are

removed from the system (if installed).

Mounting the HP Serviceguard Storage Management Suite Media

For a media-based installation, you must mount the media before starting the installation process.

To mount the media, complete the following steps:

1. Insert the media into the drive, and log in as root by entering the following command:

$ su root

For more information on the supported options, see su (1).

2. Scan for the device name, as follows:

If you are using the legacy I/O format, enter the following command:

# ioscan -fnC disk

For more information on the supported options, see ioscan (1M).

If you are using the new I/O format, enter the following command:

# ioscn -fNC disk

3. Create a mount point for the media, by entering the following command:

# mkdir -p /cdrom

For more information on the supported options, see mkdir (1).

4. Mount the media, by entering the following command:

# mount <absolute device-path> /cdrom

where <absolute device-path> is the device path for the CDROM.

For more information on the supported options, see mount_vxfs (1M).

5. Verify that the media is mounted, by entering the following command:

# mount

For more information on supported options, see mount_vxfs (1M).

Installing Veritas 5.1 SP1 Products

Veritas 5.1 SP1 products are supported with HP-UX 11i v3 March 2011 OEUR and later. If you

do not have the March 2011 OEUR, it is recommended that you upgrade the HP-UX version on

Mounting the HP Serviceguard Storage Management Suite Media 27

Page 28

your system before installing the 5.1 SP1 version of the Veritas software. As the HP-UX 11i v3

March 2011 OEUR release contains the VxVM 5.0 and VxFS 5.0 products by default, you cannot

directly install the 5.1 SP1 products. You must upgrade the Veritas 5.1 SP1 products from 5.0 to

5.1 SP1 after installing HP-UX 11i v3 March 2011 OEUR release.

Installing VxFS 5.1 SP1

VxFS 5.1 SP1 consists of the Base-VxFS-51 and HP OnlineJFS (B3929HB) bundles. The following

sections discuss the installation of these bundles using the HP-UX Software Distributor (SD) commands.

NOTE: You cannot install the Base-VxFS and the Base-VxVM 5.1 SP1 products on a system where

the Veritas 4.1 versions (for HP-UX 11i v2) of HP Serviceguard Storage Management, or HP

Serviceguard Cluster File System product suites are installed. The AVXFS and AVXVM products

(included in VxFS 5.1 SP1 and VxVM 5.1 SP1, respectively) check the installed products and the

cause for an installation failure for the Veritas 5.1 SP1 products on such systems. This issue is

applicable only for systems that have been upgraded from HP-UX 11i v2 to HP-UX 11i v3.

Tools such as AVXFS, AVXVM, AVXTOOLS, AFULLVXVM, and AONLINEJFS help pull in dependant

products. For example, when you select VxVM 5.1 SP1, these tools automatically pull in VxFS 5.1

SP1. These products also address issues of mutual exclusions.

Installing Base-VxFS-51

You can install Base-VxFS-51 either in the non-interactive mode or in the interactive mode.

Installing Base-VxFS-51 in Non-Interactive Mode

Enter the swinstall command in the non-interactive mode, as follows:

# swinstall -x autoreboot=true -s <depot-path> Base-VxFS-51

For more information on the supported options, see swinstall (1M).

NOTE: If you are installing VxFS 5.1 SP1 in a system that contains the HP-UX Data Center

Operating Environment (DC-OE), HP-UX Virtual Server Operating Environment (VSE-OE) or HP-UX

High Availability Operating Environment (HA-OE), you must either include OnlineJFS 5.1 (B3929HB)

in the swinstall command line or remove OnlineJFS 5.0.1 (B3929GB) prior to installing VxFS

5.1 SP1.

Installing Base-VxFS-51 in Interactive Mode

To install Base-VxFS-51 in interactive mode, complete the following steps:

1. Enter the swinstall command in interactive mode, as follows:

# swinstall -x autoreboot=true -s <depot-path>

For more information on the supported options, see swinstall (1M).

2. Mark the Base-VxFS-51 bundle in the SD Install window.

NOTE: If you are installing VxFS 5.1 SP1 in a system that includes the HP-UX Data Center

Operating Environment (DC-OE), HP-UX Virtual Server Operating Environment (VSE-OE) or

HP-UX High Availability Operating Environment (HA-OE), you must either mark OnlineJFS 5.1

(B3929HB) in the SD install dialog box or remove OnlineJFS 5.0.1 (B3929GB) prior to

installing VxFS 5.1 SP1.

3. Select Actions, and click Install. Follow the on-screen instructions to complete the installation.

The system reboots automatically after the installation is complete. You can monitor the installation

process for warnings and notes. For more information on installation, see the log file /var/adm/

sw/swagent.log.

28 Installing the Veritas 5.1 SP1 Products

Page 29

Verifying Base-VxFS-51 Installation

Verify the Base-VxFS-51 installation on your system by entering the following command:

# swverify Base-VxFS-51

For more information on the supported options, see swverify (1M).

Installing HP OnlineJFS (B3929HB)

The HP OnlineJFS (B3929HB) license enables additional VxFS functionality not available with

Base-VxFS-51.

You can install HP OnlineJFS (B3929HB) either in the non-interactive or in the interactive mode.

NOTE: Base-VxFS-51 must be installed on your system before installing HP OnlineJFS. Otherwise

the installation of HP OnlineJFS may fail with an error.

Installing HP OnlineJFS (B3929HB) in Non-Interactive Mode

Enter swinstall command in non-interactive mode, as follows:

# swinstall -s <depot-path> B3929HB

For more information on the supported options, see swinstall(1M).

Installing HP OnlineJFS (B3929HB) in the Interactive Mode

To install HP OnlineJFS (B3929HB) in the interactive mode, complete the following steps:

1. Enter the swinstall command in interactive mode, as follows:

# swinstall -s <depot-path>

2. Mark the B3929HB bundle on the SD Install Window.

3. Select Actions, and click Install. Follow the on-screen instructions to complete the installation.

Verifying HP OnlineJFS (B3929HB) Installation

Verify the installation of OnlineJFS (B3929HB) on your system by entering the following command:

# swverify B3929HB

For more information on the supported options, see swverify(1M).

Installing VxVM 5.1 SP1

VxVM 5.1 SP1 consists of the Base-VxVM-51 and Full VxVM (B9116EB) bundles. The following

sections discuss the installation of these bundles using the HP-UX SD commands.

NOTE: After installing VxVM 5.1 SP1, if there were any prior VxVM versions that had unbundled

Array Support Library (ASL) packages installed, the new unbundled ASL packages will not be

automatically installed with 5.1 SP1. You must download and install 5.1 SP1 unbundled ASLs

separately or swcopy VxVM 5.1 SP1 together with VxFS 5.1 SP1 and ASL packages into a single

depot and install the depot. The ASLs bundled together with the base product will be updated

automatically. For more information on ASLs, see the Hardware Compatibility List (HCL) available

on HP Business Support Center.

NOTE: When installing VxVM 5.1 SP1 on a system containing an earlier version of VxVM, any

earlier version of VxVM will be removed automatically as part of the post-install configuration.

Installing Base-VxVM-51

You can install Base-VxVM-51 either in the non-interactive mode or in the interactive mode.

Installing VxVM 5.1 SP1 29

Page 30

NOTE: Ensure that you select the complete Base-VxVM-51 bundle for installation while installing

Full-VxVM bundle B9116EB if your system already does not have Base-VxVM-51.

Also, verify that the bundles Base-VxVM-51, Base-VxFS-51, Base-VxTools-51 and B9116EB are

present on your system after installing B9116EB.

Installing Base-VxVM-51 in Non-Interactive Mode

Enter the swinstall command in non-interactive mode, as follows:

# swinstall -x autoreboot=true -s <depot-path> Base-VxVM-51

For more information on the supported options, see swinstall(1M).

NOTE: If you are installing VxFS 5.1 SP1 in a system that includes the HP-UX Data Center

Operating Environment (DC-OE), HP-UX Virtual Server Operating Environment (VSE-OE) or HP-UX