Page 1

HP StorageWorks

P2000 G3 MSA System

SMU Reference Guide

Par t number: 500911- 0 0 5

First edition: September 2010

Page 2

Legal and notice information

© Copyright 2010 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Acknowledgements

Microsoft and Windows are U.S. registered trademarks of Microsoft Corporation.

UNIX is a registered trademark of The Open Group.

Page 3

Contents

About this guide. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Intended audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Prerequisites. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Related documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Document conventions and symbols . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

HP technical support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Product warranties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Subscription service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

HP web sites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Documentation feedback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1 Getting started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Configuring and provisioning a new storage system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Browser setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Signing in . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Tips for signing in and signing out . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Tips for using the main window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Tips for using the help window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

System concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

About user accounts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

About vdisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

About spares. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

About volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

About hosts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

About volume mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

About volume cache options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Using write-back or write-through caching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Optimizing read-ahead caching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

About managing remote systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

About the Snapshot feature. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

About the Volume Copy feature. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

About the VDS and VSS hardware providers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

About RAID levels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

About size representations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

About the system date and time. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Related topics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

About storage-space color codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

About Configuration View icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

About vdisk reconstruction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

About data protection in a single-controller storage system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 3

Page 4

2 Configuring the system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Using the Configuration Wizard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 1: Starting the wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 2: Changing default passwords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 3: Configuring network ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Step 4: Enabling system-management services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Step 5: Setting system information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Step 6: Configuring event notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Step 7: Configuring host ports. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Step 8: Confirming configuration changes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Installing a license . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Configuring system services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Changing management interface settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Configuring email notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Configuring SNMP notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Configuring user accounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Adding users. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Modifying users. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Removing users . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Configuring system settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Changing the system date and time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Changing host interface settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Changing network interface settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Setting system information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Configuring advanced settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Changing disk settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Configuring SMART . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Configuring drive spin down for available disks and global spares. . . . . . . . . . . . . . . . . . . . . . . . 49

Scheduling drive spin down for all disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Configuring dynamic spares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Configuring the EMP polling rate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Changing system cache settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Changing the synchronize-cache mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Changing the missing LUN response . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Controlling host access to the system's write-back cache setting . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Changing auto-write-through cache triggers and behaviors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Configuring partner firmware update . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Configuring system utilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Configuring background scrub for vdisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Configuring background scrub for disks not in vdisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Configuring utility priority . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Configuring remote systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Adding a remote system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Removing a remote system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Configuring a vdisk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Managing dedicated spares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Changing a vdisk's name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Changing a vdisk's owner . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Configuring drive spin down for a vdisk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Configuring a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Changing a volume's name or OpenVMS UID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Changing a volume's cache settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Configuring a snapshot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Changing a snapshot’s name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Configuring a snap pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Changing a snap pool’s name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

4Contents

Page 5

3 Provisioning the system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Using the Provisioning Wizard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Step 1: Starting the wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Step 2: Specifying the vdisk name and RAID level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Step 3: Selecting disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Step 4: Defining volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Step 5: Setting the default mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Step 6: Confirming vdisk settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Creating a vdisk. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Deleting vdisks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Managing global spares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Creating a volume set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Creating a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Deleting volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Changing default mapping for multiple volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Explicitly mapping multiple volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Changing a volume's default mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

Changing a volume's explicit mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Unmapping volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Expanding a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Creating multiple snapshots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Creating a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Deleting snapshots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Resetting a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Creating a volume copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Aborting a volume copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Rolling back a volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Creating a snap pool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Deleting snap pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Adding a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Removing hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Changing a host's name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Changing host mappings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

Configuring CHAP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Modifying a schedule . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Deleting schedules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

4 Using system tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Updating firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Updating controller module firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Updating expansion module firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Updating disk firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Saving logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Resetting a host port . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Rescanning disk channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Restoring system defaults . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Clearing disk metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Restarting or shutting down controllers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Restarting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Shutting down . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Testing event notification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Expanding a vdisk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Verifying a vdisk. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Scrubbing a vdisk. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Removing a vdisk from quarantine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Expanding a snap pool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Checking links to a remote system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 5

Page 6

5 Viewing system status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Viewing information about the system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

System properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Enclosure properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Disk properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Vdisk properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Volume properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Snap-pool properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Snapshot properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Schedule properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Configuration limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Licensed features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Version properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Viewing the system event log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Viewing information about all vdisks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Viewing information about a vdisk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Vdisk properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Disk properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Volume properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Snap-pool properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Viewing information about a volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Volume properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Mapping properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Schedule properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Viewing information about a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Snapshot properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Mapping properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Schedule properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Viewing information about a snap pool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Snap-pool properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Volume properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Snapshot properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Viewing information about all hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Viewing information about a host. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Host properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Mapping properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Viewing information about an enclosure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Enclosure properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Disk properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Power supply properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Fan properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Controller module properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Controller module: network port properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Controller module: host port properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Controller module: expansion port properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Controller module: CompactFlash properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Drive enclosure: I/O module properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

I/O module: In port properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

I/O module: Out port properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Viewing information about a remote system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

6 Using Remote Snap to replicate volumes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

About the Remote Snap replication feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Replication actions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Performing initial replication locally or remotely. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Remote replication disaster recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Remote replication licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Related topics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

6Contents

Page 7

Using the Replication Setup Wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Step 1: Starting the wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Step 2: Selecting the primary volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Step 3: Selecting the replication mode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Step 4: Selecting the secondary volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Step 5: Confirming replication settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Replicating a volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Replicating a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Removing replication from a volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Suspending replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Resuming replication. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Aborting replication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Detaching a secondary volume. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Stopping a vdisk. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Starting a vdisk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Reattaching a secondary volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Exporting a replication image to a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Changing the primary volume for a replication set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Viewing replication properties, addresses, and images for a volume. . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Replication properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

Replication addresses. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Replication images. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Viewing information about a subordinate replication volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Replication properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Replication addresses. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Replication image properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Viewing information about a replication image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Replication status properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Primary volume snapshot properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Secondary volume snapshot properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

A SNMP reference. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Standard MIB-II behavior. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Enterprise traps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

FA MIB 2.2 SNMP behavior . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

External details for certain FA MIB 2.2 objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

External details for connUnitRevsTable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

External details for connUnitSensorTable. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

External details for connUnitPortTable. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Configuring SNMP event notification in SMU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

SNMP management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Enterprise trap MIB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

FA MIB 2.2 and 4.0 differences. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

B Using FTP to download logs and update firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Downloading system logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Updating controller module firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Updating expansion module firmware. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Updating disk firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Installing a license file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 7

Page 8

8Contents

Page 9

Figures

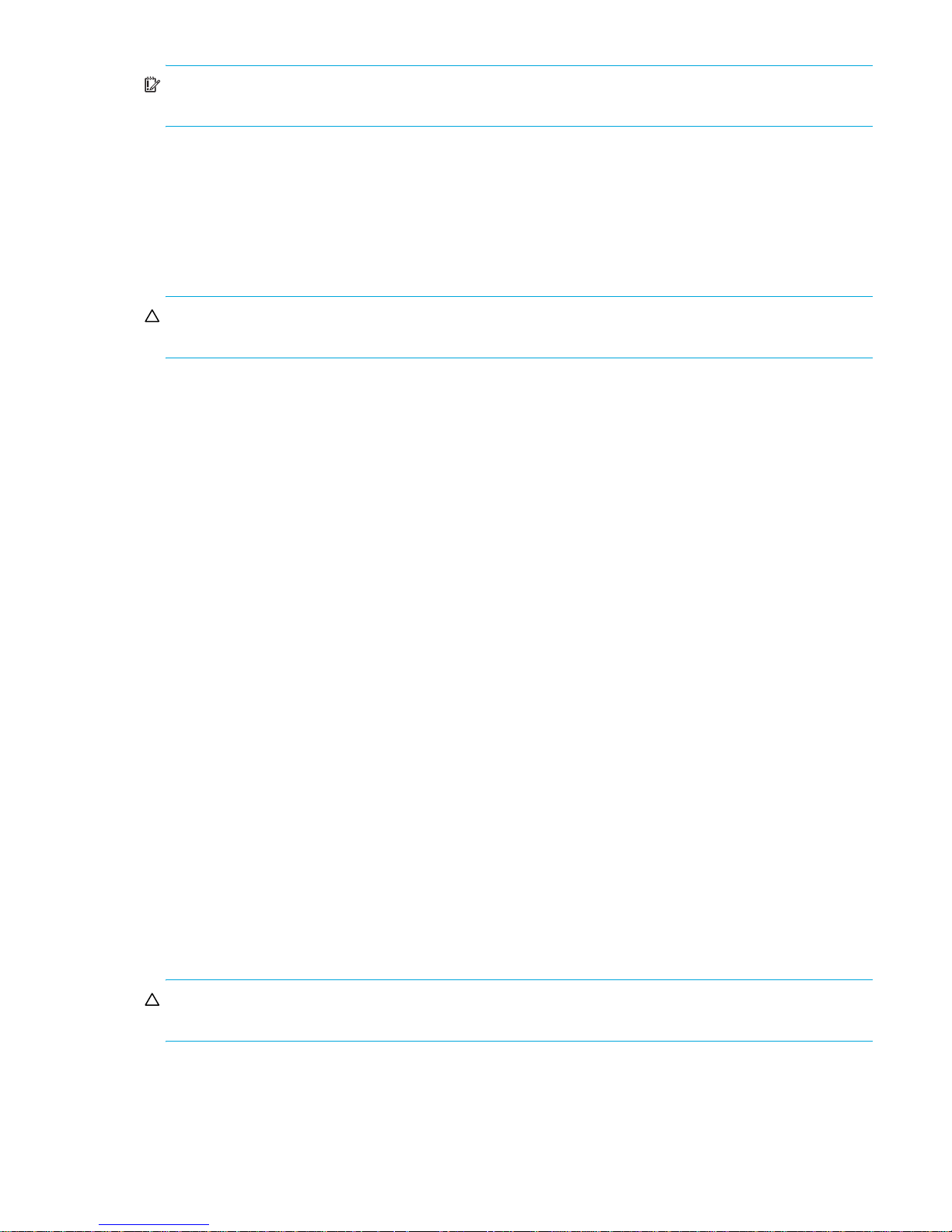

1 Relationship between a master volume and its snapshots and snap pool. . . . . . . . . . . . . . . . . . . . . . 27

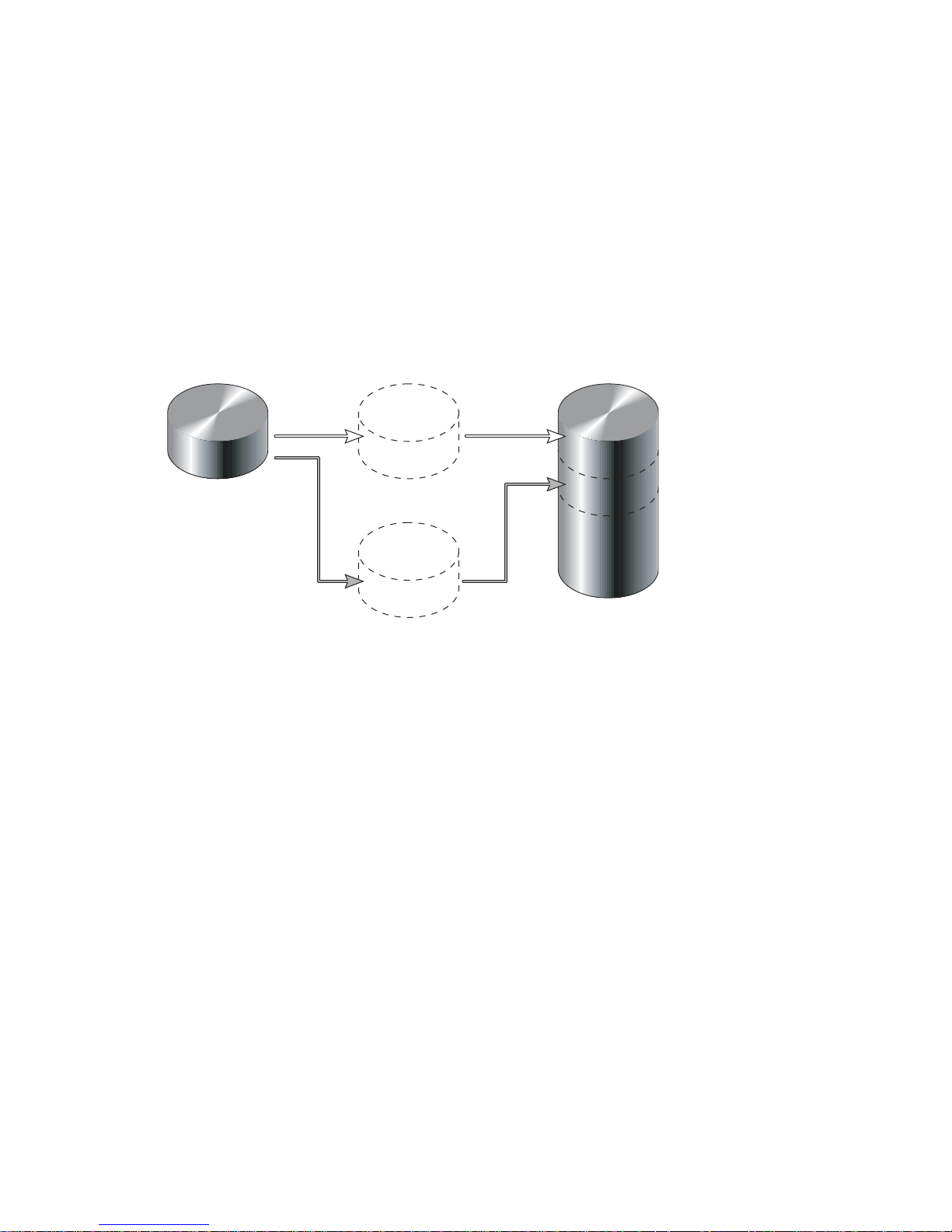

2 Rolling back a master volume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

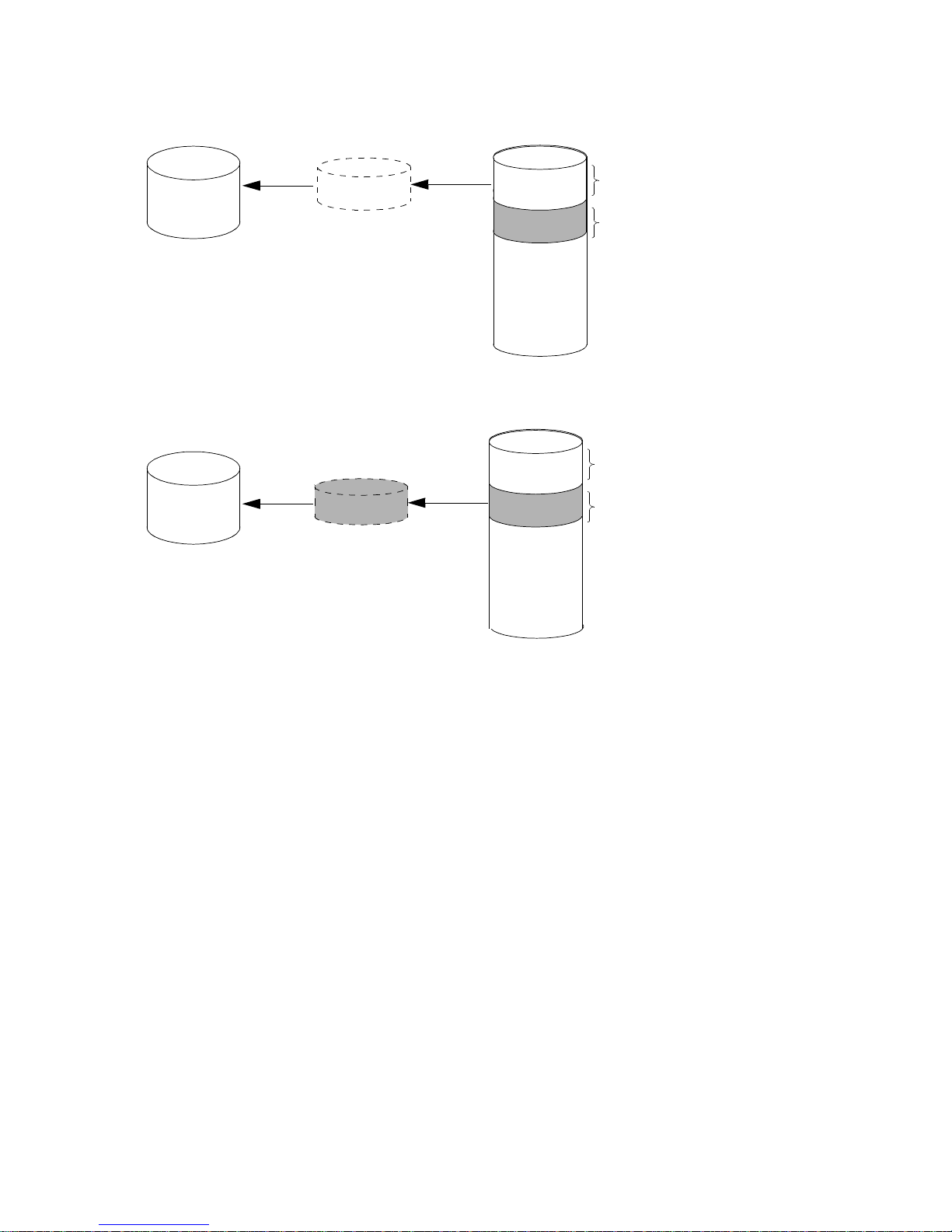

3 Creating a volume copy from a master volume or a snapshot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4 Intersite and intrasite replication sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

5 Actions that occur during a series of replications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

6 Example of primary-volume failure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 9

Page 10

10 Figures

Page 11

Tables

1 Document conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

2 SMU communication status icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3 Settings for default users . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

4 Example applications and RAID levels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

5 RAID level comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

6 Vdisk expansion by RAID level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

7 Size representations in base 2 and base 10 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .32

8 Decimal (radix) point character by locale . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

9 Storage-space color codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

10 Configuration View icons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

11 FA MIB 2.2 objects, descriptions, and values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

12 connUnitRevsTable index and description values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

13 connUnitSensorTable index, name, type, and characteristic values. . . . . . . . . . . . . . . . . . . . . . . . . . 128

14 connUnitPortTable index and name values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 11

Page 12

12 Tables

Page 13

About this guide

This guide provides information about managing an HP StorageWorks P2000 G3 MSA System by using its

web interface, Storage Management Utility (SMU).

Intended audience

This guide is intended for storage system administrators.

Prerequisites

Prerequisites for using this product include knowledge of:

• Network administration

• Storage system configuration

• Storage area network (SAN) management and direct attach storage (DAS)

• Fibre Channel, Serial Attached SCSI (SAS), Internet SCSI (iSCSI), and Ethernet protocols

Related documentation

In addition to this guide, please refer to other documents for this product:

• HP StorageWorks P2000 G3 MSA System Racking Instructions

• HP StorageWorks P2000 G3 MSA System Installation Instructions

• HP StorageWorks P2000 G3 MSA System Cable Configuration Guide

• HP StorageWorks P2000 G3 MSA System FC User’s Guide

• HP StorageWorks P2000 G3 MSA System FC/iSCSI User’s Guide

• HP StorageWorks P2000 G3 MSA System SAS User’s Guide

• HP StorageWorks P2000 G3 MSA System iSCSI User’s Guide

• HP StorageWorks P2000 G3 MSA System CLI Reference Guide

• HP StorageWorks P2000 G3 MSA System Event Descriptions Reference Guide

• Online help for HP StorageWorks P2000 G3 MSA System management interfaces

You can find these documents from the Manuals page of the HP Business Support Center web site:

http://www.hp.com/support

/manuals.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 13

Page 14

Document conventions and symbols

Table 1 Document conventions

Convention Element

Medium blue text: Figure 1 Cross-reference links and e-mail addresses

Medium blue, underlined text

(http://www.hp.com

Bold font • Key names

Italics font Text emphasis

Monospace font • File and directory names

Monospace, italic font • Code variables

Monospace, bold font Emphasis of file and directory names, system output, code, and text

)

Web site addresses

• Text typed into a GUI element, such as into a box

• GUI elements that are clicked or selected, such as menu and list

items, buttons, and check boxes

• System output

• Code

• Text typed at the command-line

• Command-line variables

typed at the command line

CAUTION: Indicates that failure to follow directions could result in damage to equipment or data.

IMPORTANT: Provides clarifying information or specific instructions.

NOTE: Provides additional information.

TIP: Provides helpful hints and shortcuts.

HP technical support

Telephone numbers for worldwide technical support are listed on the HP support web site:

http://www.hp.com/support/

Collect the following information before calling:

• Technical support registration number (if applicable)

• Product serial numbers

• Product model names and numbers

• Applicable error messages

• Operating system type and revision level

• Detailed, specific questions

For continuous quality improvement, calls may be recorded or monitored.

.

14 About this guide

Page 15

Product warranties

For information about HP StorageWorks product warranties, see the warranty information website:

h

ttp://www.hp.com/go/storagewarranty

Subscription service

HP strongly recommends that customers sign up online using the Subscriber's choice web site:

http://www.hp.com/go/e-updates

• Subscribing to this service provides you with e-mail updates on the latest product enhancements, newest

versions of drivers, and firmware documentation updates as well as instant access to numerous other

product resources.

• After signing up, you can quickly locate your products by selecting Business support and then Storage

under Product Category.

HP web sites

For other product information, see the following HP web sites:

.

• http://www.hp.com

• http://www.hp.com/go/storage

• http://www.hp.com/support/manuals

• http://www.hp.com/support/downloads

• http://www.hp.com/storage/whitepapers

• h

ttp://www.hp.com/go/p2000

Documentation feedback

HP welcomes your feedback.

To make comments and suggestions about product documentation, please send a message to

storagedocs.feedback@hp.com. All submissions become the property of HP.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 15

Page 16

16 About this guide

Page 17

1 Getting started

Storage Management Utility (SMU) is a web-based application for configuring, monitoring, and managing

the storage system.

Each controller module in the storage system contains a web server, which you access when you sign in to

SMU. In a dual-controller system, you can access all functions from either controller. If one controller

becomes unavailable, you can continue to manage the storage system from the partner controller.

SMU is also referred to as the web-browser interface (WBI).

NOTE: It is possible to upgrade an MSA2000 storage system by replacing its controllers with P2000 G3

controllers, which use the version of SMU described in this guide. For upgrade information go to

http://

StorageWorks MSA2000 G2 to the P2000 G3 MSA.”

Configuring and provisioning a new storage system

To configure and provision a storage system for the first time:

1. Configure your web browser for SMU and sign in, as described in Browser setup and Signing in below.

2. Set the system date and time, as described in Changing the system date and time on page 46.

3. Use the Configuration Wizard to configure other system settings, as described in Using the

4. Use the Provisioning Wizard to create a virtual disk (vdisk) containing storage volumes, and optionally

5. Use the Replication Setup Wizard to configure replication for a primary volume to a remote system, as

6. If you mapped volumes to hosts, verify the mappings by mounting the volumes from each host and

7. Verify that controller modules and expansion modules have the latest firmware, as described in Viewing

www.hp.com/go/p2000 click Resource Library, and view the white paper “Upgrading the HP

Configuration Wizard on page 37.

to map the volumes to hosts, as described in Using the Provisioning Wizard on page 59.

described in Using the Replication Setup Wizard on page 110.

performing simple read/write tests to the volumes.

information about the system on page 89 and Updating firmware on page 79.

You can then make additional configuration and provisioning changes and view system status, as

described in later chapters of this guide.

Browser setup

• Use Mozilla Firefox 3 or later, or Microsoft Internet Explorer 7 or later.

• To see the help window, you must enable pop-up windows.

• To optimize the display, use a color monitor and set its color quality to the highest setting.

• To navigate beyond the Sign In page (with a valid user account):

• Set the browser's local-intranet security option to medium or medium-low.

• Verify that the browser is set to allow cookies at least for the IP addresses of the storage-system

network ports.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 17

Page 18

Signing in

To sign in:

1. In the web browser’s address field, type the IP address of a controller network port and press Enter. The

SMU Sign In page is displayed. If the Sign In page does not display, verify that you have entered the

correct IP address.

2. On the Sign In page, enter the name and password of a configured user. The default user name and

password are manage and !manage. If you are logging in to SMU for the first time, the Language

field displays user setting or English, either of which results in English.

Language preferences can be configured for the system and for individual users.

3. Click Sign In. If the system is available, the System Overview page is displayed; otherwise, a message

indicates that the system is unavailable.

Tips for signing in and signing out

• Do not include a leading zero in an IP address. For example, enter 10.1.4.6 not 10.1.4.06.

• Multiple users can be signed in to each controller simultaneously.

• For each active SMU session an identifier is stored in the browser. Depending on how your browser

treats this session identifier, you might be able to run multiple independent sessions simultaneously.

Each instance of Internet Explorer can run a separate SMU session; however, all instances of Firefox

share the same session.

• If you end a SMU session without clicking the Sign Out link near the top of the SMU window, the

session automatically ends when the user's automatic sign-out time expires. If this preference is set to

Never, the session ends after 9999 minutes.

Tips for using the main window

• The Configuration View panel displays logical and physical components of the storage system. To

perform a task, select the component to act on and then either:

• Right-click to display a context menu and select the task to perform. This is the method that help

topics describe.

• Click a task category in the main panel and select the task to perform.

• The System Status panel shows how many events of each severity have occurred in the system. To view

event details, click a severity icon. For more information see Viewing the system event log on page 90.

• Many tables can be sorted by a specific column. To do so, click the column heading to sort low to high;

click again to sort high to low.

• Do not use the browser's Back, Forward, Reload, or Refresh buttons. SMU has a single page whose

content changes as you perform tasks and automatically updates to show current data.

• An asterisk (*) identifies a required setting.

• The icon in the upper right corner of the main window shows the status of communication between

SMU, the Management Controller (MC), and the Storage Controller (SC), as described in the following

table.

18 Getting started

Page 19

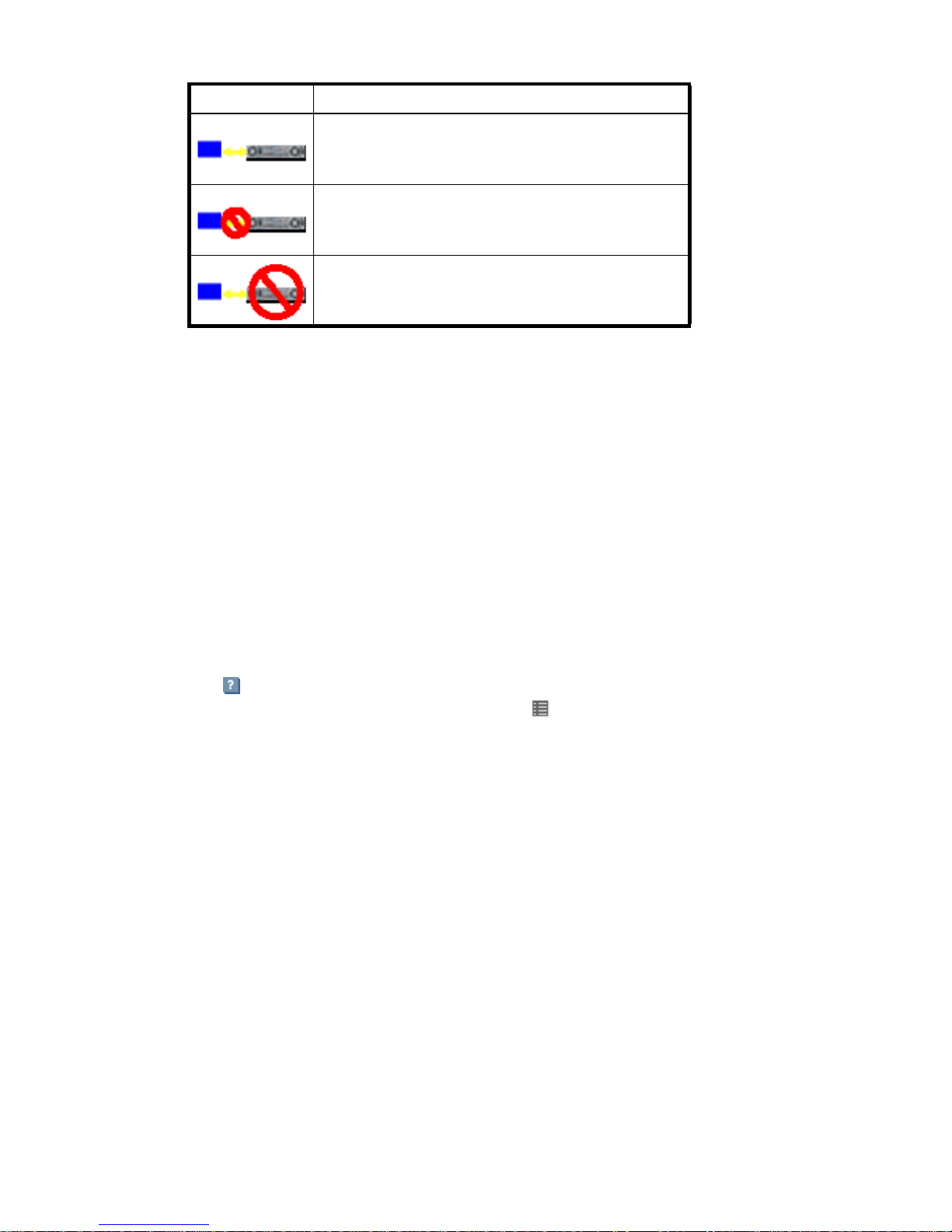

Table 2 SMU communication status icons

Icon Meaning

SMU can communicate with the Management Controller,

which can communicate with the Storage Controller.

SMU cannot communicate with the Management Controller.

SMU can communicate with the Management Controller,

which cannot communicate with the Storage Controller.

• Below the communication status icon, a timer shows how long the session can be idle until you are

automatically signed out. This timer resets after each action you perform. One minute before automatic

sign-out you are prompted to continue using SMU. The timer does not appear if the current user's Auto

Sign Out preference is set to Never.

• If a SMU session is active on a controller and the controller is power cycled or is forced offline by the

partner controller or certain other events occur, the session might hang. SMU might say that it is

“Connecting” but stop responding, or the page may become blank with the browser status “Done.”

After the controller comes back online, the session will not restart. To continue using SMU, close and

reopen the browser and start a new SMU session.

• Colors that identify how storage space is used are described in About storage-space color codes on

page 33.

• Icons shown in the Configuration View panel are described in About Configuration View icons on

page 34.

Tips for using the help window

• To display help for a component in the Configuration View panel, right-click the component and select

Help. To display help for the content in the main panel, click either Help in the menu bar or the help

icon in the upper right corner of the panel.

• In the help window, click the table of contents icon to show or hide the Contents pane.

• A help topic remains displayed until you browse to another topic in the help window, display help for a

different item in the main window, or close the help window.

• If you have viewed more than one help topic, you can click the arrow icons to display the previous or

next topic.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 19

Page 20

System concepts

About user accounts

The system provides three default user accounts and allows a maximum of 12 user accounts to be

configured. Any account can be modified or removed except you cannot remove the user you are signed in

as.

User accounts have these options:

• User Name. A user name is case sensitive and cannot already exist in the system. A name cannot

include a comma, double quote, or backslash.

• Password. A password is case sensitive. A password cannot include a comma, double quote, or

backslash. Though optional, passwords are highly recommended to ensure system security.

• User Roles. Select Monitor to let the user view system settings, or Manage to let the user view and

change system settings. You cannot change the role of user manage.

• User Type. Select Standard to allow access to standard functions, or Advanced to allow access to all

functions except diagnostic functions, or Diagnostic to allow access to all functions.

NOTE: This release has no functions that require Advanced or Diagnostic access; a Standard user can

access all functions.

• WBI Access. Allows access to the web-based management interface.

• CLI Access. Allows access to the command-line management interface.

• FTP Access. Allows access to the file transfer protocol interface, which provides a way to install

firmware updates and download logs.

• Base Preference. The base for entry and display of storage-space sizes. In base 2, sizes are shown as

powers of 2, using 1024 as a divisor for each magnitude. In base 10, sizes are shown as powers of 10,

using 1000 as a divisor for each magnitude. Operating systems usually show volume size in base 2.

Disk drives usually show size in base 10. Memory (RAM and ROM) size is always shown in base 2.

• Precision Preference. The number of decimal places (1–10) for display of storage-space sizes.

• Unit Preference. Sets the unit for display of storage-space sizes. The Auto option lets the system

determine the proper unit for a size. Based on the precision setting, if the selected unit is too large to

meaningfully display a size, the system uses a smaller unit for that size. For example, if the unit is set to

TB and the precision is set to 1, the size 0.11709 TB is s h o wn a s 119. 9 G B.

• Temperature Preference. Specifies to use either the Celsius scale or the Fahrenheit scale for temperature

values.

• Auto Sign Out. Select the amount of time that the user's session can be idle before the user is

automatically signed out: 5, 15, or 30 minutes, or Never (9999 minutes). The default is 30 minutes.

• Locale. The user’s preferred display language, which overrides the system’s default display language.

Installed language sets include Chinese-simplified, Chinese-traditional, Dutch, English, French,

German, Italian, Japanese, Korean, and Spanish.

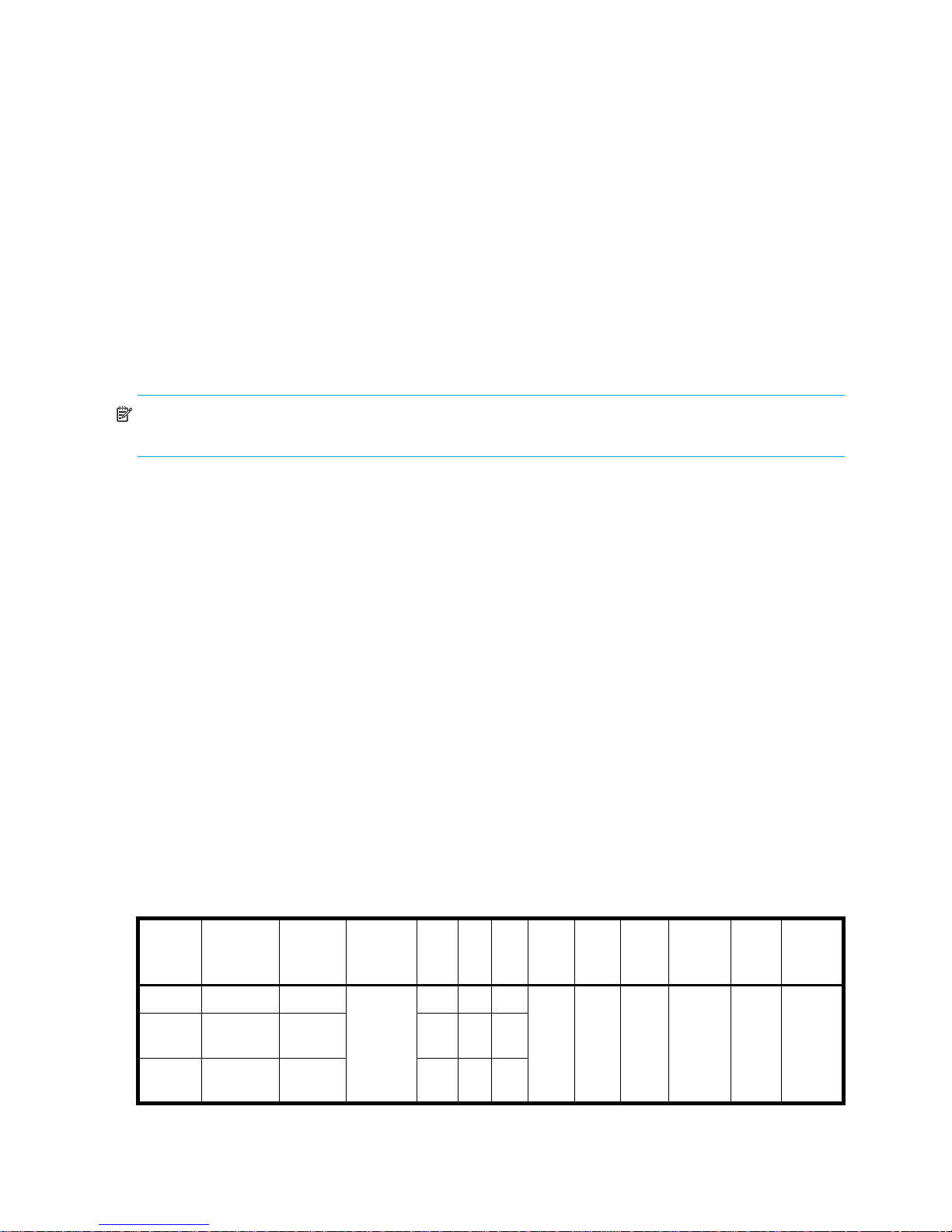

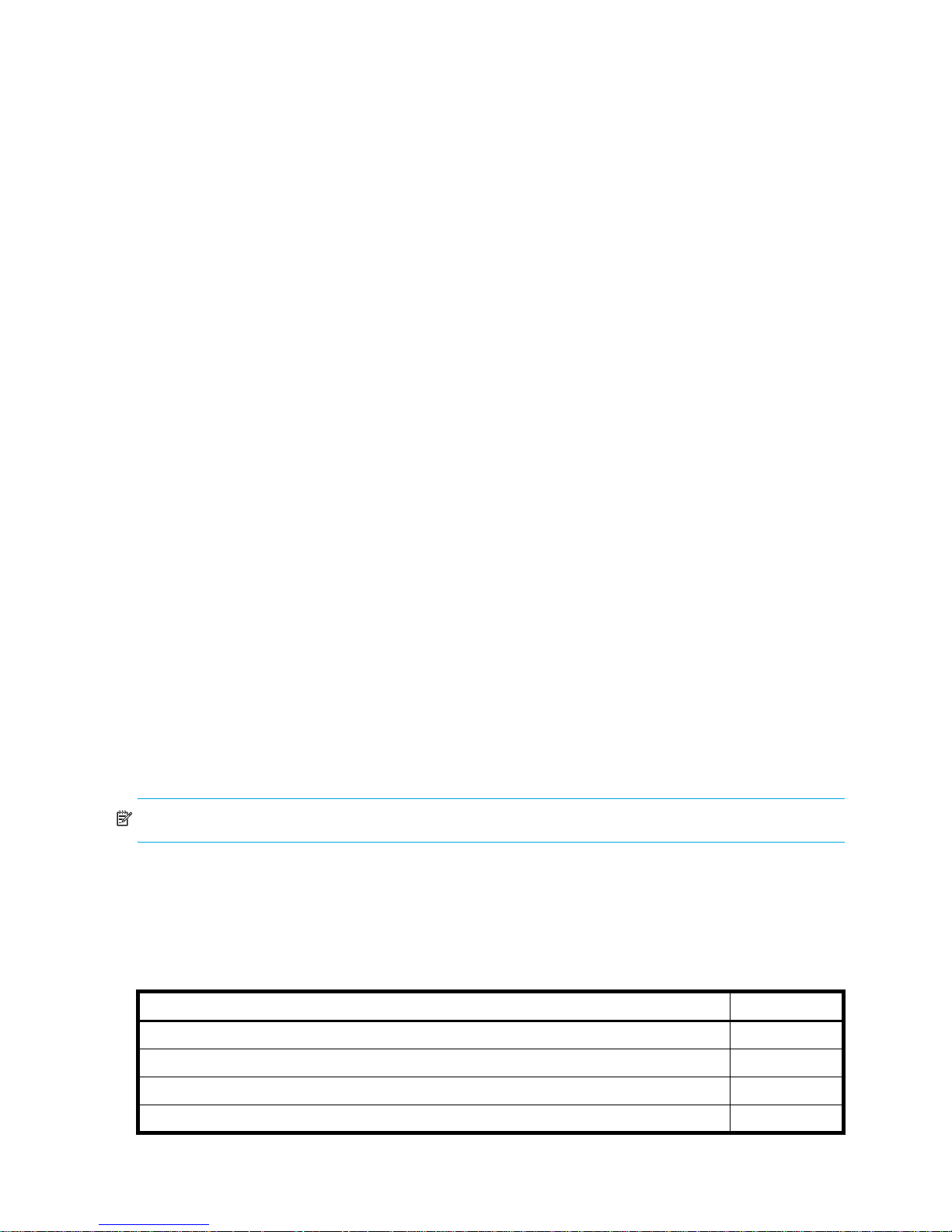

Table 3 Settings for default users

Name Password Roles Type WBI CLI FTP Base Prec. Units Temp. Auto

monitor !monitor Monitor Standard Yes Yes No 10 1 Auto

manage !manage Monitor,

Manage

ftp !ftp Monitor,

Manage

20 Getting started

Yes Yes Yes

No No Yes

Celsius

Sign

Out

30

Min.

Locale

English

Page 21

NOTE: To secure the storage system, set a new password for each default user.

Related topics

• Configuring user accounts on page 44

About vdisks

A vdisk is a “virtual” disk that is composed of one or more disks, and has the combined capacity of those

disks. The number of disks that a vdisk can contain is determined by its RAID level. All disks in a vdisk must

be the same type (SAS or SATA, small or large form-factor). A maximum of 16 vdisks per controller can

exist.

A vdisk can contain different models of disks, and disks with different capacities. For example, a vdisk can

include a 500-GB disk and a 750-GB disk. If you mix disks with different capacities, the smallest disk

determines the logical capacity of all other disks in the vdisk, regardless of RAID level. For example, if a

RAID-0 vdisk contains one 500-GB disk and four 750-GB disks, the capacity of the vdisk is equivalent to

approximately five 500-GB disks.

Each disk has metadata that identifies whether the disk is a member of a vdisk, and identifies other

members of that vdisk. This enables disks to be moved to different slots in a system; an entire vdisk to be

moved to a different system; and a vdisk to be quarantined if disks are detected missing.

In a single-controller system, all vdisks are owned by that controller. In a dual-controller system, when a

vdisk is created the system automatically assigns the owner to balance the number of vdisks each controller

owns; or, you can select the owner. Typically it does not matter which controller owns a vdisk.

In a dual-controller system, when a controller fails, the partner controller assumes temporary ownership of

the failed controller's vdisks and resources. If a fault-tolerant cabling configuration is used to connect the

controllers to drive enclosures and hosts, both controllers' LUNs are accessible through the partner.

When you create a vdisk you can use the default chunk size or one that better suits your application. The

chunk size is the amount of contiguous data that is written to a disk before moving to the next disk. After a

vdisk is created its chunk size cannot be changed. For example, if the host is writing data in 16-KB

transfers, that size would be a good choice for random transfers because one host read would generate

the read of exactly one disk in the volume. That means if the requests are random-like, then the requests

would be spread evenly over all of the disks, which is good for performance. If you have 16-KB accesses

from the host and a 64-KB block size, then some of the hosts accesses would hit the same disk; each chunk

contains four possible 16-KB groups of data that the host might want to read, which is not an optimal

solution. Alternatively, if the host accesses were 128 KB, then each host read would have to access two

disks in the vdisk. For random patterns, that ties up twice as many disks.

When you create a vdisk you can also create volumes within it. A volume is a logical subdivision of a

vdisk, and can be mapped to controller host ports for access by hosts. The storage system presents only

volumes, not vdisks, to hosts.

You can create vdisks with or without volumes by using the Provisioning Wizard, or you can create vdisks

manually.

Best practices for creating vdisks include:

• To maximize capacity, use disks of similar size.

• For greatest reliability, use disks of the same size and rotational speed.

• For storage configurations using many disks, create a few vdisks each containing many disks instead of

many vdisks each containing a few disks.

• To maximize capacity and disk usage (but not performance), you can create vdisks larger than 2 TB

and divide them into multiple volumes each having a capacity of 2 TB or less. This increases the usable

capacity of storage configurations by reducing the total number of parity disks required when using

parity-protected RAID levels. This differs from using a volume larger than 2 TB, which requires specific

support by the host operating system, I/O adapter, and application.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 21

Page 22

• For maximum use of a dual-controller system’s resources, each controller should own a similar number

of vdisks.

• Set the chunk size to match the transfer block size of the host application.

Related topics

• About RAID levels on page 30

• About spares on page 22

• About volumes on page 23

• Vdisk topics in Provisioning the system on page 59

• Configuring a vdisk on page 55

• Verifying a vdisk on page 85

• Scrubbing a vdisk on page 85

• Viewing information about a vdisk (page 92), all vdisks (page 91), or the system (page 89)

• Removing a vdisk from quarantine on page 86

About spares

A controller automatically reconstructs a redundant (fault-tolerant) vdisk (RAID 1, 3, 5, 6, 10, 50) when one

or more of its disks fails and a compatible spare disk is available. A compatible disk has enough capacity

to replace the failed disk and is the same type (SAS or SATA).

There are three types of spares:

• Dedicated spare. Reserved for use by a specific vdisk to replace a failed disk. Most secure way to

provide spares for vdisks but expensive to reserve a spare for each vdisk.

• Global spare. Reserved for use by any redundant vdisk to replace a failed disk.

• Dynamic spare. An available compatible disk that is automatically assigned to replace a failed disk in

a redundant vdisk.

When a disk fails, the system looks for a dedicated spare first. If it does not find a dedicated spare, it looks

for a global spare. If it does not find a compatible global spare and the dynamic spares option is enabled,

it takes any available compatible disk. If no compatible disk is available, reconstruction cannot start.

A best practice is to designate spares for use if disks fail. Dedicating spares to vdisks is the most secure

method, but it is also expensive to reserve spares for each vdisk. Alternatively, you can enable dynamic

spares or assign global spares.

Related topics

• Configuring dynamic spares on page 50

• Managing dedicated spares on page 55

• Managing global spares on page 62

• Using the Provisioning Wizard on page 59

• Creating a vdisk on page 61

• Viewing information about a vdisk (page 92) or all vdisks (page 91)

22 Getting started

Page 23

About volumes

A volume is a logical subdivision of a vdisk, and can be mapped to controller host ports for access by

hosts. A mapped volume provides the storage for a file system partition you create with your operating

system or third-party tools. The storage system presents only volumes, not vdisks, to hosts. A vdisk can have

a maximum of 128 volumes.

You can create a vdisk that has one volume or multiple volumes.

• Single-volume vdisks work well in environments that need one large, fault-tolerant storage space for

data on one host. A large database accessed by users on a single host that is used only for that

application is an example.

• Multiple-volume vdisks work well when you have very large disks and you want to make the most

efficient use of disk space for fault tolerance (parity and spares). For example, you could create one

10-TB RAID-5 vdisk and dedicate one spare to the vdisk. This minimizes the amount of disk space

allocated to parity and spares compared to the space required if you created five 2-TB RAID-5 vdisks.

However, I/O to multiple volumes in the same vdisk can slow system performance.

When you create volumes you can specify their sizes. If the total size of a vdisk's volumes equals the size

of the vdisk, you will not have any free space. Without free space, you cannot add or expand volumes. If

you need to add or expand a volume in a vdisk without free space, you can delete a volume to create free

space. Or, you can expand the vdisk and then either add a volume or expand a volume to use the new

free space.

You can use a volume's default name or change it to identify the volume's purpose. For example, a volume

used to store payroll information can be named Payroll.

You can create vdisks with volumes by using the Provisioning Wizard, or you can create volumes manually.

Related topics

• About vdisks on page 21

• About volume mapping on page 24

• About volume cache options on page 25

• Volume topics in Provisioning the system on page 59

• Changing a volume's name or OpenVMS UID on page 56

• Changing a volume's cache settings on page 57

• Viewing information about a volume on page 94

About hosts

A host identifies an external port that the storage system is attached to. The external port may be a port in

an I/O adapter (such as an FC HBA) in a server, or a port in a network switch.

The controllers automatically add hosts that have sent an

to the storage system. Hosts typically do this when they boot up or rescan for devices. When the command

from the host occurs, the system saves the host ID. The ID for an FC or SAS host is its WWPN. The ID for an

iSCSI host is typically, but not limited to, its IQN.

You must assign a name to an automatically added host to have the system retain it after a restart. Naming

hosts also makes them easy to recognize for volume mapping. A maximum of 64 names can be assigned.

The Configuration View panel lists hosts by name, or if they are unnamed, by ID.

inquiry

command or a

report luns

command

A storage system with iSCSI ports can be protected from unauthorized access via iSCSI by enabling

Challenge Handshake Authentication Protocol (CHAP). CHAP authentication occurs during an attempt by

a host to login to the system. This authentication requires an identifier for the host and a shared secret

between the host and the system. Optionally, the storage system can also be required to authenticate itself

to the host; this is called mutual CHAP. Steps involved in enabling CHAP include:

• Decide on host node names (identifiers) and secrets. The host node name is typically, but not limited to,

its IQN. A secret must have 12–16 characters.

• Define CHAP entries in the storage system. If the node name is a host name, then it may be useful to

display the hosts that are known to the system.

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 23

Page 24

• Enable CHAP on the storage system. Note that this applies to all iSCSI hosts, in order to avoid security

exposures.

• Define CHAP secret in the host iSCSI initiator.

• Request host login to the storage system. The host should be displayable by the system, as well as the

ports through which connections were made.

If it becomes necessary to add more hosts after CHAP is enabled, additional CHAP node names and

secrets can be added. If a host attempts to login to the storage system, it will become visible to the system,

even if the full login is not successful due to incompatible CHAP definitions. This information may be useful

in configuring CHAP entries for new hosts. This information becomes visible when an iSCSI discovery

session is established, because the storage system does not require discovery sessions to be authenticated.

Related topics

• Using the Configuration Wizard on page 37

• Changing host interface settings on page 46

• Adding a host on page 74

• Removing hosts on page 75

• Changing a host's name on page 75

• Changing host mappings on page 75

• Viewing information about a host (page 99) or all hosts (page 98)

About volume mapping

Each volume has default host-access settings that are set when the volume is created; these settings are

called the default mapping. The default mapping applies to any host that has not been explicitly mapped

using different settings. Explicit mappings for a volume override its default mapping.

Default mapping enables all attached hosts to see a volume using a specified LUN and access permissions

set by the administrator. This means that when the volume is first created, all connected hosts can

immediately access the volume using the advertised default mapping settings. This behavior is expected by

some operating systems, such as Microsoft Windows, which can immediately discover the volume. The

advantage of a default mapping is that all connected hosts can discover the volume with no additional

work by the administrator. The disadvantage is that all connected hosts can discover the volume with no

restrictions. Therefore, this process is not recommended for specialized volumes that require restricted

access.

You can change a volume's default mapping, and create, modify, or delete explicit mappings. A mapping

can specify read-write, read-only, or no access through one or more controller host ports to a volume.

When a mapping specifies no access, the volume is masked. You can apply access privileges to one or

more of the host ports on either controller. To maximize performance, map a volume to at least one host

port on the controller that owns it. To sustain I/O in the event of controller failure, map to at least one host

port on each controller.

For example, a payroll volume could be mapped with read-write access for the Human Resources host and

be masked for all other hosts. An engineering volume could be mapped with read-write access for the

Engineering host and read-only access for other departments’ hosts.

A LUN identifies a mapped volume to a host. Both controllers share a set of LUNs, and any unused LUN

can be assigned to a mapping; however, each LUN can only be used once per volume as its default LUN.

For example, if LUN 5 is the default for Volume1, no other volume in the storage system can use LUN 5 as

its default LUN. For explicit mappings, the rules differ: LUNs used in default mappings can be reused in

explicit mappings for other volumes and other hosts.

TIP: When an explicit mapping is deleted, the volume’s default mapping takes effect. Therefore, it is

recommended to use the same LUN for explicit mappings as for the default mapping.

24 Getting started

Page 25

IMPORTANT: In an FC/iSCSI combo system, do not connect hosts or map volumes to host ports used for

replication. Attempting to do so could interfere with replication operation.

Volume mapping settings are stored in disk metadata. If enough of the disks used by a volume are moved

into a different enclosure, the volume's vdisk can be reconstructed and the mapping data is preserved.

About volume cache options

You can set options that optimize reads and writes performed for each volume.

Using write-back or write-through caching

CAUTION: Only disable write-back caching if you fully understand how the host operating system,

application, and adapter move data. If used incorrectly, you might hinder system performance.

You can change a volume's write-back cache setting. Write-back is a cache-writing strategy in which the

controller receives the data to be written to disks, stores it in the memory buffer, and immediately sends the

host operating system a signal that the write operation is complete, without waiting until the data is actually

written to the disk. Write-back cache mirrors all of the data from one controller module cache to the other.

Write-back cache improves the performance of write operations and the throughput of the controller.

When write-back cache is disabled, write-through becomes the cache-writing strategy. Using write-through

cache, the controller writes the data to the disks before signaling the host operating system that the process

is complete. Write-through cache has lower write operation and throughput performance than write-back,

but it is the safer strategy, with minimum risk of data loss on power failure. However, write-through cache

does not mirror the write data because the data is written to the disk before posting command completion

and mirroring is not required. You can set conditions that cause the controller to change from write-back

caching to write-through caching.

In both caching strategies, active-active failover of the controllers is enabled.

You can enable and disable the write-back cache for each volume. By default, volume write-back cache is

enabled. Because controller cache is backed by super-capacitor technology, if the system loses power,

data is not lost. For most applications, this is the correct setting. But because back-end bandwidth is used to

mirror cache and because this mirroring uses back-end bandwidth, if you are writing large chunks of

sequential data (as would be done in video editing, telemetry acquisition, or data logging), write-through

cache has much better performance. Therefore, you might want to experiment with disabling the write-back

cache. You might see large performance gains (as much as 70 percent) if you are writing data under the

following circumstances:

• Sequential writes

• Large I/Os in relation to the chunk size

• Deep queue depth

If you are doing random access to this volume, leave the write-back cache enabled.

The best practice for a fault-tolerant configuration is to use write-back caching.

Optimizing read-ahead caching

CAUTION: Only change read-ahead cache settings if you fully understand how the host operating

system, application, and adapter move data so that you can adjust the settings accordingly.

You can optimize a volume for sequential reads or streaming data by changing its read-ahead cache

settings. Read ahead is triggered by two back-to-back accesses to consecutive LBA ranges, whether

forward (increasing LBAs) or reverse (decreasing LBAs).

HP StorageWorks P2000 G3 MSA System SMU Reference Guide 25

Page 26

You can change the amount of data read in advance after two back-to-back reads are made. Increasing

the read-ahead cache size can greatly improve performance for multiple sequential read streams; however,

increasing read-ahead size will likely decrease random read performance.

• The Default option works well for most applications: it sets one chunk for the first access in a sequential

read and one stripe for all subsequent accesses. The size of the chunk is based on the chunk size used

when you created the vdisk (the default is 64 KB). Non-RAID and RAID-1 vdisks are considered to have

a stripe size of 64 KB.

• Specific size options let you select an amount of data for all accesses.

• The Maximum option lets the controller dynamically calculate the maximum read-ahead cache size for

the volume. For example, if a single volume exists, this setting enables the controller to use nearly half

the memory for read-ahead cache.

Only use Maximum when disk latencies must be absorbed by cache. For example, for read-intensive

applications, you will want data that is most often read to be in cache so that the response to the read

request is very fast; otherwise, the controller has to locate which disks the data is on, move it up to

cache, and then send it to the host. Do not use Maximum if more than two volumes are owned by the

controller on which the read-ahead setting is being made. If there are more than two volumes, there is

contention on the cache as to which volume’s read data should be held and which has the priority;

each volume constantly overwrites the other volume’s data in cache, which could result in taking a lot of

the controller’s processing power.

• The Disabled option turns off read-ahead cache. This is useful if the host is triggering read ahead for

what are random accesses. This can happen if the host breaks up the random I/O into two smaller

reads, triggering read ahead.