Page 1

HP MSR Router Series

IP Multicast

Configuration Guide(V5)

Part number: 5998-8182

Software version: CMW520-R2513

Document version: 6PW106-20150808

Page 2

Legal and notice information

© Copyright 2015 Hewlett-Packard Development Company, L.P.

No part of this documentation may be reproduced or transmitted in any form or by any means without

prior written consent of Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice.

HEWLETT-PACKARD COMPANY MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS

MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY

AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained

herein or for incidental or consequential damages in connection with the furnishing, performance, or use

of this material.

The only warranties for HP products and services are set forth in the express warranty statements

accompanying such products and services. Nothing herein should be construed as constituting an

additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

i

Page 3

Contents

Multicast overview ······················································································································································· 1

Overview ············································································································································································ 1

Multicast overview ···················································································································································· 1

Multicast features ······················································································································································ 3

Common notations in multicast ······························································································································· 4

Multicast advantages and applications ················································································································· 4

Multicast models ································································································································································ 5

Multicast architecture ························································································································································ 5

Multicast addresses ·················································································································································· 6

Multicast protocols ··················································································································································· 9

Multicast packet forwarding mechanism ····················································································································· 11

Multicast support for VPNs ············································································································································ 12

Introduction to VPN instances ······························································································································ 12

Multicast application in VPNs ······························································································································ 12

Configuring IGMP ······················································································································································ 14

Overview ········································································································································································· 14

IGMP versions ························································································································································ 14

IGMPv1 overview ·················································································································································· 14

IGMPv2 overview ·················································································································································· 16

IGMPv3 overview ·················································································································································· 16

IGMP SSM mapping ············································································································································· 18

IGMP proxying ······················································································································································ 19

IGMP support for VPNs ········································································································································ 20

Protocols and standards ······································································································································· 20

IGMP configuration task list ·········································································································································· 20

Configuring basic IGMP functions ······························································································································· 21

Configuration prerequisites ·································································································································· 21

Enabling IGMP ······················································································································································ 21

Specifying the IGMP version ································································································································ 22

Configuring an interface as a static member interface ····················································································· 22

Configuring a multicast group filter ····················································································································· 23

Setting the maximum number of multicast groups that an interface can join ················································· 23

Adjusting IGMP performance ······································································································································· 24

Configuration prerequisites ·································································································································· 24

Configuring Router-Alert option handling methods ···························································································· 24

Configuring IGMP query and response parameters ·························································································· 25

Enabling IGMP fast-leave processing ·················································································································· 27

Enabling the IGMP host tracking function ·········································································································· 28

Configuring IGMP SSM mapping ································································································································ 28

Configuration prerequisites ·································································································································· 29

Enabling SSM mapping ········································································································································ 29

Configuring SSM mappings ································································································································· 29

Configuring IGMP proxying ········································································································································· 29

Configuration prerequisites ·································································································································· 30

Enabling IGMP proxying ······································································································································ 30

Configuring multicast forwarding on a downstream interface ········································································· 30

Displaying and maintaining IGMP ······························································································································· 31

IGMP configuration examples ······································································································································ 32

i

Page 4

Basic IGMP functions configuration example ····································································································· 32

SSM mapping configuration example ················································································································ 34

IGMP proxying configuration example ··············································································································· 37

Troubleshooting IGMP ··················································································································································· 39

No membership information exists on the receiver-side router ········································································ 39

Membership information is inconsistent on the routers on the same subnet ··················································· 40

Configuring PIM ························································································································································· 41

Overview ········································································································································································· 41

PIM-DM overview ·················································································································································· 41

PIM-SM overview ··················································································································································· 44

BIDIR-PIM overview ················································································································································ 49

Administrative scoping overview ························································································································· 52

PIM-SSM overview ················································································································································· 54

Relationship among PIM protocols ······················································································································ 55

PIM support for VPNs ············································································································································ 56

Protocols and standards ······································································································································· 56

Configuring PIM-DM ······················································································································································ 56

PIM-DM configuration task list······························································································································ 57

Configuration prerequisites ·································································································································· 57

Enabling PIM-DM ··················································································································································· 57

Enabling state-refresh capability ·························································································································· 58

Configuring state-refresh parameters ·················································································································· 58

Configuring PIM-DM graft retry period ··············································································································· 59

Configuring PIM-SM······················································································································································· 59

PIM-SM configuration task list ······························································································································ 60

Configuration prerequisites ·································································································································· 60

Enabling PIM-SM ··················································································································································· 61

Configuring an RP ················································································································································· 62

Configuring a BSR ················································································································································· 64

Configuring administrative scoping ···················································································································· 67

Configuring multicast source registration············································································································ 69

Configuring switchover to SPT ····························································································································· 70

Configuring BIDIR-PIM ··················································································································································· 71

BIDIR-PIM configuration task list ··························································································································· 71

Configuration prerequisites ·································································································································· 71

Enabling PIM-SM ··················································································································································· 72

Enabling BIDIR-PIM ················································································································································ 72

Configuring an RP ················································································································································· 73

Configuring a BSR ················································································································································· 75

Configuring administrative scoping ···················································································································· 78

Configuring PIM-SSM ···················································································································································· 80

PIM-SSM configuration task list ···························································································································· 80

Configuration prerequisites ·································································································································· 80

Enabling PIM-SM ··················································································································································· 81

Configuring the SSM group range ······················································································································ 81

Configuring common PIM features ······························································································································· 82

Configuration task list ··········································································································································· 82

Configuration prerequisites ·································································································································· 82

Configuring a multicast data filter ······················································································································· 83

Configuring a hello message filter ······················································································································ 83

Configuring PIM hello options ····························································································································· 84

Setting the prune delay timer ······························································································································· 85

Configuring common PIM timers ························································································································· 86

Configuring join/prune message sizes ··············································································································· 87

ii

Page 5

Displaying and maintaining PIM ·································································································································· 88

PIM configuration examples ········································································································································· 89

PIM-DM configuration example ··························································································································· 89

PIM-SM non-scoped zone configuration example ····························································································· 92

PIM-SM admin-scoped zone configuration example ························································································· 98

BIDIR-PIM configuration example ······················································································································· 104

PIM-SSM configuration example ························································································································ 108

Troubleshooting PIM ···················································································································································· 111

A multicast distribution tree cannot be built correctly ······················································································ 111

Multicast data abnormally terminated on an intermediate router ·································································· 112

RPs cannot join SPT in PIM-SM ·························································································································· 113

RPT establishment failure or source registration failure in PIM-SM ································································ 114

Configuring multicast routing and forwarding ······································································································ 115

Overview ······································································································································································· 115

RPF check mechanism ········································································································································· 115

Static multicast routes ·········································································································································· 117

Multicast forwarding across unicast subnets ···································································································· 119

Multicast traceroute ············································································································································· 119

Configuration task list ·················································································································································· 120

Enabling IP multicast routing ······································································································································· 120

Configuring multicast routing and forwarding ·········································································································· 121

Configuration prerequisites ································································································································ 121

Configuring static multicast routes ····················································································································· 121

Configuring a multicast routing policy ·············································································································· 122

Configuring a multicast forwarding range ······································································································· 123

Configuring the multicast forwarding table size ······························································································ 123

Tracing a multicast path ····································································································································· 124

Displaying and maintaining multicast routing and forwarding ··············································································· 125

Configuration examples ·············································································································································· 126

Changing an RPF route ······································································································································· 126

Creating an RPF route ········································································································································· 128

Multicast forwarding over GRE tunnels ············································································································· 130

Troubleshooting multicast routing and forwarding ··································································································· 133

Static multicast route failure ······························································································································· 133

Multicast data fails to reach receivers··············································································································· 134

Configuring IGMP snooping ·································································································································· 135

Hardware compatibility ··············································································································································· 135

Overview ······································································································································································· 135

Basic concepts in IGMP snooping ····················································································································· 135

How IGMP snooping works ······························································································································· 137

IGMP snooping proxying ··································································································································· 138

Protocols and standards ····································································································································· 140

IGMP snooping configuration task list ······················································································································· 140

Configuring basic IGMP snooping functions ············································································································ 141

Configuration prerequisites ································································································································ 141

Enabling IGMP snooping ··································································································································· 141

Specifying the version of IGMP snooping ········································································································ 141

Configuring IGMP snooping port functions ··············································································································· 142

Configuration prerequisites ································································································································ 142

Setting aging timers for dynamic ports ············································································································· 142

Configuring static ports ······································································································································· 143

Configuring a port as a simulated member host ····························································································· 144

Enabling IGMP snooping fast-leave processing ······························································································· 145

iii

Page 6

Disabling a port from becoming a dynamic router port ················································································· 145

Configuring IGMP snooping querier ························································································································· 146

Configuration prerequisites ································································································································ 146

Enabling IGMP snooping querier ······················································································································ 146

Configuring parameters for IGMP queries and responses ············································································· 147

Configuring source IP addresses for IGMP queries ························································································· 148

Configuring IGMP snooping proxying ······················································································································ 148

Configuration prerequisites ································································································································ 148

Enabling IGMP snooping proxying ··················································································································· 148

Configuring the source IP addresses for the IGMP messages sent by the proxy ·········································· 149

Configuring IGMP snooping policies ························································································································· 149

Configuration prerequisites ································································································································ 149

Configuring a multicast group filter ··················································································································· 149

Configuring multicast source port filtering ········································································································ 150

Enabling dropping unknown multicast data ····································································································· 151

Enabling IGMP report suppression ···················································································································· 152

Setting the maximum number of multicast groups that a port can join ························································· 153

Enabling multicast group replacement ·············································································································· 153

Setting the 802.1p precedence for IGMP messages ······················································································ 154

Enabling the IGMP snooping host tracking function ······················································································· 155

Displaying and maintaining IGMP snooping ············································································································ 155

IGMP snooping configuration examples ··················································································································· 156

Group policy and simulated joining configuration example ·········································································· 156

Static port configuration example ····················································································································· 158

IGMP snooping querier configuration example ······························································································· 161

IGMP snooping proxying configuration example ···························································································· 163

Troubleshooting IGMP snooping ································································································································ 166

Layer 2 multicast forwarding cannot function ·································································································· 166

Appendix ······································································································································································ 166

Processing of multicast protocol messages ······································································································· 166

Configuring MSDP ·················································································································································· 168

Overview ······································································································································································· 168

How MSDP works ··············································································································································· 168

MSDP support for VPNs ······································································································································ 173

Protocols and standards ····································································································································· 173

MSDP configuration task list ······································································································································· 174

Configuring basic MSDP functions ····························································································································· 174

Configuration prerequisites ································································································································ 174

Enabling MSDP ···················································································································································· 174

Creating an MSDP peer connection ·················································································································· 175

Configuring a static RPF peer ···························································································································· 175

Configuring an MSDP peer connection ····················································································································· 176

Configuration prerequisites ································································································································ 176

Configuring MSDP peer description·················································································································· 176

Configuring an MSDP mesh group ··················································································································· 176

Configuring MSDP peer connection control ····································································································· 177

Configuring SA message related parameters ··········································································································· 178

Configuration prerequisites ································································································································ 178

Configuring SA message content ······················································································································ 178

Configuring SA request messages ····················································································································· 179

Configuring SA message filtering rules ············································································································· 179

Configuring the SA cache mechanism ·············································································································· 180

Displaying and maintaining MSDP ···························································································································· 181

MSDP configuration examples ···································································································································· 181

iv

Page 7

PIM-SM Inter-domain multicast configuration ··································································································· 181

Inter-AS multicast configuration by leveraging static RPF peers ····································································· 186

Anycast RP configuration ···································································································································· 191

SA message filtering configuration ···················································································································· 195

Troubleshooting MSDP ················································································································································ 199

MSDP peers stay in down state ························································································································· 199

No SA entries exist in the router's SA cache ··································································································· 199

No SA entries exist in the router's SA cache ··································································································· 200

Configuring MBGP ·················································································································································· 201

MBGP overview ···························································································································································· 201

Protocols and standards ·············································································································································· 201

MBGP configuration task list ······································································································································· 201

Configuring basic MBGP functions ···························································································································· 202

Configuration prerequisites ································································································································ 202

Configuration procedure ···································································································································· 202

Controlling route advertisement and reception ········································································································· 202

Configuration prerequisites ································································································································ 202

Configuring MBGP route redistribution ············································································································· 203

Configuring default route redistribution into MBGP ························································································ 203

Configuring MBGP route summarization ·········································································································· 204

Advertising a default route to an IPv4 MBGP peer or peer group ································································ 204

Configuring outbound MBGP route filtering ····································································································· 205

Configuring inbound MBGP route filtering ······································································································· 206

Configuring MBGP route dampening ··············································································································· 207

Configuring MBGP route attributes ···························································································································· 208

Configuration prerequisites ································································································································ 208

Configuring MBGP route preferences ··············································································································· 208

Configuring the default local preference ·········································································································· 208

Configuring the MED attribute ··························································································································· 209

Configuring the NEXT_HOP attribute ················································································································ 209

Configuring the AS_PATH attribute ··················································································································· 210

Optimizing MBGP networks ······································································································································· 210

Configuration prerequisites ································································································································ 211

Configuring MBGP soft reset ······························································································································ 211

Enabling the MBGP ORF capability ·················································································································· 212

Configuring the maximum number of MBGP routes for load balancing ······················································· 213

Configuring a large scale MBGP network ················································································································ 213

Configuration prerequisites ································································································································ 213

Configuring IPv4 MBGP peer groups················································································································ 213

Configuring MBGP community ·························································································································· 214

Configuring an MBGP route reflector ··············································································································· 215

Displaying and maintaining MBGP ··························································································································· 216

Displaying MBGP ················································································································································ 216

Resetting MBGP connections ······························································································································ 217

Clearing MBGP information ······························································································································· 217

MBGP configuration example····································································································································· 218

Configuring multicast VPN ····································································································································· 222

Overview ······································································································································································· 222

MD-VPN overview ··············································································································································· 224

Protocols and standards ····································································································································· 227

How MD-VPN works ···················································································································································· 227

Share-MDT establishment ··································································································································· 227

Share-MDT-based delivery ·································································································································· 231

v

Page 8

MDT switchover ··················································································································································· 234

Multi-AS MD VPN ················································································································································ 235

Multicast VPN configuration task list ·························································································································· 236

Configuring MD-VPN ··················································································································································· 236

Configuration prerequisites ································································································································ 236

Enabling IP multicast routing in a VPN instance ······························································································ 237

Configuring a share-group and an MTI binding ······························································································ 237

Configuring MDT switchover parameters ········································································································· 238

Enabling switch-group reuse logging ················································································································ 238

Configuring BGP MDT ················································································································································· 239

Configuration prerequisites ································································································································ 239

Configuring BGP MDT peers or peer groups ··································································································· 239

Configuring a BGP MDT route reflector ············································································································ 240

Displaying and maintaining multicast VPN ··············································································································· 240

Multicast VPN configuration examples ······················································································································ 241

Single-AS MD VPN configuration example ······································································································ 241

Multi-AS MD VPN configuration example ········································································································ 254

Troubleshooting MD-VPN ············································································································································ 266

A share-MDT cannot be established ·················································································································· 266

An MVRF cannot be created ······························································································································ 267

Configuring MLD ····················································································································································· 268

Overview ······································································································································································· 268

MLD versions ························································································································································ 268

How MLDv1 works ·············································································································································· 268

How MLDv2 works ·············································································································································· 270

MLD message types············································································································································· 271

MLD SSM mapping ············································································································································· 274

MLD proxying ······················································································································································ 275

Protocols and standards ····································································································································· 275

MLD configuration task list ·········································································································································· 276

Configuring basic MLD functions ······························································································································· 276

Configuration prerequisites ································································································································ 276

Enabling MLD ······················································································································································ 277

Configuring the MLD version ····························································································································· 277

Configuring static joining ··································································································································· 277

Configuring an IPv6 multicast group filter ········································································································ 278

Setting the maximum number of IPv6 multicast groups that an interface can join ······································· 278

Adjusting MLD performance ······································································································································· 279

Configuration prerequisites ································································································································ 279

Configuring Router-Alert option handling methods ·························································································· 279

Configuring MLD query and response parameters ·························································································· 280

Enabling MLD fast-leave processing ·················································································································· 282

Enabling the MLD host tracking function ·········································································································· 283

Configuring MLD SSM mapping ································································································································ 283

Configuration prerequisites ································································································································ 283

Enabling MLD SSM mapping ····························································································································· 284

Configuring MLD SSM mapping entries ··········································································································· 284

Configuring MLD proxying ········································································································································· 284

Configuration prerequisites ································································································································ 284

Enabling MLD proxying ······································································································································ 285

Configuring IPv6 multicast forwarding on a downstream interface ······························································ 285

Displaying and maintaining MLD ······························································································································· 286

MLD configuration examples ······································································································································ 287

Basic MLD functions configuration example ····································································································· 287

vi

Page 9

MLD SSM mapping configuration example ····································································································· 289

MLD proxying configuration example ··············································································································· 292

Troubleshooting MLD ··················································································································································· 294

No member information exists on the receiver-side router ············································································· 294

Membership information is inconsistent on the routers on the same subnet ················································· 295

Configuring IPv6 PIM ·············································································································································· 296

Overview ······································································································································································· 296

IPv6 PIM-DM overview ········································································································································ 296

IPv6 PIM-SM overview ········································································································································ 299

IPv6 BIDIR-PIM overview ····································································································································· 305

IPv6 administrative scoping overview ··············································································································· 308

IPv6 PIM-SSM overview ······································································································································ 310

Relationship among IPv6 PIM protocols ············································································································ 312

Protocols and standards ····································································································································· 312

Configuring IPv6 PIM-DM ············································································································································ 312

IPv6 PIM-DM configuration task list ··················································································································· 312

Configuration prerequisites ································································································································ 313

Enabling IPv6 PIM-DM ········································································································································ 313

Enabling state-refresh capability ························································································································ 313

Configuring state-refresh parameters ················································································································ 314

Configuring IPv6 PIM-DM graft retry period ···································································································· 314

Configuring IPv6 PIM-SM ············································································································································ 315

IPv6 PIM-SM configuration task list ···················································································································· 315

Configuration prerequisites ································································································································ 315

Enabling IPv6 PIM-SM ········································································································································· 316

Configuring an RP ··············································································································································· 316

Configuring a BSR ··············································································································································· 319

Configuring IPv6 administrative scoping ·········································································································· 322

Configuring IPv6 multicast source registration ································································································· 323

Configuring switchover to SPT ··························································································································· 324

Configuring IPv6 BIDIR-PIM ········································································································································· 325

IPv6 BIDIR-PIM configuration task list ················································································································ 325

Configuration prerequisites ································································································································ 325

Enabling IPv6 PIM-SM ········································································································································· 326

Enabling IPv6 BIDIR-PIM ····································································································································· 326

Configuring an RP ··············································································································································· 326

Configuring a BSR ··············································································································································· 328

Configuring IPv6 administrative scoping ·········································································································· 332

Configuring IPv6 PIM-SSM ·········································································································································· 333

IPv6 PIM-SSM configuration task list ················································································································· 333

Configuration prerequisites ································································································································ 333

Enabling IPv6 PIM-SM ········································································································································· 334

Configuring the IPv6 SSM group range ··········································································································· 334

Configuring common IPv6 PIM features ···················································································································· 334

Configuration task list ········································································································································· 335

Configuration prerequisites ································································································································ 335

Configuring an IPv6 multicast data filter ··········································································································· 335

Configuring a hello message filter ···················································································································· 336

Configuring IPv6 PIM hello options ··················································································································· 336

Setting the prune delay timer ····························································································································· 338

Configuring common IPv6 PIM timers ··············································································································· 338

Configuring join/prune message sizes ············································································································· 340

Configuring IPv6 PIM to work with BFD ············································································································ 340

Displaying and maintaining IPv6 PIM ························································································································ 341

vii

Page 10

IPv6 PIM configuration examples ······························································································································· 342

IPv6 PIM-DM configuration example ················································································································· 342

IPv6 PIM-SM non-scoped zone configuration example ··················································································· 345

IPv6 PIM-SM admin-scoped zone configuration example ··············································································· 350

IPv6 BIDIR-PIM configuration example ·············································································································· 362

IPv6 PIM-SSM configuration example ··············································································································· 367

Troubleshooting IPv6 PIM ············································································································································ 370

A multicast distribution tree cannot be built correctly ······················································································ 370

IPv6 multicast data is abnormally terminated on an intermediate router ······················································ 371

RPs cannot join the SPT in IPv6 PIM-SM ············································································································ 372

RPT cannot be established or a source cannot register in IPv6 PIM-SM ························································ 372

Configuring IPv6 multicast routing and forwarding ····························································································· 374

Overview ······································································································································································· 374

RPF check mechanism ········································································································································· 374

RPF check implementation in IPv6 multicast ····································································································· 375

IPv6 multicast forwarding across IPv6 unicast subnets ···················································································· 376

Configuration task list ·················································································································································· 377

Enabling IPv6 multicast routing ··································································································································· 377

Configuring IPv6 multicast routing and forwarding ································································································· 377

Configuration prerequisites ································································································································ 377

Configuring an IPv6 multicast routing policy ··································································································· 377

Configuring an IPv6 multicast forwarding range ····························································································· 378

Configuring the IPv6 multicast forwarding table size ······················································································ 379

Displaying and maintaining IPv6 multicast routing and forwarding ······································································ 380

IPv6 multicast forwarding over GRE tunnel configuration example ········································································ 381

Troubleshooting abnormal termination of IPv6 multicast data ······································································· 384

Configuring MLD snooping ···································································································································· 386

Hardware compatibility ··············································································································································· 386

Overview ······································································································································································· 386

Basic MLD snooping concepts ··························································································································· 387

How MLD snooping works ································································································································· 388

MLD snooping proxying ····································································································································· 389

Protocols and standards ····································································································································· 391

MLD snooping configuration task list ························································································································· 391

Configuring basic MLD snooping functions ·············································································································· 392

Configuration prerequisites ································································································································ 392

Enabling MLD snooping ····································································································································· 392

Specifying the version of MLD snooping ·········································································································· 392

Configuring MLD snooping port functions ················································································································· 393

Configuration prerequisites ································································································································ 393

Configuring aging timers for dynamic ports ···································································································· 393

Configuring static ports ······································································································································· 394

Configuring a port as a simulated member host ····························································································· 395

Enabling MLD snooping fast-leave processing ································································································· 395

Disabling a port from becoming a dynamic router port ················································································· 396

Configuring MLD snooping querier ··························································································································· 397

Configuration prerequisites ································································································································ 397

Enabling MLD snooping querier ························································································································ 397

Configuring parameters for MLD queries and responses ··············································································· 398

Configuring the source IPv6 addresses for MLD queries ················································································ 398

Configuring MLD snooping proxying ························································································································ 399

Configuration prerequisites ································································································································ 399

Enabling MLD snooping proxying ····················································································································· 399

viii

Page 11

Configuring the source IPv6 addresses for the MLD messages sent by the proxy ······································· 399

Configuring an MLD snooping policy ························································································································ 400

Configuration prerequisites ································································································································ 400

Configuring an IPv6 multicast group filter ········································································································ 400

Configuring IPv6 multicast source port filtering ······························································································· 401

Enabling dropping unknown IPv6 multicast data ···························································································· 402

Enabling MLD report suppression ······················································································································ 403

Setting the maximum number of multicast groups that a port can join ························································· 403

Enabling IPv6 multicast group replacement ····································································································· 404

Setting the 802.1p precedence for MLD messages ························································································ 405

Enabling the MLD snooping host tracking function ························································································· 406

Displaying and maintaining MLD snooping ·············································································································· 406

MLD snooping configuration examples ····················································································································· 407

IPv6 group policy and simulated joining configuration example ·································································· 407

Static port configuration example ····················································································································· 409

MLD snooping querier configuration example ································································································· 413

MLD snooping proxying configuration example ······························································································ 414

Troubleshooting MLD snooping ·································································································································· 417

Layer 2 multicast forwarding cannot function ·································································································· 417

Configured IPv6 multicast group policy fails to take effect ············································································· 417

Appendix ······································································································································································ 418

Processing of IPv6 multicast protocol messages ······························································································· 418

Configuring IPv6 MBGP ········································································································································· 419

IPv6 MBGP overview ··················································································································································· 419

IPv6 MBGP configuration task list ······························································································································ 419

Configuring basic IPv6 MBGP functions ···················································································································· 420

Configuration prerequisites ································································································································ 420

Configuring an IPv6 MBGP peer ······················································································································· 420

Configuring a preferred value for routes from a peer or a peer group ························································ 420

Controlling route distribution and reception ············································································································· 421

Configuration prerequisites ································································································································ 421

Injecting a local IPv6 MBGP route ····················································································································· 421

Configuring IPv6 MBGP route redistribution ···································································································· 421

Configuring IPv6 MBGP route summarization ································································································· 422

Advertising a default route to a peer or peer group ······················································································· 422

Configuring outbound IPv6 MBGP route filtering ···························································································· 423

Configuring inbound IPv6 MBGP route filtering ······························································································ 423

Configuring IPv6 MBGP route dampening ······································································································· 424

Configuring IPv6 MBGP route attributes ···················································································································· 425

Configuration prerequisites ································································································································ 425

Configuring IPv6 MBGP route preferences ······································································································· 425

Configuring the default local preference ·········································································································· 425

Configuring the MED attribute ··························································································································· 425

Configuring the NEXT_HOP attribute ················································································································ 426

Configuring the AS_PATH attribute ··················································································································· 426

Optimizing IPv6 MBGP networks ······························································································································· 427

Configuration prerequisites ································································································································ 427

Configuring IPv6 MBGP soft reset ····················································································································· 427

Enabling the IPv6 MBGP ORF capability ········································································································· 428

Configuring the maximum number of equal-cost routes for load-balancing ················································· 429

Configuring a large scale IPv6 MBGP network ········································································································ 430

Configuration prerequisites ································································································································ 430

Configuring an IPv6 MBGP peer group ··········································································································· 430

Configuring IPv6 MBGP community ·················································································································· 430

ix

Page 12

Configuring an IPv6 MBGP route reflector ······································································································· 431

Displaying and maintaining IPv6 MBGP ··················································································································· 432

Displaying IPv6 MBGP ········································································································································ 432

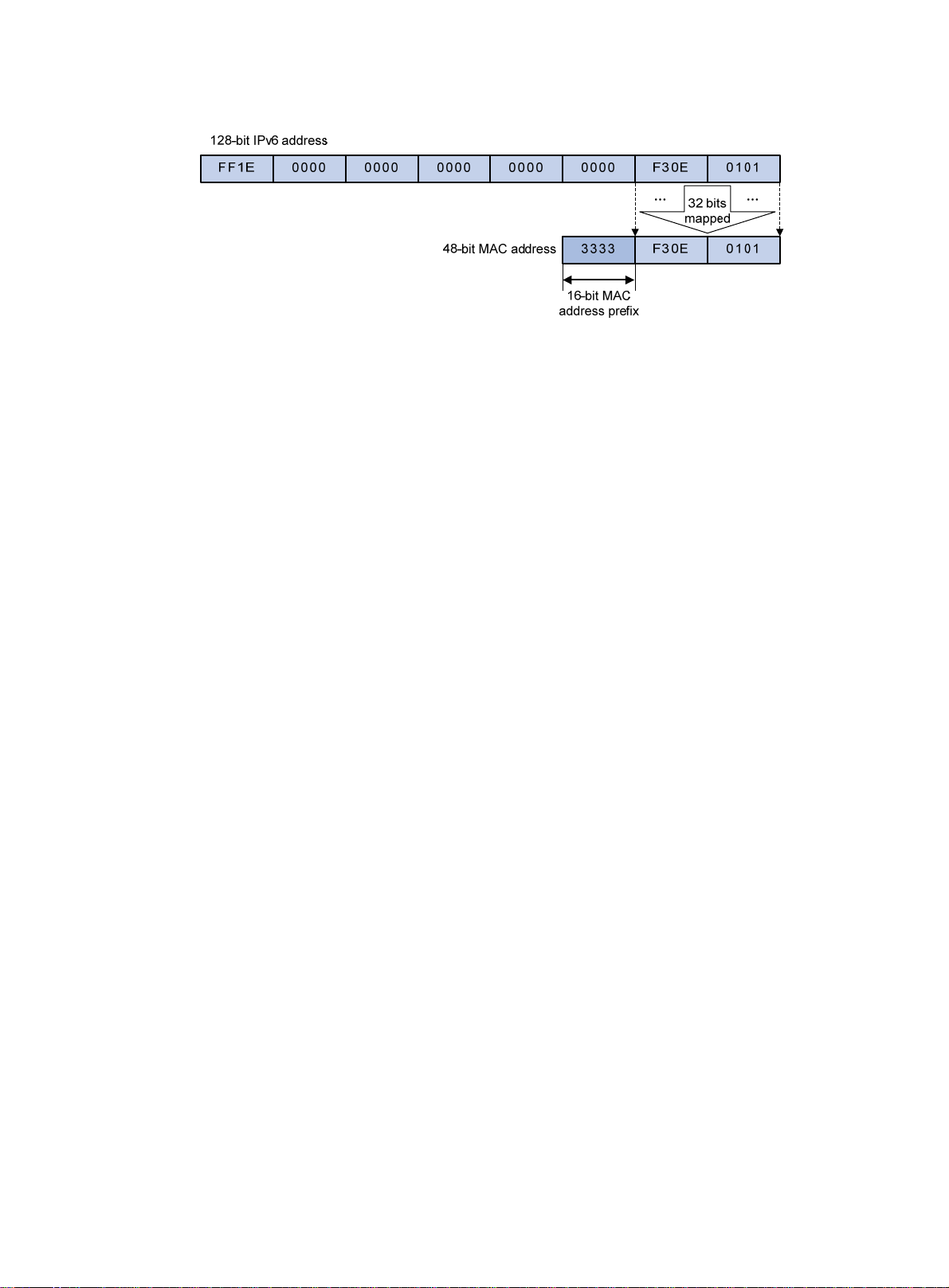

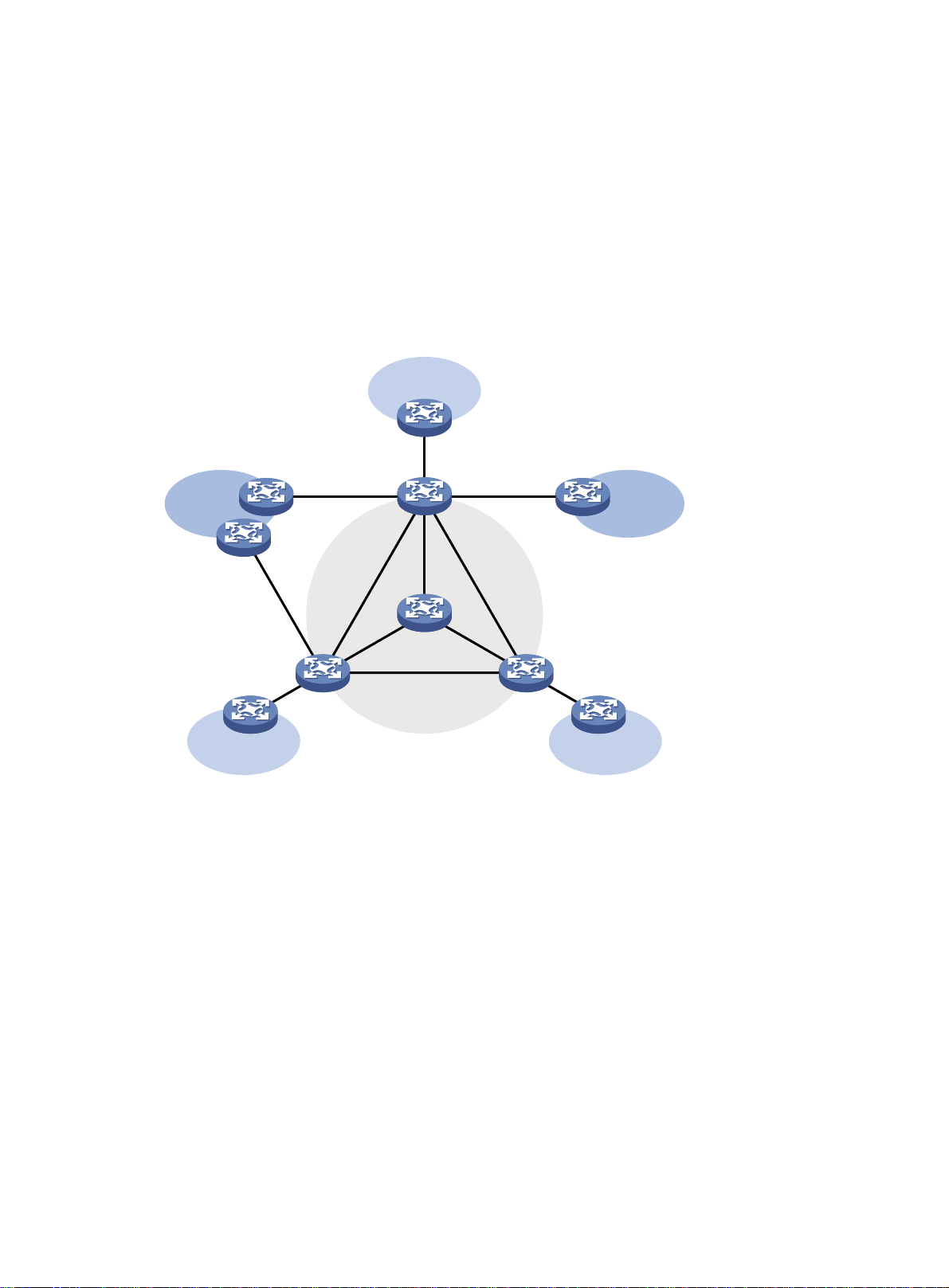

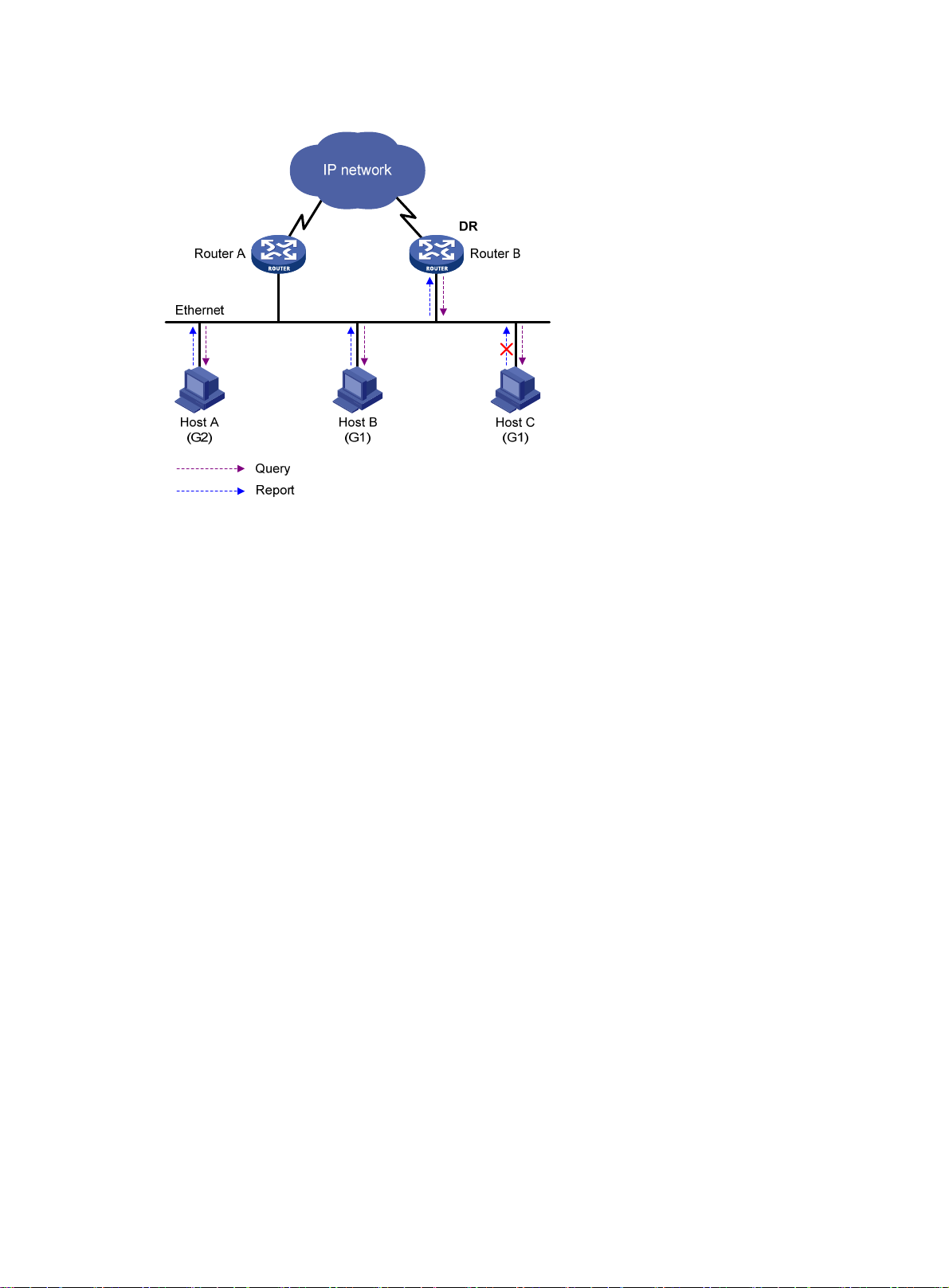

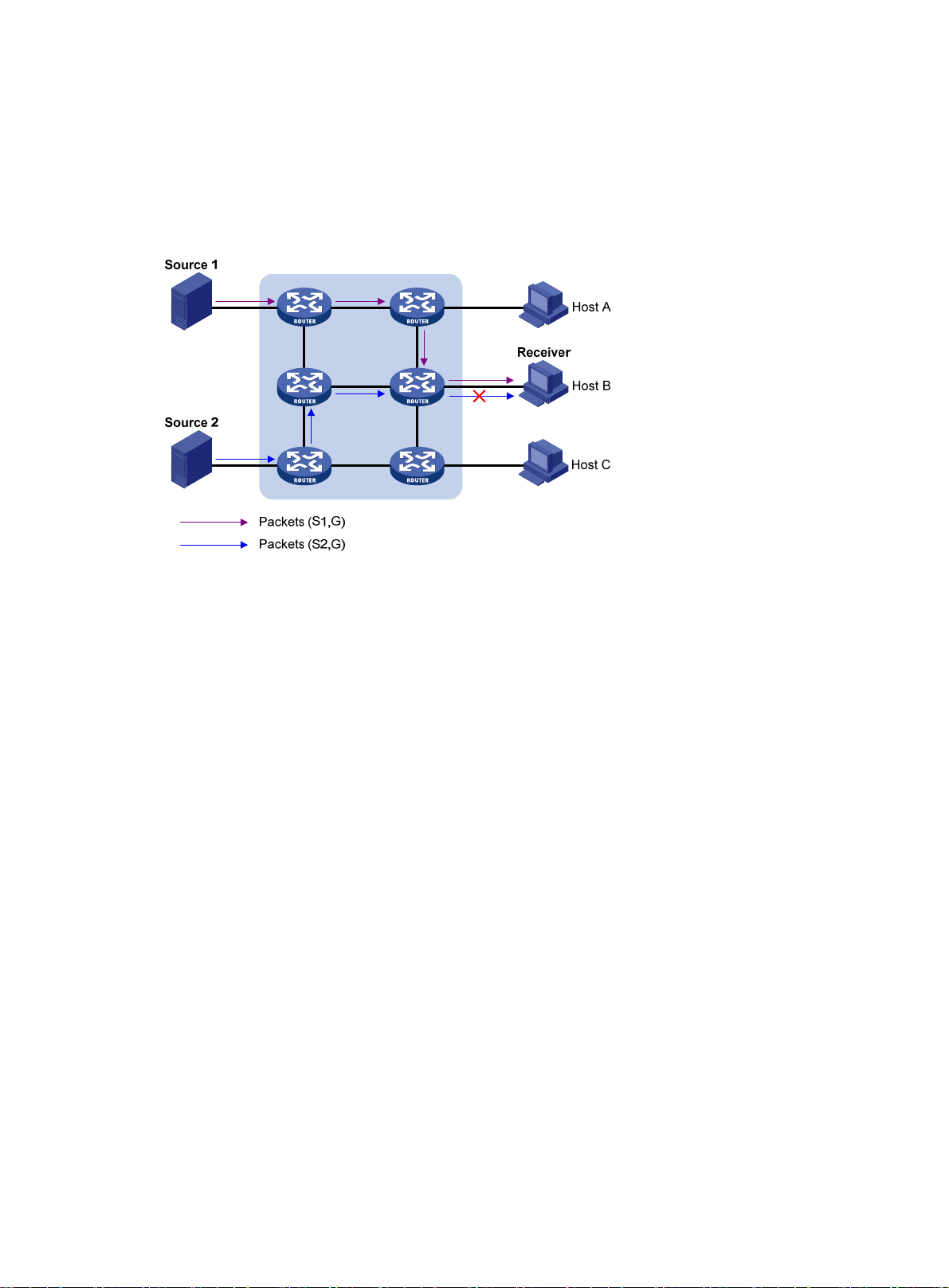

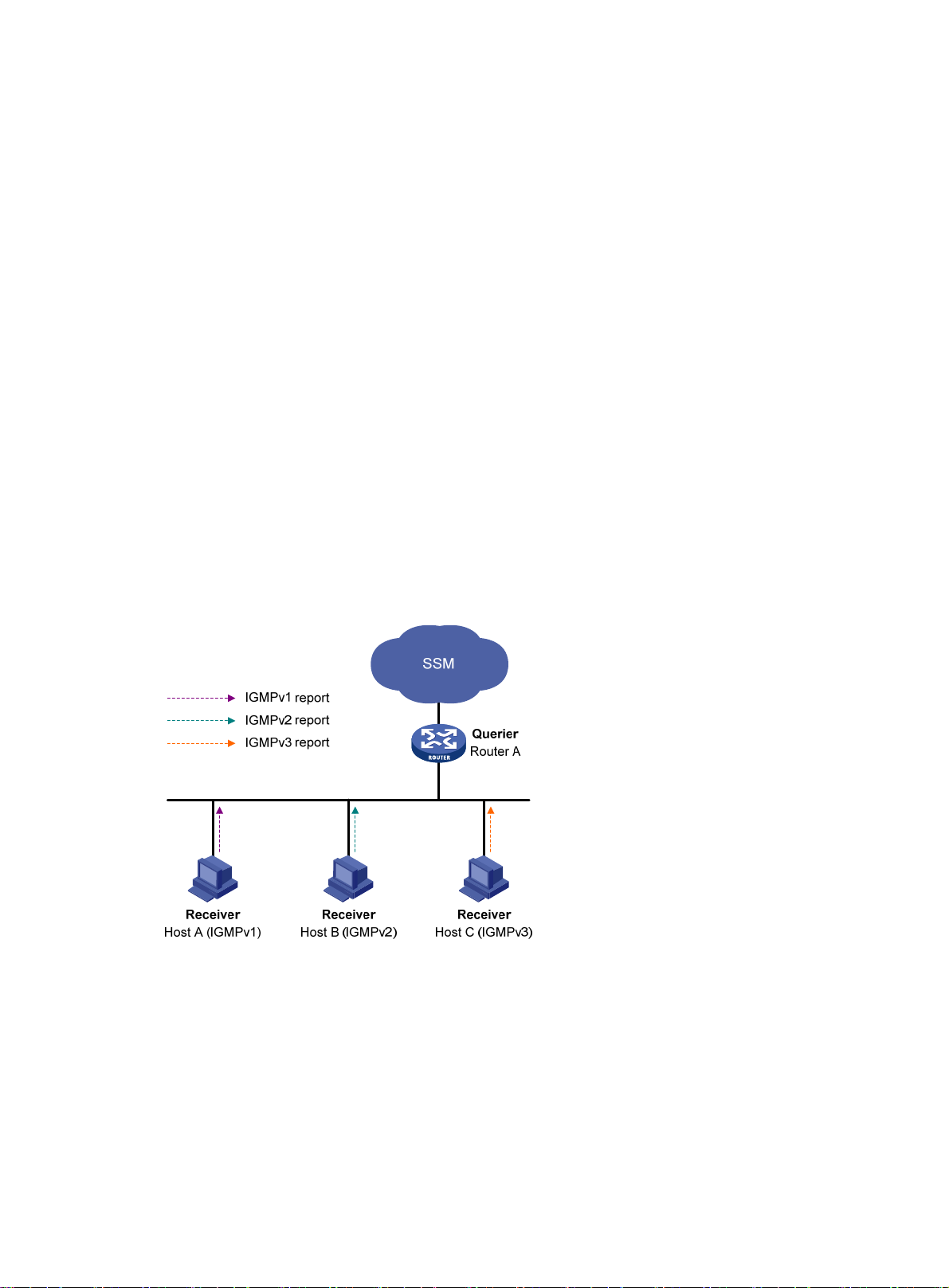

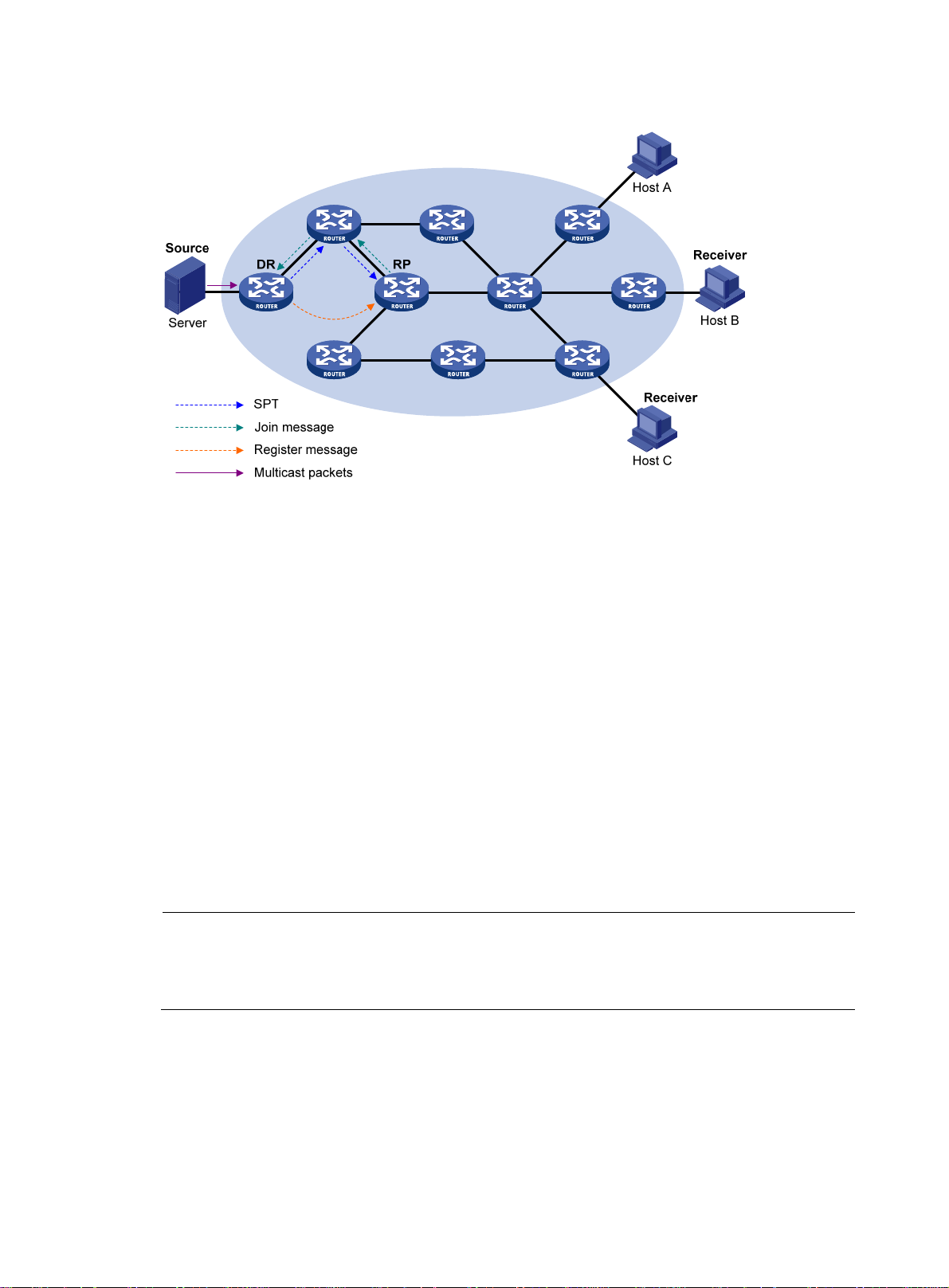

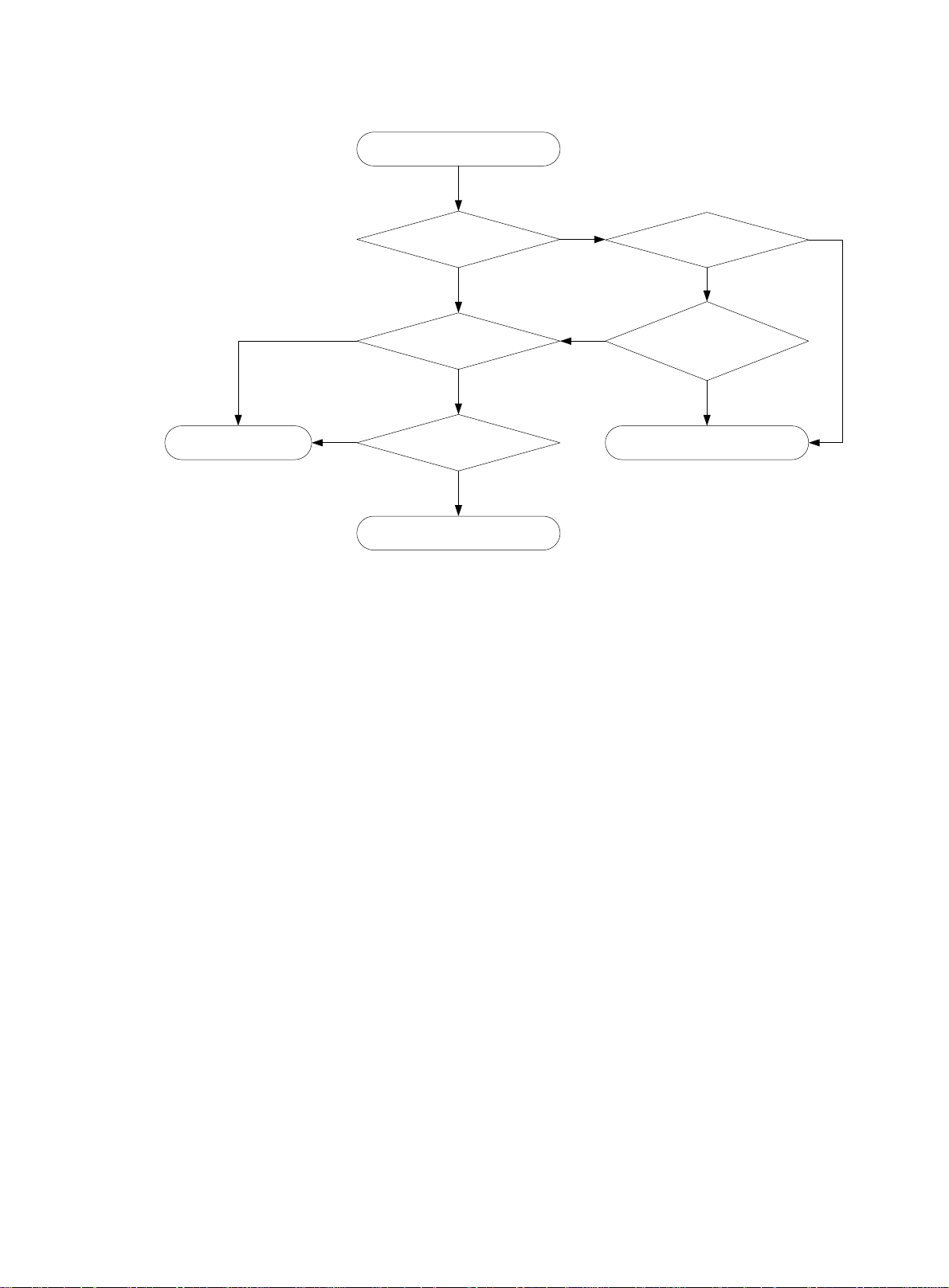

Resetting IPv6 MBGP connections ····················································································································· 433