Page 1

HPE Converged Architecture 700 with

Nimble Storage Deployment Guide

Page 2

Contents

Executive Summary.....................................................................................8

Solution Overview................................................................................................................................8

Target Audience...................................................................................................................................8

Business Need.....................................................................................................................................9

Terms and Abbreviations......................................................................................................................9

System Setup.............................................................................................11

Validated Software and Firmware Levels...........................................................................................12

HPE Service Pack for ProLiant................................................................................................13

Nimble Storage Software........................................................................................................13

HPE Infrastructure Firmware...................................................................................................13

HPE Infrastructure Software....................................................................................................14

VMware Infrastructure Software..............................................................................................14

Resources for Physical Deployment..................................................................................................15

Compute Physical Deployment...............................................................................................15

Network Physical Deployment.................................................................................................15

Cabling for Networking, Storage, and Power..........................................16

Network Cabling.................................................................................................................................16

Power Cabling....................................................................................................................................18

Preparing to Install and Configure the HPE Converged Architecture

700 with Nimble Storage........................................................................21

The Configuration Worksheet............................................................................................................21

Fully Qualified Domain Names Versus IP Addresses........................................................................21

HPE Resources for Automation Efforts..............................................................................................21

Connecting to the Components in the Solution..................................................................................22

HPE iLO..................................................................................................................................22

HPE Onboard Administrator....................................................................................................22

Network Switches....................................................................................................................22

VMware Host Client.................................................................................................................23

VMware vSphere Client for Windows......................................................................................23

VMware vSphere Web Client..................................................................................................23

VMware vSphere Administration Portal...................................................................................24

VMware VM Console: vSphere Web Client.............................................................................24

VMware VM Console: vSphere Client for Windows................................................................24

HPE OneView Web Administration Portal...............................................................................24

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 3

HPE OneView for vCenter Plugin Administrator Console.......................................................25

Nimble Storage Array CLI........................................................................................................25

Nimble Storage Administrator Console...................................................................................25

HPE Insight Control Server Provisioning Web Administration Page.......................................25

Configuring the Ethernet Network............................................................26

Set Up IRF Configuration...................................................................................................................26

Configure Multi-Active Detection and Remote Access to the Switch.................................................28

Configure IRF Priority........................................................................................................................29

Convert the Chassis Working Mode...................................................................................................29

Configure NTP...................................................................................................................................30

Configure the VLANs Needed............................................................................................................30

Convert Interfaces to Use FC Protocol..............................................................................................31

Add Individual Port Descriptions for Troubleshooting.........................................................................31

Configure Global FCoE Parameters..................................................................................................32

Configure Interface FCoE Parameters...............................................................................................33

Configure the FCoE Mode and VSANs..............................................................................................34

Add FC Interfaces to the Appropriate VSANs....................................................................................35

Configure SNMPv3............................................................................................................................35

Create Bridge Aggregations...............................................................................................................36

Uplink into the Existing Network Infrastructure..................................................................................37

Configuring the Computing Infrastructure and the Management

Servers....................................................................................................38

Configuring iLO..................................................................................................................................38

Install iLO Advanced Licenses................................................................................................38

Configure iLO..........................................................................................................................38

Configuring the c7000 Enclosures.....................................................................................................40

Assign IP Addresses to the c7000 Enclosures from the Insight Display.................................40

Configure Enclosures with the OA Wizard..............................................................................41

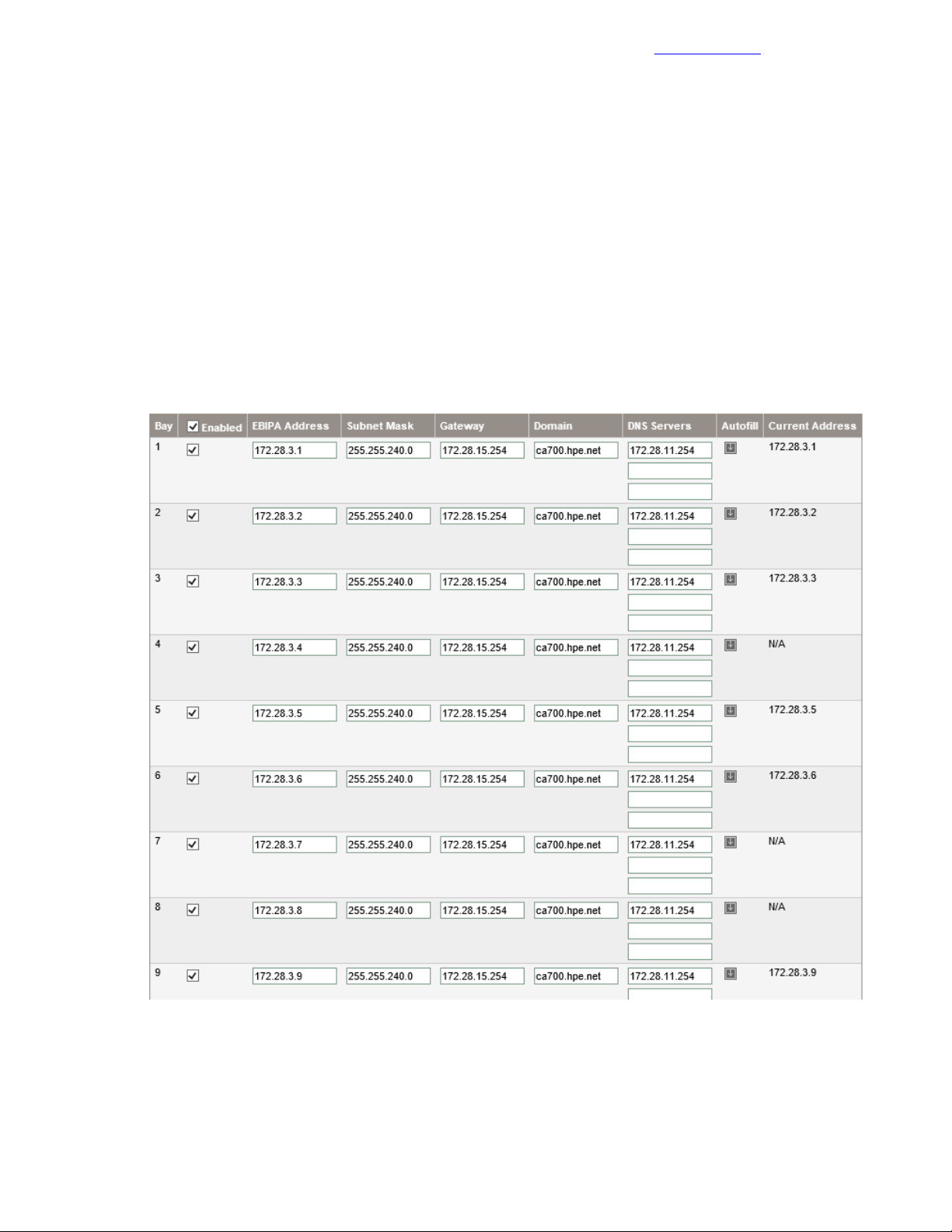

Configure Enclosure Bay IP Addressing.................................................................................43

Create Admin User for All iLOs...............................................................................................44

Repeat c7000 Configuration Procedures for Each Enclosure in the Solution.........................44

Configuring the Server and Installing the Hypervisor for the Management Servers..........................45

Launch iLO Remote Console and Power On the Server.........................................................45

Enter Intelligent Provisioning and Configure the Smart Array.................................................45

Continue Intelligent Provisioning Configuration and Install Wizard to Install Hypervisor........46

Configuring the Management Server After Hypervisor Installation ...................................................46

Configure vSphere Host Network Parameters Through the ESXi Host UI..............................46

Configure the vSwitch Failover Policy.....................................................................................47

Download the VMware vSphere Client for Windows...............................................................48

Log in to VMware ESXi Hosts with the VMware vSphere Client for Windows........................48

Set Up VMkernel Ports and Virtual Switches..........................................................................48

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 4

Rename the Local Datastore..................................................................................................50

Set Date and Time and Configure NTP...................................................................................50

Deploying the VMware vCenter Management Appliance...................................................................50

Prepare for the VMware vCenter Server Appliance Installer...................................................50

Install the Platform Services Controller...................................................................................50

Install VMware vCenter Management Appliance....................................................................52

Create vCenter Data Center and Management Cluster..........................................................53

Add Hosts to Management Cluster.........................................................................................53

Configure vCenter Power Management .................................................................................53

Building the Management VM............................................................................................................54

Deploy Base Windows VM......................................................................................................54

Install VMware Tools...............................................................................................................55

Enable the Desktop Experience Role......................................................................................55

Configure the Second Hard Drive...........................................................................................56

Set Host Name........................................................................................................................56

Set IP Addresses....................................................................................................................56

Disable the Firewall ................................................................................................................57

Enable Remote Desktop Access.............................................................................................57

Configure NTP........................................................................................................................57

Run Updates and Install Software...........................................................................................57

Configuring the IIS or ICsp Media Server...............................................................................58

Installing and Configuring the Nimble Storage Array.............................59

Initializing and Configuring the Nimble Storage Array........................................................................59

Initialize the Array with NWT...................................................................................................59

Configure the Nimble Array.....................................................................................................60

Update NimbleOS..............................................................................................................................60

Configure Nimble Storage to Communicate with VMware vCenter....................................................60

Configuring the FCoE SAN and Presenting the Storage Arrays to the

Management Host...................................................................................62

Zoning the Nimble Storage to the Management Servers...................................................................62

Create and Configure the vFC Interfaces for the Management Servers.................................62

Create the Alias for the Nimble Storage Array and Management Systems.............................62

Create the Zone Definitions for the Nimble Storage Array to the Management Systems.......64

Create and Activate the Zone Set...........................................................................................64

Present Storage to the Management Servers....................................................................................65

Migrate the Virtual Machines on the Management Hosts to Shared Storage.........................66

Deploying the HPE Infrastructure Management Software......................67

Deploying and Configuring HPE OneView.........................................................................................67

Deploy the HPE OneView VM.................................................................................................67

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 5

Perform the Initial Configuration of the HPE OneView VM......................................................67

Apply Patches to the HPE OneView VM.................................................................................68

Complete the HPE OneView Configuration from the Web UI..................................................68

Upload the HPE Service Pack for ProLiant.............................................................................69

Add HPE OneView Licenses to the HPE OneView Appliance................................................69

Import the DL Management Servers.......................................................................................70

Create Networks.....................................................................................................................70

Create Network Sets...............................................................................................................71

Create Logical Interconnect Groups........................................................................................72

Create an Enclosure Group....................................................................................................74

Import the HPE BladeSystem c7000 Enclosures....................................................................74

Creating Profiles for the HPE DL360 Gen9 Management Servers.........................................74

Creating Profile Templates for the HPE ProLiant BL460c Gen9 Servers................................75

Creating Profile Templates for the HPE ProLiant BL660c Gen9 Servers................................77

Complete Additional HPE OneView Configuration..................................................................79

Deploying and Configuring HPE ICsp................................................................................................79

Deploy the HPE ICsp VM........................................................................................................79

Perform the Initial Configuration of the HPE ICsp VM.............................................................80

Apply Patches to the HPE ICsp VM........................................................................................80

Connect to the Management VM and Obtain the ICsp Media Server Setup Utility.................81

Configure the Media Server and Load the HPE Service Pack for ProLiant.............................81

Load the VMware ESXi Image................................................................................................82

Load VMware VIBs..................................................................................................................82

Configure the ICsp Media Server............................................................................................83

Configure the HPE ICsp OneView Integration........................................................................83

Configure OS Deployment Settings........................................................................................83

Adding a New Build Plan for Enhanced HPE OneView for VMware vCenter Builds...............83

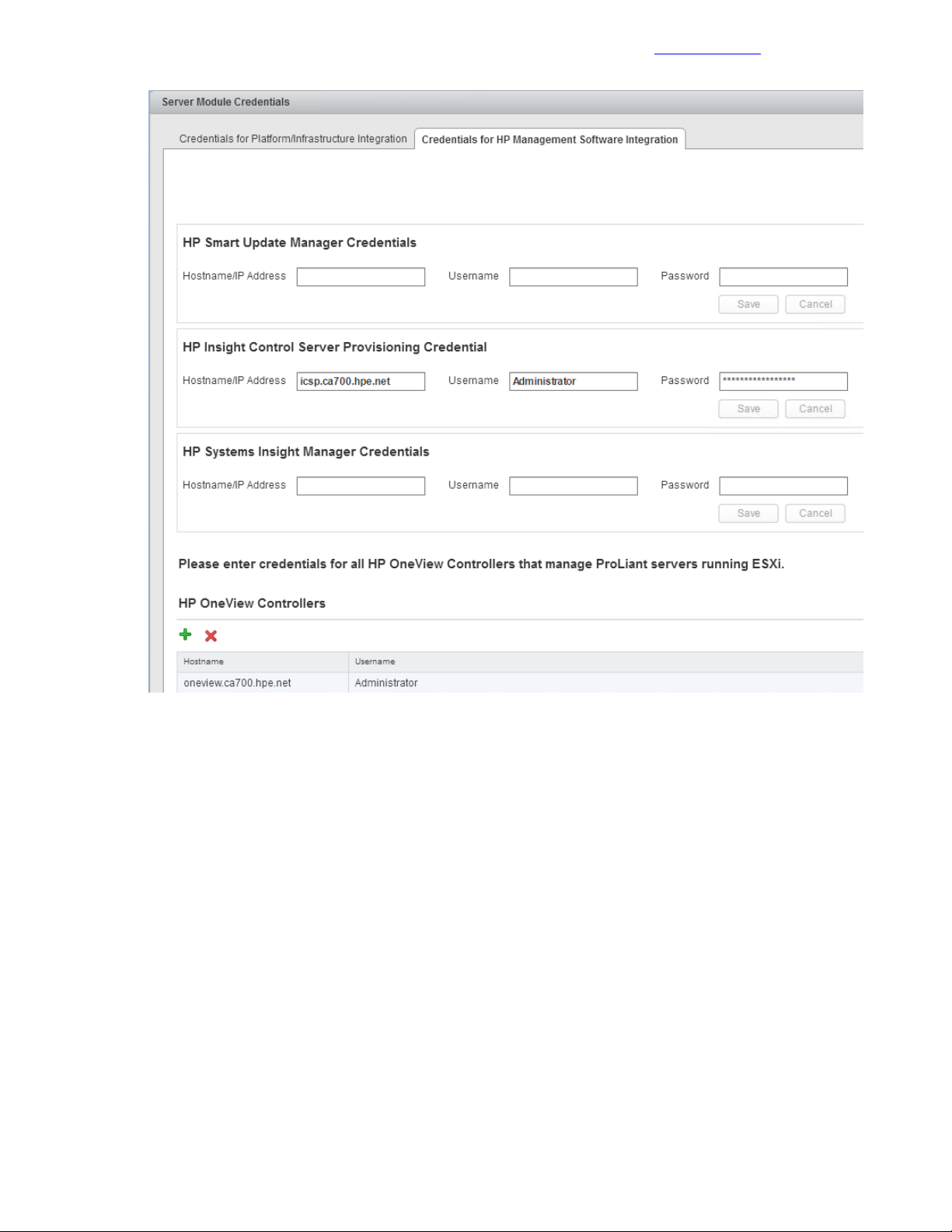

Installing and Configuring the HPE OneView for VMware vCenter Software.....................................85

Deploy the HPE OneView for VMware vCenter VM................................................................86

Perform the Initial Configuration of the HPE OneView for vCenter Plugin..............................86

Access the HPE OneView for vCenter Plugin in vCenter........................................................87

Configure Server Module Credentials.....................................................................................87

Generate a Self-Signed Certificate.........................................................................................89

Upload the Self-Signed Certificate to the Onboard Administrator of Each Enclosure.............89

Restart HPE OneView for VMware vCenter Services and Initialize the Plugin.......................90

Set Host Network Configuration Preferences..........................................................................90

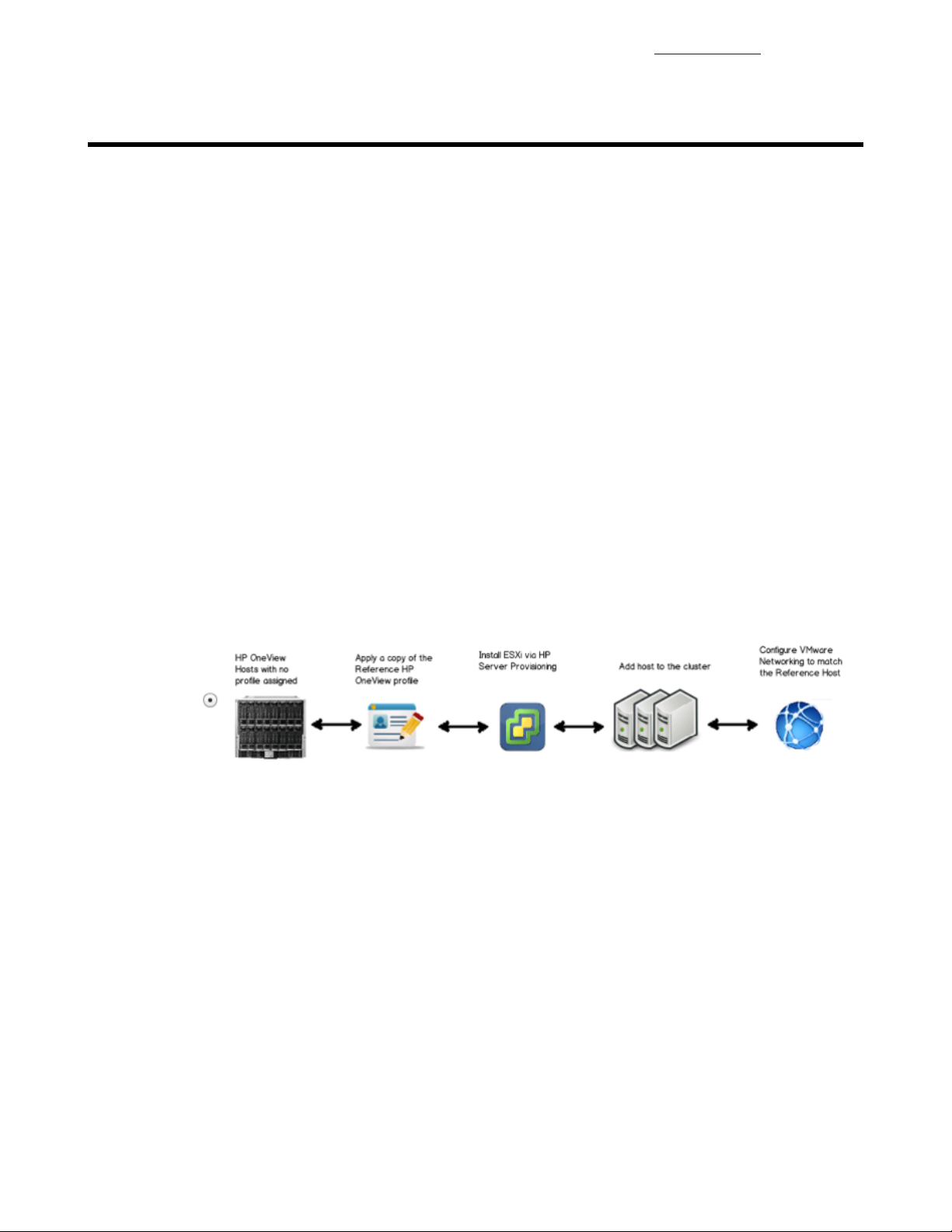

Building the Compute Servers..................................................................91

Build Compute Servers with HPE Grow Cluster................................................................................91

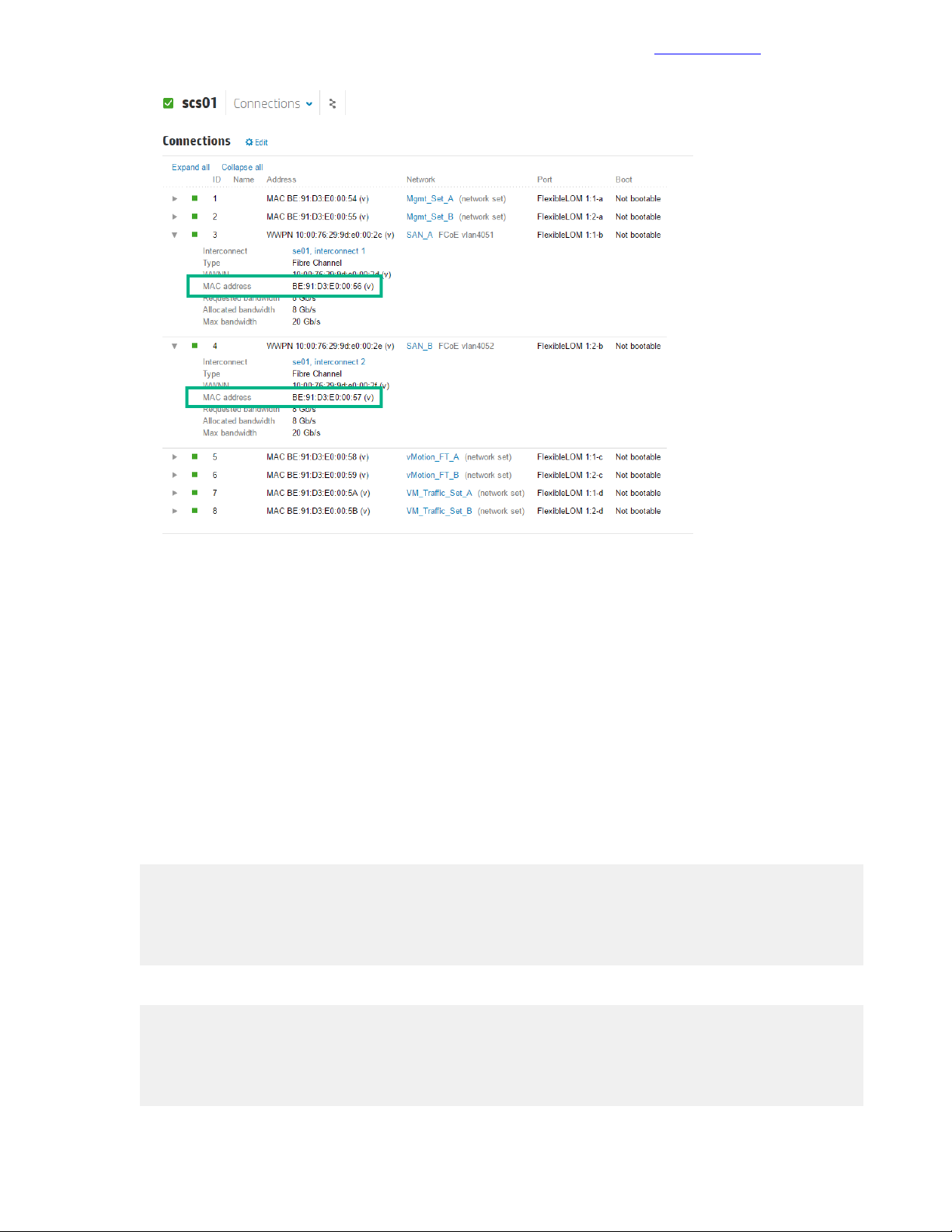

Post Installation: Configure Compute Server for OneView................................................................92

Post Installation: Configure Compute Server Networking..................................................................93

Post Installation: Configure Compute Server for VMware vCenter ...................................................94

Post Installation: Configuring Compute Server for Storage................................................................94

Gather the WWN of the Compute Server from OneView........................................................94

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 6

Create and Configure the vFC Interfaces for the Compute Hosts...........................................95

Create Datastores for the Compute Cluster............................................................................96

Appendixes.................................................................................................97

Appendix A: Configuration Worksheet...............................................................................................97

Appendix B: ESXi 6.5 Kickstart File for Grow Cluster......................................................................105

Appendix C: Factory Resetting a Blade to Prepare for a New OV4VC Build or After a Failed

Deployment on a Blade...............................................................................................................106

Remove Server Entry from ICsp...........................................................................................106

Remove Profile from OneView..............................................................................................107

Reset the Blade Through Intelligent Provisioning.................................................................107

Additional Resources..............................................................................109

About the Authors....................................................................................110

David Fogaren..................................................................................................................................110

Julian Cates.....................................................................................................................................110

Version History.........................................................................................111

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 7

Legal Notices

Documentation Feedback

Legal Notices

Copyright 2010-2017 Hewlett Packard Enterprise Development LP. All rights reserved worldwide.

No part of this document may be reproduced, stored in a retrieval system, or transmitted in any form or by

electronic, mechanical, recording, photocopy, scanning or other means without prior written permission from

Nimble Storage, Inc.

The product described in this documentation may be protected byUSPatent8,285,918,USPatent 8,832,330

US Patent8,924,607,USP atent8,949,502,USPatent9,003,113,USPatent9,015,406,USPatent 9,081,670,

US Patent 9,098,405, US Patent 9,116,630 and other pending patent applications.

Nimble Storage, Incorporated (Nimble), has used the latest information that is available in producing this

document. Nimble Storage makes no warranty, expressed or implied, with regard to accuracy and

completeness.

Information in this document is subject to change without notice.

NimbleStorageInc.NimbleStorage ,the"Nimble Storage" logo,Inf oSight,SmartStack,CASL,NimbleConnect,

Timeless Storage, Data Velocity Delivered, Unified Flash Fabric, and other names are registered trademarks

or trademarks of NimbleStorageintheUnitedStatesand/orotherjurisdictions.Othertradenames,trademarks,

and service marks are the property of their respective owners.

InfoSight®is a registered trademark in Japan of Intage Inc. of Japan. Usage of InfoSight®in Japanispermitted

pursuant to a trademark license agreement between Nimble Storage, Inc. and Intage Inc.

Nimble Storage, Inc.

211 River Oaks Parkway

San Jose, CA 95134

U.S.A.

Tel: +1 408.432.9600

Website: http://www.nimblestorage.com

Sales: sales@nimblestorage.com

Tuesday June 20, 2017 07:30:37Publication Date:

Support

All documentation and knowledge base articles are available on the Nimble Storage Support site at

https://infosight.nimblestorage.com. To register on InfoSight, click the Enroll Now link on the main page.

Email: support@nimblestorage.com

For all other general support contact information, go to http://www.nimblestorage.com/support.

Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 8

Executive Summary

Documentation Feedback

Executive Summary

In the last several years, integrated infrastructures have emerged as a more efficient, less risk-prone method

of deploying IT gear. HPE introduces a new best-of-class solution in this space, the HPE Converged

Architecture 700 with Nimble Storage.

The HPE Converged Architecture 700 with Nimble Storage delivers a scalable, converged infrastructure

platform for virtualization that provides tuned infrastructure for running workloads and applications. These

solutions are delivered through certified channel partners and provide infrastructure your way, delivered as

one integrated stack, saving you time and resources.

The HPE Converged Architecture 700 with Nimble Storage includes Hewlett Packard Enterprise lab-tested

components, such as HPE network switches, HPE industry-leading x86 servers, and storage arrays from

Nimble Storage, a Hewlett Packard Enterprise company, plus the VMware®vSphere®hypervisor. This

foundation can be used to support a wide variety of enterprise workloads:

• Data center server consolidation and cloud solutions

• Business-critical applications, such as databases and applications from Oracle, Microsoft, and SAP

• Virtual desktop infrastructure (VDI) solutions, such as Citrix®VDI and VMware Horizon®View

• Workforce-enablementapplications, such as Microsoft®Exchange Server,SharePoint®Server,andLync

Server

™

®

The HPE Converged Architecture 700 with Nimble Storage is a robust, fault-tolerant, scalable,

high-performance, high-availability solution. It has been validated in lab testing to meet these criteria so that

customers can purchase and deploy the solution with the confidence and knowledge that stringent design

and testing have been performed by HPE.

Solution Overview

The HPE Converged Architecture 700 with Nimble Storage can be thought of as a solution template whose

components havebeenprevalidated together.Thetemplateindicateswhichfamiliesofhardwareandsoftware

to deployandhowtoconnectand configure them. The HPE ConvergedArchitecture 700 with Nimble Storage

simplifies and accelerates deployment with a prescribed and validated deployment guide that produces

predictableresultsandreducestherisk of failurecausedbyalackofknowledge of the hardware and software

interdependencies.

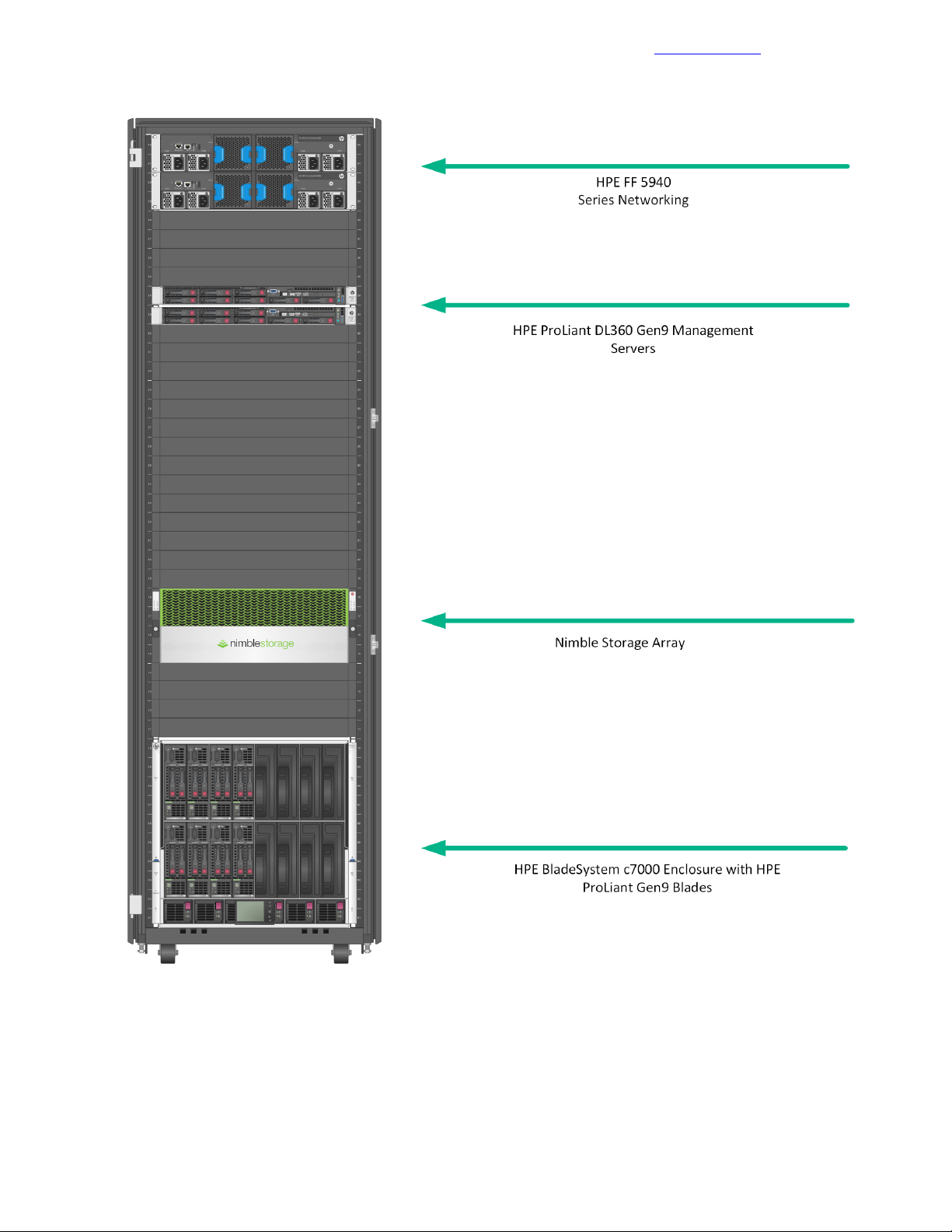

Every HPE Converged Architecture 700 with Nimble Storage deployment contains the following components:

• Ethernet switches: A pair of HPE FlexFabric 5940 10/40 GbE switches

• Storage: Nimble Storage arrays with a converged fabric-attached topology

• Computing resources:

• Industry-leading HPE ProLiant Gen9 serverbladesintheHPEBladeSystemc7000chassisformfactor

• A pair of standalone HPE ProLiant Gen9 rack mount servers for solution management

• Hypervisor: VMware vSphere 6.5

The testing described in this deployment guide was performed in May 2017.

Target Audience

The target audience for this deployment guide is solution engineers, distributors, and value-added resellers

who are HPE authorized partners. Readers can use this document to achieve the following goals:

• Gain insight into the value proposition for the HPE Converged Architecture 700 with Nimble Storage

solution.

8Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 9

Business Need

• Better understand the component requirements for the solution.

• Better understand the recommended software and features that are part of the solution.

• Leverage design guidance to architect the solution to fit a particular set of business cases.

• Better understand the design considerations related to fault tolerance, performance, and scalability when

architecting the solution.

The HPE Converged Architecture 700 with Nimble Storage is intended for midsize businesses, large

enterprises, and IT service providers who are looking for and understand the value from the combination of

consolidation, efficiency, and consistency enabled by the solution.

Documentation Feedback

Business Need

One of the biggest challenges for IT is to provide a wide variety of software services with appropriate service

levelsand performance for the applications and services that customers need. New workloads and business

demands are forcing customers to reevaluate the way they buy and manage infrastructure.

Rather than do-it-yourself systems or integrated systems assembled from components that are provided by

multiple vendors, customers want pre-engineered systems and support from a single vendor. They need

repeatable and flexible building blocks that are tuned to handle unpredictable workloads. These systems

must deliver fast time-to-value and must include software-defined management of servers, storage, and

networking that automates all layers of the data center and streamlines routine tasks.

The HPE Converged Architecture 700 with Nimble Storage combines industry-leading HPE x86 servers,

storage arrays from Nimble Storage, HPE 5940 Series network switches, and a validated management

software stack to provide a highly available, scalable, and high-performing virtualization platform from one

vendor, HPE. These components are configured according to HPE best practices, providing a prescriptively

defined foundation onto which IT workloads can be deployed.

Terms and Abbreviations

The followingtermsandabbreviationsappearfrequentlyinthisdeploymentguide.Youcanusethedefinitions

in this list as a quick reference for how the terms apply to the HPE Converged Architecture 700 with Nimble

Storage.

bridge aggregation

The HPE name for link aggregation. Link aggregation combines multiple network interface connections on

a network deviceto increase throughput beyondthelev elthatasingleconnectioncansustain.Italsoprovides

redundancy if a link fails.

HPE Insight Control server provisioning (ICsp)

A virtual appliance that is used to install and configure HP ProLiant servers. ICsp uses resources such as

OS build plans and scripts to run deployment jobs.

HPE Integrated Lights-Out (iLO)

The proprietary HPE embedded server management technology that provides out-of-band management

capabilities.

HPE Intelligent Resilient Framework (IRF)

A technology in HPE Comware-based switchesthatconverges up to nine network devices into a single fabric

(on both the management and control planes) through physical IRF ports. The configuration of all devices

that participate in the IRF configuration is managed through a single IP address, and all network switches

in the IRF configuration look like one device to the network components.

HPE OneView

A powerfulconvergedmanagementappliancethatsimplifiesthedeploymentandmanagementofconverged

infrastructure services. HPE OneView is an appliance virtual machine (VM) that runs on the VMware

management server cluster.

9Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 10

Terms and Abbreviations

Documentation Feedback

HPE ROM-Based Setup Utility (RBSU)

A utility that has a menu-driven interface and a BIOS serial CLI that allow users to perform configuration

activities on the server.

HPE Service Pack for ProLiant (SPP)

A consolidated set of solution-tested HPE ProLiant system software (firmware, drivers, agents, and utilities)

that is available as a single download from an easy-to-use website. You spend less time on maintenance

with resulting confidence in the stability of the update.

logical interconnect group

Groups of Virtual Connect interconnects that share a common network and storage configuration. HPE

OneView administrators create logical interconnect groups.

management servers

The VMware 6.5–based ESXi™DL360 Gen9 servers that host the VMs for management and for other

infrastructure.

management VM

The Windows Server®2012 R2–based VM that is used as the HPE ICsp media server and that runs the

HPE OneView for vCenter plugin software. This VM can also be the primary management system for the

solution, managing the VMware vSphere hosts and the Nimble Storage arrays.

multi-active detection (MAD)

A mechanism that manages switches during an IRF failure. MAD detects other switches in the IRF stack

that come online as masters and keeps online only the switch with the lowest master IRF ID. The others

shut down their interfaces, effectively removing them from the network, and stopping any network loops.

virtual local area network (VLAN)

A method for segmenting a network into related groups, which improves the efficiency of traffic flow and

limits the propagation of multicast and broadcast messages. Traffic between VLANs is blocked unless the

VLANs are connected by a router, which increases security.

virtual storage attached network (VSAN)

The virtual SAN fabric that carries storage traffic throughout the solution.

10Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 11

System Setup

Documentation Feedback

System Setup

To deploy the HPE ConvergedArchitecture 700 with Nimble Storagesolution, youmustcompletethefollowing

tasks:

• Lay out the physical infrastructure.

• Complete the configuration worksheet.

• Configure the network.

• Configure the HPE compute infrastructure.

• Configure the management servers:

• Configure the hypervisor for the management server.

• Deploy the VMware vCenter Management Appliance.

• Build the management VM.

• Configure the storage arrays:

• Deploy the Nimble Storage arrays.

• Configure the converged SAN network.

• Configure the Nimble Storage vCenter integration.

• Deploy the Nimble Storage datastores to the management hosts.

• Configure the HPE management software:

• Deploy HPE OneView.

• Deploy HPE ICsp.

• Deploy the HPE OneView for VMware vCenter plugin.

• Configure the compute servers:

• Build the compute servers.

• Deploy the Nimble Storage datastores to the compute hosts.

11Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 12

Validated Software and Firmware Levels

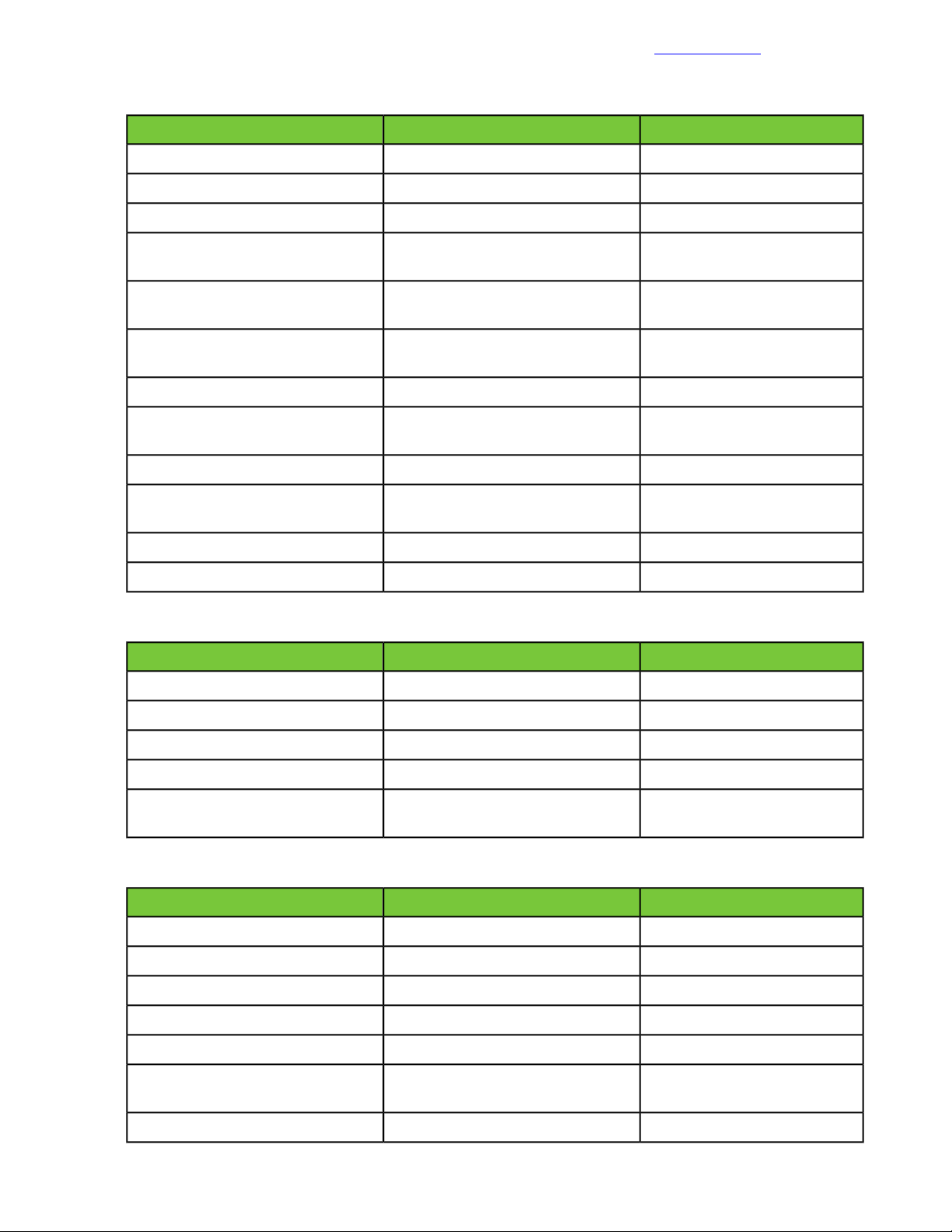

Figure 1: Configuration tested and verified by HPE and Nimble Storage

Documentation Feedback

Validated Software and Firmware Levels

The HPE Converged Architecture 700 with Nimble Storage solution was thoroughly tested to validate the

design and the interoperability of the components. The precise software and firmware versions that were

tested in the lab are presented in the sections that follow.

12Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 13

HPE Service Pack for ProLiant

Documentation Feedback

HPE Service Pack for ProLiant

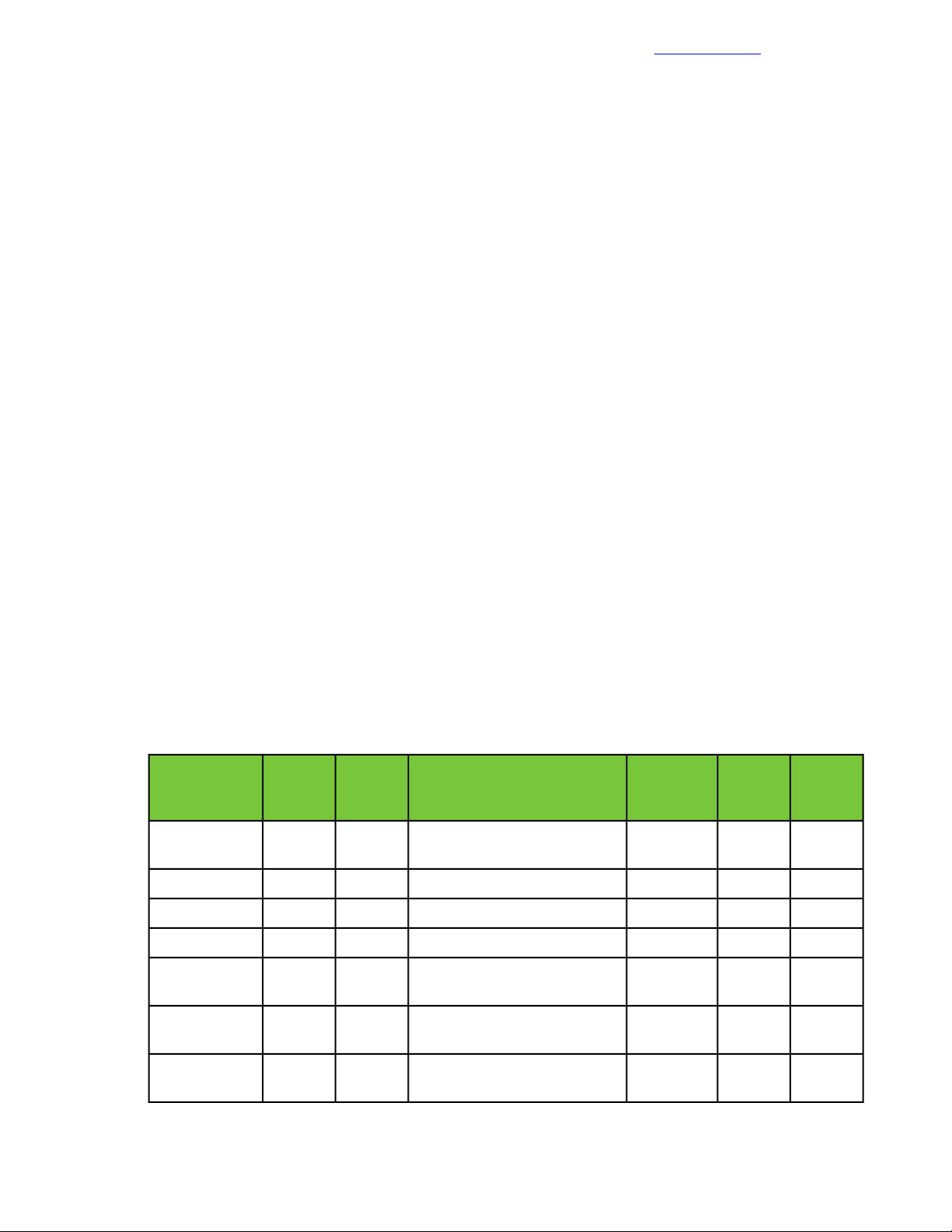

The following table lists the HPE Service Pack for ProLiant (SPP) firmware versions for the infrastructure

components that were verified for the solution. Access to the SPP requires validation from the HPE Support

Center. An active warranty or HPE Support agreement is required to download the SPP. Unless otherwise

noted, the listed components are included in the HPE SPP.

Table 1: HPE Service Pack for ProLiant

Verified VersionComponent

2017.04HPE Service Pack for ProLiant

4.60HPE Onboard Administrator

4.45HPE Virtual Connect

ROM Version: I36 v2.40 (02/17/2017)HPE ProLiant BL460c Gen9

ROM Version: P89 v2.40 (02/17/2017)HPE ProLiant DL360 Gen9

2.53*HPE Integrated Lights-Out 4 (iLO 4)

5.04HPE Smart ArrayP440ar/2GFIO Controller (DL360 Gen9)

5.04HPE Smart Array P244br/1G FIO Controller (BL460c)

bc 1.46 ncsi 1.3.16.0HPE Ethernet 1Gb 4-port 331i Adapter (Broadcom)

11.1.183.62HPE FlexFabric10Gb2P 556FLR-SFP+ Adapter (Emulex)

11.1.183.62HPE FlexFabric 20Gb 2P 650FLB Adapter (Emulex)

Note Components marked with an asterisk (*) are not included in the SPP. For these components, you must

build a custom firmware bundle. For instructions on how to create the bundle, see Create a Custom SSP in

the HPE OneView 3.0 User Guide.

Nimble Storage Software

The following table lists the Nimble Storage software components and versions that were verified for the

solution.

Table 2: Nimble Storage software

Verified VersionComponent

4.3NimbleOS

4.1.0.132Nimble Windows Toolkit

4.1.0Nimble Connection Manager for VMware ESXi 6.5

HPE Infrastructure Firmware

The followingtableliststheinfrastructurefirmware components and versionsthat were verifiedforthesolution.

The firmware versions noted in the table are not contained in the SPP; they must be downloaded separately

to a local repository. For update procedures for these components, see the documentation for the respective

component.

13Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 14

HPE Infrastructure Software

Table 3: HPE infrastructure firmware

Verified VersionComponent

2.50HPE Intelligent Provisioning

7.1.070, Release 2606HPE FF 5940-4Slot

2.00.0038HPE metered and switched power distribution unit

Documentation Feedback

HPE Infrastructure Software

The following table lists the HPE infrastructure software components and versions that were verified for the

solution.

Table 4: HPE infrastructure software

Verified VersionComponent

3.0.07HPE OneView

7.6HPE Insight Control server provisioning (ICsp)

VMware Infrastructure Software

The followingtableliststhe infrastructure software components and versions related to VMware vSphere that

were verified for the solution.

Table 5:VMware infrastructure software

Current Supported VersionComponent

6.5 (Build: 4602587) (ISO Build: 4602587)VMware vCenter Server

6.5 (Build: 5224529)VMware vSphere

HPE Custom Image for VMware ESXi 6.5

HPE Customized VMware Image

HPE publishes a separate VMware recipe specific to HPE ProLiant hardware to ensure compatibility of driver

and firmware releases with the VMware ESXi hypervisor. The recipe details the HPE customized VMware

image and the components necessary for a successful deployment of ESXi. The relevant components in the

recipe are listed in the table.

HPE publishes a new version of the HPE customized image in the following scenarios:

• When HPE publishes a new SPP

• When HPE publishes a new Maintenance Supplement Bundle (MSB)

• When VMware publishes a new OS release

• When VMware publishes a new update release

• When HPE releases a hotfix for a critical driver or a firmware fix

VMware-ESXi-6.5.0-OS-Release-5146846HPE-650.9.6.5.27-May2017 (6.5)

8.2.1HPE OneView for VMware vCenter

2012 R2 (Standard Edition)Windows Server (Windows®management VMs)

The driversforthenetworkandstoragecontrollersintheProLiantserversareintegratedintheHPEcustomized

image and are not part of the generic ESXi image that is distributed by VMware. ESXi requires the drivers

14Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 15

Resources for Physical Deployment

for these key controllers to be integrated with the base image. You will not be prompted to install key drivers

during the installation process for ESXi.

For more information about the HPE customized image, see Getting Started with HPE Customized Images

in the Software Delivery Repository - vibsdepot (aka HPE Online Depot) site.

For more information about deploying and updating VMware software on HPE ProLiant servers, including

adding VMware patches or hotfixes to the HPE customized image, see the technical white paper Deploying

and updating VMware vSphere on HPE ProLiant servers.

Windows Server VM

The Windows Server VM is used as a centralized management server within the solution and as a place to

install Windows components, such as the Windows-based VMware vCenter deployment. HPE recommends

applying Microsoft updates to the VM in accordance with your data center's operations policies.

For information about available hotfixes, visit the Microsoft Support site.

Documentation Feedback

Resources for Physical Deployment

Compute Physical Deployment

HPE BladeSystem c7000 Enclosure

Forsite requirements, installation instructions, and other general reference materials, see HPE BladeSystem

c7000 Enclosures on the HPE Support Site.

HPE ProLiant BL460c Gen9 Servers

For site requirements, installation instructions, and other general reference materials, see HPE ProLiant

BL460c Gen9 Servers on the HPE Support Site.

HPE ProLiant WS460c Gen9 Graphics Server Blade

For site requirements, installation instructions, and other general reference materials, see HPE ProLiant

WS460c Gen9 Graphics Server Blade on the HPE Support Site.

HPE ProLiant WS460c Gen9 Graphics Expansion Blade

For site requirements, installation instructions, and other general reference materials, see HPE ProLiant

WS460c Gen9 Graphics Server Blade on the HPE Support Site.

HPE ProLiant BL660c Gen9 Servers

For site requirements, installation instructions, and other general reference materials, see HPE ProLiant

BL660c Gen9 Servers on the HPE Support Site.

HPE ProLiant DL360 Gen9 Servers

For site requirements, installation instructions, and other general reference materials, see HPE ProLiant

DL360 Gen9 Servers on the HPE Support Site.

Network Physical Deployment

HPE FlexFabric 5940 Switch Series

For site requirements, installation instructions, and other general reference materials, see HPE FlexFabric

5940 Switch Series on the HPE Support Site.

15Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 16

Cabling for Networking, Storage, and Power

Documentation Feedback

Cabling for Networking, Storage, and Power

Network Cabling

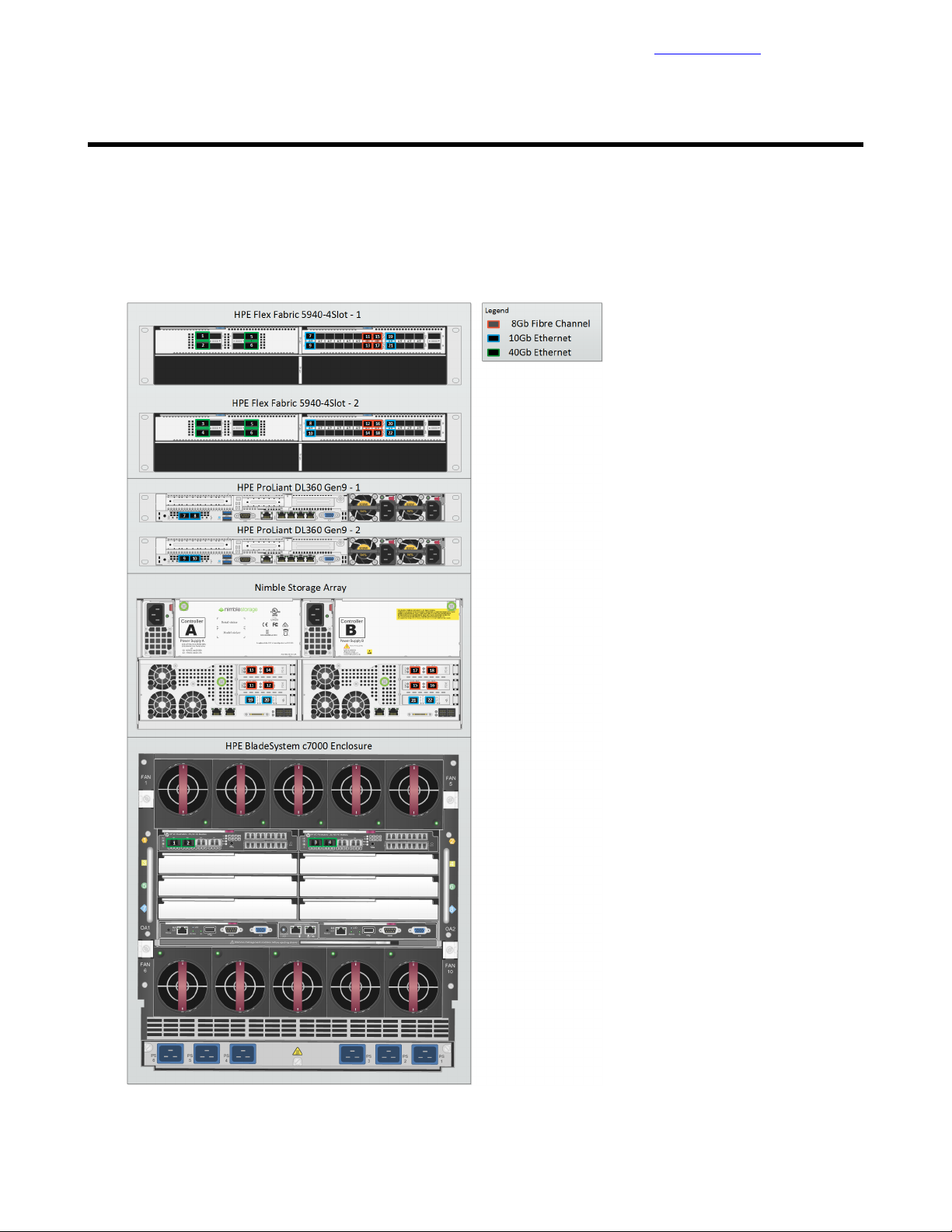

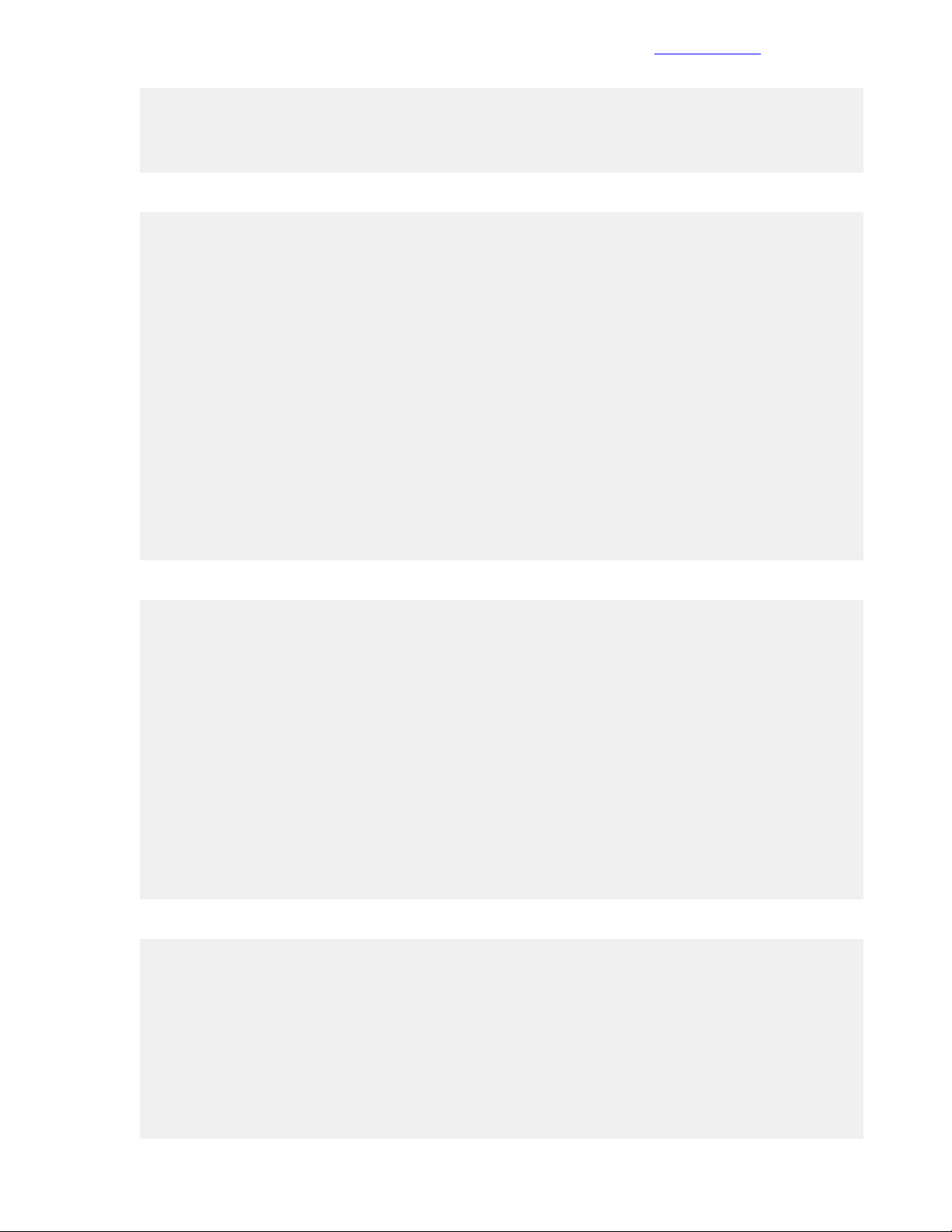

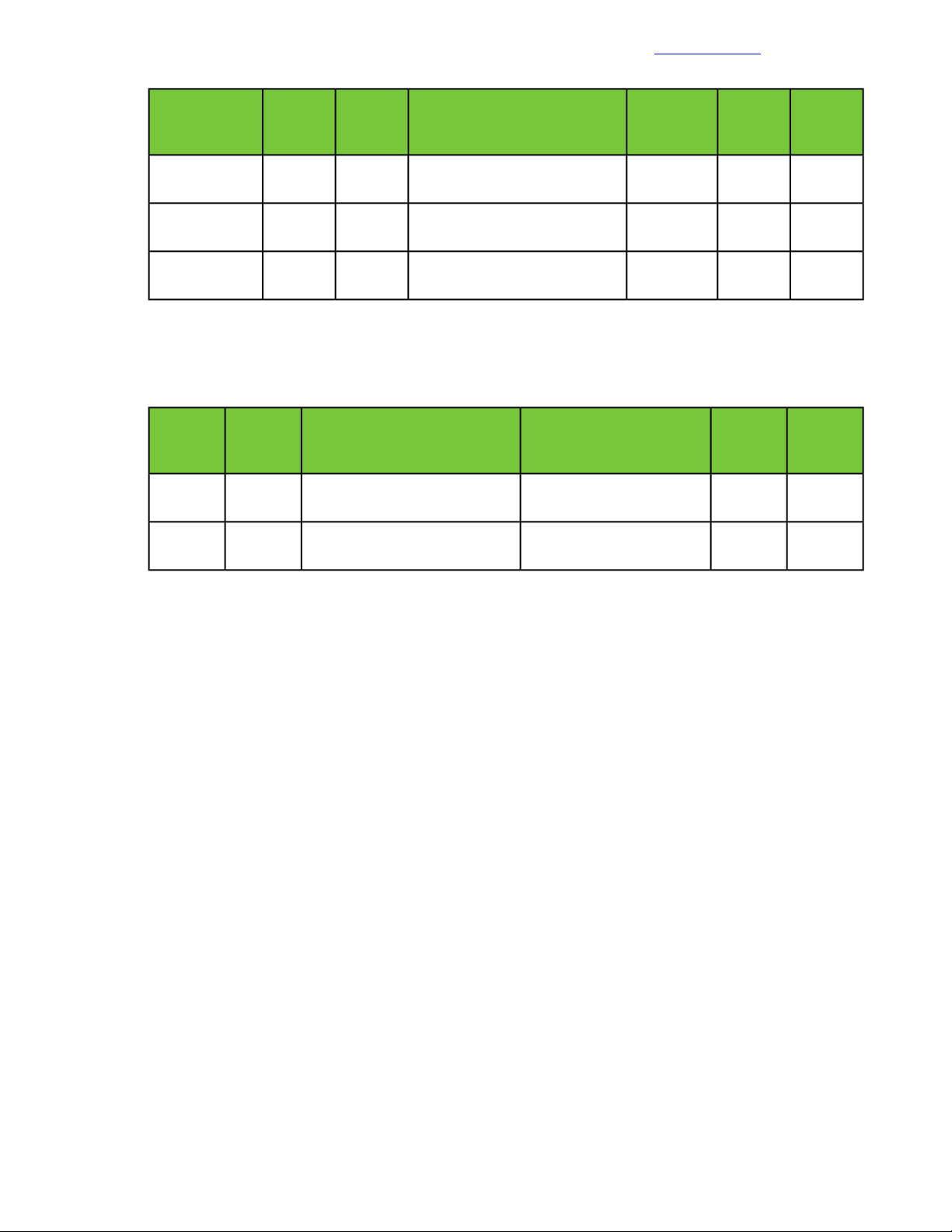

The following diagram shows the SAN connections for the HPE Converged Architecture 700 with Nimble

Storage solution.

Figure 2: Cabling overview: SAN connections

16Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 17

Network Cabling

Documentation Feedback

Detailed cabling information for each connection is provided in the following table.

Table 6: SAN connections with the HPE FF 5940-4Slot switch and the Nimble Storage array

PortDevicePortSwitch

Cabling

Code

1Interconnect 1 Q1HPEBladeSystemc7000enclosure1/1/1HPE FF 5940-4Slot-1

2Interconnect 1 Q2HPEBladeSystemc7000enclosure1/1/2HPE FF 5940-4Slot-1

3Interconnect 2 Q1HPEBladeSystemc7000enclosure2/1/1HPE FF 5940-4Slot-2

4Interconnect 2 Q2HPEBladeSystemc7000enclosure2/1/2HPE FF 5940-4Slot-2

52/1/7HPE FF 5940-4Slot-21/1/7HPE FF 5940-4Slot-1

62/1/8HPE FF 5940-4Slot-21/1/8HPE FF 5940-4Slot-1

7PCI 1-1HPE ProLiant DL360 Gen9 -11/2/1HPE FF 5940-4Slot-1

8PCI 1-2HPE ProLiant DL360 Gen9 -12/2/1HPE FF 5940-4Slot-2

9PCI 1-1HPE ProLiant DL360 Gen9 -21/2/2HPE FF 5940-4Slot-1

10PCI 1-2HPE ProLiant DL360 Gen9 -22/2/2HPE FF 5940-4Slot-2

11FC5Nimble Storage controller A1/2/13HPE FF 5940-4Slot-1

12FC6Nimble Storage controller A2/2/13HPE FF 5940-4Slot-2

13FC9Nimble Storage controller A1/2/14HPE FF 5940-4Slot-1

14FC10Nimble Storage controller A2/2/14HPE FF 5940-4Slot-2

The following diagram shows the 1 GbE connections that are used for management.

15FC5Nimble Storage controller B1/2/15HPE FF 5940-4Slot-1

16FC6Nimble Storage controller B2/2/15HPE FF 5940-4Slot-2

17FC9Nimble Storage controller B1/2/16HPE FF 5940-4Slot-1

18FC10Nimble Storage controller B2/2/16HPE FF 5940-4Slot-2

19tg1Nimble Storage controller A1/2/17HPE FF 5940-4Slot-1

20tg2Nimble Storage controller A2/2/17HPE FF 5940-4Slot-2

21tg1Nimble Storage controller B1/2/18HPE FF 5940-4Slot-1

22tg2Nimble Storage controller B2/2/18HPE FF 5940-4Slot-2

17Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 18

Power Cabling

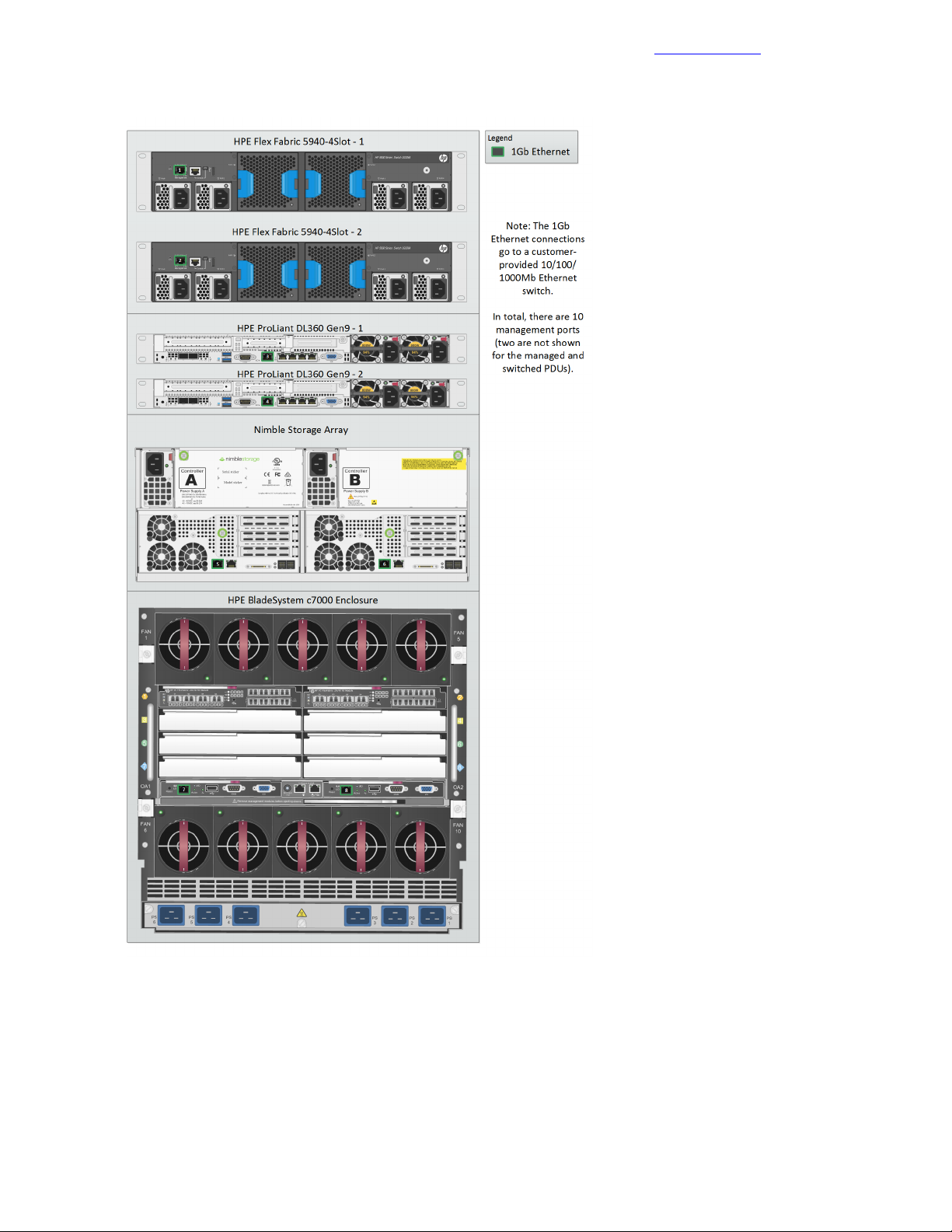

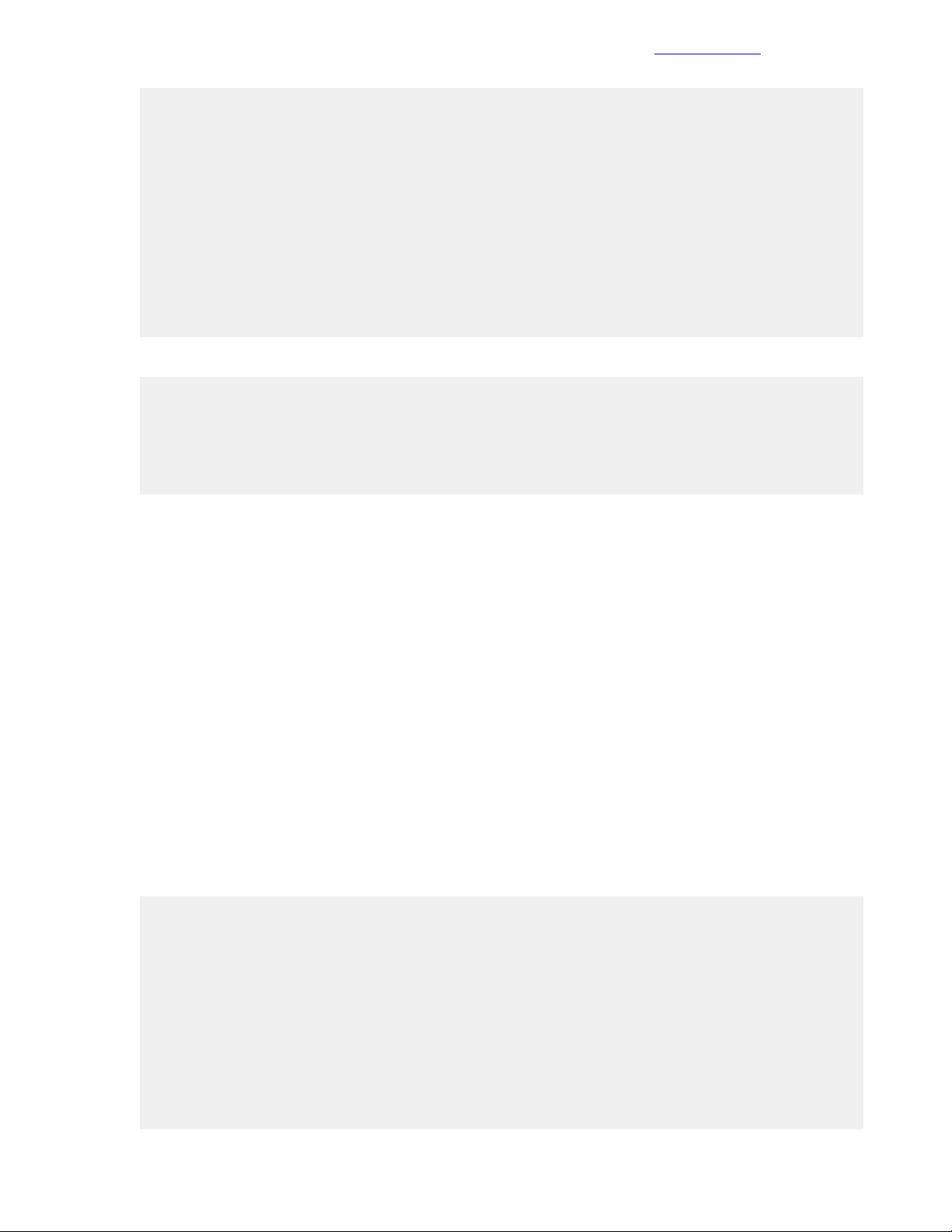

Figure 3: Cabling overview: 1 GbE connections for management

Documentation Feedback

Power Cabling

The following diagram shows the power connections for the HPE Converged Architecture 700 with Nimble

Storage solution.

18Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 19

Power Cabling

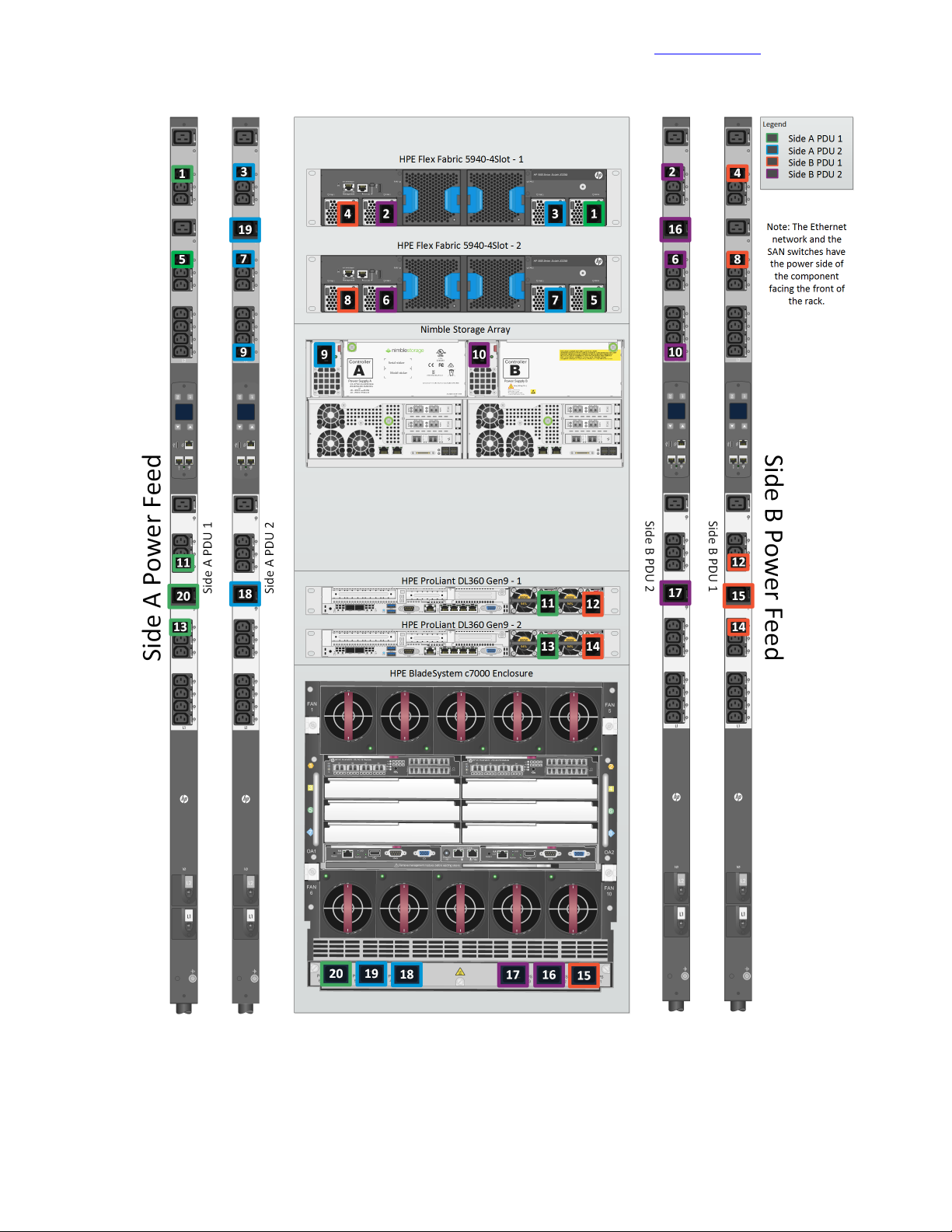

Figure 4: Power cabling overview

Documentation Feedback

Detailed information for each power connection is provided in the following table.

19Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 20

Power Cabling

Documentation Feedback

Table 7: Power configuration with HPE switching and 4 x HPE 4.9kVA 208 Volt L6-30 input

(20xC13/4xC19) NA/JP managed PDU (H8B50A)

PortPDUPortDevice

Cabling

Code

1L2 – 11Side A PDU 1PS 1HPE FF 5940-4Slot-1

2L2 – 11Side B PDU 2PS 2HPE FF 5940-4Slot-1

3L2 – 11Side A PDU 2PS 3HPE FF 5940-4Slot-1

4L2 – 11Side B PDU 1PS 4HPE FF 5940-4Slot-1

5L2 – 7Side A PDU 1PS 1HPE FF 5940-4Slot-2

6L2 – 7Side B PDU 2PS 2HPE FF 5940-4Slot-2

7L2 – 7Side A PDU 2PS 3HPE FF 5940-4Slot-2

8L2 – 7Side B PDU 1PS 4HPE FF 5940-4Slot-2

9L2 – 1Side A PDU 2PS 1Nimble Storage array controller A

10L2 – 1Side B PDU 2PS 1Nimble Storage array controller B

11L2 – 9Side A PDU 1PS 2HPE ProLiant DL360 Gen9 – 1

12L2 – 9Side B PDU 1PS 1HPE ProLiant DL360 Gen9 – 1

13L1 – 7Side A PDU 1PS 2HPE ProLiant DL360 Gen9 – 2

14L1 – 7Side B PDU 1PS 1HPE ProLiant DL360 Gen9 – 2

15L1 – 8Side B PDU 1PS 1HPEBladeSystemc7000enclosure

16L2 – 8Side B PDU 2PS 2HPEBladeSystemc7000enclosure

17L1 – 8Side B PDU 2PS 3HPEBladeSystemc7000enclosure

18L1 – 8Side A PDU 2PS 4HPEBladeSystemc7000enclosure

19L2 – 8Side A PDU 2PS 5HPEBladeSystemc7000enclosure

20L1 – 8Side A PDU 1PS 6HPEBladeSystemc7000enclosure

20Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 21

Preparing to Install and Configure the HPE Converged Architecture 700 with Nimble Storage

Documentation Feedback

Preparing to Install and Configure the HPE Converged

Architecture 700 with Nimble Storage

The Configuration Worksheet

Appendix A: Configuration Worksheet on page 97 lists the variables that are required to complete the tasks

in this deployment guide. Before beginning the deployment of an HPE Converged Architecture 700 with

Nimble Storage, ensure that the configuration worksheet is completed with the correct and validated values.

You are free to expand the configuration worksheet to suit your needs.

Fully Qualified Domain Names Versus IP Addresses

All components that are deployed with the HPE Converged Architecture 700 with Nimble Storage must have

correct forward and reverse domain name system (DNS) entries in the network DNS server. This guide

assumes that the site where the HPE Converged Architecture 700 with Nimble Storage will be deployed

already has a DNS server and that the server configuration will be modified to accommodate the HPE

ConvergedArchitecture700withNimbleStorage .YoucanalsodeployandconfigureaDNSserverspecifically

for use with this solution; however, the deployment steps for a DNS server are not covered in the guide.

Throughout the guide, when you are asked to enter the IP address of a component, you can, most of the

time, use the DNS name or the fully qualified domain name (FQDN) of the component instead. Although you

can use just IP addresses, HPE recommends that you use DNS names whenever possible. The solution

requires a valid DNS server with host name entries for every component even if you use only IP addresses.

HPE Resources for Automation Efforts

This deployment guide covers the manual steps that are required to build a verified configuration of the HPE

Converged Architecture 700 with Nimble Storage. The guide covers no automation, except the automation

that is built into products; for example, HPE OneView for VMware vCenter Enhanced Grow Cluster.

Libraries (such as REST, Python, Java, and PowerShell libraries) that you can use for automation efforts are

available for most of the components of the HPE Converged Architecture 700 with Nimble Storage. You can

use these resources to streamline deployments.

HPE OneView

HPE OneView API Reference and HP OneView 2.0 REST API Reference

HPE OneView REST API scripting help

HPE OneView SDK and Libraries on GitHub

HPE Insight Control server provisioning

HP Insight Control server provisioning API Reference:

https://<<icsp_mgmt_ip>>/api-docs/current/#about

Note To access this reference, you must be logged in to a deployed instance of HPE ICsp.

HPE Insight Control server provisioning SDK and Libraries on GitHub

HPE Integrated Lights Out

iLO RESTful API Data Management Model Reference (iLO 4)

21Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 22

Connecting to the Components in the Solution

Managing Hewlett Packard Enterprise Servers Using the RESTful API

HPE Integrated Lights Out SDK and Libraries on GitHub

Nimble Storage Array

Nimble Storage REST API Reference

VMware

VMware API and SDK Documentation

Documentation Feedback

Connecting to the Components in the Solution

As you deploy the HPE Converged Architecture 700 with Nimble Storage solution, you will need to connect

to various components. Use the information in this section for guidance on how to connect to a specific

component during the solution build or as a general reference.

HPE iLO

Perform the steps in this procedure to logintotheHPEIntegratedLights-Out(iLO)ofanHPEProLiantserver.

Procedure

1 Using a web browser, navigate to the iLO to which you want to connect.

For example, to connect to the iLO of the first management server, navigate to

https://<<mgmt_server_1_ilo_ip>>.

2 Accept any security warnings to continue to the website.

3 Log in with the user name admin and the password <<mgmt_server_ilo_password>>.

4 To access the Integrated Remote Console, click the .NET link or the Java link on the Overview page.

HPE Onboard Administrator

Perform the steps in this procedure to log in to the active HPE Onboard Administrator of an enclosure.

Procedure

1 Using a web browser, navigate to https://<<enclosure_1_oa_1_ip>>.

2 Accept any security warnings to continue to the website.

3 Log in with the user name admin and the password <<enclosure_1_password>>.

4 Alternatively, connect to the Onboard Administrator CLI through an SSH connection, using PuTTY,

TeraTerm, or another SSH client.

Network Switches

How you connect to the network switches depends on whether networking has been configured on them. A

serial connection is always possible. If the switch is availableon the network, you can use an SSH connection

instead of a serial connection.

Procedure

1 Connect a serial cable between the deployment PC and the switch.

2 Using a terminal emulation program that supports serial connections (for example, TeraTerm), select the

correct serial port.

3 Configure the port settings for 9600 baud, 8-bits, no parity, 1 stop bit, and no flow control.

4 Log in:

22Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 23

VMware Host Client

If the switch has not been configured yet, there is no user name and password.•

• If the switchhas been configured, the admin password should be <<net_switch_admin_passwor d>>.

5 If the switch has been configured and you want to connect through SSH, open an SSH connection to the

switch by using an SSH client, such as TeraTerm, and log in with the user name admin and the password

<<net_switch_admin_password>>.

Documentation Feedback

VMware Host Client

Perform the steps in this procedure to open the VMware Host Client to manage a local VMware ESXi node.

Procedure

1 Open a web browser on the management workstation and navigate to the ESXi node management IP

address; for example, <<mgmt_server_1_ip>>.

2 Accept any security warnings to continue to the website.

3 Click Open the VMware Host Client.

4 Log in with the user name root and the password <<mgmt_server_root_password>>.

5 Clear the Join CEIP checkbox and click OK.

VMware vSphere Client for Windows

Perform the steps in this procedure to download and install the vSphere Client for Windows application and

to use it to connect to VMware hosts or to vCenter.

Procedure

1 Download the client:

a Using a web browser, navigate to

http://vsphereclient.vmware.com/vsphereclient/5/1/1/2/5/0/8/VMware-viclient-all-6.0.0-5112508.exe.

b Download and install the vSphere Client for Windows.

You will later use the vSphere Web Client for administration tasks, but the thick client installed in this

step is used to ease installation.

c After you download and install the thick client, run the application.

A shortcut to the vSphere Client for Windows may have been added to the desktop.

2 Connect to a VMware host:

a In the vSphere Client application, enter the IP address or the host name for the host; for example,

<<mgmt_server_1_ip>.

b Log in with the user name root and the password <<mgmt_server_root_password>>.

c Click Login.

3 Connect to the VMware vCenter instance:

a Enter the IP address or the FQDN for vCenter; for example, <<vcenter_fqdn>>.

b Log in with the user name administrator@<<mgmt_net_domain_name>> and the password

<<vcenter_administrator_password>>.

VMware vSphere Web Client

Perform the steps in this procedure to connect to the vSphere Web Client.

Procedure

1 Using a web browser, navigate to https://<<vcenter_fqdn>>/vsphere-client.

23Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 24

VMware vSphere Administration Portal

2 Accept any certificate warnings.

3 Log in with the user name administrator@<<mgmt_net_domain_name>> and the password

<<vcenter_administrator_password>>.

Documentation Feedback

VMware vSphere Administration Portal

Perform the steps in this procedure to connect to the VMware vSphere Administration Portal.

Procedure

1 Using a web browser, navigate to https://<<vcenter_mgmt_ip>>:5480.

2 Log in with the user name root.

3 Enter the password:

• If this is the first time that you log in to the portal, the default password is vmware.

• Otherwise, the password is <<vcenter_root_password>>.

VMware VM Console: vSphere Web Client

Perform the steps in this procedure to open a VM console from the vSphere Web Client.

Procedure

1 Log in to the vSphere Web Client.

2 From the Home page, click VMs and Templates.

3 In the left pane, expand the data tree to display the VM for which you want to open the console.

4 Click the VM to select it:

a Click the Summary tab for the VM.

b Click Launch Console.

You may have to allow pop-up windows in the browser for the console window to open.

c Accept any security warnings in the new browser window or tab that opens.

VMware VM Console: vSphere Client for Windows

Perform the steps in this procedure to open a VM console from the vSphere Client for Windows application.

Procedure

1 Log in to the vSphere Client for Windows.

2 From the Home page, click VMs and Templates.

3 In the left pane, expand the data tree to display the VM for which you want to open the console.

4 Right-click the VM and choose Open Console:

a To enter console commands, click anywhere in the console window or use the mouse in the console.

b To release the keyboard and the mouse from the console, press and release Ctrl+Alt.

c To send a Ctrl-Alt-Del sequence to the VM, click VM > Guest > Send Ctrl+Alt+Del from the console

toolbar.

HPE OneView Web Administration Portal

Perform the steps in this procedure to log in to the HPE OneView Web Administration Portal.

If you are uploading a file to HPE OneView, such as a patch update or the HPE Service Pack for ProLiant,

do not use Microsoft Internet Explorer to upload the update package because this browser cannot handle

large file sizes. Use Google Chrome or Mozilla Firefox to complete this setup.

24Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 25

HPE OneView for vCenter Plugin Administrator Console

Procedure

1 Using a web browser, navigate to https://<<oneview_fqdn>>.

2 Accept any security warnings to continue to the website.

3 Log in with the user name Administrator and the password <<oneview_administrator_password>>.

Documentation Feedback

HPE OneView for vCenter Plugin Administrator Console

Perform the steps in this procedure to log in to the HPE OneView for vCenter Plugin Administrator Console.

Procedure

1 Using a web browser, navigate to https://<<ov4vc_fqdn>>/ui/index.html.

2 Accept any security warnings to continue to the website.

3 Log in with the user name Admin and the password <<ov4vc_admin_password>>.

Nimble Storage Array CLI

Perform the steps in this procedure by using a terminal editor to access the CLI of the Nimble Storage array

through SSH.

Procedure

1 Open a terminal emulator that supports SSH connections and connect to <<nimble_fqdn>>.

2 Log in with the user name admin and the password <<nimble_adm_pwd>>.

Nimble Storage Administrator Console

Perform the steps in this procedure to log in to the Nimble Storage array Administrator Console.

Procedure

1 Using a web browser, navigate to https://<<nimble_fqdn>>.

2 Accept any security warnings to continue to the website.

3 Log in with the user name admin and password <<nimble_adm_pwd>>.

HPE Insight Control Server Provisioning Web Administration Page

Perform the steps in this procedure to log in to the HPE ICsp Web Administration page.

Procedure

1 Using a web browser, navigate to https://<<icsp_fqdn>>.

2 Accept any security warnings to continue to the website.

3 Log in with the user name Administrator and the password <<icsp_administrator_password>>.

25Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 26

Configuring the Ethernet Network

Documentation Feedback

Configuring the Ethernet Network

The HPE Converged Architecture 700 with Nimble Storage has been validated with the HPE FlexFabric

5940-4Slot switch. The HPE FlexFabric 5940-slot-2QSFP+ switch can also be leveraged for deployments,

but those steps are not documented in this guide.

The following procedures describe how to configure the HPE FlexFabric 5940-4Slot switches for use in a

base HPE Converged Architecture 700 with Nimble Storage environment. Before configuring the switches,

make sure that they are running the HPE Comware version specified in Validated Software and Firmware

Levels on page 12. A base HPE Converged Architecture 700 with Nimble Storage deployment must use a

minimum of two of the same model network switches.

Set Up IRF Configuration

The procedure for configuring the Intelligent Resilient Framework (IRF) begins at switch A, moves to switch

B, and concludes back at switch A.

Procedure

1 Starting at the serial port of HPE FlexFabric 5940-4Slot switch A, configure the switch.

At initial boot and connection to the serial or console port of the switch, the Comware setup should

automatically start and attempt to enter automatic configuration.

Note When instructions call for network configuration in system-view context, if you are at the <HPE>

prompt, issue the system-view command to get to the [HPE] prompt.

Startup configuration file does not exist.

Started automatic configuration, press CTRL_C or CTRL_D to break.

Automatic configuration attempt: 1.

Not ready for automatic configuration: no interface available.

Waiting for the next...

Automatic configuration attempt: 2.

Interface used: M-GigabitEthernet0/0/0.

Enable DHCP client on M-GigabitEthernet0/0/0.

M-GigabitEthernet0/0/0 failed to obtain IP address.

Interface used: Vlan-interface1.

Enable DHCP client on Vlan-interface1.

Vlan-interface1 failed to obtain IP address.

Waiting for the next... Line aux0 is available.

Press ENTER to get started.

<HPE> system-view

System View: return to User View with Ctrl+Z.

2 Configure the IRF ports.

[HPE] interface range FortyGigE 1/1/7 to FortyGigE 1/1/8

[HPE-if-range] shutdown

[HPE-if-range] quit

[HPE] irf-port 1/1

[HPE-irf-port1/1] port group interface FortyGigE 1/1/7

[HPE-irf-port1/1] port group interface FortyGigE 1/1/8

[HPE-irf-port1/1] quit

26Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 27

Set Up IRF Configuration

[HPE]save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

3 Moving to the serial port of HPE FlexFabric 5940-4Slot switch B, configure the switch.

Startup configuration file does not exist.

Started automatic configuration, press CTRL_C or CTRL_D to break.

Automatic configuration attempt: 1.

Not ready for automatic configuration: no interface available.

Waiting for the next...

Automatic configuration attempt: 2.

Interface used: M-GigabitEthernet0/0/0.

Enable DHCP client on M-GigabitEthernet0/0/0.

M-GigabitEthernet0/0/0 failed to obtain IP address.

Interface used: Vlan-interface1.

Enable DHCP client on Vlan-interface1.

Vlan-interface1 failed to obtain IP address.

Waiting for the next...

Line aux0 is available.

Press ENTER to get started.

<HPE> system-view

System View: return to User View with Ctrl+Z.

Documentation Feedback

4 Change the IRF member ID and reboot the switch.

[HPE] irf member 1 renumber 2

Renumbering the member ID may result in configuration change or loss.

Continue?[Y/N] y

[HPE] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Validating file. Please wait...

Saved the current configuration to mainboard device successfully.

[HPE] quit

<HPE> reboot

Start to check configuration with next startup configuration file, please

wait.........DONE!

This command will reboot the device.

Continue? [Y/N]:y

Now rebooting, please wait...

5 When the switch reboot is complete, configure the IRF ports.

<HPE> system-view

[HPE] interface range FortyGigE 2/1/7 to FortyGigE 2/1/8

[HPE-if-range] shutdown

[HPE-if-range] quit

[HPE] irf-port 2/2

[HPE-irf-port2/2] port group interface FortyGigE 2/1/7

[HPE-irf-port2/2] port group interface FortyGigE 2/1/8

[HPE-irf-port2/2] quit

27Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 28

Configure Multi-Active Detection and Remote Access to the Switch

[HPE] irf-port-configuration active

[HPE] interface range FortyGigE 2/1/7 to FortyGigE 2/1/8

[HPE-if-range] undo shutdown

[HPE-if-range] quit

[HPE] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

flash:/startup.cfg exists, overwrite? [Y/N]:y

Validating file. Please wait...

Saved the current configuration to mainboard device successfully.

[HPE]

6 Back at HPE FlexFabric 5940-4Slot switch A, enable the IRF ports on switch A.

<HPE> system-view

[HPE] irf-port-configuration active

[HPE] interface range FortyGigE 1/1/7 to FortyGigE 1/1/8

[HPE-if-range] undo shutdown

[HPE-if-range] quit

Documentation Feedback

7 Allow switch B to reboot to merge into the IRF fabric.

Fromthis point on, allconfigurations happen only on switchA. No further configurationisneeded on switch

B because the control and management planes have been merged as a part of the IRF configuration.

Configure Multi-Active Detection and Remote Access to the Switch

HPErecommendsimplementing a multi-active detection (MAD) mechanism, which is useful forthese purposes:

• To detect the presence of multiple identical IRF fabrics

• To handle collisions

• To recover from faults in the unlikely event of an IRF split or failure

For more information, see the HPE FlexFabric 5940 IRF Configuration Guide. This guide explains how to

use the management links to configure MAD Bidirectional Forwarding Detection (BFD).

Note The switch configuration code in this procedure does not include switch prompts.

Procedure

1 Fromsystem-view,run the following commands, substituting the values from the configuration worksheet.

interface M-GigabitEthernet 0/0/0

ip address <<net_switch_mgmt_ip>> <<mgmt_net_netmask>>

mad bfd enable

mad ip address <<net_switch1_mad_ip>> <<mad_net_netmask>> member 1

mad ip address <<net_switch2_mad_ip>> <<mad_net_netmask>> member 2

quit

ip route-static 0.0.0.0 0.0.0.0 <<mgmt_net_gw>>

local-user admin

password simple <<net_switch_admin_password>>

authorization-attribute user-role network-admin

service-type ssh terminal

28Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 29

Configure IRF Priority

quit

ssh server enable

user-interface vty 0 63

authentication-mode scheme

protocol inbound ssh

quit

user-interface aux 0 1

authentication-mode scheme

quit

save

2 Create the public keys on the switch.

public-key local create rsa

Input the modulus length [default = 1024]:Enter

public-key local create dsa

Input the modulus length [default = 1024]:Enter

public-key local create ecdsa secp256r1

Documentation Feedback

3 SSH to the switch by using <<net_switch1_mgmt_ip>>, the user name admin, and the password

<<net_switch_admin_password>>.

Configure IRF Priority

Configure the domain and IRF parameters. The <<net_switch_domain_id>> value is an arbitrary number,

but it must be unique from other IRF domains.

Procedure

1 From system-view, run the following commands:

irf domain <<net_switch_domain_id>>

irf member 1 priority 32

irf member 2 priority 30

irf mac-address persistent always

Convert the Chassis Working Mode

To configure the interfaces and the switch to use FC or FCoE, you must convert the system working mode.

Before running the commands, make sure that both switches are merged into the IRF fabric.

Procedure

1 From system-view, run the following commands:

system-working-mode advance

Do you want to change the system working mode? [Y/N]:y

The system working mode is changed, please save the configuration and reboot

29Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 30

Configure NTP

the system to make it effective.

return

save

reboot

Configure NTP

Network Transport Protocol (NTP) must be configured on the switch.

Procedure

1 From system-view, run the following commands:

clock protocol none

return

clock datetime 13:28:00 02/17/2016

system-view

ntp-service unicast-server <<mgmt_net_ntp1>> priority

clock protocol ntp

Documentation Feedback

save

Configure the VLANs Needed

To create the necessary VLANs, perform this procedure on both switches.

Procedure

1 From system-view, run the following commands:

vlan <<mgmt_net_vlan>>

name MGMT-VLAN

quit

vlan <<deployment_net_vlan>>

name Native-Deployment-VLAN

quit

vlan <<mgmt_vmotion_net_vlan>>

name MGMT-vMotion-VLAN

quit

vlan <<mgmt_ft_net_vlan>>

name MGMT-FT-VLAN

quit

vlan <<compute_vmotion_net_vlan>>

name Compute-vMotion-VLAN

quit

vlan <<compute_ft_net_vlan>>

name Compute-FT-VLAN

quit

30Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 31

Convert Interfaces to Use FC Protocol

vlan <<vm_production_net_1_vlan>>

name VM-Production-VLAN1

quit

vlan <<fcoe_san_a_vlan>>

name FCoE-SAN-A-VLAN

description FCOE SAN A (VSAN <<net_fcoe_vsan_a_id>>)

quit

vlan <<fcoe_san_b_vlan>>

name FCoE-SAN-B-VLAN

description FCOE SAN B (VSAN <<net_fcoe_vsan_b_id>>)

quit

save

Convert Interfaces to Use FC Protocol

Convert the needed ports to serve as Fibre Channel (FC) ports.

Documentation Feedback

Procedure

1 From system-view, run the following command:

interface range Ten-GigabitEthernet 1/2/1 to Ten-GigabitEthernet 1/2/6

Ten-GigabitEthernet 2/2/1 to Ten-GigabitEthernet 2/2/6

port-type fc

Add Individual Port Descriptions for Troubleshooting

Add individual port descriptions for troubleshooting activity and verification. The following examples use a

single Nimble Storage array with dual controllers.

Procedure

1 From system-view, run the following commands:

interface FortyGigE 1/1/1

description <<enclosure_1_name>>-VC1:Q1

quit

interface FortyGigE 1/1/2

description <<enclosure_1_name>>-VC1:Q2

quit

interface FortyGigE 2/1/1

description <<enclosure_1_name>>-VC2:Q1

quit

interface FortyGigE 2/1/2

description <<enclosure_1_name>>-VC2:Q2

quit

interface FortyGigE 1/1/7

description Switch1-IRF-Switch2-IRF-2/1/7

quit

interface FortyGigE 1/1/8

31Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 32

Configure Global FCoE Parameters

description Switch1-IRF-Switch2-IRF-2/1/8

quit

interface FortyGigE 2/1/7

description Switch2-IRF-Switch1-IRF-1/1/7

quit

interface FortyGigE 2/1/8

description Switch2-IRF-Switch1-IRF-1/1/8

quit

interface Fc1/2/13

description <<nimble_system_name>> CA-fc5

quit

interface Fc1/2/14

description <<nimble_system_name>> CA-fc9

quit

interface Fc1/2/15

description <<nimble_system_name>> CB-fc5

quit

interface Fc1/2/16

description <<nimble_system_name>> CB-fc9

quit

interface Fc2/2/13

description <<nimble_system_name>> CA-fc6

quit

interface Fc2/2/14

description <<nimble_system_name>> CA-fc10

quit

interface Fc2/2/15

description <<nimble_system_name>> CB-fc6

quit

interface Fc2/2/16

description <<nimble_system_name>> CB-fc10

quit

Documentation Feedback

interface Ten-GigabitEthernet 1/2/1

description <<mgmt_server_1_hostname>>-LOM1

quit

interface Ten-GigabitEthernet 1/2/2

description <<mgmt_server_2_hostname>>-LOM1

quit

interface Ten-GigabitEthernet 2/2/1

description <<mgmt_server_1_hostname>>-LOM2

quit

interface Ten-GigabitEthernet 2/2/2

description <<mgmt_server_2_hostname>>-LOM2

quit

save

Configure Global FCoE Parameters

Configuring the Fibre Channel over Ethernet (FCoE) mode requires configuring quality of service (QoS) for

Data Center Bridging Exchange on the switch. Configuring QoS for Data Center Bridging Exchange also

prevents FCoE traffic in the switch from being blocked and ensures that the FCoE traffic is lossless.

Procedure

1 On the switch, run the following commands from system-view to configure the Enhanced Transmission

Selection local precedence QoS table.

32Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 33

Configure Interface FCoE Parameters

Level 3 (Critical Applications) must be set to the highest level to ensure that FCoE traffic is given the

highest priority.

qos map-table dot1p-lp

import 0 export 0

import 1 export 0

import 2 export 0

import 3 export 1

import 4 export 0

import 5 export 0

import 6 export 0

import 7 export 0

quit

2 Create an access control list (ACL) in the switch to allow FCoE (Ethernet protocol number 0x8906) and

FCoE Initiation Protocol (FIP) (Ethernet Protocol 0x8914) packets to pass through the switch.

This rule is also applied to the DCBX traffic classifier (created in a later step). FCoE and FIP packets on

the switch receive the highest priority because they are mapped to 802.1p priority value 3.

acl number 4000 name DCBX

rule 0 permit type 8906 ffff

rule 5 permit type 8914 ffff

quit

Documentation Feedback

3 Create a traffic classifier rule called DCBX with the operator class OR, and map it to ACL 4000, which

was created in the previous step.

traffic classifier DCBX operator or

if-match acl 4000

quit

4 Create a traffic behavior named DCBX and configure it to mark packets with an 802.1.p priority of 3.

traffic behavior DCBX

remark dot1p 3

quit

5 Create a QoS policy that associates the traffic classifier created in step 3 with the traffic behavior created

in step 4, and specify that this classifier-behavior mode is associated with DCBX.

qos policy DCBX

classifier DCBX behavior DCBX mode dcbx

quit

save

Configure Interface FCoE Parameters

To enable FCoE traffic to be passed to the CNAs of the management servers and to each enclosure, you

must configure the interface with LLDP, DCBX support, and the previously created QoS policy.

Procedure

1 In system-view, configure priority flow control and spanning tree.

To prevent FCoE packets from being dropped, enable priority flow control and ensure that any packet

with the dot1p classifier of 3 is not dropped and is forwarded. Also, setting the interfaces to edge ports in

33Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 34

Configure the FCoE Mode and VSANs

spanning tree ensures that if spanning tree is enabled on the switch as part of the configuration, these

ports directly transition to the forwarding state in the spanning tree topology.

interface range FortyGigE 1/1/1 to FortyGigE 1/1/3 FortyGigE 2/1/1 to FortyGigE

2/1/3 Ten-GigabitEthernet 1/2/17 to Ten-GigabitEthernet 1/2/18

Ten-GigabitEthernet 2/2/17 to Ten-GigabitEthernet 2/2/18

priority-flow-control auto

priority-flow-control no-drop dot1p 3

qos trust dot1p

stp edged-port

2 Configure LLDP and DNCX TLV advertising on the interfaces.

Runthe following commands in system-viewwhile still on the interfacerange from step 1 of this procedure:

lldp tlv-enable dot1-tlv dcbx

qos apply policy DCBX outbound

3 Enable weighted round robin (WRR) on the interfaces, setting 50 percent of the bandwidth to networking

for best effort (be) and 50 percent of the bandwidth to storage traffic for assured forwarding (af1).

Set the rest of the WRR values to strict priority because they are not being used. Run the following

commands in system-view while still on the interface range from step 1 of this procedure:

Documentation Feedback

qos wrr be group 1 byte-count 5

qos wrr af1 group 1 byte-count 5

qos wrr af2 group sp

qos wrr af3 group sp

qos wrr af4 group sp

qos wrr ef group sp

qos wrr cs6 group sp

qos wrr cs7 group sp

quit

save

Configure the FCoE Mode and VSANs

This procedure enables FCoE mode on the switch and sets it to Fibre Channel forwarder (FCF) mode. It also

creates the VSANs for FCoE SAN A and FCoE SAN B and attaches them to the appropriate VLAN IDs.

Procedure

1 In system-view, set the FCoE mode to FCF.

fcoe-mode fcf

2 Create the VSAN for FCoE SAN A and set the domain ID.

vsan <<net_fcoe_vsan_a_id>>

domain-id <<net_fcoe_vsan_a_domain_id>> preferred

Non-disruptive reconfiguration or isolating the switch may be performed.

Continue? [Y/N]:y

quit

34Copyright©2017 by Nimble Storage, Inc. All rights reserved.

Page 35

Add FC Interfaces to the Appropriate VSANs

3 Create the VSAN for FCoE SAN B and set the domain ID.

vsan <<net_fcoe_vsan_b_id>>

domain-id <<net_fcoe_vsan_b_domain_id>> preferred

Non-disruptive reconfiguration or isolating the switch may be performed.

Continue? [Y/N]:y

quit

4 Associate the VSAN for FCoE SAN A with the VLAN for SAN A.

vlan <<fcoe_san_a_vlan>>

fcoe enable vsan <<net_fcoe_vsan_a_id>>

quit

5 Associate the VSAN for FCoE SAN B with the VLAN for SAN B.

vlan <<fcoe_san_b_vlan>>

fcoe enable vsan <<net_fcoe_vsan_b_id>>

quit

Add FC Interfaces to the Appropriate VSANs

Documentation Feedback

This procedure associates all FC interfaces on HPE FlexFabric 5940-4Slot switch A with the VSAN for FCoE