Page 1

HP Integrity NonStop BladeSystem Planning Guide

HP Part Number: 545740-002

Published: May 2008

Edition: J06.03 and subsequent J-series RVUs

Page 2

© Copyright 2008 Hewlett-Packard Development Company, L.P.

Legal Notice

Confidential computersoftware. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license.

The informationcontained hereinis subject to change without notice. Theonly warranties for HP products and services are setforth inthe express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP

shall not be liable for technical or editorial errors or omissions contained herein.

Export of the information contained in this publication may require authorization from the U.S. Department of Commerce.

Microsoft, Windows, and Windows NT are U.S. registered trademarks of Microsoft Corporation.

Intel, Pentium, and Celeron are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other

countries.

Java is a U.S. trademark of Sun Microsystems, Inc.

Motif, OSF/1, UNIX, X/Open, and the "X" device are registered trademarks, and IT DialTone and The Open Group are trademarks of The Open

Group in the U.S. and other countries.

Open Software Foundation, OSF, the OSF logo, OSF/1, OSF/Motif, and Motif are trademarks of the Open Software Foundation, Inc.

OSF MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THE OSF MATERIAL PROVIDED HEREIN, INCLUDING, BUT NOT

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE.

OSF shall not be liable for errors contained herein or for incidental consequential damages in connection with the furnishing, performance, or

use of this material.

© 1990, 1991, 1992, 1993 Open Software Foundation, Inc. The OSF documentation and the OSF software to which it relates are derived in part

from materials supplied by the following:

© 1987, 1988, 1989 Carnegie-Mellon University. © 1989, 1990, 1991 Digital Equipment Corporation. © 1985, 1988, 1989, 1990 Encore Computer

Corporation. © 1988 Free Software Foundation, Inc. © 1987, 1988, 1989, 1990, 1991 Hewlett-Packard Company. © 1985, 1987, 1988, 1989, 1990,

1991, 1992 International Business Machines Corporation. © 1988, 1989 Massachusetts Institute of Technology. © 1988, 1989, 1990 Mentat Inc. ©

1988 Microsoft Corporation. © 1987, 1988, 1989, 1990, 1991, 1992 SecureWare, Inc. © 1990, 1991 Siemens Nixdorf Informationssysteme AG. ©

1986, 1989, 1996, 1997 Sun Microsystems, Inc. © 1989, 1990, 1991 Transarc Corporation.

OSF software and documentation arebased inpart on the Fourth BerkeleySoftware Distribution under license fromThe Regents of the University

of California. OSF acknowledges the following individuals and institutions for their role in its development: Kenneth C.R.C. Arnold, Gregory S.

Couch, Conrad C. Huang, Ed James, Symmetric Computer Systems, Robert Elz. © 1980, 1981, 1982, 1983, 1985, 1986, 1987, 1988, 1989 Regents of

the University of California.

Page 3

Table of Contents

About This Document.......................................................................................................11

Supported Release Version Updates (RVUs)........................................................................................11

Intended Audience................................................................................................................................11

New and Changed Information in This Edition...................................................................................11

Document Organization.......................................................................................................................11

Notation Conventions...........................................................................................................................11

General Syntax Notation.................................................................................................................11

Publishing History................................................................................................................................13

HP Encourages Your Comments..........................................................................................................14

1 NonStop BladeSystem Overview................................................................................15

NonStop NB50000c BladeSystem..........................................................................................................15

NonStop Multicore Architecture (NSMA)......................................................................................16

NonStop NB50000c BladeSystem Hardware.............................................................................17

c7000 Enclosure.....................................................................................................................17

NonStop Server Blade...........................................................................................................19

IP CLuster I/O Module (CLIM).............................................................................................19

Storage CLuster I/O Module (CLIM)....................................................................................19

SAS Disk Enclosure ..............................................................................................................20

IOAM Enclosure...................................................................................................................20

Fibre Channel Disk Module (FCDM)...................................................................................20

Maintenance Switch..............................................................................................................20

BladeSystem Connections to Maintenance Switch...............................................................21

CLIM Connections to Maintenance Switch..........................................................................21

IOAM Enclosure Connections to Maintenance Switch........................................................21

System Console.....................................................................................................................21

UPS and ERM (Optional)......................................................................................................21

Enterprise Storage System (Optional)..................................................................................22

Tape Drive and Interface Hardware (Optional)....................................................................23

Preparation for Other Server Hardware...............................................................................................23

Management Tools for NonStop BladeSystems ...................................................................................23

OSM Package...................................................................................................................................24

Onboard Administrator (OA)..........................................................................................................24

Integrated Lights Out (iLO).............................................................................................................24

Cluster I/O Protocols (CIP) Subsystem............................................................................................24

Subsystem Control Facility (SCF) Subsystem.................................................................................24

Component Location and Identification...............................................................................................24

Terminology.....................................................................................................................................25

Rack and Offset Physical Location..................................................................................................26

ServerNet Switch Group-Module-Slot Numbering........................................................................26

NonStop Server Blade Group-Module-Slot Numbering.................................................................27

CLIM Enclosure Group-Module-Slot-Port-Fiber Numbering.........................................................27

IOAM Enclosure Group-Module-Slot Numbering.........................................................................27

Fibre Channel Disk Module Group-Module-Slot Numbering........................................................29

System Installation Document Packet..................................................................................................30

Technical Document for the Factory-Installed Hardware Configuration.......................................30

Configuration Forms for the ServerNet Adapters and CLIMs.......................................................30

2 Site Preparation Guidelines........................................................................................31

Table of Contents 3

Page 4

Modular Cabinet Power and I/O Cable Entry......................................................................................31

Emergency Power-Off Switches...........................................................................................................31

EPO Requirement for NonStop BladeSystems................................................................................31

EPO Requirement for HP R12000/3 UPS.........................................................................................31

Electrical Power and Grounding Quality.............................................................................................31

Power Quality..................................................................................................................................31

Grounding Systems.........................................................................................................................32

Power Consumption........................................................................................................................32

Uninterruptible Power Supply (UPS)...................................................................................................32

Cooling and Humidity Control............................................................................................................33

Weight...................................................................................................................................................34

Flooring.................................................................................................................................................34

Dust and Pollution Control...................................................................................................................34

Zinc Particulates....................................................................................................................................34

Space for Receiving and Unpacking the System..................................................................................34

Operational Space.................................................................................................................................35

3 System Installation Specifications...............................................................................37

Modular Cabinets.................................................................................................................................37

NonStop BladeSystem Power Distribution...........................................................................................37

Power Feed Setup for the NonStop BladeSystem...........................................................................38

North America/Japan Power Setup With Rack-Mounted UPS.......................................................38

North America/Japan Power Setup Without Rack-Mounted UPS..................................................39

International Power Setup With Rack-Mounted UPS.....................................................................40

International Power Setup Without Rack-Mounted UPS................................................................41

Power Distribution Units (PDUs).........................................................................................................42

AC Input Power for Modular Cabinets................................................................................................44

North America and Japan: 208 V AC PDU Power..........................................................................44

International: 400 V AC PDU Power...............................................................................................44

Branch Circuits and Circuit Breakers..............................................................................................44

Enclosure AC Input.........................................................................................................................45

Phase Load Balancing......................................................................................................................45

Enclosure Power Loads...................................................................................................................46

Dimensions and Weights......................................................................................................................47

Plan View of the 42U Modular Cabinet...........................................................................................47

Service Clearances for the Modular Cabinets.................................................................................47

Unit Sizes.........................................................................................................................................47

42U Modular Cabinet Physical Specifications.................................................................................48

Enclosure Dimensions.....................................................................................................................48

Modular Cabinet and Enclosure Weights With Worksheet ...........................................................49

Modular Cabinet Stability.....................................................................................................................49

Environmental Specifications...............................................................................................................50

Heat Dissipation Specifications and Worksheet..............................................................................50

Operating Temperature, Humidity, and Altitude...........................................................................50

Nonoperating Temperature, Humidity, and Altitude.....................................................................51

Cooling Airflow Direction...............................................................................................................51

Blanking Panels................................................................................................................................51

Typical Acoustic Noise Emissions...................................................................................................51

Tested Electrostatic Immunity.........................................................................................................51

Calculating Specifications for Enclosure Combinations.......................................................................51

4 System Configuration Guidelines...............................................................................53

Internal ServerNet Interconnect Cabling..............................................................................................53

4 Table of Contents

Page 5

Dedicated Service LAN Cables........................................................................................................53

Length Restrictions for Optional Cables.........................................................................................53

Cable Product IDs............................................................................................................................54

ServerNet Fabric and Supported Connections.....................................................................................54

ServerNet Cluster Connections ......................................................................................................54

ServerNet Fabric Cross-Link Connections......................................................................................55

Interconnections Between c7000 Enclosures...................................................................................55

I/O Connections (Standard and High I/O ServerNet Switch Configurations)................................55

Connections to IOAM Enclosures...................................................................................................56

Connections to CLIMs.....................................................................................................................56

NonStop BladeSystem Port Connections..............................................................................................56

Fibre Channel Ports to Fibre Channel Disk Modules......................................................................56

Fibre Channel Ports to Fibre Tape Devices......................................................................................57

SAS Ports to SAS Disk Enclosures...................................................................................................57

SAS Ports to SAS Tape Devices........................................................................................................57

Storage CLIM Devices...........................................................................................................................57

Factory-Default Disk Volume Locations for SAS Disk Devices......................................................58

Configuration Restrictions for Storage CLIMs................................................................................58

Configurations for Storage CLIM and SAS Disk Enclosures..........................................................58

Two Storage CLIMs, Two SAS Disk Enclosures.........................................................................58

Two Storage CLIMs, Four SAS Disk Enclosures........................................................................59

Fibre Channel Devices..........................................................................................................................60

Factory-Default Disk Volume Locations for FCDMs......................................................................61

Configurations for Fibre Channel Devices......................................................................................62

Configuration Restrictions for Fibre Channel Devices....................................................................62

Recommendations for Fibre Channel Device Configuration..........................................................62

Example Configurations of the IOAM Enclosure and Fibre Channel Disk Module......................63

Two FCSAs, Two FCDMs, One IOAM Enclosure......................................................................64

Four FCSAs, Four FCDMs, One IOAM Enclosure.....................................................................64

Two FCSAs, Two FCDMs, Two IOAM Enclosures....................................................................65

Four FCSAs, Four FCDMs, Two IOAM Enclosures...................................................................66

Daisy-Chain Configurations......................................................................................................67

Four FCSAs, Three FCDMs, One IOAM Enclosure...................................................................69

Ethernet to Networks............................................................................................................................70

Managing NonStop BladeSystem Resources........................................................................................71

Changing Customer Passwords......................................................................................................71

Change the Onboard Administrator (OA) Password................................................................72

Change the CLIM iLO Password...............................................................................................72

Change the Maintenance Interface (Eth0) Password ................................................................72

Change the NonStop ServerBlade MP (iLO) Password.............................................................73

Change the Remote Desktop Password.....................................................................................73

Default Naming Conventions..........................................................................................................73

Possible Values of Disk and Tape LUNs..........................................................................................75

5 Hardware Configuration in Modular Cabinets.........................................................77

Maximum Number of Modular Components......................................................................................77

Enclosure Locations in Cabinets...........................................................................................................77

Typical Configuration...........................................................................................................................78

6 Maintenance and Support Connectivity....................................................................81

Dedicated Service LAN.........................................................................................................................81

Basic LAN Configuration................................................................................................................81

Fault-Tolerant LAN Configuration .................................................................................................83

Table of Contents 5

Page 6

IP Addresses....................................................................................................................................84

Ethernet Cables................................................................................................................................88

SWAN Concentrator Restrictions....................................................................................................88

Dedicated Service LAN Links Using G4SAs...................................................................................88

Dedicated Service LAN Links Using IP CLIMs..............................................................................89

Initial Configuration for a Dedicated Service LAN.........................................................................89

System Consoles...................................................................................................................................89

System Console Configurations......................................................................................................90

One System Console Managing One System (Setup Configuration)........................................90

Primary and Backup System Consoles Managing One System.................................................90

Multiple System Consoles Managing One System....................................................................91

Managing Multiple Systems Using One or Two System Consoles............................................91

Cascading Ethernet Switch or Hub Configuration....................................................................91

A Cables...........................................................................................................................93

Cable Types, Connectors, Lengths, and Product IDs...........................................................................93

Cable Length Restrictions.....................................................................................................................94

B Operations and Management Using OSM Applications........................................95

System-Down OSM Low-Level Link....................................................................................................95

AC Power Monitoring...........................................................................................................................95

AC Power-Fail States............................................................................................................................97

C Default Startup Characteristics...................................................................................99

Index...............................................................................................................................103

6 Table of Contents

Page 7

List of Figures

1-1 Example of a NonStop NB50000c BladeSystem............................................................................16

1-2 c7000 Enclosure Features...............................................................................................................18

1-3 Connections Between Storage CLIMs and ESS.............................................................................23

3-1 North America/Japan 3-Phase Power Setup With Rack-Mounted UPS........................................39

3-2 North America/Japan Power Setup...............................................................................................40

3-3 International 3-Phase Power Setup With UPS...............................................................................41

3-4 International Power Setup Without Rack-Mounted UPS..............................................................42

3-5 Bottom AC Power Feed.................................................................................................................43

3-6 Top AC Power Feed.......................................................................................................................43

4-1 ServerNet Switch Standard I/O Supported Connections .............................................................55

4-2 ServerNet Switch High I/O Supported Connections ...................................................................56

4-3 Two Storage CLIMs, Two SAS Disk Enclosure Configuration.....................................................59

4-4 Two Storage CLIMs, Four SAS Disk Enclosure Configuration.....................................................60

5-1 42U Configuration.........................................................................................................................79

6-1 Example of a Basic LAN Configuration With One Maintenance Switch......................................82

6-2 Example of a Fault-Tolerant LAN Configuration With Two Maintenance Switches...................84

7

Page 8

8

Page 9

List of Tables

3-1 Example of Cabinet Load Calculations.........................................................................................52

4-1 Default User Names and Passwords.............................................................................................72

9

Page 10

10

Page 11

About This Document

This guide describes the HP Integrity NonStop™ BladeSystem and provides examples of system

configurations toassist you in planning forinstallation of a new HP Integrity NonStop™ NB50000c

BladeSystem.

Supported Release Version Updates (RVUs)

This publication supports J06.03 and all subsequent J-series RVUs until otherwise indicated in

a replacement publication.

Intended Audience

This guide is written for those responsible for planning the installation, configuration, and

maintenance of a NonStop BladeSystem and the software environment at a particular site.

Appropriate personnelmust have completed HP training courses on system support for NonStop

BladeSystems.

New and Changed Information in This Edition

This is a new manual.

Document Organization

Chapter 1 (page 15)

Chapter 2 (page 31)

Chapter 3 (page 37)

Chapter 4 (page 53)

Chapter 5 (page 77)

Chapter 6 (page 81)

Appendix A (page 93)

Appendix B (page 95)

Appendix C (page 99)

ContentsSection

This chapter provides an overview of the Integrity

NonStop NB50000c BladeSystem.

This chapter outlines topics to consider when planning

or upgrading the installation site.

This chapter provides the installation specifications for a

fully populated NonStop BladeSystem enclosure.

This chapter describes the guidelines for implementing

the NonStop BladeSystem.

This chaptershows recommended locations for hardware

enclosures in the NonStop BladeSystem.

This chapter describes the connectivity options, including

ISEE, for maintenance and support of a NonStop

BladeSystem.

This appendix identifies the cables used with the NonStop

BladeSystem hardware.

This appendix describes how to use the OSM applications

to manage a NonStop BladeSystem.

This appendix describes the default startup characteristics

for a NonStop BladeSystem.

Notation Conventions

General Syntax Notation

This list summarizes the notation conventions for syntax presentation in this manual.

Supported Release Version Updates (RVUs) 11

Page 12

UPPERCASE LETTERS Uppercase letters indicate keywords and reserved words. Type these

items exactly as shown. Items not enclosed in brackets are required.

For example:

MAXATTACH

Italic Letters

Italic letters, regardless of font, indicate variable items that you

supply. Items not enclosed in brackets are required. For example:

file-name

Computer Type

Computer type letters indicate:

• C and Open System Services (OSS) keywords, commands, and

reserved words. Type these items exactly as shown. Items not

enclosed in brackets are required. For example:

Use the cextdecs.h header file.

• Text displayed by the computer. For example:

Last Logon: 14 May 2006, 08:02:23

• A listing of computer code. For example

if (listen(sock, 1) < 0)

{

perror("Listen Error");

exit(-1);

}

Bold Text

Bold text in an example indicates user input typed at the terminal.

For example:

ENTER RUN CODE

?123

CODE RECEIVED: 123.00

The user must press the Return key after typing the input.

[ ] Brackets Brackets enclose optional syntax items. For example:

TERM [\system-name.]$terminal-name

INT[ERRUPTS]

A group of items enclosed in brackets is a list from which you can

choose one item or none. The items in the list can be arranged either

vertically, with aligned brackets on each side of the list, or

horizontally, enclosed in a pair of brackets and separated by vertical

lines. For example:

FC [ num ]

[ -num ]

[ text ]

12

K [ X | D ] address

{ } Braces A group of items enclosed in braces is a list from which you are

required to choose one item. The items in the list can be arranged

either vertically, with aligned braces on each side of the list, or

horizontally, enclosed in a pair of braces and separated by vertical

lines. For example:

LISTOPENS PROCESS { $appl-mgr-name }

{ $process-name }

ALLOWSU { ON | OFF }

Page 13

| Vertical Line A vertical line separates alternatives in a horizontal list that is enclosed

in brackets or braces. For example:

INSPECT { OFF | ON | SAVEABEND }

… Ellipsis An ellipsis immediately following a pair of brackets orbraces indicates

that you can repeat the enclosed sequence of syntax items any number

of times. For example:

M address [ , new-value ]…

- ] {0|1|2|3|4|5|6|7|8|9}…

An ellipsis immediately following a single syntax item indicates that

you can repeat that syntax item any number of times. For example:

"s-char…"

Punctuation Parentheses, commas, semicolons, and other symbols not previously

described must be typed as shown. For example:

error := NEXTFILENAME ( file-name ) ;

LISTOPENS SU $process-name.#su-name

Quotation marks around a symbol such as a bracket or braceindicate

the symbol is a required character that you must type as shown. For

example:

Item Spacing Spaces shown between items are required unless one of the items is

Line Spacing If the syntax of a command is too long to fit on a single line, each

Publishing History

"[" repetition-constant-list "]"

a punctuation symbol such as a parenthesis or a comma. For example:

CALL STEPMOM ( process-id ) ;

If there is no space between two items, spaces are not permitted. In

this example, no spaces are permitted between the period and any

other items:

$process-name.#su-name

continuation line is indented three spaces and is separated from the

preceding line by a blank line. This spacing distinguishes items in a

continuation line from items in a vertical list of selections. For

example:

ALTER [ / OUT file-spec / ] LINE

[ , attribute-spec ]…

Publication DateProduct VersionPart Number

May 2008N.A.545740-002

Publishing History 13

Page 14

HP Encourages Your Comments

HP encourages your comments concerning this document. We are committed to providing

documentation that meets your needs. Send any errors found, suggestions for improvement, or

compliments to:

pubs.comments@hp.com

Include the document title, part number, and any comment, error found, or suggestion for

improvement you have concerning this document.

14

Page 15

1 NonStop BladeSystem Overview

NOTE: This document describes products and features that are not yet available on systems

running J-series RVUs. These products and features include:

• CLuster I/O Modules (CLIMs)

• The Cluster I/O Protocols (CIP) subsystem

• Serial attached SCSI (SAS) disk drives and their enclosures

The Integrity NonStop BladeSystem provides an integrated infrastructure with consolidated

server, network, storage, power, and management capabilities. The NonStop BladeSystem

implements the BladeSystem c-Class architecture and is optimized for enterprise data center

applications. The NonStop NB50000c BladeSystem is introduced as part of the J06.03 RVU.

NonStop NB50000c BladeSystem

The NonStop NB50000c BladeSystem combines the NonStop operating system and HP Integrity

NonStop BL860c Server Blades in a single footprint as part of the “NonStop Multicore Architecture

(NSMA)” (page 16).

The characteristics of an Integrity NonStop NB50000c BladeSystem are:

Intel ItaniumProcessor

Chassis

Blade System with 16 processors

Minimum CLIMs

IOAM enclosure

Maximum IOAM enclosures

1

NSE-MProcessor model

c7000 enclosure (one enclosure for 2 to 8 processors; two

enclosures for 10 to 16 processors)

42U, 19 inch rackCabinet

8 GB to 48 GBMinimum/maximum mainmemory perlogical processor

2 to 16Minimum/maximum processors

2, 4, 6, 8, 10, 12, 14, or 16Supported processor configurations

24 CLIMs (IP and Storage)Maximum CLuster I/O Modules (CLIMs) in a NonStop

• 0 CLIMs (if there are IOAM enclosures)

• 2 Storage CLIMs and 2 IP CLIMs (ifthere are no IOAM

enclosures)

4Maximum SAS disk enclosures per Storage CLIM pair

100Maximum SAS disk drives per Storage CLIM pair

4 FCDMsdaisy-chained with 14 disk drives in each FCDMMaximum FibreChannel diskmodules (FCDMs)through

• 6 IOAMs for 10 to 16 processors

• 4 IOAMs for 2 to 8 processors

SupportedEnterprise StorageSystem (ESS) support availablethrough

Storage CLIMs or IOAM enclosures

SupportedConnection to NonStop ServerNet Clusters

Not supportedM8201R Fibre Channel to SCSI router support

Not supportedConnection to NonStop S-series I/O

1 When CLIMs are also included in the configuration, the maximum number of IOAMs might be smaller. Check with

your HP representative to determine your system's maximum for IOAMs.

NonStop NB50000c BladeSystem 15

Page 16

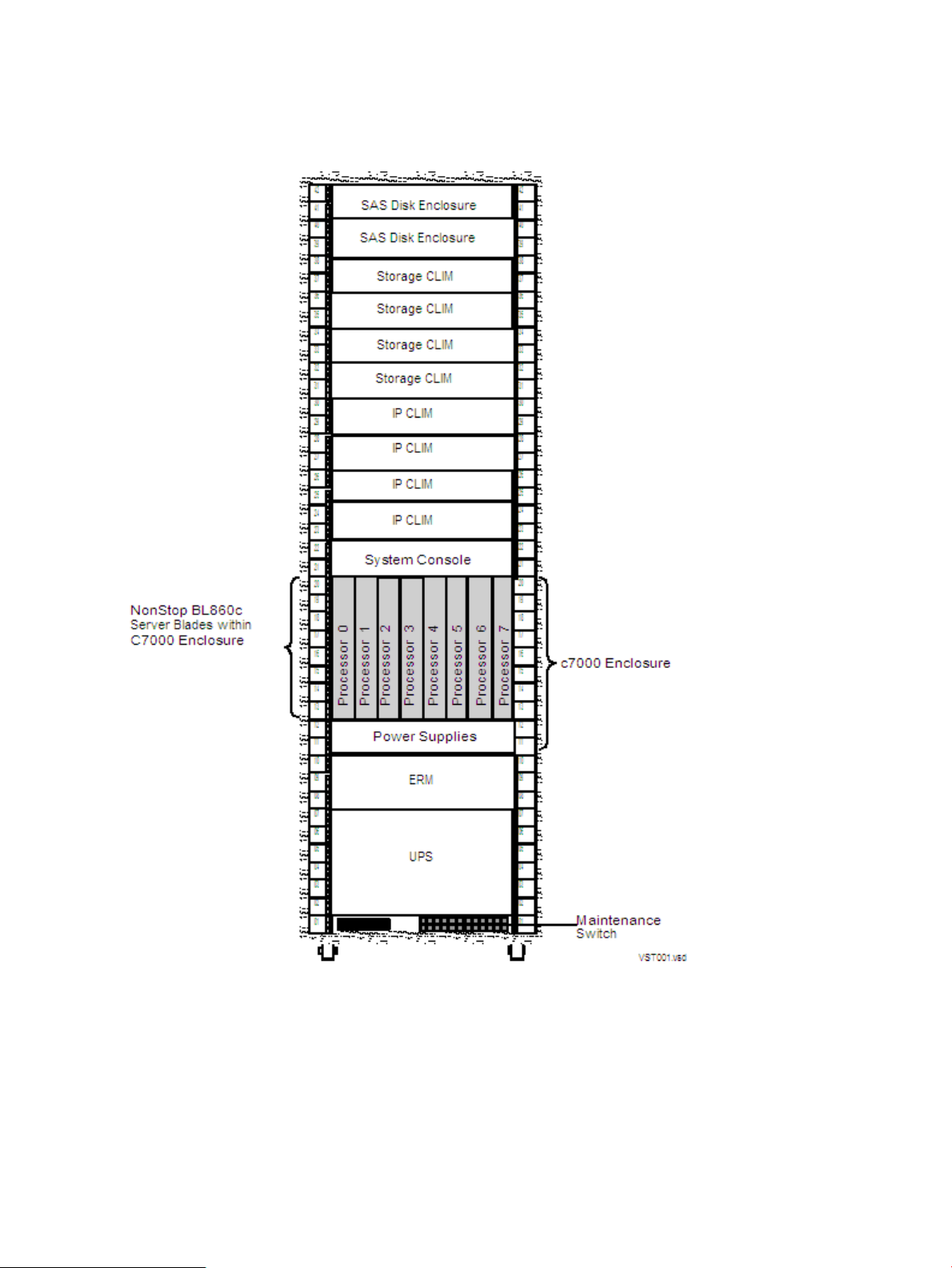

Figure 1-1 “Example of a NonStop NB50000c BladeSystem” shows the front view of an example

NonStop NB50000c BladeSystem with eight server blades in a 42U modular cabinet with the

optional HP R12000/3 UPS and the HP AF434A extended runtime module (ERM).

Figure 1-1 Example of a NonStop NB50000c BladeSystem

NonStop Multicore Architecture (NSMA)

The NonStop BladeSystem employs the HP NonStop Multicore Architecture (NSMA) to achieve

full software fault tolerance by running the NonStop operating system on NonStop Server Blades.

With the NSMA's multiple core microprocessor architecture, a set of cores comprised of instruction

processing units (IPUs) share the same memory map (except in low-level software). The NSMA

extends the traditional NonStop logical processor to a multiprocessor and includes:

• No hardware lockstep checking

• Itanium fault detection

16 NonStop BladeSystem Overview

Page 17

• High-end scalability

• Application virtualization

• Cluster programming transparency

The NonStop NB50000c BladeSystem can be configured with 2 to 16 processors, communicates

with other NonStop BladeSystems using Expand, and achieves ServerNet connectivity using a

ServerNet mezzanine, PCI Express (PCIe) interface card installed in the server blade.

NonStop NB50000c BladeSystem Hardware

A large number of enclosure combinations is possible within the modular cabinets of a NonStop

NB50000c BladeSystem. The applications and purpose of any NonStop BladeSystem determine

the number and combinations of hardware within the cabinet.

Standard hardware for a NonStop BladeSystem includes:

• “c7000 Enclosure”

• “NonStop Server Blade” (page 19)

• “Storage CLuster I/O Module (CLIM)” (page 19)

• “SAS Disk Enclosure ” (page 20)

• “IP CLuster I/O Module (CLIM)” (page 19)

• “IOAM Enclosure” (page 20)

• “Fibre Channel Disk Module (FCDM)” (page 20)

• “Maintenance Switch” (page 20)

• “System Console” (page 21)

Optional Hardware for a NonStop BladeSystem includes:

• “UPS and ERM (Optional)” (page 21)

• “Enterprise Storage System (Optional)” (page 22)

• “Tape Drive and Interface Hardware (Optional)” (page 23)

All NonStop BladeSystem components are field-replaceable units that can only be serviced by

service providers trained by HP.

Because of the number of possible configurations, you can calculate the total power consumption,

heat dissipation, and weight of each modular cabinet based on the hardware configuration that

you order from HP. For site preparation specifications for the modular cabinets and the individual

enclosures, see Chapter 3 (page 37).

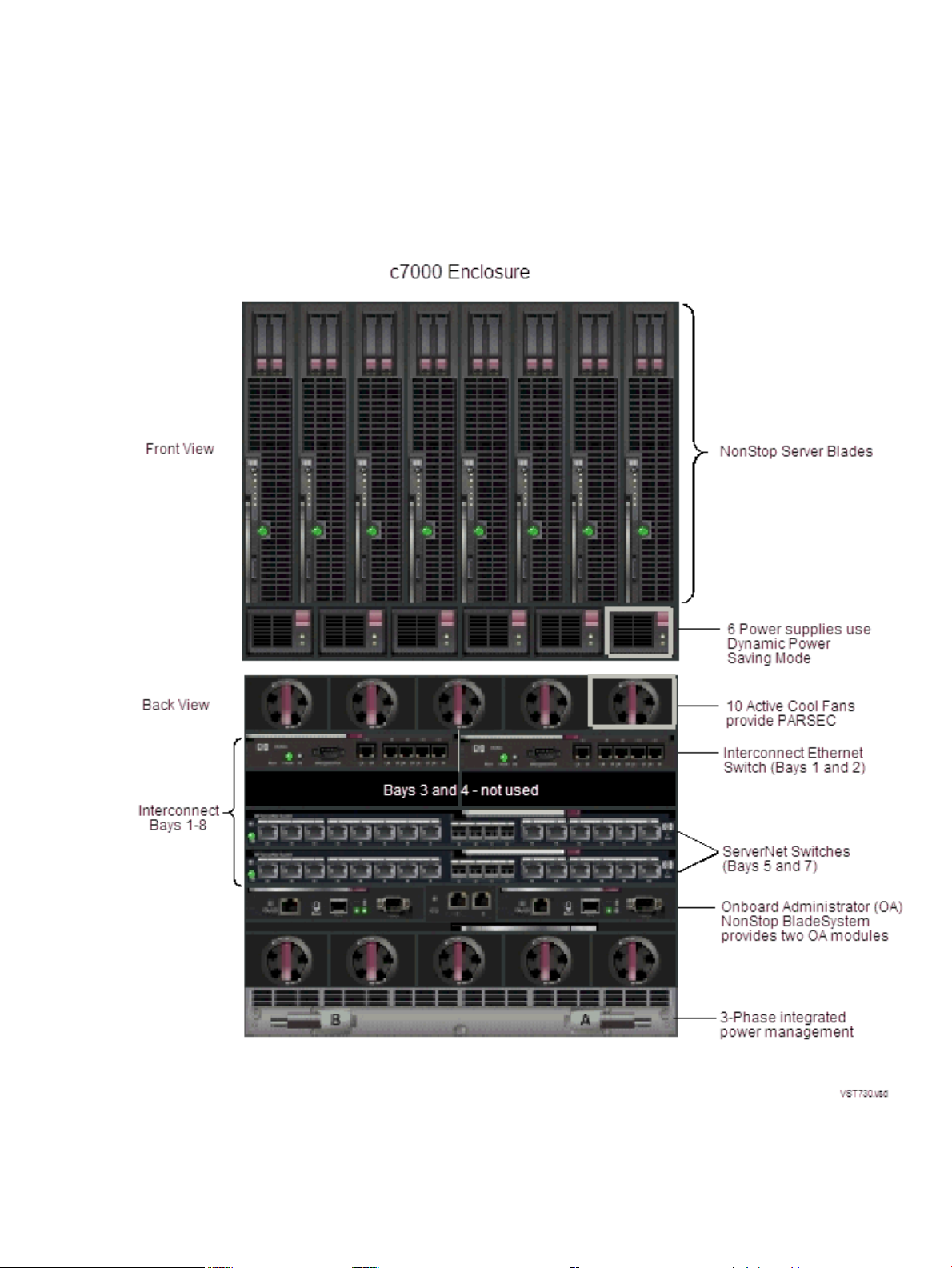

c7000 Enclosure

The three-phase c7000 enclosure provides integrated processing, power, and cooling capabilities

along with connections to the I/O infrastructure. The c7000 enclosure features include:

• Up to 8 NonStop Server Blades per c7000 enclosure – populated in pairs

• Two Onboard Administrator (OA) management modules that provide detection,

identification, management, and control services for the NonStop BladeSystem.

• The HP Insight Display provides information about the health and operation of the enclosure.

For more information about the HP Insight Display, which is the visual interface located at

the bottom front of the OA, see the HP BladeSystem Onboard Administrator User Guide.

• Two Interconnect Ethernet switches that download Halted State Services (HSS) bootcode

via the maintenance LAN.

• Two ServerNet switches that provide ServerNet connectivity between processors, between

processors and I/O, and between systems (through connections to cluster switches). There

are two types of ServerNet switches: Standard I/O or High I/O.

NonStop NB50000c BladeSystem 17

Page 18

• Six power supplies that implement Dynamic Power Saving Mode. This mode is enabled by

the OA module, and when enabled, monitors the total power consumed by the c7000

enclosure in real-time and automatically adjusts to changes in power demand.

• Ten Active Cool fans use the parallel, redundant, scalable, enclosure-based cooling (PARSEC)

architecture where fresh, cool air flows over all the blades (in the front of the enclosure) and

all the interconnect modules (in the back of the enclosure).

Figure 1-2 shows all of these c7000 features, except the HP Insight Display:

Figure 1-2 c7000 Enclosure Features

For information about the LEDs associated with the c7000 enclosure components, see the HP

BladeSystem c7000 Enclosure Setup and Installation Guide.

18 NonStop BladeSystem Overview

Page 19

NonStop Server Blade

The NonStop BL860c Server Blade is a two socket full-height server blade featuring an Intel®

Itanium® dual-core processor. Each server blade contains a ServerNet interface mezzanine card

with PCI-Express x4 to PCI-x bridge connections to provide ServerNet fabric connectivity. Other

features include four integrated Gigabit Ethernet ports for redundant network boot paths and

12 DIMM slots providing a maximum of 48 GB of memory per server blade.

IP CLuster I/O Module (CLIM)

The IP CLIM is a rack-mounted server that is part of some NonStop BladeSystem configurations.

The IP CLIM functions as a ServerNet Ethernet adapter providing HP standard Gigabit Ethernet

Network Interface Cards (NICs) to implement one of the IP CLIM configurations (either IP CLIM

A or IP CLIM B):

IP CLIM A Configuration (5 Copper Ports)

• Slot 1 contains a NIC that provides four copper Ethernet ports

• Eth01 port (between slots 1 and 2) provides one copper Ethernet port

• Slot 3 contains a ServerNet PCIe interface card, which provides the ServerNet fabric

connections

IP CLIM B Configuration (3 Copper/2 Fiber Ports)

• Slot 1 contains a NIC that provides three copper Ethernet ports

• Slots 2 contains a NIC that provides one fiber-optical Ethernet port

• Slot 3 contains a ServerNet interface PCIe card, which provides the ServerNet fabric

connections

• Slots 4 contains a NIC that provides one fiber-optical Ethernet port

For an illustration of the IP CLIM slots, see “Ethernet to Networks” (page 70).

NOTE: Both the IP and Storage CLIMs use the Cluster I/O Protocols (CIP) subsystem. For more

information about the CIP subsystem, see the Cluster I/O Protocols Configuration and Management

Manual.

Storage CLuster I/O Module (CLIM)

The Storage CLuster I/O Module (CLIM) is part of some NonStop BladeSystem configurations.

The Storage CLIM is a rack-mounted server and functions as a ServerNet I/O adapter providing:

• Dual ServerNet fabric connections

• A Serial Attached SCSI (SAS) interface for the storage subsystem via a SAS Host Bus Adapter

(HBA) supporting SAS disk drives and SAS tapes

• A Fibre Channel (FC) interface for ESS and FC tape devices via a customer-ordered FC HBA.

A Storage CLIM can have 0, 2, or 4 FC ports.

The Storage CLIM contains 5 PCIe HBA slots with these characteristics:

ProvidesConfigurationStorage CLIM HBA Slot

Part of base configuration5

Part of base configuration4

One SAS external and internal connector

with four SAS links per connector and 3

Gbps per link is provided by thePCIe 8x slot.

One SAS external connector with four SAS

links per connector and 3 Gbps per link is

provided by the PCIe 8x slot.

Part of base configuration3

ServerNet fabric connections via a PCIe 4x

adapter.

NonStop NB50000c BladeSystem 19

Page 20

ProvidesConfigurationStorage CLIM HBA Slot

SAS or Fibre ChannelOptional customer order2

SAS or Fibre ChannelOptional customer order1

Connections to FCDMs are not supported.

For an illustration of the Storage CLIM HBA slots, see “Storage CLIM Devices” (page 57).

SAS Disk Enclosure

The SAS disk enclosure is a rack-mounted disk enclosure and is part of some NonStop

BladeSystem configurations. The SAS disk enclosure supports up to 25 SAS disk drives, 3Gbps

SAS protocol, and a dual SAS domain from Storage CLIMs to dual port SAS disk drives. The

SAS disk enclosure supports connections to SAS disk drives. Connections to FCDMs are not

supported. For more information about the SAS disk enclosure, see the manual for your SAS

disk enclosure model (for example, the HP StorageWorks 70 Modular Smart Array Enclosure

Maintenance and Service Guide).

The SAS disk enclosure contains:

• 25, 2.5” disk drive slots with size options:

— 72GB, 15K rpm

— 146GB, 10K rpm

• Two independent I/O modules:

— SAS Domain A

— SAS Domain B

• Two fans

• Two power supplies

IOAM Enclosure

The IOAM enclosure is part of some NonStop BladeSystem configurations. The IOAM enclosure

uses Gigabit Ethernet 4-port ServerNet adapters (G4SAs) for networking connectivity and Fibre

Channel ServerNet adapters (FCSAs) for Fibre Channel connectivity between the system and

Fibre Channel disk modules (FCDMs), ESS, and Fibre Channel tape.

Fibre Channel Disk Module (FCDM)

The Fibre Channel disk module (FCDM) is a rack-mounted enclosure that can only be used with

NonStop BladeSystems that have IOAM enclosures. The FCDM connects to to an FCSA in an

IOAM enclosure and contains:

• Up to 14 Fibre Channel arbitrated loop disk drives (enclosure front)

• Environmental monitoring unit (EMU) (enclosure rear)

• Two fans and two power supplies

• Fibre Channel arbitrated loop (FC-AL) modules (enclosure rear)

You can daisy-chain together up to four FCDMs with 14 drives in each one.

Maintenance Switch

The HP ProCurve 2524 maintenance switch provides the communication between the NonStop

BladeSystem through the Onboard Administrator, c7000 enclosure interconnect Ethernet switch,

Storage and IP CLIMs, IOAM enclosures, the optional UPS, and the system console running HP

NonStop Open System Management (OSM). For a general description of the maintenance switch,

refer to the NonStop NS14000 Planning Guide. Details about the use or implementation of the

maintenance switch that are specific to a NonStop BladeSystem are presented here.

20 NonStop BladeSystem Overview

Page 21

The NonStopBladeSystem requires multiple connections tothe maintenance switch. The following

describes the required connections for each hardware component.

BladeSystem Connections to Maintenance Switch

• One connection per Onboard Administrator on the NonStop BladeSystem

• One connection per Interconnect Ethernet switch on the NonStop BladeSystem

• One connection to the optional UPS module

• One connection for the system console running OSM

CLIM Connections to Maintenance Switch

• One connection to the iLO port on a CLIM

• One connection to an eth0 port on a CLIM

IOAM Enclosure Connections to Maintenance Switch

• One connection to each of the two ServerNet switch boards in one I/O adapter module

(IOAM) enclosure.

• At least two connections to any two Gigabit Ethernet 4-port ServerNet adapters (G4SAs), if

the NonStop BladeSystem maintenance LAN is implemented through G4SAs.

System Console

A system console is a personal computer (PC) purchased from HP that runs maintenance and

diagnostic software for NonStop BladeSystems. When supplied with a new NonStop BladeSystem,

system consoles have factory-installed HP and third-party software for managing the system.

You can install software upgrades from the HP NonStop System Console Installer DVD image.

Some system console hardware, including the PC system unit, monitor, and keyboard, can be

mounted in the NonStop BladeSystem's 19-inch rack. Other PCs are installed outside the rack

and require separate provisions or furniture to hold the PC hardware.

For more information on the system console, refer to “System Consoles” (page 89).

UPS and ERM (Optional)

An uninterruptible power supply (UPS) is optional but recommended where a site UPS is not

available. HP supports the HP model R12000/3 UPS because it utilizes the power fail support

provided by the OSM. For information about the requirements for installing a UPS, see

“Uninterruptible Power Supply (UPS)” (page 32).

There are two different versions of the R12000/3 UPS:

• For North America and Japan, the HP AF429A is utilized and uses an IEC309 560P9 (60A)

input connector with 208V three phase (120V phase-to-neutral)

• For International, the HP AF430A is utilized and uses an IEC309 532P6 (32A) input connector

with 400V three phase (230V phase-to-neutral).

Cabinet configurations that include the HP UPS can also include extended runtime modules

(ERMs). An ERMis abattery module that extends the overallbattery-supported system run time.

NonStop NB50000c BladeSystem 21

Page 22

Up to four ERMs can be used for even longer battery-supported system run time. HP supports

the HP AF434A ERM.

WARNING! UPS's and ERMs must be mounted in the lowest portion of the NonStop

BladeSystem to avoid tipping and stability issues.

NOTE: The R12000/3 UPS has two output connectors. For I/O racks, only the output connector

to the rack level PDU is used. For processor racks, one output connector goes to the c7000 chassis

and the other to the rack PDU. For power feed setup instructions, see “NonStop BladeSystem

Power Distribution” (page 37) and “Power Feed Setup for the NonStop BladeSystem” (page 38).

For the R12000/3 UPS power and environmental requirements, refer to Chapter 3 (page 37). For

planning, installation, and emergency power-off (EPO) instructions, refer to the HP 3 Phase UPS

User Guide. This guide is available at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01079392/c01079392.pdf

For other UPS's, refer to the documentation shipped with the UPS.

Enterprise Storage System (Optional)

An Enterprise Storage System (ESS) is a collection of magnetic disks, their controllers, and a disk

cache in one or more standalone cabinets. ESS connects to the NonStop BladeSystem via the

Storage CLIM's Fibre Channel HBA ports (direct connect), Fibre Channel ports on the IOAM

enclosures (direct connect), or through a separate storage area network (SAN) using a Fibre

Channel SAN switch (switched connect). For more information about these connection types,

see your service provider.

NOTE: The Fibre Channel SAN switch power cords might not be compatible with the modular

cabinet PDU. Contact your service provider to order replacement power cords for the SAN switch

that are compatible with the modular cabinet PDU.

Cables and switches vary, depending on whether the connection is direct, switched, or a

combination:

Fibre Channel SwitchesCablesConnection

Direct connect

(LC-LC)

Storage CLIM (LC-MMF)

Storage CLIM (LC-MMF)

Combination of direct and switched

connection

switched connection

1 Customer must order the FC HBA ports on the Storage CLIM.

1

02 Fibre Channel ports on IOAM

02 Fibre Channel HBA ports on

1 or more4 Fibre Channel ports (LC-LC)Switched

1 or more4 Fibre Channel HBA ports on

12 Fibre Channel ports for each direct

14 Fibre Channel ports for each

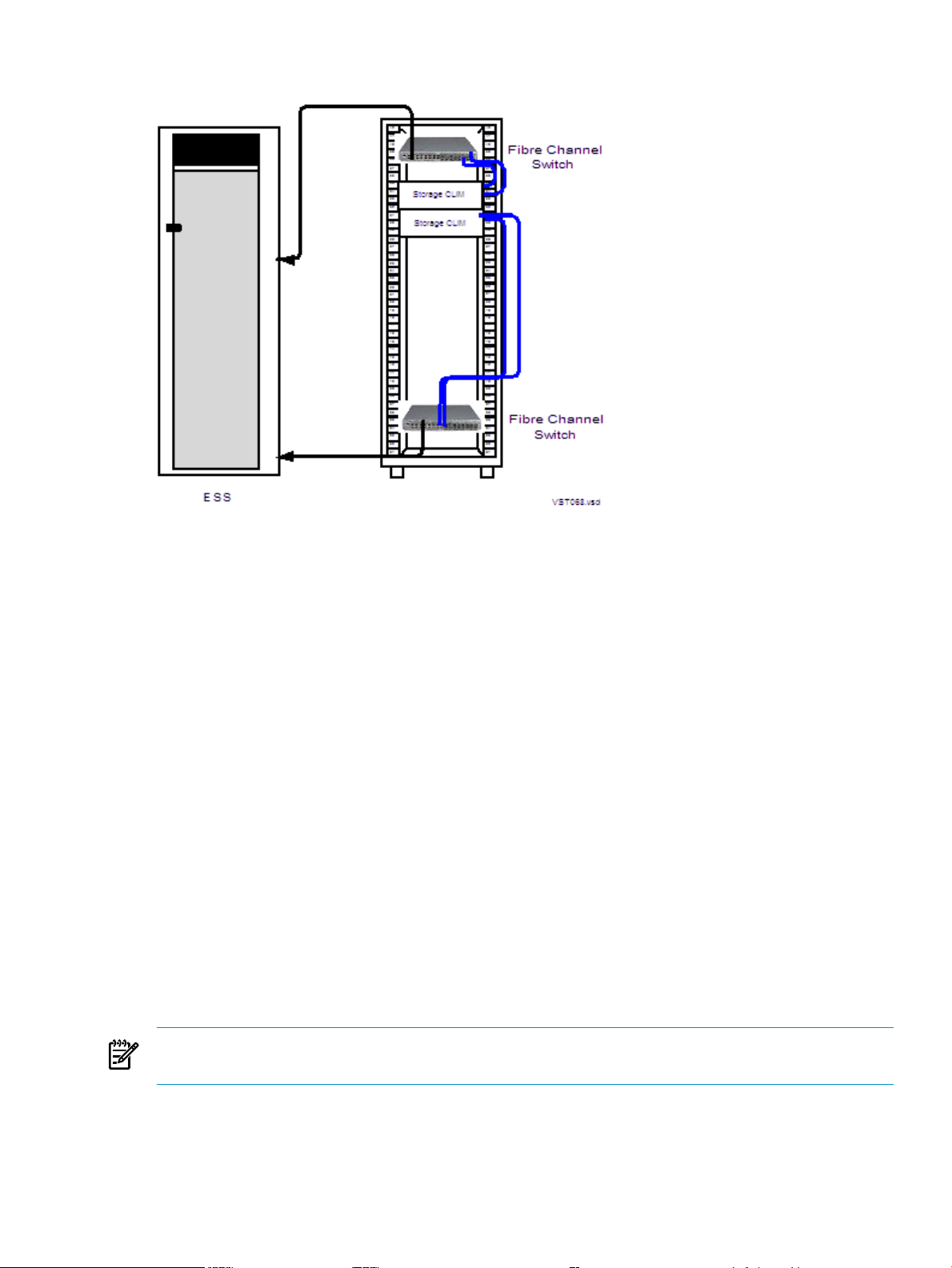

Figure 1-3 shows an example of connections between two Storage CLIMs and an ESS via separate

Fibre Channel switches:

22 NonStop BladeSystem Overview

Page 23

Figure 1-3 Connections Between Storage CLIMs and ESS

For fault tolerance, the primary and backup paths to an ESS logical device (LDEV) must go

through different Fibre Channel switches.

Some storage area procedures, such as reconfiguration, can cause the affected switches to pause.

If the pause is long enough, I/O failure occurs on all paths connected to that switch. If both the

primary and the backup paths are connected to the same switch, the LDEV goes down.

Refer to the documentation that accompanies the ESS.

Tape Drive and Interface Hardware (Optional)

For an overview of tape drives and the interface hardware, see “Fibre Channel Ports to Fibre

Tape Devices” (page 57) or “SAS Ports to SAS Tape Devices” (page 57).

For a list of supported tape devices, ask your service provider to refer to the NonStop BladeSystem

Hardware Installation Manual.

Preparation for Other Server Hardware

This guide provides the specifications only for the NonStop BladeSystem modular cabinets and

enclosures identified earlier in this section. For site preparation specifications for other HP

hardware that will be installed with the NonStop BladeSystems, consult your HP account team.

For site preparation specifications relating to hardware from other manufacturers, refer to the

documentation for those devices.

Management Tools for NonStop BladeSystems

NOTE: For information about changing the default passwords for NonStop BladeSystem

components and associated software, see “Changing Customer Passwords” (page 71).

This subsection describes the management tools available on your NonStop BladeSystem:

• “OSM Package” (page 24)

• “Onboard Administrator (OA)” (page 24)

• “Integrated Lights Out (iLO)” (page 24)

Preparation for Other Server Hardware 23

Page 24

• “Cluster I/O Protocols (CIP) Subsystem” (page 24)

• “Subsystem Control Facility (SCF) Subsystem” (page 24)

OSM Package

The HP Open System Management (OSM) product is the required system management tool for

NonStop BladeSystems. OSM works together with the Onboard Administrator (OA) and

Integrated Lights Out (iLO) management interfaces to manage c7000 enclosures. A new

client-based component, the OSM Certificate Tool, facilitates communication between OSM and

the OA.

For more information on the OSM package, including a description of the individual applications

see the OSM Migration and Configuration Guide and the OSM Service Connection User's Guide.

Onboard Administrator (OA)

The Onboard Administrator (OA) is the enclosure's management, processor, subsystem, and

firmware base and supports the c7000 enclosure and NonStop Server Blades. The OA software

is integrated with OSM and the Integrated Lights Out (iLO) management interface.

Integrated Lights Out (iLO)

iLO allows you to perform activities on the NonStop Bladesystem from a remote location and

provides anytime access to system management information such as hardware health, event logs

and configuration is available to troubleshoot and maintain the NonStop Server Blades.

Cluster I/O Protocols (CIP) Subsystem

The Cluster I/O Protocols (CIP) subsystem provides a configuration and management interface

for I/O on NonStop BladeSystems. The CIP subsystem has several tools for monitoring and

managing the subsystem. For more information about these tools and the CIP subsystem, see

the Cluster I/O Protocols (CIP) Configuration and Management Manual.

Subsystem Control Facility (SCF) Subsystem

The Subsystem Control Facility (SCF) also provides monitoring and management of the CIP

subsystem on the NonStop BladeSystem. See the Cluster I/O Protocols (CIP) Configuration and

Management Manual for more information about two subsystems with NonStop BladeSystems.

Component Location and Identification

This subsection includes these topics:

• “Terminology” (page 25)

• “Rack and Offset Physical Location” (page 26)

• “ServerNet Switch Group-Module-Slot Numbering” (page 26)

• “NonStop Server Blade Group-Module-Slot Numbering” (page 27)

• “CLIM Enclosure Group-Module-Slot-Port-Fiber Numbering” (page 27)

• “IOAM Enclosure Group-Module-Slot Numbering” (page 27)

• “Fibre Channel Disk Module Group-Module-Slot Numbering” (page 29)

24 NonStop BladeSystem Overview

Page 25

Terminology

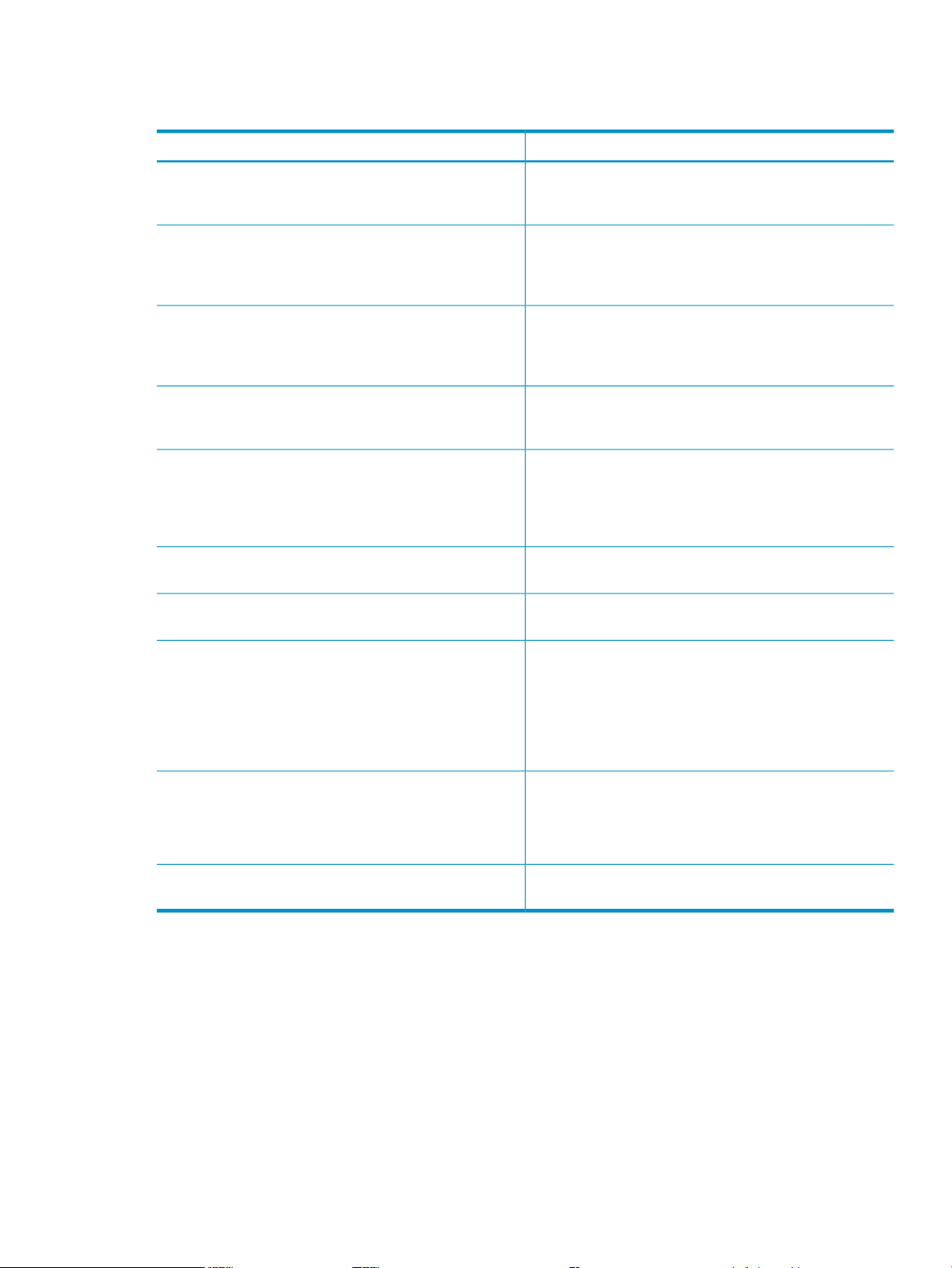

These are terms used in locating and describing components:

DefinitionTerm

Cabinet

Rack

Rack Offset

Group

Module

Slot (or Bay or Position)

Port

Computer system housing that includes a structure of

external panels, front and rear doors, internal racking,

and dual PDUs.

Structure integrated into the cabinet into which

rackmountable components are assembled.

The rack uses this naming convention:

system-name-racknumber

The physical location of components installed in a

modular cabinet, measured in U values numbered 1 to

42, withU1 atthe bottomof thecabinet. AU is1.75 inches

(44 millimeters).

A subset of a system that contains one or more modules.

A group does not necessarily correspond to a single

physical object, such as an enclosure.

A subset of a group that is usually contained in an

enclosure. Amodule contains one or more slots (or bays).

A module can consist of components sharing a common

interconnect, such as a backplane, or it can be a logical

grouping ofcomponents performing a particular function.

A subset of a module that isthe logical or physical location

of a component within that module.

A connector to which a cable can be attached and which

transmits and receives data.

Fiber

• Group-Module-Slot (GMS)

• Group-Module-Slot-Bay (GMSB)

• Group-Module-Slot-Port (GMSP)

• Group-Module-Slot-Port-Fiber (GMSPF)

NonStop Server Blade

Number (one to four) of the fiber pair (LC connector)

within an MTP-LC fiber cable. An MTP-LC fiber cable

has a single MTP connector on one end and four LC

connectors, each containing a pair of fibers, at the other

end. The MTP connector connects to the ServerNet switch

in the c7000 enclosure and the LC connectors connect to

the CLIM

A notation method used by hardware and software in

NonStop systems for organizing and identifying the

location of certain hardware components.

A server blade that provides processing and ServerNet

connections.

On NonStop BladeSystems, locations of the modular components are identified by:

• Physical location:

— Rack number

— Rack offset

• Logical location: group, module, and slot (GMS) notation as defined by their position on

the ServerNet rather than the physical location

OSM uses GMS notation in many places, including the Tree view and Attributes window, and

it uses rack and offset information to create displays of the server and its components.

Component Location and Identification 25

Page 26

Rack and Offset Physical Location

Rack nameand rack offset identify the physical location of components in a NonStop BladeSystem.

The rack name is located on an external label affixed to the rack, which includes the system name

plus a 2-digit rack number.

Rack offset is labeled on the rails in each side of the rack. These rails are measured vertically in

units called U, with one U measuring 1.75 inches (44 millimeters). The rack is 42U with U1 located

at the bottom and 42U at the top. The rack offset is the lowest number on the rack that the

component occupies.

ServerNet Switch Group-Module-Slot Numbering

• Group (100-101):

— Group 100 is the first c7000 processor enclosure containing logical processors 0-7.

— Group 101 is the second c7000 processor enclosure containing logical processors 8-15.

• Module (2-3):

— Module 2 is the X fabric.

— Module 3 is the Y fabric.

• Slot (5 or 7):

— Slot 5 contains the double-wide ServerNet switch for the X fabric.

— Slot 7 contains the double-wide ServerNet switch for the Y fabric.

NOTE: There are two types of c7000 ServerNet switches: Standard I/O and High I/O. For

more information and illustrations of the ServerNet switch ports, refer to “I/O Connections

(Standard and High I/O ServerNet Switch Configurations)” (page 55).

• Port (1-18):

— Ports 1 through 2 support the inter-enclosure links. Port 1 is marked GA. Port 2 is

marked GB.

— Ports 3 through 8 support the I/O links (IP CLIM, Storage CLIM, and IOAM)

NOTE: IOAMs must use Ports 4 through 7. These ports support 4-way IOAM links.

— Ports 9 and 10 support the cross links between two ServerNet switches in the same

enclosure.

— Ports 11 and 12 support the links to a cluster switch. SH on Port 11 stands for short haul.

LH on Port 12 stands for long haul.

— Ports 13 through 18 are not supported.

• Fiber (1-4)

These fibers support up to 4 ServerNet links on ports 3-8 of the c7000 enclosure ServerNet

switch.

26 NonStop BladeSystem Overview

Page 27

NonStop Server Blade Group-Module-Slot Numbering

These tablesshow the default numberingfor the NonStop Server Blades of a NonStop BladeSystem

when the server blades are powered on and functioning:

GMS Numbering For the Logical Processors:

Slot*ModuleGroup*Processor ID

111000

211001

311002

411003

511004

611005

711006

811007

111018

211019

*In the OSM Service Connection, the term Enclosure is used for the group and the term Bay is used for the slot.

CLIM Enclosure Group-Module-Slot-Port-Fiber Numbering

This table shows the valid values for GMSPF numbering for the X1 ServerNet switch connection

point to a CLIM:

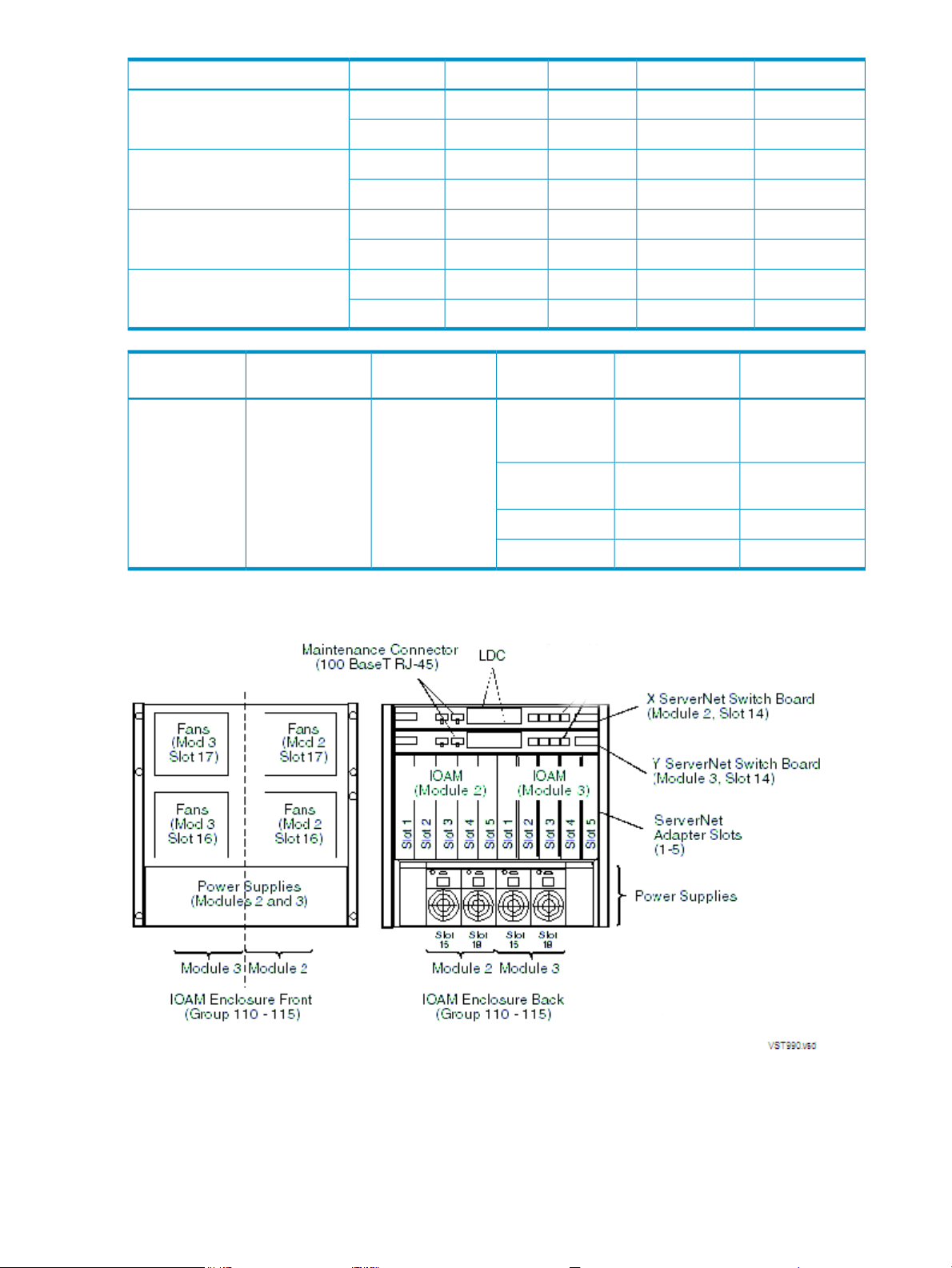

IOAM Enclosure Group-Module-Slot Numbering

A NonStop BladeSystem supports IOAM enclosures, identified as group 110 through 115:

3110110

4110111

5110112

6110113

7110114

8110115

FibersPortsSlotsModuleGroup

1 - 43 to 85, 72, 3100-101ServerNet switch

FiberPortSlotModuleGroupIOAM

1 - 44 (EA)52100110

1 - 44 (EA)73100

1 - 46 (EC)52100111

1 - 46 (EC)73100

Component Location and Identification 27

Page 28

FiberPortSlotModuleGroupIOAM

1 - 45 (EB)52100112

1 - 45 (EB)73100

1 - 47 (ED)52100113

1 - 47 (ED)73100

1 - 44 (EA)52101114

1 - 44 (EA)73101

1 - 46 (EC)52101115

1 - 46 (EC)73101

IOAM Group

preceding table.)

X ServerNet

Module

Module

1 to 532110 - 115 (See

14

ServerNet

adapters

logic board

This illustration shows the slot locations for the IOAM enclosure:

PortItemSlotY ServerNet

1 - n: where n is

number of ports

on adapter

1 - 4ServerNet switch

-Power supplies15, 18

-Fans16, 17

28 NonStop BladeSystem Overview

Page 29

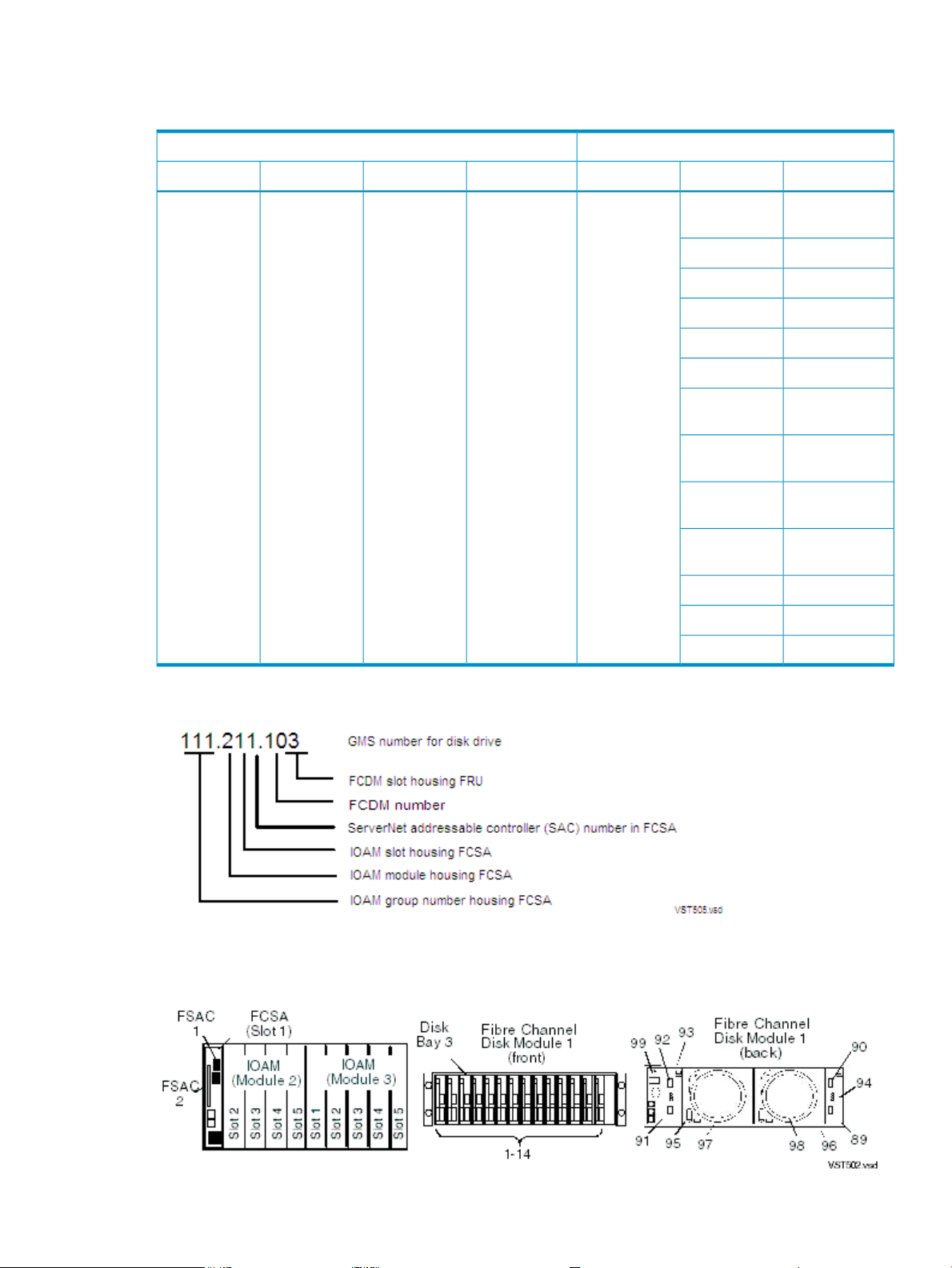

Fibre Channel Disk Module Group-Module-Slot Numbering

This table shows the default numbering for the Fibre Channel disk module:

FCDMIOAM Enclosure

ItemSlotShelfFCSA F-SACsSlotModuleGroup

110-115

3 - Y fabric

1, 21 - 52 - X fabric;

daisy-chained;

1 if single disk

enclosure

01 - 4 if

93

94

95

96

Fibre Channel

disk module

Disk drive bays1-14

Transceiver A189

Transceiver A290

Transceiver B191

Transceiver B292

Left FC-AL

board

Right FC-AL

board

Left power

supply

Right power

supply

Left blower97

Right blower98

EMU99

The form of the GMS numbering for a disk in a Fibre Channel disk module is:

This example shows the disk in bay 03 of the Fibre Channel disk module that connects to the

FCSA in the IOAM group 111, module 2, slot 1, FSAC 1:

Component Location and Identification 29

Page 30

System Installation Document Packet

To keep track of the hardware configuration, internal and external communications cabling, IP

addresses, and connect networks, assemble and retain as the systems records an Installation

Document Packet. This packet can include:

• “Technical Document for the Factory-Installed Hardware Configuration”

• “Configuration Forms for the ServerNet Adapters and CLIMs”

Technical Document for the Factory-Installed Hardware Configuration

Each new NonStop BladeSystem includes a document that describes:

• The cabinet included with the system

• Each hardware enclosure installed in the cabinet

• Cabinet U location of the bottom edge of each enclosure

• Each ServerNet cable with:

— Source and destination enclosure, component, and connector

— Cable part number

— Source and destination connection labels

This document is called a technical document and serves as the physical location and connection

map for the system.

Configuration Forms for the ServerNet Adapters and CLIMs

To add configuration forms for ServerNet adapters or CLIMs to your Installation Document

Packet, copy the necessary forms from the adapter manuals or the CLuster I/O Module (CLIM)

Installation and Configuration Guide. Follow any planning instructions in these manuals.

30 NonStop BladeSystem Overview

Page 31

2 Site Preparation Guidelines

This section describes power, environmental, and space considerations for your site.

Modular Cabinet Power and I/O Cable Entry

Power and I/O cables can enter the NonStop BladeSystem from either the top or the bottom rear

of the modular cabinets, depending on how the cabinets are ordered from HP and the routing

of the AC power feeds at the site. NonStop BladeSystem cabinets can be ordered with the AC

power cords for the PDUs exiting either:

• Top: Power and I/O cables are routed from above the modular cabinet.

• Bottom: Power and I/O cables are routed from below the modular cabinet

For information about modular cabinet power and cable options, refer to “AC Input Power for

Modular Cabinets” (page 44).

Emergency Power-Off Switches

Emergency power off (EPO) switches are required by local codes or other applicable regulations

when computer equipment contains batteries capable of supplying more than 750 volt-amperes

(VA) for more that five minutes. Systems that have thesebatteries also have internal EPO hardware

for connection to a site EPO switch or relay. In an emergency, activating the EPO switch or relay

removes power from all electrical equipment in the computer room (except that used for lighting

and fire-related sensors and alarms).

EPO Requirement for NonStop BladeSystems

NonStop BladeSystems without an optional UPS (such as an HP R12000/3 UPS) installed in the

modular cabinet do not contain batteries capable of supplying more than 750 volt-amperes (VA)

for more that five minutes, so they do not require connection to a site EPO switch.

EPO Requirement for HP R12000/3 UPS

The rack-mounted HP R12000/3, 12kVA UPS can be optionally installed in a modular cabinet,

contains batteries, and has a remote EPO (REPO) port. For site EPO switches or relays, consult

your HP site preparation specialist or electrical engineer regarding requirements.

If an EPO switch or relay connector is required for your site, contact your HP representative or

refer to the HP 3 Phase UPS User Guide for connector and wiring for the 12kVA model. This guide

is available at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01079392/c01079392.pdf

Electrical Power and Grounding Quality

Proper design and installation of a power distribution system for a NonStop BladeSystem requires

specialized skills,knowledge, and understanding of appropriate electrical codes and the limitations

of the power systems for computer and data processing equipment. For power and grounding

specifications, refer to “AC Input Power for Modular Cabinets” (page 44).

Power Quality

This equipment is designed to operate reliably over a wide range of voltages and frequencies,

described in “Enclosure AC Input” (page 45). However, damage can occur if these ranges are

Modular Cabinet Power and I/O Cable Entry 31

Page 32

exceeded. Severe electrical disturbances can exceed the design specifications of the equipment.

Common sources of such disturbances are:

• Fluctuations occurring within the facility’s distribution system

• Utility service low-voltage conditions (such as sags or brownouts)

• Wide and rapid variations in input voltage levels

• Wide and rapid variations in input power frequency

• Electrical storms

• Large inductive sources (such as motors and welders)

• Faults in the distribution system wiring (such as loose connections)

Computer systems can be protected from the sources of many of these electrical disturbances by

using:

• A dedicated power distribution system

• Power conditioning equipment

• Lightning arresters on power cables to protect equipment against electrical storms

For steps to take to ensure proper power for the servers, consult with your HP site preparation

specialist or power engineer.

Grounding Systems

The site building must provide a power distribution safety ground/protective earth for each AC

service entrance to all NonStop BladeSystem equipment. This safety grounding system must

comply with local codes and any other applicable regulations for the installation locale.

For proper grounding/protective earth connection, consult with your HP site preparation specialist

or power engineer.

Power Consumption

In a NonStop BladeSystem, the power consumption and inrush currents per connection can vary

because of the unique combination of enclosures housed in the modular cabinet. Thus, the total

power consumption for the hardware installed in the cabinet should be calculated as described

in “Enclosure Power Loads” (page 46).

Uninterruptible Power Supply (UPS)

Modular cabinets do not have built-in batteries to provide power during power failures. To

support system operation and ride-through support during a power failure, NonStop

BladeSystems require either an optional UPS (HP supports the HP model R12000/3 UPS) installed

in each modular cabinet or a site UPS to support system operation through a power failure. This

system operation support can include a planned orderly shutdown at a predetermined time in

the event of an extended power failure. A timely and orderly shutdown prevents an uncontrolled

and asymmetric shutdown of the system resources from depleted UPS batteries.

OSM provides this ride-through support during a power failure. When OSM detects a power

failure, it triggers a ride-through timer. To set this timer, you must configure the ride-through

time in SCF. For this information, refer to the SCF Reference Manual for the Kernel Subsystem. If

AC power is not restored before the configured ride-through time period ends, OSM initiates

an orderly shutdown of I/O operations and processors. For additional information, see “AC

Power Monitoring” (page 95).

32 Site Preparation Guidelines

Page 33

NOTE: Retrofitting a system in the field with a UPS and ERMs will likely require moving all

installed enclosures in the rack to provide space for the new hardware. One or more of the

enclosures that formerly resided in the rackmight be displaced and therefore have to be installed

in another rack that would also need a UPS and ERMs installed. Additionally, lifting equipment

might be required to lift heavy enclosures to their new location.

For information and specifications on the R12000/3 UPS, see Chapter 3 (page 37) and refer to

the HP 3 Phase UPS User Guide. This guide is available at:

http://bizsupport.austin.hp.com/bc/docs/support/SupportManual/c01079392/c01079392.pdf

If you install a UPS other than the HP model R12000/3 UPS in each modular cabinet of a NonStop

BladeSystem, these requirements must be met to insure the system can survive a total AC power

fail:

• The UPS output voltage can support the HP PDU input voltage requirements.

• The UPS phase output matches the PDU phase input. For NonStop BladeSystems, 3-phase

output UPSs and 3-phase input HP PDUs are supported. For details, refer to Chapter 3

(page 37).

• The UPS output can support the targeted system in the event of an AC power failure.

Calculate each cabinet load to insure the UPS can support a proper ride-through time in the

event of a total AC power failure. For more information, refer to “Enclosure Power Loads”

(page 46).

NOTE: A UPS other than the HP model R12000/3 UPS will not be able to utilize the power

fail support of the Configure a Power Source as UPS OSM action.

If your applications require a UPS that supports the entire system or even a UPS or motor

generator for all computer and support equipment in the site, you must plan the site’s electrical

infrastructure accordingly.

Cooling and Humidity Control

Do not rely on an intuitive approach to design cooling or to simply achieve an energy

balance—that is, summing up to the total power dissipation from all the hardware and sizing a

comparable air conditioning capacity. Today’s high-performance NonStop BladeSystems use

semiconductors that integrate multiple functions on a single chip with very high power densities.

These chips, plus high-power-density mass storage and power supplies, are mounted in ultra-thin

system and storage enclosures, and then deployed into computer racks in large numbers. This

higher concentration of devices results in localized heat, which increases the potential for hot

spots that can damage the equipment.

Additionally, variables in the installation site layout can adversely affect air flows and create hot

spots by allowing hot and cool air streams to mix. Studies have shown that above 70°F (20°C),

every increase of 18°F (10°C) reduces long-term electronics reliability by 50%.

Cooling airflow through each enclosure in the NonStop BladeSystem is front-to-back. Because

of high heat densities and hot spots, an accurate assessment of air flow around and through the

system equipment and specialized cooling design is essential for reliable system operation. For

an airflow assessment, consult with your HP cooling consultant or your heating, ventilation, and

air conditioning (HVAC) engineer.

Cooling and Humidity Control 33

Page 34

NOTE: Failure of site cooling with the NonStop BladeSystem continuing to run can cause rapid

heat buildup and excessive temperatures within the hardware. Excessive internal temperatures

can result in full or partial system shutdown. Ensure that the site’s cooling system remains fully

operational when the NonStop BladeSystem is running.

Because each modular cabinet houses a unique combination of enclosures, use the “Heat

Dissipation Specifications and Worksheet” (page 50) to calculate the total heat dissipation for

the hardware installed in each cabinet. For air temperature levels at the site, refer to “Operating

Temperature, Humidity, and Altitude” (page 50).

Weight

Because modular cabinets for NonStop BladeSystems house a unique combination of enclosures,

total weight must be calculated based on what is in the specific cabinet, as described in “Modular

Cabinet and Enclosure Weights With Worksheet ” (page 49).

Flooring

NonStop BladeSystems can be installed either on the site’s floor with the cables entering from

above the equipment or on raised flooring with power and I/O cables entering from underneath.

Because cooling airflow through each enclosure in the modular cabinets is front-to-back, raised

flooring is not required for system cooling.

The site floor structure and any raised flooring (if used) must be able to support the total weight