Page 1

Hitachi AMS 2000 Family TrueCopy Extended

Distance User Guide

F

AST

F

IND

L

INKS

Document organization

Release notes and readme

Getting help

Table of Contents

MK-97DF8054-23

Page 2

© 2008-2015 Hitachi, Ltd. All rights reserved.

No part of this publication may be reproduced or transmitted in any form or by any means, electronic or

mechanical, including photocopying and recording, or stored in a database or retrieval system for any

purpose without the express written permission of Hitachi, Ltd. and Hitachi Data Systems Corporation

(hereinafter referred to as “Hitachi”).

Hitachi, Ltd. and Hitachi Data Systems reserve the right to make changes to this document at any time

without notice and assume no responsibility for its use. Hitachi, Ltd. and Hitachi Data Systems products and

services can only be ordered under the terms and conditions of Hitachi Data Systems' applicable agreements.

All of the features described in this document may not be currently available. Refer to the most recent

product announcement or contact your local Hitachi Data Systems sales office for information on feature and

product availability.

Notice: Hitachi Data Systems products and services can be ordered only under the terms and conditions of

Hitachi Data Systems’ applicable agreements. The use of Hitachi Data Systems products is governed by the

terms of your agreements with Hitachi Data Systems.

Hitachi is a registered trademark of Hitachi, Ltd. in the United States and other countries. Hitachi Data

Systems is a registered trademark and service mark of Hitachi in the United States and other countries.

All other trademarks, service marks, and company names are properties of their respective owners.

Export authorization is required for the AMS 2000 Data At Rest Encryption

• Import/Use regulations may restrict export of the AMS2000 SED to certain countries

• China – AMS2000 is eligible for import but the License Key and SED may not be sent to China

• France – Import pending completion of registration formalities

• Hong Kong – Import pending completion of registration formalities

• Israel – Import pending completion of registration formalities

• Russia – Import pending completion of notification formalities

• Distribution Centers – IDC, EDC and ADC cleared for exports

ii

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 3

Table of Contents

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Intended audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Product version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Release notes and readme . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Product Abbreviations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Document revision level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Changes in this release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Document organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Document conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .xiv

Convention for storage capacity values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Related documents. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Getting help. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx

Comments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx

1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

How TCE works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

Typical environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-2

Volume pairs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

Data pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-4

Guaranteed write order and the update cycle. . . . . . . . . . . . . . . . . . . . . . 1-4

Extended update cycles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-5

Consistency groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-6

Differential Management LUs (DMLU) . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-7

TCE interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-7

2 Plan and design — sizing data pools and bandwidth . . . . . . . . . 2-1

Plan and design workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-2

Assessing business needs — RPO and the update cycle . . . . . . . . . . . . . . . . 2-2

Measuring write-workload . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-3

Collecting write-workload data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-3

Calculating data pool size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

Contents iii

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 4

Data pool key points . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-7

Determining bandwidth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2-8

3 Plan and design — remote path . . . . . . . . . . . . . . . . . . . . . . . . . . .3-1

Remote path requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-2

Management LAN requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-3

Remote data path requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-3

WAN optimization controller (WOC) requirements . . . . . . . . . . . . . . . . . . .3-4

Remote path configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-5

Fibre channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-5

Direct connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-6

Single FC switch, network connection . . . . . . . . . . . . . . . . . . . . . . . . . .3-7

Double FC switch, network connection . . . . . . . . . . . . . . . . . . . . . . . . .3-8

Fibre channel extender connection . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-9

Port transfer rate for Fibre channel. . . . . . . . . . . . . . . . . . . . . . . . . . .3-10

iSCSI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-11

Direct connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3-11

Single LAN switch, WAN connection . . . . . . . . . . . . . . . . . . . . . . . . . .3-12

Multiple LAN switch, WAN connection. . . . . . . . . . . . . . . . . . . . . . . . .3-13

Single LAN switch, WOC, WAN connection. . . . . . . . . . . . . . . . . . . . . .3-14

Multiple LAN switch, WOC, WAN connection . . . . . . . . . . . . . . . . . . . .3-15

Multiple array, LAN switch, WOC connection with single WAN . . . . . . . .3-16

Multiple array, LAN switch, WOC connection with two WANs. . . . . . . . .3-17

Supported connections between various types of arrays . . . . . . . . . . . . . . .3-18

Notes when Connecting Hitachi AMS2000 Series to other arrays . . . . . . . .3-18

Using the remote path — best practices. . . . . . . . . . . . . . . . . . . . . . . . . . .3-20

4 Plan and design—arrays, volumes and operating systems. . . . .4-1

Planning workflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-2

Planning arrays—moving data from earlier AMS models. . . . . . . . . . . . . . . . .4-2

Planning logical units for TCE volumes. . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-3

Volume pair and data pool recommendations . . . . . . . . . . . . . . . . . . . . . .4-3

Operating system recommendations and restrictions. . . . . . . . . . . . . . . . . . .4-4

Host time-out. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-4

P-VOL, S-VOL recognition by same host on VxVM, AIX®, LVM . . . . . . . . . .4-4

HP server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-4

Windows Server 2000 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-7

Windows Server 2003/2008. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-7

Identifying P-VOL and S-VOL LUs on Windows. . . . . . . . . . . . . . . . . . . .4-8

Windows 2000 or Windows Server and TCE Configuration . . . . . . . . . . .4-9

Dynamic Disk in Windows 2000/Windows Server . . . . . . . . . . . . . . . . .4-10

VMWare and TCE Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-10

Concurrent Use of Dynamic Provisioning. . . . . . . . . . . . . . . . . . . . . . .4-11

iv Contents

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 5

User Data Area of Cache Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-16

Formatting the DMLU in the Event of a Drive Failure. . . . . . . . . . . . . . . . .4-22

Maximum supported capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-23

TCE and SnapShot capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4-24

TCE, SnapShot, ShadowImage concurrent capacity . . . . . . . . . . . . . . . . .4-26

. . . . . . . . . . . . . . .Maximum Supported Capacity of P-VOL and Data Pool4-28

No SnapShot-TCE cascade configuration . . . . . . . . . . . . . . . . . . . . . . .4-29

SnapShot-TCE cascade configuration. . . . . . . . . . . . . . . . . . . . . . . . . .4-30

Cache limitations on data and data pool volumes . . . . . . . . . . . . . . . . . . .4-31

Cautions for Reconfiguring the Cache Memory. . . . . . . . . . . . . . . . . . . . .4-32

5 Requirements and specifications . . . . . . . . . . . . . . . . . . . . . . . . . .5-1

TCE system requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-2

Displaying the hardware revision number . . . . . . . . . . . . . . . . . . . . . . . . 5-3

TCE system specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

6 Installation and setup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-1

Installation procedures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-2

Installing TCE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-3

Enabling or disabling TCE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-6

Un-installing TCE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-8

Setup procedures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-11

Setting up DMLUs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-11

Setting up data pools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-12

Adding or changing the remote port CHAP secret. . . . . . . . . . . . . . . . . . .6-14

Setting up the remote path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6-15

7 Pair operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .7-1

Operations work flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-2

TCE operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-3

Checking pair status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-3

Creating the initial copy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-3

Prerequisites and best practices for pair creation . . . . . . . . . . . . . . . . . . . 7-4

TCE setup wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-4

Create pair procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-5

Splitting a pair. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-6

Resynchronizing a pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-7

Swapping pairs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-9

8 Example scenarios and procedures . . . . . . . . . . . . . . . . . . . . . . . .8-1

CLI scripting procedure for S-VOL backup. . . . . . . . . . . . . . . . . . . . . . . . . . 8-2

Scripted TCE, SnapShot procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-4

Procedure for swapping I/O to S-VOL when maintaining local array . . . . . . . 8-9

Contents v

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 6

Procedure for moving data to a remote array. . . . . . . . . . . . . . . . . . . . . . .8-10

Example procedure for moving data . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-11

Process for disaster recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-11

Takeover processing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-11

Swapping P-VOL and S-VOL. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-12

Failback to the local array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .8-12

9 Monitoring and maintenance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-1

Monitoring pair status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-2

Monitoring data pool capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-5

Monitoring data pool usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-5

Expanding data pool capacity. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-6

Changing data pool threshold value . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-6

Monitoring the remote path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-7

Changing remote path bandwidth. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-7

Monitoring cycle time. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-7

Changing cycle time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-8

Changing copy pace. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-8

Checking RPO — Monitoring P-VOL/S-VOL time difference. . . . . . . . . . . . . . .9-9

Routine maintenance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-9

Deleting a volume pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-9

Deleting data pools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-10

Deleting a DMLU. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-10

Deleting the remote path. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-11

TCE tasks before a planned remote array shutdown. . . . . . . . . . . . . . . . .9-11

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .9-11

10 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-1

Troubleshooting overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-2

Correcting data pool shortage. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-2

Correcting array problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-3

Delays in settling of S-VOL Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-4

Correcting resynchronization errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-4

Using the event log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-7

Recovering a TrueCopy path. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-8

Miscellaneous troubleshooting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10-10

A Operations using CLI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-1

Installation and setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A-2

Installing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A-3

Enabling and disabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A-5

Un-installing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .A-8

Setting the Differential Management Logical Unit. . . . . . . . . . . . . . . . . . .A-10

vi Contents

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 7

Release a DMLU . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-10

Setting the data pool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-10

Setting the LU ownership. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-12

Setting the cycle time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-13

Setting mapping information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-13

Setting the remote port CHAP secret. . . . . . . . . . . . . . . . . . . . . . . . . . . A-14

Setting the remote path. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-15

Deleting the remote path. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-17

Pair operations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-20

Displaying status for all pairs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-20

Displaying detail for a specific pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-20

Creating a pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-21

Splitting a pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-21

Resynchronizing a pair. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-22

Swapping a pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-22

Deleting a pair . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-22

Changing pair information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-23

Monitoring pair status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-23

Confirming Consistency Group (CTG) status. . . . . . . . . . . . . . . . . . . . . . A-24

Procedures for failure recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-25

Displaying the event log. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-25

Reconstructing the remote path . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-25

Sample script. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-26

B Operations using CCI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

Setting the command device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

Setting LU mapping. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

Defining the configuration definition file . . . . . . . . . . . . . . . . . . . . . . . . . B-4

Setting the environment variable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-6

Pair operations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-7

Checking pair status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-7

Creating a pair (paircreate) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-8

Splitting a pair (pairsplit) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-8

Resynchronizing a pair (pairresync). . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-9

Suspending pairs (pairsplit -R) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-9

Releasing pairs (pairsplit -S). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-10

Splitting TCE S-VOL/SnapShot V-VOL pair (pairsplit -mscas) . . . . . . . . . . B-10

Confirming data transfer when status is PAIR . . . . . . . . . . . . . . . . . . . . B-13

Pair creation/resynchronization for each CTG. . . . . . . . . . . . . . . . . . . . . B-13

Response time of pairsplit command. . . . . . . . . . . . . . . . . . . . . . . . . . . B-15

Pair, group name differences in CCI and Navigator 2. . . . . . . . . . . . . . . . . B-18

C Cascading with SnapShot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-1

Contents vii

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 8

Cascade configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-2

Replication operations supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-2

TCE operations supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-3

SnapShot operations supported . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-4

Status combinations, Read/write supported . . . . . . . . . . . . . . . . . . . . . . . . .C-4

Guidelines and restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-6

Cascading with SnapShot on the remote side . . . . . . . . . . . . . . . . . . . . . .C-6

TCE, SnapShot behaviors compared . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .C-7

D Installing TCE when Cache Partition Manager is in use . . . . . . D-1

Initializing Cache Partition when TCE and SnapShot are installed . . . . . . . . . D-2

E Wavelength Division Multiplexing (WDM) and dark fibre. . . . . . .E-1

WDM and dark fibre. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .E-2

Glossary

Index

viii Contents

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 9

Preface

This document provides instructions for planning, setting up, and

operating TrueCopy Extended Distance.

This preface includes the following information:

Intended audience

Product version

Release notes and readme

Changes in this release

Changes in this release

Document organization

Document conventions

Convention for storage capacity values

Related documents

Getting help

Preface ix

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 10

Intended audience

This document is intended for system administrators, Hitachi Data Systems

representatives, and Authorized Service Providers who install, configure,

and operate Hitachi Adaptable Modular System (AMS) 2000 family storage

systems.

Product version

This document applies to Hitachi AMS 2000 Family firmware version

08D1/B or later.

Release notes and readme

Read the release notes and readme file before installing and using this

product. They may contain requirements or restrictions that are not fully

described in this document and/or updates or corrections to this document

Product Abbreviations

Product Abbreviation

ShadowImage ShadowImage In-system Replication

Snapshot Copy-on-Write Snapshot

TrueCopy Remote TrueCopy Remote Replication

TCE TrueCopy Extended Distance

TCMD TrueCopy Modular Distributed

Windows Server Windows Server 2003, Windows Server 2008,

and Windows Server 2012.

Product Full Name

x Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 11

Changes in this release

•In Table 5-2 (page 5-3), added the parameter Remote Copy over iSCSI

in the WAN environment.

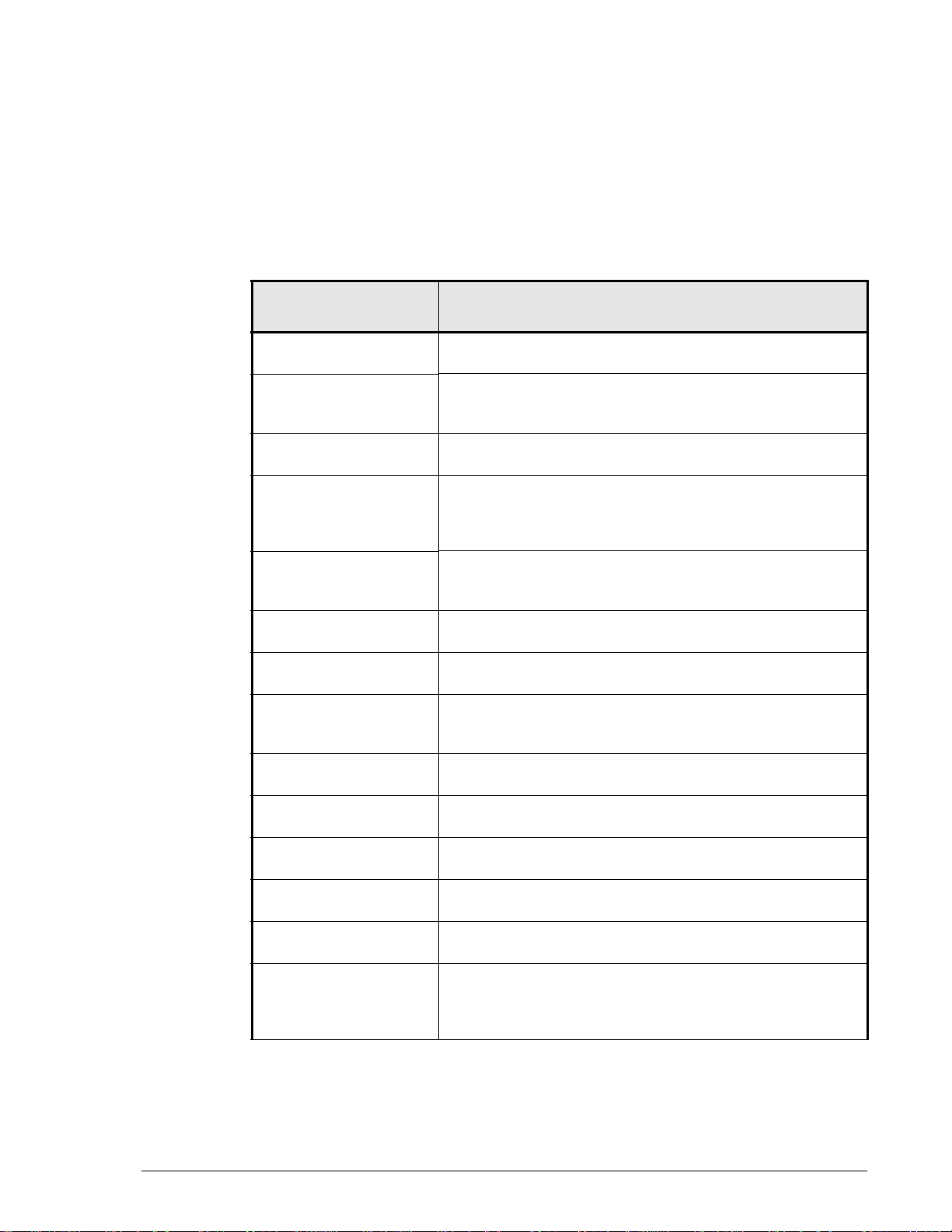

Document organization

Thumbnail descriptions of the chapters are provided in the following table.

Click the chapter title in the first column to go to that chapter. The first page

of every chapter or appendix contains links to the contents.

Chapter/Appendix

Title

Chapter 1, Overview Provides descriptions of TrueCopy Extended Distance

components and how they work together.

Chapter 2, Plan and

design — sizing data

pools and bandwidth

Chapter 3, Plan and

design — remote path

Chapter 4, Plan and

design—arrays,

volumes and operating

systems

Chapter 5,

Requirements and

specifications

Chapter 6, Installation

and setup

Chapter 7, Pair

operations

Chapter 8, Example

scenarios and

procedures

Chapter 9, Monitoring

and maintenance

Chapter 10,

Troubleshooting

Appendix A,

Operations using CLI

Appendix B,

Operations using CCI

Appendix C, Cascading

with SnapShot

Appendix D, Installing

TCE when Cache

Partition Manager is in

use

Provides instructions for measuring write-workload,

calculating data pool size and bandwidth.

Provides supported iSCSI and Fibre Channel

configurations, with information on WDM and dark fibre.

Discusses the arrays and volumes you can use for TCE.

Provides TCE system requirements and specifications.

Provides procedures for installing and setting up the TCE

system and creating the initial copy.

Provides information and procedures for TCE operations.

Provides backup, data moving, and disaster recovery

scenarios and procedures.

Provides monitoring and maintenance information.

Provides troubleshooting information.

Provides detailed Command Line Interface instructions for

configuring and using TCE.

Provides detailed Command Line Interface instructions for

configuring and using TCE.

Provides supported configurations, operations, etc. for

cascading TCE with SnapShot.

Provides required information when using Cache Partition

Manager.

Description

Preface xi

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 12

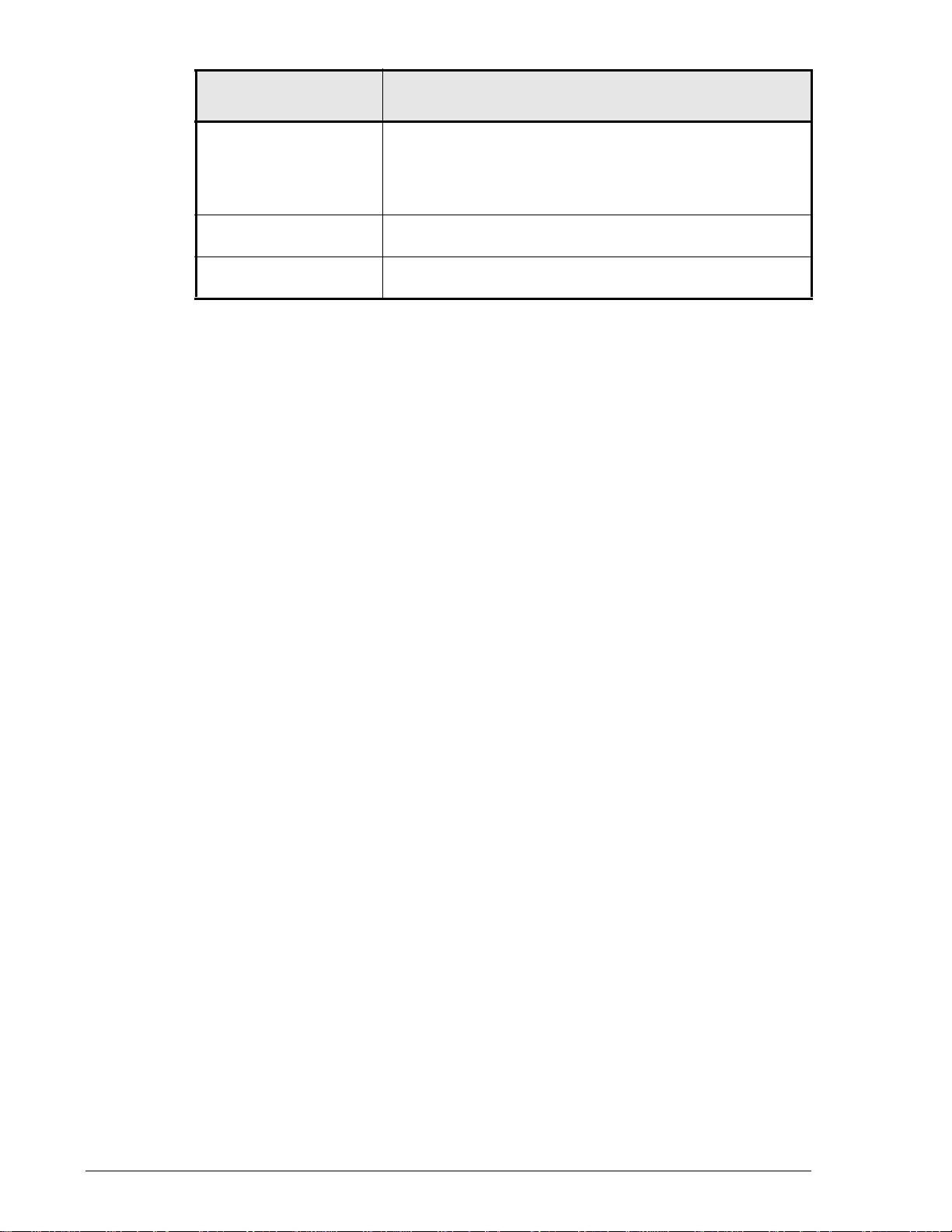

Chapter/Appendix

Title

Description

Appendix E,

Wavelength

Provides a discussion of WDM and dark fibre for channel

extender.

Division

Multiplexing (WDM)

and dark fibre

Glossary Provides definitions for terms and acronyms found in this

document.

Index Provides links and locations to specific information in this

document.

xii Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 13

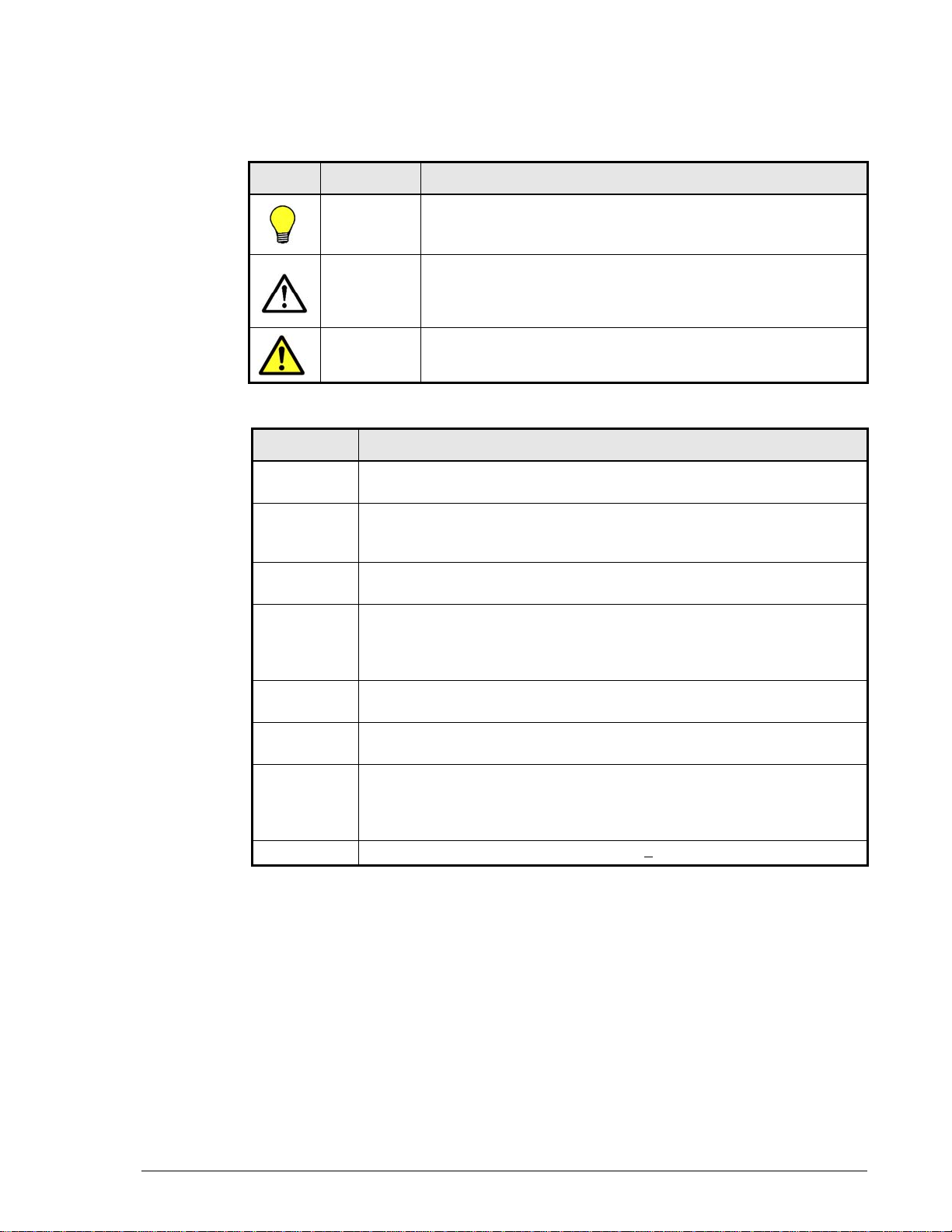

Document conventions

This document uses the following symbols to draw attention to important

safety and operational information.

Symbol Meaning Description

Tip Tips provide helpful information, guidelines, or suggestions for

Note Notes emphasize or supplement important points of the main

Caution Cautions indicate that failure to take a specified action could

The following typographic conventions are used in this document.

Convention Description

Bold Indicates text on a window, other than the window title, including

menus, menu options, buttons, fields, and labels. Example: Click OK.

Italic Indicates a variable, which is a placeholder for actual text provided by

the user or system. Example: copy source-file target-file

Angled brackets (< >) are also used to indicate variables.

screen/code Indicates text that is displayed on screen or entered by the user.

Example: # pairdisplay -g oradb

< > angled

brackets

Indicates a variable, which is a placeholder for actual text provided by

the user or system. Example: # pairdisplay -g <group>

performing tasks more effectively.

text.

result in damage to the software or hardware.

Italic font is also used to indicate variables.

[ ] square

brackets

{ } braces Indicates required or expected values. Example: { a | b } indicates that

| vertical bar Indicates that you have a choice between two or more options or

underline Indicates the default value. Example: [ a | b ]

Indicates optional values. Example: [ a | b ] indicates that you can

choose a, b, or nothing.

you must choose either a or b.

arguments. Examples:

[ a | b ] indicates that you can choose a, b, or nothing.

{ a | b } indicates that you must choose either a or b.

Preface xiii

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 14

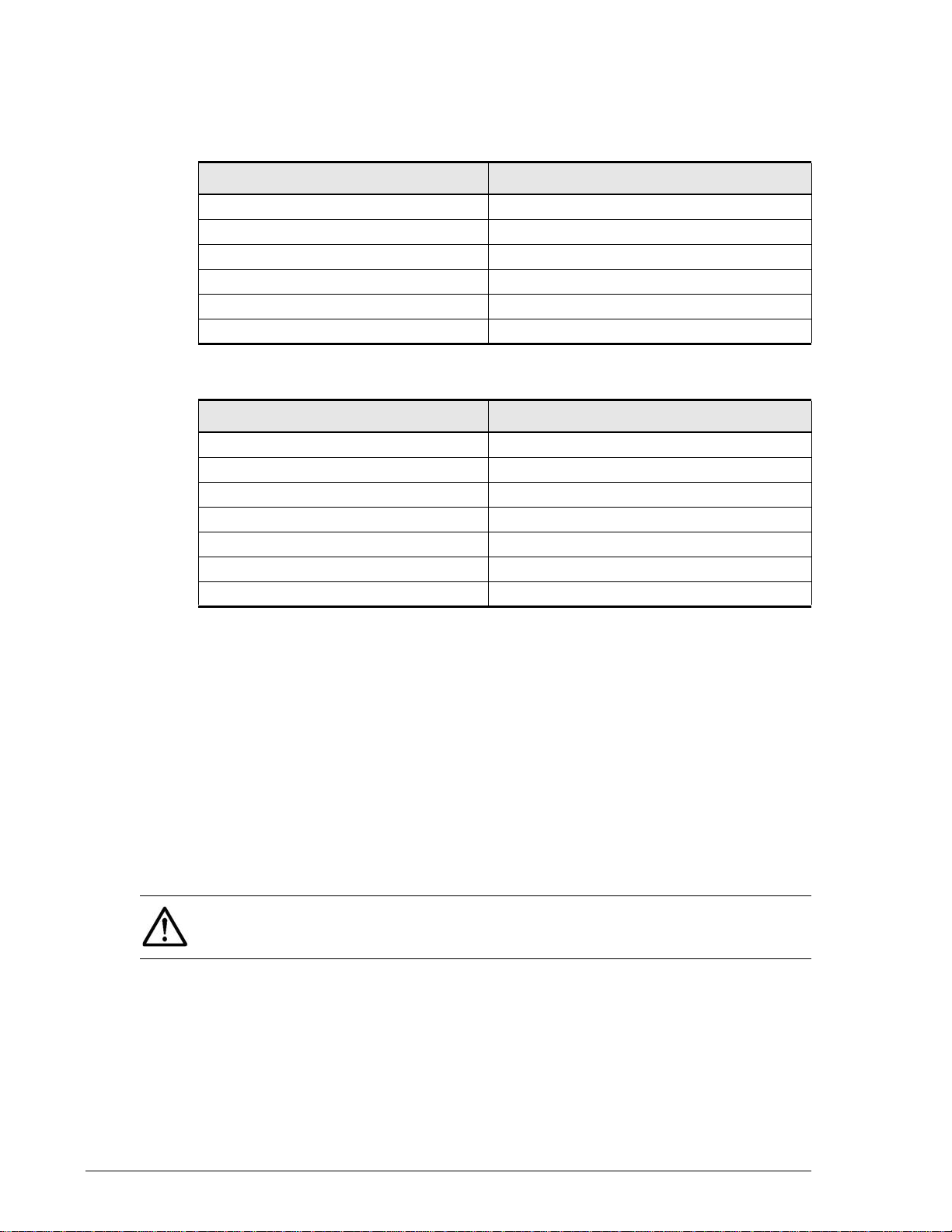

Convention for storage capacity values

Physical storage capacity values (e.g., disk drive capacity) are calculated

based on the following values:

Physical capacity unit Value

1 KB 1,000 bytes

1 MB 1,000 KB or 1,000

1 GB 1,000 MB or 1,0003 bytes

1 TB 1,000 GB or 1,0004 bytes

1 PB 1,000 TB or 1,0005 bytes

1 EB 1,000 PB or 1,000

Logical storage capacity values (e.g., logical device capacity) are calculated

based on the following values:

Logical capacity unit Value

1 block 512 bytes

1 KB 1,024 (210) bytes

1 MB 1,024 KB or 1024

1 GB 1,024 MB or 10243 bytes

1 TB 1,024 GB or 10244 bytes

1 PB 1,024 TB or 1024

1 EB 1,024 PB or 10246 bytes

2

bytes

6

bytes

2

bytes

5

bytes

Related documents

The AMS 2000 Family user documentation is available on the Hitachi Data

Systems Portal: https://portal.hds.com. Please check this site for the most

current documentation, including important updates that may have been

made after the release of the product.

This documentation set consists of the following documents.

Release notes

• Adaptable Modular Storage System Release Notes

• Storage Navigator Modular 2 Release Notes

Please read the release notes before installing and/or using this product.

They may contain requirements and/or restrictions not fully described in

this document, along with updates and/or corrections to this document.

xiv Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 15

Installation and getting started

The following documents provide instructions for installing an AMS 2000

Family storage system. They include rack information, safety information,

site-preparation instructions, getting-started guides for experienced users,

and host connectivity information. The symbol

that contain initial configuration information about Hitachi AMS 2000 Family

storage systems.

identifies documents

AMS2100/2300 Getting Started Guide, MK-98DF8152

Provides quick-start instructions for getting an AMS 2100 or AMS 2300

storage system up and running as quickly as possible.

AMS2500 Getting Started Guide,

MK-97DF8032 Provides quick-start instructions for getting an AMS 2500

storage system up and running as quickly as possible

AMS 2000 Family Site Preparation Guide, MK-98DF8149

Contains site planning and pre-installation information for AMS 2000

Family storage systems, expansion units, and high-density expansion

units. This document also covers safety precautions, rack information,

and product specifications.

AMS 2000 Family Fibre Channel Host Installation Guide,

MK-08DF8189

Describes how to prepare Hitachi AMS 2000 Family Fibre Channel

storage systems for use with host servers running supported operating

systems.

AMS 2000 Family iSCSI Host Installation Guide, MK-08DF8188

Describes how to prepare Hitachi AMS 2000 Family iSCSI storage

systems for use with host servers running supported operating systems.

Storage and replication features

The following documents describe how to use Storage Navigator Modular 2

(Navigator 2) to perform storage and replication activities.

Storage Navigator 2 Advanced Settings User’s Guide, MK-97DF8039

Contains advanced information about launching and using Navigator 2

in various operating systems, IP addresses and port numbers, server

certificates and private keys, boot and restore options, outputting

configuration information to a file, and collecting diagnostic information.

Preface xv

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 16

Storage Navigator Modular 2 User’s Guide, MK-99DF8208

Describes how to use Navigator 2 to configure and manage storage on

an AMS 2000 Family storage system.

AMS 2000 Family Dynamic Provisioning Configuration Guide,

MK-09DF8201

Describes how to use virtual storage capabilities to simplify storage

additions and administration.

Storage Navigator 2 Storage Features Reference Guide for AMS,

MK-97DF8148

Contains concepts, preparation, and specifications for Account

Authentication, Audit Logging, Cache Partition Manager, Cache

Residency Manager, Data Retention Utility, LUN Manager, Performance

Monitor, SNMP Agent, and Modular Volume Migration.

AMS 2000 Family Copy-on-write SnapShot User Guide, MK-97DF8124

Describes how to create point-in-time copies of data volumes in AMS

2100, AMS 2300, and AMS 2500 storage systems, without impacting

host service and performance levels. Snapshot copies are fully read/

write compatible with other hosts and can be used for rapid data

restores, application testing and development, data mining and

warehousing, and nondisruptive backup and maintenance procedures.

AMS 2000 Family ShadowImage In-system Replication User Guide,

MK-97DF8129

Describes how to perform high-speed nondisruptive local mirroring to

create a copy of mission-critical data in AMS 2100, AMS 2300, and AMS

2500 storage systems. ShadowImage keeps data RAID-protected and

fully recoverable, without affecting service or performance levels.

Replicated data volumes can be split from host applications and used for

system backups, application testing, and data mining applications while

business continues to operate at full capacity.

AMS 2000 Family TrueCopy Remote Replication User Guide,

MK-97DF8052

Describes how to create and maintain multiple duplicate copies of user

data across multiple AMS 2000 Family storage systems to enhance your

disaster recovery strategy.

AMS 2000 Family TrueCopy Extended Distance User Guide,

MK-97DF8054 — this document

Describes how to perform bi-directional remote data protection that

copies data over any distance without interrupting applications, and

provides failover and recovery capabilities.

xvi Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 17

AMS 2000 Data Retention Utility User’s Guide, MK-97DF8019

Describes how to lock disk volumes as read-only for a certain period of

time to ensure authorized-only access and facilitate immutable, tamperproof record retention for storage-compliant environments. After data is

written, it can be retrieved and read only by authorized applications or

users, and cannot be changed or deleted during the specified retention

period.

Storage Navigator Modular 2 online help

Provides topic and context-sensitive help information accessed through

the Navigator 2 software.

Hardware maintenance and operation

The following documents describe how to operate, maintain, and administer

an AMS 2000 Family storage system. They also provide a wide range of

technical information and specifications for the AMS 2000 Family storage

systems. The symbol

configuration information about Hitachi AMS 2000 Family storage systems.

identifies documents that contain initial

AMS 2100/2300 Storage System Hardware Guide, MK-97DF8010

Provides detailed information about installing, configuring, and

maintaining an AMS 2100/2300 storage system.

AMS 2500 Storage System Hardware Guide, MK-97DF8007

Provides detailed information about installing, configuring, and

maintaining an AMS 2500 storage system.

AMS 2000 Family Storage System Reference Guide,

MK-97DF8008

Contains specifications and technical information about power cables,

system parameters, interfaces, logical blocks, RAID levels and

configurations, and regulatory information about AMS 2100, AMS 2300,

and AMS 2500 storage systems. This document also contains remote

adapter specifications and regulatory information.

AMS 2000 Family Storage System Service and Upgrade Guide,

MK-97DF8009

Provides information about servicing and upgrading AMS 2100, AMS

2300, and AMS 2500 storage systems.

AMS 2000 Family Power Savings User Guide, MK-97DF8045

Describes how to spin down volumes in selected RAID groups when they

are not being accessed by business applications to decrease energy

consumption and significantly reduce the cost of storing and delivering

information.

Preface xvii

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 18

Command and Control (CCI)

The following documents describe how to install the Hitachi AMS 2000

Family Command Control Interface (CCI) and use it to perform TrueCopy

and ShadowImage operations.

AMS 2000 Family Command Control Interface (CCI) Installation

Guide, MK-97DF8122

Describes how to install CCI software on open-system hosts.

AMS 2000 Family Command Control Interface (CCI) Reference

Guide, MK-97DF8121

Contains reference, troubleshooting, and maintenance information

related to CCI operations on AMS 2100, AMS 2300, and AMS 2500

storage systems.

AMS 2000 Family Command Control Interface (CCI) User’s Guide,

MK-97DF8123

Describes how to use CCI to perform TrueCopy and ShadowImage

operations on AMS 2100, AMS 2300, and AMS 2500 storage systems.

Command Line Interface (CLI)

The following documents describe how to use Hitachi Storage Navigator

Modular 2 to perform management and replication activities from a

command line.

Storage Navigator Modular 2 Command Line Interface (CLI) Unified

Reference Guide, MK-97DF8089

Describes how to interact with all Navigator 2 bundled and optional

software modules by typing commands at a command line.

Storage Navigator 2 Command Line Interface Replication Reference

Guide for AMS, MK-97DF8153

Describes how to interact with Navigator 2 to perform replication

activities by typing commands at a command line.

xviii Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 19

Dynamic Replicator documentation

The following documents describe how to install, configure, and use Hitachi

Dynamic Replicator to provide AMS Family storage systems with continuous

data protection, remote replication, and application failover in a single,

easy-to-deploy and manage platform.

Hitachi Dynamic Replicator - Scout Release Notes, RN-99DF8211

Hitachi Dynamic Replicator - Scout Host Upgrade Guide,

MK-99DF8267

Hitachi Dynamic Replicator - Scout Host User Guide,

MK-99DF8266

Hitachi Dynamic Replicator - Scout Installation and Configuration

Guide, MK-98DF8213

Hitachi Dynamic Replicator - Scout Quick Install/Upgrade Guide,

MK-98DF8222

Getting help

If you need to contact the Hitachi Data Systems support center, please

provide as much information about the problem as possible, including:

Comments

• The circumstances surrounding the error or failure.

• The exact content of any messages displayed on the host systems.

• The exact content of any messages displayed on Storage Navigator

Modular 2.

• The Storage Navigator Modular 2 configuration information. This

information is used by service personnel for troubleshooting purposes.

The Hitachi Data Systems customer support staff is available 24 hours a

day, seven days a week. If you need technical support, please log on to the

Hitachi Data Systems Portal for contact information: https://portal.hds.com

Ple as e se nd u s y o ur co m me nt s on th i s d oc u me nt : doc.comments@hds.com.

Include the document title, number, and revision, and refer to specific

sections and paragraphs whenever possible.

Thank you! (All comments become the property of Hitachi Data Systems.)

Preface xix

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 20

xx Preface

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 21

1

Overview

This manual provides instructions for designing, planning,

implementing, using, monitoring, and troubleshooting TrueCopy

Extended Distance (TCE). This chapter consists of:

How TCE works

Typical environment

TCE interfaces

Overview 1–1

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 22

How TCE works

With TrueCopy Extended Distance (TCE), you create a copy of your data at

a remote location. After the initial copy is created, only changed data

transfers to the remote location.

You create a TCE copy when you:

• Select a volume on the production array that you want to replicate

• Create a volume on the remote array that will contain the copy

• Establish a Fibre Channel or iSCSI link between the local and remote

arrays

• Make the initial copy across the link on the remote array.

During and after the initial copy, the primary volume on the local side

continues to be updated with data from the host application. When the host

writes data to the P-VOL, the local array immediately returns a response to

the host. This completes the I/O processing. The array performs the

subsequent processing independently from I/O processing.

Updates are periodically sent to the secondary volume on the remote side

at the end of the “update cycle”. This is a time period established by the

user. The cycle time is based on the recovery point objective (RPO), which

is the amount of data in time (2-hours’ worth, 4 hour’s worth) that can be

lost after a disaster, until the operation is irreparably damaged. If the RPO

is two hours, the business must be able to recover all data up to two hours

before the disaster occurred.

When a disaster occurs, storage operations are transferred to the remote

site and the secondary volume becomes the production volume. All the

original data is available in the S-VOL, from the last completed update. The

update cycle is determined by your RPO and by measuring write-workload

during the TCE planning and design process.

For a detailed discussion of the disaster recovery process using TCE, please

refer to Process for disaster recovery on page 8-11.

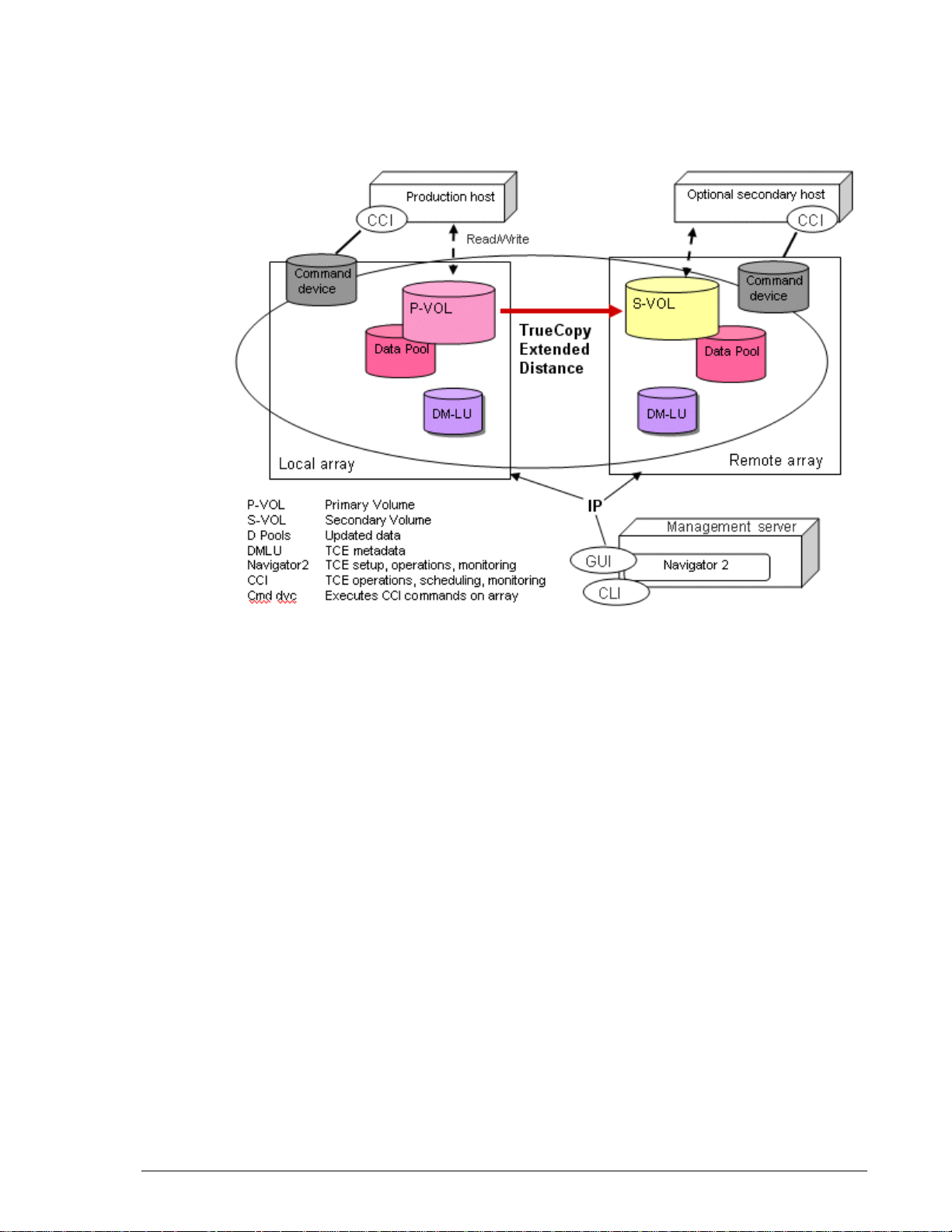

Typical environment

A typical configuration consists of the following elements. Many but not all

require user set up.

• Two AMS arrays—one on the local side connected to a host, and one on

the remote side connected to the local array. Connections are made via

Fibre Channel or iSCSI.

• A primary volume on the local array that is to be copied to the

secondary volume on the remote side.

• A differential management LU on local and remote arrays, which hold

TCE information when the array is powered down

1–2 Overview

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 23

• Interface and command software, used to perform TCE operations.

Command software uses a command device (volume) to communicate

with the arrays.

Figure 1-1 shows a typical TCE environment.

•

Volume pairs

When the initial TCE copy is completed, the production and backup volumes

are said to be “Paired”. The two paired volumes are referred to as the

primary volume (P-VOL) and secondary volume (S-VOL). Each TCE pair

consists of one P-VOL and one S-VOL. When the pair relationship is

established, data flows from the P-VOL to the S-VOL.

While in the Paired status, new data is written to the P-VOL and then

periodically transferred to the S-VOL, according to the user-defined update

cycle.

When a pair is “split”, the data flow between the volumes stops. At this time,

all the differential data that has accumulated in the local array since the last

update is copied to the S-VOL. This insures that its data is the same as the

P-VOL’s and is consistent and usable data.

During normal TCE operations, the P-VOL remains available for read/write

from the host. When the pair is split, the S-VOL also is available for read/

write operations from a host.

Figure 1-1: Typical TCE Environment

Overview 1–3

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 24

Data pools

Data from the host is continually updated to the P-VOL, as it occurs. The

data pool on the local side stores the changed data that accumulates before

the next the update cycle. The local data pool is used to update the S-VOL.

Data that accumulates in the data pool is referred to as differential data

because it contains the difference data between the P-VOL and S-VOL.

The data in the S-VOL following an update is complete, consistent, and

usable data. When the next update is to begin, this consistent data is copied

to the remote data pool. This data pool is used to maintain previous pointin-time copies of the S-VOL, which are used in the event of failback.

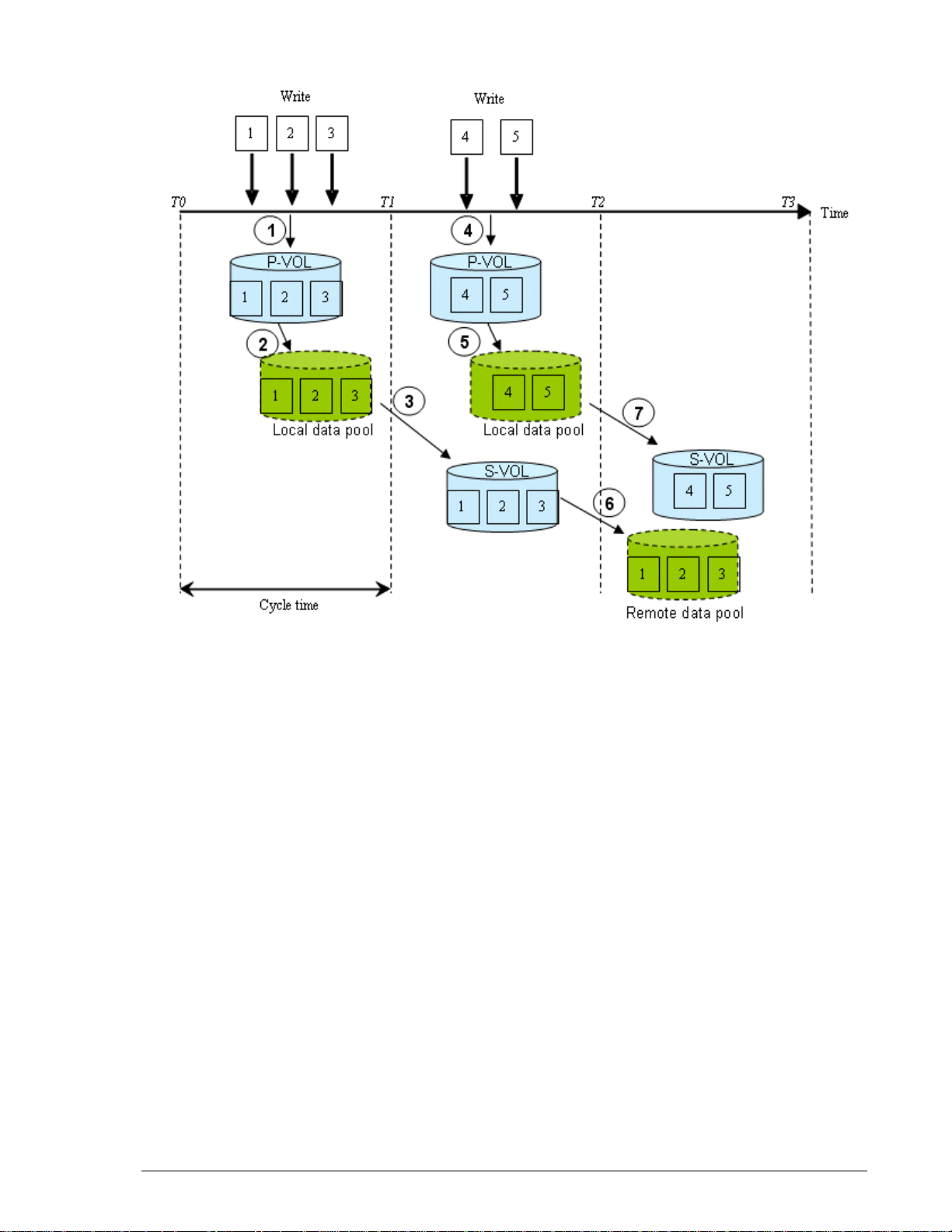

Guaranteed write order and the update cycle

S-VOL data must have the same order in which the host updates the P-VOL.

When write order is guaranteed, the S-VOL has data consistency with the

P-VOL.

As explained in the previous section, data is copied from the P-VOL and local

data pool to the S-VOL following the update cycle. When the update is

complete, S-VOL data is identical to P-VOL data at the end of the cycle.

Since the P-VOL continues to be updated while and after the S-VOL is being

updated, S-VOL data and P-VOL data are not identical.

However, the S-VOL and P-VOL can be made identical when the pair is split.

During this operation, all differential data in the local data pool is

transferred to the S-VOL, as well as all cached data in host memory. This

cached data is flushed to the P-VOL, then transferred to the S-VOL as part

of the split operation, thus ensuring that the two are identical.

If a failure occurs during an update cycle, the data in the update is

inconsistent. Write order in the S-VOL is nevertheless guaranteed — at the

point-in-time of the previous update cycle, which is stored in the remote

data pool.

Figure 1-2 shows how S-VOL data is maintained at one update cycle back

of P-VOL data.

1–4 Overview

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 25

•

Extended update cycles

If inflow to the P-VOL increases, all of the update data may not be sent

within the cycle time. This causes the cycle to extend beyond the userspecified cycle time.

As a result, more update data in the P-VOL accumulates to be copied at the

next update. Also, the time difference between the P-VOL data and S-VOL

data increases, which degrades the recovery point value. In Figure 1-2, if a

failure occurs at the primary site immediately before time T3, for example,

data consistency in the S-VOL during takeover is P-VOL data at time T1.

When inflow decreases, updates again complete within the cycle time. Cycle

time should be determined according to a realistic assessment of write

workload, as discussed in Chapter 2, Plan and design — sizing data pools

and bandwidth.

Figure 1-2: Update Cycles and Differential Data

Overview 1–5

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 26

Consistency groups

Application data often spans more than one volume. With TCE, it is possible

to manage operations spanning multiple volumes as a single group. In a

consistency group (CTG), all primary logical volumes are treated as a single

entity.

Managing primary volumes as a consistency group allows TCE operations to

be performed on all volumes in the group concurrently. Write order in

secondary volumes is guaranteed across application logical volumes.

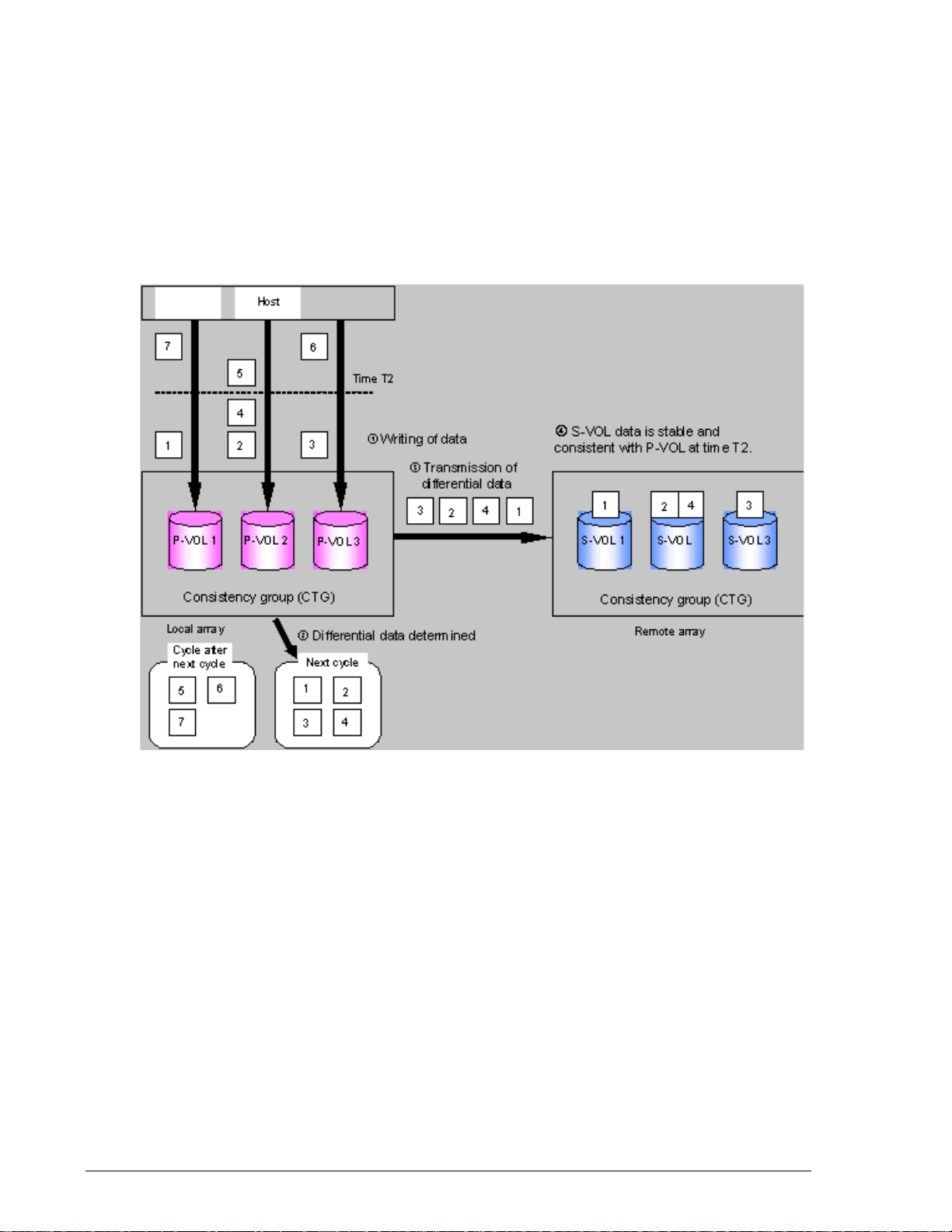

Figure 1-3 shows TCE operations with a consistency group.

•

Figure 1-3: TCE Operations with Consistency Groups

In this illustration, observe the following:

• The P-VOLs belong to the same consistency group. The host updates

the P-VOLs as required (1).

• The local array identifies the differential data in the P-VOLs when the

cycle is started (2) in an atomic manner. The differential data of the

group of the P-VOLs are determined at time T2.

• The local array transfers the differential data to the corresponding SVOLs (3). When all differential data is transferred, each S-VOL is

identical to its P-VOL at time T2 (4).

• If pairs are split or deleted, the local array stops the cycle update for

the consistency group. Differential data between P-VOLs and S-VOLs is

determined at that time. All differential data is sent to the S-VOLs, and

the split or delete operations on the pairs completes. S-VOLs maintain

1–6 Overview

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 27

data consistency across pairs in the consistency group. The pair that is

using different data pool can be belongs to the same consistency group.

Differential Management LUs (DMLU)

The DMLU is an exclusive volume used for storing TrueCopy information

when the local or remote array is powered down. The DMLU is hidden from

a host. User setup is required on the local and remote arrays.

TCE interfaces

TCE can be setup, used and monitored using of the following interfaces:

• The GUI (Hitachi Storage Navigator Modular 2 Graphical User

Interface), which is a browser-based interface from which TCE can be

setup, operated, and monitored. The GUI provides the simplest method

for performing operations, requiring no previous experience. Scripting

is not available.

• CLI (Hitachi Storage Navigator Modular 2 Command Line Interface),

from which TCE can be setup and all basic pair operations can be

performed—create, split, resynchronize, restore, swap, and delete. The

GUI also provides these functionalities. CLI also has scripting capability.

• CCI (Hitachi Command Control Interface (CCI), which is used to display

volume information and perform all copying and pair-managing

operations. CCI provides a full scripting capability which can be used to

automate replication operations. CCI requires more experience than

the GUI or CLI. CCI is required for performing failover and fall back

operations, and, on Windows 2000 Server, mount/unmount operations.

HDS recommends using the GUI to begin operations for new users with no

experience with CLI or CCI. Users who are new to replication software but

have CLI experience in managing arrays may want to continue using CLI,

though the GUI is an option. The same recommendation applies to CCI

users.

Overview 1–7

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 28

1–8 Overview

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 29

2

Plan and design — sizing

data pools and bandwidth

This chapter provides instructions for measuring write-workload

and sizing data pools and bandwidth.

Plan and design workflow

Assessing business needs — RPO and the update cycle

Measuring write-workload

Calculating data pool size

Determining bandwidth

Plan and design — sizing data pools and bandwidth 2–1

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 30

Plan and design workflow

You design your TCE system around the write-workload generated by your

host application. Data pools and bandwidth must be sized to accommodate

write-workload. This chapter helps you perform these tasks as follows:

• Assess business requirements regarding how much data your operation

must recover in the event of a disaster.

• Measure write-workload. This metric is used to ensure that data pool

size and bandwidth are sufficient to hold and pass all levels of I/O.

• Calculate data pool size. Instructions are included for matching data

pool capacity to the production environment.

• Calculate remote path bandwidth: This will make certain that you can

copy your data to the remote site within your update cycle.

Assessing business needs — RPO and the update cycle

In a TCE system, the S-VOL will contain nearly all of the data that is in the

P-VOL. The difference between them at any time will be the differential data

that accumulates during the TCE update cycle.

This differential data accumulates in the local data pool until the update

cycle starts, then it is transferred over the remote data path.

Update cycle time is a uniform interval of time during which differential data

copies to the S-VOL. You will define the update cycle time when creating the

TCE pair.

The update cycle time is based on:

• the amount of data written to your P-VOL

• the maximum amount of data loss your operation could survive during

a disaster.

The data loss that your operation can survive and remain viable determines

to what point in the past you must recover.

An ho ur ’s wo rth of da ta l os s me an s t hat yo ur re cov er y p oi nt i s o ne hou r a go .

If disaster occurs at 10:00 am, upon recovery your restart will resume

operations with data from 9:00 am.

Fifteen minutes worth of data loss means that your recovery point is 15

minutes prior to the disaster.

You must determine your recovery point objective (RPO). You can do this by

measuring your host application’s write-workload. This shows the amount

of data written to the P-VOL over time. You or your organization’s decisionmakers can use this information to decide the number of business

transactions that can be lost, the number of hours required to key in lost

data and so on. The result is the RPO.

2–2 Plan and design — sizing data pools and bandwidth

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 31

Measuring write-workload

Bandwidth and data pool size are determined by understanding the writeworkload placed on the primary volume from the host application.

• After the initial copy, TCE only copies changed data to the S-VOL.

• Data is changed when the host application writes to storage.

• Write-workload is a measure of changed data over a period of time.

When you know how much data is changing, you can plan the size of your

data pools and bandwidth to support your environment.

Collecting write-workload data

Workload data is collected using your operating system’s performance

monitoring feature. Collection should be performed during the busiest time

of month, quarter, and year so you can be sure your TCE implementation

will support your environment when demand is greatest. The following

procedure is provided to help you collect write-workload data.

To collect workload data

1. Using your operating system’s performance monitoring software, collect

the following:

- Disk-write bytes-per-second for every physical volume that will be

replicated.

- Collect this data at 10 minute intervals and over as long a period

as possible. Hitachi recommends a 4-6 week period in order to

accumulate data over all workload conditions including times when

the demands on the system are greatest.

•

2. At the end of the collection period, convert the data to MB/second and

import into a spreadsheet tool. In Figure 2-1, Write-Workload

Spreadsheet, column C shows an example of collected raw data over 10-

minute segments.

Plan and design — sizing data pools and bandwidth 2–3

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 32

•

Figure 2-1: Write-Workload Spreadsheet

Fluctuations in write-workload can be seen from interval to interval. To

calculate data pool size, the interval data will first be averaged, then used

in an equation. (Your spreadsheet at this point would have only rows B and

C populated.)

Calculating data pool size

In addition to write-workload data, cycle time must be known. Cycle time is

the frequency that updates are sent to the remote array. This is a userdefined value that can range from 30 seconds to 1 hour. The default cycle

time is 5-minutes (300 seconds). If consistency groups are used, the

minimum must be 30 seconds for one CTG, increasing 30 seconds for each

additional CTG, up to 16. Since the data pool stores all updated data that

accumulates during the cycle time, the longer the cycle time, the larger the

data pool must be. For more information on cycle time, see the discussion

in Assessing business needs — RPO and the update cycle on page 2-2, and

also Changing cycle time on page 9-8.

To calculate TCE data pool capacity

1. Using write-workload data imported into a spreadsheet tool and your

cycle time, calculate write rolling-averages, as follows. (Most

spreadsheet tools have an average function.)

- If cycle time is 1 hour, then calculate 60 minute rolling averages.

Do this by arranging the values in six 10-minute intervals.

- If cycle time is 30 minutes, then calculate 30 minute rolling

averages, arranging the values in three 10-minute intervals.

2–4 Plan and design — sizing data pools and bandwidth

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 33

Example rolling-average procedure for cycle time in Microsoft

Excel

Cycle time in the following example is 1 hour; rolling averages are

calculated using six 10-minute intervals.

a. After converting workload data into the spreadsheet (Figure 2-1,

Write-Workload Spreadsheet), in cell E4 type, =average(b2:b7),

and press Enter.

This instructs the tool to calculate the average value in cells B2

through B7 (six 10-minute intervals) and populate cell E4 with that

data. (The calculations used here are for example purposes only.

Base your calculations on your cycle time.)

b. Copy the value that displays in E4.

c. Highlight cells E5 to the E cell in the last row of workload data in the

spreadsheet.

d. Right-click the highlighted cells and select the Paste option.

Excel maintains the logic and increments the formula values initially

entered in E4. It then calculates all the 60-minute averages for every

10-minute increment, and populates the E cells, as shown in

Figure 2-2.

•

•

Figure 2-2: Rolling Averages Calculated Using 60 Minute Cycle Time

Plan and design — sizing data pools and bandwidth 2–5

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 34

For another perspective, you can graph the data, as shown in

Figure 2-3.

•

Figure 2-3: 60-Minute Rolling Averages Graphed Over Raw Data

2. From the spreadsheet or graph, locate the largest value in the E column.

This is your Peak Rolling Average (PRA) value. Use the PRA to calculate

the cumulative peak data change over cycle time. The following formula

calculates the largest expected data change over the cycle time. This will

ensure that you do not overflow your data pool.

(PRA in MB/sec) x (cycle time seconds) = (Cumulative peak data

change)

For example, if the PRA is 3 MB/sec, and the cycle time is 3600 seconds

(1 hour), then:

3MB/sec x 3600 seconds = 10,800 MB

This shows the maximum amount of changed data (pool data) that you

can expect in a 60 minute time period. This is the base data pool size

required for TCE.

3. Hitachi recommends a 20-percent safety factor for data pools. Calculate

a safety factor with the following formula:

(Combined base data pool size) x 1.2. For example:

529,200 MB x 1.2 = 635,040 MB

2–6 Plan and design — sizing data pools and bandwidth

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 35

4. It is also recommended that annual increases in data transactions be

factored into data pool sizing. This is done to minimize reconfiguration

in the future. Do this by multiplying the pool size with safety factor by

the percentage of expected annual growth. For example:

635,040 MB x 1.2 (20 percent growth rate for per year)

= 762,048 MB

Repeat this step for each year the solution will be in place.

5. Convert to gigabytes, dividing by 1,000. For example:

762,048 MB / 1,000 = 762 GB

This is the size of the example data pool with safety and growth (2nd

year) factored in.

Data pool key points

• Data pools must be set up on the local array and the remote array.

• The data pool must be on the same controller as the P-VOL and VVOL(s).

• Up to 64 LUs can be assigned to a data pool.

• Plan for highest workload and multi-year growth.

• For set up information, see Setting up data pools on page 6-12.

Plan and design — sizing data pools and bandwidth 2–7

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 36

Determining bandwidth

The purpose of this section is to ensure that you have sufficient bandwidth

between the local and remote arrays to copy all your write data in the timeframe you prescribe. The goal is to size the network so that it is capable of

transferring estimated future write workloads.

TCE requires two remote paths, each with a minimum bandwidth of 1.5 Mbs.

To determine the bandwidth

1. Graph the data in column “C” in the Write-Workload Spreadsheet on

page 2-4.

2. Locate the highest peak. Based on your write-workload measurements,

this is the greatest amount of data that will need to be transferred to the

remote array. Bandwidth must accommodate maximum possible

workload to insure that the system does not become subject to its

capacity being exceeded. This would cause further problems, such as the

new write data backing up in the data pool, update cycles becoming

extended, and so on.

3. Though the highest peak in your workload data should be used for

determining bandwidth, you should also take notice of extremely high

peaks. In some cases a batch job, defragmentation, or other process

could be driving workload to abnormally high levels. It is sometimes

worthwhile to review the processes that are running. After careful

analysis, it may be possible to lower or even eliminate some spikes by

optimizing or streamlining high-workload processes. Changing the

timing of a process may lower workload.

4. Although bandwidth can be increased, Hitachi recommends that

projected growth rate be factored over a 1, 2, or 3 year period.

Table 2-1 shows TCE bandwidth requirements.

Table 2-1: Bandwidth Requirements

Average Inflow Bandwidth Requirements WAN Types

.08 - .149 MB/s 1.5 Mb/s or more T1

.15 - .299 MB/s 3 Mb/s or more T1 x two lines

.3 - .599 MB/s 6 Mb/s or more T2

.6 - 1.199 MB/s 12 Mb/s or more T2 x two lines

1.2 - 4.499 MB/s 45 Mb/s or more T3

4.500 - 9.999 MB/s 100 Mb/s or more Fast Ethernet

2–8 Plan and design — sizing data pools and bandwidth

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 37

3

Plan and design — remote

path

A remote path is required for transferring data from the local

array to the remote array. This chapter provides network and

bandwidth requirements, and supported remote path

configurations.

Remote path requirements

Remote path configurations

Using the remote path — best practices

Plan and design — remote path 3–1

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 38

Remote path requirements

The remote path is the connection used to transfer data between the local

array and remote array. TCE supports Fibre Channel and iSCSI port

connectors and connections. The connections you use must be either one or

the other: they cannot be mixed.

The following kinds of networks are used with TCE:

• Local Area Network (LAN), for system management. Fast Ethernet is

required for the LAN.

• Wide Area Network (WAN) for the remote path. For best performance:

- A Fibre Channel extender is required.

- iSCSI connections may require a WAN Optimization Controller

(WOC).

Figure 3-1 shows the basic TCE configuration with a LAN and WAN.

•

Figure 3-1: Remote Path Configuration

Requirements are provided in the following:

• Management LAN requirements on page 3-3

• Remote data path requirements on page 3-3

• WAN optimization controller (WOC) requirements on page 3-4

• Fibre channel extender connection on page 3-9.

3–2 Plan and design — remote path

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 39

Management LAN requirements

Fast Ethernet is required for an IP LAN.

Remote data path requirements

This section discusses the TCE remote path requirements for a WAN

connection. This includes the following:

•Types of lines

•Bandwidth

• Distance between local and remote sites

• WAN Optimization Controllers (WOC) (optional)

For instructions on assessing your system’s I/O and bandwidth

requirements, see:

• Measuring write-workload on page 2-3

• Determining bandwidth on page 2-8

Table 3-1 provides remote path requirements for TCE. A WOC may also be

required, depending on the distance between the local and remote sites and

other factors listed in Table 3-3.

•

Table 3-1: Remote Data Path Requirements

Item Requirements

Bandwidth • Bandwidth must be guaranteed.

• Bandwidth must be 1.5 Mb/s or more for each pair.

100 Mb/s recommended.

• Requirements for bandwidth depend on an average

inflow from the host into the array.

•See Table 2-1 on page 2-8 for bandwidth

requirements.

Remote Path Sharing • The remote path must be dedicated for TCE pairs.

• When two or more pairs share the same path, a

WOC is recommended for each pair

•

•

.

Table 3-2 shows types of WAN cabling and protocols supported by TCE and

those not supported.

•

Table 3-2: Supported, Not Supported WAN Types

WAN Types

Supported • Dedicated Line (T1, T2, T3 etc)

Not-supported • ADSL, CATV, FTTH, ISDN

Plan and design — remote path 3–3

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 40

WAN optimization controller (WOC) requirements

WAN Optimization Controller (WOC) is a network appliance that enhances

WAN performance by accelerating long-distance TCP/IP communications.

TCE copy performance over longer distances is significantly increased when

WOC is used. A WOC guarantees bandwidth for each line.

•Use Table 3-3 to determine whether your TCE system requires the

addition of a WOC.

• Table 3-4 shows the requirements for WOCs.

•

Table 3-3: Conditions Requiring a WOC

Item Condition

Latency, Distance • If round trip time is 5 ms or more, or distance

between the local site and the remote site is 100

miles (160 km) or further, WOC is highly

recommended.

WAN Sharing • If two or more pairs share the same WAN, A WOC

is recommended for each pair.

•

Table 3-4: WOC Requirements

Item Requirements

LAN Interface • Gigabit Ethernet or fast Ethernet must be

supported.

Performance • Data transfer capability must be equal to or more

than bandwidth of WAN.

Functions • Traffic shaping, bandwidth throttling, or rate

limiting must be supported. These functions reduce

data transfer rates to a value input by the user.

• Data compression must be supported.

• TCP acceleration must be supported.

3–4 Plan and design — remote path

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 41

Remote path configurations

TCE supports both Fibre Channel and iSCSI connections for the remote

path.

• Two remote paths must be set up between, one per controller. This

ensures that an alternate path is available in the event of link failure

during copy operations.

• Paths can be configured from:

- Local controller 0 to remote controller 0 or 1

- Local controller 1 to remote controller 0 or 1

• Paths can connect a port A with a port B, and so on. Hitachi

recommends making connections between the same controller/port,

such as port 0B to 0B, and 1 B to 1 B, for simplicity. Ports can be used

for both host I/O and replication data.

The following sections describe supported Fibre Channel and iSCSI path

configurations. Recommendations and restrictions are included.

Fibre channel

The Fibre Channel remote data path can be set up in the following

configurations:

• Direct connection

• Single Fibre Channel switch and network connection

• Double FC switch and network connection

• Wavelength Division Multiplexing (WDM) and dark fibre extender

The array supports direct or switch connection only. Hub connections are

not supported.

General recommendations

The following is recommended for all supported configurations:

• TCE requires one path between the host and local array. However, two

paths are recommended; the second path can be used in the event of a

path failure.

Plan and design — remote path 3–5

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 42

Direct connection

Figure 3-2 illustrates two remote paths directly connecting the local and

remote arrays. This configuration can be used when distance is very short,

as when creating the initial copy or performing data recovery while both

arrays are installed at the local site.

•

Figure 3-2: Direct FC Connection

3–6 Plan and design — remote path

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 43

Single FC switch, network connection

Switch connections increase throughput between the arrays. Figure 3-3

illustrates two remote paths routed through one FC switch and one FC

network to make the connection to the remote site.

•

Figure 3-3: Single FC Switch, Network Connection

Recommendations

• While this configuration may be used, it is not recommended since

failure in an FC switch or the network would halt copy operations.

• Separate switches should be set up for host I/O to the local array and

for data transfer between arrays. Using one switch for both functions

results in deteriorated performance.

Plan and design — remote path 3–7

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 44

Double FC switch, network connection

Figure 3-4 illustrates two remote paths using two FC switches and two FC

networks to make the connection to the remote site.

•

Figure 3-4: Double FC Switches, Networks Connection

Recommendations

• Separate switches should be set up for host I/O to the local array and

for data transfer between arrays. Using one switch for both functions

results in deteriorated performance.

3–8 Plan and design — remote path

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 45

Fibre channel extender connection

Channel extenders convert Fibre Channel to FCIP or iFCP, which allows you

to use IP networks and significantly improve performance over longer

distances.

Figure 3-5 illustrates two remote paths using two FC switches, Wavelength

Division Multiplexor (WDM) extender, and dark fibre to make the connection

to the remote site.

•

Figure 3-5: Fibre Channel Switches, WDM, Dark Fibre Connection

Recommendations

• Only qualified components are supported.

For more information on WDM, see Appendix E, Wavelength Division

Multiplexing (WDM) and dark fibre.

Plan and design — remote path 3–9

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 46

Port transfer rate for Fibre channel

The communication speed of the Fibre Channel port on the array must

match the speed specified on the host port. These two ports—Fibre Channel

port on the array and host port—are connected via the Fibre Channel cable.

Each port on the array must be set separately.

•

Table 3-5: Setting Port Transfer Rates

Set the remote array

port to

Manual mode

Auto mode

If the host port is set to

1 Gbps 1 Gbps

2 Gbps 2 Gbps

4 Gbps 4 Gbps

8 Gbps 8 Gbps

2 Gbps Auto, with max of 2 Gbps

4 Gbps Auto, with max of 4 Gbps

8 Gbps Auto, with max of 8 Gbps

Maximum speed is ensured using the manual settings.

You can specify the port transfer rate using the Navigator 2 GUI, on the Edit

FC Port screen (Settings/FC Settings/port/Edit Port button).

•

NOTE: If your remote path is a direct connection, make sure that the

array power is off when modifying the transfer rate to prevent remote path

blockage.

Find details on communication settings in the Hitachi AMS 2100/2300

Storage System Hardware Guide.

3–10 Plan and design — remote path

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 47

iSCSI

The iSCSI remote data path can be set up in the following configurations:

• Direct connection

• Local Area Network (LAN) switch connections

• Wide Area Network (WAN) connections

• WAN Optimization Controller (WOC) connections

Recommendations

The following is recommended for all supported configurations:

• Two paths should be configured from the host to the array. This

provides a backup path in the event of path failure.

Direct connection

Figure 3-6, illustrates two remote paths directly connecting the local and

remote arrays. Direct connections are used when the local and remote

arrays are set up at the same site. In this case, category 5e or 6 copper LAN

cable is recommended.

•

Figure 3-6: Direct iSCSI Connection

Recommendations

• When a large amount of data is to be copied to the remote site, the

initial copy between local side and remote systems may be performed

at the same location. In this case, category 5e or 6 copper LAN cable is

recommended.

Plan and design — remote path 3–11

Hitachi AMS 2000 Family TrueCopy Extended Distance User Guide

Page 48

Single LAN switch, WAN connection

Figure 3-7, illustrates two remote paths using one LAN switch and network

to the remote array.

•

Figure 3-7: Single-Switch Connection

Recommendations

• This configuration is not recommended because a failure in a LAN

switch or WAN would halt operations.