Page 1

Dell PowerEdge Configuration Guide for the

M I/O Aggregator

9.5(0.1)

Page 2

Notes, Cautions, and Warnings

NOTE: A NOTE indicates important information that helps you make better use of your computer.

CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you

how to avoid the problem.

WARNING: A WARNING indicates a potential for property damage, personal injury, or death.

Copyright © 2014 Dell Inc. All rights reserved. This product is protected by U.S. and international copyright and

intellectual property laws. Dell™ and the Dell logo are trademarks of Dell Inc. in the United States and/or other

jurisdictions. All other marks and names mentioned herein may be trademarks of their respective companies.

2014 - 07

Rev. A00

Page 3

Contents

1 About this Guide..................................................................................................13

Audience.............................................................................................................................................. 13

Conventions.........................................................................................................................................13

Related Documents.............................................................................................................................14

2 Before You Start.................................................................................................. 15

IOA Operational Modes.......................................................................................................................15

Standalone mode...........................................................................................................................15

Stacking mode............................................................................................................................... 15

VLT mode.......................................................................................................................................15

Programmable MUX mode............................................................................................................15

Default Settings................................................................................................................................... 16

Other Auto-Configured Settings........................................................................................................ 16

Data Center Bridging Support.............................................................................................................17

FCoE Connectivity and FIP Snooping.................................................................................................17

iSCSI Operation....................................................................................................................................17

Link Aggregation..................................................................................................................................18

Link Tracking........................................................................................................................................18

Configuring VLANs.............................................................................................................................. 18

Uplink LAG..................................................................................................................................... 18

Server-Facing LAGs....................................................................................................................... 19

Where to Go From Here......................................................................................................................19

3 Configuration Fundamentals........................................................................... 20

Accessing the Command Line........................................................................................................... 20

CLI Modes........................................................................................................................................... 20

Navigating CLI Modes....................................................................................................................21

The do Command...............................................................................................................................22

Undoing Commands...........................................................................................................................23

Obtaining Help.................................................................................................................................... 23

Entering and Editing Commands....................................................................................................... 24

Command History...............................................................................................................................25

Filtering show Command Outputs.....................................................................................................25

Multiple Users in Configuration Mode............................................................................................... 26

4 Data Center Bridging (DCB)..............................................................................27

Ethernet Enhancements in Data Center Bridging..............................................................................27

Priority-Based Flow Control...............................................................................................................28

Page 4

Configuring Priority-Based Flow Control.................................................................................... 29

Enhanced Transmission Selection......................................................................................................31

Configuring Enhanced Transmission Selection........................................................................... 33

Configuring DCB Maps and its Attributes.......................................................................................... 33

DCB Map: Configuration Procedure............................................................................................ 33

Important Points to Remember....................................................................................................34

Applying a DCB Map on a Port..................................................................................................... 35

Configuring PFC without a DCB Map...........................................................................................35

Configuring Lossless Queues....................................................................................................... 36

Data Center Bridging Exchange Protocol (DCBx)..............................................................................37

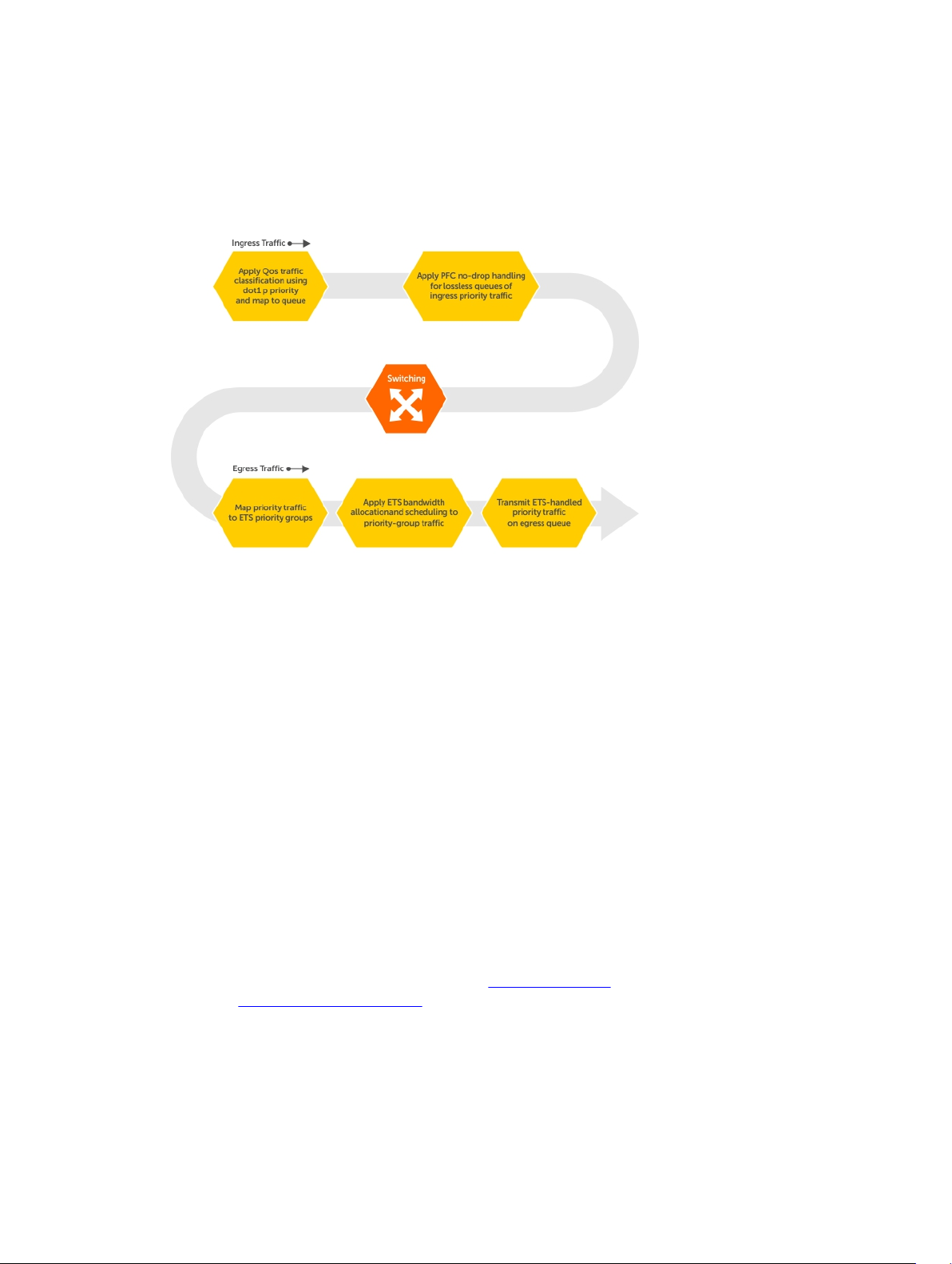

Data Center Bridging in a Traffic Flow............................................................................................... 38

Enabling Data Center Bridging........................................................................................................... 38

Data Center Bridging: Auto-DCB-Enable Mode................................................................................39

QoS dot1p Traffic Classification and Queue Assignment..................................................................41

How Priority-Based Flow Control is Implemented........................................................................... 42

How Enhanced Transmission Selection is Implemented..................................................................42

ETS Operation with DCBx.............................................................................................................43

Bandwidth Allocation for DCBX CIN............................................................................................44

DCBX Operation..................................................................................................................................44

DCBx Operation............................................................................................................................44

DCBx Port Roles............................................................................................................................45

DCB Configuration Exchange...................................................................................................... 46

Configuration Source Election..................................................................................................... 46

Propagation of DCB Information..................................................................................................47

Auto-Detection of the DCBx Version...........................................................................................47

DCBX Example.............................................................................................................................. 48

DCBX Prerequisites and Restrictions............................................................................................49

DCBX Error Messages................................................................................................................... 49

Debugging DCBX on an Interface................................................................................................49

Verifying the DCB Configuration........................................................................................................50

Hierarchical Scheduling in ETS Output Policies................................................................................ 59

5 Dynamic Host Configuration Protocol (DHCP)............................................ 61

Assigning an IP Address using DHCP..................................................................................................61

Debugging DHCP Client Operation................................................................................................... 63

DHCP Client........................................................................................................................................ 65

How DHCP Client is Implemented.....................................................................................................65

DHCP Client on a Management Interface......................................................................................... 66

DHCP Client on a VLAN......................................................................................................................66

DHCP Packet Format and Options.....................................................................................................67

Option 82............................................................................................................................................ 68

Releasing and Renewing DHCP-based IP Addresses........................................................................69

Page 5

Viewing DHCP Statistics and Lease Information............................................................................... 69

6 FIP Snooping........................................................................................................71

Fibre Channel over Ethernet............................................................................................................... 71

Ensuring Robustness in a Converged Ethernet Network...................................................................71

FIP Snooping on Ethernet Bridges......................................................................................................72

FIP Snooping in a Switch Stack...........................................................................................................75

How FIP Snooping is Implemented....................................................................................................75

FIP Snooping on VLANs.................................................................................................................75

FC-MAP Value................................................................................................................................75

Bridge-to-FCF Links...................................................................................................................... 76

Impact on other Software Features..............................................................................................76

FIP Snooping Prerequisites........................................................................................................... 76

FIP Snooping Restrictions............................................................................................................. 76

Displaying FIP Snooping Information................................................................................................. 77

FIP Snooping Example........................................................................................................................ 83

Debugging FIP Snooping ...................................................................................................................84

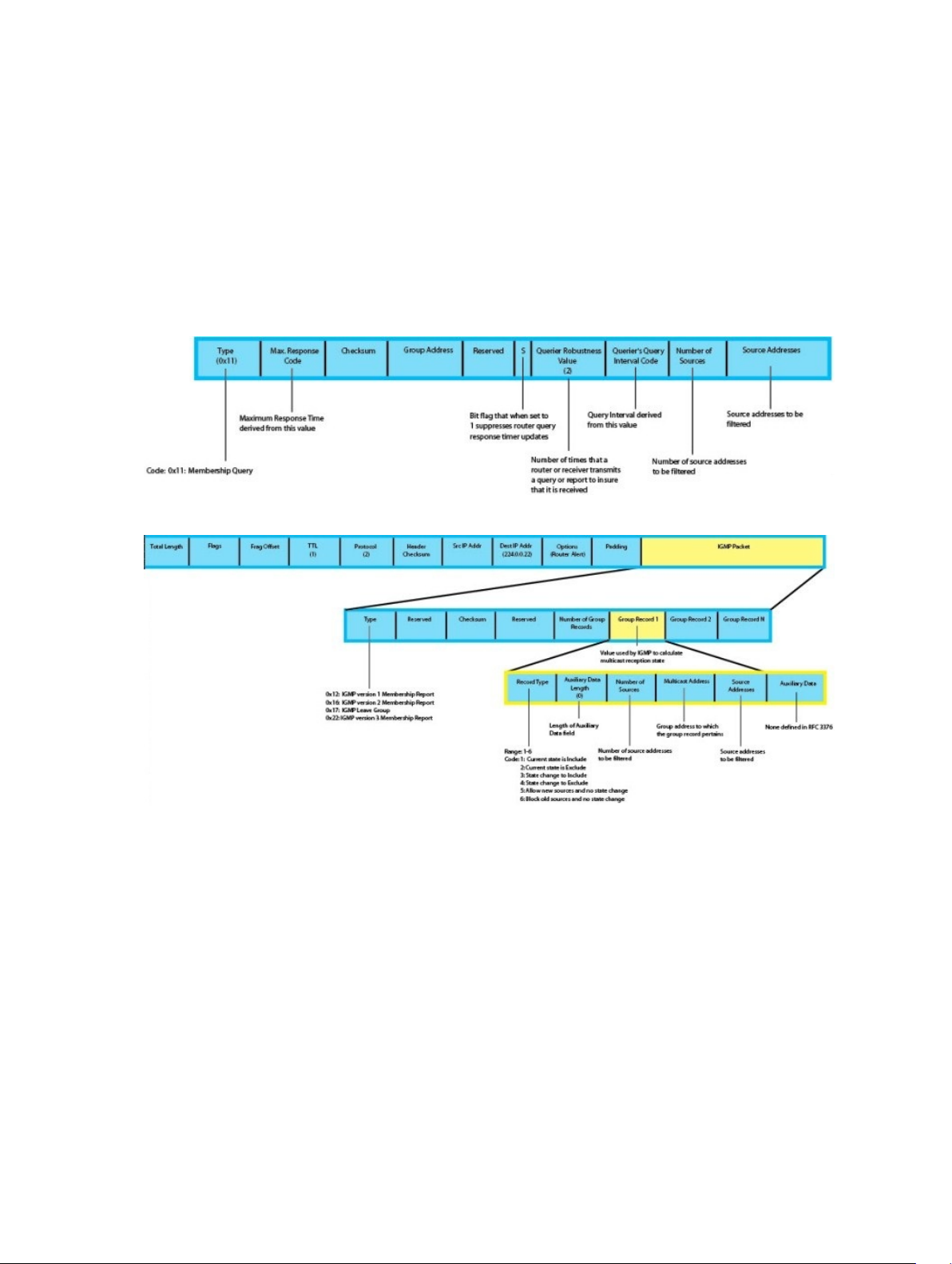

7 Internet Group Management Protocol (IGMP)............................................. 85

IGMP Overview....................................................................................................................................85

IGMP Version 2....................................................................................................................................85

Joining a Multicast Group.................................................................................................................. 86

Leaving a Multicast Group..................................................................................................................86

IGMP Version 3....................................................................................................................................86

Joining and Filtering Groups and Sources.........................................................................................87

Leaving and Staying in Groups...........................................................................................................88

IGMP Snooping................................................................................................................................... 89

How IGMP Snooping is Implemented on an Aggregator..................................................................89

Disabling Multicast Flooding.............................................................................................................. 90

Displaying IGMP Information............................................................................................................. 90

8 Interfaces............................................................................................................. 92

Basic Interface Configuration.............................................................................................................92

Advanced Interface Configuration..................................................................................................... 92

Interface Auto-Configuration.............................................................................................................92

Interface Types....................................................................................................................................93

Viewing Interface Information............................................................................................................93

Disabling and Re-enabling a Physical Interface.................................................................................95

Layer 2 Mode.......................................................................................................................................95

Management Interfaces......................................................................................................................96

Accessing an Aggregator.............................................................................................................. 96

Configuring a Management Interface..........................................................................................96

Page 6

Configuring a Static Route for a Management Interface.............................................................97

VLAN Membership.............................................................................................................................. 98

Default VLAN ................................................................................................................................ 98

Port-Based VLANs.........................................................................................................................98

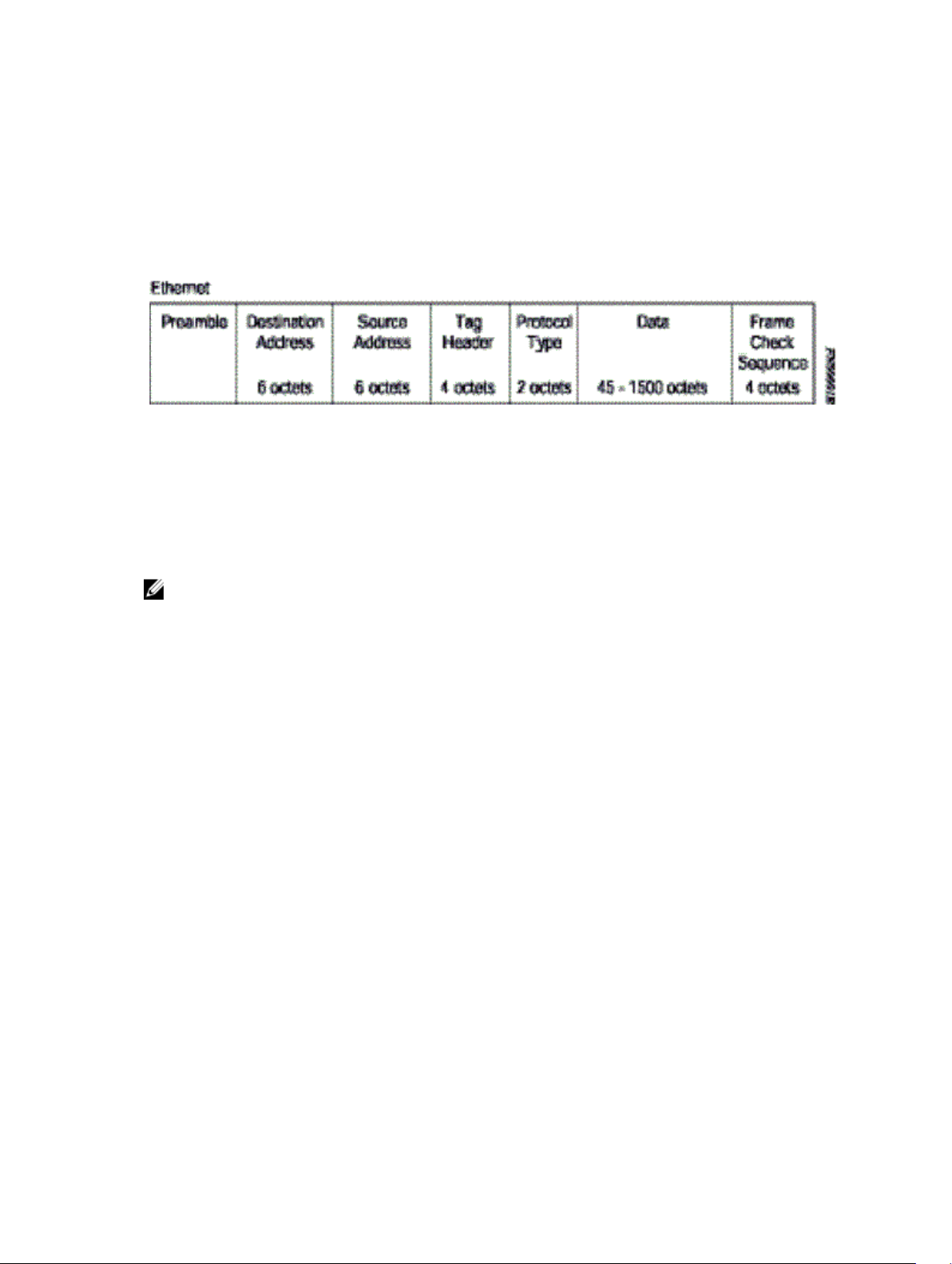

VLANs and Port Tagging............................................................................................................... 99

Configuring VLAN Membership....................................................................................................99

Displaying VLAN Membership.................................................................................................... 100

Adding an Interface to a Tagged VLAN.......................................................................................101

Adding an Interface to an Untagged VLAN................................................................................ 101

Port Channel Interfaces....................................................................................................................102

Port Channel Definitions and Standards.................................................................................... 102

Port Channel Benefits................................................................................................................. 102

Port Channel Implementation....................................................................................................102

1GbE and 10GbE Interfaces in Port Channels............................................................................103

Uplink Port Channel: VLAN Membership................................................................................... 103

Server-Facing Port Channel: VLAN Membership.......................................................................103

Displaying Port Channel Information.........................................................................................104

Interface Range................................................................................................................................. 105

Bulk Configuration Examples......................................................................................................105

Monitor and Maintain Interfaces.......................................................................................................107

Maintenance Using TDR............................................................................................................. 108

Flow Control Using Ethernet Pause Frames.................................................................................... 108

MTU Size............................................................................................................................................109

Auto-Negotiation on Ethernet Interfaces........................................................................................ 110

Setting Auto-Negotiation Options..............................................................................................112

Viewing Interface Information.......................................................................................................... 113

Clearing Interface Counters........................................................................................................114

Enabling the Management Address TLV on All Interfaces of an Aggregator.................................. 115

Enhanced Validation of Interface Ranges.........................................................................................115

9 iSCSI Optimization........................................................................................... 116

iSCSI Optimization Overview............................................................................................................ 116

Monitoring iSCSI Traffic Flows.......................................................................................................... 117

Information Monitored in iSCSI Traffic Flows.................................................................................. 118

Detection and Auto configuration for Dell EqualLogic Arrays........................................................ 118

iSCSI Optimization: Operation..........................................................................................................118

Displaying iSCSI Optimization Information...................................................................................... 119

10 Isolated Networks for Aggregators.............................................................121

Configuring and Verifying Isolated Network Settings......................................................................121

11 Link Aggregation.............................................................................................122

Page 7

Supported Modes..............................................................................................................................122

How the LACP is Implemented on an Aggregator...........................................................................122

Uplink LAG................................................................................................................................... 123

Server-Facing LAGs..................................................................................................................... 123

LACP Modes.................................................................................................................................123

Auto-Configured LACP Timeout................................................................................................ 123

LACP Example................................................................................................................................... 124

Link Aggregation Control Protocol (LACP)...................................................................................... 125

Configuration Tasks for Port Channel Interfaces.......................................................................125

Creating a Port Channel..............................................................................................................125

Adding a Physical Interface to a Port Channel...........................................................................125

Reassigning an Interface to a New Port Channel.......................................................................127

Configuring the Minimum Oper Up Links in a Port Channel.................................................... 128

......................................................................................................................................................129

Deleting or Disabling a Port Channel......................................................................................... 129

Configuring the Minimum Number of Links to be Up for Uplink LAGs to be Active..................... 130

Optimizing Traffic Disruption Over LAG Interfaces On IOA Switches in VLT Mode.......................131

Preserving LAG and Port Channel Settings in Nonvolatile Storage.................................................131

Enabling the Verification of Member Links Utilization in a LAG Bundle..........................................131

Monitoring the Member Links of a LAG Bundle...............................................................................132

Verifying LACP Operation and LAG Configuration.......................................................................... 133

12 Layer 2...............................................................................................................137

Managing the MAC Address Table....................................................................................................137

Clearing the MAC Address Entries.............................................................................................. 137

Displaying the MAC Address Table.............................................................................................138

Network Interface Controller (NIC) Teaming.................................................................................. 138

MAC Address Station Move.........................................................................................................139

MAC Move Optimization.............................................................................................................140

13 Link Layer Discovery Protocol (LLDP).........................................................141

Overview............................................................................................................................................ 141

Protocol Data Units..................................................................................................................... 141

Optional TLVs.................................................................................................................................... 143

Management TLVs.......................................................................................................................143

LLDP Operation.................................................................................................................................143

Viewing the LLDP Configuration...................................................................................................... 144

Viewing Information Advertised by Adjacent LLDP Agents.............................................................144

Clearing LLDP Counters....................................................................................................................145

Debugging LLDP............................................................................................................................... 146

Relevant Management Objects.........................................................................................................147

Page 8

14 Port Monitoring.............................................................................................. 153

Configuring Port Monitoring.............................................................................................................153

Important Points to Remember........................................................................................................154

Port Monitoring................................................................................................................................. 155

15 Security for M I/O Aggregator......................................................................156

Understanding Banner Settings........................................................................................................ 156

Accessing the I/O Aggregator Using the CMC Console Only.........................................................156

AAA Authentication............................................................................................................................157

Configuration Task List for AAA Authentication.........................................................................157

RADIUS...............................................................................................................................................159

RADIUS Authentication............................................................................................................... 160

Configuration Task List for RADIUS............................................................................................160

TACACS+...........................................................................................................................................163

Configuration Task List for TACACS+........................................................................................ 163

TACACS+ Remote Authentication..............................................................................................167

Enabling SCP and SSH...................................................................................................................... 168

Using SCP with SSH to Copy a Software Image........................................................................ 169

Secure Shell Authentication........................................................................................................170

Troubleshooting SSH...................................................................................................................173

Telnet................................................................................................................................................. 173

VTY Line and Access-Class Configuration....................................................................................... 173

VTY Line Local Authentication and Authorization..................................................................... 174

VTY Line Remote Authentication and Authorization................................................................. 174

VTY MAC-SA Filter Support......................................................................................................... 175

16 Simple Network Management Protocol (SNMP)...................................... 176

Implementation Information............................................................................................................ 176

Configuring the Simple Network Management Protocol................................................................176

Important Points to Remember..................................................................................................176

Setting up SNMP.......................................................................................................................... 177

Creating a Community................................................................................................................ 177

Reading Managed Object Values...................................................................................................... 177

Displaying the Ports in a VLAN using SNMP.....................................................................................178

Fetching Dynamic MAC Entries using SNMP...................................................................................180

Deriving Interface Indices..................................................................................................................181

Monitor Port-Channels.....................................................................................................................182

Entity MIBS.........................................................................................................................................183

Example of Sample Entity MIBS outputs.................................................................................... 183

Standard VLAN MIB........................................................................................................................... 185

Enhancements.............................................................................................................................185

Page 9

Fetching the Switchport Configuration and the Logical Interface Configuration .................. 186

SNMP Traps for Link Status...............................................................................................................187

17 Stacking............................................................................................................188

Stacking Aggregators........................................................................................................................188

Stack Management Roles............................................................................................................189

Stack Master Election..................................................................................................................190

Failover Roles.............................................................................................................................. 190

MAC Addressing...........................................................................................................................191

Stacking LAG................................................................................................................................191

Stacking VLANs............................................................................................................................ 191

Stacking Port Numbers..................................................................................................................... 192

Configuring a Switch Stack...............................................................................................................194

Stacking Prerequisites................................................................................................................. 194

Cabling Stacked Switches...........................................................................................................194

Accessing the CLI........................................................................................................................ 195

Configuring and Bringing Up a Stack......................................................................................... 195

Adding a Stack Unit..................................................................................................................... 196

Resetting a Unit on a Stack.........................................................................................................196

Removing an Aggregator from a Stack and Restoring Quad Mode..........................................197

Configuring the Uplink Speed of Interfaces as 40 Gigabit Ethernet...............................................197

Verifying a Stack Configuration........................................................................................................199

Using Show Commands............................................................................................................. 199

Troubleshooting a Switch Stack.......................................................................................................201

Failure Scenarios.........................................................................................................................203

Upgrading a Switch Stack.................................................................................................................205

Upgrading a Single Stack Unit..........................................................................................................206

18 Broadcast Storm Control..............................................................................208

Disabling Broadcast Storm Control................................................................................................. 208

Displaying Broadcast-Storm Control Status................................................................................... 208

Configuring Storm Control.............................................................................................................. 208

19 System Time and Date...................................................................................209

Setting the Time for the Software Clock......................................................................................... 209

Setting the Timezone....................................................................................................................... 209

Setting Daylight Savings Time.......................................................................................................... 210

Setting Daylight Saving Time Once............................................................................................210

Setting Recurring Daylight Saving Time......................................................................................211

20 Uplink Failure Detection (UFD)....................................................................213

Feature Description...........................................................................................................................213

Page 10

How Uplink Failure Detection Works............................................................................................... 214

UFD and NIC Teaming...................................................................................................................... 216

Important Points to Remember........................................................................................................216

Configuring Uplink Failure Detection (PMUX mode)....................................................................... 217

Clearing a UFD-Disabled Interface (in PMUX mode).......................................................................218

Displaying Uplink Failure Detection.................................................................................................220

Sample Configuration: Uplink Failure Detection.............................................................................222

Uplink Failure Detection (SMUX mode)............................................................................................223

21 PMUX Mode of the IO Aggregator.............................................................. 224

Introduction...................................................................................................................................... 224

I/O Aggregator (IOA) Programmable MUX (PMUX) Mode.............................................................. 224

Configuring and Changing to PMUX Mode.....................................................................................224

Configuring the Commands without a Separate User Account.....................................................225

Multiple Uplink LAGs.........................................................................................................................225

Multiple Uplink LAGs with 10G Member Ports................................................................................ 226

..................................................................................................................................................... 226

Multiple Uplink LAGs with 40G Member Ports................................................................................ 227

..................................................................................................................................................... 227

Uplink Failure Detection (UFD).........................................................................................................229

..................................................................................................................................................... 229

Virtual Link Trunking (VLT) in PMUX Mode......................................................................................230

.....................................................................................................................................................230

Stacking in PMUX Mode....................................................................................................................232

..................................................................................................................................................... 232

Configuring an NPIV Proxy Gateway............................................................................................... 233

Enabling Fibre Channel Capability on the Switch............................................................................233

Creating a DCB Map......................................................................................................................... 234

Important Points to Remember....................................................................................................... 234

Applying a DCB Map on Server-Facing Ethernet Ports...................................................................234

Creating an FCoE VLAN....................................................................................................................235

Creating an FCoE Map .....................................................................................................................235

Applying a DCB Map on Server-Facing Ethernet Ports...................................................................236

Applying an FCoE Map on Fabric-Facing FC Ports......................................................................... 236

Sample Configuration.......................................................................................................................237

..................................................................................................................................................... 237

Displaying NPIV Proxy Gateway Information...................................................................................237

Link Layer Discovery Protocol (LLDP)..............................................................................................238

Configure LLDP...........................................................................................................................238

CONFIGURATION versus INTERFACE Configurations..............................................................239

Enabling LLDP............................................................................................................................. 239

Advertising TLVs..........................................................................................................................240

Page 11

Viewing the LLDP Configuration................................................................................................ 241

Viewing Information Advertised by Adjacent LLDP Agents.......................................................242

Configuring LLDPDU Intervals....................................................................................................243

Configuring a Time to Live......................................................................................................... 243

Debugging LLDP.........................................................................................................................244

Virtual Link Trunking (VLT)................................................................................................................245

Overview..................................................................................................................................... 246

VLT Terminology.........................................................................................................................247

Configure Virtual Link Trunking..................................................................................................247

Verifying a VLT Configuration.....................................................................................................252

Additional VLT Sample Configurations.......................................................................................255

Troubleshooting VLT...................................................................................................................257

22 FC Flex IO Modules........................................................................................ 259

FC Flex IO Modules...........................................................................................................................259

Understanding and Working of the FC Flex IO Modules.................................................................259

FC Flex IO Modules Overview.................................................................................................... 259

FC Flex IO Module Capabilities and Operations........................................................................ 261

Guidelines for Working with FC Flex IO Modules...................................................................... 261

Processing of Data Traffic.......................................................................................................... 263

Installing and Configuring the Switch........................................................................................264

Interconnectivity of FC Flex IO Modules with Cisco MDS Switches.........................................267

Fibre Channel over Ethernet for FC Flex IO Modules..................................................................... 268

NPIV Proxy Gateway for FC Flex IO Modules..................................................................................269

NPIV Proxy Gateway Configuration on FC Flex IO Modules ................................................... 269

NPIV Proxy Gateway Operations and Capabilities.................................................................... 269

Configuring an NPIV Proxy Gateway..........................................................................................273

Displaying NPIV Proxy Gateway Information............................................................................ 280

23 Upgrade Procedures......................................................................................286

Get Help with Upgrades................................................................................................................... 286

24 Debugging and Diagnostics.........................................................................287

Debugging Aggregator Operation................................................................................................... 287

All interfaces on the Aggregator are operationally down......................................................... 287

Broadcast, unknown multicast, and DLF packets switched at a very low rate........................ 288

Flooded packets on all VLANs are received on a server........................................................... 288

Software show Commands..............................................................................................................289

Offline Diagnostics............................................................................................................................291

Important Points to Remember..................................................................................................291

Running Offline Diagnostics....................................................................................................... 291

Trace Logs.........................................................................................................................................292

Page 12

Auto Save on Crash or Rollover................................................................................................. 292

Using the Show Hardware Commands........................................................................................... 293

Environmental Monitoring............................................................................................................... 294

Recognize an Over-Temperature Condition.............................................................................295

Troubleshoot an Over-Temperature Condition........................................................................296

Recognize an Under-Voltage Condition................................................................................... 297

Troubleshoot an Under-Voltage Condition...............................................................................297

Buffer Tuning.................................................................................................................................... 298

Deciding to Tune Buffers............................................................................................................299

Sample Buffer Profile Configuration.......................................................................................... 302

Troubleshooting Packet Loss...........................................................................................................303

Displaying Drop Counters.......................................................................................................... 303

Dataplane Statistics.....................................................................................................................304

Displaying Stack Port Statistics...................................................................................................305

Displaying Drop Counters.......................................................................................................... 306

Restoring the Factory Default Settings............................................................................................ 307

Important Points to Remember..................................................................................................307

25 Standards Compliance..................................................................................309

IEEE Compliance.............................................................................................................................. 309

RFC and I-D Compliance................................................................................................................. 309

General Internet Protocols......................................................................................................... 310

General IPv4 Protocols............................................................................................................... 310

Network Management.................................................................................................................311

MIB Location..................................................................................................................................... 314

Page 13

1

About this Guide

This guide describes the supported protocols and software features, and provides configuration

instructions and examples, for the Dell Networking M I/O Aggregator running Dell Networking OS version

9.4(0.0).

The MI/O Aggregator is installed in a Dell PowerEdge M I/O Aggregator. For information about how to

install and perform the initial switch configuration, refer to the Getting Started Guides on the Dell Support

website at http://www.dell.com/support/manuals

Though this guide contains information about protocols, it is not intended to be a complete reference.

This guide is a reference for configuring protocols on Dell Networking systems. For complete information

about protocols, refer to other documentation, including IETF requests for comment (RFCs). The

instructions in this guide cite relevant RFCs, and Standards Compliance contains a complete list of the

supported RFCs and management information base files (MIBs).

NOTE: You can perform some of the configuration tasks described in this document by using either

the Dell command line or the chassis management controller (CMC) graphical interface. Tasks

supported by the CMC interface are shown with the CMC icon: CMC

Audience

This document is intended for system administrators who are responsible for configuring and maintaining

networks and assumes knowledge in Layer 2 and Layer 3 networking technologies.

Conventions

This guide uses the following conventions to describe command syntax.

Keyword

parameter Parameters are in italics and require a number or word to be entered in the CLI.

{X} Keywords and parameters within braces must be entered in the CLI.

[X] Keywords and parameters within brackets are optional.

x|y Keywords and parameters separated by a bar require you to choose one option.

x||y Keywords and parameters separated by a double bar allows you to choose any or

Keywords are in Courier (a monospaced font) and must be entered in the CLI as

listed.

all of the options.

About this Guide

13

Page 14

Related Documents

For more information about the Dell PowerEdge M I/O Aggregator MXL 10/40GbE Switch IO Module,

refer to the following documents:

• Dell Networking OS Command Line Reference Guide for the M I/O Aggregator

• Dell Networking OS Getting Started Guide for the M I/O Aggregator

• Release Notes for the M I/O Aggregator

14

About this Guide

Page 15

2

Before You Start

To install the Aggregator in a Dell PowerEdge M1000e Enclosure, use the instructions in the Dell

PowerEdge M I/O Aggregator Getting Started Guide that is shipped with the product.The I/O Aggregator

(also known as Aggregator) installs with zero-touch configuration. After you power it on, an Aggregator

boots up with default settings and auto-configures with software features enabled. This chapter describes

the default settings and software features that are automatically configured at startup. To reconfigure the

Aggregator for customized network operation, use the tasks described in the other chapters.

IOA Operational Modes

IOA supports three operational modes. Select the operational mode that meets your deployment needs.

To enable a new operational mode, reload the switch.

Standalone mode

stack-unit unit iom-mode standalone

This is the default mode for IOA. It is a fully automated zero-touch mode that allows you to configure

VLAN memberships. (Supported in CMC)

Stacking mode

stack-unit unit iom-mode stacking

Select this mode to stack up to six IOA stack units as a single logical switch. The stack units can be in the

same or on different chassis. This is a low-touch mode where all configuration except VLAN membership

is automated. To enable VLAN, you must configure it. In this operational mode, base module links are

dedicated to stacking.

VLT mode

stack-unit unit iom-mode vlt

Select this mode to multi-home server interfaces to different IOA modules. This is a low-touch mode

where all configuration except VLAN membership is automated. To enable VLAN, you must configure it.

In this mode, port 9 links are dedicated to VLT interconnect.

Programmable MUX mode

stack-unit unit iom-mode programmable-mux

Select this mode to configure PMUX mode CLI commands.

Before You Start

15

Page 16

Default Settings

The I/O Aggregator provides zero-touch configuration with the following default configuration settings:

• default user name (root)

• password (calvin)

• VLAN (vlan1) and IP address for in-band management (DHCP)

• IP address for out-of-band (OOB) management (DHCP)

• read-only SNMP community name (public)

• broadcast storm control (enabled in Standalone and VLT modes and disabled in VLT mode)

• IGMP multicast flooding (enabled)

• VLAN configuration (in Standalone mode, all ports belong to all VLANs)

You can change any of these default settings using the CLI. Refer to the appropriate chapter for details.

NOTE: You can also change many of the default settings using the chassis management controller

(CMC) interface. For information about how to access the CMC to configure the aggregator, refer

Dell Chassis Management Controller (CMC) User’s Guide on the Dell Support website at

to the

http://support.dell.com/

Other Auto-Configured Settings

After the Aggregator powers on, it auto-configures and is operational with software features enabled,

including:

• Ports: Ports are administratively up and auto-configured to operate as hybrid ports to transmit tagged

and untagged VLAN traffic.

Ports 1 to 32 are internal server-facing ports, which can operate in 10GbE mode. Ports 33 to 56 are

external ports auto-configured to operate by default as follows:

– The base-module ports operate in standalone 4x10GbE mode. You can configure these ports to

operate in 40GbE stacking mode. When configured for stacking, you cannot use 40GbE basemodule ports for uplinks.

– Ports on the 2-Port 40-GbE QSFP+ module operate only in 4x10GbE mode. You cannot user

them for stacking.

– Ports on the 4-Port 10-GbE SFP+ and 4-Port 10GBASE-T modules operate only in 10GbE mode.

For more information about how ports are numbered, refer to Port Numbering.

• Link aggregation: All uplink ports are configured in a single LAG (LAG 128).

• VLANs: All ports are configured as members of all (4094) VLANs. All VLANs are up and can send or

receive layer 2 traffic. For more information, refer to VLAN Membership.

• Data center bridging capability exchange protocol (DCBx): Server-facing ports auto-configure in

auto-downstream port roles; uplink ports auto-configure in auto-upstream port roles.

• Fibre Channel over Ethernet (FCoE) connectivity and FCoE initiation protocol (FIP) snooping: The

uplink port channel (LAG 128) is enabled to operate in Fibre channel forwarder (FCF) port mode.

• Link layer discovery protocol (LLDP): Enabled on all ports to advertise management TLV and system

name with neighboring devices.

16

Before You Start

Page 17

• Internet small computer system interface (iSCSI)optimization.

• Internet group management protocol (IGMP) snooping.

• Jumbo frames: Ports are set to a maximum MTU of 12,000 bytes by default.

• Link tracking: Uplink-state group 1 is automatically configured. In uplink state-group 1, server-facing

ports auto-configure as downstream interfaces; the uplink port-channel (LAG 128) auto-configures as

an upstream interface. Server-facing links are auto-configured to be brought up only if the uplink

port-channel is up.

• In stacking mode, base module ports are automatically configured as stack ports.

• In VLT mode, port 9 is automatically configured as VLT interconnect ports.

Data Center Bridging Support

To eliminate packet loss and provision links with required bandwidth, Data Center Bridging (DCB)

enhancements for data center networks are supported.

The aggregator provides zero-touch configuration for DCB. The aggregator auto-configures DCBX port

roles as follows:

• Server-facing ports are configured as auto-downstream interfaces.

• Uplink ports are configured as auto-upstream interfaces.

In operation, DCBx auto-configures uplink ports to match the DCB configuration in the ToR switches to

which they connect.

The Aggregator supports DCB only in standalone mode.

FCoE Connectivity and FIP Snooping

Many data centers use Fiber Channel (FC) in storage area networks (SANs). Fiber Channel over Ethernet

(FCoE) encapsulates Fiber Channel frames over Ethernet networks.

On an Aggregator, the internal ports support FCoE connectivity and connects to the converged network

adapter (CNA) in servers. FCoE allows Fiber Channel to use 10-Gigabit Ethernet networks while

preserving the Fiber Channel protocol.

The Aggregator also provides zero-touch configuration for FCoE connectivity. The Aggregator autoconfigures to match the FCoE settings used in the switches to which it connects through its uplink ports.

FIP snooping is automatically configured on an Aggregator. The auto-configured port channel (LAG 128)

operates in FCF port mode.

iSCSI Operation

Support for iSCSI traffic is turned on by default when the aggregator powers up. No configuration is

required.

When an aggregator powers up, it monitors known TCP ports for iSCSI storage devices on all interfaces.

When a session is detected, an entry is created and monitored as long as the session is active.

Before You Start

17

Page 18

An aggregator also detects iSCSI storage devices on all interfaces and autoconfigures to optimize

performance. Performance optimization operations are applied automatically, such as Jumbo frame size

support on all the interfaces, disabling of storm control and enabling spanning-tree port fast on

interfaces connected to an iSCSI equallogic (EQL) storage device.

Link Aggregation

All uplink ports are configured in a single LAG (LAG 128). Server-facing ports are auto-configured as part

of link aggregation groups if the corresponding server is configured for LACP-based network interface

controller (NIC) teaming. Static LAGs are not supported.

NOTE: The recommended LACP timeout is Long-Timeout mode.

Link Tracking

By default, all server-facing ports are tracked by the operational status of the uplink LAG. If the uplink LAG

goes down, the aggregator loses its connectivity and is no longer operational; all server-facing ports are

brought down after the specified defer-timer interval, which is 10 seconds by default. If you have

configured VLAN, you can reduce the defer time by changing the defer-timer value or remove it by using

the no defer-timer command from UPLINK-STATE-GROUP mode.

NOTE: If installed servers do not have connectivity to a switch, check the Link Status LED of uplink

ports on the aggregator. If all LEDs are on, to ensure the LACP is correctly configured, check the

LACP configuration on the ToR switch that is connected to the aggregator.

Configuring VLANs

By default, in Standalone mode, all aggregator ports belong to all 4094 VLANs and are members of

untagged VLAN 1. To configure only the required VLANs on a port, use the CLI or CMC interface.

You can configure VLANs only on server ports. The uplink LAG will automatically get the VLANs, based on

the server ports VLAN configuration.

When you configure VLANs on server-facing interfaces (ports from 1 to 8), you can assign VLANs to a

port or a range of ports by entering the vlan tagged or vlan untagged commands in Interface

Configuration mode; for example:

Dell(conf)# interface range tengigabitethernet 0/2 - 4

Dell(conf-if-range-te-0/2-4)# vlan tagged 5,7,10-12

Dell(conf-if-range-te-0/2-4)# vlan untagged 3

Uplink LAG

The tagged VLAN membership of the uplink LAG is automatically configured based on the VLAN

configuration of all server-facing ports (ports from 1 to 32).

The untagged VLAN used for the uplink LAG is always the default VLAN.

18

Before You Start

Page 19

Server-Facing LAGs

The tagged VLAN membership of a server-facing LAG is automatically configured based on the serverfacing ports that are members of the LAG.

The untagged VLAN of a server-facing LAG is configured based on the untagged VLAN to which the

lowest numbered server-facing port in the LAG belongs.

NOTE: Dell Networking recommends configuring the same VLAN membership on all LAG member

ports.

Where to Go From Here

You can customize the Aggregator for use in your data center network as necessary. To perform

additional switch configuration, do one of the following:

• For remote out-of-band management, enter the OOB management interface IP address into a Telnet

or SSH client and log in to the switch using the user ID and password to access the CLI.

• For local management using the CLI, use the attached console connection.

• For remote in-band management from a network management station, enter the IP address of the

default VLAN and log in to the switch to access the CLI.

In case of a Dell upgrade, you can check to see that an Aggregator is running the latest Dell version by

entering the show versioncommand. To download Dell version, go to http://support.dell.com

For detailed information about how to reconfigure specific software settings, refer to the appropriate

chapter.

Before You Start

19

Page 20

3

Configuration Fundamentals

The Dell Networking Operating System (OS) command line interface (CLI) is a text-based interface you

can use to configure interfaces and protocols.

The CLI is structured in modes for security and management purposes. Different sets of commands are

available in each mode, and you can limit user access to modes using privilege levels.

In Dell Networking OS, after you enable a command, it is entered into the running configuration file. You

can view the current configuration for the whole system or for a particular CLI mode. To save the current

configuration, copy the running configuration to another location. For more information, refer to Save

the Running-Configuration.

NOTE: You can use the chassis management controller (CMC) out-of-band management interface

to access and manage an Aggregator using the Dell Networking OS command-line reference. For

more information about how to access the CMC to configure an Aggregator, refer to the Dell

Chassis Management Controller (CMC) User’s Guide on the Dell Support website at http://

support.dell.com/support/edocs/systems/pem/en/index.htm.

Accessing the Command Line

Access the command line through a serial console port or a Telnet session (Logging into the System

using Telnet). When the system successfully boots, enter the command line in EXEC mode.

Logging into the System using Telnet

telnet 172.31.1.53

Trying 172.31.1.53...

Connected to 172.31.1.53.

Escape character is '^]'.

Login: username

Password:

Dell>

CLI Modes

Different sets of commands are available in each mode.

A command found in one mode cannot be executed from another mode (except for EXEC mode

commands with a preceding do command (refer to the do Command section).

The Dell Networking OS CLI is divided into three major mode levels:

• EXEC mode is the default mode and has a privilege level of 1, which is the most restricted level. Only a

limited selection of commands is available, notably the show commands, which allow you to view

system information.

20

Configuration Fundamentals

Page 21

• EXEC Privilege mode has commands to view configurations, clear counters, manage configuration

files, run diagnostics, and enable or disable debug operations. The privilege level is 15, which is

unrestricted. You can configure a password for this mode.

• CONFIGURATION mode allows you to configure security features, time settings, set logging and

SNMP functions, configure static ARP and MAC addresses, and set line cards on the system.

Beneath CONFIGURATION mode are submodes that apply to interfaces, protocols, and features. The

following example shows the submode command structure. Two sub-CONFIGURATION modes are

important when configuring the chassis for the first time:

• INTERFACE submode is the mode in which you configure Layer 2 protocols and IP services specific to

an interface. An interface can be physical (10 Gigabit Ethernet) or logical (Null, port channel, or virtual

local area network [VLAN]).

• LINE submode is the mode in which you to configure the console and virtual terminal lines.

NOTE: At any time, entering a question mark (?) displays the available command options. For

example, when you are in CONFIGURATION mode, entering the question mark first lists all available

commands, including the possible submodes.

The CLI modes are:

EXEC

EXEC Privilege

CONFIGURATION

INTERFACE

10 GIGABIT ETHERNET

INTERFACE RANGE

MANAGEMENT ETHERNET

LINE

CONSOLE

VIRTUAL TERMINAL

MONITOR SESSION

Navigating CLI Modes

The Dell prompt changes to indicate the CLI mode.

The following table lists the CLI mode, its prompt, and information about how to access and exit the CLI

mode. Move linearly through the command modes, except for the end command which takes you

directly to EXEC Privilege mode and the exit command which moves you up one command mode level.

NOTE: Sub-CONFIGURATION modes all have the letters “conf” in the prompt with more modifiers

to identify the mode and slot/port information.

Table 1. Dell Command Modes

CLI Command Mode Prompt Access Command

EXEC

EXEC Privilege

Configuration Fundamentals

Dell>

Dell#

Access the router through the

console or Telnet.

• From EXEC mode, enter the

enable command.

• From any other mode, use

the end command.

21

Page 22

CLI Command Mode Prompt Access Command

CONFIGURATION

NOTE: Access all of the

following modes from

CONFIGURATION mode.

Dell(conf)#

• From EXEC privilege mode,

enter the configure

command.

• From every mode except

EXEC and EXEC Privilege,

enter the exit command.

10 Gigabit Ethernet Interface

Interface Range

Management Ethernet Interface

MONITOR SESSION

IP COMMUNITY-LIST

CONSOLE

VIRTUAL TERMINAL

The following example shows how to change the command mode from CONFIGURATION mode to

INTERFACE configuration mode.

Example of Changing Command Modes

Dell(conf)#interface tengigabitethernet 0/2

Dell(conf-if-te-0/2)#

Dell(conf-if-te-0/1)#

Dell(conf-if-range)#

Dell(conf-if-ma-0/0)#

Dell(conf-mon-sess)# monitor session

Dell(config-communitylist)#

Dell(config-lineconsole)#

Dell(config-line-vty)#

interface (INTERFACE modes)

interface (INTERFACE modes)

interface (INTERFACE modes)

ip community-list

line (LINE Modes)

line (LINE Modes)

The do Command

You can enter an EXEC mode command from any CONFIGURATION mode (CONFIGURATION,

INTERFACE, and so on.) without having to return to EXEC mode by preceding the EXEC mode command

with the

The following example shows the output of the do command.

do command.

Dell(conf)#do show system brief

Stack MAC : 00:01:e8:00:ab:03

-- Stack Info -Slot UnitType Status ReqTyp CurTyp

Version Ports

--------------------------------------------------------------------------------

-------0 Member not present

1 Management online I/O-Aggregator I/O-Aggregator

8-3-17-38 56

2 Member not present

3 Member not present

4 Member not present

5 Member not present

22

Configuration Fundamentals

Page 23

Dell(conf)#

Undoing Commands

When you enter a command, the command line is added to the running configuration file (runningconfig).

To disable a command and remove it from the running-config, enter the no command, then the original

command. For example, to delete an IP address configured on an interface, use the no ip address

ip-address command.

NOTE: Use the help or ? command as described in Obtaining Help.

Example of Viewing Disabled Commands

Dell(conf)# interface managementethernet 0/0

Dell(conf-if-ma-0/0)# ip address 192.168.5.6/16

Dell(conf-if-ma-0/0)#

Dell(conf-if-ma-0/0)#

Dell(conf-if-ma-0/0)#show config

!

interface ManagementEthernet 0/0

ip address 192.168.5.6/16

no shutdown

Dell(conf-if-ma-0/0)#

Dell(conf-if-ma-0/0)# no ip address

Dell(conf-if-ma-0/0)#

Dell(conf-if-ma-0/0)# show config

!

interface ManagementEthernet 0/0

no ip address

no shutdown

Dell(conf-if-ma-0/0)#

Obtaining Help

Obtain a list of keywords and a brief functional description of those keywords at any CLI mode using

the ? or help command:

• To list the keywords available in the current mode, enter ? at the prompt or after a keyword.

• Enter ? after a prompt lists all of the available keywords. The output of this command is the same for

the help command.

Dell#?

start Start Shell

capture Capture Packet

cd Change current directory

clear Reset functions

clock Manage the system clock

configure Configuring from terminal

copy Copy from one file to another

--More--

• Enter ? after a partial keyword lists all of the keywords that begin with the specified letters.

Dell(conf)#cl?

clock

Dell(conf)#cl

Configuration Fundamentals

23

Page 24

• Enter [space]? after a keyword lists all of the keywords that can follow the specified keyword.

Dell(conf)#clock ?

summer-time Configure summer (daylight savings) time

timezone Configure time zone

Dell(conf)#clock

Entering and Editing Commands

Notes for entering commands.

• The CLI is not case-sensitive.

• You can enter partial CLI keywords.

– Enter the minimum number of letters to uniquely identify a command. For example, you cannot

enter cl as a partial keyword because both the clock and class-map commands begin with the

letters “cl.” You can enter clo, however, as a partial keyword because only one command begins

with those three letters.

• The TAB key auto-completes keywords in commands. Enter the minimum number of letters to

uniquely identify a command.

• The UP and DOWN arrow keys display previously entered commands (refer to Command History).

• The BACKSPACE and DELETE keys erase the previous letter.

• Key combinations are available to move quickly across the command line. The following table

describes these short-cut key combinations.

Short-Cut Key

Combination

CNTL-A Moves the cursor to the beginning of the command line.

CNTL-B Moves the cursor back one character.

CNTL-D Deletes character at cursor.

CNTL-E Moves the cursor to the end of the line.

CNTL-F Moves the cursor forward one character.

CNTL-I Completes a keyword.

CNTL-K Deletes all characters from the cursor to the end of the command line.

CNTL-L Re-enters the previous command.

CNTL-N Return to more recent commands in the history buffer after recalling commands

CNTL-P Recalls commands, beginning with the last command.

CNTL-R Re-enters the previous command.

CNTL-U Deletes the line.

CNTL-W Deletes the previous word.

CNTL-X Deletes the line.

Action

with CTRL-P or the UP arrow key.

CNTL-Z Ends continuous scrolling of command outputs.

Esc B Moves the cursor back one word.

24

Configuration Fundamentals

Page 25

Short-Cut Key

Combination

Esc F Moves the cursor forward one word.

Esc D Deletes all characters from the cursor to the end of the word.

Action

Command History

Dell Networking OS maintains a history of previously-entered commands for each mode. For example:

• When you are in EXEC mode, the UP and DOWN arrow keys display the previously-entered EXEC

mode commands.

• When you are in CONFIGURATION mode, the UP or DOWN arrows keys recall the previously-entered

CONFIGURATION mode commands.

Filtering show Command Outputs

Filter the output of a show command to display specific information by adding | [except | find |

grep | no-more | save] specified_text after the command.

The variable specified_text is the text for which you are filtering and it IS case sensitive unless you

use the ignore-case sub-option.

Starting with Dell Networking OS version 7.8.1.0, the grep command accepts an ignore-case suboption that forces the search to case-insensitive. For example, the commands:

• show run | grep Ethernet returns a search result with instances containing a capitalized

“Ethernet,” such as

• show run | grep ethernet does not return that search result because it only searches for

instances containing a non-capitalized “ethernet.”

• show run | grep Ethernet ignore-case returns instances containing both “Ethernet” and

“ethernet.”

The grep command displays only the lines containing specified text. The following example shows this

command used in combination with the

Dell(conf)#do show stack-unit all stack-ports all pfc details | grep 0

stack unit 0 stack-port all

0 Pause Tx pkts, 0 Pause Rx pkts

0 Pause Tx pkts, 0 Pause Rx pkts

0 Pause Tx pkts, 0 Pause Rx pkts

0 Pause Tx pkts, 0 Pause Rx pkts

0 Pause Tx pkts, 0 Pause Rx pkts

0 Pause Tx pkts, 0 Pause Rx pkts